mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'refs/remotes/origin/patch-1'

This commit is contained in:

commit

4f0f58d451

@ -0,0 +1,162 @@

|

||||

网络与安全方面的最佳开源软件

|

||||

================================================================================

|

||||

InfoWorld 在部署、运营和保障网络安全领域精选出了年度开源工具获奖者。

|

||||

|

||||

|

||||

|

||||

### 最佳开源网络和安全软件 ###

|

||||

|

||||

[BIND](https://en.wikipedia.org/wiki/BIND), [Sendmail](https://en.wikipedia.org/wiki/Sendmail), [OpenSSH](https://en.wikipedia.org/wiki/OpenSSH), [Cacti](https://en.wikipedia.org/wiki/Cactus), [Nagios](https://en.wikipedia.org/wiki/Nagios), [Snort](https://en.wikipedia.org/wiki/Snort_%28software%29) -- 这些为了网络而生的开源软件,好些家伙们老而弥坚。今年在这个范畴的最佳选择中,你会发现中坚、支柱、新人和新贵云集,他们正在完善网络管理,安全监控,漏洞评估,[rootkit](https://en.wikipedia.org/wiki/Rootkit) 检测,以及很多方面。

|

||||

|

||||

|

||||

|

||||

### Icinga 2 ###

|

||||

|

||||

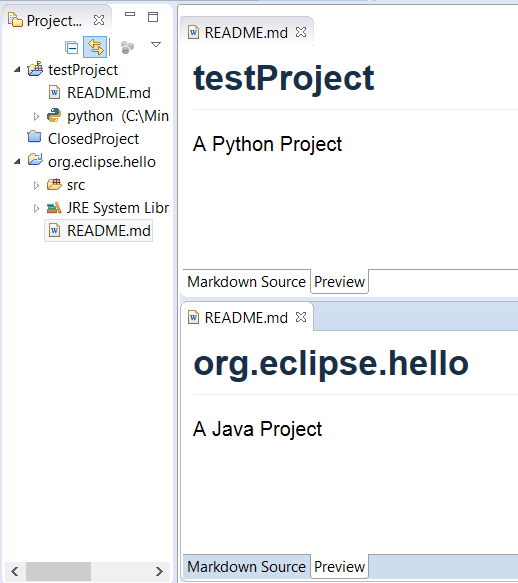

Icinga 起先只是系统监控应用 Nagios 的一个衍生分支。[Icinga 2][1] 经历了完全的重写,为用户带来了时尚的界面、对多数据库的支持,以及一个集成了众多扩展的 API。凭借着开箱即用的负载均衡、通知和配置文件,Icinga 2 缩短了在复杂环境下安装的时间。Icinga 2 原生支持 [Graphite](https://github.com/graphite-project/graphite-web)(系统监控应用),轻松为管理员呈现实时性能图表。不过真的让 Icinga 今年重新火起来的原因是 Icinga Web 2 的发布,那是一个支持可拖放定制的 仪表盘 和一些流式监控工具的前端图形界面系统。

|

||||

|

||||

管理员可以查看、过滤、并按优先顺序排列发现的问题,同时可以跟踪已经采取的动作。一个新的矩阵视图使管理员能够在单一页面上查看主机和服务。你可以通过查看特定时间段的事件或筛选事件类型来了解哪些事件需要立即关注。虽然 Icinga Web 2 有着全新界面和更为强劲的性能,不过对于传统版 Icinga 和 Web 版 Icinga 的所有常用命令还是照旧支持的。这意味着学习新版工具不耗费额外的时间。

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### Zenoss Core ###

|

||||

|

||||

这是另一个强大的开源软件,[Zenoss Core][2] 为网络管理员提供了一个完整的、一站式解决方案来跟踪和管理所有的应用程序、服务器、存储、网络组件、虚拟化工具、以及企业基础架构的其他元素。管理员可以确保硬件的运行效率并利用 ZenPacks 中模块化设计的插件来扩展功能。

|

||||

|

||||

在2015年二月发布的 Zenoss Core 5 保留了已经很强大的工具,并进一步改进以增强用户界面和扩展 仪表盘。基于 Web 的控制台和 仪表盘 可以高度可定制并动态调整,而现在的新版本还能让管理员混搭多个组件图表到一个图表中。想来这应该是一个更好的根源分析和因果分析的工具。

|

||||

|

||||

Portlets 为网络映射、设备问题、守护进程、产品状态、监视列表和事件视图等等提供了深入的分析。而且新版 HTML5 图表可以从工具导出。Zenoss 的控制中心支持带外管理并且可监控所有 Zenoss 组件。Zenoss Core 现在拥有一些新工具,用于在线备份和恢复、快照和回滚以及多主机部署等方面。更重要的是,凭借对 Docker 的全面支持,部署起来更快了。

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### OpenNMS ###

|

||||

|

||||

作为一个非常灵活的网络管理解决方案,[OpenNMS][3] 可以处理任何网络管理任务,无论是设备管理、应用性能监控、库存控制,或事件管理。凭借对 IPv6 的支持、强大的警报系统和记录用户脚本来测试 Web 应用程序的能力,OpenNMS 拥有网络管理员和测试人员需要的一切。OpenNMS 现在变得像一款移动版 仪表盘,称之为 OpenNMS Compass,可让网络专家随时,甚至在外出时都可以监视他们的网络。

|

||||

|

||||

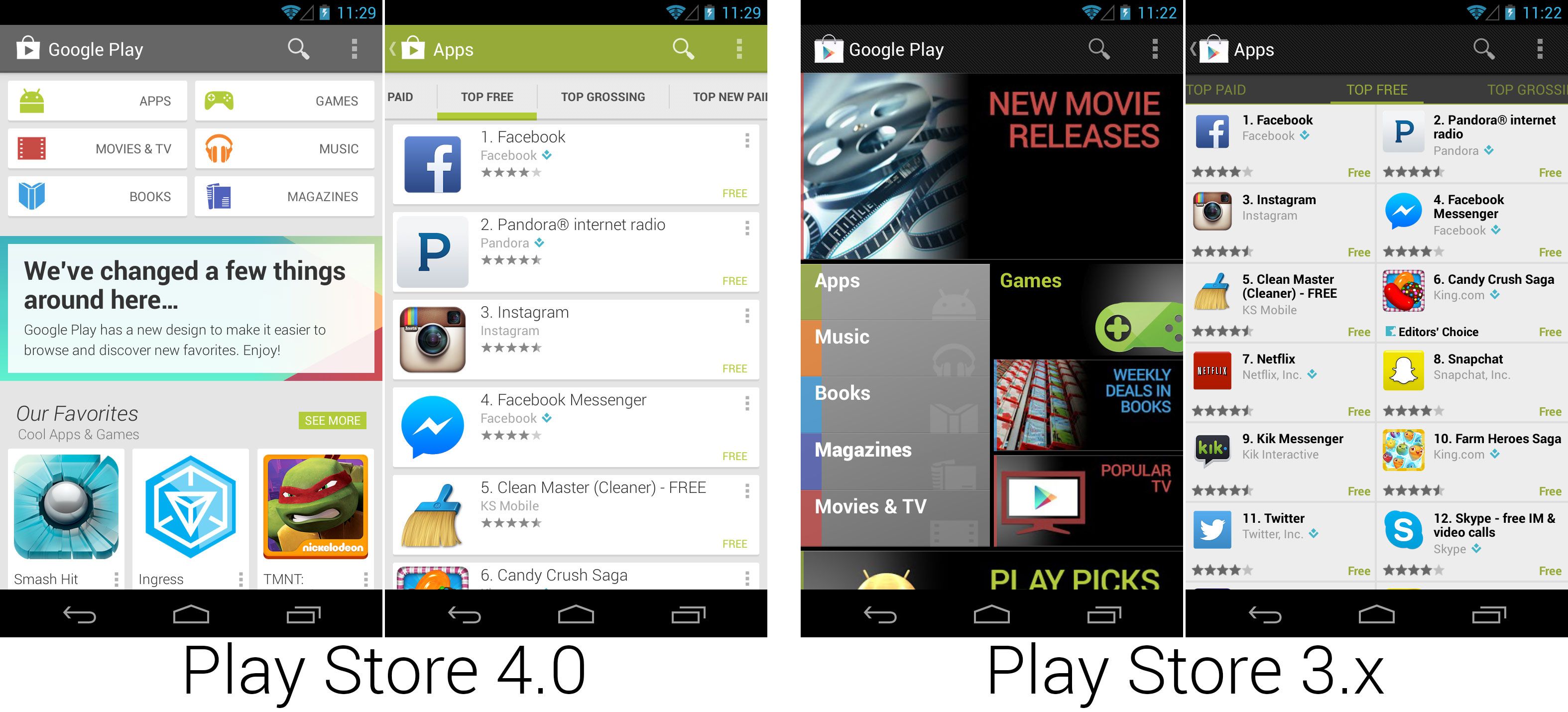

该应用程序的 IOS 版本,可从 [iTunes App Store][4] 上获取,可以显示故障、节点和告警。下一个版本将提供更多的事件细节、资源图表、以及关于 IP 和 SNMP 接口的信息。安卓版可从 [Google Play][5] 上获取,可在 仪表盘 上显示网络可用性,故障和告警,以及可以确认、提升或清除告警。移动客户端与 OpenNMS Horizon 1.12 或更高版本以及 OpenNMS Meridian 2015.1.0 或更高版本兼容。

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### Security Onion ###

|

||||

|

||||

如同一个洋葱,网络安全监控是由许多层组成。没有任何一个单一的工具可以让你洞察每一次攻击,为你显示对你的公司网络中的每一次侦查或是会话的足迹。[Security Onion][6] 在一个简单易用的 Ubuntu 发行版中打包了许多久经考验的工具,可以让你看到谁留在你的网络里,并帮助你隔离这些坏家伙。

|

||||

|

||||

无论你是采取主动式的网络安全监测还是追查可能的攻击,Security Onion 都可以帮助你。Onion 由传感器、服务器和显示层组成,结合了基于网络和基于主机的入侵检测,全面的网络数据包捕获,并提供了所有类型的日志以供检查和分析。

|

||||

|

||||

这是一个众星云集的的网络安全工具链,包括用于网络抓包的 [Netsniff-NG](http://www.netsniff-ng.org/)、基于规则的网络入侵检测系统 Snort 和 [Suricata](https://en.wikipedia.org/wiki/Suricata_%28software%29),基于分析的网络监控系统 Bro,基于主机的入侵检测系统 OSSEC 和用于显示、分析和日志管理的 Sguil、Squert、Snorby 和 ELSA (企业日志搜索和归档(Enterprise Log Search and Archive))。它是一个经过精挑细选的工具集,所有的这些全被打包进一个向导式的安装程序并有完整的文档支持,可以帮助你尽可能快地上手监控。

|

||||

|

||||

-- Victor R. Garza

|

||||

|

||||

|

||||

|

||||

### Kali Linux ###

|

||||

|

||||

[Kali Linux][7] 背后的团队今年为这个流行的安全 Linux 发行版发布了新版本,使其更快,更全能。Kali 采用全新 4.0 版的内核,改进了对硬件和无线驱动程序的支持,并且界面更为流畅。最常用的工具都可从屏幕的侧边栏上轻松找到。而最大的改变是 Kali Linux 现在是一个滚动发行版,具有持续不断的软件更新。Kali 的核心系统是基于 Debian Jessie,而且该团队会不断地从 Debian 测试版拉取最新的软件包,并持续的在上面添加 Kali 风格的新特性。

|

||||

|

||||

该发行版仍然配备了很多的渗透测试,漏洞分析,安全审查,网络应用分析,无线网络评估,逆向工程,和漏洞利用工具。现在该发行版具有上游版本检测系统,当有个别工具可更新时系统会自动通知用户。该发行版还提过了一系列 ARM 设备的镜像,包括树莓派、[Chromebook](https://en.wikipedia.org/wiki/Chromebook) 和 [Odroid](https://en.wikipedia.org/wiki/ODROID),同时也更新了 Android 设备上运行的 [NetHunter](https://www.kali.org/kali-linux-nethunter/) 渗透测试平台。还有其他的变化:Metasploit 的社区版/专业版不再包括在内,因为 Kali 2.0 还没有 [Rapid7 的官方支持][8]。

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### OpenVAS ###

|

||||

|

||||

开放式漏洞评估系统(Open Vulnerability Assessment System) [OpenVAS][9],是一个整合多种服务和工具来提供漏洞扫描和漏洞管理的软件框架。该扫描器可以使用每周更新一次的网络漏洞测试数据,或者你也可以使用商业服务的数据。该软件框架包括一个命令行界面(以使其可以用脚本调用)和一个带 SSL 安全机制的基于 [Greenbone 安全助手][10] 的浏览器界面。OpenVAS 提供了用于附加功能的各种插件。扫描可以预定运行或按需运行。

|

||||

|

||||

可通过单一的主控来控制多个安装好 OpenVAS 的系统,这使其成为了一个可扩展的企业漏洞评估工具。该项目兼容的标准使其可以将扫描结果和配置存储在 SQL 数据库中,这样它们可以容易地被外部报告工具访问。客户端工具通过基于 XML 的无状态 OpenVAS 管理协议访问 OpenVAS 管理器,所以安全管理员可以扩展该框架的功能。该软件能以软件包或源代码的方式安装在 Windows 或 Linux 上运行,或者作为一个虚拟应用下载。

|

||||

|

||||

-- Matt Sarrel

|

||||

|

||||

|

||||

|

||||

### OWASP ###

|

||||

|

||||

[OWASP][11](开放式 Web 应用程序安全项目(Open Web Application Security Project))是一个专注于提高软件安全性的在全球各地拥有分会的非营利组织。这个社区性的组织提供测试工具、文档、培训和几乎任何你可以想象到的开发安全软件相关的软件安全评估和最佳实践。有一些 OWASP 项目已成为很多安全从业者工具箱中的重要组件:

|

||||

|

||||

[ZAP][12](ZED 攻击代理项目(Zed Attack Proxy Project))是一个在 Web 应用程序中寻找漏洞的渗透测试工具。ZAP 的设计目标之一是使之易于使用,以便于那些并非安全领域专家的开发人员和测试人员能便于使用。ZAP 提供了自动扫描和一套手动测试工具集。

|

||||

|

||||

[Xenotix XSS Exploit Framework][13] 是一个先进的跨站点脚本漏洞检测和漏洞利用框架,该框架通过在浏览器引擎内执行扫描以获取真实的结果。Xenotix 扫描模块使用了三个智能模糊器( intelligent fuzzers),使其可以运行近 5000 种不同的 XSS 有效载荷。它有个 API 可以让安全管理员扩展和定制漏洞测试工具包。

|

||||

|

||||

[O-Saft][14](OWASP SSL 高级审查工具(OWASP SSL advanced forensic tool))是一个查看 SSL 证书详细信息和测试 SSL 连接的 SSL 审计工具。这个命令行工具可以在线或离线运行来评估 SSL ,比如算法和配置是否安全。O-Saft 内置提供了常见漏洞的检查,你可以容易地通过编写脚本来扩展这些功能。在 2015 年 5 月加入了一个简单的图形用户界面作为可选的下载项。

|

||||

|

||||

[OWTF][15](攻击性 Web 测试框架(Offensive Web Testing Framework))是一个遵循 OWASP 测试指南和 NIST 和 PTES 标准的自动化测试工具。该框架同时支持 Web 用户界面和命令行,用于探测 Web 和应用服务器的常见漏洞,如配置失当和软件未打补丁。

|

||||

|

||||

-- Matt Sarrel

|

||||

|

||||

|

||||

|

||||

### BeEF ###

|

||||

|

||||

Web 浏览器已经成为用于针对客户端的攻击中最常见的载体。[BeEF][15] (浏览器漏洞利用框架项目(Browser Exploitation Framework Project)),是一种广泛使用的用以评估 Web 浏览器安全性的渗透工具。BeEF 通过浏览器来启动客户端攻击,帮助你暴露出客户端系统的安全弱点。BeEF 建立了一个恶意网站,安全管理员用想要测试的浏览器访问该网站。然后 BeEF 发送命令来攻击 Web 浏览器并使用命令在客户端机器上植入软件。随后管理员就可以把客户端机器看作不设防般发动攻击了。

|

||||

|

||||

BeEF 自带键盘记录器、端口扫描器和 Web 代理这样的常用模块,此外你可以编写你自己的模块或直接将命令发送到被控制的测试机上。BeEF 带有少量的演示网页来帮你快速入门,使得编写更多的网页和攻击模块变得非常简单,让你可以因地适宜的自定义你的测试。BeEF 是一个非常有价值的评估浏览器和终端安全、学习如何发起基于浏览器攻击的测试工具。可以使用它来向你的用户综合演示,那些恶意软件通常是如何感染客户端设备的。

|

||||

|

||||

-- Matt Sarrel

|

||||

|

||||

|

||||

|

||||

### Unhide ###

|

||||

|

||||

[Unhide][16] 是一个用于定位开放的 TCP/UDP 端口和隐藏在 UNIX、Linux 和 Windows 上的进程的审查工具。隐藏的端口和进程可能是由于运行 Rootkit 或 LKM(可加载的内核模块(loadable kernel module)) 导致的。Rootkit 可能很难找到并移除,因为它们就是专门针对隐蔽性而设计的,可以在操作系统和用户前隐藏自己。一个 Rootkit 可以使用 LKM 隐藏其进程或冒充其他进程,让它在机器上运行很长一段时间而不被发现。而 Unhide 则可以使管理员们确信他们的系统是干净的。

|

||||

|

||||

Unhide 实际上是两个单独的脚本:一个用于进程,一个用于端口。该工具查询正在运行的进程、线程和开放的端口并将这些信息与系统中注册的活动比较,报告之间的差异。Unhide 和 WinUnhide 是非常轻量级的脚本,可以运行命令行而产生文本输出。它们不算优美,但是极为有用。Unhide 也包括在 [Rootkit Hunter][17] 项目中。

|

||||

|

||||

-- Matt Sarrel

|

||||

|

||||

|

||||

|

||||

### 查看更多的开源软件优胜者 ###

|

||||

|

||||

InfoWorld 网站的 2015 年最佳开源奖由下至上表扬了 100 多个开源项目。通过以下链接可以查看更多开源软件中的翘楚:

|

||||

|

||||

[2015 Bossie 评选:最佳开源应用程序][18]

|

||||

|

||||

[2015 Bossie 评选:最佳开源应用程序开发工具][19]

|

||||

|

||||

[2015 Bossie 评选:最佳开源大数据工具][20]

|

||||

|

||||

[2015 Bossie 评选:最佳开源数据中心和云计算软件][21]

|

||||

|

||||

[2015 Bossie 评选:最佳开源桌面和移动端软件][22]

|

||||

|

||||

[2015 Bossie 评选:最佳开源网络和安全软件][23]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/2982962/open-source-tools/bossie-awards-2015-the-best-open-source-networking-and-security-software.html

|

||||

|

||||

作者:[InfoWorld staff][a]

|

||||

译者:[robot527](https://github.com/robot527)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoworld.com/author/InfoWorld-staff/

|

||||

[1]:https://www.icinga.org/icinga/icinga-2/

|

||||

[2]:http://www.zenoss.com/

|

||||

[3]:http://www.opennms.org/

|

||||

[4]:https://itunes.apple.com/us/app/opennms-compass/id968875097?mt=8

|

||||

[5]:https://play.google.com/store/apps/details?id=com.opennms.compass&hl=en

|

||||

[6]:http://blog.securityonion.net/p/securityonion.html

|

||||

[7]:https://www.kali.org/

|

||||

[8]:https://community.rapid7.com/community/metasploit/blog/2015/08/12/metasploit-on-kali-linux-20

|

||||

[9]:http://www.openvas.org/

|

||||

[10]:http://www.greenbone.net/

|

||||

[11]:https://www.owasp.org/index.php/Main_Page

|

||||

[12]:https://www.owasp.org/index.php/OWASP_Zed_Attack_Proxy_Project

|

||||

[13]:https://www.owasp.org/index.php/O-Saft

|

||||

[14]:https://www.owasp.org/index.php/OWASP_OWTF

|

||||

[15]:http://www.beefproject.com/

|

||||

[16]:http://www.unhide-forensics.info/

|

||||

[17]:http://www.rootkit.nl/projects/rootkit_hunter.html

|

||||

[18]:http://www.infoworld.com/article/2982622/bossie-awards-2015-the-best-open-source-applications.html

|

||||

[19]:http://www.infoworld.com/article/2982920/bossie-awards-2015-the-best-open-source-application-development-tools.html

|

||||

[20]:http://www.infoworld.com/article/2982429/bossie-awards-2015-the-best-open-source-big-data-tools.html

|

||||

[21]:http://www.infoworld.com/article/2982923/bossie-awards-2015-the-best-open-source-data-center-and-cloud-software.html

|

||||

[22]:http://www.infoworld.com/article/2982630/bossie-awards-2015-the-best-open-source-desktop-and-mobile-software.html

|

||||

[23]:http://www.infoworld.com/article/2982962/bossie-awards-2015-the-best-open-source-networking-and-security-software.html

|

||||

@ -0,0 +1,156 @@

|

||||

如何在 Linux 上使用 Gmail SMTP 服务器发送邮件通知

|

||||

================================================================================

|

||||

假定你想配置一个 Linux 应用,用于从你的服务器或桌面客户端发送邮件信息。邮件信息可能是邮件简报、状态更新(如 [Cachet][1])、监控警报(如 [Monit][2])、磁盘时间(如 [RAID mdadm][3])等等。当你要建立自己的 [邮件发送服务器][4] 传递信息时 ,你可以替代使用一个免费的公共 SMTP 服务器,从而避免遭受维护之苦。

|

||||

|

||||

谷歌的 Gmail 服务就是最可靠的 **免费 SMTP 服务器** 之一。想要从应用中发送邮件通知,你仅需在应用中添加 Gmail 的 SMTP 服务器地址和你的身份凭证即可。

|

||||

|

||||

使用 Gmail 的 SMTP 服务器会遇到一些限制,这些限制主要用于阻止那些经常滥用服务器来发送垃圾邮件和使用邮件营销的家伙。举个例子,你一次只能给至多 100 个地址发送信息,并且一天不能超过 500 个收件人。同样,如果你不想被标为垃圾邮件发送者,你就不能发送过多的不可投递的邮件。当你达到任何一个限制,你的 Gmail 账户将被暂时的锁定一天。简而言之,Gmail 的 SMTP 服务器对于你个人的使用是非常棒的,但不适合商业的批量邮件。

|

||||

|

||||

说了这么多,是时候向你们展示 **如何在 Linux 环境下使用 Gmail 的 SMTP 服务器** 了。

|

||||

|

||||

### Google Gmail SMTP 服务器设置 ###

|

||||

|

||||

如果你想要通过你的应用使用 Gmail 的 SMTP 服务器发送邮件,请牢记接下来的详细说明。

|

||||

|

||||

- **邮件发送服务器 (SMTP 服务器)**: smtp.gmail.com

|

||||

- **使用认证**: 是

|

||||

- **使用安全连接**: 是

|

||||

- **用户名**: 你的 Gmail 账户 ID (比如 "alice" ,如果你的邮箱为 alice@gmail.com)

|

||||

- **密码**: 你的 Gmail 密码

|

||||

- **端口**: 587

|

||||

|

||||

确切的配置根据应用会有所不同。在本教程的剩余部分,我将向你展示一些在 Linux 上使用 Gmail SMTP 服务器的应用示例。

|

||||

|

||||

### 从命令行发送邮件 ###

|

||||

|

||||

作为第一个例子,让我们尝试最基本的邮件功能:使用 Gmail SMTP 服务器从命令行发送一封邮件。为此,我将使用一个称为 mutt 的命令行邮件客户端。

|

||||

|

||||

先安装 mutt:

|

||||

|

||||

对于 Debian-based 系统:

|

||||

|

||||

$ sudo apt-get install mutt

|

||||

|

||||

对于 Red Hat based 系统:

|

||||

|

||||

$ sudo yum install mutt

|

||||

|

||||

创建一个 mutt 配置文件(~/.muttrc),并和下面一样,在文件中指定 Gmail SMTP 服务器信息。将 \<gmail-id> 替换成自己的 Gmail ID。注意该配置只是为了发送邮件而已(而非接收邮件)。

|

||||

|

||||

$ vi ~/.muttrc

|

||||

|

||||

----------

|

||||

|

||||

set from = "<gmail-id>@gmail.com"

|

||||

set realname = "Dan Nanni"

|

||||

set smtp_url = "smtp://<gmail-id>@smtp.gmail.com:587/"

|

||||

set smtp_pass = "<gmail-password>"

|

||||

|

||||

一切就绪,使用 mutt 发送一封邮件:

|

||||

|

||||

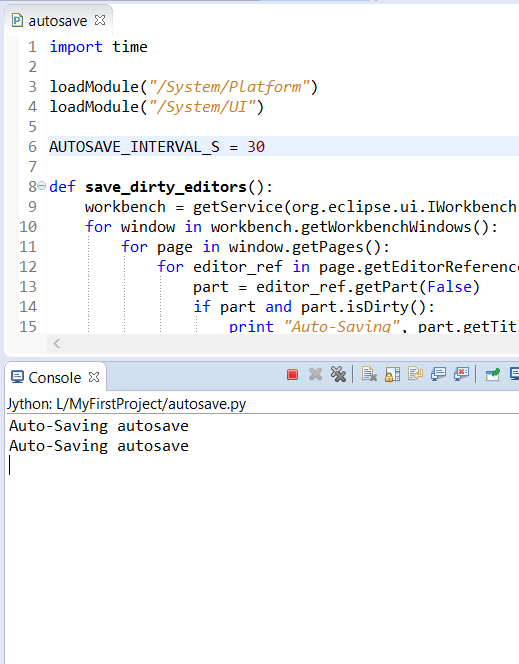

$ echo "This is an email body." | mutt -s "This is an email subject" alice@yahoo.com

|

||||

|

||||

想在一封邮件中添加附件,使用 "-a" 选项

|

||||

|

||||

$ echo "This is an email body." | mutt -s "This is an email subject" alice@yahoo.com -a ~/test_attachment.jpg

|

||||

|

||||

|

||||

|

||||

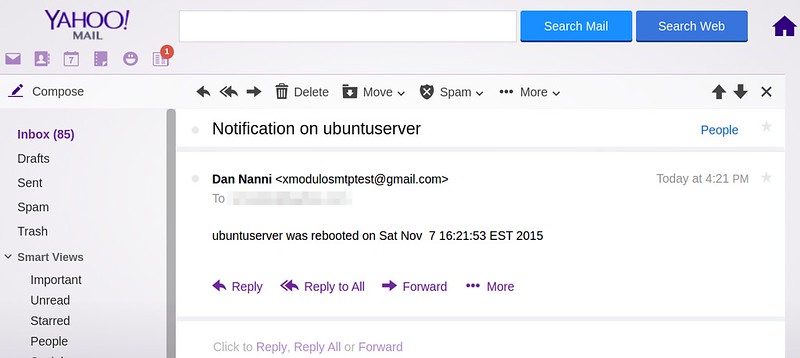

使用 Gmail SMTP 服务器意味着邮件将显示是从你 Gmail 账户发出的。换句话说,收件人将视你的 Gmail 地址为发件人地址。如果你想要使用自己的域名作为邮件发送方,你需要使用 Gmail SMTP 转发服务。

|

||||

|

||||

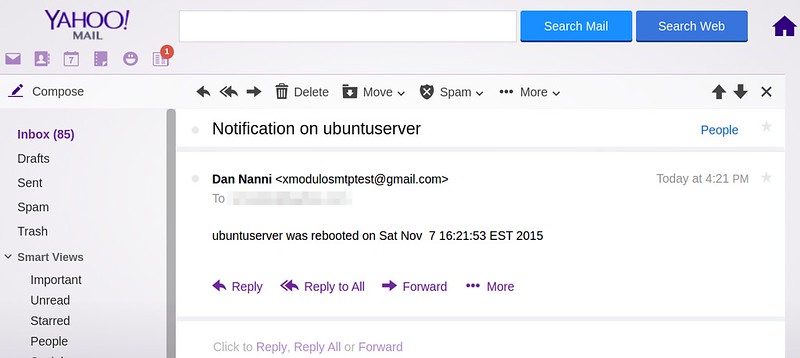

### 当服务器重启时发送邮件通知 ###

|

||||

|

||||

如果你在 [虚拟专用服务器(VPS)][5] 上跑了些重要的网站,建议监控 VPS 的重启行为。作为一个更为实用的例子,让我们研究如何在你的 VPS 上为每一次重启事件建立邮件通知。这里假设你的 VPS 上使用的是 systemd,并向你展示如何为自动邮件通知创建一个自定义的 systemd 启动服务。

|

||||

|

||||

首先创建下面的脚本 reboot_notify.sh,用于负责邮件通知。

|

||||

|

||||

$ sudo vi /usr/local/bin/reboot_notify.sh

|

||||

|

||||

----------

|

||||

|

||||

#!/bin/sh

|

||||

|

||||

echo "`hostname` was rebooted on `date`" | mutt -F /etc/muttrc -s "Notification on `hostname`" alice@yahoo.com

|

||||

|

||||

----------

|

||||

|

||||

$ sudo chmod +x /usr/local/bin/reboot_notify.sh

|

||||

|

||||

在这个脚本中,我使用 "-F" 选项,用于指定系统级的 mutt 配置文件位置。因此不要忘了创建 /etc/muttrc 文件,并如前面描述的那样填入 Gmail SMTP 信息。

|

||||

|

||||

现在让我们创建如下一个自定义的 systemd 服务。

|

||||

|

||||

$ sudo mkdir -p /usr/local/lib/systemd/system

|

||||

$ sudo vi /usr/local/lib/systemd/system/reboot-task.service

|

||||

|

||||

----------

|

||||

|

||||

[Unit]

|

||||

Description=Send a notification email when the server gets rebooted

|

||||

DefaultDependencies=no

|

||||

Before=reboot.target

|

||||

|

||||

[Service]

|

||||

Type=oneshot

|

||||

ExecStart=/usr/local/bin/reboot_notify.sh

|

||||

|

||||

[Install]

|

||||

WantedBy=reboot.target

|

||||

|

||||

在创建服务后,添加并启动该服务。

|

||||

|

||||

$ sudo systemctl enable reboot-task

|

||||

$ sudo systemctl start reboot-task

|

||||

|

||||

从现在起,在每次 VPS 重启时,你将会收到一封通知邮件。

|

||||

|

||||

|

||||

|

||||

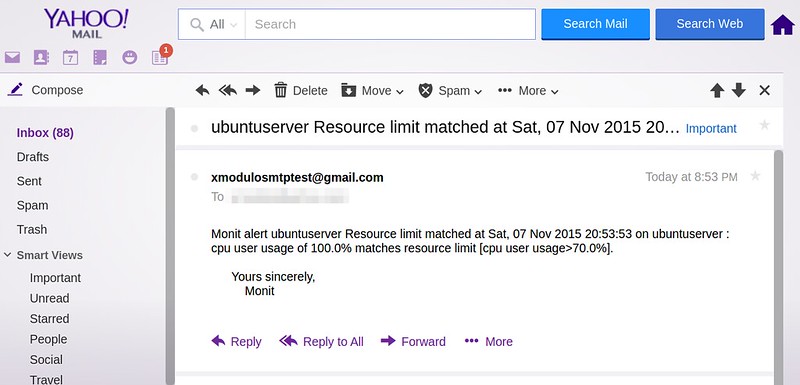

### 通过服务器使用监控发送邮件通知 ###

|

||||

|

||||

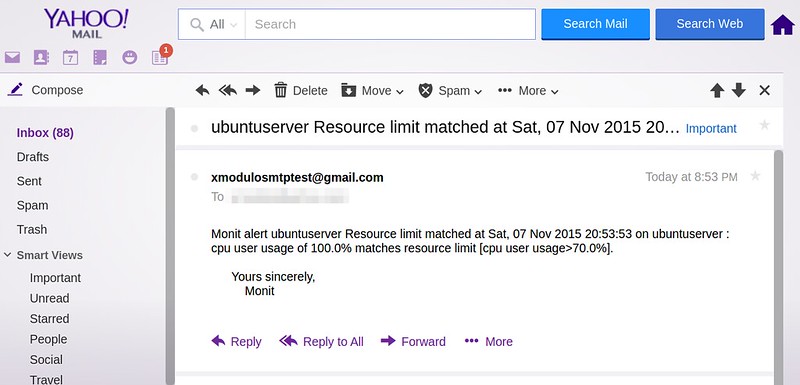

作为最后一个例子,让我展示一个现实生活中的应用程序,[Monit][6],这是一款极其有用的服务器监控应用程序。它带有全面的 [VPS][7] 监控能力(比如 CPU、内存、进程、文件系统)和邮件通知功能。

|

||||

|

||||

如果你想要接收 VPS 上由 Monit 产生的任何事件的邮件通知,你可以在 Monit 配置文件中添加以下 SMTP 信息。

|

||||

|

||||

set mailserver smtp.gmail.com port 587

|

||||

username "<your-gmail-ID>" password "<gmail-password>"

|

||||

using tlsv12

|

||||

|

||||

set mail-format {

|

||||

from: <your-gmail-ID>@gmail.com

|

||||

subject: $SERVICE $EVENT at $DATE on $HOST

|

||||

message: Monit $ACTION $SERVICE $EVENT at $DATE on $HOST : $DESCRIPTION.

|

||||

|

||||

Yours sincerely,

|

||||

Monit

|

||||

}

|

||||

|

||||

# the person who will receive notification emails

|

||||

set alert alice@yahoo.com

|

||||

|

||||

这是一个因为 CPU 负载超载而由 Monit 发送的邮件通知的例子。

|

||||

|

||||

|

||||

|

||||

### 总结 ###

|

||||

|

||||

如你所见,类似 Gmail 这样免费的 SMTP 服务器有着这么多不同的运用方式 。但再次重申,请牢记免费的 SMTP 服务器不适用于商业用途,仅仅适用于个人项目。无论你正在哪款应用中使用 Gmail SMTP 服务器,欢迎自由分享你的用例。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/send-email-notifications-gmail-smtp-server-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[cposture](https://github.com/cposture)

|

||||

校对:[martin2011qi](https://github.com/martin2011qi), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/setup-system-status-page.html

|

||||

[2]:http://xmodulo.com/server-monitoring-system-monit.html

|

||||

[3]:http://xmodulo.com/create-software-raid1-array-mdadm-linux.html

|

||||

[4]:http://xmodulo.com/mail-server-ubuntu-debian.html

|

||||

[5]:http://xmodulo.com/go/digitalocean

|

||||

[6]:http://xmodulo.com/server-monitoring-system-monit.html

|

||||

[7]:http://xmodulo.com/go/digitalocean

|

||||

65

published/20151204 Review EXT4 vs. Btrfs vs. XFS.md

Normal file

65

published/20151204 Review EXT4 vs. Btrfs vs. XFS.md

Normal file

@ -0,0 +1,65 @@

|

||||

如何选择文件系统:EXT4、Btrfs 和 XFS

|

||||

================================================================================

|

||||

|

||||

|

||||

老实说,人们最不曾思考的问题之一是他们的个人电脑中使用了什么文件系统。Windows 和 Mac OS X 用户更没有理由去考虑,因为对于他们的操作系统,只有一种选择,那就是 NTFS 和 HFS+。相反,对于 Linux 系统而言,有很多种文件系统可以选择,现在默认的是广泛采用的 ext4。然而,现在也有改用一种称为 btrfs 文件系统的趋势。那是什么使得 btrfs 更优秀,其它的文件系统又是什么,什么时候我们又能看到 Linux 发行版作出改变呢?

|

||||

|

||||

首先让我们对文件系统以及它们真正干什么有个总体的认识,然后我们再对一些有名的文件系统做详细的比较。

|

||||

|

||||

### 文件系统是干什么的? ###

|

||||

|

||||

如果你不清楚文件系统是干什么的,一句话总结起来也非常简单。文件系统主要用于控制所有程序在不使用数据时如何存储数据、如何访问数据以及有什么其它信息(元数据)和数据本身相关,等等。听起来要编程实现并不是轻而易举的事情,实际上也确实如此。文件系统一直在改进,包括了更多的功能、更高效地完成它需要做的事情。总而言之,它是所有计算机的基本需求、但并不像听起来那么简单。

|

||||

|

||||

### 为什么要分区? ###

|

||||

|

||||

由于每个操作系统都能创建或者删除分区,很多人对分区都有模糊的认识。Linux 操作系统即便使用标准安装过程,在同一块磁盘上仍使用多个分区,这看起来很奇怪,因此需要一些解释。拥有不同分区的一个主要目的就是为了在灾难发生时能获得更好的数据安全性。

|

||||

|

||||

通过将硬盘划分为分区,数据会被分隔以及重组。当事故发生的时候,只有存储在被损坏分区上的数据会被破坏,很大可能上其它分区的数据能得以保留。这个原因可以追溯到 Linux 操作系统还没有日志文件系统、任何电力故障都有可能导致灾难发生的时候。

|

||||

|

||||

使用分区也考虑到了安全和健壮性原因,因此操作系统部分损坏并不意味着整个计算机就有风险或者会受到破坏。这也是当前采用分区的一个最重要因素。举个例子,用户创建了一些会填满磁盘的脚本、程序或者 web 应用,如果该磁盘只有一个大的分区,如果磁盘满了那么整个系统就不能工作。如果用户把数据保存在不同的分区,那么就只有那个分区会受到影响,而系统分区或者其它数据分区仍能正常运行。

|

||||

|

||||

记住,拥有一个日志文件系统只能在掉电或者和存储设备意外断开连接时提供数据安全性,并不能在文件系统出现坏块或者发生逻辑错误时保护数据。对于这种情况,用户可以采用廉价磁盘冗余阵列(RAID:Redundant Array of Inexpensive Disks)的方案。

|

||||

|

||||

### 为什么要切换文件系统? ###

|

||||

|

||||

ext4 文件系统由 ext3 文件系统改进而来,而后者又是从 ext2 文件系统改进而来。虽然 ext4 文件系统已经非常稳定,是过去几年中绝大部分发行版的默认选择,但它是基于陈旧的代码开发而来。另外, Linux 操作系统用户也需要很多 ext4 文件系统本身不提供的新功能。虽然通过某些软件能满足这种需求,但性能会受到影响,在文件系统层次做到这些能获得更好的性能。

|

||||

|

||||

### Ext4 文件系统 ###

|

||||

|

||||

ext4 还有一些明显的限制。最大文件大小是 16 tebibytes(大概是 17.6 terabytes),这比普通用户当前能买到的硬盘还要大的多。使用 ext4 能创建的最大卷/分区是 1 exbibyte(大概是 1,152,921.5 terabytes)。通过使用多种技巧, ext4 比 ext3 有很大的速度提升。类似一些最先进的文件系统,它是一个日志文件系统,意味着它会对文件在磁盘中的位置以及任何其它对磁盘的更改做记录。纵观它的所有功能,它还不支持透明压缩、重复数据删除或者透明加密。技术上支持了快照,但该功能还处于实验性阶段。

|

||||

|

||||

### Btrfs 文件系统 ###

|

||||

|

||||

btrfs 有很多不同的叫法,例如 Better FS、Butter FS 或者 B-Tree FS。它是一个几乎完全从头开发的文件系统。btrfs 出现的原因是它的开发者起初希望扩展文件系统的功能使得它包括快照、池化(pooling)、校验以及其它一些功能。虽然和 ext4 无关,它也希望能保留 ext4 中能使消费者和企业受益的功能,并整合额外的能使每个人,尤其是企业受益的功能。对于使用大型软件以及大规模数据库的企业,让多种不同的硬盘看起来一致的文件系统能使他们受益并且使数据整合变得更加简单。删除重复数据能降低数据实际使用的空间,当需要镜像一个单一而巨大的文件系统时使用 btrfs 也能使数据镜像变得简单。

|

||||

|

||||

用户当然可以继续选择创建多个分区从而无需镜像任何东西。考虑到这种情况,btrfs 能横跨多种硬盘,和 ext4 相比,它能支持 16 倍以上的磁盘空间。btrfs 文件系统一个分区最大是 16 exbibytes,最大的文件大小也是 16 exbibytes。

|

||||

|

||||

### XFS 文件系统 ###

|

||||

|

||||

XFS 文件系统是扩展文件系统(extent file system)的一个扩展。XFS 是 64 位高性能日志文件系统。对 XFS 的支持大概在 2002 年合并到了 Linux 内核,到了 2009 年,红帽企业版 Linux 5.4 也支持了 XFS 文件系统。对于 64 位文件系统,XFS 支持最大文件系统大小为 8 exbibytes。XFS 文件系统有一些缺陷,例如它不能压缩,删除大量文件时性能低下。目前RHEL 7.0 文件系统默认使用 XFS。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

不幸的是,还不知道 btrfs 什么时候能到来。官方说,其下一代文件系统仍然被归类为“不稳定”,但是如果用户下载最新版本的 Ubuntu,就可以选择安装到 btrfs 分区上。什么时候 btrfs 会被归类到 “稳定” 仍然是个谜, 直到真的认为它“稳定”之前,用户也不应该期望 Ubuntu 会默认采用 btrfs。有报道说 Fedora 18 会用 btrfs 作为它的默认文件系统,因为到了发布它的时候,应该有了 btrfs 文件系统校验器。由于还没有实现所有的功能,另外和 ext4 相比性能上也比较缓慢,btrfs 还有很多的工作要做。

|

||||

|

||||

那么,究竟使用哪个更好呢?尽管性能几乎相同,但 ext4 还是赢家。为什么呢?答案在于易用性以及广泛性。对于桌面或者工作站, ext4 仍然是一个很好的文件系统。由于它是默认提供的文件系统,用户可以在上面安装操作系统。同时, ext4 支持最大 1 exabytes 的卷和 16 terabytes 的文件,因此考虑到大小,它也还有很大的进步空间。

|

||||

|

||||

btrfs 能提供更大的高达 16 exabytes 的卷以及更好的容错,但是,到现在为止,它感觉更像是一个附加的文件系统,而部署一个集成到 Linux 操作系统的文件系统。比如,尽管 btrfs 支持不同的发行版,使用 btrfs 格式化硬盘之前先要有 btrfs-tools 工具,这意味着安装 Linux 操作系统的时候它并不是一个可选项,即便不同发行版之间会有所不同。

|

||||

|

||||

尽管传输速率非常重要,评价一个文件系统除了文件传输速度之外还有很多因素。btrfs 有很多好用的功能,例如写复制(Copy-on-Write)、扩展校验、快照、清洗、自修复数据、冗余删除以及其它保证数据完整性的功能。和 ZFS 相比 btrfs 缺少 RAID-Z 功能,因此对于 btrfs, RAID 还处于实验性阶段。对于单纯的数据存储,和 ext4 相比 btrfs 似乎更加优秀,但时间会验证一切。

|

||||

|

||||

迄今为止,对于桌面系统而言,ext4 似乎是一个更好的选择,因为它是默认的文件系统,传输文件时也比 btrfs 更快。btrfs 当然值得尝试、但要在桌面 Linux 上完全取代 ext4 可能还需要一些时间。数据场和大存储池会揭示关于 ext4、XCF 以及 btrfs 不同的场景和差异。

|

||||

|

||||

如果你有不同或者其它的观点,在下面的评论框中告诉我们吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/review-ext4-vs-btrfs-vs-xfs/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/pirat9/

|

||||

@ -0,0 +1,371 @@

|

||||

怎样在 ubuntu 和 debian 中通过命令行管理 KVM

|

||||

================================================================================

|

||||

|

||||

有很多不同的方式去管理运行在 KVM 管理程序上的虚拟机。例如,virt-manager 就是一个流行的基于图形界面的前端虚拟机管理工具。然而,如果你想要在没有图形窗口的服务器环境下使用 KVM ,那么基于图形界面的解决方案显然是行不通的。事实上,你可以单纯使用包装了 kvm 命令行脚本的命令行来管理 KVM 虚拟机。作为替代方案,你可以使用 virsh 这个容易使用的命令行程序来管理客户虚拟机。在 virsh 中,它通过和 libvirtd 服务通信来达到控制虚拟机的目的,而 libvirtd 可以控制多个不同的虚拟机管理器,包括 KVM,Xen,QEMU,LXC 和 OpenVZ。

|

||||

|

||||

当你想要对虚拟机的前期准备和后期管理实现自动化操作时,像 virsh 这样的命令行管理工具是非常有用的。同样,virsh 支持多个管理器也就意味着你可以通过相同的 virsh 接口去管理不同的虚拟机管理器。

|

||||

|

||||

在这篇文章中,我会示范**怎样在 ubuntu 和 debian 上通过使用 virsh 命令行去运行 KVM**。

|

||||

|

||||

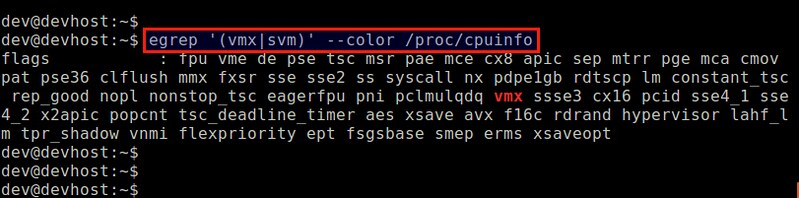

### 第一步:确认你的硬件平台支持虚拟化 ###

|

||||

|

||||

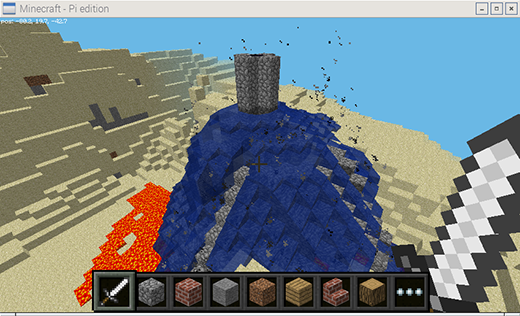

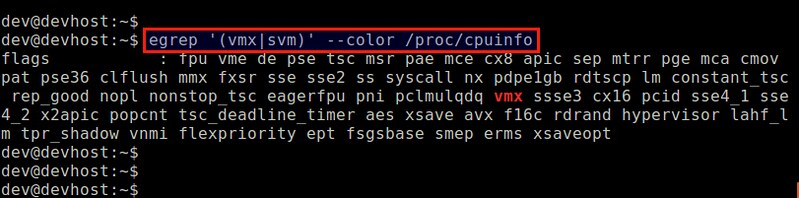

第一步,首先要确认你的 CPU 支持硬件虚拟化扩展(e.g.,Intel VT 或者 AMD-V),这是 KVM 对硬件的要求。下面的命令可以检查硬件是否支持虚拟化。

|

||||

|

||||

```

|

||||

$ egrep '(vmx|svm)' --color /proc/cpuinfo

|

||||

```

|

||||

|

||||

|

||||

|

||||

如果在输出中不包含 vmx 或者 svm 标识,那么就意味着你的 cpu 不支持硬件虚拟化。因此你不能在你的机器上使用 KVM 。确认了 cpu 支持 vmx 或者 svm 之后,接下来开始安装 KVM。

|

||||

|

||||

对于 KVM 来说,它不要求运行在拥有 64 位内核系统的主机上,但是通常我们会推荐在 64 位系统的主机上面运行 KVM。

|

||||

|

||||

### 第二步:安装KVM ###

|

||||

|

||||

使用 `apt-get` 安装 KVM 和相关的用户空间工具。

|

||||

|

||||

```

|

||||

$ sudo apt-get install qemu-kvm libvirt-bin

|

||||

```

|

||||

|

||||

安装期间,libvirtd 用户组(在 debian 上是 libvirtd-qemu 用户组)将会被创建,并且你的用户 id 将会被自动添加到该组中。这样做的目的是让你可以以一个普通用户而不是 root 用户的身份去管理虚拟机。你可以使用 `id` 命令来确认这一点,下面将会告诉你怎么去显示你的组 id:

|

||||

|

||||

```

|

||||

$ id <your-userID>

|

||||

```

|

||||

|

||||

|

||||

|

||||

如果因为某些原因,libvirt(在 debian 中是 libvirt-qemu)没有在你的组 id 中被找到,你也可以手动将你自己添加到对应的组中,如下所示:

|

||||

|

||||

在 ubuntu 上:

|

||||

|

||||

```

|

||||

$ sudo adduser [youruserID] libvirtd

|

||||

```

|

||||

|

||||

在 debian 上:

|

||||

|

||||

```

|

||||

$ sudo adduser [youruserID] libvirt-qemu

|

||||

```

|

||||

|

||||

按照如下命令重新载入更新后的组成员关系。如果要求输入密码,那么输入你的登陆密码即可。

|

||||

|

||||

```

|

||||

$ exec su -l $USER

|

||||

```

|

||||

|

||||

这时,你应该可以以普通用户的身份去执行 virsh 了。做一个如下所示的测试,这个命令将会以列表的形式列出可用的虚拟机(当前的列表是空的)。如果你没有遇到权限问题,那意味着到目前为止一切都是正常的。

|

||||

|

||||

$ virsh list

|

||||

|

||||

----------

|

||||

|

||||

Id Name State

|

||||

----------------------------------------------------

|

||||

|

||||

### 第三步:配置桥接网络 ###

|

||||

|

||||

为了使 KVM 虚拟机能够访问外部网络,一种方法是通过在 KVM 宿主机上创建 Linux 桥来实现。创建之后的桥能够将虚拟机的虚拟网卡和宿主机的物理网卡连接起来,因此,虚拟机能够发送和接收由物理网卡传输的数据包。这种方式叫做网络桥接。

|

||||

|

||||

下面将告诉你如何创建并且配置网桥,我们创建一个网桥称它为 br0。

|

||||

|

||||

首先,安装一个必需的包,然后用命令行创建一个网桥。

|

||||

|

||||

```

|

||||

$ sudo apt-get install bridge-utils

|

||||

$ sudo brctl addbr br0

|

||||

```

|

||||

|

||||

下一步就是配置已经创建好的网桥,即修改位于 `/etc/network/interfaces` 的配置文件。我们需要将该桥接网卡设置成开机启动。为了修改该配置文件,你需要关闭你的操作系统上的网络管理器(如果你在使用它的话)。跟随[操作指南][1]的说明去关闭网络管理器。

|

||||

|

||||

关闭网络管理器之后,接下来就是通过修改配置文件来配置网桥了。

|

||||

|

||||

```

|

||||

#auto eth0

|

||||

#iface eth0 inet dhcp

|

||||

|

||||

auto br0

|

||||

iface br0 inet dhcp

|

||||

bridge_ports eth0

|

||||

bridge_stp off

|

||||

bridge_fd 0

|

||||

bridge_maxwait 0

|

||||

```

|

||||

|

||||

在上面的配置中,我假设 eth0 是主要网卡,它也是连接到外网的网卡,同样,我假设 eth0 将会通过 DHCP 协议自动获取 ip 地址。注意,之前在 `/etc/network/interfaces` 中还没有对 eth0 进行任何配置。桥接网卡 br0 引用了 eth0 的配置,而 eth0 也会受到 br0 的制约。

|

||||

|

||||

重启网络服务,并确认网桥已经被成功的配置好。如果成功的话,br0 的 ip 地址将会是 eth0 自动分配的 ip 地址,而且 eth0 不会被分配任何 ip 地址。

|

||||

|

||||

```

|

||||

$ sudo /etc/init.d/networking restart

|

||||

$ ifconfig

|

||||

```

|

||||

|

||||

如果因为某些原因,eth0 仍然保留了之前分配给了 br0 的 ip 地址,那么你可能必须手动删除 eth0 的 ip 地址。

|

||||

|

||||

|

||||

|

||||

### 第四步:用命令行创建一个虚拟机 ###

|

||||

|

||||

对于虚拟机来说,它的配置信息被存储在它对应的xml文件中。因此,创建一个虚拟机的第一步就是准备一个与虚拟机对应的 xml 文件。

|

||||

|

||||

下面是一个示例 xml 文件,你可以根据需要手动修改它。

|

||||

|

||||

```

|

||||

<domain type='kvm'>

|

||||

<name>alice</name>

|

||||

<uuid>f5b8c05b-9c7a-3211-49b9-2bd635f7e2aa</uuid>

|

||||

<memory>1048576</memory>

|

||||

<currentMemory>1048576</currentMemory>

|

||||

<vcpu>1</vcpu>

|

||||

<os>

|

||||

<type>hvm</type>

|

||||

<boot dev='cdrom'/>

|

||||

</os>

|

||||

<features>

|

||||

<acpi/>

|

||||

</features>

|

||||

<clock offset='utc'/>

|

||||

<on_poweroff>destroy</on_poweroff>

|

||||

<on_reboot>restart</on_reboot>

|

||||

<on_crash>destroy</on_crash>

|

||||

<devices>

|

||||

<emulator>/usr/bin/kvm</emulator>

|

||||

<disk type="file" device="disk">

|

||||

<driver name="qemu" type="raw"/>

|

||||

<source file="/home/dev/images/alice.img"/>

|

||||

<target dev="vda" bus="virtio"/>

|

||||

<address type="pci" domain="0x0000" bus="0x00" slot="0x04" function="0x0"/>

|

||||

</disk>

|

||||

<disk type="file" device="cdrom">

|

||||

<driver name="qemu" type="raw"/>

|

||||

<source file="/home/dev/iso/CentOS-6.5-x86_64-minimal.iso"/>

|

||||

<target dev="hdc" bus="ide"/>

|

||||

<readonly/>

|

||||

<address type="drive" controller="0" bus="1" target="0" unit="0"/>

|

||||

</disk>

|

||||

<interface type='bridge'>

|

||||

<source bridge='br0'/>

|

||||

<mac address="00:00:A3:B0:56:10"/>

|

||||

</interface>

|

||||

<controller type="ide" index="0">

|

||||

<address type="pci" domain="0x0000" bus="0x00" slot="0x01" function="0x1"/>

|

||||

</controller>

|

||||

<input type='mouse' bus='ps2'/>

|

||||

<graphics type='vnc' port='-1' autoport="yes" listen='0.0.0.0'/>

|

||||

<console type='pty'>

|

||||

<target port='0'/>

|

||||

</console>

|

||||

</devices>

|

||||

</domain>

|

||||

```

|

||||

|

||||

上面的主机xml配置文件定义了如下的虚拟机内容。

|

||||

|

||||

- 1GB内存,一个虚拟cpu和一个硬件驱动

|

||||

|

||||

- 磁盘镜像:`/home/dev/images/alice.img`

|

||||

|

||||

- 从 CD-ROM 引导(`/home/dev/iso/CentOS-6.5-x86_64-minomal.iso`)

|

||||

|

||||

- 网络:一个桥接到 br0 的虚拟网卡

|

||||

|

||||

- 通过 VNC 远程访问

|

||||

|

||||

`<uuid></uuid>` 中的 UUID 字符串可以随机生成。为了得到一个随机的 uuid 字符串,你可能需要使用 uuid 命令行工具。

|

||||

|

||||

```

|

||||

$ sudo apt-get install uuid

|

||||

$ uuid

|

||||

```

|

||||

|

||||

生成一个主机 xml 配置文件的方式就是通过一个已经存在的虚拟机来导出它的 xml 配置文件。如下所示。

|

||||

|

||||

```

|

||||

$ virsh dumpxml alice > bob.xml

|

||||

```

|

||||

|

||||

|

||||

|

||||

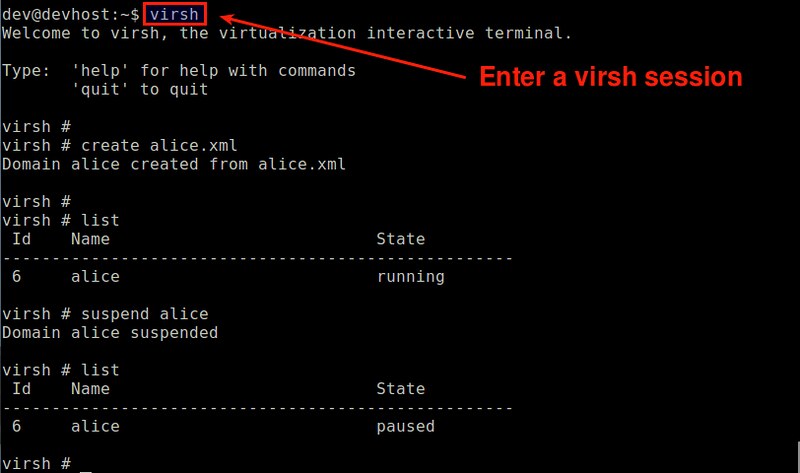

### 第五步:使用命令行启动虚拟机 ###

|

||||

|

||||

在启动虚拟机之前,我们需要创建它的初始磁盘镜像。为此,你需要使用 qemu-img 命令来生成一个 qemu-kvm 镜像。下面的命令将会创建 10 GB 大小的空磁盘,并且它是 qcow2 格式的。

|

||||

|

||||

```

|

||||

$ qemu-img create -f qcow2 /home/dev/images/alice.img 10G

|

||||

```

|

||||

|

||||

使用 qcow2 格式的磁盘镜像的好处就是它在创建之初并不会给它分配全部大小磁盘容量(这里是 10 GB),而是随着虚拟机中文件的增加而逐渐增大。因此,它对空间的使用更加有效。

|

||||

|

||||

现在,你可以通过使用之前创建的 xml 配置文件启动你的虚拟机了。下面的命令将会创建一个虚拟机,然后自动启动它。

|

||||

|

||||

```

|

||||

$ virsh create alice.xml

|

||||

Domain alice created from alice.xml

|

||||

```

|

||||

|

||||

**注意**: 如果你对一个已经存在的虚拟机执行了了上面的命令,那么这个操作将会在没有任何警告的情况下抹去那个已经存在的虚拟机的全部信息。如果你已经创建了一个虚拟机,你可能会使用下面的命令来启动虚拟机。

|

||||

|

||||

```

|

||||

$ virsh start alice.xml

|

||||

```

|

||||

|

||||

使用如下命令确认一个新的虚拟机已经被创建并成功的被启动。

|

||||

|

||||

```

|

||||

$ virsh list

|

||||

```

|

||||

|

||||

Id Name State

|

||||

----------------------------------------------------

|

||||

3 alice running

|

||||

|

||||

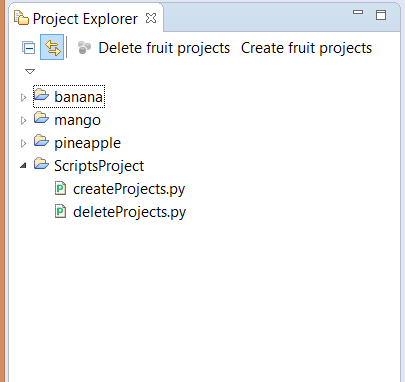

同样,使用如下命令确认你的虚拟机的虚拟网卡已经被成功的添加到了你先前创建的 br0 网桥中。

|

||||

|

||||

$ sudo brctl show

|

||||

|

||||

|

||||

|

||||

### 远程连接虚拟机 ###

|

||||

|

||||

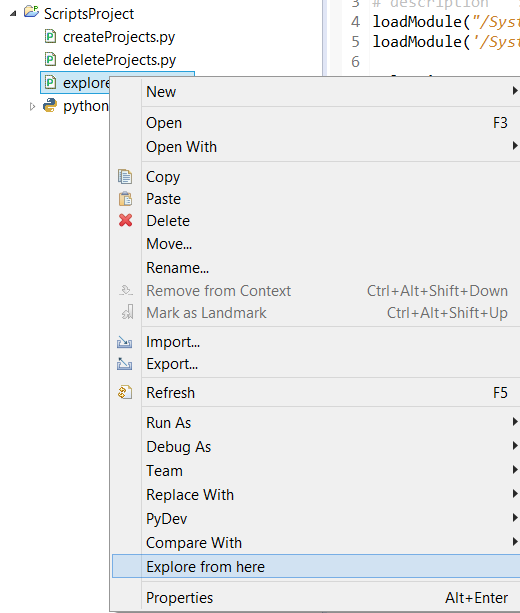

为了远程访问一个正在运行的虚拟机的控制台,你可以使用VNC客户端。

|

||||

|

||||

首先,你需要使用如下命令找出用于虚拟机的VNC端口号。

|

||||

|

||||

```

|

||||

$ sudo netstat -nap | egrep '(kvm|qemu)'

|

||||

```

|

||||

|

||||

|

||||

|

||||

在这个例子中,用于 alice 虚拟机的 VNC 端口号是 5900。 然后启动一个VNC客户端,连接到一个端口号为5900的VNC服务器。在我们的例子中,虚拟机支持由CentOS光盘文件启动。

|

||||

|

||||

|

||||

|

||||

### 使用 virsh 管理虚拟机 ###

|

||||

|

||||

下面列出了 virsh 命令的常规用法:

|

||||

|

||||

创建客户机并且启动虚拟机:

|

||||

|

||||

```

|

||||

$ virsh create alice.xml

|

||||

```

|

||||

|

||||

停止虚拟机并且删除客户机:

|

||||

|

||||

```

|

||||

$ virsh destroy alice

|

||||

```

|

||||

|

||||

关闭虚拟机(不用删除它):

|

||||

|

||||

```

|

||||

$ virsh shutdown alice

|

||||

```

|

||||

|

||||

暂停虚拟机:

|

||||

|

||||

```

|

||||

$ virsh suspend alice

|

||||

```

|

||||

|

||||

恢复虚拟机:

|

||||

|

||||

```

|

||||

$ virsh resume alice

|

||||

```

|

||||

|

||||

访问正在运行的虚拟机的控制台:

|

||||

|

||||

```

|

||||

$ virsh console alice

|

||||

```

|

||||

|

||||

设置虚拟机开机启动:

|

||||

|

||||

```

|

||||

$ virsh autostart alice

|

||||

```

|

||||

|

||||

查看虚拟机的详细信息:

|

||||

|

||||

```

|

||||

$ virsh dominfo alice

|

||||

```

|

||||

|

||||

编辑虚拟机的配置文件:

|

||||

|

||||

```

|

||||

$ virsh edit alice

|

||||

```

|

||||

|

||||

上面的这个命令将会使用一个默认的编辑器来调用主机配置文件。该配置文件中的任何改变都将自动被libvirt验证其正确性。

|

||||

|

||||

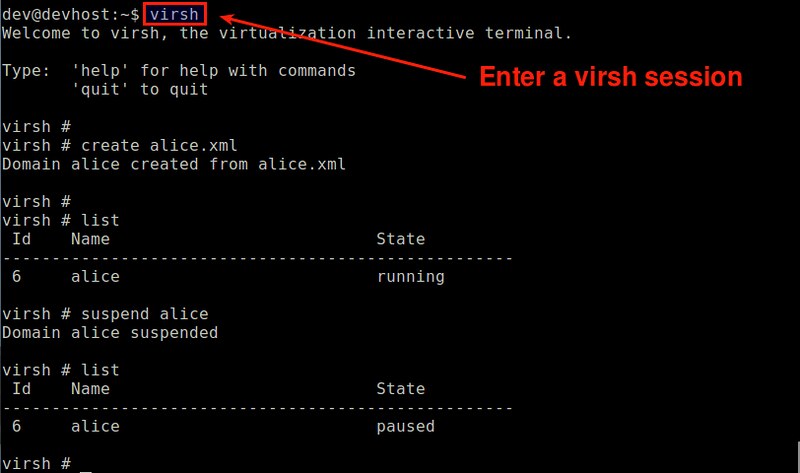

你也可以在一个virsh会话中管理虚拟机。下面的命令会创建并进入到一个virsh会话中:

|

||||

|

||||

```

|

||||

$ virsh

|

||||

```

|

||||

|

||||

在 virsh 提示中,你可以使用任何 virsh 命令。

|

||||

|

||||

|

||||

|

||||

### 问题处理 ###

|

||||

|

||||

1. 我在创建虚拟机的时候遇到了一个错误:

|

||||

|

||||

error: internal error: no supported architecture for os type 'hvm'

|

||||

|

||||

如果你的硬件不支持虚拟化的话你可能就会遇到这个错误。(例如,Intel VT或者AMD-V),这是运行KVM所必需的。如果你遇到了这个错误,而你的cpu支持虚拟化,那么这里可以给你一些可用的解决方案:

|

||||

|

||||

首先,检查你的内核模块是否丢失。

|

||||

|

||||

```

|

||||

$ lsmod | grep kvm

|

||||

```

|

||||

|

||||

如果内核模块没有加载,你必须按照如下方式加载它。

|

||||

|

||||

```

|

||||

$ sudo modprobe kvm_intel (for Intel processor)

|

||||

$ sudo modprobe kvm_amd (for AMD processor)

|

||||

```

|

||||

|

||||

第二个解决方案就是添加 `--connect qemu:///system` 参数到 `virsh` 命令中,如下所示。当你正在你的硬件平台上使用超过一个虚拟机管理器的时候就需要添加这个参数(例如,VirtualBox,VMware)。

|

||||

|

||||

```

|

||||

$ virsh --connect qemu:///system create alice.xml

|

||||

```

|

||||

|

||||

2. 当我试着访问我的虚拟机的登陆控制台的时候遇到了错误:

|

||||

|

||||

```

|

||||

$ virsh console alice

|

||||

error: internal error: cannot find character device <null>

|

||||

```

|

||||

|

||||

这个错误发生的原因是你没有在你的虚拟机配置文件中定义控制台设备。在 xml 文件中加上下面的内部设备部分即可。

|

||||

|

||||

```

|

||||

<console type='pty'>

|

||||

<target port='0'/>

|

||||

</console>

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/use-kvm-command-line-debian-ubuntu.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[kylepeng93](https://github.com/kylepeng93 )

|

||||

校对:[Ezio](https://github.com/oska874)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/disable-network-manager-linux.html

|

||||

@ -0,0 +1,28 @@

|

||||

我们能通过快速、开放的研究来战胜寨卡病毒吗?

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

关于寨卡病毒,最主要的问题就是我们对于它了解的太少了。这意味着我们需要尽快对它作出很多研究。

|

||||

|

||||

寨卡病毒现已严重威胁到世界人民的健康。在 2015 年爆发之后,它就成为了全球性的突发公共卫生事件,并且这个病毒也可能与儿童的出生缺陷有关。根据[维基百科][4],在这个病毒在 40 年代末期被发现以后,早在 1952 年就被观测到了对于人类的影响。

|

||||

|

||||

在 2 月 10 日,开放杂志 PLoS [发布了一个声明][1],这个是声明是关于有关公众紧急状况的信息共享。在此之后,刊登在研究期刊 F1000 的一篇文章 [对于能治疗寨卡病毒的开源药物(Open drug)的研究][2],它讨论了寨卡病毒及其开放研究的状况。那篇来自 PLoS 的声明列出了超过 30 个开放了对于寨卡病毒的研究进度的数据的重要组织。并且世界卫生组织实施了一个特别规定,以[创作共用许可证][3]公布提交到他们那里的资料。

|

||||

|

||||

快速公布,无限制的重复利用,以及强调研究结果的传播是推动开放科研社区的战略性一步。看到我们所关注的突发公共卫生事件能够以这样的方式开始是很令人受鼓舞的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/2/how-rapid-open-science-could-change-game-zika-virus

|

||||

|

||||

作者:[Marcus D. Hanwell][a]

|

||||

译者:[name1e5s](https://github.com/name1e5s)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mhanwell

|

||||

[1]: http://blogs.plos.org/plos/2016/02/statement-on-data-sharing-in-public-health-emergencies/

|

||||

[2]: http://f1000research.com/articles/5-150/v1

|

||||

[3]: https://creativecommons.org/licenses/by/3.0/igo/

|

||||

[4]: https://en.wikipedia.org/wiki/Zika_virus

|

||||

@ -0,0 +1,90 @@

|

||||

2016:如何选择 Linux 桌面环境

|

||||

=============================================

|

||||

|

||||

|

||||

|

||||

Linux 创建了一个友好的环境,为我们提供了选择的可能。比方说,现代大多数的 Linux 发行版都提供不同桌面环境给我们来选择。在本文中,我将挑选一些你可能会在 Linux 中见到的最棒的桌面环境来介绍。

|

||||

|

||||

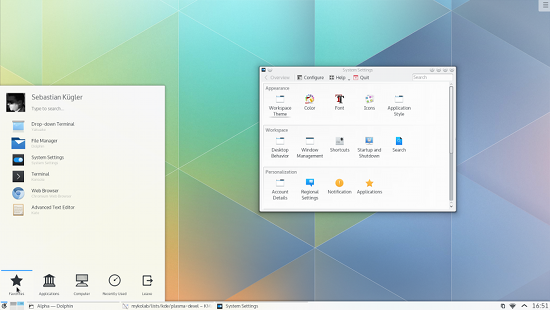

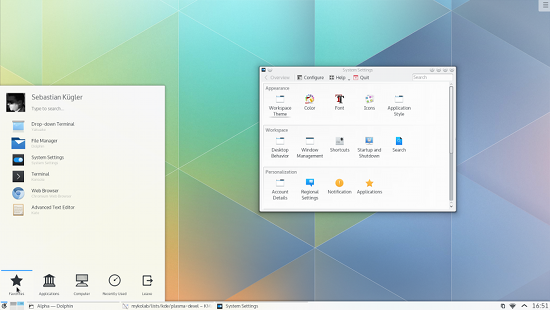

## Plasma

|

||||

|

||||

我认为,[KDE 的 Plasma 桌面](https://www.kde.org/workspaces/plasmadesktop/) 是最先进的桌面环境 (LCTT 译注:译者认为,没有什么是最好的,只有最合适的,毕竟每个人的喜好都不可能完全相同)。它是我见过功能最完善和定制性最高的桌面环境;在用户完全自主控制方面,即使是 Mac OS X 和 Windows 也无法与之比拟。

|

||||

|

||||

我爱 Plasma,因为它自带了一个非常好的文件管理器 —— Dolphin。而相对应 Gnome 环境,我更喜欢 Plasma 的原因就在于这个文件管理器。使用 Gnome 最大的痛苦就是,它的文件管理器——Files——使我无法完成一些基本任务,比如说,批量文件重命名操作。而这个操作对我来说相当重要,因为我喜欢拍摄,但 Gnome 却让我无法批量重命名这些图像文件。而使用 Dolphin 的话,这个操作就像在公园散步一样简单。

|

||||

|

||||

而且,你可以通过插件来增强 Plasma 的功能。Plasma 有大量的基础软件,如 Krita、Kdenlive、Calligra 办公套件、digiKam、Kwrite 以及由 KDE 社区开发维护的大量应用。

|

||||

|

||||

Plasma 桌面环境唯一的缺陷就是它默认的邮件客户端——Kmail。它的设置比较困难,我希望 Kmail 设置可以配置地址簿和日历。

|

||||

|

||||

包括 openSUSE 在内的多数主流发行版多使用 Plasma 作为默认桌面。

|

||||

|

||||

## GNOME

|

||||

|

||||

[GNOME](https://www.gnome.org/) (GNU Network Object Model Environment,GNU 网络对象模型环境) 由 [Miguel de Icaza](https://en.wikipedia.org/wiki/Miguel_de_Icaza) 和 Federico Mena 在 1997 年的时候创立,这是因为 KDE 使用了 Qt 工具包,而这个工具包是使用专属许可证 (proprietary license) 发布的。不像提供了大量定制的 KDE,GNOME 专注于让事情变得简单。因为其自身的简单性和易用性,GNOME 变得相当流行。而我认为 GNOME 之所以流行的原因在于,Ubuntu——使用 GNOME 作为默认桌面的主流 Linux 发行版之一——对其有着巨大的推动作用。

|

||||

|

||||

随着时代变化,GNOME 也需要作出相应的改变了。因此,开发者在 GNOME 3 中推出了 GNOME 3 Shell,从而引出了它的全新设计规范。但这同时与 Canonical 的 Ubuntu 计划存在者一些冲突,所以 Canonical 为 GNOME 开发了叫做 Unity 的自己的 Shell。最初,GNOME 3 Shell 因很多争议 (issues) 而困扰不已——最明显的是,升级之后会导致很多扩展无法正常工作。由于设计上的重大改版以及各种问题的出现,GNOME 便产生了很多分支(fork),比如 Cinnamon 和 Mate 桌面。

|

||||

|

||||

另外,使得 GNOME 让人感兴趣的是,它针对触摸设备做了优化,所以,如果你有一台触屏笔记本电脑的话,GNOME 则是最合适你这台电脑的桌面环境。

|

||||

|

||||

在 3.18 版本中,GNOME 已经作出了一些令人印象深刻的改动。其中他们所做的最让人感兴趣的是集成了 Google Drive,用户可以把他们的 Google Drive 挂载为远程存储设备,这样就不必再使用浏览器来查看里边的文件了。我也很喜欢 GNOME 里边自带的那个优秀的邮件客户端,它带有日历和地址簿功能。尽管有这么多些优秀的特性,但它的文件管理器使我不再使用 GNOME ,因为我无法处理批量文件重命名。我会坚持使用 Plasma,一直到 GNOME 的开发者修复了这个小缺陷。

|

||||

|

||||

|

||||

|

||||

## Unity

|

||||

|

||||

从技术上来说,[Unity](https://unity.ubuntu.com/) 并不是一个桌面环境,它只是 Canonical 为 Ubuntu 开发的一个图形化 Shell。Unity 运行于 GNOME 桌面之上,并使用很多 GNOME 的应用和工具。Ubuntu 团队分支了一些 GNOME 组件,以便更好的满足 Unity 用户的需求。

|

||||

|

||||

Unity 在 Ubuntu 的融合(convergence)计划中扮演着重要角色, 在 Unity 8 中,Canonical 公司正在努力将电脑桌面和移动世界结合到一起。Canonical 同时还为 Unity 开发了许多的有趣技术,比如 HUD (Head-up Display,平视显示)。他们还在 lenses 和 scopes 中通过一种独特的技术来让用户方便地找到特定内容。

|

||||

|

||||

即将发行的 Ubuntu 16.04,将会搭载 Unity 8,那时候用户就可以完全体验开发者为该开源软件添加的所有特性了。其中最大的争议之一,Unity 可选取消集成了 Amazon Ads 和其他服务。而在即将发行的版本,Canonical 从 Dash 移除了 Amazon ads,但却默认保证了系统的隐私性。

|

||||

|

||||

## Cinnamon

|

||||

|

||||

最初,[Cinnamon](https://en.wikipedia.org/wiki/Cinnamon_(software\)) 由 [Linux Mint](http://www.linuxmint.com/) 开发 —— 这是 DistroWatch.com 上统计出来最流行的发行版。就像 Unity,Cinnamon 是 GNOME Shell 的一个分支。但最后进化为一个独立的桌面环境,这是因为 Linux Mint 的开发者分支了 GNOME 桌面中很多的组件到 Cinnamon,包括 Files ——以满足自身用户的需求。

|

||||

|

||||

由于 Linux Mint 基于普通版本的 Ubuntu,开发者仍需要去完成 Ubuntu 尚未完成的目标。结果,尽管前途光明,但 Cinnamon 却充满了 Bugs 和问题。随着 17.x 本版的发布,Linux Mint 开始转移到 Ubuntu 的 LTS 版本上,从而他们可以专注于开发 Cinnamon 的核心组件,而不必再去担心代码库。转移到 LTS 的好处是,Cinnamon 变得非常稳定并且基本没有 Bugs 出现。现在,开发者已经开始向桌面环境中添加更多的新特性了。

|

||||

|

||||

对于那些更喜欢在 GNOME 基础上有一个很好的类 Windows 用户界面的用户来说,Cinnamon 是他们最好的桌面环境。

|

||||

|

||||

## MATE 桌面

|

||||

|

||||

[MATE 桌面](http://mate-desktop.com/) 同样是 GNOME 的一个分支,然而,它并不像 Cinnamon 那样由 GNOME 3 分支而来,而是现在已经没有人维护的 GNOME 2 代码库的一个分支。MATE 桌面中的一些开发者并不喜欢 GNOME 3 并且想要“继续坚持” GNOME 2,所以他们使用这个代码库来创建来 MATE。为避免和 GNOME 3 的冲突,他们重命名了全部的包:Nautilus 改为 Caja、Gedit 改为 Pluma 以及 Evince 改为 Atril 等。

|

||||

|

||||

尽管 MATE 延续了 GNOME 2,但这并不意味着他们使用过时的技术;相反,他们使用了更新的技术来提供一个现代的 GNOME 2 体验。

|

||||

|

||||

拥有相当高的资源使用率才是 MATE 最令人印象深刻之处。你可将它运行在老旧硬件或者更新一些的但不太强大的硬件上,如树梅派 (Raspberry Pi) 或者 Chromebook Flip。使得它更有让人感兴趣的是,把它运行在一些强大的硬件上,可以节省大多数的资源给其他应用,而桌面环境本身只占用很少的资源。

|

||||

|

||||

## LXQt

|

||||

|

||||

[LXQt](http://lxqt.org/) 继承了 LXDE ——最轻量级的桌面环境之一。它融合了 LXDE 和 Razor-Qt 两个开源项目。LXQt 的首个可用本版(v 0.9)发布于 2015 年。最初,开发者使用了 Qt4 ,之后为了加快开发速度,而放弃了兼容性,他们移动到 Qt5 和 KDE 框架上。我也在自己的 Arch 系统上尝试使用了 LXQt,它的确是一个非常好的轻量级桌面环境。但在完全接过 LXDE 的传承之前,LXQt 仍有一段很长的路需要走。

|

||||

|

||||

## Xfce

|

||||

|

||||

[Xfce](http://www.xfce.org/) 早于 KDE 桌面环境,它是最古老和最轻量级的桌面环境。Xfce 的最新版本是 4.15,发布于 2015 年,使用了诸如 GTK+ 3 的大量的现代科技。很多发行版都使用了 Xfce 环境以满足特定需求,比如 Ubuntu Studio ——与 MATE 类似——尽量节省系统资源给其他的应用。并且,许多的著名的 Linux 发行版——包括 Manjaro Linux、PC/OS、Salix 和 Mythbuntu ——都把它作为默认桌面环境。

|

||||

|

||||

## Budgie

|

||||

|

||||

[Budgie](https://solus-project.com/budgie/) 是一个新型的桌面环境,由 Solus Linux 团队开发和维护。Solus 是一个从零开始构建的新型发行版,而 Budgie 则是它的一个核心组件。Budgie 使用了大量的 GNOME 组件,从而提供一个华丽的用户界面。由于没有该桌面环境的更多信息,我特地联系了 Solus 的核心开发者—— Ikey Doherty。他解释说:“我们搭载了自己的桌面环境—— Budgie 桌面。与其他桌面环境不同的是,Budgie 并不是其他桌面的一个分支,它的目标是彻底融入到 GNOME 协议栈之中。它完全从零开始编写,并特意设计来迎合 Solus 提供的体验。我们会尽可能的和 GNOME 的上游团队协同工作,修复 Bugs,并提倡和支持他们的工作”。

|

||||

|

||||

## Pantheon

|

||||

|

||||

我想,[Pantheon](https://elementary.io/) 不需要特别介绍了吧,那个优美的 elementary OS 就使用它作为桌面。类似于 Budgie,很多人都认为 Pantheon 也不是 GNOME 的一个分支。elementary OS 团队大多拥有良好的设计从业背景,所以他们会近距离关注每一个细节,这使得 Pantheon 成为一个非常优美的桌面环境。偶尔,它可能缺少像 Plasma 等桌面中的某些特性,但开发者实际上是尽其所能的去坚持设计原则。

|

||||

|

||||

|

||||

|

||||

## 结论

|

||||

|

||||

当我写完本文后,我突然意识到来开源和 Linux 的重大好处。总有一些东西适合你。就像 Jon “maddog” Hall 在最近的 SCaLE 14 上说的那样:“是的,现在有 300 多个 Linux 发行版。我可以一个一个去尝试,然后坚持使用我最喜欢的那一个”。

|

||||

|

||||

所以,尽情享受 Linux 的多样性吧,最后使用最合你意的那一个。

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.com/news/software/applications/881107-best-linux-desktop-environments-for-2016

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[GHLandy](https://github.com/GHLandy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linux.com/community/forums/person/61003

|

||||

@ -0,0 +1,24 @@

|

||||

Ubuntu 16.04 为更好支持容器化而采用 ZFS

|

||||

=======================================================

|

||||

|

||||

|

||||

|

||||

Ubuntu 开发者正在为 [Ubuntu 16.04 加上 ZFS 支持](http://www.phoronix.com/scan.php?page=news_item&px=ZFS-For-Ubuntu-16.04) ,并且对该文件系统的所有支持都已经准备就绪。

|

||||

|

||||

Ubuntu 16.04 的默认安装将会继续是 ext4,但是 ZFS 支持将会自动构建进 Ubuntu 发布中,模块将在需要时自动加载,zfsutils-linux 将放到 Ubuntu 主分支内,并且通过 Canonical 对商业客户提供支持。

|

||||

|

||||

对于那些对 Ubuntu 中的 ZFS 感兴趣的人,Canonical 的 Dustin Kirkland 已经写了[一篇新的博客](http://blog.dustinkirkland.com/2016/02/zfs-is-fs-for-containers-in-ubuntu-1604.html),介绍了一些细节及为何“ZFS 是 Ubuntu 16.04 中面向容器使用的文件系统!”

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.phoronix.com/scan.php?page=news_item&px=Ubuntu-ZFS-Continues-16.04&utm_source=feedburner&utm_medium=feed&utm_campaign=Feed%3A+Phoronix+%28Phoronix%29

|

||||

|

||||

作者:[Michael Larabel][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.michaellarabel.com/

|

||||

|

||||

|

||||

@ -1,14 +1,16 @@

|

||||

Linux最大版本4.1.18 LTS发布,带来大量修改

|

||||

Linux 4.1 系列的最大版本 4.1.18 LTS发布,带来大量修改

|

||||

=================================================================================

|

||||

(LCTT 译注:这是一则过期的消息,但是为了披露更新内容,还是发布出来给大家参考)

|

||||

|

||||

**著名的内核维护者Greg Kroah-Hartman貌似正在度假中,因为Sasha Levin有幸在今天,2016年2月16日,的早些时候来[宣布](http://lkml.iu.edu/hypermail/linux/kernel/1602.2/00520.html),第十八个Linux内核维护版本Linux Kernel 4.1 LTS通用版本正式发布。**

|

||||

**著名的内核维护者Greg Kroah-Hartman貌似正在度假中,因为Sasha Levin2016年2月16日的早些时候来[宣布](http://lkml.iu.edu/hypermail/linux/kernel/1602.2/00520.html),第十八个Linux内核维护版本Linux Kernel 4.1 LTS通用版本正式发布。**

|

||||

|

||||

作为长期支持的内核分支,Linux 4.1将再多几年接收到更新和补丁,而今天的维护构建版本也证明一点,就是内核开发者们正致力于统保持该系列在所有使用该版本的GNU/Linux操作系统上稳定和可靠。Linux Kernel 4.1.18 LTS是一个大规模发行版,它带来了总计达228个文件修改,这些修改包含了多达5304个插入修改和1128个删除修改。

|

||||

作为长期支持的内核分支,Linux 4.1还会在几年内得到更新和补丁,而今天的维护构建版本也证明一点,就是内核开发者们正致力于保持该系列在所有使用该版本的GNU/Linux操作系统上稳定和可靠。Linux Kernel 4.1.18 LTS是一个大的发布版本,它带来了总计达228个文件修改,这些修改包含了多达5304个插入修改和1128个删除修改。

|

||||

|

||||

Linux Kernel 4.1.18 LTS更新了什么呢?好吧,首先是对ARM,ARM64(AArch64),MIPS,PA-RISC,m32r,PowerPC(PPC),s390以及x86等硬件架构的改进。此外,还有对Btrfs,CIFS,NFS,XFS,OCFS2,OverlayFS以及UDF文件系统的加强。对网络堆栈的修复,尤其是对mac80211的修复。同时,还有多核心、加密和mm等方面的改进和对声音的更新。

|

||||

|

||||

“我宣布4.1.18内核正式发布,所有4.1内核系列的用户都应当升级。”Sasha Levin说,“更新的4.1.y git树可以在这里找到:git://git.kernel.org/pub/scm/linux/kernel/git/stable/linux-stable.git linux-4.1.y,并且可以在常规kernel.org git网站浏览器:http://git.kernel.org/?p=linux/kernel/git/stable/linux-stable.git;a=summary 进行浏览。”

|

||||

## 大量驱动被更新

|

||||

“我宣布4.1.18内核正式发布,所有4.1内核系列的用户都应当升级。”Sasha Levin说,“更新的4.1.y git树可以在这里找到:git://git.kernel.org/pub/scm/linux/kernel/git/stable/linux-stable.git linux-4.1.y,并且可以在 kernel.org 的 git 网站上浏览:http://git.kernel.org/?p=linux/kernel/git/stable/linux-stable.git;a=summary 进行浏览。”

|

||||

|

||||

### 大量驱动被更新

|

||||

|

||||

除了架构、文件系统、声音、网络、加密、mm和核心内核方面的改进之外,Linux Kernel 4.1.18 LTS更新了各个驱动,以提供更好的硬件支持,特别是像蓝牙、DMA、EDAC、GPU(主要是Radeon和Intel i915)、无限带宽技术、IOMMU、IRQ芯片、MD、MMC、DVB、网络(主要是无线)、PCI、SCSI、USB、散热、暂存和Virtio等此类东西。

|

||||

|

||||

@ -18,9 +20,9 @@ Linux Kernel 4.1.18 LTS更新了什么呢?好吧,首先是对ARM,ARM64(A

|

||||

|

||||

via: http://news.softpedia.com/news/linux-kernel-4-1-18-lts-is-the-biggest-in-the-series-with-hundreds-of-changes-500500.shtml

|

||||

|

||||

作者:[Marius Nestor ][a]

|

||||

作者:[Marius Nestor][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,27 @@

|

||||

Xubuntu 16.04 Beta 1 开发者版本发布

|

||||

============================================

|

||||

|

||||

Ubuntu 发布团队宣布为选定的社区版本而准备的最新的 beta 测试镜像已经可以使用了。新的发布版本名称是 16.04 beta 1 ,这个版本只推荐给测试人员测试用,并不适合普通人进行日常使用。

|

||||

|

||||

|

||||

|

||||

“这个 beta 特性的镜像主要是为 [Lubuntu][1], Ubuntu Cloud, [Ubuntu GNOME][2], [Ubuntu MATE][3], [Ubuntu Kylin][4], [Ubuntu Studio][5] 和 [Xubuntu][6] 这几个发布版准备的。Xenial Xerus (LCTT 译注: ubuntu 16.04 的开发代号)的这个预发布版本并不推荐那些需要稳定版本的人员使用,同时那些不希望在系统运行中偶尔或频繁的出现 bug 的人也不建议使用这个版本。这个版本主要还是推荐给那些喜欢 ubuntu 的开发人员,以及那些想协助我们测试、报告 bug 和修改 bug 的人使用,和我们一起努力让这个发布版本早日准备就绪。” 更多的信息可以从 [发布日志][7] 获取。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/software/applications/888731-development-release-xubuntu-1604-beta-1

|

||||

|

||||

作者:[DistroWatch][a]

|

||||

译者:[Ezio](https://github.com/oska874)

|

||||

校对:[Ezio](https://github.com/oska874)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/community/forums/person/284

|

||||

[1]: http://distrowatch.com/lubuntu

|

||||

[2]: http://distrowatch.com/ubuntugnome

|

||||

[3]: http://distrowatch.com/ubuntumate

|

||||

[4]: http://distrowatch.com/ubuntukylin

|

||||

[5]: http://distrowatch.com/ubuntustudio

|

||||

[6]: http://distrowatch.com/xubuntu

|

||||

[7]: https://lists.ubuntu.com/archives/ubuntu-devel-announce/2016-February/001173.html

|

||||

@ -0,0 +1,30 @@

|

||||

OpenSSH 7.2发布,支持 SHA-256/512 的 RSA 签名

|

||||

========================================================

|

||||

|

||||

**2016.2.29,OpenBSD 项目很高兴地宣布 OpenSSH 7.2发布了,并且很块可在所有支持的平台下载。**

|

||||

|

||||

根据内部[发布公告][1],OpenSSH 7.2 主要是 bug 修复,修改了自 OpenSSH 7.1p2 以来由用户报告和开发团队发现的问题,但是我们可以看到几个新功能。

|

||||

|

||||

这其中,我们可以提到使用了 SHA-256 或者 SHA-256 512 哈希算法的 RSA 签名;增加了一个 AddKeysToAgent 客户端选项,以添加用于身份验证的 ssh-agent 的私钥;和实现了一个“restrict”级别的 authorized_keys 选项,用于存储密钥限制。

|

||||

|

||||

此外,现在 ssh_config 中 CertificateFile 选项可以明确列出证书,ssh-keygen 现在能够改变所有支持的格式的密钥注释、密钥指纹现在可以来自标准输入,多个公钥可以放到一个文件。

|

||||

|

||||

### ssh-keygen 现在支持多证书

|

||||

|

||||

除了上面提到的,OpenSSH 7.2 增加了 ssh-keygen 多证书的支持,一个一行,实现了 sshd_config ChrootDirectory 及Foreground 的“none”参数,“-c”标志允许 ssh-keyscan 获取证书而不是文本密钥。

|

||||

|

||||

最后但并非最不重要的,OpenSSH 7.3 不再默认启用 rijndael-cbc(即 AES),blowfish-cbc、cast128-cbc 等古老的算法,同样的还有基于 MD5 和截断的 HMAC 算法。在 Linux 中支持 getrandom() 系统调用。[下载 OpenSSH 7.2][2] 并查看更新日志中的更多细节。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/openssh-7-2-out-now-with-support-for-rsa-signatures-using-sha-256-512-algorithms-501111.shtml

|

||||

|

||||

作者:[Marius Nestor][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://news.softpedia.com/editors/browse/marius-nestor

|

||||

[1]: http://www.openssh.com/txt/release-7.2

|

||||

[2]: http://linux.softpedia.com/get/Security/OpenSSH-4474.shtml

|

||||

@ -0,0 +1,25 @@

|

||||

逾千万使用 https 的站点受到新型解密攻击的威胁

|

||||

===========================================================================

|

||||

|

||||

|

||||

|

||||

|

||||

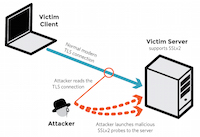

低成本的 DROWN 攻击能在数小时内完成数据解密,该攻击对采用了 TLS 的邮件服务器也同样奏效。

|

||||

|

||||

一个国际研究小组于周二发出警告,据称逾 1100 万家网站和邮件服务采用的用以保证服务安全的 [传输层安全协议 TLS][1],对于一种新发现的、成本低廉的攻击而言异常脆弱,这种攻击会在几个小时内解密敏感的通信,在某些情况下解密甚至能瞬间完成。 前一百万家最大的网站中有超过 81,000 个站点正处于这种脆弱的 HTTPS 协议保护之下。

|

||||

|

||||

这种攻击主要针对依赖于 [RSA 加密系统][2]的 TLS 所保护的通信,密钥会间接的通过 SSLv2 暴露,这是一种在 20 年前就因为自身缺陷而退休了的 TLS 前代协议。该漏洞允许攻击者可以通过反复使用 SSLv2 创建与服务器连接的方式,解密截获的 TLS 连接。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/software/applications/889455--more-than-11-million-https-websites-imperiled-by-new-decryption-attack

|

||||

|

||||

作者:[ArsTechnica][a]

|

||||

译者:[Ezio](https://github.com/oska874)

|

||||

校对:[martin2011qi](https://github.com/martin2011qi), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/community/forums/person/112

|

||||

[1]: https://en.wikipedia.org/wiki/Transport_Layer_Security

|

||||

[2]: https://en.wikipedia.org/wiki/RSA_(cryptosystem)

|

||||

@ -0,0 +1,72 @@

|

||||

Zephyr Project for Internet of Things, releases from Facebook, IBM, Yahoo, and more news

|

||||

===========================================================================================

|

||||

|

||||

|

||||

|

||||

In this week's edition of our open source news roundup, we take a look at the new IoT project from the Linux Foundation, three big corporations releasing open source, and more.

|

||||

|

||||

**News roundup for February 21 - 26, 2016**

|

||||

|

||||

### Linux Foundation unveils the Zephyr Project

|

||||

|

||||

The Internet of Things (IoT) is shaping up to be the next big thing in consumer technology. At the moment, most IoT solutions are proprietary and closed source. Open source is making numerous in-roads into the IoT world, and that's undoubtedly going to accelerate now that the Linux Foundation has [announced the Zephyr Project][1].

|

||||

|

||||

The Zephyr Project, according to ZDNet, "hopes to bring vendors and developers together under a single operating system which could make the development of connected devices an easier, less expensive and more stable process." The Project "aims to incorporate input from the open source and embedded developer communities and to encourage collaboration on the RTOS (real-time operating system)," according to the [Linux Foundation's press release][2].

|

||||

|

||||

Currently, Intel Corporation, NXP Semiconductors N.V., Synopsys, Inc., and UbiquiOS Technology Limited are the main supporters of the project. The Linux Foundation intends to attract other IoT vendors to this effort as well.

|

||||

|

||||

### Releases from Facebook, IBM, Yahoo

|

||||

|

||||

As we all know, open source isn't just about individuals or small groups hacking on code and hardware. Quite a few large corporations have significant investments in open source. This past week, three of them affirmed their commitment to open source.

|

||||

|

||||

Yahoo again waded into open source waters this week with the [release of CaffeOnSpark][3] artificial intelligence software under an Apache 2.0 license. CaffeOnSpark performs "a popular type of AI called 'deep learning' on the vast swaths of data kept in its Hadoop open-source file system for storing big data," according to VentureBeat. If you're curious, you can [find the source code on GitHub][4].

|

||||

|

||||

Earlier this week, Facebook "[unveiled a new project that seeks not only to accelerate the evolution of technologies that drive our mobile networks, but to freely share this work with the world’s telecoms][5]," according to Wired. The company plans to build "everything from new wireless radios to nee optical fiber equipment." The designs, according to Facebook, will be open source so any telecom firm can use them.

|

||||

|

||||

As part of the [Open Mainframe Project][6], IBM has open sourced the code for its Anomaly Detection Engine (ADE) for Linux logs. [According to IBM][7], "ADE detects anomalous time slices and messages in Linux logs using statistical learning" to detect suspicious behaviour. You can grab the [source code for ADE][8] from GitHub.

|

||||

|

||||

### European Union to fund research

|

||||

|

||||

The European Research Council, the European Union's science and technology funding body, is [funding four open source research projects][9] to the tune of about €2 million. According to joinup.ec.europa.eu, the projects being funded are:

|

||||

|

||||

- A code audit of Mozilla's open source Rust programming language

|

||||

|

||||

- An initiative at INRIA (France's national computer science research center) studying secure programming

|

||||

|

||||

- A project at Austria's Technische Universitat Graz testing "ways to secure code against attacks that exploit certain properties of the computer hardware"

|

||||

|

||||

- The "development of techniques to prove popular cryptographic protocols and schemes" at IST Austria

|

||||

|

||||

### In other news

|

||||

|

||||

- [Infosys' newest weapon: open source][10]

|

||||

|

||||

- [Intel demonstrates Android smartphone running a Linux desktop][11]

|

||||

|

||||

- [BeeGFS file system goes open source][12]

|

||||

|

||||

A big thanks, as always, to the Opensource.com moderators and staff for their help this week.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/2/weekly-news-feb-26

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/scottnesbitt

|

||||

[1]: http://www.zdnet.com/article/the-linux-foundations-zephyr-project-building-an-operating-system-for-iot-devices/

|

||||

[2]: http://www.linuxfoundation.org/news-media/announcements/2016/02/linux-foundation-announces-project-build-real-time-operating-system

|

||||

[3]: http://venturebeat.com/2016/02/24/yahoo-open-sources-caffeonspark-deep-learning-framework-for-hadoop/

|

||||

[4]: https://github.com/yahoo/CaffeOnSpark

|

||||

[5]: http://www.wired.com/2016/02/facebook-open-source-wireless-gear-forge-5g-world/

|

||||

[6]: https://www.openmainframeproject.org/

|

||||

[7]: http://openmainframeproject.github.io/ade/

|

||||

[8]: https://github.com/openmainframeproject/ade

|

||||

[9]: https://joinup.ec.europa.eu/node/149541

|

||||

[10]: http://www.businessinsider.in/Exclusive-Infosys-is-using-Open-Source-as-its-mostlethal-weapon-yet/articleshow/51109129.cms

|

||||

[11]: http://www.theregister.co.uk/2016/02/23/move_over_continuum_intel_shows_android_smartphone_powering_bigscreen_linux/

|

||||

[12]: http://insidehpc.com/2016/02/beegfs-parallel-file-system-now-open-source/

|

||||

@ -0,0 +1,42 @@

|

||||

Node.js 5.7 released ahead of impending OpenSSL updates

|

||||

=============================================================

|

||||

|

||||

|

||||

|

||||

>Once again, OpenSSL fixes must be evaluated by keepers of the popular server-side JavaScript platform

|

||||

|

||||

The Node.js Foundation is gearing up this week for fixes to OpenSSL that could mean updates to Node.js itself.

|

||||

|

||||

Releases to OpenSSL due on Tuesday will fix defects deemed to be of "high" severity, Rod Vagg, foundation technical steering committee director, said [in a blog post][1] on Monday. Within a day of the OpenSSL releases, the Node.js crypto team will assess their impacts, saying, "Please be prepared for the possibility of important updates to Node.js v0.10, v0.12, v4 and v5 soon after Tuesday, the 1st of March."

|

||||

|

||||

[ Deep Dive: [How to rethink security for the new world of IT][2]. | Discover how to secure your systems with InfoWorld's [Security newsletter][3]. ]

|

||||

|

||||

The high severity status actually means the issues are of lower risks than critical, perhaps affecting less-common configurations or less likely to be exploitable. Due to an embargo, the exact nature of these fixes and their impact on Node.js remain uncertain, said Vagg. "Node.js v0.10 and v0.12 both use OpenSSL v1.0.1, and Node.js v4 and v5 both use OpenSSL v1.0.2, and releases from nodejs.org and some other popular distribution sources are statically compiled. Therefore, all active release lines are impacted by this update." OpenSSL also impacted Node.js in December, [when two critical vulnerabilities were fixed][4].

|

||||

|

||||

The latest OpenSSL developments follow [the release of Node.js 5.7.0][5], which is clearing a path for the upcoming Node.js 6. Version 5 is the main focus for active development, said foundation representative Mikeal Rogers, "However, v5 won't be supported long-term, and most users will want to wait for v6, which will be released by the end of April, for the new features that are landing in v5."

|

||||

|

||||

Release 5.7 has more predictability for C++ add-ons' interactions with JavaScript. Node.js can invoke JavaScript code from C++ code, and in version 5.7, the C++ node::MakeCallback() API is now re-entrant; calling it from inside another MakeCallback() call no longer causes the nextTick queue or Promises microtask queue to be processed out of order, [according to release notes][6].

|

||||

|

||||

Also fixed is an HTTP bug where handling headers mistakenly trigger an "upgrade" event where the server just advertises protocols. The bug can prevent HTTP clients from communicating with HTTP2-enabled servers. Version 5.7 performance improvements are featured in the path, querystring, streams, and process.nextTick modules.

|

||||

|

||||

This story, "Node.js 5.7 released ahead of impending OpenSSL updates" was originally published by [InfoWorld][7].

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itworld.com/article/3039005/security/nodejs-57-released-ahead-of-impending-openssl-updates.html

|

||||

|

||||

作者:[Paul Krill][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itworld.com/author/Paul-Krill/

|

||||

[1]: https://nodejs.org/en/blog/vulnerability/openssl-march-2016/

|

||||

[2]: http://www.infoworld.com/resources/17273/security-management/how-to-rethink-security-for-the-new-world-of-it#tk.ifw-infsb

|

||||

[3]: http://www.infoworld.com/newsletters/signup.html#tk.ifw-infsb

|

||||

[4]: http://www.infoworld.com/article/3012157/security/why-nodejs-waited-for-openssl-security-update-before-patching.html

|

||||

[5]: https://nodejs.org/en/blog/release/v5.7.0/

|

||||

[6]: https://nodejs.org/en/blog/release/v5.7.0/

|

||||

[7]: http://www.infoworld.com/

|

||||

@ -1,165 +0,0 @@

|

||||

robot527 translating

|

||||

|

||||

|

||||

Bossie Awards 2015: The best open source networking and security software

|

||||

================================================================================

|

||||

InfoWorld's top picks of the year among open source tools for building, operating, and securing networks

|

||||

|

||||

|

||||

|

||||

### The best open source networking and security software ###

|

||||

|

||||

BIND, Sendmail, OpenSSH, Cacti, Nagios, Snort -- open source software seems to have been invented for networks, and many of the oldies and goodies are still going strong. Among our top picks in the category this year, you'll find a mix of stalwarts, mainstays, newcomers, and upstarts perfecting the arts of network management, security monitoring, vulnerability assessment, rootkit detection, and much more.

|

||||

|

||||

|

||||

|

||||

### Icinga 2 ###

|

||||

|

||||

Icinga began life as a fork of system monitoring application Nagios. [Icinga 2][1] was completely rewritten to give users a modern interface, support for multiple databases, and an API to integrate numerous extensions. With out-of-the-box load balancing, notifications, and configuration, Icinga 2 shortens the time to installation for complex environments. Icinga 2 supports Graphite natively, giving administrators real-time performance graphing without any fuss. But what puts Icinga back on the radar this year is its release of Icinga Web 2, a graphical front end with drag-and-drop customizable dashboards and streamlined monitoring tools.

|

||||

|

||||

Administrators can view, filter, and prioritize problems, while keeping track of which actions have already been taken. A new matrix view lets administrators view hosts and services on one page. You can view events over a particular time period or filter incidents to understand which ones need immediate attention. Icinga Web 2 may boast a new interface and zippier performance, but all the usual commands from Icinga Classic and Icinga Web are still available. That means there is no downtime trying to learn a new version of the tool.

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### Zenoss Core ###

|

||||

|

||||

Another open source stalwart, [Zenoss Core][2] gives network administrators a complete, one-stop solution for tracking and managing all of the applications, servers, storage, networking components, virtualization tools, and other elements of an enterprise infrastructure. Administrators can make sure the hardware is running efficiently and take advantage of the modular design to plug in ZenPacks for extended functionality.

|

||||

|

||||

Zenoss Core 5, released in February of this year, takes the already powerful tool and improves it further, with an enhanced user interface and expanded dashboard. The Web-based console and dashboards were already highly customizable and dynamic, and the new version now lets administrators mash up multiple component charts onto a single chart. Think of it as the tool for better root cause and cause/effect analysis.

|

||||

|

||||

Portlets give additional insights for network mapping, device issues, daemon processes, production states, watch lists, and event views, to name a few. And new HTML5 charts can be exported outside the tool. The Zenoss Control Center allows out-of-band management and monitoring of all Zenoss components. Zenoss Core has new tools for online backup and restore, snapshots and rollbacks, and multihost deployment. Even more important, deployments are faster with full Docker support.

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### OpenNMS ###

|

||||

|

||||

An extremely flexible network management solution, [OpenNMS][3] can handle any network management task, whether it's device management, application performance monitoring, inventory control, or events management. With IPv6 support, a robust alerts system, and the ability to record user scripts to test Web applications, OpenNMS has everything network administrators and testers need. OpenNMS has become, as now a mobile dashboard, called OpenNMS Compass, lets networking pros keep an eye on their network even when they're out and about.

|

||||

|

||||

The iOS version of the app, which is available on the [iTunes App Store][4], displays outages, nodes, and alarms. The next version will offer additional event details, resource graphs, and information about IP and SNMP interfaces. The Android version, available on [Google Play][5], displays network availability, outages, and alarms on the dashboard, as well as the ability to acknowledge, escalate, or clear alarms. The mobile clients are compatible with OpenNMS Horizon 1.12 or greater and OpenNMS Meridian 2015.1.0 or greater.

|

||||

|

||||

-- Fahmida Rashid

|

||||

|

||||

|

||||

|

||||

### Security Onion ###

|

||||

|

||||

Like an onion, network security monitoring is made of many layers. No single tool will give you visibility into every attack or show you every reconnaissance or foot-printing session on your company network. [Security Onion][6] bundles scores of proven tools into one handy Ubuntu distro that will allow you to see who's inside your network and help keep the bad guys out.

|

||||