mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

commit

4d0c57231d

published

20141211 How to use matplotlib for scientific plotting on Linux.md20150104 How To Install Websvn In CentOS 7.md20150114 Why Mac users don't switch to Linux.md

sources/tech

20150114 How to Configure Chroot Environment in Ubuntu 14.04.md20150126 Improve system performance by moving your log files to RAM Using Ramlog.md20150128 Docker-1 Moving to Docker.md20150209 Linux FAQs with Answers--How to fix 'fatal error--x264.h--No such file or directory' on Linux.md20150211 25 Tips for Intermediate Git Users.md20150211 Best Known Linux Archive or Compress Tools.md20150211 How To Protect Ubuntu Server Against the GHOST Vulnerability.md20150211 Install Linux-Dash (Web Based Monitoring tool) on Ubntu 14.10.md20150211 Install Mumble in Ubuntu an Opensource VoIP Apps.md20150211 Protect Apache Against Brute Force or DDoS Attacks Using Mod_Security and Mod_evasive Modules.md20150211 Simple Steps Migration From MySQL To MariaDB On Linux.md

translated/tech

@ -0,0 +1,164 @@

|

||||

在Linux中使用matplotlib进行科学画图

|

||||

================================================================================

|

||||

|

||||

如果你想要在Linxu中获得一个高效、自动化、高质量的科学画图的解决方案,应该考虑尝试下matplotlib库。Matplotlib是基于python的开源科学测绘包,基于python软件基金会许可证发布。大量的文档和例子、集成了Python和Numpy科学计算包、以及自动化能力,是作为Linux环境中进行科学画图的可靠选择的几个原因。这个教程将提供几个用matplotlib画图的例子。

|

||||

|

||||

###特性###

|

||||

|

||||

- 支持众多的图表类型,如:bar,box,contour,histogram,scatter,line plots....

|

||||

- 基于python的语法

|

||||

- 集成Numpy科学计算包

|

||||

- 数据源可以是 python 的列表、键值对和数组

|

||||

- 可定制的图表格式(坐标轴缩放、标签位置及标签内容等)

|

||||

- 可定制文本(字体,大小,位置...)

|

||||

- 支持TeX格式(等式,符号,希腊字体...)

|

||||

- 与IPython相兼容(允许在 python shell 中与图表交互)

|

||||

- 自动化(使用 Python 循环创建图表)

|

||||

- 用Python 的循环迭代生成图片

|

||||

- 保存所绘图片格式为图片文件,如:png,pdf,ps,eps,svg等

|

||||

|

||||

基于Python语法的matplotlib是其许多特性和高效工作流的基础。世面上有许多用于绘制高质量图的科学绘图包,但是这些包允许你直接在你的Python代码中去使用吗?除此以外,这些包允许你创建可以保存为图片文件的图片吗?Matplotlib允许你完成所有的这些任务。从而你可以节省时间,使用它你能够花更少的时间创建更多的图片。

|

||||

|

||||

###安装###

|

||||

安装Python和Numpy包是使用Matplotlib的前提,安装Numpy的指引请见[该链接][1]。

|

||||

|

||||

|

||||

可以通过如下命令在Debian或Ubuntu中安装Matplotlib:

|

||||

|

||||

$ sudo apt-get install python-matplotlib

|

||||

|

||||

在Fedora或CentOS/RHEL环境则可用如下命令:

|

||||

|

||||

$ sudo yum install python-matplotlib

|

||||

|

||||

|

||||

###Matplotlib 例子###

|

||||

|

||||

本教程会提供几个绘图例子演示如何使用matplotlib:

|

||||

|

||||

- 离散图和线性图

|

||||

- 柱状图

|

||||

- 饼状图

|

||||

|

||||

在这些例子中我们将用Python脚本来执行Mapplotlib命令。注意numpy和matplotlib模块需要通过import命令在脚本中进行导入。

|

||||

|

||||

np为nuupy模块的命名空间引用,plt为matplotlib.pyplot的命名空间引用:

|

||||

|

||||

import numpy as np

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

|

||||

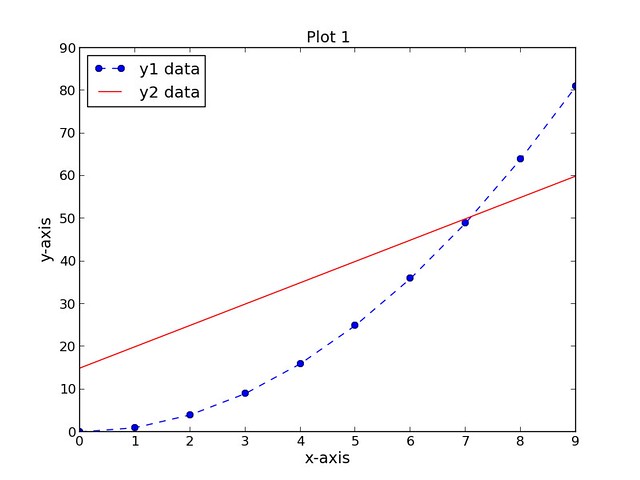

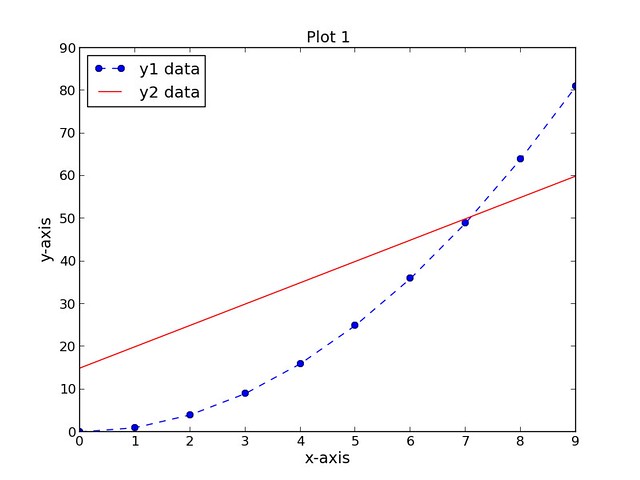

###例1:离散和线性图###

|

||||

|

||||

第一个脚本,script1.py 完成如下任务:

|

||||

|

||||

- 创建3个数据集(xData,yData1和yData2)

|

||||

- 创建一个宽8英寸、高6英寸的图(赋值1)

|

||||

- 设置图画的标题、x轴标签、y轴标签(字号均为14)

|

||||

- 绘制第一个数据集:yData1为xData数据集的函数,用圆点标识的离散蓝线,标识为"y1 data"

|

||||

- 绘制第二个数据集:yData2为xData数据集的函数,采用红实线,标识为"y2 data"

|

||||

- 把图例放置在图的左上角

|

||||

- 保存图片为PNG格式文件

|

||||

|

||||

script1.py的内容如下:

|

||||

|

||||

import numpy as np

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

xData = np.arange(0, 10, 1)

|

||||

yData1 = xData.__pow__(2.0)

|

||||

yData2 = np.arange(15, 61, 5)

|

||||

plt.figure(num=1, figsize=(8, 6))

|

||||

plt.title('Plot 1', size=14)

|

||||

plt.xlabel('x-axis', size=14)

|

||||

plt.ylabel('y-axis', size=14)

|

||||

plt.plot(xData, yData1, color='b', linestyle='--', marker='o', label='y1 data')

|

||||

plt.plot(xData, yData2, color='r', linestyle='-', label='y2 data')

|

||||

plt.legend(loc='upper left')

|

||||

plt.savefig('images/plot1.png', format='png')

|

||||

|

||||

|

||||

所画之图如下:

|

||||

|

||||

|

||||

|

||||

|

||||

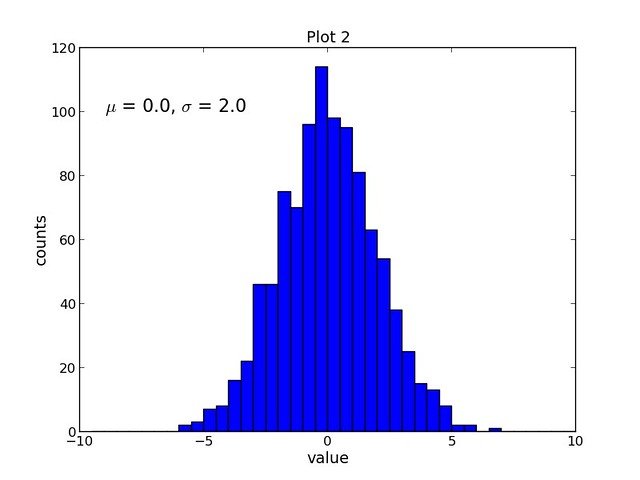

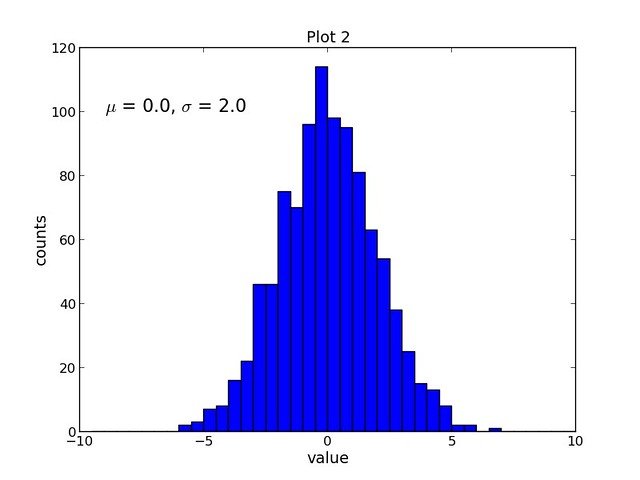

###例2:柱状图###

|

||||

|

||||

第二个脚本,script2.py 完成如下任务:

|

||||

|

||||

- 创建一个包含1000个随机样本的正态分布数据集。

|

||||

- 创建一个宽8英寸、高6英寸的图(赋值1)

|

||||

- 设置图的标题、x轴标签、y轴标签(字号均为14)

|

||||

- 用samples这个数据集画一个40个柱状,边从-10到10的柱状图

|

||||

- 添加文本,用TeX格式显示希腊字母mu和sigma(字号为16)

|

||||

- 保存图片为PNG格式。

|

||||

|

||||

script2.py代码如下:

|

||||

|

||||

import numpy as np

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

mu = 0.0

|

||||

sigma = 2.0

|

||||

samples = np.random.normal(loc=mu, scale=sigma, size=1000)

|

||||

plt.figure(num=1, figsize=(8, 6))

|

||||

plt.title('Plot 2', size=14)

|

||||

plt.xlabel('value', size=14)

|

||||

plt.ylabel('counts', size=14)

|

||||

plt.hist(samples, bins=40, range=(-10, 10))

|

||||

plt.text(-9, 100, r'$\mu$ = 0.0, $\sigma$ = 2.0', size=16)

|

||||

plt.savefig('images/plot2.png', format='png')

|

||||

|

||||

|

||||

结果见如下链接:

|

||||

|

||||

|

||||

|

||||

|

||||

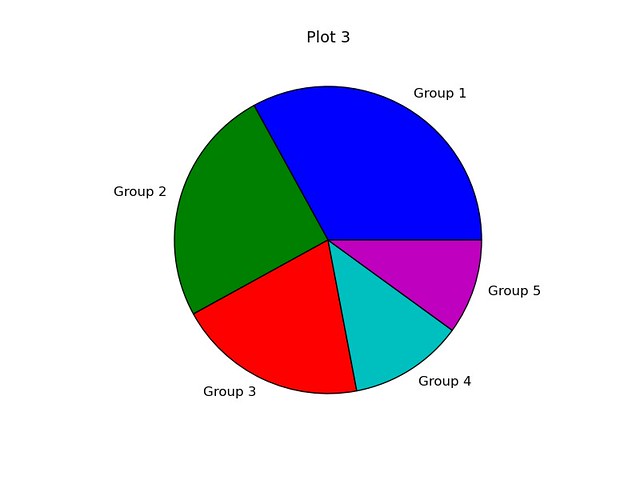

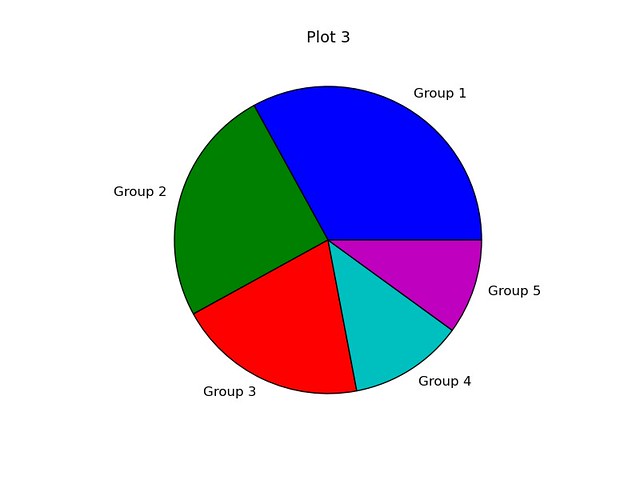

###例3:饼状图###

|

||||

|

||||

第三个脚本,script3.py 完成如下任务:

|

||||

|

||||

- 创建一个包含5个整数的列表

|

||||

- 创建一个宽6英寸、高6英寸的图(赋值1)

|

||||

- 添加一个长宽比为1的轴图

|

||||

- 设置图的标题(字号为14)

|

||||

- 用data列表画一个包含标签的饼状图

|

||||

- 保存图为PNG格式

|

||||

|

||||

脚本script3.py的代码如下:

|

||||

|

||||

import numpy as np

|

||||

import matplotlib.pyplot as plt

|

||||

|

||||

data = [33, 25, 20, 12, 10]

|

||||

plt.figure(num=1, figsize=(6, 6))

|

||||

plt.axes(aspect=1)

|

||||

plt.title('Plot 3', size=14)

|

||||

plt.pie(data, labels=('Group 1', 'Group 2', 'Group 3', 'Group 4', 'Group 5'))

|

||||

plt.savefig('images/plot3.png', format='png')

|

||||

|

||||

|

||||

结果如下链接所示:

|

||||

|

||||

|

||||

|

||||

|

||||

###总结###

|

||||

|

||||

这个教程提供了几个用matplotlib科学画图包进行画图的例子,Matplotlib是在Linux环境中用于解决科学画图的绝佳方案,表现在其无缝地和Python、Numpy连接、自动化能力,和提供多种自定义的高质量的画图产品。matplotlib包的文档和例子详见[这里][2]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/matplotlib-scientific-plotting-linux.html

|

||||

|

||||

作者:[Joshua Reed][a]

|

||||

译者:[ideas4u](https://github.com/ideas4u)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/joshua

|

||||

[1]:http://xmodulo.com/numpy-scientific-computing-linux.html

|

||||

[2]:http://matplotlib.org/

|

||||

@ -1,6 +1,6 @@

|

||||

CentOS 7中安装Websvn

|

||||

在 CentOS 7中安装Websvn

|

||||

================================================================================

|

||||

**WebSVN**为你的Subversion提供了一个试图,它设计用来反映Subversion的一整套方法。你可以检查任何文件或目录的日志,以及查看任何指定修改库中修改、添加或删除过的文件列表。你也可以检查同一文件两个版本的不同之处,以便确切地查看某个特性修订版中的修改。

|

||||

**WebSVN**为你的Subversion提供了一个视图,其设计用来对应Subversion的各种功能。你可以检查任何文件或目录的日志,以及查看任何指定版本中所修改、添加或删除过的文件列表。你也可以检查同一文件两个版本的不同之处,以便确切地查看某个特定的修订版本的变化。

|

||||

|

||||

### 特性 ###

|

||||

|

||||

@ -8,31 +8,25 @@ WebSVN提供了以下这些特性:

|

||||

|

||||

- 易于使用的界面;

|

||||

- 可自定义的模板系统;

|

||||

- 文件列表的着色;

|

||||

- 彩色文件列表;

|

||||

- 过错视图;

|

||||

- 日志信息搜索;

|

||||

- 支持RSS订阅;

|

||||

|

||||

### 安装 ###

|

||||

|

||||

我使用以下链接来将Subversion安装到CentOS 7。

|

||||

我按以下链接来将Subversion安装到CentOS 7。

|

||||

|

||||

- [CentOS 7上如何安装Subversion][1]

|

||||

|

||||

**1 – 下载websvn到/var/www/html。**

|

||||

|

||||

cd /var/www/html

|

||||

|

||||

----------

|

||||

|

||||

wget http://websvn.tigris.org/files/documents/1380/49057/websvn-2.3.3.zip

|

||||

|

||||

**2 – 解压zip包。**

|

||||

|

||||

unzip websvn-2.3.3.zip

|

||||

|

||||

----------

|

||||

|

||||

mv websvn-2.3.3 websvn

|

||||

|

||||

**3 – 安装php到你的系统。**

|

||||

@ -42,13 +36,7 @@ WebSVN提供了以下这些特性:

|

||||

**4 – 编辑web svn配置。**

|

||||

|

||||

cd /var/www/html/websvn/include

|

||||

|

||||

----------

|

||||

|

||||

cp distconfig.php config.php

|

||||

|

||||

----------

|

||||

|

||||

vi config.php

|

||||

|

||||

----------

|

||||

@ -96,7 +84,7 @@ via: http://www.unixmen.com/install-websvn-centos-7/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,147 +0,0 @@

|

||||

[bazz2222222]

|

||||

How to Configure Chroot Environment in Ubuntu 14.04

|

||||

================================================================================

|

||||

There are many instances when you may wish to isolate certain applications, user, or environments within a Linux system. Different operating systems have different methods of achieving isolation, and in Linux, a classic way is through a `chroot` environment.

|

||||

|

||||

In this guide, we'll show you step wise on how to setup an isolated environment using chroot in order to create a barrier between your regular operating system and a contained environment. This is mainly useful for testing purposes. We will teach you the steps on an **Ubuntu 14.04** VPS instance.

|

||||

|

||||

Most system administrators will benefit from knowing how to accomplish a quick and easy chroot environment and it is a valuable skill to have.

|

||||

|

||||

### The chroot environment ###

|

||||

|

||||

A chroot environment is an operating system call that will change the root location temporarily to a new folder. Typically, the operating system's conception of the root directory is the actual root located at "/". However, with `chroot`, you can specify another directory to serve as the top-level directory for the duration of a chroot.

|

||||

|

||||

Any applications that are run from within the `chroot` will be unable to see the rest of the operating system in principle.

|

||||

|

||||

#### Advantages of Chroot Environment ####

|

||||

|

||||

> - Test applications without the risk of compromising the entire host system.

|

||||

>

|

||||

> - From the security point of view, whatever happens in the chroot environment won't affect the host system (not even under root user).

|

||||

>

|

||||

> - A different operating system running in the same hardware.

|

||||

|

||||

For instance, it allows you to build, install, and test software in an environment that is separated from your normal operating system. It could also be used as a method of **running 32-bit applications in a 64-bit environment**.

|

||||

|

||||

But while chroot environments will certainly make additional work for an unprivileged user, they should be considered a hardening feature instead of a security feature, meaning that they attempt to reduce the number of attack vectors instead of creating a full solution. If you need full isolation, consider a more complete solution, such as Linux containers, Docker, vservers, etc.

|

||||

|

||||

### Debootstrap and Schroot ###

|

||||

|

||||

The necessary packages to setup the chroot environment are **debootstrap** and **schroot**, which are available in the ubuntu repository. The schroot command is used to setup the chroot environment.

|

||||

|

||||

**Debootstrap** allows you to install a new fresh copy of any Debian (or debian-based) system from a repository in a directory with all the basic commands and binaries needed to run a basic instance of the operating system.

|

||||

|

||||

The **schroot** allows access to chroots for normal users using the same mechanism, but with permissions checking and allowing additional automated setup of the chroot environment, such as mounting additional filesystems and other configuration tasks.

|

||||

|

||||

These are the steps to implement this functionality in Ubuntu 14.04 LTS:

|

||||

|

||||

### 1. Installing the Packages ###

|

||||

|

||||

Firstly, We're gonna install debootstrap and schroot in our host Ubuntu 14.04 LTS.

|

||||

|

||||

$ sudo apt-get install debootstrap

|

||||

$ sudo apt-get install schroot

|

||||

|

||||

### 2. Configuring Schroot ###

|

||||

|

||||

Now that we have the appropriate tools, we just need to specify a directory that we want to use as our chroot environment. We will create a directory called linoxide in our root directory to setup chroot there:

|

||||

|

||||

sudo mkdir /linoxide

|

||||

|

||||

We have to configure schroot to suit our needs in the configuration file .we will modify the schroot configuration file with the information we require to get configured.

|

||||

|

||||

sudo nano /etc/schroot/schroot.conf

|

||||

|

||||

We are on an Ubuntu 14.04 LTS (Trusty Tahr) system currently, but let's say that we want to test out some packages available on Ubuntu 13.10, code named "Saucy Salamander". We can do that by creating an entry that looks like this:

|

||||

|

||||

[saucy]

|

||||

description=Ubuntu Saucy

|

||||

location=/linoxide

|

||||

priority=3

|

||||

users=arun

|

||||

root-groups=root

|

||||

|

||||

|

||||

|

||||

Modify the values of the configuration parameters in the above example to fit your system:

|

||||

|

||||

### 3. Installing 32 bit Ubuntu with debootstrap ###

|

||||

|

||||

Debootstrap downloads and installs a minimal operating system inside your **chroot environment**. You can install any debian-based distro of your choice, as long as you have a repository available.

|

||||

|

||||

Above, we placed the chroot environment under the directory **/linoxide** and this is the root directory of the chroot environment. So we'll need to run debootstrap inside that directory which we have already created:

|

||||

|

||||

cd /linoxide

|

||||

sudo debootstrap --variant=buildd --arch amd64 saucy /linoxide/ http://archive.ubuntu.com/ubuntu/

|

||||

sudo chroot /linoxide /debootstrap/debootstrap --second-stage

|

||||

|

||||

You can replace amd64 in --arch as i386 or other bit OS you wanna setup available in the repository. You can replace the mirror http://archive.ubuntu.com/ubuntu/ above as the one closest, you can get the closest one from the official [Ubuntu Mirror Page][1].

|

||||

|

||||

**Note: You will need to add --foreign above 3rd line command if you choose to setup i386 bit OS choot in your 64 bit Host Ubuntu as:**

|

||||

|

||||

sudo debootstrap --variant=buildd --foreign --arch i386 saucy /linoxide/ http://archive.ubuntu.com/ubuntu/

|

||||

|

||||

It takes some time (depending on your bandwidth) to download, install and configure the complete system. It takes about 500 MBs for a minimal installation.

|

||||

|

||||

### 4. Finallizing the chroot environment ###

|

||||

|

||||

After the system is installed, we'll need to do some final configurations to make sure the system functions correctly. First, we'll want to make sure our host `fstab` is aware of some pseudo-systems in our guest.

|

||||

|

||||

sudo nano /etc/fstab

|

||||

|

||||

Add the below lines like these to the bottom of your fstab:

|

||||

|

||||

proc /linoxide/proc proc defaults 0 0

|

||||

sysfs /linoxide/sys sysfs defaults 0 0

|

||||

|

||||

Save and close the file.

|

||||

|

||||

Now, we're going to need to mount these filesystems within our guest:

|

||||

|

||||

$ sudo mount proc /linoxide/proc -t proc

|

||||

$sudo mount sysfs /linoxide/sys -t sysfs

|

||||

|

||||

We'll also want to copy our /etc/hosts file so that we will have access to the correct network information:

|

||||

|

||||

$ sudo cp /etc/hosts /linoxide/etc/hosts

|

||||

|

||||

Finally, You can list the available chroot environments using the schroot command.

|

||||

|

||||

$ schroot -l

|

||||

|

||||

We can enter the chroot environment through a command like this:

|

||||

|

||||

$ sudo chroot /linoxide/ /bin/bash

|

||||

|

||||

You can test the chroot environment by checking the version of distributions installed.

|

||||

|

||||

# lsb_release -a

|

||||

# uname -a

|

||||

|

||||

To finish this tutorial, in order to run a graphic application from the chroot, you have to export the DISPLAY environment variable.

|

||||

|

||||

$ DISPLAY=:0.0 ./apps

|

||||

|

||||

Here, we have successfully installed Chrooted Ubuntu 13.10(Saucy Salamander) in your host Ubuntu 14.04 LTS (Trusty Tahr).

|

||||

|

||||

You can exit chroot environment successfully by running the commands below:

|

||||

|

||||

# exit

|

||||

|

||||

Afterwards, we need to unmount our proc and sys filesystems:

|

||||

|

||||

$ sudo umount /test/proc

|

||||

$ sudo umount /test/sys

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/ubuntu-how-to/configure-chroot-environment-ubuntu-14-04/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:https://launchpad.net/ubuntu/+archivemirrors

|

||||

@ -1,3 +1,4 @@

|

||||

translating by soooogreen

|

||||

Improve system performance by moving your log files to RAM Using Ramlog

|

||||

================================================================================

|

||||

Ramlog act as a system daemon. On startup it creates ramdisk, it copies files from /var/log into ramdisk and mounts ramdisk as /var/log. All logs after that will be updated on ramdisk. Logs on harddrive are kept in folder /var/log.hdd which is updated when ramlog is restarted or stopped. On shutdown it saves log files back to harddisk so logs are consistent. Ramlog 2.x is using tmpfs by default, ramfs and kernel ramdisk are suppored as well. Program rsync is used for log synchronization.

|

||||

@ -109,4 +110,4 @@ via: http://www.ubuntugeek.com/improve-system-performance-by-moving-your-log-fil

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.ubuntugeek.com/author/ubuntufix

|

||||

[1]:http://www.tremende.com/ramlog/download/ramlog_2.0.0_all.deb

|

||||

[1]:http://www.tremende.com/ramlog/download/ramlog_2.0.0_all.deb

|

||||

|

||||

@ -1,82 +0,0 @@

|

||||

Translating by mtunique

|

||||

Moving to Docker

|

||||

================================================================================

|

||||

|

||||

|

||||

[TL;DR] This is the first post in a series of 3 on how my company moved its infrastructure from PaaS to Docker based deployment. If you want, you can skip the intro (this post) and head directly to the technical topics (links at the bottom of the page).

|

||||

|

||||

----------

|

||||

|

||||

In the last month I've been strggling with devops. This is my very personal story and experience in trying to streamline a deployment process of a Raila app with Docker.

|

||||

|

||||

When I started my company – [Touchware][1] – in 2012 I was a lone developer. Things were small, uncomplicated, they didn't require a lot of maintenance, nor they needed to scale all that much. During the course of last year though, we grew quite a lot (we are now a team of 10 people) and our server-side applications and API grew both in terms of scope and scale.

|

||||

|

||||

### Step 1 - Heroku ###

|

||||

|

||||

We still are a very small team and we need to make things going and run as smoothly as possible. When we looked for possible solutions, we decided to stick with something that would have removed from our shoulders the burden of managing hardware. Since we develop mainly Rails based applications and Heroku has a great support for RoR and various kind of DBs and cached (Postgres / Mongo / Redis etc.), the smartest choice seemed to be going with [Heroku][2]. And that's what we did.

|

||||

|

||||

Heroku has a great support and great documentation and deploying apps is just so snappy! Only problem is, when you start growing, you need to have piles of cash around to pay the bills. Not the best deal, really.

|

||||

|

||||

### Step 2 - Dokku ###

|

||||

|

||||

In a rush to try and cut the costs, we decided to try with Dokku. [Dokku][3], quoting the Github repo is a

|

||||

|

||||

> Docker powered mini-Heroku in around 100 lines of Bash

|

||||

|

||||

We launched some instances on [DigitalOcean][4] with Dokku pre-installed and we gave it spin. Dokku is very much like Heroku, but when you have complex applications for whom you need to twear params, or where you need certain dependencies, it's just not gonna work out. We had an app where we needed to apply multiple transformations on images and we couldn't find a way to install the correct version of imagemagick into the dokku-based Docker container that was hosting our Rails app. We still have a couple of very simple apps that are running on Dokku, but we had to move some of them back to Heroku.

|

||||

|

||||

### Step 3 - Docker ###

|

||||

|

||||

A couple of months ago, since the problem of devops and managing production apps was resurfacing, I decided to try out [Docker][5]. Docker, in simple terms, allows developers to containerize applications and to ease the deployment. Since a Docker container basically has all the dependencies it needs to run your app, if everything runs fine on your laptop, you can be sure it'll also run like a champ in production on a remote server, be it an AWS E2C instance or a VPS on DigitalOcean.

|

||||

|

||||

Docker IMHO is particularly interesting for the following reasons:

|

||||

|

||||

- it promotes modularization and separation of concerns: you need to start thinking about your apps in terms of logical components (load balancer: 1 container, DB: 1 container, webapp: 1 container etc.);

|

||||

- it's very flexible in terms of deployment options: containers can be deployed to a wide variety of HW and can be easily redeployed to different servers / providers;

|

||||

- it allows for a very fine grained tuning of your app environment: you build the images your containers runs from, so you have plenty of options for configuring your environment exactly as you would like to.

|

||||

|

||||

There are howerver some downsides:

|

||||

|

||||

- the learning curve is quite steep (this is probably a very personal problem, but I'm talking as a software dev and not as a skilled operations professional);

|

||||

- setup is not simple, especially if you want to have a private registry / repository (more about this later).

|

||||

|

||||

Following are some tips I put together during the course of the last week with the findings of someone that is new to the game.

|

||||

|

||||

----------

|

||||

|

||||

In the following articles we'll see how to setup a semi-automated Docker based deployment system.

|

||||

|

||||

- [Setting up a private Docker registry][6]

|

||||

- [Configuring a Rails app for semi-automated deployment][7]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://cocoahunter.com/2015/01/23/docker-1/

|

||||

|

||||

作者:[Michelangelo Chasseur][a]

|

||||

译者:[mtunique](https://github.com/mtunique)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://cocoahunter.com/author/michelangelo/

|

||||

[1]:http://www.touchwa.re/

|

||||

[2]:http://cocoahunter.com/2015/01/23/docker-1/www.heroku.com

|

||||

[3]:https://github.com/progrium/dokku

|

||||

[4]:http://cocoahunter.com/2015/01/23/docker-1/www.digitalocean.com

|

||||

[5]:http://www.docker.com/

|

||||

[6]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[7]:http://cocoahunter.com/2015/01/23/docker-3/

|

||||

[8]:

|

||||

[9]:

|

||||

[10]:

|

||||

[11]:

|

||||

[12]:

|

||||

[13]:

|

||||

[14]:

|

||||

[15]:

|

||||

[16]:

|

||||

[17]:

|

||||

[18]:

|

||||

[19]:

|

||||

[20]:

|

||||

@ -1,91 +0,0 @@

|

||||

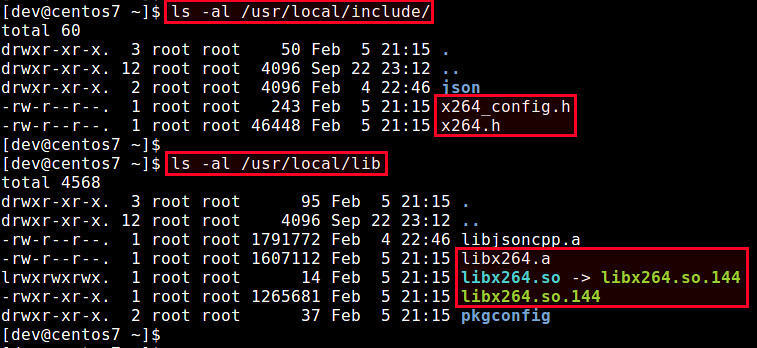

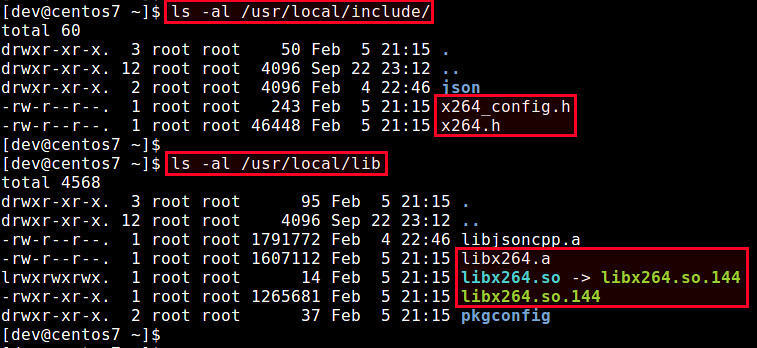

Linux FAQs with Answers--How to fix “fatal error: x264.h: No such file or directory” on Linux

|

||||

================================================================================

|

||||

> **Question**: I am trying to build a video encoding application from the source on Linux. However, during compilation, I am encountering the error: "fatal error: x264.h: No such file or directory" How can I fix this error?

|

||||

|

||||

The following compilation error indicates that you do not have x264 library's development files installed on your Linux system.

|

||||

|

||||

fatal error: x264.h: No such file or directory

|

||||

|

||||

[x264][1] is an H.264/MPEG-4 AVC encoder library licensed with GNU GPL. The x264 library is popularly used by many video encoder/transcoder programs such as Avidemux, [FFmpeg][2], [HandBrake][3], OpenShot, MEncode and more.

|

||||

|

||||

To solve the above compilation error, you need to install development files for x264 library. Here is how you can do it.

|

||||

|

||||

### Install x264 Library and its Development Files on Debian, Ubuntu or Linux Mint ###

|

||||

|

||||

On Debian based systems, x264 library is already included in the base repositories. Thus its installation is straightforward with apt-get as follows.

|

||||

|

||||

$ sudo apt-get install libx264-dev

|

||||

|

||||

### Install x264 Library and its Development Files on Fedora, CentOS/RHEL ###

|

||||

|

||||

On Red Hat based distributions such as Fedora or CentOS, the x264 library is available via the free repository of RPM Fusion. Thus, you need to install [RPM Fusion (free)][4] first.

|

||||

|

||||

Once RPM Fusion is set up, you can install x264 development files as follows.

|

||||

|

||||

$ sudo yum --enablerepo=rpmfusion-free install x264-devel

|

||||

|

||||

Note that RPM Fusion repository is not available for CentOS 7 yet, so the above method does not work for CentOS 7. In case of CentOS 7, you can build and install x264 library from the source, which is explained below.

|

||||

|

||||

### Compile x264 Library from the Source on Debian, Ubuntu or Linux Mint ###

|

||||

|

||||

If the libx264 package that comes with your distribution is not up-to-date, you can compile the latest x264 library from the source as follows.

|

||||

|

||||

$ sudo apt-get install g++ automake autoconf libtool yasm nasm git

|

||||

$ git clone git://git.videolan.org/x264.git

|

||||

$ cd x264

|

||||

$ ./configure --enable-static --enable-shared

|

||||

$ make

|

||||

$ sudo make install

|

||||

|

||||

The x264 library will be installed in /usr/local/lib. To allow the library to be used by other applications, you need to complete the last step:

|

||||

|

||||

Open /etc/ld.so.conf with a text editor, and append the following line.

|

||||

|

||||

$ sudo vi /etc/ld.so.conf

|

||||

|

||||

----------

|

||||

|

||||

/usr/local/lib

|

||||

|

||||

Finally reload all shared libraries by running:

|

||||

|

||||

$ sudo ldconfig

|

||||

|

||||

### Compile x264 Library from the Source on Fedora, CentOS/RHEL ###

|

||||

|

||||

If the x264 library is not available on your Linux distribution (e.g., CentOS 7) or the x264 library is not up-to-date, you can build the latest x264 library from the source as follows.

|

||||

|

||||

$ sudo yum install gcc gcc-c++ automake autoconf libtool yasm nasm git

|

||||

$ git clone git://git.videolan.org/x264.git

|

||||

$ cd x264

|

||||

$ ./configure --enable-static --enable-shared

|

||||

$ make

|

||||

$ sudo make install

|

||||

|

||||

Finally, to allow other applications to use x264 library installed in /usr/local/lib, add the following line in /etc/ld.so.conf:

|

||||

|

||||

$ sudo vi /etc/ld.so.conf

|

||||

|

||||

----------

|

||||

|

||||

/usr/local/lib

|

||||

|

||||

and reload all shared libraries by running:

|

||||

|

||||

$ sudo ldconfig

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/fatal-error-x264-h-no-such-file-or-directory.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.videolan.org/developers/x264.html

|

||||

[2]:http://ask.xmodulo.com/compile-ffmpeg-centos-fedora-rhel.html

|

||||

[3]:http://xmodulo.com/how-to-install-handbrake-on-linux.html

|

||||

[4]:http://xmodulo.com/how-to-install-rpm-fusion-on-fedora.html

|

||||

469

sources/tech/20150211 25 Tips for Intermediate Git Users.md

Normal file

469

sources/tech/20150211 25 Tips for Intermediate Git Users.md

Normal file

@ -0,0 +1,469 @@

|

||||

25 Tips for Intermediate Git Users

|

||||

================================================================================

|

||||

I’ve been using git for about 18 months now and thought I knew it pretty well. Then we had [Scott Chacon][1] from GitHub over to do some training at [LVS, a supplier/developer of betting/gaming software][2] (where contracted until 2013) and I learnt a ton in the first day.

|

||||

|

||||

As someone who’s always felt fairly comfortable in Git, I thought sharing some of the nuggets I learnt with the community might help someone to find an answer without needing to do lots of research.

|

||||

|

||||

### Basic Tips ###

|

||||

|

||||

#### 1. First Steps After Install ####

|

||||

|

||||

After installing Git, the first thing you should do is configure your name and email, as every commit will have these details:

|

||||

|

||||

$ git config --global user.name "Some One"

|

||||

$ git config --global user.email "someone@gmail.com"

|

||||

|

||||

#### 2. Git is Pointer-Based ####

|

||||

|

||||

Everything stored in git is in a file. When you create a commit it creates a file containing your commit message and associated data (name, email, date/time, previous commit, etc) and links it to a tree file. The tree file contains a list of objects or other trees. The object or blob is the actual content associated with the commit (a file, if you will, although the filename isn’t stored in the object, but in the tree). All of these files are stored with a filename of a SHA-1 hash of the object.

|

||||

|

||||

From there branches and tags are simply files containing (basically) a SHA-1 hash which points to the commit. Using these references allows for a lot of flexibility and speed, as creating a new branch is as simple as creating a file with the name of the branch and the SHA-1 reference to the commit you’re branching from. Of course, you’d never do that as you’d use the Git command line tools (or a GUI), but it’s that simple.

|

||||

|

||||

You may have heard references to the HEAD. This is simply a file containing the SHA-1 reference of the commit you’re currently pointing to. If you’re resolving a merge conflict and see HEAD, that’s nothing to do with a particular branch or necessarily a particular point on the branch but where you currently are.

|

||||

|

||||

All the branch pointers are kept in .git/refs/heads, HEAD is in .git/HEAD and tags are in .git/refs/tags – feel free to have a look in there.

|

||||

|

||||

#### 3. Two Parents – of course! ####

|

||||

|

||||

When viewing a merge commit message in a log, you will see two parents (as opposed to the normal one for a work-based commit). The first parent is the branch you were on and the second is the one you merged in to it.

|

||||

|

||||

#### 4. Merge Conflicts ####

|

||||

|

||||

By now I’m sure you have had a merge conflict and had to resolve it. This is normally done by editing the file, removing the <<<<, ====, >>>> markers and the keeping the code you want to store. Sometimes it’s nice to see the code before either change, i.e. before you made the change in both branches that now conflicts. This is one command away:

|

||||

|

||||

$ git diff --merge

|

||||

diff --cc dummy.rb

|

||||

index 5175dde,0c65895..4a00477

|

||||

--- a/dummy.rb

|

||||

+++ b/dummy.rb

|

||||

@@@ -1,5 -1,5 +1,5 @@@

|

||||

class MyFoo

|

||||

def say

|

||||

- puts "Bonjour"

|

||||

- puts "Hello world"

|

||||

++ puts "Annyong Haseyo"

|

||||

end

|

||||

end

|

||||

|

||||

If the file is binary, diffing files isn’t so easy… What you’ll normally want to do is to try each version of the binary file and decide which one to use (or manually copy portions over in the binary file’s editor). To pull a copy of the file from a particular branch (say you’re merging master and feature132):

|

||||

|

||||

$ git checkout master flash/foo.fla # or...

|

||||

$ git checkout feature132 flash/foo.fla

|

||||

$ # Then...

|

||||

$ git add flash/foo.fla

|

||||

|

||||

Another way is to cat the file from git – you can do this to another filename then copy the correct file over (when you’ve decided which it is) to the normal filename:

|

||||

|

||||

$ git show master:flash/foo.fla > master-foo.fla

|

||||

$ git show feature132:flash/foo.fla > feature132-foo.fla

|

||||

$ # Check out master-foo.fla and feature132-foo.fla

|

||||

$ # Let's say we decide that feature132's is correct

|

||||

$ rm flash/foo.fla

|

||||

$ mv feature132-foo.fla flash/foo.fla

|

||||

$ rm master-foo.fla

|

||||

$ git add flash/foo.fla

|

||||

|

||||

UPDATE: Thanks to Carl in the comments on the original blog post for the reminder, you can actually use “git checkout —ours flash/foo.fla” and “git checkout —theirs flash/foo.fla” to checkout a particular version without remembering which branches you merge in. Personally I prefer to be more explicit, but the option is there…

|

||||

|

||||

Remember to add the file after resolving the merge conflict (as I do above).

|

||||

|

||||

### Servers, Branching and Tagging ###

|

||||

|

||||

#### 5. Remote Servers ####

|

||||

|

||||

One of the most powerful features of Git is the ability to have more than one remote server (as well as the fact that you’re running a local repository always). You don’t always need write access either, you may have multiple servers you read from (to merge work in) and then write to another. To add a new remote server is simple:

|

||||

|

||||

$ git remote add john git@github.com:johnsomeone/someproject.git

|

||||

|

||||

If you want to see information about your remote servers you can do:

|

||||

|

||||

# shows URLs of each remote server

|

||||

$ git remote -v

|

||||

|

||||

# gives more details about each

|

||||

$ git remote show name

|

||||

|

||||

You can always see the differences between a local branch and a remote branch:

|

||||

|

||||

$ git diff master..john/master

|

||||

|

||||

You can also see the changes on HEAD that aren’t on that remote branch:

|

||||

|

||||

$ git log remote/branch..

|

||||

# Note: no final refspec after ..

|

||||

|

||||

#### 6. Tagging ####

|

||||

|

||||

In Git there are two types of tag – a lightweight tag and an annotated tag. Bearing in mind Tip 2 about Git being pointer based, the difference between the two is simple. A lightweight tag is simply a named pointer to a commit. You can always change it to point to another commit. An annotated tag is a name pointer to a tag object, with it’s own message and history. As it has it’s own message it can be GPG signed if required.

|

||||

|

||||

Creating the two types of tag is easy (and one command line switch different)

|

||||

|

||||

$ git tag to-be-tested

|

||||

$ git tag -a v1.1.0 # Prompts for a tag message

|

||||

|

||||

#### 7. Creating Branches ####

|

||||

|

||||

Creating branches in git is very easy (and lightning quick due to it only needing to create a less than 100 byte file). The longhand way of creating a new branch and switching to it:

|

||||

|

||||

$ git branch feature132

|

||||

$ git checkout feature132

|

||||

|

||||

Of course, if you know you’re going to want to switch to it straight away you can do it in one command:

|

||||

|

||||

$ git checkout -b feature132

|

||||

|

||||

If you want to rename a local branch it’s as easy as (the long way to show what happens):

|

||||

|

||||

$ git checkout -b twitter-experiment feature132

|

||||

$ git branch -d feature132

|

||||

|

||||

Update: Or you can (as Brian Palmer points out in the comments on the original blog post) just use the -m switch to “git branch” to do it in one step (as Mike points out, if you only specify one branch it renames your current branch):

|

||||

|

||||

$ git branch -m twitter-experiment

|

||||

$ git branch -m feature132 twitter-experiment

|

||||

|

||||

#### 8. Merging Branches ####

|

||||

|

||||

At some point in the future, you’re going to want to merge your changes back in. There are two ways to do this:

|

||||

|

||||

$ git checkout master

|

||||

$ git merge feature83 # Or...

|

||||

$ git rebase feature83

|

||||

|

||||

The difference between merge and rebase is that merge tries to resolve the changes and create a new commit that blends them. Rebase tries to take your changes since you last diverged from the other branch and replay them from the HEAD of the other branch. However, don’t rebase after you’ve pushed a branch to a remote server – this can cause confusion/problems.

|

||||

|

||||

If you aren’t sure which branches still have unique work on them – so you know which you need to merge and which ones can be removed, there are two switches to git branch that help:

|

||||

|

||||

# Shows branches that are all merged in to your current branch

|

||||

$ git branch --merged

|

||||

|

||||

# Shows branches that are not merged in to your current branch

|

||||

$ git branch --no-merged

|

||||

|

||||

#### 9. Remote Branches ####

|

||||

|

||||

If you have a local branch that you’d like to appear on a remote server, you can push it up with one command:

|

||||

|

||||

$ git push origin twitter-experiment:refs/heads/twitter-experiment

|

||||

# Where origin is our server name and twitter-experiment is the branch

|

||||

|

||||

Update: Thanks to Erlend in the comments on the original blog post – this is actually the same as doing `git push origin twitter-experiment` but by using the full syntax you can see that you can actually use different names on both ends (so your local can be `add-ssl-support` while your remote name can be `issue-1723`).

|

||||

|

||||

If you want to delete a branch from the server (note the colon before the branch name):

|

||||

|

||||

$ git push origin :twitter-experiment

|

||||

|

||||

If you want to show the state of all remote branches you can view them like this:

|

||||

|

||||

$ git remote show origin

|

||||

|

||||

This may list some branches that used to exist on the server but now don’t exist. If this is the case you can easily remove them from your local checkout using:

|

||||

|

||||

$ git remote prune

|

||||

|

||||

Finally, if you have a remote branch that you want to track locally, the longhand way is:

|

||||

|

||||

$ git branch --track myfeature origin/myfeature

|

||||

$ git checkout myfeature

|

||||

|

||||

However, newer versions of Git automatically set up tracking if you use the -b flag to checkout:

|

||||

|

||||

$ git checkout -b myfeature origin/myfeature

|

||||

|

||||

### Storing Content in Stashes, Index and File System ###

|

||||

|

||||

#### 10. Stashing ####

|

||||

|

||||

In Git you can drop your current work state in to a temporary storage area stack and then re-apply it later. The simple case is as follows:

|

||||

|

||||

$ git stash

|

||||

# Do something...

|

||||

$ git stash pop

|

||||

|

||||

A lot of people recommend using `git stash apply` instead of pop, however if you do this you end up with a long list of stashes left hanging around. “pop” will only remove it from the stack if it applies cleanly. If you’ve used `git stash apply` you can remove the last item from the stack anyway using:

|

||||

|

||||

$ git stash drop

|

||||

|

||||

Git will automatically create a comment based on the current commit message. If you’d prefer to use a custom message (as it may have nothing to do with the previous commit):

|

||||

|

||||

$ git stash save "My stash message"

|

||||

|

||||

If you want to apply a particular stash from your list (not necessarily the last one) you can list them and apply it like this:

|

||||

|

||||

$ git stash list

|

||||

stash@{0}: On master: Changed to German

|

||||

stash@{1}: On master: Language is now Italian

|

||||

$ git stash apply stash@{1}

|

||||

|

||||

#### 11. Adding Interactively ####

|

||||

|

||||

In the subversion world you change files and then just commit everything that has changed. In Git you have a LOT more power to commit just certain files or even certain patches. To commit certain files or parts of files you need to go in to interactive mode.

|

||||

|

||||

$ git add -i

|

||||

staged unstaged path

|

||||

|

||||

|

||||

*** Commands ***

|

||||

1: status 2: update 3: revert 4: add untracked

|

||||

5: patch 6: diff 7: quit 8: help

|

||||

What now>

|

||||

|

||||

This drops you in to a menu based interactive prompt. You can use the numbers of the commands or the highlighted letters (if you have colour highlighting turned on) to go in to that mode. Then it’s normally a matter of typing the numbers of the files you want to apply that action to (you can use formats like 1 or 1-4 or 2,4,7).

|

||||

|

||||

If you want to go to patch mode (‘p’ or ‘5’ from interactive mode) you can also go straight in to that mode:

|

||||

|

||||

$ git add -p

|

||||

diff --git a/dummy.rb b/dummy.rb

|

||||

index 4a00477..f856fb0 100644

|

||||

--- a/dummy.rb

|

||||

+++ b/dummy.rb

|

||||

@@ -1,5 +1,5 @@

|

||||

class MyFoo

|

||||

def say

|

||||

- puts "Annyong Haseyo"

|

||||

+ puts "Guten Tag"

|

||||

end

|

||||

end

|

||||

Stage this hunk [y,n,q,a,d,/,e,?]?

|

||||

|

||||

As you can see you then get a set of options at the bottom for choosing to add this changed part of the file, all changes from this file, etc. Using the ‘?’ command will explain the options.

|

||||

|

||||

#### 12. Storing/Retrieving from the File System ####

|

||||

|

||||

Some projects (the Git project itself for example) store additional files directly in the Git file system without them necessarily being a checked in file.

|

||||

|

||||

Let’s start off by storing a random file in Git:

|

||||

|

||||

$ echo "Foo" | git hash-object -w --stdin

|

||||

51fc03a9bb365fae74fd2bf66517b30bf48020cb

|

||||

|

||||

At this point the object is in the database, but if you don’t set something up to point to that object it will be garbage collected. The easiest way is to tag it:

|

||||

|

||||

$ git tag myfile 51fc03a9bb365fae74fd2bf66517b30bf48020cb

|

||||

|

||||

Note that here we’ve used the tag myfile. When we need to retrieve the file we can do it with:

|

||||

|

||||

$ git cat-file blob myfile

|

||||

|

||||

This can be useful for utility files that developers may need (passwords, gpg keys, etc) but you don’t want to actually check out on to disk every time (particularly in production).

|

||||

|

||||

### Logging and What Changed? ###

|

||||

|

||||

#### 13. Viewing a Log ####

|

||||

|

||||

You can’t use Git for long without using ‘git log’ to view your recent commits. However, there are some tips on how to use it better. For example, you can view a patch of what changed in each commit with:

|

||||

|

||||

$ git log -p

|

||||

|

||||

Or you can just view a summary of which files changed with:

|

||||

|

||||

$ git log --stat

|

||||

|

||||

There’s a nice alias you can set up which shows abbreviated commits and a nice graph of branches with the messages on a single line (like gitk, but on the command line):

|

||||

|

||||

$ git config --global alias.lol "log --pretty=oneline --abbrev-commit --graph --decorate"

|

||||

$ git lol

|

||||

* 4d2409a (master) Oops, meant that to be in Korean

|

||||

* 169b845 Hello world

|

||||

|

||||

#### 14. Searching in the Log ####

|

||||

|

||||

If you want to search for a particular author you can specify that:

|

||||

|

||||

$ git log --author=Andy

|

||||

|

||||

Update: Thanks to Johannes in the comments, I’ve cleared up some of the confusion here.

|

||||

|

||||

Or if you have a search term that appears in the commit message:

|

||||

|

||||

$ git log --grep="Something in the message"

|

||||

|

||||

There’s also a more powerful command called the pickaxe command that look for the entry that removes or adds a particular piece of content (i.e. when it first appeared or was removed). This can tell you when a line was added (but not if a character on that line was later changed):

|

||||

|

||||

$ git log -S "TODO: Check for admin status"

|

||||

|

||||

What about if you changed a particular file, e.g. `lib/foo.rb`

|

||||

|

||||

$ git log lib/foo.rb

|

||||

|

||||

Let’s say you have a `feature/132` branch and a `feature/145` and you want to view the commits on those branches that aren’t on master (note the ^ meaning not):

|

||||

|

||||

$ git log feature/132 feature/145 ^master

|

||||

|

||||

You can also narrow it down to a date range using ActiveSupport style dates:

|

||||

|

||||

$ git log --since=2.months.ago --until=1.day.ago

|

||||

|

||||

By default it will use OR to combine the query, but you can easily change it to use AND (if you have more than one criteria)

|

||||

|

||||

$ git log --since=2.months.ago --until=1.day.ago --author=andy -S "something" --all-match

|

||||

|

||||

#### 15. Selecting Revisions to View/Change ####

|

||||

|

||||

There are a number of items you can specify when referring to a revision, depending on what you know about it:

|

||||

|

||||

$ git show 12a86bc38 # By revision

|

||||

$ git show v1.0.1 # By tag

|

||||

$ git show feature132 # By branch name

|

||||

$ git show 12a86bc38^ # Parent of a commit

|

||||

$ git show 12a86bc38~2 # Grandparent of a commit

|

||||

$ git show feature132@{yesterday} # Time relative

|

||||

$ git show feature132@{2.hours.ago} # Time relative

|

||||

|

||||

Note that unlike the previous section, a caret on the end means the parent of that commit – a caret at the start means not on this branch.

|

||||

|

||||

#### 16. Selecting a Range ####

|

||||

|

||||

The easiest way is to use:

|

||||

|

||||

$ git log origin/master..new

|

||||

# [old]..[new] - everything you haven't pushed yet

|

||||

|

||||

You can also omit the [new] and it will use your current HEAD.

|

||||

|

||||

### Rewinding Time & Fixing Mistakes ###

|

||||

|

||||

#### 17. Resetting changes ####

|

||||

|

||||

You can easily unstage a change if you haven’t committed it using:

|

||||

|

||||

$ git reset HEAD lib/foo.rb

|

||||

|

||||

Often this is aliased to ‘unstage’ as it’s a bit non-obvious.

|

||||

|

||||

$ git config --global alias.unstage "reset HEAD"

|

||||

$ git unstage lib/foo.rb

|

||||

|

||||

If you’ve committed the file already, you can do two things – if it’s the last commit you can just amend it:

|

||||

|

||||

$ git commit --amend

|

||||

|

||||

This undoes the last commit, puts your working copy back as it was with the changes staged and the commit message ready to edit/commit next time you commit.

|

||||

|

||||

If you’ve committed more than once and just want to completely undo them, you can reset the branch back to a previous point in time.

|

||||

|

||||

$ git checkout feature132

|

||||

$ git reset --hard HEAD~2

|

||||

|

||||

If you actually want to bring a branch to point to a completely different SHA1 (maybe you’re bringing the HEAD of a branch to another branch, or a further commit) you can do the following to do it the long way:

|

||||

|

||||

$ git checkout FOO

|

||||

$ git reset --hard SHA

|

||||

|

||||

There’s actually a quicker way (as it doesn’t change your working copy back to the state of FOO first then forward to SHA):

|

||||

|

||||

$ git update-ref refs/heads/FOO SHA

|

||||

|

||||

#### 18. Committing to the Wrong Branch ####

|

||||

|

||||

OK, let’s assume you committed to master but should have created a topic branch called experimental instead. To move those changes over, you can create a branch at your current point, rewind head and then checkout your new branch:

|

||||

|

||||

$ git branch experimental # Creates a pointer to the current master state

|

||||

$ git reset --hard master~3 # Moves the master branch pointer back to 3 revisions ago

|

||||

$ git checkout experimental

|

||||

|

||||

This can be more complex if you’ve made the changes on a branch of a branch of a branch etc. Then what you need to do is rebase the change on a branch on to somewhere else:

|

||||

|

||||

$ git branch newtopic STARTPOINT

|

||||

$ git rebase oldtopic --onto newtopic

|

||||

|

||||

#### 19. Interactive Rebasing ####

|

||||

|

||||

This is a cool feature I’ve seen demoed before but never actually understood, now it’s easy. Let’s say you’ve made 3 commits but you want to re-order them or edit them (or combine them):

|

||||

|

||||

$ git rebase -i master~3

|

||||

|

||||

Then you get your editor pop open with some instructions. All you have to do is amend the instructions to pick/squash/edit (or remove them) commits and save/exit. Then after editing you can `git rebase —continue` to keep stepping through each of your instructions.

|

||||

|

||||

If you choose to edit one, it will leave you in the state you were in at the time you committed that, so you need to use git commit —amend to edit it.

|

||||

|

||||

**Note: DO NOT COMMIT DURING REBASE – only add then use —continue, —skip or —abort.**

|

||||

|

||||

#### 20. Cleaning Up ####

|

||||

|

||||

If you’ve committed some content to your branch (maybe you’ve imported an old repo from SVN) and you want to remove all occurrences of a file from the history:

|

||||

|

||||

$ git filter-branch --tree-filter 'rm -f *.class' HEAD

|

||||

|

||||

If you’ve already pushed to origin, but have committed the rubbish since then, you can also do this for your local system before pushing:

|

||||

|

||||

$ git filter-branch --tree-filter 'rm -f *.class' origin/master..HEAD

|

||||

|

||||

### Miscellaneous Tips ###

|

||||

|

||||

#### 21. Previous References You’ve Viewed ####

|

||||

|

||||

If you know you’ve previously viewed a SHA-1, but you’ve done some resetting/rewinding you can use the reflog commands to view the SHA-1s you’ve recently viewed:

|

||||

|

||||

$ git reflog

|

||||

$ git log -g # Same as above, but shows in 'log' format

|

||||

|

||||

#### 22. Branch Naming ####

|

||||

|

||||

A lovely little tip – don’t forget that branch names aren’t limited to a-z and 0-9. It can be quite nice to use / and . in names for fake namespacing or versionin, for example:

|

||||

|

||||

$ # Generate a changelog of Release 132

|

||||

$ git shortlog release/132 ^release/131

|

||||

$ # Tag this as v1.0.1

|

||||

$ git tag v1.0.1 release/132

|

||||

|

||||

#### 23. Finding Who Dunnit ####

|

||||

|

||||

Often it can be useful to find out who changed a line of code in a file. The simple command to do this is:

|

||||

|

||||

$ git blame FILE

|

||||

|

||||

Sometimes the change has come from a previous file (if you’ve combined two files, or you’ve moved a function) so you can use:

|

||||

|

||||

$ # shows which file names the content came from

|

||||

$ git blame -C FILE

|

||||

|

||||

Sometimes it’s nice to track this down by clicking through changes and going further and further back. There’s a nice in-built gui for this:

|

||||

|

||||

$ git gui blame FILE

|

||||

|

||||

#### 24. Database Maintenance ####

|

||||

|

||||

Git doesn’t generally require a lot of maintenance, it pretty much takes care of itself. However, you can view the statistics of your database using:

|

||||

|

||||

$ git count-objects -v

|

||||

|

||||

If this is high you can choose to garbage collect your clone. This won’t affect pushes or other people but it can make some of your commands run much faster and take less space:

|

||||

|

||||

$ git gc

|

||||

|

||||

It also might be worth running a consistency check every so often:

|

||||

|

||||

$ git fsck --full

|

||||

|

||||

You can also add a `—auto` parameter on the end (if you’re running it frequently/daily from crontab on your server) and it will only fsck if the stats show it’s necessary.

|

||||

|

||||

When checking, getting “dangling” or “unreachable” is fine, this is often a result of rewinding heads or rebasing. Getting “missing” or “sha1 mismatch” is bad… Get professional help!

|

||||

|

||||

#### 25. Recovering a Lost Branch ####

|

||||

|

||||

If you delete a branch experimental with -D you can recreate it with:

|

||||

|

||||

$ git branch experimental SHA1_OF_HASH

|

||||

|

||||

You can often find the SHA1 hash using git reflog if you’ve accessed it recently.

|

||||

|

||||

Another way is to use `git fsck —lost-found`. A dangling commit here is the lost HEAD (it will only be the HEAD of the deleted branch as the HEAD^ is referred to by HEAD so it’s not dangling)

|

||||

|

||||

### Done! ###

|

||||

|

||||

Wow, the longest blog post I’ve ever written, I hope someone finds it useful. If you did, or if you have any questions let me know in the comments…

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.andyjeffries.co.uk/25-tips-for-intermediate-git-users/

|

||||

|

||||

作者:[Andy Jeffries][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.andyjeffries.co.uk/author/andy-jeffries/

|

||||

[1]:http://gitcasts.com/about

|

||||

[2]:http://www.lvs.co.uk/

|

||||

@ -0,0 +1,229 @@

|

||||

Best Known Linux Archive / Compress Tools

|

||||

================================================================================

|

||||

Sending and receiving large files and pictures over the internet is a headache many times. Compression and decompression tools are meant to address this problem. Lets take a quick overview of a few open source tools that are available to make our jobs simpler.

|

||||

|

||||

Tar

|

||||

gzip, gunzip

|

||||

bzip2, bunzip2

|

||||

7-Zip

|

||||

|

||||

### Tar ###

|

||||

|

||||

Tar is derived from 'Tape archiver' as this was initially used for archiving and storing files on magnetic tapes. It is a GNU software. It can compress a set of files (archives), extract them and manipulate those which already exist. It is useful for storing, backing up and transporting files. Tar can preserve file and directory structure while creating the archives. Files archived using tar have '.tar' extensions.

|

||||

|

||||

Basic Usage

|

||||

|

||||

#### a) Creating an archive (c / --create) ####

|

||||

|

||||

tar --create --verbose --file=archive.tar file1 file2 file3

|

||||

|

||||

OR

|

||||

|

||||

tar cvf archive.tar file1 file2 file3

|

||||

|

||||

|

||||

|

||||

creating an archive

|

||||

|

||||

#### b) Listing an archive ( t / --list) ####

|

||||

|

||||

tar --list archive.tar

|

||||

|

||||

|

||||

|

||||

Listing the contents

|

||||

|

||||

#### c) Extracting an archive (x / --extract) ####

|

||||

|

||||

tar xvf archive.tar

|

||||

|

||||

tar xvf archive.tar --wildcards '*.c' - extracts files with only *.c extension from the archive.

|

||||

|

||||

|

||||

|

||||

Extracting files

|

||||

|

||||

|

||||

|

||||

Extract only the required files

|

||||

|

||||

#### d) Updating an archive ( u / --update) ####

|

||||

|

||||

tar uvf archive.tar newfile.c - updates the archive by adding newfile.c if its version is newer than the existing one.

|

||||

|

||||

|

||||

|

||||

Updating an archive

|

||||

|

||||

#### e) Delete from an archive (--delete) ####

|

||||

|

||||

tar--delete -f archive.tar file1.c - deletes 'file1.c' from the tar ball 'archive.tar'

|

||||

|

||||

|

||||

|

||||

Deleting files

|

||||

|

||||

Refer to [tar home page][1] for its detailed usage

|

||||

|

||||

### Gzip / Gunzip ###

|

||||

|

||||

Gzip stands for GNU zip. It is a compression utility that is commonly available in Linux operating system. Compressed files have an extension of '*.gz'

|

||||

|

||||

**Basic Usage**

|

||||

|

||||

#### a) Compressing files ####

|

||||

|

||||

gzip file(s)

|

||||

|

||||

Each file gets compressed individually

|

||||

|

||||

|

||||

|

||||

Compress files

|

||||

|

||||

This generally deletes the original files after compression. We can keep the original file by using the -c option.

|

||||

|

||||

gzip -c file > file.gz

|

||||

|

||||

|

||||

|

||||

Keep original files after compressing

|

||||

|

||||

We can also compress a group of files into a single file

|

||||

|

||||

cat file1 file2 file3 | gzip > archieve.gz

|

||||

|

||||

|

||||

|

||||

Compressing a group of files

|

||||

|

||||

#### b) Checking compression ratio ####

|

||||

|

||||

Compression ratio of the compressed file(s) can be verified using the '-l' option.

|

||||

|

||||

gzip -l archieve.gz

|

||||

|

||||

|

||||

|

||||

Checking compression ratio

|

||||

|

||||

#### c) Unzipping files ####

|

||||

|

||||

Gunzip is used for unzipping files. Here also, original files are deleted after decompression. Use the -c option to retain original files.

|

||||

|

||||

gunzip -c archieve.gz

|

||||

|

||||

|

||||

|

||||

Unzipping files

|

||||

|

||||

Using '-d' option with gzip command has the same effect of gunzip on compressed files.

|

||||

|

||||

More details can be obtained from [gzip home page][2]

|

||||

|

||||

### Bzip2 / Bunzip2 ###

|

||||

|

||||

[Bzip2][3] is also a compression tool like gzip but can compress files to smaller sizes than that is possible with other traditional tools. But the drawback is that it is slower than gzip.

|

||||

|

||||

**Basic Usage**

|

||||

|

||||

#### a) File Compression ####

|

||||

|

||||

Generally, no options are used for compression and the files to be compressed are passed as arguments. Each file gets compressed individually and compressed files will have the extension 'bz2'.

|

||||

|

||||

bzip2 file1 file2 file3

|

||||

|

||||

|

||||

|

||||

File Compression

|

||||

|

||||

Use '-k' option to keep the original files after compression / decompression.

|

||||

|

||||

|

||||

|

||||

Retaining original files after compression

|

||||

|

||||

'-d' option is used for forced decompression.

|

||||

|

||||

|

||||

|

||||

Delete files using -d option

|

||||

|

||||

#### b) Decompression ####

|

||||

|

||||

bunzip2 filename

|

||||

|

||||

|

||||

|

||||

Decompressing files

|

||||

|

||||

bunzip2 can decompress files with extensions bz2, bz, tbz2 and tbz. Files with tbz2 and tbz will end up with '.tar' extension after decompression.

|

||||

|

||||

bzip2 -dc performs the function of decompressing files to the stdout

|

||||

|

||||

### 7-zip ###

|

||||

|

||||

[7-zip][4] is another open source file archiver. It uses 7z format which is a new compression format and provides high-compression ratio. Hence, it is considered to be better than the previously mentioned compression tools. It is available under Linux as p7zip package. The package includes three binaries – 7z, 7za and 7zr. Refer to the [p7zip wiki][5] for differences between these binaries. In this article, we will be using 7zr to explain the usage. Archived files will have '.7z' extension.

|

||||

|

||||

**Basic usage**

|

||||

|

||||

#### a) Creating an archive ####

|

||||

|

||||

7zr a archive-name.7z file-name(s) / directory-name(s)

|

||||

|

||||

|

||||

|

||||

Creating an archive

|

||||

|

||||

#### b) Listing an archive ####

|

||||

|

||||

7zr l archive-name.7z

|

||||

|

||||

|

||||

|

||||

Listing an archive

|

||||

|

||||

#### c) Extracting an archive ####

|

||||

|

||||

7zr e archive-name.7z

|

||||

|

||||

|

||||

|

||||

Extracting an archive

|

||||

|

||||

#### d) Updating an archive ####

|

||||

|

||||

7zr u archive-name.7z new-file

|

||||

|

||||

|

||||

|

||||

Updating an archive

|

||||

|

||||

#### e) Deleting files from an archive ####

|

||||

|

||||

7zr d archive-name.7z file-to-be-deleted

|

||||

|

||||

|

||||

|

||||

Deleting files

|

||||

|

||||

|

||||

|

||||

Verifying file deletion

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|