mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

4ccc3e860e

@ -0,0 +1,74 @@

|

|||||||

|

5 个提升你开源项目贡献者基数的方法

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

图片提供:opensource.com

|

||||||

|

|

||||||

|

许多自由和开源软件项目因解决问题而出现,人们开始为它们做贡献,是因为他们也想修复遇到的问题。当项目的最终用户发现它对他们的需求有用,该项目就开始增长。并且出于分享的目的把人们吸引到同一个项目社区。

|

||||||

|

|

||||||

|

就像任何事物都是有寿命的,增长既是项目成功的标志也是来源。那么项目领导者和维护者如何激励贡献者基数的增长?这里有五种方法。

|

||||||

|

|

||||||

|

### 1、 提供好的文档

|

||||||

|

|

||||||

|

人们经常低估项目[文档][2]的重要性。它是项目贡献者的主要信息来源,它会激励他们努力。信息必须是正确和最新的。它应该包括如何构建该软件、如何提交补丁、编码风格指南等步骤。

|

||||||

|

|

||||||

|

查看经验丰富的科技作家、编辑 Bob Reselman 的 [7 个创建世界级文档的规则][3]。

|

||||||

|

|

||||||

|

开发人员文档的一个很好的例子是 [Python 开发人员指南][4]。它包括清晰简洁的步骤,涵盖 Python 开发的各个方面。

|

||||||

|

|

||||||

|

### 2、 降低进入门槛

|

||||||

|

|

||||||

|

如果你的项目有[工单或 bug 追踪工具][5],请确保将初级任务标记为一个“小 bug” 或“起点”。新的贡献者可以很容易地通过解决这些问题进入项目。追踪工具也是标记非编程任务(如平面设计、图稿和文档改进)的地方。有许多项目成员不是每天都编码,但是却通过这种方式成为推动力。

|

||||||

|

|

||||||

|

Fedora 项目维护着一个这样的[易修复和入门级问题的追踪工具][6]。

|

||||||

|

|

||||||

|

### 3、 为补丁提供常规反馈

|

||||||

|

|

||||||

|

确认每个补丁,即使它只有一行代码,并给作者反馈。提供反馈有助于吸引潜在的候选人,并指导他们熟悉项目。所有项目都应有一个邮件列表和[聊天功能][7]进行通信。问答可在这些媒介中发生。大多数项目不会在一夜之间成功,但那些繁荣的列表和沟通渠道为增长创造了环境。

|

||||||

|

|

||||||

|

### 4、 推广你的项目

|

||||||

|

|

||||||

|

始于解决问题的项目实际上可能对其他开发人员也有用。作为项目的主要贡献者,你的责任是为你的的项目建立文档并推广它。写博客文章,并在社交媒体上分享项目的进展。你可以从简要描述如何成为项目的贡献者开始,并在该描述中提供主要开发者文档的参考连接。此外,请务必提供有关路线图和未来版本的信息。

|

||||||

|

|

||||||

|

为了你的听众,看看由 Opensource.com 的社区经理 Rikki Endsley 写的[写作提示][8]。

|

||||||

|

|

||||||

|

### 5、 保持友好

|

||||||

|

|

||||||

|

友好的对话语调和迅速的回复将加强人们对你的项目的兴趣。最初,这些问题只是为了寻求帮助,但在未来,新的贡献者也可能会提出想法或建议。让他们有信心他们可以成为项目的贡献者。

|

||||||

|

|

||||||

|

记住你一直在被人评头论足!人们会观察项目开发者是如何在邮件列表或聊天上交谈。这些意味着对新贡献者的欢迎和开放程度。当使用技术时,我们有时会忘记人文关怀,但这对于任何项目的生态系统都很重要。考虑一个情况,项目是很好的,但项目维护者不是很受欢迎。这样的管理员可能会驱使用户远离项目。对于有大量用户基数的项目而言,不被支持的环境可能导致分裂,一部分用户可能决定复刻项目并启动新项目。在开源世界中有这样的先例。

|

||||||

|

|

||||||

|

另外,拥有不同背景的人对于开源项目的持续增长和源源不断的点子是很重要的。

|

||||||

|

|

||||||

|

最后,项目负责人有责任维持和帮助项目成长。指导新的贡献者是项目的关键,他们将成为项目和社区未来的领导者。

|

||||||

|

|

||||||

|

阅读:由红帽的内容战略家 Nicole Engard 写的 [7 种让新的贡献者感到受欢迎的方式][1]。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Kushal Das - Kushal Das 是 Python 软件基金会的一名 CPython 核心开发人员和主管。他是一名长期的 FOSS 贡献者和导师,他帮助新人进入贡献世界。他目前在 Red Hat 担任 Fedora 云工程师。他的博客在 https://kushaldas.in 。你也可以在 Twitter @kushaldas 上找到他

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/17/1/expand-project-contributor-base

|

||||||

|

|

||||||

|

作者:[Kushal Das][a]

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[Bestony](https://github.com/bestony)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/kushaldas

|

||||||

|

[1]:https://opensource.com/life/16/5/sumana-harihareswara-maria-naggaga-oscon

|

||||||

|

[2]:https://opensource.com/tags/documentation

|

||||||

|

[3]:https://opensource.com/business/16/1/scale-14x-interview-bob-reselman

|

||||||

|

[4]:https://docs.python.org/devguide/

|

||||||

|

[5]:https://opensource.com/tags/bugs-and-issues

|

||||||

|

[6]:https://fedoraproject.org/easyfix/

|

||||||

|

[7]:https://opensource.com/alternatives/slack

|

||||||

|

[8]:https://opensource.com/business/15/10/what-stephen-king-can-teach-tech-writers

|

||||||

@ -1,42 +1,35 @@

|

|||||||

如何在 Linux 中捕获并流式传输你的游戏会话

|

如何在 Linux 中捕获并流式传输你的游戏过程

|

||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

### 在本页中

|

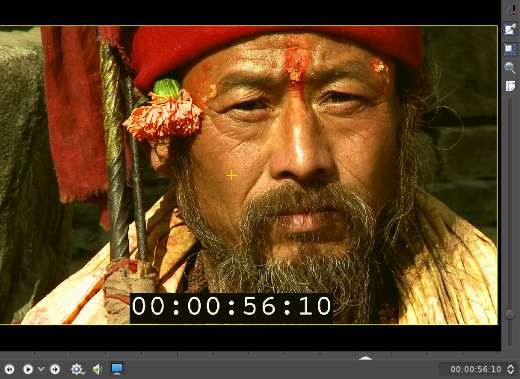

也许没有那么多铁杆的游戏玩家使用 Linux,但肯定有很多 Linux 用户喜欢玩游戏。如果你是其中之一,并希望向世界展示 Linux 游戏不再是一个笑话,那么你会喜欢下面这个关于如何捕捉并且/或者以流式播放游戏的快速教程。我在这将用一个名为 “[Open Broadcaster Software Studio][5]” 的软件,这可能是我们所能找到最好的一种。

|

||||||

|

|

||||||

1. [捕获设置][1]

|

|

||||||

2. [设置源][2]

|

|

||||||

3. [过渡][3]

|

|

||||||

4. [总结][4]

|

|

||||||

|

|

||||||

也许没有许多铁杆玩家使用 Linux,但现在肯定有很多 Linux 用户喜欢玩游戏。如果你是其中之一,并希望向世界展示 Linux 游戏不再是一个笑话,那么你会发现下面这个关于如何捕捉并且/或者流式播放游戏的快速教程。我在这将用一个名为 “[Open Broadcaster Software Studio][5]” 的软件,这可能是我们找到最好的一种。

|

|

||||||

|

|

||||||

### 捕获设置

|

### 捕获设置

|

||||||

|

|

||||||

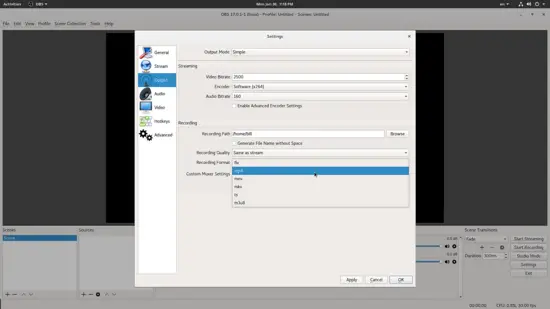

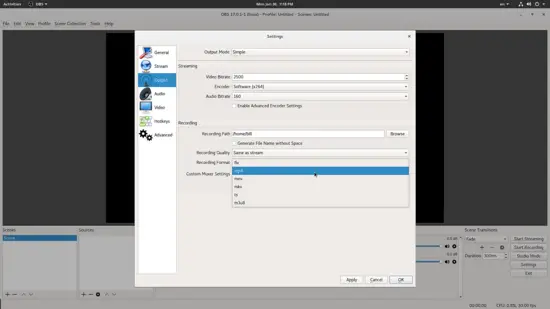

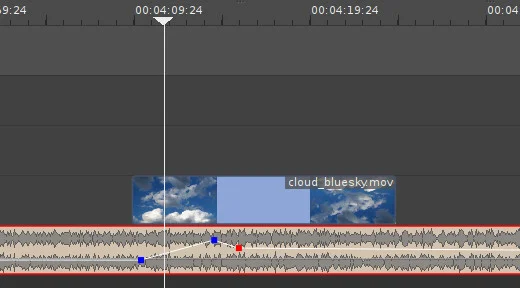

在顶层菜单中,我们选择 File → Settings,然后我们选择 “Output” 来设置要生成的文件的选项。这里我们可以设置想要的音频和视频的比特率、新创建的文件的目标路径和文件格式。这上面还提供了粗略的质量设置。

|

在顶层菜单中,我们选择 “File” → “Settings”,然后我们选择 “Output” 来设置要生成的文件的选项。这里我们可以设置想要的音频和视频的比特率、新创建的文件的目标路径和文件格式。这上面还提供了粗略的质量设置。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][6]

|

][6]

|

||||||

|

|

||||||

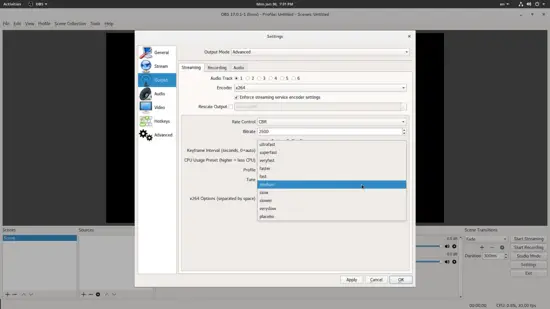

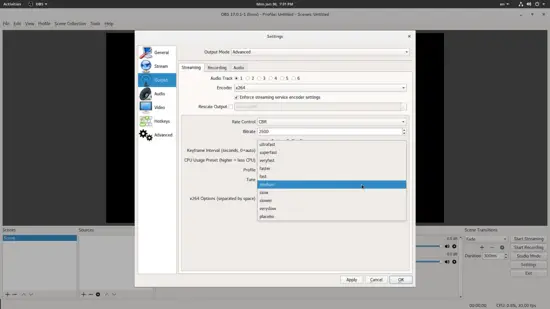

如果我们将顶部的输出模式从 “Simple” 更改为 “Advanced”,我们就能够设置 CPU 负载,使 OBS 能够控制系统。根据所选的质量,CPU 能力和捕获的游戏,存在一个 CPU 负载设置不会导致帧丢失。你可能需要做一些试验才能找到最佳设置,但如果质量设置为低,则不用担心。

|

如果我们将顶部的输出模式从 “Simple” 更改为 “Advanced”,我们就能够设置 CPU 负载,以控制 OBS 对系统的影响。根据所选的质量、CPU 能力和捕获的游戏,可以设置一个不会导致丢帧的 CPU 负载设置。你可能需要做一些试验才能找到最佳设置,但如果将质量设置为低,则不用太多设置。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][7]

|

][7]

|

||||||

|

|

||||||

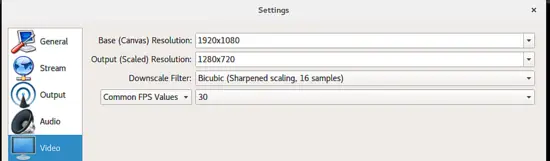

接下来,我们转到设置的 “Video” 部分,我们可以设置我们想要的输出视频分辨率。注意缩小过滤方法,因为它使最终的质量有所不同。

|

接下来,我们转到设置的 “Video” 部分,我们可以设置我们想要的输出视频分辨率。注意缩小过滤(downscaling filtering )方式,因为它使最终的质量有所不同。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][8]

|

][8]

|

||||||

|

|

||||||

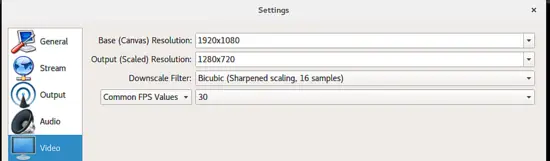

你可能还需要绑定热键以启动、暂停和停止录制。这是特别有用的,因为你可以在录制时看到游戏的屏幕。为此,请在设置中选择 “Hotkeys” 部分,并在相应的框中分配所需的按键。当然,你不必每个框都填写,你只需要填写所需的。

|

你可能还需要绑定热键以启动、暂停和停止录制。这特别有用,这样你就可以在录制时看到游戏的屏幕。为此,请在设置中选择 “Hotkeys” 部分,并在相应的框中分配所需的按键。当然,你不必每个框都填写,你只需要填写所需的。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][9]

|

][9]

|

||||||

|

|

||||||

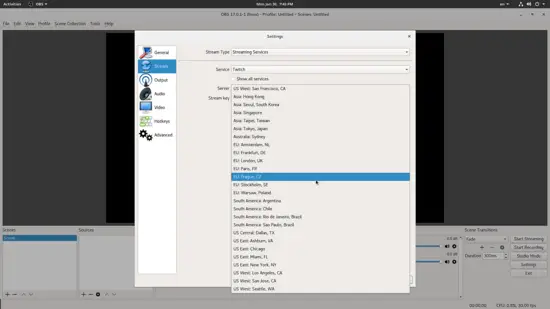

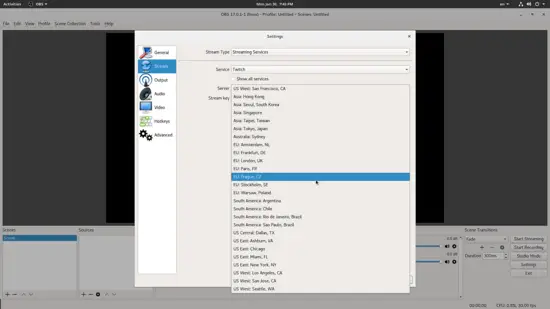

如果你对流式传输感兴趣,而不仅仅是录制,请选择 “Stream” 分类的设置,然后你可以选择支持的 30 种流媒体服务,包括Twitch、Facebook Live 和 Youtube,然后选择服务器并输入 流密钥。

|

如果你对流式传输感兴趣,而不仅仅是录制,请选择 “Stream” 分类的设置,然后你可以选择支持的 30 种流媒体服务,包括 Twitch、Facebook Live 和 Youtube,然后选择服务器并输入 流密钥。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

@ -44,17 +37,17 @@

|

|||||||

|

|

||||||

### 设置源

|

### 设置源

|

||||||

|

|

||||||

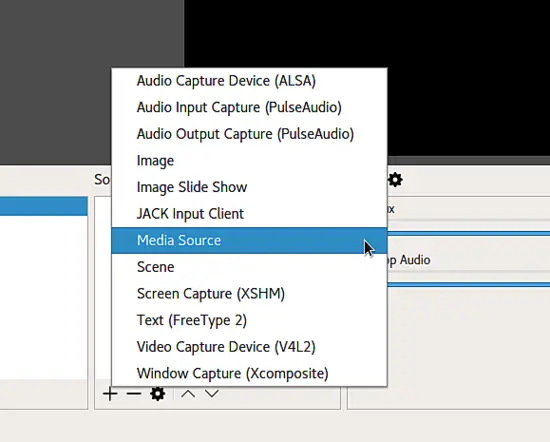

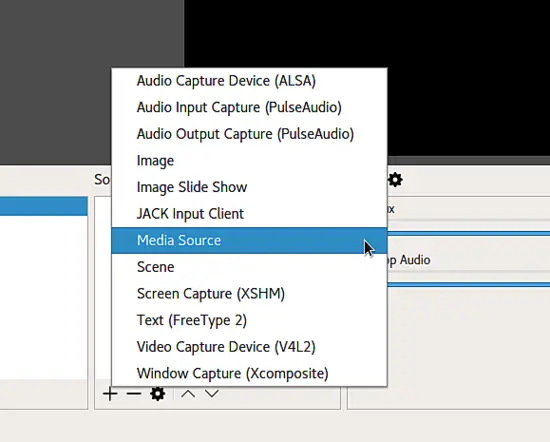

在左下方,你会发现一个名为 “Sources” 的框。我们按下加号好添加一个新的源,它本质上就是我们录制的媒体源。在这你可以设置音频和视频源,但是图像甚至文本也是可以的。

|

在左下方,你会发现一个名为 “Sources” 的框。我们按下加号来添加一个新的源,它本质上就是我们录制的媒体源。在这你可以设置音频和视频源,但是图像甚至文本也是可以的。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][11]

|

][11]

|

||||||

|

|

||||||

前三个是关于音频源,接下来的两个是图像,JACK 选项用于从乐器捕获的实时音频,媒体源用于添加文件等。这里我们感兴趣的是 “Screen Capture (XSHM)”、“Video Capture Device (V4L2)” 和 “Window Capture (Xcomposite)” 选项。

|

前三个是关于音频源,接下来的两个是图像,JACK 选项用于从乐器捕获的实时音频, Media Source 用于添加文件等。这里我们感兴趣的是 “Screen Capture (XSHM)”、“Video Capture Device (V4L2)” 和 “Window Capture (Xcomposite)” 选项。

|

||||||

|

|

||||||

屏幕捕获选项让你选择要捕获的屏幕(包括活动屏幕),以便记录所有内容。如工作区更改、窗口最小化等。对于标准批量录制来说,这是一个适合的选项,它可在发布之前进行编辑。

|

屏幕捕获选项让你选择要捕获的屏幕(包括活动屏幕),以便记录所有内容。如工作区更改、窗口最小化等。对于标准批量录制来说,这是一个适合的选项,它可在发布之前进行编辑。

|

||||||

|

|

||||||

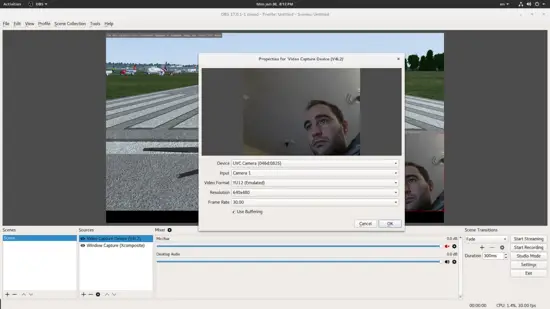

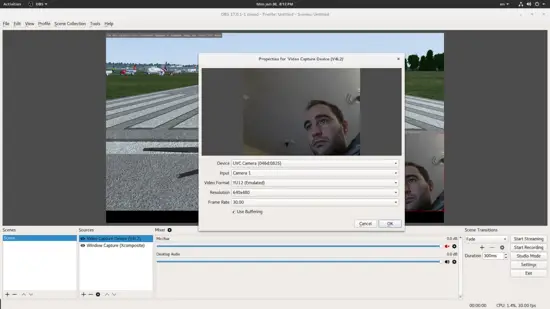

我们来探讨另外两个。Window Capture 将让我们选择一个活动窗口并将其放入捕获监视器。为了将我们的脸放在一个角落,视频捕获设备是有用的,这样人们可以在我们说话时看到我们。当然,每个添加的源都提供了一组选项来供我们实现我们最后要的效果。

|

我们来探讨另外两个。Window Capture 将让我们选择一个活动窗口并将其放入捕获监视器。为了将我们的脸放在一个角落,视频捕获设备用于人们可以在我们说话时可以看到我们。当然,每个添加的源都提供了一组选项来供我们实现我们最后要的效果。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

@ -68,7 +61,7 @@

|

|||||||

|

|

||||||

### 过渡

|

### 过渡

|

||||||

|

|

||||||

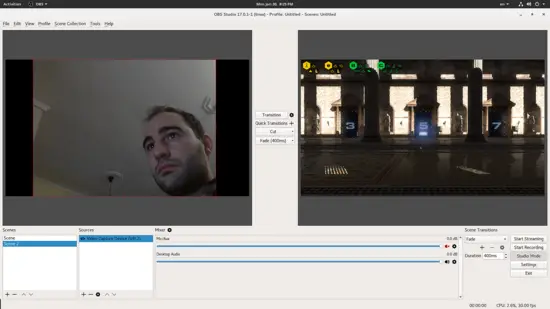

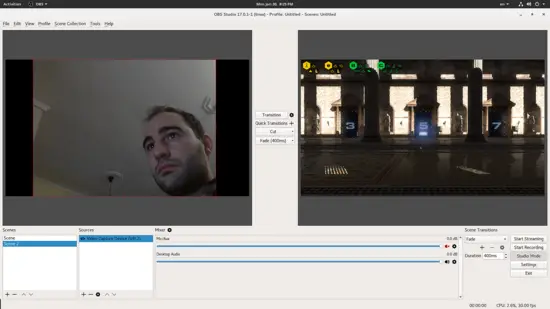

最后,我们假设你正在流式传输游戏会话,并希望能够在游戏视图和自己(或任何其他来源)之间切换。为此,请从右下角切换为“Studio Mode”,并添加一个分配给另一个源的场景。你还可以通过取消选中 “Duplicate scene” 并检查 “Transitions” 旁边的齿轮图标上的 “Duplicate sources” 来切换。 当你想在简短评论中显示你的脸部时,这很有帮助。

|

最后,如果你正在流式传输游戏会话时希望能够在游戏视图和自己(或任何其他来源)之间切换。为此,请从右下角切换为“Studio Mode”,并添加一个分配给另一个源的场景。你还可以通过取消选中 “Duplicate scene” 并检查 “Transitions” 旁边的齿轮图标上的 “Duplicate sources” 来切换。 当你想在简短评论中显示你的脸部时,这很有帮助。

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

@ -84,9 +77,9 @@ OBS Studio 是一个功能强大的免费软件,它工作稳定,使用起来

|

|||||||

|

|

||||||

via: https://www.howtoforge.com/tutorial/how-to-capture-and-stream-your-gaming-session-on-linux/

|

via: https://www.howtoforge.com/tutorial/how-to-capture-and-stream-your-gaming-session-on-linux/

|

||||||

|

|

||||||

作者:[Bill Toulas ][a]

|

作者:[Bill Toulas][a]

|

||||||

译者:[geekpi](https://github.com/geekpi)

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,28 +1,28 @@

|

|||||||

如何在 CentOS 7 中安装、配置 SFTP - [全面指南]

|

完全指南:如何在 CentOS 7 中安装、配置和安全加固 FTP 服务

|

||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

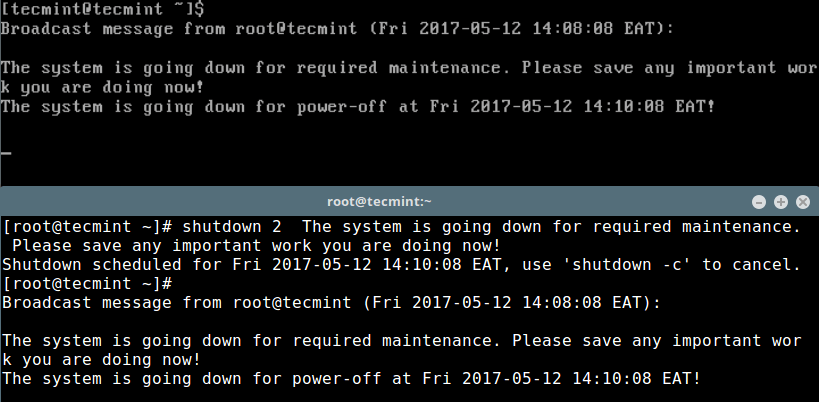

FTP(文件传输协议)是一种用于通过网络[在服务器和客户端之间传输文件][1]的传统并广泛使用的标准工具,特别是在不需要身份验证的情况下(允许匿名用户连接到服务器)。我们必须明白,默认情况下 FTP 是不安全的,因为它不加密传输用户凭据和数据。

|

FTP(文件传输协议)是一种用于通过网络[在服务器和客户端之间传输文件][1]的传统并广泛使用的标准工具,特别是在不需要身份验证的情况下(允许匿名用户连接到服务器)。我们必须明白,默认情况下 FTP 是不安全的,因为它不加密传输用户凭据和数据。

|

||||||

|

|

||||||

在本指南中,我们将介绍在 CentOS/RHEL7 和 Fedora 发行版中安装、配置和保护 FTP 服务器( VSFTPD 代表 “Very Secure FTP Daemon”)的步骤。

|

在本指南中,我们将介绍在 CentOS/RHEL7 和 Fedora 发行版中安装、配置和保护 FTP 服务器( VSFTPD 代表 “Very Secure FTP Daemon”)的步骤。

|

||||||

|

|

||||||

请注意,本指南中的所有命令将以 root 身份运行,以防你不使用 root 帐户操作服务器,请使用 [sudo命令][2] 获取 root 权限。

|

请注意,本指南中的所有命令将以 root 身份运行,如果你不使用 root 帐户操作服务器,请使用 [sudo命令][2] 获取 root 权限。

|

||||||

|

|

||||||

### 步骤 1:安装 FTP 服务器

|

### 步骤 1:安装 FTP 服务器

|

||||||

|

|

||||||

1. 安装 vsftpd 服务器很直接,只要在终端运行下面的命令。

|

1、 安装 vsftpd 服务器很直接,只要在终端运行下面的命令。

|

||||||

|

|

||||||

```

|

```

|

||||||

# yum install vsftpd

|

# yum install vsftpd

|

||||||

```

|

```

|

||||||

|

|

||||||

2. 安装完成后,服务会先被禁用,因此我们需要手动启动,并设置在下次启动时自动启用:

|

2、 安装完成后,服务先是被禁用的,因此我们需要手动启动,并设置在下次启动时自动启用:

|

||||||

|

|

||||||

```

|

```

|

||||||

# systemctl start vsftpd

|

# systemctl start vsftpd

|

||||||

# systemctl enable vsftpd

|

# systemctl enable vsftpd

|

||||||

```

|

```

|

||||||

|

|

||||||

3. 接下来,为了允许从外部系统访问 FTP 服务,我们需要打开 FTP 守护进程监听 21 端口:

|

3、 接下来,为了允许从外部系统访问 FTP 服务,我们需要打开 FTP 守护进程监听的 21 端口:

|

||||||

|

|

||||||

```

|

```

|

||||||

# firewall-cmd --zone=public --permanent --add-port=21/tcp

|

# firewall-cmd --zone=public --permanent --add-port=21/tcp

|

||||||

@ -32,7 +32,7 @@ FTP(文件传输协议)是一种用于通过网络[在服务器和客户端

|

|||||||

|

|

||||||

### 步骤 2: 配置 FTP 服务器

|

### 步骤 2: 配置 FTP 服务器

|

||||||

|

|

||||||

4. 现在,我们会进行一些配置来设置并加密我们的 FTP 服务器,让我们先备份一下原始配置文件 /etc/vsftpd/vsftpd.conf:

|

4、 现在,我们会进行一些配置来设置并加密我们的 FTP 服务器,让我们先备份一下原始配置文件 `/etc/vsftpd/vsftpd.conf`:

|

||||||

|

|

||||||

```

|

```

|

||||||

# cp /etc/vsftpd/vsftpd.conf /etc/vsftpd/vsftpd.conf.orig

|

# cp /etc/vsftpd/vsftpd.conf /etc/vsftpd/vsftpd.conf.orig

|

||||||

@ -41,30 +41,30 @@ FTP(文件传输协议)是一种用于通过网络[在服务器和客户端

|

|||||||

接下来,打开上面的文件,并将下面的选项设置相关的值:

|

接下来,打开上面的文件,并将下面的选项设置相关的值:

|

||||||

|

|

||||||

```

|

```

|

||||||

anonymous_enable=NO # disable anonymous login

|

anonymous_enable=NO ### 禁用匿名登录

|

||||||

local_enable=YES # permit local logins

|

local_enable=YES ### 允许本地用户登录

|

||||||

write_enable=YES # enable FTP commands which change the filesystem

|

write_enable=YES ### 允许对文件系统做改动的 FTP 命令

|

||||||

local_umask=022 # value of umask for file creation for local users

|

local_umask=022 ### 本地用户创建文件所用的 umask 值

|

||||||

dirmessage_enable=YES # enable showing of messages when users first enter a new directory

|

dirmessage_enable=YES ### 当用户首次进入一个新目录时显示一个消息

|

||||||

xferlog_enable=YES # a log file will be maintained detailing uploads and downloads

|

xferlog_enable=YES ### 用于记录上传、下载细节的日志文件

|

||||||

connect_from_port_20=YES # use port 20 (ftp-data) on the server machine for PORT style connections

|

connect_from_port_20=YES ### 使用端口 20 (ftp-data)用于 PORT 风格的连接

|

||||||

xferlog_std_format=YES # keep standard log file format

|

xferlog_std_format=YES ### 使用标准的日志格式

|

||||||

listen=NO # prevent vsftpd from running in standalone mode

|

listen=NO ### 不要让 vsftpd 运行在独立模式

|

||||||

listen_ipv6=YES # vsftpd will listen on an IPv6 socket instead of an IPv4 one

|

listen_ipv6=YES ### vsftpd 将监听 IPv6 而不是 IPv4

|

||||||

pam_service_name=vsftpd # name of the PAM service vsftpd will use

|

pam_service_name=vsftpd ### vsftpd 使用的 PAM 服务名

|

||||||

userlist_enable=YES # enable vsftpd to load a list of usernames

|

userlist_enable=YES ### vsftpd 支持载入用户列表

|

||||||

tcp_wrappers=YES # turn on tcp wrappers

|

tcp_wrappers=YES ### 使用 tcp wrappers

|

||||||

```

|

```

|

||||||

|

|

||||||

5. 现在基于用户列表文件 `/etc/vsftpd.userlist` 来配置 FTP 允许/拒绝用户访问。

|

5、 现在基于用户列表文件 `/etc/vsftpd.userlist` 来配置 FTP 来允许/拒绝用户的访问。

|

||||||

|

|

||||||

默认情况下,如果设置了 userlist_enable=YES,当 userlist_deny 选项设置为 YES 的时候,`userlist_file=/etc/vsftpd.userlist` 中的用户列表被拒绝登录。

|

默认情况下,如果设置了 `userlist_enable=YES`,当 `userlist_deny` 选项设置为 `YES` 的时候,`userlist_file=/etc/vsftpd.userlist` 中列出的用户被拒绝登录。

|

||||||

|

|

||||||

然而, userlist_deny=NO 更改了设置,意味着只有在 userlist_file=/etc/vsftpd.userlist 显式指定的用户才允许登录。

|

然而, 更改配置为 `userlist_deny=NO`,意味着只有在 `userlist_file=/etc/vsftpd.userlist` 显式指定的用户才允许登录。

|

||||||

|

|

||||||

```

|

```

|

||||||

userlist_enable=YES # vsftpd will load a list of usernames, from the filename given by userlist_file

|

userlist_enable=YES ### vsftpd 将从 userlist_file 给出的文件中载入用户名列表

|

||||||

userlist_file=/etc/vsftpd.userlist # stores usernames.

|

userlist_file=/etc/vsftpd.userlist ### 存储用户名的文件

|

||||||

userlist_deny=NO

|

userlist_deny=NO

|

||||||

```

|

```

|

||||||

|

|

||||||

@ -72,30 +72,30 @@ userlist_deny=NO

|

|||||||

|

|

||||||

接下来,我们将介绍如何将 FTP 用户 chroot 到 FTP 用户的家目录(本地 root)中的两种可能情况,如下所述。

|

接下来,我们将介绍如何将 FTP 用户 chroot 到 FTP 用户的家目录(本地 root)中的两种可能情况,如下所述。

|

||||||

|

|

||||||

6. 接下来添加下面的选项来限制 FTP 用户到它们自己的家目录。

|

6、 接下来添加下面的选项来限制 FTP 用户到它们自己的家目录。

|

||||||

|

|

||||||

```

|

```

|

||||||

chroot_local_user=YES

|

chroot_local_user=YES

|

||||||

allow_writeable_chroot=YES

|

allow_writeable_chroot=YES

|

||||||

```

|

```

|

||||||

|

|

||||||

chroot_local_user=YES 意味着用户可以设置 chroot jail,默认是登录后的家目录。

|

`chroot_local_user=YES` 意味着用户可以设置 chroot jail,默认是登录后的家目录。

|

||||||

|

|

||||||

同样默认的是,出于安全原因,vsftpd 不会允许 chroot jail 目录可写,然而,我们可以添加 allow_writeable_chroot=YES 来覆盖这个设置。

|

同样默认的是,出于安全原因,vsftpd 不会允许 chroot jail 目录可写,然而,我们可以添加 `allow_writeable_chroot=YES` 来覆盖这个设置。

|

||||||

|

|

||||||

保存并关闭文件。

|

保存并关闭文件。

|

||||||

|

|

||||||

### 用 SELinux 加密 FTP 服务器

|

### 步骤 3: 用 SELinux 加密 FTP 服务器

|

||||||

|

|

||||||

7. 现在,让我们设置下面的 SELinux 布尔值来允许 FTP 能读取用户家目录下的文件。请注意,这最初是使用以下命令完成的:

|

7、现在,让我们设置下面的 SELinux 布尔值来允许 FTP 能读取用户家目录下的文件。请注意,这原本是使用以下命令完成的:

|

||||||

|

|

||||||

```

|

```

|

||||||

# setsebool -P ftp_home_dir on

|

# setsebool -P ftp_home_dir on

|

||||||

```

|

```

|

||||||

|

|

||||||

然而,`ftp_home_dir` 指令由于这个 bug 报告:[https://bugzilla.redhat.com/show_bug.cgi?id=1097775][3] 默认是禁用的。

|

然而,由于这个 bug 报告:[https://bugzilla.redhat.com/show_bug.cgi?id=1097775][3],`ftp_home_dir` 指令默认是禁用的。

|

||||||

|

|

||||||

现在,我们会使用 semanage 命令来设置 SELinux 规则来允许 FTP 读取/写入用户的家目录。

|

现在,我们会使用 `semanage` 命令来设置 SELinux 规则来允许 FTP 读取/写入用户的家目录。

|

||||||

|

|

||||||

```

|

```

|

||||||

# semanage boolean -m ftpd_full_access --on

|

# semanage boolean -m ftpd_full_access --on

|

||||||

@ -109,21 +109,21 @@ chroot_local_user=YES 意味着用户可以设置 chroot jail,默认是登录

|

|||||||

|

|

||||||

### 步骤 4: 测试 FTP 服务器

|

### 步骤 4: 测试 FTP 服务器

|

||||||

|

|

||||||

8. 现在我们会用[ useradd 命令][4]创建一个 FTP 用户来测试 FTP 服务器。

|

8、 现在我们会用 [useradd 命令][4]创建一个 FTP 用户来测试 FTP 服务器。

|

||||||

|

|

||||||

```

|

```

|

||||||

# useradd -m -c “Ravi Saive, CEO” -s /bin/bash ravi

|

# useradd -m -c “Ravi Saive, CEO” -s /bin/bash ravi

|

||||||

# passwd ravi

|

# passwd ravi

|

||||||

```

|

```

|

||||||

|

|

||||||

之后,我们如下使用[ echo 命令][5]添加用户 ravi 到文件 /etc/vsftpd.userlist 中:

|

之后,我们如下使用 [echo 命令][5]添加用户 ravi 到文件 `/etc/vsftpd.userlist` 中:

|

||||||

|

|

||||||

```

|

```

|

||||||

# echo "ravi" | tee -a /etc/vsftpd.userlist

|

# echo "ravi" | tee -a /etc/vsftpd.userlist

|

||||||

# cat /etc/vsftpd.userlist

|

# cat /etc/vsftpd.userlist

|

||||||

```

|

```

|

||||||

|

|

||||||

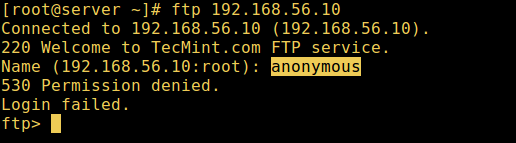

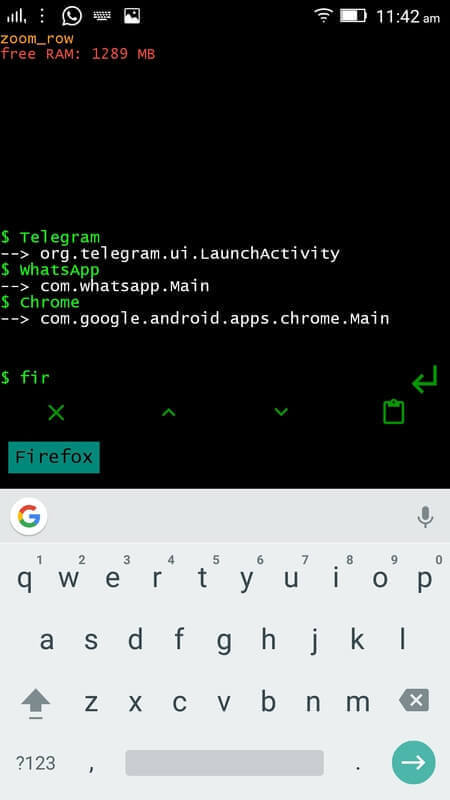

9. 现在是时候测试我们上面的设置是否可以工作了。让我们使用匿名登录测试,我们可以从下面的截图看到匿名登录不被允许。

|

9、 现在是时候测试我们上面的设置是否可以工作了。让我们使用匿名登录测试,我们可以从下面的截图看到匿名登录没有被允许。

|

||||||

|

|

||||||

```

|

```

|

||||||

# ftp 192.168.56.10

|

# ftp 192.168.56.10

|

||||||

@ -134,13 +134,14 @@ Name (192.168.56.10:root) : anonymous

|

|||||||

Login failed.

|

Login failed.

|

||||||

ftp>

|

ftp>

|

||||||

```

|

```

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][6]

|

][6]

|

||||||

|

|

||||||

测试 FTP 匿名登录

|

*测试 FTP 匿名登录*

|

||||||

|

|

||||||

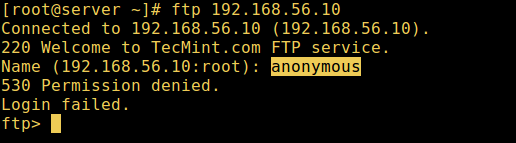

10. 让我们也测试一下没有列在 /etc/vsftpd.userlist 中的用户是否有权限登录,这不是下面截图中的例子:

|

10、 让我们也测试一下没有列在 `/etc/vsftpd.userlist` 中的用户是否有权限登录,下面截图是没有列入的情况:

|

||||||

|

|

||||||

```

|

```

|

||||||

# ftp 192.168.56.10

|

# ftp 192.168.56.10

|

||||||

@ -155,9 +156,9 @@ ftp>

|

|||||||

|

|

||||||

][7]

|

][7]

|

||||||

|

|

||||||

FTP 用户登录失败

|

*FTP 用户登录失败*

|

||||||

|

|

||||||

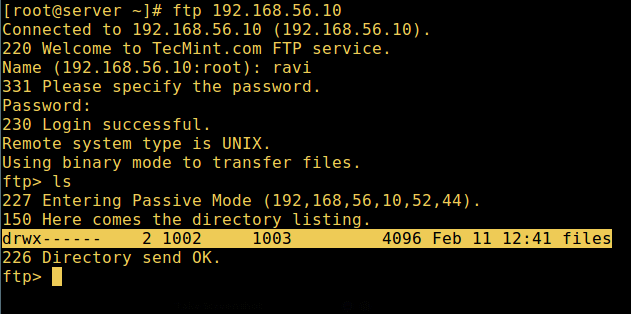

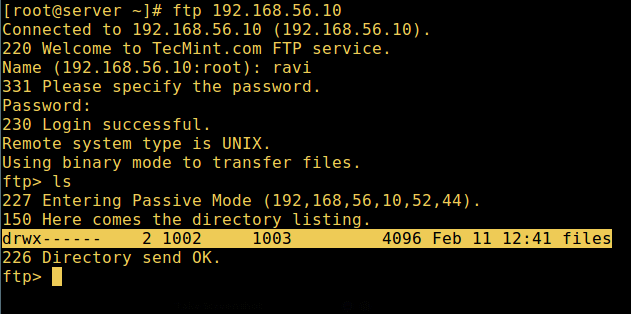

11. 现在最后测试一下列在 /etc/vsftpd.userlis 中的用户是否在登录后真的进入了他/她的家目录:

|

11、 现在最后测试一下列在 `/etc/vsftpd.userlist` 中的用户是否在登录后真的进入了他/她的家目录:

|

||||||

|

|

||||||

```

|

```

|

||||||

# ftp 192.168.56.10

|

# ftp 192.168.56.10

|

||||||

@ -171,21 +172,22 @@ Remote system type is UNIX.

|

|||||||

Using binary mode to transfer files.

|

Using binary mode to transfer files.

|

||||||

ftp> ls

|

ftp> ls

|

||||||

```

|

```

|

||||||

|

|

||||||

[

|

[

|

||||||

|

|

||||||

][8]

|

][8]

|

||||||

|

|

||||||

用户成功登录

|

*用户成功登录*

|

||||||

|

|

||||||

警告:使用 `allow_writeable_chroot=YES' 有一定的安全隐患,特别是用户具有上传权限或 shell 访问权限时。

|

警告:使用 `allow_writeable_chroot=YES` 有一定的安全隐患,特别是用户具有上传权限或 shell 访问权限时。

|

||||||

|

|

||||||

只有当你完全知道你正做什么时才激活此选项。重要的是要注意,这些安全性影响并不是 vsftpd 特定的,它们适用于所有 FTP 守护进程,它们也提供将本地用户置于 chroot jail中。

|

只有当你完全知道你正做什么时才激活此选项。重要的是要注意,这些安全性影响并不是 vsftpd 特定的,它们适用于所有提供了将本地用户置于 chroot jail 中的 FTP 守护进程。

|

||||||

|

|

||||||

因此,我们将在下一节中看到一种更安全的方法来设置不同的不可写本地根目录。

|

因此,我们将在下一节中看到一种更安全的方法来设置不同的不可写本地根目录。

|

||||||

|

|

||||||

### 步骤 5: 配置不同的 FTP 家目录

|

### 步骤 5: 配置不同的 FTP 家目录

|

||||||

|

|

||||||

12. 再次打开 vsftpd 配置文件,并将下面不安全的选项注释掉:

|

12、 再次打开 vsftpd 配置文件,并将下面不安全的选项注释掉:

|

||||||

|

|

||||||

```

|

```

|

||||||

#allow_writeable_chroot=YES

|

#allow_writeable_chroot=YES

|

||||||

@ -199,7 +201,7 @@ ftp> ls

|

|||||||

# chmod a-w /home/ravi/ftp

|

# chmod a-w /home/ravi/ftp

|

||||||

```

|

```

|

||||||

|

|

||||||

13. 接下来,在用户存储他/她的文件的本地根目录下创建一个文件夹:

|

13、 接下来,在用户存储他/她的文件的本地根目录下创建一个文件夹:

|

||||||

|

|

||||||

```

|

```

|

||||||

# mkdir /home/ravi/ftp/files

|

# mkdir /home/ravi/ftp/files

|

||||||

@ -207,11 +209,11 @@ ftp> ls

|

|||||||

# chmod 0700 /home/ravi/ftp/files/

|

# chmod 0700 /home/ravi/ftp/files/

|

||||||

```

|

```

|

||||||

|

|

||||||

、接着在 vsftpd 配置文件中添加/修改这些选项:

|

接着在 vsftpd 配置文件中添加/修改这些选项:

|

||||||

|

|

||||||

```

|

```

|

||||||

user_sub_token=$USER # 在本地根目录下插入用户名

|

user_sub_token=$USER ### 在本地根目录下插入用户名

|

||||||

local_root=/home/$USER/ftp # 定义任何用户的本地根目录

|

local_root=/home/$USER/ftp ### 定义任何用户的本地根目录

|

||||||

```

|

```

|

||||||

|

|

||||||

保存并关闭文件。再说一次,有新的设置后,让我们重启服务:

|

保存并关闭文件。再说一次,有新的设置后,让我们重启服务:

|

||||||

@ -220,7 +222,7 @@ local_root=/home/$USER/ftp # 定义任何用户的本地根目录

|

|||||||

# systemctl restart vsftpd

|

# systemctl restart vsftpd

|

||||||

```

|

```

|

||||||

|

|

||||||

14. 现在最后在测试一次查看用户本地根目录就是我们在他的家目录创建的 FTP 目录。

|

14、 现在最后在测试一次查看用户本地根目录就是我们在他的家目录创建的 FTP 目录。

|

||||||

|

|

||||||

```

|

```

|

||||||

# ftp 192.168.56.10

|

# ftp 192.168.56.10

|

||||||

@ -238,7 +240,7 @@ ftp> ls

|

|||||||

|

|

||||||

][9]

|

][9]

|

||||||

|

|

||||||

FTP 用户家目录登录成功

|

*FTP 用户家目录登录成功*

|

||||||

|

|

||||||

就是这样了!在本文中,我们介绍了如何在 CentOS 7 中安装、配置以及加密的 FTP 服务器,使用下面的评论栏给我们回复,或者分享关于这个主题的任何有用信息。

|

就是这样了!在本文中,我们介绍了如何在 CentOS 7 中安装、配置以及加密的 FTP 服务器,使用下面的评论栏给我们回复,或者分享关于这个主题的任何有用信息。

|

||||||

|

|

||||||

@ -258,7 +260,7 @@ via: http://www.tecmint.com/install-ftp-server-in-centos-7/

|

|||||||

|

|

||||||

作者:[Aaron Kili][a]

|

作者:[Aaron Kili][a]

|

||||||

译者:[geekpi](https://github.com/geekpi)

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -273,5 +275,5 @@ via: http://www.tecmint.com/install-ftp-server-in-centos-7/

|

|||||||

[7]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Login-Failed.png

|

[7]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Login-Failed.png

|

||||||

[8]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Login.png

|

[8]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Login.png

|

||||||

[9]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Home-Directory-Login-Successful.png

|

[9]:http://www.tecmint.com/wp-content/uploads/2017/02/FTP-User-Home-Directory-Login-Successful.png

|

||||||

[10]:http://www.tecmint.com/install-proftpd-in-centos-7/

|

[10]:https://linux.cn/article-8504-1.html

|

||||||

[11]:http://www.tecmint.com/secure-vsftpd-using-ssl-tls-on-centos/

|

[11]:http://www.tecmint.com/secure-vsftpd-using-ssl-tls-on-centos/

|

||||||

@ -0,0 +1,125 @@

|

|||||||

|

4 个拥有绝佳命令行界面的终端程序

|

||||||

|

============================================================

|

||||||

|

|

||||||

|

> 让我们来看几个精心设计的 CLI 程序,以及如何解决一些可发现性问题。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

>图片提供: opensource.com

|

||||||

|

|

||||||

|

在本文中,我会指出命令行界面的<ruby>可发现性<rt>discoverability</rt></ruby>缺点以及克服这些问题的几种方法。

|

||||||

|

|

||||||

|

我喜欢命令行。我第一次接触命令行是在 1997 的 DOS 6.2 上。我学习了各种命令的语法,并展示了如何在目录中列出隐藏的文件(`attrib`)。我会每次仔细检查命令中的每个字符。 当我犯了一个错误,我会从头开始重新输入命令。直到有一天,有人向我展示了如何使用向上和向下箭头按键遍历命令行历史,我被震惊了。

|

||||||

|

|

||||||

|

后来当我接触到 Linux 时,让我感到惊喜的是,上下箭头保留了它们遍历历史记录的能力。我仍然很仔细地打字,但是现在,我了解如何盲打,并且我能打的很快,每分钟可以达到 55 个单词的速度。接着有人向我展示了 tab 补完,再一次改变了我的生活。

|

||||||

|

|

||||||

|

在 GUI 应用程序中,菜单、工具提示和图标用于向用户展示功能。而命令行缺乏这种能力,但是有办法克服这个问题。在深入解决方案之前,我会来看看几个有问题的 CLI 程序:

|

||||||

|

|

||||||

|

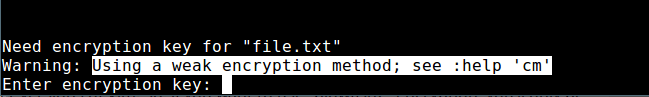

**1、 MySQL**

|

||||||

|

|

||||||

|

首先让我们看看我们所钟爱的 MySQL REPL。我经常发现自己在输入 `SELECT * FROM` 然后按 `Tab` 的习惯。MySQL 会询问我是否想看到所有的 871 种可能性。我的数据库中绝对没有 871 张表。如果我选择 `yes`,它会显示一堆 SQL 关键字、表、函数等。(LCTT 译注:REPL —— Read-Eval-Print Loop,交互式开发环境)

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

**2、 Python**

|

||||||

|

|

||||||

|

我们来看另一个例子,标准的 Python REPL。我开始输入命令,然后习惯按 `Tab` 键。瞧,插入了一个 `Tab` 字符,考虑到 `Tab` 在 Python 源代码中没有特定作用,这是一个问题。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 好的用户体验

|

||||||

|

|

||||||

|

让我看下设计良好的 CLI 程序以及它们是如何克服这些可发现性问题的。

|

||||||

|

|

||||||

|

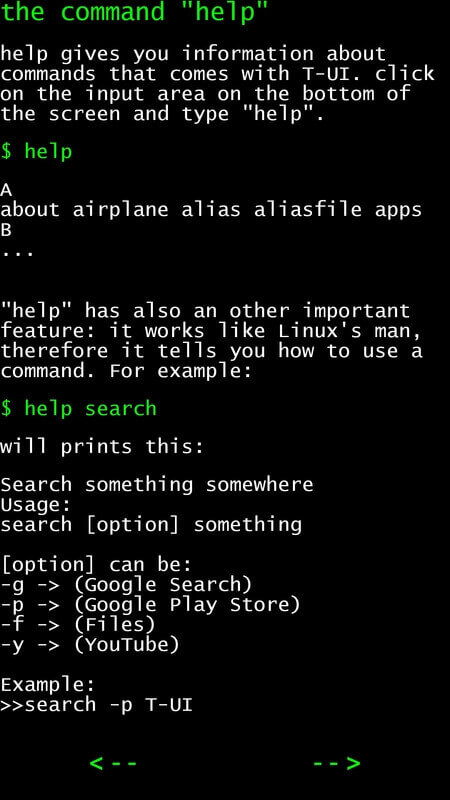

#### 自动补全: bpython

|

||||||

|

|

||||||

|

[Bpython][15] 是对 Python REPL 的一个很好的替代。当我运行 bpython 并开始输入时,建议会立即出现。我没用通过特殊的键盘绑定触发它,甚至没有按下 `Tab` 键。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

当我出于习惯按下 `Tab` 键时,它会用列表中的第一个建议补全。这是给 CLI 设计带来可发现性性的一个很好的例子。

|

||||||

|

|

||||||

|

bpython 的另一个方面是可以展示模块和函数的文档。当我输入一个函数的名字时,它会显示这个函数附带的签名以及文档字符串。这是一个多么令人难以置信的周到设计啊。

|

||||||

|

|

||||||

|

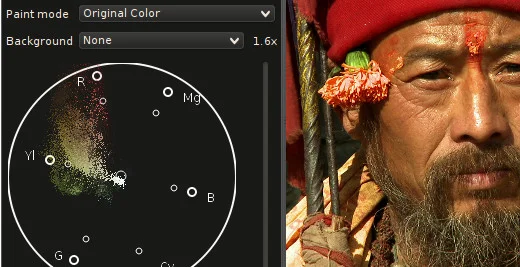

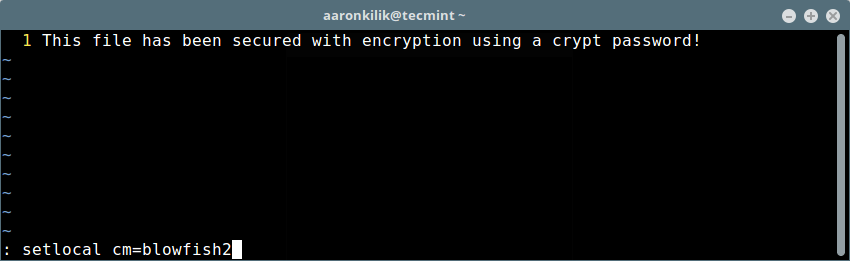

#### 上下文感知补全:mycli

|

||||||

|

|

||||||

|

[mycli][16] 是默认的 MySQL 客户端的现代替代品。这个工具对 MySQL 来说就像 bpython 之于标准 Python REPL 一样。mycli 将在你输入时自动补全关键字、表名、列和函数。

|

||||||

|

|

||||||

|

补全建议是上下文相关的。例如,在 `SELECT * FROM` 之后,只有来自当前数据库的表才会列出,而不是所有可能的关键字。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

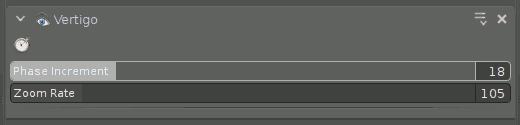

#### 模糊搜索和在线帮助: pgcli

|

||||||

|

|

||||||

|

如果您正在寻找 PostgreSQL 版本的 mycli,请看看 [pgcli][17]。 与 mycli 一样,它提供了上下文感知的自动补全。菜单中的项目使用模糊搜索缩小范围。模糊搜索允许用户输入整体字符串中的任意子字符串来尝试找到正确的匹配项。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

pgcli 和 mycli 在其 CLI 中都实现了这个功能。斜杠命令的文档也作为补全菜单的一部分展示。

|

||||||

|

|

||||||

|

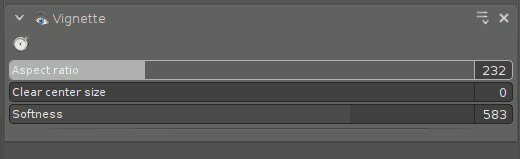

#### 可发现性: fish

|

||||||

|

|

||||||

|

在传统的 Unix shell(Bash、zsh 等)中,有一种搜索历史记录的方法。此搜索模式由 `Ctrl-R` 触发。当再次调用你上周运行过的命令时,例如 **ssh**或 **docker**,这是一个令人难以置信的有用的工具。 一旦你知道这个功能,你会发现自己经常会使用它。

|

||||||

|

|

||||||

|

如果这个功能是如此有用,那为什么不每次都搜索呢?这正是 [**fish** shell][18] 所做的。一旦你开始输入命令,**fish** 将开始建议与历史记录类似的命令。然后,你可以按右箭头键接受该建议。

|

||||||

|

|

||||||

|

### 命令行规矩

|

||||||

|

|

||||||

|

我已经回顾了一些解决可发现性的问题的创新方法,但也有一些基本的命令行功能应该作为每个 REPL 所实现基础功能的一部分:

|

||||||

|

|

||||||

|

* 确保 REPL 有可通过箭头键调用的历史记录。确保会话之间的历史持续存在。

|

||||||

|

* 提供在编辑器中编辑命令的方法。不管你的补全是多么棒,有时用户只需要一个编辑器来制作完美的命令来删除生产环境中所有的表。

|

||||||

|

* 使用分页器(`pager`)来管道输出。不要让用户滚动他们的终端。哦,要为分页器设置个合理的默认值。(记得添加选项来处理颜色代码。)

|

||||||

|

* 提供一种通过 `Ctrl-R` 界面或者 fish 式的自动搜索来搜索历史记录的方法。

|

||||||

|

|

||||||

|

### 总结

|

||||||

|

|

||||||

|

在第 2 节中,我将来看看 Python 中使你能够实现这些技术的特定库。同时,请查看其中一些精心设计的命令行应用程序:

|

||||||

|

|

||||||

|

* [bpython][5]或 [ptpython][6]:具有自动补全支持的 Python REPL。

|

||||||

|

* [http-prompt][7]:交互式 HTTP 客户端。

|

||||||

|

* [mycli][8]:MySQL、MariaDB 和 Percona 的命令行界面,具有自动补全和语法高亮。

|

||||||

|

* [pgcli][9]:具有自动补全和语法高亮,是对 [psql][10] 的替代工具。

|

||||||

|

* [wharfee][11]:用于管理 Docker 容器的 shell。

|

||||||

|

|

||||||

|

_了解更多: Amjith Ramanujam 在 5 月 20 日在波特兰俄勒冈州举办的 [PyCon US 2017][12] 上的谈话“[神奇的命令行工具][13]”。_

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

|

||||||

|

作者简介:

|

||||||

|

|

||||||

|

Amjith Ramanujam - Amjith Ramanujam 是 pgcli 和 mycli 的创始人。人们认为它们很酷,他表示笑纳赞誉。他喜欢用 Python、Javascript 和 C 编程。他喜欢编写简单易懂的代码,它们有时甚至会成功。

|

||||||

|

|

||||||

|

-----------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/17/5/4-terminal-apps

|

||||||

|

|

||||||

|

作者:[Amjith Ramanujam][a]

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/amjith

|

||||||

|

[1]:https://opensource.com/tags/python?src=programming_resource_menu

|

||||||

|

[2]:https://opensource.com/tags/javascript?src=programming_resource_menu

|

||||||

|

[3]:https://opensource.com/tags/perl?src=programming_resource_menu

|

||||||

|

[4]:https://developers.redhat.com/?intcmp=7016000000127cYAAQ&src=programming_resource_menu

|

||||||

|

[5]:http://bpython-interpreter.org/

|

||||||

|

[6]:http://github.com/jonathanslenders/ptpython/

|

||||||

|

[7]:https://github.com/eliangcs/http-prompt

|

||||||

|

[8]:http://mycli.net/

|

||||||

|

[9]:http://pgcli.com/

|

||||||

|

[10]:https://www.postgresql.org/docs/9.2/static/app-psql.html

|

||||||

|

[11]:http://wharfee.com/

|

||||||

|

[12]:https://us.pycon.org/2017/

|

||||||

|

[13]:https://us.pycon.org/2017/schedule/presentation/518/

|

||||||

|

[14]:https://opensource.com/article/17/5/4-terminal-apps?rate=3HL0zUQ8_dkTrinonNF-V41gZvjlRP40R0RlxTJQ3G4

|

||||||

|

[15]:https://bpython-interpreter.org/

|

||||||

|

[16]:http://mycli.net/

|

||||||

|

[17]:http://pgcli.com/

|

||||||

|

[18]:https://fishshell.com/

|

||||||

|

[19]:https://opensource.com/user/125521/feed

|

||||||

|

[20]:https://opensource.com/article/17/5/4-terminal-apps#comments

|

||||||

|

[21]:https://opensource.com/users/amjith

|

||||||

@ -1,83 +0,0 @@

|

|||||||

How is your community promoting diversity?

|

|

||||||

============================================================

|

|

||||||

|

|

||||||

> Open source foundation leaders weigh in.

|

|

||||||

|

|

||||||

|

|

||||||

Image by : opensource.com

|

|

||||||

|

|

||||||

Open source software is a great enabler for technology innovation. Diversity unlocks innovation and drives market growth. Open source and diversity seem like the ultimate winning combination, yet ironically open source communities are among the least diverse tech communities. This is especially true when it comes to inherent diversity: traits such as gender, age, ethnicity, and sexual orientation.

|

|

||||||

|

|

||||||

It is hard to get a true picture of the diversity of our communities in all the various dimensions. Gender diversity, by virtue of being noticeably lacking and more straight forward to measure, is the starting point and current yardstick for measuring diversity in tech communities.

|

|

||||||

|

|

||||||

For example, it is estimated that around 25% of all software developers are women, but [only 3% work][5] in free and open software. These figures are consistent with my personal experience working in open source for over 10 years.

|

|

||||||

|

|

||||||

Even when individuals in the community are [doing their best][6] (and I have worked with many who are), it seems to make little difference. And little has changed in the last ten years. However, we are, as a community, starting to have a better understanding of some of the factors that maintain this status quo, things like [unconscious bias][7] or [social graph and privilege][8] problems.

|

|

||||||

|

|

||||||

In order to overcome the gravity of these forces in open source, we need combined efforts that are sustained over the long term and that really work. There is no better example of how diversity can be improved rapidly in a relatively short space of time than the Python community. PyCon 2011 consisted of just 1% women speakers. Yet in 2014, 33% of speakers at PyCon were women. Now Python conferences regularly lay out [their diversity targets and how they intend to meet them][9].

|

|

||||||

|

|

||||||

What did it take to make that dramatic improvement in women speaker numbers? In her great talk at PyCon 2014, [Outreach Program for Women: Lessons in Collaboration][10], Marina Zhurakhinskaya outlines the key ingredients:

|

|

||||||

|

|

||||||

* The importance of having a Diversity Champion to spearhead the changes over the long term; in the Python community Jessica McKellar was the driving force behind the big improvement in diversity figures

|

|

||||||

* Specifically marketing to under-represented groups; for example, how GNOME used outreach programs, such as [Outreachy][1], to market to women specifically

|

|

||||||

|

|

||||||

We know diversity issues, while complex are imminently fixable. In this way, open source foundations can play a huge role in the sustaining efforts to promote initiatives. Are other open source communities also putting efforts into diversity? To find out, we asked a few open source foundation leaders:

|

|

||||||

|

|

||||||

### How does your foundation promote diversity in its open source community?

|

|

||||||

|

|

||||||

**Mike Milinkovich, executive director of the Eclipse Foundation:**

|

|

||||||

|

|

||||||

> "The Eclipse Foundation is committed to promoting diversity in its open source community. But that commitment does not mean that we are satisfied with where we are today. We have a long way to go, particularly in the area of gender diversity. That said, some of the tangible steps we've taken in the last couple of years are: (a) we put into place a [Community Code of Conduct][2] that covers all of our activities, (b) we are consciously recruiting women for our conference program committees, (c) we are consciously looking for women speakers for our conferences, including keynotes, and (d) we are supporting community channels to discuss diversity topics. It's been great to see members of our community step up to assume leadership roles on this topic, and we're looking forward to making a lot of progress in 2017."

|

|

||||||

|

|

||||||

**Abby Kearns, executive director for the Cloud Foundry:**

|

|

||||||

|

|

||||||

> "For Cloud Foundry we promote diversity in a variety of ways. For our community, this includes a heavy focus on diversity events at our summit, and on our keynote stage. I'm proud to say we doubled the representation by women and people of color at our last event. For our contributors, this takes on a slightly different meaning and includes diversification across company and role."

|

|

||||||

|

|

||||||

A recent Cloud Foundry Summit featured a [diversity luncheon][11] as well as a [keynote on diversity][12], which highlighted how [gender parity had been achieved][13] by one member company's team.

|

|

||||||

|

|

||||||

**Chris Aniszczyk, COO of the Cloud Native Computing Foundation:**

|

|

||||||

|

|

||||||

> "The Cloud Native Computing Foundation (CNCF) is a very young foundation still, and although we are only one year old as of December 2016, we've had promoting diversity as a goal since our inception. First, every conference hosted by CNCF has [diversity scholarships][3] available, and there are usually special diversity lunches or events at the conference to promote inclusion. We've also sponsored "[contribute your first patch][4]" style events to promote new contributors from all over. These are just some small things we currently do. In the near future, we are discussing launching a Diversity Workgroup within CNCF, and also as we ramp up our certification and training programs, we are discussing offering scholarships for folks from under-representative backgrounds."

|

|

||||||

|

|

||||||

Additionally, Cloud Native Computing Foundation is part of the [Linux Foundation][14] as a formal Collaborative Projects (along with other foundations, including Cloud Foundry Foundation). The Linux Foundation has extensive [Diversity Programs][15] and as an example, recently [partnered with the Girls In Tech][16] not-for-profit to improve diversity in open source. In the future, the CNCF actively plans to participate in these Linux Foundation wide initiatives as they arise.

|

|

||||||

|

|

||||||

For open source to thrive, companies need to foster the right environment for innovation. Diversity is a big part of this. Seeing open source foundations making the conscious decision to take action is encouraging. Dedicated time, money, and resources to diversity is making a difference within communities, and we are slowly but surely starting to see the effects. Going forward, communities can collaborate and learn from each other about what works and makes a real difference.

|

|

||||||

|

|

||||||

If you work in open source, be sure to ask and find out what is being done in your community as a whole to foster and promote diversity. Then commit to supporting these efforts and taking the steps toward making a real difference. It is exciting to think that the next ten years might be a huge improvement over the last 10, and we can start to envision a future of truly diverse open source communities, the ultimate winning combination.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

作者简介:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Tracy Miranda - Tracy Miranda is a software developer and founder of Kichwa Coders, a software consultancy specializing in Eclipse tools for scientific and embedded software. Tracy has been using Eclipse since 2003 and is actively involved in the community, particularly the Eclipse Science Working Group. Tracy has a background in electronics system design. She mentors young coders at the festival of code for Young Rewired State. Follow Tracy on Twitter @tracymiranda.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

|

|

||||||

via: https://opensource.com/article/17/1/take-action-diversity-tech

|

|

||||||

|

|

||||||

作者:[ Tracy Miranda][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:https://opensource.com/users/tracymiranda

|

|

||||||

[1]:https://www.gnome.org/outreachy/

|

|

||||||

[2]:https://www.eclipse.org/org/documents/Community_Code_of_Conduct.php

|

|

||||||

[3]:http://events.linuxfoundation.org/events/cloudnativecon-and-kubecon-north-america/attend/scholarship-opportunities

|

|

||||||

[4]:http://conferences.oreilly.com/oscon/oscon-tx-2016/public/schedule/detail/53257

|

|

||||||

[5]:https://www.linux.com/blog/how-bring-more-women-free-and-open-source-software

|

|

||||||

[6]:https://trishagee.github.io/post/what_can_men_do/

|

|

||||||

[7]:https://opensource.com/life/16/3/sxsw-diversity-google-org

|

|

||||||

[8]:https://opensource.com/life/15/8/5-year-plan-improving-diversity-tech

|

|

||||||

[9]:http://2016.pyconuk.org/diversity-target/

|

|

||||||

[10]:https://www.youtube.com/watch?v=CA8HN20NnII

|

|

||||||

[11]:https://www.youtube.com/watch?v=LSRrc5B1an0&list=PLhuMOCWn4P9io8gtd6JSlI9--q7Gw3epW&index=48

|

|

||||||

[12]:https://www.youtube.com/watch?v=FjF8EK2zQU0&list=PLhuMOCWn4P9io8gtd6JSlI9--q7Gw3epW&index=50

|

|

||||||

[13]:https://twitter.com/ab415/status/781036893286854656

|

|

||||||

[14]:https://www.linuxfoundation.org/about/diversity

|

|

||||||

[15]:https://www.linuxfoundation.org/about/diversity

|

|

||||||

[16]:https://www.linux.com/blog/linux-foundation-partners-girls-tech-increase-diversity-open-source

|

|

||||||

@ -1,111 +0,0 @@

|

|||||||

Be the open source supply chain

|

|

||||||

============================================================

|

|

||||||

|

|

||||||

### Learn why you should be a supply chain influencer.

|

|

||||||

|

|

||||||

|

|

||||||

Image by :

|

|

||||||

|

|

||||||

opensource.com

|

|

||||||

|

|

||||||

I would bet that whoever is best at managing and influencing the open source supply chain will be best positioned to create the most innovative products. In this article, I’ll explain why you should be a supply chain influencer, and how your organization can be an active participant in your supply chain.

|

|

||||||

|

|

||||||

In my previous article, [Open source and the software supply chain][2], I discussed the basics of supply chain management, and where open source fits in this model. I left readers with this illustration of the model:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

The question to ask your employer and team(s) is: How do we best take advantage of this? After all, if Apple can set the stage for its dominance by creating a better hardware supply chain, then surely one can do the same with software supply chains.

|

|

||||||

|

|

||||||

### Evaluating supply chains

|

|

||||||

|

|

||||||

Having worked with developers and product teams in many companies, I learned that the process for selecting components that go into a product is haphazard. Sometimes there is an official bake-off of one or two components against each other, but the developers often choose to work with a product based on "feel". When determining the best components, you must evaluate based on those projects’ longevity, stage of development, and enough other metrics to form the basis of a "build vs. buy" decision. Number of users, interested parties, commercial activity, involvement of development team in support, and so on are a few considerations in the decision-making process.

|

|

||||||

|

|

||||||

Over time, technology and business needs change, and in the world of open source software, even more so. Not only must an engineering and product team be able to select the best component at that time, they must also be able to switch it out for something else when the time comes—for example, when the community managing the old component moves on, or when a new component with better features emerges.

|

|

||||||

|

|

||||||

### What not to do

|

|

||||||

|

|

||||||

When evaluating supply chain components, teams are prone to make a number of mistakes, including these common ones:

|

|

||||||

|

|

||||||

* **Not Invented Here (NIH)**: I can’t tell you how many times engineering teams decided to "fix" shortcomings in existing supply chain components by deciding to write it themselves. I won’t say "never ever do that," but I will warn that if you take on the responsibility of writing an infrastructure component, understand that you’re chucking away all the advantages of the open source supply chain—namely upstream testing and upstream engineering—and deciding to take on those tasks, immediately saddling your team (and your product) with technical debt that will only grow over time. You’re making the choice to be less efficient, and you had better have a compelling reason for doing so.

|

|

||||||

* **Carrying patches forward**: Any open source-savvy team understands the value of contributing patches to their respective upstream projects. When doing so, contributed code goes through that project’s automated testing procedures, which, when combined with your own team’s existing testing infrastructure, makes for a more hardened end product. Unfortunately, not all teams are open source-savvy. Sometimes these teams are faced with onerous legal requirements that deter them from seeking permission to contribute fixes upstream. In that case, encourage (i.e., nag) your manager to get blanket legal approval for such things, because the alternative is carrying all those changes forward, incurring significant technical debt, and applying patches until the day your project (or you) dies.

|

|

||||||

* **Think you’re only a user**: Using open source components as part of your software supply chain is only the first step. To reap the rewards of open source supply chains, you must dive in and be an influencer. (More on that shortly.)

|

|

||||||

|

|

||||||

### Effective supply chain management example: Red Hat

|

|

||||||

|

|

||||||

Because of its upstream-first policies, [Red Hat][3] is an example of how both to utilize and influence software supply chains. To understand the Red Hat model, you must view their products through a supply chain perspective.

|

|

||||||

|

|

||||||

Products supported by Red Hat are composed of open source components often vetted by multiple upstream communities, and changes made to these components are pushed to their respective upstream projects, often before they land in a supported product from Red Hat. The work flow look somewhat like:

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

There are multiple reasons for this kind of workflow:

|

|

||||||

|

|

||||||

* Testing, testing, testing: By offloading some initial testing, a company like Red Hat benefits from both the upstream community’s testing, as well as the testing done by other ecosystem participants, including competitors.

|

|

||||||

* Upstream viability: The Red Hat model only works as long as upstream suppliers are viable and self-sustaining. Thus, it’s in Red Hat’s interest to make sure those communities stay healthy.

|

|

||||||

* Engineering efficiency: Because Red Hat offloads common tasks to upstream communities, their engineers spend more time adding value to products for customers.

|

|

||||||

|

|

||||||

To understand the Red Hat approach to supply chain, let’s look at their approach to product development with OpenStack.

|

|

||||||

|

|

||||||

Curiously, Red Hat’s start with OpenStack was not to create a product or even to announce one; rather, they started pushing engineering resources into strategic projects in OpenStack (starting with Nova, Keystone, and Cinder). This list grew to include several other projects in the OpenStack community. A more traditional product management executive might look at this approach and think, "Why on earth would we contribute so much engineering to something that isn’t established and has no product? Why are we giving our competitors our work for free?"

|

|

||||||

|

|

||||||

Instead, here is the open source supply chain thought process:

|

|

||||||

|

|

||||||

### Step 1

|

|

||||||

|

|

||||||

Look at growth areas in the business or largest product gaps that need filling. Is there an open source community that fits a strategic gap? Or can we build a new project from scratch to do the same? In this case, Red Hat looked at the OpenStack community and eventually determined that it would fill a gap in the product portfolio.

|

|

||||||

|

|

||||||

### Step 2

|

|

||||||

|

|

||||||

Gradually turn up the dial on engineering resources. This does a couple of things. First, it helps the engineering team get a sense of the respective projects’ prospects for success. If prospects aren’t not good, the company can stop contributing, with minimal investment spent. Once the project is determined to be worth in the investment, to the company can ensure its engineers will influence current and future development. This helps the project with quality code development, and ensures that the code meets future product requirements and acceptance criteria. Red Hat spent a lot of time slinging code in OpenStack repositories before ever announcing an OpenStack product, much less releasing one. But this was a fraction of the investment that would have been made if the company had developed an IaaS product from scratch.

|

|

||||||

|

|

||||||

### Step 3

|

|

||||||

|

|

||||||

Once the engineering investments begin, start a product management roadmap and marketing release plan. Once the code reaches a minimum level of quality, fork the upstream repository and start working on product-specific code. Bug fixes are pushed upstream to openstack.org and into product branches. (Remember: Red Hat’s model depends on upstream viability, so it makes no sense not to push fixes upstream.)

|

|

||||||

|

|

||||||

Lather, rinse, repeat. This is how you manage an open source software supply chain.

|

|

||||||

|

|

||||||

### Don't accumulate technical debt

|

|

||||||

|

|

||||||

If needed, Red Hat could decide that it would simply depend on upstream code, supply necessary proprietary product glue, and then release that as a product. This is, in fact, what most companies do with upstream open source code; however, this misses a crucial point I made previously. To develop a really great product, being heavily involved in the development process helps. How can an organization make sure that the code base meets its core product criteria if they’re not involved in the day-to-day architecture discussions?

|

|

||||||

|

|

||||||

To make matters worse, in an effort to protect backwards compatibility and interoperability, many companies fork the upstream code, make changes and don't contribute them upstream, choosing instead to carry them forward internally. That is a big no-no, saddling your engineering team forever with accumulated technical debt that will only grow over time. In that scenario, all the gains made from upstream testing, development and release go away in a whiff of stupidity.

|

|

||||||

|

|

||||||

### Red Hat and OpenShift

|

|

||||||

|

|

||||||

Once you begin to understand Red Hat’s approach to supply chain, which you can see manifested in its approach to OpenStack, you can understand its approach to OpenShift. Red Hat first released OpenShift as a proprietary product that was also open sourced. Everything was homegrown, built by a team that joined Red Hat as part of the [Makara acquisition][4] in 2010.

|

|

||||||

|

|

||||||

The technology initially suffered from NIH—using its own homegrown clustering and container management technologies, in spite of the recent (at the time) release of new projects: Kubernetes, Mesos, and Docker. What Red Hat did next is a testament to the company’s commitment to its open source supply chain model: Between OpenShift versions 2 and 3, developers rewrote it to utilize and take advantage of new developments from the Kubernetes and Docker communities, ditching their NIH approach. By restructuring the project in that way, the company took advantage of economies of scale that resulted from the burgeoning developer communities for both projects. I

|

|

||||||

|

|

||||||

Instead of Red Hat fashioning a complete QC/QA testing environment for the entire OpenShift stack, they could rely on testing infrastructure supplied by the Docker and Kubernetes communities. Thus, Red Hat contributions to both the Docker and Kubernetes code bases would undergo a few rounds of testing before ever reaching the company’s own product branches:

|

|

||||||

|

|

||||||

1. The first round of testing is by the Docker and Kubernetes communities .

|

|

||||||

2. Further testing is done by ecosystem participants building products on either or both projects.

|

|

||||||

3. More testing happens on downstream code distributions or products that "embed" both projects.

|

|

||||||

4. Final testing happens in Red Hat’s own product branch.

|

|

||||||

|

|

||||||

The amount of upstream (from Red Hat) testing done on the code ensures a level of quality that would be much more expensive for the company to do comprehensively and from scratch. This is the trick to open source supply chain management: Don’t just consume upstream code, minimally shimming it into a product. That approach won’t give you any of the advantages offered by open source development practices and direct participation for solving your customers’ problems.

|

|

||||||

|

|

||||||

To get the most benefit from the open source software supply chain, you must **be** the open source software supply chain.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

作者简介:

|

|

||||||

|

|

||||||

John Mark Walker - John Mark Walker is Director of Product Management at Dell EMC and is responsible for managing the ViPR Controller product as well as the CoprHD open source community. He has led many open source community efforts, including ManageIQ,

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: https://opensource.com/article/17/1/be-open-source-supply-chain

|

|

||||||

|

|

||||||

作者:[John Mark Walker][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:https://opensource.com/users/johnmark

|

|

||||||

[1]:https://opensource.com/article/17/1/be-open-source-supply-chain?rate=sz6X6GSpIX1EeYBj4B8PokPU1Wy-ievIcBeHAv0Rv2I

|

|

||||||

[2]:https://opensource.com/article/16/12/open-source-software-supply-chain

|

|

||||||

[3]:https://www.redhat.com/en

|

|

||||||

[4]:https://www.redhat.com/en/about/press-releases/makara

|

|

||||||

[5]:https://opensource.com/user/11815/feed

|

|

||||||

@ -1,74 +0,0 @@

|

|||||||

Developing open leaders

|

|

||||||

============================================================

|

|

||||||

|

|

||||||

> "Off-the-shelf" leadership training can't sufficiently groom tomorrow's organizational leaders. Here's how we're doing it.

|

|

||||||

|

|

||||||

|

|

||||||

Image by : opensource.com

|

|

||||||

|

|

||||||

At Red Hat, we have a saying: Not everyone needs to be a people manager, but everyone is expected to be a leader.

|

|

||||||

|

|

||||||

For many people, that requires a profound mindset shift in how to think about leaders. Yet in some ways, it's what we all intuitively know about how organizations really work. As Red Hat CEO Jim Whitehurst has pointed out, in any organization, you have the thermometers—people who reflect the organizational "temperature" and sentiment and direction—and then you have the thermostats—people who _set_ those things for the organization.

|

|

||||||

|

|

||||||

Leadership is about maximizing influence and impact. But how do you develop leadership for an open organization?

|

|

||||||

|

|

||||||

In the first installment of this series, I will share the journey, from my perspective, on how we began to build a leadership development system at Red Hat to enable our growth while sustaining the best parts of our unique culture.

|

|

||||||

|

|

||||||

### Nothing 'off the shelf'

|

|

||||||

|

|

||||||

In an open organization, you can't just buy leadership development training "off the shelf" and expect it to resonate with people—or to reflect and reinforce your unique culture. But you also probably won't have the capacity and resources to build a great leadership development system entirely from scratch.

|

|

||||||

|

|

||||||

Early on in our journey at Red Hat, our leadership development efforts focused on understanding our own philosophy and approach, then taking a bit of an open source approach: sifting through the what people had created for conventional organizations, then configuring those ideas in a way that made them feasible for an open organization.

|

|

||||||

|

|

||||||

Looking back, I can also see we spent a lot of energy looking for ways to plug specific capability gaps.

|

|

||||||

|

|

||||||

Many of our people managers were engineers and other subject matter experts who stepped into management roles because that's what our organization needed. Yet the reality was, many had little experience leading a team or group. So we had some big gaps in basic management skills.

|

|

||||||

|

|

||||||

We also had gaps—not just among managers but also among individual contributors—when it came to navigating tough conversations with respect. In a company where passion runs high and people love to engage in open and heated debate, making your voice heard without shouting others down wasn't always easy.

|

|

||||||

|

|

||||||

We couldn't find any end-to-end leadership development systems that would help train people for leading in a culture that favors flatness and meritocracy over hierarchy and seniority. And while we could build some of those things ourselves, we couldn't build everything fast enough to meet our growing organization's needs.

|

|

||||||

|

|

||||||

So when we saw a need for improved goal setting, we introduced some of the best offerings available—like Closing the Execution Gap and the concept of SMART goals (i.e. specific, measurable, attainable, relevant, and time-bound). To make these work for Red Hat, we configured them to pull through themes from our own culture that could be used in tandem to make the concepts resonate and become even more powerful.

|

|

||||||

|

|

||||||

### Considering meritocracy

|

|

||||||

|

|

||||||

In a culture that values meritocracy, being able to influence others is critical. Yet the passionate open communication and debate that we love at Red Hat sometimes created hard feelings between individuals or teams. We introduced [Crucial Conversations][2] to help everyone navigate those heated and impassioned topics, and also to help them recognize that those kinds of conversations provide the greatest opportunity for influence.

|

|

||||||

|

|

||||||

After building that foundation with Crucial Conversations, we introduced [Influencer Training][3] to help entire teams and organizations communicate and gain traction for their ideas across boundaries.

|

|

||||||

|

|

||||||

We also found a lot of value in Marcus Buckingham's strengths-based approach to leadership development, rather than the conventional models that encouraged people to spend their energy shoring up weaknesses.

|

|

||||||

|

|

||||||

Early on, we made a decision to make our leadership offerings available to individual contributors as well as managers, because we saw that these skills were important for everyone in an open organization.

|

|

||||||

|

|

||||||

Looking back, I can see that this gave us the added benefit of developing a shared understanding and language for talking about leadership throughout our organization. It helped us build and sustain a culture where leadership is expected at all levels and in any role.

|

|

||||||

|

|

||||||

At the same time, training was only part of the solution. We also began developing processes that would help entire departments develop important organizational capabilities, such as talent assessment and succession planning.

|

|

||||||

|

|

||||||

Piece by piece, our open leadership system was beginning to take shape. The story of how it came together is pretty remarkable—at least to me!—and over the next few months, I'll share the journey with you. I look forward to hearing about the journeys of other open organizations, too.

|

|

||||||

|

|

||||||

_(An earlier version of this article appeared in _[The Open Organization Leaders Manual][4]_, now available as a free download from Opensource.com.)_

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

作者简介:

|

|

||||||

|

|

||||||

DeLisa Alexander - DeLisa Alexander | DeLisa is Executive Vice President and Chief People Officer at Red Hat. Under her leadership, this team focuses on acquiring, developing, and retaining talent and enhancing the Red Hat culture and brand. In her nearly 15 years with the company, DeLisa has also worked in the Office of General Counsel, where she wrote Red Hat's first subscription agreement and closed the first deals with its OEMs.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: https://opensource.com/open-organization/17/1/developing-open-leaders

|

|

||||||

|

|

||||||

作者:[DeLisa Alexander][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:https://opensource.com/users/delisa

|

|

||||||

[1]:https://opensource.com/open-organization/17/1/developing-open-leaders?rate=VU560k86SWs0OAchgX-ge2Avg041EOeU8BrlKgxEwqQ

|

|

||||||

[2]:https://www.vitalsmarts.com/products-solutions/crucial-conversations/

|

|

||||||

[3]:https://www.vitalsmarts.com/products-solutions/influencer/

|

|

||||||

[4]:https://opensource.com/open-organization/resources/leaders-manual

|

|

||||||

[5]:https://opensource.com/user/10594/feed

|

|

||||||

[6]:https://opensource.com/open-organization/17/1/developing-open-leaders#comments

|

|

||||||

[7]:https://opensource.com/users/delisa

|

|

||||||

@ -1,93 +0,0 @@

|

|||||||

4 questions to answer when choosing community metrics to measure

|

|

||||||

============================================================

|

|

||||||

|

|

||||||

> When evaluating a specific metric that you are considering including in your metrics plan, you should answer four questions.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

Image by :

|

|

||||||

|

|

||||||

[Internet Archive Book Images][4]. Modified by Opensource.com. [CC BY-SA 4.0][5]

|

|

||||||

|

|

||||||

Thus far in the [Community Metrics Playbook][6] column, I've discussed the importance of [setting goals][7] to guide the metrics process, outlined the general [types of metrics][8] that are useful for studying your community, and reviewed technical details of [available tools][9]. As you are deciding which metrics to track for your community, having a deeper understanding of each area is important so you not only choose good metrics, but also understand and plan for what to do when the numbers don't line up with expectations.

|

|

||||||

|

|

||||||

When evaluating a specific metric that you are thinking about including in your metrics plan, you should answer four questions:

|

|

||||||

|

|

||||||

* Does it help achieve my goals?

|

|

||||||

* How accurate is it?

|

|

||||||

* What is its relationship to other metrics?

|

|

||||||

* What will I do if the metrics goes "bad"?

|

|

||||||

|

|

||||||

### Goal-appropriate

|

|

||||||

|

|

||||||

This one should be obvious by now from my [previous discussion on goals][10]: Why do you need to know this metric? Does this metric have a relationship to your project's goals? If not, then you should consider ignoring it—or at least placing much less emphasis on it. Metrics that do not help measure your progress toward goals waste time and resources that could be better spent developing better metrics.

|

|

||||||

|

|

||||||

One thing to consider are intermediate metrics. These are metrics that may not have an obvious, direct relationship to your goals. They can be dangerous when considered alone and can lead to undesirable behavior simply to "meet the number," but when combined with and interpreted in the context of other intermediates, can help projects improve.

|

|

||||||

|

|

||||||

### Accuracy

|

|

||||||

|

|

||||||

Accuracy is defined as the quality or state of being correct or precise. Gauging accuracy for metrics that have built-in subjectivity and bias, such as survey questions, is difficult, so for this discussion I'll talk about objective metrics obtained by computers, which are for the most part highly precise and accurate. [Data can't lie][11], so why are we even discussing accuracy of computed metrics? The potential for inaccurate metrics stems from their human interpretation. The classic example here is _number of downloads_. This metric can be measured easily—often as part of a download site's built-in metrics—but will not be accurate if your software is split into multiple packages, or known systemic processes produce artificially inflated (or deflated) numbers, such as automated testing systems that execute repeated downloads.

|

|

||||||

|

|

||||||