mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

commit

4b7250f52b

published

20171117 5 open source fonts ideal for programmers.md20190129 7 Methods To Identify Disk Partition-FileSystem UUID On Linux.md20190313 How to contribute to the Raspberry Pi community.md20190314 14 days of celebrating the Raspberry Pi.md20190314 A Look Back at the History of Firefox.md20190315 Sweet Home 3D- An open source tool to help you decide on your dream home.md20190317 How To Configure sudo Access In Linux.md20190402 Parallel computation in Python with Dask.md20190405 Streaming internet radio with RadioDroid.md20190408 Bash vs. Python- Which language should you use.md20190409 Cisco, Google reenergize multicloud-hybrid cloud joint development.md20190409 How To Install And Enable Flatpak Support On Linux.md20190410 How To Check The List Of Open Ports In Linux.md20190413 The Fargate Illusion.md

sources

talk

20190208 Which programming languages should you learn.md20190327 Why DevOps is the most important tech strategy today.md20190412 Gov-t warns on VPN security bug in Cisco, Palo Alto, F5, Pulse software.md20190415 Nyansa-s Voyance expands to the IoT.md20190416 Two tools to help visualize and simplify your data-driven operations.md20190416 What SDN is and where it-s going.md

tech

20171214 Build a game framework with Python using the module Pygame.md20180601 Get Started with Snap Packages in Linux.md20180629 100 Best Ubuntu Apps.md20180823 Getting started with Sensu monitoring.md20190204 Enjoy Netflix- You Should Thank FreeBSD.md20190205 Install Apache, MySQL, PHP (LAMP) Stack On Ubuntu 18.04 LTS.md20190313 How to contribute to the Raspberry Pi community.md20190325 Getting started with Vim- The basics.md20190328 How to run PostgreSQL on Kubernetes.md20190402 Parallel computation in Python with Dask.md20190402 Using Square Brackets in Bash- Part 2.md20190409 Cisco, Google reenergize multicloud-hybrid cloud joint development.md20190409 Enhanced security at the edge.md20190409 Four Methods To Add A User To Group In Linux.md20190410 Managing Partitions with sgdisk.md20190412 Designing posters with Krita, Scribus, and Inkscape.md20190412 How libraries are adopting open source.md20190412 Joe Doss- How Do You Fedora.md20190412 Linux Server Hardening Using Idempotency with Ansible- Part 2.md20190412 What-s your primary backup strategy for the -home directory in Linux.md20190414 Working with Microsoft Exchange from your Linux Desktop.md20190415 12 Single Board Computers- Alternative to Raspberry Pi.md20190415 Blender short film, new license for Chef, ethics in open source, and more news.md20190415 Getting started with Mercurial for version control.md20190415 How To Enable (UP) And Disable (DOWN) A Network Interface Port (NIC) In Linux.md20190415 Inter-process communication in Linux- Shared storage.md20190415 Kubernetes on Fedora IoT with k3s.md20190415 Troubleshooting slow WiFi on Linux.md20190416 Building a DNS-as-a-service with OpenStack Designate.md20190416 Can schools be agile.md20190416 Detecting malaria with deep learning.md20190416 How to Install MySQL in Ubuntu Linux.md20190416 Inter-process communication in Linux- Using pipes and message queues.md20190416 Linux Foundation Training Courses Sale - Discount Coupon.md20190416 Linux Server Hardening Using Idempotency with Ansible- Part 3.md

translated

talk

20190208 Which programming languages should you learn.md20190327 Why DevOps is the most important tech strategy today.md

tech

20171117 5 open source fonts ideal for programmers.md20171214 Build a game framework with Python using the module Pygame.md20180823 Getting started with Sensu monitoring.md20190204 Enjoy Netflix- You Should Thank FreeBSD.md20190314 14 days of celebrating the Raspberry Pi.md20190325 Getting started with Vim- The basics.md20190328 How to run PostgreSQL on Kubernetes.md20190402 Using Square Brackets in Bash- Part 2.md20190405 Streaming internet radio with RadioDroid.md20190409 Enhanced security at the edge.md20190409 Four Methods To Add A User To Group In Linux.md20190410 Managing Partitions with sgdisk.md20190413 How to Zip Files and Folders in Linux -Beginner Tip.md20190415 How to identify duplicate files on Linux.md20190417 HTTPie - A Modern Command Line HTTP Client For Curl And Wget Alternative.md

@ -0,0 +1,99 @@

|

||||

5 款适合程序员的开源字体

|

||||

======

|

||||

|

||||

> 编程字体有些在普通字体中没有的特点,这五种字体你可以看看。

|

||||

|

||||

|

||||

|

||||

什么是最好的编程字体呢?首先,你需要考虑到字体被设计出来的初衷可能并不相同。当选择一款用于休闲阅读的字体时,读者希望该字体的字母能够顺滑地衔接,提供一种轻松愉悦的体验。一款标准字体的每个字符,类似于拼图的一块,它需要被仔细的设计,从而与整个字体的其他部分融合在一起。

|

||||

|

||||

然而,在编写代码时,通常来说对字体的要求更具功能性。这也是为什么大多数程序员在选择时更偏爱使用固定宽度的等宽字体。选择一款带有容易分辨的数字和标点的字体在美学上令人愉悦;但它是否拥有满足你需求的版权许可也是非常重要的。

|

||||

|

||||

某些功能使得字体更适合编程。首先要清楚是什么使得等宽字体看上去井然有序。这里,让我们对比一下字母 `w` 和字母 `i`。当选择一款字体时,重要的是要考虑字母本身及周围的空白。在纸质的书籍和报纸中,有效地利用空间是极为重要的,为瘦小的 `i` 分配较小的空间,为宽大的字母 `w` 分配较大的空间是有意义的。

|

||||

|

||||

然而在终端中,你没有这些限制。每个字符享有相等的空间将非常有用。这么做的首要好处是你可以随意扫过一段代码来“估测”代码的长度。第二个好处是能够轻松地对齐字符和标点,高亮在视觉上更加明显。另外打印纸张上的等宽字体比均衡字体更加容易通过 OCR 识别。

|

||||

|

||||

在本篇文章中,我们将探索 5 款卓越的开源字体,使用它们来编程和写代码都非常理想。

|

||||

|

||||

### 1、Firacode:最佳整套编程字体

|

||||

|

||||

![FiraCode 示例][1]

|

||||

|

||||

*FiraCode, Andrew Lekashman*

|

||||

|

||||

在我们列表上的首款字体是 [FiraCode][3],一款真正符合甚至超越了其职责的编程字体。FiraCode 是 Fira 的扩展,而后者是由 Mozilla 委托设计的开源字体族。使得 FiraCode 与众不同的原因是它修改了在代码中常使用的一些符号的组合或连字,使得它看上去更具可读性。这款字体有几种不同的风格,特别是还包含 Retina 选项。你可以在它的 [GitHub][3] 主页中找到它被使用到多种编程语言中的例子。

|

||||

|

||||

![FiraCode compared to Fira Mono][2]

|

||||

|

||||

*FiraCode 与 Fira Mono 的对比,[Nikita Prokopov][3],源自 GitHub*

|

||||

|

||||

### 2、Inconsolata:优雅且由卓越设计者创造

|

||||

|

||||

![Inconsolata 示例][4]

|

||||

|

||||

*Inconsolata, Andrew Lekashman*

|

||||

|

||||

[Inconsolata][5] 是最为漂亮的等宽字体之一。从 2006 年开始它便一直是一款开源和可免费获取的字体。它的创造者 Raph Levien 在设计 Inconsolata 时秉承的一个基本原则是:等宽字体并不应该那么糟糕。使得 Inconsolata 如此优秀的两个原因是:对于 `0` 和 `o` 这两个字符它们有很大的不同,另外它还特别地设计了标点符号。

|

||||

|

||||

### 3、DejaVu Sans Mono:许多 Linux 发行版的标准配置,庞大的字形覆盖率

|

||||

|

||||

![DejaVu Sans Mono example][6]

|

||||

|

||||

*DejaVu Sans Mono, Andrew Lekashman*

|

||||

|

||||

受在 GNOME 中使用的带有版权和闭源的 Vera 字体的启发,[DejaVu Sans Mono][7] 是一个非常受欢迎的编程字体,几乎在每个现代的 Linux 发行版中都带有它。在 Book Variant 风格下 DejaVu 拥有惊人的 3310 个字形,相比于一般的字体,它们含有 100 个左右的字形。在工作中你将不会出现缺少某些字符的情况,它覆盖了 Unicode 的绝大部分,并且一直在活跃地增长着。

|

||||

|

||||

### 4、Source Code Pro:优雅、可读性强,由 Adobe 中一个小巧但天才的团队打造

|

||||

|

||||

![Source Code Pro example][8]

|

||||

|

||||

*Source Code Pro, Andrew Lekashman*

|

||||

|

||||

由 Paul Hunt 和 Teo Tuominen 设计,[Source Code Pro][9] 是[由 Adobe 创造的][10],成为了它的首款开源字体。Source Code Pro 值得注意的地方在于它极具可读性,且对于容易混淆的字符和标点,它有着非常好的区分度。Source Code Pro 也是一个字体族,有 7 中不同的风格:Extralight、Light、Regular、Medium、Semibold、Bold 和 Black,每种风格都还有斜体变体。

|

||||

|

||||

![Differentiating potentially confusable characters][11]

|

||||

|

||||

*潜在易混淆的字符之间的区别,[Paul D. Hunt][10] 源自 Adobe Typekit 博客。*

|

||||

|

||||

![Metacharacters with special meaning in computer languages][12]

|

||||

|

||||

*在计算机领域中有特别含义的特殊元字符, [Paul D. Hunt][10] 源自 Adobe Typekit 博客。*

|

||||

|

||||

### 5、Noto Mono:巨量的语言覆盖率,由 Google 中的一个大团队打造

|

||||

|

||||

![Noto Mono example][13]

|

||||

|

||||

*Noto Mono, Andrew Lekashman*

|

||||

|

||||

在我们列表上的最后一款字体是 [Noto Mono][14],这是 Google 打造的庞大 Note 字体族中的等宽版本。尽管它并不是专为编程所设计,但它在 209 种语言(包括 emoji 颜文字!)中都可以使用,并且一直在维护和更新。该项目非常庞大,是 Google 宣称 “组织全世界信息” 的使命的延续。假如你想更多地了解它,可以查看这个绝妙的[关于这些字体的视频][15]。

|

||||

|

||||

### 选择合适的字体

|

||||

|

||||

无论你选择那个字体,你都有可能在每天中花费数小时面对它,所以请确保它在审美和哲学层面上与你产生共鸣。选择正确的开源字体是确保你拥有最佳生产环境的一个重要部分。这些字体都是很棒的选择,每个都具有让它脱颖而出的功能强大的特性。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/11/how-select-open-source-programming-font

|

||||

|

||||

作者:[Andrew Lekashman][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com

|

||||

[1]:https://opensource.com/sites/default/files/u128651/firacode.png (FiraCode example)

|

||||

[2]:https://opensource.com/sites/default/files/u128651/firacode2.png (FiraCode compared to Fira Mono)

|

||||

[3]:https://github.com/tonsky/FiraCode

|

||||

[4]:https://opensource.com/sites/default/files/u128651/inconsolata.png (Inconsolata example)

|

||||

[5]:http://www.levien.com/type/myfonts/inconsolata.html

|

||||

[6]:https://opensource.com/sites/default/files/u128651/dejavu_sans_mono.png (DejaVu Sans Mono example)

|

||||

[7]:https://dejavu-fonts.github.io/

|

||||

[8]:https://opensource.com/sites/default/files/u128651/source_code_pro.png (Source Code Pro example)

|

||||

[9]:https://github.com/adobe-fonts/source-code-pro

|

||||

[10]:https://blog.typekit.com/2012/09/24/source-code-pro/

|

||||

[11]:https://opensource.com/sites/default/files/u128651/source_code_pro2.png (Differentiating potentially confusable characters)

|

||||

[12]:https://opensource.com/sites/default/files/u128651/source_code_pro3.png (Metacharacters with special meaning in computer languages)

|

||||

[13]:https://opensource.com/sites/default/files/u128651/noto.png (Noto Mono example)

|

||||

[14]:https://www.google.com/get/noto/#mono-mono

|

||||

[15]:https://www.youtube.com/watch?v=AAzvk9HSi84

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (liujing97)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10727-1.html)

|

||||

[#]: subject: (7 Methods To Identify Disk Partition/FileSystem UUID On Linux)

|

||||

[#]: via: (https://www.2daygeek.com/check-partitions-uuid-filesystem-uuid-universally-unique-identifier-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,27 +10,23 @@

|

||||

Linux 中获取硬盘分区或文件系统的 UUID 的七种方法

|

||||

======

|

||||

|

||||

作为一个 Linux 系统管理员,你应该知道如何去查看分区的 UUID 或文件系统的 UUID。

|

||||

|

||||

因为大多数的 Linux 系统使用 UUID 挂载分区。在 `/etc/fstab` 文件中可以验证此的内容。

|

||||

作为一个 Linux 系统管理员,你应该知道如何去查看分区的 UUID 或文件系统的 UUID。因为现在大多数的 Linux 系统都使用 UUID 挂载分区。你可以在 `/etc/fstab` 文件中可以验证。

|

||||

|

||||

有许多可用的实用程序可以查看 UUID。本文我们将会向你展示多种查看 UUID 的方法,并且你可以选择一种适合于你的方法。

|

||||

|

||||

### 何为 UUID?

|

||||

|

||||

UUID 代表着通用唯一识别码,它帮助 Linux 系统去识别一个磁盘驱动分区而不是块设备文件。

|

||||

UUID 意即<ruby>通用唯一识别码<rt>Universally Unique Identifier</rt></ruby>,它可以帮助 Linux 系统识别一个磁盘分区而不是块设备文件。

|

||||

|

||||

libuuid 是内核 2.15.1 中 util-linux-ng 包中的一部分,它被默认安装在 Linux 系统中。

|

||||

自内核 2.15.1 起,libuuid 就是 util-linux-ng 包中的一部分,它被默认安装在 Linux 系统中。UUID 由该库生成,可以合理地认为在一个系统中 UUID 是唯一的,并且在所有系统中也是唯一的。

|

||||

|

||||

UUID 由该库生成,可以合理地认为它在一个系统中是唯一的,并且在所有系统中也是唯一的。

|

||||

这是在计算机系统中用来标识信息的一个 128 位(比特)的数字。UUID 最初被用在<ruby>阿波罗网络计算机系统<rt>Apollo Network Computing System</rt></ruby>(NCS)中,之后 UUID 被<ruby>开放软件基金会<rt>Open Software Foundation</rt></ruby>(OSF)标准化,成为<ruby>分布式计算环境<rt>Distributed Computing Environment</rt></ruby>(DCE)的一部分。

|

||||

|

||||

在计算机系统中使用了 128 位数字去标识信息。UUID 最初被用在 Apollo 网络计算机系统(NCS)中,之后 UUID 被开放软件基金会(OSF)标准化,成为分布式计算环境(DCE)的一部分。

|

||||

UUID 以 32 个十六进制的数字表示,被连字符分割为 5 组显示,总共的 36 个字符的格式为 8-4-4-4-12(32 个字母或数字和 4 个连字符)。

|

||||

|

||||

UUID 以 32 个十六进制(基数为 16)的数字表示,被连字符分割为 5 组显示,总共的 36 个字符格式为 8-4-4-4-12(32 个字母或数字和 4 个连字符)。

|

||||

例如: `d92fa769-e00f-4fd7-b6ed-ecf7224af7fa`

|

||||

|

||||

例如:d92fa769-e00f-4fd7-b6ed-ecf7224af7fa

|

||||

|

||||

我的 /etc/fstab 文件示例。

|

||||

我的 `/etc/fstab` 文件示例:

|

||||

|

||||

```

|

||||

# cat /etc/fstab

|

||||

@ -48,19 +44,17 @@ UUID=a2092b92-af29-4760-8e68-7a201922573b swap swap defaults,noatime 0 2

|

||||

|

||||

我们可以使用下面的 7 个命令来查看。

|

||||

|

||||

* **`blkid 命令:`** 定位或打印块设备的属性。

|

||||

* **`lsblk 命令:`** lsblk 列出所有可用的或指定的块设备的信息。

|

||||

* **`hwinfo 命令:`** hwinfo 表示硬件信息工具,是另外一个很好的实用工具,用于查询系统中已存在硬件。

|

||||

* **`udevadm 命令:`** udev 管理工具

|

||||

* **`tune2fs 命令:`** 调整 ext2/ext3/ext4 文件系统上的可调文件系统参数。

|

||||

* **`dumpe2fs 命令:`** 查询 ext2/ ext3/ext4 文件系统的信息。

|

||||

* **`使用 by-uuid 路径:`** 该目录下包含有 UUID 和实际的块设备文件,UUID 与实际的块设备文件链接在一起。

|

||||

|

||||

|

||||

* `blkid` 命令:定位或打印块设备的属性。

|

||||

* `lsblk` 命令:列出所有可用的或指定的块设备的信息。

|

||||

* `hwinfo` 命令:硬件信息工具,是另外一个很好的实用工具,用于查询系统中已存在硬件。

|

||||

* `udevadm` 命令:udev 管理工具

|

||||

* `tune2fs` 命令:调整 ext2/ext3/ext4 文件系统上的可调文件系统参数。

|

||||

* `dumpe2fs` 命令:查询 ext2/ext3/ext4 文件系统的信息。

|

||||

* 使用 `by-uuid` 路径:该目录下包含有 UUID 和实际的块设备文件,UUID 与实际的块设备文件链接在一起。

|

||||

|

||||

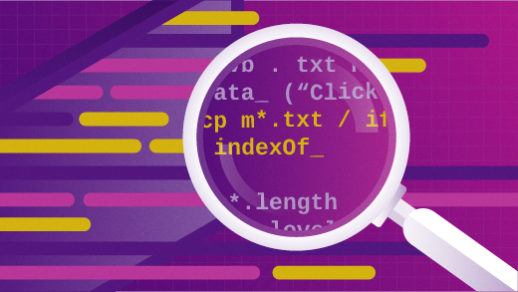

### Linux 中如何使用 blkid 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

blkid 是定位或打印块设备属性的命令行实用工具。它利用 libblkid 库在 Linux 系统中获得到磁盘分区的 UUID。

|

||||

`blkid` 是定位或打印块设备属性的命令行实用工具。它利用 libblkid 库在 Linux 系统中获得到磁盘分区的 UUID。

|

||||

|

||||

```

|

||||

# blkid

|

||||

@ -72,24 +66,24 @@ blkid 是定位或打印块设备属性的命令行实用工具。它利用 libb

|

||||

|

||||

### Linux 中如何使用 lsblk 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

lsblk 列出所有有关可用或指定块设备的信息。lsblk 命令读取 sysfs 文件系统和 udev 数据库以收集信息。

|

||||

`lsblk` 列出所有有关可用或指定块设备的信息。`lsblk` 命令读取 sysfs 文件系统和 udev 数据库以收集信息。

|

||||

|

||||

如果 udev 数据库是不可用的或者编译的 lsblk 是不支持 udev 的,它会试图从块设备中读取 LABEL,UUID 和文件系统类型。这种情况下,必须为 root 身份。该命令默认会以类似于树的格式打印出所有的块设备(RAM 盘除外)。

|

||||

如果 udev 数据库不可用或者编译的 lsblk 不支持 udev,它会试图从块设备中读取卷标、UUID 和文件系统类型。这种情况下,必须以 root 身份运行。该命令默认会以类似于树的格式打印出所有的块设备(RAM 盘除外)。

|

||||

|

||||

```

|

||||

# lsblk -o name,mountpoint,size,uuid

|

||||

NAME MOUNTPOINT SIZE UUID

|

||||

sda 30G

|

||||

└─sda1 / 20G d92fa769-e00f-4fd7-b6ed-ecf7224af7fa

|

||||

sdb 10G

|

||||

sdc 10G

|

||||

├─sdc1 1G d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

├─sdc3 1G ca307aa4-0866-49b1-8184-004025789e63

|

||||

├─sdc4 1K

|

||||

└─sdc5 1G

|

||||

sdd 10G

|

||||

sde 10G

|

||||

sr0 1024M

|

||||

NAME MOUNTPOINT SIZE UUID

|

||||

sda 30G

|

||||

└─sda1 / 20G d92fa769-e00f-4fd7-b6ed-ecf7224af7fa

|

||||

sdb 10G

|

||||

sdc 10G

|

||||

├─sdc1 1G d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

├─sdc3 1G ca307aa4-0866-49b1-8184-004025789e63

|

||||

├─sdc4 1K

|

||||

└─sdc5 1G

|

||||

sdd 10G

|

||||

sde 10G

|

||||

sr0 1024M

|

||||

```

|

||||

|

||||

### Linux 中如何使用 by-uuid 路径查看磁盘分区或文件系统的 UUID?

|

||||

@ -106,7 +100,7 @@ lrwxrwxrwx 1 root root 10 Jan 29 08:34 d92fa769-e00f-4fd7-b6ed-ecf7224af7fa -> .

|

||||

|

||||

### Linux 中如何使用 hwinfo 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

**[hwinfo][1]** 表示硬件信息工具,是另外一种很好的实用工具。它被用来检测系统中已存在的硬件,并且以可读的格式显示各种硬件组件的细节信息。

|

||||

[hwinfo][1] 意即硬件信息工具,是另外一种很好的实用工具。它被用来检测系统中已存在的硬件,并且以可读的格式显示各种硬件组件的细节信息。

|

||||

|

||||

```

|

||||

# hwinfo --block | grep by-uuid | awk '{print $3,$7}'

|

||||

@ -117,16 +111,16 @@ lrwxrwxrwx 1 root root 10 Jan 29 08:34 d92fa769-e00f-4fd7-b6ed-ecf7224af7fa -> .

|

||||

|

||||

### Linux 中如何使用 udevadm 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

udevadm 需要命令和命令特定的操作。它控制 systemd-udevd 的运行时的行为,请求内核事件、管理事件队列并且提供简单的调试机制。

|

||||

`udevadm` 需要命令和命令特定的操作。它控制 systemd-udevd 的运行时行为,请求内核事件、管理事件队列并且提供简单的调试机制。

|

||||

|

||||

```

|

||||

udevadm info -q all -n /dev/sdc1 | grep -i by-uuid | head -1

|

||||

# udevadm info -q all -n /dev/sdc1 | grep -i by-uuid | head -1

|

||||

S: disk/by-uuid/d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

```

|

||||

|

||||

### Linux 中如何使用 tune2fs 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

tune2fs 允许系统管理员在 Linux 的 ext2, ext3, ext4 文件系统中调整各种可调的文件系统参数。这些选项的当前值可以使用选项 -l 显示。

|

||||

`tune2fs` 允许系统管理员在 Linux 的 ext2、ext3、ext4 文件系统中调整各种可调的文件系统参数。这些选项的当前值可以使用选项 `-l` 显示。

|

||||

|

||||

```

|

||||

# tune2fs -l /dev/sdc1 | grep UUID

|

||||

@ -135,7 +129,7 @@ Filesystem UUID: d17e3c31-e2c9-4f11-809c-94a549bc43b7

|

||||

|

||||

### Linux 中如何使用 dumpe2fs 命令查看磁盘分区或文件系统的 UUID?

|

||||

|

||||

dumpe2fs 打印出现在设备文件系统中的超级块和块组的信息。

|

||||

`dumpe2fs` 打印出现在设备文件系统中的超级块和块组的信息。

|

||||

|

||||

```

|

||||

# dumpe2fs /dev/sdc1 | grep UUID

|

||||

@ -150,7 +144,7 @@ via: https://www.2daygeek.com/check-partitions-uuid-filesystem-uuid-universally-

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[liujing97](https://github.com/liujing97)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,53 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10731-1.html)

|

||||

[#]: subject: (How to contribute to the Raspberry Pi community)

|

||||

[#]: via: (https://opensource.com/article/19/3/contribute-raspberry-pi-community)

|

||||

[#]: author: (Anderson Silva (Red Hat) https://opensource.com/users/ansilva/users/kepler22b/users/ansilva)

|

||||

|

||||

树莓派使用入门:如何为树莓派社区做出贡献

|

||||

======

|

||||

|

||||

> 在我们的入门系列的第 13 篇文章中,发现参与树莓派社区的方法。

|

||||

|

||||

![][1]

|

||||

|

||||

这个系列已经逐渐接近尾声,我已经写了很多它的乐趣,我大多希望它能帮助人们使用树莓派进行教育或娱乐。也许这些文章能说服你买你的第一个树莓派,或者让你重新发现抽屉里的吃灰设备。如果这里有真的,那么我认为这个系列就是成功的。

|

||||

|

||||

如果你想买一台,并宣传这块绿色的小板子有多么多功能,这里有几个方法帮你与树莓派社区建立连接:

|

||||

|

||||

* 帮助改进[官方文档][2]

|

||||

* 贡献代码给依赖的[项目][3]

|

||||

* 用 Raspbian 报告 [bug][4]

|

||||

* 报告不同 ARM 架构分发版的的 bug

|

||||

* 看一眼英国国内的树莓派基金会的[代码俱乐部][5]或英国境外的[国际代码俱乐部][6],帮助孩子学习编码

|

||||

* 帮助[翻译][7]

|

||||

* 在 [Raspberry Jam][8] 当志愿者

|

||||

|

||||

这些只是你可以为树莓派社区做贡献的几种方式。最后但同样重要的是,你可以加入我并[投稿文章][9]到你最喜欢的开源网站 [Opensource.com][10]。 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/3/contribute-raspberry-pi-community

|

||||

|

||||

作者:[Anderson Silva (Red Hat)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ansilva/users/kepler22b/users/ansilva

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/raspberry_pi_community.jpg?itok=dcKwb5et

|

||||

[2]: https://www.raspberrypi.org/documentation/CONTRIBUTING.md

|

||||

[3]: https://www.raspberrypi.org/github/

|

||||

[4]: https://www.raspbian.org/RaspbianBugs

|

||||

[5]: https://www.codeclub.org.uk/

|

||||

[6]: https://www.codeclubworld.org/

|

||||

[7]: https://www.raspberrypi.org/translate/

|

||||

[8]: https://www.raspberrypi.org/jam/

|

||||

[9]: https://opensource.com/participate

|

||||

[10]: http://Opensource.com

|

||||

@ -0,0 +1,75 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10734-1.html)

|

||||

[#]: subject: (14 days of celebrating the Raspberry Pi)

|

||||

[#]: via: (https://opensource.com/article/19/3/happy-pi-day)

|

||||

[#]: author: (Anderson Silva (Red Hat) https://opensource.com/users/ansilva)

|

||||

|

||||

树莓派使用入门:庆祝树莓派的 14 天

|

||||

======

|

||||

|

||||

> 在我们关于树莓派入门系列的第 14 篇也是最后一篇文章中,回顾一下我们学到的所有东西。

|

||||

|

||||

![][1]

|

||||

|

||||

### 派节快乐!

|

||||

|

||||

每年的 3 月 14 日,我们这些极客都会庆祝派节。我们用这种方式缩写日期: `MMDD`,3 月 14 于是写成 03/14,它的数字上提醒我们 3.14,或者说 [π][2] 的前三位数字。许多美国人没有意识到的是,世界上几乎没有其他国家使用这种[日期格式][3],因此派节几乎只适用于美国,尽管它在全球范围内得到了庆祝。

|

||||

|

||||

无论你身在何处,让我们一起庆祝树莓派,并通过回顾过去两周我们所涉及的主题来结束本系列:

|

||||

|

||||

* 第 1 天:[你应该选择哪种树莓派?][4]

|

||||

* 第 2 天:[如何购买树莓派][5]

|

||||

* 第 3 天:[如何启动一个新的树莓派][6]

|

||||

* 第 4 天:[用树莓派学习 Linux][7]

|

||||

* 第 5 天:[教孩子们用树莓派学编程的 5 种方法][8]

|

||||

* 第 6 天:[可以使用树莓派学习的 3 种流行编程语言][9]

|

||||

* 第 7 天:[如何更新树莓派][10]

|

||||

* 第 8 天:[如何使用树莓派来娱乐][11]

|

||||

* 第 9 天:[树莓派上的模拟器和原生 Linux 游戏][12]

|

||||

* 第 10 天:[进入物理世界 —— 如何使用树莓派的 GPIO 针脚][13]

|

||||

* 第 11 天:[通过树莓派和 kali Linux 学习计算机安全][14]

|

||||

* 第 12 天:[在树莓派上使用 Mathematica 进行高级数学运算][15]

|

||||

* 第 13 天:[如何为树莓派社区做出贡献][16]

|

||||

|

||||

![Pi Day illustration][18]

|

||||

|

||||

我将结束本系列,感谢所有关注的人,尤其是那些在过去 14 天里从中学到了东西的人!我还想鼓励大家不断扩展他们对树莓派以及围绕它构建的所有开源(和闭源)技术的了解。

|

||||

|

||||

我还鼓励你了解其他文化、哲学、宗教和世界观。让我们成为人类的是这种惊人的 (有时是有趣的) 能力,我们不仅要适应外部环境,而且要适应智力环境。

|

||||

|

||||

不管你做什么,保持学习!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/3/happy-pi-day

|

||||

|

||||

作者:[Anderson Silva (Red Hat)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ansilva

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/raspberry-pi-juggle.png?itok=oTgGGSRA

|

||||

[2]: https://www.piday.org/million/

|

||||

[3]: https://en.wikipedia.org/wiki/Date_format_by_country

|

||||

[4]: https://linux.cn/article-10611-1.html

|

||||

[5]: https://linux.cn/article-10615-1.html

|

||||

[6]: https://linux.cn/article-10644-1.html

|

||||

[7]: https://linux.cn/article-10645-1.html

|

||||

[8]: https://linux.cn/article-10653-1.html

|

||||

[9]: https://linux.cn/article-10661-1.html

|

||||

[10]: https://linux.cn/article-10665-1.html

|

||||

[11]: https://linux.cn/article-10669-1.html

|

||||

[12]: https://linux.cn/article-10682-1.html

|

||||

[13]: https://linux.cn/article-10687-1.html

|

||||

[14]: https://linux.cn/article-10690-1.html

|

||||

[15]: https://linux.cn/article-10711-1.html

|

||||

[16]: https://linux.cn/article-10731-1.html

|

||||

[17]: /file/426561

|

||||

[18]: https://opensource.com/sites/default/files/uploads/raspberrypi_14_piday.jpg (Pi Day illustration)

|

||||

@ -92,7 +92,7 @@ via: https://itsfoss.com/history-of-firefox

|

||||

[3]: https://en.wikipedia.org/wiki/Tim_Berners-Lee

|

||||

[4]: https://www.w3.org/DesignIssues/TimBook-old/History.html

|

||||

[5]: http://viola.org/

|

||||

[6]: https://en.wikipedia.org/wiki/Mosaic_(web_browser

|

||||

[6]: https://en.wikipedia.org/wiki/Mosaic_(web_browser)

|

||||

[7]: http://www.computinghistory.org.uk/det/1789/Marc-Andreessen/

|

||||

[8]: http://www.davetitus.com/mozilla/

|

||||

[9]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/03/Mozilla_boxing.jpg?ssl=1

|

||||

@ -110,7 +110,7 @@ via: https://itsfoss.com/history-of-firefox

|

||||

[21]: https://en.wikipedia.org/wiki/Usage_share_of_web_browsers

|

||||

[22]: http://gs.statcounter.com/browser-market-share/desktop/worldwide/#monthly-201901-201901-bar

|

||||

[23]: https://en.wikipedia.org/wiki/Red_panda

|

||||

[24]: https://en.wikipedia.org/wiki/Flock_(web_browser

|

||||

[24]: https://en.wikipedia.org/wiki/Flock_(web_browser)

|

||||

[25]: https://www.windowscentral.com/microsoft-building-chromium-powered-web-browser-windows-10

|

||||

[26]: https://itsfoss.com/why-firefox/

|

||||

[27]: https://itsfoss.com/firefox-quantum-ubuntu/

|

||||

|

||||

@ -1,24 +1,24 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10732-1.html)

|

||||

[#]: subject: (Sweet Home 3D: An open source tool to help you decide on your dream home)

|

||||

[#]: via: (https://opensource.com/article/19/3/tool-find-home)

|

||||

[#]: author: (Jeff Macharyas (Community Moderator) )

|

||||

|

||||

Sweet Home 3D:一个帮助你决定梦想家庭的开源工具

|

||||

Sweet Home 3D:一个帮助你寻找梦想家庭的开源工具

|

||||

======

|

||||

|

||||

室内设计应用可以轻松渲染你喜欢的房子,不管是真实的或是想象的。

|

||||

> 室内设计应用可以轻松渲染你喜欢的房子,不管是真实的或是想象的。

|

||||

|

||||

![Houses in a row][1]

|

||||

|

||||

我最近接受了一份在弗吉尼亚州的新工作。由于我妻子一直在纽约工作,看着我们在纽约的房子直至出售,我有责任出去为我们和我们的猫找一所新房子。在我们搬进去之前她不会看到的房子!

|

||||

我最近接受了一份在弗吉尼亚州的新工作。由于我妻子一直在纽约工作,看着我们在纽约的房子直至出售,我有责任出去为我们和我们的猫找一所新房子。在我们搬进去之前她看不到新房子。

|

||||

|

||||

我和一个房地产经纪人签约,并看了几间房子,拍了许多照片,写下了潦草的笔记。晚上,我会将照片上传到 Google Drive 文件夹中,我和我老婆会通过手机同时查看这些照片,同时我还想记住房间是在右边还是左边,是否有风扇等。

|

||||

我和一个房地产经纪人签约,并看了几间房子,拍了许多照片,写下了潦草的笔记。晚上,我会将照片上传到 Google Drive 文件夹中,我和我老婆会通过手机同时查看这些照片,同时我还要记住房间是在右边还是左边,是否有风扇等。

|

||||

|

||||

由于这是一个相当繁琐且不太准确的方式来展示我的发现,我因此去寻找一个开源解决方案,以更好地展示我们未来的梦想之家将会是什么样的,而不会取决于我的模糊记忆和模糊的照片。

|

||||

由于这是一个相当繁琐且不太准确的展示我的发现的方式,我因此去寻找一个开源解决方案,以更好地展示我们未来的梦想之家将会是什么样的,而不会取决于我的模糊记忆和模糊的照片。

|

||||

|

||||

[Sweet Home 3D][2] 完全满足了我的要求。Sweet Home 3D 可在 Sourceforge 上获取,并在 GNU 通用公共许可证下发布。它的[网站][3]信息非常丰富,我能够立即启动并运行。Sweet Home 3D 由总部位于巴黎的 eTeks 的 Emmanuel Puybaret 开发。

|

||||

|

||||

@ -32,19 +32,19 @@ Sweet Home 3D:一个帮助你决定梦想家庭的开源工具

|

||||

|

||||

现在我画完了“内墙”,我从网站下载了各种“家具”,其中包括实际的家具以及门、窗、架子等。每个项目都以 ZIP 文件的形式下载,因此我创建了一个包含所有未压缩文件的文件夹。我可以自定义每件家具和重复的物品比如门,可以方便地复制粘贴到指定的地方。

|

||||

|

||||

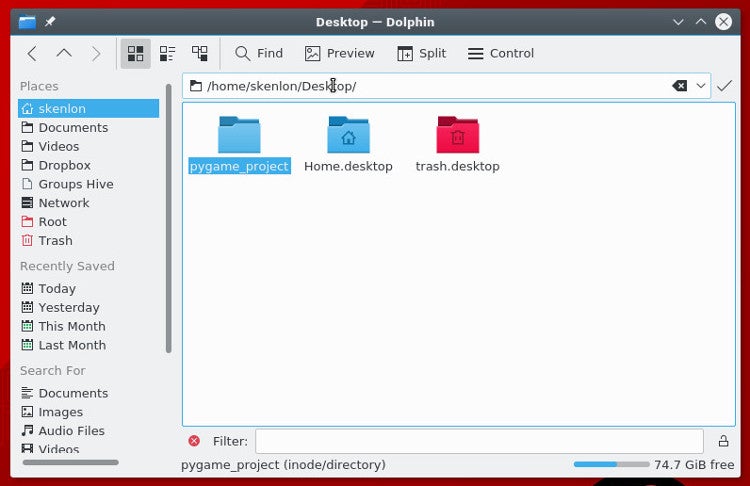

在我将所有墙壁和门窗都布置完后,我就使用应用的 3D 视图浏览房屋。根据照片和记忆,我对所有物体进行了调整直到接近房屋的样子。我可以花更多时间添加纹理,附属家具和物品,但这已经达到了我需要的程度。

|

||||

在我将所有墙壁和门窗都布置完后,我就使用这个应用的 3D 视图浏览房屋。根据照片和记忆,我对所有物体进行了调整,直到接近房屋的样子。我可以花更多时间添加纹理,附属家具和物品,但这已经达到了我需要的程度。

|

||||

|

||||

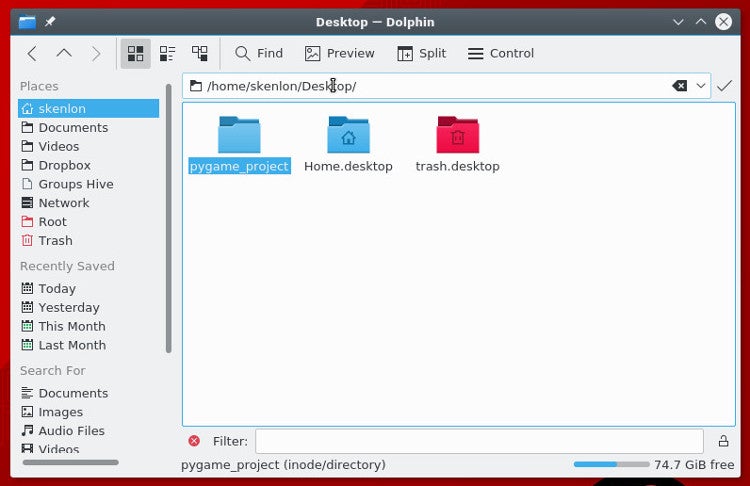

![Sweet Home 3D floorplan][7]

|

||||

|

||||

完成之后,我将计划导出为 OBJ 文件,它可在各种程序中打开,例如 [Blender][8] 和 Mac 上的 Preview,方便旋转房屋并从各个角度查看。视频功能最有用,我可以创建一个起点,然后在房子中绘制一条路径,并记录“旅程”。我将视频导出为 MOV 文件,并使用 QuickTime 在 Mac 上打开和查看。

|

||||

完成之后,我将该项目导出为 OBJ 文件,它可在各种程序中打开,例如 [Blender][8] 和 Mac 上的“预览”中,方便旋转房屋并从各个角度查看。视频功能最有用,我可以创建一个起点,然后在房子中绘制一条路径,并记录“旅程”。我将视频导出为 MOV 文件,并使用 QuickTime 在 Mac 上打开和查看。

|

||||

|

||||

我的妻子能够(几乎)所有我看到的,我们甚至可以开始在搬家前布置家具。现在,我所要做的就是装上卡车搬到新家。

|

||||

我的妻子能够(几乎)能看到所有我看到的,我们甚至可以开始在搬家前布置家具。现在,我所要做的就是把行李装上卡车搬到新家。

|

||||

|

||||

Sweet Home 3D 在我的新工作中也是有用的。我正在寻找一种方法来改善学院建筑的地图,并计划在 [Inkscape][9] 或 Illustrator 或其他软件中重新绘制它。但是,由于我有平面地图,我可以使用 Sweet Home 3D 创建平面图的 3D 版本并将其上传到我们的网站以便更方便地找到地方。

|

||||

|

||||

### 开源犯罪现场?

|

||||

|

||||

一件有趣的事:根据 [Sweet Home 3D 的博客][10],“法国法医办公室(科学警察)最近选择 Sweet Home 3D 作为设计计划表示路线和犯罪现场的工具。这是法国政府建议优先考虑免费开源解决方案的具体应用。“

|

||||

一件有趣的事:根据 [Sweet Home 3D 的博客][10],“法国法医办公室(科学警察)最近选择 Sweet Home 3D 作为设计规划表示路线和犯罪现场的工具。这是法国政府建议优先考虑自由开源解决方案的具体应用。“

|

||||

|

||||

这是公民和政府如何利用开源解决方案创建个人项目、解决犯罪和建立世界的又一点证据。

|

||||

|

||||

@ -55,11 +55,11 @@ via: https://opensource.com/article/19/3/tool-find-home

|

||||

作者:[Jeff Macharyas (Community Moderator)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:

|

||||

[a]: https://opensource.com/users/jeffmacharyas

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/house_home_colors_live_building.jpg?itok=HLpsIfIL (Houses in a row)

|

||||

[2]: https://sourceforge.net/projects/sweethome3d/

|

||||

@ -1,18 +1,16 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (liujing97)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10746-1.html)

|

||||

[#]: subject: (How To Configure sudo Access In Linux?)

|

||||

[#]: via: (https://www.2daygeek.com/how-to-configure-sudo-access-in-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

Linux 中如何配置 sudo 访问权限?

|

||||

如何在 Linux 中配置 sudo 访问权限

|

||||

======

|

||||

|

||||

Linux 系统中 root 用户拥有所有的控制权力。

|

||||

|

||||

Linux 系统中 root 是拥有最高权力的用户,可以在系统中实施任意的行为。

|

||||

Linux 系统中 root 用户拥有 Linux 中全部控制权力。Linux 系统中 root 是拥有最高权力的用户,可以在系统中实施任意的行为。

|

||||

|

||||

如果其他用户想去实施一些行为,不能为所有人都提供 root 访问权限。因为如果他或她做了一些错误的操作,没有办法去纠正它。

|

||||

|

||||

@ -20,43 +18,40 @@ Linux 系统中 root 是拥有最高权力的用户,可以在系统中实施

|

||||

|

||||

我们可以把 sudo 权限发放给相应的用户来克服这种情况。

|

||||

|

||||

sudo 命令提供了一种机制,它可以在不用分享 root 用户的密码的前提下,为信任的用户提供系统的管理权限。

|

||||

`sudo` 命令提供了一种机制,它可以在不用分享 root 用户的密码的前提下,为信任的用户提供系统的管理权限。

|

||||

|

||||

他们可以执行大部分的管理操作,但又不像 root 一样有全部的权限。

|

||||

|

||||

### 什么是 sudo?

|

||||

|

||||

sudo 是一个程序,普通用户可以使用它以超级用户或其他用户的身份执行命令,是由安全策略指定的。

|

||||

`sudo` 是一个程序,普通用户可以使用它以超级用户或其他用户的身份执行命令,是由安全策略指定的。

|

||||

|

||||

sudo 用户的访问权限是由 `/etc/sudoers` 文件控制的。

|

||||

|

||||

### sudo 用户有什么优点?

|

||||

|

||||

在 Linux 系统中,如果你不熟悉一个命令,sudo 是运行它的一个安全方式。

|

||||

在 Linux 系统中,如果你不熟悉一个命令,`sudo` 是运行它的一个安全方式。

|

||||

|

||||

* Linux 系统在 `/var/log/secure` 和 `/var/log/auth.log` 文件中保留日志,并且你可以验证 sudo 用户实施了哪些行为操作。

|

||||

* 每一次它都为当前的操作提示输入密码。所以,你将会有时间去验证这个操作是不是你想要执行的。如果你发觉它是不正确的行为,你可以安全地退出而且没有执行此操作。

|

||||

* Linux 系统在 `/var/log/secure` 和 `/var/log/auth.log` 文件中保留日志,并且你可以验证 sudo 用户实施了哪些行为操作。

|

||||

* 每一次它都为当前的操作提示输入密码。所以,你将会有时间去验证这个操作是不是你想要执行的。如果你发觉它是不正确的行为,你可以安全地退出而且没有执行此操作。

|

||||

|

||||

基于 RHEL 的系统(如 Redhat (RHEL)、 CentOS 和 Oracle Enterprise Linux (OEL))和基于 Debian 的系统(如 Debian、Ubuntu 和 LinuxMint)在这点是不一样的。

|

||||

|

||||

基于 RHEL 的系统(如 Redhat (RHEL), CentOS 和 Oracle Enterprise Linux (OEL))和基于 Debian 的系统(如 Debian, Ubuntu 和 LinuxMint)在这点是不一样的。

|

||||

|

||||

我们将会教你如何在本文中的两种发行版中执行该操作。

|

||||

我们将会教你如何在本文中提及的两种发行版中执行该操作。

|

||||

|

||||

这里有三种方法可以应用于两个发行版本。

|

||||

|

||||

* 增加用户到相应的组。基于 RHEL 的系统,我们需要添加用户到 `wheel` 组。基于 Debain 的系统,我们添加用户到 `sudo` 或 `admin` 组。

|

||||

* 手动添加用户到 `/etc/group` 文件中。

|

||||

* 用 visudo 命令添加用户到 `/etc/sudoers` 文件中。

|

||||

|

||||

|

||||

* 增加用户到相应的组。基于 RHEL 的系统,我们需要添加用户到 `wheel` 组。基于 Debain 的系统,我们添加用户到 `sudo` 或 `admin` 组。

|

||||

* 手动添加用户到 `/etc/group` 文件中。

|

||||

* 用 `visudo` 命令添加用户到 `/etc/sudoers` 文件中。

|

||||

|

||||

### 如何在 RHEL/CentOS/OEL 系统中配置 sudo 访问权限?

|

||||

|

||||

在基于 RHEL 的系统中(如 Redhat (RHEL), CentOS 和 Oracle Enterprise Linux (OEL)),使用下面的三个方法就可以做到。

|

||||

在基于 RHEL 的系统中(如 Redhat (RHEL)、 CentOS 和 Oracle Enterprise Linux (OEL)),使用下面的三个方法就可以做到。

|

||||

|

||||

### 方法 1:在 Linux 中如何使用 wheel 组为普通用户授予超级用户访问权限?

|

||||

#### 方法 1:在 Linux 中如何使用 wheel 组为普通用户授予超级用户访问权限?

|

||||

|

||||

Wheel 是基于 RHEL 的系统中的一个特殊组,它提供额外的权限,可以授权用户像超级用户一样执行受到限制的命令。

|

||||

wheel 是基于 RHEL 的系统中的一个特殊组,它提供额外的权限,可以授权用户像超级用户一样执行受到限制的命令。

|

||||

|

||||

注意,应该在 `/etc/sudoers` 文件中激活 `wheel` 组来获得该访问权限。

|

||||

|

||||

@ -70,7 +65,7 @@ Wheel 是基于 RHEL 的系统中的一个特殊组,它提供额外的权限

|

||||

|

||||

假设我们已经创建了一个用户账号来执行这些操作。在此,我将会使用 `daygeek` 这个用户账号。

|

||||

|

||||

执行下面的命令,添加用户到 wheel 组。

|

||||

执行下面的命令,添加用户到 `wheel` 组。

|

||||

|

||||

```

|

||||

# usermod -aG wheel daygeek

|

||||

@ -87,10 +82,10 @@ wheel:x:10:daygeek

|

||||

|

||||

```

|

||||

$ tail -5 /var/log/secure

|

||||

tail: cannot open _/var/log/secure_ for reading: Permission denied

|

||||

tail: cannot open /var/log/secure for reading: Permission denied

|

||||

```

|

||||

|

||||

当我试图以普通用户身份访问 `/var/log/secure` 文件时出现错误。 我将使用 sudo 访问同一个文件,让我们看看这个魔术。

|

||||

当我试图以普通用户身份访问 `/var/log/secure` 文件时出现错误。 我将使用 `sudo` 访问同一个文件,让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo tail -5 /var/log/secure

|

||||

@ -102,9 +97,9 @@ Mar 17 07:05:10 CentOS7 sudo: daygeek : TTY=pts/0 ; PWD=/home/daygeek ; USER=roo

|

||||

Mar 17 07:05:10 CentOS7 sudo: pam_unix(sudo:session): session opened for user root by daygeek(uid=0)

|

||||

```

|

||||

|

||||

### 方法 2:在 RHEL/CentOS/OEL 中如何使用 /etc/group 文件为普通用户授予超级用户访问权限?

|

||||

#### 方法 2:在 RHEL/CentOS/OEL 中如何使用 /etc/group 文件为普通用户授予超级用户访问权限?

|

||||

|

||||

我们可以通过编辑 `/etc/group` 文件来手动地添加用户到 wheel 组。

|

||||

我们可以通过编辑 `/etc/group` 文件来手动地添加用户到 `wheel` 组。

|

||||

|

||||

只需打开该文件,并在恰当的组后追加相应的用户就可完成这一点。

|

||||

|

||||

@ -115,7 +110,7 @@ wheel:x:10:daygeek,user1

|

||||

|

||||

在该例中,我将使用 `user1` 这个用户账号。

|

||||

|

||||

我将要通过在系统中重启 `Apache` 服务来检查用户 `user1` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

我将要通过在系统中重启 Apache httpd 服务来检查用户 `user1` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart httpd

|

||||

@ -128,11 +123,11 @@ Mar 17 07:10:40 CentOS7 sudo: user1 : TTY=pts/0 ; PWD=/home/user1 ; USER=root ;

|

||||

Mar 17 07:12:35 CentOS7 sudo: user1 : TTY=pts/0 ; PWD=/home/user1 ; USER=root ; COMMAND=/bin/grep -i httpd /var/log/secure

|

||||

```

|

||||

|

||||

### 方法 3:在 Linux 中如何使用 /etc/sudoers 文件为普通用户授予超级用户访问权限?

|

||||

#### 方法 3:在 Linux 中如何使用 /etc/sudoers 文件为普通用户授予超级用户访问权限?

|

||||

|

||||

sudo 用户的访问权限是被 `/etc/sudoers` 文件控制的。因此,只需将用户添加到 wheel 组下的 sudoers 文件中即可。

|

||||

sudo 用户的访问权限是被 `/etc/sudoers` 文件控制的。因此,只需将用户添加到 `sudoers` 文件中 的 `wheel` 组下即可。

|

||||

|

||||

只需通过 visudo 命令将期望的用户追加到 /etc/sudoers 文件中。

|

||||

只需通过 `visudo` 命令将期望的用户追加到 `/etc/sudoers` 文件中。

|

||||

|

||||

```

|

||||

# grep -i user2 /etc/sudoers

|

||||

@ -141,7 +136,7 @@ user2 ALL=(ALL) ALL

|

||||

|

||||

在该例中,我将使用 `user2` 这个用户账号。

|

||||

|

||||

我将要通过在系统中重启 `MariaDB` 服务来检查用户 `user2` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

我将要通过在系统中重启 MariaDB 服务来检查用户 `user2` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart mariadb

|

||||

@ -155,11 +150,11 @@ Mar 17 07:26:52 CentOS7 sudo: user2 : TTY=pts/0 ; PWD=/home/user2 ; USER=root ;

|

||||

|

||||

### 在 Debian/Ubuntu 系统中如何配置 sudo 访问权限?

|

||||

|

||||

在基于 Debian 的系统中(如 Debian, Ubuntu 和 LinuxMint),使用下面的三个方法就可以做到。

|

||||

在基于 Debian 的系统中(如 Debian、Ubuntu 和 LinuxMint),使用下面的三个方法就可以做到。

|

||||

|

||||

### 方法 1:在 Linux 中如何使用 sudo 或 admin 组为普通用户授予超级用户访问权限?

|

||||

#### 方法 1:在 Linux 中如何使用 sudo 或 admin 组为普通用户授予超级用户访问权限?

|

||||

|

||||

sudo 或 admin 是基于 Debian 的系统中的特殊组,它提供额外的权限,可以授权用户像超级用户一样执行受到限制的命令。

|

||||

`sudo` 或 `admin` 是基于 Debian 的系统中的特殊组,它提供额外的权限,可以授权用户像超级用户一样执行受到限制的命令。

|

||||

|

||||

注意,应该在 `/etc/sudoers` 文件中激活 `sudo` 或 `admin` 组来获得该访问权限。

|

||||

|

||||

@ -175,7 +170,7 @@ sudo 或 admin 是基于 Debian 的系统中的特殊组,它提供额外的权

|

||||

|

||||

假设我们已经创建了一个用户账号来执行这些操作。在此,我将会使用 `2gadmin` 这个用户账号。

|

||||

|

||||

执行下面的命令,添加用户到 sudo 组。

|

||||

执行下面的命令,添加用户到 `sudo` 组。

|

||||

|

||||

```

|

||||

# usermod -aG sudo 2gadmin

|

||||

@ -195,7 +190,7 @@ $ less /var/log/auth.log

|

||||

/var/log/auth.log: Permission denied

|

||||

```

|

||||

|

||||

当我试图以普通用户身份访问 `/var/log/auth.log` 文件时出现错误。 我将要使用 sudo 访问同一个文件,让我们看看这个魔术。

|

||||

当我试图以普通用户身份访问 `/var/log/auth.log` 文件时出现错误。 我将要使用 `sudo` 访问同一个文件,让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo tail -5 /var/log/auth.log

|

||||

@ -209,7 +204,7 @@ Mar 17 20:40:48 Ubuntu18 sudo: pam_unix(sudo:session): session opened for user r

|

||||

|

||||

或者,我们可以通过添加用户到 `admin` 组来执行相同的操作。

|

||||

|

||||

运行下面的命令,添加用户到 admin 组。

|

||||

运行下面的命令,添加用户到 `admin` 组。

|

||||

|

||||

```

|

||||

# usermod -aG admin user1

|

||||

@ -231,9 +226,9 @@ Mar 17 20:53:36 Ubuntu18 sudo: user1 : TTY=pts/0 ; PWD=/home/user1 ; USER=root ;

|

||||

Mar 17 20:53:36 Ubuntu18 sudo: pam_unix(sudo:session): session opened for user root by user1(uid=0)

|

||||

```

|

||||

|

||||

### 方法 2:在 Debian/Ubuntu 中如何使用 /etc/group 文件为普通用户授予超级用户访问权限?

|

||||

#### 方法 2:在 Debian/Ubuntu 中如何使用 /etc/group 文件为普通用户授予超级用户访问权限?

|

||||

|

||||

我们可以通过编辑 `/etc/group` 文件来手动地添加用户到 sudo 组或 admin 组。

|

||||

我们可以通过编辑 `/etc/group` 文件来手动地添加用户到 `sudo` 组或 `admin` 组。

|

||||

|

||||

只需打开该文件,并在恰当的组后追加相应的用户就可完成这一点。

|

||||

|

||||

@ -244,7 +239,7 @@ sudo:x:27:2gadmin,user2

|

||||

|

||||

在该例中,我将使用 `user2` 这个用户账号。

|

||||

|

||||

我将要通过在系统中重启 `Apache` 服务来检查用户 `user2` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

我将要通过在系统中重启 Apache httpd 服务来检查用户 `user2` 是不是拥有 `sudo` 访问权限。让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart apache2

|

||||

@ -257,11 +252,11 @@ Mar 17 21:01:04 Ubuntu18 systemd: pam_unix(systemd-user:session): session opened

|

||||

Mar 17 21:01:33 Ubuntu18 sudo: user2 : TTY=pts/0 ; PWD=/home/user2 ; USER=root ; COMMAND=/bin/systemctl restart apache2

|

||||

```

|

||||

|

||||

### 方法 3:在 Linux 中如何使用 /etc/sudoers 文件为普通用户授予超级用户访问权限?

|

||||

#### 方法 3:在 Linux 中如何使用 /etc/sudoers 文件为普通用户授予超级用户访问权限?

|

||||

|

||||

sudo 用户的访问权限是被 `/etc/sudoers` 文件控制的。因此,只需将用户添加到 sudo 或 admin 组下的 sudoers 文件中即可。

|

||||

sudo 用户的访问权限是被 `/etc/sudoers` 文件控制的。因此,只需将用户添加到 `sudoers` 文件中的 `sudo` 或 `admin` 组下即可。

|

||||

|

||||

只需通过 visudo 命令将期望的用户追加到 /etc/sudoers 文件中。

|

||||

只需通过 `visudo` 命令将期望的用户追加到 `/etc/sudoers` 文件中。

|

||||

|

||||

```

|

||||

# grep -i user3 /etc/sudoers

|

||||

@ -270,7 +265,7 @@ user3 ALL=(ALL:ALL) ALL

|

||||

|

||||

在该例中,我将使用 `user3` 这个用户账号。

|

||||

|

||||

我将要通过在系统中重启 `MariaDB` 服务来检查用户 `user3` 是不是拥有 sudo 访问权限。让我们看看这个魔术。

|

||||

我将要通过在系统中重启 MariaDB 服务来检查用户 `user3` 是不是拥有 `sudo` 访问权限。让我们看看这个魔术。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart mariadb

|

||||

@ -285,6 +280,7 @@ Mar 17 21:12:53 Ubuntu18 sudo: pam_unix(sudo:session): session closed for user r

|

||||

Mar 17 21:13:08 Ubuntu18 sudo: user3 : TTY=pts/0 ; PWD=/home/user3 ; USER=root ; COMMAND=/usr/bin/tail -f /var/log/auth.log

|

||||

Mar 17 21:13:08 Ubuntu18 sudo: pam_unix(sudo:session): session opened for user root by user3(uid=0)

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/how-to-configure-sudo-access-in-linux/

|

||||

@ -292,7 +288,7 @@ via: https://www.2daygeek.com/how-to-configure-sudo-access-in-linux/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[liujing97](https://github.com/liujing97)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,74 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10742-1.html)

|

||||

[#]: subject: (Parallel computation in Python with Dask)

|

||||

[#]: via: (https://opensource.com/article/19/4/parallel-computation-python-dask)

|

||||

[#]: author: (Moshe Zadka (Community Moderator) https://opensource.com/users/moshez)

|

||||

|

||||

使用 Dask 在 Python 中进行并行计算

|

||||

======

|

||||

|

||||

> Dask 库可以将 Python 计算扩展到多个核心甚至是多台机器。

|

||||

|

||||

![Pair programming][1]

|

||||

|

||||

关于 Python 性能的一个常见抱怨是[全局解释器锁][2](GIL)。由于 GIL,同一时刻只能有一个线程执行 Python 字节码。因此,即使在现代的多核机器上,使用线程也不会加速计算。

|

||||

|

||||

但当你需要并行化到多核时,你不需要放弃使用 Python:[Dask][3] 库可以将计算扩展到多个内核甚至多个机器。某些设置可以在数千台机器上配置 Dask,每台机器都有多个内核。虽然存在扩展规模的限制,但一般达不到。

|

||||

|

||||

虽然 Dask 有许多内置的数组操作,但举一个非内置的例子,我们可以计算[偏度][4]:

|

||||

|

||||

```

|

||||

import numpy

|

||||

import dask

|

||||

from dask import array as darray

|

||||

|

||||

arr = dask.from_array(numpy.array(my_data), chunks=(1000,))

|

||||

mean = darray.mean()

|

||||

stddev = darray.std(arr)

|

||||

unnormalized_moment = darry.mean(arr * arr * arr)

|

||||

## See formula in wikipedia:

|

||||

skewness = ((unnormalized_moment - (3 * mean * stddev ** 2) - mean ** 3) /

|

||||

stddev ** 3)

|

||||

```

|

||||

|

||||

请注意,每个操作将根据需要使用尽可能多的内核。这将在所有核心上并行化执行,即使在计算数十亿个元素时也是如此。

|

||||

|

||||

当然,并不是我们所有的操作都可由这个库并行化,有时我们需要自己实现并行性。

|

||||

|

||||

为此,Dask 有一个“延迟”功能:

|

||||

|

||||

```

|

||||

import dask

|

||||

|

||||

def is_palindrome(s):

|

||||

return s == s[::-1]

|

||||

|

||||

palindromes = [dask.delayed(is_palindrome)(s) for s in string_list]

|

||||

total = dask.delayed(sum)(palindromes)

|

||||

result = total.compute()

|

||||

```

|

||||

|

||||

这将计算字符串是否是回文并返回回文的数量。

|

||||

|

||||

虽然 Dask 是为数据科学家创建的,但它绝不仅限于数据科学。每当我们需要在 Python 中并行化任务时,我们可以使用 Dask —— 无论有没有 GIL。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/4/parallel-computation-python-dask

|

||||

|

||||

作者:[Moshe Zadka (Community Moderator)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/moshez

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/collab-team-pair-programming-code-keyboard.png?itok=kBeRTFL1 (Pair programming)

|

||||

[2]: https://wiki.python.org/moin/GlobalInterpreterLock

|

||||

[3]: https://github.com/dask/dask

|

||||

[4]: https://en.wikipedia.org/wiki/Skewness#Definition

|

||||

@ -0,0 +1,89 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (tomjlw)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10741-1.html)

|

||||

[#]: subject: (Streaming internet radio with RadioDroid)

|

||||

[#]: via: (https://opensource.com/article/19/4/radiodroid-internet-radio-player)

|

||||

[#]: author: (Chris Hermansen (Community Moderator) https://opensource.com/users/clhermansen)

|

||||

|

||||

使用 RadioDroid 流传输网络广播

|

||||

======

|

||||

|

||||

> 通过简单的设置使用你家中的音响收听你最爱的网络电台。

|

||||

|

||||

![][1]

|

||||

|

||||

最近网络媒体对 [Google 的 Chromecast 音频设备的下架][2]发出叹息。该设备在音频媒体界备受[好评][3],因此我已经在考虑入手一个。基于 Chromecast 退场的消息,我决定在它们全部被打包扔进垃圾堆之前以一个合理价位买一个。

|

||||

|

||||

我在 [MobileFun][4] 上找到一个放进我的订单中。这个设备最终到货了。它被包在一个普普通通、简简单单的 Google 包装袋中,外面打印着非常简短的使用指南。

|

||||

|

||||

![Google Chromecast 音频][5]

|

||||

|

||||

我通过我的数模转换器的光纤 S/PDIF 连接接入到家庭音响,希望以此能提供最佳的音质。

|

||||

|

||||

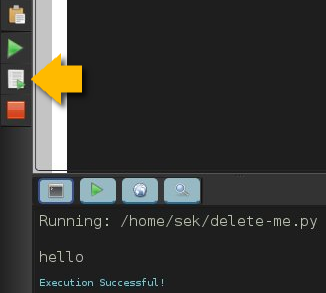

安装过程并无纰漏,在五分钟后我就可以播放一些音乐了。我知道一些安卓应用支持 Chromecast,因此我决定用 Google Play Music 测试它。意料之中,它工作得不错,音乐效果听上去也相当好。然而作为一个具有开源精神的人,我决定看看我能找到什么开源播放器能兼容 Chromecast。

|

||||

|

||||

### RadioDroid 的救赎

|

||||

|

||||

[RadioDroid 安卓应用][6] 满足条件。它是开源的,并且可从 [GitHub][7]、Google Play 以及 [F-Droid][8] 上获取。根据帮助文档,RadioDroid 从 [Community Radio Browser][9] 网页寻找播放流。因此我决定在我的手机上安装尝试一下。

|

||||

|

||||

![RadioDroid][10]

|

||||

|

||||

安装过程快速顺利,RadioDroid 打开展示当地电台十分迅速。你可以在这个屏幕截图的右上方附近看到 Chromecast 按钮(看上去像一个有着波阵面的长方形图标)。

|

||||

|

||||

我尝试了几个当地电台。这个应用可靠地在我手机喇叭上播放了音乐。但是我不得不摆弄 Chromecast 按钮来通过 Chromecast 把音乐传到流上。但是它确实可以做到流传输。

|

||||

|

||||

我决定找一下我喜爱的网络广播电台:法国马赛的 [格雷诺耶广播电台][11]。在 RadioDroid 上有许多找到电台的方法。其中一种是使用标签——“当地”、“最流行”等——就在电台列表上方。其中一个标签是国家,我找到法国,在其 1500 个电台中划来划去寻找格雷诺耶广播电台。另一种办法是使用屏幕上方的查询按钮;查询迅速找到了那家美妙的电台。我尝试了其它几次查询它们都返回了合理的信息。

|

||||

|

||||

回到“当地”标签,我在列表中翻来覆去,发现“当地”的定义似乎是“在同一个国家”。因此尽管西雅图、波特兰、旧金山、洛杉矶和朱诺比多伦多更靠近我的家,我并没有在“当地”标签中看到它们。然而通过使用查询功能,我可以发现所有名字中带有西雅图的电台。

|

||||

|

||||

“语言”标签使我找到所有用葡语(及葡语方言)播报的电台。我很快发现了另一个最爱的电台 [91 Rock Curitiba][12]。

|

||||

|

||||

接着灵感来了,虽然现在是春天了,但又如何呢?让我们听一些圣诞音乐。意料之中,搜寻圣诞把我引到了 [181.FM – Christmas Blender][13]。不错,一两分钟的欣赏对我就够了。

|

||||

|

||||

因此总的来说,我推荐把 RadioDroid 和 Chromecast 的组合作为一种用家庭音响以合理价位播放网络电台的良好方式。

|

||||

|

||||

### 对于音乐方面……

|

||||

|

||||

最近我从 [Blue Coast Music][16] 商店里选了一个 [Qua Continuum][15] 创作的叫作 [Continuum One][14] 的有趣的氛围(甚至无节拍)音乐专辑。

|

||||

|

||||

Blue Coast 有许多可提供给开源音乐爱好者的。音乐可以无需通过那些奇怪的平台专用下载管理器下载(有时以物理形式)。它通常提供几种形式,包括 WAV、FLAC 和 DSD;WAV 和 FLAC 还提供不同的字长和比特率,包括 16/44.1、24/96 和 24/192,针对 DSD 则有 2.8、5.6 和 11.2 MHz。音乐是用优秀的仪器精心录制的。不幸的是,我并没有找到许多符合我口味的音乐,尽管我喜欢 Blue Coast 上能获取的几个艺术家,包括 Qua Continuum,[Art Lande][17] 以及 [Alex De Grassi][18]。

|

||||

|

||||

在 [Bandcamp][19] 上,我挑选了 [Emancipator's Baralku][20] 和 [Framework's Tides][21],两个都是我喜欢的。两位艺术家创作的音乐符合我的口味——电音但又(总体来说)不是舞蹈,它们的音乐旋律优美,副歌也很好听。有许多可以让开源音乐发烧友爱上 Bandcamp 的东西,比如买前试听整首歌的服务;没有垃圾软件下载器;与大量音乐家的合作;以及对 [Creative Commons music][22] 的支持。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/4/radiodroid-internet-radio-player

|

||||

|

||||

作者:[Chris Hermansen (Community Moderator)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/clhermansen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/programming-code-keyboard-laptop-music-headphones.png?itok=EQZ2WKzy (woman programming)

|

||||

[2]: https://www.theverge.com/2019/1/11/18178751/google-chromecast-audio-discontinued-sale

|

||||

[3]: https://www.whathifi.com/google/chromecast-audio/review

|

||||

[4]: https://www.mobilefun.com/google-chromecast-audio-black-70476

|

||||

[5]: https://opensource.com/sites/default/files/uploads/internet-radio_chromecast.png (Google Chromecast Audio)

|

||||

[6]: https://play.google.com/store/apps/details?id=net.programmierecke.radiodroid2

|

||||

[7]: https://github.com/segler-alex/RadioDroid

|

||||

[8]: https://f-droid.org/en/packages/net.programmierecke.radiodroid2/

|

||||

[9]: http://www.radio-browser.info/gui/#!/

|

||||

[10]: https://opensource.com/sites/default/files/uploads/internet-radio_radiodroid.png (RadioDroid)

|

||||

[11]: http://www.radiogrenouille.com/

|

||||

[12]: https://91rock.com.br/

|

||||

[13]: http://player.181fm.com/?station=181-xblender

|

||||

[14]: https://www.youtube.com/watch?v=PqLCQXPS8iQ

|

||||

[15]: https://bluecoastmusic.com/artists/qua-continuum

|

||||

[16]: https://bluecoastmusic.com/store

|

||||

[17]: https://bluecoastmusic.com/store?f%5B0%5D=search_api_multi_aggregation_1%3Aart%20lande

|

||||

[18]: https://bluecoastmusic.com/store?f%5B0%5D=search_api_multi_aggregation_1%3Aalex%20de%20grassi

|

||||

[19]: https://bandcamp.com/

|

||||

[20]: https://emancipator.bandcamp.com/album/baralku

|

||||

[21]: https://frameworksuk.bandcamp.com/album/tides

|

||||

[22]: https://bandcamp.com/tag/creative-commons

|

||||

@ -1,44 +1,45 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10725-1.html)

|

||||

[#]: subject: (Bash vs. Python: Which language should you use?)

|

||||

[#]: via: (https://opensource.com/article/19/4/bash-vs-python)

|

||||

[#]: author: (Archit Modi Red Hat https://opensource.com/users/architmodi/users/greg-p/users/oz123)

|

||||

|

||||

|

||||

Bash vs Python: 该使用哪种语言?

|

||||

Bash vs Python:你该使用哪个?

|

||||

======

|

||||

两种编程语言都各有优缺点,一些任务使得它们在某些方面互有胜负。

|

||||

|

||||

> 两种编程语言都各有优缺点,它们在某些任务方面互有胜负。

|

||||

|

||||

![][1]

|

||||

|

||||

[Bash][2] 和 [Python][3] 是大多数自动化工程师最喜欢的编程语言。它们都各有优缺点,有时很难选择应该使用哪一个。诚实的答案是:这取决于任务、范围、背景和任务的复杂性。

|

||||

[Bash][2] 和 [Python][3] 是大多数自动化工程师最喜欢的编程语言。它们都各有优缺点,有时很难选择应该使用哪一个。所以,最诚实的答案是:这取决于任务、范围、背景和任务的复杂性。

|

||||

|

||||

让我们来比较一下这两种语言,以便更好地理解它们各自的优点。

|

||||

|

||||

### Bash

|

||||

|

||||

* 是一种 Linux/Unix shell 命令语言

|

||||

* 非常适合编写使用命令行界面(CLI)实用程序的 shell 脚本,利用一个命令的输出传递给另一个命令(管道),以及执行简单的任务(最多 100 行代码)

|

||||

* 非常适合编写使用命令行界面(CLI)实用程序的 shell 脚本,利用一个命令的输出传递给另一个命令(管道),以及执行简单的任务(可以多达 100 行代码)

|

||||

* 可以按原样使用命令行命令和实用程序

|

||||

* 启动时间比 Python 快,但执行时间性能差

|

||||

* Windows 默认没有安装。你的脚本可能不会兼容多个操作系统,但是 Bash 是大多数 Linux/Unix 系统的默认 shell

|

||||

* 与其它 shell (如 csh、zsh、fish) _不_ 完全兼容。

|

||||

* 管道 ("|") CLI 实用程序如 sed、awk、grep 等可以降低其性能

|

||||

* 缺少很多函数、对象、数据结构和多线程,这限制了它在复杂脚本或编程中的使用

|

||||

* 启动时间比 Python 快,但执行时性能差

|

||||

* Windows 中默认没有安装。你的脚本可能不会兼容多个操作系统,但是 Bash 是大多数 Linux/Unix 系统的默认 shell

|

||||

* 与其它 shell (如 csh、zsh、fish) *不* 完全兼容。

|

||||

* 通过管道(`|`)传递 CLI 实用程序如 `sed`、`awk`、`grep` 等会降低其性能

|

||||

* 缺少很多函数、对象、数据结构和多线程支持,这限制了它在复杂脚本或编程中的使用

|

||||

* 缺少良好的调试工具和实用程序

|

||||

|

||||

### Python

|

||||

|

||||

* 是一种面对对象编程语言(OOP),因此它比 Bash 更加通用

|

||||

* 几乎可以用于任何任务

|

||||

* 适用于大多数操作系统,默认情况下它安装在大多数 Unix/Linux 系统中

|

||||

* 适用于大多数操作系统,默认情况下它在大多数 Unix/Linux 系统中都有安装

|

||||

* 与伪代码非常相似

|

||||

* 具有简单、清晰、易于学习和阅读的语法

|

||||

* 拥有大量的库、文档以及一个活跃的社区

|

||||

* 提供比 Bash 更友好的错误处理特性

|

||||

* 有比 Bash 更好的调试工具和实用程序,这使得它在开发涉及很多行代码的复杂软件应用程序中是一种很棒的语言

|

||||

* 有比 Bash 更好的调试工具和实用程序,这使得它在开发涉及到很多行代码的复杂软件应用程序时是一种很棒的语言

|

||||

* 应用程序(或脚本)可能包含许多第三方依赖项,这些依赖项必须在执行前安装

|

||||

* 对于简单任务,需要编写比 Bash 更多的代码

|

||||

|

||||

@ -53,7 +54,7 @@ via: https://opensource.com/article/19/4/bash-vs-python

|

||||

作者:[Archit Modi (Red Hat)][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,74 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (tomjlw)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10747-1.html)

|

||||

[#]: subject: (Cisco, Google reenergize multicloud/hybrid cloud joint development)

|

||||

[#]: via: (https://www.networkworld.com/article/3388218/cisco-google-reenergize-multicloudhybrid-cloud-joint-development.html#tk.rss_all)

|

||||

[#]: author: (Michael Cooney https://www.networkworld.com/author/Michael-Cooney/)

|

||||

|

||||

思科、谷歌重新赋能多/混合云共同开发

|

||||

======

|

||||

> 思科、VMware、HPE 等公司开始采用了新的 Google Cloud Athos 云技术。

|

||||

|

||||

![Thinkstock][1]

|

||||

|

||||

思科与谷歌已扩展它们的混合云开发活动,以帮助其客户可以在从本地数据中心到公共云上的任何地方更轻松地搭建安全的多云以及混合云应用。

|

||||

|

||||

这次扩张围绕着谷歌被称作 Anthos 的新的开源混合云包展开,它是在这周的 Google Next 活动上推出的。Anthos 基于并取代了谷歌现有的谷歌云服务测试版。Anthos 将让客户们无须修改应用就可以在现有的本地硬件或公共云上运行应用。据谷歌说,它可以在[谷歌云平台][5] (GCP) 与 [谷歌 Kubernetes 引擎][6] (GKE) 或者在数据中心中与 [GKE On-Prem][7] 一同使用。谷歌说,Anthos 首次让客户们可以无需管理员和开发者了解不同的坏境和 API 就能从谷歌平台上管理在第三方云上(如 AWS 和 Azure)的工作负荷。

|

||||

|

||||

关键在于,Athos 提供了一个单一的托管服务,它使得客户们无须担心不同的环境或 API 就能跨云管理、部署工作负荷。

|

||||

|

||||

作为首秀的一部分,谷歌也宣布一个叫做 [Anthos Migrate][8] 的测试计划,它能够从本地环境或者其它云自动迁移虚拟机到 GKE 上的容器中。谷歌说,“这种独特的迁移技术使你无须修改原来的虚拟机或者应用就能以一种行云流水般的方式迁移、更新你的基础设施”。谷歌称它给予了公司按客户节奏转移本地应用到云环境的灵活性。

|

||||

|

||||

### 思科和谷歌

|

||||

|

||||

就思科来说,它宣布对 Anthos 的支持并承诺将它紧密集成进思科的数据中心技术中,例如 HyperFlex 超融合包、应用中心基础设施(思科的旗舰 SDN 方案)、SD-WAN 和 StealthWatch 云。思科说,无论是本地的还是在云端的,这次集成将通过自动更新到最新版本和安全补丁,给予一种一致的、云般的感觉。

|

||||

|

||||

“谷歌云在容器(Kubernetes)和<ruby>服务网格<rt>service mesh</rt></ruby>(Istio)上的专业与它们在开发者社区的领导力,再加上思科的企业级网络、计算、存储和安全产品及服务,将为我们的顾客促成一次强强联合。”思科的云平台和解决方案集团资深副总裁 Kip Compton 这样[写道][9],“思科对于 Anthos 的集成将会帮助顾客跨本地数据中心和公共云搭建、管理多云/混合云应用,让他们专注于创新和灵活性,同时不会影响安全性或增加复杂性。”

|

||||

|

||||

### 谷歌云和思科

|

||||

|

||||

谷歌云工程副总裁 Eyal Manor [写道][10] 通过思科对 Anthos 的支持,客户将能够:

|

||||

|

||||

* 受益于全托管服务例如 GKE 以及思科的超融合基础设施、网络和安全技术;

|

||||

* 在企业数据中心和云中一致运行

|

||||

* 在企业数据中心使用云服务

|

||||

* 用最新的云技术更新本地基础设施

|

||||

|

||||

思科和谷歌从 2017 年 10 月就在紧密合作,当时他们表示正在开发一个能够连接本地基础设施和云环境的开放混合云平台。该套件,即[思科为谷歌云打造的混合云平台][11],大致在 2018 年 9 月上市。它使得客户们能通过谷歌云托管 Kubernetes 容器开发企业级功能,包含思科网络和安全技术以及来自 Istio 的服务网格监控。

|

||||

|

||||

谷歌说开源的 Istio 的容器和微服务优化技术给开发者提供了一种一致的方式,通过服务级的 mTLS (双向传输层安全)身份验证访问控制来跨云连接、保护、管理和监听微服务。因此,客户能够轻松实施新的可移植的服务,并集中配置和管理这些服务。

|

||||

|

||||

思科不是唯一宣布对 Anthos 支持的供应商。谷歌表示,至少 30 家大型合作商包括 [VMware][12]、[Dell EMC][13]、[HPE][14]、Intel 和联想致力于为他们的客户在它们自己的超融合基础设施上提供 Anthos 服务。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3388218/cisco-google-reenergize-multicloudhybrid-cloud-joint-development.html

|

||||

|

||||

作者:[Michael Cooney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[tomjlw](https://github.com/tomjlw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Michael-Cooney/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.techhive.com/images/article/2016/12/hybrid_cloud-100700390-large.jpg

|

||||

[2]: https://www.networkworld.com/article/3233132/cloud-computing/what-is-hybrid-cloud-computing.html

|

||||

[3]: https://www.networkworld.com/article/3252775/hybrid-cloud/multicloud-mania-what-to-know.html

|

||||

[4]: https://www.networkworld.com/newsletters/signup.html

|

||||

[5]: https://cloud.google.com/

|

||||

[6]: https://cloud.google.com/kubernetes-engine/

|

||||

[7]: https://cloud.google.com/gke-on-prem/

|

||||

[8]: https://cloud.google.com/contact/

|

||||

[9]: https://blogs.cisco.com/news/next-phase-cisco-google-cloud

|

||||

[10]: https://cloud.google.com/blog/topics/partners/google-cloud-partners-with-cisco-on-hybrid-cloud-next19?utm_medium=unpaidsocial&utm_campaign=global-googlecloud-liveevent&utm_content=event-next

|

||||

[11]: https://cloud.google.com/cisco/

|

||||

[12]: https://blogs.vmware.com/networkvirtualization/2019/04/vmware-and-google-showcase-hybrid-cloud-deployment.html/

|

||||

[13]: https://www.dellemc.com/en-us/index.htm

|

||||

[14]: https://www.hpe.com/us/en/newsroom/blog-post/2019/04/hpe-and-google-cloud-join-forces-to-accelerate-innovation-with-hybrid-cloud-solutions-optimized-for-containerized-applications.html

|

||||

[15]: https://www.facebook.com/NetworkWorld/

|

||||

[16]: https://www.linkedin.com/company/network-world

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10751-1.html)

|

||||

[#]: subject: (How To Install And Enable Flatpak Support On Linux?)

|

||||

[#]: via: (https://www.2daygeek.com/how-to-install-and-enable-flatpak-support-on-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,35 +10,30 @@

|

||||

如何在 Linux 上安装并启用 Flatpak 支持?

|

||||

======

|

||||

|

||||

<to 校正:之前似乎发表过跟这个类似的一篇 https://linux.cn/article-10459-1.html>

|

||||

|

||||

目前,我们都在使用 Linux 发行版的官方软件包管理器来安装所需的软件包。

|

||||

|

||||

在 Linux 中,它做得很好,没有任何问题。(它很好地完成了它应该做的工作,同时它没有任何妥协)

|

||||

在 Linux 中,它做得很好,没有任何问题。(它不打折扣地很好的完成了它应该做的工作)

|

||||

|

||||

在一些方面它也有一些限制,所以会让我们考虑其他替代解决方案来解决。

|

||||

但在一些方面它也有一些限制,所以会让我们考虑其他替代解决方案来解决。

|

||||

|

||||

是的,默认情况下,我们不会从发行版官方软件包管理器获取最新版本的软件包,因为这些软件包是在构建当前 OS 版本时构建的。它们只会提供安全更新,直到下一个主要版本发布。

|

||||

是的,默认情况下,我们不能从发行版官方软件包管理器获取到最新版本的软件包,因为这些软件包是在构建当前 OS 版本时构建的。它们只会提供安全更新,直到下一个主要版本发布。

|

||||

|

||||

那么,这种情况有什么解决办法吗?

|

||||

|

||||

是的,我们有多种解决方案,而且我们大多数人已经开始使用其中的一些了。

|

||||

那么,这种情况有什么解决办法吗?是的,我们有多种解决方案,而且我们大多数人已经开始使用其中的一些了。

|

||||

|

||||

有些什么呢,它们有什么好处?

|

||||

|

||||

* **对于基于 Ubuntu 的系统:** PPAs

|

||||

* **对于基于 RHEL 的系统:** [EPEL Repository][1]、[ELRepo Repository][2]、[nux-dextop Repository][3]、[IUS Community Repo][4]、[RPMfusion Repository][5] 和 [Remi Repository][6]

|

||||

* **对于基于 Ubuntu 的系统:** PPA

|

||||

* **对于基于 RHEL 的系统:** [EPEL 仓库][1]、[ELRepo 仓库][2]、[nux-dextop 仓库][3]、[IUS 社区仓库][4]、[RPMfusion 仓库][5] 和 [Remi 仓库][6]

|

||||

|

||||

|

||||

使用上面的仓库,我们将获得最新的软件包。这些软件包通常都得到了很好的维护,还有大多数社区的建议。但这对于操作系统来说应该是适当的,因为它们可能并不安全。

|

||||

使用上面的仓库,我们将获得最新的软件包。这些软件包通常都得到了很好的维护,还有大多数社区的推荐。但这些只是建议,可能并不总是安全的。

|

||||

|

||||

近年来,出现了一下通用软件包封装格式,并且得到了广泛的应用。

|

||||

|

||||

* **`Flatpak:`** 它是独立于发行版的包格式,主要贡献者是 Fedora 项目团队。大多数主要的 Linux 发行版都采用了 Flatpak 框架。

|

||||

* **`Snaps:`** Snappy 是一种通用的软件包封装格式,最初由 Canonical 为 Ubuntu 手机及其操作系统设计和构建的。后来,大多数发行版都进行了改编。

|

||||

* **`AppImage:`** AppImage 是一种可移植的包格式,可以在不安装或不需要 root 权限的情况下运行。

|

||||

* Flatpak:它是独立于发行版的包格式,主要贡献者是 Fedora 项目团队。大多数主要的 Linux 发行版都采用了 Flatpak 框架。

|

||||

* Snaps:Snappy 是一种通用的软件包封装格式,最初由 Canonical 为 Ubuntu 手机及其操作系统设计和构建的。后来,更多的发行版都接纳了它。

|

||||

* AppImage:AppImage 是一种可移植的包格式,可以在不安装和不需要 root 权限的情况下运行。

|

||||

|

||||

我们之前已经介绍过 **[Snap 包管理器和包封装格式][7]**。今天我们将讨论 Flatpak 包封装格式。

|

||||

我们之前已经介绍过 [Snap 包管理器和包封装格式][7]。今天我们将讨论 Flatpak 包封装格式。

|

||||

|

||||

### 什么是 Flatpak?

|

||||

|

||||

@ -56,13 +51,13 @@ Flatpak 的一个缺点是不像 Snap 和 AppImage 那样支持服务器操作

|

||||

|

||||

大多数 Linux 发行版官方仓库都提供 Flatpak 软件包。因此,可以使用它们来进行安装。

|

||||

|

||||

对于 **`Fedora`** 系统,使用 **[DNF 命令][8]** 来安装 flatpak。

|

||||

对于 Fedora 系统,使用 [DNF 命令][8] 来安装 flatpak。

|

||||

|

||||

```

|

||||

$ sudo dnf install flatpak

|

||||

```

|

||||

|

||||

对于 **`Debian/Ubuntu`** 系统,使用 **[APT-GET 命令][9]** 或 **[APT 命令][10]** 来安装 flatpak。

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][9] 或 [APT 命令][10] 来安装 flatpak。

|

||||

|

||||

```

|

||||

$ sudo apt install flatpak

|

||||

@ -76,19 +71,19 @@ $ sudo apt update

|

||||

$ sudo apt install flatpak

|

||||

```

|

||||

|

||||

对于基于 **`Arch Linux`** 的系统,使用 **[Pacman 命令][11]** 来安装 flatpak。

|

||||

对于基于 Arch Linux 的系统,使用 [Pacman 命令][11] 来安装 flatpak。

|

||||

|

||||

```

|

||||

$ sudo pacman -S flatpak

|

||||

```

|

||||

|

||||

对于 **`RHEL/CentOS`** 系统,使用 **[YUM 命令][12]** 来安装 flatpak。

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][12] 来安装 flatpak。

|

||||

|

||||

```

|

||||

$ sudo yum install flatpak

|

||||

```

|

||||

|

||||

对于 **`openSUSE Leap`** 系统,使用 **[Zypper 命令][13]** 来安装 flatpak。

|

||||

对于 openSUSE Leap 系统,使用 [Zypper 命令][13] 来安装 flatpak。

|

||||

|

||||

```

|

||||

$ sudo zypper install flatpak

|

||||

@ -96,9 +91,7 @@ $ sudo zypper install flatpak

|

||||

|

||||

### 如何在 Linux 上启用 Flathub 支持?

|

||||

|

||||

Flathub 网站是一个应用程序商店,你可以在其中找到 flatpak。

|

||||

|

||||

它是一个中央仓库,所有的 flatpak 应用程序都可供用户使用。

|

||||

Flathub 网站是一个应用程序商店,你可以在其中找到 flatpak 软件包。它是一个中央仓库,所有的 flatpak 应用程序都可供用户使用。

|

||||

|

||||

运行以下命令在 Linux 上启用 Flathub 支持:

|

||||

|

||||

@ -226,7 +219,7 @@ org.gnome.Platform/x86_64/3.30 system,runtime

|

||||

|

||||

### 如何查看有关已安装应用程序的详细信息?

|

||||

|

||||

运行以下命令以查看有关已安装应用程序的详细信息。

|

||||

运行以下命令以查看有关已安装应用程序的详细信息:

|

||||

|

||||

```

|

||||

$ flatpak info com.github.muriloventuroso.easyssh

|

||||

@ -264,6 +257,7 @@ $ flatpak update com.github.muriloventuroso.easyssh

|

||||

### 如何移除已安装的应用程序?

|

||||

|

||||

运行以下命令来移除已安装的应用程序:

|

||||

|

||||

```

|

||||

$ sudo flatpak uninstall com.github.muriloventuroso.easyssh

|

||||

```

|

||||

@ -281,7 +275,7 @@ via: https://www.2daygeek.com/how-to-install-and-enable-flatpak-support-on-linux

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,45 +1,35 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (heguangzhi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10736-1.html)

|

||||

[#]: subject: (How To Check The List Of Open Ports In Linux?)

|

||||

[#]: via: (https://www.2daygeek.com/linux-scan-check-open-ports-using-netstat-ss-nmap/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

|

||||

如何检查Linux中的开放端口列表?

|

||||

如何检查 Linux 中的开放端口列表?

|

||||

======

|

||||

|

||||

最近,我们就同一主题写了两篇文章。

|

||||

最近,我们就同一主题写了两篇文章。这些文章内容帮助你如何检查远程服务器中给定的端口是否打开。

|

||||

|

||||

这些文章内容帮助您如何检查远程服务器中给定的端口是否打开。

|

||||

如果你想 [检查远程 Linux 系统上的端口是否打开][1] 请点击链接浏览。如果你想 [检查多个远程 Linux 系统上的端口是否打开][2] 请点击链接浏览。如果你想 [检查多个远程 Linux 系统上的多个端口状态][2] 请点击链接浏览。

|

||||

|

||||

如果您想 **[检查远程 Linux 系统上的端口是否打开][1]** 请点击链接浏览。

|

||||

但是本文帮助你检查本地系统上的开放端口列表。

|

||||

|

||||

如果您想 **[检查多个远程 Linux 系统上的端口是否打开][2]** 请点击链接浏览。

|

||||

在 Linux 中很少有用于此目的的实用程序。然而,我提供了四个最重要的 Linux 命令来检查这一点。

|

||||

|

||||

如果您想 **[检查多个远程Linux系统上的多个端口状态][2]** 请点击链接浏览。

|

||||

|

||||

但是本文帮助您检查本地系统上的开放端口列表。

|

||||

|

||||

在 Linux 中很少有用于此目的的实用程序。

|

||||

|

||||

然而,我提供了四个最重要的 Linux 命令来检查这一点。

|

||||

|

||||

您可以使用以下四个命令来完成这个工作。这些命令是非常出名的并被 Linux 管理员广泛使用。

|

||||

|

||||

* **`netstat:`** netstat (“network statistics”) 是一个显示网络连接(进和出)相关信息命令行工具,例如:路由表, 伪装连接,多点传送成员和网络端口。

|

||||

* **`nmap:`** Nmap (“Network Mapper”) 是一个网络探索与安全审计的开源工具。它旨在快速扫描大型网络。

|

||||

* **`ss:`** ss 被用于转储套接字统计信息。它也可以类似 netstat 使用。相比其他工具它可以展示更多的TCP状态信息。

|

||||

* **`lsof:`** lsof 是 List Open File 的缩写. 它用于输出被某个进程打开的所有文件。

|

||||