+

+常用的Linux内核原生支持IPv4和IPv6的TCP MD5选项。因此,如果你从全新的[Linux机器][3]构建了一台Quagga路由器,TCP的MD5功能会自动启用。剩下来的事情,仅仅是配置Quagga以使用它的功能。但是,如果你使用的是FreeBSD机器或者为Quagga构建了一个自定义内核,请确保内核开启了TCP的MD5支持(如,Linux中的CONFIG_TCP_MD5SIG选项)。

+

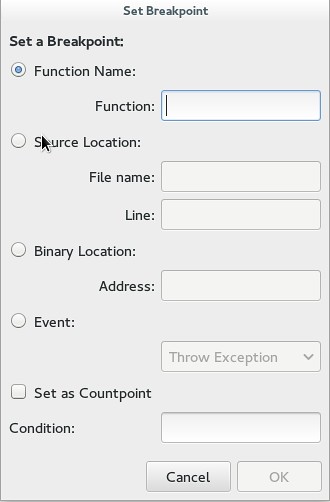

+### 配置Router-A验证功能 ###

+

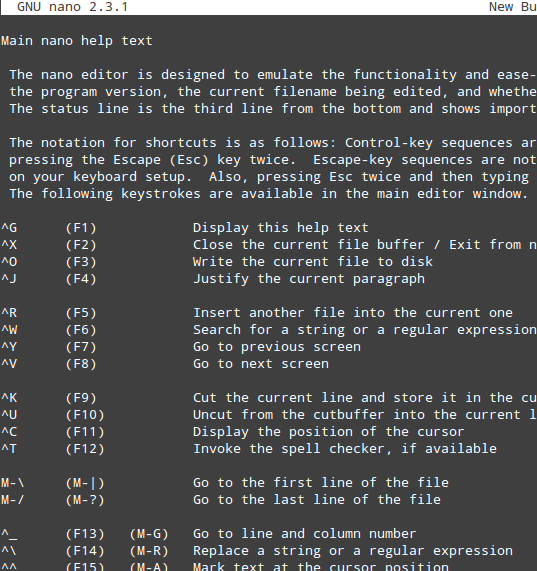

+我们将使用Quagga的CLI Shell来配置路由器,我们将使用的唯一的一个新命令是‘password’。

+

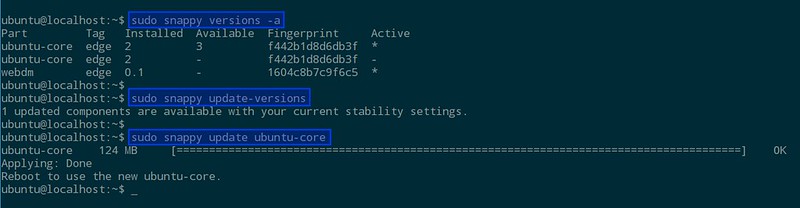

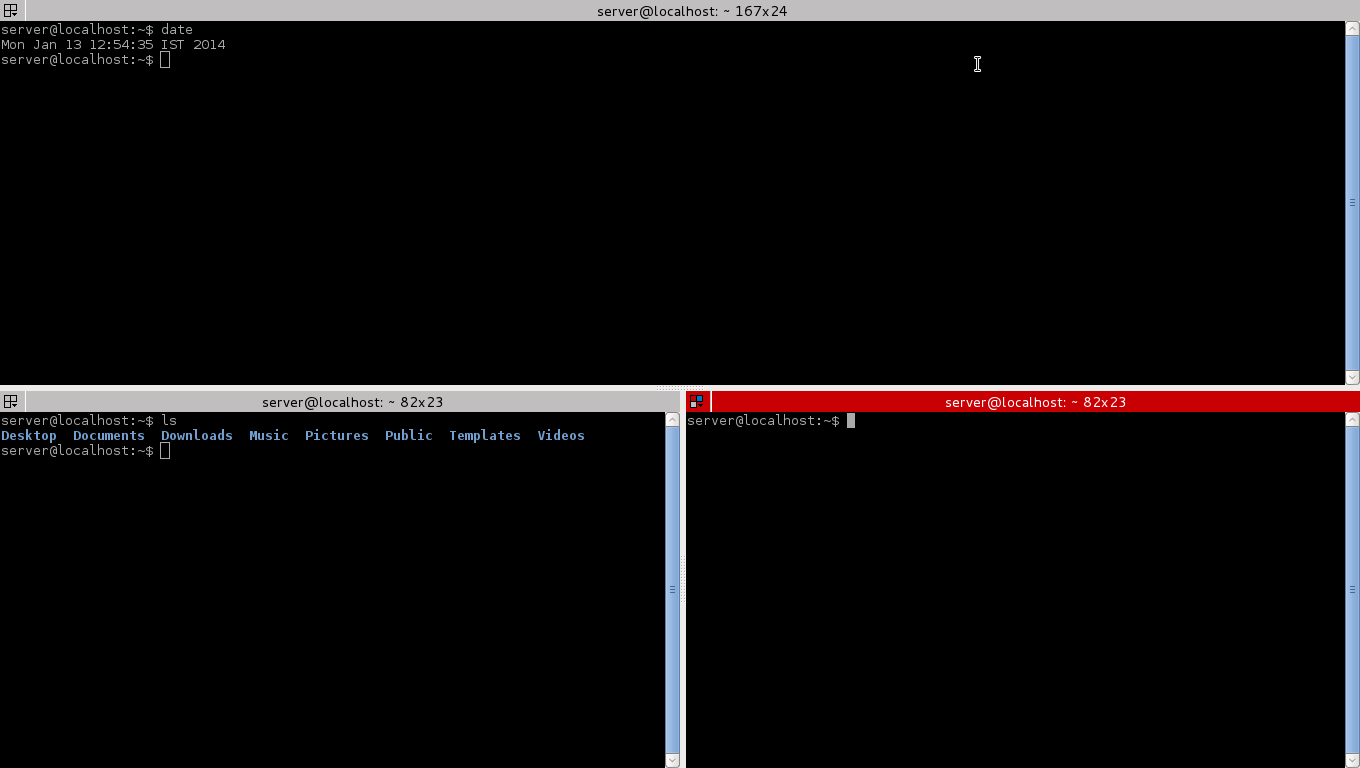

+ [root@router-a ~]# vtysh

+ router-a# conf t

+ router-a(config)# router bgp 100

+ router-a(config-router)# network 192.168.100.0/24

+ router-a(config-router)# neighbor 10.10.12.2 remote-as 200

+ router-a(config-router)# neighbor 10.10.12.2 password xmodulo

+

+本例中使用的预共享密钥是‘xmodulo’。很明显,在生产环境中,你需要选择一个更健壮的密钥。

+

+**注意**: 在Quagga中,‘service password-encryption’命令被用做加密配置文件中所有明文密码(如,登录密码)。然而,当我使用该命令时,我注意到BGP配置中的预共享密钥仍然是明文的。我不确定这是否是Quagga的限制,还是版本自身的问题。

+

+### 配置Router-B验证功能 ###

+

+我们将以类似的方式配置router-B。

+

+ [root@router-b ~]# vtysh

+ router-b# conf t

+ router-b(config)# router bgp 200

+ router-b(config-router)# network 192.168.200.0/24

+ router-b(config-router)# neighbor 10.10.12.1 remote-as 100

+ router-b(config-router)# neighbor 10.10.12.1 password xmodulo

+

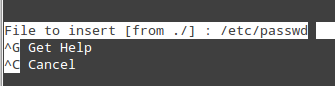

+### 验证BGP会话 ###

+

+如果一切配置正确,那么BGP会话就应该起来了,两台路由器应该能交换路由表。这时候,TCP会话中的所有流出包都会携带一个MD5摘要的包内容和一个密钥,而摘要信息会被另一端自动验证。

+

+我们可以像平时一样通过查看BGP的概要来验证活跃的BGP会话。MD5校验和的验证在Quagga内部是透明的,因此,你在BGP级别是无法看到的。

+

+

+

+如果你想要测试BGP验证,你可以配置一个邻居路由,设置其密码为空,或者故意使用错误的预共享密钥,然后查看发生了什么。你也可以使用包嗅探器,像tcpdump或者Wireshark等,来分析通过BGP会话的包。例如,带有“-M ”选项的tcpdump将验证TCP选项字段的MD5摘要。

+

+###小结###

+

+在本教程中,我们演示了怎样简单地加固两台路由间的BGP会话安全。相对于其它协议而言,配置过程非常简明。强烈推荐你加固BGP会话安全,尤其是当你用另一个AS配置BGP会话的时候。预共享密钥也应该安全地保存。

+

+--------------------------------------------------------------------------------

+

+via: http://xmodulo.com/bgp-authentication-quagga.html

+

+作者:[Sarmed Rahman][a]

+译者:[GOLinux](https://github.com/GOLinux)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://xmodulo.com/author/sarmed

+[1]:http://research.dyn.com/2008/02/pakistan-hijacks-youtube-1/

+[2]:http://tools.ietf.org/html/rfc2385

+[3]:https://linux.cn/article-4232-1.html

diff --git a/published/201505/20150409 4 Tools Send Email with Subject, Body and Attachment in Linux.md b/published/201505/20150409 4 Tools Send Email with Subject, Body and Attachment in Linux.md

new file mode 100644

index 0000000000..00003eaa19

--- /dev/null

+++ b/published/201505/20150409 4 Tools Send Email with Subject, Body and Attachment in Linux.md

@@ -0,0 +1,261 @@

+4个可以发送完整电子邮件的命令行工具

+================================================================================

+今天的文章里我们会讲到一些使用Linux命令行工具来发送带附件的电子邮件的方法。它有很多用处,比如在应用程序所在服务器上,使用电子邮件发送一个文件过来,或者你可以在脚本中使用这些命令来做一些自动化操作。在本文的例子中,我们会使用foo.tar.gz文件作为附件。

+

+有不同的命令行工具可以发送邮件,这里我分享几个多数用户会使用的工具,如`mailx`、`mutt`和`swaks`。

+

+我们即将呈现的这些工具都是非常有名的,并且存在于多数Linux发行版默认的软件仓库中,你可以使用如下命令安装:

+

+在 **Debian / Ubuntu** 系统

+

+ apt-get install mutt

+ apt-get install swaks

+ apt-get install mailx

+ apt-get install sharutils

+

+在基于Red Hat的系统,如 **CentOS** 或者 **Fedora**

+

+ yum install mutt

+ yum install swaks

+ yum install mailx

+ yum install sharutils

+

+### 1) 使用 mail / mailx ###

+

+`mailx`工具在多数Linux发行版中是默认的邮件程序,现在已经支持发送附件了。如果它不在你的系统中,你可以使用上边的命令安装。有一点需要注意,老版本的mailx可能不支持发送附件,运行如下命令查看是否支持。

+

+ $ man mail

+

+第一行看起来是这样的:

+

+ mailx [-BDdEFintv~] [-s subject] [-a attachment ] [-c cc-addr] [-b bcc-addr] [-r from-addr] [-h hops] [-A account] [-S variable[=value]] to-addr . . .

+

+如果你看到它支持`-a`的选项(-a 文件名,将文件作为附件添加到邮件)和`-s`选项(-s 主题,指定邮件的主题),那就是支持的。可以使用如下的几个例子发送邮件。

+

+**a) 简单的邮件**

+

+运行`mail`命令,然后`mailx`会等待你输入邮件内容。你可以按回车来换行。当输入完成后,按Ctrl + D,`mailx`会显示EOT表示结束。

+

+然后`mailx`会自动将邮件发送给收件人。

+

+ $ mail user@example.com

+

+ HI,

+ Good Morning

+ How are you

+ EOT

+

+**b) 发送有主题的邮件**

+

+ $ echo "Email text" | mail -s "Test Subject" user@example.com

+

+`-s`的用处是指定邮件的主题。

+

+**c) 从文件中读取邮件内容并发送**

+

+ $ mail -s "message send from file" user@example.com < /path/to/file

+

+**d) 将从管道获取到的`echo`命令输出作为邮件内容发送**

+

+ $ echo "This is message body" | mail -s "This is Subject" user@example.com

+

+**e) 发送带附件的邮件**

+

+ $ echo “Body with attachment "| mail -a foo.tar.gz -s "attached file" user@example.com

+

+`-a`选项用于指定附件。

+

+### 2) mutt ###

+

+Mutt是类Unix系统上的一个文本界面邮件客户端。它有20多年的历史,在Linux历史中也是一个很重要的部分,它是最早支持进程打分和多线程处理的客户端程序之一。按照如下的例子来发送邮件。

+

+**a) 带有主题,从文件中读取邮件的正文,并发送**

+

+ $ mutt -s "Testing from mutt" user@example.com < /tmp/message.txt

+

+**b) 通过管道获取`echo`命令输出作为邮件内容发送**

+

+ $ echo "This is the body" | mutt -s "Testing mutt" user@example.com

+

+**c) 发送带附件的邮件**

+

+ $ echo "This is the body" | mutt -s "Testing mutt" user@example.com -a /tmp/foo.tar.gz

+

+**d) 发送带有多个附件的邮件**

+

+ $ echo "This is the body" | mutt -s "Testing" user@example.com -a foo.tar.gz –a bar.tar.gz

+

+### 3) swaks ###

+

+Swaks(Swiss Army Knife,瑞士军刀)是SMTP服务上的瑞士军刀,它是一个功能强大、灵活、可编程、面向事务的SMTP测试工具,由John Jetmore开发和维护。你可以使用如下语法发送带附件的邮件:

+

+ $ swaks -t "foo@bar.com" --header "Subject: Subject" --body "Email Text" --attach foo.tar.gz

+

+关于Swaks一个重要的地方是,它会为你显示整个邮件发送过程,所以如果你想调试邮件发送过程,它是一个非常有用的工具。

+

+它会给你提供了邮件发送过程的所有细节,包括邮件接收服务器的功能支持、两个服务器之间的每一步交互。

+

+### 4) uuencode ###

+

+邮件传输系统最初是被设计来传送7位编码(类似ASCII)的内容的。这就意味这它是用来发送文本内容,而不能发会使用8位的二进制内容(如程序文件或者图片)。`uuencode`(“UNIX to UNIX encoding”,UNIX之间使用的编码方式)程序用来解决这个限制。使用`uuencode`,发送端将二进制格式的转换成文本格式来传输,接收端再转换回去。

+

+我们可以简单地使用`uuencode`和`mailx`或者`mutt`配合,来发送二进制内容,类似这样:

+

+ $ uuencode example.jpeg example.jpeg | mail user@example.com

+

+### Shell脚本:解释如何发送邮件 ###

+

+ #!/bin/bash

+

+ FROM=""

+ SUBJECT=""

+ ATTACHMENTS=""

+ TO=""

+ BODY=""

+

+ # 检查文件名对应的文件是否存在

+ function check_files()

+ {

+ output_files=""

+ for file in $1

+ do

+ if [ -s $file ]

+ then

+ output_files="${output_files}${file} "

+ fi

+ done

+ echo $output_files

+ }

+

+ echo "*********************"

+ echo "E-mail sending script."

+ echo "*********************"

+ echo

+

+ # 读取用户输入的邮件地址

+ while [ 1 ]

+ do

+ if [ ! $FROM ]

+ then

+ echo -n -e "Enter the e-mail address you wish to send mail from:\n[Enter] "

+ else

+ echo -n -e "The address you provided is not valid:\n[Enter] "

+ fi

+

+ read FROM

+ echo $FROM | grep -E '^.+@.+$' > /dev/null

+ if [ $? -eq 0 ]

+ then

+ break

+ fi

+ done

+

+ echo

+

+ # 读取用户输入的收件人地址

+ while [ 1 ]

+ do

+ if [ ! $TO ]

+ then

+ echo -n -e "Enter the e-mail address you wish to send mail to:\n[Enter] "

+ else

+ echo -n -e "The address you provided is not valid:\n[Enter] "

+ fi

+

+ read TO

+ echo $TO | grep -E '^.+@.+$' > /dev/null

+ if [ $? -eq 0 ]

+ then

+ break

+ fi

+ done

+

+ echo

+

+ # 读取用户输入的邮件主题

+ echo -n -e "Enter e-mail subject:\n[Enter] "

+ read SUBJECT

+

+ echo

+

+ if [ "$SUBJECT" == "" ]

+ then

+ echo "Proceeding without the subject..."

+ fi

+

+ # 读取作为附件的文件名

+ echo -e "Provide the list of attachments. Separate names by space.

+ If there are spaces in file name, quote file name with \"."

+ read att

+

+ echo

+

+ # 确保文件名指向真实文件

+ attachments=$(check_files "$att")

+ echo "Attachments: $attachments"

+

+ for attachment in $attachments

+ do

+ ATTACHMENTS="$ATTACHMENTS-a $attachment "

+ done

+

+ echo

+

+ # 读取完整的邮件正文

+ echo "Enter message. To mark the end of message type ;; in new line."

+ read line

+

+ while [ "$line" != ";;" ]

+ do

+ BODY="$BODY$line\n"

+ read line

+ done

+

+ SENDMAILCMD="mutt -e \"set from=$FROM\" -s \"$SUBJECT\" \

+ $ATTACHMENTS -- \"$TO\" <<< \"$BODY\""

+ echo $SENDMAILCMD

+

+ mutt -e "set from=$FROM" -s "$SUBJECT" $ATTACHMENTS -- $TO <<< $BODY

+

+** 脚本输出 **

+

+ $ bash send_mail.sh

+ *********************

+ E-mail sending script.

+ *********************

+

+ Enter the e-mail address you wish to send mail from:

+ [Enter] test@gmail.com

+

+ Enter the e-mail address you wish to send mail to:

+ [Enter] test@gmail.com

+

+ Enter e-mail subject:

+ [Enter] Message subject

+

+ Provide the list of attachments. Separate names by space.

+ If there are spaces in file name, quote file name with ".

+ send_mail.sh

+

+ Attachments: send_mail.sh

+

+ Enter message. To mark the end of message type ;; in new line.

+ This is a message

+ text

+ ;;

+

+### 总结 ###

+

+有很多方法可以使用命令行/Shell脚本来发送邮件,这里我们只分享了其中4个类Unix系统可用的工具。希望你喜欢我们的文章,并且提供您的宝贵意见,让我们知道您想了解哪些新工具。

+

+--------------------------------------------------------------------------------

+

+via: http://linoxide.com/linux-shell-script/send-email-subject-body-attachment-linux/

+

+作者:[Bobbin Zachariah][a]

+译者:[goreliu](https://github.com/goreliu)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://linoxide.com/author/bobbin/

\ No newline at end of file

diff --git a/published/201505/20150409 Install Inkscape - Open Source Vector Graphic Editor.md b/published/201505/20150409 Install Inkscape - Open Source Vector Graphic Editor.md

new file mode 100644

index 0000000000..c6361e780b

--- /dev/null

+++ b/published/201505/20150409 Install Inkscape - Open Source Vector Graphic Editor.md

@@ -0,0 +1,95 @@

+Inkscape - 开源适量图形编辑器

+================================================================================

+Inkscape是一款开源矢量图形编辑工具,并不同于Xara X、Corel Draw和Adobe Illustrator等竞争对手,它使用的是可缩放矢量图形(SVG)图形格式。SVG是一个广泛部署、免版税使用的图形格式,由W3C SVG工作组开发和维护。这是一个跨平台工具,完美运行于Linux、Windows和Mac OS上。

+

+Inkscape始于2003年,起初它的bug跟踪系统托管于Sourceforge上,但是后来迁移到了Launchpad上。当前它最新的一个稳定版本是0.91,它不断地在发展和修改中。我们将在本文里了解一下它的突出特点和安装过程。

+

+### 显著特性 ###

+

+让我们直接来了解这款应用程序的显著特性。

+

+#### 创建对象 ####

+

+- 用铅笔工具来画出不同颜色、大小和形状的手绘线,用贝塞尔曲线(笔式)工具来画出直线和曲线,通过书法工具来应用到手写的书法笔画上等等

+- 用文本工具来创建、选择、编辑和格式化文本。在纯文本框、在路径上或在形状里操作文本

+- 方便绘制各种形状,像矩形、椭圆形、圆形、弧线、多边形、星形和螺旋形等等并调整其大小、旋转并修改(圆角化)它们

+- 用简单地命令创建并嵌入位图

+

+#### 对象处理 ####

+

+- 通过交互式操作和调整参量来扭曲、移动、测量、旋转目标

+- 可以对 Z 轴进行提升或降低操作。

+- 通过对象组合和取消组合可以创建一个虚拟层用来编辑或处理

+- 图层采用层次结构树的结构,并且能锁定或以各式各样的处理方式来重新布置

+- 分布与对齐指令

+

+#### 填充与边框 ####

+

+- 可以复制/粘贴不同风格

+- 取色工具

+- 用RGB, HSL, CMS, CMYK和色盘这四种不同的方式选色

+- 渐变层编辑器能创建和管理多停点渐变层

+- 使用图像或其它选择区作为花纹填充

+- 用一些预定义点状花纹进行笔触填充

+- 通过路径标示器标示开始、对折和结束点

+

+#### 路径上的操作 ####

+

+- 节点编辑:移动节点和贝塞尔曲线控制点,节点的对齐和分布等等

+- 布尔运算(是或否)

+- 运用可变的路径起迄点可简化路径

+- 路径插入和增设连同动态和链接偏移对象

+- 通过路径追踪把位图图像转换成路径(彩色或单色路径)

+

+#### 文本处理 ####

+

+- 所有安装好的框线字体都能用,甚至可以从右至左对齐对象

+- 格式化文本、调整字母间距、行间距或列间距

+- 路径上和形状上的文本中的文本、路径或形状都可以被编辑和修改

+

+#### 渲染 ####

+

+- Inkscape完全支持抗锯齿显示,这是一种通过柔化边界上的像素从而减少或消除凹凸锯齿的技术。

+- 支持alpha透明显示和PNG格式图片的导出

+

+### 在Ubuntu 14.04和14.10上安装Inkscape ###

+

+为了在Ubuntu上安装Inkscape,我们首先需要 [添加它的稳定版Personal Package Archive][1] (PPA) 至Advanced Package Tool (APT) 库中。打开终端并运行一下命令来添加它的PPA:

+

+ sudo add-apt-repository ppa:inkscape.dev/stable

+

+

+

+PPA添加到APT库中后,我们要用以下命令进行更新:

+

+ sudo apt-get update

+

+

+

+更新好库之后,我们准备用以下命令来完成安装:

+

+ sudo apt-get install inkscape

+

+

+

+恭喜,现在Inkscape已经被安装好了,我们可以充分利用它的丰富功能特点来编辑制作图像了。

+

+

+

+### 结论 ###

+

+Inkscape是一款特点鲜明的图形编辑工具,它给予用户充分发挥自己艺术能力的权利。它还是一款自由安装和自定义的开源应用,并且支持各种文件类型,包括JPEG, PNG, GIF和PDF及更多。访问它的 [官方网站][2] 来获取更多新闻和应用更新。

+

+--------------------------------------------------------------------------------

+

+via: http://linoxide.com/tools/install-inkscape-open-source-vector-graphic-editor/

+

+作者:[Aun Raza][a]

+译者:[ZTinoZ](https://github.com/ZTinoZ)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://linoxide.com/author/arunrz/

+[1]:https://launchpad.net/~inkscape.dev/+archive/ubuntu/stable

+[2]:https://inkscape.org/en/

diff --git a/published/201505/20150410 This tool can alert you about evil twin access points in the area.md b/published/201505/20150410 This tool can alert you about evil twin access points in the area.md

new file mode 100644

index 0000000000..a43aa8206f

--- /dev/null

+++ b/published/201505/20150410 This tool can alert you about evil twin access points in the area.md

@@ -0,0 +1,41 @@

+EvilAP_Defender:可以警示和攻击 WIFI 热点陷阱的工具

+===============================================================================

+

+**开发人员称,EvilAP_Defender甚至可以攻击流氓Wi-Fi接入点**

+

+这是一个新的开源工具,可以定期扫描一个区域,以防出现恶意 Wi-Fi 接入点,同时如果发现情况会提醒网络管理员。

+

+这个工具叫做 EvilAP_Defender,是为监测攻击者所配置的恶意接入点而专门设计的,这些接入点冒用合法的名字诱导用户连接上。

+

+这类接入点被称做假面猎手(evil twin),使得黑客们可以从所接入的设备上监听互联网信息流。这可以被用来窃取证书、钓鱼网站等等。

+

+大多数用户设置他们的计算机和设备可以自动连接一些无线网络,比如家里的或者工作地方的网络。通常,当面对两个同名的无线网络时,即SSID相同,有时候甚至连MAC地址(BSSID)也相同,这时候大多数设备会自动连接信号较强的一个。

+

+这使得假面猎手攻击容易实现,因为SSID和BSSID都可以伪造。

+

+[EvilAP_Defender][1]是一个叫Mohamed Idris的人用Python语言编写,公布在GitHub上面。它可以使用一个计算机的无线网卡来发现流氓接入点,这些坏蛋们复制了一个真实接入点的SSID,BSSID,甚至是其他的参数如通道,密码,隐私协议和认证信息等等。

+

+该工具首先以学习模式运行,以便发现合法的接入点[AP],并且将其加入白名单。然后可以切换到正常模式,开始扫描未认证的接入点。

+

+如果一个恶意[AP]被发现了,该工具会用电子邮件提醒网络管理员,但是开发者也打算在未来加入短信提醒功能。

+

+该工具还有一个保护模式,在这种模式下,应用会发起一个denial-of-service [DoS]攻击反抗恶意接入点,为管理员采取防卫措施赢得一些时间。

+

+“DoS 将仅仅针对有着相同SSID的而BSSID(AP的MAC地址)不同或者不同信道的流氓 AP,”Idris在这款工具的文档中说道。“这是为了避免攻击到你的正常网络。”

+

+尽管如此,用户应该切记在许多国家,攻击别人的接入点很多时候都是非法的,甚至是一个看起来像是攻击者操控的恶意接入点。

+

+要能够运行这款工具,需要Aircrack-ng无线网套装,一个支持Aircrack-ng的无线网卡,MySQL和Python运行环境。

+

+--------------------------------------------------------------------------------

+

+via: http://www.infoworld.com/article/2905725/security0/this-tool-can-alert-you-about-evil-twin-access-points-in-the-area.html

+

+作者:[Lucian Constantin][a]

+译者:[wi-cuckoo](https://github.com/wi-cuckoo)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://www.infoworld.com/author/Lucian-Constantin/

+[1]:https://github.com/moha99sa/EvilAP_Defender/blob/master/README.TXT

diff --git a/published/201505/20150410 What is a good alternative to wget or curl on Linux.md b/published/201505/20150410 What is a good alternative to wget or curl on Linux.md

new file mode 100644

index 0000000000..3af223b631

--- /dev/null

+++ b/published/201505/20150410 What is a good alternative to wget or curl on Linux.md

@@ -0,0 +1,145 @@

+用腻了 wget 或 curl,有什么更好的替代品吗?

+================================================================================

+

+如果你经常需要通过终端以非交互模式访问网络服务器(例如,从网络上下载文件,或者是测试 RESTful 网络服务接口),可能你会选择的工具是 wget 或 curl。通过大量的命令行选项,这两种工具都可以处理很多非交互网络访问的情况(比如[这里][1]、[这里][2],还有[这里][3])。然而,即使像这些一样的强大的工具,你也只能发挥你所了解的那些选项的功能。除非你很精通那些繁冗的语法细节,这些工具对于你来说只不过是简单的网络下载器而已。

+

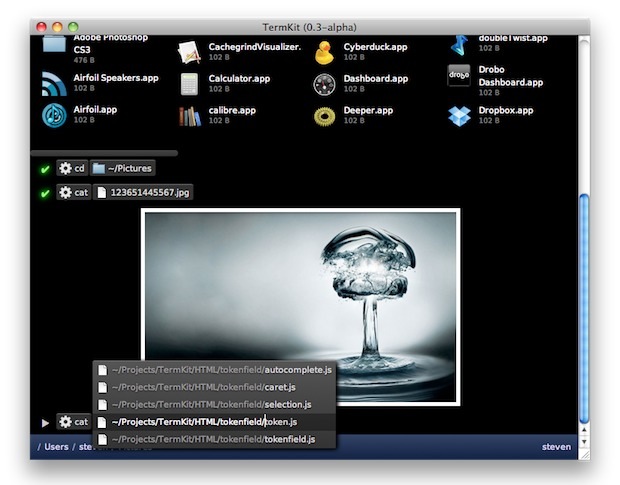

+就像其宣传的那样,“给人用 curl 类工具”,[HTTPie][4] 设计用来增强 wget 和 curl 的可用性。它的主要目标是使通过命令行与网络服务器进行交互的过程变得尽可能的人性化。为此,HTTPie 支持具有表现力、但又很简单很直观的语法。它以彩色模式显示响应,并且还有一些不错的优点,比如对 JSON 的良好支持,和持久性会话用以作业流程化。

+

+我知道很多人对把像 wget 和 curl 这样的无处不在的、可用的、完美的工具换成完全没听说过的软件心存疑虑。这种观点是好的,特别是如果你是一个系统管理员、要处理很多不同的硬件的话。然而,对于开发者和终端用户来说,重要的是效率。如果我发现了一个工具的用户更佳替代品,那么我认为采用易于使用的版本来节省宝贵的时间是毫无疑问的。没有必要对替换掉的工具保持信仰忠诚。毕竟,对于 Linux 来说,最好的事情就是可以选择。

+

+在这篇文章中,让我们来了解并展示一下我所说的 HTTPie,一个用户友好的 wget 和 curl 的替代。

+

+

+

+### 在 Linux 上安装 HTTPie ###

+

+HTTPie 是用 Python 写的,所以你可以在几乎所有地方(Linux,MacOSX,Windows)安装它。而且,在大多数的 Linux 发行版中都有编译好的安装包。

+

+#### Debian,Ubuntu 或者 Linux Mint: ####

+

+ $ sudo apt-get install httpie

+

+#### Fedora: ####

+

+ $ sudo yum install httpie

+

+#### CentOS/RHEL: ####

+

+首先,启用[EPEL 仓库][5],然后运行:

+

+ $ sudo yum install httpie

+

+对于任何 Linux 发行版,另一个安装方法时使用[pip][6]。

+

+ $ sudo pip install --upgrade httpie

+

+### HTTPie 的例子 ###

+

+当你安装完 HTTPie 后,你可以通过输入 http 命令来调用它。在这篇文章的剩余部分,我会展示几个有用的 http 命令的例子。

+

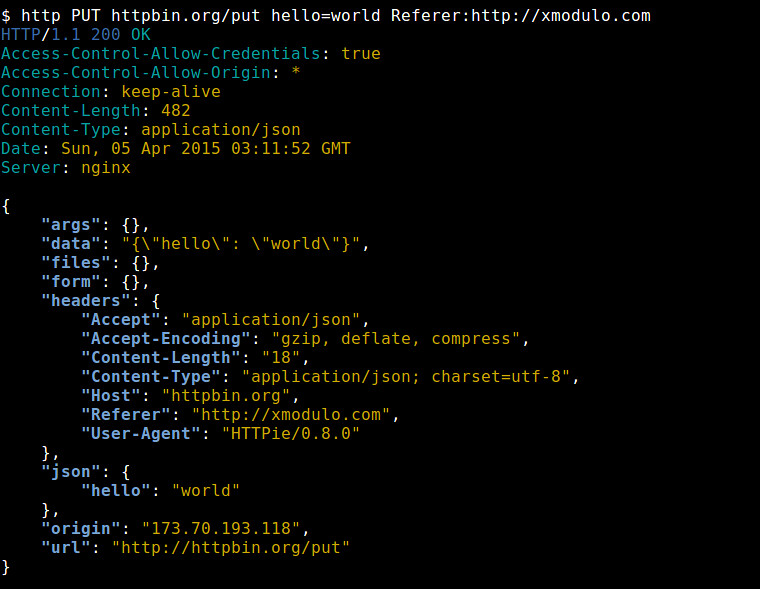

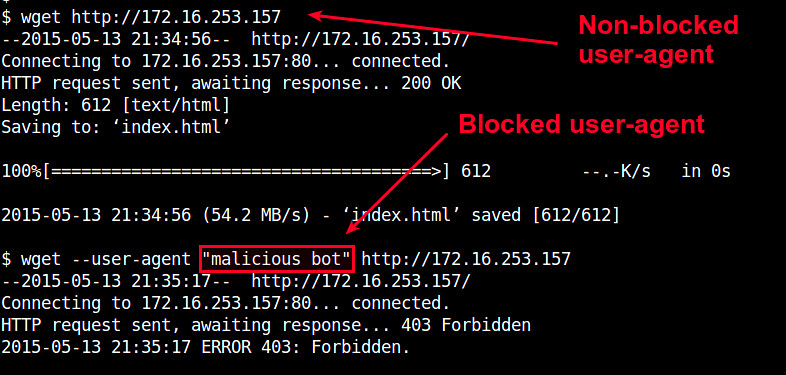

+#### 例1:定制头部 ####

+

+你可以使用 <header:value> 的格式来定制头部。例如,我们发送一个 HTTP GET 请求到 www.test.com ,使用定制用户代理(user-agent)和来源(referer),还有定制头部(比如 MyParam)。

+

+ $ http www.test.com User-Agent:Xmodulo/1.0 Referer:http://xmodulo.com MyParam:Foo

+

+注意到当使用 HTTP GET 方法时,就无需明确指定 HTTP 方法。

+

+这个 HTTP 请求看起来如下:

+

+ GET / HTTP/1.1

+ Host: www.test.com

+ Accept: */*

+ Referer: http://xmodulo.com

+ Accept-Encoding: gzip, deflate, compress

+ MyParam: Foo

+ User-Agent: Xmodulo/1.0

+

+#### 例2:下载文件 ####

+

+你可以把 http 作为文件下载器来使用。你需要像下面一样把输出重定向到文件。

+

+ $ http www.test.com/my_file.zip > my_file.zip

+

+或者:

+

+ $ http --download www.test.com/my_file.zip

+

+#### 例3:定制 HTTP 方法 ####

+

+除了默认的 GET 方法,你还可以使用其他方法(比如 PUT,POST,HEAD)。例如,发送一个 HTTP PUT 请求:

+

+ $ http PUT www.test.com name='Dan Nanni' email=dan@email.com

+

+#### 例4:提交表单 ####

+

+使用 http 命令提交表单很容易,如下:

+

+ $ http -f POST www.test.com name='Dan Nanni' comment='Hi there'

+

+'-f' 选项使 http 命令序列化数据字段,并将 'Content-Type' 设置为 "application/x-www-form-urlencoded; charset=utf-8"。

+

+这个 HTTP POST 请求看起来如下:

+

+ POST / HTTP/1.1

+ Host: www.test.com

+ Content-Length: 31

+ Content-Type: application/x-www-form-urlencoded; charset=utf-8

+ Accept-Encoding: gzip, deflate, compress

+ Accept: */*

+ User-Agent: HTTPie/0.8.0

+

+ name=Dan+Nanni&comment=Hi+there

+

+####例5:JSON 支持

+

+HTTPie 内置 JSON(一种日渐普及的数据交换格式)支持。事实上,HTTPie 默认使用的内容类型(content-type)就是 JSON。因此,当你不指定内容类型发送数据字段时,它们会自动序列化为 JSON 对象。

+

+ $ http POST www.test.com name='Dan Nanni' comment='Hi there'

+

+这个 HTTP POST 请求看起来如下:

+

+ POST / HTTP/1.1

+ Host: www.test.com

+ Content-Length: 44

+ Content-Type: application/json; charset=utf-8

+ Accept-Encoding: gzip, deflate, compress

+ Accept: application/json

+ User-Agent: HTTPie/0.8.0

+

+ {"name": "Dan Nanni", "comment": "Hi there"}

+

+#### 例6:输出重定向 ####

+

+HTTPie 的另外一个用户友好特性是输入重定向,你可以使用缓冲数据提供 HTTP 请求内容。例如:

+

+ $ http POST api.test.com/db/lookup < my_info.json

+

+或者:

+

+ $ echo '{"name": "Dan Nanni"}' | http POST api.test.com/db/lookup

+

+### 结束语 ###

+

+在这篇文章中,我介绍了 HTTPie,一个 wget 和 curl 的可能替代工具。除了这里展示的几个简单的例子,你可以在其[官方网站][7]上找到 HTTPie 的很多有趣的应用。再次重复一遍,一款再强大的工具也取决于你对它的了解程度。从个人而言,我更倾向于 HTTPie,因为我在寻找一种更简洁的测试复杂网络接口的方法。

+

+你怎么看?

+

+--------------------------------------------------------------------------------

+

+via: http://xmodulo.com/wget-curl-alternative-linux.html

+

+作者:[Dan Nanni][a]

+译者:[wangjiezhe](https://github.com/wangjiezhe)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://xmodulo.com/author/nanni

+[1]:http://xmodulo.com/how-to-download-multiple-files-with-wget.html

+[2]:http://xmodulo.com/how-to-use-custom-http-headers-with-wget.html

+[3]:https://linux.cn/article-4957-1.html

+[4]:https://github.com/jakubroztocil/httpie

+[5]:https://linux.cn/article-2324-1.html

+[6]:http://ask.xmodulo.com/install-pip-linux.html

+[7]:https://github.com/jakubroztocil/httpie

diff --git a/published/201505/20150413 A Walk Through Some Important Docker Commands.md b/published/201505/20150413 A Walk Through Some Important Docker Commands.md

new file mode 100644

index 0000000000..d58dda2d54

--- /dev/null

+++ b/published/201505/20150413 A Walk Through Some Important Docker Commands.md

@@ -0,0 +1,106 @@

+一些重要 Docker 命令的简单介绍

+================================================================================

+大家好,今天我们来学习一些在你使用 Docker 之前需要了解的重要的 Docker 命令。[Docker][1] 是一个开源项目,提供了一个可以打包、装载和运行任何应用的轻量级容器的开放平台。它没有语言支持、框架和打包系统的限制,从小型的家用电脑到高端服务器,在何时何地都可以运行。这使它们可以不依赖于特定软件栈和供应商,像一块块积木一样部署和扩展网络应用、数据库和后端服务。

+

+Docker 命令简单易学,也很容易实现或实践。这是一些你运行 Docker 并充分利用它需要知道的简单 Docker 命令。

+

+### 1. 拉取 Docker 镜像 ###

+

+由于容器是由 Docker 镜像构建的,首先我们需要拉取一个 docker 镜像来开始。我们可以从 Docker Registry Hub 获取所需的 docker 镜像。在我们使用 pull 命令拉取任何镜像之前,为了避免 pull 命令的一些恶意风险,我们需要保护我们的系统。为了保护我们的系统不受这个风险影响,我们需要添加 **127.0.0.1 index.docker.io** 到 /etc/hosts 条目。我们可以通过使用喜欢的文本编辑器完成。

+

+ # nano /etc/hosts

+

+现在,增加下面的一行到文件并保存退出。

+

+ 127.0.0.1 index.docker.io

+

+

+

+要拉取一个 docker 镜像,我们需要运行下面的命令。

+

+ # docker pull registry.hub.docker.com/busybox

+

+

+

+我们可以检查本地是否有可用的 Docker 镜像。

+

+ # docker images

+

+

+

+### 2. 运行 Docker 容器 ###

+

+现在,成功地拉取要求的或所需的 Docker 镜像之后,我们当然想运行这个 Docker 镜像。我们可以用 docker run 命令在镜像上运行一个 docker 容器。在 Docker 镜像上运行一个 docker 容器时我们有很多选项和标记。我们使用 -t 和 -i 选项来运行一个 docker 镜像并进入容器,如下面所示。

+

+ # docker run -it busybox

+

+

+

+从上面的命令中,我们进入了容器并可以通过交互 shell 访问它的内容。我们可以键入 **Ctrl-D** 从shell中退出。

+

+现在,在后台运行容器,我们用 -d 标记分离 shell,如下所示。

+

+ # docker run -itd busybox

+

+

+

+如果你想进入到一个正在运行的容器,我们可以使用 attach 命令加一个容器 id。可以使用 **docker ps** 命令获取容器 id。

+

+ # docker attach

+

+

+

+### 3. 检查容器运行 ###

+

+不论容器是否运行,查看日志文件都很简单。我们可以使用下面的命令去检查是否有 docker 容器在实时运行。

+

+ # docker ps

+

+现在,查看正在运行的或者之前运行的容器的日志,我们需要运行以下的命令。

+

+ # docker ps -a

+

+

+

+### 4. 查看容器信息 ###

+

+我们可以使用 inspect 命令查看一个 Docker 容器的各种信息。

+

+ # docker inspect

+

+

+

+### 5. 杀死或删除 ###

+

+我们可以使用容器 id 杀死或者停止 docker 容器(进程),如下所示。

+

+ # docker stop

+

+要停止每个正在运行的容器,我们需要运行下面的命令。

+

+ # docker kill $(docker ps -q)

+

+现在,如我我们希望移除一个 docker 镜像,运行下面的命令。

+

+ # docker rm

+

+如果我们想一次性移除所有 docker 镜像,我们可以运行以下命令。

+

+ # docker rm $(docker ps -aq)

+

+### 结论 ###

+

+这些都是充分学习和使用 Docker 很基本的 docker 命令。有了这些命令,Docker 变得很简单,可以提供给最终用户一个易用的计算平台。根据上面的教程,任何人学习 Docker 命令都非常简单。如果你有任何问题,建议,反馈,请写到下面的评论框中以便我们改进和更新内容。多谢! 希望你喜欢 :-)

+

+--------------------------------------------------------------------------------

+

+via: http://linoxide.com/linux-how-to/important-docker-commands/

+

+作者:[Arun Pyasi][a]

+译者:[ictlyh](https://github.com/ictlyh)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://linoxide.com/author/arunp/

+[1]:https://www.docker.com/

\ No newline at end of file

diff --git a/published/201505/20150413 Linux FAQs with Answers--How to change PATH environment variable on Linux.md b/published/201505/20150413 Linux FAQs with Answers--How to change PATH environment variable on Linux.md

new file mode 100644

index 0000000000..321ce4b2d3

--- /dev/null

+++ b/published/201505/20150413 Linux FAQs with Answers--How to change PATH environment variable on Linux.md

@@ -0,0 +1,73 @@

+Linux有问必答:如何在Linux中修改环境变量PATH

+================================================================================

+> **提问**: 当我试着运行一个程序时,它提示“command not found”。 但这个程序就在/usr/local/bin下。我该如何添加/usr/local/bin到我的PATH变量下,这样我就可以不用指定路径来运行这个命令了。

+

+在Linux中,PATH环境变量保存了一系列的目录用于用户在输入的时候搜索命令。PATH变量的值由一系列的由分号分隔的绝对路径组成。每个用户都有特定的PATH环境变量(由系统级的PATH变量初始化)。

+

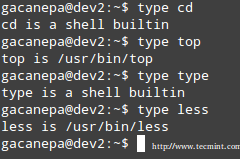

+要检查用户的环境变量,用户模式下运行下面的命令:

+

+ $ echo $PATH

+

+----------

+

+ /usr/lib64/qt-3.3/bin:/bin:/usr/bin:/usr/sbin:/sbin:/home/xmodulo/bin

+

+或者运行:

+

+ $ env | grep PATH

+

+----------

+

+ PATH=/usr/lib64/qt-3.3/bin:/bin:/usr/bin:/usr/sbin:/sbin:/home/xmodulo/bin

+

+如果你的命令不存在于上面任何一个目录内,shell就会抛出一个错误信息:“command not found”。

+

+如果你想要添加一个另外的目录(比如:/usr/local/bin)到你的PATH变量中,你可以用下面这些命令。

+

+### 为特定用户修改PATH环境变量 ###

+

+如果你只想在当前的登录会话中临时地添加一个新的目录(比如:/usr/local/bin)给用户的默认搜索路径,你只需要输入下面的命令。

+

+ $ PATH=$PATH:/usr/local/bin

+

+检查PATH是否已经更新:

+

+ $ echo $PATH

+

+----------

+

+ /usr/lib64/qt-3.3/bin:/bin:/usr/bin:/usr/sbin:/sbin:/home/xmodulo/bin:/usr/local/bin

+

+更新后的PATH会在当前的会话一直有效。然而,更改将在新的会话中失效。

+

+如果你想要永久更改PATH变量,用编辑器打开~/.bashrc (或者 ~/.bash_profile),接着在最后添加下面这行。

+

+ export PATH=$PATH:/usr/local/bin

+

+接着运行下面这行永久激活更改:

+

+ $ source ~/.bashrc (或者 source ~/.bash_profile)

+

+### 改变系统级的环境变量 ###

+

+如果你想要永久添加/usr/local/bin到系统级的PATH变量中,像下面这样编辑/etc/profile。

+

+ $ sudo vi /etc/profile

+

+----------

+

+ export PATH=$PATH:/usr/local/bin

+

+你重新登录后,更新的环境变量就会生效了。

+

+--------------------------------------------------------------------------------

+

+via: http://ask.xmodulo.com/change-path-environment-variable-linux.html

+

+作者:[Dan Nanni][a]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://ask.xmodulo.com/author/nanni

diff --git a/published/201505/20150413 [Solved] Ubuntu Does Not Remember Brightness Settings.md b/published/201505/20150413 [Solved] Ubuntu Does Not Remember Brightness Settings.md

new file mode 100644

index 0000000000..d0248ff2c2

--- /dev/null

+++ b/published/201505/20150413 [Solved] Ubuntu Does Not Remember Brightness Settings.md

@@ -0,0 +1,32 @@

+如何解决 Ubuntu 下不能记住亮度设置的问题

+================================================================================

+

+

+在[解决亮度控制在Ubuntu和Linux Mint下不工作的问题][1]这篇教程里,一些用户提到虽然问题已经得到解决,但是**Ubuntu无法记住亮度设置**,同样的情况在Linux Mint下也会发生。每次开机或从睡眠状态下唤醒,亮度会恢复至最大值或最小值。我知道这种情况很烦。不过幸好我们有很简单的方法来解决**Ubuntu和Linux Mint下的亮度问题**。

+

+### 解决Ubuntu和Linux下不能记住亮度设置 ###

+

+[Norbert][2]写了一个脚本,能让Ubuntu和Linux Mint记住亮度设置,不论是开机还是唤醒之后。为了能让你使用这个脚本更简单方便,他把这个适用于Ubuntu 12.04、14.04和14.10的PPA挂在了网上。你需要做的就是输入以下命令:

+

+ sudo add-apt-repository ppa:nrbrtx/sysvinit-backlight

+ sudo apt-get update

+ sudo apt-get install sysvinit-backlight

+

+安装好之后,重启你的系统。现在就来看看亮度设置有没有被保存下来吧。

+

+希望这篇小贴士能帮助到你。如果你有任何问题,就[来这儿][3]提bug吧。

+

+--------------------------------------------------------------------------------

+

+via: http://itsfoss.com/ubuntu-mint-brightness-settings/

+

+作者:[Abhishek][a]

+译者:[ZTinoZ](https://github.com/ZTinoZ)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://itsfoss.com/author/abhishek/

+[1]:http://itsfoss.com/fix-brightness-ubuntu-1310/

+[2]:https://launchpad.net/~nrbrtx/+archive/ubuntu/sysvinit-backlight/+packages

+[3]:https://launchpad.net/~nrbrtx/+archive/ubuntu/sysvinit-backlight/+packages

diff --git a/published/201505/20150415 Strong SSL Security on nginx.md b/published/201505/20150415 Strong SSL Security on nginx.md

new file mode 100644

index 0000000000..094c50bd37

--- /dev/null

+++ b/published/201505/20150415 Strong SSL Security on nginx.md

@@ -0,0 +1,290 @@

+增强 nginx 的 SSL 安全性

+================================================================================

+[][1]

+

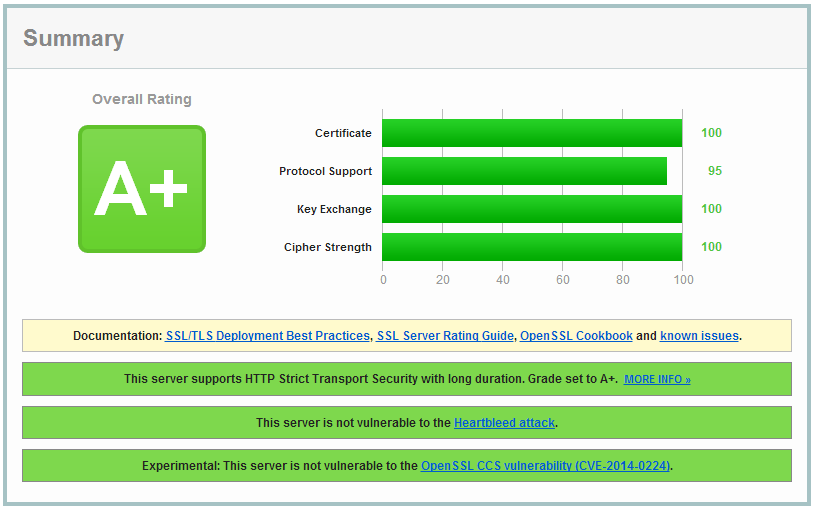

+本文向你介绍如何在 nginx 服务器上设置健壮的 SSL 安全机制。我们通过禁用 SSL 压缩来降低 CRIME 攻击威胁;禁用协议上存在安全缺陷的 SSLv3 及更低版本,并设置更健壮的加密套件(cipher suite)来尽可能启用前向安全性(Forward Secrecy);此外,我们还启用了 HSTS 和 HPKP。这样我们就拥有了一个健壮而可经受考验的 SSL 配置,并可以在 Qually Labs 的 SSL 测试中得到 A 级评分。

+

+如果不求甚解的话,可以从 [https://cipherli.st][2] 上找到 nginx 、Apache 和 Lighttpd 的安全设置,复制粘帖即可。

+

+本教程在 Digital Ocean 的 VPS 上测试通过。如果你喜欢这篇教程,想要支持作者的站点的话,购买 Digital Ocean 的 VPS 时请使用如下链接:[https://www.digitalocean.com/?refcode=7435ae6b8212][3] 。

+

+本教程可以通过[发布于 2014/1/21 的][4] SSL 实验室测试的严格要求(我之前就通过了测试,如果你按照本文操作就可以得到一个 A+ 评分)。

+

+- [本教程也可用于 Apache ][5]

+- [本教程也可用于 Lighttpd ][6]

+- [本教程也可用于 FreeBSD, NetBSD 和 OpenBSD 上的 nginx ,放在 BSD Now 播客上][7]: [http://www.bsdnow.tv/tutorials/nginx][8]

+

+你可以从下列链接中找到这方面的进一步内容:

+

+- [野兽攻击(BEAST)][9]

+- [罪恶攻击(CRIME)][10]

+- [怪物攻击(FREAK )][11]

+- [心血漏洞(Heartbleed)][12]

+- [完备的前向安全性(Perfect Forward Secrecy)][13]

+- [RC4 和 BEAST 的处理][14]

+

+我们需要编辑 nginx 的配置,在 Ubuntu/Debian 上是 `/etc/nginx/sited-enabled/yoursite.com`,在 RHEL/CentOS 上是 `/etc/nginx/conf.d/nginx.conf`。

+

+本文中,我们需要编辑443端口(SSL)的 `server` 配置中的部分。在文末你可以看到完整的配置例子。

+

+*在编辑之前切记备份一下配置文件!*

+

+### 野兽攻击(BEAST)和 RC4 ###

+

+简单的说,野兽攻击(BEAST)就是通过篡改一个加密算法的 CBC(密码块链)的模式,从而可以对部分编码流量悄悄解码。更多信息参照上面的链接。

+

+针对野兽攻击(BEAST),较新的浏览器已经启用了客户端缓解方案。推荐方案是禁用 TLS 1.0 的所有加密算法,仅允许 RC4 算法。然而,[针对 RC4 算法的攻击也越来越多](http://www.isg.rhul.ac.uk/tls/) ,很多已经从理论上逐步发展为实际可行的攻击方式。此外,有理由相信 NSA 已经实现了他们所谓的“大突破”——攻破 RC4 。

+

+禁用 RC4 会有几个后果。其一,当用户使用老旧的浏览器时,比如 Windows XP 上的 IE 会用 3DES 来替代 RC4。3DES 要比 RC4 更安全,但是它的计算成本更高,你的服务器就需要为这些用户付出更多的处理成本。其二,RC4 算法能减轻 野兽攻击(BEAST)的危害,如果禁用 RC4 会导致 TLS 1.0 用户会换到更容易受攻击的 AES-CBC 算法上(通常服务器端的对野兽攻击(BEAST)的“修复方法”是让 RC4 优先于其它算法)。我认为 RC4 的风险要高于野兽攻击(BEAST)的风险。事实上,有了客户端缓解方案(Chrome 和 Firefox 提供了缓解方案),野兽攻击(BEAST)就不是什么大问题了。而 RC4 的风险却在增长:随着时间推移,对加密算法的破解会越来越多。

+

+### 怪物攻击(FREAK) ###

+

+怪物攻击(FREAK)是一种中间人攻击,它是由来自 [INRIA、微软研究院和 IMDEA][15] 的密码学家们所发现的。怪物攻击(FREAK)的缩写来自“Factoring RSA-EXPORT Keys(RSA 出口密钥因子分解)”

+

+这个漏洞可上溯到上世纪九十年代,当时美国政府禁止出口加密软件,除非其使用编码密钥长度不超过512位的出口加密套件。

+

+这造成了一些现在的 TLS 客户端存在一个缺陷,这些客户端包括: 苹果的 SecureTransport 、OpenSSL。这个缺陷会导致它们会接受出口降级 RSA 密钥,即便客户端并没有要求使用出口降级 RSA 密钥。这个缺陷带来的影响很讨厌:在客户端存在缺陷,且服务器支持出口降级 RSA 密钥时,会发生中间人攻击,从而导致连接的强度降低。

+

+攻击分为两个组成部分:首先是服务器必须接受“出口降级 RSA 密钥”。

+

+中间人攻击可以按如下流程:

+

+- 在客户端的 Hello 消息中,要求标准的 RSA 加密套件。

+- 中间人攻击者修改该消息为‘export RSA’(输出级 RSA 密钥)。

+- 服务器回应一个512位的输出级 RSA 密钥,并以其长期密钥签名。

+- 由于 OpenSSL/SecureTransport 的缺陷,客户端会接受这个弱密钥。

+- 攻击者根据 RSA 模数分解因子来恢复相应的 RSA 解密密钥。

+- 当客户端编码‘pre-master secret’(预主密码)给服务器时,攻击者现在就可以解码它并恢复 TLS 的‘master secret’(主密码)。

+- 从这里开始,攻击者就能看到了传输的明文并注入任何东西了。

+

+本文所提供的加密套件不启用输出降级加密,请确认你的 OpenSSL 是最新的,也强烈建议你将客户端也升级到新的版本。

+

+### 心血漏洞(Heartbleed) ###

+

+心血漏洞(Heartbleed) 是一个于2014年4月公布的 OpenSSL 加密库的漏洞,它是一个被广泛使用的传输层安全(TLS)协议的实现。无论是服务器端还是客户端在 TLS 中使用了有缺陷的 OpenSSL,都可以被利用该缺陷。由于它是因 DTLS 心跳扩展(RFC 6520)中的输入验证不正确(缺少了边界检查)而导致的,所以该漏洞根据“心跳”而命名。这个漏洞是一种缓存区超读漏洞,它可以读取到本不应该读取的数据。

+

+哪个版本的 OpenSSL 受到心血漏洞(Heartbleed)的影响?

+

+各版本情况如下:

+

+- OpenSSL 1.0.1 直到 1.0.1f (包括)**存在**该缺陷

+- OpenSSL 1.0.1g **没有**该缺陷

+- OpenSSL 1.0.0 分支**没有**该缺陷

+- OpenSSL 0.9.8 分支**没有**该缺陷

+

+这个缺陷是2011年12月引入到 OpenSSL 中的,并随着 2012年3月14日 OpenSSL 发布的 1.0.1 而泛滥。2014年4月7日发布的 OpenSSL 1.0.1g 修复了该漏洞。

+

+升级你的 OpenSSL 就可以避免该缺陷。

+

+### SSL 压缩(罪恶攻击 CRIME) ###

+

+罪恶攻击(CRIME)使用 SSL 压缩来完成它的魔法,SSL 压缩在下述版本是默认关闭的: nginx 1.1.6及更高/1.0.9及更高(如果使用了 OpenSSL 1.0.0及更高), nginx 1.3.2及更高/1.2.2及更高(如果使用较旧版本的 OpenSSL)。

+

+如果你使用一个早期版本的 nginx 或 OpenSSL,而且你的发行版没有向后移植该选项,那么你需要重新编译没有一个 ZLIB 支持的 OpenSSL。这会禁止 OpenSSL 使用 DEFLATE 压缩方式。如果你禁用了这个,你仍然可以使用常规的 HTML DEFLATE 压缩。

+

+### SSLv2 和 SSLv3 ###

+

+SSLv2 是不安全的,所以我们需要禁用它。我们也禁用 SSLv3,因为 TLS 1.0 在遭受到降级攻击时,会允许攻击者强制连接使用 SSLv3,从而禁用了前向安全性(forward secrecy)。

+

+如下编辑配置文件:

+

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

+

+### 卷毛狗攻击(POODLE)和 TLS-FALLBACK-SCSV ###

+

+SSLv3 会受到[卷毛狗漏洞(POODLE)][16]的攻击。这是禁用 SSLv3 的主要原因之一。

+

+Google 提出了一个名为 [TLS\_FALLBACK\_SCSV][17] 的SSL/TLS 扩展,它用于防止强制 SSL 降级。如果你升级 到下述的 OpenSSL 版本会自动启用它。

+

+- OpenSSL 1.0.1 带有 TLS\_FALLBACK\_SCSV 1.0.1j 及更高。

+- OpenSSL 1.0.0 带有 TLS\_FALLBACK\_SCSV 1.0.0o 及更高。

+- OpenSSL 0.9.8 带有 TLS\_FALLBACK\_SCSV 0.9.8zc 及更高。

+

+[更多信息请参照 NGINX 文档][18]。

+

+### 加密套件(cipher suite) ###

+

+前向安全性(Forward Secrecy)用于在长期密钥被破解时确保会话密钥的完整性。PFS(完备的前向安全性)是指强制在每个/每次会话中推导新的密钥。

+

+这就是说,泄露的私钥并不能用来解密(之前)记录下来的 SSL 通讯。

+

+提供PFS(完备的前向安全性)功能的是那些使用了一种 Diffie-Hellman 密钥交换的短暂形式的加密套件。它们的缺点是系统开销较大,不过可以使用椭圆曲线的变体来改进。

+

+以下两个加密套件是我推荐的,之后[Mozilla 基金会][19]也推荐了。

+

+推荐的加密套件:

+

+ ssl_ciphers 'AES128+EECDH:AES128+EDH';

+

+向后兼容的推荐的加密套件(IE6/WinXP):

+

+ ssl_ciphers "ECDHE-RSA-AES256-GCM-SHA384:ECDHE-RSA-AES128-GCM-SHA256:DHE-RSA-AES256-GCM-SHA384:DHE-RSA-AES128-GCM-SHA256:ECDHE-RSA-AES256-SHA384:ECDHE-RSA-AES128-SHA256:ECDHE-RSA-AES256-SHA:ECDHE-RSA-AES128-SHA:DHE-RSA-AES256-SHA256:DHE-RSA-AES128-SHA256:DHE-RSA-AES256-SHA:DHE-RSA-AES128-SHA:ECDHE-RSA-DES-CBC3-SHA:EDH-RSA-DES-CBC3-SHA:AES256-GCM-SHA384:AES128-GCM-SHA256:AES256-SHA256:AES128-SHA256:AES256-SHA:AES128-SHA:DES-CBC3-SHA:HIGH:!aNULL:!eNULL:!EXPORT:!DES:!MD5:!PSK:!RC4";

+

+如果你的 OpenSSL 版本比较旧,不可用的加密算法会自动丢弃。应该一直使用上述的完整套件,让 OpenSSL 选择一个它所支持的。

+

+加密套件的顺序是非常重要的,因为其决定了优先选择哪个算法。上述优先推荐的算法中提供了PFS(完备的前向安全性)。

+

+较旧版本的 OpenSSL 也许不能支持这个算法的完整列表,AES-GCM 和一些 ECDHE 算法是相当新的,在 Ubuntu 和 RHEL 中所带的绝大多数 OpenSSL 版本中不支持。

+

+#### 优先顺序的逻辑 ####

+

+- ECDHE+AESGCM 加密是首选的。它们是 TLS 1.2 加密算法,现在还没有广泛支持。当前还没有对它们的已知攻击。

+- PFS 加密套件好一些,首选 ECDHE,然后是 DHE。

+- AES 128 要好于 AES 256。有一个关于 AES256 带来的安全提升程度是否值回成本的[讨论][20],结果是显而易见的。目前,AES128 要更值一些,因为它提供了不错的安全水准,确实很快,而且看起来对时序攻击更有抵抗力。

+- 在向后兼容的加密套件里面,AES 要优于 3DES。在 TLS 1.1及其以上,减轻了针对 AES 的野兽攻击(BEAST)的威胁,而在 TLS 1.0上则难以实现该攻击。在非向后兼容的加密套件里面,不支持 3DES。

+- RC4 整个不支持了。3DES 用于向后兼容。参看 [#RC4\_weaknesses][21] 中的讨论。

+

+#### 强制丢弃的算法 ####

+

+- aNULL 包含了非验证的 Diffie-Hellman 密钥交换,这会受到中间人(MITM)攻击

+- eNULL 包含了无加密的算法(明文)

+- EXPORT 是老旧的弱加密算法,是被美国法律标示为可出口的

+- RC4 包含的加密算法使用了已弃用的 ARCFOUR 算法

+- DES 包含的加密算法使用了弃用的数据加密标准(DES)

+- SSLv2 包含了定义在旧版本 SSL 标准中的所有算法,现已弃用

+- MD5 包含了使用已弃用的 MD5 作为哈希算法的所有算法

+

+### 更多设置 ###

+

+确保你也添加了如下行:

+

+ ssl_prefer_server_ciphers on;

+ ssl_session_cache shared:SSL:10m;

+

+在一个 SSLv3 或 TLSv1 握手过程中选择一个加密算法时,一般使用客户端的首选算法。如果设置了上述配置,则会替代地使用服务器端的首选算法。

+

+- [关于 ssl\_prefer\_server\_ciphers 的更多信息][22]

+- [关于 ssl\_ciphers 的更多信息][23]

+

+### 前向安全性和 Diffie Hellman Ephemeral (DHE)参数 ###

+

+前向安全性(Forward Secrecy)的概念很简单:客户端和服务器协商一个永不重用的密钥,并在会话结束时销毁它。服务器上的 RSA 私钥用于客户端和服务器之间的 Diffie-Hellman 密钥交换签名。从 Diffie-Hellman 握手中获取的预主密钥会用于之后的编码。因为预主密钥是特定于客户端和服务器之间建立的某个连接,并且只用在一个限定的时间内,所以称作短暂模式(Ephemeral)。

+

+使用了前向安全性,如果一个攻击者取得了一个服务器的私钥,他是不能解码之前的通讯信息的。这个私钥仅用于 Diffie Hellman 握手签名,并不会泄露预主密钥。Diffie Hellman 算法会确保预主密钥绝不会离开客户端和服务器,而且不能被中间人攻击所拦截。

+

+所有版本的 nginx(如1.4.4)都依赖于 OpenSSL 给 Diffie-Hellman (DH)的输入参数。不幸的是,这意味着 Diffie-Hellman Ephemeral(DHE)将使用 OpenSSL 的默认设置,包括一个用于密钥交换的1024位密钥。因为我们正在使用2048位证书,DHE 客户端就会使用一个要比非 DHE 客户端更弱的密钥交换。

+

+我们需要生成一个更强壮的 DHE 参数:

+

+ cd /etc/ssl/certs

+ openssl dhparam -out dhparam.pem 4096

+

+然后告诉 nginx 将其用作 DHE 密钥交换:

+

+ ssl_dhparam /etc/ssl/certs/dhparam.pem;

+

+### OCSP 装订(Stapling) ###

+

+当连接到一个服务器时,客户端应该使用证书吊销列表(CRL)或在线证书状态协议(OCSP)记录来校验服务器证书的有效性。CRL 的问题是它已经增长的太大了,永远也下载不完了。

+

+OCSP 更轻量级一些,因为我们每次只请求一条记录。但是副作用是当连接到一个服务器时必须对第三方 OCSP 响应器发起 OCSP 请求,这就增加了延迟和带来了潜在隐患。事实上,CA 所运营的 OCSP 响应器非常不可靠,浏览器如果不能及时收到答复,就会静默失败。攻击者通过 DoS 攻击一个 OCSP 响应器可以禁用其校验功能,这样就降低了安全性。

+

+解决方法是允许服务器在 TLS 握手中发送缓存的 OCSP 记录,以绕开 OCSP 响应器。这个机制节省了客户端和 OCSP 响应器之间的通讯,称作 OCSP 装订。

+

+客户端会在它的 CLIENT HELLO 中告知其支持 status\_request TLS 扩展,服务器仅在客户端请求它的时候才发送缓存的 OCSP 响应。

+

+大多数服务器最多会缓存 OCSP 响应48小时。服务器会按照常规的间隔连接到 CA 的 OCSP 响应器来获取刷新的 OCSP 记录。OCSP 响应器的位置可以从签名的证书中的授权信息访问(Authority Information Access)字段中获得。

+

+- [阅读我的教程:在 NGINX 中启用 OCSP 装订][24]

+

+### HTTP 严格传输安全(HSTS) ###

+

+如有可能,你应该启用 [HTTP 严格传输安全(HSTS)][25],它会引导浏览器和你的站点之间的通讯仅通过 HTTPS。

+

+- [阅读我关于 HSTS 的文章,了解如何配置它][26]

+

+### HTTP 公钥固定扩展(HPKP) ###

+

+你也应该启用 [HTTP 公钥固定扩展(HPKP)][27]。

+

+公钥固定的意思是一个证书链必须包括一个白名单中的公钥。它确保仅有白名单中的 CA 才能够为某个域名签署证书,而不是你的浏览器中存储的任何 CA。

+

+我已经写了一篇[关于 HPKP 的背景理论及在 Apache、Lighttpd 和 NGINX 中配置例子的文章][28]。

+

+### 配置范例 ###

+

+ server {

+

+ listen [::]:443 default_server;

+

+ ssl on;

+ ssl_certificate_key /etc/ssl/cert/raymii_org.pem;

+ ssl_certificate /etc/ssl/cert/ca-bundle.pem;

+

+ ssl_ciphers 'AES128+EECDH:AES128+EDH:!aNULL';

+

+ ssl_protocols TLSv1 TLSv1.1 TLSv1.2;

+ ssl_session_cache shared:SSL:10m;

+

+ ssl_stapling on;

+ ssl_stapling_verify on;

+ resolver 8.8.4.4 8.8.8.8 valid=300s;

+ resolver_timeout 10s;

+

+ ssl_prefer_server_ciphers on;

+ ssl_dhparam /etc/ssl/certs/dhparam.pem;

+

+ add_header Strict-Transport-Security max-age=63072000;

+ add_header X-Frame-Options DENY;

+ add_header X-Content-Type-Options nosniff;

+

+ root /var/www/;

+ index index.html index.htm;

+ server_name raymii.org;

+

+ }

+

+### 结尾 ###

+

+如果你使用了上述配置,你需要重启 nginx:

+

+ # 首先检查配置文件是否正确

+ /etc/init.d/nginx configtest

+ # 然后重启

+ /etc/init.d/nginx restart

+

+现在使用 [SSL Labs 测试][29]来看看你是否能得到一个漂亮的“A”。当然了,你也得到了一个安全的、强壮的、经得起考验的 SSL 配置!

+

+- [参考 Mozilla 关于这方面的内容][30]

+

+--------------------------------------------------------------------------------

+

+via: https://raymii.org/s/tutorials/Strong_SSL_Security_On_nginx.html

+

+作者:[Remy van Elst][a]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:https://raymii.org/

+[1]:https://www.ssllabs.com/ssltest/analyze.html?d=raymii.org

+[2]:https://cipherli.st/

+[3]:https://www.digitalocean.com/?refcode=7435ae6b8212

+[4]:http://blog.ivanristic.com/2014/01/ssl-labs-stricter-security-requirements-for-2014.html

+[5]:https://raymii.org/s/tutorials/Strong_SSL_Security_On_Apache2.html

+[6]:https://raymii.org/s/tutorials/Pass_the_SSL_Labs_Test_on_Lighttpd_%28Mitigate_the_CRIME_and_BEAST_attack_-_Disable_SSLv2_-_Enable_PFS%29.html

+[7]:http://www.bsdnow.tv/episodes/2014_08_20-engineering_nginx

+[8]:http://www.bsdnow.tv/tutorials/nginx

+[9]:https://en.wikipedia.org/wiki/Transport_Layer_Security#BEAST_attack

+[10]:https://en.wikipedia.org/wiki/CRIME_%28security_exploit%29

+[11]:http://blog.cryptographyengineering.com/2015/03/attack-of-week-freak-or-factoring-nsa.html

+[12]:http://heartbleed.com/

+[13]:https://en.wikipedia.org/wiki/Perfect_forward_secrecy

+[14]:https://en.wikipedia.org/wiki/Transport_Layer_Security#Dealing_with_RC4_and_BEAST

+[15]:https://www.smacktls.com/

+[16]:https://raymii.org/s/articles/Check_servers_for_the_Poodle_bug.html

+[17]:https://tools.ietf.org/html/draft-ietf-tls-downgrade-scsv-00

+[18]:http://wiki.nginx.org/HttpSslModule#ssl_protocols

+[19]:https://wiki.mozilla.org/Security/Server_Side_TLS

+[20]:http://www.mail-archive.com/dev-tech-crypto@lists.mozilla.org/msg11247.html

+[21]:https://wiki.mozilla.org/Security/Server_Side_TLS#RC4_weaknesses

+[22]:http://wiki.nginx.org/HttpSslModule#ssl_prefer_server_ciphers

+[23]:http://wiki.nginx.org/HttpSslModule#ssl_ciphers

+[24]:https://raymii.org/s/tutorials/OCSP_Stapling_on_nginx.html

+[25]:https://en.wikipedia.org/wiki/HTTP_Strict_Transport_Security

+[26]:https://linux.cn/article-5266-1.html

+[27]:https://wiki.mozilla.org/SecurityEngineering/Public_Key_Pinning

+[28]:https://linux.cn/article-5282-1.html

+[29]:https://www.ssllabs.com/ssltest/

+[30]:https://wiki.mozilla.org/Security/Server_Side_TLS

\ No newline at end of file

diff --git a/published/201505/20150417 14 Useful Examples of Linux 'sort' Command--Part 1.md b/published/201505/20150417 14 Useful Examples of Linux 'sort' Command--Part 1.md

new file mode 100644

index 0000000000..c483c4035f

--- /dev/null

+++ b/published/201505/20150417 14 Useful Examples of Linux 'sort' Command--Part 1.md

@@ -0,0 +1,132 @@

+Linux 的 ‘sort’命令的14个有用的范例(一)

+=============================================================

+Sort是用于对单个或多个文本文件内容进行排序的Linux程序。Sort命令以空格作为字段分隔符,将一行分割为多个关键字对文件进行排序。需要注意的是除非你将输出重定向到文件中,否则Sort命令并不对文件内容进行实际的排序(即文件内容没有修改),只是将文件内容按有序输出。

+

+本文的目标是通过14个实际的范例让你更深刻的理解如何在Linux中使用sort命令。

+

+1、 首先我们将会创建一个用于执行‘sort’命令的文本文件(tecmint.txt)。工作路径是‘/home/$USER/Desktop/tecmint’。

+

+下面命令中的‘-e’选项将启用‘\\’转义,将‘\n’解析成换行

+

+ $ echo -e "computer\nmouse\nLAPTOP\ndata\nRedHat\nlaptop\ndebian\nlaptop" > tecmint.txt

+

+

+

+2、 在开始学习‘sort’命令前,我们先看看文件的内容及其显示方式。

+

+ $ cat tecmint.txt

+

+

+

+3、 现在,使用如下命令对文件内容进行排序。

+

+ $ sort tecmint.txt

+

+

+

+**注意**:上面的命令并不对文件内容进行实际的排序,仅仅是将其内容按有序方式输出。

+

+4、 对文件‘tecmint.txt’文件内容排序,并将排序后的内容输出到名为sorted.txt的文件中,然后使用[cat][1]命令查看验证sorted.txt文件的内容。

+

+ $ sort tecmint.txt > sorted.txt

+ $ cat sorted.txt

+

+

+

+5、 现在使用‘-r’参数对‘tecmint.txt’文件内容进行逆序排序,并将输出内容重定向到‘reversesorted.txt’文件中,并使用cat命令查看文件的内容。

+

+ $ sort -r tecmint.txt > reversesorted.txt

+ $ cat reversesorted.txt

+

+

+

+6、 创建一个新文件(lsl.txt),文件内容为在home目录下执行‘ls -l’命令的输出。

+

+ $ ls -l /home/$USER > /home/$USER/Desktop/tecmint/lsl.txt

+ $ cat lsl.txt

+

+

+

+我们将会看到对其他字段进行排序的例子,而不是对默认的开始字符进行排序。

+

+7、 基于第二列(符号连接的数量)对文件‘lsl.txt’进行排序。

+

+ $ sort -nk2 lsl.txt

+

+**注意**:上面例子中的‘-n’参数表示对数值内容进行排序。当想基于文件中的数值列对文件进行排序时,必须要使用‘-n’参数。

+

+

+

+8、 基于第9列(文件和目录的名称,非数值)对文件‘lsl.txt’进行排序。

+

+ $ sort -k9 lsl.txt

+

+

+

+9、 sort命令并非仅能对文件进行排序,我们还可以通过管道将命令的输出内容重定向到sort命令中。

+

+ $ ls -l /home/$USER | sort -nk5

+

+

+

+10、 对文件tecmint.txt进行排序,并删除重复的行。然后检查重复的行是否已经删除了。

+

+ $ cat tecmint.txt

+ $ sort -u tecmint.txt

+

+

+

+目前我们发现的排序规则:

+

+除非指定了‘-r’参数,否则排序的优先级按下面规则排序

+

+ - 以数字开头的行优先级最高

+ - 以小写字母开头的行优先级次之

+ - 待排序内容按字典序进行排序

+ - 默认情况下,‘sort’命令将带排序内容的每行关键字当作一个字符串进行字典序排序(数字优先级最高,参看规则 1)

+

+11、 在当前位置创建第三个文件‘lsla.txt’,其内容用‘ls -lA’命令的输出内容填充。

+

+ $ ls -lA /home/$USER > /home/$USER/Desktop/tecmint/lsla.txt

+ $ cat lsla.txt

+

+

+

+了解ls命令的读者都知道‘ls -lA’ 等于 ‘ls -l’ + 隐藏文件,所以这两个文件的大部分内容都是相同的。

+

+12、 对上面两个文件内容进行排序输出。

+

+ $ sort lsl.txt lsla.txt

+

+

+

+注意文件和目录的重复

+

+13、 现在我们看看怎样对两个文件进行排序、合并,并且删除重复行。

+

+ $ sort -u lsl.txt lsla.txt

+

+

+

+此时,我们注意到重复的行已经被删除了,我们可以将输出内容重定向到文件中。

+

+14、 我们同样可以基于多列对文件内容进行排序。基于第2,5(数值)和9(非数值)列对‘ls -l’命令的输出进行排序。

+

+ $ ls -l /home/$USER | sort -t "," -nk2,5 -k9

+

+

+

+先到此为止了,在接下来的文章中我们将会学习到‘sort’命令更多的详细例子。届时敬请关注我们。保持分享精神。若喜欢本文,敬请将本文分享给你的朋友。

+

+--------------------------------------------------------------------------------

+

+via: http://www.tecmint.com/sort-command-linux/

+

+作者:[Avishek Kumar][a]

+译者:[cvsher](https://github.com/cvsher)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://www.tecmint.com/author/avishek/

+[1]:http://www.tecmint.com/13-basic-cat-command-examples-in-linux/

diff --git a/published/201505/20150417 How to Configure MariaDB Replication on CentOS Linux.md b/published/201505/20150417 How to Configure MariaDB Replication on CentOS Linux.md

new file mode 100644

index 0000000000..702d3d7521

--- /dev/null

+++ b/published/201505/20150417 How to Configure MariaDB Replication on CentOS Linux.md

@@ -0,0 +1,363 @@

+如何在 CentOS Linux 中配置 MariaDB 复制

+================================================================================

+这是一个创建数据库重复版本的过程。复制过程不仅仅是复制一个数据库,同时也包括从主节点到一个从节点的更改同步。但这并不意味着从数据库就是和主数据库完全相同的副本,因为复制可以配置为只复制表结构、行或者列,这叫做局部复制。复制保证了特定的配置对象在不同的数据库之间保持一致。

+

+### Mariadb 复制概念 ###

+

+**备份** :复制可以用来进行数据库备份。例如,当你做了主->从复制。如果主节点数据丢失(比如硬盘损坏),你可以从从节点中恢复你的数据库。

+

+**扩展** :你可以使用主->从复制作为扩展解决方案。例如,如果你有一些大的数据库以及SQL查询,使用复制你可以将这些查询分离到每个复制节点。写入操作的SQL应该只在主节点进行,而只读查询可以在从节点上进行。

+

+**分发解决方案** :你可以用复制来进行分发。例如,你可以将不同的销售数据分发到不同的数据库。

+

+**故障解决方案** : 假如你建立有主节点->从节点1->从节点2->从节点3的复制结构。你可以为主节点写脚本监控,如果主节点出故障了,脚本可以快速的将从节点1切换为新的主节点,这样复制结构变成了主节点->从节点1->从节点2,你的应用可以继续工作而不会停机。

+

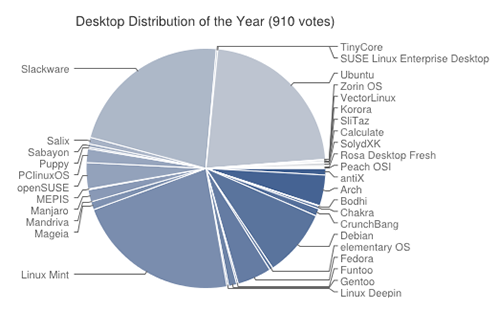

+### 复制的简单图解示范 ###

+

+

+

+开始之前,你应该知道什么是**二进制日志文件**以及 Ibdata1。

+

+二进制日志文件中包括关于数据库,数据和结构的所有更改的记录,以及每条语句的执行了多长时间。二进制日志文件包括一系列日志文件和一个索引文件。这意味着主要的SQL语句,例如CREATE, ALTER, INSERT, UPDATE 和 DELETE 会放到这个日志文件中;而例如SELECT这样的语句就不会被记录,它们可以被记录到普通的query.log文件中。

+

+而 **Ibdata1** 简单的说据是一个包括所有表和所有数据库信息的文件。

+

+### 主服务器配置 ###

+

+首先升级服务器

+

+ sudo yum install update -y && sudo yum install upgrade -y

+

+我们工作在centos7 服务器上

+

+ sudo cat /etc/redhat-release

+

+ CentOS Linux release 7.0.1406 (Core)

+

+安装 MariaDB

+

+ sudo yum install mariadb-server -y

+

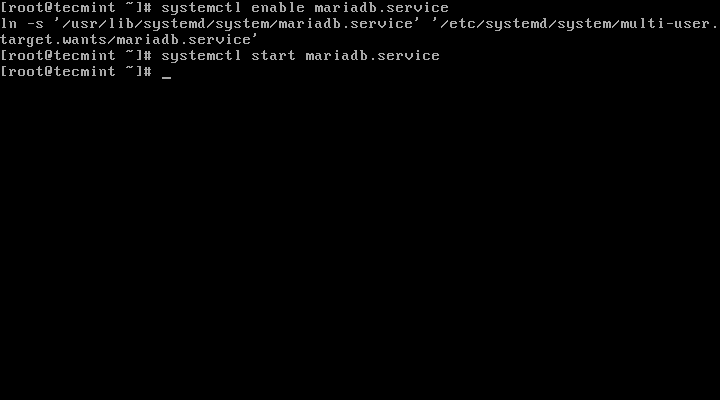

+启动 MariaDB 并启用随服务器启动

+

+ sudo systemctl start mariadb.service

+ sudo systemctl enable mariadb.service

+

+输出如下:

+

+ ln -s '/usr/lib/systemd/system/mariadb.service' '/etc/systemd/system/multi-user.target.wants/mariadb.service'

+

+检查 MariaDB 状态

+

+ sudo service mariadb status

+

+或者使用

+

+ sudo systemctl is-active mariadb.service

+

+输出如下:

+

+ Redirecting to /bin/systemctl status mariadb.service

+ mariadb.service - MariaDB database server

+ Loaded: loaded (/usr/lib/systemd/system/mariadb.service; enabled)

+

+设置 MariaDB 密码

+

+ mysql -u root

+ mysql> use mysql;

+ mysql> update user set password=PASSWORD("SOME_ROOT_PASSWORD") where User='root';

+ mysql> flush privileges;

+ mysql> exit

+

+这里 SOME_ROOT_PASSWORD 是你的 root 密码。 例如我用"q"作为密码,然后尝试登录:

+

+ sudo mysql -u root -pSOME_ROOT_PASSWORD

+

+输出如下:

+

+ Welcome to the MariaDB monitor. Commands end with ; or \g.

+ Your MariaDB connection id is 5

+ Server version: 5.5.41-MariaDB MariaDB Server

+ Copyright (c) 2000, 2014, Oracle, MariaDB Corporation Ab and others.

+

+输入 'help;' 或 '\h' 查看帮助信息。 输入 '\c' 清空当前输入语句。

+

+让我们创建包括一些数据的表的数据库

+

+创建数据库/模式

+

+ sudo mysql -u root -pSOME_ROOT_PASSWORD

+ mysql> create database test_repl;

+

+其中:

+

+ test_repl - 将要被复制的模式的名字

+

+输出:如下

+

+ Query OK, 1 row affected (0.00 sec)

+

+创建 Persons 表

+

+ mysql> use test_repl;

+

+ CREATE TABLE Persons (

+ PersonID int,

+ LastName varchar(255),

+ FirstName varchar(255),

+ Address varchar(255),

+ City varchar(255)

+ );

+

+输出如下:

+

+ mysql> MariaDB [test_repl]> CREATE TABLE Persons (

+ -> PersonID int,

+ -> LastName varchar(255),

+ -> FirstName varchar(255),

+ -> Address varchar(255),

+ -> City varchar(255)

+ -> );

+ Query OK, 0 rows affected (0.01 sec)

+

+插入一些数据

+

+ mysql> INSERT INTO Persons VALUES (1, "LastName1", "FirstName1", "Address1", "City1");

+ mysql> INSERT INTO Persons VALUES (2, "LastName2", "FirstName2", "Address2", "City2");

+ mysql> INSERT INTO Persons VALUES (3, "LastName3", "FirstName3", "Address3", "City3");

+ mysql> INSERT INTO Persons VALUES (4, "LastName4", "FirstName4", "Address4", "City4");

+ mysql> INSERT INTO Persons VALUES (5, "LastName5", "FirstName5", "Address5", "City5");

+

+输出如下:

+

+ Query OK, 5 row affected (0.00 sec)

+

+检查数据

+

+ mysql> select * from Persons;

+

+输出如下:

+

+ +----------+-----------+------------+----------+-------+

+ | PersonID | LastName | FirstName | Address | City |

+ +----------+-----------+------------+----------+-------+

+ | 1 | LastName1 | FirstName1 | Address1 | City1 |

+ | 1 | LastName1 | FirstName1 | Address1 | City1 |

+ | 2 | LastName2 | FirstName2 | Address2 | City2 |

+ | 3 | LastName3 | FirstName3 | Address3 | City3 |

+ | 4 | LastName4 | FirstName4 | Address4 | City4 |

+ | 5 | LastName5 | FirstName5 | Address5 | City5 |

+ +----------+-----------+------------+----------+-------+

+

+### 配置 MariaDB 复制 ###

+

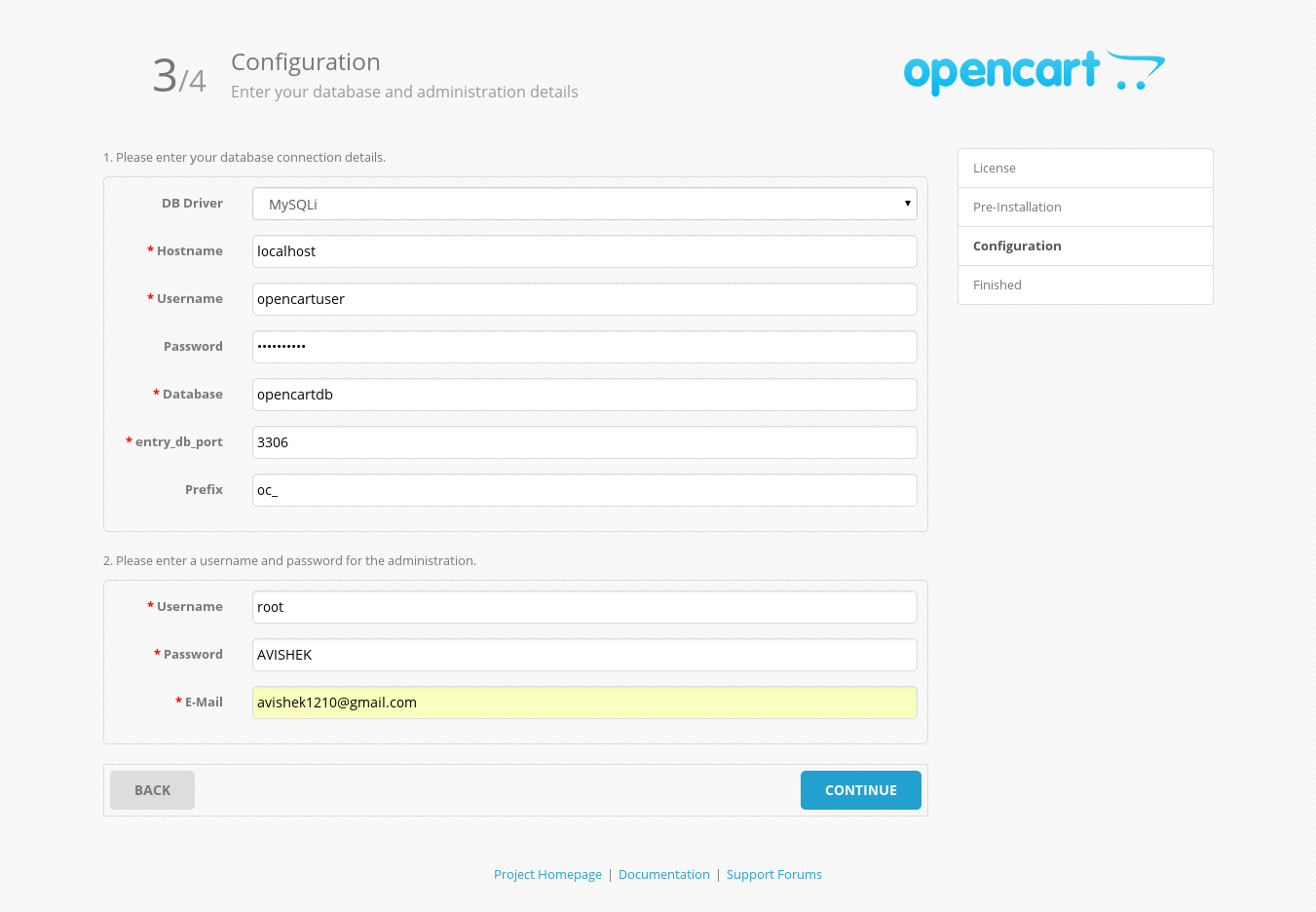

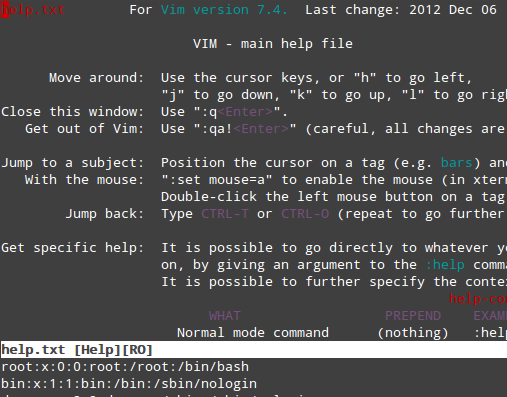

+你需要在主节点服务器上编辑 my.cnf文件来启用二进制日志以及设置服务器id。我会使用vi文本编辑器,但你可以使用任何你喜欢的,例如nano,joe。

+

+ sudo vi /etc/my.cnf

+

+将下面的一些行写到[mysqld]部分。

+

+

+ log-basename=master

+ log-bin

+ binlog-format=row

+ server_id=1

+

+输出如下:

+

+

+

+然后重启 MariaDB:

+

+ sudo service mariadb restart

+

+登录到 MariaDB 并查看二进制日志文件:

+

+sudo mysql -u root -pq test_repl

+

+mysql> SHOW MASTER STATUS;

+

+输出如下:

+

+ +--------------------+----------+--------------+------------------+

+ | File | Position | Binlog_Do_DB | Binlog_Ignore_DB |

+ +--------------------+----------+--------------+------------------+

+ | mariadb-bin.000002 | 3913 | | |

+ +--------------------+----------+--------------+------------------+

+

+**记住** : "File" 和 "Position" 的值。在从节点中你需要使用这些值

+

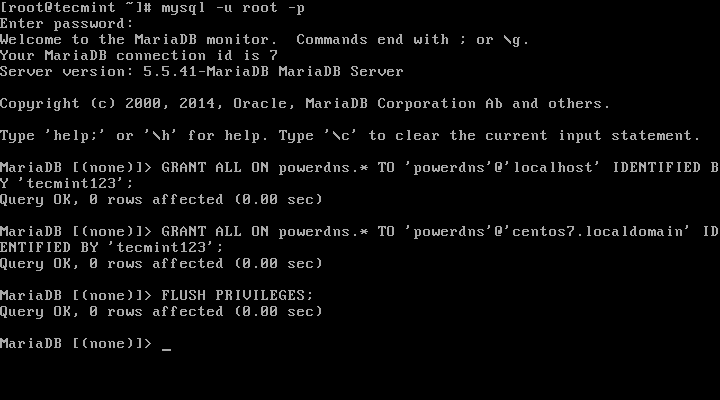

+创建用来复制的用户

+

+ mysql> GRANT REPLICATION SLAVE ON *.* TO replication_user IDENTIFIED BY 'bigs3cret' WITH GRANT OPTION;

+ mysql> flush privileges;

+

+输出如下:

+

+ Query OK, 0 rows affected (0.00 sec)

+ Query OK, 0 rows affected (0.00 sec)

+

+在数据库中检查用户

+

+ mysql> select * from mysql.user WHERE user="replication_user"\G;

+

+输出如下:

+

+ mysql> select * from mysql.user WHERE user="replication_user"\G;

+ *************************** 1. row ***************************

+ Host: %

+ User: replication_user

+ Password: *2AF30E7AEE9BF3AF584FB19653881D2D072FA49C

+ Select_priv: N

+ .....

+

+从主节点创建 DB dump (将要被复制的所有数据的快照)

+

+ mysqldump -uroot -pSOME_ROOT_PASSWORD test_repl > full-dump.sql

+

+其中:

+

+ SOME_ROOT_PASSWORD - 你设置的root用户的密码

+ test_repl - 将要复制的数据库的名称;

+

+你需要在从节点中恢复 mysql dump (full-dump.sql)。重复需要这个。

+

+### 从节点配置 ###

+

+所有这些命令需要在从节点中进行。

+

+假设我们已经更新/升级了包括有最新的MariaDB服务器的 CentOS 7.x,而且你可以用root账号登陆到MariaDB服务器(这在这篇文章的第一部分已经介绍过)

+

+登录到Maria 数据库控制台并创建数据库

+

+ mysql -u root -pSOME_ROOT_PASSWORD;

+ mysql> create database test_repl;

+ mysql> exit;

+

+在从节点恢复主节点的数据

+

+ mysql -u root -pSOME_ROOT_PASSWORD test_repl < full-dump.sql

+

+其中:

+

+full-dump.sql - 你在测试服务器中创建的DB Dump。

+

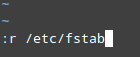

+登录到Maria 数据库并启用复制

+

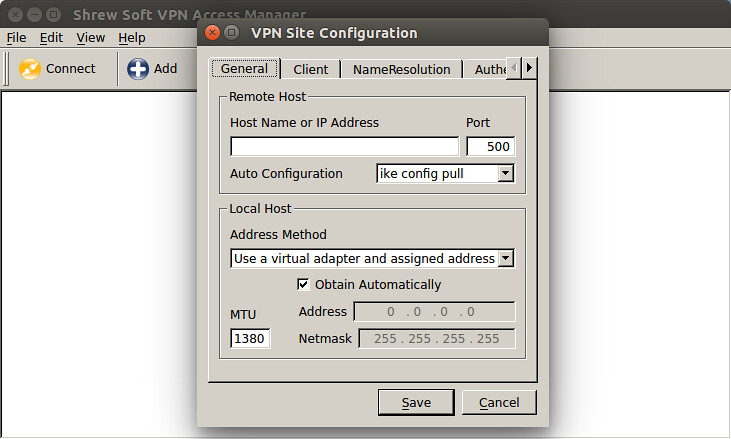

+ mysql> CHANGE MASTER TO

+ MASTER_HOST='82.196.5.39',

+ MASTER_USER='replication_user',

+ MASTER_PASSWORD='bigs3cret',

+ MASTER_PORT=3306,

+ MASTER_LOG_FILE='mariadb-bin.000002',

+ MASTER_LOG_POS=3913,

+ MASTER_CONNECT_RETRY=10;

+

+

+

+其中:

+

+ MASTER_HOST - 主节点服务器的IP

+ MASTER_USER - 主节点服务器中的复制用户

+ MASTER_PASSWORD - 复制用户密码

+ MASTER_PORT - 主节点中的mysql端口

+ MASTER_LOG_FILE - 主节点中的二进制日志文件名称

+ MASTER_LOG_POS - 主节点中的二进制日志文件位置

+

+开启从节点模式

+

+ mysql> slave start;

+

+输出如下:

+

+ Query OK, 0 rows affected (0.00 sec)

+

+检查从节点状态

+

+ mysql> show slave status\G;

+

+输出如下:

+

+ *************************** 1. row ***************************

+ Slave_IO_State: Waiting for master to send event

+ Master_Host: 82.196.5.39

+ Master_User: replication_user

+ Master_Port: 3306

+ Connect_Retry: 10

+ Master_Log_File: mariadb-bin.000002

+ Read_Master_Log_Pos: 4175

+ Relay_Log_File: mariadb-relay-bin.000002

+ Relay_Log_Pos: 793

+ Relay_Master_Log_File: mariadb-bin.000002

+ Slave_IO_Running: Yes

+ Slave_SQL_Running: Yes

+ Replicate_Do_DB:

+ Replicate_Ignore_DB:

+ Replicate_Do_Table:

+ Replicate_Ignore_Table:

+ Replicate_Wild_Do_Table:

+ Replicate_Wild_Ignore_Table:

+ Last_Errno: 0

+ Last_Error:

+ Skip_Counter: 0

+ Exec_Master_Log_Pos: 4175

+ Relay_Log_Space: 1089

+ Until_Condition: None

+ Until_Log_File:

+ Until_Log_Pos: 0

+ Master_SSL_Allowed: No

+ Master_SSL_CA_File:

+ Master_SSL_CA_Path:

+ Master_SSL_Cert:

+ Master_SSL_Cipher:

+ Master_SSL_Key:

+ Seconds_Behind_Master: 0

+ Master_SSL_Verify_Server_Cert: No

+ Last_IO_Errno: 0

+ Last_IO_Error:

+ Last_SQL_Errno: 0

+ Last_SQL_Error:

+ Replicate_Ignore_Server_Ids:

+ Master_Server_Id: 1

+ 1 row in set (0.00 sec)

+

+到这里所有步骤都应该没问题,也不应该出现错误。

+

+### 测试复制 ###

+

+在主节点服务器中添加一些条目到数据库

+

+ mysql -u root -pSOME_ROOT_PASSWORD test_repl

+

+ mysql> INSERT INTO Persons VALUES (6, "LastName6", "FirstName6", "Address6", "City6");

+ mysql> INSERT INTO Persons VALUES (7, "LastName7", "FirstName7", "Address7", "City7");

+ mysql> INSERT INTO Persons VALUES (8, "LastName8", "FirstName8", "Address8", "City8");

+

+到从节点服务器中查看复制数据

+

+ mysql -u root -pSOME_ROOT_PASSWORD test_repl

+

+ mysql> select * from Persons;

+

+ +----------+-----------+------------+----------+-------+

+ | PersonID | LastName | FirstName | Address | City |

+ +----------+-----------+------------+----------+-------+

+ ...................

+ | 6 | LastName6 | FirstName6 | Address6 | City6 |

+ | 7 | LastName7 | FirstName7 | Address7 | City7 |

+ | 8 | LastName8 | FirstName8 | Address8 | City8 |

+ +----------+-----------+------------+----------+-------+

+

+你可以看到数据已经被复制到从节点。这意味着复制能正常工作。希望你能喜欢这篇文章。如果你有任何问题请告诉我们。

+

+--------------------------------------------------------------------------------

+

+via: http://linoxide.com/how-tos/configure-mariadb-replication-centos-linux/

+

+作者:[Bobbin Zachariah][a]

+译者:[ictlyh](https://github.com/ictlyh)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://linoxide.com/author/bobbin/

\ No newline at end of file

diff --git a/published/201505/20150417 sshuttle--A transparent proxy-based VPN using ssh.md b/published/201505/20150417 sshuttle--A transparent proxy-based VPN using ssh.md

new file mode 100644

index 0000000000..953fd343b8

--- /dev/null

+++ b/published/201505/20150417 sshuttle--A transparent proxy-based VPN using ssh.md

@@ -0,0 +1,92 @@

+sshuttle:一个使用ssh的基于VPN的透明代理

+================================================================================

+sshuttle 允许你通过 ssh 创建一条从你电脑连接到任何远程服务器的 VPN 连接,只要你的服务器支持 python2.3 或则更高的版本。你必须有本机的 root 权限,但是你可以在服务端有普通账户即可。

+

+你可以在一台机器上同时运行多次 sshuttle 来连接到不同的服务器上,这样你就可以同时使用多个 VPN, sshuttle可以转发你子网中所有流量到VPN中。

+

+### 在Ubuntu中安装sshuttle ###

+

+在终端中输入下面的命令

+

+ sudo apt-get install sshuttle

+

+### 使用 sshuttle ###

+

+#### sshuttle 语法 ####

+

+ sshuttle [options...] [-r [username@]sshserver[:port]] [subnets]

+

+#### 选项细节 ####

+

+-r, —remote=[username@]sshserver[:port]

+

+远程主机名和可选的用户名,用于连接远程服务器的ssh端口号。比如example.com、testuser@example.com、testuser@example.com:2222或者example.com:2244。

+

+#### sshuttle 例子 ####

+

+在机器中使用下面的命令:

+

+ sudo sshuttle -r username@sshserver 0.0.0.0/0 -vv

+

+当开始后,sshuttle会创建一个ssh会话到由-r指定的服务器。如果-r被丢了,它会在本地运行客户端和服务端,这个有时会在测试时有用。

+

+连接到远程服务器后,sshuttle会上传它的(python)源码到远程服务器并执行。所以,你就不需要在远程服务器上安装sshuttle,并且客户端和服务器端间不会存在sshuttle版本冲突。

+

+#### 手册中的更多例子 ####

+

+代理所有的本地连接用于本地测试,没有使用ssh:

+

+ $ sudo sshuttle -v 0/0

+

+ Starting sshuttle proxy.

+ Listening on (‘0.0.0.0′, 12300).

+ [local sudo] Password:

+ firewall manager ready.

+ c : connecting to server...

+ s: available routes:

+ s: 192.168.42.0/24

+ c : connected.

+ firewall manager: starting transproxy.

+ c : Accept: ‘192.168.42.106':50035 -> ‘192.168.42.121':139.

+ c : Accept: ‘192.168.42.121':47523 -> ‘77.141.99.22':443.

+ ...etc...

+ ^C

+ firewall manager: undoing changes.

+ KeyboardInterrupt

+ c : Keyboard interrupt: exiting.

+ c : SW#8:192.168.42.121:47523: deleting

+ c : SW#6:192.168.42.106:50035: deleting

+

+测试到远程服务器上的连接,自动猜测主机名和子网:

+

+ $ sudo sshuttle -vNHr example.org

+

+ Starting sshuttle proxy.

+ Listening on (‘0.0.0.0′, 12300).

+ firewall manager ready.

+ c : connecting to server...

+ s: available routes:

+ s: 77.141.99.0/24

+ c : connected.

+ c : seed_hosts: []

+ firewall manager: starting transproxy.

+ hostwatch: Found: testbox1: 1.2.3.4

+ hostwatch: Found: mytest2: 5.6.7.8

+ hostwatch: Found: domaincontroller: 99.1.2.3

+ c : Accept: ‘192.168.42.121':60554 -> ‘77.141.99.22':22.

+ ^C

+ firewall manager: undoing changes.

+ c : Keyboard interrupt: exiting.

+ c : SW#6:192.168.42.121:60554: deleting

+

+--------------------------------------------------------------------------------

+

+via: http://www.ubuntugeek.com/sshuttle-a-transparent-proxy-based-vpn-using-ssh.html

+

+作者:[ruchi][a]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://www.ubuntugeek.com/author/ubuntufix

diff --git a/published/201505/20150420 7 Interesting Linux 'sort' Command Examples--Part 2.md b/published/201505/20150420 7 Interesting Linux 'sort' Command Examples--Part 2.md

new file mode 100644

index 0000000000..476274b495

--- /dev/null

+++ b/published/201505/20150420 7 Interesting Linux 'sort' Command Examples--Part 2.md

@@ -0,0 +1,134 @@

+Linux 的 'sort'命令的七个有趣实例(二)

+================================================================================

+

+在[上一篇文章][1]里,我们已经探讨了关于sort命令的多个例子,如果你错过了这篇文章,可以点击下面的链接进行阅读。今天的这篇文章作为上一篇文章的继续,将讨论关于sort命令的剩余用法,与上一篇一起作为Linux ‘sort’命令的完整指南。

+

+- [Linux 的 ‘sort’命令的14个有用的范例(一)][1]

+

+在我们继续深入之前,先创建一个文本文档‘month.txt’,并且将上一次给出的数据填进去。

+

+ $ echo -e "mar\ndec\noct\nsep\nfeb\naug" > month.txt

+ $ cat month.txt

+

+

+

+15、 通过使用’M‘选项,对’month.txt‘文件按照月份顺序进行排序。

+

+ $ sort -M month.txt

+

+**注意**:‘sort’命令需要至少3个字符来确认月份名称。

+

+

+

+16、 把数据整理成方便人们阅读的形式,比如1K、2M、3G、2T,这里面的K、G、M、T代表千、兆、吉、梯。

+(LCTT 译注:此处命令有误,ls 命令应该增加 -h 参数,径改之)

+

+ $ ls -lh /home/$USER | sort -h -k5

+

+

+

+17、 在上一篇文章中,我们在例子4中创建了一个名为‘sorted.txt’的文件,在例子6中创建了一个‘lsl.txt’。‘sorted.txt'已经排好序了而’lsl.txt‘还没有。让我们使用sort命令来检查两个文件是否已经排好序。

+

+ $ sort -c sorted.txt

+

+

+

+如果它返回0,则表示文件已经排好序。

+

+ $ sort -c lsl.txt

+

+

+

+报告无序。存在矛盾……

+

+18、 如果文字之间的分隔符是空格,sort命令自动地将空格后的东西当做一个新文字单元,如果分隔符不是空格呢?

+

+考虑这样一个文本文件,里面的内容可以由除了空格之外的任何符号分隔,比如‘|’,‘\’,‘+’,‘.’等……

+

+创建一个分隔符为+的文本文件。使用‘cat‘命令查看文件内容。

+

+ $ echo -e "21+linux+server+production\n11+debian+RedHat+CentOS\n131+Apache+Mysql+PHP\n7+Shell Scripting+python+perl\n111+postfix+exim+sendmail" > delimiter.txt

+

+----------

+

+ $ cat delimiter.txt

+

+

+

+现在基于由数字组成的第一个域来进行排序。

+

+ $ sort -t '+' -nk1 delimiter.txt

+

+

+

+然后再基于非数字的第四个域排序。

+

+

+

+如果分隔符是制表符,你需要在’+‘的位置上用$’\t’代替,如上例所示。

+

+19、 对主用户目录下使用‘ls -l’命令得到的结果基于第五列(‘文件大小’)进行一个乱序排列。

+

+ $ ls -l /home/avi/ | sort -k5 -R

+

+

+

+每一次你运行上面的脚本,你得到结果可能都不一样,因为结果是随机生成的。

+

+正如我在上一篇文章中提到的规则2所说——sort命令会将以小写字母开始的行排在大写字母开始的行前面。看一下上一篇文章的例3,字符串‘laptop’在‘LAPTOP’前出现。

+

+20、 如何覆盖默认的排序优先权?在这之前我们需要先将环境变量LC_ALL的值设置为C。在命令行提示栏中运行下面的代码。

+

+ $ export LC_ALL=C

+

+然后以非默认优先权的方式对‘tecmint.txt’文件重新排序。

+

+ $ sort tecmint.txt

+

+

+

+*覆盖排序优先权*

+

+不要忘记与example 3中得到的输出结果做比较,并且你可以使用‘-f’,又叫‘-ignore-case’(忽略大小写)的选项来获取更有序的输出。

+

+ $ sort -f tecmint.txt

+

+

+

+21、 给两个输入文件进行‘sort‘,然后把它们连接成一行!

+

+我们创建两个文本文档’file1.txt‘以及’file2.txt‘,并用数据填充,如下所示,并用’cat‘命令查看文件的内容。

+

+ $ echo -e “5 Reliable\n2 Fast\n3 Secure\n1 open-source\n4 customizable” > file1.txt

+ $ cat file1.txt

+

+

+

+用如下数据填充’file2.txt‘。

+

+ $ echo -e “3 RedHat\n1 Debian\n5 Ubuntu\n2 Kali\n4 Fedora” > file2.txt

+ $ cat file2.txt

+

+

+

+现在我们对两个文件进行排序并连接。

+

+ $ join <(sort -n file1.txt) <(sort file2.txt)

+

+

+

+

+我所要讲的全部内容就在这里了,希望与各位保持联系,也希望各位经常来逛逛。有反馈就在下面评论吧。

+

+--------------------------------------------------------------------------------

+

+via: http://www.tecmint.com/linux-sort-command-examples/

+

+作者:[Avishek Kumar][a]

+译者:[DongShuaike](https://github.com/DongShuaike)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://www.tecmint.com/author/avishek/

+[1]:http://www.tecmint.com/sort-command-linux/

diff --git a/published/201505/20150423 uperTuxKart 0.9 Released--The Best Racing Game on Linux Just Got Even Better.md b/published/201505/20150423 uperTuxKart 0.9 Released--The Best Racing Game on Linux Just Got Even Better.md

new file mode 100644

index 0000000000..70355325b6

--- /dev/null

+++ b/published/201505/20150423 uperTuxKart 0.9 Released--The Best Racing Game on Linux Just Got Even Better.md

@@ -0,0 +1,35 @@

+SuperTuxKart 0.9 已发行 —— Linux 中最好的竞速类游戏越来越棒了!

+================================================================================

+**热门竞速类游戏 SuperTuxKart 的新版本已经[打包发行][1]登陆下载服务器**

+

+

+

+*Super Tux Kart 0.9 发行海报*

+

+SuperTuxKart 0.9 相较前一版本做了巨大的升级,内部运行着刚出炉的新引擎(有个炫酷的名字叫‘Antarctica(南极洲)’),目的是要呈现更加炫酷的图形环境,从阴影到场景的纵深,外加卡丁车更好的物理效果。

+

+突出的图形表现也增加了对显卡的要求。SuperTuxKart 开发人员给玩家的建议是,要有图像处理能力比得上(或者,想要完美的话,要超过) Intel HD Graphics 3000, NVIDIA GeForce 8600 或 AMD Radeon HD 3650 的显卡。

+

+### 其他改变 ###

+

+SuperTuxKart 0.9 中与图像的改善同样吸引人眼球的是一对**全新赛道**,新的卡丁车,新的在线账户可以记录和分享**全新推出的成就系统**里赢得的徽章,以及大量的改装和涂装的微调。

+

+点击播放下面的官方发行视频,看看基于调色器的 STK 0.9 所散发的光辉吧。(youtube 视频:https://www.youtube.com/0FEwDH7XU9Q )

+

+Ubuntu 用户可以从项目网站上下载新发行版已编译的二进制文件。

+

+- [下载 SuperTuxKart 0.9][2]

+

+--------------------------------------------------------------------------------

+

+via: http://www.omgubuntu.co.uk/2015/04/supertuxkart-0-9-released

+

+作者:[Joey-Elijah Sneddon][a]

+译者:[H-mudcup](https://github.com/H-mudcup)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:https://plus.google.com/117485690627814051450/?rel=author

+[1]:http://supertuxkart.blogspot.co.uk/2015/04/supertuxkart-09-released.html

+[2]:http://supertuxkart.sourceforge.net/Downloads

diff --git a/published/201505/20150429 Docker 1.6 Released--How to Upgrade on Fedora or CentOS.md b/published/201505/20150429 Docker 1.6 Released--How to Upgrade on Fedora or CentOS.md

new file mode 100644

index 0000000000..6b6141211f

--- /dev/null

+++ b/published/201505/20150429 Docker 1.6 Released--How to Upgrade on Fedora or CentOS.md

@@ -0,0 +1,167 @@

+如何在Fedora / CentOS上面升级Docker 1.6

+=============================================================================

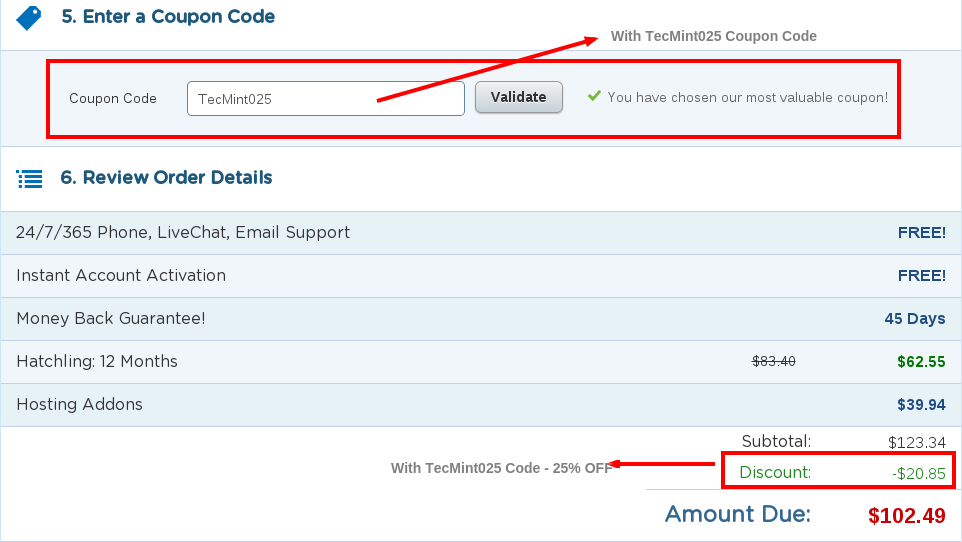

+Docker,一个流行的将软件打包的开源容器平台,已经有了新的1.6版,增加了许多新的特性。该版本主要更新了Docker Registry、Engine、 Swarm、 Compose 和 Machine等方面。这次发布旨在提升性能、改善开发者和系统管理员的体验。让我们来快速看看有哪些新特性吧。

+

+**Docker Registry (2.0)**是一项推送Docker镜像用于存储和分享的服务,因为面临加载下的体验问题而经历了架构的改变。它仍然向后兼容。Docker Registry的编写语言现在从Python改为Google的Go语言了,以提升性能。与Docker Engine 1.6结合后,拉取镜像的能力更快了。早先的镜像是队列式输送的,而现在是并行的啦。

+

+**Docker Engine (1.6)**相比之前的版本有很大的提高。目前支持容器与镜像的标签。通过标签,你可以附加用户自定义的元数据到镜像和容器上,而镜像和容器反过来可以被其他工具使用。标签对正在运行的应用是不可见的,可以用来加速搜索容器和镜像。

+

+Windows版本的Docker客户端可以连接到远程的运行在linux上的Docker Engine。

+

+Docker目前支持日志驱动API,这允许我们发送容器日志给系统如Syslog,或者第三方。这将会使得系统管理员受益。

+

+**Swarm (0.2)**是一个Docker集群工具,可以将一个Docker主机池转换为一个虚拟主机。在新特性里,容器甚至被放在了可用的节点上。通过添加更多的Docker命令,努力支持完整的Docker API。将来,使用第三方驱动来集群会成为可能。

+

+**Compose (1.2)** 是一个Docker里定义和运行复杂应用的工具, 也得到了升级。在新版本里,可以创建多个子文件,而不是用一个没有结构的文件描述一个多容器应用。

+

+通过**Machine (0.2)**,我们可以很容易地在本地计算机、云和数据中心上搭建Docker主机。新的发布版本为开发者提供了一个相对干净地驱动界面来编写驱动。Machine集中控制供给,而不是每个独立的驱动。增加了新的命令,可以用来生成主机的TLS证书,以提高安全性。

+

+### 在Fedora / CentOS 上的升级指导 ###

+

+在这一部分里,我们将会学习如何在Fedora和CentOS上升级已有的docker到最新版本。请注意,目前的Docker仅运行在64位的架构上,Fedora和CentOS都源于RedHat,命令的使用是差不多相同的,除了在Fedora20和CentOS6.5里Docker包被叫做“docker-io”。

+

+如果你系统之前没有安装Docker,使用下面命令安装:

+

+ "yum install docker-io" – on Fedora20 / CentOS6.5

+

+ "yum install docker" - on Fedora21 / CentOS7

+

+在升级之前,备份一下docker镜像和容器卷是个不错的主意。

+

+参考[“将文件系统打成 tar 包”][1]与[“卷备份、恢复或迁移”][2],获取更多信息。

+

+目前,测试系统安装了Docker1.5。样例输出显示是来自一个Fedora20的系统。

+

+验证当前系统安装的Docker版本

+

+ [root@TestNode1 ~]#sudo docker -v

+

+ Docker version 1.5.0, build a8a31ef/1.5.0

+

+如果Docker正在运行,先停掉。

+

+ [root@TestNode1 ~]# sudo systemctl stop docker

+

+使用yum update升级到最新版,但是写这篇文章的时候,仓库并不是最新版本(1.6),因此你需要使用二进制的升级方法。

+

+ [root@TestNode1 ~]#sudo yum -y update docker-io

+

+ No packages marked for update

+

+ [root@TestNode1 ~]#sudo wget https://get.docker.com/builds/Linux/x86_64/docker-latest -O /usr/bin/docker

+

+ --2015-04-19 13:40:48-- https://get.docker.com/builds/Linux/x86_64/docker-latest

+

+ Resolving get.docker.com (get.docker.com)... 162.242.195.82

+

+ Connecting to get.docker.com (get.docker.com)|162.242.195.82|:443... connected.

+

+ HTTP request sent, awaiting response... 200 OK

+

+ Length: 15443598 (15M) [binary/octet-stream]

+

+ Saving to: /usr/bin/docker

+

+ 100%[======================================>] 15,443,598 8.72MB/s in 1.7s

+

+ 2015-04-19 13:40:50 (8.72 MB/s) - /usr/bin/docker saved

+

+检查更新后的版本

+

+ [root@TestNode1 ~]#sudo docker -v

+

+ Docker version 1.6.0, build 4749651

+

+重启docker服务

+

+ [root@TestNode1 ~]# sudo systemctl start docker

+

+确认Docker在运行

+

+ [root@TestNode1 ~]# docker images

+

+ REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

+

+ fedora latest 834629358fe2 3 months ago 241.3 MB

+

+ [root@TestNode1 ~]# docker run fedora /bin/echo Hello World

+

+ Hello World

+

+CentOS安装时需要**注意**,在CentOS上安装完Docker后,当你试图启动Docker服务的时候,你可能会得到错误的信息,如下所示:

+

+ docker.service - Docker Application Container Engine

+

+ Loaded: loaded (/usr/lib/systemd/system/docker.service; disabled)

+

+ Active: failed (Result: exit-code) since Mon 2015-04-20 03:24:24 EDT; 6h ago

+

+ Docs: http://docs.docker.com

+

+ Process: 21069 ExecStart=/usr/bin/docker -d $OPTIONS $DOCKER_STORAGE_OPTIONS $DOCKER_NETWORK_OPTIONS $ADD_REGISTRY $BLOCK_REGISTRY $INSECURE_REGISTRY (code=exited, status=127)

+

+ Main PID: 21069 (code=exited, status=127)

+

+ Apr 20 03:24:24 centos7 systemd[1]: Starting Docker Application Container E.....

+

+ Apr 20 03:24:24 centos7 docker[21069]: time="2015-04-20T03:24:24-04:00" lev...)"

+

+ Apr 20 03:24:24 centos7 docker[21069]: time="2015-04-20T03:24:24-04:00" lev...)"

+

+ Apr 20 03:24:24 centos7 docker[21069]: /usr/bin/docker: relocation error: /...ce

+

+ Apr 20 03:24:24 centos7 systemd[1]: docker.service: main process exited, co.../a

+

+ Apr 20 03:24:24 centos7 systemd[1]: Failed to start Docker Application Cont...e.

+

+ Apr 20 03:24:24 centos7 systemd[1]: Unit docker.service entered failed state.

+

+这是一个已知的bug([https://bugzilla.redhat.com/show_bug.cgi?id=1207839][3]),需要将设备映射升级到最新。

+

+ [root@centos7 ~]# rpm -qa device-mapper

+

+ device-mapper-1.02.84-14.el7.x86_64

+

+ [root@centos7 ~]# yum update device-mapper

+

+ [root@centos7 ~]# rpm -qa device-mapper

+

+ device-mapper-1.02.93-3.el7.x86_64

+

+ [root@centos7 ~]# systemctl start docker

+

+### 总结 ###

+

+尽管docker技术出现时间不长,但很快就变得非常流行了。它使得开发者的生活变得轻松,运维团队可以快速独立地创建和部署应用。通过该公司的发布,Docker的快速更新,产品质量的提升,满足用户需求,未来对于Docker来说一片光明。

+

+--------------------------------------------------------------------------------

+

+via: http://linoxide.com/linux-how-to/docker-1-6-features-upgrade-fedora-centos/

+

+作者:[B N Poornima][a]

+译者:[wi-cuckoo](https://github.com/wi-cuckoo)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:http://linoxide.com/author/bnpoornima/

+[1]:http://docs.docker.com/reference/commandline/cli/#export

+[2]:http://docs.docker.com/userguide/dockervolumes/#backup-restore-or-migrate-data-volumes

+[3]:https://bugzilla.redhat.com/show_bug.cgi?id=1207839

+[4]:

+[5]:

+[6]:

+[7]:

+[8]:

+[9]:

+[10]:

+[11]:

+[12]:

+[13]:

+[14]:

+[15]:

+[16]:

+[17]:

+[18]:

+[19]:

+[20]:

diff --git a/published/201505/20150429 Synfig Studio 1.0--Open Source Animation Gets Serious.md b/published/201505/20150429 Synfig Studio 1.0--Open Source Animation Gets Serious.md

new file mode 100644

index 0000000000..a216674d9b

--- /dev/null

+++ b/published/201505/20150429 Synfig Studio 1.0--Open Source Animation Gets Serious.md

@@ -0,0 +1,38 @@

+Synfig Studio 1.0:开源动画动真格的了

+================================================================================

+

+

+**现在可以下载 Synfig Studio 这个自由、开源的2D动画软件的全新版本了。 **

+

+在这个跨平台的软件首次发行一年之后,Synfig Studio 1.0 带着一套全新改进过的功能,实现它所承诺的“创造电影级的动画的工业级解决方案”。

+

+在众多功能之上的是一个改进过的用户界面,据项目开发者说那是个用起来‘更简单’、‘更直观’的界面。客户端添加了新的**单窗口模式**,让界面更整洁,而且**使用了最新的 GTK3 库重制**。

+

+在功能方面有几个值得注意的变化,包括新加的全功能骨骼系统。

+

+这套**关节和转轴的‘骨骼’构架**非常适合2D剪纸动画,再配上这个版本新加的复杂的变形控制系统或是 Synfig 受欢迎的‘关键帧自动插入’(即:帧到帧之间的变形)应该会变得非常有效率的。(youtube视频 https://www.youtube.com/M8zW1qCq8ng )

+

+新的无损剪切工具,摩擦力效果和对逐帧位图动画的支持,可能会有助于释放开源动画师们的创造力,更别说新加的用于同步动画的时间线和声音的声效层!

+

+### 下载 Synfig Studio 1.0 ###

+

+Synfig Studio 并不是任何人都能用的工具套件,这最新发行版的最新一批改进应该能吸引一些动画制作者试一试这个软件。

+

+如果你想看看开源动画制作软件是什么样的,你可以通过下面的链接直接从工程的 Sourceforge 页下载一个适用于 Ubuntu 的最新版本的安装器。

+

+- [下载 Synfig 1.0 (64bit) .deb 安装器][1]

+- [下载 Synfig 1.0 (32bit) .deb 安装器][2]

+

+--------------------------------------------------------------------------------

+

+via: http://www.omgubuntu.co.uk/2015/04/synfig-studio-new-release-features

+

+作者:[Joey-Elijah Sneddon][a]

+译者:[H-mudcup](https://github.com/H-mudcup)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

+

+[a]:https://plus.google.com/117485690627814051450/?rel=author

+[1]:http://sourceforge.net/projects/synfig/files/releases/1.0/linux/synfigstudio_1.0_amd64.deb/download

+[2]:http://sourceforge.net/projects/synfig/files/releases/1.0/linux/synfigstudio_1.0_x86.deb/download

diff --git a/published/201505/20150429 web caching basics terminology http headers and caching strategies.md b/published/201505/20150429 web caching basics terminology http headers and caching strategies.md

new file mode 100644

index 0000000000..52e4e4e01f

--- /dev/null

+++ b/published/201505/20150429 web caching basics terminology http headers and caching strategies.md

@@ -0,0 +1,181 @@

+Web缓存基础:术语、HTTP报头和缓存策略

+=====================================================================

+

+### 简介

+

+对于您的站点的访问者来说,智能化的内容缓存是提高用户体验最有效的方式之一。缓存,或者对之前的请求的临时存储,是HTTP协议实现中最核心的内容分发策略之一。分发路径中的组件均可以缓存内容来加速后续的请求,这受控于对该内容所声明的缓存策略。

+

+在这份指南中,我们将讨论一些Web内容缓存的基本概念。这主要包括如何选择缓存策略以保证互联网范围内的缓存能够正确的处理您的内容。我们将谈一谈缓存带来的好处、副作用以及不同的策略能带来的性能和灵活性的最大结合。

+

+###什么是缓存(caching)?

+

+缓存(caching)是一个描述存储可重用资源以便加快后续请求的行为的术语。有许多不同类型的缓存,每种都有其自身的特点,应用程序缓存和内存缓存由于其对特定回复的加速,都很常用。

+

+这份指南的主要讲述的Web缓存是一种不同类型的缓存。Web缓存是HTTP协议的一个核心特性,它能最小化网络流量,并且提升用户所感知的整个系统响应速度。内容从服务器到浏览器的传输过程中,每个层面都可以找到缓存的身影。

+

+Web缓存根据特定的规则缓存相应HTTP请求的响应。对于缓存内容的后续请求便可以直接由缓存满足而不是重新发送请求到Web服务器。

+

+###好处

+

+有效的缓存技术不仅可以帮助用户,还可以帮助内容的提供者。缓存对内容分发带来的好处有:

+

+- **减少网络开销**:内容可以在从内容提供者到内容消费者网络路径之间的许多不同的地方被缓存。当内容在距离内容消费者更近的地方被缓存时,由于缓存的存在,请求将不会消耗额外的网络资源。

+- **加快响应速度**:由于并不是必须通过整个网络往返,缓存可以使内容的获得变得更快。缓存放在距用户更近的地方,例如浏览器缓存,使得内容的获取几乎是瞬时的。

+- **在同样的硬件上提高速度**:对于保存原始内容的服务器来说,更多的性能可以通过允许激进的缓存策略从硬件上压榨出来。内容拥有者们可以利用分发路径上某个强大的服务器来应对特定内容负载的冲击。

+- **网络中断时内容依旧可用**:使用某种策略,缓存可以保证在原始服务器变得不可用时,相应的内容对用户依旧可用。

+

+###术语

+

+在面对缓存时,您可能对一些经常遇到的术语可能不太熟悉。一些常见的术语如下:

+

+- **原始服务器**:原始服务器是内容的原始存放地点。如果您是Web服务器管理员,它就是您所管理的机器。它负责为任何不能从缓存中得到的内容进行回复,并且负责设置所有内容的缓存策略。

+- **缓存命中率**:一个缓存的有效性依照缓存的命中率进行度量。它是可以从缓存中得到数据的请求数与所有请求数的比率。缓存命中率高意味着有很高比例的数据可以从缓存中获得。这通常是大多数管理员想要的结果。

+- **新鲜度**:新鲜度用来描述一个缓存中的项目是否依旧适合返回给客户端。缓存中的内容只有在由缓存策略指定的新鲜期内才会被返回。

+- **过期内容**:缓存中根据缓存策略的新鲜期设置已过期的内容。过期的内容被标记为“陈旧”。通常,过期内容不能用于回复客户端的请求。必须重新从原始服务器请求新的内容或者至少验证缓存的内容是否仍然准确。

+- **校验**:缓存中的过期内容可以验证是否有效以便刷新过期时间。验证过程包括联系原始服务器以检查缓存的数据是否依旧代表了最近的版本。

+- **失效**:失效是依据过期日期从缓存中移除内容的过程。当内容在原始服务器上已被改变时就必须这样做,缓存中过期的内容会导致客户端发生问题。

+

+还有许多其他的缓存术语,不过上面的这些应该能帮助您开始。

+

+###什么能被缓存?

+

+某些特定的内容比其他内容更容易被缓存。对大多数站点来说,一些适合缓存的内容如下:

+

+- Logo和商标图像

+- 普通的不变化的图像(例如,导航图标)

+- CSS样式表

+- 普通的Javascript文件

+- 可下载的内容

+- 媒体文件

+

+这些文件更倾向于不经常改变,所以长时间的对它们进行缓存能获得好处。

+

+一些项目在缓存中必须加以注意:

+

+- HTML页面

+- 会替换改变的图像

+- 经常修改的Javascript和CSS文件

+- 需要有认证后的cookies才能访问的内容

+

+一些内容从来不应该被缓存:

+

+- 与敏感信息相关的资源(银行数据,等)

+- 用户相关且经常更改的数据

+

+除上面的通用规则外,通常您需要指定一些规则以便于更好地缓存不同种类的内容。例如,如果登录的用户都看到的是同样的网站视图,就应该在任何地方缓存这个页面。如果登录的用户会在一段时间内看到站点中用户特定的视图,您应该让用户的浏览器缓存该数据而不应让任何中介节点缓存该视图。

+