mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-03 01:10:13 +08:00

commit

4aed32d8ec

64

published/20151013 DFileManager--Cover Flow File Manager.md

Normal file

64

published/20151013 DFileManager--Cover Flow File Manager.md

Normal file

@ -0,0 +1,64 @@

|

||||

DFileManager:封面流(CoverFlow)文件管理器

|

||||

================================================================================

|

||||

|

||||

这个一个 Ubuntu 标准软件仓库中缺失的像宝石般的、有着其独特的功能的文件管理器。这是 DFileManager 在推特中的宣称。

|

||||

|

||||

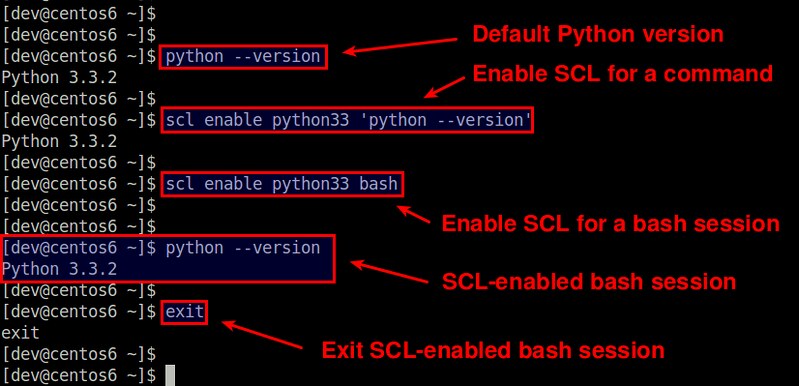

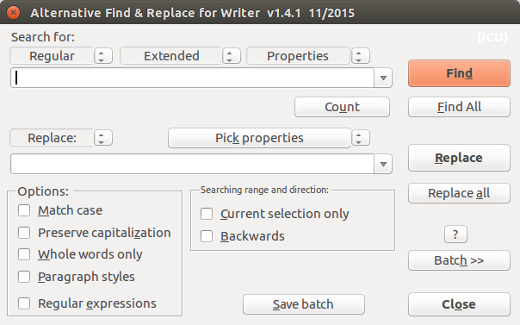

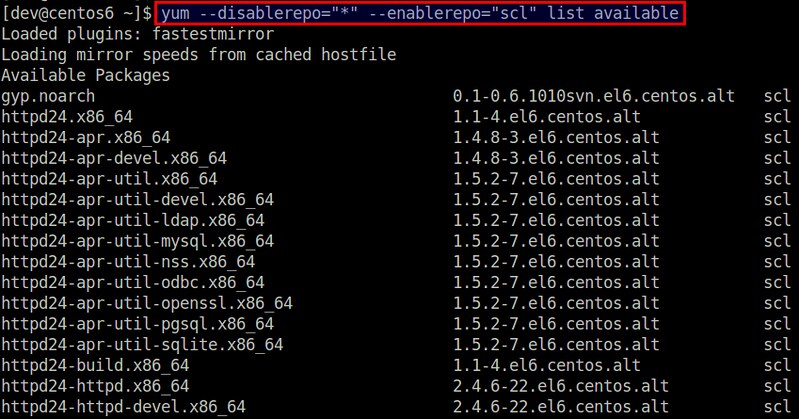

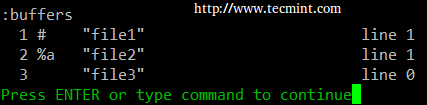

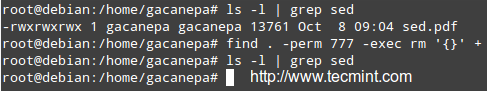

有一个不好回答的问题,如何知道到底有多少个 Linux 的开源软件?好奇的话,你可以在 Shell 里输入如下命令:

|

||||

|

||||

~$ for f in /var/lib/apt/lists/*Packages; do printf '%5d %s\n' $(grep '^Package: ' "$f" | wc -l) ${f##*/} done | sort -rn

|

||||

|

||||

在我的 Ubuntu 15.04 系统上,产生结果如下:

|

||||

|

||||

|

||||

|

||||

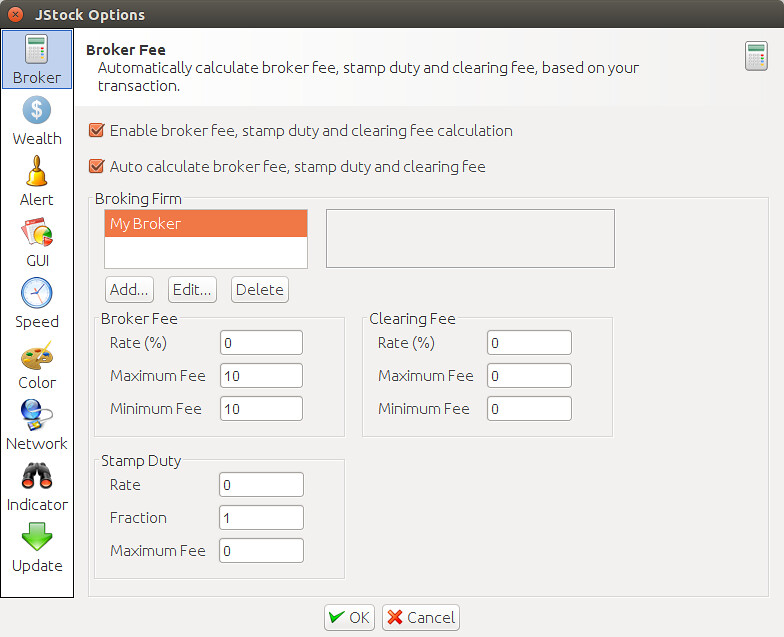

正如上面的截图所示,在 Universe 仓库中,大约有39000个包,在 main 仓库中大约有8500个包。这听起来很多。但是这些包括了开源应用、工具、库,有很多不是由 Ubuntu 开发者打包的。更重要的是,有很多重要的软件不在库中,只能通过源代码编译。DFileManager 就是这样一个软件。它是仍处在开发早期的一个基于 QT 的跨平台文件管理器。QT提供单一源码下的跨平台可移植性。

|

||||

|

||||

现在还没有二进制文件包,用户需要编译源代码才行。对于一些工具来说,这个可能会产生很大的问题,特别是如果这个应用依赖于某个复杂的依赖库,或者需要与已经安装在系统中的软件不兼容的某个版本。

|

||||

|

||||

### 安装 ###

|

||||

|

||||

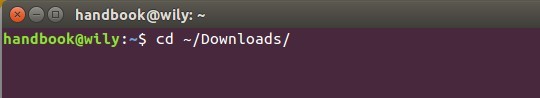

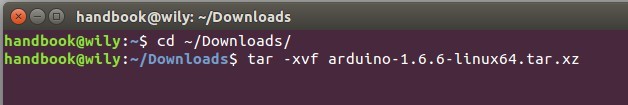

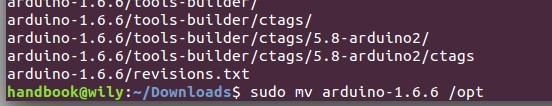

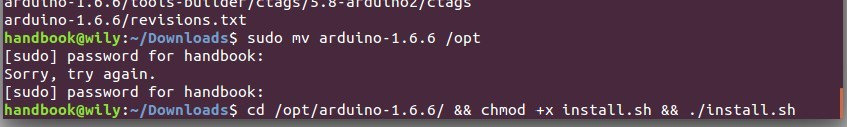

幸运的是,DFileManager 非常容易编译。对于我的老 Ubutnu 机器来说,在开发者网站上的安装介绍提供了大部分的重要步骤,不过少量的基础包没有列出(为什么总是这样?虽然许多库会让文件系统变得一团糟!)。在我的系统上,从github 下载源代码并且编译这个软件,我在 Shell 里输入了以下命令:

|

||||

|

||||

~$ sudo apt-get install qt5-default qt5-qmake libqt5x11extras5-dev

|

||||

~$ git clone git://git.code.sf.net/p/dfilemanager/code dfilemanager-code

|

||||

~$ cd dfilemananger-code

|

||||

~$ mkdir build

|

||||

~$ cd build

|

||||

~$ cmake ../ -DCMAKE_INSTALL_PREFIX=/usr

|

||||

~$ make

|

||||

~$ sudo make install

|

||||

|

||||

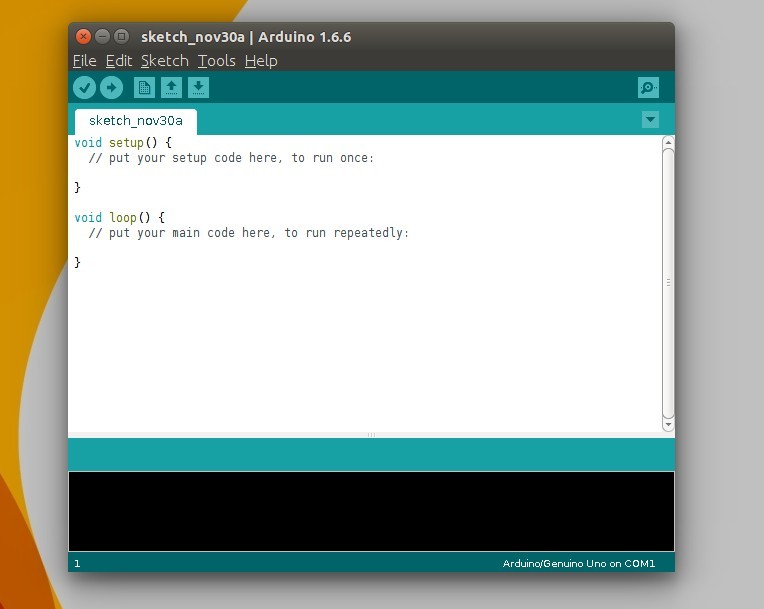

你可以通过在shell中输入如下命令来启动它:

|

||||

|

||||

~$ dfm

|

||||

|

||||

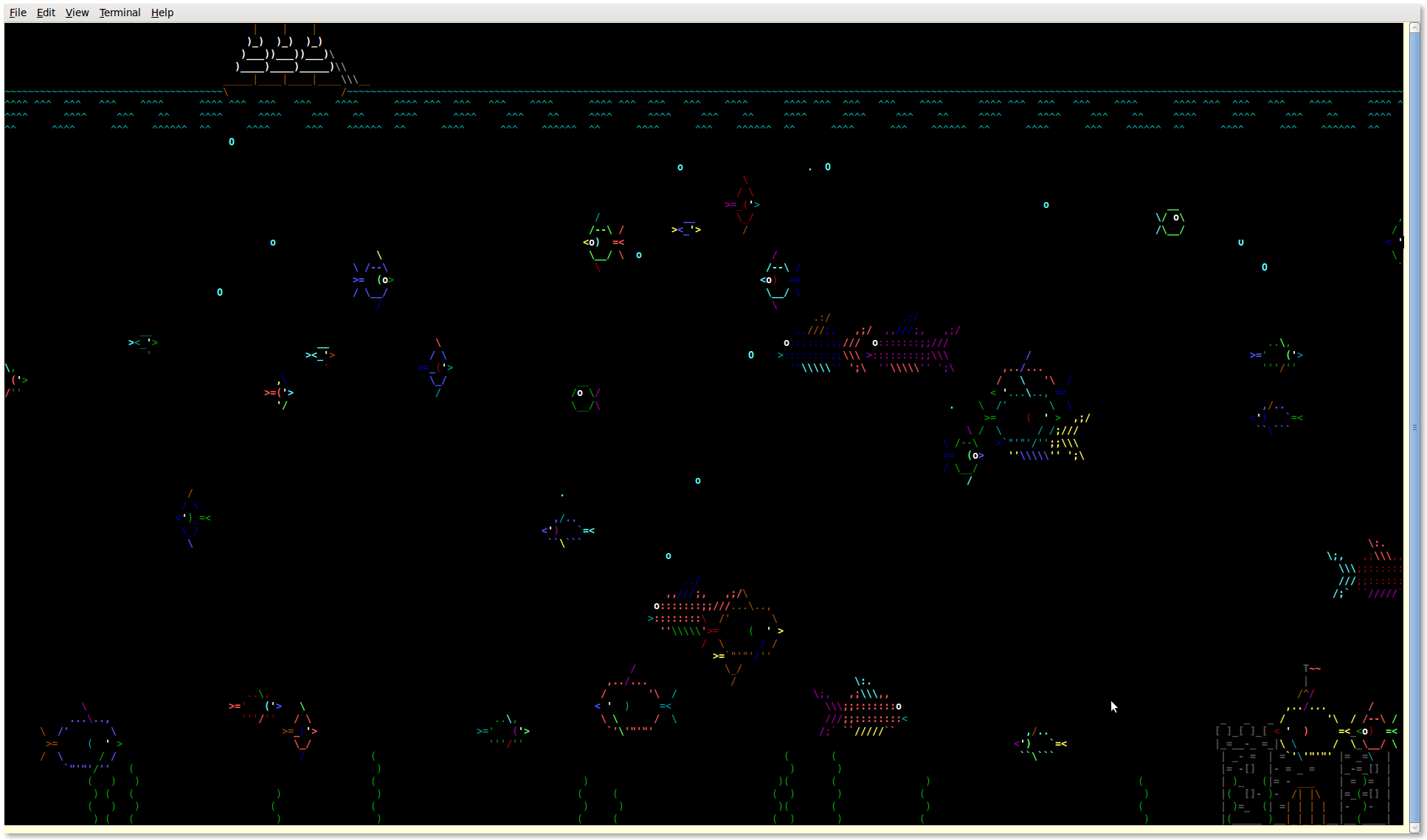

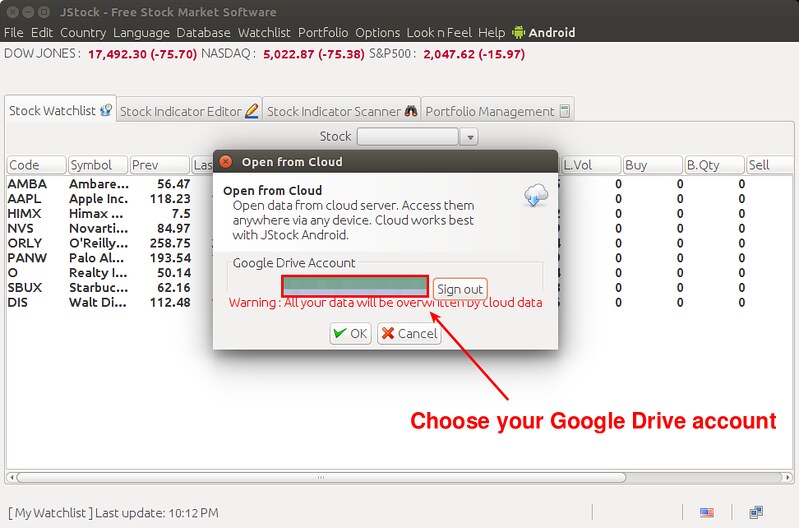

下面是运行中的 DFileManager,完全展示了其最吸引人的地方:封面流(Cover Flow)视图。可以在当前文件夹的项目间滑动,提供了一个相当有吸引力的体验。这是看图片的理想选择。这个文件管理器酷似 Finder(苹果操作系统下的默认文件管理器),可能会吸引你。

|

||||

|

||||

|

||||

|

||||

### 特点: ###

|

||||

|

||||

- 4种视图:图标、详情、列视图和封面流

|

||||

- 按位置和设备归类书签

|

||||

- 标签页

|

||||

- 简单的搜索和过滤

|

||||

- 自定义文件类型的缩略图,包括多媒体文件

|

||||

- 信息栏可以移走

|

||||

- 单击打开文件和目录

|

||||

- 可以排队 IO 操作

|

||||

- 记住每个文件夹的视图属性

|

||||

- 显示隐藏文件

|

||||

|

||||

DFileManager 不是 KDE 的 Dolphin 的替代品,但是能做相同的事情。这个是一个真正能够帮助人们的浏览文件的文件管理器。还有,别忘了反馈信息给开发者,任何人都可以做出这样的贡献。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://gofk.tumblr.com/post/131014089537/dfilemanager-cover-flow-file-manager-a-real-gem

|

||||

|

||||

作者:[gofk][a]

|

||||

译者:[bestony](https://github.com/bestony)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://gofk.tumblr.com/

|

||||

@ -0,0 +1,32 @@

|

||||

黑客们成功地在土豆上安装了 Linux !

|

||||

================================================================================

|

||||

|

||||

来自荷兰阿姆斯特丹的消息称,LinuxOnAnything.nl 网站的黑客们成功地在土豆上安装了 Linux!这是该操作系统第一次在根用蔬菜(root vegetable)上安装成功(LCTT 译注:root vetetable,一语双关,root 在 Linux 是指超级用户)。

|

||||

|

||||

|

||||

|

||||

“土豆没有 CPU,内存和存储器,这真的是个挑战,” Linux On Anything (LOA) 小组的 Johan Piest 说。“显然我们不能使用一个像 Fedora 或 Ubuntu 这些体量较大的发行版,所以我们用的是 Damn Small Linux。”

|

||||

|

||||

在尝试了几周之后,LOA 小组的的同学们弄出了一个适合土豆的 Linux 内核,这玩艺儿上面可以用 vi 来编辑小的文本文件。这个 Linux 通过一个小型的 U 盘加载到土豆上,并通过一组红黑线以二进制的方式向这个土豆发送命令。

|

||||

|

||||

LOA 小组是一个不断壮大的黑客组织的分支;这个组织致力于将 Linux 安装到所有物体上;他们先是将 Linux 装到Gameboy 和 iPod 等电子产品上,不过最近他们在挑战一些高难度的东西,譬如将Linux安装到灯泡和小狗身上!

|

||||

|

||||

LOA 小组在与另一个黑客小组 Stuttering Monarchs 竞赛,看谁先拿到土豆这一分。“土豆是一种每个人都会接触到的蔬菜,它的用途就像 Linux 一样极其广泛。无论你是想煮捣烹炸还是别的都可以” Piest 说道,“你也许认为我们完成这个挑战是为了获得某些好处,而我们只是追求逼格而已。”

|

||||

|

||||

LOA 是第一个将 Linux 安装到一匹设德兰矮种马上的小组,但这五年来竞争愈演愈烈,其它黑客小组的进度已经反超了他们。

|

||||

|

||||

“我们本来可以成为在饼干上面安装 Linux 的第一个小组,但是那群来自挪威的混蛋把我们击败了。” Piest 说。

|

||||

|

||||

第一个成功安装了 Linux 的蔬菜是一头卷心菜,它是由一个土耳其的一个黑客小组完成的。

|

||||

|

||||

(好啦——是不是已经目瞪口呆,事实上,这是一篇好几年前的恶搞文,你看出来了吗?哈哈哈哈)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.bbspot.com/news/2008/12/linux-on-a-potato.html

|

||||

|

||||

作者:[Brian Briggs](briggsb@bbspot.com)

|

||||

译者:[StdioA](https://github.com/StdioA), [hittlle](https://github.com/hittlle)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

@ -1,6 +1,7 @@

|

||||

在 Ubuntu 15.10 上为单个网卡设置多个 IP 地址

|

||||

================================================================================

|

||||

有时候你可能想在你的网卡上使用多个 IP 地址。遇到这种情况你会怎么办呢?买一个新的网卡并分配一个新的 IP?不,这没有必要(至少在小网络中)。现在我们可以在 Ubuntu 系统中为一个网卡分配多个 IP 地址。想知道怎么做到的?跟着我往下看,其实并不难。

|

||||

|

||||

有时候你可能想在你的网卡上使用多个 IP 地址。遇到这种情况你会怎么办呢?买一个新的网卡并分配一个新的 IP?不,没有这个必要(至少在小型网络中)。现在我们可以在 Ubuntu 系统中为一个网卡分配多个 IP 地址。想知道怎么做到的?跟着我往下看,其实并不难。

|

||||

|

||||

这个方法也适用于 Debian 以及它的衍生版本。

|

||||

|

||||

@ -12,7 +13,7 @@

|

||||

|

||||

sudo ip addr

|

||||

|

||||

**事例输出:**

|

||||

**样例输出:**

|

||||

|

||||

1: lo: <LOOPBACK,UP,LOWER_UP> mtu 65536 qdisc noqueue state UNKNOWN group default

|

||||

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

|

||||

@ -31,7 +32,7 @@

|

||||

|

||||

sudo ifconfig

|

||||

|

||||

**事例输出:**

|

||||

**样例输出:**

|

||||

|

||||

enp0s3 Link encap:Ethernet HWaddr 08:00:27:2a:03:4b

|

||||

inet addr:192.168.1.103 Bcast:192.168.1.255 Mask:255.255.255.0

|

||||

@ -50,7 +51,7 @@

|

||||

collisions:0 txqueuelen:0

|

||||

RX bytes:38793 (38.7 KB) TX bytes:38793 (38.7 KB)

|

||||

|

||||

正如你在上面看到的,我的网卡名称是 **enp0s3**,它的 IP 地址是 **192.168.1.103**。

|

||||

正如你在上面输出中看到的,我的网卡名称是 **enp0s3**,它的 IP 地址是 **192.168.1.103**。

|

||||

|

||||

现在让我们来为网卡添加一个新的 IP 地址,例如说 **192.168.1.104**。

|

||||

|

||||

@ -73,7 +74,7 @@

|

||||

inet6 fe80::a00:27ff:fe2a:34e/64 scope link

|

||||

valid_lft forever preferred_lft forever

|

||||

|

||||

类似地,你可以添加想要的任意多的 IP 地址。

|

||||

类似地,你可以添加任意数量的 IP 地址,只要你想要。

|

||||

|

||||

让我们 ping 一下这个 IP 地址验证一下。

|

||||

|

||||

@ -108,7 +109,7 @@

|

||||

|

||||

可以看到已经没有了!!

|

||||

|

||||

也许你已经知道,你重启系统后会丢失这些设置。那么怎么设置才能永久有效呢?这也很简单。

|

||||

正如你所知,重启系统后这些设置会失效。那么怎么设置才能永久有效呢?这也很简单。

|

||||

|

||||

### 添加永久 IP 地址 ###

|

||||

|

||||

@ -138,7 +139,7 @@ Ubuntu 系统的网卡配置文件是 **/etc/network/interfaces**。

|

||||

|

||||

sudo nano /etc/network/interfaces

|

||||

|

||||

按照黑色字体标注的添加额外的 IP 地址。

|

||||

如下添加额外的 IP 地址。

|

||||

|

||||

# This file describes the network interfaces available on your system

|

||||

# and how to activate them. For more information, see interfaces(5).

|

||||

@ -154,7 +155,7 @@ Ubuntu 系统的网卡配置文件是 **/etc/network/interfaces**。

|

||||

|

||||

保存并关闭文件。

|

||||

|

||||

无需重启运行下面的命令使更改生效。

|

||||

运行下面的命令使更改无需重启即生效。

|

||||

|

||||

sudo ifdown enp0s3 && sudo ifup enp0s3

|

||||

|

||||

@ -182,7 +183,7 @@ Ubuntu 系统的网卡配置文件是 **/etc/network/interfaces**。

|

||||

DHCPACK of 192.168.1.103 from 192.168.1.1

|

||||

bound to 192.168.1.103 -- renewal in 35146 seconds.

|

||||

|

||||

**注意**:如果你从远程连接到服务器,把上面的两个命令放到**一行**中**非常重要**,因为第一个命令会断掉你的连接。而采用这种方式可以存活你的 ssh 会话。

|

||||

**注意**:如果你从远程连接到服务器,把上面的两个命令放到**一行**中**非常重要**,因为第一个命令会断掉你的连接。而采用这种方式可以保留你的 ssh 会话。

|

||||

|

||||

现在,让我们用下面的命令来检查一下是否添加了新的 IP:

|

||||

|

||||

@ -217,10 +218,9 @@ Ubuntu 系统的网卡配置文件是 **/etc/network/interfaces**。

|

||||

|

||||

想知道怎么给 CentOS/RHEL/Scientific Linux/Fedora 系统添加额外的 IP 地址,可以点击下面的链接。

|

||||

|

||||

注:此篇文章以前做过选题:20150205 Linux Basics--Assign Multiple IP Addresses To Single Network Interface Card On CentOS 7.md

|

||||

- [Assign Multiple IP Addresses To Single Network Interface Card On CentOS 7][1]

|

||||

- [在CentOS 7上给一个网卡分配多个IP地址][1]

|

||||

|

||||

周末愉快!

|

||||

工作愉快!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -228,9 +228,9 @@ via: http://www.unixmen.com/assign-multiple-ip-addresses-to-one-interface-on-ubu

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[ictlyh](http://mutouxiaogui.cn/blog/)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/sk/

|

||||

[1]:http://www.unixmen.com/linux-basics-assign-multiple-ip-addresses-single-network-interface-card-centos-7/

|

||||

[1]:https://linux.cn/article-5127-1.html

|

||||

@ -0,0 +1,131 @@

|

||||

如何在 Ubuntu 14/15 上配置 Apache Solr

|

||||

================================================================================

|

||||

|

||||

大家好,欢迎来阅读我们今天这篇 Apache Solr 的文章。简单的来说,Apache Solr 是一个最负盛名的开源搜索平台,配合运行在网站后端的 Apache Lucene,能够让你轻松创建搜索引擎来搜索网站、数据库和文件。它能够索引和搜索多个网站并根据搜索文本的相关内容返回搜索建议。

|

||||

|

||||

Solr 使用 HTTP 可扩展标记语言(XML),可以为 JSON、Python 和 Ruby 等提供应用程序接口(API)。根据Apache Lucene 项目所述,Solr 提供了非常多的功能,很受管理员们的欢迎:

|

||||

|

||||

- 全文检索

|

||||

- 分面导航(Faceted Navigation)

|

||||

- 拼写建议/自动完成

|

||||

- 自定义文档排序/排列

|

||||

|

||||

#### 前提条件: ####

|

||||

|

||||

在一个使用最小化安装包的全新 Ubuntu 14/15 系统上,你仅仅需要少量的准备,就开始安装 Apache Solor.

|

||||

|

||||

### 1)System Update 系统更新###

|

||||

|

||||

使用一个具有 sudo 权限的非 root 用户登录你的 Ubuntu 服务器,在接下来的所有安装和使用 Solr 的步骤中都会使用它。

|

||||

|

||||

登录成功后,使用下面的命令,升级你的系统到最新的更新及补丁:

|

||||

|

||||

$ sudo apt-get update

|

||||

|

||||

### 2) 安装 JRE###

|

||||

|

||||

要安装 Solr,首先需要安装 JRE(Java Runtime Environment)作为基础环境,因为 solr 和 tomcat 都是基于Java.所以,我们需要安装最新版的 Java 并配置 Java 本地环境.

|

||||

|

||||

要想安装最新版的 Java 8,我们需要通过以下命令安装 Python Software Properties 工具包

|

||||

|

||||

$ sudo apt-get install python-software-properties

|

||||

|

||||

完成后,配置最新版 Java 8的仓库

|

||||

|

||||

$ sudo add-apt-repository ppa:webupd8team/java

|

||||

|

||||

现在你可以通过以下命令更新包源列表,使用‘apt-get’来安装最新版本的 Oracle Java 8。

|

||||

|

||||

$ sudo apt-get update

|

||||

|

||||

$ sudo apt-get install oracle-java8-installer

|

||||

|

||||

在安装和配置过程中,点击'OK'按钮接受 Java SE Platform 和 JavaFX 的 Oracle 二进制代码许可协议(Oracle Binary Code License Agreement)。

|

||||

|

||||

在安装完成后,运行下面的命令,检查是否安装成功以及查看安装的版本。

|

||||

|

||||

kash@solr:~$ java -version

|

||||

java version "1.8.0_66"

|

||||

Java(TM) SE Runtime Environment (build 1.8.0_66-b17)

|

||||

Java HotSpot(TM) 64-Bit Server VM (build 25.66-b17, mixed mode)

|

||||

|

||||

执行结果表明我们已经成功安装了 Java,并达到安装 Solr 最基本的要求了,接着我们进行下一步。

|

||||

|

||||

### 安装 Solr###

|

||||

|

||||

有两种不同的方式可以在 Ubuntu 上安装 Solr,在本文中我们只用最新的源码包来演示源码安装。

|

||||

|

||||

要使用源码安装 Solr,先要从[官网][1]下载最新的可用安装包。复制以下链接,然后使用 'wget' 命令来下载。

|

||||

|

||||

$ wget http://www.us.apache.org/dist/lucene/solr/5.3.1/solr-5.3.1.tgz

|

||||

|

||||

运行下面的命令,将这个已归档的服务解压到 /bin 目录。

|

||||

|

||||

$ tar -xzf solr-5.3.1.tgz solr-5.3.1/bin/install_solr_service.sh --strip-components=2

|

||||

|

||||

运行脚本来启动 Solr 服务,这将会先创建一个 solr 的用户,然后将 Solr 安装成服务。

|

||||

|

||||

$ sudo bash ./install_solr_service.sh solr-5.3.1.tgz

|

||||

|

||||

|

||||

|

||||

使用下面的命令来检查 Solr 服务的状态。

|

||||

|

||||

$ service solr status

|

||||

|

||||

|

||||

|

||||

### 创建 Solr 集合: ###

|

||||

|

||||

我们现在可以使用 Solr 用户添加多个集合。就像下图所示的那样,我们只需要在命令行中指定集合名称和指定其配置集就可以创建多个集合了。

|

||||

|

||||

$ sudo su - solr -c "/opt/solr/bin/solr create -c myfirstcollection -n data_driven_schema_configs"

|

||||

|

||||

|

||||

|

||||

我们已经成功的为我们的第一个集合创建了新核心实例目录,并可以将数据添加到里面。要查看库中的默认模式文件,可以在这里找到: '/opt/solr/server/solr/configsets/data_driven_schema_configs/conf' 。

|

||||

|

||||

### 使用 Solr Web###

|

||||

|

||||

可以使用默认的端口8983连接 Apache Solr。打开浏览器,输入 http://your\_server\_ip:8983/solr 或者 http://your-domain.com:8983/solr. 确保你的防火墙允许8983端口.

|

||||

|

||||

http://172.25.10.171:8983/solr/

|

||||

|

||||

|

||||

|

||||

在 Solr 的 Web 控制台左侧菜单点击 'Core Admin' 按钮,你将会看见我们之前使用命令行方式创建的集合。你可以点击 'Add Core' 按钮来创建新的核心。

|

||||

|

||||

|

||||

|

||||

就像下图中所示,你可以选择某个集合并指向文档来向里面添加内容或从文档中查询数据。如下显示的那样添加指定格式的数据。

|

||||

|

||||

{

|

||||

"number": 1,

|

||||

"Name": "George Washington",

|

||||

"birth_year": 1989,

|

||||

"Starting_Job": 2002,

|

||||

"End_Job": "2009-04-30",

|

||||

"Qualification": "Graduation",

|

||||

"skills": "Linux and Virtualization"

|

||||

}

|

||||

|

||||

添加文件后点击 'Submit Document'按钮.

|

||||

|

||||

|

||||

|

||||

### 总结###

|

||||

|

||||

在 Ubuntu 上安装成功后,你就可以使用 Solr Web 接口插入或查询数据。如果你想通过 Solr 来管理更多的数据和文件,可以创建更多的集合。希望你能喜欢这篇文章并且希望它能够帮到你。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/ubuntu-how-to/configure-apache-solr-ubuntu-14-15/

|

||||

|

||||

作者:[Kashif][a]

|

||||

译者:[taichirain](https://github.com/taichirain)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/kashifs/

|

||||

[1]:http://lucene.apache.org/solr/

|

||||

@ -1,61 +1,61 @@

|

||||

|

||||

如何在FreeBSD 10.2上安装Nginx作为Apache的反向代理

|

||||

如何在 FreeBSD 10.2 上安装 Nginx 作为 Apache 的反向代理

|

||||

================================================================================

|

||||

Nginx是一款免费的,开源的HTTP和反向代理服务器, 以及一个代理POP3/IMAP的邮件服务器. Nginx是一款高性能的web服务器,其特点是丰富的功能,简单的结构以及低内存的占用. 第一个版本由 Igor Sysoev在2002年发布,然而到现在为止很多大的科技公司都在使用,包括 Netflix, Github, Cloudflare, WordPress.com等等

|

||||

|

||||

在这篇教程里我们会 "**在freebsd 10.2系统上,安装和配置Nginx网络服务器作为Apache的反向代理**". Apache 会用PHP在8080端口上运行,并且我们需要在80端口配置Nginx的运行,用来接收用户/访问者的请求.如果网页的用户请求来自于浏览器的80端口, 那么Nginx会用Apache网络服务器和PHP来通过这个请求,并运行在8080端口.

|

||||

Nginx 是一款自由开源的 HTTP 和反向代理服务器,也可以用作 POP3/IMAP 的邮件代理服务器。Nginx 是一款高性能的 web 服务器,其特点是功能丰富,结构简单以及内存占用低。 第一个版本由 Igor Sysoev 发布于2002年,到现在有很多大型科技公司在使用,包括 Netflix、 Github、 Cloudflare、 WordPress.com 等等。

|

||||

|

||||

在这篇教程里我们会“**在 freebsd 10.2 系统上,安装和配置 Nginx 网络服务器作为 Apache 的反向代理**”。 Apache 将在8080端口上运行 PHP ,而我们会配置 Nginx 运行在80端口以接收用户/访问者的请求。如果80端口接收到用户浏览器的网页请求,那么 Nginx 会将该请求传递给运行在8080端口上的 Apache 网络服务器和 PHP。

|

||||

|

||||

#### 前提条件 ####

|

||||

|

||||

- FreeBSD 10.2.

|

||||

- Root 权限.

|

||||

- FreeBSD 10.2

|

||||

- Root 权限

|

||||

|

||||

### 步骤 1 - 更新系统 ###

|

||||

|

||||

使用SSH证书登录到你的FreeBSD服务器以及使用下面命令来更新你的系统 :

|

||||

使用 SSH 认证方式登录到你的 FreeBSD 服务器,使用下面命令来更新你的系统:

|

||||

|

||||

freebsd-update fetch

|

||||

freebsd-update install

|

||||

|

||||

### 步骤 2 - 安装 Apache ###

|

||||

|

||||

Apache是现在使用范围最广的网络服务器以及开源的HTTP服务器.在FreeBSD里Apache是未被默认安装的, 但是我们可以直接从端口下载,或者解压包在"/usr/ports/www/apache24" 目录下,再或者直接从PKG命令的FreeBSD系统信息库安装。在本教程中,我们将使用PKG命令从FreeBSD的库中安装:

|

||||

Apache 是开源的、使用范围最广的 web 服务器。在 FreeBSD 里默认没有安装 Apache, 但是我们可以直接通过 /usr/ports/www/apache24 下的 ports 或软件包来安装,也可以直接使用 pkg 命令从 FreeBSD 软件库中安装。在本教程中,我们将使用 pkg 命令从 FreeBSD 软件库中安装:

|

||||

|

||||

pkg install apache24

|

||||

|

||||

### 步骤 3 - 安装 PHP ###

|

||||

|

||||

一旦成功安装Apache, 接着将会安装PHP并由一个用户处理一个PHP的文件请求. 我们将会用到如下的PKG命令来安装PHP :

|

||||

一旦成功安装 Apache,接着将会安装 PHP ,它来负责处理用户对 PHP 文件的请求。我们将会用到如下的 pkg 命令来安装 PHP:

|

||||

|

||||

pkg install php56 mod_php56 php56-mysql php56-mysqli

|

||||

|

||||

### 步骤 4 - 配置 Apache 和 PHP ###

|

||||

|

||||

一旦所有都安装好了, 我们将会配置Apache在8080端口上运行, 并让PHP与Apache一同工作. 为了配置Apache,我们可以编辑 "httpd.conf"这个配置文件, 然而PHP我们只需要复制PHP的配置文件 php.ini 在 "/usr/local/etc/"目录下.

|

||||

一旦所有都安装好了,我们将会配置 Apache 运行在8080端口上, 并让 PHP 与 Apache 一同工作。 要想配置Apache,我们可以编辑“httpd.conf”这个配置文件, 对于 PHP 我们只需要复制 “/usr/local/etc/”目录下的 PHP 配置文件 php.ini。

|

||||

|

||||

进入到 "/usr/local/etc/" 目录 并且复制 php.ini-production 文件到 php.ini :

|

||||

进入到“/usr/local/etc/”目录,并且复制 php.ini-production 文件到 php.ini :

|

||||

|

||||

cd /usr/local/etc/

|

||||

cp php.ini-production php.ini

|

||||

|

||||

下一步, 在Apache目录下通过编辑 "httpd.conf"文件来配置Apache :

|

||||

下一步,在 Apache 目录下通过编辑“httpd.conf”文件来配置 Apache:

|

||||

|

||||

cd /usr/local/etc/apache24

|

||||

nano -c httpd.conf

|

||||

|

||||

端口配置在第 **52**行 :

|

||||

端口配置在第**52**行 :

|

||||

|

||||

Listen 8080

|

||||

|

||||

服务器名称配置在第 **219** 行:

|

||||

服务器名称配置在第**219**行:

|

||||

|

||||

ServerName 127.0.0.1:8080

|

||||

|

||||

在第 **277**行,如果目录需要,添加的DirectoryIndex文件,Apache将直接作用于它 :

|

||||

在第**277**行,添加 DirectoryIndex 文件,Apache 将用它来服务对目录的请求:

|

||||

|

||||

DirectoryIndex index.php index.html

|

||||

|

||||

在第 **287**行下,配置Apache通过添加脚本来支持PHP :

|

||||

在第**287**行下,配置 Apache ,添加脚本支持:

|

||||

|

||||

<FilesMatch "\.php$">

|

||||

SetHandler application/x-httpd-php

|

||||

@ -64,49 +64,49 @@ Apache是现在使用范围最广的网络服务器以及开源的HTTP服务器.

|

||||

SetHandler application/x-httpd-php-source

|

||||

</FilesMatch>

|

||||

|

||||

保存然后退出.

|

||||

保存并退出。

|

||||

|

||||

现在用sysrc命令,来添加Apache作为开机启动项目 :

|

||||

现在用 sysrc 命令,来添加 Apache 为开机启动项目:

|

||||

|

||||

sysrc apache24_enable=yes

|

||||

|

||||

然后用下面的命令测试Apache的配置 :

|

||||

然后用下面的命令测试 Apache 的配置:

|

||||

|

||||

apachectl configtest

|

||||

|

||||

如果到这里都没有问题的话,那么就启动Apache吧 :

|

||||

如果到这里都没有问题的话,那么就启动 Apache 吧:

|

||||

|

||||

service apache24 start

|

||||

|

||||

如果全部完毕, 在"/usr/local/www/apache24/data" 目录下,创建一个phpinfo文件是验证PHP在Apache下完美运行的好方法 :

|

||||

如果全部完毕,在“/usr/local/www/apache24/data”目录下创建一个 phpinfo 文件来验证 PHP 在 Apache 下顺利运行:

|

||||

|

||||

cd /usr/local/www/apache24/data

|

||||

echo "<?php phpinfo(); ?>" > info.php

|

||||

|

||||

现在就可以访问 freebsd 的服务器 IP : 192.168.1.123:8080/info.php.

|

||||

现在就可以访问 freebsd 的服务器 IP : 192.168.1.123:8080/info.php 。

|

||||

|

||||

|

||||

|

||||

Apache 是使用 PHP 在 8080端口下运行的.

|

||||

Apache 及 PHP 运行在 8080 端口。

|

||||

|

||||

### 步骤 5 - 安装 Nginx ###

|

||||

|

||||

Nginx 以低内存的占用作为一款高性能的web服务器以及反向代理服务器.在这个步骤里,我们将会使用Nginx作为Apache的反向代理, 因此让我们用pkg命令来安装它吧 :

|

||||

Nginx 可以以较低内存占用提供高性能的 Web 服务器和反向代理服务器。在这个步骤里,我们将会使用 Nginx 作为Apache 的反向代理,因此让我们用 pkg 命令来安装它吧:

|

||||

|

||||

pkg install nginx

|

||||

|

||||

### 步骤 6 - 配置 Nginx ###

|

||||

|

||||

一旦 Nginx 安装完毕, 在 "**nginx.conf**" 文件里,我们需要做一个新的配置文件来替换掉原来的nginx文件. 更改到 "/usr/local/etc/nginx/"目录下 并且默认备份到 nginx.conf 文件:

|

||||

一旦 Nginx 安装完毕,在“**nginx.conf**”文件里,我们需要做一个新的配置文件来替换掉原来的 nginx 配置文件。切换到“/usr/local/etc/nginx/”目录下,并且备份默认 nginx.conf 文件:

|

||||

|

||||

cd /usr/local/etc/nginx/

|

||||

mv nginx.conf nginx.conf.oroginal

|

||||

|

||||

现在就可以创建一个新的 nginx 配置文件了 :

|

||||

现在就可以创建一个新的 nginx 配置文件了:

|

||||

|

||||

nano -c nginx.conf

|

||||

|

||||

然后粘贴下面的配置:

|

||||

然后粘贴下面的配置:

|

||||

|

||||

user www;

|

||||

worker_processes 1;

|

||||

@ -164,14 +164,14 @@ Nginx 以低内存的占用作为一款高性能的web服务器以及反向代

|

||||

|

||||

}

|

||||

|

||||

保存退出.

|

||||

保存并退出。

|

||||

|

||||

下一步, 在nginx目录下面,创建一个 **proxy.conf** 文件,使其作为反向代理 :

|

||||

下一步,在 nginx 目录下面,创建一个 **proxy.conf** 文件,使其作为反向代理 :

|

||||

|

||||

cd /usr/local/etc/nginx/

|

||||

nano -c proxy.conf

|

||||

|

||||

粘贴如下配置 :

|

||||

粘贴如下配置:

|

||||

|

||||

proxy_buffering on;

|

||||

proxy_redirect off;

|

||||

@ -186,27 +186,27 @@ Nginx 以低内存的占用作为一款高性能的web服务器以及反向代

|

||||

proxy_buffers 100 8k;

|

||||

add_header X-Cache $upstream_cache_status;

|

||||

|

||||

保存退出.

|

||||

保存并退出。

|

||||

|

||||

最后一步, 为 nginx 的高速缓存创建一个 "/var/nginx/cache"的新目录 :

|

||||

最后一步,为 nginx 的高速缓存创建一个“/var/nginx/cache”的新目录:

|

||||

|

||||

mkdir -p /var/nginx/cache

|

||||

|

||||

### 步骤 7 - 配置 Nginx 的虚拟主机 ###

|

||||

|

||||

在这个步骤里面,我们需要创建一个新的虚拟主机域 "saitama.me", 以跟文件 "/usr/local/www/saitama.me" 和日志文件一同放在 "/var/log/nginx" 目录下.

|

||||

在这个步骤里面,我们需要创建一个新的虚拟主机域“saitama.me”,其文档根目录为“/usr/local/www/saitama.me”,日志文件放在“/var/log/nginx”目录下。

|

||||

|

||||

我们必须做的第一件事情就是创建新的目录来存放虚拟主机文件, 在这里我们将用到一个"**vhost**"的新文件. 并创建它 :

|

||||

我们必须做的第一件事情就是创建新的目录来存放虚拟主机配置文件,我们创建的新目录名为“**vhost**”。创建它:

|

||||

|

||||

cd /usr/local/etc/nginx/

|

||||

mkdir vhost

|

||||

|

||||

创建好vhost 目录, 那么我们就进入这个目录并创建一个新的虚拟主机文件. 这里我取名为 "**saitama.conf**" :

|

||||

创建好 vhost 目录,然后我们就进入这个目录并创建一个新的虚拟主机文件。这里我取名为“**saitama.conf**”:

|

||||

|

||||

cd vhost/

|

||||

nano -c saitama.conf

|

||||

|

||||

粘贴如下虚拟主机的配置 :

|

||||

粘贴如下虚拟主机的配置:

|

||||

|

||||

server {

|

||||

# Replace with your freebsd IP

|

||||

@ -252,67 +252,67 @@ Nginx 以低内存的占用作为一款高性能的web服务器以及反向代

|

||||

|

||||

}

|

||||

|

||||

保存退出.

|

||||

保存并退出。

|

||||

|

||||

下一步, 为nginx和虚拟主机创建一个新的日志目录 "/var/log/" :

|

||||

下一步,为 nginx 和虚拟主机创建一个新的日志目录“/var/log/”:

|

||||

|

||||

mkdir -p /var/log/nginx/

|

||||

|

||||

如果一切顺利, 在文件的根目录下创建文件 saitama.me :

|

||||

如果一切顺利,在文件的根目录下创建目录 saitama.me 用作文档根:

|

||||

|

||||

cd /usr/local/www/

|

||||

mkdir saitama.me

|

||||

|

||||

### 步骤 8 - 测试 ###

|

||||

|

||||

在这个步骤里面,我们只是测试我们的nginx和虚拟主机的配置.

|

||||

在这个步骤里面,我们只是测试我们的 nginx 和虚拟主机的配置。

|

||||

|

||||

用如下命令测试nginx的配置 :

|

||||

用如下命令测试 nginx 的配置:

|

||||

|

||||

nginx -t

|

||||

|

||||

如果一切都没有问题, 用 sysrc 命令添加nginx为启动项,并且启动nginx和重启apache:

|

||||

如果一切都没有问题,用 sysrc 命令添加 nginx 为开机启动项,并且启动 nginx 和重启 apache:

|

||||

|

||||

sysrc nginx_enable=yes

|

||||

service nginx start

|

||||

service apache24 restart

|

||||

|

||||

一切完毕后, 在 saitama.me 目录下,添加一个新的phpinfo文件来验证php的正常运行 :

|

||||

一切完毕后,在 saitama.me 目录下,添加一个新的 phpinfo 文件来验证 php 的正常运行:

|

||||

|

||||

cd /usr/local/www/saitama.me

|

||||

echo "<?php phpinfo(); ?>" > info.php

|

||||

|

||||

然后便访问这个文档 : **www.saitama.me/info.php**.

|

||||

然后访问这个域名: **www.saitama.me/info.php**。

|

||||

|

||||

|

||||

|

||||

Nginx 作为Apache的反向代理正在运行了,PHP也同样在进行工作了.

|

||||

Nginx 作为 Apache 的反向代理运行了,PHP 也同样工作了。

|

||||

|

||||

这是另一种结果 :

|

||||

这是另一个结果:

|

||||

|

||||

Test .html 文件无缓存.

|

||||

测试无缓存的 .html 文件。

|

||||

|

||||

curl -I www.saitama.me

|

||||

|

||||

|

||||

|

||||

Test .css 文件只有三十天的缓存.

|

||||

测试有三十天缓存的 .css 文件。

|

||||

|

||||

curl -I www.saitama.me/test.css

|

||||

|

||||

|

||||

|

||||

Test .php 文件正常缓存 :

|

||||

测试缓存的 .php 文件:

|

||||

|

||||

curl -I www.saitama.me/info.php

|

||||

|

||||

|

||||

|

||||

全部完成.

|

||||

全部搞定。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

Nginx 是最广泛的 HTTP 和反向代理的服务器. 拥有丰富的高性能和低内存/RAM的使用功能. Nginx使用了太多的缓存, 我们可以在网络上缓存静态文件使得网页加速, 并且在用户需要的时候再缓存php文件. 这样Nginx 的轻松配置和使用,可以让它用作HTTP服务器 或者 apache的反向代理.

|

||||

Nginx 是最受欢迎的 HTTP 和反向代理服务器,拥有丰富的功能、高性能、低内存/RAM 占用。Nginx 也用于缓存, 我们可以在网络上缓存静态文件使得网页加速,并且缓存用户请求的 php 文件。 Nginx 容易配置和使用,可以将它用作 HTTP 服务器或者 apache 的反向代理。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -320,7 +320,7 @@ via: http://linoxide.com/linux-how-to/install-nginx-reverse-proxy-apache-freebsd

|

||||

|

||||

作者:[Arul][a]

|

||||

译者:[KnightJoker](https://github.com/KnightJoker)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Caroline](https://github.com/carolinewuyan),[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,162 @@

|

||||

在 Debian Linux 上安装配置 ISC DHCP 服务器

|

||||

================================================================================

|

||||

|

||||

动态主机控制协议(Dynamic Host Control Protocol,DHCP)给网络管理员提供了一种便捷的方式,为不断变化的网络主机或是动态网络提供网络层地址。其中最常用的 DHCP 服务工具是 ISC DHCP Server。DHCP 服务的目的是给主机提供必要的网络信息以便能够和其他连接在网络中的主机互相通信。DHCP 服务提供的信息包括:DNS 服务器信息,网络地址(IP),子网掩码,默认网关信息,主机名等等。

|

||||

|

||||

本教程介绍运行在 Debian 7.7 上 4.2.4 版的 ISC-DHCP-Server 如何管理多个虚拟局域网(VLAN),也可以非常容易应用到单一网络上。

|

||||

|

||||

测试用的网络是通过思科路由器使用传统的方式来管理 DHCP 租约地址的。目前有 12 个 VLAN 需要通过集中式服务器来管理。把 DHCP 的任务转移到一个专用的服务器上,路由器可以收回相应的资源,把资源用到更重要的任务上,比如路由寻址,访问控制列表,流量监测以及网络地址转换等。

|

||||

|

||||

另一个将 DHCP 服务转移到专用服务器的好处,以后会讲到,它可以建立动态域名服务器(DDNS),这样当主机从服务器请求 DHCP 地址的时候,这样新主机的主机名就会被添加到 DNS 系统里面。

|

||||

|

||||

### 安装和配置 ISC DHCP 服务器###

|

||||

|

||||

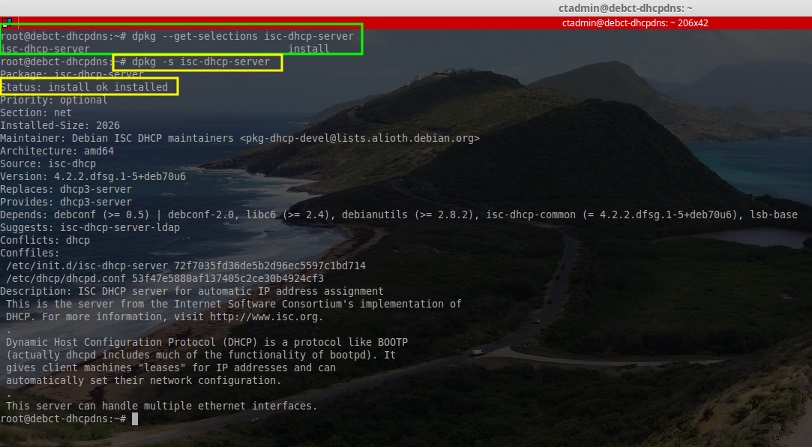

1、使用 apt 工具用来安装 Debian 软件仓库中的 ISC 软件,来创建这个多宿主服务器。与其他教程一样需要使用 root 或者 sudo 访问权限。请适当的修改,以便使用下面的命令。(LCTT 译注:下面中括号里面是注释,使用的时候请删除,#表示使用的 root 权限)

|

||||

|

||||

# apt-get install isc-dhcp-server [安装 the ISC DHCP Server 软件]

|

||||

# dpkg --get-selections isc-dhcp-server [确认软件已经成功安装]

|

||||

# dpkg -s isc-dhcp-server [用另一种方式确认成功安装]

|

||||

|

||||

|

||||

|

||||

2、 确认服务软件已经安装完成,现在需要提供网络信息来配置服务器,这样服务器才能够根据我们的需要来分发网络信息。作为管理员最起码需要了解的 DHCP 信息如下:

|

||||

|

||||

- 网络地址

|

||||

- 子网掩码

|

||||

- 动态分配的地址范围

|

||||

|

||||

其他一些服务器动态分配的有用信息包括:

|

||||

|

||||

- 默认网关

|

||||

- DNS 服务器 IP 地址

|

||||

- 域名

|

||||

- 主机名

|

||||

- 网络广播地址

|

||||

|

||||

这只是能让 ISC DHCP 服务器处理的选项中非常少的一部分。如果你想查看所有选项及其描述需要在安装好软件后输入以下命令:

|

||||

|

||||

# man dhcpd.conf

|

||||

|

||||

3、 一旦管理员已经确定了这台服务器分发的所有必要信息,那么是时候配置服务器并且分配必要的地址池了。在配置任何地址池或服务器配置之前,必须配置 DHCP 服务器侦听这台服务器上面的一个接口。

|

||||

|

||||

在这台特定的服务器上,设置好网卡后,DHCP 会侦听名称名为`'bond0'`的接口。请适根据你的实际情况来更改服务器以及网络环境。下面的配置都是针对本教程的。

|

||||

|

||||

|

||||

|

||||

这行指定的是 DHCP 服务侦听接口(一个或多个)上的 DHCP 流量。修改主配置文件,分配适合的 DHCP 地址池到所需要的网络上。主配置文件在 /etc/dhcp/dhcpd.conf。用文本编辑器打开这个文件

|

||||

|

||||

# nano /etc/dhcp/dhcpd.conf

|

||||

|

||||

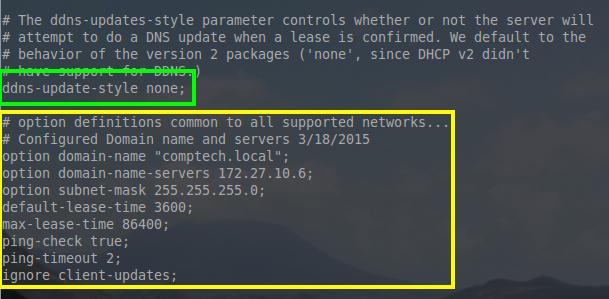

这个配置文件可以配置我们所需要的地址池/主机。文件顶部有 ‘ddns-update-style‘ 这样一句,在本教程中它设置为 ‘none‘。在以后的教程中会讲到动态 DNS,ISC-DHCP-Server 将会与 BIND9 集成,它能够使主机名更新指向到 IP 地址。

|

||||

|

||||

4、 接下来的部分是管理员配置全局网络设置,如 DNS 域名,默认的租约时间,IP地址,子网的掩码,以及其它。如果你想了解所有的选项,请阅读 man 手册中的 dhcpd.conf 文件,命令如下:

|

||||

|

||||

# man dhcpd.conf

|

||||

|

||||

对于这台服务器,我们需要在配置文件顶部配置一些全局网络设置,这样就不用到每个地址池中去单独设置了。

|

||||

|

||||

|

||||

|

||||

我们花一点时间来解释一下这些选项,在本教程中虽然它们是一些全局设置,但是也可以单独的为某一个地址池进行配置。

|

||||

|

||||

- option domain-name “comptech.local”; – 所有使用这台 DHCP 服务器的主机,都将成为 DNS 域 “comptech.local” 的一员

|

||||

|

||||

- option domain-name-servers 172.27.10.6; DHCP 向所有配置这台 DHCP 服务器的的网络主机分发 DNS 服务器地址为 172.27.10.6

|

||||

|

||||

- option subnet-mask 255.255.255.0; – 每个网络设备都分配子网掩码 255.255.255.0 或 /24

|

||||

|

||||

- default-lease-time 3600; – 默认有效的地址租约时间(单位是秒)。如果租约时间耗尽,那么主机可以重新申请租约。如果租约完成,那么相应的地址也将被尽快回收。

|

||||

|

||||

- max-lease-time 86400; – 这是一台主机所能租用的最大的租约时间(单位为秒)。

|

||||

|

||||

- ping-check true; – 这是一个额外的测试,以确保服务器分发出的网络地址不是当前网络中另一台主机已使用的网络地址。

|

||||

|

||||

- ping-timeout; – 在判断地址以前没有使用过前,服务器将等待 ping 响应多少秒。

|

||||

|

||||

- ignore client-updates; 现在这个选项是可以忽略的,因为 DDNS 在前面已在配置文件中已经被禁用,但是当 DDNS 运行时,这个选项会忽略主机更新其 DNS 主机名的请求。

|

||||

|

||||

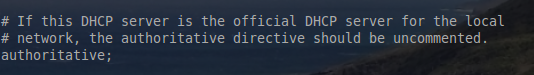

5、 文件中下面一行是权威 DHCP 所在行。这行的意义是如果服务器是为文件中所配置的网络分发地址的服务器,那么取消对该权威关键字(authoritative stanza) 的注释。

|

||||

|

||||

通过去掉关键字 authoritative 前面的 ‘#’,取消注释全局权威关键字。这台服务器将是它所管理网络里面的唯一权威。

|

||||

|

||||

|

||||

|

||||

默认情况下服务器被假定为**不是**网络上的权威服务器。之所以这样做是出于安全考虑。如果有人因为不了解 DHCP 服务的配置,导致配置不当或配置到一个不该出现的网络里面,这都将带来非常严重的连接问题。这行还可用在每个网络中单独配置使用。也就是说如果这台服务器不是整个网络的 DHCP 服务器,authoritative 行可以用在每个单独的网络中,而不是像上面截图中那样的全局配置。

|

||||

|

||||

6、 这一步是配置服务器将要管理的所有 DHCP 地址池/网络。简短起见,本教程只讲到配置的地址池之一。作为管理员需要收集一些必要的网络信息(比如域名,网络地址,有多少地址能够被分发等等)

|

||||

|

||||

以下这个地址池所用到的信息都是管理员收集整理的:网络 ID 172.27.60.0, 子网掩码 255.255.255.0 或 /24, 默认子网网关 172.27.60.1,广播地址 172.27.60.255.0 。

|

||||

|

||||

以上这些信息对于构建 dhcpd.conf 文件中新网络非常重要。使用文本编辑器修改配置文件添加新网络进去,这里我们需要使用 root 或 sudo 访问权限。

|

||||

|

||||

# nano /etc/dhcp/dhcpd.conf

|

||||

|

||||

|

||||

|

||||

当前这个例子是给用 VMWare 创建的虚拟服务器分配 IP 地址。第一行显示是该网络的子网掩码。括号里面的内容是 DHCP 服务器应该提供给网络上面主机的所有选项。

|

||||

|

||||

第一行, range 172.27.60.50 172.27.60.254; 这一行显示的是,DHCP 服务在这个网络上能够给主机动态分发的地址范围。

|

||||

|

||||

第二行,option routers 172.27.60.1; 这里显示的是给网络里面所有的主机分发的默认网关地址。

|

||||

|

||||

最后一行, option broadcast-address 172.27.60.255; 显示当前网络的广播地址。这个地址不能被包含在要分发放的地址范围内,因为广播地址不能分配到一个主机上面。

|

||||

|

||||

必须要强调的是每行的结尾必须要用(;)来结束,所有创建的网络必须要在 {} 里面。

|

||||

|

||||

7、 如果要创建多个网络,继续创建完它们的相应选项后保存文本文件即可。配置完成以后如果有更改,ISC-DHCP-Server 进程需要重启来使新的更改生效。重启进程可以通过下面的命令来完成:

|

||||

|

||||

# service isc-dhcp-server restart

|

||||

|

||||

这条命令将重启 DHCP 服务,管理员能够使用几种不同的方式来检查服务器是否已经可以处理 dhcp 请求。最简单的方法是通过 [lsof 命令][1]来查看服务器是否在侦听67端口,命令如下:

|

||||

|

||||

# lsof -i :67

|

||||

|

||||

|

||||

|

||||

这里输出的结果表明 dhcpd(DHCP 服务守护进程)正在运行并且侦听67端口。由于在 /etc/services 文件中67端口的映射,所以输出中的67端口实际上被转换成了 “bootps”。

|

||||

|

||||

在大多数的系统中这是非常常见的,现在服务器应该已经为网络连接做好准备,我们可以将一台主机接入网络请求DHCP地址来验证服务是否正常。

|

||||

|

||||

### 测试客户端连接 ###

|

||||

|

||||

8、 现在许多系统使用网络管理器来维护网络连接状态,因此这个设备应该预先配置好的,只要对应的接口处于活跃状态就能够获取 DHCP。

|

||||

|

||||

然而当一台设备无法使用网络管理器时,它可能需要手动获取 DHCP 地址。下面的几步将演示怎样手动获取以及如何查看服务器是否已经按需要分发地址。

|

||||

|

||||

‘[ifconfig][2]‘工具能够用来检查接口的配置。这台被用来测试的 DHCP 服务器的设备,它只有一个网络适配器(网卡),这块网卡被命名为 ‘eth0‘。

|

||||

|

||||

# ifconfig eth0

|

||||

|

||||

|

||||

|

||||

从输出结果上看,这台设备目前没有 IPv4 地址,这样很便于测试。我们把这台设备连接到 DHCP 服务器并发出一个请求。这台设备上已经安装了一个名为 ‘dhclient‘ 的DHCP客户端工具。因为操作系统各不相同,所以这个客户端软件也是互不一样的。

|

||||

|

||||

# dhclient eth0

|

||||

|

||||

|

||||

|

||||

当前 `'inet addr:'` 字段中显示了属于 172.27.60.0 网络地址范围内的 IPv4 地址。值得欣慰的是当前网络还配置了正确的子网掩码并且分发了广播地址。

|

||||

|

||||

到这里看起来还都不错,让我们来测试一下,看看这台设备收到新 IP 地址是不是由服务器发出的。这里我们参照服务器的日志文件来完成这个任务。虽然这个日志的内容有几十万条,但是里面只有几条是用来确定服务器是否正常工作的。这里我们使用一个工具 ‘tail’,它只显示日志文件的最后几行,这样我们就可以不用拿一个文本编辑器去查看所有的日志文件了。命令如下:

|

||||

|

||||

# tail /var/log/syslog

|

||||

|

||||

|

||||

|

||||

OK!服务器记录表明它分发了一个地址给这台主机 (HRTDEBXENSRV)。服务器按预期运行,给它充当权威服务器的网络分发了适合的网络地址。至此 DHCP 服务器搭建成功并且运行。如果有需要你可以继续配置其他的网络,排查故障,确保安全。

|

||||

|

||||

在以后的Debian教程中我会讲一些新的 ISC-DHCP-Server 功能。有时间的话我将写一篇关于 Bind9 和 DDNS 的教程,融入到这篇文章里面。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/install-and-configure-multihomed-isc-dhcp-server-on-debian-linux/

|

||||

|

||||

作者:[Rob Turner][a]

|

||||

译者:[ivo-wang](https://github.com/ivo-wang)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/robturner/

|

||||

[1]:http://www.tecmint.com/10-lsof-command-examples-in-linux/

|

||||

[2]:http://www.tecmint.com/ifconfig-command-examples/

|

||||

220

published/201512/20150917 A Repository with 44 Years of Unix Evolution.md

Executable file

220

published/201512/20150917 A Repository with 44 Years of Unix Evolution.md

Executable file

@ -0,0 +1,220 @@

|

||||

一个涵盖 Unix 44 年进化史的版本仓库

|

||||

=============================================================================

|

||||

|

||||

http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html

|

||||

|

||||

This is an HTML rendering of a working paper draft that led to a publication. The publication should always be cited in preference to this draft using the following reference:

|

||||

|

||||

- **Diomidis Spinellis**. [A repository with 44 years of Unix evolution](http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html). In MSR '15: Proceedings of the 12th Working Conference on Mining Software Repositories, pages 13-16. IEEE, 2015. Best Data Showcase Award. ([doi:10.1109/MSR.2015.6](http://dx.doi.org/10.1109/MSR.2015.6))

|

||||

|

||||

This document is also available in [PDF format](http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.pdf).

|

||||

|

||||

The document's metadata is available in [BibTeX format](http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c-bibtex.html).

|

||||

|

||||

This material is presented to ensure timely dissemination of scholarly and technical work. Copyright and all rights therein are retained by authors or by other copyright holders. All persons copying this information are expected to adhere to the terms and constraints invoked by each author's copyright. In most cases, these works may not be reposted without the explicit permission of the copyright holder.

|

||||

|

||||

[Diomidis Spinellis Publications](http://www.dmst.aueb.gr/dds/pubs/)

|

||||

|

||||

© 2015 IEEE. Personal use of this material is permitted. However, permission to reprint/republish this material for advertising or promotional purposes or for creating new collective works for resale or redistribution to servers or lists, or to reuse any copyrighted component of this work in other works must be obtained from the IEEE.

|

||||

|

||||

### 摘要 ###

|

||||

|

||||

Unix 操作系统的进化历史,可以从一个版本控制仓库中窥见,时间跨度从 1972 年的 5000 行内核代码开始,到 2015 年成为一个含有 26,000,000 行代码的被广泛使用的系统。该仓库包含 659,000 条提交,和 2306 次合并。仓库部署了被普遍采用的 Git 系统用于储存其代码,并且在时下流行的 GitHub 上建立了存档。它由来自贝尔实验室(Bell Labs),伯克利大学(Berkeley University),386BSD 团队所开发的系统软件的 24 个快照综合定制而成,这包括两个老式仓库和一个开源 FreeBSD 系统的仓库。总的来说,可以确认其中的 850 位个人贡献者,更早些时候的一批人主要做基础研究。这些数据可以用于一些经验性的研究,在软件工程,信息系统和软件考古学领域。

|

||||

|

||||

### 1、介绍 ###

|

||||

|

||||

Unix 操作系统作为一个主要的工程上的突破而脱颖而出,得益于其模范的设计、大量的技术贡献、它的开发模型及广泛的使用。Unix 编程环境的设计已经被视为一个提供非常简洁、强大而优雅的设计 [[1][1]] 。在技术方面,许多对 Unix 有直接贡献的,或者因 Unix 而流行的特性就包括 [[2][2]] :用高级语言编写的可移植部署的内核;一个分层式设计的文件系统;兼容的文件,设备,网络和进程间 I/O;管道和过滤架构;虚拟文件系统;和作为普通进程的可由用户选择的不同 shell。很早的时候,就有一个庞大的社区为 Unix 贡献软件 [[3][3]] ,[[4][4],pp. 65-72] 。随时间流逝,这个社区不断壮大,并且以现在称为开源软件开发的方式在工作着 [[5][5],pp. 440-442] 。Unix 和其睿智的晚辈们也将 C 和 C++ 编程语言、分析程序和词法分析生成器(*yacc*,*lex*)、文档编制工具(*troff*,*eqn*,*tbl*)、脚本语言(*awk*,*sed*,*Perl*)、TCP/IP 网络、和配置管理系统(configuration management system)(*SCSS*,*RCS*,*Subversion*,*Git*)发扬广大了,同时也形成了现代互联网基础设施和网络的最大的部分。

|

||||

|

||||

幸运的是,一些重要的具有历史意义的 Unix 材料已经保存下来了,现在保持对外开放。尽管 Unix 最初是由相对严格的协议发行,但在早期的开发中,很多重要的部分是通过 Unix 的版权拥有者之一(Caldera International) (LCTT 译注:2002年改名为 SCO Group)以一个自由的协议发行。通过将这些部分再结合上由加州大学伯克利分校(University of California, Berkeley)和 FreeBSD 项目组开发或发布的开源软件,贯穿了从 1972 年六月二十日开始到现在的整个系统的开发。

|

||||

|

||||

通过规划和处理这些可用的快照以及或旧或新的配置管理仓库,将这些可用数据的大部分重建到一个新合成的 Git 仓库之中。这个仓库以数字的形式记录了过去44年来最重要的数字时代产物的详细的进化。下列章节描述了该仓库的结构和内容(第[2][6]节)、创建方法(第[3][7]节)和该如何使用(第[4][8]节)。

|

||||

|

||||

### 2、数据概览 ###

|

||||

|

||||

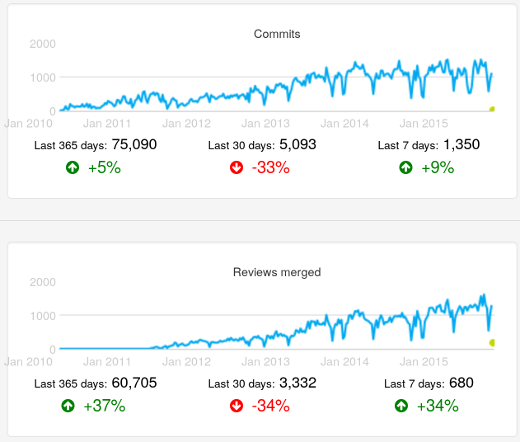

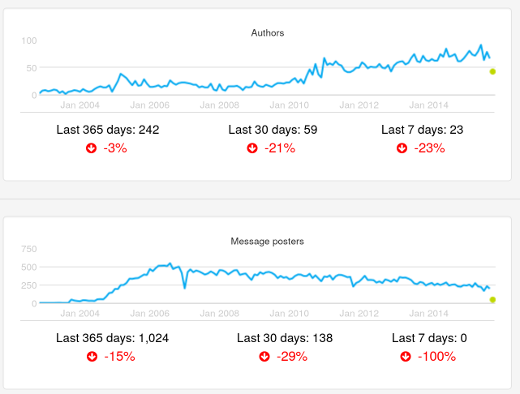

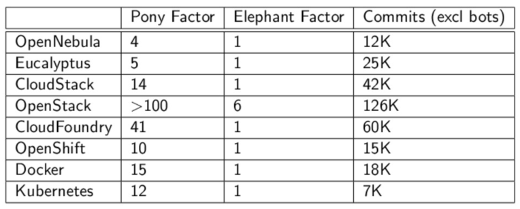

这 1GB 的 Unix 历史仓库可以从 [GitHub][9] 上克隆^[1][10] 。如今^[2][11] ,这个仓库包含来自 850 个贡献者的 659,000 个提交和 2,306 个合并。贡献者有来自贝尔实验室(Bell Labs)的 23 个员工,伯克利大学(Berkeley University)的计算机系统研究组(Computer Systems Research Group)(CSRG)的 158 个人,和 FreeBSD 项目的 660 个成员。

|

||||

|

||||

这个仓库的生命始于一个 *Epoch* 的标签,这里面只包含了证书信息和现在的 README 文件。其后各种各样的标签和分支记录了很多重要的时刻。

|

||||

|

||||

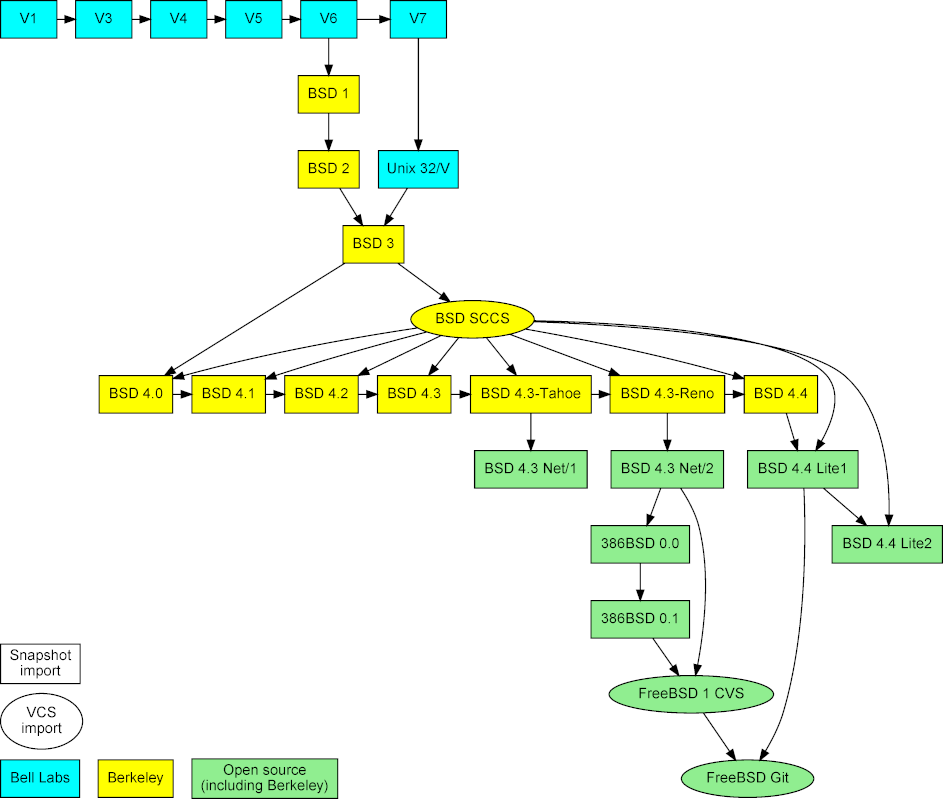

- *Research-VX* 标签对应来自贝尔实验室(Bell Labs)六个研究版本。从 *Research-V1* (4768 行 PDP-11 汇编代码)开始,到以 *Research-V7* (大约 324,000 行代码,1820 个 C 文件)结束。

|

||||

- *Bell-32V* 是第七个版本 Unix 在 DEC/VAX 架构上的移植。

|

||||

- *BSD-X* 标签对应伯克利大学(Berkeley University)释出的 15 个快照。

|

||||

- *386BSD-X* 标签对应该系统的两个开源版本,主要是 Lynne 和 William Jolitz 写的适用于 Intel 386 架构的内核代码。

|

||||

- *FreeBSD-release/X* 标签和分支标记了来自 FreeBSD 项目的 116 个发行版。

|

||||

|

||||

另外,以 *-Snapshot-Development* 为后缀的分支,表示该提交由来自一个以时间排序的快照文件序列而合成;而以一个 *-VCS-Development* 为后缀的标签,标记了有特定发行版出现的历史分支的时刻。

|

||||

|

||||

仓库的历史包含从系统开发早期的一些提交,比如下面这些。

|

||||

|

||||

commit c9f643f59434f14f774d61ee3856972b8c3905b1

|

||||

Author: Dennis Ritchie <research!dmr>

|

||||

Date: Mon Dec 2 18:18:02 1974 -0500

|

||||

Research V5 development

|

||||

Work on file usr/sys/dmr/kl.c

|

||||

|

||||

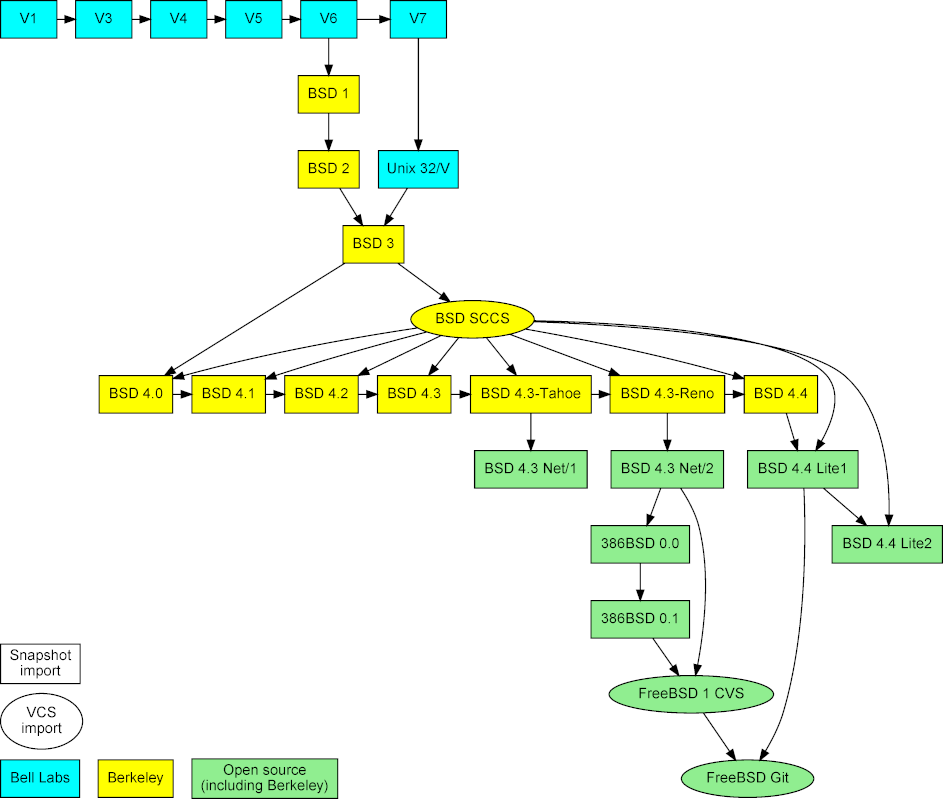

两个发布之间的合并代表着系统发生了进化,比如 BSD 3 的开发来自 BSD2 和 Unix 32/V,它在 Git 仓库里正是被表示为带两个父节点的图形节点。

|

||||

|

||||

更为重要的是,以这种方式构造的仓库允许 **git blame**,就是可以给源代码行加上注释,如版本、日期和它们第一次出现相关联的作者,这样可以知道任何代码的起源。比如说,检出 **BSD-4** 这个标签,并在内核的 *pipe.c* 文件上运行一下 git blame,就会显示出由 Ken Thompson 写于 1974,1975 和 1979年的代码行,和 Bill Joy 写于 1980 年的。这就可以自动(尽管计算上比较费事)检测出任何时刻出现的代码。

|

||||

|

||||

|

||||

|

||||

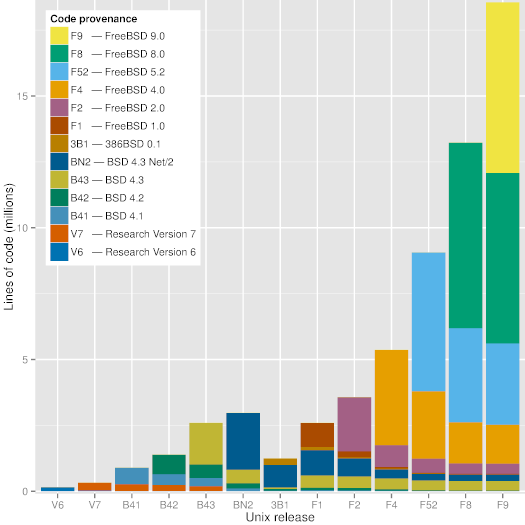

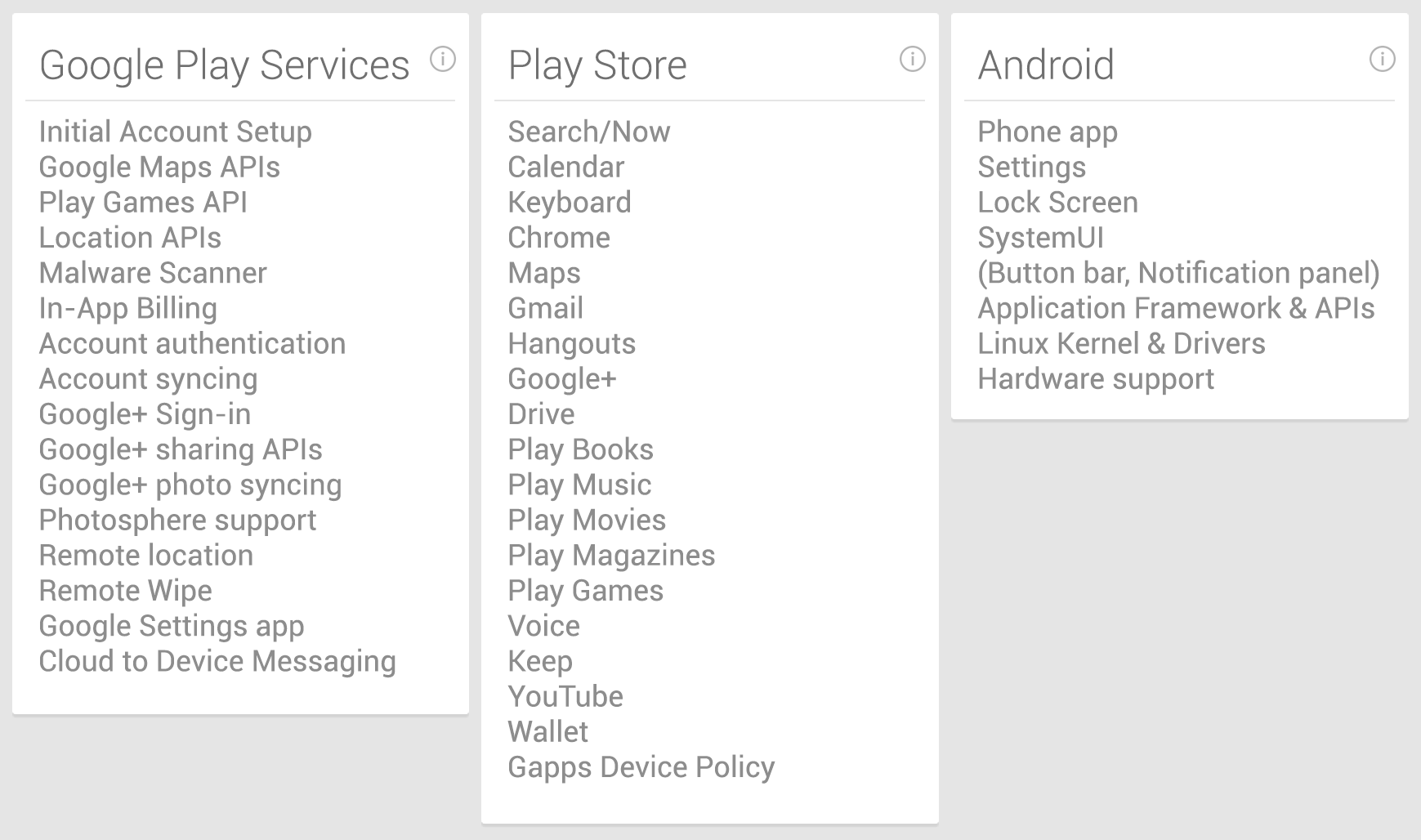

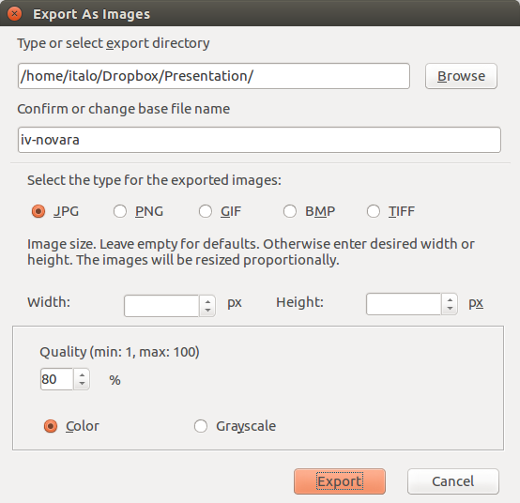

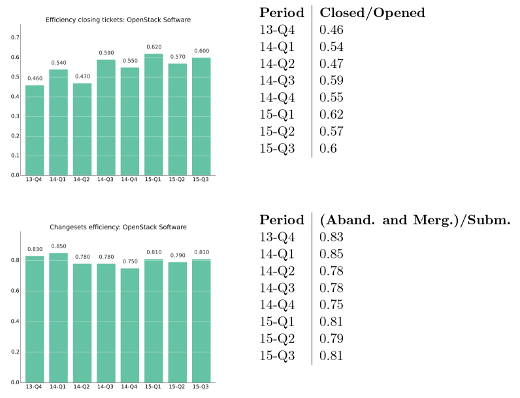

*图1:各个重大 Unix 发行版的代码来源*

|

||||

|

||||

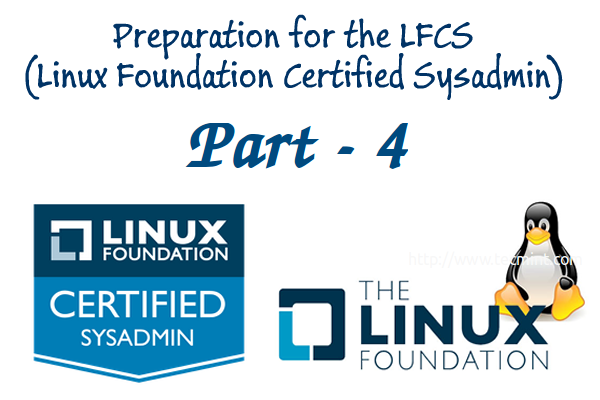

如[上图][12]所示,现代版本的 Unix(FreeBSD 9)依然有相当部分的来自 BSD 4.3,BSD 4.3 Net/2 和 BSD 2.0 的代码块。有趣的是,这图片显示有部分代码好像没有保留下来,当时激进地要创造一个脱离于伯克利(386BSD 和 FreeBSD 1.0)所释出代码的开源操作系统。FreeBSD 9 中最古老的代码是一个 18 行的队列,在 C 库里面的 timezone.c 文件里,该文件也可以在第七版的 Unix 文件里找到,同样的名字,时间戳是 1979 年一月十日 - 36 年前。

|

||||

|

||||

### 3、数据收集和处理 ###

|

||||

|

||||

这个项目的目的是以某种方式巩固从数据方面说明 Unix 的进化,通过将其并入一个现代的版本仓库,帮助人们对系统进化的研究。项目工作包括收录数据,分类并综合到一个单独的 Git 仓库里。

|

||||

|

||||

|

||||

|

||||

*图2:导入 Unix 快照、仓库及其合并*

|

||||

|

||||

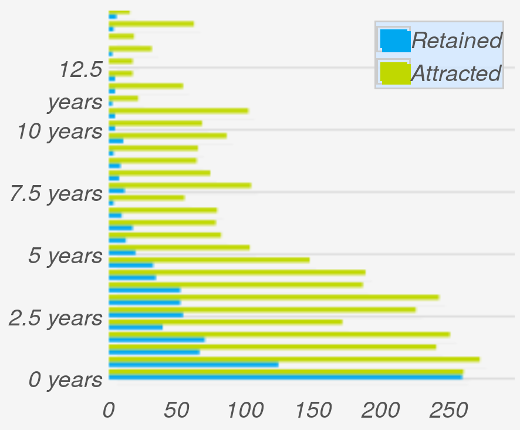

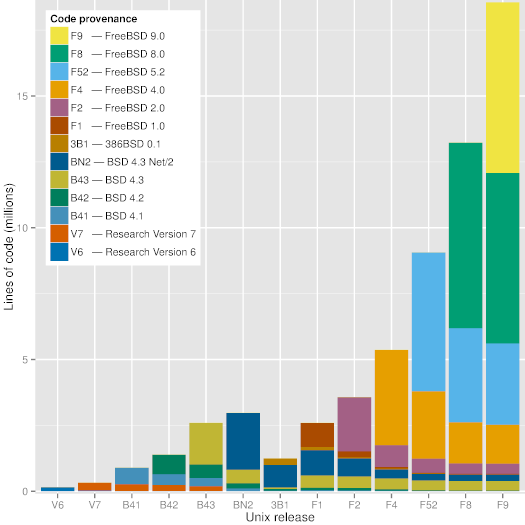

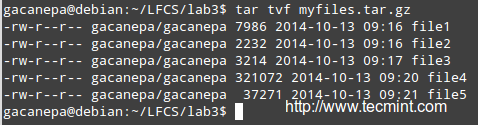

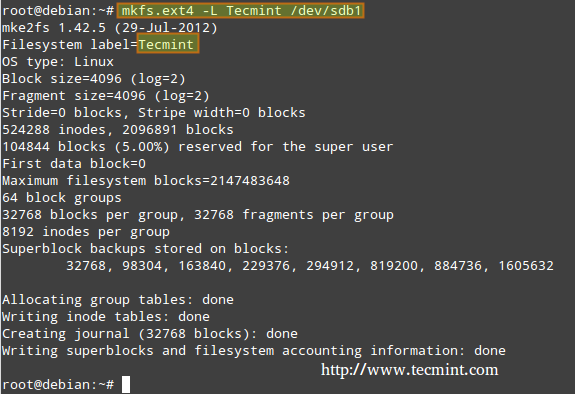

项目以三种数据类型为基础(见[图2][13])。首先,早期发布版本的快照,获取自 [Unix 遗产社会归档(Unix Heritage Society archive)][14]^[3][15] 、包括了 CSRG 全部的源代码归档的 [CD-ROM 镜像][16]^[4][17] , [Oldlinux 网站][18]^[5][19] 和 [FreeBSD 归档][20]^[6][21] 。 其次,以前的和现在的仓库,即 CSRG SCCS [[6][22]] 仓库,FreeBSD 1 CVS 仓库,和[现代 FreeBSD 开发的 Git 镜像][23]^[7][24] 。前两个都是从和快照相同的来源获得的。

|

||||

|

||||

最后,也是最费力的数据源是 **初步研究(primary research)**。释出的快照并没有提供关于它们的源头和每个文件贡献者的信息。因此,这些信息片段需要通过初步研究(primary research)验证。至于作者信息主要通过作者的自传,研究论文,内部备忘录和旧文档扫描件;通过阅读并且自动处理源代码和帮助页面补充;通过与那个年代的人用电子邮件交流;在 *StackExchange* 网站上贴出疑问;查看文件的位置(在早期的内核版本的源代码,分为 `usr/sys/dmr` 和 `/usr/sys/ken` 两个位置);从研究论文和帮助手册披露的作者找到源代码,从一个又一个的发行版中获取。(有趣的是,第一和第二的研究版(Research Edition)帮助页面都有一个 “owner” 部分,列出了作者(比如,*Ken*)及对应的系统命令、文件、系统调用或库函数。在第四版中这个部分就没了,而在 BSD 发行版中又浮现了 “Author” 部分。)关于作者信息更为详细地写在了项目的文件中,这些文件被用于匹配源代码文件和它们的作者和对应的提交信息。最后,关于源代码库之间的合并信息是获取自[ NetBSD 项目所维护的 BSD 家族树][25]^[8][26] 。

|

||||

|

||||

作为本项目的一部分而开发的软件和数据文件,现在可以[在线获取][27]^[9][28] ,并且,如果有合适的网络环境,CPU 和磁盘资源,可以用来从头构建这样一个仓库。关于主要发行版的作者信息,都存储在本项目的 `author-path` 目录下的文件里。它们的内容中带有正则表达式的文件路径后面指出了相符的作者。可以指定多个作者。正则表达式是按线性处理的,所以一个文件末尾的匹配一切的表达式可以指定一个发行版的默认作者。为避免重复,一个以 `.au` 后缀的独立文件专门用于映射作者的识别号(identifier)和他们的名字及 email。这样一个文件为每个与该系统进化相关的社区都建立了一个:贝尔实验室(Bell Labs),伯克利大学(Berkeley University),386BSD 和 FreeBSD。为了真实性的需要,早期贝尔实验室(Bell Labs)发行版的 emails 都以 UUCP 注释(UUCP notation)方式列出(例如, `research!ken`)。FreeBSD 作者的识别映射,需要导入早期的 CVS 仓库,通过从如今项目的 Git 仓库里拆解对应的数据构建。总的来说,由 1107 行构成了注释作者信息的文件(828 个规则),并且另有 640 行用于映射作者的识别号到名字。

|

||||

|

||||

现在项目的数据源被编码成了一个 168 行的 `Makefile`。它包括下面的步骤。

|

||||

|

||||

**Fetching** 从远程站点复制和克隆大约 11GB 的镜像、归档和仓库。

|

||||

|

||||

**Tooling** 从 2.9 BSD 中为旧的 PDP-11 归档获取一个归档器,并调整它以在现代的 Unix 版本下编译;编译 4.3 BSD 的 *compress* 程序来解压 386BSD 发行版,这个程序不再是现代 Unix 系统的组成部分了。

|

||||

|

||||

**Organizing** 用 *tar* 和 *cpio* 解压缩包;合并第六个研究版的三个目录;用旧的 PDP-11 归档器解压全部一个 BSD 归档;挂载 CD-ROM 镜像,这样可以作为文件系统处理;合并第 8 和 62 的 386BSD 磁盘镜像为两个独立的文件。

|

||||

|

||||

**Cleaning** 恢复第一个研究版的内核源代码文件,这个可以通过 OCR 从打印件上得到近似其原始状态的的格式;给第七个研究版的源代码文件打补丁;移除发行后被添加进来的元数据和其他文件,为避免得到错误的时间戳信息;修复毁坏的 SCCS 文件;用一个定制的 Perl 脚本移除指定到多个版本的 CVS 符号、删除与现在冲突的 CVS *Attr* 文件、用 *cvs2svn* 将 CVS 仓库转换为 Git 仓库,以处理早期的 FreeBSD CVS 仓库。

|

||||

|

||||

在仓库再现(representation)中有一个很有意思的部分就是,如何导入那些快照,并以一种方式联系起来,使得 *git blame* 可以发挥它的魔力。快照导入到仓库是基于每个文件的时间戳作为一系列的提交实现的。当所有文件导入后,就被用对应发行版的名字给标记了。然后,可以删除那些文件,并开始导入下一个快照。注意 *git blame* 命令是通过回溯一个仓库的历史来工作的,并使用启发法(heuristics)来检测文件之间或文件内的代码移动和复制。因此,删除掉的快照间会产生中断,以防止它们之间的代码被追踪。

|

||||

|

||||

相反,在下一个快照导入之前,之前快照的所有文件都被移动到了一个隐藏的后备目录里,叫做 `.ref`(引用)。它们保存在那,直到下个快照的所有文件都被导入了,这时候它们就会被删掉。因为 `.ref` 目录下的每个文件都精确对应一个原始文件,*git blame* 可以知道多少源代码通过 `.ref` 文件从一个版本移到了下一个,而不用显示出 `.ref` 文件。为了更进一步帮助检测代码起源,同时增加再现(representation)的真实性,每个发行版都被再现(represented)为一个有增量文件的分支(*-Development*)与之前发行版之间的合并。

|

||||

|

||||

上世纪 80 年代时期,只有伯克利(Berkeley) 开发的文件的一个子集是用 SCCS 版本控制的。在那个期间,我们的统一仓库里包含了来自 SCCS 的提交和快照的增量文件的导入数据。对于每个发行版,可用最近的时间戳找到该 SCCS 提交,并被标记为一个与发行版增量导入分支的合并。这些合并可以在[图2][29] 的中间看到。

|

||||

|

||||

将各种数据资源综合到一个仓库的工作,主要是用两个脚本来完成的。一个 780 行的 Perl 脚本(`import-dir.pl`)可以从一个单独的数据源(快照目录、SCCS 仓库,或者 Git 仓库)中,以 *Git fast export* 格式导出(真实的或者综合的)提交历史。输出是一个简单的文本格式,Git 工具用这个来导入和导出提交。其他方面,这个脚本以一些东西为参数,如文件到贡献者的映射、贡献者登录名和他们的全名间的映射、哪个导入的提交会被合并、哪些文件要处理和忽略、以及“引用”文件的处理。一个 450 行的 Shell 脚本创建 Git 仓库,并调用带适当参数的 Perl 脚本,来导入 27 个可用的历史数据资源。Shell 脚本也会运行 30 个测试,比较特定标签的仓库和对应的数据源,核对查看的目录中出现的和没出现的,并回溯查看分支树和合并的数量,*git blame* 和 *git log* 的输出。最后,调用 *git* 作垃圾收集和仓库压缩,从最初的 6GB 降到分发的 1GB 大小。

|

||||

|

||||

### 4、数据使用 ###

|

||||

|

||||

该数据可以用于软件工程、信息系统和软件考古学(software archeology)领域的经验性研究。鉴于它从不间断而独一无二的存在了超过了 40 年,可以供软件进化和跨代更迭参考。从那时以来,处理速度已经成千倍地增长、存储容量扩大了百万倍,该数据同样可以用于软件和硬件技术交叉进化(co-evolution)的研究。软件开发从研究中心到大学,到开源社区的转移,可以用来研究组织文化对于软件开发的影响。该仓库也可以用于学习著名人物的实际编程,比如 Turing 奖获得者(Dennis Ritchie 和 Ken Thompson)和 IT 产业的大佬(Bill Joy 和 Eric Schmidt)。另一个值得学习的现象是代码的长寿,无论是单行的水平,或是作为那个时代随 Unix 发布的完整的系统(Ingres、 Lisp、 Pascal、 Ratfor、 Snobol、 TMP),和导致代码存活或消亡的因素。最后,因为该数据让 Git 感到了压力,底层的软件仓库存储技术达到了其极限,这会推动版本管理系统领域的工程进度。

|

||||

|

||||

|

||||

|

||||

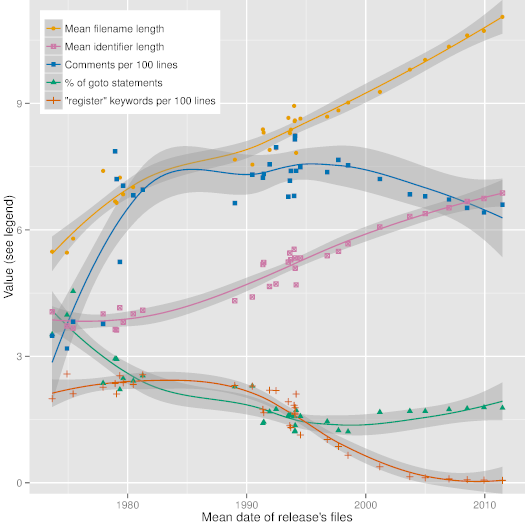

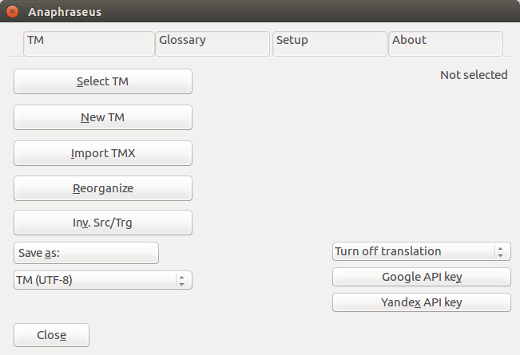

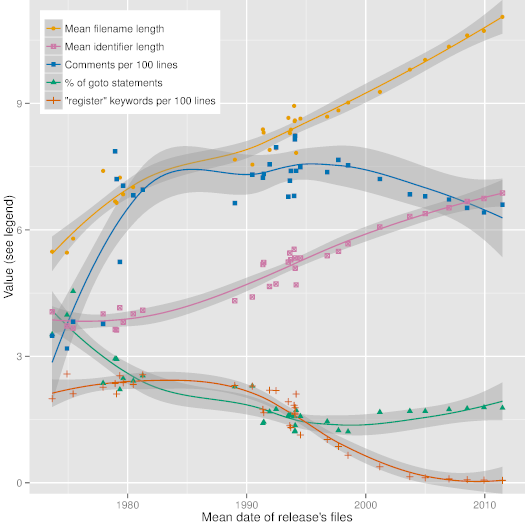

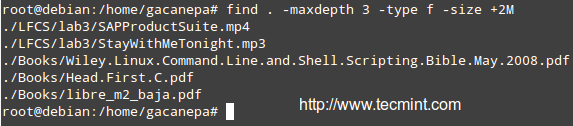

*图3:Unix 发行版的代码风格进化*

|

||||

|

||||

[图3][30] 根据 36 个主要 Unix 发行版描述了一些有趣的代码统计的趋势线(用 R 语言的局部多项式回归拟合函数生成),验证了代码风格和编程语言的使用在很长的时间尺度上的进化。这种进化是软硬件技术的需求和支持、软件构筑理论,甚至社会力量所驱动的。图片中的日期计算了出现在一个给定发行版中的所有文件的平均日期。正如可以从中看到,在过去的 40 年中,标示符和文件名字的长度已经稳步从 4 到 6 个字符增长到 7 到 11 个字符。我们也可以看到注释数量的少量稳步增加,以及 *goto* 语句的使用量减少,同时 *register* 这个类型修饰符的消失。

|

||||

|

||||

### 5、未来的工作 ###

|

||||

|

||||

可以做很多事情去提高仓库的正确性和有效性。创建过程以开源代码共享了,通过 GitHub 的拉取请求(pull request),可以很容易地贡献更多代码和修复。最有用的社区贡献将使得导入的快照文件的覆盖面增长,以便归属于某个具体的作者。现在,大约 90,000 个文件(在 160,000 总量之外)通过默认规则指定了作者。类似地,大约有 250 个作者(最初 FreeBSD 那些)仅知道其识别号。两个都列在了 build 仓库的 unmatched 目录里,欢迎贡献数据。进一步,BSD SCCS 和 FreeBSD CVS 的提交共享相同的作者和时间戳,这些可以结合成一个单独的 Git 提交。导入 SCCS 文件提交的支持会被添加进来,以便引入仓库对应的元数据。最后,也是最重要的,开源系统的更多分支会添加进来,比如 NetBSD、 OpenBSD、DragonFlyBSD 和 *illumos*。理想情况下,其他历史上重要的 Unix 发行版,如 System III、System V、 NeXTSTEP 和 SunOS 等的当前版权拥有者,也会在一个允许他们的合作伙伴使用仓库用于研究的协议下释出他们的系统。

|

||||

|

||||

### 鸣谢 ###

|

||||

|

||||

本文作者感谢很多付出努力的人们。 Brian W. Kernighan, Doug McIlroy 和 Arnold D. Robbins 在贝尔实验室(Bell Labs)的登录识别号方面提供了帮助。 Clem Cole, Era Erikson, Mary Ann Horton, Kirk McKusick, Jeremy C. Reed, Ingo Schwarze 和 Anatole Shaw 在 BSD 的登录识别号方面提供了帮助。BSD SCCS 的导入代码是基于 H. Merijn Brand 和 Jonathan Gray 的工作。

|

||||

|

||||

这次研究由欧盟 ( 欧洲社会基金(European Social Fund,ESF)) 和 希腊国家基金(Greek national funds)通过国家战略参考框架( National Strategic Reference Framework ,NSRF) 的 Operational Program " Education and Lifelong Learning" - Research Funding Program: Thalis - Athens University of Economics and Business - Software Engineering Research Platform ,共同出资赞助。

|

||||

|

||||

### 引用 ###

|

||||

|

||||

[[1]][31]

|

||||

M. D. McIlroy, E. N. Pinson, and B. A. Tague, "UNIX time-sharing system: Foreword," *The Bell System Technical Journal*, vol. 57, no. 6, pp. 1899-1904, July-August 1978.

|

||||

|

||||

[[2]][32]

|

||||

D. M. Ritchie and K. Thompson, "The UNIX time-sharing system," *Bell System Technical Journal*, vol. 57, no. 6, pp. 1905-1929, July-August 1978.

|

||||

|

||||

[[3]][33]

|

||||

D. M. Ritchie, "The evolution of the UNIX time-sharing system," *AT&T Bell Laboratories Technical Journal*, vol. 63, no. 8, pp. 1577-1593, Oct. 1984.

|

||||

|

||||

[[4]][34]

|

||||

P. H. Salus, *A Quarter Century of UNIX*. Boston, MA: Addison-Wesley, 1994.

|

||||

|

||||

[[5]][35]

|

||||

E. S. Raymond, *The Art of Unix Programming*. Addison-Wesley, 2003.

|

||||

|

||||

[[6]][36]

|

||||

M. J. Rochkind, "The source code control system," *IEEE Transactions on Software Engineering*, vol. SE-1, no. 4, pp. 255-265, 1975.

|

||||

|

||||

----------

|

||||

|

||||

#### 脚注 ####

|

||||

|

||||

[1][37] - [https://github.com/dspinellis/unix-history-repo][38]

|

||||

|

||||

[2][39] - Updates may add or modify material. To ensure replicability the repository's users are encouraged to fork it or archive it.

|

||||

|

||||

[3][40] - [http://www.tuhs.org/archive_sites.html][41]

|

||||

|

||||

[4][42] - [https://www.mckusick.com/csrg/][43]

|

||||

|

||||

[5][44] - [http://www.oldlinux.org/Linux.old/distributions/386BSD][45]

|

||||

|

||||

[6][46] - [http://ftp-archive.freebsd.org/pub/FreeBSD-Archive/old-releases/][47]

|

||||

|

||||

[7][48] - [https://github.com/freebsd/freebsd][49]

|

||||

|

||||

[8][50] - [http://ftp.netbsd.org/pub/NetBSD/NetBSD-current/src/share/misc/bsd-family-tree][51]

|

||||

|

||||

[9][52] - [https://github.com/dspinellis/unix-history-make][53]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html

|

||||

|

||||

作者:Diomidis Spinellis

|

||||

译者:[wi-cuckoo](https://github.com/wi-cuckoo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#MPT78

|

||||

[2]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#RT78

|

||||

[3]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#Rit84

|

||||

[4]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#Sal94

|

||||

[5]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#Ray03

|

||||

[6]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#sec:data

|

||||

[7]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#sec:dev

|

||||

[8]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#sec:use

|

||||

[9]:https://github.com/dspinellis/unix-history-repo

|

||||

[10]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAB

|

||||

[11]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAC

|

||||

[12]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#fig:provenance

|

||||

[13]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#fig:branches

|

||||

[14]:http://www.tuhs.org/archive_sites.html

|

||||

[15]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAD

|

||||

[16]:https://www.mckusick.com/csrg/

|

||||

[17]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAE

|

||||

[18]:http://www.oldlinux.org/Linux.old/distributions/386BSD

|

||||

[19]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAF

|

||||

[20]:http://ftp-archive.freebsd.org/pub/FreeBSD-Archive/old-releases/

|

||||

[21]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAG

|

||||

[22]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#SCCS

|

||||

[23]:https://github.com/freebsd/freebsd

|

||||

[24]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAH

|

||||

[25]:http://ftp.netbsd.org/pub/NetBSD/NetBSD-current/src/share/misc/bsd-family-tree

|

||||

[26]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAI

|

||||

[27]:https://github.com/dspinellis/unix-history-make

|

||||

[28]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFtNtAAJ

|

||||

[29]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#fig:branches

|

||||

[30]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#fig:metrics

|

||||

[31]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITEMPT78

|

||||

[32]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITERT78

|

||||

[33]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITERit84

|

||||

[34]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITESal94

|

||||

[35]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITERay03

|

||||

[36]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#CITESCCS

|

||||

[37]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAB

|

||||

[38]:https://github.com/dspinellis/unix-history-repo

|

||||

[39]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAC

|

||||

[40]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAD

|

||||

[41]:http://www.tuhs.org/archive_sites.html

|

||||

[42]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAE

|

||||

[43]:https://www.mckusick.com/csrg/

|

||||

[44]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAF

|

||||

[45]:http://www.oldlinux.org/Linux.old/distributions/386BSD

|

||||

[46]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAG

|

||||

[47]:http://ftp-archive.freebsd.org/pub/FreeBSD-Archive/old-releases/

|

||||

[48]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAH

|

||||

[49]:https://github.com/freebsd/freebsd

|

||||

[50]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAI

|

||||

[51]:http://ftp.netbsd.org/pub/NetBSD/NetBSD-current/src/share/misc/bsd-family-tree

|

||||

[52]:http://www.dmst.aueb.gr/dds/pubs/conf/2015-MSR-Unix-History/html/Spi15c.html#tthFrefAAJ

|

||||

[53]:https://github.com/dspinellis/unix-history-make

|

||||

@ -1,19 +1,18 @@

|

||||

|

||||

提高 WordPress 性能的9个技巧

|

||||

深入浅出讲述提升 WordPress 性能的九大秘笈

|

||||

================================================================================

|

||||

|

||||

关于建站和 web 应用程序交付,WordPress 是全球最大的一个平台。全球大约 [四分之一][1] 的站点现在正在使用开源 WordPress 软件,包括 eBay, Mozilla, RackSpace, TechCrunch, CNN, MTV,纽约时报,华尔街日报。

|

||||

在建站和 web 应用程序交付方面,WordPress 是全球最大的一个平台。全球大约[四分之一][1] 的站点现在正在使用开源 WordPress 软件,包括 eBay、 Mozilla、 RackSpace、 TechCrunch、 CNN、 MTV、纽约时报、华尔街日报 等等。

|

||||

|

||||

WordPress.com,对于用户创建博客平台是最流行的,其也运行在WordPress 开源软件上。[NGINX powers WordPress.com][2]。许多 WordPress 用户刚开始在 WordPress.com 上建站,然后移动到搭载着 WordPress 开源软件的托管主机上;其中大多数站点都使用 NGINX 软件。

|

||||

最流行的个人博客平台 WordPress.com,其也运行在 WordPress 开源软件上。[而 NGINX 则为 WordPress.com 提供了动力][2]。在 WordPress.com 的用户当中,许多站点起步于 WordPress.com,然后换成了自己运行 WordPress 开源软件;它们中越来越多的站点也使用了 NGINX 软件。

|

||||

|

||||

WordPress 的吸引力是它的简单性,无论是安装启动或者对于终端用户的使用。然而,当使用量不断增长时,WordPress 站点的体系结构也存在一定的问题 - 这里几个方法,包括使用缓存以及组合 WordPress 和 NGINX,可以解决这些问题。

|

||||

WordPress 的吸引力源于其简单性,无论是对于最终用户还是安装架设。然而,当使用量不断增长时,WordPress 站点的体系结构也存在一定的问题 - 这里有几个方法,包括使用缓存,以及将 WordPress 和 NGINX 组合起来,可以解决这些问题。

|

||||

|

||||

在这篇博客中,我们提供了9个技巧来进行优化,以帮助你解决 WordPress 中一些常见的性能问题:

|

||||

在这篇博客中,我们提供了九个提速技巧来帮助你解决 WordPress 中一些常见的性能问题:

|

||||

|

||||

- [缓存静态资源][3]

|

||||

- [缓存动态文件][4]

|

||||

- [使用 NGINX][5]

|

||||

- [添加支持 NGINX 的链接][6]

|

||||

- [迁移到 NGINX][5]

|

||||

- [添加 NGINX 静态链接支持][6]

|

||||

- [为 NGINX 配置 FastCGI][7]

|

||||

- [为 NGINX 配置 W3_Total_Cache][8]

|

||||

- [为 NGINX 配置 WP-Super-Cache][9]

|

||||

@ -22,39 +21,39 @@ WordPress 的吸引力是它的简单性,无论是安装启动或者对于终

|

||||

|

||||

### 在 LAMP 架构下 WordPress 的性能 ###

|

||||

|

||||

大多数 WordPress 站点都运行在传统的 LAMP 架构下:Linux 操作系统,Apache Web 服务器软件,MySQL 数据库软件 - 通常是一个单独的数据库服务器 - 和 PHP 编程语言。这些都是非常著名的,广泛应用的开源工具。大多数人都将 WordPress “称为” LAMP,并且很容易寻求帮助和支持。

|

||||

大多数 WordPress 站点都运行在传统的 LAMP 架构下:Linux 操作系统,Apache Web 服务器软件,MySQL 数据库软件(通常是一个单独的数据库服务器)和 PHP 编程语言。这些都是非常著名的,广泛应用的开源工具。在 WordPress 世界里,很多人都用的是 LAMP,所以很容易寻求帮助和支持。

|

||||

|

||||

当用户访问 WordPress 站点时,浏览器为每个用户创建六到八个连接来运行 Linux/Apache 的组合。当用户请求连接时,每个页面的 PHP 文件开始飞速的从 MySQL 数据库争夺资源来响应请求。

|

||||

当用户访问 WordPress 站点时,浏览器为每个用户创建六到八个连接来连接到 Linux/Apache 上。当用户请求连接时,PHP 即时生成每个页面,从 MySQL 数据库获取资源来响应请求。

|

||||

|

||||

LAMP 对于数百个并发用户依然能照常工作。然而,流量突然增加是常见的并且 - 通常是 - 一件好事。

|

||||

LAMP 或许对于数百个并发用户依然能照常工作。然而,流量突然增加是常见的,并且通常这应该算是一件好事。

|

||||

|

||||

但是,当 LAMP 站点变得繁忙时,当同时在线的用户达到数千个时,它的瓶颈就会被暴露出来。瓶颈存在主要是两个原因:

|

||||

|

||||

1. Apache Web 服务器 - Apache 为每一个连接需要消耗大量资源。如果 Apache 接受了太多的并发连接,内存可能会耗尽,性能急剧降低,因为数据必须使用磁盘进行交换。如果以限制连接数来提高响应时间,新的连接必须等待,这也导致了用户体验变得很差。

|

||||

1. Apache Web 服务器 - Apache 的每个/每次连接需要消耗大量资源。如果 Apache 接受了太多的并发连接,内存可能会耗尽,从而导致性能急剧降低,因为数据必须交换到磁盘了。如果以限制连接数来提高响应时间,新的连接必须等待,这也导致了用户体验变得很差。

|

||||

|

||||

1. PHP/MySQL 的交互 - 总之,一个运行 PHP 和 MySQL 数据库服务器的应用服务器上每秒的请求量不能超过最大限制。当请求的数量超过最大连接数时,用户必须等待。超过最大连接数时也会增加所有用户的响应时间。超过其两倍以上时会出现明显的性能问题。

|

||||

1. PHP/MySQL 的交互 - 一个运行 PHP 和 MySQL 数据库服务器的应用服务器上每秒的请求量有一个最大限制。当请求的数量超过这个最大限制时,用户必须等待。超过这个最大限制时也会增加所有用户的响应时间。超过其两倍以上时会出现明显的性能问题。

|

||||

|

||||

LAMP 架构的网站一般都会出现性能瓶颈,这时就需要升级硬件了 - 加 CPU,扩大磁盘空间等等。当 Apache 和 PHP/MySQL 的架构负载运行后,在硬件上不断的提升无法保证对系统资源指数增长的需求。

|

||||

LAMP 架构的网站出现性能瓶颈是常见的情况,这时就需要升级硬件了 - 增加 CPU,扩大磁盘空间等等。当 Apache 和 PHP/MySQL 的架构超载后,在硬件上不断的提升却跟不上系统资源指数增长的需求。

|

||||

|

||||

最先取代 LAMP 架构的是 LEMP 架构 – Linux, NGINX, MySQL, 和 PHP。 (这是 LEMP 的缩写,E 代表着 “engine-x.” 的发音。) 我们在 [技巧 3][12] 中会描述 LEMP 架构。

|

||||

首选替代 LAMP 架构的是 LEMP 架构 – Linux, NGINX, MySQL, 和 PHP。 (这是 LEMP 的缩写,E 代表着 “engine-x.” 的发音。) 我们在 [技巧 3][12] 中会描述 LEMP 架构。

|

||||

|

||||

### 技巧 1. 缓存静态资源 ###

|

||||

|

||||

静态资源是指不变的文件,像 CSS,JavaScript 和图片。这些文件往往在网页的数据中占半数以上。页面的其余部分是动态生成的,像在论坛中评论,仪表盘的性能,或个性化的内容(可以看看Amazon.com 产品)。

|

||||

静态资源是指不变的文件,像 CSS,JavaScript 和图片。这些文件往往在网页的数据中占半数以上。页面的其余部分是动态生成的,像在论坛中评论,性能仪表盘,或个性化的内容(可以看看 Amazon.com 产品)。

|

||||

|

||||

缓存静态资源有两大好处:

|

||||

|

||||

- 更快的交付给用户 - 用户从他们浏览器的缓存或者从互联网上离他们最近的缓存服务器获取静态文件。有时候文件较大,因此减少等待时间对他们来说帮助很大。

|

||||

- 更快的交付给用户 - 用户可以从它们浏览器的缓存或者从互联网上离它们最近的缓存服务器获取静态文件。有时候文件较大,因此减少等待时间对它们来说帮助很大。

|

||||

|

||||

- 减少应用服务器的负载 - 从缓存中检索到的每个文件会让 web 服务器少处理一个请求。你的缓存越多,用户等待的时间越短。

|

||||

|

||||

要让浏览器缓存文件,需要早在静态文件中设置正确的 HTTP 首部。当看到 HTTP Cache-Control 首部时,特别设置了 max-age,Expires 首部,以及 Entity 标记。[这里][13] 有详细的介绍。

|

||||

要让浏览器缓存文件,需要在静态文件中设置正确的 HTTP 首部。看看 HTTP Cache-Control 首部,特别是设置了 max-age 参数,Expires 首部,以及 Entity 标记。[这里][13] 有详细的介绍。

|

||||

|

||||

当启用本地缓存然后用户请求以前访问过的文件时,浏览器首先检查该文件是否在缓存中。如果在,它会询问 Web 服务器该文件是否改变过。如果该文件没有改变,Web 服务器将立即响应一个304状态码(未改变),这意味着该文件没有改变,而不是返回状态码200 OK,然后继续检索并发送已改变的文件。

|

||||

当启用本地缓存,然后用户请求以前访问过的文件时,浏览器首先检查该文件是否在缓存中。如果在,它会询问 Web 服务器该文件是否改变过。如果该文件没有改变,Web 服务器将立即响应一个304状态码(未改变),这意味着该文件没有改变,而不是返回状态码200 OK 并检索和发送已改变的文件。

|

||||

|

||||

为了支持浏览器以外的缓存,可以考虑下面的方法,内容分发网络(CDN)。CDN 是一种流行且强大的缓存工具,但我们在这里不详细描述它。可以想一下 CDN 背后的支撑技术的实现。此外,当你的站点从 HTTP/1.x 过渡到 HTTP/2 协议时,CDN 的用处可能不太大;根据需要调查和测试,找到你网站需要的正确方法。

|

||||

要在浏览器之外支持缓存,可以考虑下面讲到的技巧,以及考虑使用内容分发网络(CDN)。CDN 是一种流行且强大的缓存工具,但我们在这里不详细描述它。在你实现了这里讲到的其它技术之后可以考虑 CDN。此外,当你的站点从 HTTP/1.x 过渡到 HTTP/2 协议时,CDN 的用处可能不太大;根据需要调查和测试,找到你网站需要的正确方法。

|

||||

|

||||

如果你转向 NGINX Plus 或开源的 NGINX 软件作为架构的一部分,建议你考虑 [技巧 3][14],然后配置 NGINX 缓存静态资源。使用下面的配置,用你 Web 服务器的 URL 替换 www.example.com。

|

||||

如果你转向 NGINX Plus 或将开源的 NGINX 软件作为架构的一部分,建议你考虑 [技巧 3][14],然后配置 NGINX 缓存静态资源。使用下面的配置,用你 Web 服务器的 URL 替换 www.example.com。

|

||||

|

||||

server {

|

||||

# substitute your web server's URL for www.example.com

|

||||

@ -86,63 +85,63 @@ LAMP 对于数百个并发用户依然能照常工作。然而,流量突然增

|

||||

|

||||

### 技巧 2. 缓存动态文件 ###

|

||||

|

||||

WordPress 是动态生成的网页,这意味着每次请求时它都要生成一个给定的网页(即使和前一次的结果相同)。这意味着用户随时获得的是最新内容。

|

||||

WordPress 动态地生成网页,这意味着每次请求时它都要生成一个给定的网页(即使和前一次的结果相同)。这意味着用户随时获得的是最新内容。

|

||||

|

||||

想一下,当用户访问一个帖子时,并在文章底部有用户的评论时。你希望用户能够看到所有的评论 - 即使评论刚刚发布。动态内容就是处理这种情况的。

|

||||

|

||||

但现在,当帖子每秒出现十几二十几个请求时。应用服务器可能每秒需要频繁生成页面导致其压力过大,造成延误。为了给用户提供最新的内容,每个访问理论上都是新的请求,因此他们也不得不在首页等待。

|

||||

但现在,当帖子每秒出现十几二十几个请求时。应用服务器可能每秒需要频繁生成页面导致其压力过大,造成延误。为了给用户提供最新的内容,每个访问理论上都是新的请求,因此它们不得不在原始出处等待很长时间。

|

||||

|

||||

为了防止页面由于负载过大变得缓慢,需要缓存动态文件。这需要减少文件的动态内容来提高整个系统的响应速度。

|

||||

为了防止页面由于不断提升的负载而变得缓慢,需要缓存动态文件。这需要减少文件的动态内容来提高整个系统的响应速度。

|

||||

|

||||

要在 WordPress 中启用缓存中,需要使用一些流行的插件 - 如下所述。WordPress 的缓存插件需要刷新页面,然后将其缓存短暂时间 - 也许只有几秒钟。因此,如果该网站每秒中只有几个请求,那大多数用户获得的页面都是缓存的副本。这也有助于提高所有用户的检索时间:

|

||||

要在 WordPress 中启用缓存中,需要使用一些流行的插件 - 如下所述。WordPress 的缓存插件会请求最新的页面,然后将其缓存短暂时间 - 也许只有几秒钟。因此,如果该网站每秒中会有几个请求,那大多数用户获得的页面都是缓存的副本。这也有助于提高所有用户的检索时间:

|

||||

|

||||

- 大多数用户获得页面的缓存副本。应用服务器没有做任何工作。

|

||||

- 用户很快会得到一个新的副本。应用服务器只需每隔一段时间刷新页面。当服务器产生一个新的页面(对于第一个用户访问后,缓存页过期),它这样做要快得多,因为它的请求不会超载。

|

||||

- 用户会得到一个之前的崭新副本。应用服务器只需每隔一段时间生成一个崭新页面。当服务器产生一个崭新页面(对于缓存过期后的第一个用户访问),它这样做要快得多,因为它的请求并没有超载。

|

||||

|

||||

你可以缓存运行在 LAMP 架构或者 [LEMP 架构][15] 上 WordPress 的动态文件(在 [技巧 3][16] 中说明了)。有几个缓存插件,你可以在 WordPress 中使用。这里有最流行的缓存插件和缓存技术,从最简单到最强大的:

|

||||

你可以缓存运行在 LAMP 架构或者 [LEMP 架构][15] 上 WordPress 的动态文件(在 [技巧 3][16] 中说明了)。有几个缓存插件,你可以在 WordPress 中使用。运用到了最流行的缓存插件和缓存技术,从最简单到最强大的:

|

||||

|

||||

- [Hyper-Cache][17] 和 [Quick-Cache][18] – 这两个插件为每个 WordPress 页面创建单个 PHP 文件。它支持的一些动态函数会绕过多个 WordPress 与数据库的连接核心处理,创建一个更快的用户体验。他们不会绕过所有的 PHP 处理,所以使用以下选项他们不能给出相同的性能提升。他们也不需要修改 NGINX 的配置。

|

||||

- [Hyper-Cache][17] 和 [Quick-Cache][18] – 这两个插件为每个 WordPress 页面创建单个 PHP 文件。它支持绕过多个 WordPress 与数据库的连接核心处理的一些动态功能,创建一个更快的用户体验。它们不会绕过所有的 PHP 处理,所以并不会如下面那些取得同样的性能提升。它们也不需要修改 NGINX 的配置。

|

||||

|

||||

- [WP Super Cache][19] – 最流行的 WordPress 缓存插件。它有许多功能,它的界面非常简洁,如下图所示。我们展示了 NGINX 一个简单的配置实例在 [技巧 7][20] 中。

|

||||

- [WP Super Cache][19] – 最流行的 WordPress 缓存插件。在它易用的界面易用上提供了许多功能,如下所示。我们在 [技巧 7][20] 中展示了一个简单的 NGINX 配置实例。

|

||||

|

||||

- [W3 Total Cache][21] – 这是第二大最受欢迎的 WordPress 缓存插件。它比 WP Super Cache 的功能更强大,但它有些配置选项比较复杂。一个 NGINX 的简单配置,请看 [技巧 6][22]。

|

||||

- [W3 Total Cache][21] – 这是第二流行的 WordPress 缓存插件。它比 WP Super Cache 的功能更强大,但它有些配置选项比较复杂。样例 NGINX 配置,请看 [技巧 6][22]。

|

||||

|

||||

- [FastCGI][23] – CGI 代表通用网关接口,在因特网上发送请求和接收文件。它不是一个插件只是一种能直接使用缓存的方法。FastCGI 可以被用在 Apache 和 Nginx 上,它也是最流行的动态缓存方法;我们在 [技巧 5][24] 中描述了如何配置 NGINX 来使用它。

|

||||

- [FastCGI][23] – CGI 的意思是通用网关接口( Common Gateway Interface),在因特网上发送请求和接收文件的一种通用方式。它不是一个插件,而是一种与缓存交互缓存的方法。FastCGI 可以被用在 Apache 和 Nginx 上,它也是最流行的动态缓存方法;我们在 [技巧 5][24] 中描述了如何配置 NGINX 来使用它。

|

||||

|

||||

这些插件的技术文档解释了如何在 LAMP 架构中配置它们。配置选项包括数据库和对象缓存;也包括使用 HTML,CSS 和 JavaScript 来构建 CDN 集成环境。对于 NGINX 的配置,请看列表中的提示技巧。

|

||||

这些插件和技术的文档解释了如何在典型的 LAMP 架构中配置它们。配置方式包括数据库和对象缓存;最小化 HTML、CSS 和 JavaScript;集成流行的 CDN 集成环境。对于 NGINX 的配置,请看列表中的提示技巧。

|

||||

|

||||

**注意**:WordPress 不能缓存用户的登录信息,因为它们的 WordPress 页面都是不同的。(对于大多数网站来说,只有一小部分用户可能会登录),大多数缓存不会对刚刚评论过的用户显示缓存页面,只有当用户刷新页面时才会看到他们的评论。若要缓存页面的非个性化内容,如果它对整体性能来说很重要,可以使用一种称为 [fragment caching][25] 的技术。

|

||||

**注意**:缓存不会用于已经登录的 WordPress 用户,因为他们的 WordPress 页面都是不同的。(对于大多数网站来说,只有一小部分用户可能会登录)此外,大多数缓存不会对刚刚评论过的用户显示缓存页面,因为当用户刷新页面时希望看到他们的评论。若要缓存页面的非个性化内容,如果它对整体性能来说很重要,可以使用一种称为 [碎片缓存(fragment caching)][25] 的技术。

|

||||

|

||||

### 技巧 3. 使用 NGINX ###

|

||||

|

||||

如上所述,当并发用户数超过某一值时 Apache 会导致性能问题 – 可能数百个用户同时使用。Apache 对于每一个连接会消耗大量的资源,因而容易耗尽内存。Apache 可以配置连接数的值来避免耗尽内存,但是这意味着,超过限制时,新的连接请求必须等待。

|

||||

如上所述,当并发用户数超过某一数量时 Apache 会导致性能问题 – 可能是数百个用户同时使用。Apache 对于每一个连接会消耗大量的资源,因而容易耗尽内存。Apache 可以配置连接数的值来避免耗尽内存,但是这意味着,超过限制时,新的连接请求必须等待。

|

||||

|

||||

此外,Apache 使用 mod_php 模块将每一个连接加载到内存中,即使只有静态文件(图片,CSS,JavaScript 等)。这使得每个连接消耗更多的资源,从而限制了服务器的性能。

|

||||

此外,Apache 为每个连接加载一个 mod_php 模块副本到内存中,即使只有服务于静态文件(图片,CSS,JavaScript 等)。这使得每个连接消耗更多的资源,从而限制了服务器的性能。

|

||||

|

||||

开始解决这些问题吧,从 LAMP 架构迁到 LEMP 架构 – 使用 NGINX 取代 Apache 。NGINX 仅消耗很少量的内存就能处理成千上万的并发连接数,所以你不必经历颠簸,也不必限制并发连接数。

|

||||

要解决这些问题,从 LAMP 架构迁到 LEMP 架构 – 使用 NGINX 取代 Apache 。NGINX 在一定的内存之下就能处理成千上万的并发连接数,所以你不必经历颠簸,也不必限制并发连接数到很小的数量。

|

||||

|

||||

NGINX 处理静态文件的性能也较好,它有内置的,简单的 [缓存][26] 控制策略。减少应用服务器的负载,你的网站的访问速度会更快,用户体验更好。

|

||||

NGINX 处理静态文件的性能也较好,它有内置的,容易调整的 [缓存][26] 控制策略。减少应用服务器的负载,你的网站的访问速度会更快,用户体验更好。

|

||||

|

||||

你可以在部署的所有 Web 服务器上使用 NGINX,或者你可以把一个 NGINX 服务器作为 Apache 的“前端”来进行反向代理 - NGINX 服务器接收客户端请求,将请求的静态文件直接返回,将 PHP 请求转发到 Apache 上进行处理。

|

||||

你可以在部署环境的所有 Web 服务器上使用 NGINX,或者你可以把一个 NGINX 服务器作为 Apache 的“前端”来进行反向代理 - NGINX 服务器接收客户端请求,将请求的静态文件直接返回,将 PHP 请求转发到 Apache 上进行处理。

|

||||

|

||||

对于动态页面的生成 - WordPress 核心体验 - 选择一个缓存工具,如 [技巧 2][27] 中描述的。在下面的技巧中,你可以看到 FastCGI,W3_Total_Cache 和 WP-Super-Cache 在 NGINX 上的配置示例。 (Hyper-Cache 和 Quick-Cache 不需要改变 NGINX 的配置。)

|

||||

对于动态页面的生成,这是 WordPress 核心体验,可以选择一个缓存工具,如 [技巧 2][27] 中描述的。在下面的技巧中,你可以看到 FastCGI,W3\_Total\_Cache 和 WP-Super-Cache 在 NGINX 上的配置示例。 (Hyper-Cache 和 Quick-Cache 不需要改变 NGINX 的配置。)

|

||||

|

||||

**技巧** 缓存通常会被保存到磁盘上,但你可以用 [tmpfs][28] 将缓存放在内存中来提高性能。

|

||||

|

||||

为 WordPress 配置 NGINX 很容易。按照这四个步骤,其详细的描述在指定的技巧中:

|

||||

为 WordPress 配置 NGINX 很容易。仅需四步,其详细的描述在指定的技巧中:

|

||||

|

||||

1.添加永久的支持 - 添加对 NGINX 的永久支持。此步消除了对 **.htaccess** 配置文件的依赖,这是 Apache 特有的。参见 [技巧 4][29]

|

||||

2.配置缓存 - 选择一个缓存工具并安装好它。可选择的有 FastCGI cache,W3 Total Cache, WP Super Cache, Hyper Cache, 和 Quick Cache。请看技巧 [5][30], [6][31], 和 [7][32].

|

||||

3.落实安全防范措施 - 在 NGINX 上采用对 WordPress 最佳安全的做法。参见 [技巧 8][33]。

|

||||

4.配置 WordPress 多站点 - 如果你使用 WordPress 多站点,在 NGINX 下配置子目录,子域,或多个域的结构。见 [技巧9][34]。

|

||||

1. 添加永久链接的支持 - 让 NGINX 支持永久链接。此步消除了对 **.htaccess** 配置文件的依赖,这是 Apache 特有的。参见 [技巧 4][29]。

|

||||

2. 配置缓存 - 选择一个缓存工具并安装好它。可选择的有 FastCGI cache,W3 Total Cache, WP Super Cache, Hyper Cache, 和 Quick Cache。请看技巧 [5][30]、 [6][31] 和 [7][32]。

|

||||

3. 落实安全防范措施 - 在 NGINX 上采用对 WordPress 最佳安全的做法。参见 [技巧 8][33]。

|

||||

4. 配置 WordPress 多站点 - 如果你使用 WordPress 多站点,在 NGINX 下配置子目录,子域,或多域名架构。见 [技巧9][34]。

|

||||

|

||||

### 技巧 4. 添加支持 NGINX 的链接 ###

|

||||

### 技巧 4. 让 NGINX 支持永久链接 ###

|

||||

|

||||

许多 WordPress 网站依靠 **.htaccess** 文件,此文件依赖 WordPress 的多个功能,包括永久支持,插件和文件缓存。NGINX 不支持 **.htaccess** 文件。幸运的是,你可以使用 NGINX 的简单而全面的配置文件来实现大部分相同的功能。

|

||||

许多 WordPress 网站依赖于 **.htaccess** 文件,此文件为 WordPress 的多个功能所需要,包括永久链接支持、插件和文件缓存。NGINX 不支持 **.htaccess** 文件。幸运的是,你可以使用 NGINX 的简单而全面的配置文件来实现大部分相同的功能。

|

||||

|

||||

你可以在使用 NGINX 的 WordPress 中通过在主 [server][36] 块下添加下面的 location 块中启用 [永久链接][35]。(此 location 块在其他代码示例中也会被包括)。

|

||||

你可以在你的主 [server][36] 块下添加下面的 location 块中为使用 NGINX 的 WordPress 启用 [永久链接][35]。(此 location 块在其它代码示例中也会被包括)。

|

||||

|

||||

**try_files** 指令告诉 NGINX 检查请求的 URL 在根目录下是作为文件(**$uri**)还是目录(**$uri/**),**/var/www/example.com/htdocs**。如果都不是,NGINX 将重定向到 **/index.php**,通过查询字符串参数判断是否作为参数。

|

||||

**try_files** 指令告诉 NGINX 检查请求的 URL 在文档根目录(**/var/www/example.com/htdocs**)下是作为文件(**$uri**)还是目录(**$uri/**) 存在的。如果都不是,NGINX 将重定向到 **/index.php**,并传递查询字符串参数作为参数。

|

||||

|

||||

server {

|

||||

server_name example.com www.example.com;

|

||||

@ -159,17 +158,17 @@ NGINX 处理静态文件的性能也较好,它有内置的,简单的 [缓存

|

||||

|

||||

### 技巧 5. 在 NGINX 中配置 FastCGI ###

|

||||

|

||||

NGINX 可以从 FastCGI 应用程序中缓存响应,如 PHP 响应。此方法可提供最佳的性能。

|

||||

NGINX 可以缓存来自 FastCGI 应用程序的响应,如 PHP 响应。此方法可提供最佳的性能。

|

||||

|

||||

对于开源的 NGINX,第三方模块 [ngx_cache_purge][37] 提供了缓存清除能力,需要手动编译,配置代码如下所示。NGINX Plus 已经包含了此代码的实现。

|

||||

对于开源的 NGINX,编译入第三方模块 [ngx\_cache\_purge][37] 可以提供缓存清除能力,配置代码如下所示。NGINX Plus 已经包含了它自己实现此代码。

|

||||

|

||||

当使用 FastCGI 时,我们建议你安装 [NGINX 辅助插件][38] 并使用下面的配置文件,尤其是要使用 **fastcgi_cache_key** 并且 location 块下要包括 **fastcgi_cache_purge**。当页面被发布或有改变时,甚至有新评论被发布时,该插件会自动清除你的缓存,你也可以从 WordPress 管理控制台手动清除。

|

||||

当使用 FastCGI 时,我们建议你安装 [NGINX 辅助插件][38] 并使用下面的配置文件,尤其是要注意 **fastcgi\_cache\_key** 的使用和包括 **fastcgi\_cache\_purge** 的 location 块。当页面发布或有改变时,有新评论被发布时,该插件会自动清除你的缓存,你也可以从 WordPress 管理控制台手动清除。

|

||||

|

||||

NGINX 的辅助插件还可以添加一个简短的 HTML 代码到你网页的底部,确认缓存是否正常并显示一些统计工作。(你也可以使用 [$upstream_cache_status][39] 确认缓存功能是否正常。)

|

||||

NGINX 的辅助插件还可以在你网页的底部添加一个简短的 HTML 代码,以确认缓存是否正常并显示一些统计数据。(你也可以使用 [$upstream\_cache\_status][39] 确认缓存功能是否正常。)

|

||||

|

||||