mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-18 02:00:18 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

4720607372

@ -0,0 +1,199 @@

|

||||

如何在 Linux 系统查询机器最近重启时间

|

||||

======

|

||||

|

||||

在你的 Linux 或类 UNIX 系统中,你是如何查询系统上次重新启动的日期和时间?怎样显示系统关机的日期和时间? `last` 命令不仅可以按照时间从近到远的顺序列出该会话的特定用户、终端和主机名,而且还可以列出指定日期和时间登录的用户。输出到终端的每一行都包括用户名、会话终端、主机名、会话开始和结束的时间、会话持续的时间。要查看 Linux 或类 UNIX 系统重启和关机的时间和日期,可以使用下面的命令。

|

||||

|

||||

- `last` 命令

|

||||

- `who` 命令

|

||||

|

||||

|

||||

### 使用 who 命令来查看系统重新启动的时间/日期

|

||||

|

||||

你需要在终端使用 [who][1] 命令来打印有哪些人登录了系统,`who` 命令同时也会显示上次系统启动的时间。使用 `last` 命令来查看系统重启和关机的日期和时间,运行:

|

||||

|

||||

```

|

||||

$ who -b

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

system boot 2017-06-20 17:41

|

||||

```

|

||||

|

||||

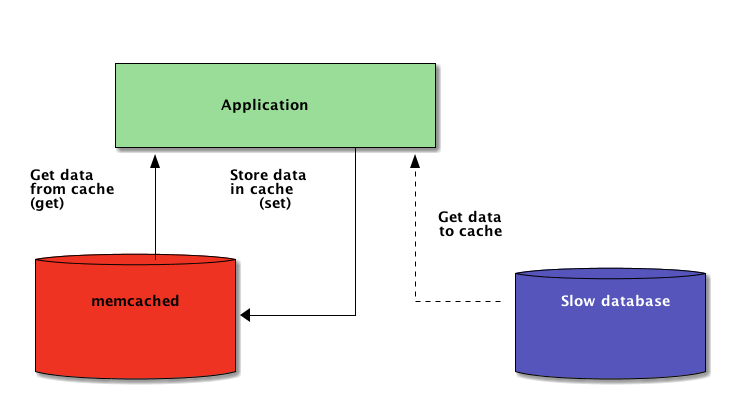

使用 `last` 命令来查询最近登录到系统的用户和系统重启的时间和日期。输入:

|

||||

|

||||

```

|

||||

$ last reboot | less

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

[![Fig.01: last command in action][2]][2]

|

||||

|

||||

或者,尝试输入:

|

||||

|

||||

```

|

||||

$ last reboot | head -1

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

reboot system boot 4.9.0-3-amd64 Sat Jul 15 19:19 still running

|

||||

```

|

||||

|

||||

`last` 命令通过查看文件 `/var/log/wtmp` 来显示自 wtmp 文件被创建时的所有登录(和登出)的用户。每当系统重新启动时,这个伪用户 `reboot` 就会登录。因此,`last reboot` 命令将会显示自该日志文件被创建以来的所有重启信息。

|

||||

|

||||

### 查看系统上次关机的时间和日期

|

||||

|

||||

可以使用下面的命令来显示上次关机的日期和时间:

|

||||

|

||||

```

|

||||

$ last -x|grep shutdown | head -1

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

shutdown system down 2.6.15.4 Sun Apr 30 13:31 - 15:08 (01:37)

|

||||

```

|

||||

|

||||

命令中,

|

||||

|

||||

* `-x`:显示系统关机和运行等级改变信息

|

||||

|

||||

|

||||

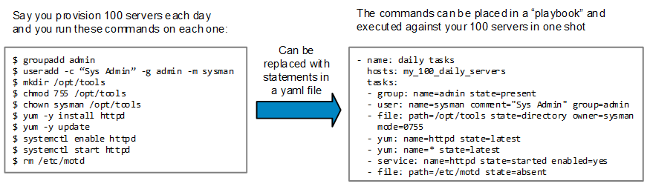

这里是 `last` 命令的其它的一些选项:

|

||||

|

||||

```

|

||||

$ last

|

||||

$ last -x

|

||||

$ last -x reboot

|

||||

$ last -x shutdown

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

![Fig.01: How to view last Linux System Reboot Date/Time ][3]

|

||||

|

||||

### 查看系统正常的运行时间

|

||||

|

||||

评论区的读者建议的另一个命令如下:

|

||||

|

||||

```

|

||||

$ uptime -s

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

2017-06-20 17:41:51

|

||||

```

|

||||

|

||||

### OS X/Unix/FreeBSD 查看最近重启和关机时间的命令示例

|

||||

|

||||

在终端输入下面的命令:

|

||||

|

||||

```

|

||||

$ last reboot

|

||||

```

|

||||

|

||||

在 OS X 示例输出结果如下:

|

||||

|

||||

```

|

||||

reboot ~ Fri Dec 18 23:58

|

||||

reboot ~ Mon Dec 14 09:54

|

||||

reboot ~ Wed Dec 9 23:21

|

||||

reboot ~ Tue Nov 17 21:52

|

||||

reboot ~ Tue Nov 17 06:01

|

||||

reboot ~ Wed Nov 11 12:14

|

||||

reboot ~ Sat Oct 31 13:40

|

||||

reboot ~ Wed Oct 28 15:56

|

||||

reboot ~ Wed Oct 28 11:35

|

||||

reboot ~ Tue Oct 27 00:00

|

||||

reboot ~ Sun Oct 18 17:28

|

||||

reboot ~ Sun Oct 18 17:11

|

||||

reboot ~ Mon Oct 5 09:35

|

||||

reboot ~ Sat Oct 3 18:57

|

||||

|

||||

|

||||

wtmp begins Sat Oct 3 18:57

|

||||

```

|

||||

|

||||

查看关机日期和时间,输入:

|

||||

|

||||

```

|

||||

$ last shutdown

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

shutdown ~ Fri Dec 18 23:57

|

||||

shutdown ~ Mon Dec 14 09:53

|

||||

shutdown ~ Wed Dec 9 23:20

|

||||

shutdown ~ Tue Nov 17 14:24

|

||||

shutdown ~ Mon Nov 16 21:15

|

||||

shutdown ~ Tue Nov 10 13:15

|

||||

shutdown ~ Sat Oct 31 13:40

|

||||

shutdown ~ Wed Oct 28 03:10

|

||||

shutdown ~ Sun Oct 18 17:27

|

||||

shutdown ~ Mon Oct 5 09:23

|

||||

|

||||

|

||||

wtmp begins Sat Oct 3 18:57

|

||||

```

|

||||

|

||||

### 如何查看是谁重启和关闭机器?

|

||||

|

||||

你需要[启用 psacct 服务然后运行下面的命令][4]来查看执行过的命令(包括用户名),在终端输入 [lastcomm][5] 命令查看信息

|

||||

|

||||

```

|

||||

# lastcomm userNameHere

|

||||

# lastcomm commandNameHere

|

||||

# lastcomm | more

|

||||

# lastcomm reboot

|

||||

# lastcomm shutdown

|

||||

### 或者查看重启和关机时间

|

||||

# lastcomm | egrep 'reboot|shutdown'

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

reboot S X root pts/0 0.00 secs Sun Dec 27 23:49

|

||||

shutdown S root pts/1 0.00 secs Sun Dec 27 23:45

|

||||

```

|

||||

|

||||

我们可以看到 root 用户在当地时间 12 月 27 日星期二 23:49 在 pts/0 重新启动了机器。

|

||||

|

||||

### 参见

|

||||

|

||||

* 更多信息可以查看 man 手册(`man last`)和参考文章 [如何在 Linux 服务器上使用 tuptime 命令查看历史和统计的正常的运行时间][6]。

|

||||

|

||||

### 关于作者

|

||||

|

||||

作者是 nixCraft 的创立者,同时也是一名经验丰富的系统管理员,也是 Linux,类 Unix 操作系统 shell 脚本的培训师。他曾与全球各行各业的客户工作过,包括 IT,教育,国防和空间研究以及非营利部门等等。你可以在 [Twitter][7]、[Facebook][8]、[Google+][9] 关注他。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/linux-last-reboot-time-and-date-find-out.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[amwps290](https://github.com/amwps290)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/faq/unix-linux-who-command-examples-syntax-usage/ "See Linux/Unix who command examples for more info"

|

||||

[2]:https://www.cyberciti.biz/tips/wp-content/uploads/2006/04/last-reboot.jpg

|

||||

[3]:https://www.cyberciti.biz/media/new/tips/2006/04/check-last-time-system-was-rebooted.jpg

|

||||

[4]:https://www.cyberciti.biz/tips/howto-log-user-activity-using-process-accounting.html

|

||||

[5]:https://www.cyberciti.biz/faq/linux-unix-lastcomm-command-examples-usage-syntax/ "See Linux/Unix lastcomm command examples for more info"

|

||||

[6]:https://www.cyberciti.biz/hardware/howto-see-historical-statistical-uptime-on-linux-server/

|

||||

[7]:https://twitter.com/nixcraft

|

||||

[8]:https://facebook.com/nixcraft

|

||||

[9]:https://plus.google.com/+CybercitiBiz

|

||||

@ -1,10 +1,11 @@

|

||||

我在 Twitch 平台直播编程的第一年

|

||||

我在 Twitch 平台直播编程的经验

|

||||

============================================================

|

||||

去年 7 月我进行了第一次直播。不像大多数人那样在 Twitch 上进行游戏直播,我想直播的内容是我利用个人时间进行的开源工作。我对 NodeJS 硬件库有一定的研究(其中大部分是靠我自学的)。考虑到我已经在 Twitch 上有了一个直播间,为什么不再建一个更小更专业的直播间,比如使用 <ruby>JavaScript 驱动硬件<rt>JavaScript powered hardware</rt></ruby> 来建立直播间 :) 我注册了 [我自己的频道][1] ,从那以后我就开始定期直播。

|

||||

|

||||

去年 7 月我进行了第一次直播。不像大多数人那样在 Twitch 上进行游戏直播,我想直播的内容是我利用个人时间进行的开源工作。我对 NodeJS 硬件库有一定的研究(其中大部分是靠我自学的)。考虑到我已经在 Twitch 上有了一个直播间,为什么不再建一个更小更专业的直播间,比如 <ruby>由 JavaScript 驱动的硬件<rt>JavaScript powered hardware</rt></ruby> ;) 我注册了 [我自己的频道][1] ,从那以后我就开始定期直播。

|

||||

|

||||

我当然不是第一个这么做的人。[Handmade Hero][2] 是我最早看到的几个在线直播编程的程序员之一。很快这种直播方式被 Vlambeer 发扬光大,他在 Twitch 的 [Nuclear Throne live][3] 直播间进行直播。我对 Vlambeer 尤其着迷。

|

||||

|

||||

我的朋友 [Nolan Lawson][4] 让我 _真正开始做_ 这件事,而不只是单纯地 _想要做_ 。我看了他 [在周末直播开源工作][5] ,做得棒极了。他解释了他当时做的每一件事。每一件事。回复 GitHub 上的 <ruby>问题<rt>issues</rt></ruby> ,鉴别 bug ,在 <ruby>分支<rt>branches</rt></ruby> 中调试程序,你知道的。这令我着迷,因为 Nolan 使他的开源库得到了广泛的使用。他的开源生活和我的完全不一样。

|

||||

我的朋友 [Nolan Lawson][4] 让我 _真正开始做_ 这件事,而不只是单纯地 _想要做_ 。我看了他 [在周末直播开源工作][5] ,做得棒极了。他解释了他当时做的每一件事。是的,每一件事,包括回复 GitHub 上的 <ruby>问题<rt>issues</rt></ruby> ,鉴别 bug ,在 <ruby>分支<rt>branches</rt></ruby> 中调试程序,你知道的。这令我着迷,因为 Nolan 使他的开源库得到了广泛的使用。他的开源生活和我的完全不一样。

|

||||

|

||||

你甚至可以看到我在他视频下的评论:

|

||||

|

||||

@ -14,27 +15,27 @@

|

||||

|

||||

那个星期六我极少的几个听众给了我很大的鼓舞,因此我坚持了下去。现在我有了超过一千个听众,他们中的一些人形成了一个可爱的小团体,他们会定期观看我的直播,我称呼他们为 “noopkat 家庭” 。

|

||||

|

||||

我们很开心。我想称呼这个即时编程部分为“多玩家在线组队编程”。我真的被他们每个人的热情和才能触动了。一次,一个团体成员指出我的 Arduino 开发板没有连接上软件,因为板子上的芯片丢了。这真是最有趣的时刻之一。

|

||||

我们很开心。我想称呼这个即时编程部分为“多玩家在线组队编程”。我真的被他们每个人的热情和才能触动了。一次,一个团体成员指出我的 Arduino 开发板不能随同我的软件工作,因为板子上的芯片丢了。这真是最有趣的时刻之一。

|

||||

|

||||

我经常暂停直播,检查我的收件箱,看看有没有人对我提过的,不再有时间完成的工作发起 <ruby>拉取请求<rt>pull request</rt></ruby> 。感谢我 Twitch 社区对我的帮助和鼓励。

|

||||

我经常暂停直播,检查我的收件箱,看看有没有人对我提及过但没有时间完成的工作发起 <ruby>拉取请求<rt>pull request</rt></ruby> 。感谢我 Twitch 社区对我的帮助和鼓励。

|

||||

|

||||

我很想聊聊 Twitch 直播给我带来的好处,但它的内容太多了,我应该会在我下一个博客里介绍。我在这里想要分享的,是我学习的关于如何自己实现直播编程的课程。最近几个开发者问我怎么开始自己的直播,因此我在这里想大家展示我给他们的建议!

|

||||

我很想聊聊 Twitch 直播给我带来的好处,但它的内容太多了,我应该会在我下一篇博客里介绍。我在这里想要分享的,是我学习的关于如何自己实现直播编程的课程。最近几个开发者问我怎么开始自己的直播,因此我在这里想大家展示我给他们的建议!

|

||||

|

||||

首先,我在这里贴出一个给过我很大帮助的教程 [“Streaming and Finding Success on Twitch”][7] 。它专注于 Twitch 与游戏直播,但也有很多和我们要做的东西相关的部分。我建议首先阅读这个教程,然后再考虑一些建立直播频道的细节(比如如何选择设备和软件)。

|

||||

|

||||

下面我列出我自己的配置。这些配置是从我多次的错误经验中总结出来的,其中要感谢我的直播同行的智慧与建议(对,你们知道就是你们!)。

|

||||

下面我列出我自己的配置。这些配置是从我多次的错误经验中总结出来的,其中要感谢我的直播同行的智慧与建议。(对,你们知道就是你们!)

|

||||

|

||||

### 软件

|

||||

|

||||

有很多免费的直播软件。我用的是 [Open Broadcaster Software (OBS)][8] 。它适用于大多数的平台。我觉得它十分直观且易于入门,但掌握其他的进阶功能则需要一段时间的学习。学好它你会获得很多好处!这是今天我直播时 OBS 的桌面截图(点击查看大图):

|

||||

有很多免费的直播软件。我用的是 [Open Broadcaster Software (OBS)][8] 。它适用于大多数的平台。我觉得它十分直观且易于入门,但掌握其他的进阶功能则需要一段时间的学习。学好它你会获得很多好处!这是今天我直播时 OBS 的桌面截图:

|

||||

|

||||

|

||||

|

||||

你直播时需要在不用的“场景”中进行切换。一个“场景”是多个“素材”通过堆叠和组合产生的集合。一个“素材”可以是照相机,麦克风,你的桌面,网页,动态文本,图片等等。 OBS 是一个很强大的软件。

|

||||

你直播时需要在不用的“<ruby>场景<rt>scenes</rt></ruby>”中进行切换。一个“场景”是多个“<ruby>素材<rt>sources</rt></ruby>”通过堆叠和组合产生的集合。一个“素材”可以是照相机、麦克风、你的桌面、网页、动态文本、图片等等。 OBS 是一个很强大的软件。

|

||||

|

||||

最上方的桌面场景是我编程的环境,我直播的时候主要停留在这里。我使用 iTerm 和 vim ,同时打开一个可以切换的浏览器窗口来查阅文献或在 GitHub 上分类检索资料。

|

||||

|

||||

底部的黑色长方形是我的网络摄像头,人们可以通过这种个人化的连接方式来观看我工作。

|

||||

底部的黑色长方形是我的网络摄像头,人们可以通过这种更个人化的连接方式来观看我工作。

|

||||

|

||||

我的场景中有一些“标签”,很多都与状态或者顶栏信息有关。顶栏只是添加了个性化信息,它在直播时是一个很好的连续性素材。这是我在 [GIMP][9] 里制作的图片,在你的场景里它会作为一个素材来加载。一些标签是从文本文件里添加的动态内容(例如最新粉丝)。另一个标签是一个 [custom one I made][10] ,它可以展示我直播的房间的动态温度与湿度。

|

||||

|

||||

@ -62,7 +63,7 @@

|

||||

|

||||

### 硬件

|

||||

|

||||

我从使用便宜的器材开始,当我意识到我会长期坚持直播之后,才将他们逐渐换成更好的。开始的时候尽量使用你现有的器材,即使是只用电脑内置的摄像头与麦克风。

|

||||

我从使用便宜的器材开始,当我意识到我会长期坚持直播之后,才将它们逐渐换成更好的。开始的时候尽量使用你现有的器材,即使是只用电脑内置的摄像头与麦克风。

|

||||

|

||||

现在我使用 Logitech Pro C920 网络摄像头,和一个固定有支架的 Blue Yeti 麦克风。花费是值得的。我直播的质量完全不同了。

|

||||

|

||||

@ -116,7 +117,7 @@

|

||||

|

||||

当你即将开始的时候,你会感觉很奇怪,不适应。你会在人们看着你写代码的时候感到紧张。这很正常!尽管我之前有过公共演说的经历,我一开始的时候还是感到陌生而不适应。我感觉我无处可藏,这令我害怕。我想:“大家可能都觉得我的代码很糟糕,我是一个糟糕的开发者。”这是一个困扰了我 _整个职业生涯_ 的想法,对我来说不新鲜了。我知道带着这些想法,我不能在发布到 GitHub 之前仔细地再检查一遍代码,而这样做更有利于我保持我作为开发者的声誉。

|

||||

|

||||

我从 Twitch 直播中发现了很多关于我代码风格的东西。我知道我的风格绝对是“先让它跑起来,然后再考虑可读性,然后再考虑运行速度”。我不再在前一天晚上提前排练好直播的内容(一开始的三四次直播我都是这么做的),所以我在 Twitch 上写的代码是相当粗糙的,我还得保证它们运行起来没问题。当我不看别人的聊天和讨论的时候,我可以写出我最好的代码,这样是没问题的。但我总会忘记我使用过无数遍的方法的名字,而且每次直播的时候都会犯“愚蠢的”错误。一般来说,这不是一个让你能达到你最好状态的生产环境。

|

||||

我从 Twitch 直播中发现了很多关于我代码风格的东西。我知道我的风格绝对是“先让它跑起来,然后再考虑可读性,然后再考虑运行速度”。我不再在前一天晚上提前排练好直播的内容(一开始的三、四次直播我都是这么做的),所以我在 Twitch 上写的代码是相当粗糙的,我还得保证它们运行起来没问题。当我不看别人的聊天和讨论的时候,我可以写出我最好的代码,这样是没问题的。但我总会忘记我使用过无数遍的方法的名字,而且每次直播的时候都会犯“愚蠢的”错误。一般来说,这不是一个让你能达到你最好状态的生产环境。

|

||||

|

||||

我的 Twitch 社区从来不会因为这个苛求我,反而是他们帮了我很多。他们理解我正同时做着几件事,而且真的给了很多务实的意见和建议。有时是他们帮我找到了解决方法,有时是我要向他们解释为什么他们的建议不适合解决这个问题。这真的很像一般意义的组队编程!

|

||||

|

||||

@ -128,7 +129,7 @@

|

||||

|

||||

如果你周日想要加入我的直播,你可以 [订阅我的 Twitch 频道][13] :)

|

||||

|

||||

最后我想说一下,我个人十分感谢 [Mattias Johansson][14] 在我早期开始直播的时候给我的建议和鼓励。他的 [FunFunFunction YouTube channel][15] 也是一个令人激动的定期直播频道。

|

||||

最后我想说一下,我自己十分感谢 [Mattias Johansson][14] 在我早期开始直播的时候给我的建议和鼓励。他的 [FunFunFunction YouTube channel][15] 也是一个令人激动的定期直播频道。

|

||||

|

||||

另:许多人问过我的键盘和其他工作设备是什么样的, [这是我使用的器材的完整列表][16] 。感谢关注!

|

||||

|

||||

@ -136,9 +137,9 @@

|

||||

|

||||

via: https://medium.freecodecamp.org/lessons-from-my-first-year-of-live-coding-on-twitch-41a32e2f41c1

|

||||

|

||||

作者:[ Suz Hinton][a]

|

||||

作者:[Suz Hinton][a]

|

||||

译者:[lonaparte](https://github.com/lonaparte)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

103

published/20180131 Why you should use named pipes on Linux.md

Normal file

103

published/20180131 Why you should use named pipes on Linux.md

Normal file

@ -0,0 +1,103 @@

|

||||

为什么应该在 Linux 上使用命名管道

|

||||

======

|

||||

|

||||

> 命名管道并不常用,但是它们为进程间通讯提供了一些有趣的特性。

|

||||

|

||||

|

||||

|

||||

估计每一位 Linux 使用者都熟悉使用 “|” 符号将数据从一个进程传输到另一个进程的操作。它使用户能简便地从一个命令输出数据到另一个命令,并筛选出想要的数据而无须写脚本进行选择、重新格式化等操作。

|

||||

|

||||

还有另一种管道, 虽然也叫“管道”这个名字却有着非常不同的性质。即您可能尚未使用甚至尚未知晓的——命名管道。

|

||||

|

||||

普通管道与命名管道的一个主要区别就是命名管道是以文件形式实实在在地存在于文件系统中的,没错,它们表现出来就是文件。但是与其它文件不同的是,命名管道文件似乎从来没有文件内容。即使用户往命名管道中写入大量数据,该文件看起来还是空的。

|

||||

|

||||

### 如何在 Linux 上创建命名管道

|

||||

|

||||

在我们研究这些空空如也的命名管道之前,先追根溯源来看看命名管道是如何被创建的。您应该使用名为 `mkfifo` 的命令来创建它们。为什么提及“FIFO”?是因为命名管道也被认为是一种 FIFO 特殊文件。术语 “FIFO” 指的是它的<ruby>先进先出<rt>first-in, first-out</rt></ruby>特性。如果你将冰淇淋盛放到碟子中,然后可以品尝它,那么你执行的就是一个LIFO(<ruby>后进先出<rt>last-in, first-out</rt></ruby>操作。如果你通过吸管喝奶昔,那你就在执行一个 FIFO 操作。好,接下来是一个创建命名管道的例子。

|

||||

|

||||

```

|

||||

$ mkfifo mypipe

|

||||

$ ls -l mypipe

|

||||

prw-r-----. 1 shs staff 0 Jan 31 13:59 mypipe

|

||||

```

|

||||

|

||||

注意一下特殊的文件类型标记 “p” 以及该文件大小为 0。您可以将重定向数据写入命名管道文件,而文件大小依然为 0。

|

||||

|

||||

```

|

||||

$ echo "Can you read this?" > mypipe

|

||||

```

|

||||

|

||||

正如上面所说,敲击回车后似乎什么都没有发生(LCTT 译注:没有返回命令行提示符)。

|

||||

|

||||

另外再开一个终端,查看该命名管道的大小,依旧是 0:

|

||||

|

||||

```

|

||||

$ ls -l mypipe

|

||||

prw-r-----. 1 shs staff 0 Jan 31 13:59 mypipe

|

||||

```

|

||||

|

||||

也许这有违直觉,用户输入的文本已经进入该命名管道,而你仍然卡在输入端。你或者其他人应该等在输出端,并准备读取放入管道的数据。现在让我们读取看看。

|

||||

|

||||

```

|

||||

$ cat mypipe

|

||||

Can you read this?

|

||||

```

|

||||

|

||||

一旦被读取之后,管道中的内容就没有了。

|

||||

|

||||

另一种研究命名管道如何工作的方式是通过将放入数据的操作置入后台来执行两个操作(将数据放入管道,而在另外一段读取它)。

|

||||

|

||||

```

|

||||

$ echo "Can you read this?" > mypipe &

|

||||

[1] 79302

|

||||

$ cat mypipe

|

||||

Can you read this?

|

||||

[1]+ Done echo "Can you read this?" > mypipe

|

||||

```

|

||||

|

||||

一旦管道被读取或“耗干”,该管道就清空了,尽管我们还能看见它并再次使用。可为什么要费此周折呢?

|

||||

|

||||

### 为何要使用命名管道?

|

||||

|

||||

命名管道很少被使用的理由似乎很充分。毕竟在 Unix 系统上,总有多种不同的方式完成同样的操作。有多种方式写文件、读文件、清空文件,尽管命名管道比它们来得更高效。

|

||||

|

||||

值得注意的是,命名管道的内容驻留在内存中而不是被写到硬盘上。数据内容只有在输入输出端都打开时才会传送。用户可以在管道的输出端打开之前向管道多次写入。通过使用命名管道,用户可以创建一个进程写入管道并且另外一个进程读取管道的流程,而不用关心协调二者时间上的同步。

|

||||

|

||||

用户可以创建一个单纯等待数据出现在管道输出端的进程,并在拿到输出数据后对其进行操作。下列命令我们采用 `tail` 来等待数据出现。

|

||||

|

||||

```

|

||||

$ tail -f mypipe

|

||||

```

|

||||

|

||||

一旦供给管道数据的进程结束了,我们就可以看到一些输出。

|

||||

|

||||

```

|

||||

$ tail -f mypipe

|

||||

Uranus replicated to WCDC7

|

||||

Saturn replicated to WCDC8

|

||||

Pluto replicated to WCDC9

|

||||

Server replication operation completed

|

||||

```

|

||||

|

||||

如果研究一下向命名管道写入的进程,用户也许会惊讶于它的资源消耗之少。在下面的 `ps` 命令输出中,唯一显著的资源消耗是虚拟内存(VSZ 那一列)。

|

||||

|

||||

```

|

||||

ps u -P 80038

|

||||

USER PID %CPU %MEM VSZ RSS TTY STAT START TIME COMMAND

|

||||

shs 80038 0.0 0.0 108488 764 pts/4 S 15:25 0:00 -bash

|

||||

```

|

||||

|

||||

命名管道与 Unix/Linux 系统上更常用的管道相比足以不同到拥有另一个名号,但是“管道”确实能反映出它们如何在进程间传送数据的形象,故将称其为“命名管道”还真是恰如其分。也许您在执行操作时就能从这个聪明的 Unix/Linux 特性中获益匪浅呢。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3251853/linux/why-use-named-pipes-on-linux.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

译者:[YPBlib](https://github.com/YPBlib)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[1]:http://www.networkworld.com/article/2926630/linux/11-pointless-but-awesome-linux-terminal-tricks.html#tk.nww-fsb

|

||||

@ -1,3 +1,5 @@

|

||||

XLCYun 翻译中

|

||||

|

||||

Manjaro Gaming: Gaming on Linux Meets Manjaro’s Awesomeness

|

||||

======

|

||||

[![Meet Manjaro Gaming, a Linux distro designed for gamers with the power of Manjaro][1]][1]

|

||||

|

||||

95

sources/talk/20180201 IT automation- How to make the case.md

Normal file

95

sources/talk/20180201 IT automation- How to make the case.md

Normal file

@ -0,0 +1,95 @@

|

||||

IT automation: How to make the case

|

||||

======

|

||||

At the start of any significant project or change initiative, IT leaders face a proverbial fork in the road.

|

||||

|

||||

Path #1 might seem to offer the shortest route from A to B: Simply force-feed the project to everyone by executive mandate, essentially saying, “You’re going to do this – or else.”

|

||||

|

||||

Path #2 might appear less direct, because on this journey you take the time to explain the strategy and the reasons behind it. In fact, you’re going to be making pit stops along this route, rather than marathoning from start to finish: “Here’s what we’re doing – and why we’re doing it.”

|

||||

|

||||

Guess which path bears better results?

|

||||

|

||||

If you said #2, you’ve traveled both paths before – and experienced the results first-hand. Getting people on board with major changes beforehand is almost always the smarter choice.

|

||||

|

||||

IT leaders know as well as anyone that with significant change often comes [significant fear][1], skepticism, and other challenges. It may be especially true with IT automation. The term alone sounds scary to some people, and it is often tied to misconceptions. Helping people understand the what, why, and how of your company’s automation strategy is a necessary step to achieving your goals associated with that strategy.

|

||||

|

||||

[ **Read our related article,** [**IT automation best practices: 7 keys to long-term success**][2]. ]

|

||||

|

||||

With that in mind, we asked a variety of IT leaders for their advice on making the case for automation in your organization:

|

||||

|

||||

## 1. Show people what’s in it for them

|

||||

|

||||

Let’s face it: Self-interest and self-preservation are natural instincts. Tapping into that human tendency is a good way to get people on board: Show people how your automation strategy will benefit them and their jobs. Will automating a particular process in the software pipeline mean fewer middle-of-the-night calls for team members? Will it enable some people to dump low-skill, manual tasks in favor of more strategic, higher-order work – the sort that helps them take the next step in their career?

|

||||

|

||||

“Convey what’s in it for them, and how it will benefit clients and the whole company,” advises Vipul Nagrath, global CIO at [ADP][3]. “Compare the current state to a brighter future state, where the company enjoys greater stability, agility, efficiency, and security.”

|

||||

|

||||

The same approach holds true when making the case outside of IT; just lighten up on the jargon when explaining the benefits to non-technical stakeholders, Nagrath says.

|

||||

|

||||

Setting up a before-and-after picture is a good storytelling device for helping people see the upside.

|

||||

|

||||

“You want to paint a picture of the current state that people can relate to,” Nagrath says. “Present what’s working, but also highlight what’s causing teams to be less than agile.” Then explain how automating certain processes will improve that current state.

|

||||

|

||||

## 2. Connect automation to specific business goals

|

||||

|

||||

Part of making a strong case entails making sure people understand that you’re not just trend-chasing. If you’re automating simply for the sake of automating, people will sniff that out and become more resistant – perhaps especially within IT.

|

||||

|

||||

“The case for automation needs to be driven by a business demand signal, such as revenue or operating expense,” says David Emerson, VP and deputy CISO at [Cyxtera][4]. “No automation endeavor is self-justifying, and no technical feat, generally, should be a means unto itself, unless it’s a core competency of the company.”

|

||||

|

||||

Like Nagrath, Emerson recommends promoting the incentives associated with achieving the business goals of automation, and working toward these goals (and corresponding incentives) in an iterative, step-by-step fashion.

|

||||

|

||||

## 3. Break the automation plan into manageable pieces

|

||||

|

||||

Even if your automation strategy is literally “automate everything,” that’s a tough sell (and probably unrealistic) for most organizations. You’ll make a stronger case with a plan that approaches automation manageable piece by manageable piece, and that enables greater flexibility to adapt along the way.

|

||||

|

||||

“When making a case for automation, I recommend clearly illustrating the incentive to move to an automated process, and allowing iteration toward that goal to introduce and prove the benefits at lower risk,” Emerson says.

|

||||

|

||||

Sergey Zuev, founder at [GA Connector][5], shares an in-the-trenches account of why automating incrementally is crucial – and how it will help you build a stronger, longer-lasting argument for your strategy. Zuev should know: His company’s tool automates the import of data from CRM applications into Google Analytics. But it was actually the company’s internal experience automating its own customer onboarding process that led to a lightbulb moment.

|

||||

|

||||

“At first, we tried to build the whole onboarding funnel at once, and as a result, the project dragged [on] for months,” Zuev says. “After realizing that it [was] going nowhere, we decided to select small chunks that would have the biggest immediate effect, and start with that. As a result, we managed to implement one of the email sequences in just a week, and are already reaping the benefits of the desecrated manual effort.”

|

||||

|

||||

## 4. Sell the big-picture benefits too

|

||||

|

||||

A step-by-step approach does not preclude painting a bigger picture. Just as it’s a good idea to make the case at the individual or team level, it’s also a good idea for help people understand the company-wide benefits.

|

||||

|

||||

“If we can accelerate the time it takes for the business to get what it needs, it will silence the skeptics.”

|

||||

|

||||

Eric Kaplan, CTO at [AHEAD][6], agrees that using small wins to show automation’s value is a smart strategy for winning people over. But the value those so-called “small” wins reveal can actually help you sharpen the big picture for people. Kaplan points to the value of individual and organizational time as an area everyone can connect with easily.

|

||||

|

||||

“The best place to do this is where you can show savings in terms of time,” Kaplan says. “If we can accelerate the time it takes for the business to get what it needs, it will silence the skeptics.”

|

||||

|

||||

Time and scalability are powerful benefits business and IT colleagues, both charged with growing the business, can grasp.

|

||||

|

||||

“The result of automation is scalability – less effort per person to maintain and grow your IT environment, as [Red Hat][7] VP, Global Services John Allessio recently [noted][8]. “If adding manpower is the only way to grow your business, then scalability is a pipe dream. Automation reduces your manpower requirements and provides the flexibility required for continued IT evolution.” (See his full article, [What DevOps teams really need from a CIO][8].)

|

||||

|

||||

## 5. Promote the heck out of your results

|

||||

|

||||

At the outset of your automation strategy, you’ll likely be making the case based on goals and the anticipated benefits of achieving those goals. But as your automation strategy evolves, there’s no case quite as convincing as one grounded in real-world results.

|

||||

|

||||

“Seeing is believing,” says Nagrath, ADP’s CIO. “Nothing quiets skeptics like a track record of delivery.”

|

||||

|

||||

That means, of course, not only achieving your goals, but also doing so on time – another good reason for the iterative, step-by-step approach.

|

||||

|

||||

While quantitative results such as percentage improvements or cost savings can speak loudly, Nagrath advises his fellow IT leaders not to stop there when telling your automation story.

|

||||

|

||||

“Making a case for automation is also a qualitative discussion, where we can promote the issues prevented, overall business continuity, reductions in failures/errors, and associates taking on [greater] responsibility as they tackle more value-added tasks.”

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2018/1/how-make-case-it-automation

|

||||

|

||||

作者:[Kevin Casey][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://enterprisersproject.com/user/kevin-casey

|

||||

[1]:https://enterprisersproject.com/article/2017/10/how-beat-fear-and-loathing-it-change

|

||||

[2]:https://enterprisersproject.com/article/2018/1/it-automation-best-practices-7-keys-long-term-success?sc_cid=70160000000h0aXAAQ

|

||||

[3]:https://www.adp.com/

|

||||

[4]:https://www.cyxtera.com/

|

||||

[5]:http://gaconnector.com/

|

||||

[6]:https://www.thinkahead.com/

|

||||

[7]:https://www.redhat.com/en?intcmp=701f2000000tjyaAAA

|

||||

[8]:https://enterprisersproject.com/article/2017/12/what-devops-teams-really-need-cio

|

||||

[9]:https://enterprisersproject.com/email-newsletter?intcmp=701f2000000tsjPAAQ

|

||||

@ -0,0 +1,108 @@

|

||||

Open source is 20: How it changed programming and business forever

|

||||

======

|

||||

![][1]

|

||||

|

||||

Every company in the world now uses open-source software. Microsoft, once its greatest enemy, is [now an enthusiastic open supporter][2]. Even [Windows is now built using open-source techniques][3]. And if you ever searched on Google, bought a book from Amazon, watched a movie on Netflix, or looked at your friend's vacation pictures on Facebook, you're an open-source user. Not bad for a technology approach that turns 20 on February 3.

|

||||

|

||||

Now, free software has been around since the first computers, but the philosophy of both free software and open source are both much newer. In the 1970s and 80s, companies rose up which sought to profit by making proprietary software. In the nascent PC world, no one even knew about free software. But, on the Internet, which was dominated by Unix and ITS systems, it was a different story.

|

||||

|

||||

In the late 70s, [Richard M. Stallman][6], also known as RMS, then an MIT programmer, created a free printer utility based on its source code. But then a new laser printer arrived on the campus and he found he could no longer get the source code and so he couldn't recreate the utility. The angry [RMS created the concept of "Free Software."][7]

|

||||

|

||||

RMS's goal was to create a free operating system, [Hurd][8]. To make this happen in September 1983, [he announced the creation of the GNU project][9] (GNU stands for GNU's Not Unix -- a recursive acronym). By January 1984, he was working full-time on the project. To help build it he created the grandfather of all free software/open-source compiler system [GCC][10] and other operating system utilities. Early in 1985, he published "[The GNU Manifesto][11]," which was the founding charter of the free software movement and launched the [Free Software Foundation (FSF)][12].

|

||||

|

||||

This went well for a few years, but inevitably, [RMS collided with proprietary companies][13]. The company Unipress took the code to a variation of his [EMACS][14] programming editor and turned it into a proprietary program. RMS never wanted that to happen again so he created the [GNU General Public License (GPL)][15] in 1989. This was the first copyleft license. It gave users the right to use, copy, distribute, and modify a program's source code. But if you make source code changes and distribute it to others, you must share the modified code. While there had been earlier free licenses, such as [1980's four-clause BSD license][16], the GPL was the one that sparked the free-software, open-source revolution.

|

||||

|

||||

In 1997, [Eric S. Raymond][17] published his vital essay, "[The Cathedral and the Bazaar][18]." In it, he showed the advantages of the free-software development methodologies using GCC, the Linux kernel, and his experiences with his own [Fetchmail][19] project as examples. This essay did more than show the advantages of free software. The programming principles he described led the way for both [Agile][20] development and [DevOps][21]. Twenty-first century programming owes a large debt to Raymond.

|

||||

|

||||

Like all revolutions, free software quickly divided its supporters. On one side, as John Mark Walker, open-source expert and Strategic Advisor at Glyptodon, recently wrote, "[Free software is a social movement][22], with nary a hint of business interests -- it exists in the realm of religion and philosophy. Free software is a way of life with a strong moral code."

|

||||

|

||||

On the other were numerous people who wanted to bring "free software" to business. They would become the founders of "open source." They argued that such phrases as "Free as in freedom" and "Free speech, not beer," left most people confused about what that really meant for software.

|

||||

|

||||

The [release of the Netscape web browser source code][23] sparked a meeting of free software leaders and experts at [a strategy session held on February 3rd][24], 1998 in Palo Alto, CA. There, Eric S. Raymond, Michael Tiemann, Todd Anderson, Jon "maddog" Hall, Larry Augustin, Sam Ockman, and Christine Peterson hammered out the first steps to open source.

|

||||

|

||||

Peterson created the "open-source term." She remembered:

|

||||

|

||||

> [The introduction of the term "open source software" was a deliberate effort][25] to make this field of endeavor more understandable to newcomers and to business, which was viewed as necessary to its spread to a broader community of users. The problem with the main earlier label, "free software," was not its political connotations, but that -- to newcomers -- its seeming focus on price is distracting. A term was needed that focuses on the key issue of source code and that does not immediately confuse those new to the concept. The first term that came along at the right time and fulfilled these requirements was rapidly adopted: open source.

|

||||

|

||||

To help clarify what open source was, and wasn't, Raymond and Bruce Perens founded the [Open Source Initiative (OSI)][26]. Its purpose was, and still is, to define what are real open-source software licenses and what aren't.

|

||||

|

||||

Stallman was enraged by open source. He wrote:

|

||||

|

||||

> The two terms describe almost the same method/category of software, but they stand for [views based on fundamentally different values][27]. Open source is a development methodology; free software is a social movement. For the free software movement, free software is an ethical imperative, essential respect for the users' freedom. By contrast, the philosophy of open source considers issues in terms of how to make software 'better' -- in a practical sense only. It says that non-free software is an inferior solution to the practical problem at hand. Most discussion of "open source" pays no attention to right and wrong, only to popularity and success.

|

||||

|

||||

He saw open source as kowtowing to business and taking the focus away from the personal freedom of being able to have free access to the code. Twenty years later, he's still angry about it.

|

||||

|

||||

In a recent e-mail to me, Stallman said, it is a "common error is connecting me or my work or free software in general with the term 'Open Source.' That is the slogan adopted in 1998 by people who reject the philosophy of the Free Software Movement." In another message, he continued, "I rejected 'open source' because it was meant to bury the "free software" ideas of freedom. Open source inspired the release ofu seful free programs, but what's missing is the idea that users deserve control of their computing. We libre-software activists say, 'Software you can't change and share is unjust, so let's escape to our free replacement.' Open source says only, 'If you let users change your code, they might fix bugs.' What it does says is not wrong, but weak; it avoids saying the deeper point."

|

||||

|

||||

Philosophical conflicts aside, open source has indeed become the model for practical software development. Larry Augustin, CEO of [SugarCRM][28], the open-source customer relationship management (CRM) Software-as-a-Service (SaaS), was one of the first to practice open-source in a commercial software business. Augustin showed that a successful business could be built on open-source software.

|

||||

|

||||

Other companies quickly embraced this model. Besides Linux companies such as [Canonical][29], [Red Hat][30] and [SUSE][31], technology businesses such as [IBM][32] and [Oracle][33] also adopted it. This, in turn, led to open source's commercial success. More recently companies you would never think of for a moment as open-source businesses like [Wal-Mart][34] and [Verizon][35], now rely on open-source programs and have their own open-source projects.

|

||||

|

||||

As Jim Zemlin, director of [The Linux Foundation][36], observed in 2014:

|

||||

|

||||

> A [new business model][37] has emerged in which companies are joining together across industries to share development resources and build common open-source code bases on which they can differentiate their own products and services.

|

||||

|

||||

Today, Hall looked back and said "I look at 'closed source' as a blip in time." Raymond is unsurprised at open-source's success. In an e-mail interview, Raymond said, "Oh, yeah, it *has* been 20 years -- and that's not a big deal because we won most of the fights we needed to quite a while ago, like in the first decade after 1998."

|

||||

|

||||

"Ever since," he continued, "we've been mainly dealing with the problems of success rather than those of failure. And a whole new class of issues, like IoT devices without upgrade paths -- doesn't help so much for the software to be open if you can't patch it."

|

||||

|

||||

In other words, he concludes, "The reward of victory is often another set of battles."

|

||||

|

||||

These are battles that open source is poised to win. Jim Whitehurst, Red Hat's CEO and president told me:

|

||||

|

||||

> The future of open source is bright. We are on the cusp of a new wave of innovation that will come about because information is being separated from physical objects thanks to the Internet of Things. Over the next decade, we will see entire industries based on open-source concepts, like the sharing of information and joint innovation, become mainstream. We'll see this impact every sector, from non-profits, like healthcare, education and government, to global corporations who realize sharing information leads to better outcomes. Open and participative innovation will become a key part of increasing productivity around the world.

|

||||

|

||||

Others see open source extending beyond software development methods. Nick Hopman, Red Hat's senior director of emerging technology practices, said:

|

||||

|

||||

> Open-source is much more than just a process to develop and expose technology. Open-source is a catalyst to drive change in every facet of society -- government, policy, medical diagnostics, process re-engineering, you name it -- and can leverage open principles that have been perfected through the experiences of open-source software development to create communities that drive change and innovation. Looking forward, open-source will continue to drive technology innovation, but I am even more excited to see how it changes the world in ways we have yet to even consider.

|

||||

|

||||

Indeed. Open source has turned twenty, but its influence, and not just on software and business, will continue on for decades to come.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.zdnet.com/article/open-source-turns-20/

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.zdnet.com/meet-the-team/us/steven-j-vaughan-nichols/

|

||||

[1]:https://zdnet1.cbsistatic.com/hub/i/r/2018/01/08/d9527281-2972-4cb7-bd87-6464d8ad50ae/thumbnail/570x322/9d4ef9007b3a3ce34de0cc39d2b15b0c/5a4faac660b22f2aba08fc3f-1280x7201jan082018150043poster.jpg

|

||||

[2]:http://www.zdnet.com/article/microsoft-the-open-source-company/

|

||||

[3]:http://www.zdnet.com/article/microsoft-uses-open-source-software-to-create-windows/

|

||||

[4]:https://zdnet1.cbsistatic.com/hub/i/r/2016/11/18/a55b3c0c-7a8e-4143-893f-44900cb2767a/resize/220x165/6cd4e37b1904743ff1f579cb10d9e857/linux-open-source-money-penguin.jpg

|

||||

[5]:http://www.zdnet.com/article/how-do-linux-and-open-source-companies-make-money-from-free-software/

|

||||

[6]:https://stallman.org/

|

||||

[7]:https://opensource.com/article/18/2/pivotal-moments-history-open-source

|

||||

[8]:https://www.gnu.org/software/hurd/hurd.html

|

||||

[9]:https://groups.google.com/forum/#!original/net.unix-wizards/8twfRPM79u0/1xlglzrWrU0J

|

||||

[10]:https://gcc.gnu.org/

|

||||

[11]:https://www.gnu.org/gnu/manifesto.en.html

|

||||

[12]:https://www.fsf.org/

|

||||

[13]:https://www.free-soft.org/gpl_history/

|

||||

[14]:https://www.gnu.org/s/emacs/

|

||||

[15]:https://www.gnu.org/licenses/gpl-3.0.en.html

|

||||

[16]:http://www.linfo.org/bsdlicense.html

|

||||

[17]:http://www.catb.org/esr/

|

||||

[18]:http://www.catb.org/esr/writings/cathedral-bazaar/

|

||||

[19]:http://www.fetchmail.info/

|

||||

[20]:https://www.agilealliance.org/agile101/

|

||||

[21]:https://aws.amazon.com/devops/what-is-devops/

|

||||

[22]:https://opensource.com/business/16/11/open-source-not-free-software?sc_cid=70160000001273HAAQ

|

||||

[23]:http://www.zdnet.com/article/the-beginning-of-the-peoples-web-20-years-of-netscape/

|

||||

[24]:https://opensource.org/history

|

||||

[25]:https://opensource.com/article/18/2/coining-term-open-source-software

|

||||

[26]:https://opensource.org

|

||||

[27]:https://www.gnu.org/philosophy/open-source-misses-the-point.html

|

||||

[28]:https://www.sugarcrm.com/

|

||||

[29]:https://www.canonical.com/

|

||||

[30]:https://www.redhat.com/en

|

||||

[31]:https://www.suse.com/

|

||||

[32]:https://developer.ibm.com/code/open/

|

||||

[33]:http://www.oracle.com/us/technologies/open-source/overview/index.html

|

||||

[34]:http://www.zdnet.com/article/walmart-relies-on-openstack/

|

||||

[35]:https://www.networkworld.com/article/3195490/lan-wan/verizon-taps-into-open-source-white-box-fervor-with-new-cpe-offering.html

|

||||

[36]:http://www.linuxfoundation.org/

|

||||

[37]:http://www.zdnet.com/article/it-takes-an-open-source-village-to-make-commercial-software/

|

||||

@ -1,3 +1,4 @@

|

||||

##Name1e5s Translating##

|

||||

Open source software: 20 years and counting

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,160 +0,0 @@

|

||||

Linux Find Out Last System Reboot Time and Date Command

|

||||

======

|

||||

So, how do you find out your Linux or UNIX-like system was last rebooted? How do you display the system shutdown date and time? The last utility will either list the sessions of specified users, ttys, and hosts, in reverse time order, or list the users logged in at a specified date and time. Each line of output contains the user name, the tty from which the session was conducted, any hostname, the start and stop times for the session, and the duration of the session. To view Linux or Unix system reboot and shutdown date and time stamp using the following commands:

|

||||

|

||||

* last command

|

||||

* who command

|

||||

|

||||

|

||||

|

||||

### Use who command to find last system reboot time/date

|

||||

|

||||

You need to use the [who command][1], to print who is logged on. It also displays the time of last system boot. Use the last command to display system reboot and shutdown date and time, run:

|

||||

`$ who -b`

|

||||

Sample outputs:

|

||||

```

|

||||

system boot 2017-06-20 17:41

|

||||

```

|

||||

|

||||

Use the last command to display listing of last logged in users and system last reboot time and date, enter:

|

||||

`$ last reboot | less`

|

||||

Sample outputs:

|

||||

[![Fig.01: last command in action][2]][2]

|

||||

Or, better try:

|

||||

`$ last reboot | head -1`

|

||||

Sample outputs:

|

||||

```

|

||||

reboot system boot 4.9.0-3-amd64 Sat Jul 15 19:19 still running

|

||||

```

|

||||

|

||||

The last command searches back through the file /var/log/wtmp and displays a list of all users logged in (and out) since that file was created. The pseudo user reboot logs in each time the system is rebooted. Thus last reboot command will show a log of all reboots since the log file was created.

|

||||

|

||||

### Finding systems last shutdown date and time

|

||||

|

||||

To display last shutdown date and time use the following command:

|

||||

`$ last -x|grep shutdown | head -1`

|

||||

Sample outputs:

|

||||

```

|

||||

shutdown system down 2.6.15.4 Sun Apr 30 13:31 - 15:08 (01:37)

|

||||

```

|

||||

|

||||

Where,

|

||||

|

||||

* **-x** : Display the system shutdown entries and run level changes.

|

||||

|

||||

|

||||

|

||||

Here is another session from my last command:

|

||||

```

|

||||

$ last

|

||||

$ last -x

|

||||

$ last -x reboot

|

||||

$ last -x shutdown

|

||||

```

|

||||

Sample outputs:

|

||||

![Fig.01: How to view last Linux System Reboot Date/Time ][3]

|

||||

|

||||

### Find out Linux system up since…

|

||||

|

||||

Another option as suggested by readers in the comments section below is to run the following command:

|

||||

`$ uptime -s`

|

||||

Sample outputs:

|

||||

```

|

||||

2017-06-20 17:41:51

|

||||

```

|

||||

|

||||

### OS X/Unix/FreeBSD find out last reboot and shutdown time command examples

|

||||

|

||||

Type the following command:

|

||||

`$ last reboot`

|

||||

Sample outputs from OS X unix:

|

||||

```

|

||||

reboot ~ Fri Dec 18 23:58

|

||||

reboot ~ Mon Dec 14 09:54

|

||||

reboot ~ Wed Dec 9 23:21

|

||||

reboot ~ Tue Nov 17 21:52

|

||||

reboot ~ Tue Nov 17 06:01

|

||||

reboot ~ Wed Nov 11 12:14

|

||||

reboot ~ Sat Oct 31 13:40

|

||||

reboot ~ Wed Oct 28 15:56

|

||||

reboot ~ Wed Oct 28 11:35

|

||||

reboot ~ Tue Oct 27 00:00

|

||||

reboot ~ Sun Oct 18 17:28

|

||||

reboot ~ Sun Oct 18 17:11

|

||||

reboot ~ Mon Oct 5 09:35

|

||||

reboot ~ Sat Oct 3 18:57

|

||||

|

||||

|

||||

wtmp begins Sat Oct 3 18:57

|

||||

```

|

||||

|

||||

To see shutdown date and time, enter:

|

||||

`$ last shutdown`

|

||||

Sample outputs:

|

||||

```

|

||||

shutdown ~ Fri Dec 18 23:57

|

||||

shutdown ~ Mon Dec 14 09:53

|

||||

shutdown ~ Wed Dec 9 23:20

|

||||

shutdown ~ Tue Nov 17 14:24

|

||||

shutdown ~ Mon Nov 16 21:15

|

||||

shutdown ~ Tue Nov 10 13:15

|

||||

shutdown ~ Sat Oct 31 13:40

|

||||

shutdown ~ Wed Oct 28 03:10

|

||||

shutdown ~ Sun Oct 18 17:27

|

||||

shutdown ~ Mon Oct 5 09:23

|

||||

|

||||

|

||||

wtmp begins Sat Oct 3 18:57

|

||||

```

|

||||

|

||||

### How do I find who rebooted/shutdown the Linux box?

|

||||

|

||||

You need [to enable psacct service and run the following command to see info][4] about executed commands including user name. Type the following [lastcomm command][5] to see

|

||||

```

|

||||

# lastcomm userNameHere

|

||||

# lastcomm commandNameHere

|

||||

# lastcomm | more

|

||||

# lastcomm reboot

|

||||

# lastcomm shutdown

|

||||

### OR see both reboot and shutdown time

|

||||

# lastcomm | egrep 'reboot|shutdown'

|

||||

```

|

||||

Sample outputs:

|

||||

```

|

||||

reboot S X root pts/0 0.00 secs Sun Dec 27 23:49

|

||||

shutdown S root pts/1 0.00 secs Sun Dec 27 23:45

|

||||

```

|

||||

|

||||

So root user rebooted the box from 'pts/0' on Sun, Dec, 27th at 23:49 local time.

|

||||

|

||||

### See also

|

||||

|

||||

* For more information read last(1) and [learn how to use the tuptime command on Linux server to see the historical and statistical uptime][6].

|

||||

|

||||

|

||||

|

||||

### about the author

|

||||

|

||||

|

||||

The author is the creator of nixCraft and a seasoned sysadmin and a trainer for the Linux operating system/Unix shell scripting. He has worked with global clients and in various industries, including IT, education, defense and space research, and the nonprofit sector. Follow him on [Twitter][7], [Facebook][8], [Google+][9].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/linux-last-reboot-time-and-date-find-out.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/faq/unix-linux-who-command-examples-syntax-usage/ (See Linux/Unix who command examples for more info)

|

||||

[2]:https://www.cyberciti.biz/tips/wp-content/uploads/2006/04/last-reboot.jpg

|

||||

[3]:https://www.cyberciti.biz/media/new/tips/2006/04/check-last-time-system-was-rebooted.jpg

|

||||

[4]:https://www.cyberciti.biz/tips/howto-log-user-activity-using-process-accounting.html

|

||||

[5]:https://www.cyberciti.biz/faq/linux-unix-lastcomm-command-examples-usage-syntax/ (See Linux/Unix lastcomm command examples for more info)

|

||||

[6]:https://www.cyberciti.biz/hardware/howto-see-historical-statistical-uptime-on-linux-server/

|

||||

[7]:https://twitter.com/nixcraft

|

||||

[8]:https://facebook.com/nixcraft

|

||||

[9]:https://plus.google.com/+CybercitiBiz

|

||||

@ -0,0 +1,82 @@

|

||||

How to use lftp to accelerate ftp/https download speed on Linux/UNIX

|

||||

======

|

||||

lftp is a file transfer program. It allows sophisticated FTP, HTTP/HTTPS, and other connections. If the site URL is specified, then lftp will connect to that site otherwise a connection has to be established with the open command. It is an essential tool for all a Linux/Unix command line users. I have already written about [Linux ultra fast command line download accelerator][1] such as Axel and prozilla. lftp is another tool for the same job with more features. lftp can handle seven file access methods:

|

||||

|

||||

1. ftp

|

||||

2. ftps

|

||||

3. http

|

||||

4. https

|

||||

5. hftp

|

||||

6. fish

|

||||

7. sftp

|

||||

8. file

|

||||

|

||||

|

||||

|

||||

### So what is unique about lftp?

|

||||

|

||||

* Every operation in lftp is reliable, that is any not fatal error is ignored, and the operation is repeated. So if downloading breaks, it will be restarted from the point automatically. Even if FTP server does not support REST command, lftp will try to retrieve the file from the very beginning until the file is transferred completely.

|

||||

* lftp has shell-like command syntax allowing you to launch several commands in parallel in the background.

|

||||

* lftp has a builtin mirror which can download or update a whole directory tree. There is also a reverse mirror (mirror -R) which uploads or updates a directory tree on the server. The mirror can also synchronize directories between two remote servers, using FXP if available.

|

||||

|

||||

|

||||

|

||||

### How to use lftp as download accelerator

|

||||

|

||||

lftp has pget command. It allows you download files in parallel. The syntax is

|

||||

`lftp -e 'pget -n NUM -c url; exit'`

|

||||

For example, download <http://kernel.org/pub/linux/kernel/v2.6/linux-2.6.22.2.tar.bz2> file using pget in 5 parts:

|

||||

```

|

||||

$ cd /tmp

|

||||

$ lftp -e 'pget -n 5 -c http://kernel.org/pub/linux/kernel/v2.6/linux-2.6.22.2.tar.bz2'

|

||||

```

|

||||

Sample outputs:

|

||||

```

|

||||

45108964 bytes transferred in 57 seconds (775.3K/s)

|

||||

lftp :~>quit

|

||||

|

||||

```

|

||||

|

||||

Where,

|

||||

|

||||

1. pget – Download files in parallel

|

||||

2. -n 5 – Set maximum number of connections to 5

|

||||

3. -c – Continue broken transfer if lfile.lftp-pget-status exists in the current directory

|

||||

|

||||

|

||||

|

||||

### How to use lftp to accelerate ftp/https download on Linux/Unix

|

||||

|

||||

Another try with added exit command:

|

||||

`$ lftp -e 'pget -n 10 -c https://cdn.kernel.org/pub/linux/kernel/v4.x/linux-4.15.tar.xz; exit'`

|

||||

|

||||

[Linux-lftp-command-demo][https://www.cyberciti.biz/tips/wp-content/uploads/2007/08/Linux-lftp-command-demo.mp4]

|

||||

|

||||

### A note about parallel downloading

|

||||

|

||||

Please note that by using download accelerator you are going to put a load on remote host. Also note that lftp may not work with sites that do not support multi-source downloads or blocks such requests at firewall level.

|

||||

|

||||

NA command offers many other features. Refer to [lftp][2] man page for more information:

|

||||

`man lftp`

|

||||

|

||||

### about the author

|

||||

|

||||

The author is the creator of nixCraft and a seasoned sysadmin and a trainer for the Linux operating system/Unix shell scripting. He has worked with global clients and in various industries, including IT, education, defense and space research, and the nonprofit sector. Follow him on [Twitter][3], [Facebook][4], [Google+][5]. Get the **latest tutorials on SysAdmin, Linux/Unix and open source topics via[my RSS/XML feed][6]**.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/linux-unix-download-accelerator.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/tips/download-accelerator-for-linux-command-line-tools.html

|

||||

[2]:https://lftp.yar.ru/

|

||||

[3]:https://twitter.com/nixcraft

|

||||

[4]:https://facebook.com/nixcraft

|

||||

[5]:https://plus.google.com/+CybercitiBiz

|

||||

[6]:https://www.cyberciti.biz/atom/atom.xml

|

||||

@ -0,0 +1,141 @@

|

||||

How to use yum-cron to automatically update RHEL/CentOS Linux

|

||||

======

|

||||

The yum command line tool is used to install and update software packages under RHEL / CentOS Linux server. I know how to apply updates using [yum update command line][1], but I would like to use cron to update packages where appropriate manually. How do I configure yum to install software patches/updates [automatically with cron][2]?

|

||||

|

||||

You need to install yum-cron package. It provides files needed to run yum updates as a cron job. Install this package if you want auto yum updates nightly via cron.

|

||||

|

||||

### How to install yum cron on a CentOS/RHEL 6.x/7.x

|

||||

|

||||

Type the following [yum command][3] on:

|

||||

`$ sudo yum install yum-cron`

|

||||

|

||||

|

||||

Turn on service using systemctl command on **CentOS/RHEL 7.x** :

|

||||

```

|

||||

$ sudo systemctl enable yum-cron.service

|

||||

$ sudo systemctl start yum-cron.service

|

||||

$ sudo systemctl status yum-cron.service

|

||||

```

|

||||

If you are using **CentOS/RHEL 6.x** , run:

|

||||

```

|

||||

$ sudo chkconfig yum-cron on

|

||||

$ sudo service yum-cron start

|

||||

```

|

||||

|

||||

|

||||

yum-cron is an alternate interface to yum. Very convenient way to call yum from cron. It provides methods to keep repository metadata up to date, and to check for, download, and apply updates. Rather than accepting many different command line arguments, the different functions of yum-cron can be accessed through config files.

|

||||

|

||||

### How to configure yum-cron to automatically update RHEL/CentOS Linux

|

||||

|

||||

You need to edit /etc/yum/yum-cron.conf and /etc/yum/yum-cron-hourly.conf files using a text editor such as vi command:

|

||||

`$ sudo vi /etc/yum/yum-cron.conf`

|

||||

Make sure updates should be applied when they are available

|

||||

`apply_updates = yes`

|

||||

You can set the address to send email messages from. Please note that ‘localhost’ will be replaced with the value of system_name.

|

||||

`email_from = root@localhost`

|

||||

List of addresses to send messages to.

|

||||

`email_to = your-it-support@some-domain-name`

|

||||

Name of the host to connect to to send email messages.

|

||||

`email_host = localhost`

|

||||

If you [do not want to update kernel package add the following on CentOS/RHEL 7.x][4]:

|

||||

`exclude=kernel*`

|

||||

For RHEL/CentOS 6.x add [the following to exclude kernel package from updating][5]:

|

||||

`YUM_PARAMETER=kernel*`

|

||||

[Save and close the file in vi/vim][6]. You also need to update /etc/yum/yum-cron-hourly.conf file if you want to apply update hourly. Otherwise /etc/yum/yum-cron.conf will run on daily using the following cron job (us [cat command][7]:

|

||||

`$ cat /etc/cron.daily/0yum-daily.cron`

|

||||

Sample outputs:

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

# Only run if this flag is set. The flag is created by the yum-cron init

|

||||

# script when the service is started -- this allows one to use chkconfig and

|

||||

# the standard "service stop|start" commands to enable or disable yum-cron.

|

||||

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Action!

|

||||

exec /usr/sbin/yum-cron /etc/yum/yum-cron-hourly.conf

|

||||

[root@centos7-box yum]# cat /etc/cron.daily/0yum-daily.cron

|

||||

#!/bin/bash

|

||||

|

||||

# Only run if this flag is set. The flag is created by the yum-cron init

|

||||

# script when the service is started -- this allows one to use chkconfig and

|

||||

# the standard "service stop|start" commands to enable or disable yum-cron.

|

||||

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Action!

|

||||

exec /usr/sbin/yum-cron

|

||||

```

|

||||

|

||||

That is all. Now your system will update automatically everyday using yum-cron. See man page of yum-cron for more details:

|

||||

`$ man yum-cron`

|

||||

|

||||

### Method 2 – Use shell scripts

|

||||

|

||||

**Warning** : The following method is outdated. Do not use it on RHEL/CentOS 6.x/7.x. I kept it below for historical reasons only when I used it on CentOS/RHEL version 4.x/5.x.

|

||||

|

||||

Let us see how to configure CentOS/RHEL for yum automatic update retrieval and installation of security packages. You can use yum-updatesd service provided with CentOS / RHEL servers. However, this service provides a few overheads. You can create daily or weekly updates with the following shell script. Create

|

||||

|

||||

* **/etc/cron.daily/yumupdate.sh** to apply updates one a day.

|

||||

* **/etc/cron.weekly/yumupdate.sh** to apply updates once a week.

|

||||

|

||||

|

||||

|

||||

#### Sample shell script to update system

|

||||

|

||||

A shell script that instructs yum to update any packages it finds via [cron][8]:

|

||||

```

|

||||

#!/bin/bash

|

||||

YUM=/usr/bin/yum

|

||||

$YUM -y -R 120 -d 0 -e 0 update yum

|

||||

$YUM -y -R 10 -e 0 -d 0 update

|

||||

```

|

||||

|

||||

(Code listing -01: /etc/cron.daily/yumupdate.sh)

|

||||

|

||||

Where,

|

||||

|

||||

1. First command will update yum itself and next will apply system updates.

|

||||

2. **-R 120** : Sets the maximum amount of time yum will wait before performing a command

|

||||

3. **-e 0** : Sets the error level to 0 (range 0 – 10). 0 means print only critical errors about which you must be told.

|

||||

4. -d 0 : Sets the debugging level to 0 – turns up or down the amount of things that are printed. (range: 0 – 10).

|

||||

5. **-y** : Assume yes; assume that the answer to any question which would be asked is yes.

|

||||

|

||||

|

||||

|

||||

Make sure you setup executable permission:

|

||||

`# chmod +x /etc/cron.daily/yumupdate.sh`

|

||||

|

||||

|

||||

### about the author

|

||||

|

||||

Posted by:

|

||||

|

||||

The author is the creator of nixCraft and a seasoned sysadmin and a trainer for the Linux operating system/Unix shell scripting. He has worked with global clients and in various industries, including IT, education, defense and space research, and the nonprofit sector. Follow him on [Twitter][9], [Facebook][10], [Google+][11]. Get the **latest tutorials on SysAdmin, Linux/Unix and open source topics via[my RSS/XML feed][12]**.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/fedora-automatic-update-retrieval-installation-with-cron/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/

|

||||

[2]:https://www.cyberciti.biz/faq/how-do-i-add-jobs-to-cron-under-linux-or-unix-oses

|

||||

[3]:https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/ (See Linux/Unix yum command examples for more info)

|

||||

[4]:https://www.cyberciti.biz/faq/yum-update-except-kernel-package-command/

|

||||

[5]:https://www.cyberciti.biz/faq/redhat-centos-linux-yum-update-exclude-packages/

|

||||

[6]:https://www.cyberciti.biz/faq/linux-unix-vim-save-and-quit-command/

|

||||

[7]:https://www.cyberciti.biz/faq/linux-unix-appleosx-bsd-cat-command-examples/ (See Linux/Unix cat command examples for more info)

|

||||

[8]:https://www.cyberciti.biz/faq/how-do-i-add-jobs-to-cron-under-linux-or-unix-oses

|

||||

[9]:https://twitter.com/nixcraft

|

||||

[10]:https://facebook.com/nixcraft

|

||||

[11]:https://plus.google.com/+CybercitiBiz

|

||||

[12]:https://www.cyberciti.biz/atom/atom.xml

|

||||

@ -1,4 +1,4 @@

|

||||

How to Use the ZFS Filesystem on Ubuntu Linux

|

||||

How to Use the ZFS Filesystem on Ubuntu Linux

|

||||

======

|

||||

There are a myriad of [filesystems available for Linux][1]. So why try a new one? They all work, right? They're not all the same, and some have some very distinct advantages, like ZFS.

|

||||

|

||||

|

||||

108

sources/tech/20171101 -dev-[u]random- entropy explained.md

Normal file

108

sources/tech/20171101 -dev-[u]random- entropy explained.md

Normal file

@ -0,0 +1,108 @@

|

||||

/dev/[u]random: entropy explained

|

||||

======

|

||||

### Entropy

|

||||

|

||||

When the topic of /dev/random and /dev/urandom come up, you always hear this word: “Entropy”. Everyone seems to have their own analogy for it. So why not me? I like to think of Entropy as “Random juice”. It is juice, required for random to be more random.

|

||||

|

||||

If you have ever generated an SSL certificate, or a GPG key, you may have seen something like:

|

||||

```

|

||||

We need to generate a lot of random bytes. It is a good idea to perform

|

||||

some other action (type on the keyboard, move the mouse, utilize the

|

||||

disks) during the prime generation; this gives the random number

|

||||

generator a better chance to gain enough entropy.

|

||||

++++++++++..+++++.+++++++++++++++.++++++++++...+++++++++++++++...++++++

|

||||

+++++++++++++++++++++++++++++.+++++..+++++.+++++.+++++++++++++++++++++++++>.

|

||||

++++++++++>+++++...........................................................+++++

|

||||

Not enough random bytes available. Please do some other work to give

|

||||

the OS a chance to collect more entropy! (Need 290 more bytes)

|

||||

|

||||

```

|

||||

|

||||

|

||||

By typing on the keyboard, and moving the mouse, you help generate Entropy, or Random Juice.

|

||||

|

||||

You might be asking yourself… Why do I need Entropy? and why it is so important for random to be actually random? Well, lets say our Entropy was limited to keyboard, mouse, and disk IO. But our system is a server, so I know there is no mouse and keyboard input. This means the only factor is your IO. If it is a single disk, that was barely used, you will have low Entropy. This means your systems ability to be random is weak. In other words, I could play the probability game, and significantly decrease the amount of time it would take to crack things like your ssh keys, or decrypt what you thought was an encrypted session.

|

||||

|

||||

Okay, but that is pretty unrealistic right? No, actually it isn’t. Take a look at this [Debian OpenSSH Vulnerability][1]. This particular issue was caused by someone removing some of the code responsible for adding Entropy. Rumor has it they removed it because it was causing valgrind to throw warnings. However, in doing that, random is now MUCH less random. In fact, so much less that Brute forcing the private ssh keys generated is now a fesible attack vector.

|

||||

|

||||

Hopefully by now we understand how important Entropy is to security. Whether you realize you are using it or not.

|

||||

|

||||

### /dev/random & /dev/urandom

|

||||

|

||||

|

||||

/dev/urandom is a Psuedo Random Number Generator, and it **does not** block if you run out of Entropy.

|

||||

/dev/random is a True Random Number Generator, and it **does** block if you run out of Entropy.

|

||||

|

||||

Most often, if we are dealing with something pragmatic, and it doesn’t contain the keys to your nukes, /dev/urandom is the right choice. Otherwise if you go with /dev/random, then when the system runs out of Entropy your application is just going to behave funny. Whether it outright fails, or just hangs until it has enough depends on how you wrote your application.

|

||||

|

||||

### Checking the Entropy

|

||||

|

||||

So, how much Entropy do you have?

|

||||

```

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/poolsize

|

||||

4096

|

||||

[root@testbox test]# cat /proc/sys/kernel/random/entropy_avail

|

||||

2975

|

||||

[root@testbox test]#

|

||||

|

||||

```

|

||||

|

||||