mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

46aa7dccbb

@ -0,0 +1,72 @@

|

||||

Apple Watch之后,下一个智能手表会是Ubuntu吗?

|

||||

===

|

||||

|

||||

**苹果借助‘Apple Watch’的发布,证实了其进军穿戴式电子设备市场的长期传言**

|

||||

|

||||

|

||||

|

||||

Ubuntu智能手表 - 好主意?

|

||||

|

||||

拥有一系列稳定功能、硬件解决方案和应用合作伙伴关系的支持,手腕穿戴设备被许多公司预示为“人与技术关系的新篇章”。

|

||||

|

||||

它的到来,以及用户兴趣的提升,有可能意味着Ubuntu需要跟进一个为智能手表定制的Ubuntu版本。

|

||||

|

||||

### 大的方面还是成功的 ###

|

||||

|

||||

苹果在正确的时间加入了快速发展的智能手表行列。手腕穿戴设备功能的界限并不是一成不变。失败的设计、简陋的用户界面以及主流用户使用穿戴技术功能的弱定制化,这些都见证了硬件类产品仍然很脆弱 - 这一因素使得Cupertino把时间花费在Apple Watch上。

|

||||

|

||||

> ‘分析师说:超过2200万的智能手表将在今年销售’

|

||||

|

||||

去年全球范围内可穿戴设备的销售数量(包括健身追踪器)仅仅1000万。今年,分析师希望设备的销量可以超过2200万 - 不包括苹果手表,因为其直到2015年初才开始零售。

|

||||

|

||||

其实,我们很容易就可以看出增长的来源。今年九月初柏林举办的IFA 2014展览会,展示了一系列来自主要制造商们的可穿戴设备,包括索尼和华硕。大多数搭载着Google最新发布的安卓穿戴平台。

|

||||

|

||||

更成熟的一个表现是:安卓穿戴设备打破了与形式因素保持一致的新奇争论,进而呈现出一致且令人信服的用户方案。和新的苹果手表一样,它紧密地连接在一个现存的智能手机生态系统上。

|

||||

|

||||

但Ubuntu手腕穿戴系统是否能与之匹配,成为一个实用案例,目前还不清楚。

|

||||

|

||||

#### 目前还没有Ubuntu智能手表的计划 ####

|

||||

|

||||

Ubuntu操作系统的通用性将多种设备的严格标准与统一的未来目标联合在一起,Canonical已经将目标指向了智能电视,平板电脑和智能手机。公司自家的显示服务Mir,甚至被用来为所有尺寸的屏幕提供驱动接口(虽然不是公认1.5"的)。

|

||||

|

||||

今年年初,Canonical社区负责人Jono Bacon被问到是否有制作Ubuntu智能手表的打算。Bacon提供了他对这个问题的看法:“为[Ubuntu触摸设备]路线增加额外的形式因素只会减缓现有的进度”。

|

||||

|

||||

在Ubuntu手机发布两周年之际,我们还是挺赞同他的想法的。

|

||||

|

||||

###除了A面还有B面!###

|

||||

|

||||

但是并不是没有希望的。在[几个月之后的一次电话采访][1]中,Ubuntu创始人Mark Shuttleworth提到,可穿戴技术和智能电视、平板电脑、智能手机一样,都在公司计划当中。

|

||||

|

||||

> “Ubuntu因其在电话中的完美设计变得独一无二,但同时它的设计也能够满足其他生态系统,从穿戴设备到PC机。”

|

||||

|

||||

然而这还没得到具体的证实,它更像一个指针,在某个方向给我们提供一个乐观的指引。

|

||||

|

||||

#### 不大可能 — 但这就是原因所在 ####

|

||||

|

||||

Canonical并不反对利用牢固的专利进军市场。事实上,它恰恰是公司DNA基因的一部分 — 犹如服务器端的RHEL,桌面端的Windows,智能手机上的安卓...

|

||||

|

||||

设备上的Ubuntu系统被制作成可以在更小的屏幕上扩展和适配运行,甚至在小如手表一样的屏幕上。当普通的代码基础已经在手机、平板电脑、桌面和TV上准备就绪,在同样的方向上,如果看不到社区的努力是十分令人吃惊的。

|

||||

|

||||

但是我之所以不认为它会从Canonical发生,至少目前还没有,是基于今年早些时候Jono Bacon的个人思想得出的结论:时间和努力。

|

||||

|

||||

Tim Cook在他的主题演讲中说道:“*我们并没有追随iPhone,也没有缩水用户界面,将其强硬捆绑在你的手腕上。*”这是一个很明显的陈述。为如此小的屏幕设计UI和UX模型、通过交互原则工作、对硬件和输入模式的推崇,这些都不是容易的事。

|

||||

|

||||

可穿戴技术仍然是一个新兴的市场。在这个阶段,Canonical可能会在探寻的过程中浪费一些发展、设计和商业上的机会。如果在一些更为紧迫的领域落后了,造成的后果远比眼前利益的损失更严重。

|

||||

|

||||

打一场持久战,耐心等待,看哪些努力成功哪些会失败,这是一条更难的路线,但是却更适合Ubuntu,就如同今天它做的一样。在新产品出现之前,Canonical把力量用在现存的产品上是更好的选择(这是一些已经来迟的理论)

|

||||

|

||||

想更进一步了解什么是Ubuntu智能手表,点击下面的[视频][2],里面展示了一个交互的Unity主题皮肤Tizen(它已经支持Samsung Galaxy Gear智能手表)。

|

||||

|

||||

---

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/09/ubuntu-smartwatch-apple-iwatch

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[su-kaiyao](https://github.com/su-kaiyao)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由[LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www.omgubuntu.co.uk/2014/03/ubuntu-tablets-coming-year

|

||||

[2]:https://www.youtube.com/embed/8Zf5dktXzEs?feature=oembed

|

||||

@ -1,8 +1,7 @@

|

||||

IPv6:IPv4犯的罪,为什么要我来弥补

|

||||

IPv6:IPv4犯的错,为什么要我来弥补

|

||||

================================================================================

|

||||

(LCTT:标题党了一把,哈哈哈好过瘾,求不拍砖)

|

||||

|

||||

在过去的十年间,IPv6 本来应该得到很大的发展,但事实上这种好事并没有降临。由此导致了一个结果,那就是大部分人都不了解 IPv6 的一些知识:它是什么,怎么使用,以及,为什么它会存在?(LCTT:这是要回答蒙田的“我是谁”哲学思考题吗?)

|

||||

在过去的十年间,IPv6 本来应该得到很大的发展,但事实上这种好事并没有降临。由此导致了一个结果,那就是大部分人都不了解 IPv6 的一些知识:它是什么,怎么使用,以及,为什么它会存在?

|

||||

|

||||

|

||||

|

||||

@ -12,15 +11,15 @@ IPv4 和 IPv6 的区别

|

||||

|

||||

自从1981年发布了 RFC 791 标准以来我们就一直在使用 **IPv4**。在那个时候,电脑又大又贵还不多见,而 IPv4 号称能提供**40亿条 IP 地址**,在当时看来,这个数字好大好大。不幸的是,这么多的 IP 地址并没有被充分利用起来,地址与地址之间存在间隙。举个例子,一家公司可能有**254(2^8-2)**条地址,但只使用其中的25条,剩下的229条被空占着,以备将来之需。于是这些空闲着的地址不能服务于真正需要它们的用户,原因就是网络路由规则的限制。最终的结果是在1981年看起来那个好大好大的数字,在2014年看起来变得好小好小。

|

||||

|

||||

互联网工程任务组(**IETF**)在90年代指出了这个问题,并提供了两套解决方案:无类型域间选路(**CIDR**)以及私有地址。在 CIDR 出现之前,你只能选择三种网络地址长度:**24 位** (共可用16,777,214个地址), **20位** (共可用1,048,574个地址)以及**16位** (共可用65,534个地址)。CIDR 出现之后,你可以将一个网络再划分成多个子网。

|

||||

互联网工程任务组(**IETF**)在90年代初指出了这个问题,并提供了两套解决方案:无类型域间选路(**CIDR**)以及私有IP地址。在 CIDR 出现之前,你只能选择三种网络地址长度:**24 位** (共16,777,214个可用地址), **20位** (共1,048,574个可用地址)以及**16位** (共65,534个可用地址)。CIDR 出现之后,你可以将一个网络再划分成多个子网。

|

||||

|

||||

举个例子,如果你需要**5个 IP 地址**,你的 ISP 会为你提供一个子网,里面的主机地址长度为3位,也就是说你最多能得到**6个地址**(LCTT:抛开子网的网络号,3位主机地址长度可以表示0~7共8个地址,但第0个和第7个有特殊用途,不能被用户使用,所以你最多能得到6个地址)。这种方法让 ISP 能尽最大效率分配 IP 地址。“私有地址”这套解决方案的效果是,你可以自己创建一个网络,里面的主机可以访问外网的主机,但外网的主机很难访问到你创建的那个网络上的主机,因为你的网络是私有的、别人不可见的。你可以创建一个非常大的网络,因为你可以使用16,777,214个主机地址,并且你可以将这个网络分割成更小的子网,方便自己管理。

|

||||

|

||||

也许你现在正在使用私有地址。看看你自己的 IP 地址,如果这个地址在这些范围内:**10.0.0.0 – 10.255.255.255**、**172.16.0.0 – 172.31.255.255**或**192.168.0.0 – 192.168.255.255**,就说明你在使用私有地址。这两套方案有效地将“IP 地址用尽”这个灾难延迟了好长时间,但这毕竟只是权宜之计,现在我们正面临最终的审判。

|

||||

|

||||

**IPv4** 还有另外一个问题,那就是这个协议的消息头长度可变。如果数据通过软件来路由,这个问题还好说。但现在路由器功能都是由硬件提供的,处理变长消息头对硬件来说是一件困难的事情。一个大的路由器需要处理来自世界各地的大量数据包,这个时候路由器的负载是非常大的。所以很明显,我们需要固定消息头的长度。

|

||||

**IPv4** 还有另外一个问题,那就是这个协议的消息头长度可变。如果数据的路由通过软件来实现,这个问题还好说。但现在路由器功能都是由硬件提供的,处理变长消息头对硬件来说是一件困难的事情。一个大的路由器需要处理来自世界各地的大量数据包,这个时候路由器的负载是非常大的。所以很明显,我们需要固定消息头的长度。

|

||||

|

||||

还有一个问题,在分配 IP 地址的时候,美国人发了因特网(LCTT:这个万恶的资本主义国家占用了大量 IP 地址)。其他国家只得到了 IP 地址的碎片。我们需要重新定制一个架构,让连续的 IP 地址能在地理位置上集中分布,这样一来路由表可以做的更小(LCTT:想想吧,网速肯定更快)。

|

||||

在分配 IP 地址的同时,还有一个问题,因特网是美国人发明的(LCTT:这个万恶的资本主义国家占用了大量 IP 地址)。其他国家只得到了 IP 地址的碎片。我们需要重新定制一个架构,让连续的 IP 地址能在地理位置上集中分布,这样一来路由表可以做的更小(LCTT:想想吧,网速肯定更快)。

|

||||

|

||||

还有一个问题,这个问题你听起来可能还不大相信,就是 IPv4 配置起来比较困难,而且还不好改变。你可能不会碰到这个问题,因为你的路由器为你做了这些事情,不用你去操心。但是你的 ISP 对此一直是很头疼的。

|

||||

|

||||

@ -28,10 +27,10 @@ IPv4 和 IPv6 的区别

|

||||

|

||||

### IPv6 和它的优点 ###

|

||||

|

||||

**IETF** 在1995年12月公布了下一代 IP 地址标准,名字叫 IPv6,为什么不是 IPv5?因为某个错误原因,“版本5”这个编号被其他项目用去了。IPv6 的优点如下:

|

||||

**IETF** 在1995年12月公布了下一代 IP 地址标准,名字叫 IPv6,为什么不是 IPv5?→_→ 因为某个错误原因,“版本5”这个编号被其他项目用去了。IPv6 的优点如下:

|

||||

|

||||

- 128位地址长度(共有3.402823669×10³⁸个地址)

|

||||

- 这个架构下的地址在逻辑上聚合

|

||||

- 其架构下的地址在逻辑上聚合

|

||||

- 消息头长度固定

|

||||

- 支持自动配置和修改你的网络。

|

||||

|

||||

@ -43,7 +42,7 @@ IPv4 和 IPv6 的区别

|

||||

|

||||

#### 聚合 ####

|

||||

|

||||

有这么多的地址,这个地址可以被稀稀拉拉地分配给主机,从而更高效地路由数据包。算一笔帐啊,你的 ISP 拿到一个**80位**地址长度的网络空间,其中16位是 ISP 的子网地址,剩下64位分给你作为主机地址。这样一来,你的 ISP 可以分配65,534个子网。

|

||||

有这么多的地址,这些地址可以被稀稀拉拉地分配给主机,从而更高效地路由数据包。算一笔帐啊,你的 ISP 拿到一个**80位**地址长度的网络空间,其中16位是 ISP 的子网地址,剩下64位分给你作为主机地址。这样一来,你的 ISP 可以分配65,534个子网。

|

||||

|

||||

然而,这些地址分配不是一成不变地,如果 ISP 想拥有更多的小子网,完全可以做到(当然,土豪 ISP 可能会要求再来一个80位网络空间)。最高的48位地址是相互独立地,也就是说 ISP 与 ISP 之间虽然可能分到相同地80位网络空间,但是这两个空间是相互隔离的,好处就是一个网络空间里面的地址会聚合在一起。

|

||||

|

||||

@ -51,25 +50,25 @@ IPv4 和 IPv6 的区别

|

||||

|

||||

**IPv4** 消息头长度可变,但 **IPv6** 消息头长度被固定为40字节。IPv4 会由于额外的参数导致消息头变长,IPv6 中,如果有额外参数,这些信息会被放到一个紧挨着消息头的地方,不会被路由器处理,当消息到达目的地时,这些额外参数会被软件提取出来。

|

||||

|

||||

IPv6 消息头有一个部分叫“flow”,是一个20位伪随机数,用于简化路由器对数据包地路由过程。如果一个数据包存在“flow”,路由器就可以根据这个值作为索引查找路由表,不必慢吞吞地遍历整张路由表来查询路由路径。这个优点使 **IPv6** 更容易被路由。

|

||||

IPv6 消息头有一个部分叫“flow”,是一个20位伪随机数,用于简化路由器对数据包的路由过程。如果一个数据包存在“flow”,路由器就可以根据这个值作为索引查找路由表,不必慢吞吞地遍历整张路由表来查询路由路径。这个优点使 **IPv6** 更容易被路由。

|

||||

|

||||

#### 自动配置 ####

|

||||

|

||||

**IPv6** 中,当主机开机时,会检查本地网络,看看有没有其他主机使用了自己的 IP 地址。如果地址没有被使用,就接着查询本地的 IPv6 路由器,找到后就向它请求一个 IPv6 地址。然后这台主机就可以连上互联网了 —— 它有自己的 IP 地址,和自己的默认路由器。

|

||||

|

||||

如果这台默认路由器当机,主机就会接着找其他路由器,作为备用路由器。这个功能在 IPv4 协议里实现起来非常困难。同样地,假如路由器想改变自己的地址,自己改掉就好了。主机会自动搜索路由器,并自动更新路由器地址。路由器会同时保存新老地址,直到所有主机都把自己地路由器地址更新成新地址。

|

||||

如果这台默认路由器宕机,主机就会接着找其他路由器,作为备用路由器。这个功能在 IPv4 协议里实现起来非常困难。同样地,假如路由器想改变自己的地址,自己改掉就好了。主机会自动搜索路由器,并自动更新路由器地址。路由器会同时保存新老地址,直到所有主机都把自己地路由器地址更新成新地址。

|

||||

|

||||

IPv6 自动配置还不是一个完整地解决方案。想要有效地使用互联网,一台主机还需要另外的东西:域名服务器、时间同步服务器、或者还需要一台文件服务器。于是 **dhcp6** 出现了,提供与 dhcp 一样的服务,唯一的区别是 dhcp6 的机器可以在可路由的状态下启动,一个 dhcp 进程可以为大量网络提供服务。

|

||||

|

||||

#### 唯一的大问题 ####

|

||||

|

||||

如果 IPv6 真的比 IPv4 好那么多,为什么它还没有被广泛使用起来(Google 在**2014年5月份**估计 IPv6 的市场占有率为**4%**)?一个最基本的原因是“先有鸡还是先有蛋”问题,用户需要让自己的服务器能为尽可能多的客户提供服务,这就意味着他们必须部署一个 **IPv4** 地址。

|

||||

如果 IPv6 真的比 IPv4 好那么多,为什么它还没有被广泛使用起来(Google 在**2014年5月份**估计 IPv6 的市场占有率为**4%**)?一个最基本的原因是“先有鸡还是先有蛋”。服务商想让自己的服务器为尽可能多的客户提供服务,这就意味着他们必须部署一个 **IPv4** 地址。

|

||||

|

||||

当然,他们可以同时使用 IPv4 和 IPv6 两套地址,但很少有客户会用到 IPv6,并且你还需要对你的软件做一些小修改来适应 IPv6。另外比较头疼的一点是,很多家庭的路由器压根不支持 IPv6。还有就是 ISP 也不愿意支持 IPv6,我问过我的 ISP 这个问题,得到的回答是:只有客户明确指出要部署这个时,他们才会用 IPv6。然后我问了现在有多少人有这个需求,答案是:包括我在内,共有1个。

|

||||

|

||||

与这种现实状况呈明显对比的是,所有主流操作系统:Windows、OS X、Linux 都默认支持 IPv6 好多年了。这些操作系统甚至提供软件让 IPv6 的数据包披上 IPv4 的皮来骗过那些会丢弃 IPv6 数据包的主机,从而达到传输数据的目的(LCTT:呃,这是高科技偷渡?)。

|

||||

与这种现实状况呈明显对比的是,所有主流操作系统:Windows、OS X、Linux 都默认支持 IPv6 好多年了。这些操作系统甚至提供软件让 IPv6 的数据包披上 IPv4 的皮来骗过那些会丢弃 IPv6 数据包的主机,从而达到传输数据的目的。

|

||||

|

||||

#### 总结 ####

|

||||

### 总结 ###

|

||||

|

||||

IPv4 已经为我们服务了好长时间。但是它的缺陷会在不远的将来遭遇不可克服的困难。IPv6 通过改变地址分配规则、简化数据包路由过程、简化首次加入网络时的配置过程等策略,可以完美解决这个问题。

|

||||

|

||||

@ -81,7 +80,7 @@ via: http://www.tecmint.com/ipv4-and-ipv6-comparison/

|

||||

|

||||

作者:[Jeff Silverman][a]

|

||||

译者:[bazz2](https://github.com/bazz2)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,82 @@

|

||||

ChromeOS 对战 Linux : 孰优孰劣,仁者见仁,智者见智

|

||||

================================================================================

|

||||

> 在 ChromeOS 和 Linux 的斗争过程中,两个桌面环境都有强有弱,这两者到底怎样呢?

|

||||

|

||||

只要稍加留意,任何人都会相信,Google 在桌面领域绝不是“玩玩而已”。在近几年,我们见到的 [ChromeOS][1] 制造的 [Google Chromebook][2] 相当的轰动。和同期人气火爆的 Amazon 一样,ChromeOS 似乎势不可挡。

|

||||

|

||||

在本文中,我们要了解的是 ChromeOS 的概念市场,ChromeOS 怎么影响着Linux 的份额,整个 ChromeOS 对于Linux 社区来说,是好事还是坏事。另外,我将会谈到一些重大问题,以及为什么没人针对这些问题做点什么。

|

||||

|

||||

### ChromeOS 并非真正的Linux ###

|

||||

|

||||

每当有朋友问我说 ChromeOS 是否是 Linux 的一个发行版时,我都会这样回答:ChromeOS 之于 Linux 就如同 OS X 之于 BSD。换句话说,我认为,ChromeOS 是 Linux 的一个派生操作系统,运行于 Linux 内核的引擎之下。而这个操作系统的大部分由 Google 的专利代码及软件组成。

|

||||

|

||||

尽管 ChromeOS 是利用了 Linux 的内核引擎,但是和现在流行的 Linux 分支版本相比,它仍然有很大的不同。

|

||||

|

||||

其实,ChromeOS 的差异化越来越明显的原因,是在于它给终端用户提供的包括 Web 应用在内的 app。因为ChromeOS 的每一个操作都是开始于浏览器窗口,这对于 Linux 用户来说,可能会有很多不一样的感受,但是,对于没有 Linux 经验的用户来说,这与他们使用的旧电脑并没有什么不同。

|

||||

|

||||

比方说,每一个以“依赖 Google 产品”为生活方式的人来说,在 ChromeOS 上的感觉将会非常良好,就好像是回家一样,特别是这个人已经接受了 Chrome 浏览器、Google Drive 云存储和Gmail 的话。久而久之,他们使用ChromeOS 也就是很自然的事情了,因为他们很容易接受使用早已习惯的 Chrome 浏览器。

|

||||

|

||||

然而,对于 Linux 爱好者来说,这样的约束就立即带来了不适应。因为软件的选择是被限制、被禁锢的,再加上要想玩游戏和 VoIP 是完全不可能的。对不起,因为 [GooglePlus Hangouts][3] 是代替不了VoIP 软件的。甚至这种情况将持续很长一段时间。

|

||||

|

||||

### ChromeOS 还是 Linux 桌面 ###

|

||||

|

||||

有人断言,ChromeOS 要是想在桌面系统的浪潮中对 Linux 产生影响,只有在 Linux 停下来浮出水面喘气的时候,或者是满足某个非技术用户的时候。

|

||||

|

||||

是的,桌面 Linux 对于大多数休闲型的用户来说绝对是一个好东西。然而,它必须有专人帮助你安装操作系统,并且提供“维修”服务,就如同我们在 Windows 和 OS X 阵营看到的一样。但是,令人失望的是,在美国, Linux 恰恰在这个方面很缺乏。所以,我们看到,ChromeOS 正慢慢的走入我们的视线。

|

||||

|

||||

我发现 Linux 桌面系统最适合那些能够提供在线技术支持的环境中。比如说:可以在家里操作和处理更新的高级用户、政府和学校的 IT 部门等等。这些环境中,Linux 桌面系统可以被配置给任何技能水平和背景的人使用。

|

||||

|

||||

相比之下,ChromeOS 是建立在完全免维护的初衷之下的,因此,不需要第三者的帮忙,你只需要允许更新,然后让他静默完成即可。这在一定程度上可能是由于 ChromeOS 是为某些特定的硬件结构设计的,这与苹果开发自己的PC 电脑也有异曲同工之妙。因为 Google 的 ChromeOS 伴随着其硬件一起提供,大部分情况下都无需担心错误的驱动、适配什么的问题。对于某些人来说,这太好了。

|

||||

|

||||

然而有些人则认为这是一个很严重的问题,不过滑稽的是,对 ChomeOS 来说,这些人压根就不在它的目标市场里。简言之,这只是一些狂热的 Linux 爱好者在对 ChomeOS 鸡蛋里挑骨头罢了。要我说,还是停止这些没必要的批评吧。

|

||||

|

||||

问题的关键在于:ChromeOS 的市场份额和 Linux 桌面系统在很长的一段时间内是不同的。这个局面可能会在将来被打破,然而在现在,仍然会是两军对峙的局面。

|

||||

|

||||

### ChromeOS 的使用率正在增长 ###

|

||||

|

||||

不管你对ChromeOS 有怎么样的看法,事实是,ChromeOS 的使用率正在增长。专门针对 ChromeOS 的电脑也一直有发布。最近,戴尔(Dell)也发布了一款针对 ChromeOS 的电脑。命名为 [Dell Chromebox][5],这款 ChromeOS 设备将会是对传统设备的又一次冲击。它没有软件光驱,没有反病毒软件,能够提供无缝的幕后自动更新。对于一般的用户,Chromebox 和 Chromebook 正逐渐成为那些工作在 Web 浏览器上的人们的一个可靠选择。

|

||||

|

||||

尽管增长速度很快,ChromeOS 设备仍然面临着一个很严峻的问题 - 存储。受限于有限的硬盘大小和严重依赖于云存储,ChromeOS 对于那些需要使用基本的浏览器功能之外的人们来说还不够用。

|

||||

|

||||

### ChromeOS 和 Linux 的异同点 ###

|

||||

|

||||

以前,我注意到 ChromeOS 和 Linux 桌面系统分别占有着两个完全不同的市场。出现这样的情况是源于 Linux 社区在线下的桌面支持上一直都有着极其糟糕的表现。

|

||||

|

||||

是的,偶然的,有些人可能会第一时间发现这个“Linux特点”。但是,并没有一个人接着跟进这些问题,确保得到问题的答案,以让他们得到 Linux 方面更多的帮助。

|

||||

|

||||

事实上,线下问题的出现可能是这样的:

|

||||

|

||||

- 有些用户偶然的在当地的 Linux 活动中发现了 Linux。

|

||||

- 他们带回了 DVD/USB 设备,并尝试安装这个操作系统。

|

||||

- 当然,有些人很幸运的成功完成了安装过程,但是,据我所知大多数的人并没有那么幸运。

|

||||

- 令人失望的是,这些人只能寄希望于在网上论坛里搜索帮助。他们很难通过主流的计算机网络经验或视频教程解决这些问题。

|

||||

-于是这些人受够了。后来有很多失望的用户拿着他们的电脑到 Windows 商店来“维修”。除了重装一个 Windows 操作系统,他们很多时候都会听到一句话,“Linux 并不适合你们”,应该尽量避免。

|

||||

|

||||

有些人肯定会说,上面的举例肯定夸大其词了。让我来告诉你:这是发生在我身边的真事,而且是经常发生。醒醒吧,Linux 社区的人们,我们的推广模式早已过期无力了。

|

||||

|

||||

### 伟大的平台,糟糕的营销和最终结论 ###

|

||||

|

||||

如果非要找一个 ChromeOS 和 Linux 桌面系统的共同点,除了它们都使用了 Linux 内核,那就是它们都是伟大的产品却拥有极其差劲的市场营销。对此,Google 认为自己的优势是,它能投入大量的资金在网上构建大面积存储空间。

|

||||

|

||||

Google 相信他们拥有“网上的优势”,而线下的问题不是很重要。这真是一个让人难以置信的目光短浅,这也成了Google 最严重的失误之一。而当地的 Linux 零售商则坚信,对于不怎么上网的人,自然不必担心他们会受到 Google巨大的在线存储的诱惑。

|

||||

|

||||

我的建议是:Linux 可以通过线下的努力,提供桌面系统,渗透 ChromeOS 市场。这就意味着 Linux 社区需要在节假日筹集资金来出席博览会、商场展览,并且在社区中进行免费的教学课程。这会立即使 Linux 桌面系统走入人们的视线,否则,最终将会是一个 ChromeOS 设备出现在人们的面前。

|

||||

|

||||

如果说本地的线下市场并没有像我说的这样,别担心。Linux 桌面系统的市场仍然会像 ChromeOS 一样增长。最坏也能保持现在这种两军对峙的市场局面。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/chromeos-vs-linux-the-good-the-bad-and-the-ugly-1.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://en.wikipedia.org/wiki/Chrome_OS

|

||||

[2]:http://www.google.com/chrome/devices/features/

|

||||

[3]:https://plus.google.com/hangouts

|

||||

[4]:http://en.wikipedia.org/wiki/Voice_over_IP

|

||||

[5]:http://www.pcworld.com/article/2602845/dell-brings-googles-chrome-os-to-desktops.html

|

||||

@ -0,0 +1,64 @@

|

||||

Linux上几款好用的字幕编辑器

|

||||

================================================================================

|

||||

如果你经常看国外的大片,你应该会喜欢带字幕版本而不是有国语配音的版本。我在法国长大,童年的记忆里充满了迪斯尼电影。但是这些电影因为有了法语的配音而听起来很怪。如果现在有机会能看原始的版本,我想,对于大多数的人来说,字幕还是必须的。我很高兴能为家人制作字幕。给我带来希望的是,Linux 也不乏有很多花哨、开源的字幕编辑器。总之一句话,文中Linux上字幕编辑器的列表并不详尽,你可以告诉我哪一款是你认为最好的字幕编辑器。

|

||||

|

||||

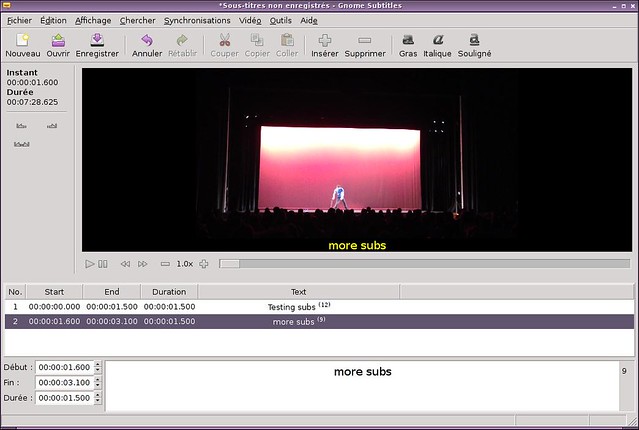

### 1. Gnome Subtitles ###

|

||||

|

||||

|

||||

|

||||

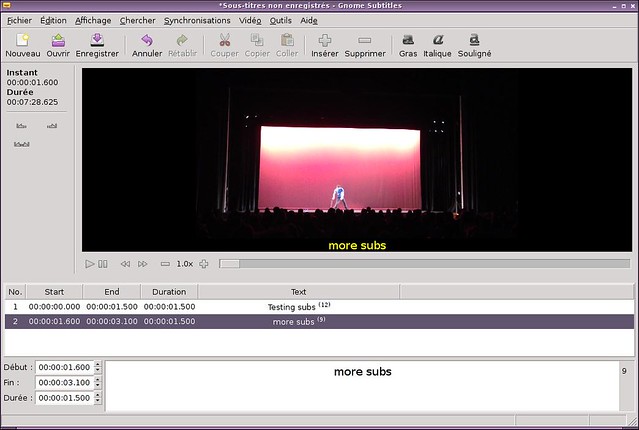

当有现有字幕需要快速编辑时,[Gnome Subtitles][1] 是我的一个选择。你可以载入视频,载入字幕文本,然后就可以即刻开始了。我很欣赏其对于易用性和高级特性之间的平衡。它带有一个同步工具以及一个拼写检查工具。最后但同样重要的的一点,这么好用最主要的是因为它的快捷键:当你编辑很多的台词的时候,你最好把你的手放在键盘上,使用其内置的快捷键来移动。

|

||||

|

||||

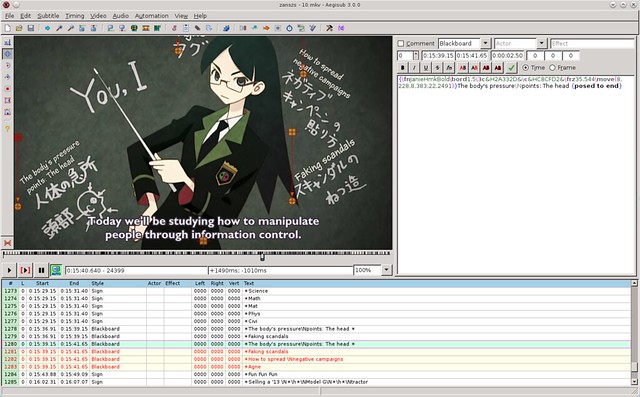

### 2. Aegisub ###

|

||||

|

||||

|

||||

|

||||

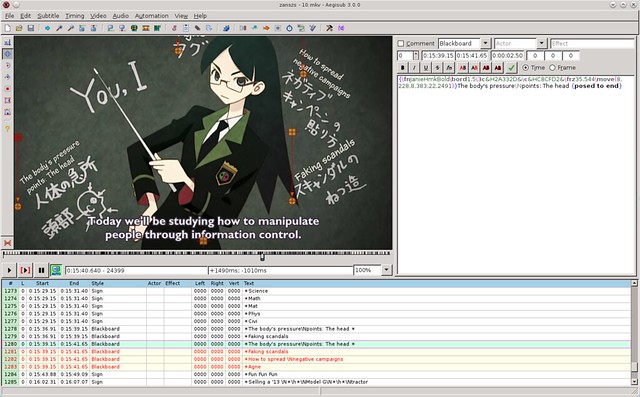

[Aegisub][2] 已经是一款高级别的复杂字幕编辑器。仅仅是界面就反映出了一定的学习曲线。但是,除了它吓人的样子以外,Aegisub 是一个非常完整的软件,提供的工具远远超出你能想象的。和Gnome Subtitles 一样,Aegisub也采用了所见即所得(WYSIWYG:what you see is what you get)的处理方式。但是是一个全新的高度:可以再屏幕上任意拖动字幕,也可以在另一边查看音频的频谱,并且可以利用快捷键做任何的事情。除此以外,它还带有一个汉字工具,有一个kalaok模式,并且你可以导入lua 脚本让它自动完成一些任务。我希望你在用之前,先去阅读下它的[指南][3]。

|

||||

|

||||

### 3. Gaupol ###

|

||||

|

||||

|

||||

|

||||

另一个操作复杂的软件是[Gaupol][4],不像Aegisub ,Gaupol 很容易上手而且采用了一个和Gnome Subtitles 很像的界面。但是在这些相对简单背后,它拥有很多很必要的工具:快捷键、第三方扩展、拼写检查,甚至是语音识别(由[CMU Sphinx][5]提供)。这里也提一个缺点,我注意到有时候在测试的时候也,软件会有消极怠工的表现,不是很严重,但是也足以让我更有理由喜欢Gnome Subtitles了。

|

||||

|

||||

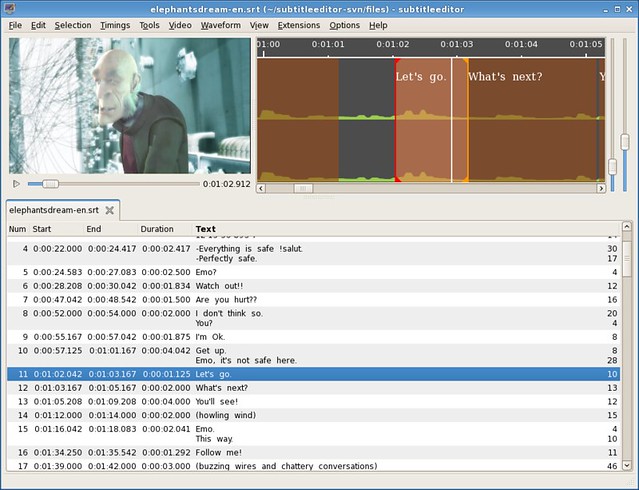

### 4. Subtitle Editor ###

|

||||

|

||||

|

||||

|

||||

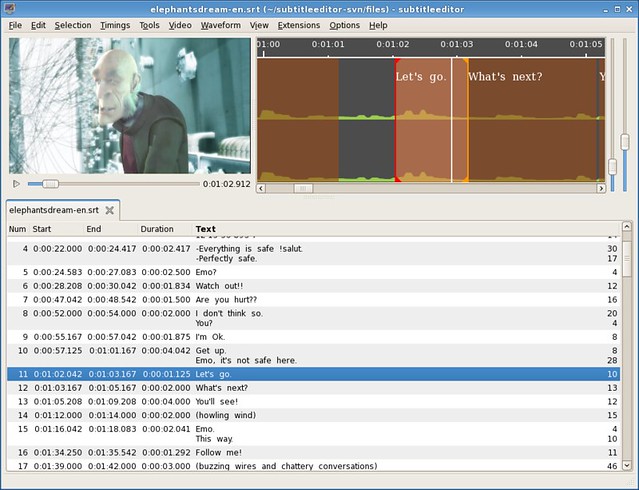

[Subtitle Editor][6]和 Gaupol 很像,但是它的界面有点不太直观,特性也只是稍微的高级一点点。我很欣赏的一点是,它可以定义“关键帧”,而且提供所有的同步选项。然而,多一点的图标,或者是少一点的文字都能提供界面的特性。作为一个值得称赞的字幕编辑器,Subtitle Editor 可以模仿“作家”打字的效果,虽然我不确定它是否特别有用。最后但同样重要的一点,重定义快捷键的功能很实用。

|

||||

|

||||

### 5. Jubler ###

|

||||

|

||||

|

||||

|

||||

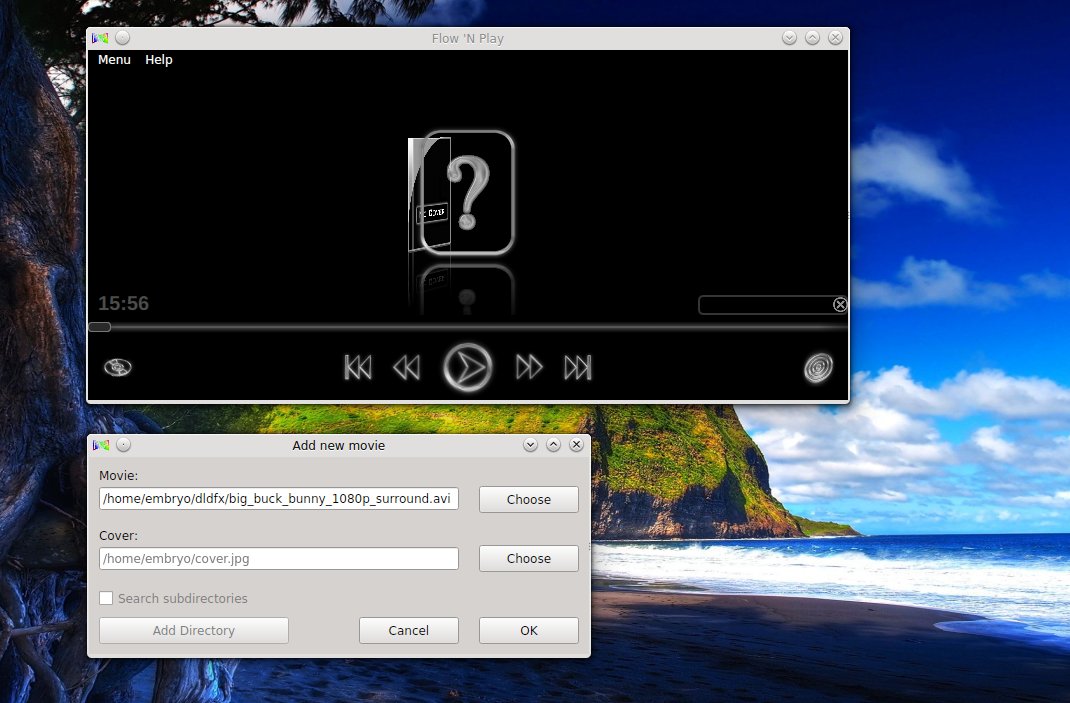

[Jubler][7]是一个用Java编写并有多平台支持的字幕编辑器。我对它的界面印象特别深刻。在上面我确实看出了Java特点的东西,但是,它仍然是经过精心的构造和构思的。像Aegisub 一样,你可以再屏幕上任意的拖动字幕,让你有愉快的体验而不单单是打字。它也可以为字幕自定义一个风格,在另外的一个轨道播放音频,翻译字幕,或者是是做拼写检查。不过,要注意的是,你需要事先安装好媒体播放器并且正确的配置,如果你想完整的使用Jubler。我把这些归功于在[官方页面][8]下载了脚本以后其简便的安装方式。

|

||||

|

||||

### 6. Subtitle Composer ###

|

||||

|

||||

|

||||

|

||||

[Subtitle Composer][9]被视为“KDE里的字幕作曲家”,它能够唤起对很多传统功能的回忆。伴随着KDE界面,我们充满了期待。我们自然会说到快捷键,我特别喜欢这个功能。除此之外,Subtitle Composer 与上面提到的编辑器最大的不同地方就在于,它可以执行用JavaScript,Python,甚至是Ruby写成的脚本。软件带有几个例子,肯定能够帮助你很好的学习使用这些特性的语法。

|

||||

|

||||

最后,不管你是否喜欢,都来为你的家庭编辑几个字幕吧,重新同步整个轨道,或者是一切从头开始,那么Linux 有很好的工具给你。对我来说,快捷键和易用性使得各个工具有差异,想要更高级别的使用体验,脚本和语音识别就成了很便利的一个功能。

|

||||

|

||||

你会使用哪个字幕编辑器,为什么?你认为还有没有更好用的字幕编辑器这里没有提到的?在评论里告诉我们吧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/good-subtitle-editor-linux.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[Caroline](https://github.com/carolinewuyan)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/adrien

|

||||

[1]:http://gnomesubtitles.org/

|

||||

[2]:http://www.aegisub.org/

|

||||

[3]:http://docs.aegisub.org/3.2/Main_Page/

|

||||

[4]:http://home.gna.org/gaupol/

|

||||

[5]:http://cmusphinx.sourceforge.net/

|

||||

[6]:http://home.gna.org/subtitleeditor/

|

||||

[7]:http://www.jubler.org/

|

||||

[8]:http://www.jubler.org/download.html

|

||||

[9]:http://sourceforge.net/projects/subcomposer/

|

||||

@ -1,22 +1,22 @@

|

||||

Linux用户应该了解一下开源硬件

|

||||

Linux用户,你们真的了解开源硬件吗?

|

||||

================================================================================

|

||||

> Linux用户不了解一点开源硬件制造相关的事情,他们将会很失望。

|

||||

> Linux用户不了解一点开源硬件制造相关的事情,他们就会经常陷入失望的情绪中。

|

||||

|

||||

商业软件和免费软件已经互相纠缠很多年了,但是这俩经常误解对方。这并不奇怪 -- 对一方来说是生意,而另一方只是一种生活方式。但是,这种误解会给人带来痛苦,这也是为什么值得花精力去揭露这里面的内幕。

|

||||

|

||||

一个逐渐普遍的现象:对开源硬件的不断尝试,不管是Canonical,Jolla,MakePlayLive,或者其他几个。不管是评论员或终端用户,一般的免费软件用户会为新的硬件平台发布表现出过分的狂热,然后因为不断延期有所醒悟,最终放弃整个产品。

|

||||

一个逐渐普遍的现象:对开源硬件的不断尝试,不管是Canonical,Jolla,MakePlayLive,或者其他公司。无论是评论员或是终端用户,通常免费软件用户都会为新的硬件平台发布表现出过分的狂热,然后因为不断延期有所醒悟,直到最终放弃整个产品。

|

||||

|

||||

这是一个没有人获益的怪圈,而且滋生出不信任 - 都是因为一般的Linux用户根本不知道这些新闻背后发生的事情。

|

||||

这是一个没有人获益的怪圈,而且常常滋生出不信任 - 都是因为一般的Linux用户根本不知道这些新闻背后发生的事情。

|

||||

|

||||

我个人对于把产品推向市场的经验很有限。但是,我还不知道谁能有所突破。推出一个开源硬件或其他产品到市场仍然不仅仅是个残酷的生意,而且严重不利于新加入的厂商。

|

||||

我个人对于把产品推向市场的经验很有限。但是,我还没听说谁能有所突破。推出一个开源硬件或其他产品到市场仍然不仅仅是个残酷的生意,而且严重不利于新进厂商。

|

||||

|

||||

### 寻找合作伙伴 ###

|

||||

|

||||

不管是数码产品的生产还是分销都被相对较少的一些公司控制着,有时需要数月的预订。利润率也会很低,所以就像那些购买古老情景喜剧的电影工作室一样,生成商一般也希望复制当前热销产品的成功。像Aaron Seigo在谈到他花精力开发Vivaldi平板时告诉我的,生产商更希望能由其他人去承担开发新产品的风险。

|

||||

不管是数码产品的生产还是分销都被相对较少的一些公司控制着,有时需要数月的预订。利润率也会很低,所以就像那些购买古老情景喜剧的电影工作室一样,生产商一般也希望复制当前热销产品的成功。像Aaron Seigo在谈到他花精力开发Vivaldi平板时告诉我的,生产商更希望能由其他人去承担开发新产品的风险。

|

||||

|

||||

不仅如此,他们更希望和那些有现成销售记录的有可能带来可复制生意的人合作。

|

||||

不仅如此,他们更希望和那些有现成销售记录的有可能带来长期客户生意的人合作。

|

||||

|

||||

而且,一般新加入的厂商所关心的产品只有几千的量。芯片制造商更愿意和苹果或三星合作,因为它们的订单很可能是几百K。

|

||||

而且,一般新加入的厂商所关心的产品只有几千的量。芯片制造商更愿意和苹果或三星这样的公司合作,因为它们的订单很可能是几十上百万的量。

|

||||

|

||||

面对这种情形,开源硬件制造者们可能会发现他们在工厂的列表中被淹没了,除非能找到二线或三线厂愿意尝试一下小批量生产新产品。

|

||||

|

||||

@ -28,9 +28,9 @@ Linux用户应该了解一下开源硬件

|

||||

|

||||

这样必然会引起潜在用户的批评,但是开源硬件制造者没得选,只能折中他们的愿景。寻找其他生产商也不能解决问题,有一个原因是这样做意味着更多延迟,但是更多的是因为完全免授权费的硬件是不存在的。像三星这样的业内巨头对免费硬件没有任何兴趣,而作为新人,开源硬件制造者也没有影响力去要求什么。

|

||||

|

||||

更何况,就算有免费硬件,生产商也不能保证会用在下一批生产中。制造者们会轻易地发现他们每次需要生产的时候都要重打一样的仗。

|

||||

更何况,就算有免费硬件,生产商也不能保证会用在下一批生产中。制造者们会轻易地发现他们每次需要生产的时候都要重打一次一模一样的仗。

|

||||

|

||||

这些都还不够,这个时候开源硬件制造者们也许已经花了6-12个月时间来讨价还价。机会来了,产业标准已经变更,他们也许为了升级产品规格又要从头来过。

|

||||

这些都还不够,这个时候开源硬件制造者们也许已经花了6-12个月时间来讨价还价。等机会终于来了,产业标准却已经变更,于是他们可能为了升级产品规格又要从头来过。

|

||||

|

||||

### 短暂而且残忍的货架期 ###

|

||||

|

||||

@ -42,15 +42,15 @@ Linux用户应该了解一下开源硬件

|

||||

|

||||

### 衡量整件怪事 ###

|

||||

|

||||

在这里我只是粗略地概括了一下,但是任何涉足过制造的人会认出我形容成标准的东西。而更糟糕的是,开源硬件制造者们通常在这个过程中才会有所觉悟。不可避免,他们也会犯错,从而带来更多的延迟。

|

||||

在这里我只是粗略地概括了一下,但是任何涉足过制造的人会认同我形容为行业标准的东西。而更糟糕的是,开源硬件制造者们通常只有在亲身经历过后才会有所觉悟。不可避免,他们也会犯错,从而带来更多的延迟。

|

||||

|

||||

但重点是,一旦你对整个过程有所了解,你对另一个开源硬件进行尝试的消息的反应就会改变。这个过程意味着,除非哪家公司处于严格的保密模式,对于产品将于六个月内发布的声明会很快会被证实是过期的推测。很可能是12-18个月,而且面对之前提过的那些困难很可能意味着这个产品永远不会真正发布。

|

||||

但重点是,一旦你对整个过程有所了解,你对另一个开源硬件进行尝试的新闻的反应就会改变。这个过程意味着,除非哪家公司处于严格的保密模式,对于产品将于六个月内发布的声明会很快会被证实是过期的推测。很可能是12-18个月,而且面对之前提过的那些困难很可能意味着这个产品永远都不会真正发布。

|

||||

|

||||

举个例子,就像我写的,人们等待第一代Steam Machines面世,它是一台基于Linux的游戏主机。他们相信Steam Machines能彻底改变Linux和游戏。

|

||||

|

||||

作为一个市场分类,Steam Machines也许比其他新产品更有优势,因为参与开发的人员至少有开发软件产品的经验。然而,整整一年过去了Steam Machines的开发成果都还只有原型机,而且直到2015年中都不一定能买到。面对硬件生产的实际情况,就算有一半能见到阳光都是很幸运了。而实际上,能发布2-4台也许更实际。

|

||||

|

||||

我做出这个预测并没有考虑个体努力。但是,对硬件生产的理解,比起那些Linux和游戏的黄金年代之类的预言,我估计这个更靠谱。如果我错了也会很开心,但是事实不会改变:让人吃惊的不是如此多的Linux相关硬件产品失败了,而是那些即使是短暂的成功的产品。

|

||||

我做出这个预测并没有考虑个体努力。但是,对硬件生产的理解,比起那些Linux和游戏的黄金年代之类的预言,我估计这个更靠谱。如果我错了也会很开心,但是事实不会改变:让人吃惊的不是如此多的Linux相关硬件产品失败了,而是那些虽然短暂但却成功的产品。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -58,7 +58,7 @@ via: http://www.datamation.com/open-source/what-linux-users-should-know-about-op

|

||||

|

||||

作者:[Bruce Byfield][a]

|

||||

译者:[zpl1025](https://github.com/zpl1025)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -95,7 +95,7 @@ via: http://xmodulo.com/configure-peer-to-peer-vpn-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[felixonmars](https://github.com/felixonmars)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,324 @@

|

||||

使用 Quagga 将你的 CentOS 系统变成一个 BGP 路由器

|

||||

================================================================================

|

||||

|

||||

在[之前的教程中][1],我对如何简单地使用Quagga把CentOS系统变成一个不折不扣地OSPF路由器做了一些介绍。Quagga是一个开源路由软件套件。在这个教程中,我将会重点讲讲**如何把一个Linux系统变成一个BGP路由器,还是使用Quagga**,演示如何建立BGP与其它BGP路由器对等。

|

||||

|

||||

在我们进入细节之前,一些BGP的背景知识还是必要的。边界网关协议(即BGP)是互联网的域间路由协议的实际标准。在BGP术语中,全球互联网是由成千上万相关联的自治系统(AS)组成,其中每一个AS代表每一个特定运营商提供的一个网络管理域([据说][2],美国前总统乔治.布什都有自己的 AS 编号)。

|

||||

|

||||

为了使其网络在全球范围内路由可达,每一个AS需要知道如何在英特网中到达其它的AS。这时候就需要BGP出来扮演这个角色了。BGP是一个AS去与相邻的AS交换路由信息的语言。这些路由信息通常被称为BGP线路或者BGP前缀。包括AS号(ASN;全球唯一号码)以及相关的IP地址块。一旦所有的BGP线路被当地的BGP路由表学习和记录,每一个AS将会知道如何到达互联网的任何公网IP。

|

||||

|

||||

在不同域(AS)之间路由的能力是BGP被称为外部网关协议(EGP)或者域间协议的主要原因。就如一些路由协议,例如OSPF、IS-IS、RIP和EIGRP都是内部网关协议(IGPs)或者域内路由协议,用于处理一个域内的路由.

|

||||

|

||||

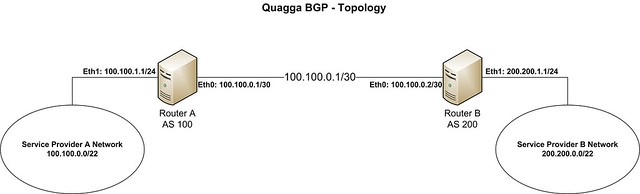

### 测试方案 ###

|

||||

|

||||

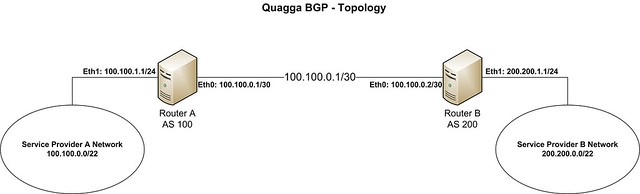

在这个教程中,让我们来使用以下拓扑。

|

||||

|

||||

|

||||

|

||||

我们假设运营商A想要建立一个BGP来与运营商B对等交换路由。它们的AS号和IP地址空间的细节如下所示:

|

||||

|

||||

- **运营商 A**: ASN (100), IP地址空间 (100.100.0.0/22), 分配给BGP路由器eth1网卡的IP地址(100.100.1.1)

|

||||

|

||||

- **运营商 B**: ASN (200), IP地址空间 (200.200.0.0/22), 分配给BGP路由器eth1网卡的IP地址(200.200.1.1)

|

||||

|

||||

路由器A和路由器B使用100.100.0.0/30子网来连接到对方。从理论上来说,任何子网从运营商那里都是可达的、可互连的。在真实场景中,建议使用掩码为30位的公网IP地址空间来实现运营商A和运营商B之间的连通。

|

||||

|

||||

### 在 CentOS中安装Quagga ###

|

||||

|

||||

如果Quagga还没安装好,我们可以使用yum来安装Quagga。

|

||||

|

||||

# yum install quagga

|

||||

|

||||

如果你正在使用的是CentOS7系统,你需要应用一下策略来设置SELinux。否则,SElinux将会阻止Zebra守护进程写入它的配置目录。如果你正在使用的是CentOS6,你可以跳过这一步。

|

||||

|

||||

# setsebool -P zebra_write_config 1

|

||||

|

||||

Quagga软件套件包含几个守护进程,这些进程可以协同工作。关于BGP路由,我们将把重点放在建立以下2个守护进程。

|

||||

|

||||

- **Zebra**:一个核心守护进程用于内核接口和静态路由.

|

||||

- **BGPd**:一个BGP守护进程.

|

||||

|

||||

### 配置日志记录 ###

|

||||

|

||||

在Quagga被安装后,下一步就是配置Zebra来管理BGP路由器的网络接口。我们通过创建一个Zebra配置文件和启用日志记录来开始第一步。

|

||||

|

||||

# cp /usr/share/doc/quagga-XXXXX/zebra.conf.sample /etc/quagga/zebra.conf

|

||||

|

||||

在CentOS6系统中:

|

||||

|

||||

# service zebra start

|

||||

# chkconfig zebra on

|

||||

|

||||

在CentOS7系统中:

|

||||

|

||||

# systemctl start zebra

|

||||

# systemctl enable zebra

|

||||

|

||||

Quagga提供了一个叫做vtysh特有的命令行工具,你可以输入与路由器厂商(例如Cisco和Juniper)兼容和支持的命令。我们将使用vtysh shell来配置BGP路由在教程的其余部分。

|

||||

|

||||

启动vtysh shell 命令,输入:

|

||||

|

||||

# vtysh

|

||||

|

||||

提示将被改成该主机名,这表明你是在vtysh shell中。

|

||||

|

||||

Router-A#

|

||||

|

||||

现在我们将使用以下命令来为Zebra配置日志文件:

|

||||

|

||||

Router-A# configure terminal

|

||||

Router-A(config)# log file /var/log/quagga/quagga.log

|

||||

Router-A(config)# exit

|

||||

|

||||

永久保存Zebra配置:

|

||||

|

||||

Router-A# write

|

||||

|

||||

在路由器B操作同样的步骤。

|

||||

|

||||

### 配置对等的IP地址 ###

|

||||

|

||||

下一步,我们将在可用的接口上配置对等的IP地址。

|

||||

|

||||

Router-A# show interface #显示接口信息

|

||||

|

||||

----------

|

||||

|

||||

Interface eth0 is up, line protocol detection is disabled

|

||||

. . . . .

|

||||

Interface eth1 is up, line protocol detection is disabled

|

||||

. . . . .

|

||||

|

||||

配置eth0接口的参数:

|

||||

|

||||

site-A-RTR# configure terminal

|

||||

site-A-RTR(config)# interface eth0

|

||||

site-A-RTR(config-if)# ip address 100.100.0.1/30

|

||||

site-A-RTR(config-if)# description "to Router-B"

|

||||

site-A-RTR(config-if)# no shutdown

|

||||

site-A-RTR(config-if)# exit

|

||||

|

||||

|

||||

继续配置eth1接口的参数:

|

||||

|

||||

site-A-RTR(config)# interface eth1

|

||||

site-A-RTR(config-if)# ip address 100.100.1.1/24

|

||||

site-A-RTR(config-if)# description "test ip from provider A network"

|

||||

site-A-RTR(config-if)# no shutdown

|

||||

site-A-RTR(config-if)# exit

|

||||

|

||||

现在确认配置:

|

||||

|

||||

Router-A# show interface

|

||||

|

||||

----------

|

||||

|

||||

Interface eth0 is up, line protocol detection is disabled

|

||||

Description: "to Router-B"

|

||||

inet 100.100.0.1/30 broadcast 100.100.0.3

|

||||

Interface eth1 is up, line protocol detection is disabled

|

||||

Description: "test ip from provider A network"

|

||||

inet 100.100.1.1/24 broadcast 100.100.1.255

|

||||

|

||||

----------

|

||||

|

||||

Router-A# show interface description #显示接口描述

|

||||

|

||||

----------

|

||||

|

||||

Interface Status Protocol Description

|

||||

eth0 up unknown "to Router-B"

|

||||

eth1 up unknown "test ip from provider A network"

|

||||

|

||||

|

||||

如果一切看起来正常,别忘记保存配置。

|

||||

|

||||

Router-A# write

|

||||

|

||||

同样地,在路由器B重复一次配置。

|

||||

|

||||

在我们继续下一步之前,确认下彼此的IP是可以ping通的。

|

||||

|

||||

Router-A# ping 100.100.0.2

|

||||

|

||||

----------

|

||||

|

||||

PING 100.100.0.2 (100.100.0.2) 56(84) bytes of data.

|

||||

64 bytes from 100.100.0.2: icmp_seq=1 ttl=64 time=0.616 ms

|

||||

|

||||

下一步,我们将继续配置BGP对等和前缀设置。

|

||||

|

||||

### 配置BGP对等 ###

|

||||

|

||||

Quagga守护进程负责BGP的服务叫bgpd。首先我们来准备它的配置文件。

|

||||

|

||||

# cp /usr/share/doc/quagga-XXXXXXX/bgpd.conf.sample /etc/quagga/bgpd.conf

|

||||

|

||||

在CentOS6系统中:

|

||||

|

||||

# service bgpd start

|

||||

# chkconfig bgpd on

|

||||

|

||||

在CentOS7中:

|

||||

|

||||

# systemctl start bgpd

|

||||

# systemctl enable bgpd

|

||||

|

||||

现在,让我们来进入Quagga 的shell。

|

||||

|

||||

# vtysh

|

||||

|

||||

第一步,我们要确认当前没有已经配置的BGP会话。在一些版本,我们可能会发现一个AS号为7675的BGP会话。由于我们不需要这个会话,所以把它移除。

|

||||

|

||||

Router-A# show running-config

|

||||

|

||||

----------

|

||||

|

||||

... ... ...

|

||||

router bgp 7675

|

||||

bgp router-id 200.200.1.1

|

||||

... ... ...

|

||||

|

||||

我们将移除一些预先配置好的BGP会话,并建立我们所需的会话取而代之。

|

||||

|

||||

Router-A# configure terminal

|

||||

Router-A(config)# no router bgp 7675

|

||||

Router-A(config)# router bgp 100

|

||||

Router-A(config)# no auto-summary

|

||||

Router-A(config)# no synchronizaiton

|

||||

Router-A(config-router)# neighbor 100.100.0.2 remote-as 200

|

||||

Router-A(config-router)# neighbor 100.100.0.2 description "provider B"

|

||||

Router-A(config-router)# exit

|

||||

Router-A(config)# exit

|

||||

Router-A# write

|

||||

|

||||

路由器B将用同样的方式来进行配置,以下配置提供作为参考。

|

||||

|

||||

Router-B# configure terminal

|

||||

Router-B(config)# no router bgp 7675

|

||||

Router-B(config)# router bgp 200

|

||||

Router-B(config)# no auto-summary

|

||||

Router-B(config)# no synchronizaiton

|

||||

Router-B(config-router)# neighbor 100.100.0.1 remote-as 100

|

||||

Router-B(config-router)# neighbor 100.100.0.1 description "provider A"

|

||||

Router-B(config-router)# exit

|

||||

Router-B(config)# exit

|

||||

Router-B# write

|

||||

|

||||

|

||||

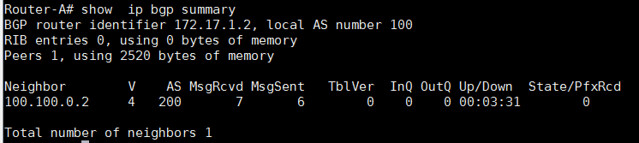

当相关的路由器都被配置好,两台路由器之间的对等将被建立。现在让我们通过运行下面的命令来确认:

|

||||

|

||||

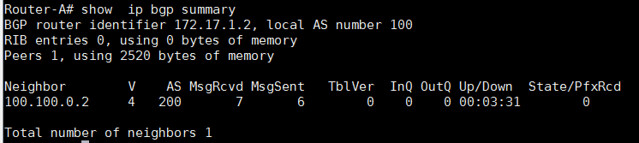

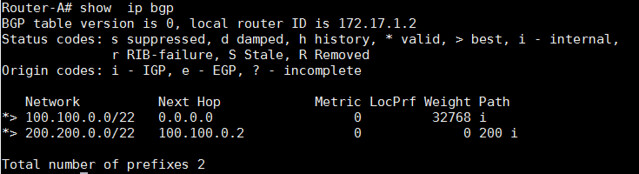

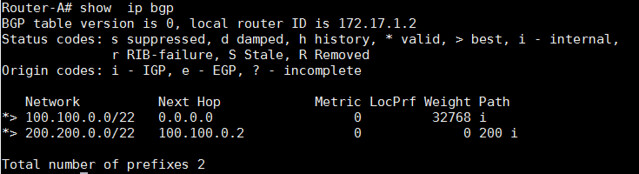

Router-A# show ip bgp summary

|

||||

|

||||

|

||||

|

||||

|

||||

从输出中,我们可以看到"State/PfxRcd"部分。如果对等关闭,输出将会显示"Idle"或者"Active'。请记住,单词'Active'这个词在路由器中总是不好的意思。它意味着路由器正在积极地寻找邻居、前缀或者路由。当对等是up状态,"State/PfxRcd"下的输出状态将会从特殊邻居接收到前缀号。

|

||||

|

||||

在这个例子的输出中,BGP对等只是在AS100和AS200之间呈up状态。因此没有前缀被更改,所以最右边列的数值是0。

|

||||

|

||||

### 配置前缀通告 ###

|

||||

|

||||

正如一开始提到,AS 100将以100.100.0.0/22作为通告,在我们的例子中AS 200将同样以200.200.0.0/22作为通告。这些前缀需要被添加到BGP配置如下。

|

||||

|

||||

在路由器-A中:

|

||||

|

||||

Router-A# configure terminal

|

||||

Router-A(config)# router bgp 100

|

||||

Router-A(config)# network 100.100.0.0/22

|

||||

Router-A(config)# exit

|

||||

Router-A# write

|

||||

|

||||

在路由器-B中:

|

||||

|

||||

Router-B# configure terminal

|

||||

Router-B(config)# router bgp 200

|

||||

Router-B(config)# network 200.200.0.0/22

|

||||

Router-B(config)# exit

|

||||

Router-B# write

|

||||

|

||||

在这一点上,两个路由器会根据需要开始通告前缀。

|

||||

|

||||

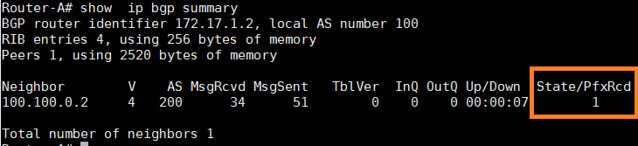

### 测试前缀通告 ###

|

||||

|

||||

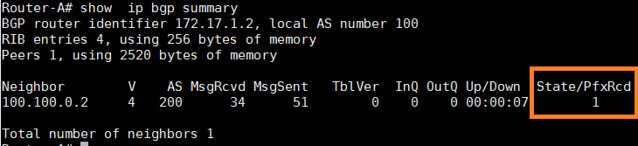

首先,让我们来确认前缀的数量是否被改变了。

|

||||

|

||||

Router-A# show ip bgp summary

|

||||

|

||||

|

||||

|

||||

为了查看所接收的更多前缀细节,我们可以使用以下命令,这个命令用于显示邻居100.100.0.2所接收到的前缀总数。

|

||||

|

||||

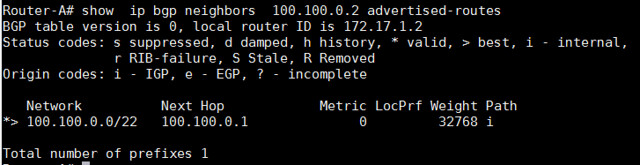

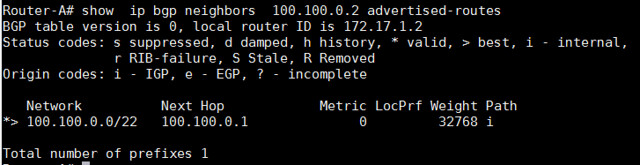

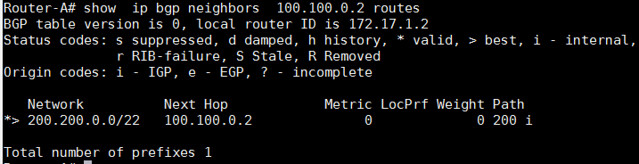

Router-A# show ip bgp neighbors 100.100.0.2 advertised-routes

|

||||

|

||||

|

||||

|

||||

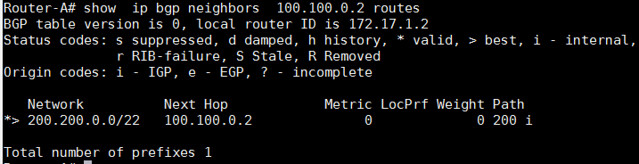

查看哪一个前缀是我们从邻居接收到的:

|

||||

|

||||

Router-A# show ip bgp neighbors 100.100.0.2 routes

|

||||

|

||||

|

||||

|

||||

我们也可以查看所有的BGP路由器:

|

||||

|

||||

Router-A# show ip bgp

|

||||

|

||||

|

||||

|

||||

|

||||

以上的命令都可以被用于检查哪个路由器通过BGP在路由器表中被学习到。

|

||||

|

||||

Router-A# show ip route

|

||||

|

||||

----------

|

||||

|

||||

代码: K - 内核路由, C - 已链接 , S - 静态 , R - 路由信息协议 , O - 开放式最短路径优先协议,

|

||||

|

||||

I - 中间系统到中间系统的路由选择协议, B - 边界网关协议, > - 选择路由, * - FIB 路由

|

||||

|

||||

C>* 100.100.0.0/30 is directly connected, eth0

|

||||

C>* 100.100.1.0/24 is directly connected, eth1

|

||||

B>* 200.200.0.0/22 [20/0] via 100.100.0.2, eth0, 00:06:45

|

||||

|

||||

----------

|

||||

|

||||

Router-A# show ip route bgp

|

||||

|

||||

----------

|

||||

|

||||

B>* 200.200.0.0/22 [20/0] via 100.100.0.2, eth0, 00:08:13

|

||||

|

||||

|

||||

BGP学习到的路由也将会在Linux路由表中出现。

|

||||

|

||||

[root@Router-A~]# ip route

|

||||

|

||||

----------

|

||||

|

||||

100.100.0.0/30 dev eth0 proto kernel scope link src 100.100.0.1

|

||||

100.100.1.0/24 dev eth1 proto kernel scope link src 100.100.1.1

|

||||

200.200.0.0/22 via 100.100.0.2 dev eth0 proto zebra

|

||||

|

||||

|

||||

最后,我们将使用ping命令来测试连通。结果将成功ping通。

|

||||

|

||||

[root@Router-A~]# ping 200.200.1.1 -c 2

|

||||

|

||||

|

||||

总而言之,本教程将重点放在如何在CentOS系统中运行一个基本的BGP路由器。这个教程让你开始学习BGP的配置,一些更高级的设置例如设置过滤器、BGP属性调整、本地优先级和预先路径准备等,我将会在后续的教程中覆盖这些主题。

|

||||

|

||||

希望这篇教程能给大家一些帮助。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/centos-bgp-router-quagga.html

|

||||

|

||||

作者:[Sarmed Rahman][a]

|

||||

译者:[disylee](https://github.com/disylee)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/sarmed

|

||||

[1]:http://linux.cn/article-4232-1.html

|

||||

[2]:http://weibo.com/3181671860/BngyXxEUF

|

||||

297

published/20141027 ntpq -p output.md

Normal file

297

published/20141027 ntpq -p output.md

Normal file

@ -0,0 +1,297 @@

|

||||

网络时间的那些事情及 ntpq 详解

|

||||

================================================================================

|

||||

[Gentoo][1](也许其他发行版也是?)中 ["ntpq -p" 的 man page][2] 只有简短的描述:“*打印出该服务器已知的节点列表和它们的状态概要信息。*”

|

||||

|

||||

我还没见到关于这个命令的说明文档,因此这里对此作一个总结,可以补充进 "[man ntpq][3]" man page 中。更多的细节见这里 “[ntpq – 标准 NTP 请求程序][4]”(原作者),和 [其他关于 man ntpq 的例子][5].

|

||||

|

||||

[NTP][6] 是一个设计用于通过 [udp][9] 网络 ([WAN][7] 或者 [LAN][8]) 来同步计算机时钟的协议。引用 [Wikipedia – NTP][10]:

|

||||

|

||||

> 网络时间协议(英语:Network Time Protocol,NTP)一种协议和软件实现,用于通过使用有网络延迟的报文交换网络同步计算机系统间的时钟。最初由美国特拉华大学的 David L. Mills 设计,现在仍然由他和志愿者小组维护,它于 1985 年之前开始使用,是因特网中最老的协议之一。

|

||||

|

||||

想了解更多有关时间和 NTP 协议的知识,可以参考 “[The NTP FAQ, Time, what Time?][11]”和 [RFCs for NTP][12]。早期的“Network Time Protocol (Version 3) RFC” ([txt][13], or [pdf][14], Appendix E, The NTP Timescale and its Chronometry, p70) 包含了对过去 5000 年我们的计时系统的变化和关系的有趣解释。维基百科的文章 [Time][15] 和 [Calendar][16] 提供了更宏观的视角。

|

||||

|

||||

命令 "ntpq -q" 输出下面这样的一个表:

|

||||

|

||||

remote refid st t when poll reach delay offset jitter

|

||||

==============================================================================

|

||||

LOCAL(0) .LOCL. 10 l 96h 64 0 0.000 0.000 0.000

|

||||

*ns2.example.com 10.193.2.20 2 u 936 1024 377 31.234 3.353 3.096

|

||||

|

||||

### 更多细节 ###

|

||||

|

||||

#### 表头 ####

|

||||

|

||||

|

||||

- **remote** – 用于同步的远程节点或服务器。“LOCAL”表示本机 (当没有远程服务器可用时会出现)

|

||||

- **refid** – 远程的服务器进行同步的更高一级服务器

|

||||

- **st** – 远程节点或服务器的 [Stratum][17](级别,NTP 时间同步是分层的)

|

||||

- **t** – 类型 (u: [unicast(单播)][18] 或 [manycast(选播)][19] 客户端, b: [broadcast(广播)][20] 或 [multicast(多播)][21] 客户端, l: 本地时钟, s: 对称节点(用于备份), A: 选播服务器, B: 广播服务器, M: 多播服务器, 参见“[Automatic Server Discovery][22]“)

|

||||

- **when** – 最后一次同步到现在的时间 (默认单位为秒, “h”表示小时,“d”表示天)

|

||||

- **poll** – 同步的频率:[rfc5905][23]建议在 NTPv4 中这个值的范围在 4 (16秒) 至 17 (36小时) 之间(即2的指数次秒),然而观察发现这个值的实际大小在一个小的多的范围内 :64 (2^6 )秒 至 1024 (2^10 )秒

|

||||

- **reach** – 一个8位的左移移位寄存器值,用来测试能否和服务器连接,每成功连接一次它的值就会增加,以 [8 进制][24]显示

|

||||

- **delay** – 从本地到远程节点或服务器通信的往返时间(毫秒)

|

||||

- **offset** – 主机与远程节点或服务器时间源的时间偏移量,offset 越接近于0,主机和 NTP 服务器的时间越接近(以[方均根][25]表示,单位为毫秒)

|

||||

- **jitter** – 与远程节点同步的时间源的平均偏差(多个时间样本中的 offset 的偏差,单位是毫秒),这个数值的绝对值越小,主机的时间就越精确

|

||||

|

||||

#### 字段的统计代码 ####

|

||||

|

||||

表中第一个字符(统计代码)是状态标识(参见 [Peer Status Word][26]),包含 " ","x","-","#","+","*","o":

|

||||

|

||||

- " " – 无状态,表示:

|

||||

- 没有远程通信的主机

|

||||

- "LOCAL" 即本机

|

||||

- (未被使用的)高层级服务器

|

||||

- 远程主机使用的这台机器作为同步服务器

|

||||

- “**x**” – 已不再使用

|

||||

- “**-**” – 已不再使用

|

||||

- “**#**” – 良好的远程节点或服务器但是未被使用 (不在按同步距离排序的前六个节点中,作为备用节点使用)

|

||||

- “**+**” – 良好的且优先使用的远程节点或服务器(包含在组合算法中)

|

||||

- “*” – 当前作为优先主同步对象的远程节点或服务器

|

||||

- “**o**” – PPS 节点 (当优先节点是有效时)。实际的系统同步是源于秒脉冲信号(pulse-per-second,PPS),可能通过PPS 时钟驱动或者通过内核接口。

|

||||

|

||||

参考 [Clock Select Algorithm][27].

|

||||

|

||||

#### refid ####

|

||||

|

||||

**refid** 有下面这些状态值

|

||||

|

||||

- 一个IP地址 – 远程节点或服务器的 [IP 地址][28]

|

||||

- **.LOCL.** – 本机 (当没有远程节点或服务器可用时)

|

||||

- **.PPS.** – 时间标准中的“[Pulse Per Second][29]”(秒脉冲)

|

||||

- **.IRIG.** – [Inter-Range Instrumentation Group][30] 时间码

|

||||

- **.ACTS.** – 美国 [NIST 标准时间][31] 电话调制器

|

||||

- **.NIST.** –美国 NIST 标准时间电话调制器

|

||||

- **.PTB.** – 德国 [PTB][32] 时间标准电话调制器

|

||||

- **.USNO.** – 美国 [USNO 标准时间][33] 电话调制器

|

||||

- **.CHU.** – [CHU][34] ([HF][35], Ottawa, ON, Canada) 标准时间无线电接收器

|

||||

- **.DCFa.** – [DCF77][36] ([LF][37], Mainflingen, Germany) 标准时间无线电接收器

|

||||

- **.HBG.** – [HBG][38] (LF Prangins, Switzerland) 标准时间无线电接收器

|

||||

- **.JJY.** – [JJY][39] (LF Fukushima, Japan) 标准时间无线电接收器

|

||||

- **.LORC.** – [LORAN][40]-C station ([MF][41]) 标准时间无线电接收器,注: [不再可用][42] (被 [eLORAN][43] 废弃)

|

||||

- **.MSF.** – [MSF][44] (LF, Anthorn, Great Britain) 标准时间无线电接收器

|

||||

- **.TDF.** – [TDF][45] (MF, Allouis, France)标准时间无线电接收器

|

||||

- **.WWV.** – [WWV][46] (HF, Ft. Collins, CO, America) 标准时间无线电接收器

|

||||

- **.WWVB.** – [WWVB][47] (LF, Ft. Collins, CO, America) 标准时间无线电接收器

|

||||

- **.WWVH.** – [WWVH][48] (HF, Kauai, HI, America) 标准时间无线电接收器

|

||||

- **.GOES.** – 美国[静止环境观测卫星][49];

|

||||

- **.GPS.** – 美国 [GPS][50];

|

||||

- **.GAL.** – [伽利略定位系统][51]欧洲 [GNSS][52];

|

||||

- **.ACST.** – 选播服务器

|

||||

- **.AUTH.** – 认证错误

|

||||

- **.AUTO.** – Autokey (NTP 的一种认证机制)顺序错误

|

||||

- **.BCST.** – 广播服务器

|

||||

- **.CRYPT.** – Autokey 协议错误

|

||||

- **.DENY.** – 服务器拒绝访问;

|

||||

- **.INIT.** – 关联初始化

|

||||

- **.MCST.** – 多播服务器

|

||||

- **.RATE.** – (轮询) 速率超出限定

|

||||

- **.TIME.** – 关联超时

|

||||

- **.STEP.** – 间隔时长改变,偏移量比危险阈值小(1000ms) 比间隔时间 (125ms)大

|

||||

|

||||

#### 操作要点 ####

|

||||

|

||||

一个时间服务器只会报告时间信息而不会从客户端更新时间(单向更新),而一个节点可以更新其他同级节点的时间,结合出一个彼此同意的时间(双向更新)。

|

||||

|

||||

[初次启动][53]时:

|

||||

|

||||

> 除非使用 iburst 选项,客户端通常需要花几分钟来和服务器同步。如果客户端在启动时时间与 NTP 服务器的时间差大于 1000 秒,守护进程会退出并在系统日志中记录,让操作者手动设置时间差小于 1000 秒后再重新启动。如果时间差小于 1000 秒,但是大于 128 秒,会自动矫正间隔,并自动重启守护进程。

|

||||

|

||||

> 当第一次启动时,时间频率文件(通常是 ntp.drift 文件,记录时间偏移)不存在,守护进程进入一个特殊模式来矫正频率。当时钟不符合[规范][54]时这会需要 900 秒。当校正完成后,守护进程创建时间频率文件进入普通模式,并分步校正剩余的偏差。

|

||||

|

||||

NTP 0 层(Stratum 0 )的设备如原子钟(铯,铷),GPS 时钟或者其他标准时间的无线电时钟为 1 层(Stratum 1)的时间服务器提供时间信号。NTP 只报告[UTC][55] 时间(统一协调时,Coordinated Universal Time)。客户端程序使用[时区][56]从 UTC 导出本地时间。

|

||||

|

||||

NTP 协议是高精度的,使用的精度小于纳秒(2的 -32 次方)。主机的时间精度和其他参数(受硬件和操作系统限制)使用命令 “ntpq -c rl” 查看(参见 [rfc1305][57] 通用变量和 [rfc5905][58])。

|

||||

|

||||

#### “ntpq -c rl”输出参数 ####

|

||||

|

||||

- **precision** 为四舍五入值,且为 2 的幂数。因此精度为 2^precision (秒)

|

||||

- **rootdelay** – 与同步网络中主同步服务器的总往返延时。注意这个值可以是正数或者负数,取决于时钟的精度。

|

||||

- **rootdisp** – 相对于同步网络中主同步服务器的偏差(秒)

|

||||

- **tc** – NTP 算法 [PLL][59] (phase locked loop,锁相环路) 或 [FLL][60] (frequency locked loop,锁频回路) 时间常量

|

||||

- **mintc** – NTP 算法 PLL/FLL 最小时间常亮或“最快响应

|

||||

- **offset** – 由结合算法得出的系统时钟偏移量(毫秒)

|

||||

- **frequency** – 系统时钟频率

|

||||

- **sys_jitter** – 由结合算法得出的系统时钟平均偏差(毫秒)

|

||||

- **clk_jitter** – 硬件时钟平均偏差(毫秒)

|

||||

- **clk_wander** – 硬件时钟偏移([PPM][61] – 百分之一)

|

||||

|

||||

Jitter (也叫 timing jitter) 表示短期变化大于10HZ 的频率, wander 表示长期变化大于10HZ 的频率 (Stability 表示系统的频率随时间的变化,和 aging, drift, trends 等是同义词)

|

||||

|

||||

#### 操作要点(续) ####

|

||||

|

||||

NTP 软件维护一系列连续更新的频率变化的校正值。对于设置正确的稳定系统,在非拥塞的网络中,现代硬件的 NTP 时钟同步通常与 UTC 标准时间相差在毫秒内。(在千兆 LAN 网络中可以达到何种精度?)

|

||||

|

||||

对于 UTC 时间,[闰秒 leap second ][62] 可以每两年插入一次用于同步地球自传的变化。注意本地时间为[夏令时][63]时时间会有一小时的变化。在重同步之前客户端设备会使用独立的 UTC 时间,除非客户端使用了偏移校准。

|

||||

|

||||

#### [闰秒发生时会怎样][64] ####

|

||||

|

||||

> 闰秒发生时,会对当天时间增加或减少一秒。闰秒的调整在 UTC 时间当天的最后一秒。如果增加一秒,UTC 时间会出现 23:59:60。即 23:59:59 到 0:00:00 之间实际上需要 2 秒钟。如果减少一秒,时间会从 23:59:58 跳至 0:00:00 。另见 [The Kernel Discipline][65].

|

||||

|

||||

那么… 间隔阈值(step threshold)的真实值是多少: 125ms 还是 128ms? PLL/FLL tc 的单位是什么 (log2 s? ms?)?在非拥塞的千兆 LAN 中时间节点间的精度能达到多少?

|

||||

|

||||

感谢 Camilo M 和 Chris B的评论。 欢迎校正错误和更多细节的探讨。

|

||||

|

||||

谢谢

|

||||

Martin

|

||||

|

||||

### 附录 ###

|

||||

|

||||

- [NTP 的纪元][66] 从 1900 开始而 UNIX 的从 1970开始.

|

||||

- [时间校正][67] 是逐渐进行的,因此时间的完全同步可能会画上几个小时。

|

||||

- [节点状态][68] 可以被记录到 [summarise/plot time offsets and errors][69]

|

||||

- [RMS][70] – 均方根

|

||||

- [PLL][71] – 锁相环路

|

||||

- [FLL][72] – 锁频回路

|

||||

- [PPM][73] – 百万分之一,用于描述频率的变化

|

||||

- [man ntpq (Gentoo 简明版本)][74]

|

||||

- [man ntpq (长期维护版本)][75]

|

||||

- [man ntpq (Gentoo 长期维护版本)][76]

|

||||

|

||||

### 另见 ###

|

||||

|

||||

- [ntpq – 标准 NTP 查询程序][77]

|

||||

- [The Network Time Protocol (NTP) 分布][78]

|

||||

- NTP 的简明[历史][79]

|

||||

- 一个更多细节的简明历史 “Mills, D.L., A brief history of NTP time: confessions of an Internet timekeeper. Submitted for publication; please do not cite or redistribute” ([pdf][80])

|

||||

- [NTP RFC][81] 标准文档

|

||||

- Network Time Protocol (Version 3) RFC – [txt][82], or [pdf][83]. Appendix E, The NTP Timescale and its Chronometry, p70, 包含了对过去 5000 年我们的计时系统的变化和关系的有趣解释。

|

||||

- 维基百科: [Time][84] 和 [Calendar][85]

|

||||

- [John Harrison and the Longitude problem][86]

|

||||

- [Clock of the Long Now][87] – The 10,000 Year Clock

|

||||

- John C Taylor – [Chronophage][88]

|

||||

- [Orders of magnitude of time][89]

|

||||

- [Greenwich Time Signal][90]

|

||||

|

||||

### 其他 ###

|

||||

|

||||

SNTP (Simple Network Time Protocol, [RFC 4330][91],简单网络协议)基本上也是NTP,但是少了一些基于 [RFC 1305][92] 实现的 NTP 的一些不再需要的内部算法。

|

||||

|

||||

Win32 时间 [Windows Time Service][93] 是 SNTP 的非标准实现,没有精度的保证,并假设精度几乎有 1-2 秒的范围。(因为没有系统时间变化校正)

|

||||

|

||||

还有一个[PTP (IEEE 1588)][95] Precision Time Protocol(精准时间协议)。见维基百科:[Precision Time Protocol][96]。软件程序为 [PTPd][97]。虫咬的功能是这是一个 [LAN][98] 高精度主从同步系统,精度在毫秒级,使用 [International Atomic Time][99] (TAI, [monotonic][100],无闰秒)。数据报时间戳需要在网卡中启用。支持 PTP 的网络会对数据报记录时间戳以减少交换机路由器的影响。也可以在不记录时间戳的网络中使用 PTP 但可能应为时间偏差太大而无法同步。因此使用这个需要对网络进行设置。

|

||||

|

||||

### 更老的时间同步协议 ###

|

||||

|

||||

- DTSS – DEC公司的数字时间同步服务, 被 NTP 所取代。例子: [DTSS VMS C code c2000][101]。 (哪里有关于 DTSS 的文章或文档吗?)

|

||||

- [DAYTIME protocol][102],使用 [TCP][103] 或 [UDP][104] 13 端口同步

|

||||

- [ICMP Timestamp][105] 和 [ICMP Timestamp Reply][106],使用 [ICMP][107] 协议同步

|

||||

- [Time Protocol][108],使用 TCP 或 UDP 37 号端口同步

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://nlug.ml1.co.uk/2012/01/ntpq-p-output/831

|

||||

|

||||

作者:Martin L

|

||||

译者:[Liao](https://github.com/liaosishere)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.gentoo.org/

|

||||

[2]:http://nlug.ml1.co.uk/2012/01/man-ntpq-gentoo-brief-version/853

|

||||

[3]:http://www.thelinuxblog.com/linux-man-pages/1/ntpq

|

||||

[4]:http://www.eecis.udel.edu/~mills/ntp/html/ntpq.html

|

||||

[5]:http://linux.die.net/man/8/ntpq

|

||||

[6]:http://www.ntp.org/

|

||||

[7]:http://en.wikipedia.org/wiki/Wide_area_network

|

||||

[8]:http://en.wikipedia.org/wiki/Local_area_network

|

||||

[9]:http://en.wikipedia.org/wiki/User_Datagram_Protocol

|

||||

[10]:http://en.wikipedia.org/wiki/Network_Time_Protocol

|

||||

[11]:http://www.ntp.org/ntpfaq/NTP-s-time.htm

|

||||

[12]:http://www.ntp.org/rfc.html

|

||||

[13]:http://www.ietf.org/rfc/rfc1305.txt

|

||||

[14]:http://www.rfc-editor.org/rfc/rfc1305.pdf

|

||||

[15]:http://en.wikipedia.org/wiki/Time

|

||||

[16]:http://en.wikipedia.org/wiki/Calendar

|

||||

[17]:http://en.wikipedia.org/wiki/Network_Time_Protocol#Clock_strata

|

||||

[18]:http://en.wikipedia.org/wiki/Unicast

|

||||

[19]:http://www.eecis.udel.edu/~mills/ntp/html/manyopt.html#mcst

|

||||

[20]:http://en.wikipedia.org/wiki/Broadcasting_%28computing%29

|

||||

[21]:http://en.wikipedia.org/wiki/Multicast

|

||||

[22]:http://www.eecis.udel.edu/~mills/ntp/html/manyopt.html

|

||||

[23]:http://www.ietf.org/rfc/rfc5905.txt

|

||||

[24]:http://en.wikipedia.org/wiki/Octal#In_computers

|

||||

[25]:http://en.wikipedia.org/wiki/Root_mean_square

|

||||

[26]:http://www.eecis.udel.edu/~mills/ntp/html/decode.html#peer

|

||||

[27]:http://www.eecis.udel.edu/~mills/ntp/html/select.html

|

||||

[28]:http://en.wikipedia.org/wiki/Ip_address

|

||||

[29]:http://en.wikipedia.org/wiki/Pulse_per_second

|

||||

[30]:http://en.wikipedia.org/wiki/Inter-Range_Instrumentation_Group

|

||||

[31]:http://en.wikipedia.org/wiki/Standard_time_and_frequency_signal_service

|

||||

[32]:http://www.ptb.de/index_en.html

|

||||

[33]:http://en.wikipedia.org/wiki/United_States_Naval_Observatory#Time_service

|

||||

[34]:http://en.wikipedia.org/wiki/CHU_%28radio_station%29

|

||||

[35]:http://en.wikipedia.org/wiki/High_frequency

|

||||

[36]:http://en.wikipedia.org/wiki/DCF77

|

||||

[37]:http://en.wikipedia.org/wiki/Low_frequency

|

||||

[38]:http://en.wikipedia.org/wiki/HBG_%28time_signal%29

|

||||

[39]:http://en.wikipedia.org/wiki/JJY#Time_standards

|

||||

[40]:http://en.wikipedia.org/wiki/LORAN#Timing_and_synchronization

|

||||

[41]:http://en.wikipedia.org/wiki/Medium_frequency

|

||||

[42]:http://en.wikipedia.org/wiki/LORAN#The_future_of_LORAN

|

||||

[43]:http://en.wikipedia.org/wiki/LORAN#eLORAN

|

||||

[44]:http://en.wikipedia.org/wiki/Time_from_NPL#The_.27MSF_signal.27_and_the_.27Rugby_clock.27

|

||||

[45]:http://en.wikipedia.org/wiki/T%C3%A9l%C3%A9_Distribution_Fran%C3%A7aise

|

||||

[46]:http://en.wikipedia.org/wiki/WWV_%28radio_station%29#Time_signals

|

||||

[47]:http://en.wikipedia.org/wiki/WWVB

|

||||

[48]:http://en.wikipedia.org/wiki/WWVH

|

||||

[49]:http://en.wikipedia.org/wiki/GOES#Further_reading

|

||||

[50]:http://en.wikipedia.org/wiki/Gps#Timekeeping

|

||||

[51]:http://en.wikipedia.org/wiki/Galileo_%28satellite_navigation%29#The_concept

|

||||

[52]:http://en.wikipedia.org/wiki/Gnss

|

||||

[53]:http://www.eecis.udel.edu/~mills/ntp/html/debug.html

|

||||

[54]:http://www.ntp.org/ntpfaq/NTP-s-algo-kernel.htm

|

||||

[55]:http://en.wikipedia.org/wiki/Coordinated_Universal_Time

|

||||

[56]:http://en.wikipedia.org/wiki/Time_zone

|

||||

[57]:http://www.ietf.org/rfc/rfc1305.txt

|

||||

[58]:http://www.ietf.org/rfc/rfc5905.txt

|

||||

[59]:http://en.wikipedia.org/wiki/PLL

|

||||

[60]:http://en.wikipedia.org/wiki/Frequency-locked_loop

|

||||

[61]:http://en.wikipedia.org/wiki/Parts_per_million

|

||||

[62]:http://en.wikipedia.org/wiki/Leap_second

|

||||

[63]:http://en.wikipedia.org/wiki/Daylight_saving_time

|

||||

[64]:http://www.ntp.org/ntpfaq/NTP-s-time.htm#Q-TIME-LEAP-SECOND

|

||||

[65]:http://www.ntp.org/ntpfaq/NTP-s-algo-kernel.htm

|

||||

[66]:http://www.ntp.org/ntpfaq/NTP-s-algo.htm#AEN1895

|

||||

[67]:http://www.ntp.org/ntpfaq/NTP-s-algo.htm#Q-ACCURATE-CLOCK

|

||||

[68]:http://www.ntp.org/ntpfaq/NTP-s-trouble.htm#Q-TRB-MON-STATFIL

|

||||

[69]:http://www.ntp.org/ntpfaq/NTP-s-trouble.htm#AEN5086

|

||||

[70]:http://en.wikipedia.org/wiki/Root_mean_square

|

||||

[71]:http://en.wikipedia.org/wiki/PLL

|

||||

[72]:http://en.wikipedia.org/wiki/Frequency-locked_loop

|

||||

[73]:http://en.wikipedia.org/wiki/Parts_per_million

|

||||

[74]:http://nlug.ml1.co.uk/2012/01/man-ntpq-gentoo-brief-version/853

|

||||

[75]:http://nlug.ml1.co.uk/2012/01/man-ntpq-long-version/855

|

||||

[76]:http://nlug.ml1.co.uk/2012/01/man-ntpq-gentoo-long-version/856

|

||||

[77]:http://www.eecis.udel.edu/~mills/ntp/html/ntpq.html

|

||||

[78]:http://www.eecis.udel.edu/~mills/ntp/html/index.html

|

||||

[79]:http://www.ntp.org/ntpfaq/NTP-s-def-hist.htm

|

||||

[80]:http://www.eecis.udel.edu/~mills/database/papers/history.pdf

|

||||

[81]:http://www.ntp.org/rfc.html

|

||||

[82]:http://www.ietf.org/rfc/rfc1305.txt

|

||||

[83]:http://www.rfc-editor.org/rfc/rfc1305.pdf

|

||||

[84]:http://en.wikipedia.org/wiki/Time

|

||||

[85]:http://en.wikipedia.org/wiki/Calendar

|

||||

[86]:http://www.rmg.co.uk/harrison

|

||||

[87]:http://longnow.org/clock/

|

||||

[88]:http://johnctaylor.com/

|

||||

[89]:http://en.wikipedia.org/wiki/Orders_of_magnitude_%28time%29

|

||||

[90]:http://en.wikipedia.org/wiki/Greenwich_Time_Signal

|

||||

[91]:http://tools.ietf.org/html/rfc4330

|

||||

[92]:http://tools.ietf.org/html/rfc1305

|

||||

[93]:http://en.wikipedia.org/wiki/Network_Time_Protocol#Microsoft_Windows

|

||||

[94]:http://en.wikipedia.org/wiki/Personal_computer

|

||||

[95]:http://www.nist.gov/el/isd/ieee/ieee1588.cfm

|

||||

[96]:http://en.wikipedia.org/wiki/IEEE_1588

|

||||

[97]:http://ptpd.sourceforge.net/

|

||||

[98]:http://en.wikipedia.org/wiki/Local_area_network

|

||||

[99]:http://en.wikipedia.org/wiki/International_Atomic_Time

|

||||

[100]:http://en.wikipedia.org/wiki/Monotonic_function

|

||||

[101]:http://antinode.info/ftp/dtss_ntp/

|

||||

[102]:http://en.wikipedia.org/wiki/DAYTIME

|

||||

[103]:http://en.wikipedia.org/wiki/Transmission_Control_Protocol

|

||||

[104]:http://en.wikipedia.org/wiki/User_Datagram_Protocol

|

||||

[105]:http://en.wikipedia.org/wiki/ICMP_Timestamp

|

||||

[106]:http://en.wikipedia.org/wiki/ICMP_Timestamp_Reply

|

||||

[107]:http://en.wikipedia.org/wiki/Internet_Control_Message_Protocol

|

||||

[108]:http://en.wikipedia.org/wiki/Time_Protocol

|

||||

@ -1,6 +1,6 @@

|

||||

Linux 和类Unix 系统上5个极品的开源软件备份工具

|

||||

Linux 和类 Unix 系统上5个最佳开源备份工具

|

||||

================================================================================

|

||||

一个好的备份最基本的就是为了能够从一些错误中恢复

|

||||

一个好的备份最基本的目的就是为了能够从一些错误中恢复:

|

||||

|

||||

- 人为的失误

|

||||

- 磁盘阵列或是硬盘故障

|

||||

@ -13,7 +13,7 @@ Linux 和类Unix 系统上5个极品的开源软件备份工具

|

||||

|

||||

确定你正在部署的软件具有下面的特性

|

||||

|

||||

1. **开源软件** - 你务必要选择那些源码可以免费获得,并且可以修改的软件。确信可以恢复你的数据,即使是软件的供应商或者/或是项目停止继续维护这个软件或者是拒绝继续为这个软件提供补丁。

|

||||

1. **开源软件** - 你务必要选择那些源码可以免费获得,并且可以修改的软件。确信可以恢复你的数据,即使是软件供应商/项目停止继续维护这个软件,或者是拒绝继续为这个软件提供补丁。

|

||||

|

||||

2. **跨平台支持** - 确定备份软件可以很好的运行各种需要部署的桌面操作系统和服务器系统。

|

||||

|

||||

@ -21,21 +21,21 @@ Linux 和类Unix 系统上5个极品的开源软件备份工具

|

||||

|

||||

4. **自动转换** - 自动转换本来是没什么,除了对于各种备份设备,包括图书馆,近线存储和自动加载,自动转换可以自动完成一些任务,包括加载,挂载和标签备份像磁带这些媒体设备。

|

||||

|

||||

5. **备份介质** - 确定你可以备份到磁带,硬盘,DVD 和云存储像AWS。

|

||||

5. **备份介质** - 确定你可以备份到磁带,硬盘,DVD 和像 AWS 这样的云存储。

|

||||

|

||||

6. **加密数据流** - 确定所有客户端到服务器的传输都被加密,保证在LAN/WAN/Internet 中传输的安全性。

|

||||

6. **加密数据流** - 确定所有客户端到服务器的传输都被加密,保证在 LAN/WAN/Internet 中传输的安全性。

|

||||

|

||||

7. **数据库支持** - 确定备份软件可以备份到数据库,像MySQL 或是 Oracle。

|

||||

|

||||

8. **备份可以跨越多个卷** - 备份软件(转存文件)可以把每个备份文件分成几个部分,允许将每个部分存在于不同的卷。这样可以保证一些数据量很大的备份(像100TB的文件)可以被存储在一些比单个部分大的设备中,比如说像硬盘和磁盘卷。

|

||||

8. **备份可以跨越多个卷** - 备份软件(转储文件时)可以把每个备份文件分成几个部分,允许将每个部分存在于不同的卷。这样可以保证一些数据量很大的备份(像100TB的文件)可以被存储在一些单个容量较小的设备中,比如说像硬盘和磁盘卷。

|

||||

|

||||

9. **VSS (卷影复制)** - 这是[微软的卷影复制服务(VSS)][1],通过创建数据的快照来备份。确定备份软件支持VSS的MS-Windows 客户端/服务器。

|

||||

|

||||

10. **重复数据删除** - 这是一种数据压缩技术,用来消除重复数据的副本(比如,图片)。

|

||||

|

||||

11. **许可证和成本** - 确定你[理解和使用的开源许可证][3]下的软件源码你可以得到。

|

||||

11. **许可证和成本** - 确定你对备份软件所用的[许可证了解和明白其使用方式][3]。

|

||||

|

||||

12. **商业支持** - 开源软件可以提供社区支持(像邮件列表和论坛)和专业的支持(像发行版提供额外的付费支持)。你可以使用付费的专业支持以培训和咨询为目的。

|

||||

12. **商业支持** - 开源软件可以提供社区支持(像邮件列表和论坛)和专业的支持(如发行版提供额外的付费支持)。你可以使用付费的专业支持为你提供培训和咨询。

|

||||

|

||||

13. **报告和警告** - 最后,你必须能够看到备份的报告,当前的工作状态,也能够在备份出错的时候提供警告。

|

||||

|

||||

@ -59,7 +59,7 @@ Linux 和类Unix 系统上5个极品的开源软件备份工具

|

||||

|

||||

### Amanda - 又一个客户端服务器备份工具 ###

|

||||

|

||||

AMANDA 是 Advanced Maryland Automatic Network Disk Archiver 的缩写。它允许系统管理员创建一个单独的服务器来备份网络上的其他主机到磁带驱动器或硬盘或者是自动转换器。

|

||||

AMANDA 是 Advanced Maryland Automatic Network Disk Archiver 的缩写。它允许系统管理员创建一个单独的备份服务器来将网络上的其他主机的数据备份到磁带驱动器、硬盘或者是自动换盘器。

|

||||

|

||||

- 操作系统:支持跨平台运行。

|

||||

- 备份级别:完全,差异,增量,合并。

|

||||

@ -75,7 +75,7 @@ AMANDA 是 Advanced Maryland Automatic Network Disk Archiver 的缩写。它允

|

||||

|

||||

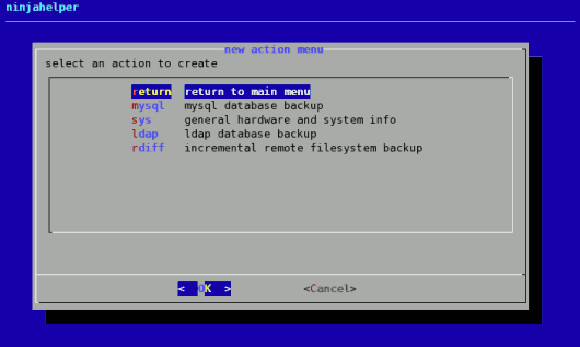

### Backupninja - 轻量级备份系统 ###

|

||||

|

||||

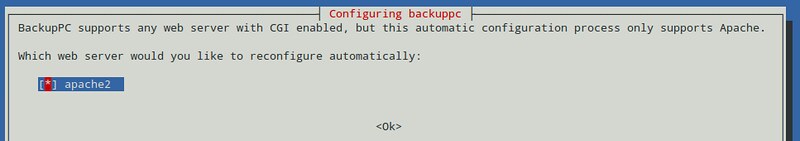

Backupninja 是一个简单易用的备份系统。你可以简单的拖放配置文件到 /etc/backup.d/ 目录来备份多个主机。

|

||||

Backupninja 是一个简单易用的备份系统。你可以简单的拖放一个配置文件到 /etc/backup.d/ 目录来备份到多个主机。

|

||||

|

||||

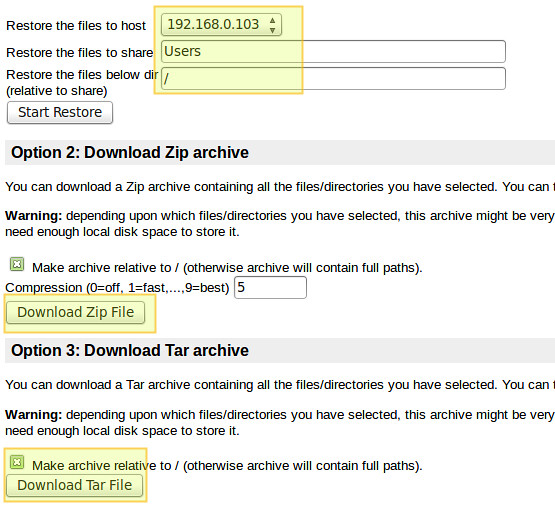

|

||||

|

||||

@ -93,7 +93,7 @@ Backupninja 是一个简单易用的备份系统。你可以简单的拖放配

|

||||

|

||||

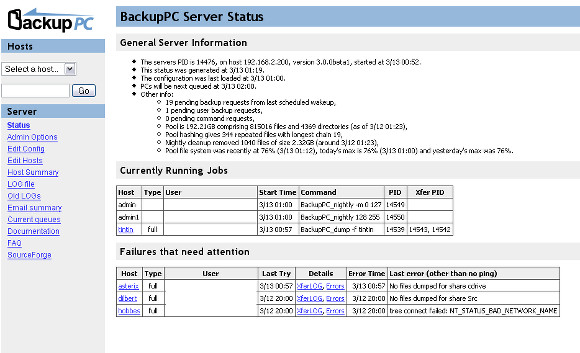

### Backuppc - 高效的客户端服务器备份工具###

|

||||

|

||||

Backuppc 可以用来备份基于LInux 和Windows 系统的主服务器硬盘。它配备了一个巧妙的池计划来最大限度的减少磁盘储存,磁盘I/O 和网络I/O。

|

||||

Backuppc 可以用来备份基于Linux 和Windows 系统的主服务器硬盘。它配备了一个巧妙的池计划来最大限度的减少磁盘储存、磁盘 I/O 和网络I/O。

|

||||

|

||||

|

||||

|

||||

@ -111,7 +111,7 @@ Backuppc 可以用来备份基于LInux 和Windows 系统的主服务器硬盘。

|

||||

|

||||

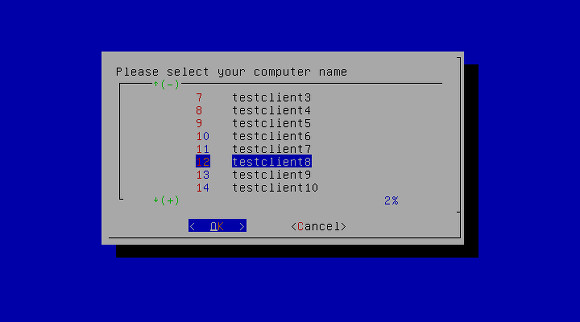

### UrBackup - 最容易配置的客户端服务器系统 ###

|

||||

|

||||

UrBackup 是一个非常容易配置的开源客户端服务器备份系统,通过图像和文件备份的组合完成了数据安全性和快速的恢复。你的文件可以通过Web界面或者是在Windows资源管理器中恢复,而驱动卷的备份用引导CD或者是USB 棒来恢复(逻辑恢复)。一个Web 界面使得配置你自己的备份服务变得非常简单。

|

||||

UrBackup 是一个非常容易配置的开源客户端服务器备份系统,通过镜像 方式和文件备份的组合完成了数据安全性和快速的恢复。磁盘卷备份可以使用可引导 CD 或U盘,通过Web界面或Windows资源管理器来恢复你的文件(硬恢复)。一个 Web 界面使得配置你自己的备份服务变得非常简单。

|

||||

|

||||

|

||||

|

||||

@ -129,19 +129,19 @@ UrBackup 是一个非常容易配置的开源客户端服务器备份系统,

|

||||

|

||||

### 其他供你考虑的一些极好用的开源备份软件 ###

|

||||

|

||||

Amanda,Bacula 和上面所提到的软件都是功能丰富,但是配置比较复杂对于一些小的网络或者是单独的服务器。我建议你学习和使用一下的备份软件:

|

||||

Amanda,Bacula 和上面所提到的这些软件功能都很丰富,但是对于一些小的网络或者是单独的服务器来说配置比较复杂。我建议你学习和使用一下的下面这些备份软件:

|

||||

|

||||

1. [Rsnapshot][10] - 我建议用这个作为对本地和远程的文件系统快照工具。查看[怎么设置和使用这个工具在Debian 和Ubuntu linux][11]和[基于CentOS,RHEL 的操作系统][12]。

|

||||

1. [Rsnapshot][10] - 我建议用这个作为对本地和远程的文件系统快照工具。看看[在Debian 和Ubuntu linux][11]和[基于CentOS,RHEL 的操作系统][12]怎么设置和使用这个工具。

|

||||

2. [rdiff-backup][13] - 另一个好用的类Unix 远程增量备份工具。

|

||||

3. [Burp][14] - Burp 是一个网络备份和恢复程序。它使用了librsync来节省网络流量和节省每个备份占用的空间。它也使用了VSS(卷影复制服务),在备份Windows计算机时进行快照。

|

||||

4. [Duplicity][15] - 伟大的加密和高效的备份类Unix操作系统。查看如何[安装Duplicity来加密云备份][16]来获取更多的信息。

|

||||

5. [SafeKeep][17] - SafeKeep是一个集中和易于使用的备份应用程序,结合了镜像和增量备份最佳功能的备份应用程序。

|

||||

5. [SafeKeep][17] - SafeKeep是一个中心化的、易于使用的备份应用程序,结合了镜像和增量备份最佳功能的备份应用程序。

|

||||

6. [DREBS][18] - DREBS 是EBS定期快照的工具。它被设计成在EBS快照所连接的EC2主机上运行。

|

||||

7. 古老的unix 程序,像rsync, tar, cpio, mt 和dump。

|

||||

|

||||

###结论###

|

||||

|

||||

我希望你会发现这篇有用的文章来备份你的数据。不要忘了验证你的备份和创建多个数据备份。然而,对于磁盘阵列并不是一个备份解决方案。使用任何一个上面提到的程序来备份你的服务器,桌面和笔记本电脑和私人的移动设备。如果你知道其他任何开源的备份软件我没有提到的,请分享在评论里。

|

||||

我希望你会发现这篇有用的文章来备份你的数据。不要忘了验证你的备份和创建多个数据备份。注意,磁盘阵列并不是一个备份解决方案!使用任何一个上面提到的程序来备份你的服务器、桌面和笔记本电脑和私人的移动设备。如果你知道其他任何开源的备份软件我没有提到的,请分享在评论里。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -149,7 +149,7 @@ via: http://www.cyberciti.biz/open-source/awesome-backup-software-for-linux-unix

|

||||

|

||||

作者:[nixCraft][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

66

published/20141108 When hackers grow old.md

Normal file

66

published/20141108 When hackers grow old.md

Normal file

@ -0,0 +1,66 @@

|

||||

ESR:黑客年暮

|

||||

================================================================================

|

||||

近来我一直在与某资深开源开发团队中的多个成员缠斗,尽管密切关注我的人们会在读完本文后猜到是哪个组织,但我不会在这里说出这个组织的名字。

|

||||

|

||||

怎么让某些人进入 21 世纪就这么难呢?真是的...

|

||||

|

||||

我快 56 岁了,也就是大部分年轻人会以为的我将时不时朝他们发出诸如“滚出我的草坪”之类歇斯底里咆哮的年龄。但事实并非如此 —— 我发现,尤其是在技术背景之下,我变得与我的年龄非常不相称。

|

||||

|

||||

在我这个年龄的大部分人确实变成了爱发牢骚、墨守成规的老顽固。并且,尴尬的是,偶尔我会成为那个打断谈话的人,我会指出他们某个在 1995 年(或者在某些特殊情况下,1985 年)时很适合的方法... 几十年后的今天就不再是好方法了。

|

||||

|

||||

为什么是我?因为年轻人在我的同龄人中很难有什么说服力。如果有人想让那帮老头改变主意,首先他得是自己同龄人中具有较高思想觉悟的佼佼者。即便如此,在与习惯做斗争的过程中,我也比看起来花费了更多的时间。

|

||||

|

||||

年轻人犯下无知的错误是可以被原谅的。他们还年轻。年轻意味着缺乏经验,缺乏经验通常会导致片面的判断。我很难原谅那些经历了足够多本该有经验的人,却被*长期的固化思维*蒙蔽,无法发觉近在咫尺的东西。

|

||||

|

||||

(补充一下:我真的不是保守党拥护者。那些和我争论政治的,无论保守党还是非保守党都没有注意到这点,我觉得这颇有点嘲讽的意味。)

|

||||

|

||||

那么,现在我们来讨论下 GNU 更新日志文件(ChangeLog)这件事。在 1985 年的时候,这是一个不错的主意,甚至可以说是必须的。当时的想法是用单独的更新日志条目来记录多个相关文件的变更情况。用这种方式来对那些存在版本缺失或者非常原始的版本进行版本控制确实不错。当时我也*在场*,所以我知道这些。

|

||||

|

||||

不过即使到了 1995 年,甚至 21 世纪早期,许多版本控制系统仍然没有太大改进。也就是说,这些版本控制系统并非对批量文件的变化进行分组再保存到一条记录上,而是对每个变化的文件分别进行记录并保存到不同的地方。CVS,当时被广泛使用的版本控制系统,仅仅是模拟日志变更 —— 并且在这方面表现得很糟糕,导致大多数人不再依赖这个功能。即便如此,更新日志文件的出现依然是必要的。

|

||||

|

||||

但随后,版本控制系统 Subversion 于 2003 年发布 beta 版,并于 2004 年发布 1.0 正式版,Subversion 真正实现了更新日志记录功能,得到了人们的广泛认可。它与一年后兴起的分布式版本控制系统(Distributed Version Control System,DVCS)共同引发了主流世界的激烈争论。因为如果你在项目上同时使用了分布式版本控制与更新日志文件记录的功能,它们将会因为争夺相同元数据的控制权而产生不可预料的冲突。

|

||||

|

||||

有几种不同的方法可以折衷解决这个问题。一种是继续将更新日志作为代码变更的授权记录。这样一来,你基本上只能得到简陋的、形式上的提交评论数据。

|

||||

|

||||

另一种方法是对提交的评论日志进行授权。如果你这样做了,不久后你就会开始思忖为什么自己仍然对所有的日志更新条目进行记录。提交元数据与变化的代码具有更好的相容性,毕竟这才是当初设计它的目的。

|

||||

|

||||

(现在,试想有这样一个项目,同样本着把项目做得最好的想法,但两拨人却做出了完全不同的选择。因此你必须同时阅读更新日志和评论日志以了解到底发生了什么。最好在矛盾激化前把问题解决....)

|

||||

|

||||

第三种办法是尝试同时使用以上两种方法 —— 在更新日志条目中,以稍微变化后的的格式复制一份评论数据,将其作为评论提交的一部分。这会导致各种你意想不到的问题,最具代表性的就是它不符合“真理的单点性(single point of truth)”原理;只要其中有拷贝文件损坏,或者日志文件条目被修改,这就不再是同步时数据匹配的问题,它将导致在其后参与进来的人试图搞清人们是怎么想的时候变得非常困惑。(LCTT 译注:《[程序员修炼之道][1]》(The Pragmatic Programmer):任何一个知识点在系统内都应当有一个唯一、明确、权威的表述。根据Brian Kernighan的建议,把这个原则称为“真理的单点性(Single Point of Truth)”或者SPOT原则。)

|

||||

|

||||

或者,正如这个*我就不说出具体名字的特定项目*所做的,它的高层开发人员在电子邮件中最近声明说,提交可以包含多个更新日志条目,并且提交的元数据与更新日志是无关的。这导致我们直到现在还得不断进行记录。

|

||||

|

||||

当时我读到邮件的时候都要吐了。什么样的傻瓜才会意识不到这是自找麻烦 —— 事实上,在 DVCS 中针对可靠的提交日志有很好的浏览工具,围绕更新日志文件的整个定制措施只会成为负担和拖累。

|

||||

|

||||

唉,这是比较特殊的笨蛋:变老的并且思维僵化了的黑客。所有的合理化改革他都会极力反对。他所遵循的行事方法在几十年前是有效的,但现在只能适得其反。如果你试图向他解释这些不仅仅和 git 的摘要信息有关,同时还为了正确适应当前的工具集,以便实现更新日志的去条目化... 呵呵,那你就准备好迎接无法忍受、无法想象的疯狂对话吧。

|

||||

|

||||

的确,它成功激怒了我。这样那样的胡言乱语使这个项目变成了很难完成的工作。而且,同样的糟糕还体现在他们吸引年轻开发者的过程中,我认为这是真正的问题。相关 Google+ 社区的人员数量已经达到了 4 位数,他们大部分都是孩子,还没有成长起来。显然外界已经接受了这样的信息:这个项目的开发者都是部落中地位根深蒂固的崇高首领,最好的崇拜方式就是远远的景仰着他们。

|

||||

|

||||

这件事给我的最大触动就是每当我要和这些部落首领较量时,我都会想:有一天我也会这样吗?或者更糟的是,我看到的只是如同镜子一般对我自己的真实写照,而我自己却浑然不觉?我的意思是,我所得到的印象来自于他的网站,这个特殊的笨蛋要比我年轻。年轻至少 15 岁呢。

|

||||

|

||||

我总是认为自己的思路很清晰。当我和那些比我聪明的人打交道时我不会受挫,我只会因为那些思路跟不上我、看不清事实的人而沮丧。但这种自信也许只是邓宁·克鲁格效应(Dunning-Krueger effect)在我身上的消极影响,我并不确定这意味着什么。很少有什么事情会让我感到害怕;而这件事在让我害怕的事情名单上是名列前茅的。

|

||||

|

||||

另一件让人不安的事是当我逐渐变老的时候,这样的矛盾发生得越来越频繁。不知怎的,我希望我的黑客同行们能以更加优雅的姿态老去,即使身体老去也应该保持一颗年轻的心灵。有些人确实是这样;但可惜绝大多数人都不是。真令人悲哀。

|

||||

|

||||

我不确定我的职业生涯会不会完美收场。假如我最后成功避免了思维僵化(注意我说的是假如),我想我一定知道其中的部分原因,但我不确定这种模式是否可以被复制 —— 为了达成目的也许得在你的头脑中发生一些复杂的化学反应。尽管如此,无论对错,请听听我给年轻黑客以及其他有志青年的建议。

|

||||

|

||||

你们——对的,也包括你——一定无法在你中年老年的时候保持不错的心灵,除非你能很好的控制这点。你必须不断地去磨练你的内心、在你还年轻的时候完成自己的种种心愿,你必须把这些行为养成一种习惯直到你老去。

|

||||

|

||||

有种说法是中年人锻炼身体的最佳时机是 30 岁以前。我以为同样的方法,坚持我以上所说的习惯能让你在 56 岁,甚至 65 岁的时候仍然保持灵活的头脑。挑战你的极限,使不断地挑战自己成为一种习惯。立刻离开安乐窝,由此当你以后真正需要它的时候你可以建立起自己的安乐窝。

|

||||

|

||||

你必须要清楚的了解这点;还有一个可选择的挑战是你选择一个可以实现的目标并且为了这个目标不断努力。这个月我要学习 Go 语言。不是指游戏,我早就玩儿过了(虽然玩儿的不是太好)。并不是因为工作需要,而是因为我觉得是时候来扩展下我自己了。

|

||||

|

||||

保持这个习惯。永远不要放弃。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://esr.ibiblio.org/?p=6485

|

||||

|

||||

作者:[Eric Raymond][a]

|

||||

译者:[Stevearzh](https://github.com/Stevearzh)

|

||||

校对:[Mr小眼儿](https://github.com/tinyeyeser)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://esr.ibiblio.org/?author=2

|

||||

[1]:http://book.51cto.com/art/200809/88490.htm

|

||||

@ -2,7 +2,7 @@

|

||||

================================================================================

|

||||

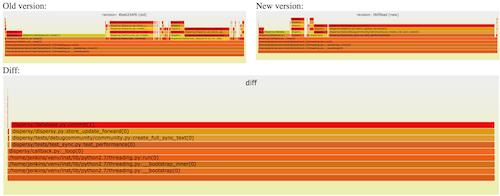

你能快速定位CPU性能回退的问题么? 如果你的工作环境非常复杂且变化快速,那么使用现有的工具是来定位这类问题是很具有挑战性的。当你花掉数周时间把根因找到时,代码已经又变更了好几轮,新的性能问题又冒了出来。

|

||||

|

||||

辛亏有了[CPU火焰图][1](flame graphs),CPU使用率的问题一般都比较好定位。但要处理性能回退问题,就要在修改前后的火焰图间,不断切换对比,来找出问题所在,这感觉就是像在太阳系中搜寻冥王星。虽然,这种方法可以解决问题,但我觉得应该会有更好的办法。

|

||||

幸亏有了[CPU火焰图][1](flame graphs),CPU使用率的问题一般都比较好定位。但要处理性能回退问题,就要在修改前后的火焰图之间,不断切换对比,来找出问题所在,这感觉就是像在太阳系中搜寻冥王星。虽然,这种方法可以解决问题,但我觉得应该会有更好的办法。

|

||||

|

||||

所以,下面就隆重介绍**红/蓝差分火焰图(red/blue differential flame graphs)**:

|

||||

|

||||

@ -14,7 +14,7 @@

|

||||

|

||||

这张火焰图中各火焰的形状和大小都是和第二次抓取的profile文件对应的CPU火焰图是相同的。(其中,y轴表示栈的深度,x轴表示样本的总数,栈帧的宽度表示了profile文件中该函数出现的比例,最顶层表示正在运行的函数,再往下就是调用它的栈)

|

||||

|

||||

在下面这个案例展示了,在系统升级后,一个工作负载的CPU使用率上升了。 下面是对应的CPU火焰图([SVG格式][4])

|

||||

在下面这个案例展示了,在系统升级后,一个工作载荷的CPU使用率上升了。 下面是对应的CPU火焰图([SVG格式][4])

|

||||

|

||||

<p><object data="http://www.brendangregg.com/blog/images/2014/zfs-flamegraph-after.svg" type="image/svg+xml" width=720 height=296>

|

||||

<img src="http://www.brendangregg.com/blog/images/2014/zfs-flamegraph-after.svg" width=720 />

|

||||

@ -22,7 +22,7 @@

|

||||

|

||||

通常,在标准的火焰图中栈帧和栈塔的颜色是随机选择的。 而在红/蓝差分火焰图中,使用不同的颜色来表示两个profile文件中的差异部分。

|

||||

|

||||

在第二个profile中deflate_slow()函数以及它后续调用的函数运行的次数要比前一次更多,所以在上图中这个栈帧被标为了红色。可以看出问题的原因是ZFS的压缩功能被使能了,而在系统升级前这项功能是关闭的。

|

||||

在第二个profile中deflate_slow()函数以及它后续调用的函数运行的次数要比前一次更多,所以在上图中这个栈帧被标为了红色。可以看出问题的原因是ZFS的压缩功能被启用了,而在系统升级前这项功能是关闭的。

|

||||

|

||||

这个例子过于简单,我甚至可以不用差分火焰图也能分析出来。但想象一下,如果是在分析一个微小的性能下降,比如说小于5%,而且代码也更加复杂的时候,问题就为那么好处理了。

|

||||

|

||||

@ -69,7 +69,9 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

在上面的例子中"func_a()->func_b()->func_c()" 代表调用栈,这个调用栈在profile1文件中共出现了31次,在profile2文件中共出现了33次。然后,使用flamegraph.pl脚本处理这3列数据,会自动生成一张红/蓝差分火焰图。

|

||||

|

||||

### 其他选项 ###

|

||||

|

||||

再介绍一些有用的选项:

|

||||

|

||||

**difffolded.pl -n**:这个选项会把两个profile文件中的数据规范化,使其能相互匹配上。如果你不这样做,抓取到所有栈的统计值肯定会不相同,因为抓取的时间和CPU负载都不同。这样的话,看上去要么就是一片红(负载增加),要么就是一片蓝(负载下降)。-n选项对第一个profile文件进行了平衡,这样你就可以得到完整红/蓝图谱。

|

||||

|

||||

**difffolded.pl -x**: 这个选项会把16进制的地址删掉。 profiler时常会无法将地址转换为符号,这样的话栈里就会有16进制地址。如果这个地址在两个profile文件中不同,这两个栈就会认为是不同的栈,而实际上它们是相同的。遇到这样的问题就用-x选项搞定。

|

||||

@ -77,6 +79,7 @@ difffolded.p只能对“折叠”过的堆栈profile文件进行操作,折叠

|

||||

**flamegraph.pl --negate**: 用于颠倒红/蓝配色。 在下面的章节中,会用到这个功能。

|

||||

|

||||

### 不足之处 ###

|

||||

|

||||

虽然我的红/蓝差分火焰图很有用,但实际上还是有一个问题:如果一个代码执行路径完全消失了,那么在火焰图中就找不到地方来标注蓝色。你只能看到当前的CPU使用情况,而不知道为什么会变成这样。

|

||||

|

||||