mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-16 00:21:12 +08:00

commit

466410f241

@ -60,6 +60,7 @@ LCTT 的组成

|

||||

* 2017/03/13 制作了 LCTT 主页、成员列表和成员主页,LCTT 主页将移动至 https://linux.cn/lctt 。

|

||||

* 2017/03/16 提升 GHLandy、bestony、rusking 为新的 Core 成员。创建 Comic 小组。

|

||||

* 2017/04/11 启用头衔制,为各位重要成员颁发头衔。

|

||||

* 2017/11/21 鉴于 qhwdw 快速而上佳的翻译质量,提升 qhwdw 为新的 Core 成员。

|

||||

|

||||

核心成员

|

||||

-------------------------------

|

||||

@ -86,6 +87,7 @@ LCTT 的组成

|

||||

- 核心成员 @Locez,

|

||||

- 核心成员 @ucasFL,

|

||||

- 核心成员 @rusking,

|

||||

- 核心成员 @qhwdw,

|

||||

- 前任选题 @DeadFire,

|

||||

- 前任校对 @reinoir222,

|

||||

- 前任校对 @PurlingNayuki,

|

||||

|

||||

@ -0,0 +1,347 @@

|

||||

理解多区域配置中的 firewalld

|

||||

============================================================

|

||||

|

||||

现在的新闻里充斥着服务器被攻击和数据失窃事件。对于一个阅读过安全公告博客的人来说,通过访问错误配置的服务器,利用最新暴露的安全漏洞或通过窃取的密码来获得系统控制权,并不是件多困难的事情。在一个典型的 Linux 服务器上的任何互联网服务都可能存在漏洞,允许未经授权的系统访问。

|

||||

|

||||

因为在应用程序层面上强化系统以防范任何可能的威胁是不可能做到的事情,而防火墙可以通过限制对系统的访问提供了安全保证。防火墙基于源 IP、目标端口和协议来过滤入站包。因为这种方式中,仅有几个 IP/端口/协议的组合与系统交互,而其它的方式做不到过滤。

|

||||

|

||||

Linux 防火墙是通过 netfilter 来处理的,它是内核级别的框架。这十几年来,iptables 被作为 netfilter 的用户态抽象层(LCTT 译注: userland,一个基本的 UNIX 系统是由 kernel 和 userland 两部分构成,除 kernel 以外的称为 userland)。iptables 将包通过一系列的规则进行检查,如果包与特定的 IP/端口/协议的组合匹配,规则就会被应用到这个包上,以决定包是被通过、拒绝或丢弃。

|

||||

|

||||

Firewalld 是最新的 netfilter 用户态抽象层。遗憾的是,由于缺乏描述多区域配置的文档,它强大而灵活的功能被低估了。这篇文章提供了一个示例去改变这种情况。

|

||||

|

||||

### Firewalld 的设计目标

|

||||

|

||||

firewalld 的设计者认识到大多数的 iptables 使用案例仅涉及到几个单播源 IP,仅让每个符合白名单的服务通过,而其它的会被拒绝。这种模式的好处是,firewalld 可以通过定义的源 IP 和/或网络接口将入站流量分类到不同<ruby>区域<rt>zone</rt></ruby>。每个区域基于指定的准则按自己配置去通过或拒绝包。

|

||||

|

||||

另外的改进是基于 iptables 进行语法简化。firewalld 通过使用服务名而不是它的端口和协议去指定服务,使它更易于使用,例如,是使用 samba 而不是使用 UDP 端口 137 和 138 和 TCP 端口 139 和 445。它进一步简化语法,消除了 iptables 中对语句顺序的依赖。

|

||||

|

||||

最后,firewalld 允许交互式修改 netfilter,允许防火墙独立于存储在 XML 中的永久配置而进行改变。因此,下面的的临时修改将在下次重新加载时被覆盖:

|

||||

|

||||

```

|

||||

# firewall-cmd <some modification>

|

||||

```

|

||||

|

||||

而,以下的改变在重加载后会永久保存:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent <some modification>

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

### 区域

|

||||

|

||||

在 firewalld 中最上层的组织是区域。如果一个包匹配区域相关联的网络接口或源 IP/掩码 ,它就是区域的一部分。可用的几个预定义区域:

|

||||

|

||||

```

|

||||

# firewall-cmd --get-zones

|

||||

block dmz drop external home internal public trusted work

|

||||

```

|

||||

|

||||

任何配置了一个**网络接口**和/或一个**源**的区域就是一个<ruby>活动区域<rt>active zone</rt></ruby>。列出活动的区域:

|

||||

|

||||

```

|

||||

# firewall-cmd --get-active-zones

|

||||

public

|

||||

interfaces: eno1 eno2

|

||||

```

|

||||

|

||||

**Interfaces** (接口)是系统中的硬件和虚拟的网络适配器的名字,正如你在上面的示例中所看到的那样。所有的活动的接口都将被分配到区域,要么是默认的区域,要么是用户指定的一个区域。但是,一个接口不能被分配给多于一个的区域。

|

||||

|

||||

在缺省配置中,firewalld 设置所有接口为 public 区域,并且不对任何区域设置源。其结果是,`public` 区域是唯一的活动区域。

|

||||

|

||||

**Sources** (源)是入站 IP 地址的范围,它也可以被分配到区域。一个源(或重叠的源)不能被分配到多个区域。这样做的结果是产生一个未定义的行为,因为不清楚应该将哪些规则应用于该源。

|

||||

|

||||

因为指定一个源不是必需的,任何包都可以通过接口匹配而归属于一个区域,而不需要通过源匹配来归属一个区域。这表示通过使用优先级方式,优先到达多个指定的源区域,稍后将详细说明这种情况。首先,我们来检查 `public` 区域的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: dhcpv6-client ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

```

|

||||

|

||||

逐行说明如下:

|

||||

|

||||

* `public (default, active)` 表示 `public` 区域是默认区域(当接口启动时会自动默认),并且它是活动的,因为,它至少有一个接口或源分配给它。

|

||||

* `interfaces: eno1 eno2` 列出了这个区域上关联的接口。

|

||||

* `sources:` 列出了这个区域的源。现在这里什么都没有,但是,如果这里有内容,它们应该是这样的格式 xxx.xxx.xxx.xxx/xx。

|

||||

* `services: dhcpv6-client ssh` 列出了允许通过这个防火墙的服务。你可以通过运行 `firewall-cmd --get-services` 得到一个防火墙预定义服务的详细列表。

|

||||

* `ports:` 列出了一个允许通过这个防火墙的目标端口。它是用于你需要去允许一个没有在 firewalld 中定义的服务的情况下。

|

||||

* `masquerade: no` 表示这个区域是否允许 IP 伪装。如果允许,它将允许 IP 转发,它可以让你的计算机作为一个路由器。

|

||||

* `forward-ports:` 列出转发的端口。

|

||||

* `icmp-blocks:` 阻塞的 icmp 流量的黑名单。

|

||||

* `rich rules:` 在一个区域中优先处理的高级配置。

|

||||

* `default` 是目标区域,它决定了与该区域匹配而没有由上面设置中显式处理的包的动作。

|

||||

|

||||

### 一个简单的单区域配置示例

|

||||

|

||||

如果只是简单地锁定你的防火墙。简单地在删除公共区域上当前允许的服务,并重新加载:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

在下面的防火墙上这些命令的结果是:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services:

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

```

|

||||

|

||||

本着尽可能严格地保证安全的精神,如果发生需要在你的防火墙上临时开放一个服务的情况(假设是 ssh),你可以增加这个服务到当前会话中(省略 `--permanent`),并且指示防火墙在一个指定的时间之后恢复修改:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --add-service=ssh --timeout=5m

|

||||

```

|

||||

|

||||

这个 `timeout` 选项是一个以秒(`s`)、分(`m`)或小时(`h`)为单位的时间值。

|

||||

|

||||

### 目标

|

||||

|

||||

当一个区域处理它的源或接口上的一个包时,但是,没有处理该包的显式规则时,这时区域的<ruby>目标<rt>target</rt></ruby>决定了该行为:

|

||||

|

||||

* `ACCEPT`:通过这个包。

|

||||

* `%%REJECT%%`:拒绝这个包,并返回一个拒绝的回复。

|

||||

* `DROP`:丢弃这个包,不回复任何信息。

|

||||

* `default`:不做任何事情。该区域不再管它,把它踢到“楼上”。

|

||||

|

||||

在 firewalld 0.3.9 中有一个 bug (已经在 0.3.10 中修复),对于一个目标是除了“default”以外的源区域,不管允许的服务是什么,这的目标都会被应用。例如,一个使用目标 `DROP` 的源区域,将丢弃所有的包,甚至是白名单中的包。遗憾的是,这个版本的 firewalld 被打包到 RHEL7 和它的衍生版中,使它成为一个相当常见的 bug。本文中的示例避免了可能出现这种行为的情况。

|

||||

|

||||

### 优先权

|

||||

|

||||

活动区域中扮演两个不同的角色。关联接口行为的区域作为接口区域,并且,关联源行为的区域作为源区域(一个区域能够扮演两个角色)。firewalld 按下列顺序处理一个包:

|

||||

|

||||

1. 相应的源区域。可以存在零个或一个这样的区域。如果这个包满足一个<ruby>富规则<rt>rich rule</rt></ruby>、服务是白名单中的、或者目标没有定义,那么源区域处理这个包,并且在这里结束。否则,向上传递这个包。

|

||||

2. 相应的接口区域。肯定有一个这样的区域。如果接口处理这个包,那么到这里结束。否则,向上传递这个包。

|

||||

3. firewalld 默认动作。接受 icmp 包并拒绝其它的一切。

|

||||

|

||||

这里的关键信息是,源区域优先于接口区域。因此,对于多区域的 firewalld 配置的一般设计模式是,创建一个优先源区域来允许指定的 IP 对系统服务的提升访问,并在一个限制性接口区域限制其它访问。

|

||||

|

||||

### 一个简单的多区域示例

|

||||

|

||||

为演示优先权,让我们在 `public` 区域中将 `http` 替换成 `ssh`,并且为我们喜欢的 IP 地址,如 1.1.1.1,设置一个默认的 `internal` 区域。以下的命令完成这个任务:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --permanent --zone=public --add-service=http

|

||||

# firewall-cmd --permanent --zone=internal --add-source=1.1.1.1

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

这些命令的结果是生成如下的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: dhcpv6-client http

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

default

|

||||

# firewall-cmd --zone=internal --list-all

|

||||

internal (active)

|

||||

interfaces:

|

||||

sources: 1.1.1.1

|

||||

services: dhcpv6-client mdns samba-client ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=internal --get-target

|

||||

default

|

||||

```

|

||||

|

||||

在上面的配置中,如果有人尝试从 1.1.1.1 去 `ssh`,这个请求将会成功,因为这个源区域(`internal`)被首先应用,并且它允许 `ssh` 访问。

|

||||

|

||||

如果有人尝试从其它的地址,如 2.2.2.2,去访问 `ssh`,它不是这个源区域的,因为和这个源区域不匹配。因此,这个请求被直接转到接口区域(`public`),它没有显式处理 `ssh`,因为,public 的目标是 `default`,这个请求被传递到默认动作,它将被拒绝。

|

||||

|

||||

如果 1.1.1.1 尝试进行 `http` 访问会怎样?源区域(`internal`)不允许它,但是,目标是 `default`,因此,请求将传递到接口区域(`public`),它被允许访问。

|

||||

|

||||

现在,让我们假设有人从 3.3.3.3 拖你的网站。要限制从那个 IP 的访问,简单地增加它到预定义的 `drop` 区域,正如其名,它将丢弃所有的连接:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=drop --add-source=3.3.3.3

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

下一次 3.3.3.3 尝试去访问你的网站,firewalld 将转发请求到源区域(`drop`)。因为目标是 `DROP`,请求将被拒绝,并且它不会被转发到接口区域(`public`)。

|

||||

|

||||

### 一个实用的多区域示例

|

||||

|

||||

假设你为你的组织的一台服务器配置防火墙。你希望允许全世界使用 `http` 和 `https` 的访问,你的组织(1.1.0.0/16)和工作组(1.1.1.0/8)使用 `ssh` 访问,并且你的工作组可以访问 `samba` 服务。使用 firewalld 中的区域,你可以用一个很直观的方式去实现这个配置。

|

||||

|

||||

`public` 这个命名,它的逻辑似乎是把全世界访问指定为公共区域,而 `internal` 区域用于为本地使用。从在 `public` 区域内设置使用 `http` 和 `https` 替换 `dhcpv6-client` 和 `ssh` 服务来开始:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=public --remove-service=ssh

|

||||

# firewall-cmd --permanent --zone=public --add-service=http

|

||||

# firewall-cmd --permanent --zone=public --add-service=https

|

||||

```

|

||||

|

||||

然后,取消 `internal` 区域的 `mdns`、`samba-client` 和 `dhcpv6-client` 服务(仅保留 `ssh`),并增加你的组织为源:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=mdns

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=samba-client

|

||||

# firewall-cmd --permanent --zone=internal --remove-service=dhcpv6-client

|

||||

# firewall-cmd --permanent --zone=internal --add-source=1.1.0.0/16

|

||||

```

|

||||

|

||||

为容纳你提升的 `samba` 的权限,增加一个富规则:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --add-rich-rule='rule family=ipv4 source address="1.1.1.0/8" service name="samba" accept'

|

||||

```

|

||||

|

||||

最后,重新加载,把这些变化拉取到会话中:

|

||||

|

||||

```

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

仅剩下少数的细节了。从一个 `internal` 区域以外的 IP 去尝试通过 `ssh` 到你的服务器,结果是回复一个拒绝的消息。它是 firewalld 默认的。更为安全的作法是去显示不活跃的 IP 行为并丢弃该连接。改变 `public` 区域的目标为 `DROP`,而不是 `default` 来实现它:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=public --set-target=DROP

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

但是,等等,你不再可以 ping 了,甚至是从内部区域!并且 icmp (ping 使用的协议)并不在 firewalld 可以列入白名单的服务列表中。那是因为,icmp 是第 3 层的 IP 协议,它没有端口的概念,不像那些捆绑了端口的服务。在设置公共区域为 `DROP` 之前,ping 能够通过防火墙是因为你的 `default` 目标通过它到达防火墙的默认动作(default),即允许它通过。但现在它已经被删除了。

|

||||

|

||||

为恢复内部网络的 ping,使用一个富规则:

|

||||

|

||||

```

|

||||

# firewall-cmd --permanent --zone=internal --add-rich-rule='rule protocol value="icmp" accept'

|

||||

# firewall-cmd --reload

|

||||

```

|

||||

|

||||

结果如下,这里是两个活动区域的配置:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=public --list-all

|

||||

public (default, active)

|

||||

interfaces: eno1 eno2

|

||||

sources:

|

||||

services: http https

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

# firewall-cmd --permanent --zone=public --get-target

|

||||

DROP

|

||||

# firewall-cmd --zone=internal --list-all

|

||||

internal (active)

|

||||

interfaces:

|

||||

sources: 1.1.0.0/16

|

||||

services: ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

rule family=ipv4 source address="1.1.1.0/8" service name="samba" accept

|

||||

rule protocol value="icmp" accept

|

||||

# firewall-cmd --permanent --zone=internal --get-target

|

||||

default

|

||||

```

|

||||

|

||||

这个设置演示了一个三层嵌套的防火墙。最外层,`public`,是一个接口区域,包含全世界的访问。紧接着的一层,`internal`,是一个源区域,包含你的组织,它是 `public` 的一个子集。最后,一个富规则增加到最内层,包含了你的工作组,它是 `internal` 的一个子集。

|

||||

|

||||

这里的关键信息是,当在一个场景中可以突破到嵌套层,最外层将使用接口区域,接下来的将使用一个源区域,并且在源区域中额外使用富规则。

|

||||

|

||||

### 调试

|

||||

|

||||

firewalld 采用直观范式来设计防火墙,但比它的前任 iptables 更容易产生歧义。如果产生无法预料的行为,或者为了更好地理解 firewalld 是怎么工作的,则可以使用 iptables 描述 netfilter 是如何配置操作的。前一个示例的输出如下,为了简单起见,将输出和日志进行了修剪:

|

||||

|

||||

```

|

||||

# iptables -S

|

||||

-P INPUT ACCEPT

|

||||

... (forward and output lines) ...

|

||||

-N INPUT_ZONES

|

||||

-N INPUT_ZONES_SOURCE

|

||||

-N INPUT_direct

|

||||

-N IN_internal

|

||||

-N IN_internal_allow

|

||||

-N IN_internal_deny

|

||||

-N IN_public

|

||||

-N IN_public_allow

|

||||

-N IN_public_deny

|

||||

-A INPUT -m conntrack --ctstate RELATED,ESTABLISHED -j ACCEPT

|

||||

-A INPUT -i lo -j ACCEPT

|

||||

-A INPUT -j INPUT_ZONES_SOURCE

|

||||

-A INPUT -j INPUT_ZONES

|

||||

-A INPUT -p icmp -j ACCEPT

|

||||

-A INPUT -m conntrack --ctstate INVALID -j DROP

|

||||

-A INPUT -j REJECT --reject-with icmp-host-prohibited

|

||||

... (forward and output lines) ...

|

||||

-A INPUT_ZONES -i eno1 -j IN_public

|

||||

-A INPUT_ZONES -i eno2 -j IN_public

|

||||

-A INPUT_ZONES -j IN_public

|

||||

-A INPUT_ZONES_SOURCE -s 1.1.0.0/16 -g IN_internal

|

||||

-A IN_internal -j IN_internal_deny

|

||||

-A IN_internal -j IN_internal_allow

|

||||

-A IN_internal_allow -p tcp -m tcp --dport 22 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p udp -m udp --dport 137 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p udp -m udp --dport 138 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p tcp -m tcp --dport 139 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -s 1.1.1.0/8 -p tcp -m tcp --dport 445 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_internal_allow -p icmp -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_public -j IN_public_deny

|

||||

-A IN_public -j IN_public_allow

|

||||

-A IN_public -j DROP

|

||||

-A IN_public_allow -p tcp -m tcp --dport 80 -m conntrack --ctstate NEW -j ACCEPT

|

||||

-A IN_public_allow -p tcp -m tcp --dport 443 -m conntrack --ctstate NEW -j ACCEPT

|

||||

```

|

||||

|

||||

在上面的 iptables 输出中,新的链(以 `-N` 开始的行)是被首先声明的。剩下的规则是附加到(以 `-A` 开始的行) iptables 中的。已建立的连接和本地流量是允许通过的,并且入站包被转到 `INPUT_ZONES_SOURCE` 链,在那里如果存在相应的区域,IP 将被发送到那个区域。从那之后,流量被转到 `INPUT_ZONES` 链,从那里它被路由到一个接口区域。如果在那里它没有被处理,icmp 是允许通过的,无效的被丢弃,并且其余的都被拒绝。

|

||||

|

||||

### 结论

|

||||

|

||||

firewalld 是一个文档不足的防火墙配置工具,它的功能远比大多数人认识到的更为强大。以创新的区域范式,firewalld 允许系统管理员去分解流量到每个唯一处理它的分类中,简化了配置过程。因为它直观的设计和语法,它在实践中不但被用于简单的单一区域中也被用于复杂的多区域配置中。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxjournal.com/content/understanding-firewalld-multi-zone-configurations?page=0,0

|

||||

|

||||

作者:[Nathan Vance][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linuxjournal.com/users/nathan-vance

|

||||

[1]:https://www.linuxjournal.com/tag/firewalls

|

||||

[2]:https://www.linuxjournal.com/tag/howtos

|

||||

[3]:https://www.linuxjournal.com/tag/networking

|

||||

[4]:https://www.linuxjournal.com/tag/security

|

||||

[5]:https://www.linuxjournal.com/tag/sysadmin

|

||||

[6]:https://www.linuxjournal.com/users/william-f-polik

|

||||

[7]:https://www.linuxjournal.com/users/nathan-vance

|

||||

@ -1,35 +1,28 @@

|

||||

#Translating by aiwhj

|

||||

|

||||

Ubuntu Core in LXD containers

|

||||

使用 LXD 容器运行 Ubuntu Core

|

||||

============================================================

|

||||

|

||||

|

||||

### Share or save

|

||||

|

||||

|

||||

|

||||

### What’s Ubuntu Core?

|

||||

### Ubuntu Core 是什么?

|

||||

|

||||

Ubuntu Core is a version of Ubuntu that’s fully transactional and entirely based on snap packages.

|

||||

Ubuntu Core 是完全基于 snap 包构建,并且完全事务化的 Ubuntu 版本。

|

||||

|

||||

Most of the system is read-only. All installed applications come from snap packages and all updates are done using transactions. Meaning that should anything go wrong at any point during a package or system update, the system will be able to revert to the previous state and report the failure.

|

||||

该系统大部分是只读的,所有已安装的应用全部来自 snap 包,完全使用事务化更新。这意味着不管在系统更新还是安装软件的时候遇到问题,整个系统都可以回退到之前的状态并且记录这个错误。

|

||||

|

||||

The current release of Ubuntu Core is called series 16 and was released in November 2016.

|

||||

最新版是在 2016 年 11 月发布的 Ubuntu Core 16。

|

||||

|

||||

Note that on Ubuntu Core systems, only snap packages using confinement can be installed (no “classic” snaps) and that a good number of snaps will not fully work in this environment or will require some manual intervention (creating user and groups, …). Ubuntu Core gets improved on a weekly basis as new releases of snapd and the “core” snap are put out.

|

||||

注意,Ubuntu Core 限制只能够安装 snap 包(而非 “传统” 软件包),并且有相当数量的 snap 包在当前环境下不能正常运行,或者需要人工干预(创建用户和用户组等)才能正常运行。随着新版的 snapd 和 “core” snap 包发布,Ubuntu Core 每周都会得到改进。

|

||||

|

||||

### Requirements

|

||||

### 环境需求

|

||||

|

||||

As far as LXD is concerned, Ubuntu Core is just another Linux distribution. That being said, snapd does require unprivileged FUSE mounts and AppArmor namespacing and stacking, so you will need the following:

|

||||

就 LXD 而言,Ubuntu Core 仅仅相当于另一个 Linux 发行版。也就是说,snapd 需要挂载无特权的 FUSE 和 AppArmor 命名空间以及软件栈,像下面这样:

|

||||

|

||||

* An up to date Ubuntu system using the official Ubuntu kernel

|

||||

* 一个新版的使用 Ubuntu 官方内核的系统

|

||||

* 一个新版的 LXD

|

||||

|

||||

* An up to date version of LXD

|

||||

### 创建一个 Ubuntu Core 容器

|

||||

|

||||

### Creating an Ubuntu Core container

|

||||

|

||||

The Ubuntu Core images are currently published on the community image server.

|

||||

You can launch a new container with:

|

||||

当前 Ubuntu Core 镜像发布在社区的镜像服务器。你可以像这样启动一个新的容器:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc launch images:ubuntu-core/16 ubuntu-core

|

||||

@ -37,9 +30,9 @@ Creating ubuntu-core

|

||||

Starting ubuntu-core

|

||||

```

|

||||

|

||||

The container will take a few seconds to start, first executing a first stage loader that determines what read-only image to use and setup the writable layers. You don’t want to interrupt the container in that stage and “lxc exec” will likely just fail as pretty much nothing is available at that point.

|

||||

这个容器启动需要一点点时间,它会先执行第一阶段的加载程序,加载程序会确定使用哪一个镜像(镜像是只读的),并且在系统上设置一个可读层,你不要在这一阶段中断容器执行,这个时候什么都没有,所以执行 `lxc exec` 将会出错。

|

||||

|

||||

Seconds later, “lxc list” will show the container IP address, indicating that it’s booted into Ubuntu Core:

|

||||

几秒钟之后,执行 `lxc list` 将会展示容器的 IP 地址,这表明已经启动了 Ubuntu Core:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc list

|

||||

@ -50,7 +43,7 @@ stgraber@dakara:~$ lxc list

|

||||

+-------------+---------+----------------------+----------------------------------------------+------------+-----------+

|

||||

```

|

||||

|

||||

You can then interact with that container the same way you would any other:

|

||||

之后你就可以像使用其他的交互一样和这个容器进行交互:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -62,11 +55,11 @@ pc-kernel 4.4.0-45-4 37 canonical -

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

### Updating the container

|

||||

### 更新容器

|

||||

|

||||

If you’ve been tracking the development of Ubuntu Core, you’ll know that those versions above are pretty old. That’s because the disk images that are used as the source for the Ubuntu Core LXD images are only refreshed every few months. Ubuntu Core systems will automatically update once a day and then automatically reboot to boot onto the new version (and revert if this fails).

|

||||

如果你一直关注着 Ubuntu Core 的开发,你应该知道上面的版本已经很老了。这是因为被用作 Ubuntu LXD 镜像的代码每隔几个月才会更新。Ubuntu Core 系统在重启时会检查更新并进行自动更新(更新失败会回退)。

|

||||

|

||||

If you want to immediately force an update, you can do it with:

|

||||

如果你想现在强制更新,你可以这样做:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -80,7 +73,7 @@ series 16

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

And then reboot the system and check the snapd version again:

|

||||

然后重启一下 Ubuntu Core 系统,然后看看 snapd 的版本。

|

||||

|

||||

```

|

||||

root@ubuntu-core:~# reboot

|

||||

@ -94,7 +87,7 @@ series 16

|

||||

root@ubuntu-core:~#

|

||||

```

|

||||

|

||||

You can get an history of all snapd interactions with

|

||||

你也可以像下面这样查看所有 snapd 的历史记录:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core snap changes

|

||||

@ -104,9 +97,9 @@ ID Status Spawn Ready Summary

|

||||

3 Done 2017-01-31T05:21:30Z 2017-01-31T05:22:45Z Refresh all snaps in the system

|

||||

```

|

||||

|

||||

### Installing some snaps

|

||||

### 安装 Snap 软件包

|

||||

|

||||

Let’s start with the simplest snaps of all, the good old Hello World:

|

||||

以一个最简单的例子开始,经典的 Hello World:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -116,7 +109,7 @@ root@ubuntu-core:~# hello-world

|

||||

Hello World!

|

||||

```

|

||||

|

||||

And then move on to something a bit more useful:

|

||||

接下来让我们看一些更有用的:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc exec ubuntu-core bash

|

||||

@ -124,9 +117,9 @@ root@ubuntu-core:~# snap install nextcloud

|

||||

nextcloud 11.0.1snap2 from 'nextcloud' installed

|

||||

```

|

||||

|

||||

Then hit your container over HTTP and you’ll get to your newly deployed Nextcloud instance.

|

||||

之后通过 HTTP 访问你的容器就可以看到刚才部署的 Nextcloud 实例。

|

||||

|

||||

If you feel like testing the latest LXD straight from git, you can do so with:

|

||||

如果你想直接通过 git 测试最新版 LXD,你可以这样做:

|

||||

|

||||

```

|

||||

stgraber@dakara:~$ lxc config set ubuntu-core security.nesting true

|

||||

@ -155,7 +148,7 @@ What IPv6 address should be used (CIDR subnet notation, “auto” or “none”

|

||||

LXD has been successfully configured.

|

||||

```

|

||||

|

||||

And because container inception never gets old, lets run Ubuntu Core 16 inside Ubuntu Core 16:

|

||||

已经设置过的容器不能回退版本,但是可以在 Ubuntu Core 16 中运行另一个 Ubuntu Core 16 容器:

|

||||

|

||||

```

|

||||

root@ubuntu-core:~# lxc launch images:ubuntu-core/16 nested-core

|

||||

@ -169,28 +162,29 @@ root@ubuntu-core:~# lxc list

|

||||

+-------------+---------+---------------------+-----------------------------------------------+------------+-----------+

|

||||

```

|

||||

|

||||

### Conclusion

|

||||

### 写在最后

|

||||

|

||||

If you ever wanted to try Ubuntu Core, this is a great way to do it. It’s also a great tool for snap authors to make sure their snap is fully self-contained and will work in all environments.

|

||||

如果你只是想试用一下 Ubuntu Core,这是一个不错的方法。对于 snap 包开发者来说,这也是一个不错的工具来测试你的 snap 包能否在不同的环境下正常运行。

|

||||

|

||||

Ubuntu Core is a great fit for environments where you want to ensure that your system is always up to date and is entirely reproducible. This does come with a number of constraints that may or may not work for you.

|

||||

如果你希望你的系统总是最新的,并且整体可复制,Ubuntu Core 是一个很不错的方案,不过这也会带来一些相应的限制,所以可能不太适合你。

|

||||

|

||||

And lastly, a word of warning. Those images are considered as good enough for testing, but aren’t officially supported at this point. We are working towards getting fully supported Ubuntu Core LXD images on the official Ubuntu image server in the near future.

|

||||

最后是一个警告,对于测试来说,这些镜像是足够的,但是当前并没有被正式的支持。在不久的将来,官方的 Ubuntu server 可以完整的支持 Ubuntu Core LXD 镜像。

|

||||

|

||||

### Extra information

|

||||

### 附录

|

||||

|

||||

The main LXD website is at: [https://linuxcontainers.org/lxd][2] Development happens on Github at: [https://github.com/lxc/lxd][3]

|

||||

Mailing-list support happens on: [https://lists.linuxcontainers.org][4]

|

||||

IRC support happens in: #lxcontainers on irc.freenode.net

|

||||

Try LXD online: [https://linuxcontainers.org/lxd/try-it][5]

|

||||

- LXD 主站:[https://linuxcontainers.org/lxd][2]

|

||||

- Github:[https://github.com/lxc/lxd][3]

|

||||

- 邮件列表:[https://lists.linuxcontainers.org][4]

|

||||

- IRC:#lxcontainers on irc.freenode.net

|

||||

- 在线试用:[https://linuxcontainers.org/lxd/try-it][5]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2017/02/27/ubuntu-core-in-lxd-containers/

|

||||

|

||||

作者:[Stéphane Graber ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

作者:[Stéphane Graber][a]

|

||||

译者:[aiwhj](https://github.com/aiwhj)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,48 @@

|

||||

肯特·贝克:改变人生的代码整理魔法

|

||||

==========

|

||||

|

||||

> 本文作者<ruby>肯特·贝克<rt>Kent Beck</rt></ruby>,是最早研究软件开发的模式和重构的人之一,是敏捷开发的开创者之一,更是极限编程和测试驱动开发的创始人,同时还是 Smalltalk 和 JUnit 的作者,对当今世界的软件开发影响深远。现在 Facebook 工作。

|

||||

|

||||

本周我一直在整理 Facebook 代码,而且我喜欢这个工作。我的职业生涯中已经整理了数千小时的代码,我有一套使这种整理更加安全、有趣和高效的规则。

|

||||

|

||||

整理工作是通过一系列短小而安全的步骤进行的。事实上,规则一就是**如果这很难,那就不要去做**。我以前在晚上做填字游戏。如果我卡住那就去睡觉,第二天晚上那些没有发现的线索往往很容易发现。与其想要一心搞个大的,不如在遇到阻力的时候停下来。

|

||||

|

||||

整理会陷入这样一种感觉:你错失的要比你从一个个成功中获得的更多(稍后会细说)。第二条规则是**当你充满活力时开始,当你累了时停下来**。起来走走。如果还没有恢复精神,那这一天的工作就算做完了。

|

||||

|

||||

只有在仔细追踪其它变化的时候(我把它和最新的差异搞混了),整理工作才可以与开发同步进行。第三条规则是**立即完成每个环节的工作**。与功能开发所不同的是,功能开发只有在完成一大块工作时才有意义,而整理是基于时间一点点完成的。

|

||||

|

||||

整理在任何步骤中都只需要付出不多的努力,所以我会在任何步骤遇到麻烦的时候放弃。所以,规则四是**两次失败后恢复**。如果我整理代码,运行测试,并遇到测试失败,那么我会立即修复它。如果我修复失败,我会立即恢复到上次已知最好的状态。

|

||||

|

||||

即便没有闪亮的新设计的愿景,整理也是有用的。不过,有时候我想看看事情会如何发展,所以第五条就是**实践**。执行一系列的整理和还原。第二次将更快,你会更加熟悉避免哪些坑。

|

||||

|

||||

只有在附带损害的风险较低,审查整理变化的成本也较低的时候整理才有用。规则六是**隔离整理**。如果你错过了在编写代码中途整理的机会,那么接下来可能很困难。要么完成并接着整理,要么还原、整理并进行修改。

|

||||

|

||||

试试这些。将临时申明的变量移动到它第一次使用的位置,简化布尔表达式(`return expression == True`?),提取一个 helper,将逻辑或状态的范围缩小到实际使用的位置。

|

||||

|

||||

### 规则

|

||||

|

||||

- 规则一、 如果这很难,那就不要去做

|

||||

- 规则二、 当你充满活力时开始,当你累了时停下来

|

||||

- 规则三、 立即完成每个环节工作

|

||||

- 规则四、 两次失败后恢复

|

||||

- 规则五、 实践

|

||||

- 规则六、 隔离整理

|

||||

|

||||

### 尾声

|

||||

|

||||

我通过严格地整理改变了架构、提取了框架。这种方式可以安全地做出重大改变。我认为这是因为,虽然每次整理的成本是不变的,但回报是指数级的,但我需要数据和模型来解释这个假说。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/

|

||||

|

||||

作者:[KENT BECK][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.facebook.com/kentlbeck

|

||||

[1]:https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/?utm_source=wanqu.co&utm_campaign=Wanqu+Daily&utm_medium=website#

|

||||

[2]:https://www.facebook.com/kentlbeck

|

||||

[3]:https://www.facebook.com/notes/kent-beck/the-life-changing-magic-of-tidying-up-code/1544047022294823/

|

||||

@ -0,0 +1,33 @@

|

||||

Let’s Encrypt :2018 年 1 月发布通配证书

|

||||

============================================================

|

||||

|

||||

Let’s Encrypt 将于 2018 年 1 月开始发放通配证书。通配证书是一个经常需要的功能,并且我们知道在一些情况下它可以使 HTTPS 部署更简单。我们希望提供通配证书有助于加速网络向 100% HTTPS 进展。

|

||||

|

||||

Let’s Encrypt 目前通过我们的全自动 DV 证书颁发和管理 API 保护了 4700 万个域名。自从 Let's Encrypt 的服务于 2015 年 12 月发布以来,它已经将加密网页的数量从 40% 大大地提高到了 58%。如果你对通配证书的可用性以及我们达成 100% 的加密网页的使命感兴趣,我们请求你为我们的[夏季筹款活动][1](LCTT 译注:之前的夏季活动,原文发布于今年夏季)做出贡献。

|

||||

|

||||

通配符证书可以保护基本域的任何数量的子域名(例如 *.example.com)。这使得管理员可以为一个域及其所有子域使用单个证书和密钥对,这可以使 HTTPS 部署更加容易。

|

||||

|

||||

通配符证书将通过我们[即将发布的 ACME v2 API 终端][2]免费提供。我们最初只支持通过 DNS 进行通配符证书的基础域验证,但是随着时间的推移可能会探索更多的验证方式。我们鼓励人们在我们的[社区论坛][3]上提出任何关于通配证书支持的问题。

|

||||

|

||||

我们决定在夏季筹款活动中宣布这一令人兴奋的进展,因为我们是一个非营利组织,这要感谢使用我们服务的社区的慷慨支持。如果你想支持一个更安全和保密的网络,[现在捐赠吧][4]!

|

||||

|

||||

我们要感谢我们的[社区][5]和我们的[赞助者][6],使我们所做的一切成为可能。如果你的公司或组织能够赞助 Let's Encrypt,请发送电子邮件至 [sponsor@letsencrypt.org][7]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://letsencrypt.org/2017/07/06/wildcard-certificates-coming-jan-2018.html

|

||||

|

||||

作者:[Josh Aas][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://letsencrypt.org/2017/07/06/wildcard-certificates-coming-jan-2018.html

|

||||

[1]:https://letsencrypt.org/donate/

|

||||

[2]:https://letsencrypt.org/2017/06/14/acme-v2-api.html

|

||||

[3]:https://community.letsencrypt.org/

|

||||

[4]:https://letsencrypt.org/donate/

|

||||

[5]:https://letsencrypt.org/getinvolved/

|

||||

[6]:https://letsencrypt.org/sponsors/

|

||||

[7]:mailto:sponsor@letsencrypt.org

|

||||

@ -0,0 +1,149 @@

|

||||

Linux 用户的手边工具:Guide to Linux

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

> “Guide to Linux” 这个应用并不完美,但它是一个非常好的工具,可以帮助你学习 Linux 命令。

|

||||

|

||||

还记得你初次使用 Linux 时的情景吗?对于有些人来说,他的学习曲线可能有些挑战性。比如,在 `/usr/bin` 中能找到许多命令。在我目前使用的 Elementary OS 系统中,命令的数量是 1944 个。当然,这并不全是真实的命令(或者,我会使用到的命令数量),但这个数目是很多的。

|

||||

|

||||

正因为如此(并且不同平台不一样),现在,新用户(和一些已经熟悉的用户)需要一些帮助。

|

||||

|

||||

对于每个管理员来说,这些技能是必须具备的:

|

||||

|

||||

* 熟悉平台

|

||||

* 理解命令

|

||||

* 编写 Shell 脚本

|

||||

|

||||

当你寻求帮助时,有时,你需要去“阅读那些该死的手册”(Read the Fine/Freaking/Funky Manual,LCTT 译注:一个网络用语,简写为 RTFM),但是当你自己都不知道要找什么的时候,它就没办法帮到你了。在那个时候,你就会为你拥有像 [Guide to Linux][15] 这样的手机应用而感到高兴。

|

||||

|

||||

不像你在 Linux.com 上看到的那些大多数的内容,这篇文章只是介绍一个 Android 应用的。为什么呢?因为这个特殊的 应用是用来帮助用户学习 Linux 的。

|

||||

|

||||

而且,它做的很好。

|

||||

|

||||

关于这个应用我清楚地告诉你 —— 它并不完美。Guide to Linux 里面充斥着很烂的英文,糟糕的标点符号,并且(如果你是一个纯粹主义者),它从来没有提到过 GNU。在这之上,它有一个特别的功能(通常它对用户非常有用)功能不是很有用(LCTT 译注:是指终端模拟器,后面会详细解释)。除此之外,我敢说 Guide to Linux 可能是 Linux 平台上最好的一个移动端的 “口袋指南”。

|

||||

|

||||

对于这个应用,你可能会喜欢它的如下特性:

|

||||

|

||||

* 离线使用

|

||||

* Linux 教程

|

||||

* 基础的和高级的 Linux 命令的详细介绍

|

||||

* 包含了命令示例和语法

|

||||

* 专用的 Shell 脚本模块

|

||||

|

||||

除此以外,Guide to Linux 是免费提供的(尽管里面有一些广告)。如果你想去除广告,它有一个应用内的购买,($2.99 USD/年)可以去消除广告。

|

||||

|

||||

让我们来安装这个应用,来看一看它的构成。

|

||||

|

||||

### 安装

|

||||

|

||||

像所有的 Android 应用一样,安装 Guide to Linux 是非常简单的。按照以下简单的几步就可以安装它了:

|

||||

|

||||

1. 打开你的 Android 设备上的 Google Play 商店

|

||||

2. 搜索 Guide to Linux

|

||||

3. 找到 Essence Infotech 的那个,并轻触进入

|

||||

4. 轻触 Install

|

||||

5. 允许安装

|

||||

|

||||

安装完成后,你可以在你的<ruby>应用抽屉<rt>App Drawer</rt></ruby>或主屏幕上(或者两者都有)上找到它去启动 Guide to Linux 。轻触图标去启动这个应用。

|

||||

|

||||

### 使用

|

||||

|

||||

让我们看一下 Guide to Linux 的每个功能。我发现某些功能比其它的更有帮助,或许你的体验会不一样。在我们分别讲解之前,我将重点提到其界面。开发者在为这个应用创建一个易于使用的界面方面做的很好。

|

||||

|

||||

从主窗口中(图 1),你可以获取四个易于访问的功能。

|

||||

|

||||

|

||||

|

||||

*图 1: The Guide to Linux 主窗口。[已获授权][1]*

|

||||

|

||||

轻触四个图标中的任何一个去启动一个功能,然后,准备去学习。

|

||||

|

||||

### 教程

|

||||

|

||||

让我们从这个应用教程的最 “新手友好” 的功能开始。打开“Tutorial”功能,然后,将看到该教程的欢迎部分,“Linux 操作系统介绍”(图 2)。

|

||||

|

||||

|

||||

|

||||

*图 2:教程开始。[已获授权][2]*

|

||||

|

||||

如果你轻触 “汉堡包菜单” (左上角的三个横线),显示了内容列表(图 3),因此,你可以在教程中选择任何一个可用部分。

|

||||

|

||||

|

||||

|

||||

*图 3:教程的内容列表。[已获授权][3]*

|

||||

|

||||

如果你现在还没有注意到,Guide to Linux 教程部分是每个主题的一系列短文的集合。短文包含图片和链接(有时候),链接将带你到指定的 web 网站(根据主题的需要)。这里没有交互,仅仅只能阅读。但是,这是一个很好的起点,由于开发者在描述各个部分方面做的很好(虽然有语法问题)。

|

||||

|

||||

尽管你可以在窗口的顶部看到一个搜索选项,但是,我还是没有发现这一功能的任何效果 —— 但是,你可以试一下。

|

||||

|

||||

对于 Linux 新手来说,如果希望获得 Linux 管理的技能,你需要去阅读整个教程。完成之后,转到下一个主题。

|

||||

|

||||

### 命令

|

||||

|

||||

命令功能类似于手机上的 man 页面一样,是大量的频繁使用的 Linux 命令。当你首次打开它,欢迎页面将详细解释使用命令的益处。

|

||||

|

||||

读完之后,你可以轻触向右的箭头(在屏幕底部)或轻触 “汉堡包菜单” ,然后从侧边栏中选择你想去学习的其它命令。(图 4)

|

||||

|

||||

|

||||

|

||||

*图 4:命令侧边栏允许你去查看列出的命令。[已获授权][4]*

|

||||

|

||||

轻触任意一个命令,你可以阅读这个命令的解释。每个命令解释页面和它的选项都提供了怎么去使用的示例。

|

||||

|

||||

### Shell 脚本

|

||||

|

||||

在这个时候,你开始熟悉 Linux 了,并对命令已经有一定程序的掌握。现在,是时候去熟悉 shell 脚本了。这个部分的设置方式与教程部分和命令部分相同。

|

||||

|

||||

你可以打开内容列表的侧边栏,然后打开包含 shell 脚本教程的任意部分(图 5)。

|

||||

|

||||

|

||||

|

||||

*图 5:Shell 脚本节看上去很熟悉。[已获授权][5]*

|

||||

|

||||

开发者在解释如何最大限度地利用 shell 脚本方面做的很好。对于任何有兴趣学习 shell 脚本细节的人来说,这是个很好的起点。

|

||||

|

||||

### 终端

|

||||

|

||||

现在我们到了一个新的地方,开发者在这个应用中包含了一个终端模拟器。遗憾的是,当你在一个没有 “root” 权限的 Android 设备上安装这个应用时,你会发现你被限制在一个只读文件系统中,在那里,大部分命令根本无法工作。但是,我在一台 Pixel 2 (通过 Android 应用商店)安装的 Guide to Linux 中,可以使用更多的这个功能(还只是较少的一部分)。在一台 OnePlus 3 (非 root 过的)上,不管我改变到哪个目录,我都是得到相同的错误信息 “permission denied”,甚至是一个简单的命令也如此。

|

||||

|

||||

在 Chromebook 上,不管怎么操作,它都是正常的(图 6)。可以说,它可以一直很好地工作在一个只读操作系统中(因此,你不能用它进行真正的工作或创建新文件)。

|

||||

|

||||

|

||||

|

||||

*图 6: 可以完美地(可以这么说)用一个终端模拟器去工作。[已获授权][6]*

|

||||

|

||||

记住,这并不是真实的成熟终端,但却是一个新用户去熟悉终端是怎么工作的一种方法。遗憾的是,大多数用户只会发现自己对这个工具的终端功能感到沮丧,仅仅是因为,它们不能使用他们在其它部分学到的东西。开发者可能将这个终端功能打造成了一个 Linux 文件系统沙箱,因此,用户可以真实地使用它去学习。每次用户打开那个工具,它将恢复到原始状态。这只是我一个想法。

|

||||

|

||||

### 写在最后…

|

||||

|

||||

尽管终端功能被一个只读文件系统所限制(几乎到了没法使用的程序),Guide to Linux 仍然是一个新手学习 Linux 的好工具。在 guide to Linux 中,你将学习关于 Linux、命令、和 shell 脚本的很多知识,以便在你安装你的第一个发行版之前,让你学习 Linux 有一个好的起点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/8/guide-linux-app-handy-tool-every-level-linux-user

|

||||

|

||||

作者:[JACK WALLEN][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://www.linux.com/licenses/category/used-permission

|

||||

[2]:https://www.linux.com/licenses/category/used-permission

|

||||

[3]:https://www.linux.com/licenses/category/used-permission

|

||||

[4]:https://www.linux.com/licenses/category/used-permission

|

||||

[5]:https://www.linux.com/licenses/category/used-permission

|

||||

[6]:https://www.linux.com/licenses/category/used-permission

|

||||

[7]:https://www.linux.com/licenses/category/used-permission

|

||||

[8]:https://www.linux.com/files/images/guidetolinux1jpg

|

||||

[9]:https://www.linux.com/files/images/guidetolinux2jpg

|

||||

[10]:https://www.linux.com/files/images/guidetolinux3jpg-0

|

||||

[11]:https://www.linux.com/files/images/guidetolinux4jpg

|

||||

[12]:https://www.linux.com/files/images/guidetolinux-5-newjpg

|

||||

[13]:https://www.linux.com/files/images/guidetolinux6jpg-0

|

||||

[14]:https://www.linux.com/files/images/guide-linuxpng

|

||||

[15]:https://play.google.com/store/apps/details?id=com.essence.linuxcommands

|

||||

[16]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

[17]:https://www.addtoany.com/share#url=https%3A%2F%2Fwww.linux.com%2Flearn%2Fintro-to-linux%2F2017%2F8%2Fguide-linux-app-handy-tool-every-level-linux-user&title=Guide%20to%20Linux%20App%20Is%20a%20Handy%20Tool%20for%20Every%20Level%20of%20Linux%20User

|

||||

@ -1,53 +1,51 @@

|

||||

[放弃你的代码,而不是你的时间][23]

|

||||

放弃你的代码,而不是你的时间

|

||||

============================================================

|

||||

|

||||

作为软件开发人员,我认为我们可以认同开源代码已经[改变了世界][9]。它的公共性质去除了软件可以变成最好的阻碍。问题是太多有价值的项目由于领导者的经历耗尽而停滞不前:

|

||||

作为软件开发人员,我认为我们可以认同开源代码^注1 已经[改变了世界][9]。它的公共性质去除了壁垒,可以让软件可以变的最好。但问题是,太多有价值的项目由于领导者的精力耗尽而停滞不前:

|

||||

|

||||

>“我没有时间和精力去投入开源了。我在开源上没有得到任何收入,所以我在那上面花的时间,我可以用在“生活上的事”,或者写作。。。正因为如此,我决定现在结束我所有的开源工作。”

|

||||

>“我没有时间和精力去投入开源了。我在开源上没有得到任何收入,所以我在那上面花的时间,我可以用在‘生活上的事’,或者写作上……正因为如此,我决定现在结束我所有的开源工作。”

|

||||

>

|

||||

>—[Ryan Bigg,几个 Ruby 和 Elixir 项目的前任维护者][1]

|

||||

>

|

||||

>“这也是一个巨大的机会成本,由于同时很多事情我无法学习或者完成,因为 FubuMVC 占用了我很多的时间,这是它现在必须停下来的主要原因。”

|

||||

>“这也是一个巨大的机会成本,由于我无法同时学习或者完成很多事情,FubuMVC 占用了我很多的时间,这是它现在必须停下来的主要原因。”

|

||||

>

|

||||

>—[前 FubuMVC 项目负责人 Jeremy Miller][2]

|

||||

>

|

||||

>“当我们决定要孩子的时候,我可能会放弃开源,我预计将是最终解决我问题的方案:最终选择。”

|

||||

>“当我们决定要孩子的时候,我可能会放弃开源,我预计最终解决我问题的方案将是:核武器。”

|

||||

>

|

||||

>—[Nolan Lawson,PouchDB 的维护者之一][3]

|

||||

|

||||

我们需要的是一种新的行业规范,即项目领导者将_一直_获得时间上的补偿。我们还需要抛弃的想法是, 任何提交问题或合并请求的开发人员都自动会得到维护者的注意。

|

||||

我们需要的是一种新的行业规范,即项目领导者将_总是_能获得(其付出的)时间上的补偿。我们还需要抛弃的想法是, 任何提交问题或合并请求的开发人员都自动会得到维护者的注意。

|

||||

|

||||

我们先来回顾一下开源库在市场上的作用。它是一个积木。它是[实用软件][10],一个企业为了在别处获利而必须承担的成本。如果用户能够理解代码并且发现它比替代方案(闭源专用、定制的内部解决方案等)更有价值,那么围绕软件的社区就会不断增长。它可以更好,更便宜,或两者兼而有之。

|

||||

我们先来回顾一下开源代码在市场上的作用。它是一个积木。它是[实用软件][10],是企业为了在别处获利而必须承担的成本。如果用户能够理解该代码的用途并且发现它比替代方案(闭源专用、定制的内部解决方案等)更有价值,那么围绕该软件的社区就会不断增长。它可以更好,更便宜,或两者兼而有之。

|

||||

|

||||

如果一个组织需要改进代码,他们可以自由地聘请任何他们想要的开发人员。通常情况下[为了他们的利益][11]会将改进贡献给社区,因为由于合并的复杂性,这是他们能够轻松地从其他用户获得未来改进的唯一方式。这种“引力”倾向于把社区聚集在一起。

|

||||

如果一个组织需要改进该代码,他们可以自由地聘请任何他们想要的开发人员。通常情况下[为了他们的利益][11]会将改进贡献给社区,因为由于代码合并的复杂性,这是他们能够轻松地从其他用户获得未来改进的唯一方式。这种“引力”倾向于把社区聚集在一起。

|

||||

|

||||

但是它也会加重项目维护者的负担,因为他们必须对这些改进做出反应。他们得到了什么回报?最好的情况是,社区贡献可能是他们将来可以使用的东西,但现在不是。最坏的情况下,这只不过是一个带着利他主义面具的自私请求罢了。

|

||||

但是它也会加重项目维护者的负担,因为他们必须对这些改进做出反应。他们得到了什么回报?最好的情况是,这些社区贡献可能是他们将来可以使用的东西,但现在不是。最坏的情况下,这只不过是一个带着利他主义面具的自私请求罢了。

|

||||

|

||||

一类开源项目避免了这个陷阱。Linux、MySQL、Android、Chromium 和 .NET Core 除了有名,有什么共同点么?他们都对一个或多个大型企业具有_战略性重要意义_,因为它们满足了这些利益。[聪明的公司商品化他们的商品][12],没有比开源软件便宜的商品。红帽需要使用 Linux 的公司来销售企业级 Linux,Oracle 使用 MySQL 销售 MySQL Enterprise,谷歌希望世界上每个人都拥有电话和浏览器,而微软则试图将开发者锁定在平台上然后将它们拉入 Azure 云。这些项目全部由各自公司直接资助。

|

||||

有一类开源项目避免了这个陷阱。Linux、MySQL、Android、Chromium 和 .NET Core 除了有名,有什么共同点么?他们都对一个或多个大型企业具有_战略性重要意义_,因为它们满足了这些利益。[聪明的公司商品化他们的商品][12],没有什么比开源软件便宜的商品了。红帽需要那些使用 Linux 的公司来销售企业级 Linux,Oracle 使用 MySQL 作为销售 MySQL Enterprise 的引子,谷歌希望世界上每个人都拥有电话和浏览器,而微软则试图将开发者锁定在平台上然后将它们拉入 Azure 云。这些项目全部由各自公司直接资助。

|

||||

|

||||

但是那些其他的项目呢,那些不是大玩家核心战略的项目呢?

|

||||

|

||||

如果你是其中一个项目的负责人,请为社区成员收取年费。_开放的源码,封闭的社区_。给用户的信息应该是“尽你所愿地使用代码,但如果你想影响项目的未来,请_为我们的时间支付_。”将非付费用户锁定在论坛和问题跟踪之外,并忽略他们的电子邮件。不支付的人应该觉得他们错过了派对。

|

||||

如果你是其中一个项目的负责人,请向社区成员收取年费。_开放的源码,封闭的社区_。给用户的信息应该是“尽你所愿地使用代码,但如果你想影响项目的未来,请_为我们的时间支付_。”将非付费用户锁定在论坛和问题跟踪之外,并忽略他们的电子邮件。不支付的人应该觉得他们错过了派对。

|

||||

|

||||

还要向贡献者收取合并非普通合并请求的时间花费。如果一个特定的提交不会立即给你带来好处,请为你的时间收取全价。要有纪律并[记住 YAGNI][13]。

|

||||

还要向贡献者收取合并非普通的合并请求的时间花费。如果一个特定的提交不会立即给你带来好处,请为你的时间收取全价。要有原则并[记住 YAGNI][13]。

|

||||

|

||||

这会导致一个极小的社区和更多的分支么?绝对。但是,如果你坚持不懈地构建自己的愿景,并为其他人创造价值,他们会尽快为要做的贡献支付。_合并贡献的意愿是[稀缺资源][4]_。如果没有它,用户必须反复地将它们的变化与你发布的每个新版本进行协调。

|

||||

这会导致一个极小的社区和更多的分支么?绝对。但是,如果你坚持不懈地构建自己的愿景,并为其他人创造价值,他们会尽快为要做的贡献而支付。_合并贡献的意愿是[稀缺资源][4]_。如果没有它,用户必须反复地将它们的变化与你发布的每个新版本进行协调。

|

||||

|

||||

如果你想在代码库中保持高水平的[概念完整性][14],那么限制社区是特别重要的。有[宽大贡献政策][15]的无领导者项目没有必要收费。

|

||||

如果你想在代码库中保持高水平的[概念完整性][14],那么限制社区是特别重要的。有[自由贡献政策][15]的无领导者项目没有必要收费。

|

||||

|

||||

为了实现更大的愿景,而不是单独为自己的业务支付成本,而是可能使其他人受益,去[众筹][16]。有许多成功的故事:

|

||||

为了实现更大的愿景,而不是单独为自己的业务支付成本,而是可能使其他人受益,去[众筹][16]吧。有许多成功的故事:

|

||||

|

||||

> [Font Awesome 5][5]

|

||||

>

|

||||

> [Ruby enVironment Management (RVM)][6]

|

||||

>

|

||||

> [Django REST framework 3][7]

|

||||

- [Font Awesome 5][5]

|

||||

- [Ruby enVironment Management (RVM)][6]

|

||||

- [Django REST framework 3][7]

|

||||

|

||||

[众筹有局限性][17]。它[不适合][18][大型项目][19]。但是,开源代码也是实用软件,它不需要雄心勃勃,冒险的破局者。它已经[渗透到每个行业][20],只有增量更新。

|

||||

[众筹有局限性][17]。它[不适合][18][大型项目][19]。但是,开源代码也是实用软件,它不需要雄心勃勃、冒险的破局者。它已经一点点地[渗透到每个行业][20]。

|

||||

|

||||

这些观点代表着一条可持续发展的道路,也可以解决[开源的多样性问题][21],这可能源于其历史上无偿的性质。但最重要的是,我们要记住,[我们一生中只留下那么多的按键次数][22],而且我们总有一天会后悔那些我们浪费的东西。

|

||||

|

||||

_当我说“开源”时,我的意思是代码[许可][8]以某种方式来构建专有的东西。这通常意味着一个宽松许可证(MIT 或 Apache 或 BSD),但并非总是如此。Linux 是当今科技行业的核心,但是是以 GPL 授权的。_

|

||||

- 注 1 :当我说“开源”时,我的意思是代码[许可][8]以某种方式来构建专有的东西。这通常意味着一个宽松许可证(MIT 或 Apache 或 BSD),但并非总是如此。Linux 是当今科技行业的核心,但是是以 GPL 授权的。_

|

||||

|

||||

感谢 Jason Haley、Don McNamara、Bryan Hogan 和 Nadia Eghbal 阅读了这篇文章的草稿。

|

||||

|

||||

@ -57,7 +55,7 @@ via: http://wgross.net/essays/give-away-your-code-but-never-your-time

|

||||

|

||||

作者:[William Gross][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

97

published/20171026 But I dont know what a container is .md

Normal file

97

published/20171026 But I dont know what a container is .md

Normal file

@ -0,0 +1,97 @@

|

||||

很遗憾,我也不知道什么是容器!

|

||||

========================

|

||||

|

||||

|

||||

|

||||

|

||||

> 题图抽象的形容了容器和虚拟机是那么的相似,又是那么的不同!

|

||||

|

||||

在近期的一些会议和学术交流会上,我一直在讲述有关 DevOps 的安全问题(亦称为 DevSecOps)^注1 。通常,我首先都会问一个问题:“在座的各位有谁知道什么是容器吗?” 通常并没有很多人举手^注2 ,所以我都会先简单介绍一下什么是容器^注3 ,然后再进行深层次的讨论交流。

|

||||

|

||||

更准确的说,在运用 DevOps 或者 DevSecOps 的时候,容器并不是必须的。但容器能很好的融于 DevOps 和 DevSecOps 方案中,结果就是,虽然不用容器便可以运用 DevOps ,但我还是假设大部分人依然会使用容器。

|

||||

|

||||

### 什么是容器

|

||||

|

||||

几个月前的一个会议上,一个同事正在容器上操作演示,因为大家都不是这个方面的专家,所以该同事就很简单的开始了他的演示。他说了诸如“在 Linux 内核源码中没有一处提及到<ruby>容器<rt>container</rt></ruby>。“之类的话。事实上,在这样的特殊群体中,这种描述表达是很危险的。就在几秒钟内,我和我的老板(坐在我旁边)下载了最新版本的内核源代码并且查找统计了其中 “container” 单词出现的次数。很显然,这位同事的说法并不准确。更准确来说,我在旧版本内核(4.9.2)代码中发现有 15273 行代码包含 “container” 一词^注4 。我和我老板会心一笑,确认同事的说法有误,并在休息时纠正了他这个有误的描述。

|

||||

|

||||

后来我们搞清楚同事想表达的意思是 Linux 内核中并没有明确提及容器这个概念。换句话说,容器使用了 Linux 内核中的一些概念、组件、工具以及机制,并没有什么特殊的东西;这些东西也可以用于其他目的^注 5 。所以才有会说“从 Linux 内核角度来看,并没有容器这样的东西。”

|

||||

|

||||

然后,什么是容器呢?我有着虚拟化(<ruby>管理器<rt>hypervisor</rt></ruby>和虚拟机)技术的背景,在我看来, 容器既像虚拟机(VM)又不像虚拟机。我知道这种解释好像没什么用,不过请听我细细道来。

|

||||

|

||||

### 容器和虚拟机相似之处有哪些?

|

||||

|

||||

容器和虚拟机相似的一个主要方面就是它是一个可执行单元。将文件打包生成镜像文件,然后它就可以运行在合适的主机平台上。和虚拟机一样,它运行于主机上,同样,它的运行也受制于该主机。主机平台为容器的运行提供软件环境和硬件资源(诸如 CPU 资源、网络环境、存储资源等等),除此之外,主机还需要负责以下的任务:

|

||||

|

||||

1. 为每一个工作单元(这里指虚拟机和容器)提供保护机制,这样可以保证即使某一个工作单元出现恶意的、有害的以及不能写入的情况时不会影响其他的工作单元。

|

||||

2. 主机保护自己不会受一些恶意运行或出现故障的工作单元影响。

|

||||

|

||||

虚拟机和容器实现这种隔离的原理并不一样,虚拟机的隔离是由管理器对硬件资源划分,而容器的隔离则是通过 Linux 内核提供的软件功能实现的^注6 。这种软件控制机制通过不同的“命名空间”保证了每一个容器的文件、用户以及网络连接等互不可见,当然容器和主机之间也互不可见。这种功能也能由 SELinux 之类软件提供,它们提供了进一步隔离容器的功能。

|

||||

|

||||

### 容器和虚拟机不同之处又有哪些?

|

||||

|

||||

以上描述有个问题,如果你对<ruby>管理器<rt>hypervisor</rt></ruby>机制概念比较模糊,也许你会认为容器就是虚拟机,但它确实不是。

|

||||

|

||||

首先,最为重要的一点^注7 ,容器是一种包格式。也许你会惊讶的反问我“什么,你不是说过容器是某种可执行文件么?” 对,容器确实是可执行文件,但容器如此迷人的一个主要原因就是它能很容易的生成比虚拟机小很多的实体化镜像文件。由于这些原因,容器消耗很少的内存,并且能非常快的启动与关闭。你可以在几分钟或者几秒钟(甚至毫秒级别)之内就启动一个容器,而虚拟机则不具备这些特点。

|

||||

|

||||

正因为容器是如此轻量级且易于替换,人们使用它们来创建微服务——应用程序拆分而成的最小组件,它们可以和一个或多个其它微服务构成任何你想要的应用。假使你只在一个容器内运行某个特定功能或者任务,你也可以让容器变得很小,这样丢弃旧容器创建新容器将变得很容易。我将在后续的文章中继续跟进这个问题以及它们对安全性的可能影响,当然,也包括 DevSecOps 。

|

||||

|

||||

希望这是一次对容器的有用的介绍,并且能带动你有动力去学习 DevSecOps 的知识(如果你不是,假装一下也好)。

|

||||

|

||||

---

|

||||

|

||||

- 注 1:我觉得 DevSecOps 读起来很奇怪,而 DevOpsSec 往往有多元化的理解,然后所讨论的主题就不一样了。

|

||||

- 注 2:我应该注意到这不仅仅会被比较保守、不太喜欢被人注意的英国听众所了解,也会被加拿大人和美国人所了解,他们的性格则和英国人不一样。

|

||||

- 注 3:当然,我只是想讨论 Linux 容器。我知道关于这个问题,是有历史根源的,所以它也值得注意,而不是我故弄玄虚。

|

||||

- 注 4:如果你感兴趣的话,我使用的是命令 `grep -ir container linux-4.9.2 | wc -l`

|

||||

- 注 5:公平的说,我们快速浏览一下,一些用途与我们讨论容器的方式无关,我们讨论的是 Linux 容器,它是抽象的,可以用来包含其他元素,因此在逻辑上被称为容器。

|

||||

- 注 6:也有一些巧妙的方法可以将容器和虚拟机结合起来以发挥它们各自的优势,那个不在我今天的主题范围内。

|

||||

- 注 7:很明显,除了我们刚才介绍的执行位。

|

||||

|

||||

*原文来自 [Alice, Eve, and Bob—a security blog][7] ,转载请注明*

|

||||

|

||||

(题图: opensource.com )

|

||||

|

||||

---

|

||||

|

||||

**作者简介**:

|

||||

|

||||

原文作者 Mike Bursell 是一名居住在英国、喜欢威士忌的开源爱好者, Red Hat 首席安全架构师。其自从 1997 年接触开源世界以来,生活和工作中一直使用 Linux (尽管不是一直都很容易)。更多信息请参考作者的博客 https://aliceevebob.com ,作者会不定期的更新一些有关安全方面的文章。

|

||||

|

||||

|

||||

|

||||

---

|

||||

|

||||

via: https://opensource.com/article/17/10/what-are-containers

|

||||

|

||||

作者:[Mike Bursell][a]

|

||||

译者:[jrglinux](https://github.com/jrglinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/mikecamel

|

||||

[1]: https://opensource.com/resources/what-are-linux-containers?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[2]: https://opensource.com/resources/what-docker?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[3]: https://opensource.com/resources/what-is-kubernetes?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[4]: https://developers.redhat.com/blog/2016/01/13/a-practical-introduction-to-docker-container-terminology/?utm_campaign=containers&intcmp=70160000000h1s6AAA

|

||||

[5]: https://opensource.com/article/17/10/what-are-containers?rate=sPHuhiD4Z3D3vJ6ZqDT-wGp8wQjcQDv-iHf2OBG_oGQ

|

||||

[6]: https://opensource.com/article/17/10/what-are-containers#*******

|

||||

[7]: https://aliceevebob.wordpress.com/2017/07/04/but-i-dont-know-what-a-container-is/

|

||||

[8]: https://opensource.com/user/105961/feed

|

||||

[9]: https://opensource.com/article/17/10/what-are-containers#*

|

||||

[10]: https://opensource.com/article/17/10/what-are-containers#**

|

||||

[11]: https://opensource.com/article/17/10/what-are-containers#***

|

||||

[12]: https://opensource.com/article/17/10/what-are-containers#******

|

||||

[13]: https://opensource.com/article/17/10/what-are-containers#*****

|

||||

[14]: https://opensource.com/users/mikecamel

|

||||

[15]: https://opensource.com/users/mikecamel

|

||||

[16]: https://opensource.com/article/17/10/what-are-containers#****

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

198

published/20171118 Getting started with OpenFaaS on minikube.md

Normal file

198

published/20171118 Getting started with OpenFaaS on minikube.md

Normal file

@ -0,0 +1,198 @@

|

||||

借助 minikube 上手 OpenFaaS

|

||||

============================================================

|

||||

|

||||

本文将介绍如何借助 [minikube][4] 在 Kubernetes 1.8 上搭建 OpenFaaS(让 Serverless Function 变得更简单)。minikube 是一个 [Kubernetes][5] 分发版,借助它,你可以在笔记本电脑上运行 Kubernetes 集群,minikube 支持 Mac 和 Linux 操作系统,但是在 MacOS 上使用得更多一些。

|

||||

|

||||

> 本文基于我们最新的部署手册 [Kubernetes 官方部署指南][6]

|

||||

|

||||

|

||||

|

||||

|

||||

### 安装部署 Minikube

|

||||

|

||||

1、 安装 [xhyve driver][1] 或 [VirtualBox][2] ,然后在上面安装 Linux 虚拟机以部署 minikube 。根据我的经验,VirtualBox 更稳定一些。

|

||||

|

||||

2、 [参照官方文档][3] 安装 minikube 。

|

||||

|

||||

3、 使用 `brew` 或 `curl -sL cli.openfaas.com | sudo sh` 安装 `faas-cli`。

|

||||

|

||||

4、 通过 `brew install kubernetes-helm` 安装 `helm` 命令行。

|

||||

|

||||

5、 运行 minikube :`minikube start`。

|

||||

|

||||

> Docker 船长小贴士:Mac 和 Windows 版本的 Docker 已经集成了对 Kubernetes 的支持。现在我们使用 Kubernetes 的时候,已经不需要再安装额外的软件了。

|

||||

|

||||

### 在 minikube 上面部署 OpenFaaS

|

||||

|

||||

1、 为 Helm 的服务器组件 tiller 新建服务账号:

|

||||

|

||||

```

|

||||

kubectl -n kube-system create sa tiller \

|

||||

&& kubectl create clusterrolebinding tiller \

|

||||

--clusterrole cluster-admin \

|

||||

--serviceaccount=kube-system:tiller

|

||||

```

|

||||

|

||||

2、 安装 Helm 的服务端组件 tiller:

|

||||

|

||||

```

|

||||

helm init --skip-refresh --upgrade --service-account tiller

|

||||

```

|

||||

|

||||

3、 克隆 Kubernetes 的 OpenFaaS 驱动程序 faas-netes:

|

||||

|

||||

```

|

||||

git clone https://github.com/openfaas/faas-netes && cd faas-netes

|

||||

```

|

||||

|

||||

4、 Minikube 没有配置 RBAC,这里我们需要把 RBAC 关闭:

|

||||

|

||||

```

|

||||

helm upgrade --install --debug --reset-values --set async=false --set rbac=false openfaas openfaas/

|

||||

```

|

||||

|

||||

(LCTT 译注:RBAC(Role-Based access control)基于角色的访问权限控制,在计算机权限管理中较为常用,详情请参考以下链接:https://en.wikipedia.org/wiki/Role-based_access_control )

|

||||

|

||||

现在,你可以看到 OpenFaaS pod 已经在你的 minikube 集群上运行起来了。输入 `kubectl get pods` 以查看 OpenFaaS pod:

|

||||

|

||||

```

|

||||

NAME READY STATUS RESTARTS AGE

|

||||

alertmanager-6dbdcddfc4-fjmrf 1/1 Running 0 1m

|

||||

faas-netesd-7b5b7d9d4-h9ftx 1/1 Running 0 1m

|

||||

gateway-965d6676d-7xcv9 1/1 Running 0 1m

|

||||

prometheus-64f9844488-t2mvn 1/1 Running 0 1m

|

||||

```

|

||||

|

||||

30,000ft:

|

||||

|

||||

该 API 网关包含了一个 [用于测试功能的最小化 UI][7],同时开放了用于功能管理的 [RESTful API][8] 。

|

||||

faas-netesd 守护进程是一种 Kubernetes 控制器,用来连接 Kubernetes API 服务器来管理服务、部署和密码。

|

||||

|

||||

Prometheus 和 AlertManager 进程协同工作,实现 OpenFaaS Function 的弹性缩放,以满足业务需求。通过 Prometheus 指标我们可以查看系统的整体运行状态,还可以用来开发功能强悍的仪表盘。

|

||||

|

||||

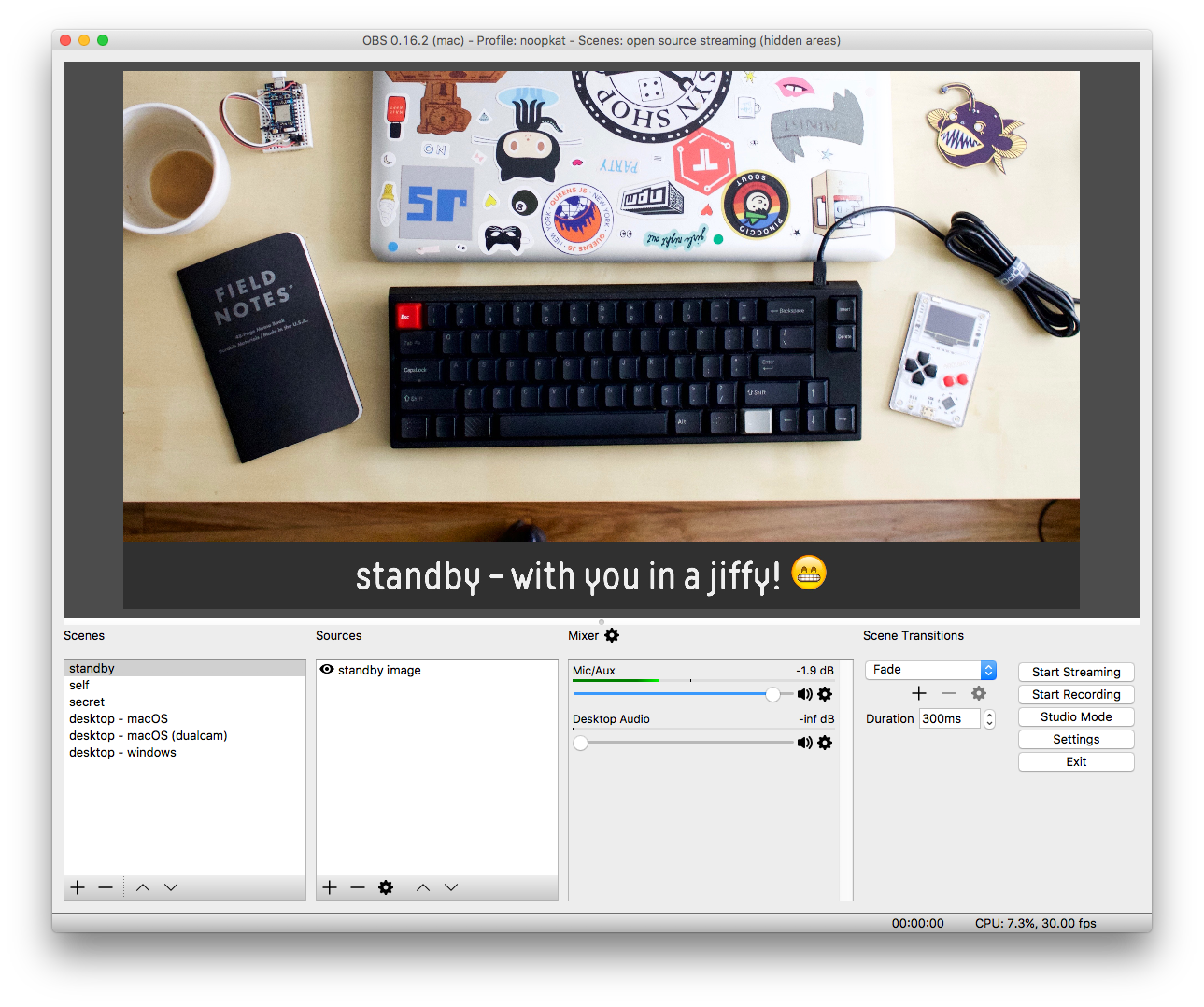

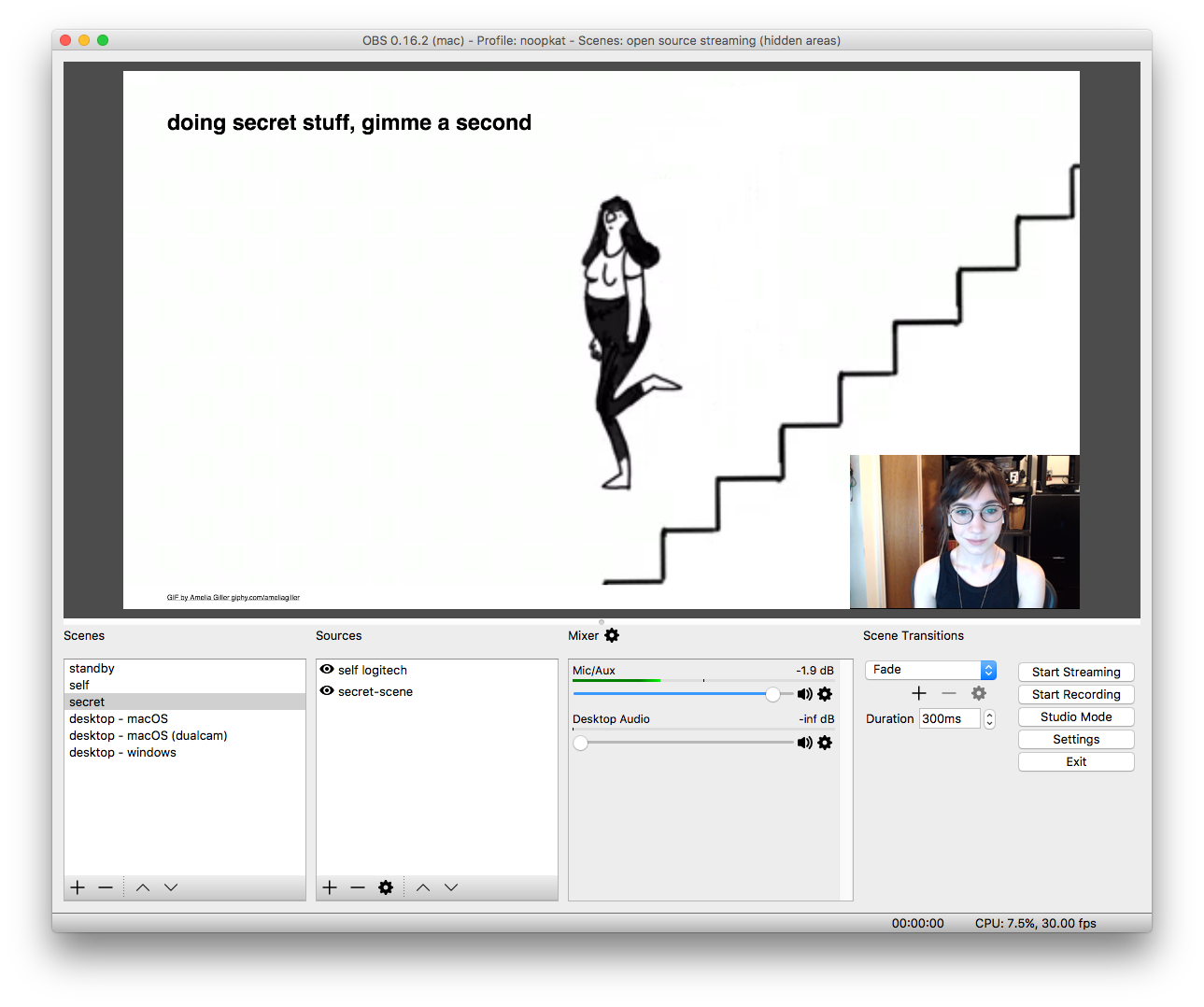

Prometheus 仪表盘示例:

|

||||

|

||||

|

||||

|

||||

### 构建/迁移/运行

|

||||

|

||||

和很多其他的 FaaS 项目不同,OpenFaaS 使用 Docker 镜像格式来进行 Function 的创建和版本控制,这意味着可以在生产环境中使用 OpenFaaS 实现以下目标:

|

||||

|

||||

* 漏洞扫描(LCTT 译注:此处我觉得应该理解为更快地实现漏洞补丁)

|

||||

* 持续集成/持续开发

|

||||

* 滚动更新

|

||||

|

||||

你也可以在现有的生产环境集群中利用空闲资源部署 OpenFaaS。其核心服务组件内存占用大概在 10-30MB 。

|

||||

|

||||

> OpenFaaS 一个关键的优势在于,它可以使用容器编排平台的 API ,这样可以和 Kubernetes 以及 Docker Swarm 进行本地集成。同时,由于使用 Docker <ruby>存储库<rt>registry</rt></ruby>进行 Function 的版本控制,所以可以按需扩展 Function,而没有按需构建 Function 的框架的额外的延时。

|

||||

|

||||

### 新建 Function

|

||||

|

||||

```

|

||||

faas-cli new --lang python hello

|

||||

```

|

||||

|

||||

以上命令创建文件 `hello.yml` 以及文件夹 `handler`,文件夹有两个文件 `handler.py`、`requirements.txt` 可用于你可能需要的 pip 模块。你可以随时编辑这些文件和文件夹,不需要担心如何维护 Dockerfile —— 我们为你通过以下方式维护:

|

||||

|

||||

* 分级创建

|

||||

* 非 root 用户

|

||||

* 以官方的 Docker Alpine Linux 版本为基础进行镜像构建 (可替换)

|

||||

|

||||

### 构建你的 Function

|

||||

|

||||

先在本地创建 Function,然后推送到 Docker 存储库。 我们这里使用 Docker Hub,打开文件 `hello.yml` 然后输入你的账号名:

|

||||

|

||||

```

|

||||

provider:

|

||||

name: faas

|

||||

gateway: http://localhost:8080

|

||||

functions:

|

||||

hello:

|

||||

lang: python

|

||||

handler: ./hello

|

||||

image: alexellis2/hello

|

||||

```

|

||||

|

||||

现在,发起构建。你的本地系统上需要安装 Docker 。

|

||||

|

||||

```

|

||||

faas-cli build -f hello.yml

|

||||

```

|

||||

|

||||

把封装好 Function 的 Docker 镜像版本推送到 Docker Hub。如果还没有登录 Docker hub ,继续前需要先输入命令 `docker login` 。

|

||||

|

||||

```

|

||||

faas-cli push -f hello.yml

|

||||

```

|

||||

|

||||

当系统中有多个 Function 的时候,可以使用 `--parallel=N` 来调用多核并行处理构建或推送任务。该命令也支持这些选项: `--no-cache`、`--squash` 。

|

||||

|

||||

### 部署及测试 Function

|

||||

|

||||

现在,可以部署、列出、调用 Function 了。每次调用 Function 时,可以通过 Prometheus 收集指标值。

|

||||

|

||||

```

|

||||

$ export gw=http://$(minikube ip):31112

|

||||

$ faas-cli deploy -f hello.yml --gateway $gw

|

||||

Deploying: hello.

|

||||

No existing function to remove

|

||||

Deployed.

|

||||

URL: http://192.168.99.100:31112/function/hello

|

||||

```

|

||||

|

||||

上面给到的是部署时调用 Function 的标准方法,你也可以使用下面的命令:

|

||||

|

||||

```

|

||||

$ echo test | faas-cli invoke hello --gateway $gw

|

||||

```

|

||||

|

||||

现在可以通过以下命令列出部署好的 Function,你将看到调用计数器数值增加。

|

||||

|

||||

```

|

||||

$ faas-cli list --gateway $gw

|

||||

Function Invocations Replicas

|

||||

hello 1 1

|

||||

```

|

||||

|

||||

_提示:这条命令也可以加上 `--verbose` 选项获得更详细的信息。_

|

||||

|

||||

由于我们是在远端集群(Linux 虚拟机)上面运行 OpenFaaS,命令里面加上一条 `--gateway` 用来覆盖环境变量。 这个选项同样适用于云平台上的远程主机。除了加上这条选项以外,还可以通过编辑 .yml 文件里面的 `gateway` 值来达到同样的效果。

|

||||

|

||||

### 迁移到 minikube 以外的环境

|

||||

|

||||

一旦你在熟悉了在 minikube 上运行 OpenFaaS ,就可以在任意 Linux 主机上搭建 Kubernetes 集群来部署 OpenFaaS 了。下图是由来自 WeaveWorks 的 Stefan Prodan 做的 OpenFaaS Demo ,这个 Demo 部署在 Google GKE 平台上的 Kubernetes 上面。图片上展示的是 OpenFaaS 内置的自动扩容的功能:

|

||||

|

||||

|

||||

|

||||

### 继续学习

|

||||

|

||||

我们的 Github 上面有很多手册和博文,可以带你轻松“上车”,把我们的页面保存成书签吧:[openfaas/faas][9][][10] 。

|

||||

|

||||

2017 哥本哈根 Dockercon Moby 峰会上,我做了关于 Serverless 和 OpenFaaS 的概述演讲,这里我把视频放上来,视频不长,大概 15 分钟左右。

|

||||

|

||||

[Youtube视频](https://youtu.be/UaReIKa2of8)

|

||||

|

||||

最后,别忘了关注 [OpenFaaS on Twitter][11] 这里有最潮的新闻、最酷的技术和 Demo 展示。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.com/@alexellisuk/getting-started-with-openfaas-on-minikube-634502c7acdf

|

||||

|

||||

作者:[Alex Ellis][a]

|

||||

译者:[mandeler](https://github.com/mandeler)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.com/@alexellisuk?source=post_header_lockup

|

||||

[1]:https://git.k8s.io/minikube/docs/drivers.md#xhyve-driver

|

||||

[2]:https://www.virtualbox.org/wiki/Downloads

|

||||

[3]:https://kubernetes.io/docs/tasks/tools/install-minikube/

|

||||

[4]:https://kubernetes.io/docs/getting-started-guides/minikube/

|

||||

[5]:https://kubernetes.io/

|

||||

[6]:https://github.com/openfaas/faas/blob/master/guide/deployment_k8s.md

|

||||

[7]:https://github.com/openfaas/faas/blob/master/TestDrive.md

|

||||

[8]:https://github.com/openfaas/faas/tree/master/api-docs

|

||||

[9]:https://github.com/openfaas/faas/tree/master/guide

|

||||

[10]:https://github.com/openfaas/faas/tree/master/guide

|

||||

[11]:https://twitter.com/openfaas

|

||||

@ -0,0 +1,84 @@

|

||||

# [The One in Which I Call Out Hacker News][14]

|

||||

|

||||

|

||||

> “Implementing caching would take thirty hours. Do you have thirty extra hours? No, you don’t. I actually have no idea how long it would take. Maybe it would take five minutes. Do you have five minutes? No. Why? Because I’m lying. It would take much longer than five minutes. That’s the eternal optimism of programmers.”

|

||||

>

|

||||

> — Professor [Owen Astrachan][1] during 23 Feb 2004 lecture for [CPS 108][2]

|

||||

|

||||

[Accusing open-source software of being a royal pain to use][5] is not a new argument; it’s been said before, by those much more eloquent than I, and even by some who are highly sympathetic to the open-source movement. Why go over it again?

|

||||

|

||||

On Hacker News on Monday, I was amused to read some people saying that [writing StackOverflow was hilariously easy][6]—and proceeding to back up their claim by [promising to clone it over July 4th weekend][7]. Others chimed in, pointing to [existing][8] [clones][9] as a good starting point.

|

||||

|

||||

Let’s assume, for sake of argument, that you decide it’s okay to write your StackOverflow clone in ASP.NET MVC, and that I, after being hypnotized with a pocket watch and a small club to the head, have decided to hand you the StackOverflow source code, page by page, so you can retype it verbatim. We’ll also assume you type like me, at a cool 100 WPM ([a smidge over eight characters per second][10]), and unlike me, _you_ make zero mistakes. StackOverflow’s *.cs, *.sql, *.css, *.js, and *.aspx files come to 2.3 MB. So merely typing the source code back into the computer will take you about eighty hours if you make zero mistakes.

|

||||

|

||||

Except, of course, you’re not doing that; you’re going to implement StackOverflow from scratch. So even assuming that it took you a mere ten times longer to design, type out, and debug your own implementation than it would take you to copy the real one, that already has you coding for several weeks straight—and I don’t know about you, but I am okay admitting I write new code _considerably_ less than one tenth as fast as I copy existing code.

|

||||

|

||||

_Well, okay_ , I hear you relent. *So not the whole thing. But I can do **most** of it.*

|

||||

|

||||

Okay, so what’s “most”? There’s simply asking and responding to questions—that part’s easy. Well, except you have to implement voting questions and answers up and down, and the questioner should be able to accept a single answer for each question. And you can’t let people upvote or accept their own answers, so you need to block that. And you need to make sure that users don’t upvote or downvote another user too many times in a certain amount of time, to prevent spambots. Probably going to have to implement a spam filter, too, come to think of it, even in the basic design, and you also need to support user icons, and you’re going to have to find a sanitizing HTML library you really trust and that interfaces well with Markdown (provided you do want to reuse [that awesome editor][11] StackOverflow has, of course). You’ll also need to purchase, design, or find widgets for all the controls, plus you need at least a basic administration interface so that moderators can moderate, and you’ll need to implement that scaling karma thing so that you give users steadily increasing power to do things as they go.

|

||||

|

||||

But if you do _all that_ , you _will_ be done.

|

||||

|

||||

Except…except, of course, for the full-text search, especially its appearance in the search-as-you-ask feature, which is kind of indispensable. And user bios, and having comments on answers, and having a main page that shows you important questions but that bubbles down steadily à la reddit. Plus you’ll totally need to implement bounties, and support multiple OpenID logins per user, and send out email notifications for pertinent events, and add a tagging system, and allow administrators to configure badges by a nice GUI. And you’ll need to show users’ karma history, upvotes, and downvotes. And the whole thing has to scale really well, since it could be slashdotted/reddited/StackOverflown at any moment.

|

||||

|

||||

But _then_ ! **Then** you’re done!

|

||||

|

||||

…right after you implement upgrades, internationalization, karma caps, a CSS design that makes your site not look like ass, AJAX versions of most of the above, and G-d knows what else that’s lurking just beneath the surface that you currently take for granted, but that will come to bite you when you start to do a real clone.

|

||||

|

||||

Tell me: which of those features do you feel you can cut and still have a compelling offering? Which ones go under “most” of the site, and which can you punt?

|

||||

|

||||

Developers think cloning a site like StackOverflow is easy for the same reason that open-source software remains such a horrible pain in the ass to use. When you put a developer in front of StackOverflow, they don’t really _see_ StackOverflow. What they actually _see_ is this:

|

||||

|

||||

```

|

||||

create table QUESTION (ID identity primary key,

|

||||

TITLE varchar(255), --- why do I know you thought 255?

|

||||

BODY text,

|

||||

UPVOTES integer not null default 0,

|

||||

DOWNVOTES integer not null default 0,

|

||||

USER integer references USER(ID));

|

||||

create table RESPONSE (ID identity primary key,

|

||||

BODY text,

|

||||

UPVOTES integer not null default 0,

|

||||

DOWNVOTES integer not null default 0,

|

||||

QUESTION integer references QUESTION(ID))

|

||||

```

|

||||

|

||||

If you then tell a developer to replicate StackOverflow, what goes into his head are the above two SQL tables and enough HTML to display them without formatting, and that really _is_ completely doable in a weekend. The smarter ones will realize that they need to implement login and logout, and comments, and that the votes need to be tied to a user, but that’s still totally doable in a weekend; it’s just a couple more tables in a SQL back-end, and the HTML to show their contents. Use a framework like Django, and you even get basic users and comments for free.

|

||||

|

||||

But that’s _not_ what StackOverflow is about. Regardless of what your feelings may be on StackOverflow in general, most visitors seem to agree that the user experience is smooth, from start to finish. They feel that they’re interacting with a polished product. Even if I didn’t know better, I would guess that very little of what actually makes StackOverflow a continuing success has to do with the database schema—and having had a chance to read through StackOverflow’s source code, I know how little really does. There is a _tremendous_ amount of spit and polish that goes into making a major website highly usable. A developer, asked how hard something will be to clone, simply _does not think about the polish_ , because _the polish is incidental to the implementation._

|

||||

|

||||

That is why an open-source clone of StackOverflow will fail. Even if someone were to manage to implement most of StackOverflow “to spec,” there are some key areas that would trip them up. Badges, for example, if you’re targeting end-users, either need a GUI to configure rules, or smart developers to determine which badges are generic enough to go on all installs. What will actually happen is that the developers will bitch and moan about how you can’t implement a really comprehensive GUI for something like badges, and then bikeshed any proposals for standard badges so far into the ground that they’ll hit escape velocity coming out the other side. They’ll ultimately come up with the same solution that bug trackers like Roundup use for their workflow: the developers implement a generic mechanism by which anyone, truly anyone at all, who feels totally comfortable working with the system API in Python or PHP or whatever, can easily add their own customizations. And when PHP and Python are so easy to learn and so much more flexible than a GUI could ever be, why bother with anything else?