mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

4595f594cc

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11499-1.html)

|

||||

[#]: subject: (How writers can get work done better with Git)

|

||||

[#]: via: (https://opensource.com/article/19/4/write-git)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/sethhttps://opensource.com/users/noreplyhttps://opensource.com/users/seth)

|

||||

@ -12,7 +12,7 @@

|

||||

|

||||

> 如果你是一名写作者,你也能从使用 Git 中受益。在我们的系列文章中了解有关 Git 鲜为人知的用法。

|

||||

|

||||

![Writing Hand][1]

|

||||

|

||||

|

||||

[Git][2] 是一个少有的能将如此多的现代计算封装到一个程序之中的应用程序,它可以用作许多其他应用程序的计算引擎。虽然它以跟踪软件开发中的源代码更改而闻名,但它还有许多其他用途,可以让你的生活更轻松、更有条理。在这个 Git 系列中,我们将分享七种鲜为人知的使用 Git 的方法。

|

||||

|

||||

@ -20,7 +20,7 @@

|

||||

|

||||

### 写作者的 Git

|

||||

|

||||

有些人写小说,也有人撰写学术论文、诗歌、剧本、技术手册或有关开源的文章。许多人都在做一点各种写作。相同的是,如果你是一名写作者,则或许能从使用 Git 中受益。尽管 Git 是著名的计算机程序员所使用的高度技术性工具,但它也是现代写作者的理想之选,本文将向你演示如何改变你的书写方式以及为什么要这么做的原因。

|

||||

有些人写小说,也有人撰写学术论文、诗歌、剧本、技术手册或有关开源的文章。许多人都在做一些各种写作。相同的是,如果你是一名写作者,或许能从使用 Git 中受益。尽管 Git 是著名的计算机程序员所使用的高度技术性工具,但它也是现代写作者的理想之选,本文将向你演示如何改变你的书写方式以及为什么要这么做的原因。

|

||||

|

||||

但是,在谈论 Git 之前,重要的是先谈谈“副本”(或者叫“内容”,对于数字时代而言)到底是什么,以及为什么它与你的交付*媒介*不同。这是 21 世纪,大多数写作者选择的工具是计算机。尽管计算机看似擅长将副本的编辑和布局等过程结合在一起,但写作者还是(重新)发现将内容与样式分开是一个好主意。这意味着你应该在计算机上像在打字机上而不是在文字处理器中进行书写。以计算机术语而言,这意味着以*纯文本*形式写作。

|

||||

|

||||

@ -30,13 +30,13 @@

|

||||

|

||||

你只需要逐字写下你的内容,而将交付工作留给发布者。即使你是自己发布,将字词作为写作作品的一种源代码也是一种更聪明、更有效的工作方式,因为在发布时,你可以使用相同的源(你的纯文本)生成适合你的目标输出(用于打印的 PDF、用于电子书的 EPUB、用于网站的 HTML 等)。

|

||||

|

||||

用纯文本编写不仅意味着你不必担心布局或文本样式,而且也不再需要专门的工具。无论是手机或平板电脑上的基本记事本应用程序、计算机附带的文本编辑器,还是从互联网上下载的免费编辑器,任何能够产生文本内容的工具对你而言都是有效的“文字处理器”。无论你身在何处或在做什么,几乎可以在任何设备上书写,并且所生成的文本可以与你的项目完美集成,而无需进行任何修改。

|

||||

用纯文本编写不仅意味着你不必担心布局或文本样式,而且也不再需要专门的工具。无论是手机或平板电脑上的基本的记事本应用程序、计算机附带的文本编辑器,还是从互联网上下载的免费编辑器,任何能够产生文本内容的工具对你而言都是有效的“文字处理器”。无论你身在何处或在做什么,几乎可以在任何设备上书写,并且所生成的文本可以与你的项目完美集成,而无需进行任何修改。

|

||||

|

||||

而且,Git 专门用来管理纯文本。

|

||||

|

||||

### Atom 编辑器

|

||||

|

||||

当你以纯文本形式书写时,文字处理程序会显得过于庞大。使用文本编辑器更容易,因为文本编辑器不会尝试“有效地”重组输入内容。它使你可以将脑海中的单词输入到屏幕中,而不会受到干扰。更好的是,文本编辑器通常是围绕插件体系结构设计的,这样应用程序本身就很基础(它用来编辑文本),但是你可以围绕它构建一个环境来满足你的各种需求。

|

||||

当你以纯文本形式书写时,文字处理程序会显得过于庞大。使用文本编辑器更容易,因为文本编辑器不会尝试“有效地”重组输入内容。它使你可以将脑海中的单词输入到屏幕中,而不会受到干扰。更好的是,文本编辑器通常是围绕插件体系结构设计的,这样应用程序本身很基础(它用来编辑文本),但是你可以围绕它构建一个环境来满足你的各种需求。

|

||||

|

||||

[Atom][4] 编辑器就是这种设计理念的一个很好的例子。这是一个具有内置 Git 集成的跨平台文本编辑器。如果你不熟悉纯文本格式,也不熟悉 Git,那么 Atom 是最简单的入门方法。

|

||||

|

||||

@ -64,15 +64,15 @@ Atom 当前没有在 BSD 上构建。但是,有很好的替代方法,例如

|

||||

|

||||

#### 快速指导

|

||||

|

||||

如果要使用纯文本和 Git,则需要适应你的编辑器。Atom 的用户界面可能比你习惯的更加动态。实际上,你可以将它视为 Firefox 或 Chrome,而不是文字处理程序,因为它具有可以根据需要打开和关闭的选项卡和面板,甚至还可以安装和配置附件。尝试全部掌握 Atom 如许之多的功能是不切实际的,但是你至少可以知道有什么功能。

|

||||

如果要使用纯文本和 Git,则需要适应你的编辑器。Atom 的用户界面可能比你习惯的更加动态。实际上,你可以将它视为 Firefox 或 Chrome,而不是文字处理程序,因为它具有可以根据需要打开或关闭的选项卡和面板,甚至还可以安装和配置附件。尝试全部掌握 Atom 如许之多的功能是不切实际的,但是你至少可以知道有什么功能。

|

||||

|

||||

当 Atom 打开时,它将显示一个欢迎屏幕。如果不出意外,此屏幕很好地介绍了 Atom 的选项卡式界面。你可以通过单击 Atom 窗口顶部选项卡上的“关闭”图标来关闭欢迎屏幕,并使用“文件 > 新建文件”创建一个新文件。

|

||||

当打开 Atom 时,它将显示一个欢迎屏幕。如果不出意外,此屏幕很好地介绍了 Atom 的选项卡式界面。你可以通过单击 Atom 窗口顶部选项卡上的“关闭”图标来关闭欢迎屏幕,并使用“文件 > 新建文件”创建一个新文件。

|

||||

|

||||

使用纯文本格式与使用文字处理程序有点不同,因此这里有一些技巧,以人可以连接的方式编写内容,并且 Git 和计算机可以解析,跟踪和转换。

|

||||

使用纯文本格式与使用文字处理程序有点不同,因此这里有一些技巧,以人可以理解的方式编写内容,并且 Git 和计算机可以解析,跟踪和转换。

|

||||

|

||||

#### 用 Markdown 书写

|

||||

|

||||

如今,当人们谈论纯文本时,大多是指 Markdown。Markdown 与其说是格式,不如说是样式,这意味着它旨在为文本提供可预测的结构,以便计算机可以检测自然的模式并智能地转换文本。Markdown 有很多定义,但是最好的技术定义和备忘单在 [CommonMark 的网站][8]上。

|

||||

如今,当人们谈论纯文本时,大多是指 Markdown。Markdown 与其说是格式,不如说是样式,这意味着它旨在为文本提供可预测的结构,以便计算机可以检测自然的模式并智能地转换文本。Markdown 有很多定义,但是最好的技术定义和备忘清单在 [CommonMark 的网站][8]上。

|

||||

|

||||

```

|

||||

# Chapter 1

|

||||

@ -85,9 +85,9 @@ And it can even reference an image.

|

||||

|

||||

从示例中可以看出,Markdown 读起来感觉不像代码,但可以将其视为代码。如果你遵循 CommonMark 定义的 Markdown 规范,那么一键就可以可靠地将 Markdown 的文字转换为 .docx、.epub、.html、MediaWiki、.odt、.pdf、.rtf 和各种其他的格式,而*不会*失去格式。

|

||||

|

||||

你可以认为 Markdown 有点像文字处理程序的样式。如果你曾经为出版社撰写过一套样式来控制章节标题和章节标题的样式,那基本上就是一回事,除了不是从下拉菜单中选择样式以外,你要给你的文字添加一些小记号。对于任何习惯“以文字交谈”的现代阅读者来说,这些表示法都是很自然的,但是在呈现文本时,它们会被精美的文本样式替换掉。实际上,这是文字处理程序在后台秘密进行的操作。文字处理器显示粗体文本,但是如果你可以看到使文本变为粗体的生成代码,则它与 Markdown 很像(实际上,它是更复杂的 XML)。使用 Markdown 可以消除这种代码和样式之间的阻隔,一方面看起来更可怕,但另一方面,你可以在几乎所有可以生成文本的东西上书写 Markdown 而不会丢失任何格式信息。

|

||||

你可以认为 Markdown 有点像文字处理程序的样式。如果你曾经为出版社撰写过一套样式来控制章节标题及其样式,那基本上就是一回事,除了不是从下拉菜单中选择样式以外,你需要给你的文字添加一些小记号。对于任何习惯“以文字交谈”的现代阅读者来说,这些表示法都是很自然的,但是在呈现文本时,它们会被精美的文本样式替换掉。实际上,这就是文字处理程序在后台秘密进行的操作。文字处理器显示粗体文本,但是如果你可以看到使文本变为粗体的生成代码,则它与 Markdown 很像(实际上,它是更复杂的 XML)。使用 Markdown 可以消除这种代码和样式之间的阻隔,一方面看起来更可怕一些,但另一方面,你可以在几乎所有可以生成文本的东西上书写 Markdown 而不会丢失任何格式信息。

|

||||

|

||||

Markdown 文件流行d 文件扩展名是 .md。如果你使用的平台不知道 .md 文件是什么,则可以手动将扩展名与 Atom 关联,或者仅使用通用的 .txt 扩展名。文件扩展名不会更改文件的性质。它只会改变你的计算机决定如何处理它的方式。Atom 和某些平台足够聪明,可以知道该文件是纯文本格式,无论你给它以什么扩展名。

|

||||

Markdown 文件流行的文件扩展名是 .md。如果你使用的平台不知道 .md 文件是什么,则可以手动将该扩展名与 Atom 关联,或者仅使用通用的 .txt 扩展名。文件扩展名不会更改文件的性质。它只会改变你的计算机决定如何处理它的方式。Atom 和某些平台足够聪明,可以知道该文件是纯文本格式,无论你给它以什么扩展名。

|

||||

|

||||

#### 实时预览

|

||||

|

||||

@ -97,25 +97,25 @@ Atom 具有 “Markdown 预览” 插件,该插件可以向你显示正在编

|

||||

|

||||

要激活此预览窗格,请选择“包 > Markdown 预览 > 切换预览” 或按 `Ctrl + Shift + M`。

|

||||

|

||||

此视图为你提供了两全其美的方法。无需承担为你的文本添加样式的负担,就可以写作,而你也可以看到一个通用的示例外观,至少是以典型的数字化格式显示了文本的外观。当然,关键是你无法控制文本的最终呈现方式,因此不要试图调整 Markdown 来强制以某种方式显示呈现的预览。

|

||||

此视图为你提供了两全其美的方法。无需承担为你的文本添加样式的负担就可以写作,而你也可以看到一个通用的示例外观,至少是以典型的数字化格式显示文本的外观。当然,关键是你无法控制文本的最终呈现方式,因此不要试图调整 Markdown 来强制以某种方式显示呈现的预览。

|

||||

|

||||

#### 每行一句话

|

||||

|

||||

你的高中写作老师不会看你的 Markdown。

|

||||

|

||||

一开始它并那么自然,但是在数字世界中,保持每行一个句子更有意义。Markdown 忽略单个换行符(当你按下 Return 或 Enter 键时),并且只在单个空行之后才会创建一个新段落。

|

||||

一开始它不那么自然,但是在数字世界中,保持每行一个句子更有意义。Markdown 会忽略单个换行符(当你按下 `Return` 或 `Enter` 键时),并且只在单个空行之后才会创建一个新段落。

|

||||

|

||||

![Writing in Atom][10]

|

||||

|

||||

每行写一个句子的好处是你的工作更容易跟踪。也就是说,如果你在段落的开头更改了一个单词,那么如果更改仅限于一行而不是一个长的段落中的一个单词,那么 Atom、Git 或任何应用程序很容易以有意义的方式突出显示该更改。换句话说,对一个句子的更改只会影响该句子,而不会影响整个段落。

|

||||

每行写一个句子的好处是你的工作更容易跟踪。也就是说,假如你在段落的开头更改了一个单词,如果更改仅限于一行而不是一个长的段落中的一个单词,那么 Atom、Git 或任何应用程序很容易以有意义的方式突出显示该更改。换句话说,对一个句子的更改只会影响该句子,而不会影响整个段落。

|

||||

|

||||

你可能会想:“许多文字处理器也可以跟踪更改,它们可以突出显示已更改的单个单词。”但是这些修订跟踪器绑定到该字处理器的界面上,这意味着你必须先打开该字处理器才能浏览修订。在纯文本工作流程中,你可以以纯文本形式查看修订,这意味着无论手头有什么,只要该设备可以处理纯文本(大多数都可以),就可以进行编辑或批准编辑。

|

||||

你可能会想:“许多文字处理器也可以跟踪更改,它们可以突出显示已更改的单个单词。”但是这些修订跟踪器绑定在该字处理器的界面上,这意味着你必须先打开该字处理器才能浏览修订。在纯文本工作流程中,你可以以纯文本形式查看修订,这意味着无论手头有什么,只要该设备可以处理纯文本(大多数都可以),就可以进行编辑或批准编辑。

|

||||

|

||||

诚然,写作者通常不会考虑行号,但它对于计算机有用,并且通常是一个很好的参考点。默认情况下,Atom 为文本文档的行进行编号。按下 Enter 键或 Return 键后,一*行*就是一行。

|

||||

诚然,写作者通常不会考虑行号,但它对于计算机有用,并且通常是一个很好的参考点。默认情况下,Atom 为文本文档的行进行编号。按下 `Enter` 键或 `Return` 键后,一*行*就是一行。

|

||||

|

||||

![Writing in Atom][11]

|

||||

|

||||

如果一行中有一个点而不是一个数字,则表示它是上一行折叠的一部分,因为它不超出了你的屏幕。

|

||||

如果(在 Atom 的)一行的行号中有一个点而不是一个数字,则表示它是上一行折叠的一部分,因为它超出了你的屏幕。

|

||||

|

||||

#### 主题

|

||||

|

||||

@ -127,7 +127,7 @@ Atom 具有 “Markdown 预览” 插件,该插件可以向你显示正在编

|

||||

|

||||

![Atom's themes][13]

|

||||

|

||||

要使用已安装的主题或根据喜好自定义主题,请导航至“设置”标签页中的“主题”类别中。从下拉菜单中选择要使用的主题。更改会立即进行,因此你可以准确了解主题如何影响您的环境。

|

||||

要使用已安装的主题或根据喜好自定义主题,请导航至“设置”标签页中的“主题”类别中。从下拉菜单中选择要使用的主题。更改会立即进行,因此你可以准确了解主题如何影响你的环境。

|

||||

|

||||

你也可以在“设置”标签的“编辑器”类别中更改工作字体。Atom 默认采用等宽字体,程序员通常首选这种字体。但是你可以使用系统上的任何字体,无论是衬线字体、无衬线字体、哥特式字体还是草书字体。无论你想整天盯着什么字体都行。

|

||||

|

||||

@ -139,19 +139,19 @@ Atom 具有 “Markdown 预览” 插件,该插件可以向你显示正在编

|

||||

|

||||

创建长文档时,我发现每个文件写一个章节比在一个文件中写整本书更有意义。此外,我不会以明显的语法 ` chapter-1.md` 或 `1.example.md` 来命名我的章节,而是以章节标题或关键词(例如 `example.md`)命名。为了将来为自己提供有关如何编写本书的指导,我维护了一个名为 `toc.md` (用于“目录”)的文件,其中列出了各章的(当前)顺序。

|

||||

|

||||

我这样做是因为,无论我多么相信第 6 章都不可能出现在第 1 章之前,但在我完成整本书之前,几乎不大可能出现我不会交换一两个章节的顺序。我发现从一开始就保持动态变化可以帮助我避免重命名混乱,也可以帮助我避免僵化的结构。

|

||||

我这样做是因为,无论我多么相信第 6 章都不可能出现在第 1 章之前,但在我完成整本书之前,几乎难以避免我会交换一两个章节的顺序。我发现从一开始就保持动态变化可以帮助我避免重命名混乱,也可以帮助我避免僵化的结构。

|

||||

|

||||

### 在 Atom 中使用 Git

|

||||

|

||||

每位写作者的共同点是两件事:他们为流传而写作,而他们的写作是一段旅程。你无需坐下来写作就完成最终稿件。顾名思义,你有一个初稿。该草稿会经过修订,你会仔细地将每个修订保存一式两份或三份,以防万一你的文件损坏了。最终,你得到了所谓的最终草案,但很有可能你有一天还会回到这份最终草案,要么恢复好的部分要么修改坏的部分。

|

||||

每位写作者的共同点是两件事:他们为流传而写作,而他们的写作是一段旅程。你不能一坐下来写作就完成了最终稿件。顾名思义,你有一个初稿。该草稿会经过修订,你会仔细地将每个修订保存一式两份或三份的备份,以防万一你的文件损坏了。最终,你得到了所谓的最终草稿,但很有可能你有一天还会回到这份最终草稿,要么恢复好的部分,要么修改坏的部分。

|

||||

|

||||

Atom 最令人兴奋的功能是其强大的 Git 集成。无需离开 Atom,你就可以与 Git 的所有主要功能进行交互,跟踪和更新项目、回滚你不喜欢的更改、集成来自协作者的更改等等。最好的学习方法就是逐步学习,因此这是从写作项目开始到结束在 Atom 界面中使用 Git 的方法。

|

||||

Atom 最令人兴奋的功能是其强大的 Git 集成。无需离开 Atom,你就可以与 Git 的所有主要功能进行交互,跟踪和更新项目、回滚你不喜欢的更改、集成来自协作者的更改等等。最好的学习方法就是逐步学习,因此这是在一个写作项目中从始至终在 Atom 界面中使用 Git 的方法。

|

||||

|

||||

第一件事:通过选择 “视图 > 切换 Git 标签页” 来显示 Git 面板。这将在 Atom 界面的右侧打开一个新标签页。现在没什么可看的,所以暂时保持打开状态就行。

|

||||

|

||||

#### 建立一个 Git 项目

|

||||

|

||||

你可以将 Git 视为它被绑定到文件夹。Git 目录之外的任何文件夹都不知道 Git,而 Git 也不知道外面。Git 目录中的文件夹和文件将被忽略,直到你授予 Git 权限来跟踪它们为止。

|

||||

你可以认为 Git 被绑定到一个文件夹。Git 目录之外的任何文件夹都不知道 Git,而 Git 也不知道外面。Git 目录中的文件夹和文件将被忽略,直到你授予 Git 权限来跟踪它们为止。

|

||||

|

||||

你可以通过在 Atom 中创建新的项目文件夹来创建 Git 项目。选择 “文件 > 添加项目文件夹”,然后在系统上创建一个新文件夹。你创建的文件夹将出现在 Atom 窗口的左侧“项目面板”中。

|

||||

|

||||

@ -159,11 +159,11 @@ Atom 最令人兴奋的功能是其强大的 Git 集成。无需离开 Atom,

|

||||

|

||||

右键单击你的新项目文件夹,然后选择“新建文件”以在项目文件夹中创建一个新文件。如果你要导入文件到新项目中,请右键单击该文件夹,然后选择“在文件管理器中显示”,以在系统的文件查看器中打开该文件夹(Linux 上为 Dolphin 或 Nautilus,Mac 上为 Finder,在 Windows 上是 Explorer),然后拖放文件到你的项目文件夹。

|

||||

|

||||

在 Atom 中打开一个项目文件(你创建的空文件或导入的文件)后,单击 Git 标签中的 “<ruby>创建存储库<rt>Create Repository</rt></ruby>” 按钮。在弹出的对话框中,单击 “<ruby>初始化<rt>Init</rt></ruby>” 以将你的项目目录初始化为本地 Git 存储库。 Git 会将 `.git` 目录(在系统的文件管理器中不可见,但在 Atom 中可见)添加到项目文件夹中。不要被这个愚弄了:`.git` 目录是 Git 管理的,而不是由你管理的,因此你一般不要动它。但是在 Atom 中看到它可以很好地提醒你正在由 Git 管理的项目中工作。换句话说,当你看到 `.git` 目录时,就有了修订历史记录。

|

||||

在 Atom 中打开一个项目文件(你创建的空文件或导入的文件)后,单击 Git 标签中的 “<ruby>创建存储库<rt>Create Repository</rt></ruby>” 按钮。在弹出的对话框中,单击 “<ruby>初始化<rt>Init</rt></ruby>” 以将你的项目目录初始化为本地 Git 存储库。 Git 会将 `.git` 目录(在系统的文件管理器中不可见,但在 Atom 中可见)添加到项目文件夹中。不要被这个愚弄了:`.git` 目录是 Git 管理的,而不是由你管理的,因此一般你不要动它。但是在 Atom 中看到它可以很好地提醒你正在由 Git 管理的项目中工作。换句话说,当你看到 `.git` 目录时,就有了修订历史记录。

|

||||

|

||||

在你的空文件中,写一些东西。你是写作者,所以输入一些单词就行。你可以随意输入任何一组单词,但要记住上面的写作技巧。

|

||||

|

||||

按 `Ctrl + S` 保存文件,该文件将显示在 Git 标签的 “<ruby>未暂存的改变<rt>Unstaged Changes</rt></ruby>” 部分中。这意味着该文件存在于你的项目文件夹中,但尚未提交给 Git 管理。通过单击 Git 选项卡右上方的 “<ruby>暂存全部<rt>Stage All</rt></ruby>” 按钮,允许 Git 跟踪这些文件。如果你使用过带有修订历史记录的文字处理器,则可以将此步骤视为允许 Git记录更改。

|

||||

按 `Ctrl + S` 保存文件,该文件将显示在 Git 标签的 “<ruby>未暂存的改变<rt>Unstaged Changes</rt></ruby>” 部分中。这意味着该文件存在于你的项目文件夹中,但尚未提交给 Git 管理。通过单击 Git 选项卡右上方的 “<ruby>暂存全部<rt>Stage All</rt></ruby>” 按钮,以允许 Git 跟踪这些文件。如果你使用过带有修订历史记录的文字处理器,则可以将此步骤视为允许 Git 记录更改。

|

||||

|

||||

#### Git 提交

|

||||

|

||||

@ -171,7 +171,7 @@ Atom 最令人兴奋的功能是其强大的 Git 集成。无需离开 Atom,

|

||||

|

||||

Git 的<ruby>提交<rt>commit</rt></ruby>会将你的文件发送到 Git 的内部和永久存档中。如果你习惯于文字处理程序,这就类似于给一个修订版命名。要创建一个提交,请在 Git 选项卡底部的“<ruby>提交<rt>Commit</rt></ruby>”消息框中输入一些描述性文本。你可能会感到含糊不清或随意写点什么,但如果你想在将来知道进行修订的原因,那么输入一些有用的信息会更有用。

|

||||

|

||||

第一次提交时,必须创建一个<ruby>分支<rt>branch</rt></ruby>。Git 分支有点像另外一个空间,它允许你从一个时间轴切换到另一个时间轴,以进行你可能想要或可能不想要永久保留的更改。如果最终喜欢该更改,则可以将一个实验分支合并到另一个实验分支,从而统一项目的不同版本。这是一个高级过程,不需要先学会,但是你仍然需要一个活动分支,因此你必须为首次提交创建一个分支。

|

||||

第一次提交时,必须创建一个<ruby>分支<rt>branch</rt></ruby>。Git 分支有点像另外一个空间,它允许你从一个时间轴切换到另一个时间轴,以进行你可能想要也可能不想要永久保留的更改。如果最终喜欢该更改,则可以将一个实验分支合并到另一个实验分支,从而统一项目的不同版本。这是一个高级过程,不需要先学会,但是你仍然需要一个活动分支,因此你必须为首次提交创建一个分支。

|

||||

|

||||

单击 Git 选项卡最底部的“<ruby>分支<rt>Branch</rt></ruby>”图标,以创建新的分支。

|

||||

|

||||

@ -185,7 +185,7 @@ Git 的<ruby>提交<rt>commit</rt></ruby>会将你的文件发送到 Git 的内

|

||||

|

||||

#### 历史记录和 Git 差异

|

||||

|

||||

一个自然而然的问题是你应该多久做一次提交。这并没有正确的答案。使用 `Ctrl + S` 保存文件并提交到 Git 是两个单独的过程,因此你会一直做这两个过程。每当你觉得自己已经做了重要的事情或打算尝试一个可能要被干掉的疯狂的新想法时,你可能都会想要做个提交。

|

||||

一个自然而然的问题是你应该多久做一次提交。这并没有正确的答案。使用 `Ctrl + S` 保存文件和提交到 Git 是两个单独的过程,因此你会一直做这两个过程。每当你觉得自己已经做了重要的事情或打算尝试一个可能会被干掉的疯狂的新想法时,你可能都会想要做次提交。

|

||||

|

||||

要了解提交对工作流程的影响,请从测试文档中删除一些文本,然后在顶部和底部添加一些文本。再次提交。 这样做几次,直到你在 Git 标签的底部有了一小段历史记录,然后单击其中一个提交以在 Atom 中查看它。

|

||||

|

||||

@ -199,15 +199,15 @@ Git 的<ruby>提交<rt>commit</rt></ruby>会将你的文件发送到 Git 的内

|

||||

|

||||

#### 远程备份

|

||||

|

||||

使用 Git 的优点之一是,按照设计,它是分布式的,这意味着你可以将工作提交到本地存储库,并将所做的更改推送到任意数量的服务器上进行备份。你还可以从这些服务器中拉取更改,以便你碰巧正在使用的任何设备始终具有最新更改。

|

||||

使用 Git 的优点之一是,按照设计它是分布式的,这意味着你可以将工作提交到本地存储库,并将所做的更改推送到任意数量的服务器上进行备份。你还可以从这些服务器中拉取更改,以便你碰巧正在使用的任何设备始终具有最新更改。

|

||||

|

||||

为此,你必须在 Git 服务器上拥有一个帐户。有几种免费的托管服务,其中包括 GitHub,这个公司开发了 Atom,但奇怪的是 GitHub 不是开源的;而 GitLab 是开源的。相比私有的,我更喜欢开源,在本示例中,我将使用 GitLab。

|

||||

为此,你必须在 Git 服务器上拥有一个帐户。有几种免费的托管服务,其中包括 GitHub,这个公司开发了 Atom,但奇怪的是 GitHub 不是开源的;而 GitLab 是开源的。相比私有软件,我更喜欢开源,在本示例中,我将使用 GitLab。

|

||||

|

||||

如果你还没有 GitLab 帐户,请注册一个帐户并开始一个新项目。项目名称不必与 Atom 中的项目文件夹匹配,但是如果匹配,则可能更有意义。你可以将项目保留为私有,在这种情况下,只有你和任何一个你给予了明确权限的人可以访问它,或者,如果你希望该项目可供任何互联网上偶然发现它的人使用,则可以将其公开。

|

||||

|

||||

不要将 README 文件添加到项目中。

|

||||

|

||||

创建项目后,这个文件将为你提供有关如何设置存储库的说明。如果你决定在终端中或通过单独的 GUI 使用 Git,这是非常有用的信息,但是 Atom 的工作流程则有所不同。

|

||||

创建项目后,它将为你提供有关如何设置存储库的说明。如果你决定在终端中或通过单独的 GUI 使用 Git,这是非常有用的信息,但是 Atom 的工作流程则有所不同。

|

||||

|

||||

单击 GitLab 界面右上方的 “<ruby>克隆<rt>Clone</rt></ruby>” 按钮。这显示了访问 Git 存储库必须使用的地址。复制 “SSH” 地址(而不是 “https” 地址)。

|

||||

|

||||

@ -224,7 +224,7 @@ Git 的<ruby>提交<rt>commit</rt></ruby>会将你的文件发送到 Git 的内

|

||||

|

||||

在 Git 标签的底部,出现了一个新按钮,标记为 “<ruby>提取<rt>Fetch</rt></ruby>”。由于你的服务器是全新的服务器,因此没有可供你提取的数据,因此请右键单击该按钮,然后选择“<ruby>推送<rt>Push</rt></ruby>”。这会将你的更改推送到你的 GitLab 帐户,现在你的项目已备份到 Git 服务器上。

|

||||

|

||||

你可以在每次提交后将更改推送到服务器。它提供了立即的异地备份,并且由于数据量通常很少,因此它几乎与本地保存一样快。

|

||||

你可以在每次提交后将更改推送到服务器。它提供了即刻的异地备份,并且由于数据量通常很少,因此它几乎与本地保存一样快。

|

||||

|

||||

### 撰写而 Git

|

||||

|

||||

@ -237,7 +237,7 @@ via: https://opensource.com/article/19/4/write-git

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,22 +1,24 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (hopefully2333)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11498-1.html)

|

||||

[#]: subject: (How DevOps professionals can become security champions)

|

||||

[#]: via: (https://opensource.com/article/19/9/devops-security-champions)

|

||||

[#]: author: (Jessica Repka https://opensource.com/users/jrepkahttps://opensource.com/users/jrepkahttps://opensource.com/users/patrickhousleyhttps://opensource.com/users/mehulrajputhttps://opensource.com/users/alanfdosshttps://opensource.com/users/marcobravo)

|

||||

[#]: author: (Jessica Repka https://opensource.com/users/jrepka)

|

||||

|

||||

DevOps 专业人员如何成为网络安全拥护者

|

||||

======

|

||||

打破信息孤岛,成为网络安全的拥护者,这对你、对你的职业、对你的公司都会有所帮助。

|

||||

![A lock on the side of a building][1]

|

||||

|

||||

> 打破信息孤岛,成为网络安全的拥护者,这对你、对你的职业、对你的公司都会有所帮助。

|

||||

|

||||

|

||||

|

||||

安全是 DevOps 中一个被误解了的部分,一些人认为它不在 DevOps 的范围内,而另一些人认为它太过重要(并且被忽视),建议改为使用 DevSecOps。无论你同意哪一方的观点,网络安全都会影响到我们每一个人,这是很明显的事实。

|

||||

|

||||

每年, [黑客行为的统计数据][3] 都会更加令人震惊。例如, 每 39 秒就有一次黑客行为发生,这可能会导致你为公司写的记录、身份和专有项目被盗。你的安全团队可能需要花上几个月(也可能是永远找不到)才能发现这次黑客行为背后是谁,目的是什么,人在哪,什么时候黑进来的。

|

||||

每年,[黑客行为的统计数据][3] 都会更加令人震惊。例如,每 39 秒就有一次黑客行为发生,这可能会导致你为公司写的记录、身份和专有项目被盗。你的安全团队可能需要花上几个月(也可能是永远找不到)才能发现这次黑客行为背后是谁,目的是什么,人在哪,什么时候黑进来的。

|

||||

|

||||

运营专家面对这些棘手问题应该如何是好?呐我说,现在是时候成为网络安全的拥护者,变为解决方案的一部分了。

|

||||

运维专家面对这些棘手问题应该如何是好?呐我说,现在是时候成为网络安全的拥护者,变为解决方案的一部分了。

|

||||

|

||||

### 孤岛势力范围的战争

|

||||

|

||||

@ -28,52 +30,44 @@ DevOps 专业人员如何成为网络安全拥护者

|

||||

|

||||

为了打破这些孤岛并结束势力战争,我在每个安全团队中都选了至少一个人来交谈,了解我们组织日常安全运营里的来龙去脉。我开始做这件事是出于好奇,但我持续做这件事是因为它总是能带给我一些有价值的、新的观点。例如,我了解到,对于每个因为失败的安全性而被停止的部署,安全团队都在疯狂地尝试修复 10 个他们看见的其他问题。他们反应的莽撞和尖锐是因为他们必须在有限的时间里修复这些问题,不然这些问题就会变成一个大问题。

|

||||

|

||||

考虑到发现、识别和撤销已完成操作所需的大量知识,或者指出 DevOps 团队正在做什么-没有背景信息-然后复制并测试它。所有的这些通常都要由人手配备非常不足的安全团队完成。

|

||||

考虑到发现、识别和撤销已完成操作所需的大量知识,或者指出 DevOps 团队正在做什么(没有背景信息)然后复制并测试它。所有的这些通常都要由人手配备非常不足的安全团队完成。

|

||||

|

||||

这就是你的安全团队的日常生活,并且你的 DevOps 团队看不到这些。ITSEC 的日常工作意味着超时加班和过度劳累,以确保公司,公司的团队,团队里工作的所有人能够安全地工作。

|

||||

|

||||

### 成为安全拥护者的方法

|

||||

|

||||

这些是你成为你的安全团队的拥护者之后可以帮到它们的。这意味着-对于你做的所有操作-你必须仔细、认真地查看所有能够让其他人登录的方式,以及他们能够从中获得什么。

|

||||

这些是你成为你的安全团队的拥护者之后可以帮到它们的。这意味着,对于你做的所有操作,你必须仔细、认真地查看所有能够让其他人登录的方式,以及他们能够从中获得什么。

|

||||

|

||||

帮助你的安全团队就是在帮助你自己。将工具添加到你的工作流程里,以此将你知道的要干的活和他们知道的要干的活结合到一起。从小事入手,例如阅读公共漏洞披露(CVEs),并将扫描模块添加到你的 CI/CD 流程里。对于你写的所有代码,都会有一个开源扫描工具,添加小型开源工具(例如下面列出来的)在长远看来是可以让项目更好的。

|

||||

帮助你的安全团队就是在帮助你自己。将工具添加到你的工作流程里,以此将你知道的要干的活和他们知道的要干的活结合到一起。从小事入手,例如阅读公共漏洞披露(CVE),并将扫描模块添加到你的 CI/CD 流程里。对于你写的所有代码,都会有一个开源扫描工具,添加小型开源工具(例如下面列出来的)在长远看来是可以让项目更好的。

|

||||

|

||||

**容器扫描工具:**

|

||||

**容器扫描工具:**

|

||||

|

||||

* [Anchore Engine][5]

|

||||

* [Clair][6]

|

||||

* [Vuls][7]

|

||||

* [OpenSCAP][8]

|

||||

|

||||

|

||||

|

||||

**代码扫描工具:**

|

||||

**代码扫描工具:**

|

||||

|

||||

* [OWASP SonarQube][9]

|

||||

* [Find Security Bugs][10]

|

||||

* [Google Hacking Diggity Project][11]

|

||||

|

||||

|

||||

|

||||

**Kubernetes 安全工具:**

|

||||

**Kubernetes 安全工具:**

|

||||

|

||||

* [Project Calico][12]

|

||||

* [Kube-hunter][13]

|

||||

* [NeuVector][14]

|

||||

|

||||

|

||||

|

||||

### 保持你的 DevOps 态度

|

||||

|

||||

如果你的工作角色是和 DevOps 相关的,那么学习新技术和如何运用这项新技术创造新事物就是你工作的一部分。安全也是一样。我在 DevOps 安全方面保持到最新,下面是我的方法的列表。

|

||||

|

||||

* 每周阅读一篇你工作的方向里和安全相关的文章.

|

||||

* 每周查看 [CVE][15] 官方网站,了解出现了什么新漏洞.

|

||||

* 每周查看 [CVE][15] 官方网站,了解出现了什么新漏洞.

|

||||

* 尝试做一次黑客马拉松。一些公司每个月都要这样做一次;如果你觉得还不够、想了解更多,可以访问 Beginner Hack 1.0 网站。

|

||||

* 每年至少一次和那你的安全团队的成员一起参加安全会议,从他们的角度来看事情。

|

||||

|

||||

|

||||

|

||||

### 成为拥护者是为了变得更好

|

||||

|

||||

你应该成为你的安全的拥护者,下面是我们列出来的几个理由。首先是增长你的知识,帮助你的职业发展。第二是帮助其他的团队,培养新的关系,打破对你的组织有害的孤岛。在你的整个组织内建立由很多好处,包括设置沟通团队的典范,并鼓励人们一起工作。你同样能促进在整个组织中分享知识,并给每个人提供一个在安全方面更好的内部合作的新契机。

|

||||

@ -87,11 +81,11 @@ via: https://opensource.com/article/19/9/devops-security-champions

|

||||

作者:[Jessica Repka][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jrepkahttps://opensource.com/users/jrepkahttps://opensource.com/users/patrickhousleyhttps://opensource.com/users/mehulrajputhttps://opensource.com/users/alanfdosshttps://opensource.com/users/marcobravo

|

||||

[a]: https://opensource.com/users/jrepka

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/BUSINESS_3reasons.png?itok=k6F3-BqA (A lock on the side of a building)

|

||||

[2]: https://opensource.com/article/19/1/what-devsecops

|

||||

@ -0,0 +1,189 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (Morisun029)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11494-1.html)

|

||||

[#]: subject: (Mutation testing by example: Failure as experimentation)

|

||||

[#]: via: (https://opensource.com/article/19/9/mutation-testing-example-failure-experimentation)

|

||||

[#]: author: (Alex Bunardzic https://opensource.com/users/alex-bunardzichttps://opensource.com/users/jocunddew)

|

||||

|

||||

变异测试:基于故障的试验

|

||||

======

|

||||

|

||||

> 基于 .NET 的 xUnit.net 测试框架,开发一款自动猫门的逻辑,让门在白天开放,夜间锁定。

|

||||

|

||||

![Digital hand surrounding by objects, bike, light bulb, graphs][1]

|

||||

|

||||

在本系列的[第一篇文章][2]中,我演示了如何使用设计的故障来确保代码中的预期结果。在第二篇文章中,我将继续开发示例项目:一款自动猫门,该门在白天开放,夜间锁定。

|

||||

|

||||

在此提醒一下,你可以按照[此处的说明][3]使用 .NET 的 xUnit.net 测试框架。

|

||||

|

||||

### 关于白天时间

|

||||

|

||||

回想一下,测试驱动开发(TDD)围绕着大量的单元测试。

|

||||

|

||||

第一篇文章中实现了满足 `Given7pmReturnNighttime` 单元测试期望的逻辑。但还没有完,现在,你需要描述当前时间大于 7 点时期望发生的结果。这是新的单元测试,称为 `Given7amReturnDaylight`:

|

||||

|

||||

```

|

||||

[Fact]

|

||||

public void Given7amReturnDaylight()

|

||||

{

|

||||

var expected = "Daylight";

|

||||

var actual = dayOrNightUtility.GetDayOrNight();

|

||||

Assert.Equal(expected, actual);

|

||||

}

|

||||

```

|

||||

|

||||

现在,新的单元测试失败了(越早失败越好!):

|

||||

|

||||

```

|

||||

Starting test execution, please wait...

|

||||

[Xunit.net 00:00:01.23] unittest.UnitTest1.Given7amReturnDaylight [FAIL]

|

||||

Failed unittest.UnitTest1.Given7amReturnDaylight

|

||||

[...]

|

||||

```

|

||||

|

||||

期望接收到字符串值是 `Daylight`,但实际接收到的值是 `Nighttime`。

|

||||

|

||||

### 分析失败的测试用例

|

||||

|

||||

经过仔细检查,代码本身似乎已经出现问题。 事实证明,`GetDayOrNight` 方法的实现是不可测试的!

|

||||

|

||||

看看我们面临的核心挑战:

|

||||

|

||||

1. `GetDayOrNight` 依赖隐藏输入。

|

||||

|

||||

`dayOrNight` 的值取决于隐藏输入(它从内置系统时钟中获取一天的时间值)。

|

||||

2. `GetDayOrNight` 包含非确定性行为。

|

||||

|

||||

从系统时钟中获取到的时间值是不确定的。(因为)该时间取决于你运行代码的时间点,而这一点我们认为这是不可预测的。

|

||||

3. `GetDayOrNight` API 的质量差。

|

||||

|

||||

该 API 与具体的数据源(系统 `DateTime`)紧密耦合。

|

||||

4. `GetDayOrNight` 违反了单一责任原则。

|

||||

|

||||

该方法实现同时使用和处理信息。优良作法是一种方法应负责执行一项职责。

|

||||

5. `GetDayOrNight` 有多个更改原因。

|

||||

|

||||

可以想象内部时间源可能会更改的情况。同样,很容易想象处理逻辑也将改变。这些变化的不同原因必须相互隔离。

|

||||

6. 当(我们)尝试了解 `GetDayOrNight` 行为时,会发现它的 API 签名不足。

|

||||

|

||||

最理想的做法就是通过简单的查看 API 的签名,就能了解 API 预期的行为类型。

|

||||

7. `GetDayOrNight` 取决于全局共享可变状态。

|

||||

|

||||

要不惜一切代价避免共享的可变状态!

|

||||

8. 即使在阅读源代码之后,也无法预测 `GetDayOrNight` 方法的行为。

|

||||

|

||||

这是一个严重的问题。通过阅读源代码,应该始终非常清晰,系统一旦开始运行,便可以预测出其行为。

|

||||

|

||||

### 失败背后的原则

|

||||

|

||||

每当你遇到工程问题时,建议使用久经考验的<ruby>分而治之<rt>divide and conquer</rt></ruby>策略。在这种情况下,遵循<ruby>关注点分离<rt>separation of concerns</rt></ruby>的原则是一种可行的方法。

|

||||

|

||||

> 关注点分离(SoC)是一种用于将计算机程序分为不同模块的设计原理,以便每个模块都可以解决一个关注点。关注点是影响计算机程序代码的一组信息。关注点可以和要优化代码的硬件的细节一样概括,也可以和要实例化的类的名称一样具体。完美体现 SoC 的程序称为模块化程序。

|

||||

>

|

||||

> ([出处][4])

|

||||

|

||||

`GetDayOrNight` 方法应仅与确定日期和时间值表示白天还是夜晚有关。它不应该与寻找该值的来源有关。该问题应留给调用客户端。

|

||||

|

||||

必须将这个问题留给调用客户端,以获取当前时间。这种方法符合另一个有价值的工程原理——<ruby>控制反转<rt>inversion of control</rt></ruby>。Martin Fowler [在这里][5]详细探讨了这一概念。

|

||||

|

||||

> 框架的一个重要特征是用户定义的用于定制框架的方法通常来自于框架本身,而不是从用户的应用程序代码调用来的。该框架通常在协调和排序应用程序活动中扮演主程序的角色。控制权的这种反转使框架有能力充当可扩展的框架。用户提供的方法为框架中的特定应用程序量身制定泛化算法。

|

||||

>

|

||||

> -- [Ralph Johnson and Brian Foote][6]

|

||||

|

||||

### 重构测试用例

|

||||

|

||||

因此,代码需要重构。摆脱对内部时钟的依赖(`DateTime` 系统实用程序):

|

||||

|

||||

```

|

||||

DateTime time = new DateTime();

|

||||

```

|

||||

|

||||

删除上述代码(在你的文件中应该是第 7 行)。通过将输入参数 `DateTime` 时间添加到 `GetDayOrNight` 方法,进一步重构代码。

|

||||

|

||||

这是重构的类 `DayOrNightUtility.cs`:

|

||||

|

||||

```

|

||||

using System;

|

||||

|

||||

namespace app {

|

||||

public class DayOrNightUtility {

|

||||

public string GetDayOrNight(DateTime time) {

|

||||

string dayOrNight = "Nighttime";

|

||||

if(time.Hour >= 7 && time.Hour < 19) {

|

||||

dayOrNight = "Daylight";

|

||||

}

|

||||

return dayOrNight;

|

||||

}

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

重构代码需要更改单元测试。 需要准备 `nightHour` 和 `dayHour` 的测试数据,并将这些值传到`GetDayOrNight` 方法中。 以下是重构的单元测试:

|

||||

|

||||

```

|

||||

using System;

|

||||

using Xunit;

|

||||

using app;

|

||||

|

||||

namespace unittest

|

||||

{

|

||||

public class UnitTest1

|

||||

{

|

||||

DayOrNightUtility dayOrNightUtility = new DayOrNightUtility();

|

||||

DateTime nightHour = new DateTime(2019, 08, 03, 19, 00, 00);

|

||||

DateTime dayHour = new DateTime(2019, 08, 03, 07, 00, 00);

|

||||

|

||||

[Fact]

|

||||

public void Given7pmReturnNighttime()

|

||||

{

|

||||

var expected = "Nighttime";

|

||||

var actual = dayOrNightUtility.GetDayOrNight(nightHour);

|

||||

Assert.Equal(expected, actual);

|

||||

}

|

||||

|

||||

[Fact]

|

||||

public void Given7amReturnDaylight()

|

||||

{

|

||||

var expected = "Daylight";

|

||||

var actual = dayOrNightUtility.GetDayOrNight(dayHour);

|

||||

Assert.Equal(expected, actual);

|

||||

}

|

||||

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

### 经验教训

|

||||

|

||||

在继续开发这种简单的场景之前,请先回顾复习一下本次练习中所学到的东西。

|

||||

|

||||

运行无法测试的代码,很容易在不经意间制造陷阱。从表面上看,这样的代码似乎可以正常工作。但是,遵循测试驱动开发(TDD)的实践(首先描述期望结果,然后才描述实现),暴露了代码中的严重问题。

|

||||

|

||||

这表明 TDD 是确保代码不会太凌乱的理想方法。TDD 指出了一些问题区域,例如缺乏单一责任和存在隐藏输入。此外,TDD 有助于删除不确定性代码,并用行为明确的完全可测试代码替换它。

|

||||

|

||||

最后,TDD 帮助交付易于阅读、逻辑易于遵循的代码。

|

||||

|

||||

在本系列的下一篇文章中,我将演示如何使用在本练习中创建的逻辑来实现功能代码,以及如何进行进一步的测试使其变得更好。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/9/mutation-testing-example-failure-experimentation

|

||||

|

||||

作者:[Alex Bunardzic][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[Morisun029](https://github.com/Morisun029)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/alex-bunardzic

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/rh_003588_01_rd3os.combacktoschoolseriesk12_rh_021x_0.png?itok=fvorN0e- (Digital hand surrounding by objects, bike, light bulb, graphs)

|

||||

[2]: https://linux.cn/article-11483-1.html

|

||||

[3]: https://linux.cn/article-11468-1.html

|

||||

[4]: https://en.wikipedia.org/wiki/Separation_of_concerns

|

||||

[5]: https://martinfowler.com/bliki/InversionOfControl.html

|

||||

[6]: http://www.laputan.org/drc/drc.html

|

||||

[7]: http://www.google.com/search?q=new+msdn.microsoft.com

|

||||

@ -1,34 +1,34 @@

|

||||

[#]: collector: "lujun9972"

|

||||

[#]: translator: "way-ww"

|

||||

[#]: reviewer: " "

|

||||

[#]: publisher: " "

|

||||

[#]: url: " "

|

||||

[#]: reviewer: "wxy"

|

||||

[#]: publisher: "wxy"

|

||||

[#]: url: "https://linux.cn/article-11491-1.html"

|

||||

[#]: subject: "How to Run the Top Command in Batch Mode"

|

||||

[#]: via: "https://www.2daygeek.com/linux-run-execute-top-command-in-batch-mode/"

|

||||

[#]: author: "Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/"

|

||||

|

||||

如何在批处理模式下运行 Top 命令

|

||||

如何在批处理模式下运行 top 命令

|

||||

======

|

||||

|

||||

**[Top 命令][1]** 是每个人都在使用的用于 **[监控 Linux 系统性能][2]** 的最好的命令。

|

||||

|

||||

|

||||

除了很少的几个操作, 你可能已经知道 top 命令的绝大部分操作, 如果我没错的话, 批处理模式就是其中之一。

|

||||

[top 命令][1] 是每个人都在使用的用于 [监控 Linux 系统性能][2] 的最好的命令。你可能已经知道 `top` 命令的绝大部分操作,除了很少的几个操作,如果我没错的话,批处理模式就是其中之一。

|

||||

|

||||

大部分的脚本编写者和开发人员都知道这个, 因为这个操作主要就是用来编写脚本。

|

||||

大部分的脚本编写者和开发人员都知道这个,因为这个操作主要就是用来编写脚本。

|

||||

|

||||

如果你不了解这个, 不用担心,我们将在这里介绍它。

|

||||

如果你不了解这个,不用担心,我们将在这里介绍它。

|

||||

|

||||

### 什么是 Top 命令的批处理模式

|

||||

### 什么是 top 命令的批处理模式

|

||||

|

||||

批处理模式允许你将 top 命令的输出发送至其他程序或者文件中。

|

||||

批处理模式允许你将 `top` 命令的输出发送至其他程序或者文件中。

|

||||

|

||||

在这个模式中, top 命令将不会接收输入并且持续运行直到迭代次数达到你用 “-n” 选项指定的次数为止。

|

||||

在这个模式中,`top` 命令将不会接收输入并且持续运行,直到迭代次数达到你用 `-n` 选项指定的次数为止。

|

||||

|

||||

如果你想解决 Linux 服务器上的任何性能问题, 你需要正确的 **[理解 top 命令的输出][3]** 。

|

||||

如果你想解决 Linux 服务器上的任何性能问题,你需要正确的 [理解 top 命令的输出][3]。

|

||||

|

||||

### 1) 如何在批处理模式下运行 top 命令

|

||||

|

||||

默认地, top 命令按照 CPU 的使用率来排序输出结果, 所以当你在批处理模式中运行以下命令时, 它会执行同样的操作并打印前 35 行。

|

||||

默认地,`top` 命令按照 CPU 的使用率来排序输出结果,所以当你在批处理模式中运行以下命令时,它会执行同样的操作并打印前 35 行:

|

||||

|

||||

```

|

||||

# top -bc | head -35

|

||||

@ -72,7 +72,7 @@ PID USER PR NI VIRT RES SHR S %CPU %MEM TIME+ COMMAND

|

||||

|

||||

### 2) 如何在批处理模式下运行 top 命令并按内存使用率排序结果

|

||||

|

||||

在批处理模式中运行以下命令按内存使用率对结果进行排序

|

||||

在批处理模式中运行以下命令按内存使用率对结果进行排序:

|

||||

|

||||

```

|

||||

# top -bc -o +%MEM | head -n 20

|

||||

@ -99,19 +99,17 @@ KiB Swap: 1048572 total, 514640 free, 533932 used. 2475984 avail Mem

|

||||

8632 nobody 20 0 256844 25744 2216 S 0.0 0.7 0:00.03 /usr/sbin/httpd -k start

|

||||

```

|

||||

|

||||

**上面命令的详细信息:**

|

||||

|

||||

* **-b :** 批处理模式选项

|

||||

* **-c :** 打印运行中的进程的绝对路径

|

||||

* **-o :** 指定进行排序的字段

|

||||

* **head :** 输出文件的第一部分

|

||||

* **-n :** 打印前 n 行

|

||||

|

||||

上面命令的详细信息:

|

||||

|

||||

* `-b`:批处理模式选项

|

||||

* `-c`:打印运行中的进程的绝对路径

|

||||

* `-o`:指定进行排序的字段

|

||||

* `head`:输出文件的第一部分

|

||||

* `-n`:打印前 n 行

|

||||

|

||||

### 3) 如何在批处理模式下运行 top 命令并按照指定的用户进程对结果进行排序

|

||||

|

||||

如果你想要按照指定用户进程对结果进行排序请运行以下命令

|

||||

如果你想要按照指定用户进程对结果进行排序请运行以下命令:

|

||||

|

||||

```

|

||||

# top -bc -u mysql | head -n 10

|

||||

@ -128,13 +126,11 @@ KiB Swap: 1048572 total, 514640 free, 533932 used. 2649412 avail Mem

|

||||

|

||||

### 4) 如何在批处理模式下运行 top 命令并按照处理时间进行排序

|

||||

|

||||

在批处理模式中使用以下 top 命令按照处理时间对结果进行排序。 这展示了任务从启动以来已使用的总 CPU 时间

|

||||

|

||||

但是如果你想要检查一个进程在 Linux 上运行了多长时间请看接下来的文章。

|

||||

|

||||

* **[检查 Linux 中进程运行时间的五种方法][4]**

|

||||

在批处理模式中使用以下 `top` 命令按照处理时间对结果进行排序。这展示了任务从启动以来已使用的总 CPU 时间。

|

||||

|

||||

但是如果你想要检查一个进程在 Linux 上运行了多长时间请看接下来的文章:

|

||||

|

||||

* [检查 Linux 中进程运行时间的五种方法][4]

|

||||

|

||||

```

|

||||

# top -bc -o TIME+ | head -n 20

|

||||

@ -163,7 +159,7 @@ KiB Swap: 1048572 total, 514640 free, 533932 used. 2440332 avail Mem

|

||||

|

||||

### 5) 如何在批处理模式下运行 top 命令并将结果保存到文件中

|

||||

|

||||

如果出于解决问题的目的, 你想要和别人分享 top 命令的输出, 请使用以下命令重定向输出到文件中

|

||||

如果出于解决问题的目的,你想要和别人分享 `top` 命令的输出,请使用以下命令重定向输出到文件中:

|

||||

|

||||

```

|

||||

# top -bc | head -35 > top-report.txt

|

||||

@ -209,9 +205,9 @@ KiB Swap: 1048572 total, 514640 free, 533932 used. 2659084 avail Mem

|

||||

|

||||

### 如何按照指定字段对结果进行排序

|

||||

|

||||

在 top 命令的最新版本中, 按下 **“f”** 键进入字段管理界面。

|

||||

在 `top` 命令的最新版本中, 按下 `f` 键进入字段管理界面。

|

||||

|

||||

要使用新字段进行排序, 请使用 **“up/down”** 箭头选择正确的选项, 然后再按下 **“s”** 键进行排序。 最后按 **“q”** 键退出此窗口。

|

||||

要使用新字段进行排序, 请使用 `up`/`down` 箭头选择正确的选项,然后再按下 `s` 键进行排序。最后按 `q` 键退出此窗口。

|

||||

|

||||

```

|

||||

Fields Management for window 1:Def, whose current sort field is %CPU

|

||||

@ -269,9 +265,9 @@ Fields Management for window 1:Def, whose current sort field is %CPU

|

||||

nsUSER = USER namespace Inode

|

||||

```

|

||||

|

||||

对 top 命令的旧版本, 请按 **“shift+f”** 或 **“shift+o”** 键进入字段管理界面进行排序。

|

||||

对 `top` 命令的旧版本,请按 `shift+f` 或 `shift+o` 键进入字段管理界面进行排序。

|

||||

|

||||

要使用新字段进行排序, 请选择相应的排序字段字母, 然后按下 **“Enter”** 排序。

|

||||

要使用新字段进行排序,请选择相应的排序字段字母, 然后按下回车键排序。

|

||||

|

||||

```

|

||||

Current Sort Field: N for window 1:Def

|

||||

@ -323,7 +319,7 @@ via: https://www.2daygeek.com/linux-run-execute-top-command-in-batch-mode/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[way-ww](https://github.com/way-ww)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,71 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lnrCoder)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11492-1.html)

|

||||

[#]: subject: (DevSecOps pipelines and tools: What you need to know)

|

||||

[#]: via: (https://opensource.com/article/19/10/devsecops-pipeline-and-tools)

|

||||

[#]: author: (Sagar Nangare https://opensource.com/users/sagarnangare)

|

||||

|

||||

你需要知道的 DevSecOps 流程及工具

|

||||

======

|

||||

|

||||

> DevSecOps 对 DevOps 进行了改进,以确保安全性仍然是该过程的一个重要部分。

|

||||

|

||||

|

||||

|

||||

到目前为止,DevOps 在 IT 世界中已广为人知,但其并非完美无缺。试想一下,你在一个项目的现代应用程序交付中实施了所有 DevOps 工程实践。你已经到达开发流程的末尾,但是渗透测试团队(内部或外部)检测到安全漏洞并提出了报告。现在,你必须重新启动所有流程,并要求开发人员修复该漏洞。

|

||||

|

||||

在基于 DevOps 的软件开发生命周期(SDLC)系统中,这并不繁琐,但它确实会浪费时间并影响交付进度。如果从 SDLC 初期就已经集成了安全性,那么你可能已经跟踪到了该故障,并在开发流程中就消除了它。但是,如上述情形那样,将安全性推到开发流程的最后将导致更长的开发生命周期。

|

||||

|

||||

这就是引入 DevSecOps 的原因,它以自动化的方式巩固了整个软件交付周期。

|

||||

|

||||

在现代 DevOps 方法中,组织广泛使用容器托管应用程序,我们看到 [Kubernetes][2] 和 [Istio][3] 使用的较多。但是,这些工具都有其自身的漏洞。例如,云原生计算基金会(CNCF)最近完成了一项 [kubernetes 安全审计][4],发现了几个问题。DevOps 开发流程中使用的所有工具在流程运行时都需要进行安全检查,DevSecOps 会推动管理员去监视工具的存储库以获取升级和补丁。

|

||||

|

||||

### 什么是 DevSecOps?

|

||||

|

||||

与 DevOps 一样,DevSecOps 是开发人员和 IT 运营团队在开发和部署软件应用程序时所遵循的一种思维方式或文化。它将主动和自动化的安全审计以及渗透测试集成到敏捷应用程序开发中。

|

||||

|

||||

要使用 [DevSecOps][5],你需要:

|

||||

|

||||

* 从 SDLC 开始就引入安全性概念,以最大程度地减少软件代码中的漏洞。

|

||||

* 确保每个人(包括开发人员和 IT 运营团队)共同承担在其任务中遵循安全实践的责任。

|

||||

* 在 DevOps 工作流程开始时集成安全控件、工具和流程。这些将在软件交付的每个阶段启用自动安全检查。

|

||||

|

||||

DevOps 一直致力于在开发和发布过程中包括安全性以及质量保证(QA)、数据库管理和其他所有方面。然而,DevSecOps 是该过程的一个演进,以确保安全永远不会被遗忘,成为该过程的一个重要部分。

|

||||

|

||||

### 了解 DevSecOps 流程

|

||||

|

||||

典型的 DevOps 流程有不同的阶段;典型的 SDLC 流程包括计划、编码、构建、测试、发布和部署等阶段。在 DevSecOps 中,每个阶段都会应用特定的安全检查。

|

||||

|

||||

* **计划**:执行安全性分析并创建测试计划,以确定在何处、如何以及何时进行测试的方案。

|

||||

* **编码**:部署整理工具和 Git 控件以保护密码和 API 密钥。

|

||||

* **构建**:在构建执行代码时,请结合使用静态应用程序安全测试(SAST)工具来跟踪代码中的缺陷,然后再部署到生产环境中。这些工具针对特定的编程语言。

|

||||

* **测试**:在运行时使用动态应用程序安全测试(DAST)工具来测试您的应用程序。 这些工具可以检测与用户身份验证,授权,SQL 注入以及与 API 相关的端点相关的错误。

|

||||

* **发布**:在发布应用程序之前,请使用安全分析工具来进行全面的渗透测试和漏洞扫描。

|

||||

* **部署**:在运行时完成上述测试后,将安全的版本发送到生产中以进行最终部署。

|

||||

|

||||

### DevSecOps 工具

|

||||

|

||||

SDLC 的每个阶段都有可用的工具。有些是商业产品,但大多数是开源的。在我的下一篇文章中,我将更多地讨论在流程的不同阶段使用的工具。

|

||||

|

||||

随着基于现代 IT 基础设施的企业安全威胁的复杂性增加,DevSecOps 将发挥更加关键的作用。然而,DevSecOps 流程将需要随着时间的推移而改进,而不是仅仅依靠同时实施所有安全更改即可。这将消除回溯或应用交付失败的可能性。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/10/devsecops-pipeline-and-tools

|

||||

|

||||

作者:[Sagar Nangare][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lnrCoder](https://github.com/lnrCoder)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/sagarnangare

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/LAW-Internet_construction_9401467_520x292_0512_dc.png?itok=RPkPPtDe (An intersection of pipes.)

|

||||

[2]: https://opensource.com/resources/what-is-kubernetes

|

||||

[3]: https://opensource.com/article/18/9/what-istio

|

||||

[4]: https://www.cncf.io/blog/2019/08/06/open-sourcing-the-kubernetes-security-audit/

|

||||

[5]: https://resources.whitesourcesoftware.com/blog-whitesource/devsecops

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11490-1.html)

|

||||

[#]: subject: (Bash Script to Delete Files/Folders Older Than “X” Days in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/bash-script-to-delete-files-folders-older-than-x-days-in-linux/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,29 +10,21 @@

|

||||

在 Linux 中使用 Bash 脚本删除早于 “X” 天的文件/文件夹

|

||||

======

|

||||

|

||||

**[磁盘使用率][1]**监控工具能够在达到给定阈值时提醒我们。

|

||||

[磁盘使用率][1] 监控工具能够在达到给定阈值时提醒我们。但它们无法自行解决 [磁盘使用率][2] 问题。需要手动干预才能解决该问题。

|

||||

|

||||

但它们无法自行解决**[磁盘使用率][2]**问题。

|

||||

如果你想完全自动化此类操作,你会做什么。是的,可以使用 bash 脚本来完成。

|

||||

|

||||

需要手动干预才能解决该问题。

|

||||

|

||||

如果你想完全自动化此类操作,你会做什么。

|

||||

|

||||

是的,可以使用 bash 脚本来完成。

|

||||

|

||||

该脚本可防止来自**[监控工具][3]**的警报,因为我们会在填满磁盘空间之前删除旧的日志文件。

|

||||

该脚本可防止来自 [监控工具][3] 的警报,因为我们会在填满磁盘空间之前删除旧的日志文件。

|

||||

|

||||

我们过去做了很多 shell 脚本。如果要查看,请进入下面的链接。

|

||||

|

||||

* **[如何使用 shell 脚本自动化日常活动?][4]**

|

||||

|

||||

|

||||

* [如何使用 shell 脚本自动化日常活动?][4]

|

||||

|

||||

我在本文中添加了两个 bash 脚本,它们有助于清除旧日志。

|

||||

|

||||

### 1)在 Linux 中删除早于 “X” 天的文件夹的 Bash 脚本

|

||||

|

||||

我们有一个名为 **“/var/log/app/”** 的文件夹,其中包含 15 天的日志,我们将删除早于 10 天的文件夹。

|

||||

我们有一个名为 `/var/log/app/` 的文件夹,其中包含 15 天的日志,我们将删除早于 10 天的文件夹。

|

||||

|

||||

```

|

||||

$ ls -lh /var/log/app/

|

||||

@ -56,7 +48,7 @@ drwxrw-rw- 3 root root 24K Oct 15 23:52 app_log.15

|

||||

|

||||

该脚本将删除早于 10 天的文件夹,并通过邮件发送文件夹列表。

|

||||

|

||||

你可以根据需要修改 **“-mtime X”** 的值。另外,请替换你的电子邮箱,而不是用我们的。

|

||||

你可以根据需要修改 `-mtime X` 的值。另外,请替换你的电子邮箱,而不是用我们的。

|

||||

|

||||

```

|

||||

# /opt/script/delete-old-folders.sh

|

||||

@ -81,7 +73,7 @@ rm $MESSAGE /tmp/folder.out

|

||||

fi

|

||||

```

|

||||

|

||||

给 **“delete-old-folders.sh”** 设置可执行权限。

|

||||

给 `delete-old-folders.sh` 设置可执行权限。

|

||||

|

||||

```

|

||||

# chmod +x /opt/script/delete-old-folders.sh

|

||||

@ -109,15 +101,13 @@ Oct 15 /var/log/app/app_log.15

|

||||

|

||||

### 2)在 Linux 中删除早于 “X” 天的文件的 Bash 脚本

|

||||

|

||||

我们有一个名为 **“/var/log/apache/”** 的文件夹,其中包含15天的日志,我们将删除 10 天前的文件。

|

||||

我们有一个名为 `/var/log/apache/` 的文件夹,其中包含15天的日志,我们将删除 10 天前的文件。

|

||||

|

||||

以下文章与该主题相关,因此你可能有兴趣阅读。

|

||||

|

||||

* **[如何在 Linux 中查找和删除早于 “X” 天和 “X” 小时的文件?][6]**

|

||||

* **[如何在 Linux 中查找最近修改的文件/文件夹][7]**

|

||||

* **[如何在 Linux 中自动删除或清理 /tmp 文件夹内容?][8]**

|

||||

|

||||

|

||||

* [如何在 Linux 中查找和删除早于 “X” 天和 “X” 小时的文件?][6]

|

||||

* [如何在 Linux 中查找最近修改的文件/文件夹][7]

|

||||

* [如何在 Linux 中自动删除或清理 /tmp 文件夹内容?][8]

|

||||

|

||||

```

|

||||

# ls -lh /var/log/apache/

|

||||

@ -141,7 +131,7 @@ Oct 15 /var/log/app/app_log.15

|

||||

|

||||

该脚本将删除 10 天前的文件并通过邮件发送文件夹列表。

|

||||

|

||||

你可以根据需要修改 **“-mtime X”** 的值。另外,请替换你的电子邮箱,而不是用我们的。

|

||||

你可以根据需要修改 `-mtime X` 的值。另外,请替换你的电子邮箱,而不是用我们的。

|

||||

|

||||

```

|

||||

# /opt/script/delete-old-files.sh

|

||||

@ -166,7 +156,7 @@ rm $MESSAGE /tmp/file.out

|

||||

fi

|

||||

```

|

||||

|

||||

给 **“delete-old-files.sh”** 设置可执行权限。

|

||||

给 `delete-old-files.sh` 设置可执行权限。

|

||||

|

||||

```

|

||||

# chmod +x /opt/script/delete-old-files.sh

|

||||

@ -199,7 +189,7 @@ via: https://www.2daygeek.com/bash-script-to-delete-files-folders-older-than-x-d

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,79 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11495-1.html)

|

||||

[#]: subject: (Linux sudo flaw can lead to unauthorized privileges)

|

||||

[#]: via: (https://www.networkworld.com/article/3446036/linux-sudo-flaw-can-lead-to-unauthorized-privileges.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

Linux sudo 漏洞可能导致未经授权的特权访问

|

||||

======

|

||||

|

||||

|

||||

|

||||

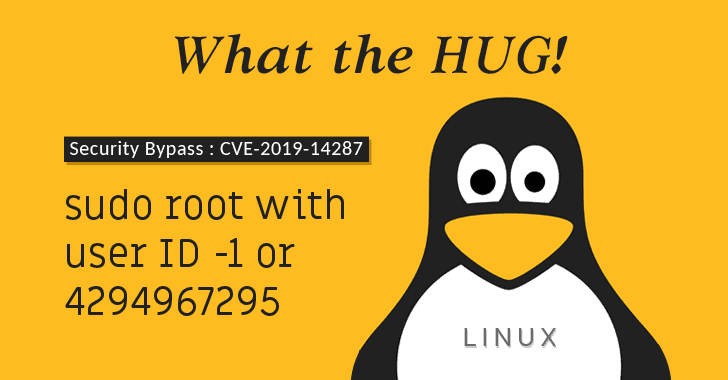

> 在 Linux 中利用新发现的 sudo 漏洞可以使某些用户以 root 身份运行命令,尽管对此还有所限制。

|

||||

|

||||

[sudo][1] 命令中最近发现了一个严重漏洞,如果被利用,普通用户可以 root 身份运行命令,即使在 `/etc/sudoers` 文件中明确禁止了该用户这样做。

|

||||

|

||||

将 `sudo` 更新到版本 1.8.28 应该可以解决该问题,因此建议 Linux 管理员尽快这样做。

|

||||

|

||||

如何利用此漏洞取决于 `/etc/sudoers` 中授予的特定权限。例如,一条规则允许用户以除了 root 用户之外的任何用户身份来编辑文件,这实际上将允许该用户也以 root 用户身份来编辑文件。在这种情况下,该漏洞可能会导致非常严重的问题。

|

||||

|

||||

用户要能够利用此漏洞,需要在 `/etc/sudoers` 中为**用户**分配特权,以使该用户可以以其他用户身份运行命令,并且该漏洞仅限于以这种方式分配的命令特权。

|

||||

|

||||

此问题影响 1.8.28 之前的版本。要检查你的 `sudo` 版本,请使用以下命令:

|

||||

|

||||

```

|

||||

$ sudo -V

|

||||

Sudo version 1.8.27 <===

|

||||

Sudoers policy plugin version 1.8.27

|

||||

Sudoers file grammar version 46

|

||||

Sudoers I/O plugin version 1.8.27

|

||||

```

|

||||

|

||||

该漏洞已在 CVE 数据库中分配了编号 [CVE-2019-14287][4]。它的风险是,任何被指定能以任意用户运行某个命令的用户,即使被明确禁止以 root 身份运行,它都能逃脱限制。

|

||||

|

||||

下面这些行让 `jdoe` 能够以除了 root 用户之外的其他身份使用 `vi` 编辑文件(`!root` 表示“非 root”),同时 `nemo` 有权运行以除了 root 身份以外的任何用户使用 `id` 命令:

|

||||

|

||||

```

|

||||

# affected entries on host "dragonfly"

|

||||

jdoe dragonfly = (ALL, !root) /usr/bin/vi

|

||||

nemo dragonfly = (ALL, !root) /usr/bin/id

|

||||

```

|

||||

|

||||

但是,由于存在漏洞,这些用户中要么能够绕过限制并以 root 编辑文件,或者以 root 用户身份运行 `id` 命令。

|

||||

|

||||

攻击者可以通过指定用户 ID 为 `-1` 或 `4294967295` 来以 root 身份运行命令。

|

||||

|

||||

```

|

||||

sudo -u#-1 id -u

|

||||

```

|

||||

|

||||

或者

|

||||

|

||||

```

|

||||

sudo -u#4294967295 id -u

|

||||

```

|

||||

|

||||

响应为 `1` 表明该命令以 root 身份运行(显示 root 的用户 ID)。

|

||||

|

||||

苹果信息安全团队的 Joe Vennix 找到并分析该问题。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3446036/linux-sudo-flaw-can-lead-to-unauthorized-privileges.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3236499/some-tricks-for-using-sudo.html

|

||||

[4]: http://cve.mitre.org/cgi-bin/cvename.cgi?name=CVE-2019-14287

|

||||

[5]: https://www.facebook.com/NetworkWorld/

|

||||

[6]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,82 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11497-1.html)

|

||||

[#]: subject: (Kubernetes networking, OpenStack Train, and more industry trends)

|

||||

[#]: via: (https://opensource.com/article/19/10/kubernetes-openstack-and-more-industry-trends)

|

||||

[#]: author: (Tim Hildred https://opensource.com/users/thildred)

|

||||

|

||||

每周开源点评:Kubernetes 网络、OpenStack Train 以及更多的行业趋势

|

||||

======

|

||||

|

||||

> 开源社区和行业趋势的每周总览。

|

||||

|

||||

![Person standing in front of a giant computer screen with numbers, data][1]

|

||||

|

||||

作为我在具有开源开发模型的企业软件公司担任高级产品营销经理的角色的一部分,我为产品营销人员、经理和其他影响者定期发布有关开源社区,市场和行业趋势的定期更新。以下是该更新中我和他们最喜欢的五篇文章。

|

||||

|

||||

### OpenStack Train 中最令人兴奋的功能

|

||||

|

||||

- [文章地址][2]

|

||||

|

||||

> 考虑到 Train 版本必须提供的所有技术优势([你可以在此处查看版本亮点][3]),你可能会对 Red Hat 认为这些将使我们的电信和企业客户受益的顶级功能及其用例感到好奇。以下我们对该版本最兴奋的功能的概述。

|

||||

|

||||

**影响**:OpenStack 对我来说就像 Shia LaBeouf:它在几年前达到了炒作的顶峰,然后继续产出了好的作品。Train 版本看起来是又一次令人难以置信的创新下降。

|

||||

|

||||

### 以 Ansible 原生的方式构建 Kubernetes 操作器

|

||||

|

||||

- [文章地址][4]

|

||||

|

||||

> 操作器简化了 Kubernetes 上复杂应用程序的管理。它们通常是用 Go 语言编写的,并且需要懂得 Kubernetes 内部的专业知识。但是,还有另一种进入门槛较低的选择。Ansible 是操作器 SDK 中的一等公民。使用 Ansible 可以释放应用程序工程师的精力,最大限度地利用时间来自动化和协调你的应用程序,并使用一种简单的语言在新的和现有的平台上进行操作。在这里我们可以看到如何做。

|

||||

|

||||

**影响**:这就像你发现可以用搅拌器和冷冻香蕉制作出不错的冰淇淋一样:Ansible(通常被认为很容易掌握)可以使你比你想象的更容易地做一些令人印象深刻的操作器魔术。

|

||||

|

||||

### Kubernetes 网络:幕后花絮

|

||||

|

||||

- [文章地址][5]

|

||||

|

||||

> 尽管围绕该主题有很多很好的资源(链接在[这里][6]),但我找不到一个示例,可以将所有的点与网络工程师喜欢和讨厌的命令输出连接起来,以显示背后实际发生的情况。因此,我决定从许多不同的来源收集这些信息,以期帮助你更好地了解事物之间的联系。

|

||||

|

||||

**影响**:这是一篇对复杂主题(带有图片)阐述的很好的作品。保证可以使 Kubenetes 网络的混乱程度降低 10%。

|

||||

|

||||

### 保护容器供应链

|

||||

|

||||

- [文章地址][7]

|

||||

|

||||

> 随着容器、软件即服务和函数即服务的出现,人们开始着眼于在使用现有服务、函数和容器映像的过程中寻求新的价值。[Red Hat][8] 的容器首席产品经理 Scott McCarty 表示,关注这个重点既有优点也有缺点。“它使我们能够集中精力编写满足我们需求的新应用程序代码,同时将对基础架构的关注转移给其他人身上,”McCarty 说,“容器处于一个最佳位置,提供了足够的控制,而卸去了许多繁琐的基础架构工作。”但是,容器也会带来与安全性相关的劣势。

|

||||

|

||||

**影响**:我在一个由大约十位安全人员组成的小组中,可以肯定地说,整天要考虑软件安全性需要一定的倾向。当你长时间凝视深渊时,它也凝视着你。如果你不是如此倾向的软件开发人员,请听取 Scott 的建议并确保你的供应商考虑安全。

|

||||

|

||||

### 15 岁的 Fedora:为何 Matthew Miller 看到 Linux 发行版的光明前景

|

||||

|

||||

- [文章链接][9]

|

||||

|

||||

> 在 TechRepublic 的一个大范围采访中,Fedora 项目负责人 Matthew Miller 讨论了过去的经验教训、软件容器的普遍采用和竞争性标准、Fedora 的潜在变化以及包括 systemd 在内的热门话题。

|

||||

|

||||

**影响**:我喜欢 Fedora 项目的原因是它的清晰度;该项目知道它代表什么。像 Matt 这样的人就是为什么能看到光明前景的原因。

|

||||

|

||||

*我希望你喜欢这张上周让我印象深刻的列表,并在下周一回来了解更多的开放源码社区、市场和行业趋势。*

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/10/kubernetes-openstack-and-more-industry-trends

|

||||

|

||||

作者:[Tim Hildred][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/thildred

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/data_metrics_analytics_desktop_laptop.png?itok=9QXd7AUr (Person standing in front of a giant computer screen with numbers, data)

|

||||

[2]: https://www.redhat.com/en/blog/look-most-exciting-features-openstack-train

|

||||

[3]: https://releases.openstack.org/train/highlights.html

|

||||

[4]: https://www.cncf.io/webinars/building-kubernetes-operators-in-an-ansible-native-way/

|

||||

[5]: https://itnext.io/kubernetes-networking-behind-the-scenes-39a1ab1792bb

|

||||

[6]: https://github.com/nleiva/kubernetes-networking-links

|

||||

[7]: https://www.devprojournal.com/technology-trends/open-source/securing-the-container-supply-chain/

|

||||

[8]: https://www.redhat.com/en

|

||||

[9]: https://www.techrepublic.com/article/fedora-at-15-why-matthew-miller-sees-a-bright-future-for-the-linux-distribution/

|

||||

@ -0,0 +1,93 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11502-1.html)

|

||||

[#]: subject: (Pylint: Making your Python code consistent)

|

||||

[#]: via: (https://opensource.com/article/19/10/python-pylint-introduction)

|

||||

[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

|

||||

|

||||

Pylint:让你的 Python 代码保持一致

|

||||

======

|

||||

|

||||

> 当你想要争论代码复杂性时,Pylint 是你的朋友。

|

||||

|

||||

![OpenStack source code \(Python\) in VIM][1]

|

||||

|

||||

Pylint 是更高层级的 Python 样式强制程序。而 [flake8][2] 和 [black][3] 检查的是“本地”样式:换行位置、注释的格式、发现注释掉的代码或日志格式中的错误做法之类的问题。

|

||||

|

||||

默认情况下,Pylint 非常激进。它将对每样东西都提供严厉的意见,从检查是否实际实现声明的接口到重构重复代码的可能性,这对新用户来说可能会很多。一种温和地将其引入项目或团队的方法是先关闭*所有*检查器,然后逐个启用检查器。如果你已经在使用 flake8、black 和 [mypy][4],这尤其有用:Pylint 有相当多的检查器和它们在功能上重叠。

|

||||

|

||||

但是,Pylint 独有之处之一是能够强制执行更高级别的问题:例如,函数的行数或者类中方法的数量。

|

||||

|

||||

这些数字可能因项目而异,并且可能取决于开发团队的偏好。但是,一旦团队就参数达成一致,使用自动工具*强制化*这些参数非常有用。这是 Pylint 闪耀的地方。

|

||||

|

||||

### 配置 Pylint

|

||||

|

||||

要以空配置开始,请将 `.pylintrc` 设置为

|

||||

|

||||

```

|

||||

[MESSAGES CONTROL]

|

||||

|

||||

disable=all

|

||||

```

|

||||

|

||||

这将禁用所有 Pylint 消息。由于其中许多是冗余的,这是有道理的。在 Pylint 中,`message` 是一种特定的警告。

|

||||

|

||||

你可以通过运行 `pylint` 来确认所有消息都已关闭:

|

||||

|

||||

```

|

||||

$ pylint <my package>

|

||||

```

|

||||

|

||||

通常,向 `pylint` 命令行添加参数并不是一个好主意:配置 `pylint` 的最佳位置是 `.pylintrc`。为了使它做*一些*有用的事,我们需要启用一些消息。

|

||||

|

||||

要启用消息,在 `.pylintrc` 中的 `[MESSAGES CONTROL]` 下添加

|

||||

|

||||

```

|

||||

enable=<message>,

|

||||

...

|

||||

```

|

||||

|

||||

对于看起来有用的“消息”(Pylint 称之为不同类型的警告)。我最喜欢的包括 `too-many-lines`、`too-many-arguments` 和 `too-many-branches`。所有这些会限制模块或函数的复杂性,并且无需进行人工操作即可客观地进行代码复杂度测量。

|

||||

|

||||

*检查器*是*消息*的来源:每条消息只属于一个检查器。许多最有用的消息都在[设计检查器][5]下。默认数字通常都不错,但要调整最大值也很简单:我们可以在 `.pylintrc` 中添加一个名为 `DESIGN` 的段。

|

||||

|

||||

```

|

||||

[DESIGN]

|

||||

max-args=7

|

||||

max-locals=15

|

||||

```

|

||||

|

||||

另一个有用的消息来源是“重构”检查器。我已启用一些最喜欢的消息有 `consider-using-dict-comprehension`、`stop-iteration-return`(它会查找正确的停止迭代的方式是 `return` 而使用了 `raise StopIteration` 的迭代器)和 `chained-comparison`,它将建议使用如 `1 <= x < 5`,而不是不太明显的 `1 <= x && 5 > 5` 的语法。

|

||||

|

||||

最后是一个在性能方面消耗很大的检查器,但它非常有用,就是 `similarities`。它会查找不同部分代码之间的复制粘贴来强制执行“不要重复自己”(DRY 原则)。它只启用一条消息:`duplicate-code`。默认的 “最小相似行数” 设置为 4。可以使用 `.pylintrc` 将其设置为不同的值。

|

||||

|

||||

```

|

||||

[SIMILARITIES]

|

||||

min-similarity-lines=3

|

||||

```

|

||||

|

||||

### Pylint 使代码评审变得简单

|

||||

|

||||

如果你厌倦了需要指出一个类太复杂,或者两个不同的函数基本相同的代码评审,请将 Pylint 添加到你的[持续集成][6]配置中,并且只需要对项目复杂性准则的争论一次就行。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/10/python-pylint-introduction

|

||||

|

||||

作者:[Moshe Zadka][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/moshez

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/openstack_python_vim_2.jpg?itok=4fza48WU (OpenStack source code (Python) in VIM)

|

||||

[2]: https://opensource.com/article/19/5/python-flake8

|

||||

[3]: https://opensource.com/article/19/5/python-black

|

||||

[4]: https://opensource.com/article/19/5/python-mypy

|

||||

[5]: https://pylint.readthedocs.io/en/latest/technical_reference/features.html#design-checker

|

||||

[6]: https://opensource.com/business/15/7/six-continuous-integration-tools

|

||||

@ -0,0 +1,183 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lnrCoder)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11503-1.html)

|

||||

[#]: subject: (How to Get the Size of a Directory in Linux)

|

||||

[#]: via: (https://www.2daygeek.com/find-get-size-of-directory-folder-linux-disk-usage-du-command/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

如何获取 Linux 中的目录大小

|

||||

======

|

||||

|

||||

你应该已经注意到,在 Linux 中使用 [ls 命令][1] 列出的目录内容中,目录的大小仅显示 4KB。这个大小正确吗?如果不正确,那它代表什么,又该如何获取 Linux 中的目录或文件夹大小?这是一个默认的大小,是用来存储磁盘上存储目录的元数据的大小。

|

||||

|

||||

Linux 上有一些应用程序可以 [获取目录的实际大小][2]。其中,磁盘使用率(`du`)命令已被 Linux 管理员广泛使用。

|

||||

|

||||

我将向您展示如何使用各种选项获取文件夹大小。

|

||||

|

||||

### 什么是 du 命令?

|

||||

|

||||

[du 命令][3] 表示 <ruby>磁盘使用率<rt>Disk Usage</rt></ruby>。这是一个标准的 Unix 程序,用于估计当前工作目录中的文件空间使用情况。

|

||||

|

||||

它使用递归方式总结磁盘使用情况,以获取目录及其子目录的大小。

|

||||

|

||||

如同我说的那样, 使用 `ls` 命令时,目录大小仅显示 4KB。参见下面的输出。

|

||||

|

||||

```

|

||||

$ ls -lh | grep ^d

|

||||

|

||||

drwxr-xr-x 3 daygeek daygeek 4.0K Aug 2 13:57 Bank_Details

|

||||

drwxr-xr-x 2 daygeek daygeek 4.0K Mar 15 2019 daygeek

|

||||

drwxr-xr-x 6 daygeek daygeek 4.0K Feb 16 2019 drive-2daygeek

|

||||

drwxr-xr-x 13 daygeek daygeek 4.0K Jan 6 2019 drive-mageshm

|

||||

drwxr-xr-x 15 daygeek daygeek 4.0K Sep 29 21:32 Thanu_Photos

|

||||

```

|

||||

|

||||

### 1) 在 Linux 上如何只获取父目录的大小

|

||||

|

||||

使用以下 `du` 命令格式获取给定目录的总大小。在该示例中,我们将得到 `/home/daygeek/Documents` 目录的总大小。

|

||||

|

||||

```

|

||||

$ du -hs /home/daygeek/Documents

|

||||

或

|

||||

$ du -h --max-depth=0 /home/daygeek/Documents/

|

||||

20G /home/daygeek/Documents

|

||||

```

|

||||

|

||||

详细说明:

|

||||

|

||||

* `du` – 这是一个命令

|

||||

* `-h` – 以易读的格式显示大小 (例如 1K 234M 2G)

|

||||

* `-s` – 仅显示每个参数的总数

|

||||

* `--max-depth=N` – 目录的打印深度

|

||||

|

||||

### 2) 在 Linux 上如何获取每个目录的大小

|

||||

|

||||

使用以下 `du` 命令格式获取每个目录(包括子目录)的总大小。

|

||||

|

||||

在该示例中,我们将获得每个 `/home/daygeek/Documents` 目录及其子目录的总大小。

|

||||

|

||||

```

|

||||

$ du -h /home/daygeek/Documents/ | sort -rh | head -20

|

||||

|

||||

20G /home/daygeek/Documents/

|

||||

9.6G /home/daygeek/Documents/drive-2daygeek

|

||||

6.3G /home/daygeek/Documents/Thanu_Photos

|

||||

5.3G /home/daygeek/Documents/Thanu_Photos/Camera

|

||||

5.3G /home/daygeek/Documents/drive-2daygeek/Thanu-videos

|

||||

3.2G /home/daygeek/Documents/drive-mageshm

|

||||

2.3G /home/daygeek/Documents/drive-2daygeek/Thanu-Photos

|

||||

2.2G /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month

|

||||

916M /home/daygeek/Documents/drive-mageshm/Tanisha

|

||||

454M /home/daygeek/Documents/drive-mageshm/2g-backup

|

||||

415M /home/daygeek/Documents/Thanu_Photos/WhatsApp Video

|

||||

300M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Jan-2017

|

||||

288M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Oct-2017

|

||||

226M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Sep-2017

|

||||

219M /home/daygeek/Documents/Thanu_Photos/WhatsApp Documents

|

||||

213M /home/daygeek/Documents/drive-mageshm/photos

|

||||

163M /home/daygeek/Documents/Thanu_Photos/WhatsApp Video/Sent

|

||||

161M /home/daygeek/Documents/Thanu_Photos/WhatsApp Images

|

||||

154M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/June-2017

|

||||

150M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Nov-2016

|

||||

```

|

||||

|

||||

### 3) 在 Linux 上如何获取每个目录的摘要

|

||||

|

||||

使用如下 `du` 命令格式仅获取每个目录的摘要。

|

||||

|

||||

```

|

||||

$ du -hs /home/daygeek/Documents/* | sort -rh | head -10

|

||||

|

||||

9.6G /home/daygeek/Documents/drive-2daygeek

|

||||

6.3G /home/daygeek/Documents/Thanu_Photos

|

||||

3.2G /home/daygeek/Documents/drive-mageshm

|

||||

756K /home/daygeek/Documents/Bank_Details

|

||||

272K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-TouchInterface1.png

|

||||

172K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-NightLight.png

|

||||

164K /home/daygeek/Documents/ConfigServer Security and Firewall (csf) Cheat Sheet.pdf

|

||||

132K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-Todo.png

|

||||

112K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-ZorinAutoTheme.png

|

||||

96K /home/daygeek/Documents/distro-info.xlsx

|

||||

```

|

||||

|

||||

### 4) 在 Linux 上如何获取每个目录的不含子目录的大小

|

||||

|

||||

使用如下 `du` 命令格式来展示每个目录的总大小,不包括子目录。

|

||||

|

||||

```

|

||||

$ du -hS /home/daygeek/Documents/ | sort -rh | head -20

|

||||

|

||||

5.3G /home/daygeek/Documents/Thanu_Photos/Camera

|

||||

5.3G /home/daygeek/Documents/drive-2daygeek/Thanu-videos

|

||||

2.3G /home/daygeek/Documents/drive-2daygeek/Thanu-Photos

|

||||

1.5G /home/daygeek/Documents/drive-mageshm

|

||||

831M /home/daygeek/Documents/drive-mageshm/Tanisha

|

||||

454M /home/daygeek/Documents/drive-mageshm/2g-backup

|

||||

300M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Jan-2017

|

||||

288M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Oct-2017

|

||||

253M /home/daygeek/Documents/Thanu_Photos/WhatsApp Video

|

||||

226M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Sep-2017

|

||||

219M /home/daygeek/Documents/Thanu_Photos/WhatsApp Documents

|

||||

213M /home/daygeek/Documents/drive-mageshm/photos

|

||||

163M /home/daygeek/Documents/Thanu_Photos/WhatsApp Video/Sent

|

||||

154M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/June-2017

|

||||

150M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Nov-2016

|

||||

127M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Dec-2016

|

||||

100M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Oct-2016

|

||||

94M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Nov-2017

|

||||

92M /home/daygeek/Documents/Thanu_Photos/WhatsApp Images

|

||||

90M /home/daygeek/Documents/drive-2daygeek/Thanu-photos-by-month/Dec-2017

|

||||

```

|

||||

|

||||

### 5) 在 Linux 上如何仅获取一级子目录的大小

|

||||

|

||||

如果要获取 Linux 上给定目录的一级子目录(包括其子目录)的大小,请使用以下命令格式。

|

||||

|

||||

```

|

||||

$ du -h --max-depth=1 /home/daygeek/Documents/

|

||||

|

||||

3.2G /home/daygeek/Documents/drive-mageshm

|

||||

4.0K /home/daygeek/Documents/daygeek

|

||||

756K /home/daygeek/Documents/Bank_Details

|

||||

9.6G /home/daygeek/Documents/drive-2daygeek

|

||||

6.3G /home/daygeek/Documents/Thanu_Photos

|

||||

20G /home/daygeek/Documents/

|

||||

```

|

||||

|

||||

### 6) 如何在 du 命令输出中获得总计

|

||||

|

||||

如果要在 `du` 命令输出中获得总计,请使用以下 `du` 命令格式。

|

||||

|

||||

```

|

||||

$ du -hsc /home/daygeek/Documents/* | sort -rh | head -10

|

||||

|

||||

20G total

|

||||

9.6G /home/daygeek/Documents/drive-2daygeek

|

||||

6.3G /home/daygeek/Documents/Thanu_Photos

|

||||

3.2G /home/daygeek/Documents/drive-mageshm

|

||||

756K /home/daygeek/Documents/Bank_Details

|

||||

272K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-TouchInterface1.png

|

||||

172K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-NightLight.png

|

||||

164K /home/daygeek/Documents/ConfigServer Security and Firewall (csf) Cheat Sheet.pdf

|

||||

132K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-Todo.png

|

||||

112K /home/daygeek/Documents/user-friendly-zorin-os-15-has-been-released-ZorinAutoTheme.png

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/find-get-size-of-directory-folder-linux-disk-usage-du-command/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lnrCoder](https://github.com/lnrCoder)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/linux-unix-ls-command-display-directory-contents/

|

||||

[2]: https://www.2daygeek.com/how-to-get-find-size-of-directory-folder-linux/

|

||||

[3]: https://www.2daygeek.com/linux-check-disk-usage-files-directories-size-du-command/

|

||||

@ -0,0 +1,94 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (MX Linux 19 Released With Debian 10.1 ‘Buster’ & Other Improvements)

|

||||

[#]: via: (https://itsfoss.com/mx-linux-19/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

MX Linux 19 Released With Debian 10.1 ‘Buster’ & Other Improvements

|

||||

======

|

||||

|

||||

MX Linux 18 has been one of my top recommendations for the [best Linux distributions][1], specially when considering distros other than Ubuntu.

|

||||

|

||||

It is based on Debian 9.6 ‘Stretch’ – which was incredibly a fast and smooth experience.

|

||||

|

||||

Now, as a major upgrade to that, MX Linux 19 brings a lot of major improvements and changes. Here, we shall take a look at the key highlights.

|

||||

|

||||

### New features in MX Linux 19

|

||||

|

||||

[Subscribe to our YouTube channel for more Linux videos][2]

|

||||

|

||||

#### Debian 10 ‘Buster’

|

||||

|

||||