mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-30 02:40:11 +08:00

Merge pull request #2 from LCTT/master

This commit is contained in:

commit

43f0d25899

published

sources/tech

20160516 Securing Your Server.md20161012 Introduction to FirewallD on CentOS.md20161024 How to Use Old Xorg Apps in Unity 8 on Ubuntu 16.10.md20161201 Using the NTP time synchronization.md20161205 Manage Samba4 Active Directory Infrastructure from Windows10 via RSAT – Part 3.md20161207 Manage Samba4 AD Domain Controller DNS and Group Policy from Windows – Part 4.md20161216 Kprobes Event Tracing on ARMv8.mdHow to Manage Samba4 AD Infrastructure from Linux Command Line – Part 2.md

LXD

translated/tech

20161201 How to Configure a Firewall with UFW.md20161203 Redirect a Website URL from One Server to Different Server in Apache.md20161206 How to Find Recent or Today’s Modified Files in Linux.md20161215 Building an Email Server on Ubuntu Linux - Part 2.md20161215 Building an Email Server on Ubuntu Linux - Part 3.md20161216 sshpass -An Excellent Tool for Non-Interactive SSH Login – Never Use on Production Server.md

LXD

@ -1,4 +1,4 @@

|

||||

PyCharm - Linux 下最好的 Python IDE(集成开发环境)

|

||||

PyCharm - Linux 下最好的 Python IDE

|

||||

=========

|

||||

/about/pycharm2-57e2d5ee5f9b586c352c7493.png)

|

||||

|

||||

@ -12,9 +12,7 @@ PyCharm 是由 [Jetbrains][3] 开发的一个编辑器和调试器,[Jetbrains]

|

||||

|

||||

### 如何安装 PyCharm

|

||||

|

||||

我已经写了一篇关于如何获取 PyCharm 的指南,下载,解压文件,然后运行。

|

||||

|

||||

[点击链接][4].

|

||||

我已经[写了一篇][4]关于如何获取 PyCharm 的指南,下载、解压文件,然后运行。

|

||||

|

||||

### 欢迎界面

|

||||

|

||||

@ -24,7 +22,7 @@ PyCharm 是由 [Jetbrains][3] 开发的一个编辑器和调试器,[Jetbrains]

|

||||

|

||||

* 创建新项目

|

||||

* 打开项目

|

||||

* 版本控制检查

|

||||

* 从版本控制仓库检出

|

||||

|

||||

还有一个配置设置选项,你可以通过它设置默认 Python 版本或者一些其他设置。

|

||||

|

||||

@ -46,7 +44,7 @@ PyCharm 是由 [Jetbrains][3] 开发的一个编辑器和调试器,[Jetbrains]

|

||||

* Twitter Bootstrap

|

||||

* Web Starter Kit

|

||||

|

||||

这不是一个编程教程,所以我没必要说明这些项目类型是什么。如果你想创建一个可以运行在 Windows、Linux 和 Mac 上的简单桌面运行程序,那么你可以选择 Pure Python 项目,然后使用 QT 库来开发图形应用程序,这样的图形应用程序无论在任何操作系统上运行,看起来都像是原生的,就像是在该系统上开发的一样。

|

||||

这不是一个编程教程,所以我没必要说明这些项目类型是什么。如果你想创建一个可以运行在 Windows、Linux 和 Mac 上的简单桌面运行程序,那么你可以选择 Pure Python 项目,然后使用 Qt 库来开发图形应用程序,这样的图形应用程序无论在何种操作系统上运行,看起来都像是原生的,就像是在该系统上开发的一样。

|

||||

|

||||

选择了项目类型以后,你需要输入一个项目名字并且选择一个 Python 版本来进行开发。

|

||||

|

||||

@ -58,15 +56,15 @@ PyCharm 是由 [Jetbrains][3] 开发的一个编辑器和调试器,[Jetbrains]

|

||||

|

||||

PyCharm 提供了从各种在线资源查看项目源码的选项,在线资源包括 [GitHub][5]、[CVS][6]、Git、[Mercurial][7] 以及 [Subversion][8]。

|

||||

|

||||

### PyCharm IDE(集成开发环境)

|

||||

### PyCharm IDE(集成开发环境)

|

||||

|

||||

PyCharm IDE 可以通过顶部的一个菜单打开,在这个菜单下面你可以为每个打开的项目‘贴上’标签。

|

||||

PyCharm IDE 中可以打开顶部的菜单,在这个菜单下方你可以看到每个打开的项目的标签。

|

||||

|

||||

屏幕右方是调试选项区,可以单步运行代码。

|

||||

|

||||

左面板有一系列项目文件和外部库。

|

||||

左侧面板有项目文件和外部库的列表。

|

||||

|

||||

如果想在项目中新建一个文件,你可以‘右击’项目名字,然后选择‘新建’。然后你可以在下面这些文件类型中选择一种添加到项目中:

|

||||

如果想在项目中新建一个文件,你可以鼠标右击项目的名字,然后选择‘新建’。然后你可以在下面这些文件类型中选择一种添加到项目中:

|

||||

|

||||

* 文件

|

||||

* 目录

|

||||

@ -101,13 +99,12 @@ PyCharm IDE 可以通过顶部的一个菜单打开,在这个菜单下面你

|

||||

|

||||

当你运行到一行代码的时候,你可以对这行代码中出现的变量进行监视,这样当变量值改变的时候你能够看到。

|

||||

|

||||

另一个不错的选择是运行检查器覆盖的代码。在过去这些年里,编程界发生了很大的变化,现在,对于开发人员来说,进行测试驱动开发是很常见的,这样他们可以检查对程序所做的每一个改变,确保不会破坏系统的另一部分。

|

||||

另一个不错的选择是使用覆盖检查器运行代码。在过去这些年里,编程界发生了很大的变化,现在,对于开发人员来说,进行测试驱动开发是很常见的,这样他们可以检查对程序所做的每一个改变,确保不会破坏系统的另一部分。

|

||||

|

||||

检查器能够很好的帮助你运行程序,执行一些测试,运行结束以后,它会以百分比的形式告诉你测试运行所覆盖的代码有多少。

|

||||

覆盖检查器能够很好的帮助你运行程序,执行一些测试,运行结束以后,它会以百分比的形式告诉你测试运行所覆盖的代码有多少。

|

||||

|

||||

还有一个工具可以显示‘类函数’或‘类’的名字,以及一个项目被调用的次数和在一个特定代码片段运行所花费的时间。

|

||||

|

||||

|

||||

### 代码重构

|

||||

|

||||

PyCharm 一个很强大的特性是代码重构选项。

|

||||

@ -122,7 +119,7 @@ PyCharm 一个很强大的特性是代码重构选项。

|

||||

|

||||

你不必遵循 PyCharm 的所有规则。这些规则大部分只是好的编码准则,与你的代码是否能够正确运行无关。

|

||||

|

||||

代码菜单还有其他重构选项。比如,你可以进行代码清理以及检查文件或项目问题。

|

||||

代码菜单还有其它的重构选项。比如,你可以进行代码清理以及检查文件或项目问题。

|

||||

|

||||

### 总结

|

||||

|

||||

@ -130,11 +127,11 @@ PyCharm 是 Linux 系统上开发 Python 代码的一个优秀编辑器,并且

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.lifewire.com/how-to-install-the-pycharm-python-ide-in-linux-4091033

|

||||

via: https://www.lifewire.com/pycharm-the-best-linux-python-ide-4091045

|

||||

|

||||

作者:[Gary Newell ][a]

|

||||

作者:[Gary Newell][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 组织编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

382

sources/tech/20160516 Securing Your Server.md

Normal file

382

sources/tech/20160516 Securing Your Server.md

Normal file

@ -0,0 +1,382 @@

|

||||

translating---geekpi

|

||||

|

||||

Securing Your Server

|

||||

============================================================

|

||||

|

||||

### Update Your System–Frequently

|

||||

|

||||

Keeping your software up to date is the single biggest security precaution you can take for any operating system. Software updates range from critical vulnerability patches to minor bug fixes, and many software vulnerabilities are actually patched by the time they become public.

|

||||

|

||||

### Automatic Security Updates

|

||||

|

||||

There are arguments for and against automatic updates on servers. [Fedora’s Wiki][15] has a good breakdown of the pros and cons, but the risk of automatic updates will be minimal if you limit them to security updates.

|

||||

|

||||

The practicality of automatic updates is something you must judge for yourself because it comes down to what _you_ do with your Linode. Bear in mind that automatic updates apply only to packages sourced from repositories, not self-compiled applications. You may find it worthwhile to have a test environment that replicates your production server. Updates can be applied there and reviewed for issues before being applied to the live environment.

|

||||

|

||||

* CentOS uses _[yum-cron][2]_ for automatic updates.

|

||||

|

||||

* Debian and Ubuntu use _[unattended upgrades][3]_.

|

||||

|

||||

* Fedora uses _[dnf-automatic][4]_.

|

||||

|

||||

### Add a Limited User Account

|

||||

|

||||

Up to this point, you have accessed your Linode as the `root` user, which has unlimited privileges and can execute _any_ command–even one that could accidentally disrupt your server. We recommend creating a limited user account and using that at all times. Administrative tasks will be done using `sudo` to temporarily elevate your limited user’s privileges so you can administer your server.

|

||||

|

||||

> Not all Linux distributions include `sudo` on the system by default, but all the images provided by Linode have sudo in their package repositories. If you get the output `sudo: command not found`, install sudo before continuing.

|

||||

|

||||

To add a new user, first [log in to your Linode][16] via SSH.

|

||||

|

||||

### CentOS / Fedora

|

||||

|

||||

1. Create the user, replacing `example_user` with your desired username, and assign a password:

|

||||

|

||||

```

|

||||

useradd example_user && passwd example_user

|

||||

```

|

||||

|

||||

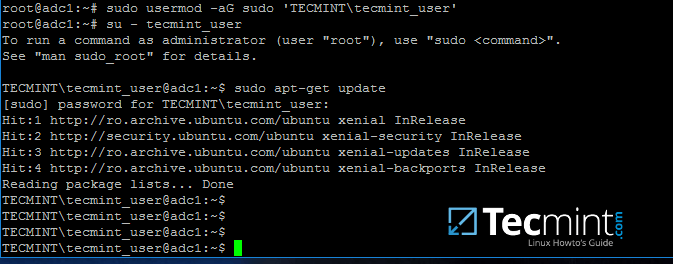

2. Add the user to the `wheel` group for sudo privileges:

|

||||

|

||||

```

|

||||

usermod -aG wheel example_user

|

||||

```

|

||||

|

||||

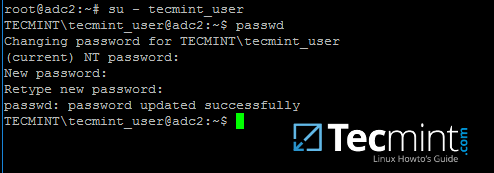

### Ubuntu

|

||||

|

||||

1. Create the user, replacing `example_user` with your desired username. You’ll then be asked to assign the user a password:

|

||||

|

||||

```

|

||||

adduser example_user

|

||||

```

|

||||

|

||||

2. Add the user to the `sudo` group so you’ll have administrative privileges:

|

||||

|

||||

|

||||

```

|

||||

adduser example_user sudo

|

||||

```

|

||||

|

||||

### Debian

|

||||

|

||||

1. Debian does not include `sudo` among their default packages. Use `apt-get` to install it:

|

||||

|

||||

|

||||

```

|

||||

apt-get install sudo

|

||||

```

|

||||

|

||||

2. Create the user, replacing `example_user` with your desired username. You’ll then be asked to assign the user a password:

|

||||

|

||||

```

|

||||

adduser example_user

|

||||

```

|

||||

|

||||

3. Add the user to the `sudo` group so you’ll have administrative privileges:

|

||||

|

||||

```

|

||||

adduser example_user sudo

|

||||

```

|

||||

|

||||

After creating your limited user, disconnect from your Linode:

|

||||

|

||||

```

|

||||

exit

|

||||

```

|

||||

|

||||

Log back in as your new user. Replace `example_user` with your username, and the example IP address with your Linode’s IP address:

|

||||

|

||||

```

|

||||

ssh example_user@203.0.113.10

|

||||

```

|

||||

|

||||

Now you can administer your Linode from your new user account instead of `root`. Nearly all superuser commands can be executed with `sudo` (example: `sudo iptables -L -nv`) and those commands will be logged to `/var/log/auth.log`.

|

||||

|

||||

### Harden SSH Access

|

||||

|

||||

By default, password authentication is used to connect to your Linode via SSH. A cryptographic key-pair is more secure because a private key takes the place of a password, which is generally much more difficult to brute-force. In this section we’ll create a key-pair and configure the Linode to not accept passwords for SSH logins.

|

||||

|

||||

### Create an Authentication Key-pair

|

||||

|

||||

1. This is done on your local computer, **not** your Linode, and will create a 4096-bit RSA key-pair. During creation, you will be given the option to encrypt the private key with a passphrase. This means that it cannot be used without entering the passphrase, unless you save it to your local desktop’s keychain manager. We suggest you use the key-pair with a passphrase, but you can leave this field blank if you don’t want to use one.

|

||||

|

||||

**Linux / OS X**

|

||||

|

||||

> If you’ve already created an RSA key-pair, this command will overwrite it, potentially locking you out of other systems. If you’ve already created a key-pair, skip this step. To check for existing keys, run `ls ~/.ssh/id_rsa*`.

|

||||

|

||||

```

|

||||

ssh-keygen -b 4096

|

||||

```

|

||||

|

||||

|

||||

Press **Enter** to use the default names `id_rsa` and `id_rsa.pub` in `/home/your_username/.ssh` before entering your passphrase.

|

||||

|

||||

**Windows**

|

||||

|

||||

This can be done using PuTTY as outlined in our guide: [Use Public Key Authentication with SSH][6].

|

||||

|

||||

2. Upload the public key to your Linode. Replace `example_user` with the name of the user you plan to administer the server as, and `203.0.113.10` with your Linode’s IP address.

|

||||

|

||||

**Linux**

|

||||

|

||||

From your local computer:

|

||||

|

||||

```

|

||||

ssh-copy-id example_user@203.0.113.10

|

||||

```

|

||||

|

||||

**OS X**

|

||||

|

||||

On your Linode (while signed in as your limited user):

|

||||

|

||||

```

|

||||

mkdir -p ~/.ssh && sudo chmod -R 700 ~/.ssh/

|

||||

```

|

||||

|

||||

From your local computer:

|

||||

|

||||

```

|

||||

scp ~/.ssh/id_rsa.pub example_user@203.0.113.10:~/.ssh/authorized_keys

|

||||

```

|

||||

|

||||

> `ssh-copy-id` is available in [Homebrew][5] if you prefer it over SCP. Install with `brew install ssh-copy-id`.

|

||||

|

||||

**Windows**

|

||||

|

||||

* **Option 1**: This can be done using [WinSCP][1]. In the login window, enter your Linode’s public IP address as the hostname, and your non-root username and password. Click _Login_ to connect.

|

||||

|

||||

Once WinSCP has connected, you’ll see two main sections. The section on the left shows files on your local computer and the section on the right shows files on your Linode. Using the file explorer on the left, navigate to the file where you’ve saved your public key, select the public key file, and click _Upload_ in the toolbar above.

|

||||

|

||||

You’ll be prompted to enter a path where you’d like to place the file on your Linode. Upload the file to `/home/example_user/.ssh/authorized_keys`, replacing `example_user` with your username.

|

||||

|

||||

* **Option 2:** Copy the public key directly from the PuTTY key generator into the terminal emulator connected to your Linode (as a non-root user):

|

||||

|

||||

```

|

||||

mkdir ~/.ssh; nano ~/.ssh/authorized_keys

|

||||

```

|

||||

|

||||

|

||||

The above command will open a blank file called `authorized_keys` in a text editor. Copy the public key into the text file, making sure it is copied as a single line exactly as it was generated by PuTTY. Press **CTRL+X**, then **Y**, then **Enter** to save the file.

|

||||

|

||||

Finally, you’ll want to set permissions for the public key directory and the key file itself:

|

||||

|

||||

```

|

||||

sudo chmod 700 -R ~/.ssh && chmod 600 ~/.ssh/authorized_keys

|

||||

```

|

||||

|

||||

These commands provide an extra layer of security by preventing other users from accessing the public key directory as well as the file itself. For more information on how this works, see our guide on [how to modify file permissions][7].

|

||||

|

||||

3. Now exit and log back into your Linode. If you specified a passphrase for your private key, you’ll need to enter it.

|

||||

|

||||

### SSH Daemon Options

|

||||

|

||||

1. **Disallow root logins over SSH.** This requires all SSH connections be by non-root users. Once a limited user account is connected, administrative privileges are accessible either by using `sudo` or changing to a root shell using `su -`.

|

||||

|

||||

```

|

||||

# Authentication:

|

||||

...

|

||||

PermitRootLogin no

|

||||

```

|

||||

|

||||

|

||||

2. **Disable SSH password authentication.** This requires all users connecting via SSH to use key authentication. Depending on the Linux distribution, the line `PasswordAuthentication` may need to be added, or uncommented by removing the leading `#`.

|

||||

|

||||

|

||||

```

|

||||

# Change to no to disable tunnelled clear text passwords

|

||||

PasswordAuthentication no

|

||||

```

|

||||

|

||||

> You may want to leave password authentication enabled if you connect to your Linode from many different computers. This will allow you to authenticate with a password instead of generating and uploading a key-pair for every device.

|

||||

|

||||

3. **Listen on only one internet protocol.** The SSH daemon listens for incoming connections over both IPv4 and IPv6 by default. Unless you need to SSH into your Linode using both protocols, disable whichever you do not need. _This does not disable the protocol system-wide, it is only for the SSH daemon._

|

||||

|

||||

Use the option:

|

||||

|

||||

* `AddressFamily inet` to listen only on IPv4.

|

||||

* `AddressFamily inet6` to listen only on IPv6.

|

||||

|

||||

The `AddressFamily` option is usually not in the `sshd_config` file by default. Add it to the end of the file:

|

||||

|

||||

```

|

||||

echo 'AddressFamily inet' | sudo tee -a /etc/ssh/sshd_config

|

||||

```

|

||||

|

||||

|

||||

4. Restart the SSH service to load the new configuration.

|

||||

|

||||

If you’re using a Linux distribution which uses systemd (CentOS 7, Debian 8, Fedora, Ubuntu 15.10+)

|

||||

|

||||

```

|

||||

sudo systemctl restart sshd

|

||||

```

|

||||

|

||||

If your init system is SystemV or Upstart (CentOS 6, Debian 7, Ubuntu 14.04):

|

||||

|

||||

```

|

||||

sudo service ssh restart

|

||||

```

|

||||

|

||||

### Use Fail2Ban for SSH Login Protection

|

||||

|

||||

[_Fail2Ban_][17] is an application that bans IP addresses from logging into your server after too many failed login attempts. Since legitimate logins usually take no more than three tries to succeed (and with SSH keys, no more than one), a server being spammed with unsuccessful logins indicates attempted malicious access.

|

||||

|

||||

Fail2Ban can monitor a variety of protocols including SSH, HTTP, and SMTP. By default, Fail2Ban monitors SSH only, and is a helpful security deterrent for any server since the SSH daemon is usually configured to run constantly and listen for connections from any remote IP address.

|

||||

|

||||

For complete instructions on installing and configuring Fail2Ban, see our guide: [Securing Your Server with Fail2ban][18].

|

||||

|

||||

### Remove Unused Network-Facing Services

|

||||

|

||||

Most Linux distributions install with running network services which listen for incoming connections from the internet, the loopback interface, or a combination of both. Network-facing services which are not needed should be removed from the system to reduce the attack surface of both running processes and installed packages.

|

||||

|

||||

### Determine Running Services

|

||||

|

||||

To see your Linode’s running network services:

|

||||

|

||||

```

|

||||

sudo netstat -tulpn

|

||||

```

|

||||

|

||||

|

||||

> If netstat isn’t included in your Linux distribution by default, install the package `net-tools` or use the `ss -tulpn`command instead.

|

||||

|

||||

The following is an example of netstat’s output. Note that because distributions run different services by default, your output will differ:

|

||||

|

||||

|

||||

```

|

||||

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

|

||||

tcp 0 0 0.0.0.0:111 0.0.0.0:* LISTEN 7315/rpcbind

|

||||

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 3277/sshd

|

||||

tcp 0 0 127.0.0.1:25 0.0.0.0:* LISTEN 3179/exim4

|

||||

tcp 0 0 0.0.0.0:42526 0.0.0.0:* LISTEN 2845/rpc.statd

|

||||

tcp6 0 0 :::48745 :::* LISTEN 2845/rpc.statd

|

||||

tcp6 0 0 :::111 :::* LISTEN 7315/rpcbind

|

||||

tcp6 0 0 :::22 :::* LISTEN 3277/sshd

|

||||

tcp6 0 0 ::1:25 :::* LISTEN 3179/exim4

|

||||

udp 0 0 127.0.0.1:901 0.0.0.0:* 2845/rpc.statd

|

||||

udp 0 0 0.0.0.0:47663 0.0.0.0:* 2845/rpc.statd

|

||||

udp 0 0 0.0.0.0:111 0.0.0.0:* 7315/rpcbind

|

||||

udp 0 0 192.0.2.1:123 0.0.0.0:* 3327/ntpd

|

||||

udp 0 0 127.0.0.1:123 0.0.0.0:* 3327/ntpd

|

||||

udp 0 0 0.0.0.0:123 0.0.0.0:* 3327/ntpd

|

||||

udp 0 0 0.0.0.0:705 0.0.0.0:* 7315/rpcbind

|

||||

udp6 0 0 :::111 :::* 7315/rpcbind

|

||||

udp6 0 0 fe80::f03c:91ff:fec:123 :::* 3327/ntpd

|

||||

udp6 0 0 2001:DB8::123 :::* 3327/ntpd

|

||||

udp6 0 0 ::1:123 :::* 3327/ntpd

|

||||

udp6 0 0 :::123 :::* 3327/ntpd

|

||||

udp6 0 0 :::705 :::* 7315/rpcbind

|

||||

udp6 0 0 :::60671 :::* 2845/rpc.statd

|

||||

```

|

||||

|

||||

Netstat tells us that services are running for [Remote Procedure Call][19] (rpc.statd and rpcbind), SSH (sshd), [NTPdate][20] (ntpd) and [Exim][21] (exim4).

|

||||

|

||||

#### TCP

|

||||

|

||||

See the **Local Address** column of the netstat readout. The process `rpcbind` is listening on `0.0.0.0:111` and `:::111` for a foreign address of `0.0.0.0:*` or `:::*`. This means that it’s accepting incoming TCP connections from other RPC clients on any external address, both IPv4 and IPv6, from any port and over any network interface. We see similar for SSH, and that Exim is listening locally for traffic from the loopback interface, as shown by the `127.0.0.1` address.

|

||||

|

||||

#### UDP

|

||||

|

||||

UDP sockets are _[stateless][14]_, meaning they are either open or closed and every process’s connection is independent of those which occurred before and after. This is in contrast to TCP connection states such as _LISTEN_, _ESTABLISHED_ and _CLOSE_WAIT_.

|

||||

|

||||

Our netstat output shows that NTPdate is: 1) accepting incoming connections on the Linode’s public IP address; 2) communicates over localhost; and 3) accepts connections from external sources. These are over port 123, and both IPv4 and IPv6\. We also see more sockets open for RPC.

|

||||

|

||||

### Determine Which Services to Remove

|

||||

|

||||

If you were to do a basic TCP and UDP [nmap][22] scan of your Linode without a firewall enabled, SSH, RPC and NTPdate would be present in the result with ports open. By [configuring a firewall][23] you can filter those ports, with the exception of SSH because it must allow your incoming connections. Ideally, however, the unused services should be disabled.

|

||||

|

||||

* You will likely be administering your server primarily through an SSH connection, so that service needs to stay. As mentioned above, [RSA keys][8] and [Fail2Ban][9] can help protect SSH.

|

||||

|

||||

* NTP is necessary for your server’s timekeeping but there are alternatives to NTPdate. If you prefer a time synchronization method which does not hold open network ports, and you do not need nanosecond accuracy, then you may be interested in replacing NTPdate with [OpenNTPD][10].

|

||||

|

||||

* Exim and RPC, however, are unnecessary unless you have a specific use for them, and should be removed.

|

||||

|

||||

> This section focused on Debian 8\. Different Linux distributions have different services enabled by default. If you are unsure of what a service does, do an internet search to understand what it is before attempting to remove or disable it.

|

||||

|

||||

### Uninstall the Listening Services

|

||||

|

||||

How to remove the offending packages will differ depending on your distribution’s package manager.

|

||||

|

||||

**Arch**

|

||||

|

||||

```

|

||||

sudo pacman -Rs package_name

|

||||

```

|

||||

|

||||

**CentOS**

|

||||

|

||||

|

||||

```

|

||||

sudo yum remove package_name

|

||||

```

|

||||

|

||||

|

||||

**Debian / Ubuntu**

|

||||

|

||||

```

|

||||

sudo apt-get purge package_name

|

||||

```

|

||||

|

||||

**Fedora**

|

||||

|

||||

|

||||

```

|

||||

sudo dnf remove package_name

|

||||

```

|

||||

|

||||

Run `sudo netstat -tulpn` again. You should now only see listening services for SSH (sshd) and NTP (ntpdate, network time protocol).

|

||||

|

||||

### Configure a Firewall

|

||||

|

||||

Using a _firewall_ to block unwanted inbound traffic to your Linode provides a highly effective security layer. By being very specific about the traffic you allow in, you can prevent intrusions and network mapping. A best practice is to allow only the traffic you need, and deny everything else. See our documentation on some of the most common firewall applications:

|

||||

|

||||

* [Iptables][11] is the controller for netfilter, the Linux kernel’s packet filtering framework. Iptables is included in most Linux distributions by default.

|

||||

|

||||

* [FirewallD][12] is the iptables controller available for the CentOS / Fedora family of distributions.

|

||||

|

||||

* [UFW][13] provides an iptables frontend for Debian and Ubuntu.

|

||||

|

||||

### Next Steps

|

||||

|

||||

These are the most basic steps to harden any Linux server, but further security layers will depend on its intended use. Additional techniques can include application configurations, using [intrusion detection][24] or installing a form of [access control][25].

|

||||

|

||||

Now you can begin setting up your Linode for any purpose you choose. We have a library of documentation to assist you with a variety of topics ranging from [migration from shared hosting][26] to [enabling two-factor authentication][27] to [hosting a website][28].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linode.com/docs/security/securing-your-server/

|

||||

|

||||

作者:[Phil Zona ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linode.com/docs/security/securing-your-server/

|

||||

[1]:http://winscp.net/

|

||||

[2]:https://fedoraproject.org/wiki/AutoUpdates#Fedora_21_or_earlier_versions

|

||||

[3]:https://help.ubuntu.com/lts/serverguide/automatic-updates.html

|

||||

[4]:https://dnf.readthedocs.org/en/latest/automatic.html

|

||||

[5]:http://brew.sh/

|

||||

[6]:https://www.linode.com/docs/security/use-public-key-authentication-with-ssh#windows-operating-system

|

||||

[7]:https://www.linode.com/docs/tools-reference/modify-file-permissions-with-chmod

|

||||

[8]:https://www.linode.com/docs/security/securing-your-server/#create-an-authentication-key-pair

|

||||

[9]:https://www.linode.com/docs/security/securing-your-server/#use-fail2ban-for-ssh-login-protection

|

||||

[10]:https://en.wikipedia.org/wiki/OpenNTPD

|

||||

[11]:https://www.linode.com/docs/security/firewalls/control-network-traffic-with-iptables

|

||||

[12]:https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

|

||||

[13]:https://www.linode.com/docs/security/firewalls/configure-firewall-with-ufw

|

||||

[14]:https://en.wikipedia.org/wiki/Stateless_protocol

|

||||

[15]:https://fedoraproject.org/wiki/AutoUpdates#Why_use_Automatic_updates.3F

|

||||

[16]:https://www.linode.com/docs/getting-started#logging-in-for-the-first-time

|

||||

[17]:http://www.fail2ban.org/wiki/index.php/Main_Page

|

||||

[18]:https://www.linode.com/docs/security/using-fail2ban-for-security

|

||||

[19]:https://en.wikipedia.org/wiki/Open_Network_Computing_Remote_Procedure_Call

|

||||

[20]:http://support.ntp.org/bin/view/Main/SoftwareDownloads

|

||||

[21]:http://www.exim.org/

|

||||

[22]:https://nmap.org/

|

||||

[23]:https://www.linode.com/docs/security/securing-your-server/#configure-a-firewall

|

||||

[24]:https://linode.com/docs/security/ossec-ids-debian-7

|

||||

[25]:https://en.wikipedia.org/wiki/Access_control#Access_Control

|

||||

[26]:https://www.linode.com/docs/migrate-to-linode/migrate-from-shared-hosting

|

||||

[27]:https://www.linode.com/docs/security/linode-manager-security-controls

|

||||

[28]:https://www.linode.com/docs/websites/hosting-a-website

|

||||

409

sources/tech/20161012 Introduction to FirewallD on CentOS.md

Normal file

409

sources/tech/20161012 Introduction to FirewallD on CentOS.md

Normal file

@ -0,0 +1,409 @@

|

||||

Introduction to FirewallD on CentOS

|

||||

============================================================

|

||||

|

||||

|

||||

[FirewallD][4] is frontend controller for iptables used to implement persistent network traffic rules. It provides command line and graphical interfaces and is available in the repositories of most Linux distributions. Working with FirewallD has two main differences compared to directly controlling iptables:

|

||||

|

||||

1. FirewallD uses _zones_ and _services_ instead of chain and rules.

|

||||

2. It manages rulesets dynamically, allowing updates without breaking existing sessions and connections.

|

||||

|

||||

> FirewallD is a wrapper for iptables to allow easier management of iptables rules–it is **not** an iptables replacement. While iptables commands are still available to FirewallD, it’s recommended to use only FirewallD commands with FirewallD.

|

||||

|

||||

This guide will introduce you to FirewallD, its notions of zones and services, and show you some basic configuration steps.

|

||||

|

||||

### Installing and Managing FirewallD

|

||||

|

||||

FirewallD is included by default with CentOS 7 and Fedora 20+ but it’s inactive. Controlling it is the same as with other systemd units.

|

||||

|

||||

1. To start the service and enable FirewallD on boot:

|

||||

|

||||

|

||||

```

|

||||

sudo systemctl start firewalld

|

||||

sudo systemctl enable firewalld

|

||||

```

|

||||

|

|

||||

|

||||

To stop and disable it:

|

||||

|

||||

|

||||

```

|

||||

sudo systemctl stop firewalld

|

||||

sudo systemctl disable firewalld

|

||||

```

|

||||

|

||||

|

||||

2. Check the firewall status. The output should say either `running` or `not running`.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --state

|

||||

```

|

||||

|

||||

|

||||

3. To view the status of the FirewallD daemon:

|

||||

|

||||

|

||||

```

|

||||

sudo systemctl status firewalld

|

||||

```

|

||||

|

||||

|

||||

Example output:

|

||||

|

||||

|

||||

```

|

||||

firewalld.service - firewalld - dynamic firewall daemon

|

||||

Loaded: loaded (/usr/lib/systemd/system/firewalld.service; disabled)

|

||||

Active: active (running) since Wed 2015-09-02 18:03:22 UTC; 1min 12s ago

|

||||

Main PID: 11954 (firewalld)

|

||||

CGroup: /system.slice/firewalld.service

|

||||

└─11954 /usr/bin/python -Es /usr/sbin/firewalld --nofork --nopid

|

||||

```

|

||||

|

||||

|

||||

4. To reload a FirewallD configuration:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

|

||||

### Configuring FirewallD

|

||||

|

||||

Firewalld is configured with XML files. Except for very specific configurations, you won’t have to deal with them and **firewall-cmd** should be used instead.

|

||||

|

||||

Configuration files are located in two directories:

|

||||

|

||||

* `/usr/lib/FirewallD` holds default configurations like default zones and common services. Avoid updating them because those files will be overwritten by each firewalld package update.

|

||||

* `/etc/firewalld` holds system configuration files. These files will overwrite a default configuration.

|

||||

|

||||

### Configuration Sets

|

||||

|

||||

Firewalld uses two _configuration sets_: Runtime and Permanent. Runtime configuration changes are not retained on reboot or upon restarting FirewallD whereas permanent changes are not applied to a running system.

|

||||

|

||||

By default, `firewall-cmd` commands apply to runtime configuration but using the `--permanent` flag will establish a persistent configuration. To add and activate a permanent rule, you can use one of two methods.

|

||||

|

||||

1. Add the rule to both the permanent and runtime sets.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||

sudo firewall-cmd --zone=public --add-service=http

|

||||

```

|

||||

|

||||

|

||||

2. Add the rule to the permanent set and reload FirewallD.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||

sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

|

||||

> The reload command drops all runtime configurations and applies a permanent configuration. Because firewalld manages the ruleset dynamically, it won’t break an existing connection and session.

|

||||

|

||||

### Firewall Zones

|

||||

|

||||

Zones are pre-constructed rulesets for various trust levels you would likely have for a given location or scenario (e.g. home, public, trusted, etc.). Different zones allow different network services and incoming traffic types while denying everything else. After enabling FirewallD for the first time, _Public_will be the default zone.

|

||||

|

||||

Zones can also be applied to different network interfaces. For example, with separate interfaces for both an internal network and the Internet, you can allow DHCP on an internal zone but only HTTP and SSH on external zone. Any interface not explicitly set to a specific zone will be attached to the default zone.

|

||||

|

||||

To view the default zone:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --get-default-zone

|

||||

```

|

||||

|

||||

|

||||

To change the default zone:

|

||||

|

||||

```

|

||||

sudo firewall-cmd --set-default-zone=internal

|

||||

```

|

||||

|

||||

|

||||

To see the zones used by your network interface(s):

|

||||

|

||||

```

|

||||

sudo firewall-cmd --get-active-zones

|

||||

```

|

||||

|

||||

|

||||

Example output:

|

||||

|

||||

|

||||

```

|

||||

public

|

||||

interfaces: eth0

|

||||

```

|

||||

|

||||

|

||||

To get all configurations for a specific zone:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --list-all

|

||||

```

|

||||

|

||||

|

||||

Example output:

|

||||

|

||||

|

||||

```

|

||||

public (default, active)

|

||||

interfaces: ens160

|

||||

sources:

|

||||

services: dhcpv6-client http ssh

|

||||

ports: 12345/tcp

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

```

|

||||

|

||||

To get all configurations for all zones:

|

||||

|

||||

```

|

||||

sudo firewall-cmd --list-all-zones

|

||||

```

|

||||

|

||||

|

||||

Example output:

|

||||

|

||||

|

||||

```

|

||||

block

|

||||

interfaces:

|

||||

sources:

|

||||

services:

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

|

||||

...

|

||||

|

||||

work

|

||||

interfaces:

|

||||

sources:

|

||||

services: dhcpv6-client ipp-client ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

```

|

||||

|

||||

|

||||

### Working with Services

|

||||

|

||||

FirewallD can allow traffic based on predefined rules for specific network services. You can create your own custom serivce rules and add them to any zone. The configuration files for the default supported services are located at `/usr/lib/firewalld/services` and user-created service files would be in `/etc/firewalld/services`.

|

||||

|

||||

To view the default available services:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --get-services

|

||||

```

|

||||

|

||||

|

||||

As an example, to enable or disable the HTTP service:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-service=http --permanent

|

||||

sudo firewall-cmd --zone=public --remove-service=http --permanent

|

||||

```

|

||||

|

||||

|

||||

### Allowing or Denying an Arbitrary Port/Protocol

|

||||

|

||||

As an example: Allow or disable TCP traffic on port 12345.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-port=12345/tcp --permanent

|

||||

sudo firewall-cmd --zone=public --remove-port=12345/tcp --permanent

|

||||

```

|

||||

|

||||

|

||||

### Port Forwarding

|

||||

|

||||

The example rule below forwards traffic from port 80 to port 12345 on **the same server**.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone="public" --add-forward-port=port=80:proto=tcp:toport=12345

|

||||

```

|

||||

|

||||

|

||||

To forward a port to **a different server**:

|

||||

|

||||

1. Activate masquerade in the desired zone.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-masquerade

|

||||

```

|

||||

|

||||

|

||||

2. Add the forward rule. This example forwards traffic from local port 80 to port 8080 on _a remote server_ located at the IP address: 123.456.78.9.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone="public" --add-forward-port=port=80:proto=tcp:toport=8080:toaddr=123.456.78.9

|

||||

```

|

||||

|

||||

|

||||

To remove the rules, substitute `--add` with `--remove`. For example:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --remove-masquerade

|

||||

```

|

||||

|

||||

|

||||

### Constructing a Ruleset with FirewallD

|

||||

|

||||

As an example, here is how you would use FirewallD to assign basic rules to your Linode if you were running a web server.

|

||||

|

||||

1. Assign the _dmz_ zone as the default zone to eth0\. Of the default zones offered, dmz (demilitarized zone) is the most desirable to start with for this application because it allows only SSH and ICMP.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --set-default-zone=dmz

|

||||

sudo firewall-cmd --zone=dmz --add-interface=eth0

|

||||

```

|

||||

|

||||

|

||||

2. Add permanent service rules for HTTP and HTTPS to the dmz zone:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=dmz --add-service=http --permanent

|

||||

sudo firewall-cmd --zone=dmz --add-service=https --permanent

|

||||

```

|

||||

|

||||

|

||||

3. Reload FirewallD so the rules take effect immediately:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --reload

|

||||

```

|

||||

|

||||

|

||||

If you now run `firewall-cmd --zone=dmz --list-all`, this should be the output:

|

||||

|

||||

|

||||

|

||||

```

|

||||

dmz (default)

|

||||

interfaces: eth0

|

||||

sources:

|

||||

services: http https ssh

|

||||

ports:

|

||||

masquerade: no

|

||||

forward-ports:

|

||||

icmp-blocks:

|

||||

rich rules:

|

||||

```

|

||||

|

||||

|

||||

This tells us that the **dmz** zone is our **default** which applies to the **eth0 interface**, all network **sources** and **ports**. Incoming HTTP (port 80), HTTPS (port 443) and SSH (port 22) traffic is allowed and since there are no restrictions on IP versioning, this will apply to both IPv4 and IPv6. **Masquerading** and **port forwarding** are not allowed. We have no **ICMP blocks**, so ICMP traffic is fully allowed, and no **rich rules**. All outgoing traffic is allowed.

|

||||

|

||||

### Advanced Configuration

|

||||

|

||||

Services and ports are fine for basic configuration but may be too limiting for advanced scenarios. Rich Rules and Direct Interface allow you to add fully custom firewall rules to any zone for any port, protocol, address and action.

|

||||

|

||||

### Rich Rules

|

||||

|

||||

Rich rules syntax is extensive but fully documented in the [firewalld.richlanguage(5)][5] man page (or see `man firewalld.richlanguage` in your terminal). Use `--add-rich-rule`, `--list-rich-rules` and `--remove-rich-rule` with firewall-cmd command to manage them.

|

||||

|

||||

Here are some common examples:

|

||||

|

||||

Allow all IPv4 traffic from host 192.168.0.14.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-rich-rule 'rule family="ipv4" source address=192.168.0.14 accept'

|

||||

```

|

||||

|

||||

|

||||

Deny IPv4 traffic over TCP from host 192.168.1.10 to port 22.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-rich-rule 'rule family="ipv4" source address="192.168.1.10" port port=22 protocol=tcp reject'

|

||||

```

|

||||

|

||||

|

||||

Allow IPv4 traffic over TCP from host 10.1.0.3 to port 80, and forward it locally to port 6532.

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-rich-rule 'rule family=ipv4 source address=10.1.0.3 forward-port port=80 protocol=tcp to-port=6532'

|

||||

```

|

||||

|

||||

|

||||

Forward all IPv4 traffic on port 80 to port 8080 on host 172.31.4.2 (masquerade should be active on the zone).

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --zone=public --add-rich-rule 'rule family=ipv4 forward-port port=80 protocol=tcp to-port=8080 to-addr=172.31.4.2'

|

||||

```

|

||||

|

||||

|

||||

To list your current Rich Rules:

|

||||

|

||||

|

||||

```

|

||||

sudo firewall-cmd --list-rich-rules

|

||||

```

|

||||

|

||||

|

||||

### iptables Direct Interface

|

||||

|

||||

For the most advanced usage, or for iptables experts, FirewallD provides a direct interface that allows you to pass raw iptables commands to it. Direct Interface rules are not persistent unless the `--permanent` is used.

|

||||

|

||||

To see all custom chains or rules added to FirewallD:

|

||||

|

||||

|

||||

```

|

||||

firewall-cmd --direct --get-all-chains

|

||||

firewall-cmd --direct --get-all-rules

|

||||

```

|

||||

|

||||

|

||||

Discussing iptables syntax details goes beyond the scope of this guide. If you want to learn more, you can review our [iptables guide][6].

|

||||

|

||||

### More Information

|

||||

|

||||

You may wish to consult the following resources for additional information on this topic. While these are provided in the hope that they will be useful, please note that we cannot vouch for the accuracy or timeliness of externally hosted materials.

|

||||

|

||||

* [FirewallD Official Site][1]

|

||||

* [RHEL 7 Security Guide: Introduction to FirewallD][2]

|

||||

* [Fedora Wiki: FirewallD][3]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

|

||||

|

||||

作者:[ Linode][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linode.com/docs/security/firewalls/introduction-to-firewalld-on-centos

|

||||

[1]:http://www.firewalld.org/

|

||||

[2]:https://access.redhat.com/documentation/en-US/Red_Hat_Enterprise_Linux/7/html/Security_Guide/sec-Using_Firewalls.html#sec-Introduction_to_firewalld

|

||||

[3]:https://fedoraproject.org/wiki/FirewallD

|

||||

[4]:http://www.firewalld.org/

|

||||

[5]:https://jpopelka.fedorapeople.org/firewalld/doc/firewalld.richlanguage.html

|

||||

[6]:https://www.linode.com/docs/networking/firewalls/control-network-traffic-with-iptables

|

||||

@ -1,3 +1,5 @@

|

||||

translating by ypingcn.

|

||||

|

||||

How to Use Old Xorg Apps in Unity 8 on Ubuntu 16.10

|

||||

====

|

||||

|

||||

|

||||

142

sources/tech/20161201 Using the NTP time synchronization.md

Normal file

142

sources/tech/20161201 Using the NTP time synchronization.md

Normal file

@ -0,0 +1,142 @@

|

||||

使用 NTP 进行时间同步

|

||||

============================================================

|

||||

|

||||

NTP 是通过网络来同步时间的一种 TCP/IP 协议。通常客户端向服务器请求当前的时间,并根据结果来设置其时钟。

|

||||

|

||||

Behind this simple description, there is a lot of complexity - there are tiers of NTP servers, with the tier one NTP servers connected to atomic clocks, and tier two and three servers spreading the load of actually handling requests across the Internet. Also the client software is a lot more complex than you might think - it has to factor out communication delays, and adjust the time in a way that does not upset all the other processes that run on the server. But luckily all that complexity is hidden from you!

|

||||

|

||||

Ubuntu uses ntpdate and ntpd.

|

||||

|

||||

* [timedatectl][4]

|

||||

* [timesyncd][5]

|

||||

* [ntpdate][6]

|

||||

* [timeservers][7]

|

||||

* [ntpd][8]

|

||||

* [安装][9]

|

||||

* [配置][10]

|

||||

* [View status][11]

|

||||

* [PPS Support][12]

|

||||

* [参考资料][13]

|

||||

|

||||

### timedatectl

|

||||

|

||||

In recent Ubuntu releases timedatectl replaces ntpdate. By default timedatectl syncs the time once on boot and later on uses socket activation to recheck once network connections become active.

|

||||

|

||||

If ntpdate / ntp is installed timedatectl steps back to let you keep your old setup. That shall ensure that no two time syncing services are fighting and also to retain any kind of old behaviour/config that you had through an upgrade. But it also implies that on an upgrade from a former release ntp/ntpdate might still be installed and therefore renders the new systemd based services disabled.

|

||||

|

||||

### timesyncd

|

||||

|

||||

In recent Ubuntu releases timesyncd replaces the client portion of ntpd. By default timesyncd regularly checks and keeps the time in sync. It also stores time updates locally, so that after reboots monotonically advances if applicable.

|

||||

|

||||

The current status of time and time configuration via timedatectl and timesyncd can be checked with timedatectl status.

|

||||

|

||||

```

|

||||

timedatectl status

|

||||

Local time: Fri 2016-04-29 06:32:57 UTC

|

||||

Universal time: Fri 2016-04-29 06:32:57 UTC

|

||||

RTC time: Fri 2016-04-29 07:44:02

|

||||

Time zone: Etc/UTC (UTC, +0000)

|

||||

Network time on: yes

|

||||

NTP synchronized: no

|

||||

RTC in local TZ: no

|

||||

```

|

||||

|

||||

If NTP is installed and replaces the activity of timedatectl the line "NTP synchronized" is set to yes.

|

||||

|

||||

The nameserver to fetch time for timedatectl and timesyncd from can be specified in /etc/systemd/timesyncd.conf and with flexible additional config files in /etc/systemd/timesyncd.conf.d/.

|

||||

|

||||

### ntpdate

|

||||

|

||||

ntpdate is considered deprecated in favour of timedatectl and thereby no more installed by default. If installed it will run once at boot time to set up your time according to Ubuntu's NTP server. Later on anytime a new interface comes up it retries to update the time - while doing so it will try to slowly drift time as long as the delta it has to cover isn't too big. That behaviour can be controlled with the -B/-b switches.

|

||||

|

||||

```

|

||||

ntpdate ntp.ubuntu.com

|

||||

```

|

||||

|

||||

### timeservers

|

||||

|

||||

By default the systemd based tools request time information at ntp.ubuntu.com. In classic ntpd based service uses the pool of [0-3].ubuntu.pool.ntp.org Of the pool number 2.ubuntu.pool.ntp.org as well as ntp.ubuntu.com also support ipv6 if needed. If one needs to force ipv6 there also is ipv6.ntp.ubuntu.com which is not configured by default.

|

||||

|

||||

### ntpd

|

||||

|

||||

The ntp daemon ntpd calculates the drift of your system clock and continuously adjusts it, so there are no large corrections that could lead to inconsistent logs for instance. The cost is a little processing power and memory, but for a modern server this is negligible.

|

||||

|

||||

### 安装

|

||||

|

||||

To install ntpd, from a terminal prompt enter:

|

||||

|

||||

```

|

||||

sudo apt install ntp

|

||||

```

|

||||

|

||||

### 配置

|

||||

|

||||

Edit /etc/ntp.conf to add/remove server lines. By default these servers are configured:

|

||||

|

||||

```

|

||||

# Use servers from the NTP Pool Project. Approved by Ubuntu Technical Board

|

||||

# on 2011-02-08 (LP: #104525). See http://www.pool.ntp.org/join.html for

|

||||

# more information.

|

||||

server 0.ubuntu.pool.ntp.org

|

||||

server 1.ubuntu.pool.ntp.org

|

||||

server 2.ubuntu.pool.ntp.org

|

||||

server 3.ubuntu.pool.ntp.org

|

||||

```

|

||||

|

||||

After changing the config file you have to reload the ntpd:

|

||||

|

||||

```

|

||||

sudo systemctl reload ntp.service

|

||||

```

|

||||

|

||||

### View status

|

||||

|

||||

Use ntpq to see more info:

|

||||

|

||||

```

|

||||

# sudo ntpq -p

|

||||

remote refid st t when poll reach delay offset jitter

|

||||

==============================================================================

|

||||

+stratum2-2.NTP. 129.70.130.70 2 u 5 64 377 68.461 -44.274 110.334

|

||||

+ntp2.m-online.n 212.18.1.106 2 u 5 64 377 54.629 -27.318 78.882

|

||||

*145.253.66.170 .DCFa. 1 u 10 64 377 83.607 -30.159 68.343

|

||||

+stratum2-3.NTP. 129.70.130.70 2 u 5 64 357 68.795 -68.168 104.612

|

||||

+europium.canoni 193.79.237.14 2 u 63 64 337 81.534 -67.968 92.792

|

||||

```

|

||||

|

||||

### PPS Support

|

||||

|

||||

Since 16.04 ntp supports PPS discipline which can be used to augment ntp with local timesources for better accuracy. For more details on configuration see the external pps ressource listed below.

|

||||

|

||||

### 参考资料

|

||||

|

||||

* See the [Ubuntu Time][1] wiki page for more information.

|

||||

|

||||

* [ntp.org, home of the Network Time Protocol project][2]

|

||||

|

||||

* [ntp.org faq on configuring PPS][3]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://help.ubuntu.com/lts/serverguide/NTP.html

|

||||

|

||||

作者:[Ubuntu][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://help.ubuntu.com/lts/serverguide/NTP.html

|

||||

[1]:https://help.ubuntu.com/community/UbuntuTime

|

||||

[2]:http://www.ntp.org/

|

||||

[3]:http://www.ntp.org/ntpfaq/NTP-s-config-adv.htm#S-CONFIG-ADV-PPS

|

||||

[4]:https://help.ubuntu.com/lts/serverguide/NTP.html#timedatectl

|

||||

[5]:https://help.ubuntu.com/lts/serverguide/NTP.html#timesyncd

|

||||

[6]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntpdate

|

||||

[7]:https://help.ubuntu.com/lts/serverguide/NTP.html#timeservers

|

||||

[8]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntpd

|

||||

[9]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntp-installation

|

||||

[10]:https://help.ubuntu.com/lts/serverguide/NTP.html#timeservers-conf

|

||||

[11]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntp-status

|

||||

[12]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntp-pps

|

||||

[13]:https://help.ubuntu.com/lts/serverguide/NTP.html#ntp-references

|

||||

@ -1,3 +1,5 @@

|

||||

Rusking translating

|

||||

|

||||

Manage Samba4 Active Directory Infrastructure from Windows10 via RSAT – Part 3

|

||||

============================================================

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

Rusking translating

|

||||

|

||||

Manage Samba4 AD Domain Controller DNS and Group Policy from Windows – Part 4

|

||||

============================================================

|

||||

|

||||

334

sources/tech/20161216 Kprobes Event Tracing on ARMv8.md

Normal file

334

sources/tech/20161216 Kprobes Event Tracing on ARMv8.md

Normal file

@ -0,0 +1,334 @@

|

||||

# Kprobes Event Tracing on ARMv8

|

||||

|

||||

|

||||

|

||||

|

||||

### Introduction

|

||||

|

||||

Kprobes is a kernel feature that allows instrumenting the kernel by setting arbitrary breakpoints that call out to developer-supplied routines before and after the breakpointed instruction is executed (or simulated). See the kprobes documentation[[1]][2] for more information. Basic kprobes functionality is selected withCONFIG_KPROBES. Kprobes support was added to mainline for arm64 in the v4.8 release.

|

||||

|

||||

In this article we describe the use of kprobes on arm64 using the debugfs event tracing interfaces from the command line to collect dynamic trace events. This feature has been available for some time on several architectures (including arm32), and is now available on arm64\. The feature allows use of kprobes without having to write any code.

|

||||

|

||||

### Types of Probes

|

||||

|

||||

The kprobes subsystem provides three different types of dynamic probes described below.

|

||||

|

||||

### Kprobes

|

||||

|

||||

The basic probe is a software breakpoint kprobes inserts in place of the instruction you are probing, saving the original instruction for eventual single-stepping (or simulation) when the probe point is hit.

|

||||

|

||||

### Kretprobes

|

||||

|

||||

Kretprobes is a part of kprobes that allows intercepting a returning function instead of having to set a probe (or possibly several probes) at the return points. This feature is selected whenever kprobes is selected, for supported architectures (including ARMv8).

|

||||

|

||||

### Jprobes

|

||||

|

||||

Jprobes allows intercepting a call into a function by supplying an intermediary function with the same calling signature, which will be called first. Jprobes is a programming interface only and cannot be used through the debugfs event tracing subsystem. As such we will not be discussing jprobes further here. Consult the kprobes documentation if you wish to use jprobes.

|

||||

|

||||

### Invoking Kprobes

|

||||

|

||||

Kprobes provides a set of APIs which can be called from kernel code to set up probe points and register functions to be called when probe points are hit. Kprobes is also accessible without adding code to the kernel, by writing to specific event tracing debugfs files to set the probe address and information to be recorded in the trace log when the probe is hit. The latter is the focus of what this document will be talking about. Lastly kprobes can be accessed through the perf command.

|

||||

|

||||

### Kprobes API

|

||||

|

||||

The kernel developer can write functions in the kernel (often done in a dedicated debug module) to set probe points and take whatever action is desired right before and right after the probed instruction is executed. This is well documented in kprobes.txt.

|

||||

|

||||

### Event Tracing

|

||||

|

||||

The event tracing subsystem has its own documentation[[2]][3] which might be worth a read to understand the background of event tracing in general. The event tracing subsystem serves as a foundation for both tracepoints and kprobes event tracing. The event tracing documentation focuses on tracepoints, so bear that in mind when consulting that documentation. Kprobes differs from tracepoints in that there is no predefined list of tracepoints but instead arbitrary dynamically created probe points that trigger the collection of trace event information. The event tracing subsystem is controlled and monitored through a set of debugfs files. Event tracing (CONFIG_EVENT_TRACING) will be selected automatically when needed by something like the kprobe event tracing subsystem.

|

||||

|

||||

#### Kprobes Events

|

||||

|

||||

With the kprobes event tracing subsystem the user can specify information to be reported at arbitrary breakpoints in the kernel, determined simply by specifying the address of any existing probeable instruction along with formatting information. When that breakpoint is encountered during execution kprobes passes the requested information to the common parts of the event tracing subsystem which formats and appends the data to the trace log, much like how tracepoints works. Kprobes uses a similar but mostly separate collection of debugfs files to control and display trace event information. This feature is selected withCONFIG_KPROBE_EVENT. The kprobetrace documentation[[3]][4] provides the essential information on how to use kprobes event tracing and should be consulted to understand details about the examples presented below.

|

||||

|

||||

### Kprobes and Perf

|

||||

|

||||

The perf tools provide another command line interface to kprobes. In particular “perf probe” allows probe points to be specified by source file and line number, in addition to function name plus offset, and address. The perf interface is really a wrapper for using the debugfs interface for kprobes.

|

||||

|

||||

### Arm64 Kprobes

|

||||

|

||||

All of the above aspects of kprobes are now implemented for arm64, in practice there are some differences from other architectures though:

|

||||

|

||||

* Register name arguments are, of course, architecture specific and can be found in the ARM ARM.

|

||||

|

||||

* Not all instruction types can currently be probed. Currently unprobeable instructions include mrs/msr(except DAIF read), exception generation instructions, eret, and hint (except for the nop variant). In these cases it is simplest to just probe a nearby instruction instead. These instructions are blacklisted from probing because the changes they cause to processor state are unsafe to do during kprobe single-stepping or instruction simulation, because the single-stepping context kprobes constructs is inconsistent with what the instruction needs, or because the instruction can’t tolerate the additional processing time and exception handling in kprobes (ldx/stx).

|

||||

* An attempt is made to identify instructions within a ldx/stx sequence and prevent probing, however it is theoretically possible for this check to fail resulting in allowing a probed atomic sequence which can never succeed. Be careful when probing around atomic code sequences.

|

||||

* Note that because of the details of Linux ARM64 calling conventions it is not possible to reliably duplicate the stack frame for the probed function and for that reason no attempt is made to do so with jprobes, unlike the majority of other architectures supporting jprobes. The reason for this is that there is insufficient information for the callee to know for certain the amount of the stack that is needed.

|

||||

|

||||

* Note that the stack pointer information recorded from a probe will reflect the particular stack pointer in use at the time the probe was hit, be it the kernel stack pointer or the interrupt stack pointer.

|

||||

* There is a list of kernel functions which cannot be probed, usually because they are called as part of kprobes processing. Part of this list is architecture-specific and also includes things like exception entry code.

|

||||

|

||||

### Using Kprobes Event Tracing

|

||||

|

||||

One common use case for kprobes is instrumenting function entry and/or exit. It is particularly easy to install probes for this since one can just use the function name for the probe address. Kprobes event tracing will look up the symbol name and determine the address. The ARMv8 calling standard defines where the function arguments and return values can be found, and these can be printed out as part of the kprobe event processing.

|

||||

|

||||

### Example: Function entry probing

|

||||

|

||||

Instrumenting a USB ethernet driver reset function:

|

||||

|

||||

```

|

||||

_$ pwd

|

||||

/sys/kernel/debug/tracing

|

||||

$ cat > kprobe_events <<EOF

|

||||

p ax88772_reset %x0

|

||||

EOF

|

||||

$ echo 1 > events/kprobes/enable_

|

||||

```

|

||||

|

||||

At this point a trace event will be recorded every time the driver’s _ax8872_reset()_ function is called. The event will display the pointer to the _usbnet_ structure passed in via X0 (as per the ARMv8 calling standard) as this function’s only argument. After plugging in a USB dongle requiring this ethernet driver we see the following trace information:

|

||||

|

||||

```

|

||||

_$ cat trace

|

||||

# tracer: nop

|

||||

#

|

||||

# entries-in-buffer/entries-written: 1/1 #P:8

|

||||

#

|

||||

# _—–=> irqs-off

|

||||

# / _—-=> need-resched

|

||||

# | / _—=> hardirq/softirq

|

||||

# || / _–=> preempt-depth

|

||||

# ||| / delay

|

||||

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

|

||||

# | | | |||| | |

|

||||

kworker/0:0-4 [000] d… 10972.102939: p_ax88772_reset_0:

|

||||

(ax88772_reset+0x0/0x230) arg1=0xffff800064824c80_

|

||||

```

|

||||

|

||||

Here we can see the value of the pointer argument passed in to our probed function. Since we did not use the optional labelling features of kprobes event tracing the information we requested is automatically labeled_arg1_. Note that this refers to the first value in the list of values we requested that kprobes log for this probe, not the actual position of the argument to the function. In this case it also just happens to be the first argument to the function we’ve probed.

|

||||

|

||||

### Example: Function entry and return probing

|

||||

|

||||

The kretprobe feature is used specifically to probe a function return. At function entry the kprobes subsystem will be called and will set up a hook to be called at function return, where it will record the requested event information. For the most common case the return information, typically in the X0 register, is quite useful. The return value in %x0 can also be referred to as _$retval_. The following example also demonstrates how to provide a human-readable label to be displayed with the information of interest.

|

||||

|

||||

Example of instrumenting the kernel __do_fork()_ function to record arguments and results using a kprobe and a kretprobe:

|

||||

|

||||

```

|

||||

_$ cd /sys/kernel/debug/tracing

|

||||

$ cat > kprobe_events <<EOF

|

||||

p _do_fork %x0 %x1 %x2 %x3 %x4 %x5

|

||||

r _do_fork pid=%x0

|

||||

EOF

|

||||

$ echo 1 > events/kprobes/enable_

|

||||

```

|

||||

|

||||

At this point every call to _do_fork() will produce two kprobe events recorded into the “_trace_” file, one reporting the calling argument values and one reporting the return value. The return value shall be labeled “_pid_” in the trace file. Here are the contents of the trace file after three fork syscalls have been made:

|

||||

|

||||

```

|

||||

_$ cat trace

|

||||

# tracer: nop

|

||||

#

|

||||

# entries-in-buffer/entries-written: 6/6 #P:8

|

||||

#

|

||||

# _—–=> irqs-off

|

||||

# / _—-=> need-resched

|

||||

# | / _—=> hardirq/softirq

|

||||

# || / _–=> preempt-depth

|

||||

# ||| / delay

|

||||

# TASK-PID CPU# |||| TIMESTAMP FUNCTION

|

||||

# | | | |||| | |

|

||||

bash-1671 [001] d… 204.946007: p__do_fork_0: (_do_fork+0x0/0x3e4) arg1=0x1200011 arg2=0x0 arg3=0x0 arg4=0x0 arg5=0xffff78b690d0 arg6=0x0

|

||||

bash-1671 [001] d..1 204.946391: r__do_fork_0: (SyS_clone+0x18/0x20 <- _do_fork) pid=0x724

|

||||

bash-1671 [001] d… 208.845749: p__do_fork_0: (_do_fork+0x0/0x3e4) arg1=0x1200011 arg2=0x0 arg3=0x0 arg4=0x0 arg5=0xffff78b690d0 arg6=0x0

|

||||

bash-1671 [001] d..1 208.846127: r__do_fork_0: (SyS_clone+0x18/0x20 <- _do_fork) pid=0x725

|

||||

bash-1671 [001] d… 214.401604: p__do_fork_0: (_do_fork+0x0/0x3e4) arg1=0x1200011 arg2=0x0 arg3=0x0 arg4=0x0 arg5=0xffff78b690d0 arg6=0x0

|

||||

bash-1671 [001] d..1 214.401975: r__do_fork_0: (SyS_clone+0x18/0x20 <- _do_fork) pid=0x726_