mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

429a99a715

158

sources/talk/20181114 Analyzing the DNA of DevOps.md

Normal file

158

sources/talk/20181114 Analyzing the DNA of DevOps.md

Normal file

@ -0,0 +1,158 @@

|

||||

Analyzing the DNA of DevOps

|

||||

======

|

||||

How have waterfall, agile, and other development frameworks shaped the evolution of DevOps? Here's what we discovered.

|

||||

|

||||

|

||||

If you were to analyze the DNA of DevOps, what would you find in its ancestry report?

|

||||

|

||||

This article is not a methodology bake-off, so if you are looking for advice or a debate on the best approach to software engineering, you can stop reading here. Rather, we are going to explore the genetic sequences that have brought DevOps to the forefront of today's digital transformations.

|

||||

|

||||

Much of DevOps has evolved through trial and error, as companies have struggled to be responsive to customers’ demands while improving quality and standing out in an increasingly competitive marketplace. Adding to the challenge is the transition from a product-driven to a service-driven global economy that connects people in new ways. The software development lifecycle is becoming an increasingly complex system of services and microservices, both interconnected and instrumented. As DevOps is pushed further and faster than ever, the speed of change is wiping out slower traditional methodologies like waterfall.

|

||||

|

||||

We are not slamming the waterfall approach—many organizations have valid reasons to continue using it. However, mature organizations should aim to move away from wasteful processes, and indeed, many startups have a competitive edge over companies that use more traditional approaches in their day-to-day operations.

|

||||

|

||||

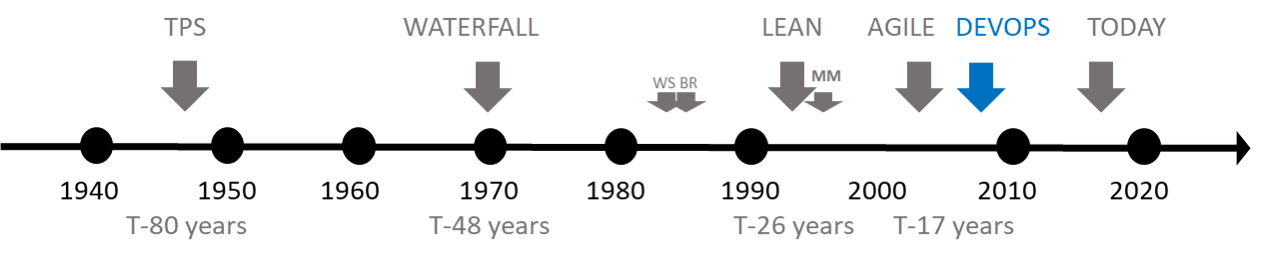

Ironically, lean, [Kanban][1], continuous, and agile principles and processes trace back to the early 1940's, so DevOps cannot claim to be a completely new idea.

|

||||

|

||||

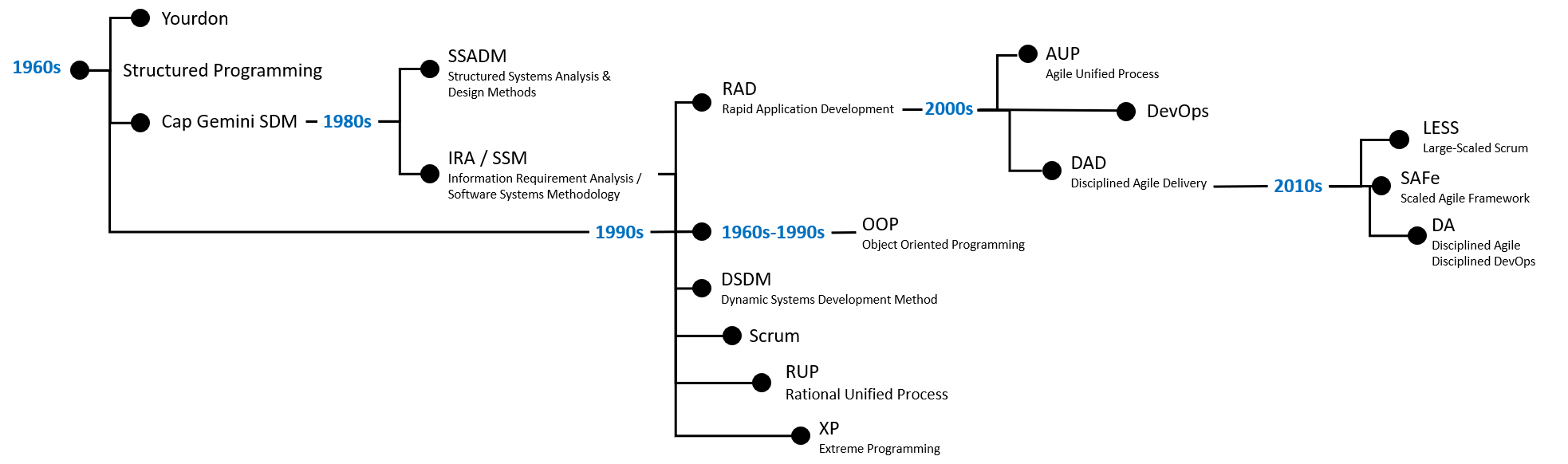

Let's start by stepping back a few years and looking at the waterfall, lean, and agile software development approaches. The figure below shows a “haplogroup” of the software development lifecycle. (Remember, we are not looking for the best approach but trying to understand which approach has positively influenced our combined 67 years of software engineering and the evolution to a DevOps mindset.)

|

||||

|

||||

|

||||

|

||||

> “A fool with a tool is still a fool.” -Mathew Mathai

|

||||

|

||||

### The traditional waterfall method

|

||||

|

||||

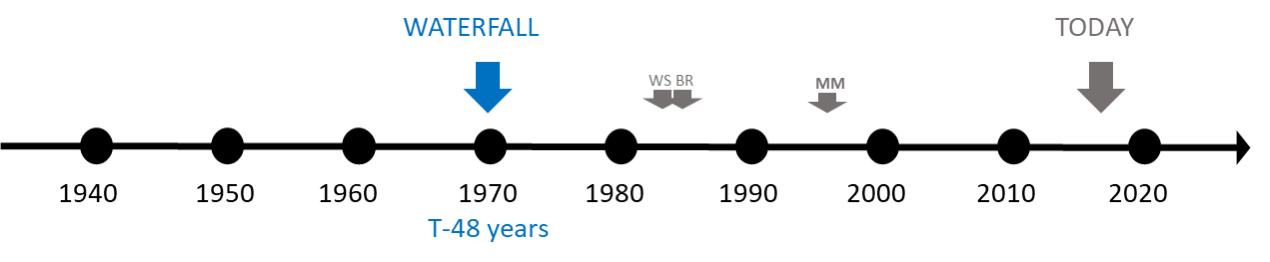

From our perspective, the oldest genetic material comes from the [waterfall][2] model, first introduced by Dr. Winston W. Royce in a paper published in the 1970's.

|

||||

|

||||

|

||||

|

||||

Like a waterfall, this approach emphasizes a logical and sequential progression through requirements, analysis, coding, testing, and operations in a single pass. You must complete each sequence, meet criteria, and obtain a signoff before you can begin the next one. The waterfall approach benefits projects that need stringent sequences and that have a detailed and predictable scope and milestone-based development. Contrary to popular belief, it also allows teams to experiment and make early design changes during the requirements, analysis, and design stages.

|

||||

|

||||

|

||||

|

||||

### Lean thinking

|

||||

|

||||

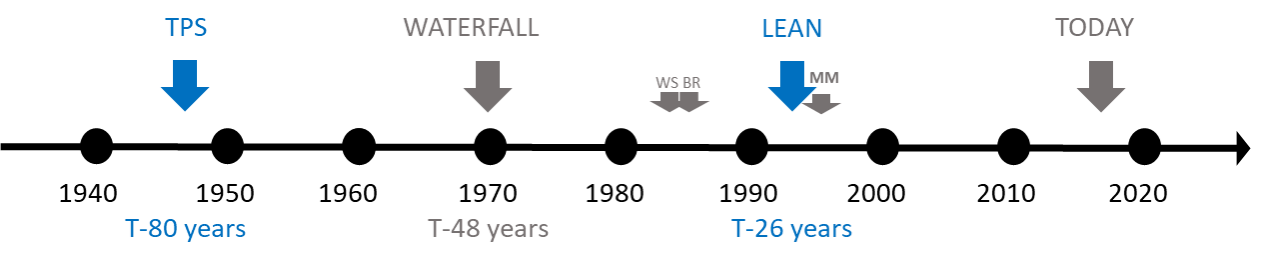

Although lean thinking dates to the Venetian Arsenal in the 1450s, we start the clock when Toyota created the [Toyota Production System][3], developed by Japanese engineers between 1948 and 1972. Toyota published an official description of the system in 1992.

|

||||

|

||||

|

||||

|

||||

Lean thinking is based on [five principles][4]: value, value stream, flow, pull, and perfection. The core of this approach is to understand and support an effective value stream, eliminate waste, and deliver continuous value to the user. It is about delighting your users without interruption.

|

||||

|

||||

|

||||

|

||||

### Kaizen

|

||||

|

||||

Kaizen is based on incremental improvements; the **Plan- >Do->Check->Act** lifecycle moved companies toward a continuous improvement mindset. Originally developed to improve the flow and processes of the assembly line, the Kaizen concept also adds value across the supply chain. The Toyota Production system was one of the early implementors of Kaizen and continuous improvement. Kaizen and DevOps work well together in environments where workflow goes from design to production. Kaizen focuses on two areas:

|

||||

|

||||

* Flow

|

||||

* Process

|

||||

|

||||

|

||||

|

||||

### Continuous delivery

|

||||

|

||||

Kaizen inspired the development of processes and tools to automate production. Companies were able to speed up production and improve the quality, design, build, test, and delivery phases by removing waste (including culture and mindset) and automating as much as possible using machines, software, and robotics. Much of the Kaizen philosophy also applies to lean business and software practices and continuous delivery deployment for DevOps principles and goals.

|

||||

|

||||

### Agile

|

||||

|

||||

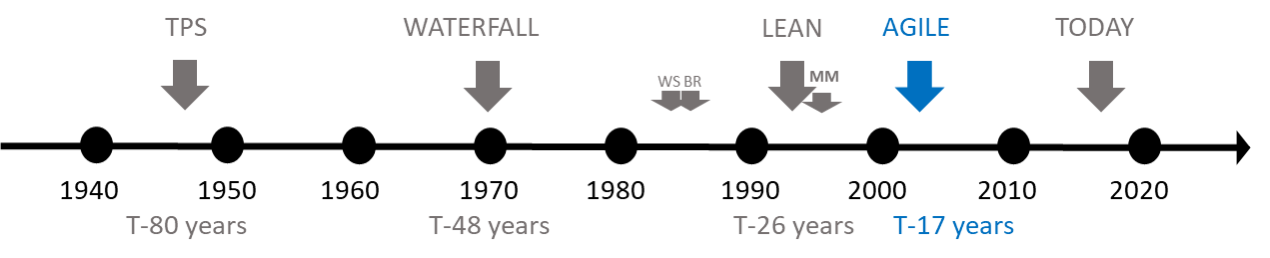

The [Manifesto for Agile Software Development][5] appeared in 2001, authored by Alistair Cockburn, Bob Martin, Jeff Sutherland, Jim Highsmith, Ken Schwaber, Kent Beck, Ward Cunningham, and others.

|

||||

|

||||

|

||||

|

||||

[Agile][6] is not about throwing caution to the wind, ditching design, or building software in the Wild West. It is about being able to create and respond to change. Agile development is [based on twelve principles][7] and a manifesto that values individuals and collaboration, working software, customer collaboration, and responding to change.

|

||||

|

||||

|

||||

|

||||

### Disciplined agile

|

||||

|

||||

Since the Agile Manifesto has remained static for 20 years, many agile practitioners have looked for ways to add choice and subjectivity to the approach. Additionally, the Agile Manifesto focuses heavily on development, so a tweak toward solutions rather than code or software is especially needed in today's fast-paced development environment. Scott Ambler and Mark Lines co-authored [Disciplined Agile Delivery][8] and [The Disciplined Agile Framework][9], based on their experiences at Rational, IBM, and organizations in which teams needed more choice or were not mature enough to implement lean practices, or where context didn't fit the lifecycle.

|

||||

|

||||

The significance of DAD and DA is that it is a [process-decision framework][10] that enables simplified process decisions around incremental and iterative solution delivery. DAD builds on the many practices of agile software development, including scrum, agile modeling, lean software development, and others. The extensive use of agile modeling and refactoring, including encouraging automation through test-driven development (TDD), lean thinking such as Kanban, [XP][11], [scrum][12], and [RUP][13] through a choice of five agile lifecycles, and the introduction of the architect owner, gives agile practitioners added mindsets, processes, and tools to successfully implement DevOps.

|

||||

|

||||

### DevOps

|

||||

|

||||

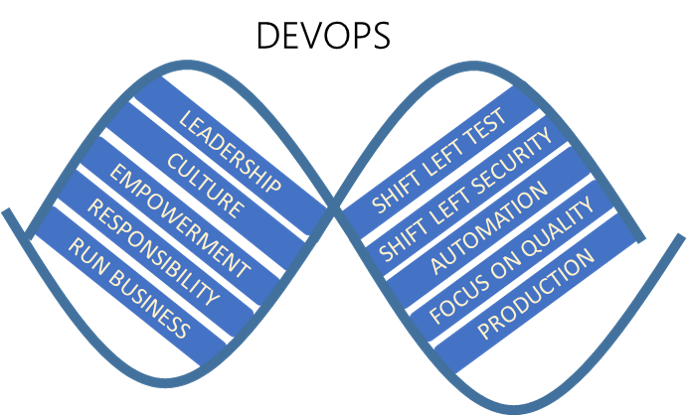

As far as we can gather, DevOps emerged during a series of DevOpsDays in Belgium in 2009, going on to become the foundation for numerous digital transformations. Microsoft principal DevOps manager [Donovan Brown][14] defines DevOps as “the union of people, process, and products to enable continuous delivery of value to our end users.”

|

||||

|

||||

|

||||

|

||||

Let's go back to our original question: What would you find in the ancestry report of DevOps if you analyzed its DNA?

|

||||

|

||||

|

||||

|

||||

We are looking at history dating back 80, 48, 26, and 17 years—an eternity in today’s fast-paced and often turbulent environment. By nature, we humans continuously experiment, learn, and adapt, inheriting strengths and resolving weaknesses from our genetic strands.

|

||||

|

||||

Under the microscope, we will find traces of waterfall, lean thinking, agile, scrum, Kanban, and other genetic material. For example, there are traces of waterfall for detailed and predictable scope, traces of lean for cutting waste, and traces of agile for promoting increments of shippable code. The genetic strands that define when and how to ship the code are where DevOps lights up in our DNA exploration.

|

||||

|

||||

|

||||

|

||||

You use the telemetry you collect from watching your solution in production to drive experiments, confirm hypotheses, and prioritize your product backlog. In other words, DevOps inherits from a variety of proven and evolving frameworks and enables you to transform your culture, use products as enablers, and most importantly, delight your customers.

|

||||

|

||||

If you are comfortable with lean thinking and agile, you will enjoy the full benefits of DevOps. If you come from a waterfall environment, you will receive help from a DevOps mindset, but your lean and agile counterparts will outperform you.

|

||||

|

||||

### eDevOps

|

||||

|

||||

|

||||

|

||||

In 2016, Brent Reed coined the term eDevOps (no Google or Wikipedia references exist to date), defining it as “a way of working (WoW) that brings continuous improvement across the enterprise seamlessly, through people, processes and tools.”

|

||||

|

||||

Brent found that agile was failing in IT: Businesses that had adopted lean thinking were not achieving the value, focus, and velocity they expected from their trusted IT experts. Frustrated at seeing an "ivory tower" in which siloed IT services were disconnected from architecture, development, operations, and help desk support teams, he applied his practical knowledge of disciplined agile delivery and added some goals and practical applications to the DAD toolset, including:

|

||||

|

||||

* Focus and drive of culture through a continuous improvement (Kaizen) mindset, bringing people together even when they are across the cubicle

|

||||

* Velocity through automation (TDD + refactoring everything possible), removing waste and adopting a [TOGAF][15], JBGE (just barely good enough) approach to documentation

|

||||

* Value through modeling (architecture modeling) and shifting left to enable right through exposing anti-patterns while sharing through collaboration patterns in a more versatile and strategic modern digital repository

|

||||

|

||||

|

||||

|

||||

Using his experience with AI at IBM, Brent designed a maturity model for eDevOps that incrementally automates dashboards for measuring and decision-making purposes so that continuous improvement through a continuous deployment (automating from development to production) is a real possibility for any organization. eDevOps in an effective transformation program based on disciplined DevOps that enables:

|

||||

|

||||

* Business to DevOps (BizDevOps),

|

||||

* Security to DevOps (SecDevOps)

|

||||

* Information to DevOps (DataDevOps)

|

||||

* Loosely coupled technical services while bringing together and delighting all stakeholders

|

||||

* Building potentially consumable solutions every two weeks or faster

|

||||

* Collecting, measuring, analyzing, displaying, and automating actionable insight through the DevOps processes from concept through live production use

|

||||

* Continuous improvement following a Kaizen and disciplined agile approach

|

||||

|

||||

|

||||

|

||||

### The next stage in the development of DevOps

|

||||

|

||||

|

||||

|

||||

Will DevOps ultimately be considered hype—a collection of more tech thrown at corporations and added to the already extensive list of buzzwords? Time, of course, will tell how DevOps will progress. However, DevOps' DNA must continue to mature and be refined, and developers must understand that it is neither a silver bullet nor a remedy to cure all ailments and solve all problems.

|

||||

|

||||

```

|

||||

DevOps != Agile != Lean Thinking != Waterfall

|

||||

|

||||

DevOps != Tools !=Technology

|

||||

|

||||

DevOps Ì Agile Ì Lean Thinking Ì Waterfall

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/analyzing-devops

|

||||

|

||||

作者:[Willy-Peter Schaub][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/wpschaub

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://en.wikipedia.org/wiki/Kanban

|

||||

[2]: https://airbrake.io/blog/sdlc/waterfall-model

|

||||

[3]: https://en.wikipedia.org/wiki/Toyota_Production_System

|

||||

[4]: https://www.lean.org/WhatsLean/Principles.cfm

|

||||

[5]: http://agilemanifesto.org/

|

||||

[6]: https://www.agilealliance.org/agile101

|

||||

[7]: http://agilemanifesto.org/principles.html

|

||||

[8]: https://books.google.com/books?id=CwvBEKsCY2gC

|

||||

[9]: http://www.disciplinedagiledelivery.com/books/

|

||||

[10]: https://en.wikipedia.org/wiki/Disciplined_agile_delivery

|

||||

[11]: https://en.wikipedia.org/wiki/Extreme_programming

|

||||

[12]: https://www.scrum.org/resources/what-is-scrum

|

||||

[13]: https://en.wikipedia.org/wiki/Rational_Unified_Process

|

||||

[14]: http://donovanbrown.com/

|

||||

[15]: http://www.opengroup.org/togaf

|

||||

@ -0,0 +1,76 @@

|

||||

Is your startup built on open source? 9 tips for getting started

|

||||

======

|

||||

Are open source businesses all that different from normal businesses?

|

||||

|

||||

|

||||

When I started [Gluu][1] in 2009, I had no idea how difficult it would be to start an open source software company. Using the open source development methodology seemed like a good idea, especially for infrastructure software based on protocols defined by open standards. By nature, entrepreneurs are optimistic—we underestimate the difficulty of starting a business. However, Gluu was my fourth business, so I thought I knew what I was in for. But I was in for a surprise!

|

||||

|

||||

Every business is unique. One of the challenges of serial entrepreneurship is that a truth that was core to the success of a previous business may be incorrect in your next business. Building a business around open source forced me to change my plan. How to find the right team members, how to price our offering, how to market our product—all of these aspects of starting a business (and more) were impacted by the open source mission and required an adjustment from my previous experience.

|

||||

|

||||

A few years ago, we started to question whether Gluu was pursuing the right business model. The business was growing, but not as fast as we would have liked.

|

||||

|

||||

One of the things we did at Gluu was to prepare a "business model canvas," an approach detailed in the book [Business Model Generation: A Handbook for Visionaries, Game Changers, and Challengers][2] by Yves Pigneur and Alexander Osterwalder. This is a thought-provoking exercise for any business at any stage. It helped us consider our business more holistically. A business is more than a stream of revenue. You need to think about how you segment the market, how to interact with customers, what are your sales channels, what are your key activities, what is your value proposition, what are your expenses, partnerships, and key resources. We've done this a few times over the years because a business model naturally evolves over time.

|

||||

|

||||

In 2016, I started to wonder how other open source businesses were structuring their business models. Business Model Generation talks about three types of companies: product innovation, customer relationship, and infrastructure.

|

||||

|

||||

* Product innovation companies are first to market with new products and can get a lot of market share because they are first.

|

||||

* Customer relationship companies have a wider offering and need to get "wallet share" not market share.

|

||||

* Infrastructure companies are very scalable but need established operating procedures and lots of capital.

|

||||

|

||||

|

||||

|

||||

![Open Source Underdogs podcast][4]

|

||||

|

||||

Mike Swartz, CC BY

|

||||

|

||||

It's hard to figure out what models and types of business other open source software companies are pursuing by just looking at their website. And most open source companies are private—so there are no SEC filings to examine.

|

||||

|

||||

To find out more, I went to the web. I found a [great talk][5] from Mike Olson, Founder and Chief Strategy Officer at Cloudera, about open source business models. It was recorded as part of a Stanford business lecture series. I wanted more of these kinds of talks! But I couldn't find any. That's when I got the idea to start a podcast where I interview founders of open source companies and ask them to describe what business model they are pursuing.

|

||||

|

||||

In 2018, this idea became a reality when we started a podcast called [Open Source Underdogs][6]. So far, we have recorded nine episodes. There is a lot of great content in all the episodes, but I thought it would be fun to share one piece of advice from each.

|

||||

|

||||

### Advice from 9 open source businesses

|

||||

|

||||

**Peter Wang, CTO of Anaconda: **"Investors coming in to help put more gas in your gas tank want to understand what road you're on and how far you want to go. If you can't communicate to investors on a basis that they understand about your business model and revenue model, then you have no business asking them for their money. Don't get mad at them!"

|

||||

|

||||

**Jim Thompson, Founder of Netgate: **"Businesses survive at the whim of their customers. Solving customer problems and providing value to the business is literally why you have a business!"

|

||||

|

||||

**Michael Howard, CEO of MariaDB: **"My advice to open source software startups? It depends what part of the stack you're in. If you're infrastructure, you have no choice but to be open source."

|

||||

|

||||

**Ian Tien, CEO of** **Mattermost: ** "You want to build something that people love. So start with roles that open source can play in your vision for the product, the distribution model, the community you want to build, and the business you want to build."

|

||||

|

||||

**Mike Olson, Founder and Chief Strategy Officer at Cloudera: **"A business model is a complex construct. Open source is a really important component of strategic thinking. It's a great distributed development model. It's a genius, low-cost distribution model—and those have a bunch of advantages. But you need to think about how you're going to get paid."

|

||||

|

||||

**Elliot Horowitz, Founder of MongoDB: **"The most important thing, whether it's open source or not open source, is to get incredibly close to your users."

|

||||

|

||||

**Tom Hatch, CEO of SaltStack: **"Being able to build an internal culture and a management mindset that deals with open source, and profits from open source, and functions in a stable and responsible way with regard to open source is one of the big challenges you're going to face. It's one thing to make a piece of open source software and get people to use it. It's another to build a company on top of that open source."

|

||||

|

||||

**Matt Mullenweg, CEO of Automattic: **"Open source businesses aren't that different from normal businesses. A mistake that we made, that others can avoid, is not incorporating the best leaders and team members in functions like marketing and sales."

|

||||

|

||||

**Gabriel Engel, CEO of RocketChat: **"Moving from a five-person company, where you are the center of the company, and it's easy to know what everyone is doing, and everyone relies on you for decisions, to a 40-person company—that transition is harder than expected."

|

||||

|

||||

### What we've learned

|

||||

|

||||

After recording these podcasts, we've tweaked Gluu's business model a little. It's become clearer that we need to embrace open core—we've been over-reliant on support revenue. It's a direction we had been going, but listening to our podcast's guests supported our decision.

|

||||

|

||||

We have many new episodes lined up for 2018 and 2019, including conversations with the founders of Liferay, Couchbase, TimescaleDB, Canonical, Redis, and more, who are sure to offer even more great insights about the open source software business. You can find all the podcast episodes by searching for "Open Source Underdogs" on iTunes and Google podcasts or by visiting our [website][6]. We want to hear your opinions and ideas you have to help us improve the podcast, so after you listen, please leave us a review.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/tips-open-source-entrepreneurs

|

||||

|

||||

作者:[Mike Schwartz][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/gluufederation

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.gluu.org/

|

||||

[2]: https://www.wiley.com/en-us/Business+Model+Generation%3A+A+Handbook+for+Visionaries%2C+Game+Changers%2C+and+Challengers-p-9780470876411

|

||||

[3]: /file/414706

|

||||

[4]: https://opensource.com/sites/default/files/uploads/underdogs_logo.jpg (Open Source Underdogs podcast)

|

||||

[5]: https://youtu.be/T_UM5PYk9NA

|

||||

[6]: https://opensourceunderdogs.com/

|

||||

@ -1,70 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

8 great pytest plugins

|

||||

======

|

||||

|

||||

|

||||

|

||||

We are big fans of [pytest][1] and use it as our default Python testing tool for work and open source projects. For this month's Python column, we're sharing why we love pytest and some of the plugins that make testing with pytest so much fun.

|

||||

|

||||

### What is pytest?

|

||||

|

||||

As the tool's website says, "The pytest framework makes it easy to write small tests, yet scales to support complex functional testing for applications and libraries."

|

||||

|

||||

`test_*.py` and as functions that begin with `test_*`. Pytest will then find all your tests, across your whole project, and run them automatically when you run `pytest` in your console. Pytest accepts `set_trace()` function that can be entered into your test; this will pause your tests and allow you to interact with your variables and otherwise "poke around" in the console to debug your project.

|

||||

|

||||

Pytest allows you to define your tests in any file calledand as functions that begin with. Pytest will then find all your tests, across your whole project, and run them automatically when you runin your console. Pytest accepts [flags and arguments][2] that can change when the testrunner stops, how it outputs results, which tests are run, and what information is included in the output. It also includes afunction that can be entered into your test; this will pause your tests and allow you to interact with your variables and otherwise "poke around" in the console to debug your project.

|

||||

|

||||

One of the best aspects of pytest is its robust plugin ecosystem. Because pytest is such a popular testing library, over the years many plugins have been created to extend, customize, and enhance its capabilities. These eight plugins are among our favorites.

|

||||

|

||||

### Great 8

|

||||

|

||||

**1.[pytest-sugar][3]**

|

||||

`pytest-sugar` changes the default look and feel of pytest, adds a progress bar, and shows failing tests instantly. It requires no configuration; just `pip install pytest-sugar`, run your tests with `pytest`, and enjoy the prettier, more useful output.

|

||||

|

||||

**2.[pytest-cov][4]**

|

||||

`pytest-cov` adds coverage support for pytest to show which lines of code have been tested and which have not. It will also include the percentage of test coverage for your project.

|

||||

|

||||

**3.[pytest-picked][5]**

|

||||

`pytest-picked` runs tests based on code that you have modified but not committed to `git` yet. Install the library and run your tests with `pytest --picked` to test only files that have been changed since your last commit.

|

||||

|

||||

**4.[pytest-instafail][6]**

|

||||

`pytest-instafail` modifies pytest's default behavior to show failures and errors immediately instead of waiting until pytest has finished running every test.

|

||||

|

||||

**5.[pytest-tldr][7]**

|

||||

A brand-new pytest plugin that limits the output to just the things you need. `pytest-tldr` (the `tldr` stands for "too long, didn't read"), like `pytest-sugar`, requires no configuration other than basic installation. Instead of pytest's default output, which is pretty verbose, `pytest-tldr`'s default limits the output to only tracebacks for failing tests and omits the color-coding that some find annoying. Adding a `-v` flag returns the more verbose output for those who prefer it.

|

||||

|

||||

**6.[pytest-xdist][8]**

|

||||

`pytest-xdist` allows you to run multiple tests in parallel via the `-n` flag: `pytest -n 2`, for example, would run your tests on two CPUs. This can significantly speed up your tests. It also includes the `--looponfail` flag, which will automatically re-run your failing tests.

|

||||

|

||||

**7.[pytest-django][9]**

|

||||

`pytest-django` adds pytest support to Django applications and projects. Specifically, `pytest-django` introduces the ability to test Django projects using pytest fixtures, omits the need to import `unittest` and copy/paste other boilerplate testing code, and runs faster than the standard Django test suite.

|

||||

|

||||

**8.[django-test-plus][10]**

|

||||

`django-test-plus` isn't specific to pytest, but it now supports pytest. It includes its own `TestCase` class that your tests can inherit from and enables you to use fewer keystrokes to type out frequent test cases, like checking for specific HTTP error codes.

|

||||

|

||||

The libraries we mentioned above are by no means your only options for extending your pytest usage. The landscape for useful pytest plugins is vast. Check out the [Pytest Plugins Compatibility][11] page to explore on your own. Which ones are your favorites?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/6/pytest-plugins

|

||||

|

||||

作者:[Jeff Triplett;Lacery Williams Henschel][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/sites/default/files/styles/byline_thumbnail/public/pictures/dcus-2017-bw.jpg?itok=s8PhD7Ok

|

||||

[1]:https://docs.pytest.org/en/latest/

|

||||

[2]:https://docs.pytest.org/en/latest/usage.html

|

||||

[3]:https://github.com/Frozenball/pytest-sugar

|

||||

[4]:https://github.com/pytest-dev/pytest-cov

|

||||

[5]:https://github.com/anapaulagomes/pytest-picked

|

||||

[6]:https://github.com/pytest-dev/pytest-instafail

|

||||

[7]:https://github.com/freakboy3742/pytest-tldr

|

||||

[8]:https://github.com/pytest-dev/pytest-xdist

|

||||

[9]:https://pytest-django.readthedocs.io/en/latest/

|

||||

[10]:https://django-test-plus.readthedocs.io/en/latest/

|

||||

[11]:https://plugincompat.herokuapp.com/

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Publishing Markdown to HTML with MDwiki

|

||||

======

|

||||

|

||||

|

||||

@ -1,75 +0,0 @@

|

||||

Translating by qhwdw

|

||||

|

||||

|

||||

Greg Kroah-Hartman Explains How the Kernel Community Is Securing Linux

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

Kernel maintainer Greg Kroah-Hartman talks about how the kernel community is hardening Linux against vulnerabilities.[Creative Commons Zero][2]

|

||||

|

||||

As Linux adoption expands, it’s increasingly important for the kernel community to improve the security of the world’s most widely used technology. Security is vital not only for enterprise customers, it’s also important for consumers, as 80 percent of mobile devices are powered by Linux. In this article, Linux kernel maintainer Greg Kroah-Hartman provides a glimpse into how the kernel community deals with vulnerabilities.

|

||||

|

||||

### There will be bugs

|

||||

|

||||

|

||||

|

||||

|

||||

Greg Kroah-Hartman[The Linux Foundation][1]

|

||||

|

||||

As Linus Torvalds once said, most security holes are bugs, and bugs are part of the software development process. As long as the software is being written, there will be bugs.

|

||||

|

||||

“A bug is a bug. We don’t know if a bug is a security bug or not. There is a famous bug that I fixed and then three years later Red Hat realized it was a security hole,” said Kroah-Hartman.

|

||||

|

||||

There is not much the kernel community can do to eliminate bugs, but it can do more testing to find them. The kernel community now has its own security team that’s made up of kernel developers who know the core of the kernel.

|

||||

|

||||

“When we get a report, we involve the domain owner to fix the issue. In some cases it’s the same people, so we made them part of the security team to speed things up,” Kroah Hartman said. But he also stressed that all parts of the kernel have to be aware of these security issues because kernel is a trusted environment and they have to protect it.

|

||||

|

||||

“Once we fix things, we can put them in our stack analysis rules so that they are never reintroduced,” he said.

|

||||

|

||||

Besides fixing bugs, the community also continues to add hardening to the kernel. “We have realized that we need to have mitigations. We need hardening,” said Kroah-Hartman.

|

||||

|

||||

Huge efforts have been made by Kees Cook and others to take the hardening features that have been traditionally outside of the kernel and merge or adapt them for the kernel. With every kernel released, Cook provides a summary of all the new hardening features. But hardening the kernel is not enough, vendors have to enable the new features and take advantage of them. That’s not happening.

|

||||

|

||||

Kroah-Hartman [releases a stable kernel every week][5], and companies pick one to support for a longer period so that device manufacturers can take advantage of it. However, Kroah-Hartman has observed that, aside from the Google Pixel, most Android phones don’t include the additional hardening features, meaning all those phones are vulnerable. “People need to enable this stuff,” he said.

|

||||

|

||||

“I went out and bought all the top of the line phones based on kernel 4.4 to see which one actually updated. I found only one company that updated their kernel,” he said. “I'm working through the whole supply chain trying to solve that problem because it's a tough problem. There are many different groups involved -- the SoC manufacturers, the carriers, and so on. The point is that they have to push the kernel that we create out to people.”

|

||||

|

||||

The good news is that unlike with consumer electronics, the big vendors like Red Hat and SUSE keep the kernel updated even in the enterprise environment. Modern systems with containers, pods, and virtualization make this even easier. It’s effortless to update and reboot with no downtime. It is, in fact, easier to keep things secure than it used to be.

|

||||

|

||||

### Meltdown and Spectre

|

||||

|

||||

No security discussion is complete without the mention of Meltdown and Spectre. The kernel community is still working on fixes as new flaws are discovered. However, Intel has changed its approach in light of these events.

|

||||

|

||||

“They are reworking on how they approach security bugs and how they work with the community because they know they did it wrong,” Kroah-Hartman said. “The kernel has fixes for almost all of the big Spectre issues, but there is going to be a long tail of minor things.”

|

||||

|

||||

The good news is that these Intel vulnerabilities proved that things are getting better for the kernel community. “We are doing more testing. With the latest round of security patches, we worked on our own for four months before releasing them to the world because we were embargoed. But once they hit the real world, it made us realize how much we rely on the infrastructure we have built over the years to do this kind of testing, which ensures that we don’t have bugs before they hit other people,” he said. “So things are certainly getting better.”

|

||||

|

||||

The increasing focus on security is also creating more job opportunities for talented people. Since security is an area that gets eyeballs, those who want to build a career in kernel space, security is a good place to get started with.

|

||||

|

||||

“If there are people who want a job to do this type of work, we have plenty of companies who would love to hire them. I know some people who have started off fixing bugs and then got hired,” Kroah-Hartman said.

|

||||

|

||||

You can hear more in the video below:

|

||||

|

||||

[视频](https://youtu.be/jkGVabyMh1I)

|

||||

|

||||

_Check out the schedule of talks for Open Source Summit Europe and sign up to receive updates:_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/2018/10/greg-kroah-hartman-explains-how-kernel-community-securing-linux-0

|

||||

|

||||

作者:[SWAPNIL BHARTIYA][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/arnieswap

|

||||

[b]:https://github.com/oska874

|

||||

[1]:https://www.linux.com/licenses/category/linux-foundation

|

||||

[2]:https://www.linux.com/licenses/category/creative-commons-zero

|

||||

[3]:https://www.linux.com/files/images/greg-k-hpng

|

||||

[4]:https://www.linux.com/files/images/kernel-securityjpg-0

|

||||

[5]:https://www.kernel.org/category/releases.html

|

||||

@ -1,3 +1,4 @@

|

||||

[translating by ChiZelin]

|

||||

Monitoring database health and behavior: Which metrics matter?

|

||||

======

|

||||

Monitoring your database can be overwhelming or seem not important. Here's how to do it right.

|

||||

|

||||

@ -1,95 +0,0 @@

|

||||

HankChow translating

|

||||

|

||||

Gitbase: Exploring git repos with SQL

|

||||

======

|

||||

Gitbase is a Go-powered open source project that allows SQL queries to be run on Git repositories.

|

||||

|

||||

|

||||

Git has become the de-facto standard for code versioning, but its popularity didn't remove the complexity of performing deep analyses of the history and contents of source code repositories.

|

||||

|

||||

SQL, on the other hand, is a battle-tested language to query large codebases as its adoption by projects like Spark and BigQuery shows.

|

||||

|

||||

So it is just logical that at source{d} we chose these two technologies to create gitbase: the code-as-data solution for large-scale analysis of git repositories with SQL.

|

||||

|

||||

[Gitbase][1] is a fully open source project that stands on the shoulders of a series of giants which made its development possible, this article aims to point out the main ones.

|

||||

|

||||

|

||||

|

||||

The [gitbase][2] [playground][2] provides a visual way to use gitbase.

|

||||

|

||||

### Parsing SQL with Vitess

|

||||

|

||||

Gitbase's user interface is SQL. This means we need to be able to parse and understand the SQL requests that arrive through the network following the MySQL protocol. Fortunately for us, this was already implemented by our friends at YouTube and their [Vitess][3] project. Vitess is a database clustering system for horizontal scaling of MySQL.

|

||||

|

||||

We simply grabbed the pieces of code that mattered to us and made it into an [open source project][4] that allows anyone to write a MySQL server in minutes (as I showed in my [justforfunc][5] episode [CSVQL—serving CSV with SQL][6]).

|

||||

|

||||

### Reading git repositories with go-git

|

||||

|

||||

Once we've parsed a request we still need to find how to answer it by reading the git repositories in our dataset. For this, we integrated source{d}'s most successful repository [go-git][7]. Go-git is a* *highly extensible Git implementation in pure Go.

|

||||

|

||||

This allowed us to easily analyze repositories stored on disk as [siva][8] files (again an open source project by source{d}) or simply cloned with git clone.

|

||||

|

||||

### Detecting languages with enry and parsing files with babelfish

|

||||

|

||||

Gitbase does not stop its analytic power at the git history. By integrating language detection with our (obviously) open source project [enry][9] and program parsing with [babelfish][10]. Babelfish is a self-hosted server for universal source code parsing, turning code files into Universal Abstract Syntax Trees (UASTs)

|

||||

|

||||

These two features are exposed in gitbase as the user functions LANGUAGE and UAST. Together they make requests like "find the name of the function that was most often modified during the last month" possible.

|

||||

|

||||

### Making it go fast

|

||||

|

||||

Gitbase analyzes really large datasets—e.g. Public Git Archive, with 3TB of source code from GitHub ([announcement][11]) and in order to do so every CPU cycle counts.

|

||||

|

||||

This is why we integrated two more projects into the mix: Rubex and Pilosa.

|

||||

|

||||

#### Speeding up regular expressions with Rubex and Oniguruma

|

||||

|

||||

[Rubex][12] is a quasi-drop-in replacement for Go's regexp standard library package. I say quasi because they do not implement the LiteralPrefix method on the regexp.Regexp type, but I also had never heard about that method until right now.

|

||||

|

||||

#### Speeding up queries with Pilosa indexes

|

||||

|

||||

Rubex gets its performance from the highly optimized C library [Oniguruma][13] which it calls using [cgo][14]

|

||||

|

||||

Indexes are a well-known feature of basically every relational database, but Vitess does not implement them since it doesn't really need to.

|

||||

|

||||

But again open source came to the rescue with [Pilosa][15], a distributed bitmap index implemented in Go which made gitbase usable on massive datasets. Pilosa is an open source, distributed bitmap index that dramatically accelerates queries across multiple, massive datasets.

|

||||

|

||||

### Conclusion

|

||||

|

||||

I'd like to use this blog post to personally thank the open source community that made it possible for us to create gitbase in such a shorter period that anyone would have expected. At source{d} we are firm believers in open source and every single line of code under github.com/src-d (including our OKRs and investor board) is a testament to that.

|

||||

|

||||

Would you like to give gitbase a try? The fastest and easiest way is with source{d} Engine. Download it from sourced.tech/engine and get gitbase running with a single command!

|

||||

|

||||

Want to know more? Check out the recording of my talk at the [Go SF meetup][16].

|

||||

|

||||

The article was [originally published][17] on Medium and is republished here with permission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/gitbase

|

||||

|

||||

作者:[Francesc Campoy][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/francesc

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://github.com/src-d/gitbase

|

||||

[2]: https://github.com/src-d/gitbase-web

|

||||

[3]: https://github.com/vitessio/vitess

|

||||

[4]: https://github.com/src-d/go-mysql-server

|

||||

[5]: http://justforfunc.com/

|

||||

[6]: https://youtu.be/bcRDXAraprk

|

||||

[7]: https://github.com/src-d/go-git

|

||||

[8]: https://github.com/src-d/siva

|

||||

[9]: https://github.com/src-d/enry

|

||||

[10]: https://github.com/bblfsh/bblfshd

|

||||

[11]: https://blog.sourced.tech/post/announcing-pga/

|

||||

[12]: https://github.com/moovweb/rubex

|

||||

[13]: https://github.com/kkos/oniguruma

|

||||

[14]: https://golang.org/cmd/cgo/

|

||||

[15]: https://github.com/pilosa/pilosa

|

||||

[16]: https://www.meetup.com/golangsf/events/251690574/

|

||||

[17]: https://medium.com/sourcedtech/gitbase-exploring-git-repos-with-sql-95ec0986386c

|

||||

@ -1,4 +1,4 @@

|

||||

4 tips for learning Golang

|

||||

translating by dianbanjiu 4 tips for learning Golang

|

||||

======

|

||||

Arriving in Golang land: A senior developer's journey.

|

||||

|

||||

|

||||

@ -0,0 +1,148 @@

|

||||

How to use systemd-nspawn for Linux system recovery

|

||||

======

|

||||

Tap into systemd's ability to launch containers to repair a damaged system's root filesystem.

|

||||

|

||||

|

||||

For as long as GNU/Linux systems have existed, system administrators have needed to recover from root filesystem corruption, accidental configuration changes, or other situations that kept the system from booting into a "normal" state.

|

||||

|

||||

Linux distributions typically offer one or more menu options at boot time (for example, in the GRUB menu) that can be used for rescuing a broken system; typically they boot the system into a single-user mode with most system services disabled. In the worst case, the user could modify the kernel command line in the bootloader to use the standard shell as the init (PID 1) process. This method is the most complex and fraught with complications, which can lead to frustration and lost time when a system needs rescuing.

|

||||

|

||||

Most importantly, these methods all assume that the damaged system has a physical console of some sort, but this is no longer a given in the age of cloud computing. Without a physical console, there are few (if any) options to influence the boot process this way. Even physical machines may be small, embedded devices that don't offer an easy-to-use console, and finding the proper serial port cables and adapters and setting up a serial terminal emulator, all to use a serial console port while dealing with an emergency, is often complicated.

|

||||

|

||||

When another system (of the same architecture and generally similar configuration) is available, a common technique to simplify the repair process is to extract the storage device(s) from the damaged system and connect them to the working system as secondary devices. With physical systems, this is usually straightforward, but most cloud computing platforms can also support this since they allow the root storage volume of the damaged instance to be mounted on another instance.

|

||||

|

||||

Once the root filesystem is attached to another system, addressing filesystem corruption is straightforward using **fsck** and other tools. Addressing configuration mistakes, broken packages, or other issues can be more complex since they require mounting the filesystem and locating and changing the correct configuration files or databases.

|

||||

|

||||

### Using systemd

|

||||

|

||||

Before **[**systemd**][1]** , editing configuration files with a text editor was a practical way to correct a configuration. Locating the necessary files and understanding their contents may be a separate challenge, which is beyond the scope of this article.

|

||||

|

||||

When the GNU/Linux system uses **systemd** though, many configuration changes are best made using the tools it provides—enabling and disabling services, for example, requires the creation or removal of symbolic links in various locations. The **systemctl** tool is used to make these changes, but using it requires a **systemd** instance to be running and listening (on D-Bus) for requests. When the root filesystem is mounted as an additional filesystem on another machine, the running **systemd** instance can't be used to make these changes.

|

||||

|

||||

Manually launching the target system's **systemd** is not practical either, since it is designed to be the PID 1 process on a system and manage all other processes, which would conflict with the already-running instance on the system used for the repairs.

|

||||

|

||||

Thankfully, **systemd** has the ability to launch containers, fully encapsulated GNU/Linux systems with their own PID 1 and environment that utilize various namespace features offered by the Linux kernel. Unlike tools like Docker and Rocket, **systemd** doen't require a container image to launch a container; it can launch one rooted at any point in the existing filesystem. This is done using the **systemd-nspawn** tool, which will create the necessary system namespaces and launch the initial process in the container, then provide a console in the container. In contrast to **chroot** , which only changes the apparent root of the filesystem, this type of container will have a separate filesystem namespace, suitable filesystems mounted on **/dev** , **/run** , and **/proc** , and a separate process namespace and IPC namespaces. Consult the **systemd-nspawn** [man page][2] to learn more about its capabilities.

|

||||

|

||||

### An example to show how it works

|

||||

|

||||

In this example, the storage device containing the damaged system's root filesystem has been attached to a running system, where it appears as **/dev/vdc**. The device name will vary based on the number of existing storage devices, the type of device, and the method used to connect it to the system. The root filesystem could use the entire storage device or be in a partition within the device; since the most common (simple) configuration places the root filesystem in the device's first partition, this example will use **/dev/vdc1.** Make sure to replace the device name in the commands below with your system's correct device name.

|

||||

|

||||

The damaged root filesystem may also be more complex than a single filesystem on a device; it may be a volume in an LVM volume set or on a set of devices combined into a software RAID device. In these cases, the necessary steps to compose and activate the logical device holding the filesystem must be performed before it will be available for mounting. Again, those steps are beyond the scope of this article.

|

||||

|

||||

#### Prerequisites

|

||||

|

||||

First, ensure the **systemd-nspawn** tool is installed—most GNU/Linux distributions don't install it by default. It's provided by the **systemd-container** package on most distributions, so use your distribution's package manager to install that package. The instructions in this example were tested using Debian 9 but should work similarly on any modern GNU/Linux distribution.

|

||||

|

||||

Using the commands below will almost certainly require root permissions, so you'll either need to log in as root, use **sudo** to obtain a shell with root permissions, or prefix each of the commands with **sudo**.

|

||||

|

||||

#### Verify and mount the fileystem

|

||||

|

||||

First, use **fsck** to verify the target filesystem's structures and content:

|

||||

|

||||

```

|

||||

$ fsck /dev/vdc1

|

||||

```

|

||||

|

||||

If it finds any problems with the filesystem, answer the questions appropriately to correct them. If the filesystem is sufficiently damaged, it may not be repairable, in which case you'll have to find other ways to extract its contents.

|

||||

|

||||

Now, create a temporary directory and mount the target filesystem onto that directory:

|

||||

|

||||

```

|

||||

$ mkdir /tmp/target-rescue

|

||||

$ mount /dev/vdc1 /tmp/target-rescue

|

||||

```

|

||||

|

||||

With the filesystem mounted, launch a container with that filesystem as its root filesystem:

|

||||

|

||||

```

|

||||

$ systemd-nspawn --directory /tmp/target-rescue --boot -- --unit rescue.target

|

||||

```

|

||||

|

||||

The command-line arguments for launching the container are:

|

||||

|

||||

* **\--directory /tmp/target-rescue** provides the path of the container's root filesystem.

|

||||

* **\--boot** searches for a suitable init program in the container's root filesystem and launches it, passing parameters from the command line to it. In this example, the target system also uses **systemd** as its PID 1 process, so the remaining parameters are intended for it. If the target system you are repairing uses any other tool as its PID 1 process, you'll need to adjust the parameters accordingly.

|

||||

* **\--** separates parameters for **systemd-nspawn** from those intended for the container's PID 1 process.

|

||||

* **\--unit rescue.target** tells **systemd** in the container the name of the target it should try to reach during the boot process. In order to simplify the rescue operations in the target system, boot it into "rescue" mode rather than into its normal multi-user mode.

|

||||

|

||||

|

||||

|

||||

If all goes well, you should see output that looks similar to this:

|

||||

|

||||

```

|

||||

Spawning container target-rescue on /tmp/target-rescue.

|

||||

Press ^] three times within 1s to kill container.

|

||||

systemd 232 running in system mode. (+PAM +AUDIT +SELINUX +IMA +APPARMOR +SMACK +SYSVINIT +UTMP +LIBCRYPTSETUP +GCRYPT +GNUTLS +ACL +XZ +LZ4 +SECCOMP +BLKID +ELFUTILS +KMOD +IDN)

|

||||

Detected virtualization systemd-nspawn.

|

||||

Detected architecture arm.

|

||||

|

||||

Welcome to Debian GNU/Linux 9 (Stretch)!

|

||||

|

||||

Set hostname to <test>.

|

||||

Failed to install release agent, ignoring: No such file or directory

|

||||

[ OK ] Reached target Swap.

|

||||

[ OK ] Listening on Journal Socket (/dev/log).

|

||||

[ OK ] Started Dispatch Password Requests to Console Directory Watch.

|

||||

[ OK ] Reached target Encrypted Volumes.

|

||||

[ OK ] Created slice System Slice.

|

||||

Mounting POSIX Message Queue File System...

|

||||

[ OK ] Listening on Journal Socket.

|

||||

Starting Set the console keyboard layout...

|

||||

Starting Restore / save the current clock...

|

||||

Starting Journal Service...

|

||||

Starting Remount Root and Kernel File Systems...

|

||||

[ OK ] Mounted POSIX Message Queue File System.

|

||||

[ OK ] Started Journal Service.

|

||||

[ OK ] Started Remount Root and Kernel File Systems.

|

||||

Starting Flush Journal to Persistent Storage...

|

||||

[ OK ] Started Restore / save the current clock.

|

||||

[ OK ] Started Flush Journal to Persistent Storage.

|

||||

[ OK ] Started Set the console keyboard layout.

|

||||

[ OK ] Reached target Local File Systems (Pre).

|

||||

[ OK ] Reached target Local File Systems.

|

||||

Starting Create Volatile Files and Directories...

|

||||

[ OK ] Started Create Volatile Files and Directories.

|

||||

[ OK ] Reached target System Time Synchronized.

|

||||

Starting Update UTMP about System Boot/Shutdown...

|

||||

[ OK ] Started Update UTMP about System Boot/Shutdown.

|

||||

[ OK ] Reached target System Initialization.

|

||||

[ OK ] Started Rescue Shell.

|

||||

[ OK ] Reached target Rescue Mode.

|

||||

Starting Update UTMP about System Runlevel Changes...

|

||||

[ OK ] Started Update UTMP about System Runlevel Changes.

|

||||

You are in rescue mode. After logging in, type "journalctl -xb" to view

|

||||

system logs, "systemctl reboot" to reboot, "systemctl default" or ^D to

|

||||

boot into default mode.

|

||||

Give root password for maintenance

|

||||

(or press Control-D to continue):

|

||||

```

|

||||

|

||||

In this output, you can see **systemd** launching as the init process in the container and detecting that it is being run inside a container so it can adjust its behavior appropriately. Various unit files are started to bring the container to a usable state, then the target system's root password is requested. You can enter the root password here if you want a shell prompt with root permissions, or you can press **Ctrl+D** to allow the startup process to continue, which will display a normal console login prompt.

|

||||

|

||||

When you have completed the necessary changes to the target system, press **Ctrl+]** three times in rapid succession; this will terminate the container and return you to your original shell. From there, you can clean up by unmounting the target system's filesystem and removing the temporary directory:

|

||||

|

||||

```

|

||||

$ umount /tmp/target-rescue

|

||||

$ rmdir /tmp/target-rescue

|

||||

```

|

||||

|

||||

That's it! You can now remove the target system's storage device(s) and return them to the target system.

|

||||

|

||||

The idea to use **systemd-nspawn** this way, especially the **\--boot parameter** , came from [a question][3] posted on StackExchange. Thanks to Shibumi and kirbyfan64sos for providing useful answers to this question!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/11/systemd-nspawn-system-recovery

|

||||

|

||||

作者:[Kevin P.Fleming][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/kpfleming

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.freedesktop.org/wiki/Software/systemd/

|

||||

[2]: https://www.freedesktop.org/software/systemd/man/systemd-nspawn.html

|

||||

[3]: https://unix.stackexchange.com/questions/457819/running-systemd-utilities-like-systemctl-under-an-nspawn

|

||||

@ -0,0 +1,260 @@

|

||||

11 Things To Do After Installing elementary OS 5 Juno

|

||||

======

|

||||

I’ve been using [elementary OS 5 Juno][1] for over a month and it has been an amazing experience. It is easily the [best Mac OS inspired Linux distribution][2] and one of the [best Linux distribution for beginners][3].

|

||||

|

||||

However, you will need to take care of a couple of things after installing it.

|

||||

In this article, we will discuss the most important things that you need to do after installing [elementary OS][4] 5 Juno.

|

||||

|

||||

### Things to do after installing elementary OS 5 Juno

|

||||

|

||||

![Things to do after installing elementary OS Juno][5]

|

||||

|

||||

Things I mentioned in this list are from my personal experience and preference. Of course, you are not restricted to these few things. You can explore and tweak the system as much as you like. However, if you follow (some of) these recommendations, things might be smoother for you.

|

||||

|

||||

#### 1.Run a System Update

|

||||

|

||||

![terminal showing system updates in elementary os 5 Juno][6]

|

||||

|

||||

Even when you download the latest version of a distribution – it is always recommended to check for the latest System updates. You might have a quick fix for an annoying bug, or, maybe there’s an important security patch that you shouldn’t ignore. So, no matter what – you should always ensure that you have everything up-to-date.

|

||||

|

||||

To do that, you need to type in the following command in the terminal:

|

||||

|

||||

```

|

||||

sudo apt-get update

|

||||

```

|

||||

|

||||

#### 2\. Set Window Hotcorner

|

||||

|

||||

![][7]

|

||||

|

||||

You wouldn’t notice the minimize button for a window. So, how do you do it?

|

||||

|

||||

Well, you can just bring up the dock and click the app icon again to minimize it or press **Windows key + H** as a shortcut to minimize the active window.

|

||||

|

||||

But, I’ll recommend something way more easy and intuitive. Maybe you already knew it, but for the users who were unaware of the “ **hotcorners** ” feature, here’s what it does:

|

||||

|

||||

Whenever you hover the cursor to any of the 4 corners of the window, you can set a preset action to happen when you do that. For example, when you move your cursor to the **left corner** of the screen you get the **multi-tasking view** to switch between apps – which acts like a “gesture“.

|

||||

|

||||

In order to utilize the functionality, you can follow the steps below:

|

||||

|

||||

1. Head to the System Settings.

|

||||

2. Click on the “ **Desktop** ” option (as shown in the image above).

|

||||

3. Next, select the “ **Hot Corner** ” section (as shown in the image below).

|

||||

4. Depending on what corner you prefer, choose an appropriate action (refer to the image below – that’s what I personally prefer as my settings)

|

||||

|

||||

|

||||

|

||||

#### 3\. Install Multimedia codecs

|

||||

|

||||

I’ve tried playing MP3/MP4 files – it just works fine. However, there are a lot of file formats when it comes to multimedia.

|

||||

|

||||

So, just to be able to play almost every format of multimedia, you should install the codecs. Here’s what you need to enter in the terminal:

|

||||

|

||||

To get certain proprietary codecs:

|

||||

|

||||

```

|

||||

sudo apt install ubuntu-restricted-extras

|

||||

```

|

||||

|

||||

To specifically install [Libav][8]:

|

||||

|

||||

```

|

||||

sudo apt install libavcodec-extra

|

||||

```

|

||||

|

||||

To install a codec in order to facilitate playing video DVDs:

|

||||

|

||||

```

|

||||

sudo apt install libdvd-pkg

|

||||

```

|

||||

|

||||

#### 4\. Install GDebi

|

||||

|

||||

You don’t get to install .deb files by just double-clicking it on elementary OS 5 Juno. It just does not let you do that.

|

||||

|

||||

So, you need an additional tool to help you install .deb files.

|

||||

|

||||

We’ll recommend you to use **GDebi**. I prefer it because it lets you know about the dependencies even before trying to install it – that way – you can be sure about what you need in order to correctly install an application.

|

||||

|

||||

Simply install GDebi and open any .deb files by performing a right-click on them **open in GDebi Package Installer.**

|

||||

|

||||

To install it, type in the following command:

|

||||

|

||||

```

|

||||

sudo apt install gdebi

|

||||

```

|

||||

|

||||

#### 5\. Add a PPA for your Favorite App

|

||||

|

||||

Yes, elementary OS 5 Juno now supports PPA (unlike its previous version). So, you no longer need to enable the support for PPAs explicitly.

|

||||

|

||||

Just grab a PPA and add it via terminal to install something you like.

|

||||

|

||||

#### 6\. Install Essential Applications

|

||||

|

||||

If you’re a Linux power user, you already know what you want and where to get it, but if you’re new to this Linux distro and looking out for some applications to have installed, I have a few recommendations:

|

||||

|

||||

**Steam app** : If you’re a gamer, this is a must-have app. You just need to type in a single command to install it:

|

||||

|

||||

```

|

||||

sudo apt install steam

|

||||

```

|

||||

|

||||

**GIMP** : It is the best photoshop alternative across every platform. Get it installed for every type of image manipulation:

|

||||

|

||||

```

|

||||

sudo apt install gimp

|

||||

```

|

||||

|

||||

**Wine** : If you want to install an application that only runs on Windows, you can try using Wine to run such Windows apps here on Linux. To install, follow the command:

|

||||

|

||||

```

|

||||

sudo apt install wine-stable

|

||||

```

|

||||

|

||||

**qBittorrent** : If you prefer downloading Torrent files, you should have this installed as your Torrent client. To install it, enter the following command:

|

||||

|

||||

```

|

||||

sudo apt install qbittorrent

|

||||

```

|

||||

|

||||

**Flameshot** : You can obviously utilize the default screenshot tool to take screenshots. But, if you want to instantly share your screenshots and the ability to annotate – install flameshot. Here’s how you can do that:

|

||||

|

||||

```

|

||||

sudo apt install flameshot

|

||||

```

|

||||

|

||||

**Chrome/Firefox: **The default browser isn’t much useful. So, you should install Chrome/Firefox – as per your choice.

|

||||

|

||||

To install chrome, enter the command:

|

||||

|

||||

```

|

||||

sudo apt install chromium-browser

|

||||

```

|

||||

|

||||

To install Firefox, enter:

|

||||

|

||||

```

|

||||

sudo apt install firefox

|

||||

```

|

||||

|

||||

These are some of the most common applications you should definitely have installed. For the rest, you should browse through the App Center or the Flathub to install your favorite applications.

|

||||

|

||||

#### 7\. Install Flatpak (Optional)

|

||||

|

||||

It’s just my personal recommendation – I find flatpak to be the preferred way to install apps on any Linux distro I use.

|

||||

|

||||

You can try it and learn more about it at its [official website][9].

|

||||

|

||||

To install flatpak, type in:

|

||||

|

||||

```

|

||||

sudo apt install flatpak

|

||||

```

|

||||

|

||||

After you are done installing flatpak, you can directly head to [Flathub][10] to install some of your favorite apps and you will also find the command/instruction to install it via the terminal.

|

||||

|

||||

In case you do not want to launch the browser, you can search for your app by typing in (example – finding Discord and installing it):

|

||||

|

||||

```

|

||||

flatpak search discord flathub

|

||||

```

|

||||

|

||||

After gettting the application ID, you can proceed installing it by typing in:

|

||||

|

||||

```

|

||||

flatpak install flathub com.discordapp.Discord

|

||||

```

|

||||

|

||||

#### 8\. Enable the Night Light

|

||||

|

||||

![Night Light in elementary OS Juno][11]

|

||||

|

||||

You might have installed Redshift as per our recommendation for [elemantary OS 0.4 Loki][12] to filter the blue light to avoid straining our eyes- but you do not need any 3rd party tool anymore.

|

||||

|

||||

It comes baked in as the “ **Night Light** ” feature.

|

||||

|

||||

You just head to System Settings and click on “ **Displays** ” (as shown in the image above).

|

||||

|

||||

Select the **Night Light** section and activate it with your preferred settings.

|

||||

|

||||

#### 9\. Install NVIDIA driver metapackage (for NVIDIA GPUs)

|

||||

|

||||

![Nvidia drivers in elementary OS juno][13]

|

||||

|

||||

The NVIDIA driver metapackage should be listed right at the App Center – so you can easily the NVIDIA driver.

|

||||

|

||||

However, it’s not the latest driver version – I have version **390.77** installed and it’s performing just fine.

|

||||

|

||||

If you want the latest version for Linux, you should check out NVIDIA’s [official download page][14].

|

||||

|

||||

Also, if you’re curious about the version installed, just type in the following command:

|

||||

|

||||

```

|

||||

nvidia-smi

|

||||

```

|

||||

|

||||

#### 10\. Install TLP for Advanced Power Management

|

||||

|

||||

We’ve said it before. And, we’ll still recommend it.

|

||||

|

||||

If you want to manage your background tasks/activity and prevent overheating of your system – you should install TLP.

|

||||

|

||||

It does not offer a GUI, but you don’t have to bother. You just install it and let it manage whatever it takes to prevent overheating.

|

||||

|

||||

It’s very helpful for laptop users.

|

||||

|

||||

To install, type in:

|

||||

|

||||

```

|

||||

supo apt install tlp tlp-rdw

|

||||

```

|

||||

|

||||

#### 11\. Perform visual customizations

|

||||

|

||||

![][15]

|

||||

|

||||

If you need to change the look of your Linux distro, you can install GNOME tweaks tool to get the options. In order to install the tweak tool, type in:

|

||||

|

||||

```

|

||||

sudo apt install gnome-tweaks

|

||||

```

|

||||

|

||||

Once you install it, head to the application launcher and search for “Tweaks”, you’ll find something like this:

|

||||

|

||||

Here, you can select the icon, theme, wallpaper, and you’ll also be able to tweak a couple more options that’s not limited to the visual elements.

|

||||

|

||||

### Wrapping Up

|

||||

|

||||

It’s the least you should do after installing elementary OS 5 Juno. However, considering that elementary OS 5 Juno comes with numerous new features – you can explore a lot more new things as well.

|

||||

|

||||

Let us know what you did first after installing elementary OS 5 Juno and how’s your experience with it so far?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/things-to-do-after-installing-elementary-os-5-juno/

|

||||

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/ankush/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://itsfoss.com/elementary-os-juno-features/

|

||||