diff --git a/translated/talk/20190108 Hacking math education with Python.md b/published/20190108 Hacking math education with Python.md

similarity index 93%

rename from translated/talk/20190108 Hacking math education with Python.md

rename to published/20190108 Hacking math education with Python.md

index 120e56c521..0ab5baca72 100644

--- a/translated/talk/20190108 Hacking math education with Python.md

+++ b/published/20190108 Hacking math education with Python.md

@@ -1,14 +1,15 @@

[#]: collector: (lujun9972)

[#]: translator: (HankChow)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-10527-1.html)

[#]: subject: (Hacking math education with Python)

[#]: via: (https://opensource.com/article/19/1/hacking-math)

[#]: author: (Don Watkins https://opensource.com/users/don-watkins)

将 Python 结合到数学教育中

======

+

> 身兼教师、开发者、作家数职的 Peter Farrell 来讲述为什么使用 Python 来讲数学课会比传统方法更加好。

@@ -19,11 +20,11 @@

Peter 的灵感来源于 Logo 语言之父 [Seymour Papert][2],他的 Logo 语言现在还存在于 Python 的 [Turtle 模块][3]中。Logo 语言中的海龟形象让 Peter 喜欢上了 Python,并且进一步将 Python 应用到数学教学中。

-Peter 在他的新书《[Python 数学奇遇记][5]》中分享了他的方法:“图文并茂地指导如何用代码探索数学”。因此我最近对他进行了一次采访,向他了解更多这方面的情况。

+Peter 在他的新书《[Python 数学奇遇记][5]》中分享了他的方法:“图文并茂地指导如何用代码探索数学”。因此我最近对他进行了一次采访,向他了解更多这方面的情况。

-**Don Watkins(译者注:本文作者):** 你的教学背景是什么?

+**Don Watkins(LCTT 译注:本文作者):** 你的教学背景是什么?

-**Peter Farrell:** 我曾经当过八年的数学老师,之后又教了十年的数学。我还在当老师的时候,就阅读过 Papert 的 《[头脑风暴][6]》并从中受到了启发,将 Logo 语言和海龟引入到了我所有的数学课上。

+**Peter Farrell:** 我曾经当过八年的数学老师,之后又做了十年的数学私教。我还在当老师的时候,就阅读过 Papert 的 《[头脑风暴][6]》并从中受到了启发,将 Logo 语言和海龟引入到了我所有的数学课上。

**DW:** 你为什么开始使用 Python 呢?

@@ -68,7 +69,7 @@ via: https://opensource.com/article/19/1/hacking-math

作者:[Don Watkins][a]

选题:[lujun9972][b]

译者:[HankChow](https://github.com/HankChow)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

diff --git a/sources/talk/20190110 Toyota Motors and its Linux Journey.md b/sources/talk/20190110 Toyota Motors and its Linux Journey.md

index 1d76ffe0b6..ef3afd38a0 100644

--- a/sources/talk/20190110 Toyota Motors and its Linux Journey.md

+++ b/sources/talk/20190110 Toyota Motors and its Linux Journey.md

@@ -1,5 +1,5 @@

[#]: collector: (lujun9972)

-[#]: translator: ( )

+[#]: translator: (jdh8383)

[#]: reviewer: ( )

[#]: publisher: ( )

[#]: url: ( )

@@ -7,12 +7,11 @@

[#]: via: (https://itsfoss.com/toyota-motors-linux-journey)

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

-Toyota Motors and its Linux Journey

+丰田汽车的Linux之旅

======

-**This is a community submission from It’s FOSS reader Malcolm Dean.**

-

I spoke with Brian R Lyons of TMNA Toyota Motor Corp North America about the implementation of Linux in Toyota and Lexus infotainment systems. I came to find out there is an Automotive Grade Linux (AGL) being used by several autmobile manufacturers.

+我之前跟丰田汽车北美分公司的Brian.R.Lyons聊过天,话题是关于Linux在丰田和雷克萨斯汽车的信息娱乐系统上的实施方案。我发现一些汽车制造商使用了Automotive Grade Linux (AGL)。

I put together a short article comprising of my discussion with Brian about Toyota and its tryst with Linux. I hope that Linux enthusiasts will like this quick little chat.

diff --git a/sources/talk/20190131 4 confusing open source license scenarios and how to navigate them.md b/sources/talk/20190131 4 confusing open source license scenarios and how to navigate them.md

new file mode 100644

index 0000000000..fd93cdd9a6

--- /dev/null

+++ b/sources/talk/20190131 4 confusing open source license scenarios and how to navigate them.md

@@ -0,0 +1,59 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (4 confusing open source license scenarios and how to navigate them)

+[#]: via: (https://opensource.com/article/19/1/open-source-license-scenarios)

+[#]: author: (P.Kevin Nelson https://opensource.com/users/pkn4645)

+

+4 confusing open source license scenarios and how to navigate them

+======

+

+Before you begin using a piece of software, make sure you fully understand the terms of its license.

+

+

+

+As an attorney running an open source program office for a Fortune 500 corporation, I am often asked to look into a product or component where there seems to be confusion as to the licensing model. Under what terms can the code be used, and what obligations run with such use? This often happens when the code or the associated project community does not clearly indicate availability under a [commonly accepted open source license][1]. The confusion is understandable as copyright owners often evolve their products and services in different directions in response to market demands. Here are some of the scenarios I commonly discover and how you can approach each situation.

+

+### Multiple licenses

+

+The product is truly open source with an [Open Source Initiative][2] (OSI) open source-approved license, but has changed licensing models at least once if not multiple times throughout its lifespan. This scenario is fairly easy to address; the user simply has to decide if the latest version with its attendant features and bug fixes is worth the conditions to be compliant with the current license. If so, great. If not, then the user can move back in time to a version released under a more palatable license and start from that fork, understanding that there may not be an active community for support and continued development.

+

+### Old open source

+

+This is a variation on the multiple licenses model with the twist that current licensing is proprietary only. You have to use an older version to take advantage of open source terms and conditions. Most often, the product was released under a valid open source license up to a certain point in its development, but then the copyright holder chose to evolve the code in a proprietary fashion and offer new releases only under proprietary commercial licensing terms. So, if you want the newest capabilities, you have to purchase a proprietary license, and you most likely will not get a copy of the underlying source code. Most often the open source community that grew up around the original code line falls away once the members understand there will be no further commitment from the copyright holder to the open source branch. While this scenario is understandable from the copyright holder's perspective, it can be seen as "burning a bridge" to the open source community. It would be very difficult to again leverage the benefits of the open source contribution models once a project owner follows this path.

+

+### Open core

+

+By far the most common discovery is that a product has both an open source-licensed "community edition" and a proprietary-licensed commercial offering, commonly referred to as open core. This is often encouraging to potential consumers, as it gives them a "try before you buy" option or even a chance to influence both versions of the product by becoming an active member of the community. I usually encourage clients to begin with the community version, get involved, and see what they can achieve. Then, if the product becomes a crucial part of their business plan, they have the option to upgrade to the proprietary level at any time.

+

+### Freemium

+

+The component is not open source at all, but instead it is released under some version of the "freemium" model. A version with restricted or time-limited functionality can be downloaded with no immediate purchase required. However, since the source code is usually not provided and its accompanying license does not allow perpetual use, the creation of derivative works, nor further distribution, it is definitely not open source. In this scenario, it is usually best to pass unless you are prepared to purchase a proprietary license and accept all attendant terms and conditions of use. Users are often the most disappointed in this outcome as it has somewhat of a deceptive feel.

+

+### OSI compliant

+

+Of course, the happy path I haven't mentioned is to discover the project has a single, clear, OSI-compliant license. In those situations, open source software is as easy as downloading and going forward within appropriate use.

+

+Each of the more complex scenarios described above can present problems to potential development projects, but consultation with skilled procurement or intellectual property professionals with regard to licensing lineage can reveal excellent opportunities.

+

+An earlier version of this article was published on [OSS Law][3] and is republished with the author's permission.

+

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/1/open-source-license-scenarios

+

+作者:[P.Kevin Nelson][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/pkn4645

+[b]: https://github.com/lujun9972

+[1]: https://opensource.org/licenses

+[2]: https://opensource.org/licenses/category

+[3]: http://www.pknlaw.com/2017/06/i-thought-that-was-open-source.html

diff --git a/sources/talk/20190131 OOP Before OOP with Simula.md b/sources/talk/20190131 OOP Before OOP with Simula.md

new file mode 100644

index 0000000000..cae9d9bd3a

--- /dev/null

+++ b/sources/talk/20190131 OOP Before OOP with Simula.md

@@ -0,0 +1,203 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (OOP Before OOP with Simula)

+[#]: via: (https://twobithistory.org/2019/01/31/simula.html)

+[#]: author: (Sinclair Target https://twobithistory.org)

+

+OOP Before OOP with Simula

+======

+

+Imagine that you are sitting on the grassy bank of a river. Ahead of you, the water flows past swiftly. The afternoon sun has put you in an idle, philosophical mood, and you begin to wonder whether the river in front of you really exists at all. Sure, large volumes of water are going by only a few feet away. But what is this thing that you are calling a “river”? After all, the water you see is here and then gone, to be replaced only by more and different water. It doesn’t seem like the word “river” refers to any fixed thing in front of you at all.

+

+In 2009, Rich Hickey, the creator of Clojure, gave [an excellent talk][1] about why this philosophical quandary poses a problem for the object-oriented programming paradigm. He argues that we think of an object in a computer program the same way we think of a river—we imagine that the object has a fixed identity, even though many or all of the object’s properties will change over time. Doing this is a mistake, because we have no way of distinguishing between an object instance in one state and the same object instance in another state. We have no explicit notion of time in our programs. We just breezily use the same name everywhere and hope that the object is in the state we expect it to be in when we reference it. Inevitably, we write bugs.

+

+The solution, Hickey concludes, is that we ought to model the world not as a collection of mutable objects but a collection of processes acting on immutable data. We should think of each object as a “river” of causally related states. In sum, you should use a functional language like Clojure.

+

+![][2]

+The author, on a hike, pondering the ontological commitments

+of object-oriented programming.

+

+Since Hickey gave his talk in 2009, interest in functional programming languages has grown, and functional programming idioms have found their way into the most popular object-oriented languages. Even so, most programmers continue to instantiate objects and mutate them in place every day. And they have been doing it for so long that it is hard to imagine that programming could ever look different.

+

+I wanted to write an article about Simula and imagined that it would mostly be about when and how object-oriented constructs we are familiar with today were added to the language. But I think the more interesting story is about how Simula was originally so unlike modern object-oriented programming languages. This shouldn’t be a surprise, because the object-oriented paradigm we know now did not spring into existence fully formed. There were two major versions of Simula: Simula I and Simula 67. Simula 67 brought the world classes, class hierarchies, and virtual methods. But Simula I was a first draft that experimented with other ideas about how data and procedures could be bundled together. The Simula I model is not a functional model like the one Hickey proposes, but it does focus on processes that unfold over time rather than objects with hidden state that interact with each other. Had Simula 67 stuck with more of Simula I’s ideas, the object-oriented paradigm we know today might have looked very different indeed—and that contingency should teach us to be wary of assuming that the current paradigm will dominate forever.

+

+### Simula 0 Through 67

+

+Simula was created by two Norwegians, Kristen Nygaard and Ole-Johan Dahl.

+

+In the late 1950s, Nygaard was employed by the Norwegian Defense Research Establishment (NDRE), a research institute affiliated with the Norwegian military. While there, he developed Monte Carlo simulations used for nuclear reactor design and operations research. These simulations were at first done by hand and then eventually programmed and run on a Ferranti Mercury. Nygaard soon found that he wanted a higher-level way to describe these simulations to a computer.

+

+The kind of simulation that Nygaard commonly developed is known as a “discrete event model.” The simulation captures how a sequence of events change the state of a system over time—but the important property here is that the simulation can jump from one event to the next, since the events are discrete and nothing changes in the system between events. This kind of modeling, according to a paper that Nygaard and Dahl presented about Simula in 1966, was increasingly being used to analyze “nerve networks, communication systems, traffic flow, production systems, administrative systems, social systems, etc.” So Nygaard thought that other people might want a higher-level way to describe these simulations too. He began looking for someone that could help him implement what he called his “Simulation Language” or “Monte Carlo Compiler.”

+

+Dahl, who had also been employed by NDRE, where he had worked on language design, came aboard at this point to play Wozniak to Nygaard’s Jobs. Over the next year or so, Nygaard and Dahl worked to develop what has been called “Simula 0.” This early version of the language was going to be merely a modest extension to ALGOL 60, and the plan was to implement it as a preprocessor. The language was then much less abstract than what came later. The primary language constructs were “stations” and “customers.” These could be used to model certain discrete event networks; Nygaard and Dahl give an example simulating airport departures. But Nygaard and Dahl eventually came up with a more general language construct that could represent both “stations” and “customers” and also model a wider range of simulations. This was the first of two major generalizations that took Simula from being an application-specific ALGOL package to a general-purpose programming language.

+

+In Simula I, there were no “stations” or “customers,” but these could be recreated using “processes.” A process was a bundle of data attributes associated with a single action known as the process’ operating rule. You might think of a process as an object with only a single method, called something like `run()`. This analogy is imperfect though, because each process’ operating rule could be suspended or resumed at any time—the operating rules were a kind of coroutine. A Simula I program would model a system as a set of processes that conceptually all ran in parallel. Only one process could actually be “current” at any time, but once a process suspended itself the next queued process would automatically take over. As the simulation ran, behind the scenes, Simula would keep a timeline of “event notices” that tracked when each process should be resumed. In order to resume a suspended process, Simula needed to keep track of multiple call stacks. This meant that Simula could no longer be an ALGOL preprocessor, because ALGOL had only once call stack. Nygaard and Dahl were committed to writing their own compiler.

+

+In their paper introducing this system, Nygaard and Dahl illustrate its use by implementing a simulation of a factory with a limited number of machines that can serve orders. The process here is the order, which starts by looking for an available machine, suspends itself to wait for one if none are available, and then runs to completion once a free machine is found. There is a definition of the order process that is then used to instantiate several different order instances, but no methods are ever called on these instances. The main part of the program just creates the processes and sets them running.

+

+The first Simula I compiler was finished in 1965. The language grew popular at the Norwegian Computer Center, where Nygaard and Dahl had gone to work after leaving NDRE. Implementations of Simula I were made available to UNIVAC users and to Burroughs B5500 users. Nygaard and Dahl did a consulting deal with a Swedish company called ASEA that involved using Simula to run job shop simulations. But Nygaard and Dahl soon realized that Simula could be used to write programs that had nothing to do with simulation at all.

+

+Stein Krogdahl, a professor at the University of Oslo that has written about the history of Simula, claims that “the spark that really made the development of a new general-purpose language take off” was [a paper called “Record Handling”][3] by the British computer scientist C.A.R. Hoare. If you read Hoare’s paper now, this is easy to believe. I’m surprised that you don’t hear Hoare’s name more often when people talk about the history of object-oriented languages. Consider this excerpt from his paper:

+

+> The proposal envisages the existence inside the computer during the execution of the program, of an arbitrary number of records, each of which represents some object which is of past, present or future interest to the programmer. The program keeps dynamic control of the number of records in existence, and can create new records or destroy existing ones in accordance with the requirements of the task in hand.

+

+> Each record in the computer must belong to one of a limited number of disjoint record classes; the programmer may declare as many record classes as he requires, and he associates with each class an identifier to name it. A record class name may be thought of as a common generic term like “cow,” “table,” or “house” and the records which belong to these classes represent the individual cows, tables, and houses.

+

+Hoare does not mention subclasses in this particular paper, but Dahl credits him with introducing Nygaard and himself to the concept. Nygaard and Dahl had noticed that processes in Simula I often had common elements. Using a superclass to implement those common elements would be convenient. This also raised the possibility that the “process” idea itself could be implemented as a superclass, meaning that not every class had to be a process with a single operating rule. This then was the second great generalization that would make Simula 67 a truly general-purpose programming language. It was such a shift of focus that Nygaard and Dahl briefly considered changing the name of the language so that people would know it was not just for simulations. But “Simula” was too much of an established name for them to risk it.

+

+In 1967, Nygaard and Dahl signed a contract with Control Data to implement this new version of Simula, to be known as Simula 67. A conference was held in June, where people from Control Data, the University of Oslo, and the Norwegian Computing Center met with Nygaard and Dahl to establish a specification for this new language. This conference eventually led to a document called the [“Simula 67 Common Base Language,”][4] which defined the language going forward.

+

+Several different vendors would make Simula 67 compilers. The Association of Simula Users (ASU) was founded and began holding annual conferences. Simula 67 soon had users in more than 23 different countries.

+

+### 21st Century Simula

+

+Simula is remembered now because of its influence on the languages that have supplanted it. You would be hard-pressed to find anyone still using Simula to write application programs. But that doesn’t mean that Simula is an entirely dead language. You can still compile and run Simula programs on your computer today, thanks to [GNU cim][5].

+

+The cim compiler implements the Simula standard as it was after a revision in 1986. But this is mostly the Simula 67 version of the language. You can write classes, subclass, and virtual methods just as you would have with Simula 67. So you could create a small object-oriented program that looks a lot like something you could easily write in Python or Ruby:

+

+```

+! dogs.sim ;

+Begin

+ Class Dog;

+ ! The cim compiler requires virtual procedures to be fully specified ;

+ Virtual: Procedure bark Is Procedure bark;;

+ Begin

+ Procedure bark;

+ Begin

+ OutText("Woof!");

+ OutImage; ! Outputs a newline ;

+ End;

+ End;

+

+ Dog Class Chihuahua; ! Chihuahua is "prefixed" by Dog ;

+ Begin

+ Procedure bark;

+ Begin

+ OutText("Yap yap yap yap yap yap");

+ OutImage;

+ End;

+ End;

+

+ Ref (Dog) d;

+ d :- new Chihuahua; ! :- is the reference assignment operator ;

+ d.bark;

+End;

+```

+

+You would compile and run it as follows:

+

+```

+$ cim dogs.sim

+Compiling dogs.sim:

+gcc -g -O2 -c dogs.c

+gcc -g -O2 -o dogs dogs.o -L/usr/local/lib -lcim

+$ ./dogs

+Yap yap yap yap yap yap

+```

+

+(You might notice that cim compiles Simula to C, then hands off to a C compiler.)

+

+This was what object-oriented programming looked like in 1967, and I hope you agree that aside from syntactic differences this is also what object-oriented programming looks like in 2019. So you can see why Simula is considered a historically important language.

+

+But I’m more interested in showing you the process model that was central to Simula I. That process model is still available in Simula 67, but only when you use the `Process` class and a special `Simulation` block.

+

+In order to show you how processes work, I’ve decided to simulate the following scenario. Imagine that there is a village full of villagers next to a river. The river has lots of fish, but between them the villagers only have one fishing rod. The villagers, who have voracious appetites, get hungry every 60 minutes or so. When they get hungry, they have to use the fishing rod to catch a fish. If a villager cannot use the fishing rod because another villager is waiting for it, then the villager queues up to use the fishing rod. If a villager has to wait more than five minutes to catch a fish, then the villager loses health. If a villager loses too much health, then that villager has starved to death.

+

+This is a somewhat strange example and I’m not sure why this is what first came to mind. But there you go. We will represent our villagers as Simula processes and see what happens over a day’s worth of simulated time in a village with four villagers.

+

+The full program is [available here as a Gist][6].

+

+The last lines of my output look like the following. Here we are seeing what happens in the last few hours of the day:

+

+```

+1299.45: John is hungry and requests the fishing rod.

+1299.45: John is now fishing.

+1311.39: John has caught a fish.

+1328.96: Betty is hungry and requests the fishing rod.

+1328.96: Betty is now fishing.

+1331.25: Jane is hungry and requests the fishing rod.

+1340.44: Betty has caught a fish.

+1340.44: Jane went hungry waiting for the rod.

+1340.44: Jane starved to death waiting for the rod.

+1369.21: John is hungry and requests the fishing rod.

+1369.21: John is now fishing.

+1379.33: John has caught a fish.

+1409.59: Betty is hungry and requests the fishing rod.

+1409.59: Betty is now fishing.

+1419.98: Betty has caught a fish.

+1427.53: John is hungry and requests the fishing rod.

+1427.53: John is now fishing.

+1437.52: John has caught a fish.

+```

+

+Poor Jane starved to death. But she lasted longer than Sam, who didn’t even make it to 7am. Betty and John sure have it good now that only two of them need the fishing rod.

+

+What I want you to see here is that the main, top-level part of the program does nothing but create the four villager processes and get them going. The processes manipulate the fishing rod object in the same way that we would manipulate an object today. But the main part of the program does not call any methods or modify and properties on the processes. The processes have internal state, but this internal state only gets modified by the process itself.

+

+There are still fields that get mutated in place here, so this style of programming does not directly address the problems that pure functional programming would solve. But as Krogdahl observes, “this mechanism invites the programmer of a simulation to model the underlying system as a set of processes, each describing some natural sequence of events in that system.” Rather than thinking primarily in terms of nouns or actors—objects that do things to other objects—here we are thinking of ongoing processes. The benefit is that we can hand overall control of our program off to Simula’s event notice system, which Krogdahl calls a “time manager.” So even though we are still mutating processes in place, no process makes any assumptions about the state of another process. Each process interacts with other processes only indirectly.

+

+It’s not obvious how this pattern could be used to build, say, a compiler or an HTTP server. (On the other hand, if you’ve ever programmed games in the Unity game engine, this should look familiar.) I also admit that even though we have a “time manager” now, this may not have been exactly what Hickey meant when he said that we need an explicit notion of time in our programs. (I think he’d want something like the superscript notation [that Ada Lovelace used][7] to distinguish between the different values a variable assumes through time.) All the same, I think it’s really interesting that right there at the beginning of object-oriented programming we can find a style of programming that is not all like the object-oriented programming we are used to. We might take it for granted that object-oriented programming simply works one way—that a program is just a long list of the things that certain objects do to other objects in the exact order that they do them. Simula I’s process system shows that there are other approaches. Functional languages are probably a better thought-out alternative, but Simula I reminds us that the very notion of alternatives to modern object-oriented programming should come as no surprise.

+

+If you enjoyed this post, more like it come out every four weeks! Follow [@TwoBitHistory][8] on Twitter or subscribe to the [RSS feed][9] to make sure you know when a new post is out.

+

+Previously on TwoBitHistory…

+

+> Hey everyone! I sadly haven't had time to do any new writing but I've just put up an updated version of my history of RSS. This version incorporates interviews I've since done with some of the key people behind RSS like Ramanathan Guha and Dan Libby.

+>

+> — TwoBitHistory (@TwoBitHistory) [December 18, 2018][10]

+

+

+

+--------------------------------------------------------------------------------

+

+1. Jan Rune Holmevik, “The History of Simula,” accessed January 31, 2019, http://campus.hesge.ch/daehne/2004-2005/langages/simula.htm. ↩

+

+2. Ole-Johan Dahl and Kristen Nygaard, “SIMULA—An ALGOL-Based Simulation Langauge,” Communications of the ACM 9, no. 9 (September 1966): 671, accessed January 31, 2019, http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.95.384&rep=rep1&type=pdf. ↩

+

+3. Stein Krogdahl, “The Birth of Simula,” 2, accessed January 31, 2019, http://heim.ifi.uio.no/~steinkr/papers/HiNC1-webversion-simula.pdf. ↩

+

+4. ibid. ↩

+

+5. Ole-Johan Dahl and Kristen Nygaard, “The Development of the Simula Languages,” ACM SIGPLAN Notices 13, no. 8 (August 1978): 248, accessed January 31, 2019, https://hannemyr.com/cache/knojd_acm78.pdf. ↩

+

+6. Dahl and Nygaard (1966), 676. ↩

+

+7. Dahl and Nygaard (1978), 257. ↩

+

+8. Krogdahl, 3. ↩

+

+9. Ole-Johan Dahl, “The Birth of Object-Orientation: The Simula Languages,” 3, accessed January 31, 2019, http://www.olejohandahl.info/old/birth-of-oo.pdf. ↩

+

+10. Dahl and Nygaard (1978), 265. ↩

+

+11. Holmevik. ↩

+

+12. Krogdahl, 4. ↩

+

+

+--------------------------------------------------------------------------------

+

+via: https://twobithistory.org/2019/01/31/simula.html

+

+作者:[Sinclair Target][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://twobithistory.org

+[b]: https://github.com/lujun9972

+[1]: https://www.infoq.com/presentations/Are-We-There-Yet-Rich-Hickey

+[2]: /images/river.jpg

+[3]: https://archive.computerhistory.org/resources/text/algol/ACM_Algol_bulletin/1061032/p39-hoare.pdf

+[4]: http://web.eah-jena.de/~kleine/history/languages/Simula-CommonBaseLanguage.pdf

+[5]: https://www.gnu.org/software/cim/

+[6]: https://gist.github.com/sinclairtarget/6364cd521010d28ee24dd41ab3d61a96

+[7]: https://twobithistory.org/2018/08/18/ada-lovelace-note-g.html

+[8]: https://twitter.com/TwoBitHistory

+[9]: https://twobithistory.org/feed.xml

+[10]: https://twitter.com/TwoBitHistory/status/1075075139543449600?ref_src=twsrc%5Etfw

diff --git a/sources/talk/20190204 Config management is dead- Long live Config Management Camp.md b/sources/talk/20190204 Config management is dead- Long live Config Management Camp.md

new file mode 100644

index 0000000000..679ac9033b

--- /dev/null

+++ b/sources/talk/20190204 Config management is dead- Long live Config Management Camp.md

@@ -0,0 +1,118 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Config management is dead: Long live Config Management Camp)

+[#]: via: (https://opensource.com/article/19/2/configuration-management-camp)

+[#]: author: (Matthew Broberg https://opensource.com/users/mbbroberg)

+

+Config management is dead: Long live Config Management Camp

+======

+

+CfgMgmtCamp '19 co-organizers share their take on ops, DevOps, observability, and the rise of YoloOps and YAML engineers.

+

+

+

+Everyone goes to [FOSDEM][1] in Brussels to learn from its massive collection of talk tracks, colloquially known as developer rooms, that run the gauntlet of curiosities, covering programming languages like Rust, Go, and Python, to special topics ranging from community, to legal, to privacy. After two days of nonstop activity, many FOSDEM attendees move on to Ghent, Belgium, to join hundreds for Configuration Management Camp ([CfgMgmtCamp][2]).

+

+Kris Buytaert and Toshaan Bharvani run the popular post-FOSDEM show centered around infrastructure management, featuring hackerspaces, training, workshops, and keynotes. It's a deeply technical exploration of the who, what, and how of building resilient infrastructure. It started in 2013 as a PuppetCamp but expanded to include more communities and tools in 2014.

+

+I spoke with Kris and Toshaan, who both have a healthy sense of humor, about CfgMgmtCamp's past, present, and future. Our interview has been edited for length and clarity.

+

+**Matthew: Your opening[keynote][3] is called "CfgMgmtCamp is dead." Is config management dead? Will it live on, or will something take its place?**

+

+**Kris:** We've noticed people are jumping on the hype of containers, trying to solve the same problems in a different way. But they are still managing config, only in different ways and with other tools. Over the past couple of years, we've evolved from a conference with a focus on infrastructure-as-code tooling, such as Puppet, Chef, CFEngine, Ansible, Juju, and Salt, to a more open source infrastructure automation conference in general. So, config management is definitely not dead. Infrastructure-as-code is also not dead, but it all is evolving.

+

+**Toshaan:** We see people changing tools, jumping on hype, and communities changing; however, the basic ideas and concepts remain the same.

+

+**Matthew: It's great to see[observability as the topic][4] of one of your keynotes. Why should those who care about configuration management also care about monitoring and observability?**

+

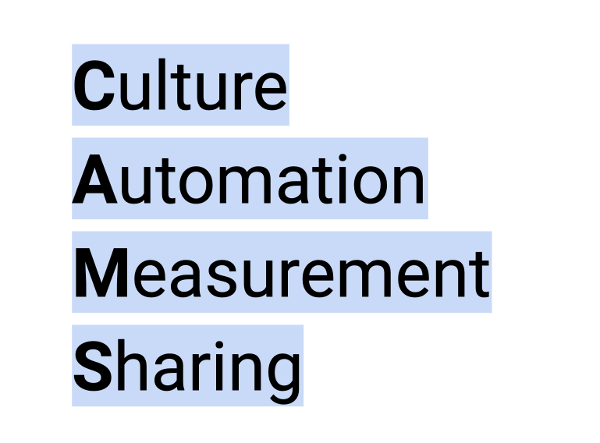

+**Kris:** While the name of the conference hasn't changed, the tools have evolved and we have expanded our horizon. Ten years ago, [Devopsdays][5] was just #devopsdays, but it evolved to focus on culture—the C of [CAMS][6] in the DevOps' core principles of Culture, Automation, Measurement, and Sharing.

+

+

+

+[Monitorama][7] filled the gap on monitoring and metrics (tackling the M in CAMS). Config Management Camp is about open source Automation, the A. Since they are all open source conferences, they fulfill the Sharing part, completing the CAMS concept.

+

+Observability sits on the line between Automation and Measurement. To go one step further, in some of my talks about open source monitoring, I describe the evolution of monitoring tools from #monitoringsucks to #monitoringlove; for lots of people (including me), the love for monitoring returned because we tied it to automation. We started to provision a service and automatically adapted the monitoring of that service to its state. Gone were the days where the monitoring tool was out of sync with reality.

+

+Looking at it from the other side, when you have an infrastructure or application so complex that you need observability in it, you'd better not be deploying manually; you will need some form of automation at that level of complexity. So, observability and infrastructure automation are tied together.

+

+**Toshaan:** Yes, while in the past we focused on configuration management, we will be looking to expand that into all types of infrastructure management. Last year, we played with this idea, and we were able to have a lot of cross-tool presentations. This year, we've taken this a step further by having more differentiated content.

+

+**Matthew: Some of my virtualization and Linux admin friends push back, saying observability is a developer's responsibility. How would you respond without just saying "DevOps?"**

+

+**Kris:** What you describe is what I call "Ooops Devs." This is a trend where the people who run the platform don't really care what they run; as long as port 80 is listening and the node pings, they are happy. It's equally bad as "Dev Ooops." "Ooops Devs" is where the devs rant about the ops folks because they are slow, not agile, and not responsive. But, to me, your job as an ops person or as a Linux admin is to keep a service running, and the only way to do that is to take on that task is as a team—with your colleagues who have different roles and insights, people who write code, people who design, etc. It is a shared responsibility. And hiding behind "that is someone else's responsibility," doesn't smell like collaboration going on.

+

+**Toshaan:** Even in the dark ages of silos, I believe a true sysadmin should have cared about observability, monitoring, and automation. I believe that the DevOps movement has made this much more widespread, and that it has become easier to get this information and expose it. On the other hand, I believe that pure operators or sysadmins have learned to be team players (or, they may have died out). I like the analogy of an army unit composed of different specialty soldiers who work together to complete a mission; we have engineers who work to deliver products or services.

+

+**Matthew: In a[Devopsdays Zurich talk][8], Kris offered an opinion that Americans build software for acquisition and Europeans build for resilience. In that light, what are the best skills for someone who wants to build meaningful infrastructure?**

+

+**Toshaan:** I believe still some people don't understand the complexity of code sprawl, and they believe that some new hype will solve this magically.

+

+**Kris:** This year, we invited [Steve Traugott][9], co-author of the 1998 USENIX paper "[Bootstrapping an Infrastructure][10]" that helped kickstart our community. So many people never read [Infrastructures.org][11], never experienced the pain of building images and image sprawl, and don't understand the evolution we went through that led us to build things the way we build them from source code.

+

+People should study topics such as idempotence, resilience, reproducibility, and surviving the tenth floor test. (As explained in "Bootstrapping an Infrastructure": "The test we used when designing infrastructures was 'Can I grab a random machine and throw it out the tenth-floor window without adversely impacting users for more than 10 minutes?' If the answer to this was 'yes,' then we knew we were doing things right.") But only after they understand the service they are building—the service is the absolute priority—can they begin working on things like: how can we run this, how can we make sure it keeps running, how can it fail and how can we prevent that, and if it disappears, how can we spin it up again fast, unnoticed by the end user.

+

+**Toshaan:** 100% uptime.

+

+**Kris:** The challenge we have is that lots of people don't have that experience yet. We've seen the rise of [YoloOps][12]—just spin it up once, fire, and forget—which results in security problems, stability problems, data loss, etc., and they often grasp onto the solutions in YoloOps, the easy way to do something quickly and move on. But understanding how things will eventually fail takes time, it's called experience.

+

+**Toshaan:** Well, when I was a student and manned the CentOS stand at FOSDEM, I remember a guy coming up to the stand and complaining that he couldn't do consulting because of the "fire once and forgot" policy of CentOS, and that it just worked too well. I like to call this ZombieOps, but YoloOps works also.

+

+**Matthew: I see you're leading the second year of YamlCamp as well. Why does a markup language need its own camp?**

+

+**Kris:** [YamlCamp][13] is a parody, it's a joke. Last year, Bob Walker ([@rjw1][14]) gave a talk titled "Are we all YAML engineers now?" that led to more jokes. We've had a discussion for years about rebranding CfgMgmtCamp; the problem is that people know our name, we have a large enough audience to keep going, and changing the name would mean effort spent on logos, website, DNS, etc. We won't change the name, but we joked that we could rebrand to YamlCamp, because for some weird reason, a lot of the talks are about YAML. :)

+

+**Matthew: Do you think systems engineers should list YAML as a skill or a language on their CV? Should companies be hiring YAML engineers, or do you have "Long live all YAML engineers" on the website in jest?**

+

+**Toshaan:** Well, the real question is whether people are willing to call themselves YAML engineers proudly, because we already have enough DevOps engineers.

+

+**Matthew: What FOSS software helps you manage the event?**

+

+**Toshaan:** I re-did the website in Hugo CMS because we were spending too much time maintaining the website manually. I chose Hugo, because I was learning Golang, and because it has been successfully used for other conferences and my own website. I also wanted a static website and iCalendar output, so we could use calendar tooling such as Giggity to have a good scheduling tool.

+

+The website now builds quite nicely, and while I still have some ideas on improvements, maintenance is now much easier.

+

+For the call for proposals (CFP), we now use [OpenCFP][15]. We want to optimize the submission, voting, selection, and extraction to be as automated as possible, while being easy and comfortable for potential speakers, reviewers, and ourselves to use. OpenCFP seems to be the tool that works; while we still have some feature requirements, I believe that, once we have some time to contribute back to OpenCFP, we'll have a fully functional and easy tool to run CFPs with.

+

+Last, we switched from EventBrite to Pretix because I wanted to be GDPR compliant and have the ability to run our questions, vouchers, and extra features. Pretix allows us to control registration of attendees, speakers, sponsors, and organizers and have a single overview of all the people coming to the event.

+

+### Wrapping up

+

+The beauty of Configuration Management Camp to me is that it continues to evolve with its audience. Configuration management is certainly at the heart of the work, but it's in service to resilient infrastructure. Keep your eyes open for the talk recordings to learn from the [line up of incredible speakers][16], and thank you to the team for running this (free) show!

+

+You can follow Kris [@KrisBuytaert][17] and Toshaan [@toshywoshy][18]. You can also see Kris' past articles [on his blog][19].

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/2/configuration-management-camp

+

+作者:[Matthew Broberg][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mbbroberg

+[b]: https://github.com/lujun9972

+[1]: https://fosdem.org/2019/

+[2]: https://cfgmgmtcamp.eu/

+[3]: https://cfgmgmtcamp.eu/schedule/monday/intro00/

+[4]: https://cfgmgmtcamp.eu/schedule/monday/keynote0/

+[5]: https://www.devopsdays.org/

+[6]: http://devopsdictionary.com/wiki/CAMS

+[7]: http://monitorama.com/

+[8]: https://vimeo.com/272519813

+[9]: https://cfgmgmtcamp.eu/schedule/tuesday/keynote1/

+[10]: http://www.infrastructures.org/papers/bootstrap/bootstrap.html

+[11]: http://www.infrastructures.org/

+[12]: https://gist.githubusercontent.com/mariozig/5025613/raw/yolo

+[13]: https://twitter.com/yamlcamp

+[14]: https://twitter.com/rjw1

+[15]: https://github.com/opencfp/opencfp

+[16]: https://cfgmgmtcamp.eu/speaker/

+[17]: https://twitter.com/KrisBuytaert

+[18]: https://twitter.com/toshywoshy

+[19]: https://krisbuytaert.be/index.shtml

diff --git a/sources/talk/20190205 7 predictions for artificial intelligence in 2019.md b/sources/talk/20190205 7 predictions for artificial intelligence in 2019.md

new file mode 100644

index 0000000000..2e1b047a15

--- /dev/null

+++ b/sources/talk/20190205 7 predictions for artificial intelligence in 2019.md

@@ -0,0 +1,91 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (7 predictions for artificial intelligence in 2019)

+[#]: via: (https://opensource.com/article/19/2/predictions-artificial-intelligence)

+[#]: author: (Salil Sethi https://opensource.com/users/salilsethi)

+

+7 predictions for artificial intelligence in 2019

+======

+

+While 2018 was a big year for AI, the stage is set for it to make an even deeper impact in 2019.

+

+

+

+Without question, 2018 was a big year for artificial intelligence (AI) as it pushed even further into the mainstream, successfully automating more functionality than ever before. Companies are increasingly exploring applications for AI, and the general public has grown accustomed to interacting with the technology on a daily basis.

+

+The stage is set for AI to continue transforming the world as we know it. In 2019, not only will the technology continue growing in global prevalence, but it will also spawn deeper conversations around important topics, fuel innovative business models, and impact society in new ways, including the following seven.

+

+### 1\. Machine learning as a service (MLaaS) will be deployed more broadly

+

+In 2018, we witnessed major strides in MLaaS with technology powerhouses like Google, Microsoft, and Amazon leading the way. Prebuilt machine learning solutions and capabilities are becoming more attractive in the market, especially to smaller companies that don't have the necessary in-house resources or talent. For those that have the technical know-how and experience, there is a significant opportunity to sell and deploy packaged solutions that can be easily implemented by others.

+

+Today, MLaaS is sold primarily on a subscription or usage basis by cloud-computing providers. For example, Microsoft Azure's ML Studio provides developers with a drag-and-drop environment to develop powerful machine learning models. Google Cloud's Machine Learning Engine also helps developers build large, sophisticated algorithms for a variety of applications. In 2017, Amazon jumped into the realm of AI and launched Amazon SageMaker, another platform that developers can use to build, train, and deploy custom machine learning models.

+

+In 2019 and beyond, be prepared to see MLaaS offered on a much broader scale. Transparency Market Research predicts it will grow to US$20 billion at an alarming 40% CAGR by 2025.

+

+### 2\. More explainable or "transparent" AI will be developed

+

+Although there are already many examples of how AI is impacting our world, explaining the outputs and rationale of complex machine learning models remains a challenge.

+

+Unfortunately, AI continues to carry the "black box" burden, posing a significant limitation in situations where humans want to understand the rationale behind AI-supported decision making.

+

+AI democratization has been led by a plethora of open source tools and libraries, such as Scikit Learn, TensorFlow, PyTorch, and more. The open source community will lead the charge to build explainable, or "transparent," AI that can clearly document its logic, expose biases in data sets, and provide answers to follow-up questions.

+

+Before AI is widely adopted, humans need to know that the technology can perform effectively and explain its reasoning under any circumstance.

+

+### 3\. AI will impact the global political landscape

+

+In 2019, AI will play a bigger role on the global stage, impacting relationships between international superpowers that are investing in the technology. Early adopters of AI, such as the US and [China][1], will struggle to balance self-interest with collaborative R&D. Countries that have AI talent and machine learning capabilities will experience tremendous growth in areas like predictive analytics, creating a wider global technology gap.

+

+Additionally, more conversations will take place around the ethical use of AI. Naturally, different countries will approach this topic differently, which will affect political relationships. Overall, AI's impact will be small relative to other international issues, but more noticeable than before.

+

+### 4\. AI will create more jobs than it eliminates

+

+Over the long term, many jobs will be eliminated as a result of AI-enabled automation. Roles characterized by repetitive, manual tasks are being outsourced to AI more and more every day. However, in 2019, AI will create more jobs than it replaces.

+

+Rather than eliminating the need for humans entirely, AI is augmenting existing systems and processes. As a result, a new type of role is emerging. Humans are needed to support AI implementation and oversee its application. Next year, more manual labor will transition to management-type jobs that work alongside AI, a trend that will continue to 2020. Gartner predicts that in two years, [AI will create 2.3 million jobs while only eliminating 1.8 million.][2]

+

+### 5\. AI assistants will become more pervasive and useful

+

+AI assistants are nothing new to the modern world. Apple's Siri and Amazon's Alexa have been supporting humans on the road and in their homes for years. In 2019, we will see AI assistants continue to grow in their sophistication and capabilities. As they collect more behavioral data, AI assistants will become better at responding to requests and completing tasks. With advances in natural language processing and speech recognition, humans will have smoother and more useful interactions with AI assistants.

+

+In 2018, we saw companies launch promising new AI assistants. Recently, Google began rolling out its voice-enabled reservation booking service, Duplex, which can call and book appointments on behalf of users. Technology company X.ai has built two AI personal assistants, Amy and Andrew, who can interact with humans and schedule meetings for their employers. Amazon also recently announced Echo Auto, a device that enables drivers to integrate Alexa into their vehicles. However, humans will continue to place expectations ahead of reality and be disappointed at the technology's limitations.

+

+### 6\. AI/ML governance will gain importance

+

+With so many companies investing in AI, much more energy will be put towards developing effective AI governance structures. Frameworks are needed to guide data collection and management, appropriate AI use, and ethical applications. Successful and appropriate AI use involves many different stakeholders, highlighting the need for reliable and consistent governing bodies.

+

+In 2019, more organizations will create governance structures and more clearly define how AI progress and implementation are managed. Given the current gap in explainability, these structures will be tremendously important as humans continue to turn to AI to support decision-making.

+

+### 7\. AI will help companies solve AI talent shortages

+

+A [shortage of AI and machine learning talent][3] is creating an innovation bottleneck. A [survey][4] released last year from O'Reilly revealed that the biggest challenge companies are facing related to using AI is a lack of available talent. And as technological advancement continues to accelerate, it is becoming harder for companies to develop talent that can lead large-scale enterprise AI efforts.

+

+To combat this, organizations will—ironically—use AI and machine learning to help address the talent gap in 2019. For example, Google Cloud's AutoML includes machine learning products that help developers train machine learning models without having any prior AI coding experience. Amazon Personalize is another machine learning service that helps developers build sophisticated personalization systems that can be implemented in many ways by different kinds of companies. In addition, companies will use AI to find talent and fill job vacancies and propel innovation forward.

+

+### AI In 2019: bigger and better with a tighter leash

+

+Over the next year, AI will grow more prevalent and powerful than ever. Expect to see new applications and challenges and be ready for an increased emphasis on checks and balances.

+

+What do you think? How might AI impact the world in 2019? Please share your thoughts in the comments below!

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/2/predictions-artificial-intelligence

+

+作者:[Salil Sethi][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/salilsethi

+[b]: https://github.com/lujun9972

+[1]: https://www.turingtribe.com/story/china-is-achieving-ai-dominance-by-relying-on-young-blue-collar-workers-rLMsmWqLG4fGFwisQ

+[2]: https://www.gartner.com/en/newsroom/press-releases/2017-12-13-gartner-says-by-2020-artificial-intelligence-will-create-more-jobs-than-it-eliminates

+[3]: https://www.turingtribe.com/story/tencent-says-there-are-only-bTpNm9HKaADd4DrEi

+[4]: https://www.forbes.com/sites/bernardmarr/2018/06/25/the-ai-skills-crisis-and-how-to-close-the-gap/#19bafcf631f3

diff --git a/sources/talk/20190206 4 steps to becoming an awesome agile developer.md b/sources/talk/20190206 4 steps to becoming an awesome agile developer.md

new file mode 100644

index 0000000000..bad4025aef

--- /dev/null

+++ b/sources/talk/20190206 4 steps to becoming an awesome agile developer.md

@@ -0,0 +1,82 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (4 steps to becoming an awesome agile developer)

+[#]: via: (https://opensource.com/article/19/2/steps-agile-developer)

+[#]: author: (Daniel Oh https://opensource.com/users/daniel-oh)

+

+4 steps to becoming an awesome agile developer

+======

+There's no magical way to do it, but these practices will put you well on your way to embracing agile in application development, testing, and debugging.

+

+

+Enterprises are rushing into their DevOps journey through [agile][1] software development with cloud-native technologies such as [Linux containers][2], [Kubernetes][3], and [serverless][4]. Continuous integration helps enterprise developers reduce bugs, unexpected errors, and improve the quality of their code deployed in production.

+

+However, this doesn't mean all developers in DevOps automatically embrace agile for their daily work in application development, testing, and debugging. There is no magical way to do it, but the following four practical steps and best practices will put you well on your way to becoming an awesome agile developer.

+

+### Start with design thinking agile practices

+

+There are many opportunities to learn about using agile software development practices in your DevOps initiatives. Agile practices inspire people with new ideas and experiences for improving their daily work in application development with team collaboration. More importantly, those practices will help you discover the answers to questions such as: Why am I doing this? What kind of problems am I trying to solve? How do I measure the outcomes?

+

+A [domain-driven design][5] approach will help you start discovery sooner and easier. For example, the [Start At The End][6] practice helps you redesign your application and explore potential business outcomes—such as, what would happen if your application fails in production? You might also be interested in [Event Storming][7] for interactive and rapid discovery or [Impact Mapping][8] for graphical and strategic design as part of domain-driven design practices.

+

+### Use a predictive approach first

+

+In agile software development projects, enterprise developers are mainly focused on adapting to rapidly changing app development environments such as reactive runtimes, cloud-native frameworks, Linux container packaging, and the Kubernetes platform. They believe this is the best way to become an agile developer in their organization. However, this type of adaptive approach typically makes it harder for developers to understand and report what they will do in the next sprint. Developers might know the ultimate goal and, at best, the app features for a release about four months from the current sprint.

+

+In contrast, the predictive approach places more emphasis on analyzing known risks and planning future sprints in detail. For example, predictive developers can accurately report the functions and tasks planned for the entire development process. But it's not a magical way to make your agile projects succeed all the time because the predictive team depends totally on effective early-stage analysis. If the analysis does not work very well, it may be difficult for the project to change direction once it gets started.

+

+To mitigate this risk, I recommend that senior agile developers increase the predictive capabilities with a plan-driven method, and junior agile developers start with the adaptive methods for value-driven development.

+

+### Continuously improve code quality

+

+Don't hesitate to engage in [continuous integration][9] (CI) practices for improving your application before deploying code into production. To adopt modern application frameworks, such as cloud-native architecture, Linux container packaging, and hybrid cloud workloads, you have to learn about automated tools to address complex CI procedures.

+

+[Jenkins][10] is the standard CI tool for many organizations; it allows developers to build and test applications in many projects in an automated fashion. Its most important function is detecting unexpected errors during CI to prevent them from happening in production. This should increase business outcomes through better customer satisfaction.

+

+Automated CI enables agile developers to not only improve the quality of their code but their also application development agility through learning and using open source tools and patterns such as [behavior-driven development][11], [test-driven development][12], [automated unit testing][13], [pair programming][14], [code review][15], and [design pattern][16].

+

+### Never stop exploring communities

+

+Never settle, even if you already have a great reputation as an agile developer. You have to continuously take on bigger challenges to make great software in an agile way.

+

+By participating in the very active and growing open source community, you will not only improve your skills as an agile developer, but your actions can also inspire other developers who want to learn agile practices.

+

+How do you get involved in specific communities? It depends on your interests and what you want to learn. It might mean presenting specific topics at conferences or local meetups, writing technical blog posts, publishing practical guidebooks, committing code, or creating pull requests to open source projects' Git repositories. It's worth exploring open source communities for agile software development, as I've found it is a great way to share your expertise, knowledge, and practices with other brilliant developers and, along the way, help each other.

+

+### Get started

+

+These practical steps can give you a shorter path to becoming an awesome agile developer. Then you can lead junior developers in your team and organization to become more flexible, valuable, and predictive using agile principles.

+

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/2/steps-agile-developer

+

+作者:[Daniel Oh][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/daniel-oh

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/article/18/10/what-agile

+[2]: https://opensource.com/resources/what-are-linux-containers

+[3]: https://opensource.com/resources/what-is-kubernetes

+[4]: https://opensource.com/article/18/11/open-source-serverless-platforms

+[5]: https://en.wikipedia.org/wiki/Domain-driven_design

+[6]: https://openpracticelibrary.com/practice/start-at-the-end/

+[7]: https://openpracticelibrary.com/practice/event-storming/

+[8]: https://openpracticelibrary.com/practice/impact-mapping/

+[9]: https://en.wikipedia.org/wiki/Continuous_integration

+[10]: https://jenkins.io/

+[11]: https://en.wikipedia.org/wiki/Behavior-driven_development

+[12]: https://en.wikipedia.org/wiki/Test-driven_development

+[13]: https://en.wikipedia.org/wiki/Unit_testing

+[14]: https://en.wikipedia.org/wiki/Pair_programming

+[15]: https://en.wikipedia.org/wiki/Code_review

+[16]: https://en.wikipedia.org/wiki/Design_pattern

diff --git a/sources/talk/20190206 What blockchain and open source communities have in common.md b/sources/talk/20190206 What blockchain and open source communities have in common.md

new file mode 100644

index 0000000000..bc4f9464d0

--- /dev/null

+++ b/sources/talk/20190206 What blockchain and open source communities have in common.md

@@ -0,0 +1,64 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (What blockchain and open source communities have in common)

+[#]: via: (https://opensource.com/article/19/2/blockchain-open-source-communities)

+[#]: author: (Gordon Haff https://opensource.com/users/ghaff)

+

+What blockchain and open source communities have in common

+======

+Blockchain initiatives can look to open source governance for lessons on establishing trust.

+

+

+One of the characteristics of blockchains that gets a lot of attention is how they enable distributed trust. The topic of trust is a surprisingly complicated one. In fact, there's now an [entire book][1] devoted to the topic by Kevin Werbach.

+

+But here's what it means in a nutshell. Organizations that wish to work together, but do not fully trust one another, can establish a permissioned blockchain and invite business partners to record their transactions on a shared distributed ledger. Permissioned blockchains can trace assets when transactions are added to the blockchain. A permissioned blockchain implies a degree of trust (again, trust is complicated) among members of a consortium, but no single entity controls the storage and validation of transactions.

+

+The basic model is that a group of financial institutions or participants in a logistics system can jointly set up a permissioned blockchain that will validate and immutably record transactions. There's no dependence on a single entity, whether it's one of the direct participants or a third-party intermediary who set up the blockchain, to safeguard the integrity of the system. The blockchain itself does so through a variety of cryptographic mechanisms.

+

+Here's the rub though. It requires that competitors work together cooperatively—a relationship often called [coopetition][2]. The term dates back to the early 20th century, but it grew into widespread use when former Novell CEO Ray Noorda started using the term to describe the company's business strategy in the 1990s. Novell was then planning to get into the internet portal business, which required it to seek partnerships with some of the search engine providers and other companies it would also be competing against. In 1996, coopetition became the subject of a bestselling [book][3].

+

+Coopetition can be especially difficult when a blockchain network initiative appears to be driven by a dominant company. And it's hard for the dominant company not to exert outsize influence over the initiative, just as a natural consequence of how big it is. For example, the IBM-Maersk joint venture has [struggled to sign up rival shipping companies][4], in part because Maersk is the world's largest carrier by capacity, a position that makes rivals wary.

+

+We see this same dynamic in open source communities. The original creators of a project need to not only let go; they need to put governance structures in place that give competing companies confidence that there's a level playing field.

+

+For example, Sarah Novotny, now head of open source strategy at Google Cloud Platform, [told me in a 2017 interview][5] about the [Kubernetes][6] project that it isn't always easy to give up control, even when people buy into doing what is best for a project.

+

+> Google turned Kubernetes over to the Cloud Native Computing Foundation (CNCF), which sits under the Linux Foundation umbrella. As [CNCF executive director Dan Kohn puts it][7]: "One of the things they realized very early on is that a project with a neutral home is always going to achieve a higher level of collaboration. They really wanted to find a home for it where a number of different companies could participate."

+>

+> Defaulting to public may not be either natural or comfortable. "Early on, my first six, eight, or 12 weeks at Google, I think half my electrons in email were spent on: 'Why is this discussion not happening on a public mailing list? Is there a reason that this is specific to GKE [Google Container Engine]? No, there's not a reason,'" said Novotny.

+

+To be sure, some grumble that open source foundations have become too common and that many are too dominated by paying corporate members. Simon Phipps, currently the president of the Open Source Initiative, gave a talk at OSCON way back in 2015 titled ["Enough Foundations Already!"][8] in which he argued that "before we start another open source foundation, let's agree that what we need protected is software freedom and not corporate politics."

+

+Nonetheless, while not appropriate for every project, foundations with business, legal, and technical governance are increasingly the model for open source projects that require extensive cooperation among competing companies. A [2017 analysis of GitHub data by the Linux Foundation][9] found a number of different governance models in use by the highest-velocity open source projects. Unsurprisingly, quite a few remained under the control of the company that created or acquired them. However, about a third were under the auspices of a foundation.

+

+Is there a lesson here for blockchain? Quite possibly. Open source projects can be sponsored by a company while still putting systems and governance in place that are welcoming to outside contributors. However, there's a great deal of history to suggest that doing so is hard because it's hard not to exert control and leverage when you can. Furthermore, even if you make a successful case for being truly open to equal participation to outsiders today, it will be hard to allay suspicions that you might not be as welcoming tomorrow.

+

+To the degree that we can equate blockchain consortiums with open source communities, this suggests that business blockchain initiatives should look to open source governance for lessons. Dominant players in the ecosystem need to forgo control, and they need to have conversations with partners and potential partners about what types of structures would make participating easier.

+

+Many blockchain infrastructure software projects are already under foundations such as Hyperledger. But perhaps some specific production deployments of blockchain aimed at specific industries and ecosystems will benefit from formal governance structures as well.

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/2/blockchain-open-source-communities

+

+作者:[Gordon Haff][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/ghaff

+[b]: https://github.com/lujun9972

+[1]: https://mitpress.mit.edu/books/blockchain-and-new-architecture-trust

+[2]: https://en.wikipedia.org/wiki/Coopetition

+[3]: https://en.wikipedia.org/wiki/Co-opetition_(book)

+[4]: https://www.theregister.co.uk/2018/10/30/ibm_struggles_to_sign_up_shipping_carriers_to_blockchain_supply_chain_platform_reports/

+[5]: https://opensource.com/article/17/4/podcast-kubernetes-sarah-novotny

+[6]: https://kubernetes.io/

+[7]: http://bitmason.blogspot.com/2017/02/podcast-cloud-native-computing.html

+[8]: https://www.oreilly.com/ideas/enough-foundations-already

+[9]: https://www.linuxfoundation.org/blog/2017/08/successful-open-source-projects-common/

diff --git a/sources/talk/20190208 Which programming languages should you learn.md b/sources/talk/20190208 Which programming languages should you learn.md

new file mode 100644

index 0000000000..31cef16f03

--- /dev/null

+++ b/sources/talk/20190208 Which programming languages should you learn.md

@@ -0,0 +1,46 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Which programming languages should you learn?)

+[#]: via: (https://opensource.com/article/19/2/which-programming-languages-should-you-learn)

+[#]: author: (Marty Kalin https://opensource.com/users/mkalindepauledu)

+

+Which programming languages should you learn?

+======

+Learning a new programming language is a great way to get ahead in your career. But which one?

+

+

+If you want to get started or get ahead in your programming career, learning a new language is a smart idea. But the huge number of languages in active use invites the question: Which programming language is the best one to know? To answer that, let's start with a simplifying question: What sort of programming do you want to do?

+

+If you want to do web programming on the client side, then the specialized languages HTML, CSS, and JavaScript—in one of its seemingly infinite dialects—are de rigueur.

+

+If you want to do web programming on the server side, the options include all of the familiar general-purpose languages: C++, Golang, Java, C#, Node.js, Perl, Python, Ruby, and so on. As a matter of course, server-side programs interact with datastores, such as relational and other databases, which means query languages such as SQL may come into play.

+

+If you're writing native apps for mobile devices, knowing the target platform is important. For Apple devices, Swift has supplanted Objective C as the language of choice. For Android devices, Java (with dedicated libraries and toolsets) remains the dominant language. There are special languages such as Xamarin, used with C#, that can generate platform-specific code for Apple, Android, and Windows devices.

+

+What about general-purpose languages? There are various choices within the usual pigeonholes. Among the dynamic or scripting languages (e.g., Perl, Python, and Ruby), there are newer offerings such as Node.js. Java and C#, which are more alike than their fans like to admit, remain the dominant statically compiled languages targeted at a virtual machine (the JVM and CLR, respectively). Among languages that compile into native executables, C++ is still in the mix, along with later arrivals such as Golang and Rust. General-purpose functional languages abound (e.g., Clojure, Haskell, Erlang, F#, Lisp, and Scala), often with passionately devoted communities. It's worth noting that object-oriented languages such as Java and C# have added functional constructs (in particular, lambdas), and the dynamic languages have had functional constructs from the start.

+

+Let me end with a pitch for C, which is a small, elegant, and extensible language not to be confused with C++. Modern operating systems are written mostly in C, with the rest in assembly language. The standard libraries on any platform are likewise mostly in C. For example, any program that issues the Hello, world! greeting does so through a call to the C library function named **write**.

+

+C serves as a portable assembly language, exposing details about the underlying system that other high-level languages deliberately hide. To understand C is thus to gain a better grasp of how programs contend for the shared system resources (processors, memory, and I/O devices) required for execution. C is at once high-level and close-to-the-metal, so unrivaled in performance—except, of course, for assembly language. Finally, C is the lingua franca among programming languages, and almost every general-purpose language supports C calls in one form or another.

+

+For a modern introduction to C, consider my book [C Programming: Introducing Portable Assembler][1]. No matter how you go about it, learn C and you'll learn a lot more than just another programming language.

+

+What programming languages do you think are important to know? Do you agree or disagree with these recommendations? Let us know in the comments!

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/2/which-programming-languages-should-you-learn

+

+作者:[Marty Kalin][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mkalindepauledu

+[b]: https://github.com/lujun9972

+[1]: https://www.amazon.com/dp/1977056954?ref_=pe_870760_150889320

diff --git a/sources/tech/20190129 A small notebook for a system administrator.md b/sources/tech/20190129 A small notebook for a system administrator.md

new file mode 100644

index 0000000000..45d6ba50eb

--- /dev/null

+++ b/sources/tech/20190129 A small notebook for a system administrator.md

@@ -0,0 +1,552 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (A small notebook for a system administrator)

+[#]: via: (https://habr.com/en/post/437912/)

+[#]: author: (sukhe https://habr.com/en/users/sukhe/)

+

+A small notebook for a system administrator

+======

+

+I am a system administrator, and I need a small, lightweight notebook for every day carrying. Of course, not just to carry it, but for use it to work.

+

+I already have a ThinkPad x200, but it’s heavier than I would like. And among the lightweight notebooks, I did not find anything suitable. All of them imitate the MacBook Air: thin, shiny, glamorous, and they all critically lack ports. Such notebook is suitable for posting photos on Instagram, but not for work. At least not for mine.

+

+After not finding anything suitable, I thought about how a notebook would turn out if it were developed not with design, but the needs of real users in mind. System administrators, for example. Or people serving telecommunications equipment in hard-to-reach places — on roofs, masts, in the woods, literally in the middle of nowhere.

+

+The results of my thoughts are presented in this article.

+

+

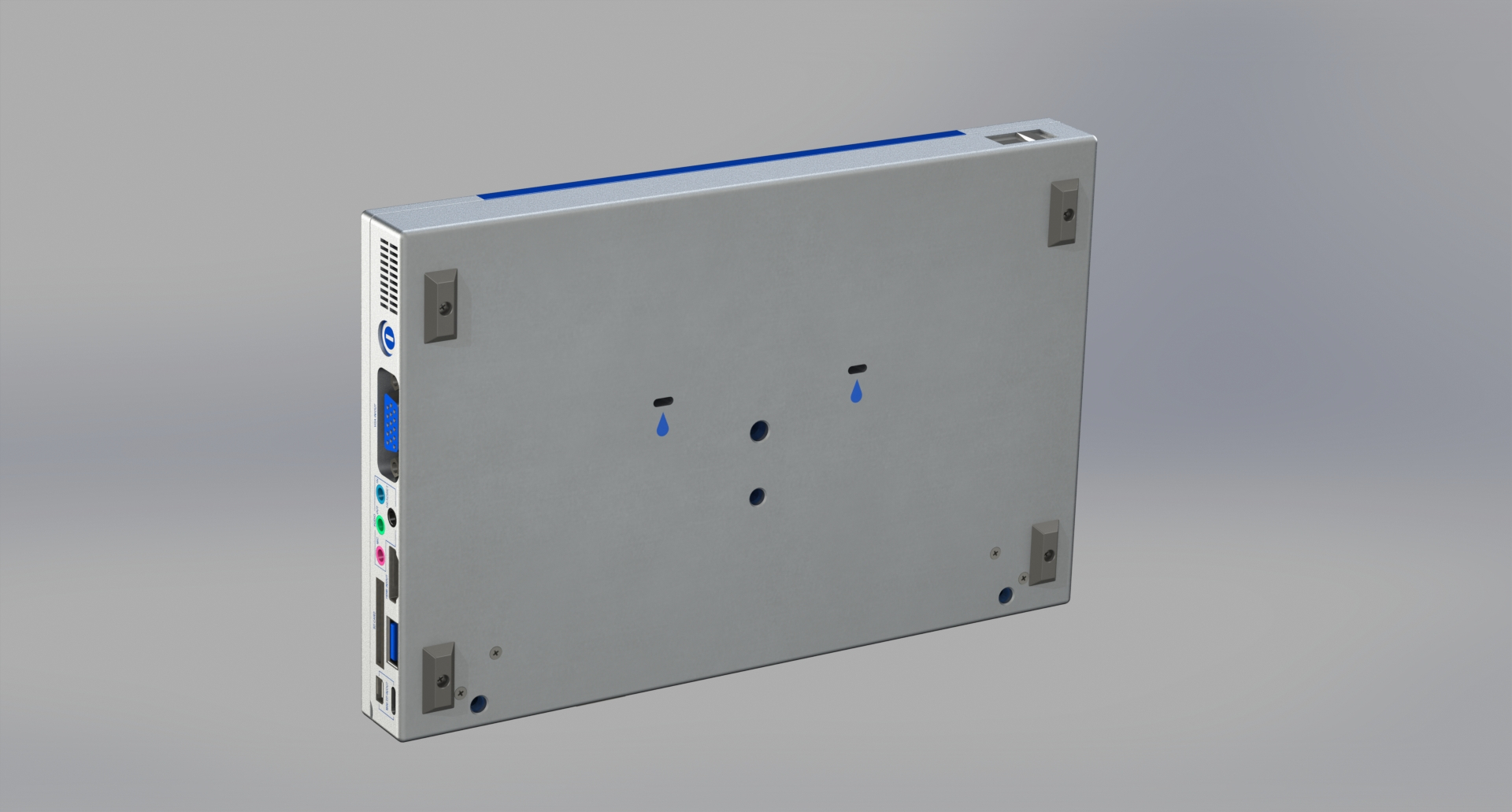

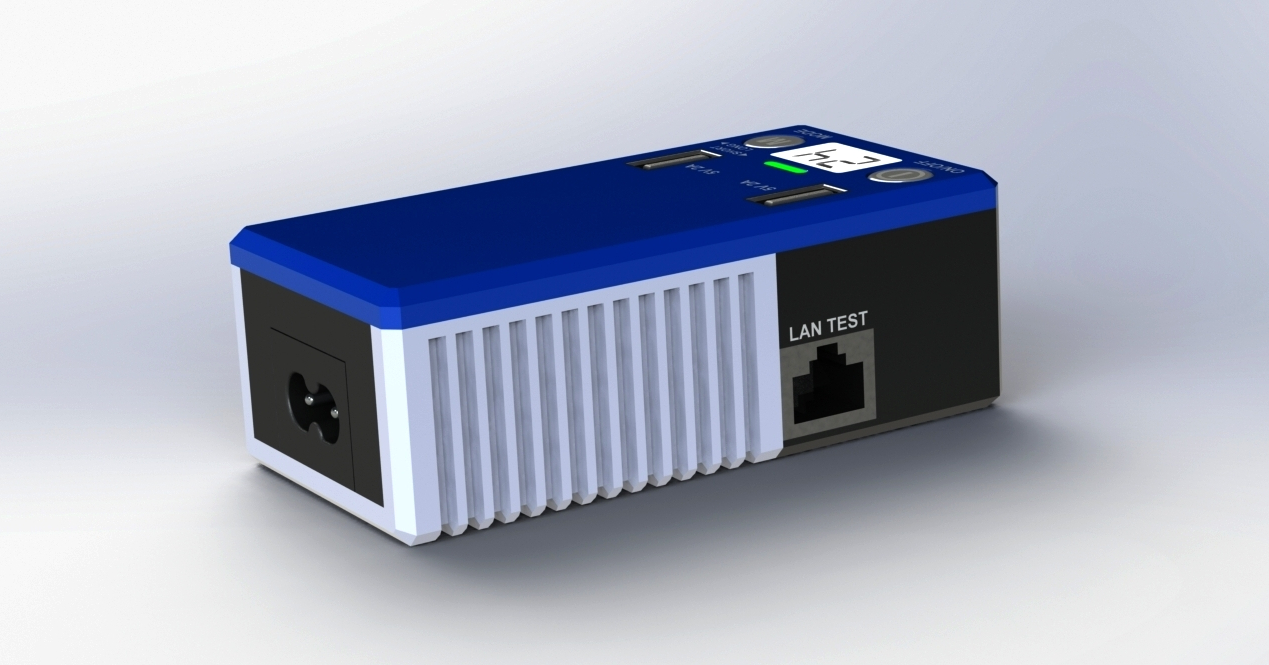

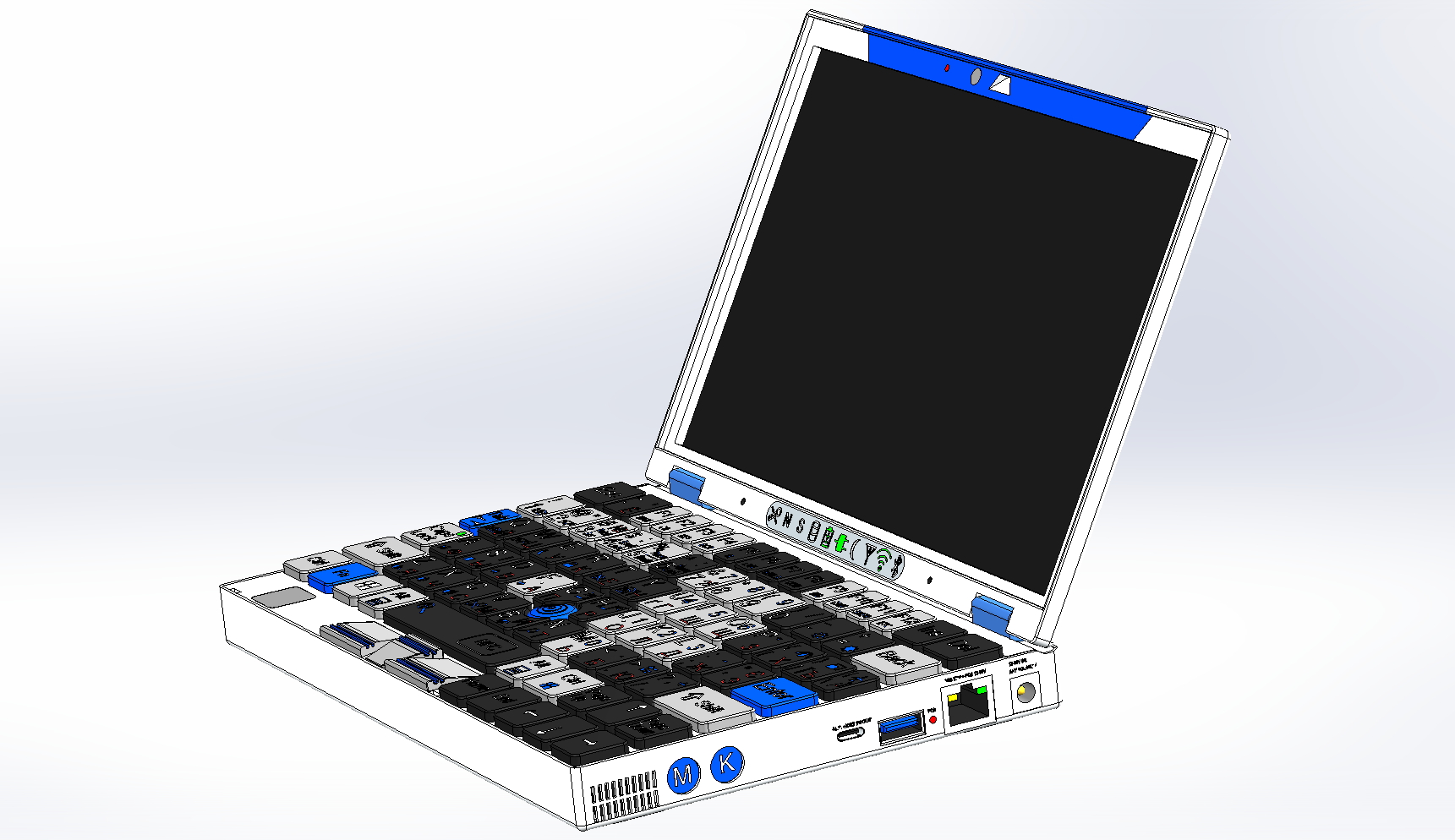

+[![Figure to attract attention][1]][2]

+

+Of course, your understanding of the admin notebook does not have to coincide with mine. But I hope you will find a couple of interesting thoughts here.

+

+Just keep in mind that «system administrator» is just the name of my position. And in fact, I have to work as a network engineer, and installer, and perform a significant part of other work related to hardware. Our company is tiny, we are far from large settlements, so all of us have to be universal specialist.

+

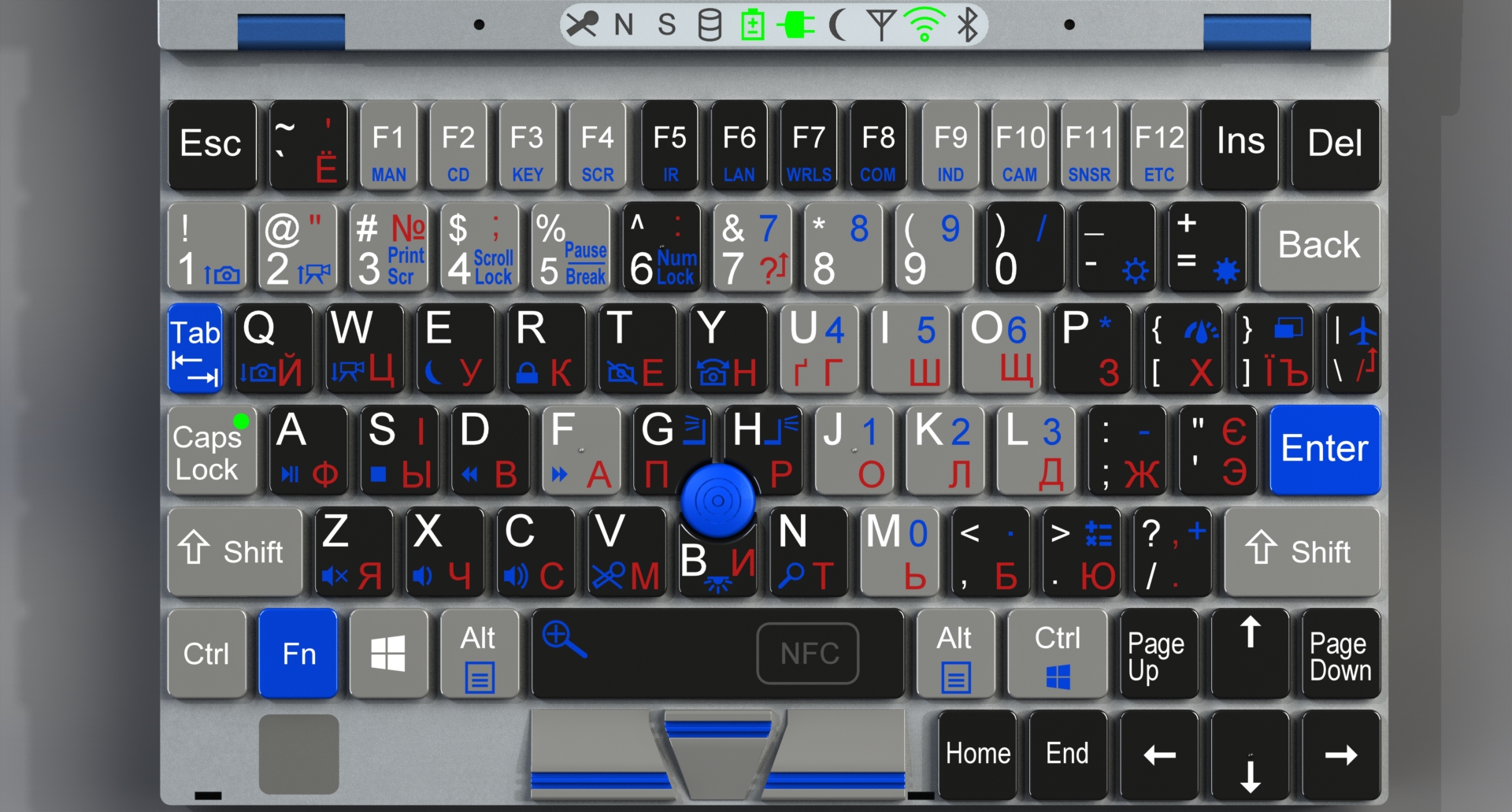

+In order not to constantly clarify «this notebook», later in the article I will call it the “adminbook”. Although it can be useful not only to administrators, but also to all who need a small, lightweight notebook with a lot of connectors. In fact, even large laptops don’t have as many connectors.

+

+So let's get started…

+

+### 1\. Dimensions and weight

+

+Of course, you want it smaller and more lightweight, but the keyboard with the screen should not be too small. And there has to be space for connectors, too.

+

+In my opinion, a suitable option is a notebook half the size of an x200. That is, approximately the size of a sheet of A5 paper (210x148mm). In addition, the side pockets of many bags and backpacks are designed for this size. This means that the adminbook doesn’t even have to be carried in the main compartment.

+