mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

3ee0f5f750

@ -1,21 +1,17 @@

|

||||

如何在 Linux 上使用网络配置工具 Netplan

|

||||

======

|

||||

> netplan 是一个命令行工具,用于在某些 Linux 发行版上配置网络。

|

||||

|

||||

|

||||

|

||||

多年以来 Linux 管理员和用户们使用相同的方式配置他们的网络接口。例如,如果你是 Ubuntu 用户,你能够用桌面 GUI 配置网络连接,也可以在 /etc/network/interfaces 文件里配置。配置相当简单且从未失败。在文件中配置看起来就像这样:

|

||||

多年以来 Linux 管理员和用户们以相同的方式配置他们的网络接口。例如,如果你是 Ubuntu 用户,你能够用桌面 GUI 配置网络连接,也可以在 `/etc/network/interfaces` 文件里配置。配置相当简单且可以奏效。在文件中配置看起来就像这样:

|

||||

|

||||

```

|

||||

auto enp10s0

|

||||

|

||||

iface enp10s0 inet static

|

||||

|

||||

address 192.168.1.162

|

||||

|

||||

netmask 255.255.255.0

|

||||

|

||||

gateway 192.168.1.100

|

||||

|

||||

dns-nameservers 1.0.0.1,1.1.1.1

|

||||

```

|

||||

|

||||

@ -25,7 +21,7 @@ dns-nameservers 1.0.0.1,1.1.1.1

|

||||

sudo systemctl restart networking

|

||||

```

|

||||

|

||||

或者,如果你使用不带systemd 的发行版,你可以通过老办法来重启网络:

|

||||

或者,如果你使用不带 systemd 的发行版,你可以通过老办法来重启网络:

|

||||

|

||||

```

|

||||

sudo /etc/init.d/networking restart

|

||||

@ -33,13 +29,13 @@ sudo /etc/init.d/networking restart

|

||||

|

||||

你的网络将会重新启动,新的配置将会生效。

|

||||

|

||||

这就是多年以来的做法。但是现在,在某些发行版上(例如 Ubuntu Linux 18.04),网络的配置与控制发生了很大的变化。不需要那个 interfaces 文件和 /etc/init.d/networking 脚本,我们现在转向使用 [Netplan][1]。Netplan 是一个在某些 Linux 发行版上配置网络连接的命令行工具。Netplan 使用 YAML 描述文件来配置网络接口,然后,通过这些描述为任何给定的呈现工具生成必要的配置选项。

|

||||

这就是多年以来的做法。但是现在,在某些发行版上(例如 Ubuntu Linux 18.04),网络的配置与控制发生了很大的变化。不需要那个 `interfaces` 文件和 `/etc/init.d/networking` 脚本,我们现在转向使用 [Netplan][1]。Netplan 是一个在某些 Linux 发行版上配置网络连接的命令行工具。Netplan 使用 YAML 描述文件来配置网络接口,然后,通过这些描述为任何给定的呈现工具生成必要的配置选项。

|

||||

|

||||

我将向你展示如何在 Linux 上使用 Netplan 配置静态 IP 地址和 DHCP 地址。我会在 Ubuntu Server 18.04 上演示。有句忠告,你创建的 .yaml 文件中的间距必须保持一致,否则将会失败。你不用为每行使用特定的间距,只需保持一致就行了。

|

||||

我将向你展示如何在 Linux 上使用 Netplan 配置静态 IP 地址和 DHCP 地址。我会在 Ubuntu Server 18.04 上演示。有句忠告,你创建的 .yaml 文件中的缩进必须保持一致,否则将会失败。你不用为每行使用特定的缩进间距,只需保持一致就行了。

|

||||

|

||||

### 新的配置文件

|

||||

|

||||

打开终端窗口(或者通过 SSH 登录进 Ubuntu 服务器)。你会在 /etc/netplan 文件夹下发现 Netplan 的新配置文件。使用 cd/etc/netplan 命令进入到那个文件夹下。一旦进到了那个文件夹,也许你就能够看到一个文件:

|

||||

打开终端窗口(或者通过 SSH 登录进 Ubuntu 服务器)。你会在 `/etc/netplan` 文件夹下发现 Netplan 的新配置文件。使用 `cd /etc/netplan` 命令进入到那个文件夹下。一旦进到了那个文件夹,也许你就能够看到一个文件:

|

||||

|

||||

```

|

||||

01-netcfg.yaml

|

||||

@ -55,13 +51,11 @@ sudo cp /etc/netplan/01-netcfg.yaml /etc/netplan/01-netcfg.yaml.bak

|

||||

|

||||

### 网络设备名称

|

||||

|

||||

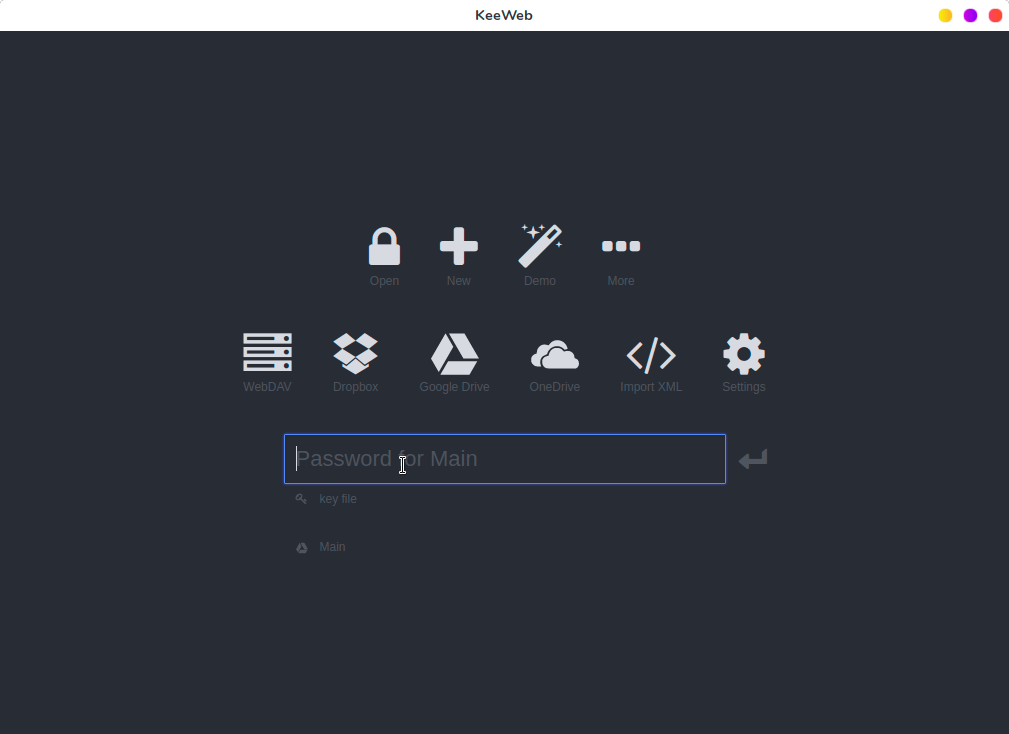

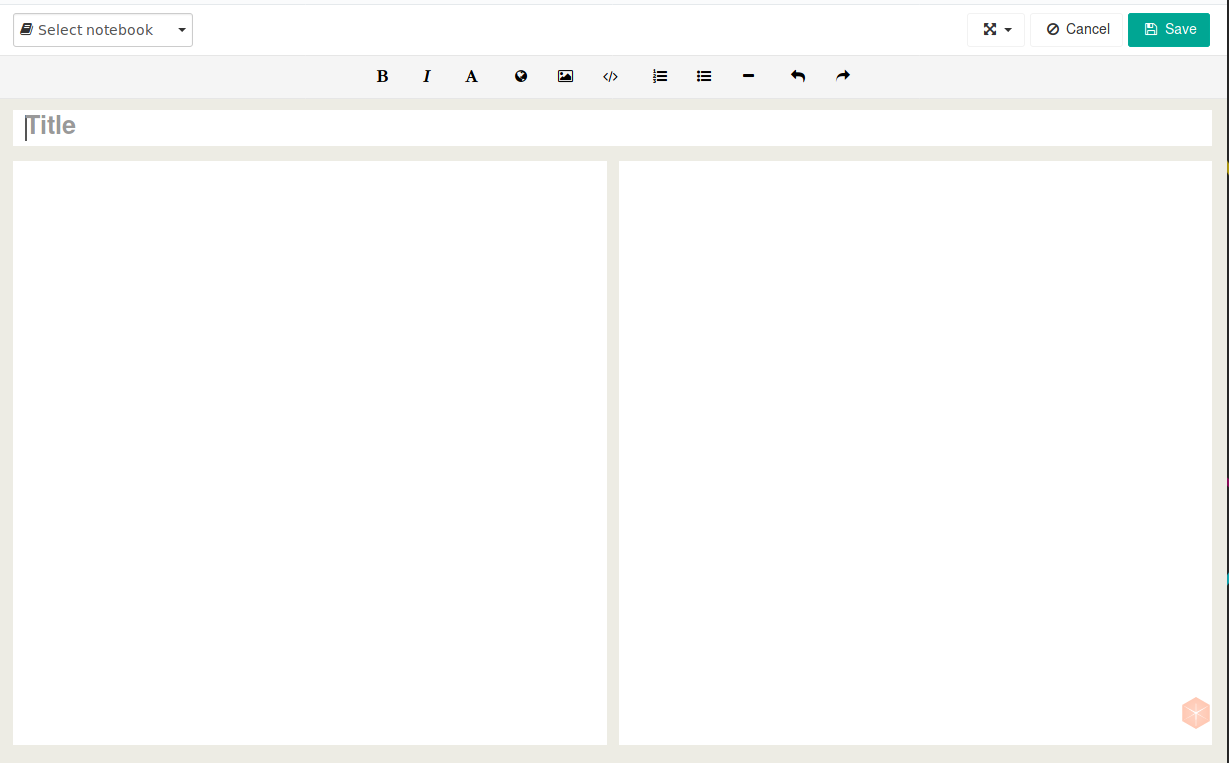

在你开始配置静态 IP 之前,你需要知道设备名称。要做到这一点,你可以使用命令 ip a,然后找出哪一个设备将会被用到(图 1)。

|

||||

在你开始配置静态 IP 之前,你需要知道设备名称。要做到这一点,你可以使用命令 `ip a`,然后找出哪一个设备将会被用到(图 1)。

|

||||

|

||||

![netplan][3]

|

||||

|

||||

图 1:使用 ip a 命令找出设备名称

|

||||

|

||||

[Used with permission][4] (译注:这是什么鬼?)

|

||||

*图 1:使用 ip a 命令找出设备名称*

|

||||

|

||||

我将为 ens5 配置一个静态的 IP。

|

||||

|

||||

@ -75,67 +69,46 @@ sudo nano /etc/netplan/01-netcfg.yaml

|

||||

|

||||

文件的布局看起来就像这样:

|

||||

|

||||

```

|

||||

network:

|

||||

|

||||

Version: 2

|

||||

|

||||

Renderer: networkd

|

||||

|

||||

ethernets:

|

||||

|

||||

DEVICE_NAME:

|

||||

|

||||

Dhcp4: yes/no

|

||||

|

||||

Addresses: [IP/NETMASK]

|

||||

|

||||

Gateway: GATEWAY

|

||||

|

||||

Nameservers:

|

||||

|

||||

Addresses: [NAMESERVER, NAMESERVER]

|

||||

Version: 2

|

||||

Renderer: networkd

|

||||

ethernets:

|

||||

DEVICE_NAME:

|

||||

Dhcp4: yes/no

|

||||

Addresses: [IP/NETMASK]

|

||||

Gateway: GATEWAY

|

||||

Nameservers:

|

||||

Addresses: [NAMESERVER, NAMESERVER]

|

||||

```

|

||||

|

||||

其中:

|

||||

|

||||

* DEVICE_NAME 是需要配置设备的实际名称。

|

||||

|

||||

* yes/no 代表是否启用 dhcp4。

|

||||

|

||||

* IP 是设备的 IP 地址。

|

||||

|

||||

* NETMASK 是 IP 地址的掩码。

|

||||

|

||||

* GATEWAY 是网关的地址。

|

||||

|

||||

* NAMESERVER 是由逗号分开的 DNS 服务器列表。

|

||||

* `DEVICE_NAME` 是需要配置设备的实际名称。

|

||||

* `yes`/`no` 代表是否启用 dhcp4。

|

||||

* `IP` 是设备的 IP 地址。

|

||||

* `NETMASK` 是 IP 地址的掩码。

|

||||

* `GATEWAY` 是网关的地址。

|

||||

* `NAMESERVER` 是由逗号分开的 DNS 服务器列表。

|

||||

|

||||

这是一份 .yaml 文件的样例:

|

||||

|

||||

```

|

||||

network:

|

||||

|

||||

version: 2

|

||||

|

||||

renderer: networkd

|

||||

|

||||

ethernets:

|

||||

|

||||

ens5:

|

||||

|

||||

dhcp4: no

|

||||

|

||||

addresses: [192.168.1.230/24]

|

||||

|

||||

gateway4: 192.168.1.254

|

||||

|

||||

nameservers:

|

||||

|

||||

addresses: [8.8.4.4,8.8.8.8]

|

||||

version: 2

|

||||

renderer: networkd

|

||||

ethernets:

|

||||

ens5:

|

||||

dhcp4: no

|

||||

addresses: [192.168.1.230/24]

|

||||

gateway4: 192.168.1.254

|

||||

nameservers:

|

||||

addresses: [8.8.4.4,8.8.8.8]

|

||||

```

|

||||

|

||||

编辑上面的文件以达到你想要的效果。保存并关闭文件。

|

||||

|

||||

注意,掩码已经不用再配置为 255.255.255.0 这种形式。取而代之的是,掩码已被添加进了 IP 地址中。

|

||||

注意,掩码已经不用再配置为 `255.255.255.0` 这种形式。取而代之的是,掩码已被添加进了 IP 地址中。

|

||||

|

||||

### 测试配置

|

||||

|

||||

@ -165,20 +138,13 @@ sudo netplan apply

|

||||

|

||||

```

|

||||

network:

|

||||

|

||||

version: 2

|

||||

|

||||

renderer: networkd

|

||||

|

||||

ethernets:

|

||||

|

||||

ens5:

|

||||

|

||||

Addresses: []

|

||||

|

||||

dhcp4: true

|

||||

|

||||

optional: true

|

||||

version: 2

|

||||

renderer: networkd

|

||||

ethernets:

|

||||

ens5:

|

||||

Addresses: []

|

||||

dhcp4: true

|

||||

optional: true

|

||||

```

|

||||

|

||||

保存并退出。用下面命令来测试文件:

|

||||

@ -187,15 +153,15 @@ network:

|

||||

sudo netplan try

|

||||

```

|

||||

|

||||

Netplan 应该会成功配置 DHCP 服务。这时你可以使用 ip a 命令得到动态分配的地址,然后重新配置静态地址。或者,你可以直接使用 DHCP 分配的地址(但看看这是一个服务器,你可能不想这样做)。

|

||||

Netplan 应该会成功配置 DHCP 服务。这时你可以使用 `ip a` 命令得到动态分配的地址,然后重新配置静态地址。或者,你可以直接使用 DHCP 分配的地址(但看看这是一个服务器,你可能不想这样做)。

|

||||

|

||||

也许你有不只一个的网络接口,你可以命名第二个 .yaml 文件为 02-netcfg.yaml 。Netplan 会按照数字顺序应用配置文件,因此 01 会在 02 之前使用。根据你的需要创建多个配置文件。

|

||||

也许你有不只一个的网络接口,你可以命名第二个 .yaml 文件为 `02-netcfg.yaml` 。Netplan 会按照数字顺序应用配置文件,因此 01 会在 02 之前使用。根据你的需要创建多个配置文件。

|

||||

|

||||

### 就是这些了

|

||||

|

||||

不管你信不信,那些就是所有关于使用 Netplan 的东西了。虽然它对于我们习惯性的配置网络地址来说是一个相当大的改变,但并不是所有人都用的惯。但这种配置方式值得一提...因此你会适应的。

|

||||

不管怎样,那些就是所有关于使用 Netplan 的东西了。虽然它对于我们习惯性的配置网络地址来说是一个相当大的改变,但并不是所有人都用的惯。但这种配置方式值得一提……因此你会适应的。

|

||||

|

||||

在 Linux Foundation 和 edX 上通过 ["Introduction to Linux"] 课程学习更多关于 Linux 的内容。

|

||||

在 Linux Foundation 和 edX 上通过 [“Introduction to Linux”][5] 课程学习更多关于 Linux 的内容。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -204,7 +170,7 @@ via: https://www.linux.com/learn/intro-to-linux/2018/9/how-use-netplan-network-c

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[LuuMing](https://github.com/LuuMing)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,75 @@

|

||||

Troubleshooting Node.js Issues with llnode

|

||||

======

|

||||

|

||||

|

||||

|

||||

The llnode plugin lets you inspect Node.js processes and core dumps; it adds the ability to inspect JavaScript stack frames, objects, source code and more. At [Node+JS Interactive][1], Matheus Marchini, Node.js Collaborator and Lead Software Engineer at Sthima, will host a [workshop][2] on how to use llnode to find and fix issues quickly and reliably, without bloating your application with logs or compromising performance. He explains more in this interview.

|

||||

|

||||

**Linux.com: What are some common issues that happen with a Node.js application in production?**

|

||||

|

||||

**Matheus Marchini:** One of the most common issues Node.js developers might experience -- either in production or during development -- are unhandled exceptions. They happen when your code throws an error, and this error is not properly handled. There's a variation of this issue with Promises, although in this case, the problem is worse: if a Promise is rejected but there's no handler for that rejection, the application might enter into an undefined state and it can start to misbehave.

|

||||

|

||||

The application might also crash when it's using too much memory. This usually happens when there's a memory leak in the application, although we usually don't have classic memory leaks in Node.js. Instead of unreferenced objects, we might have objects that are not used anymore but are still retained by another object, leading the Garbage Collector to ignore them. If this happens with several objects, we can quickly exhaust our available memory.

|

||||

|

||||

Memory is not the only resource that might get exhausted. Given the asynchronous nature of Node.js and how it scales for a large number of requests, the application might start to run out on other resources such as opened file descriptions and a number of concurrent connections to a database.

|

||||

|

||||

Infinite loops are not that common because we usually catch those during development, but every once in a while one manages to slip through our tests and get into our production servers. These are pretty catastrophic because they will block the main thread, rendering the entire application unresponsive.

|

||||

|

||||

The last issues I'd like to point out are performance issues. Those can happen for a variety of reasons, ranging from unoptimized function to I/O latency.

|

||||

|

||||

**Linux.com: Are there any quick tests you can do to determine what might be happening with your Node.js application?**

|

||||

|

||||

**Marchini:** Node.js and V8 have several tools and features built-in which developers can use to find issues faster. For example, if you're facing performance issues, you might want to use the built-in [V8 CpuProfiler][3]. Memory issues can be tracked down with [V8 Sampling Heap Profiler][4]. All of these options are interesting because you can open their results in Chrome DevTools and get some nice graphical visualizations by default.

|

||||

|

||||

If you are using native modules on your project, V8 built-in tools might not give you enough insights, since they focus only on JavaScript metrics. As an alternative to V8 CpuProfiler, you can use system profiler tools, such as [perf for Linux][5] and Dtrace for FreeBSD / OS X. You can grab the result from these tools and turn them into flamegraphs, making it easier to find which functions are taking more time to process.

|

||||

|

||||

You can use third-party tools as well: [node-report][6] is an amazing first failure data capture which doesn't introduce a significant overhead. When your application crashes, it will generate a report with detailed information about the state of the system, including environment variables, flags used, operating system details, etc. You can also generate this report on demand, and it is extremely useful when asking for help in forums, for example. The best part is that, after installing it through npm, you can enable it with a flag -- no need to make changes in your code!

|

||||

|

||||

But one of the tools I'm most amazed by is [llnode][7].

|

||||

|

||||

**Linux.com: When would you want to use something like llnode; and what exactly is it?**

|

||||

|

||||

**Marchini:** **** llnode is useful when debugging infinite loops, uncaught exceptions or out of memory issues since it allows you to inspect the state of your application when it crashed. How does llnode do this? You can tell Node.js and your operating system to take a core dump of your application when it crashes and load it into llnode. llnode will analyze this core dump and give you useful information such as how many objects were allocated in the heap, the complete stack trace for the process (including native calls and V8 internals), pending requests and handlers in the event loop queue, etc.

|

||||

|

||||

The most impressive feature llnode has is its ability to inspect objects and functions: you can see which variables are available for a given function, look at the function's code and inspect which properties your objects have with their respective values. For example, you can look up which variables are available for your HTTP handler function and which parameters it received. You can also look at headers and the payload of a given request.

|

||||

|

||||

llnode is a plugin for [lldb][8], and it uses lldb features alongside hints provided by V8 and Node.js to recreate the process heap. It uses a few heuristics, too, so results might not be entirely correct sometimes. But most of the times the results are good enough -- and way better than not using any tool.

|

||||

|

||||

This technique -- which is called post-mortem debugging -- is not something new, though, and it has been part of the Node.js project since 2012. This is a common technique used by C and C++ developers, but not many dynamic runtimes support it. I'm happy we can say Node.js is one of those runtimes.

|

||||

|

||||

**Linux.com: What are some key items folks should know before adding llnode to their environment?**

|

||||

|

||||

**Marchini:** To install and use llnode you'll need to have lldb installed on your system. If you're on OS X, lldb is installed as part of Xcode. On Linux, you can install it from your distribution's repository. We recommend using LLDB 3.9 or later.

|

||||

|

||||

You'll also have to set up your environment to generate core dumps. First, remember to set the flag --abort-on-uncaught-exception when running a Node.js application, otherwise, Node.js won't generate a core dump when an uncaught exception happens. You'll also need to tell your operating system to generate core dumps when an application crashes. The most common way to do that is by running `ulimit -c unlimited`, but this will only apply to your current shell session. If you're using a process manager such as systemd I suggest looking at the process manager docs. You can also generate on-demand core dumps of a running process with tools such as gcore.

|

||||

|

||||

**Linux.com: What can we expect from llnode in the future?**

|

||||

|

||||

**Marchini:** llnode collaborators are working on several features and improvements to make the project more accessible for developers less familiar with native debugging tools. To accomplish that, we're improving the overall user experience as well as the project's documentation and installation process. Future versions will include colorized output, more reliable output for some commands and a simplified mode focused on JavaScript information. We are also working on a JavaScript API which can be used to automate some analysis, create graphical user interfaces, etc.

|

||||

|

||||

If this project sounds interesting to you, and you would like to get involved, feel free join the conversation in [our issues tracker][9] or contact me on social [@mmarkini][10]. I would love to help you get started!

|

||||

|

||||

Learn more at [Node+JS Interactive][1], coming up October 10-12, 2018 in Vancouver, Canada.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/2018/9/troubleshooting-nodejs-issues-llnode

|

||||

|

||||

作者:[The Linux Foundation][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/ericstephenbrown

|

||||

[1]: https://events.linuxfoundation.org/events/node-js-interactive-2018/?utm_source=Linux.com&utm_medium=article&utm_campaign=jsint18

|

||||

[2]: http://sched.co/G285

|

||||

[3]: https://nodejs.org/api/inspector.html#inspector_cpu_profiler

|

||||

[4]: https://github.com/v8/sampling-heap-profiler

|

||||

[5]: http://www.brendangregg.com/blog/2014-09-17/node-flame-graphs-on-linux.html

|

||||

[6]: https://github.com/nodejs/node-report

|

||||

[7]: https://github.com/nodejs/llnode

|

||||

[8]: https://lldb.llvm.org/

|

||||

[9]: https://github.com/nodejs/llnode/issues

|

||||

[10]: https://twitter.com/mmarkini

|

||||

@ -0,0 +1,44 @@

|

||||

Creator of the World Wide Web is Creating a New Decentralized Web

|

||||

======

|

||||

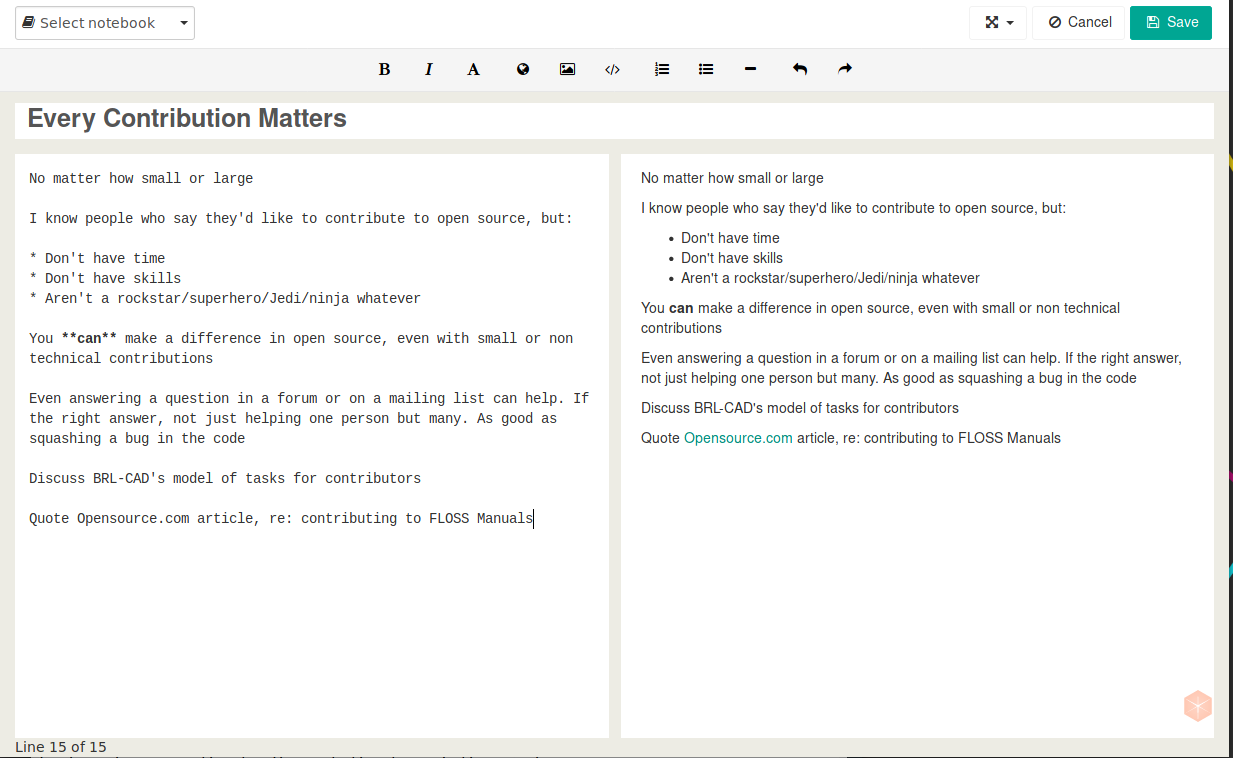

**Creator of the world wide web, Tim Berners-Lee has unveiled his plans to create a new decentralized web where the data will be controlled by the users.**

|

||||

|

||||

[Tim Berners-Lee][1] is known for creating the world wide web, i.e., the internet you know today. More than two decades later, Tim is working to free the internet from the clutches of corporate giants and give the power back to the people via a decentralized web.

|

||||

|

||||

Berners-Lee was unhappy with the way ‘powerful forces’ of the internet handle data of the users for their own agenda. So he [started working on his own open source project][2] Solid “to restore the power and agency of individuals on the web.”

|

||||

|

||||

> Solid changes the current model where users have to hand over personal data to digital giants in exchange for perceived value. As we’ve all discovered, this hasn’t been in our best interests. Solid is how we evolve the web in order to restore balance — by giving every one of us complete control over data, personal or not, in a revolutionary way.

|

||||

|

||||

![Tim Berners-Lee is creating a decentralized web with open source project Solid][3]

|

||||

|

||||

Basically, [Solid][4] is a platform built using the existing web where you create own ‘pods’ (personal data store). You decide where this pod will be hosted, who will access which data element and how the data will be shared through this pod.

|

||||

|

||||

Berners-Lee believes that Solid “will empower individuals, developers and businesses with entirely new ways to conceive, build and find innovative, trusted and beneficial applications and services.”

|

||||

|

||||

Developers need to integrate Solid into their apps and sites. Solid is still in the early stages so there are no apps for now but the project website claims that “the first wave of Solid apps are being created now.”

|

||||

|

||||

Berners-Lee has created a startup called [Inrupt][5] and has taken a sabbatical from MIT to work full-time on Solid and to take it “from the vision of a few to the reality of many.”

|

||||

|

||||

If you are interested in Solid, [learn how to create apps][6] or [contribute to the project][7] in your own way. Of course, it will take a lot of effort to build and drive the broad adoption of Solid so every bit of contribution will count to the success of a decentralized web.

|

||||

|

||||

Do you think a [decentralized web][8] will be a reality? What do you think of decentralized web in general and project Solid in particular?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/solid-decentralized-web/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: https://en.wikipedia.org/wiki/Tim_Berners-Lee

|

||||

[2]: https://medium.com/@timberners_lee/one-small-step-for-the-web-87f92217d085

|

||||

[3]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/tim-berners-lee-solid-project.jpeg

|

||||

[4]: https://solid.inrupt.com/

|

||||

[5]: https://www.inrupt.com/

|

||||

[6]: https://solid.inrupt.com/docs/getting-started

|

||||

[7]: https://solid.inrupt.com/community

|

||||

[8]: https://tech.co/decentralized-internet-guide-2018-02

|

||||

@ -0,0 +1,97 @@

|

||||

Interview With Peter Ganten, CEO of Univention GmbH

|

||||

======

|

||||

I have been asking the Univention team to share the behind-the-scenes story of [**Univention**][1] for a couple of months. Finally, today we got the interview of **Mr. Peter H. Ganten** , CEO of Univention GmbH. Despite his busy schedule, in this interview, he shares what he thinks of the Univention project and its impact on open source ecosystem, what open source developers and companies will need to do to keep thriving and what are the biggest challenges for open source projects.

|

||||

|

||||

**OSTechNix: What’s your background and why have you founded Univention?**

|

||||

|

||||

**Peter Ganten:** I studied physics and psychology. In psychology I was a research assistant and coded evaluation software. I realized how important it is that results have to be disclosed in order to verify or falsify them. The same goes for the code that leads to the results. This brought me into contact with Open Source Software (OSS) and Linux.

|

||||

|

||||

|

||||

|

||||

I was a kind of technical lab manager and I had the opportunity to try out a lot, which led to my book about Debian. That was still in the New Economy era where the first business models emerged on how to make money with Open Source. When the bubble burst, I had the plan to make OSS a solid business model without venture capital but with Hanseatic business style – seriously, steadily, no bling bling.

|

||||

|

||||

**What were the biggest challenges at the beginning?**

|

||||

|

||||

When I came from the university, the biggest challenge clearly was to gain entrepreneurial and business management knowledge. I quickly learned that it’s not about Open Source software as an end to itself but always about customer value, and the benefits OSS offers its customers. We all had to learn a lot.

|

||||

|

||||

In the beginning, we expected that Linux on the desktop would become established in a similar way as Linux on the server. However, this has not yet been proven true. The replacement has happened with Android and the iPhone. Our conclusion then was to change our offerings towards ID management and enterprise servers.

|

||||

|

||||

**Why does UCS matter? And for whom makes it sense to use it?**

|

||||

|

||||

There is cool OSS in all areas, but many organizations are not capable to combine it all together and make it manageable. For the basic infrastructure (Windows desktops, users, user rights, roles, ID management, apps) we need a central instance to which groupware, CRM etc. is connected. Without Univention this would have to be laboriously assembled and maintained manually. This is possible for very large companies, but far too complex for many other organizations.

|

||||

|

||||

[**UCS**][2] can be used out of the box and is scalable. That’s why it’s becoming more and more popular – more than 10,000 organizations are using UCS already today.

|

||||

|

||||

**Who are your users and most important clients? What do they love most about UCS?**

|

||||

|

||||

The Core Edition is free of charge and used by organizations from all sectors and industries such as associations, micro-enterprises, universities or large organizations with thousands of users. In the enterprise environment, where Long Term Servicing (LTS) and professional support are particularly important, we have organizations ranging in size from 30-50 users to several thousand users. One of the target groups is the education system in Germany. In many large cities and within their school administrations UCS is used, for example, in Cologne, Hannover, Bremen, Kassel and in several federal states. They are looking for manageable IT and apps for schools. That’s what we offer, because we can guarantee these authorities full control over their users’ identities.

|

||||

|

||||

Also, more and more cloud service providers and MSPs want to take UCS to deliver a selection of cloud-based app solutions.

|

||||

|

||||

**Is UCS 100% Open Source? If so, how can you run a profitable business selling it?**

|

||||

|

||||

Yes, UCS is 100% Open Source, every line, the whole code is OSS. You can download and use UCS Core Edition for **FREE!**

|

||||

|

||||

We know that in large, complex organizations, vendor support and liability is needed for LTS, SLAs, and we offer that with our Enterprise subscriptions and consulting services. We don’t offer these in the Core Edition.

|

||||

|

||||

**And what are you giving back to the OS community?**

|

||||

|

||||

A lot. We are involved in the Debian team and co-finance the LTS maintenance for Debian. For important OS components in UCS like [**OpenLDAP**][3], Samba or KVM we co-finance the development or have co-developed them ourselves. We make it all freely available.

|

||||

|

||||

We are also involved on the political level in ensuring that OSS is used. We are engaged, for example, in the [**Free Software Foundation Europe (FSFE)**][4] and the [**German Open Source Business Alliance**][5], of which I am the chairman. We are working hard to make OSS more successful.

|

||||

|

||||

**How can I get started with UCS?**

|

||||

|

||||

It’s easy to get started with the Core Edition, which, like the Enterprise Edition, has an App Center and can be easily installed on your own hardware or as an appliance in a virtual machine. Just [**download Univention ISO**][6] and install it as described in the below link.

|

||||

|

||||

Alternatively, you can try the [**UCS Online Demo**][7] to get a first impression of Univention Corporate Server without actually installing it on your system.

|

||||

|

||||

**What do you think are the biggest challenges for Open Source?**

|

||||

|

||||

There is a certain attitude you can see over and over again even in bigger projects: OSS alone is viewed as an almost mandatory prerequisite for a good, sustainable, secure and trustworthy IT solution – but just having decided to use OSS is no guarantee for success. You have to carry out projects professionally and cooperate with the manufacturers. A danger is that in complex projects people think: “Oh, OSS is free, I just put it all together by myself”. But normally you do not have the know-how to successfully implement complex software solutions. You would never proceed like this with Closed Source. There people think: “Oh, the software costs 3 $ millions, so it’s okay if I have to spend another 300,000 Dollars on consultants.”

|

||||

|

||||

At OSS this is different. If such projects fail and leave burnt ground behind, we have to explain again and again that the failure of such projects is not due to the nature of OSS but to its poor implementation and organization in a specific project: You have to conclude reasonable contracts and involve partners as in the proprietary world, but you’ll gain a better solution.

|

||||

|

||||

Another challenge: We must stay innovative, move forward, attract new people who are enthusiastic about working on projects. That’s sometimes a challenge. For example, there are a number of proprietary cloud services that are good but lead to extremely high dependency. There are approaches to alternatives in OSS, but no suitable business models yet. So it’s hard to find and fund developers. For example, I can think of Evernote and OneNote for which there is no reasonable OSS alternative.

|

||||

|

||||

**And what will the future bring for Univention?**

|

||||

|

||||

I don’t have a crystal ball, but we are extremely optimistic. We see a very high growth potential in the education market. More OSS is being made in the public sector, because we have repeatedly experienced the dead ends that can be reached if we solely rely on Closed Source.

|

||||

|

||||

Overall, we will continue our organic growth at double-digit rates year after year.

|

||||

|

||||

UCS and its core functionalities of identity management, infrastructure management and app center will increasingly be offered and used from the cloud as a managed service. We will support our technology in this direction, e.g., through containers, so that a hypervisor or bare metal is not always necessary for operation.

|

||||

|

||||

**You have been the CEO of Univention for a long time. What keeps you motivated?**

|

||||

|

||||

I have been the CEO of Univention for more than 16 years now. My biggest motivation is to realize that something is moving. That we offer the better way for IT. That the people who go this way with us are excited to work with us. I go home satisfied in the evening (of course not every evening). It’s totally cool to work with the team I have. It motivates and pushes you every time I need it myself.

|

||||

|

||||

I’m a techie and nerd at heart, I enjoy dealing with technology. So I’m totally happy at this place and I’m grateful to the world that I can do whatever I want every day. Not everyone can say that.

|

||||

|

||||

**Who gives you inspiration?**

|

||||

|

||||

My employees, the customers and the Open Source projects. The exchange with other people.

|

||||

|

||||

The motivation behind everything is that we want to make sure that mankind will be able to influence and change the IT that surrounds us today and in the future just the way we want it and we thinks it’s good. We want to make a contribution to this. That is why Univention is there. That is important to us every day.

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/interview-with-peter-ganten-ceo-of-univention-gmbh/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/sk/

|

||||

[1]: https://www.ostechnix.com/introduction-univention-corporate-server/

|

||||

[2]: https://www.univention.com/products/ucs/

|

||||

[3]: https://www.ostechnix.com/redhat-and-suse-announced-to-withdraw-support-for-openldap/

|

||||

[4]: https://fsfe.org/

|

||||

[5]: https://osb-alliance.de/

|

||||

[6]: https://www.univention.com/downloads/download-ucs/

|

||||

[7]: https://www.univention.com/downloads/ucs-online-demo/

|

||||

@ -0,0 +1,637 @@

|

||||

# Compiling Lisp to JavaScript From Scratch in 350

|

||||

|

||||

In this article we will look at a from-scratch implementation of a compiler from a simple LISP-like calculator language to JavaScript. The complete source code can be found [here][7].

|

||||

|

||||

We will:

|

||||

|

||||

1. Define our language and write a simple program in it

|

||||

|

||||

2. Implement a simple parser combinator library

|

||||

|

||||

3. Implement a parser for our language

|

||||

|

||||

4. Implement a pretty printer for our language

|

||||

|

||||

5. Define a subset of JavaScript for our usage

|

||||

|

||||

6. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

7. Glue it all together

|

||||

|

||||

Let's start!

|

||||

|

||||

### 1\. Defining the language

|

||||

|

||||

The main attraction of lisps is that their syntax already represent a tree, this is why they are so easy to parse. We'll see that soon. But first let's define our language. Here's a BNF description of our language's syntax:

|

||||

|

||||

```

|

||||

program ::= expr

|

||||

expr ::= <integer> | <name> | ([<expr>])

|

||||

```

|

||||

|

||||

Basically, our language let's us define one expression at the top level which it will evaluate. An expression is composed of either an integer, for example `5`, a variable, for example `x`, or a list of expressions, for example `(add x 1)`.

|

||||

|

||||

An integer evaluate to itself, a variable evaluates to what it's bound in the current environment, and a list evaluates to a function call where the first argument is the function and the rest are the arguments to the function.

|

||||

|

||||

We have some built-in special forms in our language so we can do more interesting stuff:

|

||||

|

||||

* let expression let's us introduce new variables in the environment of the body of the let. The syntax is:

|

||||

|

||||

```

|

||||

let ::= (let ([<letarg>]) <body>)

|

||||

letargs ::= (<name> <expr>)

|

||||

body ::= <expr>

|

||||

```

|

||||

|

||||

* lambda expression: evaluates to an anonymous function definition. The syntax is:

|

||||

|

||||

```

|

||||

lambda ::= (lambda ([<name>]) <body>)

|

||||

```

|

||||

|

||||

We also have a few built in functions: `add`, `mul`, `sub`, `div` and `print`.

|

||||

|

||||

Let's see a quick example of a program written in our language:

|

||||

|

||||

```

|

||||

(let

|

||||

((compose

|

||||

(lambda (f g)

|

||||

(lambda (x) (f (g x)))))

|

||||

(square

|

||||

(lambda (x) (mul x x)))

|

||||

(add1

|

||||

(lambda (x) (add x 1))))

|

||||

(print ((compose square add1) 5)))

|

||||

```

|

||||

|

||||

This program defines 3 functions: `compose`, `square` and `add1`. And then prints the result of the computation:`((compose square add1) 5)`

|

||||

|

||||

I hope this is enough information about the language. Let's start implementing it!

|

||||

|

||||

We can define the language in Haskell like this:

|

||||

|

||||

```

|

||||

type Name = String

|

||||

|

||||

data Expr

|

||||

= ATOM Atom

|

||||

| LIST [Expr]

|

||||

deriving (Eq, Read, Show)

|

||||

|

||||

data Atom

|

||||

= Int Int

|

||||

| Symbol Name

|

||||

deriving (Eq, Read, Show)

|

||||

```

|

||||

|

||||

We can parse programs in the language we defined to an `Expr`. Also, we are giving the new data types `Eq`, `Read`and `Show` instances to aid in testing and debugging. You'll be able to use those in the REPL for example to verify all this actually works.

|

||||

|

||||

The reason we did not define `lambda`, `let` and the other built-in functions as part of the syntax is because we can get away with it in this case. These functions are just a more specific case of a `LIST`. So I decided to leave this to a later phase.

|

||||

|

||||

Usually, you would like to define these special cases in the abstract syntax - to improve error messages, to unable static analysis and optimizations and such, but we won't do that here so this is enough for us.

|

||||

|

||||

Another thing you would like to do usually is add some annotation to the syntax. For example the location: Which file did this `Expr` come from and which row and col in the file. You can use this in later stages to print the location of errors, even if they are not in the parser stage.

|

||||

|

||||

* _Exercise 1_ : Add a `Program` data type to include multiple `Expr` sequentially

|

||||

|

||||

* _Exercise 2_ : Add location annotation to the syntax tree.

|

||||

|

||||

### 2\. Implement a simple parser combinator library

|

||||

|

||||

First thing we are going to do is define an Embedded Domain Specific Language (or EDSL) which we will use to define our languages' parser. This is often referred to as parser combinator library. The reason we are doing it is strictly for learning purposes, Haskell has great parsing libraries and you should definitely use them when building real software, or even when just experimenting. One such library is [megaparsec][8].

|

||||

|

||||

First let's talk about the idea behind our parser library implementation. In it's essence, our parser is a function that takes some input, might consume some or all of the input, and returns the value it managed to parse and the rest of the input it didn't parse yet, or throws an error if it failed. Let's write that down.

|

||||

|

||||

```

|

||||

newtype Parser a

|

||||

= Parser (ParseString -> Either ParseError (a, ParseString))

|

||||

|

||||

data ParseString

|

||||

= ParseString Name (Int, Int) String

|

||||

|

||||

data ParseError

|

||||

= ParseError ParseString Error

|

||||

|

||||

type Error = String

|

||||

|

||||

```

|

||||

|

||||

Here we defined three main new types.

|

||||

|

||||

First, `Parser a`, is the parsing function we described before.

|

||||

|

||||

Second, `ParseString` is our input or state we carry along. It has three significant parts:

|

||||

|

||||

* `Name`: This is the name of the source

|

||||

|

||||

* `(Int, Int)`: This is the current location in the source

|

||||

|

||||

* `String`: This is the remaining string left to parse

|

||||

|

||||

Third, `ParseError` contains the current state of the parser and an error message.

|

||||

|

||||

Now we want our parser to be flexible, so we will define a few instances for common type classes for it. These instances will allow us to combine small parsers to make bigger parsers (hence the name 'parser combinators').

|

||||

|

||||

The first one is a `Functor` instance. We want a `Functor` instance because we want to be able to define a parser using another parser simply by applying a function on the parsed value. We will see an example of this when we define the parser for our language.

|

||||

|

||||

```

|

||||

instance Functor Parser where

|

||||

fmap f (Parser parser) =

|

||||

Parser (\str -> first f <$> parser str)

|

||||

```

|

||||

|

||||

The second instance is an `Applicative` instance. One common use case for this instance instance is to lift a pure function on multiple parsers.

|

||||

|

||||

```

|

||||

instance Applicative Parser where

|

||||

pure x = Parser (\str -> Right (x, str))

|

||||

(Parser p1) <*> (Parser p2) =

|

||||

Parser $

|

||||

\str -> do

|

||||

(f, rest) <- p1 str

|

||||

(x, rest') <- p2 rest

|

||||

pure (f x, rest')

|

||||

|

||||

```

|

||||

|

||||

(Note: _We will also implement a Monad instance so we can use do notation here._ )

|

||||

|

||||

The third instance is an `Alternative` instance. We want to be able to supply an alternative parser in case one fails.

|

||||

|

||||

```

|

||||

instance Alternative Parser where

|

||||

empty = Parser (`throwErr` "Failed consuming input")

|

||||

(Parser p1) <|> (Parser p2) =

|

||||

Parser $

|

||||

\pstr -> case p1 pstr of

|

||||

Right result -> Right result

|

||||

Left _ -> p2 pstr

|

||||

```

|

||||

|

||||

The forth instance is a `Monad` instance. So we'll be able to chain parsers.

|

||||

|

||||

```

|

||||

instance Monad Parser where

|

||||

(Parser p1) >>= f =

|

||||

Parser $

|

||||

\str -> case p1 str of

|

||||

Left err -> Left err

|

||||

Right (rs, rest) ->

|

||||

case f rs of

|

||||

Parser parser -> parser rest

|

||||

|

||||

```

|

||||

|

||||

Next, let's define a way to run a parser and a utility function for failure:

|

||||

|

||||

```

|

||||

|

||||

runParser :: String -> String -> Parser a -> Either ParseError (a, ParseString)

|

||||

runParser name str (Parser parser) = parser $ ParseString name (0,0) str

|

||||

|

||||

throwErr :: ParseString -> String -> Either ParseError a

|

||||

throwErr ps@(ParseString name (row,col) _) errMsg =

|

||||

Left $ ParseError ps $ unlines

|

||||

[ "*** " ++ name ++ ": " ++ errMsg

|

||||

, "* On row " ++ show row ++ ", column " ++ show col ++ "."

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

Now we'll start implementing the combinators which are the API and heart of the EDSL.

|

||||

|

||||

First, we'll define `oneOf`. `oneOf` will succeed if one of the characters in the list supplied to it is the next character of the input and will fail otherwise.

|

||||

|

||||

```

|

||||

oneOf :: [Char] -> Parser Char

|

||||

oneOf chars =

|

||||

Parser $ \case

|

||||

ps@(ParseString name (row, col) str) ->

|

||||

case str of

|

||||

[] -> throwErr ps "Cannot read character of empty string"

|

||||

(c:cs) ->

|

||||

if c `elem` chars

|

||||

then Right (c, ParseString name (row, col+1) cs)

|

||||

else throwErr ps $ unlines ["Unexpected character " ++ [c], "Expecting one of: " ++ show chars]

|

||||

```

|

||||

|

||||

`optional` will stop a parser from throwing an error. It will just return `Nothing` on failure.

|

||||

|

||||

```

|

||||

optional :: Parser a -> Parser (Maybe a)

|

||||

optional (Parser parser) =

|

||||

Parser $

|

||||

\pstr -> case parser pstr of

|

||||

Left _ -> Right (Nothing, pstr)

|

||||

Right (x, rest) -> Right (Just x, rest)

|

||||

```

|

||||

|

||||

`many` will try to run a parser repeatedly until it fails. When it does, it'll return a list of successful parses. `many1`will do the same, but will throw an error if it fails to parse at least once.

|

||||

|

||||

```

|

||||

many :: Parser a -> Parser [a]

|

||||

many parser = go []

|

||||

where go cs = (parser >>= \c -> go (c:cs)) <|> pure (reverse cs)

|

||||

|

||||

many1 :: Parser a -> Parser [a]

|

||||

many1 parser =

|

||||

(:) <$> parser <*> many parser

|

||||

|

||||

```

|

||||

|

||||

These next few parsers use the combinators we defined to make more specific parsers:

|

||||

|

||||

```

|

||||

char :: Char -> Parser Char

|

||||

char c = oneOf [c]

|

||||

|

||||

string :: String -> Parser String

|

||||

string = traverse char

|

||||

|

||||

space :: Parser Char

|

||||

space = oneOf " \n"

|

||||

|

||||

spaces :: Parser String

|

||||

spaces = many space

|

||||

|

||||

spaces1 :: Parser String

|

||||

spaces1 = many1 space

|

||||

|

||||

withSpaces :: Parser a -> Parser a

|

||||

withSpaces parser =

|

||||

spaces *> parser <* spaces

|

||||

|

||||

parens :: Parser a -> Parser a

|

||||

parens parser =

|

||||

(withSpaces $ char '(')

|

||||

*> withSpaces parser

|

||||

<* (spaces *> char ')')

|

||||

|

||||

sepBy :: Parser a -> Parser b -> Parser [b]

|

||||

sepBy sep parser = do

|

||||

frst <- optional parser

|

||||

rest <- many (sep *> parser)

|

||||

pure $ maybe rest (:rest) frst

|

||||

|

||||

```

|

||||

|

||||

Now we have everything we need to start defining a parser for our language.

|

||||

|

||||

* _Exercise_ : implement an EOF (end of file/input) parser combinator.

|

||||

|

||||

### 3\. Implementing a parser for our language

|

||||

|

||||

To define our parser, we'll use the top-bottom method.

|

||||

|

||||

```

|

||||

parseExpr :: Parser Expr

|

||||

parseExpr = fmap ATOM parseAtom <|> fmap LIST parseList

|

||||

|

||||

parseList :: Parser [Expr]

|

||||

parseList = parens $ sepBy spaces1 parseExpr

|

||||

|

||||

parseAtom :: Parser Atom

|

||||

parseAtom = parseSymbol <|> parseInt

|

||||

|

||||

parseSymbol :: Parser Atom

|

||||

parseSymbol = fmap Symbol parseName

|

||||

|

||||

```

|

||||

|

||||

Notice that these four function are a very high-level description of our language. This demonstrate why Haskell is so nice for parsing. Still, after defining the high-level parts, we still need to define the lower-level `parseName` and `parseInt`.

|

||||

|

||||

What characters can we use as names in our language? Let's decide to use lowercase letters, digits and underscores, where the first character must be a letter.

|

||||

|

||||

```

|

||||

parseName :: Parser Name

|

||||

parseName = do

|

||||

c <- oneOf ['a'..'z']

|

||||

cs <- many $ oneOf $ ['a'..'z'] ++ "0123456789" ++ "_"

|

||||

pure (c:cs)

|

||||

```

|

||||

|

||||

For integers, we want a sequence of digits optionally preceding by '-':

|

||||

|

||||

```

|

||||

parseInt :: Parser Atom

|

||||

parseInt = do

|

||||

sign <- optional $ char '-'

|

||||

num <- many1 $ oneOf "0123456789"

|

||||

let result = read $ maybe num (:num) sign of

|

||||

pure $ Int result

|

||||

```

|

||||

|

||||

Lastly, we'll define a function to run a parser and get back an `Expr` or an error message.

|

||||

|

||||

```

|

||||

runExprParser :: Name -> String -> Either String Expr

|

||||

runExprParser name str =

|

||||

case runParser name str (withSpaces parseExpr) of

|

||||

Left (ParseError _ errMsg) -> Left errMsg

|

||||

Right (result, _) -> Right result

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Write a parser for the `Program` type you defined in the first section

|

||||

|

||||

* _Exercise 2_ : Rewrite `parseName` in Applicative style

|

||||

|

||||

* _Exercise 3_ : Find a way to handle the overflow case in `parseInt` instead of using `read`.

|

||||

|

||||

### 4\. Implement a pretty printer for our language

|

||||

|

||||

One more thing we'd like to do is be able to print our programs as source code. This is useful for better error messages.

|

||||

|

||||

```

|

||||

printExpr :: Expr -> String

|

||||

printExpr = printExpr' False 0

|

||||

|

||||

printAtom :: Atom -> String

|

||||

printAtom = \case

|

||||

Symbol s -> s

|

||||

Int i -> show i

|

||||

|

||||

printExpr' :: Bool -> Int -> Expr -> String

|

||||

printExpr' doindent level = \case

|

||||

ATOM a -> indent (bool 0 level doindent) (printAtom a)

|

||||

LIST (e:es) ->

|

||||

indent (bool 0 level doindent) $

|

||||

concat

|

||||

[ "("

|

||||

, printExpr' False (level + 1) e

|

||||

, bool "\n" "" (null es)

|

||||

, intercalate "\n" $ map (printExpr' True (level + 1)) es

|

||||

, ")"

|

||||

]

|

||||

|

||||

indent :: Int -> String -> String

|

||||

indent tabs e = concat (replicate tabs " ") ++ e

|

||||

```

|

||||

|

||||

* _Exercise_ : Write a pretty printer for the `Program` type you defined in the first section

|

||||

|

||||

Okay, we wrote around 200 lines so far of what's typically called the front-end of the compiler. We have around 150 more lines to go and three more tasks: We need to define a subset of JS for our usage, define the translator from our language to that subset, and glue the whole thing together. Let's go!

|

||||

|

||||

### 5\. Define a subset of JavaScript for our usage

|

||||

|

||||

First, we'll define the subset of JavaScript we are going to use:

|

||||

|

||||

```

|

||||

data JSExpr

|

||||

= JSInt Int

|

||||

| JSSymbol Name

|

||||

| JSBinOp JSBinOp JSExpr JSExpr

|

||||

| JSLambda [Name] JSExpr

|

||||

| JSFunCall JSExpr [JSExpr]

|

||||

| JSReturn JSExpr

|

||||

deriving (Eq, Show, Read)

|

||||

|

||||

type JSBinOp = String

|

||||

```

|

||||

|

||||

This data type represent a JavaScript expression. We have two atoms - `JSInt` and `JSSymbol` to which we'll translate our languages' `Atom`, We have `JSBinOp` to represent a binary operation such as `+` or `*`, we have `JSLambda`for anonymous functions same as our `lambda expression`, We have `JSFunCall` which we'll use both for calling functions and introducing new names as in `let`, and we have `JSReturn` to return values from functions as that's required in JavaScript.

|

||||

|

||||

This `JSExpr` type is an **abstract representation** of a JavaScript expression. We will translate our own `Expr`which is an abstract representation of our languages' expression to `JSExpr` and from there to JavaScript. But in order to do that we need to take this `JSExpr` and produce JavaScript code from it. We'll do that by pattern matching on `JSExpr` recursively and emit JS code as a `String`. This is basically the same thing we did in `printExpr`. We'll also track the scoping of elements so we can indent the generated code in a nice way.

|

||||

|

||||

```

|

||||

printJSOp :: JSBinOp -> String

|

||||

printJSOp op = op

|

||||

|

||||

printJSExpr :: Bool -> Int -> JSExpr -> String

|

||||

printJSExpr doindent tabs = \case

|

||||

JSInt i -> show i

|

||||

JSSymbol name -> name

|

||||

JSLambda vars expr -> (if doindent then indent tabs else id) $ unlines

|

||||

["function(" ++ intercalate ", " vars ++ ") {"

|

||||

,indent (tabs+1) $ printJSExpr False (tabs+1) expr

|

||||

] ++ indent tabs "}"

|

||||

JSBinOp op e1 e2 -> "(" ++ printJSExpr False tabs e1 ++ " " ++ printJSOp op ++ " " ++ printJSExpr False tabs e2 ++ ")"

|

||||

JSFunCall f exprs -> "(" ++ printJSExpr False tabs f ++ ")(" ++ intercalate ", " (fmap (printJSExpr False tabs) exprs) ++ ")"

|

||||

JSReturn expr -> (if doindent then indent tabs else id) $ "return " ++ printJSExpr False tabs expr ++ ";"

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Add a `JSProgram` type that will hold multiple `JSExpr` and create a function `printJSExprProgram` to generate code for it.

|

||||

|

||||

* _Exercise 2_ : Add a new type of `JSExpr` - `JSIf`, and generate code for it.

|

||||

|

||||

### 6\. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

We are almost there. In this section we'll create a function to translate `Expr` to `JSExpr`.

|

||||

|

||||

The basic idea is simple, we'll translate `ATOM` to `JSSymbol` or `JSInt` and `LIST` to either a function call or a special case we'll translate later.

|

||||

|

||||

```

|

||||

type TransError = String

|

||||

|

||||

translateToJS :: Expr -> Either TransError JSExpr

|

||||

translateToJS = \case

|

||||

ATOM (Symbol s) -> pure $ JSSymbol s

|

||||

ATOM (Int i) -> pure $ JSInt i

|

||||

LIST xs -> translateList xs

|

||||

|

||||

translateList :: [Expr] -> Either TransError JSExpr

|

||||

translateList = \case

|

||||

[] -> Left "translating empty list"

|

||||

ATOM (Symbol s):xs

|

||||

| Just f <- lookup s builtins ->

|

||||

f xs

|

||||

f:xs ->

|

||||

JSFunCall <$> translateToJS f <*> traverse translateToJS xs

|

||||

|

||||

```

|

||||

|

||||

`builtins` is a list of special cases to translate, like `lambda` and `let`. Every case gets the list of arguments for it, verify that its syntactically valid and translates it to the equivalent `JSExpr`.

|

||||

|

||||

```

|

||||

type Builtin = [Expr] -> Either TransError JSExpr

|

||||

type Builtins = [(Name, Builtin)]

|

||||

|

||||

builtins :: Builtins

|

||||

builtins =

|

||||

[("lambda", transLambda)

|

||||

,("let", transLet)

|

||||

,("add", transBinOp "add" "+")

|

||||

,("mul", transBinOp "mul" "*")

|

||||

,("sub", transBinOp "sub" "-")

|

||||

,("div", transBinOp "div" "/")

|

||||

,("print", transPrint)

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

In our case, we treat built-in special forms as special and not first class, so will not be able to use them as first class functions and such.

|

||||

|

||||

We'll translate a Lambda to an anonymous function:

|

||||

|

||||

```

|

||||

transLambda :: [Expr] -> Either TransError JSExpr

|

||||

transLambda = \case

|

||||

[LIST vars, body] -> do

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSLambda vars' <$> (JSReturn <$> translateToJS body)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for lambda."

|

||||

,"expecting 2 arguments, the first is the list of vars and the second is the body of the lambda."

|

||||

,"In expression: " ++ show (LIST $ ATOM (Symbol "lambda") : vars)

|

||||

]

|

||||

|

||||

fromSymbol :: Expr -> Either String Name

|

||||

fromSymbol (ATOM (Symbol s)) = Right s

|

||||

fromSymbol e = Left $ "cannot bind value to non symbol type: " ++ show e

|

||||

|

||||

```

|

||||

|

||||

We'll translate let to a definition of a function with the relevant named arguments and call it with the values, Thus introducing the variables in that scope:

|

||||

|

||||

```

|

||||

transLet :: [Expr] -> Either TransError JSExpr

|

||||

transLet = \case

|

||||

[LIST binds, body] -> do

|

||||

(vars, vals) <- letParams binds

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSFunCall . JSLambda vars' <$> (JSReturn <$> translateToJS body) <*> traverse translateToJS vals

|

||||

where

|

||||

letParams :: [Expr] -> Either Error ([Expr],[Expr])

|

||||

letParams = \case

|

||||

[] -> pure ([],[])

|

||||

LIST [x,y] : rest -> ((x:) *** (y:)) <$> letParams rest

|

||||

x : _ -> Left ("Unexpected argument in let list in expression:\n" ++ printExpr x)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for let."

|

||||

,"expecting 2 arguments, the first is the list of var/val pairs and the second is the let body."

|

||||

,"In expression:\n" ++ printExpr (LIST $ ATOM (Symbol "let") : vars)

|

||||

]

|

||||

```

|

||||

|

||||

We'll translate an operation that can work on multiple arguments to a chain of binary operations. For example: `(add 1 2 3)` will become `1 + (2 + 3)`

|

||||

|

||||

```

|

||||

transBinOp :: Name -> Name -> [Expr] -> Either TransError JSExpr

|

||||

transBinOp f _ [] = Left $ "Syntax error: '" ++ f ++ "' expected at least 1 argument, got: 0"

|

||||

transBinOp _ _ [x] = translateToJS x

|

||||

transBinOp _ f list = foldl1 (JSBinOp f) <$> traverse translateToJS list

|

||||

```

|

||||

|

||||

And we'll translate a `print` as a call to `console.log`

|

||||

|

||||

```

|

||||

transPrint :: [Expr] -> Either TransError JSExpr

|

||||

transPrint [expr] = JSFunCall (JSSymbol "console.log") . (:[]) <$> translateToJS expr

|

||||

transPrint xs = Left $ "Syntax error. print expected 1 arguments, got: " ++ show (length xs)

|

||||

|

||||

```

|

||||

|

||||

Notice that we could have skipped verifying the syntax if we'd parse those as special cases of `Expr`.

|

||||

|

||||

* _Exercise 1_ : Translate `Program` to `JSProgram`

|

||||

|

||||

* _Exercise 2_ : add a special case for `if Expr Expr Expr` and translate it to the `JSIf` case you implemented in the last exercise

|

||||

|

||||

### 7\. Glue it all together

|

||||

|

||||

Finally, we are going to glue this all together. We'll:

|

||||

|

||||

1. Read a file

|

||||

|

||||

2. Parse it to `Expr`

|

||||

|

||||

3. Translate it to `JSExpr`

|

||||

|

||||

4. Emit JavaScript code to the standard output

|

||||

|

||||

We'll also enable a few flags for testing:

|

||||

|

||||

* `--e` will parse and print the abstract representation of the expression (`Expr`)

|

||||

|

||||

* `--pp` will parse and pretty print

|

||||

|

||||

* `--jse` will parse, translate and print the abstract representation of the resulting JS (`JSExpr`)

|

||||

|

||||

* `--ppc` will parse, pretty print and compile

|

||||

|

||||

```

|

||||

main :: IO ()

|

||||

main = getArgs >>= \case

|

||||

[file] ->

|

||||

printCompile =<< readFile file

|

||||

["--e",file] ->

|

||||

either putStrLn print . runExprParser "--e" =<< readFile file

|

||||

["--pp",file] ->

|

||||

either putStrLn (putStrLn . printExpr) . runExprParser "--pp" =<< readFile file

|

||||

["--jse",file] ->

|

||||

either print (either putStrLn print . translateToJS) . runExprParser "--jse" =<< readFile file

|

||||

["--ppc",file] ->

|

||||

either putStrLn (either putStrLn putStrLn) . fmap (compile . printExpr) . runExprParser "--ppc" =<< readFile file

|

||||

_ ->

|

||||

putStrLn $ unlines

|

||||

["Usage: runghc Main.hs [ --e, --pp, --jse, --ppc ] <filename>"

|

||||

,"--e print the Expr"

|

||||

,"--pp pretty print Expr"

|

||||

,"--jse print the JSExpr"

|

||||

,"--ppc pretty print Expr and then compile"

|

||||

]

|

||||

|

||||

printCompile :: String -> IO ()

|

||||

printCompile = either putStrLn putStrLn . compile

|

||||

|

||||

compile :: String -> Either Error String

|

||||

compile str = printJSExpr False 0 <$> (translateToJS =<< runExprParser "compile" str)

|

||||

|

||||

```

|

||||

|

||||

That's it. We have a compiler from our language to JS. Again, you can view the full source file [here][9].

|

||||

|

||||

Running our compiler with the example from the first section yields this JavaScript code:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp

|

||||

(function(compose, square, add1) {

|

||||

return (console.log)(((compose)(square, add1))(5));

|

||||

})(function(f, g) {

|

||||

return function(x) {

|

||||

return (f)((g)(x));

|

||||

};

|

||||

}, function(x) {

|

||||

return (x * x);

|

||||

}, function(x) {

|

||||

return (x + 1);

|

||||

})

|

||||

```

|

||||

|

||||

If you have node.js installed on your computer, you can run this code by running:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp | node -p

|

||||

36

|

||||

undefined

|

||||

```

|

||||

|

||||

* _Final exercise_ : instead of compiling an expression, compile a program of multiple expressions.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

|

||||

作者:[ Gil Mizrahi ][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://gilmi.me/home

|

||||

[b]:https://github.com/oska874

|

||||

[1]:https://gilmi.me/blog/authors/Gil

|

||||

[2]:https://gilmi.me/blog/tags/compilers

|

||||

[3]:https://gilmi.me/blog/tags/fp

|

||||

[4]:https://gilmi.me/blog/tags/haskell

|

||||

[5]:https://gilmi.me/blog/tags/lisp

|

||||

[6]:https://gilmi.me/blog/tags/parsing

|

||||

[7]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[8]:https://mrkkrp.github.io/megaparsec/

|

||||

[9]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[10]:https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

A Desktop GUI Application For NPM

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by qhwdw

|

||||

Complete Sed Command Guide [Explained with Practical Examples]

|

||||

======

|

||||

In a previous article, I showed the [basic usage of Sed][1], the stream editor, on a practical use case. Today, be prepared to gain more insight about Sed as we will take an in-depth tour of the sed execution model. This will be also an opportunity to make an exhaustive review of all Sed commands and to dive into their details and subtleties. So, if you are ready, launch a terminal, [download the test files][2] and sit comfortably before your keyboard: we will start our exploration right now!

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by qhwdw

|

||||

Install Oracle VirtualBox On Ubuntu 18.04 LTS Headless Server

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by qhwdw

|

||||

Setup Headless Virtualization Server Using KVM In Ubuntu 18.04 LTS

|

||||

======

|

||||

|

||||

|

||||

205

sources/tech/20180715 Why is Python so slow.md

Normal file

205

sources/tech/20180715 Why is Python so slow.md

Normal file

@ -0,0 +1,205 @@

|

||||

Why is Python so slow?

|

||||

============================================================

|

||||

|

||||

Python is booming in popularity. It is used in DevOps, Data Science, Web Development and Security.

|

||||

|

||||

It does not, however, win any medals for speed.

|

||||

|

||||

|

||||

|

||||

|

||||

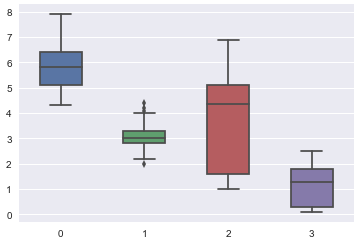

> How does Java compare in terms of speed to C or C++ or C# or Python? The answer depends greatly on the type of application you’re running. No benchmark is perfect, but The Computer Language Benchmarks Game is [a good starting point][5].

|

||||

|

||||

I’ve been referring to the Computer Language Benchmarks Game for over a decade; compared with other languages like Java, C#, Go, JavaScript, C++, Python is [one of the slowest][6]. This includes [JIT][7] (C#, Java) and [AOT][8] (C, C++) compilers, as well as interpreted languages like JavaScript.

|

||||

|

||||

_NB: When I say “Python”, I’m talking about the reference implementation of the language, CPython. I will refer to other runtimes in this article._

|

||||

|

||||

> I want to answer this question: When Python completes a comparable application 2–10x slower than another language, _why is it slow_ and can’t we _make it faster_ ?

|

||||

|

||||

Here are the top theories:

|

||||

|

||||

* “ _It’s the GIL (Global Interpreter Lock)_ ”

|

||||

|

||||

* “ _It’s because its interpreted and not compiled_ ”

|

||||

|

||||

* “ _It’s because its a dynamically typed language_ ”

|

||||

|

||||

Which one of these reasons has the biggest impact on performance?

|

||||

|

||||

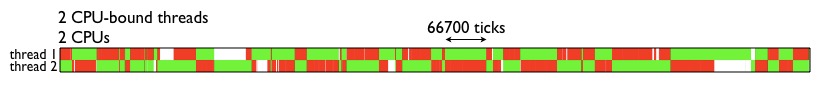

### “It’s the GIL”

|

||||

|

||||

Modern computers come with CPU’s that have multiple cores, and sometimes multiple processors. In order to utilise all this extra processing power, the Operating System defines a low-level structure called a thread, where a process (e.g. Chrome Browser) can spawn multiple threads and have instructions for the system inside. That way if one process is particularly CPU-intensive, that load can be shared across the cores and this effectively makes most applications complete tasks faster.

|

||||

|

||||

My Chrome Browser, as I’m writing this article, has 44 threads open. Keep in mind that the structure and API of threading are different between POSIX-based (e.g. Mac OS and Linux) and Windows OS. The operating system also handles the scheduling of threads.

|

||||

|

||||

IF you haven’t done multi-threaded programming before, a concept you’ll need to quickly become familiar with locks. Unlike a single-threaded process, you need to ensure that when changing variables in memory, multiple threads don’t try and access/change the same memory address at the same time.

|

||||

|

||||