mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

3e2274f58b

@ -1,56 +0,0 @@

|

||||

heguangzhi translating

|

||||

|

||||

Linus, His Apology, And Why We Should Support Him

|

||||

======

|

||||

|

||||

|

||||

|

||||

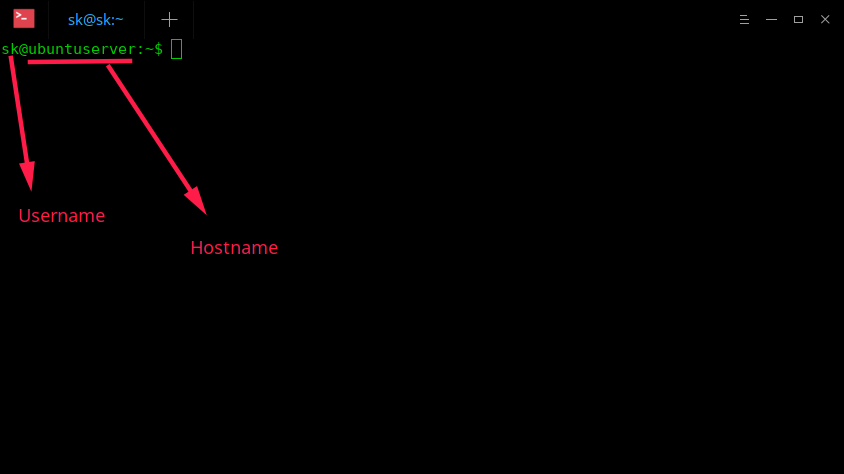

Today, Linus Torvalds, the creator of Linux, which powers everything from smartwatches to electrical grids posted [a pretty remarkable note on the kernel mailing list][1].

|

||||

|

||||

As a little bit of backstory, Linus has sometimes come under fire for the ways in which he has expressed feedback, provided criticism, and reacted to various scenarios on the kernel mailing list. This criticism has been fair in many cases: he has been overly aggressive at times, and while the kernel maintainers are a tight-knit group, the optics, particularly for those new to kernel development has often been pretty bad.

|

||||

|

||||

Like many conflict scenarios, this feedback has been communicated back to him in both constructive and non-constructive ways. Historically he has been seemingly reluctant to really internalize this feedback, I suspect partially because (a) the Linux kernel is a very successful project, and (b) some of the critics have at times gone nuclear at him (which often doesn’t work as a strategy towards defensive people). Well, things changed today.

|

||||

|

||||

In his post today he shared some self-reflection on this feedback:

|

||||

|

||||

> This week people in our community confronted me about my lifetime of not understanding emotions. My flippant attacks in emails have been both unprofessional and uncalled for. Especially at times when I made it personal. In my quest for a better patch, this made sense to me. I know now this was not OK and I am truly sorry.

|

||||

|

||||

He went on to not just share an admission that this has been a problem, but to also share a very personal acceptance that he struggles to understand and engage with people’s emotions:

|

||||

|

||||

> The above is basically a long-winded way to get to the somewhat painful personal admission that hey, I need to change some of my behavior, and I want to apologize to the people that my personal behavior hurt and possibly drove away from kernel development entirely. I am going to take time off and get some assistance on how to understand people’s emotions and respond appropriately.

|

||||

|

||||

His post is sure to light up the open source, Linux, and tech world for the next few weeks. For some it will be celebrated as a step in the right direction. For some it will be too little too late, and their animus will remain. For some they will be cautiously supportive, but defer judgement until they have seen his future behavior demonstrate substantive changes.

|

||||

|

||||

### My Take

|

||||

|

||||

I wouldn’t say I know Linus very closely; we have a casual relationship. I see him at conferences from time to time, and we often bump into each other and catch up. I interviewed him for my book and for the Global Learning XPRIZE. From my experience he is a funny, genuine, friendly guy. Interestingly, and not unusually at all for open source, his online persona is rather different to his in-person persona. I am not going to deny that when I would see these dust-ups on LKML, it didn’t reflect the Linus I know. I chalked it down to a mixture of his struggles with social skills, dogmatic pragmatism, and ego.

|

||||

|

||||

His post today is a pretty remarkable change of posture for him, and I encourage that we as a community support him in making these changes.

|

||||

|

||||

**Accepting these personal challenges is tough, particularly for someone in his position**. Linux is a global phenomenon. It has resulted in billions of dollars of technology creation, powering thousands of companies, and changing the norms around of how software is consumed and created. It is easy to forget that Linux was started by a quiet Finnish kid in his university dorm room. It is important to remember that **just because Linux has scaled elegantly, it doesn’t mean that Linus has been able to**. He isn’t a codebase, he is a human being, and bugs are harder to spot and fix in humans. You can’t just deploy a fix immediately. It takes time to identify the problem and foster and grow a change. The starting point for this is to support people in that desire for change, not re-litigate the ills of the past: that will get us nowhere quickly.

|

||||

|

||||

[![Young Linus Torvalds][2]][3]

|

||||

|

||||

I am also mindful of ego. None of us like to admit we have an ago, but we all do. You don’t get to build one of the most fundamental technologies in the last thirty years and not have an ego. He built it…they came…and a revolution was energized because of what he created. While Linus’s ego is more subtle, and thankfully doesn’t extend to faddish self-promotion, overly expensive suits, and forays into Hollywood (quite the opposite), his ego has naturally resulted in abrupt opinions on how his project should run, sometimes plugging fingers in his ears to particularly challenging viewpoints from others. **His post today is a clear example of him putting Linux as a project ahead of his own personal ego**.

|

||||

|

||||

This is important for a few reasons. Firstly, being in such a public position and accepting your personal flaws isn’t a problem many people face, and isn’t a situation many people handle well. I work with a lot of CEOs, and they often say it is the loneliest job on the planet. I have heard American presidents say the same in interviews. This is because they are the top of the tree with all the responsibility and expectations on their shoulders. Put yourself in Linus’s position: his little project has blown up into a global phenomenon, and he didn’t necessarily have the social tools to be able to handle this change. Ego forces these internal struggles under the surface and to push them down and avoid them. So, to accept them as publicly and openly as he did today is a very firm step in the right direction. Now, the true test will be results, but we need to all provide the breathing space for him to accomplish them.

|

||||

|

||||

So, I would encourage everyone to give Linus a shot. This doesn’t mean the frustrations of the past are erased, and he has acknowledged and apologized for these mistakes as a first step. He has accepted he struggles with understanding other’s emotions, and a desire to help improve this for the betterment of the project and himself. **He is a human, and the best tonic for humans to resolve their own internal struggles is the support and encouragement of other humans**. This is not unique to Linus, but to anyone who faces similar struggles.

|

||||

|

||||

All the best, Linus.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.jonobacon.com/2018/09/16/linus-his-apology-and-why-we-should-support-him/

|

||||

|

||||

作者:[Jono Bacon][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.jonobacon.com/author/admin/

|

||||

[1]: https://lkml.org/lkml/2018/9/16/167

|

||||

[2]: https://i1.wp.com/www.jonobacon.com/wp-content/uploads/2018/09/linus.jpg?resize=499%2C342&ssl=1

|

||||

[3]: https://i1.wp.com/www.jonobacon.com/wp-content/uploads/2018/09/linus.jpg?ssl=1

|

||||

@ -1,102 +0,0 @@

|

||||

ANNOUNCING THE GENERAL AVAILABILITY OF CONTAINERD 1.0, THE INDUSTRY-STANDARD RUNTIME USED BY MILLIONS OF USERS

|

||||

============================================================

|

||||

|

||||

Today, we’re pleased to announce that containerd (pronounced Con-Tay-Ner-D), an industry-standard runtime for building container solutions, has reached its 1.0 milestone. containerd has already been deployed in millions of systems in production today, making it the most widely adopted runtime and an essential upstream component of the Docker platform.

|

||||

|

||||

Built to address the needs of modern container platforms like Docker and orchestration systems like Kubernetes, containerd ensures users have a consistent dev to ops experience. From [Docker’s initial announcement][22] last year that it was spinning out its core runtime to [its donation to the CNCF][23] in March 2017, the containerd project has experienced significant growth and progress over the past 12 months. .

|

||||

|

||||

Within both the Docker and Kubernetes communities, there has been a significant uptick in contributions from independents and CNCF member companies alike including Docker, Google, NTT, IBM, Microsoft, AWS, ZTE, Huawei and ZJU. Similarly, the maintainers have been working to add key functionality to containerd.The initial containerd donation provided everything users need to ensure a seamless container experience including methods for:

|

||||

|

||||

* transferring container images,

|

||||

|

||||

* container execution and supervision,

|

||||

|

||||

* low-level local storage and network interfaces and

|

||||

|

||||

* the ability to work on both Linux, Windows and other platforms.

|

||||

|

||||

Additional work has been done to add even more powerful capabilities to containerd including a:

|

||||

|

||||

* Complete storage and distribution system that supports both OCI and Docker image formats and

|

||||

|

||||

* Robust events system

|

||||

|

||||

* More sophisticated snapshot model to manage container filesystems

|

||||

|

||||

These changes helped the team build out a smaller interface for the snapshotters, while still fulfilling the requirements needed from things like a builder. It also reduces the amount of code needed, making it much easier to maintain in the long run.

|

||||

|

||||

The containerd 1.0 milestone comes after several months testing both the alpha and version versions, which enabled the team to implement many performance improvements. Some of these,improvements include the creation of a stress testing system, improvements in garbage collection and shim memory usage.

|

||||

|

||||

“In 2017 key functionality has been added containerd to address the needs of modern container platforms like Docker and orchestration systems like Kubernetes,” said Michael Crosby, Maintainer for containerd and engineer at Docker. “Since our announcement in December, we have been progressing the design of the project with the goal of making it easily embeddable in higher level systems to provide core container capabilities. We will continue to work with the community to create a runtime that’s lightweight yet powerful, balancing new functionality with the desire for code that is easy to support and maintain.”

|

||||

|

||||

containerd is already being used by Kubernetes for its[ cri-containerd project][24], which enables users to run Kubernetes clusters using containerd as the underlying runtime. containerd is also an essential upstream component of the Docker platform and is currently used by millions of end users. There is also strong alignment with other CNCF projects: containerd exposes an API using [gRPC][25] and exposes metrics in the [Prometheus][26] format. containerd also fully leverages the Open Container Initiative (OCI) runtime, image format specifications and OCI reference implementation ([runC][27]), and will pursue OCI certification when it is available.

|

||||

|

||||

Key Milestones in the progress to 1.0 include:

|

||||

|

||||

|

||||

|

||||

Notable containerd facts and figures:

|

||||

|

||||

* 1994 GitHub stars, 401 forks

|

||||

|

||||

* 108 contributors

|

||||

|

||||

* 8 maintainers from independents and and member companies alike including Docker, Google, IBM, ZTE and ZJU .

|

||||

|

||||

* 3030+ commits, 26 releases

|

||||

|

||||

Availability and Resources

|

||||

|

||||

To participate in containerd: [github.com/containerd/containerd][28]

|

||||

|

||||

* Getting Started with containerd: [http://mobyproject.org/blog/2017/08/15/containerd-getting-started/][8]

|

||||

|

||||

* Roadmap: [https://github.com/containerd/containerd/blob/master/ROADMAP.md][1]

|

||||

|

||||

* Scope table: [https://github.com/containerd/containerd#scope][2]

|

||||

|

||||

* Architecture document: [https://github.com/containerd/containerd/blob/master/design/architecture.md][3]

|

||||

|

||||

* APIs: [https://github.com/containerd/containerd/tree/master/api/][9].

|

||||

|

||||

* Learn more about containerd at KubeCon by attending Justin Cormack’s [LinuxKit & Kubernetes talk at Austin Docker Meetup][10], Patrick Chanezon’s [Moby session][11] [Phil Estes’ session][12] or the [containerd salon][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.docker.com/2017/12/cncf-containerd-1-0-ga-announcement/

|

||||

|

||||

作者:[Patrick Chanezon ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blog.docker.com/author/chanezon/

|

||||

[1]:https://github.com/docker/containerd/blob/master/ROADMAP.md

|

||||

[2]:https://github.com/docker/containerd#scope

|

||||

[3]:https://github.com/docker/containerd/blob/master/design/architecture.md

|

||||

[4]:http://www.linkedin.com/shareArticle?mini=true&url=http://dockr.ly/2ArQe3G&title=Announcing%20the%20General%20Availability%20of%20containerd%201.0%2C%20the%20industry-standard%20runtime%20used%20by%20millions%20of%20users&summary=Today,%20we%E2%80%99re%20pleased%20to%20announce%20that%20containerd%20(pronounced%20Con-Tay-Ner-D),%20an%20industry-standard%20runtime%20for%20building%20container%20solutions,%20has%20reached%20its%201.0%20milestone.%20containerd%20has%20already%20been%20deployed%20in%20millions%20of%20systems%20in%20production%20today,%20making%20it%20the%20most%20widely%20adopted%20runtime%20and%20an%20essential%20upstream%20component%20of%20the%20Docker%20platform.%20Built%20...

|

||||

[5]:http://www.reddit.com/submit?url=http://dockr.ly/2ArQe3G&title=Announcing%20the%20General%20Availability%20of%20containerd%201.0%2C%20the%20industry-standard%20runtime%20used%20by%20millions%20of%20users

|

||||

[6]:https://plus.google.com/share?url=http://dockr.ly/2ArQe3G

|

||||

[7]:http://news.ycombinator.com/submitlink?u=http://dockr.ly/2ArQe3G&t=Announcing%20the%20General%20Availability%20of%20containerd%201.0%2C%20the%20industry-standard%20runtime%20used%20by%20millions%20of%20users

|

||||

[8]:http://mobyproject.org/blog/2017/08/15/containerd-getting-started/

|

||||

[9]:https://github.com/docker/containerd/tree/master/api/

|

||||

[10]:https://www.meetup.com/Docker-Austin/events/245536895/

|

||||

[11]:http://sched.co/CU6G

|

||||

[12]:https://kccncna17.sched.com/event/CU6g/embedding-the-containerd-runtime-for-fun-and-profit-i-phil-estes-ibm

|

||||

[13]:https://kccncna17.sched.com/event/Cx9k/containerd-salon-hosted-by-derek-mcgowan-docker-lantao-liu-google

|

||||

[14]:https://blog.docker.com/author/chanezon/

|

||||

[15]:https://blog.docker.com/tag/cloud-native-computing-foundation/

|

||||

[16]:https://blog.docker.com/tag/cncf/

|

||||

[17]:https://blog.docker.com/tag/container-runtime/

|

||||

[18]:https://blog.docker.com/tag/containerd/

|

||||

[19]:https://blog.docker.com/tag/cri-containerd/

|

||||

[20]:https://blog.docker.com/tag/grpc/

|

||||

[21]:https://blog.docker.com/tag/kubernetes/

|

||||

[22]:https://blog.docker.com/2016/12/introducing-containerd/

|

||||

[23]:https://blog.docker.com/2017/03/docker-donates-containerd-to-cncf/

|

||||

[24]:http://blog.kubernetes.io/2017/11/containerd-container-runtime-options-kubernetes.html

|

||||

[25]:http://www.grpc.io/

|

||||

[26]:https://prometheus.io/

|

||||

[27]:https://github.com/opencontainers/runc

|

||||

[28]:http://github.com/containerd/containerd

|

||||

@ -1,74 +0,0 @@

|

||||

Ubuntu Updates for the Meltdown / Spectre Vulnerabilities

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

* For up-to-date patch, package, and USN links, please refer to: [https://wiki.ubuntu.com/SecurityTeam/KnowledgeBase/SpectreAndMeltdown][2]

|

||||

|

||||

Unfortunately, you’ve probably already read about one of the most widespread security issues in modern computing history — colloquially known as “[Meltdown][5]” ([CVE-2017-5754][6]) and “[Spectre][7]” ([CVE-2017-5753][8] and [CVE-2017-5715][9]) — affecting practically every computer built in the last 10 years, running any operating system. That includes [Ubuntu][10].

|

||||

|

||||

I say “unfortunately”, in part because there was a coordinated release date of January 9, 2018, agreed upon by essentially every operating system, hardware, and cloud vendor in the world. By design, operating system updates would be available at the same time as the public disclosure of the security vulnerability. While it happens rarely, this an industry standard best practice, which has broken down in this case.

|

||||

|

||||

At its heart, this vulnerability is a CPU hardware architecture design issue. But there are billions of affected hardware devices, and replacing CPUs is simply unreasonable. As a result, operating system kernels — Windows, MacOS, Linux, and many others — are being patched to mitigate the critical security vulnerability.

|

||||

|

||||

Canonical engineers have been working on this since we were made aware under the embargoed disclosure (November 2017) and have worked through the Christmas and New Years holidays, testing and integrating an incredibly complex patch set into a broad set of Ubuntu kernels and CPU architectures.

|

||||

|

||||

Ubuntu users of the 64-bit x86 architecture (aka, amd64) can expect updated kernels by the original January 9, 2018 coordinated release date, and sooner if possible. Updates will be available for:

|

||||

|

||||

* Ubuntu 17.10 (Artful) — Linux 4.13 HWE

|

||||

|

||||

* Ubuntu 16.04 LTS (Xenial) — Linux 4.4 (and 4.4 HWE)

|

||||

|

||||

* Ubuntu 14.04 LTS (Trusty) — Linux 3.13

|

||||

|

||||

* Ubuntu 12.04 ESM** (Precise) — Linux 3.2

|

||||

* Note that an [Ubuntu Advantage license][1] is required for the 12.04 ESM kernel update, as Ubuntu 12.04 LTS is past its end-of-life

|

||||

|

||||

Ubuntu 18.04 LTS (Bionic) will release in April of 2018, and will ship a 4.15 kernel, which includes the [KPTI][11] patchset as integrated upstream.

|

||||

|

||||

Ubuntu optimized kernels for the Amazon, Google, and Microsoft public clouds are also covered by these updates, as well as the rest of Canonical’s [Certified Public Clouds][12] including Oracle, OVH, Rackspace, IBM Cloud, Joyent, and Dimension Data.

|

||||

|

||||

These kernel fixes will not be [Livepatch-able][13]. The source code changes required to address this problem is comprised of hundreds of independent patches, touching hundreds of files and thousands of lines of code. The sheer complexity of this patchset is not compatible with the Linux kernel Livepatch mechanism. An update and a reboot will be required to active this update.

|

||||

|

||||

Furthermore, you can expect Ubuntu security updates for a number of other related packages, including CPU microcode, GCC and QEMU in the coming days.

|

||||

|

||||

We don’t have a performance analysis to share at this time, but please do stay tuned here as we’ll followup with that as soon as possible.

|

||||

|

||||

Thanks,

|

||||

[@DustinKirkland][14]

|

||||

VP of Product

|

||||

Canonical / Ubuntu

|

||||

|

||||

### About the author

|

||||

|

||||

|

||||

|

||||

Dustin Kirkland is part of Canonical's Ubuntu Product and Strategy team, working for Mark Shuttleworth, and leading the technical strategy, road map, and life cycle of the Ubuntu Cloud and IoT commercial offerings. Formerly the CTO of Gazzang, a venture funded start-up acquired by Cloudera, Dustin designed and implemented an innovative key management system for the cloud, called zTrustee, and delivered comprehensive security for cloud and big data platforms with eCryptfs and other encryption technologies. Dustin is an active Core Developer of the Ubuntu Linux distribution, maintainer of 20+ open source projects, and the creator of Byobu, DivItUp.com, and LinuxSearch.org. A Fightin' Texas Aggie Class of 2001 graduate, Dustin lives in Austin, Texas, with his wife Kim, daughters, and his Australian Shepherds, Aggie and Tiger. Dustin is also an avid home brewer.

|

||||

|

||||

[More articles by Dustin][3]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://insights.ubuntu.com/2018/01/04/ubuntu-updates-for-the-meltdown-spectre-vulnerabilities/

|

||||

|

||||

作者:[Dustin Kirkland][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://insights.ubuntu.com/author/kirkland/

|

||||

[1]:https://www.ubuntu.com/support/esm

|

||||

[2]:https://wiki.ubuntu.com/SecurityTeam/KnowledgeBase/SpectreAndMeltdown

|

||||

[3]:https://insights.ubuntu.com/author/kirkland/

|

||||

[4]:https://insights.ubuntu.com/author/kirkland/

|

||||

[5]:https://en.wikipedia.org/wiki/Meltdown_(security_vulnerability)

|

||||

[6]:https://people.canonical.com/~ubuntu-security/cve/2017/CVE-2017-5754.html

|

||||

[7]:https://en.wikipedia.org/wiki/Spectre_(security_vulnerability)

|

||||

[8]:https://people.canonical.com/~ubuntu-security/cve/2017/CVE-2017-5753.html

|

||||

[9]:https://people.canonical.com/~ubuntu-security/cve/2017/CVE-2017-5715.html

|

||||

[10]:https://wiki.ubuntu.com/SecurityTeam/KnowledgeBase/SpectreAndMeltdown

|

||||

[11]:https://lwn.net/Articles/742404/

|

||||

[12]:https://partners.ubuntu.com/programmes/public-cloud

|

||||

[13]:https://www.ubuntu.com/server/livepatch

|

||||

[14]:https://twitter.com/dustinkirkland

|

||||

@ -1,85 +0,0 @@

|

||||

Reckoning The Spectre And Meltdown Performance Hit For HPC

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

While no one has yet created an exploit to take advantage of the Spectre and Meltdown speculative execution vulnerabilities that were exposed by Google six months ago and that were revealed in early January, it is only a matter of time. The [patching frenzy has not settled down yet][2], and a big concern is not just whether these patches fill the security gaps, but at what cost they do so in terms of application performance.

|

||||

|

||||

To try to ascertain the performance impact of the Spectre and Meltdown patches, most people have relied on comments from Google on the negligible nature of the performance hit on its own applications and some tests done by Red Hat on a variety of workloads, [which we profiled in our initial story on the vulnerabilities][3]. This is a good starting point, but what companies really need to do is profile the performance of their applications before and after applying the patches – and in such a fine-grained way that they can use the data to debug the performance hit and see if there is any remediation they can take to alleviate the impact.

|

||||

|

||||

In the meantime, we are relying on researchers and vendors to figure out the performance impacts. Networking chip maker Mellanox Technologies, always eager to promote the benefits of the offload model of its switch and network interface chips, has run some tests to show the effects of the Spectre and Meltdown patches on high performance networking for various workloads and using various networking technologies, including its own Ethernet and InfiniBand devices and Intel’s OmniPath. Some HPC researchers at the University of Buffalo have also done some preliminary benchmarking of selected HPC workloads to see the effect on compute and network performance. This is a good starting point, but is far from a complete picture of the impact that might be seen on HPC workloads after organization deploy the Spectre and Meltdown patches to their systems.

|

||||

|

||||

To recap, here is what Red Hat found out when it tested the initial Spectre and Meltdown patches running its Enterprise Linux 7 release on servers using Intel’s “Haswell” Xeon E5 v3, “Broadwell” Xeon E5 v4, and “Skylake” Xeon SP processors:

|

||||

|

||||

* **Measurable, 8 percent to 19 percent:** Highly cached random memory, with buffered I/O, OLTP database workloads, and benchmarks with high kernel-to-user space transitions are impacted between 8 percent and 19 percent. Examples include OLTP Workloads (TPC), sysbench, pgbench, netperf (< 256 byte), and fio (random I/O to NvME).

|

||||

|

||||

* **Modest, 3 percent to 7 percent:** Database analytics, Decision Support System (DSS), and Java VMs are impacted less than the Measurable category. These applications may have significant sequential disk or network traffic, but kernel/device drivers are able to aggregate requests to moderate level of kernel-to-user transitions. Examples include SPECjbb2005, Queries/Hour and overall analytic timing (sec).

|

||||

|

||||

* **Small, 2 percent to 5 percent:** HPC CPU-intensive workloads are affected the least with only 2 percent to 5 percent performance impact because jobs run mostly in user space and are scheduled using CPU pinning or NUMA control. Examples include Linpack NxN on X86 and SPECcpu2006.

|

||||

|

||||

* **Minimal impact:** Linux accelerator technologies that generally bypass the kernel in favor of user direct access are the least affected, with less than 2% overhead measured. Examples tested include DPDK (VsPERF at 64 byte) and OpenOnload (STAC-N). Userspace accesses to VDSO like get-time-of-day are not impacted. We expect similar minimal impact for other offloads.

|

||||

|

||||

And just to remind you, according to Red Hat containerized applications running atop Linux do not incur an extra Spectre or Meltdown penalty compared to applications running on bare metal because they are implemented as generic Linux processes themselves. But applications running inside virtual machines running atop hypervisors, Red Hat does expect that, thanks to the increase in the frequency of user-to-kernel transitions, the performance hit will be higher. (How much has not yet been revealed.)

|

||||

|

||||

Gilad Shainer, the vice president of marketing for the InfiniBand side of the Mellanox house, shared some initial performance data from the company’s labs with regard to the Spectre and Meltdown patches. ([The presentation is available online here.][4])

|

||||

|

||||

In general, Shainer tells _The Next Platform_ , the offload model that Mellanox employs in its InfiniBand switches (RDMA is a big component of this) and in its Ethernet (The RoCE clone of RDMA is used here) are a very big deal given the fact that the network drivers bypass the operating system kernels. The exploits take advantage, in one of three forms, of the porous barrier between the kernel and user spaces in the operating systems, so anything that is kernel heavy will be adversely affected. This, says Shainer, includes the TCP/IP protocol that underpins Ethernet as well as the OmniPath protocol, which by its nature tries to have the CPUs in the system do a lot of the network processing. Intel and others who have used an onload model have contended that this allows for networks to be more scalable, and clearly there are very scalable InfiniBand and OmniPath networks, with many thousands of nodes, so both approaches seem to work in production.

|

||||

|

||||

Here are the feeds and speeds on the systems that Mellanox tested on two sets of networking tests. For the comparison of Ethernet with RoCE added and standard TCP over Ethernet, the hardware was a two-socket server using Intel’s Xeon E5-2697A v4 running at 2.60 GHz. This machine was configured with Red Hat Enterprise Linux 7.4, with kernel versions 3.10.0-693.11.6.el7.x86_64 and 3.10.0-693.el7.x86_64\. (Those numbers _are_ different – there is an _11.6_ in the middle of the second one.) The machines were equipped with ConnectX-5 server adapters with firmware 16.22.0170 and the MLNX_OFED_LINUX-4.3-0.0.5.0 driver. The workload that was tested was not a specific HPC application, but rather a very low level, homegrown interconnect benchmark that is used to stress switch chips and NICs to see their peak _sustained_ performance, as distinct from peak _theoretical_ performance, which is the absolute ceiling. This particular test was run on a two-node cluster, passing data from one machine to the other.

|

||||

|

||||

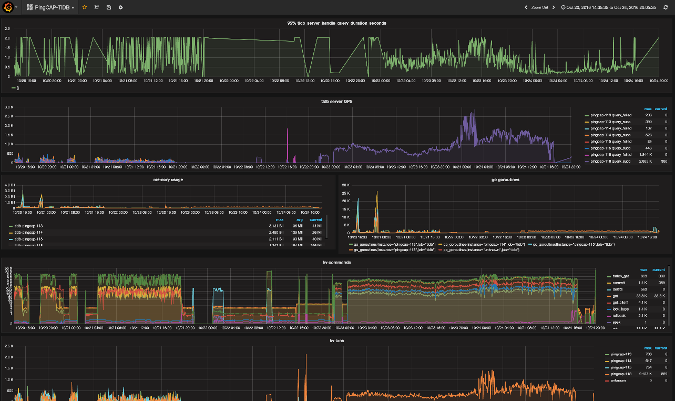

Here is how the performance stacked up before and after the Spectre and Meltdown patches were added to the systems:

|

||||

|

||||

[][5]

|

||||

|

||||

As you can see, at this very low level, there is no impact on network performance between two machines supporting RoCE on Ethernet, but running plain vanilla TCP without an offload on top of Ethernet, there are some big performance hits. Interestingly, on this low-level test, the impact was greatest on small message sizes in the TCP stack and then disappeared as the message sizes got larger.

|

||||

|

||||

On a separate round of tests pitting InfiniBand from Mellanox against OmniPath from Intel, the server nodes were configured with a pair of Intel Xeon SP Gold 6138 processors running at 2 GHz, also with Red Hat Enterprise Linux 7.4 with the 3.10.0-693.el7.x86_64 and 3.10.0-693.11.6.el7.x86_64 kernel versions. The OmniPath adapter uses the IntelOPA-IFS.RHEL74-x86_64.10.6.1.0.2 driver and the Mellanox ConnectX-5 adapter uses the MLNX_OFED 4.2 driver.

|

||||

|

||||

Here is how the InfiniBand and OmniPath protocols did on the tests before and after the patches:

|

||||

|

||||

[][6]

|

||||

|

||||

Again, thanks to the offload model and the fact that this was a low level benchmark that did not hit the kernel very much (and some HPC applications might cross that boundary and therefore invoke the Spectre and Meltdown performance penalties), there was no real effect on the two-node cluster running InfiniBand. With the OmniPath system, the impact was around 10 percent for small message sizes, and then grew to 25 percent or so once the message sizes transmitted reached 512 bytes.

|

||||

|

||||

We have no idea what the performance implications are for clusters of more than two machines using the Mellanox approach. It would be interesting to see if the degradation compounds or doesn’t.

|

||||

|

||||

### Early HPC Performance Tests

|

||||

|

||||

While such low level benchmarks provide some initial guidance on what the effect might be of the Spectre and Meltdown patches on HPC performance, what you really need is a benchmark run of real HPC applications running on clusters of various sizes, both before and after the Spectre and Meltdown patches are applied to the Linux nodes. A team of researchers led by Nikolay Simakov at the Center For Computational Research at SUNY Buffalo fired up some HPC benchmarks and a performance monitoring tool derived from the National Science Foundation’s Extreme Digital (XSEDE) program to see the effect of the Spectre and Meltdown patches on how much work they could get done as gauged by wall clock time to get that work done.

|

||||

|

||||

The paper that Simakov and his team put together on the initial results [is found here][7]. The tool that was used to monitor the performance of the systems was called XD Metrics on Demand, or XDMoD, and it was open sourced and is available for anyone to use. (You might consider [Open XDMoD][8] for your own metrics to determine the performance implications of the Spectre and Meltdown patches.) The benchmarks tested by the SUNY Buffalo researchers included the NAMD molecular dynamics and NWChem computational chemistry applications, as well as the HPC Challenge suite, which itself includes the STREAM memory bandwidth test and the NASA Parallel Benchmarks (NPB), the Interconnect MPI Benchmarks (IMB). The researchers also tested the IOR file reading and the MDTest metadata benchmark tests from Lawrence Livermore National Laboratory. The IOR and MDTest benchmarks were run in local mode and in conjunction with a GPFS parallel file system running on an external 3 PB storage cluster. (The tests with a “.local” suffix in the table are run on storage in the server nodes themselves.)

|

||||

|

||||

SUNY Buffalo has an experimental cluster with two-socket machines based on Intel “Nehalem” Xeon L5520 processors, which have eight cores and which are, by our reckoning, very long in the tooth indeed in that they are nearly nine years old. Each node has 24 GB of main memory and has 40 Gb/sec QDR InfiniBand links cross connecting them together. The systems are running the latest CentOS 7.4.1708 release, without and then with the patches applied. (The same kernel patches outlined above in the Mellanox test.) Simakov and his team ran each benchmark on a single node configuration and then ran the benchmark on a two node configuration, and it shows the difference between running a low-level benchmark and actual applications when doing tests. Take a look at the table of results:

|

||||

|

||||

[][9]

|

||||

|

||||

The before runs of each application tested were done on around 20 runs, and the after was done on around 50 runs. For the core HPC applications – NAMD, NWChem, and the elements of HPCC – the performance degradation was between 2 percent and 3 percent, consistent with what Red Hat told people to expect back in the first week that the Spectre and Meltdown vulnerabilities were revealed and the initial patches were available. However, moving on to two-node configurations, where network overhead was taken into account, the performance impact ranged from 5 percent to 11 percent. This is more than you would expect based on the low level benchmarks that Mellanox has done. Just to make things interesting, on the IOR and MDTest benchmarks, moving from one to two nodes actually lessened the performance impact; running the IOR test on the local disks resulted in a smaller performance hit then over the network for a single node, but was not as low as for a two-node cluster running out to the GPFS file system.

|

||||

|

||||

There is a lot of food for thought in this data, to say the least.

|

||||

|

||||

What we want to know – and what the SUNY Buffalo researchers are working on – is what happens to performance on these HPC applications when the cluster is scaled out.

|

||||

|

||||

“We will know that answer soon,” Simakov tells _The Next Platform_ . “But there are only two scenarios that are possible. Either it is going to get worse or it is going to stay about the same as a two-node cluster. We think that it will most likely stay the same, because all of the MPI communication happens through the shared memory on a single node, and when you get to two nodes, you get it into the network fabric and at that point, you are probably paying all of the extra performance penalties.”

|

||||

|

||||

We will update this story with data on larger scale clusters as soon as Simakov and his team provide the data.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.nextplatform.com/2018/01/30/reckoning-spectre-meltdown-performance-hit-hpc/

|

||||

|

||||

作者:[Timothy Prickett Morgan][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.nextplatform.com/author/tpmn/

|

||||

[1]:https://www.nextplatform.com/author/tpmn/

|

||||

[2]:https://www.nextplatform.com/2018/01/18/datacenters-brace-spectre-meltdown-impact/

|

||||

[3]:https://www.nextplatform.com/2018/01/08/cost-spectre-meltdown-server-taxes/

|

||||

[4]:http://www.mellanox.com/related-docs/presentations/2018/performance/Spectre-and-Meltdown-Performance.pdf?homepage

|

||||

[5]:https://3s81si1s5ygj3mzby34dq6qf-wpengine.netdna-ssl.com/wp-content/uploads/2018/01/mellanox-spectre-meltdown-roce-versus-tcp.jpg

|

||||

[6]:https://3s81si1s5ygj3mzby34dq6qf-wpengine.netdna-ssl.com/wp-content/uploads/2018/01/mellanox-spectre-meltdown-infiniband-versus-omnipath.jpg

|

||||

[7]:https://arxiv.org/pdf/1801.04329.pdf

|

||||

[8]:http://open.xdmod.org/7.0/index.html

|

||||

[9]:https://3s81si1s5ygj3mzby34dq6qf-wpengine.netdna-ssl.com/wp-content/uploads/2018/01/suny-buffalo-spectre-meltdown-test-table.jpg

|

||||

@ -1,59 +0,0 @@

|

||||

Louis-Philippe Véronneau -

|

||||

======

|

||||

I've been watching [Critical Role][1]1 for a while now and since I've started my master's degree I haven't had much time to sit down and watch the show on YouTube as I used to do.

|

||||

|

||||

I thus started listening to the podcasts instead; that way, I can listen to the show while I'm doing other productive tasks. Pretty quickly, I grew tired of manually downloading every episode each time I finished the last one. To make things worst, the podcast is hosted on PodBean and they won't let you download episodes on a mobile device without their app. Grrr.

|

||||

|

||||

After the 10th time opening the terminal on my phone to download the podcast using some `wget` magic I decided enough was enough: I was going to write a dumb script to download them all in one batch.

|

||||

|

||||

I'm a little ashamed to say it took me more time than I had intended... The PodBean website uses semi-randomized URLs, so I could not figure out a way to guess the paths to the hosted audio files. I considered using `youtube-dl` to get the DASH version of the show on YouTube, but Google has been heavily throttling DASH streams recently. Not cool Google.

|

||||

|

||||

I then had the idea to use iTune's RSS feed to get the audio files. Surely they would somehow be included there? Of course Apple doesn't give you a simple RSS feed link on the iTunes podcast page, so I had to rummage around and eventually found out this is the link you have to use:

|

||||

```

|

||||

https://itunes.apple.com/lookup?id=1243705452&entity=podcast

|

||||

|

||||

```

|

||||

|

||||

Surprise surprise, from the json file this links points to, I found out the main Critical Role podcast page [has a proper RSS feed][2]. To my defense, the RSS button on the main podcast page brings you to some PodBean crap page.

|

||||

|

||||

Anyway, once you have the RSS feed, it's only a matter of using `grep` and `sed` until you get what you want.

|

||||

|

||||

Around 20 minutes later, I had downloaded all the episodes, for a total of 22Gb! Victory dance!

|

||||

|

||||

Video clip loop of the Critical Role doing a victory dance.

|

||||

|

||||

### Script

|

||||

|

||||

Here's the bash script I wrote. You will need `recode` to run it, as the RSS feed includes some HTML entities.

|

||||

```

|

||||

# Get the whole RSS feed

|

||||

wget -qO /tmp/criticalrole.rss http://criticalrolepodcast.geekandsundry.com/feed/

|

||||

|

||||

# Extract the URLS and the episode titles

|

||||

mp3s=( $(grep -o "http.\+mp3" /tmp/criticalrole.rss) )

|

||||

titles=( $(tail -n +45 /tmp/criticalrole.rss | grep -o "<title>.\+</title>" \

|

||||

| sed -r 's@</?title>@@g; s@ @\\@g' | recode html..utf8) )

|

||||

|

||||

# Download all the episodes under their titles

|

||||

for i in ${!titles[*]}

|

||||

do

|

||||

wget -qO "$(sed -e "s@\\\@\\ @g" <<< "${titles[$i]}").mp3" ${mp3s[$i]}

|

||||

done

|

||||

|

||||

```

|

||||

|

||||

1 - For those of you not familiar with Critical Role, it's web series where a group of voice actresses and actors from LA play Dungeons & Dragons. It's so good even people like me who never played D&D can enjoy it..

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://veronneau.org/downloading-all-the-critical-role-podcasts-in-one-batch.html

|

||||

|

||||

作者:[Louis-Philippe Véronneau][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://veronneau.org/

|

||||

[1]:https://en.wikipedia.org/wiki/Critical_Role

|

||||

[2]:http://criticalrolepodcast.geekandsundry.com/feed/

|

||||

@ -1,108 +0,0 @@

|

||||

Announcing NGINX Unit 1.0

|

||||

============================================================

|

||||

|

||||

Today, April 12, marks a significant milestone in the development of [NGINX Unit][8], our dynamic web and application server. Approximately six months after its [first public release][9], we’re now happy to announce that NGINX Unit is generally available and production‑ready. NGINX Unit is our new open source initiative led by Igor Sysoev, creator of the original NGINX Open Source software, which is now used by more than [409 million websites][10].

|

||||

|

||||

“I set out to make an application server which will be remotely and dynamically configured, and able to switch dynamically from one language or application version to another,” explains Igor. “Dynamic configuration and switching I saw as being certainly the main problem. People want to reconfigure servers without interrupting client processing.”

|

||||

|

||||

NGINX Unit is dynamically configured using a REST API; there is no static configuration file. All configuration changes happen directly in memory. Configuration changes take effect without requiring process reloads or service interruptions.

|

||||

|

||||

|

||||

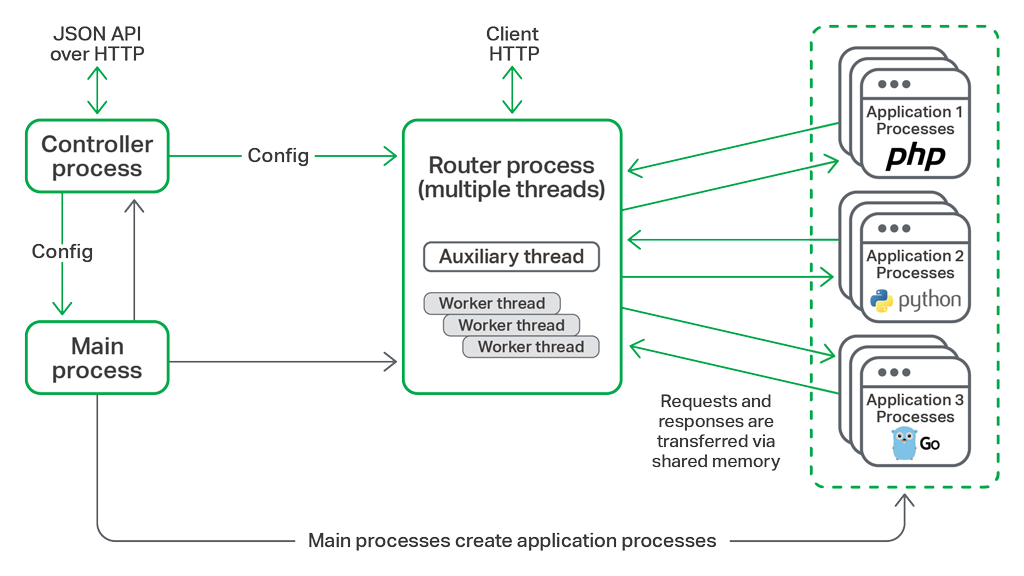

NGINX Unit runs multiple languages simultaneously

|

||||

|

||||

“The dynamic switching requires that we can run different languages and language versions in one server,” continues Igor.

|

||||

|

||||

As of Release 1.0, NGINX Unit supports Go, Perl, PHP, Python, and Ruby on the same server. Multiple language versions are also supported, so you can, for instance, run applications written for PHP 5 and PHP 7 on the same server. Support for additional languages, including Java, is planned for future NGINX Unit releases.

|

||||

|

||||

Note: We have an additional blog post on [how to configure NGINX, NGINX Unit, and WordPress][11] to work together.

|

||||

|

||||

Igor studied at Moscow State Technical University, which was a pioneer in the Russian space program, and April 12 has a special significance. “This is the anniversary of the first manned spaceflight in history, made by [Yuri Gagarin][12]. The first public version of NGINX [0.1.0] was released on [[October 4, 2004][7],] the anniversary of the [Sputnik][13] launch, and NGINX 1.0 was launched on April 12, 2011.”

|

||||

|

||||

### What Is NGINX Unit?

|

||||

|

||||

NGINX Unit is a dynamic web and application server, suitable for both stand‑alone applications and distributed, microservices application architectures. It launches and scales application processes on demand, executing each application instance in its own secure sandbox.

|

||||

|

||||

NGINX Unit manages and routes all incoming network transactions to the application through a separate “router” process, so it can rapidly implement configuration changes without interrupting service.

|

||||

|

||||

“The configuration is in JSON format, so users can edit it manually, and it’s very suitable for scripting. We hope to add capabilities to [NGINX Controller][14] and [NGINX Amplify][15] to work with Unit configuration too,” explains Igor.

|

||||

|

||||

The NGINX Unit configuration process is described thoroughly in the [documentation][16].

|

||||

|

||||

“Now Unit can run Python, PHP, Ruby, Perl and Go – five languages. For example, during our beta, one of our users used Unit to run a number of different PHP platform versions on a single host,” says Igor.

|

||||

|

||||

NGINX Unit’s ability to run multiple language runtimes is based on its internal separation between the router process, which terminates incoming HTTP requests, and groups of application processes, which implement the application runtime and execute application code.

|

||||

|

||||

|

||||

NGINX Unit architecture

|

||||

|

||||

The router process is persistent – it never restarts – meaning that configuration updates can be implemented seamlessly, without any interruption in service. Each application process is deployed in its own sandbox (with support for [Linux control groups][17] [cgroups] under active development), so that NGINX Unit provides secure isolation for user code.

|

||||

|

||||

### What’s Next for NGINX Unit?

|

||||

|

||||

The next milestones for the NGINX Unit engineering team after Release 1.0 are concerned with HTTP maturity, serving static content, and additional language support.

|

||||

|

||||

“We plan to add SSL and HTTP/2 capabilities in Unit,” says Igor. “Also, we plan to support routing in configurations; currently, we have direct mapping from one listen port to one application. We plan to add routing using URIs and hostnames, etc.”

|

||||

|

||||

“In addition, we want to add more language support to Unit. We are completing the Ruby implementation, and next we will consider Node.js and Java. Java will be added in a Tomcat‑compatible fashion.”

|

||||

|

||||

The end goal for NGINX Unit is to create an open source platform for distributed, polyglot applications which can run application code securely, reliably, and with the best possible performance. The platform will self‑manage, with capabilities such as autoscaling to meet SLAs within resource constraints, and service discovery and internal load balancing to make it easy to create a [service mesh][18].

|

||||

|

||||

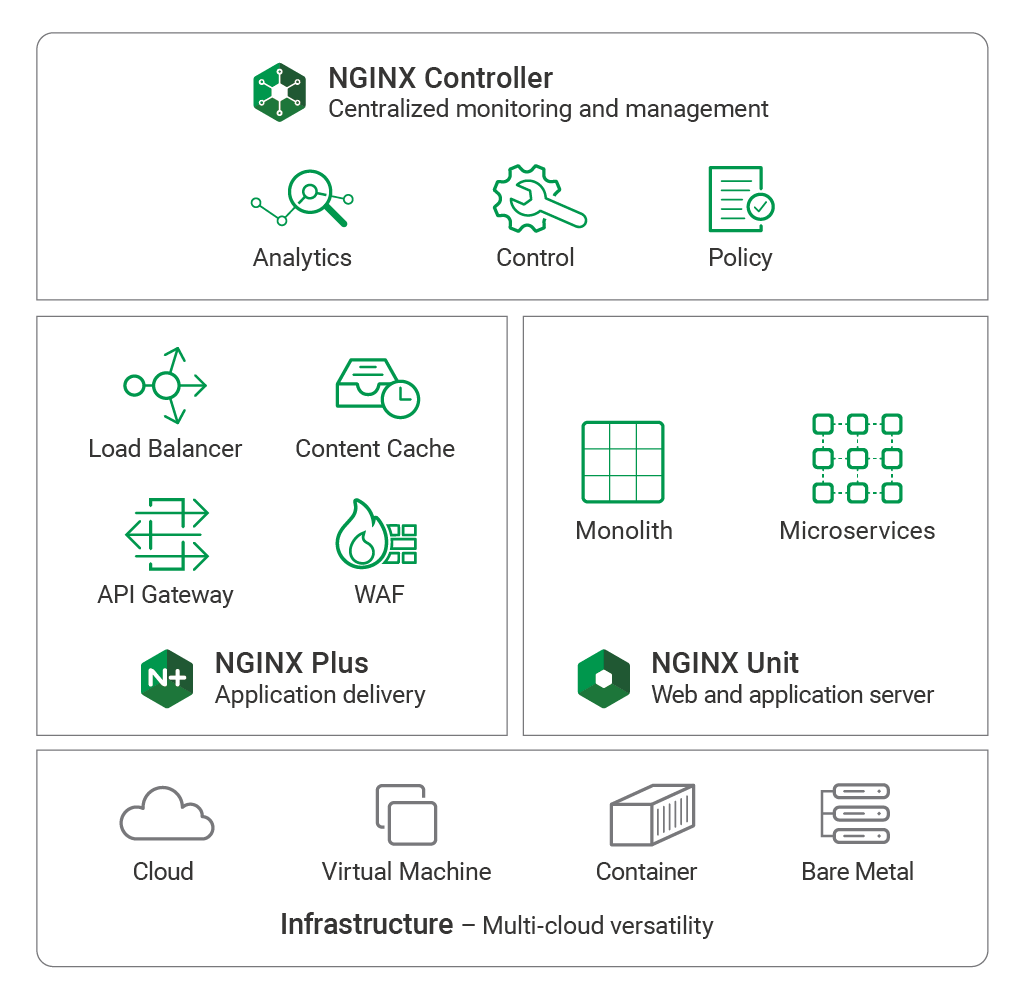

### NGINX Unit and the NGINX Application Platform

|

||||

|

||||

An NGINX Unit platform will typically be delivered with a front‑end tier of NGINX Open Source or NGINX Plus reverse proxies to provide ingress control, edge load balancing, and security. The joint platform (NGINX Unit and NGINX or NGINX Plus) can then be managed fully using NGINX Controller to monitor, configure, and control the entire platform.

|

||||

|

||||

|

||||

The NGINX Application Platform is our vision for building microservices

|

||||

|

||||

Together, these three components – NGINX Plus, NGINX Unit, and NGINX Controller – make up the [NGINX Application Platform][19]. The NGINX Application Platform is a product suite that delivers load balancing, caching, API management, a WAF, and application serving, with rich management and control planes that simplify the tasks of operating monolithic, microservices, and transitional applications.

|

||||

|

||||

### Getting Started with NGINX Unit

|

||||

|

||||

NGINX Unit is free and open source. Please see the [installation instructions][20] to get started. We have prebuilt packages for most operating systems, including Ubuntu and Red Hat Enterprise Linux. We also make a [Docker container][21] available on Docker Hub.

|

||||

|

||||

The source code is available in our [Mercurial repository][22] and [mirrored on GitHub][23]. The code is available under the Apache 2.0 license. You can compile NGINX Unit yourself on most popular Linux and Unix systems.

|

||||

|

||||

If you have any questions, please use the [GitHub issues board][24] or the [NGINX Unit mailing list][25]. We’d love to hear how you are using NGINX Unit, and we welcome [code contributions][26] too.

|

||||

|

||||

We’re also happy to extend technical support for NGINX Unit to NGINX Plus customers with Professional or Enterprise support contracts. Please refer to our [Support page][27] for details of the support services we can offer.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.nginx.com/blog/nginx-unit-1-0-released/

|

||||

|

||||

作者:[www.nginx.com ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:www.nginx.com

|

||||

[1]:https://twitter.com/intent/tweet?text=Announcing+NGINX+Unit+1.0+by+%40nginx+https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F

|

||||

[2]:http://www.linkedin.com/shareArticle?mini=true&url=https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F&title=Announcing+NGINX+Unit+1.0&summary=Today%2C+April+12%2C+marks+a+significant+milestone+in+the+development+of+NGINX%26nbsp%3BUnit%2C+our+dynamic+web+and+application+server.+Approximately+six+months+after+its+first+public+release%2C+we%E2%80%99re+now+happy+to+announce+that+NGINX%26nbsp%3BUnit+is+generally+available+and+production%26%238209%3Bready.+NGINX%26nbsp%3BUnit+is+our+new+open+source+initiative+led+by+Igor%26nbsp%3BSysoev%2C+creator+of+the+original+NGINX+Open+Source+%5B%26hellip%3B%5D

|

||||

[3]:https://news.ycombinator.com/submitlink?u=https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F&t=Announcing%20NGINX%20Unit%201.0&text=Today,%20April%2012,%20marks%20a%20significant%20milestone%20in%20the%20development%20of%20NGINX%C2%A0Unit,%20our%20dynamic%20web%20and%20application%20server.%20Approximately%20six%20months%20after%20its%20first%20public%20release,%20we%E2%80%99re%20now%20happy%20to%20announce%20that%20NGINX%C2%A0Unit%20is%20generally%20available%20and%20production%E2%80%91ready.%20NGINX%C2%A0Unit%20is%20our%20new%20open%20source%20initiative%20led%20by%20Igor%C2%A0Sysoev,%20creator%20of%20the%20original%20NGINX%20Open%20Source%20[%E2%80%A6]

|

||||

[4]:https://www.facebook.com/sharer/sharer.php?u=https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F

|

||||

[5]:https://plus.google.com/share?url=https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F

|

||||

[6]:http://www.reddit.com/submit?url=https%3A%2F%2Fwww.nginx.com%2Fblog%2Fnginx-unit-1-0-released%2F&title=Announcing+NGINX+Unit+1.0&text=Today%2C+April+12%2C+marks+a+significant+milestone+in+the+development+of+NGINX%26nbsp%3BUnit%2C+our+dynamic+web+and+application+server.+Approximately+six+months+after+its+first+public+release%2C+we%E2%80%99re+now+happy+to+announce+that+NGINX%26nbsp%3BUnit+is+generally+available+and+production%26%238209%3Bready.+NGINX%26nbsp%3BUnit+is+our+new+open+source+initiative+led+by+Igor%26nbsp%3BSysoev%2C+creator+of+the+original+NGINX+Open+Source+%5B%26hellip%3B%5D

|

||||

[7]:http://nginx.org/en/CHANGES

|

||||

[8]:https://www.nginx.com/products/nginx-unit/

|

||||

[9]:https://www.nginx.com/blog/introducing-nginx-unit/

|

||||

[10]:https://news.netcraft.com/archives/2018/03/27/march-2018-web-server-survey.html

|

||||

[11]:https://www.nginx.com/blog/installing-wordpress-with-nginx-unit/

|

||||

[12]:https://en.wikipedia.org/wiki/Yuri_Gagarin

|

||||

[13]:https://en.wikipedia.org/wiki/Sputnik_1

|

||||

[14]:https://www.nginx.com/products/nginx-controller/

|

||||

[15]:https://www.nginx.com/products/nginx-amplify/

|

||||

[16]:http://unit.nginx.org/configuration/

|

||||

[17]:https://en.wikipedia.org/wiki/Cgroups

|

||||

[18]:https://www.nginx.com/blog/what-is-a-service-mesh/

|

||||

[19]:https://www.nginx.com/products

|

||||

[20]:http://unit.nginx.org/installation/

|

||||

[21]:https://hub.docker.com/r/nginx/unit/

|

||||

[22]:http://hg.nginx.org/unit

|

||||

[23]:https://github.com/nginx/unit

|

||||

[24]:https://github.com/nginx/unit/issues

|

||||

[25]:http://mailman.nginx.org/mailman/listinfo/unit

|

||||

[26]:https://unit.nginx.org/contribution/

|

||||

[27]:https://www.nginx.com/support

|

||||

[28]:https://www.nginx.com/blog/tag/releases/

|

||||

[29]:https://www.nginx.com/blog/tag/nginx-unit/

|

||||

@ -1,294 +0,0 @@

|

||||

Things to do After Installing Ubuntu 18.04

|

||||

======

|

||||

**Brief: This list of things to do after installing Ubuntu 18.04 helps you get started with Bionic Beaver for a smoother desktop experience.**

|

||||

|

||||

[Ubuntu][1] 18.04 Bionic Beaver releases today. You are perhaps already aware of the [new features in Ubuntu 18.04 LTS][2] release. If not, here’s the video review of Ubuntu 18.04 LTS:

|

||||

|

||||

[Subscribe to YouTube Channel for more Ubuntu Videos][3]

|

||||

|

||||

If you opted to install Ubuntu 18.04, I have listed out a few recommended steps that you can follow to get started with it.

|

||||

|

||||

### Things to do after installing Ubuntu 18.04 Bionic Beaver

|

||||

|

||||

![Things to do after installing Ubuntu 18.04][4]

|

||||

|

||||

I should mention that the list of things to do after installing Ubuntu 18.04 depends a lot on you and your interests and needs. If you are a programmer, you’ll focus on installing programming tools. If you are a graphic designer, you’ll focus on installing graphics tools.

|

||||

|

||||

Still, there are a few things that should be applicable to most Ubuntu users. This list is composed of those things plus a few of my of my favorites.

|

||||

|

||||

Also, this list is for the default [GNOME desktop][5]. If you are using some other flavor like [Kubuntu][6], Lubuntu etc then the GNOME-specific stuff won’t be applicable to your system.

|

||||

|

||||

You don’t have to follow each and every point on the list blindly. You should see if the recommended action suits your requirements or not.

|

||||

|

||||

With that said, let’s get started with this list of things to do after installing Ubuntu 18.04.

|

||||

|

||||

#### 1\. Update the system

|

||||

|

||||

This is the first thing you should do after installing Ubuntu. Update the system without fail. It may sound strange because you just installed a fresh OS but still, you must check for the updates.

|

||||

|

||||

In my experience, if you don’t update the system right after installing Ubuntu, you might face issues while trying to install a new program.

|

||||

|

||||

To update Ubuntu 18.04, press Super Key (Windows Key) to launch the Activity Overview and look for Software Updater. Run it to check for updates.

|

||||

|

||||

![Software Updater in Ubuntu 17.10][7]

|

||||

|

||||

**Alternatively** , you can use these famous commands in the terminal ( Use Ctrl+Alt+T):

|

||||

```

|

||||

sudo apt update && sudo apt upgrade

|

||||

|

||||

```

|

||||

|

||||

#### 2\. Enable additional repositories for more software

|

||||

|

||||

[Ubuntu has several repositories][8] from where it provides software for your system. These repositories are:

|

||||

|

||||

* Main – Free and open-source software supported by Ubuntu team

|

||||

* Universe – Free and open-source software maintained by the community

|

||||

* Restricted – Proprietary drivers for devices.

|

||||

* Multiverse – Software restricted by copyright or legal issues.

|

||||

* Canonical Partners – Software packaged by Ubuntu for their partners

|

||||

|

||||

|

||||

|

||||

Enabling all these repositories will give you access to more software and proprietary drivers.

|

||||

|

||||

Go to Activity Overview by pressing Super Key (Windows key), and search for Software & Updates:

|

||||

|

||||

![Software and Updates in Ubuntu 17.10][9]

|

||||

|

||||

Under the Ubuntu Software tab, make sure you have checked all of the Main, Universe, Restricted and Multiverse repository checked.

|

||||

|

||||

![Setting repositories in Ubuntu 18.04][10]

|

||||

|

||||

Now move to the **Other Software** tab, check the option of **Canonical Partners**.

|

||||

|

||||

![Enable Canonical Partners repository in Ubuntu 17.10][11]

|

||||

|

||||

You’ll have to enter your password in order to update the software sources. Once it completes, you’ll find more applications to install in the Software Center.

|

||||

|

||||

#### 3\. Install media codecs

|

||||

|

||||

In order to play media files like MP#, MPEG4, AVI etc, you’ll need to install media codecs. Ubuntu has them in their repository but doesn’t install it by default because of copyright issues in various countries.

|

||||

|

||||

As an individual, you can install these media codecs easily using the Ubuntu Restricted Extra package. Click on the link below to install it from the Software Center.

|

||||

|

||||

[Install Ubuntu Restricted Extras][12]

|

||||

|

||||

Or alternatively, use the command below to install it:

|

||||

```

|

||||

sudo apt install ubuntu-restricted-extras

|

||||

|

||||

```

|

||||

|

||||

#### 4\. Install software from the Software Center

|

||||

|

||||

Now that you have setup the repositories and installed the codecs, it is time to get software. If you are absolutely new to Ubuntu, please follow this [guide to installing software in Ubuntu][13].

|

||||

|

||||

There are several ways to install software. The most convenient way is to use the Software Center that has thousands of software available in various categories. You can install them in a few clicks from the software center.

|

||||

|

||||

![Software Center in Ubuntu 17.10 ][14]

|

||||

|

||||

It depends on you what kind of software you would like to install. I’ll suggest some of my favorites here.

|

||||

|

||||

* **VLC** – media player for videos

|

||||

* **GIMP** – Photoshop alternative for Linux

|

||||

* **Pinta** – Paint alternative in Linux

|

||||

* **Calibre** – eBook management tool

|

||||

* **Chromium** – Open Source web browser

|

||||

* **Kazam** – Screen recorder tool

|

||||

* [**Gdebi**][15] – Lightweight package installer for .deb packages

|

||||

* **Spotify** – For streaming music

|

||||

* **Skype** – For video messaging

|

||||

* **Kdenlive** – [Video editor for Linux][16]

|

||||

* **Atom** – [Code editor][17] for programming

|

||||

|

||||

|

||||

|

||||

You may also refer to this list of [must-have Linux applications][18] for more software recommendations.

|

||||

|

||||

#### 5\. Install software from the Web

|

||||

|

||||

Though Ubuntu has thousands of applications in the software center, you may not find some of your favorite applications despite the fact that they support Linux.

|

||||

|

||||

Many software vendors provide ready to install .deb packages. You can download these .deb files from their website and install it by double-clicking on it.

|

||||

|

||||

[Google Chrome][19] is one such software that you can download from the web and install it.

|

||||

|

||||

#### 6\. Opt out of data collection in Ubuntu 18.04 (optional)

|

||||

|

||||

Ubuntu 18.04 collects some harmless statistics about your system hardware and your system installation preference. It also collects crash reports.

|

||||

|

||||

You’ll be given the option to not send this data to Ubuntu servers when you log in to Ubuntu 18.04 for the first time.

|

||||

|

||||

![Opt out of data collection in Ubuntu 18.04][20]

|

||||

|

||||

If you miss it that time, you can disable it by going to System Settings -> Privacy and then set the Problem Reporting to Manual.

|

||||

|

||||

![Privacy settings in Ubuntu 18.04][21]

|

||||

|

||||

#### 7\. Customize the GNOME desktop (Dock, themes, extensions and more)

|

||||

|

||||

The GNOME desktop looks good in Ubuntu 18.04 but doesn’t mean you cannot change it.

|

||||

|

||||

You can do a few visual changes from the System Settings. You can change the wallpaper of the desktop and the lock screen, you can change the position of the dock (launcher on the left side), change power settings, Bluetooth etc. In short, you can find many settings that you can change as per your need.

|

||||

|

||||

![Ubuntu 17.10 System Settings][22]

|

||||

|

||||

Changing themes and icons are the major way to change the looks of your system. I advise going through the list of [best GNOME themes][23] and [icons for Ubuntu][24]. Once you have found the theme and icon of your choice, you can use them with GNOME Tweaks tool.

|

||||

|

||||

You can install GNOME Tweaks via the Software Center or you can use the command below to install it:

|

||||

```

|

||||

sudo apt install gnome-tweak-tool

|

||||

|

||||

```

|

||||

|

||||

Once it is installed, you can easily [install new themes and icons][25].

|

||||

|

||||

![Change theme is one of the must to do things after installing Ubuntu 17.10][26]

|

||||

|

||||

You should also have a look at [use GNOME extensions][27] to further enhance the looks and capabilities of your system. I made this video about using GNOME extensions in 17.10 and you can follow the same for Ubuntu 18.04.

|

||||

|

||||

If you are wondering which extension to use, do take a look at this list of [best GNOME extensions][28].

|

||||

|

||||

I also recommend reading this article on [GNOME customization in Ubuntu][29] so that you can know the GNOME desktop in detail.

|

||||

|

||||

#### 8\. Prolong your battery and prevent overheating

|

||||

|

||||

Let’s move on to [prevent overheating in Linux laptops][30]. TLP is a wonderful tool that controls CPU temperature and extends your laptops’ battery life in the long run.

|

||||

|

||||

Make sure that you haven’t installed any other power saving application such as [Laptop Mode Tools][31]. You can install it using the command below in a terminal:

|

||||

```

|

||||

sudo apt install tlp tlp-rdw

|

||||

|

||||

```

|

||||

|

||||

Once installed, run the command below to start it:

|

||||

```

|

||||

sudo tlp start

|

||||

|

||||

```

|

||||

|

||||

#### 9\. Save your eyes with Nightlight

|

||||

|

||||

Nightlight is my favorite feature in GNOME desktop. Keeping [your eyes safe at night][32] from the computer screen is very important. Reducing blue light helps reducing eye strain at night.

|

||||

|

||||

![flux effect][33]

|

||||

|

||||

GNOME provides a built-in Night Light option, which you can activate in the System Settings.

|

||||

|

||||

Just go to System Settings-> Devices-> Displays and turn on the Night Light option.

|

||||

|

||||

![Enabling night light is a must to do in Ubuntu 17.10][34]

|

||||

|

||||

#### 9\. Disable automatic suspend for laptops

|

||||

|

||||

Ubuntu 18.04 comes with a new automatic suspend feature for laptops. If the system is running on battery and is inactive for 20 minutes, it will go in suspend mode.

|

||||

|

||||

I understand that the intention is to save battery life but it is an inconvenience as well. You can’t keep the power plugged in all the time because it’s not good for the battery life. And you may need the system to be running even when you are not using it.

|

||||

|

||||

Thankfully, you can change this behavior. Go to System Settings -> Power. Under Suspend & Power Button section, either turn off the Automatic Suspend option or extend its time period.

|

||||

|

||||

![Disable automatic suspend in Ubuntu 18.04][35]

|

||||

|

||||

You can also change the screen dimming behavior in here.

|

||||

|

||||

#### 10\. System cleaning

|

||||

|

||||

I have written in detail about [how to clean up your Ubuntu system][36]. I recommend reading that article to know various ways to keep your system free of junk.

|

||||

|

||||

Normally, you can use this little command to free up space from your system:

|

||||

```

|

||||

sudo apt autoremove

|

||||

|

||||

```

|

||||

|

||||

It’s a good idea to run this command every once a while. If you don’t like the command line, you can use a GUI tool like [Stacer][37] or [Bleach Bit][38].

|

||||

|

||||

#### 11\. Going back to Unity or Vanilla GNOME (not recommended)

|

||||

|

||||

If you have been using Unity or GNOME in the past, you may not like the new customized GNOME desktop in Ubuntu 18.04. Ubuntu has customized GNOME so that it resembles Unity but at the end of the day, it is neither completely Unity nor completely GNOME.

|

||||

|

||||

So if you are a hardcore Unity or GNOMEfan, you may want to use your favorite desktop in its ‘real’ form. I wouldn’t recommend but if you insist here are some tutorials for you:

|

||||

|

||||

#### 12\. Can’t log in to Ubuntu 18.04 after incorrect password? Here’s a workaround

|

||||

|

||||

I noticed a [little bug in Ubuntu 18.04][39] while trying to change the desktop session to Ubuntu Community theme. It seems if you try to change the sessions at the login screen, it rejects your password first and at the second attempt, the login gets stuck. You can wait for 5-10 minutes to get it back or force power it off.

|

||||

|

||||

The workaround here is that after it displays the incorrect password message, click Cancel, then click your name, then enter your password again.

|

||||

|

||||

#### 13\. Experience the Community theme (optional)

|

||||

|

||||

Ubuntu 18.04 was supposed to have a dashing new theme developed by the community. The theme could not be completed so it could not become the default look of Bionic Beaver release. I am guessing that it will be the default theme in Ubuntu 18.10.

|

||||

|

||||

![Ubuntu 18.04 Communitheme][40]

|

||||

|

||||

You can try out the aesthetic theme even today. [Installing Ubuntu Community Theme][41] is very easy. Just look for it in the software center, install it, restart your system and then at the login choose the Communitheme session.

|

||||

|

||||

#### 14\. Get Windows 10 in Virtual Box (if you need it)

|

||||

|

||||

In a situation where you must use Windows for some reasons, you can [install Windows in virtual box inside Linux][42]. It will run as a regular Ubuntu application.

|

||||

|

||||

It’s not the best way but it still gives you an option. You can also [use WINE to run Windows software on Linux][43]. In both cases, I suggest trying the alternative native Linux application first before jumping to virtual machine or WINE.

|

||||

|

||||

#### What do you do after installing Ubuntu?

|

||||

|

||||

Those were my suggestions for getting started with Ubuntu. There are many more tutorials that you can find under [Ubuntu 18.04][44] tag. You may go through them as well to see if there is something useful for you.

|

||||

|

||||

Enough from myside. Your turn now. What are the items on your list of **things to do after installing Ubuntu 18.04**? The comment section is all yours.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/things-to-do-after-installing-ubuntu-18-04/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:https://www.ubuntu.com/

|

||||

[2]:https://itsfoss.com/ubuntu-18-04-release-features/

|

||||

[3]:https://www.youtube.com/c/itsfoss?sub_confirmation=1

|

||||

[4]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/04/things-to-after-installing-ubuntu-18-04-featured-800x450.jpeg

|

||||

[5]:https://www.gnome.org/

|

||||

[6]:https://kubuntu.org/

|

||||

[7]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/10/software-update-ubuntu-17-10.jpg

|

||||

[8]:https://help.ubuntu.com/community/Repositories/Ubuntu

|

||||

[9]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/10/software-updates-ubuntu-17-10.jpg

|

||||

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/04/repositories-ubuntu-18.png

|

||||

[11]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/10/software-repository-ubuntu-17-10.jpeg

|

||||

[12]:apt://ubuntu-restricted-extras

|

||||

[13]:https://itsfoss.com/remove-install-software-ubuntu/

|

||||

[14]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2017/10/Ubuntu-software-center-17-10-800x551.jpeg

|

||||

[15]:https://itsfoss.com/gdebi-default-ubuntu-software-center/

|

||||

[16]:https://itsfoss.com/best-video-editing-software-linux/

|

||||