mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

commit

3dcbfd3a5b

published

20150522 Analyzing Linux Logs.md20150602 Howto Configure OpenVPN Server-Client on Ubuntu 15.04.md

201507

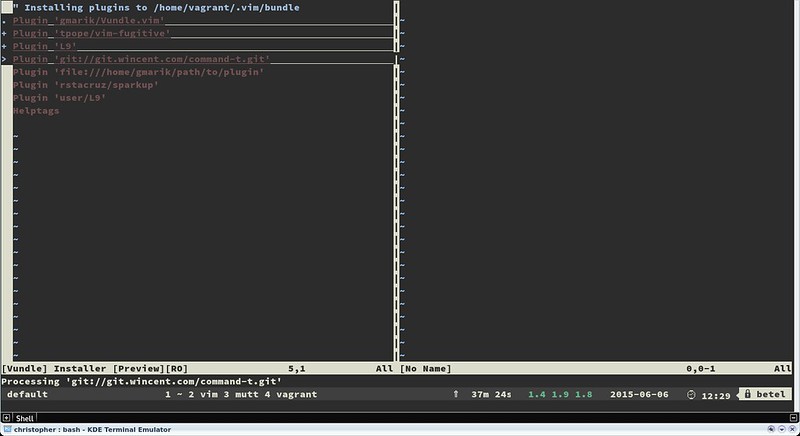

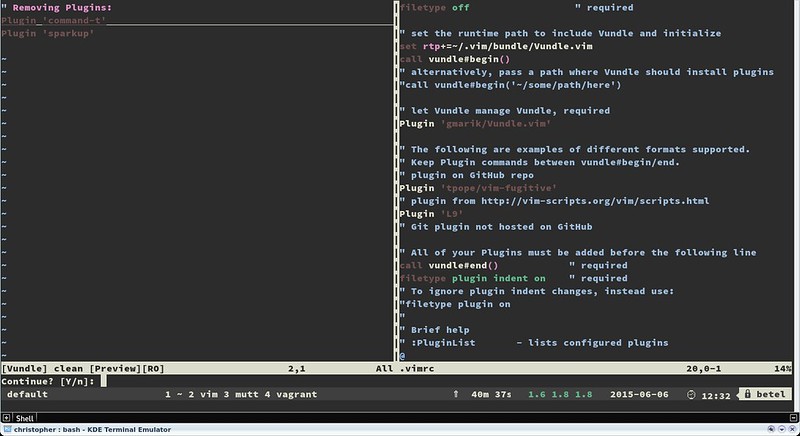

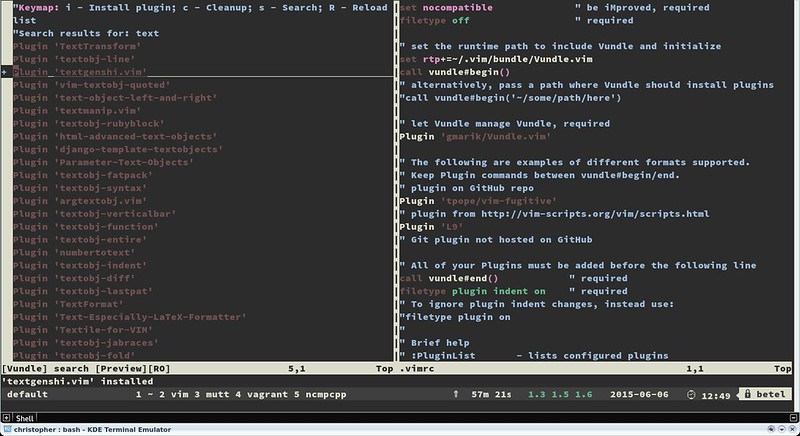

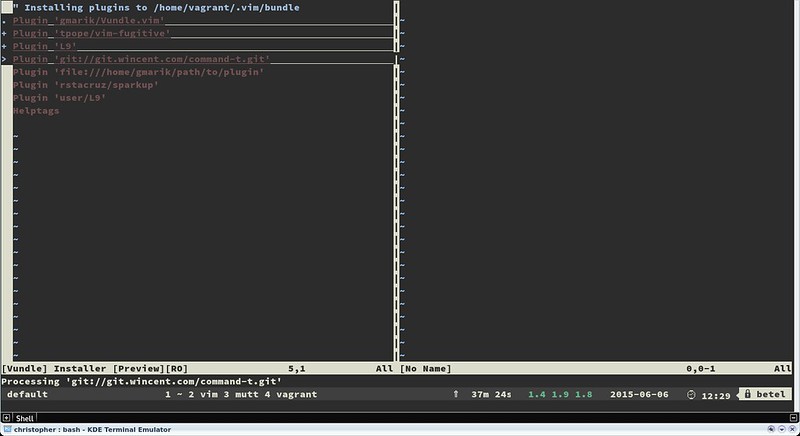

20150121 Syncthing--A Private And Secure Tool To Sync Files or Folders Between Computers.md20150127 25 Useful Apache '.htaccess' Tricks to Secure and Customize Websites.md20150227 Fix Minimal BASH like line editing is supported GRUB Error In Linux.md20150309 Comparative Introduction To FreeBSD For Linux Users.md20150401 ZMap Documentation.md20150407 10 Truly Amusing Easter Eggs in Linux.md20150410 10 Top Distributions in Demand to Get Your Dream Job.md20150505 How to Manage 'Systemd' Services and Units Using 'Systemctl' in Linux.md20150515 Lolcat--A Command Line Tool to Output Rainbow Of Colors in Linux Terminal.md20150520 Is Linux Better than OS X GNU Open Source and Apple in History.md20150526 20 Useful Terminal Emulators for Linux.md20150527 Animated Wallpaper Adds Live Backgrounds To Linux Distros.md20150527 How to Develop Own Custom Linux Distribution From Scratch.md20150527 How to edit your documents collaboratively on Linux.md20150601 How to monitor Linux servers with SNMP and Cacti.md20150601 How to monitor common services with Nagios.md20150603 Installing Ruby on Rails using rbenv on Ubuntu 15.04.md20150604 How to access SQLite database in Perl.md20150610 How to Manipulate Filenames Having Spaces and Special Characters in Linux.md20150610 How to secure your Linux server.md20150610 watch--Repeat Linux or Unix Commands Regular Intervals.md20150612 How to Configure Apache Containers with Docker on Fedora 22.md20150612 How to Configure Swarm Native Clustering for Docker.md20150612 Linux_Logo--A Command Line Tool to Print Color ANSI Logos of Linux Distributions.md20150615 How to combine two graphs on Cacti.md20150616 Installing LAMP Linux, Apache, MariaDB, PHP or PhpMyAdmin in RHEL or CentOS 7.0.md20150616 LINUX 101--POWER UP YOUR SHELL.md20150616 Linux Humor on the Command-line.md20150616 XBMC--build a remote control.md20150617 Tor Browser--An Ultimate Web Browser for Anonymous Web Browsing in Linux.md20150618 How to Setup Node.JS on Ubuntu 15.04 with Different Methods.md20150618 What will be the future of Linux without Linus.md20150625 Screen Capture Made Easy with these Dedicated Tools.md20150629 4 Useful Tips on mkdir, tar and kill Commands in Linux.md20150629 Backup with these DeDuplicating Encryption Tools.md20150629 First Stable Version Of Atom Code Editor Has Been Released.md20150706 PHP Security.md20150709 7 command line tools for monitoring your Linux system.md20150709 Install Google Hangouts Desktop Client In Linux.md20150709 Linus Torvalds Says People Who Believe in AI Singularity Are on Drugs.md20150709 Linux FAQs with Answers--How to fix 'tar--Exiting with failure status due to previous errors'.md20150709 Linux FAQs with Answers--How to install a Brother printer on Linux.md20150709 Linux FAQs with Answers--How to open multiple tabs in a GNOME terminal on Ubuntu 15.04.md20150709 Why is the ibdata1 file continuously growing in MySQL.md20150713 How To Fix System Program Problem Detected In Ubuntu 14.04.md20150713 How to manage Vim plugins.md20150716 4 CCleaner Alternatives For Ubuntu Linux.md20150717 Setting Up 'XR' (Crossroads) Load Balancer for Web Servers on RHEL or CentOS.md20150722 12 Useful PHP Commandline Usage Every Linux User Must Know.md20150722 How to Use and Execute PHP Codes in Linux Command Line--Part 1.mdInstall Plex Media Server On Ubuntu or CentOS 7.1 or Fedora 22.mdPHP 7 upgrading.md

sources

talk

20141219 2015 will be the year Linux takes over the enterprise and other predictions.md20141224 The Curious Case of the Disappearing Distros.md20150112 diff -u--What's New in Kernel Development.md20150121 Did this JavaScript break the console.md20150128 7 communities driving open source development.md20150320 Revealed--The best and worst of Docker.md20150709 Interviews--Linus Torvalds Answers Your Question.md20150709 command line tools for monitoring your Linux system.md

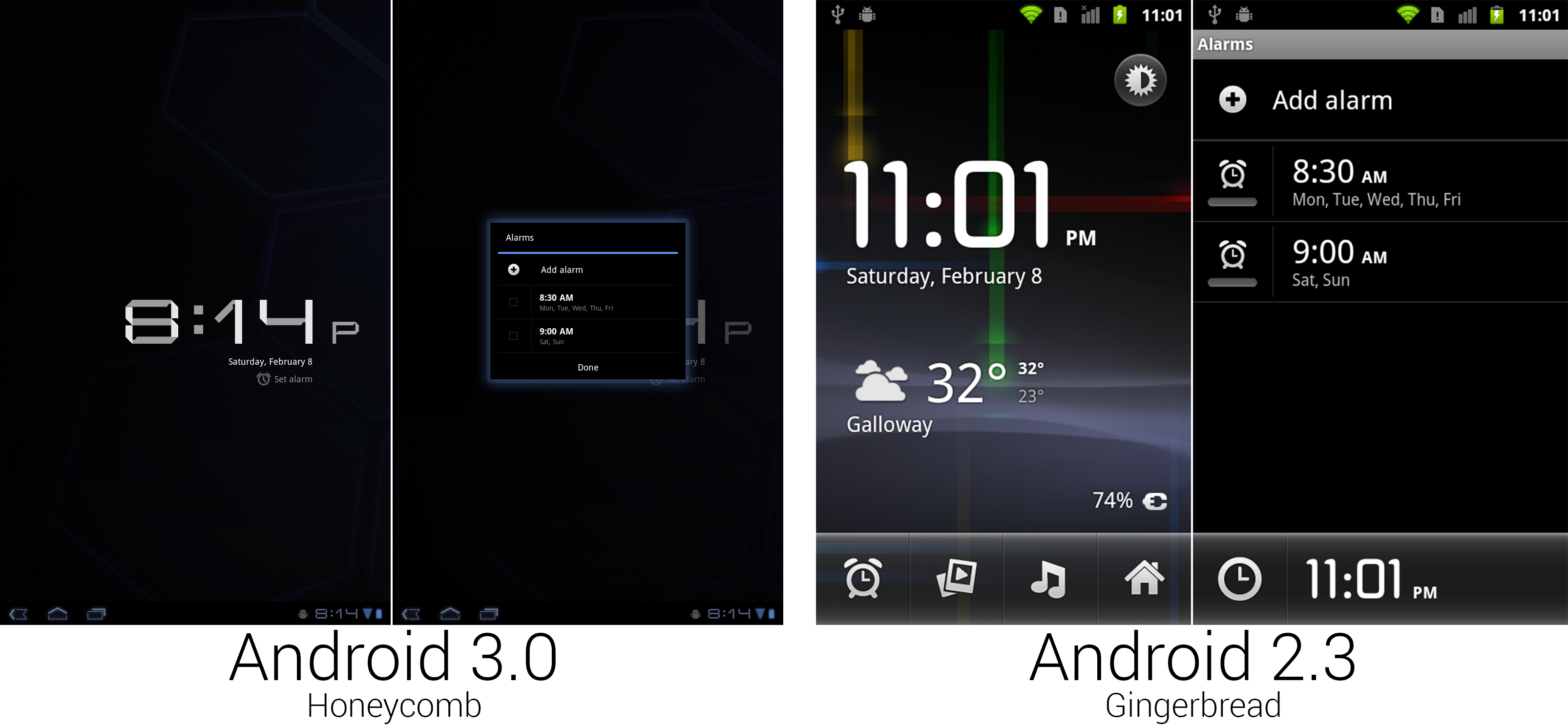

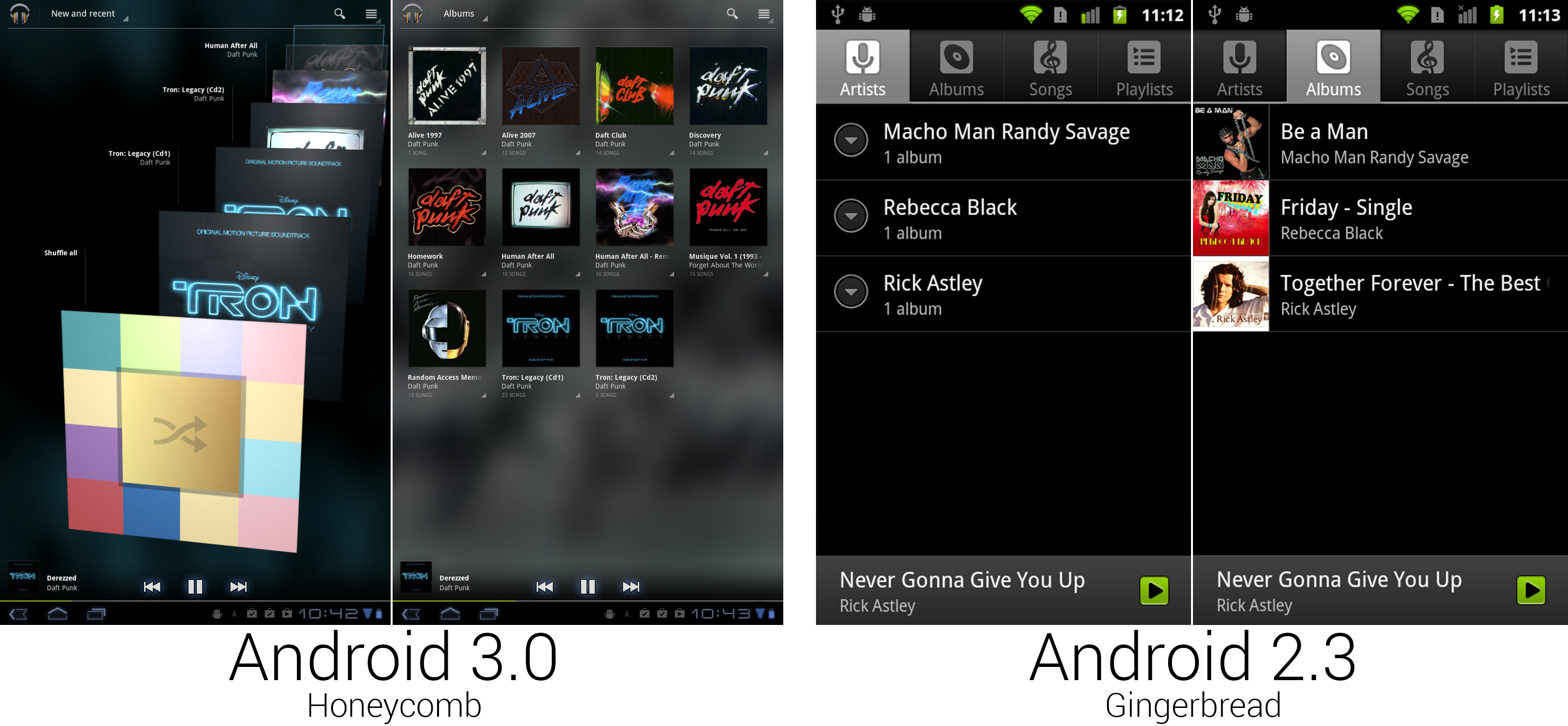

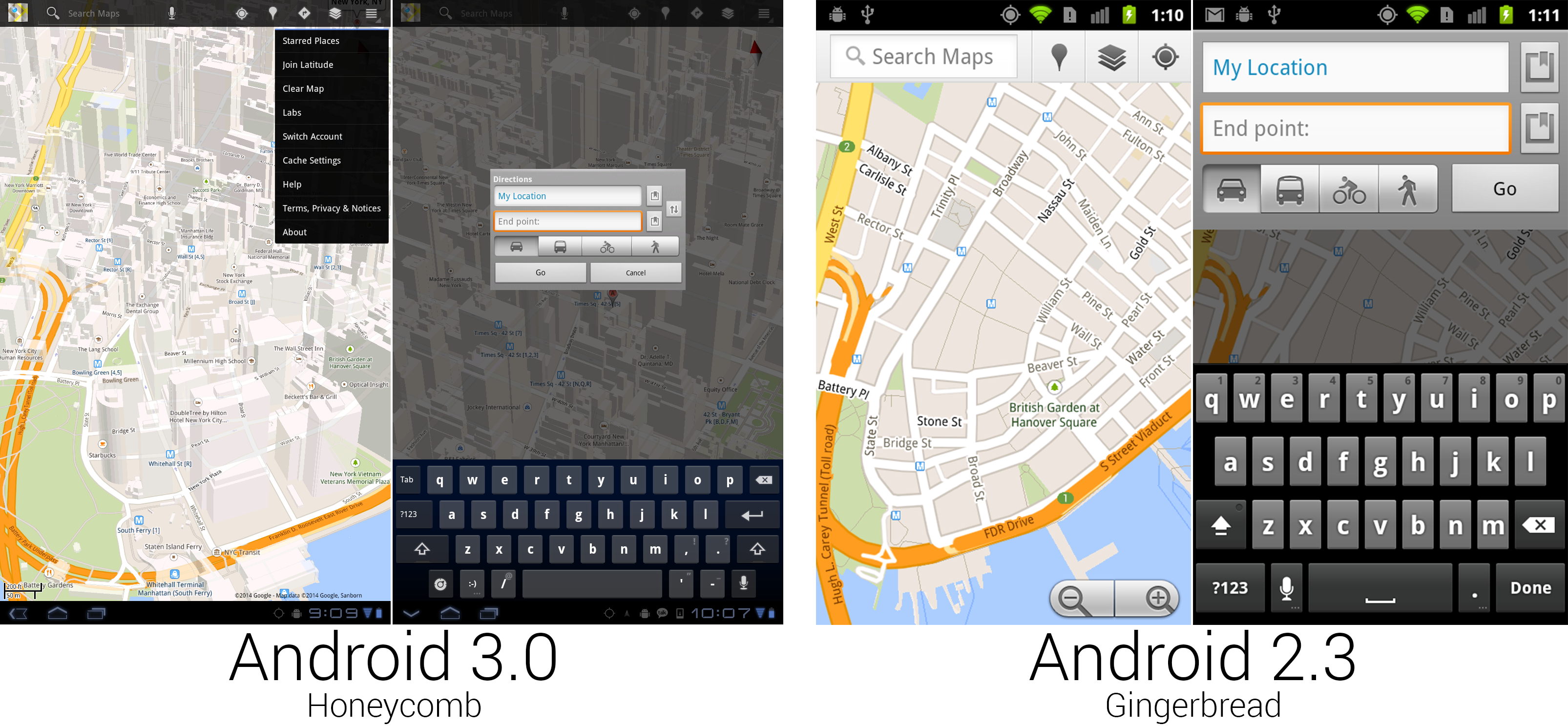

The history of Android

tech

20150225 How to set up IPv6 BGP peering and filtering in Quagga BGP router.md20150504 How to access a Linux server behind NAT via reverse SSH tunnel.md20150515 Install Plex Media Server On Ubuntu or CentOS 7.1 or Fedora 22.md20150522 Analyzing Linux Logs.md20150604 Nishita Agarwal Shares Her Interview Experience on Linux 'iptables' Firewall.md20150612 How to Configure Swarm Native Clustering for Docker.md20150625 How to Provision Swarm Clusters using Docker Machine.md20150717 How to collect NGINX metrics - Part 2.md20150717 How to monitor NGINX with Datadog - Part 3.md20150717 Howto Configure FTP Server with Proftpd on Fedora 22.md20150722 12 Useful PHP Commandline Usage Every Linux User Must Know.md20150722 How To Fix 'The Update Information Is Outdated' In Ubuntu 14.04.md20150722 How To Manage StartUp Applications In Ubuntu.md20150722 Howto Interactively Perform Tasks with Docker using Kitematic.md20150728 Process of the Linux kernel building.md20150728 Understanding Shell Commands Easily Using 'Explain Shell' Script in Linux.md20150730 How to Setup iTOP (IT Operational Portal) on CentOS 7.md20150730 Howto Configure Nginx as Rreverse Proxy or Load Balancer with Weave and Docker.md

RHCSA Series

translated

talk

20150309 Comparative Introduction To FreeBSD For Linux Users.md20150520 Is Linux Better than OS X GNU Open Source and Apple in History.md

The history of Android

tech

20150616 LINUX 101--POWER UP YOUR SHELL.md20150625 How to Provision Swarm Clusters using Docker Machine.md20150713 How to manage Vim plugins.md20150717 How to Configure Chef (server or client) on Ubuntu 14.04 or 15.04.md20150717 How to collect NGINX metrics - Part 2.md20150717 Howto Configure FTP Server with Proftpd on Fedora 22.md20150722 How To Fix 'The Update Information Is Outdated' In Ubuntu 14.04.md20150722 How To Manage StartUp Applications In Ubuntu.md20150722 Howto Interactively Perform Tasks with Docker using Kitematic.md20150727 Easy Backup Restore and Migrate Containers in Docker.md20150728 How To Fix--There is no command installed for 7-zip archive files.md20150728 How to Update Linux Kernel for Improved System Performance.md20150728 Tips to Create ISO from CD, Watch User Activity and Check Memory Usages of Browser.md

183

published/20150522 Analyzing Linux Logs.md

Normal file

183

published/20150522 Analyzing Linux Logs.md

Normal file

@ -0,0 +1,183 @@

|

||||

如何分析 Linux 日志

|

||||

==============================================================================

|

||||

|

||||

|

||||

日志中有大量的信息需要你处理,尽管有时候想要提取并非想象中的容易。在这篇文章中我们会介绍一些你现在就能做的基本日志分析例子(只需要搜索即可)。我们还将涉及一些更高级的分析,但这些需要你前期努力做出适当的设置,后期就能节省很多时间。对数据进行高级分析的例子包括生成汇总计数、对有效值进行过滤,等等。

|

||||

|

||||

我们首先会向你展示如何在命令行中使用多个不同的工具,然后展示了一个日志管理工具如何能自动完成大部分繁重工作从而使得日志分析变得简单。

|

||||

|

||||

### 用 Grep 搜索 ###

|

||||

|

||||

搜索文本是查找信息最基本的方式。搜索文本最常用的工具是 [grep][1]。这个命令行工具在大部分 Linux 发行版中都有,它允许你用正则表达式搜索日志。正则表达式是一种用特殊的语言写的、能识别匹配文本的模式。最简单的模式就是用引号把你想要查找的字符串括起来。

|

||||

|

||||

#### 正则表达式 ####

|

||||

|

||||

这是一个在 Ubuntu 系统的认证日志中查找 “user hoover” 的例子:

|

||||

|

||||

$ grep "user hoover" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

pam_unix(sshd:session): session opened for user hoover by (uid=0)

|

||||

pam_unix(sshd:session): session closed for user hoover

|

||||

|

||||

构建精确的正则表达式可能很难。例如,如果我们想要搜索一个类似端口 “4792” 的数字,它可能也会匹配时间戳、URL 以及其它不需要的数据。Ubuntu 中下面的例子,它匹配了一个我们不想要的 Apache 日志。

|

||||

|

||||

$ grep "4792" /var/log/auth.log

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

74.91.21.46 - - [31/Mar/2015:19:44:32 +0000] "GET /scripts/samples/search?q=4972 HTTP/1.0" 404 545 "-" "-”

|

||||

|

||||

#### 环绕搜索 ####

|

||||

|

||||

另一个有用的小技巧是你可以用 grep 做环绕搜索。这会向你展示一个匹配前面或后面几行是什么。它能帮助你调试导致错误或问题的东西。`B` 选项展示前面几行,`A` 选项展示后面几行。举个例子,我们知道当一个人以管理员员身份登录失败时,同时他们的 IP 也没有反向解析,也就意味着他们可能没有有效的域名。这非常可疑!

|

||||

|

||||

$ grep -B 3 -A 2 'Invalid user' /var/log/auth.log

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: reverse mapping checking getaddrinfo for 216-19-2-8.commspeed.net [216.19.2.8] failed - POSSIBLE BREAK-IN ATTEMPT!

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12545]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Invalid user admin from 216.19.2.8

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: input_userauth_request: invalid user admin [preauth]

|

||||

Apr 28 17:06:20 ip-172-31-11-241 sshd[12547]: Received disconnect from 216.19.2.8: 11: Bye Bye [preauth]

|

||||

|

||||

#### Tail ####

|

||||

|

||||

你也可以把 grep 和 [tail][2] 结合使用来获取一个文件的最后几行,或者跟踪日志并实时打印。这在你做交互式更改的时候非常有用,例如启动服务器或者测试代码更改。

|

||||

|

||||

$ tail -f /var/log/auth.log | grep 'Invalid user'

|

||||

Apr 30 19:49:48 ip-172-31-11-241 sshd[6512]: Invalid user ubnt from 219.140.64.136

|

||||

Apr 30 19:49:49 ip-172-31-11-241 sshd[6514]: Invalid user admin from 219.140.64.136

|

||||

|

||||

关于 grep 和正则表达式的详细介绍并不在本指南的范围,但 [Ryan’s Tutorials][3] 有更深入的介绍。

|

||||

|

||||

日志管理系统有更高的性能和更强大的搜索能力。它们通常会索引数据并进行并行查询,因此你可以很快的在几秒内就能搜索 GB 或 TB 的日志。相比之下,grep 就需要几分钟,在极端情况下可能甚至几小时。日志管理系统也使用类似 [Lucene][4] 的查询语言,它提供更简单的语法来检索数字、域以及其它。

|

||||

|

||||

### 用 Cut、 AWK、 和 Grok 解析 ###

|

||||

|

||||

#### 命令行工具 ####

|

||||

|

||||

Linux 提供了多个命令行工具用于文本解析和分析。当你想要快速解析少量数据时非常有用,但处理大量数据时可能需要很长时间。

|

||||

|

||||

#### Cut ####

|

||||

|

||||

[cut][5] 命令允许你从有分隔符的日志解析字段。分隔符是指能分开字段或键值对的等号或逗号等。

|

||||

|

||||

假设我们想从下面的日志中解析出用户:

|

||||

|

||||

pam_unix(su:auth): authentication failure; logname=hoover uid=1000 euid=0 tty=/dev/pts/0 ruser=hoover rhost= user=root

|

||||

|

||||

我们可以像下面这样用 cut 命令获取用等号分割后的第八个字段的文本。这是一个 Ubuntu 系统上的例子:

|

||||

|

||||

$ grep "authentication failure" /var/log/auth.log | cut -d '=' -f 8

|

||||

root

|

||||

hoover

|

||||

root

|

||||

nagios

|

||||

nagios

|

||||

|

||||

#### AWK ####

|

||||

|

||||

另外,你也可以使用 [awk][6],它能提供更强大的解析字段功能。它提供了一个脚本语言,你可以过滤出几乎任何不相干的东西。

|

||||

|

||||

例如,假设在 Ubuntu 系统中我们有下面的一行日志,我们想要提取登录失败的用户名称:

|

||||

|

||||

Mar 24 08:28:18 ip-172-31-11-241 sshd[32701]: input_userauth_request: invalid user guest [preauth]

|

||||

|

||||

你可以像下面这样使用 awk 命令。首先,用一个正则表达式 /sshd.*invalid user/ 来匹配 sshd invalid user 行。然后用 { print $9 } 根据默认的分隔符空格打印第九个字段。这样就输出了用户名。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

你可以在 [Awk 用户指南][7] 中阅读更多关于如何使用正则表达式和输出字段的信息。

|

||||

|

||||

#### 日志管理系统 ####

|

||||

|

||||

日志管理系统使得解析变得更加简单,使用户能快速的分析很多的日志文件。他们能自动解析标准的日志格式,比如常见的 Linux 日志和 Web 服务器日志。这能节省很多时间,因为当处理系统问题的时候你不需要考虑自己写解析逻辑。

|

||||

|

||||

下面是一个 sshd 日志消息的例子,解析出了每个 remoteHost 和 user。这是 Loggly 中的一张截图,它是一个基于云的日志管理服务。

|

||||

|

||||

|

||||

|

||||

你也可以对非标准格式自定义解析。一个常用的工具是 [Grok][8],它用一个常见正则表达式库,可以解析原始文本为结构化 JSON。下面是一个 Grok 在 Logstash 中解析内核日志文件的事例配置:

|

||||

|

||||

filter{

|

||||

grok {

|

||||

match => {"message" => "%{CISCOTIMESTAMP:timestamp} %{HOST:host} %{WORD:program}%{NOTSPACE} %{NOTSPACE}%{NUMBER:duration}%{NOTSPACE} %{GREEDYDATA:kernel_logs}"

|

||||

}

|

||||

}

|

||||

|

||||

下图是 Grok 解析后输出的结果:

|

||||

|

||||

|

||||

|

||||

### 用 Rsyslog 和 AWK 过滤 ###

|

||||

|

||||

过滤使得你能检索一个特定的字段值而不是进行全文检索。这使你的日志分析更加准确,因为它会忽略来自其它部分日志信息不需要的匹配。为了对一个字段值进行搜索,你首先需要解析日志或者至少有对事件结构进行检索的方式。

|

||||

|

||||

#### 如何对应用进行过滤 ####

|

||||

|

||||

通常,你可能只想看一个应用的日志。如果你的应用把记录都保存到一个文件中就会很容易。如果你需要在一个聚集或集中式日志中过滤一个应用就会比较复杂。下面有几种方法来实现:

|

||||

|

||||

1. 用 rsyslog 守护进程解析和过滤日志。下面的例子将 sshd 应用的日志写入一个名为 sshd-message 的文件,然后丢弃事件以便它不会在其它地方重复出现。你可以将它添加到你的 rsyslog.conf 文件中测试这个例子。

|

||||

|

||||

:programname, isequal, “sshd” /var/log/sshd-messages

|

||||

&~

|

||||

|

||||

2. 用类似 awk 的命令行工具提取特定字段的值,例如 sshd 用户名。下面是 Ubuntu 系统中的一个例子。

|

||||

|

||||

$ awk '/sshd.*invalid user/ { print $9 }' /var/log/auth.log

|

||||

guest

|

||||

admin

|

||||

info

|

||||

test

|

||||

ubnt

|

||||

|

||||

3. 用日志管理系统自动解析日志,然后在需要的应用名称上点击过滤。下面是在 Loggly 日志管理服务中提取 syslog 域的截图。我们对应用名称 “sshd” 进行过滤,如维恩图图标所示。

|

||||

|

||||

|

||||

|

||||

#### 如何过滤错误 ####

|

||||

|

||||

一个人最希望看到日志中的错误。不幸的是,默认的 syslog 配置不直接输出错误的严重性,也就使得难以过滤它们。

|

||||

|

||||

这里有两个解决该问题的方法。首先,你可以修改你的 rsyslog 配置,在日志文件中输出错误的严重性,使得便于查看和检索。在你的 rsyslog 配置中你可以用 pri-text 添加一个 [模板][9],像下面这样:

|

||||

|

||||

"<%pri-text%> : %timegenerated%,%HOSTNAME%,%syslogtag%,%msg%n"

|

||||

|

||||

这个例子会按照下面的格式输出。你可以看到该信息中指示错误的 err。

|

||||

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你可以用 awk 或者 grep 检索错误信息。在 Ubuntu 中,对这个例子,我们可以用一些语法特征,例如 . 和 >,它们只会匹配这个域。

|

||||

|

||||

$ grep '.err>' /var/log/auth.log

|

||||

<authpriv.err> : Mar 11 18:18:00,hoover-VirtualBox,su[5026]:, pam_authenticate: Authentication failure

|

||||

|

||||

你的第二个选择是使用日志管理系统。好的日志管理系统能自动解析 syslog 消息并抽取错误域。它们也允许你用简单的点击过滤日志消息中的特定错误。

|

||||

|

||||

下面是 Loggly 中一个截图,显示了高亮错误严重性的 syslog 域,表示我们正在过滤错误:

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/analyzing-linux-logs/

|

||||

|

||||

作者:[Jason Skowronski][a],[Amy Echeverri][b],[ Sadequl Hussain][c]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linkedin.com/in/jasonskowronski

|

||||

[b]:https://www.linkedin.com/in/amyecheverri

|

||||

[c]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:http://linux.die.net/man/1/grep

|

||||

[2]:http://linux.die.net/man/1/tail

|

||||

[3]:http://ryanstutorials.net/linuxtutorial/grep.php

|

||||

[4]:https://lucene.apache.org/core/2_9_4/queryparsersyntax.html

|

||||

[5]:http://linux.die.net/man/1/cut

|

||||

[6]:http://linux.die.net/man/1/awk

|

||||

[7]:http://www.delorie.com/gnu/docs/gawk/gawk_26.html#IDX155

|

||||

[8]:http://logstash.net/docs/1.4.2/filters/grok

|

||||

[9]:http://www.rsyslog.com/doc/v8-stable/configuration/templates.html

|

||||

@ -1,18 +1,18 @@

|

||||

Ubuntu 15.04上配置OpenVPN服务器-客户端

|

||||

在 Ubuntu 15.04 上配置 OpenVPN 服务器和客户端

|

||||

================================================================================

|

||||

虚拟专用网(VPN)是几种用于建立与其它网络连接的网络技术中常见的一个名称。它被称为虚拟网,因为各个节点的连接不是通过物理线路实现的。而由于没有网络所有者的正确授权是不能通过公共线路访问到网络,所以它是专用的。

|

||||

虚拟专用网(VPN)常指几种通过其它网络建立连接技术。它之所以被称为“虚拟”,是因为各个节点间的连接不是通过物理线路实现的,而“专用”是指如果没有网络所有者的正确授权是不能被公开访问到。

|

||||

|

||||

|

||||

|

||||

[OpenVPN][1]软件通过TUN/TAP驱动的帮助,使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提额外提供了灵活的配置,可以帮助你避免防火墙限制。

|

||||

[OpenVPN][1]软件借助TUN/TAP驱动使用TCP和UDP协议来传输数据。UDP协议和TUN驱动允许NAT后的用户建立到OpenVPN服务器的连接。此外,OpenVPN允许指定自定义端口。它提供了更多的灵活配置,可以帮助你避免防火墙限制。

|

||||

|

||||

OpenVPN中,由OpenSSL库和传输层安全协议(TLS)提供了安全和加密。TLS是SSL协议的一个改进版本。

|

||||

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何预备使用带有公共密钥非对称加密和TLS协议基础结构(PKI)。

|

||||

OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示了如何配置OpenVPN的服务器端,以及如何配置使用带有公共密钥基础结构(PKI)的非对称加密和TLS协议。

|

||||

|

||||

### 服务器端配置 ###

|

||||

|

||||

首先,我们必须安装OpenVPN。在Ubuntu 15.04和其它带有‘apt’报管理器的Unix系统中,可以通过如下命令安装:

|

||||

首先,我们必须安装OpenVPN软件。在Ubuntu 15.04和其它带有‘apt’包管理器的Unix系统中,可以通过如下命令安装:

|

||||

|

||||

sudo apt-get install openvpn

|

||||

|

||||

@ -20,7 +20,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

sudo apt-get unstall easy-rsa

|

||||

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在“sudo -i”命令后;此外,你可以使用“sudo -E”作为接下来所有命令的前缀。

|

||||

**注意**: 所有接下来的命令要以超级用户权限执行,如在使用`sudo -i`命令后执行,或者你可以使用`sudo -E`作为接下来所有命令的前缀。

|

||||

|

||||

开始之前,我们需要拷贝“easy-rsa”到openvpn文件夹。

|

||||

|

||||

@ -32,15 +32,15 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

这里,我们开启了一个密钥生成进程。

|

||||

这里,我们开始密钥生成进程。

|

||||

|

||||

首先,我们编辑一个“var”文件。为了简化生成过程,我们需要在里面指定数据。这里是“var”文件的一个样例:

|

||||

首先,我们编辑一个“vars”文件。为了简化生成过程,我们需要在里面指定数据。这里是“vars”文件的一个样例:

|

||||

|

||||

export KEY_COUNTRY="US"

|

||||

export KEY_PROVINCE="CA"

|

||||

export KEY_CITY="SanFrancisco"

|

||||

export KEY_ORG="Fort-Funston"

|

||||

export KEY_EMAIL="my@myhost.mydomain"

|

||||

export KEY_COUNTRY="CN"

|

||||

export KEY_PROVINCE="BJ"

|

||||

export KEY_CITY="Beijing"

|

||||

export KEY_ORG="Linux.CN"

|

||||

export KEY_EMAIL="open@vpn.linux.cn"

|

||||

export KEY_OU=server

|

||||

|

||||

希望这些字段名称对你而言已经很清楚,不需要进一步说明了。

|

||||

@ -61,7 +61,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

./build-ca

|

||||

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查以下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

在对话中,我们可以看到默认的变量,这些变量是我们先前在“vars”中指定的。我们可以检查一下,如有必要进行编辑,然后按回车几次。对话如下

|

||||

|

||||

Generating a 2048 bit RSA private key

|

||||

.............................................+++

|

||||

@ -75,14 +75,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [Fort-Funston CA]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN CA]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

接下来,我们需要生成一个服务器密钥

|

||||

|

||||

@ -102,14 +102,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

For some fields there will be a default value,

|

||||

If you enter '.', the field will be left blank.

|

||||

-----

|

||||

Country Name (2 letter code) [US]:

|

||||

State or Province Name (full name) [CA]:

|

||||

Locality Name (eg, city) [SanFrancisco]:

|

||||

Organization Name (eg, company) [Fort-Funston]:

|

||||

Organizational Unit Name (eg, section) [MyOrganizationalUnit]:

|

||||

Common Name (eg, your name or your server's hostname) [server]:

|

||||

Country Name (2 letter code) [CN]:

|

||||

State or Province Name (full name) [BJ]:

|

||||

Locality Name (eg, city) [Beijing]:

|

||||

Organization Name (eg, company) [Linux.CN]:

|

||||

Organizational Unit Name (eg, section) [Tech]:

|

||||

Common Name (eg, your name or your server's hostname) [Linux.CN server]:

|

||||

Name [EasyRSA]:

|

||||

Email Address [me@myhost.mydomain]:

|

||||

Email Address [open@vpn.linux.cn]:

|

||||

|

||||

Please enter the following 'extra' attributes

|

||||

to be sent with your certificate request

|

||||

@ -119,14 +119,14 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

Check that the request matches the signature

|

||||

Signature ok

|

||||

The Subject's Distinguished Name is as follows

|

||||

countryName :PRINTABLE:'US'

|

||||

stateOrProvinceName :PRINTABLE:'CA'

|

||||

localityName :PRINTABLE:'SanFrancisco'

|

||||

organizationName :PRINTABLE:'Fort-Funston'

|

||||

organizationalUnitName:PRINTABLE:'MyOrganizationalUnit'

|

||||

commonName :PRINTABLE:'server'

|

||||

countryName :PRINTABLE:'CN'

|

||||

stateOrProvinceName :PRINTABLE:'BJ'

|

||||

localityName :PRINTABLE:'Beijing'

|

||||

organizationName :PRINTABLE:'Linux.CN'

|

||||

organizationalUnitName:PRINTABLE:'Tech'

|

||||

commonName :PRINTABLE:'Linux.CN server'

|

||||

name :PRINTABLE:'EasyRSA'

|

||||

emailAddress :IA5STRING:'me@myhost.mydomain'

|

||||

emailAddress :IA5STRING:'open@vpn.linux.cn'

|

||||

Certificate is to be certified until May 22 19:00:25 2025 GMT (3650 days)

|

||||

Sign the certificate? [y/n]:y

|

||||

1 out of 1 certificate requests certified, commit? [y/n]y

|

||||

@ -143,7 +143,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

Generating DH parameters, 2048 bit long safe prime, generator 2

|

||||

This is going to take a long time

|

||||

................................+................<and many many dots>

|

||||

................................+................<许多的点>

|

||||

|

||||

在漫长的等待之后,我们可以继续生成最后的密钥了,该密钥用于TLS验证。命令如下:

|

||||

|

||||

@ -176,7 +176,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

### Unix的客户端配置 ###

|

||||

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要从先前的部分连接到OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的目录中:

|

||||

假定我们有一台装有类Unix操作系统的设备,比如Ubuntu 15.04,并安装有OpenVPN。我们想要连接到前面建立的OpenVPN服务器。首先,我们需要为客户端生成密钥。为了生成该密钥,请转到服务器上的对应目录中:

|

||||

|

||||

cd /etc/openvpn/easy-rsa/2.0

|

||||

|

||||

@ -211,7 +211,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -243,7 +243,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

安卓设备上的OpenVPN配置和Unix系统上的十分类似,我们需要一个含有配置文件、密钥和证书的包。文件列表如下:

|

||||

|

||||

- configuration file (.ovpn),

|

||||

- 配置文件 (扩展名 .ovpn),

|

||||

- ca.crt,

|

||||

- dh2048.pem,

|

||||

- client.crt,

|

||||

@ -257,7 +257,7 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

dev tun

|

||||

proto udp

|

||||

|

||||

# IP and Port of remote host with OpenVPN server

|

||||

# 远程 OpenVPN 服务器的 IP 和 端口号

|

||||

remote 111.222.333.444 1194

|

||||

|

||||

resolv-retry infinite

|

||||

@ -274,21 +274,21 @@ OpenSSL提供了两种加密方法:对称和非对称。下面,我们展示

|

||||

|

||||

所有这些文件我们必须移动我们设备的SD卡上。

|

||||

|

||||

然后,我们需要安装[OpenVPN连接][2]。

|

||||

然后,我们需要安装一个[OpenVPN Connect][2] 应用。

|

||||

|

||||

接下来,配置过程很是简单:

|

||||

|

||||

open setting of OpenVPN and select Import options

|

||||

select Import Profile from SD card option

|

||||

in opened window go to folder with prepared files and select .ovpn file

|

||||

application offered us to create a new profile

|

||||

tap on the Connect button and wait a second

|

||||

- 打开 OpenVPN 并选择“Import”选项

|

||||

- 选择“Import Profile from SD card”

|

||||

- 在打开的窗口中导航到我们放置好文件的目录,并选择那个 .ovpn 文件

|

||||

- 应用会要求我们创建一个新的配置文件

|

||||

- 点击“Connect”按钮并稍等一下

|

||||

|

||||

搞定。现在,我们的安卓设备已经通过安全的VPN连接连接到我们的专用网。

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和在企业中使用。

|

||||

虽然OpenVPN初始配置花费不少时间,但是简易的客户端配置为我们弥补了时间上的损失,也提供了从任何设备连接的能力。此外,OpenVPN提供了一个很高的安全等级,以及从不同地方连接的能力,包括位于NAT后面的客户端。因此,OpenVPN可以同时在家和企业中使用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -296,7 +296,7 @@ via: http://linoxide.com/ubuntu-how-to/configure-openvpn-server-client-ubuntu-15

|

||||

|

||||

作者:[Ivan Zabrovskiy][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,10 +1,10 @@

|

||||

Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

Syncthing: 一个在计算机之间同步文件/文件夹的私密安全同步工具

|

||||

================================================================================

|

||||

### 简介 ###

|

||||

|

||||

**Syncthing** 是一个免费开源的工具,它能在你的各个网络计算机间同步文件/文件夹。它不像其它的同步工具,如**BitTorrent Sync**和**Dropbox**那样,它的同步数据是直接从一个系统中直接传输到另一个系统的,并且它是完全开源的,安全且私有的。你所有的珍贵数据都会被存储在你的系统中,这样你就能对你的文件和文件夹拥有全面的控制权,没有任何的文件或文件夹会被存储在第三方系统中。此外,你有权决定这些数据该存于何处,是否要分享到第三方,或这些数据在互联网上的传输方式。

|

||||

**Syncthing**是一个免费开源的工具,它能在你的各个网络计算机间同步文件/文件夹。它不像其它的同步工具,如**BitTorrent Sync**和**Dropbox**那样,它的同步数据是直接从一个系统中直接传输到另一个系统的,并且它是完全开源的,安全且私密的。你所有的珍贵数据都会被存储在你的系统中,这样你就能对你的文件和文件夹拥有全面的控制权,没有任何的文件或文件夹会被存储在第三方系统中。此外,你有权决定这些数据该存于何处,是否要分享到第三方,或这些数据在互联网上的传输方式。

|

||||

|

||||

所有的信息通讯都使用TLS进行加密,这样你的数据便能十分安全地逃离窥探。Syncthing有一个强大的响应式的网页管理界面(WebGUI,下同),它能够帮助用户简便地添加,删除和管理那些通过网络进行同步的文件夹。通过使用Syncthing,你可以在多个系统上一次同步多个文件夹。在安装和使用上,Syncthing是一个可移植的,简单但强大的工具。即然文件或文件夹是从一部计算机中直接传输到另一计算机中的,那么你就无需考虑向云服务供应商支付金钱来获取额外的云空间。你所需要的仅仅是非常稳定的LAN/WAN连接和你的系统中足够的硬盘空间。它支持所有的现代操作系统,包括GNU/Linux, Windows, Mac OS X, 当然还有Android。

|

||||

所有的信息通讯都使用TLS进行加密,这样你的数据便能十分安全地逃离窥探。Syncthing有一个强大的响应式的网页管理界面(WebGUI,下同),它能够帮助用户简便地添加、删除和管理那些通过网络进行同步的文件夹。通过使用Syncthing,你可以在多个系统上一次同步多个文件夹。在安装和使用上,Syncthing是一个可移植的、简单而强大的工具。即然文件或文件夹是从一部计算机中直接传输到另一计算机中的,那么你就无需考虑向云服务供应商支付金钱来获取额外的云空间。你所需要的仅仅是非常稳定的LAN/WAN连接以及在你的系统中有足够的硬盘空间。它支持所有的现代操作系统,包括GNU/Linux, Windows, Mac OS X, 当然还有Android。

|

||||

|

||||

### 安装 ###

|

||||

|

||||

@ -13,7 +13,7 @@ Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

### 系统1细节: ###

|

||||

|

||||

- **操作系统**: Ubuntu 14.04 LTS server;

|

||||

- **主机名**: server1.unixmen.local;

|

||||

- **主机名**: **server1**.unixmen.local;

|

||||

- **IP地址**: 192.168.1.150.

|

||||

- **系统用户**: sk (你可以使用你自己的系统用户)

|

||||

- **同步文件夹**: /home/Sync/ (Syncthing会默认创建)

|

||||

@ -21,7 +21,7 @@ Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

### 系统2细节 ###

|

||||

|

||||

- **操作系统**: Ubuntu 14.10 server;

|

||||

- **主机名**: server.unixmen.local;

|

||||

- **主机名**: **server**.unixmen.local;

|

||||

- **IP地址**: 192.168.1.151.

|

||||

- **系统用户**: sk (你可以使用你自己的系统用户)

|

||||

- **同步文件夹**: /home/Sync/ (Syncthing会默认创建)

|

||||

@ -49,7 +49,7 @@ Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

|

||||

cd syncthing-linux-amd64-v0.10.20/

|

||||

|

||||

复制可执行文件"Syncthing"到**$PATH**:

|

||||

复制可执行文件"syncthing"到**$PATH**:

|

||||

|

||||

sudo cp syncthing /usr/local/bin/

|

||||

|

||||

@ -57,7 +57,7 @@ Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

|

||||

syncthing

|

||||

|

||||

当你执行上述命令后,syncthing会生成一个配置以及一些关键值(keys),并且在你的浏览器上打开一个管理界面。,

|

||||

当你执行上述命令后,syncthing会生成一个配置以及一些配置键值,并且在你的浏览器上打开一个管理界面。

|

||||

|

||||

输入示例:

|

||||

|

||||

@ -78,11 +78,11 @@ Syncthing: 一个跨计算机的私人的文件/文件夹安全同步工具

|

||||

[BQXVO] 15:41:07 INFO: Device BQXVO3D-VEBIDRE-MVMMGJI-ECD2PC3-T5LT3JB-OK4Z45E-MPIDWHI-IRW3NAZ is "server1" at [dynamic]

|

||||

[BQXVO] 15:41:07 INFO: Completed initial scan (rw) of folder default

|

||||

|

||||

Syncthing已经被成功地初始化了,网页管理接口也可以通过浏览器在URL: **http://localhost:8080**进行访问了。如上面输入所看到的,Syncthing在你的**home**目录中的Sync目录**下自动为你创建了一个名为**default**的文件夹。

|

||||

Syncthing已经被成功地初始化了,网页管理接口也可以通过浏览器访问URL: **http://localhost:8080**。如上面输入所看到的,Syncthing在你的**home**目录中的Sync目录**下自动为你创建了一个名为**default**的文件夹。

|

||||

|

||||

默认情况下,Syncthing的网页管理界面(WebGUI)只能在本地端口(localhost)中进行访问,你需要在两个系统中进行以下操作:

|

||||

默认情况下,Syncthing的网页管理界面只能在本地端口(localhost)中进行访问,要从远程进行访问,你需要在两个系统中进行以下操作:

|

||||

|

||||

首先,按下CTRL+C键来停止Syncthing初始化进程。现在你回到了终端界面。

|

||||

首先,按下CTRL+C键来终止Syncthing初始化进程。现在你回到了终端界面。

|

||||

|

||||

编辑**config.xml**文件,

|

||||

|

||||

@ -115,17 +115,18 @@ Syncthing已经被成功地初始化了,网页管理接口也可以通过浏

|

||||

现在,在你的浏览器上打开**http://ip-address:8080/**。你会看到下面的界面:

|

||||

|

||||

|

||||

|

||||

网页管理界面分为两个窗格,在左窗格中,你应该可以看到同步的文件夹列表。如前所述,文件夹**default**在你初始化Syncthing时被自动创建。如果你想同步更多文件夹,点击**Add Folder**按钮。

|

||||

|

||||

在右窗格中,你可以看到已连接的设备数。现在这里只有一个,就是你现在正在操作的计算机。

|

||||

|

||||

### 网页管理界面(WebGUI)上设置Syncthing ###

|

||||

### 网页管理界面上设置Syncthing ###

|

||||

|

||||

为了提高安全性,让我们启用TLS,并且设置访问网页管理界面的管理员用户和密码。要做到这点,点击右上角的齿轮按钮,然后选择**Settings**

|

||||

|

||||

|

||||

|

||||

输入管理员的帐户名/密码。我设置的是admin/Ubuntu。你可以使用一些更复杂的密码。

|

||||

输入管理员的帐户名/密码。我设置的是admin/Ubuntu。你应该使用一些更复杂的密码。

|

||||

|

||||

|

||||

|

||||

@ -155,7 +156,7 @@ Syncthing已经被成功地初始化了,网页管理接口也可以通过浏

|

||||

|

||||

|

||||

|

||||

接着会出现下面的界面。在Device区域粘贴**系统1 ID **。输入设备名称(可选)。在地址区域,你可以输入其它系统(译者注:即粘贴的ID所属的系统,此应为系统1)的IP地址,或者使用默认值。默认值为**dynamic**。最后,选择要同步的文件夹。在我们的例子中,同步文件夹为**default**。

|

||||

接着会出现下面的界面。在Device区域粘贴**系统1 ID **。输入设备名称(可选)。在地址区域,你可以输入其它系统( LCTT 译注:即粘贴的ID所属的系统,此应为系统1)的IP地址,或者使用默认值。默认值为**dynamic**。最后,选择要同步的文件夹。在我们的例子中,同步文件夹为**default**。

|

||||

|

||||

|

||||

|

||||

@ -181,7 +182,7 @@ Syncthing已经被成功地初始化了,网页管理接口也可以通过浏

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

现在,在任一个系统中的“**default**”文件夹中放进任意文件或文件夹。你应该可以看到这些文件/文件夹被自动同步到其它系统。

|

||||

|

||||

@ -197,7 +198,7 @@ via: http://www.unixmen.com/syncthing-private-secure-tool-sync-filesfolders-comp

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[XLCYun](https://github.com/XLCYun)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,11 @@

|

||||

修复Linux中的提供最小化类BASH命令行编辑GRUB错误

|

||||

修复Linux中的“提供类似行编辑的袖珍BASH...”的GRUB错误

|

||||

================================================================================

|

||||

|

||||

这两天我[安装了Elementary OS和Windows双系统][1],在启动的时候遇到了一个Grub错误。命令行中呈现如下信息:

|

||||

|

||||

**提供最小化类BASH命令行编辑。对于第一个词,TAB键补全可以使用的命令。除此之外,TAB键补全可用的设备或文件。**

|

||||

**Minimal BASH like line editing is supported. For the first word, TAB lists possible command completions. anywhere else TAB lists possible device or file completions.**

|

||||

|

||||

**提供类似行编辑的袖珍 BASH。TAB键补全第一个词,列出可以使用的命令。除此之外,TAB键补全可以列出可用的设备或文件。**

|

||||

|

||||

|

||||

|

||||

@ -10,7 +13,7 @@

|

||||

|

||||

通过这篇文章里我们可以学到基于Linux系统**如何修复Ubuntu中出现的“minimal BASH like line editing is supported” Grub错误**。

|

||||

|

||||

> 你可以参阅这篇教程来修复类似的高频问题,[错误:分区未找到Linux grub救援模式][3]。

|

||||

> 你可以参阅这篇教程来修复类似的常见问题,[错误:分区未找到Linux grub救援模式][3]。

|

||||

|

||||

### 先决条件 ###

|

||||

|

||||

@ -19,11 +22,11 @@

|

||||

- 一个包含相同版本、相同OS的LiveUSB或磁盘

|

||||

- 当前会话的Internet连接正常工作

|

||||

|

||||

在确认了你拥有先决条件了之后,让我们看看如何修复Linux的死亡黑屏(如果我可以这样的称呼它的话;))。

|

||||

在确认了你拥有先决条件了之后,让我们看看如何修复Linux的死亡黑屏(如果我可以这样的称呼它的话 ;) )。

|

||||

|

||||

### 如何在基于Ubuntu的Linux中修复“minimal BASH like line editing is supported” Grub错误 ###

|

||||

|

||||

我知道你一定疑问这种Grub错误并不局限于在基于Ubuntu的Linux发行版上发生,那为什么我要强调在基于Ubuntu的发行版上呢?原因是,在这里我们将采用一个简单的方法并叫作**Boot Repair**的工具来修复我们的问题。我并不确定在其他的诸如Fedora的发行版中是否有这个工具可用。不再浪费时间,我们来看如何修复minimal BASH like line editing is supported Grub错误。

|

||||

我知道你一定疑问这种Grub错误并不局限于在基于Ubuntu的Linux发行版上发生,那为什么我要强调在基于Ubuntu的发行版上呢?原因是,在这里我们将采用一个简单的方法,用个叫做**Boot Repair**的工具来修复我们的问题。我并不确定在其他的诸如Fedora的发行版中是否有这个工具可用。不再浪费时间,我们来看如何修复“minimal BASH like line editing is supported” Grub错误。

|

||||

|

||||

### 步骤 1: 引导进入lives会话 ###

|

||||

|

||||

@ -75,7 +78,7 @@ via: http://itsfoss.com/fix-minimal-bash-line-editing-supported-grub-error-linux

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,101 @@

|

||||

FreeBSD 和 Linux 有什么不同?

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

### 简介 ###

|

||||

|

||||

BSD最初从UNIX继承而来,目前,有许多的类Unix操作系统是基于BSD的。FreeBSD是使用最广泛的开源的伯克利软件发行版(即 BSD 发行版)。就像它隐含的意思一样,它是一个自由开源的类Unix操作系统,并且是公共服务器平台。FreeBSD源代码通常以宽松的BSD许可证发布。它与Linux有很多相似的地方,但我们得承认它们在很多方面仍有不同。

|

||||

|

||||

本文的其余部分组织如下:FreeBSD的描述在第一部分,FreeBSD和Linux的相似点在第二部分,它们的区别将在第三部分讨论,对他们功能的讨论和总结在最后一节。

|

||||

|

||||

### FreeBSD描述 ###

|

||||

|

||||

#### 历史 ####

|

||||

|

||||

- FreeBSD的第一个版本发布于1993年,它的第一张CD-ROM是FreeBSD1.0,发行于1993年12月。接下来,FreeBSD 2.1.0在1995年发布,并且获得了所有用户的青睐。实际上许多IT公司都使用FreeBSD并且很满意,我们可以列出其中的一些:IBM、Nokia、NetApp和Juniper Network。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 关于它的许可证,FreeBSD以多种开源许可证进行发布,它的名为Kernel的最新代码以两句版BSD许可证进行了发布,给予使用和重新发布FreeBSD的绝对自由。其它的代码则以三句版或四句版BSD许可证进行发布,有些是以GPL和CDDL的许可证发布的。

|

||||

|

||||

(LCTT 译注:BSD 许可证与 GPL 许可证相比,相当简短,最初只有四句规则;1999年应 RMS 请求,删除了第三句,新的许可证称作“新 BSD”或三句版BSD;原来的 BSD 许可证称作“旧 BSD”、“修订的 BSD”或四句版BSD;也有一种删除了第三、第四两句的版本,称之为两句版 BSD,等价于 MIT 许可证。)

|

||||

|

||||

#### 用户 ####

|

||||

|

||||

- FreeBSD的重要特点之一就是它的用户多样性。实际上,FreeBSD可以作为邮件服务器、Web 服务器、FTP 服务器以及路由器等,您只需要在它上运行服务相关的软件即可。而且FreeBSD还支持ARM、PowerPC、MIPS、x86、x86-64架构。

|

||||

|

||||

### FreeBSD和Linux的相似处 ###

|

||||

|

||||

FreeBSD和Linux是两个自由开源的软件。实际上,它们的用户可以很容易的检查并修改源代码,用户拥有绝对的自由。而且,FreeBSD和Linux都是类Unix系统,它们的内核、内部组件、库程序都使用从历史上的AT&T Unix继承来的算法。FreeBSD从根基上更像Unix系统,而Linux是作为自由的类Unix系统发布的。许多工具应用都可以在FreeBSD和Linux中找到,实际上,他们几乎有同样的功能。

|

||||

|

||||

此外,FreeBSD能够运行大量的Linux应用。它可以安装一个Linux的兼容层,这个兼容层可以在编译FreeBSD时加入AAC Compact Linux得到,或通过下载已编译了Linux兼容层的FreeBSD系统,其中会包括兼容程序:aac_linux.ko。不同于FreeBSD的是,Linux无法运行FreeBSD的软件。

|

||||

|

||||

最后,我们注意到虽然二者有同样的目标,但二者还是有一些不同之处,我们在下一节中列出。

|

||||

|

||||

### FreeBSD和Linux的区别 ###

|

||||

|

||||

目前对于大多数用户来说并没有一个选择FreeBSD还是Linux的明确的准则。因为他们有着很多同样的应用程序,因为他们都被称作类Unix系统。

|

||||

|

||||

在这一章,我们将列出这两种系统的一些重要的不同之处。

|

||||

|

||||

#### 许可证 ####

|

||||

|

||||

- 两个系统的区别首先在于它们的许可证。Linux以GPL许可证发行,它为用户提供阅读、发行和修改源代码的自由,GPL许可证帮助用户避免仅仅发行二进制。而FreeBSD以BSD许可证发布,BSD许可证比GPL更宽容,因为其衍生著作不需要仍以该许可证发布。这意味着任何用户能够使用、发布、修改代码,并且不需要维持之前的许可证。

|

||||

- 您可以依据您的需求,在两种许可证中选择一种。首先是BSD许可证,由于其特殊的条款,它更受用户青睐。实际上,这个许可证使用户在保证源代码的封闭性的同时,可以售卖以该许可证发布的软件。再说说GPL,它需要每个使用以该许可证发布的软件的用户多加注意。

|

||||

- 如果想在以不同许可证发布的两种软件中做出选择,您需要了解他们各自的许可证,以及他们开发中的方法论,从而能了解他们特性的区别,来选择更适合自己需求的。

|

||||

|

||||

#### 控制 ####

|

||||

|

||||

- 由于FreeBSD和Linux是以不同的许可证发布的,Linus Torvalds控制着Linux的内核,而FreeBSD却与Linux不同,它并未被控制。我个人更倾向于使用FreeBSD而不是Linux,这是因为FreeBSD才是绝对自由的软件,没有任何控制许可证的存在。Linux和FreeBSD还有其他的不同之处,我建议您先不急着做出选择,等读完本文后再做出您的选择。

|

||||

|

||||

#### 操作系统 ####

|

||||

|

||||

- Linux主要指内核系统,这与FreeBSD不同,FreeBSD的整个系统都被维护着。FreeBSD的内核和一组由FreeBSD团队开发的软件被作为一个整体进行维护。实际上,FreeBSD开发人员能够远程且高效的管理核心操作系统。

|

||||

- 而Linux方面,在管理系统方面有一些困难。由于不同的组件由不同的源维护,Linux开发者需要将它们汇集起来,才能达到同样的功能。

|

||||

- FreeBSD和Linux都给了用户大量的可选软件和发行版,但他们管理的方式不同。FreeBSD是统一的管理方式,而Linux需要被分别维护。

|

||||

|

||||

#### 硬件支持 ####

|

||||

|

||||

- 说到硬件支持,Linux比FreeBSD做的更好。但这不意味着FreeBSD没有像Linux那样支持硬件的能力。他们只是在管理的方式不同,这通常还依赖于您的需求。因此,如果您在寻找最新的解决方案,FreeBSD更适应您;但如果您在寻找更多的普适性,那最好使用Linux。

|

||||

|

||||

#### 原生FreeBSD Vs 原生Linux ####

|

||||

|

||||

- 两者的原生系统的区别又有不同。就像我之前说的,Linux是一个Unix的替代系统,由Linux Torvalds编写,并由网络上的许多极客一起协助实现的。Linux有一个现代系统所需要的全部功能,诸如虚拟内存、共享库、动态加载、优秀的内存管理等。它以GPL许可证发布。

|

||||

- FreeBSD也继承了Unix的许多重要的特性。FreeBSD作为在加州大学开发的BSD的一种发行版。开发BSD的最重要的原因是用一个开源的系统来替代AT&T操作系统,从而给用户无需AT&T许可证便可使用的能力。

|

||||

- 许可证的问题是开发者们最关心的问题。他们试图提供一个最大化克隆Unix的开源系统。这影响了用户的选择,由于FreeBSD使用BSD许可证进行发布,因而相比Linux更加自由。

|

||||

|

||||

#### 支持的软件包 ####

|

||||

|

||||

- 从用户的角度来看,另一个二者不同的地方便是软件包以及从源码安装的软件的可用性和支持。Linux只提供了预编译的二进制包,这与FreeBSD不同,它不但提供预编译的包,而且还提供从源码编译和安装的构建系统。使用它的 ports 工具,FreeBSD给了您选择使用预编译的软件包(默认)和在编译时定制您软件的能力。(LCTT 译注:此处说明有误。Linux 也提供了源代码方式的包,并支持自己构建。)

|

||||

- 这些 ports 允许您构建所有支持FreeBSD的软件。而且,它们的管理还是层次化的,您可以在/usr/ports下找到源文件的地址以及一些正确使用FreeBSD的文档。

|

||||

- 这些提到的 ports给予你产生不同软件包版本的可能性。FreeBSD给了您通过源代码构建以及预编译的两种软件,而不是像Linux一样只有预编译的软件包。您可以使用两种安装方式管理您的系统。

|

||||

|

||||

#### FreeBSD 和 Linux 常用工具比较 ####

|

||||

|

||||

- 有大量的常用工具在FreeBSD上可用,并且有趣的是他们由FreeBSD的团队所拥有。相反的,Linux工具来自GNU,这就是为什么在使用中有一些限制。(LCTT 译注:这也是 Linux 正式的名称被称作“GNU/Linux”的原因,因为本质上 Linux 其实只是指内核。)

|

||||

- 实际上FreeBSD采用的BSD许可证非常有益且有用。因此,您有能力维护核心操作系统,控制这些应用程序的开发。有一些工具类似于它们的祖先 - BSD和Unix的工具,但不同于GNU的套件,GNU套件只想做到最小的向后兼容。

|

||||

|

||||

#### 标准 Shell ####

|

||||

|

||||

- FreeBSD默认使用tcsh。它是csh的评估版,由于FreeBSD以BSD许可证发行,因此不建议您在其中使用GNU的组件 bash shell。bash和tcsh的区别仅仅在于tcsh的脚本功能。实际上,我们更推荐在FreeBSD中使用sh shell,因为它更加可靠,可以避免一些使用tcsh和csh时出现的脚本问题。

|

||||

|

||||

#### 一个更加层次化的文件系统 ####

|

||||

|

||||

- 像之前提到的一样,使用FreeBSD时,基础操作系统以及可选组件可以被很容易的区别开来。这导致了一些管理它们的标准。在Linux下,/bin,/sbin,/usr/bin或者/usr/sbin都是存放可执行文件的目录。FreeBSD不同,它有一些附加的对其进行组织的规范。基础操作系统被放在/usr/local/bin或者/usr/local/sbin目录下。这种方法可以帮助管理和区分基础操作系统和可选组件。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

FreeBSD和Linux都是自由且开源的系统,他们有相似点也有不同点。上面列出的内容并不能说哪个系统比另一个更好。实际上,FreeBSD和Linux都有自己的特点和技术规格,这使它们与别的系统区别开来。那么,您有什么看法呢?您已经有在使用它们中的某个系统了么?如果答案为是的话,请给我们您的反馈;如果答案是否的话,在读完我们的描述后,您怎么看?请在留言处发表您的观点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/comparative-introduction-freebsd-linux-users/

|

||||

|

||||

作者:[anismaj][a]

|

||||

译者:[wwy-hust](https://github.com/wwy-hust)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.unixmen.com/author/anis/

|

||||

@ -1,25 +1,24 @@

|

||||

sevenot translated

|

||||

10大帮助你获得理想的职业的需求分布

|

||||

10 大帮助你获得理想的职业的操作系统技能

|

||||

================================================================================

|

||||

我们用了5篇系列文章,来让人们意识到那些可以帮助他们获得理想职业的顶级技能。在这个充满竞争的社会里,你不能仅仅依赖一项仅能,你需要在多个职业技能上都有所涉猎。我们并不能权衡这些技能,但是我们可以参考这些几乎不变的惯例和统计数据。

|

||||

|

||||

下面的文章和紧跟其后的内容,是针对全球各大IT公司上一季度对员工技能要求的详细调查报告。统计数据真实的反映了需求和市场的变化。我们会尽力让这份报告保持时效性,特别是有明显变化的时候。这五篇系列文章是:

|

||||

|

||||

-10大帮助你获得理想的职业的需求分布

|

||||

-[10大帮助你获得职位的著名 IT 技能][1]

|

||||

-10大帮助你获得理想职位的项目技能

|

||||

-10大帮助你赢得理想职位的网络技能

|

||||

-10大帮助你获得理想职位的个人认证

|

||||

- 10大帮助你获得理想的职业的需求分布

|

||||

- [10大帮助你获得职位的著名 IT 技能][1]

|

||||

- [10大帮助你获得理想职位的项目技能][2]

|

||||

- [10大帮助你赢得理想职位的网络技能][3]

|

||||

- [10大帮助你获得理想职位的个人认证][4]

|

||||

|

||||

### 1. Windows ###

|

||||

|

||||

微软研发的windows操作系统不仅在PC市场上占据龙头地位,而且从职位视角来看也是最枪手的操作系统工作,不管你是赞成还是反对。有资料显示上一季度需求增长达到0.1%.

|

||||

微软研发的windows操作系统不仅在PC市场上占据龙头地位,而且从职位视角来看也是最抢手的操作系统技能,不管你是赞成还是反对。有资料显示上一季度需求增长达到0.1%.

|

||||

|

||||

最新版本 : Windows 8.1

|

||||

|

||||

### 2. Red Hat Enterprise Linux ###

|

||||

|

||||

Red Hat Enterprise Linux 是一个商业发行版本的企业级Linux,它由红帽公司研发。它是世界上运用最广的Linux发行版本,特别是在生产环境和协同工作方面。上一季度其整体需求上涨17%,位列第二。

|

||||

Red Hat Enterprise Linux 是一个商业的Linux发行版本,它由红帽公司研发。它是世界上运用最广的Linux发行版本之一,特别是在生产环境和协同工作方面。上一季度其整体需求上涨17%,位列第二。

|

||||

|

||||

最新版本 : RedHat Enterprise Linux 7.1

|

||||

|

||||

@ -50,9 +49,10 @@ Red Hat Enterprise Linux 是一个商业发行版本的企业级Linux,它由

|

||||

### 7. Ubuntu ###

|

||||

|

||||

排在第7的是Ubuntu,这是一款由Canonicals公司研发设计的Linux系统,旨在服务于个人。上一季度需求率上涨11%。

|

||||

|

||||

最新版本 :

|

||||

|

||||

- Ubuntu 14.10 (9 months security and maintenance update).

|

||||

- Ubuntu 14.10 (已有九个月的安全和维护更新).

|

||||

- Ubuntu 14.04.2 LTS

|

||||

|

||||

### 8. Suse ###

|

||||

@ -63,19 +63,16 @@ Red Hat Enterprise Linux 是一个商业发行版本的企业级Linux,它由

|

||||

|

||||

### 9. Debian ###

|

||||

|

||||

The very famous Linux Operating System, mother of 100’s of Distro and closest to GNU comes at number nine.

|

||||

排在第9的是非常有名的 Linux 操作系统Debian,非常贴近GNU。其上一季度需求率上涨9%。

|

||||

排在第9的是非常有名的 Linux 操作系统Debian,它是上百种Linux 发行版之母,非常接近GNU理念。其上一季度需求率上涨9%。

|

||||

|

||||

最新版本: Debian 7.8

|

||||

|

||||

### 10. HP-UX ###

|

||||

|

||||

The Proprietary UNIX Operating System designed by Hewlett-Packard comes at number ten. It has shown a decline in the last quarter by 5%.

|

||||

排在第10的是Hewlett-Packard公司研发的专用 Linux 操作系统HP-UX,上一季度需求率上涨5%。

|

||||

|

||||

最新版本 : 11i v3 Update 13

|

||||

|

||||

注:表格数据--不需要翻译--开始

|

||||

<table border="0" cellspacing="0">

|

||||

<colgroup width="107"></colgroup>

|

||||

<colgroup width="92"></colgroup>

|

||||

@ -133,7 +130,6 @@ The Proprietary UNIX Operating System designed by Hewlett-Packard comes at numbe

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

注:表格数据--不需要翻译--结束

|

||||

|

||||

以上便是全部信息,我会尽快推出下一篇系列文章,敬请关注Tecmint。不要忘了留下您宝贵的评论。如果您喜欢我们的文章并且与我们分享您的见解,这对我们的工作是一种鼓励。

|

||||

|

||||

@ -143,9 +139,12 @@ via: http://www.tecmint.com/top-distributions-in-demand-to-get-your-dream-job/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[sevenot](https://github.com/sevenot)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/top-distributions-in-demand-to-get-your-dream-job/www.tecmint.com/famous-it-skills-in-demand-that-will-get-you-hired/

|

||||

[1]:http://www.tecmint.com/famous-it-skills-in-demand-that-will-get-you-hired/

|

||||

[2]:https://linux.cn/article-5303-1.html

|

||||

[3]:http://www.tecmint.com/networking-protocols-skills-to-land-your-dream-job/

|

||||

[4]:http://www.tecmint.com/professional-certifications-in-demand-that-will-get-you-hired/

|

||||

@ -1,13 +1,14 @@

|

||||

在Linux中使用‘Systemctl’管理‘Systemd’服务和单元

|

||||

systemctl 完全指南

|

||||

================================================================================

|

||||

Systemctl是一个systemd工具,主要负责控制systemd系统和服务管理器。

|

||||

|

||||

Systemd是一个系统管理守护进程、工具和库的集合,用于取代System V初始进程。Systemd的功能是用于集中管理和配置类UNIX系统。

|

||||

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有位数不多的几个尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

在Linux生态系统中,Systemd被部署到了大多数的标准Linux发行版中,只有为数不多的几个发行版尚未部署。Systemd通常是所有其它守护进程的父进程,但并非总是如此。

|

||||

|

||||

|

||||

使用Systemctl管理Linux服务

|

||||

|

||||

*使用Systemctl管理Linux服务*

|

||||

|

||||

本文旨在阐明在运行systemd的系统上“如何控制系统和服务”。

|

||||

|

||||

@ -41,11 +42,9 @@ Systemd是一个系统管理守护进程、工具和库的集合,用于取代S

|

||||

root 555 1 0 16:27 ? 00:00:00 /usr/lib/systemd/systemd-logind

|

||||

dbus 556 1 0 16:27 ? 00:00:00 /bin/dbus-daemon --system --address=systemd: --nofork --nopidfile --systemd-activation

|

||||

|

||||

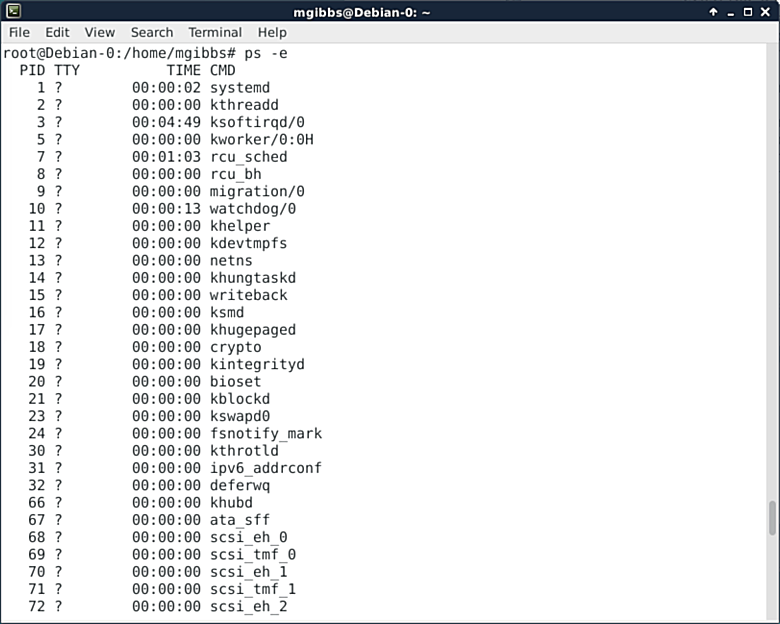

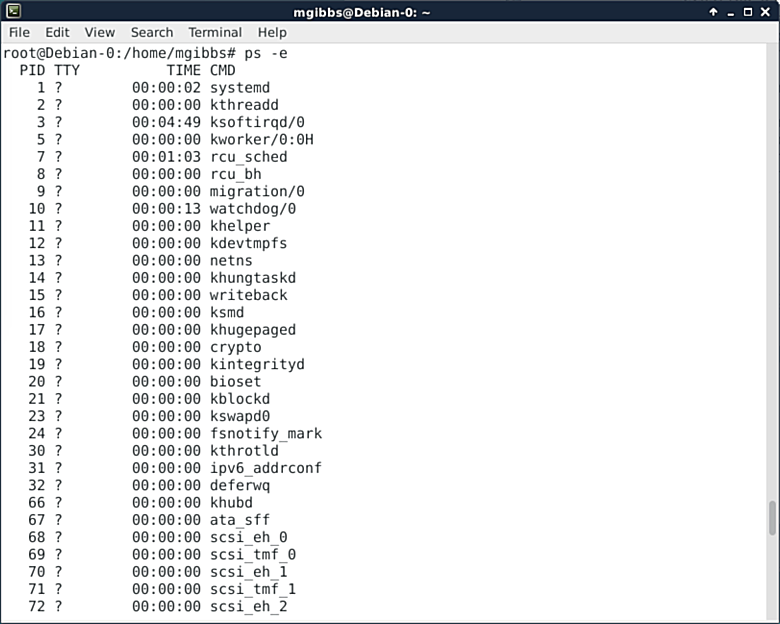

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-

|

||||

**注意**:systemd是作为父进程(PID=1)运行的。在上面带(-e)参数的ps命令输出中,选择所有进程,(-a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(即 -eaf)。

|

||||

|

||||

a)选择除会话前导外的所有进程,并使用(-f)参数输出完整格式列表(如 -eaf)。

|

||||

|

||||

也请注意上例中后随的方括号和样例剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

也请注意上例中后随的方括号和例子中剩余部分。方括号表达式是grep的字符类表达式的一部分。

|

||||

|

||||

#### 4. 分析systemd启动进程 ####

|

||||

|

||||

@ -147,7 +146,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

1 loaded units listed. Pass --all to see loaded but inactive units, too.

|

||||

To show all installed unit files use 'systemctl list-unit-files'.

|

||||

|

||||

#### 10. 检查某个单元(cron.service)是否启用 ####

|

||||

#### 10. 检查某个单元(如 cron.service)是否启用 ####

|

||||

|

||||

# systemctl is-enabled crond.service

|

||||

|

||||

@ -187,7 +186,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

dbus-org.fedoraproject.FirewallD1.service enabled

|

||||

....

|

||||

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(httpd.service)状态 ####

|

||||

#### 13. Linux中如何启动、重启、停止、重载服务以及检查服务(如 httpd.service)状态 ####

|

||||

|

||||

# systemctl start httpd.service

|

||||

# systemctl restart httpd.service

|

||||

@ -214,15 +213,15 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

Apr 28 17:21:30 tecmint systemd[1]: Started The Apache HTTP Server.

|

||||

Hint: Some lines were ellipsized, use -l to show in full.

|

||||

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

**注意**:当我们使用systemctl的start,restart,stop和reload命令时,我们不会从终端获取到任何输出内容,只有status命令可以打印输出。

|

||||

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(系统启动时自动启动服务) ####

|

||||

#### 14. 如何激活服务并在启动时启用或禁用服务(即系统启动时自动启动服务) ####

|

||||

|

||||

# systemctl is-active httpd.service

|

||||

# systemctl enable httpd.service

|

||||

# systemctl disable httpd.service

|

||||

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(httpd.service) ####

|

||||

#### 15. 如何屏蔽(让它不能启动)或显示服务(如 httpd.service) ####

|

||||

|

||||

# systemctl mask httpd.service

|

||||

ln -s '/dev/null' '/etc/systemd/system/httpd.service'

|

||||

@ -297,7 +296,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

# systemctl enable tmp.mount

|

||||

# systemctl disable tmp.mount

|

||||

|

||||

#### 20. 在Linux中屏蔽(让它不能启动)或显示挂载点 ####

|

||||

#### 20. 在Linux中屏蔽(让它不能启用)或可见挂载点 ####

|

||||

|

||||

# systemctl mask tmp.mount

|

||||

|

||||

@ -375,7 +374,7 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

|

||||

CPUShares=2000

|

||||

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

**注意**:当你为某个服务设置CPUShares,会自动创建一个以服务名命名的目录(如 httpd.service),里面包含了一个名为90-CPUShares.conf的文件,该文件含有CPUShare限制信息,你可以通过以下方式查看该文件:

|

||||

|

||||

# vi /etc/systemd/system/httpd.service.d/90-CPUShares.conf

|

||||

|

||||

@ -528,13 +527,13 @@ a)选择除会话前导外的所有进程,并使用(-f)参数输出完

|

||||

#### 35. 启动运行等级5,即图形模式 ####

|

||||

|

||||

# systemctl isolate runlevel5.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate graphical.target

|

||||

|

||||

#### 36. 启动运行等级3,即多用户模式(命令行) ####

|

||||

|

||||

# systemctl isolate runlevel3.target

|

||||

OR

|

||||

或

|

||||

# systemctl isolate multiuser.target

|

||||

|

||||

#### 36. 设置多用户模式或图形模式为默认运行等级 ####

|

||||

@ -572,7 +571,7 @@ via: http://www.tecmint.com/manage-services-using-systemd-and-systemctl-in-linux

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,57 @@

|

||||

GNU、开源和 Apple 的那些黑历史

|

||||

==============================================================================

|

||||

> 自由软件/开源社区与 Apple 之间的争论可以回溯到上世纪80年代,当时 Linux 的创始人称 Mac OS X 的核心就是“一堆废物”。还有其他一些软件史上的轶事。

|

||||

|

||||

|

||||

|

||||

开源拥护者们与微软之间有着很长、而且摇摆的关系。每个人都知道这个。但是,在许多方面,自由或者开源软件的支持者们与 Apple 之间的争执则更加突出——尽管这很少受到媒体的关注。

|

||||

|

||||

需要说明的是,并不是所有的开源拥护者都厌恶苹果。从各种轶事中,我已经见过很多 Linux 的黑客玩耍 iPhone 和iPad。实际上,许多 Linux 用户是十分喜欢 Apple 的 OS X 系统的,以至于他们[创造了很多Linux的发行版][1],都设计得看起来像OS X。(顺便说下,[北朝鲜政府][2]就这样做了。)

|

||||

|

||||

但是 Mac 的信徒与企鹅的信徒——即 Linux 社区(不包括别的,仅指自由与开源软件世界中的这一小部分)之间的关系,并不一直是完全的和谐。并且这绝不是一个新的现象,在我研究Linux和自由软件基金会历史的时候就发现了。

|

||||

|

||||

### GNU vs. Apple ###

|

||||

|

||||

这场战争将回溯到至少上世纪80年代后期。1988年6月,Richard Stallman 发起了 [GNU][3] 项目,希望建立一个完全自由的类 Unix 操作系统,其源代码将会免费共享,[强烈指责][4] Apple 对 [Hewlett-Packard][5](HPQ)和 [Microsoft][6](MSFT)的诉讼,称Apple的声明中说别人对 Macintosh 操作系统的界面和体验的抄袭是不正确。如果 Apple 流行的话,GNU 警告到,这家公司“将会借助大众的新力量终结掉为取代商业软件而生的自由软件。”

|

||||

|

||||

那个时候,GNU 对抗 Apple 的诉讼(这意味着,十分讽刺的是,GNU 正在支持 Microsoft,尽管当时的情况不一样),通过发布[“让你的律师远离我的电脑”按钮][7]。同时呼吁 GNU 的支持者们抵制 Apple,警告虽然 Macintosh 看起来是不错的计算机,但 Apple 一旦赢得了诉讼就会给市场带来垄断,这会极大地提高计算机的售价。

|

||||

|

||||

Apple 最终[输掉了这场诉讼][8],但是直到1994年之后,GNU 才[撤销对 Apple 的抵制][9]。这期间,GNU 一直不断指责 Apple。在上世纪90年代早期甚至之后,GNU 开始发展 GNU 软件项目,可以在其他个人电脑平台包括 MS-DOS 计算机上使用。[GNU 宣称][10],除非 Apple 停止在计算机领域垄断的野心,让用户界面可以模仿 Macintosh 的一些东西,否则“我们不会提供任何对 Apple 机器的支持。”(因此讽刺的是 Apple 在90年代后期开发的类 UNIX 系统 OS X 有一大堆软件来自GNU。但是那是另外的故事了。)

|

||||

|

||||

### Torvalds 与 Jobs ###

|

||||

|

||||

除去他对大多数发行版比较自由放任的态度,Linux内核的创造者 Liuns Torvalds 相较于 Stallman 和 GNU 过去对Apple 的态度和善得多。在他 2001 年出版的书”Just For Fun: The Story of an Accidental Revolutionary“中,Torvalds 描述到与 Steve Jobs 的一次会面,大约是 1997 年收到后者的邀请去讨论 Mac OS X,当时 Apple 正在开发中,但还没有公开发布。

|

||||

|

||||

“基本上,Jobs 一开始就试图告诉我在桌面上的玩家就两个,Microsoft 和 Apple,而且他认为我能为 Linux 做的最好的事,就是从了 Apple,努力让开源用户去支持 Mac OS X” Torvalds 写道。

|

||||

|

||||

这次会谈显然让 Torvalds 很不爽。争吵的一点集中在 Torvalds 对 Mach 技术上的藐视,对于 Apple 正在用于构建新的 OS X 操作系统的内核,Torvalds 称其“一堆废物。它包含了所有你能做到的设计错误,并且甚至打算只弥补一小部分。”

|

||||

|

||||

但是更令人不快的是,显然是 Jobs 在开发 OS X 时入侵开源的方式(OS X 的核心里上有很多开源程序):“他有点贬低了结构的瑕疵:谁在乎基础操作系统这个真正的 low-core 东西是不是开源,如果你有 Mac 层在最上面,这不是开源?”

|

||||

|

||||

一切的一切,Torvalds 总结到,Jobs “并没有太多争论。他仅仅很简单地说着,胸有成竹地认为我会对与 Apple 合作感兴趣”。“他一无所知,不能去想像还会有人并不关心 Mac 市场份额的增长。我认为他真的感到惊讶了,当我表现出对 Mac 的市场有多大,或者 Microsoft 市场有多大的毫不关心时。”

|

||||

|

||||

当然,Torvalds 并没有对所有 Linux 用户说起过。他对于 OS X 和 Apple 的看法从 2001 年开始就渐渐软化了。但实际上,早在2000年,Linux 社区的领导角色表现出对 Apple 及其高层的傲慢的深深的鄙视,可以看出一些重要的东西,关于 Apple 世界和开源/自由软件世界的矛盾是多么的根深蒂固。

|

||||

|

||||

从以上两则历史上的花边新闻中,可以看到关于 Apple 产品价值的重大争议,即是否该公司致力于提升其创造的软硬件的质量,或者仅仅是借市场的小聪明获利,让Apple产品卖出更多的钱而不是创造等同其价值的功能。但是不管怎样,我会暂时置身讨论之外。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://thevarguy.com/open-source-application-software-companies/051815/linux-better-os-x-gnu-open-source-and-apple-

|

||||

|

||||

作者:[Christopher Tozzi][a]

|

||||

译者:[wi-cuckoo](https://github.com/wi-cuckoo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://thevarguy.com/author/christopher-tozzi

|

||||

[1]:https://www.linux.com/news/software/applications/773516-the-mac-ifying-of-the-linux-desktop/

|

||||

[2]:http://thevarguy.com/open-source-application-software-companies/010615/north-koreas-red-star-linux-os-made-apples-image

|

||||

[3]:http://gnu.org/

|

||||

[4]:https://www.gnu.org/bulletins/bull5.html

|

||||

[5]:http://www.hp.com/

|

||||

[6]:http://www.microsoft.com/

|

||||

[7]:http://www.duntemann.com/AppleSnakeButton.jpg

|

||||

[8]:http://www.freibrun.com/articles/articl12.htm

|

||||

[9]:https://www.gnu.org/bulletins/bull18.html#SEC6

|

||||

[10]:https://www.gnu.org/bulletins/bull12.html

|

||||

@ -0,0 +1,98 @@

|

||||

如何配置一个 Docker Swarm 原生集群

|

||||

================================================================================

|

||||

|

||||

嗨,大家好。今天我们来学一学Swarm相关的内容吧,我们将学习通过Swarm来创建Docker原生集群。[Docker Swarm][1]是用于Docker的原生集群项目,它可以将一个Docker主机池转换成单个的虚拟主机。Swarm工作于标准的Docker API,所以任何可以和Docker守护进程通信的工具都可以使用Swarm来透明地伸缩到多个主机上。就像其它Docker项目一样,Swarm遵循“内置电池,并可拆卸”的原则(LCTT 译注:batteries included,内置电池原来是 Python 圈里面对 Python 的一种赞誉,指自给自足,无需外求的丰富环境;but removable,并可拆卸应该指的是非强制耦合)。它附带有一个开箱即用的简单的后端调度程序,而且作为初始开发套件,也为其开发了一个可插拔不同后端的API。其目标在于为一些简单的使用情况提供一个平滑的、开箱即用的体验,并且它允许切换为更强大的后端,如Mesos,以用于大规模生产环境部署。Swarm配置和使用极其简单。

|

||||

|

||||

这里给大家提供Swarm 0.2开箱的即用一些特性。

|

||||

|

||||

1. Swarm 0.2.0大约85%与Docker引擎兼容。

|

||||

2. 它支持资源管理。

|

||||

3. 它具有一些带有限制和类同功能的高级调度特性。

|

||||

4. 它支持多个发现后端(hubs,consul,etcd,zookeeper)

|

||||

5. 它使用TLS加密方法进行安全通信和验证。

|

||||

|

||||

那么,我们来看一看Swarm的一些相当简单而简用的使用步骤吧。

|

||||

|

||||

### 1. 运行Swarm的先决条件 ###

|

||||

|

||||

我们必须在所有节点安装Docker 1.4.0或更高版本。虽然各个节点的IP地址不需要要公共地址,但是Swarm管理器必须可以通过网络访问各个节点。

|

||||

|

||||

**注意**:Swarm当前还处于beta版本,因此功能特性等还有可能发生改变,我们不推荐你在生产环境中使用。

|

||||

|

||||

### 2. 创建Swarm集群 ###

|

||||

|

||||

现在,我们将通过运行下面的命令来创建Swarm集群。各个节点都将运行一个swarm节点代理,该代理会注册、监控相关的Docker守护进程,并更新发现后端获取的节点状态。下面的命令会返回一个唯一的集群ID标记,在启动节点上的Swarm代理时会用到它。

|

||||

|

||||

在集群管理器中:

|

||||

|

||||

# docker run swarm create

|

||||

|

||||

|

||||

|

||||

### 3. 启动各个节点上的Docker守护进程 ###

|

||||

|

||||

我们需要登录进我们将用来创建集群的每个节点,并在其上使用-H标记启动Docker守护进程。它会保证Swarm管理器能够通过TCP访问到各个节点上的Docker远程API。要启动Docker守护进程,我们需要在各个节点内部运行以下命令。

|

||||

|

||||

# docker -H tcp://0.0.0.0:2375 -d

|

||||

|

||||

|

||||

|

||||

### 4. 添加节点 ###

|

||||

|

||||

在启用Docker守护进程后,我们需要添加Swarm节点到发现服务,我们必须确保节点IP可从Swarm管理器访问到。要完成该操作,我们需要运行以下命令。

|

||||

|

||||

# docker run -d swarm join --addr=<node_ip>:2375 token://<cluster_id>

|

||||

|

||||

|

||||

|

||||

**注意**:我们需要用步骤2中获取到的节点IP地址和集群ID替换这里的<node_ip>和<cluster_id>。

|

||||

|

||||

### 5. 开启Swarm管理器 ###

|

||||

|

||||

现在,由于我们已经获得了连接到集群的节点,我们将启动swarm管理器。我们需要在节点中运行以下命令。

|

||||

|

||||

# docker run -d -p <swarm_port>:2375 swarm manage token://<cluster_id>

|

||||

|

||||

|

||||

|

||||

### 6. 检查配置 ###

|

||||

|

||||

一旦管理运行起来后,我们可以通过运行以下命令来检查配置。

|

||||

|

||||

# docker -H tcp://<manager_ip:manager_port> info

|

||||

|

||||

|

||||

|

||||

**注意**:我们需要替换<manager_ip:manager_port>为运行swarm管理器的主机的IP地址和端口。

|

||||

|

||||

### 7. 使用docker CLI来访问节点 ###

|

||||

|

||||

在一切都像上面说得那样完美地完成后,这一部分是Docker Swarm最为重要的部分。我们可以使用Docker CLI来访问节点,并在节点上运行容器。

|

||||

|

||||

# docker -H tcp://<manager_ip:manager_port> info

|

||||

# docker -H tcp://<manager_ip:manager_port> run ...

|

||||

|

||||

### 8. 监听集群中的节点 ###

|

||||

|

||||

我们可以使用swarm list命令来获取所有运行中节点的列表。

|

||||

|

||||

# docker run --rm swarm list token://<cluster_id>

|

||||

|

||||

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

Swarm真的是一个有着相当不错的功能的docker,它可以用于创建和管理集群。它相当易于配置和使用,当我们在它上面使用限制器和类同器时它更为出色。高级调度程序是一个相当不错的特性,它可以应用过滤器来通过端口、标签、健康状况来排除节点,并且它使用策略来挑选最佳节点。那么,如果你有任何问题、评论、反馈,请在下面的评论框中写出来吧,好让我们知道哪些材料需要补充或改进。谢谢大家了!尽情享受吧 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-how-to/configure-swarm-clustering-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:https://docs.docker.com/swarm/

|

||||

177

published/201507/20150616 LINUX 101--POWER UP YOUR SHELL.md

Normal file

177

published/201507/20150616 LINUX 101--POWER UP YOUR SHELL.md

Normal file

@ -0,0 +1,177 @@

|

||||

LINUX 101: 让你的 SHELL 更强大

|

||||

================================================================================

|

||||

> 在我们的关于 shell 基础的指导下, 得到一个更灵活,功能更强大且多彩的命令行界面

|

||||

|

||||

**为何要这样做?**

|

||||

|

||||

- 使得在 shell 提示符下过得更轻松,高效

|

||||

- 在失去连接后恢复先前的会话

|

||||

- Stop pushing around that fiddly rodent!

|

||||

|

||||

|

||||

|

||||

这是我的命令行提示符的设置。对于这个小的终端窗口来说,这或许有些长。但你可以根据你的喜好来调整它。

|

||||

|

||||

作为一个 Linux 用户, 你可能熟悉 shell (又名为命令行)。 或许你需要时不时的打开终端来完成那些不能在 GUI 下处理的必要任务,抑或是因为你处在一个将窗口铺满桌面的环境中,而 shell 是你与你的 linux 机器交互的主要方式。

|

||||

|

||||

在上面那些情况下,你可能正在使用你所使用的发行版本自带的 Bash 配置。 尽管对于大多数的任务而言,它足够好了,但它可以更加强大。 在本教程中,我们将向你展示如何使得你的 shell 提供更多有用信息、更加实用且更适合工作。 我们将对提示符进行自定义,让它比默认情况下提供更好的反馈,并向你展示如何使用炫酷的 `tmux` 工具来管理会话并同时运行多个程序。 并且,为了让眼睛舒服一点,我们还将关注配色方案。那么,进击吧,少女!

|

||||

|

||||

### 让提示符更美妙 ###

|

||||

|

||||

大多数的发行版本配置有一个非常简单的提示符,它们大多向你展示了一些基本信息, 但提示符可以为你提供更多的内容。例如,在 Debian 7 下,默认的提示符是这样的:

|

||||

|

||||

mike@somebox:~$

|

||||

|

||||

上面的提示符展示出了用户、主机名、当前目录和账户类型符号(假如你切换到 root 账户, **$** 会变为 **#**)。 那这些信息是在哪里存储的呢? 答案是:在 **PS1** 环境变量中。 假如你键入 `echo $PS1`, 你将会在这个命令的输出字符串的最后有如下的字符:

|

||||

|

||||

\u@\h:\w$

|

||||

|

||||

这看起来有一些丑陋,并在瞥见它的第一眼时,你可能会开始尖叫,认为它是令人恐惧的正则表达式,但我们不打算用这些复杂的字符来煎熬我们的大脑。这不是正则表达式,这里的斜杠是转义序列,它告诉提示符进行一些特别的处理。 例如,上面的 **u** 部分,告诉提示符展示用户名, 而 w 则展示工作路径.

|

||||

|

||||

下面是一些你可以在提示符中用到的字符的列表:

|

||||

|

||||

- d 当前的日期

|

||||

- h 主机名

|

||||

- n 代表换行的字符

|

||||

- A 当前的时间 (HH:MM)

|

||||

- u 当前的用户

|

||||

- w (小写) 整个工作路径的全称

|

||||

- W (大写) 工作路径的简短名称

|

||||

- $ 一个提示符号,对于 root 用户为 # 号

|

||||

- ! 当前命令在 shell 历史记录中的序号

|

||||

|

||||

下面解释 **w** 和 **W** 选项的区别: 对于前者,你将看到你所在的工作路径的完整地址,(例如 **/usr/local/bin**),而对于后者, 它则只显示 **bin** 这一部分。

|

||||

|

||||

现在,我们该怎样改变提示符呢? 你需要更改 **PS1** 环境变量的内容,试试下面这个:

|

||||

|

||||

export PS1="I am \u and it is \A $"

|

||||

|

||||

现在,你的提示符将会像下面这样:

|

||||

|

||||

I am mike and it is 11:26 $

|

||||

|

||||

从这个例子出发,你就可以按照你的想法来试验一下上面列出的其他转义序列。 但等等 – 当你登出后,你的这些努力都将消失,因为在你每次打开终端时,**PS1** 环境变量的值都会被重置。解决这个问题的最简单方式是打开 **.bashrc** 配置文件(在你的家目录下) 并在这个文件的最下方添加上完整的 `export` 命令。在每次你启动一个新的 shell 会话时,这个 **.bashrc** 会被 `Bash` 读取, 所以你的加强的提示符就可以一直出现。你还可以使用额外的颜色来装扮提示符。刚开始,这将有点棘手,因为你必须使用一些相当奇怪的转义序列,但结果是非常漂亮的。 将下面的字符添加到你的 **PS1**字符串中的某个位置,最终这将把文本变为红色:

|

||||

|

||||

\[\e[31m\]

|

||||

|

||||

你可以将这里的 31 更改为其他的数字来获得不同的颜色:

|

||||

|

||||

- 30 黑色

|

||||

- 32 绿色

|

||||

- 33 黄色

|

||||

- 34 蓝色

|

||||

- 35 洋红色

|

||||

- 36 青色

|

||||

- 37 白色

|

||||

|

||||

所以,让我们使用先前看到的转义序列和颜色来创造一个提示符,以此来结束这一小节的内容。深吸一口气,弯曲你的手指,然后键入下面这只“野兽”:

|

||||

|

||||

export PS1="(\!) \[\e[31m\] \[\A\] \[\e[32m\]\u@\h \[\e[34m\]\w \[\e[30m\]$"

|

||||

|

||||

上面的命令提供了一个 Bash 命令历史序号、当前的时间、彩色的用户或主机名组合、以及工作路径。假如你“野心勃勃”,利用一些惊人的组合,你还可以更改提示符的背景色和前景色。非常有用的 Arch wiki 有一个关于颜色代码的完整列表:[http://tinyurl.com/3gvz4ec][1]。

|

||||

|

||||

> **Shell 精要**

|

||||

>

|

||||

> 假如你是一个彻底的 Linux 新手并第一次阅读这份杂志,或许你会发觉阅读这些教程有些吃力。 所以这里有一些基础知识来让你熟悉一些 shell。 通常在你的菜单中, shell 指的是 Terminal、 XTerm 或 Konsole, 当你启动它后, 最为实用的命令有这些:

|

||||

>

|

||||

> **ls** (列出文件名); **cp one.txt two.txt** (复制文件); **rm file.txt** (移除文件); **mv old.txt new.txt** (移动或重命名文件);

|

||||

>

|

||||

> **cd /some/directory** (改变目录); **cd ..** (回到上级目录); **./program** (在当前目录下运行一个程序); **ls > list.txt** (重定向输出到一个文件)。

|

||||

>

|

||||

> 几乎每个命令都有一个手册页用来解释其选项(例如 **man ls** – 按 Q 来退出)。在那里,你可以知晓命令的选项,这样你就知道 **ls -la** 展示一个详细的列表,其中也列出了隐藏文件, 并且在键入一个文件或目录的名字的一部分后, 可以使用 Tab 键来自动补全。

|

||||

|

||||

### Tmux: 针对 shell 的窗口管理器 ###

|

||||

|

||||

在文本模式的环境中使用一个窗口管理器 – 这听起来有点不可思议, 是吧? 然而,你应该记得当 Web 浏览器第一次实现分页浏览的时候吧? 在当时, 这是在可用性上的一个重大进步,它减少了桌面任务栏的杂乱无章和繁多的窗口列表。 对于你的浏览器来说,你只需要一个按钮便可以在浏览器中切换到你打开的每个单独网站, 而不是针对每个网站都有一个任务栏或导航图标。 这个功能非常有意义。

|

||||

|

||||

若有时你同时运行着几个虚拟终端,你便会遇到相似的情况; 在这些终端之间跳转,或每次在任务栏或窗口列表中找到你所需要的那一个终端,都可能会让你觉得麻烦。 拥有一个文本模式的窗口管理器不仅可以让你像在同一个终端窗口中运行多个 shell 会话,而且你甚至还可以将这些窗口排列在一起。

|

||||

|

||||

另外,这样还有另一个好处:可以将这些窗口进行分离和重新连接。想要看看这是如何运行的最好方式是自己尝试一下。在一个终端窗口中,输入 `screen` (在大多数发行版本中,它已经默认安装了或者可以在软件包仓库中找到)。 某些欢迎的文字将会出现 – 只需敲击 Enter 键这些文字就会消失。 现在运行一个交互式的文本模式的程序,例如 `nano`, 并关闭这个终端窗口。

|

||||

|

||||

在一个正常的 shell 对话中, 关闭窗口将会终止所有在该终端中运行的进程 – 所以刚才的 Nano 编辑对话也就被终止了, 但对于 screen 来说,并不是这样的。打开一个新的终端并输入如下命令:

|

||||

|

||||

screen -r

|

||||

|

||||

瞧,你刚开打开的 Nano 会话又回来了!

|

||||

|

||||

当刚才你运行 **screen** 时, 它会创建了一个新的独立的 shell 会话, 它不与某个特定的终端窗口绑定在一起,所以可以在后面被分离并重新连接(即 **-r** 选项)。

|

||||

|

||||

当你正使用 SSH 去连接另一台机器并做着某些工作时, 但并不想因为一个脆弱的连接而影响你的进度,这个方法尤其有用。假如你在一个 **screen** 会话中做着某些工作,并且你的连接突然中断了(或者你的笔记本没电了,又或者你的电脑报废了——不是这么悲催吧),你只需重新连接或给电脑充电或重新买一台电脑,接着运行 **screen -r** 来重新连接到远程的电脑,并在刚才掉线的地方接着开始。

|

||||

|

||||

现在,我们都一直在讨论 GNU 的 **screen**,但这个小节的标题提到的是 tmux。 实质上, **tmux** (terminal multiplexer) 就像是 **screen** 的一个进阶版本,带有许多有用的额外功能,所以现在我们开始关注 tmux。 某些发行版本默认包含了 **tmux**; 在其他的发行版本上,通常只需要一个 **apt-get、 yum install** 或 **pacman -S** 命令便可以安装它。

|

||||

|

||||

一旦你安装了它过后,键入 **tmux** 来启动它。接着你将注意到,在终端窗口的底部有一条绿色的信息栏,它非常像传统的窗口管理器中的任务栏: 上面显示着一个运行着的程序的列表、机器的主机名、当前时间和日期。 现在运行一个程序,同样以 Nano 为例, 敲击 Ctrl+B 后接着按 C 键, 这将在 tmux 会话中创建一个新的窗口,你便可以在终端的底部的任务栏中看到如下的信息:

|

||||

|

||||

0:nano- 1:bash*

|

||||

|

||||

每一个窗口都有一个数字,当前呈现的程序被一个星号所标记。 Ctrl+B 是与 tmux 交互的标准方式, 所以若你敲击这个按键组合并带上一个窗口序号, 那么就会切换到对应的那个窗口。你也可以使用 Ctrl+B 再加上 N 或 P 来分别切换到下一个或上一个窗口 – 或者使用 Ctrl+B 加上 L 来在最近使用的两个窗口之间来进行切换(有点类似于桌面中的经典的 Alt+Tab 组合键的效果)。 若需要知道窗口列表,使用 Ctrl+B 再加上 W。

|

||||

|

||||

目前为止,一切都还好:现在你可以在一个单独的终端窗口中运行多个程序,避免混乱(尤其是当你经常与同一个远程主机保持多个 SSH 连接时)。 当想同时看两个程序又该怎么办呢?

|

||||

|

||||

针对这种情况, 可以使用 tmux 中的窗格。 敲击 Ctrl+B 再加上 % , 则当前窗口将分为两个部分:一个在左一个在右。你可以使用 Ctrl+B 再加上 O 来在这两个部分之间切换。 这尤其在你想同时看两个东西时非常实用, – 例如一个窗格看指导手册,另一个窗格里用编辑器看一个配置文件。

|

||||

|

||||

有时,你想对一个单独的窗格进行缩放,而这需要一定的技巧。 首先你需要敲击 Ctrl+B 再加上一个 :(冒号),这将使得位于底部的 tmux 栏变为深橙色。 现在,你进入了命令模式,在这里你可以输入命令来操作 tmux。 输入 **resize-pane -R** 来使当前窗格向右移动一个字符的间距, 或使用 **-L** 来向左移动。 对于一个简单的操作,这些命令似乎有些长,但请注意,在 tmux 的命令模式(前面提到的一个分号开始的模式)下,可以使用 Tab 键来补全命令。 另外需要提及的是, **tmux** 同样也有一个命令历史记录,所以若你想重复刚才的缩放操作,可以先敲击 Ctrl+B 再跟上一个分号,并使用向上的箭头来取回刚才输入的命令。

|

||||

|

||||

最后,让我们看一下分离和重新连接 - 即我们刚才介绍的 screen 的特色功能。 在 tmux 中,敲击 Ctrl+B 再加上 D 来从当前的终端窗口中分离当前的 tmux 会话。这使得这个会话的一切工作都在后台中运行、使用 `tmux a` 可以再重新连接到刚才的会话。但若你同时有多个 tmux 会话在运行时,又该怎么办呢? 我们可以使用下面的命令来列出它们:

|

||||

|

||||

tmux ls

|

||||

|

||||

这个命令将为每个会话分配一个序号; 假如你想重新连接到会话 1, 可以使用 `tmux a -t 1`. tmux 是可以高度定制的,你可以自定义按键绑定并更改配色方案, 所以一旦你适应了它的主要功能,请钻研指导手册以了解更多的内容。

|

||||

|

||||

|

||||

|

||||

|

||||

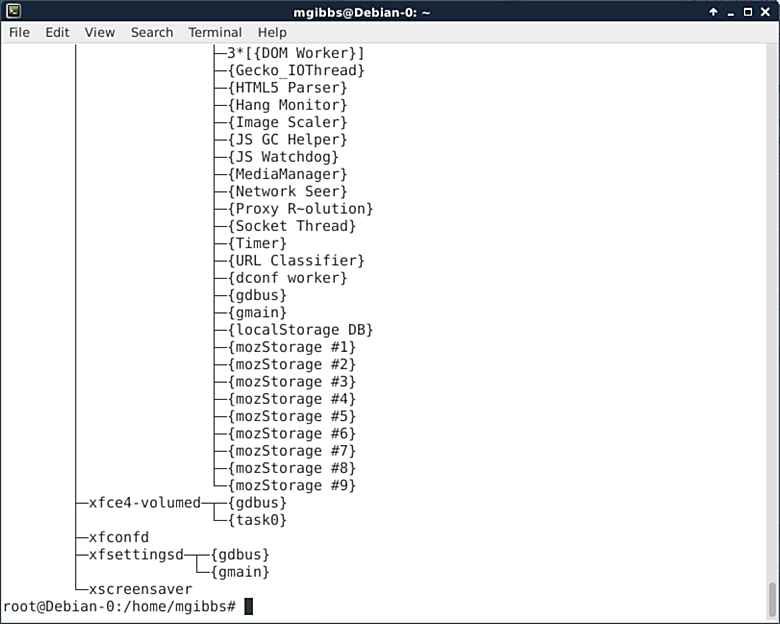

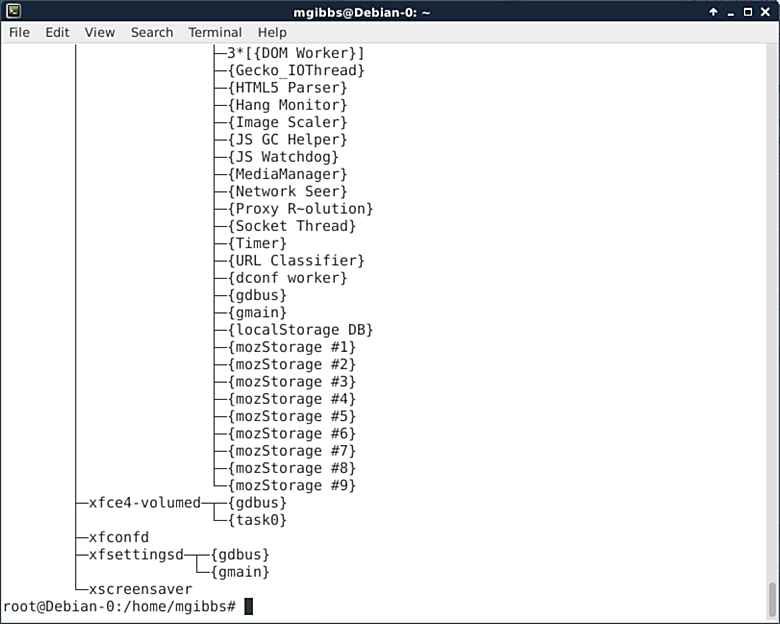

上图中, tmux 开启了两个窗格: 左边是 Vim 正在编辑一个配置文件,而右边则展示着指导手册页。

|

||||

|

||||

> **Zsh: 另一个 shell**

|

||||

>

|

||||

> 选择是好的,但标准同样重要。 你要知道几乎每个主流的 Linux 发行版本都默认使用 Bash shell – 尽管还存在其他的 shell。 Bash 为你提供了一个 shell 能够给你提供的几乎任何功能,包括命令历史记录,文件名补全和许多脚本编程的能力。它成熟、可靠并文档丰富 – 但它不是你唯一的选择。

|

||||

>

|

||||

> 许多高级用户热衷于 Zsh, 即 Z shell。 这是 Bash 的一个替代品并提供了 Bash 的几乎所有功能,另外还提供了一些额外的功能。 例如, 在 Zsh 中,你输入 **ls** ,并敲击 Tab 键可以得到 **ls** 可用的各种不同选项的一个大致描述。 而不需要再打开 man page 了!

|

||||

>

|

||||

> Zsh 还支持其他强大的自动补全功能: 例如,输入 **cd /u/lo/bi** 再敲击 Tab 键, 则完整的路径名 **/usr/local/bin** 就会出现(这里假设没有其他的路径包含 **u**, **lo** 和 **bi** 等字符)。 或者只输入 **cd** 再跟上 Tab 键,则你将看到着色后的路径名的列表 – 这比 Bash 给出的简单的结果好看得多。

|

||||

>

|

||||

> Zsh 在大多数的主要发行版本上都可以得到了; 安装它后并输入 **zsh** 便可启动它。 要将你的默认 shell 从 Bash 改为 Zsh, 可以使用 **chsh** 命令。 若需了解更多的信息,请访问 [www.zsh.org][2]。

|

||||

|

||||

### “未来”的终端 ###

|

||||

|

||||

你或许会好奇为什么包含你的命令行提示符的应用被叫做终端。 这需要追溯到 Unix 的早期, 那时人们一般工作在一个多用户的机器上,这个巨大的电脑主机将占据一座建筑中的一个房间, 人们通过某些线路,使用屏幕和键盘来连接到这个主机, 这些终端机通常被称为“哑终端”, 因为它们不能靠自己做任何重要的执行任务 – 它们只展示通过线路从主机传来的信息,并输送回从键盘的敲击中得到的输入信息。

|

||||

|

||||

今天,我们在自己的机器上执行几乎所有的实际操作,所以我们的电脑不是传统意义下的终端,这就是为什么诸如 **XTerm**、 Gnome Terminal、 Konsole 等程序被称为“终端模拟器” 的原因 – 他们提供了同昔日的物理终端一样的功能。事实上,在许多方面它们并没有改变多少。诚然,现在我们有了反锯齿字体,更好的颜色和点击网址的能力,但总的来说,几十年来我们一直以同样的方式在工作。

|

||||

|

||||

所以某些程序员正尝试改变这个状况。 **Terminology** ([http://tinyurl.com/osopjv9][3]), 它来自于超级时髦的 Enlightenment 窗口管理器背后的团队,旨在让终端步入到 21 世纪,例如带有在线媒体显示功能。你可以在一个充满图片的目录里输入 **ls** 命令,便可以看到它们的缩略图,或甚至可以直接在你的终端里播放视频。 这使得一个终端有点类似于一个文件管理器,意味着你可以快速地检查媒体文件的内容而不必用另一个应用来打开它们。

|

||||

|

||||

接着还有 Xiki ([www.xiki.org][4]),它自身的描述为“命令的革新”。它就像是一个传统的 shell、一个 GUI 和一个 wiki 之间的过渡;你可以在任何地方输入命令,并在后面将它们的输出存储为笔记以作为参考,并可以创建非常强大的自定义命令。用几句话是很能描述它的,所以作者们已经创作了一个视频来展示它的潜力是多么的巨大(请看 **Xiki** 网站的截屏视频部分)。

|

||||

|

||||

并且 Xiki 绝不是那种在几个月之内就消亡的昙花一现的项目,作者们成功地进行了一次 Kickstarter 众筹,在七月底已募集到超过 $84,000。 是的,你没有看错 – $84K 来支持一个终端模拟器。这可能是最不寻常的集资活动了,因为某些疯狂的家伙已经决定开始创办它们自己的 Linux 杂志 ......

|

||||

|

||||

### 下一代终端 ###

|

||||

|

||||

许多命令行和基于文本的程序在功能上与它们的 GUI 程序是相同的,并且常常更加快速和高效。我们的推荐有:

|

||||

**Irssi** (IRC 客户端); **Mutt** (mail 客户端); **rTorrent** (BitTorrent); **Ranger** (文件管理器); **htop** (进程监视器)。 若给定在终端的限制下来进行 Web 浏览, Elinks 确实做的很好,并且对于阅读那些以文字为主的网站例如 Wikipedia 来说。它非常实用。

|

||||

|

||||

> **微调配色方案**

|

||||

>

|

||||

> 在《Linux Voice》杂志社中,我们并不迷恋那些养眼的东西,但当你每天花费几个小时盯着屏幕看东西时,我们确实认识到美学的重要性。我们中的许多人都喜欢调整我们的桌面和窗口管理器来达到完美的效果,调整阴影效果、摆弄不同的配色方案,直到我们 100% 的满意(然后出于习惯,摆弄更多的东西)。

|

||||

>