mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

3d72ed78e7

144

published/20181123 Three SSH GUI Tools for Linux.md

Normal file

144

published/20181123 Three SSH GUI Tools for Linux.md

Normal file

@ -0,0 +1,144 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: subject: (Three SSH GUI Tools for Linux)

|

||||

[#]: via: (https://www.linux.com/blog/learn/intro-to-linux/2018/11/three-ssh-guis-linux)

|

||||

[#]: author: (Jack Wallen https://www.linux.com/users/jlwallen)

|

||||

[#]: url: (https://linux.cn/article-10559-1.html)

|

||||

|

||||

3 个 Linux 上的 SSH 图形界面工具

|

||||

======

|

||||

|

||||

> 了解一下这三个用于 Linux 上的 SSH 图形界面工具。

|

||||

|

||||

|

||||

|

||||

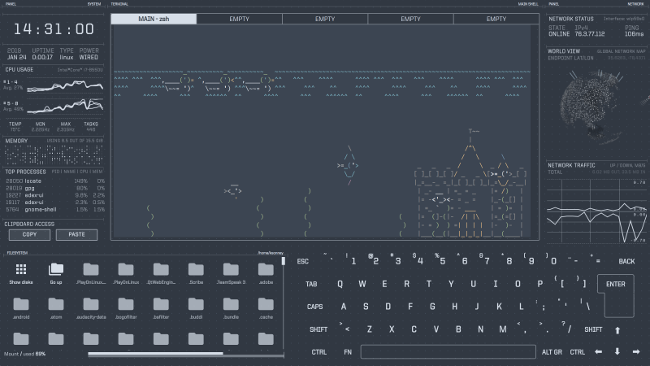

在你担任 Linux 管理员的职业生涯中,你会使用 Secure Shell(SSH)远程连接到 Linux 服务器或桌面。可能你曾经在某些情况下,会同时 SSH 连接到多个 Linux 服务器。实际上,SSH 可能是 Linux 工具箱中最常用的工具之一。因此,你应该尽可能提高体验效率。对于许多管理员来说,没有什么比命令行更有效了。但是,有些用户更喜欢使用 GUI 工具,尤其是在从台式机连接到远程并在服务器上工作时。

|

||||

|

||||

如果你碰巧喜欢好的图形界面工具,你肯定很乐于了解一些 Linux 上优秀的 SSH 图形界面工具。让我们来看看这三个工具,看看它们中的一个(或多个)是否完全符合你的需求。

|

||||

|

||||

我将在 [Elementary OS][1] 上演示这些工具,但它们都可用于大多数主要发行版。

|

||||

|

||||

### PuTTY

|

||||

|

||||

已经有一些经验的人都知道 [PuTTY][2]。实际上,从 Windows 环境通过 SSH 连接到 Linux 服务器时,PuTTY 是事实上的标准工具。但 PuTTY 不仅适用于 Windows。事实上,通过标准软件库,PuTTY 也可以安装在 Linux 上。 PuTTY 的功能列表包括:

|

||||

|

||||

* 保存会话。

|

||||

* 通过 IP 或主机名连接。

|

||||

* 使用替代的 SSH 端口。

|

||||

* 定义连接类型。

|

||||

* 日志。

|

||||

* 设置键盘、响铃、外观、连接等等。

|

||||

* 配置本地和远程隧道。

|

||||

* 支持代理。

|

||||

* 支持 X11 隧道。

|

||||

|

||||

PuTTY 图形工具主要是一种保存 SSH 会话的方法,因此可以更轻松地管理所有需要不断远程进出的各种 Linux 服务器和桌面。一旦连接成功,PuTTY 就会建立一个到 Linux 服务器的连接窗口,你将可以在其中工作。此时,你可能会有疑问,为什么不在终端窗口工作呢?对于一些人来说,保存会话的便利确实使 PuTTY 值得使用。

|

||||

|

||||

在 Linux 上安装 PuTTY 很简单。例如,你可以在基于 Debian 的发行版上运行命令:

|

||||

|

||||

```

|

||||

sudo apt-get install -y putty

|

||||

```

|

||||

|

||||

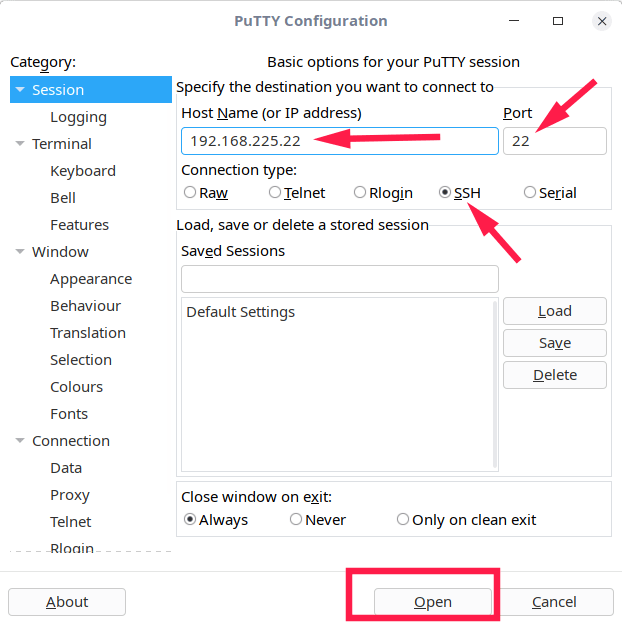

安装后,你可以从桌面菜单运行 PuTTY 图形工具或运行命令 `putty`。在 PuTTY “Configuration” 窗口(图 1)中,在 “HostName (or IP address) ” 部分键入主机名或 IP 地址,配置 “Port”(如果不是默认值 22),从 “Connection type”中选择 SSH,然后单击“Open”。

|

||||

|

||||

![PuTTY Connection][4]

|

||||

|

||||

*图 1:PuTTY 连接配置窗口*

|

||||

|

||||

建立连接后,系统将提示你输入远程服务器上的用户凭据(图2)。

|

||||

|

||||

![log in][7]

|

||||

|

||||

*图 2:使用 PuTTY 登录到远程服务器*

|

||||

|

||||

要保存会话(以便你不必始终键入远程服务器信息),请填写主机名(或 IP 地址)、配置端口和连接类型,然后(在单击 “Open” 之前),在 “Saved Sessions” 部分的顶部文本区域中键入名称,然后单击 “Save”。这将保存会话的配置。若要连接到已保存的会话,请从 “Saved Sessions” 窗口中选择它,单击 “Load”,然后单击 “Open”。系统会提示你输入远程服务器上的远程凭据。

|

||||

|

||||

### EasySSH

|

||||

|

||||

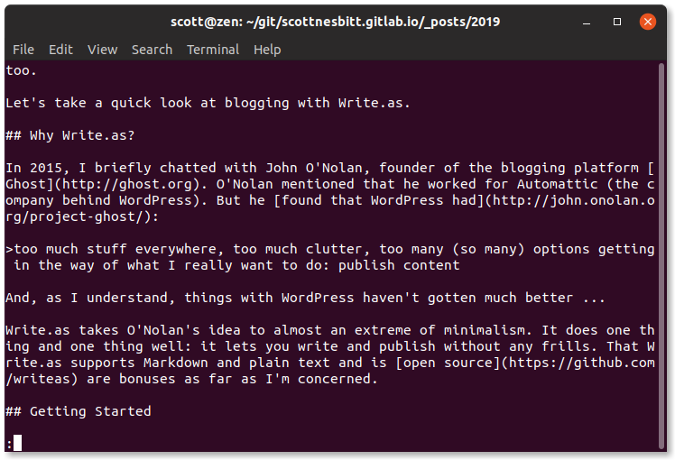

虽然 [EasySSH][8] 没有提供 PuTTY 中的那么多的配置选项,但它(顾名思义)非常容易使用。 EasySSH 的最佳功能之一是它提供了一个标签式界面,因此你可以打开多个 SSH 连接并在它们之间快速切换。EasySSH 的其他功能包括:

|

||||

|

||||

* 分组(出于更好的体验效率,可以对标签进行分组)。

|

||||

* 保存用户名、密码。

|

||||

* 外观选项。

|

||||

* 支持本地和远程隧道。

|

||||

|

||||

在 Linux 桌面上安装 EasySSH 很简单,因为可以通过 Flatpak 安装应用程序(这意味着你必须在系统上安装 Flatpak)。安装 Flatpak 后,使用以下命令添加 EasySSH:

|

||||

|

||||

```

|

||||

sudo flatpak remote-add --if-not-exists flathub https://flathub.org/repo/flathub.flatpakrepo

|

||||

|

||||

sudo flatpak install flathub com.github.muriloventuroso.easyssh

|

||||

```

|

||||

|

||||

用如下命令运行 EasySSH:

|

||||

|

||||

```

|

||||

flatpak run com.github.muriloventuroso.easyssh

|

||||

```

|

||||

|

||||

将会打开 EasySSH 应用程序,你可以单击左上角的 “+” 按钮。 在结果窗口(图 3)中,根据需要配置 SSH 连接。

|

||||

|

||||

![Adding a connection][10]

|

||||

|

||||

*图 3:在 EasySSH 中添加连接很简单*

|

||||

|

||||

添加连接后,它将显示在主窗口的左侧导航中(图 4)。

|

||||

|

||||

![EasySSH][12]

|

||||

|

||||

*图 4:EasySSH 主窗口*

|

||||

|

||||

要在 EasySSH 连接到远程服务器,请从左侧导航栏中选择它,然后单击 “Connect” 按钮(图 5)。

|

||||

|

||||

![Connecting][14]

|

||||

|

||||

*图 5:用 EasySSH 连接到远程服务器*

|

||||

|

||||

对于 EasySSH 的一个警告是你必须将用户名和密码保存在连接配置中(否则连接将失败)。这意味着任何有权访问运行 EasySSH 的桌面的人都可以在不知道密码的情况下远程访问你的服务器。因此,你必须始终记住在你离开时锁定桌面屏幕(并确保使用强密码)。否则服务器容易受到意外登录的影响。

|

||||

|

||||

### Terminator

|

||||

|

||||

(LCTT 译注:这个选择不符合本文主题,本节删节)

|

||||

|

||||

### termius

|

||||

|

||||

(LCTT 译注:本节是根据网友推荐补充的)

|

||||

|

||||

termius 是一个商业版的 SSH、Telnet 和 Mosh 客户端,不是开源软件。支持包括 [Linux](https://www.termius.com/linux)、Windows、Mac、iOS 和安卓在内的各种操作系统。对于单一设备是免费的,支持多设备的白金账号需要按月付费。

|

||||

|

||||

### 很少(但值得)的选择

|

||||

|

||||

Linux 上没有很多可用的 SSH 图形界面工具。为什么?因为大多数管理员更喜欢简单地打开终端窗口并使用标准命令行工具来远程访问其服务器。但是,如果你需要图形界面工具,则有两个可靠选项,可以更轻松地登录多台计算机。虽然对于那些寻找 SSH 图形界面工具的人来说只有不多的几个选择,但那些可用的工具当然值得你花时间。尝试其中一个,亲眼看看。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/intro-to-linux/2018/11/three-ssh-guis-linux

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://elementary.io/

|

||||

[2]: https://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

|

||||

[3]: https://www.linux.com/files/images/sshguis1jpg

|

||||

[4]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_1.jpg?itok=DiNTz_wO (PuTTY Connection)

|

||||

[5]: https://www.linux.com/licenses/category/used-permission

|

||||

[6]: https://www.linux.com/files/images/sshguis2jpg

|

||||

[7]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_2.jpg?itok=4ORsJlz3 (log in)

|

||||

[8]: https://github.com/muriloventuroso/easyssh

|

||||

[9]: https://www.linux.com/files/images/sshguis3jpg

|

||||

[10]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_3.jpg?itok=bHC2zlda (Adding a connection)

|

||||

[11]: https://www.linux.com/files/images/sshguis4jpg

|

||||

[12]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_4.jpg?itok=hhJzhRIg (EasySSH)

|

||||

[13]: https://www.linux.com/files/images/sshguis5jpg

|

||||

[14]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_5.jpg?itok=piFEFYTQ (Connecting)

|

||||

[15]: https://www.linux.com/files/images/sshguis6jpg

|

||||

[16]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ssh_guis_6.jpg?itok=-kYl6iSE (Terminator)

|

||||

@ -1,36 +1,38 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10557-1.html)

|

||||

[#]: subject: (Get started with Go For It, a flexible to-do list application)

|

||||

[#]: via: (https://opensource.com/article/19/1/productivity-tool-go-for-it)

|

||||

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

|

||||

|

||||

开始使用 Go For It,一个灵活的待办事项列表程序

|

||||

开始使用 Go For It 吧,一个灵活的待办事项列表程序

|

||||

======

|

||||

Go For It,是我们开源工具系列中的第十个工具,它将使你在 2019 年更高效,它在 Todo.txt 系统的基础上构建,以帮助你完成更多工作。

|

||||

|

||||

> Go For It,是我们开源工具系列中的第十个工具,它将使你在 2019 年更高效,它在 Todo.txt 系统的基础上构建,以帮助你完成更多工作。

|

||||

|

||||

|

||||

|

||||

每年年初似乎都有疯狂的冲动,想方设法提高工作效率。新年的决议,开始一年的权利,当然,“与旧的,与新的”的态度都有助于实现这一目标。通常的一轮建议严重偏向封闭源和专有软件。它不一定是这样。

|

||||

每年年初似乎都有疯狂的冲动想提高工作效率。新年的决心,渴望开启新的一年,当然,“抛弃旧的,拥抱新的”的态度促成了这一切。通常这时的建议严重偏向闭源和专有软件,但事实上并不用这样。

|

||||

|

||||

这是我挑选出的 19 个新的(或者对你而言新的)开源工具中的第 10 个工具来帮助你在 2019 年更有效率。

|

||||

|

||||

### Go For It

|

||||

|

||||

有时,人们要高效率需要的不是一个花哨的看板或一组笔记,而是一个简单,直接的待办事项清单。像“将项目添加到列表中,在完成后检查”一样基本的东西。为此,[纯文本 Todo.txt 系统][1]可能是最容易使用的系统之一,几乎所有系统都支持它。

|

||||

有时,人们要高效率需要的不是一个花哨的看板或一组笔记,而是一个简单、直接的待办事项清单。像“将项目添加到列表中,在完成后检查”一样基本的东西。为此,[纯文本 Todo.txt 系统][1]可能是最容易使用的系统之一,几乎所有系统都支持它。

|

||||

|

||||

|

||||

|

||||

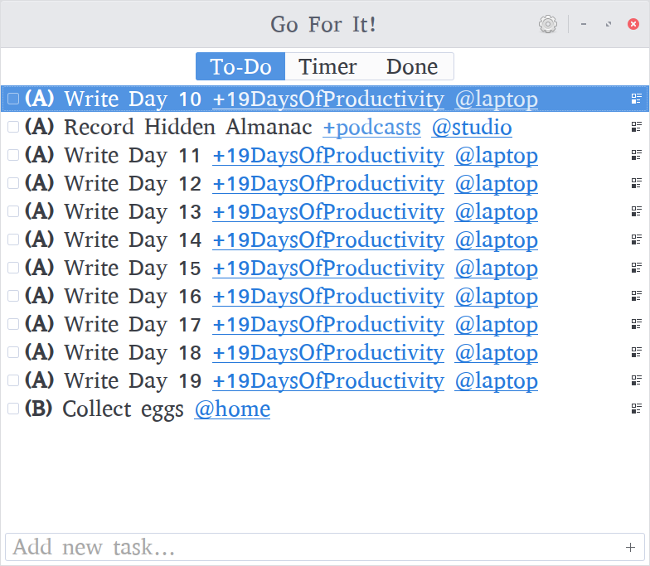

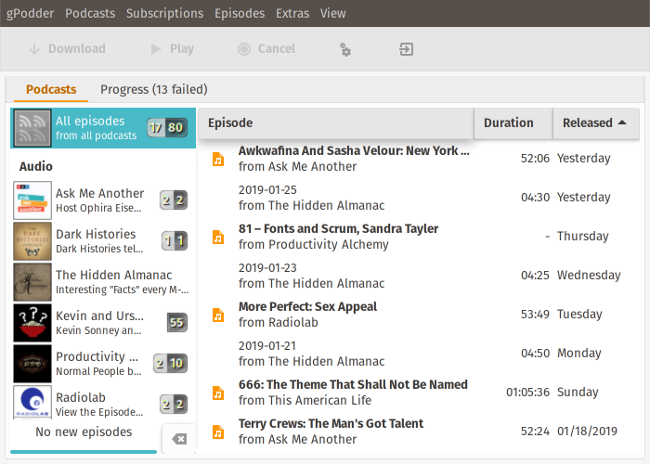

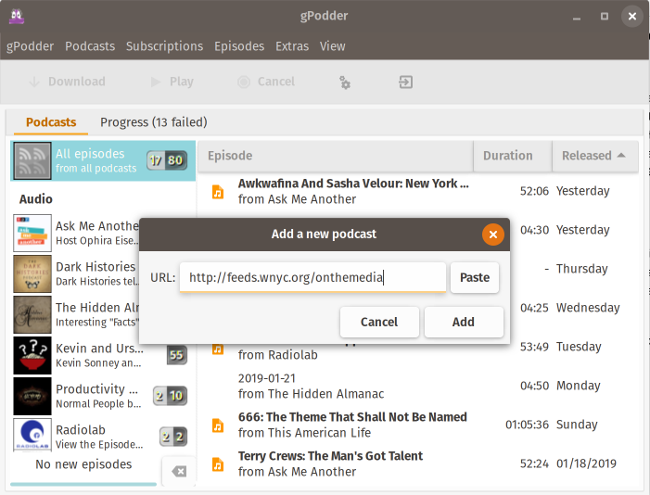

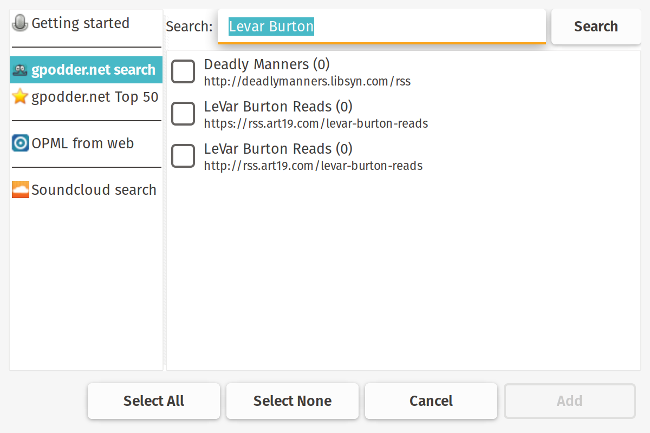

[Go For It][2] 是一个简单易用的 Todo.txt 图形界面。如果你已经在使用 Todo.txt,它可以与现有文件一起使用,如果还没有,那么可以同时创建待办事项和完成事项。它允许拖放任务排序,允许用户按照他们想要执行的顺序组织待办事项。它还支持 [Todo.txt 格式指南][3]中所述的优先级,项目和上下文。而且,只需单击任务列表中的项目或者上下文就可通过它们过滤任务。

|

||||

[Go For It][2] 是一个简单易用的 Todo.txt 图形界面。如果你已经在使用 Todo.txt,它可以与现有文件一起使用,如果还没有,那么可以同时创建待办事项和完成事项。它允许拖放任务排序,允许用户按照他们想要执行的顺序组织待办事项。它还支持 [Todo.txt 格式指南][3]中所述的优先级、项目和上下文。而且,只需单击任务列表中的项目或者上下文就可通过它们过滤任务。

|

||||

|

||||

|

||||

|

||||

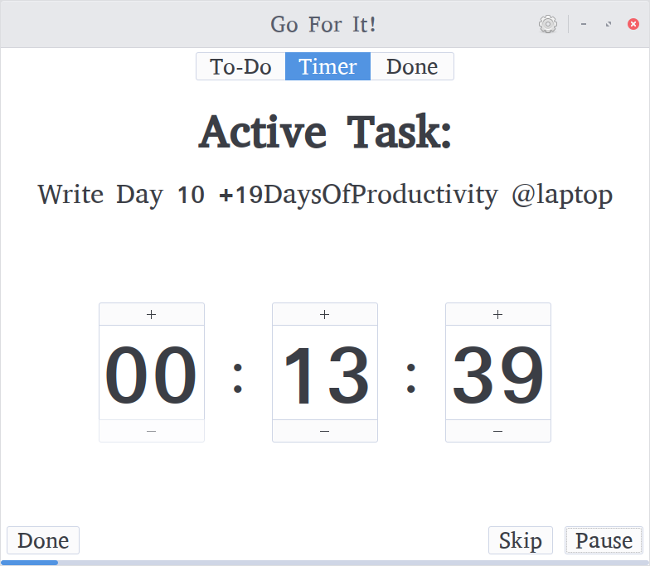

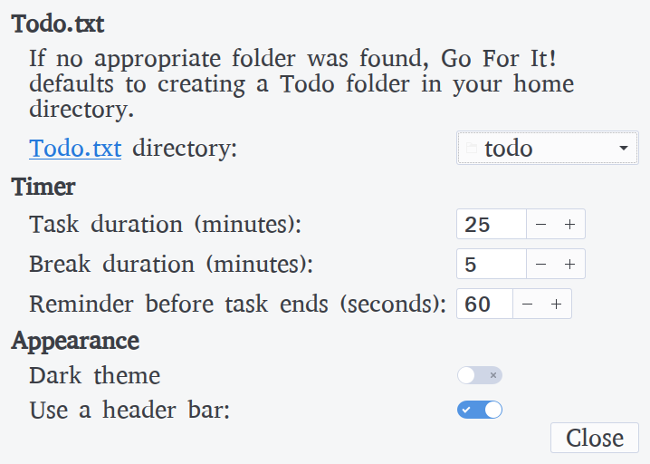

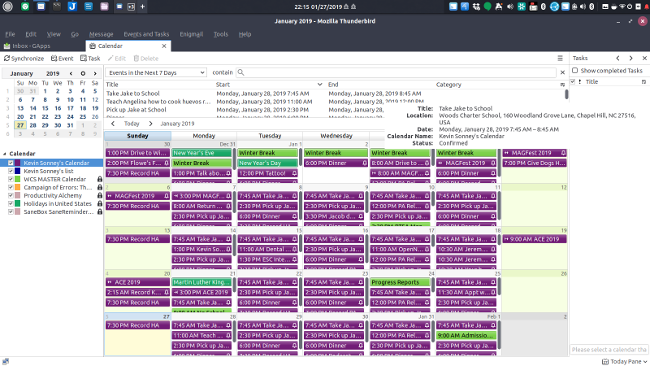

一开始,Go For It 可能看起来与任何其他 Todo.txt 程序相同,但外观可能是骗人的。将 Go For It 与其他真正区分开的功能是它包含一个内置的[番茄工作法][4]计时器。选择要完成的任务,切换到“计时器”选项卡,然后单击“启动”。任务完成后,只需单击“完成”,它将自动重置计时器并选择列表中的下一个任务。你可以暂停并重新启动计时器,也可以单击“跳过”跳转到下一个任务(或中断)。当当前任务剩余 60 秒时,它会发出警告。任务的默认时间设置为25分钟,中断的默认时间设置为五分钟。你可以在“设置”页面中调整,同时还能调整 Todo.txt 和 done.txt 文件的目录的位置。

|

||||

一开始,Go For It 可能看起来与任何其他 Todo.txt 程序相同,但外观可能是骗人的。将 Go For It 与其他程序真正区分开的功能是它包含一个内置的[番茄工作法][4]计时器。选择要完成的任务,切换到“计时器”选项卡,然后单击“启动”。任务完成后,只需单击“完成”,它将自动重置计时器并选择列表中的下一个任务。你可以暂停并重新启动计时器,也可以单击“跳过”跳转到下一个任务(或中断)。在当前任务剩余 60 秒时,它会发出警告。任务的默认时间设置为 25 分钟,中断的默认时间设置为 5 分钟。你可以在“设置”页面中调整,同时还能调整 Todo.txt 和 done.txt 文件的目录的位置。

|

||||

|

||||

|

||||

|

||||

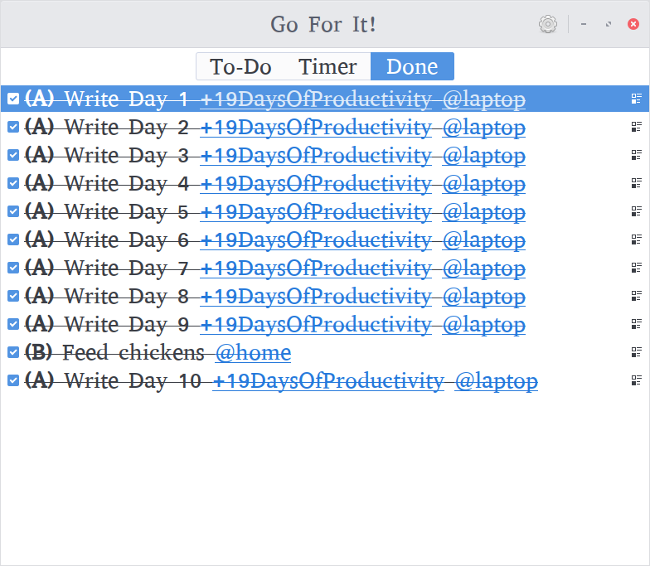

Go For It 的第三个选项卡“已完成”,允许你查看已完成的任务并在需要时将其清除。能够看到你已经完成的可能是非常激励的,也是一种了解你在更长的过程中进度的好方法。

|

||||

Go For It 的第三个选项卡是“已完成”,允许你查看已完成的任务并在需要时将其清除。能够看到你已经完成的可能是非常激励的,也是一种了解你在更长的过程中进度的好方法。

|

||||

|

||||

|

||||

|

||||

@ -45,7 +47,7 @@ via: https://opensource.com/article/19/1/productivity-tool-go-for-it

|

||||

作者:[Kevin Sonney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

111

published/20190201 Top 5 Linux Distributions for New Users.md

Normal file

111

published/20190201 Top 5 Linux Distributions for New Users.md

Normal file

@ -0,0 +1,111 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10553-1.html)

|

||||

[#]: subject: (Top 5 Linux Distributions for New Users)

|

||||

[#]: via: (https://www.linux.com/blog/learn/2019/2/top-5-linux-distributions-new-users)

|

||||

[#]: author: (Jack Wallen https://www.linux.com/users/jlwallen)

|

||||

|

||||

5 个面向新手的 Linux 发行版

|

||||

======

|

||||

|

||||

> 5 个可使用新用户有如归家般感觉的发行版。

|

||||

|

||||

|

||||

|

||||

从最初的 Linux 到现在,Linux 已经发展了很长一段路。但是,无论你曾经多少次听说过现在使用 Linux 有多容易,仍然会有表示怀疑的人。而要真的承担得其这份声明,桌面必须足够简单,以便不熟悉 Linux 的人也能够使用它。事实上大量的桌面发行版使这成为了现实。

|

||||

|

||||

### 无需 Linux 知识

|

||||

|

||||

将这个清单误解为又一个“最佳用户友好型 Linux 发行版”的清单可能很简单。但这不是我们要在这里看到的。这二者之间有什么不同?就我的目的而言,定义的界限是 Linux 是否真正起到了使用的作用。换句话说,你是否可以将这个桌面操作系统放在一个用户面前,并让他们应用自如而无需懂得 Linux 知识呢?

|

||||

|

||||

不管你相信与否,有些发行版就能做到。这里我将介绍给你 5 个这样的发行版。这些或许你全都听说过。它们或许不是你所选择的发行版,但可以向你保证它们无需过多关注,而是将用户放在眼前的。

|

||||

|

||||

我们来看看选中的几个。

|

||||

|

||||

### Elementary OS

|

||||

|

||||

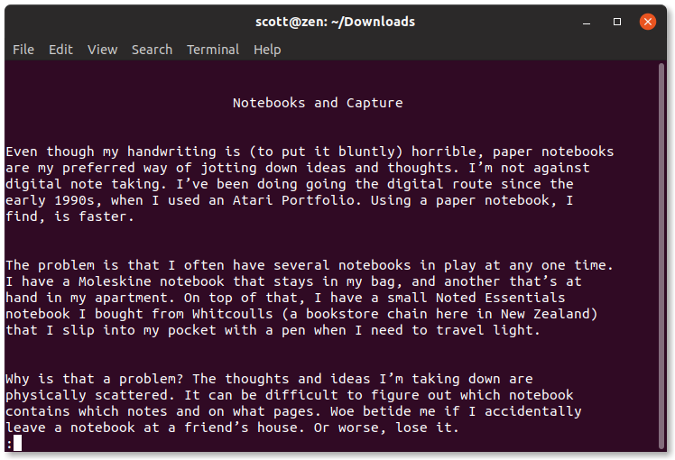

[Elementary OS](https://elementary.io/) 的理念主要围绕人们如何实际使用他们的桌面。开发人员和设计人员不遗余力地创建尽可能简单的桌面。在这个过程中,他们致力于去 Linux 化的 Linux。这并不是说他们已经从这个等式中删除了 Linux。不,恰恰相反,他们所做的就是创建一个与你所发现的一样的中立的操作系统。Elementary OS 是如此流畅,以确保一切都完美合理。从单个 Dock 到每个人都清晰明了的应用程序菜单,这是一个桌面,而不用提醒用户说,“你正在使用 Linux!” 事实上,其布局本身就让人联想到 Mac,但附加了一个简单的应用程序菜单(图 1)。

|

||||

|

||||

![Elementary OS Juno][2]

|

||||

|

||||

*图 1:Elementary OS Juno 应用菜单*

|

||||

|

||||

将 Elementary OS 放在此列表中的另一个重要原因是它不像其他桌面发行版那样灵活。当然,有些用户会对此不以为然,但是如果桌面没有向用户扔出各种花哨的定制诱惑,那么就会形成一个非常熟悉的环境:一个既不需要也不允许大量修修补补的环境。操作系统在让新用户熟悉该平台这一方面还有很长的路要走。

|

||||

|

||||

与任何现代 Linux 桌面发行版一样,Elementary OS 包括了应用商店,称为 AppCenter,用户可以在其中安装所需的所有应用程序,而无需触及命令行。

|

||||

|

||||

### 深度操作系统

|

||||

|

||||

[深度操作系统](https://www.deepin.org/)不仅得到了市场上最漂亮的台式机之一的赞誉,它也像任何桌面操作系统一样容易上手。其桌面界面非常简单,对于毫无 Linux 经验的用户来说,它的上手速度非常快。事实上,你很难找到无法立即上手使用 Deepin 桌面的用户。而这里唯一可能的障碍可能是其侧边栏控制中心(图 2)。

|

||||

|

||||

![][5]

|

||||

|

||||

*图 2:Deepin 的侧边栏控制编码*

|

||||

|

||||

但即使是侧边栏控制面板,也像市场上的任何其他配置工具一样直观。任何使用过移动设备的人对于这种布局都很熟悉。至于打开应用程序,Deepin 的启动器采用了 macOS Launchpad 的方式。此按钮位于桌面底座上通常最右侧的位置,因此用户立即就可以会意,知道它可能类似于标准的“开始”菜单。

|

||||

|

||||

与 Elementary OS(以及市场上大多数 Linux 发行版)类似,深度操作系统也包含一个应用程序商店(简称为“商店”),可以轻松安装大量应用程序。

|

||||

|

||||

### Ubuntu

|

||||

|

||||

你知道肯定有它。[Ubuntu](https://www.ubuntu.com/) 通常在大多数用户友好的 Linux 列表中占据首位。因为它是少数几个不需要懂得 Linux 就能使用的桌面之一。但在采用 GNOME(和 Unity 谢幕)之前,情况并非如此。因为 Unity 经常需要进行一些调整才能达到一点 Linux 知识都不需要的程度(图 3)。现在 Ubuntu 已经采用了 GNOME,并将其调整到甚至不需要懂得 GNOME 的程度,这个桌面使得对 Linux 的简单性和可用性的要求不再是迫切问题。

|

||||

|

||||

![Ubuntu 18.04][7]

|

||||

|

||||

*图 3:Ubuntu 18.04 桌面可使用马上熟悉起来*

|

||||

|

||||

与 Elementary OS 不同,Ubuntu 对用户毫无阻碍。因此,任何想从桌面上获得更多信息的人都可以拥有它。但是,其开箱即用的体验对于任何类型的用户都是足够的。任何一个让用户不知道他们触手可及的力量有多少的桌面,肯定不如 Ubuntu。

|

||||

|

||||

### Linux Mint

|

||||

|

||||

我需要首先声明,我从来都不是 [Linux Mint](https://linuxmint.com/) 的忠实粉丝。但这并不是说我不尊重开发者的工作,而更多的是一种审美观点。我更喜欢现代化的桌面环境。但是,旧式的学校计算机桌面的隐喻(可以在默认的 Cinnamon 桌面中找到)可以让几乎每个人使用它的人都格外熟悉。Linux Mint 使用任务栏、开始按钮、系统托盘和桌面图标(图 4),提供了一个需要零学习曲线的界面。事实上,一些用户最初可能会被愚弄,以为他们正在使用 Windows 7 的克隆版。甚至是它的更新警告图标也会让用户感到非常熟悉。

|

||||

|

||||

![Linux Mint][9]

|

||||

|

||||

*图 4:Linux Mint 的 Cinnamon 桌面非常像 Windows 7*

|

||||

|

||||

因为 Linux Mint 受益于其所基于的 Ubuntu,它不仅会让你马上熟悉起来,而且具有很高的可用性。无论你是否对底层平台有所了解,用户都会立即感受到宾至如归的感觉。

|

||||

|

||||

### Ubuntu Budgie

|

||||

|

||||

我们的列表将以这样一个发行版做结:它也能让用户忘记他们正在使用 Linux,并且使用常用工具变得简单、美观。使 Ubuntu 融合 Budgie 桌面可以构成一个令人印象深刻的易用发行版。虽然其桌面布局(图 5)可能不太一样,但毫无疑问,适应这个环境并不需要浪费时间。实际上,除了 Dock 默认居于桌面的左侧,[Ubuntu Budgie](https://ubuntubudgie.org/) 确实看起来像 Elementary OS。

|

||||

|

||||

![Budgie][11]

|

||||

|

||||

*图 5:Budgie 桌面既漂亮又简单*

|

||||

|

||||

Ubuntu Budgie 中的系统托盘/通知区域提供了一些不太多见的功能,比如:快速访问 Caffeine(一种保持桌面清醒的工具)、快速笔记工具(用于记录简单笔记)、Night Lite 开关、原地下拉菜单(用于快速访问文件夹),当然还有 Raven 小程序/通知侧边栏(与深度操作系统中的控制中心侧边栏类似,但不太优雅)。Budgie 还包括一个应用程序菜单(左上角),用户可以访问所有已安装的应用程序。打开一个应用程序,该图标将出现在 Dock 中。右键单击该应用程序图标,然后选择“保留在 Dock”以便更快地访问。

|

||||

|

||||

Ubuntu Budgie 的一切都很直观,所以几乎没有学习曲线。这种发行版既优雅又易于使用,不能再好了。

|

||||

|

||||

### 选择一个吧

|

||||

|

||||

至此介绍了 5 个 Linux 发行版,它们各自以自己的方式提供了让任何用户都马上熟悉的桌面体验。虽然这些可能不是你对顶级发行版的选择,但对于那些不熟悉 Linux 的用户来说,却不能否定它们的价值。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/learn/2019/2/top-5-linux-distributions-new-users

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/users/jlwallen

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linux.com/files/images/elementaryosjpg-2

|

||||

[2]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/elementaryos_0.jpg?itok=KxgNUvMW (Elementary OS Juno)

|

||||

[3]: https://www.linux.com/licenses/category/used-permission

|

||||

[4]: https://www.linux.com/files/images/deepinjpg

|

||||

[5]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/deepin.jpg?itok=VV381a9f

|

||||

[6]: https://www.linux.com/files/images/ubuntujpg-1

|

||||

[7]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/ubuntu_1.jpg?itok=bax-_Tsg (Ubuntu 18.04)

|

||||

[8]: https://www.linux.com/files/images/linuxmintjpg

|

||||

[9]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/linuxmint.jpg?itok=8sPon0Cq (Linux Mint )

|

||||

[10]: https://www.linux.com/files/images/budgiejpg-0

|

||||

[11]: https://www.linux.com/sites/lcom/files/styles/rendered_file/public/budgie_0.jpg?itok=zcf-AHmj (Budgie)

|

||||

[12]: https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,25 +1,24 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10554-1.html)

|

||||

[#]: subject: (4 cool new projects to try in COPR for February 2019)

|

||||

[#]: via: (https://fedoramagazine.org/4-cool-new-projects-to-try-in-copr-for-february-2019/)

|

||||

[#]: author: (Dominik Turecek https://fedoramagazine.org)

|

||||

|

||||

2019 年 2 月 COPR 中 4 个值得尝试很酷的新项目

|

||||

COPR 仓库中 4 个很酷的新软件(2019.2)

|

||||

======

|

||||

|

||||

|

||||

|

||||

COPR 是个人软件仓库[集合][1],它不在 Fedora 中。这是因为某些软件不符合轻松打包的标准。或者它可能不符合其他 Fedora 标准,尽管它是自由而开源的。COPR 可以在 Fedora 套件之外提供这些项目。COPR 中的软件不被 Fedora 基础设施不支持或没有被该项目所签名。但是,这是一种尝试新的或实验性的软件的一种巧妙的方式。

|

||||

|

||||

|

||||

这是 COPR 中一组新的有趣项目。

|

||||

|

||||

### CryFS

|

||||

|

||||

[CryFS][2] 是一个加密文件系统。它设计用于云存储,主要用于 Dropbox,尽管它也可以与其他存储提供商一起使用。CryFS 不仅加密文件系统中的文件,还会加密元数据、文件大小和目录结构。

|

||||

[CryFS][2] 是一个加密文件系统。它设计与云存储一同使用,主要是 Dropbox,尽管它也可以与其他存储提供商一起使用。CryFS 不仅加密文件系统中的文件,还会加密元数据、文件大小和目录结构。

|

||||

|

||||

#### 安装说明

|

||||

|

||||

@ -32,13 +31,13 @@ sudo dnf install cryfs

|

||||

|

||||

### Cheat

|

||||

|

||||

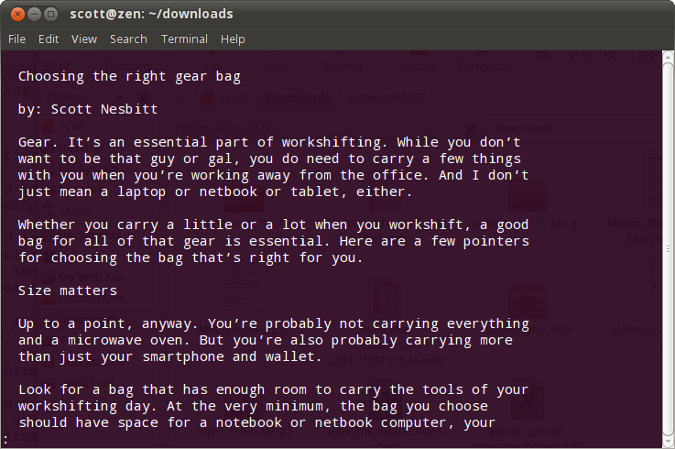

[Cheat][3] 是一个用于在命令行中查看各种备忘录的工具,用来提醒仅偶尔使用的程序的使用方法。对于许多 Linux 程序,cheat 提供了来自手册页的压缩后的信息,主要关注最常用的示例。除了内置的备忘录,cheat 允许你编辑现有的备忘录或从头开始创建新的备忘录。

|

||||

[Cheat][3] 是一个用于在命令行中查看各种备忘录的工具,用来提醒仅偶尔使用的程序的使用方法。对于许多 Linux 程序,`cheat` 提供了来自手册页的精简后的信息,主要关注最常用的示例。除了内置的备忘录,`cheat` 允许你编辑现有的备忘录或从头开始创建新的备忘录。

|

||||

|

||||

![][4]

|

||||

|

||||

#### 安装说明

|

||||

|

||||

仓库目前为 Fedora 28、29 和 Rawhide 以及 EPEL 7 提供 cheat。要安装 cheat,请使用以下命令:

|

||||

仓库目前为 Fedora 28、29 和 Rawhide 以及 EPEL 7 提供 `cheat`。要安装 `cheat`,请使用以下命令:

|

||||

|

||||

```

|

||||

sudo dnf copr enable tkorbar/cheat

|

||||

@ -47,20 +46,20 @@ sudo dnf install cheat

|

||||

|

||||

### Setconf

|

||||

|

||||

[setconf][5] 是一个简单的程序,作为 sed 的替代方案,用于对配置文件进行更改。setconf 唯一能做的就是找到指定文件中的密钥并更改其值。setconf 仅提供一些选项来更改其行为 - 例如,取消更改行的注释。

|

||||

[setconf][5] 是一个简单的程序,作为 `sed` 的替代方案,用于对配置文件进行更改。`setconf` 唯一能做的就是找到指定文件中的密钥并更改其值。`setconf` 仅提供很少的选项来更改其行为 - 例如,取消更改行的注释。

|

||||

|

||||

#### 安装说明

|

||||

|

||||

仓库目前为 Fedora 27、28 和 29 提供 setconf。要安装 setconf,请使用以下命令:

|

||||

仓库目前为 Fedora 27、28 和 29 提供 `setconf`。要安装 `setconf`,请使用以下命令:

|

||||

|

||||

```

|

||||

sudo dnf copr enable jamacku/setconf

|

||||

sudo dnf install setconf

|

||||

```

|

||||

|

||||

### Reddit Terminal Viewer

|

||||

### Reddit 终端查看器

|

||||

|

||||

[Reddit Terminal Viewer][6],或称为 rtv,提供了从终端浏览 Reddit 的界面。它提供了 Reddit 的基本功能,因此你可以登录到你的帐户,查看 subreddits、评论、点赞和发现新主题。但是,rtv 目前不支持 Reddit 标签。

|

||||

[Reddit 终端查看器][6],或称为 `rtv`,提供了从终端浏览 Reddit 的界面。它提供了 Reddit 的基本功能,因此你可以登录到你的帐户,查看 subreddits、评论、点赞和发现新主题。但是,rtv 目前不支持 Reddit 标签。

|

||||

|

||||

![][7]

|

||||

|

||||

@ -81,7 +80,7 @@ via: https://fedoramagazine.org/4-cool-new-projects-to-try-in-copr-for-february-

|

||||

作者:[Dominik Turecek][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (zhs852)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10552-1.html)

|

||||

[#]: subject: (How To Install And Use PuTTY On Linux)

|

||||

[#]: via: (https://www.ostechnix.com/how-to-install-and-use-putty-on-linux/)

|

||||

[#]: author: (SK https://www.ostechnix.com/author/sk/)

|

||||

@ -12,7 +12,7 @@

|

||||

|

||||

|

||||

|

||||

PuTTY 是一个免费、开源且支持包括 SSH、Telnet 和 Rlogin 在内的多种协议的 GUI 客户端。一般来说,Windows 管理员们会把 PuTTY 当成 SSH 或 Telnet 客户端来在本地 Windows 系统和远程 Linux 服务器之间建立连接。不过,PuTTY 可不是 Windows 的独占软件。它在 Linux 用户之中也是很流行的。本篇文章将会告诉你如何在 Linux 中安装并使用 PuTTY。

|

||||

PuTTY 是一个自由开源且支持包括 SSH、Telnet 和 Rlogin 在内的多种协议的 GUI 客户端。一般来说,Windows 管理员们会把 PuTTY 当成 SSH 或 Telnet 客户端来在本地 Windows 系统和远程 Linux 服务器之间建立连接。不过,PuTTY 可不是 Windows 的独占软件。它在 Linux 用户之中也是很流行的。本篇文章将会告诉你如何在 Linux 中安装并使用 PuTTY。

|

||||

|

||||

### 在 Linux 中安装 PuTTY

|

||||

|

||||

@ -60,11 +60,11 @@ PuTTY 的默认界面长这个样子:

|

||||

|

||||

### 使用 PuTTY 访问远程 Linux 服务器

|

||||

|

||||

请在左侧面板点击 **会话** 选项卡,输入远程主机名(或 IP 地址)。然后,请选择连接类型(比如 Telnet、Rlogin 以及 SSH 等)。根据你选择的连接类型,PuTTY 会自动选择对应连接类型的默认端口号(比如 SSH 是 22、Telnet 是 23),如果你修改了默认端口号,别忘了手动把它输入到 **端口** 里。在这里,我用 SSH 连接到远程主机。在输入所有信息后,请点击 **打开**。

|

||||

请在左侧面板点击 “Session” 选项卡,输入远程主机名(或 IP 地址)。然后,请选择连接类型(比如 Telnet、Rlogin 以及 SSH 等)。根据你选择的连接类型,PuTTY 会自动选择对应连接类型的默认端口号(比如 SSH 是 22、Telnet 是 23),如果你修改了默认端口号,别忘了手动把它输入到 “Port” 里。在这里,我用 SSH 连接到远程主机。在输入所有信息后,请点击 “Open”。

|

||||

|

||||

|

||||

|

||||

如果这是你首次连接到这个远程主机,PuTTY 会显示一个安全警告,问你是否信任你连接到的远程主机。点击 **接受** 即可将远程主机的密钥加入 PuTTY 的储存当中:

|

||||

如果这是你首次连接到这个远程主机,PuTTY 会显示一个安全警告,问你是否信任你连接到的远程主机。点击 “Accept” 即可将远程主机的密钥加入 PuTTY 的缓存当中:

|

||||

|

||||

![PuTTY 安全警告][2]

|

||||

|

||||

@ -74,63 +74,60 @@ PuTTY 的默认界面长这个样子:

|

||||

|

||||

#### 使用密钥验证访问远程主机

|

||||

|

||||

一些 Linux 管理员可能在服务器上配置了密钥认证。举个例子,在用 PuTTY 访问 AMS instances 的时候,你需要指定密钥文件的位置。PuTTY 可以使用它自己的格式(**.ppk** 文件)来进行公钥验证。

|

||||

一些 Linux 管理员可能在服务器上配置了密钥认证。举个例子,在用 PuTTY 访问 AMS 实例的时候,你需要指定密钥文件的位置。PuTTY 可以使用它自己的格式(`.ppk` 文件)来进行公钥验证。

|

||||

|

||||

首先输入主机名或 IP。之后,在 **分类** 选项卡中,展开 **连接**,再展开 **SSH**,然后选择 **认证**,之后便可选择 **.ppk** 密钥文件了。

|

||||

首先输入主机名或 IP。之后,在 “Category” 选项卡中,展开 “Connection”,再展开 “SSH”,然后选择 “Auth”,之后便可选择 `.ppk` 密钥文件了。

|

||||

|

||||

![][3]

|

||||

|

||||

点击接受来关闭安全提示。然后,输入远程主机的密码片段(如果密钥被密码片段保护)来建立连接。

|

||||

点击 “Accept” 来关闭安全提示。然后,输入远程主机的密码(如果密钥被密码保护)来建立连接。

|

||||

|

||||

#### 保存 PuTTY 会话

|

||||

|

||||

有些时候,你可能需要多次连接到同一个远程主机,你可以保存这些会话并在之后不输入信息访问他们。

|

||||

|

||||

请输入主机名(或 IP 地址),并提供一个会话名称,然后点击 **保存**。如果你有密钥文件,请确保你在点击保存按钮之前指定它们。

|

||||

请输入主机名(或 IP 地址),并提供一个会话名称,然后点击 “Save”。如果你有密钥文件,请确保你在点击 “Save” 按钮之前指定它们。

|

||||

|

||||

![][4]

|

||||

|

||||

现在,你可以通过选择 **已保存的会话**,然后点击 **Load**,再点击 **打开** 来启动连接。

|

||||

现在,你可以通过选择 “Saved sessions”,然后点击 “Load”,再点击 “Open” 来启动连接。

|

||||

|

||||

#### 使用 <ruby>pscp<rt>PuTTY Secure Copy Client</rt></ruby> 来将文件传输到远程主机中

|

||||

#### 使用 PuTTY 安全复制客户端(pscp)来将文件传输到远程主机中

|

||||

|

||||

通常来说,Linux 用户和管理员会使用 **scp** 这个命令行工具来从本地往远程主机传输文件。不过 PuTTY 给我们提供了一个叫做 <ruby>PuTTY 安全拷贝客户端<rt>PuTTY Secure Copy Client</rt></ruby>(简写为 **PSCP**)的工具来干这个事情。如果你的本地主机运行的是 Windows,你可能需要这个工具。PSCP 在 Windows 和 Linux 下都是可用的。

|

||||

通常来说,Linux 用户和管理员会使用 `scp` 这个命令行工具来从本地往远程主机传输文件。不过 PuTTY 给我们提供了一个叫做 <ruby>PuTTY 安全复制客户端<rt>PuTTY Secure Copy Client</rt></ruby>(简写为 `pscp`)的工具来干这个事情。如果你的本地主机运行的是 Windows,你可能需要这个工具。PSCP 在 Windows 和 Linux 下都是可用的。

|

||||

|

||||

使用这个命令来将 **file.txt** 从本地的 Arch Linux 拷贝到远程的 Ubuntu 上:

|

||||

使用这个命令来将 `file.txt` 从本地的 Arch Linux 拷贝到远程的 Ubuntu 上:

|

||||

|

||||

```shell

|

||||

pscp -i test.ppk file.txt sk@192.168.225.22:/home/sk/

|

||||

```

|

||||

|

||||

让我们来拆分这个命令:

|

||||

让我们来分析这个命令:

|

||||

|

||||

* **-i test.ppk** : 访问远程主机的密钥文件;

|

||||

* **file.txt** : 要拷贝到远程主机的文件;

|

||||

* **sk@192.168.225.22** : 远程主机的用户名与 IP;

|

||||

* **/home/sk/** : 目标路径。

|

||||

* `-i test.ppk`:访问远程主机所用的密钥文件;

|

||||

* `file.txt`:要拷贝到远程主机的文件;

|

||||

* `sk@192.168.225.22`:远程主机的用户名与 IP;

|

||||

* `/home/sk/`:目标路径。

|

||||

|

||||

|

||||

|

||||

要拷贝一个目录,请使用 <ruby>**-r**<rt>Recursive</rt></ruby> 参数:

|

||||

要拷贝一个目录,请使用 `-r`(<ruby>递归<rt>Recursive</rt></ruby>)参数:

|

||||

|

||||

```shell

|

||||

pscp -i test.ppk -r dir/ sk@192.168.225.22:/home/sk/

|

||||

```

|

||||

|

||||

要使用 pscp 传输文件,请执行以下命令:

|

||||

要使用 `pscp` 传输文件,请执行以下命令:

|

||||

|

||||

```shell

|

||||

pscp -i test.ppk c:\documents\file.txt.txt sk@192.168.225.22:/home/sk/

|

||||

```

|

||||

|

||||

你现在应该了解了 PuTTY 是什么,知道了如何安装它和如何使用它。同时,你也学习到了如何使用 pscp 程序在本地和远程主机上传输文件。

|

||||

你现在应该了解了 PuTTY 是什么,知道了如何安装它和如何使用它。同时,你也学习到了如何使用 `pscp` 程序在本地和远程主机上传输文件。

|

||||

|

||||

以上便是所有了,希望这篇文章对你有帮助。

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/how-to-install-and-use-putty-on-linux/

|

||||

@ -138,7 +135,7 @@ via: https://www.ostechnix.com/how-to-install-and-use-putty-on-linux/

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[zhs852](https://github.com/zhs852)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,15 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-10555-1.html)

|

||||

[#]: subject: (Drinking coffee with AWK)

|

||||

[#]: via: (https://opensource.com/article/19/2/drinking-coffee-awk)

|

||||

[#]: author: (Moshe Zadka https://opensource.com/users/moshez)

|

||||

|

||||

用 AWK 喝咖啡

|

||||

======

|

||||

> 用一个简单的 AWK 程序跟踪你的同事所喝咖啡的欠款。

|

||||

> 用一个简单的 AWK 程序跟踪你的同事喝咖啡的欠款。

|

||||

|

||||

|

||||

|

||||

@ -63,7 +63,7 @@ $1 == "member" {

|

||||

}

|

||||

```

|

||||

|

||||

第二条规则在记录付款时减少欠账。

|

||||

第二条规则在记录付款(`payment`)时减少欠账。

|

||||

|

||||

```

|

||||

$1 == "payment" {

|

||||

@ -71,7 +71,7 @@ $1 == "payment" {

|

||||

}

|

||||

```

|

||||

|

||||

还款则相反:它增加欠账。这可以优雅地支持意外地给了某人太多钱的情况。

|

||||

还款(`payback`)则相反:它增加欠账。这可以优雅地支持意外地给了某人太多钱的情况。

|

||||

|

||||

```

|

||||

$1 == "payback" {

|

||||

@ -79,7 +79,7 @@ $1 == "payback" {

|

||||

}

|

||||

```

|

||||

|

||||

最复杂的部分出现在有人购买速溶咖啡供咖啡角使用时。它被视为付款,并且该人的债务减少了适当的金额。接下来,它计算每个会员的费用。它根据成员的级别对所有成员进行迭代并增加欠款

|

||||

最复杂的部分出现在有人购买(`bought`)速溶咖啡供咖啡角使用时。它被视为付款(`payment`),并且该人的债务减少了适当的金额。接下来,它计算每个会员的费用。它根据成员的级别对所有成员进行迭代并增加欠款

|

||||

|

||||

```

|

||||

$1 == "bought" {

|

||||

@ -101,7 +101,7 @@ END {

|

||||

}

|

||||

```

|

||||

|

||||

除了一个遍历成员文件,并向人们发送提醒电子邮件以支付他们的会费(积极清账)的脚本外,这个系统管理咖啡角相当一段时间。

|

||||

再配合一个遍历成员文件,并向人们发送提醒电子邮件以支付他们的会费(积极清账)的脚本,这个系统管理咖啡角相当一段时间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -110,7 +110,7 @@ via: https://opensource.com/article/19/2/drinking-coffee-awk

|

||||

作者:[Moshe Zadka][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

52

sources/talk/20120911 Doug Bolden, Dunnet (IF).md

Normal file

52

sources/talk/20120911 Doug Bolden, Dunnet (IF).md

Normal file

@ -0,0 +1,52 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Doug Bolden, Dunnet (IF))

|

||||

[#]: via: (http://www.wyrmis.com/games/if/dunnet.html)

|

||||

[#]: author: (W Doug Bolden http://www.wyrmis.com)

|

||||

|

||||

Doug Bolden, Dunnet (IF)

|

||||

======

|

||||

|

||||

### Dunnet (IF)

|

||||

|

||||

#### Review

|

||||

|

||||

When I began becoming a semi-serious hobbyist of IF last year, I mostly focused on Infocom, Adventures Unlimited, other Scott Adams based games, and freeware titles. I went on to buy some from Malinche. I picked up _1893_ and _Futureboy_ and (most recnetly) _Treasures of a Slave Kingdom_. I downloaded a lot of free games from various sites. With all of my research and playing, I never once read anything that talked about a game being bundled with Emacs.

|

||||

|

||||

Partially, this is because I am a Vim guy. But I used to use Emacs. Kind of a lot. For probably my first couple of years with Linux. About as long as I have been a diehard Vim fan, now. I just never explored, it seems.

|

||||

|

||||

I booted up Emacs tonight, and my fonts were hosed. Still do not know exactly why. I surfed some menus to find out what was going wrong and came across a menu option called "Adventure" under Games, which I assumed (I know, I know) meant the Crowther and Woods and 1977 variety. When I clicked it tonight, thinking that it has been a few months since I chased a bird around with a cage in a mine so I can fight off giant snakes or something, I was brought up text involving ends of roads and shovels. Trees, if shaken, that kill me with a coconut. This was not the game I thought it was.

|

||||

|

||||

I dug around (or, in purely technical terms, typed "help") and got directed to [this website][1]. Well, here was an IF I had never touched before. Brand spanking new to me. I had planned to play out some _ToaSK_ tonight, but figured that could wait. Besides, I was not quite in the mood for the jocular fun of S. John Ross's commerical IF outing. I needed something a little more direct, and this apparently it.

|

||||

|

||||

Most of the game plays out just like the _Colossal Cave Adventure_ cousins of the oldschool (generally commercial) IF days. There are items you pick. Each does a single task (well, there could be one exception to this, I guess). You collect treasures. Winning is a combination of getting to the end and turning in the treasures. The game slightly tweaks the formula by allowing multiple drop off points for the treasures. Since there is a weight limit, though, you usually have to drop them off at a particular time to avoid getting stuck. At several times, your "item cache" is flushed, so to speak, meaning you have to go back and replay earlier portions to find out how to bring things foward. Damage to items can occur to stop you from being able to play. Replaying is pretty much unavoidable, unless you guess outcomes just right.

|

||||

|

||||

It also inherits many problems from the era it came. There is a twisty maze. I'm not sure how big it is. I just cheated and looked up a walkthrough for the maze portion. I plan on going back and replaying up to the maze bit and mapping it out, though. I was just mentally and physically beat when I played and knew that I was going to have to call it quits on the game for the night or cheat through the maze. I'm glad I cheated, because there are some interesting things after the maze.

|

||||

|

||||

It also has the same sort of stilted syntax and variable levels of description that the original _Adventure_ had. Looking at one item might give you "there is nothing special about that" while looking at another might give you a sentence of flavor text. Several things mentioned in the background do not exist to the parser, which some do. Part of game play is putting up with experimenting. This includes, in cases, a tendency of room descriptions to be written from the perspective of the first time you enter. I know that the Classroom found towards the end of the game does not mention the South exit, either. There are possibly other times this occured that I didn't notice.

|

||||

|

||||

It's final issue, again coming out of the era it was designed, is random death syndrome. This is not too common, but there are a few places where things that have no initially apparent fatal outcome lead to one anyhow. In some ways, this "fatal outcome" is just the game reaching an unwinnable state. For an example of the former, type "shake trees" in the first room. For an example of the latter, send either the lamp, the key, or the shovel through the ftp without switching ftp modes first. At least with the former, there is a sense of exploration in finding out new ways to die. In IF, creative deaths is a form of victory in their own right.

|

||||

|

||||

_Dunnet_ has a couple of differences from most IF. The former difference is minor. There are little odd descriptions throughout the game. "This room is red" or "The towel has a picture of Snoopy one it" or "There is a cliff here" that do not seem to have an immediate effect on the game. Sure, you can jump over the cliff (and die, obviously) but but it still comes off as a bright spot in the standard description matrix. Towards the end, you will be forced to bring back these details. It makes a neat little diversion of looking around and exploring things. Most of the details are cute and/or add to the surreality of the game overall.

|

||||

|

||||

The other big difference, and the one that greatly increased both my annoyance with and my enjoyment of the game, revolves around the two-three computer oriented scenes in the game. You have to type commands into two different computers throughout. One is a VAX and the other is, um, something like a PC (I forget). In both cases, there are clues to be found by knowing your way around the interface. This is a game for computer folk, so most who play it will have a sense of how to type "ls" or "dir" depending on the OS. But not all, will. Beating the game requires a general sense of computer literacy. You must know what types are in ftp. You must know how to determine what type a file is. You must know how to read a text file on a DOS style prompt. You must know something about protocols and etiquette for logging into ftp servers. All this sort of thing. If you do, or are willing to learn (I looked up some of the stuff online) then you can get past this portion with no problem. But this can be like the maze to some people, requiring several replays to get things right.

|

||||

|

||||

The end result is a quirky but fun game that I wish I had known about before because now I have the feeling that my computer is hiding other secrets from me. Glad to have played. Will likely play again to see how many ways I can die.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.wyrmis.com/games/if/dunnet.html

|

||||

|

||||

作者:[W Doug Bolden][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://www.wyrmis.com

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://www.driver-aces.com/ronnie.html

|

||||

@ -1,74 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Book Review: Fundamentals of Linux)

|

||||

[#]: via: (https://itsfoss.com/fundamentals-of-linux-book-review)

|

||||

[#]: author: (John Paul https://itsfoss.com/author/john/)

|

||||

|

||||

Book Review: Fundamentals of Linux

|

||||

======

|

||||

|

||||

There are many great books that cover the basics of what Linux is and how it works. Today, I will be taking a look at one such book. Today, the subject of our discussion is [Fundamentals of Linux][1] by Oliver Pelz and is published by [PacktPub][2].

|

||||

|

||||

[Oliver Pelz][3] has over ten years of experience as a software developer and a system administrator. He holds a degree in bioinformatics.

|

||||

|

||||

### What is the book ‘Fundamentals of Linux’ about?

|

||||

|

||||

![Fundamental of Linux books][4]

|

||||

|

||||

As can be guessed from the title, the goal of Fundamentals of Linux is to give the reader a strong foundation from which to learn about the Linux command line. The book is a little over two hundred pages long, so it only focuses on teaching the everyday tasks and problems that users commonly encounter. The book is designed for readers who want to become Linux administrators.

|

||||

|

||||

The first chapter starts out by giving an overview of virtualization. From there the author instructs how to create a virtual instance of [CentOS][5] in [VirtualBox][6], how to clone it, and how to use snapshots. You will also learn how to connect to the virtual machines via SSH.

|

||||

|

||||

The second chapter covers the basics of the Linux command line. This includes shell globbing, shell expansion, how to work with file names that contain spaces or special characters. It also explains how to interpret a command’s manual page, as well as, how to use `sed`, `awk`, and to navigate the Linux file system.

|

||||

|

||||

The third chapter takes a more in-depth look at the Linux file system. You will learn how files are linked in Linux and how to search for them. You will also be given an overview of users, groups and file permissions. Since the chapter focuses on interacting with files, it tells how to read text files from the command line, as well as, an overview of how to use the VIM editor.

|

||||

|

||||

Chapter four focuses on using the command line. It covers important commands, such as `cat`, `sort`, `awk`. `tee`, `tar`, `rsync`, `nmap`, `htop` and more. You will learn what processes are and how they communicate with each other. This chapter also includes an introduction to Bash shell scripting.

|

||||

|

||||

The fifth and final chapter covers networking on Linux and other advanced command line concepts. The author discusses how Linux handles networking and gives examples using multiple virtual machines. He also covers how to install new programs and how to set up a firewall.

|

||||

|

||||

### Thoughts on the book

|

||||

|

||||

Fundamentals of Linux might seem short at five chapters and a little over two hundred pages. However, quite a bit of information is covered. You are given everything that you need to get going on the command line.

|

||||

|

||||

The book’s sole focus on the command line is one thing to keep in mind. You won’t get any information on how to use a graphical user interface. That is partially because Linux has so many different desktop environments and so many similar system applications that it would be hard to write a book that could cover all of the variables. It is also partially because the book is aimed at potential Linux administrators.

|

||||

|

||||

I was kinda surprised to see that the author used [CentOS][7] to teach Linux. I would have expected him to use a more common Linux distro, like Ubuntu, Debian, or Fedora. However, because it is a distro designed for servers very little changes over time, so it is a very stable basis for a course on Linux basics.

|

||||

|

||||

I’ve used Linux for over half a decade. I spent most of that time using desktop Linux. I dove into the terminal when I needed to, but didn’t spend lots of time there. I have performed many of the actions covered in this book using a mouse. Now, I know how to do the same things via the terminal. It won’t change the way I do my tasks, but it will help me understand what goes on behind the curtain.

|

||||

|

||||

If you have either just started using Linux or are planning to do so in the future, I would not recommend this book. It might be a little overwhelming. If you have already spent some time with Linux or can quickly grasp the technical language, this book may very well be for you.

|

||||

|

||||

If you think this book is apt for your learning needs, you can get the book from the link below:

|

||||

|

||||

We will be trying to review more Linux books in coming months so stay tuned with us.

|

||||

|

||||

What is your favorite introductory book on Linux? Let us know in the comments below.

|

||||

|

||||

If you found this article interesting, please take a minute to share it on social media, Hacker News or [Reddit][8].

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/fundamentals-of-linux-book-review

|

||||

|

||||

作者:[John Paul][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/john/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.packtpub.com/networking-and-servers/fundamentals-linux

|

||||

[2]: https://www.packtpub.com/

|

||||

[3]: http://www.oliverpelz.de/index.html

|

||||

[4]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/01/fundamentals-of-linux-book-review.jpeg?resize=800%2C450&ssl=1

|

||||

[5]: https://centos.org/

|

||||

[6]: https://www.virtualbox.org/

|

||||

[7]: https://www.centos.org/

|

||||

[8]: http://reddit.com/r/linuxusersgroup

|

||||

@ -0,0 +1,136 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (How our non-profit works openly to make education accessible)

|

||||

[#]: via: (https://opensource.com/open-organization/19/2/building-curriculahub)

|

||||

[#]: author: (Tanner Johnson https://opensource.com/users/johnsontanner3)

|

||||

|

||||

How our non-profit works openly to make education accessible

|

||||

======

|

||||

To build an open access education hub, our team practiced the same open methods we teach our students.

|

||||

|

||||

|

||||

I'm lucky to work with a team of impressive students at Duke University who are leaders in their classrooms and beyond. As members of [CSbyUs][1], a non-profit and student-run organization based at Duke, we connect university students to middle school students, mostly from [title I schools][2] across North Carolina's Research Triangle Park. Our mission is to fuel future change agents from under-resourced learning environments by fostering critical technology skills for thriving in the digital age.

|

||||

|

||||

The CSbyUs Tech R&D team (TRD for short) recently set an ambitious goal to build and deploy a powerful web application over the course of one fall semester. Our team of six knew we had to do something about our workflow to ship a product by winter break. In our middle school classrooms, we teach our learners to use agile methodologies and design thinking to create mobile applications. On the TRD team, we realized we needed to practice what we preach in those classrooms to ship a quality product by semester's end.

|

||||

|

||||

This is the story of how and why we utilized the principles we teach our students in order to deploy technology that will scale our mission and make our teaching resources open and accessible.

|

||||

|

||||

### Setting the scene

|

||||

|

||||

For the past two years, CSbyUs has operated "on the ground," connecting Duke undergraduates to Durham middle schools via after-school programming. After teaching and evaluating several iterations of our unique, student-centered mobile app development curriculum, we saw promising results. Our middle schoolers were creating functional mobile apps, connecting to their mentors, and leaving the class more confident in their computer science skills. Naturally, we wondered how to expand our programming.

|

||||

|

||||

We knew we should take our own advice and lean into web-based technologies to share our work, but we weren't immediately sure what problem we needed to solve. Ultimately, we decided to create a web app that serves as a centralized hub for open source and open access digital education curricula. "CurriculaHub" (name inspired by GitHub) would be the defining pillar of CSbyUs's new website, where educators could share and adapt resources.

|

||||

|

||||

But the vision and implementation didn't happen overnight.

|

||||

|

||||

Given our sense of urgency and the potential of "CurriculaHub," we wanted to start this project with a well defined plan. The stakes were (and are) high, so planning, albeit occasionally tedious, was critical to our success. Like the curriculum we teach, we scaffolded our workflow process with design thinking and agile methodology, two critical 21st century frameworks we often fail to practice in higher ed.

|

||||

|

||||

What follows is a step-wise explanation of our design thinking process, starting from inspiration and ending in a shipped prototype.

|

||||

|

||||

```

|

||||

This is the story of how and why we utilized the principles we teach our students in order to deploy technology that will scale our mission and make our teaching resources open and accessible.

|

||||

```

|

||||

|

||||

### Our Process

|

||||

|

||||

#### **Step 1: Pre-Work**

|

||||

|

||||

In order to understand the why to our what, you have to know who our team is.

|

||||

|

||||

The members of this team are busy. All of us contribute to CSbyUs beyond our TRD-related responsibilities. As an organization with lofty goals beyond creating a web-based platform, we have to reconcile our "on the ground" commitments (i.e., curriculum curation, research and evaluation, mentorship training and practice, presentations at conferences, etc.) with our "in the cloud" technological goals.

|

||||

|

||||

In addition to balancing time across our organization, we have to be flexible in the ways we communicate. As a remote member of the team, I'm writing this post from Spain, but the rest of our team is based in North Carolina, adding collaboration challenges.

|

||||

|

||||

Before diving into development (or even problem identification), we knew we had to set some clear expectations for how we'd operate as a team. We took a note from our curriculum team's book and started with some [rules of engagement][3]. This is actually [a well-documented approach][4] to setting up a team's [social contract][5] used by teams across the tech space. During a summer internship at IBM, I remember pre-project meetings where my manager and team spent more than an hour clarifying principles of interaction. Whenever we faced uncertainty in our team operations, we'd pull out the rules of engagement and clear things up almost immediately. (An aside: I've found this strategy to be wildly effective not only in my teams, but in all relationships).

|

||||

|

||||

Considering the remote nature of our team, one of our favorite tools is Slack. We use it for almost everything. We can't have sticky-note brainstorms, so we create Slack brainstorm threads. In fact, that's exactly what we did to generate our rules of engagement. One [open source principle we take to heart is transparency][6]; Slack allows us to archive and openly share our thought processes and decision-making steps with the rest of our team.

|

||||

|

||||

#### **Step 2: Empathy Research**

|

||||

|

||||

We're all here for unique reasons, but we find a common intersection: the desire to broaden equity in access to quality digital era education.

|

||||

|

||||

Each member of our team has been lucky enough to study at Duke. We know how it feels to have limitless opportunities and the support of talented peers and renowned professors. But we're mindful that this isn't normal. Across the country and beyond, these opportunities are few and far between. Where they do exist, they're confined within the guarded walls of higher institutes of learning or come with a lofty price tag.

|

||||

|

||||

While our team members' common desire to broaden access is clear, we work hard to root our decisions in research. So our team begins each semester [reviewing][7] [research][8] that justifies our existence. TRD works with CRD (curriculum research and development) and TT (teaching team), our two other CSbyUs sub-teams, to discuss current trends in digital education access, their systemic roots, and novel approaches to broaden access and make materials relevant to learners. We not only perform research collaboratively at the beginning of the semester but also implement weekly stand-up research meetings with the sub-teams. During these, CRD often presents new findings we've gleaned from interviewing current teachers and digging into the current state of access in our local community. They are our constant source of data-driven, empathy-fueling research.

|

||||

|

||||

Through this type of empathy-based research, we have found that educators interested in student-centered teaching and digital era education lack a centralized space for proven and adaptable curricula and lesson plans. The bureaucracy and rigid structures that shape classroom learning in the United States makes reshaping curricula around the personal needs of students daunting and seemingly impossible. As students, educators, and technologists, we wondered how we might unleash the creativity and agency of others by sharing our own resources and creating an online ecosystem of support.

|

||||

|

||||

#### **Step 3: Defining the Problem**

|

||||

|

||||

We wanted to avoid [scope creep][9] caused by a poorly defined mission and vision (something that happens too often in some organizations). We needed structures to define our goals and maintain clarity in scope. Before imagining our application features, we knew we'd have to start with defining our north star. We would generate a clear problem statement to which we could refer throughout development.

|

||||

|

||||

Before imagining our application features, we knew we'd have to start with defining our north star.

|

||||

|

||||

This is common practice for us. Before committing to new programming, new partnerships, or new changes, the CSbyUs team always refers back to our mission and vision and asks, "Does this make sense?" (in fact, we post our mission and vision to the top of every meeting minutes document). If it fits and we have capacity to pursue it, we go for it. And if we don't, then we don't. In the case of a "no," we are always sure to document what and why because, as engineers know, [detailed logs are almost always a good decision][10]. TRD gleaned that big-picture wisdom and implemented a group-defined problem statement to guide our sub-team mission and future development decisions.

|

||||

|

||||

To formulate a single, succinct problem statement, we each began by posting our own takes on the problem. Then, during one of our weekly [30-minute-no-more-no-less stand-up meetings][11], we identified commonalities and differences, ultimately [merging all our ideas into one][12]. Boiled down, we identified that there exist massive barriers for educators, parents, and students to share, modify, and discuss open source and accessible curricula. And of course, our mission would be to break down those barriers with user-centered technology. This "north star" lives as a highly visible document in our Google Drive, which has influenced our feature prioritization and future directions.

|

||||

|

||||

#### **Step 4: Ideating a Solution**

|

||||

|

||||

With our problem defined and our rules of engagement established, we were ready to imagine a solution.

|

||||

|

||||

We believe that effective structures can ensure meritocracy and community. Sometimes, certain personalities dominate team decision-making and leave little space for collaborative input. To avoid that pitfall and maximize our equality of voice, we tend to use "offline" individual brainstorms and merge collective ideas online. It's the same process we used to create our rules of engagement and problem statement. In the case of ideating a solution, we started with "offline" brainstorms of three [S.M.A.R.T. goals][13]. Those goals would be ones we could achieve as a software development team (specifically because the CRD and TT teams offer different skill sets) and address our problem statement. Finally, we wrote these goals in a meeting minutes document, clustering common goals and ultimately identifying themes that describe our application features. In the end, we identified three: support, feedback, and open source curricula.

|

||||

|

||||

From here, we divided ourselves into sub-teams, repeating the goal-setting process with those teams—but in a way that was specific to our features. And if it's not obvious by now, we realized a web-based platform would be the most optimal and scalable solution for supporting students, educators, and parents by providing a hub for sharing and adapting proven curricula.

|

||||

|

||||

To work efficiently, we needed to be adaptive, reinforcing structures that worked and eliminating those that didn't. For example, we put a lot of effort in crafting meeting agendas. We strive to include only those subjects we must discuss in-person and table everything else for offline discussions on Slack or individually organized calls. We practice this in real time, too. During our regular meetings on Google Hangouts, if someone brings up a topic that isn't highly relevant or urgent, the current stand-up lead (a role that rotates weekly) "parking lots" it until the end of the meeting. If we have space at the end, we pull from the parking lot, and if not, we reserve that discussion for a Slack thread.

|

||||

|

||||

This prioritization structure has led to massive gains in meeting efficiency and a focus on progress updates, shared technical hurdle discussions, collective decision-making, and assigning actionable tasks (the next-steps a person has committed to taking, documented with their name attached for everyone to view).

|

||||

|

||||

#### **Step 5: Prototyping**

|

||||

|

||||

This is where the fun starts.

|

||||

|

||||

Our team was only able to unite new people with highly varied experience through the power of open principles and methodologies.

|

||||

|

||||

Given our requirements—like an interactive user experience, the ability to collaborate on blogs and curricula, and the ability to receive feedback from our users—we began identifying the best technologies. Ultimately, we decided to build our web app with a ReactJS frontend and a Ruby on Rails backend. We chose these due to the extensive documentation and active community for both, and the well-maintained libraries that bridge the relationship between the two (e.g., react-on-rails). Since we chose Rails for our backend, it was obvious from the start that we'd work within a Model-View-Controller framework.

|

||||

|

||||

Most of us didn't have previous experience with web development, neither on the frontend nor the backend. So, getting up and running with either technology independently presented a steep learning curve, and gluing the two together only steepened it. To centralize our work, we use an open-access GitHub repository. Given our relatively novice experience in web development, our success hinged on extremely efficient and open collaborations.

|

||||

|

||||

And to explain that, we need to revisit the idea of structures. Some of ours include peer code reviews—where we can exchange best-practices and reusable solutions, maintaining up-to-date tech and user documentation so we can look back and understand design decisions—and (my personal favorite) our questions bot on Slack, which gently reminds us to post and answer questions in a separate Slack #questions channel.

|

||||

|

||||

We've also dabbled with other strategies, like instructional videos for generating basic React components and rendering them in Rails Views. I tried this and in my first video, [I covered a basic introduction to our repository structure][14] and best practices for generating React components. While this proved useful, our team has since realized the wealth of online resources that document various implementations of these technologies robustly. Also, we simply haven't had enough time (but we might revisit them in the future—stay tuned).

|

||||

|

||||

We're also excited about our cloud-based implementation. We use Heroku to host our application and manage data storage. In next iterations, we plan to both expand upon our current features and configure a continuous iteration/continuous development pipeline using services like Jenkins integrated with GitHub.

|

||||

|

||||

#### **Step 6: Testing**

|

||||

|

||||

Since we've [just deployed][1], we are now in a testing stage. Our goals are to collect user feedback across our feature domains and our application experience as a whole, especially as they interact with our specific audiences. Given our original constraints (namely, time and people power), this iteration is the first of many to come. For example, future iterations will allow for individual users to register accounts and post external curricula directly on our site without going through the extra steps of email. We want to scale and maximize our efficiency, and that's part of the recipe we'll deploy in future iterations. As for user testing: We collect user feedback via our contact form, via informal testing within our team, and via structured focus groups. [We welcome your constructive feedback and collaboration][15].

|

||||

|

||||

Our team was only able to unite new people with highly varied experience through the power of open principles and methodologies. Luckily enough, each one I described in this post is adaptable to virtually every team.

|

||||

|

||||

Regardless of whether you work—on a software development team, in a classroom, or, heck, [even in your family][16]—principles like transparency and community are almost always the best foundation for a successful organization.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/19/2/building-curriculahub

|

||||

|

||||

作者:[Tanner Johnson][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/johnsontanner3

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: http://csbyus.org

|

||||

[2]: https://www2.ed.gov/programs/titleiparta/index.html

|

||||

[3]: https://docs.google.com/document/d/1tqV6B6Uk-QB7Psj1rX9tfCyW3E64_v6xDlhRZ-L2rq0/edit

|

||||

[4]: https://www.atlassian.com/team-playbook/plays/rules-of-engagement

|

||||

[5]: https://openpracticelibrary.com/practice/social-contract/

|

||||

[6]: https://opensource.com/open-organization/resources/open-org-definition

|

||||

[7]: https://services.google.com/fh/files/misc/images-of-computer-science-report.pdf

|

||||

[8]: https://drive.google.com/file/d/1_iK0ZRAXVwGX9owtjUUjNz3_2kbyYZ79/view?usp=sharing

|

||||

[9]: https://www.pmi.org/learning/library/top-five-causes-scope-creep-6675

|

||||

[10]: https://www.codeproject.com/Articles/42354/The-Art-of-Logging#what

|

||||

[11]: https://opensource.com/open-organization/16/2/6-steps-running-perfect-30-minute-meeting

|

||||

[12]: https://docs.google.com/document/d/1wdPRvFhMKPCrwOG2CGp7kP4rKOXrJKI77CgjMfaaXnk/edit?usp=sharing

|

||||

[13]: https://www.projectmanager.com/blog/how-to-create-smart-goals

|

||||

[14]: https://www.youtube.com/watch?v=52kvV0plW1E

|

||||

[15]: http://csbyus.org/

|

||||

[16]: https://opensource.com/open-organization/15/11/what-our-families-teach-us-about-organizational-life

|

||||

@ -1,3 +1,5 @@

|

||||

translating by Cycoe

|

||||

Cycoe 翻译中

|

||||

8 KDE Plasma Tips and Tricks to Improve Your Productivity

|

||||

======

|

||||

|

||||

|

||||

@ -1,78 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Get started with Org mode without Emacs)

|

||||

[#]: via: (https://opensource.com/article/19/1/productivity-tool-org-mode)

|

||||

[#]: author: (Kevin Sonney https://opensource.com/users/ksonney (Kevin Sonney))

|

||||

|

||||

Get started with Org mode without Emacs

|

||||

======

|

||||

No, you don't need Emacs to use Org, the 16th in our series on open source tools that will make you more productive in 2019.

|

||||

|

||||

|

||||

|

||||

There seems to be a mad rush at the beginning of every year to find ways to be more productive. New Year's resolutions, the itch to start the year off right, and of course, an "out with the old, in with the new" attitude all contribute to this. And the usual round of recommendations is heavily biased towards closed source and proprietary software. It doesn't have to be that way.

|

||||

|

||||

Here's the 16th of my picks for 19 new (or new-to-you) open source tools to help you be more productive in 2019.

|

||||

|

||||

### Org (without Emacs)

|

||||

|

||||

[Org mode][1] (or just Org) is not in the least bit new, but there are still many people who have never used it. They would love to try it out to get a feel for how Org can help them be productive. But the biggest barrier is that Org is associated with Emacs, and many people think one requires the other. Not so! Org can be used with a variety of other tools and editors once you understand the basics.

|

||||

|

||||

|

||||

|

||||

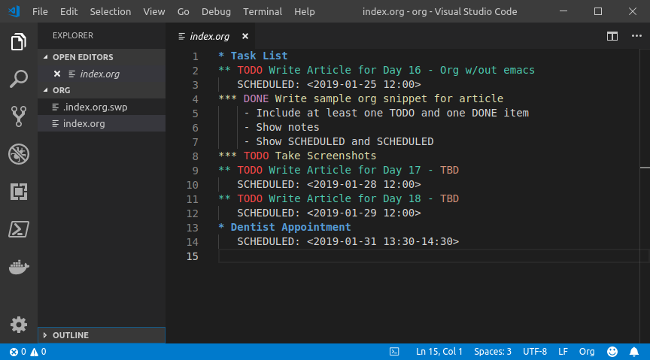

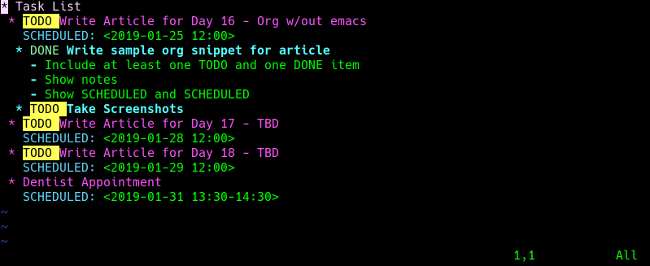

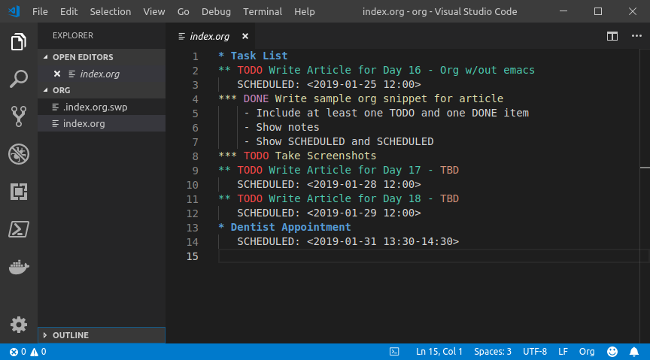

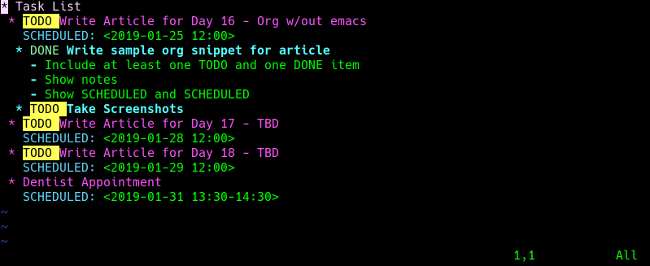

Org, at its very heart, is a structured text file. It has headers, subheaders, and keywords that allow other tools to parse files into agendas and to-do lists. Org files can be edited with any flat-text editor (e.g., [Vim][2], [Atom][3], or [Visual Studio Code][4]), and many have plugins that help create and manage Org files.

|

||||

|

||||

A basic Org file looks something like this:

|

||||

|

||||

```

|

||||

* Task List

|

||||

** TODO Write Article for Day 16 - Org w/out emacs

|

||||

DEADLINE: <2019-01-25 12:00>

|

||||

*** DONE Write sample org snippet for article

|

||||

- Include at least one TODO and one DONE item

|

||||

- Show notes

|

||||

- Show SCHEDULED and DEADLINE

|

||||

*** TODO Take Screenshots

|

||||

** Dentist Appointment

|

||||

SCHEDULED: <2019-01-31 13:30-14:30>

|

||||

```

|

||||

|

||||

Org uses an outline format that uses ***** as bullets to indicate an item's level. Any item that begins with the word TODO (yes, in all caps) is just that—a to-do item. The work DONE indicates it is completed. SCHEDULED and DEADLINE indicate dates and times relevant to the item. If there's no time in either field, the item is considered an all-day event.

|

||||

|

||||

With the right plugins, your favorite text editor becomes a powerhouse of productivity and organization. For example, the [vim-orgmode][5] plugin's features include functions to create Org files, syntax highlighting, and key commands to generate agendas and comprehensive to-do lists across files.

|

||||

|

||||

|

||||

|

||||

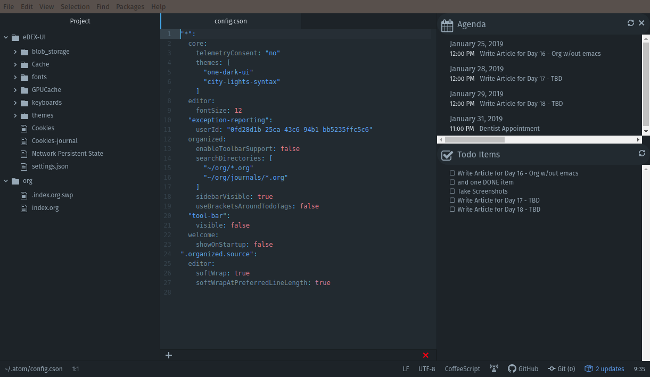

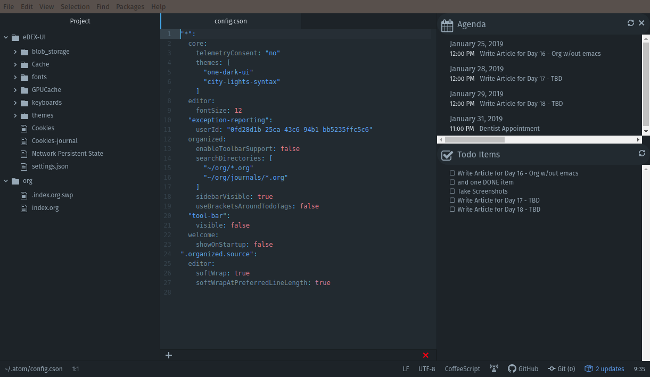

The Atom [Organized][6] plugin adds a sidebar on the right side of the screen that shows the agenda and to-do items in Org files. It can read from multiple files by default with a path set up in the configuration options. The Todo sidebar allows you to click on a to-do item to mark it done, then automatically updates the source Org file.

|

||||

|

||||

|

||||

|

||||

There are also a whole host of tools that "speak Org" to help keep you productive. With libraries in Python, Perl, PHP, NodeJS, and more, you can develop your own scripts and tools. And, of course, there is also [Emacs][7], which has Org support within the core distribution.

|

||||

|

||||

|

||||

|

||||

Org mode is one of the best tools for keeping on track with what needs to be done and when. And, contrary to myth, it doesn't need Emacs, just a text editor.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/productivity-tool-org-mode

|

||||

|

||||

作者:[Kevin Sonney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/ksonney (Kevin Sonney)

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://orgmode.org/

|

||||

[2]: https://www.vim.org/

|

||||

[3]: https://atom.io/

|

||||

[4]: https://code.visualstudio.com/

|

||||

[5]: https://github.com/jceb/vim-orgmode

|

||||

[6]: https://atom.io/packages/organized

|

||||

[7]: https://www.gnu.org/software/emacs/

|

||||

@ -1,158 +0,0 @@

|

||||

The 5 Best Linux Distributions for Development

|

||||

============================================================

|

||||

|

||||

|

||||

Jack Wallen looks at some of the best LInux distributions for development efforts.[Creative Commons Zero][6]

|

||||

|

||||

When considering Linux, there are so many variables to take into account. What package manager do you wish to use? Do you prefer a modern or old-standard desktop interface? Is ease of use your priority? How flexible do you want your distribution? What task will the distribution serve?

|

||||

|

||||

It is that last question which should often be considered first. Is the distribution going to work as a desktop or a server? Will you be doing network or system audits? Or will you be developing? If you’ve spent much time considering Linux, you know that for every task there are several well-suited distributions. This certainly holds true for developers. Even though Linux, by design, is an ideal platform for developers, there are certain distributions that rise above the rest, to serve as great operating systems to serve developers.

|

||||

|

||||