mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

3d0a3d966a

@ -0,0 +1,37 @@

|

||||

"Fork Debian" Project Aims to Put Pressure on Debian Community and Systemd Adoption

|

||||

================================================================================

|

||||

> There is still a great deal of resistance in the Debian community towards the upcoming adoption of systemd

|

||||

|

||||

**The Debian project decided to adopt systemd a while ago and ditch the upstart counterpart. The decision was very controversial and it's still contested by some users. Now, a new proposition has been made, to fork Debian into something that doesn't have systemd.**

|

||||

|

||||

|

||||

|

||||

systemd is the replacement for the init system and it's the daemon that starts right after the Linux kernel. It's responsible for initiating all the other components in a system and it's also responsible for shutting them down in the correct order, so you might imagine why people think this is an important piece of software.

|

||||

|

||||

The discussions in the Debian community have been very heated, but systemd prevailed and it looked like the end of it. Linux distros based on it have already started to make the changes. For example, Ubuntu is already preparing to adopt systemd, although it's still pretty far off.

|

||||

|

||||

### Forking Debian, not really a solution ###

|

||||

|

||||

Developers have already forked systemd, but the projects resulted don't have a lot of support from the community. As you can imagine, systemd also has a big following and people are not giving up so easily. Now, someone has made a website called debianfork.org to advocate for a Debian without systemd, in an effort to put pressure on the developers.

|

||||

|

||||

"We are Veteran Unix Admins and we are concerned about what is happening to Debian GNU/Linux to the point of considering a fork of the project. Some of us are upstream developers, some professional sysadmins: we are all concerned peers interacting with Debian and derivatives on a daily basis. We don't want to be forced to use systemd in substitution to the traditional UNIX sysvinit init, because systemd betrays the UNIX philosophy."

|

||||

|

||||

"We contemplate adopting more recent alternatives to sysvinit, but not those undermining the basic design principles of 'do one thing and do it well' with a complex collection of dozens of tightly coupled binaries and opaque logs," reads the [website][1], among a lot of other things.

|

||||

|

||||

Basically, the new website is not actually about a Debian fork, but more like a form of pressure for the [upcoming vote][2] that will be taken for the "Re-Proposal - preserve freedom of choice of init systems." This is a general resolution made by Ian Jackson and he hopes to get enough support in order to turn back the decision made by the Technical Committee regarding systemd.

|

||||

|

||||

It's clear that the debate is still not over in the Debian community, but it remains to be seen if the decisions already made can be overturned.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Fork-Debian-Project-Started-to-Put-Pressure-on-Debian-Community-and-Systemd-Adoption-462598.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://debianfork.org/

|

||||

[2]:https://lists.debian.org/debian-vote/2014/10/msg00001.html

|

||||

@ -0,0 +1,64 @@

|

||||

Microsoft loves Linux -- for Azure's sake

|

||||

================================================================================

|

||||

|

||||

|

||||

Scott Guthrie, executive vice president, Microsoft Cloud and Enterprise group, shows how Microsoft differentiates Azure. Credit: James Niccolai/IDG News Service

|

||||

|

||||

### Microsoft adds CoreOS and Cloudera to its growing set of Azure services ###

|

||||

|

||||

Microsoft now loves Linux.

|

||||

|

||||

This was the message from Microsoft CEO Satya Nadella, standing in front of an image that read "Microsoft [heart symbol] Linux," during a Monday webcast to announce a number of services it had added to its Azure cloud, including the Cloudera Hadoop package and the CoreOS Linux distribution.

|

||||

|

||||

In addition, the company launched a marketplace portal, now in preview mode, designed to make it easier for customers to procure and manage their cloud operations.

|

||||

|

||||

Microsoft is also planning to release an Azure appliance, in conjunction with Dell, that will allow organizations to run hybrid clouds where they can easily move operations between Microsoft's Azure cloud and their own in-house version.

|

||||

|

||||

The declaration of affection for Linux indicates a growing acceptance of software that wasn't created at Microsoft, at least for the sake of making its Azure cloud platform as comprehensive as possible.

|

||||

|

||||

For decades, the company tied most of its new products and innovations to its Windows platform, and saw other OSes, such as Linux, as a competitive threat. Former CEO Steve Ballmer [once infamously called Linux a cancer][1].

|

||||

|

||||

This animosity may be evaporating as Microsoft is finding that customers want cloud services that incorporate software from other sources in addition to Microsoft. About 20 percent of the workloads run on Azure are based on Linux, Nadella admitted.

|

||||

|

||||

Now, the company considers its newest competitors to be the cloud services offered by Amazon and Google.

|

||||

|

||||

Nadella said that by early 2015, Azure will be operational in 19 regions around the world, which will provide more local coverage than either Google or Amazon.

|

||||

|

||||

He also noted that the company is investing more than $4.5 billion in data centers, which by Microsoft's estimation is twice as much as Amazon's investments and six times as much as Google's.

|

||||

|

||||

To compete, Microsoft has been adding widely-used third party software packages to Azure at a rapid clip. Nadella noted that Azure now supports all the major data integration stacks, such as those from Oracle and IBM, as well as major new entrants such as MongoDB and Hadoop.

|

||||

|

||||

The results seem to be paying off. Today Azure is generating about $4.48 billion in annual revenue for Microsoft, and we are "still at the early days," of cloud computing, Nadella said.

|

||||

|

||||

The service attracts about 10,000 new customers per week. About 2 million developers have signed on to Visual studio online since its launch. The service runs about 1.2 million SQL databases.

|

||||

|

||||

CoreOS is now actually the fifth Linux distribution that Azure offers, joining Ubuntu, CentOS, OpenSuse, and Oracle Linux (a variant of Red Hat Enterprise Linux). Customers [can also package their own Linux distributions][2] to run in Azure.

|

||||

|

||||

CoreOS was developed as [a lightweight Linux distribution][3] to be used primarily in cloud environments. Officially launched in December, CoreOS is already offered as a service by Google, Rackspace, DigitalOcean and others.

|

||||

|

||||

Cloudera is the second Hadoop distribution offered on Azure, following Hortonworks. Cloudera CEO Mike Olson joined the Microsoft executives onstage to demonstrate how easily one can use the Cloudera Hadoop software within Azure.

|

||||

|

||||

Using the new portal, Olson showed how to start up a 90-node instance of Cloudera with a few clicks. Such a deployment can be connected to an Excel spreadsheet, where the user can query the dataset using natural language.

|

||||

|

||||

Microsoft also announced a number of other services and products.

|

||||

|

||||

Azure will have a new type of virtual machine, which is being called the "G Family." These virtual machines can have up to 32 CPU cores, 450GB of working memory and 6.5TB of storage, making it in effect "the largest virtual machine in the cloud," said Scott Guthrie, who is the Microsoft executive vice president overseeing Azure.

|

||||

|

||||

This family of virtual machines is equipped to handle the much larger workloads Microsoft is anticipating its customers will want to run. It has also upped the amount of storage each virtual machine can access, to 32TB.

|

||||

|

||||

The new cloud platform appliance, available in November, will allow customers to run Azure services on-premise, which can provide a way to bridge their on-premise and cloud operations. One early customer, integrator General Dynamics, plans to use this technology to help its U.S. government customers migrate to the cloud.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.computerworld.com/article/2836315/microsoft-loves-linux-for-azures-sake.html

|

||||

|

||||

作者:[Joab Jackson][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Joab-Jackson/

|

||||

[1]:http://www.theregister.co.uk/2001/06/02/ballmer_linux_is_a_cancer/

|

||||

[2]:http://azure.microsoft.com/en-us/documentation/articles/virtual-machines-linux-create-upload-vhd/

|

||||

[3]:http://www.itworld.com/article/2696116/open-source-tools/coreos-linux-does-away-with-the-upgrade-cycle.html

|

||||

@ -0,0 +1,30 @@

|

||||

Red Hat acquires FeedHenry to get mobile app chops

|

||||

================================================================================

|

||||

Red Hat wants a piece of the enterprise mobile app market, so it has acquired Irish company FeedHenry for approximately $82 million.

|

||||

|

||||

The growing popularity of mobile devices has put pressure on enterprise IT departments to make existing apps available from smartphones and tablets -- a trend that Red Hat is getting in on with the FeedHenry acquisition.

|

||||

|

||||

The mobile app segment is one of the fastest growing in the enterprise software market, and organizations are looking for better tools to build mobile applications that extend and enhance traditional enterprise applications, according to Red Hat.

|

||||

|

||||

"Mobile computing for the enterprise is different than Angry Birds. Enterprise mobile applications need a backend platform that enables the mobile user to access data, build backend logic, and access corporate APIs, all in a scalable, secure manner," Craig Muzilla, senior vice president for Red Hat's Application Platform Business, said in a [blog post][1].

|

||||

|

||||

FeedHenry provides a cloud-based platform that lets users develop and deploy applications for mobile devices that meet those demands. Developers can create native apps for Android, iOS, Windows Phone and BlackBerry as well as HTML5 apps, or a mixture of native and Web apps.

|

||||

|

||||

A key building block is Node.js, an increasingly popular platform based on Chrome's JavaScript runtime for building fast and scalable applications.

|

||||

|

||||

From Red Hat's point of view, FeedHenry is a natural fit with the company's strengths in enterprise middleware and PaaS (platform-as-a-service). It adds better mobile capabilities to the JBoss Middleware portfolio and OpenShift PaaS offerings, Red Hat said.

|

||||

|

||||

Red Hat plans to continue to sell and support FeedHenry's products, and will continue to honor client contracts. For the most part, it will be business as usual, according to Red Hat. The transaction is expected to close in the third quarter of its fiscal 2015.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.computerworld.com/article/2685286/red-hat-acquires-feedhenry-to-get-mobile-app-chops.html

|

||||

|

||||

作者:[Mikael Ricknäs][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Mikael-Rickn%C3%A4s/

|

||||

[1]:http://www.redhat.com/en/about/blog/its-time-go-mobile

|

||||

@ -0,0 +1,28 @@

|

||||

This is the name of Ubuntu 15.04 — And It’s Not Velociraptor

|

||||

================================================================================

|

||||

**Ubuntu 14.10 may not be out of the door yet, but attention is already turning to Ubuntu 15.04. Today it got its name: ‘[Vivid Vervet][1]’.**

|

||||

|

||||

|

||||

|

||||

Announcing the monkey-themed moniker in his usual loquacious style, Mark Shuttleworth cites the ‘upstart’ and playful nature of the mascot as in tune with its own foray into the mobile space.

|

||||

|

||||

> “This is a time when every electronic thing can be an Internet thing, and that’s a chance for us to bring our platform, with its security and its long term support, to a vast and important field. In a world where almost any device can be smart, and also subverted, our shared efforts to make trusted and trustworthy systems might find fertile ground.

|

||||

|

||||

Talking of plans for the release Shuttleworth states one goal is to “show the way past a simple Internet of things, to a world of Internet things-you-can-trust.”

|

||||

|

||||

Ubuntu 15.04 is due for release in April 2015. It’s not expected to arrive with either Mir or Unity 8 by default, but given the veracious speed of acceleration in ambitions, it may find its way out for testing.

|

||||

|

||||

Do you like the name? Were you hoping for velociraptor?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.omgubuntu.co.uk/2014/10/ubuntu-15-04-named-vivid-vervet

|

||||

|

||||

作者:[Joey-Elijah Sneddon][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://plus.google.com/117485690627814051450/?rel=author

|

||||

[1]:http://www.markshuttleworth.com/archives/1425

|

||||

34

sources/news/20141021 Ubuntu 15.04 Is Called Vivid Vervet.md

Normal file

34

sources/news/20141021 Ubuntu 15.04 Is Called Vivid Vervet.md

Normal file

@ -0,0 +1,34 @@

|

||||

Ubuntu 15.04 Is Called Vivid Vervet

|

||||

================================================================================

|

||||

> Mark Shuttleworth decided on the new name for Ubuntu 15.04

|

||||

|

||||

|

||||

|

||||

**One of Mark Shuttleworth's privileges is to decide what the code name for upcoming Ubuntu versions is. It's usually a real animal and now it's a monkey whose name starts with V and, as usual, it's probably a species you’ve never heard of before.**

|

||||

|

||||

With very few exceptions, some of the names chosen for Ubuntu releases send the older users to the Encyclopedia Britannica and the new ones to Google. Shuttleworth generally chooses animals that are less known and the names usually have something in common with the release.

|

||||

|

||||

For example, Trusty Tahr, the name of Ubuntu 14.04 LTS, followed the idea of long term support for the operating system, hence the trusty adjective. Precise Pangolin did the same for Ubuntu 12.04 LTS, and so on. Intermediary releases are not all that obvious and the Ubuntu 14.10 Utopic Unicorn is proof of that.

|

||||

|

||||

### Still thinking about the monkey whose name starts with a V? ###

|

||||

|

||||

The way the version number is chosen is pretty clear. The first part is for the year and the second one is for the month, so Ubuntu 14.10 is actually Ubuntu 2014 October. On the other hand, the names only follow a simple rule, one adjective and one animal, so the choice is rather simple. Unlike other communities, where the designation is decided by users or at least with their participation, Ubuntu is different, although it's not a singular example.

|

||||

|

||||

"Release week! Already! I wouldn't call Trusty 'vintage' just yet, but Utopic is poised to leap into the torrent stream. We've all managed to land our final touches to *buntu and are excited to bring the next wave of newness to users around the world. Glad to see the unicorn theme went down well, judging from the various desktops I see on G+."

|

||||

|

||||

"In my favourite places, the smartest thing around is a particular kind of monkey. Vexatious at times, volant and vogie at others, a vervet gets in anywhere and delights in teasing cats and dogs alike. As the upstart monkey in this business I can think of no better mascot. And so let's launch our vicenary cycle, our verist varlet, the Vivid Vervet!" says Mark Shuttleworth on his [blog][1].

|

||||

|

||||

So, there you have it, Ubuntu 15.04, the operating system that is scheduled to arrive in April 2015, will be called Vivid Vervet. I won't keep you anymore for details, I'm sure you are already looking up the vervet on Wikipedia.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Ubuntu-15-04-Is-Called-Vivid-Vervet-462621.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:http://www.markshuttleworth.com/archives/1425

|

||||

@ -1,120 +0,0 @@

|

||||

Linux Poetry Explains the Kernel, Line By Line

|

||||

================================================================================

|

||||

> Editor's Note: Feeling inspired? Send your Linux poem to [editors@linux.com][1] for your chance to win a free pass to [LinuxCon North America][2] in Chicago, Aug. 20-22. Be sure to include your name, contact information and a brief explanation of your poem. We'll draw one winner at random from all eligible entries each week through Aug. 1, 2014.

|

||||

|

||||

|

||||

|

||||

Software developer Morgan Phillips is teaching herself how the Linux kernel works by writing poetry.

|

||||

|

||||

Writing poems about the Linux kernel has been enlightening in more ways than one for software developer Morgan Phillips.

|

||||

|

||||

Over the past few months she's begun to teach herself how the Linux kernel works by studying text books, including [Understanding the Linux Kernel][3], Unix Network Programming, and The Unix Programming Environment. But instead of taking notes, she weaves the new terminology and ideas she learns into poetry about system architecture and programming concepts. (See some examples, below, and on her [Linux Poetry blog][4].)

|

||||

|

||||

It's a “pedagogical hack” she adopted in college and took up again a few years ago when she first landed a job as a data warehouse engineer at Facebook and needed to quickly learn Hadoop.

|

||||

|

||||

“I could remember bits and pieces of information but it was too rote, too rigid in my mind, so I started writing poems,” she said. “It forced me to wrap all of these bits of information into context and helped me learn things much more effectively.”

|

||||

|

||||

The Linux kernel's history, architecture, abundant terminology and complex concepts, are rich fodder for her poetry.

|

||||

|

||||

“I could probably write thousands of poems about just one subsystem in the kernel,” she said.

|

||||

|

||||

### Why learn Linux? ###

|

||||

|

||||

|

||||

Phillips publishes on her Linux Poetry blog.

|

||||

|

||||

Phillips started her software career through a somewhat unconventional route as a physics major in a research laboratory. Instead of writing journal articles she was writing Python scripts to parse research project data on active galactic nuclei. She never learned the fundamentals of computer science (CS), but picked up the information on the job, as the need arose.

|

||||

|

||||

She soon got a job doing network security research for the Army Research Laboratory in Adelphi, Maryland, working with Linux. That was her first foray into the networking stack and the lower levels of the operating system.

|

||||

|

||||

Most recently she worked at Facebook until about six months ago when she moved from the Silicon Valley back to Nashville, near her home state of Kentucky, to work for a software startup that helps major record labels manage their business.

|

||||

|

||||

“I have all this experience but I suffer from a thing that almost every person who doesn’t have an actual background in CS does: I have islands of knowledge with big gaps in between,” she said. “Every time I'd come across some concept, some data structure in the kernel, I'd have to go educate myself on it.”

|

||||

|

||||

A few weeks ago her frustration peaked. She was trying to do a form of message passing between web application processes and a web socket server she had written and found herself having to brush up on all the ways she could do interprocess communication.

|

||||

|

||||

“I was like, that's it. I'm going to start really learning everything I should have known starting at the bottom up with the Linux kernel,” she said. “So I bought some textbooks and started reading.”

|

||||

|

||||

|

||||

|

||||

### What she's learned ###

|

||||

|

||||

Over the course of a few months of reading books and writing poems she's learned about how the virtual memory subsystem works. She's learned about the data structures that hold process information, about the virtual memory layout and how pages are mapped into memory, and about memory management.

|

||||

|

||||

“I hadn't thought about a lot of things, like that a system that's multiprocessing shouldn’t bother with semaphores,” she said. “Spin locks are often more efficient.”

|

||||

|

||||

Writing poems has also given her insight into her own way of thinking about the world. In some small way she is communicating not just her knowledge of Linux systems, but also the way that she conceptualizes them.

|

||||

|

||||

“It's a deep look into my mind,” she said. “Poetry is the best way to share these abstract ideas and things that we can't possibly truly share with other people.”

|

||||

|

||||

Writing a Linux poem

|

||||

|

||||

The inspiration for her Linux poems starts with reading a textbook chapter. She hones the topics down to the key concepts that she wants to remember and what others might find interesting, as well as things she can “wrap a conceptual bubble around.”

|

||||

|

||||

A concept like demand paging is too broad to fit into a single poem, for example. “So I'm working my way down deeper in it,” she said. “Instead I'm looking at writing a poem about the actual data structure where process memory is laid out and then mapped into a page map.”

|

||||

|

||||

She hasn't had any formal training writing poetry, but writes the lines so that they are visually appealing and have a nice rhythm when they're read aloud.

|

||||

|

||||

In her poem, “The Reentrant Kernel,” Phillips writes about an important property in software that allows a function to be paused and restarted later with the same result. System calls need to have this reentrant property in order to make the scheduler run as efficiently as possible, Phillips explains. The poem also includes a program, written in C style pseudocode, to help illustrate the concept.

|

||||

|

||||

Phillips hopes her Linux poetry helps her increase her understanding enough to start contributing to the Linux kernel.

|

||||

|

||||

“I've been very intimidated for a long time by the idea of submitting a patch to the kernel, being a kernel hacker,” she said. “To me that's the pinnacle of success.

|

||||

|

||||

“My ultimate dream is that I can gain a good enough understanding of the kernel and C to submit a patch and have it accepted.”

|

||||

|

||||

The Reentrant Kernel

|

||||

|

||||

A reentrant function,

|

||||

if interrupted,

|

||||

will return a result,

|

||||

which is not perturbed.

|

||||

|

||||

int global_int;

|

||||

int is_not_reentrant(int x) {

|

||||

int x = x;

|

||||

return global_int + x; },

|

||||

depends on a global variable,

|

||||

which may change during execution.

|

||||

|

||||

int global_int;

|

||||

int is_reentrant(int x) {

|

||||

int saved = global_int;

|

||||

return saved + x; },

|

||||

mitigates external dependency,

|

||||

it is reentrant, though not thread safe.

|

||||

|

||||

UNIX kernels are reentrant,

|

||||

a process may be interrupted while in kernel mode,

|

||||

so that, for instance, time is not wasted,

|

||||

waiting on devices.

|

||||

|

||||

Process alpha requests to read from a device,

|

||||

the kernel obliges,

|

||||

CPU switches into kernel mode,

|

||||

system call begins execution.

|

||||

|

||||

Process alpha is waiting for data,

|

||||

it yields to the scheduler,

|

||||

process beta writes to a file,

|

||||

the device signals that data is available.

|

||||

|

||||

Context switches,

|

||||

process alpha continues execution,

|

||||

data is fetched,

|

||||

CPU enters user mode.

|

||||

|

||||

注:上面代码内文本发布时请参考原文排版(第一行着重,全部居中)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linux.com/news/featured-blogs/200-libby-clark/777473-linux-poetry-explains-the-kernel-line-by-line/

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:editors@linux.com

|

||||

[2]:http://events.linuxfoundation.org/events/linuxcon-north-america

|

||||

[3]:http://shop.oreilly.com/product/9780596005658.do

|

||||

[4]:http://www.linux-poetry.com/

|

||||

@ -1,5 +1,3 @@

|

||||

CNprober translating...

|

||||

|

||||

Linux Administration: A Smart Career Choice

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -1,4 +1,3 @@

|

||||

Love-xuan 翻译中

|

||||

Don't Fear The Command Line

|

||||

================================================================================

|

||||

|

||||

|

||||

119

sources/talk/20141021 Interview--Thomas Voß of Mir.md

Normal file

119

sources/talk/20141021 Interview--Thomas Voß of Mir.md

Normal file

@ -0,0 +1,119 @@

|

||||

Interview: Thomas Voß of Mir

|

||||

================================================================================

|

||||

**Mir was big during the space race and it’s a big part of Canonical’s unification strategy. We talk to one of its chief architects at mission control.**

|

||||

|

||||

Not since the days of 2004, when X.org split from XFree86, have we seen such exciting developments in the normally prosaic realms of display servers. These are the bits that run behind your desktop, making sure Gnome, KDE, Xfce and the rest can talk to your graphics hardware, your screen and even your keyboard and mouse. They have a profound effect on your system’s performance and capabilities. And where we once had one, we now have two more – Wayland and Mir, and both are competing to win your affections in the battle for an X replacement.

|

||||

|

||||

We spoke to Wayland’s Daniel Stone in issue 6 of Linux Voice, so we thought it was only fair to give equal coverage to Mir, Canonical’s own in-house X replacement, and a project that has so far courted controversy with some of its decisions. Which is why we headed to Frankfurt and asked its Technical Architect, Thomas Voß, for some background context…

|

||||

|

||||

|

||||

|

||||

**Linux Voice: Let’s go right back to the beginning, and look at what X was originally designed for. X solved the problems that were present 30 years ago, where people had entirely different needs, right?**

|

||||

|

||||

**Thomas Voß**: It was mainframes. It was very expensive mainframe computers with very cheap terminals, trying to keep the price as low as possible. And one of the first and foremost goals was: “Hey, I want to be able to distribute my UI across the network, ideally compressed and using as little data as possible”. So a lot of the decisions in X were motivated by that.

|

||||

|

||||

A lot of the graphics languages that X supports even today have been motivated by that decision. The X developers started off in a 2D world; everything was a 2D graphics language, the X way of drawing rectangles. And it’s present today. So X is not necessarily bad in that respect; it still solves a lot of use cases, but it’s grown over time.

|

||||

|

||||

One of the reasons is that X is a protocol, in essence. So a lot of things got added to the protocol. The problem with adding things to a protocol is that they tend to stick. To use a 2D graphics language as an example, XVideo is something that no-one really likes today. It’s difficult to support and the GPU vendors actually cry out in pain when you start talking about XVideo. It’s somewhat bloated, and it’s just old. It’s an old proven technology – and I’m all for that. I actually like X for a lot of things, and it was a good source of inspiration. But then when you look at your current use cases and the current setup we are in, where convergence is one of the buzzwords – massively overrated obviously – but at the heart of convergence lies the fact that you want to scale across different form factors.

|

||||

|

||||

**LV: And convergence is big for Canonical isn’t it?**

|

||||

|

||||

**Thomas**: It’s big, I think, for everyone, especially over time. But convergence is a use case that was always of interest to us. So we always had this idea that we want one codebase. We don’t want a situation like Apple has with OS X and iOS, which are two different codebases. We basically said “Look, whatever we want to do, we want to do it from one codebase, because it’s more efficient.” We don’t want to end up in the situation where we have to be maintaining two, three or four separate codebases.

|

||||

|

||||

That’s where we were coming from when we were looking at X, and it was just too bloated. And we looked at a lot of alternatives. We started looking at how Mac OS X was doing things. We obviously didn’t have access to the source code, but if you see the transition from OS 9 to OS X, it was as if they entirely switched to one graphics language. It was pre-PostScript at that time. But they chose one graphics language, and that’s it. From that point on, when you choose a graphics language, things suddenly become more simple to do. Today’s graphics language is EGL ES, so there was inspiration for us to say we were converged on GL and EGL. From our perspective, that’s the least common denominator.

|

||||

|

||||

> We basically said: whatever we want to do, we want to do it from one codebase, because it’s more efficient.

|

||||

|

||||

Obviously there are disadvantages to having only one graphics language, but the benefits outweigh the disadvantages. And I think that’s a common theme in the industry. Android made the same decision to go that way. Even Wayland to a certain degree has been doing that. They have to support EGL and GL, simply because it’s very convenient for app developers and toolkit developers – an open graphics language. That was the part that inspired us, and we wanted to have this one graphics language and support it well. And that takes a lot of craft.

|

||||

|

||||

So, once you can say: no more weird 2D API, no more weird phong API, and everything is mapped out to GL, you’re way better off. And you can distill down the scope of the overall project to something more manageable. So it went from being impossible to possible. And then there was me, being very opinionated. I don’t believe in extensibility from the beginning – traditionally in Linux everything is super extensible, which has got benefits for a certain audience.

|

||||

|

||||

If you think about the audience of the display server, it’s one of the few places in the system where you’ve got three audiences. So you’ve got the users, who don’t care, or shouldn’t care, about the display server.

|

||||

|

||||

**LV: It’s transparent to them.**

|

||||

|

||||

**Thomas**: Yes, it’s pixels, right? That’s all they care about. It should be smooth. It should be super nice to use. But the display server is not their main concern. It obviously feeds into a user experience, quite significantly, but there are a lot of other parts in the system that are important as well.

|

||||

|

||||

Then you’ve got developers who care about the display server in terms of the API. Obviously we said we want to satisfy this audience, and we want to provide a super-fast experience for users. It should be rock solid and stable. People have been making fun of us and saying “yeah, every project wants to be rock solid and stable”. Cool – so many fail in doing that, so let’s get that down and just write out what we really want to achieve.

|

||||

|

||||

And then you’ve got developers, and the moment you expose an API to them, or a protocol, you sign a contract with them, essentially. So they develop to your API – well, many app developers won’t directly because they’ll be using toolkits – but at some point you’ve got developers who sign up to your API.

|

||||

|

||||

|

||||

|

||||

**LV: The developers writing the toolkits, then?**

|

||||

|

||||

**Thomas**: We do a lot of work in that arena, but in general it’s a contract that we have with normal app developers. And we said: look, we don’t want the API or contract to be super extensible and trying to satisfy every need out there. We want to understand what people really want to do, and we want to commit to one API and contract. Not five different variants of the contract, but we want to say: look, this is what we support and we, as Canonical and as the Mir maintainers, will sign up to.

|

||||

|

||||

So I think that’s a very good thing. You can buy into specific shells sitting on top of Mir, but you can always assume a certain base level of functionality that we will always provide in terms of window management, in terms of rendering capabilities, and so on and so forth. And funnily enough, that also helps with convergence. Because once you start thinking about the API as very important, you really start thinking about convergence. And what happens if we think about form factor and we transfer from a phone to a tablet to a desktop to a fridge?

|

||||

|

||||

**LV: And whatever might come!**

|

||||

|

||||

**Thomas**: Right, right. How do we account for future developments? And we said we don’t feel comfortable making Mir super extensible, because it will just grow. Either it will just grow and grow, or you will end up with an organisation that just maintains your protocol and protocol extensions.

|

||||

|

||||

**LV: So that’s looking at Mir in relation to X. The obvious question is comparing Mir to Wayland – so what is it that Mir does, that Wayland doesn’t?**

|

||||

|

||||

**Thomas**: This might sound picky, but we have to distinguish what Wayland really is. Wayland is a protocol specification which is interesting because the value proposition is somewhat difficult. You’ve got a protocol and you’ve got a reference implementation. Specifically, when we started, Weston was still a test bed and everything being developed ended up in there.

|

||||

|

||||

No one was buying into that; no one was saying, “Look, we’re moving this to production-level quality with a bona fide protocol layer that is frozen and stable for a specific version that caters to application authors”. If you look at the Ubuntu repository today, or in Debian, there’s Wayland-cursor-whatever, so they have extensions already. So that’s a bit different from our approach to Mir, from my perspective at least.

|

||||

|

||||

There was this protocol that the Wayland developers finished and back then, before we did Mir and I looked into all of this, I wrote a Wayland compositor in Go, just to get to know things.

|

||||

|

||||

**LV: As you do!**

|

||||

|

||||

**Thomas**: And I said: you know, I don’t think a protocol is a good way of approaching this because versioning a protocol in a packaging scenario is super difficult. But versioning a C API, or any sort of API that has a binary stability contract, is way easier and we are way more experienced at that. So, in that respect, we are different in that we are saying the protocol is an implementation detail, at least up to a certain point.

|

||||

|

||||

I’m pretty sure for version 1.0, which we will call a golden release, we will open up the protocol for communication purposes. Under the covers it’s Google buffers and sockets. So we’ll say: this is the API, work against that, and we’re committed to it.

|

||||

|

||||

That’s one thing, and then we said: OK, there’s Weston, but we cannot use Weston because it’s not working on Android, the driver model is not well defined, and there’s so much work that we would have to do to actually implement a Wayland compositor. And then we are in a situation where we would have to cut out a set of functionality from the Wayland protocol and commit to that, no matter what happens, and ultimately that would be a fork, over time, right?.

|

||||

|

||||

**LV: It’s a difficult concept for many end users, who just want to see something working.**

|

||||

|

||||

**Thomas**: Right, and even from a developer’s perspective – and let’s jump to the political part – I find it somewhat difficult to have a party owning a protocol definition and another party building the reference implementations. Now, Gnome and KDE do two different Wayland compositors. I don’t see the benefit in that, to be quite frank, so the value proposition is difficult to my mind.

|

||||

|

||||

The driver model in Mir and Wayland is ultimately not that different – it’s GL/EGL based. That is kind of the denominator that you will find in both things, which is actually a good thing, because if you look at the contract to application developers and toolkit developers, most of them don’t want Mir or Wayland. They talk ELG and GL, and at that point, it’s not that much of a problem to support both.

|

||||

|

||||

> If there had been a full reference implementation of Wayland, our decision might have been different.

|

||||

|

||||

So we did this work for porting the Chromium browser to Mir. We actually took the Chromium Wayland back-end, factored out all the common pieces to EGL and GL ES, and split it up into Wayland and Mir.

|

||||

|

||||

And I think from a user’s or application developer’s perspective, the difference is not there. I think, in retrospect, if there would have been something like a full reference implementation of Wayland, where a company had signed up to provide something that is working, and committed to a certain protocol version, our decision might have been different. But there just wasn’t. It was five years out there, Wayland, Wayland, Wayland, and there was nothing that we could build upon.

|

||||

|

||||

**LV: The main experience we’ve had is with RebeccaBlackOS, which has Weston and Wayland, because, like you say, there’s no that much out there running it.**

|

||||

|

||||

**Thomas**: Right. I find Wayland impressive, obviously, but I think Mir will be significantly more relevant than Wayland in two years time. We just keep on bootstrapping everything, and we’ve got things working across multiple platforms. Are there issues, and are there open questions to solve? Most likely. We never said we would come up with the perfect solution in version 1. That was not our goal. I don’t think software should be built that way. So it just should be iterated.

|

||||

|

||||

|

||||

|

||||

**LV: When was Mir originally planned for? Which Ubuntu release? Because it has been pushed back a couple of times.**

|

||||

|

||||

**Thomas**: Well, we originally planned to have it by 14.04. That was the kind of stretch goal, because it highly depends on the availability of proprietary graphics drivers. So you can’t ship an LTS [Long Term Support] release of Ubuntu on a new display server without supporting the hardware of the big guys.

|

||||

|

||||

**LV: We thought that would be quite ambitious anyway – a Long Term Support release with a whole new display server!**

|

||||

|

||||

**Thomas**: Yes, it was ambitious – but for a reason. If you don’t set a stretch goal, and probably fail in reaching it, and then re-evaluate how you move forward, it’s difficult to drive a project. So if you just keep it evolving and evolving and evolving, and you don’t have a checkpoint at some point…

|

||||

|

||||

**LV: That’s like a lot of open source projects. Inkscape is still on 0.48 or something, and it works, it’s reliable, but they never get to 1.0. Because they always say: “Oh let’s add this feature, and that feature”, and the rest of us are left thinking: just release 1.0 already!.**

|

||||

|

||||

**Thomas**: And I wouldn’t actually tie it to a version number. To me, that is secondary. To me, the question is whether we call this ready for broad public consumption on all of the hardware versions we want to support?

|

||||

|

||||

In Canonical, as a company, we have OEM contracts and we are enabling Ubuntu on a host of devices, and laptops and whatever, so we have to deliver on those contracts. And the question is, can we do that? No. Well, you never like a ‘no’.

|

||||

|

||||

> The question is whether we call this ready for broad public consumption on the hardware we want to support.

|

||||

|

||||

Usually, when you encounter a problem and you tackle it, and you start thinking how to solve the problem, that’s more beneficial than never hearing a no. That’s kind of what we were aiming for. Ubuntu 14.04 was a stretch goal – everyone was aware of that and we didn’t reach it. Fine, cool. Let’s go on.

|

||||

|

||||

So how do we stage ourself for the next cycle, until an LTS? Now we have this initiative where we have a daily testable image with Unity 8 and Mir. It’s not super usable because it’s just essentially the tethered UI that you are seeing there, but still it’s something that we didn’t have a year ago. And for me, that’s a huge gain.

|

||||

|

||||

And ultimately, before we can ship something, before any new display server can ship in an LTS release, you need to have buy-in from the GPU vendors. That’s what you need.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxvoice.com/interview-thomas-vos-of-mir/

|

||||

|

||||

作者:[Mike Saunders][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxvoice.com/author/mike/

|

||||

@ -1,168 +0,0 @@

|

||||

(翻译中 by runningwater)

|

||||

Camicri Cube: An Offline And Portable Package Management System

|

||||

================================================================================

|

||||

|

||||

|

||||

As we all know, we must have an Internet connection in our System for downloading and installing applications using synaptic manager or software center. But, what if you don’t have an Internet connection, or the Internet connection is dead slow? This will be definitely a headache when installing packages using software center in your Linux desktop. Instead, you can manually download the applications from their official site, and install them. But, most of the Linux users doesn’t aware about the required dependencies for the applications that they wanted to install. What could you do if you have such situation? Leave all the worries now. Today, we introduce an awesome offline package manager called **Camicri Cube**.

|

||||

|

||||

You can use this package manager on any Internet connected system, download the list of packages you want to install, bring them back to your offline computer, and Install them. Sounds good? Yes, It is! Cube is a package manager like Synaptic and Ubuntu Software Center, but a portable one. It can be used and run in any platform (Windows, Apt-Based Linux Distributions), online and offline, in flashdrive or any removable devices. The main goal of this project is to enable the offline Linux users to download and install Linux applications easily.

|

||||

|

||||

Cube will gather complete details of your offline computer such as OS details, installed applications and more. Then, just the copy the cube application using any USB thumb drive, and use it on the other Internet connected system, and download the list of applications you want. After downloading all required packages, head back to your original computer and start installing them. Cube is developed and maintained by **Jake Capangpangan**. It is written using C++, and bundled with all necessary packages. So, you don’t have to install any extra software to use it.

|

||||

|

||||

### Installation ###

|

||||

|

||||

Now, let us download and install Cube on the Offline system which doesn’t have the Internet connection. Download Cube latest version either from the [official Launchpad Page][1] or [Sourceforge site][2]. Make sure you have downloaded the correct version depending upon your offline computer architecture. As I use 64 bit system, I downloaded the 64bit version.

|

||||

|

||||

wget http://sourceforge.net/projects/camicricube/files/Camicri%20Cube%201.0.9/cube-1.0.9.2_64bit.zip/

|

||||

|

||||

Extract the zip file and move it to your home directory or anywhere you want:

|

||||

|

||||

unzip cube-1.0.9.2_64bit.zip

|

||||

|

||||

That’s it. Now it’s time to know how to use it.

|

||||

|

||||

### Usage ###

|

||||

|

||||

Here, I will be using Two Ubuntu systems. The original (Offline – no Internet) is running with **Ubuntu 14.04**, and the Internet connected system is running with **Lubuntu 14.04** Desktop.

|

||||

|

||||

#### Steps to do On Offline system: ####

|

||||

|

||||

From the offline system, Go to the extracted Cube folder. You’ll find an executable called “cube-linux”. Double click it, and Click Execute. If it not executable, set the executable permission as shown below.

|

||||

|

||||

sudo chmod -R +x cube/

|

||||

|

||||

Then, go to the cube directory,

|

||||

|

||||

cd cube/

|

||||

|

||||

And run the following command to run it.

|

||||

|

||||

./cube-linux

|

||||

|

||||

Enter the Project name (Ex.sk) and click **Create**. As I mentioned above, this will create a new project with complete details of your system such as OS details, list of installed applications, list of repositories etc.

|

||||

|

||||

|

||||

|

||||

As you know, our system is an offline computer that means I don’t have Internet connection. So I skipped the Update Repositories process by clicking on the **Cancel** button. We will update the repositories later on an Internet connected system.

|

||||

|

||||

|

||||

|

||||

Again, I clicked **No** to skip updating the offline computer, because we don’t have Internet connection.

|

||||

|

||||

|

||||

|

||||

That’s it. Now the new project has been created. The new project will be saved on your main cube folder. Go to the Cube folder, and you’ll find a folder called Projects. This folder will hold all the essential details of your offline system.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Now, close the cube application, and copy the entire main **cube** folder to any flash drive, and go to the Internet connected system.

|

||||

|

||||

#### Steps to do on an Internet connected system: ####

|

||||

|

||||

The following steps needs to be done on the Internet connected system. In our case, Its **Lubuntu 14.04**.

|

||||

|

||||

Make the cube folder executable as we did in the original computer.

|

||||

|

||||

sudo chmod -R +x cube/

|

||||

|

||||

Now, double click the file cube-linux to open it or you can launch it from the Terminal as shown below.

|

||||

|

||||

cd cube/

|

||||

./cube-linux

|

||||

|

||||

You will see that your project is now listed in the “Open Existing Projects” part of the window. Select your project

|

||||

|

||||

|

||||

|

||||

Then, the cube will ask if this is your project’s original computer. It’s not my original (Offline) computer, so I clicked **No**.

|

||||

|

||||

|

||||

|

||||

You’ll be asked if you want to update your repositories. Click **Ok** to update the repositories.

|

||||

|

||||

|

||||

|

||||

Next, we have to update all outdated packages/applications. Click on the “**Mark All updates**” button from the Cube’s tool bar. After that, click “**Download all marked**” button to update all updated packages/applications. As you see in the below screenshot, there are 302 packages needs to be updated in my case. Then, Click **Ok** to continue to download marked packages.

|

||||

|

||||

|

||||

|

||||

Now, Cube will start to download all marked packages.

|

||||

|

||||

|

||||

|

||||

We have completed updating repositories and packages. Now, you can download a new package if you want to install it on your offline system.

|

||||

|

||||

#### Downloading New Applications ####

|

||||

|

||||

For example, here I am going to download the **apache2** Package. Enter the name of the package in the **search** box, and hit Search button. The Cube will fetch the details of the application that you are looking for. Hit the “**Download this package now**” button, and click **Ok** to start download.

|

||||

|

||||

|

||||

|

||||

Cube will start downloading the apache2 package with all its dependencies.

|

||||

|

||||

|

||||

|

||||

If you want to search and download more packages, simply Click the button “**Mark this package**”, and do search the required packages. You can mark as many as packages you want to install on your original computer. Once you marked all packages, hit the “**Download all marked**” button on the top tool bar to start downloading them.

|

||||

|

||||

After you completed updating repositories, outdated packages, and downloading new applications, close the Cube application. Then, copy the entire Cube folder to any flash drive or external hdd, and go back to your Offline system.

|

||||

|

||||

#### Steps to do on Offline computer: ####

|

||||

|

||||

Copy the Cube folder back to your Offline system on any place you want. Go to the cube folder and double click **cube-linux** file to launch Cube application.

|

||||

|

||||

Or, you can launch it from Terminal as shown below.

|

||||

|

||||

cd cube/

|

||||

./cube-linux

|

||||

|

||||

Select your project and click Open.

|

||||

|

||||

|

||||

|

||||

Then a dialog will ask you to update your system, please click “Yes” especially when you download new repositories, because this will transfer all new repositories to your computer.

|

||||

|

||||

|

||||

|

||||

You’ll see that the repositories will be updated on your offline computer without Internet connection. Because, we already have updated the repositories on the Internet connected system. Seems cool, isn’t it?

|

||||

|

||||

After updating the repositories, let us install all downloaded packages. Click the “Mark All Downloaded” button to select all downloaded packages, and click “Install All Marked” to install all of them from the Cube main Tool bar. The Cube application will automatically open a new Terminal, and install all packages.

|

||||

|

||||

|

||||

|

||||

If you encountered with dependency problems, go to **Cube Menu -> Packages -> Install packages with complete dependencies** to install all packages.

|

||||

|

||||

If you want to install a specific package, Navigate to the List Packages, click the “Downloaded” button, and all downloaded packages will be listed.

|

||||

|

||||

|

||||

|

||||

Then, double click the desired package, and click “Install this”, or “Mark this” if you want to install it later.

|

||||

|

||||

|

||||

|

||||

By this way, you can download the required packages from any Internet connected system, and then you can install them in your offline computer without Internet connection.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

This is one of the best and useful tool ever I have used. But during testing this tool in my Ubuntu 14.04 testbox, I faced many dependency problems, and the Cube application is suddenly closed often. Also, I could use this tool only on a fresh Ubuntu 14.04 offline system without any issues. Hope all these issues wouldn’t happen on previous versions of Ubuntu. Apart from these minor issues, this tool does this job as advertised and worked like a charm.

|

||||

|

||||

Cheers!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/camicri-cube-offline-portable-package-management-system/

|

||||

|

||||

原文作者:

|

||||

|

||||

|

||||

|

||||

[SK][a](Senthilkumar, aka SK, is a Linux enthusiast, FOSS Supporter & Linux Consultant from Tamilnadu, India. A passionate and dynamic person, aims to deliver quality content to IT professionals and loves very much to write and explore new things about Linux, Open Source, Computers and Internet.)

|

||||

|

||||

译者:[runningwater](https://github.com/runningwater) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/sk/

|

||||

[1]:https://launchpad.net/camicricube

|

||||

[2]:http://sourceforge.net/projects/camicricube/

|

||||

@ -1,4 +1,3 @@

|

||||

nd0104 is translate

|

||||

Install Google Docs on Linux with Grive Tools

|

||||

================================================================================

|

||||

Google Drive is two years old now and Google’s cloud storage solution seems to be still going strong thanks to its integration with Google Docs and Gmail. There’s one thing still missing though: a lack of an official Linux client. Apparently Google has had one floating around their offices for a while now, however it’s not seen the light of day on any Linux system.

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

translating by haimingfg

|

||||

|

||||

What are useful CLI tools for Linux system admins

|

||||

================================================================================

|

||||

System administrators (sysadmins) are responsible for day-to-day operations of production systems and services. One of the critical roles of sysadmins is to ensure that operational services are available round the clock. For that, they have to carefully plan backup policies, disaster management strategies, scheduled maintenance, security audits, etc. Like every other discipline, sysadmins have their tools of trade. Utilizing proper tools in the right case at the right time can help maintain the health of operating systems with minimal service interruptions and maximum uptime.

|

||||

|

||||

@ -1,5 +1,3 @@

|

||||

chi1shi2 is translating.

|

||||

|

||||

How to use on-screen virtual keyboard on Linux

|

||||

================================================================================

|

||||

On-screen virtual keyboard is an alternative input method that can replace a real hardware keyboard. Virtual keyboard may be a necessity in various cases. For example, your hardware keyboard is just broken; you do not have enough keyboards for extra machines; your hardware does not have an available port left to connect a keyboard; you are a disabled person with difficulty in typing on a real keyboard; or you are building a touchscreen-based web kiosk.

|

||||

|

||||

@ -1,6 +1,3 @@

|

||||

>>Linchenguang is translating

|

||||

|

||||

》》延期申请

|

||||

Linux TCP/IP networking: net-tools vs. iproute2

|

||||

================================================================================

|

||||

Many sysadmins still manage and troubleshoot various network configurations by using a combination of ifconfig, route, arp and netstat command-line tools, collectively known as net-tools. Originally rooted in the BSD TCP/IP toolkit, the net-tools was developed to configure network functionality of older Linux kernels. Its development in the Linux community so far has ceased since 2001. Some Linux distros such as Arch Linux and CentOS/RHEL 7 have already deprecated net-tools in favor of iproute2.

|

||||

|

||||

@ -1,4 +1,3 @@

|

||||

|

||||

How to create a software RAID-1 array with mdadm on Linux

|

||||

================================================================================

|

||||

Redundant Array of Independent Disks (RAID) is a storage technology that combines multiple hard disks into a single logical unit to provide fault-tolerance and/or improve disk I/O performance. Depending on how data is stored in an array of disks (e.g., with striping, mirroring, parity, or any combination thereof), different RAID levels are defined (e.g., RAID-0, RAID-1, RAID-5, etc). RAID can be implemented either in software or with a hardware RAID card. On modern Linux, basic software RAID functionality is available by default.

|

||||

|

||||

@ -1,106 +0,0 @@

|

||||

[felixonmars translating...]

|

||||

|

||||

How to configure peer-to-peer VPN on Linux

|

||||

================================================================================

|

||||

A traditional VPN (e.g., OpenVPN, PPTP) is composed of a VPN server and one or more VPN clients connected to the server. When any two VPN clients talk to each other, the VPN server needs to relay VPN traffic between them. The problem of such a hub-and-spoke type of VPN topology is that the VPN server can easily become a performance bottleneck as the number of connected clients increases. The centralized VPN server is also a single point of failure in a sense that if the VPN server goes down, the entire VPN is no longer accessible to any VPN client.

|

||||

|

||||

Peer-to-peer VPN (or P2P VPN) is an alternative VPN model that addresses these problems of the traditional server-client based VPN. In a P2P VPN, there is no longer a centralized VPN server. Any node with a public IP address can bootstrap other nodes into a VPN. Once connected to a VPN, each node can communicate with any other node in the VPN directly, without going through an intermediary server node. When any one node goes down, the rest of nodes in the VPN are not affected. Inter-node latency/bandwidth and VPN scalability naturally improve in such a setting, which is desirable if you want to use a VPN for multi-player gaming or file sharing among many friends.

|

||||

|

||||

There are several open-source implementations of P2P VPN, such as [Tinc][1], peerVPN, and [n2n][2]. In this tutorial, I am going to demonstrate **how to configure a peer-to-peer VPN using** n2n **on Linux**.

|

||||

|

||||

n2n is an open-source (GPLv3) software allowing you to construct an encrypted layer-2/3 peer-to-peer VPN among users. The VPN created by n2n is "NAT-friendly," which means that two users behind different NAT routers can directly talk to each other over the VPN. n2n supports symmetric NAT type which is the most restrictive form of NAT. For that, the VPN traffic of n2n is encapsulated by UDP.

|

||||

|

||||

A n2n VPN is composed of two kinds of nodes: edge node and super node. An edge node is a computer which is connected to a VPN, potentially from behind a NAT router. A super node is a computer with a publicly reachable IP address, which assists with initial signaling for NATed edges. To create a P2P VPN among users, we need at least one super node.

|

||||

|

||||

|

||||

|

||||

### Preparation ###

|

||||

|

||||

In this tutorial, I am going to set up a P2P VPN using three nodes: one super node, and two edge nodes. The only requirement is that edge nodes be able to ping the IP address of the super node. It does not matter whether the edge nodes are behind NAT routers or not.

|

||||

|

||||

### Install n2n on Linux ###

|

||||

|

||||

To construct a P2P VPN using n2n, you need to install n2n on every edge node as well as super node.

|

||||

|

||||

Due to its minimal dependency requirements, n2n can be built easily on most Linux platforms.

|

||||

|

||||

To install n2n on Debian-based system:

|

||||

|

||||

$ sudo apt-get install subversion build-essential libssl-dev

|

||||

$ svn co https://svn.ntop.org/svn/ntop/trunk/n2n

|

||||

$ cd n2n/n2n_v2

|

||||

$ make

|

||||

$ sudo make install

|

||||

|

||||

To install n2n on Red Hat-based system:

|

||||

|

||||

$ sudo yum install subversion gcc-c++ openssl-devel

|

||||

$ svn co https://svn.ntop.org/svn/ntop/trunk/n2n

|

||||

$ cd n2n/n2n_v2

|

||||

$ make

|

||||

$ sudo make install

|

||||

|

||||

### Configure a P2P VPN with n2n ###

|

||||

|

||||

As mentioned before, we need to set up at least one super node which acts as an initial bootstraping server. We assume that the IP address of the super node is 1.1.1.1.

|

||||

|

||||

#### Super node: ####

|

||||

|

||||

On a computer which acts as a super node, run the following command. The "-l <port>" specifies the listening port of the super node. No root privilege is required to run supernode.

|

||||

|

||||

$ supernode -l 5000

|

||||

|

||||

#### Edge node: ####

|

||||

|

||||

On each edge node, use the following command to connect to a P2P VPN. The edge daemon will be running in the background.

|

||||

|

||||

Edge node #1:

|

||||

|

||||

$ sudo edge -d edge0 -a 10.0.0.10 -c mynetwork -u 1000 -g 1000 -k password -l 1.1.1.1:5000 -m ae:e0:4f:e7:47:5b

|

||||

|

||||

Edge node #2:

|

||||

|

||||

$ sudo edge -d edge0 -a 10.0.0.11 -c mynetwork -u 1000 -g 1000 -k password -l 1.1.1.1:5000 -m ae:e0:4f:e7:47:5c

|

||||

|

||||

Here are some explanations on the command-line.

|

||||

|

||||

- The "-d <name>" option specifies the name of a TAP interface being created by edge command.

|

||||

- The "-a <IP-address>" option defines (statically) the VPN IP address to be assigned to the TAP interface. If you want to use DHCP, you need to set up a DHCP server on one of edge nodes, and use "-a dhcp:0.0.0.0" option instead.

|

||||

- The "-c <community-name>" option specifies the name of a VPN group (with a length of up to 16 bytes). This option is used to create multiple VPNs among the same group of nodes.

|

||||

- The "-u" and "-g" options are used to drop root priviledge after creating a TAP interface. The edge daemon will run as the specified user/group ID.

|

||||

- The "-k <key-string>" option specifies a twofish encryption key string to be used. If you want to hide a key-string from the command-line, you can define the key in N2N_KEY environment variable.

|

||||

- The "-l <IP-address:port>" option specifies super node's listening IP address and port number. For redundancy, you can specify up to two different super nodes (e.g., -l <supernode A> -l <supernode B>).

|

||||

- The "-m <mac-address> assigns a static MAC address to a TAP interface. Without this, edge command will randomly generate a MAC address. In fact, hardcoding a static MAC address for a VPN interface is highly recommended. Otherwise, in case you restart edge daemon on a node, ARP cache of other peers will be polluted due to a newly generated MAC addess, and they will not send traffic to the node until the polluted ARP entry is evicted.

|

||||

|

||||

|

||||

|

||||

At this point, you should be able to ping from one edge node to the other using their VPN IP addresses.

|

||||

|

||||

### Troubleshooting ###

|

||||

|

||||

1. You are getting the following error while invoking edge daemon.

|

||||

|

||||

n2n[4405]: ERROR: ioctl() [Operation not permitted][-1]

|

||||

|

||||

Be aware that edge daemon requires superuser privilege when creating a TAP interface. Thus make sure to use root privilege or set SUID for edge command. You can always use "-u" and "-g" option to drop root privilege afterwards.

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

n2n can be a quite practical free VPN solution for you. You can easily configure a super node from your own home network or by grabbing a publicly addressable VPS instance from [cloud hosting][3]. Instead of placing sensitive credentials and encryption keys in the hands of a third-party VPN provider, you can use n2n to set up your own low-latency, high bandwidth, scalable P2P VPN among your friends.

|

||||

|

||||

What is your thought on n2n? Share your opinion in the comment.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/configure-peer-to-peer-vpn-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/how-to-install-and-configure-tinc-vpn.html

|

||||

[2]:http://www.ntop.org/products/n2n/

|

||||

[3]:http://xmodulo.com/go/digitalocean

|

||||

@ -1,86 +0,0 @@

|

||||

wangjiezhe translating...

|

||||

|

||||

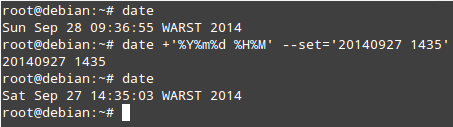

Linux FAQs with Answers--How to change date and time from the command line on Linux

|

||||

================================================================================

|

||||

> **Question**: In Linux, how can I change date and time from the command line?

|

||||

|

||||

Keeping the date and time up-to-date in a Linux system is an important responsibility of every Linux user and system administrator. Many applications rely on accurate timing information to operate properly. Besides, inaccurate date and time render timestamp information in log files meaningless, diminishing their usefulness for system inspection and troubleshooting. For production systems, accurate date and time are even more critical. For example, the production in a retail company must be accounted precisely at all times (and stored in a database server) so that the finance department can calculate the expenses and net income of the day, current week, month, and year.

|

||||

|

||||

We must note that there are two kinds of clocks in a Linux machine: the software clock (aka system clock), which is maintained by the kernel, and the (battery-driven) hardware clock, which is used to keep track of time when the machine is powered down. During boot, the kernel sets the system clock to the same time as the hardware clock. Afterwards, both clocks run independent from each other.

|

||||

|

||||

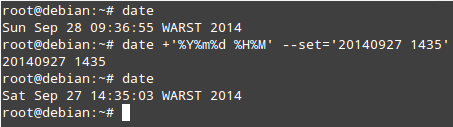

### Method One: Date Command ###

|

||||

|

||||

In Linux, you can use the date command to change the date and time of your system:

|

||||

|

||||

# date --set='NEW_DATE'

|

||||

|

||||

where NEW_DATE is a mostly free format human readable date string such as "Sun, 28 Sep 2014 16:21:42" or "2014-09-29 16:21:42".

|

||||

|

||||

The date format can also be specified to obtain more accurate results:

|

||||

|

||||

# date +FORMAT --set='NEW_DATE'

|

||||

|

||||

For example:

|

||||

|

||||

# date +’%Y%m%d %H%m’ --set='20140928 1518'

|

||||

|

||||

|

||||

|

||||

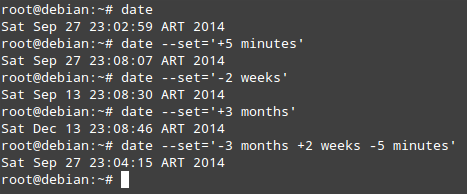

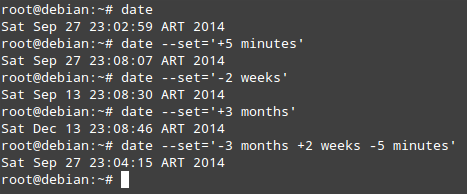

You can also increment or decrement date or time by a number of days, weeks, months or years, and seconds, minutes or hours, respectively. You may combine date and time parameters in one command as well.

|

||||

|

||||

# date --set='+5 minutes'