mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

3cdef948c8

@ -1,223 +1,272 @@

|

||||

Translated by shipsw

|

||||

20 个 OpenSSH 最佳安全实践

|

||||

======

|

||||

|

||||

20 个 OpenSSH 安全实践

|

||||

======

|

||||

![OpenSSH 安全提示][1]

|

||||

|

||||

OpenSSH 是 SSH 协议的一个实现。一般被 scp 或 sftp 用在远程登录、备份、远程文件传输等功能上。SSH能够完美保障两个网络或系统间数据传输的保密性和完整性。尽管如此,他主要用在使用公匙加密的服务器验证上。不时出现关于 OpenSSH 零日漏洞的[谣言][2]。本文描述**如何设置你的 Linux 或类 Unix 系统以提高 sshd 的安全性**。

|

||||

OpenSSH 是 SSH 协议的一个实现。一般通过 `scp` 或 `sftp` 用于远程登录、备份、远程文件传输等功能。SSH能够完美保障两个网络或系统间数据传输的保密性和完整性。尽管如此,它最大的优势是使用公匙加密来进行服务器验证。时不时会出现关于 OpenSSH 零日漏洞的[传言][2]。本文将描述如何设置你的 Linux 或类 Unix 系统以提高 sshd 的安全性。

|

||||

|

||||

|

||||

#### OpenSSH 默认设置

|

||||

### OpenSSH 默认设置

|

||||

|

||||

* TCP 端口 - 22

|

||||

* OpenSSH 服务配置文件 - sshd_config (位于 /etc/ssh/)

|

||||

* TCP 端口 - 22

|

||||

* OpenSSH 服务配置文件 - `sshd_config` (位于 `/etc/ssh/`)

|

||||

|

||||

### 1、 基于公匙的登录

|

||||

|

||||

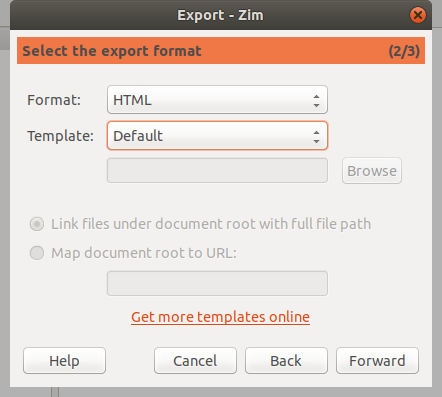

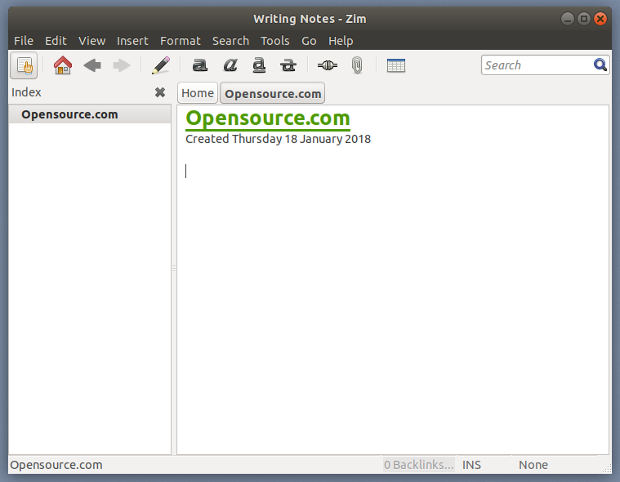

OpenSSH 服务支持各种验证方式。推荐使用公匙加密验证。首先,使用以下 `ssh-keygen` 命令在本地电脑上创建密匙对:

|

||||

|

||||

#### 1. 基于公匙的登录

|

||||

|

||||

OpenSSH 服务支持各种验证方式。推荐使用公匙加密验证。首先,使用以下 ssh-keygen 命令在本地电脑上创建密匙对:

|

||||

|

||||

低于 1024 位的 DSA 和 RSA 加密是很弱的,请不要使用。RSA 密匙主要是在考虑 ssh 客户端兼容性的时候代替 ECDSA 密匙使用的。

|

||||

> 1024 位或低于它的 DSA 和 RSA 加密是很弱的,请不要使用。当考虑 ssh 客户端向后兼容性的时候,请使用 RSA密匙代替 ECDSA 密匙。所有的 ssh 密钥要么使用 ED25519 ,要么使用 RSA,不要使用其它类型。

|

||||

|

||||

```

|

||||

$ ssh-keygen -t key_type -b bits -C "comment"

|

||||

```

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ ssh-keygen -t ed25519 -C "Login to production cluster at xyz corp"

|

||||

或

|

||||

$ ssh-keygen -t rsa -b 4096 -f ~/.ssh/id_rsa_aws_$(date +%Y-%m-%d) -C "AWS key for abc corp clients"

|

||||

```

|

||||

下一步,使用 ssh-copy-id 命令安装公匙:

|

||||

|

||||

下一步,使用 `ssh-copy-id` 命令安装公匙:

|

||||

|

||||

```

|

||||

$ ssh-copy-id -i /path/to/public-key-file user@host

|

||||

或

|

||||

$ ssh-copy-id user@remote-server-ip-or-dns-name

|

||||

```

|

||||

|

||||

示例:

|

||||

|

||||

```

|

||||

$ ssh-copy-id vivek@rhel7-aws-server

|

||||

```

|

||||

提示输入用户名和密码的时候,使用你自己的 ssh 公匙:

|

||||

`$ ssh vivek@rhel7-aws-server`

|

||||

[![OpenSSH 服务安全最佳实践][3]][3]

|

||||

|

||||

提示输入用户名和密码的时候,确认基于 ssh 公匙的登录是否工作:

|

||||

|

||||

```

|

||||

$ ssh vivek@rhel7-aws-server

|

||||

```

|

||||

|

||||

[![OpenSSH 服务安全最佳实践][3]][3]

|

||||

|

||||

更多有关 ssh 公匙的信息,参照以下文章:

|

||||

|

||||

* [为备份脚本设置无密码安全登录][48]

|

||||

|

||||

* [sshpass: 使用脚本密码登录SSH服务器][49]

|

||||

|

||||

* [如何为一个 Linux/类Unix 系统设置 SSH 登录密匙][50]

|

||||

|

||||

* [如何使用 Ansible 工具上传 ssh 登录授权公匙][51]

|

||||

* [为备份脚本设置无密码安全登录][48]

|

||||

* [sshpass:使用脚本密码登录 SSH 服务器][49]

|

||||

* [如何为一个 Linux/类 Unix 系统设置 SSH 登录密匙][50]

|

||||

* [如何使用 Ansible 工具上传 ssh 登录授权公匙][51]

|

||||

|

||||

|

||||

#### 2. 禁用 root 用户登录

|

||||

### 2、 禁用 root 用户登录

|

||||

|

||||

禁用 root 用户登录前,确认普通用户可以以 root 身份登录。例如,允许用户 vivek 使用 sudo 命令以 root 身份登录。

|

||||

禁用 root 用户登录前,确认普通用户可以以 root 身份登录。例如,允许用户 vivek 使用 `sudo` 命令以 root 身份登录。

|

||||

|

||||

##### 在 Debian/Ubuntu 系统中如何将用户 vivek 添加到 sudo 组中

|

||||

#### 在 Debian/Ubuntu 系统中如何将用户 vivek 添加到 sudo 组中

|

||||

|

||||

允许 sudo 组中的用户执行任何命令。 [将用户 vivek 添加到 sudo 组中][4]:

|

||||

`$ sudo adduser vivek sudo`

|

||||

使用 [id 命令][5] 验证用户组。

|

||||

`$ id vivek`

|

||||

允许 sudo 组中的用户执行任何命令。 [将用户 vivek 添加到 sudo 组中][4]:

|

||||

|

||||

##### 在 CentOS/RHEL 系统中如何将用户 vivek 添加到 sudo 组中

|

||||

```

|

||||

$ sudo adduser vivek sudo

|

||||

```

|

||||

|

||||

使用 [id 命令][5] 验证用户组。

|

||||

|

||||

```

|

||||

$ id vivek

|

||||

```

|

||||

|

||||

#### 在 CentOS/RHEL 系统中如何将用户 vivek 添加到 sudo 组中

|

||||

|

||||

在 CentOS/RHEL 和 Fedora 系统中允许 wheel 组中的用户执行所有的命令。使用 `usermod` 命令将用户 vivek 添加到 wheel 组中:

|

||||

|

||||

在 CentOS/RHEL 和 Fedora 系统中允许 wheel 组中的用户执行所有的命令。使用 uermod 命令将用户 vivek 添加到 wheel 组中:

|

||||

```

|

||||

$ sudo usermod -aG wheel vivek

|

||||

$ id vivek

|

||||

```

|

||||

|

||||

##### 测试 sudo 权限并禁用 ssh root 登录

|

||||

#### 测试 sudo 权限并禁用 ssh root 登录

|

||||

|

||||

测试并确保用户 vivek 可以以 root 身份登录执行以下命令:

|

||||

|

||||

```

|

||||

$ sudo -i

|

||||

$ sudo /etc/init.d/sshd status

|

||||

$ sudo systemctl status httpd

|

||||

```

|

||||

添加以下内容到 sshd_config 文件中来禁用 root 登录。

|

||||

|

||||

添加以下内容到 `sshd_config` 文件中来禁用 root 登录:

|

||||

|

||||

```

|

||||

PermitRootLogin no

|

||||

ChallengeResponseAuthentication no

|

||||

PasswordAuthentication no

|

||||

UsePAM no

|

||||

```

|

||||

|

||||

更多信息参见“[如何通过禁用 Linux 的 ssh 密码登录来增强系统安全][6]” 。

|

||||

|

||||

#### 3. 禁用密码登录

|

||||

### 3、 禁用密码登录

|

||||

|

||||

所有的密码登录都应该禁用,仅留下公匙登录。添加以下内容到 `sshd_config` 文件中:

|

||||

|

||||

所有的密码登录都应该禁用,仅留下公匙登录。添加以下内容到 sshd_config 文件中:

|

||||

```

|

||||

AuthenticationMethods publickey

|

||||

PubkeyAuthentication yes

|

||||

```

|

||||

CentOS 6.x/RHEL 6.x 系统中老版本的 SSHD 用户可以使用以下设置:

|

||||

|

||||

CentOS 6.x/RHEL 6.x 系统中老版本的 sshd 用户可以使用以下设置:

|

||||

|

||||

```

|

||||

PubkeyAuthentication yes

|

||||

```

|

||||

|

||||

#### 4. 限制用户的 ssh 权限

|

||||

### 4、 限制用户的 ssh 访问

|

||||

|

||||

默认状态下,所有的系统用户都可以使用密码或公匙登录。但是有些时候需要为 FTP 或者 email 服务创建 UNIX/Linux 用户。然而,这些用户也可以使用 ssh 登录系统。他们将获得访问系统工具的完整权限,包括编译器和诸如 Perl、Python(可以打开网络端口干很多疯狂的事情)等的脚本语言。通过添加以下内容到 `sshd_config` 文件中来仅允许用户 root、vivek 和 jerry 通过 SSH 登录系统:

|

||||

|

||||

```

|

||||

AllowUsers vivek jerry

|

||||

```

|

||||

|

||||

当然,你也可以添加以下内容到 `sshd_config` 文件中来达到仅拒绝一部分用户通过 SSH 登录系统的效果。

|

||||

|

||||

```

|

||||

DenyUsers root saroj anjali foo

|

||||

```

|

||||

|

||||

默认状态下,所有的系统用户都可以使用密码或公匙登录。但是有些时候需要为 FTP 或者 email 服务创建 UNIX/Linux 用户。所以,这些用户也可以使用 ssh 登录系统。他们将获得访问系统工具的完整权限,包括编译器和诸如 Perl、Python(可以打开网络端口干很多疯狂的事情) 等的脚本语言。通过添加以下内容到 sshd_config 文件中来仅允许用户 root、vivek 和 jerry 通过 SSH 登录系统:

|

||||

`AllowUsers vivek jerry`

|

||||

当然,你也可以添加以下内容到 sshd_config 文件中来达到仅拒绝一部分用户通过 SSH 登录系统的效果。

|

||||

`DenyUsers root saroj anjali foo`

|

||||

你也可以通过[配置 Linux PAM][7] 来禁用或允许用户通过 sshd 登录。也可以允许或禁止一个[用户组列表][8]通过 ssh 登录系统。

|

||||

|

||||

#### 5. 禁用空密码

|

||||

### 5、 禁用空密码

|

||||

|

||||

你需要明确禁止空密码账户远程登录系统,更新 sshd_config 文件的以下内容:

|

||||

`PermitEmptyPasswords no`

|

||||

你需要明确禁止空密码账户远程登录系统,更新 `sshd_config` 文件的以下内容:

|

||||

|

||||

#### 6. 为 ssh 用户或者密匙使用强密码

|

||||

```

|

||||

PermitEmptyPasswords no

|

||||

```

|

||||

|

||||

### 6、 为 ssh 用户或者密匙使用强密码

|

||||

|

||||

为密匙使用强密码和短语的重要性再怎么强调都不过分。暴力破解可以起作用就是因为用户使用了基于字典的密码。你可以强制用户避开[字典密码][9]并使用[约翰的开膛手工具][10]来检测弱密码。以下是一个随机密码生成器(放到你的 `~/.bashrc` 下):

|

||||

|

||||

为密匙使用强密码和短语的重要性再怎么强调都不过分。暴力破解可以起作用就是因为用户使用了基于字典的密码。你可以强制用户避开字典密码并使用[约翰的开膛手工具][10]来检测弱密码。以下是一个随机密码生成器(放到你的 ~/.bashrc 下):

|

||||

```

|

||||

genpasswd() {

|

||||

local l=$1

|

||||

[ "$l" == "" ] && l=20

|

||||

tr -dc A-Za-z0-9_ < /dev/urandom | head -c ${l} | xargs

|

||||

[ "$l" == "" ] && l=20

|

||||

tr -dc A-Za-z0-9_ < /dev/urandom | head -c ${l} | xargs

|

||||

}

|

||||

```

|

||||

|

||||

运行:

|

||||

`genpasswd 16`

|

||||

输出:

|

||||

运行:

|

||||

|

||||

```

|

||||

genpasswd 16

|

||||

```

|

||||

|

||||

输出:

|

||||

|

||||

```

|

||||

uw8CnDVMwC6vOKgW

|

||||

```

|

||||

* [使用 mkpasswd / makepasswd / pwgen 生成随机密码][52]

|

||||

|

||||

* [Linux / UNIX: 生成密码][53]

|

||||

* [使用 mkpasswd / makepasswd / pwgen 生成随机密码][52]

|

||||

* [Linux / UNIX: 生成密码][53]

|

||||

* [Linux 随机密码生成命令][54]

|

||||

|

||||

* [Linux 随机密码生成命令][54]

|

||||

### 7、 为 SSH 的 22端口配置防火墙

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

你需要更新 `iptables`/`ufw`/`firewall-cmd` 或 pf 防火墙配置来为 ssh 的 TCP 端口 22 配置防火墙。一般来说,OpenSSH 服务应该仅允许本地或者其他的远端地址访问。

|

||||

|

||||

#### 7. 为 SSH 端口 # 22 配置防火墙

|

||||

#### Netfilter(Iptables) 配置

|

||||

|

||||

你需要更新 iptables/ufw/firewall-cmd 或 pf firewall 来为 ssh TCP 端口 # 22 配置防火墙。一般来说,OpenSSH 服务应该仅允许本地或者其他的远端地址访问。

|

||||

更新 [/etc/sysconfig/iptables (Redhat 和其派生系统特有文件) ][11] 实现仅接受来自于 192.168.1.0/24 和 202.54.1.5/29 的连接,输入:

|

||||

|

||||

##### Netfilter (Iptables) 配置

|

||||

|

||||

更新 [/etc/sysconfig/iptables (Redhat和其派生系统特有文件) ][11] 实现仅接受来自于 192.168.1.0/24 和 202.54.1.5/29 的连接, 输入:

|

||||

```

|

||||

-A RH-Firewall-1-INPUT -s 192.168.1.0/24 -m state --state NEW -p tcp --dport 22 -j ACCEPT

|

||||

-A RH-Firewall-1-INPUT -s 202.54.1.5/29 -m state --state NEW -p tcp --dport 22 -j ACCEPT

|

||||

```

|

||||

|

||||

如果同时使用 IPv6 的话,可以编辑/etc/sysconfig/ip6tables(Redhat 和其派生系统特有文件),输入:

|

||||

如果同时使用 IPv6 的话,可以编辑 `/etc/sysconfig/ip6tables` (Redhat 和其派生系统特有文件),输入:

|

||||

|

||||

```

|

||||

-A RH-Firewall-1-INPUT -s ipv6network::/ipv6mask -m tcp -p tcp --dport 22 -j ACCEPT

|

||||

|

||||

```

|

||||

|

||||

将 ipv6network::/ipv6mask 替换为实际的 IPv6 网段。

|

||||

将 `ipv6network::/ipv6mask` 替换为实际的 IPv6 网段。

|

||||

|

||||

##### Debian/Ubuntu Linux 下的 UFW

|

||||

#### Debian/Ubuntu Linux 下的 UFW

|

||||

|

||||

[UFW 是 uncomplicated firewall 的首字母缩写,主要用来管理 Linux 防火墙][12],目的是提供一种用户友好的界面。输入[以下命令使得系统进允许网段 202.54.1.5/29 接入端口 22][13]:

|

||||

`$ sudo ufw allow from 202.54.1.5/29 to any port 22`

|

||||

更多信息请参见 "[Linux: 菜鸟管理员的 25 个 Iptables Netfilter 命令][14]"。

|

||||

[UFW 是 Uncomplicated FireWall 的首字母缩写,主要用来管理 Linux 防火墙][12],目的是提供一种用户友好的界面。输入[以下命令使得系统仅允许网段 202.54.1.5/29 接入端口 22][13]:

|

||||

|

||||

##### *BSD PF 防火墙配置

|

||||

```

|

||||

$ sudo ufw allow from 202.54.1.5/29 to any port 22

|

||||

```

|

||||

|

||||

更多信息请参见 “[Linux:菜鸟管理员的 25 个 Iptables Netfilter 命令][14]”。

|

||||

|

||||

#### *BSD PF 防火墙配置

|

||||

|

||||

如果使用 PF 防火墙 [/etc/pf.conf][15] 配置如下:

|

||||

|

||||

```

|

||||

pass in on $ext_if inet proto tcp from {192.168.1.0/24, 202.54.1.5/29} to $ssh_server_ip port ssh flags S/SA synproxy state

|

||||

```

|

||||

|

||||

#### 8. 修改 SSH 端口和绑定 IP

|

||||

### 8、 修改 SSH 端口和绑定 IP

|

||||

|

||||

ssh 默认监听系统中所有可用的网卡。修改并绑定 ssh 端口有助于避免暴力脚本的连接(许多暴力脚本只尝试端口 22)。更新文件 `sshd_config` 的以下内容来绑定端口 300 到 IP 192.168.1.5 和 202.54.1.5:

|

||||

|

||||

SSH 默认监听系统中所有可用的网卡。修改并绑定 ssh 端口有助于避免暴力脚本的连接(许多暴力脚本只尝试端口 22)。更新文件 sshd_config 的以下内容来绑定端口 300 到 IP 192.168.1.5 和 202.54.1.5:

|

||||

```

|

||||

Port 300

|

||||

ListenAddress 192.168.1.5

|

||||

ListenAddress 202.54.1.5

|

||||

```

|

||||

|

||||

端口 300 监听地址 192.168.1.5 监听地址 202.54.1.5

|

||||

|

||||

当需要接受动态广域网地址的连接时,使用主动脚本是个不错的选择,比如 fail2ban 或 denyhosts。

|

||||

|

||||

#### 9. 使用 TCP wrappers (可选的)

|

||||

### 9、 使用 TCP wrappers (可选的)

|

||||

|

||||

TCP wrapper 是一个基于主机的访问控制系统,用来过滤来自互联网的网络访问。OpenSSH 支持 TCP wrappers。只需要更新文件 `/etc/hosts.allow` 中的以下内容就可以使得 SSH 只接受来自于 192.168.1.2 和 172.16.23.12 的连接:

|

||||

|

||||

TCP wrapper 是一个基于主机的访问控制系统,用来过滤来自互联网的网络访问。OpenSSH 支持 TCP wrappers。只需要更新文件 /etc/hosts.allow 中的以下内容就可以使得 SSH 只接受来自于 192.168.1.2 和 172.16.23.12 的连接:

|

||||

```

|

||||

sshd : 192.168.1.2 172.16.23.12

|

||||

```

|

||||

|

||||

在 Linux/Mac OS X 和类 UNIX 系统中参见 [TCP wrappers 设置和使用的常见问题][16]。

|

||||

|

||||

#### 10. 阻止 SSH 破解或暴力攻击

|

||||

### 10、 阻止 SSH 破解或暴力攻击

|

||||

|

||||

暴力破解是一种在单一或者分布式网络中使用大量组合(用户名和密码的组合)来尝试连接一个加密系统的方法。可以使用以下软件来应对暴力攻击:

|

||||

暴力破解是一种在单一或者分布式网络中使用大量(用户名和密码的)组合来尝试连接一个加密系统的方法。可以使用以下软件来应对暴力攻击:

|

||||

|

||||

* [DenyHosts][17] 是一个基于 Python SSH 安全工具。该工具通过监控授权日志中的非法登录日志并封禁原始IP的方式来应对暴力攻击。

|

||||

* RHEL / Fedora 和 CentOS Linux 下如何设置 [DenyHosts][18]。

|

||||

* [Fail2ban][19] 是另一个类似的用来预防针对 SSH 攻击的工具。

|

||||

* [sshguard][20] 是一个使用 pf 来预防针对 SSH 和其他服务攻击的工具。

|

||||

* [security/sshblock][21] 阻止滥用 SSH 尝试登录。

|

||||

* [IPQ BDB filter][22] 可以看做是 fail2ban 的一个简化版。

|

||||

* [DenyHosts][17] 是一个基于 Python SSH 安全工具。该工具通过监控授权日志中的非法登录日志并封禁原始 IP 的方式来应对暴力攻击。

|

||||

* RHEL / Fedora 和 CentOS Linux 下如何设置 [DenyHosts][18]。

|

||||

* [Fail2ban][19] 是另一个类似的用来预防针对 SSH 攻击的工具。

|

||||

* [sshguard][20] 是一个使用 pf 来预防针对 SSH 和其他服务攻击的工具。

|

||||

* [security/sshblock][21] 阻止滥用 SSH 尝试登录。

|

||||

* [IPQ BDB filter][22] 可以看做是 fail2ban 的一个简化版。

|

||||

|

||||

### 11、 限制 TCP 端口 22 的传入速率(可选的)

|

||||

|

||||

netfilter 和 pf 都提供速率限制选项可以对端口 22 的传入速率进行简单的限制。

|

||||

|

||||

#### 11. 限制 TCP 端口 # 22 的传入速率 (可选的)

|

||||

|

||||

netfilter 和 pf 都提供速率限制选项可以对端口 # 22 的传入速率进行简单的限制。

|

||||

|

||||

##### Iptables 示例

|

||||

#### Iptables 示例

|

||||

|

||||

以下脚本将会阻止 60 秒内尝试登录 5 次以上的客户端的连入。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

inet_if=eth1

|

||||

ssh_port=22

|

||||

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --set

|

||||

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --update --seconds 60 --hitcount 5

|

||||

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --set

|

||||

$IPT -I INPUT -p tcp --dport ${ssh_port} -i ${inet_if} -m state --state NEW -m recent --update --seconds 60 --hitcount 5

|

||||

```

|

||||

|

||||

在你的 iptables 脚本中调用以上脚本。其他配置选项:

|

||||

|

||||

```

|

||||

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state NEW -m limit --limit 3/min --limit-burst 3 -j ACCEPT

|

||||

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state ESTABLISHED -j ACCEPT

|

||||

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state NEW -m limit --limit 3/min --limit-burst 3 -j ACCEPT

|

||||

$IPT -A INPUT -i ${inet_if} -p tcp --dport ${ssh_port} -m state --state ESTABLISHED -j ACCEPT

|

||||

$IPT -A OUTPUT -o ${inet_if} -p tcp --sport ${ssh_port} -m state --state ESTABLISHED -j ACCEPT

|

||||

# another one line example

|

||||

# $IPT -A INPUT -i ${inet_if} -m state --state NEW,ESTABLISHED,RELATED -p tcp --dport 22 -m limit --limit 5/minute --limit-burst 5-j ACCEPT

|

||||

@ -225,9 +274,10 @@ $IPT -A OUTPUT -o ${inet_if} -p tcp --sport ${ssh_port} -m state --state ESTABLI

|

||||

|

||||

其他细节参见 iptables 用户手册。

|

||||

|

||||

##### *BSD PF 示例

|

||||

#### *BSD PF 示例

|

||||

|

||||

以下脚本将限制每个客户端的连入数量为 20,并且 5 秒内的连接不超过 15 个。如果客户端触发此规则,则将其加入 abusive_ips 表并限制该客户端连入。最后 flush 关键词杀死所有触发规则的客户端的连接。

|

||||

|

||||

以下脚本将限制每个客户端的连入数量为 20,并且 5 秒范围的连接不超过 15 个。如果客户端触发此规则则将其加入 abusive_ips 表并限制该客户端连入。最后 flush 关键词杀死所有触发规则的客户端的状态。

|

||||

```

|

||||

sshd_server_ip = "202.54.1.5"

|

||||

table <abusive_ips> persist

|

||||

@ -235,9 +285,10 @@ block in quick from <abusive_ips>

|

||||

pass in on $ext_if proto tcp to $sshd_server_ip port ssh flags S/SA keep state (max-src-conn 20, max-src-conn-rate 15/5, overload <abusive_ips> flush)

|

||||

```

|

||||

|

||||

#### 12. 使用端口敲门 (可选的)

|

||||

### 12、 使用端口敲门(可选的)

|

||||

|

||||

[端口敲门][23]是通过在一组预先指定的封闭端口上生成连接尝试,以便从外部打开防火墙上的端口的方法。一旦指定的端口连接顺序被触发,防火墙规则就被动态修改以允许发送连接的主机连入指定的端口。以下是一个使用 iptables 实现的端口敲门的示例:

|

||||

|

||||

[端口敲门][23]是通过在一组预先指定的封闭端口上生成连接尝试来从外部打开防火墙上的端口的方法。一旦指定的端口连接顺序被触发,防火墙规则就被动态修改以允许发送连接的主机连入指定的端口。以下是一个使用 iptables 实现的端口敲门的示例:

|

||||

```

|

||||

$IPT -N stage1

|

||||

$IPT -A stage1 -m recent --remove --name knock

|

||||

@ -257,24 +308,31 @@ $IPT -A INPUT -p tcp --dport 22 -m recent --rcheck --seconds 5 --name heaven -j

|

||||

$IPT -A INPUT -p tcp --syn -j door

|

||||

```

|

||||

|

||||

更多信息请参见:

|

||||

|

||||

更多信息请参见:

|

||||

[Debian / Ubuntu: 使用 Knockd and Iptables 设置端口敲门][55]

|

||||

|

||||

#### 13. 配置空闲超时注销时长

|

||||

### 13、 配置空闲超时注销时长

|

||||

|

||||

用户可以通过 ssh 连入服务器,可以配置一个超时时间间隔来避免无人值守的 ssh 会话。 打开 `sshd_config` 并确保配置以下值:

|

||||

|

||||

用户可以通过 ssh 连入服务器,可以配置一个超时时间间隔来避免无人值守的 ssh 会话。 打开 sshd_config 并确保配置以下值:

|

||||

```

|

||||

ClientAliveInterval 300

|

||||

ClientAliveCountMax 0

|

||||

```

|

||||

以秒为单位设置一个空闲超时时间(300秒 = 5分钟)。一旦空闲时间超过这个值,空闲用户就会被踢出会话。更多细节参见[如何自动注销空闲超时的 BASH / TCSH / SSH 用户][24]。

|

||||

|

||||

#### 14. 为 ssh 用户启用警示标语

|

||||

以秒为单位设置一个空闲超时时间(300秒 = 5分钟)。一旦空闲时间超过这个值,空闲用户就会被踢出会话。更多细节参见[如何自动注销空闲超时的 BASH / TCSH / SSH 用户][24]。

|

||||

|

||||

### 14、 为 ssh 用户启用警示标语

|

||||

|

||||

更新 `sshd_config` 文件如下行来设置用户的警示标语:

|

||||

|

||||

```

|

||||

Banner /etc/issue

|

||||

```

|

||||

|

||||

`/etc/issue 示例文件:

|

||||

|

||||

更新 sshd_config 文件如下来设置用户的警示标语

|

||||

`Banner /etc/issue`

|

||||

/etc/issue 示例文件:

|

||||

```

|

||||

----------------------------------------------------------------------------------------------

|

||||

You are accessing a XYZ Government (XYZG) Information System (IS) that is provided for authorized use only.

|

||||

@ -297,45 +355,61 @@ or monitoring of the content of privileged communications, or work product, rela

|

||||

or services by attorneys, psychotherapists, or clergy, and their assistants. Such communications and work

|

||||

product are private and confidential. See User Agreement for details.

|

||||

----------------------------------------------------------------------------------------------

|

||||

|

||||

```

|

||||

|

||||

以上是一个标准的示例,更多的用户协议和法律细节请咨询你的律师团队。

|

||||

|

||||

#### 15. 禁用 .rhosts 文件 (核实)

|

||||

### 15、 禁用 .rhosts 文件(需核实)

|

||||

|

||||

禁止读取用户的 `~/.rhosts` 和 `~/.shosts` 文件。更新 `sshd_config` 文件中的以下内容:

|

||||

|

||||

```

|

||||

IgnoreRhosts yes

|

||||

```

|

||||

|

||||

禁止读取用户的 ~/.rhosts 和 ~/.shosts 文件。更新 sshd_config 文件中的以下内容:

|

||||

`IgnoreRhosts yes`

|

||||

SSH 可以模拟过时的 rsh 命令,所以应该禁用不安全的 RSH 连接。

|

||||

|

||||

#### 16. 禁用 host-based 授权 (核实)

|

||||

### 16、 禁用基于主机的授权(需核实)

|

||||

|

||||

禁用 host-based 授权,更新 sshd_config 文件的以下选项:

|

||||

`HostbasedAuthentication no`

|

||||

禁用基于主机的授权,更新 `sshd_config` 文件的以下选项:

|

||||

|

||||

#### 17. 为 OpenSSH 和 操作系统打补丁

|

||||

```

|

||||

HostbasedAuthentication no

|

||||

```

|

||||

|

||||

### 17、 为 OpenSSH 和操作系统打补丁

|

||||

|

||||

推荐你使用类似 [yum][25]、[apt-get][26] 和 [freebsd-update][27] 等工具保持系统安装了最新的安全补丁。

|

||||

|

||||

#### 18. Chroot OpenSSH (将用户锁定在主目录)

|

||||

### 18、 Chroot OpenSSH (将用户锁定在主目录)

|

||||

|

||||

默认设置下用户可以浏览诸如 /etc/、/bin 等目录。可以使用 chroot 或者其他专有工具如 [rssh][28] 来保护ssh连接。从版本 4.8p1 或 4.9p1 起,OpenSSH 不再需要依赖诸如 rssh 或复杂的 chroot(1) 等第三方工具来将用户锁定在主目录中。可以使用新的 ChrootDirectory 指令将用户锁定在其主目录,参见[这篇博文][29]。

|

||||

默认设置下用户可以浏览诸如 `/etc`、`/bin` 等目录。可以使用 chroot 或者其他专有工具如 [rssh][28] 来保护 ssh 连接。从版本 4.8p1 或 4.9p1 起,OpenSSH 不再需要依赖诸如 rssh 或复杂的 chroot(1) 等第三方工具来将用户锁定在主目录中。可以使用新的 `ChrootDirectory` 指令将用户锁定在其主目录,参见[这篇博文][29]。

|

||||

|

||||

#### 19. 禁用客户端的 OpenSSH 服务

|

||||

### 19. 禁用客户端的 OpenSSH 服务

|

||||

|

||||

工作站和笔记本不需要 OpenSSH 服务。如果不需要提供 ssh 远程登录和文件传输功能的话,可以禁用 sshd 服务。CentOS / RHEL 用户可以使用 [yum 命令][30] 禁用或删除 openssh-server:

|

||||

|

||||

```

|

||||

$ sudo yum erase openssh-server

|

||||

```

|

||||

|

||||

Debian / Ubuntu 用户可以使用 [apt 命令][31]/[apt-get 命令][32] 删除 openssh-server:

|

||||

|

||||

```

|

||||

$ sudo apt-get remove openssh-server

|

||||

```

|

||||

|

||||

有可能需要更新 iptables 脚本来移除 ssh 的例外规则。CentOS / RHEL / Fedora 系统可以编辑文件 `/etc/sysconfig/iptables` 和 `/etc/sysconfig/ip6tables`。最后[重启 iptables][33] 服务:

|

||||

|

||||

工作站和笔记本不需要 OpenSSH 服务。如果不需要提供 SSH 远程登录和文件传输功能的话,可以禁用 SSHD 服务。CentOS / RHEL 用户可以使用 [yum 命令][30] 禁用或删除openssh-server:

|

||||

`$ sudo yum erase openssh-server`

|

||||

Debian / Ubuntu 用户可以使用 [apt 命令][31]/[apt-get 命令][32] 删除 openssh-server:

|

||||

`$ sudo apt-get remove openssh-server`

|

||||

有可能需要更新 iptables 脚本来移除 ssh 例外规则。CentOS / RHEL / Fedora 系统可以编辑文件 /etc/sysconfig/iptables 和 /etc/sysconfig/ip6tables。最后[重启 iptables][33] 服务:

|

||||

```

|

||||

# service iptables restart

|

||||

# service ip6tables restart

|

||||

```

|

||||

|

||||

#### 20. 来自 Mozilla 的额外提示

|

||||

### 20. 来自 Mozilla 的额外提示

|

||||

|

||||

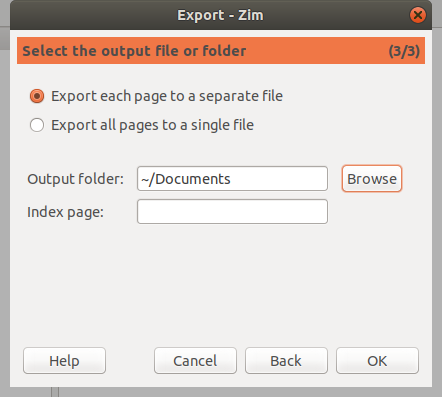

如果使用 6.7+ 版本的 OpenSSH,可以尝试下[以下设置][34]:

|

||||

|

||||

如果使用 6.7+ 版本的 OpenSSH,可以尝试下以下设置:

|

||||

```

|

||||

#################[ WARNING ]########################

|

||||

# Do not use any setting blindly. Read sshd_config #

|

||||

@ -361,10 +435,11 @@ MACs hmac-sha2-512-etm@openssh.com,hmac-sha2-256-etm@openssh.com,umac-128-etm@op

|

||||

LogLevel VERBOSE

|

||||

|

||||

# Log sftp level file access (read/write/etc.) that would not be easily logged otherwise.

|

||||

Subsystem sftp /usr/lib/ssh/sftp-server -f AUTHPRIV -l INFO

|

||||

Subsystem sftp /usr/lib/ssh/sftp-server -f AUTHPRIV -l INFO

|

||||

```

|

||||

|

||||

使用以下命令获取 OpenSSH 支持的加密方法:

|

||||

|

||||

```

|

||||

$ ssh -Q cipher

|

||||

$ ssh -Q cipher-auth

|

||||

@ -372,15 +447,25 @@ $ ssh -Q mac

|

||||

$ ssh -Q kex

|

||||

$ ssh -Q key

|

||||

```

|

||||

[![OpenSSH安全教程查询密码和算法选择][35]][35]

|

||||

|

||||

#### 如何测试 sshd_config 文件并重启/重新加载 SSH 服务?

|

||||

[![OpenSSH安全教程查询密码和算法选择][35]][35]

|

||||

|

||||

### 如何测试 sshd_config 文件并重启/重新加载 SSH 服务?

|

||||

|

||||

在重启 sshd 前检查配置文件的有效性和密匙的完整性,运行:

|

||||

|

||||

```

|

||||

$ sudo sshd -t

|

||||

```

|

||||

|

||||

扩展测试模式:

|

||||

|

||||

```

|

||||

$ sudo sshd -T

|

||||

```

|

||||

|

||||

在重启 sshd 前检查配置文件的有效性和密匙的完整性,运行:

|

||||

`$ sudo sshd -t`

|

||||

扩展测试模式:

|

||||

`$ sudo sshd -T`

|

||||

最后,根据系统的的版本[重启 Linux 或类 Unix 系统中的 sshd 服务][37]:

|

||||

|

||||

```

|

||||

$ [sudo systemctl start ssh][38] ## Debian/Ubunt Linux##

|

||||

$ [sudo systemctl restart sshd.service][39] ## CentOS/RHEL/Fedora Linux##

|

||||

@ -388,25 +473,21 @@ $ doas /etc/rc.d/sshd restart ## OpenBSD##

|

||||

$ sudo service sshd restart ## FreeBSD##

|

||||

```

|

||||

|

||||

#### 其他建议

|

||||

### 其他建议

|

||||

|

||||

1. [使用 2FA 加强 SSH 的安全性][40] - 可以使用[OATH Toolkit][41] 或 [DuoSecurity][42] 启用多重身份验证。

|

||||

2. [基于密匙链的身份验证][43] - 密匙链是一个 bash 脚本,可以使得基于密匙的验证非常的灵活方便。相对于无密码密匙,它提供更好的安全性。

|

||||

1. [使用 2FA 加强 SSH 的安全性][40] - 可以使用 [OATH Toolkit][41] 或 [DuoSecurity][42] 启用多重身份验证。

|

||||

2. [基于密匙链的身份验证][43] - 密匙链是一个 bash 脚本,可以使得基于密匙的验证非常的灵活方便。相对于无密码密匙,它提供更好的安全性。

|

||||

|

||||

### 更多信息:

|

||||

|

||||

* [OpenSSH 官方][44] 项目。

|

||||

* 用户手册: sshd(8)、ssh(1)、ssh-add(1)、ssh-agent(1)。

|

||||

|

||||

#### 更多信息:

|

||||

如果知道这里没用提及的方便的软件或者技术,请在下面的评论中分享,以帮助读者保持 OpenSSH 的安全。

|

||||

|

||||

* [OpenSSH 官方][44] 项目.

|

||||

* 用户手册: sshd(8),ssh(1),ssh-add(1),ssh-agent(1)

|

||||

### 关于作者

|

||||

|

||||

|

||||

|

||||

如果你发现一个方便的软件或者技术,请在下面的评论中分享,以帮助读者保持 OpenSSH 的安全。

|

||||

|

||||

#### 关于作者

|

||||

|

||||

作者是 nixCraft 的创始人,一个经验丰富的系统管理员和 Linux/Unix 脚本培训师。他曾与全球客户合作,领域涉及IT,教育,国防和空间研究以及非营利部门等多个行业。请在 [Twitter][45]、[Facebook][46]、[Google+][47] 上关注他。

|

||||

作者是 nixCraft 的创始人,一个经验丰富的系统管理员和 Linux/Unix 脚本培训师。他曾与全球客户合作,领域涉及 IT,教育,国防和空间研究以及非营利部门等多个行业。请在 [Twitter][45]、[Facebook][46]、[Google+][47] 上关注他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -414,7 +495,7 @@ via: https://www.cyberciti.biz/tips/linux-unix-bsd-openssh-server-best-practices

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[shipsw](https://github.com/shipsw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -467,7 +548,7 @@ via: https://www.cyberciti.biz/tips/linux-unix-bsd-openssh-server-best-practices

|

||||

[46]:https://facebook.com/nixcraft

|

||||

[47]:https://plus.google.com/+CybercitiBiz

|

||||

[48]:https://www.cyberciti.biz/faq/ssh-passwordless-login-with-keychain-for-scripts/

|

||||

[49]:https://www.cyberciti.biz/faq/noninteractive-shell-script-ssh-password-provider/

|

||||

[49]:https://linux.cn/article-8086-1.html

|

||||

[50]:https://www.cyberciti.biz/faq/how-to-set-up-ssh-keys-on-linux-unix/

|

||||

[51]:https://www.cyberciti.biz/faq/how-to-upload-ssh-public-key-to-as-authorized_key-using-ansible/

|

||||

[52]:https://www.cyberciti.biz/faq/generating-random-password/

|

||||

@ -0,0 +1,124 @@

|

||||

在 Linux 上安装必应桌面墙纸更换器

|

||||

======

|

||||

|

||||

你是否厌倦了 Linux 桌面背景,想要设置好看的壁纸,但是不知道在哪里可以找到?别担心,我们在这里会帮助你。

|

||||

|

||||

我们都知道必应搜索引擎,但是由于一些原因很少有人使用它,每个人都喜欢必应网站的背景壁纸,它是非常漂亮和惊人的高分辨率图像。

|

||||

|

||||

如果你想使用这些图片作为你的桌面壁纸,你可以手动下载它,但是很难去每天下载一个新的图片,然后把它设置为壁纸。这就是自动壁纸改变的地方。

|

||||

|

||||

[必应桌面墙纸更换器][1]会自动下载并将桌面壁纸更改为当天的必应照片。所有的壁纸都储存在 `/home/[user]/Pictures/BingWallpapers/`。

|

||||

|

||||

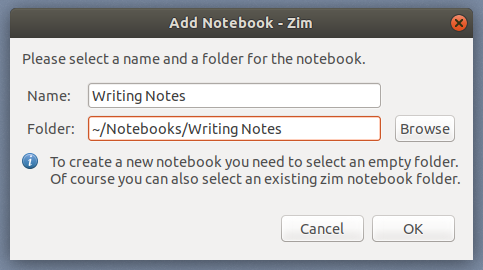

### 方法 1: 使用 Utkarsh Gupta Shell 脚本

|

||||

|

||||

这个小型 Python 脚本会自动下载并将桌面壁纸更改为当天的必应照片。该脚本在机器启动时自动运行,并工作于 GNU/Linux 上的 Gnome 或 Cinnamon 环境。它不需要手动工作,安装程序会为你做所有事情。

|

||||

|

||||

从 2.0+ 版本开始,该脚本的安装程序就可以像普通的 Linux 二进制命令一样工作,它会为某些任务请求 sudo 权限。

|

||||

|

||||

只需克隆仓库并切换到项目目录,然后运行 shell 脚本即可安装必应桌面墙纸更换器。

|

||||

|

||||

```

|

||||

$ https://github.com/UtkarshGpta/bing-desktop-wallpaper-changer/archive/master.zip

|

||||

$ unzip master

|

||||

$ cd bing-desktop-wallpaper-changer-master

|

||||

```

|

||||

|

||||

运行 `installer.sh` 使用 `--install` 选项来安装必应桌面墙纸更换器。它会下载并设置必应照片为你的 Linux 桌面。

|

||||

|

||||

```

|

||||

$ ./installer.sh --install

|

||||

|

||||

Bing-Desktop-Wallpaper-Changer

|

||||

BDWC Installer v3_beta2

|

||||

|

||||

GitHub:

|

||||

Contributors:

|

||||

.

|

||||

.

|

||||

[sudo] password for daygeek: ******

|

||||

.

|

||||

Where do you want to install Bing-Desktop-Wallpaper-Changer?

|

||||

Entering 'opt' or leaving input blank will install in /opt/bing-desktop-wallpaper-changer

|

||||

Entering 'home' will install in /home/daygeek/bing-desktop-wallpaper-changer

|

||||

Install Bing-Desktop-Wallpaper-Changer in (opt/home)? :Press Enter

|

||||

|

||||

Should we create bing-desktop-wallpaper-changer symlink to /usr/bin/bingwallpaper so you could easily execute it?

|

||||

Create symlink for easy execution, e.g. in Terminal (y/n)? : y

|

||||

|

||||

Should bing-desktop-wallpaper-changer needs to autostart when you log in? (Add in Startup Application)

|

||||

Add in Startup Application (y/n)? : y

|

||||

.

|

||||

.

|

||||

Executing bing-desktop-wallpaper-changer...

|

||||

|

||||

|

||||

Finished!!

|

||||

```

|

||||

|

||||

![][3]

|

||||

|

||||

要卸载该脚本:

|

||||

|

||||

```

|

||||

$ ./installer.sh --uninstall

|

||||

```

|

||||

|

||||

使用帮助页面了解更多关于此脚本的选项。

|

||||

|

||||

```

|

||||

$ ./installer.sh --help

|

||||

```

|

||||

|

||||

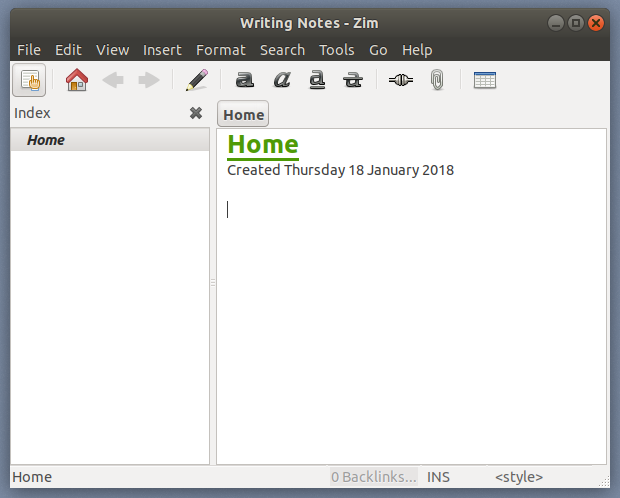

### 方法 2: 使用 GNOME Shell 扩展

|

||||

|

||||

这个轻量级 [GNOME shell 扩展][4],可将你的壁纸每天更改为微软必应的壁纸。它还会显示一个包含图像标题和解释的通知。

|

||||

|

||||

该扩展大部分基于 Elinvention 的 NASA APOD 扩展,受到了 Utkarsh Gupta 的 Bing Desktop WallpaperChanger 启发。

|

||||

|

||||

#### 特点

|

||||

|

||||

- 获取当天的必应壁纸并设置为锁屏和桌面墙纸(这两者都是用户可选的)

|

||||

- 可强制选择某个特定区域(即地区)

|

||||

- 为多个显示器自动选择最高分辨率(和最合适的墙纸)

|

||||

- 可以选择在 1 到 7 天之后清理墙纸目录(删除最旧的)

|

||||

- 只有当它们被更新时,才会尝试下载壁纸

|

||||

- 不会持续进行更新 - 每天只进行一次,启动时也要进行一次(更新是在必应更新时进行的)

|

||||

|

||||

#### 如何安装

|

||||

|

||||

访问 [extenisons.gnome.org][5] 网站并将切换按钮拖到 “ON”,然后点击 “Install” 按钮安装必应壁纸 GNOME 扩展。(LCTT 译注:页面上并没有发现 ON 按钮,但是有 Download 按钮)

|

||||

|

||||

![][6]

|

||||

|

||||

安装必应壁纸 GNOME 扩展后,它会自动下载并为你的 Linux 桌面设置当天的必应照片,并显示关于壁纸的通知。

|

||||

|

||||

![][7]

|

||||

|

||||

托盘指示器将帮助你执行少量操作,也可以打开设置。

|

||||

|

||||

![][8]

|

||||

|

||||

根据你的要求自定义设置。

|

||||

|

||||

![][9]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/bing-desktop-wallpaper-changer-linux-bing-photo-of-the-day/

|

||||

|

||||

作者:[2daygeek][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.2daygeek.com/author/2daygeek/

|

||||

[1]:https://github.com/UtkarshGpta/bing-desktop-wallpaper-changer

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:https://www.2daygeek.com/wp-content/uploads/2017/09/bing-wallpaper-changer-linux-5.png

|

||||

[4]:https://github.com/neffo/bing-wallpaper-gnome-extension

|

||||

[5]:https://extensions.gnome.org/extension/1262/bing-wallpaper-changer/

|

||||

[6]:https://www.2daygeek.com/wp-content/uploads/2017/09/bing-wallpaper-changer-for-linux-1.png

|

||||

[7]:https://www.2daygeek.com/wp-content/uploads/2017/09/bing-wallpaper-changer-for-linux-2.png

|

||||

[8]:https://www.2daygeek.com/wp-content/uploads/2017/09/bing-wallpaper-changer-for-linux-3.png

|

||||

[9]:https://www.2daygeek.com/wp-content/uploads/2017/09/bing-wallpaper-changer-for-linux-4.png

|

||||

@ -1,28 +1,21 @@

|

||||

|

||||

如何提供有帮助的回答

|

||||

=============================

|

||||

|

||||

如果你的同事问你一个不太清晰的问题,你会怎么回答?我认为提问题是一种技巧(可以看 [如何提出有意义的问题][1]) 同时,合理地回答问题也是一种技巧。他们都是非常实用的。

|

||||

如果你的同事问你一个不太清晰的问题,你会怎么回答?我认为提问题是一种技巧(可以看 [如何提出有意义的问题][1]) 同时,合理地回答问题也是一种技巧,它们都是非常实用的。

|

||||

|

||||

一开始 - 有时向你提问的人不尊重你的时间,这很糟糕。

|

||||

|

||||

理想情况下,我们假设问你问题的人是一个理性的人并且正在尽力解决问题而你想帮助他们。和我一起工作的人是这样,我所生活的世界也是这样。当然,现实生活并不是这样。

|

||||

一开始 —— 有时向你提问的人不尊重你的时间,这很糟糕。理想情况下,我们假设问你问题的人是一个理性的人并且正在尽力解决问题,而你想帮助他们。和我一起工作的人是这样,我所生活的世界也是这样。当然,现实生活并不是这样。

|

||||

|

||||

下面是有助于回答问题的一些方法!

|

||||

|

||||

|

||||

### 如果他们提问不清楚,帮他们澄清

|

||||

### 如果他们的提问不清楚,帮他们澄清

|

||||

|

||||

通常初学者不会提出很清晰的问题,或者问一些对回答问题没有必要信息的问题。你可以尝试以下方法 澄清问题:

|

||||

|

||||

* ** 重述为一个更明确的问题 ** 来回复他们(”你是想问 X 吗?“)

|

||||

|

||||

* ** 向他们了解更具体的他们并没有提供的信息 ** (”你使用 IPv6 ?”)

|

||||

|

||||

* ** 问是什么导致了他们的问题 ** 例如,有时有些人会进入我的团队频道,询问我们的服务发现(service discovery )如何工作的。这通常是因为他们试图设置/重新配置服务。在这种情况下,如果问“你正在使用哪种服务?可以给我看看你正在处理的 pull requests 吗?”是有帮助的。

|

||||

|

||||

这些方法很多来自 [如何提出有意义的问题][2]中的要点。(尽管我永远不会对某人说“噢,你得先看完 “如何提出有意义的问题”这篇文章后再来像我提问)

|

||||

* **重述为一个更明确的问题**来回复他们(“你是想问 X 吗?”)

|

||||

* **向他们了解更具体的他们并没有提供的信息** (“你使用 IPv6 ?”)

|

||||

* **问是什么导致了他们的问题**。例如,有时有些人会进入我的团队频道,询问我们的<ruby>服务发现<rt>service discovery</rt></ruby>如何工作的。这通常是因为他们试图设置/重新配置服务。在这种情况下,如果问“你正在使用哪种服务?可以给我看看你正在处理的‘拉取请求’吗?”是有帮助的。

|

||||

|

||||

这些方法很多来自[如何提出有意义的问题][2]中的要点。(尽管我永远不会对某人说“噢,你得先看完《如何提出有意义的问题》这篇文章后再来向我提问)

|

||||

|

||||

### 弄清楚他们已经知道了什么

|

||||

|

||||

@ -30,66 +23,54 @@

|

||||

|

||||

Harold Treen 给了我一个很好的例子:

|

||||

|

||||

> 前几天,有人请我解释“ Redux-Sagas ”。与其深入解释不如说“ 他们就像 worker threads 监听行为(actions),让你更新 Redux store 。

|

||||

> 前几天,有人请我解释 “Redux-Sagas”。与其深入解释,不如说 “它们就像监听 action 的工人线程,并可以让你更新 Redux store。

|

||||

|

||||

> 我开始搞清楚他们对 Redux 、行为(actions)、store 以及其他基本概念了解多少。将这些概念都联系在一起再来解释会容易得多。

|

||||

> 我开始搞清楚他们对 Redux、action、store 以及其他基本概念了解多少。将这些概念都联系在一起再来解释会容易得多。

|

||||

|

||||

弄清楚问你问题的人已经知道什么是非常重要的。因为有时他们可能会对基础概念感到疑惑(“ Redux 是什么?“),或者他们可能是专家但是恰巧遇到了微妙的极端情况(corner case)。如果答案建立在他们不知道的概念上会令他们困惑,但如果重述他们已经知道的的又会是乏味的。

|

||||

弄清楚问你问题的人已经知道什么是非常重要的。因为有时他们可能会对基础概念感到疑惑(“Redux 是什么?”),或者他们可能是专家,但是恰巧遇到了微妙的<ruby>极端情况<rt>corner case</rt></ruby>。如果答案建立在他们不知道的概念上会令他们困惑,但如果重述他们已经知道的的又会是乏味的。

|

||||

|

||||

这里有一个很实用的技巧来了解他们已经知道什么 - 比如可以尝试用“你对 X 了解多少?”而不是问“你知道 X 吗?”。

|

||||

|

||||

|

||||

### 给他们一个文档

|

||||

|

||||

“RTFM” (“去读那些他妈的手册”(Read The Fucking Manual))是一个典型的无用的回答,但事实上如果向他们指明一个特定的文档会是非常有用的!当我提问题的时候,我当然很乐意翻看那些能实际解决我的问题的文档,因为它也可能解决其他我想问的问题。

|

||||

“RTFM” (<ruby>“去读那些他妈的手册”<rt>Read The Fucking Manual</rt></ruby>)是一个典型的无用的回答,但事实上如果向他们指明一个特定的文档会是非常有用的!当我提问题的时候,我当然很乐意翻看那些能实际解决我的问题的文档,因为它也可能解决其他我想问的问题。

|

||||

|

||||

我认为明确你所给的文档的确能够解决问题是非常重要的,或者至少经过查阅后确认它对解决问题有帮助。否则,你可能将以下面这种情形结束对话(非常常见):

|

||||

|

||||

* Ali:我应该如何处理 X ?

|

||||

* Jada:\<文档链接>

|

||||

* Ali: 这个没有实际解释如何处理 X ,它仅仅解释了如何处理 Y !

|

||||

|

||||

* Jada:<文档链接>

|

||||

|

||||

* Ali: 这个并有实际解释如何处理 X ,它仅仅解释了如何处理 Y !

|

||||

|

||||

如果我所给的文档特别长,我会指明文档中那个我将会谈及的特定部分。[bash 手册][3] 有44000个字(真的!),所以如果只说“它在 bash 手册中有说明”是没有帮助的:)

|

||||

|

||||

如果我所给的文档特别长,我会指明文档中那个我将会谈及的特定部分。[bash 手册][3] 有 44000 个字(真的!),所以如果只说“它在 bash 手册中有说明”是没有帮助的 :)

|

||||

|

||||

### 告诉他们一个有用的搜索

|

||||

|

||||

在工作中,我经常发现我可以利用我所知道的关键字进行搜索找到能够解决我的问题的答案。对于初学者来说,这些关键字往往不是那么明显。所以说“这是我用来寻找这个答案的搜索”可能有用些。再次说明,回答时请经检查后以确保搜索能够得到他们所需要的答案:)

|

||||

|

||||

在工作中,我经常发现我可以利用我所知道的关键字进行搜索来找到能够解决我的问题的答案。对于初学者来说,这些关键字往往不是那么明显。所以说“这是我用来寻找这个答案的搜索”可能有用些。再次说明,回答时请经检查后以确保搜索能够得到他们所需要的答案 :)

|

||||

|

||||

### 写新文档

|

||||

|

||||

人们经常一次又一次地问我的团队同样的问题。很显然这并不是他们的错(他们怎么能够知道在他们之前已经有10个人问了这个问题,且知道答案是什么呢?)因此,我们会尝试写新文档,而不是直接回答回答问题。

|

||||

人们经常一次又一次地问我的团队同样的问题。很显然这并不是他们的错(他们怎么能够知道在他们之前已经有 10 个人问了这个问题,且知道答案是什么呢?)因此,我们会尝试写新文档,而不是直接回答回答问题。

|

||||

|

||||

1. 马上写新文档

|

||||

|

||||

2. 给他们我们刚刚写好的新文档

|

||||

|

||||

3. 公示

|

||||

|

||||

写文档有时往往比回答问题需要花很多时间,但这是值得的。写文档尤其重要,如果:

|

||||

|

||||

a. 这个问题被问了一遍又一遍

|

||||

|

||||

b. 随着时间的推移,这个答案不会变化太大(如果这个答案每一个星期或者一个月就会变化,文档就会过时并且令人受挫)

|

||||

|

||||

|

||||

### 解释你做了什么

|

||||

|

||||

对于一个话题,作为初学者来说,这样的交流会真让人沮丧:

|

||||

|

||||

* 新人:“嗨!你如何处理 X ?”

|

||||

|

||||

* 有经验的人:“我已经处理过了,而且它已经完美解决了”

|

||||

|

||||

* 新人:”...... 但是你做了什么?!“

|

||||

|

||||

如果问你问题的人想知道事情是如何进行的,这样是有帮助的:

|

||||

|

||||

* 让他们去完成任务而不是自己做

|

||||

|

||||

* 告诉他们你是如何得到你给他们的答案的。

|

||||

|

||||

这可能比你自己做的时间还要长,但对于被问的人来说这是一个学习机会,因为那样做使得他们将来能够更好地解决问题。

|

||||

@ -97,88 +78,74 @@ b. 随着时间的推移,这个答案不会变化太大(如果这个答案

|

||||

这样,你可以进行更好的交流,像这:

|

||||

|

||||

* 新人:“这个网站出现了错误,发生了什么?”

|

||||

|

||||

* 有经验的人:(2分钟后)”oh 这是因为发生了数据库故障转移“

|

||||

|

||||

* 新人: ”你是怎么知道的??!?!?“

|

||||

|

||||

* 有经验的人:“以下是我所做的!“:

|

||||

|

||||

* 有经验的人:(2分钟后)“oh 这是因为发生了数据库故障转移”

|

||||

* 新人: “你是怎么知道的??!?!?”

|

||||

* 有经验的人:“以下是我所做的!”:

|

||||

1. 通常这些错误是因为服务器 Y 被关闭了。我查看了一下 `$PLACE` 但它表明服务器 Y 开着。所以,并不是这个原因导致的。

|

||||

|

||||

2. 然后我查看 X 的仪表盘 ,仪表盘的这个部分显示这里发生了数据库故障转移。

|

||||

|

||||

3. 然后我在日志中找到了相应服务器,并且它显示连接数据库错误,看起来错误就是这里。

|

||||

|

||||

如果你正在解释你是如何调试一个问题,解释你是如何发现问题,以及如何找出问题的。尽管看起来你好像已经得到正确答案,但感觉更好的是能够帮助他们提高学习和诊断能力,并了解可用的资源。

|

||||

|

||||

|

||||

### 解决根本问题

|

||||

|

||||

这一点有点棘手。有时候人们认为他们依旧找到了解决问题的正确途径,且他们只再多一点信息就可以解决问题。但他们可能并不是走在正确的道路上!比如:

|

||||

这一点有点棘手。有时候人们认为他们依旧找到了解决问题的正确途径,且他们只要再多一点信息就可以解决问题。但他们可能并不是走在正确的道路上!比如:

|

||||

|

||||

* George:”我在处理 X 的时候遇到了错误,我该如何修复它?“

|

||||

|

||||

* Jasminda:”你是正在尝试解决 Y 吗?如果是这样,你不应该处理 X ,反而你应该处理 Z 。“

|

||||

|

||||

* George:“噢,你是对的!!!谢谢你!我回反过来处理 Z 的。“

|

||||

* George:“我在处理 X 的时候遇到了错误,我该如何修复它?”

|

||||

* Jasminda:“你是正在尝试解决 Y 吗?如果是这样,你不应该处理 X ,反而你应该处理 Z 。”

|

||||

* George:“噢,你是对的!!!谢谢你!我回反过来处理 Z 的。”

|

||||

|

||||

Jasminda 一点都没有回答 George 的问题!反而,她猜测 George 并不想处理 X ,并且她是猜对了。这是非常有用的!

|

||||

|

||||

如果你这样做可能会产生高高在上的感觉:

|

||||

|

||||

* George:”我在处理 X 的时候遇到了错误,我该如何修复它?“

|

||||

* George:“我在处理 X 的时候遇到了错误,我该如何修复它?”

|

||||

* Jasminda:“不要这样做,如果你想处理 Y ,你应该反过来完成 Z 。”

|

||||

* George:“好吧,我并不是想处理 Y 。实际上我想处理 X 因为某些原因(REASONS)。所以我该如何处理 X 。”

|

||||

|

||||

* Jasminda:不要这样做,如果你想处理 Y ,你应该反过来完成 Z 。

|

||||

|

||||

* George:“好吧,我并不是想处理 Y 。实际上我想处理 X 因为某些原因(REASONS)。所以我该如何处理 X 。

|

||||

|

||||

所以不要高高在上,且要记住有时有些提问者可能已经偏离根本问题很远了。同时回答提问者提出的问题以及他们本该提出的问题都是合理的:“嗯,如果你想处理 X ,那么你可能需要这么做,但如果你想用这个解决 Y 问题,可能通过处理其他事情你可以更好地解决这个问题,这就是为什么可以做得更好的原因。

|

||||

所以不要高高在上,且要记住有时有些提问者可能已经偏离根本问题很远了。同时回答提问者提出的问题以及他们本该提出的问题都是合理的:“嗯,如果你想处理 X ,那么你可能需要这么做,但如果你想用这个解决 Y 问题,可能通过处理其他事情你可以更好地解决这个问题,这就是为什么可以做得更好的原因。”

|

||||

|

||||

|

||||

### 询问”那个回答可以解决您的问题吗?”

|

||||

### 询问“那个回答可以解决您的问题吗?”

|

||||

|

||||

我总是喜欢在我回答了问题之后核实是否真的已经解决了问题:”这个回答解决了您的问题吗?您还有其他问题吗?“在问完这个之后最好等待一会,因为人们通常需要一两分钟来知道他们是否已经找到了答案。

|

||||

我总是喜欢在我回答了问题之后核实是否真的已经解决了问题:“这个回答解决了您的问题吗?您还有其他问题吗?”在问完这个之后最好等待一会,因为人们通常需要一两分钟来知道他们是否已经找到了答案。

|

||||

|

||||

我发现尤其是问“这个回答解决了您的问题吗”这个额外的步骤在写完文档后是非常有用的。通常,在写关于我熟悉的东西的文档时,我会忽略掉重要的东西而不会意识到它。

|

||||

|

||||

|

||||

### 结对编程和面对面交谈

|

||||

|

||||

我是远程工作的,所以我的很多对话都是基于文本的。我认为这是沟通的默认方式。

|

||||

|

||||

今天,我们生活在一个方便进行小视频会议和屏幕共享的世界!在工作时候,在任何时间我都可以点击一个按钮并快速加入与他人的视频对话或者屏幕共享的对话中!

|

||||

|

||||

例如,最近有人问如何自动调节他们的服务容量规划。我告诉他们我们有几样东西需要清理,但我还不太确定他们要清理的是什么。然后我们进行了一个简短的视屏会话并在5分钟后,我们解决了他们问题。

|

||||

例如,最近有人问如何自动调节他们的服务容量规划。我告诉他们我们有几样东西需要清理,但我还不太确定他们要清理的是什么。然后我们进行了一个简短的视频会话并在 5 分钟后,我们解决了他们问题。

|

||||

|

||||

我认为,特别是如果有人真的被困在该如何开始一项任务时,开启视频进行结对编程几分钟真的比电子邮件或者一些即时通信更有效。

|

||||

|

||||

|

||||

### 不要表现得过于惊讶

|

||||

|

||||

这是源自 Recurse Center 的一则法则:[不要故作惊讶][4]。这里有一个常见的情景:

|

||||

|

||||

* 某人1:“什么是 Linux 内核”

|

||||

* 某甲:“什么是 Linux 内核”

|

||||

* 某乙:“你竟然不知道什么是 Linux 内核?!!!!?!!!????”

|

||||

|

||||

* 某人2:“你竟然不知道什么是 Linux 内核(LINUX KERNEL)?!!!!?!!!????”

|

||||

某乙的表现(无论他们是否真的如此惊讶)是没有帮助的。这大部分只会让某甲不好受,因为他们确实不知道什么是 Linux 内核。

|

||||

|

||||

某人2表现(无论他们是否真的如此惊讶)是没有帮助的。这大部分只会让某人1不好受,因为他们确实不知道什么是 Linux 内核。

|

||||

我一直在假装不惊讶,即使我事实上确实有点惊讶那个人不知道这种东西。

|

||||

|

||||

我一直在假装不惊讶即使我事实上确实有点惊讶那个人不知道这种东西但它是令人敬畏的。

|

||||

|

||||

### 回答问题是令人敬畏的

|

||||

### 回答问题真的很棒

|

||||

|

||||

显然并不是所有方法都是合适的,但希望你能够发现这里有些是有帮助的!我发现花时间去回答问题并教导人们是其实是很有收获的。

|

||||

|

||||

特别感谢 Josh Triplett 的一些建议并做了很多有益的补充,以及感谢 Harold Treen、Vaibhav Sagar、Peter Bhat Hatkins、Wesley Aptekar Cassels 和 Paul Gowder的阅读或评论。

|

||||

特别感谢 Josh Triplett 的一些建议并做了很多有益的补充,以及感谢 Harold Treen、Vaibhav Sagar、Peter Bhat Hatkins、Wesley Aptekar Cassels 和 Paul Gowder 的阅读或评论。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/answer-questions-well/

|

||||

|

||||

作者:[ Julia Evans][a]

|

||||

作者:[Julia Evans][a]

|

||||

译者:[HardworkFish](https://github.com/HardworkFish)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

112

sources/talk/20170210 Evolutional Steps of Computer Systems.md

Normal file

112

sources/talk/20170210 Evolutional Steps of Computer Systems.md

Normal file

@ -0,0 +1,112 @@

|

||||

Evolutional Steps of Computer Systems

|

||||

======

|

||||

Throughout the history of the modern computer, there were several evolutional steps related to the way we interact with the system. I tend to categorize those steps as following:

|

||||

|

||||

1. Numeric Systems

|

||||

2. Application-Specific Systems

|

||||

3. Application-Centric Systems

|

||||

4. Information-Centric Systems

|

||||

5. Application-Less Systems

|

||||

|

||||

|

||||

|

||||

Following sections describe how I see those categories.

|

||||

|

||||

### Numeric Systems

|

||||

|

||||

[Early computers][1] were designed with numbers in mind. They could add, subtract, multiply, divide. Some of them were able to perform more complex mathematical operations such as differentiate or integrate.

|

||||

|

||||

If you map characters to numbers, they were able to «compute» [strings][2] as well but this is somewhat «creative use of numbers» instead of meaningful processing arbitrary information.

|

||||

|

||||

### Application-Specific Systems

|

||||

|

||||

For higher-level problems, pure numeric systems are not sufficient. Application-specific systems were developed to do one single task. They were very similar to numeric systems. However, with sufficiently complex number calculations, systems were able to accomplish very well-defined higher level tasks such as calculations related to scheduling problems or other optimization problems.

|

||||

|

||||

Systems of this category were built for one single purpose, one distinct problem they solved.

|

||||

|

||||

### Application-Centric Systems

|

||||

|

||||

Systems that are application-centric are the first real general purpose systems. Their main usage style is still mostly application-specific but with multiple applications working either time-sliced (one app after another) or in multi-tasking mode (multiple apps at the same time).

|

||||

|

||||

Early personal computers [from the 70s][3] of the previous century were the first application-centric systems that became popular for a wide group of people.

|

||||

|

||||

Yet modern operating systems - Windows, macOS, most GNU/Linux desktop environments - still follow the same principles.

|

||||

|

||||

Of course, there are sub-categories as well:

|

||||

|

||||

1. Strict Application-Centric Systems

|

||||

2. Loose Application-Centric Systems

|

||||

|

||||

|

||||

|

||||

Strict application-centric systems such as [Windows 3.1][4] (Program Manager and File Manager) or even the initial version of [Windows 95][5] had no pre-defined folder hierarchy. The user did start text processing software like [WinWord][6] and saved the files in the program folder of WinWord. When working with a spreadsheet program, its files were saved in the application folder of the spreadsheet tool. And so on. Users did not create their own hierarchy of folders mostly because of convenience, laziness, or because they did not saw any necessity. The number of files per user were sill within dozens up to a few hundreds.

|

||||

|

||||

For accessing information, the user typically opened an application and within the application, the files containing the generated data were retrieved using file/open.

|

||||

|

||||

It was [Windows 95][5] SP2 that introduced «[My Documents][7]» for the Windows platform. With this file hierarchy template, application designers began switching to «My Documents» as a default file save/open location instead of using the software product installation path. This made the users embrace this pattern and start to maintain folder hierarchies on their own.

|

||||

|

||||

This resulted in loose application-centric systems: typical file retrieval is done via a file manager. When a file is opened, the associated application is started by the operating system. It is a small or subtle but very important usage shift. Application-centric systems are still the dominant usage pattern for personal computers.

|

||||

|

||||

Nevertheless, this pattern comes with many disadvantages. For example in order to prevent data retrieval problems, there is the need to maintain a strict hierarchy of folders that contain all related files of a given project. Unfortunately, nature does not fit well in strict hierarchy of folders. Further more, [this does not scale well][8]. Desktop search engines and advanced data organizing tools like [tagstore][9] are able to smooth the edged a bit. As studies show, only a minority of users are using such advanced retrieval tools. Most users still navigate through the file system without using any alternative or supplemental retrieval techniques.

|

||||

|

||||

### Information-Centric Systems

|

||||

|

||||

One possible way of dealing with the issue that a certain topic needs to have a folder that holds all related files is to switch from an application-centric system to an information-centric systems.

|

||||

|

||||

Instead of opening a spreadsheet application to work with the project budget, opening a word processor application to write the project report, and opening another tool to work with image files, an information-centric system combines all the information on the project in one place, in one application.

|

||||

|

||||

The calculations for the previous month is right beneath notes from a client meeting which is right beneath a photography of the whiteboard notes which is right beneath some todo tasks. Without any application or file border in between.

|

||||

|

||||

Early attempts to create such an environment were IBM [OS/2][10], Microsoft [OLE][11] or [NeXT][12]. None of them were a major success for a variety of reasons. A very interesting information-centric environment is [Acme][13] from [Plan 9][14]. It combines [a wide variety of applications][15] within one application but it never reached a notable distribution even with its ports to Windows or GNU/Linux.

|

||||

|

||||

Modern approaches for an information-centric system are advanced [personal wikis][16] like [TheBrain][17] or [Microsoft OneNote][18].

|

||||

|

||||

My personal tool of choice is the [GNU/Emacs][19] platform with its [Org-mode][19] extension. I hardly leave Org-mode when I work with my computer. For accessing external data sources, I created [Memacs][20] which brings me a broad variety of data into Org-mode. I love to do spreadsheet calculations right beneath scheduled tasks, in-line images, internal and external links, and so forth. It is truly an information-centric system where the user doesn't have to deal with application borders or strictly hierarchical file-system folders. Multi-classifications is possible using simple or advanced tagging. All kinds of views can be derived with a single command. One of those views is my calendar, the agenda. Another derived view is the list of borrowed things. And so on. There are no limits for Org-mode users. If you can think of it, it is most likely possible within Org-mode.

|

||||

|

||||

Is this the end of the evolution? Certainly not.

|

||||

|

||||

### Application-Less Systems

|

||||

|

||||

I can think of a class of systems which I refer to as application-less systems. As the next logical step, there is no need to have single-domain applications even when they are as capable as Org-mode. The computer offers a nice to use interface to information and features, not files and applications. Even a classical operating system is not accessible.

|

||||

|

||||

Application-less systems might as well be combined with [artificial intelligence][21]. Think of it as some kind of [HAL 9000][22] from [A Space Odyssey][23]. Or [LCARS][24] from Star Trek.

|

||||

|

||||

It is hard to believe that there is a transition between our application-based, vendor-based software culture and application-less systems. Maybe the open source movement with its slow but constant development will be able to form a truly application-less environment where all kinds of organizations and people are contributing to.

|

||||

|

||||

Information and features to retrieve and manipulate information, this is all it takes. This is all we need. Everything else is just limiting distraction.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://karl-voit.at/2017/02/10/evolution-of-systems/

|

||||

|

||||

作者:[Karl Voit][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://karl-voit.at

|

||||

[1]:https://en.wikipedia.org/wiki/History_of_computing_hardware

|

||||

[2]:https://en.wikipedia.org/wiki/String_%2528computer_science%2529

|

||||

[3]:https://en.wikipedia.org/wiki/Xerox_Alto

|

||||

[4]:https://en.wikipedia.org/wiki/Windows_3.1x

|

||||

[5]:https://en.wikipedia.org/wiki/Windows_95

|

||||

[6]:https://en.wikipedia.org/wiki/Microsoft_Word

|

||||

[7]:https://en.wikipedia.org/wiki/My_Documents

|

||||

[8]:http://karl-voit.at/tagstore/downloads/Voit2012b.pdf

|

||||

[9]:http://karl-voit.at/tagstore/

|

||||

[10]:https://en.wikipedia.org/wiki/OS/2

|

||||

[11]:https://en.wikipedia.org/wiki/Object_Linking_and_Embedding

|

||||

[12]:https://en.wikipedia.org/wiki/NeXT

|

||||

[13]:https://en.wikipedia.org/wiki/Acme_%2528text_editor%2529

|

||||

[14]:https://en.wikipedia.org/wiki/Plan_9_from_Bell_Labs

|

||||

[15]:https://en.wikipedia.org/wiki/List_of_Plan_9_applications

|

||||

[16]:https://en.wikipedia.org/wiki/Personal_wiki

|

||||

[17]:https://en.wikipedia.org/wiki/TheBrain

|

||||

[18]:https://en.wikipedia.org/wiki/Microsoft_OneNote

|

||||

[19]:../../../../tags/emacs

|

||||

[20]:https://github.com/novoid/Memacs

|

||||

[21]:https://en.wikipedia.org/wiki/Artificial_intelligence

|

||||

[22]:https://en.wikipedia.org/wiki/HAL_9000

|

||||

[23]:https://en.wikipedia.org/wiki/2001:_A_Space_Odyssey

|

||||

[24]:https://en.wikipedia.org/wiki/LCARS

|

||||

@ -0,0 +1,91 @@

|

||||

Why culture is the most important issue in a DevOps transformation

|

||||

======

|

||||

|

||||

|

||||

|

||||

You've been appointed the DevOps champion in your organisation: congratulations. So, what's the most important issue that you need to address?

|

||||

|

||||

It's the technology—tools and the toolchain—right? Everybody knows that unless you get the right tools for the job, you're never going to make things work. You need integration with your existing stack (though whether you go with tight or loose integration will be an interesting question), a support plan (vendor, third party, or internal), and a bug-tracking system to go with your source code management system. And that's just the start.

|

||||

|

||||

No! Don't be ridiculous: It's clearly the process that's most important. If the team doesn't agree on how stand-ups are run, who participates, the frequency and length of the meetings, and how many people are required for a quorum, then you'll never be able to institute a consistent, repeatable working pattern.

|

||||

|

||||

In fact, although both the technology and the process are important, there's a third component that is equally important, but typically even harder to get right: culture. Yup, it's that touch-feely thing we techies tend to struggle with.1

|

||||

|

||||

### Culture

|

||||

|

||||

I was visiting a midsized government institution a few months ago (not in the UK, as it happens), and we arrived a little early to meet the CEO and CTO. We were ushered into the CEO's office and waited for a while as the two of them finished participating in the daily stand-up. They apologised for being a minute or two late, but far from being offended, I was impressed. Here was an organisation where the culture of participation was clearly infused all the way up to the top.

|

||||

|

||||

Not that culture can be imposed from the top—nor can you rely on it percolating up from the bottom3—but these two C-level execs were not only modelling the behaviour they expected from the rest of their team, but also seemed, from the brief discussion we had about the process afterwards, to be truly invested in it. If you can get management to buy into the process—and be seen buying in—you are at least likely to have problems with other groups finding plausible excuses to keep their distance and get away with it.

|

||||

|

||||

So let's assume management believes you should give DevOps a go. Where do you start?

|

||||

|

||||

Developers may well be your easiest target group. They are often keen to try new things and find ways to move things along faster, so they are often the group that can be expected to adopt new technologies and methodologies. DevOps arguably has been driven mainly by the development community.

|

||||

|

||||

But you shouldn't assume all developers will be keen to embrace this change. For some, the way things have always been done—your Rick Parfitts of dev, if you will7—is fine. Finding ways to help them work efficiently in the new world is part of your job, not just theirs. If you have superstar developers who aren't happy with change, you risk alienating and losing them if you try to force them into your brave new world. What's worse, if they dig their heels in, you risk the adoption of your DevSecOps vision being compromised when they explain to their managers that things aren't going to change if it makes their lives more difficult and reduces their productivity.

|

||||

|

||||

Maybe you're not going to be able to move all the systems and people to DevOps immediately. Maybe you're going to need to choose which apps start with and who will be your first DevOps champions. Maybe it's time to move slowly.

|

||||

|

||||

### Not maybe: definitely

|

||||

|

||||

No—I lied. You're definitely going to need to move slowly. Trying to change everything at once is a recipe for disaster.

|

||||

|

||||

This goes for all elements of the change—which people to choose, which technologies to choose, which applications to choose, which user base to choose, which use cases to choose—bar one. For those elements, if you try to move everything in one go, you will fail. You'll fail for a number of reasons. You'll fail for reasons I can't imagine and, more importantly, for reasons you can't imagine. But some of the reasons will include:

|

||||

|

||||

* People—most people—don't like change.

|

||||

* Technologies don't like change (you can't just switch and expect everything to still work).

|

||||

* Applications don't like change (things worked before, or at least failed in known ways). You want to change everything in one go? Well, they'll all fail in new and exciting9 ways.

|

||||

* Users don't like change.

|

||||

* Use cases don't like change.

|

||||

|

||||

|

||||

|

||||

### The one exception

|

||||

|

||||

You noticed I wrote "bar one" when discussing which elements you shouldn't choose to change all in one go? Well done.

|

||||

|

||||

What's that exception? It's the initial team. When you choose your initial application to change and you're thinking about choosing the team to make that change, select the members carefully and select a complete set. This is important. If you choose just developers, just test folks, just security folks, just ops folks, or just management—if you leave out one functional group from your list—you won't have proved anything at all. Well, you might have proved to a small section of your community that it kind of works, but you'll have missed out on a trick. And that trick is: If you choose keen people from across your functional groups, it's much harder to fail.

|

||||

|

||||

Say your first attempt goes brilliantly. How are you going to convince other people to replicate your success and adopt DevOps? Well, the company newsletter, of course. And that will convince how many people, exactly? Yes, that number.12 If, on the other hand, you have team members from across the functional parts or the organisation, when you succeed, they'll tell their colleagues and you'll get more buy-in next time.

|

||||

|

||||

If it fails, if you've chosen your team wisely—if they're all enthusiastic and know that "fail often, fail fast" is good—they'll be ready to go again.

|

||||

|

||||

Therefore, you need to choose enthusiasts from across your functional groups. They can work on the technologies and the process, and once that's working, it's the people who will create that cultural change. You can just sit back and enjoy. Until the next crisis, of course.

|

||||

|

||||

1\. OK, you're right. It should be "with which we techies tend to struggle."2

|

||||

|

||||

2\. You thought I was going to qualify that bit about techies struggling with touchy-feely stuff, didn't you? Read it again: I put "tend to." That's the best you're getting.

|

||||

|

||||

3\. Is percolating a bottom-up process? I don't drink coffee,4 so I wouldn't know.

|

||||

|

||||

4\. Do people even use percolators to make coffee anymore? Feel free to let me know in the comments. I may pretend interest if you're lucky.

|

||||

|

||||

5\. For U.S. readers (and some other countries, maybe?), please substitute "check" for "tick" here.6

|

||||

|

||||

6\. For U.S. techie readers, feel free to perform `s/tick/check/;`.

|

||||

|

||||

7\. This is a Status Quo8 reference for which I'm extremely sorry.

|

||||

|

||||

8\. For millennial readers, please consult your favourite online reference engine or just roll your eyes and move on.

|

||||

|

||||

9\. For people who say, "but I love excitement," try being on call at 2 a.m. on a Sunday at the end of the quarter when your chief financial officer calls you up to ask why all of last month's sales figures have been corrupted with the letters "DEADBEEF."10

|

||||

|

||||

10\. For people not in the know, this is a string often used by techies as test data because a) it's non-numerical; b) it's numerical (in hexadecimal); c) it's easy to search for in debug files; and d) it's funny.11

|

||||

|

||||

11\. Though see.9

|

||||

|

||||

12\. It's a low number, is all I'm saying.

|

||||

|

||||

This article originally appeared on [Alice, Eve, and Bob – a security blog][1] and is republished with permission.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/2/most-important-issue-devops-transformation

|

||||

|

||||

作者:[Mike Bursell][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/mikecamel

|

||||

[1]:https://aliceevebob.com/2018/02/06/moving-to-devops-whats-most-important/

|

||||

85

sources/talk/20180226 5 keys to building open hardware.md

Normal file

85

sources/talk/20180226 5 keys to building open hardware.md

Normal file

@ -0,0 +1,85 @@

|

||||

5 keys to building open hardware

|

||||

======

|

||||

|

||||

|

||||

The science community is increasingly embracing free and open source hardware ([FOSH][1]). Researchers have been busy [hacking their own equipment][2] and creating hundreds of devices based on the distributed digital manufacturing model to advance their scientific experiments.

|

||||

|

||||

A major reason for all this interest in distributed digital manufacturing of scientific FOSH is money: Research indicates that FOSH [slashes costs by 90% to 99%][3] compared to proprietary tools. Commercializing scientific FOSH with [open hardware business models][4] has supported the rapid growth of an engineering subfield to develop FOSH for science, which comes together annually at the [Gathering for Open Science Hardware][5].

|

||||

|

||||

Remarkably, not one, but [two new academic journals][6] are devoted to the topic: the [Journal of Open Hardware][7] (from Ubiquity Press, a new open access publisher that also publishes the [Journal of Open Research Software][8] ) and [HardwareX][9] (an [open access journal][10] from Elsevier, one of the world's largest academic publishers).

|

||||

|

||||

Because of the academic community's support, scientific FOSH developers can get academic credit while having fun designing open hardware and pushing science forward faster.

|

||||

|

||||

### 5 steps for scientific FOSH

|

||||

|

||||

Shane Oberloier and I co-authored a new [article][11] published in Designs, an open access engineering design journal, about the principles of designing FOSH scientific equipment. We used the example of a slide dryer, fabricated for under $20, which costs up to 300 times less than proprietary equivalents. [Scientific][1] and [medical][12] equipment tends to be complex with huge payoffs for developing FOSH alternatives.

|

||||

|

||||

I've summarized the five steps (including six design principles) that Shane and I detail in our Designs article. These design principles can be generalized to non-scientific devices, although the more complex the design or equipment, the larger the potential savings.

|

||||

|

||||

If you are interested in designing open hardware for scientific projects, these steps will maximize your project's impact.

|

||||

|

||||

1. Evaluate similar existing tools for their functions but base your FOSH design on replicating their physical effects, not pre-existing designs. If necessary, evaluate a proof of concept.

|

||||

|

||||

|

||||

2. Use the following design principles:

|

||||

|

||||

|

||||

* Use only free and open source software toolchains (e.g., open source CAD packages such as [OpenSCAD][13], [FreeCAD][14], or [Blender][15]) and open hardware for device fabrication.

|

||||

* Attempt to minimize the number and type of parts and the complexity of the tools.

|

||||

* Minimize the amount of material and the cost of production.

|

||||

* Maximize the use of components that can be distributed or digitally manufactured by using widespread and accessible tools such as the open source [RepRap 3D printer][16].

|

||||

* Create [parametric designs][17] with predesigned components, which enable others to customize your design. By making parametric designs rather than solving a specific case, all future cases can also be solved while enabling future users to alter the core variables to make the device useful for them.

|

||||

* All components that are not easily and economically fabricated with existing open hardware equipment in a distributed fashion should be chosen from off-the-shelf parts that are readily available throughout the world.

|

||||

|

||||

|

||||

3. Validate the design for the targeted function(s).

|

||||

|

||||

|

||||

4. Meticulously document the design, manufacture, assembly, calibration, and operation of the device. This should include the raw source of the design, not just the files used for production. The Open Source Hardware Association has extensive [guidelines][18] for properly documenting and releasing open source designs, which can be summarized as follows:

|

||||

|

||||

|

||||

* Share design files in a universal type.

|

||||

* Include a fully detailed bill of materials, including prices and sourcing information.

|

||||

* If software is involved, make sure the code is clear and understandable to the general public.

|

||||

* Include many photos so that nothing is obscured, and they can be used as a reference while manufacturing.

|

||||