mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-19 00:30:12 +08:00

Merge branch 'master' of https://github.com/ZTinoZ/TranslateProject.git

This commit is contained in:

commit

382f6da61b

@ -4,7 +4,7 @@

|

||||

|

||||

### Puppet 是什么? ###

|

||||

|

||||

Puppet 是一款为 IT 系统管理员和顾问设计的自动化软件,你可以用它自动化地完成诸如安装应用程序和服务、补丁管理和部署等工作。所有资源的相关配置都以“manifests”的方式保存,单台机器或者多台机器都可以使用。如果你想了解更多内容,Puppet 实验室的网站上有关于 [Puppet 及其工作原理][1]的更详细的介绍。

|

||||

Puppet 是一款为 IT 系统管理员和顾问们设计的自动化软件,你可以用它自动化地完成诸如安装应用程序和服务、补丁管理和部署等工作。所有资源的相关配置都以“manifests”的方式保存,单台机器或者多台机器都可以使用。如果你想了解更多内容,Puppet 实验室的网站上有关于 [Puppet 及其工作原理][1]的更详细的介绍。

|

||||

|

||||

### 本教程要做些什么? ###

|

||||

|

||||

@ -58,7 +58,7 @@ Puppet 是一款为 IT 系统管理员和顾问设计的自动化软件,你可

|

||||

|

||||

# chkconfig puppet on

|

||||

|

||||

Puppet 客户端需要知道 Puppet master 服务器的地址。最佳方案是使用 DNS 服务器解析 Puppet master 服务器地址。如果你没有 DNS 服务器,在 `/etc/hosts` 里添加下面这几行也可以:

|

||||

Puppet 客户端需要知道 Puppet master 服务器的地址。最佳方案是使用 DNS 服务器解析 Puppet master 服务器地址。如果你没有 DNS 服务器,在 `/etc/hosts` 里添加类似下面这几行也可以:

|

||||

|

||||

> 1.2.3.4 server.your.domain

|

||||

|

||||

@ -125,7 +125,7 @@ master 服务器名也要在 `/etc/puppet/puppet.conf` 文件的“[agent]”小

|

||||

|

||||

> runinterval = <yourtime>

|

||||

|

||||

这个选项的值可以是秒(格式比如 30 或者 30s),分钟(30m),小时(6h),天(2d)以及年(5y)。值得注意的是,0 意味着“立即执行”而不是“从不执行”。

|

||||

这个选项的值可以是秒(格式比如 30 或者 30s),分钟(30m),小时(6h),天(2d)以及年(5y)。值得注意的是,**0 意味着“立即执行”而不是“从不执行”**。

|

||||

|

||||

### 提示和技巧 ###

|

||||

|

||||

@ -139,7 +139,7 @@ master 服务器名也要在 `/etc/puppet/puppet.conf` 文件的“[agent]”小

|

||||

|

||||

# puppet agent -t --debug

|

||||

|

||||

Debug 选项会显示 Puppet 本次运行时的差不多每一个步骤,这在调试非常复杂的问题时很有用。另一个很有用的选项是:

|

||||

debug 选项会显示 Puppet 本次运行时的差不多每一个步骤,这在调试非常复杂的问题时很有用。另一个很有用的选项是:

|

||||

|

||||

# puppet agent -t --noop

|

||||

|

||||

@ -187,7 +187,7 @@ via: http://xmodulo.com/2014/08/install-puppet-server-client-centos-rhel.html

|

||||

|

||||

作者:[Jaroslav Štěpánek][a]

|

||||

译者:[sailing](https://github.com/sailing)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,15 @@

|

||||

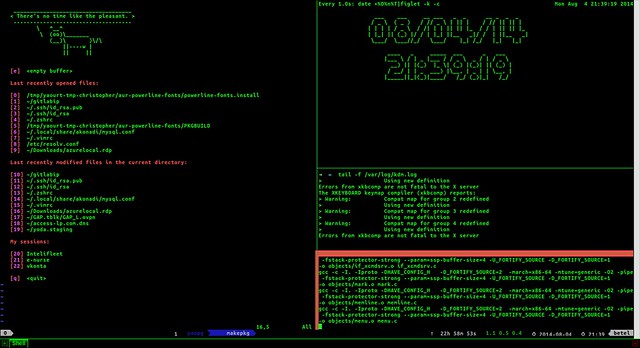

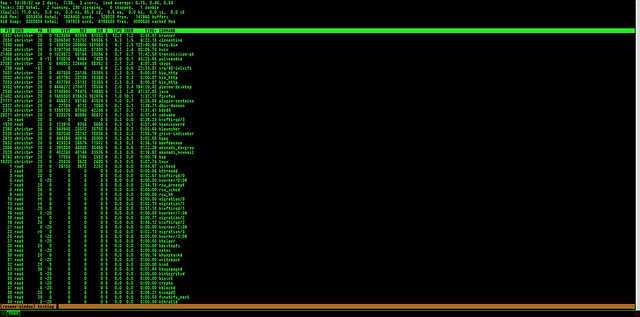

如何使用Tmux提高终端环境下的生产率

|

||||

如何使用Tmux提高终端环境下的效率

|

||||

===

|

||||

|

||||

鼠标的采用是次精彩的创新,它让电脑更加接近普通人。但从程序员和系统管理员的角度,使用电脑办公时,手一旦离开键盘,就会有些分心

|

||||

鼠标的发明是了不起的创新,它让电脑更加接近普通人。但从程序员和系统管理员的角度,使用电脑工作时,手一旦离开键盘,就会有些分心。

|

||||

|

||||

作为一名系统管理员,我大量的工作都需要在终端环境下。打开很多标签,然后在多个终端之间切换窗口会让我慢下来。而且当我的服务器出问题的时候,我不能浪费任何时间

|

||||

作为一名系统管理员,我大量的工作都需要在终端环境下。打开很多标签,然后在多个终端之间切换窗口会让我慢下来。尤其是当我的服务器出问题的时候,我不能浪费任何时间!

|

||||

|

||||

|

||||

|

||||

[Tmux][1]是我日常工作必要的工具之一。我可以借助Tmux创造出复杂的开发环境,同时还可以在一旁进行SSH远程连接。我可以开出很多窗口,拆分成很多面板,附加和分离会话等等。掌握了Tmux之后,你就可以扔掉鼠标了(只是个玩笑:D)

|

||||

[Tmux][1]是我日常工作必要的工具之一。我可以借助Tmux构建出复杂的开发环境,同时还可以在一旁进行SSH远程连接。我可以开出很多窗口,将其拆分成很多面板,接管和分离会话等等。掌握了Tmux之后,你就可以扔掉鼠标了(只是个玩笑:D)。

|

||||

|

||||

Tmux("Terminal Multiplexer"的简称)可以让我们在单个屏幕的灵活布局下开出很多终端,我们就可以协作地使用它们。举个例子,在一个面板中,我们用Vim修改一些配置文件,在另一个面板,我们使用irssi聊天,而在其余的面板,跟踪一些日志。然后,我们还可以打开新的窗口来升级系统,再开一个新窗口来进行服务器的ssh连接。在这些窗口面板间浏览切换和创建它们一样简单。它的高度可配置和可定制的,让其成为你心中的延伸

|

||||

Tmux("Terminal Multiplexer"的简称)可以让我们在单个屏幕的灵活布局下开出很多终端,我们就可以协作地使用它们。举个例子,在一个面板中,我们用Vim修改一些配置文件,在另一个面板,我们使用`irssi`聊天,而在其余的面板,可以跟踪一些日志。然后,我们还可以打开新的窗口来升级系统,再开一个新窗口来进行服务器的ssh连接。在这些窗口面板间浏览切换和创建它们一样简单。它的高度可配置和可定制的,让其成为你心中的延伸

|

||||

|

||||

### 在Linux/OSX下安装Tmux ###

|

||||

|

||||

@ -20,22 +20,21 @@ Tmux("Terminal Multiplexer"的简称)可以让我们在单个屏幕的灵活布

|

||||

# sudo brew install tmux

|

||||

# sudo port install tmux

|

||||

|

||||

### Debian/Ubuntu ###

|

||||

#### Debian/Ubuntu: ####

|

||||

|

||||

# sudo apt-get install tmux

|

||||

|

||||

RHEL/CentOS/Fedora(RHEL/CentOS 要求 [EPEL repo][2]):

|

||||

####RHEL/CentOS/Fedora(RHEL/CentOS 要求 [EPEL repo][2]):####

|

||||

|

||||

$ sudo yum install tmux

|

||||

|

||||

Archlinux:

|

||||

####Archlinux:####

|

||||

|

||||

$ sudo pacman -S tmux

|

||||

|

||||

### 使用不同会话工作 ###

|

||||

|

||||

使用Tmux的最好方式是使用不同的会话,这样你就可以以你想要的方式,将任务和应用组织到不同的会话中。如果你想改变一个会话,会话里面的任何工作都无须停止或者杀掉,让我们来看看这是怎么工作的

|

||||

|

||||

使用Tmux的最好方式是使用会话的方式,这样你就可以以你想要的方式,将任务和应用组织到不同的会话中。如果你想改变一个会话,会话里面的任何工作都无须停止或者杀掉。让我们来看看这是怎么工作的。

|

||||

|

||||

让我们开始一个叫做"session"的会话,并且运行top命令

|

||||

|

||||

@ -46,20 +45,20 @@ Archlinux:

|

||||

|

||||

$ tmux attach-session -t session

|

||||

|

||||

之后你会看到top操作仍然运行在重新连接的会话上

|

||||

之后你会看到top操作仍然运行在重新连接的会话上。

|

||||

|

||||

一些管理sessions的命令:

|

||||

|

||||

$ tmux list-session

|

||||

$ tmux new-session <session-name>

|

||||

$ tmux attach-session -t <session-name>

|

||||

$ tmux rename-session -t <session-name>

|

||||

$ tmux choose-session -t <session-name>

|

||||

$ tmux kill-session -t <session-name>

|

||||

$ tmux new-session <会话名>

|

||||

$ tmux attach-session -t <会话名>

|

||||

$ tmux rename-session -t <会话名>

|

||||

$ tmux choose-session -t <会话名>

|

||||

$ tmux kill-session -t <会话名>

|

||||

|

||||

### 使用不同的窗口工作

|

||||

|

||||

很多情况下,你需要在一个会话中运行多个命令,并且执行多个任务。我们可以在一个会话的多个窗口里组织他们。在现代化的GUI终端(比如 iTerm或者Konsole),一个窗口被视为一个标签。在会话中配置了我们默认的环境,我们就能够在一个会话中创建许多我们需要的窗口。窗口就像运行在会话中的应用程序,当我们脱离当前会话的时候,它仍在持续,让我们来看一个例子:

|

||||

很多情况下,你需要在一个会话中运行多个命令,执行多个任务。我们可以在一个会话的多个窗口里组织他们。在现代的GUI终端(比如 iTerm或者Konsole),一个窗口被视为一个标签。在会话中配置了我们默认的环境之后,我们就能够在一个会话中创建许多我们需要的窗口。窗口就像运行在会话中的应用程序,当我们脱离当前会话的时候,它仍在持续,让我们来看一个例子:

|

||||

|

||||

$ tmux new -s my_session

|

||||

|

||||

@ -67,7 +66,7 @@ Archlinux:

|

||||

|

||||

按下**CTRL-b c**

|

||||

|

||||

这将会创建一个新的窗口,然后屏幕的光标移向它。现在你就可以在新窗口下运行你的新应用。你可以写下你当前窗口的名字。在目前的案例下,我运行的top程序,所以top就是该窗口的名字

|

||||

这将会创建一个新的窗口,然后屏幕的光标移向它。现在你就可以在新窗口下运行你的新应用。你可以修改你当前窗口的名字。在目前的例子里,我运行的top程序,所以top就是该窗口的名字

|

||||

|

||||

如果你想要重命名,只需要按下:

|

||||

|

||||

@ -77,15 +76,15 @@ Archlinux:

|

||||

|

||||

|

||||

|

||||

一旦在一个会话中创建多个窗口,我们需要在这些窗口间移动的办法。窗口以数组的形式被组织在一起,每个窗口都有一个从0开始计数的号码,想要快速跳转到其余窗口:

|

||||

一旦在一个会话中创建多个窗口,我们需要在这些窗口间移动的办法。窗口像数组一样组织在一起,从0开始用数字标记每个窗口,想要快速跳转到其余窗口:

|

||||

|

||||

**CTRL-b <window number>**

|

||||

**CTRL-b <窗口号>**

|

||||

|

||||

如果我们给窗口起了名字,我们可以使用下面的命令切换:

|

||||

如果我们给窗口起了名字,我们可以使用下面的命令找到它们:

|

||||

|

||||

**CTRL-b f**

|

||||

|

||||

列出所有窗口:

|

||||

也可以列出所有窗口:

|

||||

|

||||

**CTRL-b w**

|

||||

|

||||

@ -94,21 +93,21 @@ Archlinux:

|

||||

**CTRL-b n**(到达下一个窗口)

|

||||

**CTRL-b p**(到达上一个窗口)

|

||||

|

||||

想要离开一个窗口:

|

||||

想要离开一个窗口,可以输入 exit 或者:

|

||||

|

||||

**CTRL-b &**

|

||||

|

||||

关闭窗口之前,你需要确认一下

|

||||

关闭窗口之前,你需要确认一下。

|

||||

|

||||

### 把窗口分成许多面板

|

||||

|

||||

有时候你在编辑器工作的同时,需要查看日志文件。编辑的同时追踪日志真的很有帮助。Tmux可以让我们把窗口分成许多面板。举了例子,我们可以创建一个控制台监测我们的服务器,同时拥有一个复杂的编辑器环境,这样就能同时进行编译和debug

|

||||

有时候你在编辑器工作的同时,需要查看日志文件。在编辑的同时追踪日志真的很有帮助。Tmux可以让我们把窗口分成许多面板。举个例子,我们可以创建一个控制台监测我们的服务器,同时用编辑器构造复杂的开发环境,这样就能同时进行编译和调试了。

|

||||

|

||||

让我们创建另一个Tmux会话,让其以面板的方式工作。首先,如果我们在某个会话中,那就从Tmux会话中脱离出来

|

||||

让我们创建另一个Tmux会话,让其以面板的方式工作。首先,如果我们在某个会话中,那就从Tmux会话中脱离出来:

|

||||

|

||||

**CTRL-b d**

|

||||

|

||||

开始一个叫做"panes"的新会话

|

||||

开始一个叫做"panes"的新会话:

|

||||

|

||||

$ tmux new -s panes

|

||||

|

||||

@ -120,17 +119,17 @@ Archlinux:

|

||||

|

||||

**CRTL-b %**

|

||||

|

||||

又增加了两个

|

||||

又增加了两个:

|

||||

|

||||

|

||||

|

||||

在他们之间移动:

|

||||

|

||||

**CTRL-b <Arrow keys>**

|

||||

**CTRL-b <光标键>**

|

||||

|

||||

### 结论

|

||||

|

||||

我希望这篇教程能对你有作用。作为奖励,像[Tmuxinator][3] 或者 [Tmuxifier][4]这样的工具,可以简化Tmux会话,窗口和面板的创建及加载,你可以很容易就配置Tmux。如果你没有使用过这些,尝试一下吧

|

||||

我希望这篇教程能对你有作用。此外,像[Tmuxinator][3] 或者 [Tmuxifier][4]这样的工具,可以简化Tmux会话,窗口和面板的创建及加载,你可以很容易就配置Tmux。如果你没有使用过这些,尝试一下吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -138,7 +137,7 @@ via: http://xmodulo.com/2014/08/improve-productivity-terminal-environment-tmux.h

|

||||

|

||||

作者:[Christopher Valerio][a]

|

||||

译者:[su-kaiyao](https://github.com/su-kaiyao)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,16 +1,15 @@

|

||||

移除Linux系统上的文件元数据

|

||||

如何在Linux上移除文件内的隐私数据

|

||||

================================================================================

|

||||

|

||||

典型的数据文件通常关联着“元数据”,其包含这个文件的描述信息,表现为一系列属性-值的集合。元数据一般包括创建者名称、生成文件的工具、文件创建/修改时期、创建位置和编辑历史等等。EXIF(镜像标准)、RDF(web资源)和DOI(数字文档)是几种流行的元数据标准。

|

||||

|

||||

典型的数据文件通常关联着“元数据”,其包含这个文件的描述信息,表现为一系列属性-值的集合。元数据一般包括创建者名称、生成文件的工具、文件创建/修改时期、创建位置和编辑历史等等。几种流行的元数据标准有 EXIF(图片)、RDF(web资源)和DOI(数字文档)等。

|

||||

|

||||

虽然元数据在数据管理领域有它的优点,但事实上它会[危害][1]你的隐私。相机图片中的EXIF格式数据会泄露出可识别的个人信息,比如相机型号、拍摄相关的GPS坐标和用户偏爱的照片编辑软件等。在文档和电子表格中的元数据包含作者/所属单位信息和相关的编辑历史。不一定这么绝对,但诸如[metagoofil][2]一类的元数据收集工具在信息收集的过程中常最作为入侵测试的一部分被利用。

|

||||

|

||||

对那些想要从共享数据中擦除一切个人元数据的用户来说,有一些方法从数据文件中移除元数据。你可以使用已有的文档或图片编辑软件,通常有自带的元数据编辑功能。在这个教程里,我会介绍一种不错的、单独的**元数据清理工具**,其目标只有一个:**匿名一切私有元数据**。

|

||||

|

||||

[MAT][3](元数据匿名工具箱)是一款专业的元数据清理器,使用Python编写。它在Tor工程旗下开发而成,在[Trails][4]上衍生出标准,后者是一种私人增强的live操作系统。【翻译得别扭,麻烦修正:)】

|

||||

[MAT][3](元数据匿名工具箱)是一款专业的元数据清理器,使用Python编写。它属于Tor旗下的项目,而且是Live 版的隐私增强操作系统 [Trails][4] 的标配应用。

|

||||

|

||||

与诸如[exiftool][5]等只能对有限数量的文件类型进行写入的工具相比,MAT支持从各种各样的文件中消除元数据:图片(png、jpg)、文档(odt、docx、pptx、xlsx和pdf)、归档文件(tar、tar.bz2)和音频(mp3、ogg、flac)等。

|

||||

与诸如[exiftool][5]等只能对有限种类的文件类型进行写入的工具相比,MAT支持从各种各样的文件中消除元数据:图片(png、jpg)、文档(odt、docx、pptx、xlsx和pdf)、归档文件(tar、tar.bz2)和音频(mp3、ogg、flac)等。

|

||||

|

||||

### 在Linux上安装MAT ###

|

||||

|

||||

@ -18,7 +17,7 @@

|

||||

|

||||

$ sudo apt-get install mat

|

||||

|

||||

在Fedora上,并没有预先生成的MAT包,所以你需要从源码生成。这是我在Fedora上生成MAT的步骤(不成功的话,请查看教程底部):

|

||||

在Fedora上,并没有预先生成的MAT软件包,所以你需要从源码生成。这是我在Fedora上生成MAT的步骤(不成功的话,请查看教程底部):

|

||||

|

||||

$ sudo yum install python-devel intltool python-pdfrw perl-Image-ExifTool python-mutagen

|

||||

$ sudo pip install hachoir-core hachoir-parser

|

||||

@ -95,7 +94,7 @@

|

||||

|

||||

### 总结 ###

|

||||

|

||||

MAT是一款简单但非常好用的工具,用来预防从元数据中无意泄露私人数据。请注意如果有必要,还是需要你去隐藏文件内容。MAT能做的是消除与文件相关的元数据,但并不会对文件本身进行任何操作。简而言之,MAT是一名救生员,因为它可以处理大多数常见的元数据移除,但不应该只指望它来保证你的隐私。[译者注:养成良好的隐私保护意识和习惯才是最好的方法]

|

||||

MAT是一款简单但非常好用的工具,用来预防从元数据中无意泄露私人数据。请注意如果有必要,文件内容也需要保护。MAT能做的是消除与文件相关的元数据,但并不会对文件本身进行任何操作。简而言之,MAT是一名救生员,因为它可以处理大多数常见的元数据移除,但不应该只指望它来保证你的隐私。[译者注:养成良好的隐私保护意识和习惯才是最好的方法]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -103,7 +102,7 @@ via: http://xmodulo.com/2014/08/remove-file-metadata-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[KayGuoWhu](https://github.com/KayGuoWhu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,11 +1,12 @@

|

||||

在Linux中使用逻辑卷管理器构建灵活的磁盘存储——第一部分

|

||||

在Linux中使用LVM构建灵活的磁盘存储(第一部分)

|

||||

================================================================================

|

||||

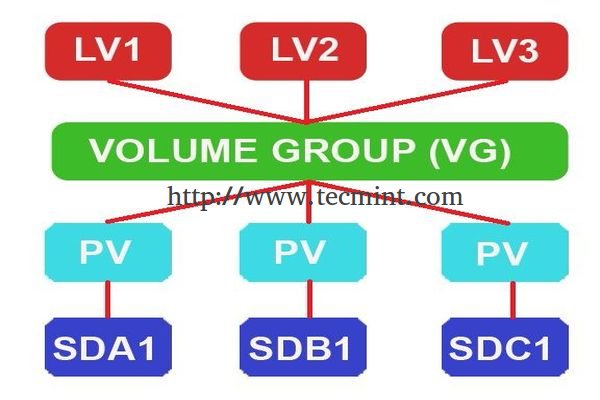

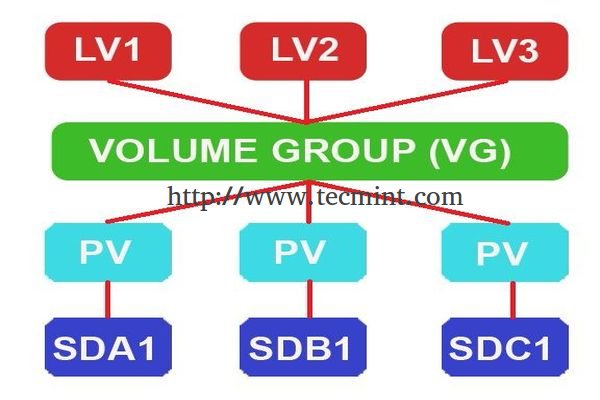

**逻辑卷管理器(LVM)**让磁盘空间管理更为便捷。如果一个文件系统需要更多的空间,它可以在它的卷组中将空闲空间添加到它的逻辑卷中,而文件系统可以根据你的意愿调整大小。如果某个磁盘启动失败,替换磁盘可以使用卷组注册成一个物理卷,而逻辑卷扩展可以将数据迁移到新磁盘而不会丢失数据。

|

||||

**逻辑卷管理器(LVM)**让磁盘空间管理更为便捷。如果一个文件系统需要更多的空间,可以在它的卷组中将空闲空间添加到其逻辑卷中,而文件系统可以根据你的意愿调整大小。如果某个磁盘启动失败,用于替换的磁盘可以使用卷组注册成一个物理卷,而逻辑卷扩展可以将数据迁移到新磁盘而不会丢失数据。

|

||||

|

||||

|

||||

在Linux中创建LVM存储

|

||||

<center></center>

|

||||

|

||||

在现代世界中,每台服务器空间都会因为我们的需求增长而不断扩展。逻辑卷可以用于RAID,SAN。单个物理卷将会被加入组以创建卷组,在卷组中,我们需要切割空间以创建逻辑卷。在使用逻辑卷时,我们可以使用某些命令来跨磁盘、跨逻辑卷扩展,或者减少逻辑卷大小,而不用重新格式化和重新对当前磁盘分区。卷可以跨磁盘抽取数据,这会增加I/O数据量。

|

||||

<center>*在Linux中创建LVM存储*</center>

|

||||

|

||||

在如今,每台服务器空间都会因为我们的需求增长而不断扩展。逻辑卷可以用于RAID,SAN。单个物理卷将会被加入组以创建卷组,在卷组中,我们需要切割空间以创建逻辑卷。在使用逻辑卷时,我们可以使用某些命令来跨磁盘、跨逻辑卷扩展,或者减少逻辑卷大小,而不用重新格式化和重新对当前磁盘分区。卷可以跨磁盘抽取数据,这会增加I/O数据量。

|

||||

|

||||

### LVM特性 ###

|

||||

|

||||

@ -27,8 +28,8 @@

|

||||

# vgs

|

||||

# lvs

|

||||

|

||||

|

||||

检查物理卷

|

||||

<center></center>

|

||||

<center>*检查物理卷*</center>

|

||||

|

||||

下面是上面截图中各个参数的说明。

|

||||

|

||||

@ -52,8 +53,8 @@

|

||||

|

||||

# fdisk -l

|

||||

|

||||

|

||||

验证添加的磁盘

|

||||

<center></center>

|

||||

<center>*验证添加的磁盘*</center>

|

||||

|

||||

- 用于操作系统(CentOS 6.5)的默认磁盘。

|

||||

- 默认磁盘上定义的分区(vda1 = swap),(vda2 = /)。

|

||||

@ -61,8 +62,8 @@

|

||||

|

||||

各个磁盘大小都是20GB,默认的卷组的PE大小为4MB,我们在该服务器上配置的卷组使用默认PE。

|

||||

|

||||

|

||||

卷组显示

|

||||

<center></center>

|

||||

<center>*卷组显示*</center>

|

||||

|

||||

- **VG Name** – 卷组名称。

|

||||

- **Format** – LVM架构使用LVM2。

|

||||

@ -82,8 +83,8 @@

|

||||

|

||||

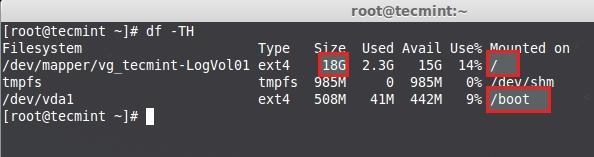

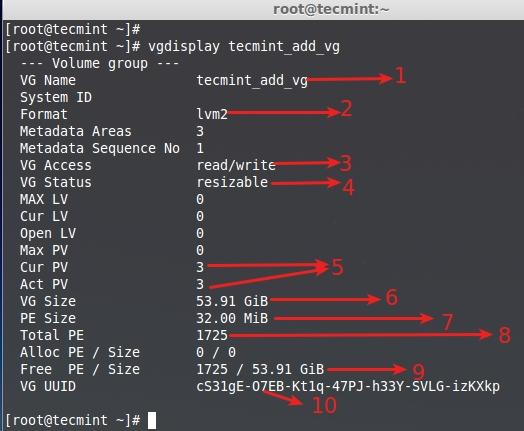

# df -TH

|

||||

|

||||

|

||||

检查磁盘空间

|

||||

<center></center>

|

||||

<center>*检查磁盘空间*</center>

|

||||

|

||||

上面的图片中显示了用于根的挂载点已使用了**18GB**,因此没有空闲空间可用了。

|

||||

|

||||

@ -91,15 +92,15 @@

|

||||

|

||||

我们可以扩展当前使用的卷组以获得更多空间。但在这里,我们将要做的是,创建新的卷组,然后在里面肆意妄为吧。过会儿,我们可以看到怎样来扩展使用中的卷组的文件系统。

|

||||

|

||||

在使用新磁盘钱,我们需要使用fdisk来对磁盘分区。

|

||||

在使用新磁盘前,我们需要使用fdisk来对磁盘分区。

|

||||

|

||||

# fdisk -cu /dev/sda

|

||||

|

||||

- **c** – 关闭DOS兼容模式,推荐使用该选项。

|

||||

- **u** – 当列出分区表时,会以扇区而不是柱面显示。

|

||||

|

||||

|

||||

创建新的物理分区

|

||||

<center></center>

|

||||

<center>*创建新的物理分区*</center>

|

||||

|

||||

接下来,请遵循以下步骤来创建新分区。

|

||||

|

||||

@ -118,8 +119,8 @@

|

||||

|

||||

# fdisk -l

|

||||

|

||||

|

||||

验证分区表

|

||||

<center></center>

|

||||

<center>*验证分区表*</center>

|

||||

|

||||

### 创建物理卷 ###

|

||||

|

||||

@ -135,8 +136,8 @@

|

||||

|

||||

# pvs

|

||||

|

||||

|

||||

创建物理卷

|

||||

<center></center>

|

||||

<center>*创建物理卷*</center>

|

||||

|

||||

### 创建卷组 ###

|

||||

|

||||

@ -152,11 +153,11 @@

|

||||

|

||||

# vgs

|

||||

|

||||

|

||||

创建卷组

|

||||

<center></center>

|

||||

<center>*创建卷组*</center>

|

||||

|

||||

|

||||

验证卷组

|

||||

<center></center>

|

||||

<center>*验证卷组*</center>

|

||||

|

||||

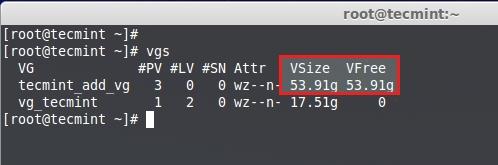

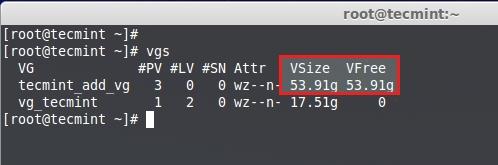

理解vgs命令输出:

|

||||

|

||||

@ -173,15 +174,15 @@

|

||||

|

||||

# vgs -v

|

||||

|

||||

|

||||

检查卷组信息

|

||||

<center></center>

|

||||

<center>*检查卷组信息*</center>

|

||||

|

||||

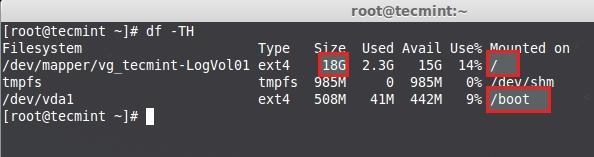

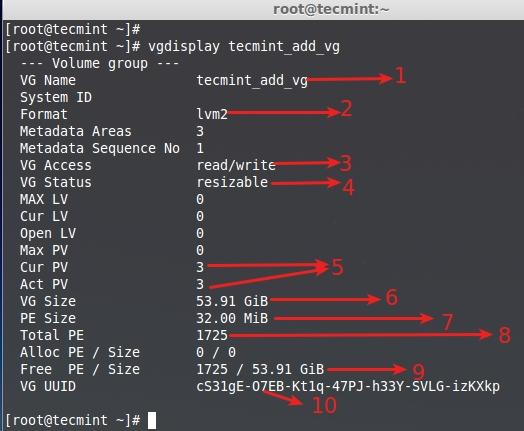

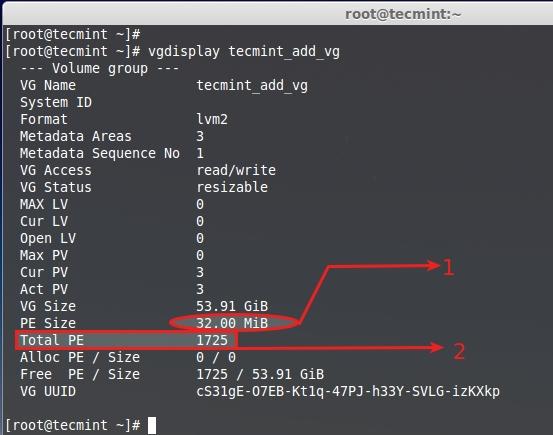

**8.** 要获取更多关于新创建的卷组信息,运行以下命令。

|

||||

|

||||

# vgdisplay tecmint_add_vg

|

||||

|

||||

|

||||

列出新卷组

|

||||

<center></center>

|

||||

<center>*列出新卷组*</center>

|

||||

|

||||

- 卷组名称

|

||||

- 使用的LVM架构。

|

||||

@ -200,15 +201,15 @@

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

列出当前卷组

|

||||

<center></center>

|

||||

<center>*列出当前卷组*</center>

|

||||

|

||||

**10.** 这些逻辑卷处于**vg_tecmint**卷组中使用**pvs**命令来列出并查看有多少空闲空间可以创建逻辑卷。

|

||||

|

||||

# pvs

|

||||

|

||||

|

||||

检查空闲空间

|

||||

<center></center>

|

||||

<center>*检查空闲空间*</center>

|

||||

|

||||

**11.** 卷组大小为**54GB**,而且未被使用,所以我们可以在该组内创建LV。让我们将卷组平均划分大小来创建3个逻辑卷,就是说**54GB**/3 = **18GB**,创建出来的单个逻辑卷应该会是18GB。

|

||||

|

||||

@ -218,8 +219,8 @@

|

||||

|

||||

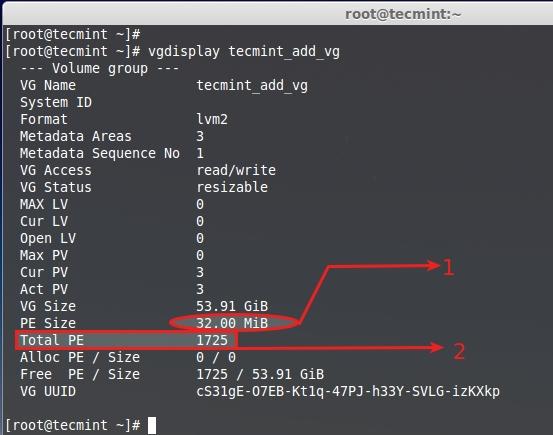

# vgdisplay tecmint_add_vg

|

||||

|

||||

|

||||

创建新逻辑卷

|

||||

<center></center>

|

||||

<center>*创建新逻辑卷*</center>

|

||||

|

||||

- 默认分配给该卷组的PE为32MB,这里单个的PE大小为32MB。

|

||||

- 总可用PE是1725。

|

||||

@ -233,8 +234,8 @@

|

||||

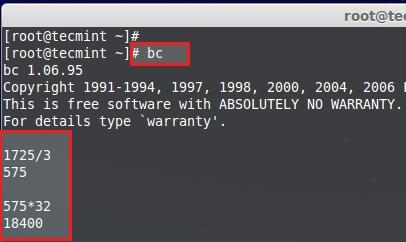

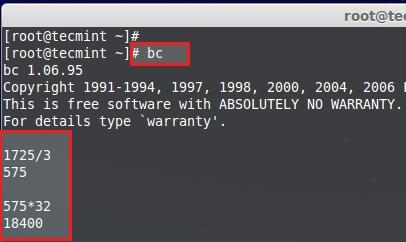

1725PE/3 = 575 PE.

|

||||

575 PE x 32MB = 18400 --> 18GB

|

||||

|

||||

|

||||

计算磁盘空间

|

||||

<center></center>

|

||||

<center>*计算磁盘空间*</center>

|

||||

|

||||

按**CRTL+D**退出**bc**。现在让我们使用575个PE来创建3个逻辑卷。

|

||||

|

||||

@ -253,8 +254,8 @@

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

列出创建的逻辑卷

|

||||

<center></center>

|

||||

<center>*列出创建的逻辑卷*</center>

|

||||

|

||||

#### 方法2: 使用GB大小创建逻辑卷 ####

|

||||

|

||||

@ -272,8 +273,8 @@

|

||||

|

||||

# lvs

|

||||

|

||||

|

||||

验证创建的逻辑卷

|

||||

<center></center>

|

||||

<center>*验证创建的逻辑卷*</center>

|

||||

|

||||

这里,我们可以看到,当创建第三个LV的时候,我们不能收集到18GB空间。这是因为尺寸有小小的改变,但在使用或者尺寸来创建LV时,这个问题会被忽略。

|

||||

|

||||

@ -287,8 +288,8 @@

|

||||

|

||||

# mkfs.ext4 /dev/tecmint_add_vg/tecmint_manager

|

||||

|

||||

|

||||

创建Ext4文件系统

|

||||

<center></center>

|

||||

<center>*创建Ext4文件系统*</center>

|

||||

|

||||

**13.** 让我们在**/mnt**下创建目录,并将已创建好文件系统的逻辑卷挂载上去。

|

||||

|

||||

@ -302,8 +303,8 @@

|

||||

|

||||

# df -h

|

||||

|

||||

|

||||

挂载逻辑卷

|

||||

<center></center>

|

||||

<center>*挂载逻辑卷*</center>

|

||||

|

||||

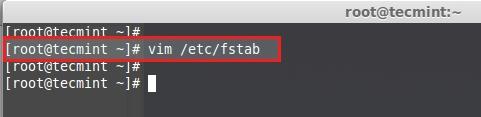

#### 永久挂载 ####

|

||||

|

||||

@ -321,32 +322,31 @@

|

||||

/dev/mapper/tecmint_add_vg-tecmint_public /mnt/tecmint_public ext4 defaults 0 0

|

||||

/dev/mapper/tecmint_add_vg-tecmint_manager /mnt/tecmint_manager ext4 defaults 0 0

|

||||

|

||||

|

||||

获取mtab挂载条目

|

||||

<center>*</center>

|

||||

<center>*获取mtab挂载条目*</center>

|

||||

|

||||

|

||||

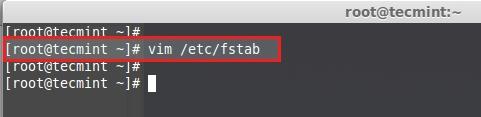

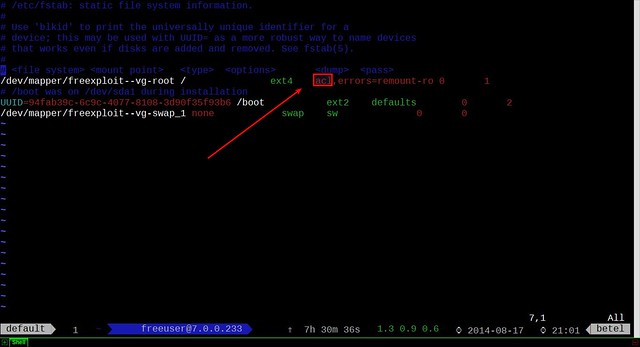

打开fstab文件

|

||||

<center></center>

|

||||

<center>*打开fstab文件*</center>

|

||||

|

||||

|

||||

添加自动挂载条目

|

||||

<center></center>

|

||||

<center>*添加自动挂载条目*</center>

|

||||

|

||||

重启前,执行mount -a命令来检查fstab条目。

|

||||

|

||||

# mount -av

|

||||

|

||||

|

||||

验证fstab条目

|

||||

<center></center>

|

||||

<center>*验证fstab条目*</center>

|

||||

|

||||

这里,我们已经了解了怎样来使用逻辑卷构建灵活的存储,从使用物理磁盘到物理卷,物理卷到卷组,卷组再到逻辑卷。

|

||||

|

||||

在我即将奉献的文章中,我将介绍如何扩展卷组、逻辑卷,减少逻辑卷,拍快照以及从快照中恢复。到那时,保持TecMint更新到这些精彩文章中的内容。

|

||||

--------------------------------------------------------------------------------

|

||||

在我即将奉献的文章中,我将介绍如何扩展卷组、逻辑卷,减少逻辑卷,拍快照以及从快照中恢复。 --------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/create-lvm-storage-in-linux/

|

||||

|

||||

作者:[Babin Lonston][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

配置Linux访问控制列表(ACL)

|

||||

配置 Linux 的访问控制列表(ACL)

|

||||

================================================================================

|

||||

使用拥有权限控制的Liunx,工作是一件轻松的任务。它可以定义任何user,group和other的权限。无论是在桌面电脑或者不会有很多用户的虚拟Linux实例,或者当用户不愿意分享他们之间的文件时,这样的工作是很棒的。然而,如果你是在一个大型组织,你运行了NFS或者Samba服务给不同的用户。然后你将会需要灵活的挑选并设置很多复杂的配置和权限去满足你的组织不同的需求。

|

||||

使用拥有权限控制的Liunx,工作是一件轻松的任务。它可以定义任何user,group和other的权限。无论是在桌面电脑或者不会有很多用户的虚拟Linux实例,或者当用户不愿意分享他们之间的文件时,这样的工作是很棒的。然而,如果你是在一个大型组织,你运行了NFS或者Samba服务给不同的用户,然后你将会需要灵活的挑选并设置很多复杂的配置和权限去满足你的组织不同的需求。

|

||||

|

||||

Linux(和其他Unix,兼容POSIX的)所以拥有访问控制列表(ACL),它是一种分配权限之外的普遍范式。例如,默认情况下你需要确认3个权限组:owner,group和other。使用ACL,你可以增加权限给其他用户或组别,而不单只是简单的"other"或者是拥有者不存在的组别。可以允许指定的用户A、B、C拥有写权限而不再是让他们整个组拥有写权限。

|

||||

Linux(和其他Unix等POSIX兼容的操作系统)有一种被称为访问控制列表(ACL)的权限控制方法,它是一种权限分配之外的普遍范式。例如,默认情况下你需要确认3个权限组:owner、group和other。而使用ACL,你可以增加权限给其他用户或组别,而不单只是简单的"other"或者是拥有者不存在的组别。可以允许指定的用户A、B、C拥有写权限而不再是让他们整个组拥有写权限。

|

||||

|

||||

ACL支持多种Linux文件系统,包括ext2, ext3, ext4, XFS, Btfrs, 等。如果你不确定你的文件系统是否支持ACL,请参考文档。

|

||||

|

||||

@ -32,15 +32,15 @@ Archlinux 中:

|

||||

|

||||

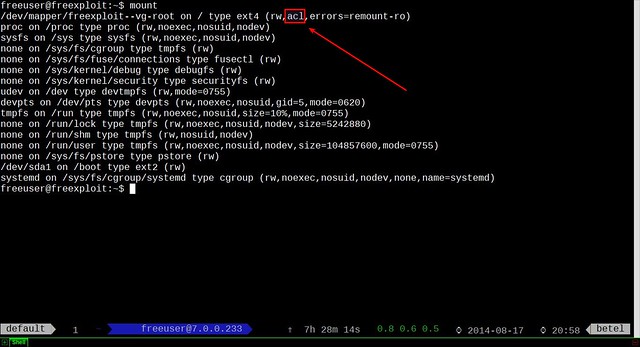

|

||||

|

||||

你可以注意到,我的root分区中ACL属性已经开启。万一你没有开启,你需要编辑/etc/fstab文件。增加acl标记,在你需要开启ACL的分区之前。

|

||||

你可以注意到,我的root分区中ACL属性已经开启。万一你没有开启,你需要编辑/etc/fstab文件,在你需要开启ACL的分区的选项前增加acl标记。

|

||||

|

||||

|

||||

|

||||

现在我们需要重新挂载分区(我喜欢完全重启,因为我不想丢掉数据),如果你对任何分区开启ACL,你必须也重新挂载它。

|

||||

现在我们需要重新挂载分区(我喜欢完全重启,因为我不想丢失数据),如果你对其它分区开启ACL,你必须也重新挂载它。

|

||||

|

||||

$ sudo mount / -o remount

|

||||

|

||||

令人敬佩!现在我们已经在我们的系统中开启ACL,让我们开始和它一起工作。

|

||||

干的不错!现在我们已经在我们的系统中开启ACL,让我们开始和它一起工作。

|

||||

|

||||

### ACL 范例 ###

|

||||

|

||||

@ -54,7 +54,6 @@ Archlinux 中:

|

||||

|

||||

我想要分享这个目录给其他两个用户test和test2,一个拥有完整权限,另一个只有读权限。

|

||||

|

||||

First, to set ACLs for user test:

|

||||

首先,为用户test设置ACL:

|

||||

|

||||

$ sudo setfacl -m u:test:rwx /shared

|

||||

@ -84,7 +83,7 @@ First, to set ACLs for user test:

|

||||

|

||||

|

||||

|

||||

你可以注意到,正常权限后多一个+标记。这表示ACL已经设置成功。为了真正读取ACL,我们需要运行:

|

||||

你可以注意到,正常权限后多一个+标记。这表示ACL已经设置成功。要具体看一下ACL,我们需要运行:

|

||||

|

||||

$ sudo getfacl /shared

|

||||

|

||||

@ -102,11 +101,11 @@ First, to set ACLs for user test:

|

||||

|

||||

|

||||

|

||||

最后一件事。在设置了ACL文件或目录工作时,cp和mv命令会改变这些设置。在cp的情况下,需要添加“p”参数来复制ACL设置。如果这不可行,它将会展示一个警告。mv默认移动ACL设置,如果这也不可行,它也会向您展示一个警告。

|

||||

最后,在设置了ACL文件或目录工作时,cp和mv命令会改变这些设置。在cp的情况下,需要添加“p”参数来复制ACL设置。如果这不可行,它将会展示一个警告。mv默认移动ACL设置,如果这也不可行,它也会向您展示一个警告。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

使用ACL给了在你想要分享的文件上巨大的权利和控制,特别是在NFS/Samba服务。此外,如果你的主管共享主机,这个工具是必备的。

|

||||

使用ACL让在你想要分享的文件上拥有更多的能力和控制,特别是在NFS/Samba服务。此外,如果你的主管共享主机,这个工具是必备的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -114,7 +113,7 @@ via: http://xmodulo.com/2014/08/configure-access-control-lists-acls-linux.html

|

||||

|

||||

作者:[Christopher Valerio][a]

|

||||

译者:[VicYu](http://www.vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,9 +1,10 @@

|

||||

Linux有问必答——如何在CentOS上安装Shutter

|

||||

Linux有问必答:如何在CentOS上安装Shutter

|

||||

================================================================================

|

||||

> **问题**:我想要在我的CentOS桌面上试试Shutter屏幕截图程序,但是,当我试着用yum来安装Shutter时,它总是告诉我“没有shutter包可用”。我怎样才能在CentOS上安装Shutter啊?

|

||||

|

||||

[Shutter][1]是一个用于Linux桌面的开源(GPLv3)屏幕截图工具。它打包有大量用户友好的功能,这让它成为Linux中功能最强大的屏幕截图程序之一。你可以用Shutter来捕捉一个规则区域、一个窗口、整个桌面屏幕、或者甚至是来自任意专用地址的一个网页的截图。除此之外,你也可以用它内建的图像编辑器来对捕获的截图进行编辑,应用不同的效果,将图像导出为不同的图像格式(svg,pdf,ps),或者上传图片到公共图像主机或者FTP站点。

|

||||

Shutter is not available as a pre-built package on CentOS (as of version 7). Fortunately, there exists a third-party RPM repository called Nux Dextop, which offers Shutter package. So [enable Nux Dextop repository][2] on CentOS. Then use the following command to install Shutter.

|

||||

|

||||

Shutter 在 CentOS (截止至版本 7)上没有预先构建好的软件包。幸运的是,有一个第三方提供的叫做 Nux Dextop 的 RPM 中提供了 Shutter 软件包。 所以在 CentOS 上[启用 Nux Dextop 软件库][2],然后使用下列命令来安装它:

|

||||

|

||||

$ sudo yum --enablerepo=nux-dextop install shutter

|

||||

|

||||

@ -14,9 +15,9 @@ Shutter is not available as a pre-built package on CentOS (as of version 7). For

|

||||

via: http://ask.xmodulo.com/install-shutter-centos.html

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://shutter-project.org/

|

||||

[2]:http://ask.xmodulo.com/enable-nux-dextop-repository-centos-rhel.html

|

||||

[2]:http://linux.cn/article-3889-1.html

|

||||

@ -1,6 +1,6 @@

|

||||

Google drive和Ubuntu 14.04 LTS的胶合

|

||||

墙外香花:Google drive和Ubuntu 14.04 LTS的胶合

|

||||

================================================================================

|

||||

Google尚未发布其**官方Linux客户端**,以用于从Ubuntu访问其drive。然开源社区却业已开发完毕非官方之软件包‘**grive-tools**’。

|

||||

Google尚未发布用于从Ubuntu访问其drive的**官方Linux客户端**。然开源社区却业已开发完毕非官方之软件包‘**grive-tools**’。

|

||||

|

||||

Grive乃是Google Drive(**在线存储服务**)的GNU/Linux系统客户端,允许你**同步**所选目录到云端,以及上传新文件到Google Drive。

|

||||

|

||||

@ -22,7 +22,7 @@ Grive乃是Google Drive(**在线存储服务**)的GNU/Linux系统客户端

|

||||

|

||||

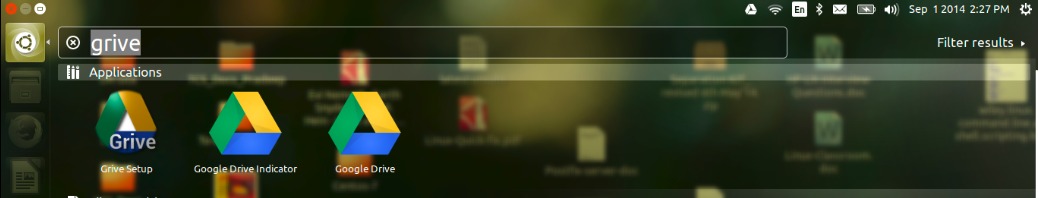

**步骤:1** 安装完了,通过输入**Grive**在**Unity Dash**搜索应用,并打开之。

|

||||

|

||||

|

||||

|

||||

|

||||

**步骤:2** 登入google drive,你将被问及访问google drive的权限。

|

||||

|

||||

@ -36,25 +36,25 @@ Grive乃是Google Drive(**在线存储服务**)的GNU/Linux系统客户端

|

||||

|

||||

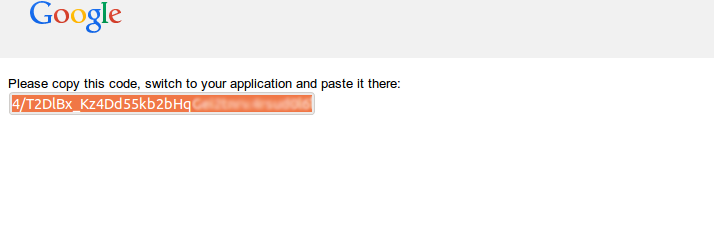

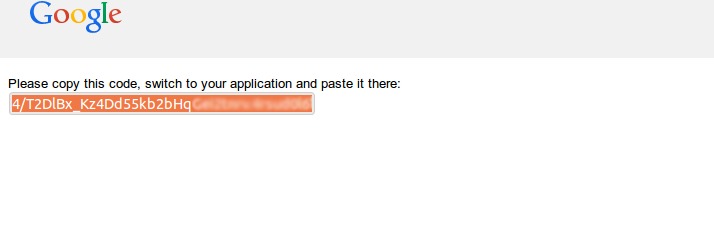

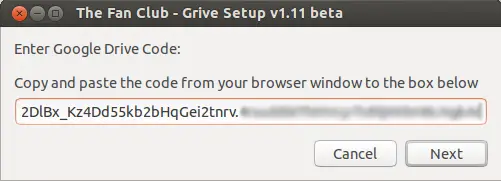

**步骤:3** 下面将提供给你一个 **google代码**,复制并粘贴到**Grive设置框**内。

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

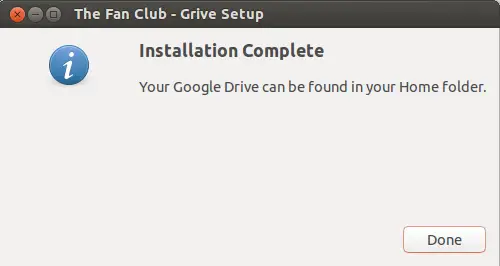

点击下一步后,将会开始同步google drive到你**家目录**下的‘**Google Drive**’文件夹。完成后,将出现如下窗口。

|

||||

|

||||

|

||||

|

||||

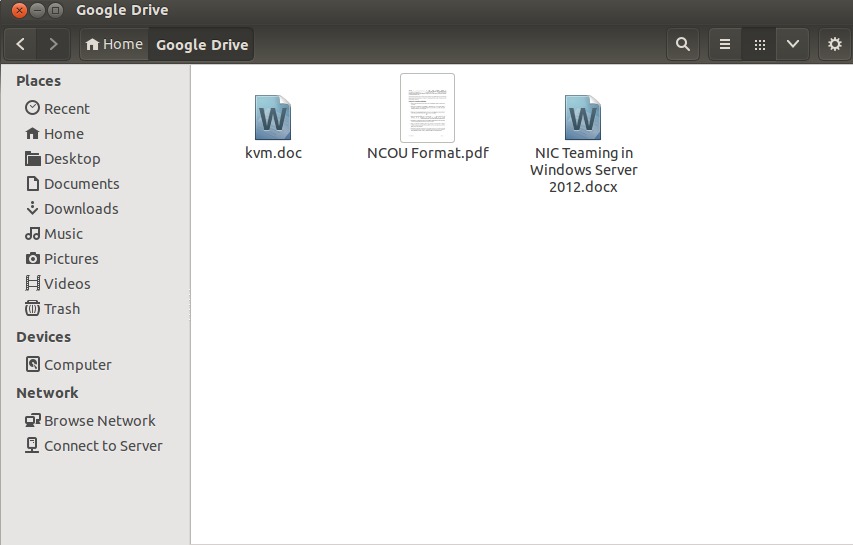

Google Drive folder created under **user's home directory**

|

||||

Google Drive 文件夹会创建在**用户的主目录**下。

|

||||

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxtechi.com/mount-google-drive-in-ubuntu/

|

||||

|

||||

作者:[Pradeep Kumar ][a]

|

||||

作者:[Pradeep Kumar][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

Linux有问必答——如何使用tcpdump来捕获TCP SYN,ACK和FIN包

|

||||

Linux有问必答:如何使用tcpdump来捕获TCP SYN,ACK和FIN包

|

||||

================================================================================

|

||||

> **问题**:我想要监控TCP连接活动(如,建立连接的三次握手,以及断开连接的四次握手)。要完成此事,我只需要捕获TCP控制包,如SYN,ACK或FIN标记相关的包。我怎样使用tcpdump来仅仅捕获TCP SYN,ACK和/或FYN包?

|

||||

|

||||

作为事实上的捕获工具,tcpdump提供了强大而又灵活的包过滤功能。作为tcpdump基础的libpcap包捕获引擎支持标准的包过滤规则,如基于5重包头的过滤(如基于源/目的IP地址/端口和IP协议类型)。

|

||||

作为业界标准的捕获工具,tcpdump提供了强大而又灵活的包过滤功能。作为tcpdump基础的libpcap包捕获引擎支持标准的包过滤规则,如基于5重包头的过滤(如基于源/目的IP地址/端口和IP协议类型)。

|

||||

|

||||

tcpdump/libpcap的包过滤规则也支持更多通用分组表达式,在这些表达式中,包中的任意字节范围都可以使用关系或二进制操作符进行检查。对于字节范围表达,你可以使用以下格式:

|

||||

|

||||

@ -34,8 +34,8 @@ tcpdump/libpcap的包过滤规则也支持更多通用分组表达式,在这

|

||||

|

||||

via: http://ask.xmodulo.com/capture-tcp-syn-ack-fin-packets-tcpdump.html

|

||||

|

||||

作者:[作者名][a]

|

||||

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -1,15 +1,14 @@

|

||||

Linux FAQ - Ubuntu如何使用命令行移除PPA仓库

|

||||

Linux有问必答:Ubuntu如何使用命令行移除PPA仓库

|

||||

================================================================================

|

||||

> **问题**: 前段时间,我的Ubuntu增加了一个第三方的PPA仓库,如何才能移除这个PPA仓库呢?

|

||||

|

||||

个人软件包档案(PPA)是Ubuntu独有的解决方案,允许独立开发者和贡献者构建、贡献任何定制的软件包来作为通过启动面板的第三方APT仓库。如果你是Ubuntu用户,有可能你已经增加一些流行的第三方PPA仓库到你的Ubuntu系统。如果你需要删除掉已经预先配置好的PPA仓库,下面将教你怎么做。

|

||||

|

||||

|

||||

假如你有一个第三方PPA仓库叫“ppa:webapps/preview”增加到了你的系统中,如下。

|

||||

假如你想增加一个叫“ppa:webapps/preview”第三方PPA仓库到你的系统中,如下:

|

||||

|

||||

$ sudo add-apt-repository ppa:webapps/preview

|

||||

|

||||

如果你想要 **单独地删除一个PPA仓库**,运行下面的命令。

|

||||

如果你想要 **单独地删除某个PPA仓库**,运行下面的命令:

|

||||

|

||||

$ sudo add-apt-repository --remove ppa:someppa/ppa

|

||||

|

||||

@ -17,22 +16,22 @@ Linux FAQ - Ubuntu如何使用命令行移除PPA仓库

|

||||

|

||||

如果你想要 **完整的删除一个PPA仓库并包括来自这个PPA安装或更新过的软件包**,你需要ppa-purge命令。

|

||||

|

||||

安装ppa-purge软件包:

|

||||

首先要安装ppa-purge软件包:

|

||||

|

||||

$ sudo apt-get install ppa-purge

|

||||

|

||||

删除PPA仓库和与之相关的软件包,运行下列命令:

|

||||

然后使用如下命令删除PPA仓库和与之相关的软件包:

|

||||

|

||||

$ sudo ppa-purge ppa:webapps/preview

|

||||

|

||||

特别滴,在发行版更新后,你需要[分辨和清除已损坏的PPA仓库][1],这个方法特别有用!

|

||||

特别滴,在发行版更新后,当你[分辨和清除已损坏的PPA仓库][1]时这个方法特别有用!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/how-to-remove-ppa-repository-from-command-line-on-ubuntu.html

|

||||

|

||||

译者:[Vic___](http://www.vicyu.net)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

Linux有问必答-- 如何用Perl检测Linux的发行版本

|

||||

Linux有问必答:如何用Perl检测Linux的发行版本

|

||||

================================================================================

|

||||

> **提问**:我需要写一个Perl程序,它会包含Linux发行版相关的代码。为此,Perl程序需要能够自动检测运行中的Linux的发行版(如Ubuntu、CentOS、Debian、Fedora等等),以及它是什么版本号。如何用Perl检测Linux的发行版本?

|

||||

|

||||

如果要用Perl脚本检测Linux的发行版,你可以使用一个名为[Linux::Distribution][1]的Perl模块。该模块通过检查/etc/lsb-release以及其他特定的/etc下的发行版特定的目录来猜测底层Linux操作系统。它支持检测所有主要的Linux发行版,包括Fedora、CentOS、Arch Linux、Debian、Ubuntu、SUSE、Red Hat、Gentoo、Slackware、Knoppix和Mandrake。

|

||||

如果要用Perl脚本检测Linux的发行版,你可以使用一个名为[Linux::Distribution][1]的Perl模块。该模块通过检查/etc/lsb-release以及其他在/etc下的发行版特定的目录来猜测底层Linux操作系统。它支持检测所有主要的Linux发行版,包括Fedora、CentOS、Arch Linux、Debian、Ubuntu、SUSE、Red Hat、Gentoo、Slackware、Knoppix和Mandrake。

|

||||

|

||||

要在Perl中使用这个模块,你首先需要安装它。

|

||||

|

||||

@ -20,7 +20,7 @@ Linux有问必答-- 如何用Perl检测Linux的发行版本

|

||||

|

||||

$ sudo yum -y install perl-CPAN

|

||||

|

||||

使用这条命令来构建并安装模块:

|

||||

然后,使用这条命令来构建并安装模块:

|

||||

|

||||

$ sudo perl -MCPAN -e 'install Linux::Distribution'

|

||||

|

||||

@ -46,7 +46,7 @@ Linux::Distribution模块安装完成之后,你可以使用下面的代码片

|

||||

via: http://ask.xmodulo.com/detect-linux-distribution-in-perl.html

|

||||

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -47,6 +47,6 @@ via: http://www.unixmen.com/reset-root-password-centos-7/

|

||||

|

||||

作者:M.el Khamlichi

|

||||

译者:[su-kaiyao](https://github.com/su-kaiyao)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

@ -2,7 +2,7 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

快速地向你展示**如何检查你的系统是否受到Shellshock的影响**如果有,**怎样修复你的系统免于被Bash漏洞利用**。

|

||||

快速地向你展示**如何检查你的系统是否受到Shellshock的影响**,如果有,**怎样修复你的系统免于被Bash漏洞利用**。

|

||||

|

||||

如果你正跟踪新闻,你可能已经听说过在[Bash][1]中发现了一个漏洞,这被称为**Bash Bug**或者** Shellshock**。 [红帽][2]是第一个发现这个漏洞的机构。Shellshock错误允许攻击者注入自己的代码,从而使系统开放各给种恶意软件和远程攻击。事实上,[黑客已经利用它来启动DDoS攻击][3]。

|

||||

|

||||

@ -55,7 +55,7 @@ via: http://itsfoss.com/linux-shellshock-check-fix/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,27 +1,27 @@

|

||||

7 Improvements The Linux Desktop Needs

|

||||

7个Linux桌面需要改善之处

|

||||

======================================

|

||||

|

||||

In the last fifteen years, the Linux desktop has gone from a collection of marginally adequate solutions to an unparalleled source of innovation and choice. Many of its standard features are either unavailable in Windows, or else available only as a proprietary extension. As a result, using Linux is increasingly not only a matter of principle, but of preference as well.

|

||||

在过去的15年内,Linux桌面从一个还算凑合的边缘化解决方案集合发展到了一个空前的创新和选择源。它的标准特点中有许多是要么不适用于Windows系统,要么就只适合作为一个专有的扩展。因此,使用Linux愈发变得不仅是一个原则问题,也是一种偏好。

|

||||

|

||||

Yet, despite this progress, gaps remain. Some are missing features, others missing features, and still others pie-in-the sky extras that could be easily implemented to extend the desktop metaphor without straining users' tolerance of change.

|

||||

然而,尽管Linux桌面不停在进步,但是仍然存在差距。一些正在丢失它们的特点,一些已经丢失了,还有一些如同天上掉的馅饼般的附加设备能轻易地实现在不考虑用户对于改变的容忍度的情况下来扩展桌面。

|

||||

|

||||

For instance, here are 7 improvements that would benefit the Linux desktop:

|

||||

比如说,以下是7个有利于Linux桌面发展的改善建议:

|

||||

|

||||

### 7. Easy Email Encryption

|

||||

### 7. 简单的Email加密技术

|

||||

|

||||

These days, every email reader from Alpine to Thunderbird and Kmail include email encryption. However, documentation is often either non-existent or poor.

|

||||

如今,每个Email阅读器从Alpine到Thunderbird再到Kmail,都包括了Email加密技术。然而,文件编制通常是不存在或者非常劣质。

|

||||

|

||||

But, even if you understand the theory, the practice is difficult. Controls are generally scattered throughout the configuration menus and tabs, requiring a thorough search for all the settings that you require or want. Should you fail to set up encryption properly, usually you receive no feedback about why.

|

||||

但是,即使你理论上看懂了,但是实践起来还是很困难的。控件通常分散在整个配置菜单和选项卡中,需要为所有你需要和想要的设置进行一次彻底的搜索。如果你未能进行适当的加密,你就收不到反馈。

|

||||

|

||||

The closest to an easy process is [Enigmail][1], a Thunderbird extension that includes a setup wizard aimed at beginners. But you have to know about Enigmail to use it, and the menu it adds to the composition window buries the encryption option one level down and places it with other options guaranteed to mystify everyday users.

|

||||

最新的一个简易加密进程是 [Enigmail][1] ,一个包含面向初学者设置向导的Thunderbird扩展。但是你一定要知道怎么用Enigmail,而且菜单新增了合成窗口并把加密设置项添加了进去,如果把它弄到其它的设置里势必会使每个用户都难以理解。

|

||||

|

||||

No matter what the desktop, the assumption is that, if you want encrypted email, you already understand it. Today, though, the constant media references to security and privacy have ensured that such an assumption no longer applies.

|

||||

无论桌面怎么样,假设如果你想接收加密过的Email,你就要先知道密码。可如今,不断有媒体涉及安全和隐私方面就已经确定了这样的假设不再适用。

|

||||

|

||||

### 6. Thumbnails for Virtual Workspaces

|

||||

### 6. 虚拟工作空间缩略图

|

||||

|

||||

Virtual workspaces offer more desktop space without requiring additional monitors. Yet, despite their usefulness, management of virtual workspaces hasn't changed in over a decade. On most desktops, you control them through a pager in which each workspace is represented by an unadorned rectangle that gives few indications of what might be on it except for its name or number -- or, in the case of Ubuntu's Unity, which workspace is currently active.

|

||||

虚拟工作空间提供了更多不需要额外监听的桌面空间。然而,尽管它们很实用,但是虚拟工作空间的管理并没有在过去十年发生改变。在大多数桌面产品中,你控制On most desktops, you control them through a pager in which each workspace is represented by an unadorned rectangle that gives few indications of what might be on it except for its name or number -- or, in the case of Ubuntu's Unity, which workspace is currently active.

|

||||

|

||||

True, GNOME and Cinnamon do offer better views, but the usefulness of these views is limited by the fact that they require a change of screens. Nor is KDE's written list of contents, which is jarring in the primarily graphic-oriented desktop.

|

||||

确实,GNOME和Cinnamon能提供出不错的视图,但是它们的实用性受限于它们需要显示屏大小的事实和会与主要的图形化桌面发生冲突的KDE的内容书面列表。

|

||||

|

||||

A less distracting solution might be mouseover thumbnails large enough for those with normal vision to see exactly what is on each workspace.

|

||||

|

||||

@ -71,7 +71,7 @@ For years, Stardock Systems has been selling a Windows extension called [Fences]

|

||||

|

||||

In other words, fences automate the sort of arrangements that users make on their desktop all the time. Yet aside from one or two minor functions they share with KDE's Folder Views, fences remain completely unknown on Linux desktops. Perhaps the reason is that designers are focused on mobile devices as the source of ideas, and fences are decidedly a feature of the traditional workstation desktop.

|

||||

|

||||

### Personalized Lists

|

||||

### 个性化列表

|

||||

|

||||

As I made this list, what struck me was how few of the improvements were general. Several of these improvement would appeal largely to specific audiences, and only one even implies the porting of a proprietary application. At least one is cosmetic rather than functional.

|

||||

|

||||

@ -85,7 +85,7 @@ All of which raises the question: what other improvements do you think would ben

|

||||

|

||||

via: http://www.datamation.com/open-source/7-improvements-the-linux-desktop-needs-1.html

|

||||

|

||||

译者:[译者ID](https://github.com/译者ID) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[ZTinoZ](https://github.com/ZTinoZ) 校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

shaohaolin translating

|

||||

Can Ubuntu Do This? — Answers to The 4 Questions New Users Ask Most

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

[felixonmars translating...]

|

||||

|

||||

Upstream and Downstream: why packaging takes time

|

||||

================================================================================

|

||||

Here in the KDE office in Barcelona some people spend their time on purely upstream KDE projects and some of us are primarily interested in making distros work which mean our users can get all the stuff we make. I've been asked why we don't just automate the packaging and go and do more productive things. One view of making on a distro like Kubuntu is that its just a way to package up the hard work done by others to take all the credit. I don't deny that, but there's quite a lot to the packaging of all that hard work, for a start there's a lot of it these days.

|

||||

|

||||

@ -1,41 +0,0 @@

|

||||

Linus Torvalds Started a Revolution on August 25, 1991. Happy Birthday, Linux!

|

||||

================================================================================

|

||||

|

||||

Linus Torvalds

|

||||

|

||||

**The Linux project has just turned 23 and it's now the biggest collaborative endeavor in the world, with thousands of people working on it.**

|

||||

|

||||

Back in 1991, a young programmer called Linus Torvalds wanted to make a free operating system that wasn't going to be as big as the GNU project and that was just a hobby. He started something that would turn out to be the most successful operating system on the planet, but no one would have been able to guess it back then.

|

||||

|

||||

Linus Torvalds sent an email on August 25, 1991, asking for help in testing his new operating system. Things haven't changed all that much in the meantime and he still sends emails about new Linux releases, although back then it wasn't called like that.

|

||||

|

||||

"I'm doing a (free) operating system (just a hobby, won't be big and professional like gnu) for 386(486) AT clones. This has been brewing since april, and is starting to get ready. I'd like any feedback on things people like/dislike in minix, as my OS resembles it somewhat (same physical layout of the file-system (due to practical reasons) among other things). I've currently ported bash(1.08) and gcc(1.40), and things seem to work."

|

||||

|

||||

"This implies that I'll get something practical within a few months, and I'd like to know what features most people would want. Any suggestions are welcome, but I won't promise I'll implement them :-) PS. Yes - it's free of any minix code, and it has a multi-threaded fs. It is NOT protable (uses 386 task switching etc), and it probably never will support anything other than AT-harddisks, as that's all I have :-(. " [wrote][1] Linus Torvalds.

|

||||

|

||||

This is the entire mails that started it all, although it's interesting to see how things have evolved since then. The Linux operating system caught on, especially on the server market, but the power of Linux also extended in other areas.

|

||||

|

||||

In fact, it's hard to find any technology that hasn't been influenced by a Linus OS. Phones, TVs, fridges, minicomputers, consoles, tablets, and basically everything that has a chip in it is capable of running Linux or it already has some sort of Linux-based OS installed on it.

|

||||

|

||||

Linux is omnipresent on billions of devices and its influence is growing each year on an exponential basis. You might think that Linus is also the wealthiest man on the planet, but remember, Linux is free software and anyone can use it, modify it, and make money of it. He didn't do it for the money.

|

||||

|

||||

Linus Torvalds started a revolution in 1991, but it hasn't ended. In fact, you could say that it's just getting started.

|

||||

|

||||

> Happy Anniversary, Linux! Please join us in celebrating 23 years of the free OS that has changed the world. [pic.twitter.com/mTVApV85gD][2]

|

||||

>

|

||||

> — The Linux Foundation (@linuxfoundation) [August 25, 2014][3]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/Linus-Torvalds-Started-a-Revolution-on-August-25-1991-Happy-Birthday-Linux-456212.shtml

|

||||

|

||||

作者:[Silviu Stahie][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://news.softpedia.com/editors/browse/silviu-stahie

|

||||

[1]:https://groups.google.com/forum/#!original/comp.os.minix/dlNtH7RRrGA/SwRavCzVE7gJ

|

||||

[2]:http://t.co/mTVApV85gD

|

||||

[3]:https://twitter.com/linuxfoundation/statuses/503799441900314624

|

||||

@ -1,86 +0,0 @@

|

||||

(translating by runningwater)

|

||||

Why Do Some Old Programming Languages Never Die?

|

||||

================================================================================

|

||||

> We like what we already know.

|

||||

|

||||

|

||||

|

||||

Many of today’s most well-known programming languages are old enough to vote. PHP is 20. Python is 23. HTML is 21. Ruby and JavaScript are 19. C is a whopping 42 years old.

|

||||

|

||||

Nobody could have predicted this. Not even computer scientist [Brian Kernighan][1], co-author of the very first book on C, which is still being printed today. (The language itself was the work of Kernighan's [co-author Dennis Ritchie][2], who passed away in 2011.)

|

||||

|

||||

“I dimly recall a conversation early on with the editors, telling them that we’d sell something like 5,000 copies of the book,” Kernighan told me in a recent interview. “We managed to do better than that. I didn’t think students would still be using a version of it as a textbook in 2014.”

|

||||

|

||||

What’s especially remarkable about C's persistence is that Google developed a new language, Go, specifically to more efficiently solve the problems C solves now. Still, it’s hard for Kernighan to imagine something like Go outright killing C no matter how good it is.

|

||||

|

||||

“Most languages don’t die—or at least once they get to a certain level of acceptance they don’t die," he said. "C still solves certain problems better than anything else, so it sticks around.”

|

||||

|

||||

### Write What You Know ###

|

||||

|

||||

Why do some computer languages become more successful than others? Because developers choose to use them. That’s logical enough, but it gets tricky when you want to figure out why developers choose to use the languages they do.

|

||||

|

||||

Ari Rabkin and Leo Meyerovich are researchers from, respectively, Princeton and the University of California at Berkeley who devoted two years to answering just that question. Their resulting paper, [Empirical Analysis of Programming Language Adoption][3], describes their analysis of more than 200,000 Sourceforge projects and polling of more than 13,000 programmers.

|

||||

|

||||

Their main finding? Most of the time programmers choose programming languages they know.

|

||||

|

||||

“There are languages we use because we’ve always used them,” Rabkin told me. “For example, astronomers historically use IDL [Interactive Data Language] for their computer programs, not because it has special features for stars or anything, but because it has tremendous inertia. They have good programs they’ve built with it that they want to keep.”

|

||||

|

||||

In other words, it’s partly thanks to name recognition that established languages retain monumental staying power. Of course, that doesn’t mean popular languages don’t change. Rabkin noted that the C we use today is nothing like the language Kernighan first wrote about, which probably wouldn’t be fully compatible with a modern C compiler.

|

||||

|

||||

“There’s an old, relevant joke in which an engineer is asked which language he thinks people will be using in 30 years and he says, ‘I don’t know, but it’ll be called Fortran’,” Rabkin said. “Long-lived languages are not the same as how they were when they were designed in the '70s and '80s. People have mostly added things instead of removed because that doesn’t break backwards compatibility, but many features have been fixed.”

|

||||

|

||||

This backwards compatibility means that not only can programmers continue to use languages as they update programs, they also don’t need to go back and rewrite the oldest sections. That older ‘legacy code’ keeps languages around forever, but at a cost. As long as it’s there, people’s beliefs about a language will stick around, too.

|

||||

|

||||

### PHP: A Case Study Of A Long-Lived Language ###

|

||||

|

||||

Legacy code refers to programs—or portions of programs—written in outdated source code. Think, for instance, of key programming functions for a business or engineering project that are written in a language that no one supports. They still carry out their original purpose and are too difficult or expensive to rewrite in modern code, so they stick around, forcing programmers to turn handsprings to ensure they keep working even as other code changes around them.

|

||||

|

||||

Any language that's been around more than a few years has a legacy-code problem of some sort, and PHP is no exception. PHP is an interesting example because its legacy code is distinctly different from its modern code, in what proponents say—and critics admit—is a huge improvement.

|

||||

|

||||

Andi Gutmans is a co-inventor of the Zend Engine, the compiler that became standard by the time PHP4 came around. Gutmans said he and his partner originally wanted to improve PHP3, and were so successful that the original PHP inventor, Rasmus Lerdorf, joined their project. The result was a compiler for PHP4 and its successor, PHP5.

|

||||

|

||||

As a consequence, the PHP of today is quite different from its progenitor, the original PHP. Yet in Gutmans' view, the base of legacy code written in older PHP versions keeps alive old prejudices against the language—such as the notion that PHP is riddled with security holes, or that it can't "scale" to handle large computing tasks.

|

||||

|

||||

"People who criticize PHP are usually criticizing where it was in 1998,” he says. “These people are not up-to-date with where it is today. PHP today is a very mature ecosystem.”

|

||||

|

||||

Today, Gutmans says, the most important thing for him as a steward is to encouraging people to keep updating to the latest versions. “PHP is a big enough community now that you have big legacy code bases," he says. "But generally speaking, most of our communities are on PHP5.3 at minimum.”

|

||||

|

||||

The issue is that users never fully upgrade to the latest version of any language. It’s why many Python users are still using Python 2, released in 2000, instead of Python 3, released in 2008. Even after six years major users like Google still aren’t upgrading. There are a variety of reasons for this, but it made many developers wary about taking the plunge.

|

||||

|

||||

“Nothing ever dies," Rabkin says. "Any language with legacy code will last forever. Rewrites are expensive and if it’s not broke don’t fix it.”

|

||||

|

||||

### Developer Brains As Scarce Resources ###

|

||||

|

||||

Of course, developers aren’t choosing these languages merely to maintain pesky legacy code. Rabkin and Meyerovich found that when it comes to language preference, age is just a number. As Rabkin told me:

|

||||

|

||||

> A thing that really shocked us and that I think is important is that we grouped people by age and asked them how many languages they know. Our intuition was that it would gradually rise over time; it doesn’t. Twenty-five-year-olds and 45-year-olds all know about the same number of languages. This was constant through several rewordings of the question. Your chance of knowing a given language does not vary with your age.

|

||||

|

||||

In other words, it’s not just old developers who cling to the classics; young programmers are also discovering and adopting old languages for the first time. That could be because the languages have interesting libraries and features, or because the communities these developers are a part of have adopted the language as a group.

|

||||

|

||||

“There’s a fixed amount of programmer attention in the world,” said Rabkin. “If a language delivers enough distinctive value, people will learn it and use it. If the people you exchange code and knowledge with you share a language, you’ll want to learn it. So for example, as long as those libraries are Python libraries and community expertise is Python experience, Python will do well.”

|

||||

|

||||

Communities are a huge factor in how languages do, the researchers discovered. While there's not much difference between high level languages like Python and Ruby, for example, programmers are prone to develop strong feelings about the superiority of one over the other.

|

||||

|

||||

“Rails didn’t have to be written in Ruby, but since it was, it proves there were social factors at work,” Rabkin says. “For example, the thing that resurrected Objective-C is that the Apple engineering team said, ‘Let’s use this.’ They didn’t have to pick it.”

|

||||

|

||||

Through social influence and legacy code, our oldest and most popular computer languages have powerful inertia. How could Go surpass C? If the right people and companies say it ought to.

|

||||

|

||||

“It comes down to who is better at evangelizing a language,” says Rabkin.

|

||||

|

||||

Lead image by [Blake Patterson][4]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://readwrite.com/2014/09/02/programming-language-coding-lifetime

|

||||

|

||||

作者:[Lauren Orsini][a]

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://readwrite.com/author/lauren-orsini

|

||||

[1]:http://en.wikipedia.org/wiki/Brian_Kernighan

|

||||

[2]:http://en.wikipedia.org/wiki/Dennis_Ritchie

|

||||

[3]:http://asrabkin.bitbucket.org/papers/oopsla13.pdf

|

||||

[4]:https://www.flickr.com/photos/blakespot/2444037775/

|

||||

@ -1,3 +1,5 @@

|

||||

[felixonmars translating...]

|

||||

|

||||

10 Open Source Cloning Software For Linux Users

|

||||

================================================================================

|

||||

> These cloning software take all disk data, convert them into a single .img file and you can copy it to another hard drive.

|

||||

@ -84,4 +86,4 @@ via: http://www.efytimes.com/e1/fullnews.asp?edid=148039

|

||||

[7]:http://doclone.nongnu.org/

|

||||

[8]:http://www.macrium.com/reflectfree.aspx

|

||||

[9]:http://www.runtime.org/driveimage-xml.htm

|

||||

[10]:http://www.paragon-software.com/home/br-free/

|

||||

[10]:http://www.paragon-software.com/home/br-free/

|

||||

|

||||

@ -1,108 +0,0 @@

|

||||

barney-ro translating

|

||||

|

||||

ChromeOS vs Linux: The Good, the Bad and the Ugly

|

||||

ChromeOS 对战 Linux : 孰优孰劣 仁者见仁 智者见智

|

||||

================================================================================

|

||||

> In the battle between ChromeOS and Linux, both desktop environments have strengths and weaknesses.

|

||||

|

||||

> 在 ChromeOS 和 Linux 的斗争过程中,不管是哪一家的操作系统都是有优有劣。

|

||||

|

||||

Anyone who believes Google isn't "making a play" for desktop users isn't paying attention. In recent years, I've seen [ChromeOS][1] making quite a splash on the [Google Chromebook][2]. Exploding with popularity on sites such as Amazon.com, it looks as if ChromeOS could be unstoppable.

|

||||

|

||||

任何不关注Google的人都不会相信Google在桌面用户当中扮演这一个很重要的角色。在近几年,我们见到的[ChromeOS][1]制造的[Google Chromebook][2]相当的轰动。和同期的人气火爆的Amazon一样,似乎ChromeOS势不可挡。

|

||||

|

||||

In this article, I'm going to look at ChromeOS as a concept to market, how it's affecting Linux adoption and whether or not it's a good/bad thing for the Linux community as a whole. Plus, I'll talk about the biggest issue of all and how no one is doing anything about it.

|

||||

|

||||

在本文中,我们要了解的是ChromeOS概念的市场,ChromeOS怎么影响着Linux的使用,和整个 ChromeOS 对于一个社区来说,是好事还是坏事。另外,我将会谈到一些重大的事情,和为什么没人去为他做点什么事情。

|

||||

|

||||

### ChromeOS isn't really Linux ###

|

||||

|

||||

### ChromeOS 并不是真正的Linux ###

|

||||

|

||||

When folks ask me if ChromeOS is a Linux distribution, I usually reply that ChromeOS is to Linux what OS X is to BSD. In other words, I consider ChromeOS to be a forked operating system that uses the Linux kernel under the hood. Much of the operating system is made up of Google's own proprietary blend of code and software.

|

||||

|

||||

每当有朋友问我说是否ChromeOS 是否是Linux 的一个分支时,我都会这样回答:ChromeOS 对于Linux 就好像是 OS X 对于BSD 。换句话说,我认为,ChromeOS 是一个派生的操作系统,运行于Linux 内核的引擎之下。很多操作系统就组成了Google 的专利代码和软件。

|

||||

|

||||

So while the ChromeOS is using the Linux kernel under its hood, it's still very different from what we might find with today's modern Linux distributions.

|

||||

|

||||

尽管ChromeOS 是利用了Linux 内核引擎,但是它仍然有很大的不同和现在流行的Linux分支版本。

|

||||

|

||||

Where ChromeOS's difference becomes most apparent, however, is in the apps it offers the end user: Web applications. With everything being launched from a browser window, Linux users might find using ChromeOS to be a bit vanilla. But for non-Linux users, the experience is not all that different than what they may have used on their old PCs.

|

||||

|

||||

ChromeOS和它们最大的不同就在于它给终端用户提供的app,包括Web 应用。因为ChromeOS 每一个操作都是开始于浏览器窗口,对于Linux 用户来说,可能会有很多不一样的感受,但是,对于没有Linux 经验的用户来说,这与他们使用的旧电脑并没有什么不同。

|

||||

|

||||

For example: Anyone who is living a Google-centric lifestyle on Windows will feel right at home on ChromeOS. Odds are this individual is already relying on the Chrome browser, Google Drive and Gmail. By extension, moving over to ChromeOS feels fairly natural for these folks, as they're simply using the browser they're already used to.

|

||||

|

||||

就是说,每一个以Google-centric为生活方式的人来说,当他们回到家时在ChromeOS上的感觉将会非常良好。这样的优势就是这个人已经接受了Chrome 浏览器,Google 驱动器和Gmail 。久而久之,他们的亲朋好友也都对ChromeOs有了好感,就好像是他们很容易接受Chrome 流浪器,因为他们早已经用过。

|

||||

|

||||

Linux enthusiasts, however, tend to feel constrained almost immediately. Software choices feel limited and boxed in, plus games and VoIP are totally out of the question. Sorry, but [GooglePlus Hangouts][3] isn't a replacement for [VoIP][4] software. Not even by a long shot.

|

||||

|

||||

然而,对于Linux 爱好者来说,这样就立即带来了不适应。软件的选择是受限制的,盒装的,在加上游戏和VoIP 是完全不可能的。对不起,因为[GooglePlus Hangouts][3]是代替不了VoIP 软件的。甚至在很长的一段时间里。

|

||||

|

||||

### ChromeOS or Linux on the desktop ###

|

||||

|

||||

### ChromeOS 和Linux 的桌面化 ###

|

||||

Anyone making the claim that ChromeOS hurts Linux adoption on the desktop needs to come up for air and meet non-technical users sometime.

|

||||

|

||||

有人断言,ChromeOS 要是想在桌面系统中对Linux 产生影响,只有在Linux 停下来浮出水面换气的时候或者是满足某个非技术用户的时候。

|

||||

|

||||

Yes, desktop Linux is absolutely fine for most casual computer users. However it helps to have someone to install the OS and offer "maintenance" services like we see in the Windows and OS X camps. Sadly Linux lacks this here in the States, which is where I see ChromeOS coming into play.

|

||||

|

||||

是的,桌面Linux 对于大多数休闲型的用户来说绝对是一个好东西。它有助于有专人安装操作系统,并且提供“维修”服务,从windows 和 OS X 的阵营来看。但是,令人失望的是,在美国Linux 正好在这个方面很缺乏。所以,我们看到,ChromeOS 慢慢的走入我们的视线。

|

||||

|

||||

I've found the Linux desktop is best suited for environments where on-site tech support can manage things on the down-low. Examples include: Homes where advanced users can drop by and handle updates, governments and schools with IT departments. These are environments where Linux on the desktop is set up to be used by users of any skill level or background.

|

||||

|

||||

By contrast, ChromeOS is built to be completely maintenance free, thus not requiring any third part assistance short of turning it on and allowing updates to do the magic behind the scenes. This is partly made possible due to the ChromeOS being designed for specific hardware builds, in a similar spirit to how Apple develops their own computers. Because Google has a pulse on the hardware ChromeOS is bundled with, it allows for a generally error free experience. And for some individuals, this is fantastic!

|

||||

|

||||

Comically, the folks who exclaim that there's a problem here are not even remotely the target market for ChromeOS. In short, these are passionate Linux enthusiasts looking for something to gripe about. My advice? Stop inventing problems where none exist.

|

||||

|

||||

The point is: the market share for ChromeOS and Linux on the desktop are not even remotely the same. This could change in the future, but at this time, these two groups are largely separate.

|

||||

|

||||

### ChromeOS use is growing ###

|

||||

|

||||

No matter what your view of ChromeOS happens to be, the fact remains that its adoption is growing. New computers built for ChromeOS are being released all the time. One of the most recent ChromeOS computer releases is from Dell. Appropriately named the [Dell Chromebox][5], this desktop ChromeOS appliance is yet another shot at traditional computing. It has zero software DVDs, no anti-malware software, and offfers completely seamless updates behind the scenes. For casual users, Chromeboxes and Chromebooks are becoming a viable option for those who do most of their work from within a web browser.

|

||||

|

||||

Despite this growth, ChromeOS appliances face one huge downside – storage. Bound by limited hard drive size and a heavy reliance on cloud storage, ChromeOS isn't going to cut it for anyone who uses their computers outside of basic web browser functionality.

|

||||

|

||||

### ChromeOS and Linux crossing streams ###

|

||||

|

||||

Previously, I mentioned that ChromeOS and Linux on the desktop are in two completely separate markets. The reason why this is the case stems from the fact that the Linux community has done a horrid job at promoting Linux on the desktop offline.

|

||||

|

||||

Yes, there are occasional events where casual folks might discover this "Linux thing" for the first time. But there isn't a single entity to then follow up with these folks, making sure they’re getting their questions answered and that they're getting the most out of Linux.

|

||||

|

||||

In reality, the likely offline discovery breakdown goes something like this:

|

||||

|

||||

- Casual user finds out Linux from their local Linux event.

|

||||

- They bring the DVD/USB device home and attempt to install the OS.

|

||||

- While some folks very well may have success with the install process, I've been contacted by a number of folks with the opposite experience.

|

||||

- Frustrated, these folks are then expected to "search" online forums for help. Difficult to do on a primary computer experiencing network or video issues.

|

||||

- Completely fed up, some of the above frustrated bring their computers back into a Windows shop for "repair." In addition to Windows being re-installed, they also receive an earful about how "Linux isn't for them" and should be avoided.

|

||||

|

||||

Some of you might charge that the above example is exaggerated. I would respond with this: It's happened to people I know personally and it happens often. Wake up Linux community, our adoption model is broken and tired.

|

||||

|

||||

### Great platforms, horrible marketing and closing thoughts ###

|

||||

|

||||

If there is one thing that I feel ChromeOS and Linux on the desktop have in common...besides the Linux kernel, it's that they both happen to be great products with rotten marketing. The advantage however, goes to Google with this one, due to their ability to spend big money online and reserve shelf space at big box stores.

|

||||

|

||||

Google believes that because they have the "online advantage" that offline efforts aren't really that important. This is incredibly short-sighted and reflects one of Google's biggest missteps. The belief that if you're not exposed to their online efforts, you're not worth bothering with, is only countered by local shelf-space at select big box stores.

|

||||

|

||||

My suggestion is this – offer Linux on the desktop to the ChromeOS market through offline efforts. This means Linux User Groups need to start raising funds to be present at county fairs, mall kiosks during the holiday season and teaching free classes at community centers. This will immediately put Linux on the desktop in front of the same audience that might otherwise end up with a ChromeOS powered appliance.

|

||||

|

||||

If local offline efforts like this don't happen, not to worry. Linux on the desktop will continue to grow as will the ChromeOS market. Sadly though, it will absolutely keep the two markets separate as they are now.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.datamation.com/open-source/chromeos-vs-linux-the-good-the-bad-and-the-ugly-1.html

|

||||

|

||||

作者:[Matt Hartley][a]

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.datamation.com/author/Matt-Hartley-3080.html

|

||||

[1]:http://en.wikipedia.org/wiki/Chrome_OS

|

||||

[2]:http://www.google.com/chrome/devices/features/

|

||||

[3]:https://plus.google.com/hangouts

|

||||

[4]:http://en.wikipedia.org/wiki/Voice_over_IP

|

||||

[5]:http://www.pcworld.com/article/2602845/dell-brings-googles-chrome-os-to-desktops.html

|

||||

@ -1,3 +1,5 @@

|

||||

barney-ro translating

|

||||

|

||||

What is a good subtitle editor on Linux

|

||||

================================================================================

|

||||

If you watch foreign movies regularly, chances are you prefer having subtitles rather than the dub. Grown up in France, I know that most Disney movies during my childhood sounded weird because of the French dub. If now I have the chance to be able to watch them in their original version, I know that for a lot of people subtitles are still required. And I surprise myself sometimes making subtitles for my family. Hopefully for me, Linux is not devoid of fancy and open source subtitle editors. In short, this is the non-exhaustive list of open source subtitle editors for Linux. Share your opinion on what you think of the best subtitle editor.

|

||||

@ -47,7 +49,7 @@ Which subtitle editor do you use and why? Or is there another one that you prefe

|

||||

via: http://xmodulo.com/good-subtitle-editor-linux.html

|

||||

|

||||

作者:[Adrien Brochard][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

译者:[barney-ro](https://github.com/barney-ro)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||