mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

translating...

This commit is contained in:

parent

2614de75b7

commit

354cd21453

@ -1,50 +1,82 @@

|

||||

[kenxx](https://github.com/kenxx)

|

||||

|

||||

[Why (most) High Level Languages are Slow][7]

|

||||

[为什么(大部分)高级语言运行效率较慢Why (most) High Level Languages are Slow][7]

|

||||

============================================================

|

||||

|

||||

Contents

|

||||

内容

|

||||

|

||||

|

||||

* * [Cache costs review][1]

|

||||

* * [回顾缓存消耗问题Cache costs review][1]

|

||||

* [Why C# introduces cache misses][2]

|

||||

* [Garbage Collection][3]

|

||||

* [Closing remarks][5]

|

||||

* [垃圾回收Garbage Collection][3]

|

||||

* [结语Closing remarks][5]

|

||||

|

||||

|

||||

In the last month or two I’ve had basically the same conversation half a dozen times, both online and in real life, so I figured I’d just write up a blog post that I can refer to in the future.

|

||||

|

||||

上一两个月中,我不下六次的和线上线下的朋友讨论了这个话题,所以我干脆直接把它写在博客中,以便以后查阅。

|

||||

|

||||

The reason most high level languages are slow is usually because of two reasons:

|

||||

|

||||

大部分高级语言运行效率较慢的原因通常有两点:

|

||||

|

||||

1. They don’t play well with the cache.

|

||||

2. They have to do expensive garbage collections

|

||||

|

||||

1. 没有很好的利用缓存;

|

||||

2. 垃圾回收机制性能消耗高。

|

||||

|

||||

But really, both of these boil down to a single reason: the language heavily encourages too many allocations.

|

||||

|

||||

但事实上,这两个原因可以归因于:高级语言强烈地鼓励去使用很多的内存。

|

||||

|

||||

First, I’ll just state up front that for all of this I’m talking mostly about client-side applications. If you’re spending 99.9% of your time waiting on the network then it probably doesn’t matter how slow your language is – optimizing network is your main concern. I’m talking about applications where local execution speed is important.

|

||||

|

||||

首先,以下内容大部分是关于客户端应用的。如果你花费了 99.9% 的时间去等待网络,那么这很可能不是拖慢语言运行效率的原因——优化网络是优先考虑的问题。在本文中程序本地执行速度才是重要的。

|

||||

|

||||

I’m going to pick on C# as the specific example here for two reasons: the first is that it’s the high level language I use most often these days, and because if I used Java I’d get a bunch of C# fans telling me how it has value types and therefore doesn’t have these issues (this is wrong).

|

||||

|

||||

我将选用 C# 语言作为本文参考语言,其原因有二:首先这是个最近我常用的高级语言;其次如果我使用 Java 语言,许多使用 C# 的朋友会告诉我 C# 不会有这些问题,因为它有值类型(但这是错误的)。

|

||||

|

||||

In the following I will be talking about what happens when you write idiomatic code. When you work “with the grain” of the language. When you write code in the style of the standard libraries and tutorials. I’m not very interested in ugly workarounds as “proof” that there’s no problem. Yes, you can sometimes fight the language to avoid a particular issue, but that doesn’t make the language unproblematic.

|

||||

|

||||

接下来我将会讨论,出于编程习惯编写的代码、使用普遍编程方法(with the grain)的代码或使用库或教程中提到的常用代码来编写程序时会发生什么。我对编程时使用丑陋的解决方法来“证明”语言本身没问题这事没有兴趣,当然你可以和语言抗争来避免该语言独有的问题,但这并不能说明语言本身是没有问题的。

|

||||

|

||||

### Cache costs review

|

||||

|

||||

### 回顾缓存消耗问题

|

||||

|

||||

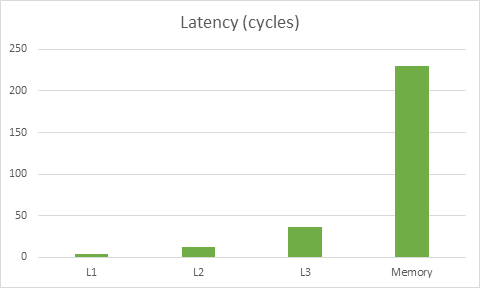

First, let’s review the importance of playing well with the cache. Here’s a graph based on [this data][10] on memory latencies for Haswell:

|

||||

|

||||

首先我们先来回顾一下合理使用缓存的重要性。下图是基于在 Haswell 架构下内存潜在因素对其影响的 [数据][10]:

|

||||

|

||||

|

||||

|

||||

The latency for this particular CPU to get to memory is about 230 cycles, meanwhile the cost of reading data from L1 is 4 cycles. The key takeaway here is that doing the wrong thing for the cache can make code ~50x slower. In fact, it may be even worse than that – modern CPUs can often do multiple things at once so you could be loading stuff from L1 while operating on stuff that’s already in registers, thus hiding the L1 load cost partially or completely.

|

||||

|

||||

内存的潜在因素对于这款 CPU 要读取内存的数据需要近 230 个运算周期,同时需要从 L1 缓冲区消耗 4 个运算周期来读取数据。此处的关键是当错误的去使用缓存可导致运行速度拖慢近 50 倍。事实上,这并不是最糟糕的——在现代的 CPU 中它们都能同时地做多种操作,所以当你一边加载 L1 缓冲区的内容的同时这个内容已经进入到了寄存器,因此数据从 L1 缓冲区加载这个过程的性能消耗被完整的隐藏了起来。

|

||||

|

||||

Without exaggerating we can say that aside from making reasonable algorithm choices, cache misses are the main thing you need to worry about for performance. Once you’re accessing data efficiently you can worry about fine tuning the actual operations you do. In comparison to cache misses, minor inefficiencies just don’t matter much.

|

||||

|

||||

撇开选择合理的算法不谈,不夸张地讲,在性能优化中你要考虑的最主要因素其实是缓存未命中。当你能够有效的访问一个数据时候,你才可以调整你的每个具体的操作。与缓存未命中问题相比,次要的低效问题对效率并没有什么过多的影响。

|

||||

|

||||

This is actually good news for language designers! You don’t _have_ to build the most efficient compiler on the planet, and you totally can get away with some extra overhead here and there for your abstractions (e.g. array bounds checking), all you need to do is make sure that your design makes it easy to write code that accesses data efficiently and programs in your language won’t have any problems running at speeds that are competitive with C.

|

||||

|

||||

这对于编程语言的设计者来说是一个好消息!你都_不需要_去建造一个最高效的编译器,你可以完全摆脱一些额外的开销(比如:数组边界检查),你只需要专注怎么设计能使你的语言访问数据更高效、又不用担心与 C 语言代码比较运行速度。

|

||||

|

||||

|

||||

### Why C# introduces cache misses

|

||||

|

||||

### 为什么介绍 C# 的缓存未命中问题

|

||||

|

||||

To put it bluntly, C# is a language that simply isn’t designed to run efficiently with modern cache realities in mind. Again, I’m now talking about the limitations of the design and the “pressure” it puts on the programmer to do things in inefficient ways. Many of these things have theoretical workarounds that you could do at great inconvenience. I’m talking about idiomatic code, what the language “wants” you to do.

|

||||

|

||||

坦率地讲,C# 在设计时就没打算在现代缓存中实现高效运行。我又一次提到程序语言设计的局限性以及其带给程序员无法编写高效的代码的“压力”。大部分的理论解决方法都非常的不便。我是在说那些编程语言“希望”你这样编写的习惯性代码。

|

||||

|

||||

The basic problem with C# is that it has very poor support for value-based programming. Yes, it has structs which are values that are stored “embedded” where they are declared (e.g. on the stack, or inside another object). But there are a several big issues with structs that make them more of a band-aid than a solution.

|

||||

|

||||

C# 最基本的问题是对于 `value-based` 低下的支持性

|

||||

|

||||

* You have to declare your data types as struct up front – which means that if you _ever_ need this type to exist as a heap allocation then _all_ of them need to be heap allocations. You could make some kind of class-wrapper for your struct and forward all the members but it’s pretty painful. It would be better if classes and structs were declared the same way and could be used in both ways on a case-by-case basis. So when something can live on the stack you declare it as a value, and when it needs to be on the heap you declare it as an object. This is how C++ works, for example. You’re not encouraged to make everything into an object-type just because there’s a few things here and there that need them on the heap.

|

||||

|

||||

* _Referencing_ values is extremely limited. You can pass values by reference to functions, but that’s about it. You can’t just grab a reference to an element in a List<int>, you have to store both a reference to the list and an index. You can’t grab a pointer to a stack-allocated value, or a value stored inside an object (or value). You can only copy them, unless you’re passing them to a function (by ref). This is all understandable, by the way. If type safety is a priority, it’s pretty difficult (though not imposible) to support flexible referencing of values while also guaranteeing type safety. The rationale behind these restrictions don’t change the fact that the restrictions are there, though.</int>

|

||||

|

||||

Loading…

Reference in New Issue

Block a user