diff --git a/published/20171216 Sysadmin 101- Troubleshooting.md b/published/20171216 Sysadmin 101- Troubleshooting.md

new file mode 100644

index 0000000000..0beb6eeb67

--- /dev/null

+++ b/published/20171216 Sysadmin 101- Troubleshooting.md

@@ -0,0 +1,138 @@

+系统管理员入门:排除故障

+======

+

+

+

+我通常会严格保持此博客的技术性,将观察、意见等内容保持在最低限度。但是,这篇和接下来的几篇文章将介绍刚进入系统管理/SRE/系统工程师/sysops/devops-ops(无论你想称自己是什么)角色的常见的基础知识。

+

+请跟我来!

+

+> “我的网站很慢!”

+

+我只是随机选择了本文的问题类型,这也可以应用于任何与系统管理员相关的故障排除。我并不是要炫耀那些可以发现最多的信息的最聪明的“金句”。它也不是一个详尽的、一步步指导的、并在最后一个方框中导向“利润”一词的“流程图”。

+

+我会通过一些例子展示常规的方法。

+

+示例场景仅用于说明本文目的。它们有时会做一些不适用于所有情况的假设,而且肯定会有很多读者在某些时候说“哦,但我觉得你会发现……”。

+

+但那可能会让我们错失重点。

+

+十多年来,我一直在从事于支持工作,或在支持机构工作,有一件事让我一次又一次地感到震惊,这促使我写下了这篇文章。

+

+**有许多技术人员在遇到问题时的本能反应,就是不管三七二十一去尝试可能的解决方案。**

+

+*“我的网站很慢,所以”,*

+

+* 我将尝试增大 `MaxClients`/`MaxRequestWorkers`/`worker_connections`

+* 我将尝试提升 `innodb_buffer_pool_size`/`effective_cache_size`

+* 我打算尝试启用 `mod_gzip`(遗憾的是,这是真实的故事)

+

+*“我曾经看过这个问题,它是因为某种原因造成的 —— 所以我估计还是这个原因,它应该能解决这个问题。”*

+

+这浪费了很多时间,并会让你在黑暗中盲目乱撞,胡乱鼓捣。

+

+你的 InnoDB 的缓冲池也许达到 100% 的利用率,但这可能只是因为有人运行了一段时间的一次性大型报告导致的。如果没有排除这种情况,那你就是在浪费时间。

+

+### 开始之前

+

+在这里,我应该说明一下,虽然这些建议同样适用于许多角色,但我是从一般的支持系统管理员的角度来撰写的。在一个成熟的内部组织中,或与规模较大的、规范管理的或“企业级”客户合作时,你通常会对一切都进行检测、测量、绘制、整理(甚至不是文字),并发出警报。那么你的方法也往往会有所不同。让我们在这里先忽略这种情况。

+

+如果你没有这种东西,那就随意了。

+

+### 澄清问题

+

+首先确定实际上是什么问题。“慢”可以是多种形式的。是收到第一个字节的时间吗?从糟糕的 Javascript 加载和每页加载要拉取 15 MB 的静态内容,这是一个完全不同类型的问题。是慢,还是比通常慢?这是两个非常不同的解决方案!

+

+在你着手做某事之前,确保你知道实际报告和遇到的问题。找到问题的根源通常很困难,但即便找不到也必须找到问题本身。

+

+否则,这相当于系统管理员带着一把刀去参加枪战。

+

+### 唾手可得

+

+首次登录可疑服务器时,你可以查找一些常见的嫌疑对象。事实上,你应该这样做!每当我登录到服务器时,我都会发出一些命令来快速检查一些事情:我们是否发生了页交换(`free` / `vmstat`),磁盘是否繁忙(`top` / `iostat` / `iotop`),是否有丢包(`netstat` / `proc` / `net` / `dev`),是否处于连接数过多的状态(`netstat`),有什么东西占用了 CPU(`top`),谁在这个服务器上(`w` / `who`),syslog 和 `dmesg` 中是否有引人注目的消息?

+

+如果你从 RAID 控制器得到 2000 条抱怨直写式缓存没有生效的消息,那么继续进行是没有意义的。

+

+这用不了半分钟。如果什么都没有引起你的注意 —— 那么继续。

+

+### 重现问题

+

+如果某处确实存在问题,并且找不到唾手可得的信息。

+

+那么采取所有步骤来尝试重现问题。当你可以重现该问题时,你就可以观察它。**当你能观察到时,你就可以解决。**如果在第一步中尚未显现出或覆盖了问题所在,询问报告问题的人需要采取哪些确切步骤来重现问题。

+

+对于由太阳耀斑或只能运行在 OS/2 上的客户端引起的问题,重现并不总是可行的。但你的第一个停靠港应该是至少尝试一下!在一开始,你所知道的是“某人认为他们的网站很慢”。对于那些人,他们可能还在用他们的 GPRS 手机,也可能正在安装 Windows 更新。你在这里挖掘得再深也是浪费时间。

+

+尝试重现!

+

+### 检查日志

+

+我对于有必要包括这一点感到很难过。但是我曾经看到有人在运行 `tail /var/log/...` 之后几分钟就不看了。大多数 *NIX 工具都特别喜欢记录日志。任何明显的错误都会在大多数应用程序日志中显得非常突出。检查一下。

+

+### 缩小范围

+

+如果没有明显的问题,但你可以重现所报告的问题,那也很棒。所以,你现在知道网站是慢的。现在你已经把范围缩小到:浏览器的渲染/错误、应用程序代码、DNS 基础设施、路由器、防火墙、网卡(所有的)、以太网电缆、负载均衡器、数据库、缓存层、会话存储、Web 服务器软件、应用程序服务器、内存、CPU、RAID 卡、磁盘等等。

+

+根据设置添加一些其他可能的罪魁祸首。它们也可能是 SAN,也不要忘记硬件 WAF!以及…… 你明白我的意思。

+

+如果问题是接收到第一个字节的时间,你当然会开始对 Web 服务器去应用上已知的修复程序,就是它响应缓慢,你也觉得几乎就是它,对吧?但是你错了!

+

+你要回去尝试重现这个问题。只是这一次,你要试图消除尽可能多的潜在问题来源。

+

+你可以非常轻松地消除绝大多数可能的罪魁祸首:你能从服务器本地重现问题吗?恭喜,你刚刚节省了自己必须尝试修复 BGP 路由的时间。

+

+如果不能,请尝试使用同一网络上的其他计算机。如果可以的话,至少你可以将防火墙移到你的嫌疑人名单上,(但是要注意一下那个交换机!)

+

+是所有的连接都很慢吗?虽然服务器是 Web 服务器,但并不意味着你不应该尝试使用其他类型的服务进行重现问题。[netcat][1] 在这些场景中非常有用(但是你的 SSH 连接可能会一直有延迟,这可以作为线索)! 如果这也很慢,你至少知道你很可能遇到了网络问题,可以忽略掉整个 Web 软件及其所有组件的问题。用这个知识(我不收 200 美元)再次从顶部开始,按你的方式由内到外地进行!

+

+即使你可以在本地复现 —— 仍然有很多“因素”留下。让我们排除一些变量。你能用普通文件重现它吗? 如果 `i_am_a_1kb_file.html` 很慢,你就知道它不是数据库、缓存层或 OS 以外的任何东西和 Web 服务器本身的问题。

+

+你能用一个需要解释或执行的 `hello_world.(py|php|js|rb..)` 文件重现问题吗?如果可以的话,你已经大大缩小了范围,你可以专注于少数事情。如果 `hello_world` 可以马上工作,你仍然学到了很多东西!你知道了没有任何明显的资源限制、任何满的队列或在任何地方卡住的 IPC 调用,所以这是应用程序正在做的事情或它正在与之通信的事情。

+

+所有页面都慢吗?或者只是从第三方加载“实时分数数据”的页面慢?

+

+**这可以归结为:你仍然可以重现这个问题所涉及的最少量的“因素”是什么?**

+

+我们的示例是一个缓慢的网站,但这同样适用于几乎所有问题。邮件投递?你能在本地投递吗?能发给自己吗?能发给<常见的服务提供者>吗?使用小的、纯文本的消息进行测试。尝试直到遇到 2MB 拥堵时。使用 STARTTLS 和不使用 STARTTLS 呢?按你的方式由内到外地进行!

+

+这些步骤中的每一步都只需要几秒钟,远远快于实施大多数“可能的”修复方案。

+

+### 隔离观察

+

+到目前为止,当你去除特定组件时无法重现问题时,你可能已经偶然发现了问题所在。

+

+但如果你还没有,或者你仍然不知道**为什么**:一旦你找到了一种方法来重现问题,你和问题之间的“东西”(某个技术术语)最少,那么就该开始隔离和观察了。

+

+请记住,许多服务可以在前台运行和/或启用调试。对于某些类别的问题,执行此操作通常非常有帮助。

+

+这也是你的传统武器库发挥作用的地方。`strace`、`lsof`、`netstat`、`GDB`、`iotop`、`valgrind`、语言分析器(cProfile、xdebug、ruby-prof ……)那些类型的工具。

+

+一旦你走到这一步,你就很少能摆脱剖析器或调试器了。

+

+[strace][2] 通常是一个非常好的起点。

+

+你可能会注意到应用程序停留在某个连接到端口 3306 的套接字文件描述符上的特定 `read()` 调用上。你会知道该怎么做。

+

+转到 MySQL 并再次从顶部开始。显而易见:“等待某某锁”、死锁、`max_connections` ……进而:是所有查询?还是只写请求?只有某些表?还是只有某些存储引擎?等等……

+

+你可能会注意到调用外部 API 资源的 `connect()` 需要五秒钟才能完成,甚至超时。你会知道该怎么做。

+

+你可能会注意到,在同一对文件中有 1000 个调用 `fstat()` 和 `open()` 作为循环依赖的一部分。你会知道该怎么做。

+

+它可能不是那些特别的东西,但我保证,你会发现一些东西。

+

+如果你只是从这一部分学到一点,那也不错;学习使用 `strace` 吧!**真的**学习它,阅读整个手册页。甚至不要跳过历史部分。`man` 每个你还不知道它做了什么的系统调用。98% 的故障排除会话以 `strace` 而终结。

+

+---------------------------------------------------------------------

+

+via: http://northernmost.org/blog/troubleshooting-101/index.html

+

+作者:[Erik Ljungstrom][a]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]:http://northernmost.org

+[1]:http://nc110.sourceforge.net/

+[2]:https://linux.die.net/man/1/strace

diff --git a/published/20180928 Quiet log noise with Python and machine learning.md b/published/20180928 Quiet log noise with Python and machine learning.md

new file mode 100644

index 0000000000..d6471b3969

--- /dev/null

+++ b/published/20180928 Quiet log noise with Python and machine learning.md

@@ -0,0 +1,106 @@

+Logreduce:用 Python 和机器学习去除日志噪音

+======

+

+> Logreduce 可以通过从大量日志数据中挑选出异常来节省调试时间。

+

+

+

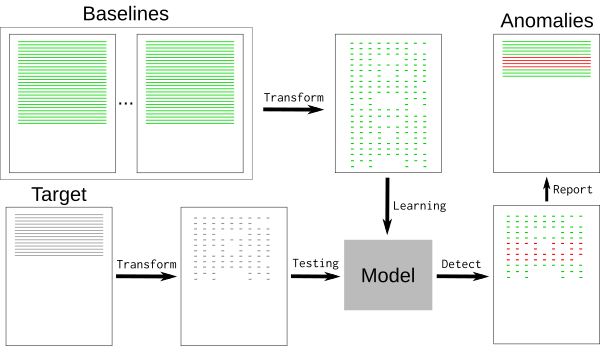

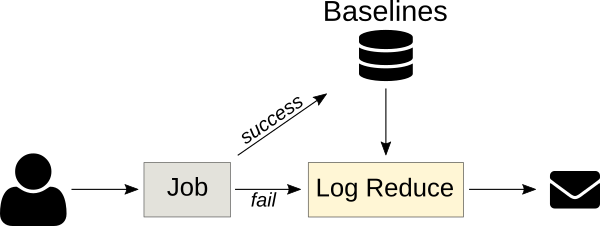

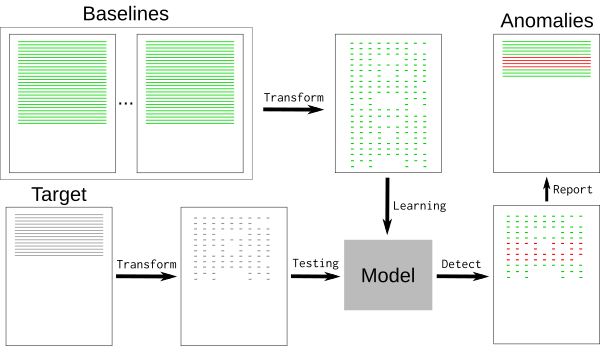

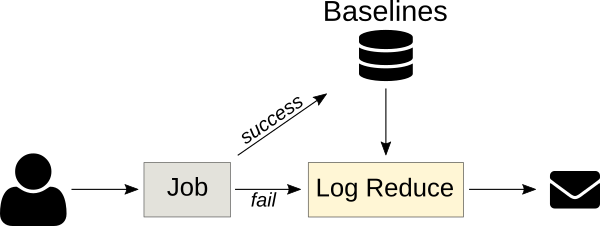

+持续集成(CI)作业会生成大量数据。当一个作业失败时,弄清楚出了什么问题可能是一个繁琐的过程,它涉及到调查日志以发现根本原因 —— 这通常只能在全部的作业输出的一小部分中找到。为了更容易地将最相关的数据与其余数据分开,可以使用先前成功运行的作业结果来训练 [Logreduce][1] 机器学习模型,以从失败的运行日志中提取异常。

+

+此方法也可以应用于其他用例,例如,从 [Journald][2] 或其他系统级的常规日志文件中提取异常。

+

+### 使用机器学习来降低噪音

+

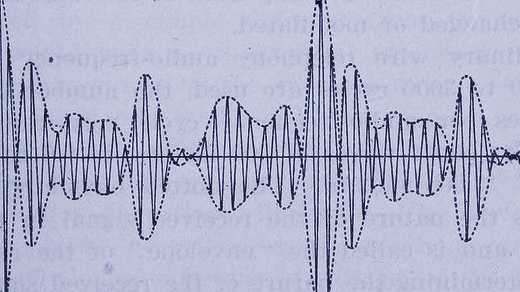

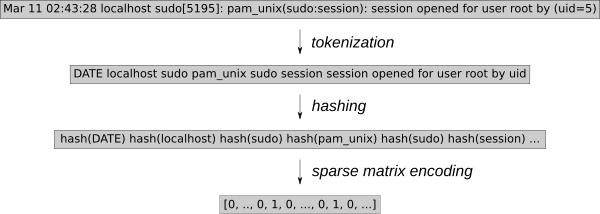

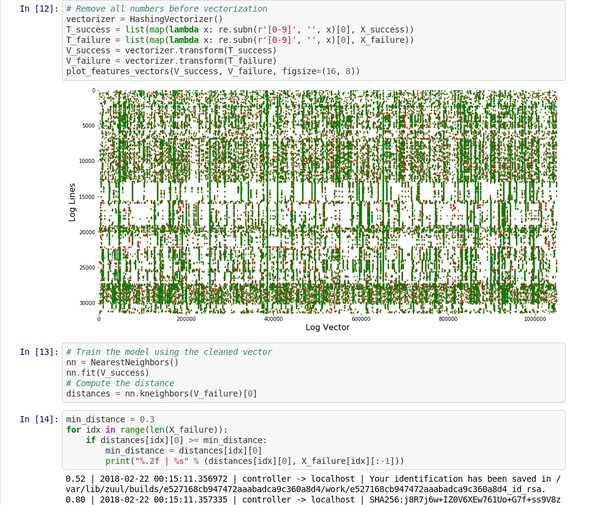

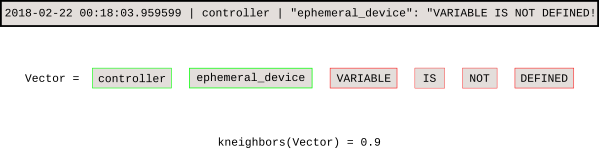

+典型的日志文件包含许多标称事件(“基线”)以及与开发人员相关的一些例外事件。基线可能包含随机元素,例如难以检测和删除的时间戳或唯一标识符。要删除基线事件,我们可以使用 [k-最近邻模式识别算法][3](k-NN)。

+

+

+

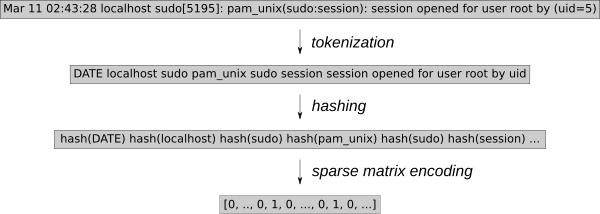

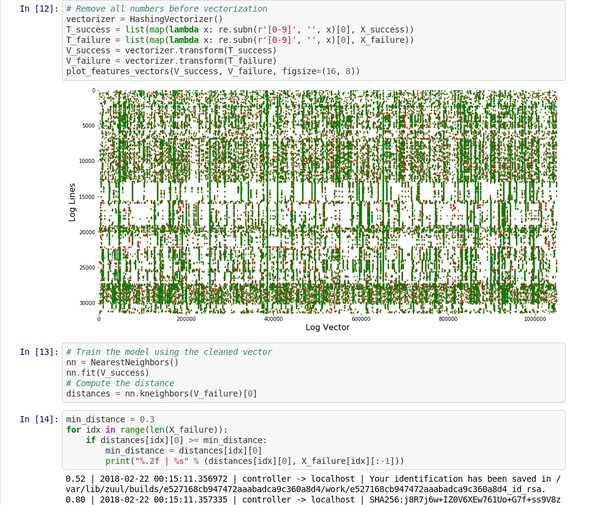

+日志事件必须转换为可用于 k-NN 回归的数值。使用通用特征提取工具 [HashingVectorizer][4] 可以将该过程应用于任何类型的日志。它散列每个单词并在稀疏矩阵中对每个事件进行编码。为了进一步减少搜索空间,这个标记化过程删除了已知的随机单词,例如日期或 IP 地址。

+

+

+

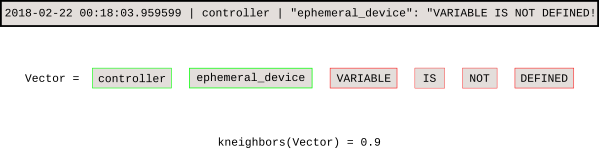

+训练模型后,k-NN 搜索可以告诉我们每个新事件与基线的距离。

+

+

+

+这个 [Jupyter 笔记本][5] 演示了该稀疏矩阵向量的处理和图形。

+

+

+

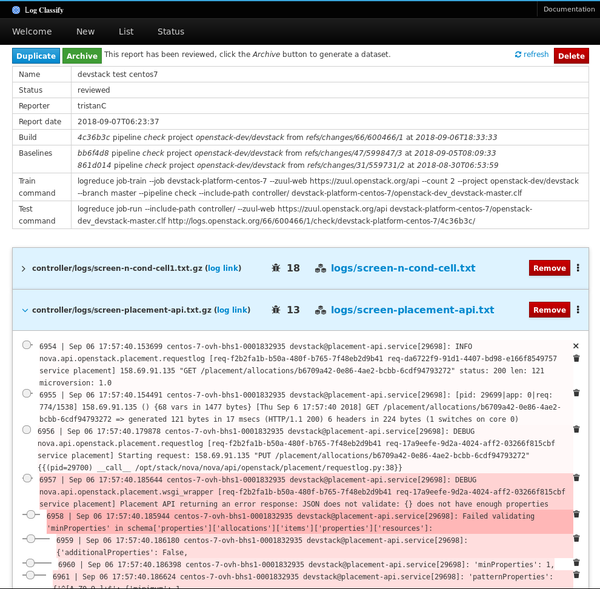

+### Logreduce 介绍

+

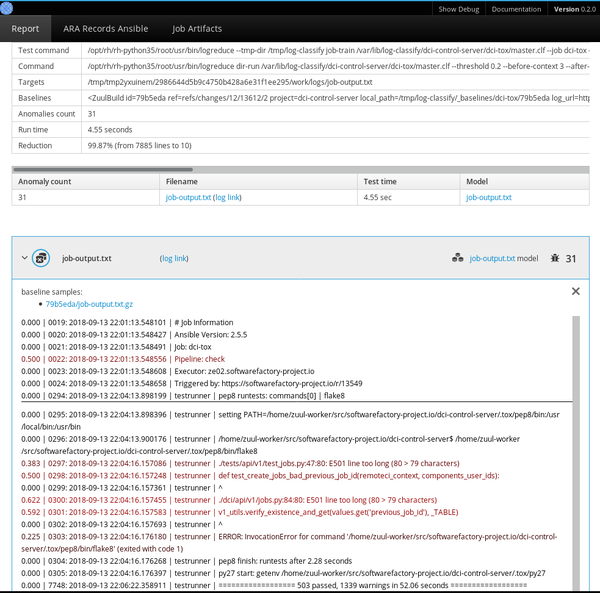

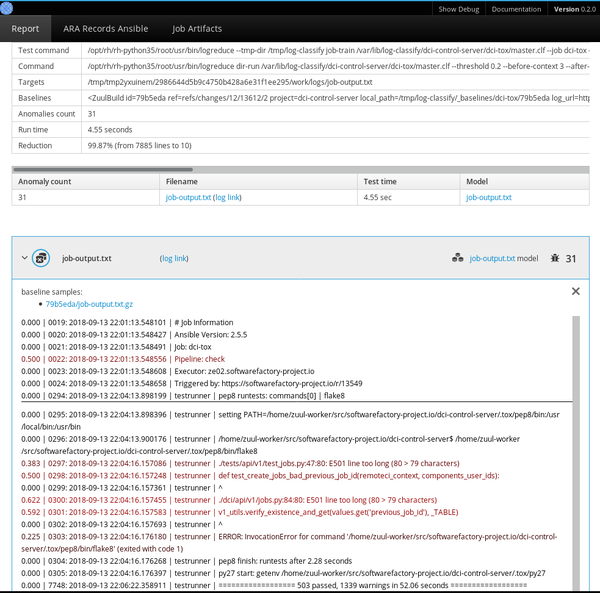

+Logreduce Python 软件透明地实现了这个过程。Logreduce 的最初目标是使用构建数据库来协助分析 [Zuul CI][6] 作业的失败问题,现在它已集成到 [Software Factory 开发车间][7]的作业日志处理中。

+

+最简单的是,Logreduce 会比较文件或目录并删除相似的行。Logreduce 为每个源文件构建模型,并使用以下语法输出距离高于定义阈值的任何目标行:`distance | filename:line-number: line-content`。

+

+```

+$ logreduce diff /var/log/audit/audit.log.1 /var/log/audit/audit.log

+INFO logreduce.Classifier - Training took 21.982s at 0.364MB/s (1.314kl/s) (8.000 MB - 28.884 kilo-lines)

+0.244 | audit.log:19963: type=USER_AUTH acct="root" exe="/usr/bin/su" hostname=managesf.sftests.com

+INFO logreduce.Classifier - Testing took 18.297s at 0.306MB/s (1.094kl/s) (5.607 MB - 20.015 kilo-lines)

+99.99% reduction (from 20015 lines to 1

+

+```

+

+更高级的 Logreduce 用法可以离线训练模型以便重复使用。可以使用基线的许多变体来拟合 k-NN 搜索树。

+

+```

+$ logreduce dir-train audit.clf /var/log/audit/audit.log.*

+INFO logreduce.Classifier - Training took 80.883s at 0.396MB/s (1.397kl/s) (32.001 MB - 112.977 kilo-lines)

+DEBUG logreduce.Classifier - audit.clf: written

+$ logreduce dir-run audit.clf /var/log/audit/audit.log

+```

+

+Logreduce 还实现了接口,以发现 Journald 时间范围(天/周/月)和 Zuul CI 作业构建历史的基线。它还可以生成 HTML 报告,该报告在一个简单的界面中将在多个文件中发现的异常进行分组。

+

+

+

+### 管理基线

+

+使用 k-NN 回归进行异常检测的关键是拥有一个已知良好基线的数据库,该模型使用数据库来检测偏离太远的日志行。此方法依赖于包含所有标称事件的基线,因为基线中未找到的任何内容都将报告为异常。

+

+CI 作业是 k-NN 回归的重要目标,因为作业的输出通常是确定性的,之前的运行结果可以自动用作基线。 Logreduce 具有 Zuul 作业角色,可以将其用作失败的作业发布任务的一部分,以便发布简明报告(而不是完整作业的日志)。只要可以提前构建基线,该原则就可以应用于其他情况。例如,标称系统的 [SoS 报告][8] 可用于查找缺陷部署中的问题。

+

+

+

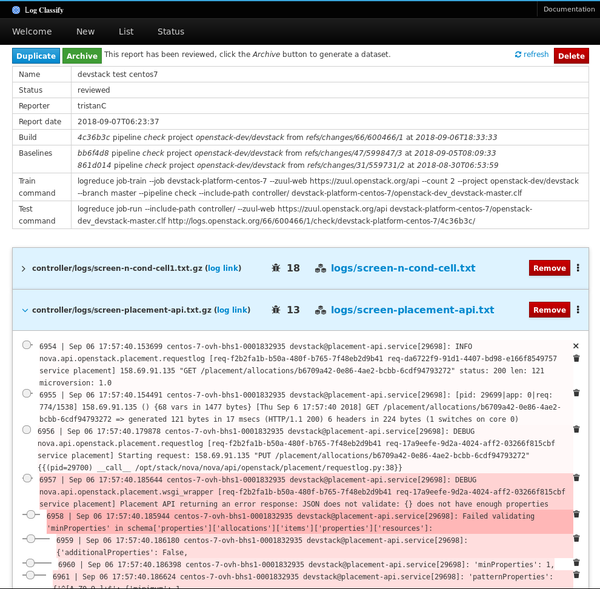

+### 异常分类服务

+

+下一版本的 Logreduce 引入了一种服务器模式,可以将日志处理卸载到外部服务,在外部服务中可以进一步分析该报告。它还支持导入现有报告和请求以分析 Zuul 构建。这些服务以异步方式运行分析,并具有 Web 界面以调整分数并消除误报。

+

+

+

+已审核的报告可以作为独立数据集存档,其中包含目标日志文件和记录在一个普通的 JSON 文件中的异常行的分数。

+

+### 项目路线图

+

+Logreduce 已经能有效使用,但是有很多机会来改进该工具。未来的计划包括:

+

+* 策划在日志文件中发现的许多带注释的异常,并生成一个公共域数据集以进行进一步研究。日志文件中的异常检测是一个具有挑战性的主题,并且有一个用于测试新模型的通用数据集将有助于识别新的解决方案。

+* 重复使用带注释的异常模型来优化所报告的距离。例如,当用户通过将距离设置为零来将日志行标记为误报时,模型可能会降低未来报告中这些日志行的得分。

+* 对存档异常取指纹特征以检测新报告何时包含已知的异常。因此,该服务可以通知用户该作业遇到已知问题,而不是报告异常的内容。解决问题后,该服务可以自动重新启动该作业。

+* 支持更多基准发现接口,用于 SOS 报告、Jenkins 构建、Travis CI 等目标。

+

+如果你有兴趣参与此项目,请通过 #log-classify Freenode IRC 频道与我们联系。欢迎反馈!

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/18/9/quiet-log-noise-python-and-machine-learning

+

+作者:[Tristan de Cacqueray][a]

+选题:[lujun9972](https://github.com/lujun9972)

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/tristanc

+[1]: https://pypi.org/project/logreduce/

+[2]: http://man7.org/linux/man-pages/man8/systemd-journald.service.8.html

+[3]: https://en.wikipedia.org/wiki/K-nearest_neighbors_algorithm

+[4]: http://scikit-learn.org/stable/modules/generated/sklearn.feature_extraction.text.HashingVectorizer.html

+[5]: https://github.com/TristanCacqueray/anomaly-detection-workshop-opendev/blob/master/datasets/notebook/anomaly-detection-with-scikit-learn.ipynb

+[6]: https://zuul-ci.org

+[7]: https://www.softwarefactory-project.io

+[8]: https://sos.readthedocs.io/en/latest/

+[9]: https://www.openstack.org/summit/berlin-2018/summit-schedule/speakers/4307

+[10]: https://www.openstack.org/summit/berlin-2018/

diff --git a/published/20190304 How to Install MongoDB on Ubuntu.md b/published/20190304 How to Install MongoDB on Ubuntu.md

new file mode 100644

index 0000000000..1ba0edae1f

--- /dev/null

+++ b/published/20190304 How to Install MongoDB on Ubuntu.md

@@ -0,0 +1,235 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11175-1.html)

+[#]: subject: (How to Install MongoDB on Ubuntu)

+[#]: via: (https://itsfoss.com/install-mongodb-ubuntu)

+[#]: author: (Sergiu https://itsfoss.com/author/sergiu/)

+

+如何在 Ubuntu 上安装 MongoDB

+======

+

+> 本教程介绍了在 Ubuntu 和基于 Ubuntu 的 Linux 发行版上安装 MongoDB 的两种方法。

+

+[MongoDB][1] 是一个越来越流行的自由开源的 NoSQL 数据库,它将数据存储在类似 JSON 的灵活文档集中,这与 SQL 数据库中常见的表格形式形成对比。

+

+你很可能发现在现代 Web 应用中使用 MongoDB。它的文档模型使得使用各种编程语言能非常直观地访问和处理它。

+

+![mongodb Ubuntu][2]

+

+在本文中,我将介绍两种在 Ubuntu 上安装 MongoDB 的方法。

+

+### 在基于 Ubuntu 的发行版上安装 MongoDB

+

+1. 使用 Ubuntu 仓库安装 MongoDB。简单但不是最新版本的 MongoDB

+2. 使用其官方仓库安装 MongoDB。稍微复杂,但你能得到最新版本的 MongoDB。

+

+第一种安装方法更容易,但如果你计划使用官方支持的最新版本,那么我建议使用第二种方法。

+

+有些人可能更喜欢使用 snap 包。Ubuntu 软件中心提供了 snap,但我不建议使用它们,因为他们现在已经过期了,因此我这里不会提到。

+

+### 方法 1:从 Ubuntu 仓库安装 MongoDB

+

+这是在系统中安装 MongoDB 的简便方法,你只需输入一个命令即可。

+

+#### 安装 MongoDB

+

+首先,确保你的包是最新的。打开终端并输入:

+

+```

+sudo apt update && sudo apt upgrade -y

+```

+

+继续安装 MongoDB:

+

+```

+sudo apt install mongodb

+```

+

+这就完成了!MongoDB 现在安装到你的计算机上了。

+

+MongoDB 服务应该在安装时自动启动,但要检查服务状态:

+

+```

+sudo systemctl status mongodb

+```

+

+![Check if the MongoDB service is running.][3]

+

+你可以看到该服务是**活动**的。

+

+#### 运行 MongoDB

+

+MongoDB 目前是一个 systemd 服务,因此我们使用 `systemctl` 来检查和修改它的状态,使用以下命令:

+

+```

+sudo systemctl status mongodb

+sudo systemctl stop mongodb

+sudo systemctl start mongodb

+sudo systemctl restart mongodb

+```

+

+你也可以修改 MongoDB 是否自动随系统启动(默认:启用):

+

+```

+sudo systemctl disable mongodb

+sudo systemctl enable mongodb

+```

+

+要开始使用(创建和编辑)数据库,请输入:

+

+```

+mongo

+```

+

+这将启动 **mongo shell**。有关查询和选项的详细信息,请查看[手册][4]。

+

+**注意:**根据你计划使用 MongoDB 的方式,你可能需要调整防火墙。不过这超出了本篇的内容,并且取决于你的配置。

+

+#### 卸载 MongoDB

+

+如果你从 Ubuntu 仓库安装 MongoDB 并想要卸载它(可能要使用官方支持的方式安装),请输入:

+

+```

+sudo systemctl stop mongodb

+sudo apt purge mongodb

+sudo apt autoremove

+```

+

+这应该会完全卸载 MongoDB。确保**备份**你可能想要保留的任何集合或文档,因为它们将被删除!

+

+### 方法 2:在 Ubuntu 上安装 MongoDB 社区版

+

+这是推荐的安装 MongoDB 的方法,它使用包管理器。你需要多打几条命令,对于 Linux 新手而言,这可能会感到害怕。

+

+但没有什么可怕的!我们将一步步说明安装过程。

+

+#### 安装 MongoDB

+

+由 MongoDB Inc. 维护的包称为 `mongodb-org`,而不是 `mongodb`(这是 Ubuntu 仓库中包的名称)。在开始之前,请确保系统上未安装 `mongodb`。因为包之间会发生冲突。让我们开始吧!

+

+首先,我们必须导入公钥:

+

+```

+sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 9DA31620334BD75D9DCB49F368818C72E52529D4

+```

+

+现在,你需要在源列表中添加一个新的仓库,以便你可以安装 MongoDB 社区版并获得自动更新:

+

+```

+echo "deb [ arch=amd64 ] https://repo.mongodb.org/apt/ubuntu $(lsb_release -cs)/mongodb-org/4.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-4.0.list

+```

+

+要安装 `mongodb-org`,我们需要更新我们的包数据库,以便系统知道可用的新包:

+

+```

+sudo apt update

+```

+

+现在你可以安装**最新稳定版**的 MongoDB:

+

+```

+sudo apt install -y mongodb-org

+```

+

+或者某个**特定版本**(在 `=` 后面修改版本号)

+

+```

+sudo apt install -y mongodb-org=4.0.6 mongodb-org-server=4.0.6 mongodb-org-shell=4.0.6 mongodb-org-mongos=4.0.6 mongodb-org-tools=4.0.6

+```

+

+如果你选择安装特定版本,请确保在所有位置都修改了版本号。如果你修改了 `mongodb-org=4.0.6`,你将安装最新版本。

+

+默认情况下,使用包管理器(`apt-get`)更新时,MongoDB 将更新为最新的版本。要阻止这种情况发生(并冻结为已安装的版本),请使用:

+

+```

+echo "mongodb-org hold" | sudo dpkg --set-selections

+echo "mongodb-org-server hold" | sudo dpkg --set-selections

+echo "mongodb-org-shell hold" | sudo dpkg --set-selections

+echo "mongodb-org-mongos hold" | sudo dpkg --set-selections

+echo "mongodb-org-tools hold" | sudo dpkg --set-selections

+```

+

+你现在已经成功安装了 MongoDB!

+

+#### 配置 MongoDB

+

+默认情况下,包管理器将创建 `/var/lib/mongodb` 和 `/var/log/mongodb`,MongoDB 将使用 `mongodb` 用户帐户运行。

+

+我不会去更改这些默认设置,因为这超出了本指南的范围。有关详细信息,请查看[手册][5]。

+

+`/etc/mongod.conf` 中的设置在启动/重新启动 **mongodb** 服务实例时生效。

+

+##### 运行 MongoDB

+

+要启动 mongodb 的守护进程 `mongod`,请输入:

+

+```

+sudo service mongod start

+```

+

+现在你应该验证 `mongod` 进程是否已成功启动。此信息(默认情况下)保存在 `/var/log/mongodb/mongod.log` 中。我们来看看文件的内容:

+

+```

+sudo cat /var/log/mongodb/mongod.log

+```

+

+![Check MongoDB logs to see if the process is running properly.][6]

+

+只要你在某处看到:`[initandlisten] waiting for connections on port 27017`,就说明进程正常运行。

+

+**注意**:27017 是 `mongod` 的默认端口。

+

+要停止/重启 `mongod`,请输入:

+

+```

+sudo service mongod stop

+sudo service mongod restart

+```

+

+现在,你可以通过打开 **mongo shell** 来使用 MongoDB:

+

+```

+mongo

+```

+

+#### 卸载 MongoDB

+

+运行以下命令:

+

+```

+sudo service mongod stop

+sudo apt purge mongodb-org*

+```

+

+要删除**数据库**和**日志文件**(确保**备份**你要保留的内容!):

+

+```

+sudo rm -r /var/log/mongodb

+sudo rm -r /var/lib/mongodb

+```

+

+### 总结

+

+MongoDB 是一个很棒的 NoSQL 数据库,它易于集成到现代项目中。我希望本教程能帮助你在 Ubuntu 上安装它!在下面的评论中告诉我们你计划如何使用 MongoDB。

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/install-mongodb-ubuntu

+

+作者:[Sergiu][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/sergiu/

+[b]: https://github.com/lujun9972

+[1]: https://www.mongodb.com/

+[2]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/03/mongodb-ubuntu.jpeg?resize=800%2C450&ssl=1

+[3]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/02/mongodb_check_status.jpg?fit=800%2C574&ssl=1

+[4]: https://docs.mongodb.com/manual/tutorial/getting-started/

+[5]: https://docs.mongodb.com/manual/

+[6]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/02/mongodb_org_check_logs.jpg?fit=800%2C467&ssl=1

diff --git a/published/20190613 Continuous integration testing for the Linux kernel.md b/published/20190613 Continuous integration testing for the Linux kernel.md

new file mode 100644

index 0000000000..38f262eabe

--- /dev/null

+++ b/published/20190613 Continuous integration testing for the Linux kernel.md

@@ -0,0 +1,92 @@

+[#]: collector: (lujun9972)

+[#]: translator: (LazyWolfLin)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11176-1.html)

+[#]: subject: (Continuous integration testing for the Linux kernel)

+[#]: via: (https://opensource.com/article/19/6/continuous-kernel-integration-linux)

+[#]: author: (Major Hayden https://opensource.com/users/mhayden)

+

+Linux 内核的持续集成测试

+======

+

+> CKI 团队是如何防止 bug 被合并到 Linux 内核中。

+

+

+

+Linux 内核的每个发布版本包含了来自 1,700 个开发者产生的 14,000 个变更集,很显然,这使得 Linux 内核快速迭代的同时也产生了巨大的复杂性问题。内核上 Bug 有小麻烦也有大问题,有时是系统崩溃,有时是数据丢失。

+

+随着越来越多的项目对于持续集成(CI)的呼声,[内核持续集成(CKI)][2]小组秉承着一个任务目标:防止 Bug 被合并到内核当中。

+

+### Linux 测试问题

+

+许多 Linux 发行版只在需要的时候对 Linux 内核进行测试。而这种测试往往只在版本发布时或者用户发现错误时进行。

+

+有时候,出现玄学问题时,维护人员需要在包含了数万个补丁的变更中匆忙地寻找哪个补丁导致这个新的玄学 Bug。诊断 Bug 需要专业的硬件设备、一系列的触发器以及内核相关的专业知识。

+

+#### CI 和 Linux

+

+许多现代软件代码库都采用某种自动化 CI 测试机制,能够在提交进入代码存储库之前对其进行测试。这种自动化测试使得维护人员可以通过查看 CI 测试报告来发现软件质量问题以及大多数的错误。一些简单的项目,比如某个 Python 库,附带的大量工具使得整个检查过程更简单。

+

+在任何测试之前都需要配置和编译 Linux。而这么做将耗费大量的时间和计算资源。此外,Linux 内核必需在虚拟机或者裸机上启动才能进行测试。而访问某些硬件架构需要额外的开销或者非常慢的仿真。因此,人们必须确定一组能够触发错误或者验证修复的测试集。

+

+#### CKI 团队如何运作?

+

+Red Hat 公司的 CKI 团队当前正追踪来自数个内部内核分支和上游的[稳定内核分支树][3]等内核分支的更改。我们关注每个代码库的两类关键事件:

+

+ 1. 当维护人员合并 PR 或者补丁时,代码库变化后的最终结果。

+ 2. 当开发人员通过拼凑或者稳定补丁队列发起变更合并时。

+

+当这些事件发生时,自动化工具开始执行,[GitLab CI 管道][4]开始进行测试。一旦管道开始执行 [linting][5] 脚本、合并每一个补丁,并为多种硬件架构编译内核,真正的测试便开始了。我们会在六分钟内完成四种硬件架构的内核编译工作,并且通常会在两个小时或更短的时间内将反馈提交到稳定邮件列表中。(自 2019 年 1 月起)每月执行超过 100,000 次内核测试,并完成了超过 11,000 个 GitLab 管道。

+

+每个内核都会在本地硬件架构上启动,其中包含:

+

+* [aarch64][6]:64 位 [ARM][7],例如 [Cavium(当前是 Marvell)ThunderX][8]。

+* [ppc64/ppc64le][9]:大端和小端的 [IBM POWER][10] 系统。

+* [s390x][11]:[IBM Zseries][12] 大型机

+* [x86_64][13]:[Intel][14] 和 [AMD][15] 工作站、笔记本和服务器。

+

+这些内核上运行了包括 [Linux 测试项目(LTP)][16]在内的多个测试,其中包括使用常用测试工具的大量测试。我们 CKI 团队开源了超过 44 个测试并将继续开源更多测试。

+

+### 参与其中

+

+上游的内核测试工作日渐增多。包括 [Google][17]、Intel、[Linaro][18] 和 [Sony][19] 在内的许多公司为各种内核提供了测试输出。每一项工作都专注于为上游内核以及每个公司的客户群带来价值。

+

+如果你或者你的公司想要参与这一工作,请参加在 9 月份在葡萄牙里斯本举办的 [Linux Plumbers Conference 2019][20]。在会议结束后的两天加入我们的 Kernel CI hackfest 活动,并推动快速内核测试的发展。

+

+更多详细信息,[请见][21]我在 Texas Linux Fest 2019 上的演讲。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/6/continuous-kernel-integration-linux

+

+作者:[Major Hayden][a]

+选题:[lujun9972][b]

+译者:[LazyWolfLin](https://github.com/LazyWolfLin)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/mhayden

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/linux_kernel_clang_vscode.jpg?itok=fozZ4zrr "Linux kernel source code (C) in Visual Studio Code"

+[2]: https://cki-project.org/

+[3]: https://www.kernel.org/doc/html/latest/process/stable-kernel-rules.html

+[4]: https://docs.gitlab.com/ee/ci/pipelines.html

+[5]: https://en.wikipedia.org/wiki/Lint_(software)

+[6]: https://en.wikipedia.org/wiki/ARM_architecture

+[7]: https://www.arm.com/

+[8]: https://www.marvell.com/server-processors/thunderx-arm-processors/

+[9]: https://en.wikipedia.org/wiki/Ppc64

+[10]: https://www.ibm.com/it-infrastructure/power

+[11]: https://en.wikipedia.org/wiki/Linux_on_z_Systems

+[12]: https://www.ibm.com/it-infrastructure/z

+[13]: https://en.wikipedia.org/wiki/X86-64

+[14]: https://www.intel.com/

+[15]: https://www.amd.com/

+[16]: https://github.com/linux-test-project/ltp

+[17]: https://www.google.com/

+[18]: https://www.linaro.org/

+[19]: https://www.sony.com/

+[20]: https://www.linuxplumbersconf.org/

+[21]: https://docs.google.com/presentation/d/1T0JaRA0wtDU0aTWTyASwwy_ugtzjUcw_ZDmC5KFzw-A/edit?usp=sharing

diff --git a/published/20171226 Top 10 Microsoft Visio Alternatives for Linux.md b/published/201907/20171226 Top 10 Microsoft Visio Alternatives for Linux.md

similarity index 100%

rename from published/20171226 Top 10 Microsoft Visio Alternatives for Linux.md

rename to published/201907/20171226 Top 10 Microsoft Visio Alternatives for Linux.md

diff --git a/published/20180406 MX Linux- A Mid-Weight Distro Focused on Simplicity.md b/published/201907/20180406 MX Linux- A Mid-Weight Distro Focused on Simplicity.md

similarity index 100%

rename from published/20180406 MX Linux- A Mid-Weight Distro Focused on Simplicity.md

rename to published/201907/20180406 MX Linux- A Mid-Weight Distro Focused on Simplicity.md

diff --git a/published/20180529 Manage your workstation with Ansible- Configure desktop settings.md b/published/201907/20180529 Manage your workstation with Ansible- Configure desktop settings.md

similarity index 100%

rename from published/20180529 Manage your workstation with Ansible- Configure desktop settings.md

rename to published/201907/20180529 Manage your workstation with Ansible- Configure desktop settings.md

diff --git a/published/20180620 How To Find The Port Number Of A Service In Linux.md b/published/201907/20180620 How To Find The Port Number Of A Service In Linux.md

similarity index 100%

rename from published/20180620 How To Find The Port Number Of A Service In Linux.md

rename to published/201907/20180620 How To Find The Port Number Of A Service In Linux.md

diff --git a/published/20180629 100 Best Ubuntu Apps.md b/published/201907/20180629 100 Best Ubuntu Apps.md

similarity index 100%

rename from published/20180629 100 Best Ubuntu Apps.md

rename to published/201907/20180629 100 Best Ubuntu Apps.md

diff --git a/published/20180902 Learning BASIC Like It-s 1983.md b/published/201907/20180902 Learning BASIC Like It-s 1983.md

similarity index 100%

rename from published/20180902 Learning BASIC Like It-s 1983.md

rename to published/201907/20180902 Learning BASIC Like It-s 1983.md

diff --git a/published/201907/20180924 5 ways to play old-school games on a Raspberry Pi.md b/published/201907/20180924 5 ways to play old-school games on a Raspberry Pi.md

new file mode 100644

index 0000000000..f9a55eda13

--- /dev/null

+++ b/published/201907/20180924 5 ways to play old-school games on a Raspberry Pi.md

@@ -0,0 +1,173 @@

+在树莓派上玩怀旧游戏的 5 种方法

+======

+

+> 使用这些用于树莓派的开源平台来重温游戏的黄金时代。

+

+

+

+他们使它们不像过去那样子了,对吧?我是说,电子游戏。

+

+当然,现在的设备更强大了。赛达尔公主在过去每个边只有 16 个像素,而现在的图像处理能力足够处理她头上的每根头发。今天的处理器打败 1988 年的处理器简直不费吹灰之力。

+

+但是你知道缺少什么吗?乐趣。

+

+你有数之不尽的游戏,按下一个按钮就可以完成教程任务。可能有故事情节,当然杀死坏蛋也可以不需要故事情节,你需要的只是跳跃和射击。因此,毫不奇怪,树莓派最持久的流行用途之一就是重温上世纪八九十年代的 8 位和 16 位游戏的黄金时代。但从哪里开始呢?

+

+在树莓派上有几种方法可以玩怀旧游戏。每一种都有自己的优点和缺点,我将在这里讨论这些。

+

+### RetroPie

+

+[RetroPie][1] 可能是树莓派上最受欢迎的复古游戏平台。它是一个可靠的万能选手,是模拟经典桌面和控制台游戏系统的绝佳选择。

+

+

+

+#### 介绍

+

+RetroPie 构建在 [Raspbian][2] 上运行。如果你愿意,它也可以安装在现有的 Raspbian 镜像上。它使用 [EmulationStation][3] 作为开源仿真器库(包括 [Libretro][4] 仿真器)的图形前端。

+

+不过,你要玩游戏其实并不需要理解上面的任何一个词。

+

+#### 它有什么好处

+

+入门很容易。你需要做的就是将镜像刻录到 SD 卡,配置你的控制器、复制游戏,然后开始杀死坏蛋。

+

+它的庞大用户群意味着有大量的支持和信息,活跃的在线社区也可以求助问题。

+

+除了随 RetroPie 镜像一起安装的仿真器之外,还有一个可以从包管理器安装的庞大的仿真器库,并且它一直在增长。RetroPie 还提供了用户友好的菜单系统来管理这些,可以节省你的时间。

+

+从 RetroPie 菜单中可以轻松添加 Kodi 和配备了 Chromium 浏览器的 Raspbian 桌面。这意味着你的这套复古游戏装备也适于作为家庭影院、[YouTube][5]、[SoundCloud][6] 以及所有其它“休息室电脑”产品。

+

+RetroPie 还有许多其它自定义选项:你可以更改菜单中的图形,为不同的模拟器设置不同的控制手柄配置,使你的树莓派文件系统的所有内容对你的本地 Windows 网络可见等等。

+

+RetroPie 建立在 Raspbian 上,这意味着你可以探索这个树莓派最受欢迎的操作系统。你所发现的大多数树莓派项目和教程都是为 Raspbian 编写的,因此可以轻松地自定义和安装新内容。我已经使用我的 RetroPie 装备作为无线桥接器,在上面安装了 MIDI 合成器,自学了一些 Python,更重要的是,所有这些都没有影响它作为游戏机的用途。

+

+#### 它有什么不太好的

+

+RetroPie 的安装简单和易用性在某种程度上是一把双刃剑。你可以在 RetroPie 上玩了很长时间,而甚至没有学习过哪怕像 `sudo apt-get` 这样简单的东西,但这也意味着你错过了很多树莓派的体验。

+

+但不一定必须如此;当你需要时,命令行仍然存在于底层,但是也许用户与 Bash shell 有点隔离,而使它最终并没有看上去那么可怕、另外,RetroPie 的主菜单只能通过控制手柄操作,当你没有接入手柄时,这可能很烦人,因为你一直将该系统用于游戏之外的事情。

+

+#### 它适用于谁?

+

+任何想直接玩一些游戏的人,任何想拥有最大、最好的模拟器库的人,以及任何想在不玩游戏的时候开始探索 Linux 的人。

+

+### Recalbox

+

+[Recalbox][7] 是一个较新的树莓派开源模拟器套件。它还支持其它基于 ARM 的小型计算机。

+

+

+

+#### 介绍

+

+与 Retropie 一样, Recalbox 基于 EmulationStation 和 Libretro。它的不同之处在于它不是基于 Raspbian 构建的,而是基于它自己的 Linux 发行版:RecalboxOS。

+

+#### 它有什么好处

+

+Recalbox 的设置比 RetroPie 更容易。你甚至不需要做 SD 卡镜像;只需复制一些文件即可。它还为一些游戏控制器提供开箱即用的支持,可以让你更快地开始游戏。它预装了 Kodi。这是一个现成的游戏和媒体平台。

+

+#### 它有什么不太好的

+

+Recalbox 比 RetroPie 拥有更少的仿真器、更少的自定义选项和更小的用户社区。

+

+你的 Recalbox 装备可能一直用于模拟器和 Kodi,安装成什么样就是什么样。如果你想深入了解 Linux,你可能需要为 Raspbian 提供一个新的 SD 卡。

+

+#### 它适用于谁?

+

+如果你想要绝对简单的复古游戏体验,并且不想玩一些比较少见的游戏平台模拟器,或者你害怕一些技术性工作(也没有兴趣去做),那么 Recalbox 非常适合你。

+

+对于大多数读者来说,Recalbox 可能最适合推荐给你那些不太懂技术的朋友或亲戚。它超级简单的设置和几乎没什么选项甚至可以让你免去帮助他们解决问题。

+

+### 做个你自己的

+

+好,你可能已经注意到 Retropie 和 Recalbox 都是由许多相同的开源组件构建的。那么为什么不自己把它们组合在一起呢?

+

+#### 介绍

+

+无论你想要的是什么,开源软件的本质意味着你可以使用现有的模拟器套件作为起点,或者随意使用它们。

+

+#### 它有什么好处

+

+如果你想有自己的自定义界面,我想除了亲自动手别无它法。这也是安装在 RetroPie 中没有的仿真器的方法,例如 [BeebEm][8]) 或 [ArcEm][9]。

+

+#### 它有什么不太好的

+

+嗯,工作量有点大。

+

+#### 它适用于谁?

+

+喜欢鼓捣的人,有动手能力的人,开发者,经验丰富的业余爱好者等。

+

+### 原生 RISC OS 游戏体验

+

+现在有一匹黑马:[RISC OS][10],它是 ARM 设备的原始操作系统。

+

+#### 介绍

+

+在 ARM 成为世界上最受欢迎的 CPU 架构之前,它最初是作为 Acorn Archimedes 的处理器而开发的。现在看起来这像是一种被遗忘的野兽,但是那几年,它作为世界上最强大的台式计算机独领风骚了好几年,并且吸引了大量的游戏开发项目。

+

+树莓派中的 ARM 处理器是 Archimedes 的曾孙辈的 CPU,所以我们仍然可以在其上安装 RISC OS,只要做一点工作,就可以让这些游戏运行起来。这与我们到上面所介绍的仿真器方式不同,我们是在玩为该操作系统和 CPU 架构开发的游戏。

+

+#### 它有什么好处

+

+这是 RISC OS 的完美展现,这绝对是操作系统的瑰宝,非常值得一试。

+

+事实上,你使用的是和以前几乎相同的操作系统来加载和玩你的游戏,这使得你的复古游戏装备像是一个时间机器一样,这无疑为该项目增添了一些魅力和复古价值。

+

+有一些精彩的游戏只在 Archimedes 上发布过。Archimedes 的巨大硬件优势也意味着它通常拥有许多多平台游戏大作的最佳图形和最流畅的游戏体验。这类游戏的版权持有者非常慷慨,可以合法地免费下载它们。

+

+#### 它有什么不太好的

+

+安装了 RISC OS 之后,它仍然需要一些努力才能让游戏运行起来。这是 [入门指南][11]。

+

+对于休息室来说,这绝对不是一个很好的全能选手。没有什么比 [Kodi][12] 更好的了。它有一个网络浏览器 [NetSurf][13],但它在支持现代 Web 方面还需要一些努力。你不会像使用模拟器套件那样得到大量可以玩的游戏。RISC OS Open 对于爱好者来说可以免费下载和使用,而且很多源代码已经开源,尽管由于这个名字,它不是一个 100% 的开源操作系统。

+

+#### 它适用于谁?

+

+这是专为追求新奇的人,绝对怀旧的人,想要探索一个来自上世纪 80 年代的有趣的操作系统的人,怀旧过去的 Acorn 机器的人,以及想要一个完全不同的怀旧游戏项目的人而设计的。

+

+### 终端游戏

+

+你是否真的需要安装模拟器或者一个异域风情的操作系统才能重温辉煌的日子?为什么不从命令行安装一些原生 Linux 游戏呢?

+

+#### 介绍

+

+有一系列原生的 Linux 游戏经过测试可以在 [树莓派][14] 上运行。

+

+#### 它有什么好处

+

+你可以使用命令行从程序包安装其中的大部分,然后开始玩。很容易。如果你已经有了一个跑起来的 Raspbian,那么它可能是你运行游戏的最快途径。

+

+#### 它有什么不太好的

+

+严格来说,这并不是真正的复古游戏。Linux 诞生于 1991 年,过了一段时间才成为了一个游戏平台。这些不是经典的 8 位和 16 位时代的游戏体验;后来有一些移植的游戏或受复古影响的游戏。

+

+#### 它适用于谁?

+

+如果你只是想找点乐子,这没问题。但如果你想重温过去,那就不完全是这样了。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/18/9/retro-gaming-raspberry-pi

+

+作者:[James Mawson][a]

+选题:[lujun9972](https://github.com/lujun9972)

+译者:[canhetingsky](https://github.com/canhetingsky)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/dxmjames

+[1]: https://retropie.org.uk/

+[2]: https://www.raspbian.org/

+[3]: https://emulationstation.org/

+[4]: https://www.libretro.com/

+[5]: https://www.youtube.com/

+[6]: https://soundcloud.com/

+[7]: https://www.recalbox.com/

+[8]: http://www.mkw.me.uk/beebem/

+[9]: http://arcem.sourceforge.net/

+[10]: https://opensource.com/article/18/7/gentle-intro-risc-os

+[11]: https://blog.dxmtechsupport.com.au/playing-badass-acorn-archimedes-games-on-a-raspberry-pi/

+[12]: https://kodi.tv/

+[13]: https://www.netsurf-browser.org/

+[14]: https://www.raspberrypi.org/forums/viewtopic.php?f=78&t=51794

diff --git a/published/20190211 Introducing kids to computational thinking with Python.md b/published/201907/20190211 Introducing kids to computational thinking with Python.md

similarity index 100%

rename from published/20190211 Introducing kids to computational thinking with Python.md

rename to published/201907/20190211 Introducing kids to computational thinking with Python.md

diff --git a/published/20190301 Emacs for (even more of) the win.md b/published/201907/20190301 Emacs for (even more of) the win.md

similarity index 100%

rename from published/20190301 Emacs for (even more of) the win.md

rename to published/201907/20190301 Emacs for (even more of) the win.md

diff --git a/published/20190302 Create a Custom System Tray Indicator For Your Tasks on Linux.md b/published/201907/20190302 Create a Custom System Tray Indicator For Your Tasks on Linux.md

similarity index 100%

rename from published/20190302 Create a Custom System Tray Indicator For Your Tasks on Linux.md

rename to published/201907/20190302 Create a Custom System Tray Indicator For Your Tasks on Linux.md

diff --git a/published/201907/20190306 ClusterShell - A Nifty Tool To Run Commands On Cluster Nodes In Parallel.md b/published/201907/20190306 ClusterShell - A Nifty Tool To Run Commands On Cluster Nodes In Parallel.md

new file mode 100644

index 0000000000..ed9c481007

--- /dev/null

+++ b/published/201907/20190306 ClusterShell - A Nifty Tool To Run Commands On Cluster Nodes In Parallel.md

@@ -0,0 +1,295 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11147-1.html)

+[#]: subject: (ClusterShell – A Nifty Tool To Run Commands On Cluster Nodes In Parallel)

+[#]: via: (https://www.2daygeek.com/clustershell-clush-run-commands-on-cluster-nodes-remote-system-in-parallel-linux/)

+[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

+

+ClusterShell:一个在集群节点上并行运行命令的好工具

+======

+

+

+

+我们过去曾写过两篇如何并行地在多个远程服务器上运行命令的文章:[并行 SSH(PSSH)][1] 和[分布式 Shell(DSH)][2]。今天,我们将讨论相同类型的主题,但它允许我们在集群节点上执行相同的操作。你可能会想,我可以编写一个小的 shell 脚本来实现这个目的,而不是安装这些第三方软件包。

+

+当然,你是对的,如果要在十几个远程系统中运行一些命令,那么你不需要使用它。但是,你的脚本需要一些时间来完成此任务,因为它是按顺序运行的。想想你要是在一千多台服务器上运行一些命令会是什么样子?在这种情况下,你的脚本用处不大。此外,完成任务需要很长时间。所以,要克服这种问题和情况,我们需要可以在远程计算机上并行运行命令。

+

+为此,我们需要在一个并行应用程序中使用它。我希望这个解释可以解决你对并行实用程序的疑虑。

+

+### ClusterShell

+

+[ClusterShell][3] 是一个事件驱动的开源 Python 库,旨在在服务器场或大型 Linux 集群上并行运行本地或远程命令。(`clush` 即 [ClusterShell][3])。

+

+它将处理在 HPC 集群上遇到的常见问题,例如在节点组上操作,使用优化过的执行算法运行分布式命令,以及收集结果和合并相同的输出,或检索返回代码。

+

+ClusterShell 可以利用已安装在系统上的现有远程 shell 设施,如 SSH。

+

+ClusterShell 的主要目标是通过为开发人员提供轻量级、但可扩展的 Python API 来改进高性能集群的管理。它还提供了 `clush`、`clubak` 和 `cluset`/`nodeset` 等方便的命令行工具,可以让传统的 shell 脚本利用这个库的一些功能。

+

+ClusterShell 是用 Python 编写的,它需要 Python(v2.6+ 或 v3.4+)才能在你的系统上运行。

+

+### 如何在 Linux 上安装 ClusterShell?

+

+ClusterShell 包在大多数发行版的官方包管理器中都可用。因此,使用发行版包管理器工具进行安装。

+

+对于 Fedora 系统,使用 [DNF 命令][4]来安装 clustershell。

+

+```

+$ sudo dnf install clustershell

+```

+

+如果系统默认是 Python 2,这会安装 Python 2 模块和工具,可以运行以下命令安装 Python 3 开发包。

+

+```

+$ sudo dnf install python3-clustershell

+```

+

+在执行 clustershell 安装之前,请确保你已在系统上启用 [EPEL 存储库][5]。

+

+对于 RHEL/CentOS 系统,使用 [YUM 命令][6] 来安装 clustershell。

+

+```

+$ sudo yum install clustershell

+```

+

+如果系统默认是 Python 2,这会安装 Python 2 模块和工具,可以运行以下命令安装 Python 3 开发包。

+

+```

+$ sudo yum install python34-clustershell

+```

+

+对于 openSUSE Leap 系统,使用 [Zypper 命令][7] 来安装 clustershell。

+

+```

+$ sudo zypper install clustershell

+```

+

+如果系统默认是 Python 2,这会安装 Python 2 模块和工具,可以运行以下命令安装 Python 3 开发包。

+

+```

+$ sudo zypper install python3-clustershell

+```

+

+对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][8] 或 [APT 命令][9] 来安装 clustershell。

+

+```

+$ sudo apt install clustershell

+```

+

+### 如何在 Linux 使用 PIP 安装 ClusterShell?

+

+可以使用 PIP 安装 ClusterShell,因为它是用 Python 编写的。

+

+在执行 clustershell 安装之前,请确保你已在系统上启用了 [Python][10] 和 [PIP][11]。

+

+```

+$ sudo pip install ClusterShell

+```

+

+### 如何在 Linux 上使用 ClusterShell?

+

+与其他实用程序(如 `pssh` 和 `dsh`)相比,它是直接了当的优秀工具。它有很多选项可以在远程并行执行。

+

+在开始使用 clustershell 之前,请确保你已启用系统上的[无密码登录][12]。

+

+以下配置文件定义了系统范围的默认值。你不需要修改这里的任何东西。

+

+```

+$ cat /etc/clustershell/clush.conf

+```

+

+如果你想要创建一个服务器组,那也可以。默认情况下有一些示例,请根据你的要求执行相同操作。

+

+```

+$ cat /etc/clustershell/groups.d/local.cfg

+```

+

+只需按以下列格式运行 clustershell 命令即可从给定节点获取信息:

+

+```

+$ clush -w 192.168.1.4,192.168.1.9 cat /proc/version

+192.168.1.9: Linux version 4.15.0-45-generic ([email protected]) (gcc version 7.3.0 (Ubuntu 7.3.0-16ubuntu3)) #48-Ubuntu SMP Tue Jan 29 16:28:13 UTC 2019

+192.168.1.4: Linux version 3.10.0-957.el7.x86_64 ([email protected]) (gcc version 4.8.5 20150623 (Red Hat 4.8.5-36) (GCC) ) #1 SMP Thu Nov 8 23:39:32 UTC 2018

+```

+

+选项:

+

+ * `-w:` 你要运行该命令的节点。

+

+你可以使用正则表达式而不是使用完整主机名和 IP:

+

+```

+$ clush -w 192.168.1.[4,9] uname -r

+192.168.1.9: 4.15.0-45-generic

+192.168.1.4: 3.10.0-957.el7.x86_64

+```

+

+或者,如果服务器位于同一 IP 系列中,则可以使用以下格式:

+

+```

+$ clush -w 192.168.1.[4-9] date

+192.168.1.6: Mon Mar 4 21:08:29 IST 2019

+192.168.1.7: Mon Mar 4 21:08:29 IST 2019

+192.168.1.8: Mon Mar 4 21:08:29 IST 2019

+192.168.1.5: Mon Mar 4 09:16:30 CST 2019

+192.168.1.9: Mon Mar 4 21:08:29 IST 2019

+192.168.1.4: Mon Mar 4 09:16:30 CST 2019

+```

+

+clustershell 允许我们以批处理模式运行命令。使用以下格式来实现此目的:

+

+```

+$ clush -w 192.168.1.4,192.168.1.9 -b

+Enter 'quit' to leave this interactive mode

+Working with nodes: 192.168.1.[4,9]

+clush> hostnamectl

+---------------

+192.168.1.4

+---------------

+ Static hostname: CentOS7.2daygeek.com

+ Icon name: computer-vm

+ Chassis: vm

+ Machine ID: 002f47b82af248f5be1d67b67e03514c

+ Boot ID: f9b37a073c534dec8b236885e754cb56

+ Virtualization: kvm

+ Operating System: CentOS Linux 7 (Core)

+ CPE OS Name: cpe:/o:centos:centos:7

+ Kernel: Linux 3.10.0-957.el7.x86_64

+ Architecture: x86-64

+---------------

+192.168.1.9

+---------------

+ Static hostname: Ubuntu18

+ Icon name: computer-vm

+ Chassis: vm

+ Machine ID: 27f6c2febda84dc881f28fd145077187

+ Boot ID: f176f2eb45524d4f906d12e2b5716649

+ Virtualization: oracle

+ Operating System: Ubuntu 18.04.2 LTS

+ Kernel: Linux 4.15.0-45-generic

+ Architecture: x86-64

+clush> free -m

+---------------

+192.168.1.4

+---------------

+ total used free shared buff/cache available

+Mem: 1838 641 217 19 978 969

+Swap: 2047 0 2047

+---------------

+192.168.1.9

+---------------

+ total used free shared buff/cache available

+Mem: 1993 352 1067 1 573 1473

+Swap: 1425 0 1425

+clush> w

+---------------

+192.168.1.4

+---------------

+ 09:21:14 up 3:21, 3 users, load average: 0.00, 0.01, 0.05

+USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

+daygeek :0 :0 06:02 ?xdm? 1:28 0.30s /usr/libexec/gnome-session-binary --session gnome-classic

+daygeek pts/0 :0 06:03 3:17m 0.06s 0.06s bash

+daygeek pts/1 192.168.1.6 06:03 52:26 0.10s 0.10s -bash

+---------------

+192.168.1.9

+---------------

+ 21:13:12 up 3:12, 1 user, load average: 0.08, 0.03, 0.00

+USER TTY FROM LOGIN@ IDLE JCPU PCPU WHAT

+daygeek pts/0 192.168.1.6 20:42 29:41 0.05s 0.05s -bash

+clush> quit

+```

+

+如果要在一组节点上运行该命令,请使用以下格式:

+

+```

+$ clush -w @dev uptime

+or

+$ clush -g dev uptime

+or

+$ clush --group=dev uptime

+

+192.168.1.9: 21:10:10 up 3:09, 1 user, load average: 0.09, 0.03, 0.01

+192.168.1.4: 09:18:12 up 3:18, 3 users, load average: 0.01, 0.02, 0.05

+```

+

+如果要在多个节点组上运行该命令,请使用以下格式:

+

+```

+$ clush -w @dev,@uat uptime

+or

+$ clush -g dev,uat uptime

+or

+$ clush --group=dev,uat uptime

+

+192.168.1.7: 07:57:19 up 59 min, 1 user, load average: 0.08, 0.03, 0.00

+192.168.1.9: 20:27:20 up 1:00, 1 user, load average: 0.00, 0.00, 0.00

+192.168.1.5: 08:57:21 up 59 min, 1 user, load average: 0.00, 0.01, 0.05

+```

+

+clustershell 允许我们将文件复制到远程计算机。将本地文件或目录复制到同一个远程节点:

+

+```

+$ clush -w 192.168.1.[4,9] --copy /home/daygeek/passwd-up.sh

+```

+

+我们可以通过运行以下命令来验证它:

+

+```

+$ clush -w 192.168.1.[4,9] ls -lh /home/daygeek/passwd-up.sh

+192.168.1.4: -rwxr-xr-x. 1 daygeek daygeek 159 Mar 4 09:00 /home/daygeek/passwd-up.sh

+192.168.1.9: -rwxr-xr-x 1 daygeek daygeek 159 Mar 4 20:52 /home/daygeek/passwd-up.sh

+```

+

+将本地文件或目录复制到不同位置的远程节点:

+

+```

+$ clush -g uat --copy /home/daygeek/passwd-up.sh --dest /tmp

+```

+

+我们可以通过运行以下命令来验证它:

+

+```

+$ clush --group=uat ls -lh /tmp/passwd-up.sh

+192.168.1.7: -rwxr-xr-x. 1 daygeek daygeek 159 Mar 6 07:44 /tmp/passwd-up.sh

+```

+

+将文件或目录从远程节点复制到本地系统:

+

+```

+$ clush -w 192.168.1.7 --rcopy /home/daygeek/Documents/magi.txt --dest /tmp

+```

+

+我们可以通过运行以下命令来验证它:

+

+```

+$ ls -lh /tmp/magi.txt.192.168.1.7

+-rw-r--r-- 1 daygeek daygeek 35 Mar 6 20:24 /tmp/magi.txt.192.168.1.7

+```

+

+--------------------------------------------------------------------------------

+

+via: https://www.2daygeek.com/clustershell-clush-run-commands-on-cluster-nodes-remote-system-in-parallel-linux/

+

+作者:[Magesh Maruthamuthu][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.2daygeek.com/author/magesh/

+[b]: https://github.com/lujun9972

+[1]: https://www.2daygeek.com/pssh-parallel-ssh-run-execute-commands-on-multiple-linux-servers/

+[2]: https://www.2daygeek.com/dsh-run-execute-shell-commands-on-multiple-linux-servers-at-once/

+[3]: https://cea-hpc.github.io/clustershell/

+[4]: https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/

+[5]: https://www.2daygeek.com/install-enable-epel-repository-on-rhel-centos-scientific-linux-oracle-linux/

+[6]: https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/

+[7]: https://www.2daygeek.com/zypper-command-examples-manage-packages-opensuse-system/

+[8]: https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/

+[9]: https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/

+[10]: https://www.2daygeek.com/3-methods-to-install-latest-python3-package-on-centos-6-system/

+[11]: https://www.2daygeek.com/install-pip-manage-python-packages-linux/

+[12]: https://www.2daygeek.com/linux-passwordless-ssh-login-using-ssh-keygen/

diff --git a/published/20190320 4 cool terminal multiplexers.md b/published/201907/20190320 4 cool terminal multiplexers.md

similarity index 100%

rename from published/20190320 4 cool terminal multiplexers.md

rename to published/201907/20190320 4 cool terminal multiplexers.md

diff --git a/published/20190416 Can schools be agile.md b/published/201907/20190416 Can schools be agile.md

similarity index 100%

rename from published/20190416 Can schools be agile.md

rename to published/201907/20190416 Can schools be agile.md

diff --git a/published/20190501 Looking into Linux modules.md b/published/201907/20190501 Looking into Linux modules.md

similarity index 100%

rename from published/20190501 Looking into Linux modules.md

rename to published/201907/20190501 Looking into Linux modules.md

diff --git a/published/20190505 Blockchain 2.0 - Public Vs Private Blockchain Comparison -Part 7.md b/published/201907/20190505 Blockchain 2.0 - Public Vs Private Blockchain Comparison -Part 7.md

similarity index 100%

rename from published/20190505 Blockchain 2.0 - Public Vs Private Blockchain Comparison -Part 7.md

rename to published/201907/20190505 Blockchain 2.0 - Public Vs Private Blockchain Comparison -Part 7.md

diff --git a/published/20190513 When to be concerned about memory levels on Linux.md b/published/201907/20190513 When to be concerned about memory levels on Linux.md

similarity index 100%

rename from published/20190513 When to be concerned about memory levels on Linux.md

rename to published/201907/20190513 When to be concerned about memory levels on Linux.md

diff --git a/published/20190518 Best Linux Distributions for Beginners.md b/published/201907/20190518 Best Linux Distributions for Beginners.md

similarity index 100%

rename from published/20190518 Best Linux Distributions for Beginners.md

rename to published/201907/20190518 Best Linux Distributions for Beginners.md

diff --git a/published/201907/20190522 Convert Markdown files to word processor docs using pandoc.md b/published/201907/20190522 Convert Markdown files to word processor docs using pandoc.md

new file mode 100644

index 0000000000..381510d7a7

--- /dev/null

+++ b/published/201907/20190522 Convert Markdown files to word processor docs using pandoc.md

@@ -0,0 +1,129 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11160-1.html)

+[#]: subject: (Convert Markdown files to word processor docs using pandoc)

+[#]: via: (https://opensource.com/article/19/5/convert-markdown-to-word-pandoc)

+[#]: author: (Scott Nesbitt https://opensource.com/users/scottnesbitt/users/jason-van-gumster/users/kikofernandez)

+

+使用 pandoc 将 Markdown 转换为格式化文档

+======

+

+> 生活在普通文本世界么?以下是无需使用文字处理器而创建别人要的格式化文档的方法。

+

+![][1]

+

+如果你生活在[普通文本][2]世界里,总会有人要求你提供格式化文档。我就经常遇到这个问题,特别是在 Day JobTM。虽然我已经给与我合作的开发团队之一介绍了用于撰写和审阅发行说明的 [Docs Like Code][3] 工作流程,但是还有少数人对 GitHub 和使用 [Markdown][4] 没有兴趣,他们更喜欢为特定的专有应用格式化的文档。

+

+好消息是,你不会被卡在将未格式化的文本复制粘贴到文字处理器的问题当中。使用 [pandoc][5],你可以快速地给人们他们想要的东西。让我们看看如何使用 pandoc 将文档从 Markdown 转换为 Linux 中的文字处理器格式。

+

+请注意,pandoc 也可用于从两种 BSD([NetBSD][7] 和 [FreeBSD][8])到 Chrome OS、MacOS 和 Windows 等的各种操作系统。

+

+### 基本转换

+

+首先,在你的计算机上[安装 pandoc][9]。然后,打开控制台终端窗口,并导航到包含要转换的文件的目录。

+

+输入此命令以创建 ODT 文件(可以使用 [LibreOffice Writer][10] 或 [AbiWord][11] 等字处理器打开):

+

+```

+pandoc -t odt filename.md -o filename.odt

+```

+

+记得用实际文件名称替换 `filename`。如果你需要为其他文字处理器(你知道我的意思)创建一个文件,替换命令行的 `odt` 为 `docx`。以下是本文转换为 ODT 文件时的内容:

+

+![Basic conversion results with pandoc.][12]

+

+这些转换结果虽然可用,但有点乏味。让我们看看如何为转换后的文档添加更多样式。

+

+### 带样式转换

+

+`pandoc` 有一个漂亮的功能,使你可以在将带标记的纯文本文件转换为字处理器格式时指定样式模板。在此文件中,你可以编辑文档中的少量样式,包括控制段落、文章标题和副标题、段落标题、说明、基本的表格和超链接的样式。

+

+让我们来看看能做什么。

+

+#### 创建模板

+

+要设置文档样式,你不能只是使用任何一个模板就行。你需要生成 pandoc 称之为引用模板的文件,这是将文本文件转换为文字处理器文档时使用的模板。要创建此文件,请在终端窗口中键入以下内容:

+

+```

+pandoc -o custom-reference.odt --print-default-data-file reference.odt

+```

+

+此命令创建一个名为 `custom-reference.odt` 的文件。如果你正在使用其他文字处理程序,请将命令行中的 “odt” 更改为 “docx”。

+

+在 LibreOffice Writer 中打开模板文件,然后按 `F11` 打开 LibreOffice Writer 的 “样式” 窗格。虽然 [pandoc 手册][13]建议不要对该文件进行其他更改,但我会在必要时更改页面大小并添加页眉和页脚。

+

+#### 使用模板

+

+那么,你要如何使用刚刚创建的模板?有两种方法可以做到这一点。

+

+最简单的方法是将模板放在家目录的 `.pandoc` 文件夹中,如果该文件夹不存在,则必须先创建该文件夹。当转换文档时,`pandoc` 会使用此模板文件。如果你需要多个模板,请参阅下一节了解如何从多个模板中进行选择。

+

+使用模板的另一种方法是在命令行键入以下转换选项:

+

+```

+pandoc -t odt file-name.md --reference-doc=path-to-your-file/reference.odt -o file-name.odt

+```

+

+如果你想知道使用自定义模板转换后的文件是什么样的,这是一个示例:

+

+![A document converted using a pandoc style template.][14]

+

+#### 选择模板

+

+很多人只需要一个 `pandoc` 模板,但是,有些人需要不止一个。

+

+例如,在我的日常工作中,我使用了几个模板:一个带有 DRAFT 水印,一个带有表示内部使用的水印,另一个用于文档的最终版本。每种类型的文档都需要不同的模板。

+

+如果你有类似的需求,可以像使用单个模板一样创建文件 `custom-reference.odt`,将生成的文件重命名为例如 `custom-reference-draft.odt` 这样的名字,然后在 LibreOffice Writer 中打开它并修改样式。对你需要的每个模板重复此过程。

+

+接下来,将文件复制到家目录中。如果你愿意,你甚至可以将它们放在 `.pandoc` 文件夹中。

+

+要在转换时选择特定模板,你需要在终端中运行此命令:

+

+```

+pandoc -t odt file-name.md --reference-doc=path-to-your-file/custom-template.odt -o file-name.odt

+```

+

+改变 `custom-template.odt` 为你的模板文件名。

+

+### 结语

+

+为了不用记住我不经常使用的一组选项,我拼凑了一些简单的、非常蹩脚的单行脚本,这些脚本封装了每个模板的选项。例如,我运行脚本 `todraft.sh` 以使用带有 DRAFT 水印的模板创建文字处理器文档。你可能也想要这样做。

+

+以下是使用包含 DRAFT 水印的模板的脚本示例:

+

+```

+pandoc -t odt $1.md -o $1.odt --reference-doc=~/Documents/pandoc-templates/custom-reference-draft.odt

+```

+

+使用 pandoc 是一种不必放弃命令行生活而以人们要求的格式提供文档的好方法。此工具也不仅适用于 Markdown。我在本文中讨论的内容还可以让你在各种标记语言之间创建和转换文档。有关更多详细信息,请参阅前面链接的 [pandoc 官网][5]。

+

+--------------------------------------------------------------------------------

+

+via: https://opensource.com/article/19/5/convert-markdown-to-word-pandoc

+

+作者:[Scott Nesbitt][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensource.com/users/scottnesbitt/users/jason-van-gumster/users/kikofernandez

+[b]: https://github.com/lujun9972

+[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/computer_keyboard_laptop_development_code_woman.png?itok=vbYz6jjb

+[2]: https://plaintextproject.online/

+[3]: https://www.docslikecode.com/

+[4]: https://en.wikipedia.org/wiki/Markdown

+[5]: https://pandoc.org/

+[6]: /resources/linux

+[7]: https://www.netbsd.org/

+[8]: https://www.freebsd.org/

+[9]: https://pandoc.org/installing.html

+[10]: https://www.libreoffice.org/discover/writer/

+[11]: https://www.abisource.com/

+[12]: https://opensource.com/sites/default/files/uploads/pandoc-wp-basic-conversion_600_0.png (Basic conversion results with pandoc.)

+[13]: https://pandoc.org/MANUAL.html

+[14]: https://opensource.com/sites/default/files/uploads/pandoc-wp-conversion-with-tpl_600.png (A document converted using a pandoc style template.)

diff --git a/published/20190531 Use Firefox Send with ffsend in Fedora.md b/published/201907/20190531 Use Firefox Send with ffsend in Fedora.md

similarity index 100%

rename from published/20190531 Use Firefox Send with ffsend in Fedora.md

rename to published/201907/20190531 Use Firefox Send with ffsend in Fedora.md

diff --git a/published/20190603 How to set up virtual environments for Python on MacOS.md b/published/201907/20190603 How to set up virtual environments for Python on MacOS.md

similarity index 100%

rename from published/20190603 How to set up virtual environments for Python on MacOS.md

rename to published/201907/20190603 How to set up virtual environments for Python on MacOS.md

diff --git a/published/20190604 5G will augment Wi-Fi, not replace it.md b/published/201907/20190604 5G will augment Wi-Fi, not replace it.md

similarity index 100%

rename from published/20190604 5G will augment Wi-Fi, not replace it.md

rename to published/201907/20190604 5G will augment Wi-Fi, not replace it.md

diff --git a/published/20190606 Zorin OS Becomes Even More Awesome With Zorin 15 Release.md b/published/201907/20190606 Zorin OS Becomes Even More Awesome With Zorin 15 Release.md

similarity index 100%

rename from published/20190606 Zorin OS Becomes Even More Awesome With Zorin 15 Release.md

rename to published/201907/20190606 Zorin OS Becomes Even More Awesome With Zorin 15 Release.md

diff --git a/published/20190607 4 tools to help you drive Kubernetes.md b/published/201907/20190607 4 tools to help you drive Kubernetes.md

similarity index 100%

rename from published/20190607 4 tools to help you drive Kubernetes.md

rename to published/201907/20190607 4 tools to help you drive Kubernetes.md

diff --git a/published/20190607 Free and Open Source Trello Alternative OpenProject 9 Released.md b/published/201907/20190607 Free and Open Source Trello Alternative OpenProject 9 Released.md

similarity index 100%

rename from published/20190607 Free and Open Source Trello Alternative OpenProject 9 Released.md

rename to published/201907/20190607 Free and Open Source Trello Alternative OpenProject 9 Released.md

diff --git a/published/20190609 How to set ulimit and file descriptors limit on Linux Servers.md b/published/201907/20190609 How to set ulimit and file descriptors limit on Linux Servers.md

similarity index 100%

rename from published/20190609 How to set ulimit and file descriptors limit on Linux Servers.md

rename to published/201907/20190609 How to set ulimit and file descriptors limit on Linux Servers.md

diff --git a/published/20190610 5 Easy Ways To Free Up Space (Remove Unwanted or Junk Files) on Ubuntu.md b/published/201907/20190610 5 Easy Ways To Free Up Space (Remove Unwanted or Junk Files) on Ubuntu.md

similarity index 100%

rename from published/20190610 5 Easy Ways To Free Up Space (Remove Unwanted or Junk Files) on Ubuntu.md

rename to published/201907/20190610 5 Easy Ways To Free Up Space (Remove Unwanted or Junk Files) on Ubuntu.md

diff --git a/published/20190612 BitTorrent Client Deluge 2.0 Released- Here-s What-s New.md b/published/201907/20190612 BitTorrent Client Deluge 2.0 Released- Here-s What-s New.md

similarity index 100%

rename from published/20190612 BitTorrent Client Deluge 2.0 Released- Here-s What-s New.md

rename to published/201907/20190612 BitTorrent Client Deluge 2.0 Released- Here-s What-s New.md

diff --git a/published/20190613 IPython is still the heart of Jupyter Notebooks for Python developers.md b/published/201907/20190613 IPython is still the heart of Jupyter Notebooks for Python developers.md

similarity index 100%

rename from published/20190613 IPython is still the heart of Jupyter Notebooks for Python developers.md

rename to published/201907/20190613 IPython is still the heart of Jupyter Notebooks for Python developers.md

diff --git a/published/20190617 Use ImageGlass to quickly view JPG images as a slideshow.md b/published/201907/20190617 Use ImageGlass to quickly view JPG images as a slideshow.md

similarity index 100%

rename from published/20190617 Use ImageGlass to quickly view JPG images as a slideshow.md

rename to published/201907/20190617 Use ImageGlass to quickly view JPG images as a slideshow.md

diff --git a/published/20190618 A beginner-s guide to Linux permissions.md b/published/201907/20190618 A beginner-s guide to Linux permissions.md

similarity index 100%

rename from published/20190618 A beginner-s guide to Linux permissions.md

rename to published/201907/20190618 A beginner-s guide to Linux permissions.md

diff --git a/published/20190619 Leading in the Python community.md b/published/201907/20190619 Leading in the Python community.md

similarity index 100%

rename from published/20190619 Leading in the Python community.md

rename to published/201907/20190619 Leading in the Python community.md

diff --git a/published/20190621 Three Ways to Lock and Unlock User Account in Linux.md b/published/201907/20190621 Three Ways to Lock and Unlock User Account in Linux.md

similarity index 100%

rename from published/20190621 Three Ways to Lock and Unlock User Account in Linux.md

rename to published/201907/20190621 Three Ways to Lock and Unlock User Account in Linux.md

diff --git a/published/20190624 With Upgraded Specs, Raspberry Pi 4 Takes Aim at Desktop Segment.md b/published/201907/20190624 With Upgraded Specs, Raspberry Pi 4 Takes Aim at Desktop Segment.md

similarity index 100%

rename from published/20190624 With Upgraded Specs, Raspberry Pi 4 Takes Aim at Desktop Segment.md

rename to published/201907/20190624 With Upgraded Specs, Raspberry Pi 4 Takes Aim at Desktop Segment.md

diff --git a/published/20190625 5 tiny Linux distros to try before you die.md b/published/201907/20190625 5 tiny Linux distros to try before you die.md

similarity index 100%

rename from published/20190625 5 tiny Linux distros to try before you die.md

rename to published/201907/20190625 5 tiny Linux distros to try before you die.md

diff --git a/published/20190625 The innovation delusion.md b/published/201907/20190625 The innovation delusion.md

similarity index 100%

rename from published/20190625 The innovation delusion.md

rename to published/201907/20190625 The innovation delusion.md

diff --git a/published/20190626 Tracking down library injections on Linux.md b/published/201907/20190626 Tracking down library injections on Linux.md

similarity index 100%

rename from published/20190626 Tracking down library injections on Linux.md

rename to published/201907/20190626 Tracking down library injections on Linux.md

diff --git a/published/20190627 How to use Tig to browse Git logs.md b/published/201907/20190627 How to use Tig to browse Git logs.md

similarity index 100%

rename from published/20190627 How to use Tig to browse Git logs.md

rename to published/201907/20190627 How to use Tig to browse Git logs.md

diff --git a/published/20190628 Undo releases Live Recorder 5.0 for Linux debugging.md b/published/201907/20190628 Undo releases Live Recorder 5.0 for Linux debugging.md

similarity index 100%

rename from published/20190628 Undo releases Live Recorder 5.0 for Linux debugging.md

rename to published/201907/20190628 Undo releases Live Recorder 5.0 for Linux debugging.md

diff --git a/published/20190630 Donald Trump Now Wants to Ban End-to-End Encryption.md b/published/201907/20190630 Donald Trump Now Wants to Ban End-to-End Encryption.md

similarity index 100%

rename from published/20190630 Donald Trump Now Wants to Ban End-to-End Encryption.md

rename to published/201907/20190630 Donald Trump Now Wants to Ban End-to-End Encryption.md

diff --git a/published/20190701 Ubuntu or Fedora- Which One Should You Use and Why.md b/published/201907/20190701 Ubuntu or Fedora- Which One Should You Use and Why.md

similarity index 100%

rename from published/20190701 Ubuntu or Fedora- Which One Should You Use and Why.md

rename to published/201907/20190701 Ubuntu or Fedora- Which One Should You Use and Why.md

diff --git a/published/20190702 Jupyter and data science in Fedora.md b/published/201907/20190702 Jupyter and data science in Fedora.md

similarity index 100%

rename from published/20190702 Jupyter and data science in Fedora.md

rename to published/201907/20190702 Jupyter and data science in Fedora.md

diff --git a/published/20190702 Make Linux stronger with firewalls.md b/published/201907/20190702 Make Linux stronger with firewalls.md

similarity index 100%

rename from published/20190702 Make Linux stronger with firewalls.md

rename to published/201907/20190702 Make Linux stronger with firewalls.md

diff --git a/published/20190703 How to Manually Install Security Updates on Debian-Ubuntu.md b/published/201907/20190703 How to Manually Install Security Updates on Debian-Ubuntu.md

similarity index 100%

rename from published/20190703 How to Manually Install Security Updates on Debian-Ubuntu.md

rename to published/201907/20190703 How to Manually Install Security Updates on Debian-Ubuntu.md

diff --git a/published/20190703 Parse arguments with Python.md b/published/201907/20190703 Parse arguments with Python.md

similarity index 100%

rename from published/20190703 Parse arguments with Python.md

rename to published/201907/20190703 Parse arguments with Python.md

diff --git a/published/201907/20190705 Bash Script to Monitor Messages Log (Warning, Error and Critical) on Linux.md b/published/201907/20190705 Bash Script to Monitor Messages Log (Warning, Error and Critical) on Linux.md

new file mode 100644

index 0000000000..8f06f3a6a9

--- /dev/null

+++ b/published/201907/20190705 Bash Script to Monitor Messages Log (Warning, Error and Critical) on Linux.md

@@ -0,0 +1,109 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11171-1.html)

+[#]: subject: (Bash Script to Monitor Messages Log (Warning, Error and Critical) on Linux)

+[#]: via: (https://www.2daygeek.com/linux-bash-script-to-monitor-messages-log-warning-error-critical-send-email/)

+[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

+

+在 Linux 上用 Bash 脚本监控 messages 日志

+======

+

+目前市场上有许多开源监控工具可用于监控 Linux 系统的性能。当系统达到指定的阈值限制时,它将发送电子邮件警报。它可以监视 CPU 利用率、内存利用率、交换利用率、磁盘空间利用率等所有内容。

+

+如果你只有很少的系统并且想要监视它们,那么编写一个小的 shell 脚本可以使你的任务变得非常简单。

+

+在本教程中,我们添加了一个 shell 脚本来监视 Linux 系统上的 messages 日志。

+

+我们过去添加了许多有用的 shell 脚本。如果要查看这些内容,请导航至以下链接。

+

+- [如何使用 shell 脚本监控系统的日常活动?][1]

+

+此脚本将检查 `/var/log/messages` 文件中的 “warning“、“error” 和 “critical”,如果发现任何有关的东西,就给指定电子邮件地址发邮件。

+

+如果服务器有许多匹配的字符串,我们就不能经常运行这个可能填满收件箱的脚本,我们可以在一天内运行一次。

+

+为了解决这个问题,我让脚本以不同的方式触发电子邮件。

+

+如果 `/var/log/messages` 文件中昨天的日志中找到任何给定字符串,则脚本将向给定的电子邮件地址发送电子邮件警报。

+

+**注意:**你需要更改电子邮件地址,而不是我们的电子邮件地址。

+

+```

+# vi /opt/scripts/os-log-alert.sh

+```

+

+```

+#!/bin/bash

+#Set the variable which equal to zero

+prev_count=0

+

+count=$(grep -i "`date --date='yesterday' '+%b %e'`" /var/log/messages | egrep -wi 'warning|error|critical' | wc -l)

+

+if [ "$prev_count" -lt "$count" ] ; then

+ # Send a mail to given email id when errors found in log

+ SUBJECT="WARNING: Errors found in log on "`date --date='yesterday' '+%b %e'`""

+ # This is a temp file, which is created to store the email message.

+ MESSAGE="/tmp/logs.txt"

+ TO="2daygeek@gmail.com"

+ echo "ATTENTION: Errors are found in /var/log/messages. Please Check with Linux admin." >> $MESSAGE

+ echo "Hostname: `hostname`" >> $MESSAGE

+ echo -e "\n" >> $MESSAGE

+ echo "+------------------------------------------------------------------------------------+" >> $MESSAGE

+ echo "Error messages in the log file as below" >> $MESSAGE

+ echo "+------------------------------------------------------------------------------------+" >> $MESSAGE

+ grep -i "`date --date='yesterday' '+%b %e'`" /var/log/messages | awk '{ $3=""; print}' | egrep -wi 'warning|error|critical' >> $MESSAGE

+ mail -s "$SUBJECT" "$TO" < $MESSAGE

+ #rm $MESSAGE

+fi

+```

+

+为 `os-log-alert.sh` 文件设置可执行权限。

+

+```

+$ chmod +x /opt/scripts/os-log-alert.sh

+```

+

+最后添加一个 cron 任务来自动执行此操作。它将每天 7 点钟运行。

+

+```

+# crontab -e

+```

+

+```

+0 7 * * * /bin/bash /opt/scripts/os-log-alert.sh

+```

+

+**注意:**你将在每天 7 点收到昨天日志的电子邮件提醒。

+

+**输出:**你将收到类似下面的电子邮件提醒。

+

+```

+ATTENTION: Errors are found in /var/log/messages. Please Check with Linux admin.

+

++-----------------------------------------------------+

+Error messages in the log file as below

++-----------------------------------------------------+

+Jul 3 02:40:11 ns1 kernel: php-fpm[3175]: segfault at 299 ip 000055dfe7cc7e25 sp 00007ffd799d7d38 error 4 in php-fpm[55dfe7a89000+3a7000]

+Jul 3 02:50:14 ns1 kernel: lmtp[8249]: segfault at 20 ip 00007f9cc05295e4 sp 00007ffc57bca1a0 error 4 in libdovecot-storage.so.0.0.0[7f9cc04df000+148000]

+Jul 3 15:36:09 ns1 kernel: php-fpm[17846]: segfault at 299 ip 000055dfe7cc7e25 sp 00007ffd799d7d38 error 4 in php-fpm[55dfe7a89000+3a7000]

+Jul 3 15:45:54 ns1 pure-ftpd: (?@5.188.62.5) [WARNING] Authentication failed for user [daygeek]

+Jul 3 16:25:36 ns1 pure-ftpd: (?@104.140.148.58) [WARNING] Sorry, cleartext sessions and weak ciphers are not accepted on this server.#012Please reconnect using TLS security mechanisms.

+Jul 3 16:44:20 ns1 kernel: php-fpm[8979]: segfault at 299 ip 000055dfe7cc7e25 sp 00007ffd799d7d38 error 4 in php-fpm[55dfe7a89000+3a7000]

+```

+

+--------------------------------------------------------------------------------

+

+via: https://www.2daygeek.com/linux-bash-script-to-monitor-messages-log-warning-error-critical-send-email/

+

+作者:[Magesh Maruthamuthu][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.2daygeek.com/author/magesh/

+[b]: https://github.com/lujun9972

+[1]: https://www.2daygeek.com/category/shell-script/

diff --git a/published/20190705 Copy and paste at the Linux command line with xclip.md b/published/201907/20190705 Copy and paste at the Linux command line with xclip.md

similarity index 100%

rename from published/20190705 Copy and paste at the Linux command line with xclip.md

rename to published/201907/20190705 Copy and paste at the Linux command line with xclip.md

diff --git a/published/20190705 Manage your shell environment.md b/published/201907/20190705 Manage your shell environment.md

similarity index 100%

rename from published/20190705 Manage your shell environment.md

rename to published/201907/20190705 Manage your shell environment.md

diff --git a/published/20190706 How To Delete A Repository And GPG Key In Ubuntu.md b/published/201907/20190706 How To Delete A Repository And GPG Key In Ubuntu.md

similarity index 100%

rename from published/20190706 How To Delete A Repository And GPG Key In Ubuntu.md

rename to published/201907/20190706 How To Delete A Repository And GPG Key In Ubuntu.md

diff --git a/published/201907/20190706 How to enable DNS-over-HTTPS (DoH) in Firefox.md b/published/201907/20190706 How to enable DNS-over-HTTPS (DoH) in Firefox.md

new file mode 100644

index 0000000000..7a4e915b9e

--- /dev/null

+++ b/published/201907/20190706 How to enable DNS-over-HTTPS (DoH) in Firefox.md

@@ -0,0 +1,95 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11163-1.html)

+[#]: subject: (How to enable DNS-over-HTTPS (DoH) in Firefox)

+[#]: via: (https://www.zdnet.com/article/how-to-enable-dns-over-https-doh-in-firefox/)

+[#]: author: (Catalin Cimpanu https://www.zdnet.com/meet-the-team/us/catalin.cimpanu/)

+

+如何在 Firefox 中启用 DNS-over-HTTPS(DoH)

+======

+

+DNS-over-HTTPS(DoH)协议目前是谈论的焦点,Firefox 是唯一支持它的浏览器。但是,Firefox 默认不启用此功能,用户必须经历许多步骤并修改多个设置才能启动并运行 DoH。

+

+在开始如何在 Firefox 中启用 DoH 支持的分步教程之前,让我们先描述它的原理。

+

+### DNS-over-HTTPS 的工作原理

+

+DNS-over-HTTPS 协议通过获取用户在浏览器中输入的域名,并向 DNS 服务器发送查询,以了解托管该站点的 Web 服务器的 IP 地址。

+

+这也是正常 DNS 的工作原理。但是,DoH 通过 443 端口的加密 HTTPS 连接接受 DNS 查询将其发送到兼容 DoH 的 DNS 服务器(解析器),而不是在 53 端口上发送纯文本。这样,DoH 就会在常规 HTTPS 流量中隐藏 DNS 查询,因此第三方监听者将无法嗅探流量,并了解用户的 DNS 查询,从而推断他们将要访问的网站。

+

+此外,DNS-over-HTTPS 的第二个特性是该协议工作在应用层。应用可以带上内部硬编码的 DoH 兼容的 DNS 解析器列表,从而向它们发送 DoH 查询。这种操作模式绕过了系统级别的默认 DNS 设置,在大多数情况下,这些设置是由本地 Internet 服务提供商(ISP)设置的。这也意味着支持 DoH 的应用可以有效地绕过本地 ISP 流量过滤器并访问可能被本地电信公司或当地政府阻止的内容 —— 这也是 DoH 目前被誉为用户隐私和安全的福音的原因。

+

+这是 DoH 在推出后不到两年的时间里获得相当大的普及的原因之一,同时也是一群[英国 ISP 因为 Mozilla 计划支持 DoH 协议而提名它为 2019 年的“互联网恶棍” (Internet Villian)][1]的原因,ISP 认为 DoH 协议会阻碍他们过滤不良流量的努力。(LCTT 译注:后来这一奖项的提名被取消。)

+

+作为回应,并且由于英国政府阻止访问侵犯版权内容的复杂情况,以及 ISP 自愿阻止访问虐待儿童网站的情况,[Mozilla 已决定不为英国用户默认启用此功能][2]。

+

+下面的分步指南将向英国和世界各地的 Firefox 用户展示如何立即启用该功能,而不用等到 Mozilla 将来启用它 —— 如果它会这么做的话。在 Firefox 中有两种启用 DoH 支持的方法。

+

+### 方法 1:通过 Firefox 设置

+

+**步骤 1:**进入 Firefox 菜单,选择**工具**,然后选择**首选项**。 可选在 URL 栏中输入 `about:preferences`,然后按下回车。这将打开 Firefox 的首选项。

+

+**步骤 2:**在**常规**中,向下滚动到**网络设置**,然后按**设置**按钮。

+

+![DoH section in Firefox settings][3]

+

+**步骤3:**在弹出窗口中,向下滚动并选择“**Enable DNS over HTTPS**”,然后配置你需要的 DoH 解析器。你可以使用内置的 Cloudflare 解析器(该公司与 Mozilla [达成协议][4],记录更少的 Firefox 用户数据),或者你可以在[这个列表][4]中选择一个。

+

+![DoH section in Firefox settings][6]

+

+### 方法 2:通过 about:config

+

+**步骤 1:**在 URL 栏中输入 `about:config`,然后按回车访问 Firefox 的隐藏配置面板。在这里,用户需要启用和修改三个设置。

+

+**步骤 2:**第一个设置是 `network.trr.mode`。这打开了 DoH 支持。此设置支持四个值:

+

+* `0` - 标准 Firefox 安装中的默认值(当前为 5,表示禁用 DoH)

+* `1` - 启用 DoH,但 Firefox 依据哪个请求更快返回选择使用 DoH 或者常规 DNS

+* `2` - 启用 DoH,常规 DNS 作为备用

+* `3` - 启用 DoH,并禁用常规 DNS

+* `5` - 禁用 DoH

+

+值为 2 工作得最好

+

+![DoH in Firefox][7]

+

+**步骤3:**需要修改的第二个设置是 `network.trr.uri`。这是与 DoH 兼容的 DNS 服务器的 URL,Firefox 将向它发送 DoH DNS 查询。默认情况下,Firefox 使用 Cloudflare 的 DoH服务,地址是:。但是,用户可以使用自己的 DoH 服务器 URL。他们可以从[这个列表][8]中选择其中一个可用的。Mozilla 在 Firefox 中使用 Cloudflare 的原因是因为与这家公司[达成了协议][4],之后 Cloudflare 将收集来自 Firefox 用户的 DoH 查询的非常少的数据。

+

+[DoH in Firefox][9]

+

+**步骤4:**第三个设置是可选的,你可以跳过此设置。 但是如果设置不起作用,你可以使用此作为步骤 3 的备用。该选项名为 `network.trr.bootstrapAddress`,它是一个输入字段,用户可以输入步骤 3 中兼容 DoH 的 DNS 解析器的 IP 地址。对于 Cloudflare,它是 1.1.1.1。 对于 Google 服务,它是 8.8.8.8。 如果你使用了另一个 DoH 解析器的 URL,如果有必要的话,你需要追踪那台服务器的 IP 地址并输入。

+

+![DoH in Firefox][10]

+

+通常,在步骤 3 中输入的 URL 应该足够了。

+设置应该立即生效,但如果它们不起作用,请重新启动 Firefox。

+

+文章信息来源:[Mozilla Wiki][11]

+

+

+--------------------------------------------------------------------------------

+

+via: https://www.zdnet.com/article/how-to-enable-dns-over-https-doh-in-firefox/

+

+作者:[Catalin Cimpanu][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.zdnet.com/meet-the-team/us/catalin.cimpanu/

+[b]: https://github.com/lujun9972

+[1]: https://linux.cn/article-11068-1.html

+[2]: https://www.zdnet.com/article/mozilla-no-plans-to-enable-dns-over-https-by-default-in-the-uk/

+[3]: https://zdnet1.cbsistatic.com/hub/i/2019/07/07/df30c7b0-3a20-4de7-8640-3dea6d249a49/121bd379b6232e1e2a97c35ea8c7764e/doh-settings-1.png

+[4]: https://developers.cloudflare.com/1.1.1.1/commitment-to-privacy/privacy-policy/firefox/

+[6]: https://zdnet3.cbsistatic.com/hub/i/2019/07/07/8608af28-2a28-4ff1-952b-9b6d2deb1ea6/b1fc322caaa2c955b1a2fb285daf0e42/doh-settings-2.png

+[7]: https://zdnet1.cbsistatic.com/hub/i/2019/07/06/0232b3a7-82c6-4a6f-90c1-faf0c090254c/6db9b36509021c460fcc7fe825bb74c5/doh-1.png

+[8]: https://github.com/curl/curl/wiki/DNS-over-HTTPS#publicly-available-servers

+[9]: https://zdnet2.cbsistatic.com/hub/i/2019/07/06/4dd1d5c1-6fa7-4f5b-b7cd-b544748edfed/baa7a70ac084861d94a744a57a3147ad/doh-2.png

+[10]: https://zdnet1.cbsistatic.com/hub/i/2019/07/06/8ec20a28-673c-4a17-8195-16579398e90a/538fe8420f9b24724aeb4a6c8d4f0f0f/doh-3.png

+[11]: https://wiki.mozilla.org/Trusted_Recursive_Resolver

diff --git a/published/20190706 Say WHAAAT- Mozilla has Been Nominated for the -Internet Villain- Award in the UK.md b/published/201907/20190706 Say WHAAAT- Mozilla has Been Nominated for the -Internet Villain- Award in the UK.md

similarity index 100%

rename from published/20190706 Say WHAAAT- Mozilla has Been Nominated for the -Internet Villain- Award in the UK.md

rename to published/201907/20190706 Say WHAAAT- Mozilla has Been Nominated for the -Internet Villain- Award in the UK.md

diff --git a/published/20190708 10 ways to get started with Linux.md b/published/201907/20190708 10 ways to get started with Linux.md

similarity index 100%

rename from published/20190708 10 ways to get started with Linux.md

rename to published/201907/20190708 10 ways to get started with Linux.md

diff --git a/published/20190708 Command line quick tips- Permissions.md b/published/201907/20190708 Command line quick tips- Permissions.md

similarity index 100%

rename from published/20190708 Command line quick tips- Permissions.md

rename to published/201907/20190708 Command line quick tips- Permissions.md

diff --git a/published/20190708 Debian 10 (Buster) Installation Steps with Screenshots.md b/published/201907/20190708 Debian 10 (Buster) Installation Steps with Screenshots.md

similarity index 100%

rename from published/20190708 Debian 10 (Buster) Installation Steps with Screenshots.md

rename to published/201907/20190708 Debian 10 (Buster) Installation Steps with Screenshots.md

diff --git a/published/20190708 Linux Games Get A Performance Boost for AMD GPUs Thanks to Valve-s New Compiler.md b/published/201907/20190708 Linux Games Get A Performance Boost for AMD GPUs Thanks to Valve-s New Compiler.md

similarity index 100%

rename from published/20190708 Linux Games Get A Performance Boost for AMD GPUs Thanks to Valve-s New Compiler.md

rename to published/201907/20190708 Linux Games Get A Performance Boost for AMD GPUs Thanks to Valve-s New Compiler.md

diff --git a/published/20190709 From BASIC to Ruby- Life lessons from first programming languages on Command Line Heroes.md b/published/201907/20190709 From BASIC to Ruby- Life lessons from first programming languages on Command Line Heroes.md

similarity index 100%

rename from published/20190709 From BASIC to Ruby- Life lessons from first programming languages on Command Line Heroes.md

rename to published/201907/20190709 From BASIC to Ruby- Life lessons from first programming languages on Command Line Heroes.md

diff --git a/published/20190709 Pipx - Install And Run Python Applications In Isolated Environments.md b/published/201907/20190709 Pipx - Install And Run Python Applications In Isolated Environments.md

similarity index 100%

rename from published/20190709 Pipx - Install And Run Python Applications In Isolated Environments.md

rename to published/201907/20190709 Pipx - Install And Run Python Applications In Isolated Environments.md

diff --git a/published/20190709 Sysadmin vs SRE- What-s the difference.md b/published/201907/20190709 Sysadmin vs SRE- What-s the difference.md

similarity index 100%

rename from published/20190709 Sysadmin vs SRE- What-s the difference.md

rename to published/201907/20190709 Sysadmin vs SRE- What-s the difference.md

diff --git a/published/20190710 32-bit life support- Cross-compiling with GCC.md b/published/201907/20190710 32-bit life support- Cross-compiling with GCC.md

similarity index 100%

rename from published/20190710 32-bit life support- Cross-compiling with GCC.md

rename to published/201907/20190710 32-bit life support- Cross-compiling with GCC.md

diff --git a/published/20190710 How To Find Virtualbox Version From Commandline In Linux.md b/published/201907/20190710 How To Find Virtualbox Version From Commandline In Linux.md

similarity index 100%

rename from published/20190710 How To Find Virtualbox Version From Commandline In Linux.md

rename to published/201907/20190710 How To Find Virtualbox Version From Commandline In Linux.md

diff --git a/published/20190710 Test 200- Linux And Unix Operating Systems Online For Free.md b/published/201907/20190710 Test 200- Linux And Unix Operating Systems Online For Free.md

similarity index 100%

rename from published/20190710 Test 200- Linux And Unix Operating Systems Online For Free.md

rename to published/201907/20190710 Test 200- Linux And Unix Operating Systems Online For Free.md

diff --git a/published/20190711 How to install Elasticsearch on MacOS.md b/published/201907/20190711 How to install Elasticsearch on MacOS.md

similarity index 100%

rename from published/20190711 How to install Elasticsearch on MacOS.md

rename to published/201907/20190711 How to install Elasticsearch on MacOS.md

diff --git a/published/20190711 Type Linux Commands In Capital Letters To Run Them As Sudo User.md b/published/201907/20190711 Type Linux Commands In Capital Letters To Run Them As Sudo User.md

similarity index 100%

rename from published/20190711 Type Linux Commands In Capital Letters To Run Them As Sudo User.md

rename to published/201907/20190711 Type Linux Commands In Capital Letters To Run Them As Sudo User.md

diff --git a/published/201907/20190712 MTTR is dead, long live CIRT.md b/published/201907/20190712 MTTR is dead, long live CIRT.md

new file mode 100644

index 0000000000..1055d9e832

--- /dev/null

+++ b/published/201907/20190712 MTTR is dead, long live CIRT.md

@@ -0,0 +1,78 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11155-1.html)

+[#]: subject: (MTTR is dead, long live CIRT)

+[#]: via: (https://opensource.com/article/19/7/measure-operational-performance)

+[#]: author: (Julie Gunderson https://opensource.com/users/juliegund/users/kearnsjd/users/ophir)

+

+MTTR 已死,CIRT 长存

+======

+

+> 通过关注影响业务的事件,CIRT 是衡量运维绩效的更准确方法。

+

+![Green graph of measurements][1]

+

+IT 运维圈子的玩法正在发生变化,这意味着过去的规则越来越不合理。机构需要适当环境中的准确的、可理解的、且可操作的指标,以衡量运维绩效并推动关键业务转型。

+

+越多的客户使用现代工具,他们管理的事件类型的变化越多,将所有这些不同事件粉碎到一个桶中以计算平均解决时间来表示运维绩效的意义就越少,这就是 IT 一直以来在做的事情。

+

+### 历史与指标

+

+历史表明,在分析信号以防止错误和误解时,背景信息是关键。例如,在 20 世纪 80 年代,瑞典建立了一个分析水听器信号的系统,以提醒他们在瑞典当地水域出现的俄罗斯潜艇。瑞典人使用了他们认为代表一类俄罗斯潜艇的声学特征 —— 但实际上是鲱鱼在遇到潜在捕食者时释放的[气泡声][2]。这种对指标的误解加剧了各国之间的紧张关系,几乎导致了战争。

+

+![Funny fish cartoon][3]

+

+平均解决时间(MTTR)是运维经理用于获得实现目标洞察力的主要运维绩效指标。这是一项基于系统可靠性工程的古老措施。MTTR 已被许多行业采用,包括制造、设施维护以及最近的 IT 运维,它代表了解决在特定时间段内创建的事件所需的平均时间。

+

+MTTR 的计算方法是将所有事件(从事件创建时间到解决时间)所需的时间除以事件总数。

+

+![MTTR formula][4]

+

+正如它所说的,MTTR 是 **所有** 事件的平均值。MTTR 将高紧急事件和低紧急事件混为一谈。它还会重复计算每个单独的、未分组的事件,并得出有效的解决时间。它包括了在相同上下文中手动解决和自动解决的事件。它将在创建了几天(或几个月)甚至完全被忽略的事件混合在一起。最后,MTTR 包括每个小的瞬态突发事件(在 120 秒内自动关闭的事件),这些突发事件要么是非问题噪音,要么已由机器快速解决。

+

+![Variability in incident types][5]

+

+MTTR 将所有事件(无论何种类型)抛入一个桶中,将它们全部混合在一起,并计算整个集合中的“平均”解决时间。这种过于简单化的方法导致运维执行方式的的噪音、错误和误导性指示。

+

+### 一种衡量绩效的新方法

+

+关键事件响应时间(CIRT)是评估运维绩效的一种更准确的新方法。PagerDuty 创立了 CIRT 的概念,但该方法可供所有人免费使用。

+