diff --git a/published/20190826 How RPM packages are made- the source RPM.md b/published/20190826 How RPM packages are made- the source RPM.md

new file mode 100644

index 0000000000..222ec93038

--- /dev/null

+++ b/published/20190826 How RPM packages are made- the source RPM.md

@@ -0,0 +1,235 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11527-1.html)

+[#]: subject: (How RPM packages are made: the source RPM)

+[#]: via: (https://fedoramagazine.org/how-rpm-packages-are-made-the-source-rpm/)

+[#]: author: (Ankur Sinha "FranciscoD" https://fedoramagazine.org/author/ankursinha/)

+

+RPM 包是如何从源 RPM 制作的

+======

+

+![][1]

+

+在[上一篇文章中,我们研究了什么是 RPM 软件包][2]。它们是包含文件和元数据的档案文件。当安装或卸载 RPM 时,此元数据告诉 RPM 在哪里创建或删除文件。正如你将在上一篇文章中记住的,元数据还包含有关“依赖项”的信息,它可以是“运行时”或“构建时”的依赖信息。

+

+例如,让我们来看看 `fpaste`。你可以使用 `dnf` 下载该 RPM。这将下载 Fedora 存储库中可用的 `fpaste` 最新版本。在 Fedora 30 上,当前版本为 0.3.9.2:

+

+```

+$ dnf download fpaste

+

+...

+fpaste-0.3.9.2-2.fc30.noarch.rpm

+```

+

+由于这是个构建 RPM,因此它仅包含使用 `fpaste` 所需的文件:

+

+```

+$ rpm -qpl ./fpaste-0.3.9.2-2.fc30.noarch.rpm

+/usr/bin/fpaste

+/usr/share/doc/fpaste

+/usr/share/doc/fpaste/README.rst

+/usr/share/doc/fpaste/TODO

+/usr/share/licenses/fpaste

+/usr/share/licenses/fpaste/COPYING

+/usr/share/man/man1/fpaste.1.gz

+```

+

+### 源 RPM

+

+在此链条中的下一个环节是源 RPM。Fedora 中的所有软件都必须从其源代码构建。我们不包括预构建的二进制文件。因此,要制作一个 RPM 文件,RPM(工具)需要:

+

+* 给出必须要安装的文件,

+* 例如,如果要编译出这些文件,则告诉它们如何生成这些文件,

+* 告知必须在何处安装这些文件,

+* 该特定软件需要其他哪些依赖才能正常工作。

+

+源 RPM 拥有所有这些信息。源 RPM 与构建 RPM 相似,但顾名思义,它们不包含已构建的二进制文件,而是包含某个软件的源文件。让我们下载 `fpaste` 的源 RPM:

+

+```

+$ dnf download fpaste --source

+

+...

+fpaste-0.3.9.2-2.fc30.src.rpm

+```

+

+注意文件的结尾是 `src.rpm`。所有的 RPM 都是从源 RPM 构建的。你也可以使用 `dnf` 轻松检查“二进制” RPM 的源 RPM:

+

+```

+$ dnf repoquery --qf "%{SOURCERPM}" fpaste

+fpaste-0.3.9.2-2.fc30.src.rpm

+```

+

+另外,由于这是源 RPM,因此它不包含构建的文件。相反,它包含有关如何从中构建 RPM 的源代码和指令:

+

+```

+$ rpm -qpl ./fpaste-0.3.9.2-2.fc30.src.rpm

+fpaste-0.3.9.2.tar.gz

+fpaste.spec

+```

+

+这里,第一个文件只是 `fpaste` 的源代码。第二个是 spec 文件。spec 文件是个配方,可告诉 RPM(工具)如何使用源 RPM 中包含的源代码创建 RPM(档案文件)— 它包含 RPM(工具)构建 RPM(档案文件)所需的所有信息。在 spec 文件中。当我们软件包维护人员添加软件到 Fedora 中时,我们大部分时间都花在编写和完善 spec 文件上。当软件包需要更新时,我们会回过头来调整 spec 文件。你可以在 的源代码存储库中查看 Fedora 中所有软件包的 spec 文件。

+

+请注意,一个源 RPM 可能包含构建多个 RPM 的说明。`fpaste` 是一款非常简单的软件,一个源 RPM 生成一个“二进制” RPM。而 Python 则更复杂。虽然只有一个源 RPM,但它会生成多个二进制 RPM:

+

+```

+$ sudo dnf repoquery --qf "%{SOURCERPM}" python3

+python3-3.7.3-1.fc30.src.rpm

+python3-3.7.4-1.fc30.src.rpm

+

+$ sudo dnf repoquery --qf "%{SOURCERPM}" python3-devel

+python3-3.7.3-1.fc30.src.rpm

+python3-3.7.4-1.fc30.src.rpm

+

+$ sudo dnf repoquery --qf "%{SOURCERPM}" python3-libs

+python3-3.7.3-1.fc30.src.rpm

+python3-3.7.4-1.fc30.src.rpm

+

+$ sudo dnf repoquery --qf "%{SOURCERPM}" python3-idle

+python3-3.7.3-1.fc30.src.rpm

+python3-3.7.4-1.fc30.src.rpm

+

+$ sudo dnf repoquery --qf "%{SOURCERPM}" python3-tkinter

+python3-3.7.3-1.fc30.src.rpm

+python3-3.7.4-1.fc30.src.rpm

+```

+

+用 RPM 行话来讲,“python3” 是“主包”,因此该 spec 文件将称为 `python3.spec`。所有其他软件包均为“子软件包”。你可以下载 python3 的源 RPM,并查看其中的内容。(提示:补丁也是源代码的一部分):

+

+```

+$ dnf download --source python3

+python3-3.7.4-1.fc30.src.rpm

+

+$ rpm -qpl ./python3-3.7.4-1.fc30.src.rpm

+00001-rpath.patch

+00102-lib64.patch

+00111-no-static-lib.patch

+00155-avoid-ctypes-thunks.patch

+00170-gc-assertions.patch

+00178-dont-duplicate-flags-in-sysconfig.patch

+00189-use-rpm-wheels.patch

+00205-make-libpl-respect-lib64.patch

+00251-change-user-install-location.patch

+00274-fix-arch-names.patch

+00316-mark-bdist_wininst-unsupported.patch

+Python-3.7.4.tar.xz

+check-pyc-timestamps.py

+idle3.appdata.xml

+idle3.desktop

+python3.spec

+```

+

+### 从源 RPM 构建 RPM

+

+现在我们有了源 RPM,并且其中有什么内容,我们可以从中重建 RPM。但是,在执行此操作之前,我们应该设置系统以构建 RPM。首先,我们安装必需的工具:

+

+```

+$ sudo dnf install fedora-packager

+```

+

+这将安装 `rpmbuild` 工具。`rpmbuild` 需要一个默认布局,以便它知道源 RPM 中每个必需组件的位置。让我们看看它们是什么:

+

+```

+# spec 文件将出现在哪里?

+$ rpm -E %{_specdir}

+/home/asinha/rpmbuild/SPECS

+

+# 源代码将出现在哪里?

+$ rpm -E %{_sourcedir}

+/home/asinha/rpmbuild/SOURCES

+

+# 临时构建目录是哪里?

+$ rpm -E %{_builddir}

+/home/asinha/rpmbuild/BUILD

+

+# 构建根目录是哪里?

+$ rpm -E %{_buildrootdir}

+/home/asinha/rpmbuild/BUILDROOT

+

+# 源 RPM 将放在哪里?

+$ rpm -E %{_srcrpmdir}

+/home/asinha/rpmbuild/SRPMS

+

+# 构建的 RPM 将放在哪里?

+$ rpm -E %{_rpmdir}

+/home/asinha/rpmbuild/RPMS

+```

+

+我已经在系统上设置了所有这些目录:

+

+```

+$ cd

+$ tree -L 1 rpmbuild/

+rpmbuild/

+├── BUILD

+├── BUILDROOT

+├── RPMS

+├── SOURCES

+├── SPECS

+└── SRPMS

+

+6 directories, 0 files

+```

+

+RPM 还提供了一个为你全部设置好的工具:

+

+```

+$ rpmdev-setuptree

+```

+

+然后,确保已安装 `fpaste` 的所有构建依赖项:

+

+```

+sudo dnf builddep fpaste-0.3.9.2-3.fc30.src.rpm

+```

+

+对于 `fpaste`,你只需要 Python,并且它肯定已经安装在你的系统上(`dnf` 也使用 Python)。还可以给 `builddep` 命令一个 spec 文件,而不是源 RPM。在手册页中了解更多信息:

+

+```

+$ man dnf.plugin.builddep

+```

+

+现在我们有了所需的一切,从源 RPM 构建一个 RPM 就像这样简单:

+

+```

+$ rpmbuild --rebuild fpaste-0.3.9.2-3.fc30.src.rpm

+..

+..

+

+$ tree ~/rpmbuild/RPMS/noarch/

+/home/asinha/rpmbuild/RPMS/noarch/

+└── fpaste-0.3.9.2-3.fc30.noarch.rpm

+

+0 directories, 1 file

+```

+

+`rpmbuild` 将安装源 RPM 并从中构建你的 RPM。现在,你可以使用 `dnf` 安装 RPM 以使用它。当然,如前所述,如果你想在 RPM 中进行任何更改,则必须修改 spec 文件,我们将在下一篇文章中介绍 spec 文件。

+

+### 总结

+

+总结一下这篇文章有两点:

+

+* 我们通常安装使用的 RPM 是包含软件的构建版本的 “二进制” RPM

+* 构建 RPM 来自于源 RPM,源 RPM 包括用于生成二进制 RPM 所需的源代码和规范文件。

+

+如果你想开始构建 RPM,并帮助 Fedora 社区维护我们提供的大量软件,则可以从这里开始:

+

+如有任何疑问,请发邮件到 [Fedora 开发人员邮件列表][3],我们随时乐意为你提供帮助!

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/how-rpm-packages-are-made-the-source-rpm/

+

+作者:[Ankur Sinha "FranciscoD"][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://fedoramagazine.org/author/ankursinha/

+[b]: https://github.com/lujun9972

+[1]: https://fedoramagazine.org/wp-content/uploads/2019/06/rpm.png-816x345.jpg

+[2]: https://linux.cn/article-11452-1.html

+[3]: https://lists.fedoraproject.org/archives/list/devel@lists.fedoraproject.org/

diff --git a/published/20190828 Someone Forked GIMP into Glimpse Because Gimp is an Offensive Word.md b/published/20190828 Someone Forked GIMP into Glimpse Because Gimp is an Offensive Word.md

new file mode 100644

index 0000000000..70abe7d3c9

--- /dev/null

+++ b/published/20190828 Someone Forked GIMP into Glimpse Because Gimp is an Offensive Word.md

@@ -0,0 +1,84 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11529-1.html)

+[#]: subject: (Someone Forked GIMP into Glimpse Because Gimp is an Offensive Word)

+[#]: via: (https://itsfoss.com/gimp-fork-glimpse/)

+[#]: author: (John Paul https://itsfoss.com/author/john/)

+

+由于 GIMP 是令人反感的字眼,有人将它复刻了

+======

+

+在开源应用程序世界中,当社区成员希望以与其他人不同的方向来开发应用程序时,复刻是很常见的。最新的具有新闻价值的一个复刻称为 [Glimpse][1],旨在解决用户在使用 [GNU 图像处理程序][2](通常称为 GIMP)时遇到的某些问题。

+

+### 为什么创建 GIMP 的复刻?

+

+![][3]

+

+当你访问 Glimpse 应用的[主页][1]时,它表示该项目的目标是“尝试其他设计方向并修复长期存在的错误。”这听起来并不奇怪。但是,如果你开始阅读该项目的博客文章,则是另外一种印象。

+

+根据该项目的[第一篇博客文章][4],他们创建了这个复刻是因为他们不喜欢 GIMP 这个名称。根据该帖子,“我们中的许多人不认为该软件的名称适用于所有用户,并且在拒绝该项目的 13 年后,我们决定复刻!”

+

+如果你想知道为什么这些人认为 GIMP 令人讨厌,他们在[关于页面][5]中回答该问题:

+

+> “如果英语不是你的母语,那么你可能没有意识到 ‘gimp’ 一词有问题。在某些国家,这被视为针对残疾人的侮辱和针对不受欢迎儿童的操场侮辱。它也可以与成年人同意的某些‘天黑后’活动联系起来。”

+

+他们还指出,他们并没有使这一举动脱离政治正确或过于敏感。“除了可能给边缘化社区带来的痛苦外,我们当中许多人都有过倡导自由软件的故事,比如在 GNU 图像处理程序没有被专业环境中的老板或同事视为可选项这件事上。”

+

+他们似乎在回答许多质疑,“不幸的是,我们不得不复刻整个项目来更改其名称,我们认为有关此问题的讨论陷入了僵局,而这是最积极的前进方向。 ”

+

+看起来 Glimpse 这个名称不是确定不变的。他们的 GitHub 页面上有个关于可能选择其他名称的[提案][7]。也许他们应该放弃 GNU 这个词,我认为 IMP 这个词没有不好的含义。(LCTT 译注:反讽)

+

+### 分叉之路

+

+![GIMP 2.10][8]

+

+[GIMP][6] 已经存在了 20 多年,因此任何形式的复刻都是一项艰巨的任务。当前,[他们正在计划][9]首先在 2019 年 9 月发布 Glimpse 0.1。这将是一个软复刻,这意味着在迁移到新身份时的更改将主要是装饰性的。(LCTT 译注:事实上到本译文发布时,该项目仍然处于蛋疼的 0.1 beta,也许 11 月,也许 12 月,才能发布 0.1 的正式版本。)

+

+Glimpse 1.0 将是一个硬复刻,他们将积极更改代码库并将其添加到代码库中。他们想将 1.0 移植到 GTK3 并拥有自己的文档。他们估计,直到 2020 年 GIMP 3 发布之后才能做到。

+

+除了 1.0,Glimpse 团队还计划打响自己的名声。他们计划进行“前端 UI 重写”。他们目前正在讨论[改用哪种语言][10]。D 和 Rust 似乎有很多支持者。随着时间的流逝,他们也[希望][4]“添加新功能以解决普通用户的抱怨”。

+

+### 最后的思考

+

+我过去曾经使用过一点 GIMP,但从来没有对它的名称感到困扰。老实说,我很长一段时间都不知道这意味着什么。有趣的是,当我在 Wikipedia 上搜索 GIMP 时,看到了一个 [GIMP 项目][11]的条目,这是纽约的一个现代舞蹈项目,其中包括残疾人。我想 gimp 并不是每个人视为一个贬低词汇的。

+

+对我来说,更改名称似乎需要大量工作。似乎改写 UI 的想法会使项目看起来更有价值一些。我想知道他们是否会调整它以带来更经典的 UI,例如[使用 Ctrl + S 保存到 GIMP][12] / Glimpse。让我们拭目以待。

+

+如果你对该项目感兴趣,可以在 [Twitter][14] 上关注他们,查看其 [GitHub 帐户][15],或查看其 [Patreon 页面][16]。

+

+你觉得被 GIMP 名称冒犯了吗?你是否认为值得对应用程序进行复刻,以便你可以对其进行重命名?在下面的评论中让我们知道。

+

+如果你觉得这篇文章有趣,请花一点时间在社交媒体、Hacker News 或 [Reddit][17] 上分享。

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/gimp-fork-glimpse/

+

+作者:[John Paul][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/john/

+[b]: https://github.com/lujun9972

+[1]: https://getglimpse.app/

+[2]: https://www.gimp.org/

+[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/08/gimp-fork-glimpse.png?resize=800%2C450&ssl=1

+[4]: https://getglimpse.app/posts/so-it-begins/

+[5]: https://getglimpse.app/about/

+[6]: https://itsfoss.com/gimp-2-10-release/

+[7]: https://github.com/glimpse-editor/Glimpse/issues/92

+[8]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2018/08/gimp-screenshot.jpg?resize=800%2C508&ssl=1

+[9]: https://getglimpse.app/posts/six-week-checkpoint/

+[10]: https://github.com/glimpse-editor/Glimpse/issues/70

+[11]: https://en.wikipedia.org/wiki/The_Gimp_Project

+[12]: https://itsfoss.com/how-to-solve-gimp-2-8-does-not-save-in-jpeg-or-png-format/

+[13]: https://itsfoss.com/wps-office-2016-linux/

+[14]: https://twitter.com/glimpse_editor

+[15]: https://github.com/glimpse-editor/Glimpse

+[16]: https://www.patreon.com/glimpse

+[17]: https://reddit.com/r/linuxusersgroup

diff --git a/published/20140510 Managing Digital Files (e.g., Photographs) in Files and Folders.md b/published/201910/20140510 Managing Digital Files (e.g., Photographs) in Files and Folders.md

similarity index 100%

rename from published/20140510 Managing Digital Files (e.g., Photographs) in Files and Folders.md

rename to published/201910/20140510 Managing Digital Files (e.g., Photographs) in Files and Folders.md

diff --git a/published/201910/20180706 Building a Messenger App- OAuth.md b/published/201910/20180706 Building a Messenger App- OAuth.md

new file mode 100644

index 0000000000..62b85717d5

--- /dev/null

+++ b/published/201910/20180706 Building a Messenger App- OAuth.md

@@ -0,0 +1,444 @@

+[#]: collector: (lujun9972)

+[#]: translator: (PsiACE)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11510-1.html)

+[#]: subject: (Building a Messenger App: OAuth)

+[#]: via: (https://nicolasparada.netlify.com/posts/go-messenger-oauth/)

+[#]: author: (Nicolás Parada https://nicolasparada.netlify.com/)

+

+构建一个即时消息应用(二):OAuth

+======

+

+[上一篇:模式](https://linux.cn/article-11396-1.html)。

+

+在这篇帖子中,我们将会通过为应用添加社交登录功能进入后端开发。

+

+社交登录的工作方式十分简单:用户点击链接,然后重定向到 GitHub 授权页面。当用户授予我们对他的个人信息的访问权限之后,就会重定向回登录页面。下一次尝试登录时,系统将不会再次请求授权,也就是说,我们的应用已经记住了这个用户。这使得整个登录流程看起来就和你用鼠标单击一样快。

+

+如果进一步考虑其内部实现的话,过程就会变得复杂起来。首先,我们需要注册一个新的 [GitHub OAuth 应用][2]。

+

+这一步中,比较重要的是回调 URL。我们将它设置为 `http://localhost:3000/api/oauth/github/callback`。这是因为,在开发过程中,我们总是在本地主机上工作。一旦你要将应用交付生产,请使用正确的回调 URL 注册一个新的应用。

+

+注册以后,你将会收到“客户端 id”和“安全密钥”。安全起见,请不要与任何人分享他们 👀

+

+顺便让我们开始写一些代码吧。现在,创建一个 `main.go` 文件:

+

+```

+package main

+

+import (

+ "database/sql"

+ "fmt"

+ "log"

+ "net/http"

+ "net/url"

+ "os"

+ "strconv"

+

+ "github.com/gorilla/securecookie"

+ "github.com/joho/godotenv"

+ "github.com/knq/jwt"

+ _ "github.com/lib/pq"

+ "github.com/matryer/way"

+ "golang.org/x/oauth2"

+ "golang.org/x/oauth2/github"

+)

+

+var origin *url.URL

+var db *sql.DB

+var githubOAuthConfig *oauth2.Config

+var cookieSigner *securecookie.SecureCookie

+var jwtSigner jwt.Signer

+

+func main() {

+ godotenv.Load()

+

+ port := intEnv("PORT", 3000)

+ originString := env("ORIGIN", fmt.Sprintf("http://localhost:%d/", port))

+ databaseURL := env("DATABASE_URL", "postgresql://root@127.0.0.1:26257/messenger?sslmode=disable")

+ githubClientID := os.Getenv("GITHUB_CLIENT_ID")

+ githubClientSecret := os.Getenv("GITHUB_CLIENT_SECRET")

+ hashKey := env("HASH_KEY", "secret")

+ jwtKey := env("JWT_KEY", "secret")

+

+ var err error

+ if origin, err = url.Parse(originString); err != nil || !origin.IsAbs() {

+ log.Fatal("invalid origin")

+ return

+ }

+

+ if i, err := strconv.Atoi(origin.Port()); err == nil {

+ port = i

+ }

+

+ if githubClientID == "" || githubClientSecret == "" {

+ log.Fatalf("remember to set both $GITHUB_CLIENT_ID and $GITHUB_CLIENT_SECRET")

+ return

+ }

+

+ if db, err = sql.Open("postgres", databaseURL); err != nil {

+ log.Fatalf("could not open database connection: %v\n", err)

+ return

+ }

+ defer db.Close()

+ if err = db.Ping(); err != nil {

+ log.Fatalf("could not ping to db: %v\n", err)

+ return

+ }

+

+ githubRedirectURL := *origin

+ githubRedirectURL.Path = "/api/oauth/github/callback"

+ githubOAuthConfig = &oauth2.Config{

+ ClientID: githubClientID,

+ ClientSecret: githubClientSecret,

+ Endpoint: github.Endpoint,

+ RedirectURL: githubRedirectURL.String(),

+ Scopes: []string{"read:user"},

+ }

+

+ cookieSigner = securecookie.New([]byte(hashKey), nil).MaxAge(0)

+

+ jwtSigner, err = jwt.HS256.New([]byte(jwtKey))

+ if err != nil {

+ log.Fatalf("could not create JWT signer: %v\n", err)

+ return

+ }

+

+ router := way.NewRouter()

+ router.HandleFunc("GET", "/api/oauth/github", githubOAuthStart)

+ router.HandleFunc("GET", "/api/oauth/github/callback", githubOAuthCallback)

+ router.HandleFunc("GET", "/api/auth_user", guard(getAuthUser))

+

+ log.Printf("accepting connections on port %d\n", port)

+ log.Printf("starting server at %s\n", origin.String())

+ addr := fmt.Sprintf(":%d", port)

+ if err = http.ListenAndServe(addr, router); err != nil {

+ log.Fatalf("could not start server: %v\n", err)

+ }

+}

+

+func env(key, fallbackValue string) string {

+ v, ok := os.LookupEnv(key)

+ if !ok {

+ return fallbackValue

+ }

+ return v

+}

+

+func intEnv(key string, fallbackValue int) int {

+ v, ok := os.LookupEnv(key)

+ if !ok {

+ return fallbackValue

+ }

+ i, err := strconv.Atoi(v)

+ if err != nil {

+ return fallbackValue

+ }

+ return i

+}

+```

+

+安装依赖项:

+

+```

+go get -u github.com/gorilla/securecookie

+go get -u github.com/joho/godotenv

+go get -u github.com/knq/jwt

+go get -u github.com/lib/pq

+ge get -u github.com/matoous/go-nanoid

+go get -u github.com/matryer/way

+go get -u golang.org/x/oauth2

+```

+

+我们将会使用 `.env` 文件来保存密钥和其他配置。请创建这个文件,并保证里面至少包含以下内容:

+

+```

+GITHUB_CLIENT_ID=your_github_client_id

+GITHUB_CLIENT_SECRET=your_github_client_secret

+```

+

+我们还要用到的其他环境变量有:

+

+ * `PORT`:服务器运行的端口,默认值是 `3000`。

+ * `ORIGIN`:你的域名,默认值是 `http://localhost:3000/`。我们也可以在这里指定端口。

+ * `DATABASE_URL`:Cockroach 数据库的地址。默认值是 `postgresql://root@127.0.0.1:26257/messenger?sslmode=disable`。

+ * `HASH_KEY`:用于为 cookie 签名的密钥。没错,我们会使用已签名的 cookie 来确保安全。

+ * `JWT_KEY`:用于签署 JSON 网络令牌的密钥。

+

+因为代码中已经设定了默认值,所以你也不用把它们写到 `.env` 文件中。

+

+在读取配置并连接到数据库之后,我们会创建一个 OAuth 配置。我们会使用 `ORIGIN` 信息来构建回调 URL(就和我们在 GitHub 页面上注册的一样)。我们的数据范围设置为 “read:user”。这会允许我们读取公开的用户信息,这里我们只需要他的用户名和头像就够了。然后我们会初始化 cookie 和 JWT 签名器。定义一些端点并启动服务器。

+

+在实现 HTTP 处理程序之前,让我们编写一些函数来发送 HTTP 响应。

+

+```

+func respond(w http.ResponseWriter, v interface{}, statusCode int) {

+ b, err := json.Marshal(v)

+ if err != nil {

+ respondError(w, fmt.Errorf("could not marshal response: %v", err))

+ return

+ }

+ w.Header().Set("Content-Type", "application/json; charset=utf-8")

+ w.WriteHeader(statusCode)

+ w.Write(b)

+}

+

+func respondError(w http.ResponseWriter, err error) {

+ log.Println(err)

+ http.Error(w, http.StatusText(http.StatusInternalServerError), http.StatusInternalServerError)

+}

+```

+

+第一个函数用来发送 JSON,而第二个将错误记录到控制台并返回一个 `500 Internal Server Error` 错误信息。

+

+### OAuth 开始

+

+所以,用户点击写着 “Access with GitHub” 的链接。该链接指向 `/api/oauth/github`,这将会把用户重定向到 github。

+

+```

+func githubOAuthStart(w http.ResponseWriter, r *http.Request) {

+ state, err := gonanoid.Nanoid()

+ if err != nil {

+ respondError(w, fmt.Errorf("could not generte state: %v", err))

+ return

+ }

+

+ stateCookieValue, err := cookieSigner.Encode("state", state)

+ if err != nil {

+ respondError(w, fmt.Errorf("could not encode state cookie: %v", err))

+ return

+ }

+

+ http.SetCookie(w, &http.Cookie{

+ Name: "state",

+ Value: stateCookieValue,

+ Path: "/api/oauth/github",

+ HttpOnly: true,

+ })

+ http.Redirect(w, r, githubOAuthConfig.AuthCodeURL(state), http.StatusTemporaryRedirect)

+}

+```

+

+OAuth2 使用一种机制来防止 CSRF 攻击,因此它需要一个“状态”(`state`)。我们使用 `Nanoid()` 来创建一个随机字符串,并用这个字符串作为状态。我们也把它保存为一个 cookie。

+

+### OAuth 回调

+

+一旦用户授权我们访问他的个人信息,他将会被重定向到这个端点。这个 URL 的查询字符串上将会包含状态(`state`)和授权码(`code`): `/api/oauth/github/callback?state=&code=`。

+

+```

+const jwtLifetime = time.Hour * 24 * 14

+

+type GithubUser struct {

+ ID int `json:"id"`

+ Login string `json:"login"`

+ AvatarURL *string `json:"avatar_url,omitempty"`

+}

+

+type User struct {

+ ID string `json:"id"`

+ Username string `json:"username"`

+ AvatarURL *string `json:"avatarUrl"`

+}

+

+func githubOAuthCallback(w http.ResponseWriter, r *http.Request) {

+ stateCookie, err := r.Cookie("state")

+ if err != nil {

+ http.Error(w, http.StatusText(http.StatusTeapot), http.StatusTeapot)

+ return

+ }

+

+ http.SetCookie(w, &http.Cookie{

+ Name: "state",

+ Value: "",

+ MaxAge: -1,

+ HttpOnly: true,

+ })

+

+ var state string

+ if err = cookieSigner.Decode("state", stateCookie.Value, &state); err != nil {

+ http.Error(w, http.StatusText(http.StatusTeapot), http.StatusTeapot)

+ return

+ }

+

+ q := r.URL.Query()

+

+ if state != q.Get("state") {

+ http.Error(w, http.StatusText(http.StatusTeapot), http.StatusTeapot)

+ return

+ }

+

+ ctx := r.Context()

+

+ t, err := githubOAuthConfig.Exchange(ctx, q.Get("code"))

+ if err != nil {

+ respondError(w, fmt.Errorf("could not fetch github token: %v", err))

+ return

+ }

+

+ client := githubOAuthConfig.Client(ctx, t)

+ resp, err := client.Get("https://api.github.com/user")

+ if err != nil {

+ respondError(w, fmt.Errorf("could not fetch github user: %v", err))

+ return

+ }

+

+ var githubUser GithubUser

+ if err = json.NewDecoder(resp.Body).Decode(&githubUser); err != nil {

+ respondError(w, fmt.Errorf("could not decode github user: %v", err))

+ return

+ }

+ defer resp.Body.Close()

+

+ tx, err := db.BeginTx(ctx, nil)

+ if err != nil {

+ respondError(w, fmt.Errorf("could not begin tx: %v", err))

+ return

+ }

+

+ var user User

+ if err = tx.QueryRowContext(ctx, `

+ SELECT id, username, avatar_url FROM users WHERE github_id = $1

+ `, githubUser.ID).Scan(&user.ID, &user.Username, &user.AvatarURL); err == sql.ErrNoRows {

+ if err = tx.QueryRowContext(ctx, `

+ INSERT INTO users (username, avatar_url, github_id) VALUES ($1, $2, $3)

+ RETURNING id

+ `, githubUser.Login, githubUser.AvatarURL, githubUser.ID).Scan(&user.ID); err != nil {

+ respondError(w, fmt.Errorf("could not insert user: %v", err))

+ return

+ }

+ user.Username = githubUser.Login

+ user.AvatarURL = githubUser.AvatarURL

+ } else if err != nil {

+ respondError(w, fmt.Errorf("could not query user by github ID: %v", err))

+ return

+ }

+

+ if err = tx.Commit(); err != nil {

+ respondError(w, fmt.Errorf("could not commit to finish github oauth: %v", err))

+ return

+ }

+

+ exp := time.Now().Add(jwtLifetime)

+ token, err := jwtSigner.Encode(jwt.Claims{

+ Subject: user.ID,

+ Expiration: json.Number(strconv.FormatInt(exp.Unix(), 10)),

+ })

+ if err != nil {

+ respondError(w, fmt.Errorf("could not create token: %v", err))

+ return

+ }

+

+ expiresAt, _ := exp.MarshalText()

+

+ data := make(url.Values)

+ data.Set("token", string(token))

+ data.Set("expires_at", string(expiresAt))

+

+ http.Redirect(w, r, "/callback?"+data.Encode(), http.StatusTemporaryRedirect)

+}

+```

+

+首先,我们会尝试使用之前保存的状态对 cookie 进行解码。并将其与查询字符串中的状态进行比较。如果它们不匹配,我们会返回一个 `418 I'm teapot`(未知来源)错误。

+

+接着,我们使用授权码生成一个令牌。这个令牌被用于创建 HTTP 客户端来向 GitHub API 发出请求。所以最终我们会向 `https://api.github.com/user` 发送一个 GET 请求。这个端点将会以 JSON 格式向我们提供当前经过身份验证的用户信息。我们将会解码这些内容,一并获取用户的 ID、登录名(用户名)和头像 URL。

+

+然后我们将会尝试在数据库上找到具有该 GitHub ID 的用户。如果没有找到,就使用该数据创建一个新的。

+

+之后,对于新创建的用户,我们会发出一个将用户 ID 作为主题(`Subject`)的 JSON 网络令牌,并使用该令牌重定向到前端,查询字符串中一并包含该令牌的到期日(`Expiration`)。

+

+这一 Web 应用也会被用在其他帖子,但是重定向的链接会是 `/callback?token=&expires_at=`。在那里,我们将会利用 JavaScript 从 URL 中获取令牌和到期日,并通过 `Authorization` 标头中的令牌以 `Bearer token_here` 的形式对 `/api/auth_user` 进行 GET 请求,来获取已认证的身份用户并将其保存到 localStorage。

+

+### Guard 中间件

+

+为了获取当前已经过身份验证的用户,我们设计了 Guard 中间件。这是因为在接下来的文章中,我们会有很多需要进行身份认证的端点,而中间件将会允许我们共享这一功能。

+

+```

+type ContextKey struct {

+ Name string

+}

+

+var keyAuthUserID = ContextKey{"auth_user_id"}

+

+func guard(handler http.HandlerFunc) http.HandlerFunc {

+ return func(w http.ResponseWriter, r *http.Request) {

+ var token string

+ if a := r.Header.Get("Authorization"); strings.HasPrefix(a, "Bearer ") {

+ token = a[7:]

+ } else if t := r.URL.Query().Get("token"); t != "" {

+ token = t

+ } else {

+ http.Error(w, http.StatusText(http.StatusUnauthorized), http.StatusUnauthorized)

+ return

+ }

+

+ var claims jwt.Claims

+ if err := jwtSigner.Decode([]byte(token), &claims); err != nil {

+ http.Error(w, http.StatusText(http.StatusUnauthorized), http.StatusUnauthorized)

+ return

+ }

+

+ ctx := r.Context()

+ ctx = context.WithValue(ctx, keyAuthUserID, claims.Subject)

+

+ handler(w, r.WithContext(ctx))

+ }

+}

+```

+

+首先,我们尝试从 `Authorization` 标头或者是 URL 查询字符串中的 `token` 字段中读取令牌。如果没有找到,我们需要返回 `401 Unauthorized`(未授权)错误。然后我们将会对令牌中的申明进行解码,并使用该主题作为当前已经过身份验证的用户 ID。

+

+现在,我们可以用这一中间件来封装任何需要授权的 `http.handlerFunc`,并且在处理函数的上下文中保有已经过身份验证的用户 ID。

+

+```

+var guarded = guard(func(w http.ResponseWriter, r *http.Request) {

+ authUserID := r.Context().Value(keyAuthUserID).(string)

+})

+```

+

+### 获取认证用户

+

+```

+func getAuthUser(w http.ResponseWriter, r *http.Request) {

+ ctx := r.Context()

+ authUserID := ctx.Value(keyAuthUserID).(string)

+

+ var user User

+ if err := db.QueryRowContext(ctx, `

+ SELECT username, avatar_url FROM users WHERE id = $1

+ `, authUserID).Scan(&user.Username, &user.AvatarURL); err == sql.ErrNoRows {

+ http.Error(w, http.StatusText(http.StatusTeapot), http.StatusTeapot)

+ return

+ } else if err != nil {

+ respondError(w, fmt.Errorf("could not query auth user: %v", err))

+ return

+ }

+

+ user.ID = authUserID

+

+ respond(w, user, http.StatusOK)

+}

+```

+

+我们使用 Guard 中间件来获取当前经过身份认证的用户 ID 并查询数据库。

+

+这一部分涵盖了后端的 OAuth 流程。在下一篇帖子中,我们将会看到如何开始与其他用户的对话。

+

+- [源代码][3]

+

+--------------------------------------------------------------------------------

+

+via: https://nicolasparada.netlify.com/posts/go-messenger-oauth/

+

+作者:[Nicolás Parada][a]

+选题:[lujun9972][b]

+译者:[PsiACE](https://github.com/PsiACE)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://nicolasparada.netlify.com/

+[b]: https://github.com/lujun9972

+[1]: https://nicolasparada.netlify.com/posts/go-messenger-schema/

+[2]: https://github.com/settings/applications/new

+[3]: https://github.com/nicolasparada/go-messenger-demo

diff --git a/published/20180906 What a shell dotfile can do for you.md b/published/201910/20180906 What a shell dotfile can do for you.md

similarity index 100%

rename from published/20180906 What a shell dotfile can do for you.md

rename to published/201910/20180906 What a shell dotfile can do for you.md

diff --git a/published/20190214 The Earliest Linux Distros- Before Mainstream Distros Became So Popular.md b/published/201910/20190214 The Earliest Linux Distros- Before Mainstream Distros Became So Popular.md

similarity index 100%

rename from published/20190214 The Earliest Linux Distros- Before Mainstream Distros Became So Popular.md

rename to published/201910/20190214 The Earliest Linux Distros- Before Mainstream Distros Became So Popular.md

diff --git a/published/20190301 Guide to Install VMware Tools on Linux.md b/published/201910/20190301 Guide to Install VMware Tools on Linux.md

similarity index 100%

rename from published/20190301 Guide to Install VMware Tools on Linux.md

rename to published/201910/20190301 Guide to Install VMware Tools on Linux.md

diff --git a/published/20190320 Move your dotfiles to version control.md b/published/201910/20190320 Move your dotfiles to version control.md

similarity index 100%

rename from published/20190320 Move your dotfiles to version control.md

rename to published/201910/20190320 Move your dotfiles to version control.md

diff --git a/published/20190404 How writers can get work done better with Git.md b/published/201910/20190404 How writers can get work done better with Git.md

similarity index 100%

rename from published/20190404 How writers can get work done better with Git.md

rename to published/201910/20190404 How writers can get work done better with Git.md

diff --git a/published/20190513 Blockchain 2.0 - Introduction To Hyperledger Fabric -Part 10.md b/published/201910/20190513 Blockchain 2.0 - Introduction To Hyperledger Fabric -Part 10.md

similarity index 100%

rename from published/20190513 Blockchain 2.0 - Introduction To Hyperledger Fabric -Part 10.md

rename to published/201910/20190513 Blockchain 2.0 - Introduction To Hyperledger Fabric -Part 10.md

diff --git a/published/20190614 What is a Java constructor.md b/published/201910/20190614 What is a Java constructor.md

similarity index 100%

rename from published/20190614 What is a Java constructor.md

rename to published/201910/20190614 What is a Java constructor.md

diff --git a/published/20190627 RPM packages explained.md b/published/201910/20190627 RPM packages explained.md

similarity index 100%

rename from published/20190627 RPM packages explained.md

rename to published/201910/20190627 RPM packages explained.md

diff --git a/published/20190701 Learn how to Record and Replay Linux Terminal Sessions Activity.md b/published/201910/20190701 Learn how to Record and Replay Linux Terminal Sessions Activity.md

similarity index 100%

rename from published/20190701 Learn how to Record and Replay Linux Terminal Sessions Activity.md

rename to published/201910/20190701 Learn how to Record and Replay Linux Terminal Sessions Activity.md

diff --git a/published/20190719 Buying a Linux-ready laptop.md b/published/201910/20190719 Buying a Linux-ready laptop.md

similarity index 100%

rename from published/20190719 Buying a Linux-ready laptop.md

rename to published/201910/20190719 Buying a Linux-ready laptop.md

diff --git a/published/20190805 How to Install and Configure PostgreSQL on Ubuntu.md b/published/201910/20190805 How to Install and Configure PostgreSQL on Ubuntu.md

similarity index 100%

rename from published/20190805 How to Install and Configure PostgreSQL on Ubuntu.md

rename to published/201910/20190805 How to Install and Configure PostgreSQL on Ubuntu.md

diff --git a/published/20190809 Mutation testing is the evolution of TDD.md b/published/201910/20190809 Mutation testing is the evolution of TDD.md

similarity index 100%

rename from published/20190809 Mutation testing is the evolution of TDD.md

rename to published/201910/20190809 Mutation testing is the evolution of TDD.md

diff --git a/published/20190822 A Raspberry Pi Based Open Source Tablet is in Making and it-s Called CutiePi.md b/published/201910/20190822 A Raspberry Pi Based Open Source Tablet is in Making and it-s Called CutiePi.md

similarity index 100%

rename from published/20190822 A Raspberry Pi Based Open Source Tablet is in Making and it-s Called CutiePi.md

rename to published/201910/20190822 A Raspberry Pi Based Open Source Tablet is in Making and it-s Called CutiePi.md

diff --git a/published/20190823 The lifecycle of Linux kernel testing.md b/published/201910/20190823 The lifecycle of Linux kernel testing.md

similarity index 100%

rename from published/20190823 The lifecycle of Linux kernel testing.md

rename to published/201910/20190823 The lifecycle of Linux kernel testing.md

diff --git a/published/20190824 How to compile a Linux kernel in the 21st century.md b/published/201910/20190824 How to compile a Linux kernel in the 21st century.md

similarity index 100%

rename from published/20190824 How to compile a Linux kernel in the 21st century.md

rename to published/201910/20190824 How to compile a Linux kernel in the 21st century.md

diff --git a/published/20190826 Introduction to the Linux chown command.md b/published/201910/20190826 Introduction to the Linux chown command.md

similarity index 100%

rename from published/20190826 Introduction to the Linux chown command.md

rename to published/201910/20190826 Introduction to the Linux chown command.md

diff --git a/published/20190830 How to Install Linux on Intel NUC.md b/published/201910/20190830 How to Install Linux on Intel NUC.md

similarity index 100%

rename from published/20190830 How to Install Linux on Intel NUC.md

rename to published/201910/20190830 How to Install Linux on Intel NUC.md

diff --git a/published/20190901 Best Linux Distributions For Everyone in 2019.md b/published/201910/20190901 Best Linux Distributions For Everyone in 2019.md

similarity index 100%

rename from published/20190901 Best Linux Distributions For Everyone in 2019.md

rename to published/201910/20190901 Best Linux Distributions For Everyone in 2019.md

diff --git a/published/20190911 4 open source cloud security tools.md b/published/201910/20190911 4 open source cloud security tools.md

similarity index 100%

rename from published/20190911 4 open source cloud security tools.md

rename to published/201910/20190911 4 open source cloud security tools.md

diff --git a/published/20190916 Copying large files with Rsync, and some misconceptions.md b/published/201910/20190916 Copying large files with Rsync, and some misconceptions.md

similarity index 100%

rename from published/20190916 Copying large files with Rsync, and some misconceptions.md

rename to published/201910/20190916 Copying large files with Rsync, and some misconceptions.md

diff --git a/published/20190916 Linux commands to display your hardware information.md b/published/201910/20190916 Linux commands to display your hardware information.md

similarity index 100%

rename from published/20190916 Linux commands to display your hardware information.md

rename to published/201910/20190916 Linux commands to display your hardware information.md

diff --git a/published/20190918 Adding themes and plugins to Zsh.md b/published/201910/20190918 Adding themes and plugins to Zsh.md

similarity index 100%

rename from published/20190918 Adding themes and plugins to Zsh.md

rename to published/201910/20190918 Adding themes and plugins to Zsh.md

diff --git a/published/20190920 Hone advanced Bash skills by building Minesweeper.md b/published/201910/20190920 Hone advanced Bash skills by building Minesweeper.md

similarity index 100%

rename from published/20190920 Hone advanced Bash skills by building Minesweeper.md

rename to published/201910/20190920 Hone advanced Bash skills by building Minesweeper.md

diff --git a/published/20190923 Installation Guide of Manjaro 18.1 (KDE Edition) with Screenshots.md b/published/201910/20190923 Installation Guide of Manjaro 18.1 (KDE Edition) with Screenshots.md

similarity index 100%

rename from published/20190923 Installation Guide of Manjaro 18.1 (KDE Edition) with Screenshots.md

rename to published/201910/20190923 Installation Guide of Manjaro 18.1 (KDE Edition) with Screenshots.md

diff --git a/published/20190923 Mutation testing by example- How to leverage failure.md b/published/201910/20190923 Mutation testing by example- How to leverage failure.md

similarity index 100%

rename from published/20190923 Mutation testing by example- How to leverage failure.md

rename to published/201910/20190923 Mutation testing by example- How to leverage failure.md

diff --git a/published/20190924 Fedora and CentOS Stream.md b/published/201910/20190924 Fedora and CentOS Stream.md

similarity index 100%

rename from published/20190924 Fedora and CentOS Stream.md

rename to published/201910/20190924 Fedora and CentOS Stream.md

diff --git a/published/20190924 How DevOps professionals can become security champions.md b/published/201910/20190924 How DevOps professionals can become security champions.md

similarity index 100%

rename from published/20190924 How DevOps professionals can become security champions.md

rename to published/201910/20190924 How DevOps professionals can become security champions.md

diff --git a/published/20190924 Java still relevant, Linux desktop, and more industry trends.md b/published/201910/20190924 Java still relevant, Linux desktop, and more industry trends.md

similarity index 100%

rename from published/20190924 Java still relevant, Linux desktop, and more industry trends.md

rename to published/201910/20190924 Java still relevant, Linux desktop, and more industry trends.md

diff --git a/published/20190924 Mutation testing by example- Failure as experimentation.md b/published/201910/20190924 Mutation testing by example- Failure as experimentation.md

similarity index 100%

rename from published/20190924 Mutation testing by example- Failure as experimentation.md

rename to published/201910/20190924 Mutation testing by example- Failure as experimentation.md

diff --git a/published/20190925 3 quick tips for working with Linux files.md b/published/201910/20190925 3 quick tips for working with Linux files.md

similarity index 100%

rename from published/20190925 3 quick tips for working with Linux files.md

rename to published/201910/20190925 3 quick tips for working with Linux files.md

diff --git a/published/20190925 Essential Accessories for Intel NUC Mini PC.md b/published/201910/20190925 Essential Accessories for Intel NUC Mini PC.md

similarity index 100%

rename from published/20190925 Essential Accessories for Intel NUC Mini PC.md

rename to published/201910/20190925 Essential Accessories for Intel NUC Mini PC.md

diff --git a/published/20190925 Mirror your Android screen on your computer with Guiscrcpy.md b/published/201910/20190925 Mirror your Android screen on your computer with Guiscrcpy.md

similarity index 100%

rename from published/20190925 Mirror your Android screen on your computer with Guiscrcpy.md

rename to published/201910/20190925 Mirror your Android screen on your computer with Guiscrcpy.md

diff --git a/published/20190926 How to Execute Commands on Remote Linux System over SSH.md b/published/201910/20190926 How to Execute Commands on Remote Linux System over SSH.md

similarity index 100%

rename from published/20190926 How to Execute Commands on Remote Linux System over SSH.md

rename to published/201910/20190926 How to Execute Commands on Remote Linux System over SSH.md

diff --git a/published/20190926 You Can Now Use OneDrive in Linux Natively Thanks to Insync.md b/published/201910/20190926 You Can Now Use OneDrive in Linux Natively Thanks to Insync.md

similarity index 100%

rename from published/20190926 You Can Now Use OneDrive in Linux Natively Thanks to Insync.md

rename to published/201910/20190926 You Can Now Use OneDrive in Linux Natively Thanks to Insync.md

diff --git a/published/20190927 CentOS 8 Installation Guide with Screenshots.md b/published/201910/20190927 CentOS 8 Installation Guide with Screenshots.md

similarity index 100%

rename from published/20190927 CentOS 8 Installation Guide with Screenshots.md

rename to published/201910/20190927 CentOS 8 Installation Guide with Screenshots.md

diff --git a/published/20190929 Bash Script to Generate System Uptime Reports on Linux.md b/published/201910/20190929 Bash Script to Generate System Uptime Reports on Linux.md

similarity index 100%

rename from published/20190929 Bash Script to Generate System Uptime Reports on Linux.md

rename to published/201910/20190929 Bash Script to Generate System Uptime Reports on Linux.md

diff --git a/published/20190929 How to Install and Use Cockpit on CentOS 8 - RHEL 8.md b/published/201910/20190929 How to Install and Use Cockpit on CentOS 8 - RHEL 8.md

similarity index 100%

rename from published/20190929 How to Install and Use Cockpit on CentOS 8 - RHEL 8.md

rename to published/201910/20190929 How to Install and Use Cockpit on CentOS 8 - RHEL 8.md

diff --git a/published/20191002 3 command line games for learning Bash the fun way.md b/published/201910/20191002 3 command line games for learning Bash the fun way.md

similarity index 100%

rename from published/20191002 3 command line games for learning Bash the fun way.md

rename to published/201910/20191002 3 command line games for learning Bash the fun way.md

diff --git a/published/20191002 7 Bash history shortcuts you will actually use.md b/published/201910/20191002 7 Bash history shortcuts you will actually use.md

similarity index 100%

rename from published/20191002 7 Bash history shortcuts you will actually use.md

rename to published/201910/20191002 7 Bash history shortcuts you will actually use.md

diff --git a/published/20191003 How to Run the Top Command in Batch Mode.md b/published/201910/20191003 How to Run the Top Command in Batch Mode.md

similarity index 100%

rename from published/20191003 How to Run the Top Command in Batch Mode.md

rename to published/201910/20191003 How to Run the Top Command in Batch Mode.md

diff --git a/published/20191004 9 essential GNU binutils tools.md b/published/201910/20191004 9 essential GNU binutils tools.md

similarity index 100%

rename from published/20191004 9 essential GNU binutils tools.md

rename to published/201910/20191004 9 essential GNU binutils tools.md

diff --git a/published/20191004 All That You Can Do with Google Analytics, and More.md b/published/201910/20191004 All That You Can Do with Google Analytics, and More.md

similarity index 100%

rename from published/20191004 All That You Can Do with Google Analytics, and More.md

rename to published/201910/20191004 All That You Can Do with Google Analytics, and More.md

diff --git a/published/20191004 In Fedora 31, 32-bit i686 is 86ed.md b/published/201910/20191004 In Fedora 31, 32-bit i686 is 86ed.md

similarity index 100%

rename from published/20191004 In Fedora 31, 32-bit i686 is 86ed.md

rename to published/201910/20191004 In Fedora 31, 32-bit i686 is 86ed.md

diff --git a/published/201910/20191005 Use GameHub to Manage All Your Linux Games in One Place.md b/published/201910/20191005 Use GameHub to Manage All Your Linux Games in One Place.md

new file mode 100644

index 0000000000..5c4de853c5

--- /dev/null

+++ b/published/201910/20191005 Use GameHub to Manage All Your Linux Games in One Place.md

@@ -0,0 +1,161 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wenwensnow)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11504-1.html)

+[#]: subject: (Use GameHub to Manage All Your Linux Games in One Place)

+[#]: via: (https://itsfoss.com/gamehub/)

+[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

+

+用 GameHub 集中管理你 Linux 上的所有游戏

+======

+

+你在 Linux 上是怎么[玩游戏的呢][1]? 让我猜猜,要不就是从软件中心直接安装,要不就选 Steam、GOG、Humble Bundle 等平台,对吧?但是,如果你有多个游戏启动器和客户端,又要如何管理呢?好吧,对我来说这简直令人头疼 —— 这也是我发现 [GameHub][2] 这个应用之后,感到非常高兴的原因。

+

+GameHub 是为 Linux 发行版设计的一个桌面应用,它能让你“集中管理你的所有游戏”。这听起来很有趣,是不是?下面让我来具体说明一下。

+

+![][3]

+

+### 集中管理不同平台 Linux 游戏的 GameHub

+

+让我们看看,对玩家来说,让 GameHub 成为一个[不可或缺的 Linux 应用][4]的功能,都有哪些。

+

+#### Steam、GOG & Humble Bundle 支持

+

+![][5]

+

+它支持 Steam、[GOG][6] 和 [Humble Bundle][7] 账户整合。你可以登录你的 GameHub 账号,从而在你的库管理器中管理所有游戏。

+

+对我来说,我在 Steam 上有很多游戏,Humble Bundle 上也有一些。我不能确保它支持所有平台,但可以确信的是,主流平台游戏是没有问题的。

+

+#### 支持原生游戏

+

+![][8]

+

+[有很多网站专门推荐 Linux 游戏,并支持下载][9]。你可以通过下载安装包,或者添加可执行文件,从而管理原生游戏。

+

+可惜的是,现在无法在 GameHub 内搜索 Linux 游戏。如上图所示,你需要分别下载游戏,随后再将其添加到 GameHub 中。

+

+#### 模拟器支持

+

+用模拟器,你可以在 [Linux 上玩复古游戏][10]。正如上图所示,你可以添加模拟器(并导入模拟的镜像)。

+

+你可以在 [RetroArch][11] 查看已有的模拟器,但也能根据需求添加自定义模拟器。

+

+#### 用户界面

+

+![Gamehub 界面选项][12]

+

+当然,用户体验很重要。因此,探究下用户界面都有些什么,也很有必要。

+

+我个人觉得,这一应用很容易使用,并且黑色主题是一个加分项。

+

+#### 手柄支持

+

+如果你习惯在 Linux 系统上用手柄玩游戏 —— 你可以轻松在设置里添加,启用或禁用它。

+

+#### 多个数据提供商

+

+因为它需要获取你的游戏信息(或元数据),也意味着它需要一个数据源。你可以看到下图列出的所有数据源。

+

+![Data Providers Gamehub][13]

+

+这里你什么也不用做 —— 但如果你使用的是 steam 之外的其他平台,你需要为 [IDGB 生成一个 API 密钥][14]。

+

+我建议只有出现 GameHub 中的提示/通知,或有些游戏在 GameHub 上没有任何描述/图片/状态时,再这么做。

+

+#### 兼容性选项

+

+![][15]

+

+你有不支持在 Linux 上运行的游戏吗?

+

+不用担心,GameHub 上提供了多种兼容工具,如 Wine/Proton,你可以利用它们来玩游戏。

+

+我们无法确定具体哪个兼容工具适用于你 —— 所以你需要自己亲自测试。然而,对许多游戏玩家来说,这的确是个很有用的功能。

+

+### GameHub: 如何安装它呢?

+

+![][18]

+

+首先,你可以直接在软件中心或者应用商店内搜索。 它在 “Pop!_Shop” 之下。所以,它在绝大多数官方源中都能找到。

+

+如果你在这些地方都没有找到,你可以手动添加源,并从终端上安装它,你需要输入以下命令:

+

+```

+sudo add-apt-repository ppa:tkashkin/gamehub

+sudo apt update

+sudo apt install com.github.tkashkin.gamehub

+```

+

+如果你遇到了 “add-apt-repository command not found” 这个错误,你可以看看,[add-apt-repository not found error.][19]这篇文章,它能帮你解决这一问题。

+

+这里还提供 AppImage 和 FlatPak 版本。 在[官网][2] 上,你可以针对找到其他 Linux 发行版的安装手册。

+

+同时,你还可以从它的 [GitHub 页面][20]下载之前版本的安装包.

+

+[GameHub][2]

+

+### 如何在 GameHub 上管理你的游戏?

+

+在启动程序后,你可以将自己的 Steam/GOG/Humble Bundle 账号添加进来。

+

+对于 Steam,你需要在 Linux 发行版上安装 Steam 客户端。一旦安装完成,你可以轻松将账号中的游戏导入 GameHub。

+

+![][16]

+

+对于 GOG & Humble Bundle,登录后,就能直接在 GameHub 上管理游戏了。

+

+如果你想添加模拟器或者本地安装文件,点击窗口右上角的 “+” 按钮进行添加。

+

+### 如何安装游戏?

+

+对于 Steam 游戏,它会自动启动 Steam 客户端,从而下载/安装游戏(我希望之后安装游戏,可以不用启动 Steam!)

+

+![][17]

+

+但对于 GOG/Humble Bundle,登录后就能直接、下载安装游戏。必要的话,对于那些不支持在 Linux 上运行的游戏,你可以使用兼容工具。

+

+无论是模拟器游戏,还是本地游戏,只需添加安装包或导入模拟器镜像就可以了。这里没什么其他步骤要做。

+

+### 注意

+

+GameHub 是相当灵活的一个集中游戏管理应用。 用户界面和选项设置也相当直观。

+

+你之前是否使用过这一应用呢?如果有,请在评论里写下你的感受。

+

+而且,如果你想尝试一些与此功能相似的工具/应用,请务必告诉我们。

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/gamehub/

+

+作者:[Ankush Das][a]

+选题:[lujun9972][b]

+译者:[wenwensnow](https://github.com/wenwensnow)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/ankush/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/linux-gaming-guide/

+[2]: https://tkashkin.tk/projects/gamehub/

+[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-home-1.png?ssl=1

+[4]: https://itsfoss.com/essential-linux-applications/

+[5]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-platform-support.png?ssl=1

+[6]: https://www.gog.com/

+[7]: https://www.humblebundle.com/monthly?partner=itsfoss

+[8]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-native-installers.png?ssl=1

+[9]: https://itsfoss.com/download-linux-games/

+[10]: https://itsfoss.com/play-retro-games-linux/

+[11]: https://www.retroarch.com/

+[12]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-appearance.png?ssl=1

+[13]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/data-providers-gamehub.png?ssl=1

+[14]: https://www.igdb.com/api

+[15]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-windows-game.png?fit=800%2C569&ssl=1

+[16]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-library.png?ssl=1

+[17]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-compatibility-layer.png?ssl=1

+[18]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/gamehub-install.jpg?ssl=1

+[19]: https://itsfoss.com/add-apt-repository-command-not-found/

+[20]: https://github.com/tkashkin/GameHub/releases

diff --git a/published/20191006 How to Install and Configure VNC Server on Centos 8 - RHEL 8.md b/published/201910/20191006 How to Install and Configure VNC Server on Centos 8 - RHEL 8.md

similarity index 100%

rename from published/20191006 How to Install and Configure VNC Server on Centos 8 - RHEL 8.md

rename to published/201910/20191006 How to Install and Configure VNC Server on Centos 8 - RHEL 8.md

diff --git a/published/20191007 IceWM - A really cool desktop.md b/published/201910/20191007 IceWM - A really cool desktop.md

similarity index 100%

rename from published/20191007 IceWM - A really cool desktop.md

rename to published/201910/20191007 IceWM - A really cool desktop.md

diff --git a/published/20191008 7 steps to securing your Linux server.md b/published/201910/20191008 7 steps to securing your Linux server.md

similarity index 100%

rename from published/20191008 7 steps to securing your Linux server.md

rename to published/201910/20191008 7 steps to securing your Linux server.md

diff --git a/published/20191008 How to manage Go projects with GVM.md b/published/201910/20191008 How to manage Go projects with GVM.md

similarity index 100%

rename from published/20191008 How to manage Go projects with GVM.md

rename to published/201910/20191008 How to manage Go projects with GVM.md

diff --git a/published/20191009 Command line quick tips- Locate and process files with find and xargs.md b/published/201910/20191009 Command line quick tips- Locate and process files with find and xargs.md

similarity index 100%

rename from published/20191009 Command line quick tips- Locate and process files with find and xargs.md

rename to published/201910/20191009 Command line quick tips- Locate and process files with find and xargs.md

diff --git a/published/20191009 Top 10 open source video players for Linux.md b/published/201910/20191009 Top 10 open source video players for Linux.md

similarity index 100%

rename from published/20191009 Top 10 open source video players for Linux.md

rename to published/201910/20191009 Top 10 open source video players for Linux.md

diff --git a/published/20191010 DevSecOps pipelines and tools- What you need to know.md b/published/201910/20191010 DevSecOps pipelines and tools- What you need to know.md

similarity index 100%

rename from published/20191010 DevSecOps pipelines and tools- What you need to know.md

rename to published/201910/20191010 DevSecOps pipelines and tools- What you need to know.md

diff --git a/published/20191010 Viewing files and processes as trees on Linux.md b/published/201910/20191010 Viewing files and processes as trees on Linux.md

similarity index 100%

rename from published/20191010 Viewing files and processes as trees on Linux.md

rename to published/201910/20191010 Viewing files and processes as trees on Linux.md

diff --git a/published/20191011 How to Unzip a Zip File in Linux -Beginner-s Tutorial.md b/published/201910/20191011 How to Unzip a Zip File in Linux -Beginner-s Tutorial.md

similarity index 100%

rename from published/20191011 How to Unzip a Zip File in Linux -Beginner-s Tutorial.md

rename to published/201910/20191011 How to Unzip a Zip File in Linux -Beginner-s Tutorial.md

diff --git a/published/201910/20191011 How to use IoT devices to keep children safe.md b/published/201910/20191011 How to use IoT devices to keep children safe.md

new file mode 100644

index 0000000000..bf05a950f1

--- /dev/null

+++ b/published/201910/20191011 How to use IoT devices to keep children safe.md

@@ -0,0 +1,68 @@

+[#]: collector: (lujun9972)

+[#]: translator: (Morisun029)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11506-1.html)

+[#]: subject: (How to use IoT devices to keep children safe?)

+[#]: via: (https://opensourceforu.com/2019/10/how-to-use-iot-devices-to-keep-children-safe/)

+[#]: author: (Andrew Carroll https://opensourceforu.com/author/andrew-carroll/)

+

+如何使用物联网设备来确保儿童安全?

+======

+

+![][1]

+

+IoT (物联网)设备正在迅速改变我们的生活。这些设备无处不在,从我们的家庭到其它行业。根据一些预测数据,到 2020 年,将会有 100 亿个 IoT 设备。到 2025 年,该数量将增长到 220 亿。目前,物联网已经在很多领域得到了应用,包括智能家居、工业生产过程、农业甚至医疗保健领域。伴随着如此广泛的应用,物联网显然已经成为近年来的热门话题之一。

+

+多种因素促成了物联网设备在多个学科的爆炸式增长。这其中包括低成本处理器和无线连接的的可用性,以及开源平台的信息交流推动了物联网领域的创新。与传统的应用程序开发相比,物联网设备的开发成指数级增长,因为它的资源是开源的。

+

+在解释如何使用物联网设备来保护儿童之前,必须对物联网技术有基本的了解。

+

+### IoT 设备是什么?

+

+IoT 设备是指那些在没有人类参与的情况下彼此之间可以通信的设备。因此,许多专家并不将智能手机和计算机视为物联网设备。此外,物联网设备必须能够收集数据并且能将收集到的数据传送到其他设备或云端进行处理。

+

+然而,在某些领域中,我们需要探索物联网的潜力。儿童往往是脆弱的,他们很容易成为犯罪分子和其他蓄意伤害者的目标。无论在物理世界还是数字世界中,儿童都很容易面临犯罪的威胁。因为父母不能始终亲自到场保护孩子;这就是为什么需要监视工具了。

+

+除了适用于儿童的可穿戴设备外,还有许多父母监视应用程序,例如 Xnspy,可实时监控儿童并提供信息的实时更新。这些工具可确保儿童安全。可穿戴设备确保儿童身体上的安全性,而家长监控应用可确保儿童的上网安全。

+

+由于越来越多的孩子花费时间在智能手机上,毫无意外地,他们也就成为诈骗分子的主要目标。此外,由于恋童癖、网络自夸和其他犯罪在网络上的盛行,儿童也有可能成为网络欺凌的目标。

+

+这些解决方案够吗?我们需要找到物联网解决方案,以确保孩子们在网上和线下的安全。在当代,我们如何确保孩子的安全?我们需要提出创新的解决方案。 物联网可以帮助保护孩子在学校和家里的安全。

+

+### 物联网的潜力

+

+物联网设备提供的好处很多。举例来说,父母可以远程监控自己的孩子,而又不会显得太霸道。因此,儿童在拥有安全环境的同时也会有空间和自由让自己变得独立。

+

+而且,父母也不必在为孩子的安全而担忧。物联网设备可以提供 7x24 小时的信息更新。像 Xnspy 之类的监视应用程序在提供有关孩子的智能手机活动信息方面更进了一步。随着物联网设备变得越来越复杂,拥有更长使用寿命的电池只是一个时间问题。诸如位置跟踪器之类的物联网设备可以提供有关孩子下落的准确详细信息,所以父母不必担心。

+

+虽然可穿戴设备已经非常好了,但在确保儿童安全方面,这些通常还远远不够。因此,要为儿童提供安全的环境,我们还需要其他方法。许多事件表明,儿童在学校比其他任何公共场所都容易受到攻击。因此,学校需要采取安全措施,以确保儿童和教师的安全。在这一点上,物联网设备可用于检测潜在威胁并采取必要的措施来防止攻击。威胁检测系统包括摄像头。系统一旦检测到威胁,便可以通知当局,如一些执法机构和医院。智能锁等设备可用于封锁学校(包括教室),来保护儿童。除此之外,还可以告知父母其孩子的安全,并立即收到有关威胁的警报。这将需要实施无线技术,例如 Wi-Fi 和传感器。因此,学校需要制定专门用于提供教室安全性的预算。

+

+智能家居实现拍手关灯,也可以让你的家庭助手帮你关灯。同样,物联网设备也可用在屋内来保护儿童。在家里,物联网设备(例如摄像头)为父母在照顾孩子时提供 100% 的可见性。当父母不在家里时,可以使用摄像头和其他传感器检测是否发生了可疑活动。其他设备(例如连接到这些传感器的智能锁)可以锁门和窗,以确保孩子们的安全。

+

+同样,可以引入许多物联网解决方案来确保孩子的安全。

+

+### 有多好就有多坏

+

+物联网设备中的传感器会创建大量数据。数据的安全性是至关重要的一个因素。收集的有关孩子的数据如果落入不法分子手中会存在危险。因此,需要采取预防措施。IoT 设备中泄露的任何数据都可用于确定行为模式。因此,必须对提供不违反用户隐私的安全物联网解决方案投入资金。

+

+IoT 设备通常连接到 Wi-Fi,用于设备之间传输数据。未加密数据的不安全网络会带来某些风险。这样的网络很容易被窃听。黑客可以使用此类网点来入侵系统。他们还可以将恶意软件引入系统,从而使系统变得脆弱、易受攻击。此外,对设备和公共网络(例如学校的网络)的网络攻击可能导致数据泄露和私有数据盗用。 因此,在实施用于保护儿童的物联网解决方案时,保护网络和物联网设备的总体计划必须生效。

+

+物联网设备保护儿童在学校和家里的安全的潜力尚未发现有什么创新。我们需要付出更多努力来保护连接 IoT 设备的网络安全。此外,物联网设备生成的数据可能落入不法分子手中,从而造成更多麻烦。因此,这是物联网安全至关重要的一个领域。

+

+

+--------------------------------------------------------------------------------

+

+via: https://opensourceforu.com/2019/10/how-to-use-iot-devices-to-keep-children-safe/

+

+作者:[Andrew Carroll][a]

+选题:[lujun9972][b]

+译者:[Morisun029](https://github.com/Morisun029)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://opensourceforu.com/author/andrew-carroll/

+[b]: https://github.com/lujun9972

+[1]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Visual-Internet-of-things_EB-May18.jpg?resize=696%2C507&ssl=1 (Visual Internet of things_EB May18)

+[2]: https://i0.wp.com/opensourceforu.com/wp-content/uploads/2019/10/Visual-Internet-of-things_EB-May18.jpg?fit=900%2C656&ssl=1

diff --git a/published/201910/20191013 Object-Oriented Programming and Essential State.md b/published/201910/20191013 Object-Oriented Programming and Essential State.md

new file mode 100644

index 0000000000..2847253e32

--- /dev/null

+++ b/published/201910/20191013 Object-Oriented Programming and Essential State.md

@@ -0,0 +1,98 @@

+[#]: collector: (lujun9972)

+[#]: translator: (geekpi)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11519-1.html)

+[#]: subject: (Object-Oriented Programming and Essential State)

+[#]: via: (https://theartofmachinery.com/2019/10/13/oop_and_essential_state.html)

+[#]: author: (Simon Arneaud https://theartofmachinery.com)

+

+面向对象编程和根本状态

+======

+

+

+

+早在 2015 年,Brian Will 撰写了一篇有挑衅性的博客:[面向对象编程:一个灾难故事][1]。他随后发布了一个名为[面向对象编程很糟糕][2]的视频,该视频更加详细。我建议你花些时间观看视频,下面是我的一段总结:

+

+> OOP 的柏拉图式理想是一堆相互解耦的对象,它们彼此之间发送无状态消息。没有人真的像这样制作软件,Brian 指出这甚至没有意义:对象需要知道向哪个对象发送消息,这意味着它们需要相互引用。该视频大部分讲述的是这样一个痛点:人们试图将对象耦合以实现控制流,同时假装它们是通过设计解耦的。

+

+总的来说,他的想法与我自己的 OOP 经验产生了共鸣:对象没有问题,但是我一直不满意的是*面向*对象建模程序控制流,并且试图使代码“正确地”面向对象似乎总是在创建不必要的复杂性。

+

+有一件事我认为他无法完全解释。他直截了当地说“封装没有作用”,但在脚注后面加上“在细粒度的代码级别”,并继续承认对象有时可以奏效,并且在库和文件级别封装是可以的。但是他没有确切解释为什么有时会奏效,有时却没有奏效,以及如何和在何处划清界限。有人可能会说这使他的 “OOP 不好”的说法有缺陷,但是我认为他的观点是正确的,并且可以在根本状态和偶发状态之间划清界限。

+

+如果你以前从未听说过“根本”和“偶发”这两个术语的使用,那么你应该阅读 Fred Brooks 的经典文章《[没有银弹][3]》。(顺便说一句,他写了许多很棒的有关构建软件系统的文章。)我以前曾写过[关于根本和偶发的复杂性的文章][4],这里有一个简短的摘要:软件是复杂的。部分原因是因为我们希望软件能够解决混乱的现实世界问题,因此我们将其称为“根本复杂性”。“偶发复杂性”是所有其它的复杂性,因为我们正尝试使用硅和金属来解决与硅和金属无关的问题。例如,对于大多数程序而言,用于内存管理或在内存与磁盘之间传输数据或解析文本格式的代码都是“偶发的复杂性”。

+

+假设你正在构建一个支持多个频道的聊天应用。消息可以随时到达任何频道。有些频道特别有趣,当有新消息传入时,用户希望得到通知。而其他频道静音:消息被存储,但用户不会受到打扰。你需要跟踪每个频道的用户首选设置。

+

+一种实现方法是在频道和频道设置之间使用映射(也称为哈希表、字典或关联数组)。注意,映射是 Brian Will 所说的可以用作对象的抽象数据类型(ADT)。

+

+如果我们有一个调试器并查看内存中的映射对象,我们将看到什么?我们当然会找到频道 ID 和频道设置数据(或至少指向它们的指针)。但是我们还会找到其它数据。如果该映射是使用红黑树实现的,我们将看到带有红/黑标签和指向其他节点的指针的树节点对象。与频道相关的数据是根本状态,而树节点是偶发状态。不过,请注意以下几点:该映射有效地封装了它的偶发状态 —— 你可以用 AVL 树实现的另一个映射替换该映射,并且你的聊天程序仍然可以使用。另一方面,映射没有封装根本状态(仅使用 `get()` 和 `set()` 方法访问数据并不是封装)。事实上,映射与根本状态是尽可能不可知的,你可以使用基本相同的映射数据结构来存储与频道或通知无关的其他映射。

+

+这就是映射 ADT 如此成功的原因:它封装了偶发状态,并与根本状态解耦。如果你思考一下,Brian 用封装描述的问题就是尝试封装根本状态。其他描述的好处是封装偶发状态的好处。

+

+要使整个软件系统都达到这一理想状况相当困难,但扩展开来,我认为它看起来像这样:

+

+* 没有全局的可变状态

+* 封装了偶发状态(在对象或模块或以其他任何形式)

+* 无状态偶发复杂性封装在单独函数中,与数据解耦

+* 使用诸如依赖注入之类的技巧使输入和输出变得明确

+* 组件可由易于识别的位置完全拥有和控制

+

+其中有些违反了我很久以来的直觉。例如,如果你有一个数据库查询函数,如果数据库连接处理隐藏在该函数内部,并且唯一的参数是查询参数,那么接口会看起来会更简单。但是,当你使用这样的函数构建软件系统时,协调数据库的使用实际上变得更加复杂。组件不仅以自己的方式做事,而且还试图将自己所做的事情隐藏为“实现细节”。数据库查询需要数据库连接这一事实从来都不是实现细节。如果无法隐藏某些内容,那么显露它是更合理的。

+

+我对将面向对象编程和函数式编程放在对立的两极非常警惕,但我认为从函数式编程进入面向对象编程的另一极端是很有趣的:OOP 试图封装事物,包括无法封装的根本复杂性,而纯函数式编程往往会使事情变得明确,包括一些偶发复杂性。在大多数时候,这没什么问题,但有时候(比如[在纯函数式语言中构建自我指称的数据结构][5])设计更多的是为了函数编程,而不是为了简便(这就是为什么 [Haskell 包含了一些“逃生出口”][6])。我之前写过一篇[所谓“弱纯性”的中间立场][7]。

+

+Brian 发现封装对更大规模有效,原因有几个。一个是,由于大小的原因,较大的组件更可能包含偶发状态。另一个是“偶发”与你要解决的问题有关。从聊天程序用户的角度来看,“偶发的复杂性”是与消息、频道和用户等无关的任何事物。但是,当你将问题分解为子问题时,更多的事情就变得“根本”。例如,在解决“构建聊天应用”问题时,可以说频道名称和频道 ID 之间的映射是偶发的复杂性,而在解决“实现 `getChannelIdByName()` 函数”子问题时,这是根本复杂性。因此,封装对于子组件的作用比对父组件的作用要小。

+

+顺便说一句,在视频的结尾,Brian Will 想知道是否有任何语言支持*无法*访问它们所作用的范围的匿名函数。[D][8] 语言可以。 D 中的匿名 Lambda 通常是闭包,但是如果你想要的话,也可以声明匿名无状态函数:

+

+```

+import std.stdio;

+

+void main()

+{

+ int x = 41;

+

+ // Value from immediately executed lambda

+ auto v1 = () {

+ return x + 1;

+ }();

+ writeln(v1);

+

+ // Same thing

+ auto v2 = delegate() {

+ return x + 1;

+ }();

+ writeln(v2);

+

+ // Plain functions aren't closures

+ auto v3 = function() {

+ // Can't access x

+ // Can't access any mutable global state either if also marked pure

+ return 42;

+ }();

+ writeln(v3);

+}

+```

+

+--------------------------------------------------------------------------------

+

+via: https://theartofmachinery.com/2019/10/13/oop_and_essential_state.html

+

+作者:[Simon Arneaud][a]

+选题:[lujun9972][b]

+译者:[geekpi](https://github.com/geekpi)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://theartofmachinery.com

+[b]: https://github.com/lujun9972

+[1]: https://medium.com/@brianwill/object-oriented-programming-a-personal-disaster-1b044c2383ab

+[2]: https://www.youtube.com/watch?v=QM1iUe6IofM

+[3]: http://www.cs.nott.ac.uk/~pszcah/G51ISS/Documents/NoSilverBullet.html

+[4]: https://theartofmachinery.com/2017/06/25/compression_complexity_and_software.html

+[5]: https://wiki.haskell.org/Tying_the_Knot

+[6]: https://en.wikibooks.org/wiki/Haskell/Mutable_objects#The_ST_monad

+[7]: https://theartofmachinery.com/2016/03/28/dirtying_pure_functions_can_be_useful.html

+[8]: https://dlang.org

diff --git a/published/20191014 Use sshuttle to build a poor man-s VPN.md b/published/201910/20191014 Use sshuttle to build a poor man-s VPN.md

similarity index 100%

rename from published/20191014 Use sshuttle to build a poor man-s VPN.md

rename to published/201910/20191014 Use sshuttle to build a poor man-s VPN.md

diff --git a/published/201910/20191015 10 Ways to Customize Your Linux Desktop With GNOME Tweaks Tool.md b/published/201910/20191015 10 Ways to Customize Your Linux Desktop With GNOME Tweaks Tool.md

new file mode 100644

index 0000000000..c9adda9a5d

--- /dev/null

+++ b/published/201910/20191015 10 Ways to Customize Your Linux Desktop With GNOME Tweaks Tool.md

@@ -0,0 +1,166 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11523-1.html)

+[#]: subject: (10 Ways to Customize Your Linux Desktop With GNOME Tweaks Tool)

+[#]: via: (https://itsfoss.com/gnome-tweak-tool/)

+[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

+

+使用 GNOME 优化工具自定义 Linux 桌面的 10 种方法

+======

+

+

+![][7]

+

+你可以通过多种方法来调整 Ubuntu,以自定义其外观和行为。我发现最简单的方法是使用 [GNOME 优化工具][2]。它也被称为 GNOME Tweak 或简单地称为 Tweak(优化)。

+

+在过去的教程中,我已经多次介绍过它。在这里,我列出了你可以使用此工具执行的所有主要优化。

+

+我在这里使用的是 Ubuntu,但是这些步骤应该适用于使用 GNOME 桌面环境的任何 Linux 发行版。

+

+### 在 Ubuntu 18.04 或其它版本上安装 GNOME 优化工具

+

+GNOME 优化工具可从 [Ubuntu 中的 Universe 存储库][3]中安装,因此请确保已在“软件和更新”工具中启用了该仓库:

+

+![在 Ubuntu 中启用 Universe 存储库][4]

+

+之后,你可以从软件中心安装 GNOME 优化工具。只需打开软件中心并搜索 “GNOME Tweaks” 并从那里安装它:

+

+![从软件中心安装 GNOME 优化工具][5]

+

+或者,你也可以使用命令行通过 [apt 命令][6]安装此软件:

+

+```

+sudo apt install gnome-tweaks

+```

+

+### 用优化工具定制 GNOME 桌面

+

+GNOME 优化工具使你可以进行许多设置更改。其中的某些更改(例如墙纸更改、启动应用程序等)也可以在官方的“系统设置”工具中找到。我将重点介绍默认情况下“设置”中不可用的优化。

+

+#### 1、改变主题

+

+你可以通过各种方式[在 Ubuntu 中安装新主题][8]。但是,如果要更改为新安装的主题,则必须安装GNOME 优化工具。

+

+你可以在“外观”部分找到主题和图标设置。你可以浏览可用的主题和图标并设置你喜欢的主题和图标。更改将立即生效。

+

+![通过 GNOME 优化更改主题][9]

+

+#### 2、禁用动画以提速你的桌面体验

+

+应用程序窗口的打开、关闭、最大化等操作都有一些细微的动画。你可以禁用这些动画以稍微加快系统的速度,因为它会稍微使用一点资源。

+

+![禁用动画以获得稍快的桌面体验][10]

+

+#### 3、控制桌面图标

+

+至少在 Ubuntu 中,你会在桌面上看到“家目录”和“垃圾箱”图标。如果你不喜欢,可以选择禁用它。你还可以选择要在桌面上显示的图标。

+

+![在 Ubuntu 中控制桌面图标][11]

+

+#### 4、管理 GNOME 扩展

+

+我想你可能知道 [GNOME 扩展][12]。这些是用于桌面的小型“插件”,可扩展 GNOME 桌面的功能。有[大量的 GNOME 扩展][13],可用于在顶部面板中查看 CPU 消耗、获取剪贴板历史记录等等。

+

+我已经写了一篇[安装和使用 GNOME 扩展][14]的详细文章。在这里,我假设你已经在使用它们,如果是这样,可以从 GNOME 优化工具中对其进行管理。

+

+![管理 GNOME 扩展][15]

+

+#### 5、改变字体和缩放比例

+

+你可以[在 Ubuntu 中安装新字体][16],并使用这个优化工具在系统范围应用字体更改。如果你认为桌面上的图标和文本太小,也可以更改缩放比例。

+

+![更改字体和缩放比例][17]

+

+#### 6、控制触摸板行为,例如在键入时禁用触摸板,使触摸板右键单击可以工作

+

+GNOME 优化工具还允许你在键入时禁用触摸板。如果你在笔记本电脑上快速键入,这将很有用。手掌底部可能会触摸触摸板,并导致光标移至屏幕上不需要的位置。

+

+在键入时自动禁用触摸板可解决此问题。

+

+![键入时禁用触摸板][18]

+

+你还会注意到[当你按下触摸板的右下角以进行右键单击时,什么也没有发生][19]。你的触摸板并没有问题。这是一项系统设置,可对没有实体右键按钮的任何触摸板(例如旧的 Thinkpad 笔记本电脑)禁用这种右键单击功能。两指点击可为你提供右键单击操作。

+

+你也可以通过在“鼠标单击模拟”下设置为“区域”中而不是“手指”来找回这项功能。

+

+![修复右键单击问题][20]

+

+你可能必须[重新启动 Ubuntu][21] 来使这项更改生效。如果你是 Emacs 爱好者,还可以强制使用 Emacs 键盘绑定。

+

+#### 7、改变电源设置

+

+电源这里只有一个设置。它可以让你在盖上盖子后将笔记本电脑置于挂起模式。

+

+![GNOME 优化工具中的电源设置][22]

+

+#### 8、决定什么显示在顶部面板

+

+桌面的顶部面板显示了一些重要的信息。在这里有日历、网络图标、系统设置和“活动”选项。

+

+你还可以[显示电池百分比][23]、添加日期及时间,并显示星期数。你还可以启用鼠标热角,以便将鼠标移至屏幕的左上角时可以获得所有正在运行的应用程序的活动视图。

+

+![GNOME 优化工具中的顶部面板设置][24]

+

+如果将鼠标焦点放在应用程序窗口上,你会注意到其菜单显示在顶部面板中。如果你不喜欢这样,可以将其关闭,然后应用程序菜单将显示应用程序本身。

+

+#### 9、配置应用窗口

+

+你可以决定是否在应用程序窗口中显示最大化和最小化选项(右上角的按钮)。你也可以改变它们的位置到左边或右边。

+

+![应用程序窗口配置][25]

+

+这里还有其他一些配置选项。我不使用它们,但你可以自行探索。

+

+#### 10、配置工作区

+

+GNOME 优化工具还允许你围绕工作区配置一些内容。

+

+![在 Ubuntu 中配置工作区][26]

+

+### 总结

+

+对于任何 GNOME 用户,GNOME 优化(Tweaks)工具都是必备工具。它可以帮助你配置桌面的外观和功能。 我感到惊讶的是,该工具甚至没有出现在 Ubuntu 的主存储库中。我认为应该默认安装它,要不,你就得在 Ubuntu 中手动安装 GNOME 优化工具。

+

+如果你在 GNOME 优化工具中发现了一些此处没有讨论的隐藏技巧,为什么不与大家分享呢?

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/gnome-tweak-tool/

+

+作者:[Abhishek Prakash][a]

+选题:[lujun9972][b]

+译者:[wxy](https://github.com/wxy)

+校对:[wxy](https://github.com/wxy)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/abhishek/

+[b]: https://github.com/lujun9972

+[1]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/gnome-tweak-tool-icon.png?ssl=1

+[2]: https://wiki.gnome.org/action/show/Apps/Tweaks?action=show&redirect=Apps%2FGnomeTweakTool

+[3]: https://itsfoss.com/ubuntu-repositories/

+[4]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/03/enable-repositories-ubuntu.png?ssl=1

+[5]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/install-gnome-tweaks-tool.jpg?ssl=1

+[6]: https://itsfoss.com/apt-command-guide/

+[7]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/customize-gnome-with-tweak-tool.jpg?ssl=1

+[8]: https://itsfoss.com/install-themes-ubuntu/

+[9]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/change-theme-ubuntu-gnome.jpg?ssl=1

+[10]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/disable-animation-ubuntu-gnome.jpg?ssl=1

+[11]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/desktop-icons-ubuntu.jpg?ssl=1

+[12]: https://extensions.gnome.org/

+[13]: https://itsfoss.com/best-gnome-extensions/

+[14]: https://itsfoss.com/gnome-shell-extensions/

+[15]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/manage-gnome-extension-tweaks-tool.jpg?ssl=1

+[16]: https://itsfoss.com/install-fonts-ubuntu/

+[17]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/change-fonts-ubuntu-gnome.jpg?ssl=1

+[18]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/disable-touchpad-while-typing-ubuntu.jpg?ssl=1

+[19]: https://itsfoss.com/fix-right-click-touchpad-ubuntu/

+[20]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/enable-right-click-ubuntu.jpg?ssl=1

+[21]: https://itsfoss.com/schedule-shutdown-ubuntu/

+[22]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/10/power-settings-gnome-tweaks-tool.jpg?ssl=1

+[23]: https://itsfoss.com/display-battery-ubuntu/

+[24]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/top-panel-settings-gnome-tweaks-tool.jpg?ssl=1

+[25]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/10/windows-configuration-ubuntu-gnome-tweaks.jpg?ssl=1

+[26]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/10/configure-workspaces-ubuntu.jpg?ssl=1

diff --git a/published/20191015 4 Free and Open Source Alternatives to Adobe Photoshop.md b/published/201910/20191015 4 Free and Open Source Alternatives to Adobe Photoshop.md

similarity index 100%

rename from published/20191015 4 Free and Open Source Alternatives to Adobe Photoshop.md

rename to published/201910/20191015 4 Free and Open Source Alternatives to Adobe Photoshop.md

diff --git a/published/20191015 Bash Script to Delete Files-Folders Older Than -X- Days in Linux.md b/published/201910/20191015 Bash Script to Delete Files-Folders Older Than -X- Days in Linux.md

similarity index 100%

rename from published/20191015 Bash Script to Delete Files-Folders Older Than -X- Days in Linux.md

rename to published/201910/20191015 Bash Script to Delete Files-Folders Older Than -X- Days in Linux.md

diff --git a/published/20191016 Linux sudo flaw can lead to unauthorized privileges.md b/published/201910/20191016 Linux sudo flaw can lead to unauthorized privileges.md

similarity index 100%

rename from published/20191016 Linux sudo flaw can lead to unauthorized privileges.md

rename to published/201910/20191016 Linux sudo flaw can lead to unauthorized privileges.md

diff --git a/translated/tech/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md b/published/201910/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md

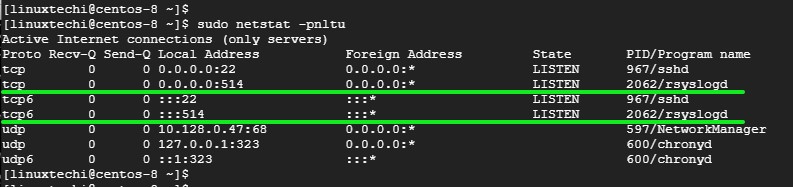

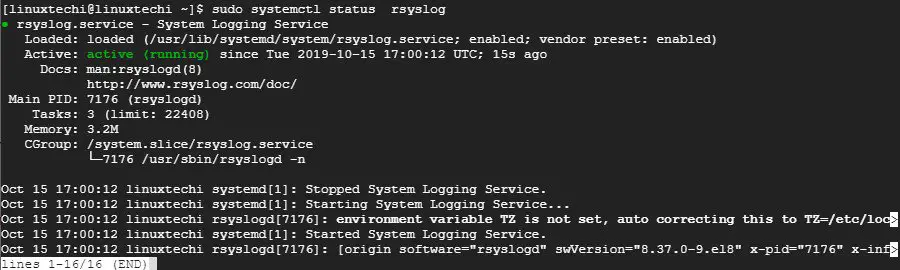

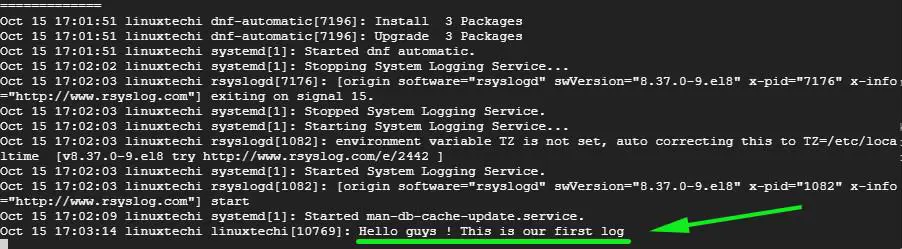

similarity index 53%

rename from translated/tech/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md

rename to published/201910/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md

index 370c68d163..ba0505daf9 100644

--- a/translated/tech/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md

+++ b/published/201910/20191018 How to Configure Rsyslog Server in CentOS 8 - RHEL 8.md

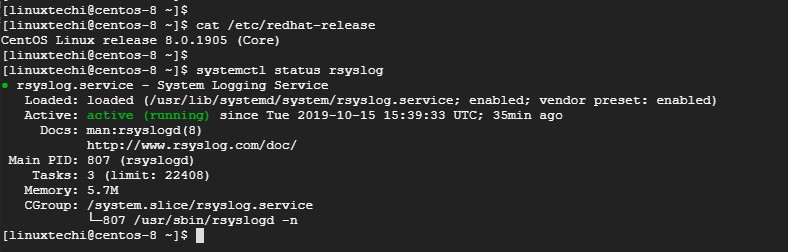

@@ -1,27 +1,27 @@

[#]: collector: (lujun9972)

[#]: translator: (geekpi)

-[#]: reviewer: ( )

-[#]: publisher: ( )

-[#]: url: ( )

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11505-1.html)

[#]: subject: (How to Configure Rsyslog Server in CentOS 8 / RHEL 8)

[#]: via: (https://www.linuxtechi.com/configure-rsyslog-server-centos-8-rhel-8/)

[#]: author: (James Kiarie https://www.linuxtechi.com/author/james/)

-如何在 CentOS 8 / RHEL 8 中配置 Rsyslog 服务器

+如何在 CentOS8/RHEL8 中配置 Rsyslog 服务器

======

-**Rsyslog** 是一个免费的开源日志记录程序,默认下在 **CentOS** 8 和 **RHEL** 8 系统上存在。它提供了一种从客户端节点到单个中央服务器的“集中日志”的简单有效的方法。日志集中化有两个好处。首先,它简化了日志查看,因为系统管理员可以在一个中心节点查看远程服务器的所有日志,而无需登录每个客户端系统来检查日志。如果需要监视多台服务器,这将非常有用,其次,如果远程客户端崩溃,你不用担心丢失日志,因为所有日志都将保存在**中央 rsyslog 服务器上**。Rsyslog 取代了仅支持 **UDP** 协议的 syslog。它以优异的功能扩展了基本的 syslog 协议,例如在传输日志时支持 **UDP** 和 **TCP**协议,增强的过滤功能以及灵活的配置选项。让我们来探讨如何在 CentOS 8 / RHEL 8 系统中配置 Rsyslog 服务器。

+

-[![configure-rsyslog-centos8-rhel8][1]][2]

+Rsyslog 是一个自由开源的日志记录程序,在 CentOS 8 和 RHEL 8 系统上默认可用。它提供了一种从客户端节点到单个中央服务器的“集中日志”的简单有效的方法。日志集中化有两个好处。首先,它简化了日志查看,因为系统管理员可以在一个中心节点查看远程服务器的所有日志,而无需登录每个客户端系统来检查日志。如果需要监视多台服务器,这将非常有用,其次,如果远程客户端崩溃,你不用担心丢失日志,因为所有日志都将保存在中心的 Rsyslog 服务器上。rsyslog 取代了仅支持 UDP 协议的 syslog。它以优异的功能扩展了基本的 syslog 协议,例如在传输日志时支持 UDP 和 TCP 协议,增强的过滤功能以及灵活的配置选项。让我们来探讨如何在 CentOS 8 / RHEL 8 系统中配置 Rsyslog 服务器。

+

+![configure-rsyslog-centos8-rhel8][2]

### 预先条件

我们将搭建以下实验环境来测试集中式日志记录过程:

- * **Rsyslog 服务器** CentOS 8 Minimal IP 地址: 10.128.0.47

- * **客户端系统** RHEL 8 Minimal IP 地址: 10.128.0.48

-

-

+ * Rsyslog 服务器 CentOS 8 Minimal IP 地址: 10.128.0.47

+ * 客户端系统 RHEL 8 Minimal IP 地址: 10.128.0.48

通过上面的设置,我们将演示如何设置 Rsyslog 服务器,然后配置客户端系统以将日志发送到 Rsyslog 服务器进行监视。

@@ -35,30 +35,30 @@

$ systemctl status rsyslog

```

-示例输出

+示例输出:

-![rsyslog-service-status-centos8][1]

+

-如果由于某种原因不存在 rsyslog,那么可以使用以下命令进行安装:

+如果由于某种原因 Rsyslog 不存在,那么可以使用以下命令进行安装:

```

$ sudo yum install rsyslog

```

-接下来,你需要修改 Rsyslog 配置文件中的一些设置。打开配置文件。

+接下来,你需要修改 Rsyslog 配置文件中的一些设置。打开配置文件:

```

$ sudo vim /etc/rsyslog.conf

```

-滚动并取消注释下面的行,以允许通过 UDP 协议接收日志

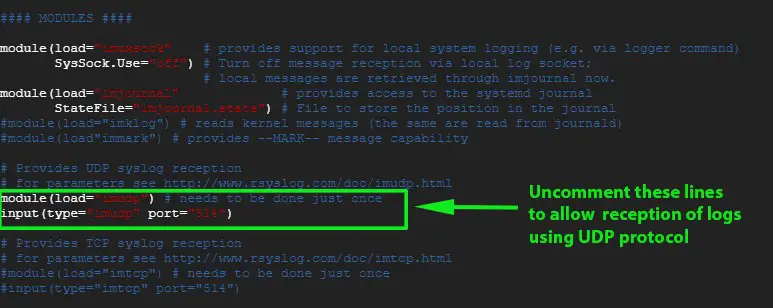

+滚动并取消注释下面的行,以允许通过 UDP 协议接收日志:

```

module(load="imudp") # needs to be done just once

input(type="imudp" port="514")

```

-![rsyslog-conf-centos8-rhel8][1]

+

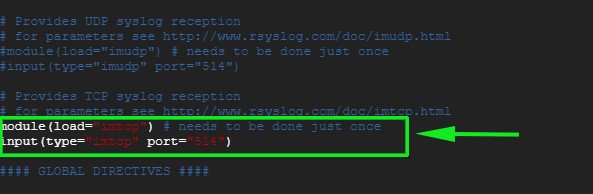

同样,如果你希望启用 TCP rsyslog 接收,请取消注释下面的行:

@@ -67,47 +67,47 @@ module(load="imtcp") # needs to be done just once

input(type="imtcp" port="514")

```

-![rsyslog-conf-tcp-centos8-rhel8][1]

+

保存并退出配置文件。

-要从客户端系统接收日志,我们需要在防火墙上打开 Rsyslog 默认端口 514。为此,请运行

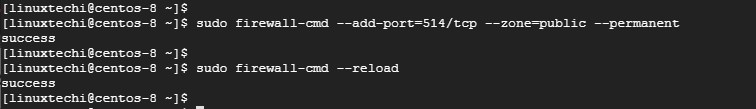

+要从客户端系统接收日志,我们需要在防火墙上打开 Rsyslog 默认端口 514。为此,请运行:

```

# sudo firewall-cmd --add-port=514/tcp --zone=public --permanent

```

-接下来,重新加载防火墙保存更改

+接下来,重新加载防火墙保存更改:

```

# sudo firewall-cmd --reload

```

-示例输出

+示例输出:

-![firewall-ports-rsyslog-centos8][1]

+

-接下来,重启 Rsyslog 服务器

+接下来,重启 Rsyslog 服务器:

```

$ sudo systemctl restart rsyslog

```

-要在启动时运行 Rsyslog,运行以下命令

+要在启动时运行 Rsyslog,运行以下命令:

```

$ sudo systemctl enable rsyslog

```

-要确认 Rsyslog 服务器正在监听 514 端口,请使用 netstat 命令,如下所示:

+要确认 Rsyslog 服务器正在监听 514 端口,请使用 `netstat` 命令,如下所示:

```

$ sudo netstat -pnltu

```

-示例输出

+示例输出:

-![netstat-rsyslog-port-centos8][1]

+

完美!我们已经成功配置了 Rsyslog 服务器来从客户端系统接收日志。

@@ -127,42 +127,42 @@ $ tail -f /var/log/messages

$ sudo systemctl status rsyslog

```

-示例输出

+示例输出:

-![client-rsyslog-service-rhel8][1]

+

-接下来,打开 rsyslog 配置文件

+接下来,打开 rsyslog 配置文件:

```

$ sudo vim /etc/rsyslog.conf

```

-在文件末尾,添加以下行

+在文件末尾,添加以下行:

```

*.* @10.128.0.47:514 # Use @ for UDP protocol

*.* @@10.128.0.47:514 # Use @@ for TCP protocol

```

-保存并退出配置文件。就像 Rsyslog 服务器一样,打开 514 端口,这是防火墙上的默认 Rsyslog 端口。

+保存并退出配置文件。就像 Rsyslog 服务器一样,打开 514 端口,这是防火墙上的默认 Rsyslog 端口:

```

$ sudo firewall-cmd --add-port=514/tcp --zone=public --permanent

```

-接下来,重新加载防火墙以保存更改

+接下来,重新加载防火墙以保存更改:

```

$ sudo firewall-cmd --reload

```

-接下来,重启 rsyslog 服务

+接下来,重启 rsyslog 服务:

```

$ sudo systemctl restart rsyslog

```

-要在启动时运行 Rsyslog,请运行以下命令

+要在启动时运行 Rsyslog,请运行以下命令:

```

$ sudo systemctl enable rsyslog

@@ -178,15 +178,15 @@ $ sudo systemctl enable rsyslog

# logger "Hello guys! This is our first log"

```

-现在进入 Rsyslog 服务器并运行以下命令来实时查看日志消息

+现在进入 Rsyslog 服务器并运行以下命令来实时查看日志消息:

```

# tail -f /var/log/messages

```

-客户端系统上命令运行的输出显示在了 Rsyslog 服务器的日志中,这意味着 Rsyslog 服务器正在接收来自客户端系统的日志。

+客户端系统上命令运行的输出显示在了 Rsyslog 服务器的日志中,这意味着 Rsyslog 服务器正在接收来自客户端系统的日志:

-![centralize-logs-rsyslogs-centos8][1]

+

就是这些了!我们成功设置了 Rsyslog 服务器来接收来自客户端系统的日志信息。

@@ -197,11 +197,11 @@ via: https://www.linuxtechi.com/configure-rsyslog-server-centos-8-rhel-8/

作者:[James Kiarie][a]

选题:[lujun9972][b]

译者:[geekpi](https://github.com/geekpi)

-校对:[校对者ID](https://github.com/校对者ID)

+校对:[wxy](https://github.com/wxy)

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

[a]: https://www.linuxtechi.com/author/james/

[b]: https://github.com/lujun9972

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

-[2]: https://www.linuxtechi.com/wp-content/uploads/2019/10/configure-rsyslog-centos8-rhel8.jpg

\ No newline at end of file

+[2]: https://www.linuxtechi.com/wp-content/uploads/2019/10/configure-rsyslog-centos8-rhel8.jpg

diff --git a/published/20191021 Kubernetes networking, OpenStack Train, and more industry trends.md b/published/201910/20191021 Kubernetes networking, OpenStack Train, and more industry trends.md

similarity index 100%

rename from published/20191021 Kubernetes networking, OpenStack Train, and more industry trends.md

rename to published/201910/20191021 Kubernetes networking, OpenStack Train, and more industry trends.md

diff --git a/published/20191021 Pylint- Making your Python code consistent.md b/published/201910/20191021 Pylint- Making your Python code consistent.md

similarity index 100%

rename from published/20191021 Pylint- Making your Python code consistent.md

rename to published/201910/20191021 Pylint- Making your Python code consistent.md

diff --git a/published/201910/20191021 Transition to Nftables.md b/published/201910/20191021 Transition to Nftables.md

new file mode 100644

index 0000000000..71aac43603

--- /dev/null

+++ b/published/201910/20191021 Transition to Nftables.md

@@ -0,0 +1,193 @@

+[#]: collector: (lujun9972)

+[#]: translator: (wxy)

+[#]: reviewer: (wxy)

+[#]: publisher: (wxy)

+[#]: url: (https://linux.cn/article-11513-1.html)

+[#]: subject: (Transition to Nftables)

+[#]: via: (https://opensourceforu.com/2019/10/transition-to-nftables/)

+[#]: author: (Vijay Marcel D https://opensourceforu.com/author/vijay-marcel/)

+

+过渡到 nftables

+======

+

+

+

+> 开源世界中的每个主要发行版都在演进,逐渐将 nftables 作为了默认防火墙。换言之,古老的 iptables 现在已经消亡。本文是有关如何构建 nftables 的教程。

+