mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

321455a5dd

@ -1,40 +1,41 @@

|

||||

UKTools - 安装最新 Linux 内核的简便方法

|

||||

UKTools:安装最新 Linux 内核的简便方法

|

||||

======

|

||||

|

||||

Ubuntu 中有许多实用程序可以将 Linux 内核升级到最新的稳定版本。我们之前已经写过关于这些实用程序的文章,例如 Linux Kernel Utilities (LKU), Ubuntu Kernel Upgrade Utility (UKUU) 和 Ubunsys。

|

||||

Ubuntu 中有许多实用程序可以将 Linux 内核升级到最新的稳定版本。我们之前已经写过关于这些实用程序的文章,例如 Linux Kernel Utilities (LKU)、 Ubuntu Kernel Upgrade Utility (UKUU) 和 Ubunsys。

|

||||

|

||||

另外还有一些其它实用程序可供使用。我们计划在其它文章中包含这些,例如 `ubuntu-mainline-kernel.sh` 和从主线内核手动安装的方式。

|

||||

|

||||

另外还有一些其它实用程序可供使用。我们计划在其它文章中包含这些,例如 ubuntu-mainline-kernel.sh 和 manual method from mainline kernel.

|

||||

今天我们还会教你类似的使用工具 —— UKTools。你可以尝试使用这些实用程序中的任何一个来将 Linux 内核升级至最新版本。

|

||||

|

||||

今天我们还会教你类似的使用工具 -- UKTools。你可以尝试使用这些实用程序中的任何一个来将 Linux 内核升级至最新版本。

|

||||

|

||||

最新的内核版本附带了安全漏洞修复和一些改进,因此,最好保持最新的内核版本以获得可靠,安全和更好的硬件性能。

|

||||

最新的内核版本附带了安全漏洞修复和一些改进,因此,最好保持最新的内核版本以获得可靠、安全和更好的硬件性能。

|

||||

|

||||

有时候最新的内核版本可能会有一些漏洞,并且会导致系统崩溃,这是你的风险。我建议你不要在生产环境中安装它。

|

||||

|

||||

**建议阅读:**

|

||||

**(#)** [Linux 内核实用程序(LKU)- 在 Ubuntu/LinuxMint 中编译,安装和更新最新内核的一组 Shell 脚本][1]

|

||||

**(#)** [Ukuu - 在基于 Ubuntu 的系统中安装或升级 Linux 内核的简便方法][2]

|

||||

**(#)** [6 种检查系统上正在运行的 Linux 内核版本的方法][3]

|

||||

**建议阅读:**

|

||||

|

||||

- [Linux 内核实用程序(LKU)- 在 Ubuntu/LinuxMint 中编译,安装和更新最新内核的一组 Shell 脚本][1]

|

||||

- [Ukuu - 在基于 Ubuntu 的系统中安装或升级 Linux 内核的简便方法][2]

|

||||

- [6 种检查系统上正在运行的 Linux 内核版本的方法][3]

|

||||

|

||||

### 什么是 UKTools

|

||||

|

||||

[UKTools][4] 意思是 Ubuntu 内核工具,它包含两个 shell 脚本 `ukupgrade` 和 `ukpurge`。

|

||||

|

||||

ukupgrade 意思是 “Ubuntu Kernel Upgrade”,它允许用户将 Linux 内核升级到 Ubuntu/Mint 的最新稳定版本以及基于 [kernel.ubuntu.com][5] 的衍生版本。

|

||||

`ukupgrade` 意思是 “Ubuntu Kernel Upgrade”,它允许用户将 Linux 内核升级到 Ubuntu/Mint 的最新稳定版本以及基于 [kernel.ubuntu.com][5] 的衍生版本。

|

||||

|

||||

ukpurge 意思是 “Ubuntu Kernel Purge”,它允许用户在机器中删除旧的 Linux 内核镜像或头文件,用于 Ubuntu/Mint 和其衍生版本。它将只保留三个内核版本。

|

||||

`ukpurge` 意思是 “Ubuntu Kernel Purge”,它允许用户在机器中删除旧的 Linux 内核镜像或头文件,用于 Ubuntu/Mint 和其衍生版本。它将只保留三个内核版本。

|

||||

|

||||

此实用程序没有 GUI,但它看起来非常简单直接,因此,新手可以在没有任何问题的情况下进行升级。

|

||||

|

||||

我正在运行 Ubuntu 17.10,目前的内核版本如下:

|

||||

|

||||

```

|

||||

$ uname -a

|

||||

Linux ubuntu 4.13.0-39-generic #44-Ubuntu SMP Thu Apr 5 14:25:01 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

|

||||

|

||||

```

|

||||

|

||||

运行以下命令来获取系统上已安装内核的列表(Ubuntu 及其衍生产品)。目前我持有 `7` 个内核。

|

||||

|

||||

```

|

||||

$ dpkg --list | grep linux-image

|

||||

ii linux-image-4.13.0-16-generic 4.13.0-16.19 amd64 Linux kernel image for version 4.13.0 on 64 bit x86 SMP

|

||||

@ -52,7 +53,6 @@ ii linux-image-extra-4.13.0-37-generic 4.13.0-37.42 amd64 Linux kernel extra mod

|

||||

ii linux-image-extra-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-extra-4.13.0-39-generic 4.13.0-39.44 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

|

||||

```

|

||||

|

||||

### 如何安装 UKTools

|

||||

@ -60,18 +60,19 @@ ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

在 Ubuntu 及其衍生产品上,只需运行以下命令来安装 UKTools 即可。

|

||||

|

||||

在你的系统上运行以下命令来克隆 UKTools 仓库:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/usbkey9/uktools

|

||||

|

||||

```

|

||||

|

||||

进入 uktools 目录:

|

||||

|

||||

```

|

||||

$ cd uktools

|

||||

|

||||

```

|

||||

|

||||

运行 Makefile 以生成必要的文件。此外,这将自动安装最新的可用内核。只需重新启动系统即可使用最新的内核。

|

||||

运行 `Makefile` 以生成必要的文件。此外,这将自动安装最新的可用内核。只需重新启动系统即可使用最新的内核。

|

||||

|

||||

```

|

||||

$ sudo make

|

||||

[sudo] password for daygeek:

|

||||

@ -188,30 +189,30 @@ done

|

||||

|

||||

Thanks for using this script! Hope it helped.

|

||||

Give it a star: https://github.com/MarauderXtreme/uktools

|

||||

|

||||

```

|

||||

|

||||

重新启动系统以激活最新的内核。

|

||||

|

||||

```

|

||||

$ sudo shutdown -r now

|

||||

|

||||

```

|

||||

|

||||

一旦系统重新启动,重新检查内核版本。

|

||||

|

||||

```

|

||||

$ uname -a

|

||||

Linux ubuntu 4.16.7-041607-generic #201805021131 SMP Wed May 2 15:34:55 UTC 2018 x86_64 x86_64 x86_64 GNU/Linux

|

||||

|

||||

```

|

||||

|

||||

此 make 命令会将下面的文件放到 `/usr/local/bin` 目录中。

|

||||

|

||||

```

|

||||

do-kernel-upgrade

|

||||

do-kernel-purge

|

||||

|

||||

```

|

||||

|

||||

要移除旧内核,运行以下命令:

|

||||

|

||||

```

|

||||

$ do-kernel-purge

|

||||

|

||||

@ -364,10 +365,10 @@ run-parts: executing /etc/kernel/postrm.d/initramfs-tools 4.13.0-37-generic /boo

|

||||

run-parts: executing /etc/kernel/postrm.d/zz-update-grub 4.13.0-37-generic /boot/vmlinuz-4.13.0-37-generic

|

||||

|

||||

Thanks for using this script!!!

|

||||

|

||||

```

|

||||

|

||||

使用以下命令重新检查已安装内核的列表。它将只保留三个旧的内核。

|

||||

|

||||

```

|

||||

$ dpkg --list | grep linux-image

|

||||

ii linux-image-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel image for version 4.13.0 on 64 bit x86 SMP

|

||||

@ -376,14 +377,13 @@ ii linux-image-extra-4.13.0-38-generic 4.13.0-38.43 amd64 Linux kernel extra mod

|

||||

ii linux-image-extra-4.13.0-39-generic 4.13.0-39.44 amd64 Linux kernel extra modules for version 4.13.0 on 64 bit x86 SMP

|

||||

ii linux-image-generic 4.13.0.39.42 amd64 Generic Linux kernel image

|

||||

ii linux-image-unsigned-4.16.7-041607-generic 4.16.7-041607.201805021131 amd64 Linux kernel image for version 4.16.7 on 64 bit x86 SMP

|

||||

|

||||

```

|

||||

|

||||

下次你可以调用 `do-kernel-upgrade` 实用程序来安装新的内核。如果有任何新内核可用,那么它将安装。如果没有,它将报告当前没有可用的内核更新。

|

||||

|

||||

```

|

||||

$ do-kernel-upgrade

|

||||

Kernel up to date. Finishing

|

||||

|

||||

```

|

||||

|

||||

再次运行 `do-kernel-purge` 命令以确认。如果发现超过三个内核,那么它将移除。如果不是,它将报告没有删除消息。

|

||||

@ -400,7 +400,6 @@ Linux Kernel 4.16.7-041607 Generic (linux-image-4.16.7-041607-generic)

|

||||

Nothing to remove!

|

||||

|

||||

Thanks for using this script!!!

|

||||

|

||||

```

|

||||

|

||||

|

||||

@ -411,7 +410,7 @@ via: https://www.2daygeek.com/uktools-easy-way-to-install-latest-stable-linux-ke

|

||||

作者:[Prakash Subramanian][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,37 +1,37 @@

|

||||

用在你终端的 4 款酷炫应用

|

||||

4 款酷炫的终端应用

|

||||

======

|

||||

|

||||

|

||||

|

||||

许多 Linux 用户认为在终端中工作太复杂或无聊,并试图逃避它。但这里有个改善 - 四款终端下的很棒的开源程序。它们既有趣又易于使用,甚至可以在你需要在命令行中工作时照亮你的生活。

|

||||

许多 Linux 用户认为在终端中工作太复杂、无聊,并试图逃避它。但这里有个改善方法 —— 四款终端下很棒的开源程序。它们既有趣又易于使用,甚至可以在你需要在命令行中工作时照亮你的生活。

|

||||

|

||||

### No More Secrets

|

||||

|

||||

这是一个简单的命令行工具,可以重现 1992 年电影 [Sneakers][1] 中所见的著名数据解密效果。该项目让你编译 nms 命令,该命令与管道数据一起使用并以混乱字符的形式打印输出。开始后,你可以按任意键,并能在输出中看到很酷的好莱坞效果的现场“解密”。

|

||||

这是一个简单的命令行工具,可以重现 1992 年电影 [Sneakers][1] 中所见的著名数据解密效果。该项目让你编译个 `nms` 命令,该命令与管道数据一起使用并以混乱字符的形式打印输出。开始后,你可以按任意键,并能在输出中看到很酷的好莱坞效果的现场“解密”。

|

||||

|

||||

![][2]

|

||||

|

||||

#### 安装说明

|

||||

|

||||

一个全新安装的 Fedora Workstation 系统已经包含了从源代码构建 No More Secrets 所需的一切。只需在终端中输入以下命令:

|

||||

|

||||

```

|

||||

git clone https://github.com/bartobri/no-more-secrets.git

|

||||

cd ./no-more-secrets

|

||||

make nms

|

||||

make sneakers ## Optional

|

||||

sudo make install

|

||||

|

||||

```

|

||||

|

||||

对于那些记得原始电影的人来说,sneakers 命令是一个小小的彩蛋,但主要的英雄是 nms。使用管道将任何 Linux 命令重定向到 nms,如下所示:

|

||||

对于那些记得原来的电影的人来说,`sneakers` 命令是一个小小的彩蛋,但主要的英雄是 `nms`。使用管道将任何 Linux 命令重定向到 `nms`,如下所示:

|

||||

|

||||

```

|

||||

systemctl list-units --type=target | nms

|

||||

|

||||

```

|

||||

|

||||

当文本停止闪烁,按任意键“解密”它。上面的 systemctl 命令只是一个例子 - 你几乎可以用任何东西替换它!

|

||||

当文本停止闪烁,按任意键“解密”它。上面的 `systemctl` 命令只是一个例子 —— 你几乎可以用任何东西替换它!

|

||||

|

||||

### Lolcat

|

||||

### lolcat

|

||||

|

||||

这是一个用彩虹为终端输出着色的命令。没什么用,但是它看起来很棒!

|

||||

|

||||

@ -39,50 +39,50 @@ systemctl list-units --type=target | nms

|

||||

|

||||

#### 安装说明

|

||||

|

||||

Lolcat 是一个 Ruby 软件包,可从官方 Ruby Gems 托管中获得。所以,你首先需要 gem 客户端:

|

||||

`lolcat` 是一个 Ruby 软件包,可从官方 Ruby Gems 托管中获得。所以,你首先需要 gem 客户端:

|

||||

|

||||

```

|

||||

sudo dnf install -y rubygems

|

||||

|

||||

```

|

||||

|

||||

然后安装 Lolcat 本身:

|

||||

然后安装 `lolcat` 本身:

|

||||

|

||||

```

|

||||

gem install lolcat

|

||||

|

||||

```

|

||||

|

||||

再说一次,使用 lolcat 命令管道任何其他命令,并在 Fedora 终端中享受彩虹(和独角兽!)。

|

||||

再说一次,使用 `lolcat` 命令管道任何其他命令,并在 Fedora 终端中享受彩虹(和独角兽!)。

|

||||

|

||||

### Chafa

|

||||

### chafa

|

||||

|

||||

![][4]

|

||||

|

||||

Chafa 是一个[命令行图像转换器和查看器][5]。它可以帮助你在不离开终端的情况下欣赏图像。语法非常简单:

|

||||

`chafa` 是一个[命令行图像转换器和查看器][5]。它可以帮助你在不离开终端的情况下欣赏图像。语法非常简单:

|

||||

|

||||

```

|

||||

chafa /path/to/your/image

|

||||

|

||||

```

|

||||

|

||||

你可以将几乎任何类型的图像投射到 Chafa,包括 JPG、PNG、TIFF、BMP 或几乎任何 ImageMagick 支持的图像 - 这是 Chafa 用于解析输入文件的引擎。最酷的部分是 Chafa 还可以在你的终端内显示非常流畅的 GIF 动画!

|

||||

你可以将几乎任何类型的图像投射到 `chafa`,包括 JPG、PNG、TIFF、BMP 或几乎任何 ImageMagick 支持的图像 - 这是 `chafa` 用于解析输入文件的引擎。最酷的部分是 `chafa` 还可以在你的终端内显示非常流畅的 GIF 动画!

|

||||

|

||||

#### 安装说明

|

||||

|

||||

Chafa 还没有为 Fedora 打包,但从源代码构建它很容易。首先,获取必要的构建依赖项:

|

||||

`chafa` 还没有为 Fedora 打包,但从源代码构建它很容易。首先,获取必要的构建依赖项:

|

||||

|

||||

```

|

||||

sudo dnf install -y autoconf automake libtool gtk-doc glib2-devel ImageMagick-devel

|

||||

|

||||

```

|

||||

|

||||

接下来,克隆代码或从项目的 Github 页面下载快照,然后 cd 到 Chafa 目录。在那之后就好了:

|

||||

接下来,克隆代码或从项目的 GitHub 页面下载快照,然后 cd 到 `chafa` 目录,这样就行了:

|

||||

|

||||

```

|

||||

git clone https://github.com/hpjansson/chafa

|

||||

./autogen.sh

|

||||

make

|

||||

sudo make install

|

||||

|

||||

```

|

||||

|

||||

大的图像在第一次运行时可能需要一段时间处理,但 Chafa 会缓存你加载的所有内容。下一次运行几乎是瞬间完成的。

|

||||

大的图像在第一次运行时可能需要一段时间处理,但 `chafa` 会缓存你加载的所有内容。下一次运行几乎是瞬间完成的。

|

||||

|

||||

### Browsh

|

||||

|

||||

@ -93,12 +93,12 @@ Browsh 是完善的终端网页浏览器。它比 Lynx 更强大,当然更引

|

||||

#### 安装说明

|

||||

|

||||

该项目为各种 Linux 发行版提供了包,包括 Fedora。以这种方式安装:

|

||||

|

||||

```

|

||||

sudo dnf install -y https://github.com/browsh-org/browsh/releases/download/v1.4.6/browsh_1.4.6_linux_amd64.rpm

|

||||

|

||||

```

|

||||

|

||||

之后,启动 browsh 命令并给它几秒钟加载。按 Ctrl+L 将焦点切换到地址栏并开始浏览 Web,就像以前一样使用!使用 Ctrl+Q 返回终端。

|

||||

之后,启动 `browsh` 命令并给它几秒钟加载。按 `Ctrl+L` 将焦点切换到地址栏并开始浏览 Web,就像以前一样使用!使用 `Ctrl+Q` 返回终端。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -108,7 +108,7 @@ via: https://fedoramagazine.org/4-cool-apps-for-your-terminal/

|

||||

作者:[atolstoy][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,62 +0,0 @@

|

||||

translating by lujun9972

|

||||

How to Play Sound Through Two or More Output Devices in Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

Handling audio in Linux can be a pain. Pulseaudio has made it both better and worse. While some things work better than they did before, other things have become more complicated. Handling audio output is one of those things.

|

||||

|

||||

If you want to enable multiple audio outputs from your Linux PC, you can use a simple utility to enable your other sound devices on a virtual interface. It's a lot easier than it sounds.

|

||||

|

||||

In case you're wondering why you'd want to do this, a pretty common instance is playing video from your computer on a TV and using both the PC and TV speakers.

|

||||

|

||||

### Install Paprefs

|

||||

|

||||

The easiest way to enable audio playback from multiple sources is to use a simple graphical utility called "paprefs." It's short for PulseAudio Preferences.

|

||||

|

||||

It's available through the Ubuntu repositories, so just install it with Apt.

|

||||

```

|

||||

sudo apt install paprefs

|

||||

```

|

||||

|

||||

When the install finishes, you can just launch the program.

|

||||

|

||||

### Enable Dual Audio Playback

|

||||

|

||||

Even though the utility is graphical, it's still probably easier to launch it by typing `paprefs` in the command line as a regular user.

|

||||

|

||||

The window that opens has a few tabs with settings that you can tweak. The tab that you're looking for is the last one, "Simultaneous Output."

|

||||

|

||||

![Paprefs on Ubuntu][1]

|

||||

|

||||

There isn't a whole lot on the tab, just a checkbox to enable the setting.

|

||||

|

||||

Next, open up the regular sound preferences. It's in different places on different distributions. On Ubuntu it'll be under the GNOME system settings.

|

||||

|

||||

![Enable Simultaneous Audio][2]

|

||||

|

||||

Once you have your sound preferences open, select the "Output" tab. Select the "Simultaneous output" radio button. It's now your default output.

|

||||

|

||||

### Test It

|

||||

|

||||

To test it, you can use anything you like, but music always works. If you are using a video, like suggested earlier, you can certainly test it with that as well.

|

||||

|

||||

If everything is working well, you should hear audio out of all connected devices.

|

||||

|

||||

That's all there really is to do. This works best when there are multiple devices, like the HDMI port and the standard analog output. You can certainly try it with other configurations, too. You should also keep in mind that there will only be a single volume control, so adjust the physical output devices accordingly.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/play-sound-through-multiple-devices-linux/

|

||||

|

||||

作者:[Nick Congleton][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/nickcongleton/

|

||||

[1]:https://www.maketecheasier.com/assets/uploads/2018/01/sa-paprefs.jpg (Paprefs on Ubuntu)

|

||||

[2]:https://www.maketecheasier.com/assets/uploads/2018/01/sa-enable.jpg (Enable Simultaneous Audio)

|

||||

[3]:https://depositphotos.com/89314442/stock-photo-headphones-on-speakers.html

|

||||

@ -1,121 +0,0 @@

|

||||

|

||||

FSSlc is translating

|

||||

|

||||

netdev day 1: IPsec!

|

||||

============================================================

|

||||

|

||||

Hello! This year, like last year, I’m at the [netdev conference][3]. (here are my [notes from last year][4]).

|

||||

|

||||

Today at the conference I learned a lot about IPsec, so we’re going to talk about IPsec! There was an IPsec workshop given by Sowmini Varadhan and [Paul Wouters][5]. All of the mistakes in this post are 100% my fault though :).

|

||||

|

||||

### what’s IPsec?

|

||||

|

||||

IPsec is a protocol used to encrypt IP packets. Some VPNs are implemented with IPsec. One big thing I hadn’t really realized until today is that there isn’t just one protocol used for VPNs – I think VPN is just a general term meaning “your IP packets get encrypted and sent through another server” and VPNs can be implemented using a bunch of different protocols (OpenVPN, PPTP, SSTP, IPsec, etc) in a bunch of different ways.

|

||||

|

||||

Why is IPsec different from other VPN protocols? (like, why was there a tutorial about it at netdev and not the other protocols?) My understanding is that there are 2 things that make it different:

|

||||

|

||||

* It’s an IETF standard, documented in eg [RFC 6071][1] (did you know the IETF is the group that makes RFCs? I didn’t until today!)

|

||||

|

||||

* it’s implemented in the Linux kernel (so it makes sense that there was a netdev tutorial on it, since netdev is a Linux kernel networking conference :))

|

||||

|

||||

### How does IPsec work?

|

||||

|

||||

So let’s say your laptop is using IPsec to encrypt its packets and send them through another device. How does that work? There are 2 parts to IPsec: a userspace part, and a kernel part.

|

||||

|

||||

The userspace part of IPsec is responsible for key exchange, using a protocol called [IKE][6] (“internet key exchange”). Basically when you open a new VPN connection, you need to talk to the VPN server and negotiate a key to do encryption.

|

||||

|

||||

The kernel part of IPsec is responsible for the actual encryption of packets – once a key is generated using IKE, the userspace part of IPsec will tell the kernel which encryption key to use. Then the kernel will use that key to encrypt packets!

|

||||

|

||||

### Security Policy & Security Associations

|

||||

|

||||

The kernel part of IPSec has two databases: the security policy database(SPD) and the security association database (SAD).

|

||||

|

||||

The security policy database has IP ranges and rules for what to do to packets for that IP range (“do IPsec to it”, “drop the packet”, “let it through”). I find this a little confusing because I’m used to rules about what to do to packets in various IP ranges being in the route table (`sudo ip route list`), but apparently you can have IPsec rules too and they’re in a different place!

|

||||

|

||||

The security association database I think has the encryption keys to use for various IPs.

|

||||

|

||||

The way you inspect these databases is, extremely unintuitively, using a command called `ip xfrm`. What does xfrm mean? I don’t know!

|

||||

|

||||

```

|

||||

# security policy database

|

||||

$ sudo ip xfrm policy

|

||||

$ sudo ip x p

|

||||

|

||||

# security association database

|

||||

$ sudo ip xfrm state

|

||||

$ sudo ip x s

|

||||

|

||||

```

|

||||

|

||||

### Why is IPsec implemented in the Linux kernel and TLS isn’t?

|

||||

|

||||

For both TLS and IPsec, you need to do a key exchange when opening the connection (using Diffie-Hellman or something). For some reason that might be obvious but that I don’t understand yet (??) people don’t want to do key exchange in the kernel.

|

||||

|

||||

The reason IPsec is easier to implement in the kernel is that with IPsec, you need to negotiate key exchanges much less frequently (once for every IP address you want to open a VPN connection with), and IPsec sessions are much longer lived. So it’s easy for userspace to do a key exchange, get the key, and hand it off to the kernel which will then use that key for every IP packet.

|

||||

|

||||

With TLS, there are a couple of problems:

|

||||

|

||||

a. you’re constantly doing new key exchanges every time you open a new TLS connection, and TLS connections are shorter-lived b. there isn’t a natural protocol boundary where you need to start doing encryption – with IPsec, you just encrypt every IP packet in a given IP range, but with TLS you need to look at your TCP stream, recognize whether the TCP packet is a data packet or not, and decide to encrypt it

|

||||

|

||||

There’s actually a patch [implementing TLS in the Linux kernel][7] which lets userspace do key exchange and then pass the kernel the keys, so this obviously isn’t impossible, but it’s a much newer thing and I think it’s more complicated with TLS than with IPsec.

|

||||

|

||||

### What software do you use to do IPsec?

|

||||

|

||||

The ones I know about are Libreswan and Strongswan. Today’s tutorial focused on Libreswan.

|

||||

|

||||

Somewhat confusingly, even though Libreswan and Strongswan are different software packages, they both install a binary called `ipsec` for managing IPsec connections, and the two `ipsec` binaries are not the same program (even though they do have the same role).

|

||||

|

||||

Strongswan and Libreswan do what’s described in the “how does IPsec work” section above – they do key exchange with IKE and tell the kernel about keys to configure it to do encryption.

|

||||

|

||||

### IPsec isn’t only for VPNs!

|

||||

|

||||

At the beginning of this post I said “IPsec is a VPN protocol”, which is true, but you don’t have to use IPsec to implement VPNs! There are actually two ways to use IPsec:

|

||||

|

||||

1. “transport mode”, where the IP header is unchanged and only the contents of the IP packet are encrypted. This mode is a little more like using TLS – you talk to the server you’re communicating with directly (not through a VPN server or something), it’s just that the contents of the IP packet get encrypted

|

||||

|

||||

2. “tunnel mode”, where the IP header and its contents are all encrypted and encapsulated into another UDP packet. This is the mode that’s used for VPNs – you take your packet that you’re sending to secret_site.com, encrypt it, send it to your VPN server, and the VPN server passes it on for you.

|

||||

|

||||

### opportunistic IPsec

|

||||

|

||||

An interesting application of “transport mode” IPsec I learned about today (where you open an IPsec connection directly with the host you’re communicating with instead of some other intermediary server) is this thing called “opportunistic IPsec”. There’s an opportunistic IPsec server here:[http://oe.libreswan.org/][8].

|

||||

|

||||

I think the idea is that if you set up Libreswan and unbound up on your computer, then when you connect to [http://oe.libreswan.org][9], what happens is:

|

||||

|

||||

1. `unbound` makes a DNS query for the IPSECKEY record of oe.libreswan.org (`dig ipseckey oe.libreswan.org`) to get a public key to use for that domain. (this requires DNSSEC to be secure which when I learn about it will be a whole other blog post, but you can just run that DNS query with dig and it will work if you want to see the results)

|

||||

|

||||

2. `unbound` gives the public key to libreswan, which uses it to do a key exchange with the IKE server running on oe.libreswan.org

|

||||

|

||||

3. `libreswan` finishes the key exchange, gives the encryption key to the kernel, and tells the kernel to use that encryption key when talking to `oe.libreswan.org`

|

||||

|

||||

4. Your connection is now encrypted! Even though it’s a HTTP connection! so interesting!

|

||||

|

||||

### IPsec and TLS learn from each other

|

||||

|

||||

One interesting tidbit from the tutorial today was that the IPsec and TLS protocols have actually learned from each other over time – like they said IPsec’s IKE protocol had perfect forward secrecy before TLS, and IPsec has also learned some things from TLS. It’s neat to hear about how different internet protocols are learning & changing over time!

|

||||

|

||||

### IPsec is interesting!

|

||||

|

||||

I’ve spent quite a lot of time learning about TLS, which is obviously a super important networking protocol (let’s encrypt the internet! :D). But IPsec is an important internet encryption protocol too, and it has a different role from TLS! Apparently some mobile phone protocols (like 5G/LTE) use IPsec to encrypt their network traffic!

|

||||

|

||||

I’m happy I know a little more about it now! As usual several things in this post are probably wrong, but hopefully not too wrong :)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2018/07/11/netdev-day-1--ipsec/

|

||||

|

||||

作者:[ Julia Evans][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/about

|

||||

[1]:https://tools.ietf.org/html/rfc6071

|

||||

[2]:https://jvns.ca/categories/netdev

|

||||

[3]:https://www.netdevconf.org/0x12/

|

||||

[4]:https://jvns.ca/categories/netdev/

|

||||

[5]:https://nohats.ca/

|

||||

[6]:https://en.wikipedia.org/wiki/Internet_Key_Exchange

|

||||

[7]:https://blog.filippo.io/playing-with-kernel-tls-in-linux-4-13-and-go/

|

||||

[8]:http://oe.libreswan.org/

|

||||

[9]:http://oe.libreswan.org/

|

||||

@ -1,283 +0,0 @@

|

||||

FSSlc is translating

|

||||

|

||||

A sysadmin's guide to SELinux: 42 answers to the big questions

|

||||

============================================================

|

||||

|

||||

> Get answers to the big questions about life, the universe, and everything else about Security-Enhanced Linux.

|

||||

|

||||

|

||||

Image credits : [JanBaby][13], via Pixabay [CC0][14].

|

||||

|

||||

> "It is an important and popular fact that things are not always what they seem…"

|

||||

> ―Douglas Adams, _The Hitchhiker's Guide to the Galaxy_

|

||||

|

||||

Security. Hardening. Compliance. Policy. The Four Horsemen of the SysAdmin Apocalypse. In addition to our daily tasks—monitoring, backup, implementation, tuning, updating, and so forth—we are also in charge of securing our systems. Even those systems where the third-party provider tells us to disable the enhanced security. It seems like a job for _Mission Impossible_ 's [Ethan Hunt][15].

|

||||

|

||||

Faced with this dilemma, some sysadmins decide to [take the blue pill][16] because they think they will never know the answer to the big question of life, the universe, and everything else. And, as we all know, that answer is **[42][2]**.

|

||||

|

||||

In the spirit of _The Hitchhiker's Guide to the Galaxy_ , here are the 42 answers to the big questions about managing and using [SELinux][17] with your systems.

|

||||

|

||||

1. SELinux is a LABELING system, which means every process has a LABEL. Every file, directory, and system object has a LABEL. Policy rules control access between labeled processes and labeled objects. The kernel enforces these rules.

|

||||

|

||||

1. The two most important concepts are: _Labeling_ (files, process, ports, etc.) and _Type enforcement_ (which isolates processes from each other based on types).

|

||||

|

||||

1. The correct Label format is `user:role:type:level` ( _optional_ ).

|

||||

|

||||

1. The purpose of _Multi-Level Security (MLS) enforcement_ is to control processes ( _domains_ ) based on the security level of the data they will be using. For example, a secret process cannot read top-secret data.

|

||||

|

||||

1. _Multi-Category Security (MCS) enforcement_ protects similar processes from each other (like virtual machines, OpenShift gears, SELinux sandboxes, containers, etc.).

|

||||

|

||||

1. Kernel parameters for changing SELinux modes at boot:

|

||||

* `autorelabel=1` → forces the system to relabel

|

||||

|

||||

* `selinux=0` → kernel doesn't load any part of the SELinux infrastructure

|

||||

|

||||

* `enforcing=0` → boot in permissive mode

|

||||

|

||||

1. If you need to relabel the entire system:

|

||||

`# touch /.autorelabel

|

||||

#reboot`

|

||||

If the system labeling contains a large amount of errors, you might need to boot in permissive mode in order for the autorelabel to succeed.

|

||||

|

||||

1. To check if SELinux is enabled: `# getenforce`

|

||||

|

||||

1. To temporarily enable/disable SELinux: `# setenforce [1|0]`

|

||||

|

||||

1. SELinux status tool: `# sestatus`

|

||||

|

||||

1. Configuration file: `/etc/selinux/config`

|

||||

|

||||

1. How does SELinux work? Here's an example of labeling for an Apache Web Server:

|

||||

* Binary: `/usr/sbin/httpd`→`httpd_exec_t`

|

||||

|

||||

* Configuration directory: `/etc/httpd`→`httpd_config_t`

|

||||

|

||||

* Logfile directory: `/var/log/httpd` → `httpd_log_t`

|

||||

|

||||

* Content directory: `/var/www/html` → `httpd_sys_content_t`

|

||||

|

||||

* Startup script: `/usr/lib/systemd/system/httpd.service` → `httpd_unit_file_d`

|

||||

|

||||

* Process: `/usr/sbin/httpd -DFOREGROUND` → `httpd_t`

|

||||

|

||||

* Ports: `80/tcp, 443/tcp` → `httpd_t, http_port_t`

|

||||

|

||||

A process running in the `httpd_t` context can interact with an object with the `httpd_something_t` label.

|

||||

|

||||

1. Many commands accept the argument `-Z` to view, create, and modify context:

|

||||

* `ls -Z`

|

||||

|

||||

* `id -Z`

|

||||

|

||||

* `ps -Z`

|

||||

|

||||

* `netstat -Z`

|

||||

|

||||

* `cp -Z`

|

||||

|

||||

* `mkdir -Z`

|

||||

|

||||

Contexts are set when files are created based on their parent directory's context (with a few exceptions). RPMs can set contexts as part of installation.

|

||||

|

||||

1. There are four key causes of SELinux errors, which are further explained in items 15-21 below:

|

||||

* Labeling problems

|

||||

|

||||

* Something SELinux needs to know

|

||||

|

||||

* A bug in an SELinux policy/app

|

||||

|

||||

* Your information may be compromised

|

||||

|

||||

1. _Labeling problem:_ If your files in `/srv/myweb` are not labeled correctly, access might be denied. Here are some ways to fix this:

|

||||

* If you know the label:

|

||||

`# semanage fcontext -a -t httpd_sys_content_t '/srv/myweb(/.*)?'`

|

||||

|

||||

* If you know the file with the equivalent labeling:

|

||||

`# semanage fcontext -a -e /srv/myweb /var/www`

|

||||

|

||||

* Restore the context (for both cases):

|

||||

`# restorecon -vR /srv/myweb`

|

||||

|

||||

1. _Labeling problem:_ If you move a file instead of copying it, the file keeps its original context. To fix these issues:

|

||||

* Change the context command with the label:

|

||||

`# chcon -t httpd_system_content_t /var/www/html/index.html`

|

||||

|

||||

* Change the context command with the reference label:

|

||||

`# chcon --reference /var/www/html/ /var/www/html/index.html`

|

||||

|

||||

* Restore the context (for both cases): `# restorecon -vR /var/www/html/`

|

||||

|

||||

1. If _SELinux needs to know_ HTTPD listens on port 8585, tell SELinux:

|

||||

`# semanage port -a -t http_port_t -p tcp 8585`

|

||||

|

||||

1. _SELinux needs to know_ booleans allow parts of SELinux policy to be changed at runtime without any knowledge of SELinux policy writing. For example, if you want httpd to send email, enter: `# setsebool -P httpd_can_sendmail 1`

|

||||

|

||||

1. _SELinux needs to know_ booleans are just off/on settings for SELinux:

|

||||

* To see all booleans: `# getsebool -a`

|

||||

|

||||

* To see the description of each one: `# semanage boolean -l`

|

||||

|

||||

* To set a boolean execute: `# setsebool [_boolean_] [1|0]`

|

||||

|

||||

* To configure it permanently, add `-P`. For example:

|

||||

`# setsebool httpd_enable_ftp_server 1 -P`

|

||||

|

||||

1. SELinux policies/apps can have bugs, including:

|

||||

* Unusual code paths

|

||||

|

||||

* Configurations

|

||||

|

||||

* Redirection of `stdout`

|

||||

|

||||

* Leaked file descriptors

|

||||

|

||||

* Executable memory

|

||||

|

||||

* Badly built libraries

|

||||

Open a ticket (do not file a Bugzilla report; there are no SLAs with Bugzilla).

|

||||

|

||||

1. _Your information may be compromised_ if you have confined domains trying to:

|

||||

* Load kernel modules

|

||||

|

||||

* Turn off the enforcing mode of SELinux

|

||||

|

||||

* Write to `etc_t/shadow_t`

|

||||

|

||||

* Modify iptables rules

|

||||

|

||||

1. SELinux tools for the development of policy modules:

|

||||

`# yum -y install setroubleshoot setroubleshoot-server`

|

||||

Reboot or restart `auditd` after you install.

|

||||

|

||||

1. Use `journalctl` for listing all logs related to `setroubleshoot`:

|

||||

`# journalctl -t setroubleshoot --since=14:20`

|

||||

|

||||

1. Use `journalctl` for listing all logs related to a particular SELinux label. For example:

|

||||

`# journalctl _SELINUX_CONTEXT=system_u:system_r:policykit_t:s0`

|

||||

|

||||

1. Use `setroubleshoot` log when an SELinux error occurs and suggest some possible solutions. For example, from `journalctl`:

|

||||

```

|

||||

Jun 14 19:41:07 web1 setroubleshoot: SELinux is preventing httpd from getattr access on the file /var/www/html/index.html. For complete message run: sealert -l 12fd8b04-0119-4077-a710-2d0e0ee5755e

|

||||

|

||||

# sealert -l 12fd8b04-0119-4077-a710-2d0e0ee5755e

|

||||

SELinux is preventing httpd from getattr access on the file /var/www/html/index.html.

|

||||

|

||||

***** Plugin restorecon (99.5 confidence) suggests ************************

|

||||

|

||||

If you want to fix the label,

|

||||

/var/www/html/index.html default label should be httpd_syscontent_t.

|

||||

Then you can restorecon.

|

||||

Do

|

||||

# /sbin/restorecon -v /var/www/html/index.html

|

||||

```

|

||||

|

||||

1. Logging: SELinux records information all over the place:

|

||||

* `/var/log/messages`

|

||||

|

||||

* `/var/log/audit/audit.log`

|

||||

|

||||

* `/var/lib/setroubleshoot/setroubleshoot_database.xml`

|

||||

|

||||

1. Logging: Looking for SELinux errors in the audit log:

|

||||

`# ausearch -m AVC,USER_AVC,SELINUX_ERR -ts today`

|

||||

|

||||

1. To search for SELinux Access Vector Cache (AVC) messages for a particular service:

|

||||

`# ausearch -m avc -c httpd`

|

||||

|

||||

1. The `audit2allow` utility gathers information from logs of denied operations and then generates SELinux policy-allow rules. For example:

|

||||

* To produce a human-readable description of why the access was denied: `# audit2allow -w -a`

|

||||

|

||||

* To view the type enforcement rule that allows the denied access: `# audit2allow -a`

|

||||

|

||||

* To create a custom module: `# audit2allow -a -M mypolicy`

|

||||

The `-M` option creates a type enforcement file (.te) with the name specified and compiles the rule into a policy package (.pp): `mypolicy.pp mypolicy.te`

|

||||

|

||||

* To install the custom module: `# semodule -i mypolicy.pp`

|

||||

|

||||

1. To configure a single process (domain) to run permissive: `# semanage permissive -a httpd_t`

|

||||

|

||||

1. If you no longer want a domain to be permissive: `# semanage permissive -d httpd_t`

|

||||

|

||||

1. To disable all permissive domains: `# semodule -d permissivedomains`

|

||||

|

||||

1. Enabling SELinux MLS policy: `# yum install selinux-policy-mls`

|

||||

In `/etc/selinux/config:`

|

||||

`SELINUX=permissive`

|

||||

`SELINUXTYPE=mls`

|

||||

Make sure SELinux is running in permissive mode: `# setenforce 0`

|

||||

Use the `fixfiles` script to ensure that files are relabeled upon the next reboot:

|

||||

`# fixfiles -F onboot # reboot`

|

||||

|

||||

1. Create a user with a specific MLS range: `# useradd -Z staff_u john`

|

||||

Using the `useradd` command, map the new user to an existing SELinux user (in this case, `staff_u`).

|

||||

|

||||

1. To view the mapping between SELinux and Linux users: `# semanage login -l`

|

||||

|

||||

1. Define a specific range for a user: `# semanage login --modify --range s2:c100 john`

|

||||

|

||||

1. To correct the label on the user's home directory (if needed): `# chcon -R -l s2:c100 /home/john`

|

||||

|

||||

1. To list the current categories: `# chcat -L`

|

||||

|

||||

1. To modify the categories or to start creating your own, modify the file as follows:

|

||||

`/etc/selinux/_<selinuxtype>_/setrans.conf`

|

||||

|

||||

1. To run a command or script in a specific file, role, and user context:

|

||||

`# runcon -t initrc_t -r system_r -u user_u yourcommandhere`

|

||||

* `-t` is the _file context_

|

||||

|

||||

* `-r` is the _role context_

|

||||

|

||||

* `-u` is the _user context_

|

||||

|

||||

1. Containers running with SELinux disabled:

|

||||

* With Podman: `# podman run --security-opt label=disable` …

|

||||

|

||||

* With Docker: `# docker run --security-opt label=disable` …

|

||||

|

||||

1. If you need to give a container full access to the system:

|

||||

* With Podman: `# podman run --privileged` …

|

||||

|

||||

* With Docker: `# docker run --privileged` …

|

||||

|

||||

And with this, you already know the answer. So please: **Don't panic, and turn on SELinux**.

|

||||

|

||||

|

||||

### About the author

|

||||

|

||||

Alex Callejas - Alex Callejas is a Technical Account Manager of Red Hat in the LATAM region, based in Mexico City. With more than 10 years of experience as SysAdmin, he has strong expertise on Infrastructure Hardening. Enthusiast of the Open Source, supports the community sharing his knowledge in different events of public access and universities. Geek by nature, Linux by choice, Fedora of course.[More about me][11]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/sysadmin-guide-selinux

|

||||

|

||||

作者:[ Alex Callejas][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/darkaxl

|

||||

[1]:https://opensource.com/article/18/7/sysadmin-guide-selinux?rate=hR1QSlwcImXNksBPPrLOeP6ooSoOU7PZaR07aGFuYVo

|

||||

[2]:https://en.wikipedia.org/wiki/Phrases_from_The_Hitchhiker%27s_Guide_to_the_Galaxy#Answer_to_the_Ultimate_Question_of_Life,_the_Universe,_and_Everything_%2842%29

|

||||

[3]:https://fedorapeople.org/~dwalsh/SELinux/SELinux

|

||||

[4]:https://opensource.com/users/rhatdan

|

||||

[5]:https://opensource.com/business/13/11/selinux-policy-guide

|

||||

[6]:http://people.redhat.com/tcameron/Summit2018/selinux/SELinux_for_Mere_Mortals_Summit_2018.pdf

|

||||

[7]:http://twitter.com/thomasdcameron

|

||||

[8]:http://blog.linuxgrrl.com/2014/04/16/the-selinux-coloring-book/

|

||||

[9]:https://opensource.com/users/mairin

|

||||

[10]:https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/selinux_users_and_administrators_guide/index

|

||||

[11]:https://opensource.com/users/darkaxl

|

||||

[12]:https://opensource.com/user/219886/feed

|

||||

[13]:https://pixabay.com/en/security-secure-technology-safety-2168234/

|

||||

[14]:https://creativecommons.org/publicdomain/zero/1.0/deed.en

|

||||

[15]:https://en.wikipedia.org/wiki/Ethan_Hunt

|

||||

[16]:https://en.wikipedia.org/wiki/Red_pill_and_blue_pill

|

||||

[17]:https://en.wikipedia.org/wiki/Security-Enhanced_Linux

|

||||

[18]:https://opensource.com/users/darkaxl

|

||||

[19]:https://opensource.com/users/darkaxl

|

||||

[20]:https://opensource.com/article/18/7/sysadmin-guide-selinux#comments

|

||||

[21]:https://opensource.com/tags/security

|

||||

[22]:https://opensource.com/tags/linux

|

||||

[23]:https://opensource.com/tags/sysadmin

|

||||

@ -1,124 +0,0 @@

|

||||

FSSlc is translating

|

||||

|

||||

|

||||

netdev day 2: moving away from "as fast as possible" in networking code

|

||||

============================================================

|

||||

|

||||

Hello! Today was day 2 of netdev. I only made it to the morning of the conference, but the morning was VERY EXCITING. The highlight of this morning was a keynote by [Van Jacobson][1] about the future of congestion control on the internet (!!!) called “Evolving from As Fast As Possible: Teaching NICs about time”

|

||||

|

||||

I’m going to try to summarize what I learned from this talk. I almost certainly have some things wrong, but let’s go!

|

||||

|

||||

This talk was about how the internet has changed since 1988, why we need new algorithms today, and how we can change Linux’s networking stack to implement those algorithms more easily.

|

||||

|

||||

### what’s congestion control?

|

||||

|

||||

Everyone on the internet is sending packets all at once, all the time. The links on the internet are of dramatically different speeds (some are WAY slower than others), and sometimes they get full! When a device on the internet receives packets at a rate faster than it can handle, it drops the packets.

|

||||

|

||||

The most naive you way you could imagine sending packets is:

|

||||

|

||||

1. Send all the packets you have to send all at once

|

||||

|

||||

2. If you discover any of those packets got dropped, resend the packet right away

|

||||

|

||||

It turns out that if you implemented TCP that way, the internet would collapse and grind to a halt. We know that it would collapse because it did kinda collapse, in 1986\. To fix this, folks invented congestion control algorithms – the original paper describing how they avoided collapsing the internet is [Congestion Avoidance and Control][2], by Van Jacobson from 1988\. (30 years ago!)

|

||||

|

||||

### How has the internet changed since 1988?

|

||||

|

||||

The main thing he said has changed about the internet is – it used to be that switches would always have faster network cards than servers on the internet. So the servers in the middle of the internet would be a lot faster than the clients, and it didn’t matter as much how fast clients sent packets.

|

||||

|

||||

Today apparently that’s not true! As we all know, computers today aren’t really faster than computers 5 years ago (we ran into some problems with the speed of light). So what happens (I think) is that the big switches in routers are not really that much faster than the NICs on servers in datacenters.

|

||||

|

||||

This is bad because it means that clients are much more easily able to saturate the links in the middle, which results in the internet getting slower. (and there’s [buffer bloat][3] which results in high latency)

|

||||

|

||||

So to improve performance on the internet and not saturate all the queues on every router, clients need to be a little better behaved and to send packets a bit more slowly.

|

||||

|

||||

### sending more packets more slowly results in better performance

|

||||

|

||||

Here’s an idea that was really surprising to me – sending packets more slowly often actually results in better performance (even if you are the only one doing it). Here’s why!

|

||||

|

||||

Suppose you’re trying to send 10MB of data, and there’s a link somewhere in the middle between you and the client you’re trying to talk to that is SLOW, like 1MB/s or something. Assuming that you can tell the speed of this slow link (more on that later), you have 2 choices:

|

||||

|

||||

1. Send the entire 10MB of data at once and see what happens

|

||||

|

||||

2. Slow it down so you send it at 1MB/s

|

||||

|

||||

Now – either way, you’re probably going to end up with some packet loss. So it seems like you might as well just send all the data at once if you’re going to end up with packet loss either way, right? No!! The key observation is that packet loss in the middle of your stream is much better than packet loss at the end of your stream. If a few packets in the middle are dropped, the client you’re sending to will realize, tell you, and you can just resend them. No big deal! But if packets at the END are dropped, the client has no way of knowing you sent those packets at all! So you basically need to time out at some point when you don’t get an ACK for those packets and resend it. And timeouts typically take a long time to happen!

|

||||

|

||||

So why is sending data more slowly better? Well, if you send data faster than the bottleneck for the link, what will happen is that all the packets will pile up in a queue somewhere, the queue will get full, and then the packets at the END of your stream will get dropped. And, like we just explained, the packets at the end of the stream are the worst packets to drop! So then you have all these timeouts, and sending your 10MB of data will take way longer than if you’d just sent your packets at the correct speed in the first place.

|

||||

|

||||

I thought this was really cool because it doesn’t require cooperation from anybody else on the internet – even if everybody else is sending all their packets really fast, it’s _still_ more advantageous for you to send your packets at the correct rate (the rate of the bottleneck in the middle)

|

||||

|

||||

### how to tell the right speed to send data at: BBR!

|

||||

|

||||

Earlier I said “assuming that you can tell the speed of the slow link between your client and server…“. How do you do that? Well, some folks from Google (where Jacobson works) came up with an algorithm for measuring the speed of bottlenecks! It’s called BBR. This post is already long enough, but for more about BBR, see [BBR: Congestion-based congestion control][4] and [the summary from the morning paper][5].

|

||||

|

||||

(as an aside, [https://blog.acolyer.org][6]’s daily “the morning paper” summaries are basically the only way I learn about / understand CS papers, it’s possibly the greatest blog on the internet)

|

||||

|

||||

### networking code is designed to run “as fast as possible”

|

||||

|

||||

So! Let’s say we believe we want to send data a little more slowly, at the speed of the bottleneck in our connection. This is all very well, but networking software isn’t really designed to send data at a controlled rate! This (as far as I understand it) is how most networking stuff is designed:

|

||||

|

||||

1. There’s a queue of packets coming in

|

||||

|

||||

2. It reads off the queue and sends the packets out as as fast as possible

|

||||

|

||||

3. That’s it

|

||||

|

||||

This is pretty inflexible! Like – suppose I have one really fast connection I’m sending packets on, and one really slow connection. If all I have is a queue to put packets on, I don’t get that much control over when the packets I’m sending actually get sent out. I can’t slow down the queue!

|

||||

|

||||

### a better way: give every packet an “earliest departure time”

|

||||

|

||||

His proposal was to modify the skb data structure in the Linux kernel (which is the data structure used to represent network packets) to have a TIMESTAMP on it representing the earliest time that packet should go out.

|

||||

|

||||

I don’t know a lot about the Linux network stack, but the interesting thing to me about this proposal is that it doesn’t sound like a huge change! It’s just an extra timestamp.

|

||||

|

||||

### replace queues with timing wheels!!!

|

||||

|

||||

Once we have all these packets with times on them, how do we get them sent out at the right time? TIMING WHEELS!

|

||||

|

||||

At Papers We Love a while back ([some good links in the meetup description][7]) there was a talk about timing wheels. Timing wheels are the algorithm the Linux process scheduler decides when to run processes.

|

||||

|

||||

He said that timing wheels actually perform better than queues for scheduling work – they both offer constant time operations, but the timing wheels constant is smaller because of some stuff to do with cache performance. I didn’t really follow the performance arguments.

|

||||

|

||||

One point he made about timing wheels is that you can easily implement a queue with a timing wheel (though not vice versa!) – if every time you add a new packet, you say that you want it to be sent RIGHT NOW at the earliest, then you effectively end up with a queue. So this timing wheel approach is backwards compatible, but it makes it much easier to implement more complex traffic shaping algorithms where you send out different packets at different rates.

|

||||

|

||||

### maybe we can fix the internet by improving Linux!

|

||||

|

||||

With any internet-scale problem, the tricky thing about making progress on it is that you need cooperation from SO MANY different parties to change how internet protocols are implemented. You have Linux machines, BSD machines, Windows machines, different kinds of phones, Juniper/Cisco routers, and lots of other devices!

|

||||

|

||||

But Linux is in kind of an interesting position in the networking landscape!

|

||||

|

||||

* Android phones run Linux

|

||||

|

||||

* Most consumer wifi routers run Linux

|

||||

|

||||

* Lots of servers run Linux

|

||||

|

||||

So in any given network connection, you’re actually relatively likely to have a Linux machine at both ends (a linux server, and either a Linux router or Android device).

|

||||

|

||||

So the point is that if you want to improve congestion on the internet in general, it would make a huge difference to just change the Linux networking stack. (and maybe the iOS networking stack too) Which is why there was a keynote at this Linux networking conference about it!

|

||||

|

||||

### the internet is still changing! Cool!

|

||||

|

||||

I usually think of TCP/IP as something that we figured out in the 80s, so it was really fascinating to hear that folks think that there are still serious issues with how we’re designing our networking protocols, and that there’s work to do to design them differently.

|

||||

|

||||

And of course it makes sense – the landscape of networking hardware and the relative speeds of everything and the kinds of things people are using the internet for (netflix!) is changing all the time, so it’s reasonable that at some point we need to start designing our algorithms differently for the internet of 2018 instead of the internet of 1998.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2018/07/12/netdev-day-2--moving-away-from--as-fast-as-possible/

|

||||

|

||||

作者:[Julia Evans][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://jvns.ca/about

|

||||

[1]:https://en.wikipedia.org/wiki/Van_Jacobson

|

||||

[2]:https://cs162.eecs.berkeley.edu/static/readings/jacobson-congestion.pdf

|

||||

[3]:https://apenwarr.ca/log/?m=201101#10

|

||||

[4]:https://queue.acm.org/detail.cfm?id=3022184

|

||||

[5]:https://blog.acolyer.org/2017/03/31/bbr-congestion-based-congestion-control/

|

||||

[6]:https://blog.acolyer.org/

|

||||

[7]:https://www.meetup.com/Papers-We-Love-Montreal/events/235100825/

|

||||

@ -1,73 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

4 open source media conversion tools for the Linux desktop

|

||||

======

|

||||

|

||||

|

||||

|

||||

Ah, so many file formats—especially audio and video ones—can make for fun times if you get a file with an extension you don't recognize, if your media player doesn't play a file in that format, or if you want to use an open format.

|

||||

|

||||

So, what can a Linux user do? Turn to one of the many open source media conversion tools for the Linux desktop, of course. Let's take a look at four of them.

|

||||

|

||||

### Gnac

|

||||

|

||||

|

||||

|

||||

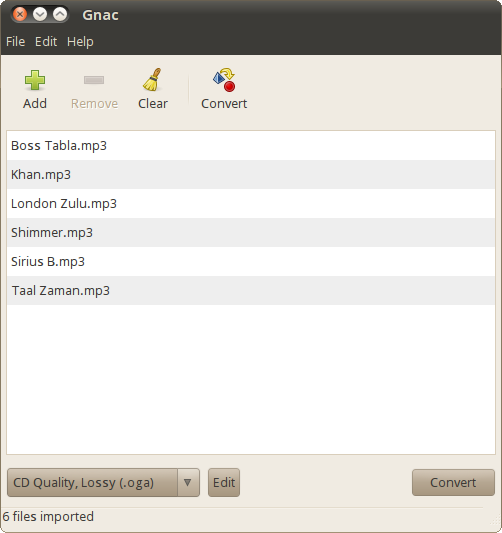

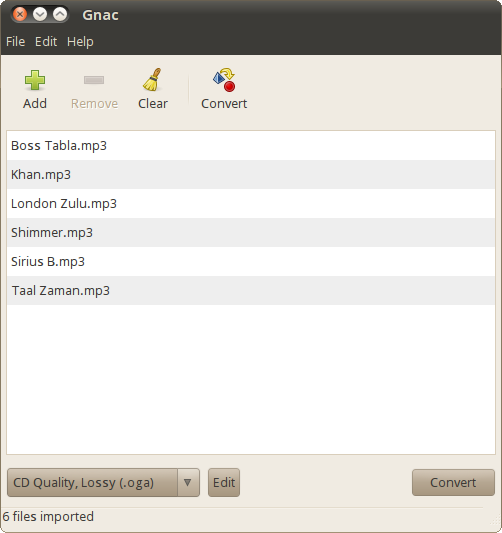

[Gnac][1] is one of my favorite audio converters and has been for years. It's easy to use, it's powerful, and it does one thing well—as any top-notch utility should.

|

||||

|

||||

How easy? You click a toolbar button to add one or more files to convert, choose a format to convert to, and then click **Convert**. The conversions are quick, and they're clean.

|

||||

|

||||

How powerful? Gnac can handle all the audio formats that the [GStreamer][2] multimedia framework supports. Out of the box, you can convert between Ogg, FLAC, AAC, MP3, WAV, and SPX. You can also change the conversion options for each format or add new ones.

|

||||

|

||||

### SoundConverter

|

||||

|

||||

|

||||

|

||||

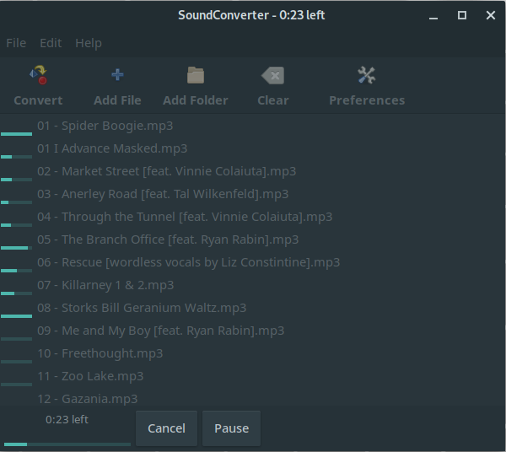

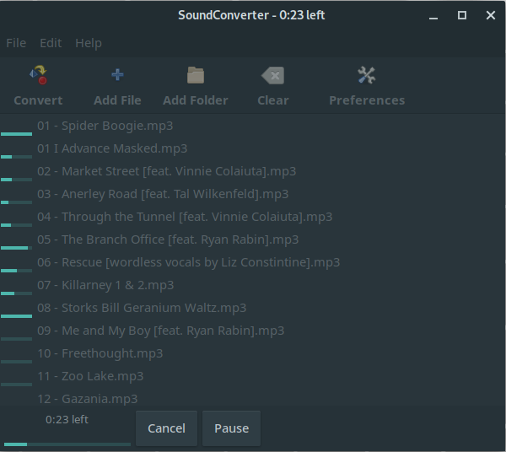

If simplicity with a few extra features is your thing, then give [SoundConverter][3] a look. As its name states, SoundConverter works its magic only on audio files. Like Gnac, it can read the formats that GStreamer supports, and it can spit out Ogg Vorbis, MP3, FLAC, WAV, AAC, and Opus files.

|

||||

|

||||

Load individual files or an entire folder by either clicking **Add File** or dragging and dropping it into the SoundConverter window. Click **Convert** , and the software powers through the conversion. It's fast, too—I've converted a folder containing a couple dozen files in about a minute.

|

||||

|

||||

SoundConverter has options for setting the quality of your converted files. You can change the way files are named (for example, include a track number or album name in the title) and create subfolders for the converted files.

|

||||

|

||||

### WinFF

|

||||

|

||||

|

||||

|

||||

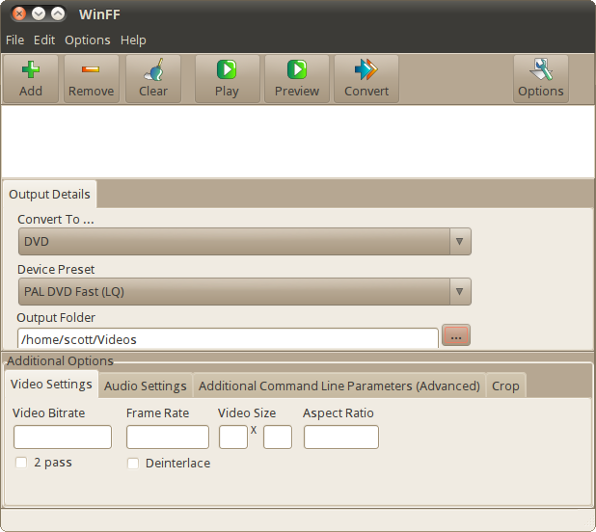

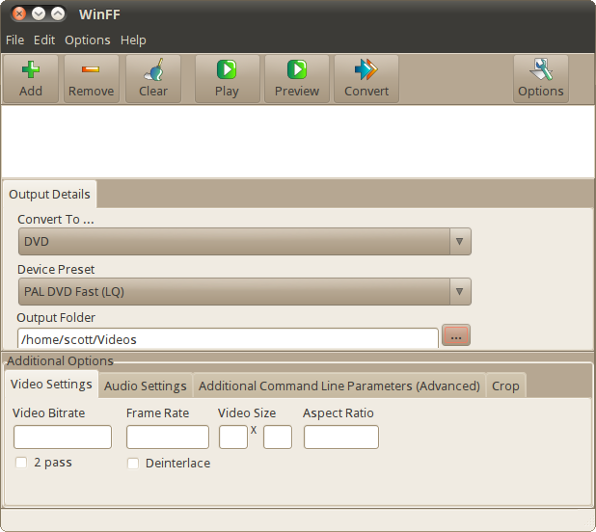

[WinFF][4], on its own, isn't a converter. It's a graphical frontend to FFmpeg, which [Tim Nugent looked at][5] for Opensource.com. While WinFF doesn't have all the flexibility of FFmpeg, it makes FFmpeg easier to use and gets the job done quickly and fairly easily.

|

||||

|

||||

Although it's not the prettiest application out there, WinFF doesn't need to be. It's more than usable. You can choose what formats to convert to from a dropdown list and select several presets. On top of that, you can specify options like bitrates and frame rates, the number of audio channels to use, and even the size at which to crop videos.

|

||||

|

||||

The conversions, especially video, take a bit of time, but the results are generally quite good. Once in a while, the conversion gets a bit mangled—but not often enough to be a concern. And, as I said earlier, using WinFF can save me a bit of time.

|

||||

|

||||

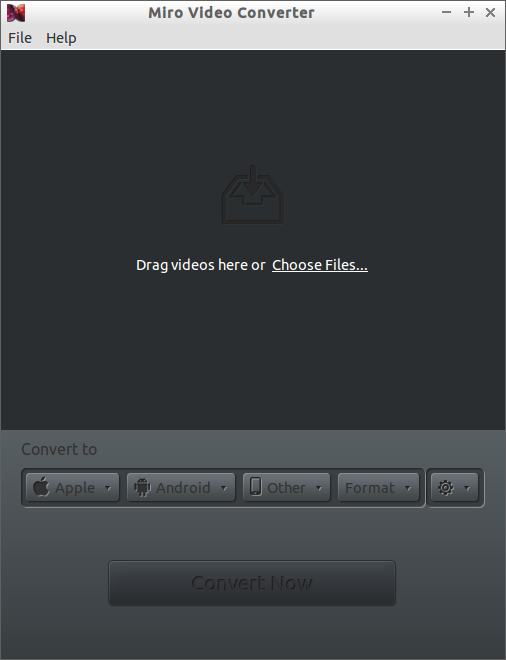

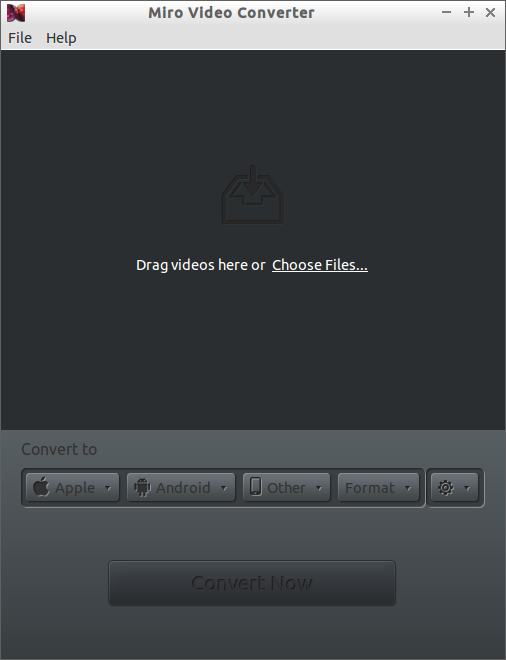

### Miro Video Converter

|

||||

|

||||

|

||||

|

||||

Not all video files are created equally. Some are in proprietary formats. Others look great on a monitor or TV screen but aren't optimized for a mobile device. That's where [Miro Video Converter][6] comes to the rescue.

|

||||

|

||||

Miro Video Converter has a heavy emphasis on mobile. It can convert video that you can play on Android phones, Apple devices, the PlayStation Portable, and the Kindle Fire. It will convert most common video formats to MP4, [WebM][7] , and [Ogg Theora][8] . You can find a full list of supported devices and formats [on Miro's website][6]

|

||||

|

||||

To use it, either drag and drop a file into the window or select the file that you want to convert. Then, click the Format menu to choose the format for the conversion. You can also click the Apple, Android, or Other menus to choose a device for which you want to convert the file. Miro Video Converter resizes the video for the device's screen resolution.

|

||||

|

||||

Do you have a favorite Linux media conversion application? Feel free to share it by leaving a comment.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/media-conversion-tools-linux

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/scottnesbitt

|

||||

[1]:http://gnac.sourceforge.net

|

||||

[2]:http://www.gstreamer.net/

|

||||

[3]:http://soundconverter.org/

|

||||

[4]:https://www.biggmatt.com/winff/

|

||||

[5]:https://opensource.com/article/17/6/ffmpeg-convert-media-file-formats

|

||||

[6]:http://www.mirovideoconverter.com/

|

||||

[7]:https://en.wikipedia.org/wiki/WebM

|

||||

[8]:https://en.wikipedia.org/wiki/Ogg_theora

|

||||

@ -1,3 +1,5 @@

|

||||

translating----geekpi

|

||||

|

||||

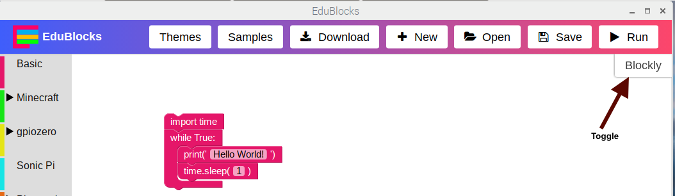

Getting started with Mu, a Python editor for beginners

|

||||

======

|

||||

|

||||

|

||||

101

sources/tech/20180803 UNIX curiosities.md

Normal file

101

sources/tech/20180803 UNIX curiosities.md

Normal file

@ -0,0 +1,101 @@

|

||||

UNIX curiosities

|

||||

======

|

||||

Recently I've been doing more UNIXy things in various tools I'm writing, and I hit two interesting issues. Neither of these are "bugs", but behaviors that I wasn't expecting.

|

||||

|

||||

### Thread-safe printf

|

||||

|

||||

I have a C application that reads some images from disk, does some processing, and writes output about these images to STDOUT. Pseudocode:

|

||||

```

|

||||

for(imagefilename in images)

|

||||

{

|

||||

results = process(imagefilename);

|

||||

printf(results);

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

The processing is independent for each image, so naturally I want to distribute this processing between various CPUs to speed things up. I usually use `fork()`, so I wrote this:

|

||||

```

|

||||

for(child in children)

|

||||

{

|

||||

pipe = create_pipe();

|

||||

worker(pipe);

|

||||

}

|

||||

|

||||

// main parent process

|

||||

for(imagefilename in images)

|

||||

{

|

||||

write(pipe[i_image % N_children], imagefilename)

|

||||

}

|

||||

|

||||

worker()

|

||||

{

|

||||

while(1)

|

||||

{

|

||||

imagefilename = read(pipe);

|

||||

results = process(imagefilename);

|

||||

printf(results);

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

This is the normal thing: I make pipes for IPC, and send the child workers image filenames through these pipes. Each worker _could_ write its results back to the main process via another set of pipes, but that's a pain, so here each worker writes to the shared STDOUT directly. This works OK, but as one would expect, the writes to STDOUT clash, so the results for the various images end up interspersed. That's bad. I didn't feel like setting up my own locks, but fortunately GNU libc provides facilities for that: [`flockfile()`][1]. I put those in, and … it didn't work! Why? Because whatever `flockfile()` does internally ends up restricted to a single subprocess because of `fork()`'s copy-on-write behavior. I.e. the extra safety provided by `fork()` (compared to threads) actually ends up breaking the locks.

|

||||

|

||||

I haven't tried using other locking mechanisms (like pthread mutexes for instance), but I can imagine they'll have similar problems. And I want to keep things simple, so sending the output back to the parent for output is out of the question: this creates more work for both me the programmer, and for the computer running the program.

|

||||

|

||||

The solution: use threads instead of forks. This has a nice side effect of making the pipes redundant. Final pseudocode:

|

||||

```

|

||||

for(children)

|

||||

{

|

||||

pthread_create(worker, child_index);

|

||||

}

|

||||

for(children)

|

||||

{

|

||||

pthread_join(child);

|

||||

}

|

||||

|

||||

worker(child_index)

|

||||

{

|

||||

for(i_image = child_index; i_image < N_images; i_image += N_children)

|

||||

{

|

||||

results = process(images[i_image]);

|

||||

flockfile(stdout);

|

||||

printf(results);

|

||||

funlockfile(stdout);

|

||||

}

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

Much simpler, and actually works as desired. I guess sometimes threads are better.

|

||||

|

||||

### Passing a partly-read file to a child process

|

||||

|

||||

For various [vnlog][2] tools I needed to implement this sequence:

|

||||

|

||||

1. process opens a file with O_CLOEXEC turned off

|

||||

2. process reads a part of this file (up-to the end of the legend in the case of vnlog)

|

||||

3. process calls exec to invoke another program to process the rest of the already-opened file

|

||||

|

||||

The second program may require a file name on the commandline instead of an already-opened file descriptor because this second program may be calling open() by itself. If I pass it the filename, this new program will re-open the file, and then start reading the file from the beginning, not from the location where the original program left off. It is important for my application that this does not happen, so passing the filename to the second program does not work.

|

||||

|

||||

So I really need to pass the already-open file descriptor somehow. I'm using Linux (other OSs maybe behave differently here), so I can in theory do this by passing /dev/fd/N instead of the filename. But it turns out this does not work either. On Linux (again, maybe this is Linux-specific somehow) for normal files /dev/fd/N is a symlink to the original file. So this ends up doing exactly the same thing that passing the filename does.

|

||||

|

||||

But there's a workaround! If we're reading a pipe instead of a file, then there's nothing to symlink to, and /dev/fd/N ends up passing the original pipe down to the second process, and things then work correctly. And I can fake this by changing the open("filename") above to something like popen("cat filename"). Yuck! Is this really the best we can do? What does this look like on one of the BSDs, say?

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://notes.secretsauce.net/notes/2018/08/03_unix-curiosities.html

|

||||

|

||||

作者:[Dima Kogan][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://notes.secretsauce.net/

|

||||

[1]:https://www.gnu.org/software/libc/manual/html_node/Streams-and-Threads.html

|

||||

[2]:http://www.github.com/dkogan/vnlog

|

||||

159

sources/tech/20180804 Installing Android on VirtualBox.md

Normal file

159

sources/tech/20180804 Installing Android on VirtualBox.md

Normal file

@ -0,0 +1,159 @@

|

||||

Installing Android on VirtualBox

|

||||

======

|

||||

If you are developing mobile apps Android can be a bit of a hassle. While iOS comes with its niceties, provided you are using macOS, Android comes with just Android Studio which is designed to support more than a few Android version, including wearables.

|

||||

|

||||

Needless to say, all the binaries, SDKs, frameworks and debuggers are going to pollute your filesystem with lots and lots of files, logs and other miscellaneous objects. An efficient work around for this is installing Android on your VirtualBox which takes away one of the sluggiest aspect of Android development — The device emulator. You can use this VM to run your test application or just fiddle with Android’s internals. So without further ado let’s set on up!

|

||||

|

||||

### Getting Started

|

||||

|

||||

To get started we will need to have VirtualBox installed on our system, you can get a copy for Windows, macOS or any major distro of Linux [here][1]. Next you would need a copy of Android meant to run on x86 hardware, because that’s what VirtualBox is going to offer to a Virtual Machine an x86 or an x86_64 (a.k.a AMD64) platform to run.

|

||||

|