mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

commit

30747f1001

2

.gitignore

vendored

2

.gitignore

vendored

@ -3,3 +3,5 @@ members.md

|

|||||||

*.html

|

*.html

|

||||||

*.bak

|

*.bak

|

||||||

.DS_Store

|

.DS_Store

|

||||||

|

sources/*/.*

|

||||||

|

translated/*/.*

|

||||||

95

published/20180128 Being open about data privacy.md

Normal file

95

published/20180128 Being open about data privacy.md

Normal file

@ -0,0 +1,95 @@

|

|||||||

|

对数据隐私持开放的态度

|

||||||

|

======

|

||||||

|

|

||||||

|

> 尽管有包括 GDPR 在内的法规,数据隐私对于几乎所有的人来说都是很重要的事情。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

今天(LCTT 译注:本文发表于 2018/1/28)是<ruby>[数据隐私日][1]<rt>Data Privacy Day</rt></ruby>,(在欧洲叫“<ruby>数据保护日<rt>Data Protection Day</rt></ruby>”),你可能会认为现在我们处于一个开源的世界中,所有的数据都应该是自由的,[就像人们想的那样][2],但是现实并没那么简单。主要有两个原因:

|

||||||

|

|

||||||

|

1. 我们中的大多数(不仅仅是在开源中)认为至少有些关于我们自己的数据是不愿意分享出去的(我在之前发表的一篇文章中列举了一些例子[3])

|

||||||

|

2. 我们很多人虽然在开源中工作,但事实上是为了一些商业公司或者其他一些组织工作,也是在合法的要求范围内分享数据。

|

||||||

|

|

||||||

|

所以实际上,数据隐私对于每个人来说是很重要的。

|

||||||

|

|

||||||

|

事实证明,在美国和欧洲之间,人们和政府认为让组织使用哪些数据的出发点是有些不同的。前者通常为商业实体(特别是愤世嫉俗的人们会指出是大型的商业实体)利用他们所收集到的关于我们的数据提供了更多的自由度。在欧洲,完全是另一观念,一直以来持有的多是有更多约束限制的观念,而且在 5 月 25 日,欧洲的观点可以说取得了胜利。

|

||||||

|

|

||||||

|

### 通用数据保护条例(GDPR)的影响

|

||||||

|

|

||||||

|

那是一个相当全面的声明,其实事实上这是 2016 年欧盟通过的一项称之为<ruby>通用数据保护条例<rt>General Data Protection Regulation</rt></ruby>(GDPR)的立法的日期。数据通用保护条例在私人数据怎样才能被保存,如何才能被使用,谁能使用,能被持有多长时间这些方面设置了严格的规则。它描述了什么数据属于私人数据——而且涉及的条目范围非常广泛,从你的姓名、家庭住址到你的医疗记录以及接通你电脑的 IP 地址。

|

||||||

|

|

||||||

|

通用数据保护条例的重要之处是它并不仅仅适用于欧洲的公司,如果你是阿根廷人、日本人、美国人或者是俄罗斯的公司而且你正在收集涉及到欧盟居民的数据,你就要受到这个条例的约束管辖。

|

||||||

|

|

||||||

|

“哼!” 你可能会这样说^注1 ,“我的业务不在欧洲:他们能对我有啥约束?” 答案很简单:如果你想继续在欧盟做任何生意,你最好遵守,因为一旦你违反了通用数据保护条例的规则,你将会受到你的全球总收入百分之四的惩罚。是的,你没听错,是全球总收入,而不是仅仅在欧盟某一国家的的收入,也不只是净利润,而是全球总收入。这将会让你去叮嘱告知你的法律团队,他们就会知会你的整个团队,同时也会立即去指引你的 IT 团队,确保你的行为在相当短的时间内合规。

|

||||||

|

|

||||||

|

看上去这和非欧盟公民没有什么相关性,但其实不然,对大多数公司来说,对所有的他们的顾客、合作伙伴以及员工实行同样的数据保护措施是件既简单又有效的事情,而不是仅针对欧盟公民实施,这将会是一件很有利的事情。^注2

|

||||||

|

|

||||||

|

然而,数据通用保护条例不久将在全球实施并不意味着一切都会变的很美好^注3 :事实并非如此,我们一直在丢弃关于我们自己的信息——而且允许公司去使用它。

|

||||||

|

|

||||||

|

有一句话是这么说的(尽管很争议):“如果你没有在付费,那么你就是产品。”这句话的意思就是如果你没有为某一项服务付费,那么其他的人就在付费使用你的数据。你有付费使用 Facebook、推特、谷歌邮箱?你觉得他们是如何赚钱的?大部分是通过广告,一些人会争论那是他们向你提供的一项服务而已,但事实上是他们在利用你的数据从广告商里获取收益。你不是一个真正的广告的顾客——只有当你从看了广告后买了他们的商品之后你才变成了他们的顾客,但直到这个发生之前,都是广告平台和广告商的关系。

|

||||||

|

|

||||||

|

有些服务是允许你通过付费来消除广告的(流媒体音乐平台声破天就是这样的),但从另一方面来讲,即使你认为付费的服务也可以启用广告(例如,亚马逊正在努力让 Alexa 发广告),除非我们想要开始为这些所有的免费服务付费,我们需要清楚我们所放弃的,而且在我们暴露的和不想暴露的之间做一些选择。

|

||||||

|

|

||||||

|

### 谁是顾客?

|

||||||

|

|

||||||

|

关于数据的另一个问题一直在困扰着我们,它是产生的数据量的直接结果。有许多组织一直在产生巨量的数据,包括公共的组织比如大学、医院或者是政府部门^注4 ——而且他们没有能力去储存这些数据。如果这些数据没有长久的价值也就没什么要紧的,但事实正好相反,随着处理大数据的工具正在开发中,而且这些组织也认识到他们现在以及在不久的将来将能够去挖掘这些数据。

|

||||||

|

|

||||||

|

然而他们面临的是,随着数据的增长和存储量无法跟上该怎么办。幸运的是——而且我是带有讽刺意味的使用了这个词^注5 ,大公司正在介入去帮助他们。“把你们的数据给我们,”他们说,“我们将免费保存。我们甚至让你随时能够使用你所收集到的数据!”这听起来很棒,是吗?这是大公司^注6 的一个极具代表性的例子,站在慈善的立场上帮助公共组织管理他们收集到的关于我们的数据。

|

||||||

|

|

||||||

|

不幸的是,慈善不是唯一的理由。他们是附有条件的:作为同意保存数据的交换条件,这些公司得到了将数据访问权限出售给第三方的权利。你认为公共组织,或者是被收集数据的人在数据被出售使用权使给第三方,以及在他们如何使用上能有发言权吗?我将把这个问题当做一个练习留给读者去思考。^注7

|

||||||

|

|

||||||

|

### 开放和积极

|

||||||

|

|

||||||

|

然而并不只有坏消息。政府中有一项在逐渐发展起来的“开放数据”运动鼓励各个部门免费开放大量他们的数据给公众或者其他组织。在某些情况下,这是专门立法的。许多志愿组织——尤其是那些接受公共资金的——正在开始这样做。甚至商业组织也有感兴趣的苗头。而且,有一些技术已经可行了,例如围绕不同的隐私和多方计算上,正在允许跨越多个数据集挖掘数据,而不用太多披露个人的信息——这个计算问题从未如现在比你想象的更容易。

|

||||||

|

|

||||||

|

这些对我们来说意味着什么呢?我之前在网站 Opensource.com 上写过关于[开源的共享福利][4],而且我越来越相信我们需要把我们的视野从软件拓展到其他区域:硬件、组织,和这次讨论有关的,数据。让我们假设一下你是 A 公司要提向另一家公司客户 B^注8 提供一项服务 。在此有四种不同类型的数据:

|

||||||

|

|

||||||

|

1. 数据完全开放:对 A 和 B 都是可得到的,世界上任何人都可以得到

|

||||||

|

2. 数据是已知的、共享的,和机密的:A 和 B 可得到,但其他人不能得到

|

||||||

|

3. 数据是公司级别上保密的:A 公司可以得到,但 B 顾客不能

|

||||||

|

4. 数据是顾客级别保密的:B 顾客可以得到,但 A 公司不能

|

||||||

|

|

||||||

|

首先,也许我们对数据应该更开放些,将数据默认放到选项 1 中。如果那些数据对所有人开放——在无人驾驶、语音识别,矿藏以及人口数据统计会有相当大的作用的。^注9 如果我们能够找到方法将数据放到选项 2、3 和 4 中,不是很好吗?——或者至少它们中的一些——在选项 1 中是可以实现的,同时仍将细节保密?这就是研究这些新技术的希望。

|

||||||

|

然而有很长的路要走,所以不要太兴奋,同时,开始考虑将你的的一些数据默认开放。

|

||||||

|

|

||||||

|

### 一些具体的措施

|

||||||

|

|

||||||

|

我们如何处理数据的隐私和开放?下面是我想到的一些具体的措施:欢迎大家评论做出更多的贡献。

|

||||||

|

|

||||||

|

* 检查你的组织是否正在认真严格的执行通用数据保护条例。如果没有,去推动实施它。

|

||||||

|

* 要默认加密敏感数据(或者适当的时候用散列算法),当不再需要的时候及时删掉——除非数据正在被处理使用,否则没有任何借口让数据清晰可见。

|

||||||

|

* 当你注册了一个服务的时候考虑一下你公开了什么信息,特别是社交媒体类的。

|

||||||

|

* 和你的非技术朋友讨论这个话题。

|

||||||

|

* 教育你的孩子、你朋友的孩子以及他们的朋友。然而最好是去他们的学校和他们的老师谈谈在他们的学校中展示。

|

||||||

|

* 鼓励你所服务和志愿贡献的组织,或者和他们沟通一些推动数据的默认开放。不是去思考为什么我要使数据开放,而是从我为什么不让数据开放开始。

|

||||||

|

* 尝试去访问一些开源数据。挖掘使用它、开发应用来使用它,进行数据分析,画漂亮的图,^注10 制作有趣的音乐,考虑使用它来做些事。告诉组织去使用它们,感谢它们,而且鼓励他们去做更多。

|

||||||

|

|

||||||

|

**注:**

|

||||||

|

|

||||||

|

1. 我承认你可能尽管不会。

|

||||||

|

2. 假设你坚信你的个人数据应该被保护。

|

||||||

|

3. 如果你在思考“极好的”的寓意,在这点上你并不孤独。

|

||||||

|

4. 事实上这些机构能够有多开放取决于你所居住的地方。

|

||||||

|

5. 假设我是英国人,那是非常非常大的剂量。

|

||||||

|

6. 他们可能是巨大的公司:没有其他人能够负担得起这么大的存储和基础架构来使数据保持可用。

|

||||||

|

7. 不,答案是“不”。

|

||||||

|

8. 尽管这个例子也同样适用于个人。看看:A 可能是 Alice,B 可能是 BOb……

|

||||||

|

9. 并不是说我们应该暴露个人的数据或者是这样的数据应该被保密,当然——不是那类的数据。

|

||||||

|

10. 我的一个朋友当她接孩子放学的时候总是下雨,所以为了避免确认失误,她在整个学年都访问天气信息并制作了图表分享到社交媒体上。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/article/18/1/being-open-about-data-privacy

|

||||||

|

|

||||||

|

作者:[Mike Bursell][a]

|

||||||

|

译者:[FelixYFZ](https://github.com/FelixYFZ)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com/users/mikecamel

|

||||||

|

[1]:https://en.wikipedia.org/wiki/Data_Privacy_Day

|

||||||

|

[2]:https://en.wikipedia.org/wiki/Information_wants_to_be_free

|

||||||

|

[3]:https://aliceevebob.wordpress.com/2017/06/06/helping-our-governments-differently/

|

||||||

|

[4]:https://opensource.com/article/17/11/commonwealth-open-source

|

||||||

|

[5]:http://www.outpost9.com/reference/jargon/jargon_40.html#TAG2036

|

||||||

@ -0,0 +1,132 @@

|

|||||||

|

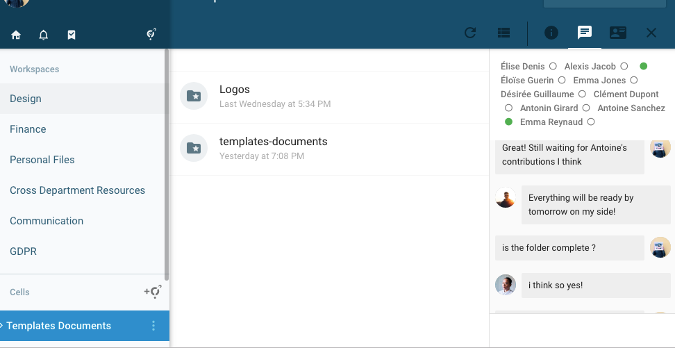

IT 自动化的下一步是什么: 6 大趋势

|

||||||

|

======

|

||||||

|

|

||||||

|

> 自动化专家分享了一点对 [自动化][6]不远的将来的看法。请将这些保留在你的视线之内。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

我们最近讨论了 [推动 IT 自动化的因素][1],可以看到[当前趋势][2]正在增长,以及那些给刚开始使用自动化部分流程的组织的 [有用的技巧][3] 。

|

||||||

|

|

||||||

|

噢,我们也分享了如何在贵公司[进行自动化的案例][4]及 [长期成功的关键][5]的专家建议。

|

||||||

|

|

||||||

|

现在,只有一个问题:自动化的下一步是什么? 我们邀请一系列专家分享一下 [自动化][6]不远的将来的看法。 以下是他们建议 IT 领域领导需密切关注的六大趋势。

|

||||||

|

|

||||||

|

### 1、 机器学习的成熟

|

||||||

|

|

||||||

|

对于关于 [机器学习][7](与“自我学习系统”相似的定义)的讨论,对于绝大多数组织的项目来说,实际执行起来它仍然为时过早。但预计这将发生变化,机器学习将在下一次 IT 自动化浪潮中将扮演着至关重要的角色。

|

||||||

|

|

||||||

|

[Advanced Systems Concepts, Inc.][8] 公司的工程总监 Mehul Amin 指出机器学习是 IT 自动化下一个关键增长领域之一。

|

||||||

|

|

||||||

|

“随着数据化的发展,自动化软件理应可以自我决策,否则这就是开发人员的责任了”,Amin 说。 “例如,开发者构建了需要执行的内容,但通过使用来自系统内部分析的软件,可以确定执行该流程的最佳系统。”

|

||||||

|

|

||||||

|

假设将这个系统延伸到其他地方中。Amin 指出,机器学习可以使自动化系统在必要的时候提供额外的资源,以需要满足时间线或 SLA,同样在不需要资源以及其他的可能性的时候退出。

|

||||||

|

|

||||||

|

显然不只有 Amin 一个人这样认为。

|

||||||

|

|

||||||

|

“IT 自动化正在走向自我学习的方向” ,[Sungard Availability Services][9] 公司首席架构师 Kiran Chitturi 表示,“系统将会能测试和监控自己,加强业务流程和软件交付能力。”

|

||||||

|

|

||||||

|

Chitturi 指出自动化测试就是个例子。脚本测试已经被广泛采用,但很快这些自动化测试流程将会更容易学习,更快发展,例如开发出新的代码或将更为广泛地影响生产环境。

|

||||||

|

|

||||||

|

### 2、 人工智能催生的自动化

|

||||||

|

|

||||||

|

上述原则同样适合与相关的(但是独立的) [人工智能][10]的领域。根据对人工智能的定义,机器学习在短时间内可能会对 IT 领域产生巨大的影响(并且我们可能会看到这两个领域的许多重叠的定义和理解)。假定新兴的人工智能技术将也会产生新的自动化机会。

|

||||||

|

|

||||||

|

[SolarWinds][11] 公司技术负责人 Patrick Hubbard 说,“人工智能和机器学习的整合普遍被认为对未来几年的商业成功起至关重要的作用。”

|

||||||

|

|

||||||

|

### 3、 这并不意味着不再需要人力

|

||||||

|

|

||||||

|

让我们试着安慰一下那些不知所措的人:前两种趋势并不一定意味着我们将失去工作。

|

||||||

|

|

||||||

|

这很可能意味着各种角色的改变,以及[全新角色][12]的创造。

|

||||||

|

|

||||||

|

但是在可预见的将来,至少,你不必需要对机器人鞠躬。

|

||||||

|

|

||||||

|

“一台机器只能运行在给定的环境变量中——它不能选择包含新的变量,在今天只有人类可以这样做,” Hubbard 解释说。“但是,对于 IT 专业人员来说,这将需要培养 AI 和自动化技能,如对程序设计、编程、管理人工智能和机器学习功能算法的基本理解,以及用强大的安全状态面对更复杂的网络攻击。”

|

||||||

|

|

||||||

|

Hubbard 分享一些新的工具或功能例子,例如支持人工智能的安全软件或机器学习的应用程序,这些应用程序可以远程发现石油管道中的维护需求。两者都可以提高效益和效果,自然不会代替需要信息安全或管道维护的人员。

|

||||||

|

|

||||||

|

“许多新功能仍需要人工监控,”Hubbard 说。“例如,为了让机器确定一些‘预测’是否可能成为‘规律’,人为的管理是必要的。”

|

||||||

|

|

||||||

|

即使你把机器学习和 AI 先放在一边,看待一般的 IT 自动化,同样原理也是成立的,尤其是在软件开发生命周期中。

|

||||||

|

|

||||||

|

[Juniper Networks][13] 公司自动化首席架构师 Matthew Oswalt ,指出 IT 自动化增长的根本原因是它通过减少操作基础设施所需的人工工作量来创造直接价值。

|

||||||

|

|

||||||

|

> 在代码上,操作工程师可以使用事件驱动的自动化提前定义他们的工作流程,而不是在凌晨 3 点来应对基础设施的问题。

|

||||||

|

|

||||||

|

“它也将操作工作流程作为代码而不再是容易过时的文档或系统知识阶段,”Oswalt 解释说。“操作人员仍然需要在[自动化]工具响应事件方面后发挥积极作用。采用自动化的下一个阶段是建立一个能够跨 IT 频谱识别发生的有趣事件的系统,并以自主方式进行响应。在代码上,操作工程师可以使用事件驱动的自动化提前定义他们的工作流程,而不是在凌晨 3 点来应对基础设施的问题。他们可以依靠这个系统在任何时候以同样的方式作出回应。”

|

||||||

|

|

||||||

|

### 4、 对自动化的焦虑将会减少

|

||||||

|

|

||||||

|

SolarWinds 公司的 Hubbard 指出,“自动化”一词本身就产生大量的不确定性和担忧,不仅仅是在 IT 领域,而且是跨专业领域,他说这种担忧是合理的。但一些随之而来的担忧可能被夸大了,甚至与科技产业本身共存。现实可能实际上是这方面的镇静力:当自动化的实际实施和实践帮助人们认识到这个列表中的第 3 项时,我们将看到第 4 项的出现。

|

||||||

|

|

||||||

|

“今年我们可能会看到对自动化焦虑的减少,更多的组织开始接受人工智能和机器学习作为增加现有人力资源的一种方式,”Hubbard 说。“自动化历史上为更多的工作创造了空间,通过降低成本和时间来完成较小任务,并将劳动力重新集中到无法自动化并需要人力的事情上。人工智能和机器学习也是如此。”

|

||||||

|

|

||||||

|

自动化还将减少令 IT 领导者神经紧张的一些焦虑:安全。正如[红帽][14]公司首席架构师 Matt Smith 最近[指出][15]的那样,自动化将越来越多地帮助 IT 部门降低与维护任务相关的安全风险。

|

||||||

|

|

||||||

|

他的建议是:“首先在维护活动期间记录和自动化 IT 资产之间的交互。通过依靠自动化,您不仅可以消除之前需要大量手动操作和手术技巧的任务,还可以降低人为错误的风险,并展示当您的 IT 组织采纳变更和新工作方法时可能发生的情况。最终,这将迅速减少对应用安全补丁的抵制。而且它还可以帮助您的企业在下一次重大安全事件中摆脱头条新闻。”

|

||||||

|

|

||||||

|

**[ 阅读全文: [12个企业安全坏习惯要打破。][16] ] **

|

||||||

|

|

||||||

|

### 5、 脚本和自动化工具将持续发展

|

||||||

|

|

||||||

|

许多组织看到了增加自动化的第一步,通常以脚本或自动化工具(有时称为配置管理工具)的形式作为“早期”工作。

|

||||||

|

|

||||||

|

但是随着各种自动化技术的使用,对这些工具的观点也在不断发展。

|

||||||

|

|

||||||

|

[DataVision][18] 首席运营官 Mark Abolafia 表示:“数据中心环境中存在很多重复性过程,容易出现人为错误,[Ansible][17] 等技术有助于缓解这些问题。“通过 Ansible ,人们可以为一组操作编写特定的步骤,并输入不同的变量,例如地址等,使过去长时间的过程链实现自动化,而这些过程以前都需要人为触摸和更长的交付时间。”

|

||||||

|

|

||||||

|

**[想了解更多关于 Ansible 这个方面的知识吗?阅读相关文章:[使用 Ansible 时的成功秘诀][19]。 ]**

|

||||||

|

|

||||||

|

另一个因素是:工具本身将继续变得更先进。

|

||||||

|

|

||||||

|

“使用先进的 IT 自动化工具,开发人员将能够在更短的时间内构建和自动化工作流程,减少易出错的编码,” ASCI 公司的 Amin 说。“这些工具包括预先构建的、预先测试过的拖放式集成,API 作业,丰富的变量使用,参考功能和对象修订历史记录。”

|

||||||

|

|

||||||

|

### 6、 自动化开创了新的指标机会

|

||||||

|

|

||||||

|

正如我们在此前所说的那样,IT 自动化不是万能的。它不会修复被破坏的流程,或者以其他方式为您的组织提供全面的灵丹妙药。这也是持续不断的:自动化并不排除衡量性能的必要性。

|

||||||

|

|

||||||

|

**[ 参见我们的相关文章 [DevOps 指标:你在衡量什么重要吗?][20] ]**

|

||||||

|

|

||||||

|

实际上,自动化应该打开了新的机会。

|

||||||

|

|

||||||

|

[Janeiro Digital][21] 公司架构师总裁 Josh Collins 说,“随着越来越多的开发活动 —— 源代码管理、DevOps 管道、工作项目跟踪等转向 API 驱动的平台,将这些原始数据拼接在一起以描绘组织效率提升的机会和图景”。

|

||||||

|

|

||||||

|

Collins 认为这是一种可能的新型“开发组织度量指标”。但不要误认为这意味着机器和算法可以突然预测 IT 所做的一切。

|

||||||

|

|

||||||

|

“无论是衡量个人资源还是整体团队,这些指标都可以很强大 —— 但应该用大量的背景来衡量。”Collins 说,“将这些数据用于高层次趋势并确认定性观察 —— 而不是临床评级你的团队。”

|

||||||

|

|

||||||

|

**想要更多这样知识, IT 领导者?[注册我们的每周电子邮件通讯][22]。**

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://enterprisersproject.com/article/2018/3/what-s-next-it-automation-6-trends-watch

|

||||||

|

|

||||||

|

作者:[Kevin Casey][a]

|

||||||

|

译者:[MZqk](https://github.com/MZqk)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://enterprisersproject.com/user/kevin-casey

|

||||||

|

[1]:https://enterprisersproject.com/article/2017/12/5-factors-fueling-automation-it-now

|

||||||

|

[2]:https://enterprisersproject.com/article/2017/12/4-trends-watch-it-automation-expands

|

||||||

|

[3]:https://enterprisersproject.com/article/2018/1/getting-started-automation-6-tips

|

||||||

|

[4]:https://enterprisersproject.com/article/2018/1/how-make-case-it-automation

|

||||||

|

[5]:https://enterprisersproject.com/article/2018/1/it-automation-best-practices-7-keys-long-term-success

|

||||||

|

[6]:https://enterprisersproject.com/tags/automation

|

||||||

|

[7]:https://enterprisersproject.com/article/2018/2/how-spot-machine-learning-opportunity

|

||||||

|

[8]:https://www.advsyscon.com/en-us/

|

||||||

|

[9]:https://www.sungardas.com/en/

|

||||||

|

[10]:https://enterprisersproject.com/tags/artificial-intelligence

|

||||||

|

[11]:https://www.solarwinds.com/

|

||||||

|

[12]:https://enterprisersproject.com/article/2017/12/8-emerging-ai-jobs-it-pros

|

||||||

|

[13]:https://www.juniper.net/

|

||||||

|

[14]:https://www.redhat.com/en?intcmp=701f2000000tjyaAAA

|

||||||

|

[15]:https://enterprisersproject.com/article/2018/2/12-bad-enterprise-security-habits-break

|

||||||

|

[16]:https://enterprisersproject.com/article/2018/2/12-bad-enterprise-security-habits-break?sc_cid=70160000000h0aXAAQ

|

||||||

|

[17]:https://opensource.com/tags/ansible

|

||||||

|

[18]:https://datavision.com/

|

||||||

|

[19]:https://opensource.com/article/18/2/tips-success-when-getting-started-ansible?intcmp=701f2000000tjyaAAA

|

||||||

|

[20]:https://enterprisersproject.com/article/2017/7/devops-metrics-are-you-measuring-what-matters?sc_cid=70160000000h0aXAAQ

|

||||||

|

[21]:https://www.janeirodigital.com/

|

||||||

|

[22]:https://enterprisersproject.com/email-newsletter?intcmp=701f2000000tsjPAAQ

|

||||||

@ -0,0 +1,134 @@

|

|||||||

|

如何轻松地检查 Ubuntu 版本以及其它系统信息

|

||||||

|

======

|

||||||

|

|

||||||

|

> 摘要:想知道你正在使用的 Ubuntu 具体是什么版本吗?这篇文档将告诉你如何检查你的 Ubuntu 版本、桌面环境以及其他相关的系统信息。

|

||||||

|

|

||||||

|

通常,你能非常容易的通过命令行或者图形界面获取你正在使用的 Ubuntu 的版本。当你正在尝试学习一篇互联网上的入门教材或者正在从各种各样的论坛里获取帮助的时候,知道当前正在使用的 Ubuntu 确切的版本号、桌面环境以及其他的系统信息将是尤为重要的。

|

||||||

|

|

||||||

|

在这篇简短的文章中,作者将展示各种检查 [Ubuntu][1] 版本以及其他常用的系统信息的方法。

|

||||||

|

|

||||||

|

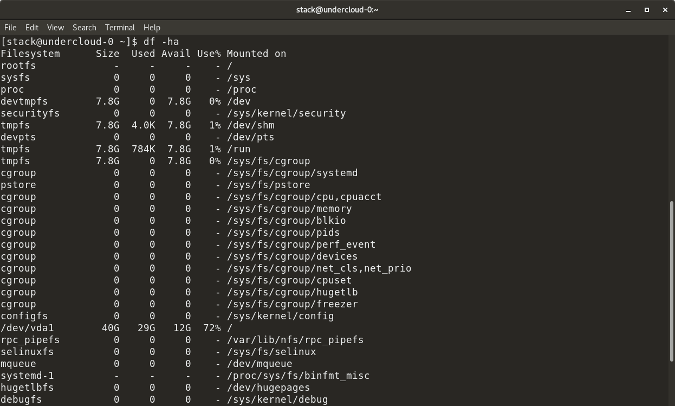

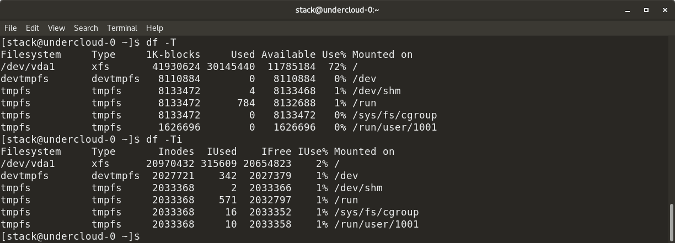

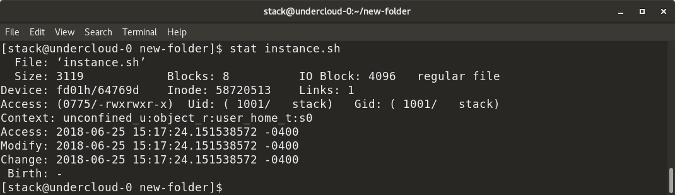

### 如何在命令行检查 Ubuntu 版本

|

||||||

|

|

||||||

|

这个是获得 Ubuntu 版本的最好的办法。我本想先展示如何用图形界面做到这一点,但是我决定还是先从命令行方法说起,因为这种方法不依赖于你使用的任何[桌面环境][2]。 你可以在 Ubuntu 的任何变种系统上使用这种方法。

|

||||||

|

|

||||||

|

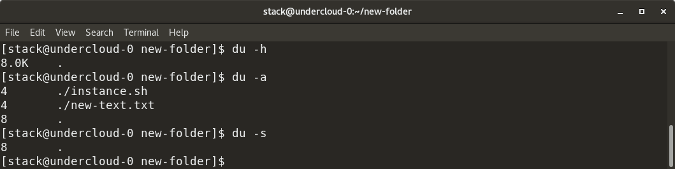

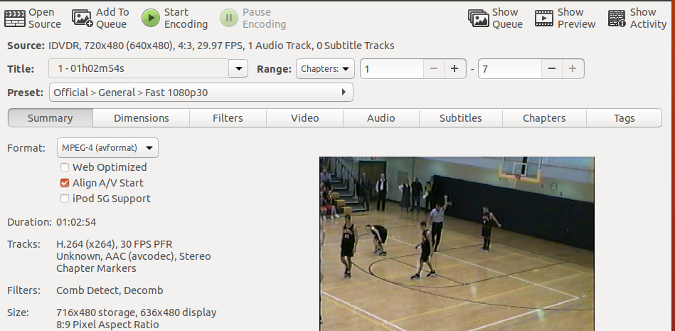

打开你的命令行终端 (`Ctrl+Alt+T`), 键入下面的命令:

|

||||||

|

|

||||||

|

```

|

||||||

|

lsb_release -a

|

||||||

|

```

|

||||||

|

|

||||||

|

上面命令的输出应该如下:

|

||||||

|

|

||||||

|

```

|

||||||

|

No LSB modules are available.

|

||||||

|

Distributor ID: Ubuntu

|

||||||

|

Description: Ubuntu 16.04.4 LTS

|

||||||

|

Release: 16.04

|

||||||

|

Codename: xenial

|

||||||

|

```

|

||||||

|

|

||||||

|

![How to check Ubuntu version in command line][3]

|

||||||

|

|

||||||

|

正像你所看到的,当前我的系统安装的 Ubuntu 版本是 Ubuntu 16.04, 版本代号: Xenial。

|

||||||

|

|

||||||

|

且慢!为什么版本描述中显示的是 Ubuntu 16.04.4 而发行版本是 16.04?到底哪个才是正确的版本?16.04 还是 16.04.4? 这两者之间有什么区别?

|

||||||

|

|

||||||

|

如果言简意赅的回答这个问题的话,那么答案应该是你正在使用 Ubuntu 16.04。这个是基准版本,而 16.04.4 进一步指明这是 16.04 的第四个补丁版本。你可以将补丁版本理解为 Windows 世界里的服务包。在这里,16.04 和 16.04.4 都是正确的版本号。

|

||||||

|

|

||||||

|

那么输出的 Xenial 又是什么?那正是 Ubuntu 16.04 的版本代号。你可以阅读下面这篇文章获取更多信息:[了解 Ubuntu 的命名惯例][4]。

|

||||||

|

|

||||||

|

#### 其他一些获取 Ubuntu 版本的方法

|

||||||

|

|

||||||

|

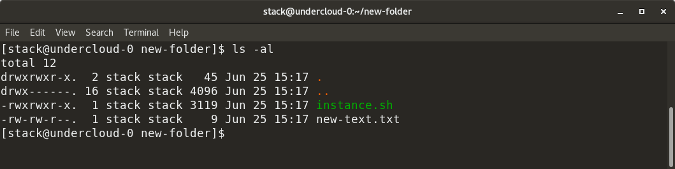

你也可以使用下面任意的命令得到 Ubuntu 的版本:

|

||||||

|

|

||||||

|

```

|

||||||

|

cat /etc/lsb-release

|

||||||

|

```

|

||||||

|

|

||||||

|

输出如下信息:

|

||||||

|

|

||||||

|

```

|

||||||

|

DISTRIB_ID=Ubuntu

|

||||||

|

DISTRIB_RELEASE=16.04

|

||||||

|

DISTRIB_CODENAME=xenial

|

||||||

|

DISTRIB_DESCRIPTION="Ubuntu 16.04.4 LTS"

|

||||||

|

```

|

||||||

|

|

||||||

|

![How to check Ubuntu version in command line][5]

|

||||||

|

|

||||||

|

|

||||||

|

你还可以使用下面的命令来获得 Ubuntu 版本:

|

||||||

|

|

||||||

|

```

|

||||||

|

cat /etc/issue

|

||||||

|

```

|

||||||

|

|

||||||

|

|

||||||

|

命令行的输出将会如下:

|

||||||

|

|

||||||

|

```

|

||||||

|

Ubuntu 16.04.4 LTS \n \l

|

||||||

|

```

|

||||||

|

|

||||||

|

不要介意输出末尾的\n \l. 这里 Ubuntu 版本就是 16.04.4,或者更加简单:16.04。

|

||||||

|

|

||||||

|

|

||||||

|

### 如何在图形界面下得到 Ubuntu 版本

|

||||||

|

|

||||||

|

在图形界面下获取 Ubuntu 版本更是小事一桩。这里我使用了 Ubuntu 18.04 的图形界面系统 GNOME 的屏幕截图来展示如何做到这一点。如果你在使用 Unity 或者别的桌面环境的话,显示可能会有所不同。这也是为什么我推荐使用命令行方式来获得版本的原因:你不用依赖形形色色的图形界面。

|

||||||

|

|

||||||

|

下面我来展示如何在桌面环境获取 Ubuntu 版本。

|

||||||

|

|

||||||

|

进入‘系统设置’并点击下面的‘详细信息’栏。

|

||||||

|

|

||||||

|

![Finding Ubuntu version graphically][6]

|

||||||

|

|

||||||

|

你将会看到系统的 Ubuntu 版本和其他和桌面系统有关的系统信息 这里的截图来自 [GNOME][7] 。

|

||||||

|

|

||||||

|

![Finding Ubuntu version graphically][8]

|

||||||

|

|

||||||

|

### 如何知道桌面环境以及其他的系统信息

|

||||||

|

|

||||||

|

你刚才学习的是如何得到 Ubuntu 的版本信息,那么如何知道桌面环境呢? 更进一步, 如果你还想知道当前使用的 Linux 内核版本呢?

|

||||||

|

|

||||||

|

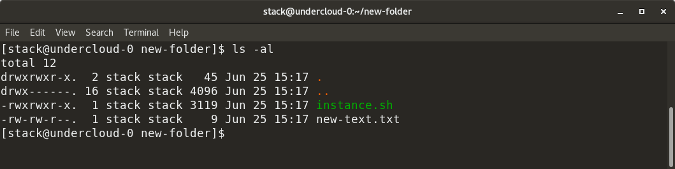

有各种各样的命令你可以用来得到这些信息,不过今天我想推荐一个命令行工具, 叫做 [Neofetch][9]。 这个工具能在命令行完美展示系统信息,包括 Ubuntu 或者其他 Linux 发行版的系统图标。

|

||||||

|

|

||||||

|

用下面的命令安装 Neofetch:

|

||||||

|

|

||||||

|

```

|

||||||

|

sudo apt install neofetch

|

||||||

|

```

|

||||||

|

|

||||||

|

安装成功后,运行 `neofetch` 将会优雅的展示系统的信息如下。

|

||||||

|

|

||||||

|

![System information in Linux terminal][10]

|

||||||

|

|

||||||

|

如你所见,`neofetch` 完全展示了 Linux 内核版本、Ubuntu 的版本、桌面系统版本以及环境、主题和图标等等信息。

|

||||||

|

|

||||||

|

|

||||||

|

希望我如上展示方法能帮到你更快的找到你正在使用的 Ubuntu 版本和其他系统信息。如果你对这篇文章有其他的建议,欢迎在评论栏里留言。

|

||||||

|

|

||||||

|

再见。:)

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://itsfoss.com/how-to-know-ubuntu-unity-version/

|

||||||

|

|

||||||

|

作者:[Abhishek Prakash][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[DavidChenLiang](https://github.com/davidchenliang)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]: https://itsfoss.com/author/abhishek/

|

||||||

|

[1]:https://www.ubuntu.com/

|

||||||

|

[2]:https://en.wikipedia.org/wiki/Desktop_environment

|

||||||

|

[3]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2013/03/check-ubuntu-version-command-line-1-800x216.jpeg

|

||||||

|

[4]:https://itsfoss.com/linux-code-names/

|

||||||

|

[5]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2013/03/check-ubuntu-version-command-line-2-800x185.jpeg

|

||||||

|

[6]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2013/03/ubuntu-version-system-settings.jpeg

|

||||||

|

[7]:https://www.gnome.org/

|

||||||

|

[8]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2013/03/checking-ubuntu-version-gui.jpeg

|

||||||

|

[9]:https://itsfoss.com/display-linux-logo-in-ascii/

|

||||||

|

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2013/03/ubuntu-system-information-terminal-800x400.jpeg

|

||||||

@ -2,7 +2,8 @@ Android 工程师的一年

|

|||||||

============================================================

|

============================================================

|

||||||

|

|

||||||

|

|

||||||

>妙绝的绘画来自 [Miquel Beltran][0]

|

|

||||||

|

> 这幅妙绝的绘画来自 [Miquel Beltran][0]

|

||||||

|

|

||||||

我的技术生涯,从两年前算起。开始是 QA 测试员,一年后就转入开发人员角色。没怎么努力,也没有投入过多的个人时间。

|

我的技术生涯,从两年前算起。开始是 QA 测试员,一年后就转入开发人员角色。没怎么努力,也没有投入过多的个人时间。

|

||||||

|

|

||||||

@ -12,7 +13,7 @@ Android 工程师的一年

|

|||||||

|

|

||||||

我的第一个职位角色, Android 开发者,开始于一年前。我工作的这家公司,可以花一半的时间去尝试其它角色的工作,这给我从 QA 职位转到 Android 开发者职位创造了机会。

|

我的第一个职位角色, Android 开发者,开始于一年前。我工作的这家公司,可以花一半的时间去尝试其它角色的工作,这给我从 QA 职位转到 Android 开发者职位创造了机会。

|

||||||

|

|

||||||

这一转变归功于我在晚上和周末投入学习 Android 的时间。我通过了[ Android 基础纳米学位][3]、[Andriod 工程师纳米学位][4]课程,也获得了[ Google 开发者认证][5]。这部分的详细故事在[这儿][6]。

|

这一转变归功于我在晚上和周末投入学习 Android 的时间。我通过了 [Android 基础纳米学位][3]、[Andriod 工程师纳米学位][4]课程,也获得了 [Google 开发者认证][5]。这部分的详细故事在[这儿][6]。

|

||||||

|

|

||||||

两个月后,公司雇佣了另一位 QA,我转向全职工作。挑战从此开始!

|

两个月后,公司雇佣了另一位 QA,我转向全职工作。挑战从此开始!

|

||||||

|

|

||||||

@ -46,29 +47,27 @@ Android 工程师的一年

|

|||||||

|

|

||||||

一个例子就是拉取代码进行公开展示和代码审查。有是我会请同事私下检查我的代码,并不想被公开拉取,向任何人展示。

|

一个例子就是拉取代码进行公开展示和代码审查。有是我会请同事私下检查我的代码,并不想被公开拉取,向任何人展示。

|

||||||

|

|

||||||

其他时候,当我做代码审查时,会花好几分钟盯着"批准"按纽犹豫不决,在担心审查通过的会被其他同事找出毛病。

|

其他时候,当我做代码审查时,会花好几分钟盯着“批准”按纽犹豫不决,在担心审查通过的代码会被其他同事找出毛病。

|

||||||

|

|

||||||

当我在一些事上持反对意见时,由于缺乏相关知识,担心被坐冷板凳,从来没有大声说出来过。

|

当我在一些事上持反对意见时,由于缺乏相关知识,担心被坐冷板凳,从来没有大声说出来过。

|

||||||

|

|

||||||

> 某些时间我会请同事私下[...]检查我的代码,以避免被公开展示。

|

> 某些时间我会请同事私下[...]检查我的代码,以避免被公开展示。

|

||||||

|

|

||||||

* * *

|

|

||||||

|

|

||||||

### 新的公司,新的挑战

|

### 新的公司,新的挑战

|

||||||

|

|

||||||

后来,我手边有了个新的机会。感谢曾经和我共事的朋友,我被[ Babbel ][7]邀请去参加初级 Android 工程师职位的招聘流程。

|

后来,我手边有了个新的机会。感谢曾经和我共事的朋友,我被 [Babbel][7] 邀请去参加初级 Android 工程师职位的招聘流程。

|

||||||

|

|

||||||

我见到了他们的团队,同时自告奋勇的在他们办公室主持了一次本地会议。此事让我下定决心要申请这个职位。我喜欢公司的箴言:全民学习。其次,公司每个人都非常友善,在那儿工作看起来很愉快!但我没有马上申请,因为我认为自己不够好,所以为什么能申请呢?

|

我见到了他们的团队,同时自告奋勇的在他们办公室主持了一次本地会议。此事让我下定决心要申请这个职位。我喜欢公司的箴言:全民学习。其次,公司每个人都非常友善,在那儿工作看起来很愉快!但我没有马上申请,因为我认为自己不够好,所以为什么能申请呢?

|

||||||

|

|

||||||

还好我的朋友和搭档推动我这样做,他们给了我发送简历的力量和勇气。过后不久就进入了面试流程。这很简单:以很小的应该程序来进行编码挑战,随后是和团队一起的技术面试,之后是和招聘经理间关于团队合作的面试。

|

还好我的朋友和搭档推动我这样做,他们给了我发送简历的力量和勇气。过后不久就进入了面试流程。这很简单:以很小的程序的形式来进行编码挑战,随后是和团队一起的技术面试,之后是和招聘经理间关于团队合作的面试。

|

||||||

|

|

||||||

#### 招聘过程

|

#### 招聘过程

|

||||||

|

|

||||||

我用周未的时间来完成编码挑战的项目,并在周一就立即发送过去。不久就受邀去当场面试。

|

我用周未的时间来完成编码挑战的项目,并在周一就立即发送过去。不久就受邀去当场面试。

|

||||||

|

|

||||||

技术面试是关于编程挑战本身,我们谈论了 Android 好的不好的、我为什么以这种方式实现这功能,以及如何改进等等。随后是招聘经理进行的一次简短的关于团队合作面试,也有涉及到编程挑战的事,我们谈到了我面临的挑战,我如何解决这些问题,等等。

|

技术面试是关于编程挑战本身,我们谈论了 Android 好的不好的地方、我为什么以这种方式实现这功能,以及如何改进等等。随后是招聘经理进行的一次简短的关于团队合作面试,也有涉及到编程挑战的事,我们谈到了我面临的挑战,我如何解决这些问题,等等。

|

||||||

|

|

||||||

最后,通过面试,得到 offer, 我授受了!

|

最后,通过面试,得到 offer,我授受了!

|

||||||

|

|

||||||

我的 Android 工程师生涯的第一年,有九个月在一个公司,后面三个月在当前的公司。

|

我的 Android 工程师生涯的第一年,有九个月在一个公司,后面三个月在当前的公司。

|

||||||

|

|

||||||

@ -88,7 +87,7 @@ Android 工程师的一年

|

|||||||

|

|

||||||

两次三次后,压力就堵到胸口。为什么我还不知道?为什么就那么难理解?这种状态让我焦虑万分。

|

两次三次后,压力就堵到胸口。为什么我还不知道?为什么就那么难理解?这种状态让我焦虑万分。

|

||||||

|

|

||||||

我意识到我需要承认我确实不懂某个特定的主题,但第一步是要知道有这么个概念!有是,仅仅需要的就是更多的时间、更多的练习,最终会"在大脑中完全演绎" :-)

|

我意识到我需要承认我确实不懂某个特定的主题,但第一步是要知道有这么个概念!有时,仅仅需要的就是更多的时间、更多的练习,最终会“在大脑中完全演绎” :-)

|

||||||

|

|

||||||

例如,我常常为 Java 的接口类和抽象类所困扰,不管看了多少的例子,还是不能完全明白他们之间的区别。但一旦我使用后,即使还不能解释其工作原理,也知道了怎么使用以及什么时候使用。

|

例如,我常常为 Java 的接口类和抽象类所困扰,不管看了多少的例子,还是不能完全明白他们之间的区别。但一旦我使用后,即使还不能解释其工作原理,也知道了怎么使用以及什么时候使用。

|

||||||

|

|

||||||

@ -102,19 +101,13 @@ Android 工程师的一年

|

|||||||

|

|

||||||

工程师的角色不仅仅是编码,而是广泛的技能。 我仍然处于旅程的起点,在掌握它的道路上,我想着重于以下几点:

|

工程师的角色不仅仅是编码,而是广泛的技能。 我仍然处于旅程的起点,在掌握它的道路上,我想着重于以下几点:

|

||||||

|

|

||||||

* 交流:因为英文不是我的母语,所以有的时候我会努力传达我的想法,这在我工作中是至关重要的。我可以通过写作,阅读和交谈来解决这个问题。

|

* 交流:因为英文不是我的母语,所以有的时候我需要努力传达我的想法,这在我工作中是至关重要的。我可以通过写作,阅读和交谈来解决这个问题。

|

||||||

|

* 提有建设性的反馈意见:我想给同事有意义的反馈,这样我们一起共同发展。

|

||||||

* 提有建设性的反馈意见: 我想给同事有意义的反馈,这样我们一起共同发展。

|

* 为我的成就感到骄傲:我需要创建一个列表来跟踪各种成就,无论大小,或整体进步,所以当我挣扎时我可以回顾并感觉良好。

|

||||||

|

* 不要着迷于不知道的事情:当有很多新事物出现时很难做到都知道,所以只关注必须的,及手头项目需要的东西,这非常重要的。

|

||||||

* 为我的成就感到骄傲: 我需要创建一个列表来跟踪各种成就,无论大小,或整体进步,所以当我挣扎时我可以回顾并感觉良好。

|

* 多和同事分享知识:我是初级的并不意味着没有可以分享的!我需要持续分享我感兴趣的的文章及讨论话题。我知道同事们会感激我的。

|

||||||

|

* 耐心和持续学习:和现在一样的保持不断学习,但对自己要有更多耐心。

|

||||||

* 不要着迷于不知道的事情: 当有很多新事物出现时很难做到都知道,所以只关注必须的,及手头项目需要的东西,这非常重要的。

|

* 自我保健:随时注意休息,不要为难自己。 放松也富有成效。

|

||||||

|

|

||||||

* 多和同事分享知识。我是初级的并不意味着没有可以分享的!我需要持续分享我感兴趣的的文章及讨论话题。我知道同事们会感激我的。

|

|

||||||

|

|

||||||

* 耐心和持续学习: 和现在一样的保持不断学习,但对自己要有更多耐心。

|

|

||||||

|

|

||||||

* 自我保健: 随时注意休息,不要为难自己。 放松也富有成效。

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

@ -122,7 +115,7 @@ via: https://proandroiddev.com/a-year-as-android-engineer-55e2a428dfc8

|

|||||||

|

|

||||||

作者:[Lara Martín][a]

|

作者:[Lara Martín][a]

|

||||||

译者:[runningwater](https://github.com/runningwater)

|

译者:[runningwater](https://github.com/runningwater)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,91 +1,92 @@

|

|||||||

搭建属于你自己的 Git 服务器

|

搭建属于你自己的 Git 服务器

|

||||||

======

|

======

|

||||||

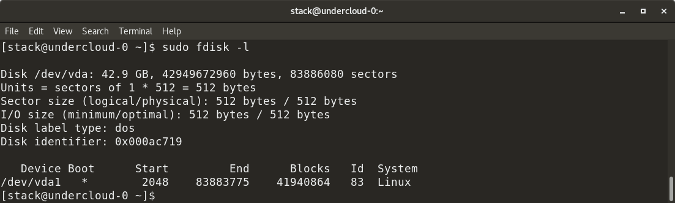

**在本文中,我们的目的是让你了解如何设置属于自己的Git服务器。**

|

|

||||||

|

|

||||||

Git 是由 Linux Torvalds 开发的一个版本控制系统,现如今正在被全世界大量开发者使用。许多公司喜欢使用基于 Git 版本控制的 GitHub 代码托管。[根据报道,GitHub 是现如今全世界最大的代码托管网站][3]。GitHub 宣称已经有 920 万用户和 2180 万个仓库。许多大型公司现如今也将代码迁移到 GitHub 上。[甚至于谷歌,一家搜索引擎公司,也正将代码迁移到 GitHub 上][4]。

|

|

||||||

|

|

||||||

|

> 在本文中,我们的目的是让你了解如何设置属于自己的Git服务器。

|

||||||

|

|

||||||

|

[Git][1] 是由 [Linux Torvalds 开发][2]的一个版本控制系统,现如今正在被全世界大量开发者使用。许多公司喜欢使用基于 Git 版本控制的 GitHub 代码托管。[根据报道,GitHub 是现如今全世界最大的代码托管网站][3]。GitHub 宣称已经有 920 万用户和 2180 万个仓库。许多大型公司现如今也将代码迁移到 GitHub 上。[甚至于谷歌,一家搜索引擎公司,也正将代码迁移到 GitHub 上][4]。

|

||||||

|

|

||||||

### 运行你自己的 Git 服务器

|

### 运行你自己的 Git 服务器

|

||||||

|

|

||||||

GitHub 能提供极佳的服务,但却有一些限制,尤其是你是单人或是一名 coding 爱好者。GitHub 其中之一的限制就是其中免费的服务没有提供代码私有化托管业务。[你不得不支付每月7美金购买5个私有仓库][5],并且想要更多的私有仓库则要交更多的钱。

|

GitHub 能提供极佳的服务,但却有一些限制,尤其是你是单人或是一名 coding 爱好者。GitHub 其中之一的限制就是其中免费的服务没有提供代码私有托管业务。[你不得不支付每月 7 美金购买 5 个私有仓库][5],并且想要更多的私有仓库则要交更多的钱。

|

||||||

|

|

||||||

|

|

||||||

万一你想要仓库私有化或需要更多权限控制,最好的方法就是在你的服务器上运行 Git。不仅你能够省去一笔钱,你还能够在你的服务器有更多的操作。在大多数情况下,大多数高级 Linux 用户已经拥有自己的服务器,并且在这些服务器上推送 Git 就像“啤酒一样免费”(LCTT 译者注:指免费软件)。

|

|

||||||

|

|

||||||

|

万一你想要私有仓库或需要更多权限控制,最好的方法就是在你的服务器上运行 Git。不仅你能够省去一笔钱,你还能够在你的服务器有更多的操作。在大多数情况下,大多数高级 Linux 用户已经拥有自己的服务器,并且在这些服务器上方式 Git 就像“啤酒一样免费”(LCTT 译注:指免费软件)。

|

||||||

|

|

||||||

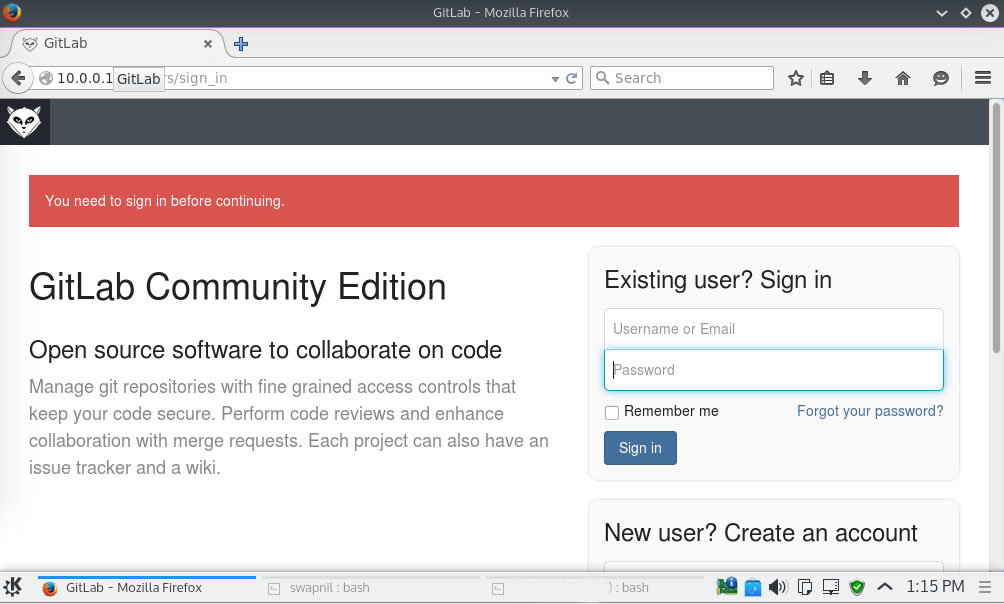

在这篇教程中,我们主要讲在你的服务器上,使用两种代码管理的方法。一种是运行一个纯 Git 服务器,另一个是使用名为 [GitLab][6] 的 GUI 工具。在本教程中,我在 VPS 上运行的操作系统是 Ubuntu 14.04 LTS。

|

在这篇教程中,我们主要讲在你的服务器上,使用两种代码管理的方法。一种是运行一个纯 Git 服务器,另一个是使用名为 [GitLab][6] 的 GUI 工具。在本教程中,我在 VPS 上运行的操作系统是 Ubuntu 14.04 LTS。

|

||||||

|

|

||||||

### 在你的服务器上安装Git

|

### 在你的服务器上安装 Git

|

||||||

|

|

||||||

在本篇教程中,我们考虑一个简单案例,我们有一个远程服务器和一台本地服务器,现在我们需要使用这两台机器来工作。为了简单起见,我们就分别叫他们为远程服务器和本地服务器。

|

在本篇教程中,我们考虑一个简单案例,我们有一个远程服务器和一台本地服务器,现在我们需要使用这两台机器来工作。为了简单起见,我们就分别叫它们为远程服务器和本地服务器。

|

||||||

|

|

||||||

首先,在两边的机器上安装Git。你可以从依赖包中安装 Git,在本文中,我们将使用更简单的方法:

|

首先,在两边的机器上安装 Git。你可以从依赖包中安装 Git,在本文中,我们将使用更简单的方法:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo apt-get install git-core

|

sudo apt-get install git-core

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

为 Git 创建一个用户。

|

为 Git 创建一个用户。

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo useradd git

|

sudo useradd git

|

||||||

passwd git

|

passwd git

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

为了容易的访问服务器,我们设置一个免密 ssh 登录。首先在你本地电脑上创建一个 ssh 密钥:

|

为了容易的访问服务器,我们设置一个免密 ssh 登录。首先在你本地电脑上创建一个 ssh 密钥:

|

||||||

|

|

||||||

```

|

```

|

||||||

ssh-keygen -t rsa

|

ssh-keygen -t rsa

|

||||||

|

|

||||||

```

|

```

|

||||||

这时会要求你输入保存密钥的路径,这时只需要点击回车便保存在默认路径。第二个问题是输入访问远程服务器所需的密码。它生成两个密钥——公钥和私钥。记下您在下一步中需要使用的公钥的位置。

|

|

||||||

|

这时会要求你输入保存密钥的路径,这时只需要点击回车保存在默认路径。第二个问题是输入访问远程服务器所需的密码。它生成两个密钥——公钥和私钥。记下您在下一步中需要使用的公钥的位置。

|

||||||

|

|

||||||

现在您必须将这些密钥复制到服务器上,以便两台机器可以相互通信。在本地机器上运行以下命令:

|

现在您必须将这些密钥复制到服务器上,以便两台机器可以相互通信。在本地机器上运行以下命令:

|

||||||

|

|

||||||

|

|

||||||

```

|

```

|

||||||

cat ~/.ssh/id_rsa.pub | ssh git@remote-server "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys"

|

cat ~/.ssh/id_rsa.pub | ssh git@remote-server "mkdir -p ~/.ssh && cat >> ~/.ssh/authorized_keys"

|

||||||

|

|

||||||

```

|

```

|

||||||

现在,用 ssh 登录进服务器并为 Git 创建一个项目路径。你可以为你的仓库设置一个你想要的目录。

|

|

||||||

|

现在,用 `ssh` 登录进服务器并为 Git 创建一个项目路径。你可以为你的仓库设置一个你想要的目录。

|

||||||

|

|

||||||

现在跳转到该目录中:

|

现在跳转到该目录中:

|

||||||

|

|

||||||

```

|

```

|

||||||

cd /home/swapnil/project-1.git

|

cd /home/swapnil/project-1.git

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在新建一个空仓库:

|

现在新建一个空仓库:

|

||||||

|

|

||||||

```

|

```

|

||||||

git init --bare

|

git init --bare

|

||||||

Initialized empty Git repository in /home/swapnil/project-1.git

|

Initialized empty Git repository in /home/swapnil/project-1.git

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在我们需要在本地机器上新建一个基于 Git 版本控制仓库:

|

现在我们需要在本地机器上新建一个基于 Git 版本控制仓库:

|

||||||

|

|

||||||

```

|

```

|

||||||

mkdir -p /home/swapnil/git/project

|

mkdir -p /home/swapnil/git/project

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

进入我们创建仓库的目录:

|

进入我们创建仓库的目录:

|

||||||

|

|

||||||

```

|

```

|

||||||

cd /home/swapnil/git/project

|

cd /home/swapnil/git/project

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在在该目录中创建项目所需的文件。留在这个目录并启动 git:

|

现在在该目录中创建项目所需的文件。留在这个目录并启动 `git`:

|

||||||

|

|

||||||

```

|

```

|

||||||

git init

|

git init

|

||||||

Initialized empty Git repository in /home/swapnil/git/project

|

Initialized empty Git repository in /home/swapnil/git/project

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

把所有文件添加到仓库中:

|

把所有文件添加到仓库中:

|

||||||

|

|

||||||

```

|

```

|

||||||

git add .

|

git add .

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在,每次添加文件或进行更改时,都必须运行上面的 add 命令。 您还需要为每个文件更改都写入提交消息。提交消息基本上说明了我们所做的更改。

|

现在,每次添加文件或进行更改时,都必须运行上面的 `add` 命令。 您还需要为每个文件更改都写入提交消息。提交消息基本上说明了我们所做的更改。

|

||||||

|

|

||||||

```

|

```

|

||||||

git commit -m "message" -a

|

git commit -m "message" -a

|

||||||

@ -93,10 +94,9 @@ git commit -m "message" -a

|

|||||||

2 files changed, 2 insertions(+)

|

2 files changed, 2 insertions(+)

|

||||||

create mode 100644 GoT.txt

|

create mode 100644 GoT.txt

|

||||||

create mode 100644 writing.txt

|

create mode 100644 writing.txt

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

在这种情况下,我有一个名为 GoT(权力游戏审查)的文件,并且我做了一些更改,所以当我运行命令时,它指定对文件进行更改。 在上面的命令中 `-a` 选项意味着提交仓库中的所有文件。 如果您只更改了一个,则可以指定该文件的名称而不是使用 `-a`。

|

在这种情况下,我有一个名为 GoT(《权力的游戏》的点评)的文件,并且我做了一些更改,所以当我运行命令时,它指定对文件进行更改。 在上面的命令中 `-a` 选项意味着提交仓库中的所有文件。 如果您只更改了一个,则可以指定该文件的名称而不是使用 `-a`。

|

||||||

|

|

||||||

举一个例子:

|

举一个例子:

|

||||||

|

|

||||||

@ -104,124 +104,124 @@ git commit -m "message" -a

|

|||||||

git commit -m "message" GoT.txt

|

git commit -m "message" GoT.txt

|

||||||

[master e517b10] message

|

[master e517b10] message

|

||||||

1 file changed, 1 insertion(+)

|

1 file changed, 1 insertion(+)

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

到现在为止,我们一直在本地服务器上工作。现在我们必须将这些更改推送到远程服务器上,以便通过 Internet 访问,并且可以与其他团队成员进行协作。

|

到现在为止,我们一直在本地服务器上工作。现在我们必须将这些更改推送到远程服务器上,以便通过互联网访问,并且可以与其他团队成员进行协作。

|

||||||

|

|

||||||

```

|

```

|

||||||

git remote add origin ssh://git@remote-server/repo-<wbr< a="">>path-on-server..git

|

git remote add origin ssh://git@remote-server/repo-<wbr< a="">>path-on-server..git

|

||||||

|

|

||||||

```

|

```

|

||||||

现在,您可以使用 “pull” 或 “push” 选项在服务器和本地计算机之间 push 或 pull:

|

|

||||||

|

现在,您可以使用 `pull` 或 `push` 选项在服务器和本地计算机之间推送或拉取:

|

||||||

|

|

||||||

```

|

```

|

||||||

git push origin master

|

git push origin master

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

如果有其他团队成员想要使用该项目,则需要将远程服务器上的仓库克隆到其本地计算机上:

|

如果有其他团队成员想要使用该项目,则需要将远程服务器上的仓库克隆到其本地计算机上:

|

||||||

|

|

||||||

```

|

```

|

||||||

git clone git@remote-server:/home/swapnil/project.git

|

git clone git@remote-server:/home/swapnil/project.git

|

||||||

|

|

||||||

```

|

```

|

||||||

这里 /home/swapnil/project.git 是远程服务器上的项目路径,在你本机上则会改变。

|

|

||||||

|

这里 `/home/swapnil/project.git` 是远程服务器上的项目路径,在你本机上则会改变。

|

||||||

|

|

||||||

然后进入本地计算机上的目录(使用服务器上的项目名称):

|

然后进入本地计算机上的目录(使用服务器上的项目名称):

|

||||||

|

|

||||||

```

|

```

|

||||||

cd /project

|

cd /project

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在他们可以编辑文件,写入提交更改信息,然后将它们推送到服务器:

|

现在他们可以编辑文件,写入提交更改信息,然后将它们推送到服务器:

|

||||||

|

|

||||||

```

|

```

|

||||||

git commit -m 'corrections in GoT.txt story' -a

|

git commit -m 'corrections in GoT.txt story' -a

|

||||||

And then push changes:

|

```

|

||||||

|

|

||||||

git push origin master

|

然后推送改变:

|

||||||

|

|

||||||

```

|

```

|

||||||

|

git push origin master

|

||||||

|

```

|

||||||

|

|

||||||

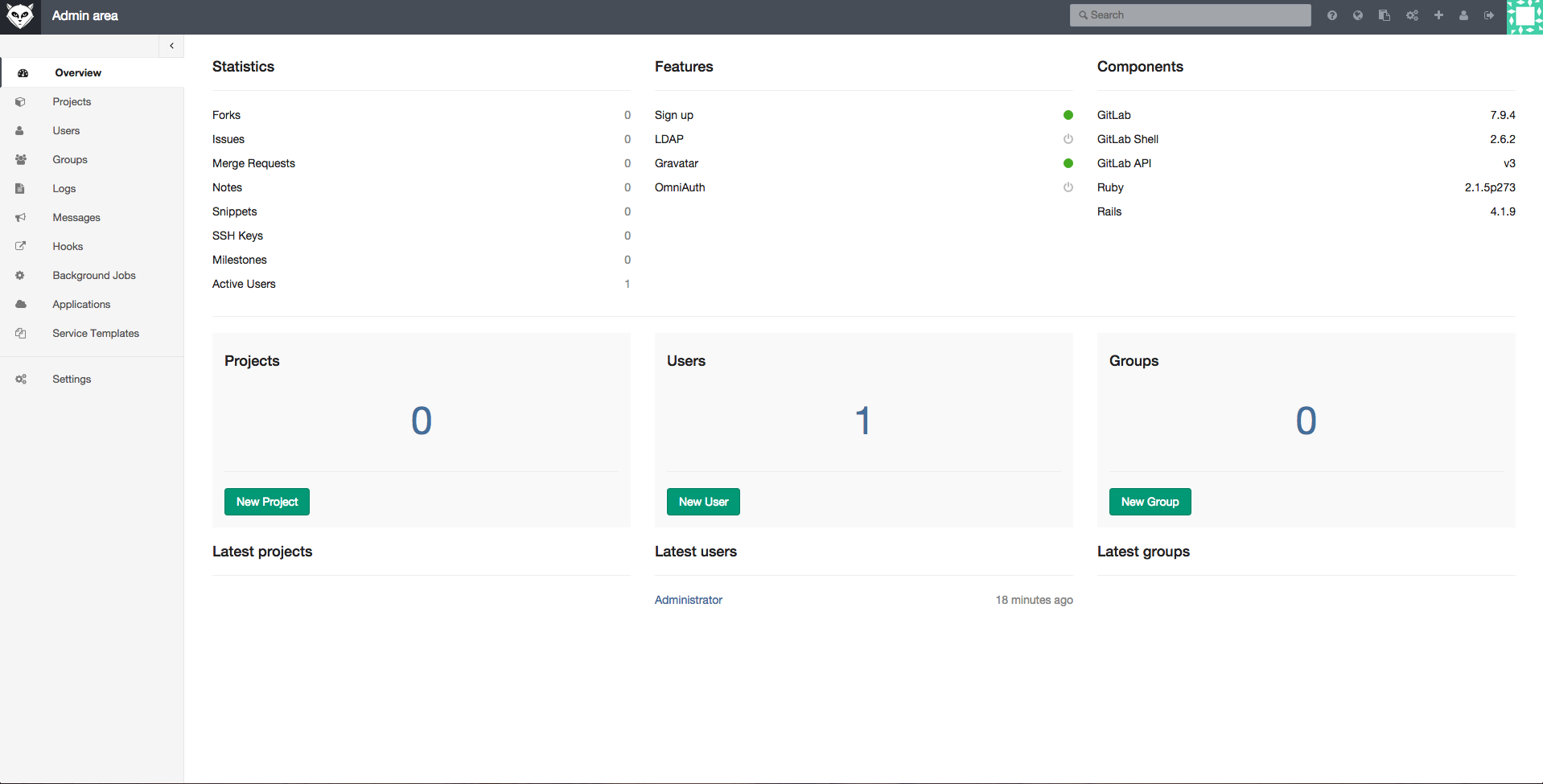

我认为这足以让一个新用户开始在他们自己的服务器上使用 Git。 如果您正在寻找一些 GUI 工具来管理本地计算机上的更改,则可以使用 GUI 工具,例如 QGit 或 GitK for Linux。

|

我认为这足以让一个新用户开始在他们自己的服务器上使用 Git。 如果您正在寻找一些 GUI 工具来管理本地计算机上的更改,则可以使用 GUI 工具,例如 QGit 或 GitK for Linux。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

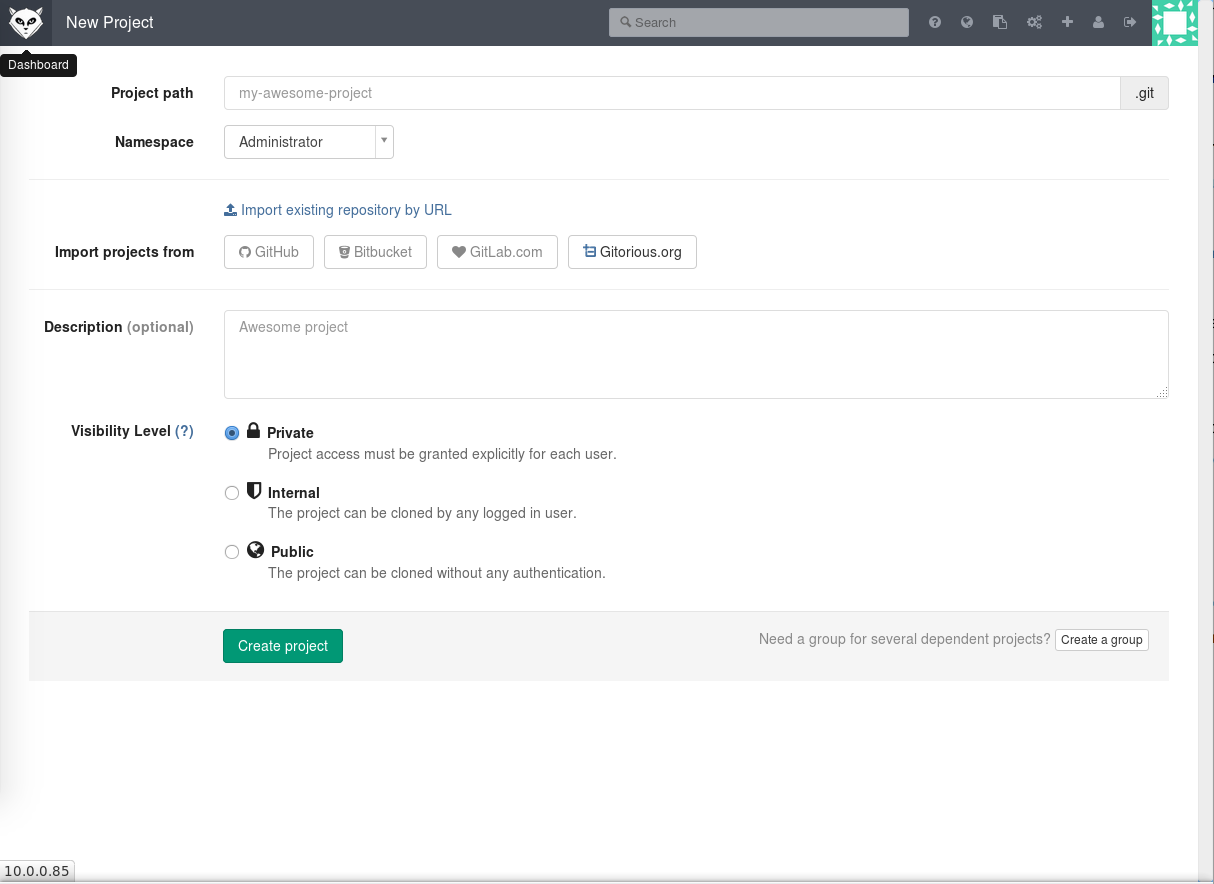

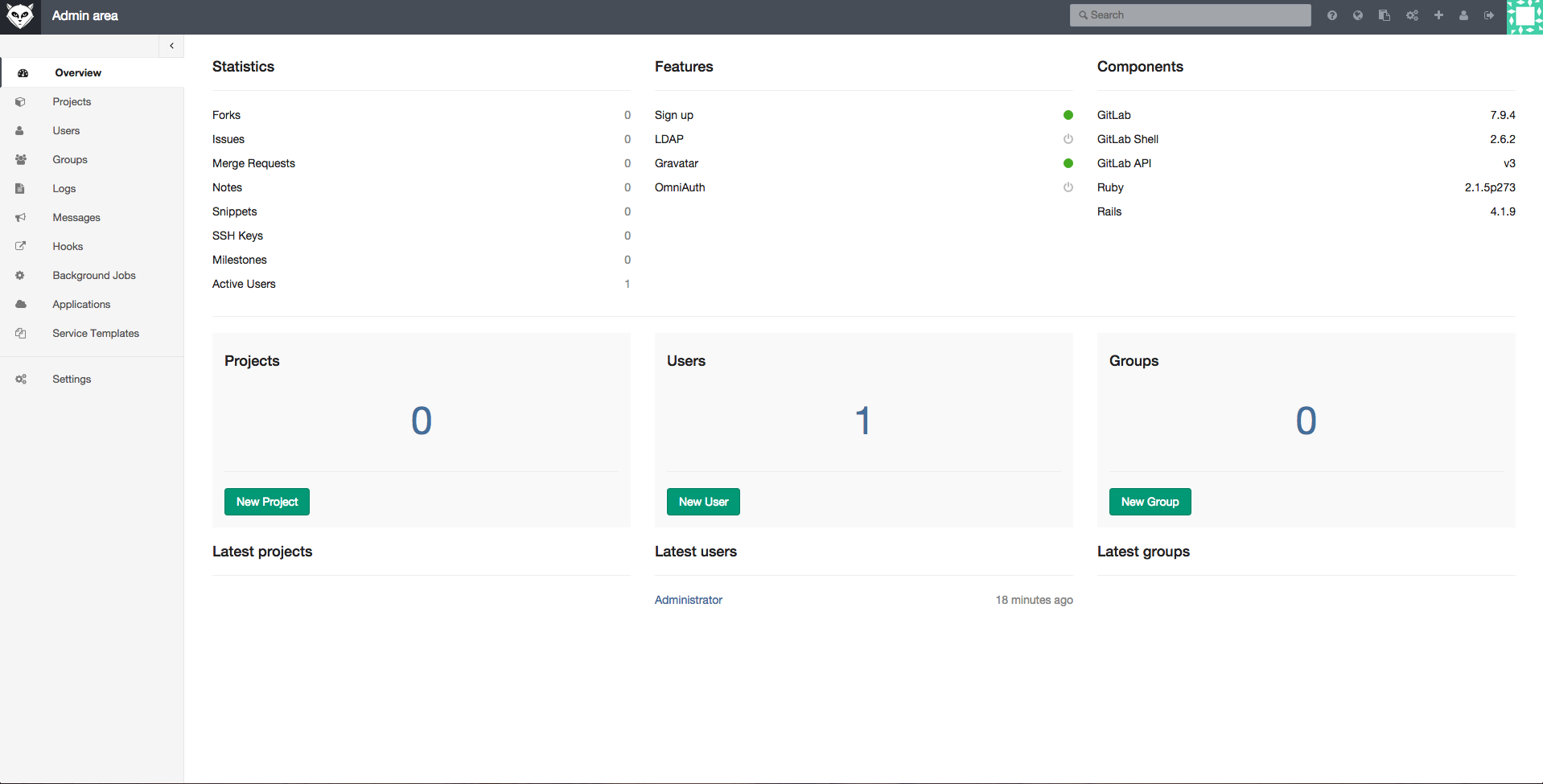

### 使用 GitLab

|

### 使用 GitLab

|

||||||

|

|

||||||

这是项目所有者和合作者的纯粹命令行解决方案。这当然不像使用 GitHub 那么简单。不幸的是,尽管 GitHub 是全球最大的代码托管商,但是它自己的软件别人却无法使用。因为它不是开源的,所以你不能获取源代码并编译你自己的 GitHub。与 WordPress 或 Drupal 不同,您无法下载 GitHub 并在您自己的服务器上运行它。

|

这是项目所有者和协作者的纯命令行解决方案。这当然不像使用 GitHub 那么简单。不幸的是,尽管 GitHub 是全球最大的代码托管商,但是它自己的软件别人却无法使用。因为它不是开源的,所以你不能获取源代码并编译你自己的 GitHub。这与 WordPress 或 Drupal 不同,您无法下载 GitHub 并在您自己的服务器上运行它。

|

||||||

|

|

||||||

|

|

||||||

像往常一样,在开源世界中,是没有终结的尽头。GitLab 是一个非常优秀的项目。这是一个开源项目,允许用户在自己的服务器上运行类似于 GitHub 的项目管理系统。

|

像往常一样,在开源世界中,是没有终结的尽头。GitLab 是一个非常优秀的项目。这是一个开源项目,允许用户在自己的服务器上运行类似于 GitHub 的项目管理系统。

|

||||||

|

|

||||||

|

|

||||||

您可以使用 GitLab 为团队成员或公司运行类似于 GitHub 的服务。您可以使用 GitLab 在公开发布之前开发私有项目。

|

您可以使用 GitLab 为团队成员或公司运行类似于 GitHub 的服务。您可以使用 GitLab 在公开发布之前开发私有项目。

|

||||||

|

|

||||||

|

|

||||||

GitLab 采用传统的开源商业模式。他们有两种产品:免费的开源软件,用户可以在自己的服务器上安装,以及类似于 GitHub 的托管服务。

|

GitLab 采用传统的开源商业模式。他们有两种产品:免费的开源软件,用户可以在自己的服务器上安装,以及类似于 GitHub 的托管服务。

|

||||||

|

|

||||||

|

|

||||||

可下载版本有两个版本,免费的社区版和付费企业版。企业版基于社区版,但附带针对企业客户的其他功能。它或多或少与 WordPress.org 或 Wordpress.com 提供的服务类似。

|

可下载版本有两个版本,免费的社区版和付费企业版。企业版基于社区版,但附带针对企业客户的其他功能。它或多或少与 WordPress.org 或 Wordpress.com 提供的服务类似。

|

||||||

|

|

||||||

|

|

||||||

社区版具有高度可扩展性,可以在单个服务器或群集上支持 25000 个用户。GitLab 的一些功能包括:Git 仓库管理,代码评论,问题跟踪,活动源和维基。它配备了 GitLab CI,用于持续集成和交付。

|

社区版具有高度可扩展性,可以在单个服务器或群集上支持 25000 个用户。GitLab 的一些功能包括:Git 仓库管理,代码评论,问题跟踪,活动源和维基。它配备了 GitLab CI,用于持续集成和交付。

|

||||||

|

|

||||||

|

Digital Ocean 等许多 VPS 提供商会为用户提供 GitLab 服务。 如果你想在你自己的服务器上运行它,你可以手动安装它。GitLab 为不同的操作系统提供了软件包。 在我们安装 GitLab 之前,您可能需要配置 SMTP 电子邮件服务器,以便 GitLab 可以在需要时随时推送电子邮件。官方推荐使用 Postfix。所以,先在你的服务器上安装 Postfix:

|

||||||

Digital Ocean 等许多 VPS 提供商会为用户提供 GitLab 服务。 如果你想在你自己的服务器上运行它,你可以手动安装它。GitLab 为不同的操作系统提供 Omnibus 软件包。 在我们安装 GitLab 之前,您可能需要配置 SMTP 电子邮件服务器,以便 GitLab 可以在需要时随时推送电子邮件。官方推荐使用 Postfix。所以,先在你的服务器上安装 Postfix:

|

|

||||||

|

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo apt-get install postfix

|

sudo apt-get install postfix

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

在安装 Postfix 期间,它会问你一些问题,不要跳过它们。 如果你一不小心跳过,你可以使用这个命令来重新配置它:

|

在安装 Postfix 期间,它会问你一些问题,不要跳过它们。 如果你一不小心跳过,你可以使用这个命令来重新配置它:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo dpkg-reconfigure postfix

|

sudo dpkg-reconfigure postfix

|

||||||

|

|

||||||

```

|

```

|

||||||

运行此命令时,请选择 “Internet 站点”并为使用 Gitlab 的域名提供电子邮件ID。

|

|

||||||

|

|

||||||

|

运行此命令时,请选择 “Internet Site”并为使用 Gitlab 的域名提供电子邮件 ID。

|

||||||

|

|

||||||

我是这样输入的:

|

我是这样输入的:

|

||||||

|

|

||||||

```

|

```

|

||||||

This e-mail address is being protected from spambots. You need JavaScript enabled to view it

|

xxx@x.com

|

||||||

|

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

用 Tab 键并为 postfix 创建一个用户名。接下来将会要求你输入一个目标邮箱。

|

用 Tab 键并为 postfix 创建一个用户名。接下来将会要求你输入一个目标邮箱。

|

||||||

|

|

||||||

在剩下的步骤中,都选择默认选项。当我们安装且配置完成后,我们继续安装 GitLab。

|

在剩下的步骤中,都选择默认选项。当我们安装且配置完成后,我们继续安装 GitLab。

|

||||||

|

|

||||||

我们使用 wget 来下载软件包(用 [最新包][7] 替换下载链接):

|

我们使用 `wget` 来下载软件包(用 [最新包][7] 替换下载链接):

|

||||||

|

|

||||||

```

|

```

|

||||||

wget https://downloads-packages.s3.amazonaws.com/ubuntu-14.04/gitlab_7.9.4-omnibus.1-1_amd64.deb

|

wget https://downloads-packages.s3.amazonaws.com/ubuntu-14.04/gitlab_7.9.4-omnibus.1-1_amd64.deb

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

然后安装这个包:

|

然后安装这个包:

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo dpkg -i gitlab_7.9.4-omnibus.1-1_amd64.deb

|

sudo dpkg -i gitlab_7.9.4-omnibus.1-1_amd64.deb

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

现在是时候配置并启动 GitLab 了。

|

现在是时候配置并启动 GitLab 了。

|

||||||

|

|

||||||

```

|

```

|

||||||

sudo gitlab-ctl reconfigure

|

sudo gitlab-ctl reconfigure

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

您现在需要在配置文件中配置域名,以便您可以访问 GitLab。打开文件。

|

您现在需要在配置文件中配置域名,以便您可以访问 GitLab。打开文件。

|

||||||

|

|

||||||

```

|

```

|

||||||

nano /etc/gitlab/gitlab.rb

|

nano /etc/gitlab/gitlab.rb

|

||||||

|

|

||||||

```

|

```

|

||||||

|

|

||||||

在这个文件中编辑 'external_url' 并输入服务器域名。保存文件,然后从 Web 浏览器中打开新建的一个 GitLab 站点。

|

在这个文件中编辑 `external_url` 并输入服务器域名。保存文件,然后从 Web 浏览器中打开新建的一个 GitLab 站点。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

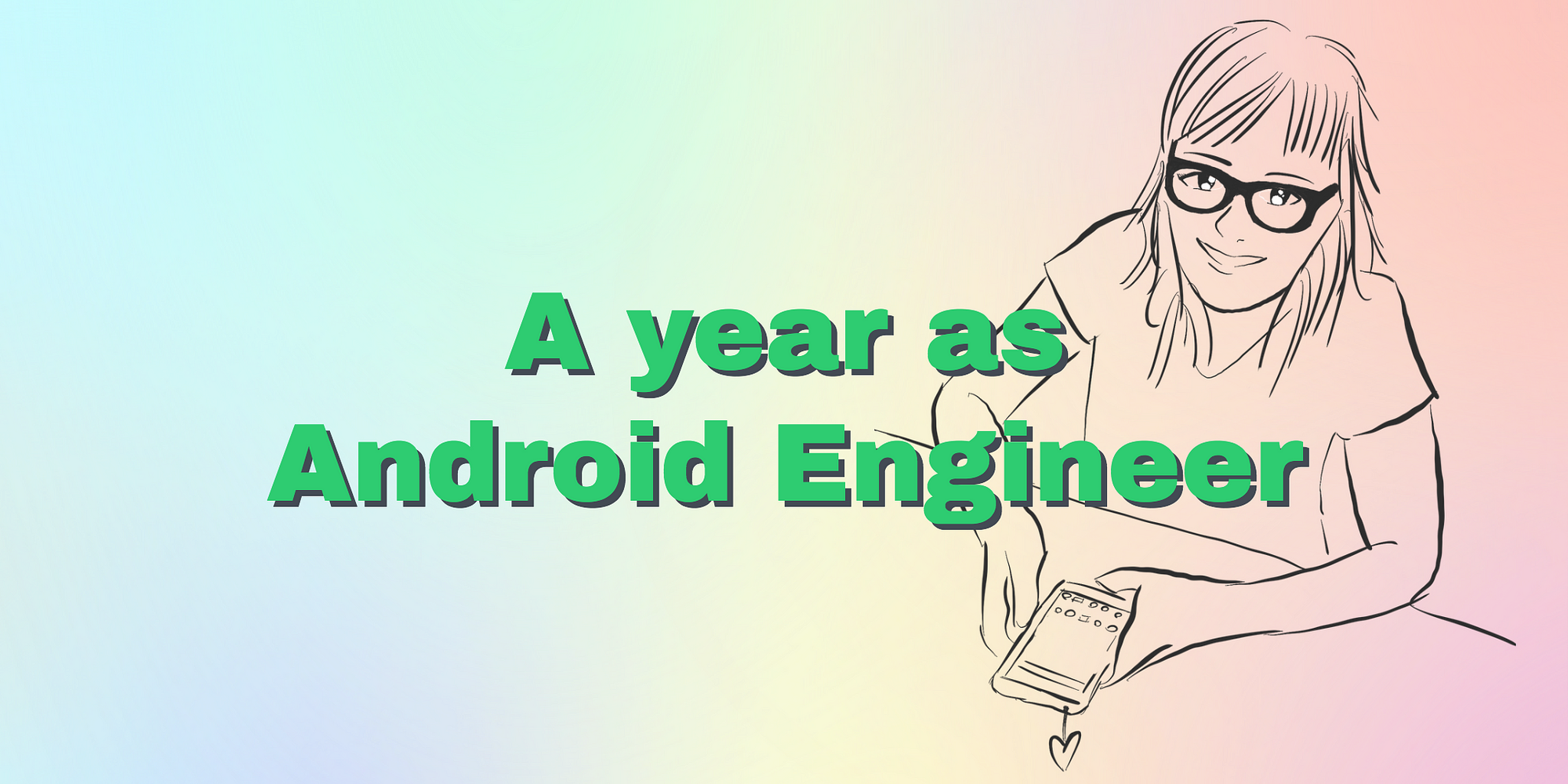

默认情况下,它会以系统管理员的身份创建 'root',并使用 '5iveL!fe' 作为密码。 登录到 GitLab 站点,然后更改密码。

|

默认情况下,它会以系统管理员的身份创建 `root`,并使用 `5iveL!fe` 作为密码。 登录到 GitLab 站点,然后更改密码。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

密码更改后,登录该网站并开始管理您的项目。

|

密码更改后,登录该网站并开始管理您的项目。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

GitLab 有很多选项和功能。最后,我借用电影“黑客帝国”中的经典台词:“不幸的是,没有人知道 GitLab 可以做什么。你必须亲自尝试一下。”

|

GitLab 有很多选项和功能。最后,我借用电影“黑客帝国”中的经典台词:“不幸的是,没有人知道 GitLab 可以做什么。你必须亲自尝试一下。”

|

||||||

|

|

||||||

|

|

||||||

@ -232,7 +232,7 @@ via: https://www.linux.com/learn/how-run-your-own-git-server

|

|||||||

作者:[Swapnil Bhartiya][a]

|

作者:[Swapnil Bhartiya][a]

|

||||||

选题:[lujun9972](https://github.com/lujun9972)

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

译者:[wyxplus](https://github.com/wyxplus)

|

译者:[wyxplus](https://github.com/wyxplus)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

122

published/20180618 5 open source alternatives to Dropbox.md

Normal file

122

published/20180618 5 open source alternatives to Dropbox.md

Normal file

@ -0,0 +1,122 @@

|

|||||||

|

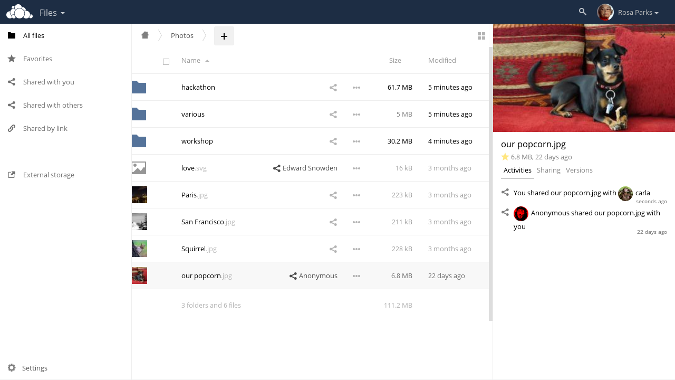

可代替 Dropbox 的 5 个开源软件

|

||||||

|

=====

|

||||||

|

|

||||||

|

> 寻找一个不会破坏你的安全、自由或银行资产的文件共享应用。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Dropbox 在文件共享应用中是个 800 磅的大猩猩。尽管它是个极度流行的工具,但你可能仍想使用一个软件去替代它。

|

||||||

|

|

||||||

|

也行你出于各种好的理由,包括安全和自由,这使你决定用[开源方式][1]。亦或是你已经被数据泄露吓坏了,或者定价计划不能满足你实际需要的存储量。

|

||||||

|

|

||||||

|

幸运的是,有各种各样的开源文件共享应用,可以提供给你更多的存储容量,更好的安全性,并且以低于 Dropbox 很多的价格来让你掌控你自己的数据。有多低呢?如果你有一定的技术和一台 Linux 服务器可供使用,那尝试一下免费的应用吧。

|

||||||

|

|

||||||

|

这里有 5 个最好的可以代替 Dropbox 的开源应用,以及其他一些,你可能想考虑使用。

|

||||||

|

|

||||||

|

### ownCloud

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

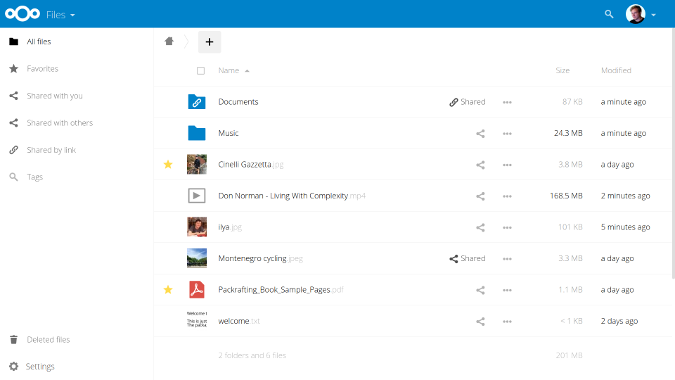

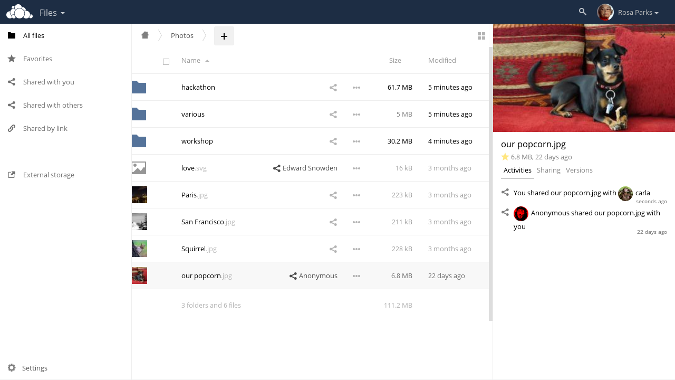

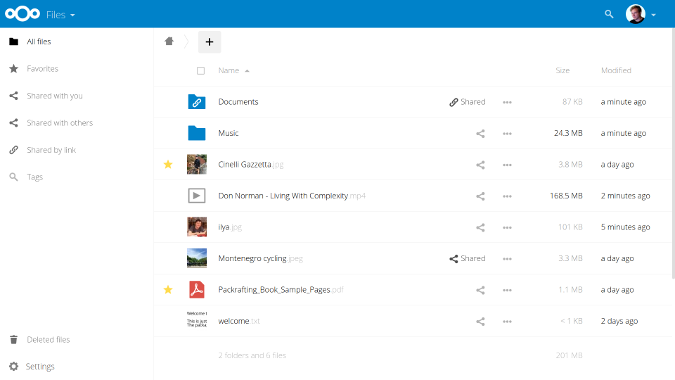

[ownCloud][2] 发布于 2010 年,是本文所列应用中最老的,但是不要被这件事蒙蔽:它仍然十分流行(根据该公司统计,有超过 150 万用户),并且由由 1100 个参与者的社区积极维护,定期发布更新。

|

||||||

|

|

||||||

|

它的主要特点——文件共享和文档写作功能和 Dropbox 的功能相似。它们的主要区别(除了它的[开源协议][3])是你的文件可以托管在你的私人 Linux 服务器或云上,给予用户对自己数据完全的控制权。(自托管是本文所列应用的一个普遍的功能。)

|

||||||

|

|

||||||

|

使用 ownCloud,你可以通过 Linux、MacOS 或 Windows 的客户端和安卓、iOS 的移动应用程序来同步和访问文件。你还可以通过带有密码保护的链接分享给其他人来协作或者上传和下载。数据传输通过端到端加密(E2EE)和 SSL 加密来保护安全。你还可以通过使用它的 [市场][4] 中的各种各样的第三方应用来扩展它的功能。当然,它也提供付费的、商业许可的企业版本。

|

||||||

|

|

||||||

|

ownCloud 提供了详尽的[文档][5],包括安装指南和针对用户、管理员、开发者的手册。你可以从 GitHub 仓库中获取它的[源码][6]。

|

||||||

|

|

||||||

|

### NextCloud

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

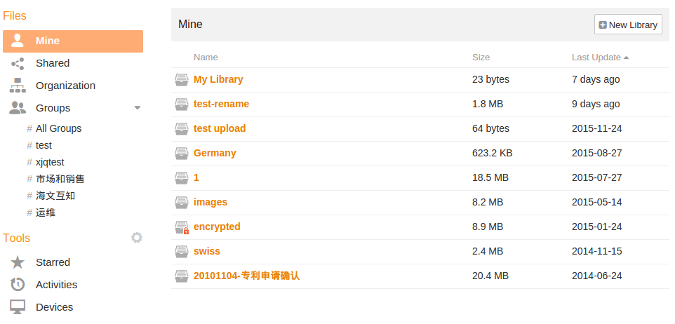

[NextCloud][7] 在 2016 年从 ownCloud 分裂出来,并且具有很多相同的功能。 NextCloud 以它的高安全性和法规遵从性作为它的一个独特的[推崇的卖点][8]。它具有 HIPAA (医疗) 和 GDPR (隐私)法规遵从功能,并提供广泛的数据策略约束、加密、用户管理和审核功能。它还在传输和存储期间对数据进行加密,并且集成了移动设备管理和身份验证机制 (包括 LDAP/AD、单点登录、双因素身份验证等)。

|

||||||

|

|

||||||

|

像本文列表里的其他应用一样, NextCloud 是自托管的,但是如果你不想在自己的 Linux 上安装 NextCloud 服务器,该公司与几个[提供商][9]达成了伙伴合作,提供安装和托管,并销售服务器、设备和服务支持。在[市场][10]中提供了大量的apps 来扩展它的功能。

|

||||||

|

|

||||||

|

NextCloud 的[文档][11]为用户、管理员和开发者提供了详细的信息,并且它的论坛、IRC 频道和社交媒体提供了基于社区的支持。如果你想贡献或者获取它的源码、报告一个错误、查看它的 AGPLv3 许可,或者想了解更多,请访问它的[GitHub 项目主页][12]。

|

||||||

|

|

||||||

|

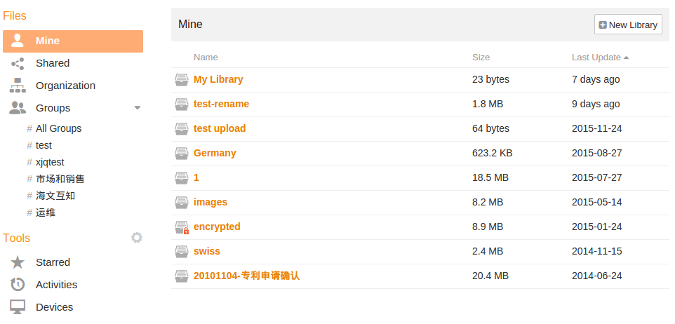

### Seafile

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

与 ownCloud 或 NextCloud 相比,[Seafile][13] 或许没有花里胡哨的卖点(app 生态),但是它能完成任务。实质上, 它充当了 Linux 服务器上的虚拟驱动器,以扩展你的桌面存储,并允许你使用密码保护和各种级别的权限(即只读或读写) 有选择地共享文件。

|

||||||

|

|

||||||

|

它的协作功能包括文件夹权限控制,密码保护的下载链接和像 Git 一样的版本控制和记录。文件使用双因素身份验证、文件加密和 AD/LDAP 集成进行保护,并且可以从 Windows、MacOS、Linux、iOS 或 Android 设备进行访问。

|

||||||

|

|

||||||

|

更多详细信息, 请访问 Seafile 的 [GitHub 仓库][14]、[服务手册][15]、[wiki][16] 和[论坛][17]。请注意, Seafile 的社区版在 [GPLv2][18] 下获得许可,但其专业版不是开源的。

|

||||||

|

|

||||||

|

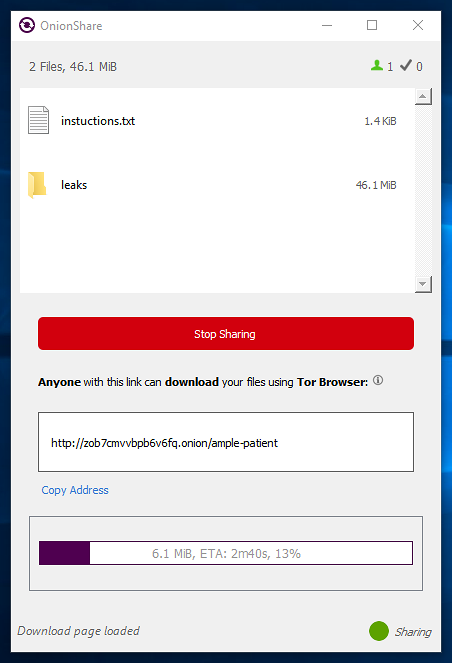

### OnionShare

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

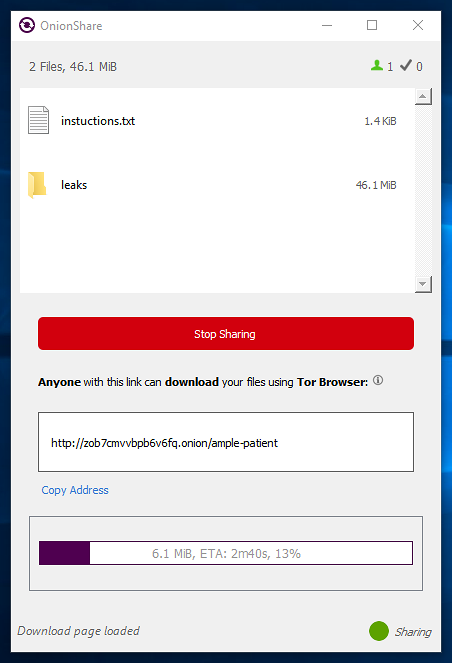

[OnionShare][19] 是一个很酷的应用:如果你想匿名,它允许你安全地共享单个文件或文件夹。不需要设置或维护服务器,所有你需要做的就是[下载和安装][20],无论是在 MacOS, Windows 还是 Linux 上。文件始终在你自己的计算机上; 当你共享文件时,OnionShare 创建一个 web 服务器,使其可作为 Tor 洋葱服务访问,并生成一个不可猜测的 .onion URL,这个 URL 允许收件人通过 [Tor 浏览器][21]获取文件。

|

||||||

|

|

||||||

|

你可以设置文件共享的限制,例如限制可以下载的次数或使用自动停止计时器,这会设置一个严格的过期日期/时间,超过这个期限便不可访问(即使尚未访问该文件)。

|

||||||

|

|

||||||

|

OnionShare 在 [GPLv3][22] 之下被许可;有关详细信息,请查阅其 [GitHub 仓库][22],其中还包括[文档][23],介绍了这个易用的文件共享软件的特点。

|

||||||

|

|

||||||

|

### Pydio Cells

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

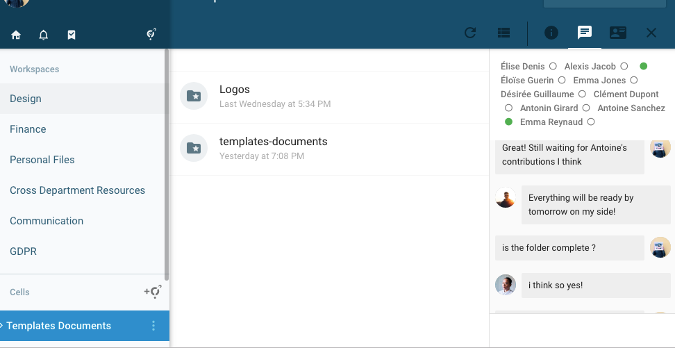

[Pydio Cells][24] 在 2018 年 5 月推出了稳定版,是对 Pydio 共享应用程序的核心服务器代码的彻底大修。由于 Pydio 的基于 PHP 的后端的限制,开发人员决定用 Go 服务器语言和微服务体系结构重写后端。(前端仍然是基于 PHP 的)。

|

||||||

|

|

||||||

|

Pydio Cells 包括通常的共享和版本控制功能,以及应用程序中的消息接受、移动应用程序(Android 和 iOS),以及一种社交网络风格的协作方法。安全性包括基于 OpenID 连接的身份验证、rest 加密、安全策略等。企业发行版中包含着高级功能,但在社区(家庭)版本中,对于大多数中小型企业和家庭用户来说,依然是足够的。

|

||||||

|

|

||||||

|

您可以 在 Linux 和 MacOS 里[下载][25] Pydio Cells。有关详细信息, 请查阅 [文档常见问题][26]、[源码库][27] 和 [AGPLv3 许可证][28]

|

||||||

|

|

||||||

|

### 其他

|

||||||

|

|

||||||

|

如果以上选择不能满足你的需求,你可能想考虑其他开源的文件共享型应用。

|

||||||

|

|

||||||

|

* 如果你的主要目的是在设备间同步文件而不是分享文件,考察一下 [Syncthing][29]。

|

||||||

|

* 如果你是一个 Git 的粉丝而不需要一个移动应用。你可能更喜欢 [SparkleShare][30]。

|

||||||

|

* 如果你主要想要一个地方聚合所有你的个人数据, 看看 [Cozy][31]。

|

||||||

|

* 如果你想找一个轻量级的或者专注于文件共享的工具,考察一下 [Scott Nesbitt's review][32]——一个罕为人知的工具。

|

||||||

|

|

||||||

|

哪个是你最喜欢的开源文件共享应用?在评论中让我们知悉。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://opensource.com/alternatives/dropbox

|

||||||

|

|

||||||

|

作者:[Opensource.com][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[distant1219](https://github.com/distant1219)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://opensource.com

|

||||||

|

[1]:https://opensource.com/open-source-way

|

||||||

|

[2]:https://owncloud.org/

|

||||||

|

[3]:https://www.gnu.org/licenses/agpl-3.0.html

|

||||||

|

[4]:https://marketplace.owncloud.com/

|

||||||

|

[5]:https://doc.owncloud.com/

|

||||||

|

[6]:https://github.com/owncloud

|

||||||

|

[7]:https://nextcloud.com/

|

||||||

|

[8]:https://nextcloud.com/secure/

|

||||||

|

[9]:https://nextcloud.com/providers/

|

||||||

|

[10]:https://apps.nextcloud.com/

|

||||||

|

[11]:https://nextcloud.com/support/

|

||||||

|

[12]:https://github.com/nextcloud

|

||||||

|

[13]:https://www.seafile.com/en/home/

|

||||||

|

[14]:https://github.com/haiwen/seafile

|

||||||

|

[15]:https://manual.seafile.com/

|

||||||

|

[16]:https://seacloud.cc/group/3/wiki/

|

||||||

|

[17]:https://forum.seafile.com/

|

||||||

|

[18]:https://github.com/haiwen/seafile/blob/master/LICENSE.txt

|

||||||

|

[19]:https://onionshare.org/

|

||||||

|

[20]:https://onionshare.org/#downloads

|

||||||

|

[21]:https://www.torproject.org/

|

||||||

|

[22]:https://github.com/micahflee/onionshare/blob/develop/LICENSE

|

||||||

|

[23]:https://github.com/micahflee/onionshare/wiki

|

||||||

|

[24]:https://pydio.com/en

|

||||||

|

[25]:https://pydio.com/download/

|

||||||

|

[26]:https://pydio.com/en/docs/faq

|

||||||

|

[27]:https://github.com/pydio/cells

|

||||||

|

[28]:https://github.com/pydio/pydio-core/blob/develop/LICENSE

|

||||||

|

[29]:https://syncthing.net/

|

||||||

|

[30]:http://www.sparkleshare.org/

|

||||||

|

[31]:https://cozy.io/en/

|

||||||

|

[32]:https://opensource.com/article/17/3/file-sharing-tools

|

||||||

@ -0,0 +1,120 @@

|

|||||||

|

如何在 Linux 中使用一个命令升级所有软件

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

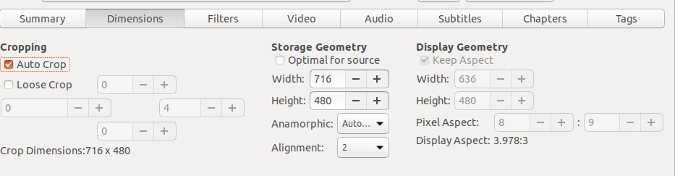

众所周知,让我们的 Linux 系统保持最新状态会用到多种包管理器。比如说,在 Ubuntu 中,你无法使用 `sudo apt update` 和 `sudo apt upgrade` 命令升级所有软件。此命令仅升级使用 APT 包管理器安装的应用程序。你有可能使用 `cargo`、[pip][1]、`npm`、`snap` 、`flatpak` 或 [Linuxbrew][2] 包管理器安装了其他软件。你需要使用相应的包管理器才能使它们全部更新。

|

||||||

|

|

||||||

|

再也不用这样了!跟 `topgrade` 打个招呼,这是一个可以一次性升级系统中所有软件的工具。

|

||||||

|

|

||||||

|

你无需运行每个包管理器来更新包。这个 `topgrade` 工具通过检测已安装的软件包、工具、插件并运行相应的软件包管理器来更新 Linux 中的所有软件,用一条命令解决了这个问题。它是自由而开源的,使用 **rust 语言**编写。它支持 GNU/Linux 和 Mac OS X.

|

||||||

|

|

||||||

|

### 在 Linux 中使用一个命令升级所有软件

|

||||||

|

|

||||||

|

`topgrade` 存在于 AUR 中。因此,你可以在任何基于 Arch 的系统中使用 [Yay][3] 助手程序安装它。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ yay -S topgrade

|

||||||

|

```

|

||||||

|

|

||||||

|

在其他 Linux 发行版上,你可以使用 `cargo` 包管理器安装 `topgrade`。要安装 cargo 包管理器,请参阅以下链接:

|

||||||

|

|

||||||

|

- [在 Linux 安装 rust 语言][12]

|

||||||

|

|

||||||

|

然后,运行以下命令来安装 `topgrade`。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ cargo install topgrade

|

||||||

|

```

|

||||||

|

|

||||||

|

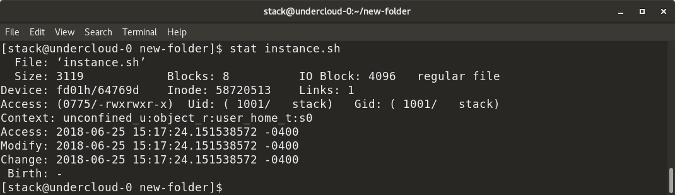

安装完成后,运行 `topgrade` 以升级 Linux 系统中的所有软件。

|

||||||

|

|

||||||

|

```

|

||||||

|

$ topgrade

|

||||||

|

```

|

||||||

|

|

||||||

|

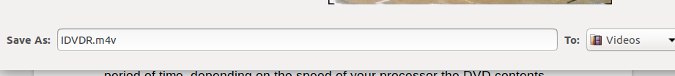

一旦调用了 `topgrade`,它将逐个执行以下任务。如有必要,系统会要求输入 root/sudo 用户密码。

|

||||||

|

|

||||||

|

1、 运行系统的包管理器:

|

||||||

|

|

||||||

|

* Arch:运行 `yay` 或者回退到 [pacman][4]

|

||||||

|

* CentOS/RHEL:运行 `yum upgrade`

|

||||||

|

* Fedora :运行 `dnf upgrade`

|

||||||

|

* Debian/Ubuntu:运行 `apt update` 和 `apt dist-upgrade`

|

||||||

|

* Linux/macOS:运行 `brew update` 和 `brew upgrade`

|

||||||

|

|

||||||

|

2、 检查 Git 是否跟踪了以下路径。如果有,则拉取它们:

|

||||||

|

|

||||||

|

* `~/.emacs.d` (无论你使用 Spacemacs 还是自定义配置都应该可用)

|

||||||

|

* `~/.zshrc`

|

||||||

|

* `~/.oh-my-zsh`

|

||||||

|

* `~/.tmux`

|

||||||

|

* `~/.config/fish/config.fish`

|

||||||

|

* 自定义路径

|

||||||

|

|

||||||

|

3、 Unix:运行 `zplug` 更新

|

||||||

|

|

||||||

|

4、 Unix:使用 TPM 升级 `tmux` 插件

|

||||||

|

|

||||||

|

5、 运行 `cargo install-update`

|

||||||

|

|

||||||

|

6、 升级 Emacs 包

|

||||||

|

|

||||||

|

7、 升级 Vim 包。对以下插件框架均可用:

|

||||||

|

|

||||||

|

* NeoBundle

|

||||||

|

* [Vundle][5]

|

||||||

|

* Plug

|

||||||

|

|

||||||

|

8、 升级 [npm][6] 全局安装的包

|

||||||

|

|

||||||

|

9、 升级 Atom 包

|

||||||

|

|

||||||

|

10、 升级 [Flatpak][7] 包

|

||||||

|

|

||||||

|

11、 升级 [snap][8] 包

|

||||||

|

|

||||||

|

12、 Linux:运行 `fwupdmgr` 显示固件升级。 (仅查看。实际不会执行升级)

|

||||||

|

|

||||||

|

13、 运行自定义命令。

|

||||||

|

|

||||||

|

最后,`topgrade` 将运行 `needrestart` 以重新启动所有服务。在 Mac OS X 中,它会升级 App Store 程序。

|

||||||

|

|

||||||

|

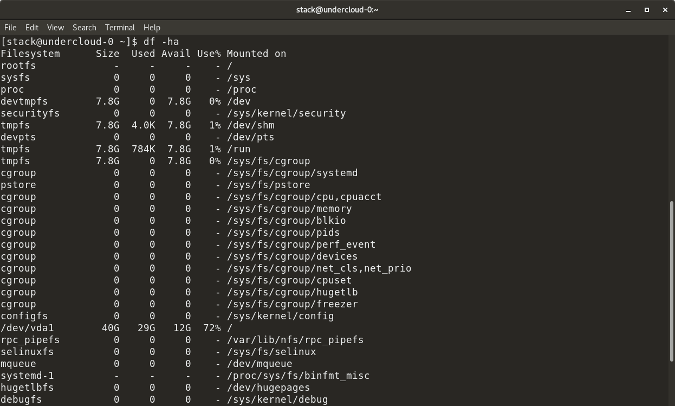

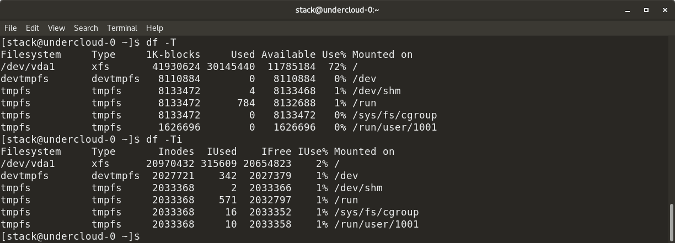

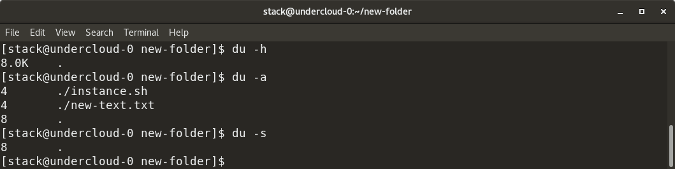

我的 Ubuntu 18.04 LTS 测试环境的示例输出:

|

||||||

|

|

||||||

|

![][10]

|

||||||

|

|

||||||

|

好处是如果一个任务失败,它将自动运行下一个任务并完成所有其他后续任务。最后,它将显示摘要,其中包含运行的任务数量,成功的数量和失败的数量等详细信息。

|

||||||

|

|

||||||

|

![][11]

|

||||||

|

|

||||||

|

**建议阅读:**

|

||||||

|

|

||||||

|

就个人而言,我喜欢创建一个像 `topgrade` 程序的想法,并使用一个命令升级使用各种包管理器安装的所有软件。我希望你也觉得它有用。还有更多的好东西。敬请关注!

|

||||||

|

|

||||||

|

干杯!

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.ostechnix.com/how-to-upgrade-everything-using-a-single-command-in-linux/

|

||||||

|

|

||||||

|

作者:[SK][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://www.ostechnix.com/author/sk/

|

||||||

|

[1]:https://www.ostechnix.com/manage-python-packages-using-pip/

|

||||||

|

[2]:https://www.ostechnix.com/linuxbrew-common-package-manager-linux-mac-os-x/

|

||||||

|

[3]:https://www.ostechnix.com/yay-found-yet-another-reliable-aur-helper/

|

||||||

|

[4]:https://www.ostechnix.com/getting-started-pacman/

|

||||||

|

[5]:https://www.ostechnix.com/manage-vim-plugins-using-vundle-linux/

|

||||||

|

[6]:https://www.ostechnix.com/manage-nodejs-packages-using-npm/

|

||||||

|

[7]:https://www.ostechnix.com/flatpak-new-framework-desktop-applications-linux/

|

||||||

|

[8]:https://www.ostechnix.com/install-snap-packages-arch-linux-fedora/

|

||||||

|

[9]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||||

|

[10]:http://www.ostechnix.com/wp-content/uploads/2018/06/topgrade-1.png

|

||||||

|

[11]:http://www.ostechnix.com/wp-content/uploads/2018/06/topgrade-2.png

|

||||||

|

[12]:https://www.ostechnix.com/install-rust-programming-language-in-linux/

|

||||||

@ -0,0 +1,67 @@

|

|||||||

|

在 Fedora 28 Workstation 使用 emoji 加速输入

|

||||||

|

======

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

Fedora 28 Workstation 添加了一个功能允许你使用键盘快速搜索、选择和输入 emoji。emoji,这种可爱的表意文字是 Unicode 的一部分,在消息传递中使用得相当广泛,特别是在移动设备上。你可能听过这样的成语:“一图胜千言”。这正是 emoji 所提供的:简单的图像供你在交流中使用。Unicode 的每个版本都增加了更多 emoji,在最近的 Unicode 版本中添加了 200 多个 emoji。本文向你展示如何使它们在你的 Fedora 系统中易于使用。

|

||||||

|

|

||||||

|

很高兴看到 emoji 的数量在增长。但与此同时,它带来了如何在计算设备中输入它们的挑战。许多人已经将这些符号用于移动设备或社交网站中的输入。

|

||||||

|

|

||||||

|

[**编者注:**本文是对此主题以前发表过的文章的更新]。

|

||||||

|

|

||||||

|

### 在 Fedora 28 Workstation 上启用 emoji 输入

|

||||||

|

|

||||||

|

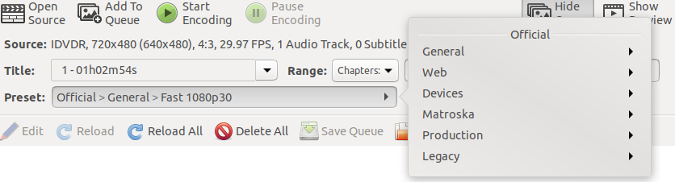

新的 emoji 输入法默认出现在 Fedora 28 Workstation 中。要使用它,必须使用“区域和语言设置”对话框启用它。从 Fedora Workstation 设置打开“区域和语言”对话框,或在“概要”中搜索它。

|

||||||

|

|

||||||

|

[![Region & Language settings tool][1]][2]

|

||||||

|

|

||||||

|

选择 `+` 控件添加输入源。出现以下对话框:

|

||||||

|

|

||||||

|

[![Adding an input source][3]][4]

|

||||||

|

|

||||||

|

选择最后选项(三个点)来完全展开选择。然后,在列表底部找到“Other”并选择它:

|

||||||

|

|

||||||

|

[![Selecting other input sources][5]][6]

|

||||||

|

|

||||||

|

在下面的对话框中,找到 “Typing Booster” 选项并选择它:

|

||||||

|

|

||||||

|

[![][7]][8]

|

||||||

|

|

||||||

|

这个高级输入法由 iBus 在背后支持。该高级输入法可通过列表右侧的齿轮图标在列表中识别。

|

||||||

|

|

||||||

|

输入法下拉菜单自动出现在 GNOME Shell 顶部栏中。确认你的默认输入法 —— 在此示例中为英语(美国) - 被选为当前输入法,你就可以输入了。

|

||||||

|

|

||||||

|

[![Input method dropdown in Shell top bar][9]][10]

|

||||||

|

|

||||||

|

### 使用新的表情符号输入法

|

||||||

|

|

||||||

|

现在 emoji 输入法启用了,按键盘快捷键 `Ctrl+Shift+E` 搜索 emoji。将出现一个弹出对话框,你可以在其中输入搜索词,例如 “smile” 来查找匹配的符号。

|

||||||

|

|

||||||

|

[![Searching for smile emoji][11]][12]

|

||||||

|

|

||||||

|

使用箭头键翻页列表。然后按回车进行选择,字形将替换输入内容。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://fedoramagazine.org/boost-typing-emoji-fedora-28-workstation/

|

||||||

|

|

||||||

|

作者:[Paul W. Frields][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://fedoramagazine.org/author/pfrields/

|

||||||

|

[1]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-15-02-41-1024x718.png

|

||||||

|

[2]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-15-02-41.png

|

||||||

|

[3]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-33-46-1024x839.png

|

||||||

|

[4]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-33-46.png

|

||||||

|

[5]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-34-15-1024x839.png

|

||||||

|

[6]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-34-15.png

|

||||||

|

[7]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-34-41-1024x839.png

|

||||||

|

[8]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-34-41.png

|

||||||

|

[9]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-15-05-24-300x244.png

|

||||||

|

[10]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-15-05-24.png

|

||||||

|

[11]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-36-31-290x300.png

|

||||||

|

[12]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-14-36-31.png

|

||||||

@ -0,0 +1,40 @@

|

|||||||

|

在 Arch 用户仓库(AUR)中发现恶意软件

|

||||||

|

======

|

||||||

|

|

||||||

|

7 月 7 日,有一个 AUR 软件包被改入了一些恶意代码,提醒 [Arch Linux][1] 用户(以及一般的 Linux 用户)在安装之前应该尽可能检查所有由用户生成的软件包。

|

||||||

|

|

||||||

|

[AUR][3](即 Arch(Linux)用户仓库)包含包描述,也称为 PKGBUILD,它使得从源代码编译包变得更容易。虽然这些包非常有用,但它们永远不应被视为安全的,并且用户应尽可能在使用之前检查其内容。毕竟,AUR 在网页中以粗体显示 “**AUR 包是用户制作的内容。任何使用该提供的文件的风险由你自行承担。**”

|

||||||

|

|

||||||

|

这次[发现][4]包含恶意代码的 AUR 包证明了这一点。[acroread][5] 于 7 月 7 日(看起来它以前是“孤儿”,意思是它没有维护者)被一位名为 “xeactor” 的用户修改,它包含了一行从 pastebin 使用 `curl` 下载脚本的命令。然后,该脚本下载了另一个脚本并安装了一个 systemd 单元以定期运行该脚本。

|

||||||

|

|

||||||

|

**看来有[另外两个][2] AUR 包以同样的方式被修改。所有违规软件包都已删除,并暂停了用于上传它们的用户帐户(它们注册在更新软件包的同一天)。**

|

||||||

|

|

||||||

|

这些恶意代码没有做任何真正有害的事情 —— 它只是试图上传一些系统信息,比如机器 ID、`uname -a` 的输出(包括内核版本、架构等)、CPU 信息、pacman 信息,以及 `systemctl list-units`(列出 systemd 单元信息)的输出到 pastebin.com。我说“试图”是因为第二个脚本中存在错误而没有实际上传系统信息(上传函数为 “upload”,但脚本试图使用其他名称 “uploader” 调用它)。

|

||||||

|

|

||||||

|

此外,将这些恶意脚本添加到 AUR 的人将脚本中的个人 Pastebin API 密钥以明文形式留下,再次证明他们真的不明白他们在做什么。(LCTT 译注:意即这是一个菜鸟“黑客”,还不懂得如何有经验地隐藏自己。)

|

||||||

|

|

||||||

|

尝试将此信息上传到 Pastebin 的目的尚不清楚,特别是原本可以上传更加敏感信息的情况下,如 GPG / SSH 密钥。

|

||||||

|

|

||||||

|

**更新:** Reddit用户 u/xanaxdroid_ [提及][6]同一个名为 “xeactor” 的用户也发布了一些加密货币挖矿软件包,因此他推测 “xeactor” 可能正计划添加一些隐藏的加密货币挖矿软件到 AUR([两个月][7]前的一些 Ubuntu Snap 软件包也是如此)。这就是 “xeactor” 可能试图获取各种系统信息的原因。此 AUR 用户上传的所有包都已删除,因此我无法检查。

|

||||||

|

|

||||||

|

**另一个更新:**你究竟应该在那些用户生成的软件包检查什么(如 AUR 中发现的)?情况各有不同,我无法准确地告诉你,但你可以从寻找任何尝试使用 `curl`、`wget`和其他类似工具下载内容的东西开始,看看他们究竟想要下载什么。还要检查从中下载软件包源的服务器,并确保它是官方来源。不幸的是,这不是一个确切的“科学做法”。例如,对于 Launchpad PPA,事情变得更加复杂,因为你必须懂得 Debian 如何打包,并且这些源代码是可以直接更改的,因为它托管在 PPA 中并由用户上传的。使用 Snap 软件包会变得更加复杂,因为在安装之前你无法检查这些软件包(据我所知)。在后面这些情况下,作为通用解决方案,我觉得你应该只安装你信任的用户/打包器生成的软件包。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: https://www.linuxuprising.com/2018/07/malware-found-on-arch-user-repository.html

|

||||||

|

|

||||||

|

作者:[Logix][a]

|

||||||

|

选题:[lujun9972](https://github.com/lujun9972)

|

||||||

|

译者:[geekpi](https://github.com/geekpi)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:https://plus.google.com/118280394805678839070

|

||||||

|

[1]:https://www.archlinux.org/

|

||||||

|

[2]:https://lists.archlinux.org/pipermail/aur-general/2018-July/034153.html

|

||||||

|

[3]:https://aur.archlinux.org/

|

||||||

|

[4]:https://lists.archlinux.org/pipermail/aur-general/2018-July/034152.html

|

||||||

|

[5]:https://aur.archlinux.org/cgit/aur.git/commit/?h=acroread&id=b3fec9f2f16703c2dae9e793f75ad6e0d98509bc

|

||||||

|

[6]:https://www.reddit.com/r/archlinux/comments/8x0p5z/reminder_to_always_read_your_pkgbuilds/e21iugg/

|

||||||

|