mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

Merge branch 'master' of github.com:LCTT/TranslateProject into pulls

This commit is contained in:

commit

304690cd2c

published

20131113 Your visual how-to guide for SELinux policy enforcement.md20160104 How to Change Linux IO Scheduler.md20170213 Set Up and Configure a Firewall with FirewallD on CentOS 7.md20170214 CentOS-vs-Ubuntu.md20170214 How to Install Ubuntu with Separate Root and Home Hard Drives.md20170227 How to setup a Linux server on Amazon AWS.md20170228 How to Install and Secure MariaDB 10 in CentOS 7.md20170309 How to Change Root Password of MySQL or MariaDB in Linux.md20170316 How to Install Latest Python 3.6 Version in Linux.md20170317 Kgif – A Simple Shell Script to Create a Gif File from Active Window.md20170320 ELRepo – Community Repo for Enterprise Linux RHEL CentOS SL.md

sources

talk

20170223 What a Linux Desktop Does Better.md20170315 Hire a DDoS service to take down your enemies.md20170317 Why AlphaGo Is Not AI.md20170317 Why do you use Linux and open source software.md

tech

20131113 Your visual how-to guide for SELinux policy enforcement.md20150112 Data-Oriented Hash Table.md20151127 5 ways to change GRUB background in Kali Linux.md20161027 Network management with LXD.md20161220 TypeScript the missing introduction.md201701 GraphQL In Use Building a Blogging Engine API with Golang and PostgreSQL.md20170101 FTPS vs SFTP.md20170111 Git in 2016.md20170111 NMAP Common Scans – Part One.md20170117 How to Keep Hackers out of Your Linux Machine Part 2: Three More Easy Security Tips.md20170123 Linux command line navigation tipstricks 3 - the CDPATH environment variable.md20170205 Hosting Django With Nginx and Gunicorn on Linux.md20170210 Use tmux for a more powerful terminal.md20170214 10 Best Linux Terminal Emulators For Ubuntu And Fedora.md20170214 CentOS Vs. Ubuntu.md20170214 How to Install Ubuntu with Separate Root and Home Hard Drives.md20170217 Understanding the difference between sudo and su.md20170220 An introduction to the Linux boot and startup processes.md20170227 How to setup a Linux server on Amazon AWS.md20170302 Installation of Devuan Linux Fork of Debian.md20170308 Many SQL Performance Problems Stem from Unnecessary, Mandatory Work.md20170309 How to Change Root Password of MySQL or MariaDB in Linux.md20170314 Integrate Ubuntu 16.04 to AD as a Domain Member with Samba and Winbind – Part 8.md20170316 An introduction to GRUB2 configuration for your Linux machine.md20170316 What is Linux VPS Hosting.md20170317 AWS cloud terminology.md20170317 How to Build Your Own Media Center with OpenELEC.md20170317 How to control GPIO pins and operate relays with the Raspberry Pi.md20170317 Join CentOS 7 Desktop to Samba4 AD as a Domain Member – Part 9.md20170317 Make Container Management Easy With Cockpit.md20170324 A formal spec for GitHub Flavored Markdown.md20170324 This Xfce Bug Is Wrecking Users Monitors.md

translated/tech

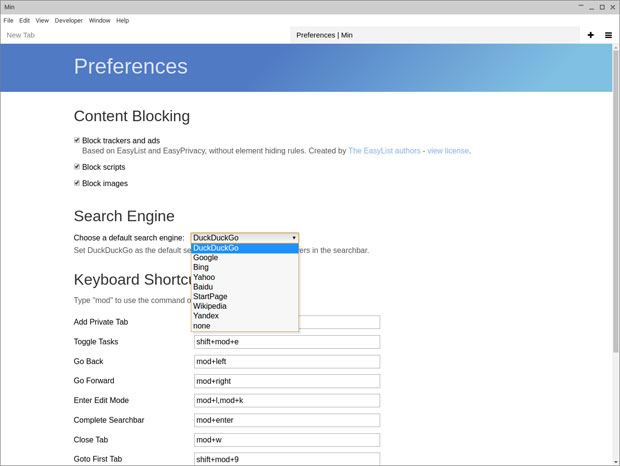

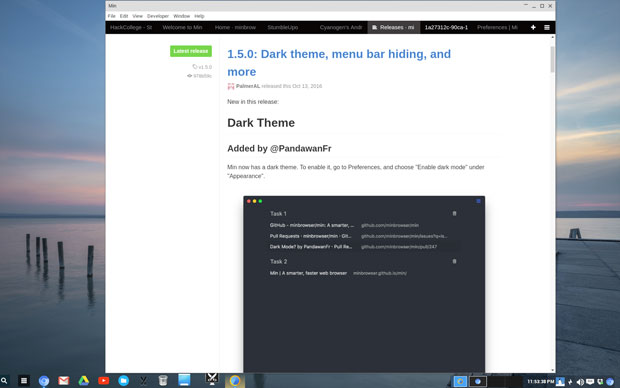

20170101 FTPS vs SFTP.md20170107 Min Browser Muffles the Web Noise.md20170111 NMAP Common Scans – Part One.md20170117 How to Keep Hackers out of Your Linux Machine Part 2 Three More Easy Security Tips.md20170118 Linux command line navigation tips- the basics of pushd and popd commands.md20170123 Linux command line navigation tipstricks 3 - the CDPATH environment variable.md20170201 Protecting Your Privacy With Firefox on Linux.md20170205 Hosting Django With Nginx and Gunicorn on Linux.md20170214 10 Best Linux Terminal Emulators For Ubuntu And Fedora.md20170217 Understanding the difference between sudo and su.md20170309 How to open port on AWS EC2 Linux server.md20170310 How to install Fedora 25 on your Raspberry Pi.md20170317 The End of the Line for EPEL-5.md20170321 How to deploy Kubernetes on the Raspberry Pi.md

@ -0,0 +1,172 @@

|

||||

看漫画学 SELinux 强制策略

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

>图像来自: opensource.com

|

||||

|

||||

今年是我们一起庆祝 SELinux 纪念日的第十个年头了(LCTT 译者注:本文发表于 2013 年)。真是太难以置信了!SELinux 最初在 Fedora Core 3 中被引入,随后加入了红帽企业版 Linux 4。从来没有使用过 SELinux 的家伙,你可要好好儿找个理由了……

|

||||

|

||||

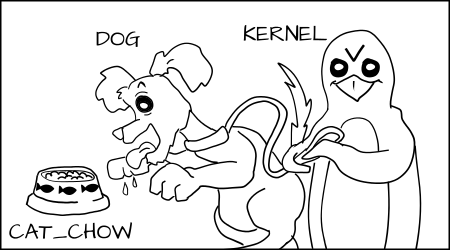

SElinux 是一个标签型系统。每一个进程都有一个标签。操作系统中的每一个文件/目录客体(object)也都有一个标签。甚至连网络端口、设备,乃至潜在的主机名都被分配了标签。我们把控制访问进程的标签的规则写入一个类似文件的客体标签中,这些规则我们称之为策略(policy)。内核强制实施了这些规则。有时候这种“强制”被称为强制访问控制体系(Mandatory Access Control)(MAC)。

|

||||

|

||||

一个客体的拥有者对客体的安全属性并没有自主权。标准 Linux 访问控制体系,拥有者/分组 + 权限标志如 rwx,常常被称作自主访问控制(Discretionary Access Control)(DAC)。SELinux 没有文件 UID 或拥有权的概念。一切都被标签控制,这意味着在没有至高无上的 root 权限进程时,也可以设置 SELinux 系统。

|

||||

|

||||

**注意:** _SELinux不允许你摒弃 DAC 控制。SELinux 是一个并行的强制模型。一个应用必须同时支持 SELinux 和 DAC 来完成特定的行为。这可能会导致管理员迷惑为什么进程被拒绝访问。管理员被拒绝访问是因为在 DAC 中有些问题,而不是在 SELinux 标签。

|

||||

|

||||

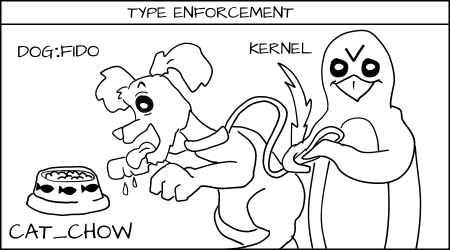

### 类型强制

|

||||

|

||||

让我们更深入的研究下标签。SELinux 最主要的“模型”或“强制”叫做类型强制(type enforcement)。基本上这意味着我们根据进程的类型来定义其标签,以及根据文件系统客体的类型来定义其标签。

|

||||

|

||||

_打个比方_

|

||||

|

||||

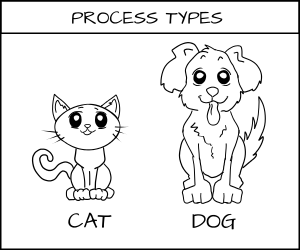

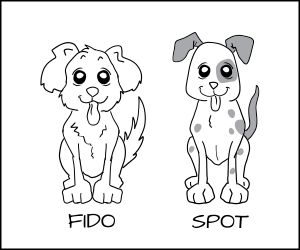

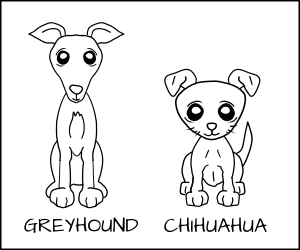

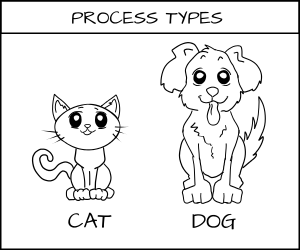

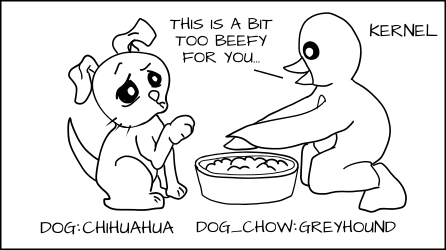

想象一下在一个系统里定义客体的类型为猫和狗。猫(CAT)和狗(DOG)都是进程类型(process type)。

|

||||

|

||||

|

||||

|

||||

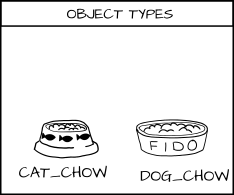

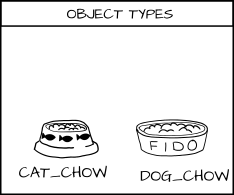

我们有一类希望能与之交互的客体,我们称之为食物。而我希望能够为食物增加类型:`cat_food` (猫的食物)和 `dog_food`(狗的食物)。

|

||||

|

||||

|

||||

|

||||

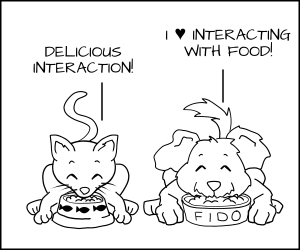

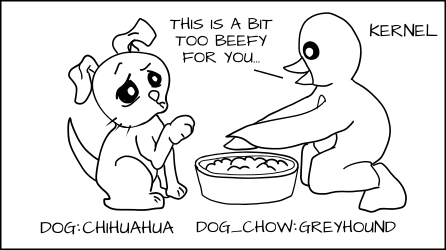

作为一个策略制定者,我可以说一只狗有权限去吃狗粮(`dog_chow`),而一只猫有权限去吃猫粮(`cat_chow`)。在 SELinux 中我可以将这条规则写入策略中。

|

||||

|

||||

|

||||

|

||||

`allow cat cat_chow:food eat;`

|

||||

|

||||

`允许 猫 猫粮:食物 吃;`

|

||||

|

||||

`allow dog dog_chow:food eat;`

|

||||

|

||||

`允许 狗 狗粮:食物 吃;`

|

||||

|

||||

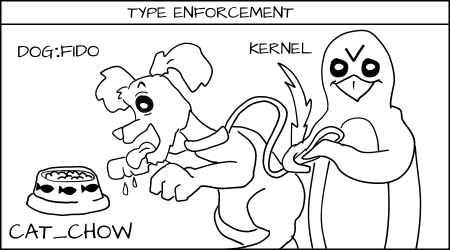

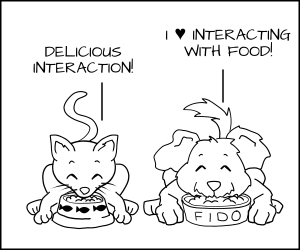

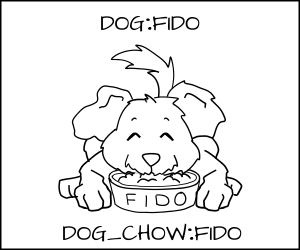

有了这些规则,内核会允许猫进程去吃打上猫粮标签 `cat_chow` 的食物,允许狗去吃打上狗粮标签 `dog_chow` 的食物。

|

||||

|

||||

|

||||

|

||||

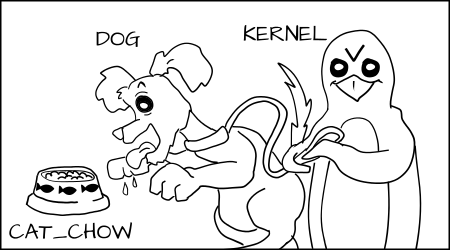

此外,在 SELinux 系统中,由于禁止是默认规则,这意味着,如果狗进程想要去吃猫粮 `cat_chow`,内核会阻止它。

|

||||

|

||||

|

||||

|

||||

同理,猫也不允许去接触狗粮。

|

||||

|

||||

|

||||

|

||||

_现实例子_

|

||||

|

||||

我们将 Apache 进程标为 `httpd_t`,将 Apache 上下文标为 `httpd_sys_content_t` 和 `httpdsys_content_rw_t`。假设我们把信用卡数据存储在 MySQL 数据库中,其标签为 `msyqld_data_t`。如果一个 Apache 进程被劫持,黑客可以获得 `httpd_t` 进程的控制权,从而能够去读取 `httpd_sys_content_t` 文件并向 `httpd_sys_content_rw_t` 文件执行写操作。但是黑客却不允许去读信用卡数据(`mysqld_data_t`),即使 Apache 进程是在 root 下运行。在这种情况下 SELinux 减轻了这次闯入的后果。

|

||||

|

||||

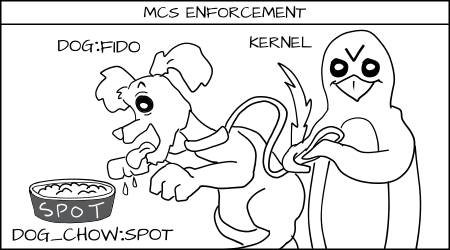

### 多类别安全强制

|

||||

|

||||

_打个比方_

|

||||

|

||||

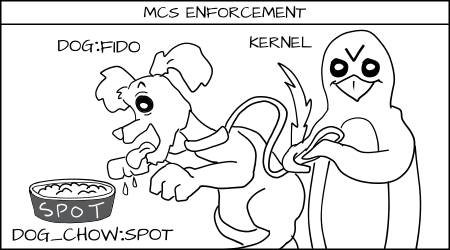

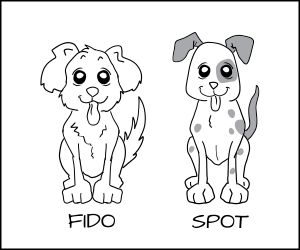

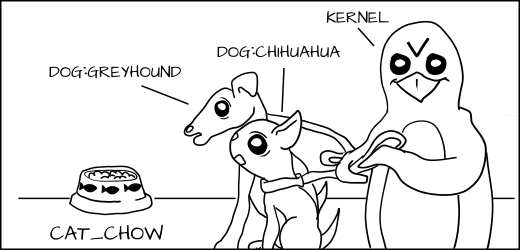

上面我们定义了狗进程和猫进程,但是如果你有多个狗进程:Fido 和 Spot,而你想要阻止 Fido 去吃 Spot 的狗粮 `dog_chow` 怎么办呢?

|

||||

|

||||

|

||||

|

||||

一个解决方式是创建大量的新类型,如 `Fido_dog` 和 `Fido_dog_chow`。但是这很快会变得难以驾驭因为所有的狗都有差不多相同的权限。

|

||||

|

||||

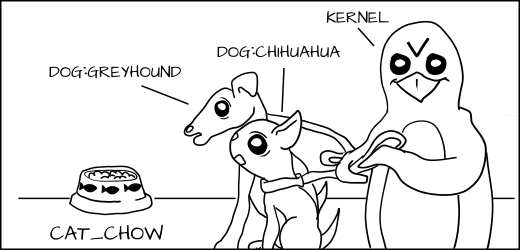

为了解决这个问题我们发明了一种新的强制形式,叫做多类别安全(Multi Category Security)(MCS)。在 MCS 中,我们在狗进程和狗粮的标签上增加了另外一部分标签。现在我们将狗进程标记为 `dog:random1(Fido)` 和 `dog:random2(Spot)`。

|

||||

|

||||

|

||||

|

||||

我们将狗粮标记为 `dog_chow:random1(Fido)` 和 `dog_chow:random2(Spot)`。

|

||||

|

||||

|

||||

|

||||

MCS 规则声明如果类型强制规则被遵守而且该 MCS 随机标签正确匹配,则访问是允许的,否则就会被拒绝。

|

||||

|

||||

Fido (`dog:random1`) 尝试去吃 `cat_chow:food` 被类型强制拒绝了。

|

||||

|

||||

|

||||

|

||||

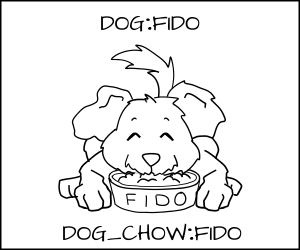

Fido (`dog:random1`) 允许去吃 `dog_chow:random1`。

|

||||

|

||||

|

||||

|

||||

Fido (`dog:random1`) 去吃 spot(`dog_chow:random2`)的食物被拒绝。

|

||||

|

||||

|

||||

|

||||

_现实例子_

|

||||

|

||||

在计算机系统中我们经常有很多具有同样访问权限的进程,但是我们又希望它们各自独立。有时我们称之为多租户环境(multi-tenant environment)。最好的例子就是虚拟机。如果我有一个运行很多虚拟机的服务器,而其中一个被劫持,我希望能够阻止它去攻击其它虚拟机和虚拟机镜像。但是在一个类型强制系统中 KVM 虚拟机被标记为 `svirt_t` 而镜像被标记为 `svirt_image_t`。 我们允许 `svirt_t` 可以读/写/删除标记为 `svirt_image_t` 的上下文。通过使用 libvirt 我们不仅实现了类型强制隔离,而且实现了 MCS 隔离。当 libvirt 将要启动一个虚拟机时,它会挑选出一个 MCS 随机标签如 `s0:c1,c2`,接着它会将 `svirt_image_t:s0:c1,c2` 标签分发给虚拟机需要去操作的所有上下文。最终,虚拟机以 `svirt_t:s0:c1,c2` 为标签启动。因此,SELinux 内核控制 `svirt_t:s0:c1,c2` 不允许写向 `svirt_image_t:s0:c3,c4`,即使虚拟机被一个黑客劫持并接管,即使它是运行在 root 下。

|

||||

|

||||

我们在 OpenShift 中使用[类似的隔离策略][8]。每一个 gear(user/app process)都有相同的 SELinux 类型(`openshift_t`)(LCTT 译注:gear 为 OpenShift 的计量单位)。策略定义的规则控制着 gear 类型的访问权限,而一个独一无二的 MCS 标签确保了一个 gear 不能影响其他 gear。

|

||||

|

||||

请观看[这个短视频][9]来看 OpenShift gear 切换到 root 会发生什么。

|

||||

|

||||

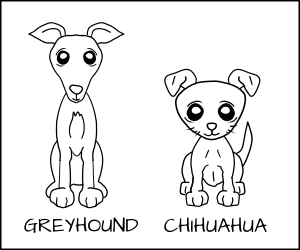

### 多级别安全强制

|

||||

|

||||

另外一种不经常使用的 SELinux 强制形式叫做多级别安全(Multi Level Security)(MLS);它开发于上世纪 60 年代,并且主要使用在受信操作系统上如 Trusted Solaris。

|

||||

|

||||

其核心观点就是通过进程使用的数据等级来控制进程。一个 _secret_ 进程不能读取 _top secret_ 数据。

|

||||

|

||||

MLS 很像 MCS,除了它在强制策略中增加了支配的概念。MCS 标签必须完全匹配,但一个 MLS 标签可以支配另一个 MLS 标签并且获得访问。

|

||||

|

||||

_打个比方_

|

||||

|

||||

不讨论不同名字的狗,我们现在来看不同种类。我们现在有一只格雷伊猎犬和一只吉娃娃。

|

||||

|

||||

|

||||

|

||||

我们可能想要允许格雷伊猎犬去吃任何狗粮,但是吉娃娃如果尝试去吃格雷伊猎犬的狗粮可能会被呛到。

|

||||

|

||||

我们把格雷伊猎犬标记为 `dog:Greyhound`,把它的狗粮标记为 `dog_chow:Greyhound`,把吉娃娃标记为 `dog:Chihuahua`,把它的狗粮标记为 `dog_chow:Chihuahua`。

|

||||

|

||||

|

||||

|

||||

使用 MLS 策略,我们可以使 MLS 格雷伊猎犬标签支配吉娃娃标签。这意味着 `dog:Greyhound` 允许去吃 `dog_chow:Greyhound` 和 `dog_chow:Chihuahua`。

|

||||

|

||||

|

||||

|

||||

但是 `dog:Chihuahua` 不允许去吃 `dog_chow:Greyhound`。

|

||||

|

||||

|

||||

|

||||

当然,由于类型强制, `dog:Greyhound` 和 `dog:Chihuahua` 仍然不允许去吃 `cat_chow:Siamese`,即使 MLS 类型 GreyHound 支配 Siamese。

|

||||

|

||||

|

||||

|

||||

_现实例子_

|

||||

|

||||

有两个 Apache 服务器:一个以 `httpd_t:TopSecret` 运行,一个以 `httpd_t:Secret` 运行。如果 Apache 进程 `httpd_t:Secret` 被劫持,黑客可以读取 `httpd_sys_content_t:Secret` 但会被禁止读取 `httpd_sys_content_t:TopSecret`。

|

||||

|

||||

但是如果运行 `httpd_t:TopSecret` 的 Apache 进程被劫持,它可以读取 `httpd_sys_content_t:Secret` 数据和 `httpd_sys_content_t:TopSecret` 数据。

|

||||

|

||||

我们在军事系统上使用 MLS,一个用户可能被允许读取 _secret_ 数据,但是另一个用户在同一个系统上可以读取 _top secret_ 数据。

|

||||

|

||||

### 结论

|

||||

|

||||

SELinux 是一个功能强大的标签系统,控制着内核授予每个进程的访问权限。最主要的特性是类型强制,策略规则定义的进程访问权限基于进程被标记的类型和客体被标记的类型。也引入了另外两个控制手段,分离有着同样类型进程的叫做 MCS,而 MLS,则允许进程间存在支配等级。

|

||||

|

||||

_*所有的漫画都来自 [Máirín Duffy][6]_

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Daniel J Walsh - Daniel Walsh 已经在计算机安全领域工作了将近 30 年。Daniel 与 2001 年 8 月加入红帽。

|

||||

|

||||

-------------------------

|

||||

|

||||

via: https://opensource.com/business/13/11/selinux-policy-guide

|

||||

|

||||

作者:[Daniel J Walsh][a]

|

||||

译者:[xiaow6](https://github.com/xiaow6)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/rhatdan

|

||||

[1]:https://opensource.com/resources/what-is-linux?src=linux_resource_menu

|

||||

[2]:https://opensource.com/resources/what-are-linux-containers?src=linux_resource_menu

|

||||

[3]:https://opensource.com/article/16/11/managing-devices-linux?src=linux_resource_menu

|

||||

[4]:https://developers.redhat.com/promotions/linux-cheatsheet/?intcmp=7016000000127cYAAQ

|

||||

[5]:https://opensource.com/tags/linux?src=linux_resource_menu

|

||||

[6]:https://opensource.com/users/mairin

|

||||

[7]:https://opensource.com/business/13/11/selinux-policy-guide?rate=XNCbBUJpG2rjpCoRumnDzQw-VsLWBEh-9G2hdHyB31I

|

||||

[8]:http://people.fedoraproject.org/~dwalsh/SELinux/Presentations/openshift_selinux.ogv

|

||||

[9]:http://people.fedoraproject.org/~dwalsh/SELinux/Presentations/openshift_selinux.ogv

|

||||

[10]:https://opensource.com/user/16673/feed

|

||||

[11]:https://opensource.com/business/13/11/selinux-policy-guide#comments

|

||||

[12]:https://opensource.com/users/rhatdan

|

||||

66

published/20160104 How to Change Linux IO Scheduler.md

Normal file

66

published/20160104 How to Change Linux IO Scheduler.md

Normal file

@ -0,0 +1,66 @@

|

||||

如何更改 Linux 的 I/O 调度器

|

||||

==================================

|

||||

|

||||

Linux 的 I/O 调度器是一个从存储卷以块式 I/O 访问的进程,有时也叫磁盘调度器。Linux I/O 调度器的工作机制是控制块设备的请求队列:确定队列中哪些 I/O 的优先级更高以及何时下发 I/O 到块设备,以此来减少磁盘寻道时间,从而提高系统的吞吐量。

|

||||

|

||||

目前 Linux 上有如下几种 I/O 调度算法:

|

||||

|

||||

1. noop - 通常用于内存存储的设备。

|

||||

2. cfq - 绝对公平调度器。进程平均使用IO带宽。

|

||||

3. Deadline - 针对延迟的调度器,每一个 I/O,都有一个最晚执行时间。

|

||||

4. Anticipatory - 启发式调度,类似 Deadline 算法,但是引入预测机制提高性能。

|

||||

|

||||

查看设备当前的 I/O 调度器:

|

||||

|

||||

```

|

||||

# cat /sys/block/<Disk_Name>/queue/scheduler

|

||||

```

|

||||

|

||||

假设磁盘名称是 `/dev/sdc`:

|

||||

|

||||

```

|

||||

# cat /sys/block/sdc/queue/scheduler

|

||||

noop anticipatory deadline [cfq]

|

||||

```

|

||||

|

||||

### 如何改变硬盘设备 I/O 调度器

|

||||

|

||||

使用如下指令:

|

||||

|

||||

```

|

||||

# echo {SCHEDULER-NAME} > /sys/block/<Disk_Name>/queue/scheduler

|

||||

```

|

||||

|

||||

比如设置 noop 调度器:

|

||||

|

||||

```

|

||||

# echo noop > /sys/block/sdc/queue/scheduler

|

||||

```

|

||||

|

||||

以上设置重启后会失效,要想重启后配置仍生效,需要在内核启动参数中将 `elevator=noop` 写入 `/boot/grub/menu.lst`:

|

||||

|

||||

#### 1. 备份 menu.lst 文件

|

||||

|

||||

```

|

||||

cp -p /boot/grub/menu.lst /boot/grub/menu.lst-backup

|

||||

```

|

||||

|

||||

#### 2. 更新 /boot/grub/menu.lst

|

||||

|

||||

将 `elevator=noop` 添加到文件末尾,比如:

|

||||

|

||||

```

|

||||

kernel /vmlinuz-2.6.16.60-0.91.1-smp root=/dev/sysvg/root splash=silent splash=off showopts elevator=noop

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linuxroutes.com/change-io-scheduler-linux/

|

||||

|

||||

作者:[UX Techno][a]

|

||||

译者:[honpey](https://github.com/honpey)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linuxroutes.com/change-io-scheduler-linux/

|

||||

@ -1,92 +1,89 @@

|

||||

|

||||

在 CentOS 7 上利用 FirewallD 设置和配置防火墙

|

||||

CentOS 7 上的 FirewallD 简明指南

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

|

||||

FirewallD 是 CentOS 7 服务器上默认可用的防火墙管理工具。基本上,它是 iptables 的封装,有图形配置工具 firewall-config 和命令行工具 `firewall-cmd`。使用 iptables 服务,每次改动都要求刷新旧规则,并且从 `/etc/sysconfig/iptables` 读取新规则,然而 firewalld 只应用改动了的不同部分。

|

||||

|

||||

### FirewallD 的区域(zone)

|

||||

|

||||

FirewallD 是 CentOS 7 服务器上的一个默认可用的防火墙管理工具。基本上,它是 iptables 的封装,有图形配置工具 firewall-config 和命令行工具 firewall-cmd。使用 iptables 服务每次改动都要求刷新旧规则,并且从 `/etc/sysconfig/iptables` 读取新规则,然而 firewalld 仅仅会应用改动了的不同部分。

|

||||

FirewallD 使用服务(service) 和区域(zone)来代替 iptables 的规则(rule)和链(chain)。

|

||||

|

||||

### FirewallD zones

|

||||

默认情况下,有以下的区域(zone)可用:

|

||||

|

||||

* **drop** – 丢弃所有传入的网络数据包并且无回应,只有传出网络连接可用。

|

||||

* **block** — 拒绝所有传入网络数据包并回应一条主机禁止的 ICMP 消息,只有传出网络连接可用。

|

||||

* **public** — 只接受被选择的传入网络连接,用于公共区域。

|

||||

* **external** — 用于启用了地址伪装的外部网络,只接受选定的传入网络连接。

|

||||

* **dmz** — DMZ 隔离区,外部受限地访问内部网络,只接受选定的传入网络连接。

|

||||

* **work** — 对于处在你工作区域内的计算机,只接受被选择的传入网络连接。

|

||||

* **home** — 对于处在你家庭区域内的计算机,只接受被选择的传入网络连接。

|

||||

* **internal** — 对于处在你内部网络的计算机,只接受被选择的传入网络连接。

|

||||

* **trusted** — 所有网络连接都接受。

|

||||

|

||||

FirewallD 使用 services 和 zones 代替 iptables 的 rules 和 chains 。

|

||||

要列出所有可用的区域,运行:

|

||||

|

||||

默认情况下,有以下的 zones 可用:

|

||||

|

||||

|

||||

* drop – 丢弃所有传入的网络数据包并且无回应,只有传出网络连接可用。

|

||||

* block — 拒绝所有传入网络数据包并回应一条主机禁止 ICMP 的消息,只有传出网络连接可用。

|

||||

* public — 只接受被选择的传入网络连接,用于公共区域。

|

||||

* external — 用于启用伪装的外部网络,只接受被选择的传入网络连接。

|

||||

* dmz — DMZ 隔离区,外部受限地访问内部网络,只接受被选择的传入网络连接。

|

||||

* work — 对于处在你家庭区域内的计算机,只接受被选择的传入网络连接。

|

||||

* home — 对于处在你家庭区域内的计算机,只接受被选择的传入网络连接。

|

||||

* internal — 对于处在你内部网络的计算机,只接受被选择的传入网络连接。

|

||||

* trusted — 所有网络连接都接受。

|

||||

|

||||

列出所有可用的 zones :

|

||||

```

|

||||

# firewall-cmd --get-zones

|

||||

work drop internal external trusted home dmz public block

|

||||

```

|

||||

|

||||

列出默认的 zone :

|

||||

列出默认的区域 :

|

||||

|

||||

```

|

||||

# firewall-cmd --get-default-zone

|

||||

public

|

||||

```

|

||||

|

||||

改变默认的 zone :

|

||||

改变默认的区域 :

|

||||

|

||||

```

|

||||

# firewall-cmd --set-default-zone=dmz

|

||||

# firewall-cmd --get-default-zone

|

||||

dmz

|

||||

```

|

||||

|

||||

### FirewallD services

|

||||

### FirewallD 服务

|

||||

|

||||

FirewallD services 使用 XML 配置文件为 firewalld 录入服务信息。

|

||||

FirewallD 服务使用 XML 配置文件,记录了 firewalld 服务信息。

|

||||

|

||||

列出所有可用的服务:

|

||||

|

||||

列出所有可用的 services :

|

||||

```

|

||||

# firewall-cmd --get-services

|

||||

amanda-client amanda-k5-client bacula bacula-client ceph ceph-mon dhcp dhcpv6 dhcpv6-client dns docker-registry dropbox-lansync freeipa-ldap freeipa-ldaps freeipa-replication ftp high-availability http https imap imaps ipp ipp-client ipsec iscsi-target kadmin kerberos kpasswd ldap ldaps libvirt libvirt-tls mdns mosh mountd ms-wbt mysql nfs ntp openvpn pmcd pmproxy pmwebapi pmwebapis pop3 pop3s postgresql privoxy proxy-dhcp ptp pulseaudio puppetmaster radius rpc-bind rsyncd samba samba-client sane smtp smtps snmp snmptrap squid ssh synergy syslog syslog-tls telnet tftp tftp-client tinc tor-socks transmission-client vdsm vnc-server wbem-https xmpp-bosh xmpp-client xmpp-local xmpp-server

|

||||

```

|

||||

|

||||

|

||||

|

||||

XML 配置文件存储在 `/usr/lib/firewalld/services/` 和 `/etc/firewalld/services/` 目录。

|

||||

XML 配置文件存储在 `/usr/lib/firewalld/services/` 和 `/etc/firewalld/services/` 目录下。

|

||||

|

||||

### 用 FirewallD 配置你的防火墙

|

||||

|

||||

作为一个例子,假设你正在运行一个 web 服务器,SSH 服务端口为 7022 ,以及邮件服务,你可以利用 FirewallD 这样配置你的服务器:

|

||||

|

||||

作为一个例子,假设你正在运行一个 web 服务,端口为 7022 的 SSH 服务和邮件服务,你可以利用 FirewallD 这样配置你的 [RoseHosting VPS][6]:

|

||||

首先设置默认区为 dmz。

|

||||

|

||||

|

||||

首先设置默认 zone 为 dmz。

|

||||

```

|

||||

# firewall-cmd --set-default-zone=dmz

|

||||

# firewall-cmd --get-default-zone

|

||||

dmz

|

||||

```

|

||||

|

||||

添加持久性的 HTTP 和 HTTPS service 规则到 dmz zone :

|

||||

为 dmz 区添加持久性的 HTTP 和 HTTPS 规则:

|

||||

|

||||

```

|

||||

# firewall-cmd --zone=dmz --add-service=http --permanent

|

||||

# firewall-cmd --zone=dmz --add-service=https --permanent

|

||||

```

|

||||

|

||||

开启端口 25 (SMTP) 和端口 465 (SMTPS) :

|

||||

|

||||

开启端口 25 (SMTP) 和端口 465 (SMTPS) :

|

||||

```

|

||||

firewall-cmd --zone=dmz --add-service=smtp --permanent

|

||||

firewall-cmd --zone=dmz --add-service=smtps --permanent

|

||||

```

|

||||

|

||||

开启 IMAP、IMAPS、POP3 和 POP3S 端口:

|

||||

|

||||

开启 IMAP, IMAPS, POP3 和 POP3S 端口:

|

||||

```

|

||||

firewall-cmd --zone=dmz --add-service=imap --permanent

|

||||

firewall-cmd --zone=dmz --add-service=imaps --permanent

|

||||

@ -94,23 +91,23 @@ firewall-cmd --zone=dmz --add-service=pop3 --permanent

|

||||

firewall-cmd --zone=dmz --add-service=pop3s --permanent

|

||||

```

|

||||

|

||||

因为将 SSH 端口改到了 7022,所以要移除 ssh 服务(端口 22),开启端口 7022:

|

||||

|

||||

将 SSH 端口改到 7022 后,我们移除 ssh service (端口 22),并且开启端口 7022

|

||||

```

|

||||

firewall-cmd --remove-service=ssh --permanent

|

||||

firewall-cmd --add-port=7022/tcp --permanent

|

||||

```

|

||||

|

||||

要实现这些更改,我们需要重新加载防火墙:

|

||||

要应用这些更改,我们需要重新加载防火墙:

|

||||

|

||||

```

|

||||

firewall-cmd --reload

|

||||

```

|

||||

|

||||

|

||||

最后可以列出这些规则:

|

||||

### firewall-cmd –list-all

|

||||

|

||||

```

|

||||

# firewall-cmd –list-all

|

||||

dmz

|

||||

target: default

|

||||

icmp-block-inversion: no

|

||||

@ -129,11 +126,7 @@ rich rules:

|

||||

* * *

|

||||

|

||||

|

||||

|

||||

当然,如果你使用任何一个我们的 [CentOS VPS hosting][7] 服务,你完全不用做这些。在这种情况下,你可以直接叫我们的专家 Linux 管理员为你设置。他们提供 24x7 h 的帮助并且会马上回应你的请求。

|

||||

|

||||

|

||||

PS. 如果你喜欢这篇文章,请按分享按钮分享给你社交网络上的朋友或者直接在下面留下一个回复。谢谢。

|

||||

PS. 如果你喜欢这篇文章,请在下面留下一个回复。谢谢。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -145,7 +138,7 @@ via: https://www.rosehosting.com/blog/set-up-and-configure-a-firewall-with-firew

|

||||

|

||||

作者:[rosehosting.com][a]

|

||||

译者:[Locez](https://github.com/locez)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

83

published/20170214 CentOS-vs-Ubuntu.md

Normal file

83

published/20170214 CentOS-vs-Ubuntu.md

Normal file

@ -0,0 +1,83 @@

|

||||

CentOS 与 Ubuntu 有什么不同?

|

||||

============

|

||||

|

||||

[

|

||||

][4]

|

||||

|

||||

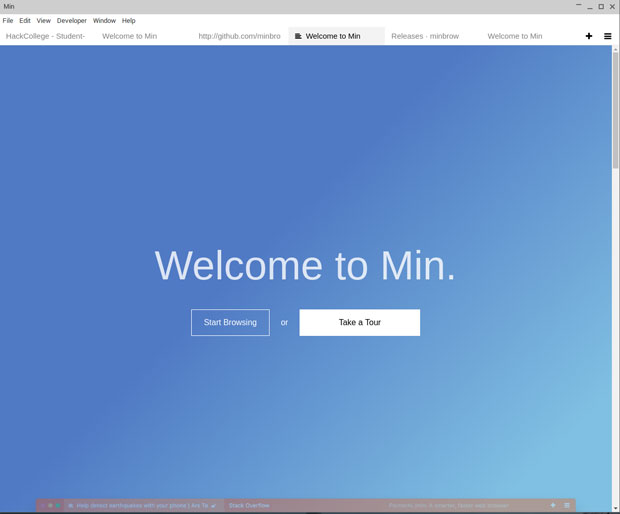

Linux 中的可选项似乎“无穷无尽”,因为每个人都可以通过修改一个已经发行的版本或者新的[白手起家的版本][7] (LFS) 来构建 Linux。

|

||||

|

||||

关于 Linux 发行版的选择,我们关注的因素包括用户界面、文件系统、软件包分发、新的特性以及更新周期和可维护性等。

|

||||

|

||||

在这篇文章中,我们会讲到两个较为熟知的 Linux 发行版,实际上,更多的是介绍两者之间的不同,以及在哪些方面一方比另一方更好。

|

||||

|

||||

### 什么是 CentOS?

|

||||

|

||||

CentOS(Community Enterprise Operating System)是脱胎于 Red Hat Enterprise Linux (RHEL) 并与之兼容的由社区支持的克隆版 Linux 发行版,所以我们可以认为 CentOS 是 RHEL 的一个免费版。CentOS 的每一套发行版都有 10 年的维护期,每个新版本的释出周期为 2 年。在 2014 年 1 月 8 日,[CentOS 声明正式加入红帽](https://linux.cn/article-2453-1.html),为新的 CentOS 董事会所管理,但仍然保持与 RHEL 的独立性。

|

||||

|

||||

扩展阅读:[如何安装 CentOS?][1]

|

||||

|

||||

#### CentOS 的历史和第一次释出

|

||||

|

||||

[CentOS][8] 第一次释出是在 2004 年,当时名叫 cAOs Linux;它是由社区维护和管理的一套基于 RPM 的发行版。

|

||||

|

||||

CentOS 结合了包括 Debian、Red Hat Linux/Fedora 和 FreeBSD 等在内的许多方面,使其能够令服务器和集群稳定工作 3 到 5 年的时间。它有一群开源软件开发者作为拥趸,是一个大型组织(CAOS 基金会)的一部分。

|

||||

|

||||

在 2006 年 6 月,David Parsley 宣布由他开发的 TAO Linux(另一个 RHEL 克隆版本)退出历史舞台并全力转入 CentOS 的开发工作。不过,他的领域转移并不会影响之前的 TAO 用户, 因为他们可以通过使用 `yum update` 来更新系统以迁移到 CentOS。

|

||||

|

||||

2014 年 1 月,红帽开始赞助 CentOS 项目,并移交了所有权和商标。

|

||||

|

||||

#### CentOS 设计

|

||||

|

||||

确切地说,CentOS 是付费 RHEL (Red Had Enterprise Edition) 版本的克隆。RHEL 提供源码以供之后 CentOS 修改和变更(移除商标和 logo)并完善为最终的成品。

|

||||

|

||||

### Ubuntu

|

||||

|

||||

Ubuntu 是一个基于 Debian 的 Linux 操作系统,应用于桌面、服务器、智能手机和平板电脑等多个领域。Ubuntu 是由一个英国的名为 Canonical Ltd. 的公司发行的,由南非的 Mark Shuttleworth 创立并赞助。

|

||||

|

||||

扩展阅读:[安装完 Ubuntu 16.10 必须做的 10 件事][2]

|

||||

|

||||

#### Ubuntu 的设计

|

||||

|

||||

Ubuntu 是一个在全世界的开发者共同努力下生成的开源发行版。在这些年的悉心经营下,Ubuntu 的界面变得越来越现代化和人性化,整个系统运行也更加流畅、安全,并且有成千上万的应用可供下载。

|

||||

|

||||

由于它是基于 [Debian][10] 的,因此它也支持 .deb 包、较新的包系统和更为安全的 [snap 包格式 (snappy)][11]。

|

||||

|

||||

这种新的打包系统允许分发的应用自带满足所需的依赖性。

|

||||

|

||||

扩展阅读:[点评 Ubuntu 16.10 中的 Unity 8][3]

|

||||

|

||||

### CentOS 与 Ubuntu 的区别

|

||||

|

||||

* Ubuntu 基于 Debian,CentOS 基于 RHEL;

|

||||

* Ubuntu 使用 .deb 和 .snap 的软件包,CentOS 使用 .rpm 和 flatpak 软件包;

|

||||

* Ubuntu 使用 apt 来更新,CentOS 使用 yum;

|

||||

* CentOS 看起来会更稳定,因为它不会像 Ubuntu 那样对包做常规性更新,但这并不意味着 Ubuntu 就不比 CentOS 安全;

|

||||

* Ubuntu 有更多的文档和免费的问题、信息支持;

|

||||

* Ubuntu 服务器版本在云服务和容器部署上的支持更多。

|

||||

|

||||

### 结论

|

||||

|

||||

不论你的选择如何,**是 Ubuntu 还是 CentOS**,两者都是非常优秀稳定的发行版。如果你想要一个发布周期更短的版本,那么就选 Ubuntu;如果你想要一个不经常变更包的版本,那么就选 CentOS。在下方留下的评论,说出你更钟爱哪一个吧!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.linuxandubuntu.com/home/centos-vs-ubuntu

|

||||

|

||||

作者:[linuxandubuntu.com][a]

|

||||

译者:[Meditator-hkx](http://www.kaixinhuang.com)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxandubuntu.com/home/centos-vs-ubuntu

|

||||

[1]:http://www.linuxandubuntu.com/home/how-to-install-centos

|

||||

[2]:http://www.linuxandubuntu.com/home/10-things-to-do-after-installing-ubuntu-16-04-xenial-xerus

|

||||

[3]:http://www.linuxandubuntu.com/home/linuxandubuntu-review-of-unity-8-preview-in-ubuntu-1610

|

||||

[4]:http://www.linuxandubuntu.com/home/centos-vs-ubuntu

|

||||

[5]:http://www.linuxandubuntu.com/home/centos-vs-ubuntu

|

||||

[6]:http://www.linuxandubuntu.com/home/centos-vs-ubuntu#comments

|

||||

[7]:http://www.linuxandubuntu.com/home/how-to-create-a-linux-distro

|

||||

[8]:http://www.linuxandubuntu.com/home/10-things-to-do-after-installing-centos

|

||||

[9]:https:]:http://www.linuxandubuntu.com/home/linuxandubuntu-review-of-unity-8-preview-in-ubuntu-1610

|

||||

[10]:https://www.debian.org/

|

||||

[11]:https://en.wikipedia.org/wiki/Snappy_(package_manager)

|

||||

@ -0,0 +1,86 @@

|

||||

在独立的 Root 和 Home 硬盘驱动器上安装 Ubuntu

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

安装 Linux 系统时,可以有两种不同的方式。第一种方式是在一个超快的固态硬盘上进行安装,这样可以保证迅速开机和高速访问数据。第二种方式是在一个较慢但很强大的普通硬盘驱动器上安装,这样的硬盘转速快并且存储容量大,从而可以存储大量的应用程序和数据。

|

||||

|

||||

然而,一些 Linux 用户都知道,固态硬盘很棒,但是又很贵,而普通硬盘容量很大但速度较慢。如果我告诉你,可以同时利用两种硬盘来安装 Linux 系统,会怎么样?一个超快、现代化的固态硬盘驱动 Linux 内核,一个容量很大的普通硬盘来存储其他数据。

|

||||

|

||||

在这篇文章中,我将阐述如何通过分离 Root 目录和 Home 目录安装 Ubuntu 系统 — Root 目录存于 SSD(固态硬盘)中,Home 目录存于普通硬盘中。

|

||||

|

||||

### 没有多余的硬盘驱动器?尝试一下 SD 卡(内存卡)!

|

||||

|

||||

|

||||

|

||||

在多个驱动器上安装 Linux 系统是很不错的,并且每一个高级用户都应该学会这样做。然而,还有一种情况使得用户应该这样安装 Linux 系统 - 在低存储容量的笔记本电脑上安装系统。可能你有一台很便宜、没有花费太多的笔记本电脑,上面安装了 Linux 系统,电脑上没有多余的硬盘驱动,但有一个 SD 卡插槽。

|

||||

|

||||

这篇教程也是针对这种类型的电脑的。跟随这篇教程,可以为笔记本电脑买一个高速的 SD 卡来存储 Home 目录,而不是使用另一个硬盘驱动。本教程也适用于这种使用情况。

|

||||

|

||||

### 制作 USB 启动盘

|

||||

|

||||

首先去[这个网站][11]下载最新的 Ubuntu Linux 版本。然后下载 [Etcher][12]- USB 镜像制作工具。这是一个使用起来很简单的工具,并且支持所有主流的操作系统。你还需要一个至少有 2GB 大小的 USB 驱动器。

|

||||

|

||||

|

||||

|

||||

安装好 Etcher 以后,直接打开。点击 <ruby>选择镜像<rt>Select Image</rt></ruby> 按钮来制作镜像。这将提示用户浏览、寻找 ISO 镜像,找到前面下载的 Ubuntu ISO 文件并选择。然后,插入 USB 驱动器,Etcher 应该会自动选择它。之后,点击 “Flash!” 按钮,Ubuntu 启动盘的制作过程就开始了。

|

||||

|

||||

为了能够启动 Ubuntu 系统,需要配置 BIOS。这是必需的,这样计算机才能启动新创建的 Ubuntu 启动盘。为了进入 BIOS,在插入 USB 的情况下重启电脑,然后按正确的键(Del、F2 或者任何和你的电脑相应的键)。找到从 USB 启动的选项,然后启用这个选项。

|

||||

|

||||

如果你的个人电脑不支持 USB 启动,那么把 Ubuntu 镜像刻入 DVD 中。

|

||||

|

||||

### 安装

|

||||

|

||||

当用启动盘第一次加载 Ubuntu 时,欢迎界面会出现两个选项。请选择 “安装 Ubuntu” 选项。在下一页中,Ubiquity 安装工具会请求用户选择一些选项。这些选项不是强制性的,可以忽略。然而,建议两个选项都勾选,因为这样可以节省安装系统以后的时间,特别是安装 MP3 解码器和更新系统。(LCTT 译注:当然如果你的网速不够快,还是不要勾选的好。)

|

||||

|

||||

|

||||

|

||||

勾选了<ruby>“准备安装 Ubuntu”<rt>Preparing to install Ubuntu</rt></ruby>页面中的两个选项以后,需要选择安装类型了。有许多种安装类型。然而,这个教程需要选择自定义安装类型。为了进入自定义安装页面,勾选<ruby>“其他”<rt>something else</rt></ruby>选项,然后点击“继续”。

|

||||

|

||||

现在将显示 Ubuntu 自定义安装分区工具。它将显示任何/所有能够安装 Ubuntu 系统的磁盘。如果两个硬盘均可用,那么它们都会显示。如果插有 SD 卡,那么它也会显示。

|

||||

|

||||

选择用于 Root 文件系统的硬盘驱动器。如果上面已经有分区表,编辑器会显示出来,请使用分区工具把它们全部删除。如果驱动没有格式化也没有分区,那么使用鼠标选择驱动器,然后点击<ruby>“新建分区表”<rt>new partition table</rt></ruby>。对所有驱动器执行这个操作,从而使它们都有分区表。(LCTT 译注:警告,如果驱动器上有你需要的数据,请先备份,否则重新分区后将永远丢失。)

|

||||

|

||||

|

||||

|

||||

现在所有分区都有了分区表(并已删除分区),可以开始进行配置了。在第一个驱动器下选择空闲空间,然后点击加号按钮来创建新分区。然后将会出现一个“创建分区窗口”。允许工具使用整个硬盘。然后转到<ruby>“挂载点”<rt>Mount Point</rt></ruby>下拉菜单。选择 `/` (Root)作为挂载点,之后点击 OK 按钮确认设置。

|

||||

|

||||

对第二个驱动器做相同的事,这次选择 `/home` 作为挂载点。两个驱动都设置好以后,选择要放入引导装载器的驱动器,然后点击 <ruby>“现在安装”<rt>install now</rt></ruby>,安装进程就开始了。

|

||||

|

||||

|

||||

|

||||

从这以后的安装进程是标准安装。创建用户名、选择时区等。

|

||||

|

||||

**注:** 你是以 UEFI 模式进行安装吗?如果是,那么需要给 boot 创建一个 512 MB 大小的 FAT32 分区。在创建其他任何分区前做这件事。确保选择 “/boot” 作为这个分区的挂载点。

|

||||

|

||||

如果你需要一个交换分区,那么,在创建用于 `/` 的分区前,在第一个驱动器上进行创建。可以通过点击 ‘+’ 按钮,然后输入所需大小,选择下拉菜单中的<ruby>“交换区域”<rt>swap area</rt></ruby>来创建交换分区。

|

||||

|

||||

### 结论

|

||||

|

||||

Linux 最好的地方就是可以自己按需配置。有多少其他操作系统可以让你把文件系统分割在不同的硬盘驱动上?并不多,这是肯定的。我希望有了这个指南,你将意识到 Ubuntu 能够提供的真正力量。

|

||||

|

||||

安装 Ubuntu 系统时你会用多个驱动器吗?请在下面的评论中让我们知道。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/install-ubuntu-with-different-root-home-hard-drives/

|

||||

|

||||

作者:[Derrik Diener][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/derrikdiener/

|

||||

[1]:https://www.maketecheasier.com/author/derrikdiener/

|

||||

[2]:https://www.maketecheasier.com/install-ubuntu-with-different-root-home-hard-drives/#respond

|

||||

[3]:https://www.maketecheasier.com/category/linux-tips/

|

||||

[4]:http://www.facebook.com/sharer.php?u=https%3A%2F%2Fwww.maketecheasier.com%2Finstall-ubuntu-with-different-root-home-hard-drives%2F

|

||||

[5]:http://twitter.com/share?url=https%3A%2F%2Fwww.maketecheasier.com%2Finstall-ubuntu-with-different-root-home-hard-drives%2F&text=How+to+Install+Ubuntu+with+Separate+Root+and+Home+Hard+Drives

|

||||

[6]:mailto:?subject=How%20to%20Install%20Ubuntu%20with%20Separate%20Root%20and%20Home%20Hard%20Drives&body=https%3A%2F%2Fwww.maketecheasier.com%2Finstall-ubuntu-with-different-root-home-hard-drives%2F

|

||||

[7]:https://www.maketecheasier.com/byb-dimmable-eye-care-desk-lamp/

|

||||

[8]:https://www.maketecheasier.com/download-appx-files-from-windows-store/

|

||||

[9]:https://support.google.com/adsense/troubleshooter/1631343

|

||||

[10]:http://www.maketecheasier.com/tag/ssd

|

||||

[11]:http://ubuntu.com/download

|

||||

[12]:https://etcher.io/

|

||||

139

published/20170227 How to setup a Linux server on Amazon AWS.md

Normal file

139

published/20170227 How to setup a Linux server on Amazon AWS.md

Normal file

@ -0,0 +1,139 @@

|

||||

如何在 Amazon AWS 上设置一台 Linux 服务器

|

||||

============================================================

|

||||

|

||||

AWS(Amazon Web Services)是全球领先的云服务器提供商之一。你可以使用 AWS 平台在一分钟内设置完服务器。在 AWS 上,你可以微调服务器的许多技术细节,如 CPU 数量,内存和磁盘空间,磁盘类型(更快的 SSD 或者经典的 IDE)等。关于 AWS 最好的一点是,你只需要为你使用到的服务付费。在开始之前,AWS 提供了一个名为 “Free Tier” 的特殊帐户,你可以免费使用一年的 AWS 技术服务,但会有一些小限制,例如,你每个月使用服务器时长不能超过 750 小时,超过这个他们就会向你收费。你可以在 [aws 官网][3]上查看所有相关的规则。

|

||||

|

||||

因为我的这篇文章是关于在 AWS 上创建 Linux 服务器,因此拥有 “Free Tier” 帐户是先决条件。要注册帐户,你可以使用此[链接][4]。请注意,你需要在创建帐户时输入信用卡详细信息。

|

||||

|

||||

让我们假设你已经创建了 “Free Tier” 帐户。

|

||||

|

||||

在继续之前,你必须了解 AWS 中的一些术语以了解设置:

|

||||

|

||||

1. EC2(弹性计算云):此术语用于虚拟机。

|

||||

2. AMI(Amazon 机器镜像):表示操作系统实例。

|

||||

3. EBS(弹性块存储):AWS 中的一种存储环境类型。

|

||||

|

||||

通过以下链接登录 AWS 控制台:[https://console.aws.amazon.com/][5] 。

|

||||

|

||||

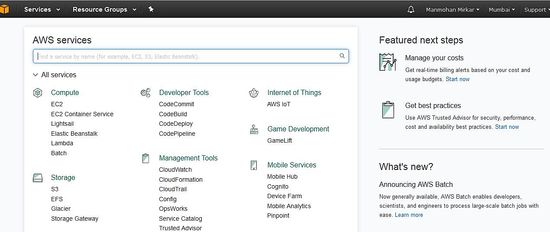

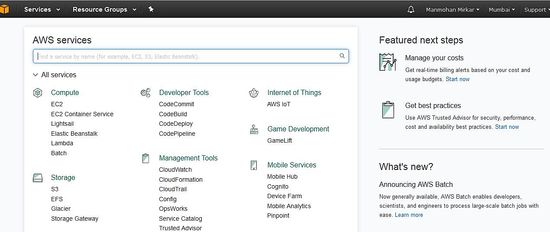

AWS 控制台将如下所示:

|

||||

|

||||

[

|

||||

|

||||

][6]

|

||||

|

||||

### 在 AWS 中设置 Linux VM

|

||||

|

||||

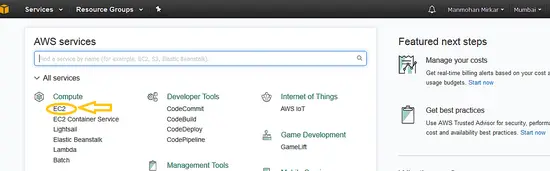

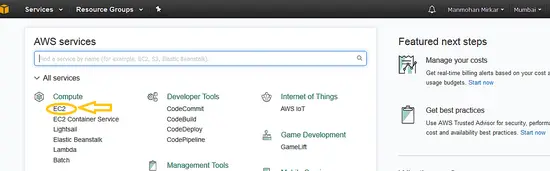

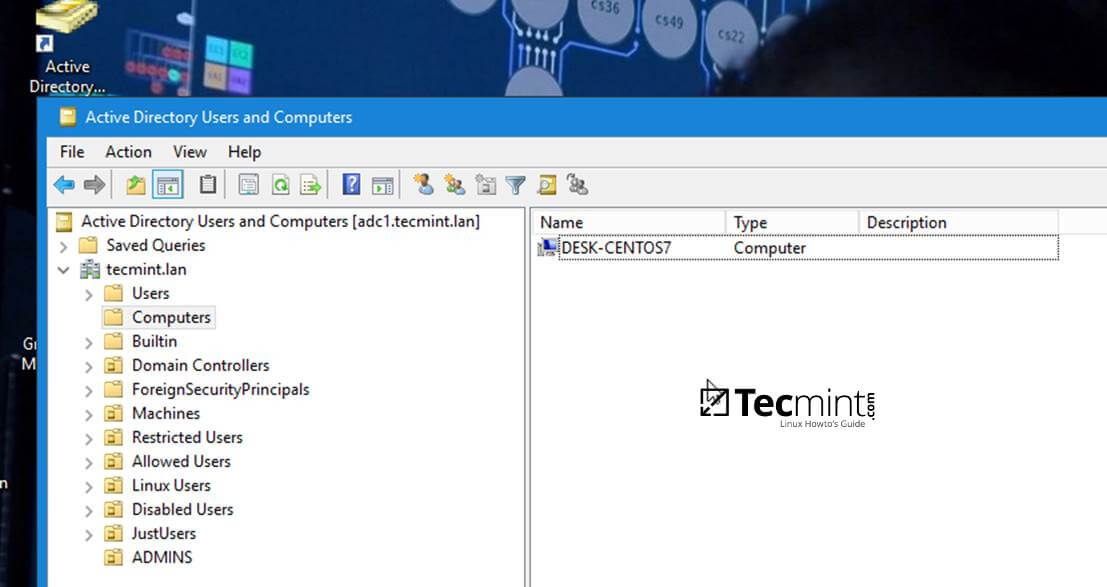

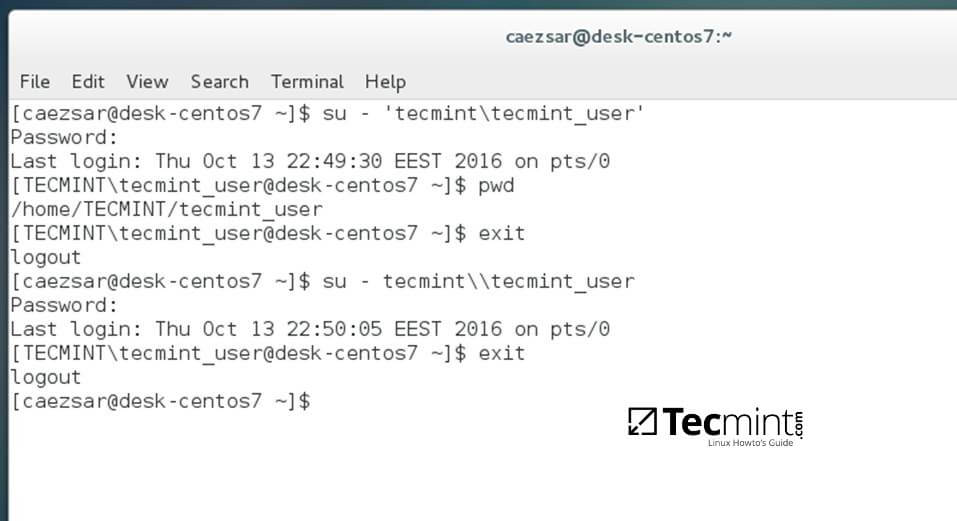

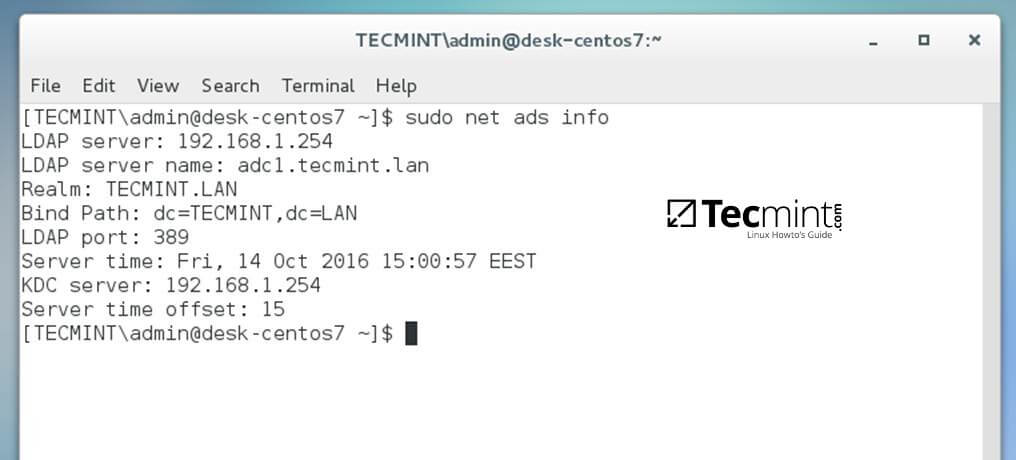

1、 创建一个 EC2(虚拟机)实例:在开始安装系统之前,你必须在 AWS 中创建一台虚拟机。要创建虚拟机,在“<ruby>计算<rt>compute</rt></ruby>”菜单下点击 EC2:

|

||||

|

||||

[

|

||||

|

||||

][7]

|

||||

|

||||

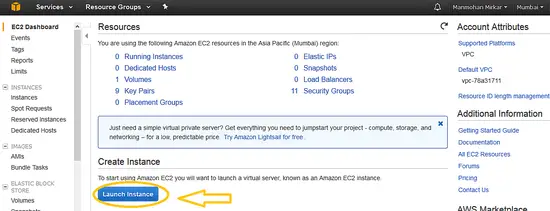

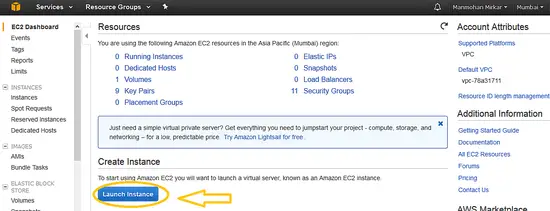

2、 现在在<ruby>创建实例<rt>Create instance</rt></ruby>下点击<ruby>“启动实例”<rt>Launch Instance</rt></ruby>按钮。

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

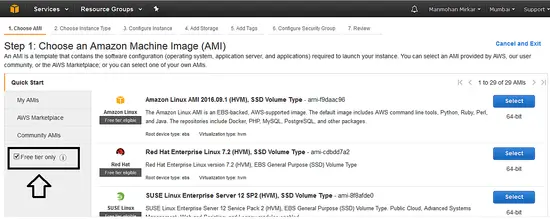

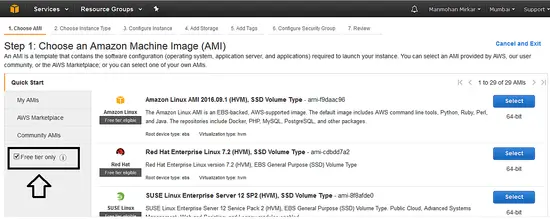

3、 现在,当你使用的是一个 “Free Tier” 帐号,接着最好选择 “Free Tier” 单选按钮以便 AWS 可以过滤出可以免费使用的实例。这可以让你不用为使用 AWS 的资源而付费。

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

4、 要继续操作,请选择以下选项:

|

||||

|

||||

a、 在经典实例向导中选择一个 AMI(Amazon Machine Image),然后选择使用 **Red Hat Enterprise Linux 7.2(HVM),SSD 存储**

|

||||

|

||||

b、 选择 “**t2.micro**” 作为实例详细信息。

|

||||

|

||||

c、 **配置实例详细信息**:不要更改任何内容,只需单击下一步。

|

||||

|

||||

d、 **添加存储**:不要更改任何内容,只需点击下一步,因为此时我们将使用默认的 10(GiB)硬盘。

|

||||

|

||||

e、 **添加标签**:不要更改任何内容只需点击下一步。

|

||||

|

||||

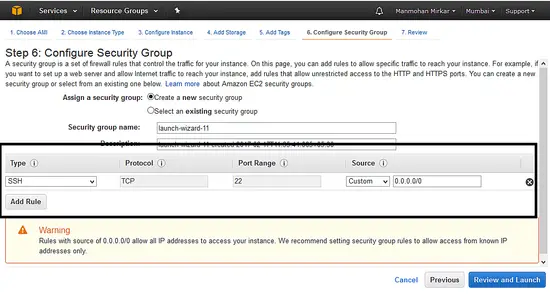

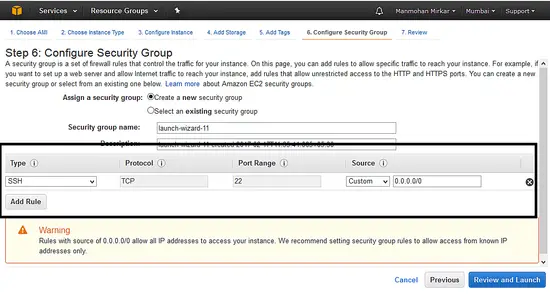

f、 **配置安全组**:现在选择用于 ssh 的 22 端口,以便你可以在任何地方访问此服务器。

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

g、 选择“<ruby>查看并启动<rt>Review and Launch</rt></ruby>”按钮。

|

||||

|

||||

h、 如果所有的详情都无误,点击 “<ruby>启动<rt>Launch</rt></ruby>”按钮。

|

||||

|

||||

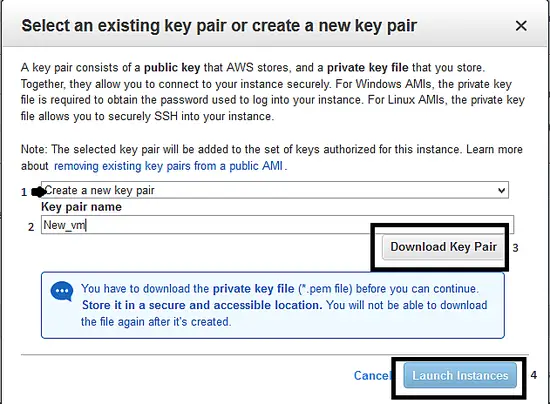

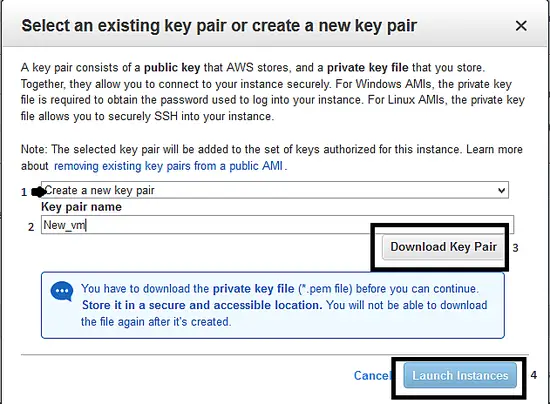

i、 单击“<ruby>启动<rt>Launch</rt></ruby>”按钮后,系统会像下面那样弹出一个窗口以创建“密钥对”:选择选项“<ruby>创建密钥对<rt>create a new key pair</rt></ruby>”,并给密钥对起个名字,然后下载下来。在使用 ssh 连接到服务器时,需要此密钥对。最后,单击“<ruby>启动实例<rt>Launch Instance</rt></ruby>”按钮。

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

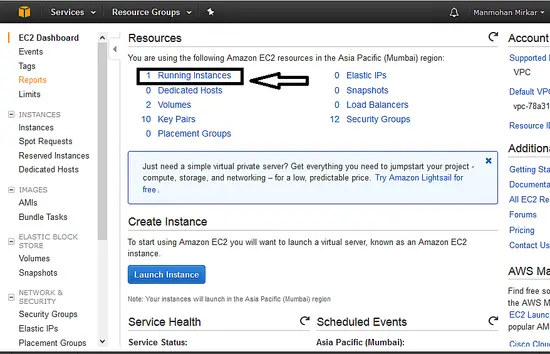

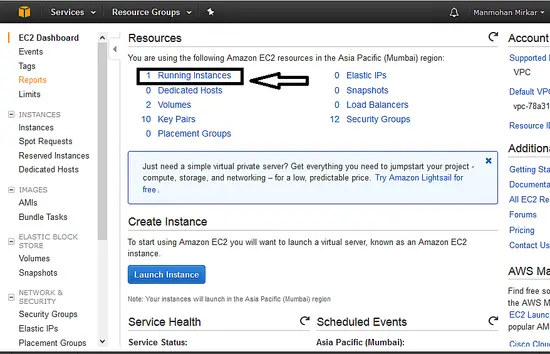

j、 点击“<ruby>启动实例<rt>Launch Instance</rt></ruby>”按钮后,转到左上角的服务。选择“<ruby>计算<rt>compute</rt></ruby>”--> “EC2”。现在点击“<ruby>运行实例<rt>Running Instances</rt></ruby>”:

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

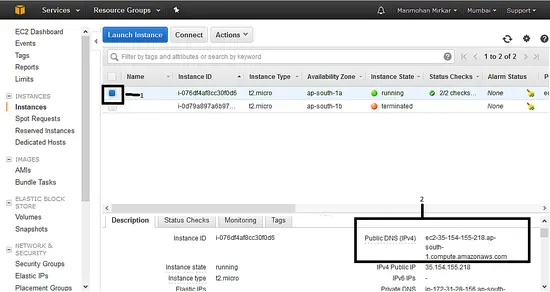

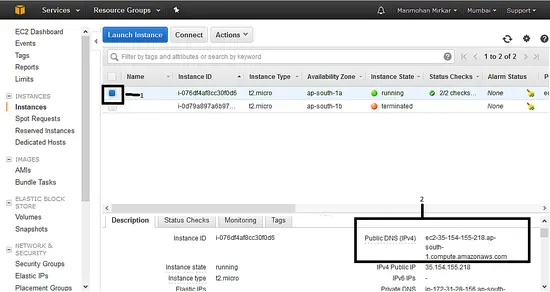

k、 现在你可以看到,你的新 VM 的状态是 “<ruby>运行中<rt>running</rt></ruby>”。选择实例,请记下登录到服务器所需的 “<ruby>公开 DNS 名称<rt>Public DNS</rt></ruby>”。

|

||||

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

现在你已完成创建一台运行 Linux 的 VM。要连接到服务器,请按照以下步骤操作。

|

||||

|

||||

### 从 Windows 中连接到 EC2 实例

|

||||

|

||||

1、 首先,你需要有 putty gen 和 Putty exe 用于从 Windows 连接到服务器(或 Linux 上的 SSH 命令)。你可以通过下面的[链接][14]下载 putty。

|

||||

|

||||

2、 现在打开 putty gen :`puttygen.exe`。

|

||||

|

||||

3、 你需要单击 “Load” 按钮,浏览并选择你从亚马逊上面下载的密钥对文件(pem 文件)。

|

||||

|

||||

4、 你需要选择 “ssh2-RSA” 选项,然后单击保存私钥按钮。请在下一个弹出窗口中选择 “yes”。

|

||||

|

||||

5、 将文件以扩展名 `.ppk` 保存。

|

||||

|

||||

6、 现在你需要打开 `putty.exe`。在左侧菜单中点击 “connect”,然后选择 “SSH”,然后选择 “Auth”。你需要单击浏览按钮来选择我们在步骤 4 中创建的 .ppk 文件。

|

||||

|

||||

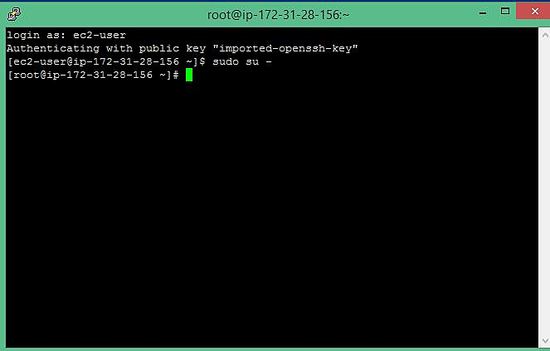

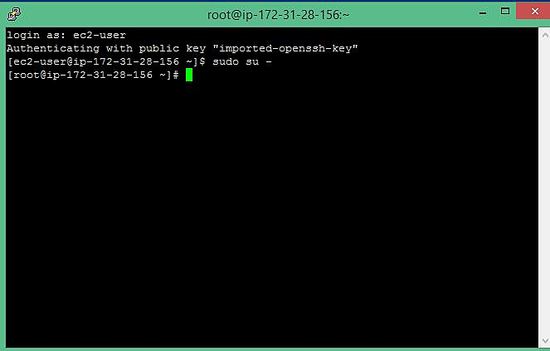

7、 现在点击 “session” 菜单,并在“host name” 中粘贴在本教程中 “k” 步骤中的 DNS 值,然后点击 “open” 按钮。

|

||||

|

||||

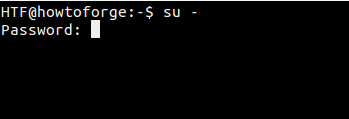

8、 在要求用户名和密码时,输入 `ec2-user` 和空白密码,然后输入下面的命令。

|

||||

|

||||

```

|

||||

$ sudo su -

|

||||

```

|

||||

|

||||

哈哈,你现在是在 AWS 云上托管的 Linux 服务器上的主人啦。

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/

|

||||

|

||||

作者:[MANMOHAN MIRKAR][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/

|

||||

[1]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/#setup-a-linux-vm-in-aws

|

||||

[2]:https://www.howtoforge.com/tutorial/how-to-setup-linux-server-with-aws/#connect-to-an-ec-instance-from-windows

|

||||

[3]:http://aws.amazon.com/free/

|

||||

[4]:http://aws.amazon.com/ec2/

|

||||

[5]:https://console.aws.amazon.com/

|

||||

[6]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_console.JPG

|

||||

[7]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_console_ec21.png

|

||||

[8]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_launch_ec2.png

|

||||

[9]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_free_tier_radio1.png

|

||||

[10]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_ssh_port1.png

|

||||

[11]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_key_pair.png

|

||||

[12]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_running_instance.png

|

||||

[13]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_dns_value.png

|

||||

[14]:http://www.chiark.greenend.org.uk/~sgtatham/putty/latest.html

|

||||

[15]:https://www.howtoforge.com/images/how_to_setup_linux_server_with_aws/big/aws_putty1.JPG

|

||||

@ -1,14 +1,13 @@

|

||||

|

||||

如何在 CentOS 7 上安装和安全配置 MariaDB 10

|

||||

===========================================

|

||||

|

||||

**MariaDB** 是 MySQL 数据库的自由开源分支,与 MySQL 在思想上同出一源,在未来仍将是自由且开源的。

|

||||

**MariaDB** 是 MySQL 数据库的自由开源分支,与 MySQL 在设计思想上同出一源,在未来仍将是自由且开源的。

|

||||

|

||||

在这篇博文中,我将会介绍如何在当前使用最广的 RHEL/CentOS 和 Fedora 发行版上安装 **MariaDB 10.1** 稳定版。

|

||||

|

||||

目前了解到的情况是:Red Hat Enterprise Linux/CentOS 7.0 发行版已将默认的数据库从 MySQL 切换到 MariaDB。

|

||||

|

||||

在本文中需要注意的是,我们假定您能够在服务器中使用 root 帐号工作,或者可以使用 [sudo command][7] 运行任何命令。

|

||||

在本文中需要注意的是,我们假定您能够在服务器中使用 root 帐号工作,或者可以使用 [sudo][7] 命令运行任何命令。

|

||||

|

||||

### 第一步:添加 MariaDB yum 仓库

|

||||

|

||||

@ -39,6 +38,7 @@ baseurl = http://yum.mariadb.org/10.1/rhel7-amd64

|

||||

gpgkey=https://yum.mariadb.org/RPM-GPG-KEY-MariaDB

|

||||

gpgcheck=1

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

@ -52,19 +52,21 @@ gpgcheck=1

|

||||

```

|

||||

# yum install MariaDB-server MariaDB-client -y

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

*在 CentOS 7 中安装 MariaDB*

|

||||

|

||||

3. MariaDB 包安装完毕后,立即启动数据库服务守护进程,并可以通过下面的操作设置,在操作系统重启后自动启动服务。

|

||||

3、 MariaDB 包安装完毕后,立即启动数据库服务守护进程,并可以通过下面的操作设置,在操作系统重启后自动启动服务。

|

||||

|

||||

```

|

||||

# systemctl start mariadb

|

||||

# systemctl enable mariadb

|

||||

# systemctl status mariadb

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

@ -73,7 +75,7 @@ gpgcheck=1

|

||||

|

||||

### 第三步:在 CentOS 7 中对 MariaDB 进行安全配置

|

||||

|

||||

4. 现在可以通过以下操作进行安全配置:设置 MariaDB 的 root 账户密码,禁用 root 远程登录,删除测试数据库以及测试帐号,最后需要使用下面的命令重新加载权限。

|

||||

4、 现在可以通过以下操作进行安全配置:设置 MariaDB 的 root 账户密码,禁用 root 远程登录,删除测试数据库以及测试帐号,最后需要使用下面的命令重新加载权限。

|

||||

|

||||

```

|

||||

# mysql_secure_installation

|

||||

@ -84,13 +86,14 @@ gpgcheck=1

|

||||

|

||||

*CentOS 7 中的 MySQL 安全配置*

|

||||

|

||||

5. 在配置完数据库的安全配置后,你可能想检查下 MariaDB 的特性,比如:版本号,默认参数列表,以及通过 MariaDB 命令行登录。如下所示:

|

||||

5、 在配置完数据库的安全配置后,你可能想检查下 MariaDB 的特性,比如:版本号、默认参数列表、以及通过 MariaDB 命令行登录。如下所示:

|

||||

|

||||

```

|

||||

# mysql -V

|

||||

# mysqld --print-defaults

|

||||

# mysql -u root -p

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

@ -101,15 +104,15 @@ gpgcheck=1

|

||||

|

||||

如果你刚开始学习使用 MySQL/MariaDB,可以通过以下指南学习:

|

||||

|

||||

1. [Learn MySQL / MariaDB for Beginners – Part 1][1]

|

||||

2. [Learn MySQL / MariaDB for Beginners – Part 2][2]

|

||||

3. [MySQL Basic Database Administration Commands – Part III][3]

|

||||

4. [20 MySQL (Mysqladmin) Commands for Database Administration – Part IV][4]

|

||||

1. [新手学习 MySQL / MariaDB(一)][1]

|

||||

2. [新手学习 MySQL / MariaDB(二)][2]

|

||||

3. [MySQL 数据库基础管理命令(三)][3]

|

||||

4. [20 MySQL 管理命令 Mysqladmin(四)][4]

|

||||

|

||||

同样查看下面的文档学习如何优化你的 MySQL/MariaDB 服务,并使用工具监控数据库的活动情况。

|

||||

|

||||

1. [15 Tips to Tune and Optimize Your MySQL/MariaDB Performance][5]

|

||||

2. [4 Useful Tools to Monitor MySQL/MariaDB Database Activities][6]

|

||||

1. [15 个 MySQL/MariaDB 调优技巧][5]

|

||||

2. [4 监控 MySQL/MariaDB 数据库的工具][6]

|

||||

|

||||

文章到此就结束了,本文内容比较浅显,文中主要展示了如何在 RHEL/CentOS 和 Fefora 操作系统中安装 **MariaDB 10.1** 稳定版。您可以通过下面的联系方式将您遇到的任何问题或者想法发给我们。

|

||||

|

||||

@ -0,0 +1,99 @@

|

||||

在 Linux 中修改 MySQL 或 MariaDB 的 Root 密码

|

||||

============================================================

|

||||

|

||||

如果你是第一次[安装 MySQL 或 MariaDB][1],你可以执行 `mysql_secure_installation` 脚本来实现基本的安全设置。

|

||||

|

||||

其中的一个设置是数据库的 root 密码 —— 该密码必须保密,并且只在必要的时候使用。如果你需要修改它(例如,当数据库管理员换了人 —— 或者被解雇了!)。

|

||||

|

||||

**建议阅读:**[在 Linux 中恢复 MySQL 或 MariaDB 的 Root 密码][2]

|

||||

|

||||

这篇文章迟早会派上用场的。我们讲说明怎样来在 Linux 中修改 MySQL 或 MariaDB 数据库服务器的 root 密码。

|

||||

|

||||

尽管我们会在本文中使用 MariaDB 服务器,但本文中的用法说明对 MySQL 也有效。

|

||||

|

||||

### 修改 MySQL 或 MariaDB 的 root 密码

|

||||

|

||||

你知道 root 密码,但是想要重置它,对于这样的情况,让我们首先确定 MariaDB 正在运行:

|

||||

|

||||

```

|

||||

------------- CentOS/RHEL 7 and Fedora 22+ -------------

|

||||

# systemctl is-active mariadb

|

||||

------------- CentOS/RHEL 6 and Fedora -------------

|

||||

# /etc/init.d/mysqld status

|

||||

```

|

||||

|

||||

[

|

||||

|

||||

][3]

|

||||

|

||||

*检查 MysQL 状态*

|

||||

|

||||

如果上面的命令返回中没有 `active` 这个关键词,那么该服务就是停止状态,你需要在进行下一步之前先启动数据库服务:

|

||||

|

||||

```

|

||||

------------- CentOS/RHEL 7 and Fedora 22+ -------------

|

||||

# systemctl start mariadb

|

||||

------------- CentOS/RHEL 6 and Fedora -------------

|

||||

# /etc/init.d/mysqld start

|

||||

```

|

||||

|

||||

接下来,我们将以 root 登录进数据库服务器:

|

||||

|

||||

```

|

||||

# mysql -u root -p

|

||||

```

|

||||

|

||||

为了兼容不同版本,我们将使用下面的声明来更新 mysql 数据库的用户表。注意,你需要将 `YourPasswordHere` 替换为你为 root 选择的新密码。

|

||||

|

||||

```

|

||||

MariaDB [(none)]> USE mysql;

|

||||

MariaDB [(none)]> UPDATE user SET password=PASSWORD('YourPasswordHere') WHERE User='root' AND Host = 'localhost';

|

||||

MariaDB [(none)]> FLUSH PRIVILEGES;

|

||||

```

|

||||

|

||||

要验证是否操作成功,请输入以下命令退出当前 MariaDB 会话。

|

||||

|

||||

```

|

||||

MariaDB [(none)]> exit;

|

||||

```

|

||||

|

||||

然后,敲回车。你现在应该可以使用新密码连接到服务器了。

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

*修改 MysQL/MariaDB Root 密码*

|

||||

|

||||

|

||||

##### 小结

|

||||

|

||||

在本文中,我们说明了如何修改 MariaDB / MySQL 的 root 密码 —— 或许你知道当前所讲的这个方法,也可能不知道。

|

||||

|

||||

像往常一样,如果你有任何问题或者反馈,请尽管使用下面的评论框来留下你宝贵的意见或建议,我们期待着您的留言。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Gabriel Cánepa是一位来自阿根廷圣路易斯的 Villa Mercedes 的 GNU/Linux 系统管理员和 web 开发者。他为世界范围内的主要的消费产品公司工作,也很钟情于在他日常工作的方方面面中使用 FOSS 工具来提高生产效率。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/change-mysql-mariadb-root-password/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

|

||||

[1]:http://www.tecmint.com/install-mariadb-in-centos-7/

|

||||

[2]:http://www.tecmint.com/reset-mysql-or-mariadb-root-password/

|

||||

[3]:http://www.tecmint.com/wp-content/uploads/2017/03/Check-MySQL-Status.png

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2017/03/Change-MySQL-Root-Password.png

|

||||

[5]:http://www.tecmint.com/author/gacanepa/

|

||||

[6]:http://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[7]:http://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

@ -0,0 +1,111 @@

|

||||

如何在 Linux 中安装最新的 Python 3.6 版本

|

||||

============================================================

|

||||

|

||||

在这篇文章中,我将展示如何在 CentOS/RHEL 7、Debian 以及它的衍生版本比如 Ubuntu(最新的 Ubuntu 16.04 LTS 版本已经安装了最新的 Python 版本)或 Linux Mint 上安装和使用 Python 3.x 。我们的重点是安装可用于命令行的核心语言工具。

|

||||

|

||||

然后,我们也会阐述如何安装 Python IDLE - 一个基于 GUI 的工具,它允许我们运行 Python 代码和创建独立函数。

|

||||

|

||||

### 在 Linux 中安装 Python 3.6

|

||||

|

||||

在我写这篇文章的时候(2017 年三月中旬),在 CentOS 和 Debian 8 中可用的最新 Python 版本分别是 Python 3.4 和 Python 3.5 。

|

||||

|

||||

虽然我们可以使用 [yum][1] 和 [aptitude][2](或 [apt-get][3])安装核心安装包以及它们的依赖,但在这儿,我将阐述如何使用源代码进行安装。

|

||||

|

||||

为什么?理由很简单:这样我们能够获取语言的最新的稳定发行版(3.6),并且提供了一种和 Linux 版本无关的安装方法。

|

||||

|

||||

在 CentOS 7 中安装 Python 之前,请确保系统中已经有了所有必要的开发依赖:

|

||||

|

||||

```

|

||||

# yum -y groupinstall development

|

||||

# yum -y install zlib-devel

|

||||

```

|

||||

|

||||

在 Debian 中,我们需要安装 gcc、make 和 zlib 压缩/解压缩库:

|

||||

|

||||

```

|

||||

# aptitude -y install gcc make zlib1g-dev

|

||||

```

|

||||

|

||||

运行下面的命令来安装 Python 3.6:

|

||||

|

||||

```

|

||||

# wget https://www.python.org/ftp/python/3.6.0/Python-3.6.0.tar.xz

|

||||

# tar xJf Python-3.6.0.tar.xz

|

||||

# cd Python-3.6.0

|

||||

# ./configure

|

||||

# make && make install

|

||||

```

|

||||

|

||||

现在,放松一下,或者饿的话去吃个三明治,因为这可能需要花费一些时间。安装完成以后,使用 `which` 命令来查看主要二进制代码的位置:

|

||||

|

||||

```

|

||||

# which python3

|

||||

# python3 -V

|

||||

```

|

||||

|

||||

上面的命令的输出应该和这相似:

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

*查看 Linux 系统中的 Python 版本*

|

||||

|

||||

要退出 Python 提示符,只需输入:

|

||||

|

||||

```

|

||||

quit()

|

||||

或

|

||||

exit()

|

||||

```

|

||||

|

||||

然后按回车键。

|

||||

|

||||

恭喜!Python 3.6 已经安装在你的系统上了。

|

||||

|

||||

### 在 Linux 中安装 Python IDLE

|

||||

|

||||

Python IDLE 是一个基于 GUI 的 Python 工具。如果你想安装 Python IDLE,请安装叫做 idle(Debian)或 python-tools(CentOS)的包:

|

||||

|

||||

```

|

||||

# apt-get install idle [On Debian]

|

||||

# yum install python-tools [On CentOS]

|

||||

```

|

||||

|

||||

输入下面的命令启动 Python IDLE:

|

||||

|

||||

```

|

||||

# idle

|

||||

```

|

||||

|

||||

### 总结

|

||||

|

||||

在这篇文章中,我们阐述了如何从源代码安装最新的 Python 稳定版本。

|

||||

|

||||

最后但不是不重要,如果你之前使用 Python 2,那么你可能需要看一下 [从 Python 2 迁移到 Python 3 的官方文档][5]。这是一个可以读入 Python 2 代码,然后转化为有效的 Python 3 代码的程序。

|

||||

|

||||

你有任何关于这篇文章的问题或想法吗?请使用下面的评论栏与我们联系

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Gabriel Cánepa - 一位来自阿根廷圣路易斯梅塞德斯镇 (Villa Mercedes, San Luis, Argentina) 的 GNU/Linux 系统管理员,Web 开发者。就职于一家世界领先级的消费品公司,乐于在每天的工作中能使用 FOSS 工具来提高生产力。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/install-python-in-linux/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

|

||||

[1]:http://www.tecmint.com/20-linux-yum-yellowdog-updater-modified-commands-for-package-mangement/

|

||||

[2]:http://www.tecmint.com/linux-package-management/

|

||||

[3]:http://www.tecmint.com/useful-basic-commands-of-apt-get-and-apt-cache-for-package-management/

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2017/03/Check-Python-Version-in-Linux.png

|

||||

[5]:https://docs.python.org/3.6/library/2to3.html

|

||||

@ -0,0 +1,154 @@

|

||||

Kgif:一个从活动窗口创建 GIF 的简单脚本

|

||||

============================================================

|

||||

|

||||

[Kgif][2] 是一个简单的 shell 脚本,它可以从活动窗口创建一个 GIF 文件。我觉得这个程序专门是为捕获终端活动设计的,我经常用于这个。

|

||||

|

||||

它将窗口的活动捕获为一系列的 PNG 图片,然后组合在一起创建一个GIF 动画。脚本以 0.5 秒的间隔截取活动窗口。如果你觉得这不符合你的要求,你可以根据你的需要修改脚本。

|

||||

|

||||

最初它是为了捕获 tty 输出以及创建 github 项目的预览图创建的。

|

||||

|

||||

确保你在运行 Kgif 之前已经安装了 scrot 和 ImageMagick 软件包。

|

||||

|

||||

推荐阅读:[Peek - 在 Linux 中创建一个 GIF 动画录像机][3]。

|

||||

|

||||

什么是 ImageMagick?ImageMagick 是一个命令行工具,用于图像转换和编辑。它支持所有类型的图片格式(超过 200 种),如 PNG、JPEG、JPEG-2000、GIF、TIFF、DPX、EXR、WebP、Postscript、PDF 和 SVG。

|

||||

|

||||

什么是 Scrot?Scrot 代表 SCReenshOT,它是一个开源的命令行工具,用于捕获桌面、终端或特定窗口的屏幕截图。

|

||||

|

||||

#### 安装依赖

|

||||

|

||||

Kgif 需要 scrot 以及 ImageMagick。

|

||||

|

||||

对于基于 Debian 的系统:

|

||||

|

||||

```

|

||||

$ sudo apt-get install scrot imagemagick

|

||||

```

|

||||

|

||||

对于基于 RHEL/CentOS 的系统:

|

||||

|

||||

```

|

||||

$ sudo yum install scrot ImageMagick

|

||||

```

|

||||

|

||||

对于 Fedora 系统:

|

||||

|

||||

```

|

||||

$ sudo dnf install scrot ImageMagick

|

||||

```

|

||||

|

||||

对于 openSUSE 系统:

|

||||

|

||||

```

|

||||

$ sudo zypper install scrot ImageMagick

|

||||

```

|

||||

|

||||

对于基于 Arch Linux 的系统:

|

||||

|

||||

```

|

||||

$ sudo pacman -S scrot ImageMagick

|

||||

```

|

||||

|

||||

#### 安装 Kgif 及使用

|

||||

|

||||

安装 Kgif 并不困难,因为不需要安装。只需从开发者的 github 页面克隆源文件,你就可以运行 `kgif.sh` 文件来捕获活动窗口了。默认情况下它的延迟为 1 秒,你可以用 `--delay` 选项来修改延迟。最后,按下 `Ctrl + c` 来停止捕获。

|

||||

|

||||

```

|

||||

$ git clone https://github.com/luminousmen/Kgif

|

||||

$ cd Kgif

|

||||

$ ./kgif.sh

|

||||

Setting delay to 1 sec

|

||||

|

||||

Capturing...

|

||||

^C

|

||||

Stop capturing

|

||||

Converting to gif...

|

||||

Cleaning...

|

||||

Done!

|

||||

```

|

||||

|

||||

检查系统中是否已存在依赖。

|

||||

|

||||

```

|

||||

$ ./kgif.sh --check

|

||||

OK: found scrot

|

||||

OK: found imagemagick

|

||||

```

|

||||

|

||||

设置在 N 秒延迟后开始捕获。

|

||||

|

||||

```

|

||||

$ ./kgif.sh --delay=5

|

||||

|

||||

Setting delay to 5 sec

|

||||

|

||||

Capturing...

|

||||

^C

|

||||

Stop capturing

|

||||

Converting to gif...

|

||||

Cleaning...

|

||||

Done!

|

||||

```

|

||||

|

||||

它会将文件保存为 `terminal.gif`,并且每次在生成新文件时都会覆盖。因此,我建议你添加 `--filename` 选项将文件保存为不同的文件名。

|

||||

|

||||

```

|

||||

$ ./kgif.sh --delay=5 --filename=2g-test.gif

|

||||

|

||||

Setting delay to 5 sec

|

||||

|

||||

Capturing...

|

||||

^C

|

||||

Stop capturing

|

||||

Converting to gif...

|

||||

Cleaning...

|

||||

Done!

|

||||

```

|

||||

|

||||

使用 `--noclean` 选项保留 png 截图。

|

||||

|

||||

```

|

||||

$ ./kgif.sh --delay=5 --noclean

|

||||

```

|

||||

|

||||

要了解更多的选项:

|

||||

|

||||

```

|

||||

$ ./kgif.sh --help

|

||||

|

||||

usage: ./kgif.sh [--delay] [--filename ] [--gifdelay] [--noclean] [--check] [-h]

|

||||

-h, --help Show this help, exit

|

||||

--check Check if all dependencies are installed, exit

|

||||

--delay= Set delay in seconds to specify how long script will wait until start capturing.

|

||||

--gifdelay= Set delay in seconds to specify how fast images appears in gif.

|

||||

--filename= Set file name for output gif.

|

||||

--noclean Set if you don't want to delete source *.png screenshots.

|

||||

```

|

||||

|

||||

默认捕获输出。

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

我感觉默认的捕获非常快,接着我做了一些修改并得到了合适的输出。

|

||||

[

|

||||

|

||||

][5]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.2daygeek.com/kgif-create-animated-gif-file-active-window-screen-recorder-capture-arch-linux-mint-fedora-ubuntu-debian-opensuse-centos/

|

||||

|

||||

作者:[MAGESH MARUTHAMUTHU][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.2daygeek.com/author/magesh/

|

||||

[1]:http://www.2daygeek.com/author/magesh/

|

||||

[2]:https://github.com/luminousmen/Kgif

|

||||

[3]:http://www.2daygeek.com/kgif-create-animated-gif-file-active-window-screen-recorder-capture-arch-linux-mint-fedora-ubuntu-debian-opensuse-centos/www.2daygeek.com/peek-create-animated-gif-screen-recorder-capture-arch-linux-mint-fedora-ubuntu/

|

||||

[4]:http://www.2daygeek.com/wp-content/uploads/2017/03/kgif-test.gif

|

||||

[5]:http://www.2daygeek.com/wp-content/uploads/2017/03/kgif-test-delay-modified.gif

|

||||

@ -0,0 +1,100 @@

|

||||

ELRepo - Enterprise Linux (RHEL、CentOS 及 SL)的社区仓库

|

||||

============================================================

|

||||

|

||||

如果你正在使用 Enterprise Linux 发行版(Red Hat Enterprise Linux 或其衍生产品,如 CentOS 或 Scientific Linux),并且需要对特定硬件或新硬件支持,那么你找对地方了。

|

||||

|

||||

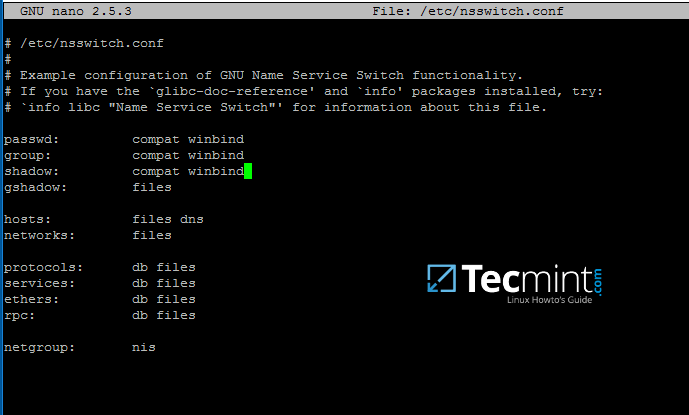

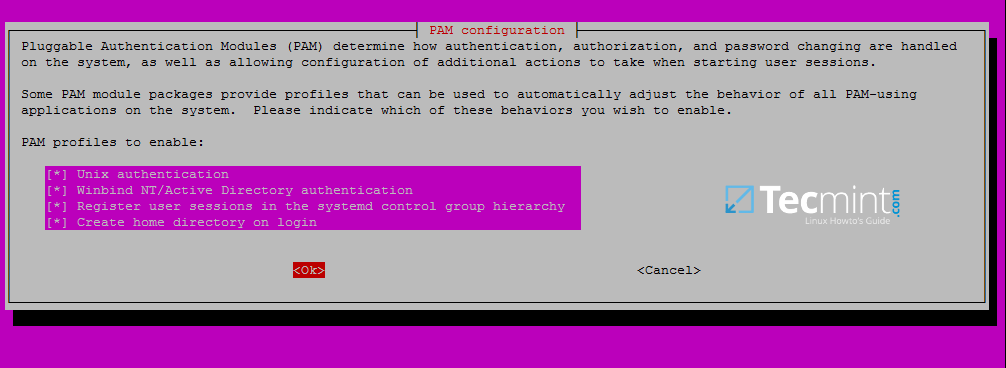

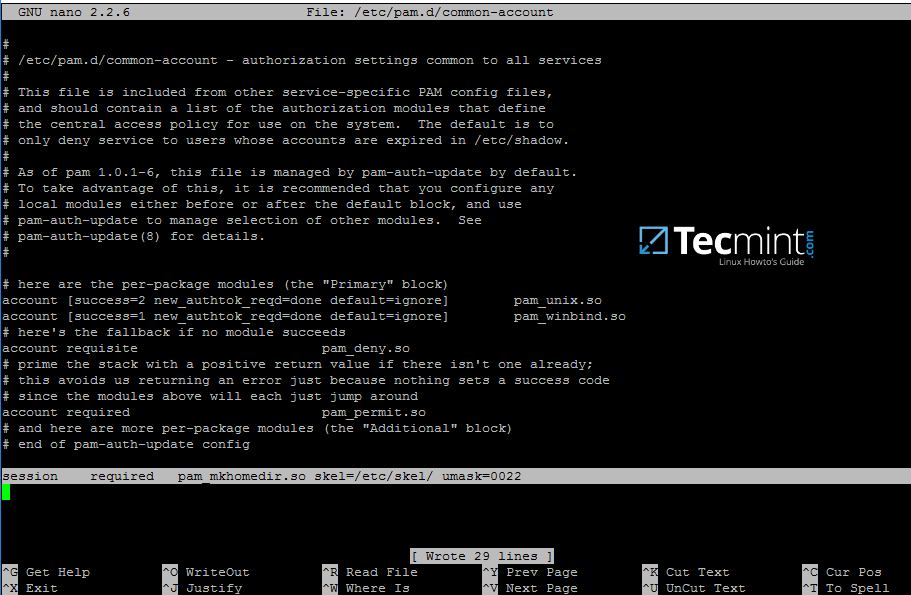

在本文中,我们将讨论如何启用 ELRepo 仓库,该软件源包含文件系统驱动以及网络摄像头驱动程序等等(支持显卡、网卡、声音设备甚至[新内核][1])

|

||||

|

||||

### 在 Enterprise Linux 中启用 ELRepo

|

||||

|

||||

虽然 ELRepo 是第三方仓库,但它有 Freenode(#elrepo)上的一个活跃社区以及用户邮件列表的良好支持。

|

||||

|

||||

如果你仍然对在软件源中添加一个独立的仓库表示担心,请注意 CentOS 已在它的 wiki([参见此处][2])将它列为是可靠的。如果你仍然有疑虑,请随时在评论中提问!

|

||||

|

||||

需要注意的是 ELRepo 不仅提供对 Enterprise Linux 7 提供支持,还支持以前的版本。考虑到 CentOS 5 在本月底(2017 年 3 月)结束支持(EOL),这可能看起来并不是一件很大的事,但请记住,CentOS 6 的 EOL 不会早于 2020 年 3 月之前。

|

||||

|

||||

不管你用的 EL 是何版本,在实际启用时需要先导入 GPG 密钥:

|

||||

|

||||

```

|

||||

# rpm --import https://www.elrepo.org/RPM-GPG-KEY-elrepo.org

|

||||

```

|

||||

|

||||

**在 EL5 中启用 ELRepo:**

|

||||

|

||||

```

|

||||

# rpm -Uvh http://www.elrepo.org/elrepo-release-5-5.el5.elrepo.noarch.rpm

|

||||

```

|

||||

|

||||

**在 EL6 中启用 ELRepo:**

|

||||

|

||||

```

|

||||

# rpm -Uvh http://www.elrepo.org/elrepo-release-6-6.el6.elrepo.noarch.rpm

|

||||

```

|

||||

|

||||

**在 EL7 中启用 ELRepo:**

|

||||

|

||||

```

|

||||

# rpm -Uvh http://www.elrepo.org/elrepo-release-7.0-2.el7.elrepo.noarch.rpm

|

||||

```

|

||||

|

||||

这篇文章只会覆盖 EL7,在接下来的小节中分享几个例子。

|

||||

|

||||

### 理解 ELRepo 频道

|

||||

|

||||

为了更好地组织仓库中的软件,ELRepo 共分为 4 个独立频道:

|

||||

|

||||

* elrepo 是主频道,默认情况下启用。它不包含正式发行版中的包。

|

||||

* elrepo-extras 包含可以替代发行版提供的软件包。默认情况下不启用。为了避免混淆,当需要从该仓库中安装或更新软件包时,可以通过以下方式临时启用该频道(将软件包替换为实际软件包名称):`# yum --enablerepo=elrepo-extras install package`

|

||||

* elrepo-testing 提供将放入主频道中,但是仍在测试中的软件包。

|

||||

* elrepo-kernel 提供长期及稳定的主线内核,它们已经特别为 EL 配置过。

|

||||

|

||||

默认情况下,elrepo-testing 和 elrepo-kernel 都被禁用,如果我们[需要从中安装或更新软件包][3],可以像 elrepo-extras 那样启用它们。

|

||||

|

||||

要列出每个频道中的可用软件包,请运行以下命令之一:

|

||||

|

||||

```

|

||||

# yum --disablerepo="*" --enablerepo="elrepo" list available

|

||||

# yum --disablerepo="*" --enablerepo="elrepo-extras" list available

|

||||

# yum --disablerepo="*" --enablerepo="elrepo-testing" list available

|

||||

# yum --disablerepo="*" --enablerepo="elrepo-kernel" list available

|

||||

```

|

||||

|

||||

下面的图片说明了第一个例子:

|

||||

|

||||

[

|

||||

|

||||

][4]

|

||||

|

||||

*列出 ELRepo 可用的软件包*

|

||||

|

||||

##### 总结

|

||||

|

||||

本篇文章中,我们已经解释 ELRepo 是什么,以及你从如何将它们添加到你的软件源。

|

||||

|

||||

如果你对本文有任何问题或意见,请随时在评论栏中联系我们。我们期待你的回音!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Gabriel Cánepa - 一位来自阿根廷圣路易斯梅塞德斯镇 (Villa Mercedes, San Luis, Argentina) 的 GNU/Linux 系统管理员,Web 开发者。就职于一家世界领先级的消费品公司,乐于在每天的工作中能使用 FOSS 工具来提高生产力。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/enable-elrepo-in-rhel-centos-scientific-linux/

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/gacanepa/

|

||||

|

||||

[1]:http://www.tecmint.com/install-upgrade-kernel-version-in-centos-7/

|

||||

[2]:https://wiki.centos.org/AdditionalResources/Repositories

|

||||

[3]:http://www.tecmint.com/auto-install-security-patches-updates-on-centos-rhel/

|

||||

[4]:http://www.tecmint.com/wp-content/uploads/2017/03/List-ELRepo-Available-Packages.png

|

||||

[5]:http://www.tecmint.com/author/gacanepa/

|

||||

[6]:http://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[7]:http://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

@ -1,3 +1,4 @@

|

||||

# rusking translating

|

||||

What a Linux Desktop Does Better

|

||||

============================================================

|

||||

|

||||

|

||||

@ -0,0 +1,76 @@

|

||||

Hire a DDoS service to take down your enemies

|

||||

========================

|

||||

|

||||

>With the rampant availability of IoT devices, cybercriminals offer denial of service attacks to take advantage of password problems.

|

||||

|

||||

|

||||

|

||||

With the onrush of connected internet of things (IoT) devices, distributed denial-of-service attacks are becoming a dangerous trend. Similar to what happened to [DNS service provider Dyn last fall][3], anyone and everyone is in the crosshairs. The idea of using unprotected IoT devices as a way to bombard networks is gaining momentum.

|

||||

|

||||

The advent of DDoS-for-hire services means that even the least tech-savvy individual can exact revenge on some website. Step on up to the counter and purchase a stresser that can systemically take down a company.

|

||||

|

||||

According to [Neustar][4], almost three quarters of all global brands, organizations and companies have been victims of a DDoS attack. And more than 3,700 [DDoS attacks occur each day][5].

|

||||

|

||||

|

||||

#### [■ RELATED: How can you detect a fake ransom letter?][1]

|

||||

|

||||

|

||||

Chase Cunningham, director of cyber operations at A10 Networks, said to find IoT-enabled devices, all you have to do is go on an underground site and ask around for the Mirai scanner code. Once you have that you can scan for anything talking to the internet that can be used for that type of attack.

|

||||

|

||||

“Or you can go to a site like Shodan and craft a couple of simple queries to look for device specific requests. Once you get that information you just go to your DDoS for hire tool and change the configuration to point at the right target and use the right type of traffic emulator and bingo, nuke whatever you like,” he said.

|

||||

|

||||

“Basically everything is for sale," he added. "You can buy a 'stresser', which is just a simple botnet type offering that will allow anyone who knows how to click the start button access to a functional DDoS botnet.”

|

||||

|

||||

>Once you get that information you just go to your DDoS for hire tool and change the configuration to point at the right target and use the right type of traffic emulator and bingo, nuke whatever you like.

|

||||

|

||||

>Chase Cunningham, A10 director of cyber operations

|

||||

|

||||

Cybersecurity vendor Imperva says for just a few dozen dollars, users can quickly get an attack up and running. The company writes on its website that these kits contain the bot payload and the CnC (command and control) files. Using these, aspiring bot masters (a.k.a. herders) can start distributing malware, infecting devices through a use of spam email, vulnerability scanners, brute force attacks and more.

|

||||

|

||||

|

||||

Most [stressers and booters][6] have embraced a commonplace SaaS (software as a service) business model, based on subscriptions. As the Incapsula [Q2 2015 DDoS report][7] has shown, the average one hour/month DDoS package will cost $38 (with $19.99 at the lower end of the scale).

|

||||

|

||||

|

||||

|

||||

“Stresser and booter services are just a byproduct of a new reality, where services that can bring down businesses and organizations are allowed to operate in a dubious grey area,” Imperva wrote.

|

||||

|

||||

While cost varies, [attacks can run businesses anywhere from $14,000 to $2.35 million per incident][8]. And once a business is attacked, there’s an [82 percent chance they’ll be attacked again][9].

|

||||

|

||||

DDoS of Things (DoT) use IoT devices to build botnets that create large DDoS attacks. The DoT attacks have leveraged hundreds of thousands of IoT devices to attack anything from large service providers to enterprises.

|

||||

|

||||

“Most of the reputable DDoS sellers have changeable configurations for their tool sets so you can easily set the type of attack you want to take place. I haven’t seen many yet that specifically include the option to ‘purchase’ an IoT-specific traffic emulator but I’m sure it’s coming. If it were me running the service I would definitely have that as an option,” Cunningham said.

|

||||

|

||||

According to an IDG News Service story, building a DDoS-for-service can also be easy. Often the hackers will rent six to 12 servers, and use them to push out internet traffic to whatever target. In late October, HackForums.net [shut down][10] its "Server Stress Testing" section, amid concerns that hackers were peddling DDoS-for-hire services through the site for as little as $10 a month.

|

||||

|

||||

Also in December, law enforcement agencies in the U.S. and Europe [arrested][11] 34 suspects involved in DDoS-for-hire services.

|

||||

|

||||

If it is so easy to do so, why don’t these attacks happen more often?

|

||||

|

||||

Cunningham said that these attacks do happen all the time, in fact they happen every second of the day. “You just don’t hear about it because a lot of these are more nuisance attacks than big time bring down the house DDoS type events,” he said.

|

||||

|

||||

Also a lot of the attack platforms being sold only take systems down for an hour or a bit longer. Usually an hour-long attack on a site will cost anywhere from $15 to $50\. It depends, though, sometimes for better attack platforms it can hundreds of dollars an hour, he said.

|

||||

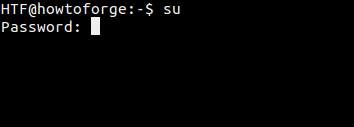

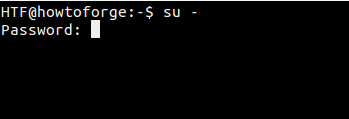

|

||||