mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

commit

2f5076d1db

@ -1,15 +1,15 @@

|

||||

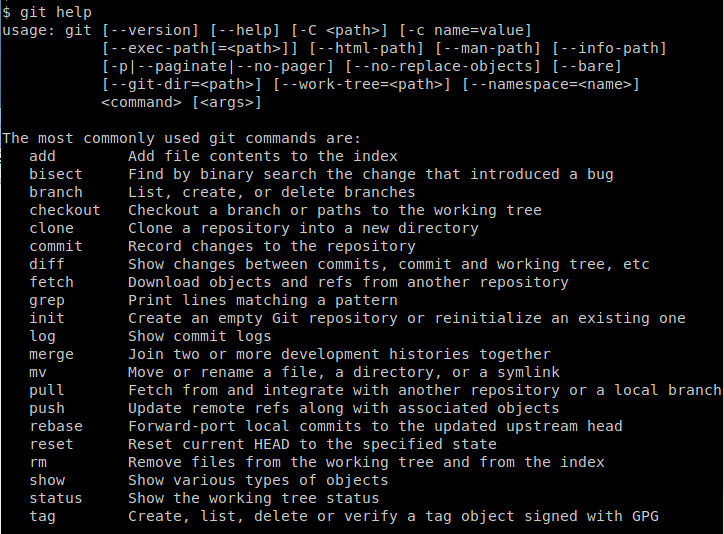

Linux问答 -- 如何在Linux上安装Git

|

||||

Linux有问必答:如何在Linux上安装Git

|

||||

================================================================================

|

||||

|

||||

> **问题:** 我尝试从一个Git公共仓库克隆项目,但出现了这样的错误提示:“git: command not found”。 请问我该如何安装Git? [注明一下是哪个Linux发行版]?

|

||||

> **问题:** 我尝试从一个Git公共仓库克隆项目,但出现了这样的错误提示:“git: command not found”。 请问我该如何在某某发行版上安装Git?

|

||||

|

||||

Git是一个流行的并且开源的版本控制系统(VCS),最初是为Linux环境开发的。跟CVS或者SVN这些版本控制系统不同的是,Git的版本控制被认为是“分布式的”,某种意义上,git的本地工作目录可以作为一个功能完善的仓库来使用,它具备完整的历史记录和版本追踪能力。在这种工作模型之下,各个协作者将内容提交到他们的本地仓库中(与之相对的会直接提交到核心仓库),如果有必要,再有选择性地推送到核心仓库。这就为Git这个版本管理系统带来了大型协作系统所必须的可扩展能力和冗余能力。

|

||||

Git是一个流行的开源版本控制系统(VCS),最初是为Linux环境开发的。跟CVS或者SVN这些版本控制系统不同的是,Git的版本控制被认为是“分布式的”,某种意义上,git的本地工作目录可以作为一个功能完善的仓库来使用,它具备完整的历史记录和版本追踪能力。在这种工作模型之下,各个协作者将内容提交到他们的本地仓库中(与之相对的会总是提交到核心仓库),如果有必要,再有选择性地推送到核心仓库。这就为Git这个版本管理系统带来了大型协作系统所必须的可扩展能力和冗余能力。

|

||||

|

||||

|

||||

|

||||

### 使用包管理器安装Git ###

|

||||

|

||||

Git已经被所有的主力Linux发行版所支持。所以安装它最简单的方法就是使用各个Linux发行版的包管理器。

|

||||

Git已经被所有的主流Linux发行版所支持。所以安装它最简单的方法就是使用各个Linux发行版的包管理器。

|

||||

|

||||

**Debian, Ubuntu, 或 Linux Mint**

|

||||

|

||||

@ -18,6 +18,8 @@ Git已经被所有的主力Linux发行版所支持。所以安装它最简单的

|

||||

**Fedora, CentOS 或 RHEL**

|

||||

|

||||

$ sudo yum install git

|

||||

或

|

||||

$ sudo dnf install git

|

||||

|

||||

**Arch Linux**

|

||||

|

||||

@ -33,7 +35,7 @@ Git已经被所有的主力Linux发行版所支持。所以安装它最简单的

|

||||

|

||||

### 从源码安装Git ###

|

||||

|

||||

如果由于某些原因,你希望从源码安装Git,安装如下介绍操作。

|

||||

如果由于某些原因,你希望从源码安装Git,按照如下介绍操作。

|

||||

|

||||

**安装依赖包**

|

||||

|

||||

@ -65,7 +67,7 @@ via: http://ask.xmodulo.com/install-git-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[mr-ping](https://github.com/mr-ping)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,100 +0,0 @@

|

||||

Translating by ZTinoZ

|

||||

5 heroes of the Linux world

|

||||

================================================================================

|

||||

Who are these people, seen and unseen, whose work affects all of us every day?

|

||||

|

||||

|

||||

Image courtesy [Christopher Michel/Flickr][1]

|

||||

|

||||

### High-flying penguins ###

|

||||

|

||||

Linux and open source is driven by passionate people who write best-of-breed software and then release the code to the public so anyone can use it, without any strings attached. (Well, there is one string attached and that’s licence.)

|

||||

|

||||

Who are these people? These heroes of the Linux world, whose work affects all of us every day. Allow me to introduce you.

|

||||

|

||||

|

||||

Image courtesy Swapnil Bhartiya

|

||||

|

||||

### Klaus Knopper ###

|

||||

|

||||

Klaus Knopper, an Austrian developer who lives in Germany, is the founder of Knoppix and Adriana Linux, which he developed for his blind wife.

|

||||

|

||||

Knoppix holds a very special place in heart of those Linux users who started using Linux before Ubuntu came along. What makes Knoppix so special is that it popularized the concept of Live CD. Unlike Windows or Mac OS X, you could run the entire operating system from the CD without installing anything on the system. It allowed new users to test Linux on their systems without formatting the hard drive. The live feature of Linux alone contributed heavily to its popularity.

|

||||

|

||||

|

||||

Image courtesy [Fórum Internacional Software Live/Flickr][2]

|

||||

|

||||

### Lennart Pottering ###

|

||||

|

||||

Lennart Pottering is yet another genius from Germany. He has written so many core components of a Linux (as well as BSD) system that it’s hard to keep track. Most of his work is towards the successors of aging or broken components of the Linux systems.

|

||||

|

||||

Pottering wrote the modern init system systemd, which shook the Linux world and created a [rift in the Debian community][3].

|

||||

|

||||

While Linus Torvalds has no problems with systemd, and praises it, he is not a huge fan of the way systemd developers (including the co-author Kay Sievers,) respond to bug reports and criticism. At one point Linus said on the LKML (Linux Kernel Mailing List) that he would [never work with Sievers][4].

|

||||

|

||||

Lennart is also the author of Pulseaudio, sound server on Linux and Avahi, zero-configuration networking (zeroconf) implementation.

|

||||

|

||||

|

||||

Image courtesy [Meego Com/Flickr][5]

|

||||

|

||||

### Jim Zemlin ###

|

||||

|

||||

Jim Zemlin isn't a developer, but as founder of The Linux Foundation he is certainly one of the most important figures of the Linux world.

|

||||

|

||||

In 2007, The Linux Foundation was formed as a result of merger between two open source bodies: the Free Standards Group and the Open Source Development Labs. Zemlin was the executive director of the Free Standards Group. Post-merger Zemlin became the executive director of The Linux Foundation and has held that position since.

|

||||

|

||||

Under his leadership, The Linux Foundation has become the central figure in the modern IT world and plays a very critical role for the Linux ecosystem. In order to ensure that key developers like Torvalds and Kroah-Hartman can focus on Linux, the foundation sponsors them as fellows.

|

||||

|

||||

Zemlin also made the foundation a bridge between companies so they can collaborate on Linux while at the same time competing in the market. The foundation also organizes many conferences around the world and [offers many courses for Linux developers][6].

|

||||

|

||||

People may think of Zemlin as Linus Torvalds' boss, but he refers to himself as "Linus Torvalds' janitor."

|

||||

|

||||

|

||||

Image courtesy [Coscup/Flickr][7]

|

||||

|

||||

### Greg Kroah-Hartman ###

|

||||

|

||||

Greg Kroah-Hartman is known as second-in-command of the Linux kernel. The ‘gentle giant’ is the maintainer of the stable branch of the kernel and of staging subsystem, USB, driver core, debugfs, kref, kobject, and the [sysfs][8] kernel subsystems along with many other components of a Linux system.

|

||||

|

||||

He is also credited for device drivers for Linux. One of his jobs is to travel around the globe, meet hardware makers and persuade them to make their drivers available for Linux. The next time you plug some random USB device to your system and it works out of the box, thank Kroah-Hartman. (Don't thank the distro. Some distros try to take credit for the work Kroah-Hartman or the Linux kernel did.)

|

||||

|

||||

Kroah-Hartman previously worked for Novell and then joined the Linux Foundation as a fellow, alongside Linus Torvalds.

|

||||

|

||||

Kroah-Hartman is the total opposite of Linus and never rants (at least publicly). One time there was some ripple was when he stated that [Canonical doesn’t contribute much to the Linux kernel][9].

|

||||

|

||||

On a personal level, Kroah-Hartman is extremely helpful to new developers and users and is easily accessible.

|

||||

|

||||

|

||||

Image courtesy Swapnil Bhartiya

|

||||

|

||||

### Linus Torvalds ###

|

||||

|

||||

No collection of Linux heroes would be complete without Linus Torvalds. He is the author of the Linux kernel, the most used open source technology on the planet and beyond. His software powers everything from space stations to supercomputers, military drones to mobile devices and tiny smartwatches. Linus remains the authority on the Linux kernel and makes the final decision on which patches to merge to the kernel.

|

||||

|

||||

Linux isn't Torvalds' only contribution open source. When he got fed-up with the existing software revision control systems, which his kernel heavily relied on, he wrote his own, called Git. Git enjoys the same reputation as Linux; it is the most used version control system in the world.

|

||||

|

||||

Torvalds is also a passionate scuba diver and when he found no decent dive logs for Linux, he wrote his own and called it SubSurface.

|

||||

|

||||

Torvalds is [well known for his rants][10] and once admitted that his ego is as big as a small planet. But he is also known for admitting his mistakes if he realizes he was wrong.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itworld.com/article/2955001/linux/5-heros-of-the-linux-world.html

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itworld.com/author/Swapnil-Bhartiya/

|

||||

[1]:https://flic.kr/p/siJ25M

|

||||

[2]:https://flic.kr/p/uTzj54

|

||||

[3]:http://www.itwire.com/business-it-news/open-source/66153-systemd-fallout-two-debian-technical-panel-members-resign

|

||||

[4]:http://www.linuxveda.com/2014/04/04/linus-torvalds-systemd-kay-sievers/

|

||||

[5]:https://flic.kr/p/9Lnhpu

|

||||

[6]:http://www.itworld.com/article/2951968/linux/linux-foundation-offers-cheaper-courses-and-certifications-for-india.html

|

||||

[7]:https://flic.kr/p/hBv8Pp

|

||||

[8]:https://en.wikipedia.org/wiki/Sysfs

|

||||

[9]:https://www.youtube.com/watch?v=CyHAeGBFS8k

|

||||

[10]:http://www.itworld.com/article/2873200/operating-systems/11-technologies-that-tick-off-linus-torvalds.html

|

||||

@ -1,59 +0,0 @@

|

||||

For Linux, Supercomputers R Us

|

||||

================================================================================

|

||||

|

||||

Credit: Michel Ngilen, CC BY 2.0, via Wikimedia Commons

|

||||

|

||||

> Almost all supercomputers run Linux, including the ones built from Raspberry Pi boards and PlayStation 3 game consoles

|

||||

|

||||

Supercomputers are serious things, called on to do serious computing. They tend to be engaged in serious pursuits like atomic bomb simulations, climate modeling and high-level physics. Naturally, they cost serious money. At the very top of the latest [Top500][1] supercomputer ranking is the Tianhe-2 supercomputer at China’s National University of Defense Technology. It cost about $390 million to build.

|

||||

|

||||

But then there’s the supercomputer that Joshua Kiepert, a doctoral student at Boise State’s Electrical and Computer Engineering department, [created with Raspberry Pi computers][2].It cost less than $2,000.

|

||||

|

||||

No, I’m not making that up. It’s an honest-to-goodness supercomputer made from overclocked 1-GHz [Model B Raspberry Pi][3] ARM11 processors with Videocore IV GPUs. Each one comes with 512MB of RAM, a pair of USB ports and a 10/100 BaseT Ethernet port.

|

||||

|

||||

And what do the Tianhe-2 and the Boise State supercomputer have in common? They both run Linux. As do [486 out of the world’s fastest 500 supercomputers][4]. It’s part of a domination of the category that began over 20 years ago. And now it’s trickling down to built-on-the-cheap supercomputers. Because Kiepert’s machine isn’t the only budget number cruncher out there.

|

||||

|

||||

Gaurav Khanna, an associate professor of physics at the University of Massachusetts Dartmouth, created a [supercomputer with something shy of 200 PlayStation 3 video game consoles][5].

|

||||

|

||||

The PlayStations are powered by a 3.2-GHz PowerPC-based Power Processing Element. Each comes with 512MB of RAM. You can still buy one, although Sony will be phasing them out by year’s end, for just over $200. Khanna started with only 16 PlayStation 3s for his first supercomputer, so you too could put a supercomputer on your credit card for less than four grand.

|

||||

|

||||

These machines may be built from toys, but they’re not playthings. Khanna has done serious astrophysics on his rig. A white-hat hacking group used a similar [PlayStation 3 supercomputer in 2008 to crack the SSL MD5 hashing algorithm][6] in 2008.

|

||||

|

||||

Two years later, the Air Force Research Laboratory [Condor Cluster was using 1,760 Sony PlayStation 3 processors][7] and 168 general-purpose graphical processing units. This bargain-basement supercomputer runs at about 500TFLOPs, or 500 trillion floating point operations per second.

|

||||

|

||||

Other cheap options for home supercomputers include specialist parallel-processing boards such as the [$99 credit-card-sized Parallella board][8], and high-end graphics boards such as [Nvidia’s Titan Z][9] and [AMD’s FirePro W9100][10]. Those high-end boards, coveted by gamers with visions of a dream machine or even a chance at winning the first-place prize of over $100,000 in the [Intel Extreme Masters World Championship League of][11] [Legends][12], cost considerably more, retailing for about $3,000. On the other hand, a single one can deliver over 2.5TFLOPS all by itself, and for scientists and researchers, they offer an affordable way to get a supercomputer they can call their own.

|

||||

|

||||

As for the Linux connection, that all started in 1994 at the Goddard Space Flight Center with the first [Beowulf supercomputer][13].

|

||||

|

||||

By our standards, there wasn’t much that was super about the first Beowulf. But in its day, the first homemade supercomputer, with its 16 Intel 486DX processors and 10Mbps Ethernet for the bus, was great. [Beowulf, designed by NASA contractors Don Becker and Thomas Sterling][14], was the first “maker” supercomputer. Its “compute components,” 486DX PCs, cost only a few thousand dollars. While its speed was only in single-digit gigaflops, [Beowulf][15] showed you could build supercomputers from commercial off-the-shelf (COTS) hardware and Linux.

|

||||

|

||||

I wish I’d had a part in its creation, but I’d already left Goddard by 1994 for a career as a full-time technology journalist. Darn it!

|

||||

|

||||

But even from this side of my reporter’s notebook, I can still appreciate how COTS and open-source software changed supercomputing forever. I hope you can too. Because, whether it’s a cluster of Raspberry Pis or a monster with over 3 million Intel Ivy Bridge and Xeon Phi chips, almost all of today’s supercomputers trace their ancestry to Beowulf.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[1]:http://www.top500.org/

|

||||

[2]:http://www.zdnet.com/article/build-your-own-supercomputer-out-of-raspberry-pi-boards/

|

||||

[3]:https://www.raspberrypi.org/products/model-b/

|

||||

[4]:http://www.zdnet.com/article/linux-still-rules-supercomputing/

|

||||

[5]:http://www.nytimes.com/2014/12/23/science/an-economical-way-to-save-progress.html?smid=fb-nytimes&smtyp=cur&bicmp=AD&bicmlukp=WT.mc_id&bicmst=1409232722000&bicmet=1419773522000&_r=4

|

||||

[6]:http://www.computerworld.com/article/2529932/cybercrime-hacking/researchers-hack-verisign-s-ssl-scheme-for-securing-web-sites.html

|

||||

[7]:http://phys.org/news/2010-12-air-playstation-3s-supercomputer.html

|

||||

[8]:http://www.zdnet.com/article/parallella-the-99-linux-supercomputer/

|

||||

[9]:http://blogs.nvidia.com/blog/2014/03/25/titan-z/

|

||||

[10]:http://www.amd.com/en-us/press-releases/Pages/amd-flagship-professional-2014apr7.aspx

|

||||

[11]:http://en.intelextrememasters.com/news/check-out-the-intel-extreme-masters-katowice-prize-money-distribution/

|

||||

[12]:http://www.google.com/url?q=http%3A%2F%2Fen.intelextrememasters.com%2Fnews%2Fcheck-out-the-intel-extreme-masters-katowice-prize-money-distribution%2F&sa=D&sntz=1&usg=AFQjCNE6yoAGGz-Hpi2tPF4gdhuPBEckhQ

|

||||

[13]:http://www.beowulf.org/overview/history.html

|

||||

[14]:http://yclept.ucdavis.edu/Beowulf/aboutbeowulf.html

|

||||

[15]:http://www.beowulf.org/

|

||||

@ -1,183 +0,0 @@

|

||||

Translating by Ping

|

||||

How to set up a Replica Set on MongoDB

|

||||

================================================================================

|

||||

MongoDB has become the most famous NoSQL database on the market. MongoDB is document-oriented, and its scheme-free design makes it a really attractive solution for all kinds of web applications. One of the features that I like the most is Replica Set, where multiple copies of the same data set are maintained by a group of mongod nodes for redundancy and high availability.

|

||||

|

||||

This tutorial describes how to configure a Replica Set on MonoDB.

|

||||

|

||||

The most common configuration for a Replica Set involves one primary and multiple secondary nodes. The replication will then be initiated from the primary toward the secondaries. Replica Sets can not only provide database protection against unexpected hardware failure and service downtime, but also improve read throughput of database clients as they can be configured to read from different nodes.

|

||||

|

||||

### Set up the Environment ###

|

||||

|

||||

In this tutorial, we are going to set up a Replica Set with one primary and two secondary nodes.

|

||||

|

||||

|

||||

|

||||

In order to implement this lab, we will use three virtual machines (VMs) running on VirtualBox. I am going to install Ubuntu 14.04 on the VMs, and install official packages for Mongodb.

|

||||

|

||||

I am going to set up a necessary environment on one VM instance, and then clone it to the other two VM instances. Thus pick one VM named master, and perform the following installations.

|

||||

|

||||

First, we need to add the MongoDB key for apt:

|

||||

|

||||

$ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 7F0CEB10

|

||||

|

||||

Then we need to add the official MongoDB repository to our source.list:

|

||||

|

||||

$ sudo su

|

||||

# echo "deb http://repo.mongodb.org/apt/ubuntu "$(lsb_release -sc)"/mongodb-org/3.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-3.0.list

|

||||

|

||||

Let's update repositories and install MongoDB.

|

||||

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install -y mongodb-org

|

||||

|

||||

Now let's make some changes in /etc/mongodb.conf.

|

||||

|

||||

auth = true

|

||||

dbpath=/var/lib/mongodb

|

||||

logpath=/var/log/mongodb/mongod.log

|

||||

logappend=true

|

||||

keyFile=/var/lib/mongodb/keyFile

|

||||

replSet=myReplica

|

||||

|

||||

The first line is to make sure that we are going to have authentication on our database. keyFile is to set up a keyfile that is going to be used by MongoDB to replicate between nodes. replSet sets up the name of our replica set.

|

||||

|

||||

Now we are going to create our keyfile, so that it can be in all our instances.

|

||||

|

||||

$ echo -n "MyRandomStringForReplicaSet" | md5sum > keyFile

|

||||

|

||||

This will create keyfile that contains a MD5 string, but it has some noise that we need to clean up before using it in MongoDB. Use the following command to clean it up:

|

||||

|

||||

$ echo -n "MyReplicaSetKey" | md5sum|grep -o "[0-9a-z]\+" > keyFile

|

||||

|

||||

What grep command does is to print MD5 string with no spaces or other characters that we don't want.

|

||||

|

||||

Now we are going to make the keyfile ready for use:

|

||||

|

||||

$ sudo cp keyFile /var/lib/mongodb

|

||||

$ sudo chown mongodb:nogroup keyFile

|

||||

$ sudo chmod 400 keyFile

|

||||

|

||||

Now we have our Ubuntu VM ready to be cloned. Power it off, and clone it to the other VMs.

|

||||

|

||||

|

||||

|

||||

I name the cloned VMs secondary1 and secondary2. Make sure to reinitialize the MAC address of cloned VMs and clone full disks.

|

||||

|

||||

|

||||

|

||||

All three VM instances should be on the same network to communicate with each other. For this, we are going to attach all three VMs to "Internet Network".

|

||||

|

||||

It is recommended that each VM instances be assigned a static IP address, as opposed to DHCP IP address, so that the VMs will not lose connectivity among themselves when a DHCP server assigns different IP addresses to them.

|

||||

|

||||

Let's edit /etc/networks/interfaces of each VM as follows.

|

||||

|

||||

On primary:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.2

|

||||

netmask 255.255.255.0

|

||||

|

||||

On secondary1:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.3

|

||||

netmask 255.255.255.0

|

||||

|

||||

On secondary2:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.4

|

||||

netmask 255.255.255.0

|

||||

|

||||

Another file that needs to be set up is /etc/hosts, because we don't have DNS. We need to set the hostnames in /etc/hosts.

|

||||

|

||||

On primary:

|

||||

|

||||

127.0.0.1 localhost primary

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

On secondary1:

|

||||

|

||||

127.0.0.1 localhost secondary1

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

On secondary2:

|

||||

|

||||

127.0.0.1 localhost secondary2

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

Check connectivity among themselves by using ping command:

|

||||

|

||||

$ ping primary

|

||||

$ ping secondary1

|

||||

$ ping secondary2

|

||||

|

||||

### Set up a Replica Set ###

|

||||

|

||||

After verifying connectivity among VMs, we can go ahead and create the admin user so that we can start working on the Replica Set.

|

||||

|

||||

On primary node, open /etc/mongodb.conf, and comment out two lines that start with auth and replSet:

|

||||

|

||||

dbpath=/var/lib/mongodb

|

||||

logpath=/var/log/mongodb/mongod.log

|

||||

logappend=true

|

||||

#auth = true

|

||||

keyFile=/var/lib/mongodb/keyFile

|

||||

#replSet=myReplica

|

||||

|

||||

Restart mongod daemon.

|

||||

|

||||

$ sudo service mongod restart

|

||||

|

||||

Create an admin user after conencting to MongoDB:

|

||||

|

||||

> use admin

|

||||

> db.createUser({

|

||||

user:"admin",

|

||||

pwd:"

|

||||

})

|

||||

$ sudo service mongod restart

|

||||

|

||||

Connect to MongoDB and use these commands to add secondary1 and secondary2 to our Replicat Set.

|

||||

|

||||

> use admin

|

||||

> db.auth("admin","myreallyhardpassword")

|

||||

> rs.initiate()

|

||||

> rs.add ("secondary1:27017")

|

||||

> rs.add("secondary2:27017")

|

||||

|

||||

Now that we have our Replica Set, we can start working on our project. Consult the [official driver documentation][1] to see how to connect to a Replica Set. In case you want to query from shell, you have to connect to primary instance to insert or query the database. Secondary nodes will not let you do that. If you attempt to access the database on a secondary node, you will get this error message:

|

||||

|

||||

myReplica:SECONDARY>

|

||||

myReplica:SECONDARY> show databases

|

||||

2015-05-10T03:09:24.131+0000 E QUERY Error: listDatabases failed:{ "note" : "from execCommand", "ok" : 0, "errmsg" : "not master" }

|

||||

at Error ()

|

||||

at Mongo.getDBs (src/mongo/shell/mongo.js:47:15)

|

||||

at shellHelper.show (src/mongo/shell/utils.js:630:33)

|

||||

at shellHelper (src/mongo/shell/utils.js:524:36)

|

||||

at (shellhelp2):1:1 at src/mongo/shell/mongo.js:47

|

||||

|

||||

I hope you find this tutorial useful. You can use Vagrant to automate your local environments and help you code faster.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/setup-replica-set-mongodb.html

|

||||

|

||||

作者:[Christopher Valerio][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/valerio

|

||||

[1]:http://docs.mongodb.org/ecosystem/drivers/

|

||||

@ -1,117 +0,0 @@

|

||||

translation by strugglingyouth

|

||||

Troubleshooting with Linux Logs

|

||||

================================================================================

|

||||

Troubleshooting is the main reason people create logs. Often you’ll want to diagnose why a problem happened with your Linux system or application. An error message or a sequence of events can give you clues to the root cause, indicate how to reproduce the issue, and point out ways to fix it. Here are a few use cases for things you might want to troubleshoot in your logs.

|

||||

|

||||

### Cause of Login Failures ###

|

||||

|

||||

If you want to check if your system is secure, you can check your authentication logs for failed login attempts and unfamiliar successes. Authentication failures occur when someone passes incorrect or otherwise invalid login credentials, often to ssh for remote access or su for local access to another user’s permissions. These are logged by the [pluggable authentication module][1], or pam for short. Look in your logs for strings like Failed password and user unknown. Successful authentication records include strings like Accepted password and session opened.

|

||||

|

||||

Failure Examples:

|

||||

|

||||

pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ruser= rhost=10.0.2.2

|

||||

Failed password for invalid user hoover from 10.0.2.2 port 4791 ssh2

|

||||

pam_unix(sshd:auth): check pass; user unknown

|

||||

PAM service(sshd) ignoring max retries; 6 > 3

|

||||

|

||||

Success Examples:

|

||||

|

||||

Accepted password for hoover from 10.0.2.2 port 4792 ssh2

|

||||

pam_unix(sshd:session): session opened for user hoover by (uid=0)

|

||||

pam_unix(sshd:session): session closed for user hoover

|

||||

|

||||

You can use grep to find which users accounts have the most failed logins. These are the accounts that potential attackers are trying and failing to access. This example is for an Ubuntu system.

|

||||

|

||||

$ grep "invalid user" /var/log/auth.log | cut -d ' ' -f 10 | sort | uniq -c | sort -nr

|

||||

23 oracle

|

||||

18 postgres

|

||||

17 nagios

|

||||

10 zabbix

|

||||

6 test

|

||||

|

||||

You’ll need to write a different command for each application and message because there is no standard format. Log management systems that automatically parse logs will effectively normalize them and help you extract key fields like username.

|

||||

|

||||

Log management systems can extract the usernames from your Linux logs using automated parsing. This lets you see an overview of the users and filter on them with a single click. In this example, we can see that the root user logged in over 2,700 times because we are filtering the logs to show login attempts only for the root user.

|

||||

|

||||

|

||||

|

||||

Log management systems also let you view graphs over time to spot unusual trends. If someone had one or two failed logins within a few minutes, it might be that a real user forgot his or her password. However, if there are hundreds of failed logins or they are all different usernames, it’s more likely that someone is trying to attack the system. Here you can see that on March 12, someone tried to login as test and nagios several hundred times. This is clearly not a legitimate use of the system.

|

||||

|

||||

|

||||

|

||||

### Cause of Reboots ###

|

||||

|

||||

Sometimes a server can stop due to a system crash or reboot. How do you know when it happened and who did it?

|

||||

|

||||

#### Shutdown Command ####

|

||||

|

||||

If someone ran the shutdown command manually, you can see it in the auth log file. Here you can see that someone remotely logged in from the IP 50.0.134.125 as the user ubuntu and then shut the system down.

|

||||

|

||||

Mar 19 18:36:41 ip-172-31-11-231 sshd[23437]: Accepted publickey for ubuntu from 50.0.134.125 port 52538 ssh

|

||||

Mar 19 18:36:41 ip-172-31-11-231 23437]:sshd[ pam_unix(sshd:session): session opened for user ubuntu by (uid=0)

|

||||

Mar 19 18:37:09 ip-172-31-11-231 sudo: ubuntu : TTY=pts/1 ; PWD=/home/ubuntu ; USER=root ; COMMAND=/sbin/shutdown -r now

|

||||

|

||||

#### Kernel Initializing ####

|

||||

|

||||

If you want to see when the server restarted regardless of reason (including crashes) you can search logs from the kernel initializing. You’d search for the facility kernel messages and Initializing cpu.

|

||||

|

||||

Mar 19 18:39:30 ip-172-31-11-231 kernel: [ 0.000000] Initializing cgroup subsys cpuset

|

||||

Mar 19 18:39:30 ip-172-31-11-231 kernel: [ 0.000000] Initializing cgroup subsys cpu

|

||||

Mar 19 18:39:30 ip-172-31-11-231 kernel: [ 0.000000] Linux version 3.8.0-44-generic (buildd@tipua) (gcc version 4.6.3 (Ubuntu/Linaro 4.6.3-1ubuntu5) ) #66~precise1-Ubuntu SMP Tue Jul 15 04:01:04 UTC 2014 (Ubuntu 3.8.0-44.66~precise1-generic 3.8.13.25)

|

||||

|

||||

### Detect Memory Problems ###

|

||||

|

||||

There are lots of reasons a server might crash, but one common cause is running out of memory.

|

||||

|

||||

When your system is low on memory, processes are killed, typically in the order of which ones will release the most resources. The error occurs when your system is using all of its memory and a new or existing process attempts to access additional memory. Look in your log files for strings like Out of Memory or for kernel warnings like to kill. These strings indicate that your system intentionally killed the process or application rather than allowing the process to crash.

|

||||

|

||||

Examples:

|

||||

|

||||

[33238.178288] Out of memory: Kill process 6230 (firefox) score 53 or sacrifice child

|

||||

[29923450.995084] select 5230 (docker), adj 0, size 708, to kill

|

||||

|

||||

You can find these logs using a tool like grep. This example is for Ubuntu:

|

||||

|

||||

$ grep “Out of memory” /var/log/syslog

|

||||

[33238.178288] Out of memory: Kill process 6230 (firefox) score 53 or sacrifice child

|

||||

|

||||

Keep in mind that grep itself uses memory, so you might cause an out of memory error just by running grep. This is another reason it’s a fabulous idea to centralize your logs!

|

||||

|

||||

### Log Cron Job Errors ###

|

||||

|

||||

The cron daemon is a scheduler that runs processes at specified dates and times. If the process fails to run or fails to finish, then a cron error appears in your log files. You can find these files in /var/log/cron, /var/log/messages, and /var/log/syslog depending on your distribution. There are many reasons a cron job can fail. Usually the problems lie with the process rather than the cron daemon itself.

|

||||

|

||||

By default, cron jobs output through email using Postfix. Here is a log showing that an email was sent. Unfortunately, you cannot see the contents of the message here.

|

||||

|

||||

Mar 13 16:35:01 PSQ110 postfix/pickup[15158]: C3EDC5800B4: uid=1001 from=<hoover>

|

||||

Mar 13 16:35:01 PSQ110 postfix/cleanup[15727]: C3EDC5800B4: message-id=<20150310110501.C3EDC5800B4@PSQ110>

|

||||

Mar 13 16:35:01 PSQ110 postfix/qmgr[15159]: C3EDC5800B4: from=<hoover@loggly.com>, size=607, nrcpt=1 (queue active)

|

||||

Mar 13 16:35:05 PSQ110 postfix/smtp[15729]: C3EDC5800B4: to=<hoover@loggly.com>, relay=gmail-smtp-in.l.google.com[74.125.130.26]:25, delay=4.1, delays=0.26/0/2.2/1.7, dsn=2.0.0, status=sent (250 2.0.0 OK 1425985505 f16si501651pdj.5 - gsmtp)

|

||||

|

||||

You should consider logging the cron standard output to help debug problems. Here is how you can redirect your cron standard output to syslog using the logger command. Replace the echo command with your own script and helloCron with whatever you want to set the appName to.

|

||||

|

||||

*/5 * * * * echo ‘Hello World’ 2>&1 | /usr/bin/logger -t helloCron

|

||||

|

||||

Which creates the log entries:

|

||||

|

||||

Apr 28 22:20:01 ip-172-31-11-231 CRON[15296]: (ubuntu) CMD (echo 'Hello World!' 2>&1 | /usr/bin/logger -t helloCron)

|

||||

Apr 28 22:20:01 ip-172-31-11-231 helloCron: Hello World!

|

||||

|

||||

Each cron job will log differently based on the specific type of job and how it outputs data. Hopefully there are clues to the root cause of problems within the logs, or you can add additional logging as needed.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/troubleshooting-with-linux-logs/

|

||||

|

||||

作者:[Jason Skowronski][a1]

|

||||

作者:[Amy Echeverri][a2]

|

||||

作者:[Sadequl Hussain][a3]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a1]:https://www.linkedin.com/in/jasonskowronski

|

||||

[a2]:https://www.linkedin.com/in/amyecheverri

|

||||

[a3]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:http://linux.die.net/man/8/pam.d

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by ZTinoZ

|

||||

Installation Guide for Puppet on Ubuntu 15.04

|

||||

================================================================================

|

||||

Hi everyone, today in this article we'll learn how to install puppet to manage your server infrastructure running ubuntu 15.04. Puppet is an open source software configuration management tool which is developed and maintained by Puppet Labs that allows us to automate the provisioning, configuration and management of a server infrastructure. Whether we're managing just a few servers or thousands of physical and virtual machines to orchestration and reporting, puppet automates tasks that system administrators often do manually which frees up time and mental space so sysadmins can work on improving other aspects of your overall setup. It ensures consistency, reliability and stability of the automated jobs processed. It facilitates closer collaboration between sysadmins and developers, enabling more efficient delivery of cleaner, better-designed code. Puppet is available in two solutions configuration management and data center automation. They are **puppet open source and puppet enterprise**. Puppet open source is a flexible, customizable solution available under the Apache 2.0 license, designed to help system administrators automate the many repetitive tasks they regularly perform. Whereas puppet enterprise edition is a proven commercial solution for diverse enterprise IT environments which lets us get all the benefits of open source puppet, plus puppet apps, commercial-only enhancements, supported modules and integrations, and the assurance of a fully supported platform. Puppet uses SSL certificates to authenticate communication between master and agent nodes.

|

||||

@ -426,4 +427,4 @@ via: http://linoxide.com/linux-how-to/install-puppet-ubuntu-15-04/

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/arunp/

|

||||

[1]:https://docs.puppetlabs.com/puppet/latest/reference/config_file_main.html

|

||||

[1]:https://docs.puppetlabs.com/puppet/latest/reference/config_file_main.html

|

||||

|

||||

@ -0,0 +1,203 @@

|

||||

How to Install Snort and Usage in Ubuntu 15.04

|

||||

================================================================================

|

||||

Intrusion detection in a network is important for IT security. Intrusion Detection System used for the detection of illegal and malicious attempts in the network. Snort is well-known open source intrusion detection system. Web interface (Snorby) can be used for better analysis of alerts. Snort can be used as an intrusion prevention system with iptables/pf firewall. In this article, we will install and configure an open source IDS system snort.

|

||||

|

||||

### Snort Installation ###

|

||||

|

||||

#### Prerequisite ####

|

||||

|

||||

Data Acquisition library (DAQ) is used by the snort for abstract calls to packet capture libraries. It is available on snort website. Downloading process is shown in the following screenshot.

|

||||

|

||||

|

||||

|

||||

Extract it and run ./configure, make and make install commands for DAQ installation. However, DAQ required other tools therefore ./configure script will generate following errors .

|

||||

|

||||

flex and bison error

|

||||

|

||||

|

||||

|

||||

libpcap error.

|

||||

|

||||

|

||||

|

||||

Therefore first install flex/bison and libcap before DAQ installation which is shown in the figure.

|

||||

|

||||

|

||||

|

||||

Installation of libpcap development library is shown below

|

||||

|

||||

|

||||

|

||||

After installation of necessary tools, again run ./configure script which will show following output.

|

||||

|

||||

|

||||

|

||||

make and make install commands result is shown in the following screens.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

After successful installation of DAQ, now we will install snort. Downloading using wget is shown in the below figure.

|

||||

|

||||

|

||||

|

||||

Extract compressed package using below given command.

|

||||

|

||||

#tar -xvzf snort-2.9.7.3.tar.gz

|

||||

|

||||

|

||||

|

||||

Create installation directory and set prefix parameter in the configure script. It is also recommended to enable sourcefire flag for Packet Performance Monitoring (PPM).

|

||||

|

||||

#mkdir /usr/local/snort

|

||||

|

||||

#./configure --prefix=/usr/local/snort/ --enable-sourcefire

|

||||

|

||||

|

||||

|

||||

Configure script generates error due to missing libpcre-dev , libdumbnet-dev and zlib development libraries.

|

||||

|

||||

error due to missing libpcre library.

|

||||

|

||||

|

||||

|

||||

error due to missing dnet (libdumbnet) library.

|

||||

|

||||

|

||||

|

||||

configure script generate error due to missing zlib library.

|

||||

|

||||

|

||||

|

||||

Installation of all required development libraries is shown in the next screenshots.

|

||||

|

||||

# aptitude install libpcre3-dev

|

||||

|

||||

|

||||

|

||||

# aptitude install libdumbnet-dev

|

||||

|

||||

|

||||

|

||||

# aptitude install zlib1g-dev

|

||||

|

||||

|

||||

|

||||

After installation of above required libraries for snort, again run the configure scripts without any error.

|

||||

|

||||

Run make & make install commands for the compilation and installations of snort in /usr/local/snort directory.

|

||||

|

||||

#make

|

||||

|

||||

|

||||

|

||||

#make install

|

||||

|

||||

|

||||

|

||||

Finally snort running from /usr/local/snort/bin directory. Currently it is in promisc mode (packet dump mode) of all traffic on eth0 interface.

|

||||

|

||||

|

||||

|

||||

Traffic dump by the snort interface is shown in following figure.

|

||||

|

||||

|

||||

|

||||

#### Rules and Configuration of Snort ####

|

||||

|

||||

Snort installation from source code required rules and configuration setting therefore now we will copy rules and configuration under /etc/snort directory. We have created single bash scripts for rules and configuration setting. It is used for following snort setting.

|

||||

|

||||

- Creation of snort user for snort IDS service on linux.

|

||||

- Creation of directories and files under /etc directory for snort configuration.

|

||||

- Permission setting and copying data from etc directory of snort source code.

|

||||

- Remove # (comment sign) from rules path in snort.conf file.

|

||||

|

||||

#!/bin/bash##PATH of source code of snort

|

||||

snort_src="/home/test/Downloads/snort-2.9.7.3"

|

||||

echo "adding group and user for snort..."

|

||||

groupadd snort &> /dev/null

|

||||

useradd snort -r -s /sbin/nologin -d /var/log/snort -c snort_idps -g snort &> /dev/null#snort configuration

|

||||

echo "Configuring snort..."mkdir -p /etc/snort

|

||||

mkdir -p /etc/snort/rules

|

||||

touch /etc/snort/rules/black_list.rules

|

||||

touch /etc/snort/rules/white_list.rules

|

||||

touch /etc/snort/rules/local.rules

|

||||

mkdir /etc/snort/preproc_rules

|

||||

mkdir /var/log/snort

|

||||

mkdir -p /usr/local/lib/snort_dynamicrules

|

||||

chmod -R 775 /etc/snort

|

||||

chmod -R 775 /var/log/snort

|

||||

chmod -R 775 /usr/local/lib/snort_dynamicrules

|

||||

chown -R snort:snort /etc/snort

|

||||

chown -R snort:snort /var/log/snort

|

||||

chown -R snort:snort /usr/local/lib/snort_dynamicrules

|

||||

###copy configuration and rules from etc directory under source code of snort

|

||||

echo "copying from snort source to /etc/snort ....."

|

||||

echo $snort_src

|

||||

echo "-------------"

|

||||

cp $snort_src/etc/*.conf* /etc/snort

|

||||

cp $snort_src/etc/*.map /etc/snort##enable rules

|

||||

sed -i 's/include \$RULE\_PATH/#include \$RULE\_PATH/' /etc/snort/snort.conf

|

||||

echo "---DONE---"

|

||||

|

||||

Change the snort source directory in the script and run it. Following output appear in case of success.

|

||||

|

||||

|

||||

|

||||

Above script copied following files/directories from snort source into /etc/snort configuration file.

|

||||

|

||||

|

||||

|

||||

Snort configuration file is very complex however following necessary changes are required in snort.conf for IDS proper working.

|

||||

|

||||

ipvar HOME_NET 192.168.1.0/24 # LAN side

|

||||

|

||||

----------

|

||||

|

||||

ipvar EXTERNAL_NET !$HOME_NET # WAN side

|

||||

|

||||

|

||||

|

||||

var RULE_PATH /etc/snort/rules # snort signature path

|

||||

var SO_RULE_PATH /etc/snort/so_rules #rules in shared libraries

|

||||

var PREPROC_RULE_PATH /etc/snort/preproc_rules # Preproces path

|

||||

var WHITE_LIST_PATH /etc/snort/rules # dont scan

|

||||

var BLACK_LIST_PATH /etc/snort/rules # Must scan

|

||||

|

||||

|

||||

|

||||

include $RULE_PATH/local.rules # file for custom rules

|

||||

|

||||

remove comment sign (#) from other rules such as ftp.rules,exploit.rules etc.

|

||||

|

||||

|

||||

|

||||

Now [Download community][1] rules and extract under /etc/snort/rules directory. Enable community and emerging threats rules in snort.conf file.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

Run following command to test the configuration file after above mentioned changes.

|

||||

|

||||

#snort -T -c /etc/snort/snort.conf

|

||||

|

||||

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

In this article our focus was on the installation and configuration of an open source IDPS system snort on Ubuntu distribution. By default it is used for the monitoring of events however it can con configured inline mode for the protection of network. Snort rules can be tested and analysed in offline mode using pcap capture file.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/security/install-snort-usage-ubuntu-15-04/

|

||||

|

||||

作者:[nido][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/naveeda/

|

||||

[1]:https://www.snort.org/downloads/community/community-rules.tar.gz

|

||||

@ -0,0 +1,99 @@

|

||||

How to download apk files from Google Play Store on Linux

|

||||

================================================================================

|

||||

Suppose you want to install an Android app on your Android device. However, for whatever reason, you cannot access Google Play Store on the Android device. What can you do then? One way to install the app without Google Play Store access is to download its APK file using some other means, and then [install the APK][1] file on the Android device manually.

|

||||

|

||||

There are several ways to download official APK files from Google Play Store on non-Android devices such as regular computers and laptops. For example, there are browser plugins (e.g., for [Chrome][2] or [Firefox][3]) or online APK archives that allow you to download APK files using a web browser. If you do not trust these closed-source plugins or third-party APK repositories, there is yet another way to download official APK files manually, and that is via an open-source Linux app called [GooglePlayDownloader][4].

|

||||

|

||||

GooglePlayDownloader is a Python-based GUI application that enables you to search and download APK files from Google Play Store. Since this is completely open-source, you can be assured while using it. In this tutorial, I am going to show how to download an APK file from Google Play Store using GooglePlayDownloader in Linux environment.

|

||||

|

||||

### Python requirement ###

|

||||

|

||||

GooglePlayDownloader requires Python with SNI (Server Name Indication) support for SSL/TLS communication. This feature comes with Python 2.7.9 or higher. This leaves out older distributions such as Debian 7 Wheezy or earlier, Ubuntu 14.04 or earlier, or CentOS/RHEL 7 or earlier. Assuming that you have a Linux distribution with Python 2.7.9 or higher, proceed to install GooglePlayDownloader as follows.

|

||||

|

||||

### Install GooglePlayDownloader on Ubuntu ###

|

||||

|

||||

On Ubuntu, you can use the official deb build. One catch is that you may need to install one required dependency manually.

|

||||

|

||||

#### On Ubuntu 14.10 ####

|

||||

|

||||

Download [python-ndg-httpsclient][5] deb package, which is a missing dependency on older Ubuntu distributions. Also download GooglePlayDownloader's official deb package.

|

||||

|

||||

$ wget http://mirrors.kernel.org/ubuntu/pool/main/n/ndg-httpsclient/python-ndg-httpsclient_0.3.2-1ubuntu4_all.deb

|

||||

$ wget http://codingteam.net/project/googleplaydownloader/download/file/googleplaydownloader_1.7-1_all.deb

|

||||

|

||||

We are going to use [gdebi command][6] to install those two deb files as follows. The gdebi command will automatically handle any other dependencies.

|

||||

|

||||

$ sudo apt-get install gdebi-core

|

||||

$ sudo gdebi python-ndg-httpsclient_0.3.2-1ubuntu4_all.deb

|

||||

$ sudo gdebi googleplaydownloader_1.7-1_all.deb

|

||||

|

||||

#### On Ubuntu 15.04 or later ####

|

||||

|

||||

Recent Ubuntu distributions ship all required dependencies, and thus the installation is straightforward as follows.

|

||||

|

||||

$ wget http://codingteam.net/project/googleplaydownloader/download/file/googleplaydownloader_1.7-1_all.deb

|

||||

$ sudo apt-get install gdebi-core

|

||||

$ sudo gdebi googleplaydownloader_1.7-1_all.deb

|

||||

|

||||

### Install GooglePlayDownloader on Debian ###

|

||||

|

||||

Due to its Python requirement, GooglePlayDownloader cannot be installed on Debian 7 Wheezy or earlier unless you upgrade its stock Python.

|

||||

|

||||

#### On Debian 8 Jessie and higher: ####

|

||||

|

||||

$ wget http://codingteam.net/project/googleplaydownloader/download/file/googleplaydownloader_1.7-1_all.deb

|

||||

$ sudo apt-get install gdebi-core

|

||||

$ sudo gdebi googleplaydownloader_1.7-1_all.deb

|

||||

|

||||

### Install GooglePlayDownloader on Fedora ###

|

||||

|

||||

Since GooglePlayDownloader was originally developed for Debian based distributions, you need to install it from the source if you want to use it on Fedora.

|

||||

|

||||

First, install necessary dependencies.

|

||||

|

||||

$ sudo yum install python-pyasn1 wxPython python-ndg_httpsclient protobuf-python python-requests

|

||||

|

||||

Then install it as follows.

|

||||

|

||||

$ wget http://codingteam.net/project/googleplaydownloader/download/file/googleplaydownloader_1.7.orig.tar.gz

|

||||

$ tar -xvf googleplaydownloader_1.7.orig.tar.gz

|

||||

$ cd googleplaydownloader-1.7

|

||||

$ chmod o+r -R .

|

||||

$ sudo python setup.py install

|

||||

$ sudo sh -c "echo 'python /usr/lib/python2.7/site-packages/googleplaydownloader-1.7-py2.7.egg/googleplaydownloader/googleplaydownloader.py' > /usr/bin/googleplaydownloader"

|

||||

|

||||

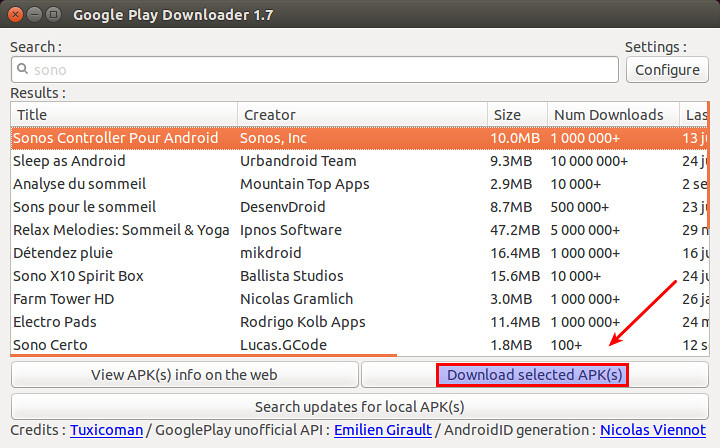

### Download APK Files from Google Play Store with GooglePlayDownloader ###

|

||||

|

||||

Once you installed GooglePlayDownloader, you can download APK files from Google Play Store as follows.

|

||||

|

||||

First launch the app by typing:

|

||||

|

||||

$ googleplaydownloader

|

||||

|

||||

|

||||

|

||||

At the search bar, type the name of the app you want to download from Google Play Store.

|

||||

|

||||

|

||||

|

||||

Once you find the app in the search list, choose the app, and click on "Download selected APK(s)" button. You will find the downloaded APK file in your home directory. Now you can move the APK file to the Android device of your choice, and install it manually.

|

||||

|

||||

Hope this helps.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/download-apk-files-google-play-store.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/how-to-install-apk-file-on-android-phone-or-tablet.html

|

||||

[2]:https://chrome.google.com/webstore/detail/apk-downloader/cgihflhdpokeobcfimliamffejfnmfii

|

||||

[3]:https://addons.mozilla.org/en-us/firefox/addon/apk-downloader/

|

||||

[4]:http://codingteam.net/project/googleplaydownloader

|

||||

[5]:http://packages.ubuntu.com/vivid/python-ndg-httpsclient

|

||||

[6]:http://xmodulo.com/how-to-install-deb-file-with-dependencies.html

|

||||

@ -1,3 +1,5 @@

|

||||

FSSlc translating

|

||||

|

||||

RHCSA Series: Editing Text Files with Nano and Vim / Analyzing text with grep and regexps – Part 4

|

||||

================================================================================

|

||||

Every system administrator has to deal with text files as part of his daily responsibilities. That includes editing existing files (most likely configuration files), or creating new ones. It has been said that if you want to start a holy war in the Linux world, you can ask sysadmins what their favorite text editor is and why. We are not going to do that in this article, but will present a few tips that will be helpful to use two of the most widely used text editors in RHEL 7: nano (due to its simplicity and easiness of use, specially to new users), and vi/m (due to its several features that convert it into more than a simple editor). I am sure that you can find many more reasons to use one or the other, or perhaps some other editor such as emacs or pico. It’s entirely up to you.

|

||||

@ -251,4 +253,4 @@ via: http://www.tecmint.com/rhcsa-exam-how-to-use-nano-vi-editors/

|

||||

[2]:http://www.tecmint.com/file-and-directory-management-in-linux/

|

||||

[3]:http://www.tecmint.com/rhcsa-exam-reviewing-essential-commands-system-documentation/

|

||||

[4]:http://www.nano-editor.org/

|

||||

[5]:http://www.vim.org/

|

||||

[5]:http://www.vim.org/

|

||||

|

||||

59

translated/talk/20150810 For Linux, Supercomputers R Us.md

Normal file

59

translated/talk/20150810 For Linux, Supercomputers R Us.md

Normal file

@ -0,0 +1,59 @@

|

||||

Linux:称霸超级计算机系统

|

||||

================================================================================

|

||||

|

||||

首图来源:By Michel Ngilen,[ CC BY 2.0 ], via Wikimedia Commons

|

||||

|

||||

> 几乎所有超级计算机上运行的系统都是Linux,其中包括那些由树莓派(Raspberry Pi)板和PlayStation 3游戏机板组成的计算机。

|

||||

|

||||

超级计算机是很正经的工具,目的是做严肃的计算。它们往往从事于严肃的追求,比如原子弹的模拟,气候模拟和高级物理学。当然,它们也需要大笔资金的投资。在最新的超级计算机[500强][1]排名中,中国国防科大研制的天河2号位居第一。天河2号耗资约3.9亿美元。

|

||||

|

||||

但是,也有一个超级计算机,是由博伊西州立大学电气和计算机工程系的一名在读博士Joshua Kiepert[用树莓派构建完成][2]的。其创建成本低于2000美元。

|

||||

|

||||

不,这不是我编造的。这是一个真实的超级计算机,由超频1GHz的[B型树莓派][3]ARM11处理器与VideoCore IV GPU组成。每个都配备了512MB的RAM,一对USB端口和1个10/100 BaseT以太网端口。

|

||||

|

||||

那么天河2号和博伊西州立大学的超级计算机有什么共同点?它们都运行Linux系统。世界最快的超级计算机[前500强中有486][4]个也同样运行的是Linux系统。这是20多年前就开始的一种覆盖。现在Linux开始建立于廉价的超级计算机。因为Kiepert的机器并不是唯一的预算数字计算机。

|

||||

|

||||

Gaurav Khanna,麻省大学达特茅斯分校的物理学副教授,创建了一台超级计算机仅用了[不足200的PlayStation3视频游戏机][5]。

|

||||

|

||||

PlayStation游戏机是由一个3.2 GHz的基于PowerPC的电源处理单元供电。每个都配有512M的RAM。你现在仍然可以花200美元买到一个,尽管索尼将在年底逐步淘汰它们。Khanna仅用16个PlayStation 3s构建了他第一台超级计算机,所以你也可以花费不到4000美元就拥有你自己的超级计算机。

|

||||

|

||||

这些机器可能是从玩具建成的,但他们不是玩具。Khanna已经用它做了严肃的天体物理学研究。一个白帽子黑客组织使用了类似的[PlayStation 3超级计算机在2008年破解了SSL的MD5哈希算法][6]。

|

||||

|

||||

两年后,美国空军研究实验室研制的[Condor Cluster,使用了1,760个索尼的PlayStation3的处理器][7]和168个通用的图形处理单元。这个低廉的超级计算机,每秒运行约500TFLOPs,或500万亿次浮点运算。

|

||||

|

||||

其他的一些便宜且适用于构建家庭超级计算机的构件包括,专业并行处理板比如信用卡大小[99美元的Parallella板][8],以及高端显卡比如[Nvidia的 Titan Z][9] 以及[ AMD的 FirePro W9100][10].这些高端主板市场零售价约3000美元,被一些[英特尔极限大师赛世界锦标赛英雄联盟参赛][11]玩家觊觎能够赢得的梦想的机器,c[传说][12]这项比赛第一名能获得超过10万美元奖金。另一方面,一个人能够独自提供超过2.5TFLOPS,并且他们为科学家和研究人员提供了一个经济的方法,使得他们拥有自己专属的超级计算机。

|

||||

|

||||

作为Linux的连接,这一切都开始于1994年戈达德航天中心的第一个名为[Beowulf超级计算机][13]。

|

||||

|

||||

按照我们的标准,Beowulf不能算是最优越的。但在那个时期,作为第一台自制的超级计算机,其16英特尔486DX处理器和10Mbps的以太网总线,是伟大的创举。由[美国航空航天局承包人Don Becker和Thomas Sterling设计的Beowulf][14],是第一个“制造者”超级计算机。它的“计算部件”486DX PCs,成本仅有几千美元。尽管它的速度只有一位数的浮点运算,[Beowulf][15]表明了你可以用商用现货(COTS)硬件和Linux创建超级计算机。

|

||||

|

||||

我真希望我参与创作了一部分,但是我1994年就离开了戈达德,开始了作为一名全职的科技记者的职业生涯。该死。

|

||||

|

||||

但是尽管我只是使用笔记本的记者,我依然能够体会到COTS和开源软件是如何永远的改变了超级计算机。我希望现在读这篇文章的你也能。因为,无论是Raspberry Pis集群,还是超过300万英特尔的Ivy Bridge和Xeon Phi芯片的庞然大物,几乎所有当代的超级计算机都可以追溯到Beowulf。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via:

|

||||

|

||||

作者:[Steven J. Vaughan-Nichols][a]

|

||||

译者:[xiaoyu33](https://github.com/xiaoyu33)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.computerworld.com/author/Steven-J.-Vaughan_Nichols/

|

||||

[1]:http://www.top500.org/

|

||||

[2]:http://www.zdnet.com/article/build-your-own-supercomputer-out-of-raspberry-pi-boards/

|

||||

[3]:https://www.raspberrypi.org/products/model-b/

|

||||

[4]:http://www.zdnet.com/article/linux-still-rules-supercomputing/

|

||||

[5]:http://www.nytimes.com/2014/12/23/science/an-economical-way-to-save-progress.html?smid=fb-nytimes&smtyp=cur&bicmp=AD&bicmlukp=WT.mc_id&bicmst=1409232722000&bicmet=1419773522000&_r=4

|

||||

[6]:http://www.computerworld.com/article/2529932/cybercrime-hacking/researchers-hack-verisign-s-ssl-scheme-for-securing-web-sites.html

|

||||

[7]:http://phys.org/news/2010-12-air-playstation-3s-supercomputer.html

|

||||

[8]:http://www.zdnet.com/article/parallella-the-99-linux-supercomputer/

|

||||

[9]:http://blogs.nvidia.com/blog/2014/03/25/titan-z/

|

||||

[10]:http://www.amd.com/en-us/press-releases/Pages/amd-flagship-professional-2014apr7.aspx

|

||||

[11]:http://en.intelextrememasters.com/news/check-out-the-intel-extreme-masters-katowice-prize-money-distribution/

|

||||

[12]:http://www.google.com/url?q=http%3A%2F%2Fen.intelextrememasters.com%2Fnews%2Fcheck-out-the-intel-extreme-masters-katowice-prize-money-distribution%2F&sa=D&sntz=1&usg=AFQjCNE6yoAGGz-Hpi2tPF4gdhuPBEckhQ

|

||||

[13]:http://www.beowulf.org/overview/history.html

|

||||

[14]:http://yclept.ucdavis.edu/Beowulf/aboutbeowulf.html

|

||||

[15]:http://www.beowulf.org/

|

||||

@ -0,0 +1,183 @@

|

||||

如何配置MongoDB副本集(Replica Set)

|

||||

================================================================================

|

||||

MongoDB已经成为市面上最知名的NoSQL数据库。MongoDB是面向文档的,它的无模式设计使得它在各种各样的WEB应用当中广受欢迎。最让我喜欢的特性之一是它的副本集,副本集将同一数据的多份拷贝放在一组mongod节点上,从而实现数据的冗余以及高可用性。

|

||||

|

||||

这篇教程将向你介绍如何配置一个MongoDB副本集。

|

||||

|

||||

副本集的最常见配置涉及到一个主节点以及多个副节点。这之后启动的复制行为会从这个主节点到其他副节点。副本集不止可以针对意外的硬件故障和停机事件对数据库提供保护,同时也因为提供了更多的结点从而提高了数据库客户端数据读取的吞吐量。

|

||||

|

||||

### 配置环境 ###

|

||||

|

||||

这个教程里,我们会配置一个包括一个主节点以及两个副节点的副本集。

|

||||

|

||||

|

||||

|

||||

为了达到这个目的,我们使用了3个运行在VirtualBox上的虚拟机。我会在这些虚拟机上安装Ubuntu 14.04,并且安装MongoDB官方包。

|

||||

|

||||

我会在一个虚拟机实例上配置好需要的环境,然后将它克隆到其他的虚拟机实例上。因此,选择一个名为master的虚拟机,执行以下安装过程。

|

||||

|

||||

首先,我们需要在apt中增加一个MongoDB密钥:

|

||||

|

||||

$ sudo apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv 7F0CEB10

|

||||

|

||||

然后,将官方的MongoDB仓库添加到source.list中:

|

||||

|

||||

$ sudo su

|

||||

# echo "deb http://repo.mongodb.org/apt/ubuntu "$(lsb_release -sc)"/mongodb-org/3.0 multiverse" | sudo tee /etc/apt/sources.list.d/mongodb-org-3.0.list

|

||||

|

||||

接下来更新apt仓库并且安装MongoDB。

|

||||

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install -y mongodb-org

|

||||

|

||||

现在对/etc/mongodb.conf做一些更改

|

||||

|

||||

auth = true

|

||||

dbpath=/var/lib/mongodb

|

||||

logpath=/var/log/mongodb/mongod.log

|

||||

logappend=true

|

||||

keyFile=/var/lib/mongodb/keyFile

|

||||

replSet=myReplica

|

||||

|

||||

第一行的作用是确认我们的数据库需要验证才可以使用的。keyfile用来配置用于MongoDB结点间复制行为的密钥文件。replSet用来为副本集设置一个名称。

|

||||

|

||||

接下来我们创建一个用于所有实例的密钥文件。

|

||||

|

||||

$ echo -n "MyRandomStringForReplicaSet" | md5sum > keyFile

|

||||

|

||||

这将会创建一个含有MD5字符串的密钥文件,但是由于其中包含了一些噪音,我们需要对他们清理后才能正式在MongoDB中使用。

|

||||

|

||||

$ echo -n "MyReplicaSetKey" | md5sum|grep -o "[0-9a-z]\+" > keyFile

|

||||

|

||||

grep命令的作用的是把将空格等我们不想要的内容过滤掉之后的MD5字符串打印出来。

|

||||

|

||||

现在我们对密钥文件进行一些操作,让它真正可用。

|

||||

|

||||

$ sudo cp keyFile /var/lib/mongodb

|

||||

$ sudo chown mongodb:nogroup keyFile

|

||||

$ sudo chmod 400 keyFile

|

||||

|

||||

接下来,关闭此虚拟机。将其Ubuntu系统克隆到其他虚拟机上。

|

||||

|

||||

|

||||

|

||||

这是克隆后的副节点1和副节点2。确认你已经将它们的MAC地址重新初始化,并且克隆整个硬盘。

|

||||

|

||||

|

||||

|

||||

请注意,三个虚拟机示例需要在同一个网络中以便相互通讯。因此,我们需要它们弄到“互联网"上去。

|

||||

|

||||

这里推荐给每个虚拟机设置一个静态IP地址,而不是使用DHCP。这样它们就不至于在DHCP分配IP地址给他们的时候失去连接。

|

||||

|

||||

像下面这样编辑每个虚拟机的/etc/networks/interfaces文件。

|

||||

|

||||

在主结点上:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.2

|

||||

netmask 255.255.255.0

|

||||

|

||||

在副结点1上:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.3

|

||||

netmask 255.255.255.0

|

||||

|

||||

在副结点2上:

|

||||

|

||||

auto eth1

|

||||

iface eth1 inet static

|

||||

address 192.168.50.4

|

||||

netmask 255.255.255.0

|

||||

|

||||

由于我们没有DNS服务,所以需要设置设置一下/etc/hosts这个文件,手工将主机名称放到次文件中。

|

||||

|

||||

在主结点上:

|

||||

|

||||

127.0.0.1 localhost primary

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

在副结点1上:

|

||||

|

||||

127.0.0.1 localhost secondary1

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

在副结点2上:

|

||||

|

||||

127.0.0.1 localhost secondary2

|

||||

192.168.50.2 primary

|

||||

192.168.50.3 secondary1

|

||||

192.168.50.4 secondary2

|

||||

|

||||

使用ping命令检查各个结点之间的连接。

|

||||

|

||||

$ ping primary

|

||||

$ ping secondary1

|

||||

$ ping secondary2

|

||||

|

||||

### 配置副本集 ###

|

||||

|

||||

验证各个结点可以正常连通后,我们就可以新建一个管理员用户,用于之后的副本集操作。

|

||||

|

||||

在主节点上,打开/etc/mongodb.conf文件,将auth和replSet两项注释掉。

|

||||

|

||||

dbpath=/var/lib/mongodb

|

||||

logpath=/var/log/mongodb/mongod.log

|

||||

logappend=true

|

||||

#auth = true

|

||||

keyFile=/var/lib/mongodb/keyFile

|

||||

#replSet=myReplica

|

||||

|

||||

重启mongod进程。

|

||||

|

||||

$ sudo service mongod restart

|

||||

|

||||

连接MongoDB后,新建管理员用户。

|

||||

|

||||

> use admin

|

||||

> db.createUser({

|

||||

user:"admin",

|

||||

pwd:"

|

||||

})

|

||||

$ sudo service mongod restart

|

||||

|

||||

连接到MongoDB,用以下命令将secondary1和secondary2节点添加到我们的副本集中。

|

||||

|

||||

> use admin

|

||||

> db.auth("admin","myreallyhardpassword")

|

||||

> rs.initiate()

|

||||

> rs.add ("secondary1:27017")

|

||||

> rs.add("secondary2:27017")

|

||||

|

||||

|

||||

现在副本集到手了,可以开始我们的项目了。参照 [official driver documentation][1] 来了解如何连接到副本集。如果你想要用Shell来请求数据,那么你需要连接到主节点上来插入或者请求数据,副节点不行。如果你执意要尝试用附件点操作,那么以下错误信息就蹦出来招呼你了。

|

||||

|

||||

myReplica:SECONDARY>

|

||||

myReplica:SECONDARY> show databases

|

||||

2015-05-10T03:09:24.131+0000 E QUERY Error: listDatabases failed:{ "note" : "from execCommand", "ok" : 0, "errmsg" : "not master" }

|

||||

at Error ()

|

||||

at Mongo.getDBs (src/mongo/shell/mongo.js:47:15)

|

||||

at shellHelper.show (src/mongo/shell/utils.js:630:33)

|

||||

at shellHelper (src/mongo/shell/utils.js:524:36)

|

||||

at (shellhelp2):1:1 at src/mongo/shell/mongo.js:47

|

||||

|

||||

希望这篇教程能对你有所帮助。你可以使用Vagrant来自动完成你的本地环境配置,并且加速你的代码。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/setup-replica-set-mongodb.html

|

||||

|

||||

作者:[Christopher Valerio][a]

|

||||

译者:[mr-ping](https://github.com/mr-ping)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/valerio

|

||||

[1]:http://docs.mongodb.org/ecosystem/drivers/

|

||||

117

translated/tech/20150803 Troubleshooting with Linux Logs.md

Normal file

117

translated/tech/20150803 Troubleshooting with Linux Logs.md

Normal file

@ -0,0 +1,117 @@

|

||||

在 Linux 中使用日志来排错

|

||||

================================================================================

|

||||

人们创建日志的主要原因是排错。通常你会诊断为什么问题发生在你的 Linux 系统或应用程序中。错误信息或一些列事件可以给你提供造成根本原因的线索,说明问题是如何发生的,并指出如何解决它。这里有几个使用日志来解决的样例。

|

||||

|

||||

### 登录失败原因 ###

|

||||

|

||||

如果你想检查你的系统是否安全,你可以在验证日志中检查登录失败的和登录成功但可疑的用户。当有人通过不正当或无效的凭据来登录时会出现认证失败,经常使用 SSH 进行远程登录或 su 到本地其他用户来进行访问权。这些是由[插入式验证模块][1]来记录,或 PAM 进行短期记录。在你的日志中会看到像 Failed 这样的字符串密码和未知的用户。成功认证记录包括像 Accepted 这样的字符串密码并打开会话。

|

||||

|

||||

失败的例子:

|

||||

|

||||

pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ruser= rhost=10.0.2.2

|

||||

Failed password for invalid user hoover from 10.0.2.2 port 4791 ssh2

|

||||

pam_unix(sshd:auth): check pass; user unknown

|

||||

PAM service(sshd) ignoring max retries; 6 > 3

|

||||

|

||||

成功的例子:

|

||||