mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-24 02:20:09 +08:00

commit

2f2a16aa3c

@ -0,0 +1,146 @@

|

||||

如何使用 yum-cron 自动更新 RHEL/CentOS Linux

|

||||

======

|

||||

|

||||

`yum` 命令是 RHEL / CentOS Linux 系统中用来安装和更新软件包的一个工具。我知道如何使用 [yum 命令行][1] 更新系统,但是我想用 cron 任务自动更新软件包。该如何配置才能使得 `yum` 使用 [cron 自动更新][2]系统补丁或更新呢?

|

||||

|

||||

首先需要安装 yum-cron 软件包。该软件包提供以 cron 命令运行 `yum` 更新所需的文件。如果你想要每晚通过 cron 自动更新可以安装这个软件包。

|

||||

|

||||

### CentOS/RHEL 6.x/7.x 上安装 yum cron

|

||||

|

||||

输入以下 [yum 命令][3]:

|

||||

|

||||

```

|

||||

$ sudo yum install yum-cron

|

||||

```

|

||||

|

||||

|

||||

|

||||

使用 CentOS/RHEL 7.x 上的 `systemctl` 启动服务:

|

||||

|

||||

```

|

||||

$ sudo systemctl enable yum-cron.service

|

||||

$ sudo systemctl start yum-cron.service

|

||||

$ sudo systemctl status yum-cron.service

|

||||

```

|

||||

|

||||

在 CentOS/RHEL 6.x 系统中,运行:

|

||||

|

||||

```

|

||||

$ sudo chkconfig yum-cron on

|

||||

$ sudo service yum-cron start

|

||||

```

|

||||

|

||||

|

||||

|

||||

`yum-cron` 是 `yum` 的一个替代方式。使得 cron 调用 `yum` 变得非常方便。该软件提供了元数据更新、更新检查、下载和安装等功能。`yum-cron` 的各种功能可以使用配置文件配置,而不是输入一堆复杂的命令行参数。

|

||||

|

||||

### 配置 yum-cron 自动更新 RHEL/CentOS Linux

|

||||

|

||||

使用 vi 等编辑器编辑文件 `/etc/yum/yum-cron.conf` 和 `/etc/yum/yum-cron-hourly.conf`:

|

||||

|

||||

```

|

||||

$ sudo vi /etc/yum/yum-cron.conf

|

||||

```

|

||||

|

||||

确保更新可用时自动更新:

|

||||

|

||||

```

|

||||

apply_updates = yes

|

||||

```

|

||||

|

||||

可以设置通知 email 的发件地址。注意: localhost` 将会被 `system_name` 的值代替。

|

||||

|

||||

```

|

||||

email_from = root@localhost

|

||||

```

|

||||

|

||||

列出发送到的 email 地址。

|

||||

|

||||

```

|

||||

email_to = your-it-support@some-domain-name

|

||||

```

|

||||

|

||||

发送 email 信息的主机名。

|

||||

|

||||

```

|

||||

email_host = localhost

|

||||

```

|

||||

|

||||

[CentOS/RHEL 7.x][4] 上不想更新内核的话,添加以下内容:

|

||||

|

||||

```

|

||||

exclude=kernel*

|

||||

```

|

||||

|

||||

RHEL/CentOS 6.x 下[添加以下内容来禁用内核更新][5]:

|

||||

|

||||

```

|

||||

YUM_PARAMETER=kernel*

|

||||

```

|

||||

|

||||

[保存并关闭文件][6]。如果想每小时更新系统的话修改文件 `/etc/yum/yum-cron-hourly.conf`,否则文件 `/etc/yum/yum-cron.conf` 将使用以下命令每天运行一次(使用 [cat 命令][7] 查看):

|

||||

|

||||

```

|

||||

$ cat /etc/cron.daily/0yum-daily.cron

|

||||

```

|

||||

|

||||

示例输出:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

# Only run if this flag is set. The flag is created by the yum-cron init

|

||||

# script when the service is started -- this allows one to use chkconfig and

|

||||

# the standard "service stop|start" commands to enable or disable yum-cron.

|

||||

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Action!

|

||||

exec /usr/sbin/yum-cron /etc/yum/yum-cron-hourly.conf

|

||||

[root@centos7-box yum]# cat /etc/cron.daily/0yum-daily.cron

|

||||

#!/bin/bash

|

||||

|

||||

# Only run if this flag is set. The flag is created by the yum-cron init

|

||||

# script when the service is started -- this allows one to use chkconfig and

|

||||

# the standard "service stop|start" commands to enable or disable yum-cron.

|

||||

if [[ ! -f /var/lock/subsys/yum-cron ]]; then

|

||||

exit 0

|

||||

fi

|

||||

|

||||

# Action!

|

||||

exec /usr/sbin/yum-cron

|

||||

```

|

||||

|

||||

完成配置。现在你的系统将每天自动更新一次。更多细节请参照 yum-cron 的说明手册。

|

||||

|

||||

```

|

||||

$ man yum-cron

|

||||

```

|

||||

|

||||

### 关于作者

|

||||

|

||||

作者是 nixCraft 的创始人,一个经验丰富的系统管理员和 Linux/Unix 脚本培训师。他曾与全球客户合作,领域涉及IT,教育,国防和空间研究以及非营利部门等多个行业。请在 [Twitter][9]、[Facebook][10]、[Google+][11] 上关注他。获取更多有关系统管理、Linux/Unix 和开源话题请关注[我的 RSS/XML 地址][12]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/fedora-automatic-update-retrieval-installation-with-cron/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[shipsw](https://github.com/shipsw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz/

|

||||

[1]:https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/

|

||||

[2]:https://www.cyberciti.biz/faq/how-do-i-add-jobs-to-cron-under-linux-or-unix-oses

|

||||

[3]:https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/ [4]:https://www.cyberciti.biz/faq/yum-update-except-kernel-package-command/

|

||||

[5]:https://www.cyberciti.biz/faq/redhat-centos-linux-yum-update-exclude-packages/

|

||||

[6]:https://www.cyberciti.biz/faq/linux-unix-vim-save-and-quit-command/

|

||||

[7]:https://www.cyberciti.biz/faq/linux-unix-appleosx-bsd-cat-command-examples/

|

||||

[8]:https://www.cyberciti.biz/faq/how-do-i-add-jobs-to-cron-under-linux-or-unix-oses

|

||||

[9]:https://twitter.com/nixcraft

|

||||

[10]:https://facebook.com/nixcraft

|

||||

[11]:https://plus.google.com/+CybercitiBiz

|

||||

[12]:https://www.cyberciti.biz/atom/atom.xml

|

||||

@ -1,18 +1,20 @@

|

||||

使用一个命令重置 Linux 桌面到默认设置

|

||||

使用一个命令重置 Linux 桌面为默认设置

|

||||

======

|

||||

|

||||

|

||||

|

||||

前段时间,我们分享了一篇关于 [**Resetter**][1] 的文章 - 这是一个有用的软件,可以在几分钟内将 Ubuntu 重置为出厂默认设置。使用 Resetter,任何人都可以轻松地将 Ubuntu 重置为第一次安装时的状态。今天,我偶然发现了一个类似的东西。不,它不是一个应用程序,而是一个单行的命令来重置你的 Linux 桌面设置、调整和定制到默认状态。

|

||||

前段时间,我们分享了一篇关于 [Resetter][1] 的文章 - 这是一个有用的软件,可以在几分钟内将 Ubuntu 重置为出厂默认设置。使用 Resetter,任何人都可以轻松地将 Ubuntu 重置为第一次安装时的状态。今天,我偶然发现了一个类似的东西。不,它不是一个应用程序,而是一个单行的命令来重置你的 Linux 桌面设置、调整和定制到默认状态。

|

||||

|

||||

### 将 Linux 桌面重置为默认设置

|

||||

|

||||

这个命令会将 Ubuntu Unity、Gnome 和 MATE 桌面重置为默认状态。我在我的 **Arch Linux MATE** 和 **Ubuntu 16.04 Unity** 上测试了这个命令。它可以在两个系统上工作。我希望它也能在其他桌面上运行。在写这篇文章的时候,我还没有安装 GNOME 的 Linux 桌面,因此我无法确认。但是,我相信它也可以在 Gnome 桌面环境中使用。

|

||||

这个命令会将 Ubuntu Unity、Gnome 和 MATE 桌面重置为默认状态。我在我的 Arch Linux MATE 和 Ubuntu 16.04 Unity 上测试了这个命令。它可以在两个系统上工作。我希望它也能在其他桌面上运行。在写这篇文章的时候,我还没有安装 GNOME 的 Linux 桌面,因此我无法确认。但是,我相信它也可以在 Gnome 桌面环境中使用。

|

||||

|

||||

**一句忠告:**请注意,此命令将重置你在系统中所做的所有定制和调整,包括 Unity 启动器或 Dock 中的固定应用程序、桌面小程序、桌面指示器、系统字体、GTK主题、图标主题、显示器分辨率、键盘快捷键、窗口按钮位置、菜单和启动器行为等。

|

||||

**一句忠告:**请注意,此命令将重置你在系统中所做的所有定制和调整,包括 Unity 启动器或 Dock 中固定的应用程序、桌面小程序、桌面指示器、系统字体、GTK主题、图标主题、显示器分辨率、键盘快捷键、窗口按钮位置、菜单和启动器行为等。

|

||||

|

||||

好的是它只会重置桌面设置。它不会影响其他不使用 dconf 的程序。此外,它不会删除你的个人资料。

|

||||

好的是它只会重置桌面设置。它不会影响其他不使用 `dconf` 的程序。此外,它不会删除你的个人资料。

|

||||

|

||||

现在,让我们开始。要将 Ubuntu Unity 或其他带有 GNOME/MATE 环境的 Linux 桌面重置,运行下面的命令:

|

||||

|

||||

```

|

||||

dconf reset -f /

|

||||

```

|

||||

@ -29,12 +31,13 @@ dconf reset -f /

|

||||

|

||||

看见了么?现在,我的 Ubuntu 桌面已经回到了出厂设置。

|

||||

|

||||

有关 “dconf” 命令的更多详细信息,请参阅手册页。

|

||||

有关 `dconf` 命令的更多详细信息,请参阅手册页。

|

||||

|

||||

```

|

||||

man dconf

|

||||

```

|

||||

|

||||

在重置桌面上我个人更喜欢 “Resetter” 而不是 “dconf” 命令。因为,Resetter 给用户提供了更多的选择。用户可以决定删除哪些应用程序、保留哪些应用程序、是保留现有用户帐户还是创建新用户等等。如果你懒得安装 Resetter,你可以使用这个 “dconf” 命令在几分钟内将你的 Linux 系统重置为默认设置。

|

||||

在重置桌面上我个人更喜欢 “Resetter” 而不是 `dconf` 命令。因为,Resetter 给用户提供了更多的选择。用户可以决定删除哪些应用程序、保留哪些应用程序、是保留现有用户帐户还是创建新用户等等。如果你懒得安装 Resetter,你可以使用这个 `dconf` 命令在几分钟内将你的 Linux 系统重置为默认设置。

|

||||

|

||||

就是这样了。希望这个有帮助。我将很快发布另一篇有用的指导。敬请关注!

|

||||

|

||||

@ -48,12 +51,12 @@ via: https://www.ostechnix.com/reset-linux-desktop-default-settings-single-comma

|

||||

|

||||

作者:[Edwin Arteaga][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com

|

||||

[1]:https://www.ostechnix.com/reset-ubuntu-factory-defaults/

|

||||

[1]:https://linux.cn/article-9217-1.html

|

||||

[2]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/10/Before-resetting-Ubuntu-to-default-1.png ()

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/10/After-resetting-Ubuntu-to-default-1.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/10/Before-resetting-Ubuntu-to-default-1.png

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2017/10/After-resetting-Ubuntu-to-default-1.png

|

||||

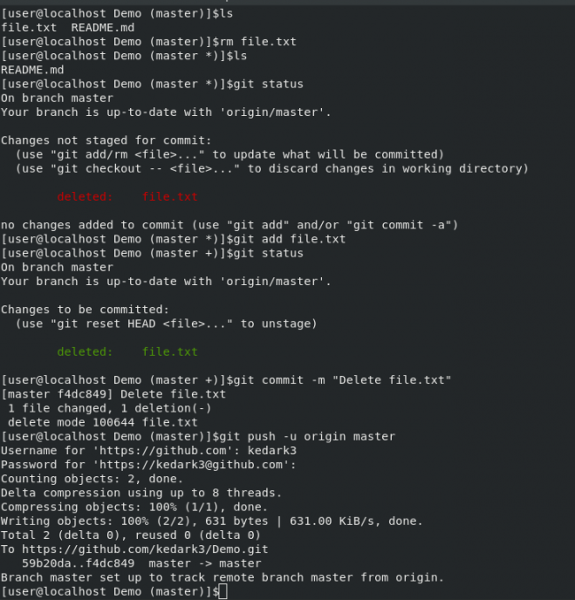

@ -0,0 +1,137 @@

|

||||

如何使用 GNU Stow 来管理从源代码安装的程序和点文件

|

||||

=====

|

||||

|

||||

### 目的

|

||||

|

||||

使用 GNU Stow 轻松管理从源代码安装的程序和点文件(LCTT 译注:<ruby>点文件<rt>dotfile</rt></ruby>,即以 `.` 开头的文件,在 *nix 下默认为隐藏文件,常用于存储程序的配置信息。)

|

||||

|

||||

### 要求

|

||||

|

||||

* root 权限

|

||||

|

||||

### 难度

|

||||

|

||||

简单

|

||||

|

||||

### 约定

|

||||

|

||||

* `#` - 给定的命令要求直接以 root 用户身份或使用 `sudo` 命令以 root 权限执行

|

||||

* `$` - 给定的命令将作为普通的非特权用户来执行

|

||||

|

||||

### 介绍

|

||||

|

||||

有时候我们必须从源代码安装程序,因为它们也许不能通过标准渠道获得,或者我们可能需要特定版本的软件。 GNU Stow 是一个非常不错的<ruby>符号链接工厂<rt>symlinks factory</rt></ruby>程序,它可以帮助我们保持文件的整洁,易于维护。

|

||||

|

||||

### 获得 stow

|

||||

|

||||

你的 Linux 发行版本很可能包含 `stow`,例如在 Fedora,你安装它只需要:

|

||||

|

||||

```

|

||||

# dnf install stow

|

||||

```

|

||||

|

||||

在 Ubuntu/Debian 中,安装 `stow` 需要执行:

|

||||

|

||||

```

|

||||

# apt install stow

|

||||

```

|

||||

|

||||

在某些 Linux 发行版中,`stow` 在标准库中是不可用的,但是可以通过一些额外的软件源(例如 RHEL 和 CentOS7 中的EPEL )轻松获得,或者,作为最后的手段,你可以从源代码编译它。只需要很少的依赖关系。

|

||||

|

||||

### 从源代码编译

|

||||

|

||||

最新的可用 stow 版本是 `2.2.2`。源码包可以在这里下载:`https://ftp.gnu.org/gnu/stow/`。

|

||||

|

||||

一旦你下载了源码包,你就必须解压它。切换到你下载软件包的目录,然后运行:

|

||||

|

||||

```

|

||||

$ tar -xvpzf stow-2.2.2.tar.gz

|

||||

```

|

||||

|

||||

解压源文件后,切换到 `stow-2.2.2` 目录中,然后编译该程序,只需运行:

|

||||

|

||||

```

|

||||

$ ./configure

|

||||

$ make

|

||||

```

|

||||

|

||||

最后,安装软件包:

|

||||

|

||||

```

|

||||

# make install

|

||||

```

|

||||

|

||||

默认情况下,软件包将安装在 `/usr/local/` 目录中,但是我们可以改变它,通过配置脚本的 `--prefix` 选项指定目录,或者在运行 `make install` 时添加 `prefix="/your/dir"`。

|

||||

|

||||

此时,如果所有工作都按预期工作,我们应该已经在系统上安装了 `stow`。

|

||||

|

||||

### stow 是如何工作的?

|

||||

|

||||

`stow` 背后主要的概念在程序手册中有很好的解释:

|

||||

|

||||

> Stow 使用的方法是将每个软件包安装到自己的目录树中,然后使用符号链接使它看起来像文件一样安装在公共的目录树中

|

||||

|

||||

为了更好地理解这个软件的运作,我们来分析一下它的关键概念:

|

||||

|

||||

#### stow 文件目录

|

||||

|

||||

stow 目录是包含所有 stow 软件包的根目录,每个包都有自己的子目录。典型的 stow 目录是 `/usr/local/stow`:在其中,每个子目录代表一个软件包。

|

||||

|

||||

#### stow 软件包

|

||||

|

||||

如上所述,stow 目录包含多个“软件包”,每个软件包都位于自己单独的子目录中,通常以程序本身命名。包就是与特定软件相关的文件和目录列表,作为一个实体进行管理。

|

||||

|

||||

#### stow 目标目录

|

||||

|

||||

stow 目标目录解释起来是一个非常简单的概念。它是包文件应该安装到的目录。默认情况下,stow 目标目录被视作是调用 stow 的目录。这种行为可以通过使用 `-t` 选项( `--target` 的简写)轻松改变,这使我们可以指定一个替代目录。

|

||||

|

||||

### 一个实际的例子

|

||||

|

||||

我相信一个好的例子胜过 1000 句话,所以让我来展示 `stow` 如何工作。假设我们想编译并安装 `libx264`,首先我们克隆包含其源代码的仓库:

|

||||

|

||||

```

|

||||

$ git clone git://git.videolan.org/x264.git

|

||||

```

|

||||

|

||||

运行该命令几秒钟后,将创建 `x264` 目录,它将包含准备编译的源代码。我们切换到 `x264` 目录中并运行 `configure` 脚本,将 `--prefix` 指定为 `/usr/local/stow/libx264` 目录。

|

||||

|

||||

```

|

||||

$ cd x264 && ./configure --prefix=/usr/local/stow/libx264

|

||||

```

|

||||

|

||||

然后我们构建该程序并安装它:

|

||||

|

||||

```

|

||||

$ make

|

||||

# make install

|

||||

```

|

||||

|

||||

`x264` 目录应该创建在 `stow` 目录内:它包含了所有通常直接安装在系统中的东西。 现在,我们所要做的就是调用 `stow`。 我们必须从 `stow` 目录内运行这个命令,通过使用 `-d` 选项来手动指定 `stow` 目录的路径(默认为当前目录),或者通过如前所述用 `-t` 指定目标。我们还应该提供要作为参数存储的软件包的名称。 在这里,我们从 `stow` 目录运行程序,所以我们需要输入的内容是:

|

||||

|

||||

```

|

||||

# stow libx264

|

||||

```

|

||||

|

||||

libx264 软件包中包含的所有文件和目录现在已经在调用 stow 的父目录 (/usr/local) 中进行了符号链接,因此,例如在 `/usr/local/ stow/x264/bin` 中包含的 libx264 二进制文件现在符号链接在 `/usr/local/bin` 之中,`/usr/local/stow/x264/etc` 中的文件现在符号链接在 `/usr/local/etc` 之中等等。通过这种方式,系统将显示文件已正常安装,并且我们可以容易地跟踪我们编译和安装的每个程序。要反转该操作,我们只需使用 `-D` 选项:

|

||||

|

||||

```

|

||||

# stow -d libx264

|

||||

```

|

||||

|

||||

完成了!符号链接不再存在:我们只是“卸载”了一个 stow 包,使我们的系统保持在一个干净且一致的状态。 在这一点上,我们应该清楚为什么 stow 还可以用于管理点文件。 通常的做法是在 git 仓库中包含用户特定的所有配置文件,以便轻松管理它们并使它们在任何地方都可用,然后使用 stow 将它们放在适当位置,如放在用户主目录中。

|

||||

|

||||

stow 还会阻止你错误地覆盖文件:如果目标文件已经存在,并且没有指向 stow 目录中的包时,它将拒绝创建符号链接。 这种情况在 stow 术语中称为冲突。

|

||||

|

||||

就是这样!有关选项的完整列表,请参阅 stow 帮助页,并且不要忘记在评论中告诉我们你对此的看法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linuxconfig.org/how-to-use-gnu-stow-to-manage-programs-installed-from-source-and-dotfiles

|

||||

|

||||

作者:[Egidio Docile][a]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linuxconfig.org

|

||||

@ -0,0 +1,129 @@

|

||||

Linux 容器安全的 10 个层面

|

||||

======

|

||||

|

||||

> 应用这些策略来保护容器解决方案的各个层面和容器生命周期的各个阶段的安全。

|

||||

|

||||

|

||||

|

||||

容器提供了打包应用程序的一种简单方法,它实现了从开发到测试到投入生产系统的无缝传递。它也有助于确保跨不同环境的连贯性,包括物理服务器、虚拟机、以及公有云或私有云。这些好处使得一些组织为了更方便地部署和管理为他们提升业务价值的应用程序,而快速地采用了容器技术。

|

||||

|

||||

|

||||

|

||||

企业需要高度安全,在容器中运行核心服务的任何人都会问,“容器安全吗?”以及“我们能信任运行在容器中的应用程序吗?”

|

||||

|

||||

对容器进行安全保护就像是对运行中的进程进行安全保护一样。在你部署和运行你的容器之前,你需要去考虑整个解决方案各个层面的安全。你也需要去考虑贯穿了应用程序和容器整个生命周期的安全。

|

||||

|

||||

请尝试从这十个关键的因素去确保容器解决方案栈不同层面、以及容器生命周期的不同阶段的安全。

|

||||

|

||||

### 1. 容器宿主机操作系统和多租户环境

|

||||

|

||||

由于容器将应用程序和它的依赖作为一个单元来处理,使得开发者构建和升级应用程序变得更加容易,并且,容器可以启用多租户技术将许多应用程序和服务部署到一台共享主机上。在一台单独的主机上以容器方式部署多个应用程序、按需启动和关闭单个容器都是很容易的。为完全实现这种打包和部署技术的优势,运营团队需要运行容器的合适环境。运营者需要一个安全的操作系统,它能够在边界上保护容器安全、从容器中保护主机内核,以及保护容器彼此之间的安全。

|

||||

|

||||

容器是隔离而资源受限的 Linux 进程,允许你在一个共享的宿主机内核上运行沙盒化的应用程序。保护容器的方法与保护你的 Linux 中运行的任何进程的方法是一样的。降低权限是非常重要的,也是保护容器安全的最佳实践。最好使用尽可能小的权限去创建容器。容器应该以一个普通用户的权限来运行,而不是 root 权限的用户。在 Linux 中可以使用多个层面的安全加固手段,Linux 命名空间、安全强化 Linux([SELinux][1])、[cgroups][2] 、capabilities(LCTT 译注:Linux 内核的一个安全特性,它打破了传统的普通用户与 root 用户的概念,在进程级提供更好的安全控制)、以及安全计算模式( [seccomp][3] ),这五种 Linux 的安全特性可以用于保护容器的安全。

|

||||

|

||||

### 2. 容器内容(使用可信来源)

|

||||

|

||||

在谈到安全时,首先要考虑你的容器里面有什么?例如 ,有些时候,应用程序和基础设施是由很多可用组件所构成的。它们中的一些是开源的软件包,比如,Linux 操作系统、Apache Web 服务器、Red Hat JBoss 企业应用平台、PostgreSQL,以及 Node.js。这些软件包的容器化版本已经可以使用了,因此,你没有必要自己去构建它们。但是,对于你从一些外部来源下载的任何代码,你需要知道这些软件包的原始来源,是谁构建的它,以及这些包里面是否包含恶意代码。

|

||||

|

||||

### 3. 容器注册(安全访问容器镜像)

|

||||

|

||||

你的团队的容器构建于下载的公共容器镜像,因此,访问和升级这些下载的容器镜像以及内部构建镜像,与管理和下载其它类型的二进制文件的方式是相同的,这一点至关重要。许多私有的注册库支持容器镜像的存储。选择一个私有的注册库,可以帮你将存储在它的注册中的容器镜像实现策略自动化。

|

||||

|

||||

### 4. 安全性与构建过程

|

||||

|

||||

在一个容器化环境中,软件构建过程是软件生命周期的一个阶段,它将所需的运行时库和应用程序代码集成到一起。管理这个构建过程对于保护软件栈安全来说是很关键的。遵守“一次构建,到处部署”的原则,可以确保构建过程的结果正是生产系统中需要的。保持容器的恒定不变也很重要 — 换句话说就是,不要对正在运行的容器打补丁,而是,重新构建和部署它们。

|

||||

|

||||

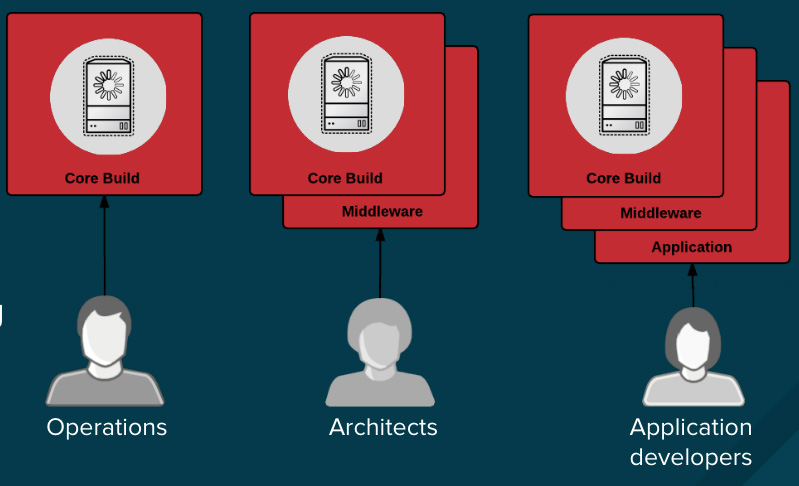

不论是因为你处于一个高强度监管的行业中,还是只希望简单地优化你的团队的成果,设计你的容器镜像管理以及构建过程,可以使用容器层的优势来实现控制分离,因此,你应该去这么做:

|

||||

|

||||

* 运营团队管理基础镜像

|

||||

* 架构师管理中间件、运行时、数据库,以及其它解决方案

|

||||

* 开发者专注于应用程序层面,并且只写代码

|

||||

|

||||

|

||||

|

||||

最后,标记好你的定制构建容器,这样可以确保在构建和部署时不会搞混乱。

|

||||

|

||||

### 5. 控制好在同一个集群内部署应用

|

||||

|

||||

如果是在构建过程中出现的任何问题,或者在镜像被部署之后发现的任何漏洞,那么,请在基于策略的、自动化工具上添加另外的安全层。

|

||||

|

||||

我们来看一下,一个应用程序的构建使用了三个容器镜像层:内核、中间件,以及应用程序。如果在内核镜像中发现了问题,那么只能重新构建镜像。一旦构建完成,镜像就会被发布到容器平台注册库中。这个平台可以自动检测到发生变化的镜像。对于基于这个镜像的其它构建将被触发一个预定义的动作,平台将自己重新构建应用镜像,合并该修复的库。

|

||||

|

||||

一旦构建完成,镜像将被发布到容器平台的内部注册库中。在它的内部注册库中,会立即检测到镜像发生变化,应用程序在这里将会被触发一个预定义的动作,自动部署更新镜像,确保运行在生产系统中的代码总是使用更新后的最新的镜像。所有的这些功能协同工作,将安全功能集成到你的持续集成和持续部署(CI/CD)过程和管道中。

|

||||

|

||||

### 6. 容器编配:保护容器平台安全

|

||||

|

||||

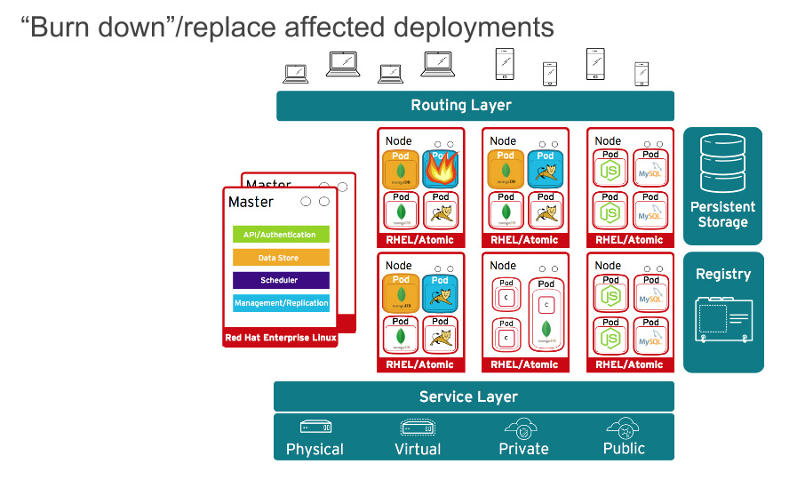

当然了,应用程序很少会以单一容器分发。甚至,简单的应用程序一般情况下都会有一个前端、一个后端、以及一个数据库。而在容器中以微服务模式部署的应用程序,意味着应用程序将部署在多个容器中,有时它们在同一台宿主机上,有时它们是分布在多个宿主机或者节点上,如下面的图所示:

|

||||

|

||||

|

||||

|

||||

在大规模的容器部署时,你应该考虑:

|

||||

|

||||

* 哪个容器应该被部署在哪个宿主机上?

|

||||

* 那个宿主机应该有什么样的性能?

|

||||

* 哪个容器需要访问其它容器?它们之间如何发现彼此?

|

||||

* 你如何控制和管理对共享资源的访问,像网络和存储?

|

||||

* 如何监视容器健康状况?

|

||||

* 如何去自动扩展性能以满足应用程序的需要?

|

||||

* 如何在满足安全需求的同时启用开发者的自助服务?

|

||||

|

||||

考虑到开发者和运营者的能力,提供基于角色的访问控制是容器平台的关键要素。例如,编配管理服务器是中心访问点,应该接受最高级别的安全检查。API 是规模化的自动容器平台管理的关键,可以用于为 pod、服务,以及复制控制器验证和配置数据;在入站请求上执行项目验证;以及调用其它主要系统组件上的触发器。

|

||||

|

||||

### 7. 网络隔离

|

||||

|

||||

在容器中部署现代微服务应用,经常意味着跨多个节点在多个容器上部署。考虑到网络防御,你需要一种在一个集群中的应用之间的相互隔离的方法。一个典型的公有云容器服务,像 Google 容器引擎(GKE)、Azure 容器服务,或者 Amazon Web 服务(AWS)容器服务,是单租户服务。他们让你在你初始化建立的虚拟机集群上运行你的容器。对于多租户容器的安全,你需要容器平台为你启用一个单一集群,并且分割流量以隔离不同的用户、团队、应用、以及在这个集群中的环境。

|

||||

|

||||

使用网络命名空间,容器内的每个集合(即大家熟知的 “pod”)都会得到它自己的 IP 和绑定的端口范围,以此来从一个节点上隔离每个 pod 网络。除使用下面所述的方式之外,默认情况下,来自不同命名空间(项目)的 pod 并不能发送或者接收其它 pod 上的包和不同项目的服务。你可以使用这些特性在同一个集群内隔离开发者环境、测试环境,以及生产环境。但是,这样会导致 IP 地址和端口数量的激增,使得网络管理更加复杂。另外,容器是被设计为反复使用的,你应该在处理这种复杂性的工具上进行投入。在容器平台上比较受欢迎的工具是使用 [软件定义网络][4] (SDN) 提供一个定义的网络集群,它允许跨不同集群的容器进行通讯。

|

||||

|

||||

### 8. 存储

|

||||

|

||||

容器即可被用于无状态应用,也可被用于有状态应用。保护外加的存储是保护有状态服务的一个关键要素。容器平台对多种受欢迎的存储提供了插件,包括网络文件系统(NFS)、AWS 弹性块存储(EBS)、GCE 持久磁盘、GlusterFS、iSCSI、 RADOS(Ceph)、Cinder 等等。

|

||||

|

||||

一个持久卷(PV)可以通过资源提供者支持的任何方式装载到一个主机上。提供者有不同的性能,而每个 PV 的访问模式被设置为特定的卷支持的特定模式。例如,NFS 能够支持多路客户端同时读/写,但是,一个特定的 NFS 的 PV 可以在服务器上被发布为只读模式。每个 PV 有它自己的一组反应特定 PV 性能的访问模式的描述,比如,ReadWriteOnce、ReadOnlyMany、以及 ReadWriteMany。

|

||||

|

||||

### 9. API 管理、终端安全、以及单点登录(SSO)

|

||||

|

||||

保护你的应用安全,包括管理应用、以及 API 的认证和授权。

|

||||

|

||||

Web SSO 能力是现代应用程序的一个关键部分。在构建它们的应用时,容器平台带来了开发者可以使用的多种容器化服务。

|

||||

|

||||

API 是微服务构成的应用程序的关键所在。这些应用程序有多个独立的 API 服务,这导致了终端服务数量的激增,它就需要额外的管理工具。推荐使用 API 管理工具。所有的 API 平台应该提供多种 API 认证和安全所需要的标准选项,这些选项既可以单独使用,也可以组合使用,以用于发布证书或者控制访问。

|

||||

|

||||

这些选项包括标准的 API key、应用 ID 和密钥对,以及 OAuth 2.0。

|

||||

|

||||

### 10. 在一个联合集群中的角色和访问管理

|

||||

|

||||

在 2016 年 7 月份,Kubernetes 1.3 引入了 [Kubernetes 联合集群][5]。这是一个令人兴奋的新特性之一,它是在 Kubernetes 上游、当前的 Kubernetes 1.6 beta 中引用的。联合是用于部署和访问跨多集群运行在公有云或企业数据中心的应用程序服务的。多个集群能够用于去实现应用程序的高可用性,应用程序可以跨多个可用区域,或者去启用部署公共管理,或者跨不同的供应商进行迁移,比如,AWS、Google Cloud、以及 Azure。

|

||||

|

||||

当管理联合集群时,你必须确保你的编配工具能够提供你所需要的跨不同部署平台的实例的安全性。一般来说,认证和授权是很关键的 —— 不论你的应用程序运行在什么地方,将数据安全可靠地传递给它们,以及管理跨集群的多租户应用程序。Kubernetes 扩展了联合集群,包括对联合的秘密数据、联合的命名空间、以及 Ingress objects 的支持。

|

||||

|

||||

### 选择一个容器平台

|

||||

|

||||

当然,它并不仅关乎安全。你需要提供一个你的开发者团队和运营团队有相关经验的容器平台。他们需要一个安全的、企业级的基于容器的应用平台,它能够同时满足开发者和运营者的需要,而且还能够提高操作效率和基础设施利用率。

|

||||

|

||||

想从 Daniel 在 [欧盟开源峰会][7] 上的 [容器安全的十个层面][6] 的演讲中学习更多知识吗?这个峰会已于 10 月 23 - 26 日在 Prague 举行。

|

||||

|

||||

### 关于作者

|

||||

|

||||

Daniel Oh;Microservives;Agile;Devops;Java Ee;Container;Openshift;Jboss;Evangelism

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/10/10-layers-container-security

|

||||

|

||||

作者:[Daniel Oh][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/daniel-oh

|

||||

[1]:https://en.wikipedia.org/wiki/Security-Enhanced_Linux

|

||||

[2]:https://en.wikipedia.org/wiki/Cgroups

|

||||

[3]:https://en.wikipedia.org/wiki/Seccomp

|

||||

[4]:https://en.wikipedia.org/wiki/Software-defined_networking

|

||||

[5]:https://kubernetes.io/docs/concepts/cluster-administration/federation/

|

||||

[6]:https://osseu17.sched.com/mobile/#session:f2deeabfc1640d002c1d55101ce81223

|

||||

[7]:http://events.linuxfoundation.org/events/open-source-summit-europe

|

||||

140

published/20171102 What is huge pages in Linux.md

Normal file

140

published/20171102 What is huge pages in Linux.md

Normal file

@ -0,0 +1,140 @@

|

||||

Linux 中的“大内存页”(hugepage)是个什么?

|

||||

======

|

||||

|

||||

> 学习 Linux 中的<ruby>大内存页<rt>hugepage</rt></ruby>。理解什么是“大内存页”,如何进行配置,如何查看当前状态以及如何禁用它。

|

||||

|

||||

![Huge Pages in Linux][1]

|

||||

|

||||

本文中我们会详细介绍<ruby>大内存页<rt>huge page</rt></ruby>,让你能够回答:Linux 中的“大内存页”是什么?在 RHEL6、RHEL7、Ubuntu 等 Linux 中,如何启用/禁用“大内存页”?如何查看“大内存页”的当前值?

|

||||

|

||||

首先让我们从“大内存页”的基础知识开始讲起。

|

||||

|

||||

### Linux 中的“大内存页”是个什么玩意?

|

||||

|

||||

“大内存页”有助于 Linux 系统进行虚拟内存管理。顾名思义,除了标准的 4KB 大小的页面外,它们还能帮助管理内存中的巨大的页面。使用“大内存页”,你最大可以定义 1GB 的页面大小。

|

||||

|

||||

在系统启动期间,你能用“大内存页”为应用程序预留一部分内存。这部分内存,即被“大内存页”占用的这些存储器永远不会被交换出内存。它会一直保留其中,除非你修改了配置。这会极大地提高像 Oracle 数据库这样的需要海量内存的应用程序的性能。

|

||||

|

||||

### 为什么使用“大内存页”?

|

||||

|

||||

在虚拟内存管理中,内核维护一个将虚拟内存地址映射到物理地址的表,对于每个页面操作,内核都需要加载相关的映射。如果你的内存页很小,那么你需要加载的页就会很多,导致内核会加载更多的映射表。而这会降低性能。

|

||||

|

||||

使用“大内存页”,意味着所需要的页变少了。从而大大减少由内核加载的映射表的数量。这提高了内核级别的性能最终有利于应用程序的性能。

|

||||

|

||||

简而言之,通过启用“大内存页”,系统具只需要处理较少的页面映射表,从而减少访问/维护它们的开销!

|

||||

|

||||

### 如何配置“大内存页”?

|

||||

|

||||

运行下面命令来查看当前“大内存页”的详细内容。

|

||||

|

||||

```

|

||||

root@kerneltalks # grep Huge /proc/meminfo

|

||||

AnonHugePages: 0 kB

|

||||

HugePages_Total: 0

|

||||

HugePages_Free: 0

|

||||

HugePages_Rsvd: 0

|

||||

HugePages_Surp: 0

|

||||

Hugepagesize: 2048 kB

|

||||

```

|

||||

|

||||

从上面输出可以看到,每个页的大小为 2MB(`Hugepagesize`),并且系统中目前有 `0` 个“大内存页”(`HugePages_Total`)。这里“大内存页”的大小可以从 `2MB` 增加到 `1GB`。

|

||||

|

||||

运行下面的脚本可以知道系统当前需要多少个巨大页。该脚本取之于 Oracle。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

#

|

||||

# hugepages_settings.sh

|

||||

#

|

||||

# Linux bash script to compute values for the

|

||||

# recommended HugePages/HugeTLB configuration

|

||||

#

|

||||

# Note: This script does calculation for all shared memory

|

||||

# segments available when the script is run, no matter it

|

||||

# is an Oracle RDBMS shared memory segment or not.

|

||||

# Check for the kernel version

|

||||

KERN=`uname -r | awk -F. '{ printf("%d.%d\n",$1,$2); }'`

|

||||

# Find out the HugePage size

|

||||

HPG_SZ=`grep Hugepagesize /proc/meminfo | awk {'print $2'}`

|

||||

# Start from 1 pages to be on the safe side and guarantee 1 free HugePage

|

||||

NUM_PG=1

|

||||

# Cumulative number of pages required to handle the running shared memory segments

|

||||

for SEG_BYTES in `ipcs -m | awk {'print $5'} | grep "[0-9][0-9]*"`

|

||||

do

|

||||

MIN_PG=`echo "$SEG_BYTES/($HPG_SZ*1024)" | bc -q`

|

||||

if [ $MIN_PG -gt 0 ]; then

|

||||

NUM_PG=`echo "$NUM_PG+$MIN_PG+1" | bc -q`

|

||||

fi

|

||||

done

|

||||

# Finish with results

|

||||

case $KERN in

|

||||

'2.4') HUGETLB_POOL=`echo "$NUM_PG*$HPG_SZ/1024" | bc -q`;

|

||||

echo "Recommended setting: vm.hugetlb_pool = $HUGETLB_POOL" ;;

|

||||

'2.6' | '3.8' | '3.10' | '4.1' ) echo "Recommended setting: vm.nr_hugepages = $NUM_PG" ;;

|

||||

*) echo "Unrecognized kernel version $KERN. Exiting." ;;

|

||||

esac

|

||||

# End

|

||||

```

|

||||

|

||||

将它以 `hugepages_settings.sh` 为名保存到 `/tmp` 中,然后运行之:

|

||||

|

||||

```

|

||||

root@kerneltalks # sh /tmp/hugepages_settings.sh

|

||||

Recommended setting: vm.nr_hugepages = 124

|

||||

```

|

||||

|

||||

你的输出类似如上结果,只是数字会有一些出入。

|

||||

|

||||

这意味着,你系统需要 124 个每个 2MB 的“大内存页”!若你设置页面大小为 4MB,则结果就变成了 62。你明白了吧?

|

||||

|

||||

### 配置内核中的“大内存页”

|

||||

|

||||

本文最后一部分内容是配置上面提到的 [内核参数 ][2] ,然后重新加载。将下面内容添加到 `/etc/sysctl.conf` 中,然后输入 `sysctl -p` 命令重新加载配置。

|

||||

|

||||

```

|

||||

vm.nr_hugepages=126

|

||||

```

|

||||

|

||||

注意我们这里多加了两个额外的页,因为我们希望在实际需要的页面数量之外多一些额外的空闲页。

|

||||

|

||||

现在,内核已经配置好了,但是要让应用能够使用这些“大内存页”还需要提高内存的使用阀值。新的内存阀值应该为 126 个页 x 每个页 2 MB = 252 MB,也就是 258048 KB。

|

||||

|

||||

你需要编辑 `/etc/security/limits.conf` 中的如下配置:

|

||||

|

||||

```

|

||||

soft memlock 258048

|

||||

hard memlock 258048

|

||||

```

|

||||

|

||||

某些情况下,这些设置是在指定应用的文件中配置的,比如 Oracle DB 就是在 `/etc/security/limits.d/99-grid-oracle-limits.conf` 中配置的。

|

||||

|

||||

这就完成了!你可能还需要重启应用来让应用来使用这些新的巨大页。

|

||||

|

||||

### 如何禁用“大内存页”?

|

||||

|

||||

“大内存页”默认是开启的。使用下面命令来查看“大内存页”的当前状态。

|

||||

|

||||

```

|

||||

root@kerneltalks# cat /sys/kernel/mm/transparent_hugepage/enabled

|

||||

[always] madvise never

|

||||

```

|

||||

|

||||

输出中的 `[always]` 标志说明系统启用了“大内存页”。

|

||||

|

||||

若使用的是基于 RedHat 的系统,则应该要查看的文件路径为 `/sys/kernel/mm/redhat_transparent_hugepage/enabled`。

|

||||

|

||||

若想禁用“大内存页”,则在 `/etc/grub.conf` 中的 `kernel` 行后面加上 `transparent_hugepage=never`,然后重启系统。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://kerneltalks.com/services/what-is-huge-pages-in-linux/

|

||||

|

||||

作者:[Shrikant Lavhate][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://kerneltalks.com

|

||||

[1]:https://a1.kerneltalks.com/wp-content/uploads/2017/11/hugepages-in-linux.png

|

||||

[2]:https://kerneltalks.com/linux/how-to-tune-kernel-parameters-in-linux/

|

||||

@ -0,0 +1,46 @@

|

||||

如何使用看板(kanban)创建更好的文档

|

||||

======

|

||||

> 通过卡片分类和看板来给用户提供他们想要的信息。

|

||||

|

||||

|

||||

|

||||

如果你正在处理文档、网站或其他面向用户的内容,那么了解用户希望找到的内容(包括他们想要的信息以及信息的组织和结构)很有帮助。毕竟,如果人们无法找到他们想要的东西,那么再出色的内容也没有用。

|

||||

|

||||

卡片分类是一种简单而有效的方式,可以从用户那里收集有关菜单界面和页面的内容。最简单的实现方式是在计划在网站或文档中的部分分类标注一些索引卡,并要求用户按照查找信息的方式对卡片进行分类。一个变体是让人们编写自己的菜单标题或内容元素。

|

||||

|

||||

我们的目标是了解用户的期望以及他们希望在哪里找到它,而不是自己弄清楚菜单和布局。当与用户处于相同的物理位置时,这是相对简单的,但当尝试从多个位置的人员获得反馈时,这会更具挑战性。

|

||||

|

||||

我发现[<ruby>看板<rt>kanban</rt></ruby>][1]对于这些情况是一个很好的工具。它允许人们轻松拖动虚拟卡片进行分类和排名,而且与专门卡片分类软件不同,它们是多用途的。

|

||||

|

||||

我经常使用 Trello 进行卡片分类,但有几种你可能想尝试的[开源替代品][2]。

|

||||

|

||||

### 怎么运行的

|

||||

|

||||

我最成功的看板体验是在写 [Gluster][3] 文档的时候 —— 这是一个自由开源的可扩展的网络存储文件系统。我需要携带大量随着时间而增长的文档,并将其分成若干类别以创建导航系统。由于我没有必要的技术知识来分类,我向 Gluster 团队和开发人员社区寻求指导。

|

||||

|

||||

首先,我创建了一个共享看板。我列出了一些通用名称,这些名称可以为我计划在文档中涵盖的所有主题排序和创建卡片。我标记了一些不同颜色的卡片,以表明某个主题缺失并需要创建,或者它存在并需要删除。然后,我把所有卡片放入“未排序”一列,并要求人们将它们拖到他们认为这些卡片应该组织到的地方,然后给我一个他们认为是理想状态的截图。

|

||||

|

||||

处理所有截图是最棘手的部分。我希望有一个合并或共识功能可以帮助我汇总每个人的数据,而不必检查一堆截图。幸运的是,在第一个人对卡片进行分类之后,人们或多或少地对该结构达成一致,而只做了很小的修改。当对某个主题的位置有不同意见时,我发起一个快速会议,让人们可以解释他们的想法,并且可以排除分歧。

|

||||

|

||||

### 使用数据

|

||||

|

||||

在这里,很容易将捕捉到的信息转换为菜单并对其进行优化。如果用户认为项目应该成为子菜单,他们通常会在评论中或在电话聊天时告诉我。对菜单组织的看法因人们的工作任务而异,所以从来没有完全达成一致意见,但用户进行测试意味着你不会对人们使用什么以及在哪里查找有很多盲点。

|

||||

|

||||

将卡片分类与分析功能配对,可以让你更深入地了解人们在寻找什么。有一次,当我对一些我正在写的培训文档进行分析时,我惊讶地发现搜索量最大的页面是关于资本的。所以我在顶层菜单层面上显示了该页面,即使我的“逻辑”设置将它放在了子菜单中。

|

||||

|

||||

我发现看板卡片分类是一种很好的方式,可以帮助我创建用户想要查看的内容,并将其放在希望被找到的位置。你是否发现了另一种对用户友好的组织内容的方法?或者看板的另一种有趣用途是什么?如果有的话,请在评论中分享你的想法。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/11/kanban-boards-card-sorting

|

||||

|

||||

作者:[Heidi Waterhouse][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/hwaterhouse

|

||||

[1]:https://en.wikipedia.org/wiki/Kanban

|

||||

[2]:https://opensource.com/alternatives/trello

|

||||

[3]:https://www.gluster.org/

|

||||

@ -1,59 +1,64 @@

|

||||

使用 Showterm 录制和共享终端会话

|

||||

使用 Showterm 录制和分享终端会话

|

||||

======

|

||||

|

||||

|

||||

|

||||

你可以使用几乎所有的屏幕录制程序轻松录制终端会话。但是,你很可能会得到超大的视频文件。Linux 中有几种终端录制程序,每种录制程序都有自己的优点和缺点。Showterm 是一个可以非常容易地记录终端会话,上传,共享,并将它们嵌入到任何网页中。一个优点是,你不会有巨大的文件来处理。

|

||||

你可以使用几乎所有的屏幕录制程序轻松录制终端会话。但是,你很可能会得到超大的视频文件。Linux 中有几种终端录制程序,每种录制程序都有自己的优点和缺点。Showterm 是一个可以非常容易地记录终端会话、上传、分享,并将它们嵌入到任何网页中的工具。一个优点是,你不会有巨大的文件来处理。

|

||||

|

||||

Showterm 是开源的,该项目可以在这个[ GitHub 页面][1]上找到。

|

||||

Showterm 是开源的,该项目可以在这个 [GitHub 页面][1]上找到。

|

||||

|

||||

**相关**:[2 个简单的将你的终端会话录制为视频的 Linux 程序][2]

|

||||

|

||||

### 在 Linux 中安装 Showterm

|

||||

|

||||

Showterm 要求你在计算机上安装了 Ruby。以下是如何安装该程序。

|

||||

|

||||

```

|

||||

gem install showterm

|

||||

```

|

||||

|

||||

如果你没有在 Linux 上安装 Ruby:

|

||||

如果你没有在 Linux 上安装 Ruby,可以这样:

|

||||

|

||||

```

|

||||

sudo curl showterm.io/showterm > ~/bin/showterm

|

||||

sudo chmod +x ~/bin/showterm

|

||||

```

|

||||

|

||||

如果你只是想运行程序而不是安装:

|

||||

|

||||

```

|

||||

bash <(curl record.showterm.io)

|

||||

```

|

||||

|

||||

你可以在终端输入 `showterm --help` 得到帮助页面。如果没有出现帮助页面,那么可能是未安装 showterm。现在你已安装了 Showterm(或正在运行独立版本),让我们开始使用该工具进行录制。

|

||||

你可以在终端输入 `showterm --help` 得到帮助页面。如果没有出现帮助页面,那么可能是未安装 `showterm`。现在你已安装了 Showterm(或正在运行独立版本),让我们开始使用该工具进行录制。

|

||||

|

||||

**相关**:[如何在 Ubuntu 中录制终端会话][3]

|

||||

**相关**:[如何在 Ubuntu 中录制终端会话][3]

|

||||

|

||||

### 录制终端会话

|

||||

|

||||

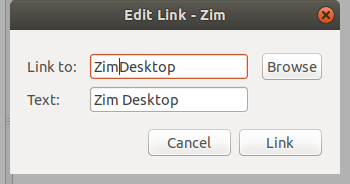

![showterm terminal][4]

|

||||

|

||||

录制终端会话非常简单。从命令行运行 `showterm`。这会在后台启动终端录制。所有从命令行输入的命令都由 Showterm 记录。完成录制后,请按 Ctrl + D 或在命令行中输入`exit` 停止录制。

|

||||

录制终端会话非常简单。从命令行运行 `showterm`。这会在后台启动终端录制。所有从命令行输入的命令都由 Showterm 记录。完成录制后,请按 `Ctrl + D` 或在命令行中输入`exit` 停止录制。

|

||||

|

||||

Showterm 会上传你的视频并输出一个看起来像 http://showterm.io/<一长串字符> 的链接的视频。不幸的是,终端会话会立即上传,而没有任何提示。请不要惊慌!你可以通过输入 `showterm --delete <recording URL>` 删除任何已上传的视频。在上传视频之前,你可以通过在 showterm 命令中添加 `-e` 选项来改变计时。如果视频无法上传,你可以使用 `showterm --retry <script> <times>` 强制重试。

|

||||

Showterm 会上传你的视频并输出一个看起来像 `http://showterm.io/<一长串字符>` 的链接的视频。不幸的是,终端会话会立即上传,而没有任何提示。请不要惊慌!你可以通过输入 `showterm --delete <recording URL>` 删除任何已上传的视频。在上传视频之前,你可以通过在 `showterm` 命令中添加 `-e` 选项来改变计时。如果视频无法上传,你可以使用 `showterm --retry <script> <times>` 强制重试。

|

||||

|

||||

在查看录制内容时,还可以通过在 URL 中添加 “#slow”、“#fast” 或 “#stop” 来控制视频的计时。slow 让视频以正常速度播放、fast 是速度加倍、stop,如名称所示,停止播放视频。

|

||||

在查看录制内容时,还可以通过在 URL 中添加 `#slow`、`#fast` 或 `#stop` 来控制视频的计时。`#slow` 让视频以正常速度播放、`#fast` 是速度加倍、`#stop`,如名称所示,停止播放视频。

|

||||

|

||||

Showterm 终端录制视频可以通过 iframe 轻松嵌入到网页中。这可以通过将 iframe 源添加到 showterm 视频地址来实现,如下所示。

|

||||

|

||||

![showtermio][5]

|

||||

|

||||

作为开源工具,Showterm 允许进一步定制。例如,要运行你自己的 Showterm 服务器,你需要运行以下命令:

|

||||

|

||||

```

|

||||

export SHOWTERM_SERVER=https://showterm.myorg.local/

|

||||

```

|

||||

这样你的客户端可以和它通信。还有额外的功能只需很少的编程知识就可添加。Showterm 服务器项目可在此[ GitHub 页面][1]获得。

|

||||

|

||||

这样你的客户端可以和它通信。还有额外的功能只需很少的编程知识就可添加。Showterm 服务器项目可在此 [GitHub 页面][1]获得。

|

||||

|

||||

### 结论

|

||||

|

||||

如果你想与同事分享一些命令行教程,请务必记得 Showterm。Showterm 是基于文本的。因此,与其他屏幕录制机相比,它将产生相对较小的视频。该工具本身尺寸相当小 - 只有几千字节。

|

||||

如果你想与同事分享一些命令行教程,请务必记得 Showterm。Showterm 是基于文本的。因此,与其他屏幕录制机相比,它将产生相对较小的视频。该工具本身尺寸相当小 —— 只有几千字节。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -61,7 +66,7 @@ via: https://www.maketecheasier.com/record-terminal-session-showterm/

|

||||

|

||||

作者:[Bruno Edoh][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,198 @@

|

||||

使用 pelican 和 Github pages 来搭建博客

|

||||

===============================

|

||||

|

||||

今天我将谈一下[我这个博客][a]是如何搭建的。在我们开始之前,我希望你熟悉使用 Github 并且可以搭建一个 Python 虚拟环境来进行开发。如果你不能做到这些,我推荐你去学习一下 [Django Girls 教程][2],它包含以上和更多的内容。

|

||||

|

||||

这是一篇帮助你发布由 Github 托管的个人博客的教程。为此,你需要一个正常的 Github 用户账户 (而不是一个工程账户)。

|

||||

|

||||

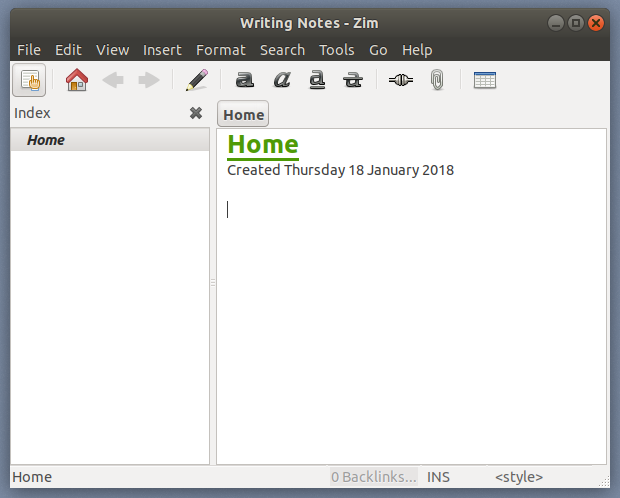

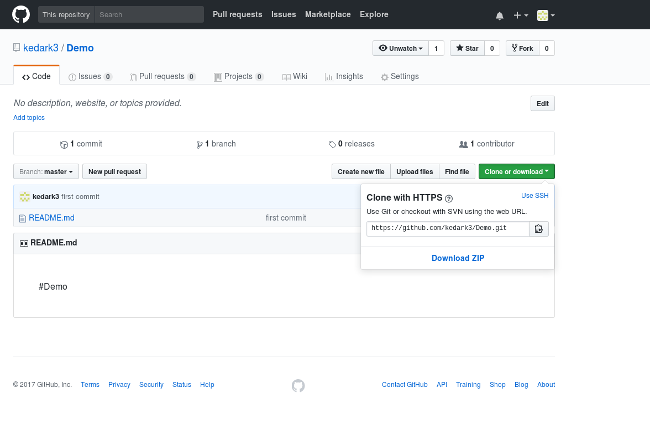

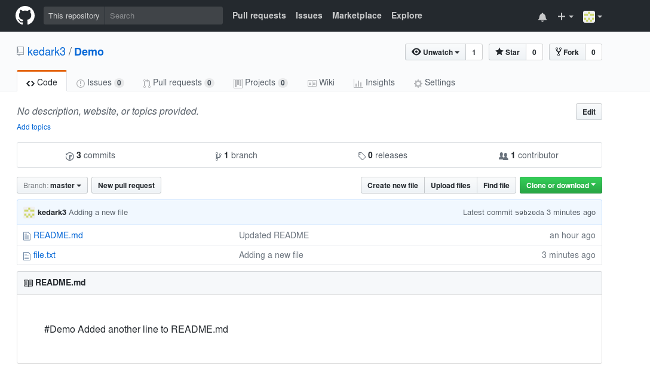

你要做的第一件事是创建一个放置代码的 Github 仓库。如果你想要你的博客仅仅指向你的用户名 (比如 rsip22.github.io) 而不是一个子文件夹 (比如 rsip22.github.io/blog),你必须创建一个带有全名的仓库。

|

||||

|

||||

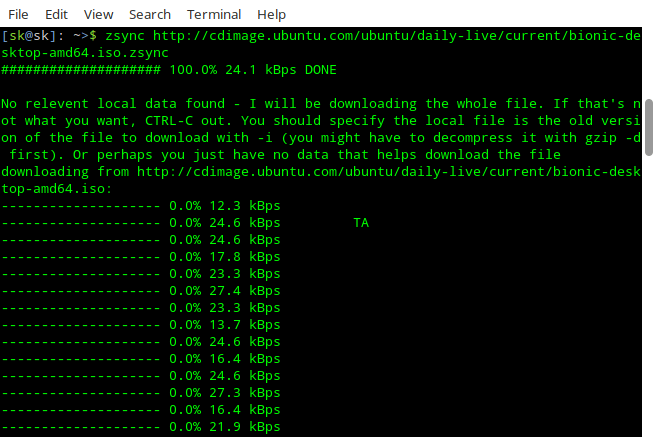

![][3]

|

||||

|

||||

*Github 截图,打开了创建新仓库的菜单,正在以 'rsip22.github.io' 名字创建一个新的仓库*

|

||||

|

||||

我推荐你使用 `README`、用于 Python 的 `.gitignore` 和 [一个自由软件许可证][4] 初始化你的仓库。如果你使用自由软件许可证,你仍然拥有这些代码,但是你使得其他人能从中受益,允许他们学习和复用它,并且更重要的是允许他们享有这些代码。

|

||||

|

||||

既然仓库已经创建好了,那我们就克隆到本机中将用来保存代码的文件夹下:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/YOUR_USERNAME/YOUR_USERNAME.github.io.git

|

||||

```

|

||||

|

||||

并且切换到新的目录:

|

||||

|

||||

```

|

||||

$ cd YOUR_USERNAME.github.io

|

||||

```

|

||||

|

||||

因为 Github Pages 偏好运行的方式是从 master 分支提供文件,你必须将你的源代码放到新的分支,防止 Pelican 产生的静态文件输出到 master 分支。为此,你必须创建一个名为 source 的分支。

|

||||

|

||||

```

|

||||

$ git checkout -b source

|

||||

```

|

||||

|

||||

用你的系统所安装的 Pyhton 3 创建该虚拟环境(virtualenv)。

|

||||

|

||||

在 GNU/Linux 系统中,命令可能如下:

|

||||

|

||||

```

|

||||

$ python3 -m venv venv

|

||||

```

|

||||

|

||||

或者像这样:

|

||||

|

||||

```

|

||||

$ virtualenv --python=python3.5 venv

|

||||

```

|

||||

|

||||

并且激活它:

|

||||

|

||||

```

|

||||

$ source venv/bin/activate

|

||||

```

|

||||

|

||||

在虚拟环境里,你需要安装 pelican 和它的依赖包。你也应该安装 ghp-import (来帮助我们发布到 Github 上)和 Markdown (为了使用 markdown 语法来写文章)。运行如下命令:

|

||||

|

||||

```

|

||||

(venv)$ pip install pelican markdown ghp-import

|

||||

```

|

||||

|

||||

一旦完成,你就可以使用 `pelican-quickstart` 开始创建你的博客了:

|

||||

|

||||

```

|

||||

(venv)$ pelican-quickstart

|

||||

```

|

||||

|

||||

这将会提示我们一系列的问题。在回答它们之前,请看一下如下我的答案:

|

||||

|

||||

```

|

||||

> Where do you want to create your new web site? [.] ./

|

||||

> What will be the title of this web site? Renata's blog

|

||||

> Who will be the author of this web site? Renata

|

||||

> What will be the default language of this web site? [pt] en

|

||||

> Do you want to specify a URL prefix? e.g., http://example.com (Y/n) n

|

||||

> Do you want to enable article pagination? (Y/n) y

|

||||

> How many articles per page do you want? [10] 10

|

||||

> What is your time zone? [Europe/Paris] America/Sao_Paulo

|

||||

> Do you want to generate a Fabfile/Makefile to automate generation and publishing? (Y/n) Y **# PAY ATTENTION TO THIS!**

|

||||

> Do you want an auto-reload & simpleHTTP script to assist with theme and site development? (Y/n) n

|

||||

> Do you want to upload your website using FTP? (y/N) n

|

||||

> Do you want to upload your website using SSH? (y/N) n

|

||||

> Do you want to upload your website using Dropbox? (y/N) n

|

||||

> Do you want to upload your website using S3? (y/N) n

|

||||

> Do you want to upload your website using Rackspace Cloud Files? (y/N) n

|

||||

> Do you want to upload your website using GitHub Pages? (y/N) y

|

||||

> Is this your personal page (username.github.io)? (y/N) y

|

||||

Done. Your new project is available at /home/username/YOUR_USERNAME.github.io

|

||||

```

|

||||

|

||||

关于时区,应该指定为 TZ 时区(这里是全部列表: [tz 数据库时区列表][5])。

|

||||

|

||||

现在,继续往下走并开始创建你的第一篇博文!你可能想在你喜爱的代码编辑器里打开工程目录并且找到里面的 `content` 文件夹。然后创建一个新文件,它可以被命名为 `my-first-post.md` (别担心,这只是为了测试,以后你可以改变它)。在文章内容之前,应该以元数据开始,这些元数据标识标题、日期、目录及更多,像下面这样:

|

||||

|

||||

```

|

||||

.lang="markdown" # DON'T COPY this line, it exists just for highlighting purposes

|

||||

|

||||

Title: My first post

|

||||

Date: 2017-11-26 10:01

|

||||

Modified: 2017-11-27 12:30

|

||||

Category: misc

|

||||

Tags: first, misc

|

||||

Slug: My-first-post

|

||||

Authors: Your name

|

||||

Summary: What does your post talk about? Write here.

|

||||

|

||||

This is the *first post* from my Pelican blog. **YAY!**

|

||||

```

|

||||

|

||||

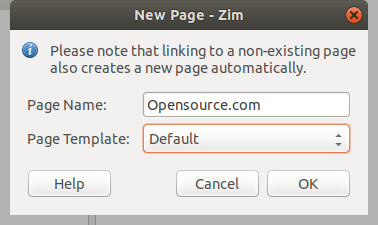

让我们看看它长什么样?

|

||||

|

||||

进入终端,产生静态文件并且启动服务器。要这么做,使用下面命令:

|

||||

|

||||

```

|

||||

(venv)$ make html && make serve

|

||||

```

|

||||

|

||||

当这条命令正在运行,你应该可以在你喜爱的 web 浏览器地址栏中键入 `localhost:8000` 来访问它。

|

||||

|

||||

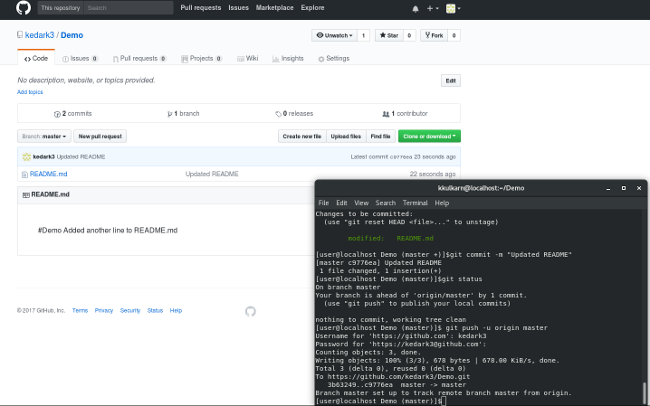

![][6]

|

||||

|

||||

*博客主页的截图。它有一个带有 Renata's blog 标题的头部,第一篇博文在左边,文章的信息在右边,链接和社交在底部*

|

||||

|

||||

相当简洁,对吧?

|

||||

|

||||

现在,如果你想在文章中放一张图片,该怎么做呢?好,首先你在放置文章的内容目录里创建一个目录。为了引用简单,我们将这个目录命名为 `image`。现在你必须让 Pelican 使用它。找到 `pelicanconf.py` 文件,这个文件是你配置系统的地方,并且添加一个包含你的图片目录的变量:

|

||||

|

||||

```

|

||||

.lang="python" # DON'T COPY this line, it exists just for highlighting purposes

|

||||

|

||||

STATIC_PATHS = ['images']

|

||||

```

|

||||

|

||||

保存它。打开文章并且以如下方式添加图片:

|

||||

|

||||

```

|

||||

.lang="markdown" # DON'T COPY this line, it exists just for highlighting purposes

|

||||

|

||||

|

||||

```

|

||||

|

||||

你可以在终端中随时按下 `CTRL+C` 来中断服务器。但是你应该再次启动它并检查图片是否正确。你能记住怎么样做吗?

|

||||

|

||||

```

|

||||

(venv)$ make html && make serve

|

||||

```

|

||||

|

||||

在你代码完工之前的最后一步:你应该确保任何人都可以使用 ATOM 或 RSS 流来读你的文章。找到 `pelicanconf.py` 文件,这个文件是你配置系统的地方,并且编辑关于 RSS 流产生的部分:

|

||||

|

||||

```

|

||||

.lang="python" # DON'T COPY this line, it exists just for highlighting purposes

|

||||

|

||||

FEED_ALL_ATOM = 'feeds/all.atom.xml'

|

||||

FEED_ALL_RSS = 'feeds/all.rss.xml'

|

||||

AUTHOR_FEED_RSS = 'feeds/%s.rss.xml'

|

||||

RSS_FEED_SUMMARY_ONLY = False

|

||||

```

|

||||

|

||||

保存所有,这样你才可以将代码上传到 Github 上。你可以通过添加所有文件,使用一个信息(“first commit”)来提交它,并且使用 `git push`。你将会被问起你的 Github 登录名和密码。

|

||||

|

||||

```

|

||||

$ git add -A && git commit -a -m 'first commit' && git push --all

|

||||

```

|

||||

|

||||

还有...记住在最开始的时候,我给你说的怎样防止 Pelican 产生的静态文件输出 master 分支吗。现在对你来说是时候产生它们了:

|

||||

|

||||

```

|

||||

$ make github

|

||||

```

|

||||

|

||||

你将会被再次问及 Github 登录名和密码。好了!你的新博客应该创建在 `https://YOUR_USERNAME.github.io`。

|

||||

|

||||

如果你在过程中任何一步遇到一个错误,请重新读一下这篇手册,尝试并看看你是否能发现错误发生的部分,因为这是调试的第一步。有时甚至一些简单的东西比如一个错字或者 Python 中错误的缩进都可以给我们带来麻烦。说出来并向网上或你的社区求助。

|

||||

|

||||

对于如何使用 Markdown 来写文章,你可以读一下 [Daring Fireball Markdown 指南][7]。

|

||||

|

||||

为了获取其它主题,我建议你访问 [Pelican 主题][8]。

|

||||

|

||||

这篇文章改编自 [Adrien Leger 的使用一个 Bottstrap3 主题来搭建由 Github 托管的 Pelican 博客][9]。

|

||||

|

||||

-----------------------------------------------------------

|

||||

|

||||

via: https://rsip22.github.io/blog/create-a-blog-with-pelican-and-github-pages.html

|

||||

|

||||

作者:[rsip22][a]

|

||||

译者:[liuxinyu123](https://github.com/liuxinyu123)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://rsip22.github.io

|

||||

[1]:https://rsip22.github.io/blog/category/blog.html

|

||||

[2]:https://tutorial.djangogirls.org

|

||||

[3]:https://rsip22.github.io/blog/img/create_github_repository.png

|

||||

[4]:https://www.gnu.org/licenses/license-list.html

|

||||

[5]:https://en.wikipedia.org/wiki/List_of_tz_database_time_zones

|

||||

[6]:https://rsip22.github.io/blog/img/blog_screenshot.png

|

||||

[7]:https://daringfireball.net/projects/markdown/syntax

|

||||

[8]:http://www.pelicanthemes.com/

|

||||

[9]:https://a-slide.github.io/blog/github-pelican

|

||||

110

published/20171214 IPv6 Auto-Configuration in Linux.md

Normal file

110

published/20171214 IPv6 Auto-Configuration in Linux.md

Normal file

@ -0,0 +1,110 @@

|

||||

在 Linux 中自动配置 IPv6 地址

|

||||

======

|

||||

|

||||

|

||||

|

||||

在 [KVM 中测试 IPv6 网络:第 1 部分][1] 一文中,我们学习了关于<ruby>唯一本地地址<rt>unique local addresses</rt></ruby>(ULA)的相关内容。在本文中,我们将学习如何为 ULA 自动配置 IP 地址。

|

||||

|

||||

### 何时使用唯一本地地址

|

||||

|

||||

<ruby>唯一本地地址<rt>unique local addresses</rt></ruby>(ULA)使用 `fd00::/8` 地址块,它类似于我们常用的 IPv4 的私有地址:`10.0.0.0/8`、`172.16.0.0/12`、以及 `192.168.0.0/16`。但它们并不能直接替换。IPv4 的私有地址分类和网络地址转换(NAT)功能是为了缓解 IPv4 地址短缺的问题,这是个明智的解决方案,它延缓了本该被替换的 IPv4 的生命周期。IPv6 也支持 NAT,但是我想不出使用它的理由。IPv6 的地址数量远远大于 IPv4;它是不一样的,因此需要做不一样的事情。

|

||||

|

||||

那么,ULA 存在的意义是什么呢?尤其是在我们已经有了<ruby>本地链路地址<rt>link-local addresses</rt></ruby>(`fe80::/10`)时,到底需不需要我们去配置它们呢?它们之间(LCTT 译注:指的是唯一本地地址和本地链路地址)有两个重要的区别。一是,本地链路地址是不可路由的,因此,你不能跨子网使用它。二是,ULA 是你自己管理的;你可以自己选择它用于子网的地址范围,并且它们是可路由的。

|

||||

|

||||

使用 ULA 的另一个好处是,如果你只是在局域网中“混日子”的话,你不需要为它们分配全局单播 IPv6 地址。当然了,如果你的 ISP 已经为你分配了 IPv6 的<ruby>全局单播地址<rt>global unicast addresses</rt></ruby>,就不需要使用 ULA 了。你也可以在同一个网络中混合使用全局单播地址和 ULA,但是,我想不出这样使用的一个好理由,并且要一定确保你不使用网络地址转换(NAT)以使 ULA 可公共访问。在我看来,这是很愚蠢的行为。

|

||||

|

||||

ULA 是仅为私有网络使用的,并且应该阻止其流出你的网络,不允许进入因特网。这很简单,在你的边界设备上只要阻止整个 `fd00::/8` 范围的 IPv6 地址即可实现。

|

||||

|

||||

### 地址自动配置

|

||||

|

||||

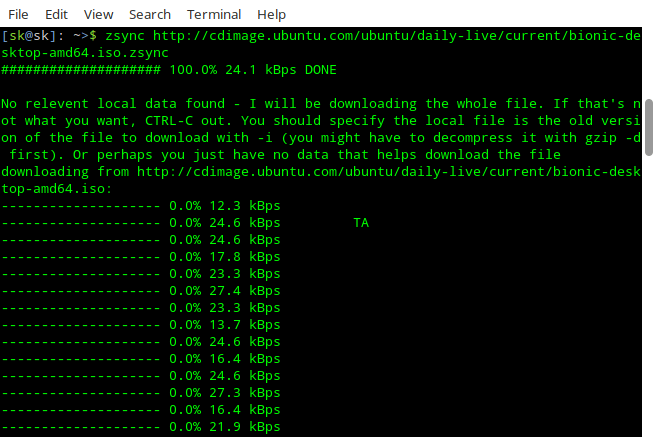

ULA 不像本地链路地址那样自动配置的,但是使用 radvd 设置自动配置是非常容易的,radva 是路由器公告守护程序。在你开始之前,运行 `ifconfig` 或者 `ip addr show` 去查看你现有的 IP 地址。

|

||||

|

||||

在生产系统上使用时,你应该将 radvd 安装在一台单独的路由器上,如果只是测试使用,你可以将它安装在你的网络中的任意 Linux PC 上。在我的小型 KVM 测试实验室中,我使用 `apt-get install radvd` 命令把它安装在 Ubuntu 上。安装完成之后,我先不启动它,因为它还没有配置文件:

|

||||

|

||||

```

|

||||

$ sudo systemctl status radvd

|

||||

● radvd.service - LSB: Router Advertising Daemon

|

||||

Loaded: loaded (/etc/init.d/radvd; bad; vendor preset: enabled)

|

||||

Active: active (exited) since Mon 2017-12-11 20:08:25 PST; 4min 59s ago

|

||||

Docs: man:systemd-sysv-generator(8)

|

||||

|

||||

Dec 11 20:08:25 ubunut1 systemd[1]: Starting LSB: Router Advertising Daemon...

|

||||

Dec 11 20:08:25 ubunut1 radvd[3541]: Starting radvd:

|

||||

Dec 11 20:08:25 ubunut1 radvd[3541]: * /etc/radvd.conf does not exist or is empty.

|

||||

Dec 11 20:08:25 ubunut1 radvd[3541]: * See /usr/share/doc/radvd/README.Debian

|

||||

Dec 11 20:08:25 ubunut1 radvd[3541]: * radvd will *not* be started.

|

||||

Dec 11 20:08:25 ubunut1 systemd[1]: Started LSB: Router Advertising Daemon.

|

||||

```

|

||||

|

||||

这些所有的消息有点让人困惑,实际上 radvd 并没有运行,你可以使用经典命令 `ps | grep radvd` 来验证这一点。因此,我们现在需要去创建 `/etc/radvd.conf` 文件。拷贝这个示例,将第一行的网络接口名替换成你自己的接口名字:

|

||||

|

||||

```

|

||||

interface ens7 {

|

||||

AdvSendAdvert on;

|

||||

MinRtrAdvInterval 3;

|

||||

MaxRtrAdvInterval 10;

|

||||

prefix fd7d:844d:3e17:f3ae::/64

|

||||

{

|

||||

AdvOnLink on;

|

||||

AdvAutonomous on;

|

||||

};

|

||||

|

||||

};

|

||||

```

|

||||

|

||||

前缀(`prefix`)定义了你的网络地址,它是地址的前 64 位。前两个字符必须是 `fd`,前缀接下来的剩余部分你自己定义它,最后的 64 位留空,因为 radvd 将去分配最后的 64 位。前缀后面的 16 位用来定义子网,剩余的地址定义为主机地址。你的子网必须总是 `/64`。RFC 4193 要求地址必须随机生成;查看 [在 KVM 中测试 IPv6 Networking:第 1 部分][1] 学习创建和管理 ULAs 的更多知识。

|

||||

|

||||

### IPv6 转发

|

||||

|

||||

IPv6 转发必须要启用。下面的命令去启用它,重启后生效:

|

||||

|

||||

```

|

||||

$ sudo sysctl -w net.ipv6.conf.all.forwarding=1

|

||||

```

|

||||

|

||||

取消注释或者添加如下的行到 `/etc/sysctl.conf` 文件中,以使它永久生效:

|

||||

|

||||

```

|

||||

net.ipv6.conf.all.forwarding = 1

|

||||

```

|

||||

|

||||

启动 radvd 守护程序:

|

||||

|

||||

```

|

||||

$ sudo systemctl stop radvd

|

||||

$ sudo systemctl start radvd

|

||||

```

|

||||

|

||||

这个示例在我的 Ubuntu 测试系统中遇到了一个怪事;radvd 总是停止,我查看它的状态却没有任何问题,做任何改变之后都需要重新启动 radvd。

|

||||

|

||||

启动成功后没有任何输出,并且失败也是如此,因此,需要运行 `sudo systemctl status radvd` 去查看它的运行状态。如果有错误,`systemctl` 会告诉你。一般常见的错误都是 `/etc/radvd.conf` 中的语法错误。

|

||||

|

||||

在 Twitter 上抱怨了上述问题之后,我学到了一件很酷的技巧:当你运行 ` journalctl -xe --no-pager` 去调试 `systemctl` 错误时,你的输出会被换行,然后,你就可以看到错误信息。

|

||||

|

||||

现在检查你的主机,查看它们自动分配的新地址:

|

||||

|

||||

```

|

||||

$ ifconfig

|

||||

ens7 Link encap:Ethernet HWaddr 52:54:00:57:71:50

|

||||

[...]

|

||||

inet6 addr: fd7d:844d:3e17:f3ae:9808:98d5:bea9:14d9/64 Scope:Global

|

||||

[...]

|

||||

```

|

||||

|

||||

本文到此为止,下周继续学习如何为 ULA 管理 DNS,这样你就可以使用一个合适的主机名来代替这些长长的 IPv6 地址。

|

||||

|

||||

通过来自 Linux 基金会和 edX 的 [“Linux 入门”][2] 免费课程学习更多 Linux 的知识。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2017/12/ipv6-auto-configuration-linux

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/learn/intro-to-linux/2017/11/testing-ipv6-networking-kvm-part-1

|

||||

[2]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -1,44 +1,47 @@

|

||||

什么是容器?为什么我们关注它?

|

||||

======

|

||||

|

||||

|

||||

|

||||

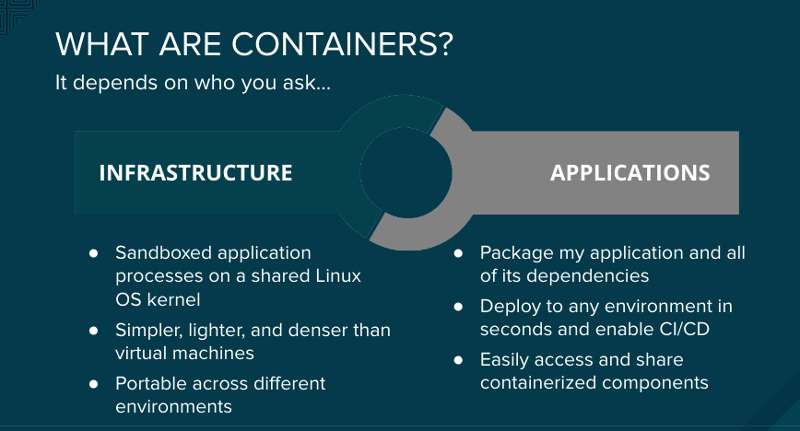

什么是容器?你需要它们吗?为什么?在这篇文章中,我们会回答这些基本问题。

|

||||

|

||||

但是,为了回答这些问题,我们要提出更多的问题。当你开始考虑怎么用容器适配你的工作时,你需要弄清楚:你在哪开发应用?你在哪测试它?你在哪使用它?

|

||||

|

||||

你可能在你的笔记本电脑上开发应用,你的电脑上已经装好了所需要的库文件,扩展包,开发工具,和开发框架。它在一个模拟生产环境的机器上进行测试,然后被用于生产。问题是这三种环境不一定都是一样的;他们没有同样的工具,框架,和库。你在你机器上开发的应用不一定可以在生产环境中正常工作。

|

||||

你可能在你的笔记本电脑上开发应用,你的电脑上已经装好了所需要的库文件、扩展包、开发工具和开发框架。它在一个模拟生产环境的机器上进行测试,然后被用于生产环境。问题是这三种环境不一定都是一样的;它们没有同样的工具、框架和库。你在你机器上开发的应用不一定可以在生产环境中正常工作。

|

||||

|

||||

容器解决了这个问题。正如 Docker 解释的,“容器镜像是软件的一个轻量的,独立的,可执行的包,包括了执行它所需要的所有东西:代码,运行环境,系统工具,系统库,设置。”

|

||||

容器解决了这个问题。正如 Docker 解释的,“容器镜像是软件的一个轻量的、独立的、可执行的包,包括了执行它所需要的所有东西:代码、运行环境、系统工具、系统库、设置。”

|

||||

|

||||

这代表着,一旦一个应用被封装成容器,那么它所依赖的下层环境就不再重要了。它可以在任何地方运行,甚至在混合云环境下也可以。这是容器在开发者,执行团队,甚至 CIO (信息主管)中变得如此流行的原因之一。

|

||||

这代表着,一旦一个应用被封装成容器,那么它所依赖的下层环境就不再重要了。它可以在任何地方运行,甚至在混合云环境下也可以。这是容器在开发人员,执行团队,甚至 CIO (信息主管)中变得如此流行的原因之一。

|

||||

|

||||

### 容器对开发者的好处

|

||||

### 容器对开发人员的好处

|

||||

|

||||

现在开发者或执行者不再需要关注他们要使用什么平台来运行应用。开发者不会再说:“这在我的系统上运行得好好的。”

|

||||

现在开发人员或运维人员不再需要关注他们要使用什么平台来运行应用。开发人员不会再说:“这在我的系统上运行得好好的。”

|

||||

|

||||

容器的另一个重大优势时它的隔离性和安全性。因为容器将应用和运行平台隔离开了,应用以及它周边的东西都会变得安全。同时,不同的团队可以在一台设备上同时运行不同的应用——对于传统应用来说这是不可以的。

|

||||

容器的另一个重大优势是它的隔离性和安全性。因为容器将应用和运行平台隔离开了,应用以及它周边的东西都会变得安全。同时,不同的团队可以在一台设备上同时运行不同的应用——对于传统应用来说这是不可以的。

|

||||

|

||||

这不是虚拟机( VM )所提供的吗?是的,也不是。虚拟机可以隔离应用,但它负载太高了。[在一份文献中][1],Canonical 比较了容器和虚拟机,结果是:“容器提供了一种新的虚拟化方法,它有着和传统虚拟机几乎相同的资源隔离水平。但容器的负载更小,它占用更少的内存,更为高效。这意味着可以实现高密度的虚拟化:一旦安装,你可以在相同的硬件上运行更多应用。”另外,虚拟机启动前需要更多的准备,而容器只需几秒就能运行,可以瞬间启动。

|

||||

这不是虚拟机( VM )所提供的吗?既是,也不是。虚拟机可以隔离应用,但它负载太高了。[在一份文献中][1],Canonical 比较了容器和虚拟机,结果是:“容器提供了一种新的虚拟化方法,它有着和传统虚拟机几乎相同的资源隔离水平。但容器的负载更小,它占用更少的内存,更为高效。这意味着可以实现高密度的虚拟化:一旦安装,你可以在相同的硬件上运行更多应用。”另外,虚拟机启动前需要更多的准备,而容器只需几秒就能运行,可以瞬间启动。

|

||||

|

||||

### 容器对应用生态的好处

|

||||

|

||||

现在,一个庞大的,由供应商和解决方案组成的生态系统已经允许公司大规模地运用容器,不管是用于编排,监控,记录,或者生命周期管理。

|

||||

现在,一个庞大的,由供应商和解决方案组成的生态系统已经可以让公司大规模地运用容器,不管是用于编排、监控、记录或者生命周期管理。

|

||||

|

||||

为了保证容器可以运行在任何地方,容器生态系统一起成立了[开源容器倡议][2](OCI)。这是一个 Linux 基金会的项目,目标在于创建关于容器运行环境和容器镜像格式这两个容器核心部分的规范。这两个规范确保容器空间中不会有任何碎片。

|

||||

为了保证容器可以运行在任何地方,容器生态系统一起成立了[开源容器倡议][2](OCI)。这是一个 Linux 基金会的项目,目标在于创建关于容器运行环境和容器镜像格式这两个容器核心部分的规范。这两个规范确保容器领域中不会有任何不一致。

|

||||

|

||||

在很长的一段时间里,容器是专门用于 Linux 内核的,但微软和 Docker 的密切合作将容器带到了微软平台上。现在你可以在 Linux,Windows,Azure,AWS,Google 计算引擎,Rackspace,以及大型计算机上使用容器。甚至 VMware 也正在发展容器,它的 [vSphere Integrated Container][3](VIC)允许 IT 专业人员在他们平台的传统工作负载上运行容器。

|

||||

在很长的一段时间里,容器是专门用于 Linux 内核的,但微软和 Docker 的密切合作将容器带到了微软平台上。现在你可以在 Linux、Windows、Azure、AWS、Google 计算引擎、Rackspace,以及大型计算机上使用容器。甚至 VMware 也正在发展容器,它的 [vSphere Integrated Container][3](VIC)允许 IT 专业人员在他们平台的传统工作负载上运行容器。

|

||||

|

||||

### 容器对 CIO 的好处

|

||||

|

||||

容器在开发者中因为以上的原因而变得十分流行,同时他们也给CIO提供了很大的便利。将工作负载迁移到容器中的优势正在改变着公司运行的模式。

|

||||

容器在开发人员中因为以上的原因而变得十分流行,同时他们也给 CIO 提供了很大的便利。将工作负载迁移到容器中的优势正在改变着公司运行的模式。

|

||||

|

||||

传统的应用有大约十年的生命周期。新版本的发布需要多年的努力,因为应用是独立于平台的,有时需要经过几年的努力才能看到生产效果。由于这个生命周期,开发者会尽可能在应用里塞满各种功能,这会使应用变得庞大笨拙,漏洞百出。

|

||||

传统的应用有大约十年的生命周期。新版本的发布需要多年的努力,因为应用是依赖于平台的,有时几年也不能到达产品阶段。由于这个生命周期,开发人员会尽可能在应用里塞满各种功能,这会使应用变得庞大笨拙,漏洞百出。

|

||||

|

||||

这个过程影响了公司内部的创新文化。当人们几个月甚至几年都不能看到他们的创意被实现时,他们就不再有动力了。

|

||||

|

||||

容器解决了这个问题。因为你可以将应用切分成更小的微服务。你可以在几周或几天内开发,测试和部署。新特性可以添加成为新的容器。他们可以在测试结束后以最快的速度被投入生产。公司可以更快转型,超过他们的竞争者。因为想法可以被很快转化为容器并部署,这个方式使得创意爆炸式增长。

|

||||

容器解决了这个问题。因为你可以将应用切分成更小的微服务。你可以在几周或几天内开发、测试和部署。新特性可以添加成为新的容器。他们可以在测试结束后以最快的速度被投入生产。公司可以更快转型,超过他们的竞争者。因为想法可以被很快转化为容器并部署,这个方式使得创意爆炸式增长。

|

||||

|

||||

### 结论

|

||||

|

||||

容器解决了许多传统工作负载所面对的问题。但是,它并不能解决所有 IT 专业人员面对的问题。它只是众多解决方案中的一个。在下一篇文章中,我们将会覆盖一些容器的基本属于,然后我们会解释如何开始构建容器。

|

||||

容器解决了许多传统工作负载所面对的问题。但是,它并不能解决所有 IT 专业人员面对的问题。它只是众多解决方案中的一个。在下一篇文章中,我们将会覆盖一些容器的基本术语,然后我们会解释如何开始构建容器。

|

||||

|

||||

通过 Linux 基金会和 edX 提供的免费的 ["Introduction to Linux" ][4] 课程学习更多 Linux 知识。

|

||||

|

||||

@ -46,9 +49,9 @@

|

||||

|

||||

via: https://www.linux.com/blog/intro-to-Linux/2017/12/what-are-containers-and-why-should-you-care

|

||||

|

||||

作者:[wapnil Bhartiya][a]

|

||||

作者:[SWAPNIL BHARTIYA][a]

|

||||

译者:[lonaparte](https://github.com/lonaparte)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,98 @@

|

||||

Tlog:录制/播放终端 IO 和会话的工具

|

||||

======

|

||||

|

||||

Tlog 是 Linux 中终端 I/O 录制和回放软件包。它用于实现一个集中式用户会话录制。它将所有经过的消息录制为 JSON 消息。录制为 JSON 格式的主要目的是将数据传送到 ElasticSearch 之类的存储服务,可以从中搜索和查询,以及回放。同时,它们保留所有通过的数据和时序。

|

||||

|

||||

Tlog 包含三个工具,分别是 `tlog-rec`、tlog-rec-session` 和 `tlog-play`。

|

||||

|

||||

* `tlog-rec` 工具一般用于录制终端、程序或 shell 的输入或输出。

|

||||

* `tlog-rec-session` 工具用于录制整个终端会话的 I/O,包括录制的用户。

|

||||

* `tlog-play` 工具用于回放录制。

|

||||

|

||||

在本文中,我将解释如何在 CentOS 7.4 服务器上安装 Tlog。

|

||||

|

||||

### 安装

|

||||

|

||||

在安装之前,我们需要确保我们的系统满足编译和安装程序的所有软件要求。在第一步中,使用以下命令更新系统仓库和软件包。

|

||||

|

||||

```

|

||||

# yum update

|

||||

```

|

||||

|

||||

我们需要安装此软件安装所需的依赖项。在安装之前,我已经使用这些命令安装了所有依赖包。

|

||||

|

||||

```

|

||||

# yum install wget gcc

|

||||

# yum install systemd-devel json-c-devel libcurl-devel m4

|

||||

```

|

||||

|

||||

完成这些安装后,我们可以下载该工具的[源码包][1]并根据需要将其解压到服务器上:

|

||||

|

||||

```

|

||||

# wget https://github.com/Scribery/tlog/releases/download/v3/tlog-3.tar.gz

|

||||

# tar -xvf tlog-3.tar.gz

|

||||

# cd tlog-3

|

||||

```

|

||||

|

||||

现在,你可以使用我们通常的配置和编译方法开始构建此工具。

|

||||

|

||||

```

|

||||

# ./configure --prefix=/usr --sysconfdir=/etc && make

|

||||

# make install

|

||||

# ldconfig

|

||||

```

|

||||

|

||||

最后,你需要运行 `ldconfig`。它对命令行中指定目录、`/etc/ld.so.conf` 文件,以及信任的目录( `/lib` 和 `/usr/lib`)中最近的共享库创建必要的链接和缓存。

|

||||

|

||||

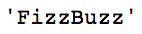

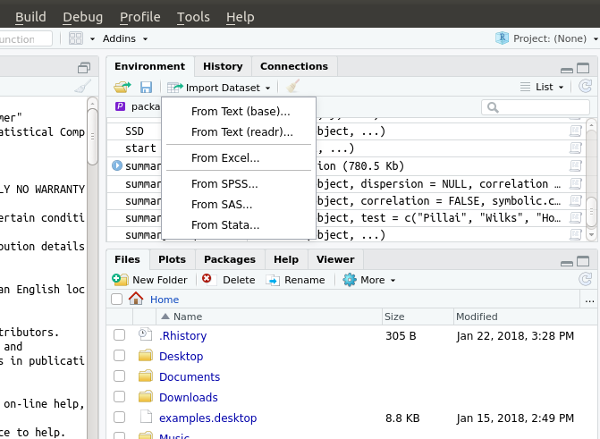

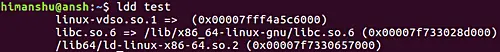

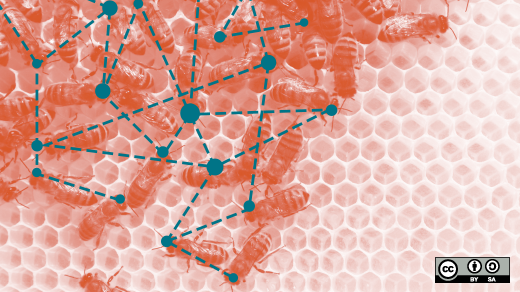

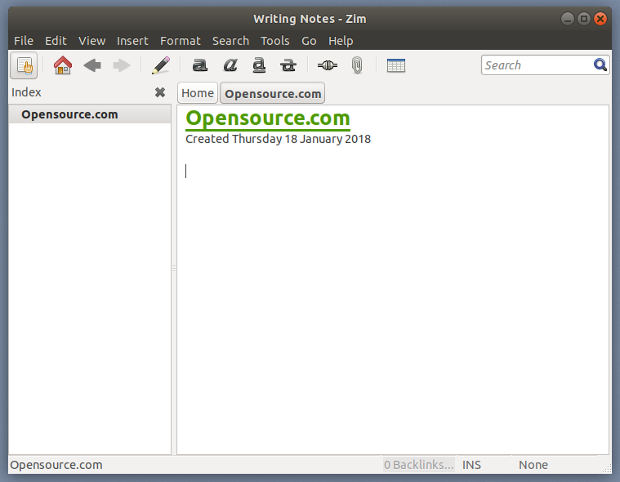

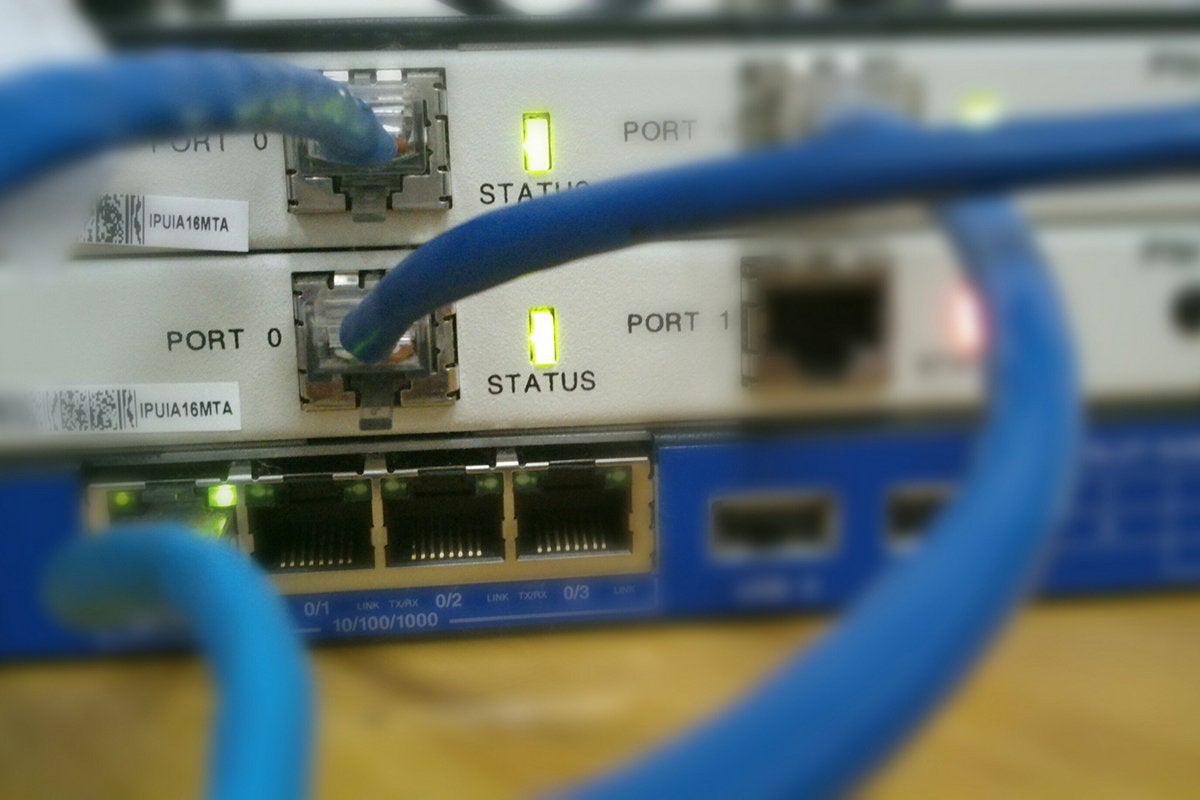

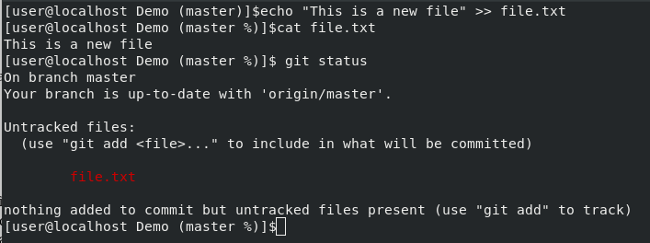

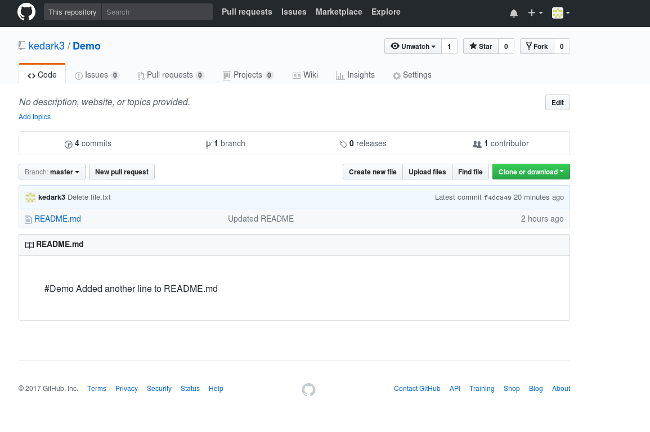

### Tlog 工作流程图

|

||||

|

||||

![Tlog working process][2]

|

||||

|

||||

首先,用户通过 PAM 进行身份验证登录。名称服务交换器(NSS)提供的 `tlog` 信息是用户的 shell。这初始化了 tlog 部分,并从环境变量/配置文件收集关于实际 shell 的信息,并在 PTY 中启动实际的 shell。然后通过 syslog 或 sd-journal 开始录制在终端和 PTY 之间传递的所有内容。

|

||||

|

||||

### 用法

|

||||

|

||||

你可以使用 `tlog-rec` 录制一个会话并使用 `tlog-play` 回放它,以测试新安装的 tlog 是否能够正常录制和回放会话。

|

||||

|

||||

#### 录制到文件中

|

||||

|

||||

要将会话录制到文件中,请在命令行中执行 `tlog-rec`,如下所示:

|

||||

|

||||

```

|

||||

tlog-rec --writer=file --file-path=tlog.log

|

||||

```

|

||||

|

||||

该命令会将我们的终端会话录制到名为 `tlog.log` 的文件中,并将其保存在命令中指定的路径中。

|

||||

|

||||

#### 从文件中回放

|

||||

|

||||

你可以在录制过程中或录制后使用 `tlog-play` 命令回放录制的会话。

|

||||

|

||||

```

|

||||

tlog-play --reader=file --file-path=tlog.log

|

||||

```

|

||||

|

||||

该命令从指定的路径读取先前录制的文件 `tlog.log`。

|

||||

|

||||

### 总结

|

||||

|

||||

Tlog 是一个开源软件包,可用于实现集中式用户会话录制。它主要是作为一个更大的用户会话录制解决方案的一部分使用,但它被设计为独立且可重用的。该工具可以帮助录制用户所做的一切,并将其存储在服务器的某个位置,以备将来参考。你可以从这个[文档][3]中获得关于这个软件包使用的更多细节。我希望这篇文章对你有用。请发表你的宝贵建议和意见。

|

||||

|

||||

**关于 Saheetha Shameer (作者)**

|

||||

|

||||

我正在担任高级系统管理员。我是一名快速学习者,有轻微的倾向跟随行业中目前和正在出现的趋势。我的爱好包括听音乐、玩策略游戏、阅读和园艺。我对尝试各种美食也有很高的热情 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://linoxide.com/linux-how-to/tlog-tool-record-play-terminal-io-sessions/

|

||||

|

||||

作者:[Saheetha Shameer][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://linoxide.com/author/saheethas/

|

||||

[1]:https://github.com/Scribery/tlog/releases/download/v3/tlog-3.tar.gz

|

||||

[2]:https://linoxide.com/wp-content/uploads/2018/01/Tlog-working-process.png

|

||||

[3]:https://github.com/Scribery/tlog/blob/master/README.md

|

||||

@ -0,0 +1,180 @@

|

||||

Ansible:像系统管理员一样思考的自动化框架

|

||||

======

|

||||

|

||||

这些年来,我已经写了许多关于 DevOps 工具的文章,也培训了这方面的人员。尽管这些工具很棒,但很明显,大多数都是按照开发人员的思路设计出来的。这也没有什么问题,因为以编程的方式接近配置管理是重点。不过,直到我开始接触 Ansible,我才觉得这才是系统管理员喜欢的东西。

|

||||

|

||||

喜欢的一部分原因是 Ansible 与客户端计算机通信的方式,是通过 SSH 的。作为系统管理员,你们都非常熟悉通过 SSH 连接到计算机,所以从单词“去”的角度来看,相对于其它选择,你更容易理解 Ansible。

|

||||

|

||||

考虑到这一点,我打算写一些文章,探讨如何使用 Ansible。这是一个很好的系统,但是当我第一次接触到这个系统的时候,不知道如何开始。这并不是学习曲线陡峭。事实上,问题是在开始使用 Ansible 之前,我并没有太多的东西要学,这才是让人感到困惑的。例如,如果您不必安装客户端程序(Ansible 没有在客户端计算机上安装任何软件),那么您将如何启动?

|

||||

|

||||

### 踏出第一步

|

||||

|

||||

起初 Ansible 对我来说非常困难的原因在于配置服务器/客户端的关系是非常灵活的,我不知道我该从何入手。事实是,Ansible 并不关心你如何设置 SSH 系统。它会利用你现有的任何配置。需要考虑以下几件事情:

|

||||

|

||||

1. Ansible 需要通过 SSH 连接到客户端计算机。

|

||||

2. 连接后,Ansible 需要提升权限才能配置系统,安装软件包等等。

|

||||

|

||||

不幸的是,这两个考虑真的带来了一堆蠕虫。连接到远程计算机并提升权限是一件可怕的事情。当您在远程计算机上安装代理并使用 Chef 或 Puppet 处理特权升级问题时,似乎感觉就没那么可怕了。 Ansible 并非不安全,而是安全的决定权在你手中。

|

||||

|

||||

接下来,我将列出一系列潜在的配置,以及每个配置的优缺点。这不是一个详尽的清单,但是你会受到正确的启发,去思考在你自己的环境中什么是理想的配置。也需要注意,我不会提到像 Vagrant 这样的系统,因为尽管 Vagrant 在构建测试和开发的敏捷架构时非常棒,但是和一堆服务器是非常不同的,因此考虑因素是极不相似的。

|

||||

|

||||

### 一些 SSH 场景

|

||||

|

||||

#### 1)在 Ansible 配置中,root 用户以密码进入远程计算机。

|

||||

|

||||

拥有这个想法是一个非常可怕的开始。这个设置的“优点”是它消除了对特权提升的需要,并且远程服务器上不需要其他用户帐户。 但是,这种便利的成本是不值得的。 首先,大多数系统不会让你在不改变默认配置的情况下以 root 身份进行 SSH 登录。默认的配置之所以如此,坦率地说,是因为允许 root 用户远程连接是一个不好的主意。 其次,将 root 密码放在 Ansible 机器上的纯文本配置文件中是不合适的。 真的,我提到了这种可能性,因为这是可以的,但这是应该避免的。 请记住,Ansible 允许你自己配置连接,它可以让你做真正愚蠢的事情。 但是请不要这么做。

|

||||

|

||||

#### 2)使用存储在 Ansible 配置中的密码,以普通用户的身份进入远程计算机。

|

||||

|

||||

这种情况的一个优点是它不需要太多的客户端配置。 大多数用户默认情况下都可以使用 SSH,因此 Ansible 应该能够使用用户凭据并且能够正常登录。 我个人不喜欢在配置文件中以纯文本形式存储密码,但至少它不是 root 密码。 如果您使用此方法,请务必考虑远程服务器上的权限提升方式。 我知道我还没有谈到权限提升,但是如果你在配置文件中配置了一个密码,这个密码可能会被用来获得 sudo 访问权限。 因此,一旦发生泄露,您不仅已经泄露了远程用户的帐户,还可能泄露整个系统。

|

||||

|

||||

#### 3)使用具有空密码的密钥对进行身份验证,以普通用户身份进入远程计算机。

|

||||

|

||||

这消除了将密码存储在配置文件中的弊端,至少在登录的过程中消除了。 没有密码的密钥对并不理想,但这是我经常做的事情。 在我的个人内部网络中,我通常使用没有密码的密钥对来自动执行许多事情,如需要身份验证的定时任务。 这不是最安全的选择,因为私钥泄露意味着可以无限制地访问远程用户的帐户,但是相对于在配置文件中存储密码我更喜欢这种方式。

|

||||

|

||||

#### 4)使用通过密码保护的密钥对进行身份验证,以普通用户的身份通过 SSH 连接到远程计算机。

|

||||

|

||||

这是处理远程访问的一种非常安全的方式,因为它需要两种不同的身份验证因素来解密:私钥和密码。 如果你只是以交互方式运行 Ansible,这可能是理想的设置。 当你运行命令时,Ansible 会提示你输入私钥的密码,然后使用密钥对登录到远程系统。 是的,只需使用标准密码登录并且不用在配置文件中指定密码即可完成,但是如果不管怎样都要在命令行上输入密码,那为什么不在保护层添加密钥对呢?

|

||||

|

||||

#### 5)使用密码保护密钥对进行 SSH 连接,但是使用 ssh-agent “解锁”私钥。

|

||||

|

||||

这并不能完美地解决无人值守、自动化的 Ansible 命令的问题,但是它确实也使安全设置变得相当方便。 ssh-agent 程序一次验证密码,然后使用该验证进行后续连接。当我使用 Ansible 时,这是我想要做的事情。如果我是完全值得信任的,我通常仍然使用没有密码的密钥对,但是这通常是因为我在我的家庭服务器上工作,是不是容易受到攻击的。

|

||||

|

||||

在配置 SSH 环境时还要记住一些其他注意事项。 也许你可以限制 Ansible 用户(通常是你的本地用户),以便它只能从一个特定的 IP 地址登录。 也许您的 Ansible 服务器可以位于不同的子网中,位于强大的防火墙之后,因此其私钥更难以远程访问。 也许 Ansible 服务器本身没有安装 SSH 服务器,所以根本没法访问。 同样,Ansible 的优势之一是它使用 SSH 协议进行通信,而且这是一个你用了多年的协议,你已经把你的系统调整到最适合你的环境了。 我不是宣传“最佳实践”的忠实粉丝,因为实际上最好的做法是考虑你的环境,并选择最适合你情况的设置。

|

||||

|

||||

### 权限提升

|

||||

|

||||

一旦您的 Ansible 服务器通过 SSH 连接到它的客户端,就需要能够提升特权。 如果你选择了上面的选项 1,那么你已经是 root 了,这是一个有争议的问题。 但是由于没有人选择选项 1(对吧?),您需要考虑客户端计算机上的普通用户如何获得访问权限。 Ansible 支持各种权限提升的系统,但在 Linux 中,最常用的选项是 `sudo` 和 `su`。 和 SSH 一样,有几种情况需要考虑,虽然肯定还有其他选择。

|

||||

|

||||

#### 1)使用 su 提升权限。

|

||||

|

||||

对于 RedHat/CentOS 用户来说,可能默认是使用 `su` 来获得系统访问权限。 默认情况下,这些系统在安装过程中配置了 root 密码,要想获得特殊访问权限,您需要输入该密码。使用 `su` 的问题在于,虽说它可以给了您完全访问远程系统,而您确实也可以完全访问远程系统。 (是的,这是讽刺。)另外,`su` 程序没有使用密钥对进行身份验证的能力,所以密码必须以交互方式输入或存储在配置文件中。 由于它实际上是 root 密码,因此将其存储在配置文件中听起来像、也确实是一个可怕的想法。

|

||||

|

||||

#### 2)使用 sudo 提升权限。

|

||||

|

||||

这就是 Debian/Ubuntu 系统的配置方式。 正常用户组中的用户可以使用 `sudo` 命令并使用 root 权限执行该命令。 随之而来的是,这仍然存在密码存储或交互式输入的问题。 由于在配置文件中存储用户的密码看起来不太可怕,我猜这是使用 `su` 的一个进步,但是如果密码被泄露,仍然可以完全访问系统。 (毕竟,输入 `sudo` 和 `su -` 都将允许用户成为 root 用户,就像拥有 root 密码一样。)

|

||||

|

||||

#### 3) 使用 sudo 提升权限,并在 sudoers 文件中配置 NOPASSWD。

|

||||

|

||||

再次,在我的本地环境中,我就是这么做的。 这并不完美,因为它给予用户帐户无限制的 root 权限,并且不需要任何密码。 但是,当我这样做并且使用没有密码短语的 SSH 密钥对时,我可以让 Ansible 命令更轻松的自动化。 再次提示,虽然这很方便,但这不是一个非常安全的想法。

|

||||

|

||||

#### 4)使用 sudo 提升权限,并在特定的可执行文件上配置 NOPASSWD。

|

||||

|

||||

这个想法可能是安全性和便利性的最佳折衷。 基本上,如果你知道你打算用 Ansible 做什么,那么你可以为远程用户使用的那些应用程序提供 NOPASSWD 权限。 这可能会让人有些困惑,因为 Ansible 使用 Python 来处理很多事情,但是经过足够的尝试和错误,你应该能够弄清原理。 这是额外的工作,但确实消除了一些明显的安全漏洞。

|

||||

|

||||

### 计划实施

|

||||

|

||||

一旦你决定如何处理 Ansible 认证和权限提升,就需要设置它。 在熟悉 Ansible 之后,您可能会使用该工具来帮助“引导”新客户端,但首先手动配置客户端非常重要,以便您知道发生了什么事情。 将你熟悉的事情变得自动化比从头开始自动化要好。

|

||||

|

||||

我已经写过关于 SSH 密钥对的文章,网上有无数的设置类的文章。 来自 Ansible 服务器的简短版本看起来像这样:

|

||||

|

||||

```

|

||||

# ssh-keygen

|

||||

# ssh-copy-id -i .ssh/id_dsa.pub remoteuser@remote.computer.ip

|

||||

# ssh remoteuser@remote.computer.ip

|

||||

```

|

||||

|

||||

如果您在创建密钥对时选择不使用密码,最后一步您应该可以直接进入远程计算机,而不用输入密码或密钥串。

|

||||

|

||||

为了在 `sudo` 中设置权限提升,您需要编辑 `sudoers` 文件。 你不应该直接编辑文件,而是使用:

|

||||

|

||||

```

|

||||

# sudo visudo

|

||||

```

|

||||

|

||||

这将打开 `sudoers` 文件并允许您安全地进行更改(保存时会进行错误检查,所以您不会意外地因为输入错误将自己锁住)。 这个文件中有一些例子,所以你应该能够弄清楚如何分配你想要的确切的权限。

|

||||

|

||||

一旦配置完成,您应该在使用 Ansible 之前进行手动测试。 尝试 SSH 到远程客户端,然后尝试使用您选择的任何方法提升权限。 一旦你确认配置的方式可以连接,就可以安装 Ansible 了。

|

||||

|

||||

### 安装 Ansible

|

||||

|

||||

由于 Ansible 程序仅安装在一台计算机上,因此开始并不是一件繁重的工作。 Red Hat/Ubuntu 系统的软件包安装有点不同,但都不是很困难。

|

||||

|

||||

在 Red Hat/CentOS 中,首先启用 EPEL 库:

|

||||

|

||||

```

|

||||

sudo yum install epel-release

|

||||

```

|

||||

|

||||

然后安装 Ansible:

|

||||

|

||||

```

|

||||

sudo yum install ansible

|

||||

```

|

||||

|

||||

在 Ubuntu 中,首先启用 Ansible PPA:

|

||||

|

||||

```

|

||||

sudo apt-add-repository spa:ansible/ansible

|

||||

(press ENTER to access the key and add the repo)

|

||||

```

|

||||

|

||||

然后安装 Ansible:

|

||||

|

||||

```

|

||||

sudo apt-get update

|

||||

sudo apt-get install ansible

|

||||

```

|

||||

|

||||

### Ansible 主机文件配置

|

||||

|

||||

Ansible 系统无法知道您希望它控制哪个客户端,除非您给它一个计算机列表。 该列表非常简单,看起来像这样:

|

||||

|

||||

```

|

||||

# file /etc/ansible/hosts

|

||||

|

||||

[webservers]

|

||||

blogserver ansible_host=192.168.1.5

|

||||

wikiserver ansible_host=192.168.1.10

|

||||

|

||||

[dbservers]

|

||||

mysql_1 ansible_host=192.168.1.22

|

||||

pgsql_1 ansible_host=192.168.1.23

|

||||

```

|

||||

|

||||

方括号内的部分是指定的组。 单个主机可以列在多个组中,而 Ansible 可以指向单个主机或组。 这也是配置文件,比如纯文本密码的东西将被存储,如果这是你计划的那种设置。 配置文件中的每一行配置一个主机地址,并且可以在 `ansible_host` 语句之后添加多个声明。 一些有用的选项是:

|

||||

|

||||

```

|

||||

ansible_ssh_pass

|

||||

ansible_become

|

||||

ansible_become_method

|

||||

ansible_become_user

|

||||

ansible_become_pass

|

||||

```

|

||||

|

||||

### Ansible <ruby>保险库<rt>Vault</rt></ruby>

|

||||

|

||||

(LCTT 译注:Vault 作为 ansible 的一项新功能可将例如密码、密钥等敏感数据文件进行加密,而非明文存放)

|

||||

|

||||

我也应该注意到,尽管安装程序比较复杂,而且这不是在您首次进入 Ansible 世界时可能会做的事情,但该程序确实提供了一种加密保险库中的密码的方法。 一旦您熟悉 Ansible,并且希望将其投入生产,将这些密码存储在加密的 Ansible 保险库中是非常理想的。 但是本着先学会爬再学会走的精神,我建议首先在非生产环境下使用无密码方法。

|

||||

|

||||

### 系统测试

|

||||

|

||||

最后,你应该测试你的系统,以确保客户端可以正常连接。 `ping` 测试将确保 Ansible 计算机可以 `ping` 每个主机:

|

||||

|

||||

```

|

||||

ansible -m ping all

|

||||

```

|

||||

|

||||

运行后,如果 `ping` 成功,您应该看到每个定义的主机显示 `ping` 的消息:`pong`。 这实际上并没有测试认证,只是测试网络连接。 试试这个来测试你的认证:

|

||||

|

||||

```

|