mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-27 02:30:10 +08:00

20141008-3 选题

This commit is contained in:

parent

91801190ea

commit

2ae0cf3468

@ -0,0 +1,273 @@

|

||||

How to configure HTTP load balancer with HAProxy on Linux

|

||||

================================================================================

|

||||

Increased demand on web based applications and services are putting more and more weight on the shoulders of IT administrators. When faced with unexpected traffic spikes, organic traffic growth, or internal challenges such as hardware failures and urgent maintenance, your web application must remain available, no matter what. Even modern devops and continuous delivery practices can threaten the reliability and consistent performance of your web service.

|

||||

|

||||

Unpredictability or inconsistent performance is not something you can afford. But how can we eliminate these downsides? In most cases a proper load balancing solution will do the job. And today I will show you how to set up HTTP load balancer using [HAProxy][1].

|

||||

|

||||

### What is HTTP load balancing? ###

|

||||

|

||||

HTTP load balancing is a networking solution responsible for distributing incoming HTTP or HTTPS traffic among servers hosting the same application content. By balancing application requests across multiple available servers, a load balancer prevents any application server from becoming a single point of failure, thus improving overall application availability and responsiveness. It also allows you to easily scale in/out an application deployment by adding or removing extra application servers with changing workloads.

|

||||

|

||||

### Where and when to use load balancing? ###

|

||||

|

||||

As load balancers improve server utilization and maximize availability, you should use it whenever your servers start to be under high loads. Or if you are just planning your architecture for a bigger project, it's a good habit to plan usage of load balancer upfront. It will prove itself useful in the future when you need to scale your environment.

|

||||

|

||||

### What is HAProxy? ###

|

||||

|

||||

HAProxy is a popular open-source load balancer and proxy for TCP/HTTP servers on GNU/Linux platforms. Designed in a single-threaded event-driven architecture, HAproxy is capable of handling [10G NIC line rate][2] easily, and is being extensively used in many production environments. Its features include automatic health checks, customizable load balancing algorithms, HTTPS/SSL support, session rate limiting, etc.

|

||||

|

||||

### What are we going to achieve in this tutorial? ###

|

||||

|

||||

In this tutorial, we will go through the process of configuring a HAProxy-based load balancer for HTTP web servers.

|

||||

|

||||

### Prerequisites ###

|

||||

|

||||

You will need at least one, or preferably two web servers to verify functionality of your load balancer. We assume that backend HTTP web servers are already [up and running][3].

|

||||

|

||||

### Install HAProxy on Linux ###

|

||||

|

||||

For most distributions, we can install HAProxy using your distribution's package manager.

|

||||

|

||||

#### Install HAProxy on Debian ####

|

||||

|

||||

In Debian we need to add backports for Wheezy. To do that, please create a new file called "backports.list" in /etc/apt/sources.list.d, with the following content:

|

||||

|

||||

deb http://cdn.debian.net/debian wheezybackports main

|

||||

|

||||

Refresh your repository data and install HAProxy.

|

||||

|

||||

# apt get update

|

||||

# apt get install haproxy

|

||||

|

||||

#### Install HAProxy on Ubuntu ####

|

||||

|

||||

# apt get install haproxy

|

||||

|

||||

#### Install HAProxy on CentOS and RHEL ####

|

||||

|

||||

# yum install haproxy

|

||||

|

||||

### Configure HAProxy ###

|

||||

|

||||

In this tutorial, we assume that there are two HTTP web servers up and running with IP addresses 192.168.100.2 and 192.168.100.3. We also assume that the load balancer will be configured at a server with IP address 192.168.100.4.

|

||||

|

||||

To make HAProxy functional, you need to change a number of items in /etc/haproxy/haproxy.cfg. These changes are described in this section. In case some configuration differs for different GNU/Linux distributions, it will be noted in the paragraph.

|

||||

|

||||

#### 1. Configure Logging ####

|

||||

|

||||

One of the first things you should do is to set up proper logging for your HAProxy, which will be useful for future debugging. Log configuration can be found in the global section of /etc/haproxy/haproxy.cfg. The following are distro-specific instructions for configuring logging for HAProxy.

|

||||

|

||||

**CentOS or RHEL:**

|

||||

|

||||

To enable logging on CentOS/RHEL, replace:

|

||||

|

||||

log 127.0.0.1 local2

|

||||

|

||||

with:

|

||||

|

||||

log 127.0.0.1 local0

|

||||

|

||||

The next step is to set up separate log files for HAProxy in /var/log. For that, we need to modify our current rsyslog configuration. To make the configuration simple and clear, we will create a new file called haproxy.conf in /etc/rsyslog.d/ with the following content.

|

||||

|

||||

$ModLoad imudp

|

||||

$UDPServerRun 514

|

||||

$template Haproxy,"%msg%\n"

|

||||

local0.=info /var/log/haproxy.log;Haproxy

|

||||

local0.notice /var/log/haproxystatus.log;Haproxy

|

||||

local0.* ~

|

||||

|

||||

This configuration will separate all HAProxy messages based on the $template to log files in /var/log. Now restart rsyslog to apply the changes.

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

**Debian or Ubuntu:**

|

||||

|

||||

To enable logging for HAProxy on Debian or Ubuntu, replace:

|

||||

|

||||

log /dev/log local0

|

||||

log /dev/log local1 notice

|

||||

|

||||

with:

|

||||

|

||||

log 127.0.0.1 local0

|

||||

|

||||

Next, to configure separate log files for HAProxy, edit a file called haproxy.conf (or 49-haproxy.conf in Debian) in /etc/rsyslog.d/ with the following content.

|

||||

|

||||

$ModLoad imudp

|

||||

$UDPServerRun 514

|

||||

$template Haproxy,"%msg%\n"

|

||||

local0.=info /var/log/haproxy.log;Haproxy

|

||||

local0.notice /var/log/haproxystatus.log;Haproxy

|

||||

local0.* ~

|

||||

|

||||

This configuration will separate all HAProxy messages based on the $template to log files in /var/log. Now restart rsyslog to apply the changes.

|

||||

|

||||

# service rsyslog restart

|

||||

|

||||

#### 2. Setting Defaults ####

|

||||

|

||||

The next step is to set default variables for HAProxy. Find the defaults section in /etc/haproxy/haproxy.cfg, and replace it with the following configuration.

|

||||

|

||||

defaults

|

||||

log global

|

||||

mode http

|

||||

option httplog

|

||||

option dontlognull

|

||||

retries 3

|

||||

option redispatch

|

||||

maxconn 20000

|

||||

contimeout 5000

|

||||

clitimeout 50000

|

||||

srvtimeout 50000

|

||||

|

||||

The configuration stated above is recommended for HTTP load balancer use, but it may not be the optimal solution for your environment. In that case, feel free to explore HAProxy man pages to tweak it.

|

||||

|

||||

#### 3. Webfarm Configuration ####

|

||||

|

||||

Webfarm configuration defines the pool of available HTTP servers. Most of the settings for our load balancer will be placed here. Now we will create some basic configuration, where our nodes will be defined. Replace all of the configuration from frontend section until the end of file with the following code:

|

||||

|

||||

listen webfarm *:80

|

||||

mode http

|

||||

stats enable

|

||||

stats uri /haproxy?stats

|

||||

stats realm Haproxy\ Statistics

|

||||

stats auth haproxy:stats

|

||||

balance roundrobin

|

||||

cookie LBN insert indirect nocache

|

||||

option httpclose

|

||||

option forwardfor

|

||||

server web01 192.168.100.2:80 cookie node1 check

|

||||

server web02 192.168.100.3:80 cookie node2 check

|

||||

|

||||

The line "listen webfarm *:80" defines on which interfaces our load balancer will listen. For the sake of the tutorial, I've set that to "*" which makes the load balancer listen on all our interfaces. In a real world scenario, this might be undesirable and should be replaced with an interface that is accessible from the internet.

|

||||

|

||||

stats enable

|

||||

stats uri /haproxy?stats

|

||||

stats realm Haproxy\ Statistics

|

||||

stats auth haproxy:stats

|

||||

|

||||

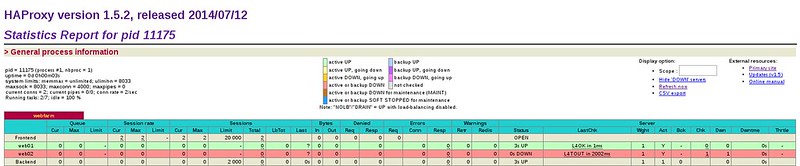

The above settings declare that our load balancer statistics can be accessed on http://<load-balancer-IP>/haproxy?stats. The access is secured with a simple HTTP authentication with login name "haproxy" and password "stats". These settings should be replaced with your own credentials. If you don't need to have these statistics available, then completely disable them.

|

||||

|

||||

Here is an example of HAProxy statistics.

|

||||

|

||||

|

||||

|

||||

The line "balance roundrobin" defines the type of load balancing we will use. In this tutorial we will use simple round robin algorithm, which is fully sufficient for HTTP load balancing. HAProxy also offers other types of load balancing:

|

||||

|

||||

- **leastconn**: gives connections to the server with the lowest number of connections.

|

||||

- **source**: hashes the source IP address, and divides it by the total weight of the running servers to decide which server will receive the request.

|

||||

- **uri**: the left part of the URI (before the question mark) is hashed and divided by the total weight of the running servers. The result determines which server will receive the request.

|

||||

- **url_param**: the URL parameter specified in the argument will be looked up in the query string of each HTTP GET request. You can basically lock the request using crafted URL to specific load balancer node.

|

||||

- **hdr(name**): the HTTP header <name> will be looked up in each HTTP request and directed to specific node.

|

||||

|

||||

The line "cookie LBN insert indirect nocache" makes our load balancer store persistent cookies, which allows us to pinpoint which node from the pool is used for a particular session. These node cookies will be stored with a defined name. In our case, I used "LBN", but you can specify any name you like. The node will store its string as a value for this cookie.

|

||||

|

||||

server web01 192.168.100.2:80 cookie node1 check

|

||||

server web02 192.168.100.3:80 cookie node2 check

|

||||

|

||||

The above part is the definition of our pool of web server nodes. Each server is represented with its internal name (e.g., web01, web02). IP address, and unique cookie string. The cookie string can be defined as anything you want. I am using simple node1, node2 ... node(n).

|

||||

|

||||

### Start HAProxy ###

|

||||

|

||||

When you are done with the configuration, it's time to start HAProxy and verify that everything is working as intended.

|

||||

|

||||

#### Start HAProxy on Centos/RHEL ####

|

||||

|

||||

Enable HAProxy to be started after boot and turn it on using:

|

||||

|

||||

# chkconfig haproxy on

|

||||

# service haproxy start

|

||||

|

||||

And of course don't forget to enable port 80 in the firewall as follows.

|

||||

|

||||

**Firewall on CentOS/RHEL 7:**

|

||||

|

||||

# firewallcmd permanent zone=public addport=80/tcp

|

||||

# firewallcmd reload

|

||||

|

||||

**Firewall on CentOS/RHEL 6:**

|

||||

|

||||

Add following line into section ":OUTPUT ACCEPT" of /etc/sysconfig/iptables:

|

||||

|

||||

A INPUT m state state NEW m tcp p tcp dport 80 j ACCEPT

|

||||

|

||||

and restart **iptables**:

|

||||

|

||||

# service iptables restart

|

||||

|

||||

#### Start HAProxy on Debian ####

|

||||

|

||||

#### Start HAProxy with: ####

|

||||

|

||||

# service haproxy start

|

||||

|

||||

Don't forget to enable port 80 in the firewall by adding the following line into /etc/iptables.up.rules:

|

||||

|

||||

A INPUT p tcp dport 80 j ACCEPT

|

||||

|

||||

#### Start HAProxy on Ubuntu ####

|

||||

|

||||

Enable HAProxy to be started after boot by setting "ENABLED" option to "1" in /etc/default/haproxy:

|

||||

|

||||

ENABLED=1

|

||||

|

||||

Start HAProxy:

|

||||

|

||||

# service haproxy start

|

||||

|

||||

and enable port 80 in the firewall:

|

||||

|

||||

# ufw allow 80

|

||||

|

||||

### Test HAProxy ###

|

||||

|

||||

To check whether HAproxy is working properly, we can do the following.

|

||||

|

||||

First, prepare test.php file with the following content:

|

||||

|

||||

<?php

|

||||

header('Content-Type: text/plain');

|

||||

echo "Server IP: ".$_SERVER['SERVER_ADDR'];

|

||||

echo "\nX-Forwarded-for: ".$_SERVER['HTTP_X_FORWARDED_FOR'];

|

||||

?>

|

||||

|

||||

This PHP file will tell us which server (i.e., load balancer) forwarded the request, and what backend web server actually handled the request.

|

||||

|

||||

Place this PHP file in the root directory of both backend web servers. Now use curl command to fetch this PHP file from the load balancer (192.168.100.4).

|

||||

|

||||

$ curl http://192.168.100.4/test.php

|

||||

|

||||

When we run this command multiple times, we should see the following two outputs alternate (due to the round robin algorithm).

|

||||

|

||||

Server IP: 192.168.100.2

|

||||

X-Forwarded-for: 192.168.100.4

|

||||

|

||||

----------

|

||||

|

||||

Server IP: 192.168.100.3

|

||||

X-Forwarded-for: 192.168.100.4

|

||||

|

||||

If we stop one of the two backend web servers, the curl command should still work, directing requests to the other available web server.

|

||||

|

||||

### Summary ###

|

||||

|

||||

By now you should have a fully operational load balancer that supplies your web nodes with requests in round robin mode. As always, feel free to experiment with the configuration to make it more suitable for your infrastructure. I hope this tutorial helped you to make your web projects more resistant and available.

|

||||

|

||||

As most of you already noticed, this tutorial contains settings for only one load balancer. Which means that we have just replaced one single point of failure with another. In real life scenarios you should deploy at least two or three load balancers to cover for any failures that might happen, but that is out of the scope of this tutorial right now.

|

||||

|

||||

If you have any questions or suggestions feel free to post them in the comments and I will do my best to answer or advice.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/haproxy-http-load-balancer-linux.html

|

||||

|

||||

作者:[Jaroslav Štěpánek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/jaroslav

|

||||

[1]:http://www.haproxy.org/

|

||||

[2]:http://www.haproxy.org/10g.html

|

||||

[3]:http://xmodulo.com/how-to-install-lamp-server-on-ubuntu.html

|

||||

@ -0,0 +1,125 @@

|

||||

How to configure a host intrusion detection system on CentOS

|

||||

================================================================================

|

||||

One of the first safety measures that any sysadmin may want to implement in their production servers is a mechanism to detect file tampering - not only the content of files, but also their attributes.

|

||||

|

||||

[AIDE][1] (short for "Advanced Intrusion Detection Environment") is an open source host-based intrusion detection system. AIDE checks the integrity of system binaries and essential configuration files by checking for inconsistencies in a number of file attributes, including permissions, file type, inode, number of links, link name, user, group, file size, block count, mtime, atime, ctime, acl, SELinux security context, xattrs, and md5/sha checksums.

|

||||

|

||||

AIDE builds a database of file attributes by scanning the file system of a (untampered) Linux server. It then checks the server's file attributes against the database, and alerts of any changes made to the indexed files while the server is running. For this very reason, AIDE must reindex protected files every time the system is updated or configuration files are changed for legitimate reasons.

|

||||

|

||||

For some clients, their security policies may mandate some sort of IDS installed on their servers. But whether a client requires it or not, it's a good sysadmin practice to have IDS in place.

|

||||

|

||||

### Installing AIDE on CentOS or RHEL ###

|

||||

|

||||

An initial installation (along with the first run) of AIDE is best performed in a system where the operating system has just been installed, and none of the services is exposed to the Internet or even to a local area network. At this early stage, we can minimize the risk of any break-ins and tampering attempts coming from outside. In fact, it is the only way to ensure that the system is clean at the time AIDE builds its initial database.

|

||||

|

||||

For that reason, after we install AIDE using:

|

||||

|

||||

# yum install aide

|

||||

|

||||

we need to unplug our machine from the network, and perform some basic configuration tasks as described next.

|

||||

|

||||

### Configuring AIDE ###

|

||||

|

||||

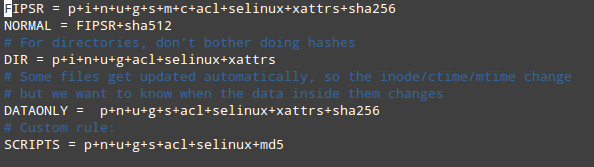

The default configuration file can be found in /etc/aide.conf. This file presents several example protection rules (e.g., FIPSR, NORMAL, DIR, DATAONLY), each of which is followed by an equal sign and a list of file attributes to check for, or any predefined rules (delimited by a plus sign). You can also define any custom rules using this format.

|

||||

|

||||

|

||||

|

||||

FIPSR = p+i+n+u+g+s+m+c+acl+selinux+xattrs+sha256

|

||||

NORMAL = FIPSR+sha512

|

||||

|

||||

For example, the above means that NORMAL rule will check for inconsistencies in the following attributes: permissions (p), inode (i), number of links (n), user (u), group (g), size (s), mtime (m), ctime (c), ACL (acl), SELinux (selinux), xattrs (xattr), SHA256/SHA512 checksums (sha256 and sha512).

|

||||

|

||||

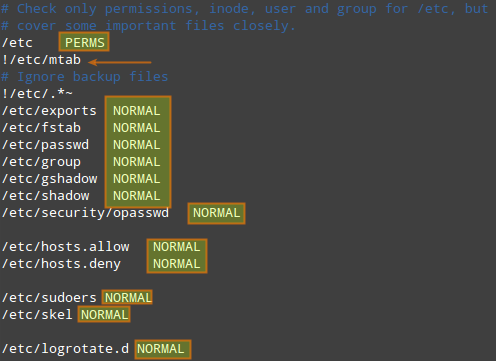

The defined rules can be used for different directories and files (denoted with regular expressions) flexibly.

|

||||

|

||||

|

||||

|

||||

An exclamation sign (!) preceding an entry tells AIDE to ignore a subdirectory (or files inside a directory) for which another rule can be defined.

|

||||

|

||||

In the above example, PERMS is the default rule for /etc and its subdirectories and files. However, no rule will be applied to backup files in /etc (i.e., /etc/.*~) nor to /etc/mtab file. For some selective subdirectories or files in /etc, NORMAL rule is applied instead, overriding the default rule PERMS.

|

||||

|

||||

Defining and applying the right rules to the right locations in your system is perhaps the most difficult part of using AIDE, but using a good judgement is a nice start. As a rule of thumb, do not check for more attributes than necessary. For example, checking mtime for files inside /var/log or /var/spool will certainly result in a lot of false positives because there are many applications and daemons often writing to these locations, which is perfectly fine. In addition, checking for multiple checksums may enhance security at the expense of AIDE's increased running time.

|

||||

|

||||

Optionally, you can have the results of the check mailed to you if you specify your email address with the MAILTO variable. Place the following line anywhere in /etc/aide.conf.

|

||||

|

||||

MAILTO=root@localhost

|

||||

|

||||

### Running AIDE for the First Time ###

|

||||

|

||||

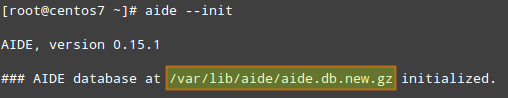

Run the following command to initialize the AIDE database:

|

||||

|

||||

# aide --init

|

||||

|

||||

|

||||

|

||||

The /var/lib/aide/aide.db.new.gz file, as per /etc/aide.conf, needs to be renamed to /var/lib/aide/aide.db.gz, so as to enable AIDE to read it:

|

||||

|

||||

# mv /var/lib/aide/aide.db.new.gz /var/lib/aide.db.gz

|

||||

|

||||

Now it's time to perform our first system check against the database. For that, simply run:

|

||||

|

||||

# aide

|

||||

|

||||

When called with no options, AIDE assumes --check.

|

||||

|

||||

If no change was made since the database had been created, AIDE will finish with an OK message.

|

||||

|

||||

|

||||

|

||||

### Managing AIDE in a Production Environment ###

|

||||

|

||||

After constructing an initial AIDE database, you often need to update a protected server for various legitimate reasons as part of ongoing system administration activities. Every time your server has been updated, you must re-build the AIDE database to incorporate the change in the database. For that, use this command:

|

||||

|

||||

# aide --update

|

||||

|

||||

To protect a production system with AIDE, it is probably best to schedule a cron job for checking for any inconsistencies with AIDE periodically. For example, to schedule AIDE to run and have the result mailed to an email address on a daily basis:

|

||||

|

||||

# crontab -e

|

||||

|

||||

----------

|

||||

|

||||

0 0 * * * /usr/sbin/aide --check | /usr/bin/mail -s "AIDE run for $HOSTNAME" your@email.com

|

||||

|

||||

### Testing AIDE for File Tampering ###

|

||||

|

||||

The following test scenarios will demonstrate how AIDE check the integrity of our files.

|

||||

|

||||

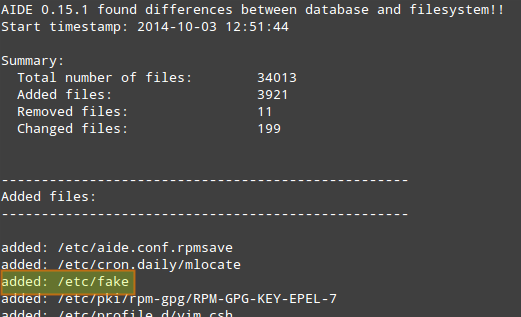

#### Test Scenario 1 ####

|

||||

|

||||

Let's add a new file (e.g., /etc/fake).

|

||||

|

||||

# cat /dev/null > /etc/fake

|

||||

|

||||

|

||||

|

||||

#### Test Scenario 2 ####

|

||||

|

||||

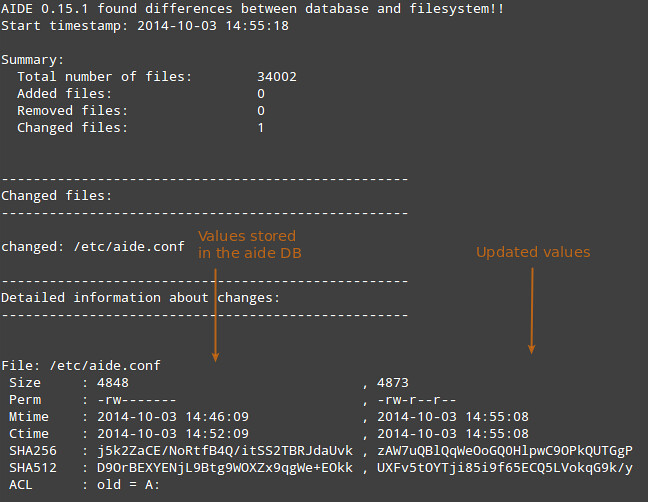

Let's change file permissions, and see if it can be detected.

|

||||

|

||||

# chmod 644 /etc/aide.conf

|

||||

|

||||

#### Test Scenario 3 ####

|

||||

|

||||

Finally, let's change file content (e.g., adding a comment line to /etc/aide.conf)

|

||||

|

||||

echo "#This is a comment" >> /etc/aide.conf

|

||||

|

||||

|

||||

|

||||

In the screenshot above, the first column shows the file attributes, the second column the values present in the AIDE database, and the third column the updated values for those attributes. An empty space in the third columns indicates that the attribute has not changed (ACL in this example).

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

If you ever find yourself in a situation where you have good reasons to think that a system has been tampered with, but are unable to determine what might have been changed at first sight, a host-based intrusion detection system like AIDE can be very helpful in that it can help you quickly identify what has changed instead of guessing and wasting valuable time.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/host-intrusion-detection-system-centos.html

|

||||

|

||||

作者:[Gabriel Cánepa][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/gabriel

|

||||

[1]:http://aide.sourceforge.net/

|

||||

@ -0,0 +1,117 @@

|

||||

How to speed up slow apt-get install on Debian or Ubuntu

|

||||

================================================================================

|

||||

If you feel that package installation by **apt-get** or **aptitude** is often too slow on your Debian or Ubuntu system, there are several ways to improve the situation. Have you considered switching default mirror sites being used? Have you checked the upstream bandwidth of your Internet connection to see if that is the bottleneck?

|

||||

|

||||

Nothing else, you can try this third option: use [apt-fast][1] tool. apt-fast is actually a shell script wrapper written around apt-get and aptitude, which can accelerate package download speed. Internally, apt-fast uses [aria2][2] download utility which can download a file in "chunked" forms from multiple mirrors simultaneously (like in BitTorrent download).

|

||||

|

||||

### Install apt-fast on Debian or Ubuntu ###

|

||||

|

||||

Here are the steps to install apt-fast on Debian-based Linux.

|

||||

|

||||

#### Debian ####

|

||||

|

||||

$ sudo apt-get install aria2

|

||||

$ wget https://github.com/ilikenwf/apt-fast/archive/master.zip

|

||||

$ unzip master.zip

|

||||

$ cd apt-fast-master

|

||||

$ sudo cp apt-fast /usr/bin

|

||||

$ sudo cp apt-fast.conf /etc

|

||||

$ sudo cp ./man/apt-fast.8 /usr/share/man/man8

|

||||

$ sudo gzip /usr/share/man/man8/apt-fast.8

|

||||

$ sudo cp ./man/apt-fast.conf.5 /usr/share/man/man5

|

||||

$ sudo gzip /usr/share/man/man5/apt-fast.conf.5

|

||||

|

||||

#### Ubuntu 14.04 and higher ####

|

||||

|

||||

$ sudo add-apt-repository ppa:saiarcot895/myppa

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install apt-fast

|

||||

|

||||

#### Ubuntu 11.04 to 13.10 ####

|

||||

|

||||

$ sudo add-apt-repository ppa:apt-fast/stable

|

||||

$ sudo apt-get update

|

||||

$ sudo apt-get install apt-fast

|

||||

|

||||

During installation on Ubuntu, you will be asked to choose a default package manager (e.g., apt-get, aptitude), and other settings. You can always change the settings later by editing a configuration file /etc/apt-fast.conf.

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

### Configure apt-fast ###

|

||||

|

||||

After installation, you need to configure a list of mirrors used by **apt-fast** in /etc/apt-fast.conf.

|

||||

|

||||

You can find a list of Debian/Ubuntu mirrors to choose from at the following URLs.

|

||||

|

||||

- **Debian**: [http://www.debian.org/mirror/list][3]

|

||||

- **Ubuntu**: [https://launchpad.net/ubuntu/+archivemirrors][4]

|

||||

|

||||

After choosing mirrors which are geographically close to your location, add those chosen mirrors to /etc/apt-fast.conf in the following format.

|

||||

|

||||

$ sudo vi /etc/apt-fast.conf

|

||||

|

||||

Debian:

|

||||

|

||||

MIRRORS=('http://ftp.us.debian.org/debian/,http://carroll.aset.psu.edu/pub/linux/distributions/debian/,http://debian.gtisc.gatech.edu/debian/,http://debian.lcs.mit.edu/debian/,http://mirror.cc.columbia.edu/debian/')

|

||||

|

||||

Ubuntu/Mint:

|

||||

|

||||

MIRRORS=('http://us.archive.ubuntu.com/ubuntu,http://mirror.cc.columbia.edu/pub/linux/ubuntu/archive/,http://mirror.cc.vt.edu/pub2/ubuntu/,http://mirror.umd.edu/ubuntu/,http://mirrors.mit.edu/ubuntu/')

|

||||

|

||||

As shown above, individual mirrors for a particular archive should be separated by commas. It is recommended that you include the default mirror site specified in /etc/apt/sources.list in the MIRRORS string.

|

||||

|

||||

### Install a Package with apt-fast ###

|

||||

|

||||

Now you are ready to test the power of apt-fast. Here is the command-line usage of **apt-fast**:

|

||||

|

||||

apt-fast [apt-get options and arguments]

|

||||

apt-fast [aptitude options and arguments]

|

||||

apt-fast { { install | upgrade | dist-upgrade | build-dep | download | source } [ -y | --yes | --assume-yes | --assume-no ] ... | clean }

|

||||

|

||||

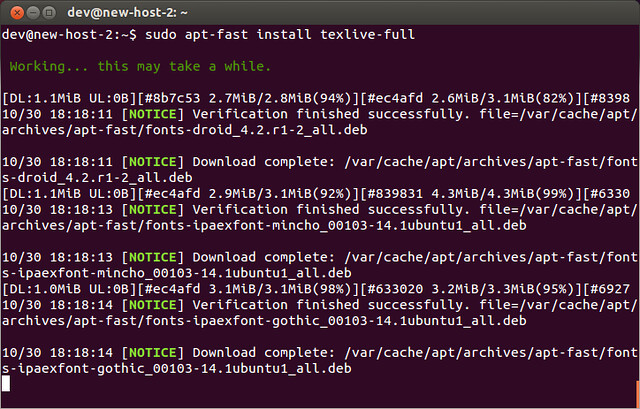

To install a package with **apt-fast**:

|

||||

|

||||

$ sudo apt-fast install texlive-full

|

||||

|

||||

To download a package in the current directory without installing it:

|

||||

|

||||

$ sudo apt-fast download texlive-full

|

||||

|

||||

|

||||

|

||||

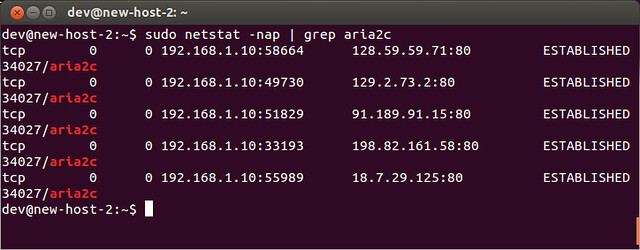

As mentioned earlier, parallel downloading of apt-fast is done by aria2. You can verify parallel downloads from multiple mirrors as follows.

|

||||

|

||||

$ sudo netstat -nap | grep aria2c

|

||||

|

||||

|

||||

|

||||

Note that **apt-fast** does not make "apt-get update" faster. Parallel downloading gets triggered only for "install", "upgrade", "dist-upgrade" and "build-dep" operations. For other operations, apt-fast simply falls back to the default package manager **apt-get** or **aptitude**.

|

||||

|

||||

### How Fast is apt-fast? ###

|

||||

|

||||

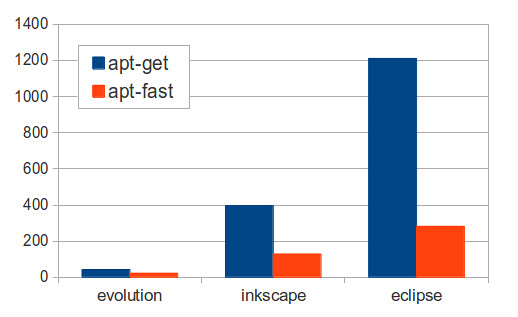

To compare apt-fast and apt-get, I tried installing several packages using two methods on two identical Ubuntu instances. The following graph shows total package installation time (in seconds).

|

||||

|

||||

|

||||

|

||||

As you can see, **apt-fast** is substantially faster (e.g., 3--4 times faster) than **apt-get**, especially when a bulky package is installed.

|

||||

|

||||

Be aware that performance improvement will of course vary, depending on your upstream Internet connectivity. In my case, I had ample spare bandwidth to leverage in my upstream connection, and that's why I see dramatic improvement by using parallel download.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/speed-slow-apt-get-install-debian-ubuntu.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:https://github.com/ilikenwf/apt-fast

|

||||

[2]:http://aria2.sourceforge.net/

|

||||

[3]:http://www.debian.org/mirror/list

|

||||

[4]:https://launchpad.net/ubuntu/+archivemirrors

|

||||

Loading…

Reference in New Issue

Block a user