mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-03 23:40:14 +08:00

commit

2a2021d324

@ -2,8 +2,6 @@

|

||||

================================================================================

|

||||

|

||||

|

||||

图片来源 : opensource.com

|

||||

|

||||

经过了一整天的Opensource.com[社区版主][1]年会,最后一项日程提了上来,内容只有“特邀嘉宾:待定”几个字。作为[Opensource.com][3]的项目负责人和社区管理员,[Jason Hibbets][2]起身解释道,“因为这个嘉宾有可能无法到场,因此我不想提前说是谁。在几个月前我问他何时有空过来,他给了我两个时间点,我选了其中一个。今天是这三周中Jim唯一能来的一天”。(译者注:Jim是指下文中提到的Jim Whitehurst,即红帽公司总裁兼首席执行官)

|

||||

|

||||

这句话在版主们(Moderators)中引起一阵轰动,他们从世界各地赶来参加此次的[拥抱开源大会(All Things Open Conference)][4]。版主们纷纷往前挪动椅子,仔细聆听。

|

||||

@ -14,7 +12,7 @@

|

||||

|

||||

“大家好!”,这个家伙开口了。他没穿正装,只是衬衫和休闲裤。

|

||||

|

||||

这时会场中第二高个子的人,红帽全球意识部门(Global Awareness)的高级主管[Jeff Mackanic][5],告诉他大部分社区版本今天都在场,然后让每个人开始作简单的自我介绍。

|

||||

这时会场中第二高个子的人,红帽全球意识部门(Global Awareness)的高级主管[Jeff Mackanic][5],告诉他大部分社区版主今天都在场,然后让每个人开始作简单的自我介绍。

|

||||

|

||||

“我叫[Jen Wike Huger][6],负责Opensource.com的内容管理,很高兴见到大家。”

|

||||

|

||||

@ -22,13 +20,13 @@

|

||||

|

||||

“我叫[Robin][9],从2013年开始参与版主项目。我在OSDC做了一些事情,工作是在[City of the Hague][10]维护[网站][11]。”

|

||||

|

||||

“我叫[Marcus Hanwell][12],来自英格兰,在[Kitware][13]工作。同时,我是FOSS科学软件的技术总监,和国家实验室在[Titan][14] Z和[Gpu programming][15]方面合作。我主要使用[Gentoo][16]和[KDE][17]。最后,我很激动能加入FOSS和开源科学。”

|

||||

“我叫[Marcus Hanwell][12],来自英格兰,在[Kitware][13]工作。同时,我是FOSS science software的技术总监,和国家实验室在[Titan][14] Z和[Gpu programming][15]方面合作。我主要使用[Gentoo][16]和[KDE][17]。最后,我很激动能参与到FOSS和开源科学。”

|

||||

|

||||

“我叫[Phil Shapiro][18],是华盛顿的一个小图书馆28个Linux工作站的管理员。我视各位为我的同事。非常高兴能一起交流分享,贡献力量。我主要关注FOSS和自豪感的关系,以及FOSS如何提升自豪感。”

|

||||

“我叫[Phil Shapiro][18],是华盛顿的一个小图书馆的28个Linux工作站的管理员。我视各位为我的同事。非常高兴能一起交流分享,贡献力量。我主要关注FOSS和自豪感的关系,以及FOSS如何提升自豪感。”

|

||||

|

||||

“我叫[Joshua Holm][19]。我大多数时间都在关注系统更新,以及帮助人们在网上找工作。”

|

||||

|

||||

“我叫[Mel Chernoff][20],在红帽工作,和[Jason Hibbets]和[Mark Bohannon]一起主要关注政府渠道方面。”

|

||||

“我叫[Mel Chernoff][20],在红帽工作,和[Jason Hibbets][22]和[Mark Bohannon][23]一起主要关注[政府][21]渠道方面。”

|

||||

|

||||

“我叫[Scott Nesbitt][24],写过很多东西,使用FOSS很久了。我是个普通人,不是系统管理员,也不是程序员,只希望能更加高效工作。我帮助人们在商业和生活中使用FOSS。”

|

||||

|

||||

@ -38,41 +36,41 @@

|

||||

|

||||

“你在[新FOSS Minor][30]教书?!”,Jim说道,“很酷!”

|

||||

|

||||

“我叫[Jason Baker][31]。我是红慢的一个云专家,主要做[OpenStack][32]方面的工作。”

|

||||

“我叫[Jason Baker][31]。我是红帽的一个云专家,主要做[OpenStack][32]方面的工作。”

|

||||

|

||||

“我叫[Mark Bohannan][33],是红帽全球开放协议的一员,在华盛顿外工作。和Mel一样,我花了相当多时间写作,也从法律和政府部门中找合作者。我做了一个很好的小册子来讨论正在发生在政府中的积极变化。”

|

||||

|

||||

“我叫[Jason Hibbets][34],我组织了这次会议。”

|

||||

“我叫[Jason Hibbets][34],我组织了这次讨论。”

|

||||

|

||||

会场中一片笑声。

|

||||

|

||||

“我也组织了这片讨论,可以这么说,”这个棕红色头发笑容灿烂的家伙说道。笑声持续一会逐渐平息。

|

||||

“我也组织了这个讨论,可以这么说,”这个棕红色头发笑容灿烂的家伙说道。笑声持续一会逐渐平息。

|

||||

|

||||

我当时在他左边,时不时从转录空隙中抬头看一眼,然后从眼神中注意到微笑背后暗示的那个自2008年1月起开始领导公司的人,红帽的CEO[Jim Whitehurst][35]。

|

||||

我当时在他左边,时不时从记录的间隙中抬头看一眼,我注意到淡淡微笑背后的那个令人瞩目的人,是自2008年1月起开始领导红帽公司的CEO [Jim Whitehurst][35]。

|

||||

|

||||

“我有世界上最好的工作,”稍稍向后靠、叉腿抱头,Whitehurst开始了演讲。“我开始领导红帽,在世界各地旅行到处看看情况。在这里的七年中,FOSS和广泛的开源创新所发生的美好的事情是开源已经脱离了条条框框。我现在认为,IT正处在FOSS之前所在的位置。我们可以预见FOSS从一个替代走向创新驱动力。”用户也看到了这一点。他们用FOSS并不是因为它便宜,而是因为它能提供和创新的解决方案。这也十一个全球现象。比如,我刚才还在印度,然后发现那里的用户拥抱开源的两个理由:一个是创新,另一个是那里的市场有些特殊,需要完全的控制。

|

||||

“我有世界上最好的工作,”稍稍向后靠、叉腿抱头,Whitehurst开始了演讲。“我开始领导红帽,在世界各地旅行到处看看情况。在这里的七年中,FOSS和广泛的开源创新所发生的最美好的事情是开源已经脱离了条条框框。我现在认为,信息技术正处在FOSS之前所在的位置。我们可以预见FOSS从一个替代品走向创新驱动力。我们的用户也看到了这一点。他们用FOSS并不是因为它便宜,而是因为它能带来可控和创新的解决方案。这也是个全球现象。比如,我刚才还在印度,然后发现那里的用户拥抱开源的两个理由:一个是创新,另一个是那里的市场有些特殊,需要完全的可控。”

|

||||

|

||||

“[孟买证券交易所][36]想得到源代码并加以控制,五年前这在证券交易领域闻所未闻。那时FOSS正在重复发明轮子。今天看来,FOSS正在做几乎所有的结合了大数据的事物。几乎所有的新框架,语言和方法论,包括流动(尽管不包括设备),都首先发生在开源世界。”

|

||||

“[孟买证券交易所][36]想得到源代码并加以控制,五年前这种事情在证券交易领域就没有听说过。那时FOSS正在重复发明轮子。今天看来,实际上大数据的每件事情都出现在FOSS领域。几乎所有的新框架,语言和方法论,包括移动通讯(尽管不包括设备),都首先发生在开源世界。”

|

||||

|

||||

“这是因为用户数量已经达到了相当的规模。这不只是红帽遇到的情况,[Google][37],[Amazon][38],[Facebook][39]等也出现这样的情况。他们想解决自己的问题,用开源的方式。忘掉协议吧,开源绝不仅如此。我们建立了一个交通工具,一套规则,例如[Hadoop][40],[Cassandra][41]和其他工具。事实上,开源驱动创新。例如,Hadoop在厂商们意识的规模带来的问题。他们实际上有足够的资和资源金来解决自己的问题。”开源是许多领域的默认技术方案。这在一个更加注重内容的世界中更是如此,例如[3D打印][42]和其他使用信息内容的物理产品。”

|

||||

“这是因为用户数量已经达到了相当的规模。这不只是红帽遇到的情况,[Google][37],[Amazon][38],[Facebook][39]等也出现这样的情况。他们想解决自己的问题,用开源的方式。忘掉许可协议吧,开源绝不仅如此。我们建立了一个交通工具,一套规则,例如[Hadoop][40],[Cassandra][41]和其他工具。事实上,开源驱动创新。例如,Hadoop是在厂商们意识到规模带来的问题时的一个解决方案。他们实际上有足够的资金和资源来解决自己的问题。开源是许多领域的默认技术方案。这在一个更加注重内容的世界中更是如此,例如[3D打印][42]和其他使用信息内容的实体产品。”

|

||||

|

||||

“源代码的开源确实很酷,但开源不应当仅限于此。在各行各业不同领域开源仍有可以用武之地。我们要问下自己:‘开源能够为教育,政府,法律带来什么?其它的呢?其它的领域如何能学习我们?’”

|

||||

|

||||

“还有内容的问题。内容在现在是免费的,当然我们可以投资更多的免费内容,不过我们也需要商业模式围绕的内容。这是我们更应该关注的。如果你相信开放的创新能带来更好,那么我们需要更多的商业模式。”

|

||||

“还有内容的问题。内容在现在是免费的,当然我们可以投资更多的免费内容,不过我们也需要商业模式围绕的内容。这是我们更应该关注的。如果你相信开放的创新更好,那么我们需要更多的商业模式。”

|

||||

|

||||

“教育让我担心其相比与‘社区’它更关注‘内容’。例如,无论我走到哪里,大学校长们都会说,‘等等,难道教育将会免费?!’对于下游来说FOSS免费很棒,但别忘了上游很强大。免费课程很棒,但我们同样需要社区来不断迭代和完善。这是很多人都在做的事情,Opensource.com是一个提供交流的社区。问题不是‘我们如何控制内容’,也不是‘如何建立和分发内容’,而是要确保它处在不断的完善当中,而且能给其他领域提供有价值的参考。”

|

||||

“教育让我担心,其相比与‘社区’它更关注‘内容’。例如,无论我走到哪里,大学的校长们都会说,‘等等,难道教育将会免费?!’对于下游来说FOSS免费很棒,但别忘了上游很强大。免费课程很棒,但我们同样需要社区来不断迭代和完善。这是很多人都在做的事情,Opensource.com是一个提供交流的社区。问题不是‘我们如何控制内容’,也不是‘如何建立和分发内容’,而是要确保它处在不断的完善当中,而且能给其他领域提供有价值的参考。”

|

||||

|

||||

“改变世界的潜力是无穷无尽的,我们已经取得了很棒的进步。”六年前我们痴迷于制定宣言,我们说‘我们是领导者’。我们用错词了,因为那潜在意味着控制。积极的参与者们同样也不能很好理解……[Máirín Duffy][43]提出了[催化剂][44]这个词。然后我们组成了红帽,不断地促进行动,指引方向。”

|

||||

|

||||

“Opensource.com也是其他领域的催化剂,而这正是它的本义所在,我希望你们也这样认为。当时的内容质量和现在比起来都令人难以置信。你可以看到每季度它都在进步。谢谢你们的时间!谢谢成为了催化剂!这是一个让世界变得更好的机会。我想听听你们的看法。”

|

||||

“Opensource.com也是其他领域的催化剂,而这正是它的本义所在,我希望你们也这样认为。当时的内容质量和现在比起来都令人难以置信。你可以看到每季度它都在进步。谢谢你们付出的时间!谢谢成为了催化剂!这是一个让世界变得更好的机会。我想听听你们的看法。”

|

||||

|

||||

我瞥了一下桌子,发现几个人眼中带泪。

|

||||

|

||||

然后Whitehurst又回顾了大会的开放教育议题。“极端一点看,如果你有一门[Ulysses][45]的公开课。在这里你能和一群人一起合作体验课堂。这样就和代码块一样的:大家一起努力,代码随着时间不断改进。”

|

||||

|

||||

在这一点上,我有发言权。当谈论其FOSS和学术团体之间的差异,向基础和可能的不调和这些词语都跳了出来。

|

||||

在这一点上,我有发言权。当谈论其FOSS和学术团体之间的差异,像“基础”和“可能不调和”这些词语都跳了出来。

|

||||

|

||||

**Remy**: “倒退带来死亡。如果你在论文或者发布的代码中烦了一个错误,有可能带来十分严重的后果。学校一直都是避免失败寻求正确答案的地方。复制意味着抄袭。轮子在一遍遍地教条地被发明。FOSS你能快速失败,但在学术界,你只能带来无效的结果。”

|

||||

**Remy**: “倒退带来死亡。如果你在论文或者发布的代码中犯了一个错误,有可能带来十分严重的后果。学校一直都是避免失败寻求正确答案的地方。复制意味着抄袭。轮子在一遍遍地教条地被发明。FOSS让你能快速失败,但在学术界,你只能带来无效的结果。”

|

||||

|

||||

**Nicole**: “学术界有太多自我的家伙,你们需要一个发布经理。”

|

||||

|

||||

@ -80,20 +78,21 @@

|

||||

|

||||

**Luis**: “团队和分享应该优先考虑,红帽可以多向它们强调这一点。”

|

||||

|

||||

**Jim**: “还有公司在其中扮演积极角色吗?”

|

||||

**Jim**: “还有公司在其中扮演积极角色了吗?”

|

||||

|

||||

[Phil Shapiro][46]: “我对FOSS的临界点感兴趣。联邦没有改用[LibreOffice][47]把我逼疯了。我们没有在软件上花税款,也不应当在字处理软件或者微软的Office上浪费税钱。”

|

||||

[Phil Shapiro][46]: “我对FOSS的临界点感兴趣。Fed没有改用[LibreOffice][47]把我逼疯了。我们没有在软件上花税款,也不应当在字处理软件或者微软的Office上浪费税钱。”

|

||||

|

||||

**Jim**: “我们经常提倡这一点。我们能做更多吗?这是个问题。首先,我们在我们的产品涉足的地方取得了进步。我们在政府中有坚实的专营权。我们比私有公司平均话费更多。银行和电信业都和政府挨着。我们在欧洲做的更好,我认为在那工作又更低的税。下一代计算就像‘终结者’,我们到处取得了进步,但仍然需要忧患意识。”

|

||||

**Jim**: “我们经常提倡这一点。我们能做更多吗?这是个问题。首先,我们在我们的产品涉足的地方取得了进步。我们在政府中有坚实的专营权。我们比私有公司平均花费更多。银行和电信业都和政府挨着。我们在欧洲做的更好,我认为在那工作有更低的税。下一代计算就像‘终结者’,我们到处取得了进步,但仍然需要忧患意识。”

|

||||

|

||||

突然,门开了。Jim转身向门口站着的执行助理点头。他要去参加下一场会了。他并拢双腿,站着向前微倾。然后,他再次向每个人的工作和奉献表示感谢,微笑着出了门……留给我们更多的激励。

|

||||

|

||||

突然,门开了。Jim转身向门口站着的执行助理点头。他要去参加下一场会了。他并拢双腿,站着向前微倾。然后,他再次向每个人的工作和奉献表示感谢,微笑着除了门……留给我们更多的激励。

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/business/14/12/jim-whitehurst-inspiration-open-source

|

||||

|

||||

作者:[Remy][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[fyh](https://github.com/fyh)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,53 +1,54 @@

|

||||

Autojump – 一个高级的‘cd’命令用以快速浏览 Linux 文件系统

|

||||

Autojump:一个可以在 Linux 文件系统快速导航的高级 cd 命令

|

||||

================================================================================

|

||||

对于那些主要通过控制台或终端使用 Linux 命令行来工作的 Linux 用户来说,他们真切地感受到了 Linux 的强大。 然而在 Linux 的分层文件系统中进行浏览有时或许是一件头疼的事,尤其是对于那些新手来说。

|

||||

|

||||

对于那些主要通过控制台或终端使用 Linux 命令行来工作的 Linux 用户来说,他们真切地感受到了 Linux 的强大。 然而在 Linux 的分层文件系统中进行导航有时或许是一件头疼的事,尤其是对于那些新手来说。

|

||||

|

||||

现在,有一个用 Python 写的名为 `autojump` 的 Linux 命令行实用程序,它是 Linux ‘[cd][1]’命令的高级版本。

|

||||

|

||||

|

||||

|

||||

Autojump – 浏览 Linux 文件系统的最快方式

|

||||

*Autojump – Linux 文件系统导航的最快方式*

|

||||

|

||||

这个应用原本由 Joël Schaerer 编写,现在由 +William Ting 维护。

|

||||

|

||||

Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行更轻松的目录浏览。与传统的 `cd` 命令相比,autojump 能够更加快速地浏览至目的目录。

|

||||

Autojump 应用可以从用户那里学习并帮助用户在 Linux 命令行中进行更轻松的目录导航。与传统的 `cd` 命令相比,autojump 能够更加快速地导航至目的目录。

|

||||

|

||||

#### autojump 的特色 ####

|

||||

|

||||

- 免费且开源的应用,在 GPL V3 协议下发布。

|

||||

- 自主学习的应用,从用户的浏览习惯中学习。

|

||||

- 更快速地浏览。不必包含子目录的名称。

|

||||

- 对于大多数的标准 Linux 发行版本,能够在软件仓库中下载得到,它们包括 Debian (testing/unstable), Ubuntu, Mint, Arch, Gentoo, Slackware, CentOS, RedHat and Fedora。

|

||||

- 自由开源的应用,在 GPL V3 协议下发布。

|

||||

- 自主学习的应用,从用户的导航习惯中学习。

|

||||

- 更快速地导航。不必包含子目录的名称。

|

||||

- 对于大多数的标准 Linux 发行版本,能够在软件仓库中下载得到,它们包括 Debian (testing/unstable), Ubuntu, Mint, Arch, Gentoo, Slackware, CentOS, RedHat 和 Fedora。

|

||||

- 也能在其他平台中使用,例如 OS X(使用 Homebrew) 和 Windows (通过 Clink 来实现)

|

||||

- 使用 autojump 你可以跳至任何特定的目录或一个子目录。你还可以打开文件管理器来到达某个目录,并查看你在某个目录中所待时间的统计数据。

|

||||

- 使用 autojump 你可以跳至任何特定的目录或一个子目录。你还可以用文件管理器打开某个目录,并查看你在某个目录中所待时间的统计数据。

|

||||

|

||||

#### 前提 ####

|

||||

|

||||

- 版本号不低于 2.6 的 Python

|

||||

|

||||

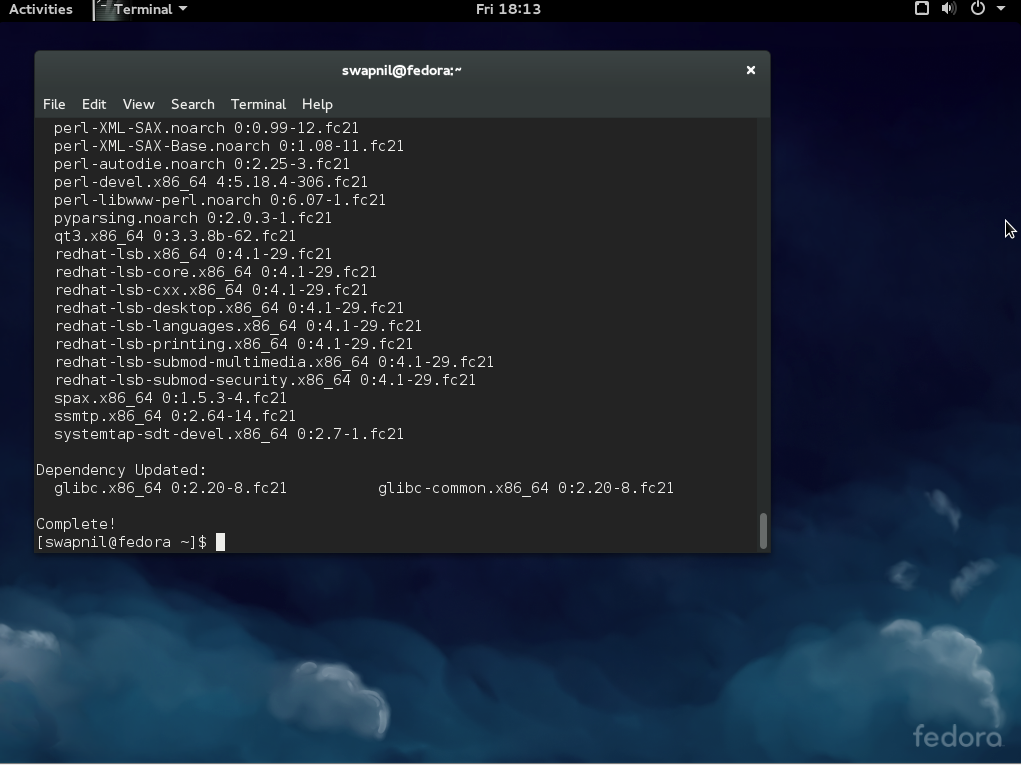

### 第 1 步: 做一次全局系统升级 ###

|

||||

### 第 1 步: 做一次完整的系统升级 ###

|

||||

|

||||

1. 以 **root** 用户的身份,做一次系统更新或升级,以此保证你安装有最新版本的 Python。

|

||||

1、 以 **root** 用户的身份,做一次系统更新或升级,以此保证你安装有最新版本的 Python。

|

||||

|

||||

# apt-get update && apt-get upgrade && apt-get dist-upgrade [APT based systems]

|

||||

# yum update && yum upgrade [YUM based systems]

|

||||

# dnf update && dnf upgrade [DNF based systems]

|

||||

# apt-get update && apt-get upgrade && apt-get dist-upgrade [基于 APT 的系统]

|

||||

# yum update && yum upgrade [基于 YUM 的系统]

|

||||

# dnf update && dnf upgrade [基于 DNF 的系统]

|

||||

|

||||

**注** : 这里特别提醒,在基于 YUM 或 DNF 的系统中,更新和升级执行相同的行动,大多数时间里它们是通用的,这点与基于 APT 的系统不同。

|

||||

|

||||

### 第 2 步: 下载和安装 Autojump ###

|

||||

|

||||

2. 正如前面所言,在大多数的 Linux 发行版本的软件仓库中, autojump 都可获取到。通过包管理器你就可以安装它。但若你想从源代码开始来安装它,你需要克隆源代码并执行 python 脚本,如下面所示:

|

||||

2、 正如前面所言,在大多数的 Linux 发行版本的软件仓库中, autojump 都可获取到。通过包管理器你就可以安装它。但若你想从源代码开始来安装它,你需要克隆源代码并执行 python 脚本,如下面所示:

|

||||

|

||||

#### 从源代码安装 ####

|

||||

|

||||

若没有安装 git,请安装它。我们需要使用它来克隆 git 仓库。

|

||||

|

||||

# apt-get install git [APT based systems]

|

||||

# yum install git [YUM based systems]

|

||||

# dnf install git [DNF based systems]

|

||||

# apt-get install git [基于 APT 的系统]

|

||||

# yum install git [基于 YUM 的系统]

|

||||

# dnf install git [基于 DNF 的系统]

|

||||

|

||||

一旦安装完 git,以常规用户身份登录,然后像下面那样来克隆 autojump:

|

||||

一旦安装完 git,以普通用户身份登录,然后像下面那样来克隆 autojump:

|

||||

|

||||

$ git clone git://github.com/joelthelion/autojump.git

|

||||

|

||||

@ -55,29 +56,29 @@ Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行

|

||||

|

||||

$ cd autojump

|

||||

|

||||

下载,赋予脚本文件可执行权限,并以 root 用户身份来运行安装脚本。

|

||||

下载,赋予安装脚本文件可执行权限,并以 root 用户身份来运行安装脚本。

|

||||

|

||||

# chmod 755 install.py

|

||||

# ./install.py

|

||||

|

||||

#### 从软件仓库中安装 ####

|

||||

|

||||

3. 假如你不想麻烦,你可以以 **root** 用户身份从软件仓库中直接安装它:

|

||||

3、 假如你不想麻烦,你可以以 **root** 用户身份从软件仓库中直接安装它:

|

||||

|

||||

在 Debian, Ubuntu, Mint 及类似系统中安装 autojump :

|

||||

|

||||

# apt-get install autojump (注: 这里原文为 autojumo, 应该为 autojump)

|

||||

# apt-get install autojump

|

||||

|

||||

为了在 Fedora, CentOS, RedHat 及类似系统中安装 autojump, 你需要启用 [EPEL 软件仓库][2]。

|

||||

|

||||

# yum install epel-release

|

||||

# yum install autojump

|

||||

OR

|

||||

或

|

||||

# dnf install autojump

|

||||

|

||||

### 第 3 步: 安装后的配置 ###

|

||||

|

||||

4. 在 Debian 及其衍生系统 (Ubuntu, Mint,…) 中, 激活 autojump 应用是非常重要的。

|

||||

4、 在 Debian 及其衍生系统 (Ubuntu, Mint,…) 中, 激活 autojump 应用是非常重要的。

|

||||

|

||||

为了暂时激活 autojump 应用,即直到你关闭当前会话或打开一个新的会话之前让 autojump 均有效,你需要以常规用户身份运行下面的命令:

|

||||

|

||||

@ -89,7 +90,7 @@ Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行

|

||||

|

||||

### 第 4 步: Autojump 的预测试和使用 ###

|

||||

|

||||

5. 如先前所言, autojump 将只跳到先前 `cd` 命令到过的目录。所以在我们开始测试之前,我们要使用 `cd` 切换到一些目录中去,并创建一些目录。下面是我所执行的命令。

|

||||

5、 如先前所言, autojump 将只跳到先前 `cd` 命令到过的目录。所以在我们开始测试之前,我们要使用 `cd` 切换到一些目录中去,并创建一些目录。下面是我所执行的命令。

|

||||

|

||||

$ cd

|

||||

$ cd

|

||||

@ -120,45 +121,45 @@ Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行

|

||||

|

||||

现在,我们已经切换到过上面所列的目录,并为了测试创建了一些目录,一切准备就绪,让我们开始吧。

|

||||

|

||||

**需要记住的一点** : `j` 是 autojump 的一个包装,你可以使用 j 来代替 autojump, 相反亦可。

|

||||

**需要记住的一点** : `j` 是 autojump 的一个封装,你可以使用 j 来代替 autojump, 相反亦可。

|

||||

|

||||

6. 使用 -v 选项查看安装的 autojump 的版本。

|

||||

6、 使用 -v 选项查看安装的 autojump 的版本。

|

||||

|

||||

$ j -v

|

||||

or

|

||||

或

|

||||

$ autojump -v

|

||||

|

||||

|

||||

|

||||

查看 Autojump 的版本

|

||||

*查看 Autojump 的版本*

|

||||

|

||||

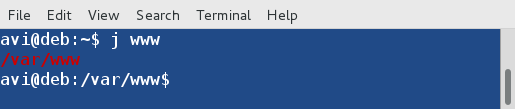

7. 跳到先前到过的目录 ‘/var/www‘。

|

||||

7、 跳到先前到过的目录 ‘/var/www‘。

|

||||

|

||||

$ j www

|

||||

|

||||

|

||||

|

||||

跳到目录

|

||||

*跳到目录*

|

||||

|

||||

8. 跳到先前到过的子目录‘/home/avi/autojump-test/b‘ 而不键入子目录的全名。

|

||||

8、 跳到先前到过的子目录‘/home/avi/autojump-test/b‘ 而不键入子目录的全名。

|

||||

|

||||

$ jc b

|

||||

|

||||

|

||||

|

||||

跳到子目录

|

||||

*跳到子目录*

|

||||

|

||||

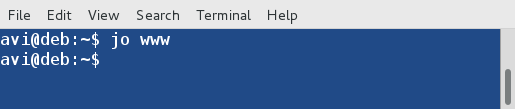

9. 使用下面的命令,你就可以从命令行打开一个文件管理器,例如 GNOME Nautilus ,而不是跳到一个目录。

|

||||

9、 使用下面的命令,你就可以从命令行打开一个文件管理器,例如 GNOME Nautilus ,而不是跳到一个目录。

|

||||

|

||||

$ jo www

|

||||

|

||||

|

||||

|

||||

|

||||

跳到目录

|

||||

*打开目录*

|

||||

|

||||

|

||||

|

||||

在文件管理器中打开目录

|

||||

*在文件管理器中打开目录*

|

||||

|

||||

你也可以在一个文件管理器中打开一个子目录。

|

||||

|

||||

@ -166,19 +167,19 @@ Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行

|

||||

|

||||

|

||||

|

||||

打开子目录

|

||||

*打开子目录*

|

||||

|

||||

|

||||

|

||||

在文件管理器中打开子目录

|

||||

*在文件管理器中打开子目录*

|

||||

|

||||

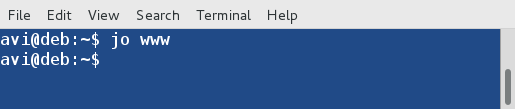

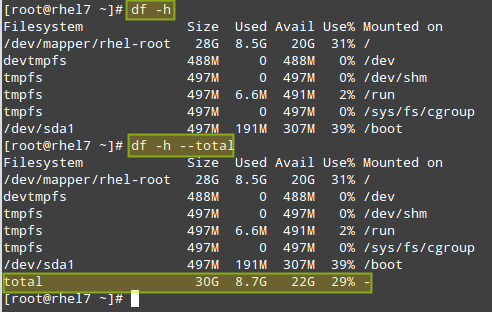

10. 查看每个文件夹的关键权重和在所有目录权重中的总关键权重的相关统计数据。文件夹的关键权重代表在这个文件夹中所花的总时间。 目录权重是列表中目录的数目。(注: 在这一句中,我觉得原文中的 if 应该为 is)

|

||||

10、 查看每个文件夹的权重和全部文件夹计算得出的总权重的统计数据。文件夹的权重代表在这个文件夹中所花的总时间。 文件夹权重是该列表中目录的数字。(LCTT 译注: 在这一句中,我觉得原文中的 if 应该为 is)

|

||||

|

||||

$ j --stat

|

||||

|

||||

|

||||

|

||||

|

||||

查看目录统计数据

|

||||

*查看文件夹统计数据*

|

||||

|

||||

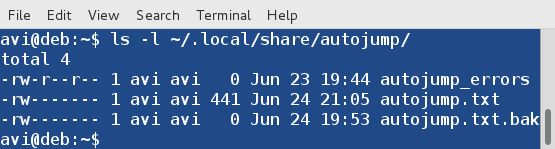

**提醒** : autojump 存储其运行日志和错误日志的地方是文件夹 `~/.local/share/autojump/`。千万不要重写这些文件,否则你将失去你所有的统计状态结果。

|

||||

|

||||

@ -186,15 +187,15 @@ Autojump 应用从用户那里学习并帮助用户在 Linux 命令行中进行

|

||||

|

||||

|

||||

|

||||

Autojump 的日志

|

||||

*Autojump 的日志*

|

||||

|

||||

11. 假如需要,你只需运行下面的命令就可以查看帮助 :

|

||||

11、 假如需要,你只需运行下面的命令就可以查看帮助 :

|

||||

|

||||

$ j --help

|

||||

|

||||

|

||||

|

||||

Autojump 的帮助和选项

|

||||

*Autojump 的帮助和选项*

|

||||

|

||||

### 功能需求和已知的冲突 ###

|

||||

|

||||

@ -204,18 +205,19 @@ Autojump 的帮助和选项

|

||||

|

||||

### 结论: ###

|

||||

|

||||

假如你是一个命令行用户, autojump 是你必备的实用程序。它可以简化许多事情。它是一个在命令行中浏览 Linux 目录的绝佳的程序。请自行尝试它,并在下面的评论框中让我知晓你宝贵的反馈。保持联系,保持分享。喜爱并分享,帮助我们更好地传播。

|

||||

假如你是一个命令行用户, autojump 是你必备的实用程序。它可以简化许多事情。它是一个在命令行中导航 Linux 目录的绝佳的程序。请自行尝试它,并在下面的评论框中让我知晓你宝贵的反馈。保持联系,保持分享。喜爱并分享,帮助我们更好地传播。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.tecmint.com/autojump-a-quickest-way-to-navigate-linux-filesystem/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.tecmint.com/author/avishek/

|

||||

[1]:http://www.tecmint.com/cd-command-in-linux/

|

||||

[2]:http://www.tecmint.com/how-to-enable-epel-repository-for-rhel-centos-6-5/

|

||||

[2]:https://linux.cn/article-2324-1.html

|

||||

[3]:http://www.tecmint.com/manage-linux-filenames-with-special-characters/

|

||||

@ -1,6 +1,6 @@

|

||||

如何在Ubuntu 14.04/15.04上配置Chef(服务端/客户端)

|

||||

如何在 Ubuntu 上安装配置管理系统 Chef (大厨)

|

||||

================================================================================

|

||||

Chef是对于信息技术专业人员的一款配置管理和自动化工具,它可以配置和管理你的设备无论它在本地还是在云上。它可以用于加速应用部署并协调多个系统管理员和开发人员的工作,涉及到成百甚至上千的服务器和程序来支持大量的客户群。chef最有用的是让设备变成代码。一旦你掌握了Chef,你可以获得一流的网络IT支持来自动化管理你的云端设备或者终端用户。

|

||||

Chef是面对IT专业人员的一款配置管理和自动化工具,它可以配置和管理你的基础设施,无论它在本地还是在云上。它可以用于加速应用部署并协调多个系统管理员和开发人员的工作,这涉及到可支持大量的客户群的成百上千的服务器和程序。chef最有用的是让基础设施变成代码。一旦你掌握了Chef,你可以获得一流的网络IT支持来自动化管理你的云端基础设施或者终端用户。

|

||||

|

||||

下面是我们将要在本篇中要设置和配置Chef的主要组件。

|

||||

|

||||

@ -10,34 +10,13 @@ Chef是对于信息技术专业人员的一款配置管理和自动化工具,

|

||||

|

||||

我们将在下面的基础环境下设置Chef配置管理系统。

|

||||

|

||||

注:表格

|

||||

<table width="701" style="height: 284px;">

|

||||

<tbody>

|

||||

<tr>

|

||||

<td width="660" colspan="2"><strong>管理和配置工具:Chef</strong></td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="220"><strong>基础操作系统</strong></td>

|

||||

<td width="492">Ubuntu 14.04.1 LTS (x86_64)</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="220"><strong>Chef Server</strong></td>

|

||||

<td width="492">Version 12.1.0</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="220"><strong>Chef Manage</strong></td>

|

||||

<td width="492">Version 1.17.0</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="220"><strong>Chef Development Kit</strong></td>

|

||||

<td width="492">Version 0.6.2</td>

|

||||

</tr>

|

||||

<tr>

|

||||

<td width="220"><strong>内存和CPU</strong></td>

|

||||

<td width="492">4 GB , 2.0+2.0 GHZ</td>

|

||||

</tr>

|

||||

</tbody>

|

||||

</table>

|

||||

|管理和配置工具:Chef||

|

||||

|-------------------------------|---|

|

||||

|基础操作系统|Ubuntu 14.04.1 LTS (x86_64)|

|

||||

|Chef Server|Version 12.1.0|

|

||||

|Chef Manage|Version 1.17.0|

|

||||

|Chef Development Kit|Version 0.6.2|

|

||||

|内存和CPU|4 GB , 2.0+2.0 GHz|

|

||||

|

||||

### Chef服务端的安装和配置 ###

|

||||

|

||||

@ -45,15 +24,15 @@ Chef服务端是核心组件,它存储配置以及其他和工作站交互的

|

||||

|

||||

我使用下面的命令来下载和安装它。

|

||||

|

||||

**1) 下载Chef服务端**

|

||||

####1) 下载Chef服务端

|

||||

|

||||

root@ubuntu-14-chef:/tmp# wget https://web-dl.packagecloud.io/chef/stable/packages/ubuntu/trusty/chef-server-core_12.1.0-1_amd64.deb

|

||||

|

||||

**2) 安装Chef服务端**

|

||||

####2) 安装Chef服务端

|

||||

|

||||

root@ubuntu-14-chef:/tmp# dpkg -i chef-server-core_12.1.0-1_amd64.deb

|

||||

|

||||

**3) 重新配置Chef服务端**

|

||||

####3) 重新配置Chef服务端

|

||||

|

||||

现在运行下面的命令来启动所有的chef服务端服务,这步也许会花费一些时间,因为它有许多不同一起工作的服务组成来创建一个正常运作的系统。

|

||||

|

||||

@ -64,35 +43,35 @@ chef服务端启动命令'chef-server-ctl reconfigure'需要运行两次,这

|

||||

Chef Client finished, 342/350 resources updated in 113.71139964 seconds

|

||||

opscode Reconfigured!

|

||||

|

||||

**4) 重启系统 **

|

||||

####4) 重启系统

|

||||

|

||||

安装完成后重启系统使系统能最好的工作,不然我们或许会在创建用户的时候看到下面的SSL连接错误。

|

||||

|

||||

ERROR: Errno::ECONNRESET: Connection reset by peer - SSL_connect

|

||||

|

||||

**5) 创建心的管理员**

|

||||

####5) 创建新的管理员

|

||||

|

||||

运行下面的命令来创建一个新的用它自己的配置的管理员账户。创建过程中,用户的RSA私钥会自动生成并需要被保存到一个安全的地方。--file选项会保存RSA私钥到指定的路径下。

|

||||

运行下面的命令来创建一个新的管理员账户及其配置。创建过程中,用户的RSA私钥会自动生成,它需要保存到一个安全的地方。--file选项会保存RSA私钥到指定的路径下。

|

||||

|

||||

root@ubuntu-14-chef:/tmp# chef-server-ctl user-create kashi kashi kashi kashif.fareedi@gmail.com kashi123 --filename /root/kashi.pem

|

||||

|

||||

### Chef服务端的管理设置 ###

|

||||

|

||||

Chef Manage是一个针对企业Chef用户的管理控制台,它启用了可视化的web用户界面并可以管理节点、数据包、规则、环境、配置和基于角色的访问控制(RBAC)

|

||||

Chef Manage是一个针对企业Chef用户的管理控制台,它提供了可视化的web用户界面,可以管理节点、数据包、规则、环境、Cookbook 和基于角色的访问控制(RBAC)

|

||||

|

||||

**1) 下载Chef Manage**

|

||||

####1) 下载Chef Manage

|

||||

|

||||

从官网复制链接病下载chef manage的安装包。

|

||||

从官网复制链接并下载chef manage的安装包。

|

||||

|

||||

root@ubuntu-14-chef:~# wget https://web-dl.packagecloud.io/chef/stable/packages/ubuntu/trusty/opscode-manage_1.17.0-1_amd64.deb

|

||||

|

||||

**2) 安装Chef Manage**

|

||||

####2) 安装Chef Manage

|

||||

|

||||

使用下面的命令在root的家目录下安装它。

|

||||

|

||||

root@ubuntu-14-chef:~# chef-server-ctl install opscode-manage --path /root

|

||||

|

||||

**3) 重启Chef Manage和服务端**

|

||||

####3) 重启Chef Manage和服务端

|

||||

|

||||

安装完成后我们需要运行下面的命令来重启chef manage和服务端。

|

||||

|

||||

@ -101,28 +80,27 @@ Chef Manage是一个针对企业Chef用户的管理控制台,它启用了可

|

||||

|

||||

### Chef Manage网页控制台 ###

|

||||

|

||||

我们可以使用localhost访问网页控制台以及fqdn,并用已经创建的管理员登录

|

||||

我们可以使用localhost或它的全称域名来访问网页控制台,并用已经创建的管理员登录

|

||||

|

||||

|

||||

|

||||

**1) Chef Manage创建新的组织 **

|

||||

####1) Chef Manage创建新的组织

|

||||

|

||||

你或许被要求创建新的组织或者接受其他阻止的邀请。如下所示,使用缩写和全名来创建一个新的组织。

|

||||

你或许被要求创建新的组织,或者也可以接受其他组织的邀请。如下所示,使用缩写和全名来创建一个新的组织。

|

||||

|

||||

|

||||

|

||||

**2) 用命令行创建心的组织 **

|

||||

####2) 用命令行创建新的组织

|

||||

|

||||

We can also create new Organization from the command line by executing the following command.

|

||||

我们同样也可以运行下面的命令来创建新的组织。

|

||||

|

||||

root@ubuntu-14-chef:~# chef-server-ctl org-create linux Linoxide Linux Org. --association_user kashi --filename linux.pem

|

||||

|

||||

### 设置工作站 ###

|

||||

|

||||

我们已经完成安装chef服务端,现在我们可以开始创建任何recipes、cookbooks、属性和其他任何的我们想要对Chef的修改。

|

||||

我们已经完成安装chef服务端,现在我们可以开始创建任何recipes([基础配置元素](https://docs.chef.io/recipes.html))、cookbooks([基础配置集](https://docs.chef.io/cookbooks.html))、attributes([节点属性](https://docs.chef.io/attributes.html))和其他任何的我们想要对Chef做的修改。

|

||||

|

||||

**1) 在Chef服务端上创建新的用户和组织 **

|

||||

####1) 在Chef服务端上创建新的用户和组织

|

||||

|

||||

为了设置工作站,我们用命令行创建一个新的用户和组织。

|

||||

|

||||

@ -130,25 +108,23 @@ We can also create new Organization from the command line by executing the follo

|

||||

|

||||

root@ubuntu-14-chef:~# chef-server-ctl org-create blogs Linoxide Blogs Inc. --association_user bloger --filename blogs.pem

|

||||

|

||||

**2) 下载工作站入门套件 **

|

||||

####2) 下载工作站入门套件

|

||||

|

||||

Now Download and Save starter-kit from the chef manage web console on a workstation and use it to work with Chef server.

|

||||

在工作站的网页控制台中下面并保存入门套件用于与服务端协同工作

|

||||

在工作站的网页控制台中下载保存入门套件,它用于与服务端协同工作

|

||||

|

||||

|

||||

|

||||

**3) 点击"Proceed"下载套件 **

|

||||

####3) 下载套件后,点击"Proceed"

|

||||

|

||||

|

||||

|

||||

### 对于工作站的Chef开发套件设置 ###

|

||||

### 用于工作站的Chef开发套件设置 ###

|

||||

|

||||

Chef开发套件是一款包含所有开发chef所需工具的软件包。它捆绑了由Chef开发的带Chef客户端的工具。

|

||||

Chef开发套件是一款包含开发chef所需的所有工具的软件包。它捆绑了由Chef开发的带Chef客户端的工具。

|

||||

|

||||

**1) 下载 Chef DK**

|

||||

####1) 下载 Chef DK

|

||||

|

||||

We can Download chef development kit from its official web link and choose the required operating system to get its chef development tool kit.

|

||||

我们可以从它的官网链接中下载开发包,并选择操作系统来得到chef开发包。

|

||||

我们可以从它的官网链接中下载开发包,并选择操作系统来下载chef开发包。

|

||||

|

||||

|

||||

|

||||

@ -156,13 +132,13 @@ We can Download chef development kit from its official web link and choose the r

|

||||

|

||||

root@ubuntu-15-WKS:~# wget https://opscode-omnibus-packages.s3.amazonaws.com/ubuntu/12.04/x86_64/chefdk_0.6.2-1_amd64.deb

|

||||

|

||||

**1) Chef开发套件安装**

|

||||

####2) Chef开发套件安装

|

||||

|

||||

使用dpkg命令安装开发套件

|

||||

|

||||

root@ubuntu-15-WKS:~# dpkg -i chefdk_0.6.2-1_amd64.deb

|

||||

|

||||

**3) Chef DK 验证**

|

||||

####3) Chef DK 验证

|

||||

|

||||

使用下面的命令验证客户端是否已经正确安装。

|

||||

|

||||

@ -195,7 +171,7 @@ We can Download chef development kit from its official web link and choose the r

|

||||

Verification of component 'chefspec' succeeded.

|

||||

Verification of component 'package installation' succeeded.

|

||||

|

||||

**连接Chef服务端**

|

||||

####4) 连接Chef服务端

|

||||

|

||||

我们将创建 ~/.chef并从chef服务端复制两个用户和组织的pem文件到chef的文件到这个目录下。

|

||||

|

||||

@ -209,7 +185,7 @@ We can Download chef development kit from its official web link and choose the r

|

||||

kashi.pem 100% 1678 1.6KB/s 00:00

|

||||

linux.pem 100% 1678 1.6KB/s 00:00

|

||||

|

||||

** 编辑配置来管理chef环境 **

|

||||

####5) 编辑配置来管理chef环境

|

||||

|

||||

现在使用下面的内容创建"~/.chef/knife.rb"。

|

||||

|

||||

@ -231,13 +207,13 @@ We can Download chef development kit from its official web link and choose the r

|

||||

|

||||

root@ubuntu-15-WKS:/# mkdir cookbooks

|

||||

|

||||

**测试Knife配置**

|

||||

####6) 测试Knife配置

|

||||

|

||||

运行“knife user list”和“knife client list”来验证knife是否在工作。

|

||||

|

||||

root@ubuntu-15-WKS:/.chef# knife user list

|

||||

|

||||

第一次运行的时候可能会得到下面的错误,这是因为工作站上还没有chef服务端的SSL证书。

|

||||

第一次运行的时候可能会看到下面的错误,这是因为工作站上还没有chef服务端的SSL证书。

|

||||

|

||||

ERROR: SSL Validation failure connecting to host: 172.25.10.173 - SSL_connect returned=1 errno=0 state=SSLv3 read server certificate B: certificate verify failed

|

||||

ERROR: Could not establish a secure connection to the server.

|

||||

@ -245,24 +221,24 @@ We can Download chef development kit from its official web link and choose the r

|

||||

If your Chef Server uses a self-signed certificate, you can use

|

||||

`knife ssl fetch` to make knife trust the server's certificates.

|

||||

|

||||

要从上面的命令中恢复,运行下面的命令来获取ssl整数并重新运行knife user和client list,这时候应该就可以了。

|

||||

要从上面的命令中恢复,运行下面的命令来获取ssl证书,并重新运行knife user和client list,这时候应该就可以了。

|

||||

|

||||

root@ubuntu-15-WKS:/.chef# knife ssl fetch

|

||||

WARNING: Certificates from 172.25.10.173 will be fetched and placed in your trusted_cert

|

||||

directory (/.chef/trusted_certs).

|

||||

|

||||

knife没有办法验证这些是有效的证书。你应该在下载时候验证这些证书的真实性。

|

||||

knife没有办法验证这些是有效的证书。你应该在下载时候验证这些证书的真实性。

|

||||

|

||||

在/.chef/trusted_certs/ubuntu-14-chef_test_com.crt下面添加ubuntu-14-chef.test.com的证书。

|

||||

在/.chef/trusted_certs/ubuntu-14-chef_test_com.crt下面添加ubuntu-14-chef.test.com的证书。

|

||||

|

||||

在上面的命令取得ssl证书后,接着运行下面的命令。

|

||||

|

||||

root@ubuntu-15-WKS:/.chef#knife client list

|

||||

kashi-linux

|

||||

|

||||

### 与chef服务端交互的新的节点 ###

|

||||

### 配置与chef服务端交互的新节点 ###

|

||||

|

||||

节点是执行所有设备自动化的chef客户端。因此是时侯添加新的服务端到我们的chef环境下,在配置完chef-server和knife工作站后配置新的节点与chef-server交互。

|

||||

节点是执行所有基础设施自动化的chef客户端。因此,在配置完chef-server和knife工作站后,通过配置新的与chef-server交互的节点,来添加新的服务端到我们的chef环境下。

|

||||

|

||||

我们使用下面的命令来添加新的节点与chef服务端工作。

|

||||

|

||||

@ -291,16 +267,16 @@ We can Download chef development kit from its official web link and choose the r

|

||||

172.25.10.170 to file /tmp/install.sh.26024/metadata.txt

|

||||

172.25.10.170 trying wget...

|

||||

|

||||

之后我们可以在knife节点列表下看到新创建的节点,也会新节点列表下创建新的客户端。

|

||||

之后我们可以在knife节点列表下看到新创建的节点,它也会在新节点创建新的客户端。

|

||||

|

||||

root@ubuntu-15-WKS:~# knife node list

|

||||

mydns

|

||||

|

||||

相似地我们只要提供ssh证书通过上面的knife命令来创建多个节点到chef设备上。

|

||||

相似地我们只要提供ssh证书通过上面的knife命令,就可以在chef设施上创建多个节点。

|

||||

|

||||

### 总结 ###

|

||||

|

||||

本篇我们学习了chef管理工具并通过安装和配置设置浏览了它的组件。我希望你在学习安装和配置Chef服务端以及它的工作站和客户端节点中获得乐趣。

|

||||

本篇我们学习了chef管理工具并通过安装和配置设置基本了解了它的组件。我希望你在学习安装和配置Chef服务端以及它的工作站和客户端节点中获得乐趣。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -308,7 +284,7 @@ via: http://linoxide.com/ubuntu-how-to/install-configure-chef-ubuntu-14-04-15-04

|

||||

|

||||

作者:[Kashif Siddique][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

178

published/20150717 How to collect NGINX metrics - Part 2.md

Normal file

178

published/20150717 How to collect NGINX metrics - Part 2.md

Normal file

@ -0,0 +1,178 @@

|

||||

|

||||

如何收集 NGINX 指标(第二篇)

|

||||

================================================================================

|

||||

|

||||

|

||||

### 如何获取你所需要的 NGINX 指标 ###

|

||||

|

||||

如何获取需要的指标取决于你正在使用的 NGINX 版本以及你希望看到哪些指标。(参见 [如何监控 NGINX(第一篇)][1] 来深入了解NGINX指标。)自由开源的 NGINX 和商业版的 NGINX Plus 都有可以报告指标度量的状态模块,NGINX 也可以在其日志中配置输出特定指标:

|

||||

|

||||

**指标可用性**

|

||||

|

||||

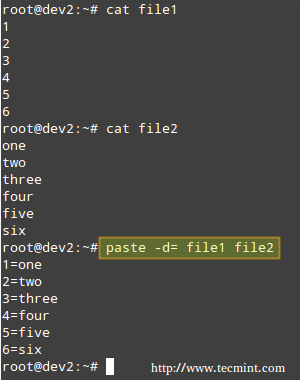

| 指标 | [NGINX (开源)](https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#open-source) | [NGINX Plus](https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#plus) | [NGINX 日志](https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#logs)|

|

||||

|-----|------|-------|-----|

|

||||

|accepts(接受) / accepted(已接受)|x|x| |

|

||||

|handled(已处理)|x|x| |

|

||||

|dropped(已丢弃)|x|x| |

|

||||

|active(活跃)|x|x| |

|

||||

|requests (请求数)/ total(全部请求数)|x|x| |

|

||||

|4xx 代码||x|x|

|

||||

|5xx 代码||x|x|

|

||||

|request time(请求处理时间)|||x|

|

||||

|

||||

#### 指标收集:NGINX(开源版) ####

|

||||

|

||||

开源版的 NGINX 会在一个简单的状态页面上显示几个与服务器状态有关的基本指标,它们由你启用的 HTTP [stub status module][2] 所提供。要检查该模块是否已启用,运行以下命令:

|

||||

|

||||

nginx -V 2>&1 | grep -o with-http_stub_status_module

|

||||

|

||||

如果你看到终端输出了 **http_stub_status_module**,说明该状态模块已启用。

|

||||

|

||||

如果该命令没有输出,你需要启用该状态模块。你可以在[从源代码构建 NGINX ][3]时使用 `--with-http_stub_status_module` 配置参数:

|

||||

|

||||

./configure \

|

||||

… \

|

||||

--with-http_stub_status_module

|

||||

make

|

||||

sudo make install

|

||||

|

||||

在验证该模块已经启用或你自己启用它后,你还需要修改 NGINX 配置文件,来给状态页面设置一个本地可访问的 URL(例如: /nginx_status):

|

||||

|

||||

server {

|

||||

location /nginx_status {

|

||||

stub_status on;

|

||||

|

||||

access_log off;

|

||||

allow 127.0.0.1;

|

||||

deny all;

|

||||

}

|

||||

}

|

||||

|

||||

注:nginx 配置中的 server 块通常并不放在主配置文件中(例如:/etc/nginx/nginx.conf),而是放在主配置会加载的辅助配置文件中。要找到主配置文件,首先运行以下命令:

|

||||

|

||||

nginx -t

|

||||

|

||||

打开列出的主配置文件,在以 http 块结尾的附近查找以 include 开头的行,如:

|

||||

|

||||

include /etc/nginx/conf.d/*.conf;

|

||||

|

||||

在其中一个包含的配置文件中,你应该会找到主 **server** 块,你可以如上所示配置 NGINX 的指标输出。更改任何配置后,通过执行以下命令重新加载配置文件:

|

||||

|

||||

nginx -s reload

|

||||

|

||||

现在,你可以浏览状态页看到你的指标:

|

||||

|

||||

Active connections: 24

|

||||

server accepts handled requests

|

||||

1156958 1156958 4491319

|

||||

Reading: 0 Writing: 18 Waiting : 6

|

||||

|

||||

请注意,如果你希望从远程计算机访问该状态页面,则需要将远程计算机的 IP 地址添加到你的状态配置文件的白名单中,在上面的配置文件中的白名单仅有 127.0.0.1。

|

||||

|

||||

NGINX 的状态页面是一种快速查看指标状况的简单方法,但当连续监测时,你需要按照标准间隔自动记录该数据。监控工具箱 [Nagios][4] 或者 [Datadog][5],以及收集统计信息的服务 [collectD][6] 已经可以解析 NGINX 的状态信息了。

|

||||

|

||||

#### 指标收集: NGINX Plus ####

|

||||

|

||||

商业版的 NGINX Plus 通过它的 ngx_http_status_module 提供了比开源版 NGINX [更多的指标][7]。NGINX Plus 以字节流的方式提供这些额外的指标,提供了关于上游系统和高速缓存的信息。NGINX Plus 也会报告所有的 HTTP 状态码类型(1XX,2XX,3XX,4XX,5XX)的计数。一个 NGINX Plus 状态报告例子[可在此查看][8]:

|

||||

|

||||

|

||||

|

||||

注:NGINX Plus 在状态仪表盘中的“Active”连接的定义和开源 NGINX 通过 stub_status_module 收集的“Active”连接指标略有不同。在 NGINX Plus 指标中,“Active”连接不包括Waiting状态的连接(即“Idle”连接)。

|

||||

|

||||

NGINX Plus 也可以输出 [JSON 格式的指标][9],可以用于集成到其他监控系统。在 NGINX Plus 中,你可以看到 [给定的上游服务器组][10]的指标和健康状况,或者简单地从上游服务器的[单个服务器][11]得到响应代码的计数:

|

||||

|

||||

{"1xx":0,"2xx":3483032,"3xx":0,"4xx":23,"5xx":0,"total":3483055}

|

||||

|

||||

要启动 NGINX Plus 指标仪表盘,你可以在 NGINX 配置文件的 http 块内添加状态 server 块。 (参见上一节,为收集开源版 NGINX 指标而如何查找相关的配置文件的说明。)例如,要设置一个状态仪表盘 (http://your.ip.address:8080/status.html)和一个 JSON 接口(http://your.ip.address:8080/status),可以添加以下 server 块来设定:

|

||||

|

||||

server {

|

||||

listen 8080;

|

||||

root /usr/share/nginx/html;

|

||||

|

||||

location /status {

|

||||

status;

|

||||

}

|

||||

|

||||

location = /status.html {

|

||||

}

|

||||

}

|

||||

|

||||

当你重新加载 NGINX 配置后,状态页就可以用了:

|

||||

|

||||

nginx -s reload

|

||||

|

||||

关于如何配置扩展状态模块,官方 NGINX Plus 文档有 [详细介绍][13] 。

|

||||

|

||||

#### 指标收集:NGINX 日志 ####

|

||||

|

||||

NGINX 的 [日志模块][14] 会把可自定义的访问日志写到你配置的指定位置。你可以通过[添加或移除变量][15]来自定义日志的格式和包含的数据。要存储详细的日志,最简单的方法是添加下面一行在你配置文件的 server 块中(参见上上节,为收集开源版 NGINX 指标而如何查找相关的配置文件的说明。):

|

||||

|

||||

access_log logs/host.access.log combined;

|

||||

|

||||

更改 NGINX 配置文件后,执行如下命令重新加载配置文件:

|

||||

|

||||

nginx -s reload

|

||||

|

||||

默认包含的 “combined” 的日志格式,会包括[一系列关键的数据][17],如实际的 HTTP 请求和相应的响应代码。在下面的示例日志中,NGINX 记录了请求 /index.html 时的 200(成功)状态码和访问不存在的请求文件 /fail 的 404(未找到)错误。

|

||||

|

||||

127.0.0.1 - - [19/Feb/2015:12:10:46 -0500] "GET /index.html HTTP/1.1" 200 612 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari 537.36"

|

||||

|

||||

127.0.0.1 - - [19/Feb/2015:12:11:05 -0500] "GET /fail HTTP/1.1" 404 570 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36"

|

||||

|

||||

你可以通过在 NGINX 配置文件中的 http 块添加一个新的日志格式来记录请求处理时间:

|

||||

|

||||

log_format nginx '$remote_addr - $remote_user [$time_local] '

|

||||

'"$request" $status $body_bytes_sent $request_time '

|

||||

'"$http_referer" "$http_user_agent"';

|

||||

|

||||

并修改配置文件中 **server** 块的 access_log 行:

|

||||

|

||||

access_log logs/host.access.log nginx;

|

||||

|

||||

重新加载配置文件后(运行 `nginx -s reload`),你的访问日志将包括响应时间,如下所示。单位为秒,精度到毫秒。在这个例子中,服务器接收到一个对 /big.pdf 的请求时,发送 33973115 字节后返回 206(成功)状态码。处理请求用时 0.202 秒(202毫秒):

|

||||

|

||||

127.0.0.1 - - [19/Feb/2015:15:50:36 -0500] "GET /big.pdf HTTP/1.1" 206 33973115 0.202 "-" "Mozilla/5.0 (Macintosh; Intel Mac OS X 10_10_1) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/40.0.2214.111 Safari/537.36"

|

||||

|

||||

你可以使用各种工具和服务来解析和分析 NGINX 日志。例如,[rsyslog][18] 可以监视你的日志,并将其传递给多个日志分析服务;你也可以使用自由开源工具,比如 [logstash][19] 来收集和分析日志;或者你可以使用一个统一日志记录层,如 [Fluentd][20] 来收集和解析你的 NGINX 日志。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

监视 NGINX 的哪一项指标将取决于你可用的工具,以及监控指标所提供的信息是否满足你们的需要。举例来说,错误率的收集是否足够重要到需要你们购买 NGINX Plus ,还是架设一个可以捕获和分析日志的系统就够了?

|

||||

|

||||

在 Datadog 中,我们已经集成了 NGINX 和 NGINX Plus,这样你就可以以最小的设置来收集和监控所有 Web 服务器的指标。[在本文中][21]了解如何用 NGINX Datadog 来监控 ,并开始 [Datadog 的免费试用][22]吧。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/

|

||||

|

||||

作者:K Young

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:https://www.datadoghq.com/blog/how-to-monitor-nginx/

|

||||

[2]:http://nginx.org/en/docs/http/ngx_http_stub_status_module.html

|

||||

[3]:http://wiki.nginx.org/InstallOptions

|

||||

[4]:https://exchange.nagios.org/directory/Plugins/Web-Servers/nginx

|

||||

[5]:http://docs.datadoghq.com/integrations/nginx/

|

||||

[6]:https://collectd.org/wiki/index.php/Plugin:nginx

|

||||

[7]:http://nginx.org/en/docs/http/ngx_http_status_module.html#data

|

||||

[8]:http://demo.nginx.com/status.html

|

||||

[9]:http://demo.nginx.com/status

|

||||

[10]:http://demo.nginx.com/status/upstreams/demoupstreams

|

||||

[11]:http://demo.nginx.com/status/upstreams/demoupstreams/0/responses

|

||||

[12]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#open-source

|

||||

[13]:http://nginx.org/en/docs/http/ngx_http_status_module.html#example

|

||||

[14]:http://nginx.org/en/docs/http/ngx_http_log_module.html

|

||||

[15]:http://nginx.org/en/docs/http/ngx_http_log_module.html#log_format

|

||||

[16]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#open-source

|

||||

[17]:http://nginx.org/en/docs/http/ngx_http_log_module.html#log_format

|

||||

[18]:http://www.rsyslog.com/

|

||||

[19]:https://www.elastic.co/products/logstash

|

||||

[20]:http://www.fluentd.org/

|

||||

[21]:https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/

|

||||

[22]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/#sign-up

|

||||

[23]:https://github.com/DataDog/the-monitor/blob/master/nginx/how_to_collect_nginx_metrics.md

|

||||

[24]:https://github.com/DataDog/the-monitor/issues

|

||||

@ -1,16 +1,16 @@

|

||||

在Linux中利用"Explain Shell"脚本更容易地理解Shell命令

|

||||

轻松使用“Explain Shell”脚本来理解 Shell 命令

|

||||

================================================================================

|

||||

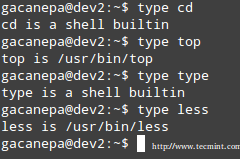

在某些时刻, 当我们在Linux平台上工作时我们所有人都需要shell命令的帮助信息。 尽管内置的帮助像man pages、whatis命令是有帮助的, 但man pages的输出非常冗长, 除非是个有linux经验的人,不然从大量的man pages中获取帮助信息是非常困难的,而whatis命令的输出很少超过一行, 这对初学者来说是不够的。

|

||||

我们在Linux上工作时,每个人都会遇到需要查找shell命令的帮助信息的时候。 尽管内置的帮助像man pages、whatis命令有所助益, 但man pages的输出非常冗长, 除非是个有linux经验的人,不然从大量的man pages中获取帮助信息是非常困难的,而whatis命令的输出很少超过一行, 这对初学者来说是不够的。

|

||||

|

||||

|

||||

|

||||

在Linux Shell中解释Shell命令

|

||||

*在Linux Shell中解释Shell命令*

|

||||

|

||||

有一些第三方应用程序, 像我们在[Commandline Cheat Sheet for Linux Users][1]提及过的'cheat'命令。Cheat是个杰出的应用程序,即使计算机没有联网也能提供shell命令的帮助, 但是它仅限于预先定义好的命令。

|

||||

有一些第三方应用程序, 像我们在[Linux 用户的命令行速查表][1]提及过的'cheat'命令。cheat是个优秀的应用程序,即使计算机没有联网也能提供shell命令的帮助, 但是它仅限于预先定义好的命令。

|

||||

|

||||

Jackson写了一小段代码,它能非常有效地在bash shell里面解释shell命令,可能最美之处就是你不需要安装第三方包了。他把包含这段代码的的文件命名为”explain.sh“。

|

||||

Jackson写了一小段代码,它能非常有效地在bash shell里面解释shell命令,可能最美之处就是你不需要安装第三方包了。他把包含这段代码的的文件命名为“explain.sh”。

|

||||

|

||||

#### Explain工具的特性 ####

|

||||

#### explain.sh工具的特性 ####

|

||||

|

||||

- 易嵌入代码。

|

||||

- 不需要安装第三方工具。

|

||||

@ -18,22 +18,22 @@ Jackson写了一小段代码,它能非常有效地在bash shell里面解释she

|

||||

- 需要网络连接才能工作。

|

||||

- 纯命令行工具。

|

||||

- 可以解释bash shell里面的大部分shell命令。

|

||||

- 无需root账户参与。

|

||||

- 无需使用root账户。

|

||||

|

||||

**先决条件**

|

||||

|

||||

唯一的条件就是'curl'包了。 在如今大多数Linux发行版里面已经预安装了culr包, 如果没有你可以按照下面的命令来安装。

|

||||

唯一的条件就是'curl'包了。 在如今大多数Linux发行版里面已经预安装了curl包, 如果没有你可以按照下面的命令来安装。

|

||||

|

||||

# apt-get install curl [On Debian systems]

|

||||

# yum install curl [On CentOS systems]

|

||||

|

||||

### 在Linux上安装explain.sh工具 ###

|

||||

|

||||

我们要将下面这段代码插入'~/.bashrc'文件(LCTT注: 若没有该文件可以自己新建一个)中。我们必须为每个用户以及对应的'.bashrc'文件插入这段代码,笔者建议你不要加在root用户下。

|

||||

我们要将下面这段代码插入'~/.bashrc'文件(LCTT译注: 若没有该文件可以自己新建一个)中。我们要为每个用户以及对应的'.bashrc'文件插入这段代码,但是建议你不要加在root用户下。

|

||||

|

||||

我们注意到.bashrc文件的第一行代码以(#)开始, 这个是可选的并且只是为了区分余下的代码。

|

||||

|

||||

# explain.sh 标记代码的开始, 我们将代码插入.bashrc文件的底部。

|

||||

\# explain.sh 标记代码的开始, 我们将代码插入.bashrc文件的底部。

|

||||

|

||||

# explain.sh begins

|

||||

explain () {

|

||||

@ -53,7 +53,7 @@ Jackson写了一小段代码,它能非常有效地在bash shell里面解释she

|

||||

|

||||

### explain.sh工具的使用 ###

|

||||

|

||||

在插入代码并保存之后,你必须退出当前的会话然后重新登录来使改变生效(LCTT注:你也可以直接使用命令“source~/.bashrc”来让改变生效)。每件事情都是交由‘curl’命令处理, 它负责将需要解释的命令以及命令选项传送给mankier服务,然后将必要的信息打印到Linux命令行。不必说的就是使用这个工具你总是需要连接网络。

|

||||

在插入代码并保存之后,你必须退出当前的会话然后重新登录来使改变生效(LCTT译注:你也可以直接使用命令`source~/.bashrc` 来让改变生效)。每件事情都是交由‘curl’命令处理, 它负责将需要解释的命令以及命令选项传送给mankier服务,然后将必要的信息打印到Linux命令行。不必说的就是使用这个工具你总是需要连接网络。

|

||||

|

||||

让我们用explain.sh脚本测试几个笔者不懂的命令例子。

|

||||

|

||||

@ -63,7 +63,7 @@ Jackson写了一小段代码,它能非常有效地在bash shell里面解释she

|

||||

|

||||

|

||||

|

||||

获得du命令的帮助

|

||||

*获得du命令的帮助*

|

||||

|

||||

**2.如果你忘了'tar -zxvf'的作用,你可以简单地如此做:**

|

||||

|

||||

@ -71,7 +71,7 @@ Jackson写了一小段代码,它能非常有效地在bash shell里面解释she

|

||||

|

||||

|

||||

|

||||

Tar命令帮助

|

||||

*Tar命令帮助*

|

||||

|

||||

**3.我的一个朋友经常对'whatis'以及'whereis'命令的使用感到困惑,所以我建议他:**

|

||||

|

||||

@ -86,7 +86,7 @@ Tar命令帮助

|

||||

|

||||

|

||||

|

||||

Whatis/Whereis命令的帮助

|

||||

*Whatis/Whereis命令的帮助*

|

||||

|

||||

你只需要使用“Ctrl+c”就能退出交互模式。

|

||||

|

||||

@ -96,11 +96,11 @@ Whatis/Whereis命令的帮助

|

||||

|

||||

|

||||

|

||||

获取多条命令的帮助

|

||||

*获取多条命令的帮助*

|

||||

|

||||

同样地,你可以请求你的shell来解释任何shell命令。 前提是你需要一个可用的网络。输出的信息是基于解释的需要从服务器中生成的,因此输出的结果是不可定制的。

|

||||

同样地,你可以请求你的shell来解释任何shell命令。 前提是你需要一个可用的网络。输出的信息是基于需要解释的命令,从服务器中生成的,因此输出的结果是不可定制的。

|

||||

|

||||

对于我来说这个工具真的很有用并且它已经荣幸地添加在我的.bashrc文件中。你对这个项目有什么想法?它对你有用么?它的解释令你满意吗?请让我知道吧!

|

||||

对于我来说这个工具真的很有用,并且它已经荣幸地添加在我的.bashrc文件中。你对这个项目有什么想法?它对你有用么?它的解释令你满意吗?请让我知道吧!

|

||||

|

||||

请在下面评论为我们提供宝贵意见,喜欢并分享我们以及帮助我们得到传播。

|

||||

|

||||

@ -110,7 +110,7 @@ via: http://www.tecmint.com/explain-shell-commands-in-the-linux-shell/

|

||||

|

||||

作者:[Avishek Kumar][a]

|

||||

译者:[dingdongnigetou](https://github.com/dingdongnigetou)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,11 +1,12 @@

|

||||

新手应知应会的Linux命令

|

||||

================================================================================

|

||||

|

||||

在Fedora上通过命令行使用dnf来管理系统更新

|

||||

|

||||

基于Linux的系统的优点之一,就是你可以通过终端中使用命令该ing来管理整个系统。使用命令行的优势在于,你可以使用相同的知识和技能来管理随便哪个Linux发行版。

|

||||

*在Fedora上通过命令行使用dnf来管理系统更新*

|

||||

|

||||

对于各个发行版以及桌面环境(DE)而言,要一致地使用图形化用户界面(GUI)却几乎是不可能的,因为它们都提供了各自的用户界面。要明确的是,有那么些情况,你需要在不同的发行版上使用不同的命令来部署某些特定的任务,但是,或多或少它们的概念和意图却仍然是一致的。

|

||||

基于Linux的系统最美妙的一点,就是你可以在终端中使用命令行来管理整个系统。使用命令行的优势在于,你可以使用相同的知识和技能来管理随便哪个Linux发行版。

|

||||

|

||||

对于各个发行版以及桌面环境(DE)而言,要一致地使用图形化用户界面(GUI)却几乎是不可能的,因为它们都提供了各自的用户界面。要明确的是,有些情况下在不同的发行版上需要使用不同的命令来执行某些特定的任务,但是,基本来说它们的思路和目的是一致的。

|

||||

|

||||

在本文中,我们打算讨论Linux用户应当掌握的一些基本命令。我将给大家演示怎样使用命令行来更新系统、管理软件、操作文件以及切换到root,这些操作将在三个主要发行版上进行:Ubuntu(也包括其定制版和衍生版,还有Debian),openSUSE,以及Fedora。

|

||||

|

||||

@ -15,7 +16,7 @@

|

||||

|

||||

Linux是基于安全设计的,但事实上是,任何软件都有缺陷,会导致安全漏洞。所以,保持你的系统更新到最新是十分重要的。这么想吧:运行过时的操作系统,就像是你坐在全副武装的坦克里头,而门却没有锁。武器会保护你吗?任何人都可以进入开放的大门,对你造成伤害。同样,在你的系统中也有没有打补丁的漏洞,这些漏洞会危害到你的系统。开源社区,不像专利世界,在漏洞补丁方面反应是相当快的,所以,如果你保持系统最新,你也获得了安全保证。

|

||||

|

||||

留意新闻站点,了解安全漏洞。如果发现了一个漏洞,请阅读之,然后在补丁出来的第一时间更新。不管怎样,在生产机器上,你每星期必须至少运行一次更新命令。如果你运行这一台复杂的服务器,那么就要额外当心了。仔细阅读变更日志,以确保更新不会搞坏你的自定义服务。

|

||||

留意新闻站点,了解安全漏洞。如果发现了一个漏洞,了解它,然后在补丁出来的第一时间更新。不管怎样,在生产环境上,你每星期必须至少运行一次更新命令。如果你运行着一台复杂的服务器,那么就要额外当心了。仔细阅读变更日志,以确保更新不会搞坏你的自定义服务。

|

||||

|

||||

**Ubuntu**:牢记一点:你在升级系统或安装不管什么软件之前,都必须要刷新仓库(也就是repos)。在Ubuntu上,你可以使用下面的命令来更新系统,第一个命令用于刷新仓库:

|

||||

|

||||

@ -29,7 +30,7 @@ Linux是基于安全设计的,但事实上是,任何软件都有缺陷,会

|

||||

|

||||

sudo apt-get dist-upgrade

|

||||

|

||||

**openSUSE**:如果你是在openSUSE上,你可以使用以下命令来更新系统(照例,第一个命令的意思是更新仓库)

|

||||

**openSUSE**:如果你是在openSUSE上,你可以使用以下命令来更新系统(照例,第一个命令的意思是更新仓库):

|

||||

|

||||

sudo zypper refresh

|

||||

sudo zypper up

|

||||

@ -42,7 +43,7 @@ Linux是基于安全设计的,但事实上是,任何软件都有缺陷,会

|

||||

### 软件安装与移除 ###

|

||||

|

||||

你只可以安装那些你系统上启用的仓库中可用的包,各个发行版默认都附带有并启用了一些官方或者第三方仓库。

|

||||

**Ubuntu**: To install any package on Ubuntu, first update the repo and then use this syntax:

|

||||

|

||||

**Ubuntu**:要在Ubuntu上安装包,首先更新仓库,然后使用下面的语句:

|

||||

|

||||

sudo apt-get install [package_name]

|

||||

@ -75,9 +76,9 @@ Linux是基于安全设计的,但事实上是,任何软件都有缺陷,会

|

||||

|

||||

### 如何管理第三方软件? ###

|

||||

|

||||

在一个庞大的开发者社区中,这些开发者们为用户提供了许多的软件。不同的发行版有不同的机制来使用这些第三方软件,将它们提供给用户。同时也取决于开发者怎样将这些软件提供给用户,有些开发者会提供二进制包,而另外一些开发者则将软件发布到仓库中。

|

||||

在一个庞大的开发者社区中,这些开发者们为用户提供了许多的软件。不同的发行版有不同的机制来将这些第三方软件提供给用户。当然,同时也取决于开发者怎样将这些软件提供给用户,有些开发者会提供二进制包,而另外一些开发者则将软件发布到仓库中。

|

||||

|

||||

Ubuntu严重依赖于PPA(个人包归档),但是,不幸的是,它却没有提供一个内建工具来帮助用于搜索这些PPA仓库。在安装软件前,你将需要通过Google搜索PPA,然后手工添加该仓库。下面就是添加PPA到系统的方法:

|

||||

Ubuntu很多地方都用到PPA(个人包归档),但是,不幸的是,它却没有提供一个内建工具来帮助用于搜索这些PPA仓库。在安装软件前,你将需要通过Google搜索PPA,然后手工添加该仓库。下面就是添加PPA到系统的方法:

|

||||

|

||||

sudo add-apt-repository ppa:<repository-name>

|

||||

|

||||

@ -85,7 +86,7 @@ Ubuntu严重依赖于PPA(个人包归档),但是,不幸的是,它却

|

||||

|

||||

sudo add-apt-repository ppa:libreoffice/ppa

|

||||

|

||||

它会要你按下回车键来导入秘钥。完成后,使用'update'命令来刷新仓库,然后安装该包。

|

||||

它会要你按下回车键来导入密钥。完成后,使用'update'命令来刷新仓库,然后安装该包。

|

||||

|

||||

openSUSE拥有一个针对第三方应用的优雅的解决方案。你可以访问software.opensuse.org,一键点击搜索并安装相应包,它会自动将对应的仓库添加到你的系统中。如果你想要手工添加仓库,可以使用该命令:

|

||||

|

||||

@ -97,13 +98,13 @@ openSUSE拥有一个针对第三方应用的优雅的解决方案。你可以访

|

||||

sudo zypper refresh

|

||||

sudo zypper install libreoffice

|

||||

|

||||

Fedora用户只需要添加RPMFusion(free和non-free仓库一起),该仓库包含了大量的应用。如果你需要添加仓库,命令如下:

|

||||

Fedora用户只需要添加RPMFusion(包括自由软件和非自由软件仓库),该仓库包含了大量的应用。如果你需要添加该仓库,命令如下:

|

||||

|

||||

dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

|

||||

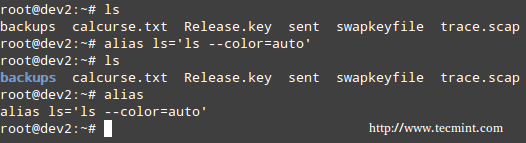

### 一些基本命令 ###

|

||||

|

||||

我已经写了一些关于使用CLI来管理你系统上的文件的[文章][1],下面介绍一些基本米ing令,这些命令在所有发行版上都经常会用到。

|

||||

我已经写了一些关于使用CLI来管理你系统上的文件的[文章][1],下面介绍一些基本命令,这些命令在所有发行版上都经常会用到。

|

||||

|

||||

拷贝文件或目录到一个新的位置:

|

||||

|

||||

@ -113,13 +114,13 @@ dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

|

||||

cp path_of_files/* path_of_the_directory_where_you_want_to_copy/

|

||||

|

||||

将一个文件从某个位置移动到另一个位置(尾斜杠是说在该目录中):

|

||||

将一个文件从某个位置移动到另一个位置(尾斜杠是说放在该目录中):

|

||||

|

||||

mv path_of_file_1 path_of_the_directory_where_you_want_to_move/

|

||||

mv path_of_file_1 path_of_the_directory_where_you_want_to_move/

|

||||

|

||||

将所有文件从一个位置移动到另一个位置:

|

||||

|

||||

mv path_of_directory_where_files_are/* path_of_the_directory_where_you_want_to_move/

|

||||

mv path_of_directory_where_files_are/* path_of_the_directory_where_you_want_to_move/

|

||||

|

||||

删除一个文件:

|

||||

|

||||

@ -135,11 +136,11 @@ dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

|

||||

### 创建新目录 ###

|

||||

|

||||

要创建一个新目录,首先输入你要创建的目录的位置。比如说,你想要在你的Documents目录中创建一个名为'foundation'的文件夹。让我们使用 cd (即change directory,改变目录)命令来改变目录:

|

||||

要创建一个新目录,首先进入到你要创建该目录的位置。比如说,你想要在你的Documents目录中创建一个名为'foundation'的文件夹。让我们使用 cd (即change directory,改变目录)命令来改变目录:

|

||||

|

||||

cd /home/swapnil/Documents

|

||||

|

||||

(替换'swapnil'为你系统中的用户)

|

||||

(替换'swapnil'为你系统中的用户名)

|

||||

|

||||

然后,使用 mkdir 命令来创建该目录:

|

||||

|

||||

@ -149,13 +150,13 @@ dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

|

||||

mdkir /home/swapnil/Documents/foundation

|

||||

|

||||

如果你想要创建父-子目录,那是指目录中的目录,那么可以使用 -p 选项。它会在指定路径中创建所有目录:

|

||||

如果你想要连父目录一起创建,那么可以使用 -p 选项。它会在指定路径中创建所有目录:

|

||||

|

||||

mdkir -p /home/swapnil/Documents/linux/foundation

|

||||

|

||||

### 成为root ###

|

||||

|

||||

你或许需要成为root,或者具有sudo权力的用户,来实施一些管理任务,如管理软件包或者对根目录或其下的文件进行一些修改。其中一个例子就是编辑'fstab'文件,该文件记录了挂载的硬件驱动器。它在'etc'目录中,而该目录又在根目录中,你只能作为超级用户来修改该文件。在大多数的发行版中,你可以通过'切换用户'来成为root。比如说,在openSUSE上,我想要成为root,因为我要在根目录中工作,你可以使用下面的命令之一:

|

||||

你或许需要成为root,或者具有sudo权力的用户,来实施一些管理任务,如管理软件包或者对根目录或其下的文件进行一些修改。其中一个例子就是编辑'fstab'文件,该文件记录了挂载的硬盘驱动器。它在'etc'目录中,而该目录又在根目录中,你只能作为超级用户来修改该文件。在大多数的发行版中,你可以通过'su'来成为root。比如说,在openSUSE上,我想要成为root,因为我要在根目录中工作,你可以使用下面的命令之一:

|

||||

|

||||

sudo su -

|

||||

|

||||

@ -165,7 +166,7 @@ dnf config-manager --add-repo http://www.example.com/example.repo

|

||||

|

||||

该命令会要求输入密码,然后你就具有root特权了。记住一点:千万不要以root用户来运行系统,除非你知道你正在做什么。另外重要的一点需要注意的是,你以root什么对目录或文件进行修改后,会将它们的拥有关系从该用户或特定的服务改变为root。你必须恢复这些文件的拥有关系,否则该服务或用户就不能访问或写入到那些文件。要改变用户,命令如下:

|

||||

|

||||

sudo chown -R user:user /path_of_file_or_directory

|

||||

sudo chown -R 用户:组 文件或目录名

|

||||

|

||||

当你将其它发行版上的分区挂载到系统中时,你可能经常需要该操作。当你试着访问这些分区上的文件时,你可能会碰到权限拒绝错误,你只需要改变这些分区的拥有关系就可以访问它们了。需要额外当心的是,不要改变根目录的权限或者拥有关系。

|

||||

|

||||

@ -177,7 +178,7 @@ via: http://www.linux.com/learn/tutorials/842251-must-know-linux-commands-for-ne

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

88

published/20150803 Linux Logging Basics.md

Normal file

88

published/20150803 Linux Logging Basics.md

Normal file

@ -0,0 +1,88 @@

|

||||

Linux 日志基础

|

||||

================================================================================

|

||||

首先,我们将描述有关 Linux 日志是什么,到哪儿去找它们,以及它们是如何创建的基础知识。如果你已经知道这些,请随意跳至下一节。

|

||||

|

||||

### Linux 系统日志 ###

|

||||

|

||||

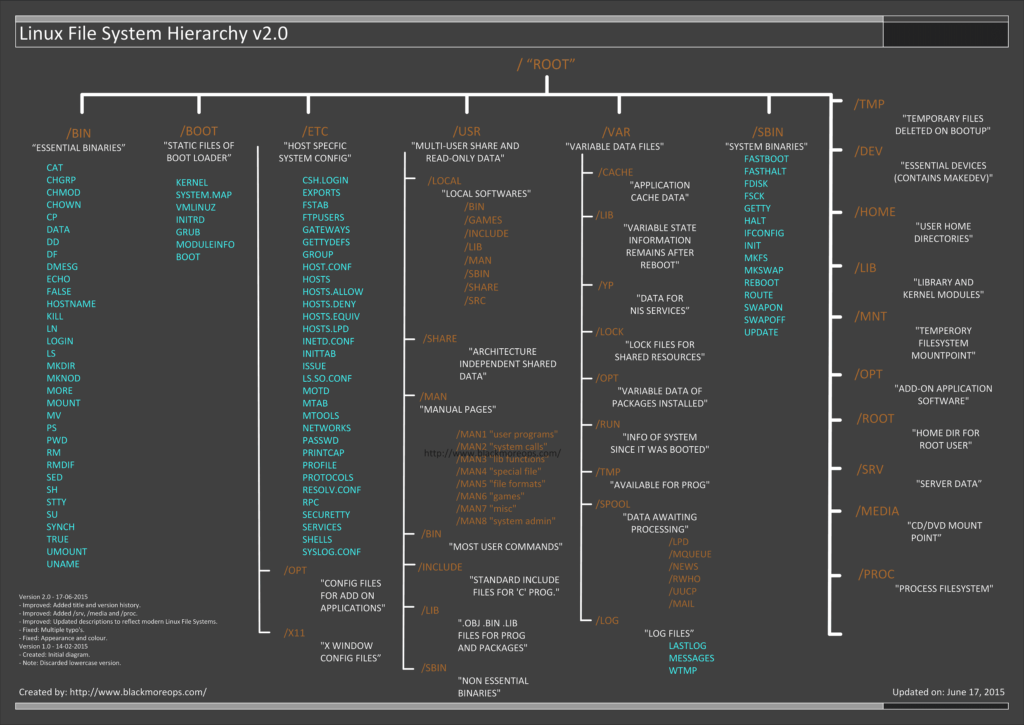

许多有价值的日志文件都是由 Linux 自动地为你创建的。你可以在 `/var/log` 目录中找到它们。下面是在一个典型的 Ubuntu 系统中这个目录的样子:

|

||||

|

||||

|

||||

|

||||

一些最为重要的 Linux 系统日志包括:

|

||||

|

||||

- `/var/log/syslog` 或 `/var/log/messages` 存储所有的全局系统活动数据,包括开机信息。基于 Debian 的系统如 Ubuntu 在 `/var/log/syslog` 中存储它们,而基于 RedHat 的系统如 RHEL 或 CentOS 则在 `/var/log/messages` 中存储它们。

|

||||

- `/var/log/auth.log` 或 `/var/log/secure` 存储来自可插拔认证模块(PAM)的日志,包括成功的登录,失败的登录尝试和认证方式。Ubuntu 和 Debian 在 `/var/log/auth.log` 中存储认证信息,而 RedHat 和 CentOS 则在 `/var/log/secure` 中存储该信息。

|

||||

- `/var/log/kern` 存储内核的错误和警告数据,这对于排除与定制内核相关的故障尤为实用。

|

||||

- `/var/log/cron` 存储有关 cron 作业的信息。使用这个数据来确保你的 cron 作业正成功地运行着。

|

||||

|

||||

Digital Ocean 有一个关于这些文件的完整[教程][1],介绍了 rsyslog 如何在常见的发行版本如 RedHat 和 CentOS 中创建它们。

|

||||

|

||||

应用程序也会在这个目录中写入日志文件。例如像 Apache,Nginx,MySQL 等常见的服务器程序可以在这个目录中写入日志文件。其中一些日志文件由应用程序自己创建,其他的则通过 syslog (具体见下文)来创建。

|

||||

|

||||

### 什么是 Syslog? ###

|

||||

|

||||

Linux 系统日志文件是如何创建的呢?答案是通过 syslog 守护程序,它在 syslog 套接字 `/dev/log` 上监听日志信息,然后将它们写入适当的日志文件中。

|

||||

|

||||

单词“syslog” 代表几个意思,并经常被用来简称如下的几个名称之一:

|

||||

|

||||

1. **Syslog 守护进程** — 一个用来接收、处理和发送 syslog 信息的程序。它可以[远程发送 syslog][2] 到一个集中式的服务器或写入到一个本地文件。常见的例子包括 rsyslogd 和 syslog-ng。在这种使用方式中,人们常说“发送到 syslog”。

|

||||

1. **Syslog 协议** — 一个指定日志如何通过网络来传送的传输协议和一个针对 syslog 信息(具体见下文) 的数据格式的定义。它在 [RFC-5424][3] 中被正式定义。对于文本日志,标准的端口是 514,对于加密日志,端口是 6514。在这种使用方式中,人们常说“通过 syslog 传送”。

|

||||

1. **Syslog 信息** — syslog 格式的日志信息或事件,它包括一个带有几个标准字段的消息头。在这种使用方式中,人们常说“发送 syslog”。

|

||||

|

||||

Syslog 信息或事件包括一个带有几个标准字段的消息头,可以使分析和路由更方便。它们包括时间戳、应用程序的名称、在系统中信息来源的分类或位置、以及事件的优先级。

|

||||

|

||||

下面展示的是一个包含 syslog 消息头的日志信息,它来自于控制着到该系统的远程登录的 sshd 守护进程,这个信息描述的是一次失败的登录尝试:

|

||||

|

||||

<34>1 2003-10-11T22:14:15.003Z server1.com sshd - - pam_unix(sshd:auth): authentication failure; logname= uid=0 euid=0 tty=ssh ruser= rhost=10.0.2.2

|

||||

|

||||

### Syslog 格式和字段 ###

|

||||

|

||||

每条 syslog 信息包含一个带有字段的信息头,这些字段是结构化的数据,使得分析和路由事件更加容易。下面是我们使用的用来产生上面的 syslog 例子的格式,你可以将每个值匹配到一个特定的字段的名称上。

|

||||

|

||||

<%pri%>%protocol-version% %timestamp:::date-rfc3339% %HOSTNAME% %app-name% %procid% %msgid% %msg%n

|

||||

|

||||

下面,你将看到一些在查找或排错时最常使用的 syslog 字段:

|

||||

|

||||

#### 时间戳 ####

|

||||

|

||||

[时间戳][4] (上面的例子为 2003-10-11T22:14:15.003Z) 暗示了在系统中发送该信息的时间和日期。这个时间在另一系统上接收该信息时可能会有所不同。上面例子中的时间戳可以分解为:

|

||||

|

||||

- **2003-10-11** 年,月,日。

|

||||

- **T** 为时间戳的必需元素,它将日期和时间分隔开。

|

||||

- **22:14:15.003** 是 24 小时制的时间,包括进入下一秒的毫秒数(**003**)。

|

||||

- **Z** 是一个可选元素,指的是 UTC 时间,除了 Z,这个例子还可以包括一个偏移量,例如 -08:00,这意味着时间从 UTC 偏移 8 小时,即 PST 时间。

|

||||

|

||||

#### 主机名 ####

|

||||

|

||||

[主机名][5] 字段(在上面的例子中对应 server1.com) 指的是主机的名称或发送信息的系统.

|

||||

|

||||

#### 应用名 ####

|

||||

|

||||

[应用名][6] 字段(在上面的例子中对应 sshd:auth) 指的是发送信息的程序的名称.

|

||||

|

||||

#### 优先级 ####

|

||||

|

||||

优先级字段或缩写为 [pri][7] (在上面的例子中对应 <34>) 告诉我们这个事件有多紧急或多严峻。它由两个数字字段组成:设备字段和紧急性字段。紧急性字段从代表 debug 类事件的数字 7 一直到代表紧急事件的数字 0 。设备字段描述了哪个进程创建了该事件。它从代表内核信息的数字 0 到代表本地应用使用的 23 。

|

||||

|

||||

Pri 有两种输出方式。第一种是以一个单独的数字表示,可以这样计算:先用设备字段的值乘以 8,再加上紧急性字段的值:(设备字段)(8) + (紧急性字段)。第二种是 pri 文本,将以“设备字段.紧急性字段” 的字符串格式输出。后一种格式更方便阅读和搜索,但占据更多的存储空间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.loggly.com/ultimate-guide/logging/linux-logging-basics/

|

||||

|

||||

作者:[Jason Skowronski][a1],[Amy Echeverri][a2],[Sadequl Hussain][a3]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a1]:https://www.linkedin.com/in/jasonskowronski

|

||||

[a2]:https://www.linkedin.com/in/amyecheverri

|

||||

[a3]:https://www.linkedin.com/pub/sadequl-hussain/14/711/1a7

|

||||

[1]:https://www.digitalocean.com/community/tutorials/how-to-view-and-configure-linux-logs-on-ubuntu-and-centos

|

||||

[2]:https://docs.google.com/document/d/11LXZxWlkNSHkcrCWTUdnLRf_CiZz9kK0cr3yGM_BU_0/edit#heading=h.y2e9tdfk1cdb

|

||||

[3]:https://tools.ietf.org/html/rfc5424

|

||||

[4]:https://tools.ietf.org/html/rfc5424#section-6.2.3

|

||||

[5]:https://tools.ietf.org/html/rfc5424#section-6.2.4

|

||||

[6]:https://tools.ietf.org/html/rfc5424#section-6.2.5

|

||||

[7]:https://tools.ietf.org/html/rfc5424#section-6.2.1

|

||||

@ -1,15 +1,15 @@

|

||||

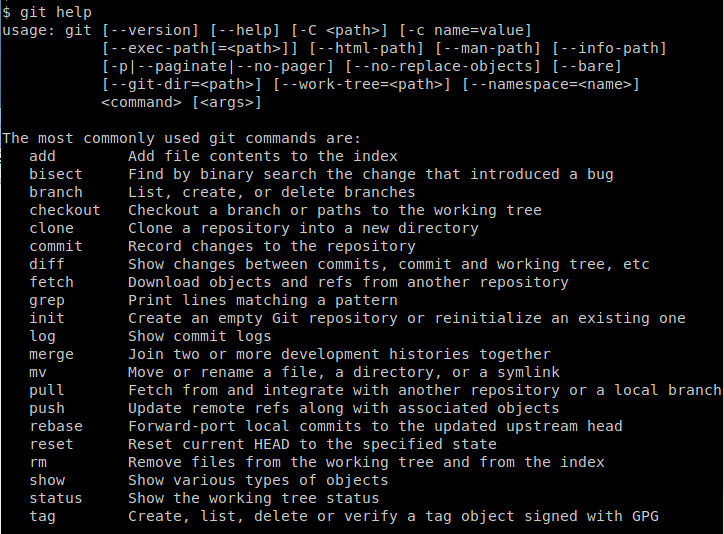

Linux问答 -- 如何在Linux上安装Git

|

||||

Linux有问必答:如何在Linux上安装Git

|

||||

================================================================================

|

||||

|

||||

> **问题:** 我尝试从一个Git公共仓库克隆项目,但出现了这样的错误提示:“git: command not found”。 请问我该如何安装Git? [注明一下是哪个Linux发行版]?

|

||||

> **问题:** 我尝试从一个Git公共仓库克隆项目,但出现了这样的错误提示:“git: command not found”。 请问我该如何在某某发行版上安装Git?

|

||||

|

||||

Git是一个流行的并且开源的版本控制系统(VCS),最初是为Linux环境开发的。跟CVS或者SVN这些版本控制系统不同的是,Git的版本控制被认为是“分布式的”,某种意义上,git的本地工作目录可以作为一个功能完善的仓库来使用,它具备完整的历史记录和版本追踪能力。在这种工作模型之下,各个协作者将内容提交到他们的本地仓库中(与之相对的会直接提交到核心仓库),如果有必要,再有选择性地推送到核心仓库。这就为Git这个版本管理系统带来了大型协作系统所必须的可扩展能力和冗余能力。

|

||||

Git是一个流行的开源版本控制系统(VCS),最初是为Linux环境开发的。跟CVS或者SVN这些版本控制系统不同的是,Git的版本控制被认为是“分布式的”,某种意义上,git的本地工作目录可以作为一个功能完善的仓库来使用,它具备完整的历史记录和版本追踪能力。在这种工作模型之下,各个协作者将内容提交到他们的本地仓库中(与之相对的会总是提交到核心仓库),如果有必要,再有选择性地推送到核心仓库。这就为Git这个版本管理系统带来了大型协作系统所必须的可扩展能力和冗余能力。

|

||||

|

||||

|

||||

|

||||

### 使用包管理器安装Git ###

|

||||

|

||||

Git已经被所有的主力Linux发行版所支持。所以安装它最简单的方法就是使用各个Linux发行版的包管理器。

|

||||

Git已经被所有的主流Linux发行版所支持。所以安装它最简单的方法就是使用各个Linux发行版的包管理器。

|

||||

|

||||

**Debian, Ubuntu, 或 Linux Mint**

|

||||

|

||||

@ -18,6 +18,8 @@ Git已经被所有的主力Linux发行版所支持。所以安装它最简单的

|

||||

**Fedora, CentOS 或 RHEL**

|

||||

|

||||

$ sudo yum install git

|

||||

或

|

||||

$ sudo dnf install git

|

||||

|

||||

**Arch Linux**

|

||||

|

||||

@ -33,7 +35,7 @@ Git已经被所有的主力Linux发行版所支持。所以安装它最简单的

|

||||

|

||||

### 从源码安装Git ###

|

||||

|

||||

如果由于某些原因,你希望从源码安装Git,安装如下介绍操作。

|

||||

如果由于某些原因,你希望从源码安装Git,按照如下介绍操作。

|

||||

|

||||

**安装依赖包**

|

||||

|

||||

@ -65,7 +67,7 @@ via: http://ask.xmodulo.com/install-git-linux.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[mr-ping](https://github.com/mr-ping)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

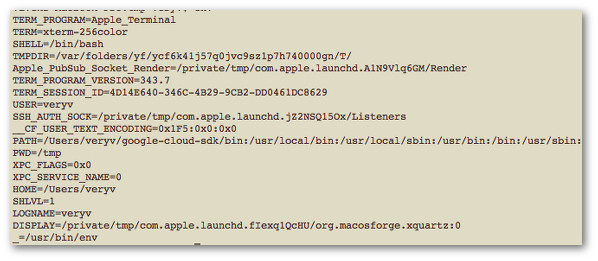

如何在 Linux 和类 Unix 系统上临时清空 Bash 环境变量

|

||||

如何在 Linux 上运行命令前临时清空 Bash 环境变量

|

||||

================================================================================

|

||||

我是个 bash shell 用户。我想临时清空 bash shell 环境变量。但我不想删除或者 unset 一个 export 环境变量。我怎样才能在 bash 或 ksh shell 的临时环境中运行程序呢?

|

||||

我是个 bash shell 用户。我想临时清空 bash shell 环境变量。但我不想删除或者 unset 一个输出的环境变量。我怎样才能在 bash 或 ksh shell 的临时环境中运行程序呢?

|

||||

|

||||

你可以在 Linux 或类 Unix 系统中使用 env 命令设置并打印环境。env 命令将环境修改为命令行指定的那样之后再执行程序。

|

||||

你可以在 Linux 或类 Unix 系统中使用 env 命令设置并打印环境。env 命令可以按命令行指定的变量来修改环境,之后再执行程序。

|

||||

|

||||

### 如何显示当前环境? ###

|

||||

|

||||

@ -17,29 +17,30 @@

|

||||

输出样例:

|

||||

|

||||

|

||||

Fig.01: Unix/Linux: 列出所有环境变量

|

||||

|

||||

*Fig.01: Unix/Linux: 列出所有环境变量*

|

||||

|

||||

### 统计环境变量数目 ###

|

||||

|

||||

输入下面的命令:

|

||||

|

||||

env | wc -l

|

||||

printenv | wc -l

|

||||

printenv | wc -l # 或者

|

||||

|

||||

输出样例:

|

||||

|

||||

20

|

||||

|

||||

### 在 bash/ksh/zsh 干净环境中运行程序 ###

|

||||

### 在干净的 bash/ksh/zsh 环境中运行程序 ###

|

||||

|

||||

语法如下所示:

|

||||

|

||||

env -i your-program-name-here arg1 arg2 ...

|

||||

|

||||

例如,不使用 http_proxy 和/或任何其它变量运行 wget 程序。临时清除所有 bash/ksh/zsh 环境变量并运行 wget 程序:

|

||||

例如,要在不使用 http_proxy 和/或任何其它环境变量的情况下运行 wget 程序。临时清除所有 bash/ksh/zsh 环境变量并运行 wget 程序:

|

||||

|

||||

env -i /usr/local/bin/wget www.cyberciti.biz

|

||||

env -i wget www.cyberciti.biz

|

||||

env -i wget www.cyberciti.biz # 或者

|

||||

|

||||

这当你想忽视任何已经设置的环境变量来运行命令时非常有用。我每天都会多次使用这个命令,以便忽视 http_proxy 和其它我设置的环境变量。

|

||||

|

||||

@ -66,12 +67,12 @@ Fig.01: Unix/Linux: 列出所有环境变量

|

||||

index.html.1 [ <=> ] 36.17K 115KB/s in 0.3s

|

||||

2015-08-03 23:25:18 (115 KB/s) - 'index.html.1' saved [37041]

|

||||

|

||||

-i 选项使 env 命令完全忽视它继承的环境。但是,它并不阻止你的命令(例如 wget 或 curl)设置新的变量。同时,也要注意运行 bash/ksh shell 的副作用:

|

||||

-i 选项使 env 命令完全忽视它继承的环境。但是,它并不会阻止你的命令(例如 wget 或 curl)设置新的变量。同时,也要注意运行 bash/ksh shell 的副作用:

|

||||

|

||||

env -i env | wc -l ## empty ##

|

||||

# Now run bash ##

|

||||

env -i env | wc -l ## 空的 ##

|

||||

# 现在运行 bash ##

|

||||

env -i bash

|

||||

## New enviroment set by bash program ##

|

||||

## bash 设置了新的环境变量 ##

|

||||

env | wc -l

|

||||

|

||||

#### 例子:设置一个环境变量 ####

|

||||

@ -79,13 +80,12 @@ Fig.01: Unix/Linux: 列出所有环境变量

|

||||

语法如下:

|

||||

|

||||

env var=value /path/to/command arg1 arg2 ...

|

||||

## OR ##

|

||||

## 或 ##

|

||||

var=value /path/to/command arg1 arg2 ...

|

||||

|

||||

例如设置 http_proxy:

|

||||

|

||||

env http_proxy="http://USER:PASSWORD@server1.cyberciti.biz:3128/" \

|

||||

/usr/local/bin/wget www.cyberciti.biz

|

||||

env http_proxy="http://USER:PASSWORD@server1.cyberciti.biz:3128/" /usr/local/bin/wget www.cyberciti.biz

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -93,6 +93,6 @@ via: http://www.cyberciti.biz/faq/linux-unix-temporarily-clearing-environment-va

|

||||

|

||||

作者:Vivek Gite

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

61

published/20150810 For Linux, Supercomputers R Us.md

Normal file

61

published/20150810 For Linux, Supercomputers R Us.md

Normal file

@ -0,0 +1,61 @@

|

||||

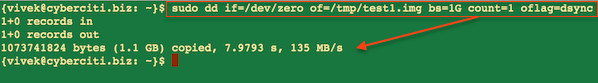

有了 Linux,你就可以搭建自己的超级计算机

|

||||

================================================================================

|

||||

|

||||

> 几乎所有超级计算机上运行的系统都是 Linux,其中包括那些由树莓派(Raspberry Pi)板卡和 PlayStation 3游戏机组成的计算机。

|

||||

|

||||

|

||||

|

||||

*题图来源:By Michel Ngilen,[ CC BY 2.0 ], via Wikimedia Commons*

|

||||

|

||||

超级计算机是一种严肃的工具,做的都是高大上的计算。它们往往从事于严肃的用途,比如原子弹模拟、气候模拟和高等物理学。当然,它们的花费也很高大上。在最新的超级计算机 [Top500][1] 排名中,中国国防科技大学研制的天河 2 号位居第一,而天河 2 号的建造耗资约 3.9 亿美元!

|

||||

|

||||

但是,也有一个超级计算机,是由博伊西州立大学电气和计算机工程系的一名在读博士 Joshua Kiepert [用树莓派构建完成][2]的,其建造成本低于2000美元。

|

||||

|

||||

不,这不是我编造的。它一个真实的超级计算机,由超频到 1GHz 的 [B 型树莓派][3]的 ARM11 处理器与 VideoCore IV GPU 组成。每个都配备了 512MB 的内存、一对 USB 端口和 1 个 10/100 BaseT 以太网端口。

|

||||

|

||||

那么天河 2 号和博伊西州立大学的超级计算机有什么共同点吗?它们都运行 Linux 系统。世界最快的超级计算机[前 500 强中有 486][4] 个也同样运行的是 Linux 系统。这是从 20 多年前就开始的格局。而现在的趋势是超级计算机开始由廉价单元组成,因为 Kiepert 的机器并不是唯一一个无所谓预算的超级计算机。

|

||||

|

||||

麻省大学达特茅斯分校的物理学副教授 Gaurav Khanna 创建了一台超级计算机仅用了[不足 200 台的 PlayStation3 视频游戏机][5]。

|

||||

|

||||

PlayStation 游戏机由一个 3.2 GHz 的基于 PowerPC 的 Power 处理器所驱动。每个都配有 512M 的内存。你现在仍然可以花 200 美元买到一个,尽管索尼将在年底逐步淘汰它们。Khanna 仅用了 16 个 PlayStation 3 构建了他第一台超级计算机,所以你也可以花费不到 4000 美元就拥有你自己的超级计算机。

|

||||

|

||||

这些机器可能是用玩具建成的,但他们不是玩具。Khanna 已经用它做了严肃的天体物理学研究。一个白帽子黑客组织使用了类似的 [PlayStation 3 超级计算机在 2008 年破解了 SSL 的 MD5 哈希算法][6]。

|

||||

|

||||

两年后,美国空军研究实验室研制的 [Condor Cluster,使用了 1760 个索尼的 PlayStation 3 的处理器][7]和168 个通用的图形处理单元。这个低廉的超级计算机,每秒运行约 500 TFLOP ,即每秒可进行 500 万亿次浮点运算。

|

||||

|

||||

其他的一些便宜且适用于构建家庭超级计算机的构件包括,专业并行处理板卡,比如信用卡大小的 [99 美元的 Parallella 板卡][8],以及高端显卡,比如 [Nvidia 的 Titan Z][9] 和 [ AMD 的 FirePro W9100][10]。这些高端板卡的市场零售价约 3000 美元,一些想要一台梦幻般的机器的玩家为此参加了[英特尔极限大师赛:英雄联盟世界锦标赛][11],要是甚至有机会得到了第一名的话,能获得超过 10 万美元奖金。另一方面,一个能够自己提供超过 2.5TFLOPS 计算能力的计算机,对于科学家和研究人员来说,这为他们提供了一个可以拥有自己专属的超级计算机的经济的方法。

|

||||

|

||||

而超级计算机与 Linux 的连接,这一切都始于 1994 年戈达德航天中心的第一个名为 [Beowulf 超级计算机][13]。

|

||||

|

||||