mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-15 01:50:08 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject into new

This commit is contained in:

commit

29d84d9051

@ -16,12 +16,8 @@ Linux DNS 查询剖析(第四部分)

|

||||

|

||||

在第四部分中,我将介绍容器如何完成 DNS 查询。你想的没错,也不是那么简单。

|

||||

|

||||

* * *

|

||||

|

||||

### 1) Docker 和 DNS

|

||||

|

||||

============================================================

|

||||

|

||||

在 [Linux DNS 查询剖析(第三部分)][3] 中,我们介绍了 `dnsmasq`,其工作方式如下:将 DNS 查询指向到 localhost 地址 `127.0.0.1`,同时启动一个进程监听 `53` 端口并处理查询请求。

|

||||

|

||||

在按上述方式配置 DNS 的主机上,如果运行了一个 Docker 容器,容器内的 `/etc/resolv.conf` 文件会是怎样的呢?

|

||||

@ -72,29 +68,29 @@ google.com. 112 IN A 172.217.23.14

|

||||

|

||||

在这个问题上,Docker 的解决方案是忽略所有可能的复杂情况,即无论主机中使用什么 DNS 服务器,容器内都使用 Google 的 DNS 服务器 `8.8.8.8` 和 `8.8.4.4` 完成 DNS 查询。

|

||||

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

_我的经历:在 2013 年,我遇到了使用 Docker 以来的第一个问题,与 Docker 的这种 DNS 解决方案密切相关。我们公司的网络屏蔽了 `8.8.8.8` 和 `8.8.4.4`,导致容器无法解析域名。_

|

||||

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 _<ruby>编排引擎<rt>orchestrators</rt></ruby>_,情况又有些不同。

|

||||

这就是 Docker 容器的情况,但对于包括 Kubernetes 在内的容器 <ruby>编排引擎<rt>orchestrators</rt></ruby>,情况又有些不同。

|

||||

|

||||

### 2) Kubernetes 和 DNS

|

||||

|

||||

在 Kubernetes 中,最小部署单元是 `pod`;`pod` 是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

在 Kubernetes 中,最小部署单元是 pod;它是一组相互协作的容器,共享 IP 地址(和其它资源)。

|

||||

|

||||

Kubernetes 面临的一个额外的挑战是,将 Kubernetes 服务请求(例如,`myservice.kubernetes.io`)通过对应的<ruby>解析器<rt>resolver</rt></ruby>,转发到具体服务地址对应的<ruby>内网地址<rt>private network</rt></ruby>。这里提到的服务地址被称为归属于“<ruby>集群域<rt>cluster domain</rt></ruby>”。集群域可由管理员配置,根据配置可以是 `cluster.local` 或 `myorg.badger` 等。

|

||||

|

||||

在 Kubernetes 中,你可以为 `pod` 指定如下四种 `pod` 内 DNS 查询的方式。

|

||||

在 Kubernetes 中,你可以为 pod 指定如下四种 pod 内 DNS 查询的方式。

|

||||

|

||||

* Default

|

||||

**Default**

|

||||

|

||||

在这种(名称容易让人误解)的方式中,`pod` 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

在这种(名称容易让人误解)的方式中,pod 与其所在的主机采用相同的 DNS 查询路径,与前面介绍的主机 DNS 查询一致。我们说这种方式的名称容易让人误解,因为该方式并不是默认选项!`ClusterFirst` 才是默认选项。

|

||||

|

||||

如果你希望覆盖 `/etc/resolv.conf` 中的条目,你可以添加到 `kubelet` 的配置中。

|

||||

|

||||

* ClusterFirst

|

||||

**ClusterFirst**

|

||||

|

||||

在 `ClusterFirst` 方式中,遇到 DNS 查询请求会做有选择的转发。根据配置的不同,有以下两种方式:

|

||||

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 `pod` 所在的主机。

|

||||

第一种方式配置相对古老但更简明,即采用一个规则:如果请求的域名不是集群域的子域,那么将其转发到 pod 所在的主机。

|

||||

|

||||

第二种方式相对新一些,你可以在内部 DNS 中配置选择性转发。

|

||||

|

||||

@ -115,27 +111,27 @@ data:

|

||||

|

||||

在 `stubDomains` 条目中,可以为特定域名指定特定的 DNS 服务器;而 `upstreamNameservers` 条目则给出,待查询域名不是集群域子域情况下用到的 DNS 服务器。

|

||||

|

||||

这是通过在一个 `pod` 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

这是通过在一个 pod 中运行我们熟知的 `dnsmasq` 实现的。

|

||||

|

||||

|

||||

|

||||

剩下两种选项都比较小众:

|

||||

|

||||

* ClusterFirstWithHostNet

|

||||

**ClusterFirstWithHostNet**

|

||||

|

||||

适用于 `pod` 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 `pod` 对应主机相同的网络。

|

||||

适用于 pod 使用主机网络的情况,例如绕开 Docker 网络配置,直接使用与 pod 对应主机相同的网络。

|

||||

|

||||

* None

|

||||

**None**

|

||||

|

||||

`None` 意味着不改变 DNS,但强制要求你在 `pod` <ruby>规范文件<rt>specification</rt></ruby>的 `dnsConfig` 条目中指定 DNS 配置。

|

||||

|

||||

### CoreDNS 即将到来

|

||||

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

除了上面提到的那些,一旦 `CoreDNS` 取代 Kubernetes 中的 `kube-dns`,情况还会发生变化。`CoreDNS` 相比 `kube-dns` 具有可配置性更高、效率更高等优势。

|

||||

|

||||

如果想了解更多,参考[这里][5]。

|

||||

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 `3.6`,可能有些过时。

|

||||

如果你对 OpenShift 的网络感兴趣,我曾写过一篇[文章][6]可供你参考。但文章中 OpenShift 的版本是 3.6,可能有些过时。

|

||||

|

||||

### 第四部分总结

|

||||

|

||||

@ -152,14 +148,14 @@ via: https://zwischenzugs.com/2018/08/06/anatomy-of-a-linux-dns-lookup-part-iv/

|

||||

|

||||

作者:[zwischenzugs][a]

|

||||

译者:[pinewall](https://github.com/pinewall)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://zwischenzugs.com/

|

||||

[1]:https://zwischenzugs.com/2018/06/08/anatomy-of-a-linux-dns-lookup-part-i/

|

||||

[2]:https://zwischenzugs.com/2018/06/18/anatomy-of-a-linux-dns-lookup-part-ii/

|

||||

[3]:https://zwischenzugs.com/2018/07/06/anatomy-of-a-linux-dns-lookup-part-iii/

|

||||

[1]:https://linux.cn/article-9943-1.html

|

||||

[2]:https://linux.cn/article-9949-1.html

|

||||

[3]:https://linux.cn/article-9972-1.html

|

||||

[4]:https://kubernetes.io/docs/tasks/administer-cluster/dns-custom-nameservers/#impacts-on-pods

|

||||

[5]:https://coredns.io/

|

||||

[6]:https://zwischenzugs.com/2017/10/21/openshift-3-6-dns-in-pictures/

|

||||

@ -0,0 +1,78 @@

|

||||

|

||||

Steam 让我们在 Linux 上玩 Windows 的游戏更加容易

|

||||

======

|

||||

|

||||

![Steam Wallpaper][1]

|

||||

|

||||

总所周知,[Linux 游戏][2]库中的游戏只有 Windows 游戏库中的一部分,实际上,许多人甚至都不会考虑将操作系统[转换为 Linux][3],原因很简单,因为他们喜欢的游戏,大多数都不能在这个平台上运行。

|

||||

|

||||

在撰写本文时,Steam 上已有超过 5000 种游戏可以在 Linux 上运行,而 Steam 上的游戏总数已经接近 27000 种了。现在 5000 种游戏可能看起来很多,但还没有达到 27000 种,确实没有。

|

||||

|

||||

虽然几乎所有的新的<ruby>独立游戏<rt>indie game</rt></ruby>都是在 Linux 中推出的,但我们仍然无法在这上面玩很多的 [3A 大作][4]。对我而言,虽然这其中有很多游戏我都很希望能有机会玩,但这从来都不是一个非黑即白的问题。因为我主要是玩独立游戏和[复古游戏][5],所以几乎所有我喜欢的游戏都可以在 Linux 系统上运行。

|

||||

|

||||

### 认识 Proton,Steam 的一个 WINE 复刻

|

||||

|

||||

现在,这个问题已经成为过去式了,因为本周 Valve [宣布][6]要对 Steam Play 进行一次更新,此次更新会将一个名为 Proton 的 Wine 复刻版本添加到 Linux 客户端中。是的,这个工具是开源的,Valve 已经在 [GitHub][7] 上开源了源代码,但该功能仍然处于测试阶段,所以你必须使用测试版的 Steam 客户端才能使用这项功能。

|

||||

|

||||

#### 使用 proton ,可以在 Linux 系统上通过 Steam 运行更多 Windows 游戏

|

||||

|

||||

这对我们这些 Linux 用户来说,实际上意味着什么?简单来说,这意味着我们可以在 Linux 电脑上运行全部 27000 种游戏,而无需配置像 [PlayOnLinux][8] 或 [Lutris][9] 这样的东西。我要告诉你的是,配置这些东西有时候会非常让人头疼。

|

||||

|

||||

对此更为复杂的答案是,某种原因听起来非常美好。虽然在理论上,你可以用这种方式在 Linux 上玩所有的 Windows 平台上的游戏。但只有一少部分游戏在推出时会正式支持 Linux。这少部分游戏包括 《DOOM》、《最终幻想 VI》、《铁拳 7》、《星球大战:前线 2》,和其他几个。

|

||||

|

||||

#### 你可以在 Linux 上玩所有的 Windows 游戏(理论上)

|

||||

|

||||

虽然目前该列表只有大约 30 个游戏,你可以点击“为所有游戏启用 Steam Play”复选框来强制使用 Steam 的 Proton 来安装和运行任意游戏。但你最好不要有太高的期待,它们的稳定性和性能表现不一定有你希望的那么好,所以请把期望值压低一点。

|

||||

|

||||

![Steam Play][10]

|

||||

|

||||

据[这份报告][13],已经有超过一千个游戏可以在 Linux 上玩了。按[此指南][14]来了解如何启用 Steam Play 测试版本。

|

||||

|

||||

#### 体验 Proton,没有我想的那么烂

|

||||

|

||||

例如,我安装了一些难度适中的游戏,使用 Proton 来进行安装。其中一个是《上古卷轴 4:湮没》,在我玩这个游戏的两个小时里,它只崩溃了一次,而且几乎是紧跟在游戏教程的自动保存点之后。

|

||||

|

||||

我有一块英伟达 Gtx 1050 Ti 的显卡。所以我可以使用 1080P 的高配置来玩这个游戏。而且我没有遇到除了这次崩溃之外的任何问题。我唯一真正感到不爽的只有它的帧数没有原本的高。在 90% 的时间里,游戏的帧数都在 60 帧以上,但我知道它的帧数应该能更高。

|

||||

|

||||

我安装和运行的其他所有游戏都运行得很完美,虽然我还没有较长时间地玩过它们中的任何一个。我安装的游戏中包括《森林》、《丧尸围城 4》和《刺客信条 2》。(你觉得我这是喜欢恐怖游戏吗?)

|

||||

|

||||

#### 为什么 Steam(仍然)要下注在 Linux 上?

|

||||

|

||||

现在,一切都很好,这件事为什么会发生呢?为什么 Valve 要花费时间,金钱和资源来做这样的事?我倾向于认为,他们这样做是因为他们懂得 Linux 社区的价值,但是如果要我老实地说,我不相信我们和它有任何的关系。

|

||||

|

||||

如果我一定要在这上面花钱,我想说 Valve 开发了 Proton,因为他们还没有放弃 [Steam Machine][11]。因为 [Steam OS][12] 是基于 Linux 的发行版,在这类东西上面投资可以获取最大的利润,Steam OS 上可用的游戏越多,就会有更多的人愿意购买 Steam Machine。

|

||||

|

||||

可能我是错的,但是我敢打赌啊,我们会在不远的未来看到新一批的 Steam Machine。可能我们会在一年内看到它们,也有可能我们再等五年都见不到,谁知道呢!

|

||||

|

||||

无论哪种方式,我所知道的是,我终于能兴奋地从我的 Steam 游戏库里玩游戏了。这个游戏库是多年来我通过各种慈善包、促销码和不定时地买的游戏慢慢积累的,只不过是想试试让它在 Lutris 中运行。

|

||||

|

||||

#### 为 Linux 上越来越多的游戏而激动?

|

||||

|

||||

你怎么看?你对此感到激动吗?或者说你会害怕只有很少的开发者会开发 Linux 平台上的游戏,因为现在几乎没有需求?Valve 喜欢 Linux 社区,还是说他们喜欢钱?请在下面的评论区告诉我们您的想法,然后重新搜索来查看更多类似这样的开源软件方面的文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/steam-play-proton/

|

||||

|

||||

作者:[Phillip Prado][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://itsfoss.com/author/phillip/

|

||||

[1]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/steam-wallpaper.jpeg

|

||||

[2]:https://itsfoss.com/linux-gaming-guide/

|

||||

[3]:https://itsfoss.com/reasons-switch-linux-windows-xp/

|

||||

[4]:https://itsfoss.com/triplea-game-review/

|

||||

[5]:https://itsfoss.com/play-retro-games-linux/

|

||||

[6]:https://steamcommunity.com/games/221410

|

||||

[7]:https://github.com/ValveSoftware/Proton/

|

||||

[8]:https://www.playonlinux.com/en/

|

||||

[9]:https://lutris.net/

|

||||

[10]:https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/08/SteamProton.jpg

|

||||

[11]:https://store.steampowered.com/sale/steam_machines

|

||||

[12]:https://itsfoss.com/valve-annouces-linux-based-gaming-operating-system-steamos/

|

||||

[13]:https://spcr.netlify.com/

|

||||

[14]:https://itsfoss.com/steam-play/

|

||||

@ -0,0 +1,173 @@

|

||||

每位 Ubuntu 18.04 用户都应该知道的快捷键

|

||||

======

|

||||

|

||||

了解快捷键能够提升您的生产力。这里有一些实用的 Ubuntu 快捷键助您像专业人士一样使用 Ubuntu。

|

||||

|

||||

您可以用键盘和鼠标组合来使用操作系统。

|

||||

|

||||

> 注意:本文中提到的键盘快捷键适用于 Ubuntu 18.04 GNOME 版。 通常,它们中的大多数(或者全部)也适用于其他的 Ubuntu 版本,但我不能够保证。

|

||||

|

||||

![Ubuntu keyboard shortcuts][1]

|

||||

|

||||

### 实用的 Ubuntu 快捷键

|

||||

|

||||

让我们来看一看 Ubuntu GNOME 必备的快捷键吧!通用的快捷键如 `Ctrl+C`(复制)、`Ctrl+V`(粘贴)或者 `Ctrl+S`(保存)不再赘述。

|

||||

|

||||

注意:Linux 中的 Super 键即键盘上带有 Windows 图标的键,本文中我使用了大写字母,但这不代表你需要按下 `shift` 键,比如,`T` 代表键盘上的 ‘t’ 键,而不代表 `Shift+t`。

|

||||

|

||||

#### 1、 Super 键:打开活动搜索界面

|

||||

|

||||

使用 `Super` 键可以打开活动菜单。如果你只能在 Ubuntu 上使用一个快捷键,那只能是 `Super` 键。

|

||||

|

||||

想要打开一个应用程序?按下 `Super` 键然后搜索应用程序。如果搜索的应用程序未安装,它会推荐来自应用中心的应用程序。

|

||||

|

||||

想要看看有哪些正在运行的程序?按下 `Super` 键,屏幕上就会显示所有正在运行的 GUI 应用程序。

|

||||

|

||||

想要使用工作区吗?只需按下 `Super` 键,您就可以在屏幕右侧看到工作区选项。

|

||||

|

||||

#### 2、 Ctrl+Alt+T:打开 Ubuntu 终端窗口

|

||||

|

||||

![Ubuntu Terminal Shortcut][2]

|

||||

|

||||

*使用 Ctrl+alt+T 来打开终端窗口*

|

||||

|

||||

想要打开一个新的终端,您只需使用快捷键 `Ctrl+Alt+T`。这是我在 Ubuntu 中最喜欢的键盘快捷键。 甚至在我的许多 FOSS 教程中,当需要打开终端窗口是,我都会提到这个快捷键。

|

||||

|

||||

#### 3、 Super+L 或 Ctrl+Alt+L:锁屏

|

||||

|

||||

当您离开电脑时锁定屏幕,是最基本的安全习惯之一。您可以使用 `Super+L` 快捷键,而不是繁琐地点击屏幕右上角然后选择锁定屏幕选项。

|

||||

|

||||

有些系统也会使用 `Ctrl+Alt+L` 键锁定屏幕。

|

||||

|

||||

#### 4、 Super+D or Ctrl+Alt+D:显示桌面

|

||||

|

||||

按下 `Super+D` 可以最小化所有正在运行的应用程序窗口并显示桌面。

|

||||

|

||||

再次按 `Super+D` 将重新打开所有正在运行的应用程序窗口,像之前一样。

|

||||

|

||||

您也可以使用 `Ctrl+Alt+D` 来实现此目的。

|

||||

|

||||

#### 5、 Super+A:显示应用程序菜单

|

||||

|

||||

您可以通过单击屏幕左下角的 9 个点打开 Ubuntu 18.04 GNOME 中的应用程序菜单。 但是一个更快捷的方法是使用 `Super+A` 快捷键。

|

||||

|

||||

它将显示应用程序菜单,您可以在其中查看或搜索系统上已安装的应用程序。

|

||||

|

||||

您可以使用 `Esc` 键退出应用程序菜单界面。

|

||||

|

||||

#### 6、 Super+Tab 或 Alt+Tab:在运行中的应用程序间切换

|

||||

|

||||

如果您运行的应用程序不止一个,则可以使用 `Super+Tab` 或 `Alt+Tab` 快捷键在应用程序之间切换。

|

||||

|

||||

按住 `Super` 键同时按下 `Tab` 键,即可显示应用程序切换器。 按住 `Super` 的同时,继续按下 `Tab` 键在应用程序之间进行选择。 当光标在所需的应用程序上时,松开 `Super` 和 `Tab` 键。

|

||||

|

||||

默认情况下,应用程序切换器从左向右移动。 如果要从右向左移动,可使用 `Super+Shift+Tab` 快捷键。

|

||||

|

||||

在这里您也可以用 `Alt` 键代替 `Super` 键。

|

||||

|

||||

> 提示:如果有多个应用程序实例,您可以使用 Super+` 快捷键在这些实例之间切换。

|

||||

|

||||

#### 7、 Super+箭头:移动窗口位置

|

||||

|

||||

<https://player.vimeo.com/video/289091549>

|

||||

|

||||

这个快捷键也适用于 Windows 系统。 使用应用程序时,按下 `Super+左箭头`,应用程序将贴合屏幕的左边缘,占用屏幕的左半边。

|

||||

|

||||

同样,按下 `Super+右箭头`会使应用程序贴合右边缘。

|

||||

|

||||

按下 `Super+上箭头`将最大化应用程序窗口,`Super+下箭头`将使应用程序恢复到其正常的大小。

|

||||

|

||||

#### 8、 Super+M:切换到通知栏

|

||||

|

||||

GNOME 中有一个通知栏,您可以在其中查看系统和应用程序活动的通知,这里也有一个日历。

|

||||

|

||||

![Notification Tray Ubuntu 18.04 GNOME][3]

|

||||

|

||||

*通知栏*

|

||||

|

||||

使用 `Super+M` 快捷键,您可以打开此通知栏。 如果再次按这些键,将关闭打开的通知托盘。

|

||||

|

||||

使用 `Super+V` 也可实现相同的功能。

|

||||

|

||||

#### 9、 Super+空格:切换输入法(用于多语言设置)

|

||||

|

||||

如果您使用多种语言,可能您的系统上安装了多个输入法。 例如,我需要在 Ubuntu 上同时使用[印地语] [4]和英语,所以我安装了印地语(梵文)输入法以及默认的英语输入法。

|

||||

|

||||

如果您也使用多语言设置,则可以使用 `Super+空格` 快捷键快速更改输入法。

|

||||

|

||||

#### 10、 Alt+F2:运行控制台

|

||||

|

||||

这适用于高级用户。 如果要运行快速命令,而不是打开终端并在其中运行命令,则可以使用 `Alt+F2` 运行控制台。

|

||||

|

||||

![Alt+F2 to run commands in Ubuntu][5]

|

||||

|

||||

*控制台*

|

||||

|

||||

当您使用只能在终端运行的应用程序时,这尤其有用。

|

||||

|

||||

#### 11、 Ctrl+Q:关闭应用程序窗口

|

||||

|

||||

如果您有正在运行的应用程序,可以使用 `Ctrl+Q` 快捷键关闭应用程序窗口。您也可以使用 `Ctrl+W` 来实现此目的。

|

||||

|

||||

`Alt+F4` 是关闭应用程序窗口更“通用”的快捷方式。

|

||||

|

||||

它不适用于一些应用程序,如 Ubuntu 中的默认终端。

|

||||

|

||||

#### 12、 Ctrl+Alt+箭头:切换工作区

|

||||

|

||||

![Workspace switching][6]

|

||||

|

||||

*切换工作区*

|

||||

|

||||

如果您是使用工作区的重度用户,可以使用 `Ctrl+Alt+上箭头`和 `Ctrl+Alt+下箭头`在工作区之间切换。

|

||||

|

||||

#### 13、 Ctrl+Alt+Del:注销

|

||||

|

||||

不!在 Linux 中使用著名的快捷键 `Ctrl+Alt+Del` 并不会像在 Windows 中一样打开任务管理器(除非您使用自定义快捷键)。

|

||||

|

||||

![Log Out Ubuntu][7]

|

||||

|

||||

*注销*

|

||||

|

||||

在普通的 GNOME 桌面环境中,您可以使用 `Ctrl+Alt+Del` 键打开关机菜单,但 Ubuntu 并不总是遵循此规范,因此当您在 Ubuntu 中使用 `Ctrl+Alt+Del` 键时,它会打开注销菜单。

|

||||

|

||||

### 在 Ubuntu 中使用自定义键盘快捷键

|

||||

|

||||

您不是只能使用默认的键盘快捷键,您可以根据需要创建自己的自定义键盘快捷键。

|

||||

|

||||

转到“设置->设备->键盘”,您将在这里看到系统的所有键盘快捷键。向下滚动到底部,您将看到“自定义快捷方式”选项。

|

||||

|

||||

![Add custom keyboard shortcut in Ubuntu][8]

|

||||

|

||||

您需要提供易于识别的快捷键名称、使用快捷键时运行的命令,以及您自定义的按键组合。

|

||||

|

||||

### Ubuntu 中你最喜欢的键盘快捷键是什么?

|

||||

|

||||

快捷键无穷无尽。如果需要,你可以看一看所有可能的 [GNOME 快捷键][9],看其中有没有你需要用到的快捷键。

|

||||

|

||||

您可以学习使用您经常使用应用程序的快捷键,这是很有必要的。例如,我使用 Kazam 进行[屏幕录制][10],键盘快捷键帮助我方便地暂停和开始录像。

|

||||

|

||||

您最喜欢、最离不开的 Ubuntu 快捷键是什么?

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/ubuntu-shortcuts/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[XiatianSummer](https://github.com/XiatianSummer)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/ubuntu-keyboard-shortcuts.jpeg

|

||||

[2]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/ubuntu-terminal-shortcut.jpg

|

||||

[3]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/notification-tray-ubuntu-gnome.jpeg

|

||||

[4]: https://itsfoss.com/type-indian-languages-ubuntu/

|

||||

[5]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/console-alt-f2-ubuntu-gnome.jpeg

|

||||

[6]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/workspace-switcher-ubuntu.png

|

||||

[7]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/log-out-ubuntu.jpeg

|

||||

[8]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/custom-keyboard-shortcut.jpg

|

||||

[9]: https://wiki.gnome.org/Design/OS/KeyboardShortcuts

|

||||

[10]: https://itsfoss.com/best-linux-screen-recorders/

|

||||

@ -3,7 +3,7 @@

|

||||

|

||||

|

||||

|

||||

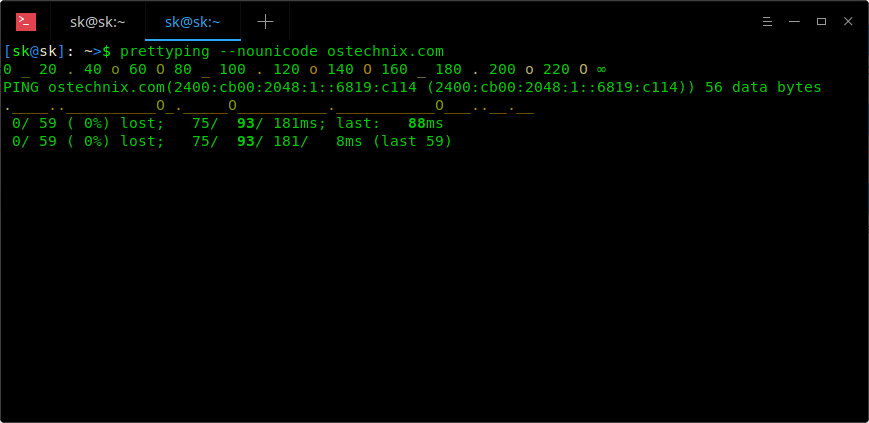

**APT**, 是 **A** dvanced **P** ackage **T** ool 的缩写,是基于 Debian 的系统的默认包管理器。我们可以使用 APT 安装、更新、升级和删除应用程序。最近,我一直遇到一个奇怪的错误。每当我尝试更新我的 Ubuntu 16.04 时,我都会收到此错误 - **“0% [Connecting to in.archive.ubuntu.com (2001:67c:1560:8001::14)]”** ,同时更新流程会卡住很长时间。我的网络连接没问题,我可以 ping 通所有网站,包括 Ubuntu 官方网站。在搜索了一番谷歌后,我意识到 Ubuntu 镜像有时无法通过 IPv6 访问。在我强制将 APT 包管理器在更新系统时使用 IPv4 代替 IPv6 访问 Ubuntu 镜像后,此问题得以解决。如果你遇到过此错误,可以按照以下说明解决。

|

||||

**APT**, 是 **A** dvanced **P** ackage **T** ool 的缩写,是基于 Debian 的系统的默认包管理器。我们可以使用 APT 安装、更新、升级和删除应用程序。最近,我一直遇到一个奇怪的错误。每当我尝试更新我的 Ubuntu 16.04 时,我都会收到此错误 - **“0% [Connecting to in.archive.ubuntu.com (2001:67c:1560:8001::14)]”** ,同时更新流程会卡住很长时间。我的网络连接没问题,我可以 ping 通所有网站,包括 Ubuntu 官方网站。在搜索了一番谷歌后,我意识到 Ubuntu 镜像站点有时无法通过 IPv6 访问。在我强制将 APT 包管理器在更新系统时使用 IPv4 代替 IPv6 访问 Ubuntu 镜像站点后,此问题得以解决。如果你遇到过此错误,可以按照以下说明解决。

|

||||

|

||||

### 强制 APT 包管理器在 Ubuntu 16.04 中使用 IPv4

|

||||

|

||||

@ -11,13 +11,12 @@

|

||||

|

||||

```

|

||||

$ sudo apt-get -o Acquire::ForceIPv4=true update

|

||||

|

||||

$ sudo apt-get -o Acquire::ForceIPv4=true upgrade

|

||||

```

|

||||

|

||||

瞧!这次更新很快就完成了。

|

||||

|

||||

你还可以使用以下命令在 **/etc/apt/apt.conf.d/99force-ipv4** 中添加以下行,以便将来对所有 **apt-get** 事务保持持久性:

|

||||

你还可以使用以下命令在 `/etc/apt/apt.conf.d/99force-ipv4` 中添加以下行,以便将来对所有 `apt-get` 事务保持持久性:

|

||||

|

||||

```

|

||||

$ echo 'Acquire::ForceIPv4 "true";' | sudo tee /etc/apt/apt.conf.d/99force-ipv4

|

||||

@ -25,7 +24,7 @@ $ echo 'Acquire::ForceIPv4 "true";' | sudo tee /etc/apt/apt.conf.d/99force-ipv4

|

||||

|

||||

**免责声明:**

|

||||

|

||||

我不知道最近是否有人遇到这个问题,但我今天在我的 Ubuntu 16.04 LTS 虚拟机中遇到了至少四五次这样的错误,我按照上面的说法解决了这个问题。我不确定这是推荐的解决方案。请浏览 Ubuntu 论坛来确保此方法合法。由于我只是一个 VM,我只将它用于测试和学习目的,我不介意这种方法的真实性。请自行承担使用风险。

|

||||

我不知道最近是否有人遇到这个问题,但我今天在我的 Ubuntu 16.04 LTS 虚拟机中遇到了至少四、五次这样的错误,我按照上面的说法解决了这个问题。我不确定这是推荐的解决方案。请浏览 Ubuntu 论坛来确保此方法合法。由于我只是一个 VM,我只将它用于测试和学习目的,我不介意这种方法的真实性。请自行承担使用风险。

|

||||

|

||||

希望这有帮助。还有更多的好东西。敬请关注!

|

||||

|

||||

@ -40,7 +39,7 @@ via: https://www.ostechnix.com/how-to-force-apt-package-manager-to-use-ipv4-in-u

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,74 @@

|

||||

CPU Power Manager – Control And Manage CPU Frequency In Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

If you are a laptop user, you probably know that power management on Linux isn’t really as good as on other OSes. While there are tools like **TLP** , [**Laptop Mode Tools** and **powertop**][1] to help reduce power consumption, overall battery life on Linux isn’t as good as Windows or Mac OS. Another way to reduce power consumption is to limit the frequency of your CPU. While this is something that has always been doable, it generally requires complicated terminal commands, making it inconvenient. But fortunately, there’s a gnome extension that helps you easily set and manage your CPU’s frequency – **CPU Power Manager**. CPU Power Manager uses the **intel_pstate** frequency scaling driver (supported by almost every Intel CPU) to control and manage CPU frequency in your GNOME desktop.

|

||||

|

||||

Another reason to use this extension is to reduce heating in your system. There are many systems out there which can get uncomfortably hot in normal usage. Limiting your CPU’s frequency could reduce heating. It will also decrease the wear and tear on your CPU and other components.

|

||||

|

||||

### Installing CPU Power Manager

|

||||

|

||||

First, go to the [**extension’s page**][2], and install the extension.

|

||||

|

||||

Once the extension has installed, you’ll get a CPU icon at the right side of the Gnome top bar. Click the icon, and you get an option to install the extension:

|

||||

|

||||

|

||||

|

||||

If you click **“Attempt Installation”** , you’ll get a password prompt. The extension needs root privileges to add policykit rule for controlling CPU frequency. This is what the prompt looks like:

|

||||

|

||||

|

||||

|

||||

Type in your password and Click **“Authenticate”** , and that finishes installation. The last action adds a policykit file – **mko.cpupower.setcpufreq.policy** at **/usr/share/polkit-1/actions**.

|

||||

|

||||

After installation is complete, if you click the CPU icon at the top right, you’ll get something like this:

|

||||

|

||||

|

||||

|

||||

### Features

|

||||

|

||||

* **See the current CPU frequency:** Obviously, you can use this window to see the frequency that your CPU is running at.

|

||||

* **Set maximum and minimum frequency:** With this extension, you can set maximum and minimum frequency limits in terms of percentage of max frequency. Once these limits are set, the CPU will operate only in this range of frequencies.

|

||||

* **Turn Turbo Boost On and Off:** This is my favorite feature. Most Intel CPU’s have “Turbo Boost” feature, whereby the one of the cores of the CPU is boosted past the normal maximum frequency for extra performance. While this can make your system more performant, it also increases power consumption a lot. So if you aren’t doing anything intensive, it’s nice to be able to turn off Turbo Boost and save power. In fact, in my case, I have Turbo Boost turned off most of the time.

|

||||

* **Make Profiles:** You can make profiles with max and min frequency that you can turn on/off easily instead of fiddling with max and frequencies.

|

||||

|

||||

|

||||

|

||||

### Preferences

|

||||

|

||||

You can also customize the extension via the preferences window:

|

||||

|

||||

|

||||

|

||||

As you can see, you can set whether CPU frequency is to be displayed, and whether to display it in **Mhz** or **Ghz**.

|

||||

|

||||

You can also edit and create/delete profiles:

|

||||

|

||||

|

||||

|

||||

You can set maximum and minimum frequencies, and turbo boost for each profile.

|

||||

|

||||

### Conclusion

|

||||

|

||||

As I said in the beginning, power management on Linux is not the best, and many people are always looking to eek out a few minutes more out of their Linux laptop. If you are one of those, check out this extension. This is a unconventional method to save power, but it does work. I certainly love this extension, and have been using it for a few months now.

|

||||

|

||||

What do you think about this extension? Put your thoughts in the comments below!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/cpu-power-manager-control-and-manage-cpu-frequency-in-linux/

|

||||

|

||||

作者:[EDITOR][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[1]: https://www.ostechnix.com/improve-laptop-battery-performance-linux/

|

||||

[2]: https://extensions.gnome.org/extension/945/cpu-power-manager/

|

||||

@ -0,0 +1,80 @@

|

||||

How to Install Cinnamon Desktop on Ubuntu

|

||||

======

|

||||

**This tutorial shows you how to install Cinnamon desktop environment on Ubuntu.**

|

||||

|

||||

[Cinnamon][1] is the default desktop environment of [Linux Mint][2]. Unlike Unity desktop environment in Ubuntu, Cinnamon is more traditional but elegant looking desktop environment with the bottom panel and app menu etc. Many Windows migrants [prefer Linux Mint over Ubuntu][3] because of Cinnamon desktop and its Windows-resembling user interface.

|

||||

|

||||

Now, you don’t need to [install Linux Mint][4] just for trying Cinnamon. In this tutorial, I’ll show you **how to install Cinnamon in Ubuntu 18.04, 16.04 and 14.04**.

|

||||

|

||||

You should note something before you install Cinnamon desktop on Ubuntu. Sometimes, installing additional desktop environments leads to conflict between the desktop environments. This may result in a broken session, broken applications and features etc. This is why you should be careful in making this choice.

|

||||

|

||||

### How to Install Cinnamon on Ubuntu

|

||||

|

||||

![How to install cinnamon desktop on Ubuntu Linux][5]

|

||||

|

||||

There used to be a-sort-of official PPA from Cinnamon team for Ubuntu but it doesn’t exist anymore. Don’t lose heart. There is an unofficial PPA available and it works perfectly. This PPA consists of the latest Cinnamon version.

|

||||

|

||||

Open a terminal and use the following commands:

|

||||

|

||||

```

|

||||

sudo add-apt-repository ppa:embrosyn/cinnamon

|

||||

sudo apt update && sudo apt install cinnamon

|

||||

|

||||

```

|

||||

|

||||

It will download files of around 150 MB in size (if I remember correctly). This also provides you with Nemo (Nautilus fork) and Cinnamon Control Center. This bonus stuff gives a closer feel of Linux Mint.

|

||||

|

||||

### Using Cinnamon desktop environment in Ubuntu

|

||||

|

||||

Once you have installed Cinnamon, log out of the current session. At the login screen, click on the Ubuntu symbol beside the username:

|

||||

|

||||

|

||||

|

||||

When you do this, it will give you all the desktop environments available for your system. No need to tell you that you have to choose Cinnamon:

|

||||

|

||||

|

||||

|

||||

Now you should be logged in to Ubuntu with Cinnamon desktop environment. Remember, you can do the same to switch back to Unity. Here is a quick screenshot of what it looked like to run **Cinnamon in Ubuntu** :

|

||||

|

||||

|

||||

|

||||

Looks completely like Linux Mint, isn’t it? I didn’t find any compatibility issue between Cinnamon and Unity. I switched back and forth between Unity and Cinnamon and both worked perfectly.

|

||||

|

||||

#### Remove Cinnamon from Ubuntu

|

||||

|

||||

It is understandable that you might want to uninstall Cinnamon. We will use PPA Purge for this purpose. Let’s install PPA Purge first:

|

||||

|

||||

```

|

||||

sudo apt-get install ppa-purge

|

||||

|

||||

```

|

||||

|

||||

Afterward, use the following command to purge the PPA:

|

||||

|

||||

```

|

||||

sudo ppa-purge ppa:embrosyn/cinnamon

|

||||

|

||||

```

|

||||

|

||||

In related articles, I suggest you to read more about [how to remove PPA in Linux][6].

|

||||

|

||||

I hope this post helps you to **install Cinnamon in Ubuntu**. Do share your experience with Cinnamon.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/install-cinnamon-on-ubuntu/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[1]: http://cinnamon.linuxmint.com/

|

||||

[2]: http://www.linuxmint.com/

|

||||

[3]: https://itsfoss.com/linux-mint-vs-ubuntu/

|

||||

[4]: https://itsfoss.com/guide-install-linux-mint-16-dual-boot-windows/

|

||||

[5]: https://4bds6hergc-flywheel.netdna-ssl.com/wp-content/uploads/2018/09/install-cinnamon-ubuntu.png

|

||||

[6]: https://itsfoss.com/how-to-remove-or-delete-ppas-quick-tip/

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by bayar199468

|

||||

7 Best eBook Readers for Linux

|

||||

======

|

||||

**Brief:** In this article, we are covering some of the best ebook readers for Linux. These apps give a better reading experience and some will even help in managing your ebooks.

|

||||

|

||||

@ -1,182 +0,0 @@

|

||||

How to Install and Use Wireshark on Debian 9 / Ubuntu 16.04 / 17.10

|

||||

============================================================

|

||||

|

||||

by [Pradeep Kumar][1] · Published November 29, 2017 · Updated November 29, 2017

|

||||

|

||||

[][2]

|

||||

|

||||

Wireshark is free and open source, cross platform, GUI based Network packet analyzer that is available for Linux, Windows, MacOS, Solaris etc. It captures network packets in real time & presents them in human readable format. Wireshark allows us to monitor the network packets up to microscopic level. Wireshark also has a command line utility called ‘tshark‘ that performs the same functions as Wireshark but through terminal & not through GUI.

|

||||

|

||||

Wireshark can be used for network troubleshooting, analyzing, software & communication protocol development & also for education purposed. Wireshark uses a library called ‘pcap‘ for capturing the network packets.

|

||||

|

||||

Wireshark comes with a lot of features & some those features are;

|

||||

|

||||

* Support for a hundreds of protocols for inspection,

|

||||

|

||||

* Ability to capture packets in real time & save them for later offline analysis,

|

||||

|

||||

* A number of filters to analyzing data,

|

||||

|

||||

* Data captured can be compressed & uncompressed on the fly,

|

||||

|

||||

* Various file formats for data analysis supported, output can also be saved to XML, CSV, plain text formats,

|

||||

|

||||

* data can be captured from a number of interfaces like ethernet, wifi, bluetooth, USB, Frame relay , token rings etc.

|

||||

|

||||

In this article, we will discuss how to install Wireshark on Ubuntu/Debain machines & will also learn to use Wireshark for capturing network packets.

|

||||

|

||||

#### Installation of Wireshark on Ubuntu 16.04 / 17.10

|

||||

|

||||

Wireshark is available with default Ubuntu repositories & can be simply installed using the following command. But there might be chances that you will not get the latest version of wireshark.

|

||||

|

||||

```

|

||||

linuxtechi@nixworld:~$ sudo apt-get update

|

||||

linuxtechi@nixworld:~$ sudo apt-get install wireshark -y

|

||||

```

|

||||

|

||||

So to install latest version of wireshark we have to enable or configure official wireshark repository.

|

||||

|

||||

Use the beneath commands one after the another to configure repository and to install latest version of Wireshark utility

|

||||

|

||||

```

|

||||

linuxtechi@nixworld:~$ sudo add-apt-repository ppa:wireshark-dev/stable

|

||||

linuxtechi@nixworld:~$ sudo apt-get update

|

||||

linuxtechi@nixworld:~$ sudo apt-get install wireshark -y

|

||||

```

|

||||

|

||||

Once the Wireshark is installed execute the below command so that non-root users can capture live packets of interfaces,

|

||||

|

||||

```

|

||||

linuxtechi@nixworld:~$ sudo setcap 'CAP_NET_RAW+eip CAP_NET_ADMIN+eip' /usr/bin/dumpcap

|

||||

```

|

||||

|

||||

#### Installation of Wireshark on Debian 9

|

||||

|

||||

Wireshark package and its dependencies are already present in the default debian 9 repositories, so to install latest and stable version of Wireshark on Debian 9, use the following command:

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ sudo apt-get update

|

||||

linuxtechi@nixhome:~$ sudo apt-get install wireshark -y

|

||||

```

|

||||

|

||||

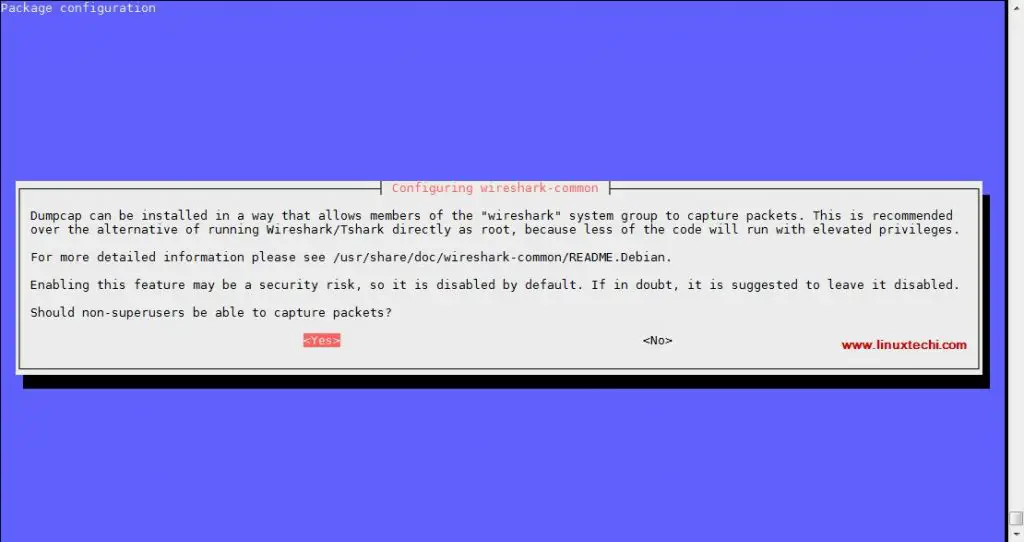

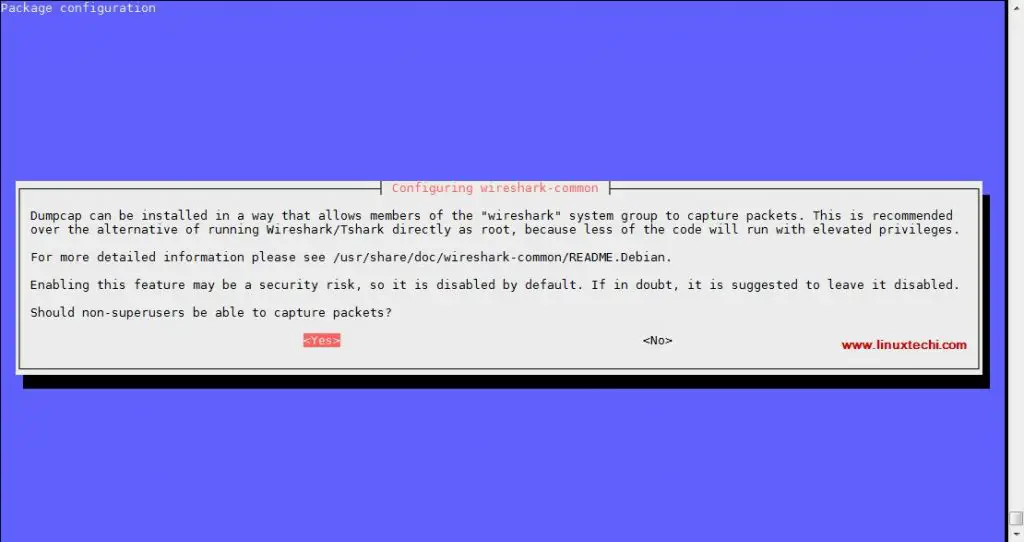

During the installation, it will prompt us to configure dumpcap for non-superusers,

|

||||

|

||||

Select ‘yes’ and then hit enter.

|

||||

|

||||

[][3]

|

||||

|

||||

Once the Installation is completed, execute the below command so that non-root users can also capture the live packets of the interfaces.

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ sudo chmod +x /usr/bin/dumpcap

|

||||

```

|

||||

|

||||

We can also use the latest source package to install the wireshark on Ubuntu/Debain & many other Linux distributions.

|

||||

|

||||

#### Installing Wireshark using source code on Debian / Ubuntu Systems

|

||||

|

||||

Firstly download the latest source package (which is 2.4.2 at the time for writing this article), use the following command,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ wget https://1.as.dl.wireshark.org/src/wireshark-2.4.2.tar.xz

|

||||

```

|

||||

|

||||

Next extract the package & enter into the extracted directory,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ tar -xf wireshark-2.4.2.tar.xz -C /tmp

|

||||

linuxtechi@nixhome:~$ cd /tmp/wireshark-2.4.2

|

||||

```

|

||||

|

||||

Now we will compile the code with the following commands,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:/tmp/wireshark-2.4.2$ ./configure --enable-setcap-install

|

||||

linuxtechi@nixhome:/tmp/wireshark-2.4.2$ make

|

||||

```

|

||||

|

||||

Lastly install the compiled packages to install Wireshark on the system,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:/tmp/wireshark-2.4.2$ sudo make install

|

||||

linuxtechi@nixhome:/tmp/wireshark-2.4.2$ sudo ldconfig

|

||||

```

|

||||

|

||||

Upon installation a separate group for Wireshark will also be created, we will now add our user to the group so that it can work with wireshark otherwise you might get ‘permission denied‘ error when starting wireshark.

|

||||

|

||||

To add the user to the wireshark group, execute the following command,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ sudo usermod -a -G wireshark linuxtechi

|

||||

```

|

||||

|

||||

Now we can start wireshark either from GUI Menu or from terminal with this command,

|

||||

|

||||

```

|

||||

linuxtechi@nixhome:~$ wireshark

|

||||

```

|

||||

|

||||

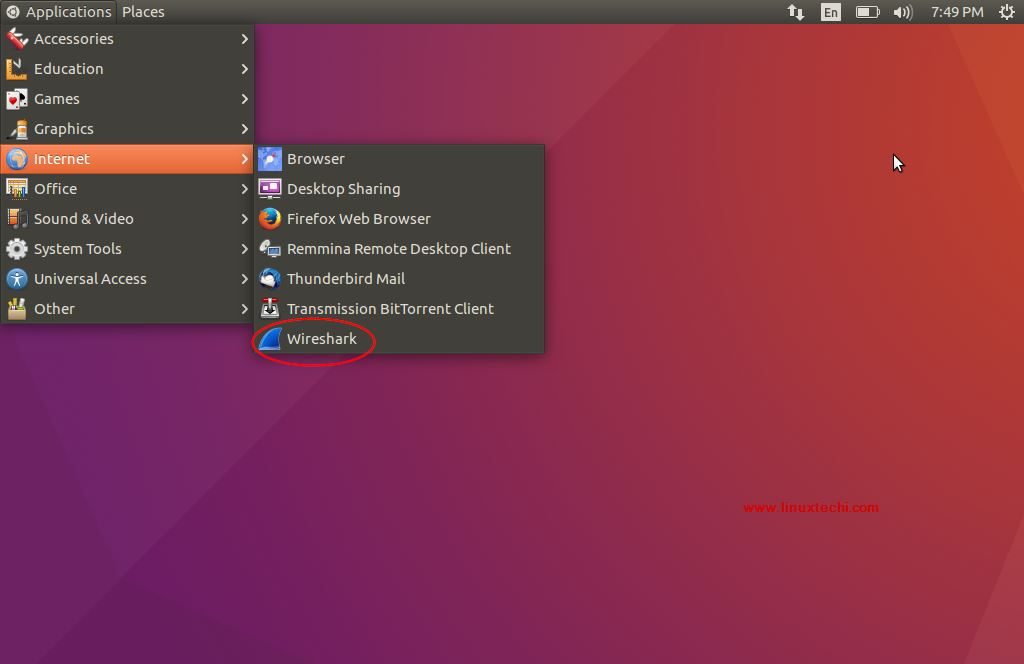

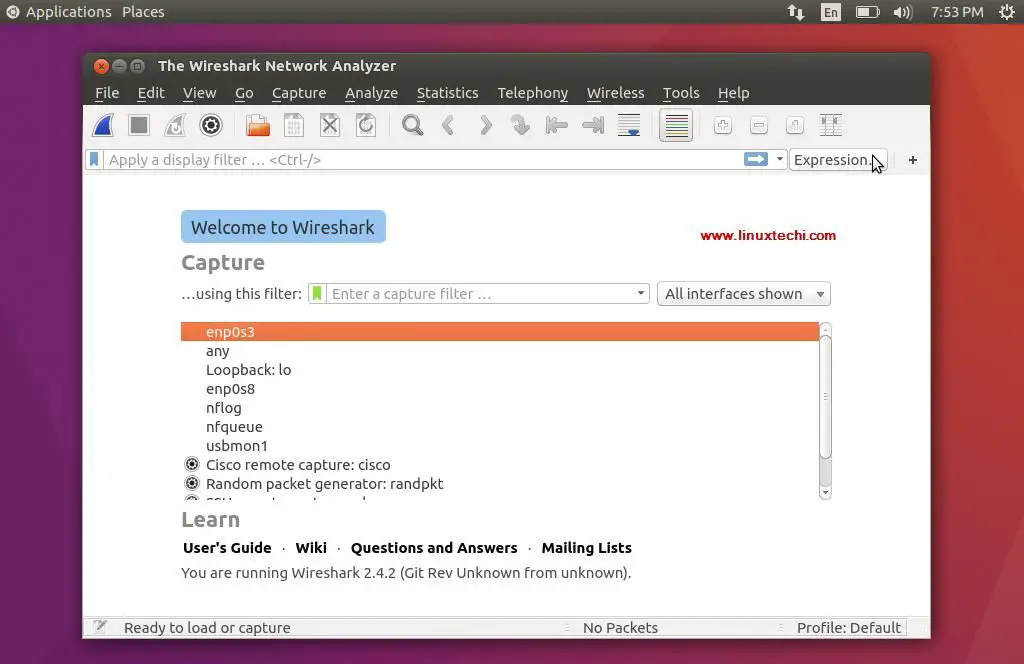

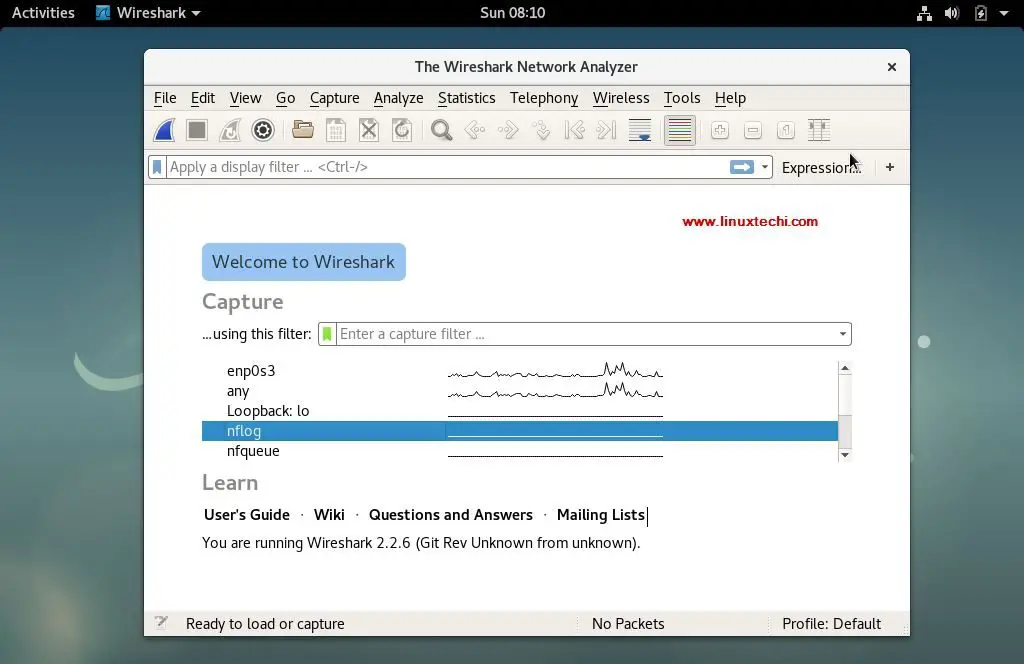

#### Access Wireshark on Debian 9 System

|

||||

|

||||

[][4]

|

||||

|

||||

Click on Wireshark icon

|

||||

|

||||

[][5]

|

||||

|

||||

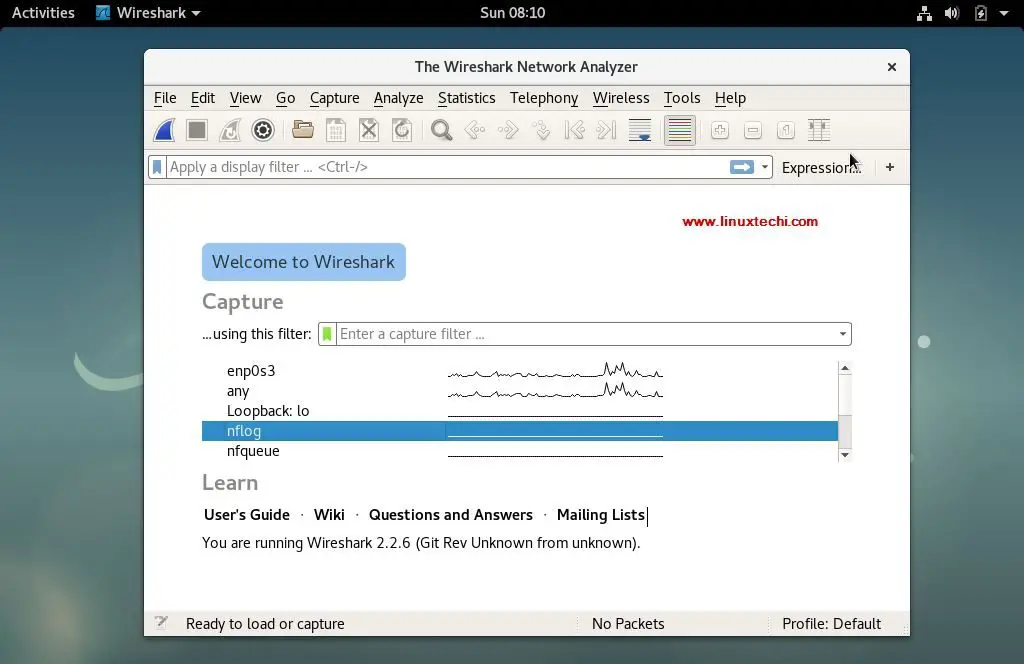

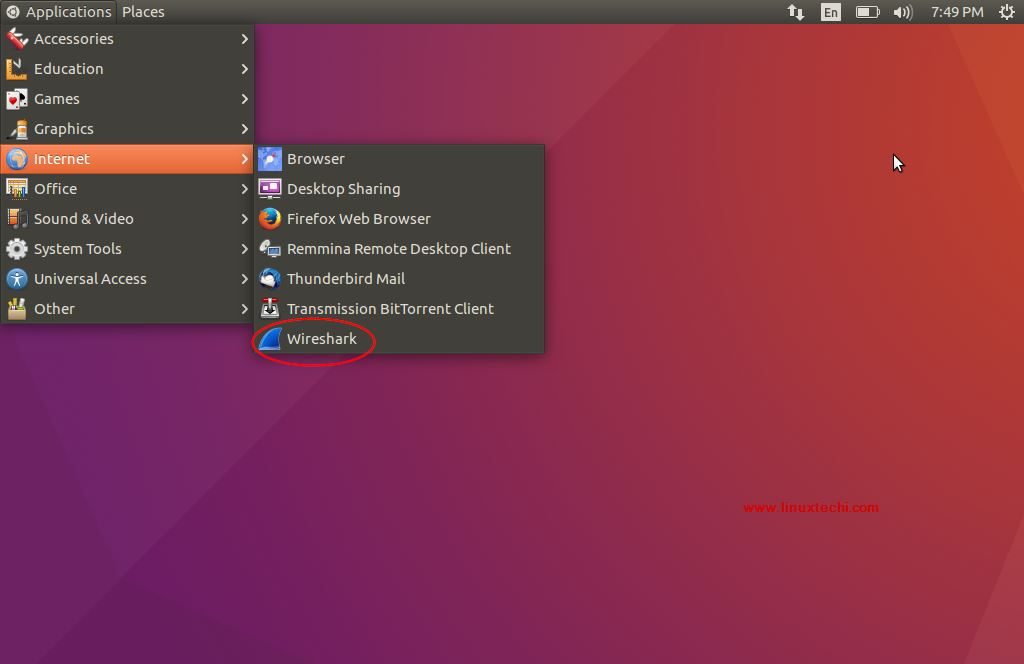

#### Access Wireshark on Ubuntu 16.04 / 17.10

|

||||

|

||||

[][6]

|

||||

|

||||

Click on Wireshark icon

|

||||

|

||||

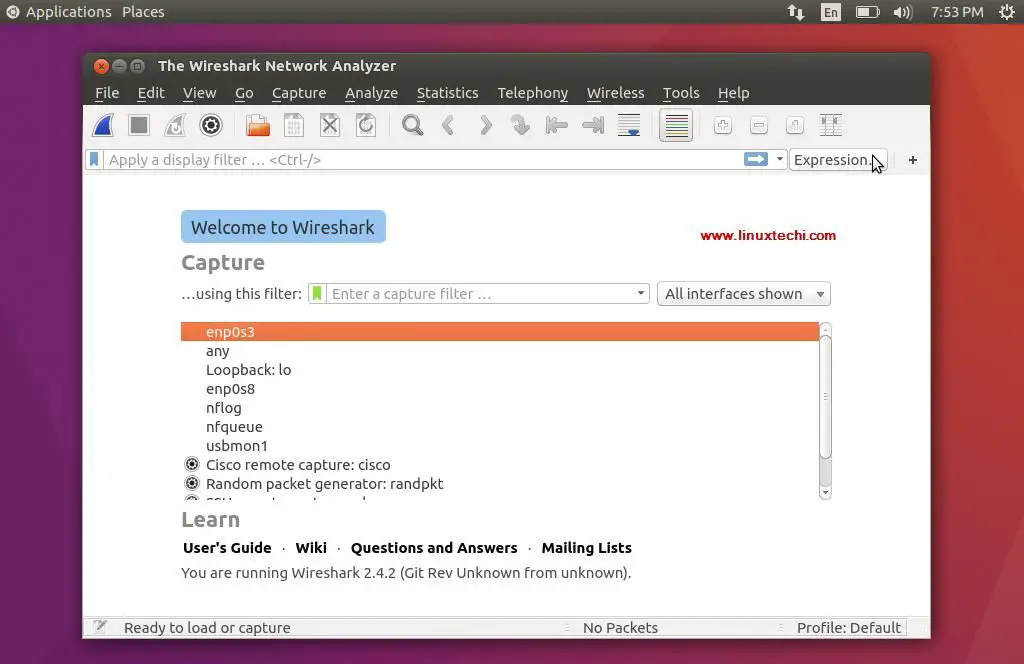

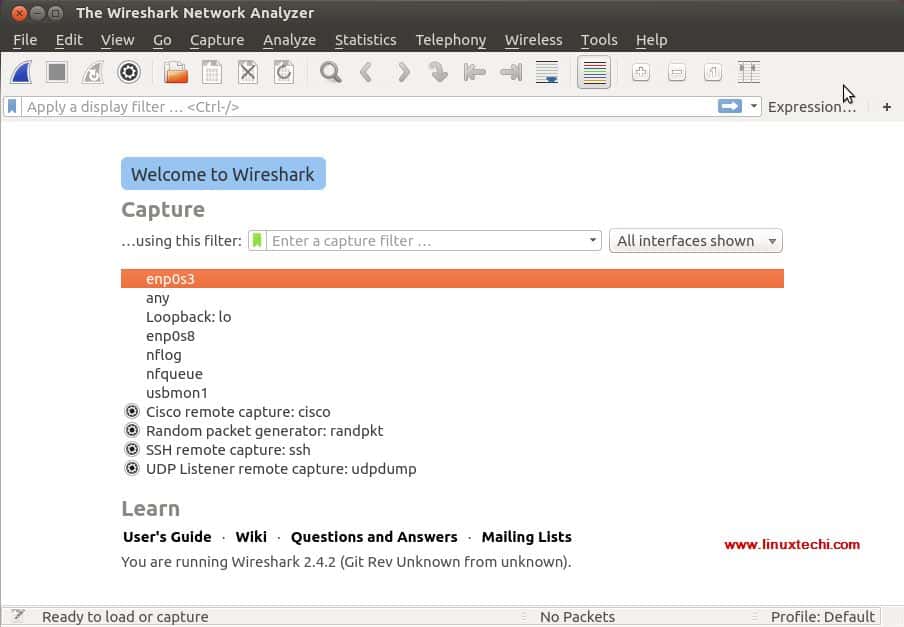

[][7]

|

||||

|

||||

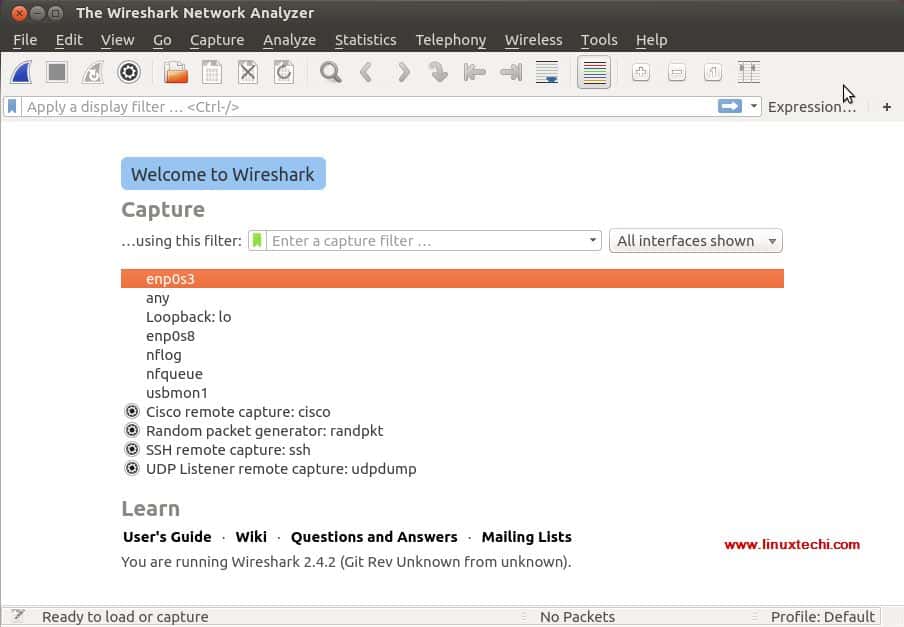

#### Capturing and Analyzing packets

|

||||

|

||||

Once the wireshark has been started, we should be presented with the wireshark window, example is shown above for Ubuntu and Debian system.

|

||||

|

||||

[][8]

|

||||

|

||||

All these are the interfaces from where we can capture the network packets. Based on the interfaces you have on your system, this screen might be different for you.

|

||||

|

||||

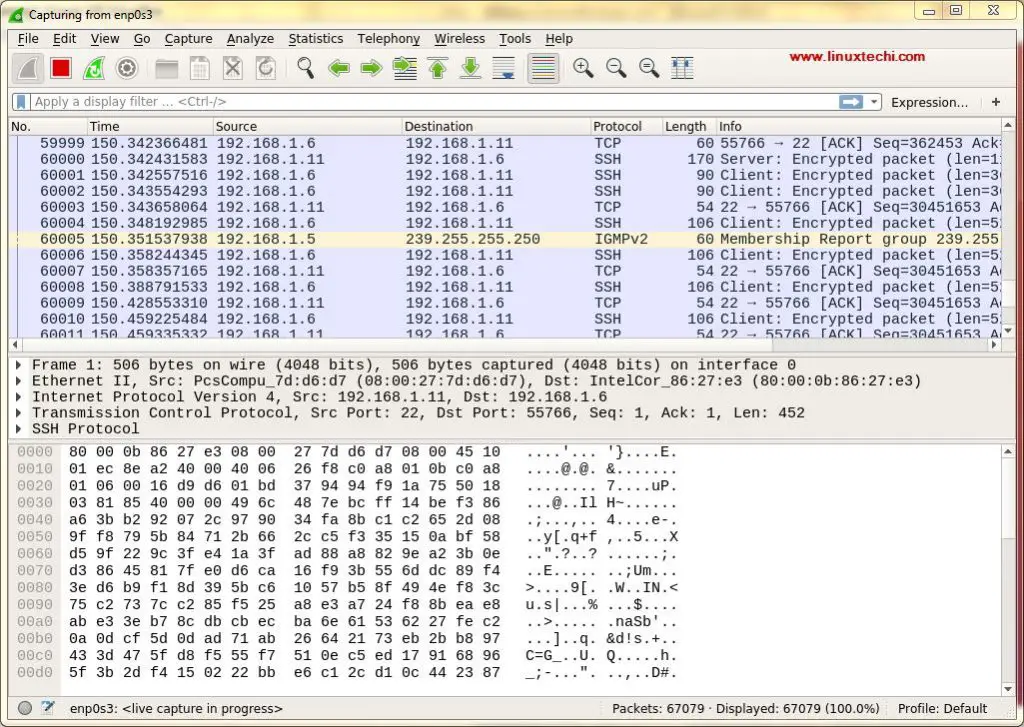

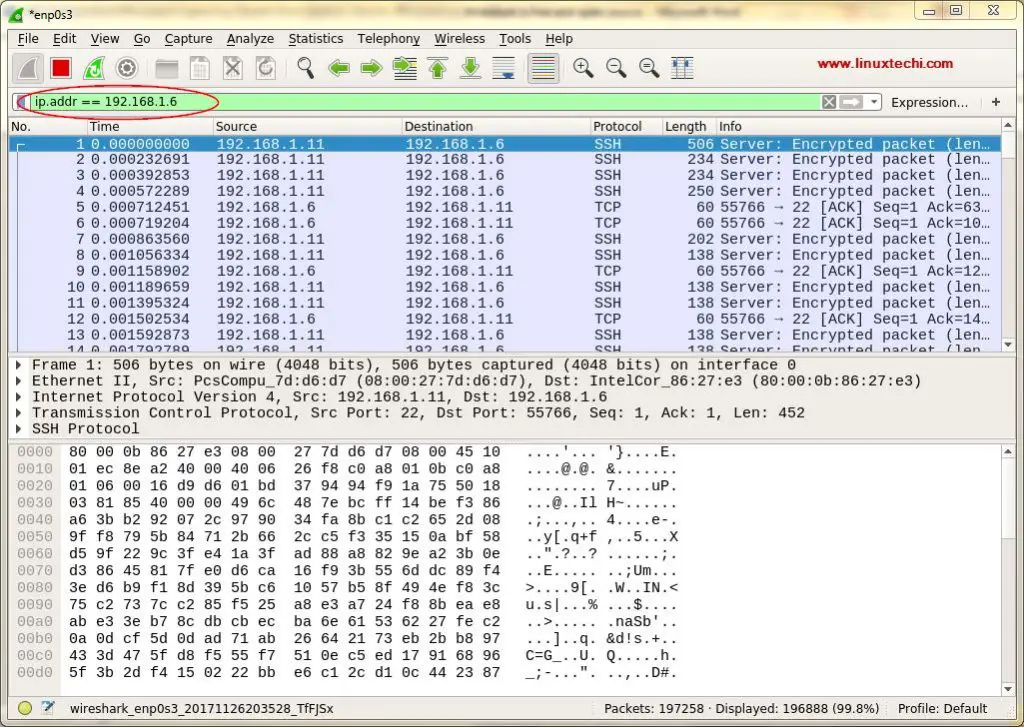

We are selecting ‘enp0s3’ for capturing the network traffic for that inteface. After selecting the inteface, network packets for all the devices on our network start to populate (refer to screenshot below)

|

||||

|

||||

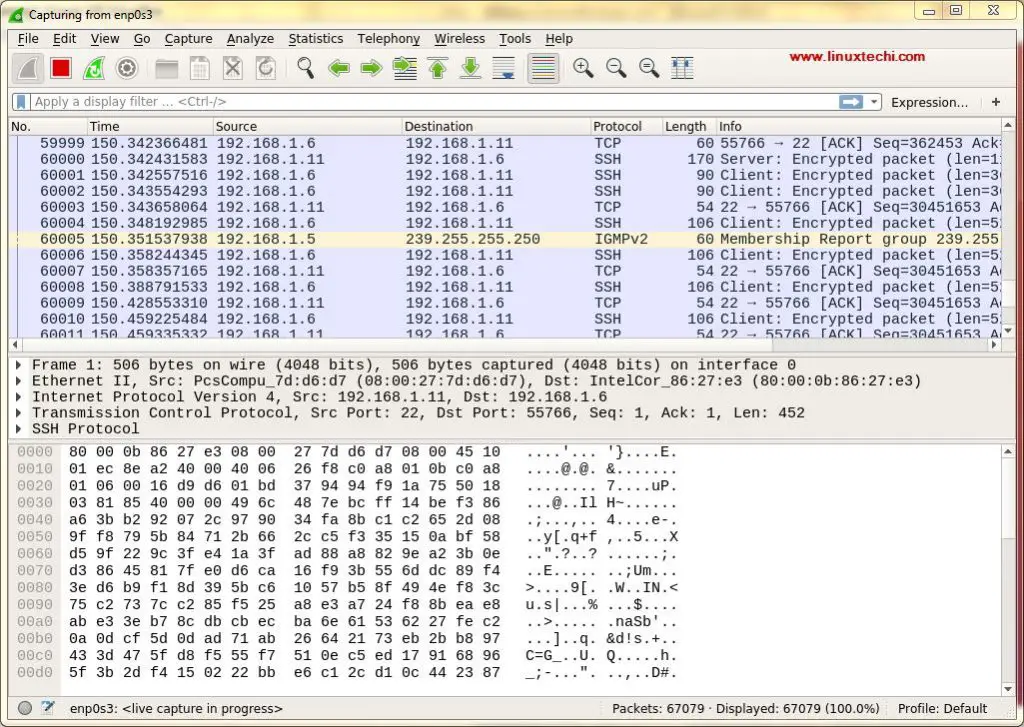

[][9]

|

||||

|

||||

First time we see this screen we might get overwhelmed by the data that is presented in this screen & might have thought how to sort out this data but worry not, one the best features of Wireshark is its filters.

|

||||

|

||||

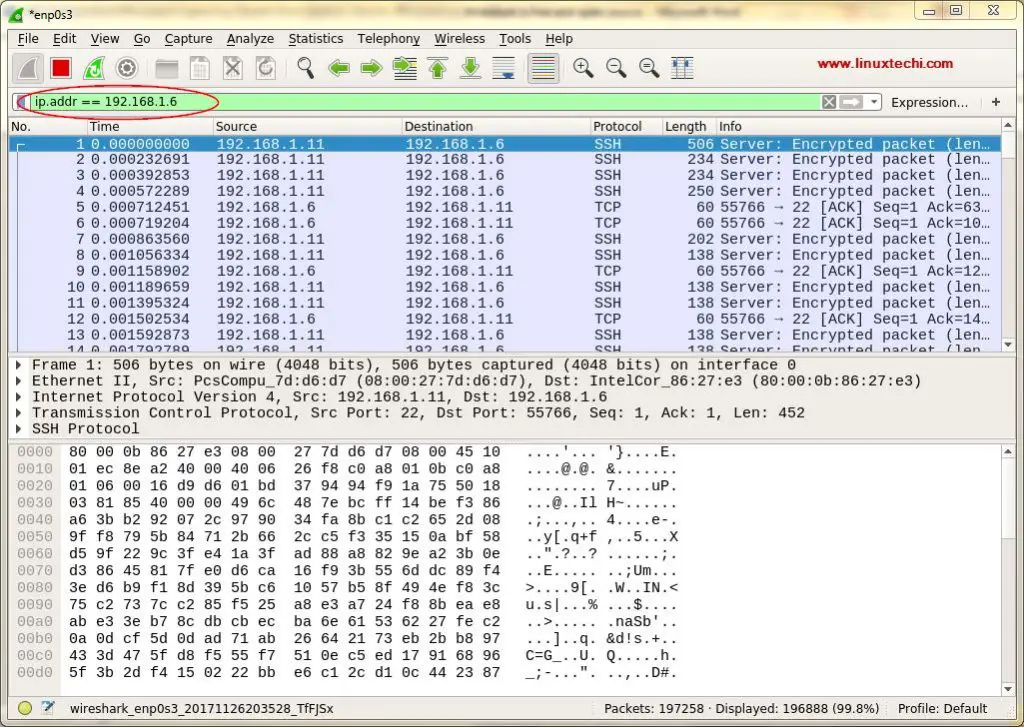

We can sort/filter out the data based on IP address, Port number, can also used source & destination filters, packet size etc & can also combine 2 or more filters together to create more comprehensive searches. We can either write our filters in ‘Apply a Display Filter‘ tab , or we can also select one of already created rules. To select pre-built filter, click on ‘flag‘ icon , next to ‘Apply a Display Filter‘ tab,

|

||||

|

||||

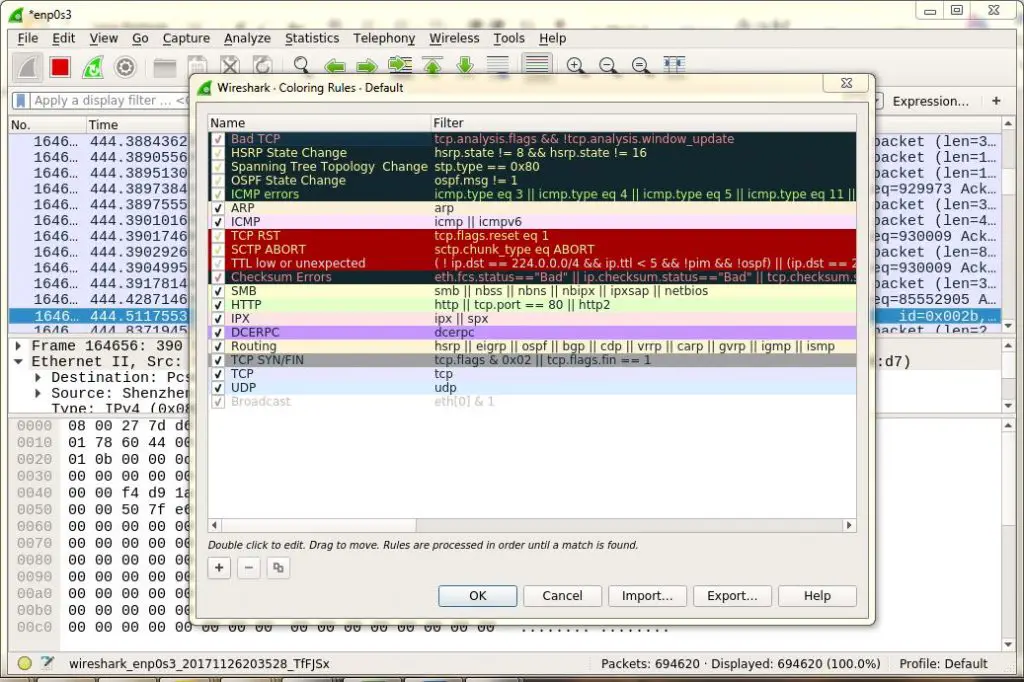

[][10]

|

||||

|

||||

We can also filter data based on the color coding, By default, light purple is TCP traffic, light blue is UDP traffic, and black identifies packets with errors , to see what these codes mean, click View -> Coloring Rules, also we can change these codes.

|

||||

|

||||

[][11]

|

||||

|

||||

After we have the results that we need, we can then click on any of the captured packets to get more details about that packet, this will show all the data about that network packet.

|

||||

|

||||

Wireshark is an extremely powerful tool takes some time to getting used to & make a command over it, this tutorial will help you get started. Please feel free to drop in your queries or suggestions in the comment box below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxtechi.com

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linuxtechi.com/author/pradeep/

|

||||

[1]:https://www.linuxtechi.com/author/pradeep/

|

||||

[2]:https://www.linuxtechi.com/wp-content/uploads/2017/11/wireshark-Debian-9-Ubuntu-16.04-17.10.jpg

|

||||

[3]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Configure-Wireshark-Debian9.jpg

|

||||

[4]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Access-wireshark-debian9.jpg

|

||||

[5]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Wireshark-window-debian9.jpg

|

||||

[6]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Access-wireshark-Ubuntu.jpg

|

||||

[7]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Wireshark-window-Ubuntu.jpg

|

||||

[8]:https://www.linuxtechi.com/wp-content/uploads/2017/11/wireshark-Linux-system.jpg

|

||||

[9]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Capturing-Packet-from-enp0s3-Ubuntu-Wireshark.jpg

|

||||

[10]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Filter-in-wireshark-Ubuntu.jpg

|

||||

[11]:https://www.linuxtechi.com/wp-content/uploads/2017/11/Packet-Colouring-Wireshark.jpg

|

||||

@ -1,308 +0,0 @@

|

||||

translating by Flowsnow

|

||||

What is behavior-driven Python?

|

||||

======

|

||||

|

||||

Have you heard about [behavior-driven development][1] (BDD) and wondered what all the buzz is about? Maybe you've caught team members talking in "gherkin" and felt left out of the conversation. Or perhaps you're a Pythonista looking for a better way to test your code. Whatever the circumstance, learning about BDD can help you and your team achieve better collaboration and test automation, and Python's `behave` framework is a great place to start.

|

||||

|

||||

### What is BDD?

|

||||

|

||||

* Submitting forms on a website

|

||||

* Searching for desired results

|

||||

* Saving a document

|

||||

* Making REST API calls

|

||||

* Running command-line interface commands

|

||||

|

||||

|

||||

|

||||

In software, a behavior is how a feature operates within a well-defined scenario of inputs, actions, and outcomes. Products can exhibit countless behaviors, such as:

|

||||

|

||||

Defining a product's features based on its behaviors makes it easier to describe them, develop them, and test them. This is the heart of BDD: making behaviors the focal point of software development. Behaviors are defined early in development using a [specification by example][2] language. One of the most common behavior spec languages is [Gherkin][3], the Given-When-Then scenario format from the [Cucumber][4] project. Behavior specs are basically plain-language descriptions of how a behavior works, with a little bit of formal structure for consistency and focus. Test frameworks can easily automate these behavior specs by "gluing" step texts to code implementations.

|

||||

|

||||

Below is an example of a behavior spec written in Gherkin:

|

||||

```

|

||||

Scenario: Basic DuckDuckGo Search

|

||||

|

||||

Given the DuckDuckGo home page is displayed

|

||||

|

||||

When the user searches for "panda"

|

||||

|

||||

Then results are shown for "panda"

|

||||

|

||||

```

|

||||

|

||||

At a quick glance, the behavior is intuitive to understand. Except for a few keywords, the language is freeform. The scenario is concise yet meaningful. A real-world example illustrates the behavior. Steps declaratively indicate what should happen—without getting bogged down in the details of how.

|

||||

|

||||

The [main benefits of BDD][5] are good collaboration and automation. Everyone can contribute to behavior development, not just programmers. Expected behaviors are defined and understood from the beginning of the process. Tests can be automated together with the features they cover. Each test covers a singular, unique behavior in order to avoid duplication. And, finally, existing steps can be reused by new behavior specs, creating a snowball effect.

|

||||

|

||||

### Python's behave framework

|

||||

|

||||

`behave` is one of the most popular BDD frameworks in Python. It is very similar to other Gherkin-based Cucumber frameworks despite not holding the official Cucumber designation. `behave` has two primary layers:

|

||||

|

||||

1. Behavior specs written in Gherkin `.feature` files

|

||||

2. Step definitions and hooks written in Python modules that implement Gherkin steps

|

||||

|

||||

|

||||

|

||||

As shown in the example above, Gherkin scenarios use a three-part format:

|

||||

|

||||

1. Given some initial state

|

||||

2. When an action is taken

|

||||

3. Then verify the outcome

|

||||

|

||||

|

||||

|

||||

Each step is "glued" by decorator to a Python function when `behave` runs tests.

|

||||

|

||||

### Installation

|

||||

|

||||

As a prerequisite, make sure you have Python and `pip` installed on your machine. I strongly recommend using Python 3. (I also recommend using [`pipenv`][6], but the following example commands use the more basic `pip`.)

|

||||

|

||||

Only one package is required for `behave`:

|

||||

```

|

||||

pip install behave

|

||||

|

||||

```

|

||||

|

||||

Other packages may also be useful, such as:

|

||||

```

|

||||

pip install requests # for REST API calls

|

||||

|

||||

pip install selenium # for Web browser interactions

|

||||

|

||||

```

|

||||

|

||||

The [behavior-driven-Python][7] project on GitHub contains the examples used in this article.

|

||||

|

||||

### Gherkin features

|

||||

|

||||

The Gherkin syntax that `behave` uses is practically compliant with the official Cucumber Gherkin standard. A `.feature` file has Feature sections, which in turn have Scenario sections with Given-When-Then steps. Below is an example:

|

||||

```

|

||||

Feature: Cucumber Basket

|

||||

|

||||

As a gardener,

|

||||

|

||||

I want to carry many cucumbers in a basket,

|

||||

|

||||

So that I don’t drop them all.

|

||||

|

||||

|

||||

|

||||

@cucumber-basket

|

||||

|

||||

Scenario: Add and remove cucumbers

|

||||

|

||||

Given the basket is empty

|

||||

|

||||

When "4" cucumbers are added to the basket

|

||||

|

||||

And "6" more cucumbers are added to the basket

|

||||

|

||||

But "3" cucumbers are removed from the basket

|

||||

|

||||

Then the basket contains "7" cucumbers

|

||||

|

||||

```

|

||||

|

||||

There are a few important things to note here:

|

||||

|

||||

* Both the Feature and Scenario sections have [short, descriptive titles][8].

|

||||

* The lines immediately following the Feature title are comments ignored by `behave`. It is a good practice to put the user story there.

|

||||

* Scenarios and Features can have tags (notice the `@cucumber-basket` mark) for hooks and filtering (explained below).

|

||||

* Steps follow a [strict Given-When-Then order][9].

|

||||

* Additional steps can be added for any type using `And` and `But`.

|

||||

* Steps can be parametrized with inputs—notice the values in double quotes.

|

||||

|

||||

|

||||

|

||||

Scenarios can also be written as templates with multiple input combinations by using a Scenario Outline:

|

||||

```

|

||||

Feature: Cucumber Basket

|

||||

|

||||

|

||||

|

||||

@cucumber-basket

|

||||

|

||||

Scenario Outline: Add cucumbers

|

||||

|

||||

Given the basket has “<initial>” cucumbers

|

||||

|

||||

When "<more>" cucumbers are added to the basket

|

||||

|

||||

Then the basket contains "<total>" cucumbers

|

||||

|

||||

|

||||

|

||||

Examples: Cucumber Counts

|

||||

|

||||

| initial | more | total |

|

||||

|

||||

| 0 | 1 | 1 |

|

||||

|

||||

| 1 | 2 | 3 |

|

||||

|

||||

| 5 | 4 | 9 |

|

||||

|

||||

```

|

||||

|

||||

Scenario Outlines always have an Examples table, in which the first row gives column titles and each subsequent row gives an input combo. The row values are substituted wherever a column title appears in a step surrounded by angle brackets. In the example above, the scenario will be run three times because there are three rows of input combos. Scenario Outlines are a great way to avoid duplicate scenarios.

|

||||

|

||||

There are other elements of the Gherkin language, but these are the main mechanics. To learn more, read the Automation Panda articles [Gherkin by Example][10] and [Writing Good Gherkin][11].

|

||||

|

||||

### Python mechanics

|

||||

|

||||

Every Gherkin step must be "glued" to a step definition, a Python function that provides the implementation. Each function has a step type decorator with the matching string. It also receives a shared context and any step parameters. Feature files must be placed in a directory named `features/`, while step definition modules must be placed in a directory named `features/steps/`. Any feature file can use step definitions from any module—they do not need to have the same names. Below is an example Python module with step definitions for the cucumber basket features.

|

||||

```

|

||||

from behave import *

|

||||

|

||||

from cucumbers.basket import CucumberBasket

|

||||

|

||||

|

||||

|

||||

@given('the basket has "{initial:d}" cucumbers')

|

||||

|

||||

def step_impl(context, initial):

|

||||

|

||||

context.basket = CucumberBasket(initial_count=initial)

|

||||

|

||||

|

||||

|

||||

@when('"{some:d}" cucumbers are added to the basket')

|

||||

|

||||

def step_impl(context, some):

|

||||

|

||||

context.basket.add(some)

|

||||

|

||||

|

||||

|

||||

@then('the basket contains "{total:d}" cucumbers')

|

||||

|

||||

def step_impl(context, total):

|

||||

|

||||

assert context.basket.count == total

|

||||

|

||||

```

|

||||

|

||||

Three [step matchers][12] are available: `parse`, `cfparse`, and `re`. The default and simplest marcher is `parse`, which is shown in the example above. Notice how parametrized values are parsed and passed into the functions as input arguments. A common best practice is to put double quotes around parameters in steps.

|

||||

|

||||

Each step definition function also receives a [context][13] variable that holds data specific to the current scenario being run, such as `feature`, `scenario`, and `tags` fields. Custom fields may be added, too, to share data between steps. Always use context to share data—never use global variables!

|

||||

|

||||

`behave` also supports [hooks][14] to handle automation concerns outside of Gherkin steps. A hook is a function that will be run before or after a step, scenario, feature, or whole test suite. Hooks are reminiscent of [aspect-oriented programming][15]. They should be placed in a special `environment.py` file under the `features/` directory. Hook functions can check the current scenario's tags, as well, so logic can be selectively applied. The example below shows how to use hooks to set up and tear down a Selenium WebDriver instance for any scenario tagged as `@web`.

|

||||

```

|

||||

from selenium import webdriver

|

||||

|

||||

|

||||

|

||||

def before_scenario(context, scenario):

|

||||

|

||||

if 'web' in context.tags:

|

||||

|

||||

context.browser = webdriver.Firefox()

|

||||

|

||||

context.browser.implicitly_wait(10)

|

||||

|

||||

|

||||

|

||||

def after_scenario(context, scenario):

|

||||

|

||||

if 'web' in context.tags:

|

||||

|

||||

context.browser.quit()

|

||||

|

||||

```

|

||||

|

||||

Note: Setup and cleanup can also be done with [fixtures][16] in `behave`.

|

||||

|

||||

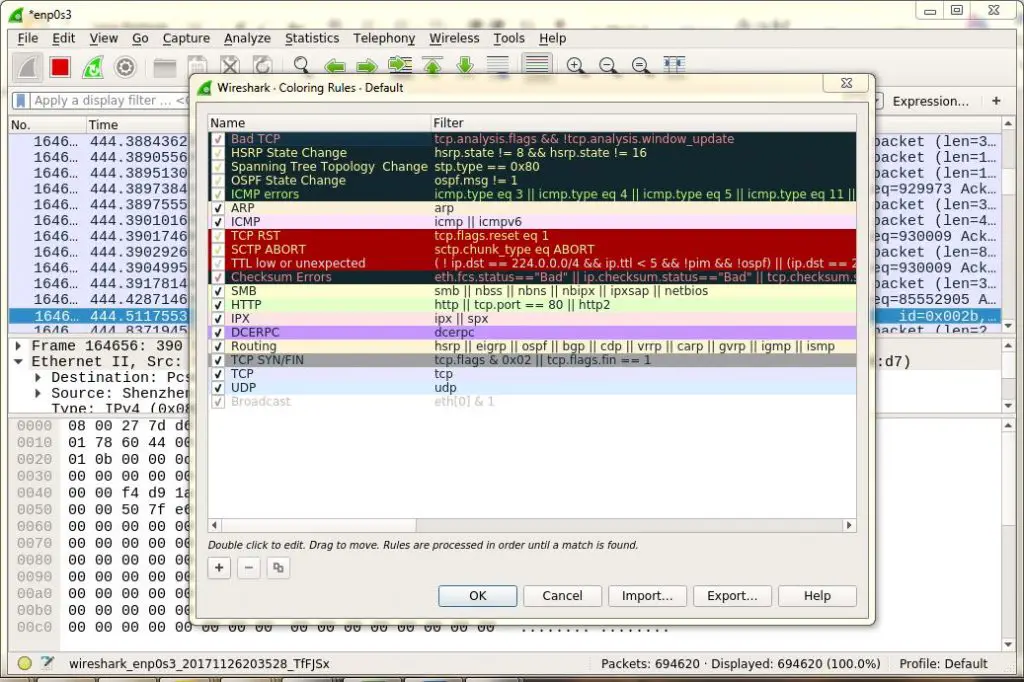

To offer an idea of what a `behave` project should look like, here's the example project's directory structure:

|

||||

|

||||

|

||||

|

||||

Any Python packages and custom modules can be used with `behave`. Use good design patterns to build a scalable test automation solution. Step definition code should be concise.

|

||||

|

||||

### Running tests

|

||||

|

||||

To run tests from the command line, change to the project's root directory and run the `behave` command. Use the `–help` option to see all available options.

|

||||

|

||||

Below are a few common use cases:

|

||||

```

|

||||

# run all tests

|

||||

|

||||

behave

|

||||

|

||||

|

||||

|

||||

# run the scenarios in a feature file

|

||||

|

||||

behave features/web.feature

|

||||

|

||||

|

||||

|

||||

# run all tests that have the @duckduckgo tag

|

||||

|

||||

behave --tags @duckduckgo

|

||||

|

||||

|

||||

|

||||

# run all tests that do not have the @unit tag

|

||||

|

||||

behave --tags ~@unit

|

||||

|

||||

|

||||

|

||||

# run all tests that have @basket and either @add or @remove

|

||||

|

||||

behave --tags @basket --tags @add,@remove

|

||||

|

||||

```

|

||||

|

||||

For convenience, options may be saved in [config][17] files.

|

||||

|

||||

### Other options

|

||||

|

||||

`behave` is not the only BDD test framework in Python. Other good frameworks include:

|

||||

|

||||

* `pytest-bdd` , a plugin for `pytest``behave`, it uses Gherkin feature files and step definition modules, but it also leverages all the features and plugins of `pytest`. For example, it can run Gherkin scenarios in parallel using `pytest-xdist`. BDD and non-BDD tests can also be executed together with the same filters. `pytest-bdd` also offers a more flexible directory layout.

|

||||

|

||||

* `radish` is a "Gherkin-plus" framework—it adds Scenario Loops and Preconditions to the standard Gherkin language, which makes it more friendly to programmers. It also offers rich command line options like `behave`.

|

||||

|

||||

* `lettuce` is an older BDD framework very similar to `behave`, with minor differences in framework mechanics. However, GitHub shows little recent activity in the project (as of May 2018).

|

||||

|

||||

|

||||

|

||||

Any of these frameworks would be good choices.

|

||||

|

||||

Also, remember that Python test frameworks can be used for any black box testing, even for non-Python products! BDD frameworks are great for web and service testing because their tests are declarative, and Python is a [great language for test automation][18].

|

||||

|

||||

This article is based on the author's [PyCon Cleveland 2018][19] talk, [Behavior-Driven Python][20].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/behavior-driven-python

|

||||

|

||||

作者:[Andrew Knight][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/andylpk247

|

||||

[1]:https://automationpanda.com/bdd/

|

||||

[2]:https://en.wikipedia.org/wiki/Specification_by_example

|

||||

[3]:https://automationpanda.com/2017/01/26/bdd-101-the-gherkin-language/

|

||||

[4]:https://cucumber.io/

|

||||

[5]:https://automationpanda.com/2017/02/13/12-awesome-benefits-of-bdd/

|

||||

[6]:https://docs.pipenv.org/

|

||||

[7]:https://github.com/AndyLPK247/behavior-driven-python

|

||||

[8]:https://automationpanda.com/2018/01/31/good-gherkin-scenario-titles/

|

||||

[9]:https://automationpanda.com/2018/02/03/are-gherkin-scenarios-with-multiple-when-then-pairs-okay/

|

||||

[10]:https://automationpanda.com/2017/01/27/bdd-101-gherkin-by-example/

|

||||

[11]:https://automationpanda.com/2017/01/30/bdd-101-writing-good-gherkin/

|

||||

[12]:http://behave.readthedocs.io/en/latest/api.html#step-parameters

|

||||

[13]:http://behave.readthedocs.io/en/latest/api.html#detecting-that-user-code-overwrites-behave-context-attributes

|

||||

[14]:http://behave.readthedocs.io/en/latest/api.html#environment-file-functions

|

||||

[15]:https://en.wikipedia.org/wiki/Aspect-oriented_programming

|

||||

[16]:http://behave.readthedocs.io/en/latest/api.html#fixtures

|

||||

[17]:http://behave.readthedocs.io/en/latest/behave.html#configuration-files

|

||||

[18]:https://automationpanda.com/2017/01/21/the-best-programming-language-for-test-automation/

|

||||

[19]:https://us.pycon.org/2018/

|

||||

[20]:https://us.pycon.org/2018/schedule/presentation/87/

|

||||

@ -1,3 +1,4 @@

|

||||

Translating by qhwdw

|

||||

What's all the C Plus Fuss? Bjarne Stroustrup warns of dangerous future plans for his C++

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,6 @@

|

||||

Translating by MjSeven

|

||||

|

||||

|

||||

An introduction to the Django Python web app framework

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating by Flowsnow

|

||||

|

||||

How to build rpm packages

|

||||

======

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

Backup Installed Packages And Restore Them On Freshly Installed Ubuntu

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,113 @@

|

||||

Distributed tracing in a microservices world

|

||||

======

|

||||

What is distributed tracing and why is it so important in a microservices environment?

|

||||

|

||||

|

||||

|

||||

[Microservices][1] have become the default choice for greenfield applications. After all, according to practitioners, microservices provide the type of decoupling required for a full digital transformation, allowing individual teams to innovate at a far greater speed than ever before.

|

||||

|

||||

Microservices are nothing more than regular distributed systems, only at a larger scale. Therefore, they exacerbate the well-known problems that any distributed system faces, like lack of visibility into a business transaction across process boundaries.

|

||||

|

||||

Given that it's extremely common to have multiple versions of a single service running in production at the same time—be it in a [A/B testing][2] scenario or as part of rolling out a new release following the [Canary release][3] technique—when we account for the fact that we are talking about hundreds of services, it's clear that what we have is chaos. It's almost impossible to map the interdependencies and understand the path of a business transaction across services and their versions.

|

||||

|

||||

### Observability

|

||||

|

||||

This chaos ends up being a good thing, as long as we can observe what's going on and diagnose the problems that will eventually occur.

|

||||

|

||||

A system is said to be observable when we can understand its state based on the [metrics, logs, and traces][4] it emits. Given that we are talking about distributed systems, knowing the state of a single instance of a single service isn't enough; we need to be able to aggregate the metrics for all instances of a given service, perhaps grouped by version. Metrics solutions like [Prometheus][5] are very popular in tackling this aspect of the observability problem. Similarly, we need logs to be stored in a central location, as it's impossible to analyze the logs from the individual instances of each service. [Logstash][6] is usually applied here, in combination with a backing storage like [Elasticsearch][7]. And finally, we need to get end-to-end traces to understand the path a given transaction has taken. This is where distributed tracing solutions come into play.

|

||||

|

||||

### Distributed tracing

|

||||

|

||||

In monolithic web applications, logging frameworks provide enough capabilities to do a basic root-cause analysis when something fails. A developer just needs to place log statements in the code. Information like "context" (usually "thread") and "timestamp" are automatically added to the log entry, making it easier to understand the execution of a given request and correlate the entries.

|

||||

|

||||

```

|

||||

Thread-1 2018-09-03T15:52:54+02:00 Request started

|

||||

Thread-2 2018-09-03T15:52:55+02:00 Charging credit card x321

|

||||

Thread-1 2018-09-03T15:52:55+02:00 Order submitted

|

||||

Thread-1 2018-09-03T15:52:56+02:00 Charging credit card x123

|

||||

Thread-1 2018-09-03T15:52:57+02:00 Changing order status

|

||||

Thread-1 2018-09-03T15:52:58+02:00 Dispatching event to inventory

|

||||

Thread-1 2018-09-03T15:52:59+02:00 Request finished

|

||||

```

|

||||

|

||||

We can safely say that the second log entry above is not related to the other entries, as it's being executed in a different thread.

|

||||

|

||||

In microservices architectures, logging alone fails to deliver the complete picture. Is this service the first one in the call chain? And what happened at the inventory service (where we apparently dispatched an event)?

|

||||

|

||||

A common strategy to answer this question is creating an identifier at the very first building block of our transaction and propagating this identifier across all the calls, probably by sending it as an HTTP header whenever a remote call is made.

|

||||

|

||||

In a central log collector, we could then see entries like the ones below. Note how we could log the correlation ID (the first column in our example), so we know that the second entry is not related to the other entries.

|

||||

|

||||

```

|

||||

abc123 Order 2018-09-03T15:52:58+02:00 Dispatching event to inventory

|

||||

def456 Order 2018-09-03T15:52:58+02:00 Dispatching event to inventory

|

||||

abc123 Inventory 2018-09-03T15:52:59+02:00 Received `order-submitted` event

|

||||

abc123 Inventory 2018-09-03T15:53:00+02:00 Checking inventory status

|

||||

abc123 Inventory 2018-09-03T15:53:01+02:00 Updating inventory

|

||||

abc123 Inventory 2018-09-03T15:53:02+02:00 Preparing order manifest

|

||||

```

|

||||

|

||||

This technique is one of the concepts at the core of any modern distributed tracing solution, but it's not really new; correlating log entries is decades old, probably as old as "distributed systems" itself.

|

||||

|

||||

What sets distributed tracing apart from regular logging is that the data structure that holds tracing data is more specialized, so we can also identify causality. Looking at the log entries above, it's hard to tell if the last step was caused by the previous entry, if they were performed concurrently, or if they share the same caller. Having a dedicated data structure also allows distributed tracing to record not only a message in a single point in time but also the start and end time of a given procedure.

|

||||

|

||||

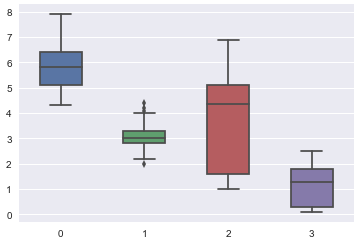

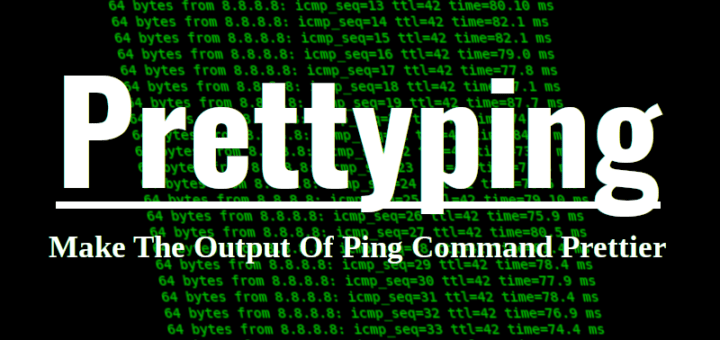

![Trace showing spans][9]

|

||||

|

||||

Trace showing spans similar to the logs described above

|

||||

|

||||

[Click to enlarge][10]

|

||||

|

||||

Most of the modern distributed tracing tools are inspired by a 2010 [paper about Dapper][11], the distributed tracing solution used at Google. In that paper, the data structure described above was called a span, and you can see nine of them in the image above. This particular "forest" of spans is called a trace and is equivalent to the correlated log entries we've seen before.

|

||||

|

||||

The image above is a screenshot of a trace displayed in [Jaeger][12], an open source distributed tracing solution hosted by the [Cloud Native Computing Foundation (CNCF)][13]. It marks each service with a color to make it easier to see the process boundaries. Timing information can be easily visualized, both by looking at the macro timeline at the top of the screen or at the individual spans, giving a sense of how long each span takes and how impactful it is in this particular execution. It's also easy to observe when processes are asynchronous and therefore may outlive the initial request.

|

||||

|

||||

Like with logging, we need to annotate or instrument our code with the data we want to record. Unlike logging, we record spans instead of messages and do some demarcation to know when the span starts and finishes so we can get accurate timing information. As we would probably like to have our business code independent from a specific distributed tracing implementation, we can use an API such as [OpenTracing][14], leaving the decision about the concrete implementation as a packaging or runtime concern. Following is pseudo-Java code showing such demarcation.

|

||||

|

||||

```

|

||||

try (Scope scope = tracer.buildSpan("submitOrder").startActive(true)) {

|

||||

scope.span().setTag("order-id", "c85b7644b6b5");

|

||||

chargeCreditCard();

|

||||

changeOrderStatus();

|

||||

dispatchEventToInventory();

|

||||

}

|

||||

```

|

||||

|

||||

Given the nature of the distributed tracing concept, it's clear the code executed "between" our business services can also be part of the trace. For instance, we could [turn on][15] the distributed tracing integration for [Istio][16], a service mesh solution that helps in the communication between microservices, and we'll suddenly have a better picture about the network latency and routing decisions made at this layer. Another example is the work done in the OpenTracing community to provide instrumentation for popular stacks, frameworks, and APIs, such as Java's [JAX-RS][17], [Spring Cloud][18], or [JDBC][19]. This enables us to see how our business code interacts with the rest of the middleware, understand where a potential problem might be happening, and identify the best areas to improve. In fact, today's middleware instrumentation is so rich that it's common to get started with distributed tracing by using only the so-called "framework instrumentation," leaving the business code free from any tracing-related code.

|

||||

|

||||

While a microservices architecture is almost unavoidable nowadays for established companies to innovate faster and for ambitious startups to achieve web scale, it's easy to feel helpless while conducting a root cause analysis when something eventually fails and the right tools aren't available. The good news is tools like Prometheus, Logstash, OpenTracing, and Jaeger provide the pieces to bring observability to your application.

|

||||

|

||||

Juraci Paixão Kröhling will present [What are My Microservices Doing?][20] at [Open Source Summit Europe][21], October 22-24 in Edinburgh, Scotland.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/distributed-tracing-microservices-world

|

||||

|

||||

作者:[Juraci Paixão Kröhling][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||