mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

29060bb4f9

@ -1,6 +1,7 @@

|

||||

如何在双系统引导下替换 Linux 发行版

|

||||

======

|

||||

在双系统引导的状态下,你可以将已安装的 Linux 发行版替换为另一个发行版,同时还可以保留原本的个人数据。

|

||||

|

||||

> 在双系统引导的状态下,你可以将已安装的 Linux 发行版替换为另一个发行版,同时还可以保留原本的个人数据。

|

||||

|

||||

![How to Replace One Linux Distribution With Another From Dual Boot][1]

|

||||

|

||||

@ -26,11 +27,9 @@

|

||||

* 需要安装的 Linux 发行版的 USB live 版

|

||||

* 在外部磁盘备份 Windows 和 Linux 中的重要文件(并非必要,但建议备份一下)

|

||||

|

||||

|

||||

|

||||

#### 在替换 Linux 发行版时要记住保留你的 home 目录

|

||||

|

||||

如果想让个人文件在安装新 Linux 系统的过程中不受影响,原有的 Linux 系统必须具有单独的 root 目录和 home 目录。你可能会发现我的[双系统引导教程][8]在安装过程中不选择“与 Windows 一起安装”选项,而选择“其它”选项,然后手动创建 root 和 home 分区。所以,手动创建单独的 home 分区也算是一个磨刀不误砍柴工的操作。因为如果要在不丢失文件的情况下,将现有的 Linux 发行版替换为另一个发行版,需要将 home 目录存放在一个单独的分区上。

|

||||

如果想让个人文件在安装新 Linux 系统的过程中不受影响,原有的 Linux 系统必须具有单独的 root 目录和 home 目录。你可能会发现我的[双系统引导教程][8]在安装过程中不选择“与 Windows 共存”选项,而选择“其它”选项,然后手动创建 root 和 home 分区。所以,手动创建单独的 home 分区也算是一个磨刀不误砍柴工的操作。因为如果要在不丢失文件的情况下,将现有的 Linux 发行版替换为另一个发行版,需要将 home 目录存放在一个单独的分区上。

|

||||

|

||||

不过,你必须记住现有 Linux 系统的用户名和密码才能使用与新系统中相同的 home 目录。

|

||||

|

||||

@ -51,69 +50,80 @@

|

||||

在安装过程中,进入“安装类型”界面时,选择“其它”选项。

|

||||

|

||||

![Replacing one Linux with another from dual boot][10]

|

||||

(在这里选择“其它”选项)

|

||||

|

||||

*在这里选择“其它”选项*

|

||||

|

||||

#### 步骤 3:准备分区操作

|

||||

|

||||

下图是分区界面。你会看到使用 Ext4 文件系统类型来安装 Linux。

|

||||

|

||||

![Identifying Linux partition in dual boot][11]

|

||||

(确定 Linux 的安装位置)

|

||||

|

||||

*确定 Linux 的安装位置*

|

||||

|

||||

在上图中,标记为 Linux Mint 19 的 Ext4 分区是 root 分区,大小为 82691 MB 的第二个 Ext4 分区是 home 分区。在这里我这里没有使用[交换空间][12]。

|

||||

|

||||

如果你只有一个 Ext4 分区,就意味着你的 home 目录与 root 目录位于同一分区。在这种情况下,你就无法保留 home 目录中的文件了,这个时候我建议将重要文件复制到外部磁盘,否则这些文件将不会保留。

|

||||

|

||||

然后是删除 root 分区。选择 root 分区,然后点击 - 号,这个操作释放了一些磁盘空间。

|

||||

然后是删除 root 分区。选择 root 分区,然后点击 `-` 号,这个操作释放了一些磁盘空间。

|

||||

|

||||

![Delete root partition of your existing Linux install][13]

|

||||

(删除 root 分区)

|

||||

|

||||

磁盘空间释放出来后,点击 + 号。

|

||||

*删除 root 分区*

|

||||

|

||||

磁盘空间释放出来后,点击 `+` 号。

|

||||

|

||||

![Create root partition for the new Linux][14]

|

||||

(创建新的 root 分区)

|

||||

|

||||

*创建新的 root 分区*

|

||||

|

||||

现在已经在可用空间中创建一个新分区。如果你之前的 Linux 系统中只有一个 root 分区,就应该在这里创建 root 分区和 home 分区。如果需要,还可以创建交换分区。

|

||||

|

||||

如果你之前已经有 root 分区和 home 分区,那么只需要从已删除的 root 分区创建 root 分区就可以了。

|

||||

|

||||

![Create root partition for the new Linux][15]

|

||||

(创建 root 分区)

|

||||

|

||||

你可能有疑问,为什么要经过“删除”和“添加”两个过程,而不使用“更改”选项。这是因为以前使用“更改”选项好像没有效果,所以我更喜欢用 - 和 +。这是迷信吗?也许是吧。

|

||||

*创建 root 分区*

|

||||

|

||||

你可能有疑问,为什么要经过“删除”和“添加”两个过程,而不使用“更改”选项。这是因为以前使用“更改”选项好像没有效果,所以我更喜欢用 `-` 和 `+`。这是迷信吗?也许是吧。

|

||||

|

||||

这里有一个重要的步骤,对新创建的 root 分区进行格式化。在没有更改分区大小的情况下,默认是不会对分区进行格式化的。如果分区没有被格式化,之后可能会出现问题。

|

||||

|

||||

![][16]

|

||||

(格式化 root 分区很重要)

|

||||

|

||||

*格式化 root 分区很重要*

|

||||

|

||||

如果你在新的 Linux 系统上已经划分了单独的 home 分区,选中它并点击更改。

|

||||

|

||||

![Recreate home partition][17]

|

||||

(修改已有的 home 分区)

|

||||

|

||||

*修改已有的 home 分区*

|

||||

|

||||

然后指定将其作为 home 分区挂载即可。

|

||||

|

||||

![Specify the home mount point][18]

|

||||

(指定 home 分区的挂载点)

|

||||

|

||||

*指定 home 分区的挂载点*

|

||||

|

||||

如果你还有交换分区,可以重复与 home 分区相同的步骤,唯一不同的是要指定将空间用作交换空间。

|

||||

|

||||

现在的状态应该是有一个 root 分区(将被格式化)和一个 home 分区(如果需要,还可以使用交换分区)。点击“立即安装”可以开始安装。

|

||||

|

||||

![Verify partitions while replacing one Linux with another][19]

|

||||

(检查分区情况)

|

||||

|

||||

*检查分区情况*

|

||||

|

||||

接下来的几个界面就很熟悉了,要重点注意的是创建用户和密码的步骤。如果你之前有一个单独的 home 分区,并且还想使用相同的 home 目录,那你必须使用和之前相同的用户名和密码,至于设备名称则可以任意指定。

|

||||

|

||||

![To keep the home partition intact, use the previous user and password][20]

|

||||

(要保持 home 分区不变,请使用之前的用户名和密码)

|

||||

|

||||

*要保持 home 分区不变,请使用之前的用户名和密码*

|

||||

|

||||

接下来只要静待安装完成,不需执行任何操作。

|

||||

|

||||

![Wait for installation to finish][21]

|

||||

(等待安装完成)

|

||||

|

||||

*等待安装完成*

|

||||

|

||||

安装完成后重新启动系统,你就能使用新的 Linux 发行版。

|

||||

|

||||

@ -126,7 +136,7 @@ via: https://itsfoss.com/replace-linux-from-dual-boot/

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[HankChow](https://github.com/HankChow)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,15 +1,15 @@

|

||||

系统管理员需知的 16 个 iptables 使用技巧

|

||||

=======

|

||||

|

||||

iptables 是一款控制系统进出流量的强大配置工具。

|

||||

> iptables 是一款控制系统进出流量的强大配置工具。

|

||||

|

||||

|

||||

|

||||

现代 Linux 内核带有一个叫 [Netfilter][1] 的数据包过滤框架。Netfilter 提供了允许、禁止以及修改等操作来控制进出系统的流量数据包。基于 Netfilter 框架的用户层命令行工具 **iptables** 提供了强大的防火墙配置功能,允许你添加规则来构建防火墙策略。[iptables][2] 丰富复杂的功能以及其巴洛克式命令语法可能让人难以驾驭。我们就来探讨一下其中的一些功能,提供一些系统管理员解决某些问题需要的使用技巧。

|

||||

现代 Linux 内核带有一个叫 [Netfilter][1] 的数据包过滤框架。Netfilter 提供了允许、丢弃以及修改等操作来控制进出系统的流量数据包。基于 Netfilter 框架的用户层命令行工具 `iptables` 提供了强大的防火墙配置功能,允许你添加规则来构建防火墙策略。[iptables][2] 丰富复杂的功能以及其巴洛克式命令语法可能让人难以驾驭。我们就来探讨一下其中的一些功能,提供一些系统管理员解决某些问题需要的使用技巧。

|

||||

|

||||

### 避免封锁自己

|

||||

|

||||

应用场景:假设你将对公司服务器上的防火墙规则进行修改,需要避免封锁你自己以及其他同事的情况(这将会带来一定时间和金钱的损失,也许一旦发生马上就有部门打电话找你了)

|

||||

应用场景:假设你将对公司服务器上的防火墙规则进行修改,你需要避免封锁你自己以及其他同事的情况(这将会带来一定时间和金钱的损失,也许一旦发生马上就有部门打电话找你了)

|

||||

|

||||

#### 技巧 #1: 开始之前先备份一下 iptables 配置文件。

|

||||

|

||||

@ -17,7 +17,6 @@ iptables 是一款控制系统进出流量的强大配置工具。

|

||||

|

||||

```

|

||||

/sbin/iptables-save > /root/iptables-works

|

||||

|

||||

```

|

||||

#### 技巧 #2: 更妥当的做法,给文件加上时间戳。

|

||||

|

||||

@ -25,28 +24,24 @@ iptables 是一款控制系统进出流量的强大配置工具。

|

||||

|

||||

```

|

||||

/sbin/iptables-save > /root/iptables-works-`date +%F`

|

||||

|

||||

```

|

||||

|

||||

然后你就可以生成如下名字的文件:

|

||||

|

||||

```

|

||||

/root/iptables-works-2018-09-11

|

||||

|

||||

```

|

||||

|

||||

这样万一使得系统不工作了,你也可以很快的利用备份文件恢复原状:

|

||||

|

||||

```

|

||||

/sbin/iptables-restore < /root/iptables-works-2018-09-11

|

||||

|

||||

```

|

||||

|

||||

#### 技巧 #3: 每次创建 iptables 配置文件副本时,都创建一个指向 `latest` 的文件的链接。

|

||||

#### 技巧 #3: 每次创建 iptables 配置文件副本时,都创建一个指向最新的文件的链接。

|

||||

|

||||

```

|

||||

ln –s /root/iptables-works-`date +%F` /root/iptables-works-latest

|

||||

|

||||

```

|

||||

|

||||

#### 技巧 #4: 将特定规则放在策略顶部,底部放置通用规则。

|

||||

@ -55,19 +50,17 @@ ln –s /root/iptables-works-`date +%F` /root/iptables-works-latest

|

||||

|

||||

```

|

||||

iptables -A INPUT -p tcp --dport 22 -j DROP

|

||||

|

||||

```

|

||||

|

||||

你在规则中指定的条件越多,封锁自己的可能性就越小。不要使用上面暗中通用规则,而是使用如下的规则:

|

||||

你在规则中指定的条件越多,封锁自己的可能性就越小。不要使用上面非常通用的规则,而是使用如下的规则:

|

||||

|

||||

```

|

||||

iptables -A INPUT -p tcp --dport 22 –s 10.0.0.0/8 –d 192.168.100.101 -j DROP

|

||||

|

||||

```

|

||||

|

||||

此规则表示在 **INPUT** 链尾追加一条新规则,将源地址为 **10.0.0.0/8**、 目的地址是 **192.168.100.101**、目的端口号是 **22** (**\--dport 22** ) 的 **tcp**(**-p tcp** )数据包通通丢弃掉。

|

||||

此规则表示在 `INPUT` 链尾追加一条新规则,将源地址为 `10.0.0.0/8`、 目的地址是 `192.168.100.101`、目的端口号是 `22` (`--dport 22` ) 的 TCP(`-p tcp` )数据包通通丢弃掉。

|

||||

|

||||

还有很多方法可以设置更具体的规则。例如,使用 **-i eth0** 将会限制这条规则作用于 **eth0** 网卡,对 **eth1** 网卡则不生效。

|

||||

还有很多方法可以设置更具体的规则。例如,使用 `-i eth0` 将会限制这条规则作用于 `eth0` 网卡,对 `eth1` 网卡则不生效。

|

||||

|

||||

#### 技巧 #5: 在策略规则顶部将你的 IP 列入白名单。

|

||||

|

||||

@ -75,10 +68,9 @@ iptables -A INPUT -p tcp --dport 22 –s 10.0.0.0/8 –d 192.168.100.101 -j DROP

|

||||

|

||||

```

|

||||

iptables -I INPUT -s <your IP> -j ACCEPT

|

||||

|

||||

```

|

||||

|

||||

你需要将该规则添加到策略首位置。**-I** 表示则策略首部插入规则,**-A** 表示在策略尾部追加规则。

|

||||

你需要将该规则添加到策略首位置。`-I` 表示则策略首部插入规则,`-A` 表示在策略尾部追加规则。

|

||||

|

||||

#### 技巧 #6: 理解现有策略中的所有规则。

|

||||

|

||||

@ -100,7 +92,7 @@ iptables -I INPUT -s <your IP> -j ACCEPT

|

||||

|

||||

#### 技巧 #2: 将用户完成工作所需的最少量服务设置为允许

|

||||

|

||||

该策略需要允许工作站能通过 DHCP (**-p udp --dport 67:68 -sport 67:68**)来获取 IP 地址、子网掩码以及其他一些信息。对于远程操作,需要允许 SSH 服务(**-dport 22**),邮件服务(**--dport 25**),DNS服务(**--dport 53**),ping 功能(**-p icmp**),NTP 服务(**--dport 123 --sport 123**)以及HTTP 服务(**-dport 80**)和 HTTPS 服务(**--dport 443**)。

|

||||

该策略需要允许工作站能通过 DHCP(`-p udp --dport 67:68 -sport 67:68`)来获取 IP 地址、子网掩码以及其他一些信息。对于远程操作,需要允许 SSH 服务(`-dport 22`),邮件服务(`--dport 25`),DNS 服务(`--dport 53`),ping 功能(`-p icmp`),NTP 服务(`--dport 123 --sport 123`)以及 HTTP 服务(`-dport 80`)和 HTTPS 服务(`--dport 443`)。

|

||||

|

||||

```

|

||||

# Set a default policy of DROP

|

||||

@ -144,7 +136,7 @@ COMMIT

|

||||

|

||||

### 限制 IP 地址范围

|

||||

|

||||

应用场景:贵公司的 CEO 认为员工在 Facebook 上花费过多的时间,需要采取一些限制措施。CEO 命令下达给 CIO,CIO 命令CISO,最终任务由你来执行。你决定阻止一切到 Facebook 的访问连接。首先你使用 `host` 或者 `whois` 命令来获取 Facebook 的 IP 地址。

|

||||

应用场景:贵公司的 CEO 认为员工在 Facebook 上花费过多的时间,需要采取一些限制措施。CEO 命令下达给 CIO,CIO 命令 CISO,最终任务由你来执行。你决定阻止一切到 Facebook 的访问连接。首先你使用 `host` 或者 `whois` 命令来获取 Facebook 的 IP 地址。

|

||||

|

||||

```

|

||||

host -t a www.facebook.com

|

||||

@ -153,33 +145,33 @@ star.c10r.facebook.com has address 31.13.65.17

|

||||

whois 31.13.65.17 | grep inetnum

|

||||

inetnum: 31.13.64.0 - 31.13.127.255

|

||||

```

|

||||

然后使用 [CIDR to IPv4转换][3] 页面来将其转换为 CIDR 表示法。然后你得到 **31.13.64.0/18** 的地址。输入以下命令来阻止对 Facebook 的访问:

|

||||

|

||||

然后使用 [CIDR 到 IPv4 转换][3] 页面来将其转换为 CIDR 表示法。然后你得到 `31.13.64.0/18` 的地址。输入以下命令来阻止对 Facebook 的访问:

|

||||

|

||||

```

|

||||

iptables -A OUTPUT -p tcp -i eth0 –o eth1 –d 31.13.64.0/18 -j DROP

|

||||

```

|

||||

|

||||

### 按时间规定做限制-场景1

|

||||

### 按时间规定做限制 - 场景1

|

||||

|

||||

应用场景:公司员工强烈反对限制一切对 Facebook 的访问,这导致了 CEO 放宽了要求(考虑到员工的反对以及他的助理提醒说她将 HIS Facebook 页面保持在最新状态)。然后 CEO 决定允许在午餐时间访问 Facebook(中午12点到下午1点之间)。假设默认规则是丢弃,使用 iptables 的时间功能便可以实现。

|

||||

应用场景:公司员工强烈反对限制一切对 Facebook 的访问,这导致了 CEO 放宽了要求(考虑到员工的反对以及他的助理提醒说她负责更新他的 Facebook 页面)。然后 CEO 决定允许在午餐时间访问 Facebook(中午 12 点到下午 1 点之间)。假设默认规则是丢弃,使用 iptables 的时间功能便可以实现。

|

||||

|

||||

```

|

||||

iptables –A OUTPUT -p tcp -m multiport --dport http,https -i eth0 -o eth1 -m time --timestart 12:00 –timestop 13:00 –d 31.13.64.0/18 -j ACCEPT

|

||||

```

|

||||

|

||||

该命令中指定在中午12点(**\--timestart 12:00**)到下午1点(**\--timestop 13:00**)之间允许(**-j ACCEPT**)到 Facebook.com (**-d [31.13.64.0/18][5]**)的 http 以及 https (**-m multiport --dport http,https**)的访问。

|

||||

该命令中指定在中午12点(`--timestart 12:00`)到下午 1 点(`--timestop 13:00`)之间允许(`-j ACCEPT`)到 Facebook.com (`-d [31.13.64.0/18][5]`)的 http 以及 https (`-m multiport --dport http,https`)的访问。

|

||||

|

||||

### 按时间规定做限制-场景2

|

||||

### 按时间规定做限制 - 场景2

|

||||

|

||||

应用场景

|

||||

Scenario: 在计划系统维护期间,你需要设置凌晨2点到3点之间拒绝所有的 TCP 和 UDP 访问,这样维护任务就不会受到干扰。使用两个 iptables 规则可实现:

|

||||

应用场景:在计划系统维护期间,你需要设置凌晨 2 点到 3 点之间拒绝所有的 TCP 和 UDP 访问,这样维护任务就不会受到干扰。使用两个 iptables 规则可实现:

|

||||

|

||||

```

|

||||

iptables -A INPUT -p tcp -m time --timestart 02:00 --timestop 03:00 -j DROP

|

||||

iptables -A INPUT -p udp -m time --timestart 02:00 --timestop 03:00 -j DROP

|

||||

```

|

||||

|

||||

该规则禁止(**-j DROP**)在凌晨2点(**\--timestart 02:00**)到凌晨3点(**\--timestop 03:00**)之间的 TCP 和 UDP (**-p tcp and -p udp**)的数据进入(**-A INPUT**)访问。

|

||||

该规则禁止(`-j DROP`)在凌晨2点(`--timestart 02:00`)到凌晨3点(`--timestop 03:00`)之间的 TCP 和 UDP (`-p tcp and -p udp`)的数据进入(`-A INPUT`)访问。

|

||||

|

||||

### 限制连接数量

|

||||

|

||||

@ -189,11 +181,11 @@ iptables -A INPUT -p udp -m time --timestart 02:00 --timestop 03:00 -j DROP

|

||||

iptables –A INPUT –p tcp –syn -m multiport -–dport http,https –m connlimit -–connlimit-above 20 –j REJECT -–reject-with-tcp-reset

|

||||

```

|

||||

|

||||

分析一下上面的命令。如果单个主机在一分钟之内新建立(**-p tcp -syn**)超过20个(**-connlimit-above 20**)到你的 web 服务器(**--dport http,https**)的连接,服务器将拒绝(**-j REJECT**)建立新的连接,然后通知该主机新建连接被拒绝(**--reject-with-tcp-reset**)。

|

||||

分析一下上面的命令。如果单个主机在一分钟之内新建立(`-p tcp -syn`)超过 20 个(`-connlimit-above 20`)到你的 web 服务器(`--dport http,https`)的连接,服务器将拒绝(`-j REJECT`)建立新的连接,然后通知对方新建连接被拒绝(`--reject-with-tcp-reset`)。

|

||||

|

||||

### 监控 iptables 规则

|

||||

|

||||

应用场景:由于数据包会遍历链中的规则,iptables遵循 ”首次匹配获胜“ 的原则,因此经常匹配的规则应该靠近策略的顶部,而不太频繁匹配的规则应该接近底部。 你怎么知道哪些规则使用最多或最少,可以在顶部或底部附近监控?

|

||||

应用场景:由于数据包会遍历链中的规则,iptables 遵循 “首次匹配获胜” 的原则,因此经常匹配的规则应该靠近策略的顶部,而不太频繁匹配的规则应该接近底部。 你怎么知道哪些规则使用最多或最少,可以在顶部或底部附近监控?

|

||||

|

||||

#### 技巧 #1: 查看规则被访问了多少次

|

||||

|

||||

@ -203,7 +195,7 @@ iptables –A INPUT –p tcp –syn -m multiport -–dport http,https –m connl

|

||||

iptables -L -v -n –line-numbers

|

||||

```

|

||||

|

||||

用 **-L** 选项列出链中的所有规则。因为没有指定具体哪条链,所有链规则都会被输出,使用 **-v** 选项显示详细信息,**-n** 选项则显示数字格式的数据包和字节计数器,每个规则开头的数值表示该规则在链中的位置。

|

||||

用 `-L` 选项列出链中的所有规则。因为没有指定具体哪条链,所有链规则都会被输出,使用 `-v` 选项显示详细信息,`-n` 选项则显示数字格式的数据包和字节计数器,每个规则开头的数值表示该规则在链中的位置。

|

||||

|

||||

根据数据包和字节计数的结果,你可以将访问频率最高的规则放到顶部,将访问频率最低的规则放到底部。

|

||||

|

||||

@ -215,17 +207,17 @@ iptables -L -v -n –line-numbers

|

||||

iptables -nvL | grep -v "0 0"

|

||||

```

|

||||

|

||||

注意:两个数字0之间不是 Tab 键,而是 5 个空格。

|

||||

注意:两个数字 0 之间不是 Tab 键,而是 **5** 个空格。

|

||||

|

||||

#### 技巧 #3: 监控正在发生什么

|

||||

|

||||

可能你也想像使用 **top** 命令一样来实时监控 iptables 的情况。使用如下命令来动态监视 iptables 中的活动,并仅显示正在遍历的规则:

|

||||

可能你也想像使用 `top` 命令一样来实时监控 iptables 的情况。使用如下命令来动态监视 iptables 中的活动,并仅显示正在遍历的规则:

|

||||

|

||||

```

|

||||

watch --interval=5 'iptables -nvL | grep -v "0 0"'

|

||||

```

|

||||

|

||||

**watch** 命令通过参数 **iptables -nvL | grep -v “0 0“** 每隔 5s 输出 iptables 的动态。这条命令允许你查看数据包和字节计数的变化。

|

||||

`watch` 命令通过参数 `iptables -nvL | grep -v “0 0“` 每隔 5 秒输出 iptables 的动态。这条命令允许你查看数据包和字节计数的变化。

|

||||

|

||||

### 输出日志

|

||||

|

||||

@ -239,7 +231,7 @@ watch --interval=5 'iptables -nvL | grep -v "0 0"'

|

||||

|

||||

### 不要满足于允许和丢弃规则

|

||||

|

||||

本文中已经涵盖了 iptables 的很多方面,从避免封锁自己、iptables 配置防火墙以及监控 iptables 中的活动等等方面介绍了 iptables。你可以从这里开始探索 iptables 甚至获取更多的使用技巧。

|

||||

本文中已经涵盖了 iptables 的很多方面,从避免封锁自己、配置 iptables 防火墙以及监控 iptables 中的活动等等方面介绍了 iptables。你可以从这里开始探索 iptables 甚至获取更多的使用技巧。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -247,8 +239,8 @@ via: https://opensource.com/article/18/10/iptables-tips-and-tricks

|

||||

|

||||

作者:[Gary Smith][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[jrg](https://github.com/jrglinu)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[jrg](https://github.com/jrglinux)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,7 +1,7 @@

|

||||

在 Linux 命令行中使用 ls 列出文件的提示

|

||||

在 Linux 命令行中使用 ls 列出文件的技巧

|

||||

======

|

||||

|

||||

学习一些 Linux `ls` 命令最有用的变化。

|

||||

> 学习一些 Linux `ls` 命令最有用的变化。

|

||||

|

||||

|

||||

|

||||

@ -3,30 +3,30 @@

|

||||

|

||||

|

||||

|

||||

前段时间,我写了一篇在安装 Windows 后在 Arch Linux 上**[如何重新安装 Grub][1]的教程。**

|

||||

前段时间,我写了一篇在安装 Windows 后在 Arch Linux 上[如何重新安装 Grub][1]的教程。

|

||||

|

||||

几周前,我不得不在我的笔记本上从头开始重新安装 **Arch Linux**,同时我发现安装 **Grub** 并不像我想的那么简单。

|

||||

几周前,我不得不在我的笔记本上从头开始重新安装 Arch Linux,同时我发现安装 Grub 并不像我想的那么简单。

|

||||

|

||||

出于这个原因,由于在新安装 **Arch Linux** 时**在 UEFI bios 中安装 Grub** 并不容易,所以我要写这篇教程。

|

||||

出于这个原因,由于在新安装 Arch Linux 时在 UEFI bios 中安装 Grub 并不容易,所以我要写这篇教程。

|

||||

|

||||

### 定位 EFI 分区

|

||||

|

||||

在 **Arch Linux** 上安装 **Grub** 的第一件重要事情是定位 **EFI** 分区。让我们运行以下命令以找到此分区:

|

||||

在 Arch Linux 上安装 Grub 的第一件重要事情是定位 EFI 分区。让我们运行以下命令以找到此分区:

|

||||

|

||||

```

|

||||

# fdisk -l

|

||||

```

|

||||

|

||||

我们需要检查标记为 **EFI System** 的分区,我这里是 **/dev/sda2**。

|

||||

我们需要检查标记为 EFI System 的分区,我这里是 `/dev/sda2`。

|

||||

|

||||

之后,我们需要在例如 /boot/efi 上挂载这个分区:

|

||||

之后,我们需要在例如 `/boot/efi` 上挂载这个分区:

|

||||

|

||||

```

|

||||

# mkdir /boot/efi

|

||||

# mount /dev/sdb2 /boot/efi

|

||||

```

|

||||

|

||||

另一件重要的事情是将此分区添加到 **/etc/fstab** 中。

|

||||

另一件重要的事情是将此分区添加到 `/etc/fstab` 中。

|

||||

|

||||

#### 安装 Grub

|

||||

|

||||

@ -39,7 +39,7 @@

|

||||

|

||||

#### 自动将 Windows 添加到 Grub 菜单中

|

||||

|

||||

为了自动将**Windows 条目添加到 Grub 菜单**,我们需要安装 **os-prober**:

|

||||

为了自动将 Windows 条目添加到 Grub 菜单,我们需要安装 os-prober:

|

||||

|

||||

```

|

||||

# pacman -Sy os-prober

|

||||

@ -62,7 +62,7 @@ via: http://fasterland.net/how-to-install-grub-on-arch-linux-uefi.html

|

||||

作者:[Francesco Mondello][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,75 +0,0 @@

|

||||

(translating by runningwater)

|

||||

CPU Power Manager – Control And Manage CPU Frequency In Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

If you are a laptop user, you probably know that power management on Linux isn’t really as good as on other OSes. While there are tools like **TLP** , [**Laptop Mode Tools** and **powertop**][1] to help reduce power consumption, overall battery life on Linux isn’t as good as Windows or Mac OS. Another way to reduce power consumption is to limit the frequency of your CPU. While this is something that has always been doable, it generally requires complicated terminal commands, making it inconvenient. But fortunately, there’s a gnome extension that helps you easily set and manage your CPU’s frequency – **CPU Power Manager**. CPU Power Manager uses the **intel_pstate** frequency scaling driver (supported by almost every Intel CPU) to control and manage CPU frequency in your GNOME desktop.

|

||||

|

||||

Another reason to use this extension is to reduce heating in your system. There are many systems out there which can get uncomfortably hot in normal usage. Limiting your CPU’s frequency could reduce heating. It will also decrease the wear and tear on your CPU and other components.

|

||||

|

||||

### Installing CPU Power Manager

|

||||

|

||||

First, go to the [**extension’s page**][2], and install the extension.

|

||||

|

||||

Once the extension has installed, you’ll get a CPU icon at the right side of the Gnome top bar. Click the icon, and you get an option to install the extension:

|

||||

|

||||

|

||||

|

||||

If you click **“Attempt Installation”** , you’ll get a password prompt. The extension needs root privileges to add policykit rule for controlling CPU frequency. This is what the prompt looks like:

|

||||

|

||||

|

||||

|

||||

Type in your password and Click **“Authenticate”** , and that finishes installation. The last action adds a policykit file – **mko.cpupower.setcpufreq.policy** at **/usr/share/polkit-1/actions**.

|

||||

|

||||

After installation is complete, if you click the CPU icon at the top right, you’ll get something like this:

|

||||

|

||||

|

||||

|

||||

### Features

|

||||

|

||||

* **See the current CPU frequency:** Obviously, you can use this window to see the frequency that your CPU is running at.

|

||||

* **Set maximum and minimum frequency:** With this extension, you can set maximum and minimum frequency limits in terms of percentage of max frequency. Once these limits are set, the CPU will operate only in this range of frequencies.

|

||||

* **Turn Turbo Boost On and Off:** This is my favorite feature. Most Intel CPU’s have “Turbo Boost” feature, whereby the one of the cores of the CPU is boosted past the normal maximum frequency for extra performance. While this can make your system more performant, it also increases power consumption a lot. So if you aren’t doing anything intensive, it’s nice to be able to turn off Turbo Boost and save power. In fact, in my case, I have Turbo Boost turned off most of the time.

|

||||

* **Make Profiles:** You can make profiles with max and min frequency that you can turn on/off easily instead of fiddling with max and frequencies.

|

||||

|

||||

|

||||

|

||||

### Preferences

|

||||

|

||||

You can also customize the extension via the preferences window:

|

||||

|

||||

|

||||

|

||||

As you can see, you can set whether CPU frequency is to be displayed, and whether to display it in **Mhz** or **Ghz**.

|

||||

|

||||

You can also edit and create/delete profiles:

|

||||

|

||||

|

||||

|

||||

You can set maximum and minimum frequencies, and turbo boost for each profile.

|

||||

|

||||

### Conclusion

|

||||

|

||||

As I said in the beginning, power management on Linux is not the best, and many people are always looking to eek out a few minutes more out of their Linux laptop. If you are one of those, check out this extension. This is a unconventional method to save power, but it does work. I certainly love this extension, and have been using it for a few months now.

|

||||

|

||||

What do you think about this extension? Put your thoughts in the comments below!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/cpu-power-manager-control-and-manage-cpu-frequency-in-linux/

|

||||

|

||||

作者:[EDITOR][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[runningwater](https://github.com/runningwater)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.ostechnix.com/author/editor/

|

||||

[1]: https://www.ostechnix.com/improve-laptop-battery-performance-linux/

|

||||

[2]: https://extensions.gnome.org/extension/945/cpu-power-manager/

|

||||

@ -1,639 +0,0 @@

|

||||

BriFuture is translating this article

|

||||

|

||||

# Compiling Lisp to JavaScript From Scratch in 350

|

||||

|

||||

In this article we will look at a from-scratch implementation of a compiler from a simple LISP-like calculator language to JavaScript. The complete source code can be found [here][7].

|

||||

|

||||

We will:

|

||||

|

||||

1. Define our language and write a simple program in it

|

||||

|

||||

2. Implement a simple parser combinator library

|

||||

|

||||

3. Implement a parser for our language

|

||||

|

||||

4. Implement a pretty printer for our language

|

||||

|

||||

5. Define a subset of JavaScript for our usage

|

||||

|

||||

6. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

7. Glue it all together

|

||||

|

||||

Let's start!

|

||||

|

||||

### 1\. Defining the language

|

||||

|

||||

The main attraction of lisps is that their syntax already represent a tree, this is why they are so easy to parse. We'll see that soon. But first let's define our language. Here's a BNF description of our language's syntax:

|

||||

|

||||

```

|

||||

program ::= expr

|

||||

expr ::= <integer> | <name> | ([<expr>])

|

||||

```

|

||||

|

||||

Basically, our language let's us define one expression at the top level which it will evaluate. An expression is composed of either an integer, for example `5`, a variable, for example `x`, or a list of expressions, for example `(add x 1)`.

|

||||

|

||||

An integer evaluate to itself, a variable evaluates to what it's bound in the current environment, and a list evaluates to a function call where the first argument is the function and the rest are the arguments to the function.

|

||||

|

||||

We have some built-in special forms in our language so we can do more interesting stuff:

|

||||

|

||||

* let expression let's us introduce new variables in the environment of the body of the let. The syntax is:

|

||||

|

||||

```

|

||||

let ::= (let ([<letarg>]) <body>)

|

||||

letargs ::= (<name> <expr>)

|

||||

body ::= <expr>

|

||||

```

|

||||

|

||||

* lambda expression: evaluates to an anonymous function definition. The syntax is:

|

||||

|

||||

```

|

||||

lambda ::= (lambda ([<name>]) <body>)

|

||||

```

|

||||

|

||||

We also have a few built in functions: `add`, `mul`, `sub`, `div` and `print`.

|

||||

|

||||

Let's see a quick example of a program written in our language:

|

||||

|

||||

```

|

||||

(let

|

||||

((compose

|

||||

(lambda (f g)

|

||||

(lambda (x) (f (g x)))))

|

||||

(square

|

||||

(lambda (x) (mul x x)))

|

||||

(add1

|

||||

(lambda (x) (add x 1))))

|

||||

(print ((compose square add1) 5)))

|

||||

```

|

||||

|

||||

This program defines 3 functions: `compose`, `square` and `add1`. And then prints the result of the computation:`((compose square add1) 5)`

|

||||

|

||||

I hope this is enough information about the language. Let's start implementing it!

|

||||

|

||||

We can define the language in Haskell like this:

|

||||

|

||||

```

|

||||

type Name = String

|

||||

|

||||

data Expr

|

||||

= ATOM Atom

|

||||

| LIST [Expr]

|

||||

deriving (Eq, Read, Show)

|

||||

|

||||

data Atom

|

||||

= Int Int

|

||||

| Symbol Name

|

||||

deriving (Eq, Read, Show)

|

||||

```

|

||||

|

||||

We can parse programs in the language we defined to an `Expr`. Also, we are giving the new data types `Eq`, `Read`and `Show` instances to aid in testing and debugging. You'll be able to use those in the REPL for example to verify all this actually works.

|

||||

|

||||

The reason we did not define `lambda`, `let` and the other built-in functions as part of the syntax is because we can get away with it in this case. These functions are just a more specific case of a `LIST`. So I decided to leave this to a later phase.

|

||||

|

||||

Usually, you would like to define these special cases in the abstract syntax - to improve error messages, to unable static analysis and optimizations and such, but we won't do that here so this is enough for us.

|

||||

|

||||

Another thing you would like to do usually is add some annotation to the syntax. For example the location: Which file did this `Expr` come from and which row and col in the file. You can use this in later stages to print the location of errors, even if they are not in the parser stage.

|

||||

|

||||

* _Exercise 1_ : Add a `Program` data type to include multiple `Expr` sequentially

|

||||

|

||||

* _Exercise 2_ : Add location annotation to the syntax tree.

|

||||

|

||||

### 2\. Implement a simple parser combinator library

|

||||

|

||||

First thing we are going to do is define an Embedded Domain Specific Language (or EDSL) which we will use to define our languages' parser. This is often referred to as parser combinator library. The reason we are doing it is strictly for learning purposes, Haskell has great parsing libraries and you should definitely use them when building real software, or even when just experimenting. One such library is [megaparsec][8].

|

||||

|

||||

First let's talk about the idea behind our parser library implementation. In it's essence, our parser is a function that takes some input, might consume some or all of the input, and returns the value it managed to parse and the rest of the input it didn't parse yet, or throws an error if it failed. Let's write that down.

|

||||

|

||||

```

|

||||

newtype Parser a

|

||||

= Parser (ParseString -> Either ParseError (a, ParseString))

|

||||

|

||||

data ParseString

|

||||

= ParseString Name (Int, Int) String

|

||||

|

||||

data ParseError

|

||||

= ParseError ParseString Error

|

||||

|

||||

type Error = String

|

||||

|

||||

```

|

||||

|

||||

Here we defined three main new types.

|

||||

|

||||

First, `Parser a`, is the parsing function we described before.

|

||||

|

||||

Second, `ParseString` is our input or state we carry along. It has three significant parts:

|

||||

|

||||

* `Name`: This is the name of the source

|

||||

|

||||

* `(Int, Int)`: This is the current location in the source

|

||||

|

||||

* `String`: This is the remaining string left to parse

|

||||

|

||||

Third, `ParseError` contains the current state of the parser and an error message.

|

||||

|

||||

Now we want our parser to be flexible, so we will define a few instances for common type classes for it. These instances will allow us to combine small parsers to make bigger parsers (hence the name 'parser combinators').

|

||||

|

||||

The first one is a `Functor` instance. We want a `Functor` instance because we want to be able to define a parser using another parser simply by applying a function on the parsed value. We will see an example of this when we define the parser for our language.

|

||||

|

||||

```

|

||||

instance Functor Parser where

|

||||

fmap f (Parser parser) =

|

||||

Parser (\str -> first f <$> parser str)

|

||||

```

|

||||

|

||||

The second instance is an `Applicative` instance. One common use case for this instance instance is to lift a pure function on multiple parsers.

|

||||

|

||||

```

|

||||

instance Applicative Parser where

|

||||

pure x = Parser (\str -> Right (x, str))

|

||||

(Parser p1) <*> (Parser p2) =

|

||||

Parser $

|

||||

\str -> do

|

||||

(f, rest) <- p1 str

|

||||

(x, rest') <- p2 rest

|

||||

pure (f x, rest')

|

||||

|

||||

```

|

||||

|

||||

(Note: _We will also implement a Monad instance so we can use do notation here._ )

|

||||

|

||||

The third instance is an `Alternative` instance. We want to be able to supply an alternative parser in case one fails.

|

||||

|

||||

```

|

||||

instance Alternative Parser where

|

||||

empty = Parser (`throwErr` "Failed consuming input")

|

||||

(Parser p1) <|> (Parser p2) =

|

||||

Parser $

|

||||

\pstr -> case p1 pstr of

|

||||

Right result -> Right result

|

||||

Left _ -> p2 pstr

|

||||

```

|

||||

|

||||

The forth instance is a `Monad` instance. So we'll be able to chain parsers.

|

||||

|

||||

```

|

||||

instance Monad Parser where

|

||||

(Parser p1) >>= f =

|

||||

Parser $

|

||||

\str -> case p1 str of

|

||||

Left err -> Left err

|

||||

Right (rs, rest) ->

|

||||

case f rs of

|

||||

Parser parser -> parser rest

|

||||

|

||||

```

|

||||

|

||||

Next, let's define a way to run a parser and a utility function for failure:

|

||||

|

||||

```

|

||||

|

||||

runParser :: String -> String -> Parser a -> Either ParseError (a, ParseString)

|

||||

runParser name str (Parser parser) = parser $ ParseString name (0,0) str

|

||||

|

||||

throwErr :: ParseString -> String -> Either ParseError a

|

||||

throwErr ps@(ParseString name (row,col) _) errMsg =

|

||||

Left $ ParseError ps $ unlines

|

||||

[ "*** " ++ name ++ ": " ++ errMsg

|

||||

, "* On row " ++ show row ++ ", column " ++ show col ++ "."

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

Now we'll start implementing the combinators which are the API and heart of the EDSL.

|

||||

|

||||

First, we'll define `oneOf`. `oneOf` will succeed if one of the characters in the list supplied to it is the next character of the input and will fail otherwise.

|

||||

|

||||

```

|

||||

oneOf :: [Char] -> Parser Char

|

||||

oneOf chars =

|

||||

Parser $ \case

|

||||

ps@(ParseString name (row, col) str) ->

|

||||

case str of

|

||||

[] -> throwErr ps "Cannot read character of empty string"

|

||||

(c:cs) ->

|

||||

if c `elem` chars

|

||||

then Right (c, ParseString name (row, col+1) cs)

|

||||

else throwErr ps $ unlines ["Unexpected character " ++ [c], "Expecting one of: " ++ show chars]

|

||||

```

|

||||

|

||||

`optional` will stop a parser from throwing an error. It will just return `Nothing` on failure.

|

||||

|

||||

```

|

||||

optional :: Parser a -> Parser (Maybe a)

|

||||

optional (Parser parser) =

|

||||

Parser $

|

||||

\pstr -> case parser pstr of

|

||||

Left _ -> Right (Nothing, pstr)

|

||||

Right (x, rest) -> Right (Just x, rest)

|

||||

```

|

||||

|

||||

`many` will try to run a parser repeatedly until it fails. When it does, it'll return a list of successful parses. `many1`will do the same, but will throw an error if it fails to parse at least once.

|

||||

|

||||

```

|

||||

many :: Parser a -> Parser [a]

|

||||

many parser = go []

|

||||

where go cs = (parser >>= \c -> go (c:cs)) <|> pure (reverse cs)

|

||||

|

||||

many1 :: Parser a -> Parser [a]

|

||||

many1 parser =

|

||||

(:) <$> parser <*> many parser

|

||||

|

||||

```

|

||||

|

||||

These next few parsers use the combinators we defined to make more specific parsers:

|

||||

|

||||

```

|

||||

char :: Char -> Parser Char

|

||||

char c = oneOf [c]

|

||||

|

||||

string :: String -> Parser String

|

||||

string = traverse char

|

||||

|

||||

space :: Parser Char

|

||||

space = oneOf " \n"

|

||||

|

||||

spaces :: Parser String

|

||||

spaces = many space

|

||||

|

||||

spaces1 :: Parser String

|

||||

spaces1 = many1 space

|

||||

|

||||

withSpaces :: Parser a -> Parser a

|

||||

withSpaces parser =

|

||||

spaces *> parser <* spaces

|

||||

|

||||

parens :: Parser a -> Parser a

|

||||

parens parser =

|

||||

(withSpaces $ char '(')

|

||||

*> withSpaces parser

|

||||

<* (spaces *> char ')')

|

||||

|

||||

sepBy :: Parser a -> Parser b -> Parser [b]

|

||||

sepBy sep parser = do

|

||||

frst <- optional parser

|

||||

rest <- many (sep *> parser)

|

||||

pure $ maybe rest (:rest) frst

|

||||

|

||||

```

|

||||

|

||||

Now we have everything we need to start defining a parser for our language.

|

||||

|

||||

* _Exercise_ : implement an EOF (end of file/input) parser combinator.

|

||||

|

||||

### 3\. Implementing a parser for our language

|

||||

|

||||

To define our parser, we'll use the top-bottom method.

|

||||

|

||||

```

|

||||

parseExpr :: Parser Expr

|

||||

parseExpr = fmap ATOM parseAtom <|> fmap LIST parseList

|

||||

|

||||

parseList :: Parser [Expr]

|

||||

parseList = parens $ sepBy spaces1 parseExpr

|

||||

|

||||

parseAtom :: Parser Atom

|

||||

parseAtom = parseSymbol <|> parseInt

|

||||

|

||||

parseSymbol :: Parser Atom

|

||||

parseSymbol = fmap Symbol parseName

|

||||

|

||||

```

|

||||

|

||||

Notice that these four function are a very high-level description of our language. This demonstrate why Haskell is so nice for parsing. Still, after defining the high-level parts, we still need to define the lower-level `parseName` and `parseInt`.

|

||||

|

||||

What characters can we use as names in our language? Let's decide to use lowercase letters, digits and underscores, where the first character must be a letter.

|

||||

|

||||

```

|

||||

parseName :: Parser Name

|

||||

parseName = do

|

||||

c <- oneOf ['a'..'z']

|

||||

cs <- many $ oneOf $ ['a'..'z'] ++ "0123456789" ++ "_"

|

||||

pure (c:cs)

|

||||

```

|

||||

|

||||

For integers, we want a sequence of digits optionally preceding by '-':

|

||||

|

||||

```

|

||||

parseInt :: Parser Atom

|

||||

parseInt = do

|

||||

sign <- optional $ char '-'

|

||||

num <- many1 $ oneOf "0123456789"

|

||||

let result = read $ maybe num (:num) sign of

|

||||

pure $ Int result

|

||||

```

|

||||

|

||||

Lastly, we'll define a function to run a parser and get back an `Expr` or an error message.

|

||||

|

||||

```

|

||||

runExprParser :: Name -> String -> Either String Expr

|

||||

runExprParser name str =

|

||||

case runParser name str (withSpaces parseExpr) of

|

||||

Left (ParseError _ errMsg) -> Left errMsg

|

||||

Right (result, _) -> Right result

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Write a parser for the `Program` type you defined in the first section

|

||||

|

||||

* _Exercise 2_ : Rewrite `parseName` in Applicative style

|

||||

|

||||

* _Exercise 3_ : Find a way to handle the overflow case in `parseInt` instead of using `read`.

|

||||

|

||||

### 4\. Implement a pretty printer for our language

|

||||

|

||||

One more thing we'd like to do is be able to print our programs as source code. This is useful for better error messages.

|

||||

|

||||

```

|

||||

printExpr :: Expr -> String

|

||||

printExpr = printExpr' False 0

|

||||

|

||||

printAtom :: Atom -> String

|

||||

printAtom = \case

|

||||

Symbol s -> s

|

||||

Int i -> show i

|

||||

|

||||

printExpr' :: Bool -> Int -> Expr -> String

|

||||

printExpr' doindent level = \case

|

||||

ATOM a -> indent (bool 0 level doindent) (printAtom a)

|

||||

LIST (e:es) ->

|

||||

indent (bool 0 level doindent) $

|

||||

concat

|

||||

[ "("

|

||||

, printExpr' False (level + 1) e

|

||||

, bool "\n" "" (null es)

|

||||

, intercalate "\n" $ map (printExpr' True (level + 1)) es

|

||||

, ")"

|

||||

]

|

||||

|

||||

indent :: Int -> String -> String

|

||||

indent tabs e = concat (replicate tabs " ") ++ e

|

||||

```

|

||||

|

||||

* _Exercise_ : Write a pretty printer for the `Program` type you defined in the first section

|

||||

|

||||

Okay, we wrote around 200 lines so far of what's typically called the front-end of the compiler. We have around 150 more lines to go and three more tasks: We need to define a subset of JS for our usage, define the translator from our language to that subset, and glue the whole thing together. Let's go!

|

||||

|

||||

### 5\. Define a subset of JavaScript for our usage

|

||||

|

||||

First, we'll define the subset of JavaScript we are going to use:

|

||||

|

||||

```

|

||||

data JSExpr

|

||||

= JSInt Int

|

||||

| JSSymbol Name

|

||||

| JSBinOp JSBinOp JSExpr JSExpr

|

||||

| JSLambda [Name] JSExpr

|

||||

| JSFunCall JSExpr [JSExpr]

|

||||

| JSReturn JSExpr

|

||||

deriving (Eq, Show, Read)

|

||||

|

||||

type JSBinOp = String

|

||||

```

|

||||

|

||||

This data type represent a JavaScript expression. We have two atoms - `JSInt` and `JSSymbol` to which we'll translate our languages' `Atom`, We have `JSBinOp` to represent a binary operation such as `+` or `*`, we have `JSLambda`for anonymous functions same as our `lambda expression`, We have `JSFunCall` which we'll use both for calling functions and introducing new names as in `let`, and we have `JSReturn` to return values from functions as that's required in JavaScript.

|

||||

|

||||

This `JSExpr` type is an **abstract representation** of a JavaScript expression. We will translate our own `Expr`which is an abstract representation of our languages' expression to `JSExpr` and from there to JavaScript. But in order to do that we need to take this `JSExpr` and produce JavaScript code from it. We'll do that by pattern matching on `JSExpr` recursively and emit JS code as a `String`. This is basically the same thing we did in `printExpr`. We'll also track the scoping of elements so we can indent the generated code in a nice way.

|

||||

|

||||

```

|

||||

printJSOp :: JSBinOp -> String

|

||||

printJSOp op = op

|

||||

|

||||

printJSExpr :: Bool -> Int -> JSExpr -> String

|

||||

printJSExpr doindent tabs = \case

|

||||

JSInt i -> show i

|

||||

JSSymbol name -> name

|

||||

JSLambda vars expr -> (if doindent then indent tabs else id) $ unlines

|

||||

["function(" ++ intercalate ", " vars ++ ") {"

|

||||

,indent (tabs+1) $ printJSExpr False (tabs+1) expr

|

||||

] ++ indent tabs "}"

|

||||

JSBinOp op e1 e2 -> "(" ++ printJSExpr False tabs e1 ++ " " ++ printJSOp op ++ " " ++ printJSExpr False tabs e2 ++ ")"

|

||||

JSFunCall f exprs -> "(" ++ printJSExpr False tabs f ++ ")(" ++ intercalate ", " (fmap (printJSExpr False tabs) exprs) ++ ")"

|

||||

JSReturn expr -> (if doindent then indent tabs else id) $ "return " ++ printJSExpr False tabs expr ++ ";"

|

||||

```

|

||||

|

||||

* _Exercise 1_ : Add a `JSProgram` type that will hold multiple `JSExpr` and create a function `printJSExprProgram` to generate code for it.

|

||||

|

||||

* _Exercise 2_ : Add a new type of `JSExpr` - `JSIf`, and generate code for it.

|

||||

|

||||

### 6\. Implement a code translator to the JavaScript subset we defined

|

||||

|

||||

We are almost there. In this section we'll create a function to translate `Expr` to `JSExpr`.

|

||||

|

||||

The basic idea is simple, we'll translate `ATOM` to `JSSymbol` or `JSInt` and `LIST` to either a function call or a special case we'll translate later.

|

||||

|

||||

```

|

||||

type TransError = String

|

||||

|

||||

translateToJS :: Expr -> Either TransError JSExpr

|

||||

translateToJS = \case

|

||||

ATOM (Symbol s) -> pure $ JSSymbol s

|

||||

ATOM (Int i) -> pure $ JSInt i

|

||||

LIST xs -> translateList xs

|

||||

|

||||

translateList :: [Expr] -> Either TransError JSExpr

|

||||

translateList = \case

|

||||

[] -> Left "translating empty list"

|

||||

ATOM (Symbol s):xs

|

||||

| Just f <- lookup s builtins ->

|

||||

f xs

|

||||

f:xs ->

|

||||

JSFunCall <$> translateToJS f <*> traverse translateToJS xs

|

||||

|

||||

```

|

||||

|

||||

`builtins` is a list of special cases to translate, like `lambda` and `let`. Every case gets the list of arguments for it, verify that its syntactically valid and translates it to the equivalent `JSExpr`.

|

||||

|

||||

```

|

||||

type Builtin = [Expr] -> Either TransError JSExpr

|

||||

type Builtins = [(Name, Builtin)]

|

||||

|

||||

builtins :: Builtins

|

||||

builtins =

|

||||

[("lambda", transLambda)

|

||||

,("let", transLet)

|

||||

,("add", transBinOp "add" "+")

|

||||

,("mul", transBinOp "mul" "*")

|

||||

,("sub", transBinOp "sub" "-")

|

||||

,("div", transBinOp "div" "/")

|

||||

,("print", transPrint)

|

||||

]

|

||||

|

||||

```

|

||||

|

||||

In our case, we treat built-in special forms as special and not first class, so will not be able to use them as first class functions and such.

|

||||

|

||||

We'll translate a Lambda to an anonymous function:

|

||||

|

||||

```

|

||||

transLambda :: [Expr] -> Either TransError JSExpr

|

||||

transLambda = \case

|

||||

[LIST vars, body] -> do

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSLambda vars' <$> (JSReturn <$> translateToJS body)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for lambda."

|

||||

,"expecting 2 arguments, the first is the list of vars and the second is the body of the lambda."

|

||||

,"In expression: " ++ show (LIST $ ATOM (Symbol "lambda") : vars)

|

||||

]

|

||||

|

||||

fromSymbol :: Expr -> Either String Name

|

||||

fromSymbol (ATOM (Symbol s)) = Right s

|

||||

fromSymbol e = Left $ "cannot bind value to non symbol type: " ++ show e

|

||||

|

||||

```

|

||||

|

||||

We'll translate let to a definition of a function with the relevant named arguments and call it with the values, Thus introducing the variables in that scope:

|

||||

|

||||

```

|

||||

transLet :: [Expr] -> Either TransError JSExpr

|

||||

transLet = \case

|

||||

[LIST binds, body] -> do

|

||||

(vars, vals) <- letParams binds

|

||||

vars' <- traverse fromSymbol vars

|

||||

JSFunCall . JSLambda vars' <$> (JSReturn <$> translateToJS body) <*> traverse translateToJS vals

|

||||

where

|

||||

letParams :: [Expr] -> Either Error ([Expr],[Expr])

|

||||

letParams = \case

|

||||

[] -> pure ([],[])

|

||||

LIST [x,y] : rest -> ((x:) *** (y:)) <$> letParams rest

|

||||

x : _ -> Left ("Unexpected argument in let list in expression:\n" ++ printExpr x)

|

||||

|

||||

vars ->

|

||||

Left $ unlines

|

||||

["Syntax error: unexpected arguments for let."

|

||||

,"expecting 2 arguments, the first is the list of var/val pairs and the second is the let body."

|

||||

,"In expression:\n" ++ printExpr (LIST $ ATOM (Symbol "let") : vars)

|

||||

]

|

||||

```

|

||||

|

||||

We'll translate an operation that can work on multiple arguments to a chain of binary operations. For example: `(add 1 2 3)` will become `1 + (2 + 3)`

|

||||

|

||||

```

|

||||

transBinOp :: Name -> Name -> [Expr] -> Either TransError JSExpr

|

||||

transBinOp f _ [] = Left $ "Syntax error: '" ++ f ++ "' expected at least 1 argument, got: 0"

|

||||

transBinOp _ _ [x] = translateToJS x

|

||||

transBinOp _ f list = foldl1 (JSBinOp f) <$> traverse translateToJS list

|

||||

```

|

||||

|

||||

And we'll translate a `print` as a call to `console.log`

|

||||

|

||||

```

|

||||

transPrint :: [Expr] -> Either TransError JSExpr

|

||||

transPrint [expr] = JSFunCall (JSSymbol "console.log") . (:[]) <$> translateToJS expr

|

||||

transPrint xs = Left $ "Syntax error. print expected 1 arguments, got: " ++ show (length xs)

|

||||

|

||||

```

|

||||

|

||||

Notice that we could have skipped verifying the syntax if we'd parse those as special cases of `Expr`.

|

||||

|

||||

* _Exercise 1_ : Translate `Program` to `JSProgram`

|

||||

|

||||

* _Exercise 2_ : add a special case for `if Expr Expr Expr` and translate it to the `JSIf` case you implemented in the last exercise

|

||||

|

||||

### 7\. Glue it all together

|

||||

|

||||

Finally, we are going to glue this all together. We'll:

|

||||

|

||||

1. Read a file

|

||||

|

||||

2. Parse it to `Expr`

|

||||

|

||||

3. Translate it to `JSExpr`

|

||||

|

||||

4. Emit JavaScript code to the standard output

|

||||

|

||||

We'll also enable a few flags for testing:

|

||||

|

||||

* `--e` will parse and print the abstract representation of the expression (`Expr`)

|

||||

|

||||

* `--pp` will parse and pretty print

|

||||

|

||||

* `--jse` will parse, translate and print the abstract representation of the resulting JS (`JSExpr`)

|

||||

|

||||

* `--ppc` will parse, pretty print and compile

|

||||

|

||||

```

|

||||

main :: IO ()

|

||||

main = getArgs >>= \case

|

||||

[file] ->

|

||||

printCompile =<< readFile file

|

||||

["--e",file] ->

|

||||

either putStrLn print . runExprParser "--e" =<< readFile file

|

||||

["--pp",file] ->

|

||||

either putStrLn (putStrLn . printExpr) . runExprParser "--pp" =<< readFile file

|

||||

["--jse",file] ->

|

||||

either print (either putStrLn print . translateToJS) . runExprParser "--jse" =<< readFile file

|

||||

["--ppc",file] ->

|

||||

either putStrLn (either putStrLn putStrLn) . fmap (compile . printExpr) . runExprParser "--ppc" =<< readFile file

|

||||

_ ->

|

||||

putStrLn $ unlines

|

||||

["Usage: runghc Main.hs [ --e, --pp, --jse, --ppc ] <filename>"

|

||||

,"--e print the Expr"

|

||||

,"--pp pretty print Expr"

|

||||

,"--jse print the JSExpr"

|

||||

,"--ppc pretty print Expr and then compile"

|

||||

]

|

||||

|

||||

printCompile :: String -> IO ()

|

||||

printCompile = either putStrLn putStrLn . compile

|

||||

|

||||

compile :: String -> Either Error String

|

||||

compile str = printJSExpr False 0 <$> (translateToJS =<< runExprParser "compile" str)

|

||||

|

||||

```

|

||||

|

||||

That's it. We have a compiler from our language to JS. Again, you can view the full source file [here][9].

|

||||

|

||||

Running our compiler with the example from the first section yields this JavaScript code:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp

|

||||

(function(compose, square, add1) {

|

||||

return (console.log)(((compose)(square, add1))(5));

|

||||

})(function(f, g) {

|

||||

return function(x) {

|

||||

return (f)((g)(x));

|

||||

};

|

||||

}, function(x) {

|

||||

return (x * x);

|

||||

}, function(x) {

|

||||

return (x + 1);

|

||||

})

|

||||

```

|

||||

|

||||

If you have node.js installed on your computer, you can run this code by running:

|

||||

|

||||

```

|

||||

$ runhaskell Lisp.hs example.lsp | node -p

|

||||

36

|

||||

undefined

|

||||

```

|

||||

|

||||

* _Final exercise_ : instead of compiling an expression, compile a program of multiple expressions.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

|

||||

作者:[ Gil Mizrahi ][a]

|

||||

选题:[oska874][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://gilmi.me/home

|

||||

[b]:https://github.com/oska874

|

||||

[1]:https://gilmi.me/blog/authors/Gil

|

||||

[2]:https://gilmi.me/blog/tags/compilers

|

||||

[3]:https://gilmi.me/blog/tags/fp

|

||||

[4]:https://gilmi.me/blog/tags/haskell

|

||||

[5]:https://gilmi.me/blog/tags/lisp

|

||||

[6]:https://gilmi.me/blog/tags/parsing

|

||||

[7]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[8]:https://mrkkrp.github.io/megaparsec/

|

||||

[9]:https://gist.github.com/soupi/d4ff0727ccb739045fad6cdf533ca7dd

|

||||

[10]:https://gilmi.me/blog/post/2016/10/14/lisp-to-js

|

||||

@ -1,100 +0,0 @@

|

||||

translating by dianbanjiu

|

||||

Download an OS with GNOME Boxes

|

||||

======

|

||||

|

||||

|

||||

|

||||

Boxes is the GNOME application for running virtual machines. Recently Boxes added a new feature that makes it easier to run different Linux distributions. You can now automatically install these distros in Boxes, as well as operating systems like FreeBSD and FreeDOS. The list even includes Red Hat Enterprise Linux. The Red Hat Developer Program includes a [no-cost subscription to Red Hat Enterprise Linux][1]. With a [Red Hat Developer][2] account, Boxes can automatically set up a RHEL virtual machine entitled to the Developer Suite subscription. Here’s how it works.

|

||||

|

||||

### Red Hat Enterprise Linux

|

||||

|

||||

To create a Red Hat Enterprise Linux virtual machine, launch Boxes and click New. Select Download an OS from the source selection list. At the top, pick Red Hat Enterprise Linux. This opens a web form at [developers.redhat.com][2]. Sign in with an existing Red Hat Developer Account, or create a new one.

|

||||

|

||||

![][3]

|

||||

|

||||

If this is a new account, Boxes requires some additional information before continuing. This step is required to enable the Developer Subscription on the account. Be sure to [accept the Terms & Conditions][4] now too. This saves a step later during registration.

|

||||

|

||||

![][5]

|

||||

|

||||

Click Submit and the installation disk image starts to download. The download can take a while, depending on your Internet connection. This is a great time to go fix a cup of tea or coffee!

|

||||

|

||||

![][6]

|

||||

|

||||

Once the media has downloaded (conveniently to ~/Downloads), Boxes offers to perform an Express Install. Fill in the account and password information and click Continue. Click Create after you verify the virtual machine details. The Express Install automatically performs the entire installation! (Now is a great time to enjoy a second cup of tea or coffee, if so inclined.)

|

||||

|

||||

![][7]

|

||||

|

||||

![][8]

|

||||

|

||||

![][9]

|

||||

|

||||

Once the installation is done, the virtual machine reboots and logs directly into the desktop. Inside the virtual machine, launch the Red Hat Subscription Manager via the Applications menu, under System Tools. Enter the root password to launch the utility.

|

||||

|

||||

![][10]

|

||||

|

||||

Click the Register button and follow the steps through the registration assistant. Log in with your Red Hat Developers account when prompted.

|

||||

|

||||

![][11]

|

||||

|

||||

![][12]

|

||||

|

||||

Now you can download and install updates through any normal update method, such as yum or GNOME Software.

|

||||

|

||||

![][13]

|

||||

|

||||

### FreeDOS anyone?

|

||||

|

||||

Boxes can install a lot more than just Red Hat Enterprise Linux, too. As a front end to KVM and qemu, Boxes supports a wide variety of operating systems. Using [libosinfo][14], Boxes can automatically download (and in some cases, install) quite a few different ones.

|

||||

|

||||

![][15]

|

||||

|

||||

To install an OS from the list, select it and finish creating the new virtual machine. Some OSes, like FreeDOS, do not support an Express Install. In those cases the virtual machine boots from the installation media. You can then manually install.

|

||||

|

||||

![][16]

|

||||

|

||||

![][17]

|

||||

|

||||

### Popular operating systems on Boxes

|

||||

|

||||

These are just a few of the popular choices available in Boxes today.

|

||||

|

||||

![][18]![][19]![][20]![][21]![][22]![][23]

|

||||

|

||||

Fedora updates its osinfo-db package regularly. Be sure to check back frequently for new OS options.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/download-os-gnome-boxes/

|

||||

|

||||

作者:[Link Dupont][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fedoramagazine.org/author/linkdupont/

|

||||

[1]:https://developers.redhat.com/blog/2016/03/31/no-cost-rhel-developer-subscription-now-available/

|

||||

[2]:http://developers.redhat.com

|

||||

[3]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-14-33-13.png

|

||||

[4]:https://www.redhat.com/wapps/tnc/termsack?event%5B%5D=signIn

|

||||

[5]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-14-34-37.png

|

||||

[6]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-14-37-27.png

|

||||

[7]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-09-11.png

|

||||

[8]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-15-19-1024x815.png

|

||||

[9]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-21-53-1024x815.png

|

||||

[10]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-26-29-1024x815.png

|

||||

[11]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-30-48-1024x815.png

|

||||

[12]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-31-17-1024x815.png

|

||||

[13]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-32-29-1024x815.png

|

||||

[14]:https://libosinfo.org

|

||||

[15]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-20-02-56.png

|

||||

[16]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-40-25.png

|

||||

[17]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-15-43-02-1024x815.png

|

||||

[18]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-16-55-20-1024x815.png

|

||||

[19]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-16-28-28-1024x815.png

|

||||

[20]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-16-11-43-1024x815.png

|

||||

[21]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-16-58-09-1024x815.png

|

||||

[22]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-17-46-38-1024x815.png

|

||||

[23]:https://fedoramagazine.org/wp-content/uploads/2018/05/Screenshot-from-2018-05-25-18-34-11-1024x815.png

|

||||

@ -1,3 +1,4 @@

|

||||

Translateing By DavidChenLiang

|

||||

Top Linux developers' recommended programming books

|

||||

======

|

||||

Without question, Linux was created by brilliant programmers who employed good computer science knowledge. Let the Linux programmers whose names you know share the books that got them started and the technology references they recommend for today's developers. How many of them have you read?

|

||||

|

||||

@ -1,61 +0,0 @@

|

||||

translating by belitex

|

||||

|

||||

A sysadmin's guide to containers

|

||||

======

|

||||

|

||||

|

||||

|

||||

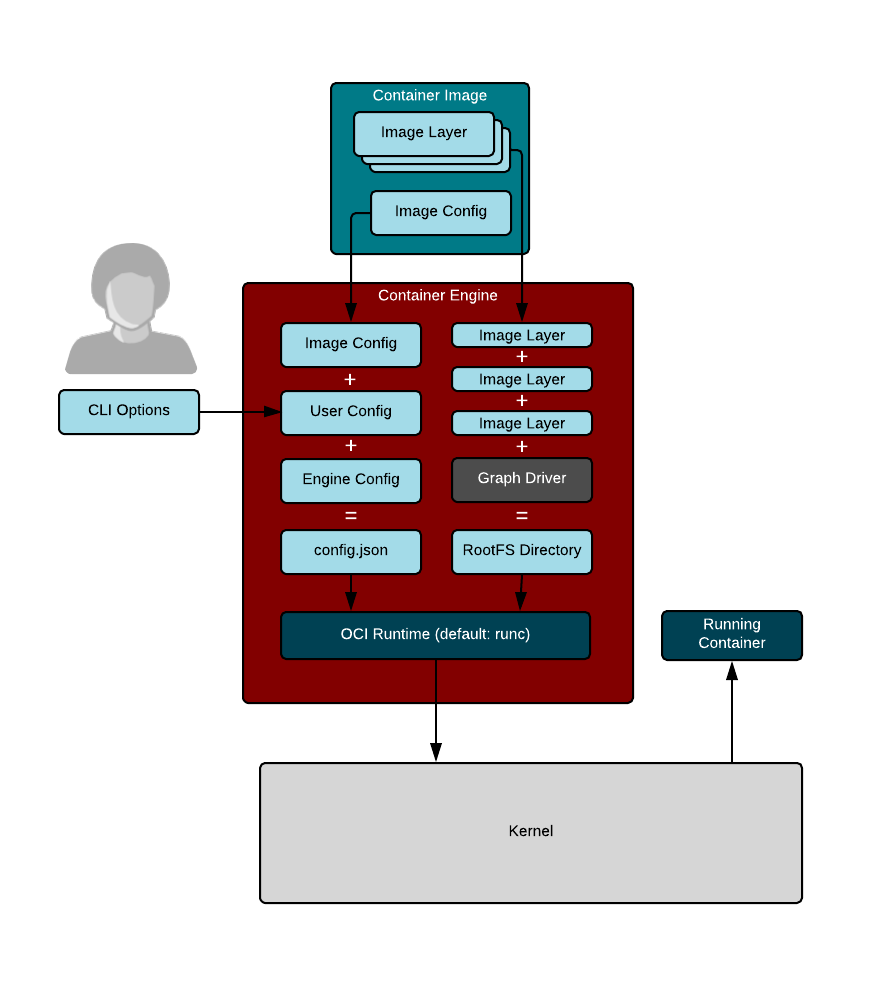

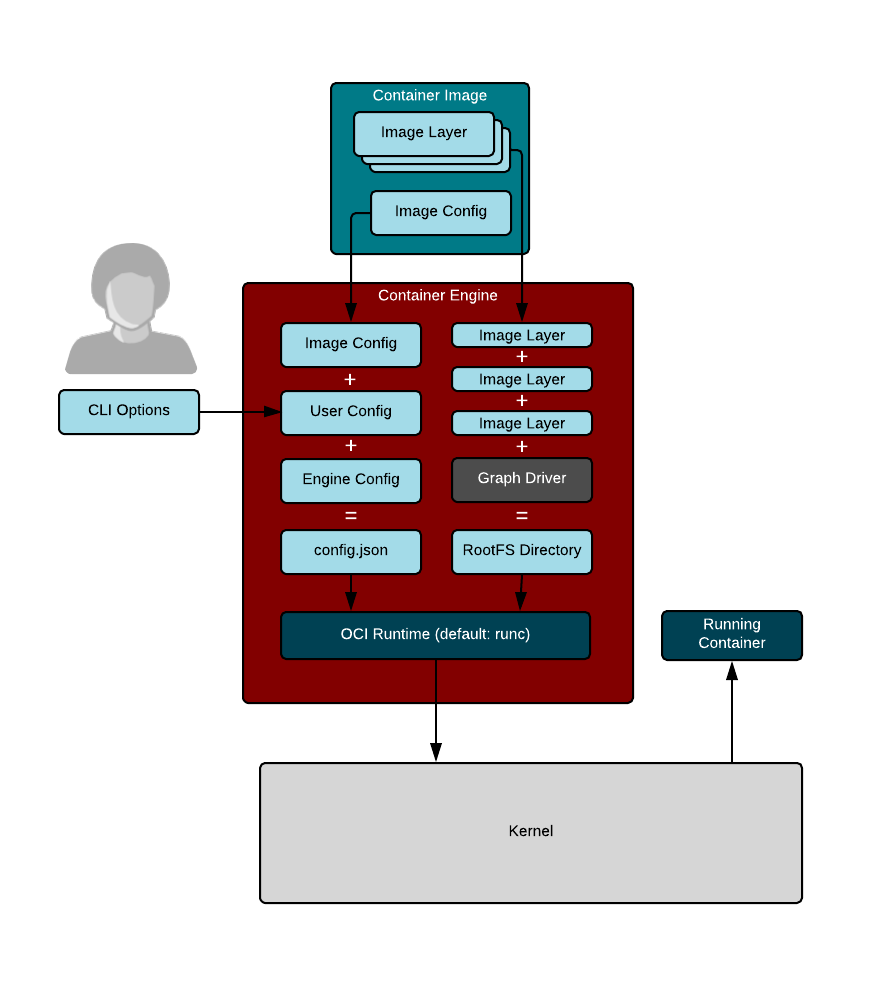

The term "containers" is heavily overused. Also, depending on the context, it can mean different things to different people.

|

||||

|

||||

Traditional Linux containers are really just ordinary processes on a Linux system. These groups of processes are isolated from other groups of processes using resource constraints (control groups [cgroups]), Linux security constraints (Unix permissions, capabilities, SELinux, AppArmor, seccomp, etc.), and namespaces (PID, network, mount, etc.).

|

||||

|

||||

If you boot a modern Linux system and took a look at any process with `cat /proc/PID/cgroup`, you see that the process is in a cgroup. If you look at `/proc/PID/status`, you see capabilities. If you look at `/proc/self/attr/current`, you see SELinux labels. If you look at `/proc/PID/ns`, you see the list of namespaces the process is in. So, if you define a container as a process with resource constraints, Linux security constraints, and namespaces, by definition every process on a Linux system is in a container. This is why we often say [Linux is containers, containers are Linux][1]. **Container runtimes** are tools that modify these resource constraints, security, and namespaces and launch the container.

|

||||

|

||||

Docker introduced the concept of a **container image** , which is a standard TAR file that combines:

|

||||

|

||||

* **Rootfs (container root filesystem):** A directory on the system that looks like the standard root (`/`) of the operating system. For example, a directory with `/usr`, `/var`, `/home`, etc.

|

||||

* **JSON file (container configuration):** Specifies how to run the rootfs; for example, what **command** or **entrypoint** to run in the rootfs when the container starts; **environment variables** to set for the container; the container's **working directory** ; and a few other settings.

|

||||

|

||||

|

||||

|

||||

Docker "`tar`'s up" the rootfs and the JSON file to create the **base image**. This enables you to install additional content on the rootfs, create a new JSON file, and `tar` the difference between the original image and the new image with the updated JSON file. This creates a **layered image**.

|

||||

|

||||

The definition of a container image was eventually standardized by the [Open Container Initiative (OCI)][2] standards body as the [OCI Image Specification][3].

|

||||

|

||||

Tools used to create container images are called **container image builders**. Sometimes container engines perform this task, but several standalone tools are available that can build container images.

|

||||

|

||||

Docker took these container images ( **tarballs** ) and moved them to a web service from which they could be pulled, developed a protocol to pull them, and called the web service a **container registry**.

|

||||

|

||||

**Container engines** are programs that can pull container images from container registries and reassemble them onto **container storage**. Container engines also launch **container runtimes** (see below).

|

||||

|

||||

|

||||

|

||||

Container storage is usually a **copy-on-write** (COW) layered filesystem. When you pull down a container image from a container registry, you first need to untar the rootfs and place it on disk. If you have multiple layers that make up your image, each layer is downloaded and stored on a different layer on the COW filesystem. The COW filesystem allows each layer to be stored separately, which maximizes sharing for layered images. Container engines often support multiple types of container storage, including `overlay`, `devicemapper`, `btrfs`, `aufs`, and `zfs`.

|

||||

|

||||

|

||||