mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

commit

29058e1e77

@ -0,0 +1,105 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12173-1.html)

|

||||

[#]: subject: (Create Stunning Pixel Art With Free and Open Source Editor Pixelorama)

|

||||

[#]: via: (https://itsfoss.com/pixelorama/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

使用 Pixelorama 创建令人惊叹的像素艺术

|

||||

======

|

||||

|

||||

> Pixelorama 是一个跨平台、自由开源的 2D 精灵编辑器。它在一个整洁的用户界面中提供了创建像素艺术所有必要工具。

|

||||

|

||||

### Pixelorama:开源 Sprite 编辑器

|

||||

|

||||

[Pixelorama][1] 是 [Orama 互动][2]公司的年轻游戏开发人员创建的一个工具。他们已经开发了一些 2D 游戏,其中一些使用了像素艺术。

|

||||

|

||||

虽然 Orama 主要从事于游戏开发,但开发人员也创建实用工具,帮助他们(和其他人)创建这些游戏。

|

||||

|

||||

自由开源的<ruby>精灵<rt>Sprite</rt></ruby>编辑器 Pixelorama 就是这样一个实用工具。它构建在 [Godot 引擎][3]之上,非常适合创作像素艺术。

|

||||

|

||||

![Pixelorama screenshot][4]

|

||||

|

||||

你看到上面截图中的像素艺术了吗?它是使用 Pixelorama 创建的。这段视频展示了制作上述图片的时间推移视频。

|

||||

|

||||

### Pixelorama 的功能

|

||||

|

||||

以下是 Pixelorama 提供的主要功能:

|

||||

|

||||

* 多种工具,如铅笔、橡皮擦、填充桶、取色器等

|

||||

* 多层系统,你可以根据需要添加、删除、上下移动、克隆和合并多个层

|

||||

* 支持 Spritesheets

|

||||

* 导入图像并在 Pixelorama 中编辑它们

|

||||

* 带有 [Onion Skinning][5] 的动画时间线

|

||||

* 自定义画笔

|

||||

* 以 Pixelorama 的自定义文件格式 .pxo 保存并打开你的项目

|

||||

* 水平和垂直镜像绘图

|

||||

* 用于创建图样的磁贴模式

|

||||

* 拆分屏幕模式和迷你画布预览

|

||||

* 使用鼠标滚轮缩放

|

||||

* 无限次撤消和重做

|

||||

* 缩放、裁剪、翻转、旋转、颜色反转和去饱和图像

|

||||

* 键盘快捷键

|

||||

* 提供多种语言

|

||||

* 支持 Linux、Windows 和 macOS

|

||||

|

||||

### 在 Linux 上安装 Pixelorama

|

||||

|

||||

Pixelorama 提供 Snap 应用,如果你使用的是 Ubuntu,那么可以在软件中心找到它。

|

||||

|

||||

![Pixelorama is available in Ubuntu Software Center][6]

|

||||

|

||||

或者,如果你在 [Linux 发行版上启用了 Snap 支持][7],那么可以使用此命令安装它:

|

||||

|

||||

```

|

||||

sudo snap install pixelorama

|

||||

```

|

||||

|

||||

如果你不想使用 Snap,不用担心。你可以从[他们的 GitHub 仓库][8]下载最新版本的 Pixelorama,[解压 zip 文件][9],你会看到一个可执行文件。授予此文件执行权限,并双击它运行应用。

|

||||

|

||||

- [下载 Pixelorama][10]

|

||||

|

||||

### 总结

|

||||

|

||||

![Pixelorama Welcome Screen][11]

|

||||

|

||||

在 Pixeloaram 的功能中,它说你可以导入图像并对其进行编辑。我想,这只是对某些类型的文件,因为当我尝试导入 PNG 或 JPEG 文件,程序崩溃了。

|

||||

|

||||

然而,我可以像一个 3 岁的孩子那样随意涂鸦并制作像素艺术。我对艺术不是很感兴趣,但我认为这[对 Linux 上的数字艺术家是个有用的工具][12]。

|

||||

|

||||

我喜欢这样的想法:尽管是游戏开发人员,但他们创建的工具,可以帮助其他游戏开发人员和艺术家。这就是开源的精神。

|

||||

|

||||

如果你喜欢这个项目,并且会使用它,请考虑通过捐赠来支持他们。[It’s FOSS 捐赠了][13] 25 美元,以感谢他们的努力。

|

||||

|

||||

- [向 Pixelorama 捐赠(主要开发者的个人 Paypal 账户)][14]

|

||||

|

||||

你喜欢 Pixelorama 吗?你是否使用其他开源精灵编辑器?请随时在评论栏分享你的观点。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

via: https://itsfoss.com/pixelorama/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.orama-interactive.com/pixelorama

|

||||

[2]: https://www.orama-interactive.com/

|

||||

[3]: https://godotengine.org/

|

||||

[4]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/03/pixelorama-v6.jpg?ssl=1

|

||||

[5]: https://en.wikipedia.org/wiki/Onion_skinning

|

||||

[6]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/03/pixelorama-ubuntu-software-center.jpg?ssl=1

|

||||

[7]: https://itsfoss.com/install-snap-linux/

|

||||

[8]: https://github.com/Orama-Interactive/Pixelorama

|

||||

[9]: https://itsfoss.com/unzip-linux/

|

||||

[10]: https://github.com/Orama-Interactive/Pixelorama/releases

|

||||

[11]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/03/pixelorama.jpg?ssl=1

|

||||

[12]: https://itsfoss.com/best-linux-graphic-design-software/

|

||||

[13]: https://itsfoss.com/donations-foss/

|

||||

[14]: https://www.paypal.me/erevos

|

||||

@ -1,16 +1,16 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12171-1.html)

|

||||

[#]: subject: (Rambox is an All-in-one Messenger for Linux)

|

||||

[#]: via: (https://itsfoss.com/rambox/)

|

||||

[#]: author: (Ankush Das https://itsfoss.com/author/ankush/)

|

||||

|

||||

Rambox 是 Linux 中多合一的消息收发工具

|

||||

Rambox:Linux 中多合一的消息收发工具

|

||||

======

|

||||

|

||||

_**简介:Rambox 是一个多合一消息收发工具,允许你将多种服务(如 Discord、Slack、Facebook Messenger)和数百个此类服务结合在一起。**_

|

||||

> Rambox 是一个多合一消息收发工具,允许你将多种服务(如 Discord、Slack、Facebook Messenger)和数百个此类服务结合在一起。

|

||||

|

||||

### Rambox:在单个应用中添加多个消息服务

|

||||

|

||||

@ -18,7 +18,7 @@ _**简介:Rambox 是一个多合一消息收发工具,允许你将多种服

|

||||

|

||||

Rambox 是通过安装单个应用管理多个通信服务的最佳方式之一。你可以在一个界面使用[多个消息服务][2],如 Facebook Messenger、Gmail chats、AOL、Discord、Google Duo、[Viber][3] 等。

|

||||

|

||||

这样,你就不需要安装单独的应用或者在浏览器中保持打开。你可以使用主密码锁定 Rambox 应用。你还可以使用"请勿打扰"功能。

|

||||

这样,你就不需要安装单独的应用或者在浏览器中一直打开着。你可以使用主密码锁定 Rambox 应用。你还可以使用“请勿打扰”功能。

|

||||

|

||||

Rambox 提供可免费使用的[开源社区版][4]。付费专业版允许你访问 600 多个应用,而社区版则包含 99 多个应用。专业版本具有额外的功能,如主题、休眠、ad-block、拼写检查和高级支持。

|

||||

|

||||

@ -44,24 +44,22 @@ Rambox 提供可免费使用的[开源社区版][4]。付费专业版允许你

|

||||

* Ad-block (**专业版**)

|

||||

* 休眠支持 (**专业版**)

|

||||

* 主题支持(**专业版**)

|

||||

* 移动视图 (**专业版**)

|

||||

* 移动设备视图 (**专业版**)

|

||||

* 拼写检查 (**专业版**)

|

||||

* 工作时间 - 计划传入通知时间 (**专业版**)

|

||||

* 代理支持 (**专业版**)

|

||||

|

||||

|

||||

* 工作时间 - 计划传入通知的时间 (**专业版**)

|

||||

* 支持代理 (**专业版**)

|

||||

|

||||

除了我在这里列出的内容外,你还可以在 Rambox Pro 版本中找到更多功能。要了解有关它的更多信息,你可以参考[正式功能列表][6]。

|

||||

|

||||

还值得注意的是,你不能有超过 3 个活跃并发设备连接。

|

||||

还值得注意的是,你不能超过 3 个活跃并发设备的连接。

|

||||

|

||||

### 在 Linux 上安装 Rambox

|

||||

|

||||

你可以在[官方下载页][4]获取 **.AppImage** 文件来运行 Rambox。如果你好奇,你可以参考我们的指南,了解如何[在 Linux 上使用 AppImage 文件][7]。

|

||||

你可以在[官方下载页][4]获取 .AppImage 文件来运行 Rambox。如果你不清楚,你可以参考我们的指南,了解如何[在 Linux 上使用 AppImage 文件][7]。

|

||||

|

||||

另外,你也可以从 [Snap 商店][8]获取它。此外,请查看其 [GitHub release][9] 部分的 **.deb / .rpm** 或其他包。

|

||||

另外,你也可以从 [Snap 商店][8]获取它。此外,请查看其 [GitHub release][9] 部分的 .deb / .rpm 或其他包。

|

||||

|

||||

[Download Rambox Community Edition][4]

|

||||

- [下载 Rambox 社区版][4]

|

||||

|

||||

### 总结

|

||||

|

||||

@ -69,7 +67,7 @@ Rambox 提供可免费使用的[开源社区版][4]。付费专业版允许你

|

||||

|

||||

还有一个类似的应用称为 [Franz][10],它也像 Rambox 部分开源、部分高级版。

|

||||

|

||||

尽管像 Rambox 或 Franz 这样的解决方案非常有用,但它们并不总是资源友好,特别是如果你同时使用数十个服务。因此,请留意系统资源(如果你注意到对性能的影响)。

|

||||

尽管像 Rambox 或 Franz 这样的解决方案非常有用,但它们并不总是节约资源,特别是如果你同时使用数十个服务。因此,请留意系统资源(如果你注意到对性能的影响)。

|

||||

|

||||

除此之外,这是一个令人印象深刻的应用。你有试过了么?欢迎随时让我知道你的想法!

|

||||

|

||||

@ -80,7 +78,7 @@ via: https://itsfoss.com/rambox/

|

||||

作者:[Ankush Das][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,20 +1,22 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12161-1.html)

|

||||

[#]: subject: (The Difference Between DNF and YUM, Why is Yum Replaced by DNF?)

|

||||

[#]: via: (https://www.2daygeek.com/comparison-difference-between-dnf-vs-yum/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

DNF 和 YUM 的区别,为什么 YUM 会被 DNF 取代?

|

||||

DNF 和 Yum 的区别,为什么 Yum 会被 DNF 取代?

|

||||

======

|

||||

|

||||

由于 Yum 中许多长期存在的问题仍未得到解决,因此 [Yum 包管理器][1]已被 [DNF 包管理器][2]取代。这些问题包括性能差、内存占用过多、依赖解析速度变慢等。

|

||||

|

||||

DNF 使用 `libsolv` 进行依赖解析,由 SUSE 开发和维护,旨在提高性能。DNF 主要是用 Python 编写的,它有自己的应对依赖解析的方法。

|

||||

DNF 使用 `libsolv` 进行依赖解析,由 SUSE 开发和维护,旨在提高性能。

|

||||

|

||||

Yum 是 RPM 的前端工具,它管理依赖关系和资源库,然后使用 RPM 来安装、下载和删除包。它的 API 没有完整的文档,它的扩展系统只允许 Python 插件。

|

||||

Yum 主要是用 Python 编写的,它有自己的应对依赖解析的方法。它的 API 没有完整的文档,它的扩展系统只允许 Python 插件。

|

||||

|

||||

Yum 是 RPM 的前端工具,它管理依赖关系和资源库,然后使用 RPM 来安装、下载和删除包。

|

||||

|

||||

为什么他们要建立一个新的工具,而不是修复现有的问题呢?

|

||||

|

||||

@ -33,17 +35,17 @@ Ales Kozamblak 解释说,这个修复在技术上是不可行的,而且 Yum

|

||||

2 | API 有完整的文档 | API 没有完整的文档

|

||||

3 | 由 C、C++、Python 编写的 | 只用 Python 编写

|

||||

4 | DNF 目前在 Fedora、RHEL 8、CentOS 8、OEL 8 和 Mageia 6/7 中使用 | YUM 目前在 RHEL 6/7、CentOS 6/7、OEL 6/7 中使用

|

||||

5 | DNf 支持各种扩展 | Yum 只支持基于 Python 的扩展

|

||||

5 | DNF 支持各种扩展 | Yum 只支持基于 Python 的扩展

|

||||

6 | API 有良好的文档,因此很容易创建新的功能 | 因为 API 没有正确的文档化,所以创建新功能非常困难

|

||||

7 | DNF 在同步存储库的元数据时,使用的内存较少 | 在同步存储库的元数据时,YUM 使用了过多的内存

|

||||

8 | DNF 使用满足性算法来解决依赖关系解析(它是用字典的方法来存储和检索包和依赖信息)| 由于使用公开 API 的原因,Yum 依赖性解析变得迟钝

|

||||

9 | 从内存使用量和版本库元数据的依赖性解析来看,性能都不错 | 总的来说,在很多因素的影响下,表现不佳

|

||||

10 | DNF 更新:在 DNF 更新过程中,如果包中包含不相关的依赖,则不会更新 | YUM 将在没有验证的情况下更新软件包

|

||||

11 | 如果启用的存储库没有响应,DNF 将跳过它,并继续使用可用的存储库出来事务 | 如果有存储库不可用,YUM 会立即停止

|

||||

11 | 如果启用的存储库没有响应,DNF 将跳过它,并继续使用可用的存储库处理事务 | 如果有存储库不可用,YUM 会立即停止

|

||||

12 | `dnf update` 和 `dnf upgrade` 是等价的 | 在 Yum 中则不同

|

||||

13 | 安装包的依赖关系不更新 | Yum 为这种行为提供了一个选项

|

||||

14 | 清理删除的包:当删除一个包时,DNF 会自动删除任何没有被用户明确安装的依赖包 | Yum 不会这样做

|

||||

15 | 存储库缓存更新计划:默认情况下,系统启动后 10 分钟后,DNF 每小时检查一次对配置的存储库的更新。这个动作由系统定时器单元 `/usr/lib/systemd/system/system/dnf-makecache.timer` 控制 | Yum 也会这样做

|

||||

15 | 存储库缓存更新计划:默认情况下,系统启动后 10 分钟后,DNF 每小时会对配置的存储库检查一次更新。这个动作由系统定时器单元 `dnf-makecache.timer` 控制 | Yum 也会这样做

|

||||

16 | 内核包不受 DNF 保护。不像 Yum,你可以删除所有的内核包,包括运行中的内核包 | Yum 不允许你删除运行中的内核

|

||||

17 | libsolv:用于解包和读取资源库。hawkey: 为 libsolv 提供简化的 C 和 Python API 库。librepo: 提供 C 和 Python(类似 libcURL)API 的库,用于下载 Linux 存储库元数据和软件包。libcomps: 是 yum.comps 库的替代品。它是用纯 C 语言编写的库,有 Python 2 和 Python 3 的绑定。| Yum 不使用单独的库来执行这些功能

|

||||

18 | DNF 包含 29000 行代码 | Yum 包含 56000 行代码

|

||||

@ -56,7 +58,7 @@ via: https://www.2daygeek.com/comparison-difference-between-dnf-vs-yum/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,34 +1,30 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12165-1.html)

|

||||

[#]: subject: (How to Check the Available Network Interfaces, Associated IP Addresses, MAC Addresses, and Interface Speed on Linux)

|

||||

[#]: via: (https://www.2daygeek.com/linux-unix-check-network-interfaces-names-nic-speed-ip-mac-address/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

如何在 Linux 上检查可用的网络接口、关联的 IP 地址、MAC 地址和接口速度

|

||||

如何在 Linux 上检查网卡信息

|

||||

======

|

||||

|

||||

默认在设置服务器时,你将配置主网络接口。

|

||||

|

||||

|

||||

这是每个人所做的构建工作的一部分。

|

||||

默认情况下,在设置服务器时你会配置主网络接口。这是每个人所做的构建工作的一部分。有时出于各种原因,你可能需要配置额外的网络接口。

|

||||

|

||||

有时出于各种原因,你可能需要配置额外的网络接口。

|

||||

这可以是通过网络<ruby>绑定<rt>bonding</rt><ruby>/<ruby>协作<rt>teaming</rt></ruby>来提供高可用性,也可以是用于应用需求或备份的单独接口。

|

||||

|

||||

这可以是网络绑定/团队合作或高可用性,也可以是用于应用需求或备份的单独接口。

|

||||

为此,你需要知道计算机有多少接口以及它们的速度来配置它们。

|

||||

|

||||

为此,你需要知道计算机有多少接口以及它们的配置速度。

|

||||

|

||||

有许多命令可检查可用的网络接口,但是我们仅使用 IP 命令。

|

||||

|

||||

稍后,我们将使用所有这些工具编写单独的文章。

|

||||

有许多命令可检查可用的网络接口,但是我们仅使用 `ip` 命令。以后,我们会另外写一篇文章来全部介绍这些工具。

|

||||

|

||||

在本教程中,我们将向你显示可用网络网卡(NIC)信息,例如接口名称、关联的 IP 地址、MAC 地址和接口速度。

|

||||

|

||||

### 什么是 IP 命令

|

||||

### 什么是 ip 命令

|

||||

|

||||

**[IP 命令][1]**类似于 ifconfig, 用于分配静态 IP 地址、路由和默认网关等。

|

||||

[ip 命令][1] 类似于 `ifconfig`, 用于分配静态 IP 地址、路由和默认网关等。

|

||||

|

||||

```

|

||||

# ip a

|

||||

@ -47,15 +43,15 @@

|

||||

|

||||

### 什么是 ethtool 命令

|

||||

|

||||

ethtool 用于查询或控制网络驱动或硬件设置。

|

||||

`ethtool` 用于查询或控制网络驱动或硬件设置。

|

||||

|

||||

```

|

||||

# ethtool eth0

|

||||

```

|

||||

|

||||

### 1)如何在 Linux 上使用 IP 命令检查可用的网络接口

|

||||

### 1)如何在 Linux 上使用 ip 命令检查可用的网络接口

|

||||

|

||||

在不带任何参数的情况下运行 IP 命令时,它会提供大量信息,但是,如果仅需要可用的网络接口,请使用以下定制的 IP 命令。

|

||||

在不带任何参数的情况下运行 `ip` 命令时,它会提供大量信息,但是,如果仅需要可用的网络接口,请使用以下定制的 `ip` 命令。

|

||||

|

||||

```

|

||||

# ip a |awk '/state UP/{print $2}'

|

||||

@ -64,13 +60,13 @@ eth0:

|

||||

eth1:

|

||||

```

|

||||

|

||||

### 2)如何在 Linux 上使用 IP 命令检查网络接口的 IP 地址

|

||||

### 2)如何在 Linux 上使用 ip 命令检查网络接口的 IP 地址

|

||||

|

||||

如果只想查看 IP 地址分配给了哪个接口,请使用以下定制的 IP 命令。

|

||||

如果只想查看 IP 地址分配给了哪个接口,请使用以下定制的 `ip` 命令。

|

||||

|

||||

```

|

||||

# ip -o a show | cut -d ' ' -f 2,7

|

||||

or

|

||||

或

|

||||

ip a |grep -i inet | awk '{print $7, $2}'

|

||||

|

||||

lo 127.0.0.1/8

|

||||

@ -78,18 +74,18 @@ lo 127.0.0.1/8

|

||||

192.168.1.102/24

|

||||

```

|

||||

|

||||

### 3)如何在 Linux 上使用 IP 命令检查网卡的 MAC 地址

|

||||

### 3)如何在 Linux 上使用 ip 命令检查网卡的 MAC 地址

|

||||

|

||||

如果只想查看网络接口名称和相应的 MAC 地址,请使用以下格式。

|

||||

|

||||

检查特定的网络接口的 MAC 地址。

|

||||

检查特定的网络接口的 MAC 地址:

|

||||

|

||||

```

|

||||

# ip link show dev eth0 |awk '/link/{print $2}'

|

||||

00:00:00:55:43:5c

|

||||

```

|

||||

|

||||

检查所有网络接口的 MAC 地址。

|

||||

检查所有网络接口的 MAC 地址,创建该脚本:

|

||||

|

||||

```

|

||||

# vi /opt/scripts/mac-addresses.sh

|

||||

@ -97,12 +93,12 @@ lo 127.0.0.1/8

|

||||

#!/bin/sh

|

||||

ip a |awk '/state UP/{print $2}' | sed 's/://' | while read output;

|

||||

do

|

||||

echo $output:

|

||||

ethtool -P $output

|

||||

echo $output:

|

||||

ethtool -P $output

|

||||

done

|

||||

```

|

||||

|

||||

运行下面的 shell 脚本获取多个网络接口的 MAC 地址。

|

||||

运行该脚本获取多个网络接口的 MAC 地址:

|

||||

|

||||

```

|

||||

# sh /opt/scripts/mac-addresses.sh

|

||||

@ -115,9 +111,9 @@ Permanent address: 00:00:00:55:43:5d

|

||||

|

||||

### 4)如何在 Linux 上使用 ethtool 命令检查网络接口速度

|

||||

|

||||

如果要在 Linux 上检查网络接口速度,请使用 ethtool 命令。

|

||||

如果要在 Linux 上检查网络接口速度,请使用 `ethtool` 命令。

|

||||

|

||||

检查特定网络接口的速度。

|

||||

检查特定网络接口的速度:

|

||||

|

||||

```

|

||||

# ethtool eth0 |grep "Speed:"

|

||||

@ -125,7 +121,7 @@ Permanent address: 00:00:00:55:43:5d

|

||||

Speed: 10000Mb/s

|

||||

```

|

||||

|

||||

检查所有网络接口速度。

|

||||

检查所有网络接口速度,创建该脚本:

|

||||

|

||||

```

|

||||

# vi /opt/scripts/port-speed.sh

|

||||

@ -133,12 +129,12 @@ Speed: 10000Mb/s

|

||||

#!/bin/sh

|

||||

ip a |awk '/state UP/{print $2}' | sed 's/://' | while read output;

|

||||

do

|

||||

echo $output:

|

||||

ethtool $output |grep "Speed:"

|

||||

echo $output:

|

||||

ethtool $output |grep "Speed:"

|

||||

done

|

||||

```

|

||||

|

||||

运行以下 shell 脚本获取多个网络接口速度。

|

||||

运行该脚本获取多个网络接口速度:

|

||||

|

||||

```

|

||||

# sh /opt/scripts/port-speed.sh

|

||||

@ -151,7 +147,7 @@ Speed: 10000Mb/s

|

||||

|

||||

### 5)验证网卡信息的 Shell 脚本

|

||||

|

||||

通过此 **[shell 脚本][2]**,你可以收集上述所有信息,例如网络接口名称、网络接口的 IP 地址,网络接口的 MAC 地址以及网络接口的速度。

|

||||

通过此 shell 脚本你可以收集上述所有信息,例如网络接口名称、网络接口的 IP 地址,网络接口的 MAC 地址以及网络接口的速度。创建该脚本:

|

||||

|

||||

```

|

||||

# vi /opt/scripts/nic-info.sh

|

||||

@ -161,14 +157,14 @@ hostname

|

||||

echo "-------------"

|

||||

for iname in $(ip a |awk '/state UP/{print $2}')

|

||||

do

|

||||

echo "$iname"

|

||||

ip a | grep -A2 $iname | awk '/inet/{print $2}'

|

||||

ip a | grep -A2 $iname | awk '/link/{print $2}'

|

||||

ethtool $iname |grep "Speed:"

|

||||

echo "$iname"

|

||||

ip a | grep -A2 $iname | awk '/inet/{print $2}'

|

||||

ip a | grep -A2 $iname | awk '/link/{print $2}'

|

||||

ethtool $iname |grep "Speed:"

|

||||

done

|

||||

```

|

||||

|

||||

运行以下 shell 脚本检查网卡信息。

|

||||

运行该脚本检查网卡信息:

|

||||

|

||||

```

|

||||

# sh /opt/scripts/nic-info.sh

|

||||

@ -192,7 +188,7 @@ via: https://www.2daygeek.com/linux-unix-check-network-interfaces-names-nic-spee

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12168-1.html)

|

||||

[#]: subject: (What Happened to IPv5? Why there is IPv4, IPv6 but no IPv5?)

|

||||

[#]: via: (https://itsfoss.com/what-happened-to-ipv5/)

|

||||

[#]: author: (John Paul https://itsfoss.com/author/john/)

|

||||

@ -20,19 +20,19 @@ IPv5 发生了什么?为什么有 IPv4、IPv6 但没有 IPv5?

|

||||

|

||||

![ARPA Logical Map in 1977 | Image courtesy: Wikipedia][1]

|

||||

|

||||

在 1960 年代后期,美国国防部的[高级研究计划局][2] (ARPA) 发起了一个[项目][3]来连接全国的计算机。最初的目标是创建一个由全国 ARPA 资助的所有计算机组成的网络系统。

|

||||

在 1960 年代后期,美国国防部的[高级研究计划局][2](DARPA)发起了一个[项目][3]来连接全国的计算机。最初的目标是创建一个由全国 ARPA 资助的所有计算机组成的网络系统。

|

||||

|

||||

由于这是第一次将如此规模的网络整合在一起,因此他们也在不断发展自己的技术和硬件。他们的第一件工作是名为[传输控制协议][4] (TCP) 的互联网协议 (IP)。该协议“可靠、有序、并会对通过 IP 网络传输的八进制(字节)流错误检测”。基本上,它确保数据安全到达。

|

||||

由于这是第一次将如此规模的网络整合在一起,因此他们也在不断发展自己的技术和硬件。他们首先做的工作之一就是开发名为<ruby>[传输控制协议][4]<rt>Transmission Control Protocol</rt></ruby>(TCP)的<ruby>互联网协议<rt>Internet Protocol</rt></ruby>(IP)。该协议“可靠、有序,并会对运行于通过 IP 网络传输的主机上的应用的八进制(字节)流通讯进行错误检测”。简单来说,它可以确保数据安全到达。

|

||||

|

||||

最初,TCP 被设计为[“主机级别的端到端协议以及打包和路由协议”][5]。但是,他们意识到他们需要拆分协议以使其更易于管理。于是决定由 IP 处理打包和路由。

|

||||

最初,TCP 被设计为[“主机级别的端到端协议以及封装和路由协议”][5]。但是,他们意识到他们需要拆分协议以使其更易于管理。于是决定由 IP 协议处理封装和路由。

|

||||

|

||||

那时,TCP 已经经历了三个版本,因此新协议被称为 IPv4。

|

||||

|

||||

### IPv5 的诞生

|

||||

|

||||

IPv5 以不同的名称开始使用:互联网流协议(或 ST)。它是[由 Apple、NeXT 和 Sun Microsystems][6] 创建用于实验流式传输语音和视频。

|

||||

IPv5 开始时有个不同的名字:<ruby>互联网流协议<rt>Internet Stream Protocol</rt></ruby>(ST)。它是[由 Apple、NeXT 和 Sun Microsystems][6] 为试验流式语音和视频而创建的。

|

||||

|

||||

该新协议能够“在保持通信的同时在特定频率上传输数据包”。

|

||||

该新协议能够“在保持通信的同时,以特定频率传输数据包”。

|

||||

|

||||

### 那么 IPv5 发生了什么?

|

||||

|

||||

@ -40,15 +40,15 @@ IPv5 以不同的名称开始使用:互联网流协议(或 ST)。它是[

|

||||

|

||||

IPv5 从未被接受为正式的互联网协议。这主要是由于 32 位限制。

|

||||

|

||||

IPV5 使用与 IPv4 相同的寻址系统。每个地址由 0 到 255 之间的四组数字组成。这将可能的地址数量限制为 [43 亿][6]。

|

||||

IPV5 使用与 IPv4 相同的寻址系统。每个地址由 0 到 255 之间的四组数字组成。这将可能的地址数量限制为 [43 亿][6]个。

|

||||

|

||||

在 1970 年代初,这似乎比全世界所需要的还要多。但是,互联网的爆炸性增长证明了这一想法是错误的。2011年,世界正式耗尽了 IPv4 地址。

|

||||

在 1970 年代初,这似乎比全世界所需要的还要多。但是,互联网的爆炸性增长证明了这一想法是错误的。2011 年,世界上的IPv4地址正式用完了。

|

||||

|

||||

在 1990 年代,一个新项目开始致力于下一代互联网协议 (IPng)。这导致了 128 位的 IPv6。IPv6 地址包含 [“8 组 4 字符的十六进制数字”][6],它可以包含从 0 到 9 的数字和从 A 到 F 的字母。与 IPv4 不同,IPv6 拥有数万亿个可能的地址,因此我们应该能安全一阵子。

|

||||

在 1990 年代,一个新项目开始致力于研究下一代互联网协议(IPng)。这形成了 128 位的 IPv6。IPv6 地址包含 [“8 组 4 字符的十六进制数字”][6],它可以包含从 0 到 9 的数字和从 A 到 F 的字母。与 IPv4 不同,IPv6 拥有数万亿个可能的地址,因此我们应该能安全一阵子。

|

||||

|

||||

同时,IPv5 奠定了 VoIP 的基础,而该技术已被我们用于当今世界范围内的通信。**因此,我想往小了说,你可以说 IPv5 仍然可以保留到了今天**。

|

||||

同时,IPv5 奠定了 VoIP 的基础,而该技术已被我们用于当今世界范围内的通信。**因此,我想在某种程度上,你可以说 IPv5 仍然可以保留到了今天**。

|

||||

|

||||

希望你喜欢有关互联网历史的轶事。你可以阅读其他[关于 Linux 和技术的琐事文章] [8]。

|

||||

希望你喜欢有关互联网历史的轶事。你可以阅读其他[关于 Linux 和技术的琐事文章][8]。

|

||||

|

||||

如果你觉得这篇文章有趣,请花一点时间在社交媒体、Hacker News 或 [Reddit][9] 上分享它。

|

||||

|

||||

@ -59,7 +59,7 @@ via: https://itsfoss.com/what-happened-to-ipv5/

|

||||

作者:[John Paul][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

77

published/202004/20200428 Fedora 32 is officially here.md

Normal file

77

published/202004/20200428 Fedora 32 is officially here.md

Normal file

@ -0,0 +1,77 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12164-1.html)

|

||||

[#]: subject: (Fedora 32 is officially here!)

|

||||

[#]: via: (https://fedoramagazine.org/announcing-fedora-32/)

|

||||

[#]: author: (Matthew Miller https://fedoramagazine.org/author/mattdm/)

|

||||

|

||||

Fedora 32 正式发布!

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

它来了! 我们很荣幸地宣布 Fedora 32 的发布。感谢成千上万的 Fedora 社区成员和贡献者的辛勤工作,我们又一次准时发布了。

|

||||

|

||||

如果你只想马上就能拿到它,请马上访问 <https://getfedora.org/>。更多详情,请继续阅读本文。

|

||||

|

||||

### Fedora 的全部变种

|

||||

|

||||

Fedora Editions 是针对特定的“展示”用途输出的。

|

||||

|

||||

Fedora Workstation 专注于桌面系统。特别是,它面向的是那些希望获得“可以工作的” Linux 操作系统体验的软件开发者。这个版本采用了 [GNOME 3.36][2],一如既往地有很多很棒的改进。我最喜欢的是新的锁屏!

|

||||

|

||||

Fedora Server 以一种易于部署的方式为系统管理员带来了新锐的开源服务器软件。对于边缘计算用例,[Fedora IoT][3] 为 IoT 生态系统提供了坚实的基础。

|

||||

|

||||

Fedora CoreOS 是一个新兴的 Fedora Edition。它是一个自动更新的、最小化的操作系统,用于安全地大规模运行容器化工作负载。它提供了几个[更新流][4],遵循大约每两周一次的自动更新。目前,next 流是基于 Fedora 32,后续还有 testing 流和 stable 流。你可以从[下载页面][5]中找到关于按 next 流发布的工件的信息,并在 [Fedora CoreOS 文档][6]中找到关于如何使用这些工件的信息。

|

||||

|

||||

当然,我们制作的不仅仅是 Editions。[Fedora Spins][7] 和[实验室][8]针对的是不同的受众和用例,包括[Fedora 天文学实验室][9],它为业余和专业的天文学家带来了完整的开源工具链,还有像 [KDE Plasma][10] 和 [Xfce][11] 这样的桌面环境。Fedora 32 中新增的 [计算神经科学实验室][12] 是由我们的神经科学特别兴趣小组开发的,它可以实现计算神经科学。

|

||||

|

||||

还有,别忘了我们的备用架构,[ARM AArch64、Power 和 S390x][13]。特别值得一提的是,我们改进了对 Rockchip 系统级芯片的支持,包括 Rock960、RockPro64 和 Rock64。

|

||||

|

||||

### 一般性的改进

|

||||

|

||||

无论你使用 Fedora 的哪个变体,你都能获得最新的开源世界。遵循我们的“[First][14]”理念,我们更新了关键的编程语言和系统库包,包括 GCC 10、Ruby 2.7 和 Python 3.8。当然,随着 Python 2 已经过了报废期,我们已经从 Fedora 中删除了大部分 Python 2 包,但我们为仍然需要它的开发者和用户提供了一个遗留的 python27 包。在 Fedora Workstation 中,我们默认启用了 EarlyOOM 服务,以改善低内存情况下的用户体验。

|

||||

|

||||

我们非常期待你能尝试一下新版本的使用体验! 现在就去 <https://getfedora.org/> 下载它。或者如果你已经在运行 Fedora 操作系统,请按照简单的[升级说明][15]进行升级。

|

||||

|

||||

### 万一出现问题……

|

||||

|

||||

如果你遇到问题,请查看[Fedora 32 常见错误][16]页面,如果有问题,请访问我们的 [Askedora][17] 用户支持平台。

|

||||

|

||||

### 谢谢大家

|

||||

|

||||

感谢在这个发布周期中为 Fedora 项目做出贡献的成千上万的人,特别是感谢那些在大流行期间为又一次准时发布而付出额外努力的人。Fedora 是一个社区,很高兴看到我们彼此之间的支持。我邀请大家参加 4 月28-29 日的[红帽峰会虚拟体验][18],了解更多关于 Fedora 和其他社区的信息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/announcing-fedora-32/

|

||||

|

||||

作者:[Matthew Miller][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/mattdm/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://fedoramagazine.org/wp-content/uploads/2020/04/f32-final-816x345.png

|

||||

[2]: https://www.gnome.org/news/2020/03/gnome-3-36-released/

|

||||

[3]: https://iot.fedoraproject.org/

|

||||

[4]: https://docs.fedoraproject.org/en-US/fedora-coreos/update-streams/

|

||||

[5]: https://getfedora.org/en/coreos/download?stream=next

|

||||

[6]: https://docs.fedoraproject.org/en-US/fedora-coreos/getting-started/

|

||||

[7]: https://spins.fedoraproject.org/

|

||||

[8]: https://labs.fedoraproject.org/

|

||||

[9]: https://labs.fedoraproject.org/en/astronomy/

|

||||

[10]: https://spins.fedoraproject.org/en/kde/

|

||||

[11]: https://spins.fedoraproject.org/en/xfce/

|

||||

[12]: https://labs.fedoraproject.org/en/comp-neuro

|

||||

[13]: https://alt.fedoraproject.org/alt/

|

||||

[14]: https://docs.fedoraproject.org/en-US/project/#_first

|

||||

[15]: https://docs.fedoraproject.org/en-US/quick-docs/upgrading/

|

||||

[16]: https://fedoraproject.org/wiki/Common_F32_bugs

|

||||

[17]: http://ask.fedoraproject.org

|

||||

[18]: https://www.redhat.com/en/summit

|

||||

@ -0,0 +1,110 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12166-1.html)

|

||||

[#]: subject: (Manjaro 20 Lysia Arrives with ZFS and Snap Support)

|

||||

[#]: via: (https://itsfoss.com/manjaro-20-release/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

Manjaro 20 Lysia 到来,支持 ZFS 和 Snap

|

||||

======

|

||||

|

||||

|

||||

|

||||

> Manjaro Linux 刷新了其 Manjaro 20 “Lysia” 的 ISO。现在在 Pamac 中支持了 Snap 和 Flatpak 软件包。在 Manjaro Architect 安装程序中增加了 ZFS 选项,并使用最新的内核 5.6 作为基础。

|

||||

|

||||

最近新的发行版的发布像下雨一样。在上周发布了 [Ubuntu 20.04 LTS](https://linux.cn/article-12142-1.html) ,紧接着 [Fedora 32](https://linux.cn/article-12164-1.html) 也刚刚发布,而现在 [Manjaro 发布了版本 20][1],代号为 Lysia。

|

||||

|

||||

### Manjaro 20 Lysia 有什么新东西?

|

||||

|

||||

其实有很多。让我给大家介绍一下 Manjaro 20 中的一些主要新功能。

|

||||

|

||||

#### 新的抹茶主题

|

||||

|

||||

Manjaro 20 有一个新的默认主题,名为 Matcha(抹茶)。它让桌面看起来更有质感。

|

||||

|

||||

![][2]

|

||||

|

||||

#### 对 Snap 和 Flatpak 的支持

|

||||

|

||||

Snap 和 Flatpak 软件包的支持得到了改进。如果你愿意,你可以在命令行中使用它们。

|

||||

|

||||

你还可以在 Pamac 图形界面包管理器中启用 Snap 和 Flatpak 支持。

|

||||

|

||||

![Enable Snap support in Pamac Manjaro][3]

|

||||

|

||||

启用后,你可以在 Pamac 软件管理器中找到并安装 Snap/Flatpak 应用程序。

|

||||

|

||||

![Snap applications in Pamac][4]

|

||||

|

||||

#### Pamac 提供了基于搜索安装新软件的方式(在 GNOME 中)

|

||||

|

||||

在 GNOME 变种中,如果你搜索某个东西,Pamac 软件管理器会提供安装符合查询的软件。在其他使用 GNOME 桌面的发行版中,GNOME 软件中心也会这样做。

|

||||

|

||||

#### ZFS 支持登陆了 Manjaro Architect

|

||||

|

||||

现在,你可以在 Manjaro Linux 中轻松地使用 ZFS 作为根文件系统。在 [Manjaro Architect][6] 中提供了对 [ZFS 文件系统][5]的支持。

|

||||

|

||||

请注意,我说的是 Manjaro Architect,即基于终端的安装程序。它和普通的图形化的 [Calamares 安装程序][7]不一样。

|

||||

|

||||

![][8]

|

||||

|

||||

#### Linux kernel 5.6

|

||||

|

||||

最新的稳定版 [Linux 内核 5.6][9] 带来了更多的硬件支持,如 thunderbolt、Nvidia 和 USB4。你也可以使用 [WireGuard VPN][10]。

|

||||

|

||||

![][11]

|

||||

|

||||

#### 其他杂项变化

|

||||

|

||||

* 新的桌面环境版本:Xfce 4.14、GNOME 3.36 和 KDE Plasma 5.18。

|

||||

* 新的默认 shell 是 zsh。

|

||||

* Display-Profiles 允许你存储一个或多个配置文件,用于你的首选显示配置。

|

||||

* 改进后的 Gnome-Layout-Switcher。

|

||||

* 最新的驱动程序。

|

||||

* 改进和完善了 Manjaro 工具。

|

||||

|

||||

### 如何取得 Manjaro 20 Lysia?

|

||||

|

||||

如果你已经在使用 Manjaro,只需更新你的 Manjaro Linux 系统,你就应该已经在使用 Lysia 了。

|

||||

|

||||

Manjaro 采用了滚动发布模式,这意味着你不必手动从一个版本升级到另一个版本。只要有新的版本发布,不需要重新安装就可以使用了。

|

||||

|

||||

既然 Manjaro 是滚动发布的,为什么每隔一段时间就会发布一个新版本呢?这是因为他们要刷新 ISO,这样下载 Manjaro 的新用户就不用再安装过去几年的更新了。这就是为什么 Arch Linux 也会每个月刷新一次 ISO 的原因。

|

||||

|

||||

Manjaro 的“ISO 刷新”是有代号和版本的,因为它可以帮助开发者清楚地标明每个开发阶段的发展方向。

|

||||

|

||||

所以,如果你已经在使用它,只需使用 Pamac 或命令行[更新你的 Manjaro Linux 系统][12]即可。

|

||||

|

||||

如果你想尝试 Manjaro 或者想使用 ZFS,那么你可以通过从它的网站上[下载 ISO][14] 来[安装 Manjaro][13]。

|

||||

|

||||

愿你喜欢新的 Manjaro Linux 发布。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/manjaro-20-release/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://forum.manjaro.org/t/manjaro-20-0-lysia-released/138633

|

||||

[2]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/manjaro-20-lysia.jpeg?resize=800%2C440&ssl=1

|

||||

[3]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/enable-snap-in-pamac-manjaro.jpg?resize=800%2C490&ssl=1

|

||||

[4]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/snap-app-pacman.jpg?resize=800%2C489&ssl=1

|

||||

[5]: https://itsfoss.com/what-is-zfs/

|

||||

[6]: https://itsfoss.com/manjaro-architect-review/

|

||||

[7]: https://calamares.io/

|

||||

[8]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/pacman-prompts-install-apps.jpg?resize=800%2C331&ssl=1

|

||||

[9]: https://itsfoss.com/linux-kernel-5-6/

|

||||

[10]: https://itsfoss.com/wireguard/

|

||||

[11]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/manjaro-20-neofetch-screen.jpg?resize=800%2C495&ssl=1

|

||||

[12]: https://itsfoss.com/update-arch-linux/

|

||||

[13]: https://itsfoss.com/install-manjaro-linux/

|

||||

[14]: https://manjaro.org/download/

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12180-1.html)

|

||||

[#]: subject: (4 Git scripts I can't live without)

|

||||

[#]: via: (https://opensource.com/article/20/4/git-extras)

|

||||

[#]: author: (Vince Power https://opensource.com/users/vincepower)

|

||||

@ -12,11 +12,11 @@

|

||||

|

||||

> Git Extras 版本库包含了 60 多个脚本,它们是 Git 基本功能的补充。以下是如何安装、使用和贡献的方法。

|

||||

|

||||

![Person using a laptop][1]

|

||||

|

||||

|

||||

2005 年,[Linus Torvalds][2] 创建了 [Git][3],以取代他之前用于维护 Linux 内核的专有的分布式源码控制管理解决方案。从那时起,Git 已经成为开源和云原生开发团队的主流版本控制解决方案。

|

||||

2005 年,[Linus Torvalds][2] 创建了 [Git][3],以取代他之前用于维护 Linux 内核的分布式源码控制管理的专有解决方案。从那时起,Git 已经成为开源和云原生开发团队的主流版本控制解决方案。

|

||||

|

||||

但即使是像 Git 这样功能丰富的应用程序,也没有人们想要或需要的每个功能,所以人们会花大力气去创建这些功能。就 Git 而言,这个人就是 [TJ Holowaychuk][4]。他的 [Git Extras][5] 项目承载了 60 多个“附加功能”,这些功能扩展了 Git 的基本功能。

|

||||

但即使是像 Git 这样功能丰富的应用程序,也没有人们想要或需要的每个功能,所以会有人花大力气去创建这些缺少的功能。就 Git 而言,这个人就是 [TJ Holowaychuk][4]。他的 [Git Extras][5] 项目承载了 60 多个“附加功能”,这些功能扩展了 Git 的基本功能。

|

||||

|

||||

### 使用 Git 附加功能

|

||||

|

||||

@ -24,9 +24,9 @@

|

||||

|

||||

#### git-ignore

|

||||

|

||||

`git ignore` 是一个方便的附加功能,它可以让你手动添加文件类型和注释到 `.git-ignore` 文件中,而不需要打开文本编辑器。它可以操作你的个人用户帐户的全局忽略文件和单独用于你正在工作的版本库的忽略文件。

|

||||

`git ignore` 是一个方便的附加功能,它可以让你手动添加文件类型和注释到 `.git-ignore` 文件中,而不需要打开文本编辑器。它可以操作你的个人用户帐户的全局忽略文件和单独用于你正在工作的版本库中的忽略文件。

|

||||

|

||||

在没有参数的情况下执行 `git ignore` 会先列出全局忽略文件,然后是本地的忽略文件。

|

||||

在不提供参数的情况下执行 `git ignore` 会先列出全局忽略文件,然后是本地的忽略文件。

|

||||

|

||||

```

|

||||

$ git ignore

|

||||

@ -105,7 +105,7 @@ branch.master.merge=refs/heads/master

|

||||

* `git mr` 检出来自 GitLab 的合并请求。

|

||||

* `git pr` 检出来自 GitHub 的拉取请求。

|

||||

|

||||

无论是哪种情况,你只需要合并请求号、拉取请求号或完整的 URL,它就会抓取远程引用,检出分支,并调整配置,这样 Git 就知道要替换哪个分支了。

|

||||

无论是哪种情况,你只需要合并请求号/拉取请求号或完整的 URL,它就会抓取远程引用,检出分支,并调整配置,这样 Git 就知道要替换哪个分支了。

|

||||

|

||||

```

|

||||

$ git mr 51

|

||||

@ -142,7 +142,7 @@ $ git extras --help

|

||||

$ brew install git-extras

|

||||

```

|

||||

|

||||

在 Linux 上,每个平台的原生包管理器中都有 Git Extras。有时,你需要启用一个额外的仓库,比如在 CentOS 上的 [EPEL][10],然后运行一条命令。

|

||||

在 Linux 上,每个平台原生的包管理器中都包含有 Git Extras。有时,你需要启用额外的仓库,比如在 CentOS 上的 [EPEL][10],然后运行一条命令。

|

||||

|

||||

```

|

||||

$ sudo yum install git-extras

|

||||

@ -152,9 +152,9 @@ $ sudo yum install git-extras

|

||||

|

||||

### 贡献

|

||||

|

||||

你是否你认为 Git 中有缺少的功能,并且已经构建了一个脚本来处理它?为什么不把它作为 Git Extras 发布版的一部分,与全世界分享呢?

|

||||

你是否认为 Git 中有缺少的功能,并且已经构建了一个脚本来处理它?为什么不把它作为 Git Extras 发布版的一部分,与全世界分享呢?

|

||||

|

||||

要做到这一点,请将该功能贡献到 Git Extras 仓库中。更多具体细节请参见仓库中的 [CONTRIBUTING.md][12] 文件,但基本的操作方法很简单。

|

||||

要做到这一点,请将该功能贡献到 Git Extras 仓库中。更多具体细节请参见仓库中的 [CONTRIBUTING.md][12] 文件,但基本的操作方法很简单:

|

||||

|

||||

1. 创建一个处理该功能的 Bash 脚本。

|

||||

2. 创建一个基本的 man 文件,让大家知道如何使用它。

|

||||

@ -171,7 +171,7 @@ via: https://opensource.com/article/20/4/git-extras

|

||||

作者:[Vince Power][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12172-1.html)

|

||||

[#]: subject: (Using Python to visualize COVID-19 projections)

|

||||

[#]: via: (https://opensource.com/article/20/4/python-data-covid-19)

|

||||

[#]: author: (AnuragGupta https://opensource.com/users/999anuraggupta)

|

||||

@ -10,11 +10,11 @@

|

||||

使用 Python 来可视化 COVID-19 预测

|

||||

======

|

||||

|

||||

> 我将演示如何使用开源库利用提供的全球病毒传播的开放数据来创建两个可视效果。

|

||||

> 我将演示如何利用提供的全球病毒传播的开放数据,使用开源库来创建两个可视效果。

|

||||

|

||||

![Colorful sound wave graph][1]

|

||||

|

||||

|

||||

使用 [Python][2] 和一些图形库,你可以预测出 COVID-19 确诊病例的总数,也可以显示一个国家(本文以印度为例)在给定日期的死亡总数。人们有时需要帮助解释和处理数据的意义,所以本文还演示了如何为五个国家创建一个动画横条形图,以显示按日期显示病例的变化。

|

||||

使用 [Python][2] 和一些图形库,你可以预测 COVID-19 确诊病例总数,也可以显示一个国家(本文以印度为例)在给定日期的死亡总数。人们有时需要帮助解释和处理数据的意义,所以本文还演示了如何为五个国家创建一个动画横条形图,以显示按日期显示病例的变化。

|

||||

|

||||

### 印度的确诊病例和死亡人数预测

|

||||

|

||||

@ -28,7 +28,6 @@

|

||||

|

||||

直接将数据加载到 Pandas `DataFrame` 中。Pandas 提供了一个函数 `read_csv()`,它可以获取一个 URL 并返回一个 `DataFrame` 对象,如下所示。

|

||||

|

||||

|

||||

```

|

||||

import pycountry

|

||||

import plotly.express as px

|

||||

@ -87,8 +86,8 @@ print(df_india.head(3))

|

||||

|

||||

在这里,我们创建一个条形图。我们将把日期放在 X 轴上,把确诊的病例数和死亡人数放在 Y 轴上。这一部分的脚本有以下几个值得注意的地方。

|

||||

|

||||

* `plt.rcParams["_figure.figure.figsize"_]=20,20` 这一行代码只适用于 Jupyter。所以如果你使用其他 IDE,请删除它。

|

||||

* 注意这行代码:`ax1 = plt.gca()`。为了确保两个图,即确诊病例和死亡病例的图都被绘制在同一个图上,我们需要给第二个图的 `ax` 对象。所以我们使用 `gca()` 来完成这个任务。(顺便说一下,`gca` 代表“get current axis”)

|

||||

* `plt.rcParams["figure.figsize"]=20,20` 这一行代码只适用于 Jupyter。所以如果你使用其他 IDE,请删除它。

|

||||

* 注意这行代码:`ax1 = plt.gca()`。为了确保两个图,即确诊病例和死亡病例的图都被绘制在同一个图上,我们需要给第二个图的 `ax` 对象。所以我们使用 `gca()` 来完成这个任务。(顺便说一下,`gca` 代表 “<ruby>获取当前坐标轴<rt>get current axis</rt></ruby>”)

|

||||

|

||||

完整的脚本如下所示。

|

||||

|

||||

@ -120,9 +119,9 @@ plt.show()

|

||||

|

||||

整个脚本[可在 GitHub 上找到][4]。

|

||||

|

||||

#### 为五个国家创建一个动画水平条形图

|

||||

### 为五个国家创建一个动画水平条形图

|

||||

|

||||

关于 Jupyter 的注意事项:要在 Jupyter 中以动态动画的形式运行,而不是静态 png 的形式,你需要在单元格的开头添加一个神奇的命令,即: `%matplotlib notebook`。这将使图形保持动态,而不是显示静态的 png 文件,因此也可以显示动画。如果你在其他 IDE 上,请删除这一行。

|

||||

关于 Jupyter 的注意事项:要在 Jupyter 中以动态动画的形式运行,而不是静态 png 的形式,你需要在单元格的开头添加一个神奇的命令,即: `%matplotlib notebook`。这将使图形保持动态,而不是显示为静态的 png 文件,因此也可以显示动画。如果你在其他 IDE 上,请删除这一行。

|

||||

|

||||

#### 1、下载数据

|

||||

|

||||

@ -130,11 +129,11 @@ plt.show()

|

||||

|

||||

#### 2、创建一个所有日期的列表

|

||||

|

||||

如果你检查你下载的数据,你会发现它有一列 `Date`。现在,这一列对每个国家都有一个日期值。因此,同一个日期会出现多次。我们需要创建一个只具有唯一值的日期列表。这会用在我们条形图的 X 轴上。我们有一行代码,如 `list_dates = df[_'Date'_].unique()`。`unique()` 方法将只提取每个日期的唯一值。

|

||||

如果你检查你下载的数据,你会发现它有一列 `Date`。现在,这一列对每个国家都有一个日期值。因此,同一个日期会出现多次。我们需要创建一个只具有唯一值的日期列表。这会用在我们条形图的 X 轴上。我们有一行代码,如 `list_dates = df[‘Date’].unique()`。`unique()` 方法将只提取每个日期的唯一值。

|

||||

|

||||

#### 3、挑选五个国家并创建一个 `ax` 对象。

|

||||

|

||||

做一个五个国家的名单。(你可以选择你喜欢的国家,甚至可以增加或减少国家的数量。)我也做了一个五个颜色的列表,每个国家的条形图的颜色对应一种。(如果你喜欢的话,也可以改一下。)这里有一行重要的代码是:`fig, ax = plt.subplots(figsize=(15, 8))`。这是创建一个 `ax` 对象所需要的。

|

||||

做一个五个国家的名单。(你可以选择你喜欢的国家,也可以增加或减少国家的数量。)我也做了一个五个颜色的列表,每个国家的条形图的颜色对应一种。(如果你喜欢的话,也可以改一下。)这里有一行重要的代码是:`fig, ax = plt.subplots(figsize=(15, 8))`。这是创建一个 `ax` 对象所需要的。

|

||||

|

||||

#### 4、编写回调函数

|

||||

|

||||

@ -148,7 +147,7 @@ plt.show()

|

||||

|

||||

```

|

||||

my_anim = animation.FuncAnimation(fig = fig, func = plot_bar,

|

||||

frames= list_dates, blit=True,

|

||||

frames = list_dates, blit = True,

|

||||

interval=20)

|

||||

```

|

||||

|

||||

@ -226,7 +225,7 @@ via: https://opensource.com/article/20/4/python-data-covid-19

|

||||

作者:[AnuragGupta][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12170-1.html)

|

||||

[#]: subject: (Difference Between YUM and RPM Package Manager)

|

||||

[#]: via: (https://www.2daygeek.com/comparison-difference-between-yum-vs-rpm/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

@ -10,6 +10,8 @@

|

||||

YUM 和 RPM 包管理器的不同之处

|

||||

======

|

||||

|

||||

|

||||

|

||||

软件包管理器在 Linux 系统中扮演着重要的角色。它允许你安装、更新、查看、搜索和删除软件包,以满足你的需求。

|

||||

|

||||

每个发行版都有自己的一套包管理器,依据你的 Linux 发行版来分别使用它们。

|

||||

@ -18,7 +20,7 @@ RPM 是最古老的传统软件包管理器之一,它是为基于 Red Hat 的

|

||||

|

||||

> 如果你想知道 [YUM 和 DNF 包管理器的区别][1]请参考该文章。

|

||||

|

||||

这意味着 yum 可以自动下载并安装所有需要的依赖项,但 rpm 会告诉你安装一个依赖项列表,然后你必须手动安装。

|

||||

这意味着 `yum` 可以自动下载并安装所有需要的依赖项,但 `rpm` 会告诉你安装一个依赖项列表,然后你必须手动安装。

|

||||

|

||||

当你想用 [rpm 命令][2] 安装一组包时,这实际上是不可能的,而且很费时间。

|

||||

|

||||

@ -76,13 +78,13 @@ via: https://www.2daygeek.com/comparison-difference-between-yum-vs-rpm/

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.2daygeek.com/comparison-difference-between-dnf-vs-yum/

|

||||

[1]: https://linux.cn/article-12161-1.html

|

||||

[2]: https://www.2daygeek.com/linux-rpm-command-examples-manage-packages-fedora-centos-rhel-systems/

|

||||

[3]: https://www.2daygeek.com/linux-yum-command-examples-manage-packages-rhel-centos-systems/

|

||||

[4]: https://www.2daygeek.com/list-of-command-line-package-manager-for-linux/

|

||||

@ -0,0 +1,283 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (qfzy1233)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12183-1.html)

|

||||

[#]: subject: (16 Things to do After Installing Ubuntu 20.04)

|

||||

[#]: via: (https://itsfoss.com/things-to-do-after-installing-ubuntu-20-04/)

|

||||

[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

|

||||

|

||||

安装完 Ubuntu 20.04 后要做的 16 件事

|

||||

======

|

||||

|

||||

> 以下是安装 Ubuntu 20.04 之后需要做的一些调整和事项,它将使你获得更流畅、更好的桌面 Linux 体验。

|

||||

|

||||

[Ubuntu 20.04 LTS(长期支持版)带来了许多新的特性][1]和观感上的变化。如果你要安装 Ubuntu 20.04,让我向你展示一些推荐步骤便于你的使用。

|

||||

|

||||

### 安装完 Ubuntu 20.04 LTS “Focal Fossa” 后要做的 16 件事

|

||||

|

||||

![][2]

|

||||

|

||||

我在这里提到的步骤仅是我的建议。如果一些定制或调整不适合你的需要和兴趣,你可以忽略它们。

|

||||

|

||||

同样的,有些步骤看起来很简单,但是对于一个 Ubuntu 新手来说是必要的。

|

||||

|

||||

这里的一些建议适用于启用 GNOME 作为默认桌面 Ubuntu 20.04,所以请检查 [Ubuntu 版本][3]和[桌面环境][4]。

|

||||

|

||||

以下列表便是安装了代号为 Focal Fossa 的 Ubuntu 20.04 LTS 之后要做的事。

|

||||

|

||||

#### 1、通过更新和启用额外的软件仓库来准备你的系统

|

||||

|

||||

安装 Ubuntu 或任何其他 Linux 发行版之后,你应该做的第一件事就是更新它。Linux 的运作是建立在本地的可用软件包数据库上,而这个缓存需要同步以便你能够安装软件。

|

||||

|

||||

升级 Ubuntu 非常简单。你可以运行软件更新从菜单(按 `Super` 键并搜索 “software updater”):

|

||||

|

||||

![Ubuntu 20.04 的软件升级器][5]

|

||||

|

||||

你也可以在终端使用以下命令更新你的系统:

|

||||

|

||||

```

|

||||

sudo apt update && sudo apt upgrade

|

||||

```

|

||||

|

||||

接下来,你应该确保启用了 [universe(宇宙)和 multiverse(多元宇宙)软件仓库][6]。使用这些软件仓库,你可以访问更多的软件。我还推荐阅读关于 [Ubuntu 软件仓库][6]的文章,以了解它背后的基本概念。

|

||||

|

||||

在菜单中搜索 “Software & Updates”:

|

||||

|

||||

![软件及更新设置项][7]

|

||||

|

||||

请务必选中软件仓库前面的勾选框:

|

||||

|

||||

![启用额外的软件仓库][8]

|

||||

|

||||

#### 2、安装媒体解码器来播放 MP3、MPEG4 和其他格式媒体文件

|

||||

|

||||

如果你想播放媒体文件,如 MP3、MPEG4、AVI 等,你需要安装媒体解码器。由于各个国家的版权问题, Ubuntu 在默认情况下不会安装它。

|

||||

|

||||

作为个人,你可以[使用 Ubuntu Restricted Extra 安装包][9]很轻松地安装这些媒体编解码器。这将[在你的 Ubuntu 系统安装][10]媒体编解码器、Adobe Flash 播放器和微软 True Type 字体等。

|

||||

|

||||

你可以通过[点击这个链接][11]来安装它(它会要求在软件中心打开它),或者使用以下命令:

|

||||

|

||||

```

|

||||

sudo apt install ubuntu-restricted-extras

|

||||

```

|

||||

|

||||

如果遇到 EULA 或许可证界面,请记住使用 `tab` 键在选项之间进行选择,然后按回车键确认你的选择。

|

||||

|

||||

![按 tab 键选择 OK 并按回车键][12]

|

||||

|

||||

#### 3、从软件中心或网络上安装软件

|

||||

|

||||

现在已经设置好了软件仓库并更新了软件包缓存,应该开始安装所需的软件了。

|

||||

|

||||

在 Ubuntu 中安装应用程序有几种方法,最简单和正式的方法是使用软件中心。

|

||||

|

||||

![Ubuntu 软件中心][14]

|

||||

|

||||

如果你想要一些关于软件的建议,请参考这个[丰富的各种用途的 Ubuntu 应用程序列表][15]。

|

||||

|

||||

一些软件供应商提供了 .deb 文件来方便地安装他们的应用程序。你可以从他们的网站获得 .deb 文件。例如,要[在 Ubuntu 上安装谷歌 Chrome][16],你可以从它的网站上获得 .deb 文件,双击它开始安装。

|

||||

|

||||

#### 4、享受 Steam Proton 和 GameModeEnjoy 上的游戏

|

||||

|

||||

[在 Linux 上进行游戏][17]已经有了长足的发展。你不再受限于自带的少数游戏。你可以[在 Ubuntu 上安装 Steam][18]并享受许多游戏。

|

||||

|

||||

[Steam 新的 Proton 项目][19]可以让你在 Linux 上玩许多只适用于 Windows 的游戏。除此之外,Ubuntu 20.04 还默认安装了 [Feral Interactive 的 GameMode][20]。

|

||||

|

||||

GameMode 会自动调整 Linux 系统的性能,使游戏具有比其他后台进程更高的优先级。

|

||||

|

||||

这意味着一些支持 GameMode 的游戏(如[古墓丽影·崛起][21])在 Ubuntu 上的性能应该有所提高。

|

||||

|

||||

#### 5、管理自动更新(适用于进阶用户和专家)

|

||||

|

||||

最近,Ubuntu 已经开始自动下载并安装对你的系统至关重要的安全更新。这是一个安全功能,作为一个普通用户,你应该让它保持默认开启。

|

||||

|

||||

但是,如果你喜欢自己进行配置更新,而这个自动更新经常导致你[“无法锁定管理目录”错误][22],也许你可以改变自动更新行为。

|

||||

|

||||

你可以选择“立即显示”,这样一有安全更新就会立即通知你,而不是自动安装。

|

||||

|

||||

![管理自动更新设置][23]

|

||||

|

||||

#### 6、控制电脑的自动挂起和屏幕锁定

|

||||

|

||||

如果你在笔记本电脑上使用 Ubuntu 20.04,那么你可能需要注意一些电源和屏幕锁定设置。

|

||||

|

||||

如果你的笔记本电脑处于电池模式,Ubuntu 会在 20 分钟不活动后休眠系统。这样做是为了节省电池电量。就我个人而言,我不喜欢它,因此我禁用了它。

|

||||

|

||||

类似地,如果你离开系统几分钟,它会自动锁定屏幕。我也不喜欢这种行为,所以我宁愿禁用它。

|

||||

|

||||

![Ubuntu 20.04 的电源设置][24]

|

||||

|

||||

#### 7、享受夜间模式

|

||||

|

||||

[Ubuntu 20.04 中最受关注的特性][25]之一是夜间模式。你可以通过进入设置并在外观部分中选择它来启用夜间模式。

|

||||

|

||||

![开启夜间主题 Ubuntu][26]

|

||||

|

||||

你可能需要做一些[额外的调整来获得完整的 Ubuntu 20.04 夜间模式][27]。

|

||||

|

||||

#### 8、控制桌面图标和启动程序

|

||||

|

||||

如果你想要一个最简的桌面,你可以禁用桌面上的图标。你还可以从左侧禁用启动程序,并在顶部面板中禁用软件状态栏。

|

||||

|

||||

所有这些都可以通过默认的新 GNOME 扩展来控制,该程序默认情况下已经可用。

|

||||

|

||||

![禁用 Ubuntu 20 04 的 Dock][28]

|

||||

|

||||

顺便说一下,你也可以通过“设置”->“外观”来将启动栏的位置改变到底部或者右边。

|

||||

|

||||

#### 9、使用表情符和特殊字符,或从搜索中禁用它

|

||||

|

||||

Ubuntu 提供了一个使用表情符号的简单方法。在默认情况下,有一个专用的应用程序叫做“字符”。它基本上可以为你提供表情符号的 [Unicode][29]。

|

||||

|

||||

不仅是表情符号,你还可以使用它来获得法语、德语、俄语和拉丁语字符的 unicode。单击符号你可以复制 unicode,当你粘贴该代码时,你所选择的符号便被插入。

|

||||

|

||||

![Ubuntu 表情符][30]

|

||||

|

||||

你也能在桌面搜索中找到这些特殊的字符和表情符号。也可以从搜索结果中复制它们。

|

||||

|

||||

![表情符出现在桌面搜索中][31]

|

||||

|

||||

如果你不想在搜索结果中看到它们,你应该禁用搜索功能对它们的访问。下一节将讨论如何做到这一点。

|

||||

|

||||

#### 10、掌握桌面搜索

|

||||

|

||||

GNOME 桌面拥有强大的搜索功能,大多数人使用它来搜索已安装的应用程序,但它不仅限于此。

|

||||

|

||||

按 `Super` 键并搜索一些东西,它将显示与搜索词匹配的任何应用程序,然后是系统设置和软件中心提供的匹配应用程序。

|

||||

|

||||

![桌面搜索][32]

|

||||

|

||||

不仅如此,搜索还可以找到文件中的文本。如果你正在使用日历,它也可以找到你的会议和提醒。你甚至可以在搜索中进行快速计算并复制其结果。

|

||||

|

||||

![Ubuntu搜索的快速计算][33]

|

||||

|

||||

你可以进入“设置”中来控制可以搜索的内容和顺序。

|

||||

|

||||

![][34]

|

||||

|

||||

#### 11、使用夜灯功能,减少夜间眼睛疲劳

|

||||

|

||||

如果你在晚上使用电脑或智能手机,你应该使用夜灯功能来减少眼睛疲劳。我觉得这很有帮助。

|

||||

|

||||

夜灯的特点是在屏幕上增加了一种黄色的色调,比白光少了一些挤压感。

|

||||

|

||||

你可以在“设置”->“显示”切换到夜灯选项卡来开启夜光功能。你可以根据自己的喜好设置“黄度”。

|

||||

|

||||

![夜灯功能][35]

|

||||

|

||||

#### 12、使用 2K/4K 显示器?使用分辨率缩放得到更大的图标和字体

|

||||

|

||||

如果你觉得图标、字体、文件夹在你的高分辨率屏幕上看起来都太小了,你可以利用分辨率缩放。

|

||||

|

||||

启用分辨率缩放可以让你有更多的选项来从 100% 增加到 200%。你可以选择适合自己喜好的缩放尺寸。

|

||||

|

||||

![在设置->显示中启用高分缩放][36]

|

||||

|

||||

#### 13、探索 GNOME 扩展功能以扩展 GNOME 桌面可用性

|

||||

|

||||

GNOME 桌面有称为“扩展”的小插件或附加组件。你应该[学会使用 GNOME 扩展][37]来扩展系统的可用性。

|

||||

|

||||

如下图所示,天气扩展顶部面板中显示了天气信息。不起眼但十分有用。你也可以在这里查看一些[最佳 GNOME 扩展][38]。不需要全部安装,只使用那些对你有用的。

|

||||

|

||||

![天气扩展][39]

|

||||

|

||||

#### 14、启用“勿扰”模式,专注于工作

|

||||

|

||||

如果你想专注于工作,禁用桌面通知会很方便。你可以轻松地启用“勿扰”模式,并静音所有通知。

|

||||

|

||||

![启用“请勿打扰”清除桌面通知][40]

|

||||

|

||||

这些通知仍然会在消息栏中,以便你以后可以阅读它们,但是它们不会在桌面上弹出。

|

||||

|

||||

#### 15、清理你的系统

|

||||

|

||||

这是你安装 Ubuntu 后不需要马上做的事情。但是记住它会对你有帮助。

|

||||

|

||||

随着时间的推移,你的系统将有大量不再需要的包。你可以用这个命令一次性删除它们:

|

||||

|

||||

```

|

||||

sudo apt autoremove

|

||||

```

|

||||

|

||||

还有其他[清理 Ubuntu 以释放磁盘空间的方法][41],但这是最简单和最安全的。

|

||||

|

||||

#### 16、根据你的喜好调整和定制 GNOME 桌面

|

||||

|

||||

我强烈推荐[安装 GNOME 设置工具][42]。这将让你可以通过额外的设置来进行定制。

|

||||

|

||||

![Gnome 设置工具][43]

|

||||

|

||||

比如,你可以[以百分比形式显示电池容量][44]、[修正在触摸板右键问题][45]、改变 Shell 主题、改变鼠标指针速度、显示日期和星期数、改变应用程序窗口行为等。

|

||||

|

||||

定制是没有尽头的,我可能仅使用了它的一小部分功能。这就是为什么我推荐[阅读这些][42]关于[自定义 GNOME 桌面][46]的文章。

|

||||

|

||||

你也可以[在 Ubuntu 中安装新主题][47],不过就我个人而言,我喜欢这个版本的默认主题。这是我第一次在 Ubuntu 发行版中使用默认的图标和主题。

|

||||

|

||||

#### 安装 Ubuntu 之后你会做什么?

|

||||

|

||||

如果你是 Ubuntu 的初学者,我建议你[阅读这一系列 Ubuntu 教程][48]开始学习。

|

||||

|

||||

这就是我的建议。安装 Ubuntu 之后你要做什么?分享你最喜欢的东西,我可能根据你的建议来更新这篇文章。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://itsfoss.com/things-to-do-after-installing-ubuntu-20-04/

|

||||

|

||||

作者:[Abhishek Prakash][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[qfzy1233](https://github.com/qfzy1233)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://itsfoss.com/author/abhishek/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://linux.cn/article-12146-1.html

|

||||

[2]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/things-to-do-after-installing-ubuntu-20-04.jpg?ssl=1

|

||||

[3]: https://linux.cn/article-9872-1.html

|

||||

[4]: https://linux.cn/article-12124-1.html

|

||||

[5]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/software-updater-ubuntu-20-04.jpg?ssl=1

|

||||

[6]: https://itsfoss.com/ubuntu-repositories/

|

||||

[7]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/software-updates-settings-ubuntu-20-04.jpg?ssl=1

|

||||

[8]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/extra-repositories-ubuntu-20.jpg?ssl=1

|

||||

[9]: https://linux.cn/article-11906-1.html

|

||||

[10]: https://linux.cn/article-12074-1.html

|

||||

[11]: //ubuntu-restricted-extras/

|

||||

[12]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/02/installing_ubuntu_restricted_extras.jpg?ssl=1

|

||||

[13]: https://itsfoss.com/remove-install-software-ubuntu/

|

||||

[14]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/software-center-ubuntu-20.png?resize=800%2C509&ssl=1

|

||||

[15]: https://itsfoss.com/best-ubuntu-apps/

|

||||

[16]: https://itsfoss.com/install-chrome-ubuntu/

|

||||

[17]: https://linux.cn/article-7316-1.html

|

||||

[18]: https://itsfoss.com/install-steam-ubuntu-linux/

|

||||

[19]: https://linux.cn/article-10054-1.html

|

||||

[20]: https://github.com/FeralInteractive/gamemode

|

||||

[21]: https://en.wikipedia.org/wiki/Rise_of_the_Tomb_Raider

|

||||

[22]: https://itsfoss.com/could-not-get-lock-error/

|

||||

[23]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/auto-updates-ubuntu.png?resize=800%2C361&ssl=1

|

||||

[24]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/power-settings-ubuntu-20-04.png?fit=800%2C591&ssl=1

|

||||

[25]: https://www.youtube.com/watch?v=lpq8pm_xkSE

|

||||

[26]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/enable-dark-theme-ubuntu.png?ssl=1

|

||||

[27]: https://linux.cn/article-12098-1.html

|

||||

[28]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2020/04/disable-dock-ubuntu-20-04.png?ssl=1

|

||||

[29]: https://en.wikipedia.org/wiki/List_of_Unicode_characters

|

||||

[30]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/emoji-ubuntu.jpg?ssl=1

|

||||

[31]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/emojis-desktop-search-ubuntu.jpg?ssl=1

|

||||

[32]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/ubuntu-desktop-search-1.jpg?ssl=1

|

||||

[33]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/quick-calculations-ubuntu-search.jpg?ssl=1

|

||||

[34]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/search-settings-control-ubuntu.png?resize=800%2C534&ssl=1

|

||||

[35]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/nightlight-ubuntu-20-04.png?ssl=1

|

||||

[36]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/fractional-scaling-ubuntu.jpg?ssl=1

|

||||

[37]: https://itsfoss.com/gnome-shell-extensions/

|

||||

[38]: https://itsfoss.com/best-gnome-extensions/

|

||||

[39]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2020/04/weather-extension-ubuntu.jpg?ssl=1

|

||||

[40]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/03/do-not-distrub-option-ubuntu-20-04.png?ssl=1

|

||||

[41]: https://itsfoss.com/free-up-space-ubuntu-linux/

|

||||

[42]: https://itsfoss.com/gnome-tweak-tool/

|

||||

[43]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2020/04/gnome-tweaks-tool-ubuntu-20-04.png?fit=800%2C551&ssl=1

|

||||

[44]: https://itsfoss.com/display-battery-ubuntu/

|

||||

[45]: https://itsfoss.com/fix-right-click-touchpad-ubuntu/

|

||||

[46]: https://itsfoss.com/gnome-tricks-ubuntu/

|

||||

[47]: https://itsfoss.com/install-themes-ubuntu/

|

||||

[48]: https://itsfoss.com/getting-started-with-ubuntu/

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lxbwolf)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12176-1.html)

|

||||

[#]: subject: (Inlining optimisations in Go)

|

||||

[#]: via: (https://dave.cheney.net/2020/04/25/inlining-optimisations-in-go)

|

||||

[#]: author: (Dave Cheney https://dave.cheney.net/author/davecheney)

|

||||

@ -10,33 +10,35 @@

|

||||

Go 中的内联优化

|

||||

======

|

||||

|

||||

本文讨论 Go 编译器是如何实现内联的以及这种优化方法如何影响你的 Go 代码。

|

||||

> 本文讨论 Go 编译器是如何实现内联的,以及这种优化方法如何影响你的 Go 代码。

|

||||

|

||||

*请注意:*本文重点讨论 *gc*,实际上是 [golang.org](https://github.com/golang/go) 的 Go 编译器。讨论到的概念可以广泛用于其他 Go 编译器,如 gccgo 和 llgo,但它们在实现方式和功能上可能有所差异。

|

||||

|

||||

|

||||

*请注意:*本文重点讨论 *gc*,这是来自 [golang.org](https://github.com/golang/go) 的事实标准的 Go 编译器。讨论到的概念可以广泛适用于其它 Go 编译器,如 gccgo 和 llgo,但它们在实现方式和功效上可能有所差异。

|

||||

|

||||

### 内联是什么?

|

||||

|

||||

内联就是把简短的函数在调用它的地方展开。在计算机发展历程的早期,这个优化是由程序员手动实现的。现在,内联已经成为编译过程中自动实现的基本优化过程的其中一步。

|

||||

<ruby>内联<rt>inlining</rt></ruby>就是把简短的函数在调用它的地方展开。在计算机发展历程的早期,这个优化是由程序员手动实现的。现在,内联已经成为编译过程中自动实现的基本优化过程的其中一步。

|

||||

|

||||

### 为什么内联很重要?

|

||||

|

||||

有两个原因。第一个是它消除了函数调用本身的虚耗。第二个是它使得编译器能更高效地执行其他的优化策略。

|

||||

有两个原因。第一个是它消除了函数调用本身的开销。第二个是它使得编译器能更高效地执行其他的优化策略。

|

||||

|

||||

#### 函数调用的虚耗

|

||||

#### 函数调用的开销

|

||||

|

||||

在任何语言中,调用一个函数 [1][2] 都会有消耗。把参数编组进寄存器或放入栈中(取决于 ABI),在返回结果时倒序取出时会有虚耗。引入一次函数调用会导致程序计数器从指令流的一点跳到另一点,这可能导致管道阻塞。函数内部通常有前置处理,需要为函数执行准备新的栈帧,还有与前置相似的后续处理,需要在返回给调用方之前释放栈帧空间。

|

||||

在任何语言中,调用一个函数 [^1] 都会有消耗。把参数编组进寄存器或放入栈中(取决于 ABI),在返回结果时的逆反过程都会有开销。引入一次函数调用会导致程序计数器从指令流的一点跳到另一点,这可能导致管道滞后。函数内部通常有<ruby>前置处理<rt>preamble</rt></ruby>,需要为函数执行准备新的栈帧,还有与前置相似的<ruby>后续处理<rt>epilogue</rt></ruby>,需要在返回给调用方之前释放栈帧空间。

|

||||

|

||||

在 Go 中函数调用会消耗额外的资源来支持栈的动态增长。在进入函数时,goroutine 可用的栈空间与函数需要的空间大小相等。如果可用空间不同,前置处理就会跳到把数据复制到一块新的、更大的空间的运行时逻辑,而这会导致栈空间变大。当这个复制完成后,运行时跳回到原来的函数入口,再执行栈空间检查,函数调用继续执行。这种方式下,goroutine 开始时可以申请很小的栈空间,在有需要时再申请更大的空间。[2][3]

|

||||

在 Go 中函数调用会消耗额外的资源来支持栈的动态增长。在进入函数时,goroutine 可用的栈空间与函数需要的空间大小进行比较。如果可用空间不同,前置处理就会跳到<ruby>运行时<rt>runtime</rt></ruby>的逻辑中,通过把数据复制到一块新的、更大的空间的来增长栈空间。当这个复制完成后,运行时就会跳回到原来的函数入口,再执行栈空间检查,现在通过了检查,函数调用继续执行。这种方式下,goroutine 开始时可以申请很小的栈空间,在有需要时再申请更大的空间。[^2]

|

||||

|

||||

这个检查消耗很小 — 只有几个指令 — 而且由于 goroutine 是成几何级数增长的,因此这个检查很少失败。这样,现代处理器的分支预测单元会通过假定检查肯定会成功来隐藏栈空间检查的消耗。当处理器预测错了栈空间检查,必须要抛弃它推测性执行的操作时,与为了增加 goroutine 的栈空间运行时所需的操作消耗的资源相比,管道阻塞的代价更小。

|

||||

这个检查消耗很小,只有几个指令,而且由于 goroutine 的栈是成几何级数增长的,因此这个检查很少失败。这样,现代处理器的分支预测单元可以通过假定检查肯定会成功来隐藏栈空间检查的消耗。当处理器预测错了栈空间检查,不得不放弃它在推测性执行所做的操作时,与为了增加 goroutine 的栈空间运行时所需的操作消耗的资源相比,管道滞后的代价更小。

|

||||

|

||||

虽然现代处理器可以用预测性执行技术优化每次函数调用中的泛型和 Go 特定的元素的虚耗,但那些虚耗不能被完全消除,因此在每次函数调用执行必要的工作过程中都会有性能消耗。一次函数调用本身的虚耗是固定的,与更大的函数相比,调用小函数的代价更大,因为在每次调用过程中它们做的有用的工作更少。

|

||||

虽然现代处理器可以用预测性执行技术优化每次函数调用中的泛型和 Go 特定的元素的开销,但那些开销不能被完全消除,因此在每次函数调用执行必要的工作过程中都会有性能消耗。一次函数调用本身的开销是固定的,与更大的函数相比,调用小函数的代价更大,因为在每次调用过程中它们做的有用的工作更少。

|

||||

|

||||

消除这些虚耗的方法必须是要消除函数调用本身,Go 的编译器就是这么做的,在某些条件下通过用函数的内容来替换函数调用来实现。这个过程被称为*内联*,因为它在函数调用处把函数体展开了。

|

||||

因此,消除这些开销的方法必须是要消除函数调用本身,Go 的编译器就是这么做的,在某些条件下通过用函数的内容来替换函数调用来实现。这个过程被称为*内联*,因为它在函数调用处把函数体展开了。

|

||||

|

||||

#### 改进的优化机会

|

||||

|

||||

Cliff Click 博士把内联描述为现代编译器做的优化措施,像常量传播(译注:此处作者笔误,原文为 constant proportion,修正为 constant propagation)和死码消除一样,都是编译器的基本优化方法。实际上,内联可以让编译器看得更深,使编译器可以观察调用的特定函数的上下文内容,可以看到能继续简化或彻底消除的逻辑。由于可以递归地执行内联,因此不仅可以在每个独立的函数上下文处进行这种优化,也可以在整个函数调用链中进行。

|

||||

Cliff Click 博士把内联描述为现代编译器做的优化措施,像常量传播(LCTT 译注:此处作者笔误,原文为 constant proportion,修正为 constant propagation)和死代码消除一样,都是编译器的基本优化方法。实际上,内联可以让编译器看得更深,使编译器可以观察调用的特定函数的上下文内容,可以看到能继续简化或彻底消除的逻辑。由于可以递归地执行内联,因此不仅可以在每个独立的函数上下文处进行这种优化决策,也可以在整个函数调用链中进行。

|

||||

|

||||

### 实践中的内联

|

||||

|

||||

@ -66,14 +68,14 @@ func BenchmarkMax(b *testing.B) {

|

||||

}

|

||||

```

|

||||

|

||||

运行这个基准,会得到如下结果:[3][4]

|

||||

运行这个基准,会得到如下结果:[^3]

|

||||

|

||||

```bash

|

||||

% go test -bench=.

|

||||

BenchmarkMax-4 530687617 2.24 ns/op

|

||||

```

|

||||

|

||||

在我的 2015 MacBook Air 上 `max(-1, i)` 的耗时约为 2.24 纳秒。现在去掉 `//go:noinline` 编译指令,再看下结果:

|

||||

在我的 2015 MacBook Air 上 `max(-1, i)` 的耗时约为 2.24 纳秒。现在去掉 `//go:noinline` 编译指令,再看下结果:

|

||||

|

||||

```bash

|

||||

% go test -bench=.

|

||||

@ -90,7 +92,7 @@ Max-4 2.21ns ± 1% 0.49ns ± 6% -77.96% (p=0.000 n=18+19)

|

||||

|

||||

这个提升是从哪儿来的呢?

|

||||

|

||||

首先,移除掉函数调用以及与之关联的前置处理 [4][5] 是主要因素。把 `max` 函数的函数体在调用处展开,减少了处理器执行的指令数量并且消除了一些分支。

|

||||

首先,移除掉函数调用以及与之关联的前置处理 [^4] 是主要因素。把 `max` 函数的函数体在调用处展开,减少了处理器执行的指令数量并且消除了一些分支。

|

||||

|

||||

现在由于编译器优化了 `BenchmarkMax`,因此它可以看到 `max` 函数的内容,进而可以做更多的提升。当 `max` 被内联后,`BenchmarkMax` 呈现给编译器的样子,看起来是这样的:

|

||||

|

||||

@ -116,7 +118,7 @@ name old time/op new time/op delta

|

||||

Max-4 2.21ns ± 1% 0.48ns ± 3% -78.14% (p=0.000 n=18+18)

|

||||

```

|

||||

|

||||

现在编译器能看到在 `BenchmarkMax` 里内联 `max` 的结果,可以执行以前不能执行的优化措施。例如,编译器注意到 `i` 初始值为 `0`,仅做自增操作,因此所有与 `i` 的比较都可以假定 `i` 不是负值。这样条件表达式 `-1 > i` 永远不是 true。[5][6]

|

||||

现在编译器能看到在 `BenchmarkMax` 里内联 `max` 的结果,可以执行以前不能执行的优化措施。例如,编译器注意到 `i` 初始值为 `0`,仅做自增操作,因此所有与 `i` 的比较都可以假定 `i` 不是负值。这样条件表达式 `-1 > i` 永远不是 `true`。[^5]

|

||||

|

||||

证明了 `-1 > i` 永远不为 true 后,编译器可以把代码简化为:

|

||||

|

||||

@ -150,7 +152,7 @@ func BenchmarkMax(b *testing.B) {

|

||||

|

||||

### 内联的限制

|

||||

|

||||

本文中我论述的内联称作*叶子*内联;把函数调用栈中最底层的函数在调用它的函数处展开的行为。内联是个递归的过程,当把函数内联到调用它的函数 A 处后,编译器会把内联后的结果代码再内联到 A 的调用方,这样持续内联下去。例如,下面的代码:

|

||||

本文中我论述的内联称作<ruby>叶子内联<rt>leaf inlining</rt></ruby>:把函数调用栈中最底层的函数在调用它的函数处展开的行为。内联是个递归的过程,当把函数内联到调用它的函数 A 处后,编译器会把内联后的结果代码再内联到 A 的调用方,这样持续内联下去。例如,下面的代码:

|

||||

|

||||

```go

|

||||

func BenchmarkMaxMaxMax(b *testing.B) {

|

||||

@ -166,11 +168,11 @@ func BenchmarkMaxMaxMax(b *testing.B) {

|

||||

|

||||

下一篇文章中,我会论述当 Go 编译器想要内联函数调用栈中间的某个函数时选用的另一种内联策略。最后我会论述编译器为了内联代码准备好要达到的极限,这个极限 Go 现在的能力还达不到。

|

||||

|

||||

1. 在 Go 中,一个方法就是一个有预先定义的形参和接受者的函数。假设这个方法不是通过接口调用的,调用一个无消耗的函数所消耗的代价与引入一个方法是相同的。[][7]

|

||||

2. 在 Go 1.14 以前,栈检查的前置处理也被 gc 用于 STW,通过把所有活跃的 goroutine 栈空间设为 0,来强制它们切换为下一次函数调用时的运行时状态。这个机制[最近被替换][8]为一种新机制,新机制下运行时可以不用等 goroutine 进行函数调用就可以暂停 goroutine。[][9]

|

||||

3. 我用 `//go:noinline` 编译指令来阻止编译器内联 `max`。这是因为我想把内联 `max` 的影响与其他影响隔离开,而不是用 `-gcflags='-l -N'` 选项在全局范围内禁止优化。关于 `//go:` 注释在[这篇文章][10]中详细论述。[][11]

|

||||

4. 你可以自己通过比较 `go test -bench=. -gcflags=-S`有无 `//go:noinline` 注释时的不同结果来验证一下。[][12]

|

||||

5. 你可以用 `-gcflags=-d=ssa/prove/debug=on` 选项来自己验证一下。[][13]

|

||||

[^1]: 在 Go 中,一个方法就是一个有预先定义的形参和接受者的函数。假设这个方法不是通过接口调用的,调用一个无消耗的函数所消耗的代价与引入一个方法是相同的。

|

||||

[^2]: 在 Go 1.14 以前,栈检查的前置处理也被垃圾回收器用于 STW,通过把所有活跃的 goroutine 栈空间设为 0,来强制它们切换为下一次函数调用时的运行时状态。这个机制[最近被替换][8]为一种新机制,新机制下运行时可以不用等 goroutine 进行函数调用就可以暂停 goroutine。

|

||||

[^3]: 我用 `//go:noinline` 编译指令来阻止编译器内联 `max`。这是因为我想把内联 `max` 的影响与其他影响隔离开,而不是用 `-gcflags='-l -N'` 选项在全局范围内禁止优化。关于 `//go:` 注释在[这篇文章][10]中详细论述。

|

||||

[^4]: 你可以自己通过比较 `go test -bench=. -gcflags=-S` 有无 `//go:noinline` 注释时的不同结果来验证一下。

|

||||

[^5]: 你可以用 `-gcflags=-d=ssa/prove/debug=on` 选项来自己验证一下。

|

||||

|

||||

#### 相关文章:

|

||||

|

||||

@ -186,7 +188,7 @@ via: https://dave.cheney.net/2020/04/25/inlining-optimisations-in-go

|

||||

作者:[Dave Cheney][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lxbwolf](https://github.com/lxbwolf)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,165 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lxbwolf)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-12181-1.html)

|

||||

[#]: subject: (Three Methods Boot CentOS/RHEL 7/8 Systems in Single User Mode)

|

||||

[#]: via: (https://www.2daygeek.com/boot-centos-7-8-rhel-7-8-single-user-mode/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

以单用户模式启动 CentOS/RHEL 7/8 的三种方法

|

||||

======

|

||||

|

||||

|

||||

|

||||

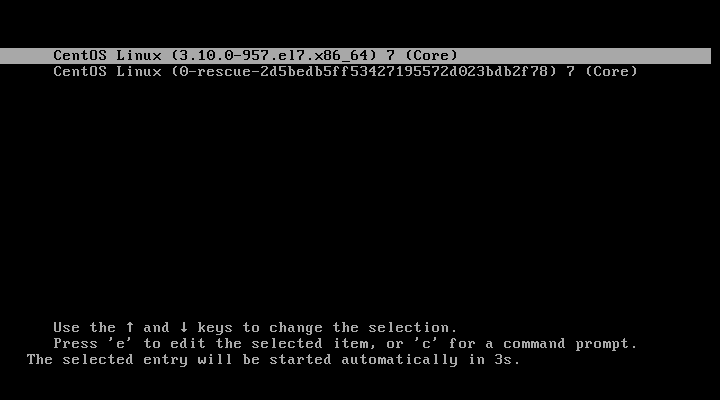

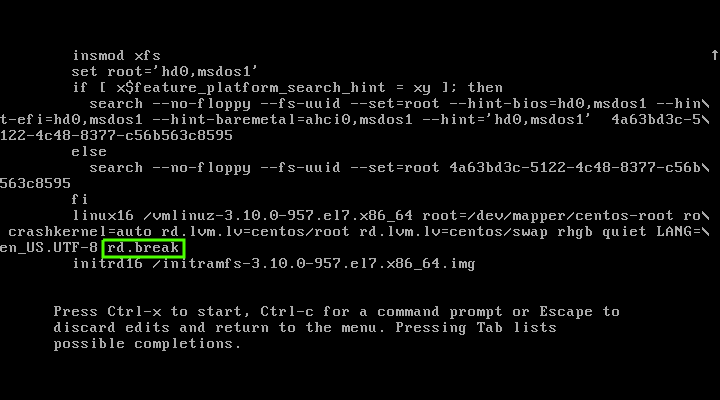

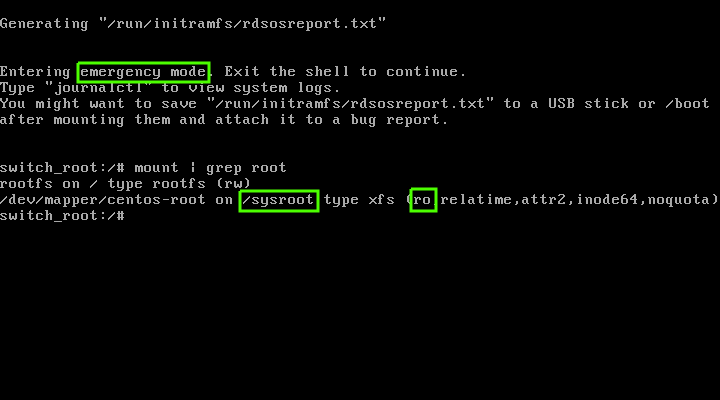

单用户模式,也被称为维护模式,超级用户可以在此模式下恢复/修复系统问题。

|

||||

|

||||

通常情况下,这类问题在多用户环境中修复不了。系统可以启动但功能不能正常运行或者你登录不了系统。

|

||||

|

||||

在基于 [Red Hat][1](RHEL)7/8 的系统中,使用 `runlevel1.target` 或 `rescue.target` 来实现。

|

||||

|

||||

在此模式下,系统会挂载所有的本地文件系统,但不开启网络接口。

|

||||

|

||||

系统仅启动特定的几个服务和修复系统必要的尽可能少的功能。

|

||||

|

||||

当你想运行文件系统一致性检查来修复损坏的文件系统,或忘记 root 密码后重置密码,或要修复系统上的一个挂载点问题时,这个方法会很有用。

|

||||

|

||||

你可以用下面三种方法以单用户模式启动 [CentOS][2]/[RHEL][3] 7/8 系统。

|

||||

|

||||