mirror of

https://github.com/LCTT/TranslateProject.git

synced 2024-12-26 21:30:55 +08:00

commit

26b7683344

145

published/20160518 Cleaning Up Your Linux Startup Process.md

Normal file

145

published/20160518 Cleaning Up Your Linux Startup Process.md

Normal file

@ -0,0 +1,145 @@

|

||||

Linux 系统开机启动项清理

|

||||

=======

|

||||

|

||||

|

||||

|

||||

一般情况下,常规用途的 Linux 发行版在开机启动时拉起各种相关服务进程,包括许多你可能无需使用的服务,例如<ruby>蓝牙<rt>bluetooth</rt></ruby>、Avahi、 <ruby>调制解调管理器<rt>ModemManager</rt></ruby>、ppp-dns(LCTT 译注:此处作者笔误 ppp-dns 应该为 pppd-dns) 等服务进程,这些都是什么东西?用于哪里,有何功能?

|

||||

|

||||

Systemd 提供了许多很好的工具用于查看系统启动情况,也可以控制在系统启动时运行什么。在这篇文章中,我将说明在 Systemd 类发行版中如何关闭一些令人讨厌的进程。

|

||||

|

||||

### 查看开机启动项

|

||||

|

||||

在过去,你能很容易通过查看 `/etc/init.d` 了解到哪些服务进程会在引导时启动。Systemd 以不同的方式展现,你可以使用如下命令罗列允许开机启动的服务进程。

|

||||

|

||||

```

|

||||

$ systemctl list-unit-files --type=service | grep enabled

|

||||

accounts-daemon.service enabled

|

||||

anacron-resume.service enabled

|

||||

anacron.service enabled

|

||||

bluetooth.service enabled

|

||||

brltty.service enabled

|

||||

[...]

|

||||

```

|

||||

|

||||

在此列表顶部,对我来说,蓝牙服务是冗余项,因为在该电脑上我不需要使用蓝牙功能,故无需运行此服务。下面的命令将停止该服务进程,并且使其开机不启动。

|

||||

|

||||

```

|

||||

$ sudo systemctl stop bluetooth.service

|

||||

$ sudo systemctl disable bluetooth.service

|

||||

```

|

||||

|

||||

你可以通过下面命令确定是否操作成功。

|

||||

|

||||

```

|

||||

$ systemctl status bluetooth.service

|

||||

bluetooth.service - Bluetooth service

|

||||

Loaded: loaded (/lib/systemd/system/bluetooth.service; disabled; vendor preset: enabled)

|

||||

Active: inactive (dead)

|

||||

Docs: man:bluetoothd(8)

|

||||

```

|

||||

|

||||

停用的服务进程仍然能够被另外一个服务进程启动。如果你真的想在任何情况下系统启动时都不启动该进程,无需卸载该它,只需要把它掩盖起来就可以阻止该进程在任何情况下开机启动。

|

||||

|

||||

```

|

||||

$ sudo systemctl mask bluetooth.service

|

||||

Created symlink from /etc/systemd/system/bluetooth.service to /dev/null.

|

||||

```

|

||||

|

||||

一旦你对禁用该进程启动而没有出现负面作用感到满意,你也可以选择卸载该程序。

|

||||

|

||||

通过执行命令可以获得如下服务列表:

|

||||

|

||||

```

|

||||

$ systemctl list-unit-files --type=service

|

||||

UNIT FILE STATE

|

||||

accounts-daemon.service enabled

|

||||

acpid.service disabled

|

||||

alsa-restore.service static

|

||||

alsa-utils.service masked

|

||||

```

|

||||

|

||||

你不能启用或禁用静态服务,因为静态服务被其他的进程所依赖,并不意味着它们自己运行。

|

||||

|

||||

### 哪些服务能够禁止?

|

||||

|

||||

如何知道你需要哪些服务,而哪些又是可以安全地禁用的呢?它总是依赖于你的个性化需求。

|

||||

|

||||

这里举例了几个服务进程的作用。许多服务进程都是发行版特定的,所以你应该看看你的发行版文档(比如通过 google 或 StackOverflow)。

|

||||

|

||||

- **accounts-daemon.service** 是一个潜在的安全风险。它是 AccountsService 的一部分,AccountsService 允许程序获得或操作用户账户信息。我不认为有好的理由能使我允许这样的后台操作,所以我选择<ruby>掩盖<rt>mask</rt></ruby>该服务进程。

|

||||

- **avahi-daemon.service** 用于零配置网络发现,使电脑超容易发现网络中打印机或其他的主机,我总是禁用它,别漏掉它。

|

||||

- **brltty.service** 提供布莱叶盲文设备支持,例如布莱叶盲文显示器。

|

||||

- **debug-shell.service** 开放了一个巨大的安全漏洞(该服务提供了一个无密码的 root shell ,用于帮助 调试 systemd 问题),除非你正在使用该服务,否则永远不要启动服务。

|

||||

- **ModemManager.service** 该服务是一个被 dbus 激活的守护进程,用于提供移动<ruby>宽频<rt>broadband</rt></ruby>(2G/3G/4G)接口,如果你没有该接口,无论是内置接口,还是通过如蓝牙配对的电话,以及 USB 适配器,那么你也无需该服务。

|

||||

- **pppd-dns.service** 是一个计算机发展的遗物,如果你使用拨号接入互联网的话,保留它,否则你不需要它。

|

||||

- **rtkit-daemon.service** 听起来很可怕,听起来像是 rootkit。 但是你需要该服务,因为它是一个<ruby>实时内核调度器<rt>real-time kernel scheduler</rt></ruby>。

|

||||

- **whoopsie.service** 是 Ubuntu 错误报告服务。它用于收集 Ubuntu 系统崩溃报告,并发送报告到 https://daisy.ubuntu.com 。 你可以放心地禁止其启动,或者永久的卸载它。

|

||||

- **wpa_supplicant.service** 仅在你使用 Wi-Fi 连接时需要。

|

||||

|

||||

### 系统启动时发生了什么?

|

||||

|

||||

Systemd 提供了一些命令帮助调试系统开机启动问题。该命令会重演你的系统启动的所有消息。

|

||||

|

||||

```

|

||||

$ journalctl -b

|

||||

|

||||

-- Logs begin at Mon 2016-05-09 06:18:11 PDT,

|

||||

end at Mon 2016-05-09 10:17:01 PDT. --

|

||||

May 16 06:18:11 studio systemd-journal[289]:

|

||||

Runtime journal (/run/log/journal/) is currently using 8.0M.

|

||||

Maximum allowed usage is set to 157.2M.

|

||||

Leaving at least 235.9M free (of currently available 1.5G of space).

|

||||

Enforced usage limit is thus 157.2M.

|

||||

[...]

|

||||

```

|

||||

|

||||

通过命令 `journalctl -b -1` 可以复审前一次启动,`journalctl -b -2` 可以复审倒数第 2 次启动,以此类推。

|

||||

|

||||

该命令会打印出大量的信息,你可能并不关注所有信息,只是关注其中问题相关部分。为此,系统提供了几个过滤器,用于帮助你锁定目标。让我们以进程号为 1 的进程为例,该进程是所有其它进程的父进程。

|

||||

|

||||

```

|

||||

$ journalctl _PID=1

|

||||

|

||||

May 08 06:18:17 studio systemd[1]: Starting LSB: Raise network interfaces....

|

||||

May 08 06:18:17 studio systemd[1]: Started LSB: Raise network interfaces..

|

||||

May 08 06:18:17 studio systemd[1]: Reached target System Initialization.

|

||||

May 08 06:18:17 studio systemd[1]: Started CUPS Scheduler.

|

||||

May 08 06:18:17 studio systemd[1]: Listening on D-Bus System Message Bus Socket

|

||||

May 08 06:18:17 studio systemd[1]: Listening on CUPS Scheduler.

|

||||

[...]

|

||||

```

|

||||

|

||||

这些打印消息显示了什么被启动,或者是正在尝试启动。

|

||||

|

||||

一个最有用的命令工具之一 `systemd-analyze blame`,用于帮助查看哪个服务进程启动耗时最长。

|

||||

|

||||

```

|

||||

$ systemd-analyze blame

|

||||

8.708s gpu-manager.service

|

||||

8.002s NetworkManager-wait-online.service

|

||||

5.791s mysql.service

|

||||

2.975s dev-sda3.device

|

||||

1.810s alsa-restore.service

|

||||

1.806s systemd-logind.service

|

||||

1.803s irqbalance.service

|

||||

1.800s lm-sensors.service

|

||||

1.800s grub-common.service

|

||||

```

|

||||

|

||||

这个特定的例子没有出现任何异常,但是如果存在系统启动瓶颈,则该命令将能发现它。

|

||||

|

||||

你也能通过如下资源了解 Systemd 如何工作:

|

||||

|

||||

- [理解和使用 Systemd](https://www.linux.com/learn/understanding-and-using-systemd)

|

||||

- [介绍 Systemd 运行级别和服务管理命令](https://www.linux.com/learn/intro-systemd-runlevels-and-service-management-commands)

|

||||

- [再次前行,另一个 Linux 初始化系统:Systemd 介绍](https://www.linux.com/learn/here-we-go-again-another-linux-init-intro-systemd)

|

||||

|

||||

----

|

||||

|

||||

via: https://www.linux.com/learn/cleaning-your-linux-startup-process

|

||||

|

||||

作者:[David Both](https://www.linux.com/users/cschroder)

|

||||

译者:[penghuster](https://github.com/penghuster)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 LCTT 原创编译,Linux中国 荣誉推出

|

||||

@ -0,0 +1,196 @@

|

||||

一周工作所用的日常 Git 命令

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

像大多数新手一样,我一开始是在 StackOverflow 上搜索 Git 命令,然后把答案复制粘贴,并没有真正理解它们究竟做了什么。

|

||||

|

||||

|

||||

|

||||

*Image credit: [XKCD][7]*

|

||||

|

||||

我曾经想过:“如果有一个最常见的 Git 命令的列表,以及它们的功能是什么,这不是极好的吗?”

|

||||

|

||||

多年之后,我编制了这样一个列表,并且给出了一些最佳实践,让新手们甚至中高级开发人员都能从中发现有用的东西。

|

||||

|

||||

为了保持实用性,我将这个列表与我过去一周实际使用的 Git 命令进行了比较。

|

||||

|

||||

几乎每个开发人员都在使用 Git,当然很可能是 GitHub。但大多数开发者大概有 99% 的时间只是使用这三个命令:

|

||||

|

||||

```

|

||||

git add --all

|

||||

git commit -am "<message>"

|

||||

git push origin master

|

||||

```

|

||||

|

||||

如果你只是单枪匹马,或者参加一场黑客马拉松或开发一次性的应用时,它工作得很好,但是当稳定性和可维护性开始成为一个优先考虑的事情后,清理提交、坚持分支策略和提交信息的规范性就变得很重要。

|

||||

|

||||

我将从常用命令的列表开始,使新手更容易了解 Git 能做什么,然后进入更高级的功能和最佳实践。

|

||||

|

||||

### 经常使用的命令

|

||||

|

||||

要想在仓库(repo)中初始化 Git,你只需输入以下命令即可。如果你没有初始化 Git,则不能在该仓库内运行任何其他的 Git 命令。

|

||||

|

||||

```

|

||||

git init

|

||||

```

|

||||

|

||||

如果你在使用 GitHub,而且正在将代码推送到在线存储的 GitHub 仓库中,那么你正在使用的就是远程(remote)仓库。该远程仓库的默认名称(也称为别名)为 `origin`。如果你已经从 Github 复制了一个项目,它就有了一个 `origin`。你可以使用命令 `git remote -v` 查看该 `origin`,该命令将列出远程仓库的 URL。

|

||||

|

||||

如果你初始化了自己的 Git 仓库,并希望将其与 GitHub 仓库相关联,则必须在 GitHub 上创建一个,复制新仓库提供的 URL,并使用 `git remote add origin <URL>` 命令,这里使用 GitHub 提供的 URL 替换 `<URL>`。这样,你就可以添加、提交和推送更改到你的远程仓库了。

|

||||

|

||||

最后一条命令用在当你需要更改远程仓库时。如果你从其他人那里复制了一个仓库,并希望将远程仓库从原始所有者更改为你自己的 GitHub 帐户。除了改用 `set-url` 来更改远程仓库外,流程与 `git remote add origin` 相同。

|

||||

|

||||

```

|

||||

git remote -v

|

||||

git remote add origin <url>

|

||||

git remote set-url origin <url>

|

||||

```

|

||||

|

||||

复制仓库最常见的方式是使用 `git clone`,后跟仓库的 URL。

|

||||

|

||||

请记住,远程仓库将连接到克隆仓库原属于的帐户。所以,如果你克隆了一个属于别人的仓库,你将无法推送到 GitHub,除非你使用上面的命令改变了 `origin`。

|

||||

|

||||

```

|

||||

git clone <url>

|

||||

```

|

||||

|

||||

你很快就会发现自己正在使用分支。如果你还不理解什么是分支,有许多其他更深入的教程,你应该先阅读它们,再继续下面的操作。([这里是一个教程][8])

|

||||

|

||||

命令 `git branch` 列出了本地机器上的所有分支。如果要创建一个新的分支,可以使用命令 `git branch <name>`,其中 `<name>` 表示分支的名字,比如说 `master`。

|

||||

|

||||

`git checkout <name>` 命令可以切换到现有的分支。你也可以使用 `git checkout -b` 命令创建一个新的分支并立即切换到它。大多数人都使用此命令而不是单独的 `branch` 和 `checkout` 命令。

|

||||

|

||||

```

|

||||

git branch

|

||||

git branch <name>

|

||||

git checkout <name>

|

||||

git checkout -b <name>

|

||||

```

|

||||

|

||||

如果你对一个分支进行了一系列的更改,假如说此分支名为 `develop`,如果想要将该分支合并回主分支(`master`)上,则使用 `git merge <branch>` 命令。你需要先检出(`checkout`)主分支,然后运行 `git merge develop` 将 `develop` 合并到主分支中。

|

||||

|

||||

```

|

||||

git merge <branch>

|

||||

```

|

||||

|

||||

如果你正在与多个人进行协作,你会发现有时 GitHub 的仓库上已经更新了,但你的本地却没有做相应的更改。如果是这样,你可以使用 `git pull origin <branch>` 命令从远程分支中拉取最新的更改。

|

||||

|

||||

```

|

||||

git pull origin <branch>

|

||||

```

|

||||

|

||||

如果您好奇地想看到哪些文件已被更改以及哪些内存正在被跟踪,可以使用 `git status` 命令。如果要查看每个文件的更改,可以使用 `git diff` 来查看每个文件中更改的行。

|

||||

|

||||

```

|

||||

git status

|

||||

git diff --stat

|

||||

```

|

||||

|

||||

### 高级命令和最佳实践

|

||||

|

||||

很快你会到达一个阶段,这时你希望你的提交看起来整洁一致。你可能还需要调整你的提交记录,使得提交更容易理解或者能还原一个意外的有破坏性的更改。

|

||||

|

||||

`git log` 命令可以输出提交的历史记录。你将使用它来查看提交的历史记录。

|

||||

|

||||

你的提交会附带消息和一个哈希值,哈希值是一串包含数字和字母的随机序列。一个哈希值示例如下:`c3d882aa1aa4e3d5f18b3890132670fbeac912f7`。

|

||||

|

||||

```

|

||||

git log

|

||||

```

|

||||

|

||||

假设你推送了一些可能破坏了你应用程序的东西。你最好回退一个提交然后再提交一次正确的,而不是修复它和推送新的东西。

|

||||

|

||||

如果你希望及时回退并从之前的提交中检出(`checkout`)你的应用程序,则可以使用该哈希作为分支名直接执行此操作。这将使你的应用程序与当前版本分离(因为你正在编辑历史记录的版本,而不是当前版本)。

|

||||

|

||||

```

|

||||

git checkout c3d88eaa1aa4e4d5f

|

||||

```

|

||||

|

||||

然后,如果你在那个历史分支中做了更改,并且想要再次推送,你必须使用强制推送。

|

||||

|

||||

**注意**:强制推送是危险的,只有在绝对必要的时候才能执行它。它将覆盖你的应用程序的历史记录,你将失去之后版本的任何信息。

|

||||

|

||||

```

|

||||

git push -f origin master

|

||||

```

|

||||

|

||||

在其他时候,将所有内容保留在一个提交中是不现实的。也行你想在尝试有潜在风险的操作之前保存当前进度,或者也许你犯了一个错误,但希望在你的版本历史中避免尴尬地留着这个错误。对此,我们有 `git rebase`。

|

||||

|

||||

假设你在本地历史记录上有 4 个提交(没有推送到 GitHub),你要回退这是个提交。你的提交记录看起来很乱很拖拉。这时你可以使用 `rebase` 将所有这些提交合并到一个简单的提交中。

|

||||

|

||||

```

|

||||

git rebase -i HEAD~4

|

||||

```

|

||||

|

||||

上面的命令会打开你计算机的默认编辑器(默认为 Vim,除非你将默认修改为其他的),提供了几个你准备如何修改你的提交的选项。它看起来就像下面的代码:

|

||||

|

||||

```

|

||||

pick 130deo9 oldest commit message

|

||||

pick 4209fei second oldest commit message

|

||||

pick 4390gne third oldest commit message

|

||||

pick bmo0dne newest commit message

|

||||

```

|

||||

|

||||

为了合并这些提交,我们需要将 `pick` 选项修改为 `fixup`(如代码下面的文档所示),以将该提交合并并丢弃该提交消息。请注意,在 Vim 中,你需要按下 `a` 或 `i` 才能编辑文本,要保存退出,你需要按下 `Esc` 键,然后按 `shift + z + z`。不要问我为什么,它就是这样。

|

||||

|

||||

```

|

||||

pick 130deo9 oldest commit message

|

||||

fixup 4209fei second oldest commit message

|

||||

fixup 4390gne third oldest commit message

|

||||

fixup bmo0dne newest commit message

|

||||

```

|

||||

|

||||

这将把你的所有提交合并到一个提交中,提交消息为 `oldest commit message`。

|

||||

|

||||

下一步是重命名你的提交消息。这完全是一个建议的操作,但只要你一直遵循一致的模式,都可以做得很好。这里我建议使用 [Google 为 Angular.js 提供的提交指南][9]。

|

||||

|

||||

为了更改提交消息,请使用 `amend` 标志。

|

||||

|

||||

```

|

||||

git commit --amend

|

||||

```

|

||||

|

||||

这也会打开 Vim,文本编辑和保存规则如上所示。为了给出一个良好的提交消息的例子,下面是遵循该指南中规则的提交消息:

|

||||

|

||||

```

|

||||

feat: add stripe checkout button to payments page

|

||||

|

||||

- add stripe checkout button

|

||||

- write tests for checkout

|

||||

```

|

||||

|

||||

保持指南中列出的类型(type)的一个优点是它使编写更改日志更加容易。你还可以在页脚(footer)(再次,在指南中规定的)中包含信息来引用问题(issue)。

|

||||

|

||||

**注意:**如果你正在协作一个项目,并将代码推送到了 GitHub,你应该避免重新引用(`rebase`)并压缩(`squash`)你的提交。如果你开始在人们的眼皮子底下更改版本历史,那么你可能会遇到难以追踪的错误,从而给每个人都带来麻烦。

|

||||

|

||||

Git 有无数的命令,但这里介绍的命令可能是您最初几年编程所需要知道的所有。

|

||||

|

||||

* * *

|

||||

|

||||

Sam Corcos 是 [Sightline Maps][10] 的首席开发工程师和联合创始人,Sightline Maps 是最直观的 3D 打印地形图的平台,以及用于构建 Phoenix 和 React 的可扩展生产应用程序的中级高级教程网站 [LearnPhoenix.io][11]。使用优惠码:free_code_camp 取得 LearnPhoenix 的20美元。

|

||||

|

||||

(题图:[GitHub Octodex][6])

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://medium.freecodecamp.org/git-cheat-sheet-and-best-practices-c6ce5321f52

|

||||

|

||||

作者:[Sam Corcos][a]

|

||||

译者:[firmianay](https://github.com/firmianay)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://medium.freecodecamp.org/@SamCorcos?source=post_header_lockup

|

||||

[1]:https://medium.freecodecamp.org/tagged/git?source=post

|

||||

[2]:https://medium.freecodecamp.org/tagged/github?source=post

|

||||

[3]:https://medium.freecodecamp.org/tagged/programming?source=post

|

||||

[4]:https://medium.freecodecamp.org/tagged/software-development?source=post

|

||||

[5]:https://medium.freecodecamp.org/tagged/web-development?source=post

|

||||

[6]:https://octodex.github.com/

|

||||

[7]:https://xkcd.com/1597/

|

||||

[8]:https://guides.github.com/introduction/flow/

|

||||

[9]:https://github.com/angular/angular.js/blob/master/CONTRIBUTING.md#-git-commit-guidelines

|

||||

[10]:http://sightlinemaps.com/

|

||||

[11]:http://learnphoenix.io/

|

||||

@ -1,18 +1,17 @@

|

||||

我对 Go 的错误处理有哪些不满,以及我是如何处理的

|

||||

======================

|

||||

|

||||

写 Go 的人往往对它的错误处理模式有一定的看法。根据你对其他语言的经验,你可能习惯于不同的方法。这就是为什么我决定要写这篇文章,尽管有点固执己见,但我认为吸收我的经验在辩论中是有用的。 我想要解决的主要问题是,很难去强制良好的错误处理实践,错误没有堆栈追踪,并且错误处理本身太冗长。不过,我已经看到了一些潜在的解决方案或许能帮助解决一些问题。

|

||||

写 Go 的人往往对它的错误处理模式有一定的看法。按不同的语言经验,人们可能有不同的习惯处理方法。这就是为什么我决定要写这篇文章,尽管有点固执己见,但我认为听取我的经验是有用的。我想要讲的主要问题是,很难去强制执行良好的错误处理实践,错误经常没有堆栈追踪,并且错误处理本身太冗长。不过,我已经看到了一些潜在的解决方案,或许能帮助解决一些问题。

|

||||

|

||||

### 与其他语言的快速比较

|

||||

|

||||

|

||||

[在 Go 中,所有的错误是值][1]。因为这点,相当多的函数最后会返回一个 `error`, 看起来像这样:

|

||||

[在 Go 中,所有的错误都是值][1]。因为这点,相当多的函数最后会返回一个 `error`, 看起来像这样:

|

||||

|

||||

```

|

||||

func (s *SomeStruct) Function() (string, error)

|

||||

```

|

||||

|

||||

由于这点,调用代码常规上会使用 `if` 语句来检查它们:

|

||||

因此这导致调用代码通常会使用 `if` 语句来检查它们:

|

||||

|

||||

```

|

||||

bytes, err := someStruct.Function()

|

||||

@ -21,13 +20,13 @@ if err != nil {

|

||||

}

|

||||

```

|

||||

|

||||

另外一种是在其他语言中如 Java、C#、Javascript、Objective C、Python 等使用的 `try-catch` 模式。如下你可以看到与先前的 Go 示例类似的 Java 代码,声明 `throws` 而不是返回 `error`:

|

||||

另外一种方法,是在其他语言中,如 Java、C#、Javascript、Objective C、Python 等使用的 `try-catch` 模式。如下你可以看到与先前的 Go 示例类似的 Java 代码,声明 `throws` 而不是返回 `error`:

|

||||

|

||||

```

|

||||

public String function() throws Exception

|

||||

```

|

||||

|

||||

`try-catch` 而不是 `if err != nil`:

|

||||

它使用的是 `try-catch` 而不是 `if err != nil`:

|

||||

|

||||

```

|

||||

try {

|

||||

@ -39,11 +38,11 @@ catch (Exception e) {

|

||||

}

|

||||

```

|

||||

|

||||

当然,还有其他的不同。不如,`error` 不会使你的程序崩溃,然而 `Exception` 会。还有其他的一些,我希望在在本篇中专注在这些上。

|

||||

当然,还有其他的不同。例如,`error` 不会使你的程序崩溃,然而 `Exception` 会。还有其他的一些,在本篇中会专门提到这些。

|

||||

|

||||

### 实现集中式错误处理

|

||||

|

||||

退一步,让我们看看为什么以及如何在一个集中的地方处理错误。

|

||||

退一步,让我们看看为什么要在一个集中的地方处理错误,以及如何做到。

|

||||

|

||||

大多数人或许会熟悉的一个例子是 web 服务 - 如果出现了一些未预料的的服务端错误,我们会生成一个 5xx 错误。在 Go 中,你或许会这么实现:

|

||||

|

||||

@ -68,7 +67,7 @@ func viewCompanies(w http.ResponseWriter, r *http.Request) {

|

||||

}

|

||||

```

|

||||

|

||||

这并不是一个好的解决方案,因为我们不得不重复在所有的处理函数中处理错误。为了能更好地维护,最好能在一处地方处理错误。幸运的是,[在 Go 的博客中,Andrew Gerrand 提供了一个替代方法][2]可以完美地实现。我们可以错见一个处理错误的类型:

|

||||

这并不是一个好的解决方案,因为我们不得不重复地在所有的处理函数中处理错误。为了能更好地维护,最好能在一处地方处理错误。幸运的是,[在 Go 语言的官方博客中,Andrew Gerrand 提供了一个替代方法][2],可以完美地实现。我们可以创建一个处理错误的 Type:

|

||||

|

||||

```

|

||||

type appHandler func(http.ResponseWriter, *http.Request) error

|

||||

@ -89,11 +88,11 @@ func init() {

|

||||

}

|

||||

```

|

||||

|

||||

接着我们需要做的是修改处理函数的签名来使它们返回 `errors`。这个方法很好,因为我们做到了 [dry][3] 原则,并且没有重复使用不必要的代码 - 现在我们可以在一处返回默认错误了。

|

||||

接着我们需要做的是修改处理函数的签名来使它们返回 `errors`。这个方法很好,因为我们做到了 [DRY][3] 原则,并且没有重复使用不必要的代码 - 现在我们可以在单独一个地方返回默认错误了。

|

||||

|

||||

### 错误上下文

|

||||

|

||||

在先前的例子中,我们可能会收到许多潜在的错误,它们的任何一个都可能在调用堆栈的许多部分生成。这时候事情就变得棘手了。

|

||||

在先前的例子中,我们可能会收到许多潜在的错误,它们中的任何一个都可能在调用堆栈的许多环节中生成。这时候事情就变得棘手了。

|

||||

|

||||

为了演示这点,我们可以扩展我们的处理函数。它可能看上去像这样,因为模板执行并不是唯一一处会发生错误的地方:

|

||||

|

||||

@ -109,9 +108,9 @@ func viewUsers(w http.ResponseWriter, r *http.Request) error {

|

||||

|

||||

调用链可能会相当深,在整个过程中,各种错误可能在不同的地方实例化。[Russ Cox][4]的这篇文章解释了如何避免遇到太多这类问题的最佳实践:

|

||||

|

||||

在 Go 中错误报告的部分约定是函数包含相关的上下文、包含正在尝试的操作(比如函数名和它的参数)

|

||||

> “在 Go 中错误报告的部分约定是函数包含相关的上下文,包括正在尝试的操作(比如函数名和它的参数)。”

|

||||

|

||||

给出的例子是 OS 包中的一个调用:

|

||||

这个给出的例子是对 OS 包的一个调用:

|

||||

|

||||

```

|

||||

err := os.Remove("/tmp/nonexist")

|

||||

@ -140,11 +139,11 @@ if err != nil {

|

||||

}

|

||||

```

|

||||

|

||||

这意味着错误发生时它们没有交流。

|

||||

这意味着错误何时发生并没有被传递出来。

|

||||

|

||||

应该注意的是,所有这些错误都可以在 `Exception` 驱动的模型中发生 - 糟糕的错误信息、隐藏异常等。那么为什么我认为该模型更有用?

|

||||

|

||||

如果我们在处理一个糟糕的异常消息,_我们仍然能够了解堆栈中发生了什么_。因为堆栈跟踪,这引发了一些我对 Go 不了解的部分 - 你知道 Go 的 `panic` 包含了堆栈追踪,但是 `error` 没有。我认为推论是 `panic` 可能会使你的程序崩溃,因此需要一个堆栈追踪,而处理错误并不会,因为它会假定你在它发生的地方做一些事。

|

||||

即便我们在处理一个糟糕的异常消息,_我们仍然能够了解它发生在调用堆栈中什么地方_。因为堆栈跟踪,这引发了一些我对 Go 不了解的部分 - 你知道 Go 的 `panic` 包含了堆栈追踪,但是 `error` 没有。我推测可能是 `panic` 会使你的程序崩溃,因此需要一个堆栈追踪,而处理错误并不会,因为它会假定你在它发生的地方做一些事。

|

||||

|

||||

所以让我们回到之前的例子 - 一个有糟糕错误信息的第三方库,它只是输出了调用链。你认为调试会更容易吗?

|

||||

|

||||

@ -170,17 +169,17 @@ github.com/Org/app/core/vendor/github.com/rusenask/goproxy.FuncReqHandler.Handle

|

||||

如果我们使用 Java 作为一个随意的例子,其中人们犯的一个最愚蠢的错误是不记录堆栈追踪:

|

||||

|

||||

```

|

||||

LOGGER.error(ex.getMessage()) // Doesn't log stack trace

|

||||

LOGGER.error(ex.getMessage(), ex) // Does log stack trace

|

||||

LOGGER.error(ex.getMessage()) // 不记录堆栈追踪

|

||||

LOGGER.error(ex.getMessage(), ex) // 记录堆栈追踪

|

||||

```

|

||||

|

||||

但是 Go 设计中似乎没有这个信息

|

||||

但是 Go 似乎在设计中就没有这个信息。

|

||||

|

||||

在获取上下文信息方面 - Russ 还提到了社区正在讨论一些潜在的接口用于剥离上下文错误。了解更多这点或许会很有趣。

|

||||

在获取上下文信息方面 - Russ 还提到了社区正在讨论一些潜在的接口用于剥离上下文错误。关于这点,了解更多或许会很有趣。

|

||||

|

||||

### 堆栈追踪问题解决方案

|

||||

|

||||

幸运的是,在做了一些查找后,我发现了这个出色的[ Go 错误][5]库来帮助解决这个问题,来给错误添加堆栈跟踪:

|

||||

幸运的是,在做了一些查找后,我发现了这个出色的 [Go 错误][5]库来帮助解决这个问题,来给错误添加堆栈跟踪:

|

||||

|

||||

```

|

||||

if errors.Is(err, crashy.Crashed) {

|

||||

@ -188,7 +187,7 @@ if errors.Is(err, crashy.Crashed) {

|

||||

}

|

||||

```

|

||||

|

||||

不过,我认为这个功能能成为语言的一等公民将是一个改进,这样你就不必对类型做一些修改了。此外,如果我们像先前的例子那样使用第三方库,那就可能不必使用 `crashy` - 我们仍有相同的问题。

|

||||

不过,我认为这个功能如果能成为语言的<ruby>第一类公民<rt>first class citizenship</rt></ruby>将是一个改进,这样你就不必做一些类型修改了。此外,如果我们像先前的例子那样使用第三方库,它可能没有使用 `crashy` - 我们仍有相同的问题。

|

||||

|

||||

### 我们对错误应该做什么?

|

||||

|

||||

@ -201,7 +200,7 @@ if err != nil {

|

||||

}

|

||||

```

|

||||

|

||||

如果我们想要调用大量会返回错误的方法时会发生什么,在同一个地方处理它们么?看上去像这样:

|

||||

如果我们想要调用大量方法,它们会产生错误,然后在一个地方处理所有错误,这时会发生什么?看上去像这样:

|

||||

|

||||

```

|

||||

err := doSomething()

|

||||

@ -222,7 +221,7 @@ func doSomething() error {

|

||||

}

|

||||

```

|

||||

|

||||

这感觉有点冗余,然而在其他语言中你可以将多条语句作为一个整体处理。

|

||||

这感觉有点冗余,在其他语言中你可以将多条语句作为一个整体处理。

|

||||

|

||||

```

|

||||

try {

|

||||

@ -245,9 +244,9 @@ public void doSomething() throws SomeErrorToPropogate {

|

||||

}

|

||||

```

|

||||

|

||||

我个人认为这两个例子实现了一件事情,只有 `Exception` 模式更少冗余更加弹性。如果有什么,我发现 `if err!= nil` 感觉像样板。也许有一种方法可以清理?

|

||||

我个人认为这两个例子实现了一件事情,只是 `Exception` 模式更少冗余,更加弹性。如果有什么的话,我觉得 `if err!= nil` 感觉像样板。也许有一种方法可以清理?

|

||||

|

||||

### 将多条语句像一个整体那样发生错误

|

||||

### 将失败的多条语句做为一个整体处理错误

|

||||

|

||||

首先,我做了更多的阅读,并[在 Rob Pike 写的 Go 博客中][7]发现了一个比较务实的解决方案。

|

||||

|

||||

@ -280,7 +279,7 @@ if ew.err != nil {

|

||||

}

|

||||

```

|

||||

|

||||

这也是一个很好的方案,但是我感觉缺少了点什么 - 因为我们不能重复使用这个模式。如果我们想要一个含有字符串参数的方法,我们就不得不改变函数签名。或者如果我们不想执行写会怎样?我们可以尝试使它更通用:

|

||||

这也是一个很好的方案,但是我感觉缺少了点什么 - 因为我们不能重复使用这个模式。如果我们想要一个含有字符串参数的方法,我们就不得不改变函数签名。或者如果我们不想执行写操作会怎样?我们可以尝试使它更通用:

|

||||

|

||||

```

|

||||

type errWrapper struct {

|

||||

@ -317,11 +316,11 @@ if err != nil {

|

||||

}

|

||||

```

|

||||

|

||||

这可以用,但是并没有帮助太大,因为它最后比标准的 `if err != nil` 检查带来了更多的冗余。我有兴趣听到有人能提供其他解决方案。或许语言本身需要一些方法来以不那么臃肿的方式的传递或者组合错误 - 但是感觉似乎是特意设计成不那么做。

|

||||

这可以用,但是并没有太大帮助,因为它最终比标准的 `if err != nil` 检查带来了更多的冗余。如果有人能提供其他解决方案,我会很有兴趣听。或许这个语言本身需要一些方法来以不那么臃肿的方式传递或者组合错误 - 但是感觉似乎是特意设计成不那么做。

|

||||

|

||||

### 总结

|

||||

|

||||

看完这些之后,你可能会认为我反对在 Go 中使用 `error`。但事实并非如此,我只是描述了如何将它与 `try catch` 模型的经验进行比较。它是一个用于系统编程很好的语言,并且已经出现了一些优秀的工具。仅举几例有 [Kubernetes][8]、[Docker][9]、[Terraform][10]、[Hoverfly][11] 等。还有小型、高性能、本地二进制的优点。但是,`error` 难以适应。 我希望我的推论是有道理的,而且一些方案和解决方法可能会有帮助。

|

||||

看完这些之后,你可能会认为我在对 `error` 挑刺儿,由此推论我反对 Go。事实并非如此,我只是将它与我使用 `try catch` 模型的经验进行比较。它是一个用于系统编程很好的语言,并且已经出现了一些优秀的工具。仅举几例,有 [Kubernetes][8]、[Docker][9]、[Terraform][10]、[Hoverfly][11] 等。还有小型、高性能、本地二进制的优点。但是,`error` 难以适应。 我希望我的推论是有道理的,而且一些方案和解决方法可能会有帮助。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -336,7 +335,7 @@ via: https://opencredo.com/why-i-dont-like-error-handling-in-go

|

||||

|

||||

作者:[Andrew Morgan][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

79

published/20170531 DNS Infrastructure at GitHub.md

Normal file

79

published/20170531 DNS Infrastructure at GitHub.md

Normal file

@ -0,0 +1,79 @@

|

||||

GitHub 的 DNS 基础设施

|

||||

============================================================

|

||||

|

||||

在 GitHub,我们最近从头改进了 DNS。这包括了我们[如何与外部 DNS 提供商交互][4]以及我们如何在内部向我们的主机提供记录。为此,我们必须设计和构建一个新的 DNS 基础设施,它可以随着 GitHub 的增长扩展并跨越多个数据中心。

|

||||

|

||||

以前,GitHub 的 DNS 基础设施相当简单直接。它包括每台服务器上本地的、只具备转发功能的 DNS 缓存服务器,以及一对被所有这些主机使用的缓存服务器和权威服务器主机。这些主机在内部网络以及公共互联网上都可用。我们在缓存守护程序中配置了<ruby>区域<rt>zone</rt></ruby><ruby>存根<rt>stub</rt></ruby>,以在本地进行查询,而不是在互联网上进行递归。我们还在我们的 DNS 提供商处设置了 NS 记录,它们将特定的内部<ruby>域<rt>domain</rt></ruby>指向这对主机的公共 IP,以便我们网络外部的查询。

|

||||

|

||||

这个配置使用了很多年,但它并非没有缺点。许多程序对于解析 DNS 查询非常敏感,我们遇到的任何性能或可用性问题在最好的情况下也会导致服务排队和性能降级,而最坏情况下客户会遭遇服务中断。配置和代码的更改可能会导致查询率发生大幅度的意外变化。因此超出这两台主机的扩展成为了一个问题。由于这些主机的网络配置,如果我们只是继续添加 IP 和主机的话存在一些本身的问题。在试图解决和补救这些问题的同时,由于缺乏测量指标和可见性,老旧的系统难以识别问题的原因。在许多情况下,我们使用 `tcpdump` 来识别有问题的流量和查询。另一个问题是在公共 DNS 服务器上运行,我们处于泄露内部网络信息的风险之下。因此,我们决定建立更好的东西,并开始确定我们对新系统的要求。

|

||||

|

||||

我们着手设计一个新的 DNS 基础设施,以改善上述包括扩展和可见性在内的运维问题,并引入了一些额外的需求。我们希望通过外部 DNS 提供商继续运行我们的公共 DNS 域,因此我们构建的系统需要与供应商无关。此外,我们希望该系统能够服务于我们的内部和外部域,这意味着内部域仅在我们的内部网络上可用,除非另有特别配置,而外部域也不用离开我们的内部网络就可解析。我们希望新的 DNS 架构不但可以[基于部署的工作流进行更改][5],并可以通过我们的仓库和配置系统使用 API 自动更改 DNS 记录。新系统不能有任何外部依赖,太依赖于 DNS 功能将会陷入级联故障,这包括连接到其他数据中心和其中可能有的 DNS 服务。我们的旧系统将缓存服务器和权威服务器在同一台主机上混合使用。我们想转到具有独立角色的分层设计。最后,我们希望系统能够支持多数据中心环境,无论是 EC2 还是裸机。

|

||||

|

||||

### 实现

|

||||

|

||||

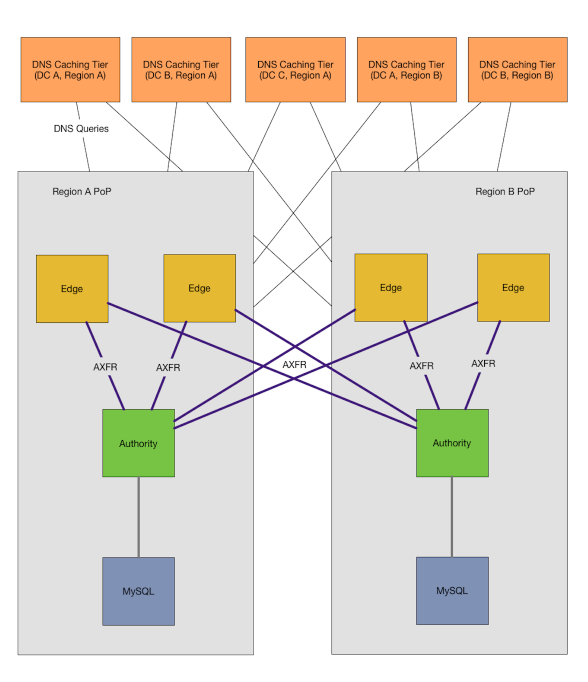

|

||||

|

||||

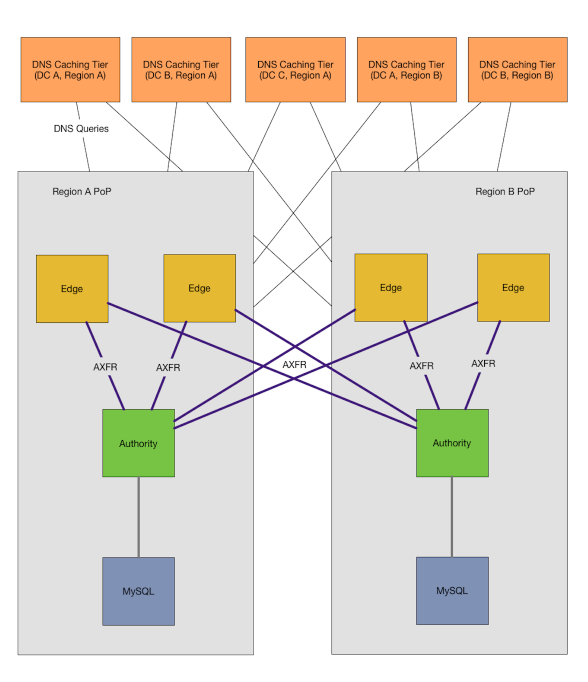

为了构建这个系统,我们确定了三类主机:<ruby>缓存主机<rt>cache</rt></ruby>、<ruby>边缘主机<rt>edge</rt></ruby>和<ruby>权威主机<rt>authority</rt></ruby>。缓存主机作为<ruby>递归解析器<rt>recursive resolver</rt></ruby>和 DNS “路由器” 缓存来自边缘层的响应。边缘层运行 DNS 权威守护程序,用于响应缓存层对 DNS <ruby>区域<rt>zone</rt></ruby>的请求,其被配置为来自权威层的<ruby>区域传输<rt>zone transfer</rt></ruby>。权威层作为隐藏的 DNS <ruby>主服务器<rt>master</rt></ruby>,作为 DNS 数据的规范来源,为来自边缘主机的<ruby>区域传输<rt>zone transfer</rt></ruby>提供服务,并提供用于创建、修改或删除记录的 HTTP API。

|

||||

|

||||

在我们的新配置中,缓存主机存在于每个数据中心中,这意味着应用主机不需要穿过数据中心边界来检索记录。缓存主机被配置为将<ruby>区域<rt>zone</rt></ruby>映射到其<ruby>地域<rt>region</rt></ruby>内的边缘主机,以便将我们的内部<ruby>区域<rt>zone</rt></ruby>路由到我们自己的主机。未明确配置的任何<ruby>区域<rt>zone</rt></ruby>将通过互联网递归解析。

|

||||

|

||||

边缘主机是地域性的主机,存在我们的网络边缘 PoP(<ruby>存在点<rt>Point of Presence</rt></ruby>)内。我们的 PoP 有一个或多个依赖于它们进行外部连接的数据中心,没有 PoP 数据中心将无法访问互联网,互联网也无法访问它们。边缘主机对所有的权威主机执行<ruby>区域传输<rt>zone transfer</rt></ruby>,无论它们存在什么<ruby>地域<rt>region</rt></ruby>或<ruby>位置<rt>location</rt></ruby>,并将这些区域存在本地的磁盘上。

|

||||

|

||||

我们的权威主机也是地域性的主机,只包含适用于其所在<ruby>地域<rt>region</rt></ruby>的<ruby>区域<rt>zone</rt></ruby>。我们的仓库和配置系统决定一个<ruby>区域<rt>zone</rt></ruby>存放在哪个<ruby>地域性权威主机<rt>regional authority</rt></ruby>,并通过 HTTP API 服务来创建和删除记录。 OctoDNS 将区域映射到地域性权威主机,并使用相同的 API 创建静态记录,以及确保动态源处于同步状态。对于外部域 (如 github.com),我们有另外一个单独的权威主机,以允许我们可以在连接中断期间查询我们的外部域。所有记录都存储在 MySQL 中。

|

||||

|

||||

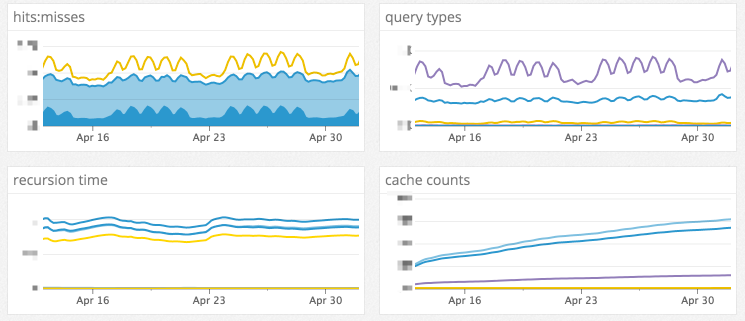

### 可运维性

|

||||

|

||||

|

||||

|

||||

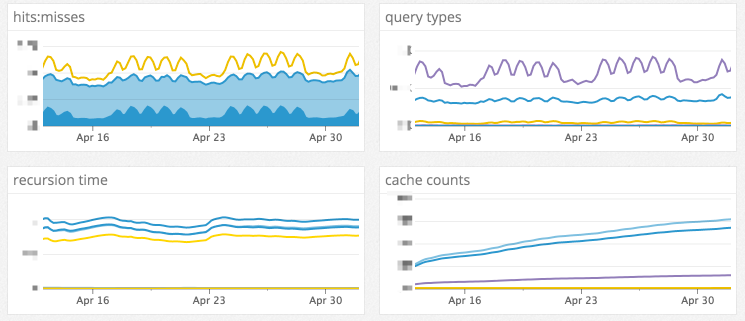

迁移到更现代的 DNS 基础设施的巨大好处是可观察性。我们的旧 DNS 系统几乎没有指标,只有有限的日志。决定使用哪些 DNS 服务器的一个重要因素是它们所产生的指标的广度和深度。我们最终用 [Unbound][6] 作为缓存主机,[NSD][7] 作为边缘主机,[PowerDNS][8] 作为权威主机,所有这些都已在比 GitHub 大得多的 DNS 基础架构中得到了证实。

|

||||

|

||||

当在我们的裸机数据中心运行时,缓存通过私有的<ruby>[任播][9]<rt>anycast</rt></ruby> IP 访问,从而使之可以到达最近的可用缓存主机。缓存主机已经以机架感知的方式部署,在它们之间提供了一定程度的平衡负载,并且与一些电源和网络故障模式相隔离。当缓存主机出现故障时,通常将用其进行 DNS 查询的服务器现在将自动路由到下一个最接近的缓存主机,以保持低延迟并提供对某些故障模式的容错。任播允许我们扩展单个 IP 地址后面的缓存数量,这与先前的配置不同,使得我们能够按 DNS 需求量运行尽可能多的缓存主机。

|

||||

|

||||

无论地域或位置如何,边缘主机使用权威层进行区域传输。我们的<ruby>区域<rt>zone</rt></ruby>并没有大到在每个<ruby>地域<rt>region</rt></ruby>保留所有<ruby>区域<rt>zone</rt></ruby>的副本成为问题。(LCTT 译注:此处原文“Our zones are not large enough that keeping a copy of all of them in every region is a problem.”,根据上下文理解而翻译。)这意味着对于每个区域,即使某个地域处于脱机状态,或者上游服务提供商存在连接问题,所有缓存服务器都可以访问具备所有区域的本地副本的本地边缘服务器。这种变化在面对连接问题方面已被证明是相当有弹性的,并且在不久前本来会导致客户面临停止服务的故障期间帮助保持 GitHub 可用。

|

||||

|

||||

那些区域传输包括了内部和外部域从它们相应的权威服务器进行的传输。正如你可能会猜想像 github.com 这样的区域是外部的,像 github.net 这样的区域通常是内部的。它们之间的区别仅在于我们使用的类型和存储在其中的数据。了解哪些区域是内部和外部的,为我们在配置中提供了一些灵活性。

|

||||

|

||||

```

|

||||

$ dig +short github.com

|

||||

192.30.253.112

|

||||

192.30.253.113

|

||||

```

|

||||

|

||||

公共<ruby>区域<rt>zone</rt></ruby>被[同步][10]到外部 DNS 提供商,并且是 GitHub 用户每天使用的 DNS 记录。另外,公共区域在我们的网络中是完全可解析的,而不需要与我们的外部提供商进行通信。这意味着需要查询 `api.github.com` 的任何服务都可以这样做,而无需依赖外部网络连接。我们还使用了 Unbound 的 `stub-first` 配置选项,它给了我们第二次查询的机会,如果我们的内部 DNS 服务由于某些原因在外部查询失败,则可以进行第二次查找。

|

||||

|

||||

```

|

||||

$ dig +short time.github.net

|

||||

10.127.6.10

|

||||

```

|

||||

|

||||

大部分的 `github.net` 区域是完全私有的,无法从互联网访问,它只包含 [RFC 1918][11] 中规定的 IP 地址。每个地域和站点都划分了私有区域。每个地域和/或站点都具有适用于该位置的一组子区域,子区域用于管理网络、服务发现、特定的服务记录,并且还包括在我们仓库中的配置主机。私有区域还包括 PTR 反向查找区域。

|

||||

|

||||

### 总结

|

||||

|

||||

用一个新系统替换可以为数百万客户提供服务的旧系统并不容易。使用实用的、基于需求的方法来设计和实施我们的新 DNS 系统,才能打造出一个能够迅速有效地运行、并有望与 GitHub 一起成长的 DNS 基础设施。

|

||||

|

||||

想帮助 GitHub SRE 团队解决有趣的问题吗?我们很乐意你加入我们。[在这申请][12]。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://githubengineering.com/dns-infrastructure-at-github/

|

||||

|

||||

作者:[Joe Williams][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://github.com/joewilliams

|

||||

[1]:https://githubengineering.com/dns-infrastructure-at-github/

|

||||

[2]:https://github.com/joewilliams

|

||||

[3]:https://github.com/joewilliams

|

||||

[4]:https://githubengineering.com/enabling-split-authority-dns-with-octodns/

|

||||

[5]:https://githubengineering.com/enabling-split-authority-dns-with-octodns/

|

||||

[6]:https://unbound.net/

|

||||

[7]:https://www.nlnetlabs.nl/projects/nsd/

|

||||

[8]:https://powerdns.com/

|

||||

[9]:https://en.wikipedia.org/wiki/Anycast

|

||||

[10]:https://githubengineering.com/enabling-split-authority-dns-with-octodns/

|

||||

[11]:http://www.faqs.org/rfcs/rfc1918.html

|

||||

[12]:https://boards.greenhouse.io/github/jobs/669805#.WPVqJlPyvUI

|

||||

@ -0,0 +1,384 @@

|

||||

Samba 系列(十五):用 SSSD 和 Realm 集成 Ubuntu 到 Samba4 AD DC

|

||||

============================================================

|

||||

|

||||

本教程将告诉你如何将 Ubuntu 桌面版机器加入到带有 SSSD 和 Realm 服务的 Samba4 活动目录域中,以在活动目录中认证用户。

|

||||

|

||||

### 要求:

|

||||

|

||||

1. [在 Ubuntu 上用 Samba4 创建一个活动目录架构][1]

|

||||

|

||||

### 第 1 步:初始配置

|

||||

|

||||

1、 在把 Ubuntu 加入活动目录前确保主机名被正确设置了。使用 `hostnamectl` 命令设置机器名字或者手动编辑 `/etc/hostname` 文件。

|

||||

|

||||

```

|

||||

$ sudo hostnamectl set-hostname your_machine_short_hostname

|

||||

$ cat /etc/hostname

|

||||

$ hostnamectl

|

||||

```

|

||||

|

||||

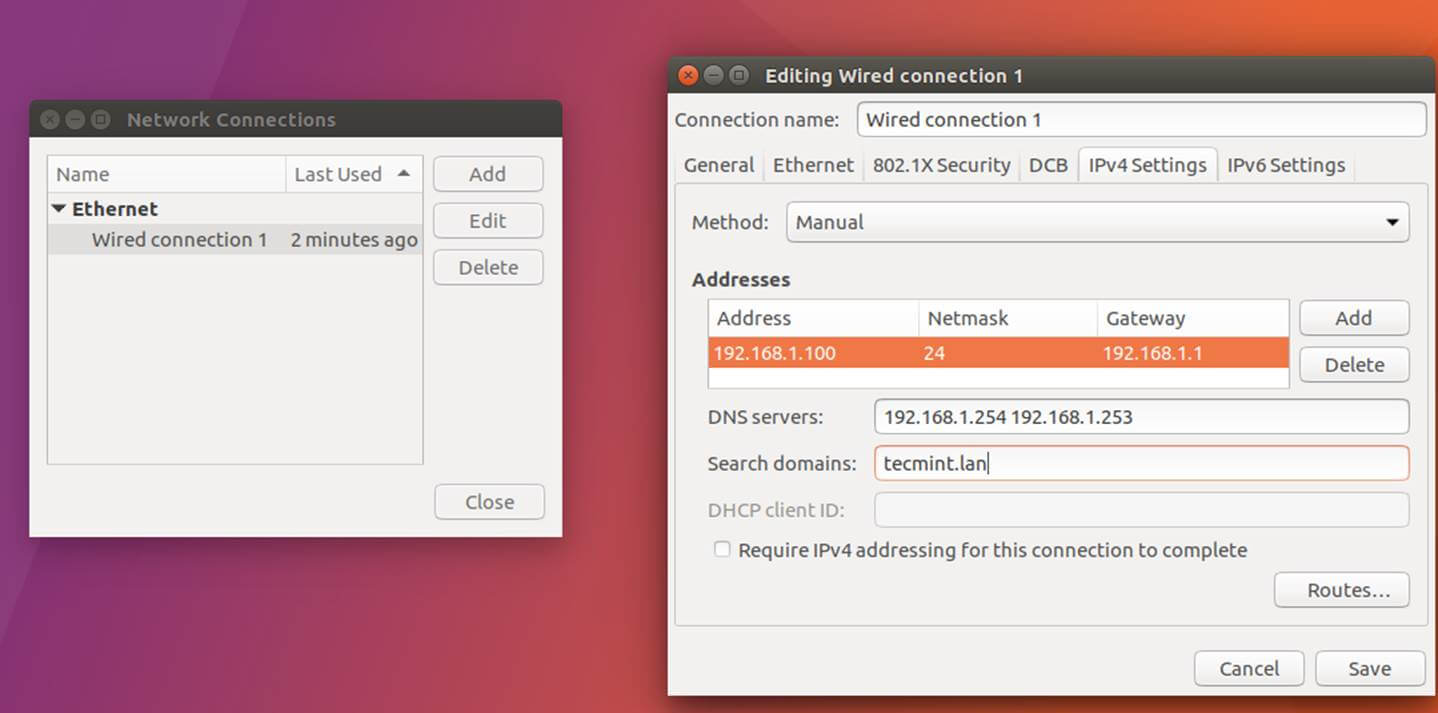

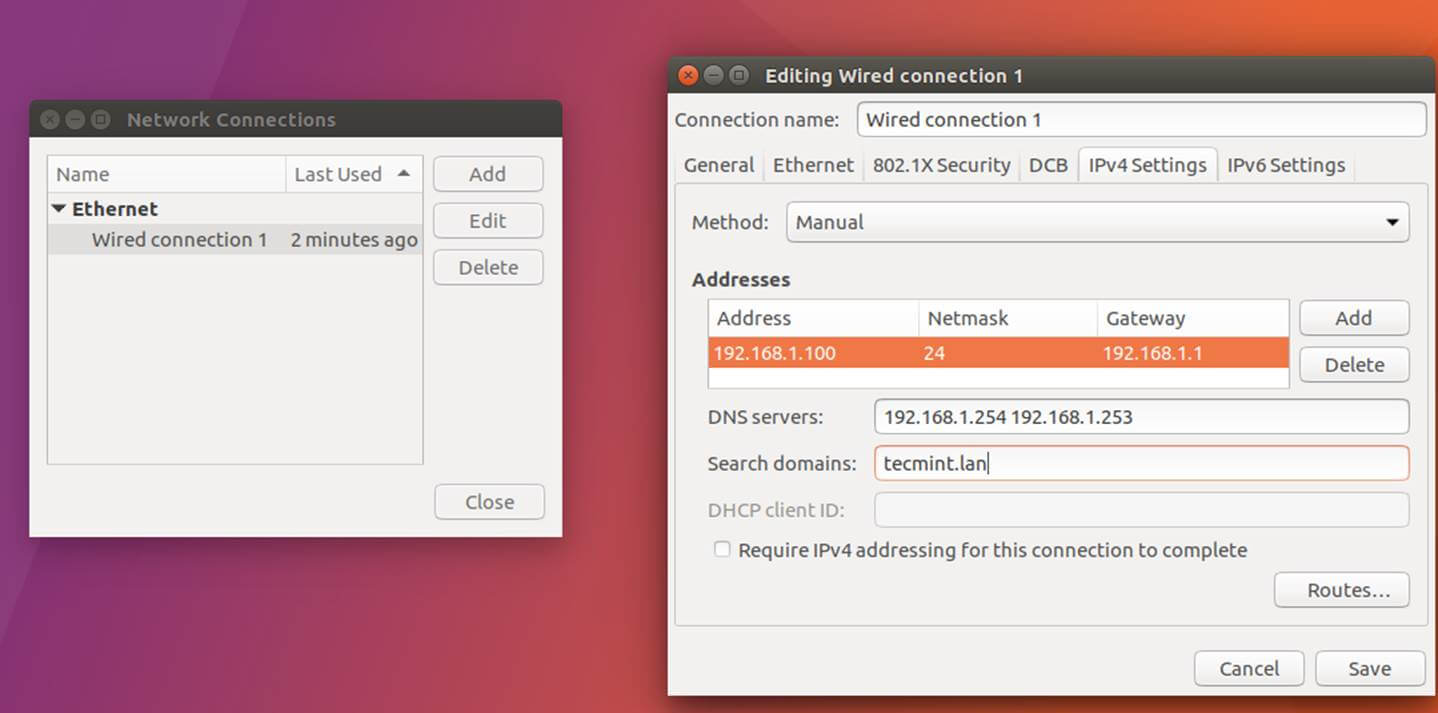

2、 接下来,编辑机器网络接口设置并且添加合适的 IP 设置,并将正确的 DNS IP 服务器地址指向 Samba 活动目录域控制器,如下图所示。

|

||||

|

||||

如果你已经配置了 DHCP 服务来为局域网机器自动分配包括合适的 AD DNS IP 地址的 IP 设置,那么你可以跳过这一步。

|

||||

|

||||

[][2]

|

||||

|

||||

*设置网络接口*

|

||||

|

||||

上图中,`192.168.1.254` 和 `192.168.1.253` 代表 Samba4 域控制器的 IP 地址。

|

||||

|

||||

3、 用 GUI(图形用户界面)或命令行重启网络服务来应用修改,并且对你的域名发起一系列 ping 请求来测试 DNS 解析如预期工作。 也用 `host` 命令来测试 DNS 解析。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart networking.service

|

||||

$ host your_domain.tld

|

||||

$ ping -c2 your_domain_name

|

||||

$ ping -c2 adc1

|

||||

$ ping -c2 adc2

|

||||

```

|

||||

|

||||

4、 最后, 确保机器时间和 Samba4 AD 同步。安装 `ntpdate` 包并用下列指令和 AD 同步时间。

|

||||

|

||||

```

|

||||

$ sudo apt-get install ntpdate

|

||||

$ sudo ntpdate your_domain_name

|

||||

```

|

||||

|

||||

### 第 2 步:安装需要的包

|

||||

|

||||

5、 这一步将安装将 Ubuntu 加入 Samba4 活动目录域控制器所必须的软件和依赖:Realmd 和 SSSD 服务。

|

||||

|

||||

```

|

||||

$ sudo apt install adcli realmd krb5-user samba-common-bin samba-libs samba-dsdb-modules sssd sssd-tools libnss-sss libpam-sss packagekit policykit-1

|

||||

```

|

||||

|

||||

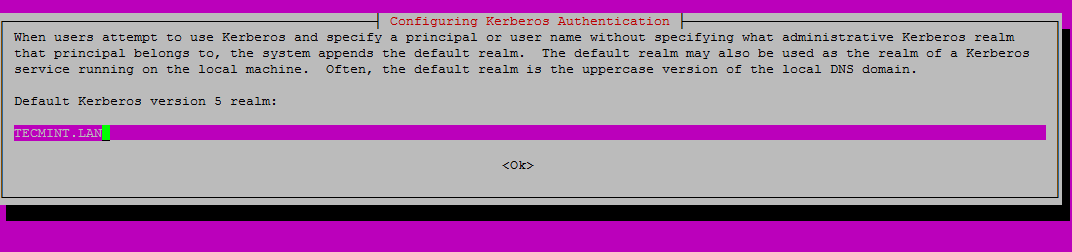

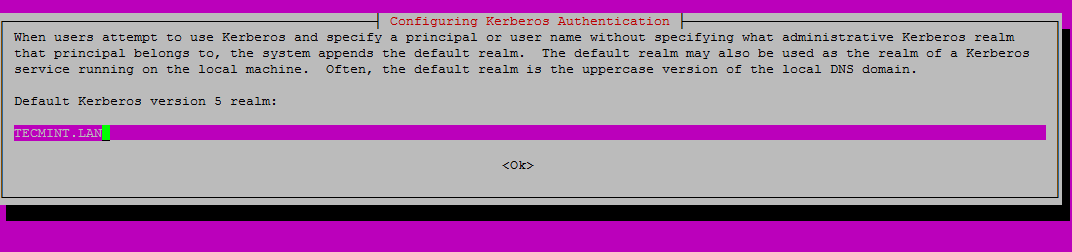

6、 输入大写的默认 realm 名称,然后按下回车继续安装。

|

||||

|

||||

[][3]

|

||||

|

||||

*输入 Realm 名称*

|

||||

|

||||

7、 接着,创建包含以下内容的 SSSD 配置文件。

|

||||

|

||||

```

|

||||

$ sudo nano /etc/sssd/sssd.conf

|

||||

```

|

||||

|

||||

加入下面的内容到 `sssd.conf` 文件。

|

||||

|

||||

```

|

||||

[nss]

|

||||

filter_groups = root

|

||||

filter_users = root

|

||||

reconnection_retries = 3

|

||||

[pam]

|

||||

reconnection_retries = 3

|

||||

[sssd]

|

||||

domains = tecmint.lan

|

||||

config_file_version = 2

|

||||

services = nss, pam

|

||||

default_domain_suffix = TECMINT.LAN

|

||||

[domain/tecmint.lan]

|

||||

ad_domain = tecmint.lan

|

||||

krb5_realm = TECMINT.LAN

|

||||

realmd_tags = manages-system joined-with-samba

|

||||

cache_credentials = True

|

||||

id_provider = ad

|

||||

krb5_store_password_if_offline = True

|

||||

default_shell = /bin/bash

|

||||

ldap_id_mapping = True

|

||||

use_fully_qualified_names = True

|

||||

fallback_homedir = /home/%d/%u

|

||||

access_provider = ad

|

||||

auth_provider = ad

|

||||

chpass_provider = ad

|

||||

access_provider = ad

|

||||

ldap_schema = ad

|

||||

dyndns_update = true

|

||||

dyndsn_refresh_interval = 43200

|

||||

dyndns_update_ptr = true

|

||||

dyndns_ttl = 3600

|

||||

```

|

||||

|

||||

确保你对应地替换了下列参数的域名:

|

||||

|

||||

```

|

||||

domains = tecmint.lan

|

||||

default_domain_suffix = TECMINT.LAN

|

||||

[domain/tecmint.lan]

|

||||

ad_domain = tecmint.lan

|

||||

krb5_realm = TECMINT.LAN

|

||||

```

|

||||

|

||||

8、 接着,用下列命令给 SSSD 配置文件适当的权限:

|

||||

|

||||

```

|

||||

$ sudo chmod 700 /etc/sssd/sssd.conf

|

||||

```

|

||||

|

||||

9、 现在,打开并编辑 Realmd 配置文件,输入下面这行:

|

||||

|

||||

```

|

||||

$ sudo nano /etc/realmd.conf

|

||||

```

|

||||

|

||||

`realmd.conf` 文件摘录:

|

||||

|

||||

```

|

||||

[active-directory]

|

||||

os-name = Linux Ubuntu

|

||||

os-version = 17.04

|

||||

[service]

|

||||

automatic-install = yes

|

||||

[users]

|

||||

default-home = /home/%d/%u

|

||||

default-shell = /bin/bash

|

||||

[tecmint.lan]

|

||||

user-principal = yes

|

||||

fully-qualified-names = no

|

||||

```

|

||||

|

||||

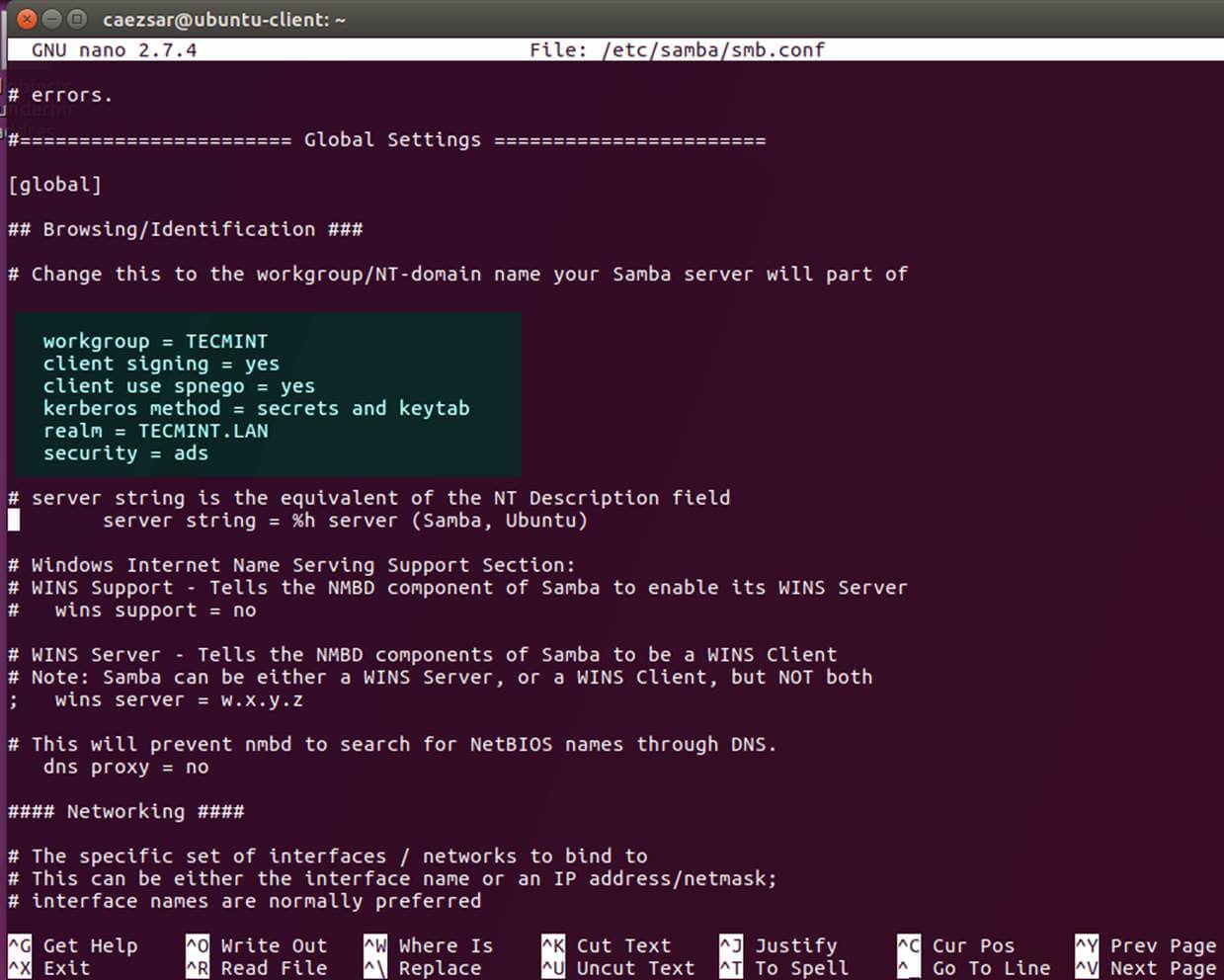

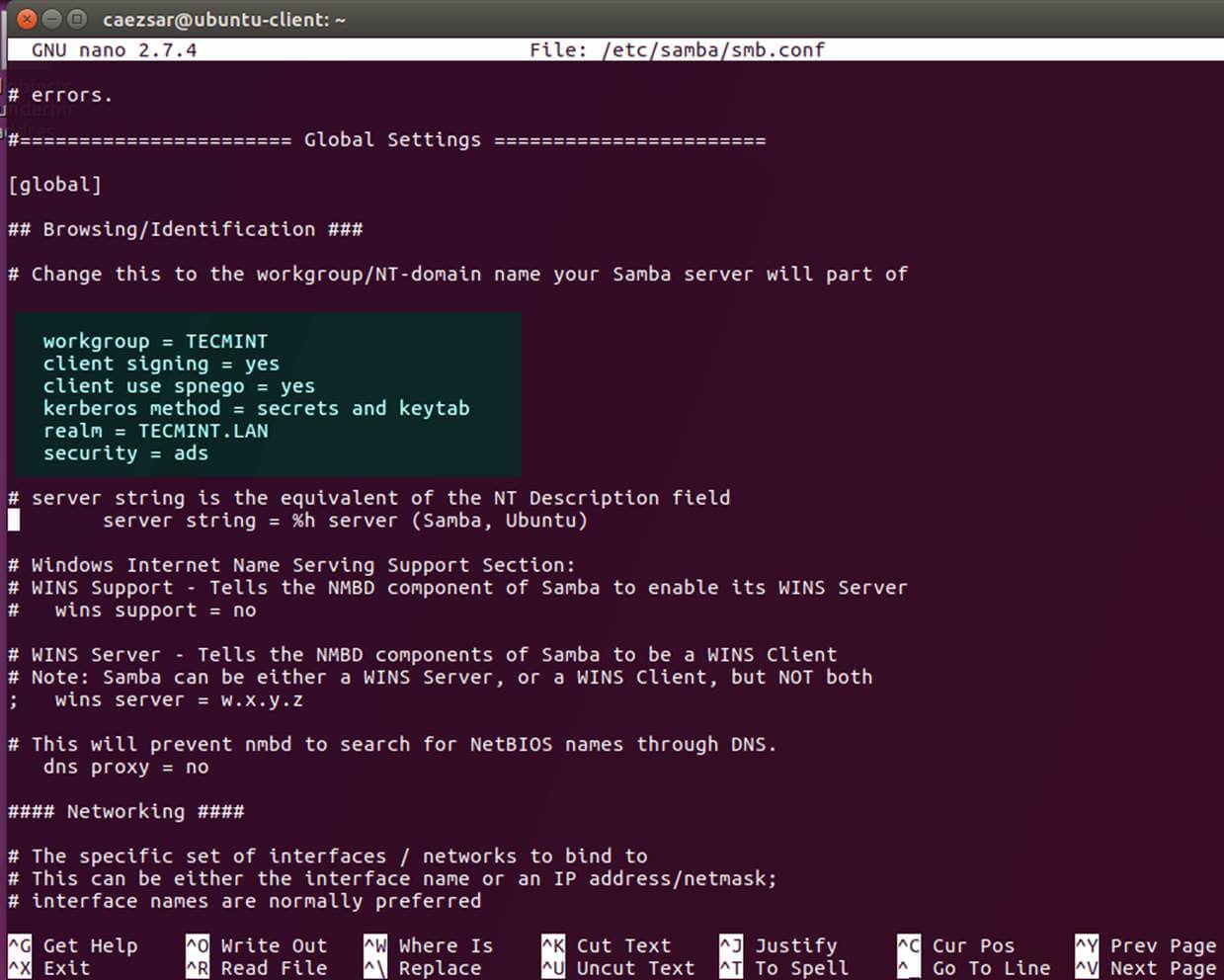

10、 最后需要修改的文件属于 Samba 守护进程。 打开 `/etc/samba/smb.conf` 文件编辑,然后在文件开头加入下面这块代码,在 `[global]` 之后的部分如下图所示。

|

||||

|

||||

```

|

||||

workgroup = TECMINT

|

||||

client signing = yes

|

||||

client use spnego = yes

|

||||

kerberos method = secrets and keytab

|

||||

realm = TECMINT.LAN

|

||||

security = ads

|

||||

```

|

||||

|

||||

[][4]

|

||||

|

||||

*配置 Samba 服务器*

|

||||

|

||||

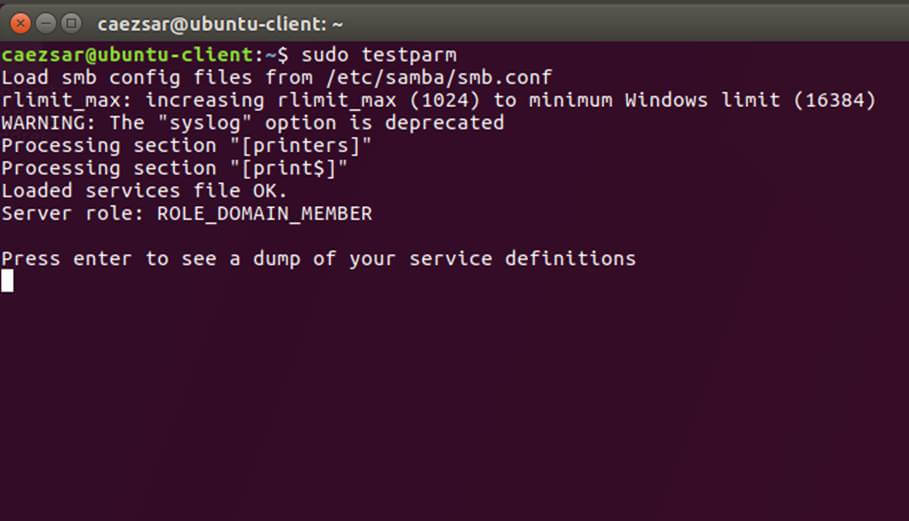

确保你替换了域名值,特别是对应域名的 realm 值,并运行 `testparm` 命令检验设置文件是否包含错误。

|

||||

|

||||

```

|

||||

$ sudo testparm

|

||||

```

|

||||

|

||||

[][5]

|

||||

|

||||

*测试 Samba 配置*

|

||||

|

||||

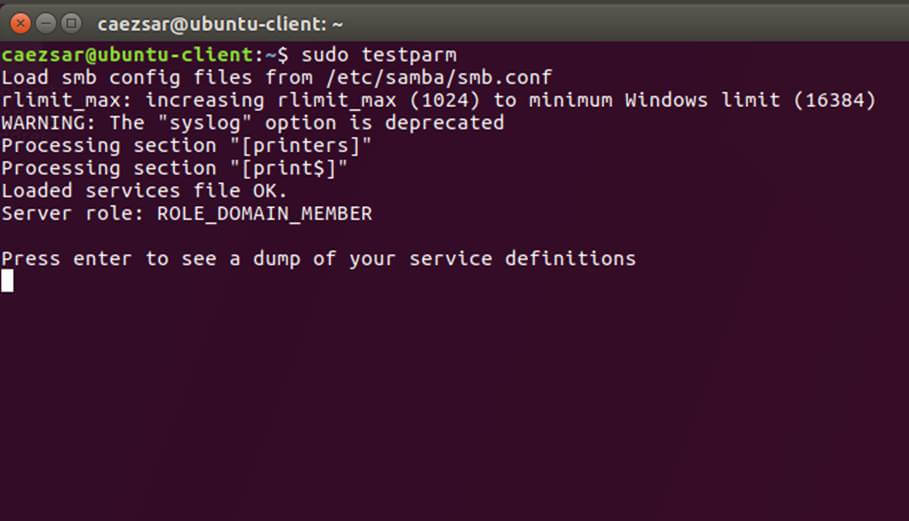

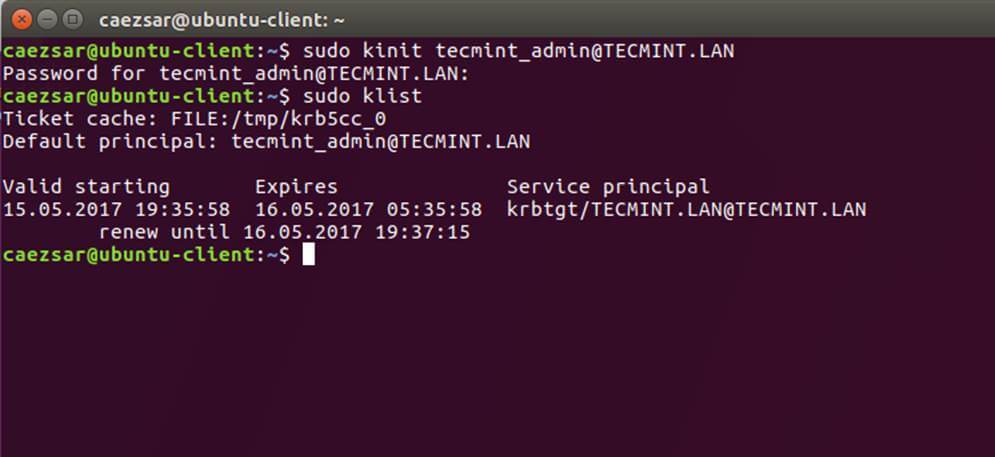

11、 在做完所有必需的修改之后,用 AD 管理员帐号验证 Kerberos 认证并用下面的命令列出票据。

|

||||

|

||||

```

|

||||

$ sudo kinit ad_admin_user@DOMAIN.TLD

|

||||

$ sudo klist

|

||||

```

|

||||

|

||||

[][6]

|

||||

|

||||

*检验 Kerberos 认证*

|

||||

|

||||

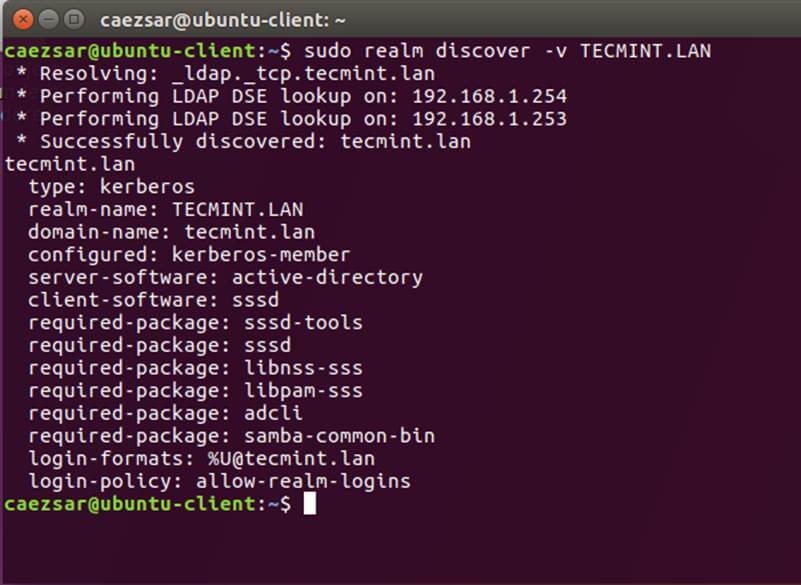

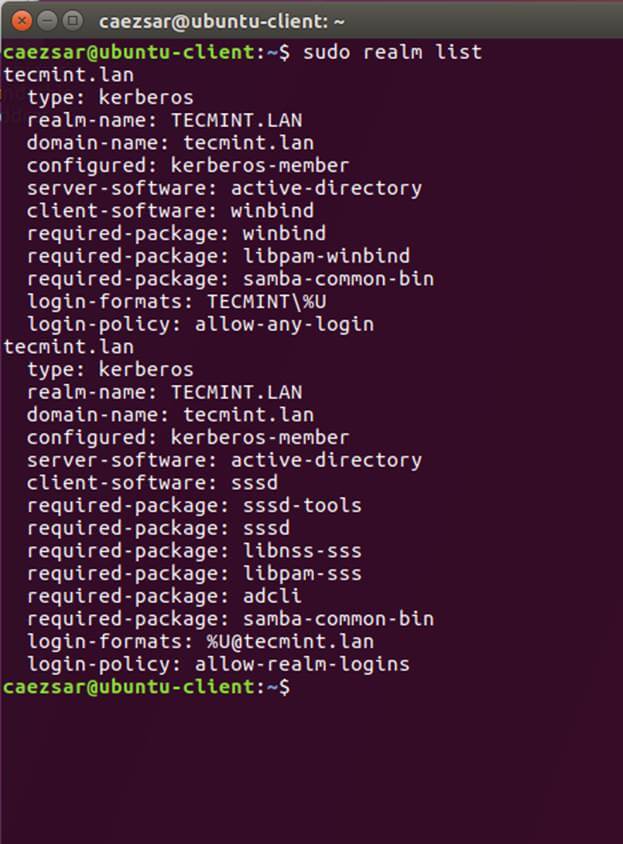

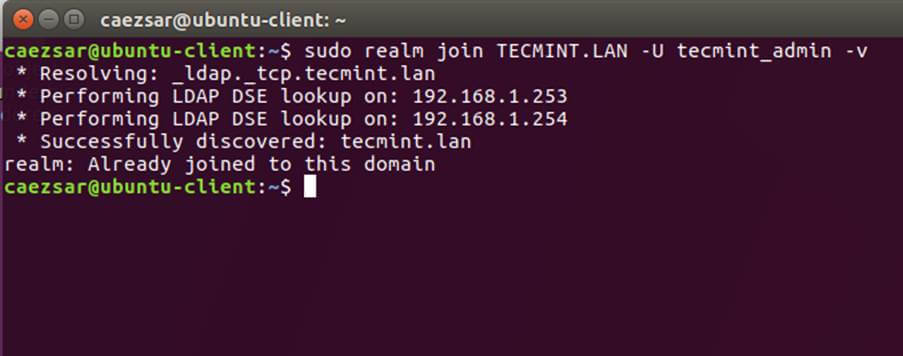

### 第 3 步: 加入 Ubuntu 到 Samba4 Realm

|

||||

|

||||

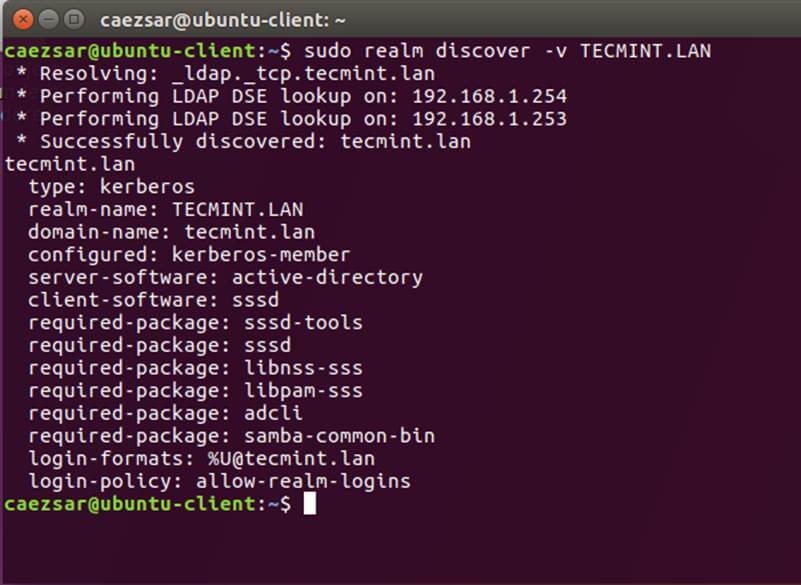

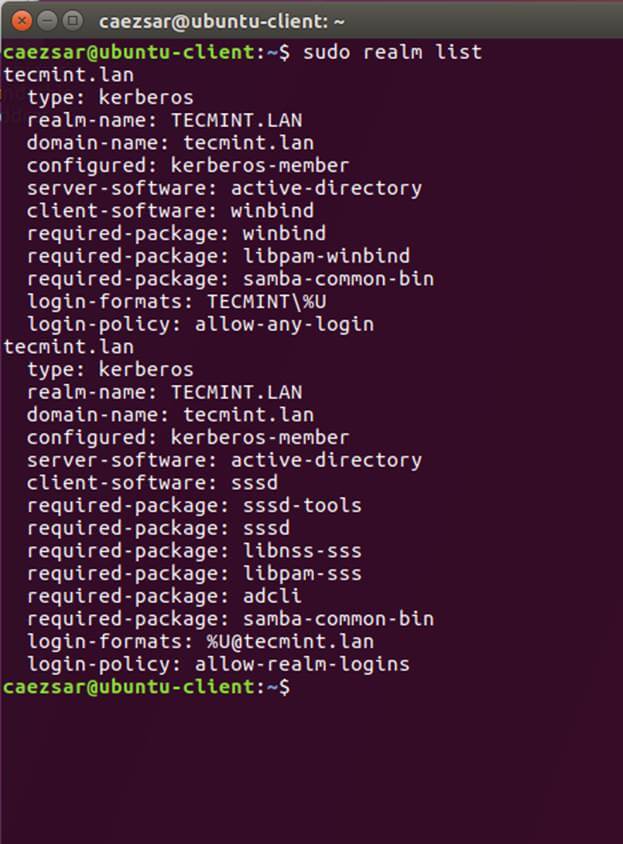

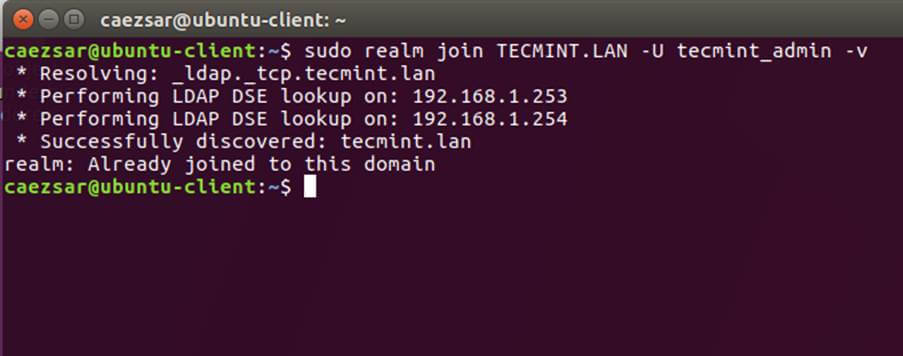

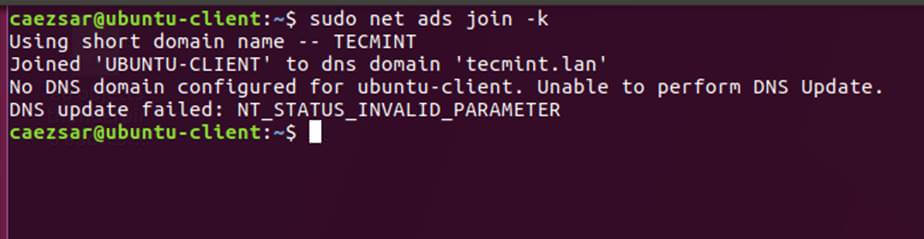

12、 键入下列命令将 Ubuntu 机器加入到 Samba4 活动目录。用有管理员权限的 AD DC 账户名字,以便绑定 realm 可以如预期般工作,并替换对应的域名值。

|

||||

|

||||

```

|

||||

$ sudo realm discover -v DOMAIN.TLD

|

||||

$ sudo realm list

|

||||

$ sudo realm join TECMINT.LAN -U ad_admin_user -v

|

||||

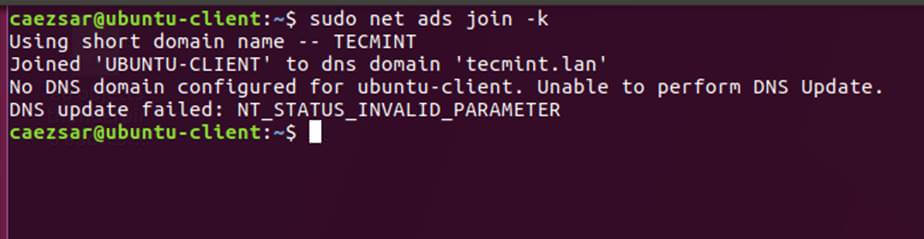

$ sudo net ads join -k

|

||||

```

|

||||

|

||||

[][7]

|

||||

|

||||

*加入 Ubuntu 到 Samba4 Realm*

|

||||

|

||||

[][8]

|

||||

|

||||

*列出 Realm Domain 信息*

|

||||

|

||||

[][9]

|

||||

|

||||

*添加用户到 Realm Domain*

|

||||

|

||||

[][10]

|

||||

|

||||

*添加 Domain 到 Realm*

|

||||

|

||||

13、 区域绑定好了之后,运行下面的命令确保所有域账户允许在这台机器上认证。

|

||||

|

||||

```

|

||||

$ sudo realm permit -all

|

||||

```

|

||||

|

||||

然后你可以使用下面举例的 `realm` 命令允许或者禁止域用户帐号或群组访问。

|

||||

|

||||

```

|

||||

$ sudo realm deny -a

|

||||

$ realm permit --groups ‘domain.tld\Linux Admins’

|

||||

$ realm permit user@domain.lan

|

||||

$ realm permit DOMAIN\\User2

|

||||

```

|

||||

|

||||

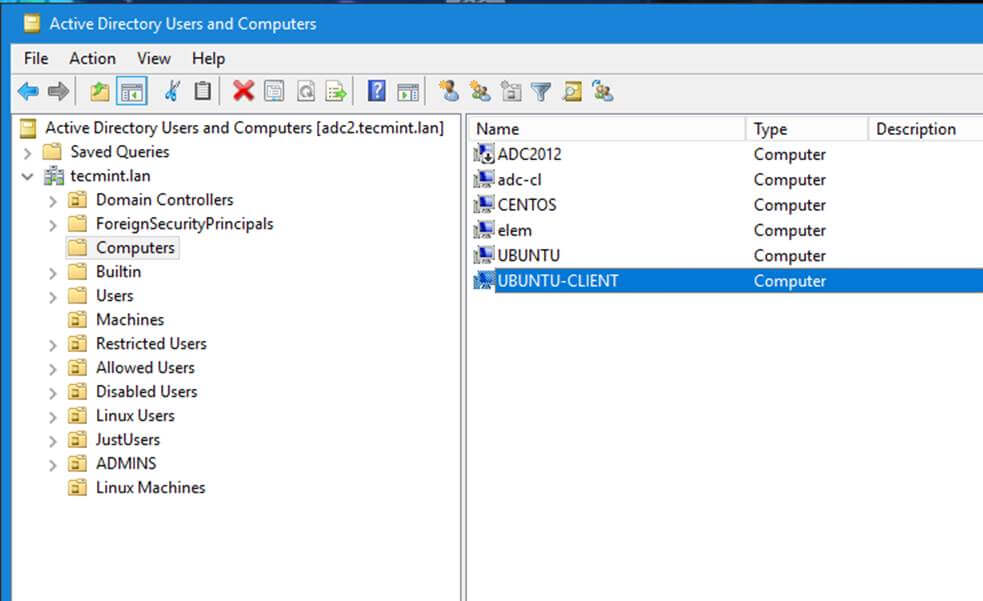

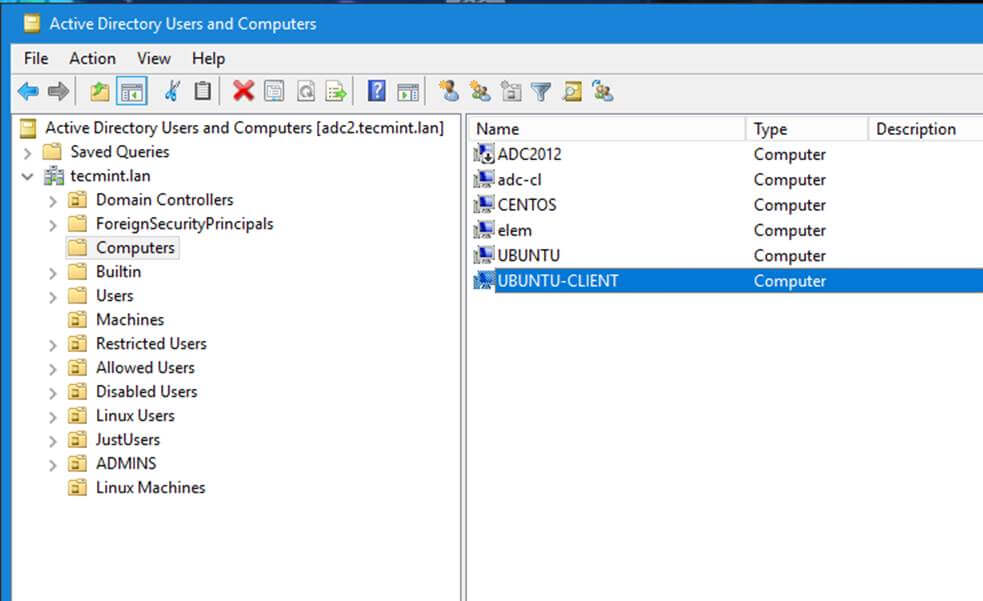

14、 从一个 [安装了 RSAT 工具的][11] Windows 机器上你可以打开 AD UC 并浏览“<ruby>电脑<rt>computers</rt></ruby>”容器,并检验是否有一个使用你机器名的对象帐号已经创建。

|

||||

|

||||

[][12]

|

||||

|

||||

*确保域被加入 AD DC*

|

||||

|

||||

### 第 4 步:配置 AD 账户认证

|

||||

|

||||

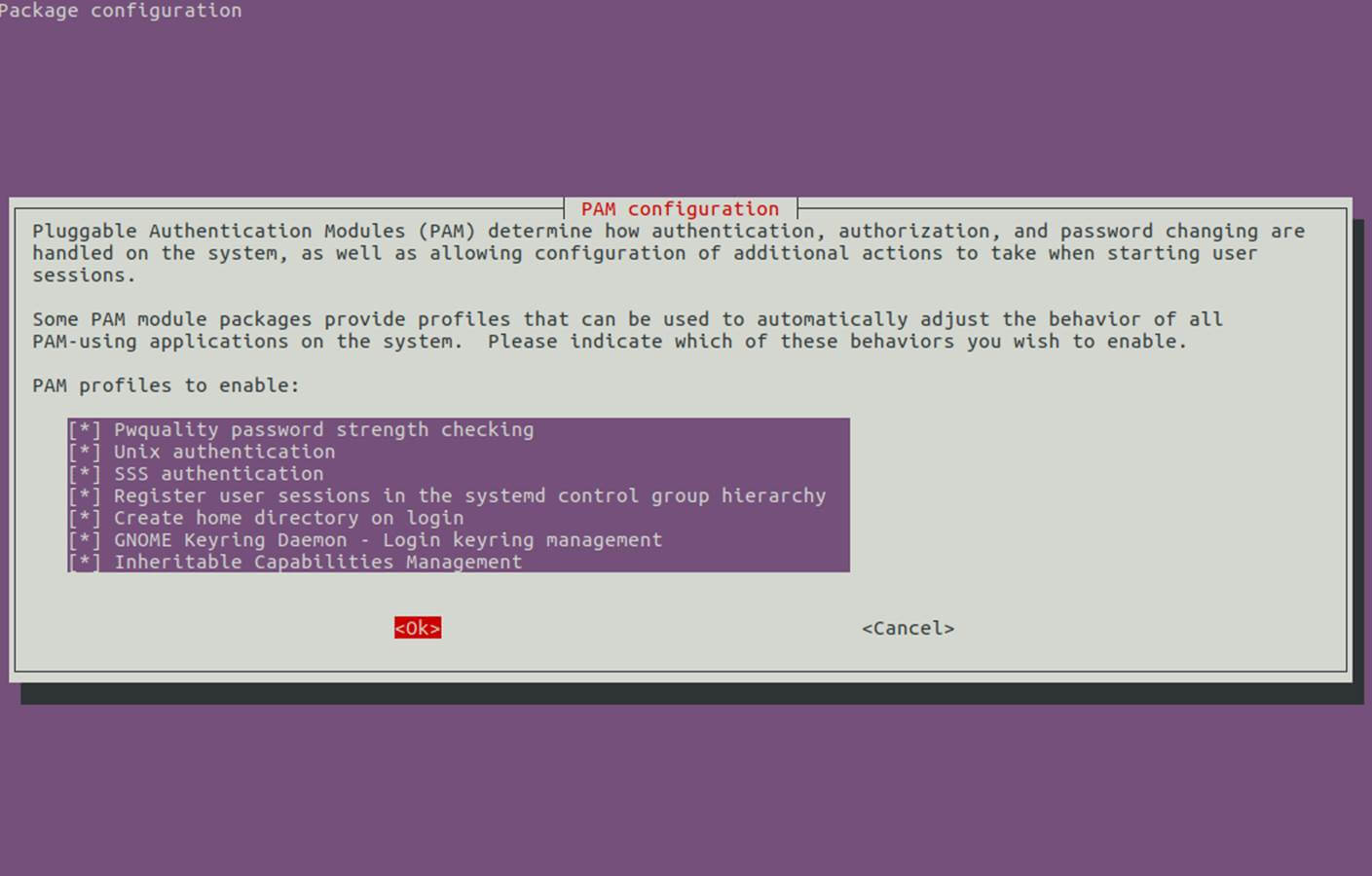

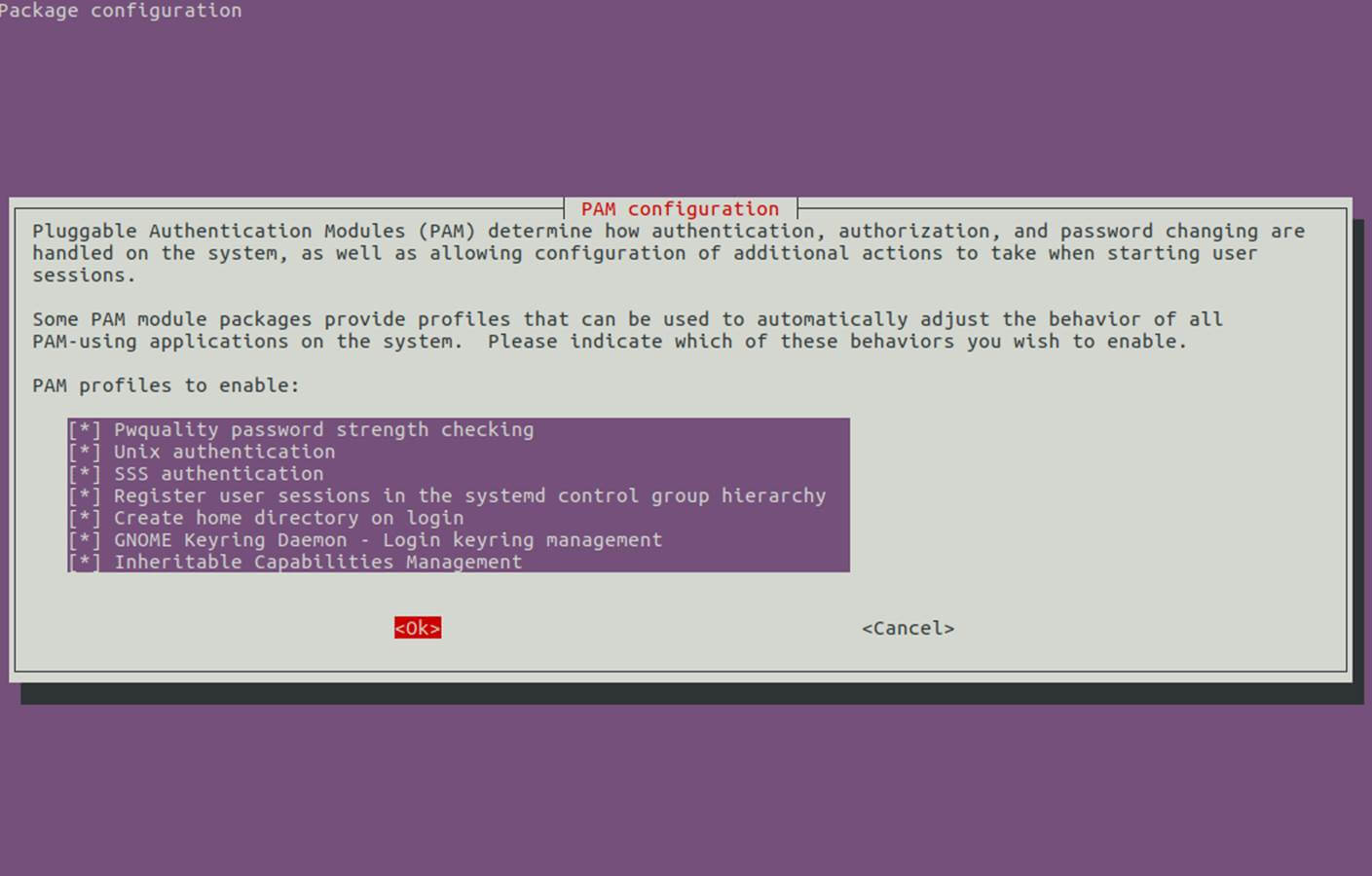

15、 为了在 Ubuntu 机器上用域账户认证,你需要用 root 权限运行 `pam-auth-update` 命令并允许所有 PAM 配置文件,包括为每个域账户在第一次注册的时候自动创建家目录的选项。

|

||||

|

||||

按 [空格] 键检验所有配置项并点击 ok 来应用配置。

|

||||

|

||||

```

|

||||

$ sudo pam-auth-update

|

||||

```

|

||||

|

||||

[][13]

|

||||

|

||||

*PAM 配置*

|

||||

|

||||

16、 在系统上手动编辑 `/etc/pam.d/common-account` 文件,下面这几行是为了给认证过的域用户自动创建家目录。

|

||||

|

||||

```

|

||||

session required pam_mkhomedir.so skel=/etc/skel/ umask=0022

|

||||

```

|

||||

|

||||

17、 如果活动目录用户不能用 linux 命令行修改他们的密码,打开 `/etc/pam.d/common-password` 文件并在 `password` 行移除 `use_authtok` 语句,最后如下:

|

||||

|

||||

```

|

||||

password [success=1 default=ignore] pam_winbind.so try_first_pass

|

||||

```

|

||||

|

||||

18、 最后,用下面的命令重启并启用以应用 Realmd 和 SSSD 服务的修改:

|

||||

|

||||

```

|

||||

$ sudo systemctl restart realmd sssd

|

||||

$ sudo systemctl enable realmd sssd

|

||||

```

|

||||

|

||||

19、 为了测试 Ubuntu 机器是是否成功集成到 realm ,安装 winbind 包并运行 `wbinfo` 命令列出域账户和群组,如下所示。

|

||||

|

||||

```

|

||||

$ sudo apt-get install winbind

|

||||

$ wbinfo -u

|

||||

$ wbinfo -g

|

||||

```

|

||||

|

||||

[][14]

|

||||

|

||||

*列出域账户*

|

||||

|

||||

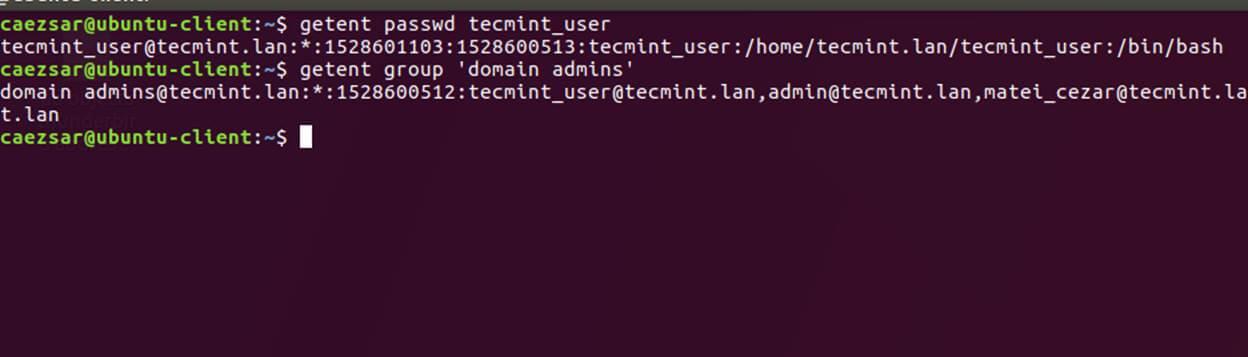

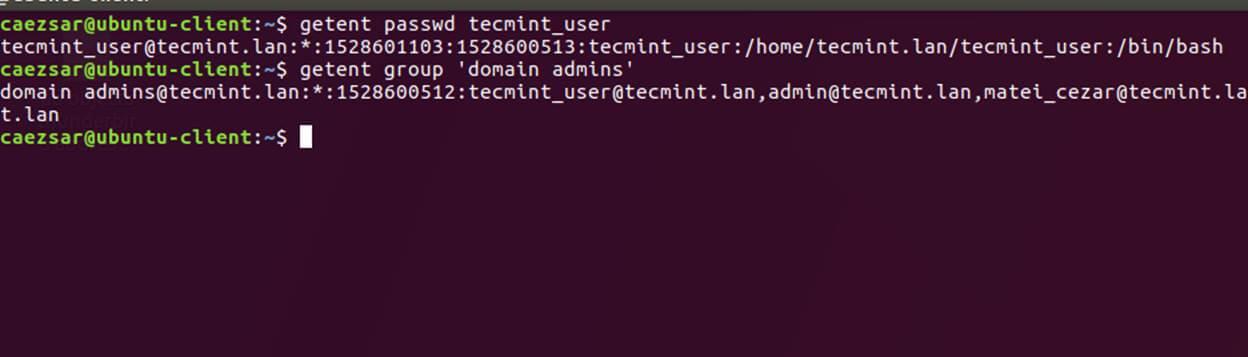

20、 同样,也可以针对特定的域用户或群组使用 `getent` 命令检验 Winbind nsswitch 模块。

|

||||

|

||||

```

|

||||

$ sudo getent passwd your_domain_user

|

||||

$ sudo getent group ‘domain admins’

|

||||

```

|

||||

|

||||

[][15]

|

||||

|

||||

*检验 Winbind Nsswitch*

|

||||

|

||||

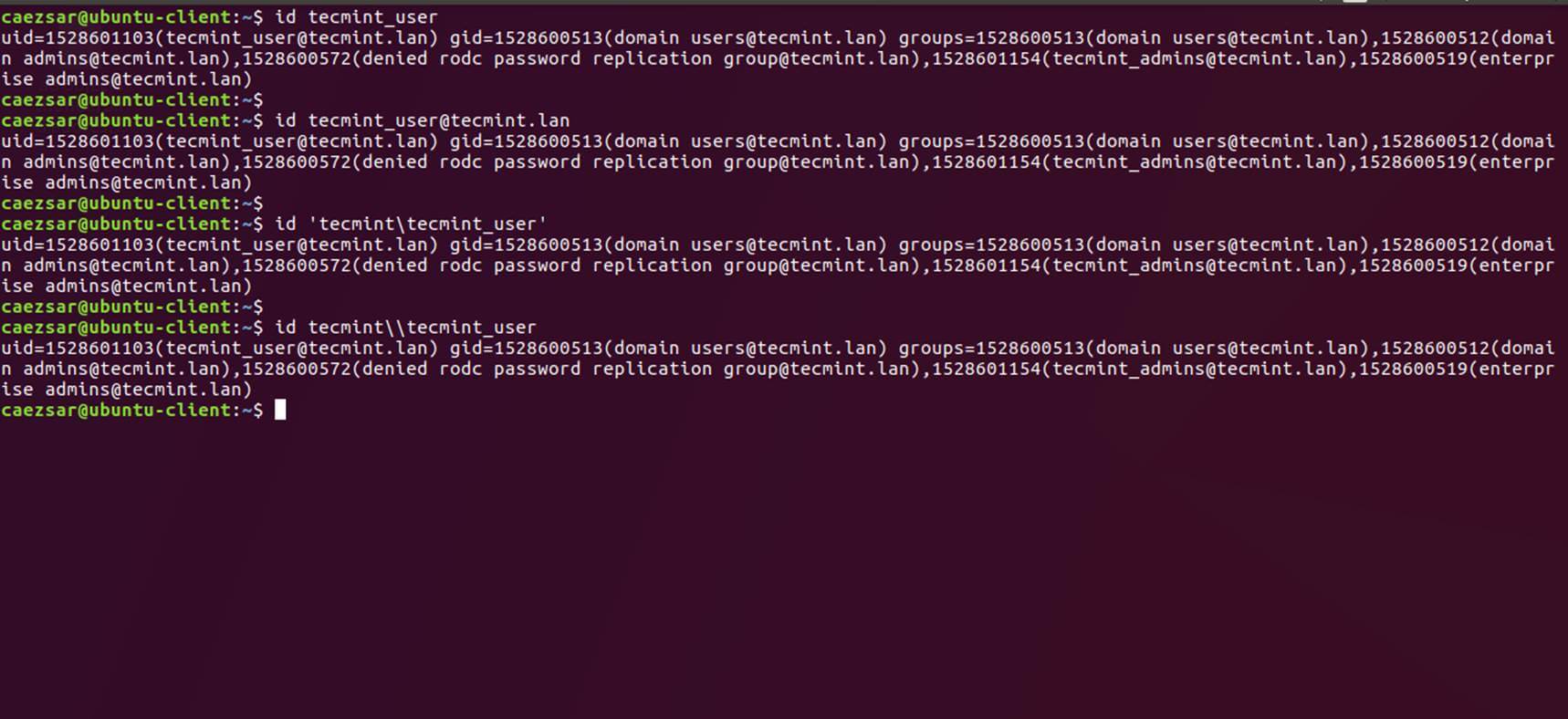

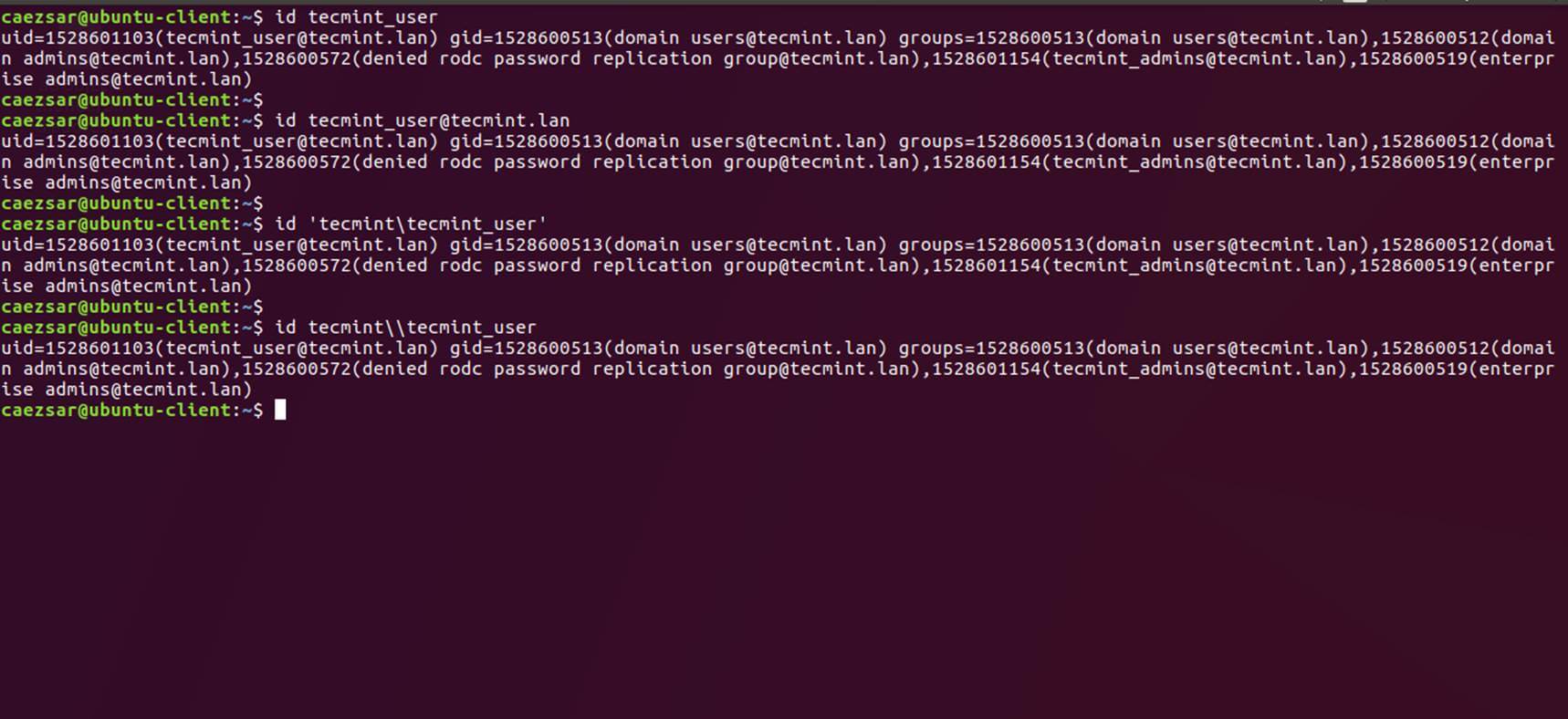

21、 你也可以用 Linux `id` 命令获取 AD 账户的信息,命令如下:

|

||||

|

||||

```

|

||||

$ id tecmint_user

|

||||

```

|

||||

|

||||

[][16]

|

||||

|

||||

*检验 AD 用户信息*

|

||||

|

||||

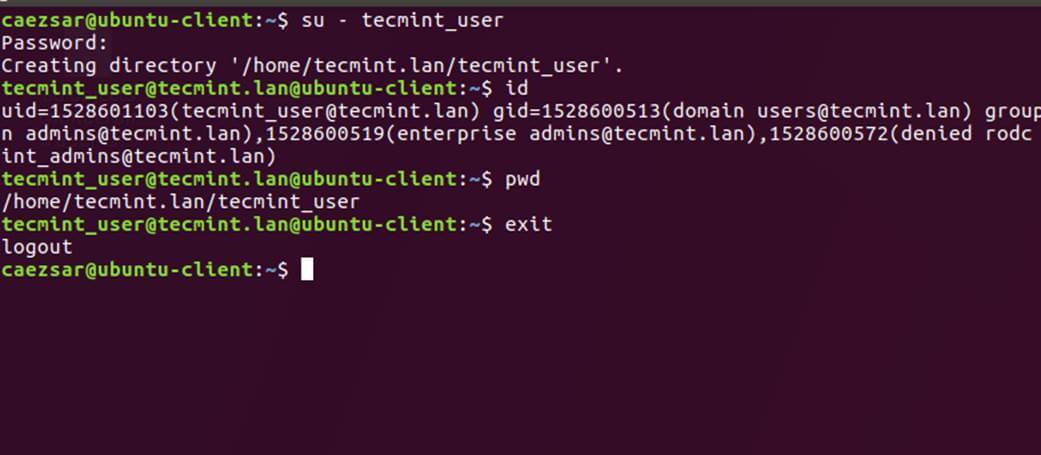

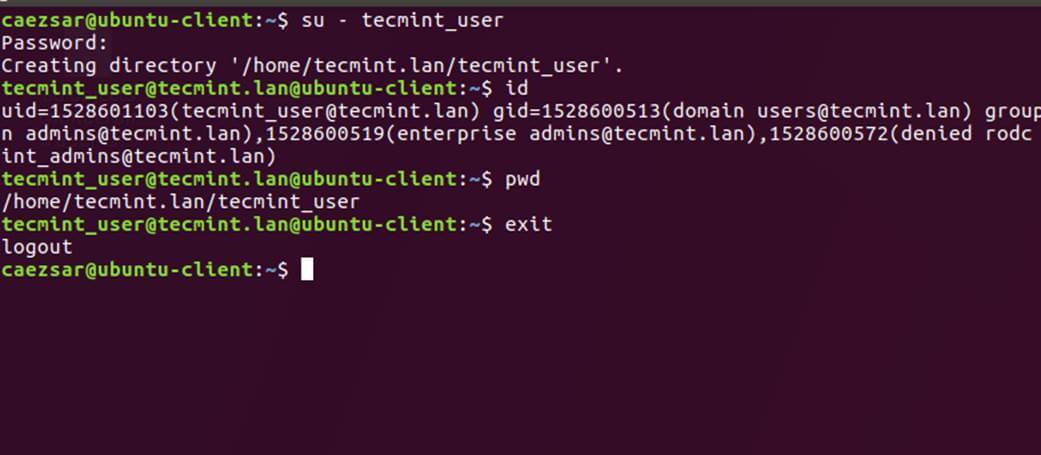

22、 用 `su -` 后跟上域用户名参数来认证 Ubuntu 主机的一个 Samba4 AD 账户。运行 `id` 命令获取该 AD 账户的更多信息。

|

||||

|

||||

```

|

||||

$ su - your_ad_user

|

||||

```

|

||||

|

||||

[][17]

|

||||

|

||||

*AD 用户认证*

|

||||

|

||||

用 `pwd` 命令查看你的域用户当前工作目录,和用 `passwd` 命令修改密码。

|

||||

|

||||

23、 在 Ubuntu 上使用有 root 权限的域账户,你需要用下面的命令添加 AD 用户名到 sudo 系统群组:

|

||||

|

||||

```

|

||||

$ sudo usermod -aG sudo your_domain_user@domain.tld

|

||||

```

|

||||

|

||||

用域账户登录 Ubuntu 并运行 `apt update` 命令来更新你的系统以检验 root 权限。

|

||||

|

||||

24、 给一个域群组 root 权限,用 `visudo` 命令打开并编辑 `/etc/sudoers` 文件,并加入如下行:

|

||||

|

||||

```

|

||||

%domain\ admins@tecmint.lan ALL=(ALL:ALL) ALL

|

||||

```

|

||||

|

||||

25、 要在 Ubuntu 桌面使用域账户认证,通过编辑 `/usr/share/lightdm/lightdm.conf.d/50-ubuntu.conf` 文件来修改 LightDM 显示管理器,增加以下两行并重启 lightdm 服务或重启机器应用修改。

|

||||

|

||||

```

|

||||

greeter-show-manual-login=true

|

||||

greeter-hide-users=true

|

||||

```

|

||||

|

||||

域账户用“你的域用户”或“你的域用户@你的域” 格式来登录 Ubuntu 桌面。

|

||||

|

||||

26、 为使用 Samba AD 账户的简称格式,编辑 `/etc/sssd/sssd.conf` 文件,在 `[sssd]` 块加入如下几行命令。

|

||||

|

||||

```

|

||||

full_name_format = %1$s

|

||||

```

|

||||

|

||||

并重启 SSSD 守护进程应用改变。

|

||||

|

||||

```

|

||||

$ sudo systemctl restart sssd

|

||||

```

|

||||

|

||||

你会注意到 bash 提示符会变成了没有附加域名部分的 AD 用户名。

|

||||

|

||||

27、 万一你因为 `sssd.conf` 里的 `enumerate=true` 参数设定而不能登录,你得用下面的命令清空 sssd 缓存数据:

|

||||

|

||||

```

|

||||

$ rm /var/lib/sss/db/cache_tecmint.lan.ldb

|

||||

```

|

||||

|

||||

这就是全部了!虽然这个教程主要集中于集成 Samba4 活动目录,同样的步骤也能被用于把使用 Realm 和 SSSD 服务的 Ubuntu 整合到微软 Windows 服务器活动目录。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Matei Cezar - 我是一名网瘾少年,开源和基于 linux 系统软件的粉丝,有4年经验在 linux 发行版桌面、服务器和 bash 脚本。

|

||||

|

||||

------------------

|

||||

|

||||

via: https://www.tecmint.com/integrate-ubuntu-to-samba4-ad-dc-with-sssd-and-realm/

|

||||

|

||||

作者:[Matei Cezar][a]

|

||||

译者:[XYenChi](https://github.com/XYenChi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.tecmint.com/author/cezarmatei/

|

||||

[1]:https://linux.cn/article-8065-1.html

|

||||

[2]:https://www.tecmint.com/wp-content/uploads/2017/07/Configure-Network-Interface.jpg

|

||||

[3]:https://www.tecmint.com/wp-content/uploads/2017/07/Set-realm-name.png

|

||||

[4]:https://www.tecmint.com/wp-content/uploads/2017/07/Configure-Samba-Server.jpg

|

||||

[5]:https://www.tecmint.com/wp-content/uploads/2017/07/Test-Samba-Configuration.jpg

|

||||

[6]:https://www.tecmint.com/wp-content/uploads/2017/07/Check-Kerberos-Authentication.jpg

|

||||

[7]:https://www.tecmint.com/wp-content/uploads/2017/07/Join-Ubuntu-to-Samba4-Realm.jpg

|

||||

[8]:https://www.tecmint.com/wp-content/uploads/2017/07/List-Realm-Domain-Info.jpg

|

||||

[9]:https://www.tecmint.com/wp-content/uploads/2017/07/Add-User-to-Realm-Domain.jpg

|

||||

[10]:https://www.tecmint.com/wp-content/uploads/2017/07/Add-Domain-to-Realm.jpg

|

||||

[11]:https://linux.cn/article-8097-1.html

|

||||

[12]:https://www.tecmint.com/wp-content/uploads/2017/07/Confirm-Domain-Added.jpg

|

||||

[13]:https://www.tecmint.com/wp-content/uploads/2017/07/PAM-Configuration.jpg

|

||||

[14]:https://www.tecmint.com/wp-content/uploads/2017/07/List-Domain-Accounts.jpg

|

||||

[15]:https://www.tecmint.com/wp-content/uploads/2017/07/check-Winbind-nsswitch.jpg

|

||||

[16]:https://www.tecmint.com/wp-content/uploads/2017/07/Check-AD-User-Info.jpg

|

||||

[17]:https://www.tecmint.com/wp-content/uploads/2017/07/AD-User-Authentication.jpg

|

||||

[18]:https://www.tecmint.com/author/cezarmatei/

|

||||

[19]:https://www.tecmint.com/10-useful-free-linux-ebooks-for-newbies-and-administrators/

|

||||

[20]:https://www.tecmint.com/free-linux-shell-scripting-books/

|

||||

216

published/20170813 An Intro to Compilers.md

Normal file

216

published/20170813 An Intro to Compilers.md

Normal file

@ -0,0 +1,216 @@

|

||||

编译器简介: 在 Siri 前时代如何与计算机对话

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

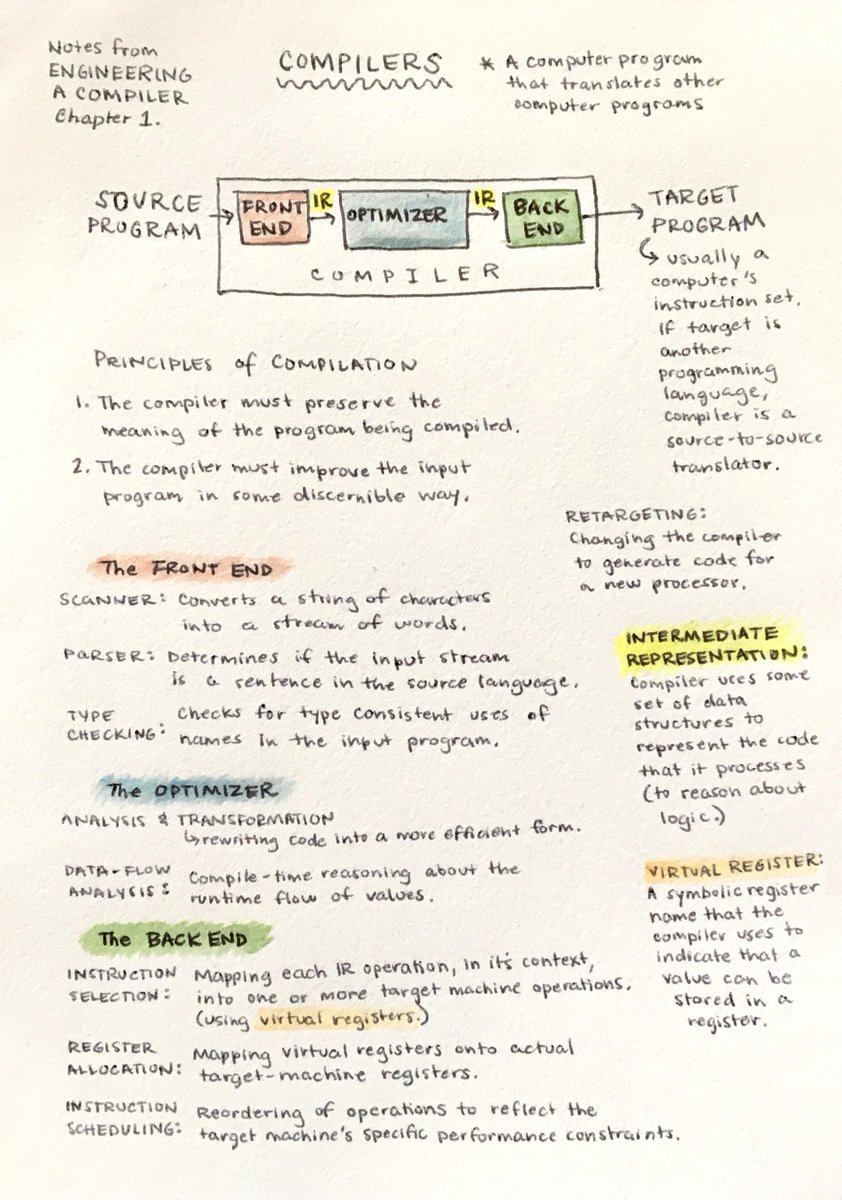

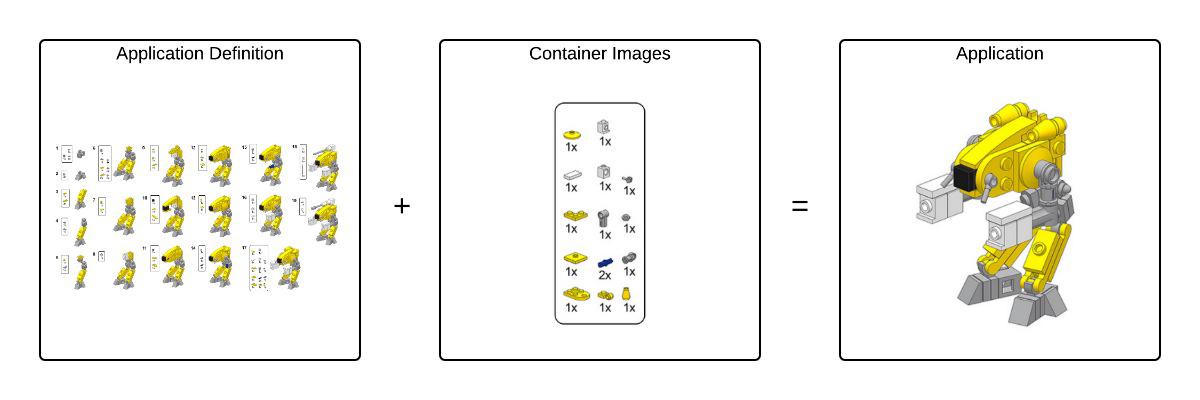

简单说来,一个<ruby>编译器<rt>compiler</rt></ruby>不过是一个可以翻译其他程序的程序。传统的编译器可以把源代码翻译成你的计算机能够理解的可执行机器代码。(一些编译器将源代码翻译成别的程序语言,这样的编译器称为源到源翻译器或<ruby>转化器<rt>transpilers</rt></ruby>。)[LLVM][7] 是一个广泛使用的编译器项目,包含许多模块化的编译工具。

|

||||

|

||||

传统的编译器设计包含三个部分:

|

||||

|

||||

|

||||

|

||||

* <ruby>前端<rt>Frontend</rt></ruby>将源代码翻译为<ruby>中间表示<rt>intermediate representation </rt></ruby> (IR)* 。[clang][1] 是 LLVM 中用于 C 家族语言的前端工具。

|

||||

* <ruby>优化器<rt>Optimizer</rt></ruby>分析 IR 然后将其转化为更高效的形式。[opt][2] 是 LLVM 的优化工具。

|

||||

* <ruby>后端<rt>Backend</rt></ruby>通过将 IR 映射到目标硬件指令集从而生成机器代码。[llc][3] 是 LLVM 的后端工具。

|

||||

|

||||

注:LLVM 的 IR 是一种和汇编类似的低级语言。然而,它抽离了特定硬件信息。

|

||||

|

||||

### Hello, Compiler

|

||||

|

||||

下面是一个打印 “Hello, Compiler!” 到标准输出的简单 C 程序。C 语法是人类可读的,但是计算机却不能理解,不知道该程序要干什么。我将通过三个编译阶段使该程序变成机器可执行的程序。

|

||||

|

||||

```

|

||||

// compile_me.c

|

||||

// Wave to the compiler. The world can wait.

|

||||

|

||||

#include <stdio.h>

|

||||

|

||||

int main() {

|

||||

printf("Hello, Compiler!\n");

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

#### 前端

|

||||

|

||||

正如我在上面所提到的,`clang` 是 LLVM 中用于 C 家族语言的前端工具。Clang 包含 <ruby>C 预处理器<rt>C preprocessor</rt></ruby>、<ruby>词法分析器<rt>lexer</rt></ruby>、<ruby>语法解析器<rt>parser</rt></ruby>、<ruby>语义分析器<rt>semantic analyzer</rt></ruby>和 <ruby>IR 生成器<rt>IR generator</rt></ruby>。

|

||||

|

||||

**C 预处理器**在将源程序翻译成 IR 前修改源程序。预处理器处理外部包含文件,比如上面的 `#include <stdio.h>`。 它将会把这一行替换为 `stdio.h` C 标准库文件的完整内容,其中包含 `printf` 函数的声明。

|

||||

|

||||

通过运行下面的命令来查看预处理步骤的输出:

|

||||

|

||||

```

|

||||

clang -E compile_me.c -o preprocessed.i

|

||||

```

|

||||

|

||||

**词法分析器**(或<ruby>扫描器<rt>scanner</rt></ruby>或<ruby>分词器<rt>tokenizer</rt></ruby>)将一串字符转化为一串单词。每一个单词或<ruby>记号<rt>token</rt></ruby>,被归并到五种语法类别之一:标点符号、关键字、标识符、文字或注释。

|

||||

|

||||

compile_me.c 的分词过程:

|

||||

|

||||

|

||||

|

||||

**语法分析器**确定源程序中的单词流是否组成了合法的句子。在分析记号流的语法后,它会输出一个<ruby>抽象语法树<rt>abstract syntax tree</rt></ruby>(AST)。Clang 的 AST 中的节点表示声明、语句和类型。

|

||||

|

||||

compile_me.c 的语法树:

|

||||

|

||||

|

||||

|

||||

**语义分析器**会遍历抽象语法树,从而确定代码语句是否有正确意义。这个阶段会检查类型错误。如果 `compile_me.c` 的 main 函数返回 `"zero"`而不是 `0`, 那么语义分析器将会抛出一个错误,因为 `"zero"` 不是 `int` 类型。

|

||||

|

||||

**IR 生成器**将抽象语法树翻译为 IR。

|

||||

|

||||

对 compile_me.c 运行 clang 来生成 LLVM IR:

|

||||

|

||||

```

|

||||

clang -S -emit-llvm -o llvm_ir.ll compile_me.c

|

||||

```

|

||||

|

||||

在 `llvm_ir.ll` 中的 main 函数:

|

||||

|

||||

```

|

||||

; llvm_ir.ll

|

||||

@.str = private unnamed_addr constant [18 x i8] c"Hello, Compiler!\0A\00", align 1

|

||||

|

||||

define i32 @main() {

|

||||

%1 = alloca i32, align 4 ; <- memory allocated on the stack

|

||||

store i32 0, i32* %1, align 4

|

||||

%2 = call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([18 x i8], [18 x i8]* @.str, i32 0, i32 0))

|

||||

ret i32 0

|

||||

}

|

||||

|

||||

declare i32 @printf(i8*, ...)

|

||||

```

|

||||

|

||||

#### 优化程序

|

||||

|

||||

优化程序的工作是基于其对程序的运行时行为的理解来提高代码效率。优化程序将 IR 作为输入,然后生成改进后的 IR 作为输出。LLVM 的优化工具 `opt` 将会通过标记 `-O2`(大写字母 `o`,数字 2)来优化处理器速度,通过标记 `Os`(大写字母 `o`,小写字母 `s`)来减少指令数目。

|

||||

|

||||

看一看上面的前端工具生成的 LLVM IR 代码和运行下面的命令生成的结果之间的区别:

|

||||

|

||||

```

|

||||

opt -O2 -S llvm_ir.ll -o optimized.ll

|

||||

```

|

||||

|

||||

在 `optimized.ll` 中的 main 函数:

|

||||

|

||||

```

|

||||

optimized.ll

|

||||

|

||||

@str = private unnamed_addr constant [17 x i8] c"Hello, Compiler!\00"

|

||||

|

||||

define i32 @main() {

|

||||

%puts = tail call i32 @puts(i8* getelementptr inbounds ([17 x i8], [17 x i8]* @str, i64 0, i64 0))

|

||||

ret i32 0

|

||||

}

|

||||

|

||||

declare i32 @puts(i8* nocapture readonly)

|

||||

```

|

||||

|

||||

优化后的版本中, main 函数没有在栈中分配内存,因为它不使用任何内存。优化后的代码中调用 `puts` 函数而不是 `printf` 函数,因为程序中并没有使用 `printf` 函数的格式化功能。

|

||||

|

||||

当然,优化程序不仅仅知道何时可以把 `printf` 函数用 `puts` 函数代替。优化程序也能展开循环并内联简单计算的结果。考虑下面的程序,它将两个整数相加并打印出结果。

|

||||

|

||||

```

|

||||

// add.c

|

||||

#include <stdio.h>

|

||||

|

||||

int main() {

|

||||

int a = 5, b = 10, c = a + b;

|

||||

printf("%i + %i = %i\n", a, b, c);

|

||||

}

|

||||

```

|

||||

|

||||

下面是未优化的 LLVM IR:

|

||||

|

||||

```

|

||||

@.str = private unnamed_addr constant [14 x i8] c"%i + %i = %i\0A\00", align 1

|

||||

|

||||

define i32 @main() {

|

||||

%1 = alloca i32, align 4 ; <- allocate stack space for var a

|

||||

%2 = alloca i32, align 4 ; <- allocate stack space for var b

|

||||

%3 = alloca i32, align 4 ; <- allocate stack space for var c

|

||||

store i32 5, i32* %1, align 4 ; <- store 5 at memory location %1

|

||||

store i32 10, i32* %2, align 4 ; <- store 10 at memory location %2

|

||||

%4 = load i32, i32* %1, align 4 ; <- load the value at memory address %1 into register %4

|

||||

%5 = load i32, i32* %2, align 4 ; <- load the value at memory address %2 into register %5

|

||||

%6 = add nsw i32 %4, %5 ; <- add the values in registers %4 and %5\. put the result in register %6

|

||||

store i32 %6, i32* %3, align 4 ; <- put the value of register %6 into memory address %3

|

||||

%7 = load i32, i32* %1, align 4 ; <- load the value at memory address %1 into register %7

|

||||

%8 = load i32, i32* %2, align 4 ; <- load the value at memory address %2 into register %8

|

||||

%9 = load i32, i32* %3, align 4 ; <- load the value at memory address %3 into register %9

|

||||

%10 = call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([14 x i8], [14 x i8]* @.str, i32 0, i32 0), i32 %7, i32 %8, i32 %9)

|

||||

ret i32 0

|

||||

}

|

||||

|

||||

declare i32 @printf(i8*, ...)

|

||||

```

|

||||

|

||||

下面是优化后的 LLVM IR:

|

||||

|

||||

```

|

||||

@.str = private unnamed_addr constant [14 x i8] c"%i + %i = %i\0A\00", align 1

|

||||

|

||||

define i32 @main() {

|

||||

%1 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([14 x i8], [14 x i8]* @.str, i64 0, i64 0), i32 5, i32 10, i32 15)

|

||||

ret i32 0

|

||||

}

|

||||

|

||||

declare i32 @printf(i8* nocapture readonly, ...)

|

||||

```

|

||||

|

||||

优化后的 main 函数本质上是未优化版本的第 17 行和 18 行,伴有变量值内联。`opt` 计算加法,因为所有的变量都是常数。很酷吧,对不对?

|

||||

|

||||

#### 后端

|

||||

|

||||

LLVM 的后端工具是 `llc`。它分三个阶段将 LLVM IR 作为输入生成机器代码。

|

||||

|

||||

* **指令选择**是将 IR 指令映射到目标机器的指令集。这个步骤使用虚拟寄存器的无限名字空间。

|

||||

* **寄存器分配**是将虚拟寄存器映射到目标体系结构的实际寄存器。我的 CPU 是 x86 结构,它只有 16 个寄存器。然而,编译器将会尽可能少的使用寄存器。

|

||||

* **指令安排**是重排操作,从而反映出目标机器的性能约束。

|

||||

|

||||

运行下面这个命令将会产生一些机器代码:

|

||||

|

||||

```

|

||||

llc -o compiled-assembly.s optimized.ll

|

||||

```

|

||||

|

||||

```

|

||||

_main:

|

||||

pushq %rbp

|

||||

movq %rsp, %rbp

|

||||

leaq L_str(%rip), %rdi

|

||||

callq _puts

|

||||

xorl %eax, %eax

|

||||

popq %rbp

|

||||

retq

|

||||

L_str:

|

||||

.asciz "Hello, Compiler!"

|

||||

```

|

||||

|

||||

这个程序是 x86 汇编语言,它是计算机所说的语言,并具有人类可读语法。某些人最后也许能理解我。

|

||||

|

||||

* * *

|

||||

|

||||

相关资源:

|

||||

|

||||

1. [设计一个编译器][4]

|

||||

2. [开始探索 LLVM 核心库][5]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://nicoleorchard.com/blog/compilers

|

||||

|

||||

作者:[Nicole Orchard][a]

|

||||

译者:[ucasFL](https://github.com/ucasFL)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://nicoleorchard.com/

|

||||

[1]:http://clang.llvm.org/

|

||||

[2]:http://llvm.org/docs/CommandGuide/opt.html

|

||||

[3]:http://llvm.org/docs/CommandGuide/llc.html

|

||||

[4]:https://www.amazon.com/Engineering-Compiler-Second-Keith-Cooper/dp/012088478X

|

||||

[5]:https://www.amazon.com/Getting-Started-LLVM-Core-Libraries/dp/1782166920

|

||||

[6]:https://twitter.com/norchard/status/864246049266958336

|

||||

[7]:http://llvm.org/

|

||||

@ -1,20 +1,20 @@

|

||||

Getting Started with Headless Chrome

|

||||

Headless Chrome 入门

|

||||

============================================================

|

||||

|

||||

|

||||

### TL;DR

|

||||

### 摘要

|

||||

|

||||

[Headless Chrome][9] is shipping in Chrome 59\. It's a way to run the Chrome browser in a headless environment. Essentially, running Chrome without chrome! It brings **all modern web platform features** provided by Chromium and the Blink rendering engine to the command line.

|

||||

在 Chrome 59 中开始搭载 [Headless Chrome][9]。这是一种在<ruby>无需显示<rt>headless</rt></ruby>的环境下运行 Chrome 浏览器的方式。从本质上来说,就是不用 chrome 浏览器来运行 Chrome 的功能!它将 Chromium 和 Blink 渲染引擎提供的所有现代 Web 平台的功能都带入了命令行。

|

||||

|

||||

Why is that useful?

|

||||

它有什么用?

|

||||

|

||||

A headless browser is a great tool for automated testing and server environments where you don't need a visible UI shell. For example, you may want to run some tests against a real web page, create a PDF of it, or just inspect how the browser renders an URL.

|

||||

<ruby>无需显示<rt>headless</rt></ruby>的浏览器对于自动化测试和不需要可视化 UI 界面的服务器环境是一个很好的工具。例如,你可能需要对真实的网页运行一些测试,创建一个 PDF,或者只是检查浏览器如何呈现 URL。

|

||||

|

||||

<aside class="caution" style="box-sizing: inherit; font-size: 14px; margin-top: 16px; margin-bottom: 16px; padding: 12px 24px 12px 60px; background: rgb(255, 243, 224); color: rgb(221, 44, 0);">**Caution:** Headless mode is available on Mac and Linux in **Chrome 59**. [Windows support][2] is coming in Chrome 60\. To check what version of Chrome you have, open `chrome://version`.</aside>

|

||||

> **注意:** Mac 和 Linux 上的 Chrome 59 都可以运行无需显示模式。[对 Windows 的支持][2]将在 Chrome 60 中提供。要检查你使用的 Chrome 版本,请在浏览器中打开 `chrome://version`。

|

||||

|

||||

### Starting Headless (CLI)

|

||||

### 开启<ruby>无需显示<rt>headless</rt></ruby>模式(命令行界面)

|

||||

|

||||

The easiest way to get started with headless mode is to open the Chrome binary from the command line. If you've got Chrome 59+ installed, start Chrome with the `--headless` flag:

|

||||

开启<ruby>无需显示<rt>headless</rt></ruby>模式最简单的方法是从命令行打开 Chrome 二进制文件。如果你已经安装了 Chrome 59 以上的版本,请使用 `--headless` 标志启动 Chrome:

|

||||

|

||||

```

|

||||

chrome \

|

||||

@ -24,11 +24,11 @@ chrome \

|

||||

https://www.chromestatus.com # URL to open. Defaults to about:blank.

|

||||

```

|

||||

|

||||

<aside class="note" style="box-sizing: inherit; font-size: 14px; margin-top: 16px; margin-bottom: 16px; padding: 12px 24px 12px 60px; background: rgb(225, 245, 254); color: rgb(2, 136, 209);">**Note:** Right now, you'll also want to include the `--disable-gpu` flag. That will eventually go away.</aside>

|

||||

> **注意:**目前你仍然需要使用 `--disable-gpu` 标志。但它最终会不需要的。

|

||||

|

||||

`chrome` should point to your installation of Chrome. The exact location will vary from platform to platform. Since I'm on Mac, I created convenient aliases for each version of Chrome that I have installed.

|

||||

`chrome` 二进制文件应该指向你安装 Chrome 的位置。确切的位置会因平台差异而不同。当前我在 Mac 上操作,所以我为安装的每个版本的 Chrome 都创建了方便使用的别名。

|

||||

|

||||

If you're on the stable channel of Chrome and cannot get the Beta, I recommend using `chrome-canary`:

|

||||

如果您使用 Chrome 的稳定版,并且无法获得测试版,我建议您使用 `chrome-canary` 版本:

|

||||

|

||||

```

|

||||

alias chrome="/Applications/Google\ Chrome.app/Contents/MacOS/Google\ Chrome"

|

||||

@ -36,31 +36,31 @@ alias chrome-canary="/Applications/Google\ Chrome\ Canary.app/Contents/MacOS/Goo

|

||||

alias chromium="/Applications/Chromium.app/Contents/MacOS/Chromium"

|

||||

```

|

||||

|

||||

Download Chrome Canary [here][10].

|

||||

在[这里][10]下载 Chrome Cannary。

|

||||

|

||||

### Command line features

|

||||

### 命令行的功能

|

||||

|

||||

In some cases, you may not need to [programmatically script][11] Headless Chrome. There are some [useful command line flags][12] to perform common tasks.

|

||||

在某些情况下,你可能不需要[以脚本编程的方式][11]操作 Headless Chrome。可以使用一些[有用的命令行标志][12]来执行常见的任务。

|

||||

|

||||

### Printing the DOM

|

||||

#### 打印 DOM

|

||||

|

||||

The `--dump-dom` flag prints `document.body.innerHTML` to stdout:

|

||||

`--dump-dom` 标志将打印 `document.body.innerHTML` 到标准输出:

|

||||

|

||||

```

|

||||

chrome --headless --disable-gpu --dump-dom https://www.chromestatus.com/

|

||||

```

|

||||

|

||||

### Create a PDF

|

||||

#### 创建一个 PDF

|

||||

|

||||

The `--print-to-pdf` flag creates a PDF of the page:

|

||||

`--print-to-pdf` 标志将页面转出为 PDF 文件:

|

||||

|

||||

```

|

||||

chrome --headless --disable-gpu --print-to-pdf https://www.chromestatus.com/

|

||||

```

|

||||

|

||||

### Taking screenshots

|

||||

#### 截图

|

||||

|

||||

To capture a screenshot of a page, use the `--screenshot` flag:

|

||||

要捕获页面的屏幕截图,请使用 `--screenshot` 标志:

|

||||

|

||||

```

|

||||

chrome --headless --disable-gpu --screenshot https://www.chromestatus.com/

|

||||

@ -72,11 +72,11 @@ chrome --headless --disable-gpu --screenshot --window-size=1280,1696 https://www

|

||||

chrome --headless --disable-gpu --screenshot --window-size=412,732 https://www.chromestatus.com/

|

||||

```

|

||||

|

||||

Running with `--screenshot` will produce a file named `screenshot.png` in the current working directory. If you're looking for full page screenshots, things are a tad more involved. There's a great blog post from David Schnurr that has you covered. Check out [Using headless Chrome as an automated screenshot tool ][13].

|

||||

使用 `--screenshot` 标志运行 Headless Chrome 将在当前工作目录中生成一个名为 `screenshot.png` 的文件。如果你正在寻求整个页面的截图,那么会涉及到很多事情。来自 David Schnurr 的一篇很棒的博文已经介绍了这一内容。请查看 [使用 headless Chrome 作为自动截屏工具][13]。

|

||||

|

||||

### REPL mode (read-eval-print loop)

|

||||

#### REPL 模式 (read-eval-print loop)

|

||||

|

||||

The `--repl` flag runs Headless in a mode where you can evaluate JS expressions in the browser, right from the command line:

|

||||

`--repl` 标志可以使 Headless Chrome 运行在一个你可以使用浏览器评估 JS 表达式的模式下。执行下面的命令:

|

||||

|

||||

```

|

||||

$ chrome --headless --disable-gpu --repl https://www.chromestatus.com/

|

||||

@ -84,35 +84,35 @@ $ chrome --headless --disable-gpu --repl https://www.chromestatus.com/

|

||||

>>> location.href

|

||||

{"result":{"type":"string","value":"https://www.chromestatus.com/features"}}

|

||||

>>> quit

|

||||

$

|

||||

```

|

||||

|

||||

### Debugging Chrome without a browser UI?

|

||||

### 在没有浏览器界面的情况下调试 Chrome

|

||||

|

||||

When you run Chrome with `--remote-debugging-port=9222`, it starts an instance with the [DevTools protocol][14] enabled. The protocol is used to communicate with Chrome and drive the headless browser instance. It's also what tools like Sublime, VS Code, and Node use for remote debugging an application. #synergy

|

||||

当你使用 `--remote-debugging-port=9222` 运行 Chrome 时,它会启动一个支持 [DevTools 协议][14]的实例。该协议用于与 Chrome 进行通信,并且驱动 Headless Chrome 浏览器实例。它也是一个类似 Sublime、VS Code 和 Node 的工具,可用于应用程序的远程调试。#协同效应

|

||||

|

||||

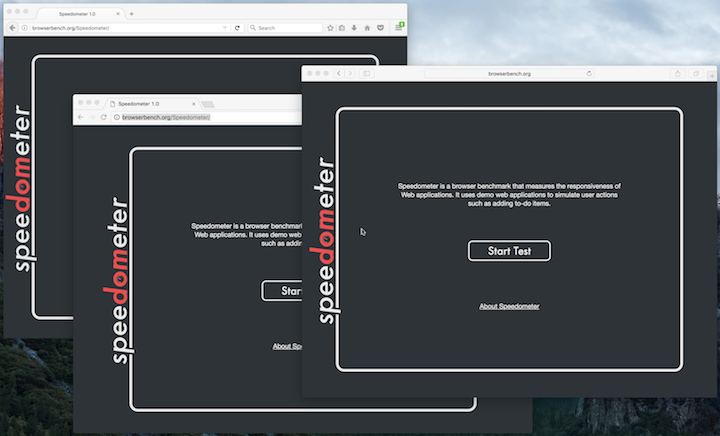

Since you don't have browser UI to see the page, navigate to `http://localhost:9222` in another browser to check that everything is working. You'll see a list of inspectable pages where you can click through and see what Headless is rendering:

|

||||

由于你没有浏览器用户界面可用来查看网页,请在另一个浏览器中输入 `http://localhost:9222`,以检查一切是否正常。你将会看到一个<ruby>可检查的<rt>inspectable</rt></ruby>页面的列表,可以点击它们来查看 Headless Chrome 正在呈现的内容:

|

||||

|

||||

|

||||

DevTools remote debugging UI

|

||||

|

||||

From here, you can use the familiar DevTools features to inspect, debug, and tweak the page as you normally would. If you're using Headless programmatically, this page is also a powerful debugging tool for seeing all the raw DevTools protocol commands going across the wire, communicating with the browser.

|

||||

*DevTools 远程调试界面*

|

||||

|

||||

### Using programmatically (Node)

|

||||

从这里,你就可以像往常一样使用熟悉的 DevTools 来检查、调试和调整页面了。如果你以编程方式使用 Headless Chrome,这个页面也是一个功能强大的调试工具,用于查看所有通过网络与浏览器交互的原始 DevTools 协议命令。

|

||||

|

||||

### The Puppeteer API

|

||||

### 使用编程模式 (Node)

|

||||

|

||||

[Puppeteer][15] is a Node library developed by the Chrome team. It provides a high-level API to control headless (or full) Chrome. It's similar to other automated testing libraries like Phantom and NightmareJS, but it only works with the latest versions of Chrome.

|

||||

#### Puppeteer 库 API

|

||||

|

||||

Among other things, Puppeteer can be used to easily take screenshots, create PDFs, navigate pages, and fetch information about those pages. I recommend the library if you want to quickly automate browser testing. It hides away the complexities of the DevTools protocol and takes care of redundant tasks like launching a debug instance of Chrome.

|

||||

[Puppeteer][15] 是一个由 Chrome 团队开发的 Node 库。它提供了一个高层次的 API 来控制无需显示版(或 完全版)的 Chrome。它与其他自动化测试库,如 Phantom 和 NightmareJS 相类似,但是只适用于最新版本的 Chrome。

|

||||

|

||||

Install it:

|

||||

除此之外,Puppeteer 还可用于轻松截取屏幕截图,创建 PDF,页面间导航以及获取有关这些页面的信息。如果你想快速地自动化进行浏览器测试,我建议使用该库。它隐藏了 DevTools 协议的复杂性,并可以处理诸如启动 Chrome 调试实例等繁冗的任务。

|

||||

|

||||

安装:

|

||||

|

||||

```

|

||||

yarn add puppeteer

|

||||

```

|

||||

|

||||

**Example** - print the user agent

|

||||

**例子** - 打印用户代理:

|

||||

|

||||

```

|

||||

const puppeteer = require('puppeteer');

|

||||

@ -124,7 +124,7 @@ const puppeteer = require('puppeteer');

|

||||

})();

|

||||

```

|

||||

|

||||

**Example** - taking a screenshot of the page

|

||||

**例子** - 获取页面的屏幕截图:

|

||||

|

||||

```

|

||||

const puppeteer = require('puppeteer');

|

||||

@ -140,19 +140,19 @@ browser.close();

|

||||

})();

|

||||

```

|

||||

|

||||

Check out [Puppeteer's documentation][16] to learn more about the full API.

|

||||

查看 [Puppeteer 的文档][16],了解完整 API 的更多信息。

|

||||

|

||||

### The CRI library

|

||||

#### CRI 库

|

||||

|

||||

[chrome-remote-interface][17] is a lower-level library than Puppeteer's API. I recommend it if you want to be close to the metal and use the [DevTools protocol][18] directly.

|

||||

[chrome-remote-interface][17] 是一个比 Puppeteer API 更低层次的库。如果你想要更接近原始信息和更直接地使用 [DevTools 协议][18]的话,我推荐使用它。

|

||||

|

||||

#### Launching Chrome

|

||||

**启动 Chrome**

|

||||

|

||||

chrome-remote-interface doesn't launch Chrome for you, so you'll have to take care of that yourself.

|

||||

chrome-remote-interface 不会为你启动 Chrome,所以你要自己启动它。

|

||||

|

||||