mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

Merge branch 'master' of https://github.com/LCTT/TranslateProject

This commit is contained in:

commit

265a1c0f0d

@ -1,12 +1,14 @@

|

||||

|

||||

自动化部署基于Docker的Rails应用

|

||||

================================================================================

|

||||

|

||||

|

||||

[TL;DR] 这是系列文章的第三篇,讲述了我的公司是如何将基础设施从PaaS移植到Docker上的。

|

||||

|

||||

- [第一部分][1]:谈论了我接触Docker之前的经历;

|

||||

- [第二部分][2]:一步步搭建一个安全而又私有的registry。

|

||||

|

||||

----------

|

||||

|

||||

在系列文章的最后一篇里,我们将用一个实例来学习如何自动化整个部署过程。

|

||||

|

||||

### 基本的Rails应用程序###

|

||||

@ -18,99 +20,97 @@

|

||||

$ rvm use 2.2.0

|

||||

$ rails new && cd docker-test

|

||||

|

||||

创建一个基础控制器:

|

||||

创建一个基本的控制器:

|

||||

|

||||

$ rails g controller welcome index

|

||||

|

||||

……然后编辑 `routes.rb` ,以便让工程的根指向我们新创建的welcome#index方法:(这句话理解不太理解)

|

||||

……,然后编辑 `routes.rb` ,以便让该项目的根指向我们新创建的welcome#index方法:

|

||||

|

||||

root 'welcome#index'

|

||||

|

||||

在终端运行 `rails s` ,然后打开浏览器,登录[http://localhost:3000][3],你会进入到索引界面当中。我们不准备给应用加上多么神奇的东西,这只是一个基础实例,用来验证当我们将要创建并部署容器的时候,一切运行正常。

|

||||

在终端运行 `rails s` ,然后打开浏览器,登录[http://localhost:3000][3],你会进入到索引界面当中。我们不准备给应用加上多么神奇的东西,这只是一个基础的实例,当我们将要创建并部署容器的时候,用它来验证一切是否运行正常。

|

||||

|

||||

### 安装webserver ###

|

||||

|

||||

我们打算使用Unicorn当做我们的webserver。在Gemfile中添加 `gem 'unicorn'`和 `gem 'foreman'`然后将它bundle起来(运行 `bundle install`命令)。

|

||||

|

||||

在Rails应用启动的伺候,需要配置Unicorn,所以我们将一个**unicorn.rb**文件放在**config**目录下。[这里有一个Unicorn配置文件的例子][4]你可以直接复制粘贴Gist的内容。

|

||||

启动Rails应用时,需要先配置好Unicorn,所以我们将一个**unicorn.rb**文件放在**config**目录下。[这里有一个Unicorn配置文件的例子][4],你可以直接复制粘贴Gist的内容。

|

||||

|

||||

Let's also add a Procfile with the following content inside the root of the project so that we will be able to start the app with foreman:

|

||||

接下来,在工程的根目录下添加一个Procfile,以便可以使用foreman启动应用,内容为下:

|

||||

接下来,在项目的根目录下添加一个Procfile,以便可以使用foreman启动应用,内容为下:

|

||||

|

||||

web: bundle exec unicorn -p $PORT -c ./config/unicorn.rb

|

||||

|

||||

现在运行**foreman start**命令启动应用,一切都将正常运行,并且你将能够在[http://localhost:5000][5]上看到一个正在运行的应用。

|

||||

|

||||

### 创建一个Docker映像 ###

|

||||

### 构建一个Docker镜像 ###

|

||||

|

||||

现在我们创建一个映像来运行我们的应用。在Rails工程的跟目录下,创建一个名为**Dockerfile**的文件,然后粘贴进以下内容:

|

||||

现在我们构建一个镜像来运行我们的应用。在这个Rails项目的根目录下,创建一个名为**Dockerfile**的文件,然后粘贴进以下内容:

|

||||

|

||||

# Base image with ruby 2.2.0

|

||||

# 基于镜像 ruby 2.2.0

|

||||

FROM ruby:2.2.0

|

||||

|

||||

# Install required libraries and dependencies

|

||||

# 安装所需的库和依赖

|

||||

RUN apt-get update && apt-get install -qy nodejs postgresql-client sqlite3 --no-install-recommends && rm -rf /var/lib/apt/lists/*

|

||||

|

||||

# Set Rails version

|

||||

# 设置 Rails 版本

|

||||

ENV RAILS_VERSION 4.1.1

|

||||

|

||||

# Install Rails

|

||||

# 安装 Rails

|

||||

RUN gem install rails --version "$RAILS_VERSION"

|

||||

|

||||

# Create directory from where the code will run

|

||||

# 创建代码所运行的目录

|

||||

RUN mkdir -p /usr/src/app

|

||||

WORKDIR /usr/src/app

|

||||

|

||||

# Make webserver reachable to the outside world

|

||||

# 使 webserver 可以在容器外面访问

|

||||

EXPOSE 3000

|

||||

|

||||

# Set ENV variables

|

||||

# 设置环境变量

|

||||

ENV PORT=3000

|

||||

|

||||

# Start the web app

|

||||

# 启动 web 应用

|

||||

CMD ["foreman","start"]

|

||||

|

||||

# Install the necessary gems

|

||||

# 安装所需的 gems

|

||||

ADD Gemfile /usr/src/app/Gemfile

|

||||

ADD Gemfile.lock /usr/src/app/Gemfile.lock

|

||||

RUN bundle install --without development test

|

||||

|

||||

# Add rails project (from same dir as Dockerfile) to project directory

|

||||

# 将 rails 项目(和 Dockerfile 同一个目录)添加到项目目录

|

||||

ADD ./ /usr/src/app

|

||||

|

||||

# Run rake tasks

|

||||

# 运行 rake 任务

|

||||

RUN RAILS_ENV=production rake db:create db:migrate

|

||||

|

||||

使用提供的Dockerfile,执行下列命令创建一个映像[1][7]:

|

||||

使用上述Dockerfile,执行下列命令创建一个镜像(确保**boot2docker**已经启动并在运行当中):

|

||||

|

||||

$ docker build -t localhost:5000/your_username/docker-test .

|

||||

|

||||

然后,如果一切正常,长日志输出的最后一行应该类似于:

|

||||

然后,如果一切正常,长长的日志输出的最后一行应该类似于:

|

||||

|

||||

Successfully built 82e48769506c

|

||||

$ docker images

|

||||

REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

|

||||

localhost:5000/your_username/docker-test latest 82e48769506c About a minute ago 884.2 MB

|

||||

|

||||

来运行容器吧!

|

||||

让我们运行一下容器试试!

|

||||

|

||||

$ docker run -d -p 3000:3000 --name docker-test localhost:5000/your_username/docker-test

|

||||

|

||||

You should be able to reach your Rails app running inside the Docker container at port 3000 of your boot2docker VM[2][8] (in my case [http://192.168.59.103:3000][6]).

|

||||

通过你的boot2docker虚拟机[2][8]的3000号端口(我的是[http://192.168.59.103:3000][6]),你可以观察你的Rails应用。

|

||||

通过你的boot2docker虚拟机的3000号端口(我的是[http://192.168.59.103:3000][6]),你可以观察你的Rails应用。(如果不清楚你的boot2docker虚拟地址,输入` $ boot2docker ip`命令查看。)

|

||||

|

||||

### 使用shell脚本进行自动化部署 ###

|

||||

|

||||

前面的文章(指文章1和文章2)已经告诉了你如何将新创建的映像推送到私有registry中,并将其部署在服务器上,所以我们跳过这一部分直接开始自动化进程。

|

||||

前面的文章(指文章1和文章2)已经告诉了你如何将新创建的镜像推送到私有registry中,并将其部署在服务器上,所以我们跳过这一部分直接开始自动化进程。

|

||||

|

||||

我们将要定义3个shell脚本,然后最后使用rake将它们捆绑在一起。

|

||||

|

||||

### 清除 ###

|

||||

|

||||

每当我们创建映像的时候,

|

||||

每当我们创建镜像的时候,

|

||||

|

||||

- 停止并重启boot2docker;

|

||||

- 去除Docker孤儿映像(那些没有标签,并且不再被容器所使用的映像们)。

|

||||

- 去除Docker孤儿镜像(那些没有标签,并且不再被容器所使用的镜像们)。

|

||||

|

||||

在你的工程根目录下的**clean.sh**文件中输入下列命令。

|

||||

|

||||

@ -132,22 +132,22 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

|

||||

$ chmod +x clean.sh

|

||||

|

||||

### 创建 ###

|

||||

### 构建 ###

|

||||

|

||||

创建的过程基本上和之前我们所做的(docker build)内容相似。在工程的根目录下创建一个**build.sh**脚本,填写如下内容:

|

||||

构建的过程基本上和之前我们所做的(docker build)内容相似。在工程的根目录下创建一个**build.sh**脚本,填写如下内容:

|

||||

|

||||

docker build -t localhost:5000/your_username/docker-test .

|

||||

|

||||

给脚本执行权限。

|

||||

记得给脚本执行权限。

|

||||

|

||||

### 部署 ###

|

||||

|

||||

最后,创建一个**deploy.sh**脚本,在里面填进如下内容:

|

||||

|

||||

# Open SSH connection from boot2docker to private registry

|

||||

# 打开 boot2docker 到私有注册库的 SSH 连接

|

||||

boot2docker ssh "ssh -o 'StrictHostKeyChecking no' -i /Users/username/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@your-registry.com &" &

|

||||

|

||||

# Wait to make sure the SSH tunnel is open before pushing...

|

||||

# 在推送前先确认该 SSH 通道是开放的。

|

||||

echo Waiting 5 seconds before pushing image.

|

||||

|

||||

echo 5...

|

||||

@ -165,7 +165,7 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

echo Starting push!

|

||||

docker push localhost:5000/username/docker-test

|

||||

|

||||

如果你不理解这其中的含义,请先仔细阅读这部分[part 2][9]。

|

||||

如果你不理解这其中的含义,请先仔细阅读这部分[第二部分][2]。

|

||||

|

||||

给脚本加上执行权限。

|

||||

|

||||

@ -179,10 +179,9 @@ You should be able to reach your Rails app running inside the Docker container a

|

||||

|

||||

这一点都不费工夫,可是事实上开发者比你想象的要懒得多!那么咱们就索性再懒一点!

|

||||

|

||||

我们最后再把工作好好整理一番,我们现在要将三个脚本捆绑在一起,通过rake。

|

||||

|

||||

为了更简单一点,你可以在工程根目录下已经存在的Rakefile中添加几行代码,打开Rakefile文件——pun intended——把下列内容粘贴进去。

|

||||

我们最后再把工作好好整理一番,我们现在要将三个脚本通过rake捆绑在一起。

|

||||

|

||||

为了更简单一点,你可以在工程根目录下已经存在的Rakefile中添加几行代码,打开Rakefile文件,把下列内容粘贴进去。

|

||||

|

||||

namespace :docker do

|

||||

desc "Remove docker container"

|

||||

@ -221,34 +220,27 @@ Deploy独立于build,build独立于clean。所以每次我们输入命令运

|

||||

|

||||

$ rake docker:deploy

|

||||

|

||||

接下来就是见证奇迹的时刻了。一旦映像文件被上传(第一次可能花费较长的时间),你就可以ssh登录产品服务器,并且(通过SSH管道)把docker映像拉取到服务器并运行了。多么简单!

|

||||

接下来就是见证奇迹的时刻了。一旦镜像文件被上传(第一次可能花费较长的时间),你就可以ssh登录产品服务器,并且(通过SSH管道)把docker镜像拉取到服务器并运行了。多么简单!

|

||||

|

||||

也许你需要一段时间来习惯,但是一旦成功,它几乎与用Heroku部署一样简单。

|

||||

|

||||

备注:像往常一样,请让我了解到你的意见。我不敢保证这种方法是最好,最快,或者最安全的Docker开发的方法,但是这东西对我们确实奏效。

|

||||

|

||||

- 确保**boot2docker**已经启动并在运行当中。

|

||||

- 如果你不了解你的boot2docker虚拟地址,输入` $ boot2docker ip`命令查看。

|

||||

- 点击[here][10],教你怎样搭建私有的registry。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://cocoahunter.com/2015/01/23/docker-3/

|

||||

|

||||

作者:[Michelangelo Chasseur][a]

|

||||

译者:[DongShuaike](https://github.com/DongShuaike)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://cocoahunter.com/author/michelangelo/

|

||||

[1]:http://cocoahunter.com/docker-1

|

||||

[2]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[1]:https://linux.cn/article-5339-1.html

|

||||

[2]:https://linux.cn/article-5379-1.html

|

||||

[3]:http://localhost:3000/

|

||||

[4]:https://gist.github.com/chasseurmic/0dad4d692ff499761b20

|

||||

[5]:http://localhost:5000/

|

||||

[6]:http://192.168.59.103:3000/

|

||||

[7]:http://cocoahunter.com/2015/01/23/docker-3/#fn:1

|

||||

[8]:http://cocoahunter.com/2015/01/23/docker-3/#fn:2

|

||||

[9]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

[10]:http://cocoahunter.com/2015/01/23/docker-2/

|

||||

|

||||

@ -1,4 +1,4 @@

|

||||

如何修复:apt-get update无法添加新的CD-ROM

|

||||

如何修复 apt-get update 无法添加新的 CD-ROM 的错误

|

||||

================================================================================

|

||||

|

||||

|

||||

@ -63,8 +63,8 @@

|

||||

via: http://itsfoss.com/fix-failed-fetch-cdrom-aptget-update-add-cdroms/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -10,12 +10,14 @@

|

||||

|

||||

#### 在 64位 Ubuntu 15.04 ####

|

||||

|

||||

$ wget http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.0-vivid/linux-image-4.0.0-040000-generic_4.0.0-040000.201504121935_amd64.deb

|

||||

$ wget http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.0-vivid/linux-headers-4.0.0-040000-generic_4.0.0-040000.201504121935_amd64.deb

|

||||

|

||||

$ sudo dpkg -i linux-headers-4.0.0*.deb linux-image-4.0.0*.deb

|

||||

|

||||

#### 在 32位 Ubuntu 15.04 ####

|

||||

|

||||

$ wget http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.0-vivid/linux-image-4.0.0-040000-generic_4.0.0-040000.201504121935_i386.deb

|

||||

$ wget http://kernel.ubuntu.com/~kernel-ppa/mainline/v4.0-vivid/linux-headers-4.0.0-040000-generic_4.0.0-040000.201504121935_i386.deb

|

||||

|

||||

$ sudo dpkg -i linux-headers-4.0.0*.deb linux-image-4.0.0*.deb

|

||||

|

||||

@ -0,0 +1,41 @@

|

||||

EvilAP_Defender:可以警示和攻击 WIFI 热点陷阱的工具

|

||||

===============================================================================

|

||||

|

||||

**开发人员称,EvilAP_Defender甚至可以攻击流氓Wi-Fi接入点**

|

||||

|

||||

这是一个新的开源工具,可以定期扫描一个区域,以防出现恶意 Wi-Fi 接入点,同时如果发现情况会提醒网络管理员。

|

||||

|

||||

这个工具叫做 EvilAP_Defender,是为监测攻击者所配置的恶意接入点而专门设计的,这些接入点冒用合法的名字诱导用户连接上。

|

||||

|

||||

这类接入点被称做假面猎手(evil twin),使得黑客们可以从所接入的设备上监听互联网信息流。这可以被用来窃取证书、钓鱼网站等等。

|

||||

|

||||

大多数用户设置他们的计算机和设备可以自动连接一些无线网络,比如家里的或者工作地方的网络。通常,当面对两个同名的无线网络时,即SSID相同,有时候甚至连MAC地址(BSSID)也相同,这时候大多数设备会自动连接信号较强的一个。

|

||||

|

||||

这使得假面猎手攻击容易实现,因为SSID和BSSID都可以伪造。

|

||||

|

||||

[EvilAP_Defender][1]是一个叫Mohamed Idris的人用Python语言编写,公布在GitHub上面。它可以使用一个计算机的无线网卡来发现流氓接入点,这些坏蛋们复制了一个真实接入点的SSID,BSSID,甚至是其他的参数如通道,密码,隐私协议和认证信息等等。

|

||||

|

||||

该工具首先以学习模式运行,以便发现合法的接入点[AP],并且将其加入白名单。然后可以切换到正常模式,开始扫描未认证的接入点。

|

||||

|

||||

如果一个恶意[AP]被发现了,该工具会用电子邮件提醒网络管理员,但是开发者也打算在未来加入短信提醒功能。

|

||||

|

||||

该工具还有一个保护模式,在这种模式下,应用会发起一个denial-of-service [DoS]攻击反抗恶意接入点,为管理员采取防卫措施赢得一些时间。

|

||||

|

||||

“DoS 将仅仅针对有着相同SSID的而BSSID(AP的MAC地址)不同或者不同信道的流氓 AP,”Idris在这款工具的文档中说道。“这是为了避免攻击到你的正常网络。”

|

||||

|

||||

尽管如此,用户应该切记在许多国家,攻击别人的接入点很多时候都是非法的,甚至是一个看起来像是攻击者操控的恶意接入点。

|

||||

|

||||

要能够运行这款工具,需要Aircrack-ng无线网套装,一个支持Aircrack-ng的无线网卡,MySQL和Python运行环境。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.infoworld.com/article/2905725/security0/this-tool-can-alert-you-about-evil-twin-access-points-in-the-area.html

|

||||

|

||||

作者:[Lucian Constantin][a]

|

||||

译者:[wi-cuckoo](https://github.com/wi-cuckoo)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.infoworld.com/author/Lucian-Constantin/

|

||||

[1]:https://github.com/moha99sa/EvilAP_Defender/blob/master/README.TXT

|

||||

@ -1,8 +1,8 @@

|

||||

在 RedHat/CentOS 7.x 中使用 cmcli 命令管理网络

|

||||

在 RedHat/CentOS 7.x 中使用 nmcli 命令管理网络

|

||||

===============

|

||||

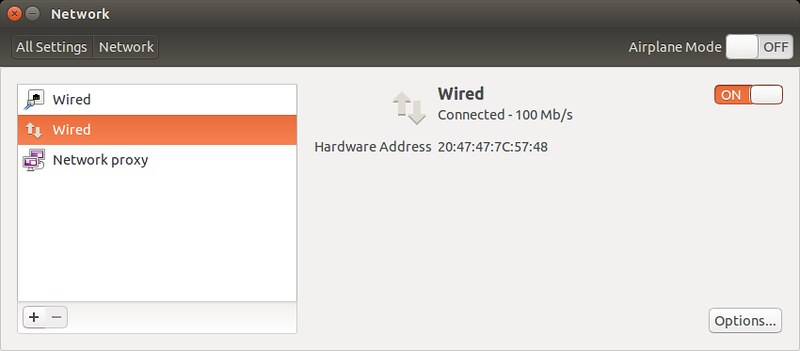

[**Red Hat Enterprise Linux 7** 与 **CentOS 7**][1] 中默认的网络服务由 **NetworkManager** 提供,这是动态控制及配置网络的守护进程,它用于保持当前网络设备及连接处于工作状态,同时也支持传统的 ifcfg 类型的配置文件。

|

||||

NetworkManager 可以用于以下类型的连接:

|

||||

Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移动3G)以及 IP-over-InfiniBand。针对与这些网络类型,NetworkManager 可以配置他们的网络别名,IP 地址,静态路由,DNS,VPN连接以及很多其它的特殊参数。

|

||||

|

||||

NetworkManager 可以用于以下类型的连接:Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移动3G)以及 IP-over-InfiniBand。针对与这些网络类型,NetworkManager 可以配置他们的网络别名,IP 地址,静态路由,DNS,VPN连接以及很多其它的特殊参数。

|

||||

|

||||

可以用命令行工具 nmcli 来控制 NetworkManager。

|

||||

|

||||

@ -24,19 +24,21 @@ Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移

|

||||

|

||||

显示所有连接。

|

||||

|

||||

# nmcli connection show -a

|

||||

# nmcli connection show -a

|

||||

|

||||

仅显示当前活动的连接。

|

||||

|

||||

# nmcli device status

|

||||

|

||||

列出通过 NetworkManager 验证的设备列表及他们的状态。

|

||||

列出 NetworkManager 识别出的设备列表及他们的状态。

|

||||

|

||||

|

||||

|

||||

### 启动/停止 网络接口###

|

||||

|

||||

使用 nmcli 工具启动或停止网络接口,与 ifconfig 的 up/down 是一样的。使用下列命令停止某个接口:

|

||||

使用 nmcli 工具启动或停止网络接口,与 ifconfig 的 up/down 是一样的。

|

||||

|

||||

使用下列命令停止某个接口:

|

||||

|

||||

# nmcli device disconnect eno16777736

|

||||

|

||||

@ -50,7 +52,7 @@ Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移

|

||||

|

||||

# nmcli connection add type ethernet con-name NAME_OF_CONNECTION ifname interface-name ip4 IP_ADDRESS gw4 GW_ADDRESS

|

||||

|

||||

根据你需要的配置更改 NAME_OF_CONNECTION,IP_ADDRESS, GW_ADDRESS参数(如果不需要网关的话可以省略最后一部分)。

|

||||

根据你需要的配置更改 NAME\_OF\_CONNECTION,IP\_ADDRESS, GW\_ADDRESS参数(如果不需要网关的话可以省略最后一部分)。

|

||||

|

||||

# nmcli connection add type ethernet con-name NEW ifname eno16777736 ip4 192.168.1.141 gw4 192.168.1.1

|

||||

|

||||

@ -68,9 +70,11 @@ Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移

|

||||

|

||||

|

||||

|

||||

###增加一个使用 DHCP 的新连接

|

||||

|

||||

增加新的连接,使用DHCP自动分配IP地址,网关,DNS等,你要做的就是将命令行后 ip/gw 地址部分去掉就行了,DHCP会自动分配这些参数。

|

||||

|

||||

例,在 eno 16777736 设备上配置一个 名为 NEW_DHCP 的 DHCP 连接

|

||||

例,在 eno 16777736 设备上配置一个 名为 NEW\_DHCP 的 DHCP 连接

|

||||

|

||||

# nmcli connection add type ethernet con-name NEW_DHCP ifname eno16777736

|

||||

|

||||

@ -79,8 +83,8 @@ Ethernet,VLANS,Bridges,Bonds,Teams,Wi-Fi,mobile boradband(如移

|

||||

via: http://linoxide.com/linux-command/nmcli-tool-red-hat-centos-7/

|

||||

|

||||

作者:[Adrian Dinu][a]

|

||||

译者:[SPccman](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

译者:[SPccman](https://github.com/SPccman)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

[translating by KayGuoWhu]

|

||||

Enjoy Android Apps on Ubuntu using ARChon Runtime

|

||||

================================================================================

|

||||

Before, we gave try to many android app emulating tools like Genymotion, Virtualbox, Android SDK, etc to try to run android apps on it. But, with this new Chrome Android Runtime, we are able to run Android Apps on our Chrome Browser. So, here are the steps we'll need to follow to install Android Apps on Ubuntu using ARChon Runtime.

|

||||

|

||||

@ -1,161 +0,0 @@

|

||||

[bazz222]

|

||||

How to set up networking between Docker containers

|

||||

================================================================================

|

||||

As you may be aware, Docker container technology has emerged as a viable lightweight alternative to full-blown virtualization. There are a growing number of use cases of Docker that the industry adopted in different contexts, for example, enabling rapid build environment, simplifying configuration of your infrastructure, isolating applications in multi-tenant environment, and so on. While you can certainly deploy an application sandbox in a standalone Docker container, many real-world use cases of Docker in production environments may involve deploying a complex multi-tier application in an ensemble of multiple containers, where each container plays a specific role (e.g., load balancer, LAMP stack, database, UI).

|

||||

|

||||

There comes the problem of **Docker container networking**: How can we interconnect different Docker containers spawned potentially across different hosts when we do not know beforehand on which host each container will be created?

|

||||

|

||||

One pretty neat open-source solution for this is [weave][1]. This tool makes interconnecting multiple Docker containers pretty much hassle-free. When I say this, I really mean it.

|

||||

|

||||

In this tutorial, I am going to demonstrate **how to set up Docker networking across different hosts using weave**.

|

||||

|

||||

### How Weave Works ###

|

||||

|

||||

|

||||

|

||||

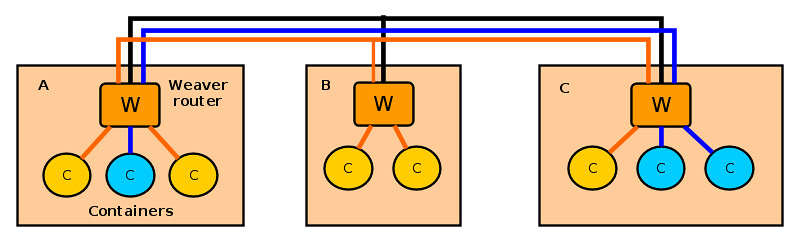

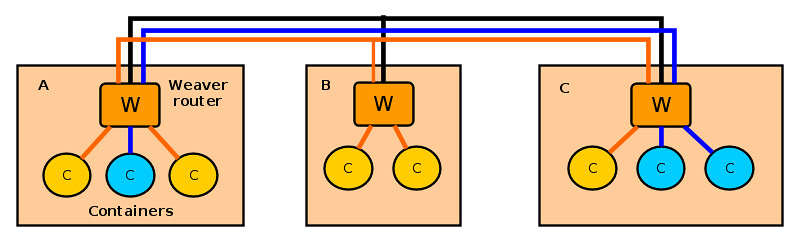

Let's first see how weave works. Weave creates a network of "peers", where each peer is a virtual router container called "weave router" residing on a distinct host. The weave routers on different hosts maintain TCP connections among themselves to exchange topology information. They also establish UDP connections among themselves to carry inter-container traffic. A weave router on each host is then connected via a bridge to all other Docker containers created on the host. When two containers on different hosts want to exchange traffic, a weave router on each host captures their traffic via a bridge, encapsulates the traffic with UDP, and forwards it to the other router over a UDP connection.

|

||||

|

||||

Each weave router maintains up-to-date weave router topology information, as well as container's MAC address information (similar to switch's MAC learning), so that it can make forwarding decision on container traffic. Weave is able to route traffic between containers created on hosts which are not directly reachable, as long as two hosts are interconnected via an intermediate weave router on weave topology. Optionally, weave routers can be set to encrypt both TCP control data and UDP data traffic based on public key cryptography.

|

||||

|

||||

### Prerequisite ###

|

||||

|

||||

Before using weave on Linux, of course you need to set up Docker environment on each host where you want to run [Docker][2] containers. Check out [these][3] [tutorials][4] on how to create Docker containers on Ubuntu or CentOS/Fedora.

|

||||

|

||||

Once Docker environment is set up, install weave on Linux as follows.

|

||||

|

||||

$ wget https://github.com/zettio/weave/releases/download/latest_release/weave

|

||||

$ chmod a+x weave

|

||||

$ sudo cp weave /usr/local/bin

|

||||

|

||||

Make sure that /usr/local/bin is include in your PATH variable by appending the following in /etc/profile.

|

||||

|

||||

export PATH="$PATH:/usr/local/bin"

|

||||

|

||||

Repeat weave installation on every host where Docker containers will be deployed.

|

||||

|

||||

Weave uses TCP/UDP 6783 port. If you are using firewall, make sure that these port numbers are not blocked by the firewall.

|

||||

|

||||

### Launch Weave Router on Each Host ###

|

||||

|

||||

When you want to interconnect Docker containers across multiple hosts, the first step is to launch a weave router on every host.

|

||||

|

||||

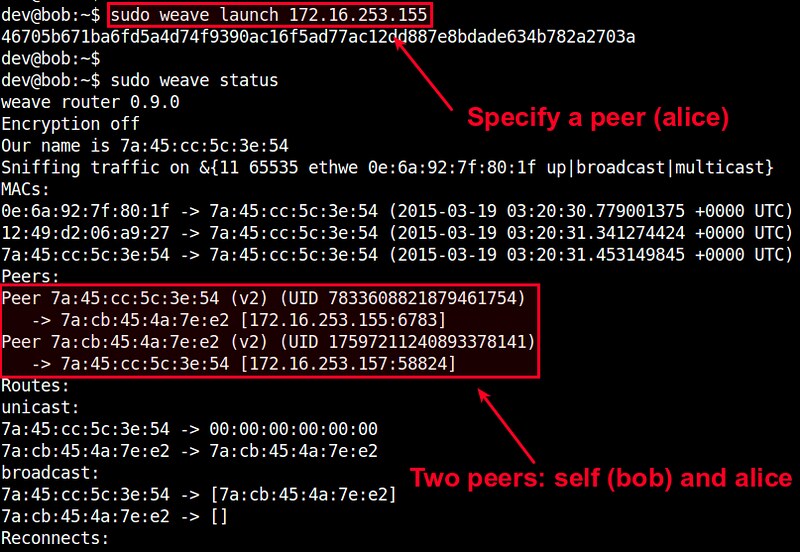

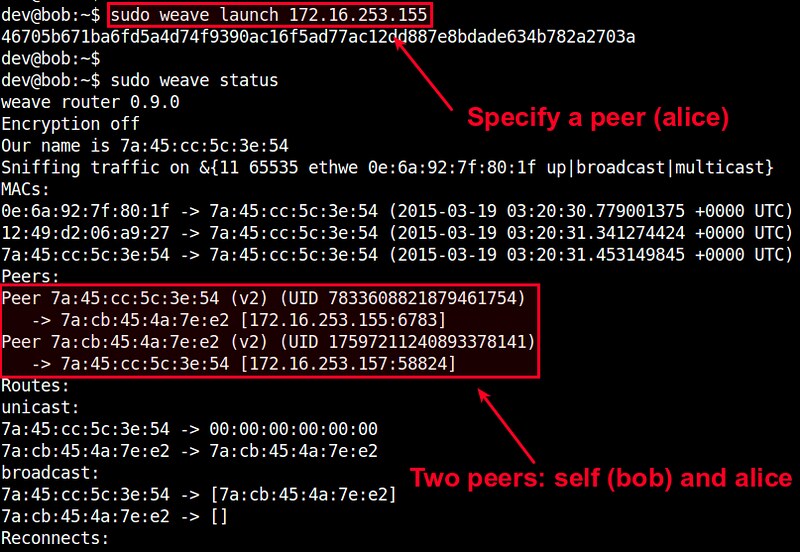

On the first host, run the following command, which will create and start a weave router container.

|

||||

|

||||

$ sudo weave launch

|

||||

|

||||

The first time you run this command, it will take a couple of minutes to download a weave image before launching a router container. On successful launch, it will print the ID of a launched weave router.

|

||||

|

||||

To check the status of the router, use this command:

|

||||

|

||||

$ sudo weave status

|

||||

|

||||

|

||||

|

||||

Since this is the first weave router launched, there will be only one peer in the peer list.

|

||||

|

||||

You can also verify the launch of a weave router by using docker command.

|

||||

|

||||

$ docker ps

|

||||

|

||||

|

||||

|

||||

On the second host, run the following command, where we specify the IP address of the first host as a peer to join.

|

||||

|

||||

$ sudo weave launch <first-host-IP-address>

|

||||

|

||||

When you check the status of the router, you will see two peers: the current host and the first host.

|

||||

|

||||

|

||||

|

||||

As you launch more routers on subsequent hosts, the peer list will grow accordingly. When launching a router, just make sure that you specify any previously launched peer's IP address.

|

||||

|

||||

At this point, you should have a weave network up and running, which consists of multiple weave routers across different hosts.

|

||||

|

||||

### Interconnect Docker Containers across Multiple Hosts ###

|

||||

|

||||

Now it is time to launch Docker containers on different hosts, and interconnect them on a virtual network.

|

||||

|

||||

Let's say we want to create a private network 10.0.0.0/24, to interconnect two Docker containers. We will assign random IP addressses from this subnet to the containers.

|

||||

|

||||

When you create a Docker container to deploy on a weave network, you need to use weave command, not docker command. Internally, the weave command uses docker command to create a container, and then sets up Docker networking on it.

|

||||

|

||||

Here is how to create a Ubuntu container on hostA, and attach the container to 10.0.0.0/24 subnet with an IP addresss 10.0.0.1.

|

||||

|

||||

hostA:~$ sudo weave run 10.0.0.1/24 -t -i ubuntu

|

||||

|

||||

On successful run, it will print the ID of a created container. You can use this ID to attach to the running container and access its console as follows.

|

||||

|

||||

hostA:~$ docker attach <container-id>

|

||||

|

||||

Move to hostB, and let's create another container. Attach it to the same subnet (10.0.0.0/24) with a different IP address 10.0.0.2.

|

||||

|

||||

hostB:~$ sudo weave run 10.0.0.2/24 -t -i ubuntu

|

||||

|

||||

Let's attach to the second container's console as well:

|

||||

|

||||

hostB:~$ docker attach <container-id>

|

||||

|

||||

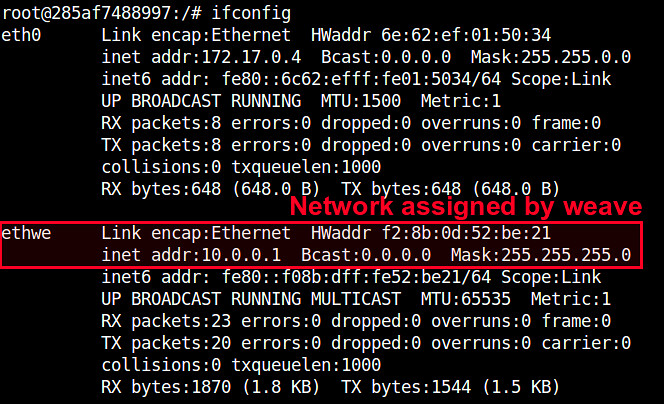

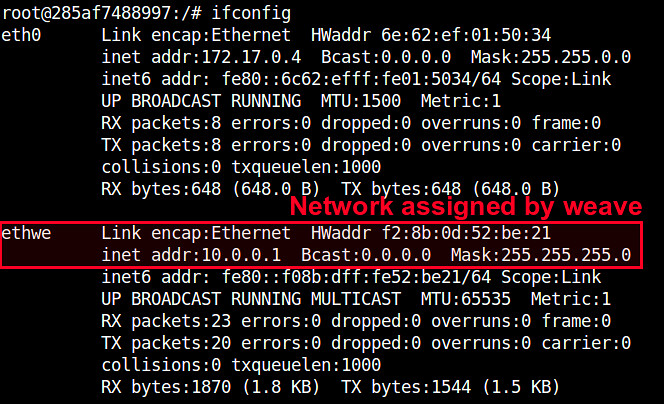

At this point, those two containers should be able to ping each other via the other's IP address. Verify that from each container's console.

|

||||

|

||||

|

||||

|

||||

If you check the interfaces of each container, you will see an interface named "ethwe" which is assigned an IP address (e.g., 10.0.0.1 and 10.0.0.2) you specified.

|

||||

|

||||

|

||||

|

||||

### Other Advanced Usages of Weave ###

|

||||

|

||||

Weave offers a number of pretty neat features. Let me briefly cover a few here.

|

||||

|

||||

#### Application Isolation ####

|

||||

|

||||

Using weave, you can create multiple virtual networks and dedicate each network to a distinct application. For example, create 10.0.0.0/24 for one group of containers, and 10.10.0.0/24 for another group of containers, and so on. Weave automatically takes care of provisioning these networks, and isolating container traffic on each network. Going further, you can flexibly detach a container from one network, and attach it to another network without restarting containers. For example:

|

||||

|

||||

First launch a container on 10.0.0.0/24:

|

||||

|

||||

$ sudo weave run 10.0.0.2/24 -t -i ubuntu

|

||||

|

||||

Detach the container from 10.0.0.0/24:

|

||||

|

||||

$ sudo weave detach 10.0.0.2/24 <container-id>

|

||||

|

||||

Re-attach the container to another network 10.10.0.0/24:

|

||||

|

||||

$ sudo weave attach 10.10.0.2/24 <container-id>

|

||||

|

||||

|

||||

|

||||

Now this container should be able to communicate with other containers on 10.10.0.0/24. This is a pretty useful feature when network information is not available at the time you create a container.

|

||||

|

||||

#### Integrate Weave Networks with Host Network ####

|

||||

|

||||

Sometimes you may need to allow containers on a virtual weave network to access physical host network. Conversely, hosts may want to access containers on a weave network. To support this requirement, weave allows weave networks to be integrated with host network.

|

||||

|

||||

For example, on hostA where a container is running on network 10.0.0.0/24, run the following command.

|

||||

|

||||

hostA:~$ sudo weave expose 10.0.0.100/24

|

||||

|

||||

This will assign IP address 10.0.0.100 to hostA, so that hostA itself is also connected to 10.0.0.0/24 network. Obviously, you need to choose an IP address which is not used by any other containers on the network.

|

||||

|

||||

At this point, hostA should be able to access any containers on 10.0.0.0/24, whether or not the containers are residing on hostA. Pretty neat!

|

||||

|

||||

### Conclusion ###

|

||||

|

||||

As you can see, weave is a pretty useful Docker networking tool. This tutorial only covers a glimpse of [its powerful features][5]. If you are more ambitious, you can try its multi-hop routing, which can be pretty useful in multi-cloud environment, dynamic re-routing, which is a neat fault-tolerance feature, or even its distributed DNS service which allows you to name containers on weave networks. If you decide to use this gem in your environment, feel free to share your use case!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://xmodulo.com/networking-between-docker-containers.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:https://github.com/zettio/weave

|

||||

[2]:http://xmodulo.com/recommend/dockerbook

|

||||

[3]:http://xmodulo.com/manage-linux-containers-docker-ubuntu.html

|

||||

[4]:http://xmodulo.com/docker-containers-centos-fedora.html

|

||||

[5]:http://zettio.github.io/weave/features.html

|

||||

@ -1,57 +0,0 @@

|

||||

Vic020

|

||||

|

||||

Linux FAQs with Answers--How to configure PCI-passthrough on virt-manager

|

||||

================================================================================

|

||||

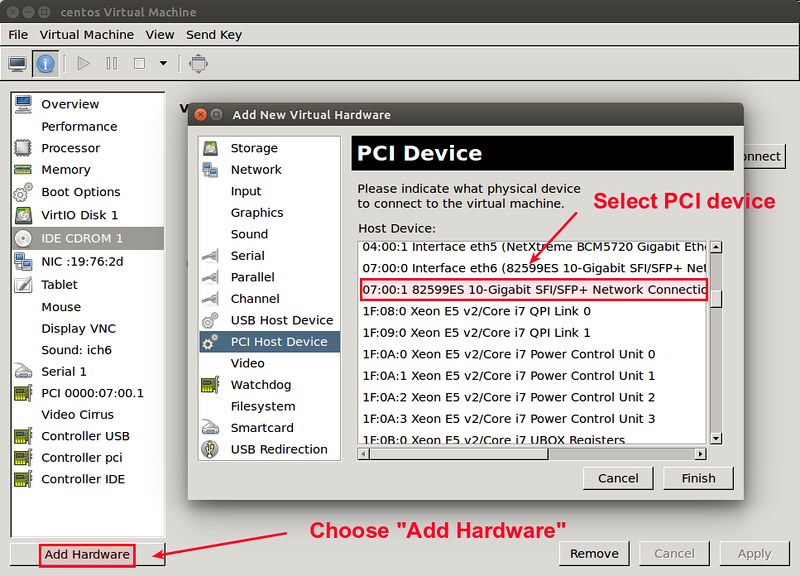

> **Question**: I would like to dedicate a physical network interface card to one of my guest VMs created by KVM. For that, I am trying to enable PCI passthrough of the NIC for the VM. How can I add a PCI device to a guest VM with PCI passthrough on virt-manager?

|

||||

|

||||

Modern hypervisors enable efficient resource sharing among multiple guest operating systems by virtualizing and emulating hardware resources. However, such virtualized resource sharing may not always be desirable, or even should be avoided when VM performance is a great concern, or when a VM requires full DMA control of a hardware device. One technique used in this case is so-called "PCI passthrough," where a guest VM is granted an exclusive access to a PCI device (e.g., network/sound/video card). Essentially, PCI passthrough bypasses the virtualization layer, and directly exposes a PCI device to a VM. No other VM can access the PCI device.

|

||||

|

||||

### Requirement for Enabling PCI Passthrough ###

|

||||

|

||||

If you want to enable PCI passthrough for an HVM guest (e.g., a fully-virtualized VM created by KVM), your system (both CPU and motherboard) must meet the following requirement. If your VM is paravirtualized (created by Xen), you can skip this step.

|

||||

|

||||

In order to enable PCI passthrough for an HVM guest VM, your system must support **VT-d** (for Intel processors) or **AMD-Vi** (for AMD processors). Intel's VT-d ("Intel Virtualization Technology for Directed I/O") is available on most high-end Nehalem processors and its successors (e.g., Westmere, Sandy Bridge, Ivy Bridge). Note that VT-d and VT-x are two independent features. A list of Intel/AMD processors with VT-d/AMD-Vi capability can be found [here][1].

|

||||

|

||||

After you verify that your host hardware supports VT-d/AMD-Vi, you then need to do two things on your system. First, make sure that VT-d/AMD-Vi is enabled in system BIOS. Second, enable IOMMU on your kernel during booting. The IOMMU service, which is provided by VT-d,/AMD-Vi, protects host memory access by a guest VM, and is a requirement for PCI passthrough for fully-virtualized guest VMs.

|

||||

|

||||

To enable IOMMU on the kernel for Intel processors, pass "**intel_iommu=on**" boot parameter on your Linux. Follow [this tutorial][2] to find out how to add a kernel boot parameter via GRUB.

|

||||

|

||||

After configuring the boot parameter, reboot your host.

|

||||

|

||||

### Add a PCI Device to a VM on Virt-Manager ###

|

||||

|

||||

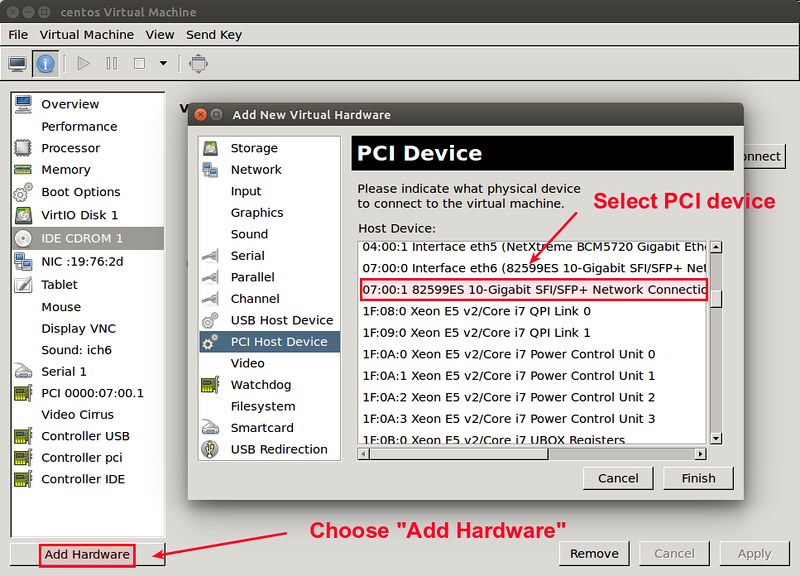

Now we are ready to enable PCI passthrough. In fact, assigning a PCI device to a guest VM is straightforward on virt-manager.

|

||||

|

||||

Open the VM's settings on virt-manager, and click on "Add Hardware" button on the left sidebar.

|

||||

|

||||

Choose a PCI device to assign from a PCI device list, and click on "Finish" button.

|

||||

|

||||

|

||||

|

||||

Finally, power on the guest. At this point, the host PCI device should be directly visible inside the guest VM.

|

||||

|

||||

### Troubleshooting ###

|

||||

|

||||

If you see either of the following errors while powering on a guest VM, the error may be because VT-d (or IOMMU) is not enabled on your host.

|

||||

|

||||

Error starting domain: unsupported configuration: host doesn't support passthrough of host PCI devices

|

||||

|

||||

----------

|

||||

|

||||

Error starting domain: Unable to read from monitor: Connection reset by peer

|

||||

|

||||

Make sure that "**intel_iommu=on**" boot parameter is passed to the kernel during boot as described above.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://ask.xmodulo.com/pci-passthrough-virt-manager.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://ask.xmodulo.com/author/nanni

|

||||

[1]:http://wiki.xenproject.org/wiki/VTdHowTo

|

||||

[2]:http://xmodulo.com/add-kernel-boot-parameters-via-grub-linux.html

|

||||

@ -1,3 +1,5 @@

|

||||

translating by createyuan

|

||||

|

||||

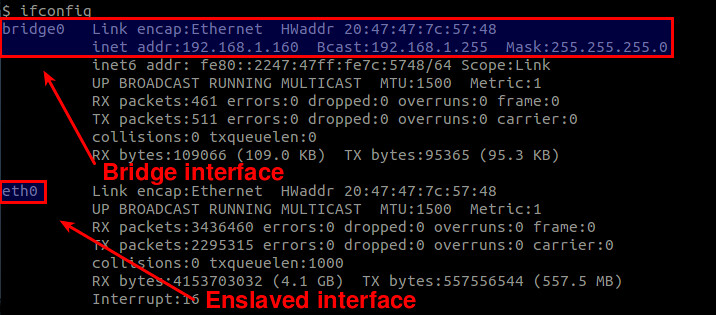

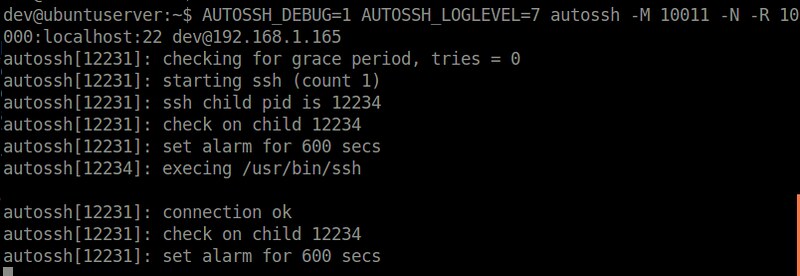

How to access a Linux server behind NAT via reverse SSH tunnel

|

||||

================================================================================

|

||||

You are running a Linux server at home, which is behind a NAT router or restrictive firewall. Now you want to SSH to the home server while you are away from home. How would you set that up? SSH port forwarding will certainly be an option. However, port forwarding can become tricky if you are dealing with multiple nested NAT environment. Besides, it can be interfered with under various ISP-specific conditions, such as restrictive ISP firewalls which block forwarded ports, or carrier-grade NAT which shares IPv4 addresses among users.

|

||||

@ -127,4 +129,4 @@ via: http://xmodulo.com/access-linux-server-behind-nat-reverse-ssh-tunnel.html

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/go/digitalocean

|

||||

[2]:http://xmodulo.com/how-to-enable-ssh-login-without.html

|

||||

[3]:http://ask.xmodulo.com/install-autossh-linux.html

|

||||

[3]:http://ask.xmodulo.com/install-autossh-linux.html

|

||||

|

||||

@ -0,0 +1,40 @@

|

||||

Bodhi Linux Introduces Moksha Desktop

|

||||

================================================================================

|

||||

|

||||

|

||||

Ubuntu based lightweight Linux distribution [Bodhi Linux][1] is working on a desktop environment of its own. This new desktop environment will be called Moksha (Sanskrit for ‘complete freedom’). Moksha will be replacing the usual [Enlightenment desktop environment][2].

|

||||

|

||||

### Why Moksha instead of Enlightenment? ###

|

||||

|

||||

Jeff Hoogland of Bodhi Linux [says][3] that he had been unhappy with the newer versions of Enlightenment in the recent past. Until E17, Enlightenment was very stable and complemented well to the need of a lightweight Linux OS, but the E18 was so full of bugs that Bodhi Linux skipped it altogether.

|

||||

|

||||

While the latest [Bodhi Linux 3.0.0 release][4] uses E19 (except the legacy mode, meant for older hardware, still uses E17), Jeff is not happy with E19 as well. He quotes:

|

||||

|

||||

> On top of the performance issues, E19 did not allow for me personally to have the same workflow I enjoyed under E17 due to features it no longer had. Because of this I had changed to using the E17 on all of my Bodhi 3 computers – even my high end ones. This got me to thinking how many of our existing Bodhi users felt the same way, so I [opened a discussion about it on our user forums][5].

|

||||

|

||||

### Moksha is continuation of the E17 desktop ###

|

||||

|

||||

Moksha will be a continuation of Bodhi’s favorite E17 desktop. Jeff further mentions:

|

||||

|

||||

> We will start by integrating all of the Bodhi changes we have simply been patching into the source code over the years and fixing the few issues the desktop has. Once this is done we will begin back porting a few of the more useful features E18 and E19 introduced to the Enlightenment desktop and finally, we will introduce a few new things we think will improve the end user experience.

|

||||

|

||||

### When will Moksha release? ###

|

||||

|

||||

The next update to Bodhi will be Bodhi 3.1.0 in August this year. This new release will bring Moksha on all of its default ISOs. Let’s wait and watch to see if Moksha turns out to be a good decision or not.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://itsfoss.com/bodhi-linux-introduces-moksha-desktop/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://itsfoss.com/author/abhishek/

|

||||

[1]:http://www.bodhilinux.com/

|

||||

[2]:https://www.enlightenment.org/

|

||||

[3]:http://www.bodhilinux.com/2015/04/28/introducing-the-moksha-desktop/

|

||||

[4]:http://itsfoss.com/bodhi-linux-3/

|

||||

[5]:http://forums.bodhilinux.com/index.php?/topic/12322-e17-vs-e19-which-are-you-using-and-why/

|

||||

@ -0,0 +1,459 @@

|

||||

First Step Guide for Learning Shell Scripting

|

||||

================================================================================

|

||||

|

||||

|

||||

Usually when people say "shell scripting" they have on mind bash, ksh, sh, ash or similar linux/unix scripting language. Scripting is another way to communicate with computer. Using graphic windows interface (not matter windows or linux) user can move mouse and clicking on the various objects like, buttons, lists, check boxes and so on. But it is very inconvenient way witch requires user participation and accuracy each time he would like to ask computer / server to do the same tasks (lets say to convert photos or download new movies, mp3 etc). To make all these things easy accessible and automated we could use shell scripts.

|

||||

|

||||

Some programming languages like pascal, foxpro, C, java needs to be compiled before they could be executed. They needs appropriate compiler to make our code to do some job.

|

||||

|

||||

Another programming languages like php, javascript, visualbasic do not needs compiler. So they need interpretersand we could run our program without compiling the code.

|

||||

|

||||

The shell scripts is also like interpreters, but it is usually used to call external compiled programs. Then captures the outputs, exit codes and act accordingly.

|

||||

|

||||

One of the most popular shell scripting language in the linux world is the bash. And i think (this is my own opinion) this is because bash shell allows user easily navigate through the history commands (previously executed) by default, in opposite ksh which requires some tuning in .profile or remember some "magic" key combination to walk through history and amend commands.

|

||||

|

||||

Ok, i think this is enough for introduction and i leaving for your judge which environment is most comfortable for you. Since now i will speak only about bash and scripting. In the following examples i will use the CentOS 6.6 and bash-4.1.2. Just make sure you have the same or greater version.

|

||||

|

||||

### Shell Script Streams ###

|

||||

|

||||

The shell scripting it is something similar to conversation of several persons. Just imagine that all command like the persons who able to do something if you properly ask them. Lets say you would like to write the document. First of all you need the paper, then you need to say the content to someone to write it, and finally you would like to store it somewhere. Or you would like build a house, so you will ask appropriate persons to cleanup the space. After they say "its done" then other engineers could build for you the walls. And finally, when engineers also tell "Its done" you can ask the painters to color your house. And what would happen if you ask the painters coloring your walls before they are built? I think they will start to complain. Almost all commands like the persons could speak and if they did its job without any issues they speaks to "standard output". If they can't to what you asking - they speaking to the "standard error". So finally all commands listening for you through "standard input".

|

||||

|

||||

Quick example- when you opening linux terminal and writing some text - you speaking to bash through "standard input". So ask the bash shell **who am i**

|

||||

|

||||

root@localhost ~]# who am i <--- you speaking through the standard input to bash shell

|

||||

root pts/0 2015-04-22 20:17 (192.168.1.123) <--- bash shell answering to you through the standard output

|

||||

|

||||

Now lets ask something that bash will not understand us:

|

||||

|

||||

[root@localhost ~]# blablabla <--- and again, you speaking through standard input

|

||||

-bash: blablabla: command not found <--- bash complaining through standard error

|

||||

|

||||

The first word before ":" usually is the command which complaining to you. Actually each of these streams has their own index number:

|

||||

|

||||

- standard input (**stdin**) - 0

|

||||

- standard output (**stdout**) - 1

|

||||

- standard error (**stderr**) - 2

|

||||

|

||||

If you really would like to know to witch output command said something - you need to redirect (to use "greater than ">" symbol after command and stream index) that speech to file:

|

||||

|

||||

[root@localhost ~]# blablabla 1> output.txt

|

||||

-bash: blablabla: command not found

|

||||

|

||||

In this example we tried to redirect 1 (**stdout**) stream to file named output.txt. Lets look does to the content of that file. We use the command cat for that:

|

||||

|

||||

[root@localhost ~]# cat output.txt

|

||||

[root@localhost ~]#

|

||||

|

||||

Seams that is empty. Ok now lets try to redirect 2 (**stderr**) streem:

|

||||

|

||||

[root@localhost ~]# blablabla 2> error.txt

|

||||

[root@localhost ~]#

|

||||

|

||||

Ok, we see that complains gone. Lets chec the file:

|

||||

|

||||

[root@localhost ~]# cat error.txt

|

||||

-bash: blablabla: command not found

|

||||

[root@localhost ~]#

|

||||

|

||||

Exactly! We see that all complains was recorded to the errors.txt file.

|

||||

|

||||

Sometimes commands produces **stdout** and **stderr** simultaniously. To redirect them to separate files we can use the following syntax:

|

||||

|

||||

command 1>out.txt 2>err.txt

|

||||

|

||||

To shorten this syntax a bit we can skip the "1" as by default the **stdout** stream will be redirected:

|

||||

|

||||

command >out.txt 2>err.txt

|

||||

|

||||

Ok, lets try to do something "bad". lets remove the file1 and folder1 with the rm command:

|

||||

|

||||

[root@localhost ~]# rm -vf folder1 file1 > out.txt 2>err.txt

|

||||

|

||||

Now check our output files:

|

||||

|

||||

[root@localhost ~]# cat out.txt

|

||||

removed `file1'

|

||||

[root@localhost ~]# cat err.txt

|

||||

rm: cannot remove `folder1': Is a directory

|

||||

[root@localhost ~]#

|

||||

|

||||

As we see the streams was separated to different files. Sometimes it is not handy as usually we want to see the sequence when the errors appeared - before or after some actions. For that we can redirect both streams to the same file:

|

||||

|

||||

command >>out_err.txt 2>>out_err.txt

|

||||

|

||||

Note : Please notice that i use ">>" instead of ">". It allows us to append file instead of overwrite.

|

||||

|

||||

We can redirect one stream to another:

|

||||

|

||||

command >out_err.txt 2>&1

|

||||

|

||||

Let me explain. All stdout of the command will be redirected to the out_err.txt. The errout will be redirected to the 1-st stream which (as i already explained above) will be redirected to the same file. Let see the example:

|

||||

|

||||

[root@localhost ~]# rm -fv folder2 file2 >out_err.txt 2>&1

|

||||

[root@localhost ~]# cat out_err.txt

|

||||

rm: cannot remove `folder2': Is a directory

|

||||

removed `file2'

|

||||

[root@localhost ~]#

|

||||

|

||||

Looking at the combined output we can state that first of all **rm** command tried to remove the folder2 and it was not success as linux require the **-r** key for **rm** command to allow remove folders. At the second the file2 was removed. By providing the **-v** (verbose) key for the **rm** command we asking rm command to inform as about each removed file or folder.

|

||||

|

||||

This is almost all you need to know about redirection. I say almost, because there is one more very important redirection which called "piping". By using | (pipe) symbol we usually redirecting **stdout** streem.

|

||||

|

||||

Lets say we have the text file:

|

||||

|

||||

[root@localhost ~]# cat text_file.txt

|

||||

This line does not contain H e l l o word

|

||||

This lilne contains Hello

|

||||

This also containd Hello

|

||||

This one no due to HELLO all capital

|

||||

Hello bash world!

|

||||

|

||||

and we need to find the lines in it with the words "Hello". Linux has the **grep** command for that:

|

||||

|

||||

[root@localhost ~]# grep Hello text_file.txt

|

||||

This lilne contains Hello

|

||||

This also containd Hello

|

||||

Hello bash world!

|

||||

[root@localhost ~]#

|

||||

|

||||

This is ok when we have file and would like to sech in it. But what to do if we need to find something in the output of another command? Yes, of course we can redirect the output to the file and then look in it:

|

||||

|

||||

[root@localhost ~]# fdisk -l>fdisk.out

|

||||

[root@localhost ~]# grep "Disk /dev" fdisk.out

|

||||

Disk /dev/sda: 8589 MB, 8589934592 bytes

|

||||

Disk /dev/mapper/VolGroup-lv_root: 7205 MB, 7205814272 bytes

|

||||

Disk /dev/mapper/VolGroup-lv_swap: 855 MB, 855638016 bytes

|

||||

[root@localhost ~]#

|

||||

|

||||

If you going to grep something with white spaces embrace that with " quotes!

|

||||

|

||||

Note : fdisk command shows information about Linux OS disk drives

|

||||

|

||||

As we see this way is not very handy as soon we will mess the space with temporary files. For that we can use the pipes. They allow us redirect one command **stdout** to another command **stdin** streams:

|

||||

|

||||

[root@localhost ~]# fdisk -l | grep "Disk /dev"

|

||||

Disk /dev/sda: 8589 MB, 8589934592 bytes

|

||||

Disk /dev/mapper/VolGroup-lv_root: 7205 MB, 7205814272 bytes

|

||||

Disk /dev/mapper/VolGroup-lv_swap: 855 MB, 855638016 bytes

|

||||

[root@localhost ~]#

|

||||

|

||||

As we see, we get the same result without any temporary files. We have redirected **frisk stdout** to the **grep stdin**.

|

||||

|

||||

**Note** : Pipe redirection is always from left to right.

|

||||

|

||||

There are several other redirections but we will speak about them later.

|

||||

|

||||

### Displaying custom messages in the shell ###

|

||||

|

||||

As we already know usually communication with and within shell is going as dialog. So lets create some real script which also will speak with us. It will allow you to learn some simple commands and better understand the scripting concept.

|

||||

|

||||

Imagine we are working in some company as help desk manager and we would like to create some shell script to register the call information: phone number, User name and brief description about issue. We going to store it in the plain text file data.txt for future statistics. Script it self should work in dialog way to make live easy for help desk workers. So first of all we need to display the questions. For displaying any messages there is echo and printf commands. Both of them displaying messages, but printf is more powerful as we can nicely form output to align it to the right, left or leave dedicated space for message. Lets start from simple one. For file creation please use your favorite text editor (kate, nano, vi, ...) and create the file named note.sh with the command inside:

|

||||

|

||||

echo "Phone number ?"

|

||||

|

||||

### Script execution ###

|

||||

|

||||

After you have saved the file we can run it with bash command by providing our file as an argument:

|

||||

|

||||

[root@localhost ~]# bash note.sh

|

||||

Phone number ?

|

||||

|

||||

Actually to use this way for script execution is not handy. It would be more comfortable just execute the script without any **bash** command as a prefix. To make it executable we can use **chmod** command:

|

||||

|

||||

[root@localhost ~]# ls -la note.sh

|

||||

-rw-r--r--. 1 root root 22 Apr 23 20:52 note.sh

|

||||

[root@localhost ~]# chmod +x note.sh

|

||||

[root@localhost ~]# ls -la note.sh

|

||||

-rwxr-xr-x. 1 root root 22 Apr 23 20:52 note.sh

|

||||

[root@localhost ~]#

|

||||

|

||||

|

||||

|

||||

**Note** : ls command displays the files in the current folder. By adding the keys -la it will display a bit more information about files.

|

||||

|

||||

As we see, before **chmod** command execution, script has only read (r) and write (w) permissions. After **chmod +x** it got execute (x) permissions. (More details about permissions i am going to describe in next article.) Now we can simply run it:

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number ?

|

||||

|

||||

Before script name i have added ./ combination. . (dot) in the unix world means current position (current folder), the / (slash) is the folder separator. (In Windows OS we use \ (backslash) for the same). So whole this combination means: "from the current folder execute the note.sh script". I think it will be more clear for you if i run this script with full path:

|

||||

|

||||

[root@localhost ~]# /root/note.sh

|

||||

Phone number ?

|

||||

[root@localhost ~]#

|

||||

|

||||

It also works.

|

||||

|

||||

Everything would be ok if all linux users would have the same default shell. If we simply execute this script default user shell will be used to parse script content and run the commands. Different shells have a bit different syntax, internal commands, etc. So to guarantee the **bash** will be used for our script we should add **#!/bin/bash** as the first line. In this way default user shell will call **/bin/bash** and only then will execute following shell commands in the script:

|

||||

|

||||

[root@localhost ~]# cat note.sh

|

||||

#!/bin/bash

|

||||

echo "Phone number ?"

|

||||

|

||||

Only now we will be 100% sure that **bash** will be used to parse our script content. Lets move on.

|

||||

|

||||

### Reading the inputs ###

|

||||

|

||||

After we have displayed the message script should wait for answer from user. There is the command **read**:

|

||||

|

||||

#!/bin/bash

|

||||

echo "Phone number ?"

|

||||

read phone

|

||||

|

||||

After execution script will wait for the user input until he press the [ENTER] key:

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number ?

|

||||

12345 <--- here is my input

|

||||

[root@localhost ~]#

|

||||

|

||||

Everything you have input will be stored to the variable **phone**. To display the value of variable we can use the same **echo** command:

|

||||

|

||||

[root@localhost ~]# cat note.sh

|

||||

#!/bin/bash

|

||||

echo "Phone number ?"

|

||||

read phone

|

||||

echo "You have entered $phone as a phone number"

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number ?

|

||||

123456

|

||||

You have entered 123456 as a phone number

|

||||

[root@localhost ~]#

|

||||

|

||||

In **bash** shell we using **$** (dollar) sign as variable indication, except when reading into variable and few other moments (will describe later).

|

||||

|

||||

Ok, now we are ready to add the rest questions:

|

||||

|

||||

#!/bin/bash

|

||||

echo "Phone number?"

|

||||

read phone

|

||||

echo "Name?"

|

||||

read name

|

||||

echo "Issue?"

|

||||

read issue

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number?

|

||||

123

|

||||

Name?

|

||||

Jim

|

||||

Issue?

|

||||

script is not working.

|

||||

[root@localhost ~]#

|

||||

|

||||

### Using stream redirection ###

|

||||

|

||||

Perfect! There is left to redirect everything to the file data.txt. As a field separator we going to use / (slash) symbol.

|

||||

|

||||

**Note** : You can chose any which you think is the best, bat be sure that content will not have thes symbols inside. It will cause extra fields in the line.

|

||||

|

||||

Do not forget to use ">>" instead of ">" as we would like to append the output to the end of file!

|

||||

|

||||

[root@localhost ~]# tail -2 note.sh

|

||||

read issue

|

||||

echo "$phone/$name/$issue">>data.txt

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number?

|

||||

987

|

||||

Name?

|

||||

Jimmy

|

||||

Issue?

|

||||

Keybord issue.

|

||||

[root@localhost ~]# cat data.txt

|

||||

987/Jimmy/Keybord issue.

|

||||

[root@localhost ~]#

|

||||

|

||||

**Note** : The command **tail** displays the last **-n** lines of the file.

|

||||

|

||||

Bingo. Lets run once again:

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number?

|

||||

556

|

||||

Name?

|

||||

Janine

|

||||

Issue?

|

||||

Mouse was broken.

|

||||

[root@localhost ~]# cat data.txt

|

||||

987/Jimmy/Keybord issue.

|

||||

556/Janine/Mouse was broken.

|

||||

[root@localhost ~]#

|

||||

|

||||

Our file is growing. Lets add the date in the front of each line. This will be useful later when playing with data while calculating statistic. For that we can use command date and give it some format as i do not like default one:

|

||||

|

||||

[root@localhost ~]# date

|

||||

Thu Apr 23 21:33:14 EEST 2015 <---- default output of dta command

|

||||

[root@localhost ~]# date "+%Y.%m.%d %H:%M:%S"

|

||||

2015.04.23 21:33:18 <---- formated output

|

||||

|

||||

There are several ways to read the command output to the variable. In this simple situation we will use ` (back quotes):

|

||||

|

||||

[root@localhost ~]# cat note.sh

|

||||

#!/bin/bash

|

||||

now=`date "+%Y.%m.%d %H:%M:%S"`

|

||||

echo "Phone number?"

|

||||

read phone

|

||||

echo "Name?"

|

||||

read name

|

||||

echo "Issue?"

|

||||

read issue

|

||||

echo "$now/$phone/$name/$issue">>data.txt

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number?

|

||||

123

|

||||

Name?

|

||||

Jim

|

||||

Issue?

|

||||

Script hanging.

|

||||

[root@localhost ~]# cat data.txt

|

||||

2015.04.23 21:38:56/123/Jim/Script hanging.

|

||||

[root@localhost ~]#

|

||||

|

||||

Hmmm... Our script looks a bit ugly. Lets prettify it a bit. If you would read manual about **read** command you would find that read command also could display some messages. For this we should use -p key and message:

|

||||

|

||||

[root@localhost ~]# cat note.sh

|

||||

#!/bin/bash

|

||||

now=`date "+%Y.%m.%d %H:%M:%S"`

|

||||

read -p "Phone number: " phone

|

||||

read -p "Name: " name

|

||||

read -p "Issue: " issue

|

||||

echo "$now/$phone/$name/$issue">>data.txt

|

||||

|

||||

You can fine a lots of interesting about each command directly from the console. Just type: **man read, man echo, man date, man ....**

|

||||

|

||||

Agree it looks much better!

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number: 321

|

||||

Name: Susane

|

||||

Issue: Mouse was stolen

|

||||

[root@localhost ~]# cat data.txt

|

||||

2015.04.23 21:38:56/123/Jim/Script hanging.

|

||||

2015.04.23 21:43:50/321/Susane/Mouse was stolen

|

||||

[root@localhost ~]#

|

||||

|

||||

And the cursor is right after the message (not in new line) what makes a bit sense.

|

||||

Loop

|

||||

|

||||

Time to improve our script. If user works all day with the calls it is not very handy to run it each time. Lets add all these actions in the never-ending loop:

|

||||

|

||||

[root@localhost ~]# cat note.sh

|

||||

#!/bin/bash

|

||||

while true

|

||||

do

|

||||

read -p "Phone number: " phone

|

||||

now=`date "+%Y.%m.%d %H:%M:%S"`

|

||||

read -p "Name: " name

|

||||

read -p "Issue: " issue

|

||||

echo "$now/$phone/$name/$issue">>data.txt

|

||||

done

|

||||

|

||||

I have swapped **read phone** and **now=`date** lines. This is because i would like to get the time right after the phone number will be entered. If i would left it as the first line in the loop **- the** now variable will get the time right after the data was stored in the file. And it is not good as the next call could be after 20 mins or so.

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number: 123

|

||||

Name: Jim

|

||||

Issue: Script still not works.

|

||||

Phone number: 777

|

||||

Name: Daniel

|

||||

Issue: I broke my monitor

|

||||

Phone number: ^C

|

||||

[root@localhost ~]# cat data.txt

|

||||

2015.04.23 21:38:56/123/Jim/Script hanging.

|

||||

2015.04.23 21:43:50/321/Susane/Mouse was stolen

|

||||

2015.04.23 21:47:55/123/Jim/Script still not works.

|

||||

2015.04.23 21:48:16/777/Daniel/I broke my monitor

|

||||

[root@localhost ~]#

|

||||

|

||||

NOTE: To exit from the never-ending loop you can by pressing [Ctrl]+[C] keys. Shell will display ^ as the Ctrl key.

|

||||

|

||||

### Using pipe redirection ###

|

||||

|

||||

Lets add more functionality to our "Frankenstein" I would like the script will display some statistic after each call. Lets say we want to see the how many times each number called us. For that we should cat the data.txt file:

|

||||

|

||||

[root@localhost ~]# cat data.txt

|

||||

2015.04.23 21:38:56/123/Jim/Script hanging.

|

||||

2015.04.23 21:43:50/321/Susane/Mouse was stolen

|

||||

2015.04.23 21:47:55/123/Jim/Script still not works.

|

||||

2015.04.23 21:48:16/777/Daniel/I broke my monitor

|

||||

2015.04.23 22:02:14/123/Jimmy/New script also not working!!!

|

||||

[root@localhost ~]#

|

||||

|

||||

Now all this output we can redirect to the **cut** command to **cut** each line into the chunks (our delimiter "/") and print the second field:

|

||||

|

||||

[root@localhost ~]# cat data.txt | cut -d"/" -f2

|

||||

123

|

||||

321

|

||||

123

|

||||

777

|

||||

123

|

||||

[root@localhost ~]#

|

||||

|

||||

Now this output we can redirect to another command to **sort**:

|

||||

|

||||

[root@localhost ~]# cat data.txt | cut -d"/" -f2|sort

|

||||

123

|

||||

123

|

||||

123

|

||||

321

|

||||

777

|

||||

[root@localhost ~]#

|

||||

|

||||

and leave only unique lines. To count unique entries just add **-c** key for **uniq** command:

|

||||

|

||||

[root@localhost ~]# cat data.txt | cut -d"/" -f2 | sort | uniq -c

|

||||

3 123

|

||||

1 321

|

||||

1 777

|

||||

[root@localhost ~]#

|

||||

|

||||

Just add this to end of our loop:

|

||||

|

||||

#!/bin/bash

|

||||

while true

|

||||

do

|

||||

read -p "Phone number: " phone

|

||||

now=`date "+%Y.%m.%d %H:%M:%S"`

|

||||

read -p "Name: " name

|

||||

read -p "Issue: " issue

|

||||

echo "$now/$phone/$name/$issue">>data.txt

|

||||

echo "===== We got calls from ====="

|

||||

cat data.txt | cut -d"/" -f2 | sort | uniq -c

|

||||

echo "--------------------------------"

|

||||

done

|

||||

|

||||

Run it:

|

||||

|

||||

[root@localhost ~]# ./note.sh

|

||||

Phone number: 454

|

||||

Name: Malini

|

||||

Issue: Windows license expired.

|

||||

===== We got calls from =====

|

||||

3 123

|

||||

1 321

|

||||

1 454

|

||||

1 777

|

||||

--------------------------------

|

||||

Phone number: ^C

|

||||

|

||||

|

||||

|

||||

Current scenario is going through well-known steps like:

|

||||

|

||||

- Display message

|

||||

- Get user input

|

||||

- Store values to the file

|

||||

- Do something with stored data

|

||||

|

||||

But what if user has several responsibilities and he needs sometimes to input data, sometimes to do statistic calculations, or might be to find something in stored data? For that we need to implement switches / cases. In next article i will show you how to use them and how to nicely form the output. It is useful while "drawing" the tables in the shell.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://linoxide.com/linux-shell-script/guide-start-learning-shell-scripting-scratch/

|

||||

|

||||

作者:[Petras Liumparas][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://linoxide.com/author/petrasl/

|

||||

@ -0,0 +1,170 @@

|

||||

Translating by wwy-hust

|

||||

|

||||

|

||||

How to Securely Store Passwords and Api Keys Using Vault

|

||||

================================================================================

|

||||

Vault is a tool that is used to access secret information securely, it may be password, API key, certificate or anything else. Vault provides a unified interface to secret information through strong access control mechanism and extensive logging of events.

|

||||

|

||||

Granting access to critical information is quite a difficult problem when we have multiple roles and individuals across different roles requiring various critical information like, login details to databases with different privileges, API keys for external services, credentials for service oriented architecture communication etc. Situation gets even worse when access to secret information is managed across different platforms with custom settings, so rolling, secure storage and managing the audit logs is almost impossible. But Vault provides a solution to such a complex situation.

|

||||

|

||||

### Salient Features ###

|

||||

|

||||

Data Encryption: Vault can encrypt and decrypt data with no requirement to store it. Developers can now store encrypted data without developing their own encryption techniques and it allows security teams to define security parameters.

|

||||

|

||||

**Secure Secret Storage**: Vault encrypts the secret information (API keys, passwords or certificates) before storing it on to the persistent (secondary) storage. So even if somebody gets access to the stored information by chance, it will be of no use until it is decrypted.

|

||||

|

||||

**Dynamic Secrets**: On demand secrets are generated for systems like AWS and SQL databases. If an application needs to access S3 bucket, for instance, it requests AWS keypair from Vault, which grants the required secret information along with a lease time. The secret information won’t work once the lease time is expired.

|

||||

|

||||

**Leasing and Renewal**: Vault grants secrets with a lease limit, it revokes the secrets as soon as lease expires which can further be renewed through APIs if required.

|

||||

|

||||

**Revocation**: Upon expiring the lease period Vault can revoke a single secret or a tree of secrets.

|

||||

|

||||

### Installing Vault ###

|

||||

|

||||

There are two ways to use Vault.

|

||||

|

||||

**1. Pre-compiled Vault Binary** can be downloaded for all Linux flavors from the following source, once done, unzip it and place it on a system PATH where other binaries are kept so that it can be accessed/invoked easily.

|

||||

|

||||

- [Download Precompiled Vault Binary (32-bit)][1]

|

||||

- [Download Precompiled Vault Binary (64-bit)][2]

|

||||

- [Download Precompiled Vault Binary (ARM)][3]

|