mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-13 22:30:37 +08:00

commit

259425c35e

@ -1,8 +1,11 @@

|

|||||||

修复Linux中的提供最小化类BASH命令行编辑GRUB错误

|

修复Linux中的“提供类似行编辑的袖珍BASH...”的GRUB错误

|

||||||

================================================================================

|

================================================================================

|

||||||

|

|

||||||

这两天我[安装了Elementary OS和Windows双系统][1],在启动的时候遇到了一个Grub错误。命令行中呈现如下信息:

|

这两天我[安装了Elementary OS和Windows双系统][1],在启动的时候遇到了一个Grub错误。命令行中呈现如下信息:

|

||||||

|

|

||||||

**提供最小化类BASH命令行编辑。对于第一个词,TAB键补全可以使用的命令。除此之外,TAB键补全可用的设备或文件。**

|

**Minimal BASH like line editing is supported. For the first word, TAB lists possible command completions. anywhere else TAB lists possible device or file completions.**

|

||||||

|

|

||||||

|

**提供类似行编辑的袖珍 BASH。TAB键补全第一个词,列出可以使用的命令。除此之外,TAB键补全可以列出可用的设备或文件。**

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@ -10,7 +13,7 @@

|

|||||||

|

|

||||||

通过这篇文章里我们可以学到基于Linux系统**如何修复Ubuntu中出现的“minimal BASH like line editing is supported” Grub错误**。

|

通过这篇文章里我们可以学到基于Linux系统**如何修复Ubuntu中出现的“minimal BASH like line editing is supported” Grub错误**。

|

||||||

|

|

||||||

> 你可以参阅这篇教程来修复类似的高频问题,[错误:分区未找到Linux grub救援模式][3]。

|

> 你可以参阅这篇教程来修复类似的常见问题,[错误:分区未找到Linux grub救援模式][3]。

|

||||||

|

|

||||||

### 先决条件 ###

|

### 先决条件 ###

|

||||||

|

|

||||||

@ -19,11 +22,11 @@

|

|||||||

- 一个包含相同版本、相同OS的LiveUSB或磁盘

|

- 一个包含相同版本、相同OS的LiveUSB或磁盘

|

||||||

- 当前会话的Internet连接正常工作

|

- 当前会话的Internet连接正常工作

|

||||||

|

|

||||||

在确认了你拥有先决条件了之后,让我们看看如何修复Linux的死亡黑屏(如果我可以这样的称呼它的话;))。

|

在确认了你拥有先决条件了之后,让我们看看如何修复Linux的死亡黑屏(如果我可以这样的称呼它的话 ;) )。

|

||||||

|

|

||||||

### 如何在基于Ubuntu的Linux中修复“minimal BASH like line editing is supported” Grub错误 ###

|

### 如何在基于Ubuntu的Linux中修复“minimal BASH like line editing is supported” Grub错误 ###

|

||||||

|

|

||||||

我知道你一定疑问这种Grub错误并不局限于在基于Ubuntu的Linux发行版上发生,那为什么我要强调在基于Ubuntu的发行版上呢?原因是,在这里我们将采用一个简单的方法并叫作**Boot Repair**的工具来修复我们的问题。我并不确定在其他的诸如Fedora的发行版中是否有这个工具可用。不再浪费时间,我们来看如何修复minimal BASH like line editing is supported Grub错误。

|

我知道你一定疑问这种Grub错误并不局限于在基于Ubuntu的Linux发行版上发生,那为什么我要强调在基于Ubuntu的发行版上呢?原因是,在这里我们将采用一个简单的方法,用个叫做**Boot Repair**的工具来修复我们的问题。我并不确定在其他的诸如Fedora的发行版中是否有这个工具可用。不再浪费时间,我们来看如何修复“minimal BASH like line editing is supported” Grub错误。

|

||||||

|

|

||||||

### 步骤 1: 引导进入lives会话 ###

|

### 步骤 1: 引导进入lives会话 ###

|

||||||

|

|

||||||

@ -75,7 +78,7 @@ via: http://itsfoss.com/fix-minimal-bash-line-editing-supported-grub-error-linux

|

|||||||

|

|

||||||

作者:[Abhishek][a]

|

作者:[Abhishek][a]

|

||||||

译者:[martin2011qi](https://github.com/martin2011qi)

|

译者:[martin2011qi](https://github.com/martin2011qi)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

177

published/20150616 LINUX 101--POWER UP YOUR SHELL.md

Normal file

177

published/20150616 LINUX 101--POWER UP YOUR SHELL.md

Normal file

@ -0,0 +1,177 @@

|

|||||||

|

LINUX 101: 让你的 SHELL 更强大

|

||||||

|

================================================================================

|

||||||

|

> 在我们的关于 shell 基础的指导下, 得到一个更灵活,功能更强大且多彩的命令行界面

|

||||||

|

|

||||||

|

**为何要这样做?**

|

||||||

|

|

||||||

|

- 使得在 shell 提示符下过得更轻松,高效

|

||||||

|

- 在失去连接后恢复先前的会话

|

||||||

|

- Stop pushing around that fiddly rodent!

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

这是我的命令行提示符的设置。对于这个小的终端窗口来说,这或许有些长。但你可以根据你的喜好来调整它。

|

||||||

|

|

||||||

|

作为一个 Linux 用户, 你可能熟悉 shell (又名为命令行)。 或许你需要时不时的打开终端来完成那些不能在 GUI 下处理的必要任务,抑或是因为你处在一个将窗口铺满桌面的环境中,而 shell 是你与你的 linux 机器交互的主要方式。

|

||||||

|

|

||||||

|

在上面那些情况下,你可能正在使用你所使用的发行版本自带的 Bash 配置。 尽管对于大多数的任务而言,它足够好了,但它可以更加强大。 在本教程中,我们将向你展示如何使得你的 shell 提供更多有用信息、更加实用且更适合工作。 我们将对提示符进行自定义,让它比默认情况下提供更好的反馈,并向你展示如何使用炫酷的 `tmux` 工具来管理会话并同时运行多个程序。 并且,为了让眼睛舒服一点,我们还将关注配色方案。那么,进击吧,少女!

|

||||||

|

|

||||||

|

### 让提示符更美妙 ###

|

||||||

|

|

||||||

|

大多数的发行版本配置有一个非常简单的提示符,它们大多向你展示了一些基本信息, 但提示符可以为你提供更多的内容。例如,在 Debian 7 下,默认的提示符是这样的:

|

||||||

|

|

||||||

|

mike@somebox:~$

|

||||||

|

|

||||||

|

上面的提示符展示出了用户、主机名、当前目录和账户类型符号(假如你切换到 root 账户, **$** 会变为 **#**)。 那这些信息是在哪里存储的呢? 答案是:在 **PS1** 环境变量中。 假如你键入 `echo $PS1`, 你将会在这个命令的输出字符串的最后有如下的字符:

|

||||||

|

|

||||||

|

\u@\h:\w$

|

||||||

|

|

||||||

|

这看起来有一些丑陋,并在瞥见它的第一眼时,你可能会开始尖叫,认为它是令人恐惧的正则表达式,但我们不打算用这些复杂的字符来煎熬我们的大脑。这不是正则表达式,这里的斜杠是转义序列,它告诉提示符进行一些特别的处理。 例如,上面的 **u** 部分,告诉提示符展示用户名, 而 w 则展示工作路径.

|

||||||

|

|

||||||

|

下面是一些你可以在提示符中用到的字符的列表:

|

||||||

|

|

||||||

|

- d 当前的日期

|

||||||

|

- h 主机名

|

||||||

|

- n 代表换行的字符

|

||||||

|

- A 当前的时间 (HH:MM)

|

||||||

|

- u 当前的用户

|

||||||

|

- w (小写) 整个工作路径的全称

|

||||||

|

- W (大写) 工作路径的简短名称

|

||||||

|

- $ 一个提示符号,对于 root 用户为 # 号

|

||||||

|

- ! 当前命令在 shell 历史记录中的序号

|

||||||

|

|

||||||

|

下面解释 **w** 和 **W** 选项的区别: 对于前者,你将看到你所在的工作路径的完整地址,(例如 **/usr/local/bin**),而对于后者, 它则只显示 **bin** 这一部分。

|

||||||

|

|

||||||

|

现在,我们该怎样改变提示符呢? 你需要更改 **PS1** 环境变量的内容,试试下面这个:

|

||||||

|

|

||||||

|

export PS1="I am \u and it is \A $"

|

||||||

|

|

||||||

|

现在,你的提示符将会像下面这样:

|

||||||

|

|

||||||

|

I am mike and it is 11:26 $

|

||||||

|

|

||||||

|

从这个例子出发,你就可以按照你的想法来试验一下上面列出的其他转义序列。 但等等 – 当你登出后,你的这些努力都将消失,因为在你每次打开终端时,**PS1** 环境变量的值都会被重置。解决这个问题的最简单方式是打开 **.bashrc** 配置文件(在你的家目录下) 并在这个文件的最下方添加上完整的 `export` 命令。在每次你启动一个新的 shell 会话时,这个 **.bashrc** 会被 `Bash` 读取, 所以你的加强的提示符就可以一直出现。你还可以使用额外的颜色来装扮提示符。刚开始,这将有点棘手,因为你必须使用一些相当奇怪的转义序列,但结果是非常漂亮的。 将下面的字符添加到你的 **PS1**字符串中的某个位置,最终这将把文本变为红色:

|

||||||

|

|

||||||

|

\[\e[31m\]

|

||||||

|

|

||||||

|

你可以将这里的 31 更改为其他的数字来获得不同的颜色:

|

||||||

|

|

||||||

|

- 30 黑色

|

||||||

|

- 32 绿色

|

||||||

|

- 33 黄色

|

||||||

|

- 34 蓝色

|

||||||

|

- 35 洋红色

|

||||||

|

- 36 青色

|

||||||

|

- 37 白色

|

||||||

|

|

||||||

|

所以,让我们使用先前看到的转义序列和颜色来创造一个提示符,以此来结束这一小节的内容。深吸一口气,弯曲你的手指,然后键入下面这只“野兽”:

|

||||||

|

|

||||||

|

export PS1="(\!) \[\e[31m\] \[\A\] \[\e[32m\]\u@\h \[\e[34m\]\w \[\e[30m\]$"

|

||||||

|

|

||||||

|

上面的命令提供了一个 Bash 命令历史序号、当前的时间、彩色的用户或主机名组合、以及工作路径。假如你“野心勃勃”,利用一些惊人的组合,你还可以更改提示符的背景色和前景色。非常有用的 Arch wiki 有一个关于颜色代码的完整列表:[http://tinyurl.com/3gvz4ec][1]。

|

||||||

|

|

||||||

|

> **Shell 精要**

|

||||||

|

>

|

||||||

|

> 假如你是一个彻底的 Linux 新手并第一次阅读这份杂志,或许你会发觉阅读这些教程有些吃力。 所以这里有一些基础知识来让你熟悉一些 shell。 通常在你的菜单中, shell 指的是 Terminal、 XTerm 或 Konsole, 当你启动它后, 最为实用的命令有这些:

|

||||||

|

>

|

||||||

|

> **ls** (列出文件名); **cp one.txt two.txt** (复制文件); **rm file.txt** (移除文件); **mv old.txt new.txt** (移动或重命名文件);

|

||||||

|

>

|

||||||

|

> **cd /some/directory** (改变目录); **cd ..** (回到上级目录); **./program** (在当前目录下运行一个程序); **ls > list.txt** (重定向输出到一个文件)。

|

||||||

|

>

|

||||||

|

> 几乎每个命令都有一个手册页用来解释其选项(例如 **man ls** – 按 Q 来退出)。在那里,你可以知晓命令的选项,这样你就知道 **ls -la** 展示一个详细的列表,其中也列出了隐藏文件, 并且在键入一个文件或目录的名字的一部分后, 可以使用 Tab 键来自动补全。

|

||||||

|

|

||||||

|

### Tmux: 针对 shell 的窗口管理器 ###

|

||||||

|

|

||||||

|

在文本模式的环境中使用一个窗口管理器 – 这听起来有点不可思议, 是吧? 然而,你应该记得当 Web 浏览器第一次实现分页浏览的时候吧? 在当时, 这是在可用性上的一个重大进步,它减少了桌面任务栏的杂乱无章和繁多的窗口列表。 对于你的浏览器来说,你只需要一个按钮便可以在浏览器中切换到你打开的每个单独网站, 而不是针对每个网站都有一个任务栏或导航图标。 这个功能非常有意义。

|

||||||

|

|

||||||

|

若有时你同时运行着几个虚拟终端,你便会遇到相似的情况; 在这些终端之间跳转,或每次在任务栏或窗口列表中找到你所需要的那一个终端,都可能会让你觉得麻烦。 拥有一个文本模式的窗口管理器不仅可以让你像在同一个终端窗口中运行多个 shell 会话,而且你甚至还可以将这些窗口排列在一起。

|

||||||

|

|

||||||

|

另外,这样还有另一个好处:可以将这些窗口进行分离和重新连接。想要看看这是如何运行的最好方式是自己尝试一下。在一个终端窗口中,输入 `screen` (在大多数发行版本中,它已经默认安装了或者可以在软件包仓库中找到)。 某些欢迎的文字将会出现 – 只需敲击 Enter 键这些文字就会消失。 现在运行一个交互式的文本模式的程序,例如 `nano`, 并关闭这个终端窗口。

|

||||||

|

|

||||||

|

在一个正常的 shell 对话中, 关闭窗口将会终止所有在该终端中运行的进程 – 所以刚才的 Nano 编辑对话也就被终止了, 但对于 screen 来说,并不是这样的。打开一个新的终端并输入如下命令:

|

||||||

|

|

||||||

|

screen -r

|

||||||

|

|

||||||

|

瞧,你刚开打开的 Nano 会话又回来了!

|

||||||

|

|

||||||

|

当刚才你运行 **screen** 时, 它会创建了一个新的独立的 shell 会话, 它不与某个特定的终端窗口绑定在一起,所以可以在后面被分离并重新连接(即 **-r** 选项)。

|

||||||

|

|

||||||

|

当你正使用 SSH 去连接另一台机器并做着某些工作时, 但并不想因为一个脆弱的连接而影响你的进度,这个方法尤其有用。假如你在一个 **screen** 会话中做着某些工作,并且你的连接突然中断了(或者你的笔记本没电了,又或者你的电脑报废了——不是这么悲催吧),你只需重新连接或给电脑充电或重新买一台电脑,接着运行 **screen -r** 来重新连接到远程的电脑,并在刚才掉线的地方接着开始。

|

||||||

|

|

||||||

|

现在,我们都一直在讨论 GNU 的 **screen**,但这个小节的标题提到的是 tmux。 实质上, **tmux** (terminal multiplexer) 就像是 **screen** 的一个进阶版本,带有许多有用的额外功能,所以现在我们开始关注 tmux。 某些发行版本默认包含了 **tmux**; 在其他的发行版本上,通常只需要一个 **apt-get、 yum install** 或 **pacman -S** 命令便可以安装它。

|

||||||

|

|

||||||

|

一旦你安装了它过后,键入 **tmux** 来启动它。接着你将注意到,在终端窗口的底部有一条绿色的信息栏,它非常像传统的窗口管理器中的任务栏: 上面显示着一个运行着的程序的列表、机器的主机名、当前时间和日期。 现在运行一个程序,同样以 Nano 为例, 敲击 Ctrl+B 后接着按 C 键, 这将在 tmux 会话中创建一个新的窗口,你便可以在终端的底部的任务栏中看到如下的信息:

|

||||||

|

|

||||||

|

0:nano- 1:bash*

|

||||||

|

|

||||||

|

每一个窗口都有一个数字,当前呈现的程序被一个星号所标记。 Ctrl+B 是与 tmux 交互的标准方式, 所以若你敲击这个按键组合并带上一个窗口序号, 那么就会切换到对应的那个窗口。你也可以使用 Ctrl+B 再加上 N 或 P 来分别切换到下一个或上一个窗口 – 或者使用 Ctrl+B 加上 L 来在最近使用的两个窗口之间来进行切换(有点类似于桌面中的经典的 Alt+Tab 组合键的效果)。 若需要知道窗口列表,使用 Ctrl+B 再加上 W。

|

||||||

|

|

||||||

|

目前为止,一切都还好:现在你可以在一个单独的终端窗口中运行多个程序,避免混乱(尤其是当你经常与同一个远程主机保持多个 SSH 连接时)。 当想同时看两个程序又该怎么办呢?

|

||||||

|

|

||||||

|

针对这种情况, 可以使用 tmux 中的窗格。 敲击 Ctrl+B 再加上 % , 则当前窗口将分为两个部分:一个在左一个在右。你可以使用 Ctrl+B 再加上 O 来在这两个部分之间切换。 这尤其在你想同时看两个东西时非常实用, – 例如一个窗格看指导手册,另一个窗格里用编辑器看一个配置文件。

|

||||||

|

|

||||||

|

有时,你想对一个单独的窗格进行缩放,而这需要一定的技巧。 首先你需要敲击 Ctrl+B 再加上一个 :(冒号),这将使得位于底部的 tmux 栏变为深橙色。 现在,你进入了命令模式,在这里你可以输入命令来操作 tmux。 输入 **resize-pane -R** 来使当前窗格向右移动一个字符的间距, 或使用 **-L** 来向左移动。 对于一个简单的操作,这些命令似乎有些长,但请注意,在 tmux 的命令模式(前面提到的一个分号开始的模式)下,可以使用 Tab 键来补全命令。 另外需要提及的是, **tmux** 同样也有一个命令历史记录,所以若你想重复刚才的缩放操作,可以先敲击 Ctrl+B 再跟上一个分号,并使用向上的箭头来取回刚才输入的命令。

|

||||||

|

|

||||||

|

最后,让我们看一下分离和重新连接 - 即我们刚才介绍的 screen 的特色功能。 在 tmux 中,敲击 Ctrl+B 再加上 D 来从当前的终端窗口中分离当前的 tmux 会话。这使得这个会话的一切工作都在后台中运行、使用 `tmux a` 可以再重新连接到刚才的会话。但若你同时有多个 tmux 会话在运行时,又该怎么办呢? 我们可以使用下面的命令来列出它们:

|

||||||

|

|

||||||

|

tmux ls

|

||||||

|

|

||||||

|

这个命令将为每个会话分配一个序号; 假如你想重新连接到会话 1, 可以使用 `tmux a -t 1`. tmux 是可以高度定制的,你可以自定义按键绑定并更改配色方案, 所以一旦你适应了它的主要功能,请钻研指导手册以了解更多的内容。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

上图中, tmux 开启了两个窗格: 左边是 Vim 正在编辑一个配置文件,而右边则展示着指导手册页。

|

||||||

|

|

||||||

|

> **Zsh: 另一个 shell**

|

||||||

|

>

|

||||||

|

> 选择是好的,但标准同样重要。 你要知道几乎每个主流的 Linux 发行版本都默认使用 Bash shell – 尽管还存在其他的 shell。 Bash 为你提供了一个 shell 能够给你提供的几乎任何功能,包括命令历史记录,文件名补全和许多脚本编程的能力。它成熟、可靠并文档丰富 – 但它不是你唯一的选择。

|

||||||

|

>

|

||||||

|

> 许多高级用户热衷于 Zsh, 即 Z shell。 这是 Bash 的一个替代品并提供了 Bash 的几乎所有功能,另外还提供了一些额外的功能。 例如, 在 Zsh 中,你输入 **ls** ,并敲击 Tab 键可以得到 **ls** 可用的各种不同选项的一个大致描述。 而不需要再打开 man page 了!

|

||||||

|

>

|

||||||

|

> Zsh 还支持其他强大的自动补全功能: 例如,输入 **cd /u/lo/bi** 再敲击 Tab 键, 则完整的路径名 **/usr/local/bin** 就会出现(这里假设没有其他的路径包含 **u**, **lo** 和 **bi** 等字符)。 或者只输入 **cd** 再跟上 Tab 键,则你将看到着色后的路径名的列表 – 这比 Bash 给出的简单的结果好看得多。

|

||||||

|

>

|

||||||

|

> Zsh 在大多数的主要发行版本上都可以得到了; 安装它后并输入 **zsh** 便可启动它。 要将你的默认 shell 从 Bash 改为 Zsh, 可以使用 **chsh** 命令。 若需了解更多的信息,请访问 [www.zsh.org][2]。

|

||||||

|

|

||||||

|

### “未来”的终端 ###

|

||||||

|

|

||||||

|

你或许会好奇为什么包含你的命令行提示符的应用被叫做终端。 这需要追溯到 Unix 的早期, 那时人们一般工作在一个多用户的机器上,这个巨大的电脑主机将占据一座建筑中的一个房间, 人们通过某些线路,使用屏幕和键盘来连接到这个主机, 这些终端机通常被称为“哑终端”, 因为它们不能靠自己做任何重要的执行任务 – 它们只展示通过线路从主机传来的信息,并输送回从键盘的敲击中得到的输入信息。

|

||||||

|

|

||||||

|

今天,我们在自己的机器上执行几乎所有的实际操作,所以我们的电脑不是传统意义下的终端,这就是为什么诸如 **XTerm**、 Gnome Terminal、 Konsole 等程序被称为“终端模拟器” 的原因 – 他们提供了同昔日的物理终端一样的功能。事实上,在许多方面它们并没有改变多少。诚然,现在我们有了反锯齿字体,更好的颜色和点击网址的能力,但总的来说,几十年来我们一直以同样的方式在工作。

|

||||||

|

|

||||||

|

所以某些程序员正尝试改变这个状况。 **Terminology** ([http://tinyurl.com/osopjv9][3]), 它来自于超级时髦的 Enlightenment 窗口管理器背后的团队,旨在让终端步入到 21 世纪,例如带有在线媒体显示功能。你可以在一个充满图片的目录里输入 **ls** 命令,便可以看到它们的缩略图,或甚至可以直接在你的终端里播放视频。 这使得一个终端有点类似于一个文件管理器,意味着你可以快速地检查媒体文件的内容而不必用另一个应用来打开它们。

|

||||||

|

|

||||||

|

接着还有 Xiki ([www.xiki.org][4]),它自身的描述为“命令的革新”。它就像是一个传统的 shell、一个 GUI 和一个 wiki 之间的过渡;你可以在任何地方输入命令,并在后面将它们的输出存储为笔记以作为参考,并可以创建非常强大的自定义命令。用几句话是很能描述它的,所以作者们已经创作了一个视频来展示它的潜力是多么的巨大(请看 **Xiki** 网站的截屏视频部分)。

|

||||||

|

|

||||||

|

并且 Xiki 绝不是那种在几个月之内就消亡的昙花一现的项目,作者们成功地进行了一次 Kickstarter 众筹,在七月底已募集到超过 $84,000。 是的,你没有看错 – $84K 来支持一个终端模拟器。这可能是最不寻常的集资活动了,因为某些疯狂的家伙已经决定开始创办它们自己的 Linux 杂志 ......

|

||||||

|

|

||||||

|

### 下一代终端 ###

|

||||||

|

|

||||||

|

许多命令行和基于文本的程序在功能上与它们的 GUI 程序是相同的,并且常常更加快速和高效。我们的推荐有:

|

||||||

|

**Irssi** (IRC 客户端); **Mutt** (mail 客户端); **rTorrent** (BitTorrent); **Ranger** (文件管理器); **htop** (进程监视器)。 若给定在终端的限制下来进行 Web 浏览, Elinks 确实做的很好,并且对于阅读那些以文字为主的网站例如 Wikipedia 来说。它非常实用。

|

||||||

|

|

||||||

|

> **微调配色方案**

|

||||||

|

>

|

||||||

|

> 在《Linux Voice》杂志社中,我们并不迷恋那些养眼的东西,但当你每天花费几个小时盯着屏幕看东西时,我们确实认识到美学的重要性。我们中的许多人都喜欢调整我们的桌面和窗口管理器来达到完美的效果,调整阴影效果、摆弄不同的配色方案,直到我们 100% 的满意(然后出于习惯,摆弄更多的东西)。

|

||||||

|

>

|

||||||

|

> 但我们倾向于忽视终端窗口,它理应也获得我们的喜爱,并且在 [http://ciembor.github.io/4bit][5] 你将看到一个极其棒的配色方案设计器,对于所有受欢迎的终端模拟器(**XTerm, Gnome Terminal, Konsole 和 Xfce4 Terminal 等都是支持的应用。**),它可以输出其设定。移动滑块直到你看到配色方案最佳, 然后点击位于该页面右上角的 `得到方案` 按钮。

|

||||||

|

>

|

||||||

|

> 相似的,假如你在一个文本编辑器,如 Vim 或 Emacs 上花费了很多的时间,使用一个精心设计的调色板也是非常值得的。 **Solarized** [http://ethanschoonover.com/solarized][6] 是一个卓越的方案,它不仅漂亮,而且因追求最大的可用性而设计,在其背后有着大量的研究和测试。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://www.linuxvoice.com/linux-101-power-up-your-shell-8/

|

||||||

|

|

||||||

|

作者:[Ben Everard][a]

|

||||||

|

译者:[FSSlc](https://github.com/FSSlc)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://www.linuxvoice.com/author/ben_everard/

|

||||||

|

[1]:http://tinyurl.com/3gvz4ec

|

||||||

|

[2]:http://www.zsh.org/

|

||||||

|

[3]:http://tinyurl.com/osopjv9

|

||||||

|

[4]:http://www.xiki.org/

|

||||||

|

[5]:http://ciembor.github.io/4bit

|

||||||

|

[6]:http://ethanschoonover.com/solarized

|

||||||

@ -0,0 +1,83 @@

|

|||||||

|

监控 Linux 系统的 7 个命令行工具

|

||||||

|

================================================================================

|

||||||

|

**这里有一些基本的命令行工具,让你能更简单地探索和操作Linux。**

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

### 深入 ###

|

||||||

|

|

||||||

|

关于Linux最棒的一件事之一是你能深入操作系统,来探索它是如何工作的,并寻找机会来微调性能或诊断问题。这里有一些基本的命令行工具,让你能更简单地探索和操作Linux。大多数的这些命令是在你的Linux系统中已经内建的,但假如它们没有的话,就用谷歌搜索命令名和你的发行版名吧,你会找到哪些包需要安装(注意,一些命令是和其它命令捆绑起来打成一个包的,你所找的包可能写的是其它的名字)。如果你知道一些你所使用的其它工具,欢迎评论。

|

||||||

|

|

||||||

|

|

||||||

|

### 我们怎么开始 ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

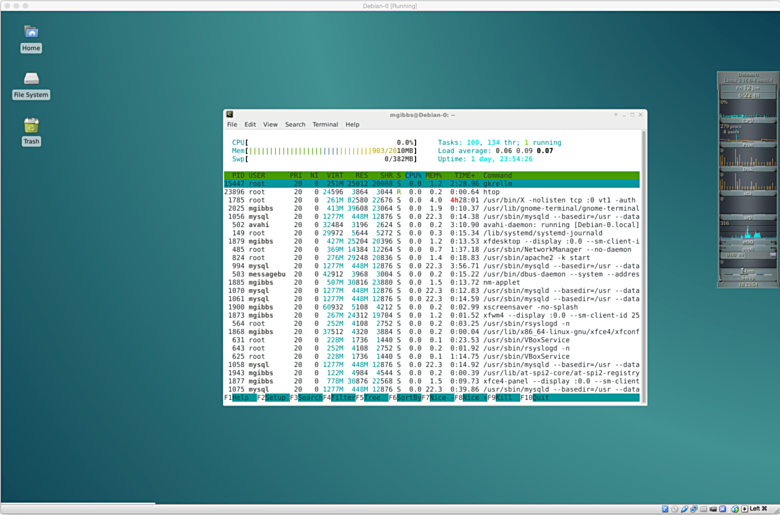

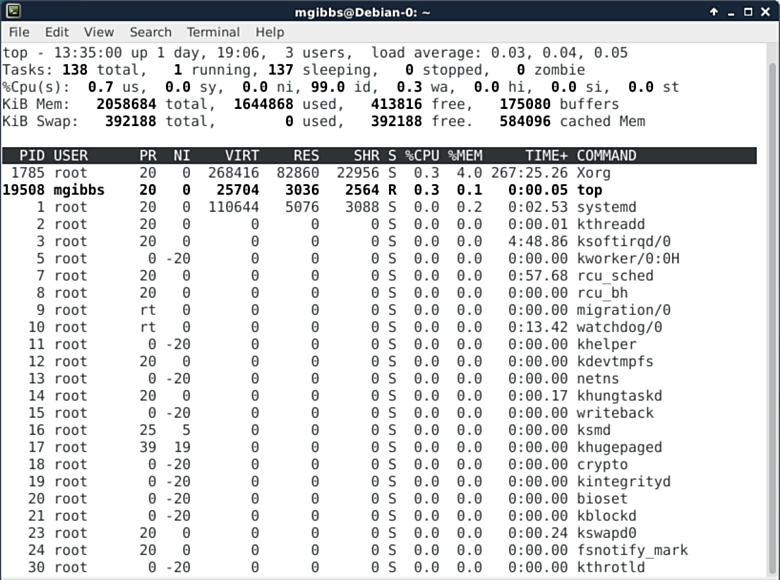

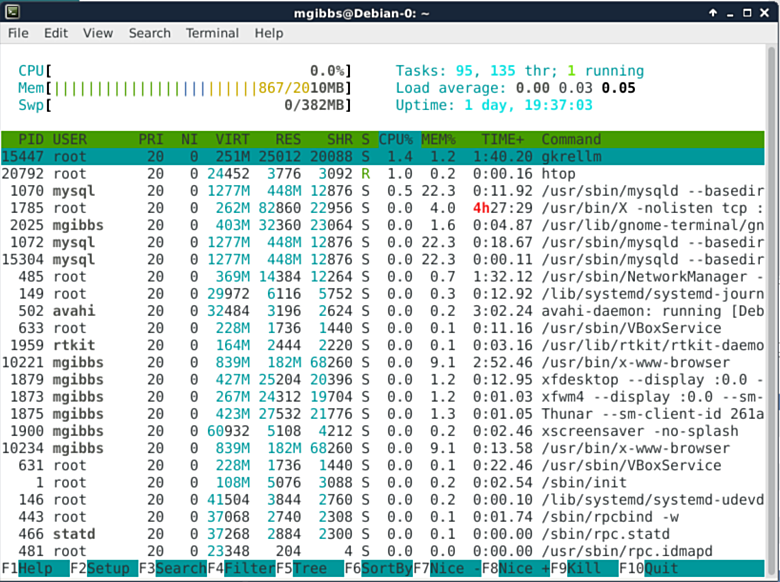

须知: 本文中的截图取自一台[Debian Linux 8.1][1] (“Jessie”),其运行在[OS X 10.10.3][3] (“Yosemite”)操作系统下的[Oracle VirtualBox 4.3.28][2]中的一台虚拟机里。想要建立你的Debian虚拟机,可以看看我的这篇教程——“[如何在 VirtualBox VM 下安装 Debian][4]”。

|

||||||

|

|

||||||

|

|

||||||

|

### Top ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

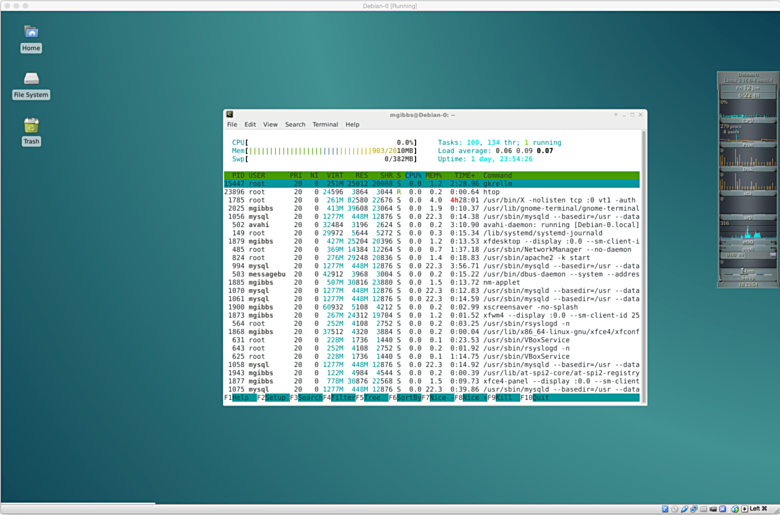

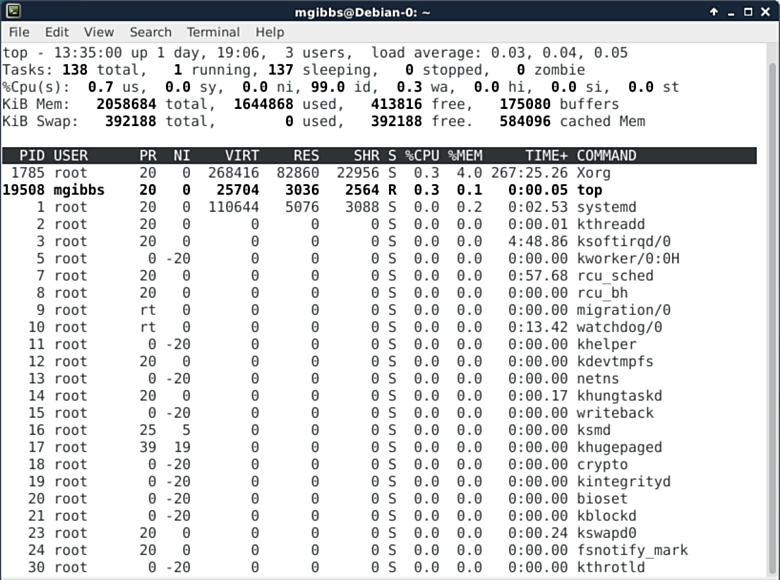

作为Linux系统监控工具中比较易用的一个,**top命令**能带我们一览Linux中的几乎每一处。以下这张图是它的默认界面,但是按“z”键可以切换不同的显示颜色。其它热键和命令则有其它的功能,例如显示概要信息和内存信息(第四行第二个),根据各种不一样的条件排序、终止进程任务等等(你可以在[这里][5]找到完整的列表)。

|

||||||

|

|

||||||

|

|

||||||

|

### htop ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

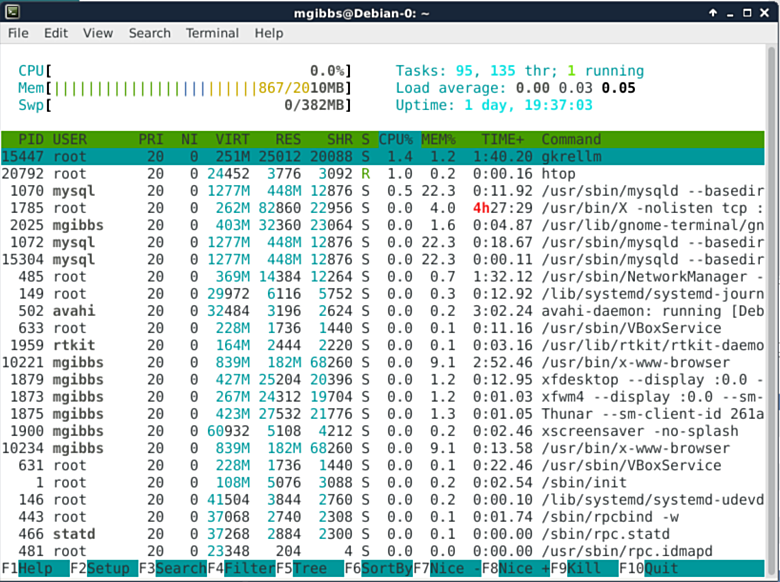

相比top,它的替代品Htop则更为精致。维基百科是这样描述的:“用户经常会部署htop以免Unix top不能提供关于系统进程的足够信息,比如说当你在尝试发现应用程序里的一个小的内存泄露问题,Htop一般也能作为一个系统监听器来使用。相比top,它提供了一个更方便的光标控制界面来向进程发送信号。” (想了解更多细节猛戳[这里][6])

|

||||||

|

|

||||||

|

|

||||||

|

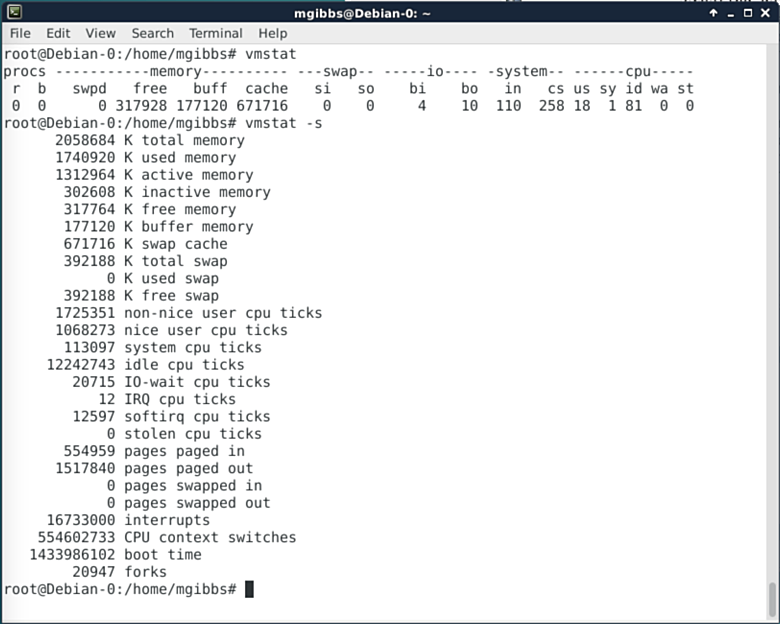

### Vmstat ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

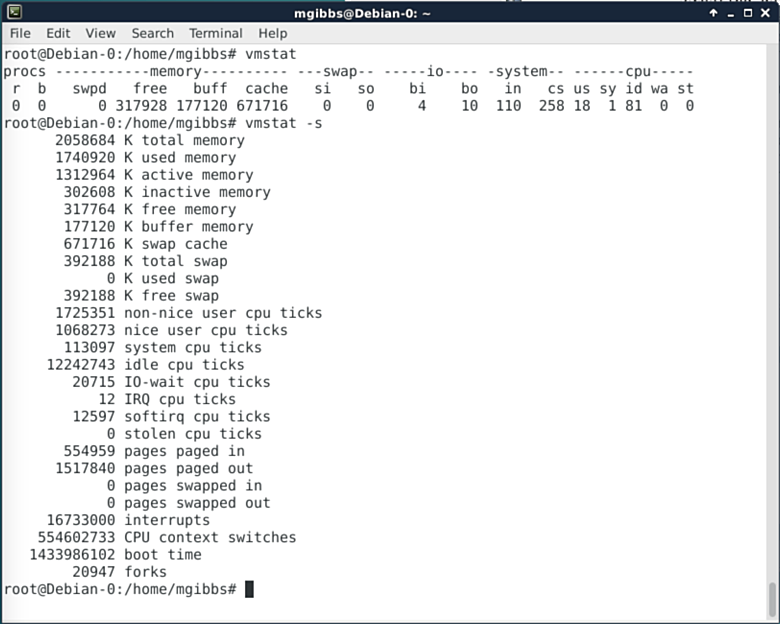

Vmstat是一款监控Linux系统性能数据的简易工具,这让它更合适使用在shell脚本中。使出你的正则表达式绝招,用vmstat和cron作业来做一些激动人心的事情吧。“后面的报告给出的是上一次系统重启之后的均值,另外一份报告给出的则是从前一个报告起间隔周期中的信息。其它的进程和内存报告是那个瞬态的情况”(猛戳[这里][7]获取更多信息)。

|

||||||

|

|

||||||

|

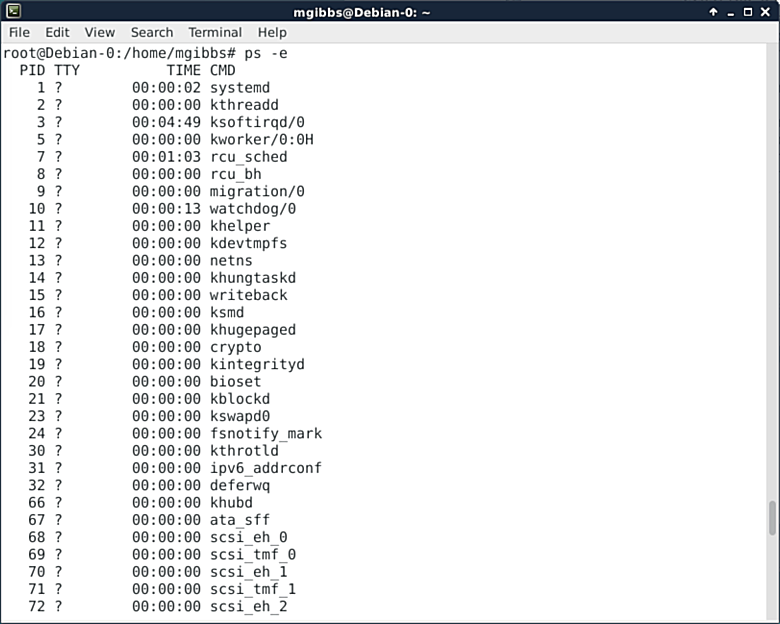

### ps ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

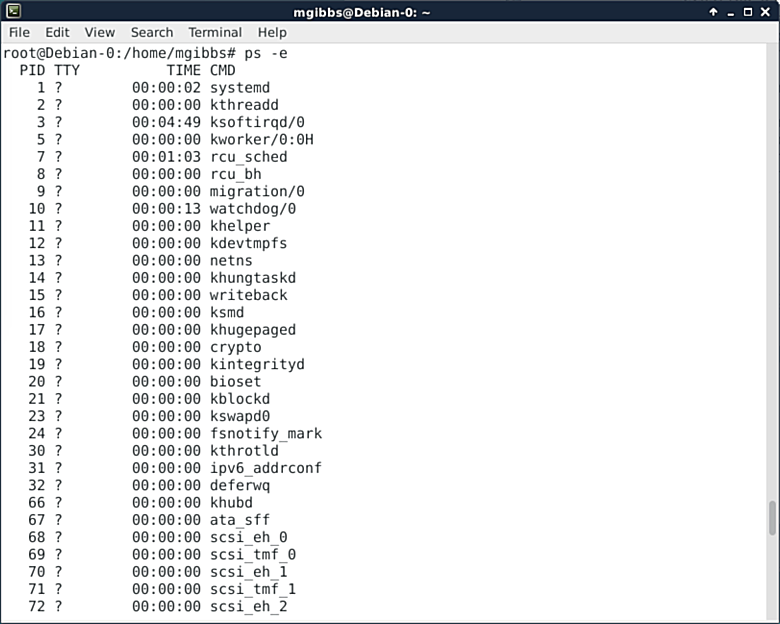

ps命令展现的是正在运行中的进程列表。在这种情况下,我们用“-e”选项来显示每个进程,也就是所有正在运行的进程了(我把列表滚动到了前面,否则列名就看不到了)。这个命令有很多选项允许你去按需格式化输出。只要使用上述一点点的正则表达式技巧,你就能得到一个强大的工具了。猛戳[这里][8]获取更多信息。

|

||||||

|

|

||||||

|

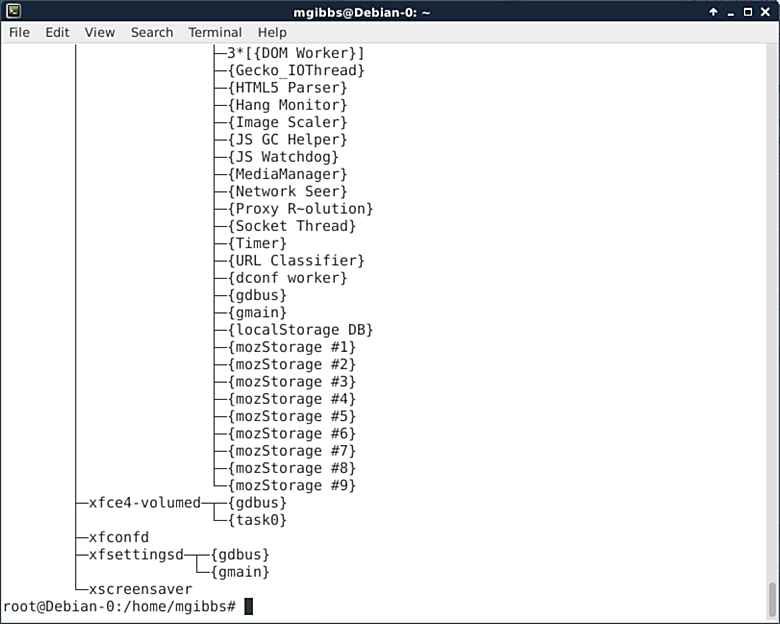

### Pstree ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

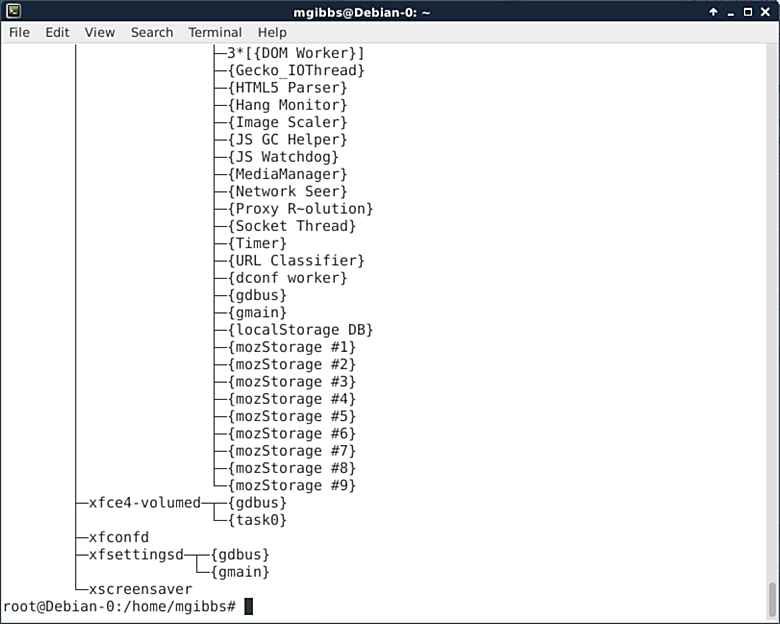

Pstree“以树状图显示正在运行中的进程。这个进程树是以某个 pid 为根节点的,如果pid被省略的话那树是以init为根节点的。如果指定用户名,那所有进程树都会以该用户所属的进程为父进程进行显示。”以树状图来帮你将进程之间的所属关系进行分类,这的确是个很有效的工具(戳[这里][9])。

|

||||||

|

|

||||||

|

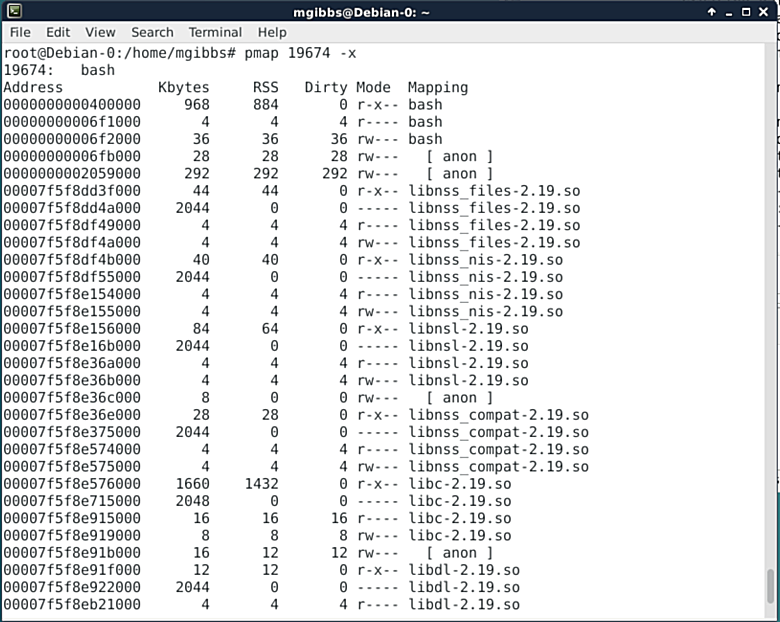

### pmap ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

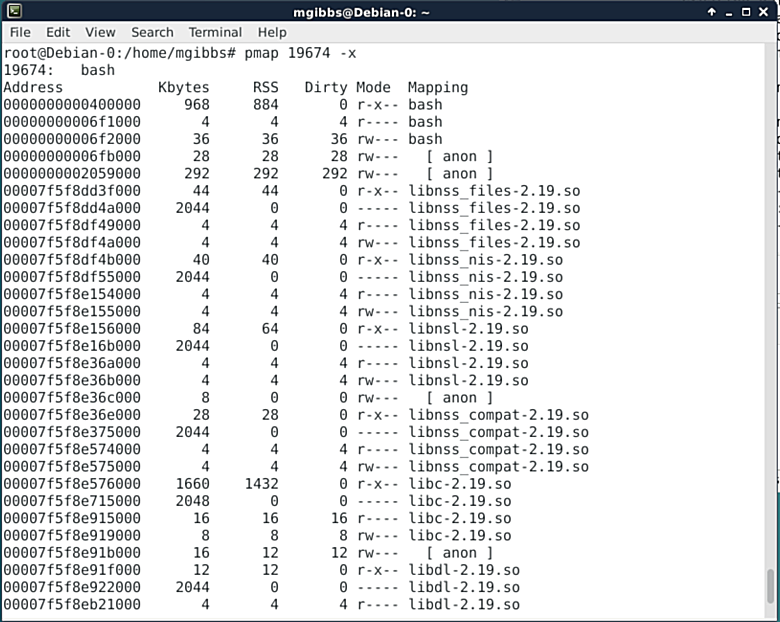

在调试过程中,理解一个应用程序如何使用内存是至关重要的,而pmap的作用就是当给出一个进程ID时显示出相关信息。上面的截图展示的是使用“-x”选项所产生的部分输出,你也可以用pmap的“-X”选项来获取更多的细节信息,但是前提是你要有个更宽的终端窗口。

|

||||||

|

|

||||||

|

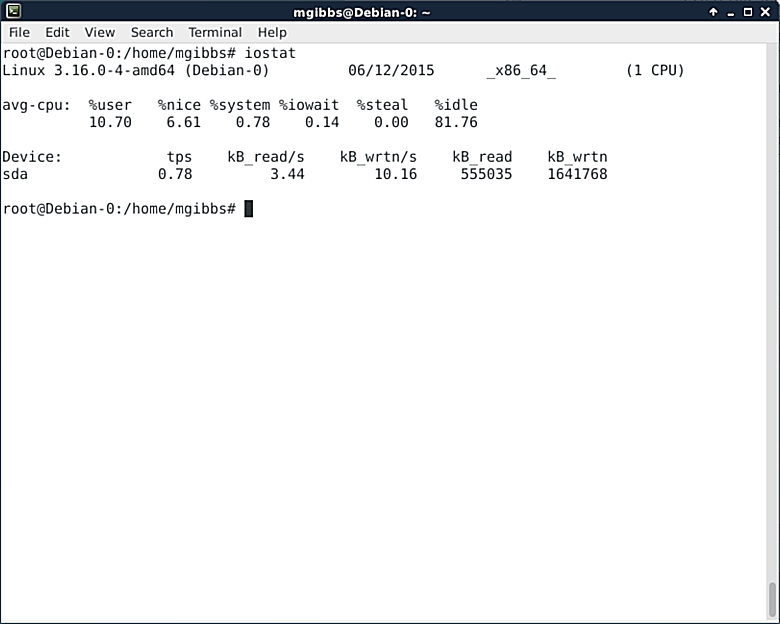

### iostat ###

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

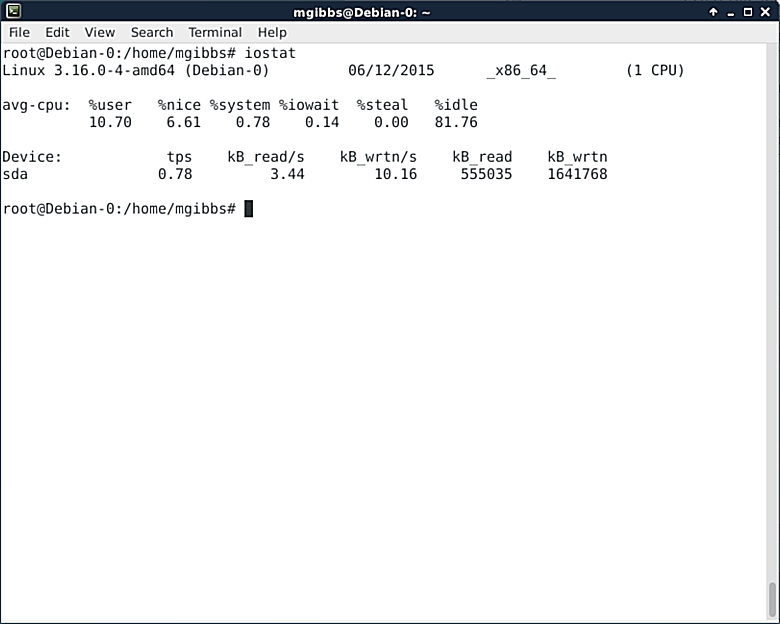

Linux系统的一个至关重要的性能指标是处理器和存储的使用率,它也是iostat命令所报告的内容。如同ps命令一样,iostat有很多选项允许你选择你需要的输出格式,除此之外还可以在某一段时间范围内的重复采样几次。详情请戳[这里][10]。

|

||||||

|

|

||||||

|

--------------------------------------------------------------------------------

|

||||||

|

|

||||||

|

via: http://www.networkworld.com/article/2937219/linux/7-command-line-tools-for-monitoring-your-linux-system.html

|

||||||

|

|

||||||

|

作者:[Mark Gibbs][a]

|

||||||

|

译者:[ZTinoZ](https://github.com/ZTinoZ)

|

||||||

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

|

[a]:http://www.networkworld.com/author/Mark-Gibbs/

|

||||||

|

[1]:https://www.debian.org/releases/stable/

|

||||||

|

[2]:https://www.virtualbox.org/

|

||||||

|

[3]:http://www.apple.com/osx/

|

||||||

|

[4]:http://www.networkworld.com/article/2937148/how-to-install-debian-linux-8-1-in-a-virtualbox-vm

|

||||||

|

[5]:http://linux.die.net/man/1/top

|

||||||

|

[6]:http://linux.die.net/man/1/htop

|

||||||

|

[7]:http://linuxcommand.org/man_pages/vmstat8.html

|

||||||

|

[8]:http://linux.die.net/man/1/ps

|

||||||

|

[9]:http://linux.die.net/man/1/pstree

|

||||||

|

[10]:http://linux.die.net/man/1/iostat

|

||||||

@ -1,24 +1,25 @@

|

|||||||

在 Linux 中安装 Google 环聊桌面客户端

|

在 Linux 中安装 Google 环聊桌面客户端

|

||||||

================================================================================

|

================================================================================

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

先前,我们已经介绍了如何[在 Linux 中安装 Facebook Messenger][1] 和[WhatsApp 桌面客户端][2]。这些应用都是非官方的应用。今天,我将为你推荐另一款非官方的应用,它就是 [Google 环聊][3]

|

先前,我们已经介绍了如何[在 Linux 中安装 Facebook Messenger][1] 和[WhatsApp 桌面客户端][2]。这些应用都是非官方的应用。今天,我将为你推荐另一款非官方的应用,它就是 [Google 环聊][3]

|

||||||

|

|

||||||

当然,你可以在 Web 浏览器中使用 Google 环聊,但相比于此,使用桌面客户端会更加有趣。好奇吗?那就跟着我看看如何 **在 Linux 中安装 Google 环聊** 以及如何使用它把。

|

当然,你可以在 Web 浏览器中使用 Google 环聊,但相比于此,使用桌面客户端会更加有趣。好奇吗?那就跟着我看看如何 **在 Linux 中安装 Google 环聊** 以及如何使用它吧。

|

||||||

|

|

||||||

### 在 Linux 中安装 Google 环聊 ###

|

### 在 Linux 中安装 Google 环聊 ###

|

||||||

|

|

||||||

我们将使用一个名为 [yakyak][4] 的开源项目,它是一个针对 Linux,Windows 和 OS X 平台的非官方 Google 环聊客户端。我将向你展示如何在 Ubuntu 中使用 yakyak,但我相信在其他的 Linux 发行版本中,你可以使用同样的方法来使用它。在了解如何使用它之前,让我们先看看 yakyak 的主要特点:

|

我们将使用一个名为 [yakyak][4] 的开源项目,它是一个针对 Linux,Windows 和 OS X 平台的非官方 Google 环聊客户端。我将向你展示如何在 Ubuntu 中使用 yakyak,但我相信在其他的 Linux 发行版本中,你可以使用同样的方法来使用它。在了解如何使用它之前,让我们先看看 yakyak 的主要特点:

|

||||||

|

|

||||||

- 发送和接受聊天信息

|

- 发送和接受聊天信息

|

||||||

- 创建和更改对话 (重命名, 添加人物)

|

- 创建和更改对话 (重命名, 添加参与者)

|

||||||

- 离开或删除对话

|

- 离开或删除对话

|

||||||

- 桌面提醒通知

|

- 桌面提醒通知

|

||||||

- 打开或关闭通知

|

- 打开或关闭通知

|

||||||

- 针对图片上传,支持拖放,复制粘贴或使用上传按钮

|

- 对于图片上传,支持拖放,复制粘贴或使用上传按钮

|

||||||

- Hangupsbot 房间同步(实际的用户图片) (注: 这里翻译不到位,希望改善一下)

|

- Hangupsbot 房间同步(使用用户实际的图片)

|

||||||

- 展示行内图片

|

- 展示行内图片

|

||||||

- 历史回放

|

- 翻阅历史

|

||||||

|

|

||||||

听起来不错吧,你可以从下面的链接下载到该软件的安装文件:

|

听起来不错吧,你可以从下面的链接下载到该软件的安装文件:

|

||||||

|

|

||||||

@ -36,7 +37,7 @@

|

|||||||

|

|

||||||

|

|

||||||

|

|

||||||

假如你想看看对话的配置图,你可以选择 `查看-> 展示对话缩略图`

|

假如你想在联系人里面显示用户头像,你可以选择 `查看-> 展示对话缩略图`

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

@ -54,7 +55,7 @@ via: http://itsfoss.com/install-google-hangouts-linux/

|

|||||||

|

|

||||||

作者:[Abhishek][a]

|

作者:[Abhishek][a]

|

||||||

译者:[FSSlc](https://github.com/FSSlc)

|

译者:[FSSlc](https://github.com/FSSlc)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,10 +1,9 @@

|

|||||||

|

如何修复 ubuntu 中检测到系统程序错误的问题

|

||||||

如何修复ubuntu 14.04中检测到系统程序错误的问题

|

|

||||||

================================================================================

|

================================================================================

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

|

在过去的几个星期,(几乎)每次都有消息 **Ubuntu 15.04在启动时检测到系统程序错误** 跑出来“欢迎”我。那时我是直接忽略掉它的,但是这种情况到了某个时刻,它就让人觉得非常烦人了!

|

||||||

在过去的几个星期,(几乎)每次都有消息 **Ubuntu 15.04在启动时检测到系统程序错误(system program problem detected on startup in Ubuntu 15.04)** 跑出来“欢迎”我。那时我是直接忽略掉它的,但是这种情况到了某个时刻,它就让人觉得非常烦人了!

|

|

||||||

|

|

||||||

> 检测到系统程序错误(System program problem detected)

|

> 检测到系统程序错误(System program problem detected)

|

||||||

>

|

>

|

||||||

@ -18,15 +17,16 @@

|

|||||||

|

|

||||||

#### 那么这个通知到底是关于什么的? ####

|

#### 那么这个通知到底是关于什么的? ####

|

||||||

|

|

||||||

大体上讲,它是在告知你,你的系统的一部分崩溃了。可别因为“崩溃”这个词而恐慌。这不是一个严重的问题,你的系统还是完完全全可用的。只是在以前的某个时刻某个程序崩溃了,而Ubuntu想让你决定要不要把这个问题报告给开发者,这样他们就能够修复这个问题。

|

大体上讲,它是在告知你,你的系统的一部分崩溃了。可别因为“崩溃”这个词而恐慌。这不是一个严重的问题,你的系统还是完完全全可用的。只是在之前的某个时刻某个程序崩溃了,而Ubuntu想让你决定要不要把这个问题报告给开发者,这样他们就能够修复这个问题。

|

||||||

|

|

||||||

#### 那么,我们点了“报告错误”的按钮后,它以后就不再显示了?####

|

#### 那么,我们点了“报告错误”的按钮后,它以后就不再显示了?####

|

||||||

|

|

||||||

|

不,不是的!即使你点了“报告错误”按钮,最后你还是会被一个如下的弹窗再次“欢迎”一下:

|

||||||

|

|

||||||

不,不是的!即使你点了“报告错误”按钮,最后你还是会被一个如下的弹窗再次“欢迎”:

|

|

||||||

|

|

||||||

|

|

||||||

[对不起,Ubuntu发生了一个内部错误(Sorry, Ubuntu has experienced an internal error)][1]是一个Apport(Apport是Ubuntu中错误信息的收集报告系统,详见Ubuntu Wiki中的Apport篇,译者注),它将会进一步的打开网页浏览器,然后你可以通过登录或创建[Launchpad][2]帐户来填写一份漏洞(Bug)报告文件。你看,这是一个复杂的过程,它要花整整四步来完成.

|

[对不起,Ubuntu发生了一个内部错误][1]是个Apport(LCTT 译注:Apport是Ubuntu中错误信息的收集报告系统,详见Ubuntu Wiki中的Apport篇),它将会进一步的打开网页浏览器,然后你可以通过登录或创建[Launchpad][2]帐户来填写一份漏洞(Bug)报告文件。你看,这是一个复杂的过程,它要花整整四步来完成。

|

||||||

|

|

||||||

#### 但是我想帮助开发者,让他们知道这个漏洞啊 !####

|

#### 但是我想帮助开发者,让他们知道这个漏洞啊 !####

|

||||||

|

|

||||||

你这样想的确非常地周到体贴,而且这样做也是正确的。但是这样做的话,存在两个问题。第一,存在非常高的概率,这个漏洞已经被报告过了;第二,即使你报告了个这次崩溃,也无法保证你不会再看到它。

|

你这样想的确非常地周到体贴,而且这样做也是正确的。但是这样做的话,存在两个问题。第一,存在非常高的概率,这个漏洞已经被报告过了;第二,即使你报告了个这次崩溃,也无法保证你不会再看到它。

|

||||||

@ -34,35 +34,38 @@

|

|||||||

#### 那么,你的意思就是说别报告这次崩溃了?####

|

#### 那么,你的意思就是说别报告这次崩溃了?####

|

||||||

|

|

||||||

对,也不对。如果你想的话,在你第一次看到它的时候报告它。你可以在上面图片显示的“显示细节(Show Details)”中,查看崩溃的程序。但是如果你总是看到它,或者你不想报告漏洞(Bug),那么我建议你还是一次性摆脱这个问题吧。

|

对,也不对。如果你想的话,在你第一次看到它的时候报告它。你可以在上面图片显示的“显示细节(Show Details)”中,查看崩溃的程序。但是如果你总是看到它,或者你不想报告漏洞(Bug),那么我建议你还是一次性摆脱这个问题吧。

|

||||||

|

|

||||||

### 修复Ubuntu中“检测到系统程序错误”的错误 ###

|

### 修复Ubuntu中“检测到系统程序错误”的错误 ###

|

||||||

|

|

||||||

这些错误报告被存放在Ubuntu中目录/var/crash中。如果你翻看这个目录的话,应该可以看到有一些以crash结尾的文件。

|

这些错误报告被存放在Ubuntu中目录/var/crash中。如果你翻看这个目录的话,应该可以看到有一些以crash结尾的文件。

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

我的建议是删除这些错误报告。打开一个终端,执行下面的命令:

|

我的建议是删除这些错误报告。打开一个终端,执行下面的命令:

|

||||||

|

|

||||||

sudo rm /var/crash/*

|

sudo rm /var/crash/*

|

||||||

|

|

||||||

这个操作会删除所有在/var/crash目录下的所有内容。这样你就不会再被这些报告以前程序错误的弹窗所扰。但是如果有一个程序又崩溃了,你就会再次看到“检测到系统程序错误”的错误。你可以再次删除这些报告文件,或者你可以禁用Apport来彻底地摆脱这个错误弹窗。

|

这个操作会删除所有在/var/crash目录下的所有内容。这样你就不会再被这些报告以前程序错误的弹窗所扰。但是如果又有一个程序崩溃了,你就会再次看到“检测到系统程序错误”的错误。你可以再次删除这些报告文件,或者你可以禁用Apport来彻底地摆脱这个错误弹窗。

|

||||||

|

|

||||||

#### 彻底地摆脱Ubuntu中的系统错误弹窗 ####

|

#### 彻底地摆脱Ubuntu中的系统错误弹窗 ####

|

||||||

|

|

||||||

如果你这样做,系统中任何程序崩溃时,系统都不会再通知你。如果你想问问我的看法的话,我会说,这不是一件坏事,除非你愿意填写错误报告。如果你不想填写错误报告,那么这些错误通知存不存在都不会有什么区别。

|

如果你这样做,系统中任何程序崩溃时,系统都不会再通知你。如果你想问问我的看法的话,我会说,这不是一件坏事,除非你愿意填写错误报告。如果你不想填写错误报告,那么这些错误通知存不存在都不会有什么区别。

|

||||||

|

|

||||||

要禁止Apport,并且彻底地摆脱Ubuntu系统中的程序崩溃报告,打开一个终端,输入以下命令:

|

要禁止Apport,并且彻底地摆脱Ubuntu系统中的程序崩溃报告,打开一个终端,输入以下命令:

|

||||||

|

|

||||||

gksu gedit /etc/default/apport

|

gksu gedit /etc/default/apport

|

||||||

|

|

||||||

这个文件的内容是:

|

这个文件的内容是:

|

||||||

|

|

||||||

# set this to 0 to disable apport, or to 1 to enable it

|

# 设置0表示禁用Apportw,或者1开启它。

|

||||||

# 设置0表示禁用Apportw,或者1开启它。译者注,下同。

|

|

||||||

# you can temporarily override this with

|

|

||||||

# 你可以用下面的命令暂时关闭它:

|

# 你可以用下面的命令暂时关闭它:

|

||||||

# sudo service apport start force_start=1

|

# sudo service apport start force_start=1

|

||||||

enabled=1

|

enabled=1

|

||||||

|

|

||||||

把**enabled=1**改为**enabled=0**.保存并关闭文件。完成之后你就再也不会看到弹窗报告错误了。很显然,如果我们想重新开启错误报告功能,只要再打开这个文件,把enabled设置为1就可以了。

|

把**enabled=1**改为**enabled=0**。保存并关闭文件。完成之后你就再也不会看到弹窗报告错误了。很显然,如果我们想重新开启错误报告功能,只要再打开这个文件,把enabled设置为1就可以了。

|

||||||

|

|

||||||

#### 你的有效吗? ####

|

#### 你的有效吗? ####

|

||||||

|

|

||||||

我希望这篇教程能够帮助你修复Ubuntu 14.04和Ubuntu 15.04中检测到系统程序错误的问题。如果这个小窍门帮你摆脱了这个烦人的问题,请让我知道。

|

我希望这篇教程能够帮助你修复Ubuntu 14.04和Ubuntu 15.04中检测到系统程序错误的问题。如果这个小窍门帮你摆脱了这个烦人的问题,请让我知道。

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

--------------------------------------------------------------------------------

|

||||||

@ -71,7 +74,7 @@ via: http://itsfoss.com/how-to-fix-system-program-problem-detected-ubuntu/

|

|||||||

|

|

||||||

作者:[Abhishek][a]

|

作者:[Abhishek][a]

|

||||||

译者:[XLCYun](https://github.com/XLCYun)

|

译者:[XLCYun](https://github.com/XLCYun)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,10 +1,12 @@

|

|||||||

Linux命令行中使用和执行PHP代码——第一部分

|

在 Linux 命令行中使用和执行 PHP 代码(一)

|

||||||

================================================================================

|

================================================================================

|

||||||

PHP是一个开元服务器端脚本语言,最初这三个字母代表的是“Personal Home Page”,而现在则代表的是“PHP:Hypertext Preprocessor”,它是个递归首字母缩写。它是一个跨平台脚本语言,深受C、C++和Java的影响。

|

PHP是一个开源服务器端脚本语言,最初这三个字母代表的是“Personal Home Page”,而现在则代表的是“PHP:Hypertext Preprocessor”,它是个递归首字母缩写。它是一个跨平台脚本语言,深受C、C++和Java的影响。

|

||||||

|

|

||||||

Linux命令行中运行PHP代码——第一部分

|

|

||||||

|

|

||||||

PHP的语法和C、Java以及带有一些PHP特性的Perl变成语言中的语法十分相似,它眼下大约正被2.6亿个网站所使用,当前最新的稳定版本是PHP版本5.6.10。

|

|

||||||

|

|

||||||

|

*在 Linux 命令行中运行 PHP 代码*

|

||||||

|

|

||||||

|

PHP的语法和C、Java以及带有一些PHP特性的Perl变成语言中的语法十分相似,它当下大约正被2.6亿个网站所使用,当前最新的稳定版本是PHP版本5.6.10。

|

||||||

|

|

||||||

PHP是HTML的嵌入脚本,它便于开发人员快速写出动态生成的页面。PHP主要用于服务器端(而Javascript则用于客户端)以通过HTTP生成动态网页,然而,当你知道可以在Linux终端中不需要网页浏览器来执行PHP时,你或许会大为惊讶。

|

PHP是HTML的嵌入脚本,它便于开发人员快速写出动态生成的页面。PHP主要用于服务器端(而Javascript则用于客户端)以通过HTTP生成动态网页,然而,当你知道可以在Linux终端中不需要网页浏览器来执行PHP时,你或许会大为惊讶。

|

||||||

|

|

||||||

@ -12,40 +14,44 @@ PHP是HTML的嵌入脚本,它便于开发人员快速写出动态生成的页

|

|||||||

|

|

||||||

**1. 在安装完PHP和Apache2后,我们需要安装PHP命令行解释器。**

|

**1. 在安装完PHP和Apache2后,我们需要安装PHP命令行解释器。**

|

||||||

|

|

||||||

# apt-get install php5-cli [Debian and alike System)

|

# apt-get install php5-cli [Debian 及类似系统]

|

||||||

# yum install php-cli [CentOS and alike System)

|

# yum install php-cli [CentOS 及类似系统]

|

||||||

|

|

||||||

接下来我们通常要做的是,在‘/var/www/html‘(这是 Apache2 在大多数发行版中的工作目录)这个位置创建一个内容为 ‘<?php phpinfo(); ?>‘,名为 ‘infophp.php‘ 的文件来测试(是否安装正确),执行以下命令即可。

|

接下来我们通常要做的是,在`/var/www/html`(这是 Apache2 在大多数发行版中的工作目录)这个位置创建一个内容为 `<?php phpinfo(); ?>`,名为 `infophp.php` 的文件来测试(PHP是否安装正确),执行以下命令即可。

|

||||||

|

|

||||||

# echo '<?php phpinfo(); ?>' > /var/www/html/infophp.php

|

# echo '<?php phpinfo(); ?>' > /var/www/html/infophp.php

|

||||||

|

|

||||||

然后,将浏览器指向http://127.0.0.1/infophp.php, 这将会在网络浏览器中打开该文件。

|

然后,将浏览器访问 http://127.0.0.1/infophp.php ,这将会在网络浏览器中打开该文件。

|

||||||

|

|

||||||

|

|

||||||

检查PHP信息

|

|

||||||

|

|

||||||

不需要任何浏览器,在Linux终端中也可以获得相同的结果。在Linux命令行中执行‘/var/www/html/infophp.php‘,如:

|

*检查PHP信息*

|

||||||

|

|

||||||

|

不需要任何浏览器,在Linux终端中也可以获得相同的结果。在Linux命令行中执行`/var/www/html/infophp.php`,如:

|

||||||

|

|

||||||

# php -f /var/www/html/infophp.php

|

# php -f /var/www/html/infophp.php

|

||||||

|

|

||||||

|

|

||||||

从命令行检查PHP信息

|

|

||||||

|

|

||||||

由于输出结果太大,我们可以通过管道将上述输出结果输送给 ‘less‘ 命令,这样就可以一次输出一屏了,命令如下:

|

*从命令行检查PHP信息*

|

||||||

|

|

||||||

|

由于输出结果太大,我们可以通过管道将上述输出结果输送给 `less` 命令,这样就可以一次输出一屏了,命令如下:

|

||||||

|

|

||||||

# php -f /var/www/html/infophp.php | less

|

# php -f /var/www/html/infophp.php | less

|

||||||

|

|

||||||

|

|

||||||

检查所有PHP信息

|

|

||||||

|

|

||||||

这里,‘-f‘选项解析病执行命令后跟随的文件。

|

*检查所有PHP信息*

|

||||||

|

|

||||||

|

这里,‘-f‘选项解析并执行命令后跟随的文件。

|

||||||

|

|

||||||

**2. 我们可以直接在Linux命令行使用`phpinfo()`这个十分有价值的调试工具而不需要从文件来调用,只需执行以下命令:**

|

**2. 我们可以直接在Linux命令行使用`phpinfo()`这个十分有价值的调试工具而不需要从文件来调用,只需执行以下命令:**

|

||||||

|

|

||||||

# php -r 'phpinfo();'

|

# php -r 'phpinfo();'

|

||||||

|

|

||||||

|

|

||||||

PHP调试工具

|

|

||||||

|

*PHP调试工具*

|

||||||

|

|

||||||

这里,‘-r‘ 选项会让PHP代码在Linux终端中不带`<`和`>`标记直接执行。

|

这里,‘-r‘ 选项会让PHP代码在Linux终端中不带`<`和`>`标记直接执行。

|

||||||

|

|

||||||

@ -74,13 +80,14 @@ PHP调试工具

|

|||||||

输入 ‘exit‘ 或者按下 ‘ctrl+c‘ 来关闭PHP交互模式。

|

输入 ‘exit‘ 或者按下 ‘ctrl+c‘ 来关闭PHP交互模式。

|

||||||

|

|

||||||

|

|

||||||

启用PHP交互模式

|

|

||||||

|

*启用PHP交互模式*

|

||||||

|

|

||||||

**4. 你可以仅仅将PHP脚本作为shell脚本来运行。首先,创建在你当前工作目录中创建一个PHP样例脚本。**

|

**4. 你可以仅仅将PHP脚本作为shell脚本来运行。首先,创建在你当前工作目录中创建一个PHP样例脚本。**

|

||||||

|

|

||||||

# echo -e '#!/usr/bin/php\n<?php phpinfo(); ?>' > phpscript.php

|

# echo -e '#!/usr/bin/php\n<?php phpinfo(); ?>' > phpscript.php

|

||||||

|

|

||||||

注意,我们在该PHP脚本的第一行使用#!/usr/bin/php,就像在shell脚本中那样(/bin/bash)。第一行的#!/usr/bin/php告诉Linux命令行将该脚本文件解析到PHP解释器中。

|

注意,我们在该PHP脚本的第一行使用`#!/usr/bin/php`,就像在shell脚本中那样(`/bin/bash`)。第一行的`#!/usr/bin/php`告诉Linux命令行用 PHP 解释器来解析该脚本文件。

|

||||||

|

|

||||||

其次,让该脚本可执行:

|

其次,让该脚本可执行:

|

||||||

|

|

||||||

@ -96,7 +103,7 @@ PHP调试工具

|

|||||||

|

|

||||||

# php -a

|

# php -a

|

||||||

|

|

||||||

创建一个函授,将它命名为 addition。同时,声明两个变量 $a 和 $b。

|

创建一个函数,将它命名为 `addition`。同时,声明两个变量 `$a` 和 `$b`。

|

||||||

|

|

||||||

php > function addition ($a, $b)

|

php > function addition ($a, $b)

|

||||||

|

|

||||||

@ -133,7 +140,8 @@ PHP调试工具

|

|||||||

12.3NULL

|

12.3NULL

|

||||||

|

|

||||||

|

|

||||||

创建PHP函数

|

|

||||||

|

*创建PHP函数*

|

||||||

|

|

||||||

你可以一直运行该函数,直至退出交互模式(ctrl+z)。同时,你也应该注意到了,上面输出结果中返回的数据类型为 NULL。这个问题可以通过要求 php 交互 shell用 return 代替 echo 返回结果来修复。

|

你可以一直运行该函数,直至退出交互模式(ctrl+z)。同时,你也应该注意到了,上面输出结果中返回的数据类型为 NULL。这个问题可以通过要求 php 交互 shell用 return 代替 echo 返回结果来修复。

|

||||||

|

|

||||||

@ -152,11 +160,12 @@ PHP调试工具

|

|||||||

这里是一个样例,在该样例的输出结果中返回了正确的数据类型。

|

这里是一个样例,在该样例的输出结果中返回了正确的数据类型。

|

||||||

|

|

||||||

|

|

||||||

PHP函数

|

|

||||||

|

*PHP函数*

|

||||||

|

|

||||||

永远都记住,用户定义的函数不会从一个shell会话保留到下一个shell会话,因此,一旦你退出交互shell,它就会丢失了。

|

永远都记住,用户定义的函数不会从一个shell会话保留到下一个shell会话,因此,一旦你退出交互shell,它就会丢失了。

|

||||||

|

|

||||||

希望你喜欢此次会话。保持连线,你会获得更多此类文章。保持关注,保持健康。请在下面的评论中为我们提供有价值的反馈。点赞并分享,帮助我们扩散。

|

希望你喜欢此次教程。保持连线,你会获得更多此类文章。保持关注,保持健康。请在下面的评论中为我们提供有价值的反馈。点赞并分享,帮助我们扩散。

|

||||||

|

|

||||||

还请阅读: [12个Linux终端中有用的的PHP命令行用法——第二部分][1]

|

还请阅读: [12个Linux终端中有用的的PHP命令行用法——第二部分][1]

|

||||||

|

|

||||||

@ -164,9 +173,9 @@ PHP函数

|

|||||||

|

|

||||||

via: http://www.tecmint.com/run-php-codes-from-linux-commandline/

|

via: http://www.tecmint.com/run-php-codes-from-linux-commandline/

|

||||||

|

|

||||||

作者:[vishek Kumar][a]

|

作者:[Avishek Kumar][a]

|

||||||

译者:[GOLinux](https://github.com/GOLinux)

|

译者:[GOLinux](https://github.com/GOLinux)

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

校对:[wxy](https://github.com/wxy)

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||||

|

|

||||||

@ -1,74 +0,0 @@

|

|||||||

2015 will be the year Linux takes over the enterprise (and other predictions)

|

|

||||||

================================================================================

|

|

||||||

> Jack Wallen removes his rose-colored glasses and peers into the crystal ball to predict what 2015 has in store for Linux.

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

The crystal ball has been vague and fuzzy for quite some time. Every pundit and voice has opined on what the upcoming year will mean to whatever topic it is they hold dear to their heart. In my case, we're talking Linux and open source.

|

|

||||||

|

|

||||||

In previous years, I'd don the rose-colored glasses and make predictions that would shine a fantastic light over the Linux landscape and proclaim 20** will be the year of Linux on the _____ (name your platform). Many times, those predictions were wrong, and Linux would wind up grinding on in the background.

|

|

||||||

|

|

||||||

This coming year, however, there are some fairly bold predictions to be made, some of which are sure things. Read on and see if you agree.

|

|

||||||

|

|

||||||

### Linux takes over big data ###

|

|

||||||

|

|

||||||

This should come as no surprise, considering the advancements Linux and open source has made over the previous few years. With the help of SuSE, Red Hat, and SAP Hana, Linux will hold powerful sway over big data in 2015. In-memory computing and live kernel patching will be the thing that catapults big data into realms of uptime and reliability never before known. SuSE will lead this charge like a warrior rushing into a battle it cannot possibly lose.

|

|

||||||

|

|

||||||

This rise of Linux in the world of big data will have serious trickle down over the rest of the business world. We already know how fond enterprise businesses are of Linux and big data. What we don't know is how this relationship will alter the course of Linux with regards to the rest of the business world.

|

|

||||||

|

|

||||||

My prediction is that the success of Linux with big data will skyrocket the popularity of Linux throughout the business landscape. More contracts for SuSE and Red Hat will equate to more deployments of Linux servers that handle more tasks within the business world. This will especially apply to the cloud, where OpenStack should easily become an overwhelming leader.

|

|

||||||

|

|

||||||

As the end of 2015 draws to a close, Linux will continue its take over of more backend services, which may include the likes of collaboration servers, security, and much more.

|

|

||||||

|

|

||||||

### Smart machines ###

|

|

||||||

|

|

||||||

Linux is already leading the trend for making homes and autos more intelligent. With improvements in the likes of Nest (which currently uses an embedded Linux), the open source platform is poised to take over your machines. Because 2015 should see a massive rise in smart machines, it goes without saying that Linux will be a huge part of that growth. I firmly believe more homes and businesses will take advantage of such smart controls, and that will lead to more innovations (all of which will be built on Linux).

|

|

||||||

|

|

||||||

One of the issues facing Nest, however, is that it was purchased by Google. What does this mean for the thermostat controller? Will Google continue using the Linux platform -- or will it opt to scrap that in favor of Android? Of course, a switch would set the Nest platform back a bit.

|

|

||||||

|

|

||||||

The upcoming year will see Linux lead the rise in popularity of home automation. Wink, Iris, Q Station, Staples Connect, and more (similar) systems will help to bridge Linux and home users together.

|

|

||||||

|

|

||||||

### The desktop ###

|

|

||||||

|

|

||||||

The big question, as always, is one that tends to hang over the heads of the Linux community like a dark cloud. That question is in relation to the desktop. Unfortunately, my predictions here aren't nearly as positive. I believe that the year 2015 will remain quite stagnant for Linux on the desktop. That complacency will center around Ubuntu.

|

|

||||||

|

|

||||||

As much as I love Ubuntu (and the Unity desktop), this particular distribution will continue to drag the Linux desktop down. Why?

|

|

||||||

|

|

||||||

Convergence... or the lack thereof.

|

|

||||||

|

|

||||||

Canonical has been so headstrong about converging the desktop and mobile experience that they are neglecting the current state of the desktop. The last two releases of Ubuntu (one being an LTS release) have been stagnant (at best). The past year saw two of the most unexciting releases of Ubuntu that I can recall. The reason? Because the developers of Ubuntu are desperately trying to make Unity 8/Mir and the ubiquitous Ubuntu Phone a reality. The vaporware that is the Ubuntu Phone will continue on through 2015, and Unity 8/Mir may or may not be released.

|

|

||||||

|

|

||||||

When the new iteration of the Ubuntu Unity desktop is finally released, it will suffer a serious setback, because there will be so little hardware available to truly show it off. [System76][1] will sell their outstanding [Sable Touch][2], which will probably become the flagship system for Unity 8/Mir. As for the Ubuntu Phone? How many reports have you read that proclaimed "Ubuntu Phone will ship this year"?

|

|

||||||

|

|

||||||

I'm now going on the record to predict that the Ubuntu Phone will not ship in 2015. Why? Canonical created partnerships with two OEMs over a year ago. Those partnerships have yet to produce a single shippable product. The closest thing to a shippable product is the Meizu MX4 phone. The "Pro" version of that phone was supposed to have a formal launch of Sept 25. Like everything associated with the Ubuntu Phone, it didn't happen.

|

|

||||||

|

|

||||||

Unless Canonical stops putting all of its eggs in one vaporware basket, desktop Linux will take a major hit in 2015. Ubuntu needs to release something major -- something to make heads turn -- otherwise, 2015 will be just another year where we all look back and think "we could have done something special."

|

|

||||||

|

|

||||||

Outside of Ubuntu, I do believe there are some outside chances that Linux could still make some noise on the desktop. I think two distributions, in particular, will bring something rather special to the table:

|

|

||||||

|

|

||||||

- [Evolve OS][3] -- a ChromeOS-like Linux distribution

|

|

||||||

- [Quantum OS][4] -- a Linux distribution that uses Android's Material Design specs

|

|

||||||

|

|

||||||

Both of these projects are quite exciting and offer unique, user-friendly takes on the Linux desktop. This is quickly become a necessity in a landscape being dragged down by out-of-date design standards (think the likes of Cinnamon, Mate, XFCE, LXCE -- all desperately clinging to the past).

|

|

||||||

|

|

||||||

This is not to say that Linux on the desktop doesn't have a chance in 2015. It does. In order to grasp the reins of that chance, it will have to move beyond the past and drop the anchors that prevent it from moving out to deeper, more viable waters.

|

|

||||||

|

|

||||||

Linux stands to make more waves in 2015 than it has in a very long time. From enterprise to home automation -- the world could be the oyster that Linux uses as a springboard to the desktop and beyond.

|

|

||||||

|

|

||||||

What are your predictions for Linux and open source in 2015? Share your thoughts in the discussion thread below.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: http://www.techrepublic.com/article/2015-will-be-the-year-linux-takes-over-the-enterprise-and-other-predictions/

|

|

||||||

|

|

||||||

作者:[Jack Wallen][a]

|

|

||||||

译者:[barney-ro](https://github.com/barney-ro)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:http://www.techrepublic.com/search/?a=jack+wallen

|

|

||||||

[1]:https://system76.com/

|

|

||||||

[2]:https://system76.com/desktops/sable

|

|

||||||

[3]:https://evolve-os.com/

|

|

||||||

[4]:http://quantum-os.github.io/

|

|

||||||

@ -1,120 +0,0 @@

|

|||||||

The Curious Case of the Disappearing Distros

|

|

||||||

================================================================================

|

|

||||||

|

|

||||||

|

|

||||||

"Linux is a big game now, with billions of dollars of profit, and it's the best thing since sliced bread, but corporations are taking control, and slowly but systematically, community distros are being killed," said Google+ blogger Alessandro Ebersol. "Linux is slowly becoming just like BSD, where companies use and abuse it and give very little in return."

|

|

||||||

|

|

||||||

Well the holidays are pretty much upon us at last here in the Linux blogosphere, and there's nowhere left to hide. The next two weeks or so promise little more than a blur of forced social occasions and too-large meals, punctuated only -- for the luckier ones among us -- by occasional respite down at the Broken Windows Lounge.

|

|

||||||

|

|

||||||

Perhaps that's why Linux bloggers seized with such glee upon the good old-fashioned mystery that came up recently -- delivered in the nick of time, as if on cue.

|

|

||||||

|

|

||||||

"Why is the Number of Linux Distros Declining?" is the [question][1] posed over at Datamation, and it's just the distraction so many FOSS fans have been needing.

|

|

||||||

|

|

||||||

"Until about 2011, the number of active distributions slowly increased by a few each year," wrote author Bruce Byfield. "By contrast, the last three years have seen a 12 percent decline -- a decrease too high to be likely to be coincidence.

|

|

||||||

|

|

||||||

"So what's happening?" Byfield wondered.

|

|

||||||

|

|

||||||

It would be difficult to imagine a more thought-provoking question with which to spend the Northern hemisphere's shortest days.

|

|

||||||

|

|

||||||

### 'There Are Too Many Distros' ###

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

"That's an easy question," began blogger [Robert Pogson][2]. "There are too many distros."

|

|

||||||

|

|

||||||

After all, "if a fanatic like me can enjoy life having sampled only a dozen distros, why have any more?" Pogson explained. "If someone has a concept different from the dozen or so most common distros, that concept can likely be demonstrated by documenting the tweaks and package-lists and, perhaps, some code."

|

|

||||||

|

|

||||||

Trying to compete with some 40,000 package repositories like Debian's, however, is "just silly," he said.

|

|

||||||

|

|

||||||

"No startup can compete with such a distro," Pogson asserted. "Why try? Just use it to do what you want and tell the world about it."

|

|

||||||

|

|

||||||

### 'I Don't Distro-Hop Anymore' ###

|

|

||||||

|

|

||||||

The major existing distros are doing a good job, so "we don't need so many derivative works," Google+ blogger Kevin O'Brien agreed.

|

|

||||||

|

|

||||||

"I know I don't 'distro-hop' anymore, and my focus is on using my computer to get work done," O'Brien added.

|

|

||||||

|

|

||||||

"If my apps run fine every day, that is all that I need," he said. "Right now I am sticking with Ubuntu LTS 14.04, and probably will until 2016."

|

|

||||||

|

|

||||||

### 'The More Distros, the Better' ###

|

|

||||||

|

|

||||||

It stands to reason that "as distros get better, there will be less reasons to roll your own," concurred [Linux Rants][3] blogger Mike Stone.

|

|

||||||

|

|

||||||

"I think the modern Linux distros cover the bases of a larger portion of the Linux-using crowd, so fewer and fewer people are starting their own distribution to compensate for something that the others aren't satisfying," he explained. "Add to that the fact that corporations are more heavily involved in the development of Linux now than they ever have been, and they're going to focus their resources."

|

|

||||||

|

|

||||||

So, the decline isn't necessarily a bad thing, as it only points to the strength of the current offerings, he asserted.

|

|

||||||

|

|

||||||

At the same time, "I do think there are some negative consequences as well," Stone added. "Variation in the distros is a way that Linux grows and evolves, and with a narrower field, we're seeing less opportunity to put new ideas out there. In my mind, the more distros, the better -- hopefully the trend reverses soon."

|

|

||||||

|

|

||||||

### 'I Hope Some Diversity Survives' ###

|

|

||||||

|

|

||||||

Indeed, "the era of novelty and experimentation is over," Google+ blogger Gonzalo Velasco C. told Linux Girl.

|

|

||||||

|

|

||||||

"Linux is 20+ years old and got professional," he noted. "There is always room for experimentation, but the top 20 are here since more than a decade ago.

|

|

||||||

|

|

||||||

"Godspeed GNU/Linux," he added. "I hope some diversity survives -- especially distros without Systemd; on the other hand, some standards are reached through consensus."

|

|

||||||

|

|

||||||

### A Question of Package Managers ###

|

|

||||||

|

|

||||||

There are two trends at work here, suggested consultant and [Slashdot][4] blogger Gerhard Mack.

|

|

||||||

|

|

||||||

First, "there are fewer reasons to start a new distro," he said. "The basic nuts and bolts are mostly done, installation is pretty easy across most distros, and it's not difficult on most hardware to get a working system without having to resort to using the command line."

|

|

||||||

|

|

||||||

The second thing is that "we are seeing a reduction of distros with inferior package managers," Mack suggested. "It is clear that .deb-based distros had fewer losses and ended up with a larger overall share."

|

|

||||||

|

|

||||||

### Survival of the Fittest ###

|

|

||||||

|

|

||||||

It's like survival of the fittest, suggested consultant Rodolfo Saenz, who is certified in Linux, IBM Tivoli Storage Manager and Microsoft Active Directory.

|

|

||||||

|

|

||||||

"I prefer to see a strong Linux with less distros," Saenz added. "Too many distros dilutes development efforts and can confuse potential future users."

|

|

||||||

|

|

||||||

Fewer distros, on the other hand, "focuses development efforts into the stronger distros and also attracts new potential users with clear choices for their needs," he said.

|

|

||||||

|

|

||||||

### All About the Money ###

|

|

||||||

|

|

||||||

Google+ blogger Alessandro Ebersol also saw survival of the fittest at play, but he took a darker view.

|

|

||||||

|

|

||||||

"Linux is a big game now, with billions of dollars of profit, and it's the best thing since sliced bread," Ebersol began. "But corporations are taking control, and slowly but systematically, community distros are being killed."

|

|

||||||

|

|

||||||

It's difficult for community distros to keep pace with the ever-changing field, and cash is a necessity, he conceded.

|

|

||||||

|

|

||||||

Still, "Linux is slowly becoming just like BSD, where companies use and abuse it and give very little in return," Ebersol said. "It saddens me, but GNU/Linux's best days were 10 years ago, circa 2002 to 2004. Now, it's the survival of the fittest -- and of course, the ones with more money will prevail."

|

|

||||||

|

|

||||||

### 'Fewer Devs Care' ###

|

|

||||||

|

|

||||||

SoylentNews blogger hairyfeet focused on today's altered computing landscape.

|

|

||||||

|

|

||||||

"The reason there are fewer distros is simple: With everybody moving to the Google Playwall of Android, and Windows 10 looking to be the next XP, fewer devs care," hairyfeet said.

|

|

||||||

|

|

||||||

"Why should they?" he went on. "The desktop wars are over, MSFT won, and the mobile wars are gonna be proprietary Google, proprietary Apple and proprietary MSFT. The money is in apps and services, and with a slow economy, there just isn't time for pulling a Taco Bell and rerolling yet another distro.

|

|

||||||

|

|

||||||

"For the few that care about Linux desktops you have Ubuntu, Mint and Cent, and that is plenty," hairyfeet said.

|

|

||||||

|

|

||||||

### 'No Less Diversity' ###

|

|

||||||

|

|

||||||

Last but not least, Chris Travers, a [blogger][5] who works on the [LedgerSMB][6] project, took an optimistic view.

|

|

||||||

|

|

||||||

"Ever since I have been around Linux, there have been a few main families -- [SuSE][7], [Red Hat][8], Debian, Gentoo, Slackware -- and a number of forks of these," Travers said. "The number of major families of distros has been declining for some time -- Mandrake and Connectiva merging, for example, Caldera disappearing -- but each of these families is ending up with fewer members as well.

|

|

||||||

|

|

||||||

"I think this is a good thing," he concluded.

|

|

||||||

|

|

||||||

"The big community distros -- Debian, Slackware, Gentoo, Fedora -- are going strong and picking up a lot of the niche users that other distros catered to," he pointed out. "Many of these distros are making it easier to come up with customized variants for niche markets. So what you have is a greater connectedness within the big distros, and no less diversity."

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: http://www.linuxinsider.com/story/The-Curious-Case-of-the-Disappearing-Distros-81518.html

|

|

||||||

|

|

||||||

作者:Katherine Noyes

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[1]:http://www.datamation.com/open-source/why-is-the-number-of-linux-distros-declining.html

|

|

||||||

[2]:http://mrpogson.com/

|

|

||||||

[3]:http://linuxrants.com/

|

|

||||||

[4]:http://slashdot.org/

|

|

||||||

[5]:http://ledgersmbdev.blogspot.com/

|

|

||||||

[6]:http://www.ledgersmb.org/

|

|

||||||

[7]:http://www.novell.com/linux

|

|

||||||

[8]:http://www.redhat.com/

|

|

||||||

@ -1,36 +0,0 @@

|

|||||||

diff -u: What's New in Kernel Development

|

|

||||||

================================================================================

|

|

||||||

**David Drysdale** wanted to add Capsicum security features to Linux after he noticed that FreeBSD already had Capsicum support. Capsicum defines fine-grained security privileges, not unlike filesystem capabilities. But as David discovered, Capsicum also has some controversy surrounding it.

|

|

||||||

|

|

||||||

Capsicum has been around for a while and was described in a USENIX paper in 2010: [http://www.cl.cam.ac.uk/research/security/capsicum/papers/2010usenix-security-capsicum-website.pdf][1].

|

|

||||||

|

|

||||||

Part of the controversy is just because of the similarity with capabilities. As Eric Biderman pointed out during the discussion, it would be possible to implement features approaching Capsicum's as an extension of capabilities, but implementing Capsicum directly would involve creating a whole new (and extensive) abstraction layer in the kernel. Although David argued that capabilities couldn't actually be extended far enough to match Capsicum's fine-grained security controls.

|

|

||||||

|

|

||||||

Capsicum also was controversial within its own developer community. For example, as Eric described, it lacked a specification for how to revoke privileges. And, David pointed out that this was because the community couldn't agree on how that could best be done. David quoted an e-mail sent by Ben Laurie to the cl-capsicum-discuss mailing list in 2011, where Ben said, "It would require additional book-keeping to find and revoke outstanding capabilities, which requires knowing how to reach capabilities, and then whether they are derived from the capability being revoked. It also requires an authorization model for revocation. The former two points mean additional overhead in terms of data structure operations and synchronisation."

|

|

||||||

|

|

||||||

Given the ongoing controversy within the Capsicum developer community and the corresponding lack of specification of key features, and given the existence of capabilities that already perform a similar function in the kernel and the invasiveness of Capsicum patches, Eric was opposed to David implementing Capsicum in Linux.

|

|

||||||

|

|

||||||

But, given the fact that capabilities are much coarser-grained than Capsicum's security features, to the point that capabilities can't really be extended far enough to mimic Capsicum's features, and given that FreeBSD already has Capsicum implemented in its kernel, showing that it can be done and that people might want it, it seems there will remain a lot of folks interested in getting Capsicum into the Linux kernel.

|

|

||||||

|

|

||||||

Sometimes it's unclear whether there's a bug in the code or just a bug in the written specification. Henrique de Moraes Holschuh noticed that the Intel Software Developer Manual (vol. 3A, section 9.11.6) said quite clearly that microcode updates required 16-byte alignment for the P6 family of CPUs, the Pentium 4 and the Xeon. But, the code in the kernel's microcode driver didn't enforce that alignment.

|

|

||||||

|

|

||||||

In fact, Henrique's investigation uncovered the fact that some Intel chips, like the Xeon X5550 and the second-generation i5 chips, needed only 4-byte alignment in practice, and not 16. However, to conform to the documented specification, he suggested fixing the kernel code to match the spec.

|

|

||||||

|

|

||||||

Borislav Petkov objected to this. He said Henrique was looking for problems where there weren't any. He said that Henrique simply had discovered a bug in Intel's documentation, because the alignment issue clearly wasn't a problem in the real world. He suggested alerting the Intel folks to the documentation problem and moving on. As he put it, "If the processor accepts the non-16-byte-aligned update, why do you care?"

|

|

||||||

|

|

||||||

But, as H. Peter Anvin remarked, the written spec was Intel's guarantee that certain behaviors would work. If the kernel ignored the spec, it could lead to subtle bugs later on. And, Bill Davidsen said that if the kernel ignored the alignment requirement, and "if the requirement is enforced in some future revision, and updates then fail in some insane way, the vendor is justified in claiming 'I told you so'."

|

|

||||||

|

|

||||||

The end result was that Henrique sent in some patches to make the microcode driver enforce the 16-byte alignment requirement.

|

|

||||||

|

|

||||||

--------------------------------------------------------------------------------

|

|

||||||

|

|

||||||

via: http://www.linuxjournal.com/content/diff-u-whats-new-kernel-development-6

|

|

||||||

|

|

||||||

作者:[Zack Brown][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:http://www.linuxjournal.com/user/801501

|

|

||||||

[1]:http://www.cl.cam.ac.uk/research/security/capsicum/papers/2010usenix-security-capsicum-website.pdf

|

|

||||||

@ -1,91 +0,0 @@

|

|||||||

Did this JavaScript break the console?

|

|

||||||

---------

|

|

||||||

|

|

||||||

#Q:

|

|

||||||

|

|

||||||

Just doing some JavaScript stuff in google chrome (don't want to try in other browsers for now, in case this is really doing real damage) and I'm not sure why this seemed to break my console.

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

>var x = "http://www.foo.bar/q?name=%%this%%";

|

|

||||||

<undefined

|

|

||||||

>x

|

|

||||||

```

|

|

||||||

|

|

||||||

After x (and enter) the console stops working... I restarted chrome and now when I do a simple

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

console.clear();

|

|

||||||

```

|

|

||||||

|

|

||||||

It's giving me

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

Console was cleared

|

|

||||||

```

|

|

||||||

|

|

||||||

And not clearing the console. Now in my scripts console.log's do not register and I'm wondering what is going on. 99% sure it has to do with the double percent signs (%%).

|

|

||||||

|

|

||||||

Anyone know what I did wrong or better yet, how to fix the console?

|

|

||||||

|

|

||||||

[A bug report for this issue has been filed here.][1]

|

|

||||||

Edit: Feeling pretty dumb, but I had Preserve log checked... That's why the console wasn't clearing.

|

|

||||||

|

|

||||||

#A:

|

|

||||||

|

|

||||||

As discussed in the comments, there are actually many different ways of constructing a string that causes this issue, and it is not necessary for there to be two percent signs in most cases.

|

|

||||||

|

|

||||||

```TXT

|

|

||||||

http://example.com/%

|

|

||||||

http://%%%

|

|

||||||

http://ab%

|

|

||||||

http://%ab

|

|

||||||

http://%zz

|

|

||||||

```

|

|

||||||

|

|

||||||

However, it's not just the presence of a percent sign that breaks the Chrome console, as when we enter the following well-formed URL, the console continues to work properly and produces a clickable link.

|

|

||||||

|

|

||||||

```TXT

|

|

||||||

http://ab%20cd

|

|

||||||

```

|

|

||||||

|

|

||||||

Additionally, the strings `http://%`, and `http://%%` will also print properly, since Chrome will not auto-link a URL-link string unless the [`http://`][2] is followed by at least 3 characters.

|

|

||||||

|

|

||||||

From here I hypothesized that the issue must be in the process of linking a URL string in the console, likely in the process of decoding a malformed URL. I remembered that the JavaScript function `decodeURI` will throw an exception if given a malformed URL, and since Chrome's developer tools are largely written in JavaScript, could this be the issue that is evidently crashing the developer console?

|

|

||||||

|

|

||||||

To test this theory, I ran Chrome by the command link, to see if any errors were being logged.

|

|

||||||

|

|

||||||

Indeed, the same error you would see if you ran decodeURI on a malformed URL (i.e. decodeURI('http://example.com/%')) was being printed to the console:

|

|

||||||

|

|

||||||

>[4810:1287:0107/164725:ERROR:CONSOLE(683)] "Uncaught URIError: URI malformed", source: chrome-devtools://devtools/bundled/devtools.js (683)

|

|

||||||

>So, I opened the URL 'chrome-devtools://devtools/bundled/devtools.js' in Chrome, and on line 683, I found the following.

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

{var parsedURL=new WebInspector.ParsedURL(decodeURI(url));var origin;var folderPath;var name;if(parsedURL.isValid){origin=parsedURL.scheme+"://"+parsedURL.host;if(parsedURL.port)

|

|

||||||

```

|

|

||||||

|

|

||||||

As we can see, `decodeURI(url)` is being called on the URL without any error checking, thus throwing the exception and crashing the developer console.

|

|

||||||

|

|

||||||

A real fix for this issue will come from adding error handling to the Chrome console code, but in the meantime, one way to avoid the issue would be to wrap the string in a complex data type like an array to prevent parsing when logging.

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

var x = "http://example.com/%";

|

|

||||||

console.log([x]);

|

|

||||||

```

|

|

||||||

|

|

||||||

Thankfully, the broken console issue does not persist once the tab is closed, and will not affect other tabs.

|

|

||||||

|

|

||||||

###Update:

|

|

||||||

|

|

||||||

Apparently, the issue can persist across tabs and restarts if Preserve Log is checked. Uncheck this if you are having this issue.

|

|

||||||

|

|

||||||

via:[stackoverflow](http://stackoverflow.com/questions/27828804/did-this-javascript-break-the-console/27830948#27830948)

|

|

||||||

|

|

||||||

作者:[Alexander O'Mara][a]

|

|

||||||

译者:[译者ID](https://github.com/译者ID)

|

|

||||||

校对:[校对者ID](https://github.com/校对者ID)

|

|

||||||

|

|

||||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

|

||||||

|

|

||||||

[a]:http://stackoverflow.com/users/3155639/alexander-omara

|

|

||||||

[1]:https://code.google.com/p/chromium/issues/detail?id=446975

|

|

||||||

[2]:https://developer.mozilla.org/en-US/docs/Web/JavaScript/Reference/Global_Objects/decodeURI

|

|

||||||

@ -1,66 +0,0 @@

|

|||||||

Revealed: The best and worst of Docker

|

|

||||||

================================================================================

|

|

||||||

|

|

||||||

Credit: [Shutterstock][1]

|

|

||||||

|

|

||||||