mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-25 00:50:15 +08:00

commit

244b6791f9

480

published/20180330 Go on very small hardware Part 1.md

Normal file

480

published/20180330 Go on very small hardware Part 1.md

Normal file

@ -0,0 +1,480 @@

|

||||

Go 语言在极小硬件上的运用(一)

|

||||

=========

|

||||

|

||||

Go 语言,能在多低下的配置上运行并发挥作用呢?

|

||||

|

||||

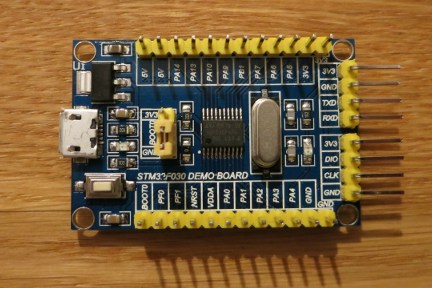

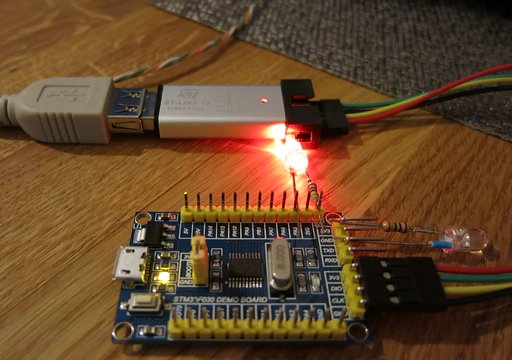

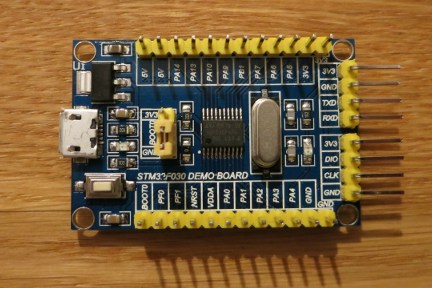

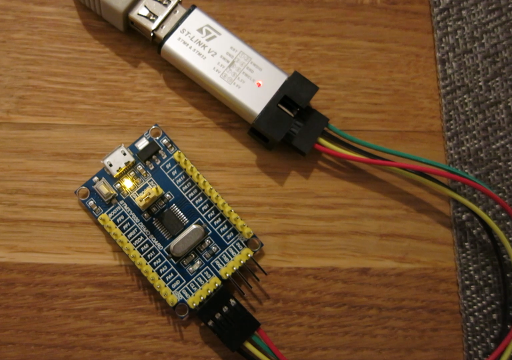

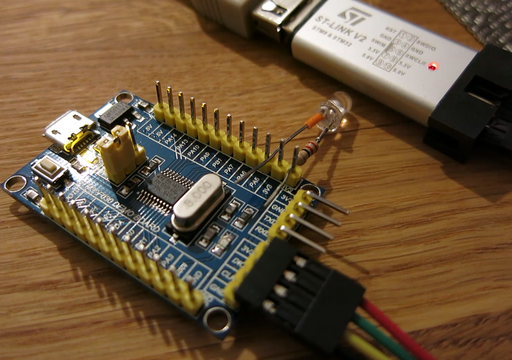

我最近购买了一个特别便宜的开发板:

|

||||

|

||||

|

||||

|

||||

我购买它的理由有三个。首先,我(作为程序员)从未接触过 STM320 系列的开发板。其次,STM32F10x 系列使用也有点少了。STM320 系列的 MCU 很便宜,有更新一些的外设,对系列产品进行了改进,问题修复也做得更好了。最后,为了这篇文章,我选用了这一系列中最低配置的开发板,整件事情就变得有趣起来了。

|

||||

|

||||

### 硬件部分

|

||||

|

||||

[STM32F030F4P6][3] 给人留下了很深的印象:

|

||||

|

||||

* CPU: [Cortex M0][1] 48 MHz(最低配置,只有 12000 个逻辑门电路)

|

||||

* RAM: 4 KB,

|

||||

* Flash: 16 KB,

|

||||

* ADC、SPI、I2C、USART 和几个定时器

|

||||

|

||||

以上这些采用了 TSSOP20 封装。正如你所见,这是一个很小的 32 位系统。

|

||||

|

||||

### 软件部分

|

||||

|

||||

如果你想知道如何在这块开发板上使用 [Go][4] 编程,你需要反复阅读硬件规范手册。你必须面对这样的真实情况:在 Go 编译器中给 Cortex-M0 提供支持的可能性很小。而且,这还仅仅只是第一个要解决的问题。

|

||||

|

||||

我会使用 [Emgo][5],但别担心,之后你会看到,它如何让 Go 在如此小的系统上尽可能发挥作用。

|

||||

|

||||

在我拿到这块开发板之前,对 [stm32/hal][6] 系列下的 F0 MCU 没有任何支持。在简单研究[参考手册][7]后,我发现 STM32F0 系列是 STM32F3 削减版,这让在新端口上开发的工作变得容易了一些。

|

||||

|

||||

如果你想接着本文的步骤做下去,需要先安装 Emgo

|

||||

|

||||

```

|

||||

cd $HOME

|

||||

git clone https://github.com/ziutek/emgo/

|

||||

cd emgo/egc

|

||||

go install

|

||||

```

|

||||

|

||||

然后设置一下环境变量

|

||||

|

||||

```

|

||||

export EGCC=path_to_arm_gcc # eg. /usr/local/arm/bin/arm-none-eabi-gcc

|

||||

export EGLD=path_to_arm_linker # eg. /usr/local/arm/bin/arm-none-eabi-ld

|

||||

export EGAR=path_to_arm_archiver # eg. /usr/local/arm/bin/arm-none-eabi-ar

|

||||

|

||||

export EGROOT=$HOME/emgo/egroot

|

||||

export EGPATH=$HOME/emgo/egpath

|

||||

|

||||

export EGARCH=cortexm0

|

||||

export EGOS=noos

|

||||

export EGTARGET=f030x6

|

||||

```

|

||||

|

||||

更详细的说明可以在 [Emgo][8] 官网上找到。

|

||||

|

||||

要确保 `egc` 在你的 `PATH` 中。 你可以使用 `go build` 来代替 `go install`,然后把 `egc` 复制到你的 `$HOME/bin` 或 `/usr/local/bin` 中。

|

||||

|

||||

现在,为你的第一个 Emgo 程序创建一个新文件夹,随后把示例中链接器脚本复制过来:

|

||||

|

||||

```

|

||||

mkdir $HOME/firstemgo

|

||||

cd $HOME/firstemgo

|

||||

cp $EGPATH/src/stm32/examples/f030-demo-board/blinky/script.ld .

|

||||

```

|

||||

|

||||

### 最基本程序

|

||||

|

||||

在 `main.go` 文件中创建一个最基本的程序:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

func main() {

|

||||

}

|

||||

```

|

||||

|

||||

文件编译没有出现任何问题:

|

||||

|

||||

```

|

||||

$ egc

|

||||

$ arm-none-eabi-size cortexm0.elf

|

||||

text data bss dec hex filename

|

||||

7452 172 104 7728 1e30 cortexm0.elf

|

||||

```

|

||||

|

||||

第一次编译可能会花点时间。编译后产生的二进制占用了 7624 个字节的 Flash 空间(文本 + 数据)。对于一个什么都没做的程序来说,占用的空间有些大。还剩下 8760 字节,可以用来做些有用的事。

|

||||

|

||||

不妨试试传统的 “Hello, World!” 程序:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import "fmt"

|

||||

|

||||

func main() {

|

||||

fmt.Println("Hello, World!")

|

||||

}

|

||||

```

|

||||

|

||||

不幸的是,这次结果有些糟糕:

|

||||

|

||||

```

|

||||

$ egc

|

||||

/usr/local/arm/bin/arm-none-eabi-ld: /home/michal/P/go/src/github.com/ziutek/emgo/egpath/src/stm32/examples/f030-demo-board/blog/cortexm0.elf section `.text' will not fit in region `Flash'

|

||||

/usr/local/arm/bin/arm-none-eabi-ld: region `Flash' overflowed by 10880 bytes

|

||||

exit status 1

|

||||

```

|

||||

|

||||

“Hello, World!” 需要 STM32F030x6 上至少 32KB 的 Flash 空间。

|

||||

|

||||

`fmt` 包强制包含整个 `strconv` 和 `reflect` 包。这三个包,即使在精简版本中的 Emgo 中,占用空间也很大。我们不能使用这个例子了。有很多的应用不需要好看的文本输出。通常,一个或多个 LED,或者七段数码管显示就足够了。不过,在第二部分,我会尝试使用 `strconv` 包来格式化,并在 UART 上显示一些数字和文本。

|

||||

|

||||

### 闪烁

|

||||

|

||||

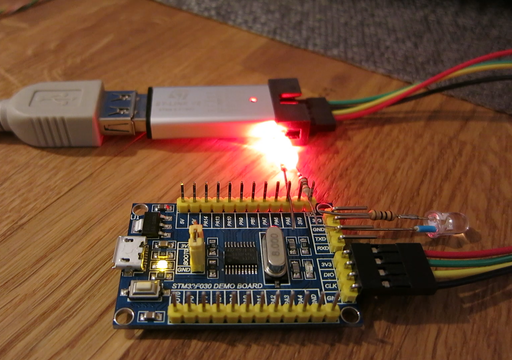

我们的开发板上有一个与 PA4 引脚和 VCC 相连的 LED。这次我们的代码稍稍长了一些:

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"delay"

|

||||

|

||||

"stm32/hal/gpio"

|

||||

"stm32/hal/system"

|

||||

"stm32/hal/system/timer/systick"

|

||||

)

|

||||

|

||||

var led gpio.Pin

|

||||

|

||||

func init() {

|

||||

system.SetupPLL(8, 1, 48/8)

|

||||

systick.Setup(2e6)

|

||||

|

||||

gpio.A.EnableClock(false)

|

||||

led = gpio.A.Pin(4)

|

||||

|

||||

cfg := &gpio.Config{Mode: gpio.Out, Driver: gpio.OpenDrain}

|

||||

led.Setup(cfg)

|

||||

}

|

||||

|

||||

func main() {

|

||||

for {

|

||||

led.Clear()

|

||||

delay.Millisec(100)

|

||||

led.Set()

|

||||

delay.Millisec(900)

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

按照惯例,`init` 函数用来初始化和配置外设。

|

||||

|

||||

`system.SetupPLL(8, 1, 48/8)` 用来配置 RCC,将外部的 8 MHz 振荡器的 PLL 作为系统时钟源。PLL 分频器设置为 1,倍频数设置为 48/8 =6,这样系统时钟频率为 48MHz。

|

||||

|

||||

`systick.Setup(2e6)` 将 Cortex-M SYSTICK 时钟作为系统时钟,每隔 2e6 次纳秒运行一次(每秒钟 500 次)。

|

||||

|

||||

`gpio.A.EnableClock(false)` 开启了 GPIO A 口的时钟。`False` 意味着这一时钟在低功耗模式下会被禁用,但在 STM32F0 系列中并未实现这一功能。

|

||||

|

||||

`led.Setup(cfg)` 设置 PA4 引脚为开漏输出。

|

||||

|

||||

`led.Clear()` 将 PA4 引脚设为低,在开漏设置中,打开 LED。

|

||||

|

||||

`led.Set()` 将 PA4 设为高电平状态,关掉LED。

|

||||

|

||||

编译这个代码:

|

||||

|

||||

```

|

||||

$ egc

|

||||

$ arm-none-eabi-size cortexm0.elf

|

||||

text data bss dec hex filename

|

||||

9772 172 168 10112 2780 cortexm0.elf

|

||||

```

|

||||

|

||||

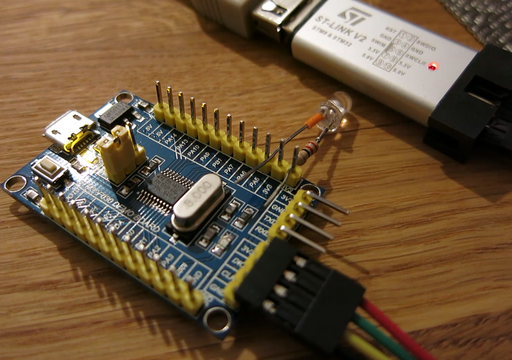

正如你所看到的,这个闪烁程序占用了 2320 字节,比最基本程序占用空间要大。还有 6440 字节的剩余空间。

|

||||

|

||||

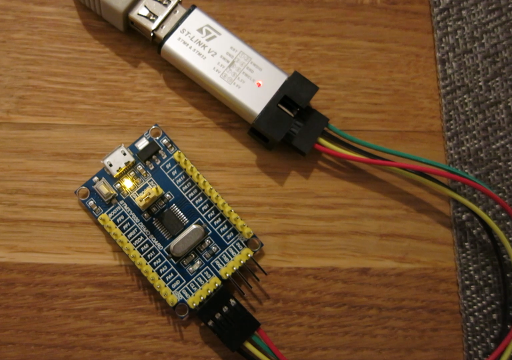

看看代码是否能运行:

|

||||

|

||||

```

|

||||

$ openocd -d0 -f interface/stlink.cfg -f target/stm32f0x.cfg -c 'init; program cortexm0.elf; reset run; exit'

|

||||

Open On-Chip Debugger 0.10.0+dev-00319-g8f1f912a (2018-03-07-19:20)

|

||||

Licensed under GNU GPL v2

|

||||

For bug reports, read

|

||||

http://openocd.org/doc/doxygen/bugs.html

|

||||

debug_level: 0

|

||||

adapter speed: 1000 kHz

|

||||

adapter_nsrst_delay: 100

|

||||

none separate

|

||||

adapter speed: 950 kHz

|

||||

target halted due to debug-request, current mode: Thread

|

||||

xPSR: 0xc1000000 pc: 0x0800119c msp: 0x20000da0

|

||||

adapter speed: 4000 kHz

|

||||

** Programming Started **

|

||||

auto erase enabled

|

||||

target halted due to breakpoint, current mode: Thread

|

||||

xPSR: 0x61000000 pc: 0x2000003a msp: 0x20000da0

|

||||

wrote 10240 bytes from file cortexm0.elf in 0.817425s (12.234 KiB/s)

|

||||

** Programming Finished **

|

||||

adapter speed: 950 kHz

|

||||

```

|

||||

|

||||

在这篇文章中,这是我第一次,将一个短视频转换成[动画 PNG][9]。我对此印象很深,再见了 YouTube。 对于 IE 用户,我很抱歉,更多信息请看 [apngasm][10]。我本应该学习 HTML5,但现在,APNG 是我最喜欢的,用来播放循环短视频的方法了。

|

||||

|

||||

|

||||

|

||||

### 更多的 Go 语言编程

|

||||

|

||||

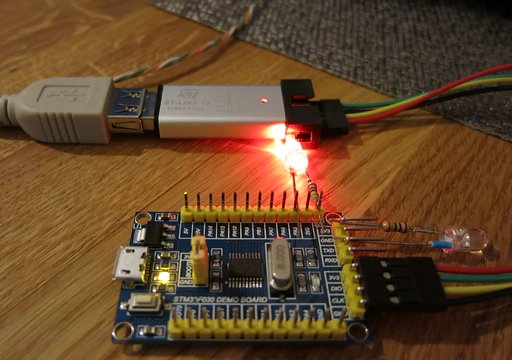

如果你不是一个 Go 程序员,但你已经听说过一些关于 Go 语言的事情,你可能会说:“Go 语法很好,但跟 C 比起来,并没有明显的提升。让我看看 Go 语言的通道和协程!”

|

||||

|

||||

接下来我会一一展示:

|

||||

|

||||

```

|

||||

import (

|

||||

"delay"

|

||||

|

||||

"stm32/hal/gpio"

|

||||

"stm32/hal/system"

|

||||

"stm32/hal/system/timer/systick"

|

||||

)

|

||||

|

||||

var led1, led2 gpio.Pin

|

||||

|

||||

func init() {

|

||||

system.SetupPLL(8, 1, 48/8)

|

||||

systick.Setup(2e6)

|

||||

|

||||

gpio.A.EnableClock(false)

|

||||

led1 = gpio.A.Pin(4)

|

||||

led2 = gpio.A.Pin(5)

|

||||

|

||||

cfg := &gpio.Config{Mode: gpio.Out, Driver: gpio.OpenDrain}

|

||||

led1.Setup(cfg)

|

||||

led2.Setup(cfg)

|

||||

}

|

||||

|

||||

func blinky(led gpio.Pin, period int) {

|

||||

for {

|

||||

led.Clear()

|

||||

delay.Millisec(100)

|

||||

led.Set()

|

||||

delay.Millisec(period - 100)

|

||||

}

|

||||

}

|

||||

|

||||

func main() {

|

||||

go blinky(led1, 500)

|

||||

blinky(led2, 1000)

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

代码改动很小: 添加了第二个 LED,上一个例子中的 `main` 函数被重命名为 `blinky` 并且需要提供两个参数。 `main` 在新的协程中先调用 `blinky`,所以两个 LED 灯在并行使用。值得一提的是,`gpio.Pin` 可以同时访问同一 GPIO 口的不同引脚。

|

||||

|

||||

Emgo 还有很多不足。其中之一就是你需要提前规定 `goroutines(tasks)` 的最大执行数量。是时候修改 `script.ld` 了:

|

||||

|

||||

```

|

||||

ISRStack = 1024;

|

||||

MainStack = 1024;

|

||||

TaskStack = 1024;

|

||||

MaxTasks = 2;

|

||||

|

||||

INCLUDE stm32/f030x4

|

||||

INCLUDE stm32/loadflash

|

||||

INCLUDE noos-cortexm

|

||||

```

|

||||

|

||||

栈的大小需要靠猜,现在还不用关心这一点。

|

||||

|

||||

```

|

||||

$ egc

|

||||

$ arm-none-eabi-size cortexm0.elf

|

||||

text data bss dec hex filename

|

||||

10020 172 172 10364 287c cortexm0.elf

|

||||

```

|

||||

|

||||

另一个 LED 和协程一共占用了 248 字节的 Flash 空间。

|

||||

|

||||

|

||||

|

||||

### 通道

|

||||

|

||||

通道是 Go 语言中协程之间相互通信的一种[推荐方式][11]。Emgo 甚至能允许通过*中断处理*来使用缓冲通道。下一个例子就展示了这种情况。

|

||||

|

||||

```

|

||||

package main

|

||||

|

||||

import (

|

||||

"delay"

|

||||

"rtos"

|

||||

|

||||

"stm32/hal/gpio"

|

||||

"stm32/hal/irq"

|

||||

"stm32/hal/system"

|

||||

"stm32/hal/system/timer/systick"

|

||||

"stm32/hal/tim"

|

||||

)

|

||||

|

||||

var (

|

||||

leds [3]gpio.Pin

|

||||

timer *tim.Periph

|

||||

ch = make(chan int, 1)

|

||||

)

|

||||

|

||||

func init() {

|

||||

system.SetupPLL(8, 1, 48/8)

|

||||

systick.Setup(2e6)

|

||||

|

||||

gpio.A.EnableClock(false)

|

||||

leds[0] = gpio.A.Pin(4)

|

||||

leds[1] = gpio.A.Pin(5)

|

||||

leds[2] = gpio.A.Pin(9)

|

||||

|

||||

cfg := &gpio.Config{Mode: gpio.Out, Driver: gpio.OpenDrain}

|

||||

for _, led := range leds {

|

||||

led.Set()

|

||||

led.Setup(cfg)

|

||||

}

|

||||

|

||||

timer = tim.TIM3

|

||||

pclk := timer.Bus().Clock()

|

||||

if pclk < system.AHB.Clock() {

|

||||

pclk *= 2

|

||||

}

|

||||

freq := uint(1e3) // Hz

|

||||

timer.EnableClock(true)

|

||||

timer.PSC.Store(tim.PSC(pclk/freq - 1))

|

||||

timer.ARR.Store(700) // ms

|

||||

timer.DIER.Store(tim.UIE)

|

||||

timer.CR1.Store(tim.CEN)

|

||||

|

||||

rtos.IRQ(irq.TIM3).Enable()

|

||||

}

|

||||

|

||||

func blinky(led gpio.Pin, period int) {

|

||||

for range ch {

|

||||

led.Clear()

|

||||

delay.Millisec(100)

|

||||

led.Set()

|

||||

delay.Millisec(period - 100)

|

||||

}

|

||||

}

|

||||

|

||||

func main() {

|

||||

go blinky(leds[1], 500)

|

||||

blinky(leds[2], 500)

|

||||

}

|

||||

|

||||

func timerISR() {

|

||||

timer.SR.Store(0)

|

||||

leds[0].Set()

|

||||

select {

|

||||

case ch <- 0:

|

||||

// Success

|

||||

default:

|

||||

leds[0].Clear()

|

||||

}

|

||||

}

|

||||

|

||||

//c:__attribute__((section(".ISRs")))

|

||||

var ISRs = [...]func(){

|

||||

irq.TIM3: timerISR,

|

||||

}

|

||||

```

|

||||

|

||||

与之前例子相比较下的不同:

|

||||

|

||||

1. 添加了第三个 LED,并连接到 PA9 引脚(UART 头的 TXD 引脚)。

|

||||

2. 时钟(`TIM3`)作为中断源。

|

||||

3. 新函数 `timerISR` 用来处理 `irq.TIM3` 的中断。

|

||||

4. 新增容量为 1 的缓冲通道是为了 `timerISR` 和 `blinky` 协程之间的通信。

|

||||

5. `ISRs` 数组作为*中断向量表*,是更大的*异常向量表*的一部分。

|

||||

6. `blinky` 中的 `for` 语句被替换成 `range` 语句。

|

||||

|

||||

为了方便起见,所有的 LED,或者说它们的引脚,都被放在 `leds` 这个数组里。另外,所有引脚在被配置为输出之前,都设置为一种已知的初始状态(高电平状态)。

|

||||

|

||||

在这个例子里,我们想让时钟以 1 kHz 的频率运行。为了配置 TIM3 预分频器,我们需要知道它的输入时钟频率。通过参考手册我们知道,输入时钟频率在 `APBCLK = AHBCLK` 时,与 `APBCLK` 相同,反之等于 2 倍的 `APBCLK`。

|

||||

|

||||

如果 CNT 寄存器增加 1 kHz,那么 ARR 寄存器的值等于*更新事件*(重载事件)在毫秒中的计数周期。 为了让更新事件产生中断,必须要设置 DIER 寄存器中的 UIE 位。CEN 位能启动时钟。

|

||||

|

||||

时钟外设在低功耗模式下必须启用,为了自身能在 CPU 处于休眠时保持运行: `timer.EnableClock(true)`。这在 STM32F0 中无关紧要,但对代码可移植性却十分重要。

|

||||

|

||||

`timerISR` 函数处理 `irq.TIM3` 的中断请求。`timer.SR.Store(0)` 会清除 SR 寄存器里的所有事件标志,无效化向 [NVIC][12] 发出的所有中断请求。凭借经验,由于中断请求无效的延时性,需要在程序一开始马上清除所有的中断标志。这避免了无意间再次调用处理。为了确保万无一失,需要先清除标志,再读取,但是在我们的例子中,清除标志就已经足够了。

|

||||

|

||||

下面的这几行代码:

|

||||

|

||||

```

|

||||

select {

|

||||

case ch <- 0:

|

||||

// Success

|

||||

default:

|

||||

leds[0].Clear()

|

||||

}

|

||||

```

|

||||

|

||||

是 Go 语言中,如何在通道上非阻塞地发送消息的方法。中断处理程序无法一直等待通道中的空余空间。如果通道已满,则执行 `default`,开发板上的LED就会开启,直到下一次中断。

|

||||

|

||||

`ISRs` 数组包含了中断向量表。`//c:__attribute__((section(".ISRs")))` 会导致链接器将数组插入到 `.ISRs` 节中。

|

||||

|

||||

`blinky` 的 `for` 循环的新写法:

|

||||

|

||||

```

|

||||

for range ch {

|

||||

led.Clear()

|

||||

delay.Millisec(100)

|

||||

led.Set()

|

||||

delay.Millisec(period - 100)

|

||||

}

|

||||

```

|

||||

|

||||

等价于:

|

||||

|

||||

```

|

||||

for {

|

||||

_, ok := <-ch

|

||||

if !ok {

|

||||

break // Channel closed.

|

||||

}

|

||||

led.Clear()

|

||||

delay.Millisec(100)

|

||||

led.Set()

|

||||

delay.Millisec(period - 100)

|

||||

}

|

||||

```

|

||||

|

||||

注意,在这个例子中,我们不在意通道中收到的值,我们只对其接受到的消息感兴趣。我们可以在声明时,将通道元素类型中的 `int` 用空结构体 `struct{}` 来代替,发送消息时,用 `struct{}{}` 结构体的值代替 0,但这部分对新手来说可能会有些陌生。

|

||||

|

||||

让我们来编译一下代码:

|

||||

|

||||

```

|

||||

$ egc

|

||||

$ arm-none-eabi-size cortexm0.elf

|

||||

text data bss dec hex filename

|

||||

11096 228 188 11512 2cf8 cortexm0.elf

|

||||

```

|

||||

|

||||

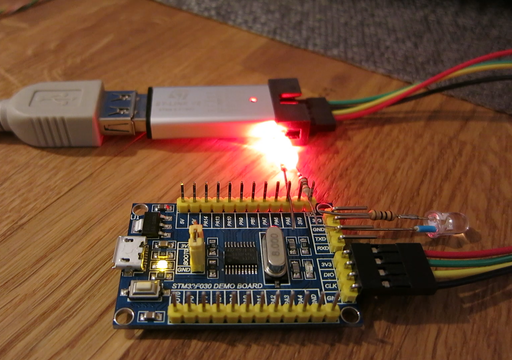

新的例子占用了 11324 字节的 Flash 空间,比上一个例子多占用了 1132 字节。

|

||||

|

||||

采用现在的时序,两个闪烁协程从通道中获取数据的速度,比 `timerISR` 发送数据的速度要快。所以它们在同时等待新数据,你还能观察到 `select` 的随机性,这也是 [Go 规范][13]所要求的。

|

||||

|

||||

|

||||

|

||||

开发板上的 LED 一直没有亮起,说明通道从未出现过溢出。

|

||||

|

||||

我们可以加快消息发送的速度,将 `timer.ARR.Store(700)` 改为 `timer.ARR.Store(200)`。 现在 `timerISR` 每秒钟发送 5 条消息,但是两个接收者加起来,每秒也只能接受 4 条消息。

|

||||

|

||||

|

||||

|

||||

正如你所看到的,`timerISR` 开启黄色 LED 灯,意味着通道上已经没有剩余空间了。

|

||||

|

||||

第一部分到这里就结束了。你应该知道,这一部分并未展示 Go 中最重要的部分,接口。

|

||||

|

||||

协程和通道只是一些方便好用的语法。你可以用自己的代码来替换它们,这并不容易,但也可以实现。接口是Go 语言的基础。这是文章中 [第二部分][14]所要提到的.

|

||||

|

||||

在 Flash 上我们还有些剩余空间。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://ziutek.github.io/2018/03/30/go_on_very_small_hardware.html

|

||||

|

||||

作者:[Michał Derkacz][a]

|

||||

译者:[wenwensnow](https://github.com/wenwensnow)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://ziutek.github.io/

|

||||

[1]:https://en.wikipedia.org/wiki/ARM_Cortex-M#Cortex-M0

|

||||

[2]:https://ziutek.github.io/2018/03/30/go_on_very_small_hardware.html

|

||||

[3]:http://www.st.com/content/st_com/en/products/microcontrollers/stm32-32-bit-arm-cortex-mcus/stm32-mainstream-mcus/stm32f0-series/stm32f0x0-value-line/stm32f030f4.html

|

||||

[4]:https://golang.org/

|

||||

[5]:https://github.com/ziutek/emgo

|

||||

[6]:https://github.com/ziutek/emgo/tree/master/egpath/src/stm32/hal

|

||||

[7]:http://www.st.com/resource/en/reference_manual/dm00091010.pdf

|

||||

[8]:https://github.com/ziutek/emgo

|

||||

[9]:https://en.wikipedia.org/wiki/APNG

|

||||

[10]:http://apngasm.sourceforge.net/

|

||||

[11]:https://blog.golang.org/share-memory-by-communicating

|

||||

[12]:http://infocenter.arm.com/help/topic/com.arm.doc.ddi0432c/Cihbecee.html

|

||||

[13]:https://golang.org/ref/spec#Select_statements

|

||||

[14]:https://ziutek.github.io/2018/04/14/go_on_very_small_hardware2.html

|

||||

252

published/20181227 Linux commands for measuring disk activity.md

Normal file

252

published/20181227 Linux commands for measuring disk activity.md

Normal file

@ -0,0 +1,252 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (laingke)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11387-1.html)

|

||||

[#]: subject: (Linux commands for measuring disk activity)

|

||||

[#]: via: (https://www.networkworld.com/article/3330497/linux/linux-commands-for-measuring-disk-activity.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

用于测量磁盘活动的 Linux 命令

|

||||

======

|

||||

> Linux 发行版提供了几个度量磁盘活动的有用命令。让我们了解一下其中的几个。

|

||||

|

||||

|

||||

|

||||

Linux 系统提供了一套方便的命令,帮助你查看磁盘有多忙,而不仅仅是磁盘有多满。在本文中,我们将研究五个非常有用的命令,用于查看磁盘活动。其中两个命令(`iostat` 和 `ioping`)可能必须添加到你的系统中,这两个命令一样要求你使用 sudo 特权,所有这五个命令都提供了查看磁盘活动的有用方法。

|

||||

|

||||

这些命令中最简单、最直观的一个可能是 `dstat` 了。

|

||||

|

||||

### dtstat

|

||||

|

||||

尽管 `dstat` 命令以字母 “d” 开头,但它提供的统计信息远远不止磁盘活动。如果你只想查看磁盘活动,可以使用 `-d` 选项。如下所示,你将得到一个磁盘读/写测量值的连续列表,直到使用 `CTRL-c` 停止显示为止。注意,在第一个报告信息之后,显示中的每个后续行将在接下来的时间间隔内报告磁盘活动,缺省值仅为一秒。

|

||||

|

||||

```

|

||||

$ dstat -d

|

||||

-dsk/total-

|

||||

read writ

|

||||

949B 73k

|

||||

65k 0 <== first second

|

||||

0 24k <== second second

|

||||

0 16k

|

||||

0 0 ^C

|

||||

```

|

||||

|

||||

在 `-d` 选项后面包含一个数字将把间隔设置为该秒数。

|

||||

|

||||

```

|

||||

$ dstat -d 10

|

||||

-dsk/total-

|

||||

read writ

|

||||

949B 73k

|

||||

65k 81M <== first five seconds

|

||||

0 21k <== second five second

|

||||

0 9011B ^C

|

||||

```

|

||||

|

||||

请注意,报告的数据可能以许多不同的单位显示——例如,M(Mb)、K(Kb)和 B(字节)。

|

||||

|

||||

如果没有选项,`dstat` 命令还将显示许多其他信息——指示 CPU 如何使用时间、显示网络和分页活动、报告中断和上下文切换。

|

||||

|

||||

```

|

||||

$ dstat

|

||||

You did not select any stats, using -cdngy by default.

|

||||

--total-cpu-usage-- -dsk/total- -net/total- ---paging-- ---system--

|

||||

usr sys idl wai stl| read writ| recv send| in out | int csw

|

||||

0 0 100 0 0| 949B 73k| 0 0 | 0 3B| 38 65

|

||||

0 0 100 0 0| 0 0 | 218B 932B| 0 0 | 53 68

|

||||

0 1 99 0 0| 0 16k| 64B 468B| 0 0 | 64 81 ^C

|

||||

```

|

||||

|

||||

`dstat` 命令提供了关于整个 Linux 系统性能的有价值的见解,几乎可以用它灵活而功能强大的命令来代替 `vmstat`、`netstat`、`iostat` 和 `ifstat` 等较旧的工具集合,该命令结合了这些旧工具的功能。要深入了解 `dstat` 命令可以提供的其它信息,请参阅这篇关于 [dstat][1] 命令的文章。

|

||||

|

||||

### iostat

|

||||

|

||||

`iostat` 命令通过观察设备活动的时间与其平均传输速率之间的关系,帮助监视系统输入/输出设备的加载情况。它有时用于评估磁盘之间的活动平衡。

|

||||

|

||||

```

|

||||

$ iostat

|

||||

Linux 4.18.0-041800-generic (butterfly) 12/26/2018 _x86_64_ (2 CPU)

|

||||

|

||||

avg-cpu: %user %nice %system %iowait %steal %idle

|

||||

0.07 0.01 0.03 0.05 0.00 99.85

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

loop0 0.00 0.00 0.00 1048 0

|

||||

loop1 0.00 0.00 0.00 365 0

|

||||

loop2 0.00 0.00 0.00 1056 0

|

||||

loop3 0.00 0.01 0.00 16169 0

|

||||

loop4 0.00 0.00 0.00 413 0

|

||||

loop5 0.00 0.00 0.00 1184 0

|

||||

loop6 0.00 0.00 0.00 1062 0

|

||||

loop7 0.00 0.00 0.00 5261 0

|

||||

sda 1.06 0.89 72.66 2837453 232735080

|

||||

sdb 0.00 0.02 0.00 48669 40

|

||||

loop8 0.00 0.00 0.00 1053 0

|

||||

loop9 0.01 0.01 0.00 18949 0

|

||||

loop10 0.00 0.00 0.00 56 0

|

||||

loop11 0.00 0.00 0.00 7090 0

|

||||

loop12 0.00 0.00 0.00 1160 0

|

||||

loop13 0.00 0.00 0.00 108 0

|

||||

loop14 0.00 0.00 0.00 3572 0

|

||||

loop15 0.01 0.01 0.00 20026 0

|

||||

loop16 0.00 0.00 0.00 24 0

|

||||

```

|

||||

|

||||

当然,当你只想关注磁盘时,Linux 回环设备上提供的所有统计信息都会使结果显得杂乱无章。不过,该命令也确实提供了 `-p` 选项,该选项使你可以仅查看磁盘——如以下命令所示。

|

||||

|

||||

```

|

||||

$ iostat -p sda

|

||||

Linux 4.18.0-041800-generic (butterfly) 12/26/2018 _x86_64_ (2 CPU)

|

||||

|

||||

avg-cpu: %user %nice %system %iowait %steal %idle

|

||||

0.07 0.01 0.03 0.05 0.00 99.85

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

sda 1.06 0.89 72.54 2843737 232815784

|

||||

sda1 1.04 0.88 72.54 2821733 232815784

|

||||

```

|

||||

|

||||

请注意 `tps` 是指每秒的传输量。

|

||||

|

||||

你还可以让 `iostat` 提供重复的报告。在下面的示例中,我们使用 `-d` 选项每五秒钟进行一次测量。

|

||||

|

||||

```

|

||||

$ iostat -p sda -d 5

|

||||

Linux 4.18.0-041800-generic (butterfly) 12/26/2018 _x86_64_ (2 CPU)

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

sda 1.06 0.89 72.51 2843749 232834048

|

||||

sda1 1.04 0.88 72.51 2821745 232834048

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

sda 0.80 0.00 11.20 0 56

|

||||

sda1 0.80 0.00 11.20 0 56

|

||||

```

|

||||

|

||||

如果你希望省略第一个(自启动以来的统计信息)报告,请在命令中添加 `-y`。

|

||||

|

||||

```

|

||||

$ iostat -p sda -d 5 -y

|

||||

Linux 4.18.0-041800-generic (butterfly) 12/26/2018 _x86_64_ (2 CPU)

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

sda 0.80 0.00 11.20 0 56

|

||||

sda1 0.80 0.00 11.20 0 56

|

||||

```

|

||||

|

||||

接下来,我们看第二个磁盘驱动器。

|

||||

|

||||

```

|

||||

$ iostat -p sdb

|

||||

Linux 4.18.0-041800-generic (butterfly) 12/26/2018 _x86_64_ (2 CPU)

|

||||

|

||||

avg-cpu: %user %nice %system %iowait %steal %idle

|

||||

0.07 0.01 0.03 0.05 0.00 99.85

|

||||

|

||||

Device tps kB_read/s kB_wrtn/s kB_read kB_wrtn

|

||||

sdb 0.00 0.02 0.00 48669 40

|

||||

sdb2 0.00 0.00 0.00 4861 40

|

||||

sdb1 0.00 0.01 0.00 35344 0

|

||||

```

|

||||

|

||||

### iotop

|

||||

|

||||

`iotop` 命令是类似 `top` 的实用程序,用于查看磁盘 I/O。它收集 Linux 内核提供的 I/O 使用信息,以便你了解哪些进程在磁盘 I/O 方面的要求最高。在下面的示例中,循环时间被设置为 5 秒。显示将自动更新,覆盖前面的输出。

|

||||

|

||||

```

|

||||

$ sudo iotop -d 5

|

||||

Total DISK READ: 0.00 B/s | Total DISK WRITE: 1585.31 B/s

|

||||

Current DISK READ: 0.00 B/s | Current DISK WRITE: 12.39 K/s

|

||||

TID PRIO USER DISK READ DISK WRITE SWAPIN IO> COMMAND

|

||||

32492 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.12 % [kworker/u8:1-ev~_power_efficient]

|

||||

208 be/3 root 0.00 B/s 1585.31 B/s 0.00 % 0.11 % [jbd2/sda1-8]

|

||||

1 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % init splash

|

||||

2 be/4 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [kthreadd]

|

||||

3 be/0 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcu_gp]

|

||||

4 be/0 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [rcu_par_gp]

|

||||

8 be/0 root 0.00 B/s 0.00 B/s 0.00 % 0.00 % [mm_percpu_wq]

|

||||

```

|

||||

|

||||

### ioping

|

||||

|

||||

`ioping` 命令是一种完全不同的工具,但是它可以报告磁盘延迟——也就是磁盘响应请求需要多长时间,而这有助于诊断磁盘问题。

|

||||

|

||||

```

|

||||

$ sudo ioping /dev/sda1

|

||||

4 KiB <<< /dev/sda1 (block device 111.8 GiB): request=1 time=960.2 us (warmup)

|

||||

4 KiB <<< /dev/sda1 (block device 111.8 GiB): request=2 time=841.5 us

|

||||

4 KiB <<< /dev/sda1 (block device 111.8 GiB): request=3 time=831.0 us

|

||||

4 KiB <<< /dev/sda1 (block device 111.8 GiB): request=4 time=1.17 ms

|

||||

^C

|

||||

--- /dev/sda1 (block device 111.8 GiB) ioping statistics ---

|

||||

3 requests completed in 2.84 ms, 12 KiB read, 1.05 k iops, 4.12 MiB/s

|

||||

generated 4 requests in 3.37 s, 16 KiB, 1 iops, 4.75 KiB/s

|

||||

min/avg/max/mdev = 831.0 us / 947.9 us / 1.17 ms / 158.0 us

|

||||

```

|

||||

|

||||

### atop

|

||||

|

||||

`atop` 命令,像 `top` 一样提供了大量有关系统性能的信息,包括有关磁盘活动的一些统计信息。

|

||||

|

||||

```

|

||||

ATOP - butterfly 2018/12/26 17:24:19 37d3h13m------ 10ed

|

||||

PRC | sys 0.03s | user 0.01s | #proc 179 | #zombie 0 | #exit 6 |

|

||||

CPU | sys 1% | user 0% | irq 0% | idle 199% | wait 0% |

|

||||

cpu | sys 1% | user 0% | irq 0% | idle 99% | cpu000 w 0% |

|

||||

CPL | avg1 0.00 | avg5 0.00 | avg15 0.00 | csw 677 | intr 470 |

|

||||

MEM | tot 5.8G | free 223.4M | cache 4.6G | buff 253.2M | slab 394.4M |

|

||||

SWP | tot 2.0G | free 2.0G | | vmcom 1.9G | vmlim 4.9G |

|

||||

DSK | sda | busy 0% | read 0 | write 7 | avio 1.14 ms |

|

||||

NET | transport | tcpi 4 | tcpo stall 8 | udpi 1 | udpo 0swout 2255 |

|

||||

NET | network | ipi 10 | ipo 7 | ipfrw 0 | deliv 60.67 ms |

|

||||

NET | enp0s25 0% | pcki 10 | pcko 8 | si 1 Kbps | so 3 Kbp0.73 ms |

|

||||

|

||||

PID SYSCPU USRCPU VGROW RGROW ST EXC THR S CPUNR CPU CMD 1/1673e4 |

|

||||

3357 0.01s 0.00s 672K 824K -- - 1 R 0 0% atop

|

||||

3359 0.01s 0.00s 0K 0K NE 0 0 E - 0% <ps>

|

||||

3361 0.00s 0.01s 0K 0K NE 0 0 E - 0% <ps>

|

||||

3363 0.01s 0.00s 0K 0K NE 0 0 E - 0% <ps>

|

||||

31357 0.00s 0.00s 0K 0K -- - 1 S 1 0% bash

|

||||

3364 0.00s 0.00s 8032K 756K N- - 1 S 1 0% sleep

|

||||

2931 0.00s 0.00s 0K 0K -- - 1 I 1 0% kworker/u8:2-e

|

||||

3356 0.00s 0.00s 0K 0K -E 0 0 E - 0% <sleep>

|

||||

3360 0.00s 0.00s 0K 0K NE 0 0 E - 0% <sleep>

|

||||

3362 0.00s 0.00s 0K 0K NE 0 0 E - 0% <sleep>

|

||||

```

|

||||

|

||||

如果你*只*想查看磁盘统计信息,则可以使用以下命令轻松进行管理:

|

||||

|

||||

```

|

||||

$ atop | grep DSK

|

||||

DSK | sda | busy 0% | read 122901 | write 3318e3 | avio 0.67 ms |

|

||||

DSK | sdb | busy 0% | read 1168 | write 103 | avio 0.73 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 92 | avio 2.39 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 94 | avio 2.47 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 99 | avio 2.26 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 94 | avio 2.43 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 94 | avio 2.43 ms |

|

||||

DSK | sda | busy 2% | read 0 | write 92 | avio 2.43 ms |

|

||||

^C

|

||||

```

|

||||

|

||||

### 了解磁盘 I/O

|

||||

|

||||

Linux 提供了足够的命令,可以让你很好地了解磁盘的工作强度,并帮助你关注潜在的问题或减缓。希望这些命令中的一个可以告诉你何时需要质疑磁盘性能。偶尔使用这些命令将有助于确保当你需要检查磁盘,特别是忙碌或缓慢的磁盘时可以显而易见地发现它们。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3330497/linux/linux-commands-for-measuring-disk-activity.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[laingke](https://github.com/laingke)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.networkworld.com/article/3291616/linux/examining-linux-system-performance-with-dstat.html

|

||||

[2]: https://www.facebook.com/NetworkWorld/

|

||||

[3]: https://www.linkedin.com/company/network-world

|

||||

@ -0,0 +1,227 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (laingke)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11373-1.html)

|

||||

[#]: subject: (Create an online store with this Java-based framework)

|

||||

[#]: via: (https://opensource.com/article/19/1/scipio-erp)

|

||||

[#]: author: (Paul Piper https://opensource.com/users/madppiper)

|

||||

|

||||

使用 Java 框架 Scipio ERP 创建一个在线商店

|

||||

======

|

||||

|

||||

> Scipio ERP 具有包罗万象的应用程序和功能。

|

||||

|

||||

|

||||

|

||||

如果,你想在网上销售产品或服务,但要么找不到合适的软件,要么觉得定制成本太高?那么,[Scipio ERP][1] 也许正是你想要的。

|

||||

|

||||

Scipio ERP 是一个基于 Java 的开源的电子商务框架,具有包罗万象的应用程序和功能。这个项目于 2014 年从 [Apache OFBiz][2] 分叉而来,侧重于更好的定制和更现代的吸引力。这个电子商务组件非常丰富,可以在多商店环境中工作,同时支持国际化,具有琳琅满目的产品配置,而且它还兼容现代 HTML 框架。该软件还为许多其他业务场景提供标准应用程序,例如会计、仓库管理或销售团队自动化。它都是高度标准化的,因此易于定制,如果你想要的不仅仅是一个虚拟购物车,这是非常棒的。

|

||||

|

||||

该系统也使得跟上现代 Web 标准变得非常容易。所有界面都是使用系统的“[模板工具包][3]”构建的,这是一个易于学习的宏集,可以将 HTML 与所有应用程序分开。正因为如此,每个应用程序都已经标准化到核心。听起来令人困惑?它真的不是 HTML——它看起来很像 HTML,但你写的内容少了很多。

|

||||

|

||||

### 初始安装

|

||||

|

||||

在你开始之前,请确保你已经安装了 Java 1.8(或更高版本)的 SDK 以及一个 Git 客户端。完成了?太棒了!接下来,切换到 Github 上的主分支:

|

||||

|

||||

```

|

||||

git clone https://github.com/ilscipio/scipio-erp.git

|

||||

cd scipio-erp

|

||||

git checkout master

|

||||

```

|

||||

|

||||

要安装该系统,只需要运行 `./install.sh` 并从命令行中选择任一选项。在开发过程中,最好一直使用 “installation for development”(选项 1),它还将安装一系列演示数据。对于专业安装,你可以修改初始配置数据(“种子数据”),以便自动为你设置公司和目录数据。默认情况下,系统将使用内部数据库运行,但是它[也可以配置][4]使用各种关系数据库,比如 PostgreSQL 和 MariaDB 等。

|

||||

|

||||

![安装向导][6]

|

||||

|

||||

*按照安装向导完成初始配置*

|

||||

|

||||

通过命令 `./start.sh` 启动系统然后打开链接 <https://localhost:8443/setup/> 完成配置。如果你安装了演示数据, 你可以使用用户名 `admin` 和密码 `scipio` 进行登录。在安装向导中,你可以设置公司简介、会计、仓库、产品目录、在线商店和额外的用户配置信息。暂时在产品商店配置界面上跳过网站实体的配置。系统允许你使用不同的底层代码运行多个在线商店;除非你想这样做,一直选择默认值是最简单的。

|

||||

|

||||

祝贺你,你刚刚安装了 Scipio ERP!在界面上操作一两分钟,感受一下它的功能。

|

||||

|

||||

### 捷径

|

||||

|

||||

在你进入自定义之前,这里有一些方便的命令可以帮助你:

|

||||

|

||||

* 创建一个 shop-override:`./ant create-component-shop-override`

|

||||

* 创建一个新组件:`./ant create-component`

|

||||

* 创建一个新主题组件:`./ant create-theme`

|

||||

* 创建管理员用户:`./ant create-admin-user-login`

|

||||

* 各种其他实用功能:`./ant -p`

|

||||

* 用于安装和更新插件的实用程序:`./git-addons help`

|

||||

|

||||

另外,请记下以下位置:

|

||||

|

||||

* 将 Scipio 作为服务运行的脚本:`/tools/scripts/`

|

||||

* 日志输出目录:`/runtime/logs`

|

||||

* 管理应用程序:`<https://localhost:8443/admin/>`

|

||||

* 电子商务应用程序:`<https://localhost:8443/shop/>`

|

||||

|

||||

最后,Scipio ERP 在以下五个主要目录中构建了所有代码:

|

||||

|

||||

* `framework`: 框架相关的源,应用程序服务器,通用界面和配置

|

||||

* `applications`: 核心应用程序

|

||||

* `addons`: 第三方扩展

|

||||

* `themes`: 修改界面外观

|

||||

* `hot-deploy`: 你自己的组件

|

||||

|

||||

除了一些配置,你将在 `hot-deploy` 和 `themes` 目录中进行开发。

|

||||

|

||||

### 在线商店定制

|

||||

|

||||

要真正使系统成为你自己的系统,请开始考虑使用[组件][7]。组件是一种模块化方法,可以覆盖、扩展和添加到系统中。你可以将组件视为独立 Web 模块,可以捕获有关数据库([实体][8])、功能([服务][9])、界面([视图][10])、[事件和操作][11]和 Web 应用程序等的信息。由于组件功能,你可以添加自己的代码,同时保持与原始源兼容。

|

||||

|

||||

运行命令 `./ant create-component-shop-override` 并按照步骤创建你的在线商店组件。该操作将会在 `hot-deploy` 目录内创建一个新目录,该目录将扩展并覆盖原始的电子商务应用程序。

|

||||

|

||||

![组件目录结构][13]

|

||||

|

||||

*一个典型的组件目录结构。*

|

||||

|

||||

你的组件将具有以下目录结构:

|

||||

|

||||

* `config`: 配置

|

||||

* `data`: 种子数据

|

||||

* `entitydef`: 数据库表定义

|

||||

* `script`: Groovy 脚本的位置

|

||||

* `servicedef`: 服务定义

|

||||

* `src`: Java 类

|

||||

* `webapp`: 你的 web 应用程序

|

||||

* `widget`: 界面定义

|

||||

|

||||

此外,`ivy.xml` 文件允许你将 Maven 库添加到构建过程中,`ofbiz-component.xml` 文件定义整个组件和 Web 应用程序结构。除了一些在当前目录所能够看到的,你还可以在 Web 应用程序的 `WEB-INF` 目录中找到 `controller.xml` 文件。这允许你定义请求实体并将它们连接到事件和界面。仅对于界面来说,你还可以使用内置的 CMS 功能,但优先要坚持使用核心机制。在引入更改之前,请熟悉 `/applications/shop/`。

|

||||

|

||||

#### 添加自定义界面

|

||||

|

||||

还记得[模板工具包][3]吗?你会发现它在每个界面都有使用到。你可以将其视为一组易于学习的宏,它用来构建所有内容。下面是一个例子:

|

||||

|

||||

```

|

||||

<@section title="Title">

|

||||

<@heading id="slider">Slider</@heading>

|

||||

<@row>

|

||||

<@cell columns=6>

|

||||

<@slider id="" class="" controls=true indicator=true>

|

||||

<@slide link="#" image="https://placehold.it/800x300">Just some content…</@slide>

|

||||

<@slide title="This is a title" link="#" image="https://placehold.it/800x300"></@slide>

|

||||

</@slider>

|

||||

</@cell>

|

||||

<@cell columns=6>Second column</@cell>

|

||||

</@row>

|

||||

</@section>

|

||||

```

|

||||

|

||||

不是很难,对吧?同时,主题包含 HTML 定义和样式。这将权力交给你的前端开发人员,他们可以定义每个宏的输出,并坚持使用自己的构建工具进行开发。

|

||||

|

||||

我们快点试试吧。首先,在你自己的在线商店上定义一个请求。你将修改此代码。一个内置的 CMS 系统也可以通过 <https://localhost:8443/cms/> 进行访问,它允许你以更有效的方式创建新模板和界面。它与模板工具包完全兼容,并附带可根据你的喜好采用的示例模板。但是既然我们试图在这里理解系统,那么首先让我们采用更复杂的方法。

|

||||

|

||||

打开你商店 `webapp` 目录中的 [controller.xml][14] 文件。控制器会跟踪请求事件并相应地执行操作。下面的操作将会在 `/shop/test` 下创建一个新的请求:

|

||||

|

||||

```

|

||||

<!-- Request Mappings -->

|

||||

<request-map uri="test">

|

||||

<security https="true" auth="false"/>

|

||||

<response name="success" type="view" value="test"/>

|

||||

</request-map>

|

||||

```

|

||||

|

||||

你可以定义多个响应,如果需要,可以在请求中使用事件或服务调用来确定你可能要使用的响应。我选择了“视图”类型的响应。视图是渲染的响应;其他类型是请求重定向、转发等。系统附带各种渲染器,可让你稍后确定输出;为此,请添加以下内容:

|

||||

|

||||

```

|

||||

<!-- View Mappings -->

|

||||

<view-map name="test" type="screen" page="component://mycomponent/widget/CommonScreens.xml#test"/>

|

||||

```

|

||||

|

||||

用你自己的组件名称替换 `my-component`。然后,你可以通过在 `widget/CommonScreens.xml` 文件的标签内添加以下内容来定义你的第一个界面:

|

||||

|

||||

```

|

||||

<screen name="test">

|

||||

<section>

|

||||

<actions>

|

||||

</actions>

|

||||

<widgets>

|

||||

<decorator-screen name="CommonShopAppDecorator" location="component://shop/widget/CommonScreens.xml">

|

||||

<decorator-section name="body">

|

||||

<platform-specific><html><html-template location="component://mycomponent/webapp/mycomponent/test/test.ftl"/></html></platform-specific>

|

||||

</decorator-section>

|

||||

</decorator-screen>

|

||||

</widgets>

|

||||

</section>

|

||||

</screen>

|

||||

```

|

||||

|

||||

商店界面实际上非常模块化,由多个元素组成([小部件、动作和装饰器][15])。为简单起见,请暂时保留原样,并通过添加第一个模板工具包文件来完成新网页。为此,创建一个新的 `webapp/mycomponent/test/test.ftl` 文件并添加以下内容:

|

||||

|

||||

```

|

||||

<@alert type="info">Success!</@alert>

|

||||

```

|

||||

|

||||

![自定义的界面][17]

|

||||

|

||||

*一个自定义的界面。*

|

||||

|

||||

打开 <https://localhost:8443/shop/control/test/> 并惊叹于你自己的成就。

|

||||

|

||||

#### 自定义主题

|

||||

|

||||

通过创建自己的主题来修改商店的界面外观。所有主题都可以作为组件在 `themes` 文件夹中找到。运行命令 `./ant create-theme` 来创建你自己的主题。

|

||||

|

||||

![主题组件布局][19]

|

||||

|

||||

*一个典型的主题组件布局。*

|

||||

|

||||

以下是最重要的目录和文件列表:

|

||||

|

||||

* 主题配置:`data/*ThemeData.xml`

|

||||

* 特定主题封装的 HTML:`includes/*.ftl`

|

||||

* 模板工具包 HTML 定义:`includes/themeTemplate.ftl`

|

||||

* CSS 类定义:`includes/themeStyles.ftl`

|

||||

* CSS 框架: `webapp/theme-title/`

|

||||

|

||||

快速浏览工具包中的 Metro 主题;它使用 Foundation CSS 框架并且充分利用了这个框架。然后,然后,在新构建的 `webapp/theme-title` 目录中设置自己的主题并开始开发。Foundation-shop 主题是一个非常简单的特定于商店的主题实现,你可以将其用作你自己工作的基础。

|

||||

|

||||

瞧!你已经建立了自己的在线商店,准备个性化定制吧!

|

||||

|

||||

![搭建完成的 Scipio ERP 在线商店][21]

|

||||

|

||||

*一个搭建完成的基于 Scipio ERP的在线商店。*

|

||||

|

||||

### 接下来是什么?

|

||||

|

||||

Scipio ERP 是一个功能强大的框架,可简化复杂的电子商务应用程序的开发。为了更完整的理解,请查看项目[文档][7],尝试[在线演示][22],或者[加入社区][23].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/1/scipio-erp

|

||||

|

||||

作者:[Paul Piper][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[laingke](https://github.com/laingke)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/madppiper

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.scipioerp.com

|

||||

[2]: https://ofbiz.apache.org/

|

||||

[3]: https://www.scipioerp.com/community/developer/freemarker-macros/

|

||||

[4]: https://www.scipioerp.com/community/developer/installation-configuration/configuration/#database-configuration

|

||||

[5]: /file/419711

|

||||

[6]: https://opensource.com/sites/default/files/uploads/setup_step5_sm.jpg (Setup wizard)

|

||||

[7]: https://www.scipioerp.com/community/developer/architecture/components/

|

||||

[8]: https://www.scipioerp.com/community/developer/entities/

|

||||

[9]: https://www.scipioerp.com/community/developer/services/

|

||||

[10]: https://www.scipioerp.com/community/developer/views-requests/

|

||||

[11]: https://www.scipioerp.com/community/developer/events-actions/

|

||||

[12]: /file/419716

|

||||

[13]: https://opensource.com/sites/default/files/uploads/component_structure.jpg (component directory structure)

|

||||

[14]: https://www.scipioerp.com/community/developer/views-requests/request-controller/

|

||||

[15]: https://www.scipioerp.com/community/developer/views-requests/screen-widgets-decorators/

|

||||

[16]: /file/419721

|

||||

[17]: https://opensource.com/sites/default/files/uploads/success_screen_sm.jpg (Custom screen)

|

||||

[18]: /file/419726

|

||||

[19]: https://opensource.com/sites/default/files/uploads/theme_structure.jpg (theme component layout)

|

||||

[20]: /file/419731

|

||||

[21]: https://opensource.com/sites/default/files/uploads/finished_shop_1_sm.jpg (Finished Scipio ERP shop)

|

||||

[22]: https://www.scipioerp.com/demo/

|

||||

[23]: https://forum.scipioerp.com/

|

||||

269

published/20190822 How to move a file in Linux.md

Normal file

269

published/20190822 How to move a file in Linux.md

Normal file

@ -0,0 +1,269 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11380-1.html)

|

||||

[#]: subject: (How to move a file in Linux)

|

||||

[#]: via: (https://opensource.com/article/19/8/moving-files-linux-depth)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/sethhttps://opensource.com/users/doni08521059)

|

||||

|

||||

在 Linux 中如何移动文件

|

||||

======

|

||||

|

||||

> 无论你是刚接触 Linux 的文件移动的新手还是已有丰富的经验,你都可以通过此深入的文章中学到一些东西。

|

||||

|

||||

|

||||

|

||||

在 Linux 中移动文件看似比较简单,但是可用的选项却比大多数人想象的要多。本文介绍了初学者如何在 GUI 和命令行中移动文件,还介绍了底层实际上发生了什么,并介绍了许多有一定经验的用户也很少使用的命令行选项。

|

||||

|

||||

### 移动什么?

|

||||

|

||||

在研究移动文件之前,有必要仔细研究*移动*文件系统对象时实际发生的情况。当文件创建后,会将其分配给一个<ruby>索引节点<rt>inode</rt></ruby>,这是文件系统中用于数据存储的固定点。你可以使用 [ls][2] 命令看到文件对应的索引节点:

|

||||

|

||||

```

|

||||

$ ls --inode example.txt

|

||||

7344977 example.txt

|

||||

```

|

||||

|

||||

移动文件时,实际上并没有将数据从一个索引节点移动到另一个索引节点,只是给文件对象分配了新的名称或文件路径而已。实际上,文件在移动时会保留其权限,因为移动文件不会更改或重新创建文件。(LCTT 译注:在不跨卷、分区和存储器时,移动文件是不会重新创建文件的;反之亦然)

|

||||

|

||||

文件和目录的索引节点并没有暗示这种继承关系,而是由文件系统本身决定的。索引节点的分配是基于文件创建时的顺序分配的,并且完全独立于你组织计算机文件的方式。一个目录“内”的文件的索引节点号可能比其父目录的索引节点号更低或更高。例如:

|

||||

|

||||

```

|

||||

$ mkdir foo

|

||||

$ mv example.txt foo

|

||||

$ ls --inode

|

||||

7476865 foo

|

||||

$ ls --inode foo

|

||||

7344977 example.txt

|

||||

```

|

||||

|

||||

但是,将文件从一个硬盘驱动器移动到另一个硬盘驱动器时,索引节点基本上会更改。发生这种情况是因为必须将新数据写入新文件系统。因此,在 Linux 中,移动和重命名文件的操作实际上是相同的操作。无论你将文件移动到另一个目录还是在同一目录使用新名称,这两个操作均由同一个底层程序执行。

|

||||

|

||||

本文重点介绍将文件从一个目录移动到另一个目录。

|

||||

|

||||

### 用鼠标移动文件

|

||||

|

||||

图形用户界面是大多数人都熟悉的友好的抽象层,位于复杂的二进制数据集合之上。这也是在 Linux 桌面上移动文件的首选方法,也是最直观的方法。从一般意义上来说,如果你习惯使用台式机,那么你可能已经知道如何在硬盘驱动器上移动文件。例如,在 GNOME 桌面上,将文件从一个窗口拖放到另一个窗口时的默认操作是移动文件而不是复制文件,因此这可能是该桌面上最直观的操作之一:

|

||||

|

||||

![Moving a file in GNOME.][3]

|

||||

|

||||

而 KDE Plasma 桌面中的 Dolphin 文件管理器默认情况下会提示用户以执行不同的操作。拖动文件时按住 `Shift` 键可强制执行移动操作:

|

||||

|

||||

![Moving a file in KDE.][4]

|

||||

|

||||

### 在命令行移动文件

|

||||

|

||||

用于在 Linux、BSD、Illumos、Solaris 和 MacOS 上移动文件的 shell 命令是 `mv`。不言自明,简单的命令 `mv <source> <destination>` 会将源文件移动到指定的目标,源和目标都由[绝对][5]或[相对][6]文件路径定义。如前所述,`mv` 是 [POSIX][7] 用户的常用命令,其有很多不为人知的附加选项,因此,无论你是新手还是有经验的人,本文都会为你带来一些有用的选项。

|

||||

|

||||

但是,不是所有 `mv` 命令都是由同一个人编写的,因此取决于你的操作系统,你可能拥有 GNU `mv`、BSD `mv` 或 Sun `mv`。命令的选项因其实现而异(BSD `mv` 根本没有长选项),因此请参阅你的 `mv` 手册页以查看支持的内容,或安装你的首选版本(这是开源的奢侈之处)。

|

||||

|

||||

#### 移动文件

|

||||

|

||||

要使用 `mv` 将文件从一个文件夹移动到另一个文件夹,请记住语法 `mv <source> <destination>`。 例如,要将文件 `example.txt` 移到你的 `Documents` 目录中:

|

||||

|

||||

```

|

||||

$ touch example.txt

|

||||

$ mv example.txt ~/Documents

|

||||

$ ls ~/Documents

|

||||

example.txt

|

||||

```

|

||||

|

||||

就像你通过将文件拖放到文件夹图标上来移动文件一样,此命令不会将 `Documents` 替换为 `example.txt`。相反,`mv` 会检测到 `Documents` 是一个文件夹,并将 `example.txt` 文件放入其中。

|

||||

|

||||

你还可以方便地在移动文件时重命名该文件:

|

||||

|

||||

```

|

||||

$ touch example.txt

|

||||

$ mv example.txt ~/Documents/foo.txt

|

||||

$ ls ~/Documents

|

||||

foo.txt

|

||||

```

|

||||

|

||||

这很重要,这使你不用将文件移动到另一个位置,也可以重命名文件,例如:

|

||||

|

||||

```

|

||||

$ touch example.txt

|

||||

$ mv example.txt foo2.txt

|

||||

$ ls foo2.txt`

|

||||

```

|

||||

|

||||

#### 移动目录

|

||||

|

||||

不像 [cp][8] 命令,`mv` 命令处理文件和目录没有什么不同,你可以用同样的格式移动目录或文件:

|

||||

|

||||

```

|

||||

$ touch file.txt

|

||||

$ mkdir foo_directory

|

||||

$ mv file.txt foo_directory

|

||||

$ mv foo_directory ~/Documents

|

||||

```

|

||||

|

||||

#### 安全地移动文件

|

||||

|

||||

如果你移动一个文件到一个已有同名文件的地方,默认情况下,`mv` 会用你移动的文件替换目标文件。这种行为被称为<ruby>清除<rt>clobbering</rt></ruby>,有时候这就是你想要的结果,而有时则不是。

|

||||

|

||||

一些发行版将 `mv` 别名定义为 `mv --interactive`(你也可以[自己写一个][9]),这会提醒你确认是否覆盖。而另外一些发行版没有这样做,那么你可以使用 `--interactive` 或 `-i` 选项来确保当两个文件有一样的名字而发生冲突时让 `mv` 请你来确认。

|

||||

|

||||

```

|

||||

$ mv --interactive example.txt ~/Documents

|

||||

mv: overwrite '~/Documents/example.txt'?

|

||||

```

|

||||

|

||||

如果你不想手动干预,那么可以使用 `--no-clobber` 或 `-n`。该选项会在发生冲突时静默拒绝移动操作。在这个例子当中,一个名为 `example.txt` 的文件以及存在于 `~/Documents`,所以它不会如命令要求从当前目录移走。

|

||||

|

||||

```

|

||||

$ mv --no-clobber example.txt ~/Documents

|

||||

$ ls

|

||||

example.txt

|

||||

```

|

||||

|

||||

#### 带备份的移动

|

||||

|

||||

如果你使用 GNU `mv`,有一个备份选项提供了另外一种安全移动的方式。要为任何冲突的目标文件创建备份文件,可以使用 `-b` 选项。

|

||||

|

||||

```

|

||||

$ mv -b example.txt ~/Documents

|

||||

$ ls ~/Documents

|

||||

example.txt example.txt~

|

||||

```

|

||||

|

||||

这个选项可以确保 `mv` 完成移动操作,但是也会保护目录位置的已有文件。

|

||||

|

||||

另外的 GNU 备份选项是 `--backup`,它带有一个定义了备份文件如何命名的参数。

|

||||

|

||||

* `existing`:如果在目标位置已经存在了编号备份文件,那么会创建编号备份。否则,会使用 `simple` 方式。

|

||||

* `none`:即使设置了 `--backup`,也不会创建备份。当 `mv` 被别名定义为带有备份选项时,这个选项可以覆盖这种行为。

|

||||

* `numbered`:给目标文件名附加一个编号。

|

||||

* `simple`:给目标文件附加一个 `~`,当你日常使用带有 `--ignore-backups` 选项的 [ls][2] 时,这些文件可以很方便地隐藏起来。

|

||||

|

||||

简单来说:

|

||||

|

||||

```

|

||||

$ mv --backup=numbered example.txt ~/Documents

|

||||

$ ls ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:23 example.txt

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:20 example.txt.~1~

|

||||

```

|

||||

|

||||

可以使用环境变量 `VERSION_CONTROL` 设置默认的备份方案。你可以在 `~/.bashrc` 文件中设置该环境变量,也可以在命令前动态设置:

|

||||

|

||||

```

|

||||

$ VERSION_CONTROL=numbered mv --backup example.txt ~/Documents

|

||||

$ ls ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:23 example.txt

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:20 example.txt.~1~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:22 example.txt.~2~

|

||||

```

|

||||

|

||||

`--backup` 选项仍然遵循 `--interactive` 或 `-i` 选项,因此即使它在执行备份之前创建了备份,它仍会提示你覆盖目标文件:

|

||||

|

||||

```

|

||||

$ mv --backup=numbered example.txt ~/Documents

|

||||

mv: overwrite '~/Documents/example.txt'? y

|

||||

$ ls ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:24 example.txt

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:20 example.txt.~1~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:22 example.txt.~2~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:23 example.txt.~3~

|

||||

```

|

||||

|

||||

你可以使用 `--force` 或 `-f` 选项覆盖 `-i`。

|

||||

|

||||

```

|

||||

$ mv --backup=numbered --force example.txt ~/Documents

|

||||

$ ls ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:26 example.txt

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:20 example.txt.~1~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:22 example.txt.~2~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:24 example.txt.~3~

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:25 example.txt.~4~

|

||||

```

|

||||

|

||||

`--backup` 选项在 BSD `mv` 中不可用。

|

||||

|

||||

#### 一次性移动多个文件

|

||||

|

||||

移动多个文件时,`mv` 会将最终目录视为目标:

|

||||

|

||||

```

|

||||

$ mv foo bar baz ~/Documents

|

||||

$ ls ~/Documents

|

||||

foo bar baz

|

||||

```

|

||||

|

||||

如果最后一个项目不是目录,则 `mv` 返回错误:

|

||||

|

||||

```

|

||||

$ mv foo bar baz

|

||||

mv: target 'baz' is not a directory

|

||||

```

|

||||

|

||||

GNU `mv` 的语法相当灵活。如果无法把目标目录作为提供给 `mv` 命令的最终参数,请使用 `--target-directory` 或 `-t` 选项:

|

||||

|

||||

```

|

||||

$ mv --target-directory=~/Documents foo bar baz

|

||||

$ ls ~/Documents

|

||||

foo bar baz

|

||||

```

|

||||

|

||||

当从某些其他命令的输出构造 `mv` 命令时(例如 `find` 命令、`xargs` 或 [GNU Parallel][10]),这特别有用。

|

||||

|

||||

#### 基于修改时间移动

|

||||

|

||||

使用 GNU `mv`,你可以根据要移动的文件是否比要替换的目标文件新来定义移动动作。该方式可以通过 `--update` 或 `-u` 选项使用,在BSD `mv` 中不可用:

|

||||

|

||||

```

|

||||

$ ls -l ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:32 example.txt

|

||||

$ ls -l

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:42 example.txt

|

||||

$ mv --update example.txt ~/Documents

|

||||

$ ls -l ~/Documents

|

||||

-rw-rw-r--. 1 seth users 128 Aug 1 17:42 example.txt

|

||||

$ ls -l

|

||||

```

|

||||

|

||||

此结果仅基于文件的修改时间,而不是两个文件的差异,因此请谨慎使用。只需使用 `touch` 命令即可愚弄 `mv`:

|

||||

|

||||

```

|

||||

$ cat example.txt

|

||||

one

|

||||

$ cat ~/Documents/example.txt

|

||||

one

|

||||

two

|

||||

$ touch example.txt

|

||||

$ mv --update example.txt ~/Documents

|

||||

$ cat ~/Documents/example.txt

|

||||

one

|

||||

```

|

||||

|

||||

显然,这不是最智能的更新功能,但是它提供了防止覆盖最新数据的基本保护。

|

||||

|

||||

### 移动

|

||||

|

||||

除了 `mv` 命令以外,还有更多的移动数据的方法,但是作为这项任务的默认程序,`mv` 是一个很好的通用选择。现在你知道了有哪些可以使用的选项,可以比以前更智能地使用 `mv` 了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/moving-files-linux-depth

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/sethhttps://opensource.com/users/doni08521059

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/files_documents_paper_folder.png?itok=eIJWac15 (Files in a folder)

|

||||

[2]: https://opensource.com/article/19/7/master-ls-command

|

||||

[3]: https://opensource.com/sites/default/files/uploads/gnome-mv.jpg (Moving a file in GNOME.)

|

||||

[4]: https://opensource.com/sites/default/files/uploads/kde-mv.jpg (Moving a file in KDE.)

|

||||

[5]: https://opensource.com/article/19/7/understanding-file-paths-and-how-use-them

|

||||

[6]: https://opensource.com/article/19/7/navigating-filesystem-relative-paths

|

||||

[7]: https://opensource.com/article/19/7/what-posix-richard-stallman-explains

|

||||

[8]: https://opensource.com/article/19/7/copying-files-linux

|

||||

[9]: https://opensource.com/article/19/7/bash-aliases

|

||||

[10]: https://opensource.com/article/18/5/gnu-parallel

|

||||

82

published/20190830 git exercises- navigate a repository.md

Normal file

82

published/20190830 git exercises- navigate a repository.md

Normal file

@ -0,0 +1,82 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11379-1.html)

|

||||

[#]: subject: (git exercises: navigate a repository)

|

||||

[#]: via: (https://jvns.ca/blog/2019/08/30/git-exercises--navigate-a-repository/)

|

||||

[#]: author: (Julia Evans https://jvns.ca/)

|

||||

|

||||

Git 练习:存储库导航

|

||||

======

|

||||

|

||||

我觉得前几天的 [curl 练习][1]进展顺利,所以今天我醒来后,想尝试编写一些 Git 练习。Git 是一大块需要学习的技能,可能要花几个小时才能学会,所以我分解练习的第一个思路是从“导航”一个存储库开始的。

|

||||

|

||||

我本来打算使用一个玩具测试库,但后来我想,为什么不使用真正的存储库呢?这样更有趣!因此,我们将浏览 Ruby 编程语言的存储库。你无需了解任何 C 即可完成此练习,只需熟悉一下存储库中的文件随时间变化的方式即可。

|

||||

|

||||

### 克隆存储库

|

||||

|

||||

开始之前,需要克隆存储库:

|

||||

|

||||

```

|

||||

git clone https://github.com/ruby/ruby

|

||||

```

|

||||

|

||||

与实际使用的大多数存储库相比,该存储库的最大不同之处在于它没有分支,但是它有很多标签,它们与分支相似,因为它们都只是指向一个提交的指针而已。因此,我们将使用标签而不是分支进行练习。*改变*标签的方式和分支非常不同,但*查看*标签和分支的方式完全相同。

|

||||

|

||||

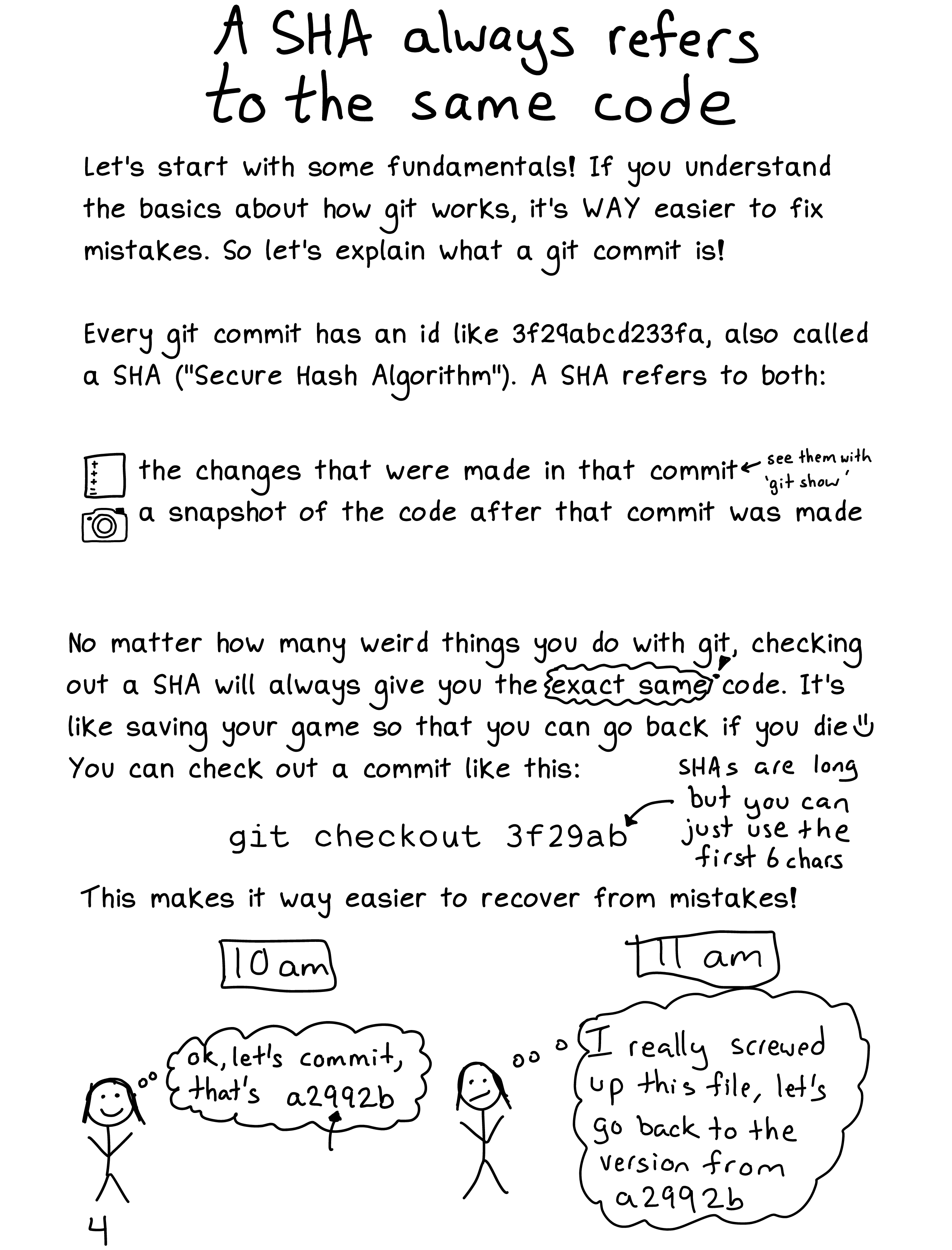

### Git SHA 总是引用同一个代码

|

||||

|

||||

执行这些练习时要记住的最重要的一点是,如本页面所述,像`9e3d9a2a009d2a0281802a84e1c5cc1c887edc71` 这样的 Git SHA 始终引用同一个的代码。下图摘自我与凯蒂·西勒·米勒撰写的一本杂志,名为《[Oh shit, git!][2]》。(她还有一个名为 <https://ohshitgit.com/> 的很棒的网站,启发了该杂志。)

|

||||

|

||||

|

||||

|

||||

我们将在练习中大量使用 Git SHA,以使你习惯于使用它们,并帮助你了解它们与标签和分支的对应关系。

|

||||

|

||||

### 我们将要使用的 Git 子命令

|

||||

|

||||

所有这些练习仅使用这 5 个 Git 子命令:

|

||||

|

||||

```

|

||||

git checkout

|

||||

git log (--oneline, --author, and -S will be useful)

|

||||

git diff (--stat will be useful)

|

||||

git show

|

||||

git status

|

||||

```

|

||||

|

||||

### 练习

|

||||

|

||||

1. 查看 matz 从 1998 年开始的 Ruby 提交。提交 ID 为 ` 3db12e8b236ac8f88db8eb4690d10e4a3b8dbcd4`。找出当时 Ruby 的代码行数。

|

||||

2. 检出当前的 master 分支。

|

||||

3. 查看文件 `hash.c` 的历史记录。更改该文件的最后一个提交 ID 是什么?

|

||||

4. 了解最近 20 年来 `hash.c` 的变化:将 master 分支上的文件与提交 `3db12e8b236ac8f88db8eb4690d10e4a3b8dbcd4` 的文件进行比较。

|

||||

5. 查找最近更改了 `hash.c` 的提交,并查看该提交的差异。

|

||||

6. 对于每个 Ruby 版本,该存储库都有一堆**标签**。获取所有标签的列表。

|

||||

7. 找出在标签 `v1_8_6_187` 和标签 `v1_8_6_188` 之间更改了多少文件。

|

||||

8. 查找 2015 年的提交(任何一个提交)并将其检出,简单地查看一下文件,然后返回 master 分支。

|

||||

9. 找出标签 `v1_8_6_187` 对应的提交。

|

||||

10. 列出目录 `.git/refs/tags`。运行 `cat .git/refs/tags/v1_8_6_187` 来查看其中一个文件的内容。

|

||||

11. 找出当前 `HEAD` 对应的提交 ID。

|

||||

12. 找出已经对 `test/` 目录进行了多少次提交。

|

||||

13. 提交 `65a5162550f58047974793cdc8067a970b2435c0` 和 `9e3d9a2a009d2a0281802a84e1c5cc1c887edc71` 之间的 `lib/telnet.rb` 的差异。该文件更改了几行?

|

||||

14. 在 Ruby 2.5.1 和 2.5.2 之间进行了多少次提交(标记为 `v2_5_1` 和 `v2_5_3`)(这一步有点棘手,步骤不只一步)

|

||||

15. “matz”(Ruby 的创建者)作了多少提交?

|

||||

16. 最近包含 “tkutil” 一词的提交是什么?

|

||||

17. 检出提交 `e51dca2596db9567bd4d698b18b4d300575d3881` 并创建一个指向该提交的新分支。

|

||||

18. 运行 `git reflog` 以查看你到目前为止完成的所有存储库导航操作。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2019/08/30/git-exercises--navigate-a-repository/

|

||||

|

||||

作者:[Julia Evans][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://jvns.ca/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://jvns.ca/blog/2019/08/27/curl-exercises/

|

||||

[2]: https://wizardzines.com/zines/oh-shit-git/

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11374-1.html)

|

||||

[#]: subject: (How to put an HTML page on the internet)

|

||||

[#]: via: (https://jvns.ca/blog/2019/09/06/how-to-put-an-html-page-on-the-internet/)

|

||||

[#]: author: (Julia Evans https://jvns.ca/)

|

||||

@ -10,19 +10,21 @@

|

||||

如何在互联网放置 HTML 页面

|

||||

======

|

||||

|

||||

|

||||

|

||||

我喜欢互联网的一点是在互联网放置静态页面是如此简单。今天有人问我该怎么做,所以我想我会快速地写下来!

|

||||

|

||||

### 只是一个 HTML 页面

|

||||

|

||||

我的所有网站都只是静态 HTML 和 CSS。我的网页设计技巧相对不高(<https://wizardzines.com>是我自己开发的最复杂的网站),因此保持我所有的网站相对简单意味着我可以做一些改变/修复,而不会花费大量时间。

|

||||

我的所有网站都只是静态 HTML 和 CSS。我的网页设计技巧相对不高(<https://wizardzines.com> 是我自己开发的最复杂的网站),因此保持我所有的网站相对简单意味着我可以做一些改变/修复,而不会花费大量时间。

|

||||

|

||||

因此,我们将在此文章中采用尽可能简单的方式 - 只需一个 HTML 页面。

|

||||

因此,我们将在此文章中采用尽可能简单的方式 —— 只需一个 HTML 页面。

|

||||

|

||||

### HTML 页面

|

||||

|

||||

我们要放在互联网上的网站只是一个名为 `index.html` 的文件。你可以在 <https://github.com/jvns/website-example> 找到它,它是一个 Github 仓库,其中只包含一个文件。

|

||||

|

||||

HTML 文件中包含一些 CSS,使其看起来不那么无聊,部分复制自< https://example.com>。

|

||||

HTML 文件中包含一些 CSS,使其看起来不那么无聊,部分复制自 <https://example.com>。

|

||||

|

||||

### 如何将 HTML 页面放在互联网上

|

||||

|

||||

@ -32,22 +34,19 @@ HTML 文件中包含一些 CSS,使其看起来不那么无聊,部分复制

|

||||

2. 将 index.html 复制到你自己 neocities 站点的 index.html 中

|

||||

3. 完成

|

||||

|

||||

|

||||

|

||||

上面的 index.html 页面位于 [julia-example-website.neocities.com][2] 中,如果你查看源代码,你将看到它与 github 仓库中的 HTML 相同。

|

||||

上面的 `index.html` 页面位于 [julia-example-website.neocities.com][2] 中,如果你查看源代码,你将看到它与 github 仓库中的 HTML 相同。

|

||||

|

||||

我认为这可能是将 HTML 页面放在互联网上的最简单的方法(这是一次回归 Geocities,它是我在 2003 年制作我的第一个网站的方式):)。我也喜欢 Neocities (像 [glitch][3],我也喜欢)它能实验、学习,并有乐趣。

|

||||

|

||||

### 其他选择

|

||||

|

||||

这绝不是唯一简单的方式 - 在你推送 Git 仓库时,Github pages 和 Gitlab pages 以及 Netlify 都将会自动发布站点,并且它们都非常易于使用(只需将它们连接到你的 github 仓库即可)。我个人使用 Git 仓库的方式,因为 Git 没有东西让我感到紧张 - 我想知道我实际推送的页面发生了什么更改。但我想你如果第一次只想将 HTML/CSS 制作的站点放到互联网上,那么 Neocities 就是一个非常好的方法。

|

||||

|

||||

这绝不是唯一简单的方式,在你推送 Git 仓库时,Github pages 和 Gitlab pages 以及 Netlify 都将会自动发布站点,并且它们都非常易于使用(只需将它们连接到你的 GitHub 仓库即可)。我个人使用 Git 仓库的方式,因为 Git 不会让我感到紧张,我想知道我实际推送的页面发生了什么更改。但我想你如果第一次只想将 HTML/CSS 制作的站点放到互联网上,那么 Neocities 就是一个非常好的方法。

|

||||

|

||||

如果你不只是玩,而是要将网站用于真实用途,那么你或许会需要买一个域名,以便你将来可以更改托管服务提供商,但这有点不那么简单。

|

||||

|

||||

### 这是学习 HTML 的一个很好的起点

|

||||

|

||||

如果你熟悉在 Git 中编辑文件,同时想练习 HTML/CSS 的话,我认为将它放在网站中是一个有趣的方式!我真的很喜欢它的简单性 - 实际上这只有一个文件,所以没有其他花哨的东西需要去理解。

|

||||

如果你熟悉在 Git 中编辑文件,同时想练习 HTML/CSS 的话,我认为将它放在网站中是一个有趣的方式!我真的很喜欢它的简单性 —— 实际上这只有一个文件,所以没有其他花哨的东西需要去理解。

|

||||

|

||||

还有很多方法可以复杂化/扩展它,比如这个博客实际上是用 [Hugo][4] 生成的,它生成了一堆 HTML 文件并放在网络中,但从基础开始总是不错的。

|

||||

|

||||

@ -58,7 +57,7 @@ via: https://jvns.ca/blog/2019/09/06/how-to-put-an-html-page-on-the-internet/

|

||||

作者:[Julia Evans][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,8 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (amwps290 )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: translator: (amwps290)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11371-1.html)

|

||||

[#]: subject: (How to set up a TFTP server on Fedora)

|

||||

[#]: via: (https://fedoramagazine.org/how-to-set-up-a-tftp-server-on-fedora/)

|

||||

[#]: author: (Curt Warfield https://fedoramagazine.org/author/rcurtiswarfield/)

|

||||

@ -12,9 +12,9 @@

|

||||

|

||||

![][1]

|

||||

|

||||

**TFTP** 即简单文本传输协议,允许用户通过 [UDP][2] 协议在系统之间传输文件。默认情况下,协议使用的是 UDP 的 69 号端口。TFTP 协议广泛用于无盘设备的远程启动。因此,在你的本地网络建立一个 TFTP 服务器,这样你就可以进行 [Fedora 的安装][3]和其他无盘设备的一些操作,这将非常有趣。

|

||||

TFTP 即<ruby>简单文本传输协议<rt>Trivial File Transfer Protocol</rt></ruby>,允许用户通过 [UDP][2] 协议在系统之间传输文件。默认情况下,协议使用的是 UDP 的 69 号端口。TFTP 协议广泛用于无盘设备的远程启动。因此,在你的本地网络建立一个 TFTP 服务器,这样你就可以对 [安装好的 Fedora][3] 和其他无盘设备做一些操作,这将非常有趣。

|

||||

|

||||

TFTP 仅仅能够从远端系统读取数据或者向远端系统写入数据。但它并没有列出远端服务器上文件的能力,同时也没有修改远端服务器的能力(译者注:感觉和前一句话矛盾)。用户身份验证也没有规定。 由于安全隐患和缺乏高级功能,TFTP 通常仅用于局域网(LAN)。

|

||||

TFTP 仅仅能够从远端系统读取数据或者向远端系统写入数据,而没有列出远端服务器上文件的能力。它也没提供用户身份验证。由于安全隐患和缺乏高级功能,TFTP 通常仅用于局域网内部(LAN)。

|

||||

|

||||

### 安装 TFTP 服务器

|

||||

|

||||

@ -23,23 +23,24 @@ TFTP 仅仅能够从远端系统读取数据或者向远端系统写入数据。

|

||||

```

|

||||

dnf install tftp-server tftp -y

|

||||

```

|

||||

上述的这条命令会为 [systemd][4] 在 _/usr/lib/systemd/system_ 目录下创建 _tftp.service_ 和 _tftp.socket_ 文件。

|

||||

|

||||

上述的这条命令会在 `/usr/lib/systemd/system` 目录下为 [systemd][4] 创建 `tftp.service` 和 `tftp.socket` 文件。

|

||||

|

||||

```

|

||||

/usr/lib/systemd/system/tftp.service

|

||||

/usr/lib/systemd/system/tftp.socket

|

||||

```

|

||||

|

||||

接下来,将这两个文件复制到 _/etc/systemd/system_ 目录下,并重新命名。

|

||||

接下来,将这两个文件复制到 `/etc/systemd/system` 目录下,并重新命名。

|

||||

|

||||

```

|

||||

cp /usr/lib/systemd/system/tftp.service /etc/systemd/system/tftp-server.service

|

||||

|

||||

cp /usr/lib/systemd/system/tftp.socket /etc/systemd/system/tftp-server.socket

|

||||

```

|

||||

|

||||

### 修改文件

|

||||

|

||||

当你把这些文件复制和重命名后,你就可以去添加一些额外的参数,下面是 _tftp-server.service_ 刚开始的样子:

|

||||

当你把这些文件复制和重命名后,你就可以去添加一些额外的参数,下面是 `tftp-server.service` 刚开始的样子:

|

||||

|

||||

```

|

||||

[Unit]

|

||||

@ -55,13 +56,13 @@ StandardInput=socket

|

||||

Also=tftp.socket

|

||||

```

|

||||

|

||||

在 _[Unit]_ 部分添加如下内容:

|

||||

在 `[Unit]` 部分添加如下内容:

|

||||

|

||||

```

|

||||

Requires=tftp-server.socket

|

||||

```

|

||||

|

||||

修改 _[ExecStart]_ 行:

|

||||

修改 `[ExecStart]` 行:

|

||||

|

||||

```

|

||||

ExecStart=/usr/sbin/in.tftpd -c -p -s /var/lib/tftpboot

|

||||

@ -69,13 +70,14 @@ ExecStart=/usr/sbin/in.tftpd -c -p -s /var/lib/tftpboot

|

||||

|

||||

下面是这些选项的意思:

|

||||

|

||||

* _**-c**_ 选项允许创建新的文件

|

||||

* _**-p**_ 选项用于指明在正常系统提供的权限检查之上没有其他额外的权限检查

|

||||

* _**-s**_ 建议使用该选项以确保安全性以及与某些引导 ROM 的兼容性,这些引导 ROM 在其请求中不容易包含目录名。

|

||||

* `-c` 选项允许创建新的文件

|

||||

* `-p` 选项用于指明在正常系统提供的权限检查之上没有其他额外的权限检查

|

||||

* `-s` 建议使用该选项以确保安全性以及与某些引导 ROM 的兼容性,这些引导 ROM 在其请求中不容易包含目录名。

|

||||

|

||||

默认的上传和下载位置位于 _/var/lib/tftpboot_。

|

||||

默认的上传和下载位置位于 `/var/lib/tftpboot`。

|

||||

|

||||

下一步,修改 `[Install]` 部分的内容

|

||||

|

||||

下一步,修改 _[Install}_ 部分的内容

|

||||

```

|

||||

[Install]

|

||||

WantedBy=multi-user.target

|

||||

@ -84,7 +86,8 @@ Also=tftp-server.socket

|

||||

|

||||

不要忘记保存你的修改。

|

||||

|

||||

下面是 _/etc/systemd/system/tftp-server.service_ 文件的完整内容:

|

||||

下面是 `/etc/systemd/system/tftp-server.service` 文件的完整内容:

|

||||

|

||||

```

|

||||

[Unit]

|

||||

Description=Tftp Server

|

||||

@ -109,11 +112,13 @@ systemctl daemon-reload

|

||||

```

|

||||

|

||||

启动服务器: