mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-12 01:40:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

22bd8d5320

@ -0,0 +1,67 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11664-1.html)

|

||||

[#]: subject: (Dell XPS 13 7390 Review: The Best Laptop For Desktop Linux Users)

|

||||

[#]: via: (https://www.linux.com/articles/dell-xps-13-7390-review-the-best-laptop-for-desktop-linux-user/)

|

||||

[#]: author: (Swapnil Bhartiya https://www.linux.com/author/swapnil/)

|

||||

|

||||

Dell XPS 13 7390:最好的 Linux 桌面笔记本

|

||||

======

|

||||

|

||||

|

||||

|

||||

曾经,我们必须进行大量研究、阅读大量评论,才能找到一种在所选的 Linux 桌面发行版上可以以最少的麻烦工作的机器。而如今,这种日子已经一去不复返了,几乎每台机器都可以运行 Linux。Linux 内核社区在设备驱动程序支持方面做得非常出色,可以使一切都开箱即用。

|

||||

|

||||

不过,有的是**可以**运行 Linux d 机器,有的是运行 Linux 的机器。戴尔计算机属于后一类。五年前,Barton George 在戴尔内部启动了一项计划,将桌面版 Linux 引入到消费级的高端戴尔系统。从一台机器开始,到现在整套从产品线的高端笔记本电脑和台式机都可以运行 Linux。

|

||||

|

||||

在这些机器中,XPS 13 是我的最爱。尽管我需要一个功能强大的台式机来处理 4K UHD、多机位视频制作,但我还需要一台超便携的笔记本电脑,可以随身携带,而不必担心笨重的背包和充电器。XPS 13 也是我的第一台笔记本电脑,陪了我 7 年多。因此,是的,这还有一个怀旧因素。

|

||||

|

||||

戴尔几乎每年都会更新其 XPS 产品线,并且最新的[产品展示宣布于 10 月][3]。[XPS 13(7390)] [4] 是该系列的增量更新,而且戴尔非常乐意向我寄来一台测评设备。

|

||||

|

||||

|

||||

|

||||

它由 6 核 Core i7-10710U CPU 所支持。它配备 16GB 内存和 1TB SSD。在 1.10 GHz 的基本频率(可以超频到 4.1 GHz)的情况下,这是一台用于常规工作负载的出色机器。它没有使用任何专用的 GPU,因此它并不适合进行游戏或从源代码进行编译的 Gentoo Linux 或 Arch Linux。但是,我确实设法在上面运行了一些 Steam 游戏。

|

||||

|

||||

如果你想运行 Kubernetes 集群、AI 框架或虚拟现实,那么 Precision 系列中还有更强大的机器,这些机器可以运行 Red Hat Enterprise Linux 和 Ubuntu。

|

||||

|

||||

该机器的底盘与上一代相同。边框保持与上一代一样的薄,依旧比 MacBook 和微软的 Surface Pro 薄。

|

||||

|

||||

它具有三个端口,其中两个是 USB-C Thunderbolt 3,可用于连接 4K 显示器、USB 附件以及用于对等网络的计算机之间的高速数据传输。

|

||||

|

||||

它还具有一个 microSD 插槽。作为视频记者,SD 卡插槽会更有用。大量使用树莓派的用户也会喜欢这种卡。

|

||||

|

||||

它具有 4 个麦克风和一个改进的摄像头,该摄像头现在位于顶部(再见,鼻孔摄像头!)。

|

||||

|

||||

XPS 13(7390)光滑纤薄。它的重量仅为 2.7 磅(1.2kg),可以与苹果的 MacBook Air 相提并论。 这台机器可以成为你的旅行伴侣,并且可以执行日常任务,例如检查电子邮件、浏览网络和写作。

|

||||

|

||||

其 4K UHD 屏幕支持 HDR,这意味着你将可以尽享《The Mandalorian》的全部美妙之处。另外,车载扬声器并没有那么好,听起来有些沉闷。它们适合进行视频聊天或休闲的 YouTube 观看,但是如果你想在今年晚些时候观看《The Witcher》剧集,或者想欣赏 Amazon、Apple Music 或 YouTube Music 的音乐,则需要耳机或外接扬声器。

|

||||

|

||||

|

||||

|

||||

但是,在插入充电线之前,你可以能使用这台机器多少时间?在正常工作量的情况下,它为我提供了大约 7-8 个小时的电池续航时间:我打开了几个选项卡浏览网络,只是看看电影或听音乐。多任务处理,尤其是各种 Web 活动,都会加速消耗电池电量。在 Linux 上进行一些微调可能会给你带来更多的续航时间,而在 Windows 10 上,我可以使用 10 多个小时呢!

|

||||

|

||||

作为仍在从事大量写作工作的视频记者,我非常喜欢键盘。但是,我们这么多年来在 Linux 台式机上听到的触控板故事一直没变:它与 MacBook 或 Windows 上的品质相差甚远。这或许有一天能改变。值得称道的是,他们确实发布了可增强体验的触控板驱动程序,但我没有运行此系统随附的提供的 Ubuntu 18.04 LTS。我全新安装了 Ubuntu 19.10,因为 Gnome 在 18.04 中的运行速度非常慢。我尝试过 openSUSE Tumbleweed、Zorin OS、elementary OS、Fedora、KDE neon 和 Arch Linux。一切正常,尽管有些需要额外的努力才能运行。

|

||||

|

||||

那么,该系统适用于谁?显然,这是给那些想要设计精良的、他们信赖的品牌的高端机器的专业人士打造的。适用于喜欢 MacBook Air,但更喜欢 Linux 台式机生态系统的用户。适用于那些希望使用 Linux 来工作,而不是使 Linux 可以工作的人。

|

||||

|

||||

我使用这台机器一周的时间,进一步说明了为什么我如此喜欢戴尔的 XPS 系列。它们是目前最好的 Linux 笔记本电脑。这款 XPS 13(7390),你值得拥有!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/articles/dell-xps-13-7390-review-the-best-laptop-for-desktop-linux-user/

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/author/swapnil/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linux.com/wp-content/uploads/2019/12/dell-xps-13-7390-1068x665.jpg (dell-xps-13-7390)

|

||||

[2]: https://www.linux.com/wp-content/uploads/2019/12/dell-xps-13-7390.jpg

|

||||

[3]: https://bartongeorge.io/2019/08/21/please-welcome-the-9th-generation-of-the-xps-13-developer-edition/

|

||||

[4]: https://blog.dell.com/en-us/dells-new-consumer-pc-portfolio-unveiled-ifa-2019/

|

||||

@ -0,0 +1,225 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (lxbwolf)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11663-1.html)

|

||||

[#]: subject: (6 Ways to Send Email from the Linux Command Line)

|

||||

[#]: via: (https://www.2daygeek.com/6-ways-to-send-email-from-the-linux-command-line/)

|

||||

[#]: author: (Magesh Maruthamuthu https://www.2daygeek.com/author/magesh/)

|

||||

|

||||

Linux 命令行发送邮件的 5 种方法

|

||||

======

|

||||

|

||||

当你需要在 shell 脚本中创建邮件时,就需要用到命令行发送邮件的知识。Linux 中有很多命令可以实现发送邮件。本教程中包含了最流行的 5 个命令行邮件客户端,你可以选择其中一个。这 5 个命令分别是:

|

||||

|

||||

* `mail` / `mailx`

|

||||

* `mutt`

|

||||

* `mpack`

|

||||

* `sendmail`

|

||||

* `ssmtp`

|

||||

|

||||

### 工作原理

|

||||

|

||||

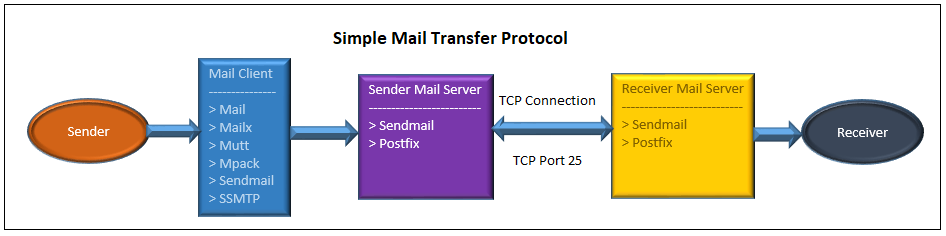

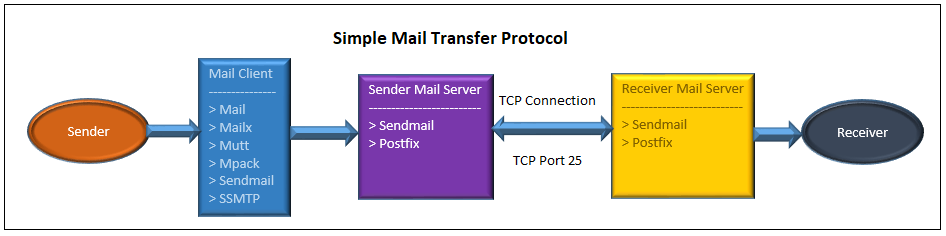

我先从整体上来解释下 Linux 中邮件命令怎么把邮件传递给收件人的。邮件命令撰写邮件并发送给一个本地邮件传输代理(MTA,如 sendmail、Postfix)。邮件服务器和远程邮件服务器之间通信以实际发送和接收邮件。下面的流程可以看得更详细。

|

||||

|

||||

|

||||

|

||||

### 1) 如何在 Linux 上安装 mail/mailx 命令

|

||||

|

||||

`mail` 命令是 Linux 终端发送邮件用的最多的命令。`mailx` 是 `mail` 命令的更新版本,基于 Berkeley Mail 8.1,意在提供 POSIX `mailx` 命令的功能,并支持 MIME、IMAP、POP3、SMTP 和 S/MIME 扩展。mailx 在某些交互特性上更加强大,如缓冲邮件消息、垃圾邮件评分和过滤等。在 Linux 发行版上,`mail` 命令是 `mailx` 命令的软链接。可以运行下面的命令从官方发行版仓库安装 `mail` 命令。

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4] 安装 mailutils。

|

||||

|

||||

```

|

||||

$ sudo apt-get install mailutils

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][5] 安装 mailx。

|

||||

|

||||

```

|

||||

$ sudo yum install mailx

|

||||

```

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 mailx。

|

||||

|

||||

```

|

||||

$ sudo dnf install mailx

|

||||

```

|

||||

|

||||

#### 1a) 如何在 Linux 上使用 mail 命令发送邮件

|

||||

|

||||

`mail` 命令简单易用。如果你不需要发送附件,使用下面的 `mail` 命令格式就可以发送邮件了:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mail -s "Subject" 2daygeek@gmail.com

|

||||

```

|

||||

|

||||

如果你要发送附件,使用下面的 `mail` 命令格式:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mail -a test1.txt -s "Subject" 2daygeek@gmail.com

|

||||

```

|

||||

|

||||

- `-a`:用于在基于 Red Hat 的系统上添加附件。

|

||||

- `-A`:用于在基于 Debian 的系统上添加附件。

|

||||

- `-s`:指定消息标题。

|

||||

|

||||

### 2) 如何在 Linux 上安装 mutt 命令

|

||||

|

||||

`mutt` 是另一个很受欢迎的在 Linux 终端发送邮件的命令。`mutt` 是一个小而强大的基于文本的程序,用来在 unix 操作系统下阅读和发送电子邮件,并支持彩色终端、MIME、OpenPGP 和按邮件线索排序的模式。可以运行下面的命令从官方发行版仓库安装 `mutt` 命令。

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4] 安装 mutt。

|

||||

|

||||

```

|

||||

$ sudo apt-get install mutt

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][5] 安装 mutt。

|

||||

|

||||

```

|

||||

$ sudo yum install mutt

|

||||

```

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 mutt。

|

||||

|

||||

```

|

||||

$ sudo dnf install mutt

|

||||

```

|

||||

|

||||

#### 2b) 如何在 Linux 上使用 mutt 命令发送邮件

|

||||

|

||||

`mutt` 一样简单易用。如果你不需要发送附件,使用下面的 `mutt` 命令格式就可以发送邮件了:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mutt -s "Subject" 2daygeek@gmail.com

|

||||

```

|

||||

|

||||

如果你要发送附件,使用下面的 `mutt` 命令格式:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mutt -s "Subject" 2daygeek@gmail.com -a test1.txt

|

||||

```

|

||||

|

||||

### 3) 如何在 Linux 上安装 mpack 命令

|

||||

|

||||

`mpack` 是另一个很受欢迎的在 Linux 终端上发送邮件的命令。`mpack` 程序会在一个或多个 MIME 消息中对命名的文件进行编码。编码后的消息被发送到一个或多个收件人。可以运行下面的命令从官方发行版仓库安装 `mpack` 命令。

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4] 安装 mpack。

|

||||

|

||||

```

|

||||

$ sudo apt-get install mpack

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][5] 安装 mpack。

|

||||

|

||||

```

|

||||

$ sudo yum install mpack

|

||||

```

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 mpack。

|

||||

|

||||

```

|

||||

$ sudo dnf install mpack

|

||||

```

|

||||

|

||||

#### 3a) 如何在 Linux 上使用 mpack 命令发送邮件

|

||||

|

||||

`mpack` 同样简单易用。如果你不需要发送附件,使用下面的 `mpack` 命令格式就可以发送邮件了:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mpack -s "Subject" 2daygeek@gmail.com

|

||||

```

|

||||

|

||||

如果你要发送附件,使用下面的 mpack 命令格式:

|

||||

|

||||

```

|

||||

$ echo "This is the mail body" | mpack -s "Subject" 2daygeek@gmail.com -a test1.txt

|

||||

```

|

||||

|

||||

### 4) 如何在 Linux 上安装 sendmail 命令

|

||||

|

||||

sendmail 是一个上广泛使用的通用 SMTP 服务器,你也可以从命令行用 `sendmail` 发邮件。可以运行下面的命令从官方发行版仓库安装 `sendmail` 命令。

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4]安装 sendmail。

|

||||

|

||||

```

|

||||

$ sudo apt-get install sendmail

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][5] 安装 sendmail。

|

||||

|

||||

```

|

||||

$ sudo yum install sendmail

|

||||

```

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 sendmail。

|

||||

|

||||

```

|

||||

$ sudo dnf install sendmail

|

||||

```

|

||||

|

||||

#### 4a) 如何在 Linux 上使用 sendmail 命令发送邮件

|

||||

|

||||

`sendmail` 同样简单易用。使用下面的 `sendmail` 命令发送邮件。

|

||||

|

||||

```

|

||||

$ echo -e "Subject: Test Mail\nThis is the mail body" > /tmp/send-mail.txt

|

||||

```

|

||||

|

||||

```

|

||||

$ sendmail 2daygeek@gmail.com < send-mail.txt

|

||||

```

|

||||

|

||||

### 5) 如何在 Linux 上安装 ssmtp 命令

|

||||

|

||||

`ssmtp` 是类似 `sendmail` 的一个只发送不接收的工具,可以把邮件从本地计算机传递到配置好的 邮件主机(mailhub)。用户可以在 Linux 命令行用 `ssmtp` 把邮件发送到 SMTP 服务器。可以运行下面的命令从官方发行版仓库安装 `ssmtp` 命令。

|

||||

|

||||

对于 Debian/Ubuntu 系统,使用 [APT-GET 命令][3] 或 [APT 命令][4]安装 ssmtp。

|

||||

|

||||

```

|

||||

$ sudo apt-get install ssmtp

|

||||

```

|

||||

|

||||

对于 RHEL/CentOS 系统,使用 [YUM 命令][5] 安装 ssmtp。

|

||||

|

||||

```

|

||||

$ sudo yum install ssmtp

|

||||

```

|

||||

|

||||

对于 Fedora 系统,使用 [DNF 命令][6] 安装 ssmtp。

|

||||

|

||||

```

|

||||

$ sudo dnf install ssmtp

|

||||

```

|

||||

|

||||

### 5a) 如何在 Linux 上使用 ssmtp 命令发送邮件

|

||||

|

||||

`ssmtp` 同样简单易用。使用下面的 `ssmtp` 命令格式发送邮件。

|

||||

|

||||

```

|

||||

$ echo -e "Subject: Test Mail\nThis is the mail body" > /tmp/ssmtp-mail.txt

|

||||

```

|

||||

|

||||

```

|

||||

$ ssmtp 2daygeek@gmail.com < /tmp/ssmtp-mail.txt

|

||||

```

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.2daygeek.com/6-ways-to-send-email-from-the-linux-command-line/

|

||||

|

||||

作者:[Magesh Maruthamuthu][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[lxbwolf](https://github.com/lxbwolf)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.2daygeek.com/author/magesh/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: https://www.2daygeek.com/wp-content/uploads/2019/12/smtp-simple-mail-transfer-protocol.png

|

||||

[3]: https://www.2daygeek.com/apt-get-apt-cache-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[4]: https://www.2daygeek.com/apt-command-examples-manage-packages-debian-ubuntu-systems/

|

||||

[5]: https://www.2daygeek.com/yum-command-examples-manage-packages-rhel-centos-systems/

|

||||

[6]: https://www.2daygeek.com/dnf-command-examples-manage-packages-fedora-system/

|

||||

@ -0,0 +1,65 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (KubeCon gets bigger, the kernel gets better, and more industry trends)

|

||||

[#]: via: (https://opensource.com/article/19/12/kubecon-bigger-kernel-better-more-industry-trends)

|

||||

[#]: author: (Tim Hildred https://opensource.com/users/thildred)

|

||||

|

||||

KubeCon gets bigger, the kernel gets better, and more industry trends

|

||||

======

|

||||

A weekly look at open source community, market, and industry trends.

|

||||

![Person standing in front of a giant computer screen with numbers, data][1]

|

||||

|

||||

As part of my role as a senior product marketing manager at an enterprise software company with an open source development model, I publish a regular update about open source community, market, and industry trends for product marketers, managers, and other influencers. Here are five of my and their favorite articles from that update.

|

||||

|

||||

## [KubeCon showed Kubernetes is big, but is it a Unicorn?][2]

|

||||

|

||||

> It’s hard to remember now but there was a time when Kubernetes was a distant No. 3 in terms of container orchestrators being used in the market. It’s also eye opening to now realize that [the firms][3] that hatched the two platforms that [towered over][4] Kubernetes have had to completely re-jigger their business models under the Kubernetes onslaught.

|

||||

>

|

||||

> And full credit to the CNCF for attempting to diffuse some of that attention from Kubernetes by spending the vast majority of the KubeCon opening keynote address touting some of the nearly two dozen graduated, incubating, and sandbox projects it also hosts. But, it was really the Big K that stole the show.

|

||||

|

||||

**The impact:** Open source is way more than the source code; governance is a big deal and can be the difference between longevity and irrelevance. Gathering, organizing, and maintaining humans is an entirely different skill set than doing the same for bits, but can have just as big an influence on the success of a project.

|

||||

|

||||

## [Report: Kubernetes use on the rise][5]

|

||||

|

||||

> At the same time, the Datadog report notes that container churn rates are approximately 10 times higher in orchestrated environments. Churn rates in container environments that lack an orchestration platform such as Kubernetes have increased in the last year as well. The average container lifespan at a typical company running infrastructure without orchestration is about two days, down from about six days in mid-2018. In 19% of those environments not running orchestration, the average container lifetime exceeded 30 days. That compares to only 3% of organizations running containers longer than 30 days in Kubernetes environments, according to the report’s findings.

|

||||

|

||||

**The impact**: If your containers aren't churning, you're probably not getting the full benefit of the technology you've adopted.

|

||||

|

||||

## [Upcoming Linux 5.5 kernel improves live patching, scheduling][6]

|

||||

|

||||

> A new WFX Wi-Fi driver for the Silicon Labs WF200 ASIC transceiver is coming to Linux kernel 5.5. This particular wireless transceiver is geared toward low-power IoT devices and uses a 2.4 GHz 802.11b/g/n radio optimized for low power RF performance in crowded RF environments. This new driver can interface via both Serial Peripheral Interface (SPI) and Secure Digital Input Output (SDIO).

|

||||

|

||||

**The impact**: The kernel's continued relevance is a direct result of the never-ending grind to keep being where people need it to be (i.e. basically everywhere).

|

||||

|

||||

## [DigitalOcean Currents: December 2019][7]

|

||||

|

||||

> In that spirit, this fall’s installment of our seasonal Currents report is dedicated to open source for the second year running. We surveyed more than 5800 developers around the world on the overall health and direction of the open source community. When we last checked in with the community in [2018][8], more than half of developers reported contributing to open source projects, and most felt the community was healthy and growing.

|

||||

|

||||

**The impact**: While the good news outweighs the bad, there are a couple of things to keep an eye on: namely, making open source more inclusive and mitigating potential negative impact of big money.

|

||||

|

||||

_I hope you enjoyed this list of what stood out to me from last week and come back next Monday for more open source community, market, and industry trends._

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/kubecon-bigger-kernel-better-more-industry-trends

|

||||

|

||||

作者:[Tim Hildred][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/thildred

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/data_metrics_analytics_desktop_laptop.png?itok=9QXd7AUr (Person standing in front of a giant computer screen with numbers, data)

|

||||

[2]: https://www.sdxcentral.com/articles/opinion-editorial/kubecon-showed-kubernetes-is-big-but-is-it-a-unicorn/2019/11/

|

||||

[3]: https://www.sdxcentral.com/articles/news/docker-unloads-enterprise-biz-to-mirantis/2019/11/

|

||||

[4]: https://www.sdxcentral.com/articles/news/mesosphere-is-now-d2iq-and-kubernetes-is-its-game/2019/08/

|

||||

[5]: https://containerjournal.com/topics/container-ecosystems/report-kubernetes-use-on-the-rise/

|

||||

[6]: https://thenewstack.io/upcoming-linux-5-5-kernel-improves-live-patching-scheduling/

|

||||

[7]: https://blog.digitalocean.com/digitalocean-currents-december-2019/

|

||||

[8]: https://www.digitalocean.com/currents/october-2018/

|

||||

@ -0,0 +1,103 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (LibreCorps mentors humanitarian startups on how to run the open source way)

|

||||

[#]: via: (https://opensource.com/article/19/12/humanitarian-startups-open-source)

|

||||

[#]: author: (Justin W. Flory https://opensource.com/users/jflory)

|

||||

|

||||

LibreCorps mentors humanitarian startups on how to run the open source way

|

||||

======

|

||||

NGOs and nonprofits can increase their reach by building open source

|

||||

communities.

|

||||

![Two diverse hands holding a globe][1]

|

||||

|

||||

Free and open source software are no longer workplace taboos, at least not in the same way they were fifteen years ago. Today, distributed collaboration platforms and tools empower people around the world to contribute code, documentation, design, leadership, and other skills to open source projects. But do newcomers actually have a deep understanding of free and open source software?

|

||||

|

||||

If you hang around in open source communities for long enough, you realize there is more to open source than slapping a free software license on a project and throwing it over an imaginary fence to wait for contributors who never come. To address this problem in the humanitarian sector, the LibreCorps program, led by Rochester Institute of Technology's FOSS initiative at the [Center for Media, Arts, Interaction & Creativity][2] (MAGIC,) partnered with UNICEF to develop a set of resources to help new open source maintainers chart an "open source roadmap" to build a community.

|

||||

|

||||

![Grassroots presentation in UNICEF office ][3]

|

||||

|

||||

### What is LibreCorps?

|

||||

|

||||

[LibreCorps][4] connects RIT students interested in open source to humanitarian and civic coding opportunities; specifically, opportunities for co-operative education placements (co-ops,) which are full-time paid internships included in the university's graduation requirements.

|

||||

|

||||

LibreCorps students work in two major areas on co-ops. The first, not surprisingly, is technology. The second is FOSS community and processes. Many NGOs and civic organizations put openly licensed work in repositories but need a plan to build and maintain a community of contributors around their technology.

|

||||

|

||||

LibreCorps has worked with numerous humanitarian projects over the years. Recently, LibreCorps was contracted by [UNICEF Innovation][5] to support the [Innovation Fund][6] by mentoring several cohorts of international start-ups in adopting best practices to meet the open source requirement of their funding.

|

||||

|

||||

Periodically, the UNICEF Innovation Fund invites the companies together for cohort workshops, with hands-on mentorship a primary component of the workshop. [Stephen Jacobs][7] and [Justin W. Flory][8] represented LibreCorps at [two UNICEF Innovation Fund workshops][9] to help these teams better understand free and open source, as well as how to successfully build communities and teams that operate as [open organizatio][10][ns][10]. Most of these teams have either never worked in open source projects or only have a basic understanding of licenses and GitHub. Often, for these teams, working on your code in a way where anyone can see what you are doing is a radical shift in process.

|

||||

|

||||

UNICEF Innovation has engaged with open source for years and currently provides funded teams with a course on [open source business models][11].

|

||||

|

||||

> "We'd approached open-source pretty tentatively and definitely naively. We were keen to move to open source for transparency and perception but, beyond that, had no plans around deriving or creating value, and we were nervous about the perceived risks. The mentorship from Mike Nolan has given us clear direction and a deeper understanding that open source isn't a compromise but a communication channel and a way to build a community, and he's giving us the tools that enable that." — Michael Nunan, Director at Tupaia.

|

||||

|

||||

### How do we create workable community strategy guides?

|

||||

|

||||

This past summer, LibreCorps began developing and evolving resources to help these teams take the complex and difficult challenge of building an open source community and break it down into smaller, more manageable steps. [Mike Nolan][12] and [Kent Reese][13] developed a roadmap template for teams to evaluate their current status in maintainership best practices and chart out milestones for where to go next. For a cohort working in open source for the first time, the LibreCorps team offers advice and suggestions on crafting a mission statement, choosing the right license for your project, and more. The rubrics provide an interactive, color-coded reference to unlock a deeper understanding of their progress towards each milestone.

|

||||

|

||||

Let's explore each resource in more detail to understand how they work:

|

||||

|

||||

### Roadmap template

|

||||

|

||||

The [roadmap template][14] is a resource that gets hands-on and personal to a specific open source project. There are five tracks within the roadmaps with different tasks to gradually ramp up the areas of focus for community management.

|

||||

|

||||

The first track includes milestones like writing a mission statement, choosing a free software license, and establishing a code of conduct, and provides a set of open source tools or frameworks for users to learn more about. The second track includes gradually more advanced milestones like documenting how to set up a development environment, learning the pull request workflow, choosing a project hosting platform, and more. Further tracks include milestones like implementing continuous integration (CI,) organizing community events, and gathering user testimonials.

|

||||

|

||||

The LibreCorps team works with each Innovation Fund team within the cohort to create a rubric specific to their projects. The cohort identifies their current position based on which milestone they are currently working toward. Then, we work together to determine next steps for growing their open source community, then create a strategy to reach and accomplish those goals.

|

||||

|

||||

### Self-evaluation rubric

|

||||

|

||||

The milestone template provides high-level guidance on which direction to go, but the [self-evaluation rubric][15] is a feedback mechanism to give cohorts a picture of exceptional, acceptable, and poor implementations for each assigned task. The self-evaluation rubrics enable LibreCorps cohorts to independently self-evaluate progress towards building a sustainable and open community.

|

||||

|

||||

The rubric is organized into five tracks:

|

||||

|

||||

* Community outreach

|

||||

* Continuous integration and health checks

|

||||

* Documentation

|

||||

* Project management

|

||||

* Workflow

|

||||

|

||||

|

||||

|

||||

Each track includes detailed sets of sub-tasks or specific components of building an open source community. For example, documentation includes writing guidelines for how to contribute, and community outreach includes maintaining a project website and engaging with an upstream project community, if one exists.

|

||||

|

||||

The LibreCorps team mostly works with the rubric to evaluate whether Innovation Fund cohort teams sufficiently meet the open source requirement for their project. Some teams make use of the rubric to get a more detailed understanding of whether they are heading in the right direction with their community work.

|

||||

|

||||

### Contribute to these resources

|

||||

|

||||

Do these resources sound useful or interesting? Fortunately, the LibreCorps content is licensed under Creative Commons licenses. Learn more about LibreCorps on the [FOSS@MAGIC website][4] and keep up with what we are doing on [our GitHub][16]. To get in touch with us, visit our community Discourse forums on [fossrit.community][17].

|

||||

|

||||

A few months ago, we profiled open source projects working to make the world a better place. In...

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/humanitarian-startups-open-source

|

||||

|

||||

作者:[Justin W. Flory][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jflory

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/world_hands_diversity.png?itok=zm4EDxgE (Two diverse hands holding a globe)

|

||||

[2]: https://www.rit.edu/magic/

|

||||

[3]: https://opensource.com/sites/default/files/uploads/unicef-flory_0.jpg (Grassroots presentation in UNICEF office )

|

||||

[4]: https://fossrit.github.io/librecorps/

|

||||

[5]: https://www.wired.com/story/wired25-stories-people-racing-to-save-us/

|

||||

[6]: https://unicefinnovationfund.org/

|

||||

[7]: https://www.rit.edu/magic/affiliate-spotlight-stephen-jacobs

|

||||

[8]: https://justinwflory.com/

|

||||

[9]: https://fossrit.github.io/announcements/2019/04/01/unicef-foss-community-building/

|

||||

[10]: https://opensource.com/open-organization/resources/open-org-maturity-model

|

||||

[11]: https://www.google.com/url?q=https://agora.unicef.org/course/info.php?id%3D18096&sa=D&ust=1573658770972000&usg=AFQjCNGemgWoJ3kCoKImLCDok7opIo2RCA

|

||||

[12]: https://nolski.rocks/

|

||||

[13]: https://kentr.itch.io/

|

||||

[14]: https://docs.google.com/document/d/1M2nVwh7ArjAU31M7QZWP4AQz1cOyMhw90iP8Wg9lZNo/edit?usp=sharing

|

||||

[15]: https://docs.google.com/spreadsheets/d/11DaQxbiOv9_EiZEozEkUapf2AsVQ4vBFelhnZ0a8R4w/edit?usp=sharing

|

||||

[16]: https://github.com/librecorps/

|

||||

[17]: https://fossrit.community/

|

||||

@ -0,0 +1,106 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Italian job: Translating our mission statement in the open)

|

||||

[#]: via: (https://opensource.com/open-organization/19/12/translating-mission-statement)

|

||||

[#]: author: (Antonella Iecle https://opensource.com/users/aiecle)

|

||||

|

||||

Italian job: Translating our mission statement in the open

|

||||

======

|

||||

A seemingly straightforward translation exercise turned into a lesson

|

||||

about the power of open decision making.

|

||||

![Yellow arrows going both ways with texture][1]

|

||||

|

||||

At Red Hat, part of my job is to ensure that company messages maintain their meaning and effectiveness in my native language—Italian—so that customers in my region can learn not only about our products and services but also about [our organizational values][2].

|

||||

|

||||

The work tends to be simple and straightforward. But in an open organization, even the tasks that seem small can present big opportunities for learning about the power of working the open way.

|

||||

|

||||

That was the case for me recently, when what I thought would be a quick translation exercise turned into a lesson in the [benefits of open decision making][3].

|

||||

|

||||

### A crowdsourced decision

|

||||

|

||||

A few months ago, I noticed a post on our company's internal collaboration platform that seemed to be calling my name. Colleagues from around the world were leaving comments on translated versions of one particular (and very important) corporate message: the [company's mission statement][4]. And they had questions about the Italian translation.

|

||||

|

||||

So I joined the conversation with no hesitation, assuming I'd engage in a quick exchange of opinions and reach a conclusion about the best way to translate Red Hat's mission statement:

|

||||

|

||||

_To be the catalyst in communities of customers, contributors, and partners creating better technology the open source way._

|

||||

|

||||

That's a single sentence consisting of less than 20 words. Translating it into another language should be a no-brainer, right? If anything, the work should take no longer than a few minutes: Read it out loud, spot room for improvement, swap a word for a more effective synonym, maybe rephrase a bit, and you're done!

|

||||

|

||||

As a matter of fact, that's not always the case.

|

||||

|

||||

Translations of the mission statement in a few languages were already available, but comments from colleagues reflected a need for some review. And as more Red Hatters from different parts of the globe joined the discussion and shared their perspectives, I began to see many possibilities for solving this translation problem—and the challenges that come with this abundance of ideas.

|

||||

|

||||

Seeing so many inspiring comments can make reaching a decision much more complicated. One might put concepts into words in a _number_ of ways, but how do we all agree on the _best_ way? How do we deliver a message that reflects our common goal? How do we make sure that all employees can identify with it? And how do we ensure that our customers understand how we can help them grow?

|

||||

|

||||

It's true: When you're about to make a decision that has an impact on other people, you have to ask yourself—and each other—a lot of questions.

|

||||

|

||||

It's true: When you're about to make a decision that has an impact on other people, you have to ask yourself—and each other—a lot of questions.

|

||||

|

||||

### Found in translation

|

||||

|

||||

Localising a message for our readers around the world means more than just translating it word by word. A literal translation may not be fully understandable, especially when the original message was written with English native speakers in mind. On the other hand, a creative translation may be just one of many possible interpretations of the original text. And then there are personal preferences: Which translation sounds better to me? Is there a particular reason? If so, how do I get my point across?

|

||||

|

||||

Linguistic debates can highlight complex aspects of communication, from the subtle nuances in the meaning of a particular word to the cultural traits, mindsets, and levels of familiarity with a topic that define how the same message can be perceived by readers from different countries. Our own conversations delved into known linguistic dilemmas like the use of anglicisms and the disambiguation of expressions that can have more than one interpretation.

|

||||

|

||||

Localisation teams must ensure that messages are delivered accurately and consistently. Working collaboratively is essential to achieving this goal.

|

||||

|

||||

To get started, we only had to look at how our original mission statement had come together in the first place. Since we were trying to do something that someone else had done before, we needed to ask: "[How did Red Hat find its mission][5]?"

|

||||

|

||||

The answer? By asking the whole company what it should be.

|

||||

|

||||

Opening up the conversation doesn't always accelerate the decision making process.

|

||||

|

||||

It may be counterintuitive, but engaging the broader community can be a very efficient way to overcome uncertainties and address problems you weren't even aware of. Opening up the conversation doesn't always accelerate the decision making process, but by bringing people together you allow them to draw a clearer picture of the problem and provide you with the elements you need to make better decisions.

|

||||

|

||||

With that in mind, all you have to do is ask other teams to contribute, then give them a reasonable amount of time to make room in their busy schedule and think about how they can help. Suggestions will come, and how you will channel the incoming information is up to you.

|

||||

|

||||

Surveys are often a great way to gather feedback and keep track of incoming responses, and this is the approach we chose. We submitted a new translation, and asked our teams in Italy to tell us if they were happy with it. The majority of the respondents were. However, a few participants had their own versions to share (and valid comments to add).

|

||||

|

||||

After considering everyone's input, we submitted a second survey. We asked the participants to choose between the solution we offered in the first survey and a _newer_ version, one we obtained by incorporating the feedback we'd received from our recent collaborative discussion.

|

||||

|

||||

The second option was the winner.

|

||||

|

||||

The initial translation did not represent Red Hat's mission as clearly and faithfully as the improved statement did. For example, in the original version, Red Hat was a “point of reference” rather than a "catalyst," and worked with "a community" rather than a larger and more diverse number of "communities." We were using the word "collaborators" instead of "contributors," and our actions were following a set of "principles" rather than the open source "way."

|

||||

|

||||

The changes we made reaffirmed Red Hat's role in the communities and its commitment to open source technology, but also to open source as a way of working.

|

||||

|

||||

Don't be afraid to jump into a conversation—or even to take the lead—if you think you can help to make better decisions.

|

||||

|

||||

So here is some advice this experience taught me: Don't be afraid to jump into a conversation—or even to take the lead—if you think you can help to make better decisions. Because as Jim Whitehurst likes to say, in the open source world, we believe [the best ideas should win][6], no matter where they come from.

|

||||

|

||||

In short, here is my five-point checklist for open decision-making, regardless of the task you face:

|

||||

|

||||

1. Encourage wide participation.

|

||||

2. Welcome all responses as they arrive.

|

||||

3. Identify the best ideas and get the most out of everyone's suggestions.

|

||||

4. Answer questions and address concerns.

|

||||

5. Communicate the outcome, and summarize the steps of the decision process. In doing so, reassert the value of broad collaboration.

|

||||

|

||||

|

||||

|

||||

One more thing (I know I said I only had five more points, but don't switch off yet): Keep listening to what people might have to say about the decision you just made together.

|

||||

|

||||

The best ideas may take longer to brew.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/open-organization/19/12/translating-mission-statement

|

||||

|

||||

作者:[Antonella Iecle][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/aiecle

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/arrows_translation_lead.jpg?itok=S4vAh9CP (Yellow arrows going both ways with texture)

|

||||

[2]: https://www.redhat.com/en/book-of-red-hat#our-values

|

||||

[3]: https://opensource.com/open-organization/resources/open-decision-framework

|

||||

[4]: https://www.redhat.com/en/book-of-red-hat#our-vision-intro

|

||||

[5]: https://www.managementexchange.com/blog/how-red-hat-used-open-source-way-develop-company-mission

|

||||

[6]: https://opensource.com/open-organization/16/8/how-make-meritocracy-work

|

||||

@ -1,241 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (14 SCP Command Examples to Securely Transfer Files in Linux)

|

||||

[#]: via: (https://www.linuxtechi.com/scp-command-examples-in-linux/)

|

||||

[#]: author: (Pradeep Kumar https://www.linuxtechi.com/author/pradeep/)

|

||||

|

||||

14 SCP Command Examples to Securely Transfer Files in Linux

|

||||

======

|

||||

|

||||

**SCP** (Secure Copy) is command line tool in Linux and Unix like systems which is used to transfer files and directories across the systems securely over the network. When we use scp command to copy files and directories from our local system to remote system then in the backend it makes **ssh connection** to remote system. In other words, we can say scp uses the same **SSH security mechanism** in the backend, it needs either password or keys for authentication.

|

||||

|

||||

[![scp-command-examples-linux][1]][2]

|

||||

|

||||

In this tutorial we will discuss 14 useful Linux scp command examples.

|

||||

|

||||

**Syntax of scp command:**

|

||||

|

||||

### scp <options> <files_or_directories> [root@linuxtechi][3]_host:/<folder>

|

||||

|

||||

### scp <options> [root@linuxtechi][3]_host:/files <folder_local_system>

|

||||

|

||||

First syntax of scp command demonstrate how to copy files or directories from local system to target host under the specific folder.

|

||||

|

||||

Second syntax of scp command demonstrate how files from target host is copied into local system.

|

||||

|

||||

Some of the most widely used options in scp command are listed below,

|

||||

|

||||

* -C Enable Compression

|

||||

* -i identity File or private key

|

||||

* -l limit the bandwidth while copying

|

||||

* -P ssh port number of target host

|

||||

* -p Preserves permissions, modes and access time of files while copying

|

||||

* -q Suppress warning message of SSH

|

||||

* -r Copy files and directories recursively

|

||||

* -v verbose output

|

||||

|

||||

|

||||

|

||||

Let’s jump into the examples now!!!!

|

||||

|

||||

###### Example:1) Copy a file from local system to remote system using scp

|

||||

|

||||

Let’s assume we want to copy jdk rpm package from our local Linux system to remote system (172.20.10.8) using scp command, use the following command,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp jdk-linux-x64_bin.rpm root@linuxtechi:/opt

|

||||

root@linuxtechi's password:

|

||||

jdk-linux-x64_bin.rpm 100% 10MB 27.1MB/s 00:00

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

Above command will copy jdk rpm package file to remote system under /opt folder.

|

||||

|

||||

###### Example:2) Copy a file from remote System to local system using scp

|

||||

|

||||

Let’s suppose we want to copy a file from remote system to our local system under the /tmp folder, execute the following scp command,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp root@linuxtechi:/root/Technical-Doc-RHS.odt /tmp

|

||||

root@linuxtechi's password:

|

||||

Technical-Doc-RHS.odt 100% 1109KB 31.8MB/s 00:00

|

||||

[root@linuxtechi ~]$ ls -l /tmp/Technical-Doc-RHS.odt

|

||||

-rwx------. 1 pkumar pkumar 1135521 Oct 19 11:12 /tmp/Technical-Doc-RHS.odt

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:3) Verbose Output while transferring files using scp (-v)

|

||||

|

||||

In scp command, we can enable the verbose output using -v option, using verbose output we can easily find what exactly is happening in the background. This becomes very useful in **debugging connection**, **authentication** and **configuration problems**.

|

||||

|

||||

```

|

||||

root@linuxtechi ~]$ scp -v jdk-linux-x64_bin.rpm root@linuxtechi:/opt

|

||||

Executing: program /usr/bin/ssh host 172.20.10.8, user root, command scp -v -t /opt

|

||||

OpenSSH_7.8p1, OpenSSL 1.1.1 FIPS 11 Sep 2018

|

||||

debug1: Reading configuration data /etc/ssh/ssh_config

|

||||

debug1: Reading configuration data /etc/ssh/ssh_config.d/05-redhat.conf

|

||||

debug1: Reading configuration data /etc/crypto-policies/back-ends/openssh.config

|

||||

debug1: /etc/ssh/ssh_config.d/05-redhat.conf line 8: Applying options for *

|

||||

debug1: Connecting to 172.20.10.8 [172.20.10.8] port 22.

|

||||

debug1: Connection established.

|

||||

…………

|

||||

debug1: Next authentication method: password

|

||||

root@linuxtechi's password:

|

||||

```

|

||||

|

||||

###### Example:4) Transfer multiple files to remote system

|

||||

|

||||

Multiple files can be copied / transferred to remote system using scp command in one go, in scp command specify the multiple files separated by space, example is shown below

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp install.txt index.html jdk-linux-x64_bin.rpm root@linuxtechi:/mnt

|

||||

root@linuxtechi's password:

|

||||

install.txt 100% 0 0.0KB/s 00:00

|

||||

index.html 100% 85KB 7.2MB/s 00:00

|

||||

jdk-linux-x64_bin.rpm 100% 10MB 25.3MB/s 00:00

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:5) Transfer files across two remote hosts

|

||||

|

||||

Using scp command we can copy files and directories between two remote hosts, let’s suppose we have a local Linux system which can connect to two remote Linux systems, so from my local linux system I can use scp command to copy files across these two systems,

|

||||

|

||||

Syntax:

|

||||

|

||||

### scp [root@linuxtechi][3]_hosts1:/<files_to_transfer> [root@linuxtechi][3]_host2:/<folder>

|

||||

|

||||

Example is shown below,

|

||||

|

||||

```

|

||||

# scp root@linuxtechi:~/backup-Oct.zip root@linuxtechi:/tmp

|

||||

# ssh root@linuxtechi "ls -l /tmp/backup-Oct.zip"

|

||||

-rwx------. 1 root root 747438080 Oct 19 12:02 /tmp/backup-Oct.zip

|

||||

```

|

||||

|

||||

###### Example:6) Copy files and directories recursively (-r)

|

||||

|

||||

Use -r option in scp command to recursively copy the entire directory from one system to another, example is shown below,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -r Downloads root@linuxtechi:/opt

|

||||

```

|

||||

|

||||

Use below command to verify whether Download folder is copied to remote system or not,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ ssh root@linuxtechi "ls -ld /opt/Downloads"

|

||||

drwxr-xr-x. 2 root root 75 Oct 19 12:10 /opt/Downloads

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:7) Increase transfer speed by enabling compression (-C)

|

||||

|

||||

In scp command, we can increase the transfer speed by enabling the compression using -C option, it will automatically enable compression at source and decompression at destination host.

|

||||

|

||||

```

|

||||

root@linuxtechi ~]$ scp -r -C Downloads root@linuxtechi:/mnt

|

||||

```

|

||||

|

||||

In the above example we are transferring the Download directory with compression enabled.

|

||||

|

||||

###### Example:8) Limit bandwidth while copying ( -l )

|

||||

|

||||

Use ‘-l’ option in scp command to put limit on bandwidth usage while copying. Bandwidth is specified in Kbit/s, example is shown below,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -l 500 jdk-linux-x64_bin.rpm root@linuxtechi:/var

|

||||

```

|

||||

|

||||

###### Example:9) Specify different ssh port while scp ( -P)

|

||||

|

||||

There can be some scenario where ssh port is changed on destination host, so while using scp command we can specify the ssh port number using ‘-P’ option.

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -P 2022 jdk-linux-x64_bin.rpm root@linuxtechi:/var

|

||||

```

|

||||

|

||||

In above example, ssh port for remote host is “2022”

|

||||

|

||||

###### Example:10) Preserves permissions, modes and access time of files while copying (-p)

|

||||

|

||||

Use “-p” option in scp command to preserve permissions, access time and modes while copying from source to destination

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -p jdk-linux-x64_bin.rpm root@linuxtechi:/var/tmp

|

||||

jdk-linux-x64_bin.rpm 100% 10MB 13.5MB/s 00:00

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:11) Transferring files in quiet mode ( -q) in scp

|

||||

|

||||

Use ‘-q’ option in scp command to suppress transfer progress, warning and diagnostic messages of ssh. Example is shown below,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -q -r Downloads root@linuxtechi:/var/tmp

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:12) Use Identify file in scp while transferring ( -i )

|

||||

|

||||

In most of the Linux environments, keys-based authentication is preferred. In scp command we specify the identify file or private key file using ‘-i’ option, example is shown below,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -i my_key.pem -r Downloads root@linuxtechi:/root

|

||||

```

|

||||

|

||||

In above example, “my_key.pem” is the identity file or private key file.

|

||||

|

||||

###### Example:13) Use different ‘ssh_config’ file in scp ( -F)

|

||||

|

||||

There are some scenarios where you use different networks to connect to Linux systems, may be some network is behind proxy servers, so in that case we must have different **ssh_config** file.

|

||||

|

||||

Different ssh_config file in scp command is specified via ‘-F’ option, example is shown below

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]$ scp -F /home/pkumar/new_ssh_config -r Downloads root@linuxtechi:/root

|

||||

root@linuxtechi's password:

|

||||

jdk-linux-x64_bin.rpm 100% 10MB 16.6MB/s 00:00

|

||||

backup-Oct.zip 100% 713MB 41.9MB/s 00:17

|

||||

index.html 100% 85KB 6.6MB/s 00:00

|

||||

[root@linuxtechi ~]$

|

||||

```

|

||||

|

||||

###### Example:14) Use Different Cipher in scp command (-c)

|

||||

|

||||

By default, scp uses ‘AES-128’ cipher to encrypt the files. If you want to use another cipher in scp command then use ‘-c’ option followed by cipher name,

|

||||

|

||||

Let’s suppose we want to use ‘3des-cbc’ cipher in scp command while transferring the files, run the following scp command

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]# scp -c 3des-cbc -r Downloads root@linuxtechi:/root

|

||||

```

|

||||

|

||||

Use the below command to list ssh and scp ciphers,

|

||||

|

||||

```

|

||||

[root@linuxtechi ~]# ssh -Q cipher localhost | paste -d , -s -

|

||||

3des-cbc,aes128-cbc,aes192-cbc,aes256-cbc,root@linuxtechi,aes128-ctr,aes192-ctr,aes256-ctr,root@linuxtechi,root@linuxtechi,root@linuxtechi

|

||||

[root@linuxtechi ~]#

|

||||

```

|

||||

|

||||

That’s all from this tutorial, to get more details about scp command, kindly refer its man page. Please do share your feedback and comments in comments section below.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linuxtechi.com/scp-command-examples-in-linux/

|

||||

|

||||

作者:[Pradeep Kumar][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linuxtechi.com/author/pradeep/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]: https://www.linuxtechi.com/wp-content/uploads/2019/10/scp-command-examples-linux.jpg

|

||||

[3]: https://www.linuxtechi.com/cdn-cgi/l/email-protection

|

||||

@ -1,65 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Dell XPS 13 7390 Review: The Best Laptop For Desktop Linux Users)

|

||||

[#]: via: (https://www.linux.com/articles/dell-xps-13-7390-review-the-best-laptop-for-desktop-linux-user/)

|

||||

[#]: author: (Swapnil Bhartiya https://www.linux.com/author/swapnil/)

|

||||

|

||||

Dell XPS 13 7390 Review: The Best Laptop For Desktop Linux Users

|

||||

======

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

[![][1]][2]

|

||||

|

||||

Gone are the days when we had to do a lot of research and read a lot of reviews to find a machine that would work with the least amount of trouble with the desktop Linux distribution of choice. Today, almost every machine out there can run Linux. The kernel community has done an incredible job with device driver support to make everything work out of the box.

|

||||

|

||||

Still, there are machines that can run Linux, and then there are machines that run Linux. Dell machines fall in the latter category. Five years ago, Barton George started a program within Dell to bring desktop Linux to consumer grade, high-end Dell systems. What started as one machine is now an entire line of high-end laptops and desktops.

|

||||

|

||||

Among these machines, XPS 13 is my favorite. While I need a really powerful desktop to handle my 4K UHD, multicam video production, I also need an ultra-portable laptop that I can bring with me anywhere without having to worry about a bulky backpack and charger. XPS 13 was also my very first laptop, which lasted me more than 7 years. So, yes, there is that nostalgic factor, too.

|

||||

|

||||

Dell updates the XPS line almost every year and the latest [rollout was announced in October][3] (link to video interview). [XPS 13 (7390)][4] is an incremental update to the series, and Dell was kind enough to send me a review unit.

|

||||

|

||||

It is powered by a 6-core, Core i7-10710U CPU. It comes with 16GB of memory and 1TB SSD. At the base frequency of 1.10 GHz, which can boost to 4.1 GHz, this is a great machine for average workloads. It doesn’t have any dedicated GPU, so it’s not meant for gaming or compiling from source for Gentoo Linux or Arch Linux. However, I did manage to run some Steam games on it.

|

||||

|

||||

If you are looking to run your Kubernetes clusters, AI frameworks or Virtual Reality, then there are more powerful machines from the Precision line, which are certified to run Red Hat Enterprise Linux and Ubuntu.

|

||||

|

||||

The machine’s chassis is identical to the previous generation. The bezels remains as thin as they were in the previous generation, still thinner than MacBook and Microsoft’s Surface Pro.

|

||||

|

||||

It has three ports, two of which are USB-C Thunderbolt 3, which can be used to connect to 4K monitors, USB accessories, and high-speed data transfer between machines with peer-to-peer networking.

|

||||

|

||||

It also has a microSD slot. As a video journalist, a slot for an SD card would have been more useful. Heavy users of Raspberry Pis would also love this card.

|

||||

|

||||

It has 4 microphones and an improved camera, which is now located at the top (goodbye, nosecam!).

|

||||

|

||||

XPS 13 (7390) is sleek and slim. At 2.7 lbs, it is certainly comparable to Apple’s MacBook Air. This machine is meant to be your travel companion and for everyday tasks like checking emails, browsing the web, and writing.

|

||||

|

||||

Its 4K UHD screen supports HDR, which means you will be able to enjoy Mandalorian in all its full glory. That said, the on-board speakers are not that great and sound muffled. They are OK for video chats or casual YouTube viewing, but if you want to enjoy the The Witcher later this year, or if you want to enjoy music from Amazon, Apple Music or YouTube Music, you need headphones or external speakers.

|

||||

|

||||

But how much fun can you get out of this machine before you reach for charging cable? It gave me around 7-8 hours of battery life with average workload: browsing the web with a few tabs, just watching a movie or listening to music. Multi-tasking, especially any kind of web activity, will drain the battery. Some fine-tuning on Linux may give you even more life. On Windows 10, I was able to get more than 10 hours!

|

||||

|

||||

As a video journalist who is still doing a fair amount of writing, I really like the keyboard. However, the trackpad is the same story that we have been hearing on desktop Linux for ages: it’s nowhere near the quality on MacBook or Windows machines. Maybe one day. To Dell’s credit, they do release drivers for the trackpad that enhances the experience, but I am not running the stock Ubuntu 18.04 LTS that came with this system. I did a fresh install of Ubuntu 19.10 because Gnome is painfully slow in 18.04. I tried openSUSE Tumbleweed, Zorin OS, elementary OS, Fedora, KDE’s neon and Arch Linux. All worked, although some needed extra effort to run.

|

||||

|

||||

So, who is this system for? It’s certainly for professionals who want a well designed, high-end machine from a brand they can trust. It’s for those who like a MacBook Air, but prefer the desktop Linux ecosystem. It’s for those who want to use Linux for work, instead of working on it to make it work.

|

||||

|

||||

Spending a week with this machine reinforced why I love Dell’s XPS series so much. They are the best Linux laptops out there. And this XPS 13 (7390) packs a punch.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/articles/dell-xps-13-7390-review-the-best-laptop-for-desktop-linux-user/

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.linux.com/author/swapnil/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://www.linux.com/wp-content/uploads/2019/12/dell-xps-13-7390-1068x665.jpg (dell-xps-13-7390)

|

||||

[2]: https://www.linux.com/wp-content/uploads/2019/12/dell-xps-13-7390.jpg

|

||||

[3]: https://bartongeorge.io/2019/08/21/please-welcome-the-9th-generation-of-the-xps-13-developer-edition/

|

||||

[4]: https://blog.dell.com/en-us/dells-new-consumer-pc-portfolio-unveiled-ifa-2019/

|

||||

@ -1,95 +0,0 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (Pekwm: A lightweight Linux desktop)

|

||||

[#]: via: (https://opensource.com/article/19/12/pekwm-linux-desktop)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

Pekwm: A lightweight Linux desktop

|

||||

======

|

||||

This article is part of a special series of 24 days of Linux desktops.

|

||||

If you're a minimalist who finds traditional desktops get in your way,

|

||||

try the Pekwm Linux desktop.

|

||||

![Penguin with green background][1]

|

||||

|

||||

Let's say you want a lightweight desktop environment, with just enough to get graphics on the screen, move some windows around, and not much else. You find traditional desktops get in your way, with their notifications and taskbars and system trays. You want to live your life primarily from a terminal, but you also want the luxury of launching graphical applications. If that sounds like you, then [Pekwm][2] may be what you've been looking for all along.

|

||||

|

||||

Pekwm is, presumably, inspired by the likes of Window Maker and Fluxbox. It provides an application menu, window decoration, and not a whole lot more. It's ideal for minimalists—users who want to conserve resources and users who prefer to work from a terminal.

|

||||

|

||||

Install Pekwm from your distribution's software repository. After installing, log out of your current desktop session so you can log into your new desktop. By default, your session manager (KDM, GDM, LightDM, or XDM, depending on your setup) will continue to log you into your previous desktop, so you must override that before logging in.

|

||||

|

||||

To override the previous desktop on GDM:

|

||||

|

||||

![Selecting your desktop in GDM][3]

|

||||

|

||||

And on KDM:

|

||||

|

||||

![Selecting your desktop in KDM][4]

|

||||

|

||||

The first time you log into Pekwm, you may encounter nothing but a black screen. Believe it or not, that's normal. What you're seeing is a blank desktop without background wallpaper. You can set a wallpaper with the **feh** command (you may need to install it from your repository). This command has a few options for setting the background, including **\--bg-fill** to fill the screen with your wallpaper, **\--bg-scale** to scale it to fit, and so on.

|

||||

|

||||

|

||||

```

|

||||

`$ feh --bg-fill ~/Pictures/wallpapers/mybackground.jpg`

|

||||

```

|

||||

|

||||

### Application menu

|

||||

|

||||

By default, Pekwm auto-generates a menu, available with a right-click anywhere on the desktop, that gives you access to applications. This menu also provides a few preference settings, such as the ability to pick a theme and to log out of your Pekwm session.

|

||||

|

||||

![Pekwm running on Fedora][5]

|

||||

|

||||

### Configuration

|

||||

|

||||

Pekwm is primarily configured in text config files stored in **$HOME/.pekwm**. The **menu** file defines your application menu, the **keys** file defines keyboard shortcuts, and so on.

|

||||

|

||||

The **start** file is a shell script that is executed after Pekwm launches. It is analogous to the **rc.local** file on a traditional Unix system; it's intentionally last in line, so whatever you put into it overrides everything that came before it. This is an important file—it's probably where you want to set your background so that _your_ choice overrides the default on the theme you're using.

|

||||

|

||||

The **start** file is also where you can launch dockapps. Dockapps are tiny applets that rose to prominence with Window Maker and Fluxbox. They usually provide network monitors, a clock, audio settings, and other things you might be used to seeing in a system tray or as a KDE plasmoid or widget in a full desktop environment. You might find some dockapps in your distribution's repository, or you can look for them online at [dockapps.net][6].

|

||||

|

||||

You can launch dockapps at startup by listing them in the **start** file followed by an **&** symbol:

|

||||

|

||||

|

||||

```

|

||||

feh --bg-fill ~/Pictures/wallpapers/mybackground.jpg

|

||||

wmnd &

|

||||

bubblemon -d &

|

||||

```

|

||||

|

||||

The **start** file must be [marked executable][7] for it to run when Pekwm starts.

|

||||

|

||||

|

||||

```

|

||||

`$ chmod +x $HOME/.pekwm/start`

|

||||

```

|

||||

|

||||

### Features

|

||||

|

||||

There's not much to Pekwm, and that's the beauty of it. If you want extra services to run on your desktop, it's up to you to launch them. If you're still learning Linux, this is a great way to learn about the minute GUI components you usually don't think about when they come bundled with a complete desktop environment (like [a taskbar][8]). It's also a good way to get used to some of the Linux commands, like [nmcli][9], that you've been meaning to learn.

|

||||

|

||||

Pekwm is a fun window manager. It's sparse, terse, and very lightweight. Give it a try!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/12/pekwm-linux-desktop

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/linux_penguin_green.png?itok=ENdVzW22 (Penguin with green background)

|

||||

[2]: http://www.pekwm.org/

|

||||

[3]: https://opensource.com/sites/default/files/uploads/advent-gdm_1.jpg (Selecting your desktop in GDM)

|

||||

[4]: https://opensource.com/sites/default/files/uploads/advent-enlightenment-kdm_0.jpg (Selecting your desktop in KDM)

|

||||

[5]: https://opensource.com/sites/default/files/uploads/advent-pekwm.jpg (Pekwm running on Fedora)

|

||||

[6]: http://dockapps.net

|

||||

[7]: https://opensource.com/article/19/6/understanding-linux-permissions

|

||||

[8]: https://opensource.com/article/19/1/productivity-tool-tint2

|

||||

[9]: https://opensource.com/article/19/5/set-static-network-connection-linux

|

||||

@ -0,0 +1,74 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: ( )

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: subject: (What's your favorite terminal emulator?)

|

||||

[#]: via: (https://opensource.com/article/19/12/favorite-terminal-emulator)

|

||||

[#]: author: (Opensource.com https://opensource.com/users/admin)

|

||||

|

||||

What's your favorite terminal emulator?

|

||||

======

|

||||

We asked our community to tell us about their experience with terminal

|

||||

emulators. Here are a few of the responses we received. Take our poll to

|

||||

weigh in on your favorite.

|

||||

![Terminal window with green text][1]

|

||||

|

||||

Preference of a terminal emulator can say a lot about a person's workflow. Is the ability to drive mouseless a must-have? Do you like to navigate between tabs or windows? There's something to be said about how it makes you feel, too. Does it have that cool factor? Tell us about your favorite terminal emulator by taking our poll or leaving us a comment. How many have you tried?

|

||||

|

||||

We asked our community to tell us about their experience with terminal emulators. Here are a few of the responses we received.

|

||||

|

||||

"My favorite terminal emulator is Tilix, customized with Powerline. I love that it supports multiple terminals open in a single window." —Dan Arel

|

||||

|

||||

"urxvt ([rxvt-unicode][2]). It's simple to configure via files, is lightweight, and readily available in most package manager repositories." —Brian Tomlinson

|

||||

|

||||

"gnome-terminal is still my go-to even though I don't use GNOME anymore. :)" —Justin W. Flory

|

||||

|

||||