mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-04 22:00:34 +08:00

commit

211b61a557

@ -15,7 +15,7 @@

|

||||

|

||||

|

||||

|

||||

当计算机还是快乐、安全的时代时,在机器中的几乎每个进程上,那些段的起始虚拟地址都是**完全相同**的。这将使远程挖掘安全漏洞变得容易。漏洞利用经常需要去引用绝对内存位置:比如在栈中的一个地址,一个库函数的地址,等等。远程攻击闭着眼睛也会选择这个地址,因为地址空间都是相同的。当攻击者们这样做的时候,人们就会受到伤害。因此,地址空间随机化开始流行起来。Linux 会通过在其起始地址上增加偏移量来随机化[栈][3]、[内存映射段][4]、以及[堆][5]。不幸的是,32 位的地址空间是非常拥挤的,为地址空间随机化留下的空间不多,因此 [妨碍了地址空间随机化的效果][6]。

|

||||

当计算机还是快乐、安全的时代时,在机器中的几乎每个进程上,那些段的起始虚拟地址都是**完全相同**的。这将使远程挖掘安全漏洞变得容易。漏洞利用经常需要去引用绝对内存位置:比如在栈中的一个地址,一个库函数的地址,等等。远程攻击可以闭着眼睛选择这个地址,因为地址空间都是相同的。当攻击者们这样做的时候,人们就会受到伤害。因此,地址空间随机化开始流行起来。Linux 会通过在其起始地址上增加偏移量来随机化[栈][3]、[内存映射段][4]、以及[堆][5]。不幸的是,32 位的地址空间是非常拥挤的,为地址空间随机化留下的空间不多,因此 [妨碍了地址空间随机化的效果][6]。

|

||||

|

||||

在进程地址空间中最高的段是栈,在大多数编程语言中它存储本地变量和函数参数。调用一个方法或者函数将推送一个新的<ruby>栈帧<rt>stack frame</rt></ruby>到这个栈。当函数返回时这个栈帧被删除。这个简单的设计,可能是因为数据严格遵循 [后进先出(LIFO)][7] 的次序,这意味着跟踪栈内容时不需要复杂的数据结构 —— 一个指向栈顶的简单指针就可以做到。推入和弹出也因此而非常快且准确。也可能是,持续的栈区重用往往会在 [CPU 缓存][8] 中保持活跃的栈内存,这样可以加快访问速度。进程中的每个线程都有它自己的栈。

|

||||

|

||||

@ -25,7 +25,7 @@

|

||||

|

||||

在栈的下面,有内存映射段。在这里,内核将文件内容直接映射到内存。任何应用程序都可以通过 Linux 的 [`mmap()`][12] 系统调用( [代码实现][13])或者 Windows 的 [`CreateFileMapping()`][14] / [`MapViewOfFile()`][15] 来请求一个映射。内存映射是实现文件 I/O 的方便高效的方式。因此,它经常被用于加载动态库。有时候,也被用于去创建一个并不匹配任何文件的匿名内存映射,这种映射经常被用做程序数据的替代。在 Linux 中,如果你通过 [`malloc()`][16] 去请求一个大的内存块,C 库将会创建这样一个匿名映射而不是使用堆内存。这里所谓的“大”表示是超过了`MMAP_THRESHOLD` 设置的字节数,它的缺省值是 128 kB,可以通过 [`mallopt()`][17] 去调整这个设置值。

|

||||

|

||||

接下来讲的是“堆”,就在我们接下来的地址空间中,堆提供运行时内存分配,像栈一样,但又不同于栈的是,它分配的数据生存期要长于分配它的函数。大多数编程语言都为程序提供了堆管理支持。因此,满足内存需要是编程语言运行时和内核共同来做的事情。在 C 中,堆分配的接口是 [`malloc()`][18] 一族,然而在垃圾回收式编程语言中,像 C#,这个接口使用 `new` 关键字。

|

||||

接下来讲的是“堆”,就在我们接下来的地址空间中,堆提供运行时内存分配,像栈一样,但又不同于栈的是,它分配的数据生存期要长于分配它的函数。大多数编程语言都为程序提供了堆管理支持。因此,满足内存需要是编程语言运行时和内核共同来做的事情。在 C 中,堆分配的接口是 [`malloc()`][18] 一族,然而在支持垃圾回收的编程语言中,像 C#,这个接口使用 `new` 关键字。

|

||||

|

||||

如果在堆中有足够的空间可以满足内存请求,它可以由编程语言运行时来处理内存分配请求,而无需内核参与。否则将通过 [`brk()`][19] 系统调用([代码实现][20])来扩大堆以满足内存请求所需的大小。堆管理是比较 [复杂的][21],在面对我们程序的混乱分配模式时,它通过复杂的算法,努力在速度和内存使用效率之间取得一种平衡。服务一个堆请求所需要的时间可能是非常可观的。实时系统有一个 [特定用途的分配器][22] 去处理这个问题。堆也会出现 _碎片化_ ,如下图所示:

|

||||

|

||||

@ -51,7 +51,7 @@

|

||||

|

||||

via: http://duartes.org/gustavo/blog/post/anatomy-of-a-program-in-memory/

|

||||

|

||||

作者:[gustavo][a]

|

||||

作者:[Gustavo Duarte][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

239

published/20160625 Trying out LXD containers on our Ubuntu.md

Normal file

239

published/20160625 Trying out LXD containers on our Ubuntu.md

Normal file

@ -0,0 +1,239 @@

|

||||

在 Ubuntu 上体验 LXD 容器

|

||||

======

|

||||

|

||||

本文的主角是容器,一种类似虚拟机但更轻量级的构造。你可以轻易地在你的 Ubuntu 桌面系统中创建一堆容器!

|

||||

|

||||

虚拟机会虚拟出整个电脑让你来安装客户机操作系统。**相比之下**,容器**复用**了主机的 Linux 内核,只是简单地 **包容** 了我们选择的根文件系统(也就是运行时环境)。Linux 内核有很多功能可以将运行的 Linux 容器与我们的主机分割开(也就是我们的 Ubuntu 桌面)。

|

||||

|

||||

Linux 本身需要一些手工操作来直接管理他们。好在,有 LXD(读音为 Lex-deeh),这是一款为我们管理 Linux 容器的服务。

|

||||

|

||||

我们将会看到如何:

|

||||

|

||||

1. 在我们的 Ubuntu 桌面上配置容器,

|

||||

2. 创建容器,

|

||||

3. 安装一台 web 服务器,

|

||||

4. 测试一下这台 web 服务器,以及

|

||||

5. 清理所有的东西。

|

||||

|

||||

### 设置 Ubuntu 容器

|

||||

|

||||

如果你安装的是 Ubuntu 16.04,那么你什么都不用做。只要安装下面所列出的一些额外的包就行了。若你安装的是 Ubuntu 14.04.x 或 Ubuntu 15.10,那么按照 [LXD 2.0 系列(二):安装与配置][1] 来进行一些操作,然后再回来。

|

||||

|

||||

确保已经更新了包列表:

|

||||

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt upgrade

|

||||

```

|

||||

|

||||

安装 `lxd` 包:

|

||||

|

||||

```

|

||||

sudo apt install lxd

|

||||

```

|

||||

|

||||

若你安装的是 Ubuntu 16.04,那么还可以让你的容器文件以 ZFS 文件系统的格式进行存储。Ubuntu 16.04 的 Linux kernel 包含了支持 ZFS 必要的内核模块。若要让 LXD 使用 ZFS 进行存储,我们只需要安装 ZFS 工具包。没有 ZFS,容器会在主机文件系统中以单独的文件形式进行存储。通过 ZFS,我们就有了写入时拷贝等功能,可以让任务完成更快一些。

|

||||

|

||||

安装 `zfsutils-linux` 包(若你安装的是 Ubuntu 16.04.x):

|

||||

|

||||

```

|

||||

sudo apt install zfsutils-linux

|

||||

```

|

||||

|

||||

安装好 LXD 后,包安装脚本应该会将你加入 `lxd` 组。该组成员可以使你无需通过 `sudo` 就能直接使用 LXD 管理容器。根据 Linux 的习惯,**你需要先登出桌面会话然后再登录** 才能应用 `lxd` 的组成员关系。(若你是高手,也可以通过在当前 shell 中执行 `newgrp lxd` 命令,就不用重登录了)。

|

||||

|

||||

在开始使用前,LXD 需要初始化存储和网络参数。

|

||||

|

||||

运行下面命令:

|

||||

|

||||

```

|

||||

$ sudo lxd init

|

||||

Name of the storage backend to use (dir or zfs): zfs

|

||||

Create a new ZFS pool (yes/no)? yes

|

||||

Name of the new ZFS pool: lxd-pool

|

||||

Would you like to use an existing block device (yes/no)? no

|

||||

Size in GB of the new loop device (1GB minimum): 30

|

||||

Would you like LXD to be available over the network (yes/no)? no

|

||||

Do you want to configure the LXD bridge (yes/no)? yes

|

||||

> You will be asked about the network bridge configuration. Accept all defaults and continue.

|

||||

Warning: Stopping lxd.service, but it can still be activated by:

|

||||

lxd.socket

|

||||

LXD has been successfully configured.

|

||||

$ _

|

||||

```

|

||||

|

||||

我们在一个(单独)的文件而不是块设备(即分区)中构建了一个文件系统来作为 ZFS 池,因此我们无需进行额外的分区操作。在本例中我指定了 30GB 大小,这个空间取之于根(`/`) 文件系统中。这个文件就是 `/var/lib/lxd/zfs.img`。

|

||||

|

||||

行了!最初的配置完成了。若有问题,或者想了解其他信息,请阅读 https://www.stgraber.org/2016/03/15/lxd-2-0-installing-and-configuring-lxd-212/ 。

|

||||

|

||||

### 创建第一个容器

|

||||

|

||||

所有 LXD 的管理操作都可以通过 `lxc` 命令来进行。我们通过给 `lxc` 不同参数来管理容器。

|

||||

|

||||

```

|

||||

lxc list

|

||||

```

|

||||

|

||||

可以列出所有已经安装的容器。很明显,这个列表现在是空的,但这表示我们的安装是没问题的。

|

||||

|

||||

```

|

||||

lxc image list

|

||||

```

|

||||

|

||||

列出可以用来启动容器的(已经缓存的)镜像列表。很明显这个列表也是空的,但这也说明我们的安装是没问题的。

|

||||

|

||||

```

|

||||

lxc image list ubuntu:

|

||||

```

|

||||

|

||||

列出可以下载并启动容器的远程镜像。而且指定了显示 Ubuntu 镜像。

|

||||

|

||||

```

|

||||

lxc image list images:

|

||||

```

|

||||

|

||||

列出可以用来启动容器的(已经缓存的)各种发行版的镜像列表。这会列出各种发行版的镜像比如 Alpine、Debian、Gentoo、Opensuse 以及 Fedora。

|

||||

|

||||

让我们启动一个 Ubuntu 16.04 容器,并称之为 `c1`:

|

||||

|

||||

```

|

||||

$ lxc launch ubuntu:x c1

|

||||

Creating c1

|

||||

Starting c1

|

||||

$

|

||||

```

|

||||

|

||||

我们使用 `launch` 动作,然后选择镜像 `ubuntu:x` (`x` 表示 Xenial/16.04 镜像),最后我们使用名字 `c1` 作为容器的名称。

|

||||

|

||||

让我们来看看安装好的首个容器,

|

||||

|

||||

```

|

||||

$ lxc list

|

||||

|

||||

+---------|---------|----------------------|------|------------|-----------+

|

||||

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

|

||||

+---------|---------|----------------------|------|------------|-----------+

|

||||

| c1 | RUNNING | 10.173.82.158 (eth0) | | PERSISTENT | 0 |

|

||||

+---------|---------|----------------------|------|------------|-----------+

|

||||

```

|

||||

|

||||

我们的首个容器 c1 已经运行起来了,它还有自己的 IP 地址(可以本地访问)。我们可以开始用它了!

|

||||

|

||||

### 安装 web 服务器

|

||||

|

||||

我们可以在容器中运行命令。运行命令的动作为 `exec`。

|

||||

|

||||

```

|

||||

$ lxc exec c1 -- uptime

|

||||

11:47:25 up 2 min,0 users,load average:0.07,0.05,0.04

|

||||

$ _

|

||||

```

|

||||

|

||||

在 `exec` 后面,我们指定容器、最后输入要在容器中运行的命令。该容器的运行时间只有 2 分钟,这是个新出炉的容器:-)。

|

||||

|

||||

命令行中的 `--` 跟我们 shell 的参数处理过程有关。若我们的命令没有任何参数,则完全可以省略 `-`。

|

||||

|

||||

```

|

||||

$ lxc exec c1 -- df -h

|

||||

```

|

||||

|

||||

这是一个必须要 `-` 的例子,由于我们的命令使用了参数 `-h`。若省略了 `-`,会报错。

|

||||

|

||||

然后我们运行容器中的 shell 来更新包列表。

|

||||

|

||||

```

|

||||

$ lxc exec c1 bash

|

||||

root@c1:~# apt update

|

||||

Ign http://archive.ubuntu.com trusty InRelease

|

||||

Get:1 http://archive.ubuntu.com trusty-updates InRelease [65.9 kB]

|

||||

Get:2 http://security.ubuntu.com trusty-security InRelease [65.9 kB]

|

||||

...

|

||||

Hit http://archive.ubuntu.com trusty/universe Translation-en

|

||||

Fetched 11.2 MB in 9s (1228 kB/s)

|

||||

Reading package lists... Done

|

||||

root@c1:~# apt upgrade

|

||||

Reading package lists... Done

|

||||

Building dependency tree

|

||||

...

|

||||

Processing triggers for man-db (2.6.7.1-1ubuntu1) ...

|

||||

Setting up dpkg (1.17.5ubuntu5.7) ...

|

||||

root@c1:~# _

|

||||

```

|

||||

|

||||

我们使用 nginx 来做 web 服务器。nginx 在某些方面要比 Apache web 服务器更酷一些。

|

||||

|

||||

```

|

||||

root@c1:~# apt install nginx

|

||||

Reading package lists... Done

|

||||

Building dependency tree

|

||||

...

|

||||

Setting up nginx-core (1.4.6-1ubuntu3.5) ...

|

||||

Setting up nginx (1.4.6-1ubuntu3.5) ...

|

||||

Processing triggers for libc-bin (2.19-0ubuntu6.9) ...

|

||||

root@c1:~# _

|

||||

```

|

||||

|

||||

让我们用浏览器访问一下这个 web 服务器。记住 IP 地址为 10.173.82.158,因此你需要在浏览器中输入这个 IP。

|

||||

|

||||

[![lxd-nginx][2]][3]

|

||||

|

||||

让我们对页面文字做一些小改动。回到容器中,进入默认 HTML 页面的目录中。

|

||||

|

||||

```

|

||||

root@c1:~# cd /var/www/html/

|

||||

root@c1:/var/www/html# ls -l

|

||||

total 2

|

||||

-rw-r--r-- 1 root root 612 Jun 25 12:15 index.nginx-debian.html

|

||||

root@c1:/var/www/html#

|

||||

```

|

||||

|

||||

使用 nano 编辑文件,然后保存:

|

||||

|

||||

[![lxd-nginx-nano][4]][5]

|

||||

|

||||

之后,再刷一下页面看看,

|

||||

|

||||

[![lxd-nginx-modified][6]][7]

|

||||

|

||||

### 清理

|

||||

|

||||

让我们清理一下这个容器,也就是删掉它。当需要的时候我们可以很方便地创建一个新容器出来。

|

||||

|

||||

```

|

||||

$ lxc list

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

| c1 | RUNNING | 10.173.82.169 (eth0) | | PERSISTENT | 0 |

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

$ lxc stop c1

|

||||

$ lxc delete c1

|

||||

$ lxc list

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

| NAME | STATE | IPV4 | IPV6 | TYPE | SNAPSHOTS |

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

+---------+---------+----------------------+------+------------+-----------+

|

||||

```

|

||||

|

||||

我们停止(关闭)这个容器,然后删掉它了。

|

||||

|

||||

本文至此就结束了。关于容器有很多玩法。而这只是配置 Ubuntu 并尝试使用容器的第一步而已。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.simos.info/trying-out-lxd-containers-on-our-ubuntu/

|

||||

|

||||

作者:[Simos Xenitellis][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blog.simos.info/author/simos/

|

||||

[1]:https://linux.cn/article-7687-1.html

|

||||

[2]:https://i2.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx.png?resize=564%2C269&ssl=1

|

||||

[3]:https://i2.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx.png?ssl=1

|

||||

[4]:https://i2.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx-nano.png?resize=750%2C424&ssl=1

|

||||

[5]:https://i2.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx-nano.png?ssl=1

|

||||

[6]:https://i1.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx-modified.png?resize=595%2C317&ssl=1

|

||||

[7]:https://i1.wp.com/blog.simos.info/wp-content/uploads/2016/06/lxd-nginx-modified.png?ssl=1

|

||||

@ -1,52 +1,47 @@

|

||||

当你在 Linux 上启动一个进程时会发生什么?

|

||||

===========================================================

|

||||

|

||||

|

||||

本文是关于 fork 和 exec 是如何在 Unix 上工作的。你或许已经知道,也有人还不知道。几年前当我了解到这些时,我惊叹不已。

|

||||

|

||||

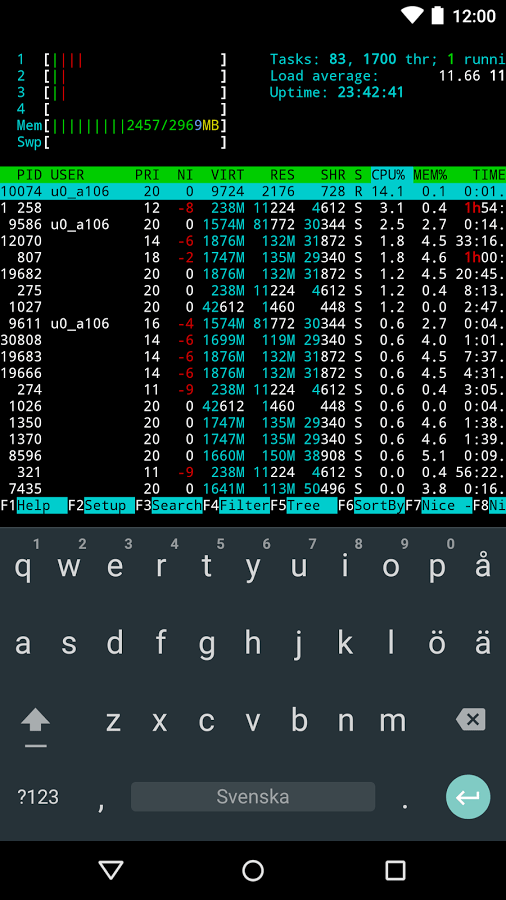

我们要做的是启动一个进程。我们已经在博客上讨论了很多关于**系统调用**的问题,每当你启动一个进程或者打开一个文件,这都是一个系统调用。所以你可能会认为有这样的系统调用:

|

||||

|

||||

```

|

||||

start_process(["ls", "-l", "my_cool_directory"])

|

||||

|

||||

```

|

||||

|

||||

这是一个合理的想法,显然这是它在 DOS 或 Windows 中的工作原理。我想说的是,这并不是 Linux 上的工作原理。但是,我查阅了文档,确实有一个 [posix_spawn][2] 的系统调用基本上是这样做的,不过这不在本文的讨论范围内。

|

||||

|

||||

### fork 和 exec

|

||||

|

||||

Linux 上的 `posix_spawn` 是通过两个系统调用实现的,分别是 `fork` 和 `exec`(实际上是 execve),这些都是人们常常使用的。尽管在 OS X 上,人们使用 `posix_spawn`,而 fork 和 exec 是不提倡的,但我们将讨论的是 Linux。

|

||||

Linux 上的 `posix_spawn` 是通过两个系统调用实现的,分别是 `fork` 和 `exec`(实际上是 `execve`),这些都是人们常常使用的。尽管在 OS X 上,人们使用 `posix_spawn`,而 `fork` 和 `exec` 是不提倡的,但我们将讨论的是 Linux。

|

||||

|

||||

Linux 中的每个进程都存在于“进程树”中。你可以通过运行 `pstree` 命令查看进程树。树的根是 `init`,进程号是 1。每个进程(init 除外)都有一个父进程,一个进程都可以有很多子进程。

|

||||

Linux 中的每个进程都存在于“进程树”中。你可以通过运行 `pstree` 命令查看进程树。树的根是 `init`,进程号是 1。每个进程(`init` 除外)都有一个父进程,一个进程都可以有很多子进程。

|

||||

|

||||

所以,假设我要启动一个名为 `ls` 的进程来列出一个目录。我是不是只要发起一个进程 `ls` 就好了呢?不是的。

|

||||

|

||||

我要做的是,创建一个子进程,这个子进程是我本身的一个克隆,然后这个子进程的“大脑”被替代,变成 `ls`。

|

||||

我要做的是,创建一个子进程,这个子进程是我(`me`)本身的一个克隆,然后这个子进程的“脑子”被吃掉了,变成 `ls`。

|

||||

|

||||

开始是这样的:

|

||||

|

||||

```

|

||||

my parent

|

||||

|- me

|

||||

|

||||

```

|

||||

|

||||

然后运行 `fork()`,生成一个子进程,是我自己的一份克隆:

|

||||

然后运行 `fork()`,生成一个子进程,是我(`me`)自己的一份克隆:

|

||||

|

||||

```

|

||||

my parent

|

||||

|- me

|

||||

|-- clone of me

|

||||

|

||||

```

|

||||

|

||||

然后我让子进程运行 `exec("ls")`,变成这样:

|

||||

然后我让该子进程运行 `exec("ls")`,变成这样:

|

||||

|

||||

```

|

||||

my parent

|

||||

|- me

|

||||

|-- ls

|

||||

|

||||

```

|

||||

|

||||

当 ls 命令结束后,我几乎又变回了我自己:

|

||||

@ -55,24 +50,22 @@ my parent

|

||||

my parent

|

||||

|- me

|

||||

|-- ls (zombie)

|

||||

|

||||

```

|

||||

|

||||

在这时 ls 其实是一个僵尸进程。这意味着它已经死了,但它还在等我,以防我需要检查它的返回值(使用 `wait` 系统调用)。一旦我获得了它的返回值,我将再次恢复独自一人的状态。

|

||||

在这时 `ls` 其实是一个僵尸进程。这意味着它已经死了,但它还在等我,以防我需要检查它的返回值(使用 `wait` 系统调用)。一旦我获得了它的返回值,我将再次恢复独自一人的状态。

|

||||

|

||||

```

|

||||

my parent

|

||||

|- me

|

||||

|

||||

```

|

||||

|

||||

### fork 和 exec 的代码实现

|

||||

|

||||

如果你要编写一个 shell,这是你必须做的一个练习(这是一个非常有趣和有启发性的项目。Kamal 在 Github 上有一个很棒的研讨会:[https://github.com/kamalmarhubi/shell-workshop][3])

|

||||

如果你要编写一个 shell,这是你必须做的一个练习(这是一个非常有趣和有启发性的项目。Kamal 在 Github 上有一个很棒的研讨会:[https://github.com/kamalmarhubi/shell-workshop][3])。

|

||||

|

||||

事实证明,有了 C 或 Python 的技能,你可以在几个小时内编写一个非常简单的 shell,例如 bash。(至少如果你旁边能有个人多少懂一点,如果没有的话用时会久一点。)我已经完成啦,真的很棒。

|

||||

事实证明,有了 C 或 Python 的技能,你可以在几个小时内编写一个非常简单的 shell,像 bash 一样。(至少如果你旁边能有个人多少懂一点,如果没有的话用时会久一点。)我已经完成啦,真的很棒。

|

||||

|

||||

这就是 fork 和 exec 在程序中的实现。我写了一段 C 的伪代码。请记住,[fork 也可能会失败哦。][4]

|

||||

这就是 `fork` 和 `exec` 在程序中的实现。我写了一段 C 的伪代码。请记住,[fork 也可能会失败哦。][4]

|

||||

|

||||

```

|

||||

int pid = fork();

|

||||

@ -80,7 +73,7 @@ int pid = fork();

|

||||

// “我”是谁呢?可能是子进程也可能是父进程

|

||||

if (pid == 0) {

|

||||

// 我现在是子进程

|

||||

// 我的大脑将被替代,然后变成一个完全不一样的进程“ls”

|

||||

// “ls” 吃掉了我脑子,然后变成一个完全不一样的进程

|

||||

exec(["ls"])

|

||||

} else if (pid == -1) {

|

||||

// 天啊,fork 失败了,简直是灾难!

|

||||

@ -89,59 +82,48 @@ if (pid == 0) {

|

||||

// 继续做一个酷酷的美男子吧

|

||||

// 需要的话,我可以等待子进程结束

|

||||

}

|

||||

|

||||

```

|

||||

|

||||

### 上文提到的“大脑被替代“是什么意思呢?

|

||||

### 上文提到的“脑子被吃掉”是什么意思呢?

|

||||

|

||||

进程有很多属性:

|

||||

|

||||

* 打开的文件(包括打开的网络连接)

|

||||

|

||||

* 环境变量

|

||||

|

||||

* 信号处理程序(在程序上运行 Ctrl + C 时会发生什么?)

|

||||

|

||||

* 内存(你的“地址空间”)

|

||||

|

||||

* 寄存器

|

||||

|

||||

* 可执行文件(/proc/$pid/exe)

|

||||

|

||||

* 可执行文件(`/proc/$pid/exe`)

|

||||

* cgroups 和命名空间(与 Linux 容器相关)

|

||||

|

||||

* 当前的工作目录

|

||||

|

||||

* 运行程序的用户

|

||||

|

||||

* 其他我还没想到的

|

||||

|

||||

当你运行 `execve` 并让另一个程序替代你的时候,实际上几乎所有东西都是相同的! 你们有相同的环境变量、信号处理程序和打开的文件等等。

|

||||

当你运行 `execve` 并让另一个程序吃掉你的脑子的时候,实际上几乎所有东西都是相同的! 你们有相同的环境变量、信号处理程序和打开的文件等等。

|

||||

|

||||

唯一改变的是,内存、寄存器以及正在运行的程序,这可是件大事。

|

||||

|

||||

### 为何 fork 并非那么耗费资源(写入时复制)

|

||||

|

||||

你可能会问:“如果我有一个使用了 2 GB 内存的进程,这是否意味着每次我启动一个子进程,所有 2 GB 的内存都要被复制一次?这听起来要耗费很多资源!“

|

||||

你可能会问:“如果我有一个使用了 2GB 内存的进程,这是否意味着每次我启动一个子进程,所有 2 GB 的内存都要被复制一次?这听起来要耗费很多资源!”

|

||||

|

||||

事实上,Linux 为 fork() 调用实现了写入时复制(copy on write),对于新进程的 2 GB 内存来说,就像是“看看旧的进程就好了,是一样的!”。然后,当如果任一进程试图写入内存,此时系统才真正地复制一个内存的副本给该进程。如果两个进程的内存是相同的,就不需要复制了。

|

||||

事实上,Linux 为 `fork()` 调用实现了<ruby>写时复制<rt>copy on write</rt></ruby>,对于新进程的 2GB 内存来说,就像是“看看旧的进程就好了,是一样的!”。然后,当如果任一进程试图写入内存,此时系统才真正地复制一个内存的副本给该进程。如果两个进程的内存是相同的,就不需要复制了。

|

||||

|

||||

### 为什么你需要知道这么多

|

||||

|

||||

你可能会说,好吧,这些琐事听起来很厉害,但为什么这么重要?关于信号处理程序或环境变量的细节会被继承吗?这对我的日常编程有什么实际影响呢?

|

||||

你可能会说,好吧,这些细节听起来很厉害,但为什么这么重要?关于信号处理程序或环境变量的细节会被继承吗?这对我的日常编程有什么实际影响呢?

|

||||

|

||||

有可能哦!比如说,在 Kamal 的博客上有一个很有意思的 [bug][5]。它讨论了 Python 如何使信号处理程序忽略了 SIGPIPE。也就是说,如果你从 Python 里运行一个程序,默认情况下它会忽略 SIGPIPE!这意味着,程序从 Python 脚本和从 shell 启动的表现会**有所不同**。在这种情况下,它会造成一个奇怪的问题。

|

||||

有可能哦!比如说,在 Kamal 的博客上有一个很有意思的 [bug][5]。它讨论了 Python 如何使信号处理程序忽略了 `SIGPIPE`。也就是说,如果你从 Python 里运行一个程序,默认情况下它会忽略 `SIGPIPE`!这意味着,程序从 Python 脚本和从 shell 启动的表现会**有所不同**。在这种情况下,它会造成一个奇怪的问题。

|

||||

|

||||

所以,你的程序的环境(环境变量、信号处理程序等)可能很重要,都是从父进程继承来的。知道这些,在调试时是很有用的。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://jvns.ca/blog/2016/10/04/exec-will-eat-your-brain/

|

||||

|

||||

作者:[ Julia Evans][a]

|

||||

作者:[Julia Evans][a]

|

||||

译者:[jessie-pang](https://github.com/jessie-pang)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,19 +1,21 @@

|

||||

让 History 命令显示日期和时间

|

||||

让 history 命令显示日期和时间

|

||||

======

|

||||

我们都对 History 命令很熟悉。它将终端上 bash 执行过的所有命令存储到 `.bash_history` 文件中,来帮助我们复查用户之前执行过的命令。

|

||||

|

||||

默认情况下 history 命令直接显示用户执行的命令而不会输出运行命令时的日期和时间,即使 history 命令记录了这个时间。

|

||||

我们都对 `history` 命令很熟悉。它将终端上 bash 执行过的所有命令存储到 `.bash_history` 文件中,来帮助我们复查用户之前执行过的命令。

|

||||

|

||||

运行 history 命令时,它会检查一个叫做 `HISTTIMEFORMAT` 的环境变量,这个环境变量指明了如何格式化输出 history 命令中记录的这个时间。

|

||||

默认情况下 `history` 命令直接显示用户执行的命令而不会输出运行命令时的日期和时间,即使 `history` 命令记录了这个时间。

|

||||

|

||||

若该值为 null 或者根本没有设置,则它跟大多数系统默认显示的一样,不会现实日期和时间。

|

||||

运行 `history` 命令时,它会检查一个叫做 `HISTTIMEFORMAT` 的环境变量,这个环境变量指明了如何格式化输出 `history` 命令中记录的这个时间。

|

||||

|

||||

`HISTTIMEFORMAT` 使用 strftime 来格式化显示时间 (strftime - 将日期和时间转换为字符串)。history 命令输出日期和时间能够帮你更容易地追踪问题。

|

||||

若该值为 null 或者根本没有设置,则它跟大多数系统默认显示的一样,不会显示日期和时间。

|

||||

|

||||

* **%T:** 替换为时间 ( %H:%M:%S )。

|

||||

* **%F:** 等同于 %Y-%m-%d (ISO 8601:2000 标准日期格式)。

|

||||

`HISTTIMEFORMAT` 使用 `strftime` 来格式化显示时间(`strftime` - 将日期和时间转换为字符串)。`history` 命令输出日期和时间能够帮你更容易地追踪问题。

|

||||

|

||||

* `%T`: 替换为时间(`%H:%M:%S`)。

|

||||

* `%F`: 等同于 `%Y-%m-%d` (ISO 8601:2000 标准日期格式)。

|

||||

|

||||

下面是 `history` 命令默认的输出。

|

||||

|

||||

下面是 history 命令默认的输出。

|

||||

```

|

||||

# history

|

||||

1 yum install -y mysql-server mysql-client

|

||||

@ -46,36 +48,36 @@

|

||||

28 sysdig

|

||||

29 yum install httpd mysql

|

||||

30 service httpd start

|

||||

|

||||

```

|

||||

|

||||

根据需求,有三种不同的方法设置环境变量。

|

||||

根据需求,有三种不同的设置环境变量的方法。

|

||||

|

||||

* 临时设置当前用户的环境变量

|

||||

* 永久设置当前/其他用户的环境变量

|

||||

* 永久设置所有用户的环境变量

|

||||

* 临时设置当前用户的环境变量

|

||||

* 永久设置当前/其他用户的环境变量

|

||||

* 永久设置所有用户的环境变量

|

||||

|

||||

**注意:** 不要忘了在最后那个单引号前加上空格,否则输出会很混乱的。

|

||||

|

||||

### 方法 -1:

|

||||

### 方法 1:

|

||||

|

||||

运行下面命令为为当前用户临时设置 `HISTTIMEFORMAT` 变量。这会一直生效到下次重启。

|

||||

|

||||

运行下面命令为为当前用户临时设置 HISTTIMEFORMAT 变量。这会一直生效到下次重启。

|

||||

```

|

||||

# export HISTTIMEFORMAT='%F %T '

|

||||

|

||||

```

|

||||

|

||||

### 方法 -2:

|

||||

### 方法 2:

|

||||

|

||||

将 `HISTTIMEFORMAT` 变量加到 `.bashrc` 或 `.bash_profile` 文件中,让它永久生效。

|

||||

|

||||

将 HISTTIMEFORMAT 变量加到 `.bashrc` 或 `.bash_profile` 文件中,让它永久生效。

|

||||

```

|

||||

# echo 'HISTTIMEFORMAT="%F %T "' >> ~/.bashrc

|

||||

或

|

||||

# echo 'HISTTIMEFORMAT="%F %T "' >> ~/.bash_profile

|

||||

|

||||

```

|

||||

|

||||

运行下面命令来让文件中的修改生效。

|

||||

|

||||

```

|

||||

# source ~/.bashrc

|

||||

或

|

||||

@ -83,21 +85,22 @@

|

||||

|

||||

```

|

||||

|

||||

### 方法 -3:

|

||||

### 方法 3:

|

||||

|

||||

将 `HISTTIMEFORMAT` 变量加入 `/etc/profile` 文件中,让它对所有用户永久生效。

|

||||

|

||||

将 HISTTIMEFORMAT 变量加入 `/etc/profile` 文件中,让它对所有用户永久生效。

|

||||

```

|

||||

# echo 'HISTTIMEFORMAT="%F %T "' >> /etc/profile

|

||||

|

||||

```

|

||||

|

||||

运行下面命令来让文件中的修改生效。

|

||||

|

||||

```

|

||||

# source /etc/profile

|

||||

|

||||

```

|

||||

|

||||

输出结果为。

|

||||

输出结果为:

|

||||

|

||||

```

|

||||

# history

|

||||

1 2017-08-16 15:30:15 yum install -y mysql-server mysql-client

|

||||

@ -130,7 +133,6 @@

|

||||

28 2017-08-16 15:30:15 sysdig

|

||||

29 2017-08-16 15:30:15 yum install httpd mysql

|

||||

30 2017-08-16 15:30:15 service httpd start

|

||||

|

||||

```

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -138,7 +140,7 @@ via: https://www.2daygeek.com/display-date-time-linux-bash-history-command/

|

||||

|

||||

作者:[2daygeek][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,8 +1,9 @@

|

||||

如何方便地寻找 GitHub 上超棒的项目和资源

|

||||

如何轻松地寻找 GitHub 上超棒的项目和资源

|

||||

======

|

||||

|

||||

|

||||

在 **GitHub** 网站上每天都会新增上百个项目。由于 GitHub 上有成千上万的项目,要在上面搜索好的项目简直要累死人。好在,有那么一伙人已经创建了一些这样的列表。其中包含的类别五花八门,如编程,数据库,编辑器,游戏,娱乐等。这使得我们寻找在 GitHub 上托管的项目,软件,资源,裤,书籍等其他东西变得容易了很多。有一个 GitHub 用户更进了一步,创建了一个名叫 `Awesome-finder` 的命令行工具,用来在 awesome 系列的仓库中寻找超棒的项目和资源。该工具帮助我们不需要离开终端(当然也就不需要使用浏览器了)的情况下浏览 awesome 列表。

|

||||

|

||||

|

||||

在 GitHub 网站上每天都会新增上百个项目。由于 GitHub 上有成千上万的项目,要在上面搜索好的项目简直要累死人。好在,有那么一伙人已经创建了一些这样的列表。其中包含的类别五花八门,如编程、数据库、编辑器、游戏、娱乐等。这使得我们寻找在 GitHub 上托管的项目、软件、资源、库、书籍等其他东西变得容易了很多。有一个 GitHub 用户更进了一步,创建了一个名叫 `Awesome-finder` 的命令行工具,用来在 awesome 系列的仓库中寻找超棒的项目和资源。该工具可以让我们不需要离开终端(当然也就不需要使用浏览器了)的情况下浏览 awesome 列表。

|

||||

|

||||

在这篇简单的说明中,我会向你演示如何方便地在类 Unix 系统中浏览 awesome 列表。

|

||||

|

||||

@ -12,12 +13,14 @@

|

||||

|

||||

使用 `pip` 可以很方便地安装该工具,`pip` 是一个用来安装使用 Python 编程语言开发的程序的包管理器。

|

||||

|

||||

在 **Arch Linux** 一起衍生发行版中(比如 **Antergos**,**Manjaro Linux**),你可以使用下面命令安装 `pip`:

|

||||

在 Arch Linux 及其衍生发行版中(比如 Antergos,Manjaro Linux),你可以使用下面命令安装 `pip`:

|

||||

|

||||

```

|

||||

sudo pacman -S python-pip

|

||||

```

|

||||

|

||||

在 **RHEL**,**CentOS** 中:

|

||||

在 RHEL,CentOS 中:

|

||||

|

||||

```

|

||||

sudo yum install epel-release

|

||||

```

|

||||

@ -25,32 +28,33 @@ sudo yum install epel-release

|

||||

sudo yum install python-pip

|

||||

```

|

||||

|

||||

在 **Fedora** 上:

|

||||

在 Fedora 上:

|

||||

|

||||

```

|

||||

sudo dnf install epel-release

|

||||

```

|

||||

```

|

||||

sudo dnf install python-pip

|

||||

```

|

||||

|

||||

在 **Debian**,**Ubuntu**,**Linux Mint** 上:

|

||||

在 Debian,Ubuntu,Linux Mint 上:

|

||||

|

||||

```

|

||||

sudo apt-get install python-pip

|

||||

```

|

||||

|

||||

在 **SUSE**,**openSUSE** 上:

|

||||

在 SUSE,openSUSE 上:

|

||||

```

|

||||

sudo zypper install python-pip

|

||||

```

|

||||

|

||||

PIP 安装好后,用下面命令来安装 'Awesome-finder'。

|

||||

`pip` 安装好后,用下面命令来安装 'Awesome-finder'。

|

||||

|

||||

```

|

||||

sudo pip install awesome-finder

|

||||

```

|

||||

|

||||

#### 用法

|

||||

|

||||

Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)的内容:

|

||||

Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)的内容:

|

||||

|

||||

* awesome

|

||||

* awesome-android

|

||||

@ -66,83 +70,84 @@ Awesome-finder 会列出 GitHub 网站中如下这些主题(其实就是仓库)

|

||||

* awesome-scala

|

||||

* awesome-swift

|

||||

|

||||

|

||||

该列表会定期更新。

|

||||

|

||||

比如,要查看 `awesome-go` 仓库中的列表,只需要输入:

|

||||

|

||||

```

|

||||

awesome go

|

||||

```

|

||||

|

||||

你就能看到用 “Go” 写的所有流行的东西了,而且这些东西按字母顺序进行了排列。

|

||||

|

||||

[![][1]][2]

|

||||

![][2]

|

||||

|

||||

你可以通过 **上/下** 箭头在列表中导航。一旦找到所需要的东西,只需要选中它,然后按下 **回车** 键就会用你默认的 web 浏览器打开相应的链接了。

|

||||

你可以通过 上/下 箭头在列表中导航。一旦找到所需要的东西,只需要选中它,然后按下回车键就会用你默认的 web 浏览器打开相应的链接了。

|

||||

|

||||

类似的,

|

||||

|

||||

* "awesome android" 命令会搜索 **awesome-android** 仓库。

|

||||

* "awesome awesome" 命令会搜索 **awesome** 仓库。

|

||||

* "awesome elixir" 命令会搜索 **awesome-elixir**。

|

||||

* "awesome go" 命令会搜索 **awesome-go**。

|

||||

* "awesome ios" 命令会搜索 **awesome-ios**。

|

||||

* "awesome java" 命令会搜索 **awesome-java**。

|

||||

* "awesome javascript" 命令会搜索 **awesome-javascript**。

|

||||

* "awesome php" 命令会搜索 **awesome-php**。

|

||||

* "awesome python" 命令会搜索 **awesome-python**。

|

||||

* "awesome ruby" 命令会搜索 **awesome-ruby**。

|

||||

* "awesome rust" 命令会搜索 **awesome-rust**。

|

||||

* "awesome scala" 命令会搜索 **awesome-scala**。

|

||||

* "awesome swift" 命令会搜索 **awesome-swift**。

|

||||

* `awesome android` 命令会搜索 awesome-android 仓库。

|

||||

* `awesome awesome` 命令会搜索 awesome 仓库。

|

||||

* `awesome elixir` 命令会搜索 awesome-elixir。

|

||||

* `awesome go` 命令会搜索 awesome-go。

|

||||

* `awesome ios` 命令会搜索 awesome-ios。

|

||||

* `awesome java` 命令会搜索 awesome-java。

|

||||

* `awesome javascript` 命令会搜索 awesome-javascript。

|

||||

* `awesome php` 命令会搜索 awesome-php。

|

||||

* `awesome python` 命令会搜索 awesome-python。

|

||||

* `awesome ruby` 命令会搜索 awesome-ruby。

|

||||

* `awesome rust` 命令会搜索 awesome-rust。

|

||||

* `awesome scala` 命令会搜索 awesome-scala。

|

||||

* `awesome swift` 命令会搜索 awesome-swift。

|

||||

|

||||

而且,它还会随着你在提示符中输入的内容而自动进行筛选。比如,当我输入 "dj" 后,他会显示与 Django 相关的内容。

|

||||

而且,它还会随着你在提示符中输入的内容而自动进行筛选。比如,当我输入 `dj` 后,他会显示与 Django 相关的内容。

|

||||

|

||||

[![][1]][3]

|

||||

![][3]

|

||||

|

||||

若你想从最新的 `awesome-<topic>`( 而不是用缓存中的数据) 中搜索,使用 `-f` 或 `-force` 标志:

|

||||

|

||||

```

|

||||

awesome <topic> -f (--force)

|

||||

|

||||

```

|

||||

|

||||

**像这样:**

|

||||

像这样:

|

||||

|

||||

```

|

||||

awesome python -f

|

||||

```

|

||||

|

||||

或,

|

||||

|

||||

```

|

||||

awesome python --force

|

||||

```

|

||||

|

||||

上面命令会显示 **awesome-python** GitHub 仓库中的列表。

|

||||

上面命令会显示 awesome-python GitHub 仓库中的列表。

|

||||

|

||||

很棒,对吧?

|

||||

|

||||

要退出这个工具的话,按下 **ESC** 键。要显示帮助信息,输入:

|

||||

要退出这个工具的话,按下 ESC 键。要显示帮助信息,输入:

|

||||

|

||||

```

|

||||

awesome -h

|

||||

```

|

||||

|

||||

本文至此就结束了。希望本文能对你产生帮助。如果你觉得我们的文章对你有帮助,请将他们分享到你的社交网络中去,造福大众。我们马上还有其他好东西要来了。敬请期待!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_008-1.png ()

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_009.png ()

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_008-1.png

|

||||

[3]:http://www.ostechnix.com/wp-content/uploads/2017/09/sk@sk_009.png

|

||||

[4]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=reddit (Click to share on Reddit)

|

||||

[5]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=twitter (Click to share on Twitter)

|

||||

[6]:https://www.ostechnix.com/easily-find-awesome-projects-resources-hosted-github/?share=facebook (Click to share on Facebook)

|

||||

@ -1,57 +1,61 @@

|

||||

微服务和容器:需要去防范的 5 个“坑”

|

||||

======

|

||||

|

||||

> 微服务与容器天生匹配,但是你需要避开一些常见的陷阱。

|

||||

|

||||

|

||||

|

||||

因为微服务和容器是 [天生的“一对”][1],所以一起来使用它们,似乎也就不会有什么问题。当我们将这对“天作之合”投入到生产系统后,你就会发现,随着你的 IT 基础的提升,等待你的将是大幅上升的成本。是不是这样的?

|

||||

|

||||

(让我们等一下,等人们笑声过去)

|

||||

|

||||

是的,很遗憾,这并不是你所希望的结果。虽然这两种技术的组合是非常强大的,但是,如果没有很好的规划和适配,它们并不能发挥出强大的性能来。在前面的文章中,我们整理了如果你想 [使用它们你应该掌握的知识][2]。但是,那些都是组织在容器中使用微服务时所遇到的常见问题。

|

||||

|

||||

事先了解这些可能出现的问题,可以为你的成功奠定更坚实的基础。

|

||||

事先了解这些可能出现的问题,能够帮你避免这些问题,为你的成功奠定更坚实的基础。

|

||||

|

||||

微服务和容器技术的出现是基于组织的需要、知识、资源等等更多的现实的要求。Mac Browning 说,“他们最常犯的一个 [错误] 是试图一次就想“搞定”一切”,他是 [DigitalOcean][3] 的工程部经理。“而真正需要面对的问题是,你的公司应该采用什么样的容器和微服务。”

|

||||

微服务和容器技术的出现是基于组织的需要、知识、资源等等更多的现实的要求。Mac Browning 说,“他们最常犯的一个 [错误] 是试图一次就想‘搞定’一切”,他是 [DigitalOcean][3] 的工程部经理。“而真正需要面对的问题是,你的公司应该采用什么样的容器和微服务。”

|

||||

|

||||

**[ 努力向你的老板和同事去解释什么是微服务?阅读我们的入门读本[如何简单明了地解释微服务][4]。]**

|

||||

|

||||

Browning 和其他的 IT 专业人员分享了他们遇到的,在组织中使用容器化微服务时的五个陷阱,特别是在他们的生产系统生命周期的早期时候。在你的组织中需要去部署微服务和容器时,了解这些知识,将有助于你去评估微服务和容器化的部署策略。

|

||||

|

||||

### 1. 在部署微服务和容器化上,试图同时从零开始

|

||||

### 1、 在部署微服务和容器化上,试图同时从零开始

|

||||

|

||||

如果你刚开始从完全的实体服务器上开始改变,或者如果你的组织在微服务和容器化上还没有足够的知识储备,那么,请记住:微服务和容器化并不是拴在一起,不可分别部署的。这就意味着,你可以发挥你公司内部专家的技术特长,先从部署其中的一个开始。Kevin McGrath,CTO, [Sungard 服务可用性][5] 资深设计师,他建议,通过首先使用容器化来为你的团队建立知识和技能储备,通过对现有应用或者新应用进行容器化部署,接着再将它们迁移到微服务架构,这样才能在最后的阶段感受到它们的优势所在。

|

||||

如果你刚开始从完全的单例应用开始改变,或者如果你的组织在微服务和容器化上还没有足够的知识储备,那么,请记住:微服务和容器化并不是拴在一起、不可分别部署的。这就意味着,你可以发挥你公司内部专家的技术特长,先从部署其中的一个开始。Sungard Availability Services][5] 的资深 CTO 架构师 Kevin McGrath 建议,通过首先使用容器化来为你的团队建立知识和技能储备,通过对现有应用或者新应用进行容器化部署,接着再将它们迁移到微服务架构,这样才能最终感受到它们的优势所在。

|

||||

|

||||

McGrath 说,“微服务要想运行的很好,需要公司经过多年的反复迭代,这样才能实现快速部署和迁移”,“如果组织不能实现快速迁移,那么支持微服务将很困难。实现快速迁移,容器化可以帮助你,这样就不用担心业务整体停机”

|

||||

McGrath 说,“微服务要想运行的很好,需要公司经过多年的反复迭代,这样才能实现快速部署和迁移”,“如果组织不能实现快速迁移,那么支持微服务将很困难。实现快速迁移,容器化可以帮助你,这样就不用担心业务整体停机”。

|

||||

|

||||

### 2. 从一个面向客户的或者关键的业务应用开始

|

||||

### 2、 从一个面向客户的或者关键的业务应用开始

|

||||

|

||||

对组织来说,一个相关陷阱恰恰就是引入容器、微服务、或者同时两者都引入的这个开端:在尝试征服一片丛林中的雄狮之前,你应该先去征服处于食物链底端的一些小动物,以取得一些实践经验。

|

||||

对组织来说,一个相关陷阱恰恰就是从容器、微服务、或者两者同时起步:在尝试征服一片丛林中的雄狮之前,你应该先去征服处于食物链底端的一些小动物,以取得一些实践经验。

|

||||

|

||||

在你的学习过程中预期会有一些错误出现 - 你是希望这些错误发生在面向客户的关键业务应用上,还是,仅对 IT 或者其他内部团队可见的低风险应用上?

|

||||

在你的学习过程中可以预期会有一些错误出现 —— 你是希望这些错误发生在面向客户的关键业务应用上,还是,仅对 IT 或者其他内部团队可见的低风险应用上?

|

||||

|

||||

DigitalOcean 的 Browning 说,“如果整个生态系统都是新的,为了获取一些微服务和容器方面的操作经验,那么,将它们先应用到影响面较低的区域,比如像你的持续集成系统或者内部工具,可能是一个低风险的做法。”你获得这方面的经验以后,当然会将这些技术应用到为客户提供服务的生产系统上。而现实情况是,不论你准备的如何周全,都不可避免会遇到问题,因此,需要提前为可能出现的问题制定应对之策。

|

||||

|

||||

### 3. 在没有合适的团队之前引入了太多的复杂性

|

||||

### 3、 在没有合适的团队之前引入了太多的复杂性

|

||||

|

||||

由于微服务架构的弹性,它可能会产生复杂的管理需求。

|

||||

|

||||

作为 [Red Hat][6] 技术的狂热拥护者,[Gordon Haff][7] 最近写道,“一个符合 OCI 标准的容器运行时本身管理单个容器是很擅长的,但是,当你开始使用多个容器和容器化应用时,并将它们分解为成百上千个节点后,管理和编配它们将变得极为复杂。最终,你将回过头来需要将容器分组来提供服务 - 比如,跨容器的网络、安全、测控”

|

||||

作为 [Red Hat][6] 技术的狂热拥护者,[Gordon Haff][7] 最近写道,“一个符合 OCI 标准的容器运行时本身管理单个容器是很擅长的,但是,当你开始使用多个容器和容器化应用时,并将它们分解为成百上千个节点后,管理和编配它们将变得极为复杂。最终,你将需要回过头来将容器分组来提供服务 —— 比如,跨容器的网络、安全、测控”。

|

||||

|

||||

Haff 提示说,“幸运的是,由于容器是可移植的,并且,与之相关的管理栈也是可移植的”。“这时出现的编配技术,比如像 [Kubernetes][8] ,使得这种 IT 需求变得简单化了”(更多内容请查阅 Haff 的文章:[容器化为编写应用带来的 5 个优势][1])

|

||||

Haff 提示说,“幸运的是,由于容器是可移植的,并且,与之相关的管理栈也是可移植的”。“这时出现的编配技术,比如像 [Kubernetes][8] ,使得这种 IT 需求变得简单化了”(更多内容请查阅 Haff 的文章:[容器化为编写应用带来的 5 个优势][1])。

|

||||

|

||||

另外,你需要合适的团队去做这些事情。如果你已经有 [DevOps shop][9],那么,你可能比较适合做这种转换。因为,从一开始你已经聚集了相关技能的人才。

|

||||

|

||||

Mike Kavis 说,“随着时间的推移,会有越来越多的服务得以部署,管理起来会变得很不方便”,他是 [Cloud Technology Partners][10] 的副总裁兼首席云架构设计师。他说,“在 DevOps 的关键过程中,确保各个领域的专家 - 开发、测试、安全、运营等等 - 全部者参与进来,并且在基于容器的微服务中,在构建、部署、运行、安全方面实现协作。”

|

||||

Mike Kavis 说,“随着时间的推移,部署了越来越多的服务,管理起来会变得很不方便”,他是 [Cloud Technology Partners][10] 的副总裁兼首席云架构设计师。他说,“在 DevOps 的关键过程中,确保各个领域的专家 —— 开发、测试、安全、运营等等 —— 全部都参与进来,并且在基于容器的微服务中,在构建、部署、运行、安全方面实现协作。”

|

||||

|

||||

### 4. 忽视重要的需求:自动化

|

||||

### 4、 忽视重要的需求:自动化

|

||||

|

||||

除了具有一个合适的团队之外,那些在基于容器化的微服务部署比较成功的组织都倾向于以“实现尽可能多的自动化”来解决固有的复杂性。

|

||||

|

||||

Carlos Sanchez 说,“实现分布式架构并不容易,一些常见的挑战,像数据持久性、日志、排错等等,在微服务架构中都会变得很复杂”,他是 [CloudBees][11] 的资深软件工程师。根据定义,Sanchez 提到的分布式架构,随着业务的增长,将变成一个巨大无比的繁重的运营任务。“服务和组件的增殖,将使得运营自动化变成一项非常强烈的需求”。Sanchez 警告说。“手动管理将限制服务的规模”

|

||||

Carlos Sanchez 说,“实现分布式架构并不容易,一些常见的挑战,像数据持久性、日志、排错等等,在微服务架构中都会变得很复杂”,他是 [CloudBees][11] 的资深软件工程师。根据定义,Sanchez 提到的分布式架构,随着业务的增长,将变成一个巨大无比的繁重的运营任务。“服务和组件的增殖,将使得运营自动化变成一项非常强烈的需求”。Sanchez 警告说。“手动管理将限制服务的规模”。

|

||||

|

||||

### 5. 随着时间的推移,微服务变得越来越臃肿

|

||||

### 5、 随着时间的推移,微服务变得越来越臃肿

|

||||

|

||||

在一个容器中运行一个服务或者软件组件并不神奇。但是,这样做并不能证明你就一定在使用微服务。Manual Nedbal, [ShieldX Networks][12] 的 CTO,它警告说,IT 专业人员要确保,随着时间的推移,微服务仍然是微服务。

|

||||

在一个容器中运行一个服务或者软件组件并不神奇。但是,这样做并不能证明你就一定在使用微服务。Manual Nedbal, [ShieldX Networks][12] 的 CTO,他警告说,IT 专业人员要确保,随着时间的推移,微服务仍然是微服务。

|

||||

|

||||

Nedbal 说,“随着时间的推移,一些软件组件积累了大量的代码和特性,将它们将在一个容器中将会产生并不需要的微服务,也不会带来相同的优势”,也就是说,“随着组件的变大,工程师需要找到合适的时机将它们再次分解”

|

||||

Nedbal 说,“随着时间的推移,一些软件组件积累了大量的代码和特性,将它们放在一个容器中将会产生并不需要的微服务,也不会带来相同的优势”,也就是说,“随着组件的变大,工程师需要找到合适的时机将它们再次分解”。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -59,7 +63,7 @@ via: https://enterprisersproject.com/article/2017/9/using-microservices-containe

|

||||

|

||||

作者:[Kevin Casey][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,17 +1,23 @@

|

||||

让我们使用 PC 键盘在终端演奏钢琴

|

||||

======

|

||||

厌倦了工作?那么来吧,让我们弹弹钢琴!是的,你没有看错。谁需要真的钢琴啊?我们可以用 PC 键盘在命令行下就能弹钢琴。向你们介绍一下 **Piano-rs** - 这是一款用 Rust 语言编写的,可以让你用 PC 键盘在终端弹钢琴的简单工具。它免费,开源,而且基于 MIT 协议。你可以在任何支持 Rust 的操作系统中使用它。

|

||||

|

||||

|

||||

### Piano-rs:使用 PC 键盘在终端弹钢琴

|

||||

厌倦了工作?那么来吧,让我们弹弹钢琴!是的,你没有看错,根本不需要真的钢琴。我们可以用 PC 键盘在命令行下就能弹钢琴。向你们介绍一下 `piano-rs` —— 这是一款用 Rust 语言编写的,可以让你用 PC 键盘在终端弹钢琴的简单工具。它自由开源,基于 MIT 协议。你可以在任何支持 Rust 的操作系统中使用它。

|

||||

|

||||

### piano-rs:使用 PC 键盘在终端弹钢琴

|

||||

|

||||

#### 安装

|

||||

|

||||

确保系统已经安装了 Rust 编程语言。若还未安装,运行下面命令来安装它。

|

||||

|

||||

```

|

||||

curl https://sh.rustup.rs -sSf | sh

|

||||

```

|

||||

|

||||

安装程序会问你是否默认安装还是自定义安装还是取消安装。我希望默认安装,因此输入 **1** (数字一)。

|

||||

(LCTT 译注:这种直接通过 curl 执行远程 shell 脚本是一种非常危险和不成熟的做法。)

|

||||

|

||||

安装程序会问你是否默认安装还是自定义安装还是取消安装。我希望默认安装,因此输入 `1` (数字一)。

|

||||

|

||||

```

|

||||

info: downloading installer

|

||||

|

||||

@ -43,7 +49,7 @@ default host triple: x86_64-unknown-linux-gnu

|

||||

1) Proceed with installation (default)

|

||||

2) Customize installation

|

||||

3) Cancel installation

|

||||

**1**

|

||||

1

|

||||

|

||||

info: syncing channel updates for 'stable-x86_64-unknown-linux-gnu'

|

||||

223.6 KiB / 223.6 KiB (100 %) 215.1 KiB/s ETA: 0 s

|

||||

@ -72,9 +78,10 @@ environment variable. Next time you log in this will be done automatically.

|

||||

To configure your current shell run source $HOME/.cargo/env

|

||||

```

|

||||

|

||||

登出然后重启系统来将 cargo 的 bin 目录纳入 PATH 变量中。

|

||||

登出然后重启系统来将 cargo 的 bin 目录纳入 `PATH` 变量中。

|

||||

|

||||

校验 Rust 是否正确安装:

|

||||

|

||||

```

|

||||

$ rustc --version

|

||||

rustc 1.21.0 (3b72af97e 2017-10-09)

|

||||

@ -83,40 +90,44 @@ rustc 1.21.0 (3b72af97e 2017-10-09)

|

||||

太棒了!Rust 成功安装了。是时候构建 piano-rs 应用了。

|

||||

|

||||

使用下面命令克隆 Piano-rs 仓库:

|

||||

|

||||

```

|

||||

git clone https://github.com/ritiek/piano-rs

|

||||

```

|

||||

|

||||

上面命令会在当前工作目录创建一个名为 "piano-rs" 的目录并下载所有内容到其中。进入该目录:

|

||||

上面命令会在当前工作目录创建一个名为 `piano-rs` 的目录并下载所有内容到其中。进入该目录:

|

||||

|

||||

```

|

||||

cd piano-rs

|

||||

```

|

||||

|

||||

最后,运行下面命令来构建 Piano-rs:

|

||||

|

||||

```

|

||||

cargo build --release

|

||||

```

|

||||

|

||||

编译过程要花上一阵子。

|

||||

|

||||

#### Usage

|

||||

#### 用法

|

||||

|

||||

编译完成后,在 `piano-rs` 目录中运行下面命令:

|

||||

|

||||

编译完成后,在 **piano-rs** 目录中运行下面命令:

|

||||

```

|

||||

./target/release/piano-rs

|

||||

```

|

||||

|

||||

这就我们在终端上的钢琴键盘了!可以开始弹指一些音符了。按下按键可以弹奏相应音符。使用 **左/右** 方向键可以在弹奏时调整音频。而,使用 **上/下** 方向键可以在弹奏时调整音长。

|

||||

这就是我们在终端上的钢琴键盘了!可以开始弹指一些音符了。按下按键可以弹奏相应音符。使用 **左/右** 方向键可以在弹奏时调整音频。而,使用 **上/下** 方向键可以在弹奏时调整音长。

|

||||

|

||||

[![][1]][2]

|

||||

![][2]

|

||||

|

||||

Piano-rs 使用与 [**multiplayerpiano.com**][3] 一样的音符和按键。另外,你可以使用[**这些音符 **][4] 来学习弹指各种流行歌曲。

|

||||

Piano-rs 使用与 [multiplayerpiano.com][3] 一样的音符和按键。另外,你可以使用[这些音符][4] 来学习弹指各种流行歌曲。

|

||||

|

||||

要查看帮助。输入:

|

||||

|

||||

```

|

||||

$ ./target/release/piano-rs -h

|

||||

```

|

||||

```

|

||||

|

||||

piano-rs 0.1.0

|

||||

Ritiek Malhotra <ritiekmalhotra123@gmail.com>

|

||||

Play piano in the terminal using PC keyboard.

|

||||

@ -141,19 +152,18 @@ OPTIONS:

|

||||

此致敬礼!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/let-us-play-piano-terminal-using-pc-keyboard/

|

||||

|

||||

作者:[SK][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:data:image/gif;base64,R0lGODlhAQABAIAAAAAAAP///yH5BAEAAAAALAAAAAABAAEAAAIBRAA7

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/10/Piano.png ()

|

||||

[2]:http://www.ostechnix.com/wp-content/uploads/2017/10/Piano.png

|

||||

[3]:http://www.multiplayerpiano.com/

|

||||

[4]:https://pastebin.com/CX1ew0uB

|

||||

@ -0,0 +1,51 @@

|

||||

autorandr:自动调整屏幕布局

|

||||

======

|

||||

|

||||

像许多笔记本用户一样,我经常将笔记本插入到不同的显示器上(桌面上有多台显示器,演示时有投影机等)。运行 `xrandr` 命令或点击界面非常繁琐,编写脚本也不是很好。

|

||||

|

||||

最近,我遇到了 [autorandr][1],它使用 EDID(和其他设置)检测连接的显示器,保存 `xrandr` 配置并恢复它们。它也可以在加载特定配置时运行任意脚本。我已经打包了它,目前仍在 NEW 状态。如果你不能等待,[这是 deb][2],[这是 git 仓库][3]。

|

||||

|

||||

要使用它,只需安装软件包,并创建你的初始配置(我这里用的名字是 `undocked`):

|

||||

|

||||

```

|

||||

autorandr --save undocked

|

||||

```

|

||||

|

||||

然后,连接你的笔记本(或者插入你的外部显示器),使用 `xrandr`(或其他任何)更改配置,然后保存你的新配置(我这里用的名字是 workstation):

|

||||

|

||||

```

|

||||

autorandr --save workstation

|

||||

```

|

||||

|

||||

对你额外的配置(或当你有新的配置)进行重复操作。

|

||||

|

||||

`autorandr` 有 `udev`、`systemd` 和 `pm-utils` 钩子,当新的显示器出现时 `autorandr --change` 应该会立即运行。如果需要,也可以手动运行 `autorandr --change` 或 `autorandr - load workstation`。你也可以在加载配置后在 `~/.config/autorandr/$PROFILE/postswitch` 添加自己的脚本来运行。由于我运行 i3,我的工作站配置如下所示:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

xrandr --dpi 92

|

||||

xrandr --output DP2-2 --primary

|

||||

i3-msg '[workspace="^(1|4|6)"] move workspace to output DP2-2;'

|

||||

i3-msg '[workspace="^(2|5|9)"] move workspace to output DP2-3;'

|

||||

i3-msg '[workspace="^(3|8)"] move workspace to output DP2-1;'

|

||||

```

|

||||

|

||||

它适当地修正了 dpi,设置主屏幕(可能不需要?),并移动 i3 工作区。你可以通过在配置文件目录中添加一个 `block` 钩子来安排配置永远不会运行。

|

||||

|

||||

如果你定期更换显示器,请看一下!

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.donarmstrong.com/posts/autorandr/

|

||||

|

||||

作者:[Don Armstrong][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.donarmstrong.com

|

||||

[1]:https://github.com/phillipberndt/autorandr

|

||||

[2]:https://www.donarmstrong.com/autorandr_1.2-1_all.deb

|

||||

[3]:https://git.donarmstrong.com/deb_pkgs/autorandr.git

|

||||

135

published/20171215 How to find and tar files into a tar ball.md

Normal file

135

published/20171215 How to find and tar files into a tar ball.md

Normal file

@ -0,0 +1,135 @@

|

||||

如何找出并打包文件成 tar 包

|

||||

======

|

||||

|

||||

Q:我想找出所有的 *.doc 文件并将它们创建成一个 tar 包,然后存储在 `/nfs/backups/docs/file.tar` 中。是否可以在 Linux 或者类 Unix 系统上查找并 tar 打包文件?

|

||||

|

||||

`find` 命令用于按照给定条件在目录层次结构中搜索文件。`tar` 命令是用于 Linux 和类 Unix 系统创建 tar 包的归档工具。

|

||||

|

||||

[![How to find and tar files on linux unix][1]][1]

|

||||

|

||||

让我们看看如何将 `tar` 命令与 `find` 命令结合在一个命令行中创建一个 tar 包。

|

||||

|

||||

### Find 命令

|

||||

|

||||

语法是:

|

||||

|

||||

```

|

||||

find /path/to/search -name "file-to-search" -options

|

||||

## 找出所有 Perl(*.pl)文件 ##

|

||||

find $HOME -name "*.pl" -print

|

||||

## 找出所有 *.doc 文件 ##

|

||||

find $HOME -name "*.doc" -print

|

||||

## 找出所有 *.sh(shell 脚本)并运行 ls -l 命令 ##

|

||||

find . -iname "*.sh" -exec ls -l {} +

|

||||

```

|

||||

|

||||

最后一个命令的输出示例:

|

||||

|

||||

```

|

||||

-rw-r--r-- 1 vivek vivek 1169 Apr 4 2017 ./backups/ansible/cluster/nginx.build.sh

|

||||

-rwxr-xr-x 1 vivek vivek 1500 Dec 6 14:36 ./bin/cloudflare.pure.url.sh

|

||||

lrwxrwxrwx 1 vivek vivek 13 Dec 31 2013 ./bin/cmspostupload.sh -> postupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 12 Dec 31 2013 ./bin/cmspreupload.sh -> preupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 14 Dec 31 2013 ./bin/cmssuploadimage.sh -> uploadimage.sh

|

||||

lrwxrwxrwx 1 vivek vivek 13 Dec 31 2013 ./bin/faqpostupload.sh -> postupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 12 Dec 31 2013 ./bin/faqpreupload.sh -> preupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 14 Dec 31 2013 ./bin/faquploadimage.sh -> uploadimage.sh

|

||||

-rw-r--r-- 1 vivek vivek 778 Nov 6 14:44 ./bin/mirror.sh

|

||||

-rwxr-xr-x 1 vivek vivek 136 Apr 25 2015 ./bin/nixcraft.com.301.sh

|

||||

-rwxr-xr-x 1 vivek vivek 547 Jan 30 2017 ./bin/paypal.sh

|

||||

-rwxr-xr-x 1 vivek vivek 531 Dec 31 2013 ./bin/postupload.sh

|

||||

-rwxr-xr-x 1 vivek vivek 437 Dec 31 2013 ./bin/preupload.sh

|

||||

-rwxr-xr-x 1 vivek vivek 1046 May 18 2017 ./bin/purge.all.cloudflare.domain.sh

|

||||

lrwxrwxrwx 1 vivek vivek 13 Dec 31 2013 ./bin/tipspostupload.sh -> postupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 12 Dec 31 2013 ./bin/tipspreupload.sh -> preupload.sh

|

||||

lrwxrwxrwx 1 vivek vivek 14 Dec 31 2013 ./bin/tipsuploadimage.sh -> uploadimage.sh

|

||||

-rwxr-xr-x 1 vivek vivek 1193 Oct 18 2013 ./bin/uploadimage.sh

|

||||

-rwxr-xr-x 1 vivek vivek 29 Nov 6 14:33 ./.vim/plugged/neomake/tests/fixtures/errors.sh

|

||||

-rwxr-xr-x 1 vivek vivek 215 Nov 6 14:33 ./.vim/plugged/neomake/tests/helpers/trap.sh

|

||||

```

|

||||

|

||||

### Tar 命令

|

||||

|

||||

要[创建 /home/vivek/projects 目录的 tar 包][2],运行:

|

||||

|

||||

```

|

||||

$ tar -cvf /home/vivek/projects.tar /home/vivek/projects

|

||||

```

|

||||

|

||||

### 结合 find 和 tar 命令

|

||||

|

||||

语法是:

|

||||

|

||||

```

|

||||

find /dir/to/search/ -name "*.doc" -exec tar -rvf out.tar {} \;

|

||||

```

|

||||

|

||||

或者

|

||||

|

||||

```

|

||||

find /dir/to/search/ -name "*.doc" -exec tar -rvf out.tar {} +

|

||||

```

|

||||

|

||||

例子:

|

||||

|

||||

```

|

||||

find $HOME -name "*.doc" -exec tar -rvf /tmp/all-doc-files.tar "{}" \;

|

||||

```

|

||||

|

||||

或者

|

||||

|

||||

```

|

||||

find $HOME -name "*.doc" -exec tar -rvf /tmp/all-doc-files.tar "{}" +

|

||||

```

|

||||

|

||||

这里,find 命令的选项:

|

||||

|

||||

* `-name "*.doc"`:按照给定的模式/标准查找文件。在这里,在 $HOME 中查找所有 *.doc 文件。

|

||||

* `-exec tar ...` :对 `find` 命令找到的所有文件执行 `tar` 命令。

|

||||

|

||||

这里,`tar` 命令的选项:

|

||||

|

||||

* `-r`:将文件追加到归档末尾。参数与 `-c` 选项具有相同的含义。

|

||||

* `-v`:详细输出。

|

||||

* `-f out.tar` : 将所有文件追加到 out.tar 中。

|

||||

|

||||

也可以像下面这样将 `find` 命令的输出通过管道输入到 `tar` 命令中:

|

||||

|

||||

```

|

||||

find $HOME -name "*.doc" -print0 | tar -cvf /tmp/file.tar --null -T -

|

||||

```

|

||||

|

||||

传递给 `find` 命令的 `-print0` 选项处理特殊的文件名。`--null` 和 `-T` 选项告诉 `tar` 命令从标准输入/管道读取输入。也可以使用 `xargs` 命令:

|

||||

|

||||

```

|

||||

find $HOME -type f -name "*.sh" | xargs tar cfvz /nfs/x230/my-shell-scripts.tgz

|

||||

```

|

||||

|

||||

有关更多信息,请参阅下面的 man 页面:

|

||||

|

||||

```

|

||||

$ man tar

|

||||

$ man find

|

||||

$ man xargs

|

||||

$ man bash

|

||||

```

|

||||

|

||||

------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

作者是 nixCraft 的创造者,是一名经验丰富的系统管理员,也是 Linux 操作系统/Unix shell 脚本培训师。他曾与全球客户以及 IT、教育、国防和太空研究以及非营利部门等多个行业合作。在 Twitter、Facebook 和 Google+ 上关注他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/faq/linux-unix-find-tar-files-into-tarball-command/

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/media/new/faq/2017/12/How-to-find-and-tar-files-on-linux-unix.jpg

|

||||

[2]:https://www.cyberciti.biz/faq/creating-a-tar-file-linux-command-line/

|

||||

127

published/20171226 How to Configure Linux for Children.md

Normal file

127

published/20171226 How to Configure Linux for Children.md

Normal file

@ -0,0 +1,127 @@

|

||||

如何配置一个小朋友使用的 Linux

|

||||

======

|

||||

|

||||

|

||||

|

||||

如果你接触电脑有一段时间了,提到 Linux,你应该会联想到一些特定的人群。你觉得哪些人在使用 Linux?别担心,这就告诉你。

|

||||

|

||||

Linux 是一个可以深度定制的操作系统。这就赋予了用户高度控制权。事实上,家长们可以针对小朋友设置出一个专门的 Linux 发行版,确保让孩子不会在不经意间接触那些高危地带。但是相比 Windows,这些设置显得更费时,但是一劳永逸。Linux 的开源免费,让教室或计算机实验室系统部署变得容易。

|

||||

|

||||

### 小朋友的 Linux 发行版

|

||||

|

||||

这些为儿童而简化的 Linux 发行版,界面对儿童十分友好。家长只需要先安装和设置,孩子就可以完全独立地使用计算机了。你将看见多彩的图形界面,丰富的图画,简明的语言。

|

||||

|

||||

不过,不幸的是,这类发行版不会经常更新,甚至有些已经不再积极开发了。但也不意味着不能使用,只是故障发生率可能会高一点。

|

||||

|

||||

![qimo-gcompris][1]

|

||||

|

||||

#### 1. Edubuntu

|

||||

|

||||

[Edubuntu][2] 是 Ubuntu 的一个分支版本,专用于教育事业。它拥有丰富的图形环境和大量教育软件,易于更新维护。它被设计成初高中学生专用的操作系统。

|

||||

|

||||

#### 2. Ubermix

|

||||

|

||||

[Ubermix][3] 是根据教育需求而被设计出来的。Ubermix 将学生从复杂的计算机设备中解脱出来,就像手机一样简单易用,而不会牺牲性能和操作系统的全部能力。一键开机、五分钟安装、二十秒钟快速还原机制,以及超过 60 个的免费预装软件,ubermix 就可以让你的硬件变成功能强大的学习设备。

|

||||

|

||||

#### 3. Sugar

|

||||

|

||||

[Sugar][4] 是为“每个孩子一台笔记本(OLPC)计划”而设计的操作系统。Sugar 和普通桌面 Linux 大不相同,它更专注于学生课堂使用和教授编程能力。

|

||||

|

||||

**注意** :很多为儿童开发的 Linux 发行版我并没有列举,因为它们大都不再积极维护或是被长时间遗弃。

|

||||

|

||||

### 为小朋友过筛选内容的 Linux

|

||||

|

||||

只有你,最能保护孩子拒绝访问少儿不宜的内容,但是你不可能每分每秒都在孩子身边。但是你可以设置“限制访问”的 URL 到内容过滤代理服务器(通过软件)。这里有两个主要的软件可以帮助你。

|

||||

|

||||

![儿童内容过滤 Linux][5]

|

||||

|

||||

#### 1、 DansGuardian

|

||||

|

||||

[DansGuardian][6],一个开源内容过滤软件,几乎可以工作在任何 Linux 发行版上,灵活而强大,需要你通过命令行设置你的代理。如果你不深究代理服务器的设置,这可能是最强力的选择。

|

||||

|

||||

配置 DansGuardian 可不是轻松活儿,但是你可以跟着安装说明按步骤完成。一旦设置完成,它将是过滤不良内容的高效工具。

|

||||

|

||||

#### 2、 Parental Control: Family Friendly Filter

|

||||

|

||||

[Parental Control: Family Friendly Filter][7] 是 Firefox 的插件,允许家长屏蔽包含色情内容在内的任何少儿不宜的网站。你也可以设置不良网站黑名单,将其一直屏蔽。

|

||||

|

||||

![firefox 内容过滤插件][8]

|

||||

|

||||

你使用的老版本的 Firefox 可能不支持 [网页插件][9],那么你可以使用 [ProCon Latte 内容过滤器][10]。家长们添加网址到预设的黑名单内,然后设置密码,防止设置被篡改。

|

||||

|

||||

#### 3、 Blocksi 网页过滤

|

||||

|

||||

[Blocksi 网页过滤][11] 是 Chrome 浏览器插件,能有效过滤网页和 Youtube。它也提供限时服务,这样你可以限制家里小朋友的上网时间。

|

||||

|

||||

### 闲趣

|

||||

|

||||

![Linux 儿童游戏:tux kart][12]

|

||||

|

||||

给孩子们使用的计算机,不管是否是用作教育,最好都要有一些游戏。虽然 Linux 没有 Windows 那么好的游戏性,但也在奋力追赶。这有建议几个有益的游戏,你可以安装到孩子们的计算机上。

|

||||

|

||||

* [Super Tux Kart][21](竞速卡丁车)

|

||||

* [GCompris][22](适合教育的游戏)

|

||||

* [Secret Maryo Chronicles][23](超级马里奥)

|

||||

* [Childsplay][24](教育/记忆力游戏)

|

||||

* [EToys][25](儿童编程)

|

||||

* [TuxTyping][26](打字游戏)

|

||||

* [Kalzium][27](元素周期表)

|

||||

* [Tux of Math Command][28](数学游戏)

|

||||

* [Pink Pony][29](Tron 风格竞速游戏)

|

||||

* [KTuberling][30](创造游戏)

|

||||

* [TuxPaint][31](绘画)

|

||||

* [Blinken][32]([记忆力][33] 游戏)

|

||||

* [KTurtle][34](编程指导环境)

|

||||

* [KStars][35](天文馆)

|

||||

* [Marble][36](虚拟地球)

|

||||

* [KHangman][37](猜单词)

|

||||

|

||||

### 结论:为什么给孩子使用 Linux?

|

||||

|

||||

Linux 以复杂著称。那为什么给孩子使用 Linux?这是为了让孩子适应 Linux。在 Linux 上工作给了解系统运行提供了很多机会。当孩子长大,他们就有随自己兴趣探索的机会。得益于 Linux 如此开放的平台,孩子们才能得到这么一个极佳的场所发现自己对计算机的毕生之恋。

|

||||

|

||||

本文于 2010 年 7 月首发,2017 年 12 月更新。

|

||||

|

||||

图片来自 [在校学生][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/configure-linux-for-children/

|

||||

|

||||

作者:[Alexander Fox][a]

|

||||

译者:[CYLeft](https://github.com/CYLeft)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/alexfox/

|

||||

[1]:https://www.maketecheasier.com/assets/uploads/2010/08/qimo-gcompris.jpg (qimo-gcompris)

|

||||

[2]:http://www.edubuntu.org

|

||||

[3]:http://www.ubermix.org/

|

||||

[4]:http://wiki.sugarlabs.org/go/Downloads

|

||||

[5]:https://www.maketecheasier.com/assets/uploads/2017/12/linux-for-children-content-filtering.png (linux-for-children-content-filtering)

|

||||

[6]:https://help.ubuntu.com/community/DansGuardian

|

||||

[7]:https://addons.mozilla.org/en-US/firefox/addon/family-friendly-filter/

|

||||

[8]:https://www.maketecheasier.com/assets/uploads/2017/12/firefox-content-filter-addon.png (firefox-content-filter-addon)

|

||||

[9]:https://www.maketecheasier.com/best-firefox-web-extensions/

|

||||

[10]:https://addons.mozilla.org/en-US/firefox/addon/procon-latte/

|

||||

[11]:https://chrome.google.com/webstore/detail/blocksi-web-filter/pgmjaihnmedpcdkjcgigocogcbffgkbn?hl=en

|

||||

[12]:https://www.maketecheasier.com/assets/uploads/2017/12/linux-for-children-tux-kart-e1513389774535.jpg (linux-for-children-tux-kart)

|

||||

[13]:https://www.flickr.com/photos/lupuca/8720604364

|

||||

[21]:http://supertuxkart.sourceforge.net/

|

||||

[22]:http://gcompris.net/

|

||||

[23]:http://www.secretmaryo.org/

|

||||

[24]:http://www.schoolsplay.org/

|

||||

[25]:http://www.squeakland.org/about/intro/

|

||||

[26]:http://tux4kids.alioth.debian.org/tuxtype/index.php

|

||||

[27]:http://edu.kde.org/kalzium/

|

||||

[28]:http://tux4kids.alioth.debian.org/tuxmath/index.php

|

||||

[29]:http://code.google.com/p/pink-pony/

|

||||

[30]:http://games.kde.org/game.php?game=ktuberling

|

||||

[31]:http://www.tuxpaint.org/

|

||||

[32]:https://www.kde.org/applications/education/blinken/

|

||||

[33]:https://www.ebay.com/sch/i.html?_nkw=memory

|

||||

[34]:https://www.kde.org/applications/education/kturtle/

|

||||

[35]:https://www.kde.org/applications/education/kstars/

|

||||

[36]:https://www.kde.org/applications/education/marble/

|

||||

[37]:https://www.kde.org/applications/education/khangman/

|

||||

@ -0,0 +1,56 @@

|

||||

4 artificial intelligence trends to watch

|

||||

======

|

||||

|

||||

|

||||

|

||||

However much your IT operation is using [artificial intelligence][1] today, expect to be doing more with it in 2018. Even if you have never dabbled in AI projects, this may be the year talk turns into action, says David Schatsky, managing director at [Deloitte][2]. "The number of companies doing something with AI is on track to rise," he says.

|

||||

|

||||

Check out his AI predictions for the coming year:

|

||||

|

||||

### 1. Expect more enterprise AI pilot projects

|

||||

|

||||

Many of today's off-the-shelf applications and platforms that companies already routinely use incorporate AI. "But besides that, a growing number of companies are experimenting with machine learning or natural language processing to solve particular problems or help understand their data, or automate internal processes, or improve their own products and services," Schatsky says.

|

||||

|

||||

**[ What IT jobs will be hot in the AI age? See our related article, [8 emerging AI jobs for IT pros][3]. ]**

|

||||

|

||||

"Beyond that, the intensity with which companies are working with AI will rise," he says. "Companies that are early adopters already mostly have five or fewer projects underway, but we think that number will rise to having 10 or more pilots underway." One reason for this prediction, he says, is that AI technologies are getting better and easier to use.

|

||||

|

||||

### 2. AI will help with data science talent crunch

|

||||

|

||||

Talent is a huge problem in data science, where most large companies are struggling to hire the data scientists they need. AI can take up some of the load, Schatsky says. "The practice of data science is increasingly automatable with tools offered both by startups and large, established technology vendors," he says. A lot of data science work is repetitive and tedious, and ripe for automation, he explains. "Data scientists aren't going away, but they're going to get much more productive. So a company that can only do a few data science projects without automation will be able to do much more with automation, even if it can't hire any more data scientists."

|

||||

|

||||

### 3. Synthetic data models will ease bottlenecks

|

||||

|

||||

Before you can train a machine learning model, you have to get the data to train it on, Schatsky notes. That's not always easy. "That's often a business bottleneck, not a production bottleneck," he says. In some cases you can't get the data because of regulations governing things like health records and financial information.

|

||||

|

||||

Synthetic data models can take a smaller set of data and use it to generate the larger set that may be needed, he says. "If you used to need 10,000 data points to train a model but could only get 2,000, you can now generate the missing 8,000 and go ahead and train your model."

|

||||

|

||||

### 4. AI decision-making will become more transparent

|

||||

|

||||

One of the business problems with AI is that it often operates as a black box. That is, once you train a model, it will spit out answers that you can't necessarily explain. "Machine learning can automatically discover patterns in data that a human can't see because it's too much data or too complex," Schatsky says. "Having discovered these patterns, it can make predictions about new data it hasn't seen."

|

||||

|

||||

The problem is that sometimes you really do need to know the reasons behind an AI finding or prediction. "You feed in a medical image and the model says, based on the data you've given me, there's a 90 percent chance that there's a tumor in this image," Schatsky says. "You say, 'Why do you think so?' and the model says, 'I don't know, that's what the data would suggest.'"

|

||||

|

||||

If you follow that data, you're going to have to do exploratory surgery on a patient, Schatsky says. That's a tough call to make when you can't explain why. "There are a lot of situations where even though the model produces very accurate results, if it can't explain how it got there, nobody wants to trust it."

|

||||

|

||||

There are also situations where because of regulations, you literally can't use data that you can't explain. "If a bank declines a loan application, it needs to be able to explain why," Schatsky says. "That's a regulation, at least in the U.S. Traditionally, a human underwriter makes that call. A machine learning model could be more accurate, but if it can't explain its answer, it can't be used."

|

||||

|

||||

Most algorithms were not designed to explain their reasoning. "So researchers are finding clever ways to get AI to spill its secrets and explain what variables make it more likely that this patient has a tumor," he says. "Once they do that, a human can look at the answers and see why it came to that conclusion."

|

||||

|

||||

That means AI findings and decisions can be used in many areas where they can't be today, he says. "That will make these models more trustworthy and more usable in the business world."

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://enterprisersproject.com/article/2018/1/4-ai-trends-watch

|

||||

|

||||

作者:[Minda Zetlin][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://enterprisersproject.com/user/minda-zetlin

|

||||

[1]:https://enterprisersproject.com/tags/artificial-intelligence

|

||||

[2]:https://www2.deloitte.com/us/en.html

|

||||

[3]:https://enterprisersproject.com/article/2017/12/8-emerging-ai-jobs-it-pros?sc_cid=70160000000h0aXAAQ

|

||||

@ -1,82 +0,0 @@

|

||||

AI and machine learning bias has dangerous implications

|

||||

======

|

||||

translating

|

||||

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

Algorithms are everywhere in our world, and so is bias. From social media news feeds to streaming service recommendations to online shopping, computer algorithms--specifically, machine learning algorithms--have permeated our day-to-day world. As for bias, we need only examine the 2016 American election to understand how deeply--both implicitly and explicitly--it permeates our society as well.

|

||||

|

||||

What's often overlooked, however, is the intersection between these two: bias in computer algorithms themselves.

|

||||

|

||||

Contrary to what many of us might think, technology is not objective. AI algorithms and their decision-making processes are directly shaped by those who build them--what code they write, what data they use to "[train][1]" the machine learning models, and how they [stress-test][2] the models after they're finished. This means that the programmers' values, biases, and human flaws are reflected in the software. If I fed an image-recognition algorithm the faces of only white researchers in my lab, for instance, it [wouldn't recognize non-white faces as human][3]. Such a conclusion isn't the result of a "stupid" or "unsophisticated" AI, but to a bias in training data: a lack of diverse faces. This has dangerous consequences.

|

||||

|

||||

There's no shortage of examples. [State court systems][4] across the country use "black box" algorithms to recommend prison sentences for convicts. [These algorithms are biased][5] against black individuals because of the data that trained them--so they recommend longer sentences as a result, thus perpetuating existing racial disparities in prisons. All this happens under the guise of objective, "scientific" decision-making.

|

||||

|

||||

The United States federal government uses machine-learning algorithms to calculate welfare payouts and other types of subsidies. But [information on these algorithms][6], such as their creators and their training data, is extremely difficult to find--which increases the risk of public officials operating under bias and meting out systematically unfair payments.

|

||||

|

||||