[@TimSweeneyEpic][8] will probably like this 😊 [pic.twitter.com/7mt9fXt7TH][9]

+>

+> — Lutris Gaming (@LutrisGaming) [April 17, 2019][10]

+

+As an avid gamer and Linux user, I immediately jumped upon this news and installed Lutris to run Epic Games on it.

+

+**Note:** _I used[Ubuntu 19.04][11] to test Epic Games store for Linux._

+

+### Using Epic Games Store for Linux using Lutris

+

+To install Epic Games Store on your Linux system, make sure that you have [Lutris][4] installed with its pre-requisites Wine and Python 3. So, first [install Wine on Ubuntu][12] or whichever Linux you are using and then [download Lutris from its website][13].

+

+[][14]

+

+Suggested read Ubuntu Mate Will Be Default OS On Entroware Laptops

+

+#### Installing Epic Games Store

+

+Once the installation of Lutris is successful, simply launch it.

+

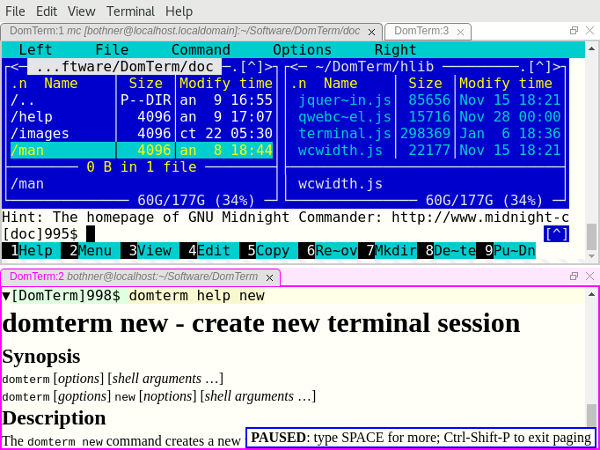

+While I tried this, I encountered an error (nothing happened when I tried to launch it using the GUI). However, when I typed in “ **lutris** ” on the terminal to launch it otherwise, I noticed an error that looked like this:

+

+![][15]

+

+Thanks to Abhishek, I learned that this is a common issue (you can check that on [GitHub][16]).

+

+So, to fix it, all I had to do was – type in a command in the terminal:

+

+```

+export LC_ALL=C

+```

+

+Just copy it and enter it in your terminal if you face the same issue. And, then, you will be able to open Lutris.

+

+**Note:** _You’ll have to enter this command every time you launch Lutris. So better to add it to your .bashrc or list of environment variable._

+

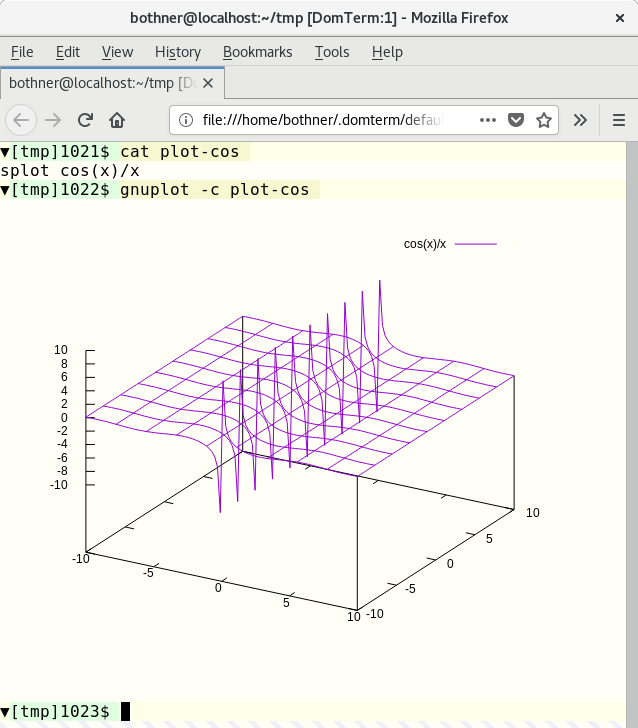

+Once that is done, simply launch it and search for “ **Epic Games Store** ” as shown in the image below:

+

+![Epic Games Store in Lutris][17]

+

+Here, I have it installed already, so you will get the option to “Install” it and then it will automatically ask you to install the required packages that it needs. You just have to proceed in order to successfully install it. That’s it – no rocket science involved.

+

+#### Playing a Game on Epic Games Store

+

+![Epic Games Store][18]

+

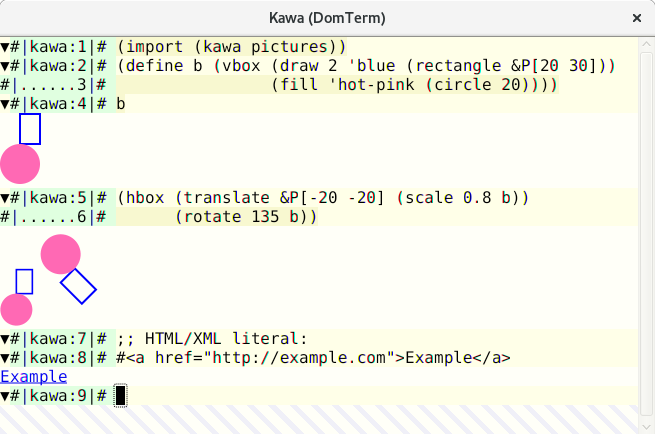

+Now that we have Epic Games store via Lutris on Linux, simply launch it and log in to your account to get started.

+

+But, does it really work?

+

+_Yes, the Epic Games Store does work._ **But, all the games don’t.**

+

+Well, I haven’t tried everything, but I grabbed a free game (Transistor – a turn-based ARPG game) to check if that works.

+

+![Transistor – Epic Games Store][19]

+

+Unfortunately, it didn’t. It says that it is “Running” when I launch it but then again, nothing happens.

+

+As of now, I’m not aware of any solutions to that – so I’ll try to keep you guys updated if I find a fix.

+

+[][20]

+

+Suggested read Alpha Version Of New Skype Client For Linux Is Out Now

+

+**Wrapping Up**

+

+It’s good to see the gaming scene improve on Linux thanks to the solutions like Lutris for users. However, there’s still a lot of work to be done.

+

+For a game to run hassle-free on Linux is still a challenge. There can be issues like this which I encountered or similar. But, it’s going in the right direction – even if it has issues.

+

+What do you think of Epic Games Store on Linux via Lutris? Have you tried it yet? Let us know your thoughts in the comments below.

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/epic-games-lutris-linux/

+

+作者:[Ankush Das][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/ankush/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/linux-gaming-guide/

+[2]: https://itsfoss.com/steam-play/

+[3]: https://itsfoss.com/steam-play-proton/

+[4]: https://lutris.net/

+[5]: https://itsfoss.com/wp-content/uploads/2019/04/epic-games-store-lutris-linux-800x450.png

+[6]: https://www.epicgames.com/store/en-US/

+[7]: https://twitter.com/EpicGames?ref_src=twsrc%5Etfw

+[8]: https://twitter.com/TimSweeneyEpic?ref_src=twsrc%5Etfw

+[9]: https://t.co/7mt9fXt7TH

+[10]: https://twitter.com/LutrisGaming/status/1118552969816018948?ref_src=twsrc%5Etfw

+[11]: https://itsfoss.com/ubuntu-19-04-release-features/

+[12]: https://itsfoss.com/install-latest-wine/

+[13]: https://lutris.net/downloads/

+[14]: https://itsfoss.com/ubuntu-mate-entroware/

+[15]: https://itsfoss.com/wp-content/uploads/2019/04/lutris-error.jpg

+[16]: https://github.com/lutris/lutris/issues/660

+[17]: https://itsfoss.com/wp-content/uploads/2019/04/lutris-epic-games-store-800x520.jpg

+[18]: https://itsfoss.com/wp-content/uploads/2019/04/epic-games-store-800x450.jpg

+[19]: https://itsfoss.com/wp-content/uploads/2019/04/transistor-game-epic-games-store-800x410.jpg

+[20]: https://itsfoss.com/skpe-alpha-linux/

diff --git a/sources/tech/20190423 How to identify same-content files on Linux.md b/sources/tech/20190423 How to identify same-content files on Linux.md

new file mode 100644

index 0000000000..8d9b34b30a

--- /dev/null

+++ b/sources/tech/20190423 How to identify same-content files on Linux.md

@@ -0,0 +1,260 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (How to identify same-content files on Linux)

+[#]: via: (https://www.networkworld.com/article/3390204/how-to-identify-same-content-files-on-linux.html#tk.rss_all)

+[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

+

+How to identify same-content files on Linux

+======

+Copies of files sometimes represent a big waste of disk space and can cause confusion if you want to make updates. Here are six commands to help you identify these files.

+![Vinoth Chandar \(CC BY 2.0\)][1]

+

+In a recent post, we looked at [how to identify and locate files that are hard links][2] (i.e., that point to the same disk content and share inodes). In this post, we'll check out commands for finding files that have the same _content_ , but are not otherwise connected.

+

+Hard links are helpful because they allow files to exist in multiple places in the file system while not taking up any additional disk space. Copies of files, on the other hand, sometimes represent a big waste of disk space and run some risk of causing some confusion if you want to make updates. In this post, we're going to look at multiple ways to identify these files.

+

+**[ Two-Minute Linux Tips:[Learn how to master a host of Linux commands in these 2-minute video tutorials][3] ]**

+

+### Comparing files with the diff command

+

+Probably the easiest way to compare two files is to use the **diff** command. The output will show you the differences between the two files. The < and > signs indicate whether the extra lines are in the first (<) or second (>) file provided as arguments. In this example, the extra lines are in backup.html.

+

+```

+$ diff index.html backup.html

+2438a2439,2441

+>

+> That's all there is to report.

+>

+```

+

+If diff shows no output, that means the two files are the same.

+

+```

+$ diff home.html index.html

+$

+```

+

+The only drawbacks to diff are that it can only compare two files at a time, and you have to identify the files to compare. Some commands we will look at in this post can find the duplicate files for you.

+

+### Using checksums

+

+The **cksum** (checksum) command computes checksums for files. Checksums are a mathematical reduction of the contents to a lengthy number (like 2819078353 228029). While not absolutely unique, the chance that files that are not identical in content would result in the same checksum is extremely small.

+

+```

+$ cksum *.html

+2819078353 228029 backup.html

+4073570409 227985 home.html

+4073570409 227985 index.html

+```

+

+In the example above, you can see how the second and third files yield the same checksum and can be assumed to be identical.

+

+### Using the find command

+

+While the find command doesn't have an option for finding duplicate files, it can be used to search files by name or type and run the cksum command. For example:

+

+```

+$ find . -name "*.html" -exec cksum {} \;

+4073570409 227985 ./home.html

+2819078353 228029 ./backup.html

+4073570409 227985 ./index.html

+```

+

+### Using the fslint command

+

+The **fslint** command can be used to specifically find duplicate files. Note that we give it a starting location. The command can take quite some time to complete if it needs to run through a large number of files. Here's output from a very modest search. Note how it lists the duplicate files and also looks for other issues, such as empty directories and bad IDs.

+

+```

+$ fslint .

+-----------------------------------file name lint

+-------------------------------Invalid utf8 names

+-----------------------------------file case lint

+----------------------------------DUPlicate files <==

+home.html

+index.html

+-----------------------------------Dangling links

+--------------------redundant characters in links

+------------------------------------suspect links

+--------------------------------Empty Directories

+./.gnupg

+----------------------------------Temporary Files

+----------------------duplicate/conflicting Names

+------------------------------------------Bad ids

+-------------------------Non Stripped executables

+```

+

+You may have to install **fslint** on your system. You will probably have to add it to your search path, as well:

+

+```

+$ export PATH=$PATH:/usr/share/fslint/fslint

+```

+

+### Using the rdfind command

+

+The **rdfind** command will also look for duplicate (same content) files. The name stands for "redundant data find," and the command is able to determine, based on file dates, which files are the originals — which is helpful if you choose to delete the duplicates, as it will remove the newer files.

+

+```

+$ rdfind ~

+Now scanning "/home/shark", found 12 files.

+Now have 12 files in total.

+Removed 1 files due to nonunique device and inode.

+Total size is 699498 bytes or 683 KiB

+Removed 9 files due to unique sizes from list.2 files left.

+Now eliminating candidates based on first bytes:removed 0 files from list.2 files left.

+Now eliminating candidates based on last bytes:removed 0 files from list.2 files left.

+Now eliminating candidates based on sha1 checksum:removed 0 files from list.2 files left.

+It seems like you have 2 files that are not unique

+Totally, 223 KiB can be reduced.

+Now making results file results.txt

+```

+

+You can also run this command in "dryrun" (i.e., only report the changes that might otherwise be made).

+

+```

+$ rdfind -dryrun true ~

+(DRYRUN MODE) Now scanning "/home/shark", found 12 files.

+(DRYRUN MODE) Now have 12 files in total.

+(DRYRUN MODE) Removed 1 files due to nonunique device and inode.

+(DRYRUN MODE) Total size is 699352 bytes or 683 KiB

+Removed 9 files due to unique sizes from list.2 files left.

+(DRYRUN MODE) Now eliminating candidates based on first bytes:removed 0 files from list.2 files left.

+(DRYRUN MODE) Now eliminating candidates based on last bytes:removed 0 files from list.2 files left.

+(DRYRUN MODE) Now eliminating candidates based on sha1 checksum:removed 0 files from list.2 files left.

+(DRYRUN MODE) It seems like you have 2 files that are not unique

+(DRYRUN MODE) Totally, 223 KiB can be reduced.

+(DRYRUN MODE) Now making results file results.txt

+```

+

+The rdfind command also provides options for things such as ignoring empty files (-ignoreempty) and following symbolic links (-followsymlinks). Check out the man page for explanations.

+

+```

+-ignoreempty ignore empty files

+-minsize ignore files smaller than speficied size

+-followsymlinks follow symbolic links

+-removeidentinode remove files referring to identical inode

+-checksum identify checksum type to be used

+-deterministic determiness how to sort files

+-makesymlinks turn duplicate files into symbolic links

+-makehardlinks replace duplicate files with hard links

+-makeresultsfile create a results file in the current directory

+-outputname provide name for results file

+-deleteduplicates delete/unlink duplicate files

+-sleep set sleep time between reading files (milliseconds)

+-n, -dryrun display what would have been done, but don't do it

+```

+

+Note that the rdfind command offers an option to delete duplicate files with the **-deleteduplicates true** setting. Hopefully the command's modest problem with grammar won't irritate you. ;-)

+

+```

+$ rdfind -deleteduplicates true .

+...

+Deleted 1 files. <==

+```

+

+You will likely have to install the rdfind command on your system. It's probably a good idea to experiment with it to get comfortable with how it works.

+

+### Using the fdupes command

+

+The **fdupes** command also makes it easy to identify duplicate files and provides a large number of useful options — like **-r** for recursion. In its simplest form, it groups duplicate files together like this:

+

+```

+$ fdupes ~

+/home/shs/UPGRADE

+/home/shs/mytwin

+

+/home/shs/lp.txt

+/home/shs/lp.man

+

+/home/shs/penguin.png

+/home/shs/penguin0.png

+/home/shs/hideme.png

+```

+

+Here's an example using recursion. Note that many of the duplicate files are important (users' .bashrc and .profile files) and should clearly not be deleted.

+

+```

+# fdupes -r /home

+/home/shark/home.html

+/home/shark/index.html

+

+/home/dory/.bashrc

+/home/eel/.bashrc

+

+/home/nemo/.profile

+/home/dory/.profile

+/home/shark/.profile

+

+/home/nemo/tryme

+/home/shs/tryme

+

+/home/shs/arrow.png

+/home/shs/PNGs/arrow.png

+

+/home/shs/11/files_11.zip

+/home/shs/ERIC/file_11.zip

+

+/home/shs/penguin0.jpg

+/home/shs/PNGs/penguin.jpg

+/home/shs/PNGs/penguin0.jpg

+

+/home/shs/Sandra_rotated.png

+/home/shs/PNGs/Sandra_rotated.png

+```

+

+The fdupe command's many options are listed below. Use the **fdupes -h** command, or read the man page for more details.

+

+```

+-r --recurse recurse

+-R --recurse: recurse through specified directories

+-s --symlinks follow symlinked directories

+-H --hardlinks treat hard links as duplicates

+-n --noempty ignore empty files

+-f --omitfirst omit the first file in each set of matches

+-A --nohidden ignore hidden files

+-1 --sameline list matches on a single line

+-S --size show size of duplicate files

+-m --summarize summarize duplicate files information

+-q --quiet hide progress indicator

+-d --delete prompt user for files to preserve

+-N --noprompt when used with --delete, preserve the first file in set

+-I --immediate delete duplicates as they are encountered

+-p --permissions don't soncider files with different owner/group or

+ permission bits as duplicates

+-o --order=WORD order files according to specification

+-i --reverse reverse order while sorting

+-v --version display fdupes version

+-h --help displays help

+```

+

+The fdupes command is another one that you're like to have to install and work with for a while to become familiar with its many options.

+

+### Wrap-up

+

+Linux systems provide a good selection of tools for locating and potentially removing duplicate files, along with options for where you want to run your search and what you want to do with duplicate files when you find them.

+

+**[ Also see:[Invaluable tips and tricks for troubleshooting Linux][4] ]**

+

+Join the Network World communities on [Facebook][5] and [LinkedIn][6] to comment on topics that are top of mind.

+

+--------------------------------------------------------------------------------

+

+via: https://www.networkworld.com/article/3390204/how-to-identify-same-content-files-on-linux.html#tk.rss_all

+

+作者:[Sandra Henry-Stocker][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

+[b]: https://github.com/lujun9972

+[1]: https://images.idgesg.net/images/article/2019/04/chairs-100794266-large.jpg

+[2]: https://www.networkworld.com/article/3387961/how-to-identify-duplicate-files-on-linux.html

+[3]: https://www.youtube.com/playlist?list=PL7D2RMSmRO9J8OTpjFECi8DJiTQdd4hua

+[4]: https://www.networkworld.com/article/3242170/linux/invaluable-tips-and-tricks-for-troubleshooting-linux.html

+[5]: https://www.facebook.com/NetworkWorld/

+[6]: https://www.linkedin.com/company/network-world

diff --git a/sources/tech/20190425 Automate backups with restic and systemd.md b/sources/tech/20190425 Automate backups with restic and systemd.md

new file mode 100644

index 0000000000..46c71ae313

--- /dev/null

+++ b/sources/tech/20190425 Automate backups with restic and systemd.md

@@ -0,0 +1,132 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Automate backups with restic and systemd)

+[#]: via: (https://fedoramagazine.org/automate-backups-with-restic-and-systemd/)

+[#]: author: (Link Dupont https://fedoramagazine.org/author/linkdupont/)

+

+Automate backups with restic and systemd

+======

+

+![][1]

+

+Timely backups are important. So much so that [backing up software][2] is a common topic of discussion, even [here on the Fedora Magazine][3]. This article demonstrates how to automate backups with **restic** using only systemd unit files.

+

+For an introduction to restic, be sure to check out our article [Use restic on Fedora for encrypted backups][4]. Then read on for more details.

+

+Two systemd services are required to run in order to automate taking snapshots and keeping data pruned. The first service runs the _backup_ command needs to be run on a regular frequency. The second service takes care of data pruning.

+

+If you’re not familiar with systemd at all, there’s never been a better time to learn. Check out [the series on systemd here at the Magazine][5], starting with this primer on unit files:

+

+> [systemd unit file basics][6]

+

+If you haven’t installed restic already, note it’s in the official Fedora repositories. To install use this command [with sudo][7]:

+

+```

+$ sudo dnf install restic

+```

+

+### Backup

+

+First, create the _~/.config/systemd/user/restic-backup.service_ file. Copy and paste the text below into the file for best results.

+

+```

+[Unit]

+Description=Restic backup service

+[Service]

+Type=oneshot

+ExecStart=restic backup --verbose --one-file-system --tag systemd.timer $BACKUP_EXCLUDES $BACKUP_PATHS

+ExecStartPost=restic forget --verbose --tag systemd.timer --group-by "paths,tags" --keep-daily $RETENTION_DAYS --keep-weekly $RETENTION_WEEKS --keep-monthly $RETENTION_MONTHS --keep-yearly $RETENTION_YEARS

+EnvironmentFile=%h/.config/restic-backup.conf

+```

+

+This service references an environment file in order to load secrets (such as _RESTIC_PASSWORD_ ). Create the _~/.config/restic-backup.conf_ file. Copy and paste the content below for best results. This example uses BackBlaze B2 buckets. Adjust the ID, key, repository, and password values accordingly.

+

+```

+BACKUP_PATHS="/home/rupert"

+BACKUP_EXCLUDES="--exclude-file /home/rupert/.restic_excludes --exclude-if-present .exclude_from_backup"

+RETENTION_DAYS=7

+RETENTION_WEEKS=4

+RETENTION_MONTHS=6

+RETENTION_YEARS=3

+B2_ACCOUNT_ID=XXXXXXXXXXXXXXXXXXXXXXXXX

+B2_ACCOUNT_KEY=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

+RESTIC_REPOSITORY=b2:XXXXXXXXXXXXXXXXXX:/

+RESTIC_PASSWORD=XXXXXXXXXXXXXXXXXXXXXXXXXXXXXXXX

+```

+

+Now that the service is installed, reload systemd: _systemctl –user daemon-reload_. Try running the service manually to create a backup: _systemctl –user start restic-backup_.

+

+Because the service is a _oneshot_ , it will run once and exit. After verifying that the service runs and creates snapshots as desired, set up a timer to run this service regularly. For example, to run the _restic-backup.service_ daily, create _~/.config/systemd/user/restic-backup.timer_ as follows. Again, copy and paste this text:

+

+```

+[Unit]

+Description=Backup with restic daily

+[Timer]

+OnCalendar=daily

+Persistent=true

+[Install]

+WantedBy=timers.target

+```

+

+Enable it by running this command:

+

+```

+$ systemctl --user enable --now restic-backup.timer

+```

+

+### Prune

+

+While the main service runs the _forget_ command to only keep snapshots within the keep policy, the data is not actually removed from the restic repository. The _prune_ command inspects the repository and current snapshots, and deletes any data not associated with a snapshot. Because _prune_ can be a time-consuming process, it is not necessary to run every time a backup is run. This is the perfect scenario for a second service and timer. First, create the file _~/.config/systemd/user/restic-prune.service_ by copying and pasting this text:

+

+```

+[Unit]

+Description=Restic backup service (data pruning)

+[Service]

+Type=oneshot

+ExecStart=restic prune

+EnvironmentFile=%h/.config/restic-backup.conf

+```

+

+Similarly to the main _restic-backup.service_ , _restic-prune_ is a oneshot service and can be run manually. Once the service has been set up, create and enable a corresponding timer at _~/.config/systemd/user/restic-prune.timer_ :

+

+```

+[Unit]

+Description=Prune data from the restic repository monthly

+[Timer]

+OnCalendar=monthly

+Persistent=true

+[Install]

+WantedBy=timers.target

+```

+

+That’s it! Restic will now run daily and prune data monthly.

+

+* * *

+

+_Photo by _[ _Samuel Zeller_][8]_ on _[_Unsplash_][9]_._

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/automate-backups-with-restic-and-systemd/

+

+作者:[Link Dupont][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://fedoramagazine.org/author/linkdupont/

+[b]: https://github.com/lujun9972

+[1]: https://fedoramagazine.org/wp-content/uploads/2019/04/restic-systemd-816x345.jpg

+[2]: https://restic.net/

+[3]: https://fedoramagazine.org/?s=backup

+[4]: https://fedoramagazine.org/use-restic-encrypted-backups/

+[5]: https://fedoramagazine.org/series/systemd-series/

+[6]: https://fedoramagazine.org/systemd-getting-a-grip-on-units/

+[7]: https://fedoramagazine.org/howto-use-sudo/

+[8]: https://unsplash.com/photos/JuFcQxgCXwA?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

+[9]: https://unsplash.com/search/photos/archive?utm_source=unsplash&utm_medium=referral&utm_content=creditCopyText

diff --git a/sources/tech/20190425 Debian has a New Project Leader.md b/sources/tech/20190425 Debian has a New Project Leader.md

new file mode 100644

index 0000000000..00f114b907

--- /dev/null

+++ b/sources/tech/20190425 Debian has a New Project Leader.md

@@ -0,0 +1,106 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Debian has a New Project Leader)

+[#]: via: (https://itsfoss.com/debian-project-leader-election/)

+[#]: author: (Shirish https://itsfoss.com/author/shirish/)

+

+Debian has a New Project Leader

+======

+

+Like each year, the Debian Secretary announced a call for nominations for the post of Debian Project Leader (commonly known as DPL) in early March. Soon 5 candidates shared their nomination. One of the DPL candidates backed out due to personal reasons and we had [four candidates][1] as can be seen in the Nomination section of the Vote page.

+

+### Sam Hartman, the new Debian Project Leader

+

+![][2]

+

+While I will not go much into details as Sam already outlined his position on his [platform][3], it is good to see that most Debian developers recognize that it’s no longer just the technical excellence which need to be looked at. I do hope he is able to create more teams which would leave some more time in DPL’s hands and less stress going forward.

+

+As he has shared, he would be looking into also helping the other DPL candidates, all of which presented initiatives to make Debian better.

+

+Apart from this, there had been some excellent suggestions, for example modernizing debian-installer, making lists.debian.org have a [Mailman 3][4] instance, modernizing Debian packaging and many more.

+

+While probably a year is too short a time for any of the deliverables that Debian people are thinking, some sort of push or start should enable Debian to reach greater heights than today.

+

+### A brief history of DPL elections

+

+In the beginning, Debian was similar to many distributions which have a [BDFL][5], although from the very start Debian had a sort of rolling leadership. While I wouldn’t go through the whole history, from October 1998 there was an idea [germinated][6] to have a Debian Constitution.

+

+After quite a bit of discussion between Debian users, contributors, developers etc. [Debian 1.0 Constitution][7] was released on December 2nd, 1998. One of the big changes was that it formalised the selection of Debian Project Leader via elections.

+

+From 1998 till 2019 13 Debian project leaders have been elected till date with Sam Hartman being the latest (2019).

+

+Before Sam, [Chris Lamb][8] was DPL in 2017 and again stood up for re-election in 2018. One of the biggest changes in Chris’s tenure was having more impetus to outreach than ever before. This made it possible to have many more mini-debconfs all around the world and thus increasing more number of Debian users and potential Debian Developers.

+

+[][9]

+

+Suggested read SemiCode OS: A Linux Distribution For Programmers And Web Developers

+

+### Duties and Responsibilities of the Debian Project Leader

+

+![][10]

+

+Debian Project Leader (DPL) is a non-monetary position which means that the DPL doesn’t get a salary or any monetary benefits in the traditional sense but it’s a prestigious position.

+

+Curious what what a DPL does? Here are some of the duties, responsibilities, prestige and perks associated with this position.

+

+#### Travelling

+

+As the DPL is the public face of the project, she/he is supposed to travel to many places in the world to share about Debian. While the travel may be a perk, it is and could be discounted by being not paid for the time spent articulating Debian’s position in various free software and other communities. Also travel, language, politics of free software are also some of the stress points that any DPL would have to go through.

+

+#### Communication

+

+A DPL is expected to have excellent verbal and non-verbal communication skills as she/he is the expected to share Debian’s vision of computing to technical and non-technical people. As she/he is also expected to weigh in many a sensitive matter, the Project Leader has to make choices about which communications should be made public and which should be private.

+

+#### Budgeting

+

+Quite a bit of the time the Debian Project Leader has to look into the finances along with the Secretary and take a call at various initiatives mooted by the larger community. The Project Leader has to ask and then make informed decisions on the same.

+

+#### Delegation

+

+One of the important tasks of the DPL is to delegate different tasks to suitable people. Some sensitive delegations include ftp-master, ftp-assistant, list-managers, debian-mirror, debian-infrastructure and so on.

+

+#### Influence

+

+Last but not the least, just like any other election, the people who contest for DPL have a platform where they share their ideas about where they would like to see the Debian project heading and how they would go about doing it.

+

+This is by no means an exhaustive list. I would suggest to read Lucas Nussbaum’s [mail][11] in which he outlines some more responsibilities as a Debian Project Leader.

+

+[][12]

+

+Suggested read Lightweight Linux Distribution Bodhi Linux 5.0 Released

+

+**In the end…**

+

+I wish Sam Hartman all the luck. I look forward to see how Debian grows under his leadership.

+

+I also hope that you learned a few non-technical thing around Debian. If you are an [ardent Debian user][13], stuff like this make you feel more involved with Debian project. What do you say?

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/debian-project-leader-election/

+

+作者:[Shirish][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/shirish/

+[b]: https://github.com/lujun9972

+[1]: https://www.debian.org/vote/2019/vote_001

+[2]: https://itsfoss.com/wp-content/uploads/2019/04/Debian-Project-Leader-election-800x450.png

+[3]: https://www.debian.org/vote/2019/platforms/hartmans

+[4]: http://docs.mailman3.org/en/latest/

+[5]: https://en.wikipedia.org/wiki/Benevolent_dictator_for_life

+[6]: https://lists.debian.org/debian-devel/1998/09/msg00506.html

+[7]: https://www.debian.org/devel/constitution.1.0

+[8]: https://www.debian.org/vote/2017/platforms/lamby

+[9]: https://itsfoss.com/semicode-os-linux/

+[10]: https://itsfoss.com/wp-content/uploads/2019/04/leadership-800x450.jpg

+[11]: https://lists.debian.org/debian-vote/2019/03/msg00023.html

+[12]: https://itsfoss.com/bodhi-linux-5/

+[13]: https://itsfoss.com/reasons-why-i-love-debian/

diff --git a/sources/tech/20190426 NomadBSD, a BSD for the Road.md b/sources/tech/20190426 NomadBSD, a BSD for the Road.md

new file mode 100644

index 0000000000..d31f9b4a90

--- /dev/null

+++ b/sources/tech/20190426 NomadBSD, a BSD for the Road.md

@@ -0,0 +1,125 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (NomadBSD, a BSD for the Road)

+[#]: via: (https://itsfoss.com/nomadbsd/)

+[#]: author: (John Paul https://itsfoss.com/author/john/)

+

+NomadBSD, a BSD for the Road

+======

+

+As regular It’s FOSS readers should know, I like diving into the world of BSDs. Recently, I came across an interesting BSD that is designed to live on a thumb drive. Let’s take a look at NomadBSD.

+

+### What is NomadBSD?

+

+![Nomadbsd Desktop][1]

+

+[NomadBSD][2] is different than most available BSDs. NomadBSD is a live system based on FreeBSD. It comes with automatic hardware detection and an initial config tool. NomadBSD is designed to “be used as a desktop system that works out of the box, but can also be used for data recovery, for educational purposes, or to test FreeBSD’s hardware compatibility.”

+

+This German BSD comes with an [OpenBox][3]-based desktop with the Plank application dock. NomadBSD makes use of the [DSB project][4]. DSB stands for “Desktop Suite (for) (Free)BSD” and consists of a collection of programs designed to create a simple and working environment without needing a ton of dependencies to use one tool. DSB is created by [Marcel Kaiser][5] one of the lead devs of NomadBSD.

+

+Just like the original BSD projects, you can contact the NomadBSD developers via a [mailing list][6].

+

+[][7]

+

+Suggested read Enjoy Netflix? You Should Thank FreeBSD

+

+#### Included Applications

+

+NomadBSD comes with the following software installed:

+

+ * Thunar file manager

+ * Asunder CD ripper

+ * Bash 5.0

+ * Filezilla FTP client

+ * Firefox web browser

+ * Fish Command line

+ * Gimp

+ * Qpdfview

+ * Git

+

+

+ * Hexchat IRC client

+ * Leafpad text editor

+ * Midnight Commander file manager

+ * PaleMoon web browser

+ * PCManFM file manager

+ * Pidgin messaging client

+ * Transmission BitTorrent client

+

+

+ * Redshift

+ * Sakura terminal emulator

+ * Slim login manager

+ * Thunderbird email client

+ * VLC media player

+ * Plank application dock

+ * Z Shell

+

+

+

+You can see a complete of the pre-installed applications in the [MANIFEST file][8].

+

+![Nomadbsd Openbox Menu][9]

+

+#### Version 1.2 Released

+

+NomadBSD recently released version 1.2 on April 21, 2019. This means that NomadBSD is now based on FreeBSD 12.0-p3. TRIM is now enabled by default. One of the biggest changes is that the initial command-line setup was replaced with a Qt graphical interface. They also added a Qt5 tool to install NomadBSD to your hard drive. A number of fixes were included to improve graphics support. They also added support for creating 32-bit images.

+

+[][10]

+

+Suggested read 6 Reasons Why Linux Users Switch to BSD

+

+### Installing NomadBSD

+

+Since NomadBSD is designed to be a live system, we will need to add the BSD to a USB drive. First, you will need to [download it][11]. There are several options to choose from: 64-bit, 32-bit, or 64-bit Mac.

+

+You will be a USB drive that has at least 4GB. The system that you are installing to should have a 1.2 GHz processor and 1GB of RAM to run NomadBSD comfortably. Both BIOS and UEFI are supported.

+

+All of the images available for download are compressed as a `.lzma` file. So, once you have downloaded the file, you will need to extract the `.img` file. On Linux, you can use either of these commands: `lzma -d nomadbsd-x.y.z.img.lzma` or `xzcat nomadbsd-x.y.z.img.lzma`. (Be sure to replace x.y.z with the correct file name you just downloaded.)

+

+Before we proceed, we need to find out the id of your USB drive. (Hopefully, you have inserted it by now.) I use the `lsblk` command to find my USB drive, which in my case is `sdb`. To write the image file, use this command `sudo dd if=nomadbsd-x.y.z.img of=/dev/sdb bs=1M conv=sync`. (Again, don’t forget to correct the file name.) If you are uncomfortable using `dd`, you can use [Etcher][12]. If you have Windows, you will need to use [7-zip][13] to extract the image file and Etcher or [Rufus][14] to write the image to the USB drive.

+

+When you boot from the USB drive, you will encounter a simple config tool. Once you answer the required questions, you will be greeted with a simple Openbox desktop.

+

+### Thoughts on NomadBSD

+

+I first discovered NomadBSD back in January when they released 1.2-RC1. At the time, I had been unable to install [Project Trident][15] on my laptop and was very frustrated with BSDs. I downloaded NomadBSD and tried it out. I initially ran into issues reaching the desktop, but RC2 fixed that issue. However, I was unable to get on the internet, even though I had an Ethernet cable plugged in. Luckily, I found the wifi manager in the menu and was able to connect to my wifi.

+

+Overall, my experience with NomadBSD was pleasant. Once I figured out a few things, I was good to go. I hope that NomadBSD is the first of a new generation of BSDs that focus on mobility and ease of use. BSD has conquered the server world, it’s about time they figured out how to be more user-friendly.

+

+Have you ever used NomadBSD? What is your BSD? Please let us know in the comments below.

+

+If you found this article interesting, please take a minute to share it on social media, Hacker News or [Reddit][16].

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/nomadbsd/

+

+作者:[John Paul][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/john/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/wp-content/uploads/2019/04/NomadBSD-desktop-800x500.jpg

+[2]: http://nomadbsd.org/

+[3]: http://openbox.org/wiki/Main_Page

+[4]: https://freeshell.de/%7Emk/projects/dsb.html

+[5]: https://github.com/mrclksr

+[6]: http://nomadbsd.org/contact.html

+[7]: https://itsfoss.com/netflix-freebsd-cdn/

+[8]: http://nomadbsd.org/download/nomadbsd-1.2.manifest

+[9]: https://itsfoss.com/wp-content/uploads/2019/04/NomadBSD-Openbox-menu-800x500.jpg

+[10]: https://itsfoss.com/why-use-bsd/

+[11]: http://nomadbsd.org/download.html

+[12]: https://www.balena.io/etcher/

+[13]: https://www.7-zip.org/

+[14]: https://rufus.ie/

+[15]: https://itsfoss.com/project-trident-interview/

+[16]: http://reddit.com/r/linuxusersgroup

diff --git a/sources/tech/20190427 Monitoring CPU and GPU Temperatures on Linux.md b/sources/tech/20190427 Monitoring CPU and GPU Temperatures on Linux.md

new file mode 100644

index 0000000000..89f942ce66

--- /dev/null

+++ b/sources/tech/20190427 Monitoring CPU and GPU Temperatures on Linux.md

@@ -0,0 +1,166 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Monitoring CPU and GPU Temperatures on Linux)

+[#]: via: (https://itsfoss.com/monitor-cpu-gpu-temp-linux/)

+[#]: author: (It's FOSS Community https://itsfoss.com/author/itsfoss/)

+

+Monitoring CPU and GPU Temperatures on Linux

+======

+

+_**Brief: This articles discusses two simple ways of monitoring CPU and GPU temperatures in Linux command line.**_

+

+Because of **[Steam][1]** (including _[Steam Play][2]_ , aka _Proton_ ) and other developments, **GNU/Linux** is becoming the gaming platform of choice for more and more computer users everyday. A good number of users are also going for **GNU/Linux** when it comes to other resource-consuming computing tasks such as [video editing][3] or graphic design ( _Kdenlive_ and _[Blender][4]_ are good examples of programs for these).

+

+Whether you are one of those users or otherwise, you are bound to have wondered how hot your computer’s CPU and GPU can get (even more so if you do overclocking). If that is the case, keep reading. We will be looking at a couple of very simple commands to monitor CPU and GPU temps.

+

+My setup includes a [Slimbook Kymera][5] and two displays (a TV set and a PC monitor) which allows me to use one for playing games and the other to keep an eye on the temperatures. Also, since I use [Zorin OS][6] I will be focusing on **Ubuntu** and **Ubuntu** derivatives.

+

+To monitor the behaviour of both CPU and GPU we will be making use of the useful `watch` command to have dynamic readings every certain number of seconds.

+

+![][7]

+

+### Monitoring CPU Temperature in Linux

+

+For CPU temps, we will combine `watch` with the `sensors` command. An interesting article about a [gui version of this tool has already been covered on It’s FOSS][8]. However, we will use the terminal version here:

+

+```

+watch -n 2 sensors

+```

+

+`watch` guarantees that the readings will be updated every 2 seconds (and this value can — of course — be changed to what best fit your needs):

+

+```

+Every 2,0s: sensors

+

+iwlwifi-virtual-0

+Adapter: Virtual device

+temp1: +39.0°C

+

+acpitz-virtual-0

+Adapter: Virtual device

+temp1: +27.8°C (crit = +119.0°C)

+temp2: +29.8°C (crit = +119.0°C)

+

+coretemp-isa-0000

+Adapter: ISA adapter

+Package id 0: +37.0°C (high = +82.0°C, crit = +100.0°C)

+Core 0: +35.0°C (high = +82.0°C, crit = +100.0°C)

+Core 1: +35.0°C (high = +82.0°C, crit = +100.0°C)

+Core 2: +33.0°C (high = +82.0°C, crit = +100.0°C)

+Core 3: +36.0°C (high = +82.0°C, crit = +100.0°C)

+Core 4: +37.0°C (high = +82.0°C, crit = +100.0°C)

+Core 5: +35.0°C (high = +82.0°C, crit = +100.0°C)

+```

+

+Amongst other things, we get the following information:

+

+ * We have 5 cores in use at the moment (with the current highest temperature being 37.0ºC).

+ * Values higher than 82.0ºC are considered high.

+ * A value over 100.0ºC is deemed critical.

+

+

+

+[][9]

+

+Suggested read Top 10 Command Line Games For Linux

+

+The values above lead us to the conclusion that the computer’s workload is very light at the moment.

+

+### Monitoring GPU Temperature in Linux

+

+Let us turn to the graphics card now. I have never used an **AMD** dedicated graphics card, so I will be focusing on **Nvidia** ones. The first thing to do is download the appropriate, current driver through [additional drivers in Ubuntu][10].

+

+On **Ubuntu** (and its forks such as **Zorin** or **Linux Mint** ), going to _Software & Updates_ > _Additional Drivers_ and selecting the most recent one normally suffices. Additionally, you can add/enable the official _ppa_ for graphics cards (either through the command line or via _Software & Updates_ > _Other Software_ ). After installing the driver you will have at your disposal the _Nvidia X Server_ gui application along with the command line utility _nvidia-smi_ (Nvidia System Management Interface). So we will use `watch` and `nvidia-smi`:

+

+```

+watch -n 2 nvidia-smi

+```

+

+And — the same as for the CPU — we will get updated readings every two seconds:

+

+```

+Every 2,0s: nvidia-smi

+

+Fri Apr 19 20:45:30 2019

++-----------------------------------------------------------------------------+

+| Nvidia-SMI 418.56 Driver Version: 418.56 CUDA Version: 10.1 |

+|-------------------------------+----------------------+----------------------+

+| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

+| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

+|===============================+======================+======================|

+| 0 GeForce GTX 106... Off | 00000000:01:00.0 On | N/A |

+| 0% 54C P8 10W / 120W | 433MiB / 6077MiB | 4% Default |

++-------------------------------+----------------------+----------------------+

+

++-----------------------------------------------------------------------------+

+| Processes: GPU Memory |

+| GPU PID Type Process name Usage |

+|=============================================================================|

+| 0 1557 G /usr/lib/xorg/Xorg 190MiB |

+| 0 1820 G /usr/bin/gnome-shell 174MiB |

+| 0 7820 G ...equest-channel-token=303407235874180773 65MiB |

++-----------------------------------------------------------------------------+

+```

+

+The chart gives the following information about the graphics card:

+

+ * it is using the open source driver version 418.56.

+ * the current temperature of the card is 54.0ºC — with the fan at 0% of its capacity.

+ * the power consumption is very low: only 10W.

+ * out of 6 GB of vram (video random access memory), it is only using 433 MB.

+ * the used vram is being taken by three processes whose IDs are — respectively — 1557, 1820 and 7820.

+

+

+

+[][11]

+

+Suggested read Googler: Now You Can Google From Linux Terminal!

+

+Most of these facts/values show that — clearly — we are not playing any resource-consuming games or dealing with heavy workloads. Should we started playing a game, processing a video — or the like —, the values would start to go up.

+

+#### Conclusion

+

+Althoug there are gui tools, I find these two commands very handy to check on your hardware in real time.

+

+What do you make of them? You can learn more about the utilities involved by reading their man pages.

+

+Do you have other preferences? Share them with us in the comments, ;).

+

+Halof!!! (Have a lot of fun!!!).

+

+![avatar][12]

+

+### Alejandro Egea-Abellán

+

+It’s FOSS Community Contributor

+

+I developed a liking for electronics, linguistics, herpetology and computers (particularly GNU/Linux and FOSS). I am LPIC-2 certified and currently work as a technical consultant and Moodle administrator in the Department for Lifelong Learning at the Ministry of Education in Murcia, Spain. I am a firm believer in lifelong learning, the sharing of knowledge and computer-user freedom.

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/monitor-cpu-gpu-temp-linux/

+

+作者:[It's FOSS Community][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/itsfoss/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/install-steam-ubuntu-linux/

+[2]: https://itsfoss.com/steam-play-proton/

+[3]: https://itsfoss.com/best-video-editing-software-linux/

+[4]: https://www.blender.org/

+[5]: https://slimbook.es/

+[6]: https://zorinos.com/

+[7]: https://itsfoss.com/wp-content/uploads/2019/04/monitor-cpu-gpu-temperature-linux-800x450.png

+[8]: https://itsfoss.com/check-laptop-cpu-temperature-ubuntu/

+[9]: https://itsfoss.com/best-command-line-games-linux/

+[10]: https://itsfoss.com/install-additional-drivers-ubuntu/

+[11]: https://itsfoss.com/review-googler-linux/

+[12]: https://itsfoss.com/wp-content/uploads/2019/04/EGEA-ABELLAN-Alejandro.jpg

diff --git a/sources/tech/20190428 Installing Budgie Desktop on Ubuntu -Quick Guide.md b/sources/tech/20190428 Installing Budgie Desktop on Ubuntu -Quick Guide.md

new file mode 100644

index 0000000000..11659592fb

--- /dev/null

+++ b/sources/tech/20190428 Installing Budgie Desktop on Ubuntu -Quick Guide.md

@@ -0,0 +1,116 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Installing Budgie Desktop on Ubuntu [Quick Guide])

+[#]: via: (https://itsfoss.com/install-budgie-ubuntu/)

+[#]: author: (Atharva Lele https://itsfoss.com/author/atharva/)

+

+Installing Budgie Desktop on Ubuntu [Quick Guide]

+======

+

+_**Brief: Learn how to install Budgie desktop on Ubuntu in this step-by-step tutorial.**_

+

+Among all the [various Ubuntu versions][1], [Ubuntu Budgie][2] is the most underrated one. It looks elegant and it’s not heavy on resources.

+

+Read this [Ubuntu Budgie review][3] or simply watch this video to see what Ubuntu Budgie 18.04 looks like.

+

+[Subscribe to our YouTube channel for more Linux Videos][4]

+

+If you like [Budgie desktop][5] but you are using some other version of Ubuntu such as the default Ubuntu with GNOME desktop, I have good news for you. You can install Budgie on your current Ubuntu system and switch the desktop environments.

+

+In this post, I’m going to tell you exactly how to do that. But first, a little introduction to Budgie for those who are unaware about it.

+

+Budgie desktop environment is developed mainly by [Solus Linux team.][6] It is designed with focus on elegance and modern usage. Budgie is available for all major Linux distributions for users to try and experience this new desktop environment. Budgie is pretty mature by now and provides a great desktop experience.

+

+Warning

+

+Installing multiple desktops on the same system MAY result in conflicts and you may see some issue like missing icons in the panel or multiple icons of the same program.

+

+You may not see any issue at all as well. It’s your call if you want to try different desktop.

+

+### Install Budgie on Ubuntu

+

+This method is not tested on Linux Mint, so I recommend that you not follow this guide for Mint.

+

+For those on Ubuntu, Budgie is now a part of the Ubuntu repositories by default. Hence, we don’t need to add any PPAs in order to get Budgie.

+

+To install Budgie, simply run this command in terminal. We’ll first make sure that the system is fully updated.

+

+```

+sudo apt update && sudo apt upgrade

+sudo apt install ubuntu-budgie-desktop

+```

+

+When everything is done downloading, you will get a prompt to choose your display manager. Select ‘lightdm’ to get the full Budgie experience.

+

+![Select lightdm][7]

+

+After the installation is complete, reboot your computer. You will be then greeted by the Budgie login screen. Enter your password to go into the homescreen.

+

+![Budgie Desktop Home][8]

+

+### Switching to other desktop environments

+

+![Budgie login screen][9]

+

+You can click the Budgie icon next to your name to get options for login. From there you can select between the installed Desktop Environments (DEs). In my case, I see Budgie and the default Ubuntu (GNOME) DEs.

+

+![Select your DE][10]

+

+Hence whenever you feel like logging into GNOME, you can do so using this menu.

+

+[][11]

+

+Suggested read Get Rid of 'snapd returned status code 400: Bad Request' Error in Ubuntu

+

+### How to Remove Budgie

+

+If you don’t like Budgie or just want to go back to your regular old Ubuntu, you can switch back to your regular desktop as described in the above section.

+

+However, if you really want to remove Budgie and its component, you can follow the following commands to get back to a clean slate.

+

+_**Switch to some other desktop environments before using these commands:**_

+

+```

+sudo apt remove ubuntu-budgie-desktop ubuntu-budgie* lightdm

+sudo apt autoremove

+sudo apt install --reinstall gdm3

+```

+

+After running all the commands successfully, reboot your computer.

+

+Now, you will be back to GNOME or whichever desktop environment you had.

+

+**What you think of Budgie?**

+

+Budgie is one of the [best desktop environments for Linux][12]. Hope this short guide helped you install the awesome Budgie desktop on your Ubuntu system.

+

+If you did install Budgie, what do you like about it the most? Let us know in the comments below. And as usual, any questions or suggestions are always welcome.

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/install-budgie-ubuntu/

+

+作者:[Atharva Lele][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/atharva/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/which-ubuntu-install/

+[2]: https://ubuntubudgie.org/

+[3]: https://itsfoss.com/ubuntu-budgie-18-review/

+[4]: https://www.youtube.com/c/itsfoss?sub_confirmation=1

+[5]: https://github.com/solus-project/budgie-desktop

+[6]: https://getsol.us/home/

+[7]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/04/budgie_install_select_dm.png?fit=800%2C559&ssl=1

+[8]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/04/budgie_homescreen.jpg?fit=800%2C500&ssl=1

+[9]: https://i2.wp.com/itsfoss.com/wp-content/uploads/2019/04/budgie_install_lockscreen.png?fit=800%2C403&ssl=1

+[10]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/04/budgie_install_lockscreen_select_de.png?fit=800%2C403&ssl=1

+[11]: https://itsfoss.com/snapd-error-ubuntu/

+[12]: https://itsfoss.com/best-linux-desktop-environments/

diff --git a/sources/tech/20190429 Awk utility in Fedora.md b/sources/tech/20190429 Awk utility in Fedora.md

new file mode 100644

index 0000000000..21e40641f7

--- /dev/null

+++ b/sources/tech/20190429 Awk utility in Fedora.md

@@ -0,0 +1,177 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Awk utility in Fedora)

+[#]: via: (https://fedoramagazine.org/awk-utility-in-fedora/)

+[#]: author: (Stephen Snow https://fedoramagazine.org/author/jakfrost/)

+

+Awk utility in Fedora

+======

+

+![][1]

+

+Fedora provides _awk_ as part of its default installation, including all its editions, including the immutable ones like Silverblue. But you may be asking, what is _awk_ and why would you need it?

+

+_Awk_ is a data driven programming language that acts when it matches a pattern. On Fedora, and most other distributions, GNU _awk_ or _gawk_ is used. Read on for more about this language and how to use it.

+

+### A brief history of awk

+

+_Awk_ began at Bell Labs in 1977. Its name is an acronym from the initials of the designers: Alfred V. Aho, Peter J. Weinberger, and Brian W. Kernighan.

+

+> The specification for _awk_ in the POSIX Command Language and Utilities standard further clarified the language. Both the _gawk_ designers and the original _awk_ designers at Bell Laboratories provided feedback for the POSIX specification.

+>

+> From [The GNU Awk User’s Guide][2]

+

+For a more in-depth look at how _awk/gawk_ ended up being as powerful and useful as it is, follow the link above. Numerous individuals have contributed to the current state of _gawk_. Among those are:

+

+ * Arnold Robbins and David Trueman, the creators of _gawk_

+ * Michael Brennan, the creator of _mawk_ , which later was merged with _gawk_

+ * Jurgen Kahrs, who added networking capabilities to _gawk_ in 1997

+ * John Hague, who rewrote the _gawk_ internals and added an _awk_ -level debugger in 2011

+

+

+

+### Using awk

+

+The following sections show various ways of using _awk_ in Fedora.

+

+#### At the command line

+

+The simples way to invoke _awk_ is at the command line. You can search a text file for a particular pattern, and if found, print out the line(s) of the file that match the pattern anywhere. As an example, use _cat_ to take a look at the command history file in your home director:

+

+```

+$ cat ~/.bash_history

+```

+

+There are probably many lines scrolling by right now.

+

+_Awk_ helps with this type of file quite easily. Instead of printing the entire file out to the terminal like _cat_ , you can use _awk_ to find something of specific interest. For this example, type the following at the command line if you’re running a standard Fedora edition:

+

+```

+$ awk '/dnf/' ~/.bash_history

+```

+

+If you’re running Silverblue, try this instead:

+

+```

+$ awk '/rpm-ostree/' ~/.bash_history

+```

+

+In both cases, more data likely appears than what you really want. That’s no problem for _awk_ since it can accept regular expressions. Using the previous example, you can change the pattern to more closely match search requirements of wanting to know about installs only. Try changing the search pattern to one of these:

+

+```

+$ awk '/rpm-ostree install/' ~/.bash_history

+$ awk '/dnf install/' ~/.bash_history

+```

+

+All the entries of your bash command line history appear that have the pattern specified at any position along the line. Awk works on one line of a data file at a time. It matches pattern, then performs an action, then moves to next line until the end of file (EOF) is reached.

+

+#### From an _awk_ program

+

+Using awk at the command line as above is not much different than piping output to _grep_ , like this:

+

+```

+$ cat .bash_history | grep 'dnf install'

+```

+

+The end result of printing to standard output ( _stdout_ ) is the same with both methods.

+

+Awk is a programming language, and the command _awk_ is an interpreter of that language. The real power and flexibility of _awk_ is you can make programs with it, and combine them with shell scripts to create even more powerful programs. For more feature rich development with _awk_ , you can also incorporate C or C++ code using [Dynamic-Extensions][3].

+

+Next, to show the power of _awk_ , let’s make a couple of program files to print the header and draw five numbers for the first row of a bingo card. To do this we’ll create two awk program files.

+

+The first file prints out the header of the bingo card. For this example it is called _bingo-title.awk_. Use your favorite editor to save this text as that file name:

+```

+

+```

+

+BEGIN {

+print "B\tI\tN\tG\tO"

+}

+```

+

+```

+

+Now the title program is ready. You could try it out with this command:

+

+```

+$ awk -f bingo-title.awk

+```

+

+The program prints the word BINGO, with a tab space ( _\t_ ) between the characters. For the number selection, let’s use one of awk’s builtin numeric functions called _rand()_ and use two of the control statements, _for_ and _switch._ (Except the editor changed my program, so no switch statement used this time).

+

+The title of the second awk program is _bingo-num.awk_. Enter the following into your favorite editor and save with that file name:

+```

+

+```

+

+@include "bingo-title.awk"

+BEGIN {

+for (i = 1; i < = 5; i++) {

+b = int(rand() * 15) + (15*(i-1))

+printf "%s\t", b

+}

+print

+}

+```

+

+```

+

+The _@include_ statement in the file tells the interpreter to process the included file first. In this case the interpreter processs the _bingo-title.awk_ file so the title prints out first.

+

+#### Running the test program

+

+Now enter the command to pick a row of bingo numbers:

+

+```

+$ awk -f bingo-num.awk

+```

+

+Output appears similar to the following. Note that the _rand()_ function in _awk_ is not ideal for truly random numbers. It’s used here only as for example purposes.

+```

+

+```

+

+$ awk -f bingo-num.awk

+B I N G O

+13 23 34 53 71

+```

+

+```

+

+In the example, we created two programs with only beginning sections that used actions to manipulate data generated from within the awk program. In order to satisfy the rules of Bingo, more work is needed to achieve the desirable results. The reader is encouraged to fix the programs so they can reliably pick bingo numbers, maybe look at the awk function _srand()_ for answers on how that could be done.

+

+### Final examples

+

+_Awk_ can be useful even for mundane daily search tasks that you encounter, like listing all _flatpak’s_ on the _Flathub_ repository from _org.gnome_ (providing you have the Flathub repository setup). The command to do that would be:

+

+```

+$ flatpak remote-ls flathub --system | awk /org.gnome/

+```

+

+A listing appears that shows all output from _remote-ls_ that matches the _org.gnome_ pattern. To see flatpaks already installed from org.gnome, enter this command:

+

+```

+$ flatpak list --system | awk /org.gnome/

+```

+

+Awk is a powerful and flexible programming language that fills a niche with text file manipulation exceedingly well.

+

+--------------------------------------------------------------------------------

+

+via: https://fedoramagazine.org/awk-utility-in-fedora/

+

+作者:[Stephen Snow][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://fedoramagazine.org/author/jakfrost/

+[b]: https://github.com/lujun9972

+[1]: https://fedoramagazine.org/wp-content/uploads/2019/04/awk-816x345.jpg

+[2]: https://www.gnu.org/software/gawk/manual/gawk.html#Foreword3

+[3]: https://www.gnu.org/software/gawk/manual/gawk.html#Dynamic-Extensions

diff --git a/sources/tech/20190429 How To Turn On And Shutdown The Raspberry Pi -Absolute Beginner Tip.md b/sources/tech/20190429 How To Turn On And Shutdown The Raspberry Pi -Absolute Beginner Tip.md

new file mode 100644

index 0000000000..ce667a1dff

--- /dev/null

+++ b/sources/tech/20190429 How To Turn On And Shutdown The Raspberry Pi -Absolute Beginner Tip.md

@@ -0,0 +1,111 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (How To Turn On And Shutdown The Raspberry Pi [Absolute Beginner Tip])

+[#]: via: (https://itsfoss.com/turn-on-raspberry-pi/)

+[#]: author: (Chinmay https://itsfoss.com/author/chinmay/)

+

+How To Turn On And Shutdown The Raspberry Pi [Absolute Beginner Tip]

+======

+

+_**Brief: This quick tip teaches you how to turn on Raspberry Pi and how to shut it down properly afterwards.**_

+

+The [Raspberry Pi][1] is one of the [most popular SBC (Single-Board-Computer)][2]. If you are interested in this topic, I believe that you’ve finally got a Pi device. I also advise to get all the [additional Raspberry Pi accessories][3] to get started with your device.

+

+You’re ready to turn it on and start to tinker around with it. It has it’s own similarities and differences compared to traditional computers like desktops and laptops.

+

+Today, let’s go ahead and learn how to turn on and shutdown a Raspberry Pi as it doesn’t really feature a ‘power button’ of sorts.

+

+For this article I’m using a Raspberry Pi 3B+, but it’s the same for all the Raspberry Pi variants.

+

+Bestseller No. 1

+

+[][4]

+

+[CanaKit Raspberry Pi 3 B+ (B Plus) Starter Kit (32 GB EVO+ Edition, Premium Black Case)][4]

+

+CanaKit - Personal Computers

+

+$79.99 [][5]

+

+Bestseller No. 2

+

+[][6]

+

+[CanaKit Raspberry Pi 3 B+ (B Plus) with Premium Clear Case and 2.5A Power Supply][6]

+

+CanaKit - Personal Computers

+

+$54.99 [][5]

+

+### Turn on Raspberry Pi

+

+![Micro USB port for Power][7]

+

+The micro USB port powers the Raspberry Pi, the way you turn it on is by plugging in the power cable into the micro USB port. But, before you do that you should make sure that you have done the following things.

+

+ * Preparing the micro SD card with Raspbian according to the official [guide][8] and inserting into the micro SD card slot.

+ * Plugging in the HDMI cable, USB keyboard and a Mouse.

+ * Plugging in the Ethernet Cable(Optional).

+

+

+

+Once you have done the above, plug in the power cable. This turns on the Raspberry Pi and the display will light up and load the Operating System.

+

+Bestseller No. 1

+

+[][4]

+

+[CanaKit Raspberry Pi 3 B+ (B Plus) Starter Kit (32 GB EVO+ Edition, Premium Black Case)][4]

+

+CanaKit - Personal Computers

+

+$79.99 [][5]

+

+### Shutting Down the Pi

+

+Shutting down the Pi is pretty straight forward, click the menu button and choose shutdown.

+

+![Turn off Raspberry Pi graphically][9]

+

+Alternatively, you can use the [shutdown command][10] in the terminal:

+

+```

+sudo shutdown now

+```

+

+Once the shutdown process has started **wait** till it completely finishes and then you can cut the power to it. Once the Pi shuts down, there is no real way to turn the Pi back on without turning off and turning on the power. You could the GPIO’s to turn on the Pi from the shutdown state but it’ll require additional modding.

+

+[][2]

+

+Suggested read 12 Single Board Computers: Alternative to Raspberry Pi

+

+_Note: Micro USB ports tend to be fragile, hence turn-off/on the power at source instead of frequently unplugging and plugging into the micro USB port._

+

+Well, that’s about all you should know about turning on and shutting down the Pi, what do you plan to use it for? Let me know in the comments.

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/turn-on-raspberry-pi/

+

+作者:[Chinmay][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/chinmay/

+[b]: https://github.com/lujun9972

+[1]: https://www.raspberrypi.org/

+[2]: https://itsfoss.com/raspberry-pi-alternatives/

+[3]: https://itsfoss.com/things-you-need-to-get-your-raspberry-pi-working/

+[4]: https://www.amazon.com/CanaKit-Raspberry-Starter-Premium-Black/dp/B07BCC8PK7?SubscriptionId=AKIAJ3N3QBK3ZHDGU54Q&tag=chmod7mediate-20&linkCode=xm2&camp=2025&creative=165953&creativeASIN=B07BCC8PK7&keywords=raspberry%20pi%20kit (CanaKit Raspberry Pi 3 B+ (B Plus) Starter Kit (32 GB EVO+ Edition, Premium Black Case))

+[5]: https://www.amazon.com/gp/prime/?tag=chmod7mediate-20 (Amazon Prime)

+[6]: https://www.amazon.com/CanaKit-Raspberry-Premium-Clear-Supply/dp/B07BC7BMHY?SubscriptionId=AKIAJ3N3QBK3ZHDGU54Q&tag=chmod7mediate-20&linkCode=xm2&camp=2025&creative=165953&creativeASIN=B07BC7BMHY&keywords=raspberry%20pi%20kit (CanaKit Raspberry Pi 3 B+ (B Plus) with Premium Clear Case and 2.5A Power Supply)

+[7]: https://i0.wp.com/itsfoss.com/wp-content/uploads/2019/04/raspberry-pi-3-microusb.png?fit=800%2C532&ssl=1

+[8]: https://www.raspberrypi.org/documentation/installation/installing-images/README.md

+[9]: https://i1.wp.com/itsfoss.com/wp-content/uploads/2019/04/Raspbian-ui-menu.jpg?fit=800%2C492&ssl=1

+[10]: https://linuxhandbook.com/linux-shutdown-command/

diff --git a/sources/tech/20190430 The Awesome Fedora 30 is Here- Check Out the New Features.md b/sources/tech/20190430 The Awesome Fedora 30 is Here- Check Out the New Features.md

new file mode 100644

index 0000000000..3d158c7031

--- /dev/null

+++ b/sources/tech/20190430 The Awesome Fedora 30 is Here- Check Out the New Features.md

@@ -0,0 +1,115 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (The Awesome Fedora 30 is Here! Check Out the New Features)

+[#]: via: (https://itsfoss.com/fedora-30/)

+[#]: author: (Abhishek Prakash https://itsfoss.com/author/abhishek/)

+

+The Awesome Fedora 30 is Here! Check Out the New Features

+======

+

+The latest and greatest release of Fedora is here. Fedora 30 brings some visual as well as performance improvements.

+

+Fedora releases a new version every six months and each release is supported for thirteen months.

+

+Before you decide to download or upgrade Fedora, let’s first see what’s new in Fedora 30.

+

+### New Features in Fedora 30

+

+![Fedora 30 Release][1]

+

+Here’s what’s new in the latest release of Fedora.

+

+#### GNOME 3.32 gives a brand new look, features and performance improvement

+

+A lot of visual improvements is brought by the latest release of GNOME.

+

+GNOME 3.32 has refreshed new icons and UI and it almost looks like a brand new version of GNOME.

+

+![Gnome 3.32 icons | Image Credit][2]

+

+GNOME 3.32 also brings several other features like fractional scaling, permission control for each application, granular control on Night Light intensity among many other changes.

+

+GNOME 3.32 also brings some performance improvements. You’ll see faster file and app searches and a smoother scrolling.

+

+#### Improved performance for DNF

+

+Fedora 30 will see a faster [DNF][3] (the default package manager for Fedora) thanks to the [zchunk][4] compression algorithm.

+

+The zchunk algorithm splits the file into independent chunks. This helps in dealing with ‘delta’ or changes as you download only the changed chunks while downloading the new version of a file.

+

+With zcunk, dnf will only download the difference between the metadata of the current version and the earlier versions.

+

+#### Fedora 30 brings two new desktop environments into the fold

+

+Fedora already offers several desktop environment choices. Fedora 30 extends the offering with [elementary OS][5]‘ Pantheon desktop environment and Deepin Linux’ [DeepinDE][6].

+

+So now you can enjoy the looks and feel of elementary OS and Deepin Linux in Fedora. How cool is that!

+

+#### Linux Kernel 5

+

+Fedora 29 has Linux Kernel 5.0.9 version that has improved support for hardware and some performance improvements. You may check out the [features of Linux kernel 5.0 in this article][7].

+

+[][8]

+

+Suggested read The Featureful Release of Nextcloud 14 Has Two New Security Features

+

+#### Updated software

+

+You’ll also get newer versions of software. Some of the major ones are:

+

+ * GCC 9.0.1

+ * [Bash Shell 5.0][9]

+ * GNU C Library 2.29

+ * Ruby 2.6

+ * Golang 1.12

+ * Mesa 19.0.2

+

+

+ * Vagrant 2.2

+ * JDK12

+ * PHP 7.3

+ * Fish 3.0

+ * Erlang 21

+ * Python 3.7.3

+

+

+

+### Getting Fedora 30

+

+If you are already using Fedora 29 then you can upgrade to the latest release from your current install. You may follow this guide to learn [how to upgrade a Fedora version][10].

+

+Fedora 29 users will still get the updates for seven more months so if you don’t feel like upgrading, you may skip it for now. Fedora 28 users have no choice because Fedora 28 reached end of life next month which means there will be no security or maintenance update anymore. Upgrading to a newer version is no longer a choice.

+

+You always has the option to download the ISO of Fedora 30 and install it afresh. You can download Fedora from its official website. It’s only available for 64-bit systems and the ISO is 1.9 GB in size.

+

+[Download Fedora 30 Workstation][11]

+

+What do you think of Fedora 30? Are you planning to upgrade or at least try it out? Do share your thoughts in the comment section.

+

+--------------------------------------------------------------------------------

+

+via: https://itsfoss.com/fedora-30/

+

+作者:[Abhishek Prakash][a]

+选题:[lujun9972][b]

+译者:[译者ID](https://github.com/译者ID)

+校对:[校对者ID](https://github.com/校对者ID)

+

+本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

+

+[a]: https://itsfoss.com/author/abhishek/

+[b]: https://github.com/lujun9972

+[1]: https://itsfoss.com/wp-content/uploads/2019/04/fedora-30-release-800x450.png

+[2]: https://itsfoss.com/wp-content/uploads/2019/04/gnome-3-32-icons.png

+[3]: https://fedoraproject.org/wiki/DNF?rd=Dnf

+[4]: https://github.com/zchunk/zchunk

+[5]: https://itsfoss.com/elementary-os-juno-features/

+[6]: https://www.deepin.org/en/dde/

+[7]: https://itsfoss.com/linux-kernel-5/

+[8]: https://itsfoss.com/nextcloud-14-release/

+[9]: https://itsfoss.com/bash-5-release/

+[10]: https://itsfoss.com/upgrade-fedora-version/

+[11]: https://getfedora.org/en/workstation/

diff --git a/sources/tech/20190430 Upgrading Fedora 29 to Fedora 30.md b/sources/tech/20190430 Upgrading Fedora 29 to Fedora 30.md

new file mode 100644

index 0000000000..f6d819c754

--- /dev/null

+++ b/sources/tech/20190430 Upgrading Fedora 29 to Fedora 30.md

@@ -0,0 +1,96 @@

+[#]: collector: (lujun9972)

+[#]: translator: ( )

+[#]: reviewer: ( )

+[#]: publisher: ( )

+[#]: url: ( )

+[#]: subject: (Upgrading Fedora 29 to Fedora 30)

+[#]: via: (https://fedoramagazine.org/upgrading-fedora-29-to-fedora-30/)

+[#]: author: (Ryan Lerch https://fedoramagazine.org/author/ryanlerch/)

+

+Upgrading Fedora 29 to Fedora 30

+======

+

+![][1]

+

+Fedora 30 i[s available now][2]. You’ll likely want to upgrade your system to the latest version of Fedora. Fedora Workstation has a graphical upgrade method. Alternatively, Fedora offers a command-line method for upgrading Fedora 29 to Fedora 30.

+

+### Upgrading Fedora 29 Workstation to Fedora 30

+

+Soon after release time, a notification appears to tell you an upgrade is available. You can click the notification to launch the **GNOME Software** app. Or you can choose Software from GNOME Shell.

+

+Choose the _Updates_ tab in GNOME Software and you should see a screen informing you that Fedora 30 is Now Available.

+

+If you don’t see anything on this screen, try using the reload button at the top left. It may take some time after release for all systems to be able to see an upgrade available.

+

+Choose _Download_ to fetch the upgrade packages. You can continue working until you reach a stopping point, and the download is complete. Then use GNOME Software to restart your system and apply the upgrade. Upgrading takes time, so you may want to grab a coffee and come back to the system later.

+

+### Using the command line

+

+If you’ve upgraded from past Fedora releases, you are likely familiar with the _dnf upgrade_ plugin. This method is the recommended and supported way to upgrade from Fedora 29 to Fedora 30. Using this plugin will make your upgrade to Fedora 30 simple and easy.

+

+##### 1\. Update software and back up your system

+

+Before you do anything, you will want to make sure you have the latest software for Fedora 29 before beginning the upgrade process. To update your software, use _GNOME Software_ or enter the following command in a terminal.

+

+```

+sudo dnf upgrade --refresh

+```

+

+Additionally, make sure you back up your system before proceeding. For help with taking a backup, see [the backup series][3] on the Fedora Magazine.

+

+##### 2\. Install the DNF plugin