mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-06 01:20:12 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

2051ab6c8b

@ -0,0 +1,64 @@

|

||||

Open Source Certification: Preparing for the Exam

|

||||

======

|

||||

Open source is the new normal in tech today, with open components and platforms driving mission-critical processes at organizations everywhere. As open source has become more pervasive, it has also profoundly impacted the job market. Across industries [the skills gap is widening, making it ever more difficult to hire people][1] with much needed job skills. That’s why open source training and certification are more important than ever, and this series aims to help you learn more and achieve your own certification goals.

|

||||

|

||||

In the [first article in the series][2], we explored why certification matters so much today. In the [second article][3], we looked at the kinds of certifications that are making a difference. This story will focus on preparing for exams, what to expect during an exam, and how testing for open source certification differs from traditional types of testing.

|

||||

|

||||

Clyde Seepersad, General Manager of Training and Certification at The Linux Foundation, stated, “For many of you, if you take the exam, it may well be the first time that you've taken a performance-based exam and it is quite different from what you might have been used to with multiple choice, where the answer is on screen and you can identify it. In performance-based exams, you get what's called a prompt.”

|

||||

|

||||

As a matter of fact, many Linux-focused certification exams literally prompt test takers at the command line. The idea is to demonstrate skills in real time in a live environment, and the best preparation for this kind of exam is practice, backed by training.

|

||||

|

||||

### Know the requirements

|

||||

|

||||

"Get some training," Seepersad emphasized. "Get some help to make sure that you're going to do well. We sometimes find folks have very deep skills in certain areas, but then they're light in other areas. If you go to the website for [Linux Foundation training and certification][4], for the [LFCS][5] and the [LFCE][6] certifications, you can scroll down the page and see the details of the domains and tasks, which represent the knowledge areas you're supposed to know.”

|

||||

|

||||

Once you’ve identified the skills you need, “really spend some time on those and try to identify whether you think there are areas where you have gaps. You can figure out what the right training or practice regimen is going to be to help you get prepared to take the exam," Seepersad said.

|

||||

|

||||

### Practice, practice, practice

|

||||

|

||||

"Practice is important, of course, for all exams," he added. "We deliver the exams in a bit of a unique way -- through your browser. We're using a terminal emulator on your browser and you're being proctored, so there's a live human who is watching you via video cam, your screen is being recorded, and you're having to work through the exam console using the browser window. You're going to be asked to do something live on the system, and then at the end, we're going to evaluate that system to see if you were successful in accomplishing the task"

|

||||

|

||||

What if you run out of time on your exam, or simply don’t pass because you couldn’t perform the required skills? “I like the phrase, exam insurance,” Seepersad said. “The way we take the stress out is by offering a ‘no questions asked’ retake. If you take either exam, LFCS, LFCE and you do not pass on your first attempt, you are automatically eligible to have a free second attempt.”

|

||||

|

||||

The Linux Foundation intentionally maintains separation between its training and certification programs and uses an independent proctoring solution to monitor candidates. It also requires that all certifications be renewed every two years, which gives potential employers confidence that skills are current and have been recently demonstrated.

|

||||

|

||||

### Free certification guide

|

||||

|

||||

Becoming a Linux Foundation Certified System Administrator or Engineer is no small feat, so the Foundation has created [this free certification guide][7] to help you with your preparation. In this guide, you’ll find:

|

||||

|

||||

* Critical things to keep in mind on test day

|

||||

|

||||

|

||||

* An array of both free and paid study resources to help you be as prepared as possible

|

||||

|

||||

* A few tips and tricks that could make the difference at exam time

|

||||

|

||||

* A checklist of all the domains and competencies covered in the exam

|

||||

|

||||

|

||||

|

||||

|

||||

With certification playing a more important role in securing a rewarding long-term career, careful planning and preparation are key. Stay tuned for the next article in this series that will answer frequently asked questions pertaining to open source certification and training.

|

||||

|

||||

[Learn more about Linux training and certification.][8]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/blog/sysadmin-cert/2018/7/open-source-certification-preparing-exam

|

||||

|

||||

作者:[Sam Dean][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/sam-dean

|

||||

[1]:https://www.linux.com/blog/os-jobs-report/2017/9/demand-open-source-skills-rise

|

||||

[2]:https://www.linux.com/blog/sysadmin-cert/2018/7/5-reasons-open-source-certification-matters-more-ever

|

||||

[3]:https://www.linux.com/blog/sysadmin-cert/2018/7/tips-success-open-source-certification

|

||||

[4]:https://training.linuxfoundation.org/

|

||||

[5]:https://training.linuxfoundation.org/certification/linux-foundation-certified-sysadmin-lfcs/

|

||||

[6]:https://training.linuxfoundation.org/certification/linux-foundation-certified-engineer-lfce/

|

||||

[7]:https://training.linuxfoundation.org/download-free-certification-prep-guide

|

||||

[8]:https://training.linuxfoundation.org/certification/

|

||||

@ -0,0 +1,71 @@

|

||||

Why moving all your workloads to the cloud is a bad idea

|

||||

======

|

||||

|

||||

|

||||

|

||||

As we've been exploring in this series, cloud hype is everywhere, telling you that migrating your applications to the cloud—including hybrid cloud and multicloud—is the way to ensure a digital future for your business. This hype rarely dives into the pitfalls of moving to the cloud, nor considers the daily work of enhancing your customer's experience and agile delivery of new and legacy applications.

|

||||

|

||||

In [part one][1] of this series, we covered basic definitions (to level the playing field). We outlined our views on hybrid cloud and multi-cloud, making sure to show the dividing lines between the two. This set the stage for [part two][2], where we discussed the first of three pitfalls: Why cost is not always the obvious motivator for moving to the cloud.

|

||||

|

||||

In part three, we'll look at the second pitfall: Why moving all your workloads to the cloud is a bad idea.

|

||||

|

||||

### Everything's better in the cloud?

|

||||

|

||||

There's a misconception that everything will benefit from running in the cloud. All workloads are not equal, and not all workloads will see a measurable effect on the bottom line from moving to the cloud.

|

||||

|

||||

As [InformationWeek wrote][3], "Not all business applications should migrate to the cloud, and enterprises must determine which apps are best suited to a cloud environment." This is a hard fact that the utility company in part two of this series learned when labor costs rose while trying to move applications to the cloud. Discovering this was not a viable solution, the utility company backed up and reevaluated its applications. It found some applications were not heavily used and others had data ownership and compliance issues. Some of its applications were not certified for use in a cloud environment.

|

||||

|

||||

Sometimes running applications in the cloud is not physically possible, but other times it's not financially viable to run in the cloud.

|

||||

|

||||

Imagine a fictional online travel company. As its business grew, it expanded its on-premises hosting capacity to over 40,000 servers. It eventually became a question of expanding resources by purchasing a data center at a time, not a rack at a time. Its business consumes bandwidth at such volumes that cloud pricing models based on bandwidth usage remain prohibitive.

|

||||

|

||||

### Get a baseline

|

||||

|

||||

Sometimes running applications in the cloud is not physically possible, but other times it's not financially viable to run in the cloud.

|

||||

|

||||

As these examples show, nothing is more important than having a thorough understanding of your application landscape. Along with a having good understanding of what applications need to migrate to the cloud, you also need to understand current IT environments, know your present level of resources, and estimate your costs for moving.

|

||||

|

||||

As these examples show, nothing is more important than having a thorough understanding of your application landscape. Along with a having good understanding of what applications need to migrate to the cloud, you also need to understand current IT environments, know your present level of resources, and estimate your costs for moving.

|

||||

|

||||

Understanding your baseline–each application's current situation and performance requirements (network, storage, CPU, memory, application and infrastructure behavior under load, etc.)–gives you the tools to make the right decision.

|

||||

|

||||

If you're running servers with single-digit CPU utilization due to complex acquisition processes, a cloud with on-demand resourcing might be a great idea. However, first ask these questions:

|

||||

|

||||

* How long did this low-utilization exist?

|

||||

* Why wasn't it caught earlier?

|

||||

* Isn't there a process or effective monitoring in place?

|

||||

* Do you really need a cloud to fix this? Or just a better process for both getting and managing your resources?

|

||||

* Will you have a better process in the cloud?

|

||||

|

||||

|

||||

|

||||

### Are containers necessary?

|

||||

|

||||

Many believe you need containers to be successful in the cloud. This popular [catchphrase][4] sums it up nicely, "We crammed this monolith into a container and called it a microservice."

|

||||

|

||||

Containers are a means to an end, and using containers doesn't mean your organization is capable of running maturely in the cloud. It's not about the technology involved, it's about applications that often were written in days gone by with technology that's now outdated. If you put a tire fire into a container and then put that container on a container platform to ship, it's still functionality that someone is using.

|

||||

|

||||

Is that fire easier to extinguish now? These container fires just create more challenges for your DevOps teams, who are already struggling to keep up with all the changes being pushed through an organization moving everything into the cloud.

|

||||

|

||||

Note, it's not necessarily a bad decision to move legacy workloads into the cloud, nor is it a bad idea to containerize them. It's about weighing the benefits and the downsides, assessing the options available, and making the right choices for each of your workloads.

|

||||

|

||||

### Coming up

|

||||

|

||||

In part four of this series, we'll describe the third and final pitfall everyone should avoid with hybrid multi-cloud. Find out what the cloud means for your data.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/7/why-you-cant-move-everything-cloud

|

||||

|

||||

作者:[Eric D.Schabell][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/eschabell

|

||||

[1]:https://opensource.com/article/18/4/pitfalls-hybrid-multi-cloud

|

||||

[2]:https://opensource.com/article/18/6/reasons-move-to-cloud

|

||||

[3]:https://www.informationweek.com/cloud/10-cloud-migration-mistakes-to-avoid/d/d-id/1318829

|

||||

[4]:https://speakerdeck.com/caseywest/containercon-north-america-cloud-anti-patterns?slide=22

|

||||

@ -1,450 +0,0 @@

|

||||

MjSeven is translating

|

||||

|

||||

|

||||

Docker Guide: Dockerizing Python Django Application

|

||||

============================================================

|

||||

|

||||

### On this page

|

||||

|

||||

1. [What we will do?][6]

|

||||

|

||||

2. [Step 1 - Install Docker-ce][7]

|

||||

|

||||

3. [Step 2 - Install Docker-compose][8]

|

||||

|

||||

4. [Step 3 - Configure Project Environment][9]

|

||||

1. [Create a New requirements.txt file][1]

|

||||

|

||||

2. [Create the Nginx virtual host file django.conf][2]

|

||||

|

||||

3. [Create the Dockerfile][3]

|

||||

|

||||

4. [Create Docker-compose script][4]

|

||||

|

||||

5. [Configure Django project][5]

|

||||

|

||||

5. [Step 4 - Build and Run the Docker image][10]

|

||||

|

||||

6. [Step 5 - Testing][11]

|

||||

|

||||

7. [Reference][12]

|

||||

|

||||

Docker is an open-source project that provides an open platform for developers and sysadmins to build, package, and run applications anywhere as a lightweight container. Docker automates the deployment of applications inside software containers.

|

||||

|

||||

Django is a web application framework written in python that follows the MVC (Model-View-Controller) architecture. It is available for free and released under an open source license. It is fast and designed to help developers get their application online as quickly as possible.

|

||||

|

||||

In this tutorial, I will show you step-by-step how to create a docker image for an existing Django application project in Ubuntu 16.04\. We will learn about dockerizing a python Django application, and then deploy the application as a container to the docker environment using a docker-compose script.

|

||||

|

||||

In order to deploy our python Django application, we need additional docker images. We need an nginx docker image for the web server and PostgreSQL image for the database.

|

||||

|

||||

### What we will do?

|

||||

|

||||

1. Install Docker-ce

|

||||

|

||||

2. Install Docker-compose

|

||||

|

||||

3. Configure Project Environment

|

||||

|

||||

4. Build and Run

|

||||

|

||||

5. Testing

|

||||

|

||||

### Step 1 - Install Docker-ce

|

||||

|

||||

In this tutorial, we will install docker-ce community edition from the docker repository. We will install docker-ce community edition and docker-compose that support compose file version 3.

|

||||

|

||||

Before installing docker-ce, install docker dependencies needed using the apt command.

|

||||

|

||||

```

|

||||

sudo apt install -y \

|

||||

apt-transport-https \

|

||||

ca-certificates \

|

||||

curl \

|

||||

software-properties-common

|

||||

```

|

||||

|

||||

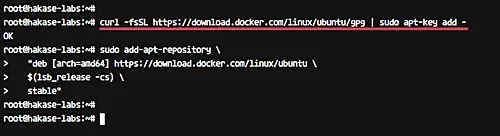

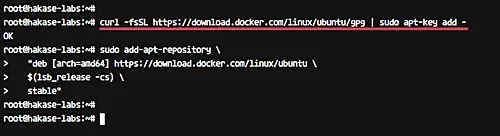

Now add the docker key and repository by running commands below.

|

||||

|

||||

```

|

||||

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo apt-key add -

|

||||

sudo add-apt-repository \

|

||||

"deb [arch=amd64] https://download.docker.com/linux/ubuntu \

|

||||

$(lsb_release -cs) \

|

||||

stable"

|

||||

```

|

||||

|

||||

[][14]

|

||||

|

||||

Update the repository and install docker-ce.

|

||||

|

||||

```

|

||||

sudo apt update

|

||||

sudo apt install -y docker-ce

|

||||

```

|

||||

|

||||

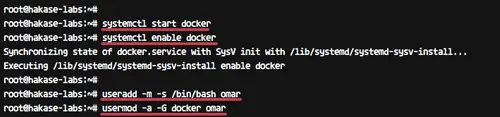

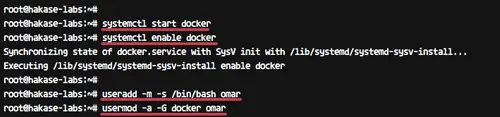

After the installation is complete, start the docker service and enable it to launch every time at system boot.

|

||||

|

||||

```

|

||||

systemctl start docker

|

||||

systemctl enable docker

|

||||

```

|

||||

|

||||

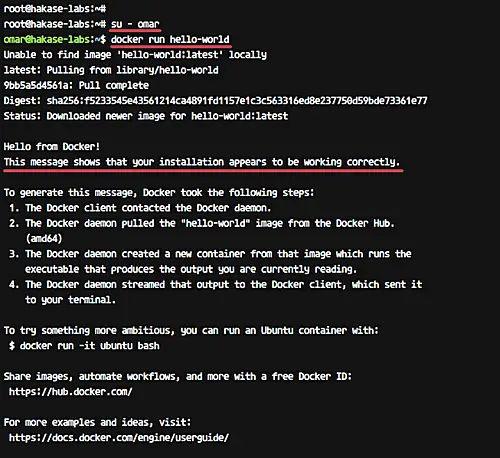

Next, we will add a new user named 'omar' and add it to the docker group.

|

||||

|

||||

```

|

||||

useradd -m -s /bin/bash omar

|

||||

usermod -a -G docker omar

|

||||

```

|

||||

|

||||

[][15]

|

||||

|

||||

Login as the omar user and run docker command as shown below.

|

||||

|

||||

```

|

||||

su - omar

|

||||

docker run hello-world

|

||||

```

|

||||

|

||||

Make sure you get the hello-world message from Docker.

|

||||

|

||||

[][16]

|

||||

|

||||

Docker-ce installation has been completed.

|

||||

|

||||

### Step 2 - Install Docker-compose

|

||||

|

||||

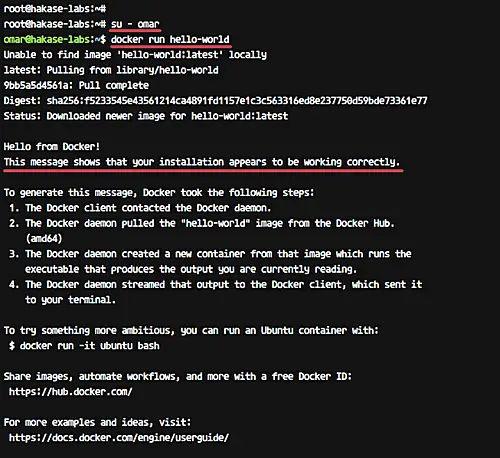

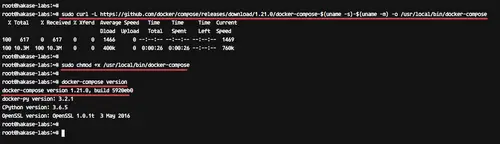

In this tutorial, we will be using the latest docker-compose support for compose file version 3\. We will install docker-compose manually.

|

||||

|

||||

Download the latest version of docker-compose using curl command to the '/usr/local/bin' directory and make it executable using chmod.

|

||||

|

||||

Run commands below.

|

||||

|

||||

```

|

||||

sudo curl -L https://github.com/docker/compose/releases/download/1.21.0/docker-compose-$(uname -s)-$(uname -m) -o /usr/local/bin/docker-compose

|

||||

sudo chmod +x /usr/local/bin/docker-compose

|

||||

```

|

||||

|

||||

Now check the docker-compose version.

|

||||

|

||||

```

|

||||

docker-compose version

|

||||

```

|

||||

|

||||

And make sure you get the latest version of the docker-compose 1.21.

|

||||

|

||||

[][17]

|

||||

|

||||

The docker-compose latest version that supports compose file version 3 has been installed.

|

||||

|

||||

### Step 3 - Configure Project Environment

|

||||

|

||||

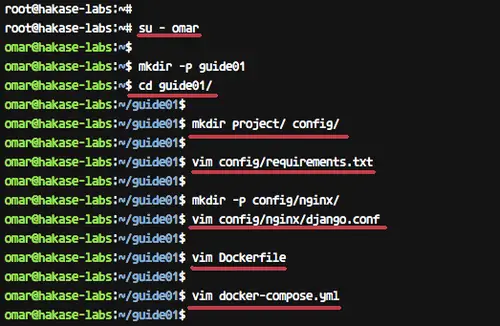

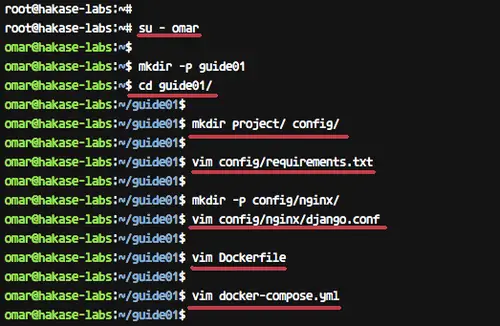

In this step, we will configure the python Django project environment. We will create new directory 'guide01' and make it as the main directory for our project files, such as a Dockerfile, Django project, nginx configuration file etc.

|

||||

|

||||

Login to the 'omar' user.

|

||||

|

||||

```

|

||||

su - omar

|

||||

```

|

||||

|

||||

Create new directory 'guide01' and go to the directory.

|

||||

|

||||

```

|

||||

mkdir -p guide01

|

||||

cd guide01/

|

||||

```

|

||||

|

||||

Now inside the 'guide01' directory, create new directories 'project' and 'config'.

|

||||

|

||||

```

|

||||

mkdir project/ config/

|

||||

```

|

||||

|

||||

Note:

|

||||

|

||||

* Directory 'project': All our python Django project files will be placed in that directory.

|

||||

|

||||

* Directory 'config': Directory for the project configuration files, including nginx configuration file, python pip requirements file etc.

|

||||

|

||||

### Create a New requirements.txt file

|

||||

|

||||

Next, create new file 'requirements.txt' inside the 'config' directory using vim command.

|

||||

|

||||

```

|

||||

vim config/requirements.txt

|

||||

```

|

||||

|

||||

Paste the configuration below.

|

||||

|

||||

```

|

||||

Django==2.0.4

|

||||

gunicorn==19.7.0

|

||||

psycopg2==2.7.4

|

||||

```

|

||||

|

||||

Save and exit.

|

||||

|

||||

### Create the Nginx virtual host file django.conf

|

||||

|

||||

Under the config directory, create the 'nginx' configuration directory and add the virtual host configuration file django.conf.

|

||||

|

||||

```

|

||||

mkdir -p config/nginx/

|

||||

vim config/nginx/django.conf

|

||||

```

|

||||

|

||||

Paste the following configuration there.

|

||||

|

||||

```

|

||||

upstream web {

|

||||

ip_hash;

|

||||

server web:8000;

|

||||

}

|

||||

|

||||

# portal

|

||||

server {

|

||||

location / {

|

||||

proxy_pass http://web/;

|

||||

}

|

||||

listen 8000;

|

||||

server_name localhost;

|

||||

|

||||

location /static {

|

||||

autoindex on;

|

||||

alias /src/static/;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

Save and exit.

|

||||

|

||||

### Create the Dockerfile

|

||||

|

||||

Create new 'Dockerfile' inside the 'guide01' directory.

|

||||

|

||||

Run the command below.

|

||||

|

||||

```

|

||||

vim Dockerfile

|

||||

```

|

||||

|

||||

Now paste Dockerfile script below.

|

||||

|

||||

```

|

||||

FROM python:3.5-alpine

|

||||

ENV PYTHONUNBUFFERED 1

|

||||

|

||||

RUN apk update && \

|

||||

apk add --virtual build-deps gcc python-dev musl-dev && \

|

||||

apk add postgresql-dev bash

|

||||

|

||||

RUN mkdir /config

|

||||

ADD /config/requirements.txt /config/

|

||||

RUN pip install -r /config/requirements.txt

|

||||

RUN mkdir /src

|

||||

WORKDIR /src

|

||||

```

|

||||

|

||||

Save and exit.

|

||||

|

||||

Note:

|

||||

|

||||

We want to build the Docker images for our Django project based on Alpine Linux, the smallest size of Linux. Our Django project will run Alpine Linux with python 3.5 installed on top of it and add the postgresql-dev package for the PostgreSQL database support. And then we will install all python packages listed on the 'requirements.txt' file using python pip command, and create new '/src' for our project.

|

||||

|

||||

### Create Docker-compose script

|

||||

|

||||

Create the 'docker-compose.yml' file under the 'guide01' directory using [vim][18] command below.

|

||||

|

||||

```

|

||||

vim docker-compose.yml

|

||||

```

|

||||

|

||||

Paste the following configuration there.

|

||||

|

||||

```

|

||||

version: '3'

|

||||

services:

|

||||

db:

|

||||

image: postgres:10.3-alpine

|

||||

container_name: postgres01

|

||||

nginx:

|

||||

image: nginx:1.13-alpine

|

||||

container_name: nginx01

|

||||

ports:

|

||||

- "8000:8000"

|

||||

volumes:

|

||||

- ./project:/src

|

||||

- ./config/nginx:/etc/nginx/conf.d

|

||||

depends_on:

|

||||

- web

|

||||

web:

|

||||

build: .

|

||||

container_name: django01

|

||||

command: bash -c "python manage.py makemigrations && python manage.py migrate && python manage.py collectstatic --noinput && gunicorn hello_django.wsgi -b 0.0.0.0:8000"

|

||||

depends_on:

|

||||

- db

|

||||

volumes:

|

||||

- ./project:/src

|

||||

expose:

|

||||

- "8000"

|

||||

restart: always

|

||||

```

|

||||

|

||||

Save and exit.

|

||||

|

||||

Note:

|

||||

|

||||

With this docker-compose file script, we will create three services. Create the database service named 'db' using the PostgreSQL alpine Linux, create the 'nginx' service using the Nginx alpine Linux again, and create our python Django container using the custom docker images generated from our Dockerfile.

|

||||

|

||||

[][19]

|

||||

|

||||

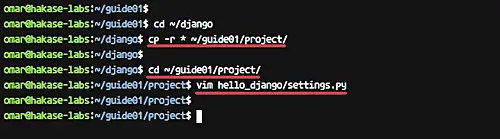

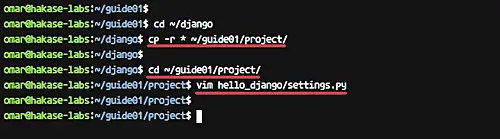

### Configure Django project

|

||||

|

||||

Copy your Django project files to the 'project' directory.

|

||||

|

||||

```

|

||||

cd ~/django

|

||||

cp -r * ~/guide01/project/

|

||||

```

|

||||

|

||||

Go to the 'project' directory and edit the application setting 'settings.py'.

|

||||

|

||||

```

|

||||

cd ~/guide01/project/

|

||||

vim hello_django/settings.py

|

||||

```

|

||||

|

||||

Note:

|

||||

|

||||

We will deploy simple Django application called 'hello_django' app.

|

||||

|

||||

On the 'ALLOW_HOSTS' line, add the service name 'web'.

|

||||

|

||||

```

|

||||

ALLOW_HOSTS = ['web']

|

||||

```

|

||||

|

||||

Now change the database settings. We will be using the PostgreSQL database that runs as a service named 'db' with default user and password.

|

||||

|

||||

```

|

||||

DATABASES = {

|

||||

'default': {

|

||||

'ENGINE': 'django.db.backends.postgresql_psycopg2',

|

||||

'NAME': 'postgres',

|

||||

'USER': 'postgres',

|

||||

'HOST': 'db',

|

||||

'PORT': 5432,

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

And for the 'STATIC_ROOT' configuration directory, add this line to the end of the line of the file.

|

||||

|

||||

```

|

||||

STATIC_ROOT = os.path.join(BASE_DIR, 'static/')

|

||||

```

|

||||

|

||||

Save and exit.

|

||||

|

||||

[][20]

|

||||

|

||||

Now we're ready to build and run the Django project under the docker container.

|

||||

|

||||

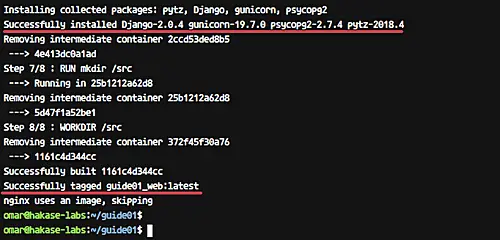

### Step 4 - Build and Run the Docker image

|

||||

|

||||

In this step, we want to build a Docker image for our Django project using the configuration on the 'guide01' directory.

|

||||

|

||||

Go to the 'guide01' directory.

|

||||

|

||||

```

|

||||

cd ~/guide01/

|

||||

```

|

||||

|

||||

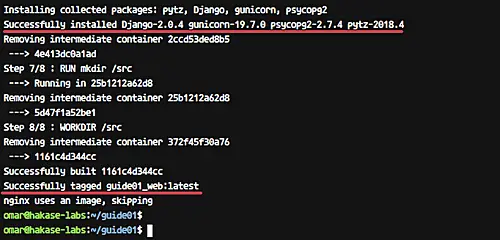

Now build the docker images using the docker-compose command.

|

||||

|

||||

```

|

||||

docker-compose build

|

||||

```

|

||||

|

||||

[][21]

|

||||

|

||||

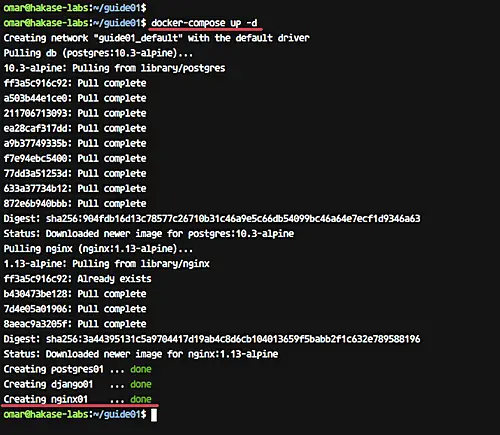

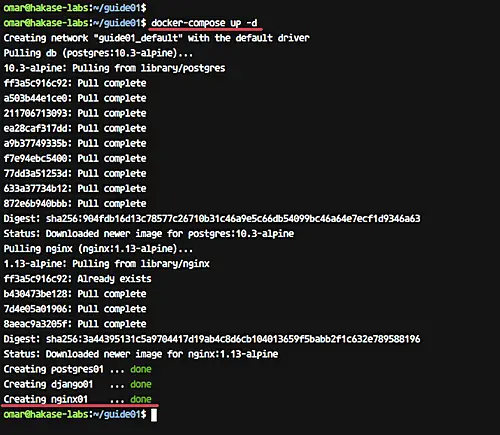

Start all services inside the docker-compose script.

|

||||

|

||||

```

|

||||

docker-compose up -d

|

||||

```

|

||||

|

||||

Wait for some minutes for Docker to build our Python image and download the nginx and postgresql docker images.

|

||||

|

||||

[][22]

|

||||

|

||||

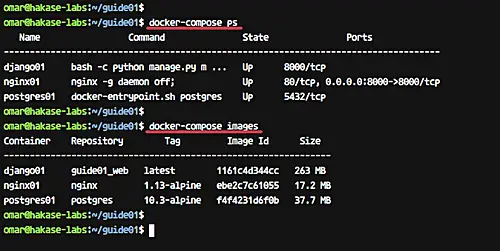

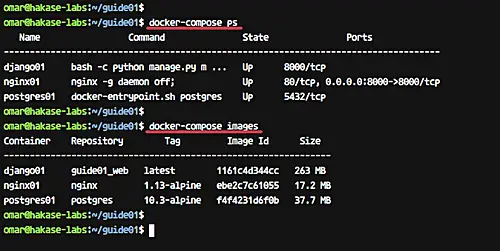

And when it's complete, check running container and list docker images on the system using following commands.

|

||||

|

||||

```

|

||||

docker-compose ps

|

||||

docker-compose images

|

||||

```

|

||||

|

||||

And now you will get three containers running and list of Docker images on the system as shown below.

|

||||

|

||||

[][23]

|

||||

|

||||

Our Python Django Application is now running inside the docker container, and docker images for our service have been created.

|

||||

|

||||

### Step 5 - Testing

|

||||

|

||||

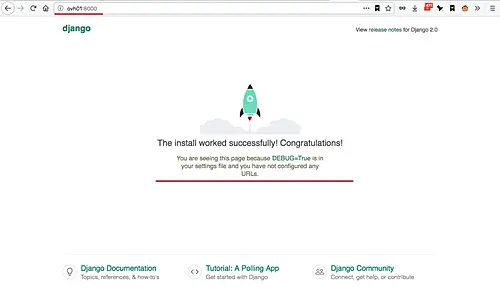

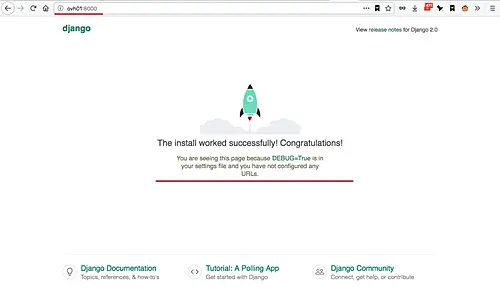

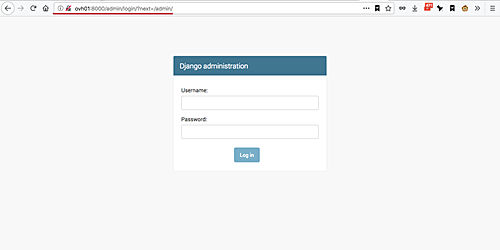

Open your web browser and type the server address with port 8000, mine is: http://ovh01:8000/

|

||||

|

||||

Now you will get the default Django home page.

|

||||

|

||||

[][24]

|

||||

|

||||

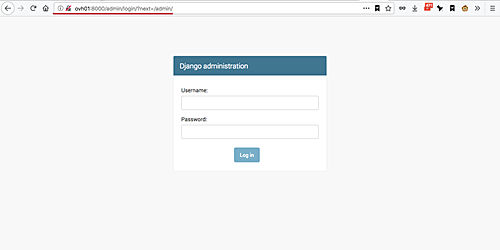

Next, test the admin page by adding the '/admin' path on the URL.

|

||||

|

||||

http://ovh01:8000/admin/

|

||||

|

||||

And you will see the Django admin login page.

|

||||

|

||||

[][25]

|

||||

|

||||

The Dockerizing Python Django Application has been completed successfully.

|

||||

|

||||

### Reference

|

||||

|

||||

* [https://docs.docker.com/][13]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/

|

||||

|

||||

作者:[Muhammad Arul][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/

|

||||

[1]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#create-a-new-requirementstxt-file

|

||||

[2]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#create-the-nginx-virtual-host-file-djangoconf

|

||||

[3]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#create-the-dockerfile

|

||||

[4]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#create-dockercompose-script

|

||||

[5]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#configure-django-project

|

||||

[6]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#what-we-will-do

|

||||

[7]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#step-install-dockerce

|

||||

[8]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#step-install-dockercompose

|

||||

[9]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#step-configure-project-environment

|

||||

[10]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#step-build-and-run-the-docker-image

|

||||

[11]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#step-testing

|

||||

[12]:https://www.howtoforge.com/tutorial/docker-guide-dockerizing-python-django-application/#reference

|

||||

[13]:https://docs.docker.com/

|

||||

[14]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/1.png

|

||||

[15]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/2.png

|

||||

[16]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/3.png

|

||||

[17]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/4.png

|

||||

[18]:https://www.howtoforge.com/vim-basics

|

||||

[19]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/5.png

|

||||

[20]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/6.png

|

||||

[21]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/7.png

|

||||

[22]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/8.png

|

||||

[23]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/9.png

|

||||

[24]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/10.png

|

||||

[25]:https://www.howtoforge.com/images/docker_guide_dockerizing_python_django_application/big/11.png

|

||||

@ -1,3 +1,5 @@

|

||||

translating by bestony

|

||||

|

||||

How to use Fio (Flexible I/O Tester) to Measure Disk Performance in Linux

|

||||

======

|

||||

|

||||

|

||||

322

sources/tech/20180705 Testing Node.js in 2018.md

Normal file

322

sources/tech/20180705 Testing Node.js in 2018.md

Normal file

@ -0,0 +1,322 @@

|

||||

BriFuture is translating

|

||||

|

||||

|

||||

Testing Node.js in 2018

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

[Stream][4] powers feeds for over 300+ million end users. With all of those users relying on our infrastructure, we’re very good about testing everything that gets pushed into production. Our primary codebase is written in Go, with some remaining bits of Python.

|

||||

|

||||

Our recent showcase application, [Winds 2.0][5], is built with Node.js and we quickly learned that our usual testing methods in Go and Python didn’t quite fit. Furthermore, creating a proper test suite requires a bit of upfront work in Node.js as the frameworks we are using don’t offer any type of built-in test functionality.

|

||||

|

||||

Setting up a good test framework can be tricky regardless of what language you’re using. In this post, we’ll uncover the hard parts of testing with Node.js, the various tooling we decided to utilize in Winds 2.0, and point you in the right direction for when it comes time for you to write your next set of tests.

|

||||

|

||||

### Why Testing is so Important

|

||||

|

||||

We’ve all pushed a bad commit to production and faced the consequences. It’s not a fun thing to have happen. Writing a solid test suite is not only a good sanity check, but it allows you to completely refactor code and feel confident that your codebase is still functional. This is especially important if you’ve just launched.

|

||||

|

||||

If you’re working with a team, it’s extremely important that you have test coverage. Without it, it’s nearly impossible for other developers on the team to know if their contributions will result in a breaking change (ouch).

|

||||

|

||||

Writing tests also encourage you and your teammates to split up code into smaller pieces. This makes it much easier to understand your code, and fix bugs along the way. The productivity gains are even bigger, due to the fact that you catch bugs early on.

|

||||

|

||||

Finally, without tests, your codebase might as well be a house of cards. There is simply zero certainty that your code is stable.

|

||||

|

||||

### The Hard Parts

|

||||

|

||||

In my opinion, most of the testing problems we ran into with Winds were specific to Node.js. The ecosystem is always growing. For example, if you are on macOS and run “brew upgrade” (with homebrew installed), your chances of seeing a new version of Node.js are quite high. With Node.js moving quickly and libraries following close behind, keeping up to date with the latest libraries is difficult.

|

||||

|

||||

Below are a few pain points that immediately come to mind:

|

||||

|

||||

1. Testing in Node.js is very opinionated and un-opinionated at the same time. Many people have different views on how a test infrastructure should be built and measured for success. The sad part is that there is no golden standard (yet) for how you should approach testing.

|

||||

|

||||

2. There are a large number of frameworks available to use in your application. However, they are generally minimal with no well-defined configuration or boot process. This leads to side effects that are very common, and yet hard to diagnose; so, you’ll likely end up writing your own test runner from scratch.

|

||||

|

||||

3. It’s almost guaranteed that you will be _required_ to write your own test runner (we’ll get to this in a minute).

|

||||

|

||||

The situations listed above are not ideal and it’s something that the Node.js community needs to address sooner rather than later. If other languages have figured it out, I think it’s time for Node.js, a widely adopted language, to figure it out as well.

|

||||

|

||||

### Writing Your Own Test Runner

|

||||

|

||||

So… you’re probably wondering what a test runner _is_ . To be honest, it’s not that complicated. A test runner is the highest component in the test suite. It allows for you to specify global configurations and environments, as well as import fixtures. One would assume this would be simple and easy to do… Right? Not so fast…

|

||||

|

||||

What we learned is that, although there is a solid number of test frameworks out there, not a single one for Node.js provides a unified way to construct your test runner. Sadly, it’s up to the developer to do so. Here’s a quick breakdown of the requirements for a test runner:

|

||||

|

||||

* Ability to load different configurations (e.g. local, test, development) and ensure that you _NEVER_ load a production configuration — you can guess what goes wrong when that happens.

|

||||

|

||||

* Lift and seed a database with dummy data for testing. This must work for various databases, whether it be MySQL, PostgreSQL, MongoDB, or any other, for that matter.

|

||||

|

||||

* Ability to load fixtures (files with seed data for testing in a development environment).

|

||||

|

||||

With Winds, we chose to use Mocha as our test runner. Mocha provides an easy and programmatic way to run tests on an ES6 codebase via command-line tools (integrated with Babel).

|

||||

|

||||

To kick off the tests, we register the Babel module loader ourselves. This provides us with finer grain greater control over which modules are imported before Babel overrides Node.js module loading process, giving us the opportunity to mock modules before any tests are run.

|

||||

|

||||

Additionally, we also use Mocha’s test runner feature to pre-assign HTTP handlers to specific requests. We do this because the normal initialization code is not run during tests (server interactions are mocked by the Chai HTTP plugin) and run some safety check to ensure we are not connecting to production databases.

|

||||

|

||||

While this isn’t part of the test runner, having a fixture loader is an important part of our test suite. We examined existing solutions; however, we settled on writing our own helper so that it was tailored to our requirements. With our solution, we can load fixtures with complex data-dependencies by following an easy ad-hoc convention when generating or writing fixtures by hand.

|

||||

|

||||

### Tooling for Winds

|

||||

|

||||

Although the process was cumbersome, we were able to find the right balance of tools and frameworks to make proper testing become a reality for our backend API. Here’s what we chose to go with:

|

||||

|

||||

### Mocha ☕

|

||||

|

||||

[Mocha][6], described as a “feature-rich JavaScript test framework running on Node.js”, was our immediate choice of tooling for the job. With well over 15k stars, many backers, sponsors, and contributors, we knew it was the right framework for the job.

|

||||

|

||||

### Chai 🥃

|

||||

|

||||

Next up was our assertion library. We chose to go with the traditional approach, which is what works best with Mocha — [Chai][7]. Chai is a BDD and TDD assertion library for Node.js. With a simple API, Chai was easy to integrate into our application and allowed for us to easily assert what we should _expect_ tobe returned from the Winds API. Best of all, writing tests feel natural with Chai. Here’s a short example:

|

||||

|

||||

```

|

||||

describe('retrieve user', () => {

|

||||

let user;

|

||||

|

||||

before(async () => {

|

||||

await loadFixture('user');

|

||||

user = await User.findOne({email: authUser.email});

|

||||

expect(user).to.not.be.null;

|

||||

});

|

||||

|

||||

after(async () => {

|

||||

await User.remove().exec();

|

||||

});

|

||||

|

||||

describe('valid request', () => {

|

||||

it('should return 200 and the user resource, including the email field, when retrieving the authenticated user', async () => {

|

||||

const response = await withLogin(request(api).get(`/users/${user._id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(user._id.toString());

|

||||

});

|

||||

|

||||

it('should return 200 and the user resource, excluding the email field, when retrieving another user', async () => {

|

||||

const anotherUser = await User.findOne({email: 'another_user@email.com'});

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${anotherUser.id}`), authUser);

|

||||

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body._id).to.equal(anotherUser._id.toString());

|

||||

expect(response.body).to.not.have.an('email');

|

||||

});

|

||||

|

||||

});

|

||||

|

||||

describe('invalid requests', () => {

|

||||

|

||||

it('should return 404 if requested user does not exist', async () => {

|

||||

const nonExistingId = '5b10e1c601e9b8702ccfb974';

|

||||

expect(await User.findOne({_id: nonExistingId})).to.be.null;

|

||||

|

||||

const response = await withLogin(request(api).get(`/users/${nonExistingId}`), authUser);

|

||||

expect(response).to.have.status(404);

|

||||

});

|

||||

});

|

||||

|

||||

});

|

||||

```

|

||||

|

||||

### Sinon 🧙

|

||||

|

||||

With the ability to work with any unit testing framework, [Sinon][8] was our first choice for a mocking library. Again, a super clean integration with minimal setup, Sinon turns mocking requests into a simple and easy process. Their website has an extremely friendly user experience and offers up easy steps to integrate Sinon with your test suite.

|

||||

|

||||

### Nock 🔮

|

||||

|

||||

For all external HTTP requests, we use [nock][9], a robust HTTP mocking library that really comes in handy when you have to communicate with a third party API (such as [Stream’s REST API][10]). There’s not much to say about this little library aside from the fact that it is awesome at what it does, and that’s why we like it. Here’s a quick example of us calling our [personalization][11] engine for Stream:

|

||||

|

||||

```

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

```

|

||||

|

||||

### Mock-require 🎩

|

||||

|

||||

The library [mock-require][12] allows dependencies on external code. In a single line of code, you can replace a module and mock-require will step in when some code attempts to import that module. It’s a small and minimalistic, but robust library, and we’re big fans.

|

||||

|

||||

### Istanbul 🔭

|

||||

|

||||

[Istanbul][13] is a JavaScript code coverage tool that computes statement, line, function and branch coverage with module loader hooks to transparently add coverage when running tests. Although we have similar functionality with CodeCov (see next section), this is a nice tool to have when running tests locally.

|

||||

|

||||

### The End Result — Working Tests

|

||||

|

||||

_With all of the libraries, including the test runner mentioned above, let’s have a look at what a full test looks like (you can have a look at our entire test suite _ [_here_][14] _):_

|

||||

|

||||

```

|

||||

import nock from 'nock';

|

||||

import { expect, request } from 'chai';

|

||||

|

||||

import api from '../../src/server';

|

||||

import Article from '../../src/models/article';

|

||||

import config from '../../src/config';

|

||||

import { dropDBs, loadFixture, withLogin } from '../utils.js';

|

||||

|

||||

describe('Article controller', () => {

|

||||

let article;

|

||||

|

||||

before(async () => {

|

||||

await dropDBs();

|

||||

await loadFixture('initial-data', 'articles');

|

||||

article = await Article.findOne({});

|

||||

expect(article).to.not.be.null;

|

||||

expect(article.rss).to.not.be.null;

|

||||

});

|

||||

|

||||

describe('get', () => {

|

||||

it('should return the right article via /articles/:articleId', async () => {

|

||||

let response = await withLogin(request(api).get(`/articles/${article.id}`));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('get parsed article', () => {

|

||||

it('should return the parsed version of the article', async () => {

|

||||

const response = await withLogin(

|

||||

request(api).get(`/articles/${article.id}`).query({ type: 'parsed' })

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list', () => {

|

||||

it('should return the list of articles', async () => {

|

||||

let response = await withLogin(request(api).get('/articles'));

|

||||

expect(response).to.have.status(200);

|

||||

});

|

||||

});

|

||||

|

||||

describe('list from personalization', () => {

|

||||

after(function () {

|

||||

nock.cleanAll();

|

||||

});

|

||||

|

||||

it('should return the list of articles', async () => {

|

||||

nock(config.stream.baseUrl)

|

||||

.get(/winds_article_recommendations/)

|

||||

.reply(200, { results: [{foreign_id:`article:${article.id}`}] });

|

||||

|

||||

const response = await withLogin(

|

||||

request(api).get('/articles').query({

|

||||

type: 'recommended',

|

||||

})

|

||||

);

|

||||

expect(response).to.have.status(200);

|

||||

expect(response.body.length).to.be.at.least(1);

|

||||

expect(response.body[0].url).to.eq(article.url);

|

||||

});

|

||||

});

|

||||

});

|

||||

```

|

||||

|

||||

### Continuous Integration

|

||||

|

||||

There are a lot of continuous integration services available, but we like to use [Travis CI][15] because they love the open-source environment just as much as we do. Given that Winds is open-source, it made for a perfect fit.

|

||||

|

||||

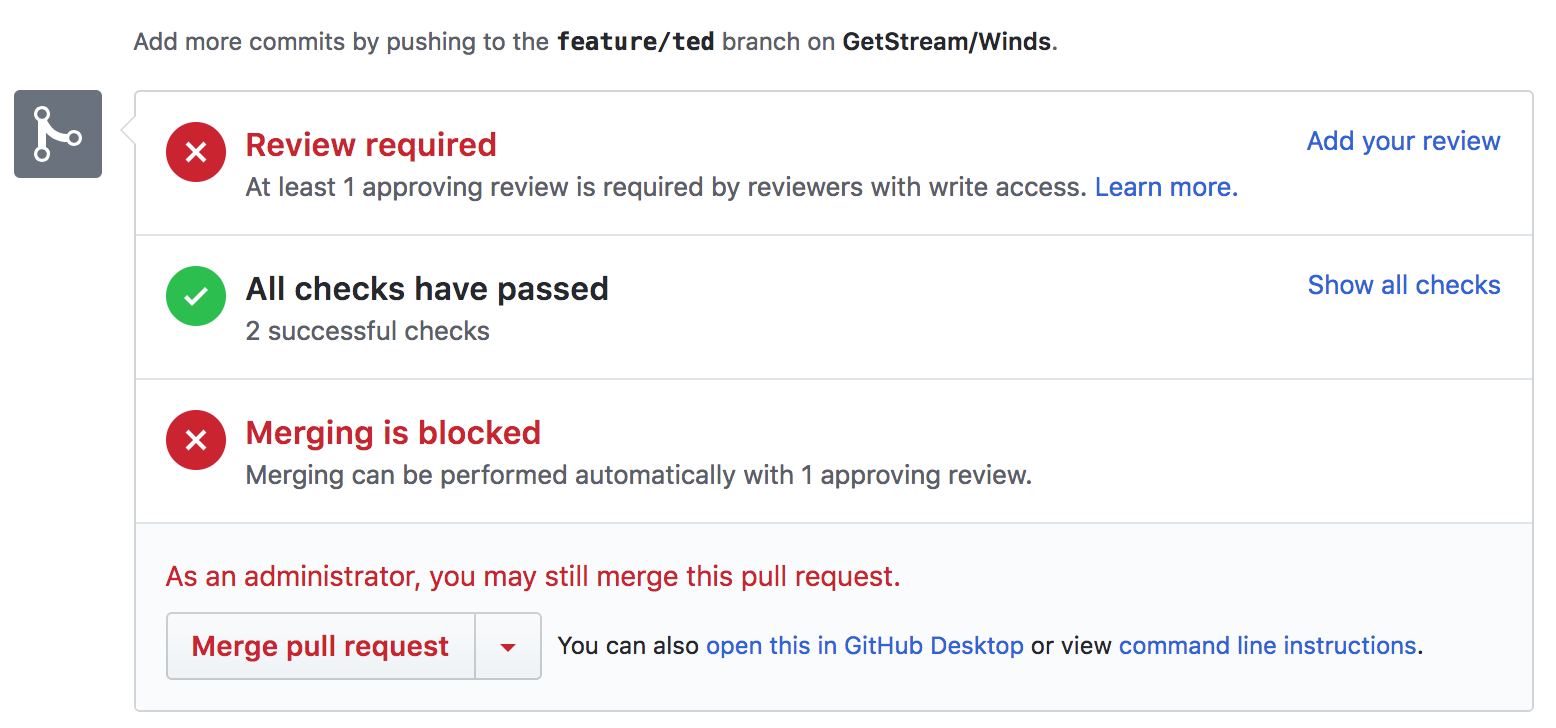

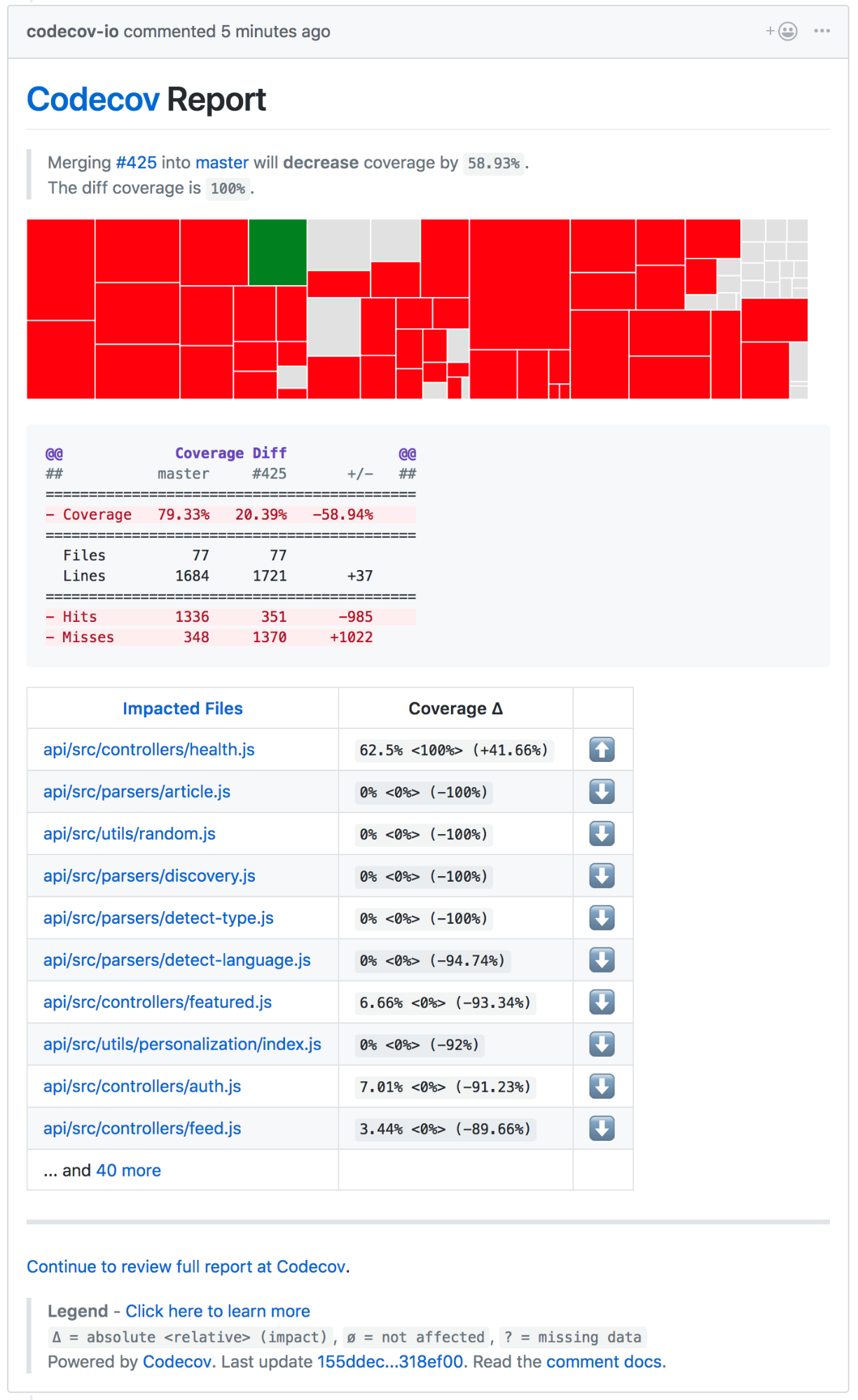

Our integration is rather simple — we have a [.travis.yml][16] file that sets up the environment and kicks off our tests via a simple [npm][17] command. The coverage reports back to GitHub, where we have a clear picture of whether or not our latest codebase or PR passes our tests. The GitHub integration is great, as it is visible without us having to go to Travis CI to look at the results. Below is a screenshot of GitHub when viewing the PR (after tests):

|

||||

|

||||

|

||||

|

||||

In addition to Travis CI, we use a tool called [CodeCov][18]. CodeCov is similar to [Istanbul][19], however, it’s a visualization tool that allows us to easily see code coverage, files changed, lines modified, and all sorts of other goodies. Though visualizing this data is possible without CodeCov, it’s nice to have everything in one spot.

|

||||

|

||||

### What We Learned

|

||||

|

||||

|

||||

|

||||

We learned a lot throughout the process of developing our test suite. With no “correct” way of doing things, we decided to set out and create our own test flow by sorting through the available libraries to find ones that were promising enough to add to our toolbox.

|

||||

|

||||

What we ultimately learned is that testing in Node.js is not as easy as it may sound. Hopefully, as Node.js continues to grow, the community will come together and build a rock solid library that handles everything test related in a “correct” manner.

|

||||

|

||||

Until then, we’ll continue to use our test suite, which is open-source on the [Winds GitHub repository][20].

|

||||

|

||||

### Limitations

|

||||

|

||||

#### No Easy Way to Create Fixtures

|

||||

|

||||

Frameworks and languages, such as Python’s Django, have easy ways to create fixtures. With Django, for example, you can use the following commands to automate the creation of fixtures by dumping data into a file:

|

||||

|

||||

The Following command will dump the whole database into a db.json file:

|

||||

./manage.py dumpdata > db.json

|

||||

|

||||

The Following command will dump only the content in django admin.logentry table:

|

||||

./manage.py dumpdata admin.logentry > logentry.json

|

||||

|

||||

The Following command will dump the content in django auth.user table: ./manage.py dumpdata auth.user > user.json

|

||||

|

||||

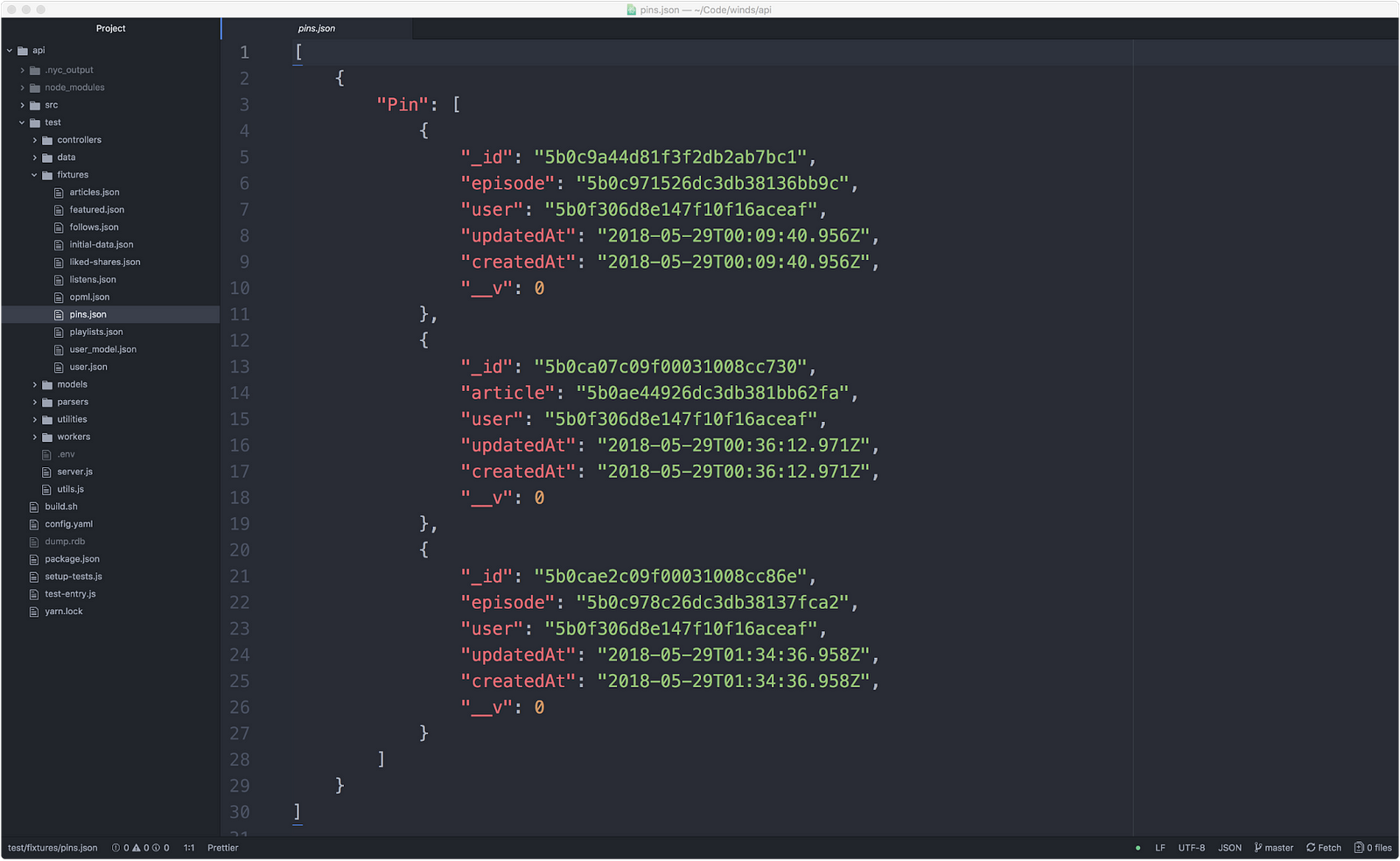

There’s no easy way to create a fixture in Node.js. What we ended up doing is using MongoDB Compass and exporting JSON from there. This resulted in a nice fixture, as shown below (however, it was a tedious process and prone to error):

|

||||

|

||||

|

||||

|

||||

|

||||

#### Unintuitive Module Loading When Using Babel, Mocked Modules, and Mocha Test-Runner

|

||||

|

||||

To support a broader variety of node versions and have access to latest additions to Javascript standard, we are using Babel to transpile our ES6 codebase to ES5\. Node.js module system is based on the CommonJS standard whereas the ES6 module system has different semantics.

|

||||

|

||||

Babel emulates ES6 module semantics on top of the Node.js module system, but because we are interfering with module loading by using mock-require, we are embarking on a journey through weird module loading corner cases, which seem unintuitive and can lead to multiple independent versions of the module imported and initialized and used throughout the codebase. This complicates mocking and global state management during testing.

|

||||

|

||||

#### Inability to Mock Functions Used Within the Module They Are Declared in When Using ES6 Modules

|

||||

|

||||

When a module exports multiple functions where one calls the other, it’s impossible to mock the function being used inside the module. The reason is that when you require an ES6 module you are presented with a separate set of references from the one used inside the module. Any attempt to rebind the references to point to new values does not really affect the code inside the module, which will continue to use the original function.

|

||||

|

||||

### Final Thoughts

|

||||

|

||||

Testing Node.js applications is a complicated process because the ecosystem is always evolving. It’s important to stay on top of the latest and greatest tools so you don’t fall behind.

|

||||

|

||||

There are so many outlets for JavaScript related news these days that it’s hard to keep up to date with all of them. Following email newsletters such as [JavaScript Weekly][21] and [Node Weekly][22] is a good start. Beyond that, joining a subreddit such as [/r/node][23] is a great idea. If you like to stay on top of the latest trends, [State of JS][24] does a great job at helping developers visualize trends in the testing world.

|

||||

|

||||

Lastly, here are a couple of my favorite blogs where articles often popup:

|

||||

|

||||

* [Hacker Noon][1]

|

||||

|

||||

* [Free Code Camp][2]

|

||||

|

||||

* [Bits and Pieces][3]

|

||||

|

||||

Think I missed something important? Let me know in the comments, or on Twitter – [@NickParsons][25].

|

||||

|

||||

Also, if you’d like to check out Stream, we have a great 5 minute tutorial on our website. Give it a shot [here][26].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Nick Parsons

|

||||

|

||||

Dreamer. Doer. Engineer. Developer Evangelist https://getstream.io.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://hackernoon.com/testing-node-js-in-2018-10a04dd77391

|

||||

|

||||

作者:[Nick Parsons][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://hackernoon.com/@nparsons08?source=post_header_lockup

|

||||

[1]:https://hackernoon.com/

|

||||

[2]:https://medium.freecodecamp.org/

|

||||

[3]:https://blog.bitsrc.io/

|

||||

[4]:https://getstream.io/

|

||||

[5]:https://getstream.io/winds

|

||||

[6]:https://github.com/mochajs/mocha

|

||||

[7]:http://www.chaijs.com/

|

||||

[8]:http://sinonjs.org/

|

||||

[9]:https://github.com/node-nock/nock

|

||||

[10]:https://getstream.io/docs_rest/

|

||||

[11]:https://getstream.io/personalization

|

||||

[12]:https://github.com/boblauer/mock-require

|

||||

[13]:https://github.com/gotwarlost/istanbul

|

||||

[14]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[15]:https://travis-ci.org/

|

||||

[16]:https://github.com/GetStream/Winds/blob/master/.travis.yml

|

||||

[17]:https://www.npmjs.com/

|

||||

[18]:https://codecov.io/#features

|

||||

[19]:https://github.com/gotwarlost/istanbul

|

||||

[20]:https://github.com/GetStream/Winds/tree/master/api/test

|

||||

[21]:https://javascriptweekly.com/

|

||||

[22]:https://nodeweekly.com/

|

||||

[23]:https://www.reddit.com/r/node/

|

||||

[24]:https://stateofjs.com/2017/testing/results/

|

||||

[25]:https://twitter.com/@nickparsons

|

||||

[26]:https://getstream.io/try-the-api

|

||||

@ -1,127 +0,0 @@

|

||||

Translating by qhwdw

|

||||

How to Run Windows Apps on Android with Wine

|

||||

======

|

||||

|

||||

|

||||

|

||||

Wine (on Linux, not the one you drink) is a free and open-source compatibility layer for running Windows programs on Unix-like operating systems. Begun in 1993, it could run a wide variety of Windows programs on Linux and macOS, although sometimes with modification. Now the Wine Project has rolled out version 3.0 which is compatible with your Android devices.

|

||||

|

||||

In this article we will show you how you can run Windows apps on your Android device with WINE.

|

||||

|

||||

**Related** : [How to Easily Install Windows Games on Linux with Winepak][1]

|

||||

|

||||

### What can you run on Wine?

|

||||

|

||||

Wine is only a compatibility layer, not a full-blown emulator, so you need an x86 Android device to take full advantage of it. However, most Androids in the hands of consumers are ARM-based.

|

||||

|

||||

Since most of you are using an ARM-based Android device, you will only be able to use Wine to run apps that have been adapted to run on Windows RT. There is a limited, but growing, list of software available for ARM devices. You can find a list of these apps that are compatible in this [thread][2] on XDA Developers Forums.

|

||||

|

||||

Some examples of apps you will be able to run on ARM are:

|

||||

|

||||

* [Keepass Portable][3]: A password storage wallet

|

||||

* [Paint.NET][4]: An image manipulation program

|

||||

* [SumatraPDF][5]: A document reader for PDFs and possibly some other document types

|

||||

* [Audacity][6]: A digital audio recording and editing program

|

||||

|

||||

|

||||

|

||||

There are also some open-source retro games available like [Doom][7] and [Quake 2][8], as well as the open-source clone, [OpenTTD][9], a version of Transport Tycoon.

|

||||

|

||||

The list of programs that Wine can run on Android ARM devices is bound to grow as the popularity of Wine on Android expands. The Wine project is working on using QEMU to emulate x86 CPU instructions on ARM, and when that is complete, the number of apps your Android will be able to run should grow rapidly.

|

||||

|

||||

### Installing Wine

|

||||

|

||||

To install Wine you must first make sure that your device’s settings allow it to download and install APKs from other sources than the Play Store. To do this you’ll need to give your device permission to download apps from unknown sources.

|

||||

|

||||

1\. Open Settings on your phone and select your Security options.

|

||||

|

||||

|

||||

![wine-android-security][10]

|

||||

|

||||

2\. Scroll down and click on the switch next to “Unknown Sources.”

|

||||

|

||||

![wine-android-unknown-sources][11]

|

||||

|

||||

3\. Accept the risks in the warning.

|

||||

|

||||

![wine-android-unknown-sources-warning][12]

|

||||

|

||||

4\. Open the [Wine installation site][13], and tap the first checkbox in the list. The download will automatically begin.

|

||||

|

||||

![wine-android-download-button][14]

|

||||

|

||||

5\. Once the download completes, open it from your Downloads folder, or pull down the notifications menu and click on the completed download there.

|

||||

|

||||

6\. Install the program. It will notify you that it needs access to recording audio and to modify, delete, and read the contents of your SD card. You may also need to give access for audio recording for some apps you will use in the program.

|

||||

|

||||

![wine-android-app-access][15]

|

||||

|

||||

7\. When the installation completes, click on the icon to open the program.

|

||||

|

||||

![wine-android-icon-small][16]

|

||||

|

||||

When you open Wine, the desktop mimics Windows 7.

|

||||

|

||||

![wine-android-desktop][17]

|

||||

|

||||

One drawback of Wine is that you have to have an external keyboard available to type. An external mouse may also be useful if you are running it on a small screen and find it difficult to tap small buttons.

|

||||

|

||||

You can tap the Start button to open two menus – Control Panel and Run.

|

||||

|

||||

![wine-android-start-button][18]

|

||||

|

||||

### Working with Wine

|

||||

|

||||

When you tap “Control panel” you will see three choices – Add/Remove Programs, Game Controllers, and Internet Settings.

|

||||

|

||||

Using “Run,” you can open a dialogue box to issue commands. For instance, launch Internet Explorer by entering `iexplore`.

|

||||

|

||||

![wine-android-run][19]

|

||||

|

||||

### Installing programs on Wine

|

||||

|

||||

1\. Download the application (or sync via the cloud) to your Android device. Take note of where you save it.

|

||||

|

||||

2\. Open the Wine Command Prompt window.

|

||||

|

||||

3\. Type the path to the location of the program. If you have saved it to the Download folder on your SD card, type:

|

||||

|

||||

4\. To run the file in Wine for Android, simply input the name of the EXE file.

|

||||

|

||||

If the ARM-ready file is compatible, it should run. If not, you’ll see a bunch of error messages. At this stage, installing Windows software on Android in Wine can be hit or miss.

|

||||

|

||||

There are still a lot of issues with this new version of Wine for Android. It doesn’t work on all Android devices. It worked on my Galaxy S6 Edge but not on my Galaxy Tab 4. Many games won’t work because the graphics driver doesn’t support Direct3D yet. You need an external keyboard and mouse to be able to easily manipulate the screen because touch-screen is not fully developed yet.

|

||||

|

||||

Even with these issues in the early stages of release, the possibilities for this technology are thought-provoking. It’s certainly likely that it will take some time yet before you can launch Windows programs on your Android smartphone using Wine without a hitch.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.maketecheasier.com/run-windows-apps-android-with-wine/

|

||||

|

||||

作者:[Tracey Rosenberger][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.maketecheasier.com/author/traceyrosenberger/

|

||||

[1]:https://www.maketecheasier.com/winepak-install-windows-games-linux/ (How to Easily Install Windows Games on Linux with Winepak)

|

||||

[2]:https://forum.xda-developers.com/showthread.php?t=2092348

|

||||

[3]:http://downloads.sourceforge.net/keepass/KeePass-2.20.1.zip

|

||||

[4]:http://forum.xda-developers.com/showthread.php?t=2411497

|

||||

[5]:http://forum.xda-developers.com/showthread.php?t=2098594

|

||||

[6]:http://forum.xda-developers.com/showthread.php?t=2103779

|

||||

[7]:http://forum.xda-developers.com/showthread.php?t=2175449

|

||||

[8]:http://forum.xda-developers.com/attachment.php?attachmentid=1640830&d=1358070370

|

||||

[9]:http://forum.xda-developers.com/showpost.php?p=36674868&postcount=151

|

||||

[10]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-security.png (wine-android-security)

|

||||

[11]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-unknown-sources.jpg (wine-android-unknown-sources)

|

||||

[12]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-unknown-sources-warning.png (wine-android-unknown-sources-warning)

|

||||

[13]:https://dl.winehq.org/wine-builds/android/

|

||||

[14]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-download-button.png (wine-android-download-button)

|

||||

[15]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-app-access.jpg (wine-android-app-access)

|

||||

[16]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-icon-small.jpg (wine-android-icon-small)

|

||||

[17]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-desktop.png (wine-android-desktop)

|

||||

[18]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-start-button.png (wine-android-start-button)

|

||||

[19]:https://www.maketecheasier.com/assets/uploads/2018/07/Wine-Android-Run.png (wine-android-run)

|

||||

@ -1,70 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

Boost your typing with emoji in Fedora 28 Workstation

|

||||

======

|

||||

|

||||

|

||||

|

||||

Fedora 28 Workstation ships with a feature that allows you to quickly search, select and input emoji using your keyboard. Emoji, cute ideograms that are part of Unicode, are used fairly widely in messaging and especially on mobile devices. You may have heard the idiom “A picture is worth a thousand words.” This is exactly what emoji provide: simple images for you to use in communication. Each release of Unicode adds more, with over 200 new ones added in past releases of Unicode. This article shows you how to make them easy to use in your Fedora system.

|

||||

|

||||

It’s great to see emoji numbers growing. But at the same time it brings the challenge of how to input them in a computing device. Many people already use these symbols for input in mobile devices or social networking sites.

|

||||

|

||||

[**Editors’ note: **This article is an update to a previously published piece on this topic.]

|

||||

|

||||

### Enabling Emoji input on Fedora 28 Workstation

|

||||

|

||||

The new emoji input method ships by default in Fedora 28 Workstation. To use it, you must enable it using the Region and Language settings dialog. Open the Region and Language dialog from the main Fedora Workstation settings, or search for it in the Overview.

|

||||

|

||||

[![Region & Language settings tool][1]][2]

|

||||

|

||||

Choose the + control to add an input source. The following dialog appears:

|

||||

|

||||

[![Adding an input source][3]][4]

|

||||

|

||||

Choose the final option (three dots) to expand the selections fully. Then, find Other at the bottom of the list and select it:

|

||||

|

||||

[![Selecting other input sources][5]][6]

|

||||

|

||||

In the next dialog, find the Typing booster choice and select it:

|

||||

|

||||

[![][7]][8]

|

||||

|

||||

This advanced input method is powered behind the scenes by iBus. The advanced input methods are identifiable in the list by the cogs icon on the right of the list.

|

||||

|

||||

The Input Method drop-down automatically appears in the GNOME Shell top bar. Ensure your default method — in this example, English (US) — is selected as the current method, and you’ll be ready to input.

|

||||

|

||||

[![Input method dropdown in Shell top bar][9]][10]

|

||||

|

||||

## Using the new Emoji input method

|

||||

|

||||

Now the Emoji input method is enabled, search for emoji by pressing the keyboard shortcut **Ctrl+Shift+E**. A pop-over dialog appears where you can type a search term, such as smile, to find matching symbols.

|

||||

|

||||

[![Searching for smile emoji][11]][12]

|

||||

|

||||

Use the arrow keys to navigate the list. Then, hit **Enter** to make your selection, and the glyph will be placed as input.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/boost-typing-emoji-fedora-28-workstation/

|

||||

|

||||

作者:[Paul W. Frields][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://fedoramagazine.org/author/pfrields/

|

||||

[1]:https://fedoramagazine.org/wp-content/uploads/2018/07/Screenshot-from-2018-07-08-15-02-41-1024x718.png

|

||||