mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-19 00:30:12 +08:00

commit

1fb55e0b02

@ -0,0 +1,128 @@

|

||||

如何在树莓派上安装 Fedora 25

|

||||

============================================================

|

||||

|

||||

> 了解 Fedora 第一个官方支持树莓派的版本

|

||||

|

||||

|

||||

|

||||

>图片提供 opensource.com

|

||||

|

||||

2016 年 10 月,Fedora 25 Beta 发布了,随之而来的还有对 [树莓派 2 和 3 的初步支持][6]。Fedora 25 的最终“通用”版在一个月后发布,从那时起,我一直在树莓派上尝试不同的 Fedora spins。

|

||||

|

||||

这篇文章不仅是一篇<ruby>树莓派<rt>Raspberry Pi</rt></ruby> 3 上的 Fedora 25 的点评,还集合了技巧、截图以及我对 Fedora 第一个官方支持 Pi 的这个版本的一些个人看法。

|

||||

|

||||

在我开始之前,需要说一下的是,为写这篇文章所做的所有工作都是在我的运行 Fedora 25 的个人笔记本电脑上完成的。我使用一张 microSD 插到 SD 适配器中,复制和编辑所有的 Fedora 镜像到 32GB 的 microSD 卡中,然后用它在一台三星电视上启动了树莓派 3。 因为 Fedora 25 尚不支持内置 Wi-Fi,所以树莓派 3 使用了以太网线缆进行网络连接。最后,我使用了 Logitech K410 无线键盘和触摸板进行输入。

|

||||

|

||||

如果你没有条件使用以太网线连接在你的树莓派上玩 Fedora 25,我曾经用过一个 Edimax Wi-Fi USB 适配器,它也可以在 Fedora 25 上工作,但在本文中,我只使用了以太网连接。

|

||||

|

||||

### 在树莓派上安装 Fedora 25 之前

|

||||

|

||||

阅读 Fedora 项目 wiki 上的[树莓派支持文档][7]。你可以从 wiki 下载 Fedora 25 安装所需的镜像,那里还列出了所有支持和不支持的内容。

|

||||

|

||||

此外,请注意,这是初始支持版本,还有许多新的工作和支持将随着 Fedora 26 的发布而出现,所以请随时报告 bug,并通过 [Bugzilla][8]、Fedora 的 [ARM 邮件列表][9]、或者 Freenode IRC 频道#fedora-arm,分享你在树莓派上使用 Fedora 25 的体验反馈。

|

||||

|

||||

### 安装

|

||||

|

||||

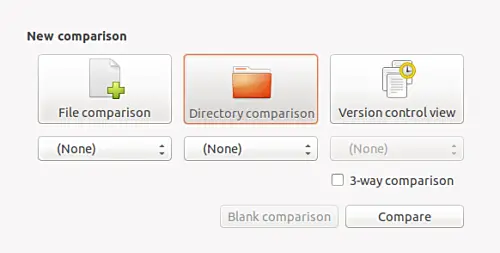

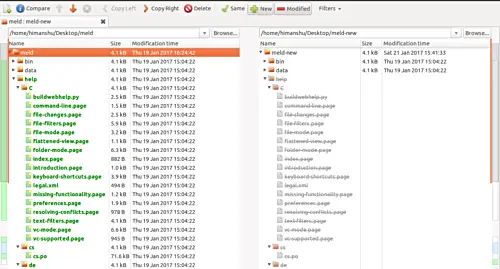

我下载并安装了五个不同的 Fedora 25 spin:GNOME(默认工作站)、KDE、Minimal、LXDE 和 Xfce。在多数情况下,它们都有一致和易于遵循的步骤,以确保我的树莓派 3 上启动正常。有的 spin 有已知 bug 的正在解决之中,而有的按照 Fedora wik 遵循标准操作程序即可。

|

||||

|

||||

|

||||

|

||||

*树莓派 3 上的 Fedora 25 workstation、 GNOME 版本*

|

||||

|

||||

### 安装步骤

|

||||

|

||||

1、 在你的笔记本上,从支持文档页面的链接下载一个树莓派的 Fedora 25 镜像。

|

||||

|

||||

2、 在笔记本上,使用 `fedora-arm-installer` 或下述命令行将镜像复制到 microSD:

|

||||

|

||||

```

|

||||

xzcat Fedora-Workstation-armhfp-25-1.3-sda.raw.xz | dd bs=4M status=progress of=/dev/mmcblk0

|

||||

```

|

||||

|

||||

注意:`/dev/mmclk0` 是我的 microSD 插到 SD 适配器后,在我的笔记本电脑上挂载的设备名。虽然我在笔记本上使用 Fedora,可以使用 `fedora-arm-installer`,但我还是喜欢命令行。

|

||||

|

||||

3、 复制完镜像后,_先不要启动你的系统_。我知道你很想这么做,但你仍然需要进行几个调整。

|

||||

|

||||

4、 为了使镜像文件尽可能小以便下载,镜像上的根文件系统是很小的,因此你必须增加根文件系统的大小。如果你不这么做,你仍然可以启动你的派,但如果你一旦运行 `dnf update` 来升级你的系统,它就会填满文件系统,导致糟糕的事情发生,所以趁着 microSD 还在你的笔记本上进行分区:

|

||||

|

||||

```

|

||||

growpart /dev/mmcblk0 4

|

||||

resize2fs /dev/mmcblk0p4

|

||||

```

|

||||

|

||||

注意:在 Fedora 中,`growpart` 命令由 `cloud-utils-growpart.noarch` 这个 RPM 提供的。

|

||||

|

||||

5、文件系统更新后,您需要将 `vc4` 模块列入黑名单。[更多有关此 bug 的信息在此。][10]

|

||||

|

||||

我建议在启动树莓派之前这样做,因为不同的 spin 有不同表现方式。例如,(至少对我来说)在没有黑名单 `vc4` 的情况下,GNOME 在我启动后首先出现,但在系统更新后,它不再出现。 KDE spin 则在第一次启动时根本不会出现 KDE。因此我们可能需要在我们的第一次启动之前将 `vc4` 加入黑名单,直到这个错误以后解决了。

|

||||

|

||||

黑名单应该出现在两个不同的地方。首先,在你的 microSD 根分区上,在 `etc/modprode.d/` 下创建一个 `vc4.conf`,内容是:`blacklist vc4`。第二,在你的 microSD 启动分区,添加 `rd.driver.blacklist=vc4` 到 `extlinux/extlinux.conf` 文件的末尾。

|

||||

|

||||

6、 现在,你可以启动你的树莓派了。

|

||||

|

||||

### 启动

|

||||

|

||||

你要有耐心,特别是对于 GNOME 和 KDE 发行版来说。在 SSD(固态驱动器)几乎即时启动的时代,你很容易就对派的启动速度感到不耐烦,特别是第一次启动时。在第一次启动 Window Manager 之前,会先弹出一个初始配置页面,可以配置 root 密码、常规用户、时区和网络。配置完毕后,你就应该能够 SSH 到你的树莓派上,方便地调试显示问题了。

|

||||

|

||||

### 系统更新

|

||||

|

||||

在树莓派上运行 Fedora 25 后,你最终(或立即)会想要更新系统。

|

||||

|

||||

首先,进行内核升级时,先熟悉你的 `/boot/extlinux/extlinux.conf` 文件。如果升级内核,下次启动时,除非手动选择正确的内核,否则很可能会启动进入救援( Rescue )模式。避免这种情况发生最好的方法是,在你的 `extlinux.conf` 中将定义 Rescue 镜像的那五行移动到文件的底部,这样最新的内核将在下次自动启动。你可以直接在派上或通过在笔记本挂载来编辑 `/boot/extlinux/extlinux.conf`:

|

||||

```

|

||||

label Fedora 25 Rescue fdcb76d0032447209f782a184f35eebc (4.9.9-200.fc25.armv7hl)

|

||||

kernel /vmlinuz-0-rescue-fdcb76d0032447209f782a184f35eebc

|

||||

append ro root=UUID=c19816a7-cbb8-4cbb-8608-7fec6d4994d0 rd.driver.blacklist=vc4

|

||||

fdtdir /dtb-4.9.9-200.fc25.armv7hl/

|

||||

initrd /initramfs-0-rescue-fdcb76d0032447209f782a184f35eebc.img

|

||||

```

|

||||

|

||||

第二点,如果无论什么原因,如果你的显示器在升级后再次变暗,并且你确定已经将 `vc4` 加入黑名单,请运行 `lsmod | grep vc4`。你可以先启动到多用户模式而不是图形模式,并从命令行中运行 `startx`。 请阅读 `/etc/inittab` 中的内容,了解如何切换 target 的说明。

|

||||

|

||||

|

||||

|

||||

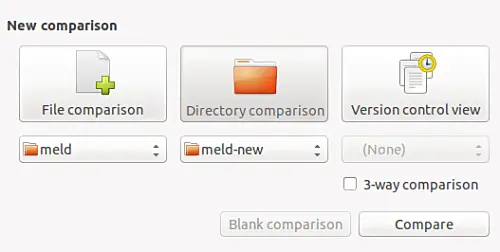

*树莓派 3 上的 Fedora 25 workstation、 KDE 版本*

|

||||

|

||||

### Fedora Spin

|

||||

|

||||

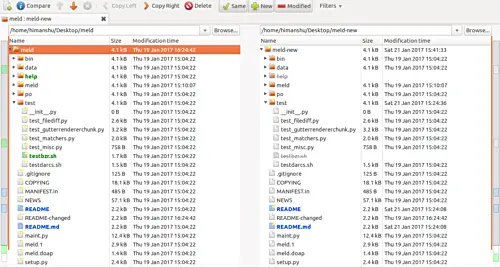

在我尝试过的所有 Fedora Spin 中,唯一有问题的是 XFCE spin,我相信这是由于这个[已知的 bug][11] 导致的。

|

||||

|

||||

按照我在这里分享的步骤操作,GNOME、KDE、LXDE 和 minimal 都运行得很好。考虑到 KDE 和 GNOME 会占用更多资源,我会推荐想要在树莓派上使用 Fedora 25 的人使用 LXDE 和 Minimal。如果你是一位系统管理员,想要一台廉价的 SELinux 支持的服务器来满足你的安全考虑,而且只是想要使用树莓派作为你的服务器,开放 22 端口以及 vi 可用,那就用 Minimal 版本。对于开发人员或刚开始学习 Linux 的人来说,LXDE 可能是更好的方式,因为它可以快速方便地访问所有基于 GUI 的工具,如浏览器、IDE 和你可能需要的客户端。

|

||||

|

||||

|

||||

|

||||

*树莓派 3 上的 Fedora 25 workstation、LXDE。*

|

||||

|

||||

看到越来越多的 Linux 发行版在基于 ARM 的树莓派上可用,那真是太棒了。对于其第一个支持的版本,Fedora 团队为日常 Linux 用户提供了更好的体验。我很期待 Fedora 26 的改进和 bug 修复。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Anderson Silva - Anderson 于 1996 年开始使用 Linux。更精确地说是 Red Hat Linux。 2007 年,他作为 IT 部门的发布工程师时加入红帽,他的职业梦想成为了现实。此后,他在红帽担任过多个不同角色,从发布工程师到系统管理员、高级经理和信息系统工程师。他是一名 RHCE 和 RHCA 以及一名活跃的 Fedora 包维护者。

|

||||

|

||||

----------------

|

||||

|

||||

via: https://opensource.com/article/17/3/how-install-fedora-on-raspberry-pi

|

||||

|

||||

作者:[Anderson Silva][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[jasminepeng](https://github.com/jasminepeng)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ansilva

|

||||

[1]:https://opensource.com/tags/raspberry-pi?src=raspberry_pi_resource_menu

|

||||

[2]:https://opensource.com/resources/what-raspberry-pi?src=raspberry_pi_resource_menu

|

||||

[3]:https://opensource.com/article/16/12/getting-started-raspberry-pi?src=raspberry_pi_resource_menu

|

||||

[4]:https://opensource.com/article/17/2/raspberry-pi-submit-your-article?src=raspberry_pi_resource_menu

|

||||

[5]:https://opensource.com/article/17/3/how-install-fedora-on-raspberry-pi?rate=gIIRltTrnOlwo4h81uDvdAjAE3V2rnwoqH0s_Dx44mE

|

||||

[6]:https://fedoramagazine.org/raspberry-pi-support-fedora-25-beta/

|

||||

[7]:https://fedoraproject.org/wiki/Raspberry_Pi

|

||||

[8]:https://bugzilla.redhat.com/show_bug.cgi?id=245418

|

||||

[9]:https://lists.fedoraproject.org/admin/lists/arm%40lists.fedoraproject.org/

|

||||

[10]:https://bugzilla.redhat.com/show_bug.cgi?id=1387733

|

||||

[11]:https://bugzilla.redhat.com/show_bug.cgi?id=1389163

|

||||

[12]:https://opensource.com/user/26502/feed

|

||||

[13]:https://opensource.com/article/17/3/how-install-fedora-on-raspberry-pi#comments

|

||||

[14]:https://opensource.com/users/ansilva

|

||||

152

sources/tech/ 20170422 FEWER MALLOCS IN CURL.md

Normal file

152

sources/tech/ 20170422 FEWER MALLOCS IN CURL.md

Normal file

@ -0,0 +1,152 @@

|

||||

FEWER MALLOCS IN CURL

|

||||

===========================================================

|

||||

|

||||

|

||||

|

||||

Today I landed yet [another small change][4] to libcurl internals that further reduces the number of small mallocs we do. This time the generic linked list functions got converted to become malloc-less (the way linked list functions should behave, really).

|

||||

|

||||

### Instrument mallocs

|

||||

|

||||

I started out my quest a few weeks ago by instrumenting our memory allocations. This is easy since we have our own memory debug and logging system in curl since many years. Using a debug build of curl I run this script in my build dir:

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

export CURL_MEMDEBUG=$HOME/tmp/curlmem.log

|

||||

./src/curl http://localhost

|

||||

./tests/memanalyze.pl -v $HOME/tmp/curlmem.log

|

||||

```

|

||||

|

||||

For curl 7.53.1, this counted about 115 memory allocations. Is that many or a few?

|

||||

|

||||

The memory log is very basic. To give you an idea what it looks like, here’s an example snippet:

|

||||

|

||||

```

|

||||

MEM getinfo.c:70 free((nil))

|

||||

MEM getinfo.c:73 free((nil))

|

||||

MEM url.c:294 free((nil))

|

||||

MEM url.c:297 strdup(0x559e7150d616) (24) = 0x559e73760f98

|

||||

MEM url.c:294 free((nil))

|

||||

MEM url.c:297 strdup(0x559e7150d62e) (22) = 0x559e73760fc8

|

||||

MEM multi.c:302 calloc(1,480) = 0x559e73760ff8

|

||||

MEM hash.c:75 malloc(224) = 0x559e737611f8

|

||||

MEM hash.c:75 malloc(29152) = 0x559e737a2bc8

|

||||

MEM hash.c:75 malloc(3104) = 0x559e737a9dc8

|

||||

```

|

||||

|

||||

### Check the log

|

||||

|

||||

I then studied the log closer and I realized that there were many small memory allocations done from the same code lines. We clearly had some rather silly code patterns where we would allocate a struct and then add that struct to a linked list or a hash and that code would then subsequently add yet another small struct and similar – and then often do that in a loop. (I say _we_ here to avoid blaming anyone, but of course I myself am to blame for most of this…)

|

||||

|

||||

Those two allocations would always happen in pairs and they would be freed at the same time. I decided to address those. Doing very small (less than say 32 bytes) allocations is also wasteful just due to the very large amount of data in proportion that will be used just to keep track of that tiny little memory area (within the malloc system). Not to mention fragmentation of the heap.

|

||||

|

||||

So, fixing the hash code and the linked list code to not use mallocs were immediate and easy ways to remove over 20% of the mallocs for a plain and simple ‘curl http://localhost’ transfer.

|

||||

|

||||

At this point I sorted all allocations based on size and checked all the smallest ones. One that stood out was one we made in _curl_multi_wait(),_ a function that is called over and over in a typical curl transfer main loop. I converted it over to [use the stack][5] for most typical use cases. Avoiding mallocs in very repeatedly called functions is a good thing.

|

||||

|

||||

### Recount

|

||||

|

||||

Today, the script from above shows that the same “curl localhost” command is down to 80 allocations from the 115 curl 7.53.1 used. Without sacrificing anything really. An easy 26% improvement. Not bad at all!

|

||||

|

||||

But okay, since I modified curl_multi_wait() I wanted to also see how it actually improves things for a slightly more advanced transfer. I took the [multi-double.c][6] example code, added the call to initiate the memory logging, made it uses curl_multi_wait() and had it download these two URLs in parallel:

|

||||

|

||||

```

|

||||

http://www.example.com/

|

||||

http://localhost/512M

|

||||

```

|

||||

|

||||

The second one being just 512 megabytes of zeroes and the first being a 600 bytes something public html page. Here’s the [count-malloc.c code][7].

|

||||

|

||||

First, I brought out 7.53.1 and built the example against that and had the memanalyze script check it:

|

||||

|

||||

```

|

||||

Mallocs: 33901

|

||||

Reallocs: 5

|

||||

Callocs: 24

|

||||

Strdups: 31

|

||||

Wcsdups: 0

|

||||

Frees: 33956

|

||||

Allocations: 33961

|

||||

Maximum allocated: 160385

|

||||

```

|

||||

|

||||

Okay, so it used 160KB of memory totally and it did over 33,900 allocations. But ok, it downloaded over 512 megabytes of data so it makes one malloc per 15KB of data. Good or bad?

|

||||

|

||||

Back to git master, the version we call 7.54.1-DEV right now – since we’re not quite sure which version number it’ll become when we release the next release. It can become 7.54.1 or 7.55.0, it has not been determined yet. But I digress, I ran the same modified multi-double.c example again, ran memanalyze on the memory log again and it now reported…

|

||||

|

||||

```

|

||||

Mallocs: 69

|

||||

Reallocs: 5

|

||||

Callocs: 24

|

||||

Strdups: 31

|

||||

Wcsdups: 0

|

||||

Frees: 124

|

||||

Allocations: 129

|

||||

Maximum allocated: 153247

|

||||

```

|

||||

|

||||

I had to look twice. Did I do something wrong? I better run it again just to double-check. The results are the same no matter how many times I run it…

|

||||

|

||||

### 33,961 vs 129

|

||||

|

||||

curl_multi_wait() is called a lot of times in a typical transfer, and it had at least one of the memory allocations we normally did during a transfer so removing that single tiny allocation had a pretty dramatic impact on the counter. A normal transfer also moves things in and out of linked lists and hashes a bit, but they too are mostly malloc-less now. Simply put: the remaining allocations are not done in the transfer loop so they’re way less important.

|

||||

|

||||

The old curl did 263 times the number of allocations the current does for this example. Or the other way around: the new one does 0.37% the number of allocations the old one did…

|

||||

|

||||

As an added bonus, the new one also allocates less memory in total as it decreased that amount by 7KB (4.3%).

|

||||

|

||||

### Are mallocs important?

|

||||

|

||||

In the day and age with many gigabytes of RAM and all, does a few mallocs in a transfer really make a notable difference for mere mortals? What is the impact of 33,832 extra mallocs done for 512MB of data?

|

||||

|

||||

To measure what impact these changes have, I decided to compare HTTP transfers from localhost and see if we can see any speed difference. localhost is fine for this test since there’s no network speed limit, but the faster curl is the faster the download will be. The server side will be equally fast/slow since I’ll use the same set for both tests.

|

||||

|

||||

I built curl 7.53.1 and curl 7.54.1-DEV identically and ran this command line:

|

||||

|

||||

```

|

||||

curl http://localhost/80GB -o /dev/null

|

||||

```

|

||||

|

||||

80 gigabytes downloaded as fast as possible written into the void.

|

||||

|

||||

The exact numbers I got for this may not be totally interesting, as it will depend on CPU in the machine, which HTTP server that serves the file and optimization level when I build curl etc. But the relative numbers should still be highly relevant. The old code vs the new.

|

||||

|

||||

7.54.1-DEV repeatedly performed 30% faster! The 2200MB/sec in my build of the earlier release increased to over 2900 MB/sec with the current version.

|

||||

|

||||

The point here is of course not that it easily can transfer HTTP over 20GB/sec using a single core on my machine – since there are very few users who actually do that speedy transfers with curl. The point is rather that curl now uses less CPU per byte transferred, which leaves more CPU over to the rest of the system to perform whatever it needs to do. Or to save battery if the device is a portable one.

|

||||

|

||||

On the cost of malloc: The 512MB test I did resulted in 33832 more allocations using the old code. The old code transferred HTTP at a rate of about 2200MB/sec. That equals 145,827 mallocs/second – that are now removed! A 600 MB/sec improvement means that curl managed to transfer 4300 bytes extra for each malloc it didn’t do, each second.

|

||||

|

||||

### Was removing these mallocs hard?

|

||||

|

||||

Not at all, it was all straight forward. It is however interesting that there’s still room for changes like this in a project this old. I’ve had this idea for some years and I’m glad I finally took the time to make it happen. Thanks to our test suite I could do this level of “drastic” internal change with a fairly high degree of confidence that I don’t introduce too terrible regressions. Thanks to our APIs being good at hiding internals, this change could be done completely without changing anything for old or new applications.

|

||||

|

||||

(Yeah I haven’t shipped the entire change in a release yet so there’s of course a risk that I’ll have to regret my “this was easy” statement…)

|

||||

|

||||

### Caveats on the numbers

|

||||

|

||||

There have been 213 commits in the curl git repo from 7.53.1 till today. There’s a chance one or more other commits than just the pure alloc changes have made a performance impact, even if I can’t think of any.

|

||||

|

||||

### More?

|

||||

|

||||

Are there more “low hanging fruits” to pick here in the similar vein?

|

||||

|

||||

Perhaps. We don’t do a lot of performance measurements or comparisons so who knows, we might do more silly things that we could stop doing and do even better. One thing I’ve always wanted to do, but never got around to, was to add daily “monitoring” of memory/mallocs used and how fast curl performs in order to better track when we unknowingly regress in these areas.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://daniel.haxx.se/blog/2017/04/22/fewer-mallocs-in-curl/

|

||||

|

||||

作者:[DANIEL STENBERG ][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://daniel.haxx.se/blog/author/daniel/

|

||||

[1]:https://daniel.haxx.se/blog/author/daniel/

|

||||

[2]:https://daniel.haxx.se/blog/2017/04/22/fewer-mallocs-in-curl/

|

||||

[3]:https://daniel.haxx.se/blog/2017/04/22/fewer-mallocs-in-curl/#comments

|

||||

[4]:https://github.com/curl/curl/commit/cbae73e1dd95946597ea74ccb580c30f78e3fa73

|

||||

[5]:https://github.com/curl/curl/commit/5f1163517e1597339d

|

||||

[6]:https://github.com/curl/curl/commit/5f1163517e1597339d

|

||||

[7]:https://gist.github.com/bagder/dc4a42cb561e791e470362da7ef731d3

|

||||

@ -1,3 +1,4 @@

|

||||

ictlyh Translating

|

||||

GraphQL In Use: Building a Blogging Engine API with Golang and PostgreSQL

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,3 +1,4 @@

|

||||

geekrainy translating

|

||||

A look at 6 iconic open source brands

|

||||

============================================================

|

||||

|

||||

|

||||

@ -1,226 +0,0 @@

|

||||

How to protect your server with badIPs.com and report IPs with Fail2ban on Debian

|

||||

============================================================

|

||||

|

||||

### On this page

|

||||

|

||||

1. [Use the badIPs list][4]

|

||||

1. [Define your security level and category][1]

|

||||

2. [Let's create the script][5]

|

||||

3. [Report IP addresses to badIPs with Fail2ban][6]

|

||||

1. [Fail2ban >= 0.8.12][2]

|

||||

2. [Fail2ban < 0.8.12][3]

|

||||

4. [Statistics of your IP reporting][7]

|

||||

|

||||

This tutorial documents the process of using the badips abuse tracker in conjunction with Fail2ban to protect your server or computer. I've tested it on a Debian 8 Jessie and Debian 7 Wheezy system.

|

||||

|

||||

**What is badIPs?**

|

||||

|

||||

BadIps is a listing of IP that are reported as bad in combinaison with [fail2ban][8].

|

||||

|

||||

This tutorial contains two parts, the first one will deal with the use of the list and the second will deal with the injection of data.

|

||||

|

||||

###

|

||||

Use the badIPs list

|

||||

|

||||

### Define your security level and category

|

||||

|

||||

You can get the IP address list by simply using the REST API.

|

||||

|

||||

When you GET this URL : [https://www.badips.com/get/categories][9]

|

||||

You’ll see all the different categories that are present on the service.

|

||||

|

||||

* Second step, determine witch score is made for you.

|

||||

Here a quote from badips that should help (personnaly I took score = 3):

|

||||

* If you'd like to compile a statistic or use the data for some experiment etc. you may start with score 0.

|

||||

* If you'd like to firewall your private server or website, go with scores from 2\. Maybe combined with your own results, even if they do not have a score above 0 or 1.

|

||||

* If you're about to protect a webshop or high traffic, money-earning e-commerce server, we recommend to use values from 3 or 4\. Maybe as well combined with your own results (key / sync).

|

||||

* If you're paranoid, take 5.

|

||||

|

||||

So now that you get your two variables, let's make your link by concatening them and grab your link.

|

||||

|

||||

http://www.badips.com/get/list/{{SERVICE}}/{{LEVEL}}

|

||||

|

||||

Note: Like me, you can take all the services. Change the name of the service to "any" in this case.

|

||||

|

||||

The resulting URL is:

|

||||

|

||||

https://www.badips.com/get/list/any/3

|

||||

|

||||

### Let's create the script

|

||||

|

||||

Alright, when that’s done, we’ll create a simple script.

|

||||

|

||||

1. Put our list in a tempory file.

|

||||

2. (only once) create a chain in iptables.

|

||||

3. Flush all the data linked to our chain (old entries).

|

||||

4. We’ll link each IP to our new chain.

|

||||

5. When it’s done, block all INPUT / OUTPUT / FORWARD that’s linked to our chain.

|

||||

6. Remove our temp file.

|

||||

|

||||

Nowe we'll create the script for that:

|

||||

|

||||

cd /home/<user>/

|

||||

vi myBlacklist.sh

|

||||

|

||||

Enter the following content into that file.

|

||||

|

||||

```

|

||||

#!/bin/sh

|

||||

# based on this version http://www.timokorthals.de/?p=334

|

||||

# adapted by Stéphane T.

|

||||

|

||||

_ipt=/sbin/iptables # Location of iptables (might be correct)

|

||||

_input=badips.db # Name of database (will be downloaded with this name)

|

||||

_pub_if=eth0 # Device which is connected to the internet (ex. $ifconfig for that)

|

||||

_droplist=droplist # Name of chain in iptables (Only change this if you have already a chain with this name)

|

||||

_level=3 # Blog level: not so bad/false report (0) over confirmed bad (3) to quite aggressive (5) (see www.badips.com for that)

|

||||

_service=any # Logged service (see www.badips.com for that)

|

||||

|

||||

# Get the bad IPs

|

||||

wget -qO- http://www.badips.com/get/list/${_service}/$_level > $_input || { echo "$0: Unable to download ip list."; exit 1; }

|

||||

|

||||

### Setup our black list ###

|

||||

# First flush it

|

||||

$_ipt --flush $_droplist

|

||||

|

||||

# Create a new chain

|

||||

# Decomment the next line on the first run

|

||||

# $_ipt -N $_droplist

|

||||

|

||||

# Filter out comments and blank lines

|

||||

# store each ip in $ip

|

||||

for ip in `cat $_input`

|

||||

do

|

||||

# Append everything to $_droplist

|

||||

$_ipt -A $_droplist -i ${_pub_if} -s $ip -j LOG --log-prefix "Drop Bad IP List "

|

||||

$_ipt -A $_droplist -i ${_pub_if} -s $ip -j DROP

|

||||

done

|

||||

|

||||

# Finally, insert or append our black list

|

||||

$_ipt -I INPUT -j $_droplist

|

||||

$_ipt -I OUTPUT -j $_droplist

|

||||

$_ipt -I FORWARD -j $_droplist

|

||||

|

||||

# Delete your temp file

|

||||

rm $_input

|

||||

exit 0

|

||||

```

|

||||

|

||||

When that’s done, you should create a cronjob that will update our blacklist.

|

||||

|

||||

For this, I used crontab and I run the script every day on 11:30PM (just before my delayed backup).

|

||||

|

||||

crontab -e

|

||||

|

||||

```

|

||||

23 30 * * * /home/<user>/myBlacklist.sh #Block BAD IPS

|

||||

```

|

||||

|

||||

Don’t forget to chmod your script:

|

||||

|

||||

chmod + x myBlacklist.sh

|

||||

|

||||

Now that’s done, your server/computer should be a little bit safer.

|

||||

|

||||

You can also run the script manually like this:

|

||||

|

||||

cd /home/<user>/

|

||||

./myBlacklist.sh

|

||||

|

||||

It should take some time… so don’t break the script. In fact, the value of it lies in the last lines.

|

||||

|

||||

### Report IP addresses to badIPs with Fail2ban

|

||||

|

||||

In the second part of this tutorial, I will show you how to report bd IP addresses bach to the badips.com website by using Fail2ban.

|

||||

|

||||

### Fail2ban >= 0.8.12

|

||||

|

||||

The reporting is made with Fail2ban. Depending on your Fail2ban version you must use the first or second section of this chapter.If you have fail2ban in version 0.8.12.

|

||||

|

||||

If you have fail2ban version 0.8.12 or later.

|

||||

|

||||

fail2ban-server --version

|

||||

|

||||

In each category that you’ll report, simply add an action.

|

||||

|

||||

```

|

||||

[ssh]

|

||||

enabled = true

|

||||

action = iptables-multiport

|

||||

badips[category=ssh]

|

||||

port = ssh

|

||||

filter = sshd

|

||||

logpath = /var/log/auth.log

|

||||

maxretry= 6

|

||||

```

|

||||

|

||||

As you can see, the category is SSH, take a look here ([https://www.badips.com/get/categories][11]) to find the correct category.

|

||||

|

||||

### Fail2ban < 0.8.12

|

||||

|

||||

If the version is less recent than 0.8.12, you’ll have a to create an action. This can be downloaded here: [https://www.badips.com/asset/fail2ban/badips.conf][12].

|

||||

|

||||

wget https://www.badips.com/asset/fail2ban/badips.conf -O /etc/fail2ban/action.d/badips.conf

|

||||

|

||||

With the badips.conf from above, you can either activate per category as above or you can enable it globally:

|

||||

|

||||

cd /etc/fail2ban/

|

||||

vi jail.conf

|

||||

|

||||

```

|

||||

[DEFAULT]

|

||||

|

||||

...

|

||||

|

||||

banaction = iptables-multiport

|

||||

badips

|

||||

```

|

||||

|

||||

Now restart fail2ban - it should start reporting from now on.

|

||||

|

||||

service fail2ban restart

|

||||

|

||||

### Statistics of your IP reporting

|

||||

|

||||

Last step – not really useful… You can create a key.

|

||||

This one is usefull if you want to see your data.

|

||||

Just copy / paste this and a JSON response will appear on your console.

|

||||

|

||||

wget https://www.badips.com/get/key -qO -

|

||||

|

||||

```

|

||||

{

|

||||

"err":"",

|

||||

"suc":"new key 5f72253b673eb49fc64dd34439531b5cca05327f has been set.",

|

||||

"key":"5f72253b673eb49fc64dd34439531b5cca05327f"

|

||||

}

|

||||

```

|

||||

|

||||

Then go on [badips][13] website, enter your “key” and click “statistics”.

|

||||

|

||||

Here we go… all your stats by category.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/

|

||||

|

||||

作者:[Stephane T][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/

|

||||

[1]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#define-your-security-level-and-category

|

||||

[2]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#failban-gt-

|

||||

[3]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#failban-ltnbsp

|

||||

[4]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#use-the-badips-list

|

||||

[5]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#lets-create-the-script

|

||||

[6]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#report-ip-addresses-to-badips-with-failban

|

||||

[7]:https://www.howtoforge.com/tutorial/protect-your-server-computer-with-badips-and-fail2ban/#statistics-of-your-ip-reporting

|

||||

[8]:http://www.fail2ban.org/

|

||||

[9]:https://www.badips.com/get/categories

|

||||

[10]:http://www.timokorthals.de/?p=334

|

||||

[11]:https://www.badips.com/get/categories

|

||||

[12]:https://www.badips.com/asset/fail2ban/badips.conf

|

||||

[13]:https://www.badips.com/

|

||||

@ -0,0 +1,438 @@

|

||||

Writing a Time Series Database from Scratch

|

||||

============================================================

|

||||

|

||||

|

||||

I work on monitoring. In particular on [Prometheus][2], a monitoring system that includes a custom time series database, and its integration with [Kubernetes][3].

|

||||

|

||||

In many ways Kubernetes represents all the things Prometheus was designed for. It makes continuous deployments, auto scaling, and other features of highly dynamic environments easily accessible. The query language and operational model, among many other conceptual decisions make Prometheus particularly well-suited for such environments. Yet, if monitored workloads become significantly more dynamic, this also puts new strains on monitoring system itself. With this in mind, rather than doubling back on problems Prometheus already solves well, we specifically aim to increase its performance in environments with highly dynamic, or transient services.

|

||||

|

||||

Prometheus's storage layer has historically shown outstanding performance, where a single server is able to ingest up to one million samples per second as several million time series, all while occupying a surprisingly small amount of disk space. While the current storage has served us well, I propose a newly designed storage subsystem that corrects for shortcomings of the existing solution and is equipped to handle the next order of scale.

|

||||

|

||||

> Note: I've no background in databases. What I say might be wrong and mislead. You can channel your criticism towards me (fabxc) in #prometheus on Freenode.

|

||||

|

||||

### Problems, Problems, Problem Space

|

||||

|

||||

First, a quick outline of what we are trying to accomplish and what key problems it raises. For each, we take a look at Prometheus' current approach, what it does well, and which problems we aim to address with the new design.

|

||||

|

||||

### Time series data

|

||||

|

||||

We have a system that collects data points over time.

|

||||

|

||||

```

|

||||

identifier -> (t0, v0), (t1, v1), (t2, v2), (t3, v3), ....

|

||||

```

|

||||

|

||||

Each data point is a tuple of a timestamp and a value. For the purpose of monitoring, the timestamp is an integer and the value any number. A 64 bit float turns out to be a good representation for counter as well as gauge values, so we go with that. A sequence of data points with strictly monotonically increasing timestamps is a series, which is addressed by an identifier. Our identifier is a metric name with a dictionary of _label dimensions_ . Label dimensions partition the measurement space of a single metric. Each metric name plus a unique set of labels is its own _time series_ that has a value stream associated with it.

|

||||

|

||||

This is a typical set of series identifiers that are part of metric counting requests:

|

||||

|

||||

```

|

||||

requests_total{path="/status", method="GET", instance=”10.0.0.1:80”}

|

||||

requests_total{path="/status", method="POST", instance=”10.0.0.3:80”}

|

||||

requests_total{path="/", method="GET", instance=”10.0.0.2:80”}

|

||||

```

|

||||

|

||||

Let's simplify this representation right away: A metric name can be treated as just another label dimension — `__name__` in our case. At the query level, it might be be treated specially but that doesn't concern our way of storing it, as we will see later.

|

||||

|

||||

```

|

||||

{__name__="requests_total", path="/status", method="GET", instance=”10.0.0.1:80”}

|

||||

{__name__="requests_total", path="/status", method="POST", instance=”10.0.0.3:80”}

|

||||

{__name__="requests_total", path="/", method="GET", instance=”10.0.0.2:80”}

|

||||

```

|

||||

|

||||

When querying time series data, we want to do so by selecting series by their labels. In the simplest case `{__name__="requests_total"}` selects all series belonging to the `requests_total` metric. For all selected series, we retrieve data points within a specified time window.

|

||||

In more complex queries, we may wish to select series satisfying several label selectors at once and also represent more complex conditions than equality. For example, negative (`method!="GET"`) or regular expression matching (`method=~"PUT|POST"`).

|

||||

|

||||

This largely defines the stored data and how it is recalled.

|

||||

|

||||

### Vertical and Horizontal

|

||||

|

||||

In a simplified view, all data points can be laid out on a two-dimensional plane. The _horizontal_ dimension represents the time and the series identifier space spreads across the _vertical_ dimension.

|

||||

|

||||

```

|

||||

series

|

||||

^

|

||||

│ . . . . . . . . . . . . . . . . . . . . . . {__name__="request_total", method="GET"}

|

||||

│ . . . . . . . . . . . . . . . . . . . . . . {__name__="request_total", method="POST"}

|

||||

│ . . . . . . .

|

||||

│ . . . . . . . . . . . . . . . . . . . ...

|

||||

│ . . . . . . . . . . . . . . . . . . . . .

|

||||

│ . . . . . . . . . . . . . . . . . . . . . {__name__="errors_total", method="POST"}

|

||||

│ . . . . . . . . . . . . . . . . . {__name__="errors_total", method="GET"}

|

||||

│ . . . . . . . . . . . . . .

|

||||

│ . . . . . . . . . . . . . . . . . . . ...

|

||||

│ . . . . . . . . . . . . . . . . . . . .

|

||||

v

|

||||

<-------------------- time --------------------->

|

||||

```

|

||||

|

||||

Prometheus retrieves data points by periodically scraping the current values for a set of time series. The entity from which we retrieve such a batch is called a _target_ . Thereby, the write pattern is completely vertical and highly concurrent as samples from each target are ingested independently.

|

||||

To provide some measurement of scale: A single Prometheus instance collects data points from tens of thousands of _targets_ , which expose hundreds to thousands of different time series each.

|

||||

|

||||

At the scale of collecting millions of data points per second, batching writes is a non-negotiable performance requirement. Writing single data points scattered across our disk would be painfully slow. Thus, we want to write larger chunks of data in sequence.

|

||||

This is an unsurprising fact for spinning disks, as their head would have to physically move to different sections all the time. While SSDs are known for fast random writes, they actually can't modify individual bytes but only write in _pages_ of 4KiB or more. This means writing a 16 byte sample is equivalent to writing a full 4KiB page. This behavior is part of what is known as [ _write amplification_ ][4], which as a bonus causes your SSD to wear out – so it wouldn't just be slow, but literally destroy your hardware within a few days or weeks.

|

||||

For more in-depth information on the problem, the blog series ["Coding for SSDs" series][5] is a an excellent resource. Let's just consider the main take away: sequential and batched writes are the ideal write pattern for spinning disks and SSDs alike. A simple rule to stick to.

|

||||

|

||||

The querying pattern is significantly more differentiated than the write the pattern. We can query a single datapoint for a single series, a single datapoint for 10000 series, weeks of data points for a single series, weeks of data points for 10000 series, etc. So on our two-dimensional plane, queries are neither fully vertical or horizontal, but a rectangular combination of the two.

|

||||

[Recording rules][6] mitigate the problem for known queries but are not a general solution for ad-hoc queries, which still have to perform reasonably well.

|

||||

|

||||

We know that we want to write in batches, but the only batches we get are vertical sets of data points across series. When querying data points for a series over a time window, not only would it be hard to figure out where the individual points can be found, we'd also have to read from a lot of random places on disk. With possibly millions of touched samples per query, this is slow even on the fastest SSDs. Reads will also retrieve more data from our disk than the requested 16 byte sample. SSDs will load a full page, HDDs will at least read an entire sector. Either way, we are wasting precious read throughput.

|

||||

So ideally, samples for the same series would be stored sequentially so we can just scan through them with as few reads as possible. On top, we only need to know where this sequence starts to access all data points.

|

||||

|

||||

There's obviously a strong tension between the ideal pattern for writing collected data to disk and the layout that would be significantly more efficient for serving queries. It is _the_ fundamental problem our TSDB has to solve.

|

||||

|

||||

#### Current solution

|

||||

|

||||

Time to take a look at how Prometheus's current storage, let's call it "V2", addresses this problem.

|

||||

We create one file per time series that contains all of its samples in sequential order. As appending single samples to all those files every few seconds is expensive, we batch up 1KiB chunks of samples for a series in memory and append those chunks to the individual files, once they are full. This approach solves a large part of the problem. Writes are now batched, samples are stored sequentially. It also enables incredibly efficient compression formats, based on the property that a given sample changes only very little with respect to the previous sample in the same series. Facebook's paper on their Gorilla TSDB describes a similar chunk-based approach and [introduces a compression format][7] that reduces 16 byte samples to an average of 1.37 bytes. The V2 storage uses various compression formats including a variation of Gorilla’s.

|

||||

|

||||

```

|

||||

┌──────────┬─────────┬─────────┬─────────┬─────────┐ series A

|

||||

└──────────┴─────────┴─────────┴─────────┴─────────┘

|

||||

┌──────────┬─────────┬─────────┬─────────┬─────────┐ series B

|

||||

└──────────┴─────────┴─────────┴─────────┴─────────┘

|

||||

. . .

|

||||

┌──────────┬─────────┬─────────┬─────────┬─────────┬─────────┐ series XYZ

|

||||

└──────────┴─────────┴─────────┴─────────┴─────────┴─────────┘

|

||||

chunk 1 chunk 2 chunk 3 ...

|

||||

```

|

||||

|

||||

While the chunk-based approach is great, keeping a separate file for each series is troubling the V2 storage for various reasons:

|

||||

|

||||

* We actually need a lot more files than the number of time series we are currently collecting data for. More on that in the section on "Series Churn". With several million files, sooner or later way may run out of [inodes][1] on our filesystem. This is a condition we can only recover from by reformatting our disks, which is as invasive and disruptive as it could be. We generally want to avoid formatting disks specifically to fit a single application.

|

||||

* Even when chunked, several thousands of chunks per second are completed and ready to be persisted. This still requires thousands of individual disk writes every second. While it is alleviated by also batching up several completed chunks for a series, this in return increases the total memory footprint of data which is waiting to be persisted.

|

||||

* It's infeasible to keep all files open for reads and writes. In particular because ~99% of data is never queried again after 24 hours. If it is queried though though, we have to open up to thousands of files, find and read relevant data points into memory, and close them again. As this would result in high query latencies, data chunks are cached rather aggressively leading to problems outlined further in the section on "Resource Consumption".

|

||||

* Eventually, old data has to be deleted and data needs to be removed from the front of millions of files. This means that deletions are actually write intensive operations. Additionally, cycling through millions of files and analyzing them makes this a process that often takes hours. By the time it completes, it might have to start over again. Oh yea, and deleting the old files will cause further write amplification for your SSD!

|

||||

* Chunks that are currently accumulating are only held in memory. If the application crashes, data will be lost. To avoid this, the memory state is periodically checkpointed to disk, which may take significantly longer than the window of data loss we are willing to accept. Restoring the checkpoint may also take several minutes, causing painfully long restart cycles.

|

||||

|

||||

The key take away from the existing design is the concept of chunks, which we most certainly want to keep. The most recent chunks always being held in memory is also generally good. After all, the most recent data is queried the most by a large margin.

|

||||

Having one file per time series is a concept we would like to find an alternative to.

|

||||

|

||||

### Series Churn

|

||||

|

||||

In the Prometheus context, we use the term _series churn_ to describe that a set of time series becomes inactive, i.e. receives no more data points, and a new set of active series appears instead.

|

||||

For example, all series exposed by a given microservice instance have a respective “instance” label attached that identifies its origin. If we perform a rolling update of our microservice and swap out every instance with a newer version, series churn occurs. In more dynamic environments those events may happen on an hourly basis. Cluster orchestration systems like Kubernetes allow continuous auto-scaling and frequent rolling updates of applications, potentially creating tens of thousands of new application instances, and with them completely new sets of time series, every day.

|

||||

|

||||

```

|

||||

series

|

||||

^

|

||||

│ . . . . . .

|

||||

│ . . . . . .

|

||||

│ . . . . . .

|

||||

│ . . . . . . .

|

||||

│ . . . . . . .

|

||||

│ . . . . . . .

|

||||

│ . . . . . .

|

||||

│ . . . . . .

|

||||

│ . . . . .

|

||||

│ . . . . .

|

||||

│ . . . . .

|

||||

v

|

||||

<-------------------- time --------------------->

|

||||

```

|

||||

|

||||

So even if the entire infrastructure roughly remains constant in size, over time there's a linear growth of time series in our database. While a Prometheus server will happily collect data for 10 million time series, query performance is significantly impacted if data has to be found among a billion series.

|

||||

|

||||

#### Current solution

|

||||

|

||||

The current V2 storage of Prometheus has an index based on LevelDB for all series that are currently stored. It allows querying series containing a given label pair, but lacks a scalable way to combine results from different label selections.

|

||||

For example, selecting all series with label `__name__="requests_total"` works efficiently, but selecting all series with `instance="A" AND __name__="requests_total"` has scalability problems. We will later revisit what causes this and which tweaks are necessary to improve lookup latencies.

|

||||

|

||||

This problem is in fact what spawned the initial hunt for a better storage system. Prometheus needed an improved indexing approach for quickly searching hundreds of millions of time series.

|

||||

|

||||

### Resource consumption

|

||||

|

||||

Resource consumption is one of the consistent topics when trying to scale Prometheus (or anything, really). But it's not actually the absolute resource hunger that is troubling users. In fact, Prometheus manages an incredible throughput given its requirements. The problem is rather its relative unpredictability and instability in face of changes. By its architecture the V2 storage slowly builds up chunks of sample data, which causes the memory consumption to ramp up over time. As chunks get completed, they are written to disk and can be evicted from memory. Eventually, Prometheus's memory usage reaches a steady state. That is until the monitored environment changes — _series churn_ increases the usage of memory, CPU, and disk IO every time we scale an application or do a rolling update.

|

||||

If the change is ongoing, it will yet again reach a steady state eventually but it will be significantly higher than in a more static environment. Transition periods are often multiple hours long and it is hard to determine what the maximum resource usage will be.

|

||||

|

||||

The approach of having a single file per time series also makes it way too easy for a single query to knock out the Prometheus process. When querying data that is not cached in memory, the files for queried series are opened and the chunks containing relevant data points are read into memory. If the amount of data exceeds the memory available, Prometheus quits rather ungracefully by getting OOM-killed.

|

||||

After the query is completed the loaded data can be released again but it is generally cached much longer to serve subsequent queries on the same data faster. The latter is a good thing obviously.

|

||||

|

||||

Lastly, we looked at write amplification in the context of SSDs and how Prometheus addresses it by batching up writes to mitigate it. Nonetheless, in several places it still causes write amplification by having too small batches and not aligning data precisely on page boundaries. For larger Prometheus servers, a reduced hardware lifetime was observed in the real world. Chances are that this is still rather normal for database applications with high write throughput, but we should keep an eye on whether we can mitigate it.

|

||||

|

||||

### Starting Over

|

||||

|

||||

By now we have a good idea of our problem domain, how the V2 storage solves it, and where its design has issues. We also saw some great concepts that we want to adapt more or less seamlessly. A fair amount of V2's problems can be addressed with improvements and partial redesigns, but to keep things fun (and after carefully evaluating my options, of course), I decided to take a stab at writing an entire time series database — from scratch, i.e. writing bytes to the file system.

|

||||

|

||||

The critical concerns of performance and resource usage are a direct consequence of the chosen storage format. We have to find the right set of algorithms and disk layout for our data to implement a well-performing storage layer.

|

||||

|

||||

This is where I take the shortcut and drive straight to the solution — skip the headache, failed ideas, endless sketching, tears, and despair.

|

||||

|

||||

### V3 — Macro Design

|

||||

|

||||

What's the macro layout of our storage? In short, everything that is revealed when running `tree` on our data directory. Just looking at that gives us a surprisingly good picture of what is going on.

|

||||

|

||||

```

|

||||

$ tree ./data

|

||||

./data

|

||||

├── b-000001

|

||||

│ ├── chunks

|

||||

│ │ ├── 000001

|

||||

│ │ ├── 000002

|

||||

│ │ └── 000003

|

||||

│ ├── index

|

||||

│ └── meta.json

|

||||

├── b-000004

|

||||

│ ├── chunks

|

||||

│ │ └── 000001

|

||||

│ ├── index

|

||||

│ └── meta.json

|

||||

├── b-000005

|

||||

│ ├── chunks

|

||||

│ │ └── 000001

|

||||

│ ├── index

|

||||

│ └── meta.json

|

||||

└── b-000006

|

||||

├── meta.json

|

||||

└── wal

|

||||

├── 000001

|

||||

├── 000002

|

||||

└── 000003

|

||||

```

|

||||

|

||||

At the top level, we have a sequence of numbered blocks, prefixed with `b-`. Each block obviously holds a file containing an index and a "chunk" directory holding more numbered files. The “chunks” directory contains nothing but raw chunks of data points for various series. Just as for V2, this makes reading series data over a time windows very cheap and allows us to apply the same efficient compression algorithms. The concept has proven to work well and we stick with it. Obviously, there is no longer a single file per series but instead a handful of files holds chunks for many of them.

|

||||

The existence of an “index” file should not be surprising. Let's just assume it contains a lot of black magic allowing us to find labels, their possible values, entire time series and the chunks holding their data points.

|

||||

|

||||

But why are there several directories containing the layout of index and chunk files? And why does the last one contain a "wal" directory instead? Understanding those two questions, solves about 90% of our problems.

|

||||

|

||||

#### Many Little Databases

|

||||

|

||||

We partition our _horizontal_ dimension, i.e. the time space, into non-overlapping blocks. Each block acts as a fully independent database containing all time series data for its time window. Hence, it has its own index and set of chunk files.

|

||||

|

||||

```

|

||||

|

||||

t0 t1 t2 t3 now

|

||||

┌───────────┐ ┌───────────┐ ┌───────────┐ ┌───────────┐

|

||||

│ │ │ │ │ │ │ │ ┌────────────┐

|

||||

│ │ │ │ │ │ │ mutable │ <─── write ──── ┤ Prometheus │

|

||||

│ │ │ │ │ │ │ │ └────────────┘

|

||||

└───────────┘ └───────────┘ └───────────┘ └───────────┘ ^

|

||||

└──────────────┴───────┬──────┴──────────────┘ │

|

||||

│ query

|

||||

│ │

|

||||

merge ─────────────────────────────────────────────────┘

|

||||

```

|

||||

|

||||

Every block of data is immutable. Of course, we must be able to add new series and samples to the most recent block as we collect new data. For this block, all new data is written to an in-memory database that provides the same lookup properties as our persistent blocks. The in-memory data structures can be updated efficiently. To prevent data loss, all incoming data is also written to a temporary _write ahead log_ , which is the set of files in our “wal” directory, from which we can re-populate the in-memory database on restart.

|

||||

All these files come with their own serialization format, which comes with all the things one would expect: lots of flags, offsets, varints, and CRC32 checksums. Good fun to come up with, rather boring to read about.

|

||||

|

||||

This layout allows us to fan out queries to all blocks relevant to the queried time range. The partial results from each block are merged back together to form the overall result.

|

||||

|

||||

This horizontal partitioning adds a few great capabilities:

|

||||

|

||||

* When querying a time range, we can easily ignore all data blocks outside of this range. It trivially addresses the problem of _series churn_ by reducing the set of inspected data to begin with.

|

||||

* When completing a block, we can persist the data from our in-memory database by sequentially writing just a handful of larger files. We avoid any write-amplification and serve SSDs and HDDs equally well.

|

||||

* We keep the good property of V2 that recent chunks, which are queried most, are always hot in memory.

|

||||

* Nicely enough, we are also no longer bound to the fixed 1KiB chunk size to better align data on disk. We can pick any size that makes the most sense for the individual data points and chosen compression format.

|

||||

* Deleting old data becomes extremely cheap and instantaneous. We merely have to delete a single directory. Remember, in the old storage we had to analyze and re-write up to hundreds of millions of files, which could take hours to converge.

|

||||

|

||||

Each block also contains a `meta.json` file. It simply holds human-readable information about the block to easily understand the state of our storage and the data it contains.

|

||||

|

||||

##### mmap

|

||||

|

||||

Moving from millions of small files to a handful of larger allows us to keep all files open with little overhead. This unblocks the usage of [`mmap(2)`][8], a system call that allows us to transparently back a virtual memory region by file contents. For simplicity, you might want to think of it like swap space, just that all our data is on disk already and no writes occur when swapping data out of memory.

|

||||

|

||||

This means we can treat all contents of our database as if they were in memory without occupying any physical RAM. Only if we access certain byte ranges in our database files, the operating system lazily loads pages from disk. This puts the operating system in charge of all memory management related to our persisted data. Generally, it is more qualified to make such decisions, as it has the full view on the entire machine and all its processes. Queried data can be rather aggressively cached in memory, yet under memory pressure the pages will be evicted. If the machine has unused memory, Prometheus will now happily cache the entire database, yet will immediately return it once another application needs it.

|

||||

Therefore, queries can longer easily OOM our process by querying more persisted data than fits into RAM. The memory cache size becomes fully adaptive and data is only loaded once the query actually needs it.

|

||||

|

||||

From my understanding, this is how a lot of databases work today and an ideal way to do it if the disk format allows — unless one is confident to outsmart the OS from within the process. We certainly get a lot of capabilities with little work from our side.

|

||||

|

||||

#### Compaction

|

||||

|

||||

The storage has to periodically "cut" a new block and write the previous one, which is now completed, onto disk. Only after the block was successfully persisted, the write ahead log files, which are used to restore in-memory blocks, are deleted.

|

||||

We are interested in keeping each block reasonably short (about two hours for a typical setup) to avoid accumulating too much data in memory. When querying multiple blocks, we have to merge their results into an overall result. This merge procedure obviously comes with a cost and a week-long query should not have to merge 80+ partial results.

|

||||

|

||||

To achieve both, we introduce _compaction_ . Compaction describes the process of taking one or more blocks of data and writing them into a, potentially larger, block. It can also modify existing data along the way, e.g. dropping deleted data, or restructuring our sample chunks for improved query performance.

|

||||

|

||||

```

|

||||

|

||||

t0 t1 t2 t3 t4 now

|

||||

┌────────────┐ ┌──────────┐ ┌───────────┐ ┌───────────┐ ┌───────────┐

|

||||

│ 1 │ │ 2 │ │ 3 │ │ 4 │ │ 5 mutable │ before

|

||||

└────────────┘ └──────────┘ └───────────┘ └───────────┘ └───────────┘

|

||||

┌─────────────────────────────────────────┐ ┌───────────┐ ┌───────────┐

|

||||

│ 1 compacted │ │ 4 │ │ 5 mutable │ after (option A)

|

||||

└─────────────────────────────────────────┘ └───────────┘ └───────────┘

|

||||

┌──────────────────────────┐ ┌──────────────────────────┐ ┌───────────┐

|

||||

│ 1 compacted │ │ 3 compacted │ │ 5 mutable │ after (option B)

|

||||

└──────────────────────────┘ └──────────────────────────┘ └───────────┘

|

||||

```

|

||||

|

||||

In this example we have the sequential blocks `[1, 2, 3, 4]`. Blocks 1, 2, and 3 can be compacted together and the new layout is `[1, 4]`. Alternatively, compact them in pairs of two into `[1, 3]`. All time series data still exist but now in fewer blocks overall. This significantly reduces the merging cost at query time as fewer partial query results have to be merged.

|

||||

|

||||

#### Retention

|

||||

|

||||

We saw that deleting old data was a slow process in the V2 storage and put a toll on CPU, memory, and disk alike. How can we drop old data in our block based design? Quite simply, by just deleting the directory of a block that has no data within our configured retention window. In the example below, block 1 can safely be deleted, whereas 2 has to stick around until it falls fully behind the boundary.

|

||||

|

||||

```

|

||||

|

|

||||

┌────────────┐ ┌────┼─────┐ ┌───────────┐ ┌───────────┐ ┌───────────┐

|

||||

│ 1 │ │ 2 | │ │ 3 │ │ 4 │ │ 5 │ . . .

|

||||

└────────────┘ └────┼─────┘ └───────────┘ └───────────┘ └───────────┘

|

||||

|

|

||||

|

|

||||

retention boundary

|

||||

```

|

||||

|

||||

The older data gets, the larger the blocks may become as we keep compacting previously compacted blocks. An upper limit has to be applied so blocks don’t grow to span the entire database and thus diminish the original benefits of our design.

|

||||

Conveniently, this also limits the total disk overhead of blocks that are partially inside and partially outside of the retention window, i.e. block 2 in the example above. When setting the maximum block size at 10% of the total retention window, our total overhead of keeping block 2 around is also bound by 10%.

|

||||

|

||||

Summed up, retention deletion goes from very expensive, to practically free.

|

||||

|

||||

> _If you've come this far and have some background in databases, you might be asking one thing by now: Is any of this new? — Not really; and probably for the better._

|

||||

>

|

||||

> _The pattern of batching data up in memory, tracked in a write ahead log, and periodically flushed to disk is ubiquitous today._

|

||||

> _The benefits we have seen apply almost universally regardless of the data's domain specifics. Prominent open source examples following this approach are LevelDB, Cassandra, InfluxDB, or HBase. The key takeaway is to avoid reinventing an inferior wheel, researching proven methods, and applying them with the right twist._

|

||||

> _Running out of places to add your own magic dust later is an unlikely scenario._

|

||||

|

||||

### The Index

|

||||

|

||||

The initial motivation to investigate storage improvements were the problems brought by _series churn_ . The block-based layout reduces the total number of series that have to be considered for serving a query. So assuming our index lookup was of complexity _O(n^2)_ , we managed to reduce the _n_ a fair amount and now have an improved complexity of _O(n^2)_ — uhm, wait... damnit.

|

||||

A quick flashback to "Algorithms 101" reminds us that this, in theory, did not buy us anything. If things were bad before, they are just as bad now. Theory can be depressing.

|

||||

|

||||

In practice, most of our queries will already be answered significantly faster. Yet, queries spanning the full time range remain slow even if they just need to find a handful of series. My original idea, dating back way before all this work was started, was a solution to exactly this problem: we need a more capable [ _inverted index_ ][9].

|

||||

An inverted index provides a fast lookup of data items based on a subset of their contents. Simply put, I can look up all series that have a label `app=”nginx"` without having to walk through every single series and check whether it contains that label.

|

||||

|

||||

For that, each series is assigned a unique ID by which it can be retrieved in constant time, i.e. O(1). In this case the ID is our _forward index_ .

|

||||

|

||||

> Example: If the series with IDs 10, 29, and 9 contain the label `app="nginx"`, the inverted index for the label "nginx" is the simple list `[10, 29, 9]`, which can be used to quickly retrieve all series containing the label. Even if there were 20 billion further series, it would not affect the speed of this lookup.

|

||||

|

||||

In short, if _n_ is our total number of series, and _m_ is the result size for a given query, the complexity of our query using the index is now _O(m)_ . Queries scaling along the amount of data they retrieve ( _m_ ) instead of the data body being searched ( _n_ ) is a great property as _m_ is generally significantly smaller.

|

||||

For brevity, let’s assume we can retrieve the inverted index list itself in constant time.

|

||||

|

||||

Actually, this is almost exactly the kind of inverted index V2 has and a minimum requirement to serve performant queries across millions of series. The keen observer will have noticed, that in the worst case, a label exists in all series and thus _m_ is, again, in _O(n)_ . This is expected and perfectly fine. If you query all data, it naturally takes longer. Things become problematic once we get involved with more complex queries.

|

||||

|

||||

#### Combining Labels

|

||||

|

||||

Labels associated with millions of series are common. Suppose a horizontally scaling “foo” microservice with hundreds of instances with thousands of series each. Every single series will have the label `app="foo"`. Of course, one generally won't query all series but restrict the query by further labels, e.g. I want to know how many requests my service instances received and query `__name__="requests_total" AND app="foo"`.

|

||||

|

||||

To find all series satisfying both label selectors, we take the inverted index list for each and intersect them. The resulting set will typically be orders of magnitude smaller than each input list individually. As each input list has the worst case size O(n), the brute force solution of nested iteration over both lists, has a runtime of O(n^2). The same cost applies for other set operations, such as the union (`app="foo" OR app="bar"`). When adding further label selectors to the query, the exponent increases for each to O(n^3), O(n^4), O(n^5), ... O(n^k). A lot of tricks can be played to minimize the effective runtime by changing the execution order. The more sophisticated, the more knowledge about the shape of the data and the relationships between labels is needed. This introduces a lot of complexity, yet does not decrease our algorithmic worst case runtime.

|

||||

|

||||

This is essentially the approach in the V2 storage and luckily a seemingly slight modification is enough gain significant improvements. What happens if we assume that the IDs in our inverted indices are sorted?

|

||||

|

||||

Suppose this example of lists for our initial query:

|

||||

|

||||

```

|

||||

__name__="requests_total" -> [ 9999, 1000, 1001, 2000000, 2000001, 2000002, 2000003 ]

|

||||

app="foo" -> [ 1, 3, 10, 11, 12, 100, 311, 320, 1000, 1001, 10002 ]

|

||||

|

||||

intersection => [ 1000, 1001 ]

|

||||

```

|

||||

|

||||

The intersection is fairly small. We can find it by setting a cursor at the beginning of each list and always advancing the one at the smaller number. When both numbers are equal, we add the number to our result and advance both cursors. Overall, we scan both lists in this zig-zag pattern and thus have a total cost of _O(2n) = O(n)_ as we only ever move forward in either list.

|

||||

|

||||

The procedure for more than two lists of different set operations works similarly. So the number of _k_ set operations merely modifies the factor ( _O(k*n)_ ) instead of the exponent ( _O(n^k)_ ) of our worst-case lookup runtime. A great improvement.

|

||||

What I described here is a simplified version of the canonical search index used by practically any [full text search engine][10] out there. Every series descriptor is treated as a short "document", and every label (name + fixed value) as a "word" inside of it. We can ignore a lot of additional data typically encountered in search engine indices, such as word position and frequency data.

|

||||

Seemingly endless research exists on approaches improving the practical runtime, often making some assumptions about the input data. Unsurprisingly, there are also plenty of techniques to compress inverted indices that come with their own benefits and drawbacks. As our "documents" are tiny and the “words” are hugely repetitive across all series, compression becomes almost irrelevant. For example, a real-world dataset of ~4.4 million series with about 12 labels each has less than 5,000 unique labels. For our initial storage version, we stick to the basic approach without compression, and just a few simple tweaks added to skip over large ranges of non-intersecting IDs.

|

||||

|

||||

While keeping the IDs sorted may sound simple, it is not always a trivial invariant to keep up. For instance, the V2 storage assigns hashes as IDs to new series and we cannot efficiently build up sorted inverted indices.

|

||||

Another daunting task is modifying the indices on disk as data gets deleted or updated. Typically, the easiest approach is to simply recompute and rewrite them but doing so while keeping the database queryable and consistent. The V3 storage does exactly this by having a separate immutable index per block that is only modified via rewrite on compaction. Only the indices for the mutable blocks, which are held entirely in memory, need to be updated.

|

||||

|

||||

### Benchmarking

|

||||

|

||||

I started initial development of the storage with a benchmark based on ~4.4 million series descriptors extracted from a real world data set and generated synthetic data points to feed into those series. This iteration just tested the stand-alone storage and was crucial to quickly identify performance bottlenecks and trigger deadlocks only experienced under highly concurrent load.

|

||||

|

||||

After the conceptual implementation was done, the benchmark could sustain a write throughput of 20 million data points per second on my Macbook Pro — all while a dozen Chrome tabs and Slack were running. So while this sounded all great it also indicated that there's no further point in pushing this benchmark (or running it in a less random environment for that matter). After all, it is synthetic and thus not worth much beyond a good first impression. Starting out about 20x above the initial design target, it was time to embed this into an actual Prometheus server, adding all the practical overhead and flakes only experienced in more realistic environments.

|

||||

|

||||

We actually had no reproducible benchmarking setup for Prometheus, in particular none that allowed A/B testing of different versions. Concerning in hindsight, but [now we have one][11]!

|

||||

|

||||

Our tool allows us to declaratively define a benchmarking scenario, which is then deployed to a Kubernetes cluster on AWS. While this is not the best environment for all-out benchmarking, it certainly reflects our user base better than dedicated bare metal servers with 64 cores and 128GB of memory.

|

||||