mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-06 01:20:12 +08:00

commit

1e7f9a6126

@ -0,0 +1,84 @@

|

||||

Why isn't open source hot among computer science students?

|

||||

======

|

||||

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

The technical savvy and inventive energy of young programmers is alive and well.

|

||||

|

||||

This was clear from the diligent work that I witnessed while participating in this year's [PennApps][1], the nation's largest college hackathon. Over the course of 48 hours, my high school- and college-age peers created projects ranging from a [blink-based communication device for shut-in patients][2] to a [burrito maker with IoT connectivity][3]. The spirit of open source was tangible throughout the event, as diverse groups bonded over a mutual desire to build, the free flow of ideas and tech know-how, fearless experimentation and rapid prototyping, and an overwhelming eagerness to participate.

|

||||

|

||||

Why then, I wondered, wasn't open source a hot topic among my tech geek peers?

|

||||

|

||||

To learn more about what college students think when they hear "open source," I surveyed several college students who are members of the same professional computer science organization I belong to. All members of this community must apply during high school or college and are selected based on their computer science-specific achievements and leadership--whether that means leading a school robotics team, founding a nonprofit to bring coding into insufficiently funded classrooms, or some other worthy endeavor. Given these individuals' accomplishments in computer science, I thought that their perspectives would help in understanding what young programmers find appealing (or unappealing) about open source projects.

|

||||

|

||||

The online survey I prepared and disseminated included the following questions:

|

||||

|

||||

* Do you like to code personal projects? Have you ever contributed to an open source project?

|

||||

* Do you feel like it's more beneficial to you to start your own programming projects, or to contribute to existing open source efforts?

|

||||

* How would you compare the prestige associated with coding for an organization that produces open source software versus proprietary software?

|

||||

|

||||

|

||||

|

||||

Though the overwhelming majority said that they at least occasionally enjoyed coding personal projects in their spare time, most had never contributed to an open source project. When I further explored this trend, a few common preconceptions about open source projects and organizations came to light. To persuade my peers that open source projects are worth their time, and to provide educators and open source organizations insight on their students, I'll address the three top preconceptions.

|

||||

|

||||

### Preconception #1: Creating personal projects from scratch is better experience than contributing to an existing open source project.

|

||||

|

||||

Of the college-age programmers I surveyed, 24 out of 26 asserted that starting their own personal projects felt potentially more beneficial than building on open source ones.

|

||||

|

||||

As a bright-eyed freshman in computer science, I believed this too. I had often heard from older peers that personal projects would make me more appealing to intern recruiters. No one ever mentioned the possibility of contributing to open source projects--so in my mind, it wasn't relevant.

|

||||

|

||||

I now realize that open source projects offer powerful preparation for the real world. Contributing to open source projects cultivates [an awareness of how tools and languages piece together][4] in a way that even individual projects cannot. Moreover, open source is an exercise in coordination and collaboration, building students' [professional skills in communication, teamwork, and problem-solving. ][5]

|

||||

|

||||

### Preconception #2: My coding skills just won't cut it.

|

||||

|

||||

A few respondents said they were intimidated by open source projects, unsure of where to contribute, or fearful of stunting project progress. Unfortunately, feelings of inferiority, which too often especially affect female programmers, do not stop at the open source community. In fact, "Imposter Syndrome" may even be magnified, as [open source advocates typically reject bureaucracy][6]--and as difficult as bureaucracy makes internal mobility, it helps newcomers know their place in an organization.

|

||||

|

||||

I remember how intimidated I felt by contribution guidelines while looking through open source projects on GitHub for the first time. However, guidelines are not intended to encourage exclusivity, but to provide a [guiding hand][7]. To that end, I think of guidelines as a way of establishing expectations without relying on a hierarchical structure.

|

||||

|

||||

Several open source projects actively carve a place for new project contributors. [TEAMMATES][8], an educational feedback management tool, is one of the many open source projects that marks issues "up for grabs" for first-timers. In the comments, programmers of all skill levels iron out implementation details, demonstrating that open source is a place for eager new programmers and seasoned software veterans alike. For young programmers who are still hesitant, [a few open source projects][9] have been thoughtful enough to adopt an [Imposter Syndrome disclaimer][10].

|

||||

|

||||

### Preconception #3: Proprietary software firms do better work than open source software organizations.

|

||||

|

||||

Only five of the 26 respondents I surveyed thought that open and proprietary software organizations were considered equal in prestige. This is likely due to the misperception that "open" means "profitless," and thus low-quality (see [Doesn't 'open source' just mean something is free of charge?][11]).

|

||||

|

||||

However, open source software and profitable software are not mutually exclusive. In fact, small and large businesses alike often pay for free open source software to receive technical support services. As [Red Hat CEO Jim Whitehurst explains][12], "We have engineering teams that track every single change--a bug fix, security enhancement, or whatever--made to Linux, and ensure our customers' mission-critical systems remain up-to-date and stable."

|

||||

|

||||

Moreover, the nature of openness facilitates rather than hinders quality by enabling more people to examine source code. [Igor Faletski, CEO of Mobify][13], writes that Mobify's team of "25 software developers and quality assurance professionals" is "no match for the all the software developers in the world who might make use of [Mobify's open source] platform. Each of them is a potential tester of, or contributor to, the project."

|

||||

|

||||

Another problem may be that young programmers are not aware of the open source software they interact with every day. I used many tools--including MySQL, Eclipse, Atom, Audacity, and WordPress--for months or even years without realizing they were open source. College students, who often rush to download syllabus-specified software to complete class assignments, may be unaware of which software is open source. This makes open source seem more foreign than it is.

|

||||

|

||||

So students, don't knock open source before you try it. Check out this [list of beginner-friendly projects][14] and [these six starting points][15] to begin your open source journey.

|

||||

|

||||

Educators, remind your students of the open source community's history of successful innovation, and lead them toward open source projects outside the classroom. You will help develop sharper, better-prepared, and more confident students.

|

||||

|

||||

### About the author

|

||||

Susie Choi - Susie is an undergraduate student studying computer science at Duke University. She is interested in the implications of technological innovation and open source principles for issues relating to education and socioeconomic inequality.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/12/students-and-open-source-3-common-preconceptions

|

||||

|

||||

作者:[Susie Choi][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/susiechoi

|

||||

[1]:http://pennapps.com/

|

||||

[2]:https://devpost.com/software/blink-9o2iln

|

||||

[3]:https://devpost.com/software/daburrito

|

||||

[4]:https://hackernoon.com/benefits-of-contributing-to-open-source-2c97b6f529e9

|

||||

[5]:https://opensource.com/education/16/8/5-reasons-student-involvement-open-source

|

||||

[6]:https://opensource.com/open-organization/17/7/open-thinking-curb-bureaucracy

|

||||

[7]:https://opensource.com/life/16/3/contributor-guidelines-template-and-tips

|

||||

[8]:https://github.com/TEAMMATES/teammates/issues?q=is%3Aissue+is%3Aopen+label%3Ad.FirstTimers

|

||||

[9]:https://github.com/adriennefriend/imposter-syndrome-disclaimer/blob/master/examples.md

|

||||

[10]:https://github.com/adriennefriend/imposter-syndrome-disclaimer

|

||||

[11]:https://opensource.com/resources/what-open-source

|

||||

[12]:https://hbr.org/2013/01/yes-you-can-make-money-with-op

|

||||

[13]:https://hbr.org/2012/10/open-sourcing-may-be-worth

|

||||

[14]:https://github.com/MunGell/awesome-for-beginners

|

||||

[15]:https://opensource.com/life/16/1/6-beginner-open-source

|

||||

@ -61,6 +61,8 @@ LCTT 的组成

|

||||

* 2017/03/16 提升 GHLandy、bestony、rusking 为新的 Core 成员。创建 Comic 小组。

|

||||

* 2017/04/11 启用头衔制,为各位重要成员颁发头衔。

|

||||

* 2017/11/21 鉴于 qhwdw 快速而上佳的翻译质量,提升 qhwdw 为新的 Core 成员。

|

||||

* 2017/11/19 wxy 在上海交大举办的 2017 中国开源年会上做了演讲:《[如何以翻译贡献参与开源社区](https://linux.cn/article-9084-1.html)》。

|

||||

* 2018/01/11 提升 lujun9972 成为核心成员,并加入选题组。

|

||||

|

||||

核心成员

|

||||

-------------------------------

|

||||

@ -88,6 +90,7 @@ LCTT 的组成

|

||||

- 核心成员 @ucasFL,

|

||||

- 核心成员 @rusking,

|

||||

- 核心成员 @qhwdw,

|

||||

- 核心成员 @lujun9972

|

||||

- 前任选题 @DeadFire,

|

||||

- 前任校对 @reinoir222,

|

||||

- 前任校对 @PurlingNayuki,

|

||||

|

||||

@ -0,0 +1,231 @@

|

||||

在不重启的情况下为 Vmware Linux 客户机添加新硬盘

|

||||

======

|

||||

|

||||

作为一名系统管理员,我经常需要用额外的硬盘来扩充存储空间或将系统数据从用户数据中分离出来。我将告诉你在将物理块设备加到虚拟主机的这个过程中,如何将一个主机上的硬盘加到一台使用 VMWare 软件虚拟化的 Linux 客户机上。

|

||||

|

||||

你可以显式的添加或删除一个 SCSI 设备,或者重新扫描整个 SCSI 总线而不用重启 Linux 虚拟机。本指南在 Vmware Server 和 Vmware Workstation v6.0 中通过测试(更老版本应该也支持)。所有命令在 RHEL、Fedora、CentOS 和 Ubuntu Linux 客户机 / 主机操作系统下都经过了测试。

|

||||

|

||||

### 步骤 1:添加新硬盘到虚拟客户机

|

||||

|

||||

首先,通过 vmware 硬件设置菜单添加硬盘。点击 “VM > Settings”

|

||||

|

||||

![Fig.01:Vmware Virtual Machine Settings ][1]

|

||||

|

||||

或者你也可以按下 `CTRL + D` 也能进入设置对话框。

|

||||

|

||||

点击 “Add” 添加新硬盘到客户机:

|

||||

|

||||

![Fig.02:VMWare adding a new hardware][2]

|

||||

|

||||

选择硬件类型为“Hard disk”然后点击 “Next”:

|

||||

|

||||

![Fig.03 VMware Adding a new disk wizard ][3]

|

||||

|

||||

选择 “create a new virtual disk” 然后点击 “Next”:

|

||||

|

||||

![Fig.04:Vmware Wizard Disk ][4]

|

||||

|

||||

设置虚拟磁盘类型为 “SCSI” ,然后点击 “Next”:

|

||||

|

||||

![Fig.05:Vmware Virtual Disk][5]

|

||||

|

||||

按需要设置最大磁盘大小,然后点击 “Next”

|

||||

|

||||

![Fig.06:Finalizing Disk Virtual Addition ][6]

|

||||

|

||||

最后,选择文件存放位置然后点击 “Finish”。

|

||||

|

||||

### 步骤 2:重新扫描 SCSI 总线,在不重启虚拟机的情况下添加 SCSI 设备

|

||||

|

||||

输入下面命令重新扫描 SCSI 总线:

|

||||

|

||||

```

|

||||

echo "- - -" > /sys/class/scsi_host/host# /scan

|

||||

fdisk -l

|

||||

tail -f /var/log/message

|

||||

```

|

||||

|

||||

输出为:

|

||||

|

||||

![Linux Vmware Rescan New Scsi Disk Without Reboot][7]

|

||||

|

||||

你需要将 `host#` 替换成真实的值,比如 `host0`。你可以通过下面命令来查出这个值:

|

||||

|

||||

`# ls /sys/class/scsi_host`

|

||||

|

||||

输出:

|

||||

|

||||

```

|

||||

host0

|

||||

```

|

||||

|

||||

然后输入下面过命令来请求重新扫描:

|

||||

|

||||

```

|

||||

echo "- - -" > /sys/class/scsi_host/host0/scan

|

||||

fdisk -l

|

||||

tail -f /var/log/message

|

||||

```

|

||||

|

||||

输出为:

|

||||

|

||||

```

|

||||

Jul 18 16:29:39 localhost kernel: Vendor: VMware, Model: VMware Virtual S Rev: 1.0

|

||||

Jul 18 16:29:39 localhost kernel: Type: Direct-Access ANSI SCSI revision: 02

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:1: Beginning Domain Validation

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:1: Domain Validation skipping write tests

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:1: Ending Domain Validation

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:1: FAST-40 WIDE SCSI 80.0 MB/s ST (25 ns, offset 127)

|

||||

Jul 18 16:29:39 localhost kernel: SCSI device sdb: 2097152 512-byte hdwr sectors (1074 MB)

|

||||

Jul 18 16:29:39 localhost kernel: sdb: Write Protect is off

|

||||

Jul 18 16:29:39 localhost kernel: sdb: cache data unavailable

|

||||

Jul 18 16:29:39 localhost kernel: sdb: assuming drive cache: write through

|

||||

Jul 18 16:29:39 localhost kernel: SCSI device sdb: 2097152 512-byte hdwr sectors (1074 MB)

|

||||

Jul 18 16:29:39 localhost kernel: sdb: Write Protect is off

|

||||

Jul 18 16:29:39 localhost kernel: sdb: cache data unavailable

|

||||

Jul 18 16:29:39 localhost kernel: sdb: assuming drive cache: write through

|

||||

Jul 18 16:29:39 localhost kernel: sdb: unknown partition table

|

||||

Jul 18 16:29:39 localhost kernel: sd 0:0:1:0: Attached scsi disk sdb

|

||||

Jul 18 16:29:39 localhost kernel: sd 0:0:1:0: Attached scsi generic sg1 type 0

|

||||

Jul 18 16:29:39 localhost kernel: Vendor: VMware, Model: VMware Virtual S Rev: 1.0

|

||||

Jul 18 16:29:39 localhost kernel: Type: Direct-Access ANSI SCSI revision: 02

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:2: Beginning Domain Validation

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:2: Domain Validation skipping write tests

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:2: Ending Domain Validation

|

||||

Jul 18 16:29:39 localhost kernel: target0:0:2: FAST-40 WIDE SCSI 80.0 MB/s ST (25 ns, offset 127)

|

||||

Jul 18 16:29:39 localhost kernel: SCSI device sdc: 2097152 512-byte hdwr sectors (1074 MB)

|

||||

Jul 18 16:29:39 localhost kernel: sdc: Write Protect is off

|

||||

Jul 18 16:29:39 localhost kernel: sdc: cache data unavailable

|

||||

Jul 18 16:29:39 localhost kernel: sdc: assuming drive cache: write through

|

||||

Jul 18 16:29:39 localhost kernel: SCSI device sdc: 2097152 512-byte hdwr sectors (1074 MB)

|

||||

Jul 18 16:29:39 localhost kernel: sdc: Write Protect is off

|

||||

Jul 18 16:29:39 localhost kernel: sdc: cache data unavailable

|

||||

Jul 18 16:29:39 localhost kernel: sdc: assuming drive cache: write through

|

||||

Jul 18 16:29:39 localhost kernel: sdc: unknown partition table

|

||||

Jul 18 16:29:39 localhost kernel: sd 0:0:2:0: Attached scsi disk sdc

|

||||

Jul 18 16:29:39 localhost kernel: sd 0:0:2:0: Attached scsi generic sg2 type 0

|

||||

```

|

||||

|

||||

#### 如何删除 /dev/sdc 这块设备?

|

||||

|

||||

除了重新扫描整个总线外,你也可以使用下面命令添加或删除指定磁盘:

|

||||

|

||||

```

|

||||

# echo 1 > /sys/block/devName/device/delete

|

||||

# echo 1 > /sys/block/sdc/device/delete

|

||||

```

|

||||

|

||||

#### 如何添加 /dev/sdc 这块设备?

|

||||

|

||||

使用下面语法添加指定设备:

|

||||

|

||||

```

|

||||

# echo "scsi add-single-device <H> <B> <T> <L>" > /proc/scsi/scsi

|

||||

```

|

||||

|

||||

这里,

|

||||

|

||||

* <H>:主机

|

||||

* <B>:总线(通道)

|

||||

* <T>:目标 (Id)

|

||||

* <L>:LUN 号

|

||||

|

||||

例如。使用参数 `host#0`,`bus#0`,`target#2`,以及 `LUN#0` 来添加 `/dev/sdc`,则输入:

|

||||

|

||||

```

|

||||

# echo "scsi add-single-device 0 0 2 0">/proc/scsi/scsi

|

||||

# fdisk -l

|

||||

# cat /proc/scsi/scsi

|

||||

```

|

||||

|

||||

结果输出:

|

||||

|

||||

```

|

||||

Attached devices:

|

||||

Host: scsi0 Channel: 00 Id: 00 Lun: 00

|

||||

Vendor: VMware, Model: VMware Virtual S Rev: 1.0

|

||||

Type: Direct-Access ANSI SCSI revision: 02

|

||||

Host: scsi0 Channel: 00 Id: 01 Lun: 00

|

||||

Vendor: VMware, Model: VMware Virtual S Rev: 1.0

|

||||

Type: Direct-Access ANSI SCSI revision: 02

|

||||

Host: scsi0 Channel: 00 Id: 02 Lun: 00

|

||||

Vendor: VMware, Model: VMware Virtual S Rev: 1.0

|

||||

Type: Direct-Access ANSI SCSI revision: 02

|

||||

```

|

||||

|

||||

### 步骤 #3:格式化新磁盘

|

||||

|

||||

现在使用 [fdisk 并通过 mkfs.ext3][8] 命令创建分区:

|

||||

|

||||

```

|

||||

# fdisk /dev/sdc

|

||||

### [if you want ext3 fs] ###

|

||||

# mkfs.ext3 /dev/sdc3

|

||||

### [if you want ext4 fs] ###

|

||||

# mkfs.ext4 /dev/sdc3

|

||||

```

|

||||

|

||||

### 步骤 #4:创建挂载点并更新 /etc/fstab

|

||||

|

||||

```

|

||||

# mkdir /disk3

|

||||

```

|

||||

|

||||

打开 `/etc/fstab` 文件,输入:

|

||||

|

||||

```

|

||||

# vi /etc/fstab

|

||||

```

|

||||

|

||||

加入下面这行:

|

||||

|

||||

```

|

||||

/dev/sdc3 /disk3 ext3 defaults 1 2

|

||||

```

|

||||

|

||||

若是 ext4 文件系统则加入:

|

||||

|

||||

```

|

||||

/dev/sdc3 /disk3 ext4 defaults 1 2

|

||||

```

|

||||

|

||||

保存并关闭文件。

|

||||

|

||||

#### 可选操作:为分区加标签

|

||||

|

||||

[你可以使用 e2label 命令为分区加标签 ][9]。假设,你想要为 `/backupDisk` 这块新分区加标签,则输入:

|

||||

|

||||

```

|

||||

# e2label /dev/sdc1 /backupDisk

|

||||

```

|

||||

|

||||

详情参见 "[Linux 分区的重要性 ][10]。

|

||||

|

||||

### 关于作者

|

||||

|

||||

作者是 nixCraft 的创始人,也是一名经验丰富的系统管理员,还是 Linux 操作系统 /Unix shell 脚本培训师。他曾服务过全球客户并与多个行业合作过,包括 IT,教育,国防和空间研究,以及非盈利机构。你可以在 [Twitter][11],[Facebook][12],[Google+][13] 上关注他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.cyberciti.biz/tips/vmware-add-a-new-hard-disk-without-rebooting-guest.html

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/media/new/tips/2009/07/virtual-machine-settings-1.png (Vmware Virtual Machine Settings )

|

||||

[2]:https://www.cyberciti.biz/media/new/tips/2009/07/vmware-add-hardware-wizard-2.png (VMWare adding a new hardware)

|

||||

[3]:https://www.cyberciti.biz/media/new/tips/2009/07/vmware-add-hardware-anew-disk-3.png (VMware Adding a new disk wizard )

|

||||

[4]:https://www.cyberciti.biz/media/new/tips/2009/07/vmware-add-hardware-4.png (Vmware Wizard Disk )

|

||||

[5]:https://www.cyberciti.biz/media/new/tips/2009/07/add-hardware-5.png (Vmware Virtual Disk)

|

||||

[6]:https://www.cyberciti.biz/media/new/tips/2009/07/vmware-final-disk-file-add-hdd-6.png (Finalizing Disk Virtual Addition)

|

||||

[7]:https://www.cyberciti.biz/media/new/tips/2009/07/vmware-linux-rescan-hard-disk.png (Linux Vmware Rescan New Scsi Disk Without Reboot)

|

||||

[8]:https://www.cyberciti.biz/faq/linux-disk-format/

|

||||

[9]:https://www.cyberciti.biz/faq/linux-modify-partition-labels-command-to-change-diskname/

|

||||

[10]:https://www.cyberciti.biz/faq/linux-partition-howto-set-labels/>how%20to%20label%20a%20Linux%20partition</a>%E2%80%9D%20for%20more%20info.</p><h2>Conclusion</h2><p>The%20VMware%20guest%20now%20has%20an%20additional%20virtualized%20storage%20device.%20%20The%20procedure%20works%20for%20all%20physical%20block%20devices,%20this%20includes%20CD-ROM,%20DVD%20and%20floppy%20devices.%20Next,%20time%20I%20will%20write%20about%20adding%20an%20additional%20virtualized%20storage%20device%20using%20XEN%20software.</p><h2>See%20also</h2><ul><li><a%20href=

|

||||

[11]:https://twitter.com/nixcraft

|

||||

[12]:https://facebook.com/nixcraft

|

||||

[13]:https://plus.google.com/+CybercitiBiz

|

||||

@ -1,33 +1,43 @@

|

||||

translating by lujun9972

|

||||

30 Handy Bash Shell Aliases For Linux / Unix / Mac OS X

|

||||

30 个方便的 Bash shell 别名

|

||||

======

|

||||

An bash alias is nothing but the shortcut to commands. The alias command allows the user to launch any command or group of commands (including options and filenames) by entering a single word. Use alias command to display a list of all defined aliases. You can add user-defined aliases to [~/.bashrc][1] file. You can cut down typing time with these aliases, work smartly, and increase productivity at the command prompt.

|

||||

|

||||

This post shows how to create and use aliases including 30 practical examples of bash shell aliases.

|

||||

[![30 Useful Bash Shell Aliase For Linux/Unix Users][2]][2]

|

||||

bash <ruby>别名<rt>alias</rt></ruby>只不过是指向命令的快捷方式而已。`alias` 命令允许用户只输入一个单词就运行任意一个命令或一组命令(包括命令选项和文件名)。执行 `alias` 命令会显示一个所有已定义别名的列表。你可以在 [~/.bashrc][1] 文件中自定义别名。使用别名可以在命令行中减少输入的时间,使工作更流畅,同时增加生产率。

|

||||

|

||||

## More about bash alias

|

||||

本文通过 30 个 bash shell 别名的实际案例演示了如何创建和使用别名。

|

||||

|

||||

The general syntax for the alias command for the bash shell is as follows:

|

||||

![30 Useful Bash Shell Aliase For Linux/Unix Users][2]

|

||||

|

||||

### How to list bash aliases

|

||||

### bash alias 的那些事

|

||||

|

||||

bash shell 中的 alias 命令的语法是这样的:

|

||||

|

||||

```

|

||||

alias [alias-name[=string]...]

|

||||

```

|

||||

|

||||

#### 如何列出 bash 别名

|

||||

|

||||

输入下面的 [alias 命令][3]:

|

||||

|

||||

```

|

||||

alias

|

||||

```

|

||||

|

||||

结果为:

|

||||

|

||||

Type the following [alias command][3]:

|

||||

`alias`

|

||||

Sample outputs:

|

||||

```

|

||||

alias ..='cd ..'

|

||||

alias amazonbackup='s3backup'

|

||||

alias apt-get='sudo apt-get'

|

||||

...

|

||||

|

||||

```

|

||||

|

||||

By default alias command shows a list of aliases that are defined for the current user.

|

||||

`alias` 命令默认会列出当前用户定义好的别名。

|

||||

|

||||

### How to define or create a bash shell alias

|

||||

#### 如何定义或者创建一个 bash shell 别名

|

||||

|

||||

使用下面语法 [创建别名][4]:

|

||||

|

||||

To [create the alias][4] use the following syntax:

|

||||

```

|

||||

alias name =value

|

||||

alias name = 'command'

|

||||

@ -36,22 +46,22 @@ alias name = '/path/to/script'

|

||||

alias name = '/path/to/script.pl arg1'

|

||||

```

|

||||

|

||||

alias name=value alias name='command' alias name='command arg1 arg2' alias name='/path/to/script' alias name='/path/to/script.pl arg1'

|

||||

举个例子,输入下面命令并回车就会为常用的 `clear`(清除屏幕)命令创建一个别名 `c`:

|

||||

|

||||

In this example, create the alias **c** for the commonly used clear command, which clears the screen, by typing the following command and then pressing the ENTER key:

|

||||

```

|

||||

alias c = 'clear'

|

||||

```

|

||||

|

||||

然后输入字母 `c` 而不是 `clear` 后回车就会清除屏幕了:

|

||||

|

||||

Then, to clear the screen, instead of typing clear, you would only have to type the letter 'c' and press the [ENTER] key:

|

||||

```

|

||||

c

|

||||

```

|

||||

|

||||

### How to disable a bash alias temporarily

|

||||

#### 如何临时性地禁用 bash 别名

|

||||

|

||||

下面语法可以[临时性地禁用别名][5]:

|

||||

|

||||

An [alias can be disabled temporarily][5] using the following syntax:

|

||||

```

|

||||

## path/to/full/command

|

||||

/usr/bin/clear

|

||||

@ -61,37 +71,43 @@ An [alias can be disabled temporarily][5] using the following syntax:

|

||||

command ls

|

||||

```

|

||||

|

||||

### How to delete/remove a bash alias

|

||||

#### 如何删除 bash 别名

|

||||

|

||||

使用 [unalias 命令来删除别名][6]。其语法为:

|

||||

|

||||

You need to use the command [called unalias to remove aliases][6]. Its syntax is as follows:

|

||||

```

|

||||

unalias aliasname

|

||||

unalias foo

|

||||

```

|

||||

|

||||

In this example, remove the alias c which was created in an earlier example:

|

||||

例如,删除我们之前创建的别名 `c`:

|

||||

|

||||

```

|

||||

unalias c

|

||||

```

|

||||

|

||||

You also need to delete the alias from the [~/.bashrc file][1] using a text editor (see next section).

|

||||

你还需要用文本编辑器删掉 [~/.bashrc 文件][1] 中的别名定义(参见下一部分内容)。

|

||||

|

||||

#### 如何让 bash shell 别名永久生效

|

||||

|

||||

别名 `c` 在当前登录会话中依然有效。但当你登出或重启系统后,别名 `c` 就没有了。为了防止出现这个问题,将别名定义写入 [~/.bashrc file][1] 中,输入:

|

||||

|

||||

The alias c remains in effect only during the current login session. Once you logs out or reboot the system the alias c will be gone. To avoid this problem, add alias to your [~/.bashrc file][1], enter:

|

||||

```

|

||||

vi ~/.bashrc

|

||||

```

|

||||

|

||||

输入下行内容让别名 `c` 对当前用户永久有效:

|

||||

|

||||

The alias c for the current user can be made permanent by entering the following line:

|

||||

```

|

||||

alias c = 'clear'

|

||||

```

|

||||

|

||||

Save and close the file. System-wide aliases (i.e. aliases for all users) can be put in the /etc/bashrc file. Please note that the alias command is built into a various shells including ksh, tcsh/csh, ash, bash and others.

|

||||

保存并关闭文件就行了。系统级的别名(也就是对所有用户都生效的别名)可以放在 `/etc/bashrc` 文件中。请注意,`alias` 命令内建于各种 shell 中,包括 ksh,tcsh/csh,ash,bash 以及其他 shell。

|

||||

|

||||

### A note about privileged access

|

||||

#### 关于特权权限判断

|

||||

|

||||

可以将下面代码加入 `~/.bashrc`:

|

||||

|

||||

You can add code as follows in ~/.bashrc:

|

||||

```

|

||||

# if user is not root, pass all commands via sudo #

|

||||

if [ $UID -ne 0 ]; then

|

||||

@ -100,9 +116,10 @@ if [ $UID -ne 0 ]; then

|

||||

fi

|

||||

```

|

||||

|

||||

### A note about os specific aliases

|

||||

#### 定义与操作系统类型相关的别名

|

||||

|

||||

可以将下面代码加入 `~/.bashrc` [使用 case 语句][7]:

|

||||

|

||||

You can add code as follows in ~/.bashrc [using the case statement][7]:

|

||||

```

|

||||

### Get os name via uname ###

|

||||

_myos="$(uname)"

|

||||

@ -116,13 +133,14 @@ case $_myos in

|

||||

esac

|

||||

```

|

||||

|

||||

## 30 bash shell aliases examples

|

||||

### 30 个 bash shell 别名的案例

|

||||

|

||||

You can define various types aliases as follows to save time and increase productivity.

|

||||

你可以定义各种类型的别名来节省时间并提高生产率。

|

||||

|

||||

### #1: Control ls command output

|

||||

#### #1:控制 ls 命令的输出

|

||||

|

||||

[ls 命令列出目录中的内容][8] 而你可以对输出进行着色:

|

||||

|

||||

The [ls command lists directory contents][8] and you can colorize the output:

|

||||

```

|

||||

## Colorize the ls output ##

|

||||

alias ls = 'ls --color=auto'

|

||||

@ -134,7 +152,8 @@ alias ll = 'ls -la'

|

||||

alias l.= 'ls -d . .. .git .gitignore .gitmodules .travis.yml --color=auto'

|

||||

```

|

||||

|

||||

### #2: Control cd command behavior

|

||||

#### #2:控制 cd 命令的行为

|

||||

|

||||

```

|

||||

## get rid of command not found ##

|

||||

alias cd..= 'cd ..'

|

||||

@ -148,9 +167,10 @@ alias .4= 'cd ../../../../'

|

||||

alias .5= 'cd ../../../../..'

|

||||

```

|

||||

|

||||

### #3: Control grep command output

|

||||

#### #3:控制 grep 命令的输出

|

||||

|

||||

[grep 命令是一个用于在纯文本文件中搜索匹配正则表达式的行的命令行工具][9]:

|

||||

|

||||

[grep command is a command-line utility for searching][9] plain-text files for lines matching a regular expression:

|

||||

```

|

||||

## Colorize the grep command output for ease of use (good for log files)##

|

||||

alias grep = 'grep --color=auto'

|

||||

@ -158,44 +178,51 @@ alias egrep = 'egrep --color=auto'

|

||||

alias fgrep = 'fgrep --color=auto'

|

||||

```

|

||||

|

||||

### #4: Start calculator with math support

|

||||

#### #4:让计算器默认开启 math 库

|

||||

|

||||

```

|

||||

alias bc = 'bc -l'

|

||||

```

|

||||

|

||||

### #4: Generate sha1 digest

|

||||

#### #4:生成 sha1 数字签名

|

||||

|

||||

```

|

||||

alias sha1 = 'openssl sha1'

|

||||

```

|

||||

|

||||

### #5: Create parent directories on demand

|

||||

#### #5:自动创建父目录

|

||||

|

||||

[mkdir 命令][10] 用于创建目录:

|

||||

|

||||

[mkdir command][10] is used to create a directory:

|

||||

```

|

||||

alias mkdir = 'mkdir -pv'

|

||||

```

|

||||

|

||||

### #6: Colorize diff output

|

||||

#### #6:为 diff 输出着色

|

||||

|

||||

你可以[使用 diff 来一行行第比较文件][11] 而一个名为 `colordiff` 的工具可以为 diff 输出着色:

|

||||

|

||||

You can [compare files line by line using diff][11] and use a tool called colordiff to colorize diff output:

|

||||

```

|

||||

# install colordiff package :)

|

||||

alias diff = 'colordiff'

|

||||

```

|

||||

|

||||

### #7: Make mount command output pretty and human readable format

|

||||

#### #7:让 mount 命令的输出更漂亮,更方便人类阅读

|

||||

|

||||

```

|

||||

alias mount = 'mount |column -t'

|

||||

```

|

||||

|

||||

### #8: Command short cuts to save time

|

||||

#### #8:简化命令以节省时间

|

||||

|

||||

```

|

||||

# handy short cuts #

|

||||

alias h = 'history'

|

||||

alias j = 'jobs -l'

|

||||

```

|

||||

|

||||

### #9: Create a new set of commands

|

||||

#### #9:创建一系列新命令

|

||||

|

||||

```

|

||||

alias path = 'echo -e ${PATH//:/\\n}'

|

||||

alias now = 'date +"%T"'

|

||||

@ -203,7 +230,8 @@ alias nowtime =now

|

||||

alias nowdate = 'date +"%d-%m-%Y"'

|

||||

```

|

||||

|

||||

### #10: Set vim as default

|

||||

#### #10:设置 vim 为默认编辑器

|

||||

|

||||

```

|

||||

alias vi = vim

|

||||

alias svi = 'sudo vi'

|

||||

@ -211,7 +239,8 @@ alias vis = 'vim "+set si"'

|

||||

alias edit = 'vim'

|

||||

```

|

||||

|

||||

### #11: Control output of networking tool called ping

|

||||

#### #11:控制网络工具 ping 的输出

|

||||

|

||||

```

|

||||

# Stop after sending count ECHO_REQUEST packets #

|

||||

alias ping = 'ping -c 5'

|

||||

@ -220,16 +249,18 @@ alias ping = 'ping -c 5'

|

||||

alias fastping = 'ping -c 100 -s.2'

|

||||

```

|

||||

|

||||

### #12: Show open ports

|

||||

#### #12:显示打开的端口

|

||||

|

||||

使用 [netstat 命令][12] 可以快速列出服务区中所有的 TCP/UDP 端口:

|

||||

|

||||

Use [netstat command][12] to quickly list all TCP/UDP port on the server:

|

||||

```

|

||||

alias ports = 'netstat -tulanp'

|

||||

```

|

||||

|

||||

### #13: Wakeup sleeping servers

|

||||

#### #13:唤醒休眠的服务器

|

||||

|

||||

[Wake-on-LAN (WOL) 是一个以太网标准][13],可以通过网络消息来开启服务器。你可以使用下面别名来[快速激活 nas 设备][14] 以及服务器:

|

||||

|

||||

[Wake-on-LAN (WOL) is an Ethernet networking][13] standard that allows a server to be turned on by a network message. You can [quickly wakeup nas devices][14] and server using the following aliases:

|

||||

```

|

||||

## replace mac with your actual server mac address #

|

||||

alias wakeupnas01 = '/usr/bin/wakeonlan 00:11:32:11:15:FC'

|

||||

@ -237,9 +268,10 @@ alias wakeupnas02 = '/usr/bin/wakeonlan 00:11:32:11:15:FD'

|

||||

alias wakeupnas03 = '/usr/bin/wakeonlan 00:11:32:11:15:FE'

|

||||

```

|

||||

|

||||

### #14: Control firewall (iptables) output

|

||||

#### #14:控制防火墙 (iptables) 的输出

|

||||

|

||||

[Netfilter 是一款 Linux 操作系统上的主机防火墙][15]。它是 Linux 发行版中的一部分,且默认情况下是激活状态。[这里列出了大多数 Liux 新手防护入侵者最常用的 iptables 方法][16]。

|

||||

|

||||

[Netfilter is a host-based firewall][15] for Linux operating systems. It is included as part of the Linux distribution and it is activated by default. This [post list most common iptables solutions][16] required by a new Linux user to secure his or her Linux operating system from intruders.

|

||||

```

|

||||

## shortcut for iptables and pass it via sudo#

|

||||

alias ipt = 'sudo /sbin/iptables'

|

||||

@ -252,7 +284,8 @@ alias iptlistfw = 'sudo /sbin/iptables -L FORWARD -n -v --line-numbers'

|

||||

alias firewall =iptlist

|

||||

```

|

||||

|

||||

### #15: Debug web server / cdn problems with curl

|

||||

#### #15:使用 curl 调试 web 服务器 / CDN 上的问题

|

||||

|

||||

```

|

||||

# get web server headers #

|

||||

alias header = 'curl -I'

|

||||

@ -261,7 +294,8 @@ alias header = 'curl -I'

|

||||

alias headerc = 'curl -I --compress'

|

||||

```

|

||||

|

||||

### #16: Add safety nets

|

||||

#### #16:增加安全性

|

||||

|

||||

```

|

||||

# do not delete / or prompt if deleting more than 3 files at a time #

|

||||

alias rm = 'rm -I --preserve-root'

|

||||

@ -277,9 +311,10 @@ alias chmod = 'chmod --preserve-root'

|

||||

alias chgrp = 'chgrp --preserve-root'

|

||||

```

|

||||

|

||||

### #17: Update Debian Linux server

|

||||

#### #17:更新 Debian Linux 服务器

|

||||

|

||||

[apt-get 命令][17] 用于通过因特网安装软件包 (ftp 或 http)。你也可以一次性升级所有软件包:

|

||||

|

||||

[apt-get command][17] is used for installing packages over the internet (ftp or http). You can also upgrade all packages in a single operations:

|

||||

```

|

||||

# distro specific - Debian / Ubuntu and friends #

|

||||

# install with apt-get

|

||||

@ -290,25 +325,27 @@ alias updatey = "sudo apt-get --yes"

|

||||

alias update = 'sudo apt-get update && sudo apt-get upgrade'

|

||||

```

|

||||

|

||||

### #18: Update RHEL / CentOS / Fedora Linux server

|

||||

#### #18:更新 RHEL / CentOS / Fedora Linux 服务器

|

||||

|

||||

[yum 命令][18] 是 RHEL / CentOS / Fedora Linux 以及其他基于这些发行版的 Linux 上的软件包管理工具:

|

||||

|

||||

[yum command][18] is a package management tool for RHEL / CentOS / Fedora Linux and friends:

|

||||

```

|

||||

## distrp specifc RHEL/CentOS ##

|

||||

alias update = 'yum update'

|

||||

alias updatey = 'yum -y update'

|

||||

```

|

||||

|

||||

### #19: Tune sudo and su

|

||||

#### #19:优化 sudo 和 su 命令

|

||||

|

||||

```

|

||||

# become root #

|

||||

alias root = 'sudo -i'

|

||||

alias su = 'sudo -i'

|

||||

```

|

||||

|

||||

### #20: Pass halt/reboot via sudo

|

||||

#### #20:使用 sudo 执行 halt/reboot 命令

|

||||

|

||||

[shutdown command][19] bring the Linux / Unix system down:

|

||||

[shutdown 命令][19] 会让 Linux / Unix 系统关机:

|

||||

```

|

||||

# reboot / halt / poweroff

|

||||

alias reboot = 'sudo /sbin/reboot'

|

||||

@ -317,7 +354,8 @@ alias halt = 'sudo /sbin/halt'

|

||||

alias shutdown = 'sudo /sbin/shutdown'

|

||||

```

|

||||

|

||||

### #21: Control web servers

|

||||

#### #21:控制 web 服务器

|

||||

|

||||

```

|

||||

# also pass it via sudo so whoever is admin can reload it without calling you #

|

||||

alias nginxreload = 'sudo /usr/local/nginx/sbin/nginx -s reload'

|

||||

@ -328,7 +366,8 @@ alias httpdreload = 'sudo /usr/sbin/apachectl -k graceful'

|

||||

alias httpdtest = 'sudo /usr/sbin/apachectl -t && /usr/sbin/apachectl -t -D DUMP_VHOSTS'

|

||||

```

|

||||

|

||||

### #22: Alias into our backup stuff

|

||||

#### #22:与备份相关的别名

|

||||

|

||||

```

|

||||

# if cron fails or if you want backup on demand just run these commands #

|

||||

# again pass it via sudo so whoever is in admin group can start the job #

|

||||

@ -343,7 +382,8 @@ alias rsnapshotmonthly = 'sudo /home/scripts/admin/scripts/backup/wrapper.rsnaps

|

||||

alias amazonbackup =s3backup

|

||||

```

|

||||

|

||||

### #23: Desktop specific - play avi/mp3 files on demand

|

||||

#### #23:桌面应用相关的别名 - 按需播放的 avi/mp3 文件

|

||||

|

||||

```

|

||||

## play video files in a current directory ##

|

||||

# cd ~/Download/movie-name

|

||||

@ -365,10 +405,10 @@ alias nplaymp3 = 'for i in /nas/multimedia/mp3/*.mp3; do mplayer "$i"; done'

|

||||

alias music = 'mplayer --shuffle *'

|

||||

```

|

||||

|

||||

#### #24:设置系统管理相关命令的默认网卡

|

||||

|

||||

### #24: Set default interfaces for sys admin related commands

|

||||

[vnstat 一款基于终端的网络流量检测器][20]。[dnstop 是一款分析 DNS 流量的终端工具][21]。[tcptrack 和 iftop 命令显示][22] TCP/UDP 连接方面的信息,它监控网卡并显示其消耗的带宽。

|

||||

|

||||

[vnstat is console-based network][20] traffic monitor. [dnstop is console tool][21] to analyze DNS traffic. [tcptrack and iftop commands displays][22] information about TCP/UDP connections it sees on a network interface and display bandwidth usage on an interface by host respectively.

|

||||

```

|

||||

## All of our servers eth1 is connected to the Internets via vlan / router etc ##

|

||||

alias dnstop = 'dnstop -l 5 eth1'

|

||||

@ -382,7 +422,8 @@ alias ethtool = 'ethtool eth1'

|

||||

alias iwconfig = 'iwconfig wlan0'

|

||||

```

|

||||

|

||||

### #25: Get system memory, cpu usage, and gpu memory info quickly

|

||||

#### #25:快速获取系统内存,cpu 使用,和 gpu 内存相关信息

|

||||

|

||||

```

|

||||

## pass options to free ##

|

||||

alias meminfo = 'free -m -l -t'

|

||||

@ -405,9 +446,10 @@ alias cpuinfo = 'lscpu'

|

||||

alias gpumeminfo = 'grep -i --color memory /var/log/Xorg.0.log'

|

||||

```

|

||||

|

||||

### #26: Control Home Router

|

||||

#### #26:控制家用路由器

|

||||

|

||||

`curl` 命令可以用来 [重启 Linksys 路由器][23]。

|

||||

|

||||

The curl command can be used to [reboot Linksys routers][23].

|

||||

```

|

||||

# Reboot my home Linksys WAG160N / WAG54 / WAG320 / WAG120N Router / Gateway from *nix.

|

||||

alias rebootlinksys = "curl -u 'admin:my-super-password' 'http://192.168.1.2/setup.cgi?todo=reboot'"

|

||||

@ -416,15 +458,17 @@ alias rebootlinksys = "curl -u 'admin:my-super-password' 'http://192.168.1.2/set

|

||||

alias reboottomato = "ssh admin@192.168.1.1 /sbin/reboot"

|

||||

```

|

||||

|

||||

### #27 Resume wget by default

|

||||

#### #27 wget 默认断点续传

|

||||

|

||||

[GNU wget 是一款用来从 web 下载文件的自由软件][25]。它支持 HTTP,HTTPS,以及 FTP 协议,而且它也支持断点续传:

|

||||

|

||||

The [GNU Wget is a free utility for non-interactive download][25] of files from the Web. It supports HTTP, HTTPS, and FTP protocols, and it can resume downloads too:

|

||||

```

|

||||

## this one saved by butt so many times ##

|

||||

alias wget = 'wget -c'

|

||||

```

|

||||

|

||||

### #28 Use different browser for testing website

|

||||

#### #28 使用不同浏览器来测试网站

|

||||

|

||||

```

|

||||

## this one saved by butt so many times ##

|

||||

alias ff4 = '/opt/firefox4/firefox'

|

||||

@ -439,9 +483,10 @@ alias ff =ff13

|

||||

alias browser =chrome

|

||||

```

|

||||

|

||||

### #29: A note about ssh alias

|

||||

#### #29:关于 ssh 别名的注意事项

|

||||

|

||||

不要创建 ssh 别名,代之以 `~/.ssh/config` 这个 OpenSSH SSH 客户端配置文件。它的选项更加丰富。下面是一个例子:

|

||||

|

||||

Do not create ssh alias, instead use ~/.ssh/config OpenSSH SSH client configuration files. It offers more option. An example:

|

||||

```

|

||||

Host server10

|

||||

Hostname 1.2.3.4

|

||||

@ -452,12 +497,13 @@ Host server10

|

||||

TCPKeepAlive yes

|

||||

```

|

||||

|

||||

Host server10 Hostname 1.2.3.4 IdentityFile ~/backups/.ssh/id_dsa user foobar Port 30000 ForwardX11Trusted yes TCPKeepAlive yes

|

||||

然后你就可以使用下面语句连接 server10 了:

|

||||

|

||||

You can now connect to peer1 using the following syntax:

|

||||

`$ ssh server10`

|

||||

```

|

||||

$ ssh server10

|

||||

```

|

||||

|

||||

### #30: It's your turn to share…

|

||||

#### #30:现在该分享你的别名了

|

||||

|

||||

```

|

||||

## set some other defaults ##

|

||||

@ -487,21 +533,18 @@ alias cdnmdel = '/home/scripts/admin/cdn/purge_cdn_cache --profile akamai --stdi

|

||||

alias amzcdnmdel = '/home/scripts/admin/cdn/purge_cdn_cache --profile amazon --stdin'

|

||||

```

|

||||

|

||||

## Conclusion

|

||||

### 总结

|

||||

|

||||

This post summarizes several types of uses for *nix bash aliases:

|

||||

本文总结了 *nix bash 别名的多种用法:

|

||||

|

||||

1. Setting default options for a command (e.g. set eth0 as default option for ethtool command via alias ethtool='ethtool eth0' ).

|

||||

2. Correcting typos (cd.. will act as cd .. via alias cd..='cd ..').

|

||||

3. Reducing the amount of typing.

|

||||

4. Setting the default path of a command that exists in several versions on a system (e.g. GNU/grep is located at /usr/local/bin/grep and Unix grep is located at /bin/grep. To use GNU grep use alias grep='/usr/local/bin/grep' ).

|

||||

5. Adding the safety nets to Unix by making commands interactive by setting default options. (e.g. rm, mv, and other commands).

|

||||

6. Compatibility by creating commands for older operating systems such as MS-DOS or other Unix like operating systems (e.g. alias del=rm ).

|

||||

|

||||

|

||||

|

||||

I've shared my aliases that I used over the years to reduce the need for repetitive command line typing. If you know and use any other bash/ksh/csh aliases that can reduce typing, share below in the comments.

|

||||

1. 为命令设置默认的参数(例如通过 `alias ethtool='ethtool eth0'` 设置 ethtool 命令的默认参数为 eth0)。

|

||||

2. 修正错误的拼写(通过 `alias cd..='cd ..'`让 `cd..` 变成 `cd ..`)。

|

||||

3. 缩减输入。

|

||||

4. 设置系统中多版本命令的默认路径(例如 GNU/grep 位于 `/usr/local/bin/grep` 中而 Unix grep 位于 `/bin/grep` 中。若想默认使用 GNU grep 则设置别名 `grep='/usr/local/bin/grep'` )。

|

||||

5. 通过默认开启命令(例如 `rm`,`mv` 等其他命令)的交互参数来增加 Unix 的安全性。

|

||||

6. 为老旧的操作系统(比如 MS-DOS 或者其他类似 Unix 的操作系统)创建命令以增加兼容性(比如 `alias del=rm`)。

|

||||

|

||||

我已经分享了多年来为了减少重复输入命令而使用的别名。若你知道或使用的哪些 bash/ksh/csh 别名能够减少输入,请在留言框中分享。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -509,33 +552,33 @@ via: https://www.cyberciti.biz/tips/bash-aliases-mac-centos-linux-unix.html

|

||||

|

||||

作者:[nixCraft][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://bash.cyberciti.biz/guide/~/.bashrc

|

||||

[2]:https://www.cyberciti.biz/tips/wp-content/uploads/2012/06/Getting-Started-With-Bash-Shell-Aliases-For-Linux-Unix.jpg

|

||||

[3]://www.cyberciti.biz/tips/bash-aliases-mac-centos-linux-unix.html (See Linux/Unix alias command examples for more info)

|

||||

[3]:https://www.cyberciti.biz/tips/bash-aliases-mac-centos-linux-unix.html (See Linux/Unix alias command examples for more info)

|

||||

[4]:https://bash.cyberciti.biz/guide/Create_and_use_aliases

|

||||

[5]://www.cyberciti.biz/faq/bash-shell-temporarily-disable-an-alias/

|

||||

[5]:https://www.cyberciti.biz/faq/bash-shell-temporarily-disable-an-alias/

|

||||

[6]:https://bash.cyberciti.biz/guide/Create_and_use_aliases#How_do_I_remove_the_alias.3F

|

||||

[7]:https://bash.cyberciti.biz/guide/The_case_statement

|

||||

[8]://www.cyberciti.biz/faq/ls-command-to-examining-the-filesystem/

|

||||

[9]://www.cyberciti.biz/faq/howto-use-grep-command-in-linux-unix/

|

||||

[10]://www.cyberciti.biz/faq/linux-make-directory-command/

|

||||

[11]://www.cyberciti.biz/faq/how-do-i-compare-two-files-under-linux-or-unix/

|

||||

[12]://www.cyberciti.biz/faq/how-do-i-find-out-what-ports-are-listeningopen-on-my-linuxfreebsd-server/

|

||||

[13]://www.cyberciti.biz/tips/linux-send-wake-on-lan-wol-magic-packets.html

|

||||

[8]:https://www.cyberciti.biz/faq/ls-command-to-examining-the-filesystem/

|

||||

[9]:https://www.cyberciti.biz/faq/howto-use-grep-command-in-linux-unix/

|

||||

[10]:https://www.cyberciti.biz/faq/linux-make-directory-command/

|

||||

[11]:https://www.cyberciti.biz/faq/how-do-i-compare-two-files-under-linux-or-unix/

|

||||

[12]:https://www.cyberciti.biz/faq/how-do-i-find-out-what-ports-are-listeningopen-on-my-linuxfreebsd-server/

|

||||

[13]:https://www.cyberciti.biz/tips/linux-send-wake-on-lan-wol-magic-packets.html

|

||||

[14]:https://bash.cyberciti.biz/misc-shell/simple-shell-script-to-wake-up-nas-devices-computers/

|

||||

[15]://www.cyberciti.biz/faq/rhel-fedorta-linux-iptables-firewall-configuration-tutorial/ (iptables CentOS/RHEL/Fedora tutorial)

|

||||

[16]://www.cyberciti.biz/tips/linux-iptables-examples.html

|

||||

[17]://www.cyberciti.biz/tips/linux-debian-package-management-cheat-sheet.html

|

||||

[18]://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/

|

||||

[19]://www.cyberciti.biz/faq/howto-shutdown-linux/

|

||||

[20]://www.cyberciti.biz/tips/keeping-a-log-of-daily-network-traffic-for-adsl-or-dedicated-remote-linux-box.html

|

||||

[21]://www.cyberciti.biz/faq/dnstop-monitor-bind-dns-server-dns-network-traffic-from-a-shell-prompt/

|

||||

[22]://www.cyberciti.biz/faq/check-network-connection-linux/

|

||||

[23]://www.cyberciti.biz/faq/reboot-linksys-wag160n-wag54-wag320-wag120n-router-gateway/

|

||||

[24]:/cdn-cgi/l/email-protection

|

||||

[25]://www.cyberciti.biz/tips/wget-resume-broken-download.html

|

||||

[15]:https://www.cyberciti.biz/faq/rhel-fedorta-linux-iptables-firewall-configuration-tutorial/ (iptables CentOS/RHEL/Fedora tutorial)

|

||||

[16]:https://www.cyberciti.biz/tips/linux-iptables-examples.html

|

||||

[17]:https://www.cyberciti.biz/tips/linux-debian-package-management-cheat-sheet.html

|

||||

[18]:https://www.cyberciti.biz/faq/rhel-centos-fedora-linux-yum-command-howto/

|

||||

[19]:https://www.cyberciti.biz/faq/howto-shutdown-linux/

|

||||

[20]:https://www.cyberciti.biz/tips/keeping-a-log-of-daily-network-traffic-for-adsl-or-dedicated-remote-linux-box.html

|

||||

[21]:https://www.cyberciti.biz/faq/dnstop-monitor-bind-dns-server-dns-network-traffic-from-a-shell-prompt/

|

||||

[22]:https://www.cyberciti.biz/faq/check-network-connection-linux/

|

||||

[23]:https://www.cyberciti.biz/faq/reboot-linksys-wag160n-wag54-wag320-wag120n-router-gateway/

|

||||

[24]:https:/cdn-cgi/l/email-protection

|

||||

[25]:https://www.cyberciti.biz/tips/wget-resume-broken-download.html

|

||||

@ -1,8 +1,8 @@

|

||||

translating by lujun9972

|

||||

How to use curl command with proxy username/password on Linux/ Unix

|

||||

如何让 curl 命令通过代理访问

|

||||

======

|

||||

|

||||

My sysadmin provided me the following proxy details:

|

||||

我的系统管理员给我提供了如下代理信息:

|

||||

|

||||

```

|

||||

IP: 202.54.1.1

|

||||

Port: 3128

|

||||

@ -10,15 +10,16 @@ Username: foo

|

||||

Password: bar

|

||||

```

|

||||

|

||||

The settings worked perfectly with Google Chrome and Firefox browser. How do I use it with the curl command? How do I tell the curl command to use my proxy settings from Google Chrome browser?

|

||||

该设置在 Google Chrome 和 Firefox 浏览器上很容易设置。但是我要怎么把它应用到 `curl` 命令上呢?我要如何让 curl 命令使用我在 Google Chrome 浏览器上的代理设置呢?

|

||||

|

||||

很多 Linux 和 Unix 命令行工具(比如 `curl` 命令,`wget` 命令,`lynx` 命令等)使用名为 `http_proxy`,`https_proxy`,`ftp_proxy` 的环境变量来获取代理信息。它允许你通过代理服务器(使用或不使用用户名/密码都行)来连接那些基于文本的会话和应用。

|

||||

|

||||

Many Linux and Unix command line tools such as curl command, wget command, lynx command, and others; use the environment variable called http_proxy, https_proxy, ftp_proxy to find the proxy details. It allows you to connect text based session and applications via the proxy server with or without a userame/password. T **his page shows how to perform HTTP/HTTPS requests with cURL cli using PROXY server.**

|

||||

本文就会演示一下如何让 `curl` 通过代理服务器发送 HTTP/HTTPS 请求。

|

||||

|

||||

## Unix and Linux curl command with proxy syntax

|

||||

### 让 curl 命令使用代理的语法

|

||||

|

||||

语法为:

|

||||

|

||||

The syntax is:

|

||||

```

|

||||

## Set the proxy address of your uni/company/vpn network ##

|

||||

export http_proxy=http://your-ip-address:port/

|

||||

@ -31,8 +32,8 @@ export https_proxy=https://your-ip-address:port/

|

||||

export https_proxy=https://user:password@your-proxy-ip-address:port/

|

||||

```

|

||||

|

||||

另一种方法是使用 `curl` 命令的 `-x` 选项:

|

||||

|

||||

Another option is to pass the -x option to the curl command. To use the specified proxy:

|

||||

```

|

||||

curl -x <[protocol://][user:password@]proxyhost[:port]> url

|

||||

--proxy <[protocol://][user:password@]proxyhost[:port]> url

|

||||

@ -40,9 +41,10 @@ curl -x <[protocol://][user:password@]proxyhost[:port]> url

|

||||

-x http://user:password@Your-Ip-Here:Port url

|

||||

```

|

||||

|

||||

## Linux use curl command with proxy

|

||||

### 在 Linux 上的一个例子

|

||||

|

||||

首先设置 `http_proxy`:

|

||||

|

||||

First set the http_proxy:

|

||||

```

|

||||

## proxy server, 202.54.1.1, port: 3128, user: foo, password: bar ##

|

||||

export http_proxy=http://foo:bar@202.54.1.1:3128/

|

||||

@ -51,7 +53,8 @@ export https_proxy=$http_proxy

|

||||

curl -I https://www.cyberciti.biz

|

||||

curl -v -I https://www.cyberciti.biz

|

||||

```

|

||||

Sample outputs:

|

||||

|

||||

输出为:

|

||||

|

||||

```

|

||||

* Rebuilt URL to: www.cyberciti.biz/

|

||||

@ -98,44 +101,55 @@ Connection: keep-alive

|

||||

* Connection #0 to host 10.12.249.194 left intact

|

||||

```

|

||||

|

||||

本例中,我来下载一个 pdf 文件:

|

||||

|

||||

In this example, I'm downloading a pdf file:

|

||||

```

|

||||

$ export http_proxy="vivek:myPasswordHere@10.12.249.194:3128/"

|

||||

$ curl -v -O http://dl.cyberciti.biz/pdfdownloads/b8bf71be9da19d3feeee27a0a6960cb3/569b7f08/cms/631.pdf

|

||||

```

|

||||

OR use the -x option:

|

||||

|

||||

也可以使用 `-x` 选项:

|

||||

|

||||

```

|

||||

curl -x 'http://vivek:myPasswordHere@10.12.249.194:3128' -v -O https://dl.cyberciti.biz/pdfdownloads/b8bf71be9da19d3feeee27a0a6960cb3/569b7f08/cms/631.pdf

|

||||

```

|

||||

Sample outputs:

|

||||

[![Fig.01: curl in action \(click to enlarge\)][1]][2]

|

||||

|

||||

## How to use the specified proxy server with curl on Unix

|

||||

输出为:

|

||||

|

||||

![Fig.01:curl in action \(click to enlarge\)][2]

|

||||

|

||||

### Unix 上的一个例子

|

||||

|

||||

```

|

||||

$ curl -x http://prox_server_vpn:3128/ -I https://www.cyberciti.biz/faq/howto-nginx-customizing-404-403-error-page/

|

||||

```

|

||||

|

||||

## How to use socks protocol?

|

||||

### socks 协议怎么办呢?

|

||||

|

||||

语法也是一样的:

|

||||

|

||||

The syntax is same:

|

||||

```

|

||||

curl -x socks5://[user:password@]proxyhost[:port]/ url

|

||||

curl --socks5 192.168.1.254:3099 https://www.cyberciti.biz/

|

||||

```

|

||||

|

||||

## How do I configure and setup curl to permanently use a proxy connection?

|

||||

### 如何让代理设置永久生效?

|

||||

|

||||

编辑 `~/.curlrc` 文件:

|

||||

|

||||

```

|

||||

$ vi ~/.curlrc

|

||||

```

|

||||

|

||||

添加下面内容:

|

||||

|

||||

Update/edit your ~/.curlrc file using a text editor such as vim:

|

||||

`$ vi ~/.curlrc`

|

||||

Append the following:

|

||||

```

|

||||

proxy = server1.cyberciti.biz:3128

|

||||

proxy-user = "foo:bar"

|

||||

```

|

||||

|

||||

Save and close the file. Another option is create a bash shell alias in your ~/.bashrc file:

|

||||

保存并关闭该文件。另一种方法是在你的 `~/.bashrc` 文件中创建一个别名:

|

||||

|

||||

```

|

||||

## alias for curl command

|

||||

## set proxy-server and port, the syntax is

|

||||

@ -143,7 +157,7 @@ Save and close the file. Another option is create a bash shell alias in your ~/.

|

||||

alias curl = "curl -x server1.cyberciti.biz:3128"

|

||||

```

|

||||

|

||||

Remember, the proxy string can be specified with a protocol:// prefix to specify alternative proxy protocols. Use socks4://, socks4a://, socks5:// or socks5h:// to request the specific SOCKS version to be used. No protocol specified, http:// and all others will be treated as HTTP proxies. If the port number is not specified in the proxy string, it is assumed to be 1080. The -x option overrides existing environment variables that set the proxy to use. If there's an environment variable setting a proxy, you can set proxy to "" to override it. See curl command man page [here for more info][3].

|

||||

记住,代理字符串中可以使用 `protocol://` 前缀来指定不同的代理协议。使用 `socks4://`,`socks4a://`,`socks5:// `或者 `socks5h://` 来指定使用的 SOCKS 版本。若没有指定协议或者使用 `http://` 表示 HTTP 协议。若没有指定端口号则默认为 `1080`。`-x` 选项的值要优先于环境变量设置的值。若不想走代理,而环境变量总设置了代理,那么可以通过设置代理为空值(`""`)来覆盖环境变量的值。[详细信息请参阅 `curl` 的 man 页 ][3]。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

@ -152,11 +166,11 @@ via: https://www.cyberciti.biz/faq/linux-unix-curl-command-with-proxy-username-p

|

||||

|

||||

作者:[Vivek Gite][a]

|

||||

译者:[lujun9972](https://github.com/lujun9972)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.cyberciti.biz

|

||||

[1]:https://www.cyberciti.biz/media/new/faq/2016/01/curl-download-output-300x141.jpg

|

||||

[2]:https://www.cyberciti.biz//www.cyberciti.biz/media/new/faq/2016/01/curl-download-output.jpg

|

||||

[2]:https://www.cyberciti.biz/media/new/faq/2016/01/curl-download-output.jpg

|

||||

[3]:https://curl.haxx.se/docs/manpage.html

|

||||

64

published/20170131 Book review Ours to Hack and to Own.md

Normal file

64

published/20170131 Book review Ours to Hack and to Own.md

Normal file

@ -0,0 +1,64 @@

|

||||

书评:《Ours to Hack and to Own》

|

||||

============================================================

|

||||

|

||||

|

||||

|

||||

Image by : opensource.com

|

||||

|

||||

私有制的时代看起来似乎结束了,在这里我将不仅仅讨论那些由我们中的许多人引入到我们的家庭与生活的设备和软件,我也将讨论这些设备与应用依赖的平台与服务。

|

||||

|

||||

尽管我们使用的许多服务是免费的,但我们对它们并没有任何控制权。本质上讲,这些企业确实控制着我们所看到的、听到的以及阅读到的内容。不仅如此,许多企业还在改变工作的本质。他们正使用封闭的平台来助长由全职工作到[零工经济][2]的转变,这种方式提供极少的安全性与确定性。

|

||||

|

||||

这项行动对于网络以及每一个使用与依赖网络的人产生了广泛的影响。仅仅二十多年前的对开放互联网的想象正在逐渐消逝,并迅速地被一块难以穿透的幕帘所取代。

|

||||

|

||||

一种逐渐流行的补救办法就是建立<ruby>[平台合作社][3]<rt>platform cooperatives</rt></ruby>, 即由他们的用户所拥有的电子化平台。正如这本书[《Ours to Hack and to Own》][4]所阐述的,平台合作社背后的观点与开源有许多相同的根源。

|

||||

|

||||

学者 Trebor Scholz 和作家 Nathan Schneider 已经收集了 40 篇论文,探讨平台合作社作为普通人可使用的工具的增长及需求,以提升开放性并对闭源系统的不透明性及各种限制予以还击。

|

||||

|

||||

### 何处适合开源

|

||||

|

||||

任何平台合作社核心及接近核心的部分依赖于开源;不仅开源技术是必要的,构成开源开放性、透明性、协同合作以及共享的准则与理念同样不可或缺。

|

||||

|

||||

在这本书的介绍中,Trebor Scholz 指出:

|

||||

|

||||

> 与斯诺登时代的互联网黑盒子系统相反,这些平台需要使它们的数据流透明来辨别自身。他们需要展示客户与员工的数据在哪里存储,数据出售给了谁以及数据用于何种目的。

|

||||

|

||||

正是对开源如此重要的透明性,促使平台合作社如此吸引人,并在目前大量已有平台之中成为令人耳目一新的变化。

|

||||

|

||||

开源软件在《Ours to Hack and to Own》所分享的平台合作社的构想中必然充当着重要角色。开源软件能够为群体建立助推合作社的技术基础设施提供快速而不算昂贵的途径。

|

||||

|

||||

Mickey Metts 在论文中这样形容, “邂逅你的友邻技术伙伴。" Metts 为一家名为 Agaric 的企业工作,这家企业使用 Drupal 为团体及小型企业建立他们不能自行完成的平台。除此以外, Metts 还鼓励任何想要建立并运营自己的企业的公司或合作社的人接受自由开源软件。为什么呢?因为它是高质量的、并不昂贵的、可定制的,并且你能够与由乐于助人而又热情的人们组成的大型社区产生联系。

|

||||

|

||||

### 不总是开源的,但开源总在

|

||||

|

||||

这本书里不是所有的论文都关注或提及开源的;但是,开源方式的关键元素——合作、社区、开放治理以及电子自由化——总是在其间若隐若现。

|

||||

|

||||

事实上正如《Ours to Hack and to Own》中许多论文所讨论的,建立一个更加开放、基于平常人的经济与社会区块,平台合作社会变得非常重要。用 Douglas Rushkoff 的话讲,那会是类似 Creative Commons 的组织“对共享知识资源的私有化”的补偿。它们也如 Barcelona 的 CTO Francesca Bria 所描述的那样,是“通过确保市民数据安全性、隐私性和权利的系统”来运营他们自己的“分布式通用数据基础架构”的城市。

|

||||

|

||||

### 最后的思考

|

||||

|

||||

如果你在寻找改变互联网以及我们工作的方式的蓝图,《Ours to Hack and to Own》并不是你要寻找的。这本书与其说是用户指南,不如说是一种宣言。如书中所说,《Ours to Hack and to Own》让我们略微了解如果我们将开源方式准则应用于社会及更加广泛的世界我们能够做的事。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

作者简介:

|

||||

|

||||

Scott Nesbitt ——作家、编辑、雇佣兵、 <ruby>虎猫牛仔<rt>Ocelot wrangle</rt></ruby>、丈夫与父亲、博客写手、陶器收藏家。Scott 正是做这样的一些事情。他还是大量写关于开源软件文章与博客的长期开源用户。你可以在 Twitter、Github 上找到他。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/17/1/review-book-ours-to-hack-and-own

|

||||

|

||||

作者:[Scott Nesbitt][a]

|

||||

译者:[darsh8](https://github.com/darsh8)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/scottnesbitt

|

||||

[1]:https://opensource.com/article/17/1/review-book-ours-to-hack-and-own?rate=dgkFEuCLLeutLMH2N_4TmUupAJDjgNvFpqWqYCbQb-8

|

||||

[2]:https://en.wikipedia.org/wiki/Access_economy

|

||||

[3]:https://en.wikipedia.org/wiki/Platform_cooperative

|

||||

[4]:http://www.orbooks.com/catalog/ours-to-hack-and-to-own/

|

||||

[5]:https://opensource.com/user/14925/feed

|

||||

[6]:https://opensource.com/users/scottnesbitt

|

||||

99

published/20170209 INTRODUCING DOCKER SECRETS MANAGEMENT.md

Normal file

99

published/20170209 INTRODUCING DOCKER SECRETS MANAGEMENT.md

Normal file

@ -0,0 +1,99 @@

|

||||

Docker 涉密信息管理介绍

|

||||

====================================

|

||||

|

||||

容器正在改变我们对应用程序和基础设施的看法。无论容器内的代码量是大还是小,容器架构都会引起代码如何与硬件相互作用方式的改变 —— 它从根本上将其从基础设施中抽象出来。对于容器安全来说,在 Docker 中,容器的安全性有三个关键组成部分,它们相互作用构成本质上更安全的应用程序。

|

||||

|

||||

|

||||

|

||||

构建更安全的应用程序的一个关键因素是与系统和其他应用程序进行安全通信,这通常需要证书、令牌、密码和其他类型的验证信息凭证 —— 通常称为应用程序<ruby>涉密信息<rt>secrets</rt></ruby>。我们很高兴可以推出 Docker Secrets,这是一个容器原生的解决方案,它是加强容器安全的<ruby>可信赖交付<rt>Trusted Delivery</rt></ruby>组件,用户可以在容器平台上直接集成涉密信息分发功能。

|

||||

|

||||

有了容器,现在应用程序是动态的,可以跨越多种环境移植。这使得现存的涉密信息分发的解决方案略显不足,因为它们都是针对静态环境。不幸的是,这导致了应用程序涉密信息管理不善的增加,在不安全的、土造的方案中(如将涉密信息嵌入到 GitHub 这样的版本控制系统或者同样糟糕的其它方案),这种情况十分常见。

|

||||

|

||||

### Docker 涉密信息管理介绍

|

||||

|

||||

根本上我们认为,如果有一个标准的接口来访问涉密信息,应用程序就更安全了。任何好的解决方案也必须遵循安全性实践,例如在传输的过程中,对涉密信息进行加密;在不用的时候也对涉密数据进行加密;防止涉密信息在应用最终使用时被无意泄露;并严格遵守最低权限原则,即应用程序只能访问所需的涉密信息,不能多也不能不少。

|

||||

|

||||

通过将涉密信息整合到 Docker 编排,我们能够在遵循这些确切的原则下为涉密信息的管理问题提供一种解决方案。

|

||||

|

||||

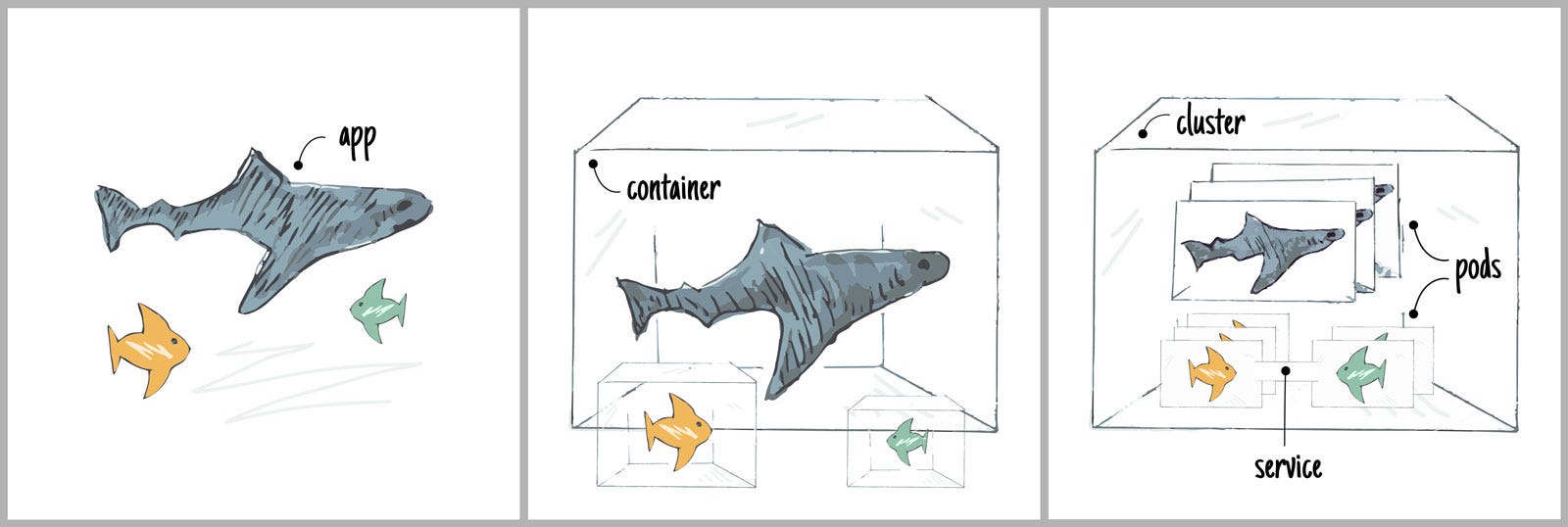

下图提供了一个高层次视图,并展示了 Docker swarm 模式体系架构是如何将一种新类型的对象 —— 一个涉密信息对象,安全地传递给我们的容器。

|

||||

|

||||

|

||||

|

||||

在 Docker 中,涉密信息是任意的数据块,比如密码、SSH 密钥、TLS 凭证,或者任何其他本质上敏感的数据。当你将一个涉密信息加入 swarm 集群(通过执行 `docker secret create` )时,利用在引导新集群时自动创建的[内置证书颁发机构][17],Docker 通过相互认证的 TLS 连接将密钥发送给 swarm 集群管理器。

|

||||

|

||||

```

|

||||

$ echo "This is a secret" | docker secret create my_secret_data -

|

||||

```

|

||||

|

||||

一旦,涉密信息到达某个管理节点,它将被保存到内部的 Raft 存储区中。该存储区使用 NACL 开源加密库中的 Salsa20、Poly1305 加密算法生成的 256 位密钥进行加密,以确保从来不会把任何涉密信息数据写入未加密的磁盘。将涉密信息写入到内部存储,赋予了涉密信息跟其它 swarm 集群数据一样的高可用性。

|

||||

|

||||

当 swarm 集群管理器启动时,包含涉密信息的加密 Raft 日志通过每一个节点独有的数据密钥进行解密。此密钥以及用于与集群其余部分通信的节点 TLS 证书可以使用一个集群级的加密密钥进行加密。该密钥称为“解锁密钥”,也使用 Raft 进行传递,将且会在管理器启动的时候使用。

|

||||

|

||||

当授予新创建或运行的服务权限访问某个涉密信息权限时,其中一个管理器节点(只有管理器可以访问被存储的所有涉密信息)会通过已经建立的 TLS 连接将其分发给正在运行特定服务的节点。这意味着节点自己不能请求涉密信息,并且只有在管理器提供给他们的时候才能访问这些涉密信息 —— 严格地控制请求涉密信息的服务。

|

||||

|

||||

```

|

||||

$ docker service create --name="redis" --secret="my_secret_data" redis:alpine

|

||||

```

|

||||

|

||||

未加密的涉密信息被挂载到一个容器,该容器位于 `/run/secrets/<secret_name>` 的内存文件系统中。

|

||||

|

||||

```

|

||||

$ docker exec $(docker ps --filter name=redis -q) ls -l /run/secrets

|

||||

total 4

|

||||

-r--r--r-- 1 root root 17 Dec 13 22:48 my_secret_data

|

||||

```

|

||||

|

||||

如果一个服务被删除或者被重新安排在其他地方,集群管理器将立即通知所有不再需要访问该涉密信息的节点,这些节点将不再有权访问该应用程序的涉密信息。

|

||||

|

||||

```

|

||||

$ docker service update --secret-rm="my_secret_data" redis

|

||||

|

||||

$ docker exec -it $(docker ps --filter name=redis -q) cat /run/secrets/my_secret_data

|

||||

|

||||

cat: can't open '/run/secrets/my_secret_data': No such file or directory

|

||||

```

|

||||

|

||||

查看 [Docker Secret 文档][18]以获取更多信息和示例,了解如何创建和管理您的涉密信息。同时,特别感谢 [Laurens Van Houtven](https://www.lvh.io/) 与 Docker 安全和核心团队合作使这一特性成为现实。

|

||||

|

||||

### 通过 Docker 更安全地使用应用程序

|

||||

|

||||

Docker 涉密信息旨在让开发人员和 IT 运营团队可以轻松使用,以用于构建和运行更安全的应用程序。它是首个被设计为既能保持涉密信息安全,并且仅在特定的容器需要它来进行必要的涉密信息操作的时候使用。从使用 Docker Compose 定义应用程序和涉密数据,到 IT 管理人员直接在 Docker Datacenter 中部署的 Compose 文件,涉密信息、网络和数据卷都将加密并安全地与应用程序一起传输。

|

||||

|

||||

更多相关学习资源:

|

||||

|

||||

* [1.13 Docker 数据中心具有 Secrets、安全扫描、容量缓存等新特性][7]

|

||||

* [下载 Docker][8] 且开始学习

|

||||

* [在 Docker 数据中心尝试使用 secrets][9]

|

||||

* [阅读文档][10]

|

||||

* 参与 [即将进行的在线研讨会][11]

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://blog.docker.com/2017/02/docker-secrets-management/

|

||||

|

||||

作者:[Ying Li][a]

|

||||

译者:[HardworkFish](https://github.com/HardworkFish)

|

||||

校对:[imquanquan](https://github.com/imquanquan), [wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://blog.docker.com/author/yingli/

|

||||

[1]:http://www.linkedin.com/shareArticle?mini=true&url=http://dockr.ly/2k6gnOB&title=Introducing%20Docker%20Secrets%20Management&summary=Containers%20are%20changing%20how%20we%20view%20apps%20and%20infrastructure.%20Whether%20the%20code%20inside%20containers%20is%20big%20or%20small,%20container%20architecture%20introduces%20a%20change%20to%20how%20that%20code%20behaves%20with%20hardware%20-%20it%20fundamentally%20abstracts%20it%20from%20the%20infrastructure.%20Docker%20believes%20that%20there%20are%20three%20key%20components%20to%20container%20security%20and%20...

|

||||