# zypper help install

+ install (in) [options] {capability | rpm_file_uri}

+

+ Install packages with specified capabilities or RPM files with specified

+ location. A capability is NAME[.ARCH][OP], where OP is one

+ of <, <=, =, >=, >.

+

+ Command options:

+ --from Select packages from the specified repository.

+ -r, --repo Load only the specified repository.

+ -t, --type Type of package (package, patch, pattern, product, srcpackage).

+ Default: package.

+ -n, --name Select packages by plain name, not by capability.

+ -C, --capability Select packages by capability.

+ -f, --force Install even if the item is already installed (reinstall),

+ downgraded or changes vendor or architecture.

+ --oldpackage Allow to replace a newer item with an older one.

+ Handy if you are doing a rollback. Unlike --force

+ it will not enforce a reinstall.

+ --replacefiles Install the packages even if they replace files from other,

+ already installed, packages. Default is to treat file conflicts

+ as an error. --download-as-needed disables the fileconflict check.

+ ......

+

+3. 安装之前搜索一个安转包(以 gnome-desktop 为例 )

+

+

# zypper se gnome-desktop

+

+ Retrieving repository 'openSUSE-13.2-Debug' metadata ............................................................[done]

+ Building repository 'openSUSE-13.2-Debug' cache .................................................................[done]

+ Retrieving repository 'openSUSE-13.2-Non-Oss' metadata ......................................................... [done]

+ Building repository 'openSUSE-13.2-Non-Oss' cache ...............................................................[done]

+ Retrieving repository 'openSUSE-13.2-Oss' metadata ..............................................................[done]

+ Building repository 'openSUSE-13.2-Oss' cache ...................................................................[done]

+ Retrieving repository 'openSUSE-13.2-Update' metadata ...........................................................[done]

+ Building repository 'openSUSE-13.2-Update' cache ................................................................[done]

+ Retrieving repository 'openSUSE-13.2-Update-Non-Oss' metadata ...................................................[done]

+ Building repository 'openSUSE-13.2-Update-Non-Oss' cache ........................................................[done]

+ Loading repository data...

+ Reading installed packages...

+

+ S | Name | Summary | Type

+ --+---------------------------------------+-----------------------------------------------------------+-----------

+ | gnome-desktop2-lang | Languages for package gnome-desktop2 | package

+ | gnome-desktop2 | The GNOME Desktop API Library | package

+ | libgnome-desktop-2-17 | The GNOME Desktop API Library | package

+ | libgnome-desktop-3-10 | The GNOME Desktop API Library | package

+ | libgnome-desktop-3-devel | The GNOME Desktop API Library -- Development Files | package

+ | libgnome-desktop-3_0-common | The GNOME Desktop API Library -- Common data files | package

+ | gnome-desktop-debugsource | Debug sources for package gnome-desktop | package

+ | gnome-desktop-sharp2-debugsource | Debug sources for package gnome-desktop-sharp2 | package

+ | gnome-desktop2-debugsource | Debug sources for package gnome-desktop2 | package

+ | libgnome-desktop-2-17-debuginfo | Debug information for package libgnome-desktop-2-17 | package

+ | libgnome-desktop-3-10-debuginfo | Debug information for package libgnome-desktop-3-10 | package

+ | libgnome-desktop-3_0-common-debuginfo | Debug information for package libgnome-desktop-3_0-common | package

+ | libgnome-desktop-2-17-debuginfo-32bit | Debug information for package libgnome-desktop-2-17 | package

+ | libgnome-desktop-3-10-debuginfo-32bit | Debug information for package libgnome-desktop-3-10 | package

+ | gnome-desktop-sharp2 | Mono bindings for libgnome-desktop | package

+ | libgnome-desktop-2-devel | The GNOME Desktop API Library -- Development Files | packag

+ | gnome-desktop-lang | Languages for package gnome-desktop | package

+ | libgnome-desktop-2-17-32bit | The GNOME Desktop API Library | package

+ | libgnome-desktop-3-10-32bit | The GNOME Desktop API Library | package

+ | gnome-desktop | The GNOME Desktop API Library | srcpackage

+

+4. 获取一个模式包的信息(以 lamp_server 为例)。

+

+

# zypper info -t pattern lamp_server

+

+ Loading repository data...

+ Reading installed packages...

+

+

+ Information for pattern lamp_server:

+ ------------------------------------

+ Repository: openSUSE-13.2-Update

+ Name: lamp_server

+ Version: 20141007-5.1

+ Arch: x86_64

+ Vendor: openSUSE

+ Installed: No

+ Visible to User: Yes

+ Summary: Web and LAMP Server

+ Description:

+ Software to set up a Web server that is able to serve static, dynamic, and interactive content (like a Web shop). This includes Apache HTTP Server, the database management system MySQL,

+ and scripting languages such as PHP, Python, Ruby on Rails, or Perl.

+ Contents:

+

+ S | Name | Type | Dependency

+ --+-------------------------------+---------+-----------

+ | apache2-mod_php5 | package |

+ | php5-iconv | package |

+ i | patterns-openSUSE-base | package |

+ i | apache2-prefork | package |

+ | php5-dom | package |

+ | php5-mysql | package |

+ i | apache2 | package |

+ | apache2-example-pages | package |

+ | mariadb | package |

+ | apache2-mod_perl | package |

+ | php5-ctype | package |

+ | apache2-doc | package |

+ | yast2-http-server | package |

+ | patterns-openSUSE-lamp_server | package |

# zypper ref

+ Repository 'openSUSE-13.2-0' is up to date.

+ Repository 'openSUSE-13.2-Debug' is up to date.

+ Repository 'openSUSE-13.2-Non-Oss' is up to date.

+ Repository 'openSUSE-13.2-Oss' is up to date.

+ Repository 'openSUSE-13.2-Update' is up to date.

+ Repository 'openSUSE-13.2-Update-Non-Oss' is up to date.

+ All repositories have been refreshed.

+

+10. 刷新一个指定的软件库(以 'repo-non-oss' 为例 )。

+

+

# zypper refresh repo-non-oss

+ Repository 'openSUSE-13.2-Non-Oss' is up to date.

+ Specified repositories have been refreshed.

+

+11. 强制更新一个软件库(以 'repo-non-oss' 为例 )。

+

+

# zypper ref -f repo-non-oss

+ Forcing raw metadata refresh

+ Retrieving repository 'openSUSE-13.2-Non-Oss' metadata ............................................................[done]

+ Forcing building of repository cache

+ Building repository 'openSUSE-13.2-Non-Oss' cache ............................................................[done]

+ Specified repositories have been refreshed.

# zypper mr -rk -p 85 repo-non-oss

+ Repository 'repo-non-oss' priority has been left unchanged (85)

+ Nothing to change for repository 'repo-non-oss'.

+

+15. 对所有的软件库关闭 rpm 文件缓存。

+

+

# zypper mr -Ka

+ RPM files caching has been disabled for repository 'openSUSE-13.2-0'.

+ RPM files caching has been disabled for repository 'repo-debug'.

+ RPM files caching has been disabled for repository 'repo-debug-update'.

+ RPM files caching has been disabled for repository 'repo-debug-update-non-oss'.

+ RPM files caching has been disabled for repository 'repo-non-oss'.

+ RPM files caching has been disabled for repository 'repo-oss'.

+ RPM files caching has been disabled for repository 'repo-source'.

+ RPM files caching has been disabled for repository 'repo-update'.

+ RPM files caching has been disabled for repository 'repo-update-non-oss'.

+

+16. 对所有的软件库开启 rpm 文件缓存。

+

# zypper mr -ka

+ RPM files caching has been enabled for repository 'openSUSE-13.2-0'.

+ RPM files caching has been enabled for repository 'repo-debug'.

+ RPM files caching has been enabled for repository 'repo-debug-update'.

+ RPM files caching has been enabled for repository 'repo-debug-update-non-oss'.

+ RPM files caching has been enabled for repository 'repo-non-oss'.

+ RPM files caching has been enabled for repository 'repo-oss'.

+ RPM files caching has been enabled for repository 'repo-source'.

+ RPM files caching has been enabled for repository 'repo-update'.

+ RPM files caching has been enabled for repository 'repo-update-non-oss'.

+

+17. 关闭远程库的 rpm 文件缓存

+

# zypper mr -Kt

+ RPM files caching has been disabled for repository 'repo-debug'.

+ RPM files caching has been disabled for repository 'repo-debug-update'.

+ RPM files caching has been disabled for repository 'repo-debug-update-non-oss'.

+ RPM files caching has been disabled for repository 'repo-non-oss'.

+ RPM files caching has been disabled for repository 'repo-oss'.

+ RPM files caching has been disabled for repository 'repo-source'.

+ RPM files caching has been disabled for repository 'repo-update'.

+ RPM files caching has been disabled for repository 'repo-update-non-oss'.

+

+18. 开启远程软件库的 rpm 文件缓存。

+

# zypper mr -kt

+ RPM files caching has been enabled for repository 'repo-debug'.

+ RPM files caching has been enabled for repository 'repo-debug-update'.

+ RPM files caching has been enabled for repository 'repo-debug-update-non-oss'.

+ RPM files caching has been enabled for repository 'repo-non-oss'.

+ RPM files caching has been enabled for repository 'repo-oss'.

+ RPM files caching has been enabled for repository 'repo-source'.

+ RPM files caching has been enabled for repository 'repo-update'.

+ RPM files caching has been enabled for repository 'repo-update-non-oss'.

# zypper in 'gcc<5.1'

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following 13 NEW packages are going to be installed:

+ cpp cpp48 gcc gcc48 libasan0 libatomic1-gcc49 libcloog-isl4 libgomp1-gcc49 libisl10 libitm1-gcc49 libmpc3 libmpfr4 libtsan0-gcc49

+

+ 13 new packages to install.

+ Overall download size: 14.5 MiB. Already cached: 0 B After the operation, additional 49.4 MiB will be used.

+ Continue? [y/n/? shows all options] (y): y

+

+24. 为特定的CPU架构安装软件包(以兼容 i586 的 gcc 为例)。

+

+

# zypper in gcc.i586

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following 13 NEW packages are going to be installed:

+ cpp cpp48 gcc gcc48 libasan0 libatomic1-gcc49 libcloog-isl4 libgomp1-gcc49 libisl10 libitm1-gcc49 libmpc3 libmpfr4 libtsan0-gcc49

+

+ 13 new packages to install.

+ Overall download size: 14.5 MiB. Already cached: 0 B After the operation, additional 49.4 MiB will be used.

+ Continue? [y/n/? shows all options] (y): y

+ Retrieving package libasan0-4.8.3+r212056-2.2.4.x86_64 (1/13), 74.2 KiB (166.9 KiB unpacked)

+ Retrieving: libasan0-4.8.3+r212056-2.2.4.x86_64.rpm .......................................................................................................................[done (79.2 KiB/s)]

+ Retrieving package libatomic1-gcc49-4.9.0+r211729-2.1.7.x86_64 (2/13), 14.3 KiB ( 26.1 KiB unpacked)

+ Retrieving: libatomic1-gcc49-4.9.0+r211729-2.1.7.x86_64.rpm ...............................................................................................................[done (55.3 KiB/s)]

# zypper in amarok upd:libxine1

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+ The following 202 NEW packages are going to be installed:

+ amarok bundle-lang-kde-en clamz cups-libs enscript fontconfig gdk-pixbuf-query-loaders ghostscript-fonts-std gptfdisk gstreamer gstreamer-plugins-base hicolor-icon-theme

+ hicolor-icon-theme-branding-openSUSE htdig hunspell hunspell-tools icoutils ispell ispell-american kde4-filesystem kdebase4-runtime kdebase4-runtime-branding-openSUSE kdelibs4

+ kdelibs4-branding-openSUSE kdelibs4-core kdialog libakonadi4 l

+ .....

+

+27. 通过指定软件包的名字安装软件包。

+

+

# zypper in -n git

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following 35 NEW packages are going to be installed:

+ cvs cvsps fontconfig git git-core git-cvs git-email git-gui gitk git-svn git-web libserf-1-1 libsqlite3-0 libXft2 libXrender1 libXss1 perl-Authen-SASL perl-Clone perl-DBD-SQLite perl-DBI

+ perl-Error perl-IO-Socket-SSL perl-MLDBM perl-Net-Daemon perl-Net-SMTP-SSL perl-Net-SSLeay perl-Params-Util perl-PlRPC perl-SQL-Statement perl-Term-ReadKey subversion subversion-perl tcl

+ tk xhost

+

+ The following 13 recommended packages were automatically selected:

+ git-cvs git-email git-gui gitk git-svn git-web perl-Authen-SASL perl-Clone perl-MLDBM perl-Net-Daemon perl-Net-SMTP-SSL perl-PlRPC perl-SQL-Statement

+

+ The following package is suggested, but will not be installed:

+ git-daemon

+

+ 35 new packages to install.

+ Overall download size: 15.6 MiB. Already cached: 0 B After the operation, additional 56.7 MiB will be used.

+ Continue? [y/n/? shows all options] (y): y

+

+28. 通过通配符来安装软件包,例如,安装所有 php5 的软件包。

+

+

# zypper in php5*

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ Problem: php5-5.6.1-18.1.x86_64 requires smtp_daemon, but this requirement cannot be provided

+ uninstallable providers: exim-4.83-3.1.8.x86_64[openSUSE-13.2-0]

+ postfix-2.11.0-5.2.2.x86_64[openSUSE-13.2-0]

+ sendmail-8.14.9-2.2.2.x86_64[openSUSE-13.2-0]

+ exim-4.83-3.1.8.i586[repo-oss]

+ msmtp-mta-1.4.32-2.1.3.i586[repo-oss]

+ postfix-2.11.0-5.2.2.i586[repo-oss]

+ sendmail-8.14.9-2.2.2.i586[repo-oss]

+ exim-4.83-3.1.8.x86_64[repo-oss]

+ msmtp-mta-1.4.32-2.1.3.x86_64[repo-oss]

+ postfix-2.11.0-5.2.2.x86_64[repo-oss]

+ sendmail-8.14.9-2.2.2.x86_64[repo-oss]

+ postfix-2.11.3-5.5.1.i586[repo-update]

+ postfix-2.11.3-5.5.1.x86_64[repo-update]

+ Solution 1: Following actions will be done:

+ do not install php5-5.6.1-18.1.x86_64

+ do not install php5-pear-Auth_SASL-1.0.6-7.1.3.noarch

+ do not install php5-pear-Horde_Http-2.0.1-6.1.3.noarch

+ do not install php5-pear-Horde_Image-2.0.1-6.1.3.noarch

+ do not install php5-pear-Horde_Kolab_Format-2.0.1-6.1.3.noarch

+ do not install php5-pear-Horde_Ldap-2.0.1-6.1.3.noarch

+ do not install php5-pear-Horde_Memcache-2.0.1-7.1.3.noarch

+ do not install php5-pear-Horde_Mime-2.0.2-6.1.3.noarch

+ do not install php5-pear-Horde_Oauth-2.0.0-6.1.3.noarch

+ do not install php5-pear-Horde_Pdf-2.0.1-6.1.3.noarch

+ ....

+

+29. 使用模式名称(模式名称是一类软件包的名字)来批量安装软件包。

+

+

# zypper in -t pattern lamp_server

+ ading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following 29 NEW packages are going to be installed:

+ apache2 apache2-doc apache2-example-pages apache2-mod_perl apache2-prefork patterns-openSUSE-lamp_server perl-Data-Dump perl-Encode-Locale perl-File-Listing perl-HTML-Parser

+ perl-HTML-Tagset perl-HTTP-Cookies perl-HTTP-Daemon perl-HTTP-Date perl-HTTP-Message perl-HTTP-Negotiate perl-IO-HTML perl-IO-Socket-SSL perl-libwww-perl perl-Linux-Pid

+ perl-LWP-MediaTypes perl-LWP-Protocol-https perl-Net-HTTP perl-Net-SSLeay perl-Tie-IxHash perl-TimeDate perl-URI perl-WWW-RobotRules yast2-http-server

+

+ The following NEW pattern is going to be installed:

+ lamp_server

+

+ The following 10 recommended packages were automatically selected:

+ apache2 apache2-doc apache2-example-pages apache2-mod_perl apache2-prefork perl-Data-Dump perl-IO-Socket-SSL perl-LWP-Protocol-https perl-TimeDate yast2-http-server

+

+ 29 new packages to install.

+ Overall download size: 7.2 MiB. Already cached: 1.2 MiB After the operation, additional 34.7 MiB will be used.

+ Continue? [y/n/? shows all options] (y):

+

+30. 使用一行命令安装一个软件包同时卸载另一个软件包,例如在安装 nano 的同时卸载 vi

+

+

# zypper in nano -vi

+ Loading repository data...

+ Reading installed packages...

+ '-vi' not found in package names. Trying capabilities.

+ Resolving package dependencies...

+

+ The following 2 NEW packages are going to be installed:

+ nano nano-lang

+

+ The following package is going to be REMOVED:

+ vim

+

+ The following recommended package was automatically selected:

+ nano-lang

+

+ 2 new packages to install, 1 to remove.

+ Overall download size: 550.0 KiB. Already cached: 0 B After the operation, 463.3 KiB will be freed.

+ Continue? [y/n/? shows all options] (y):

+ ...

+

+31. 使用 zypper 安装 rpm 软件包。

+

+

# zypper in teamviewer*.rpm

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following 24 NEW packages are going to be installed:

+ alsa-oss-32bit fontconfig-32bit libasound2-32bit libexpat1-32bit libfreetype6-32bit libgcc_s1-gcc49-32bit libICE6-32bit libjpeg62-32bit libpng12-0-32bit libpng16-16-32bit libSM6-32bit

+ libuuid1-32bit libX11-6-32bit libXau6-32bit libxcb1-32bit libXdamage1-32bit libXext6-32bit libXfixes3-32bit libXinerama1-32bit libXrandr2-32bit libXrender1-32bit libXtst6-32bit

+ libz1-32bit teamviewer

+

+ The following recommended package was automatically selected:

+ alsa-oss-32bit

+

+ 24 new packages to install.

+ Overall download size: 41.2 MiB. Already cached: 0 B After the operation, additional 119.7 MiB will be used.

+ Continue? [y/n/? shows all options] (y):

+ ..

zypper up apache2 openssh

+ Loading repository data...

+ Reading installed packages...

+ No update candidate for 'apache2-2.4.10-19.1.x86_64'. The highest available version is already installed.

+ No update candidate for 'openssh-6.6p1-5.1.3.x86_64'. The highest available version is already installed.

+ Resolving package dependencies...

+

+ Nothing to do.

+

+35. 安装一个软件库,例如 mariadb,如果该库存在则更新之。

+

+

# zypper in mariadb

+ Loading repository data...

+ Reading installed packages...

+ 'mariadb' is already installed.

+ No update candidate for 'mariadb-10.0.13-2.6.1.x86_64'. The highest available version is already installed.

+ Resolving package dependencies...

+

+ Nothing to do.

# zypper si mariadb

+ Reading installed packages...

+ Loading repository data...

+ Resolving package dependencies...

+

+ The following 36 NEW packages are going to be installed:

+ autoconf automake bison cmake cpp cpp48 gcc gcc48 gcc48-c++ gcc-c++ libaio-devel libarchive13 libasan0 libatomic1-gcc49 libcloog-isl4 libedit-devel libevent-devel libgomp1-gcc49 libisl10

+ libitm1-gcc49 libltdl7 libmpc3 libmpfr4 libopenssl-devel libstdc++48-devel libtool libtsan0-gcc49 m4 make ncurses-devel pam-devel readline-devel site-config tack tcpd-devel zlib-devel

+

+ The following source package is going to be installed:

+ mariadb

+

+ 36 new packages to install, 1 source package.

+ Overall download size: 71.5 MiB. Already cached: 129.5 KiB After the operation, additional 183.9 MiB will be used.

+ Continue? [y/n/? shows all options] (y): y

+

+37. 仅为某一个软件包安装源文件,例如 mariadb

+

+

# zypper in -D mariadb

+ Loading repository data...

+ Reading installed packages...

+ 'mariadb' is already installed.

+ No update candidate for 'mariadb-10.0.13-2.6.1.x86_64'. The highest available version is already installed.

+ Resolving package dependencies...

+

+ Nothing to do.

+

+38. 仅为某一个软件包安装依赖关系,例如 mariadb

+

+

# zypper si -d mariadb

+ Reading installed packages...

+ Loading repository data...

+ Resolving package dependencies...

+

+ The following 36 NEW packages are going to be installed:

+ autoconf automake bison cmake cpp cpp48 gcc gcc48 gcc48-c++ gcc-c++ libaio-devel libarchive13 libasan0 libatomic1-gcc49 libcloog-isl4 libedit-devel libevent-devel libgomp1-gcc49 libisl10

+ libitm1-gcc49 libltdl7 libmpc3 libmpfr4 libopenssl-devel libstdc++48-devel libtool libtsan0-gcc49 m4 make ncurses-devel pam-devel readline-devel site-config tack tcpd-devel zlib-devel

+

+ The following package is recommended, but will not be installed due to conflicts or dependency issues:

+ readline-doc

+

+ 36 new packages to install.

+ Overall download size: 33.7 MiB. Already cached: 129.5 KiB After the operation, additional 144.3 MiB will be used.

+ Continue? [y/n/? shows all options] (y): y

# zypper --non-interactive in mariadb

+ Loading repository data...

+ Reading installed packages...

+ 'mariadb' is already installed.

+ No update candidate for 'mariadb-10.0.13-2.6.1.x86_64'. The highest available version is already installed.

+ Resolving package dependencies...

+

+ Nothing to do.

+

+40. 卸载一个软件包,并且在卸载过程中跳过与用户的交互,例如 mariadb

+

+

# zypper --non-interactive rm mariadb

+ Loading repository data...

+ Reading installed packages...

+ Resolving package dependencies...

+

+ The following package is going to be REMOVED:

+ mariadb

+

+ 1 package to remove.

+ After the operation, 71.8 MiB will be freed.

+ Continue? [y/n/? shows all options] (y): y

+ (1/1) Removing mariadb-10.0.13-2.6.1 .............................................................................[done]

# zypper --quiet in mariadb

+ The following NEW package is going to be installed:

+ mariadb

+

+ 1 new package to install.

+ Overall download size: 0 B. Already cached: 7.8 MiB After the operation, additional 71.8 MiB will be used.

+ Continue? [y/n/? shows all options] (y):

+ ...

# zypper dist-upgrade

+ You are about to do a distribution upgrade with all enabled repositories. Make sure these repositories are compatible before you continue. See 'man zypper' for more information about this command.

+ Building repository 'openSUSE-13.2-0' cache .....................................................................[done]

+ Retrieving repository 'openSUSE-13.2-Debug' metadata ............................................................[done]

+ Building repository 'openSUSE-13.2-Debug' cache .................................................................[done]

+ Retrieving repository 'openSUSE-13.2-Non-Oss' metadata ..........................................................[done]

+ Building repository 'openSUSE-13.2-Non-Oss' cache ...............................................................[done]

The page you request is not present. Check the URL you have typed

"

-

-The above representation is also correct which places the string representing a usual html file.

-

-#### 4. Setting/Unsetting Apache server environment variables ####

-

-In .htaccess file you can set or unset the global environment variables that server allow to be modified by the hosters of the websites. For setting or unsetting the environment variables you need to add the following lines to your .htaccess files.

-

-**Setting the Environment variables**

-

- SetEnv OWNER “Gunjit Khera”

-

-Unsetting the Environment variables

-

- UnsetEnv OWNER

-

-#### 5. Defining different MIME types for files ####

-

-MIME (Multipurpose Internet Multimedia Extensions) are the types that are recognized by the browser by default when running any web page. You can define MIME types for your website in .htaccess files, so that different types of files as defined by you can be recognized and run by the server.

-

-

- AddType application/javascript js

- AddType application/x-font-ttf ttf ttc

-

-

-Here, mod_mime.c is the module for controlling definitions of different MIME types and if you have this module installed on your system then you can use this module to define different MIME types for different extensions used in your website so that server can understand them.

-

-#### 6. How to Limit the size of Uploads and Downloads in Apache ####

-

-.htaccess files allow you the feature to control the amount of data being uploaded or downloaded by a particular client from your website. For this you just need to append the following lines to your .htaccess file:

-

- php_value upload_max_filesize 20M

- php_value post_max_size 20M

- php_value max_execution_time 200

- php_value max_input_time 200

-

-The above lines set maximum upload size, maximum size of data being posted, maximum execution time i.e. the maximum time the a user is allowed to execute a website on his local machine, maximum time constrain within on the input time.

-

-#### 7. Making Users to download .mp3 and other files before playing on your website. ####

-

-Mostly, people play songs on websites before downloading them to check the song quality etc. Being a smart seller you can add a feature that can come in very handy for you which will not let any user play songs or videos online and users have to download them for playing. This is very useful as online playing of songs and videos consumes a lot of bandwidth.

-

-Following lines are needed to be added to be added to your .htaccess file:

-

- AddType application/octet-stream .mp3 .zip

-

-#### 8. Setting Directory Index for Website ####

-

-Most of website developers would already know that the first page that is displayed i.e. the home page of a website is named as ‘index.html’ .Many of us would have seen this also. But how is this set?

-

-.htaccess file provides a way to list a set of pages which would be scanned in order when a client requests to visit home page of the website and accordingly any one of the listed set of pages if found would be listed as the home page of the website and displayed to the user.

-

-Following line is needed to be added to produce the desired effect.

-

- DirectoryIndex index.html index.php yourpage.php

-

-The above line specifies that if any request for visiting the home page comes by any visitor then the above listed pages will be searched in order in the directory firstly: index.html which if found will be displayed as the sites home page, otherwise list will proceed to the next page i.e. index.php and so on till the last page you have entered in the list.

-

-#### 9. How to enable GZip compression for Files to save site’s bandwidth. ####

-

-This is a common observation that heavy sites generally run bit slowly than light weight sites that take less amount of space. This is just because for a heavy site it takes time to load the huge script files and images before displaying them on the client’s web browser.

-

-This is a common mechanism that when a browser requests a web page, server provides the browser with that webpage and now to locally display that web page, the browser has to download that page and then run the script inside that page.

-

-What GZip compression does here is saving the time required to serve a single customer thus increasing the bandwidth. The source files of the website on the server are kept in compressed form and when the request comes from a user then these files are transferred in compressed form which are then uncompressed and executed on the server. This improves the bandwidth constrain.

-

-Following lines can allow you to compress the source files of your website but this requires mod_deflate.c module to be installed on your server.

-

-

- AddOutputFilterByType DEFLATE text/plain

- AddOutputFilterByType DEFLATE text/html

- AddOutputFilterByType DEFLATE text/xml

- AddOutputFilterByType DEFLATE application/html

- AddOutputFilterByType DEFLATE application/javascript

- AddOutputFilterByType DEFLATE application/x-javascript

-

-

-#### 10. Playing with the File types. ####

-

-There are certain conditions that the server assumes by default. Like: .php files are run on the server, similarly .txt files say for example are meant to be displayed. Like this we can make some executable cgi-scripts or files to be simply displayed as the source code on our website instead of being executed.

-

-To do this observe the following lines from a .htaccess file.

-

- RemoveHandler cgi-script .php .pl .py

- AddType text/plain .php .pl .py

-

-These lines tell the server that .pl (perl script), .php (PHP file) and .py (Python file) are meant to just be displayed and not executed as cgi-scripts.

-

-#### 11. Setting the Time Zone for Apache server ####

-

-The power and importance of .htaccess files can be seen by the fact that this can be used to set the Time Zone of the server accordingly. This can be done by setting a global Environment variable ‘TZ’ of the list of global environment variables that are provided by the server to each of the hosted website for modification.

-

-Due to this reason only, we can see time on the websites (that display it) according to our time zone. May be some other person hosting his website on the server would have the timezone set according to the location where he lives.

-

-Following lines set the Time Zone of the Server.

-

- SetEnv TZ India/Kolkata

-

-#### 12. How to enable Cache Control on Website ####

-

-A very interesting feature of browser, most have observed is that on opening one website simultaneously more than one time, the latter one opens fast as compared to the first time. But how is this possible? Well in this case, the browser stores some frequently visited pages in its cache for faster access later on.

-

-But for how long? Well this answer depends on you i.e. on the time you set in your .htaccess file for Cache control. The .htaccess file can specify the amount of time for which the pages of website can stay in the browser’s cache and after expiration of time, it must revalidate i.e. pages would be deleted from the Cache and recreated the next time user visits the site.

-

-Following lines implement Cache Control for your website.

-

-

- Header Set Cache-Control "max-age=3600, public"

-

-

- Header Set Cache-Control "public"

- Header Set Expires "Sat, 24 Jan 2015 16:00:00 GMT"

-

-

-The above lines allow caching of the pages which are inside the directory in which .htaccess files are placed for 1 hour.

-

-#### 13. Configuring a single file, the option. ####

-

-Usually the content in .htaccess files apply to all the files and folders inside the directory in which the file is placed, but you can also provide some special permissions to a special file, like denying access to that file only or so on.

-

-For this you need to add tag to your file in a way like this:

-

-

- Order allow, deny

- Deny from 188.100.100.0

-

-

-This is a simple case of denying a file ‘conf.html’ from access by IP 188.100.100.0, but you can add any or every feature described for .htaccess file till now including the features yet to be described to the file like: Cache-control, GZip compression.

-

-This feature is used by most of the servers to secure .htaccess files which is the reason why we are not able to see the .htaccess files on the browsers. How the files are authenticated is demonstrated in subsequent heading.

-

-#### 14. Enabling CGI scripts to run outside of cgi-bin folder. ####

-

-Usually servers run CGI scripts that are located inside the cgi-bin folder but, you can enable running of CGI scripts located in your desired folder but just adding following lines to .htaccess file located in the desired folder and if not, then creating one, appending following lines:

-

- AddHandler cgi-script .cgi

- Options +ExecCGI

-

-#### 15. How to enable SSI on Website with .htaccess ####

-

-Server side includes as the name suggests would be related to something included at the server side. But what? Generally when we have many pages in our website and we have a navigation menu on our home page that displays links to other pages then, we can enable SSI (Server Size Includes) option that allows all the pages displayed in the navigation menu to be included with the home page completely.

-

-The SSI allows inclusion of multiple pages as if content they contain is a part of a single page so that any editing needed to be done is done in one file only which saves a lot of disk space. This option is by default enabled on servers but for .shtml files.

-

-In case you want to enable it for .html files you need to add following lines:

-

- AddHandler server-parsed .html

-

-After this following in the html file would lead to SSI.

-

-

-

-#### 16. How to Prevent website Directory Listing ####

-

-To prevent any client being able to list the directories of the website on the server at his local machine add following lines to the file inside the directory you don’t want to get listed.

-

- Options -Indexes

-

-#### 17. Changing Default charset and language headers. ####

-

-.htaccess files allow you to modify the character set used i.e. ASCII or UNICODE, UTF-8 etc. for your website along with the default language used for the display of content.

-

-Following server’s global environment variables allow you to achieve above feature.

-

- AddDefaultCharset UTF-8

- DefaultLanguage en-US

-

-**Re-writing URL’s: Redirection Rules**

-

-Re-writing feature simply means replacing the long and un-rememberable URL’s with short and easy to remember ones. But, before going into this topic there are some rules and some conventions for special symbols used later on in this article.

-

-**Special Symbols:**

-

- Symbol Meaning

- ^ - Start of the string

- $ - End of the String

- | - Or [a|b] – a or b

- [a-z] - Any of the letter between a to z

- + - One or more occurrence of previous letter

- * - Zero or more occurrence of previous letter

- ? - Zero or one occurrence of previous letter

-

-**Constants and their meaning:**

-

- Constant Meaning

- NC - No-case or case sensitive

- L - Last rule – stop processing further rules

- R - Temporary redirect to new URL

- R=301 - Permanent redirect to new URL

- F - Forbidden, send 403 header to the user

- P - Proxy – grab remote content in substitution section and return it

- G - Gone, no longer exists

- S=x - Skip next x rules

- T=mime-type - Force specified MIME type

- E=var:value - Set environment variable var to value

- H=handler - Set handler

- PT - Pass through – in case of URL’s with additional headers.

- QSA - Append query string from requested to substituted URL

-

-#### 18. Redirecting a non-www URL to a www URL. ####

-

-Before starting with the explanation, lets first see the lines that are needed to be added to .htaccess file to enable this feature.

-

- RewriteEngine ON

- RewriteCond %{HTTP_HOST} ^abc\.net$

- RewriteRule (.*) http://www.abc.net/$1 [R=301,L]

-

-The above lines enable the Rewrite Engine and then in second line check all those URL’s that pertain to host abc.net or have the HTTP_HOST environment variable set to “abc.net”.

-

-For all such URL’s the code permanently redirects them (as R=301 rule is enabled) to the new URL http://www.abc.net/$1 where $1 is the non-www URL having host as abc.net. The non-www URL is the one in bracket and is referred by $1.

-

-#### 19. Redirecting entire website to https. ####

-

-Following lines will help you transfer entire website to https:

-

- RewriteEngine ON

- RewriteCond %{HTTPS} !on

- RewriteRule (.*) https://%{HTTP_HOST}%{REQUEST_URI}

-

-The above lines enable the re-write engine and then check the value of HTTPS environment variable. If it is on then re-write the entire pages of the website to https.

-

-#### 20. A custom redirection example ####

-

-For example, redirect url ‘http://www.abc.net?p=100&q=20 ‘ to ‘http://www.abc.net/10020pq’.

-

- RewriteEngine ON

- RewriteRule ^http://www.abc.net/([0-9]+)([0-9]+)pq$ ^http://www.abc.net?p=$1&q=$2

-

-In above lines, $1 represents the first bracket and $2 represents the second bracket.

-

-#### 21. Renaming the htaccess file ####

-

-For preventing the .htaccess file from the intruders and other people from viewing those files you can rename that file so that it is not accessed by client’s browser. The line that does this is:

-

- AccessFileName htac.cess

-

-#### 22. How to Prevent Image Hotlinking for your Website ####

-

-Another problem that is major factor of large bandwidth consumption by the websites is the problem of hot links which are links to your websites by other websites for display of images mostly of your website which consumes your bandwidth. This problem is also called as ‘bandwidth theft’.

-

-A common observation is when a site displays the image contained in some other site due to this hot-linking your site needs to be loaded and at the stake of your site’s bandwidth, the other site’s images are displayed. To prevent this for like: images such as: .gif, .jpeg etc. following lines of code would help:

-

- RewriteEngine ON

- RewriteCond %{HTTP_REFERER} !^$

- RewriteCond %{HTTP_REFERERER} !^http://(www\.)?mydomain.com/.*$ [NC]

- RewriteRule \.(gif|jpeg|png)$ - [F].

-

-The above lines check if the HTTP_REFERER is not set to blank or not set to any of the links in your websites. If this is happening then all the images in your page are replaced by 403 forbidden.

-

-#### 23. How to Redirect Users to Maintenance Page. ####

-

-In case your website is down for maintenance and you want to notify all your clients that need to access your websites about this then for such cases you can add following lines to your .htaccess websites that allow only admin access and replace the site pages having links to any .jpg, .css, .gif, .js etc.

-

- RewriteCond %{REQUEST_URI} !^/admin/ [NC]

- RewriteCond %{REQUEST_URI} !^((.*).css|(.*).js|(.*).png|(.*).jpg) [NC]

- RewriteRule ^(.*)$ /ErrorDocs/Maintainence_Page.html

- [NC,L,U,QSA]

-

-These lines check if the Requested URL contains any request for any admin page i.e. one starting with ‘/admin/’ or any request to ‘.png, .jpg, .js, .css’ pages and for any such requests it replaces that page to ‘ErrorDocs/Maintainence_Page.html’.

-

-#### 24. Mapping IP Address to Domain Name ####

-

-Name servers are the servers that convert a specific IP Address to a domain name. This mapping can also be specified in the .htaccess files in the following manner.

-

- For Mapping L.M.N.O address to a domain name www.hellovisit.com

- RewriteCond %{HTTP_HOST} ^L\.M\.N\.O$ [NC]

- RewriteRule ^(.*)$ http://www.hellovisit.com/$1 [L,R=301]

-

-The above lines check if the host for any page is having the IP Address as: L.M.N.O and if so the page is mapped to the domain name http://www.hellovisit.com by the third line by permanent redirection.

-

-#### 25. FilesMatch Tag ####

-

-Like tag that is used to apply conditions to a single file, can be used to match to a group of files and apply some conditions to the group of files as below:

-

-

- Order Allow, Deny

- Deny from All

-

-

-### Conclusion ###

-

-The list of tricks that can be done with .htaccess files is much more. Thus, this gives us an idea how powerful this file is and how much security and dynamicity and other features it can give to your website.

-

-We’ve tried our best to cover as much as htaccess tricks in this article, but incase if we’ve missed any important trick, or you most welcome to post your htaccess ideas and tricks that you know via comments section below – we will include those in our article too…

-

---------------------------------------------------------------------------------

-

-via: http://www.tecmint.com/apache-htaccess-tricks/

-

-作者:[Gunjit Khera][a]

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

-

-[a]:http://www.tecmint.com/author/gunjitk94/

\ No newline at end of file

diff --git a/sources/tech/20150128 Docker-2 Setting up a private Docker registry.md b/sources/tech/20150128 Docker-2 Setting up a private Docker registry.md

deleted file mode 100644

index 9a9341b4b7..0000000000

--- a/sources/tech/20150128 Docker-2 Setting up a private Docker registry.md

+++ /dev/null

@@ -1,241 +0,0 @@

-Setting up a private Docker registry

-================================================================================

-

-

-[TL;DR] This is the second post in a series of 3 on how my company moved its infrastructure from PaaS to Docker based deployment.

-

-- [First part][1]: where I talk about the process we went thru before approaching Docker;

-- [Third pard][2]: where I show how to automate the entire process of building images and deploying a Rails app with Docker.

-

-----------

-

-Why would ouy want ot set up a provate registry? Well, for starters, Docker Hub only allows you to have one free private repo. Other companies are beginning to offer similar services, but they are all not very cheap. In addition, if you need to deploy production ready applications built with Docker, you might not want to publish those images on the public Docker Hub.

-

-This is a very pragmatic approach to dealing with the intricacies of setting up a private Docker registry. For the tutorial we will be using a small 512MB instance on DigitalOcean (from now on DO). I also assume you already know the basics of Docker since I will be concentrating on some more complicated stuff.

-

-### Local set up ###

-

-First of all you need to install **boot2docker** and docker CLI. If you already have your basic Docker environment up and running, you can just skip to the next section.

-

-From the terminal run the following command[1][3]:

-

- brew install boot2docker docker

-

-If everything is ok[2][4], you will now be able to start the VM inside which Docker will run with the following command:

-

- boot2docker up

-

-Follow the instructions, copy and paste the export commands that boot2docker will print in the terminal. If you now run `docker ps` you should be greeted by the following line

-

- CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

-

-Ok, Docker is ready to go. This will be enough for the moment. Let's go back to setting up the registry.

-

-### Creating the server ###

-

-Log into you DO account and create a new Droplet by selecting an image with Docker pre-installed[^n].

-

-

-

-You should receive your root credentials via email. Log into your instance and run `docker ps` to see if eveything is ok.

-

-### Setting up AWS S3 ###

-

-We are going to use Amazon Simple Storage Service (S3) as the storage layer for our registry / repository. We will need to create a bucket and user credentials to allow our docker container accessoing it.

-

-Login into your AWS account (if you don't have one you can set one up at [http://aws.amazon.com/][5]) and from the console select S3 (Simple Storage Service).

-

-

-

-Click on **Create Bucket**, enter a unique name for your bucket (and write it down, we're gonna need it later), then click on **Create**.

-

-

-

-That's it! We're done setting up the storage part.

-

-### Setup AWS access credentials ###

-

-We are now going to create a new user. Go back to your AWS console and select IAM (Identity & Access Management).

-

-

-

-In the dashboard, on the left side of the webpage, you should click on Users. Then select **Create New Users**.

-

-You should be presented with the following screen:

-

-

-

-Enter a name for your user (e.g. docker-registry) and click on Create. Write down (or download the csv file with) your Access Key and Secret Access Key that we'll need when running the Docker container. Go back to your users list and select the one you just created.

-

-Under the Permission section, click on Attach User Policy. In the next screen, you will be presented with multiple choices: select Custom Policy.

-

-

-

-Here's the content of the custom policy:

-

- {

- "Version": "2012-10-17",

- "Statement": [

- {

- "Sid": "SomeStatement",

- "Effect": "Allow",

- "Action": [

- "s3:*"

- ],

- "Resource": [

- "arn:aws:s3:::docker-registry-bucket-name/*",

- "arn:aws:s3:::docker-registry-bucket-name"

- ]

- }

- ]

- }

-

-This will allow the user (i.e. the registry) to manage (read/write) content on the bucket (make sure to use the bucket name you previously defined when setting up AWS S3). To sum it up: when you'll be pushing Docker images from your local machine to your repository, the server will be able to upload them to S3.

-

-### Installing the registry ###

-

-Now let's head back to our DO server and SSH into it. We are going to use[^n] one of the [official Docker registry images][6].

-

-Let's start our registry with the following command:

-

- docker run \

- -e SETTINGS_FLAVOR=s3 \

- -e AWS_BUCKET=bucket-name \

- -e STORAGE_PATH=/registry \

- -e AWS_KEY=your_aws_key \

- -e AWS_SECRET=your_aws_secret \

- -e SEARCH_BACKEND=sqlalchemy \

- -p 5000:5000 \

- --name registry \

- -d \

- registry

-

-Docker should pull the required fs layers from the Docker Hub and eventually start the daemonised container.

-

-### Testing the registry ###

-

-If everything worked out, you should now be able to test the registry by pinging it and by searching its content (though for the time being it's still empty).

-

-Our registry is very basic and it does not provide any means of authentication. Since there are no easy ways of adding authentication (at least none that I'm aware of that are easy enough to implment in order to justify the effort), I've decided that the easiest way of querying / pulling / pushing the registry is an unsecure (over HTTP) connection tunneled thru SSH.

-

-Opening an SSH tunnel from your local machine is straightforward:

-

- ssh -N -L 5000:localhost:5000 root@your_registry.com

-

-The command is tunnelling connections over SSH from port 5000 of the registry server (which is the one we exposed with the `docker run` command in the previous paragraph) to port 5000 on the localhost.

-

-If you now browse to the following address [http://localhost:5000/v1/_ping][7] you should get the following very simple response

-

- {}

-

-This just means that the registry is working correctly. You can also list the whole content of the registry by browsing to [http://localhost:5000/v1/search][8] that will get you a similar response:

-

- {

- "num_results": 2,

- "query": "",

- "results": [

- {

- "description": "",

- "name": "username/first-repo"

- },

- {

- "description": "",

- "name": "username/second-repo"

- }

- ]

- }

-

-### Building an image ###

-

-Let's now try and build a very simple Docker image to test our newly installed registry. On your local machine, create a Dockerfile with the following content[^n]:

-

- # Base image with ruby 2.2.0

- FROM ruby:2.2.0

-

- MAINTAINER Michelangelo Chasseur

-

-...and build it:

-

- docker build -t localhost:5000/username/repo-name .

-

-The `localhost:5000` part is especially important: the first part of the name of a Docker image will tell the `docker push` command the endpoint towards which we are trying to push our image. In our case, since we are connecting to our remote private registry via an SSH tunnel, `localhost:5000` represents exactly the reference to our registry.

-

-If everything works as expected, when the command returns, you should be able to list your newly created image with the `docker images` command. Run it and see it for yourself.

-

-### Pushing to the registry ###

-

-Now comes the trickier part. It took a me a while to realize what I'm about to describe, so just be patient if you don't get it the first time you read and try to follow along. I know that all this stuff will seem pretty complicated (and it would be if you didn't automate the process), but I promise in the end it will all make sense. In the next post I will show a couple of shell scripts and Rake tasks that will automate the whole process and will let you deploy a Rails to your registry app with a single easy command.

-

-The docker command you are running from your terminal is actually using the boot2docker VM to run the containers and do all the magic stuff. So when we run a command like `docker push some_repo` what is actually happening is that it's the boot2docker VM that is reacing out for the registry, not our localhost.

-

-This is an extremely important point to understand: in order to push the Docker image to the remote private registry, the SSH tunnel needs to be established from the boot2docker VM and not from your local machine.

-

-There are a couple of ways to go with it. I will show you the shortest one (which is not probably the easiest to understand, but it's the one that will let us automate the process with shell scripts).

-

-First of all though we need to sort one last thing with SSH.

-

-### Setting up SSH ###

-

-Let's add our boot2docker SSH key to our remote server (registry) known hosts. We can do so using the ssh-copy-id utility that you can install with the following command shouldn't you already have it:

-

- brew install ssh-copy-id

-

-Then run:

-

- ssh-copy-id -i /Users/username/.ssh/id_boot2docker root@your-registry.com

-

-Make sure to substitute `/Users/username/.ssh/id_boot2docker` with the correct path of your ssh key.

-

-This will allow us to connect via SSH to our remote registry without being prompted for the password.

-

-Finally let's test it out:

-

- boot2docker ssh "ssh -o 'StrictHostKeyChecking no' -i /Users/michelangelo/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@registry.touchwa.re &" &

-

-To break things out a little bit:

-

-- `boot2docker ssh` lets you pass a command as a parameter that will be executed by the boot2docker VM;

-- the final `&` indicates that we want our command to be executed in the background;

-- `ssh -o 'StrictHostKeyChecking no' -i /Users/michelangelo/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@registry.touchwa.re &` is the actual command our boot2docker VM will run;

- - the `-o 'StrictHostKeyChecking no'` will make sure that we are not prompted with security questions;

- - the `-i /Users/michelangelo/.ssh/id_boot2docker` indicates which SSH key we want our VM to use for authentication purposes (note that this should be the key you added to your remote registry in the previous step);

- - finally we are opening a tunnel on mapping port 5000 to localhost:5000.

-

-### Pulling from another server ###

-

-You should now be able to push your image to the remote registry by simply issuing the following command:

-

- docker push localhost:5000/username/repo_name

-

-In the [next post][9] we'll se how to automate some of this stuff and we'll containerize a real Rails application. Stay tuned!

-

-P.S. Please use the comments to let me know of any inconsistencies or fallacies in my tutorial. Hope you enjoyed it!

-

-1. I'm also assuming you are running on OS X.

-1. For a complete list of instructions to set up your docker environment and requirements, please visit [http://boot2docker.io/][10]

-1. Select Image > Applications > Docker 1.4.1 on 14.04 at the time of this writing.

-1. [https://github.com/docker/docker-registry/][11]

-1. This is just a stub, in the next post I will show you how to bundle a Rails application into a Docker container.

-

---------------------------------------------------------------------------------

-

-via: http://cocoahunter.com/2015/01/23/docker-2/

-

-作者:[Michelangelo Chasseur][a]

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

-

-[a]:http://cocoahunter.com/author/michelangelo/

-[1]:http://cocoahunter.com/2015/01/23/docker-1/

-[2]:http://cocoahunter.com/2015/01/23/docker-3/

-[3]:http://cocoahunter.com/2015/01/23/docker-2/#fn:1

-[4]:http://cocoahunter.com/2015/01/23/docker-2/#fn:2

-[5]:http://aws.amazon.com/

-[6]:https://registry.hub.docker.com/_/registry/

-[7]:http://localhost:5000/v1/_ping

-[8]:http://localhost:5000/v1/search

-[9]:http://cocoahunter.com/2015/01/23/docker-3/

-[10]:http://boot2docker.io/

-[11]:https://github.com/docker/docker-registry/

\ No newline at end of file

diff --git a/sources/tech/20150128 Docker-3 Automated Docker-based Rails deployments.md b/sources/tech/20150128 Docker-3 Automated Docker-based Rails deployments.md

deleted file mode 100644

index f450361a68..0000000000

--- a/sources/tech/20150128 Docker-3 Automated Docker-based Rails deployments.md

+++ /dev/null

@@ -1,253 +0,0 @@

-Automated Docker-based Rails deployments

-================================================================================

-

-

-[TL;DR] This is the third post in a series of 3 on how my company moved its infrastructure from PaaS to Docker based deployment.

-

-- [First part][1]: where I talk about the process we went thru before approaching Docker;

-- [Second part][2]: where I explain how setting up a private registry for in house secure deployments.

-

-----------

-

-In this final part we will see how to automate the whole deployment process with a real world (though very basic) example.

-

-### Basic Rails app ###

-

-Let's dive into the topic right away and bootstrap a basic Rails app. For the purpose of this demonstration I'm going to use Ruby 2.2.0 and Rails 4.1.1

-

-From the terminal run:

-

- $ rvm use 2.2.0

- $ rails new && cd docker-test

-

-Let's create a basic controller:

-

- $ rails g controller welcome index

-

-...and edit `routes.rb` so that the root of the project will point to our newly created welcome#index method:

-

- root 'welcome#index'

-

-Running `rails s` from the terminal and browsing to [http://localhost:3000][3] should bring you to the index page. We're not going to make anything fancier to the app, it's just a basic example to prove that when we'll build and deploy the container everything is working.

-

-### Setup the webserver ###

-

-We are going to use Unicorn as our webserver. Add `gem 'unicorn'` and `gem 'foreman'` to the Gemfile and bundle it up (run `bundle install` from the command line).

-

-Unicorn needs to be configured when the Rails app launches, so let's put a **unicorn.rb** file inside the **config** directory. [Here is an example][4] of a Unicorn configuration file. You can just copy & paste the content of the Gist.

-

-Let's also add a Procfile with the following content inside the root of the project so that we will be able to start the app with foreman:

-

- web: bundle exec unicorn -p $PORT -c ./config/unicorn.rb

-

-If you now try to run the app with **foreman start** everything should work as expected and you should have a running app on [http://localhost:5000][5]

-

-### Building a Docker image ###

-

-Now let's build the image inside which our app is going to live. In the root of our Rails project, create a file named **Dockerfile** and paste in it the following:

-

- # Base image with ruby 2.2.0

- FROM ruby:2.2.0

-

- # Install required libraries and dependencies

- RUN apt-get update && apt-get install -qy nodejs postgresql-client sqlite3 --no-install-recommends && rm -rf /var/lib/apt/lists/*

-

- # Set Rails version

- ENV RAILS_VERSION 4.1.1

-

- # Install Rails

- RUN gem install rails --version "$RAILS_VERSION"

-

- # Create directory from where the code will run

- RUN mkdir -p /usr/src/app

- WORKDIR /usr/src/app

-

- # Make webserver reachable to the outside world

- EXPOSE 3000

-

- # Set ENV variables

- ENV PORT=3000

-

- # Start the web app

- CMD ["foreman","start"]

-

- # Install the necessary gems

- ADD Gemfile /usr/src/app/Gemfile

- ADD Gemfile.lock /usr/src/app/Gemfile.lock

- RUN bundle install --without development test

-

- # Add rails project (from same dir as Dockerfile) to project directory

- ADD ./ /usr/src/app

-

- # Run rake tasks

- RUN RAILS_ENV=production rake db:create db:migrate

-

-Using the provided Dockerfile, let's try and build an image with the following command[1][7]:

-

- $ docker build -t localhost:5000/your_username/docker-test .

-

-And again, if everything worked out correctly, the last line of the long log output should read something like:

-

- Successfully built 82e48769506c

- $ docker images

- REPOSITORY TAG IMAGE ID CREATED VIRTUAL SIZE

- localhost:5000/your_username/docker-test latest 82e48769506c About a minute ago 884.2 MB

-

-Let's try and run the container!

-

- $ docker run -d -p 3000:3000 --name docker-test localhost:5000/your_username/docker-test

-

-You should be able to reach your Rails app running inside the Docker container at port 3000 of your boot2docker VM[2][8] (in my case [http://192.168.59.103:3000][6]).

-

-### Automating with shell scripts ###

-

-Since you should already know from the previous post3 how to push your newly created image to a private regisitry and deploy it on a server, let's skip this part and go straight to automating the process.

-

-We are going to define 3 shell scripts and finally tie it all together with rake.

-

-### Clean ###

-

-Every time we build our image and deploy we are better off always clean everything. That means the following:

-

-- stop (if running) and restart boot2docker;

-- remove orphaned Docker images (images that are without tags and that are no longer used by your containers).

-

-Put the following into a **clean.sh** file in the root of your project.

-

- echo Restarting boot2docker...

- boot2docker down

- boot2docker up

-

- echo Exporting Docker variables...

- sleep 1

- export DOCKER_HOST=tcp://192.168.59.103:2376

- export DOCKER_CERT_PATH=/Users/user/.boot2docker/certs/boot2docker-vm

- export DOCKER_TLS_VERIFY=1

-

- sleep 1

- echo Removing orphaned images without tags...

- docker images | grep "" | awk '{print $3}' | xargs docker rmi

-

-Also make sure to make the script executable:

-

- $ chmod +x clean.sh

-

-### Build ###

-

-The build process basically consists in reproducing what we just did before (docker build). Create a **build.sh** script at the root of your project with the following content:

-

- docker build -t localhost:5000/your_username/docker-test .

-

-Make the script executable.

-

-### Deploy ###

-

-Finally, create a **deploy.sh** script with this content:

-

- # Open SSH connection from boot2docker to private registry

- boot2docker ssh "ssh -o 'StrictHostKeyChecking no' -i /Users/username/.ssh/id_boot2docker -N -L 5000:localhost:5000 root@your-registry.com &" &

-

- # Wait to make sure the SSH tunnel is open before pushing...

- echo Waiting 5 seconds before pushing image.

-

- echo 5...

- sleep 1

- echo 4...

- sleep 1

- echo 3...

- sleep 1

- echo 2...

- sleep 1

- echo 1...

- sleep 1

-

- # Push image onto remote registry / repo

- echo Starting push!

- docker push localhost:5000/username/docker-test

-

-If you don't understand what's going on here, please make sure you've read thoroughfully [part 2][9] of this series of posts.

-

-Make the script executable.

-

-### Tying it all together with rake ###

-

-Having 3 scripts would now require you to run them individually each time you decide to deploy your app:

-

-1. clean

-1. build

-1. deploy / push

-

-That wouldn't be much of an effort, if it weren't for the fact that developers are lazy! And lazy be it, then!

-

-The final step to wrap things up, is tying the 3 parts together with rake.

-

-To make things even simpler you can just append a bunch of lines of code to the end of the already present Rakefile in the root of your project. Open the Rakefile file - pun intended :) - and paste the following:

-

- namespace :docker do

- desc "Remove docker container"

- task :clean do

- sh './clean.sh'

- end

-

- desc "Build Docker image"

- task :build => [:clean] do

- sh './build.sh'

- end

-

- desc "Deploy Docker image"

- task :deploy => [:build] do

- sh './deploy.sh'

- end

- end

-

-Even if you don't know rake syntax (which you should, because it's pretty awesome!), it's pretty obvious what we are doing. We have declared 3 tasks inside a namespace (docker).

-

-This will create the following 3 tasks:

-

-- rake docker:clean

-- rake docker:build

-- rake docker:deploy

-

-Deploy is dependent on build, build is dependent on clean. So every time we run from the command line

-

- $ rake docker:deploy

-

-All the script will be executed in the required order.

-

-### Test it ###

-

-To see if everything is working, you just need to make a small change in the code of your app and run

-

- $ rake docker:deploy

-

-and see the magic happening. Once the image has been uploaded (and the first time it could take quite a while), you can ssh into your production server and pull (thru an SSH tunnel) the docker image onto the server and run. It's that easy!

-

-Well, maybe it takes a while to get accustomed to how everything works, but once it does, it's almost (almost) as easy as deploying with Heroku.

-

-P.S. As always, please let me have your ideas. I'm not sure this is the best, or the fastest, or the safest way of doing devops with Docker, but it certainly worked out for us.

-

-- make sure to have **boot2docker** up and running.

-- If you don't know your boot2docker VM address, just run `$ boot2docker ip`

-- if you don't, you can read it [here][10]

-

---------------------------------------------------------------------------------

-

-via: http://cocoahunter.com/2015/01/23/docker-3/

-

-作者:[Michelangelo Chasseur][a]

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

-

-[a]:http://cocoahunter.com/author/michelangelo/

-[1]:http://cocoahunter.com/docker-1

-[2]:http://cocoahunter.com/2015/01/23/docker-2/

-[3]:http://localhost:3000/

-[4]:https://gist.github.com/chasseurmic/0dad4d692ff499761b20

-[5]:http://localhost:5000/

-[6]:http://192.168.59.103:3000/

-[7]:http://cocoahunter.com/2015/01/23/docker-3/#fn:1

-[8]:http://cocoahunter.com/2015/01/23/docker-3/#fn:2

-[9]:http://cocoahunter.com/2015/01/23/docker-2/

-[10]:http://cocoahunter.com/2015/01/23/docker-2/

\ No newline at end of file

diff --git a/sources/tech/20150202 How to Bind Apache Tomcat to IPv4 in Centos or Redhat.md b/sources/tech/20150202 How to Bind Apache Tomcat to IPv4 in Centos or Redhat.md

deleted file mode 100644

index 92ac657b5a..0000000000

--- a/sources/tech/20150202 How to Bind Apache Tomcat to IPv4 in Centos or Redhat.md

+++ /dev/null

@@ -1,79 +0,0 @@

-How to Bind Apache Tomcat to IPv4 in Centos / Redhat

-================================================================================

-Hi all, today we'll learn how to bind tomcat to ipv4 in CentOS 7 Linux Distribution.

-

-**Apache Tomcat** is an open source web server and servlet container developed by the [Apache Software Foundation][1]. It implements the Java Servlet, JavaServer Pages (JSP), Java Unified Expression Language and Java WebSocket specifications from Sun Microsystems and provides a web server environment for Java code to run in.

-

-Binding Tomcat to IPv4 is necessary if we have our server not working due to the default binding of our tomcat server to IPv6. As we know IPv6 is the modern way of assigning IP address to a device and is not in complete practice these days but may come into practice in soon future. So, currently we don't need to switch our tomcat server to IPv6 due to no use and we should bind it to IPv4.

-

-Before thinking to bind to IPv4, we should make sure that we've got tomcat installed in our CentOS 7. Here's is a quick tutorial on [how to install tomcat 8 in CentOS 7.0 Server][2].

-

-### 1. Switching to user tomcat ###

-

-First of all, we'll gonna switch user to **tomcat** user. We can do that by running **su - tomcat** in a shell or terminal.

-

- # su - tomcat

-

-

-

-### 2. Finding Catalina.sh ###

-

-Now, we'll First Go to bin directory inside the directory of Apache Tomcat installation which is usually under **/usr/share/apache-tomcat-8.0.x/bin/** where x is sub version of the Apache Tomcat Release. In my case, its **/usr/share/apache-tomcat-8.0.18/bin/** as I have version 8.0.18 installed in my CentOS 7 Server.

-

- $ cd /usr/share/apache-tomcat-8.0.18/bin

-

-**Note: Please replace 8.0.18 to the version of Apache Tomcat installed in your system. **

-

-Inside the bin folder, there is a script file named catalina.sh . Thats the script file which we'll gonna edit and add a line of configuration which will bind tomcat to IPv4 . You can see that file by running **ls** into a terminal or shell.

-

- $ ls

-

-

-

-### 3. Configuring Catalina.sh ###

-

-Now, we'll add **JAVA_OPTS= "$JAVA_OPTS -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Addresses"** to that scripting file catalina.sh at the end of the file as shown in the figure below. We can edit the file using our favorite text editing software like nano, vim, etc. Here, we'll gonna use nano.

-

- $ nano catalina.sh

-

-

-

-Then, add to the file as shown below:

-

-**JAVA_OPTS= "$JAVA_OPTS -Djava.net.preferIPv4Stack=true -Djava.net.preferIPv4Addresses"**

-

-

-

-Now, as we've added the configuration to the file, we'll now save and exit nano.

-

-### 4. Restarting ###

-

-Now, we'll restart our tomcat server to get our configuration working. We'll need to first execute shutdown.sh and then startup.sh .

-

- $ ./shutdown.sh

-

-Now, well run execute startup.sh as:

-

- $ ./startup.sh

-

-

-

-This will restart our tomcat server and the configuration will be loaded which will ultimately bind the server to IPv4.

-

-### Conclusion ###

-

-Hurray, finally we'have got our tomcat server bind to IPv4 running in our CentOS 7 Linux Distribution. Binding to IPv4 is easy and is necessary if your Tomcat server is bind to IPv6 which will infact will make your tomcat server not working as IPv6 is not used these days and may come into practice in coming future. If you have any questions, comments, feedback please do write on the comment box below and let us know what stuffs needs to be added or improved. Thank You! Enjoy :-)

-

---------------------------------------------------------------------------------

-

-via: http://linoxide.com/linux-how-to/bind-apache-tomcat-ipv4-centos/

-

-作者:[Arun Pyasi][a]

-译者:[译者ID](https://github.com/译者ID)

-校对:[校对者ID](https://github.com/校对者ID)

-

-本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

-

-[a]:http://linoxide.com/author/arunp/

-[1]:http://www.apache.org/

-[2]:http://linoxide.com/linux-how-to/install-tomcat-8-centos-7/

\ No newline at end of file

diff --git a/sources/tech/20150202 How to filter BGP routes in Quagga BGP router.md b/sources/tech/20150202 How to filter BGP routes in Quagga BGP router.md

deleted file mode 100644

index d92c47c774..0000000000

--- a/sources/tech/20150202 How to filter BGP routes in Quagga BGP router.md

+++ /dev/null

@@ -1,201 +0,0 @@

-How to filter BGP routes in Quagga BGP router

-================================================================================

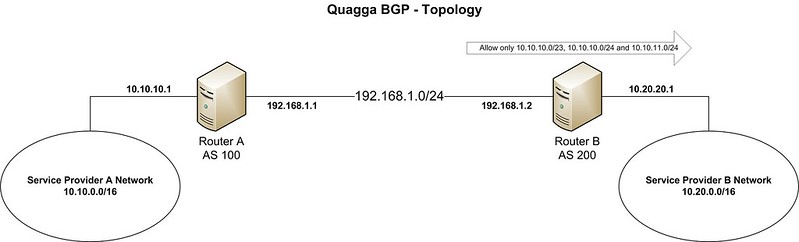

-In the [previous tutorial][1], we demonstrated how to turn a CentOS box into a BGP router using Quagga. We also covered basic BGP peering and prefix exchange setup. In this tutorial, we will focus on how we can control incoming and outgoing BGP prefixes by using **prefix-list** and **route-map**.

-

-As described in earlier tutorials, BGP routing decisions are made based on the prefixes received/advertised. To ensure error-free routing, it is recommended that you use some sort of filtering mechanism to control these incoming and outgoing prefixes. For example, if one of your BGP neighbors starts advertising prefixes which do not belong to them, and you accept such bogus prefixes by mistake, your traffic can be sent to that wrong neighbor, and end up going nowhere (so-called "getting blackholed"). To make sure that such prefixes are not received or advertised to any neighbor, you can use prefix-list and route-map. The former is a prefix-based filtering mechanism, while the latter is a more general prefix-based policy mechanism used to fine-tune actions.

-

-We will show you how to use prefix-list and route-map in Quagga.

-

-### Topology and Requirement ###

-

-In this tutorial, we assume the following topology.

-

-

-

-Service provider A has already established an eBGP peering with service provider B, and they are exchanging routing information between them. The AS and prefix details are as stated below.

-

-- **Peering block**: 192.168.1.0/24

-- **Service provider A**: AS 100, prefix 10.10.0.0/16

-- **Service provider B**: AS 200, prefix 10.20.0.0/16

-

-In this scenario, service provider B wants to receive only prefixes 10.10.10.0/23, 10.10.10.0/24 and 10.10.11.0/24 from provider A.

-

-### Quagga Installation and BGP Peering ###

-

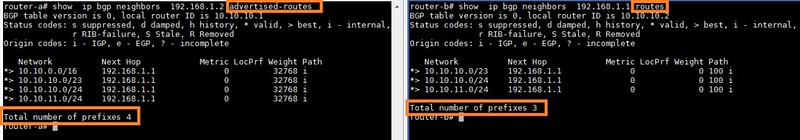

-In the [previous tutorial][1], we have already covered the method of installing Quagga and setting up BGP peering. So we will not go through the details here. Nonetheless, I am providing a summary of BGP configuration and prefix advertisements:

-

-

-

-The above output indicates that the BGP peering is up. Router-A is advertising multiple prefixes towards router-B. Router-B, on the other hand, is advertising a single prefix 10.20.0.0/16 to router-A. Both routers are receiving the prefixes without any problems.

-

-### Creating Prefix-List ###

-

-In a router, a prefix can be blocked with either an ACL or prefix-list. Using prefix-list is often preferred to ACLs since prefix-list is less processor intensive than ACLs. Also, prefix-list is easier to create and maintain.

-

- ip prefix-list DEMO-PRFX permit 192.168.0.0/23

-

-The above command creates prefix-list called 'DEMO-FRFX' that allows only 192.168.0.0/23.

-

-Another great feature of prefix-list is that we can specify a range of subnet mask(s). Take a look at the following example:

-

- ip prefix-list DEMO-PRFX permit 192.168.0.0/23 le 24

-

-The above command creates prefix-list called 'DEMO-PRFX' that permits prefixes between 192.168.0.0/23 and /24, which are 192.168.0.0/23, 192.168.0.0/24 and 192.168.1.0/24. The 'le' operator means less than or equal to. You can also use 'ge' operator for greater than or equal to.

-

-A single prefix-list statement can have multiple permit/deny actions. Each statement is assigned a sequence number which can be determined automatically or specified manually.

-

-Multiple prefix-list statements are parsed one by one in the increasing order of sequence numbers. When configuring prefix-list, we should keep in mind that there is always an **implicit deny** at the end of all prefix-list statements. This means that anything that is not explicitly allowed will be denied.

-

-To allow everything, we can use the following prefix-list statement which allows any prefix starting from 0.0.0.0/0 up to anything with subnet mask /32.

-

- ip prefix-list DEMO-PRFX permit 0.0.0.0/0 le 32

-

-Now that we know how to create prefix-list statements, we will create prefix-list called 'PRFX-LST' that will allow prefixes required in our scenario.

-

- router-b# conf t

- router-b(config)# ip prefix-list PRFX-LST permit 10.10.10.0/23 le 24

-

-### Creating Route-Map ###

-

-Besides prefix-list and ACLs, there is yet another mechanism called route-map, which can control prefixes in a BGP router. In fact, route-map can fine-tune possible actions more flexibly on the prefixes matched with an ACL or prefix-list.

-

-Similar to prefix-list, a route-map statement specifies permit or deny action, followed by a sequence number. Each route-map statement can have multiple permit/deny actions with it. For example:

-

- route-map DEMO-RMAP permit 10

-

-The above statement creates route-map called 'DEMO-RMAP', and adds permit action with sequence 10. Now we will use match command under sequence 10.

-

- router-a(config-route-map)# match (press ? in the keyboard)

-

-----------

-

- as-path Match BGP AS path list

- community Match BGP community list

- extcommunity Match BGP/VPN extended community list

- interface match first hop interface of route

- ip IP information

- ipv6 IPv6 information

- metric Match metric of route

- origin BGP origin code

- peer Match peer address

- probability Match portion of routes defined by percentage value

- tag Match tag of route

-

-As we can see, route-map can match many attributes. We will match a prefix in this tutorial.

-

- route-map DEMO-RMAP permit 10

- match ip address prefix-list DEMO-PRFX

-

-The match command will match the IP addresses permitted by the prefix-list 'DEMO-PRFX' created earlier (i.e., prefixes 192.168.0.0/23, 192.168.0.0/24 and 192.168.1.0/24).

-

-Next, we can modify the attributes by using the set command. The following example shows possible use cases of set.

-

- route-map DEMO-RMAP permit 10

- match ip address prefix-list DEMO-PRFX

- set (press ? in keyboard)

-

-----------

-

- aggregator BGP aggregator attribute

- as-path Transform BGP AS-path attribute

- atomic-aggregate BGP atomic aggregate attribute

- comm-list set BGP community list (for deletion)

- community BGP community attribute