mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-04-02 02:50:11 +08:00

commit

1aa0a41995

README.md

published

20151019 Gaming On Linux--All You Need To Know.md20151202 KDE vs GNOME vs XFCE Desktop.md20151227 Upheaval in the Debian Live project.md20160204 An Introduction to SELinux.md

201604

20151028 10 Tips for 10x Application Performance.md20151109 How to Configure Tripwire IDS on Debian.md20151114 How to Setup Drone - a Continuous Integration Service in Linux.md20151119 Going Beyond Hello World Containers is Hard Stuff.md20151126 Microsoft and Linux--True Romance or Toxic Love.md20160212 How to Add New Disk in Linux CentOS 7 Without Rebooting.md20160220 Make Sudo Insult User For Each Incorrect Password Attempt.md20160223 BeeGFS Parallel File System Goes Open Source.md20160226 How to use Python to hack your Eclipse IDE.md20160405 Ubuntu Budgie Could Be the New Flavor of Ubuntu Linux, as Part of Ubuntu 16.10.md20160414 Linux Kernel 3.12 to Be Supported Until 2017 Because of SUSE Linux Enterprise 12.md

sources

news

talk

20150820 Which Open Source Linux Distributions Would Presidential Hopefuls Run.md20151227 Upheaval in the Debian Live project.md20160505 Confessions of a cross-platform developer.md

my-open-source-story

tech

20160204 An Introduction to SELinux.md20160218 7 Steps to Start Your Linux SysAdmin Career.md20160218 9 Key Trends in Hybrid Cloud Computing.md20160218 Tizen 3.0 Joins Growing List of Raspberry Pi 2 Distributions.md20160220 Convergence Becomes Real With First Ubuntu Tablet.md20160301 The Evolving Market for Commercial Software Built On Open Source.md20160429 Master OpenStack with 5 new tutorials.md20160502 The intersection of Drupal, IoT, and open hardware.md

LFCS

Part 14 - Monitor Linux Processes Resource Usage and Set Process Limits on a Per-User Basis.mdPart 7 - LFCS--Managing System Startup Process and Services SysVinit Systemd and Upstart.md

LXD

translated

talk

20151124 Review--5 memory debuggers for Linux coding.md20151202 KDE vs GNOME vs XFCE Desktop.md

my-open-source-story

tech

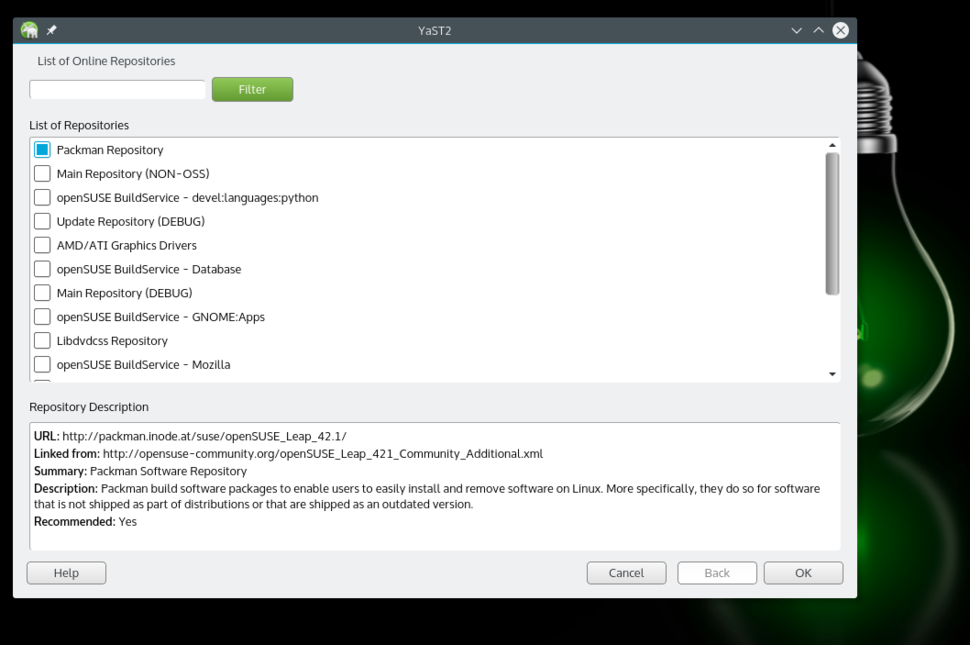

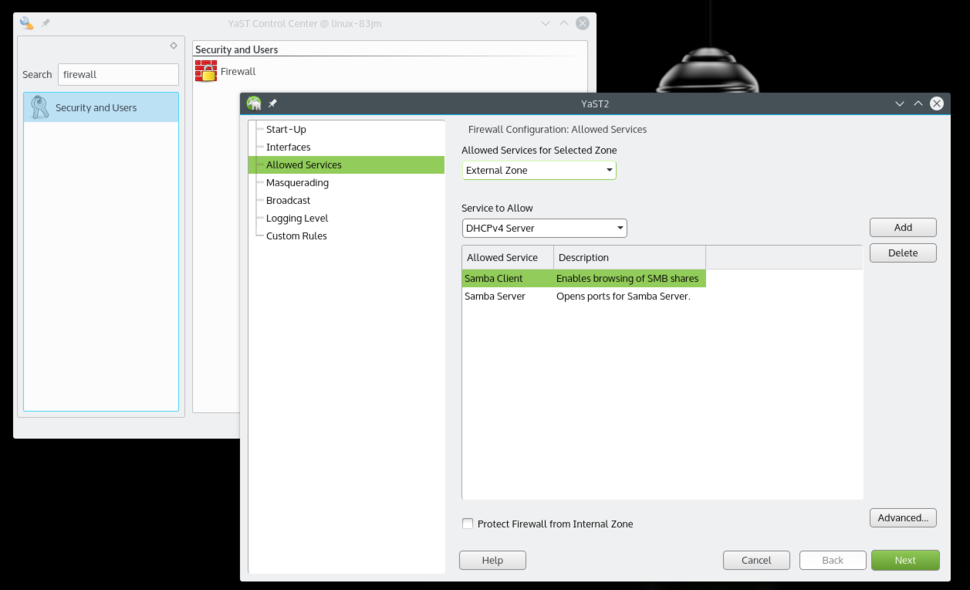

20151122 Doubly linked list in the Linux Kernel.md20151130 Useful Linux and Unix Tape Managements Commands For Sysadmins.md20151202 8 things to do after installing openSUSE Leap 42.1.md20151207 5 great Raspberry Pi projects for the classroom.md20160218 7 Steps to Start Your Linux SysAdmin Career.md20160218 9 Key Trends in Hybrid Cloud Computing.md20160226 How to use Python to hack your Eclipse IDE.md20160301 The Evolving Market for Commercial Software Built On Open Source.md

LFCS

LXD

76

README.md

76

README.md

@ -1,9 +1,9 @@

|

||||

简介

|

||||

-------------------------------

|

||||

|

||||

LCTT是“Linux中国”([http://linux.cn/](http://linux.cn/))的翻译组,负责从国外优秀媒体翻译Linux相关的技术、资讯、杂文等内容。

|

||||

LCTT是“Linux中国”([https://linux.cn/](https://linux.cn/))的翻译组,负责从国外优秀媒体翻译Linux相关的技术、资讯、杂文等内容。

|

||||

|

||||

LCTT已经拥有近百余名活跃成员,并欢迎更多的Linux志愿者加入我们的团队。

|

||||

LCTT已经拥有几百名活跃成员,并欢迎更多的Linux志愿者加入我们的团队。

|

||||

|

||||

|

||||

|

||||

@ -52,13 +52,15 @@ LCTT的组成

|

||||

* 2015/04/19 发起 LFS-BOOK-7.7-systemd 项目。

|

||||

* 2015/06/09 提升ictlyh和dongfengweixiao为Core Translators成员。

|

||||

* 2015/11/10 提升strugglingyouth、FSSlc、Vic020、alim0x为Core Translators成员。

|

||||

* 2016/05/09 提升PurlingNayuki为校对。

|

||||

|

||||

活跃成员

|

||||

-------------------------------

|

||||

|

||||

目前 TP 活跃成员有:

|

||||

- CORE @wxy,

|

||||

- CORE @DeadFire,

|

||||

- Leader @wxy,

|

||||

- Source @oska874,

|

||||

- Proofreader @PurlingNayuki,

|

||||

- CORE @geekpi,

|

||||

- CORE @GOLinux,

|

||||

- CORE @ictlyh,

|

||||

@ -71,6 +73,7 @@ LCTT的组成

|

||||

- CORE @Vic020,

|

||||

- CORE @dongfengweixiao,

|

||||

- CORE @alim0x,

|

||||

- Senior @DeadFire,

|

||||

- Senior @reinoir,

|

||||

- Senior @tinyeyeser,

|

||||

- Senior @vito-L,

|

||||

@ -80,41 +83,42 @@ LCTT的组成

|

||||

- ZTinoZ,

|

||||

- theo-l,

|

||||

- luoxcat,

|

||||

- disylee,

|

||||

- martin2011qi,

|

||||

- wi-cuckoo,

|

||||

- disylee,

|

||||

- haimingfg,

|

||||

- KayGuoWhu,

|

||||

- wwy-hust,

|

||||

- martin2011qi,

|

||||

- cvsher,

|

||||

- felixonmars,

|

||||

- su-kaiyao,

|

||||

- ivo-wang,

|

||||

- GHLandy,

|

||||

- cvsher,

|

||||

- wyangsun,

|

||||

- DongShuaike,

|

||||

- flsf,

|

||||

- SPccman,

|

||||

- Stevearzh

|

||||

- mr-ping,

|

||||

- Linchenguang,

|

||||

- oska874

|

||||

- Linux-pdz,

|

||||

- 2q1w2007,

|

||||

- felixonmars,

|

||||

- wyangsun,

|

||||

- MikeCoder,

|

||||

- mr-ping,

|

||||

- xiqingongzi

|

||||

- H-mudcup,

|

||||

- zhangboyue,

|

||||

- cposture,

|

||||

- xiqingongzi,

|

||||

- goreliu,

|

||||

- DongShuaike,

|

||||

- NearTan,

|

||||

- TxmszLou,

|

||||

- ZhouJ-sh,

|

||||

- wangjiezhe,

|

||||

- NearTan,

|

||||

- icybreaker,

|

||||

- shipsw,

|

||||

- johnhoow,

|

||||

- soooogreen,

|

||||

- linuhap,

|

||||

- boredivan,

|

||||

- blueabysm,

|

||||

- liaoishere,

|

||||

- boredivan,

|

||||

- name1e5s,

|

||||

- yechunxiao19,

|

||||

- l3b2w1,

|

||||

- XLCYun,

|

||||

@ -122,43 +126,55 @@ LCTT的组成

|

||||

- tenght,

|

||||

- coloka,

|

||||

- luoyutiantang,

|

||||

- yupmoon,

|

||||

- sonofelice,

|

||||

- jiajia9linuxer,

|

||||

- scusjs,

|

||||

- tnuoccalanosrep,

|

||||

- woodboow,

|

||||

- 1w2b3l,

|

||||

- JonathanKang,

|

||||

- crowner,

|

||||

- mtunique,

|

||||

- dingdongnigetou,

|

||||

- CNprober,

|

||||

- JonathanKang,

|

||||

- Medusar,

|

||||

- hyaocuk,

|

||||

- szrlee,

|

||||

- KnightJoker,

|

||||

- Xuanwo,

|

||||

- nd0104,

|

||||

- jerryling315,

|

||||

- xiaoyu33,

|

||||

- guodongxiaren,

|

||||

- zzlyzq,

|

||||

- yujianxuechuan,

|

||||

- ailurus1991,

|

||||

- ynmlml,

|

||||

- kylepeng93,

|

||||

- ggaaooppeenngg,

|

||||

- Ricky-Gong,

|

||||

- zky001,

|

||||

- Flowsnow,

|

||||

- lfzark,

|

||||

- 213edu,

|

||||

- Tanete,

|

||||

- liuaiping,

|

||||

- jerryling315,

|

||||

- bestony,

|

||||

- Timeszoro,

|

||||

- rogetfan,

|

||||

- itsang,

|

||||

- JeffDing,

|

||||

- Yuking-net,

|

||||

|

||||

- MikeCoder,

|

||||

- zhangboyue,

|

||||

- liaoishere,

|

||||

- yupmoon,

|

||||

- Medusar,

|

||||

- zzlyzq,

|

||||

- yujianxuechuan,

|

||||

- ailurus1991,

|

||||

- tomatoKiller,

|

||||

- stduolc,

|

||||

- shaohaolin,

|

||||

- Timeszoro,

|

||||

- rogetfan,

|

||||

- FineFan,

|

||||

- kingname,

|

||||

- jasminepeng,

|

||||

- JeffDing,

|

||||

- CHINAANSHE,

|

||||

|

||||

(按提交行数排名前百)

|

||||

@ -173,7 +189,7 @@ LFS 项目活跃成员有:

|

||||

- @KevinSJ

|

||||

- @Yuking-net

|

||||

|

||||

(更新于2015/11/29)

|

||||

(更新于2016/05/09)

|

||||

|

||||

谢谢大家的支持!

|

||||

|

||||

|

||||

@ -2,29 +2,29 @@ Linux上的游戏:所有你需要知道的

|

||||

================================================================================

|

||||

|

||||

|

||||

** 我能在 Linux 上玩游戏吗 ?**

|

||||

**我能在 Linux 上玩游戏吗 ?**

|

||||

|

||||

这是打算[投奔 Linux 阵营][1]的人最经常问的问题之一。毕竟,在 Linux 上面玩游戏经常被认为有点难以实现。事实上,一些人甚至考虑他们能不能在 Linux 上看电影或者听音乐。考虑到这些,关于 Linux 的平台的游戏的问题是很现实的。「

|

||||

这是打算[投奔 Linux 阵营][1]的人最经常问的问题之一。毕竟,在 Linux 上面玩游戏经常被认为有点难以实现。事实上,一些人甚至考虑他们能不能在 Linux 上看电影或者听音乐。考虑到这些,关于 Linux 的平台的游戏的问题是很现实的。

|

||||

|

||||

在本文中,我将解答大多数 Linux 新手关于在 Linux 打游戏的问题。例如 Linux 下能不能玩游戏,如果能的话,在**哪里下载游戏**或者如何获取有关游戏的信息。

|

||||

在本文中,我将解答大多数 Linux 新手关于在 Linux 中打游戏的问题。例如 Linux 下能不能玩游戏,如果能的话,在哪里**下载游戏**或者如何获取有关游戏的信息。

|

||||

|

||||

但是在此之前,我需要说明一下。我不是一个 PC 上的玩家或者说我不认为我是一个在 Linux 桌面上完游玩戏的家伙。我更喜欢在 PS4 上玩游戏并且我不关心 PC 上的游戏甚至也不关心手机上的游戏(我没有给我的任何一个朋友安利糖果传奇)。这也就是你很少在 It's FOSS 上很少看见关于 [Linux 上的游戏][2]的部分。

|

||||

但是在此之前,我需要说明一下。我不是一个 PC 上的玩家或者说我不认为我是一个在 Linux 桌面游戏玩家。我更喜欢在 PS4 上玩游戏并且我不关心 PC 上的游戏甚至也不关心手机上的游戏(我没有给我的任何一个朋友安利糖果传奇)。这也就是你很少能在 It's FOSS 上很少看见关于 [Linux 上的游戏][2]的原因。

|

||||

|

||||

所以我为什么要写这个主题?

|

||||

所以我为什么要提到这个主题?

|

||||

|

||||

因为别人问过我几次有关 Linux 上的游戏的问题并且我想要写出来一个能解答这些问题的 Linux 上的游戏指南。注意,在这里我不只是讨论 Ubuntu 上的游戏。我讨论的是在所有的 Linux 上的游戏。

|

||||

因为别人问过我几次有关 Linux 上的游戏的问题并且我想要写出来一个能解答这些问题的 Linux 游戏指南。注意,在这里我不只是讨论在 Ubuntu 上玩游戏。我讨论的是在所有的 Linux 上的游戏。

|

||||

|

||||

### 我能在 Linux 上玩游戏吗 ? ###

|

||||

|

||||

是,但不是完全是。

|

||||

|

||||

“是”,是指你能在Linux上玩游戏;“不完全是”,是指你不能在 Linux 上玩 ’所有的游戏‘。

|

||||

“是”,是指你能在Linux上玩游戏;“不完全是”,是指你不能在 Linux 上玩 ‘所有的游戏’。

|

||||

|

||||

什么?你是拒绝的?不必这样。我的意思是你能在 Linux 上玩很多流行的游戏,比如[反恐精英以及地铁:最后的曙光][3]等。但是你可能不能玩到所有在 Windows 上流行的最新游戏,比如[实况足球2015][4]。

|

||||

感到迷惑了吗?不必这样。我的意思是你能在 Linux 上玩很多流行的游戏,比如[反恐精英以及地铁:最后的曙光][3]等。但是你可能不能玩到所有在 Windows 上流行的最新游戏,比如[实况足球 2015 ][4]。

|

||||

|

||||

在我看来,造成这种情况的原因是 Linux 在桌面系统中仅占不到 2%,这占比使得大多数开发者没有在 Linux 上发布他们的游戏的打算。

|

||||

在我看来,造成这种情况的原因是 Linux 在桌面系统中仅占不到 2%,这样的占比使得大多数开发者没有开发其游戏的 Linux 版的动力。

|

||||

|

||||

这就意味指大多数近年来被提及的比较多的游戏很有可能不能在 Linux 上玩。不要灰心。我们能以某种方式在 Linux 上玩这些游戏,我们将在下面的章节中讨论这些方法。但是,在此之前,让我们看看在 Linux 上能玩的游戏的种类。

|

||||

这就意味指大多数近年来被提及的比较多的游戏很有可能不能在 Linux 上玩。不要灰心。还有别的方式在 Linux 上玩这些游戏,我们将在下面的章节中讨论这些方法。但是,在此之前,让我们看看在 Linux 上能玩的游戏的种类。

|

||||

|

||||

要我说的话,我会把那些游戏分为四类:

|

||||

|

||||

@ -33,7 +33,7 @@ Linux上的游戏:所有你需要知道的

|

||||

3. 浏览器里的游戏

|

||||

4. 终端里的游戏

|

||||

|

||||

让我们以最重要的 Linux 的原生游戏开始。

|

||||

让我们以最重要的一类, Linux 的原生游戏开始。

|

||||

|

||||

---------

|

||||

|

||||

@ -41,15 +41,15 @@ Linux上的游戏:所有你需要知道的

|

||||

|

||||

原生游戏指的是官方支持 Linux 的游戏。这些游戏有原生的 Linux 客户端并且能像在 Linux 上的其他软件一样不需要附加的步骤就能安装在 Linux 上面(我们将在下一节讨论)。

|

||||

|

||||

所以,如你所见,这里有一些为 Linux 开发的游戏,下一个问题就是在哪能找到这些游戏以及如何安装。我将列出来一些让你玩到游戏的渠道了。

|

||||

所以,如你所见,有一些为 Linux 开发的游戏,下一个问题就是在哪能找到这些游戏以及如何安装。我将列出一些让你玩到游戏的渠道。

|

||||

|

||||

#### Steam ####

|

||||

|

||||

|

||||

|

||||

“[Steam][5] 是一个游戏的分发平台。就如同 Kindle 是电子书的分发平台,iTunes 是音乐的分发平台一样,Steam 也具有那样的功能。它给了你购买和安装游戏,玩多人游戏以及在它的平台上关注其他游戏的选项。这些游戏被[ DRM ][6]所保护。”

|

||||

“[Steam][5] 是一个游戏的分发平台。就如同 Kindle 是电子书的分发平台, iTunes 是音乐的分发平台一样, Steam 也具有那样的功能。它提供购买和安装游戏,玩多人游戏以及在它的平台上关注其他游戏的选项。其上的游戏被[ DRM ][6]所保护。”

|

||||

|

||||

两年以前,游戏平台 Steam 宣布支持 Linux,这在当时是一个大新闻。这是 Linux 上玩游戏被严肃的对待的一个迹象。尽管这个决定更多地影响了他们自己的基于 Linux 游戏平台[ Steam OS][7]。这仍然是令人欣慰的事情,因为它给 Linux 带来了一大堆游戏。

|

||||

两年以前,游戏平台 Steam 宣布支持 Linux ,这在当时是一个大新闻。这是 Linux 上玩游戏被严肃对待的一个迹象。尽管这个决定更多地影响了他们自己的基于 Linux 游戏平台以及一个独立 Linux 发行版[ Steam OS][7] ,这仍然是令人欣慰的事情,因为它给 Linux 带来了一大堆游戏。

|

||||

|

||||

我已经写了一篇详细的关于安装以及使用 Steam 的文章。如果你想开始使用 Steam 的话,读读那篇文章。

|

||||

|

||||

@ -57,23 +57,23 @@ Linux上的游戏:所有你需要知道的

|

||||

|

||||

#### GOG.com ####

|

||||

|

||||

[GOG.com][9] 失灵一个与 Steam 类似的平台。与 Steam 一样,你能在这上面找到数以百计的 Linux 游戏,你可以购买和安装它们。如果游戏支持好几个平台,尼卡一在多个操作系统上安装他们。你买到你账户的游戏你可以随时玩。捏可以在你想要下载的任何时间下载。

|

||||

[GOG.com][9] 是另一个与 Steam 类似的平台。与 Steam 一样,你能在这上面找到数以百计的 Linux 游戏,并购买和安装它们。如果游戏支持好几个平台,你可以在多个操作系统上安装他们。你可以随时游玩使用你的账户购买的游戏。你也可以在任何时间下载。

|

||||

|

||||

GOG.com 与 Steam 不同的是前者仅提供没有 DRM 保护的游戏以及电影。而且,GOG.com 完全是基于网页的,所以你不需要安装类似 Steam 的客户端。你只需要用浏览器下载游戏然后安装到你的系统上。

|

||||

|

||||

#### Portable Linux Games ####

|

||||

|

||||

[Portable Linux Games][10] 是一个集聚了不少 Linux 游戏的网站。这家网站最特别以及最好的就是你能离线安装这些游戏。

|

||||

[Portable Linux Games][10] 是一个集聚了不少 Linux 游戏的网站。这家网站最特别以及最好的点就是你能离线安装这些游戏。

|

||||

|

||||

你下载到的文件包含所有的依赖(仅需 Wine 以及 Perl)并且他们也是与平台无关的。你所需要的仅仅是下载文件并且双击来启动安装程序。你也可以把文件储存起来以用于将来的安装,如果你网速不够快的话我很推荐您这样做。

|

||||

你下载到的文件包含所有的依赖(仅需 Wine 以及 Perl)并且他们也是与平台无关的。你所需要的仅仅是下载文件并且双击来启动安装程序。你也可以把文件储存起来以用于将来的安装。如果你网速不够快的话,我很推荐你这样做。

|

||||

|

||||

#### Game Drift 游戏商店 ####

|

||||

|

||||

[Game Drift][11] 是一个只专注于游戏的基于 Ubuntu 的 Linux 发行版。但是如果你不想只为游戏就去安装这个发行版的话,你也可以经常上线看哪个游戏可以在 Linux 上运行并且安装他们。

|

||||

[Game Drift][11] 是一个只专注于游戏的基于 Ubuntu 的 Linux 发行版。但是如果你不想只为游戏就去安装这个发行版的话,你也可以经常去它的在线游戏商店去看哪个游戏可以在 Linux 上运行并且安装他们。

|

||||

|

||||

#### Linux Game Database ####

|

||||

|

||||

如其名字所示,[Linux Game Database][12]是一个收集了很多 Linux 游戏的网站。你能在这里浏览诸多类型的游戏并从游戏开发者的网站下载/安装这些游戏。作为这家网站的会员,你甚至可以为游戏打分。LGDB,有点像 Linux 游戏界的 IMDB 或者 IGN.

|

||||

如其名字所示,[Linux Game Database][12]是一个收集了很多 Linux 游戏的网站。你能在这里浏览诸多类型的游戏并从游戏开发者的网站下载/安装这些游戏。作为这家网站的会员,你甚至可以为游戏打分。 LGDB 有点像 Linux 游戏界的 IMDB 或者 IGN.

|

||||

|

||||

#### Penguspy ####

|

||||

|

||||

@ -81,7 +81,7 @@ GOG.com 与 Steam 不同的是前者仅提供没有 DRM 保护的游戏以及电

|

||||

|

||||

#### 软件源 ####

|

||||

|

||||

看看你自己的发行版的软件源。那里可能有一些游戏。如果你用 Ubuntu 的话,它的软件中心里有一个游戏的分类。在一些其他的发行版里也有,比如 Liux Mint 等。

|

||||

看看你自己的发行版的软件源。其中可能有一些游戏。如果你用 Ubuntu 的话,它的软件中心里有一个游戏的分类。在一些其他的发行版里也有,比如 Linux Mint 等。

|

||||

|

||||

----------

|

||||

|

||||

@ -89,19 +89,19 @@ GOG.com 与 Steam 不同的是前者仅提供没有 DRM 保护的游戏以及电

|

||||

|

||||

|

||||

|

||||

到现在为止,我们一直在讨论 Linux 的原生游戏。但是并没有很多 Linux 上的原生游戏,或者说,火的不要不要的游戏大多不支持 Linux,但是都支持 Windows PC。所以,如何在 Linux 上玩 Wendows 的游戏?

|

||||

到现在为止,我们一直在讨论 Linux 的原生游戏。但是并没有很多 Linux 上的原生游戏,或者更准确地说,火的不要不要的游戏大多不支持 Linux,但是都支持 Windows PC 。所以,如何在 Linux 上玩 Windows 的游戏?

|

||||

|

||||

幸好,由于我们有 Wine, PlayOnLinux 和 CrossOver 等工具,我们能在 Linux 上玩不少的 Wendows 游戏。

|

||||

幸好,由于我们有 Wine 、 PlayOnLinux 和 CrossOver 等工具,我们能在 Linux 上玩不少的 Windows 游戏。

|

||||

|

||||

#### Wine ####

|

||||

|

||||

Wine 是一个能使 Wendows 应用在类似 Linux, BSD 和 OS X 上运行的兼容层。在 Wine 的帮助下,你可以在 Linux 下安装以及使用很多 Windows 下的应用。

|

||||

Wine 是一个能使 Windows 应用在类似 Linux , BSD 和 OS X 上运行的兼容层。在 Wine 的帮助下,你可以在 Linux 下安装以及使用很多 Windows 下的应用。

|

||||

|

||||

[在 Ubuntu 上安装 Wine][14]或者在其他 Linux 上安装 Wine 是很简单的,因为大多数发行版的软件源里都有它。这里也有一个很大的[ Wine 支持的应用的数据库][15]供您浏览。

|

||||

|

||||

#### CrossOver ####

|

||||

|

||||

[CrossOver][16] 是 Wine 的增强版,它给 Wine 提供了专业的技术上的支持。但是与 Wine 不同, CrossOver 不是免费的。你需要购买许可。好消息是它会把更新也贡献到 Wine 的开发者那里并且事实上加速了 Wine 的开发使得 Wine 能支持更多的 Windows 上的游戏和应用。如果你可以一年支付 48 美元,你可以购买 CrossOver 并得到他们提供的技术支持。

|

||||

[CrossOver][16] 是 Wine 的增强版,它给 Wine 提供了专业的技术上的支持。但是与 Wine 不同, CrossOver 不是免费的。你需要购买许可。好消息是它会把更新也贡献到 Wine 的开发者那里并且事实上加速了 Wine 的开发使得 Wine 能支持更多的 Windows 上的游戏和应用。如果你可以接受每年支付 48 美元,你可以购买 CrossOver 并得到他们提供的技术支持。

|

||||

|

||||

### PlayOnLinux ###

|

||||

|

||||

@ -113,9 +113,9 @@ PlayOnLinux 也基于 Wine 但是执行程序的方式略有不同。它有着

|

||||

|

||||

|

||||

|

||||

不必说你也应该知道有非常多的基于网页的游戏,这些游戏都可以在任何操作系统里运行,无论是 Windows,Linux,还是 OS X。大多数让人上瘾的手机游戏,比如[帝国之战][18]就有官方的网页版。

|

||||

不必说你也应该知道有非常多的基于网页的游戏,这些游戏都可以在任何操作系统里运行,无论是 Windows ,Linux ,还是 OS X 。大多数让人上瘾的手机游戏,比如[帝国之战][18]就有官方的网页版。

|

||||

|

||||

除了这些,还有 [Google Chrome在线商店][19],你可以在 Linux 上玩更多的这些游戏。这些 Chrome 上的游戏可以像一个单独的应用一样安装并从应用菜单中打开,一些游戏就算是离线也能运行。

|

||||

除了这些,还有 [Google Chrome 在线商店][19],你可以在 Linux 上玩更多的这些游戏。这些 Chrome 上的游戏可以像一个单独的应用一样安装并从应用菜单中打开,一些游戏就算是离线也能运行。

|

||||

|

||||

----------

|

||||

|

||||

@ -123,7 +123,7 @@ PlayOnLinux 也基于 Wine 但是执行程序的方式略有不同。它有着

|

||||

|

||||

|

||||

|

||||

使用 Linux 的一个附加优势就是可以使用命令行终端玩游戏。我知道这不是最好的玩游戏的 方法,但是在终端里玩[贪吃蛇][20]或者 [2048][21] 很有趣。在[这个博客][21]中有一些好玩的的终端游戏。你可以浏览并安装你喜欢的游戏。

|

||||

使用 Linux 的一个附加优势就是可以使用命令行终端玩游戏。我知道这不是最好的玩游戏的方法,但是在终端里玩[贪吃蛇][20]或者 [2048][21] 很有趣。在[这个博客][21]中有一些好玩的的终端游戏。你可以浏览并安装你喜欢的游戏。

|

||||

|

||||

----------

|

||||

|

||||

@ -131,21 +131,21 @@ PlayOnLinux 也基于 Wine 但是执行程序的方式略有不同。它有着

|

||||

|

||||

当你了解了不少的在 Linux 上你可以玩到的游戏以及你如何使用他们,下一个问题就是如何保持游戏的版本是最新的。对于这件事,我建议你看看下面的博客,这些博客能告诉你 Linux 游戏世界的最新消息:

|

||||

|

||||

- [Gaming on Linux][23]:我认为我把它叫做 Linux 游戏的门户并没有错误。在这你可以得到关于 Linux 的游戏的最新的传言以及新闻。最近, Gaming on Linux 有了一个由 Linux 游戏爱好者组成的漂亮的社区。

|

||||

- [Gaming on Linux][23]:我认为我把它叫做 Linux 游戏专业门户并没有错误。在这你可以得到关于 Linux 的游戏的最新的传言以及新闻。它经常更新, 还有由 Linux 游戏爱好者组成的优秀社区。

|

||||

- [Free Gamer][24]:一个专注于免费开源的游戏的博客。

|

||||

- [Linux Game News][25]:一个提供很多的 Linux 游戏的升级的 Tumbler 博客。

|

||||

|

||||

#### 还有别的要说的吗? ####

|

||||

|

||||

我认为让你知道如何开始在 Linux 上的游戏人生是一个好事。如果你仍然不能被说服。我推荐你做个[双系统][26],把 Linux 作为你的主要桌面系统,当你想玩游戏时,重启到 Windows。这是一个对游戏妥协的解决办法。

|

||||

我认为让你知道如何开始在 Linux 上的游戏人生是一个好事。如果你仍然不能被说服,我推荐你做个[双系统][26],把 Linux 作为你的主要桌面系统,当你想玩游戏时,重启到 Windows。这是一个对游戏妥协的解决办法。

|

||||

|

||||

现在,这里是你说出你自己的状况的时候了。你在 Linux 上玩游戏吗?你最喜欢什么游戏?你关注了哪些游戏博客?

|

||||

现在,这里是你说出你自己的想法的时候了。你在 Linux 上玩游戏吗?你最喜欢什么游戏?你关注了哪些游戏博客?

|

||||

|

||||

|

||||

投票项目:

|

||||

你怎样在 Linux 上玩游戏?

|

||||

|

||||

- 我玩原生 Linux 游戏,我也用 Wine 以及 PlayOnLinux 运行 Windows 游戏

|

||||

- 我玩原生 Linux 游戏,也用 Wine 以及 PlayOnLinux 运行 Windows 游戏

|

||||

- 我喜欢网页游戏

|

||||

- 我喜欢终端游戏

|

||||

- 我只玩原生 Linux 游戏

|

||||

@ -167,7 +167,7 @@ via: http://itsfoss.com/linux-gaming-guide/

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[name1e5s](https://github.com/name1e5s)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

53

published/20151202 KDE vs GNOME vs XFCE Desktop.md

Normal file

53

published/20151202 KDE vs GNOME vs XFCE Desktop.md

Normal file

@ -0,0 +1,53 @@

|

||||

KDE、GNOME 和 XFCE 桌面比较

|

||||

================================================================================

|

||||

|

||||

|

||||

这么多年来,很多人一直都在他们的 linux 桌面端使用 KDE 或者 GNOME 桌面环境。在这两个桌面环境多年不断发展的同时,其它的桌面也在持续增加它们的用户规模。举个例子说,在轻量级桌面环境下,XFCE 一举成为了最受欢迎的桌面环境,相较于 LXDE 缺少的优美视觉效果,默认配置下的 XFCE 在这方面就可以打败前者。XFCE 提供的功能特性都能在 GNOME2 下得到,然而,在一些较老的计算机上,它的轻量级的特性却能取得更好的效果。

|

||||

|

||||

### 桌面主题定制 ###

|

||||

|

||||

用户完成安装之后,XFCE 看起来可能会有一点无趣,因为它在视觉上还缺少一些吸引力。但是,请不要误解我的话, XFCE 仍然拥有漂亮的桌面,但是对于大多数刚刚接触 XFCE 桌面环境的人来说,可能它看起来像香草一样普普通通。不过好消息是当我们想要给 XFCE 安装新的主题的时候,这会是一个十分轻松的过程,因为你能够快速的找到你喜欢的 XFCE 主题,之后你就可以将它解压到一个合适的目录中。从这一点上来说,XFCE 自带的一个放在“外观”下的重要的图形界面工具可以帮助用户更加容易的选择中意的主题,这可能是目前在 XFCE 上这方面最好用的工具了。如果用户按照上面的建议去做的话,对于想要尝试使用 XFCE 的任何用户来说都将不存在困难。

|

||||

|

||||

在 GNOME 桌面上,用户也可以按照类似上面的方法去做。不过,其中最主要的不同点就是在你做之前,用户必须手动下载并安装 GNOME Tweak Tool。当然,对于使用来说都不会有什么障碍,但是对于用户来说,使用 XFCE 安装和激活主题并不需要去额外去下载安装各种调整工具,这可能是他们无法忽略的一个优势。而在 GNOME 上,尤其是在用户已经下载并安装了 GNOME Tweak tool 之后,你仍将必须确保你已经安装了“用户主题扩展”。

|

||||

|

||||

同 XFCE 一样,用户需要去搜索并下载自己喜欢的主题,然后,用户可以再次使用 GNOME Tweak tool,并点击该工具界面左边的“外观”按钮,接着用户便可以直接查看页面底部并点击文件浏览按钮,然后浏览到那个压缩的文件夹并打开。当完成这些之后,用户将会看到一个告诉用户已经成功应用了主题的对话框,这样你的主题便已经安装完成。然后用户就可以简单的使用下拉菜单来选择他们想要的主题。和 XFCE 一样,主题激活的过程也是十分简单的,然而,对于因为要使用一个新的主题而去下载一个没有预先安装到系统里面的应用,这种情况也是需要考虑的。

|

||||

|

||||

最后,就是 KDE 桌面主题定制的过程了。和 XFCE 一样,不需要去下载额外的工具来安装主题。从这点来看,让人有种XFCE 可能要被 KDE 战胜了的感觉。不仅在 KDE 上可以完全使用图形用户界面来安装主题,而且甚至只需要用户点击获取新主题的按钮就可以找到、查看新的主题,并且最后自动安装。

|

||||

|

||||

然而,我们应该注意到 KDE 相比 XFCE 而言,是一个更加健壮完善的桌面环境。当然,对于主要以极简设计为目的的桌面来说,缺失一些更多的功能是有一定的道理的。为此,我们要为这样优秀的功能给 KDE 加分。

|

||||

|

||||

### MATE 不是一个轻量级的桌面环境 ###

|

||||

|

||||

在继续比较 XFCE、GNOME3 和 KDE 之前,对于老手我们需要澄清一下,我们没有将 MATE 桌面环境加入到我们的比较中。MATE 可被看作是 GNOME2 的另一个衍生品,但是它并没有主要作为一款轻量级或者快捷桌面出现。相反,它的主要目的是成为一款更加传统和舒适的桌面环境,并使它的用户在使用它时就像在家里一样舒适。

|

||||

|

||||

另一方面,XFCE 生来就是要实现他自己的一系列使命。XFCE 给它的用户提供了一个更轻量而仍保持吸引人的视觉体验的桌面环境。然后,对于一些认为 MATE 也是一款轻量级的桌面环境的人来说,其实 MATE 真正的目标并不是成为一款轻量级的桌面环境。这两种选择在各自安装了一款好的主题之后看起来都会让人觉得非常具有吸引力。

|

||||

|

||||

### 桌面导航 ###

|

||||

|

||||

XFCE 除了桌面,还提供了一个醒目的导航器。任何使用过传统的 Windows 或者 GNOME 2/MATE 桌面环境的用户都可以在没有任何帮助的情况下自如的使用新安装的 XFCE 桌面环境的导航器。紧接着,添加小程序到面板中也是很显眼的。就像找一个已经安装的应用程序一样,直接使用启动器并点击你想要运行的应用程序图标就行。除了 LXDE 和 MATE 之外,还没有其他的桌面的导航器可以做到如此简单。不仅如此,更好的是控制面板的使用是非常容易使用的,对于刚刚使用这个新桌面的用户来说这是一个非常大的好处。如果用户更喜欢通过老式的方法去使用他们的桌面,那么 GNOME 就不合适。通过热角而取代了最小化按钮,加上其他的应用排布方式,这可以让大多数新用户易于使用它。

|

||||

|

||||

如果用户来自类似 Windows 这样的桌面环境,那么这些用户需要摒弃这些习惯,不能简单的通过鼠标右击一下就将一个小程序添加到他们的工作空间顶部。与此相反,它可以通过使用扩展来实现。GNOME 是可以安装拓展的,并且是非常的容易,这些容易之处体现在只需要用户简单的使用位于 GNOME 扩展页面上的 on/off 开关即可。不过,用户必须知道这个东西,才能真正使用上这个功能。

|

||||

|

||||

另一方面,GNOME 正在它的外观中体现它的设计理念,即为用户提供一个直观和易用的控制面板。你可能认为那并不是什么大事,但是,在我看来,它确实是我认为值得称赞并且有必要被提及的方面。KDE 给它的用户提供了更多的传统桌面使用体验,并通过提供相似的启动器和一种更加类似的获取软件的方式的能力来迎合来自 Windows 的用户。添加小部件或者小程序到 KDE 桌面是件非常简单的事情,只需要在桌面上右击即可。唯一的问题是 KDE 中这个功能不好发现,就像 KDE 中的其它东西一样,对于用户来说好像是隐藏的。KDE 的用户可能不同意我的观点,但我仍然坚持我的说法。

|

||||

|

||||

要增加一个小部件,只要在“我的面板”上右击就可以看见面板选项,但是并不是安装小部件的一个直观的方法。你并不能看见“添加部件”,除非你选择了“面板选项”,然后才能看见“添加部件”。这对我来说不是个问题,但是对于一些用户来说,它变成了不必要的困惑。而使事情变得更复杂的是,在用户能够找到部件区域后,他们后来发现一种称为“活动”的新术语。它和部件在同一个地方,可是它在自己的区域却是另外一种行为。

|

||||

|

||||

现在请不要误解我,KDE 中的活动特性是很不错的,也是很有价值的,但是从可用性的角度看,为了不让新手感到困惑,它更加适合于放在另一个菜单项。用户各有不同,但是让新用户多测试一段时间可以让它不断改进。对“活动”的批评先放一边,KDE 添加新部件的方法的确很棒。与 KDE 的主题一样,用户不能通过使用提供的图形用户界面浏览和自动安装部件。这是一个有点神奇的功能,但是它这样也可以工作。KDE 的控制面板可能和用户希望的样子不一样,它不是足够的简单。但是有一点很清楚,这将是他们致力于改进的地方。

|

||||

|

||||

### 因此,XFCE 是最好的桌面环境,对吗? ###

|

||||

|

||||

就我自己而言,我在我的计算机上使用 GNOME、KDE,并在我的办公室和家里的电脑上使用 Xfce。我也有一些老机器在使用 Openbox 和 LXDE。每一个桌面的体验都可以给我提供一些有用的东西,可以帮助我以适合的方式使用每台机器。对我来说,Xfce 是我的心中的挚爱,因为 Xfce 是一个我使用了多年的桌面环境。但对于这篇文章,我是用我日常使用的机器来撰写的,事实上,它用的是 GNOME。

|

||||

|

||||

这篇文章的主要思想是,对于那些正在寻找稳定的、传统的、容易理解的桌面环境的用户来说,我还是觉得 Xfce 能提供好一点的用户体验。欢迎您在评论部分和我们分享你的意见。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.unixmen.com/kde-vs-gnome-vs-xfce-desktop/

|

||||

|

||||

作者:[M.el Khamlichi][a]

|

||||

译者:[kylepeng93](https://github.com/kylepeng93)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.unixmen.com/author/pirat9/

|

||||

67

published/20151227 Upheaval in the Debian Live project.md

Normal file

67

published/20151227 Upheaval in the Debian Live project.md

Normal file

@ -0,0 +1,67 @@

|

||||

Debian Live项目的剧变

|

||||

==================================================================================

|

||||

|

||||

尽管围绕 Debian Live 项目发生了很多戏剧性事件,关于 [Debian Live 项目][1]结束的[公告][2]的影响力甚至小于该项目首次出现时的公告。主要开发者的离开是最显而易见的损失,而社区对他本人及其项目的态度是很令人困惑的,但是这个项目也许还是会以其他的形式继续下去。所以 Debian 仍然会有更多的工具去创造启动光盘和其他介质。尽管是用这样一种有遗憾的方式,项目创始人 Dabiel Baumann 和 Debian CD 团队以及安装检测团队之间出现的长期争论已经被「解决」了。

|

||||

在 11 月 9 日, Baumann 发表了题为「 Debian Live 项目的突然结束」的一篇公告。在那篇短文中,他一一列举出了自从这个和他有关的[项目被发起][3]以来近 10 年间发生的不同的事件,这些事件可以表明他在 Debian Live 项目上的努力一直没有被重视或没有被足够重视。最具决定性的因素是因为在「包的含义」上存在冲突, R.Learmonth [申请][4]了新的包名,而这侵犯了在 Debian Live 上使用的命名空间。

|

||||

|

||||

考虑到最主要的 Debian Live 包之一被命名为 live-build ,而 R.Learmonth 申请的新包名却是 live-build-ng ,这简直是对 live-build 的挑战。 live-build-ng 意为一种围绕 [vmdebootstrap][5]【译者注:创造真实的和虚拟机Debian的磁盘映像】工具的外部包装,这种包装是为了创造 live 介质(光盘和USB的插入),也是 Debian Live 最需要的的部分。但是当 Baumann Learmonth [要求][6]为他的包换一个不同的名字的时候,他得到了一个「有趣」的[回复][7]:

|

||||

|

||||

```

|

||||

应该注意到, live-build 不是一个 Debian 项目,它是一个声称自己是官方 Debian 项目的外部项目,这是一个需要我们解决的问题。

|

||||

这不是命名空间的问题,我们要将以目前维护的 live-config 和 live-boot 包为基础,把它们加入到 Debian 的本地项目。如果迫不得已的话,这将会有很多分支,但是我希望它不要发生,这样的话我们就可以把这些包整合到 Debian 中并继续以一种协作的方式去开发。

|

||||

live-build 已经被 debian-cd 放弃,live-build-ng 将会取代它。至少在一个精简的 Debian 环境中,live-build 会被放弃。我们(开发团队)正在与 debian-cd 和 Debian Installer 团队合作开发 live-build-ng 。

|

||||

```

|

||||

|

||||

Debian Live 是一个「官方的」 Debian 项目(也可以是狭义的「官方」),尽管它因为思路上的不同产生过争论。除此之外, vmdebootstrap 的维护者 Neil Willians 为脱离 Debian Live 项目[提供了如下的解释][8]:

|

||||

|

||||

```

|

||||

为了更好的支持 live-build 的代替者, vmdebootstrap 肯定会被推广。为了能够用 live-build 解决目前存在的问题,这项工作会由 debian-cd 团队来负责。这些问题包括可靠性问题,以及不能很好的支持多种机器和 UEFI 等。 vmdebootstrap 也存在着这些问题,我们用来自于对 live-boot 和 live-config 的支持情况来确定 vmdebootstrap 的功能。

|

||||

```

|

||||

|

||||

这些抱怨听起来合情合理,但是它们可能已经在目前的项目中得到了解决。然而一些秘密的项目有很明显的取代 live-build 的意图。正如 Baumann [指出][9]的,这些计划没有被发布到 debian-live 的邮件列表中。人们首次从 Debian Live 项目中获知这些计划正是因为这一次的ITP事件,所以它看起来像是一个「秘密计划」——有些事情在像 Debian 这样的项目中得不到很好的安排。

|

||||

|

||||

人们可能已经猜到了,有很多帖子都支持 Baumann [重命名][10] live-build-ng 的请求,但是紧接着,人们就因为他要停止继续在 Debian Live 上工作的决定而变得沮丧。然而 Learmonth 和 Williams 却坚持认为取代 live-build 很有必要。Learmonth 给 live-build-ng 换了一个争议性也许小一些的名字: live-wrapper 。他说他的目标是为 Debian Live 项目加入新的工具(并且「把 Debian Live 项目引入 Debian 里面」),但是完成这件事还需要很大的努力。

|

||||

|

||||

```

|

||||

我向已经被 ITP 问题所困扰的每个人道歉。我们已经告知大家 live-wrapper 还不足以完全替代 live-build 且开发工作仍在进行以收集反馈。尽管有了这部分的工作,我们收到的反馈缺并不是我们所需要的。

|

||||

```

|

||||

|

||||

这种对于取代 live-build 的强烈反对或许已经被预知到了。自由软件社区的沟通和交流很关键,所以,计划去替换一个项目的核心很容易引起争议——更何况是一个一直不为人所知的计划。从 Banumann 的角度来说,他当然不是完美的,他因为上传个不合适的 [syslinux 包][11]导致了 wheezy 的延迟发布,并且从那以后他被从 Debian 开发者暂时[降级][12]为 Debian 维护者。但是这不意味着他应该受到这种对待。当然,这个项目还有其他人参与,所以不仅仅是 Baumann 受到了影响。

|

||||

|

||||

Ben Armstrong 是其他参与者中的一位,在这个事件中,他很圆滑地处理了一些事,并且想从这个事件中全身而退。他从一封邮件[13]开始,这个邮件是为了庆祝这个项目,以及他和他的团队在过去几年取得的成果。正如他所说, Debian Live 的[下游项目列表][14]是很令人振奋的。在另一封邮件中,他也[指出][15]了这个项目不是没有生命力的:

|

||||

|

||||

```

|

||||

如果 Debian CD 开发团队通过他们的努力开发出可行的、可靠的、经过完善测试替代品,以及一个合适的取代 live-build 的候选者,这对于 Debian 项目有利无害。如果他们继续做这件事,他们不会「用一个官方改良,但不可靠且几乎没有经过测试的待选者取代 live-build 」。到目前为止,我还没有看到他们那样做的迹象。其间, live-build 仍保留在存档中——它仍然处于良好状态,且没有一种经过改良的继任者来取代它,因此开发团队没有必要尽快删除它。

|

||||

```

|

||||

|

||||

11 月 24 号, Armstrong 也在[他的博客][16]上[发布][17]了一个有关 Debian Live 的新消息。它展示了从 Baumann 退出起两周内的令人高兴的进展。甚至有迹象表明 Debian Live 项目与 live-wrapper 开发者开展了合作。博客上也有了一个[计划表][18],同时不可避免地寻求更多的帮助。这让人们有理由相信围绕项目发生的戏剧性事件仅仅是一个小摩擦——也许不可避免,但绝不是像现在看起来这么糟糕。

|

||||

|

||||

---------------------------------

|

||||

|

||||

via: https://lwn.net/Articles/665839/

|

||||

|

||||

作者:Jake Edge

|

||||

译者:[vim-kakali](https://github.com/vim-kakali)

|

||||

校对:[PurlingNayuki](https://github.com/PurlingNayuki)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[1]: https://lwn.net/Articles/666127/

|

||||

[2]: http://live.debian.net/

|

||||

[3]: https://www.debian.org/News/weekly/2006/08/

|

||||

[4]: https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=804315

|

||||

[5]: http://liw.fi/vmdebootstrap/

|

||||

[6]: https://lwn.net/Articles/666173/

|

||||

[7]: https://lwn.net/Articles/666176/

|

||||

[8]: https://lwn.net/Articles/666181/

|

||||

[9]: https://lwn.net/Articles/666208/

|

||||

[10]: https://lwn.net/Articles/666321/

|

||||

[11]: https://bugs.debian.org/cgi-bin/bugreport.cgi?bug=699808

|

||||

[12]: https://nm.debian.org/public/process/14450

|

||||

[13]: https://lwn.net/Articles/666336/

|

||||

[14]: http://live.debian.net/project/downstream/

|

||||

[15]: https://lwn.net/Articles/666338/

|

||||

[16]: https://lwn.net/Articles/666340/

|

||||

[17]: http://syn.theti.ca/2015/11/24/debian-live-after-debian-live/

|

||||

[18]: https://wiki.debian.org/DebianLive/TODO

|

||||

127

published/20160204 An Introduction to SELinux.md

Normal file

127

published/20160204 An Introduction to SELinux.md

Normal file

@ -0,0 +1,127 @@

|

||||

SELinux 入门

|

||||

===============================

|

||||

|

||||

回到 Kernel 2.6 时代,那时候引入了一个新的安全系统,用以提供访问控制安全策略的机制。这个系统就是 [Security Enhanced Linux (SELinux)][1],它是由[美国国家安全局(NSA)][2]贡献的,它为 Linux 内核子系统引入了一个健壮的强制控制访问(Mandatory Access Control)架构。

|

||||

|

||||

如果你在之前的 Linux 生涯中都禁用或忽略了 SELinux,这篇文章就是专门为你写的:这是一篇对存在于你的 Linux 桌面或服务器之下的 SELinux 系统的介绍,它能够限制权限,甚至消除程序或守护进程的脆弱性而造成破坏的可能性。

|

||||

|

||||

在我开始之前,你应该已经了解的是 SELinux 主要是红帽 Red Hat Linux 以及它的衍生发行版上的一个工具。类似地, Ubuntu 和 SUSE(以及它们的衍生发行版)使用的是 AppArmor。SELinux 和 AppArmor 有显著的不同。你可以在 SUSE,openSUSE,Ubuntu 等等发行版上安装 SELinux,但这是项难以置信的挑战,除非你十分精通 Linux。

|

||||

|

||||

说了这么多,让我来向你介绍 SELinux。

|

||||

|

||||

### DAC vs. MAC

|

||||

|

||||

Linux 上传统的访问控制标准是自主访问控制(Discretionary Access Control,DAC)。在这种形式下,一个软件或守护进程以 User ID(UID)或 Set owner User ID(SUID)的身份运行,并且拥有该用户的目标(文件、套接字、以及其它进程)权限。这使得恶意代码很容易运行在特定权限之下,从而取得访问关键的子系统的权限。

|

||||

|

||||

另一方面,强制访问控制(Mandatory Access Control,MAC)基于保密性和完整性强制信息的隔离以限制破坏。该限制单元独立于传统的 Linux 安全机制运作,并且没有超级用户的概念。

|

||||

|

||||

### SELinux 如何工作

|

||||

|

||||

考虑一下 SELinux 的相关概念:

|

||||

|

||||

- 主体(Subjects)

|

||||

- 目标(Objects)

|

||||

- 策略(Policy)

|

||||

- 模式(Mode)

|

||||

|

||||

当一个主体(Subject,如一个程序)尝试访问一个目标(Object,如一个文件),SELinux 安全服务器(SELinux Security Server,在内核中)从策略数据库(Policy Database)中运行一个检查。基于当前的模式(mode),如果 SELinux 安全服务器授予权限,该主体就能够访问该目标。如果 SELinux 安全服务器拒绝了权限,就会在 /var/log/messages 中记录一条拒绝信息。

|

||||

|

||||

听起来相对比较简单是不是?实际上过程要更加复杂,但为了简化介绍,只列出了重要的步骤。

|

||||

|

||||

### 模式

|

||||

|

||||

SELinux 有三个模式(可以由用户设置)。这些模式将规定 SELinux 在主体请求时如何应对。这些模式是:

|

||||

|

||||

- Enforcing (强制)— SELinux 策略强制执行,基于 SELinux 策略规则授予或拒绝主体对目标的访问

|

||||

- Permissive (宽容)— SELinux 策略不强制执行,不实际拒绝访问,但会有拒绝信息写入日志

|

||||

- Disabled (禁用)— 完全禁用 SELinux

|

||||

|

||||

|

||||

|

||||

*图 1:getenforce 命令显示 SELinux 的状态是 Enforcing 启用状态。*

|

||||

|

||||

默认情况下,大部分系统的 SELinux 设置为 Enforcing。你要如何知道你的系统当前是什么模式?你可以使用一条简单的命令来查看,这条命令就是 `getenforce`。这个命令用起来难以置信的简单(因为它仅仅用来报告 SELinux 的模式)。要使用这个工具,打开一个终端窗口并执行 `getenforce` 命令。命令会返回 Enforcing、Permissive,或者 Disabled(见上方图 1)。

|

||||

|

||||

设置 SELinux 的模式实际上很简单——取决于你想设置什么模式。记住:**永远不推荐关闭 SELinux**。为什么?当你这么做了,就会出现这种可能性:你磁盘上的文件可能会被打上错误的权限标签,需要你重新标记权限才能修复。而且你无法修改一个以 Disabled 模式启动的系统的模式。你的最佳模式是 Enforcing 或者 Permissive。

|

||||

|

||||

你可以从命令行或 `/etc/selinux/config` 文件更改 SELinux 的模式。要从命令行设置模式,你可以使用 `setenforce` 工具。要设置 Enforcing 模式,按下面这么做:

|

||||

|

||||

1. 打开一个终端窗口

|

||||

2. 执行 `su` 然后输入你的管理员密码

|

||||

3. 执行 `setenforce 1`

|

||||

4. 执行 `getenforce` 确定模式已经正确设置(图 2)

|

||||

|

||||

|

||||

|

||||

*图 2:设置 SELinux 模式为 Enforcing。*

|

||||

|

||||

要设置模式为 Permissive,这么做:

|

||||

|

||||

1. 打开一个终端窗口

|

||||

2. 执行 `su` 然后输入你的管理员密码

|

||||

3. 执行 `setenforce 0`

|

||||

4. 执行 `getenforce` 确定模式已经正确设置(图 3)

|

||||

|

||||

|

||||

|

||||

*图 3:设置 SELinux 模式为 Permissive。*

|

||||

|

||||

注:通过命令行设置模式会覆盖 SELinux 配置文件中的设置。

|

||||

|

||||

如果你更愿意在 SELinux 命令文件中设置模式,用你喜欢的编辑器打开那个文件找到这一行:

|

||||

|

||||

SELINUX=permissive

|

||||

|

||||

你可以按你的偏好设置模式,然后保存文件。

|

||||

|

||||

还有第三种方法修改 SELinux 的模式(通过 bootloader),但我不推荐新用户这么做。

|

||||

|

||||

### 策略类型

|

||||

|

||||

SELinux 策略有两种:

|

||||

|

||||

- Targeted — 只有目标网络进程(dhcpd,httpd,named,nscd,ntpd,portmap,snmpd,squid,以及 syslogd)受保护

|

||||

- Strict — 对所有进程完全的 SELinux 保护

|

||||

|

||||

你可以在 `/etc/selinux/config` 文件中修改策略类型。用你喜欢的编辑器打开这个文件找到这一行:

|

||||

|

||||

SELINUXTYPE=targeted

|

||||

|

||||

修改这个选项为 targeted 或 strict 以满足你的需求。

|

||||

|

||||

### 检查完整的 SELinux 状态

|

||||

|

||||

有个方便的 SELinux 工具,你可能想要用它来获取你启用了 SELinux 的系统的详细状态报告。这个命令在终端像这样运行:

|

||||

|

||||

sestatus -v

|

||||

|

||||

你可以看到像图 4 那样的输出。

|

||||

|

||||

|

||||

|

||||

*图 4:sestatus -v 命令的输出。*

|

||||

|

||||

### 仅是皮毛

|

||||

|

||||

和你预想的一样,我只介绍了 SELinux 的一点皮毛。SELinux 的确是个复杂的系统,想要更扎实地理解它是如何工作的,以及了解如何让它更好地为你的桌面或服务器工作需要更加地深入学习。我的内容还没有覆盖到疑难解答和创建自定义 SELinux 策略。

|

||||

|

||||

SELinux 是所有 Linux 管理员都应该知道的强大工具。现在已经向你介绍了 SELinux,我强烈推荐你回到 Linux.com(当有更多关于此话题的文章发表的时候)或看看 [NSA SELinux 文档][3] 获得更加深入的指南。

|

||||

|

||||

LCTT - 相关阅读:[鸟哥的 Linux 私房菜——程序管理与 SELinux 初探][4]

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/docs/ldp/883671-an-introduction-to-selinux

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

译者:[alim0x](https://github.com/alim0x)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/community/forums/person/93

|

||||

[1]: http://selinuxproject.org/page/Main_Page

|

||||

[2]: https://www.nsa.gov/research/selinux/

|

||||

[3]: https://www.nsa.gov/research/selinux/docs.shtml

|

||||

[4]: http://vbird.dic.ksu.edu.tw/linux_basic/0440processcontrol_5.php

|

||||

@ -1,42 +1,41 @@

|

||||

要超越Hello World 容器是件困难的事情

|

||||

从 Hello World 容器进阶是件困难的事情

|

||||

================================================================================

|

||||

|

||||

在[我的上一篇文章里][1], 我介绍了Linux 容器背后的技术的概念。我写了我知道的一切。容器对我来说也是比较新的概念。我写这篇文章的目的就是鼓励我真正的来学习这些东西。

|

||||

在[我的上一篇文章里][1], 我介绍了 Linux 容器背后的技术的概念。我写了我知道的一切。容器对我来说也是比较新的概念。我写这篇文章的目的就是鼓励我真正的来学习这些东西。

|

||||

|

||||

我打算在使用中学习。首先实践,然后上手并记录下我是怎么走过来的。我假设这里肯定有很多想"Hello World" 这种类型的知识帮助我快速的掌握基础。然后我能够更进一步,构建一个微服务容器或者其它东西。

|

||||

我打算在使用中学习。首先实践,然后上手并记录下我是怎么走过来的。我假设这里肯定有很多像 "Hello World" 这种类型的知识帮助我快速的掌握基础。然后我能够更进一步,构建一个微服务容器或者其它东西。

|

||||

|

||||

我的意思是还会比着更难吗,对吧?

|

||||

我想,它应该不会有多难的。

|

||||

|

||||

错了。

|

||||

但是我错了。

|

||||

|

||||

可能对某些人来说这很简单,因为他们会耗费大量的时间专注在操作工作上。但是对我来说实际上是很困难的,可以从我在Facebook 上的状态展示出来的挫折感就可以看出了。

|

||||

可能对某些人来说这很简单,因为他们在运维工作方面付出了大量的时间。但是对我来说实际上是很困难的,可以从我在Facebook 上的状态展示出来的挫折感就可以看出了。

|

||||

|

||||

但是还有一个好消息:我最终让它工作了。而且他工作的还不错。所以我准备分享向你分享我如何制作我的第一个微服务容器。我的痛苦可能会节省你不少时间呢。

|

||||

但是还有一个好消息:我最终搞定了。而且它工作的还不错。所以我准备分享向你分享我如何制作我的第一个微服务容器。我的痛苦可能会节省你不少时间呢。

|

||||

|

||||

如果你曾经发现或者从来都没有发现自己处在这种境地:像我这样的人在这里解决一些你不需要解决的问题。

|

||||

如果你曾经发现你也处于过这种境地,不要害怕:像我这样的人都能搞定,所以你也肯定行。

|

||||

|

||||

让我们开始吧。

|

||||

|

||||

|

||||

### 一个缩略图微服务 ###

|

||||

|

||||

我设计的微服务在理论上很简单。以JPG 或者PNG 格式在HTTP 终端发布一张数字照片,然后获得一个100像素宽的缩略图。

|

||||

我设计的微服务在理论上很简单。以 JPG 或者 PNG 格式在 HTTP 终端发布一张数字照片,然后获得一个100像素宽的缩略图。

|

||||

|

||||

下面是它实际的效果:

|

||||

下面是它的流程:

|

||||

|

||||

|

||||

|

||||

我决定使用NodeJS 作为我的开发语言,使用[ImageMagick][2] 来转换缩略图。

|

||||

我决定使用 NodeJS 作为我的开发语言,使用 [ImageMagick][2] 来转换缩略图。

|

||||

|

||||

我的服务的第一版的逻辑如下所示:

|

||||

|

||||

|

||||

|

||||

我下载了[Docker Toolbox][3],用它安装了Docker 的快速启动终端。Docker 快速启动终端使得创建容器更简单了。终端会启动一个装好了Docker 的Linux 虚拟机,它允许你在一个终端里运行Docker 命令。

|

||||

我下载了 [Docker Toolbox][3],用它安装了 Docker 的快速启动终端(Docker Quickstart Terminal)。Docker 快速启动终端使得创建容器更简单了。终端会启动一个装好了 Docker 的 Linux 虚拟机,它允许你在一个终端里运行 Docker 命令。

|

||||

|

||||

虽然在我的例子里,我的操作系统是Mac OS X。但是Windows 下也有相同的工具。

|

||||

虽然在我的例子里,我的操作系统是 Mac OS X。但是 Windows 下也有相同的工具。

|

||||

|

||||

我准备使用Docker 快速启动终端里为我的微服务创建一个容器镜像,然后从这个镜像运行容器。

|

||||

我准备使用 Docker 快速启动终端里为我的微服务创建一个容器镜像,然后从这个镜像运行容器。

|

||||

|

||||

Docker 快速启动终端就运行在你使用的普通终端里,就像这样:

|

||||

|

||||

@ -44,11 +43,11 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

### 第一个小问题和第一个大问题###

|

||||

|

||||

所以我用NodeJS 和ImageMagick 瞎搞了一通然后让我的服务在本地运行起来了。

|

||||

我用 NodeJS 和 ImageMagick 瞎搞了一通,然后让我的服务在本地运行起来了。

|

||||

|

||||

然后我创建了Dockerfile,这是Docker 用来构建容器的配置脚本。(我会在后面深入介绍构建和Dockerfile)

|

||||

然后我创建了 Dockerfile,这是 Docker 用来构建容器的配置脚本。(我会在后面深入介绍构建过程和 Dockerfile)

|

||||

|

||||

这是我运行Docker 快速启动终端的命令:

|

||||

这是我运行 Docker 快速启动终端的命令:

|

||||

|

||||

$ docker build -t thumbnailer:0.1

|

||||

|

||||

@ -58,32 +57,31 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

呃。

|

||||

|

||||

我估摸着过了15分钟:我忘记了在末尾参数输入一个点`.`。

|

||||

我估摸着过了15分钟我才反应过来:我忘记了在末尾参数输入一个点`.`。

|

||||

|

||||

正确的指令应该是这样的:

|

||||

|

||||

$ docker build -t thumbnailer:0.1 .

|

||||

|

||||

但是这不是我遇到的最后一个问题。

|

||||

|

||||

但是这不是我最后一个问题。

|

||||

|

||||

我让这个镜像构建好了,然后我Docker 快速启动终端输入了[`run` 命令][4]来启动容器,名字叫`thumbnailer:0.1`:

|

||||

我让这个镜像构建好了,然后我在 Docker 快速启动终端输入了 [`run` 命令][4]来启动容器,名字叫 `thumbnailer:0.1`:

|

||||

|

||||

$ docker run -d -p 3001:3000 thumbnailer:0.1

|

||||

|

||||

参数`-p 3001:3000` 让NodeJS 微服务在Docker 内运行在端口3000,而在主机上则是3001。

|

||||

参数 `-p 3001:3000` 让 NodeJS 微服务在 Docker 内运行在端口3000,而绑定在宿主主机上的3001。

|

||||

|

||||

到目前卡起来都很好,对吧?

|

||||

到目前看起来都很好,对吧?

|

||||

|

||||

错了。事情要马上变糟了。

|

||||

|

||||

我指定了在Docker 快速启动中端里用命令`docker-machine` 运行的Docker 虚拟机的ip地址:

|

||||

我通过运行 `docker-machine` 命令为这个 Docker 快速启动终端里创建的虚拟机指定了 ip 地址:

|

||||

|

||||

$ docker-machine ip default

|

||||

|

||||

这句话返回了默认虚拟机的IP地址,即运行docker 的虚拟机。对于我来说,这个ip 地址是192.168.99.100。

|

||||

这句话返回了默认虚拟机的 IP 地址,它运行在 Docker 快速启动终端里。在我这里,这个 ip 地址是 192.168.99.100。

|

||||

|

||||

我浏览网页http://192.168.99.100:3001/ ,然后找到了我创建的上传图片的网页:

|

||||

我浏览网页 http://192.168.99.100:3001/ ,然后找到了我创建的上传图片的网页:

|

||||

|

||||

|

||||

|

||||

@ -91,13 +89,13 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

但是它并没有工作。

|

||||

|

||||

终端告诉我他无法找到我的微服务需要的`/upload` 目录。

|

||||

终端告诉我他无法找到我的微服务需要的 `/upload` 目录。

|

||||

|

||||

现在开始记住,我已经在此耗费了将近一天的时间-从浪费时间到研究问题。我此时感到了一些挫折感。

|

||||

现在,你要知道,我已经在此耗费了将近一天的时间-从浪费时间到研究问题。我此时感到了一些挫折感。

|

||||

|

||||

然后灵光一闪。某人记起来微服务不应该自己做任何数据持久化的工作!保存数据应该是另一个服务的工作。

|

||||

|

||||

所以容器找不到目录`/upload` 的原因到底是什么?这个问题的根本就是我的微服务在基础设计上就有问题。

|

||||

所以容器找不到目录 `/upload` 的原因到底是什么?这个问题的根本就是我的微服务在基础设计上就有问题。

|

||||

|

||||

让我们看看另一幅图:

|

||||

|

||||

@ -109,7 +107,7 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

|

||||

|

||||

这是我用NodeJS 写的在内存工作、生成缩略图的代码:

|

||||

这是我用 NodeJS 写的在内存运行、生成缩略图的代码:

|

||||

|

||||

// Bind to the packages

|

||||

var express = require('express');

|

||||

@ -171,19 +169,19 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

module.exports = router;

|

||||

|

||||

好了,回到正轨,已经可以在我的本地机器正常工作了。我该去休息了。

|

||||

好了,一切回到了正轨,已经可以在我的本地机器正常工作了。我该去休息了。

|

||||

|

||||

但是,在我测试把这个微服务当作一个普通的Node 应用运行在本地时...

|

||||

但是,在我测试把这个微服务当作一个普通的 Node 应用运行在本地时...

|

||||

|

||||

|

||||

|

||||

它工作的很好。现在我要做的就是让他在容器里面工作。

|

||||

它工作的很好。现在我要做的就是让它在容器里面工作。

|

||||

|

||||

第二天我起床后喝点咖啡,然后创建一个镜像——这次没有忘记那个"."!

|

||||

|

||||

$ docker build -t thumbnailer:01 .

|

||||

|

||||

我从缩略图工程的根目录开始构建。构建命令使用了根目录下的Dockerfile。它是这样工作的:把Dockerfile 放到你想构建镜像的地方,然后系统就默认使用这个Dockerfile。

|

||||

我从缩略图项目的根目录开始构建。构建命令使用了根目录下的 Dockerfile。它是这样工作的:把 Dockerfile 放到你想构建镜像的地方,然后系统就默认使用这个 Dockerfile。

|

||||

|

||||

下面是我使用的Dockerfile 的内容:

|

||||

|

||||

@ -209,7 +207,7 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

### 第二个大问题 ###

|

||||

|

||||

我运行了`build` 命令,然后出了这个错:

|

||||

我运行了 `build` 命令,然后出了这个错:

|

||||

|

||||

Do you want to continue? [Y/n] Abort.

|

||||

|

||||

@ -217,7 +215,7 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

我猜测微服务出错了。我回到本地机器,从本机启动微服务,然后试着上传文件。

|

||||

|

||||

然后我从NodeJS 获得了这个错误:

|

||||

然后我从 NodeJS 获得了这个错误:

|

||||

|

||||

Error: spawn convert ENOENT

|

||||

|

||||

@ -225,25 +223,25 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

我搜索了我能想到的所有的错误原因。差不多4个小时后,我想:为什么不重启一下机器呢?

|

||||

|

||||

重启了,你猜猜结果?错误消失了!(译注:万能的重启)

|

||||

重启了,你猜猜结果?错误消失了!(LCTT 译注:万能的“重启试试”)

|

||||

|

||||

继续。

|

||||

|

||||

### 将精灵关进瓶子 ###

|

||||

### 将精灵关进瓶子里 ###

|

||||

|

||||

跳回正题:我需要完成构建工作。

|

||||

|

||||

我使用[`rm` 命令][5]删除了虚拟机里所有的容器。

|

||||

我使用 [`rm` 命令][5]删除了虚拟机里所有的容器。

|

||||

|

||||

$ docker rm -f $(docker ps -a -q)

|

||||

|

||||

`-f` 在这里的用处是强制删除运行中的镜像。

|

||||

|

||||

然后删除了全部Docker 镜像,用的是[命令`rmi`][6]:

|

||||

然后删除了全部 Docker 镜像,用的是[命令 `rmi`][6]:

|

||||

|

||||

$ docker rmi if $(docker images | tail -n +2 | awk '{print $3}')

|

||||

|

||||

我重新执行了命令构建镜像,安装容器,运行微服务。然后过了一个充满自我怀疑和沮丧的一个小时,我告诉我自己:这个错误可能不是微服务的原因。

|

||||

我重新执行了重新构建镜像、安装容器、运行微服务的整个过程。然后过了一个充满自我怀疑和沮丧的一个小时,我告诉我自己:这个错误可能不是微服务的原因。

|

||||

|

||||

所以我重新看到了这个错误:

|

||||

|

||||

@ -251,19 +249,17 @@ Docker 快速启动终端就运行在你使用的普通终端里,就像这样

|

||||

|

||||

The command '/bin/sh -c apt-get install imagemagick libmagickcore-dev libmagickwand-dev' returned a non-zero code: 1

|

||||

|

||||

这太打击我了:构建脚本好像需要有人从键盘输入Y! 但是,这是一个非交互的Dockerfile 脚本啊。这里并没有键盘。

|

||||

这太打击我了:构建脚本好像需要有人从键盘输入 Y! 但是,这是一个非交互的 Dockerfile 脚本啊。这里并没有键盘。

|

||||

|

||||

回到Dockerfile,脚本元来时这样的:

|

||||

回到 Dockerfile,脚本原来是这样的:

|

||||

|

||||

RUN apt-get update

|

||||

RUN apt-get install -y nodejs nodejs-legacy npm

|

||||

RUN apt-get install imagemagick libmagickcore-dev libmagickwand-dev

|

||||

RUN apt-get clean

|

||||

|

||||

The second `apt-get` command is missing the `-y` flag which causes "yes" to be given automatically where usually it would be prompted for.

|

||||

第二个`apt-get` 忘记了`-y` 标志,这才是错误的根本原因。

|

||||

第二个`apt-get` 忘记了`-y` 标志,它用于自动应答提示所需要的“yes”。这才是错误的根本原因。

|

||||

|

||||

I added the missing `-y` to the command:

|

||||

我在这条命令后面添加了`-y` :

|

||||

|

||||

RUN apt-get update

|

||||

@ -281,7 +277,6 @@ I added the missing `-y` to the command:

|

||||

|

||||

$ docker run -d -p 3001:3000 thumbnailer:0.1

|

||||

|

||||

Got the IP address of the Virtual Machine:

|

||||

获取了虚拟机的IP 地址:

|

||||

|

||||

$ docker-machine ip default

|

||||

@ -298,11 +293,11 @@ Got the IP address of the Virtual Machine:

|

||||

|

||||

在容器里面工作了,我的第一次啊!

|

||||

|

||||

### 这意味着什么? ###

|

||||

### 这让我学到了什么? ###

|

||||

|

||||

很久以前,我接受了这样一个道理:当你刚开始尝试某项技术时,即使是最简单的事情也会变得很困难。因此,我压抑了要成为房间里最聪明的人的欲望。然而最近几天尝试容器的过程就是一个充满自我怀疑的旅程。

|

||||

很久以前,我接受了这样一个道理:当你刚开始尝试某项技术时,即使是最简单的事情也会变得很困难。因此,我不会把自己当成最聪明的那个人,然而最近几天尝试容器的过程就是一个充满自我怀疑的旅程。

|

||||

|

||||

但是你想知道一些其它的事情吗?这篇文章是我在凌晨2点完成的,而每一个折磨的小时都值得了。为什么?因为这段时间你将自己全身心投入了喜欢的工作里。这件事很难,对于所有人来说都不是很容易就获得结果的。但是不要忘记:你在学习技术,运行世界的技术。

|

||||

但是你想知道一些其它的事情吗?这篇文章是我在凌晨2点完成的,而每一个受折磨的时刻都值得了。为什么?因为这段时间你将自己全身心投入了喜欢的工作里。这件事很难,对于所有人来说都不是很容易就获得结果的。但是不要忘记:你在学习技术,运行世界的技术。

|

||||

|

||||

P.S. 了解一下Hello World 容器的两段视频,这里会有 [Raziel Tabib’s][7] 的精彩工作内容。

|

||||

|

||||

@ -320,12 +315,12 @@ via: https://deis.com/blog/2015/beyond-hello-world-containers-hard-stuff

|

||||

|

||||

作者:[Bob Reselman][a]

|

||||

译者:[Ezio](https://github.com/oska874)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://deis.com/blog

|

||||

[1]:http://deis.com/blog/2015/developer-journey-linux-containers

|

||||

[1]:https://linux.cn/article-6594-1.html

|

||||

[2]:https://github.com/rsms/node-imagemagick

|

||||

[3]:https://www.docker.com/toolbox

|

||||

[4]:https://docs.docker.com/reference/commandline/run/

|

||||

@ -0,0 +1,209 @@

|

||||

用 Python 打造你的 Eclipse

|

||||

==============================================

|

||||

|

||||

|

||||

|

||||

Eclipse 高级脚本环境([EASE][1])项目虽然还在开发中,但是必须要承认它非常强大,它让我们可以快速打造自己的Eclipse 开发环境。

|

||||

|

||||

依据 Eclipse 强大的框架,可以通过其内建的插件系统全方面的扩展 Eclipse。然而,编写和部署一个新的插件还是十分麻烦,即使你只是需要一个额外的小功能。不过,现在依托于 EASE,你可以不用写任何一行 Java 代码就可以方便的做到这点。EASE 是一种使用 Python 或者 Javascript 这样的脚本语言自动实现这些功能的平台。

|

||||

|

||||

本文中,根据我在今年北美的 EclipseCon 大会上的[演讲][2],我将介绍如何用 Python 和 EASE 设置你的 Eclipse 环境,并告诉如何发挥 Python 的能量让你的 IDE 跑的飞起。

|

||||

|

||||

### 安装并运行 "Hello World"

|

||||

|

||||

本文中的例子使用 Python 的 Java 实现 Jython。你可以将 EASE 直接安装到你已有的 Eclipse IDE 中。本例中使用[Eclipse Mars][3],并安装 EASE 环境本身以及它的模块和 Jython 引擎。

|

||||

|

||||

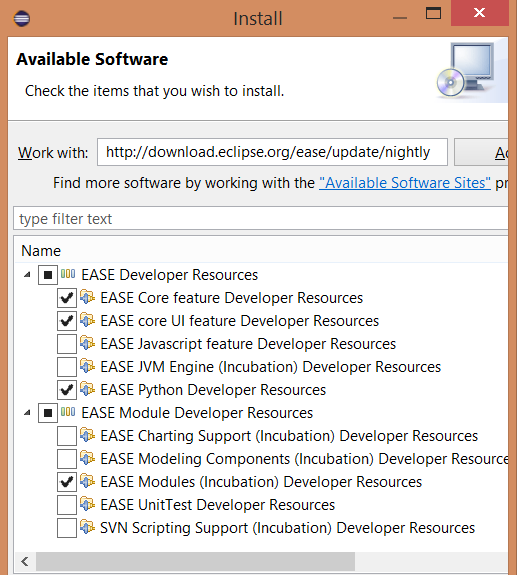

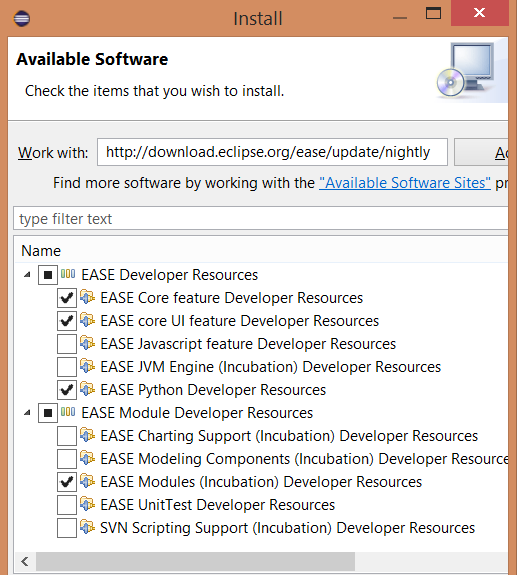

使用 Eclipse 安装对话框(`Help>Install New Software`...),安装 EASE[http://download.eclipse.org/ease/update/nightly][4],

|

||||

|

||||

选择下列组件:

|

||||

|

||||

- EASE Core feature

|

||||

- EASE core UI feature

|

||||

- EASE Python Developer Resources

|

||||

- EASE modules (Incubation)

|

||||

|

||||

这会安装 EASE 及其模块。这里我们要注意一下 Resource 模块,此模块可以访问 Eclipse 工作空间、项目和文件 API。

|

||||

|

||||

|

||||

|

||||

成功安装后,接下来安装 EASE Jython 引擎 [https://dl.bintray.com/pontesegger/ease-jython/][5] 。完成后,测试下。新建一个项目并新建一个 hello.py 文件,输入:

|

||||

|

||||

```

|

||||

print "hello world"

|

||||

```

|

||||

|

||||

选中这个文件,右击并选择“Run as -> EASE script”。这样就可以在控制台看到“Hello world”的输出。

|

||||

|

||||

现在就可以编写 Python 脚本来访问工作空间和项目了。这种方法可以用于各种定制,下面只是一些思路。

|

||||

|

||||

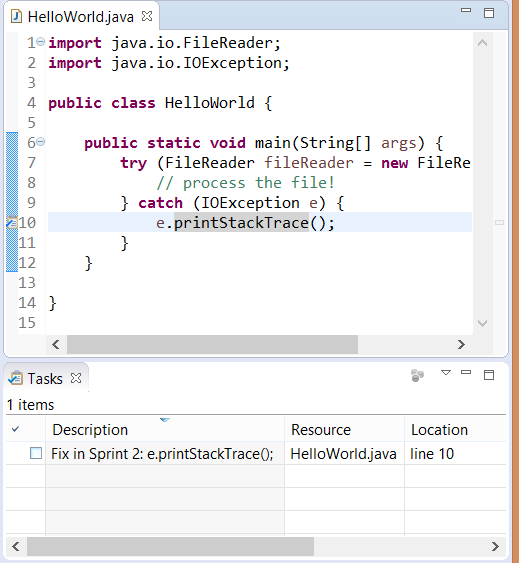

### 提升你的代码质量

|

||||

|

||||

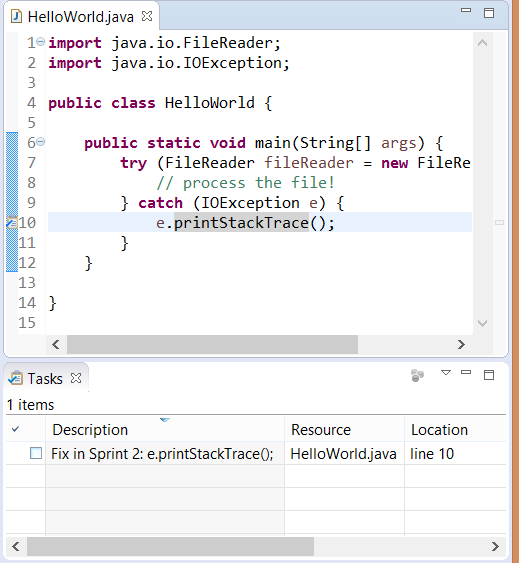

管理良好的代码质量本身是一件非常烦恼的事情,尤其是当需要处理一个大型代码库或者要许多工程师参与的时候。而这些痛苦可以通过脚本来减轻,比如批量格式化一些文件,或者[去掉文件中的 unix 式的行结束符][6]来使得在 git 之类的源代码控制系统中比较差异更加容易。另外一个更好的用途是使用脚本来生成 Eclipse markers 以高亮你可以改善的代码。这里有一些示例脚本,你可以用来在 java 文件中所有找到的“printStackTrace”方法中加入task markers 。请看[源码][7]。

|

||||

|

||||

拷贝该文件到工作空间来运行,右击并选择“Run as -> EASE script”。

|

||||

|

||||

```

|

||||

loadModule('/System/Resources')

|

||||

|

||||

from org.eclipse.core.resources import IMarker

|

||||

|

||||

for ifile in findFiles("*.java"):

|

||||

file_name = str(ifile.getLocation())

|

||||

print "Processing " + file_name

|

||||

with open(file_name) as f:

|

||||

for line_no, line in enumerate(f, start=1):

|

||||

if "printStackTrace" in line:

|

||||

marker = ifile.createMarker(IMarker.TASK)

|

||||

marker.setAttribute(IMarker.TRANSIENT, True)

|

||||

marker.setAttribute(IMarker.LINE_NUMBER, line_no)

|

||||

marker.setAttribute(IMarker.MESSAGE, "Fix in Sprint 2: " + line.strip())

|

||||

|

||||

```

|

||||

|

||||

如果你的 java 文件中包含了 printStackTraces,你就可以看见任务视图和编辑器侧边栏上自动新加的标记。

|

||||

|

||||

|

||||

|

||||

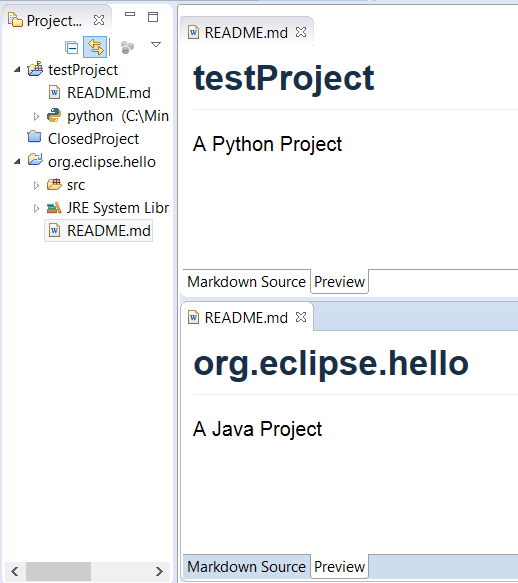

### 自动构建繁琐任务

|

||||

|

||||

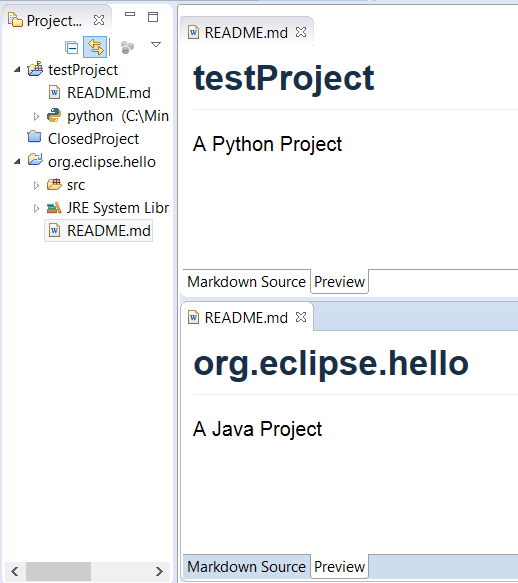

当同时工作在多个项目的时候,肯定需要完成许多繁杂、重复的任务。可能你需要在所有源文件头上加入版权信息,或者采用新框架时候自动更新文件。例如,当首次切换到 Tycho 和 Maven 的时候,我们需要 giel每个项目添加 pom.xml 文件。使用几行 Python 代码可以很轻松的完成这个任务。然后当 Tycho 支持无 pom 构建后,我们需要移除不要的 pom 文件。同样,几行代码就可以搞定这个任务,例如,这里有个脚本可以在每一个打开的工作空间项目上加入 README.md。请看源代码 [add_readme.py][8]。

|

||||

|

||||

拷贝该文件到工作空间来运行,右击并选择“Run as -> EASE script”。

|

||||

|

||||

```

|

||||

loadModule('/System/Resources')

|

||||

|

||||

for iproject in getWorkspace().getProjects():

|

||||

if not iproject.isOpen():

|

||||

continue

|

||||

|

||||

ifile = iproject.getFile("README.md")

|

||||

|

||||

if not ifile.exists():

|

||||

contents = "# " + iproject.getName() + "\n\n"

|

||||

if iproject.hasNature("org.eclipse.jdt.core.javanature"):

|

||||

contents += "A Java Project\n"

|

||||

elif iproject.hasNature("org.python.pydev.pythonNature"):

|

||||

contents += "A Python Project\n"

|

||||

writeFile(ifile, contents)

|

||||

```

|

||||

|

||||

脚本运行的结果会在每个打开的项目中加入 README.md,java 和 Python 的项目还会自动加上一行描述。

|

||||

|

||||

|

||||

|

||||

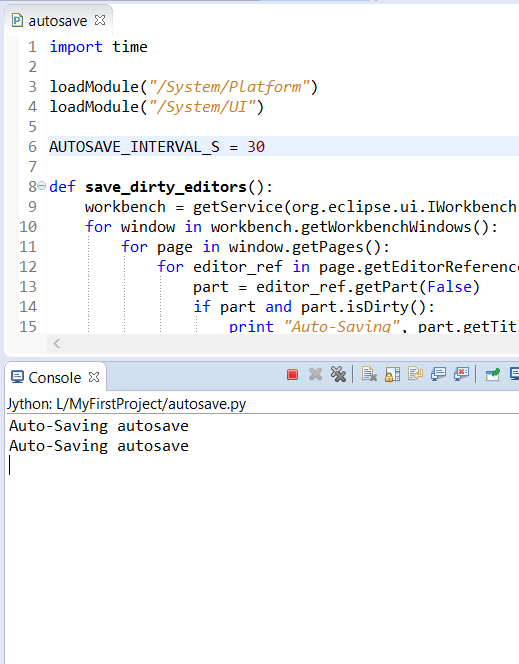

### 构建新功能

|

||||

|

||||

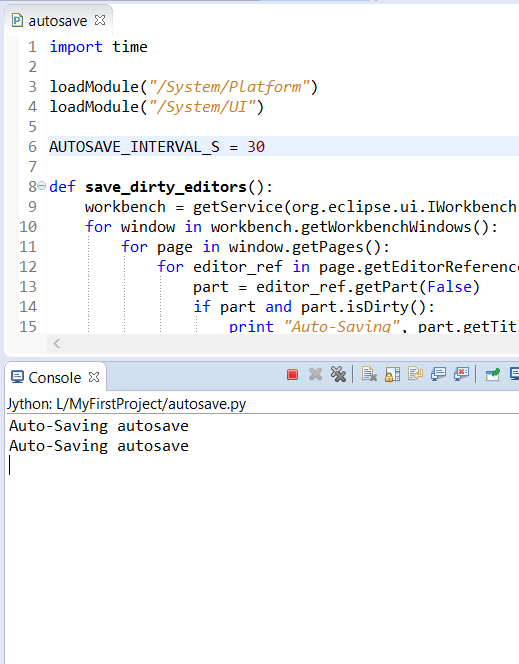

你可以使用 Python 脚本来快速构建一些急需的功能,或者做个原型给团队和用户演示你想要的功能。例如,一个 Eclipse 目前不支持的功能是自动保存你正在工作的文件。即使这个功能将会很快提供,但是你现在就可以马上拥有一个能每隔 30 秒或处于后台时自动保存的编辑器。以下是主要方法的片段。请看下列代码:[autosave.py][9]。

|

||||

|

||||

```

|

||||

def save_dirty_editors():

|

||||

workbench = getService(org.eclipse.ui.IWorkbench)

|

||||

for window in workbench.getWorkbenchWindows():

|

||||

for page in window.getPages():

|

||||

for editor_ref in page.getEditorReferences():

|

||||

part = editor_ref.getPart(False)

|

||||

if part and part.isDirty():

|

||||

print "Auto-Saving", part.getTitle()

|

||||

part.doSave(None)

|

||||

```

|

||||

|

||||

在运行脚本之前,你需要勾选 'Allow Scripts to run code in UI thread' 设定,这个设定在 Window > Preferences > Scripting 中。然后添加该脚本到工作空间,右击并选择“Run as > EASE Script”。每次编辑器自动保存时,控制台就会输出一个保存的信息。要关掉自动保存脚本,只需要点击控制台的红色方块的停止按钮即可。

|

||||

|

||||

|

||||

|

||||

### 快速扩展用户界面

|

||||

|

||||

EASE 最棒的事情是可以将你的脚本与 IDE 界面上元素(比如一个新的按钮或菜单)结合起来。不需要编写 java 代码或者安装新的插件,只需要在你的脚本前面增加几行代码。

|

||||

|

||||

下面是一个简单的脚本示例,用来创建三个新项目。

|

||||

|

||||

```

|

||||

# name : Create fruit projects

|

||||

# toolbar : Project Explorer

|

||||

# description : Create fruit projects

|

||||

|

||||

loadModule("/System/Resources")

|

||||

|

||||

for name in ["banana", "pineapple", "mango"]:

|

||||

createProject(name)

|

||||

```

|

||||

|

||||

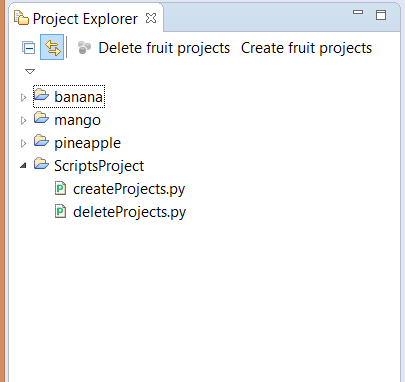

上述注释会专门告诉 EASE 增加了一个按钮到 Project Explorer 工具条。下面这个脚本是用来增加一个删除这三个项目的按钮的。请看源码 [createProjects.py][10] 和 [deleteProjects.py][11]。

|

||||

|

||||

```

|

||||

# name :Delete fruit projects

|

||||

# toolbar : Project Explorer

|

||||

# description : Get rid of the fruit projects

|

||||

|

||||

loadModule("/System/Resources")

|

||||

|

||||

for name in ["banana", "pineapple", "mango"]:

|

||||

project = getProject(name)

|

||||

project.delete(0, None)

|

||||

```

|

||||

|

||||

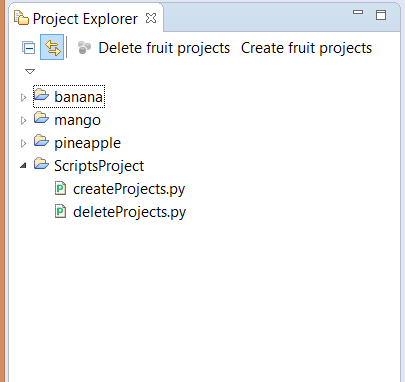

为了使按钮显示出来,增加这两个脚本到一个新的项目,假如叫做 'ScriptsProject'。然后到 Windows > Preference > Scripting > Script Location,点击 'Add Workspace' 按钮并选择 ScriptProject 项目。这个项目现在会成为放置脚本的默认位置。你可以发现 Project Explorer 上出现了这两个按钮,这样你就可以通过这两个新加的按钮快速增加和删除项目。

|

||||

|

||||

|

||||

|

||||

### 整合第三方工具

|

||||

|

||||

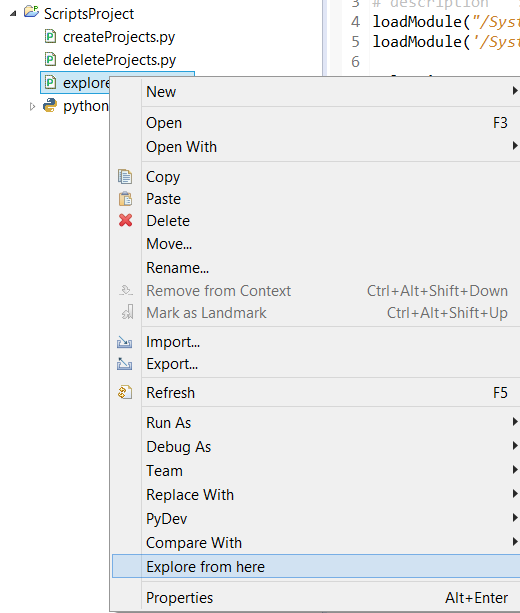

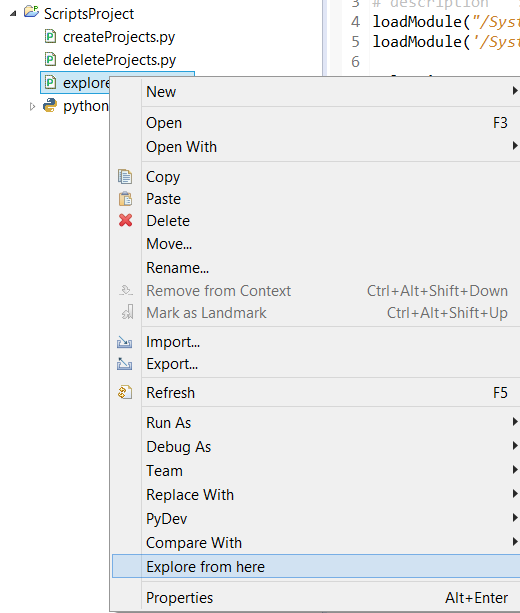

不管怎么说,你可能需要除了 Eclipse 生态系统以外的工具(这是真的,虽然 Eclipse 已经很丰富了,但是不是什么都有)。这些时候你会发现将他们包装在一个脚本来调用会非常方便。这里有一个简单的例子让你整合资源管理器,并将它加入到右键菜单栏,这样点击图标就可以打开资源管理器浏览当前文件。请看源码 [explorer.py][12]。

|

||||

|

||||

```

|

||||

# name : Explore from here

|

||||

# popup : enableFor(org.eclipse.core.resources.IResource)

|

||||

# description : Start a file browser using current selection

|

||||

loadModule("/System/Platform")

|

||||

loadModule('/System/UI')

|

||||

|

||||

selection = getSelection()

|

||||

if isinstance(selection, org.eclipse.jface.viewers.IStructuredSelection):

|

||||

selection = selection.getFirstElement()

|

||||

|

||||

if not isinstance(selection, org.eclipse.core.resources.IResource):

|

||||

selection = adapt(selection, org.eclipse.core.resources.IResource)

|

||||

|

||||

if isinstance(selection, org.eclipse.core.resources.IFile):

|

||||

selection = selection.getParent()

|

||||

|

||||

if isinstance(selection, org.eclipse.core.resources.IContainer):

|

||||

runProcess("explorer.exe", [selection.getLocation().toFile().toString()])

|

||||

```

|

||||

|

||||

为了让这个菜单显示出来,像之前一样将该文件加入一个新项目,比如说 'ScriptProject'。然后到 Windows > Preference > Scripting > Script Locations,点击“Add Workspace”并选择 'ScriptProject' 项目。当你在文件上右击鼠标键,你会看到弹出菜单出现了新的菜单项。点击它就会出现资源管理器。(注意,这个功能已经出现在 Eclipse 中了,但是你可以在这个例子中换成其它第三方工具。)

|

||||

|

||||

|

||||

|

||||

Eclipse 高级基本环境 (EASE)提供一套很棒的扩展功能,使得 Eclipse IDE 能使用 Python 来轻松扩展。虽然这个项目还在早期,但是[关于这个项目][13]更多更棒的功能也正在加紧开发中,如果你想为它做出贡献,请到[论坛][14]讨论。

|

||||

|

||||

我会在 2016 年的 [Eclipsecon North America][15] 会议上发布更多 EASE 细节。我的演讲 [Scripting Eclipse with Python][16] 也会不单会介绍 Jython,也包括 C-Python 和这个功能在科学领域是如何扩展的。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/life/16/2/how-use-python-hack-your-ide

|

||||

|

||||

作者:[Tracy Miranda][a]

|

||||

译者:[VicYu/Vic020](http://vicyu.net)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/tracymiranda

|

||||

[1]: https://eclipse.org/ease/

|

||||

[2]: https://www.eclipsecon.org/na2016/session/scripting-eclipse-python

|

||||

[3]: https://www.eclipse.org/downloads/packages/eclipse-ide-eclipse-committers-451/mars1

|

||||

[4]: http://download.eclipse.org/ease/update/nightly

|

||||

[5]: https://dl.bintray.com/pontesegger/ease-jython/

|

||||

[6]: http://code.activestate.com/recipes/66434-change-line-endings/

|

||||

[7]: https://gist.github.com/tracymiranda/6556482e278c9afc421d

|

||||

[8]: https://gist.github.com/tracymiranda/f20f233b40f1f79b1df2

|

||||

[9]: https://gist.github.com/tracymiranda/e9588d0976c46a987463

|

||||

[10]: https://gist.github.com/tracymiranda/55995daaea9a4db584dc

|

||||

[11]: https://gist.github.com/tracymiranda/baa218fc2c1a8e898194

|

||||

[12]: https://gist.github.com/tracymiranda/8aa3f0fc4bf44f4a5cd3

|

||||

[13]: https://eclipse.org/ease/

|

||||

[14]: https://dev.eclipse.org/mailman/listinfo/ease-dev

|

||||

[15]: https://www.eclipsecon.org/na2016

|

||||

[16]: https://www.eclipsecon.org/na2016/session/scripting-eclipse-python

|

||||

@ -1,32 +0,0 @@

|

||||

ownCloud 9.0 Enterprise Edition Arrives with Extensive File Control Capabilities

|

||||

==================================================================================

|

||||

|

||||

>ownCloud, Inc. has had the great pleasure of [announcing][1] the availability of the Enterprise Edition (EE) of its powerful ownCloud 9.0 self-hosting cloud server solution.

|

||||

|

||||

Engineered exclusively for small- and medium-sized business, as well as major organizations and enterprises, [ownCloud 9.0 Enterprise Edition][2] is now available with extensive file control capabilities and all the cool new features that made the open-source version of the project famous amongst Linux users.

|

||||

|

||||

Prominent new features in ownCloud 9.0 Enterprise Edition are built-in Auto-Tagging and File Firewall apps, which have been based on some of the new features of ownCloud 9.0, such as file tags, file comments, as well as notifications and activities enhancements. This offers system administrators the ability to set rules and classifications for shared documents based on user- or system-applied tags.

|

||||

|

||||

"To illustrate how this can work, imagine working in a publicly traded company which has to be very careful not to release financial information ahead of official disclosure," reads the [announcement][3]. "While sharing this information internally it could end up in a folder which is shared through a public link. By assigning a special system tag, admins can configure the system to ensure the files are not available for download despite this mistake."

|

||||

|

||||

### Used by over 8 million people around the globe

|

||||

|

||||

ownCloud 9.0 is the best release of the open-source self-hosting cloud server software so far, which is currently used by over 8 million users around the globe. The release has brought a huge number of new features, such as code signing, as well as dozens of under-the-hood improvements and cosmetic changes. ownCloud 9.0 also got its first point release, version 9.0.1, last week, which [introduced even more enhancements][4].

|

||||

|

||||

And now, enterprises can take advantage of ownCloud 9.0's new features to differentiate between storage type and location, as well as the location of users, groups, and clients. ownCloud 9.0 Enterprise Edition gives them extensive access control, which is perfect if they have strict company guidelines or work with all sorts of regulations and rules. Below, you can see the File Firewall and Auto-Tagging apps in action.

|

||||

|

||||

------------------------------------------------------------------------------

|

||||

|

||||

via: http://news.softpedia.com/news/owncloud-9-0-enterprise-edition-arrives-with-extensive-file-control-capabilities-502985.shtml

|

||||

|

||||

作者:[Marius Nestor][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: http://news.softpedia.com/editors/browse/marius-nestor

|

||||

[1]: https://owncloud.com/blog-introducing-owncloud-9-enterprise-edition/

|

||||

[2]: https://owncloud.com/

|

||||

[3]: https://owncloud.org/blog/owncloud-9-0-enterprise-edition-is-now-available/

|

||||

[4]: http://news.softpedia.com/news/owncloud-9-0-gets-its-first-point-release-over-120-improvements-introduced-502698.shtml

|

||||

@ -1,3 +1,5 @@

|

||||

vim-kakali translating

|

||||

|

||||

Which Open Source Linux Distributions Would Presidential Hopefuls Run?

|

||||

================================================================================

|

||||

![Republican presidential candidate Donald Trump

|

||||

@ -50,4 +52,4 @@ via: http://thevarguy.com/open-source-application-software-companies/081715/whic

|

||||

[4]:http://relax-and-recover.org/

|

||||

[5]:http://thevarguy.com/open-source-application-software-companies/061614/hps-machine-open-source-os-truly-revolutionary

|

||||

[6]:http://hp.com/

|

||||

[7]:http://ubuntu.com/

|

||||

[7]:http://ubuntu.com/

|

||||

|

||||

@ -1,68 +0,0 @@

|

||||

vim-kakali is translating.

|

||||

|

||||

While the event had a certain amount of drama surrounding it, the [announcement][1] of the end for the [Debian Live project][2] seems likely to have less of an impact than it first appeared. The loss of the lead developer will certainly be felt—and the treatment he and the project received seems rather baffling—but the project looks like it will continue in some form. So Debian will still have tools to create live CDs and other media going forward, but what appears to be a long-simmering dispute between project founder and leader Daniel Baumann and the Debian CD and installer teams has been "resolved", albeit in an unfortunate fashion.

|

||||

|

||||

The November 9 announcement from Baumann was titled "An abrupt End to Debian Live". In that message, he pointed to a number of different events over the nearly ten years since the [project was founded][3] that indicated to him that his efforts on Debian Live were not being valued, at least by some. The final straw, it seems, was an "intent to package" (ITP) bug [filed][4] by Iain R. Learmonth that impinged on the namespace used by Debian Live.

|

||||

|

||||

Given that one of the main Debian Live packages is called "live-build", the new package's name, "live-build-ng", was fairly confrontational in and of itself. Live-build-ng is meant to be a wrapper around the [vmdebootstrap][5] tool for creating live media (CDs and USB sticks), which is precisely the role Debian Live is filling. But when Baumann [asked][6] Learmonth to choose a different name for his package, he got an "interesting" [reply][7]:

|

||||

|

||||

```

|

||||

It is worth noting that live-build is not a Debian project, it is an external project that claims to be an official Debian project. This is something that needs to be fixed.

|

||||

There is no namespace issue, we are building on the existing live-config and live-boot packages that are maintained and bringing these into Debian as native projects. If necessary, these will be forks, but I'm hoping that won't have to happen and that we can integrate these packages into Debian and continue development in a collaborative manner.

|

||||

live-build has been deprecated by debian-cd, and live-build-ng is replacing it. In a purely Debian context at least, live-build is deprecated. live-build-ng is being developed in collaboration with debian-cd and D-I [Debian Installer].

|

||||

```

|

||||

|

||||

Whether or not Debian Live is an "official" Debian project (or even what "official" means in this context) has been disputed in the thread. Beyond that, though, Neil Williams (who is the maintainer of vmdebootstrap) [provided some][8] explanation for the switch away from Debian Live:

|

||||

|

||||

```

|

||||

vmdebootstrap is being extended explicitly to provide support for a replacement for live-build. This work is happening within the debian-cd team to be able to solve the existing problems with live-build. These problems include reliability issues, lack of multiple architecture support and lack of UEFI support. vmdebootstrap has all of these, we do use support from live-boot and live-config as these are out of the scope for vmdebootstrap.

|

||||

```

|

||||

|

||||

Those seem like legitimate complaints, but ones that could have been fixed within the existing project. Instead, though, something of a stealth project was evidently undertaken to replace live-build. As Baumann [pointed out][9], nothing was posted to the debian-live mailing list about the plans. The ITP was the first notice that anyone from the Debian Live project got about the plans, so it all looks like a "secret plan"—something that doesn't sit well in a project like Debian.

|

||||

|

||||

As might be guessed, there were multiple postings that supported Baumann's request to rename "live-build-ng", followed by many that expressed dismay at his decision to stop working on Debian Live. But Learmonth and Williams were adamant that replacing live-build is needed. Learmonth did [rename][10] live-build-ng to a perhaps less confrontational name: live-wrapper. He noted that his aim had been to add the new tool to the Debian Live project (and "bring the Debian Live project into Debian"), but things did not play out that way.

|

||||

|

||||

```

|

||||

I apologise to everyone that has been upset by the ITP bug. The software is not yet ready for use as a full replacement for live-build, and it was filed to let people know that the work was ongoing and to collect feedback. This sort of worked, but the feedback wasn't the kind I was looking for.

|

||||

```

|

||||

|

||||

The backlash could perhaps have been foreseen. Communication is a key aspect of free-software communities, so a plan to replace the guts of a project seems likely to be controversial—more so if it is kept under wraps. For his part, Baumann has certainly not been perfect—he delayed the "wheezy" release by [uploading an unsuitable syslinux package][11] and [dropped down][12] from a Debian Developer to a Debian Maintainer shortly thereafter—but that doesn't mean he deserves this kind of treatment. There are others involved in the project as well, of course, so it is not just Baumann who is affected.

|

||||

|

||||

One of those other people is Ben Armstrong, who has been something of a diplomat during the event and has tried to smooth the waters. He started with a [post][13] that celebrated the project and what Baumann and the team had accomplished over the years. As he noted, the [list of downstream projects][14] for Debian Live is quite impressive. In another post, he also [pointed out][15] that the project is not dead:

|

||||

|

||||

```

|

||||

If the Debian CD team succeeds in their efforts and produces a replacement that is viable, reliable, well-tested, and a suitable candidate to replace live-build, this can only be good for Debian. If they are doing their job, they will not "[replace live-build with] an officially improved, unreliable, little-tested alternative". I've seen no evidence so far that they operate that way. And in the meantime, live-build remains in the archive -- there is no hurry to remove it, so long as it remains in good shape, and there is not yet an improved successor to replace it.

|

||||

```

|

||||

|

||||

On November 24, Armstrong also [posted][16] an update (and to [his blog][17]) on Debian Live. It shows some good progress made in the two weeks since Baumann's exit; there are even signs of collaboration between the project and the live-wrapper developers. There is also a [to-do list][18], as well as the inevitable call for more help. That gives reason to believe that all of the drama surrounding the project was just a glitch—avoidable, perhaps, but not quite as dire as it might have seemed.

|

||||

|

||||

|

||||

---------------------------------

|

||||

|

||||

via: https://lwn.net/Articles/665839/

|

||||

|

||||

作者:Jake Edge

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[1]: https://lwn.net/Articles/666127/

|

||||

[2]: http://live.debian.net/

|

||||

[3]: https://www.debian.org/News/weekly/2006/08/

|

||||