mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-01-25 23:11:02 +08:00

commit

19117ecabf

@ -3,15 +3,15 @@ Linux 局域网路由新手指南:第 2 部分

|

||||

|

||||

|

||||

|

||||

上周 [我们学习了 IPv4 地址][1] 和如何使用管理员不可或缺的工具 —— ipcalc,今天我们继续学习更精彩的内容:局域网路由器。

|

||||

上周 [我们学习了 IPv4 地址][1] 和如何使用管理员不可或缺的工具 —— `ipcalc`,今天我们继续学习更精彩的内容:局域网路由器。

|

||||

|

||||

VirtualBox 和 KVM 是测试路由的好工具,在本文中的所有示例都是在 KVM 中执行的。如果你喜欢使用物理硬件去做测试,那么你需要三台计算机:一台用作路由器,另外两台用于表示两个不同的网络。你也需要两台以太网交换机和相应的线缆。

|

||||

|

||||

我们假设示例是一个有线以太局域网,为了更符合真实使用场景,我们将假设有一些桥接的无线接入点,当然我并不会使用这些无线接入点做任何事情。(我也不会去尝试所有的无线路由器,以及使用一个移动宽带设备连接到以太网的局域网口进行混合组网,因为它们需要进一步的安装和设置)

|

||||

我们假设这个示例是一个有线以太局域网,为了更符合真实使用场景,我们将假设有一些桥接的无线接入点,当然我并不会使用这些无线接入点做任何事情。(我也不会去尝试所有的无线路由器,以及使用一个移动宽带设备连接到以太网的局域网口进行混合组网,因为它们需要进一步的安装和设置)

|

||||

|

||||

### 网段

|

||||

|

||||

最简单的网段是两台计算机连接在同一个交换机上的相同地址空间中。这样两台计算机不需要路由器就可以相互通讯。这就是我们常说的术语 —— “广播域”,它表示所有在相同的网络中的一组主机。它们可能连接到一台单个的以太网交换机上,也可能是连接到多台交换机上。一个广播域可以包括通过以太网桥连接的两个不同的网络,通过网桥可以让两个网络像一个单个网络一样运转。无线访问点一般是桥接到有线以太网上。

|

||||

最简单的网段是两台计算机连接在同一个交换机上的相同地址空间中。这样两台计算机不需要路由器就可以相互通讯。这就是我们常说的术语 —— “广播域”,它表示所有在相同的网络中的一组主机。它们可能连接到一台单个的以太网交换机上,也可能是连接到多台交换机上。一个广播域可以包括通过以太网桥连接的两个不同的网络,通过网桥可以让两个网络像一个单个网络一样运转。无线访问点一般是桥接到有线以太网上。

|

||||

|

||||

一个广播域仅当在它们通过一台网络路由器连接的情况下,才可以与不同的广播域进行通讯。

|

||||

|

||||

@ -22,12 +22,13 @@ VirtualBox 和 KVM 是测试路由的好工具,在本文中的所有示例都

|

||||

一个广播域需要一台路由器才可以与其它广播域通讯。我们使用两台计算机和 `ip` 命令来解释这些。我们的两台计算机是 192.168.110.125 和 192.168.110.126,它们都插入到同一台以太网交换机上。在 VirtualBox 或 KVM 中,当你配置一个新网络的时候会自动创建一个虚拟交换机,因此,当你分配一个网络到虚拟虚拟机上时,就像是插入一个交换机一样。使用 `ip addr show` 去查看你的地址和网络接口名字。现在,这两台主机可以互 ping 成功。

|

||||

|

||||

现在,给其中一台主机添加一个不同网络的地址:

|

||||

|

||||

```

|

||||

# ip addr add 192.168.120.125/24 dev ens3

|

||||

|

||||

```

|

||||

|

||||

你可以指定一个网络接口名字,在示例中它的名字是 ens3。这不需要去添加一个网络前缀,在本案例中,它是 /24,但是显式地添加它并没有什么坏处。你可以使用 `ip` 命令去检查你的配置。下面的示例输出为了清晰其见进行了删减:

|

||||

你可以指定一个网络接口名字,在示例中它的名字是 `ens3`。这不需要去添加一个网络前缀,在本案例中,它是 `/24`,但是显式地添加它并没有什么坏处。你可以使用 `ip` 命令去检查你的配置。下面的示例输出为了清晰其见进行了删减:

|

||||

|

||||

```

|

||||

$ ip addr show

|

||||

ens3:

|

||||

@ -35,7 +36,6 @@ ens3:

|

||||

valid_lft 875sec preferred_lft 875sec

|

||||

inet 192.168.120.125/24 scope global ens3

|

||||

valid_lft forever preferred_lft forever

|

||||

|

||||

```

|

||||

|

||||

主机在 192.168.120.125 上可以 ping 它自己(`ping 192.168.120.125`),这是对你的配置是否正确的一个基本校验,这个时候第二台计算机就已经不能 ping 通那个地址了。

|

||||

@ -45,30 +45,27 @@ ens3:

|

||||

* 第一个网络:192.168.110.0/24

|

||||

* 第二个网络:192.168.120.0/24

|

||||

|

||||

|

||||

|

||||

接下来你的路由器必须配置去转发数据包。数据包转发默认是禁用的,你可以使用 `sysctl` 命令去检查它的配置:

|

||||

|

||||

```

|

||||

$ sysctl net.ipv4.ip_forward

|

||||

net.ipv4.ip_forward = 0

|

||||

|

||||

```

|

||||

|

||||

0 意味着禁用,使用如下的命令去启用它:

|

||||

`0` 意味着禁用,使用如下的命令去启用它:

|

||||

|

||||

```

|

||||

# echo 1 > /proc/sys/net/ipv4/ip_forward

|

||||

|

||||

```

|

||||

|

||||

接下来配置你的另一台主机做为第二个网络的一部分,你可以通过将原来在 192.168.110.0/24 的网络中的一台主机分配到 192.168.120.0/24 虚拟网络中,然后重新启动两个 “网络” 主机,注意不是路由器。(或者重启动网络;我年龄大了还有点懒,我记不住那些重启服务的奇怪命令,还不如重启网络来得干脆。)重启后各台机器的地址应该如下所示:

|

||||

|

||||

* 主机 1: 192.168.110.125

|

||||

* 主机 2: 192.168.120.135

|

||||

* 路由器: 192.168.110.126 and 192.168.120.136

|

||||

|

||||

接下来配置你的另一台主机做为第二个网络的一部分,你可以通过将原来在 192.168.110.0/24 的网络中的一台主机分配到 192.168.120.0/24 虚拟网络中,然后重新启动两个 “连网的” 主机,注意不是路由器。(或者重启动主机上的网络服务;我年龄大了还有点懒,我记不住那些重启服务的奇怪命令,还不如重启主机来得干脆。)重启后各台机器的地址应该如下所示:

|

||||

|

||||

* 主机 1: 192.168.110.125

|

||||

* 主机 2: 192.168.120.135

|

||||

* 路由器: 192.168.110.126 和 192.168.120.136

|

||||

|

||||

现在可以去随意 ping 它们,可以从任何一台计算机上 ping 到任何一台其它计算机上。使用虚拟机和各种 Linux 发行版做这些事时,可能会产生一些意想不到的问题,因此,有时候 ping 的通,有时候 ping 不通。不成功也是一件好事,这意味着你需要动手去创建一条静态路由。首先,查看已经存在的路由表。主机 1 和主机 2 的路由表如下所示:

|

||||

|

||||

```

|

||||

$ ip route show

|

||||

default via 192.168.110.1 dev ens3 proto static metric 100

|

||||

@ -82,26 +79,25 @@ default via 192.168.120.1 dev ens3 proto static metric 101

|

||||

src 192.168.110.126 metric 100

|

||||

192.168.120.0/24 dev ens9 proto kernel scope link

|

||||

src 192.168.120.136 metric 100

|

||||

|

||||

```

|

||||

|

||||

这显示了我们使用的由 KVM 分配的缺省路由。169.* 地址是自动链接的本地地址,我们不去管它。接下来我们看两条路由,这两条路由指向到我们的路由器。你可以有多条路由,在这个示例中我们将展示如何在主机 1 上添加一个非默认路由:

|

||||

|

||||

```

|

||||

# ip route add 192.168.120.0/24 via 192.168.110.126 dev ens3

|

||||

|

||||

```

|

||||

|

||||

这意味着主机1 可以通过路由器接口 192.168.110.126 去访问 192.168.110.0/24 网络。看一下它们是如何工作的?主机1 和路由器需要连接到相同的地址空间,然后路由器转发到其它的网络。

|

||||

这意味着主机 1 可以通过路由器接口 192.168.110.126 去访问 192.168.110.0/24 网络。看一下它们是如何工作的?主机 1 和路由器需要连接到相同的地址空间,然后路由器转发到其它的网络。

|

||||

|

||||

以下的命令去删除一条路由:

|

||||

|

||||

```

|

||||

# ip route del 192.168.120.0/24

|

||||

|

||||

```

|

||||

|

||||

在真实的案例中,你不需要像这样手动配置一台路由器,而是使用一个路由器守护程序,并通过 DHCP 做路由器通告,但是理解基本原理很重要。接下来我们将学习如何去配置一个易于使用的路由器守护程序来为你做这些事情。

|

||||

|

||||

通过来自 Linux 基金会和 edX 的免费课程 ["Linux 入门" ][2] 来学习更多 Linux 的知识。

|

||||

通过来自 Linux 基金会和 edX 的免费课程 [“Linux 入门”][2] 来学习更多 Linux 的知识。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -109,10 +105,10 @@ via: https://www.linux.com/learn/intro-to-linux/2018/3/linux-lan-routing-beginne

|

||||

|

||||

作者:[CARLA SCHRODER][a]

|

||||

译者:[qhwdw](https://github.com/qhwdw)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:https://www.linux.com/learn/intro-to-linux/2018/2/linux-lan-routing-beginners-part-1

|

||||

[1]:https://linux.cn/article-9657-1.html

|

||||

[2]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -2,7 +2,8 @@

|

||||

======

|

||||

|

||||

|

||||

**Font Finder** 是旧的 [**Typecatcher**][1] 的 Rust 实现,用于从[**Google 的字体存档**][2]中轻松搜索和安装 Google Web 字体。它可以帮助你在 Linux 桌面上安装数百种免费和开源字体。如果你正在为你的 Web 项目和应用以及其他任何地方寻找好看的字体,Font Finder 可以轻松地为你提供。它是用 Rust 编程语言编写的免费开源 GTK3 应用程序。与使用 Python 编写的 Typecatcher 不同,Font Finder 可以按类别过滤字体,没有 Python 运行时依赖关系,并且有更好的性能和资源消耗。

|

||||

|

||||

Font Finder 是旧的 [Typecatcher][1] 的 Rust 实现,用于从 [Google 的字体存档][2]中轻松搜索和安装 Google Web 字体。它可以帮助你在 Linux 桌面上安装数百种免费和开源字体。如果你正在为你的 Web 项目和应用以及其他任何地方寻找好看的字体,Font Finder 可以轻松地为你提供。它是用 Rust 编程语言编写的自由、开源的 GTK3 应用程序。与使用 Python 编写的 Typecatcher 不同,Font Finder 可以按类别过滤字体,没有 Python 运行时依赖关系,并且有更好的性能和更低的资源消耗。

|

||||

|

||||

在这个简短的教程中,我们将看到如何在 Linux 中安装和使用 Font Finder。

|

||||

|

||||

@ -11,25 +12,25 @@

|

||||

由于 Fond Finder 是使用 Rust 语言编写的,因此你需要向下面描述的那样在系统中安装 Rust。

|

||||

|

||||

安装 Rust 后,运行以下命令安装 Font Finder:

|

||||

|

||||

```

|

||||

$ cargo install fontfinder

|

||||

|

||||

```

|

||||

|

||||

Font Finder 也可以从 [**flatpak app**][3] 安装。首先在你的系统中安装 Flatpak,如下面的链接所述。

|

||||

Font Finder 也可以从 [flatpak app][3] 安装。首先在你的系统中安装 Flatpak,如下面的链接所述。

|

||||

|

||||

然后,使用命令安装 Font Finder:

|

||||

|

||||

```

|

||||

$ flatpak install flathub io.github.mmstick.FontFinder

|

||||

|

||||

```

|

||||

|

||||

### 在 Linux 中使用 Font Finder 搜索和安装 Google Web 字体

|

||||

|

||||

你可以从程序启动器启动 Font Finder,也可以运行以下命令启动它。

|

||||

|

||||

```

|

||||

$ flatpak run io.github.mmstick.FontFinder

|

||||

|

||||

```

|

||||

|

||||

这是 Font Finder 默认界面的样子。

|

||||

@ -42,7 +43,7 @@ $ flatpak run io.github.mmstick.FontFinder

|

||||

|

||||

![][6]

|

||||

|

||||

要安装字体,只需选择它并点击顶部的 **Install** 按钮即可。

|

||||

要安装字体,只需选择它并点击顶部的 “Install” 按钮即可。

|

||||

|

||||

![][7]

|

||||

|

||||

@ -50,7 +51,7 @@ $ flatpak run io.github.mmstick.FontFinder

|

||||

|

||||

![][8]

|

||||

|

||||

同样,要删除字体,只需从 Font Finder 面板中选择它并单击 **Uninstall** 按钮。就这么简单!

|

||||

同样,要删除字体,只需从 Font Finder 面板中选择它并单击 “Uninstall” 按钮。就这么简单!

|

||||

|

||||

左上角的设置按钮(齿轮按钮)提供了切换到暗色预览的选项。

|

||||

|

||||

@ -62,8 +63,6 @@ $ flatpak run io.github.mmstick.FontFinder

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/font-finder-easily-search-and-install-google-web-fonts-in-linux/

|

||||

@ -71,7 +70,7 @@ via: https://www.ostechnix.com/font-finder-easily-search-and-install-google-web-

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,50 @@

|

||||

LikeCoin,一种给开放式许可的内容创作者的加密货币

|

||||

======

|

||||

|

||||

> 在共创协议下授权作品和挣钱这二者不再是一种争议。

|

||||

|

||||

|

||||

|

||||

传统观点认为,作家、摄影师、艺术家和其他创作者在<ruby>共创协议<rt>Creative Commons</rt></ruby>和其他开放许可下免费共享内容的不会得到报酬。这意味着大多数独立创作者无法通过在互联网上发布他们的作品来赚钱。而现在有了 [LikeCoin][1]:一个新的开源项目,旨在使这个让艺术家们经常为了贡献而不得不妥协或牺牲的常识成为过去。

|

||||

|

||||

LikeCoin 协议旨在通过创意内容获利,以便创作者可以专注于创造出色的内容而不是出售它。

|

||||

|

||||

该协议同样基于去中心化技术,它可以跟踪何时使用内容,并使用 LikeCoin 这种 [以太坊 ERC-20][2] 加密货币通证来奖励其创作者。它通过“<ruby>创造性共识<rt>Proof of Creativity</rt></ruby>”算法进行操作,该算法一部分根据作品收到多少个“喜欢”,一部分根据有多少作品衍生自它而分配 LikeCoin。由于开放式授权的内容有更多机会被重复使用并获得 LikeCoin 令牌,因此系统鼓励内容创作者在<ruby>共创协议<rt>Creative Commons</rt></ruby>许可下发布。

|

||||

|

||||

### 如何运作的

|

||||

|

||||

当通过 LikeCoin 协议上传创意片段时,内容创作者也将包括作品的元数据,包括作者信息及其 InterPlanetary 关联数据([IPLD][3])。这些数据构成了衍生作品的家族图谱;我们称作品与其衍生品之间的关系为“内容足迹”。这种结构使得内容的继承树可以很容易地追溯到原始作品。

|

||||

|

||||

LikeCoin 通证会使用作品的衍生历史记录的信息来将其分发给创作者。由于所有创意作品都包含作者钱包的元数据,因此相应的 LikeCoin 份额可以通过算法计算并分发。

|

||||

|

||||

LikeCoin 可以通过两种方式获得奖励:要么由想要通过支付给内容创建者来表示赞赏的个人直接给予,或通过 Creators Pool 收集观众的“赞”的并根据内容的 LikeRank 分配 LikeCoin。基于在 LikeCoin 协议中的内容追踪,LikeRank 衡量作品重要性(或者我们在这个场景下定义的创造性)。一般来说,一副作品有越多的衍生作品,创意内容的创新就越多,内容就会有更高的 LikeRank。 LikeRank 是内容创新性的量化者。

|

||||

|

||||

### 如何参与?

|

||||

|

||||

LikeCoin 仍然非常新,我们期望在 2018 年晚些时候推出我们的第一个去中心化程序来奖励<ruby>共创协议<rt>Creative Commons</rt></ruby>的内容,并与更大的社区无缝连接。

|

||||

|

||||

LikeCoin 的大部分代码都可以在 [LikeCoin GitHub][4] 仓库中通过 [GPL 3.0 许可证][5]访问。由于它仍处于积极开发阶段,一些实验代码尚未公开,但我们会尽快完成。

|

||||

|

||||

我们欢迎功能请求、拉取请求、复刻和星标。请参与我们在 Github 上的开发,并加入我们在 [Telegram][6] 的讨论组。我们同样在 [Medium][7]、[Facebook][8]、[Twitter][9] 和我们的网站 [like.co][1] 发布关于我们进展的最新消息。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/likecoin

|

||||

|

||||

作者:[Kin Ko][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/ckxpress

|

||||

[1]:https://like.co/

|

||||

[2]:https://en.wikipedia.org/wiki/ERC20

|

||||

[3]:https://ipld.io/

|

||||

[4]:https://github.com/likecoin

|

||||

[5]:https://www.gnu.org/licenses/gpl-3.0.en.html

|

||||

[6]:https://t.me/likecoin

|

||||

[7]:http://medium.com/likecoin

|

||||

[8]:http://fb.com/likecoin.foundation

|

||||

[9]:https://twitter.com/likecoin_fdn

|

||||

@ -0,0 +1,75 @@

|

||||

Linux vs. Unix: What's the difference?

|

||||

======

|

||||

|

||||

|

||||

If you are a software developer in your 20s or 30s, you've grown up in a world dominated by Linux. It has been a significant player in the data center for decades, and while it's hard to find definitive operating system market share reports, Linux's share of data center operating systems could be as high as 70%, with Windows variants carrying nearly all the remaining percentage. Developers using any major public cloud can expect the target system will run Linux. Evidence that Linux is everywhere has grown in recent years when you add in Android and Linux-based embedded systems in smartphones, TVs, automobiles, and many other devices.

|

||||

|

||||

Even so, most software developers, even those who have grown up during this venerable "Linux revolution" have at least heard of Unix. It sounds similar to Linux, and you've probably heard people use these terms interchangeably. Or maybe you've heard Linux called a "Unix-like" operating system.

|

||||

|

||||

So, what is this Unix? The caricatures speak of wizard-like "graybeards" sitting behind glowing green screens, writing C code and shell scripts, powered by old-fashioned, drip-brewed coffee. But Unix has a much richer history beyond those bearded C programmers from the 1970s. While articles detailing the history of Unix and "Unix vs. Linux" comparisons abound, this article will offer a high-level background and a list of major differences between these complementary worlds.

|

||||

|

||||

### Unix's beginnings

|

||||

|

||||

The history of Unix begins at AT&T Bell Labs in the late 1960s with a small team of programmers looking to write a multi-tasking, multi-user operating system for the PDP-7. Two of the most notable members of this team at the Bell Labs research facility were Ken Thompson and Dennis Ritchie. While many of Unix's concepts were derivative of its predecessor ([Multics][1]), the Unix team's decision early in the 1970s to rewrite this small operating system in the C language is what separated Unix from all others. At the time, operating systems were rarely, if ever, portable. Instead, by nature of their design and low-level source language, operating systems were tightly linked to the hardware platform for which they had been authored. By refactoring Unix on the C programming language, Unix could now be ported to many hardware architectures.

|

||||

|

||||

In addition to this new portability, which allowed Unix to quickly expand beyond Bell Labs to other research, academic, and even commercial uses, several key of the operating system's design tenets were attractive to users and programmers. For one, Ken Thompson's [Unix philosophy][2] became a powerful model of modular software design and computing. The Unix philosophy recommended utilizing small, purpose-built programs in combination to do complex overall tasks. Since Unix was designed around files and pipes, this model of "piping" inputs and outputs of programs together into a linear set of operations on the input is still in vogue today. In fact, the current cloud functions-as-a-service (FaaS)/serverless computing model owes much of its heritage to the Unix philosophy.

|

||||

|

||||

### Rapid growth and competition

|

||||

|

||||

Through the late 1970s and 80s, Unix became the root of a family tree that expanded across research, academia, and a growing commercial Unix operating system business. Unix was not open source software, and the Unix source code was licensable via agreements with its owner, AT&T. The first known software license was sold to the University of Illinois in 1975.

|

||||

|

||||

Unix grew quickly in academia, with Berkeley becoming a significant center of activity, given Ken Thompson's sabbatical there in the '70s. With all the activity around Unix at Berkeley, a new delivery of Unix software was born: the Berkeley Software Distribution, or BSD. Initially, BSD was not an alternative to AT&T's Unix, but an add-on with additional software and capabilities. By the time 2BSD (the Second Berkeley Software Distribution) arrived in 1979, Bill Joy, a Berkeley grad student, had added now-famous programs such as `vi` and the C shell (/bin/csh).

|

||||

|

||||

In addition to BSD, which became one of the most popular branches of the Unix family, Unix's commercial offerings exploded through the 1980s and into the '90s with names like HP-UX, IBM's AIX, Sun's Solaris, Sequent, and Xenix. As the branches grew from the original root, the "[Unix wars][3]" began, and standardization became a new focus for the community. The POSIX standard was born in 1988, as well as other standardization follow-ons via The Open Group into the 1990s.

|

||||

|

||||

Around this time AT&T and Sun released System V Release 4 (SVR4), which was adopted by many commercial vendors. Separately, the BSD family of operating systems had grown over the years, leading to some open source variations that were released under the now-familiar [BSD license][4] . This included FreeBSD, OpenBSD, and NetBSD, each with a slightly different target market in the Unix server industry. These Unix variants continue to have some usage today, although many have seen their server market share dwindle into the single digits (or lower). BSD may have the largest install base of any modern Unix system today. Also, every Apple Mac hardware unit shipped in recent history can be claimed by BSD, as its OS X (now macOS) operating system is a BSD-derivative.

|

||||

|

||||

While the full history of Unix and its academic and commercial variants could take many more pages, for the sake of our article focus, let's move on to the rise of Linux.

|

||||

|

||||

### Enter Linux

|

||||

|

||||

What we call the Linux operating system today is really the combination of two efforts from the early 1990s. Richard Stallman was looking to create a truly free and open source alternative to the proprietary Unix system. He was working on the utilities and programs under the name GNU, a recursive algorithm meaning "GNU's not Unix!" Although there was a kernel project underway, it turned out to be difficult going, and without a kernel, the free and open source operating system dream could not be realized. It was Linus Torvald's work—producing a working and viable kernel that he called Linux—that brought the complete operating system to life. Given that Linus was using several GNU tools (e.g., the GNU Compiler Collection, or [GCC][5]), the marriage of the GNU tools and the Linux kernel was a perfect match.

|

||||

|

||||

Linux distributions came to life with the components of GNU, the Linux kernel, MIT's X-Windows GUI, and other BSD components that could be used under the open source BSD license. The early popularity of distributions like Slackware and then Red Hat gave the "common PC user" of the 1990s access to the Linux operating system and, with it, many of the proprietary Unix system capabilities and utilities they used in their work or academic lives.

|

||||

|

||||

Because of the free and open source standing of all the Linux components, anyone could create a Linux distribution with a bit of effort, and soon the total number of distros reached into the hundreds. Today, [distrowatch.com][6] lists 312 unique Linux distributions available in some form. Of course, many developers utilize Linux either via cloud providers or by using popular free distributions like Fedora, Canonical's Ubuntu, Debian, Arch Linux, Gentoo, and many other variants. Commercial Linux offerings, which provide support on top of the free and open source components, became viable as many enterprises, including IBM, migrated from proprietary Unix to offering middleware and software solutions atop Linux. Red Hat built a model of commercial support around Red Hat Enterprise Linux, as did German provider SUSE with SUSE Linux Enterprise Server (SLES).

|

||||

|

||||

### Comparing Unix and Linux

|

||||

|

||||

So far, we've looked at the history of Unix and the rise of Linux and the GNU/Free Software Foundation underpinnings of a free and open source alternative to Unix. Let's examine the differences between these two operating systems that share much of the same heritage and many of the same goals.

|

||||

|

||||

From a user experience perspective, not very much is different! Much of the attraction of Linux was the operating system's availability across many hardware architectures (including the modern PC) and ability to use tools familiar to Unix system administrators and users.

|

||||

|

||||

Because of POSIX standards and compliance, software written on Unix could be compiled for a Linux operating system with a usually limited amount of porting effort. Shell scripts could be used directly on Linux in many cases. While some tools had slightly different flag/command-line options between Unix and Linux, many operated the same on both.

|

||||

|

||||

One side note is that the popularity of the macOS hardware and operating system as a platform for development that mainly targets Linux may be attributed to the BSD-like macOS operating system. Many tools and scripts meant for a Linux system work easily within the macOS terminal. Many open source software components available on Linux are easily available through tools like [Homebrew][7].

|

||||

|

||||

The remaining differences between Linux and Unix are mainly related to the licensing model: open source vs. proprietary, licensed software. Also, the lack of a common kernel within Unix distributions has implications for software and hardware vendors. For Linux, a vendor can create a device driver for a specific hardware device and expect that, within reason, it will operate across most distributions. Because of the commercial and academic branches of the Unix tree, a vendor might have to write different drivers for variants of Unix and have licensing and other concerns related to access to an SDK or a distribution model for the software as a binary device driver across many Unix variants.

|

||||

|

||||

As both communities have matured over the past decade, many of the advancements in Linux have been adopted in the Unix world. Many GNU utilities were made available as add-ons for Unix systems where developers wanted features from GNU programs that aren't part of Unix. For example, IBM's AIX offered an AIX Toolbox for Linux Applications with hundreds of GNU software packages (like Bash, GCC, OpenLDAP, and many others) that could be added to an AIX installation to ease the transition between Linux and Unix-based AIX systems.

|

||||

|

||||

Proprietary Unix is still alive and well and, with many major vendors promising support for their current releases well into the 2020s, it goes without saying that Unix will be around for the foreseeable future. Also, the BSD branch of the Unix tree is open source, and NetBSD, OpenBSD, and FreeBSD all have strong user bases and open source communities that may not be as visible or active as Linux, but are holding their own in recent server share reports, with well above the proprietary Unix numbers in areas like web serving.

|

||||

|

||||

Where Linux has shown a significant advantage over proprietary Unix is in its availability across a vast number of hardware platforms and devices. The Raspberry Pi, popular with hobbyists and enthusiasts, is Linux-driven and has opened the door for an entire spectrum of IoT devices running Linux. We've already mentioned Android devices, autos (with Automotive Grade Linux), and smart TVs, where Linux has large market share. Every cloud provider on the planet offers virtual servers running Linux, and many of today's most popular cloud-native stacks are Linux-based, whether you're talking about container runtimes or Kubernetes or many of the serverless platforms that are gaining popularity.

|

||||

|

||||

One of the most revealing representations of Linux's ascendancy is Microsoft's transformation in recent years. If you told software developers a decade ago that the Windows operating system would "run Linux" in 2016, most of them would have laughed hysterically. But the existence and popularity of the Windows Subsystem for Linux (WSL), as well as more recently announced capabilities like the Windows port of Docker, including LCOW (Linux containers on Windows) support, are evidence of the impact that Linux has had—and clearly will continue to have—across the software world.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/5/differences-between-linux-and-unix

|

||||

|

||||

作者:[Phil Estes][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/estesp

|

||||

[1]:https://en.wikipedia.org/wiki/Multics

|

||||

[2]:https://en.wikipedia.org/wiki/Unix_philosophy

|

||||

[3]:https://en.wikipedia.org/wiki/Unix_wars

|

||||

[4]:https://en.wikipedia.org/wiki/BSD_licenses

|

||||

[5]:https://en.wikipedia.org/wiki/GNU_Compiler_Collection

|

||||

[6]:https://distrowatch.com/

|

||||

[7]:https://brew.sh/

|

||||

@ -0,0 +1,175 @@

|

||||

The Best Linux Tools for Teachers and Students

|

||||

======

|

||||

Linux is a platform ready for everyone. If you have a niche, Linux is ready to meet or exceed the needs of said niche. One such niche is education. If you are a teacher or a student, Linux is ready to help you navigate the waters of nearly any level of the educational system. From study aids, to writing papers, to managing classes, to running an entire institution, Linux has you covered.

|

||||

|

||||

If you’re unsure how, let me introduce you to a few tools Linux has at the ready. Some of these tools require little to no learning curve, whereas others require a full blown system administrator to install, setup, and manage. We’ll start with the simple and make our way to the complex.

|

||||

|

||||

### Study aids

|

||||

|

||||

Everyone studies a bit differently and every class requires a different type and level of studying. Fortunately, Linux has plenty of study aids. Let’s take a look at a few examples:

|

||||

|

||||

Flash Cards ─ [KWordQuiz][1] (Figure 1) is one of the many flashcard applications available for the Linux platform. KWordQuiz uses the kvtml file format and you can download plenty of pre-made, contributed files to use [here][2]. KWordQuiz is part of the KDE desktop environment, but can be installed on other desktops (KDE dependencies will be installed alongside the flashcard app).

|

||||

|

||||

|

||||

|

||||

### Language tools

|

||||

|

||||

Thanks to an ever-shrinking world, foreign language has become a crucial element of education. You’ll find plenty of language tools, including [Kiten][3] (Figure 2) the kanji browser for the KDE desktop.

|

||||

|

||||

|

||||

|

||||

If Japanese isn’t your language, you could try [Jargon Informatique][4]. This dictionary is entirely in French and, so if you’re new to the language, you might want to stick with something like [Google Translate][5].

|

||||

|

||||

### Writing Aids/ Note Taking

|

||||

|

||||

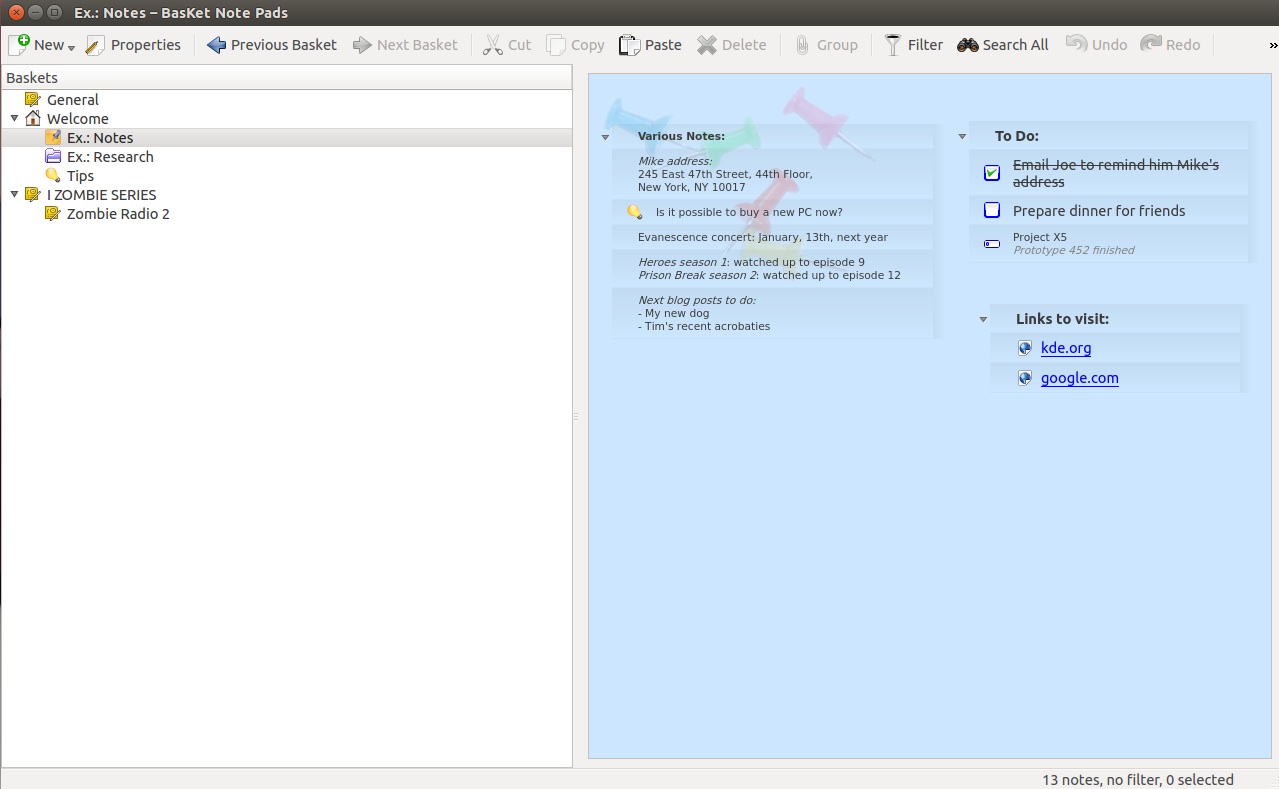

Linux has everything you need to keep notes on a subject and write those term papers. Let’s start with taking notes. If you’re familiar with Microsoft OneNote, you'll love [BasKet Note Pads][6]. With this app, you can create baskets for subjects and add just about anything ─ notes, links, images, cross references (to other baskets ─ Figure 3), app launchers, load from file, and more.

|

||||

|

||||

|

||||

|

||||

You can create baskets that are free-form, so elements can be moved around to suit your need. If you prefer a more ordered feel, create a columned basket to retain those notes walled in.

|

||||

|

||||

Of course, the mother of all writing aids for Linux would be [LibreOffice][7]. The default office suite on most Linux distributions, LibreOffice has your text documents, spreadsheets, presentations, databases, formula, and drawing covered.

|

||||

|

||||

The one caveat to using LibreOffice in an educational environment, is that you will most likely have to save your documents in the MS Office format.

|

||||

|

||||

### Education-specific distribution

|

||||

|

||||

With all of this said about Linux applications geared toward the student in mind, it might behoove you to take a look at one of the distributions created specifically for education. The best in breed is [Edubuntu][8]. This grassroots Linux distribution aims at getting Linux into schools, homes, and communities. Edubuntu uses the default Ubuntu desktop (the Unity shell) and adds the following software:

|

||||

|

||||

|

||||

+ KDE Education Suite

|

||||

|

||||

+ GCompris

|

||||

|

||||

+ Celestia

|

||||

|

||||

+ Tux4Kids

|

||||

|

||||

+ Epoptes

|

||||

|

||||

+ LTSP

|

||||

|

||||

+ GBrainy

|

||||

|

||||

+ and much more.

|

||||

|

||||

Edubuntu isn’t the only game in town. If you’d rather test other education-specific Linux distributions, here’s the short list:

|

||||

|

||||

|

||||

+ Debian-Edu

|

||||

|

||||

+ Fedora Education Spin

|

||||

|

||||

+ Guadalinux-Edu

|

||||

|

||||

+ OpenSuse-Edu

|

||||

|

||||

+ Qimo for Kids

|

||||

|

||||

+ Uberstudent.

|

||||

|

||||

### Classroom/institutional administration

|

||||

|

||||

This is where the Linux platform really shines. There are a number of tools geared specifically for administering. Let’s first look at tools specific to the classroom.

|

||||

|

||||

[iTalc][9] is a powerful didactical environment for the classroom. With this tool, teachers can view and control students desktops (supporting Linux and Windows). The iTalc system allows teachers to view what’s happening on a student's desktop, take control of their desktop, lock their desktop, show demonstrations to desktops, power on/off desktops, send text messages to students' desktops, and much more.

|

||||

|

||||

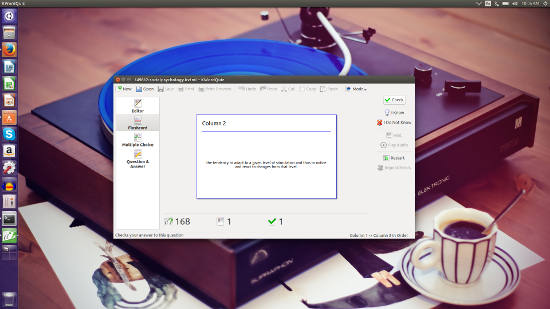

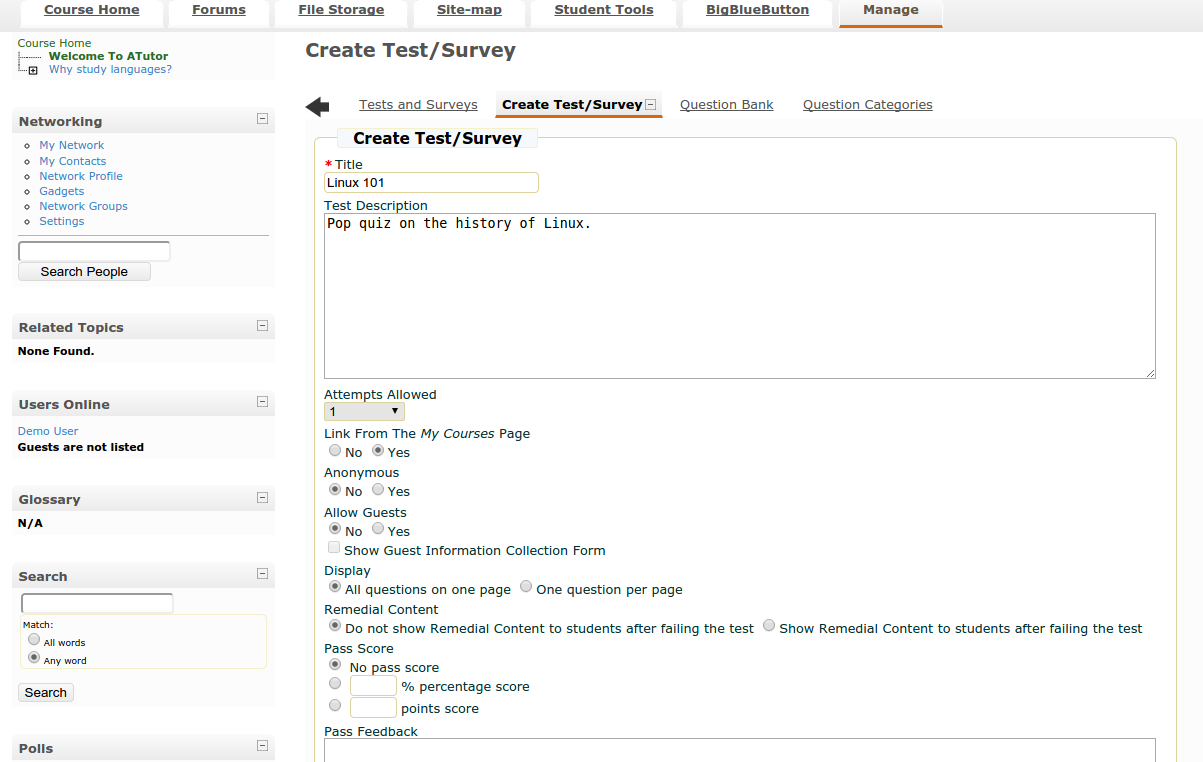

[aTutor][10] (Figure 4) is an open source Learning Management System (LMS) focused on developing online courses and e-learning content. Where aTutor really shines is the creation and management of online tests and quizzes. Of course, aTutor is not limited to testing purposes. With this powerful software, students and teachers can enjoy:

|

||||

|

||||

* Social networking

|

||||

|

||||

* Profiles

|

||||

|

||||

* Messaging

|

||||

|

||||

* Adaptive navigation

|

||||

|

||||

* Work groups

|

||||

|

||||

* File storage

|

||||

|

||||

* Group blogs

|

||||

|

||||

* and much more.

|

||||

|

||||

|

||||

|

||||

|

||||

Course material is easy to create and deploy (you can even assign tests/quizzes to specific study groups).

|

||||

|

||||

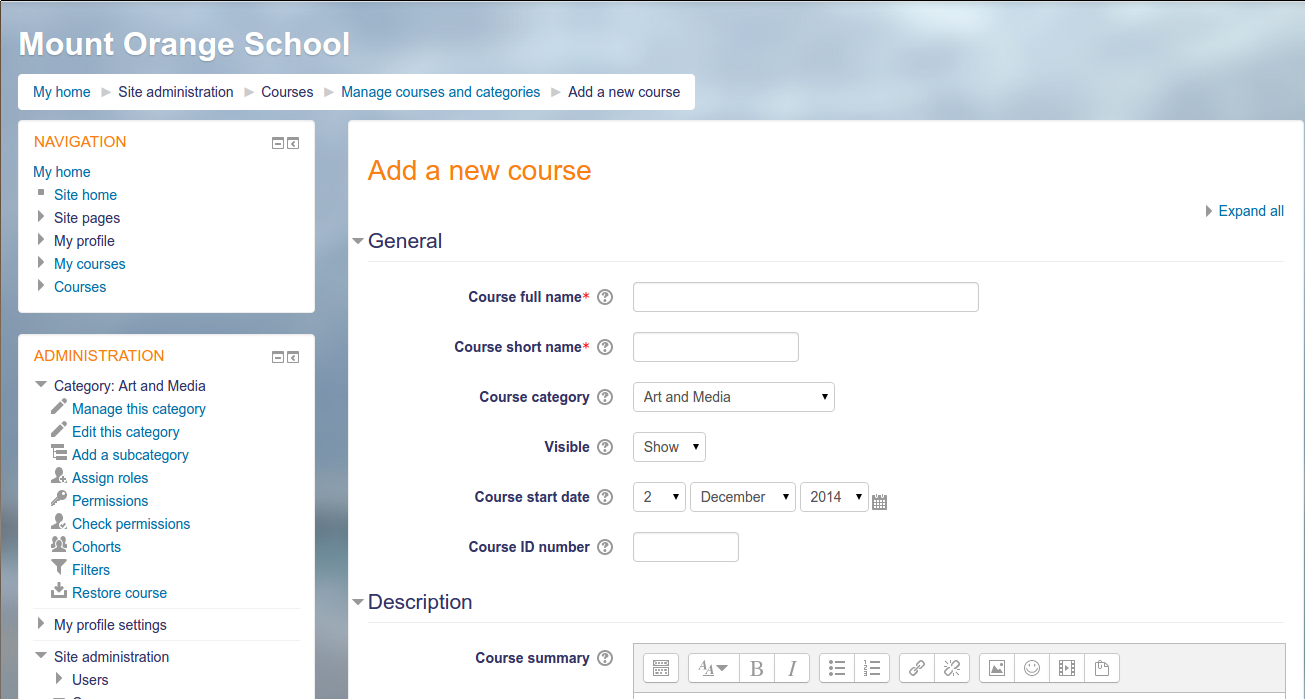

[Moodle][11] is one of the most widely used educational management software titles available. With Moodle you can manage, teach, learn, and even participate in your child’s education. This powerhouse software offers collaborative tools for teachers and students, exams, calendars, forums, file management, course management (Figure 5), notifications, progress tracking, mass enrollment, bulk course creation, attendance, and much more.

|

||||

|

||||

|

||||

|

||||

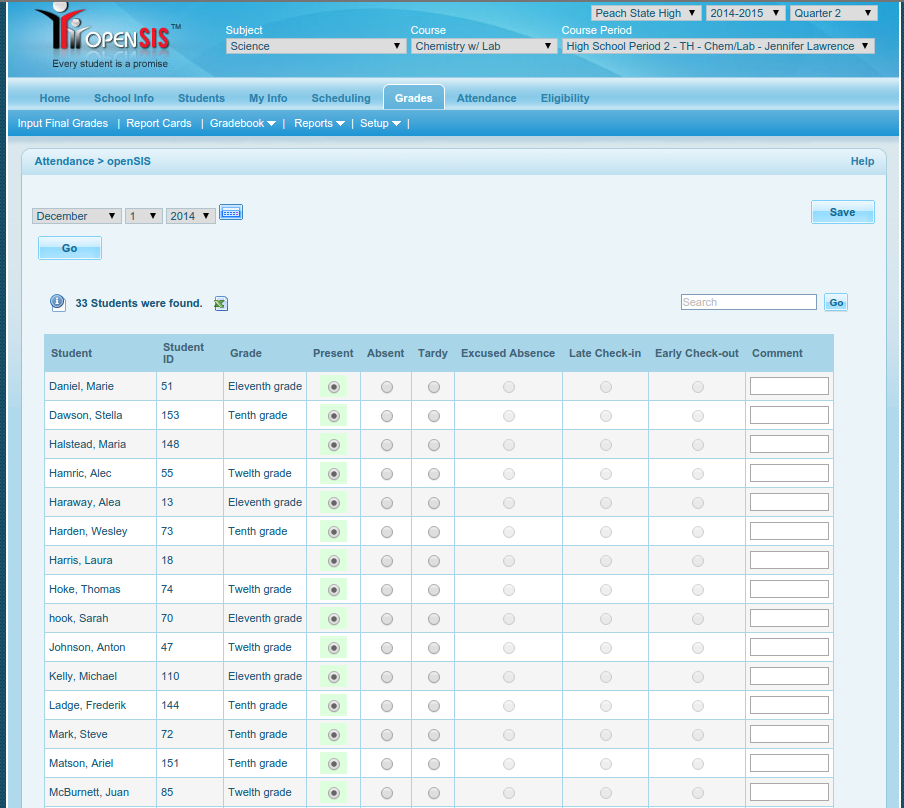

[OpenSIS][12] stands for Open Source Student Information System and does a great job of managing your educational institution. There is a free community edition, but even with the paid version you can look forward to reducing ownership costs for a school district by up to 75 percent (when compared to proprietary solutions).

|

||||

|

||||

OpenSIS includes the following features/modules:

|

||||

|

||||

* Attendance (Figure 6)

|

||||

|

||||

* Contact information

|

||||

|

||||

* Student demographics

|

||||

|

||||

* Gradebook

|

||||

|

||||

* Scheduling

|

||||

|

||||

* Health records

|

||||

|

||||

* Report cards.

|

||||

|

||||

|

||||

|

||||

|

||||

There are four editions of OpenSIS. Check out the feature comparison matrix [here][13].

|

||||

|

||||

[vufind][14] is an outstanding library management system that allows students and teachers to easily browse for library resources such as:

|

||||

|

||||

* Catalog Records

|

||||

|

||||

* Locally Cached Journals

|

||||

|

||||

* Digital Library Items

|

||||

|

||||

* Institutional Repository

|

||||

|

||||

* Institutional Bibliography

|

||||

|

||||

* Other Library Collections and Resources.

|

||||

|

||||

|

||||

|

||||

|

||||

The vufind system allows user login where authenticated users can save resources for quick recall and enjoy “more like this” results.

|

||||

|

||||

This list just barely scratches the surface of what is available for Linux in the educational arena. And, as you might expect, each tool is highly customizable and open source ─ so if the software doesn’t precisely meet your needs, you are free (in most cases) to modify the source and change it.

|

||||

|

||||

Linux and education go hand in hand. Whether you are a teacher, a student, or an administrator, you’ll find plenty of tools to help make the institution of education open, flexible, and powerful.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/news/best-linux-tools-teachers-and-students

|

||||

|

||||

作者:[Jack Wallen][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/jlwallen

|

||||

[1]:https://edu.kde.org/kwordquiz/

|

||||

[2]:http://kde-files.org/index.php?xcontentmode=694

|

||||

[3]:https://edu.kde.org/kiten/

|

||||

[4]:http://jargon.asher256.com/index.php

|

||||

[5]:https://translate.google.com/

|

||||

[6]:http://basket.kde.org/

|

||||

[7]:http://www.libreoffice.com

|

||||

[8]:http://www.edubuntu.org/

|

||||

[9]:http://italc.sourceforge.net/

|

||||

[10]:http://www.atutor.ca/

|

||||

[11]:https://moodle.org/

|

||||

[12]:http://www.opensis.com/

|

||||

[13]:http://www.opensis.com/compare_edition.php

|

||||

[14]:http://vufind-org.github.io/vufind/

|

||||

@ -1,198 +0,0 @@

|

||||

transalting by wyxplus

|

||||

4 Tools for Network Snooping on Linux

|

||||

======

|

||||

Computer networking data has to be exposed, because packets can't travel blindfolded, so join us as we use `whois`, `dig`, `nmcli`, and `nmap` to snoop networks.

|

||||

|

||||

Do be polite and don't run `nmap` on any network but your own, because probing other people's networks can be interpreted as a hostile act.

|

||||

|

||||

### Thin and Thick whois

|

||||

|

||||

You may have noticed that our beloved old `whois` command doesn't seem to give the level of detail that it used to. Check out this example for Linux.com:

|

||||

```

|

||||

$ whois linux.com

|

||||

Domain Name: LINUX.COM

|

||||

Registry Domain ID: 4245540_DOMAIN_COM-VRSN

|

||||

Registrar WHOIS Server: whois.namecheap.com

|

||||

Registrar URL: http://www.namecheap.com

|

||||

Updated Date: 2018-01-10T12:26:50Z

|

||||

Creation Date: 1994-06-02T04:00:00Z

|

||||

Registry Expiry Date: 2018-06-01T04:00:00Z

|

||||

Registrar: NameCheap Inc.

|

||||

Registrar IANA ID: 1068

|

||||

Registrar Abuse Contact Email: abuse@namecheap.com

|

||||

Registrar Abuse Contact Phone: +1.6613102107

|

||||

Domain Status: ok https://icann.org/epp#ok

|

||||

Name Server: NS5.DNSMADEEASY.COM

|

||||

Name Server: NS6.DNSMADEEASY.COM

|

||||

Name Server: NS7.DNSMADEEASY.COM

|

||||

DNSSEC: unsigned

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

There is quite a bit more, mainly annoying legalese. But where is the contact information? It is sitting on whois.namecheap.com (see the third line of output above):

|

||||

```

|

||||

$ whois -h whois.namecheap.com linux.com

|

||||

|

||||

```

|

||||

|

||||

I won't print the output here, as it is very long, containing the Registrant, Admin, and Tech contact information. So what's the deal, Lucille? Some registries, such as .com and .net are "thin" registries, storing a limited subset of domain data. To get complete information use the `-h`, or `--host` option, to get the complete dump from the domain's `Registrar WHOIS Server`.

|

||||

|

||||

Most of the other top-level domains are thick registries, such as .info. Try `whois blockchain.info` to see an example.

|

||||

|

||||

Want to get rid of the obnoxious legalese? Use the `-H` option.

|

||||

|

||||

### Digging DNS

|

||||

|

||||

Use the `dig` command to compare the results from different name servers to check for stale entries. DNS records are cached all over the place, and different servers have different refresh intervals. This is the simplest usage:

|

||||

```

|

||||

$ dig linux.com

|

||||

<<>> DiG 9.10.3-P4-Ubuntu <<>> linux.com

|

||||

;; global options: +cmd

|

||||

;; Got answer:

|

||||

;; ->>HEADER<<<- opcode: QUERY, status: NOERROR, id: 13694

|

||||

;; flags: qr rd ra; QUERY: 1, ANSWER: 4, AUTHORITY: 0, ADDITIONAL: 1

|

||||

|

||||

;; OPT PSEUDOSECTION:

|

||||

; EDNS: version: 0, flags:; udp: 1440

|

||||

;; QUESTION SECTION:

|

||||

;linux.com. IN A

|

||||

|

||||

;; ANSWER SECTION:

|

||||

linux.com. 10800 IN A 151.101.129.5

|

||||

linux.com. 10800 IN A 151.101.65.5

|

||||

linux.com. 10800 IN A 151.101.1.5

|

||||

linux.com. 10800 IN A 151.101.193.5

|

||||

|

||||

;; Query time: 92 msec

|

||||

;; SERVER: 127.0.1.1#53(127.0.1.1)

|

||||

;; WHEN: Tue Jan 16 15:17:04 PST 2018

|

||||

;; MSG SIZE rcvd: 102

|

||||

|

||||

```

|

||||

|

||||

Take notice of the SERVER: 127.0.1.1#53(127.0.1.1) line near the end of the output. This is your default caching resolver. When the address is localhost, that means there is a DNS server installed on your machine. In my case that is Dnsmasq, which is being used by Network Manager:

|

||||

```

|

||||

$ ps ax|grep dnsmasq

|

||||

2842 ? S 0:00 /usr/sbin/dnsmasq --no-resolv --keep-in-foreground

|

||||

--no-hosts --bind-interfaces --pid-file=/var/run/NetworkManager/dnsmasq.pid

|

||||

--listen-address=127.0.1.1

|

||||

|

||||

```

|

||||

|

||||

The `dig` default is to return A records, which define the domain name. IPv6 has AAAA records:

|

||||

```

|

||||

$ $ dig linux.com AAAA

|

||||

[...]

|

||||

;; ANSWER SECTION:

|

||||

linux.com. 60 IN AAAA 64:ff9b::9765:105

|

||||

linux.com. 60 IN AAAA 64:ff9b::9765:4105

|

||||

linux.com. 60 IN AAAA 64:ff9b::9765:8105

|

||||

linux.com. 60 IN AAAA 64:ff9b::9765:c105

|

||||

[...]

|

||||

|

||||

```

|

||||

|

||||

Checkitout, Linux.com has IPv6 addresses. Very good! If your Internet service provider supports IPv6 then you can connect over IPv6. (Sadly, my overpriced mobile broadband does not.)

|

||||

|

||||

Suppose you make some DNS changes to your domain, or you're seeing `dig` results that don't look right. Try querying with a public DNS service, like OpenNIC:

|

||||

```

|

||||

$ dig @69.195.152.204 linux.com

|

||||

[...]

|

||||

;; Query time: 231 msec

|

||||

;; SERVER: 69.195.152.204#53(69.195.152.204)

|

||||

|

||||

```

|

||||

|

||||

`dig` confirms that you're getting your lookup from 69.195.152.204. You can query all kinds of servers and compare results.

|

||||

|

||||

### Upstream Name Servers

|

||||

|

||||

I want to know what my upstream name servers are. To find this, I first look in `/etc/resolv/conf`:

|

||||

```

|

||||

$ cat /etc/resolv.conf

|

||||

# Dynamic resolv.conf(5) file for glibc resolver(3) generated by resolvconf(8)

|

||||

# DO NOT EDIT THIS FILE BY HAND -- YOUR CHANGES WILL BE OVERWRITTEN

|

||||

nameserver 127.0.1.1

|

||||

|

||||

```

|

||||

|

||||

Thanks, but I already knew that. Your Linux distribution may be configured differently, and you'll see your upstream servers. Let's try `nmcli`, the Network Manager command-line tool:

|

||||

```

|

||||

$ nmcli dev show | grep DNS

|

||||

IP4.DNS[1]: 192.168.1.1

|

||||

|

||||

```

|

||||

|

||||

Now we're getting somewhere, as that is the address of my mobile hotspot, and I should have thought of that myself. I can log in to its weird little Web admin panel to see its upstream servers. A lot of consumer Internet gateways don't let you view or change these settings, so try an external service such as [What's my DNS server?][1]

|

||||

|

||||

### List IPv4 Addresses on your Network

|

||||

|

||||

Which IPv4 addresses are up and in use on your network?

|

||||

```

|

||||

$ nmap -sn 192.168.1.0/24

|

||||

Starting Nmap 7.01 ( https://nmap.org ) at 2018-01-14 14:03 PST

|

||||

Nmap scan report for Mobile.Hotspot (192.168.1.1)

|

||||

Host is up (0.011s latency).

|

||||

Nmap scan report for studio (192.168.1.2)

|

||||

Host is up (0.000071s latency).

|

||||

Nmap scan report for nellybly (192.168.1.3)

|

||||

Host is up (0.015s latency)

|

||||

Nmap done: 256 IP addresses (2 hosts up) scanned in 2.23 seconds

|

||||

|

||||

```

|

||||

|

||||

Everyone wants to scan their network for open ports. This example looks for services and their versions:

|

||||

```

|

||||

$ nmap -sV 192.168.1.1/24

|

||||

|

||||

Starting Nmap 7.01 ( https://nmap.org ) at 2018-01-14 16:46 PST

|

||||

Nmap scan report for Mobile.Hotspot (192.168.1.1)

|

||||

Host is up (0.0071s latency).

|

||||

Not shown: 997 closed ports

|

||||

PORT STATE SERVICE VERSION

|

||||

22/tcp filtered ssh

|

||||

53/tcp open domain dnsmasq 2.55

|

||||

80/tcp open http GoAhead WebServer 2.5.0

|

||||

|

||||

Nmap scan report for studio (192.168.1.102)

|

||||

Host is up (0.000087s latency).

|

||||

Not shown: 998 closed ports

|

||||

PORT STATE SERVICE VERSION

|

||||

22/tcp open ssh OpenSSH 7.2p2 Ubuntu 4ubuntu2.2 (Ubuntu Linux; protocol 2.0)

|

||||

631/tcp open ipp CUPS 2.1

|

||||

Service Info: OS: Linux; CPE: cpe:/o:linux:linux_kernel

|

||||

|

||||

Service detection performed. Please report any incorrect results at https://nmap.org/submit/ .

|

||||

Nmap done: 256 IP addresses (2 hosts up) scanned in 11.65 seconds

|

||||

|

||||

```

|

||||

|

||||

These are interesting results. Let's try the same run from a different Internet account, to see if any of these services are exposed to big bad Internet. You have a second network if you have a smartphone. There are probably apps you can download, or use your phone as a hotspot to your faithful Linux computer. Fetch the WAN IP address from the hotspot control panel and try again:

|

||||

```

|

||||

$ nmap -sV 12.34.56.78

|

||||

|

||||

Starting Nmap 7.01 ( https://nmap.org ) at 2018-01-14 17:05 PST

|

||||

Nmap scan report for 12.34.56.78

|

||||

Host is up (0.0061s latency).

|

||||

All 1000 scanned ports on 12.34.56.78 are closed

|

||||

|

||||

```

|

||||

|

||||

That's what I like to see. Consult the fine man pages for these commands to learn more fun snooping techniques.

|

||||

|

||||

Learn more about Linux through the free ["Introduction to Linux" ][2]course from The Linux Foundation and edX.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.linux.com/learn/intro-to-linux/2018/1/4-tools-network-snooping-linux

|

||||

|

||||

作者:[Carla Schroder][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.linux.com/users/cschroder

|

||||

[1]:http://www.whatsmydnsserver.com/

|

||||

[2]:https://training.linuxfoundation.org/linux-courses/system-administration-training/introduction-to-linux

|

||||

@ -0,0 +1,166 @@

|

||||

Token ERC Comparison for Fungible Tokens – Blockchainers

|

||||

======

|

||||

“The good thing about standards is that there are so many to choose from.” [_Andrew S. Tanenbaum_][1]

|

||||

|

||||

### Current State of Token Standards

|

||||

|

||||

The current state of Token standards on the Ethereum platform is surprisingly simple: ERC-20 Token Standard is the only accepted and adopted (as [EIP-20][2]) standard for a Token interface.

|

||||

|

||||

Proposed in 2015, it has finally been accepted at the end of 2017.

|

||||

|

||||

In the meantime, many Ethereum Requests for Comments (ERC) have been proposed which address shortcomings of the ERC-20, which partly were caused by changes in the Ethereum platform itself, eg. the fix for the re-entrancy bug with [EIP-150][3]. Other ERC propose enhancements to the ERC-20 Token model. These enhancements were identified by experiences gathered due to the broad adoption of the Ethereum blockchain and the ERC-20 Token standard. The actual usage of the ERC-20 Token interface resulted in new demands and requirements to address non-functional requirements like permissioning and operations.

|

||||

|

||||

This blogpost should give a superficial, but complete, overview of all proposals for Token(-like) standards on the Ethereum platform. This comparison tries to be objective but most certainly will fail in doing so.

|

||||

|

||||

### The Mother of all Token Standards: ERC-20

|

||||

|

||||

There are dozens of [very good][4] and detailed description of the ERC-20, which will not be repeated here. Just the core concepts relevant for comparing the proposals are mentioned in this post.

|

||||

|

||||

#### The Withdraw Pattern

|

||||

|

||||

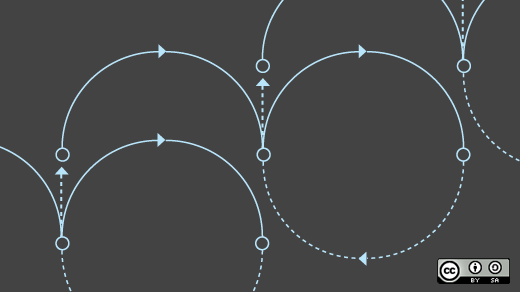

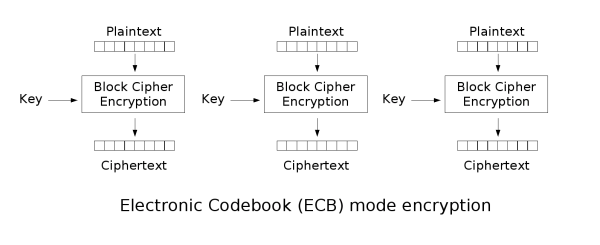

Users trying to understand the ERC-20 interface and especially the usage pattern for _transfer_ ing Tokens _from_ one externally owned account (EOA), ie. an end-user (“Alice”), to a smart contract, have a hard time getting the approve/transferFrom pattern right.

|

||||

|

||||

![][5]

|

||||

|

||||

From a software engineering perspective, this withdraw pattern is very similar to the [Hollywood principle][6] (“Don’t call us, we’ll call you!”). The idea is that the call chain is reversed: during the ERC-20 Token transfer, the Token doesn’t call the contract, but the contract does the call transferFrom on the Token.

|

||||

|

||||

While the Hollywood Principle is often used to implement Separation-of-Concerns (SoC), in Ethereum it is a security pattern to avoid having the Token contract to call an unknown function on an external contract. This behaviour was necessary due to the [Call Depth Attack][7] until [EIP-150][3] was activated. After this hard fork, the re-entrancy bug was not possible anymore and the withdraw pattern did not provide any more security than calling the Token directly.

|

||||

|

||||

But why should it be a problem now, the usage might be somehow clumsy, but we can fix this in the DApp frontend, right?

|

||||

|

||||

So, let’s see what happens if a user used transfer to send Tokens to a smart contract. Alice calls transfer on the Token contract with the contract address

|

||||

|

||||

**….aaaaand it’s gone!**

|

||||

|

||||

That’s right, the Tokens are gone. Most likely, nobody will ever get the Tokens back. But Alice is not alone, as Dexaran, inventor of ERC-223, found out, about $400.000 in tokens (let’s just say _a lot_ due to the high volatility of ETH) are irretrievably lost for all of us due to users accidentally sending Tokens to smart contracts.

|

||||

|

||||

Even if the contract developer was extremely user friendly and altruistic, he couldn’t create the contract so that it could react to getting Tokens transferred to it and eg. return them, as the contract will never be notified of this transfer and the event is only emitted on the Token contract.

|

||||

|

||||

From a software engineering perspective that’s a severe shortcoming of ERC-20. If an event occurs (and for the sake of simplicity, we are now assuming Ethereum transactions are actually events), there should be a notification to the parties involved. However, there is an event, but it’s triggered in the Token smart contract which the receiving contract cannot know.

|

||||

|

||||

Currently, it’s not possible to prevent users sending Tokens to smart contracts and losing them forever using the unintuitive transfer on the ERC-20 Token contract.

|

||||

|

||||

### The Empire Strikes Back: ERC-223

|

||||

|

||||

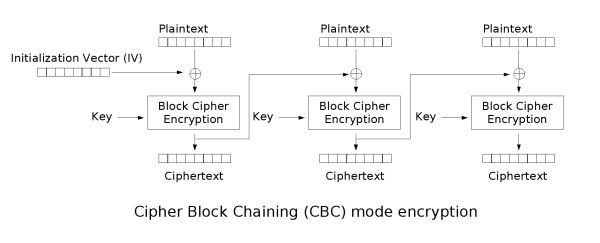

The first attempt at fixing the problems of ERC-20 was proposed by [Dexaran][8]. The main issue solved by this proposal is the different handling of EOA and smart contract accounts.

|

||||

|

||||

The compelling strategy is to reverse the calling chain (and with [EIP-150][3] solved this is now possible) and use a pre-defined callback (tokenFallback) on the receiving smart contract. If this callback is not implemented, the transfer will fail (costing all gas for the sender, a common criticism for ERC-223).

|

||||

|

||||

![][9]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Establishes a new interface, intentionally being not compliant to ERC-20 with respect to the deprecated functions

|

||||

|

||||

* Allows contract developers to handle incoming tokens (eg. accept/reject) since event pattern is followed

|

||||

|

||||

* Uses one transaction instead of two (transfer vs. approve/transferFrom) and thus saves gas and Blockchain storage

|

||||

|

||||

|

||||

|

||||

|

||||

#### Cons:

|

||||

|

||||

* If tokenFallback doesn’t exist then the contract fallback function is executed, this might have unintended side-effects

|

||||

|

||||

* If contracts assume that transfer works with Tokens, eg. for sending Tokens to specific contracts like multi-sig wallets, this would fail with ERC-223 Tokens, making it impossible to move them (ie. they are lost)

|

||||

|

||||

|

||||

### The Pragmatic Programmer: ERC-677

|

||||

|

||||

The [ERC-667 transferAndCall Token Standard][10] tries to marriage the ERC-20 and ERC-223. The idea is to introduce a transferAndCall function to the ERC-20, but keep the standard as is. ERC-223 intentionally is not completely backwards compatible, since the approve/allowance pattern is not needed anymore and was therefore removed.

|

||||

|

||||

The main goal of ERC-667 is backward compatibility, providing a safe way for new contracts to transfer tokens to external contracts.

|

||||

|

||||

![][11]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Easy to adapt for new Tokens

|

||||

|

||||

* Compatible to ERC-20

|

||||

|

||||

* Adapter for ERC-20 to use ERC-20 safely

|

||||

|

||||

#### Cons:

|

||||

|

||||

* No real innovations. A compromise of ERC-20 and ERC-223

|

||||

|

||||

* Current implementation [is not finished][12]

|

||||

|

||||

|

||||

### The Reunion: ERC-777

|

||||

|

||||

[ERC-777 A New Advanced Token Standard][13] was introduced to establish an evolved Token standard which learned from misconceptions like approve() with a value and the aforementioned send-tokens-to-contract-issue.

|

||||

|

||||

Additionally, the ERC-777 uses the new standard [ERC-820: Pseudo-introspection using a registry contract][14] which allows for registering meta-data for contracts to provide a simple type of introspection. This allows for backwards compatibility and other functionality extensions, depending on the ITokenRecipient returned by a EIP-820 lookup on the to address, and the functions implemented by the target contract.

|

||||

|

||||

ERC-777 adds a lot of learnings from using ERC-20 Tokens, eg. white-listed operators, providing Ether-compliant interfaces with send(…), using the ERC-820 to override and adapt functionality for backwards compatibility.

|

||||

|

||||

![][15]

|

||||

|

||||

#### Pros:

|

||||

|

||||

* Well thought and evolved interface for tokens, learnings from ERC-20 usage

|

||||

|

||||

* Uses the new standard request ERC-820 for introspection, allowing for added functionality

|

||||

|

||||

* White-listed operators are very useful and are more necessary than approve/allowance , which was often left infinite

|

||||

|

||||

|

||||

#### Cons:

|

||||

|

||||

* Is just starting, complex construction with dependent contract calls

|

||||

|

||||

* Dependencies raise the probability of security issues: first security issues have been [identified (and solved)][16] not in the ERC-777, but in the even newer ERC-820

|

||||

|

||||

|

||||

|

||||

|

||||

### (Pure Subjective) Conclusion

|

||||

|

||||

For now, if you want to go with the “industry standard” you have to choose ERC-20. It is widely supported and well understood. However, it has its flaws, the biggest one being the risk of non-professional users actually losing money due to design and specification issues. ERC-223 is a very good and theoretically founded answer for the issues in ERC-20 and should be considered a good alternative standard. Implementing both interfaces in a new token is not complicated and allows for reduced gas usage.

|

||||

|

||||

A pragmatic solution to the event and money loss problem is ERC-677, however it doesn’t offer enough innovation to establish itself as a standard. It could however be a good candidate for an ERC-20 2.0.

|

||||

|

||||

ERC-777 is an advanced token standard which should be the legitimate successor to ERC-20, it offers great concepts which are needed on the matured Ethereum platform, like white-listed operators, and allows for extension in an elegant way. Due to its complexity and dependency on other new standards, it will take time till the first ERC-777 tokens will be on the Mainnet.

|

||||

|

||||

### Links

|

||||

|

||||

[1] Security Issues with approve/transferFrom-Pattern in ERC-20: <https://drive.google.com/file/d/0ByMtMw2hul0EN3NCaVFHSFdxRzA/view>

|

||||

|

||||

[2] No Event Handling in ERC-20: <https://docs.google.com/document/d/1Feh5sP6oQL1-1NHi-X1dbgT3ch2WdhbXRevDN681Jv4>

|

||||

|

||||

[3] Statement for ERC-20 failures and history: <https://github.com/ethereum/EIPs/issues/223#issuecomment-317979258>

|

||||

|

||||

[4] List of differences ERC-20/223: <https://ethereum.stackexchange.com/questions/17054/erc20-vs-erc223-list-of-differences>

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://blockchainers.org/index.php/2018/02/08/token-erc-comparison-for-fungible-tokens/

|

||||

|

||||

作者:[Alexander Culum][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://blockchainers.org/index.php/author/alex/

|

||||

[1]:https://www.goodreads.com/quotes/589703-the-good-thing-about-standards-is-that-there-are-so

|

||||

[2]:https://github.com/ethereum/EIPs/blob/master/EIPS/eip-20.md

|

||||

[3]:https://github.com/ethereum/EIPs/blob/master/EIPS/eip-150.md

|

||||

[4]:https://medium.com/@jgm.orinoco/understanding-erc-20-token-contracts-a809a7310aa5

|

||||

[5]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-20-Token-Transfer-2.png

|

||||

[6]:http://matthewtmead.com/blog/hollywood-principle-dont-call-us-well-call-you-4/

|

||||

[7]:https://consensys.github.io/smart-contract-best-practices/known_attacks/

|

||||

[8]:https://github.com/Dexaran

|

||||

[9]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-223-Token-Transfer-1.png

|

||||

[10]:https://github.com/ethereum/EIPs/issues/677

|

||||

[11]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-677-Token-Transfer.png

|

||||

[12]:https://github.com/ethereum/EIPs/issues/677#issuecomment-353871138

|

||||

[13]:https://github.com/ethereum/EIPs/issues/777

|

||||

[14]:https://github.com/ethereum/EIPs/issues/820

|

||||

[15]:http://blockchainers.org/wp-content/uploads/2018/02/ERC-777-Token-Transfer.png

|

||||

[16]:https://github.com/ethereum/EIPs/issues/820#issuecomment-362049573

|

||||

72

sources/tech/20180301 Best Websites For Programmers.md

Normal file

72

sources/tech/20180301 Best Websites For Programmers.md

Normal file

@ -0,0 +1,72 @@

|

||||

Best Websites For Programmers

|

||||

======

|

||||

![][1]

|

||||

|

||||

As a programmer, you will often find yourself as a permanent visitor of some websites. These can be tutorial, reference or forums websites. So here in this article let us have a look at the best websites for programmers.

|

||||

|

||||

### W3Schools

|

||||

W3Schools is one of the best websites for beginners as well as experienced web developers to learn various programming languages. You can learn HTML5, CSS3, PHP. JavaScript, ASP etc.

|

||||

|

||||

More importantly, the website holds a lot of resources and references for web developers.

|

||||

|

||||

[![w3schools logo][2]][3]

|

||||

|

||||

You can quickly see various keywords and what they do. The website is very interactive and it allows you to try and practice the code in an embedded editor on the website itself. The website is one of those few that you will frequently visit as a web developer.

|

||||

|

||||

### GeeksforGeeks

|

||||

GeeksforGeeks is a website mostly focused on computer science. It has a huge collection of algorithms, solutions and programming questions.

|

||||

|

||||

[![geeksforgeeks programming support][4]][5]

|

||||

|

||||

The website also has a good stock of most frequently asked questions in interviews. Since the website is more about computer science in general, you will find a solution to all programming solutions in most famous languages.

|

||||

|

||||

### TutorialsPoint

|

||||

The de facto place for learning anything. Tutorials point has some of the finest and easiest tutorials that can teach you any programming language. What I really love about this website is that it is not just limited to generic programming languages.

|

||||

|

||||

|

||||

|

||||

You can find tutorials for almost all frameworks of all languages on the planet.

|

||||

|

||||

### StackOverflow

|

||||

You probably already know this that stack is the place where programmers meet. You ever get stuck solving some of your code, just ask a question on stack and programmers from all over the internet will be there to help you.

|

||||

|

||||

[![stackoverflow linux programming website][6]][7]

|

||||

|

||||

The best part about stack overflow is that almost all questions get answered. You might as well receive answers from several different points of views of other programmers.

|

||||

|

||||

### HackerRank

|

||||

Hacker rank is a website where you can participate in various coding competitions and check your competitive abilities.

|

||||

|

||||

[![hackerrank programming forums][8]][9]There are various contests organized in various programming languages and winning in them increases your score. This score can get you in the top ranks and increase your chance of getting noticed by some software company.

|

||||

|

||||

### Codebeautify

|

||||

Since we are programmers, beauty isn’t something we look after. Many a time our code can be difficult to read by someone else. Codebeautify can make your code easy to read.

|

||||

|

||||

|

||||

|

||||

The website has most languages that it can beautify. Alternatively, if you wish to make your code not readable by someone you can also do that.

|

||||

|

||||

So these were some of my picks for the best websites for programmers. If you frequently visit a site that I haven’t mentioned, do let me know in the comment section below.

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.theitstuff.com/best-websites-programmers

|

||||

|

||||

作者:[Rishabh Kandari][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.theitstuff.com/author/reevkandari

|

||||

[1]:http://www.theitstuff.com/wp-content/uploads/2017/12/best-websites-for-programmers.jpg

|

||||

[2]:http://www.theitstuff.com/wp-content/uploads/2017/12/w3schools-logo-550x110.png

|

||||

[3]:http://www.theitstuff.com/wp-content/uploads/2017/12/w3schools-logo.png

|

||||

[4]:http://www.theitstuff.com/wp-content/uploads/2017/12/geeksforgeeks-programming-support-550x152.png

|

||||

[5]:http://www.theitstuff.com/wp-content/uploads/2017/12/geeksforgeeks-programming-support.png

|

||||

[6]:http://www.theitstuff.com/wp-content/uploads/2017/12/stackoverflow-linux-programming-website-550x178.png

|

||||

[7]:http://www.theitstuff.com/wp-content/uploads/2017/12/stackoverflow-linux-programming-website.png

|

||||

[8]:http://www.theitstuff.com/wp-content/uploads/2017/12/hackerrank-programming-forums-550x118.png

|

||||

[9]:http://www.theitstuff.com/wp-content/uploads/2017/12/hackerrank-programming-forums.png

|

||||

@ -1,3 +1,4 @@

|

||||

translating by wyxplus

|

||||

Things You Should Know About Ubuntu 18.04

|

||||

======

|

||||

[Ubuntu 18.04 release][1] is just around the corner. I can see lots of questions from Ubuntu users in various Facebook groups and forums. I also organized Q&A sessions on Facebook and Instagram to know what Ubuntu users are wondering about Ubuntu 18.04.

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

pinewall translating

|

||||

|

||||

A reading list for Linux and open source fans

|

||||

======

|

||||

|

||||

|

||||

@ -1,123 +0,0 @@

|

||||

apply for translation.

|

||||

|

||||

How to kill a process or stop a program in Linux

|

||||

======

|

||||

|

||||

|

||||

When a process misbehaves, you might sometimes want to terminate or kill it. In this post, we'll explore a few ways to terminate a process or an application from the command line as well as from a graphical interface, using [gedit][1] as a sample application.

|

||||

|

||||

### Using the command line/termination characters

|

||||

|

||||

#### Ctrl + C

|

||||

|

||||

One problem invoking `gedit` from the command line (if you are not using `gedit &`) is that it will not free up the prompt, so that shell session is blocked. In such cases, Ctrl+C (the Control key in combination with 'C') comes in handy. That will terminate `gedit` and all work will be lost (unless the file was saved). Ctrl+C sends the `SIGINT` signal to `gedit`. This is a stop signal whose default action is to terminate the process. It instructs the shell to stop `gedit` and return to the main loop, and you'll get the prompt back.

|

||||

```

|

||||

$ gedit

|

||||

|

||||

^C

|

||||

|

||||

```

|

||||

|

||||

#### Ctrl + Z

|

||||

|

||||

This is called a suspend character. It sends a `SIGTSTP` signal to process. This is also a stop signal, but the default action is not to kill but to suspend the process.

|

||||

|

||||

It will stop (kill/terminate) `gedit` and return the shell prompt.

|

||||

```

|

||||

$ gedit

|

||||

|

||||

^Z

|

||||

|

||||

[1]+ Stopped gedit

|

||||

|

||||

$

|

||||

|

||||

```

|

||||

|

||||

Once the process is suspended (in this case, `gedit`), it is not possible to write or do anything in `gedit`. In the background, the process becomes a job. This can be verified by the `jobs` command.

|

||||

```

|

||||

$ jobs

|

||||

|

||||

[1]+ Stopped gedit

|

||||

|

||||

```

|

||||

|

||||

`jobs` allows you to control multiple processes within a single shell session. You can stop, resume, and move jobs to the background or foreground as needed.

|

||||

|

||||

Let's resume `gedit` in the background and free up a prompt to run other commands. You can do this using the `bg` command, followed by job ID (notice `[1]` from the output of `jobs` above. `[1]` is the job ID).

|

||||

```

|

||||

$ bg 1

|

||||

|

||||

[1]+ gedit &

|

||||

|

||||

```

|

||||

|

||||

This is similar to starting `gedit` with `&,`:

|

||||

```

|

||||

$ gedit &

|

||||

|

||||

```

|

||||

|

||||

### Using kill

|

||||

|

||||

`kill` allows fine control over signals, enabling you to signal a process by specifying either a signal name or a signal number, followed by a process ID, or PID.

|

||||

|

||||

What I like about `kill` is that it can also work with job IDs. Let's start `gedit` in the background using `gedit &`. Assuming I have a job ID of `gedit` from the `jobs` command, let's send `SIGINT` to `gedit`:

|

||||

```

|

||||

$ kill -s SIGINT %1

|

||||

|

||||

```

|

||||

|

||||

Note that the job ID should be prefixed with `%`, or `kill` will consider it a PID.

|

||||

|