mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-02-28 01:01:09 +08:00

translated (#4996)

* Create 20170113 How to install a Ceph Storage Cluster on Ubuntu 16.04.md translated * Delete 20170113 How to install a Ceph Storage Cluster on Ubuntu 16.04.md delete sources

This commit is contained in:

parent

6f34b7b027

commit

186f499a83

@ -1,465 +0,0 @@

|

||||

wyangsun translating

|

||||

|

||||

How to install a Ceph Storage Cluster on Ubuntu 16.04

|

||||

============================================================

|

||||

|

||||

### On this page

|

||||

|

||||

1. [Step 1 - Configure All Nodes][1]

|

||||

2. [Step 2 - Configure the SSH Server][2]

|

||||

3. [Step 3 - Configure the Ubuntu Firewall][3]

|

||||

4. [Step 4 - Configure the Ceph OSD Nodes][4]

|

||||

5. [Step 5 - Build the Ceph Cluster][5]

|

||||

6. [Step 6 - Testing Ceph][6]

|

||||

7. [Reference][7]

|

||||

|

||||

Ceph is an open source storage platform, it provides high performance, reliability, and scalability. It's a free distributed storage system that provides an interface for object, block, and file-level storage and can operate without a single point of failure.

|

||||

|

||||

In this tutorial, I will guide you to install and build a Ceph cluster on Ubuntu 16.04 server. A Ceph cluster consists of these components:

|

||||

|

||||

* **Ceph OSDs (ceph-osd)** - Handles the data storage, data replication, and recovery. A Ceph cluster needs at least two Ceph OSD servers. We will use three Ubuntu 16.04 servers in this setup.

|

||||

* **Ceph Monitor (ceph-mon)** - Monitors the cluster state and runs the OSD map and CRUSH map. We will use one server here.

|

||||

* **Ceph Meta Data Server (ceph-mds)** - this is needed if you want to use Ceph as a File System.

|

||||

|

||||

### **Prerequisites**

|

||||

|

||||

* 6 server nodes with Ubuntu 16.04 server installed

|

||||

* Root privileges on all nodes

|

||||

|

||||

I will use the following hostname / IP setup:

|

||||

|

||||

**hostname** **IP address**

|

||||

|

||||

_ceph-admin 10.0.15.10

|

||||

mon1 10.0.15.11

|

||||

osd1 10.0.15.21

|

||||

osd2 10.0.15.22

|

||||

osd3 10.0.15.23

|

||||

client 10.0.15.15_

|

||||

|

||||

### Step 1 - Configure All Nodes

|

||||

|

||||

In this step, we will configure all 6 nodes to prepare them for the installation of the Ceph Cluster software. So you have to follow and run the commands below on all nodes. And make sure that ssh-server is installed on all nodes.

|

||||

|

||||

**Create the Ceph User**

|

||||

|

||||

Create a new user named '**cephuser**' on all nodes.

|

||||

|

||||

useradd -m -s /bin/bash cephuser

|

||||

passwd cephuser

|

||||

|

||||

After creating the new user, we need to configure **cephuser** for passwordless sudo privileges. This means that 'cephuser' can run and get sudo privileges without having to enter a password first.

|

||||

|

||||

Run the commands below to achieve that.

|

||||

|

||||

echo "cephuser ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephuser

|

||||

chmod 0440 /etc/sudoers.d/cephuser

|

||||

sed -i s'/Defaults requiretty/#Defaults requiretty'/g /etc/sudoers

|

||||

|

||||

**Install and Configure NTP**

|

||||

|

||||

Install NTP to synchronize date and time on all nodes. Run the ntpdate command to set the date and time via NTP. We will use the US pool NTP servers. Then start and enable NTP server to run at boot time.

|

||||

|

||||

sudo apt-get install -y ntp ntpdate ntp-doc

|

||||

ntpdate 0.us.pool.ntp.org

|

||||

hwclock --systohc

|

||||

systemctl enable ntp

|

||||

systemctl start ntp

|

||||

|

||||

**Install Open-vm-tools

|

||||

**

|

||||

|

||||

If you are running all nodes inside VMware, you need to install this virtualization utility.

|

||||

|

||||

sudo apt-get install -y open-vm-tools

|

||||

|

||||

**Install Python and parted

|

||||

**

|

||||

|

||||

In this tutorial, we need python packages for building the ceph-cluster. Install python and python-pip.

|

||||

|

||||

sudo apt-get install -y python python-pip parted

|

||||

|

||||

**Configure the Hosts File**

|

||||

|

||||

Edit the hosts file on all nodes with vim editor.

|

||||

|

||||

vim /etc/hosts

|

||||

|

||||

Paste the configuration below:

|

||||

|

||||

```

|

||||

10.0.15.10 ceph-admin

|

||||

10.0.15.11 mon1

|

||||

10.0.15.21 ceph-osd1

|

||||

10.0.15.22 ceph-osd2

|

||||

10.0.15.23 ceph-osd3

|

||||

10.0.15.15 ceph-client

|

||||

```

|

||||

|

||||

Save the hosts file and exit the vim editor.

|

||||

|

||||

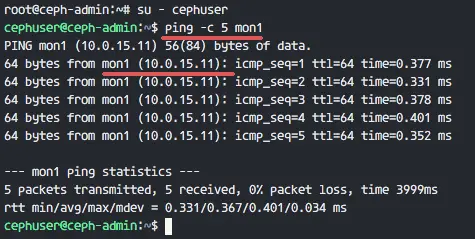

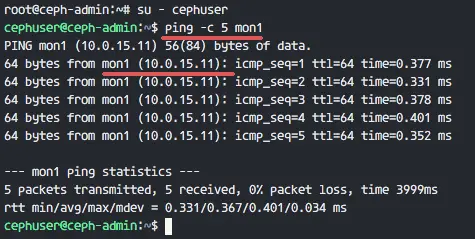

Now you can try to ping between the server hostnames to test the network connectivity.

|

||||

|

||||

ping -c 5 mon1

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

### Step 2 - Configure the SSH Server

|

||||

|

||||

In this step, we will configure the **ceph-admin node**. The admin node is used for configuring the monitor node and osd nodes. Login to the ceph-admin node and access the '**cephuser**'.

|

||||

|

||||

ssh root@ceph-admin

|

||||

su - cephuser

|

||||

|

||||

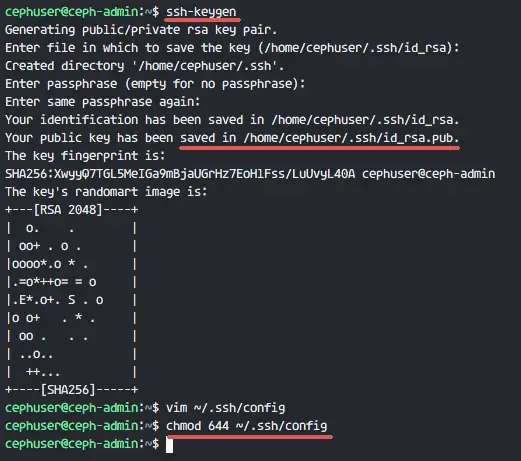

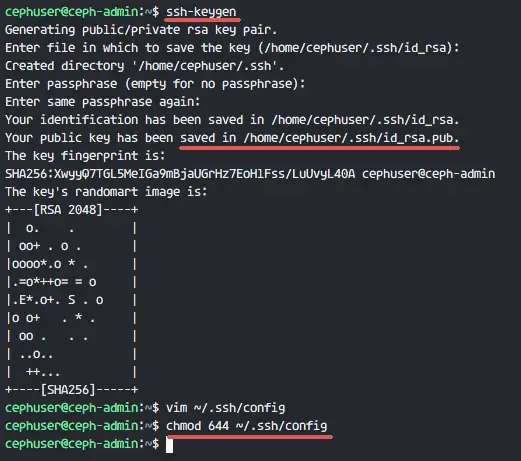

The admin node is used for installing and configuring all cluster node, so the user on the ceph-admin node must have privileges to connect to all nodes without a password. We need to configure password-less SSH access for 'cephuser' on the 'ceph-admin' node.

|

||||

|

||||

Generate the ssh keys for '**cephuser**'.

|

||||

|

||||

ssh-keygen

|

||||

|

||||

Leave passphrase is blank/empty.

|

||||

|

||||

Next, create a configuration file for the ssh config.

|

||||

|

||||

vim ~/.ssh/config

|

||||

|

||||

Paste the configuration below:

|

||||

|

||||

```

|

||||

Host ceph-admin

|

||||

Hostname ceph-admin

|

||||

User cephuser

|

||||

|

||||

Host mon1

|

||||

Hostname mon1

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd1

|

||||

Hostname ceph-osd1

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd2

|

||||

Hostname ceph-osd2

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd3

|

||||

Hostname ceph-osd3

|

||||

User cephuser

|

||||

|

||||

Host ceph-client

|

||||

Hostname ceph-client

|

||||

User cephuser

|

||||

```

|

||||

|

||||

Save the file and exit vim.

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

Change the permission of the config file to 644.

|

||||

|

||||

chmod 644 ~/.ssh/config

|

||||

|

||||

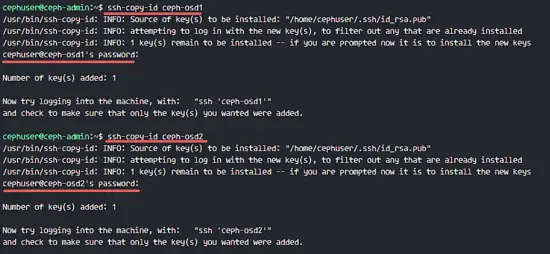

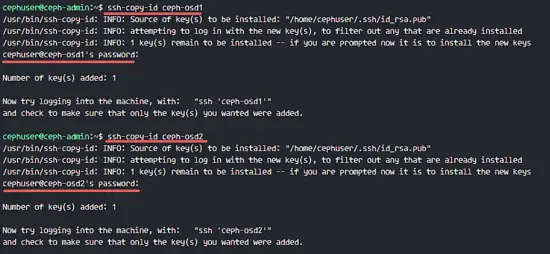

Now add the key to all nodes with the ssh-copy-id command.

|

||||

|

||||

ssh-keyscan ceph-osd1 ceph-osd2 ceph-osd3 ceph-client mon1 >> ~/.ssh/known_hosts

|

||||

ssh-copy-id ceph-osd1

|

||||

ssh-copy-id ceph-osd2

|

||||

ssh-copy-id ceph-osd3

|

||||

ssh-copy-id mon1

|

||||

|

||||

Type in your cephuser password when requested.

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

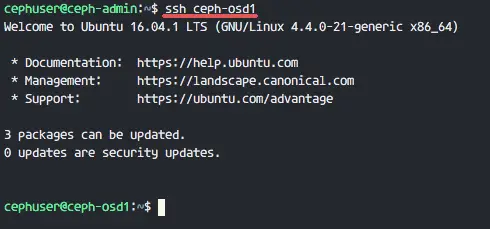

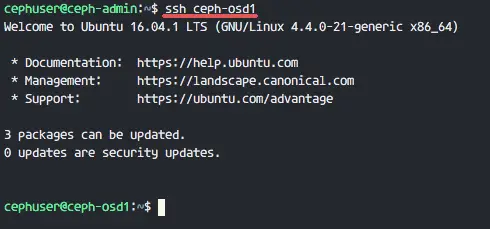

Now try to access the osd1 server from the ceph-admin node to test if the password-less login works.

|

||||

|

||||

ssh ceph-osd1

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

### Step 3 - Configure the Ubuntu Firewall

|

||||

|

||||

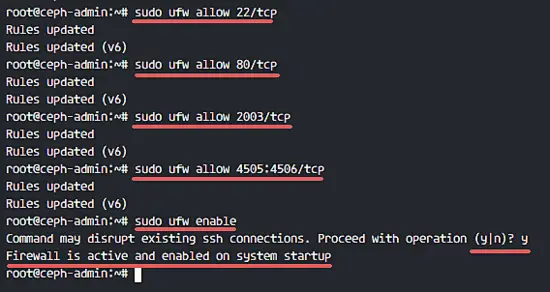

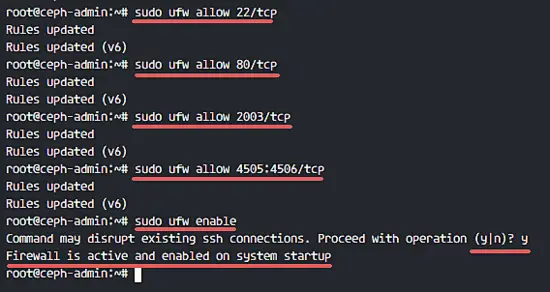

For security reasons, we need to turn on the firewall on the servers. Preferably we use Ufw (Uncomplicated Firewall), the default Ubuntu firewall, to protect the system. In this step, we will enable ufw on all nodes, then open the ports needed by ceph-admin, ceph-mon and ceph-osd.

|

||||

|

||||

Login to the ceph-admin node and install the ufw packages.

|

||||

|

||||

ssh root@ceph-admin

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

Open port 80, 2003 and 4505-4506, then reload firewalld.

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 80/tcp

|

||||

sudo ufw allow 2003/tcp

|

||||

sudo ufw allow 4505:4506/tcp

|

||||

|

||||

Start and enable ufw to start at boot time.

|

||||

|

||||

sudo ufw enable

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

From the ceph-admin node, login to the monitor node 'mon1' and install ufw.

|

||||

|

||||

ssh mon1

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

Open the ports for the ceph monitor node and start ufw.

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 6789/tcp

|

||||

sudo ufw enable

|

||||

|

||||

Finally, open these ports on each osd node: ceph-osd1, ceph-osd2 and ceph-osd3 - port 6800-7300.

|

||||

|

||||

Login to each of the ceph-osd nodes from the ceph-admin, and install ufw.

|

||||

|

||||

ssh ceph-osd1

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

Open the ports on the osd nodes and reload firewalld.

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 6800:7300/tcp

|

||||

sudo ufw enable

|

||||

|

||||

The ufw firewall configuration is finished.

|

||||

|

||||

### Step 4 - Configure the Ceph OSD Nodes

|

||||

|

||||

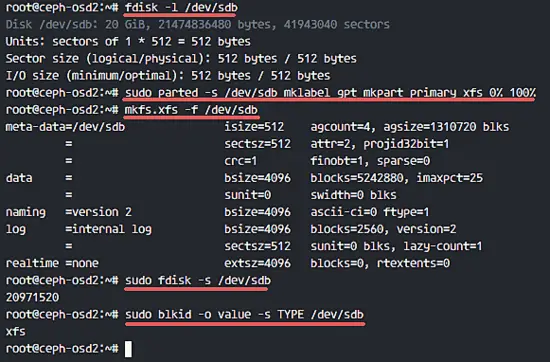

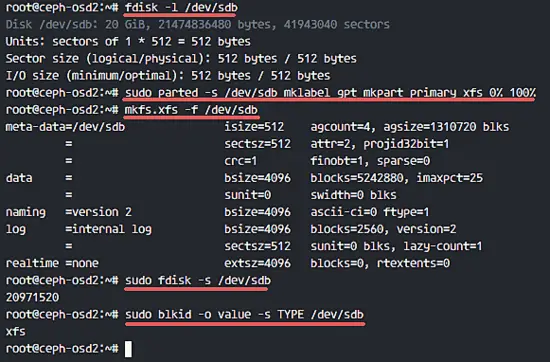

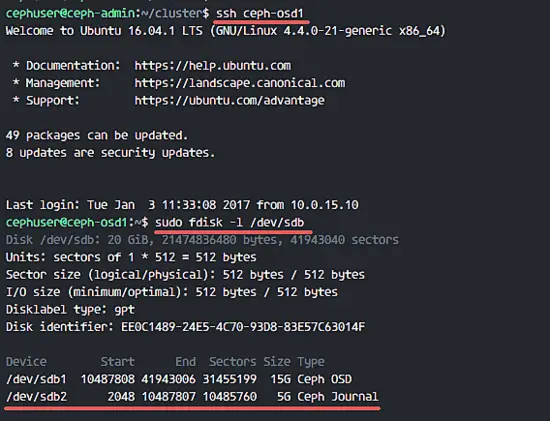

In this tutorial, we have 3 OSD nodes, each of these nodes has two hard disk partitions.

|

||||

|

||||

1. **/dev/sda** for root partition

|

||||

2. **/dev/sdb** is empty partition - 20GB

|

||||

|

||||

We will use **/dev/sdb** for the ceph disk. From the ceph-admin node, login to all OSD nodes and format the /dev/sdb partition with **XFS** file system.

|

||||

|

||||

ssh ceph-osd1

|

||||

ssh ceph-osd2

|

||||

ssh ceph-osd3

|

||||

|

||||

Check the partition scheme with the fdisk command.

|

||||

|

||||

sudo fdisk -l /dev/sdb

|

||||

|

||||

Format the /dev/sdb partition with an XFS filesystem and with a GPT partition table by using the parted command.

|

||||

|

||||

sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100%

|

||||

|

||||

Next, format the partition in XFS format with the mkfs command.

|

||||

|

||||

sudo mkfs.xfs -f /dev/sdb

|

||||

|

||||

Now check the partition, and you will see a XFS /dev/sdb partition.

|

||||

|

||||

sudo fdisk -s /dev/sdb

|

||||

sudo blkid -o value -s TYPE /dev/sdb

|

||||

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

### Step 5 - Build the Ceph Cluster

|

||||

|

||||

In this step, we will install Ceph on all nodes from the ceph-admin. To get started, login to the ceph-admin node.

|

||||

|

||||

ssh root@ceph-admin

|

||||

su - cephuser

|

||||

|

||||

**Install ceph-deploy on ceph-admin node**

|

||||

|

||||

In the first step we've already installed python and python-pip on to the system. Now we need to install the Ceph deployment tool '**ceph-deploy**' from the pypi python repository.

|

||||

|

||||

Install ceph-deploy on the ceph-admin node with the pip command.

|

||||

|

||||

sudo pip install ceph-deploy

|

||||

|

||||

Note: Make sure all nodes are updated.

|

||||

|

||||

After the ceph-deploy tool has been installed, create a new directory for the Ceph cluster configuration.

|

||||

|

||||

**Create a new Cluster**

|

||||

|

||||

Create a new cluster directory.

|

||||

|

||||

mkdir cluster

|

||||

cd cluster/

|

||||

|

||||

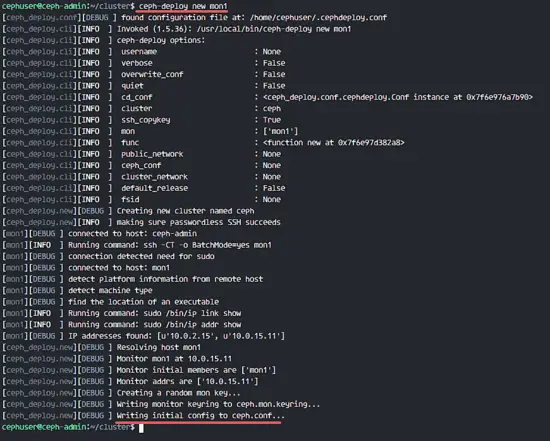

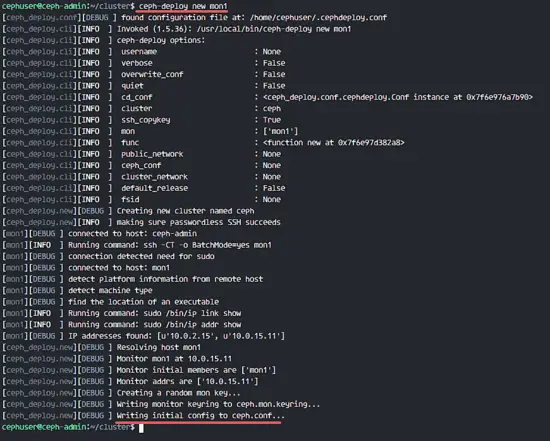

Next, create a new cluster with the '**ceph-deploy**' command by defining the monitor node '**mon1**'.

|

||||

|

||||

ceph-deploy new mon1

|

||||

|

||||

The command will generate the Ceph cluster configuration file 'ceph.conf' in cluster directory.

|

||||

|

||||

[

|

||||

|

||||

][14]

|

||||

|

||||

Edit the ceph.conf file with vim.

|

||||

|

||||

vim ceph.conf

|

||||

|

||||

Under the [global] block, paste the configuration below.

|

||||

|

||||

```

|

||||

# Your network address

|

||||

public network = 10.0.15.0/24

|

||||

osd pool default size = 2

|

||||

```

|

||||

|

||||

Save the file and exit the editor.

|

||||

|

||||

**Install Ceph on All Nodes**

|

||||

|

||||

Now install Ceph on all nodes from the ceph-admin node with a single command.

|

||||

|

||||

ceph-deploy install ceph-admin ceph-osd1 ceph-osd2 ceph-osd3 mon1

|

||||

|

||||

The command will automatically install Ceph on all nodes: mon1, osd1-3 and ceph-admin - The installation will take some time.

|

||||

|

||||

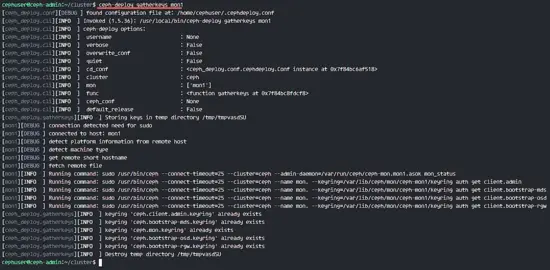

Now deploy the monitor node on the mon1 node.

|

||||

|

||||

ceph-deploy mon create-initial

|

||||

|

||||

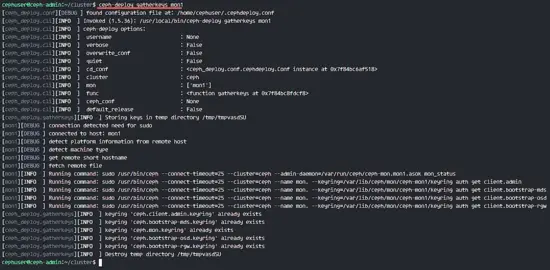

The command will create a monitor key, check the key with this ceph command.

|

||||

|

||||

ceph-deploy gatherkeys mon1

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

**Adding OSDS to the Cluster**

|

||||

|

||||

After Ceph has been installed on all nodes, now we can add the OSD daemons to the cluster. OSD Daemons will create the data and journal partition on the disk /dev/sdb.

|

||||

|

||||

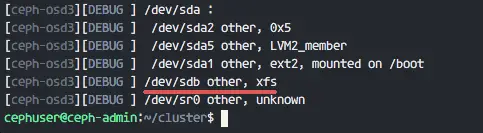

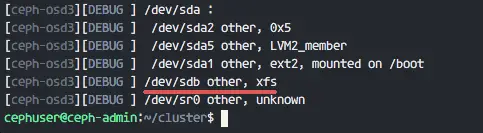

Check the available disk /dev/sdb on all osd nodes.

|

||||

|

||||

ceph-deploy disk list ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

You will see /dev/sdb with the XFS format that we created before.

|

||||

|

||||

Next, delete the partition tables on all nodes with the zap option.

|

||||

|

||||

ceph-deploy disk zap ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

The command will delete all data on /dev/sdb on the Ceph OSD nodes.

|

||||

|

||||

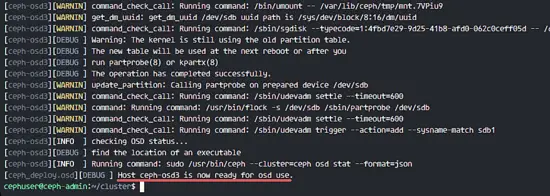

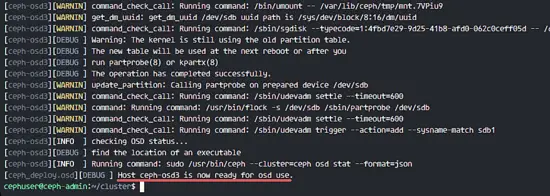

Now prepare all OSD nodes and ensure that there are no errors in the results.

|

||||

|

||||

ceph-deploy osd prepare ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

When you see the ceph-osd1-3 is ready for OSD use in the result, then the command was successful.

|

||||

|

||||

[

|

||||

|

||||

][17]

|

||||

|

||||

Activate the OSD'S with the command below:

|

||||

|

||||

ceph-deploy osd activate ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

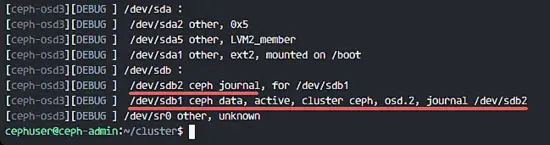

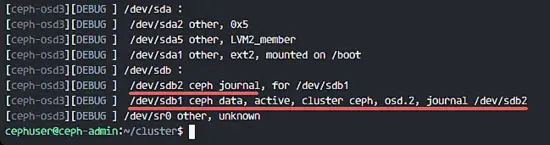

Now you can check the sdb disk on OSDS nodes again.

|

||||

|

||||

ceph-deploy disk list ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

[

|

||||

|

||||

][18]

|

||||

|

||||

The result is that /dev/sdb has two partitions now:

|

||||

|

||||

1. **/dev/sdb1** - Ceph Data

|

||||

2. **/dev/sdb2** - Ceph Journal

|

||||

|

||||

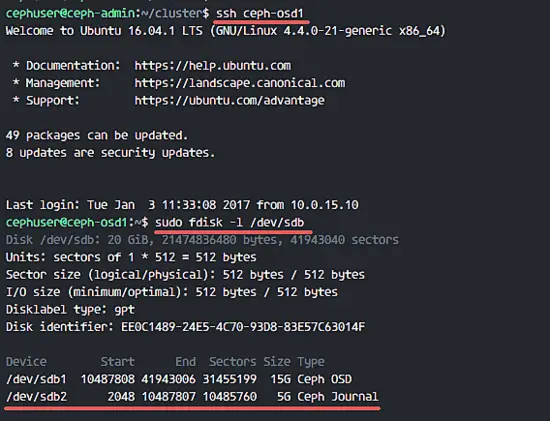

Or you check it directly on the OSD node.

|

||||

|

||||

ssh ceph-osd1

|

||||

sudo fdisk -l /dev/sdb

|

||||

|

||||

[

|

||||

|

||||

][19]

|

||||

|

||||

Next, deploy the management-key to all associated nodes.

|

||||

|

||||

ceph-deploy admin ceph-admin mon1 ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

Change the permission of the key file by running the command below on all nodes.

|

||||

|

||||

sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

|

||||

|

||||

The Ceph Cluster on Ubuntu 16.04 has been created.

|

||||

|

||||

### Step 6 - Testing Ceph

|

||||

|

||||

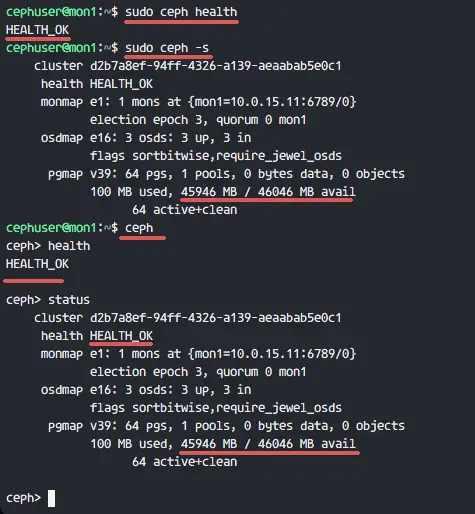

In step 4, we've installed and created a new Ceph cluster, and added OSDS nodes to the cluster. Now we should test the cluster to make sure that it works as intended.

|

||||

|

||||

From the ceph-admin node, log in to the Ceph monitor server '**mon1**'.

|

||||

|

||||

ssh mon1

|

||||

|

||||

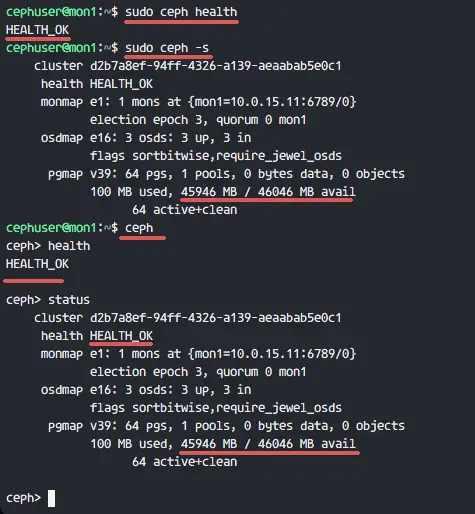

Run the command below to check the cluster health.

|

||||

|

||||

sudo ceph health

|

||||

|

||||

Now check the cluster status.

|

||||

|

||||

sudo ceph -s

|

||||

|

||||

You can see results below:

|

||||

|

||||

[

|

||||

|

||||

][20]

|

||||

|

||||

Make sure the Ceph health is **OK** and there is a monitor node '**mon1**' with IP address '**10.0.15.11**'. There are **3 OSD** servers and all are **up** and running, and there should be an available disk space of **45GB** - 3x15GB Ceph Data OSD partition.

|

||||

|

||||

We build a new Ceph Cluster on Ubuntu 16.04 successfully.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/

|

||||

|

||||

作者:[Muhammad Arul][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/

|

||||

[1]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-all-nodes

|

||||

[2]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ssh-server

|

||||

[3]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ubuntu-firewall

|

||||

[4]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ceph-osd-nodes

|

||||

[5]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-build-the-ceph-cluster

|

||||

[6]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-testing-ceph

|

||||

[7]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#reference

|

||||

[8]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/1.png

|

||||

[9]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/2.png

|

||||

[10]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/3.png

|

||||

[11]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/4.png

|

||||

[12]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/5.png

|

||||

[13]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/6.png

|

||||

[14]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/7.png

|

||||

[15]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/8.png

|

||||

[16]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/9.png

|

||||

[17]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/10.png

|

||||

[18]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/11.png

|

||||

[19]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/12.png

|

||||

[20]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/13.png

|

||||

@ -0,0 +1,466 @@

|

||||

如何在Ubuntu 16.04中安装 Ceph 存储集群

|

||||

============================================================

|

||||

|

||||

### 在此页

|

||||

|

||||

1. [第1步 - 配置所有节点][1]

|

||||

2. [第2步 - 配置 SSH 服务器][2]

|

||||

3. [第3步 - 配置 Ubuntu 防火墙][3]

|

||||

4. [第4步 - 配置 Ceph OSD 节点][4]

|

||||

5. [第5步 - 建立 Ceph 集群][5]

|

||||

6. [第6步 - 测试 Ceph][6]

|

||||

7. [参考][7]

|

||||

|

||||

Ceph 是一个高性能,可靠行和可扩展性的开源存储平台。他是一个自由的分部署存储系统,提供了一个对象,块,文件级存储接口和缺少一个节点依然可以运行的特性。

|

||||

|

||||

在这个教程中,我将指导你在 Ubuntu 16.04 服务器上安装建立一个 Ceph 集群。Ceph 集群包括这些组件:

|

||||

|

||||

* **Ceph OSDs (ceph-osd)** - 控制数据存储,数据复制和恢复。Ceph 集群需要至少两个 Ceph OSD 服务器。这次安装我们将使用三个 Ubuntu 16.04 服务器。

|

||||

* **Ceph Monitor (ceph-mon)** - 监控集群状态和运行的 OSD 映射 和 CRUSH 映射。这里我们使用一个服务器。

|

||||

* **Ceph Meta Data Server (ceph-mds)** - 如果你想把 Ceph 作为文件系统使用,就需要这个。

|

||||

|

||||

### **前提条件**

|

||||

|

||||

* 6个安装了 Ubuntu 16.04 的服务器节点

|

||||

* 所有节点上的 root 权限

|

||||

|

||||

我将使用下面这些 hostname /IP 安装:

|

||||

|

||||

**hostname** **IP address**

|

||||

|

||||

_ceph-admin 10.0.15.10

|

||||

mon1 10.0.15.11

|

||||

osd1 10.0.15.21

|

||||

osd2 10.0.15.22

|

||||

osd3 10.0.15.23

|

||||

client 10.0.15.15_

|

||||

|

||||

### 第1步 - 配置所有节点

|

||||

|

||||

这次安装,我将配置所有的6个节点来准备安装 Ceph 集群软件。所以你必须在所有节点运行下面的命令。然后确保所有节点都安装了 ssh-server。

|

||||

|

||||

**创建 Ceph 用户**

|

||||

|

||||

在所有节点创建一个名为'**cephuser**'的新用户

|

||||

|

||||

useradd -m -s /bin/bash cephuser

|

||||

passwd cephuser

|

||||

|

||||

创建完新用户后,我们需要配置 **cephuser** 无密码 sudo 权限。这意味着 ‘cephuser’ 首次可以不输入密码执行和获取 sudo 权限。

|

||||

|

||||

运行下面的命令来完成配置。

|

||||

|

||||

echo "cephuser ALL = (root) NOPASSWD:ALL" | sudo tee /etc/sudoers.d/cephuser

|

||||

chmod 0440 /etc/sudoers.d/cephuser

|

||||

sed -i s'/Defaults requiretty/#Defaults requiretty'/g /etc/sudoers

|

||||

|

||||

**安装和配置 NTP**

|

||||

|

||||

安装 NTP 来同步所有节点的日期和时间。运行 ntpdate 命令通过 NTP 设置日期。我们将使用 US 池 NTP 服务器。然后开启并使 NTP 服务在开机时启动。

|

||||

|

||||

sudo apt-get install -y ntp ntpdate ntp-doc

|

||||

ntpdate 0.us.pool.ntp.org

|

||||

hwclock --systohc

|

||||

systemctl enable ntp

|

||||

systemctl start ntp

|

||||

|

||||

**安装 Open-vm-tools**

|

||||

|

||||

如果你正在VMware里运行所有节点,你需要安装这个虚拟化工具。

|

||||

|

||||

sudo apt-get install -y open-vm-tools

|

||||

|

||||

**安装 Python 和 parted**

|

||||

|

||||

在这个教程,我们需要 python 包来建立 ceph 集群。安装 python 和 python-pip。

|

||||

|

||||

sudo apt-get install -y python python-pip parted

|

||||

|

||||

**配置 Hosts 文件**

|

||||

|

||||

用 vim 编辑器编辑所有节点的 hosts 文件。

|

||||

|

||||

vim /etc/hosts

|

||||

|

||||

Paste the configuration below:

|

||||

|

||||

```

|

||||

10.0.15.10 ceph-admin

|

||||

10.0.15.11 mon1

|

||||

10.0.15.21 ceph-osd1

|

||||

10.0.15.22 ceph-osd2

|

||||

10.0.15.23 ceph-osd3

|

||||

10.0.15.15 ceph-client

|

||||

```

|

||||

|

||||

保存 hosts 文件,然后退出 vim 编辑器。

|

||||

|

||||

现在你可以试着在两个服务器间 ping 主机名来测试网络连通性。

|

||||

|

||||

ping -c 5 mon1

|

||||

|

||||

[

|

||||

|

||||

][8]

|

||||

|

||||

### 第2步 - 配置 SSH 服务器

|

||||

|

||||

这一步,我们将配置 **ceph-admin 节点**。管理节点是用来配置监控节点和 osd 节点的。登录到 ceph-admin 节点然后使用 '**cephuser**'。

|

||||

|

||||

ssh root@ceph-admin

|

||||

su - cephuser

|

||||

|

||||

管理节点用来安装配置所有集群节点,所以 ceph-admin 用户必须有不使用密码连接到所有节点的权限。我们需要为 'ceph-admin' 节点的 'cephuser' 用户配置无密码登录权限。

|

||||

|

||||

生成 '**cephuser**' 的 ssh 密钥。

|

||||

|

||||

ssh-keygen

|

||||

|

||||

让密码为空

|

||||

|

||||

下面,为 ssh 创建一个配置文件

|

||||

|

||||

vim ~/.ssh/config

|

||||

|

||||

Paste the configuration below:

|

||||

|

||||

```

|

||||

Host ceph-admin

|

||||

Hostname ceph-admin

|

||||

User cephuser

|

||||

|

||||

Host mon1

|

||||

Hostname mon1

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd1

|

||||

Hostname ceph-osd1

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd2

|

||||

Hostname ceph-osd2

|

||||

User cephuser

|

||||

|

||||

Host ceph-osd3

|

||||

Hostname ceph-osd3

|

||||

User cephuser

|

||||

|

||||

Host ceph-client

|

||||

Hostname ceph-client

|

||||

User cephuser

|

||||

```

|

||||

|

||||

保存文件并退出 vim。

|

||||

|

||||

[

|

||||

|

||||

][9]

|

||||

|

||||

改变配置文件权限为644。

|

||||

|

||||

chmod 644 ~/.ssh/config

|

||||

|

||||

现在使用 ssh-copy-id 命令增加密钥到所有节点。

|

||||

|

||||

ssh-keyscan ceph-osd1 ceph-osd2 ceph-osd3 ceph-client mon1 >> ~/.ssh/known_hosts

|

||||

ssh-copy-id ceph-osd1

|

||||

ssh-copy-id ceph-osd2

|

||||

ssh-copy-id ceph-osd3

|

||||

ssh-copy-id mon1

|

||||

|

||||

当请求输入密码时输入你的 cephuser 密码。

|

||||

|

||||

[

|

||||

|

||||

][10]

|

||||

|

||||

现在尝试从 ceph-admin 节点登录 osd1 服务器,测试无密登录是否正常。

|

||||

|

||||

ssh ceph-osd1

|

||||

|

||||

[

|

||||

|

||||

][11]

|

||||

|

||||

### 第3步 - 配置 Ubuntu 防火墙

|

||||

|

||||

出于安全原因,我们需要在服务器打开防火墙。我们更愿使用 Ufw(不复杂的防火墙),Ubuntu 默认的防火墙,来保护系统。在这一步,我们在所有节点开启 ufw,然后打开 ceph-admin,ceph-mon 和 ceph-osd 需要使用的端口。

|

||||

|

||||

登录到 ceph-admin 节点,然后安装 ufw 包。

|

||||

|

||||

ssh root@ceph-admin

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

打开 80,2003 和 4505-4506 端口,然后重载防火墙。

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 80/tcp

|

||||

sudo ufw allow 2003/tcp

|

||||

sudo ufw allow 4505:4506/tcp

|

||||

|

||||

开启 ufw 并设置开机启动。

|

||||

|

||||

sudo ufw enable

|

||||

|

||||

[

|

||||

|

||||

][12]

|

||||

|

||||

从 ceph-admin 节点,登录到监控节点 'mon1' 然后安装 ufw。

|

||||

|

||||

ssh mon1

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

打开 ceph 监控节点的端口然后开启 ufw。

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 6789/tcp

|

||||

sudo ufw enable

|

||||

|

||||

最后,在每个 osd 节点:ceph-osd1,ceph-osd2 和 ceph-osd3 打开这些端口 6800-7300。

|

||||

|

||||

从 ceph-admin 登录到每个 ceph-osd 节点安装 ufw。

|

||||

|

||||

ssh ceph-osd1

|

||||

sudo apt-get install -y ufw

|

||||

|

||||

在 osd 节点打开端口并重载防火墙。

|

||||

|

||||

sudo ufw allow 22/tcp

|

||||

sudo ufw allow 6800:7300/tcp

|

||||

sudo ufw enable

|

||||

|

||||

ufw 防火墙配置完成。

|

||||

|

||||

### 第4步 - 配置 Ceph OSD 节点

|

||||

|

||||

这个教程里,我们有 3 个 OSD 节点,每个节点有两块硬盘分区。

|

||||

|

||||

1. **/dev/sda** for root partition

|

||||

2. **/dev/sdb** is empty partition - 20GB

|

||||

|

||||

我们要使用 **/dev/sdb** 作为 ceph 磁盘。从 ceph-admin 节点,登录到所有 OSD 节点,然后格式化 /dev/sdb 分区为 **XFS** 文件系统。

|

||||

|

||||

ssh ceph-osd1

|

||||

ssh ceph-osd2

|

||||

ssh ceph-osd3

|

||||

|

||||

使用 fdisk 命令检查分区表。

|

||||

|

||||

sudo fdisk -l /dev/sdb

|

||||

|

||||

格式化 /dev/sdb 分区为 XFS 文件系统,使用 parted 命令创建一个 GPT 分区表。

|

||||

|

||||

sudo parted -s /dev/sdb mklabel gpt mkpart primary xfs 0% 100%

|

||||

|

||||

下面,使用 mkfs 命令格式化分区为 XFS 格式。

|

||||

|

||||

sudo mkfs.xfs -f /dev/sdb

|

||||

|

||||

现在检查分区,然后你会看见 XFS /dev/sdb 分区。

|

||||

|

||||

sudo fdisk -s /dev/sdb

|

||||

sudo blkid -o value -s TYPE /dev/sdb

|

||||

|

||||

[

|

||||

|

||||

][13]

|

||||

|

||||

### 第5步 - 创建 Ceph 集群

|

||||

|

||||

在这步,我们将从 ceph-admin 安装 Ceph 到所有节点。马上开始,登录到 ceph-admin 节点。

|

||||

|

||||

ssh root@ceph-admin

|

||||

su - cephuser

|

||||

|

||||

**在 ceph-admin 节点上安装 ceph-deploy**

|

||||

|

||||

首先我们已经在系统上安装了 python 和 python-pip。现在我们需要从 pypi python 仓库安装 Ceph 部署工具 '**ceph-deploy**'。

|

||||

|

||||

Install ceph-deploy on the ceph-admin node with the pip command.

|

||||

用 pip 命令在 ceph-admin 节点安装 ceph-deploy 。

|

||||

|

||||

sudo pip install ceph-deploy

|

||||

|

||||

注意: 确保所有节点都已经更新.

|

||||

|

||||

ceph-deploy 工具已经安装完毕后,为 Ceph 集群配置创建一个新目录。

|

||||

|

||||

**创建一个新集群**

|

||||

|

||||

创建一个新集群目录。

|

||||

|

||||

mkdir cluster

|

||||

cd cluster/

|

||||

|

||||

下一步,用 '**ceph-deploy**' 命令通过定义监控节点 '**mon1**' 创建一个新集群。

|

||||

|

||||

ceph-deploy new mon1

|

||||

|

||||

命令将在集群目录生成 Ceph 集群配置文件 'ceph.conf'。

|

||||

|

||||

[

|

||||

|

||||

][14]

|

||||

|

||||

用 vim 编辑 ceph.conf。

|

||||

|

||||

vim ceph.conf

|

||||

|

||||

在 [global] 块下,粘贴下面的配置。

|

||||

|

||||

```

|

||||

# Your network address

|

||||

public network = 10.0.15.0/24

|

||||

osd pool default size = 2

|

||||

```

|

||||

|

||||

保存文件并推出编辑器。

|

||||

|

||||

**安装 Ceph 到所有节点**

|

||||

|

||||

现在用一个命令从 ceph-admin 节点安装 Ceph 到所有节点。

|

||||

|

||||

ceph-deploy install ceph-admin ceph-osd1 ceph-osd2 ceph-osd3 mon1

|

||||

|

||||

命令将自动安装 Ceph 到所有节点:mon1,osd1-3 和 ceph-admin - 安装将花一些时间。

|

||||

|

||||

现在到 mon1 节点部署监控节点。

|

||||

|

||||

ceph-deploy mon create-initial

|

||||

|

||||

命令将创建一个监控密钥,用 ceph 命令检查密钥。

|

||||

|

||||

ceph-deploy gatherkeys mon1

|

||||

|

||||

[

|

||||

|

||||

][15]

|

||||

|

||||

**增加 OSDS 到集群**

|

||||

|

||||

在所有节点上安装了 Ceph 之后,现在我们可以增加 OSD 守护进程到集群。OSD 守护进程将在磁盘 /dev/sdb 分区上创建数据和日志 。

|

||||

|

||||

检查所有 osd 节点的 /dev/sdb 磁盘可用性。

|

||||

|

||||

ceph-deploy disk list ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

[

|

||||

|

||||

][16]

|

||||

|

||||

你将看见我们之前创建 XFS 格式的 /dev/sdb。

|

||||

|

||||

下面,在所有节点用 zap 选项删除分区表。

|

||||

|

||||

ceph-deploy disk zap ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

这个命令将删除所有 Ceph OSD 节点的 /dev/sdb 上的数据。

|

||||

|

||||

现在准备所有 OSD 节点并确保结果没有报错。

|

||||

|

||||

ceph-deploy osd prepare ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

当你看到 ceph-osd1-3 结果已经准备好 OSD 使用,然后命令已经成功。

|

||||

|

||||

[

|

||||

|

||||

][17]

|

||||

|

||||

用下面的命令激活 OSD:

|

||||

|

||||

ceph-deploy osd activate ceph-osd1:/dev/sdb ceph-osd2:/dev/sdb ceph-osd3:/dev/sdb

|

||||

|

||||

现在你可以再一次检查 OSDS 节点的 sdb 磁盘。

|

||||

|

||||

ceph-deploy disk list ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

[

|

||||

|

||||

][18]

|

||||

|

||||

结果是 /dev/sdb 现在已经分为两个区:

|

||||

|

||||

1. **/dev/sdb1** - Ceph Data

|

||||

2. **/dev/sdb2** - Ceph Journal

|

||||

|

||||

或者你直接在 OSD 节点山检查。

|

||||

|

||||

ssh ceph-osd1

|

||||

sudo fdisk -l /dev/sdb

|

||||

|

||||

[

|

||||

|

||||

][19]

|

||||

|

||||

接下来,部署管理密钥到所有关联节点。

|

||||

|

||||

ceph-deploy admin ceph-admin mon1 ceph-osd1 ceph-osd2 ceph-osd3

|

||||

|

||||

在所有节点运行下面的命令,改变密钥文件权限。

|

||||

|

||||

sudo chmod 644 /etc/ceph/ceph.client.admin.keyring

|

||||

|

||||

Ceph 集群在 Ubuntu 16.04 已经创建完成。

|

||||

|

||||

### 第6步 - 测试 Ceph

|

||||

|

||||

在第4步,我们已经安装并创建了一个新 Ceph 集群,然后添加了 OSDS 节点到集群。现在我们应该测试集群确保它如期工作。

|

||||

|

||||

从 ceph-admin 节点,登录到 Ceph 监控服务器 '**mon1**'。

|

||||

|

||||

ssh mon1

|

||||

|

||||

运行下面命令来检查集群健康。

|

||||

|

||||

sudo ceph health

|

||||

|

||||

现在检查集群状态。

|

||||

|

||||

sudo ceph -s

|

||||

|

||||

你可以看到下面返回结果:

|

||||

|

||||

[

|

||||

|

||||

][20]

|

||||

|

||||

确保 Ceph 健康是 **OK** 并且有一个监控节点 '**mon1**' IP 地址为 '**10.0.15.11**'。有 **3 OSD** 服务器都是 **up** 状态并且正在运行,可用磁盘空间为 **45GB** - 3x15GB Ceph 数据 OSD 分区。

|

||||

|

||||

我们在 Ubuntu 16.04 建立一个新 Ceph 集群成功。

|

||||

|

||||

### 参考

|

||||

|

||||

* http://docs.ceph.com/docs/jewel/

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/

|

||||

|

||||

作者:[Muhammad Arul][a]

|

||||

译者:[wyangsun](https://github.com/wyangsun)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/

|

||||

[1]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-all-nodes

|

||||

[2]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ssh-server

|

||||

[3]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ubuntu-firewall

|

||||

[4]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-configure-the-ceph-osd-nodes

|

||||

[5]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-build-the-ceph-cluster

|

||||

[6]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#step-testing-ceph

|

||||

[7]:https://www.howtoforge.com/tutorial/how-to-install-a-ceph-cluster-on-ubuntu-16-04/#reference

|

||||

[8]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/1.png

|

||||

[9]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/2.png

|

||||

[10]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/3.png

|

||||

[11]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/4.png

|

||||

[12]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/5.png

|

||||

[13]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/6.png

|

||||

[14]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/7.png

|

||||

[15]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/8.png

|

||||

[16]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/9.png

|

||||

[17]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/10.png

|

||||

[18]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/11.png

|

||||

[19]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/12.png

|

||||

[20]:https://www.howtoforge.com/images/how-to-install-a-ceph-cluster-on-ubuntu-16-04/big/13.png

|

||||

Loading…

Reference in New Issue

Block a user