mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

commit

16a778bb7b

63

published/201807/20190909 Firefox 69 available in Fedora.md

Normal file

63

published/201807/20190909 Firefox 69 available in Fedora.md

Normal file

@ -0,0 +1,63 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11354-1.html)

|

||||

[#]: subject: (Firefox 69 available in Fedora)

|

||||

[#]: via: (https://fedoramagazine.org/firefox-69-available-in-fedora/)

|

||||

[#]: author: (Paul W. Frields https://fedoramagazine.org/author/pfrields/)

|

||||

|

||||

Firefox 69 已可在 Fedora 中获取

|

||||

======

|

||||

|

||||

![][1]

|

||||

|

||||

当你安装 Fedora Workstation 时,你会发现它包括了世界知名的 Firefox 浏览器。 Mozilla 基金会以开发 Firefox 以及其他促进开放、安全和隐私的互联网项目为己任。Firefox 有快速的浏览引擎和大量的隐私功能。

|

||||

|

||||

开发者社区不断改进和增强 Firefox。最新版本 Firefox 69 于最近发布,你可在稳定版 Fedora 系统(30 及更高版本)中获取它。继续阅读以获得更多详情。

|

||||

|

||||

### Firefox 69 中的新功能

|

||||

|

||||

最新版本的 Firefox 包括<ruby>[增强跟踪保护][2]<rt>Enhanced Tracking Protection</rt></ruby>(ETP)。当你使用带有新(或重置)配置文件的 Firefox 69 时,浏览器会使网站更难以跟踪你的信息或滥用你的计算机资源。

|

||||

|

||||

例如,不太正直的网站使用脚本让你的系统进行大量计算来产生加密货币,这称为<ruby>[加密挖矿][3]<rt>cryptomining</rt></ruby>。加密挖矿在你不知情或未经许可的情况下发生,因此是对你的系统的滥用。Firefox 69 中的新标准设置可防止网站遭受此类滥用。

|

||||

|

||||

Firefox 69 还有其他设置,可防止识别或记录你的浏览器指纹,以供日后使用。这些改进为你提供了额外的保护,免于你的活动被在线追踪。

|

||||

|

||||

另一个常见的烦恼是在没有提示的情况下播放视频。视频播放也会占用更多的 CPU,你可能不希望未经许可就在你的笔记本上发生这种情况。Firefox 使用<ruby>[阻止自动播放][4]<rt>Block Autoplay</rt></ruby>这个功能阻止了这种情况的发生。而 Firefox 69 还允许你停止静默开始播放的视频。此功能可防止不必要的突然的噪音。它还解决了更多真正的问题 —— 未经许可使用计算机资源。

|

||||

|

||||

新版本中还有许多其他新功能。在 [Firefox 发行说明][5]中阅读有关它们的更多信息。

|

||||

|

||||

### 如何获得更新

|

||||

|

||||

Firefox 69 存在于稳定版 Fedora 30、预发布版 Fedora 31 和 Rawhide 仓库中。该更新由 Fedora 的 Firefox 包维护者提供。维护人员还确保更新了 Mozilla 的网络安全服务(nss 包)。我们感谢 Mozilla 项目和 Firefox 社区在提供此新版本方面的辛勤工作。

|

||||

|

||||

如果你使用的是 Fedora 30 或更高版本,请在 Fedora Workstation 上使用*软件中心*,或在任何 Fedora 系统上运行以下命令:

|

||||

|

||||

```

|

||||

$ sudo dnf --refresh upgrade firefox

|

||||

```

|

||||

|

||||

如果你使用的是 Fedora 29,请[帮助测试更新][6],这样它可以变得稳定,让所有用户可以轻松使用。

|

||||

|

||||

Firefox 可能会提示你升级个人设置以使用新设置。要使用新功能,你应该这样做。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://fedoramagazine.org/firefox-69-available-in-fedora/

|

||||

|

||||

作者:[Paul W. Frields][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://fedoramagazine.org/author/pfrields/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://fedoramagazine.org/wp-content/uploads/2019/09/firefox-v69-816x345.jpg

|

||||

[2]: https://blog.mozilla.org/blog/2019/09/03/todays-firefox-blocks-third-party-tracking-cookies-and-cryptomining-by-default/

|

||||

[3]: https://www.webopedia.com/TERM/C/cryptocurrency-mining.html

|

||||

[4]: https://support.mozilla.org/kb/block-autoplay

|

||||

[5]: https://www.mozilla.org/en-US/firefox/69.0/releasenotes/

|

||||

[6]: https://bodhi.fedoraproject.org/updates/FEDORA-2019-89ae5bb576

|

||||

@ -0,0 +1,89 @@

|

||||

区块链能如何补充开源

|

||||

======

|

||||

|

||||

> 了解区块链如何成为去中心化的开源补贴模型。

|

||||

|

||||

|

||||

|

||||

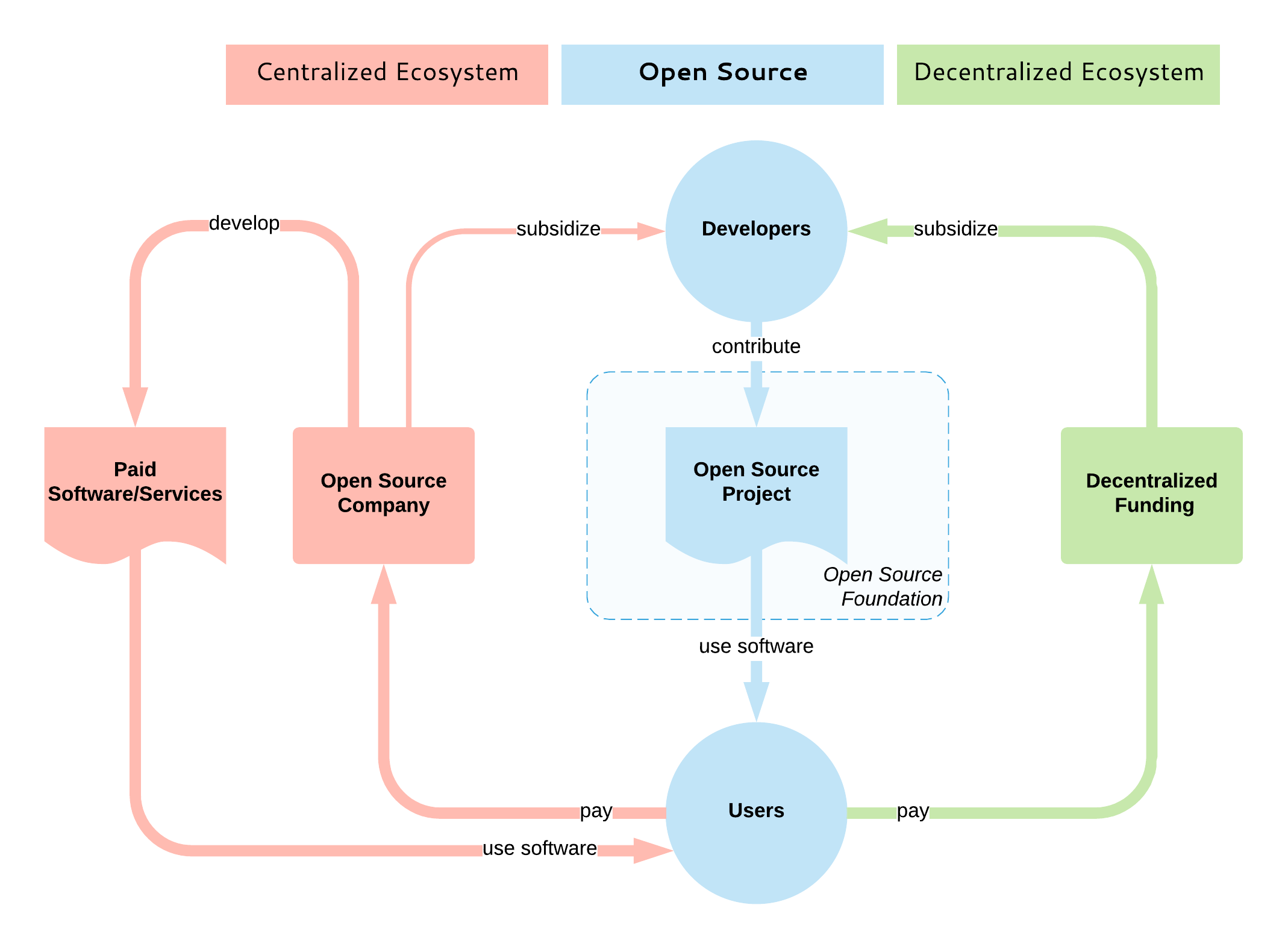

《<ruby>[大教堂与集市][1]<rt>The Cathedral and The Bazaar</rt></ruby>》是 20 年前由<ruby>埃里克·史蒂文·雷蒙德<rt>Eric Steven Raymond<rt></ruby>(ESR)撰写的经典开源故事。在这个故事中,ESR 描述了一种新的革命性的软件开发模型,其中复杂的软件项目是在没有(或者很少的)集中管理的情况下构建的。这个新模型就是<ruby>开源<rt>open source</rt></ruby>。

|

||||

|

||||

ESR 的故事比较了两种模式:

|

||||

|

||||

* 经典模型(由“大教堂”所代表),其中软件由一小群人在封闭和受控的环境中通过缓慢而稳定的发布制作而成。

|

||||

* 以及新模式(由“集市”所代表),其中软件是在开放的环境中制作的,个人可以自由参与,但仍然可以产生一个稳定和连贯的系统。

|

||||

|

||||

开源如此成功的一些原因可以追溯到 ESR 所描述的创始原则。尽早发布、经常发布,并接受许多头脑必然比一个更好的事实,让开源项目进入全世界的人才库(很少有公司能够使用闭源模式与之匹敌)。

|

||||

|

||||

在 ESR 对黑客社区的反思分析 20 年后,我们看到开源成为占据主导地位的的模式。它不再仅仅是为了满足开发人员的个人喜好,而是创新发生的地方。甚至是全球[最大][2]软件公司也正在转向这种模式,以便继续占据主导地位。

|

||||

|

||||

### 易货系统

|

||||

|

||||

如果我们仔细研究开源模型在实践中的运作方式,我们就会意识到它是一个封闭系统,只对开源开发者和技术人员开放。影响项目方向的唯一方法是加入开源社区,了解成文和不成文的规则,学习如何贡献、编码标准等,并自己亲力完成。

|

||||

|

||||

这就是集市的运作方式,也是这个易货系统类比的来源。易货系统是一种交换服务和货物以换取其他服务和货物的方法。在市场中(即软件的构建地)这意味着为了获取某些东西,你必须自己也是一个生产者并回馈一些东西——那就是通过交换你的时间和知识来完成任务。集市是开源开发者与其他开源开发者交互并以开源方式生成开源软件的地方。

|

||||

|

||||

易货系统向前迈出了一大步,从自给自足的状态演变而来,而在自给自足的状态下,每个人都必须成为所有行业的杰出人选。使用易货系统的集市(开源模式)允许具有共同兴趣和不同技能的人们收集、协作和创造个人无法自行创造的东西。易货系统简单,没有现代货币系统那么复杂,但也有一些局限性,例如:

|

||||

|

||||

* 缺乏可分性:在没有共同的交换媒介的情况下,不能将较大的不可分割的商品/价值兑换成较小的商品/价值。例如,如果你想在开源项目中进行一些哪怕是小的更改,有时你可能仍需要经历一个高进入门槛。

|

||||

* 存储价值:如果一个项目对贵公司很重要,你可能需要投入大量投资/承诺。但由于它是开源开发者之间的易货系统,因此拥有强大发言权的唯一方法是雇佣许多开源贡献者,但这并非总是可行的。

|

||||

* 转移价值:如果你投资了一个项目(受过培训的员工、雇用开源开发者)并希望将重点转移到另一个项目,却不可能快速转移(你在上一个项目中拥有的)专业知识、声誉和影响力。

|

||||

* 时间脱钩:易货系统没有为延期或提前承诺提供良好的机制。在开源世界中,这意味着用户无法提前或在未来期间以可衡量的方式表达对项目的承诺或兴趣。

|

||||

|

||||

下面,我们将探讨如何使用集市的后门解决这些限制。

|

||||

|

||||

### 货币系统

|

||||

|

||||

人们因为不同的原因勾连于集市上:有些人在那里学习,有些是出于满足开发者个人的喜好,有些人为大型软件工厂工作。因为在集市中拥有发言权的唯一方法是成为开源社区的一份子并加入这个易货系统,为了在开源世界获得信誉,许多大型软件公司雇用这些开发者并以货币方式支付薪酬。这代表可以使用货币系统来影响集市,开源不再只是为了满足开发者个人的喜好,它也占据全球整体软件生产的重要部分,并且有许多人想要施加影响。

|

||||

|

||||

开源设定了开发人员交互的指导原则,并以分布式方式构建一致的系统。它决定了项目的治理方式、软件的构建方式以及其成果如何分发给用户。它是分散的实体共同构建高质量软件的开放共识模型。但是开源模型并没有包括如何补贴开源的部分,无论是直接还是间接地,通过内在或外在动机的赞助,都与集市无关。

|

||||

|

||||

|

||||

|

||||

目前,没有相当于以补贴为目的的去中心化式开源开发模型。大多数开源补贴都是集中式的,通常一家公司通过雇用该项目的主要开源开发者来主导该项目。说实话,这是目前最好的状况,因为它保证了开发人员将长期获得报酬,项目也将继续蓬勃发展。

|

||||

|

||||

项目垄断情景也有例外情况:例如,一些云原生计算基金会(CNCF)项目是由大量的竞争公司开发的。此外,Apache 软件基金会(ASF)旨在通过鼓励不同的贡献者来使他们管理的项目不被单一供应商所主导,但实际上大多数受欢迎的项目仍然是单一供应商项目。

|

||||

|

||||

我们缺少的是一个开放的、去中心化的模式,就像一个没有集中协调和所有权的集市一样,消费者(开源用户)和生产者(开源开发者)在市场力量和开源价值的驱动下相互作用。为了补充开源,这样的模型也必须是开放和去中心化的,这就是为什么我认为区块链技术[最适合][3]的原因。

|

||||

|

||||

旨在补贴开源开发的大多数现有区块链(和非区块链)平台主要针对的是漏洞赏金、小型和零碎的任务。少数人还专注于资助新的开源项目。但并没有多少平台旨在提供维持开源项目持续开发的机制 —— 基本上,这个系统可以模仿开源服务提供商公司或开放核心、基于开源的 SaaS 产品公司的行为:确保开发人员可以获得持续和可预测的激励,并根据激励者(即用户)的优先事项指导项目开发。这种模型将解决上面列出的易货系统的局限性:

|

||||

|

||||

* 允许可分性:如果你想要一些小的修复,你可以支付少量费用,而不是成为项目的开源开发者的全部费用。

|

||||

* 存储价值:你可以在项目中投入大量资金,并确保其持续发展和你的发言权。

|

||||

* 转移价值:在任何时候,你都可以停止投资项目并将资金转移到其他项目中。

|

||||

* 时间脱钩:允许定期定期付款和订阅。

|

||||

|

||||

还有其他好处,纯粹是因为这种基于区块链的系统是透明和去中心化的:根据用户的承诺、开放的路线图承诺、去中心化决策等来量化项目的价值/实用性。

|

||||

|

||||

### 总结

|

||||

|

||||

一方面,我们看到大公司雇用开源开发者并收购开源初创公司甚至基础平台(例如微软收购 GitHub)。许多(甚至大多数)能够长期成功运行的开源项目都集中在单个供应商周围。开源的重要性及其集中化是一个事实。

|

||||

|

||||

另一方面,[维持开源软件][4]的挑战正变得越来越明显,许多人正在更深入地研究这个领域及其基本问题。有一些项目具有很高的知名度和大量的贡献者,但还有许多其他也重要的项目缺乏足够的贡献者和维护者。

|

||||

|

||||

有[许多努力][3]试图通过区块链来解决开源的挑战。这些项目应提高透明度、去中心化和补贴,并在开源用户和开发人员之间建立直接联系。这个领域还很年轻,但是进展很快,随着时间的推移,集市将会有一个加密货币系统。

|

||||

|

||||

如果有足够的时间和足够的技术,去中心化就会发生在很多层面:

|

||||

|

||||

* 互联网是一种去中心化的媒介,它释放了全球分享和获取知识的潜力。

|

||||

* 开源是一种去中心化的协作模式,它释放了全球的创新潜力。

|

||||

* 同样,区块链可以补充开源,成为去中心化的开源补贴模式。

|

||||

|

||||

请在[推特][5]上关注我在这个领域的其他帖子。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/9/barter-currency-system

|

||||

|

||||

作者:[Bilgin lbryam][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/bibryam

|

||||

[1]: http://catb.org/

|

||||

[2]: http://oss.cash/

|

||||

[3]: https://opensource.com/article/18/8/open-source-tokenomics

|

||||

[4]: https://www.youtube.com/watch?v=VS6IpvTWwkQ

|

||||

[5]: http://twitter.com/bibryam

|

||||

144

published/20190403 Use Git as the backend for chat.md

Normal file

144

published/20190403 Use Git as the backend for chat.md

Normal file

@ -0,0 +1,144 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (wxy)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11342-1.html)

|

||||

[#]: subject: (Use Git as the backend for chat)

|

||||

[#]: via: (https://opensource.com/article/19/4/git-based-chat)

|

||||

[#]: author: (Seth Kenlon https://opensource.com/users/seth)

|

||||

|

||||

用 Git 作为聊天应用的后端

|

||||

======

|

||||

|

||||

> GIC 是一个聊天应用程序的原型,展示了一种使用 Git 的新方法。

|

||||

|

||||

|

||||

|

||||

[Git][2] 是一个少有的能将如此多的现代计算封装到一个程序之中的应用程序,它可以用作许多其他应用程序的计算引擎。虽然它以跟踪软件开发中的源代码更改而闻名,但它还有许多其他用途,可以让你的生活更轻松、更有条理。在这个 Git 系列中,我们将分享七种鲜为人知的使用 Git 的方法。

|

||||

|

||||

今天我们来看看 GIC,它是一个基于 Git 的聊天应用。

|

||||

|

||||

### 初识 GIC

|

||||

|

||||

虽然 Git 的作者们可能期望会为 Git 创建前端,但毫无疑问他们从未预料到 Git 会成为某种后端,如聊天客户端的后端。然而,这正是开发人员 Ephi Gabay 用他的实验性的概念验证应用 [GIC][3] 所做的事情:用 [Node.js][4] 编写的聊天客户端,使用 Git 作为其后端数据库。

|

||||

|

||||

GIC 并没有打算用于生产用途。这纯粹是一种编程练习,但它证明了开源技术的灵活性。令人惊讶的是,除了 Node 库和 Git 本身,该客户端只包含 300 行代码。这是这个聊天客户端和开源所反映出来的最好的地方之一:建立在现有工作基础上的能力。眼见为实,你应该自己亲自来了解一下 GIC。

|

||||

|

||||

### 架设起来

|

||||

|

||||

GIC 使用 Git 作为引擎,因此你需要一个空的 Git 存储库为聊天室和记录器提供服务。存储库可以托管在任何地方,只要你和需要访问聊天服务的人可以访问该存储库就行。例如,你可以在 GitLab 等免费 Git 托管服务上设置 Git 存储库,并授予聊天用户对该 Git 存储库的贡献者访问权限。(他们必须能够提交到存储库,因为每个聊天消息都是一个文本的提交。)

|

||||

|

||||

如果你自己托管,请创建一个中心化的裸存储库。聊天中的每个用户必须在裸存储库所在的服务器上拥有一个帐户。你可以使用如 [Gitolite][5] 或 [Gitea][6] 这样的 Git 托管软件创建特定于 Git 的帐户,或者你可以在服务器上为他们提供个人用户帐户,可以使用 `git-shell` 来限制他们只能访问 Git。

|

||||

|

||||

自托管实例的性能最好。无论你是自己托管还是使用托管服务,你创建的 Git 存储库都必须具有一个活跃分支,否则 GIC 将无法在用户聊天时进行提交,因为没有 Git HEAD。确保分支初始化和活跃的最简单方法是在创建存储库时提交 `README` 或许可证文件。如果你没有这样做,你可以在事后创建并提交一个:

|

||||

|

||||

```

|

||||

$ echo "chat logs" > README

|

||||

$ git add README

|

||||

$ git commit -m 'just creating a HEAD ref'

|

||||

$ git push -u origin HEAD

|

||||

```

|

||||

|

||||

### 安装 GIC

|

||||

|

||||

由于 GIC 基于 Git 并使用 Node.js 编写,因此必须首先安装 Git、Node.js 和 Node 包管理器npm(它应该与 Node 捆绑在一起)。安装它们的命令因 Linux 或 BSD 发行版而异,这是 Fedora 上的一个示例命令:

|

||||

|

||||

```

|

||||

$ sudo dnf install git nodejs

|

||||

```

|

||||

|

||||

如果你没有运行 Linux 或 BSD,请按照 [git-scm.com][7] 和 [nodejs.org][8] 上的安装说明进行操作。

|

||||

|

||||

因此,GIC 没有安装过程。每个用户(在此示例中为 Alice 和 Bob)必须将存储库克隆到其硬盘驱动器:

|

||||

|

||||

```

|

||||

$ git clone https://github.com/ephigabay/GIC GIC

|

||||

```

|

||||

|

||||

将目录更改为 GIC 目录并使用 `npm` 安装 Node.js 依赖项:

|

||||

|

||||

```

|

||||

$ cd GIC

|

||||

$ npm install

|

||||

```

|

||||

|

||||

等待 Node 模块下载并安装。

|

||||

|

||||

### 配置 GIC

|

||||

|

||||

GIC 唯一需要的配置是 Git 聊天存储库的位置。编辑 `config.js` 文件:

|

||||

|

||||

```

|

||||

module.exports = {

|

||||

gitRepo: 'seth@example.com:/home/gitchat/chatdemo.git',

|

||||

messageCheckInterval: 500,

|

||||

branchesCheckInterval: 5000

|

||||

};

|

||||

```

|

||||

|

||||

在尝试 GIC 之前测试你与 Git 存储库的连接,以确保你的配置是正确的:

|

||||

|

||||

```

|

||||

$ git clone --quiet seth@example.com:/home/gitchat/chatdemo.git > /dev/null

|

||||

```

|

||||

|

||||

假设你没有收到任何错误,就可以开始聊天了。

|

||||

|

||||

### 用 Git 聊天

|

||||

|

||||

在 GIC 目录中启动聊天客户端:

|

||||

|

||||

```

|

||||

$ npm start

|

||||

```

|

||||

|

||||

客户端首次启动时,必须克隆聊天存储库。由于它几乎是一个空的存储库,因此不会花费很长时间。输入你的消息,然后按回车键发送消息。

|

||||

|

||||

![GIC][10]

|

||||

|

||||

*基于 Git 的聊天客户端。 他们接下来会怎么想?*

|

||||

|

||||

正如问候消息所说,Git 中的分支在 GIC 中就是聊天室或频道。无法在 GIC 的 UI 中创建新分支,但如果你在另一个终端会话或 Web UI 中创建一个分支,它将立即显示在 GIC 中。将一些 IRC 式的命令加到 GIC 中并不需要太多工作。

|

||||

|

||||

聊了一会儿之后,可以看看你的 Git 存储库。由于聊天发生在 Git 中,因此存储库本身也是聊天日志:

|

||||

|

||||

```

|

||||

$ git log --pretty=format:"%p %cn %s"

|

||||

4387984 Seth Kenlon Hey Chani, did you submit a talk for All Things Open this year?

|

||||

36369bb Chani No I didn't get a chance. Did you?

|

||||

[...]

|

||||

```

|

||||

|

||||

### 退出 GIC

|

||||

|

||||

Vim 以来,还没有一个应用程序像 GIC 那么难以退出。你看,没有办法停止 GIC。它会一直运行,直到它被杀死。当你准备停止 GIC 时,打开另一个终端选项卡或窗口并发出以下命令:

|

||||

|

||||

```

|

||||

$ kill `pgrep npm`

|

||||

```

|

||||

|

||||

GIC 是一个新奇的事物。这是一个很好的例子,说明开源生态系统如何鼓励和促进创造力和探索,并挑战我们从不同角度审视应用程序。尝试下 GIC,也许它会给你一些思路。至少,它可以让你与 Git 度过一个下午。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/4/git-based-chat

|

||||

|

||||

作者:[Seth Kenlon][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[wxy](https://github.com/wxy)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/seth

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/talk_chat_team_mobile_desktop.png?itok=d7sRtKfQ (Team communication, chat)

|

||||

[2]: https://git-scm.com/

|

||||

[3]: https://github.com/ephigabay/GIC

|

||||

[4]: https://nodejs.org/en/

|

||||

[5]: http://gitolite.com

|

||||

[6]: http://gitea.io

|

||||

[7]: http://git-scm.com

|

||||

[8]: http://nodejs.org

|

||||

[9]: mailto:seth@example.com

|

||||

[10]: https://opensource.com/sites/default/files/uploads/gic.jpg (GIC)

|

||||

261

published/20190409 Working with variables on Linux.md

Normal file

261

published/20190409 Working with variables on Linux.md

Normal file

@ -0,0 +1,261 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (MjSeven)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11344-1.html)

|

||||

[#]: subject: (Working with variables on Linux)

|

||||

[#]: via: (https://www.networkworld.com/article/3387154/working-with-variables-on-linux.html#tk.rss_all)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

在 Linux 中使用变量

|

||||

======

|

||||

|

||||

> 变量通常看起来像 `$var` 这样,但它们也有 `$1`、`$*`、`$?` 和 `$$` 这种形式。让我们来看看所有这些 `$` 值可以告诉你什么。

|

||||

|

||||

|

||||

|

||||

有许多重要的值都存储在 Linux 系统中,我们称为“变量”,但实际上变量有几种类型,并且一些有趣的命令可以帮助你使用它们。在上一篇文章中,我们研究了[环境变量][2]以及它们定义在何处。在本文中,我们来看一看在命令行和脚本中使用的变量。

|

||||

|

||||

### 用户变量

|

||||

|

||||

虽然在命令行中设置变量非常容易,但是有一些有趣的技巧。要设置变量,你只需这样做:

|

||||

|

||||

```

|

||||

$ myvar=11

|

||||

$ myvar2="eleven"

|

||||

```

|

||||

|

||||

要显示这些值,只需这样做:

|

||||

|

||||

```

|

||||

$ echo $myvar

|

||||

11

|

||||

$ echo $myvar2

|

||||

eleven

|

||||

```

|

||||

|

||||

你也可以使用这些变量。例如,要递增一个数字变量,使用以下任意一个命令:

|

||||

|

||||

```

|

||||

$ myvar=$((myvar+1))

|

||||

$ echo $myvar

|

||||

12

|

||||

$ ((myvar=myvar+1))

|

||||

$ echo $myvar

|

||||

13

|

||||

$ ((myvar+=1))

|

||||

$ echo $myvar

|

||||

14

|

||||

$ ((myvar++))

|

||||

$ echo $myvar

|

||||

15

|

||||

$ let "myvar=myvar+1"

|

||||

$ echo $myvar

|

||||

16

|

||||

$ let "myvar+=1"

|

||||

$ echo $myvar

|

||||

17

|

||||

$ let "myvar++"

|

||||

$ echo $myvar

|

||||

18

|

||||

```

|

||||

|

||||

使用其中的一些,你可以增加一个变量的值。例如:

|

||||

|

||||

```

|

||||

$ myvar0=0

|

||||

$ ((myvar0++))

|

||||

$ echo $myvar0

|

||||

1

|

||||

$ ((myvar0+=10))

|

||||

$ echo $myvar0

|

||||

11

|

||||

```

|

||||

|

||||

通过这些选项,你可能会发现它们是容易记忆、使用方便的。

|

||||

|

||||

你也可以*删除*一个变量 -- 这意味着没有定义它。

|

||||

|

||||

```

|

||||

$ unset myvar

|

||||

$ echo $myvar

|

||||

```

|

||||

|

||||

另一个有趣的选项是,你可以设置一个变量并将其设为**只读**。换句话说,变量一旦设置为只读,它的值就不能改变(除非一些非常复杂的命令行魔法才可以)。这意味着你也不能删除它。

|

||||

|

||||

```

|

||||

$ readonly myvar3=1

|

||||

$ echo $myvar3

|

||||

1

|

||||

$ ((myvar3++))

|

||||

-bash: myvar3: readonly variable

|

||||

$ unset myvar3

|

||||

-bash: unset: myvar3: cannot unset: readonly variable

|

||||

```

|

||||

|

||||

你可以使用这些设置和递增选项中来赋值和操作脚本中的变量,但也有一些非常有用的*内部变量*可以用于在脚本中。注意,你无法重新赋值或增加它们的值。

|

||||

|

||||

### 内部变量

|

||||

|

||||

在脚本中可以使用很多变量来计算参数并显示有关脚本本身的信息。

|

||||

|

||||

* `$1`、`$2`、`$3` 等表示脚本的第一个、第二个、第三个等参数。

|

||||

* `$#` 表示参数的数量。

|

||||

* `$*` 表示所有参数。

|

||||

* `$0` 表示脚本的名称。

|

||||

* `$?` 表示先前运行的命令的返回码(0 代表成功)。

|

||||

* `$$` 显示脚本的进程 ID。

|

||||

* `$PPID` 显示 shell 的进程 ID(脚本的父进程)。

|

||||

|

||||

其中一些变量也适用于命令行,但显示相关信息:

|

||||

|

||||

* `$0` 显示你正在使用的 shell 的名称(例如,-bash)。

|

||||

* `$$` 显示 shell 的进程 ID。

|

||||

* `$PPID` 显示 shell 的父进程的进程 ID(对我来说,是 sshd)。

|

||||

|

||||

为了查看它们的结果,如果我们将所有这些变量都放入一个脚本中,比如:

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

echo $0

|

||||

echo $1

|

||||

echo $2

|

||||

echo $#

|

||||

echo $*

|

||||

echo $?

|

||||

echo $$

|

||||

echo $PPID

|

||||

```

|

||||

|

||||

当我们调用这个脚本时,我们会看到如下内容:

|

||||

|

||||

```

|

||||

$ tryme one two three

|

||||

/home/shs/bin/tryme <== 脚本名称

|

||||

one <== 第一个参数

|

||||

two <== 第二个参数

|

||||

3 <== 参数的个数

|

||||

one two three <== 所有的参数

|

||||

0 <== 上一条 echo 命令的返回码

|

||||

10410 <== 脚本的进程 ID

|

||||

10109 <== 父进程 ID

|

||||

```

|

||||

|

||||

如果我们在脚本运行完毕后检查 shell 的进程 ID,我们可以看到它与脚本中显示的 PPID 相匹配:

|

||||

|

||||

```

|

||||

$ echo $$

|

||||

10109 <== shell 的进程 ID

|

||||

```

|

||||

|

||||

当然,比起简单地显示它们的值,更有用的方式是使用它们。我们来看一看它们可能的用处。

|

||||

|

||||

检查是否已提供参数:

|

||||

|

||||

```

|

||||

if [ $# == 0 ]; then

|

||||

echo "$0 filename"

|

||||

exit 1

|

||||

fi

|

||||

```

|

||||

|

||||

检查特定进程是否正在运行:

|

||||

|

||||

```

|

||||

ps -ef | grep apache2 > /dev/null

|

||||

if [ $? != 0 ]; then

|

||||

echo Apache is not running

|

||||

exit

|

||||

fi

|

||||

```

|

||||

|

||||

在尝试访问文件之前验证文件是否存在:

|

||||

|

||||

```

|

||||

if [ $# -lt 2 ]; then

|

||||

echo "Usage: $0 lines filename"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [ ! -f $2 ]; then

|

||||

echo "Error: File $2 not found"

|

||||

exit 2

|

||||

else

|

||||

head -$1 $2

|

||||

fi

|

||||

```

|

||||

|

||||

在下面的小脚本中,我们检查是否提供了正确数量的参数、第一个参数是否为数字,以及第二个参数代表的文件是否存在。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

if [ $# -lt 2 ]; then

|

||||

echo "Usage: $0 lines filename"

|

||||

exit 1

|

||||

fi

|

||||

|

||||

if [[ $1 != [0-9]* ]]; then

|

||||

echo "Error: $1 is not numeric"

|

||||

exit 2

|

||||

fi

|

||||

|

||||

if [ ! -f $2 ]; then

|

||||

echo "Error: File $2 not found"

|

||||

exit 3

|

||||

else

|

||||

echo top of file

|

||||

head -$1 $2

|

||||

fi

|

||||

```

|

||||

|

||||

### 重命名变量

|

||||

|

||||

在编写复杂的脚本时,为脚本的参数指定名称通常很有用,而不是继续将它们称为 `$1`、`$2` 等。等到第 35 行,阅读你脚本的人可能已经忘了 `$2` 表示什么。如果你将一个重要参数的值赋给 `$filename` 或 `$numlines`,那么他就不容易忘记。

|

||||

|

||||

```

|

||||

#!/bin/bash

|

||||

|

||||

if [ $# -lt 2 ]; then

|

||||

echo "Usage: $0 lines filename"

|

||||

exit 1

|

||||

else

|

||||

numlines=$1

|

||||

filename=$2

|

||||

fi

|

||||

|

||||

if [[ $numlines != [0-9]* ]]; then

|

||||

echo "Error: $numlines is not numeric"

|

||||

exit 2

|

||||

fi

|

||||

|

||||

if [ ! -f $ filename]; then

|

||||

echo "Error: File $filename not found"

|

||||

exit 3

|

||||

else

|

||||

echo top of file

|

||||

head -$numlines $filename

|

||||

fi

|

||||

```

|

||||

|

||||

当然,这个示例脚本只是运行 `head` 命令来显示文件中的前 x 行,但它的目的是显示如何在脚本中使用内部参数来帮助确保脚本运行良好,或在失败时清晰地知道失败原因。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3387154/working-with-variables-on-linux.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[MjSeven](https://github.com/MjSeven)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2019/04/variable-key-keyboard-100793080-large.jpg

|

||||

[2]: https://linux.cn/article-10916-1.html

|

||||

[3]: https://www.youtube.com/playlist?list=PL7D2RMSmRO9J8OTpjFECi8DJiTQdd4hua

|

||||

[4]: https://www.facebook.com/NetworkWorld/

|

||||

[5]: https://www.linkedin.com/company/network-world

|

||||

104

published/20190730 How to manage logs in Linux.md

Normal file

104

published/20190730 How to manage logs in Linux.md

Normal file

@ -0,0 +1,104 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (heguangzhi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11336-1.html)

|

||||

[#]: subject: (How to manage logs in Linux)

|

||||

[#]: via: (https://www.networkworld.com/article/3428361/how-to-manage-logs-in-linux.html)

|

||||

[#]: author: (Sandra Henry-Stocker https://www.networkworld.com/author/Sandra-Henry_Stocker/)

|

||||

|

||||

如何在 Linux 中管理日志

|

||||

======

|

||||

|

||||

> Linux 系统上的日志文件包含了**很多**信息——比你有时间查看的还要多。以下是一些建议,告诉你如何正确的使用它们……而不是淹没在其中。

|

||||

|

||||

![Greg Lobinski \(CC BY 2.0\)][1]

|

||||

|

||||

在 Linux 系统上管理日志文件可能非常容易,也可能非常痛苦。这完全取决于你所认为的日志管理是什么。

|

||||

|

||||

如果你认为是如何确保日志文件不会耗尽你的 Linux 服务器上的所有磁盘空间,那么这个问题通常很简单。Linux 系统上的日志文件会自动翻转,系统将只维护固定数量的翻转日志。即便如此,一眼看去一组上百个文件可能会让人不知所措。在这篇文章中,我们将看看日志轮换是如何工作的,以及一些最相关的日志文件。

|

||||

|

||||

### 自动日志轮换

|

||||

|

||||

日志文件是经常轮转的。当前的日志会获得稍微不同的文件名,并建立一个新的日志文件。以系统日志文件为例。对于许多正常的系统 messages 文件来说,这个文件是一个包罗万象的东西。如果你 `cd` 转到 `/var/log` 并查看一下,你可能会看到一系列系统日志文件,如下所示:

|

||||

|

||||

```

|

||||

$ ls -l syslog*

|

||||

-rw-r----- 1 syslog adm 28996 Jul 30 07:40 syslog

|

||||

-rw-r----- 1 syslog adm 71212 Jul 30 00:00 syslog.1

|

||||

-rw-r----- 1 syslog adm 5449 Jul 29 00:00 syslog.2.gz

|

||||

-rw-r----- 1 syslog adm 6152 Jul 28 00:00 syslog.3.gz

|

||||

-rw-r----- 1 syslog adm 7031 Jul 27 00:00 syslog.4.gz

|

||||

-rw-r----- 1 syslog adm 5602 Jul 26 00:00 syslog.5.gz

|

||||

-rw-r----- 1 syslog adm 5995 Jul 25 00:00 syslog.6.gz

|

||||

-rw-r----- 1 syslog adm 32924 Jul 24 00:00 syslog.7.gz

|

||||

```

|

||||

|

||||

轮换发生在每天午夜,旧的日志文件会保留一周,然后删除最早的系统日志文件。`syslog.7.gz` 文件将被从系统中删除,`syslog.6.gz` 将被重命名为 `syslog.7.gz`。日志文件的其余部分将依次改名,直到 `syslog` 变成 `syslog.1` 并创建一个新的 `syslog` 文件。有些系统日志文件会比其他文件大,但是一般来说,没有一个文件可能会变得非常大,并且你永远不会看到超过八个的文件。这给了你一个多星期的时间来回顾它们收集的任何数据。

|

||||

|

||||

某种特定日志文件维护的文件数量取决于日志文件本身。有些文件可能有 13 个。请注意 `syslog` 和 `dpkg` 的旧文件是如何压缩以节省空间的。这里的考虑是你对最近的日志最感兴趣,而更旧的日志可以根据需要用 `gunzip` 解压。

|

||||

|

||||

```

|

||||

# ls -t dpkg*

|

||||

dpkg.log dpkg.log.3.gz dpkg.log.6.gz dpkg.log.9.gz dpkg.log.12.gz

|

||||

dpkg.log.1 dpkg.log.4.gz dpkg.log.7.gz dpkg.log.10.gz

|

||||

dpkg.log.2.gz dpkg.log.5.gz dpkg.log.8.gz dpkg.log.11.gz

|

||||

```

|

||||

|

||||

日志文件可以根据时间和大小进行轮换。检查日志文件时请记住这一点。

|

||||

|

||||

尽管默认值适用于大多数 Linux 系统管理员,但如果你愿意,可以对日志文件轮换进行不同的配置。查看这些文件,如 `/etc/rsyslog.conf` 和 `/etc/logrotate.conf`。

|

||||

|

||||

### 使用日志文件

|

||||

|

||||

对日志文件的管理也包括时不时的使用它们。使用日志文件的第一步可能包括:习惯每个日志文件可以告诉你有关系统如何工作以及系统可能会遇到哪些问题。从头到尾读取日志文件几乎不是一个好的选择,但是当你想了解你的系统运行的情况或者需要跟踪一个问题时,知道如何从日志文件中获取信息会是有很大的好处。这也表明你对每个文件中存储的信息有一个大致的了解了。例如:

|

||||

|

||||

```

|

||||

$ who wtmp | tail -10 显示最近的登录信息

|

||||

$ who wtmp | grep shark 显示特定用户的最近登录信息

|

||||

$ grep "sudo:" auth.log 查看谁在使用 sudo

|

||||

$ tail dmesg 查看(最近的)内核日志

|

||||

$ tail dpkg.log 查看最近安装和更新的软件包

|

||||

$ more ufw.log 查看防火墙活动(假如你使用 ufw)

|

||||

```

|

||||

|

||||

你运行的一些命令也会从日志文件中提取信息。例如,如果你想查看系统重新启动的列表,可以使用如下命令:

|

||||

|

||||

```

|

||||

$ last reboot

|

||||

reboot system boot 5.0.0-20-generic Tue Jul 16 13:19 still running

|

||||

reboot system boot 5.0.0-15-generic Sat May 18 17:26 - 15:19 (21+21:52)

|

||||

reboot system boot 5.0.0-13-generic Mon Apr 29 10:55 - 15:34 (18+04:39)

|

||||

```

|

||||

|

||||

### 使用更高级的日志管理器

|

||||

|

||||

虽然你可以编写脚本来更容易地在日志文件中找到感兴趣的信息,但是你也应该知道有一些非常复杂的工具可用于日志文件分析。一些可以把来自多个来源的信息联系起来,以便更全面地了解你的网络上发生了什么。它们也可以提供实时监控。这些工具,如 [Solarwinds Log & Event Manager][3] 和 [PRTG 网络监视器][4](包括日志监视)浮现在脑海中。

|

||||

|

||||

还有一些免费工具可以帮助分析日志文件。其中包括:

|

||||

|

||||

* Logwatch — 用于扫描系统日志中感兴趣的日志行的程序

|

||||

* Logcheck — 系统日志分析器和报告器

|

||||

|

||||

在接下来的文章中,我将提供一些关于这些工具的见解和帮助。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.networkworld.com/article/3428361/how-to-manage-logs-in-linux.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[heguangzhi](https://github.com/heguangzhi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://www.networkworld.com/author/Sandra-Henry_Stocker/

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://images.idgesg.net/images/article/2019/07/logs-100806633-large.jpg

|

||||

[2]: https://www.youtube.com/playlist?list=PL7D2RMSmRO9J8OTpjFECi8DJiTQdd4hua

|

||||

[3]: https://www.esecurityplanet.com/products/solarwinds-log-event-manager-siem.html

|

||||

[4]: https://www.paessler.com/prtg

|

||||

[5]: https://www.networkworld.com/article/3242170/linux/invaluable-tips-and-tricks-for-troubleshooting-linux.html

|

||||

[6]: https://www.facebook.com/NetworkWorld/

|

||||

[7]: https://www.linkedin.com/company/network-world

|

||||

404

published/20190812 Why const Doesn-t Make C Code Faster.md

Normal file

404

published/20190812 Why const Doesn-t Make C Code Faster.md

Normal file

@ -0,0 +1,404 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (LazyWolfLin)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11339-1.html)

|

||||

[#]: subject: (Why const Doesn't Make C Code Faster)

|

||||

[#]: via: (https://theartofmachinery.com/2019/08/12/c_const_isnt_for_performance.html)

|

||||

[#]: author: (Simon Arneaud https://theartofmachinery.com)

|

||||

|

||||

为什么 const 无法让 C 代码跑得更快?

|

||||

======

|

||||

|

||||

|

||||

|

||||

在几个月前的一篇文章里,我曾说过“[有个一个流行的传言,`const` 有助于编译器优化 C 和 C++ 代码][1]”。我觉得我需要解释一下,尤其是曾经我自己也以为这是显然对的。我将会用一些理论并构造一些例子来论证,然后在一个真实的代码库 `Sqlite` 上做一些实验和基准测试。

|

||||

|

||||

### 一个简单的测试

|

||||

|

||||

让我们从一个最简单、最明显的例子开始,以前认为这是一个 `const` 让 C 代码跑得更快的例子。首先,假设我们有如下两个函数声明:

|

||||

|

||||

```

|

||||

void func(int *x);

|

||||

void constFunc(const int *x);

|

||||

```

|

||||

|

||||

然后假设我们如下两份代码:

|

||||

|

||||

```

|

||||

void byArg(int *x)

|

||||

{

|

||||

printf("%d\n", *x);

|

||||

func(x);

|

||||

printf("%d\n", *x);

|

||||

}

|

||||

|

||||

void constByArg(const int *x)

|

||||

{

|

||||

printf("%d\n", *x);

|

||||

constFunc(x);

|

||||

printf("%d\n", *x);

|

||||

}

|

||||

```

|

||||

|

||||

调用 `printf()` 时,CPU 会通过指针从 RAM 中取得 `*x` 的值。很显然,`constByArg()` 会稍微快一点,因为编译器知道 `*x` 是常量,因此不需要在调用 `constFunc()` 之后再次获取它的值。它仅是打印相同的东西。没问题吧?让我们来看下 GCC 在如下编译选项下生成的汇编代码:

|

||||

|

||||

```

|

||||

$ gcc -S -Wall -O3 test.c

|

||||

$ view test.s

|

||||

```

|

||||

|

||||

以下是函数 `byArg()` 的完整汇编代码:

|

||||

|

||||

```

|

||||

byArg:

|

||||

.LFB23:

|

||||

.cfi_startproc

|

||||

pushq %rbx

|

||||

.cfi_def_cfa_offset 16

|

||||

.cfi_offset 3, -16

|

||||

movl (%rdi), %edx

|

||||

movq %rdi, %rbx

|

||||

leaq .LC0(%rip), %rsi

|

||||

movl $1, %edi

|

||||

xorl %eax, %eax

|

||||

call __printf_chk@PLT

|

||||

movq %rbx, %rdi

|

||||

call func@PLT # constFoo 中唯一不同的指令

|

||||

movl (%rbx), %edx

|

||||

leaq .LC0(%rip), %rsi

|

||||

xorl %eax, %eax

|

||||

movl $1, %edi

|

||||

popq %rbx

|

||||

.cfi_def_cfa_offset 8

|

||||

jmp __printf_chk@PLT

|

||||

.cfi_endproc

|

||||

```

|

||||

|

||||

函数 `byArg()` 和函数 `constByArg()` 生成的汇编代码中唯一的不同之处是 `constByArg()` 有一句汇编代码 `call constFunc@PLT`,这正是源代码中的调用。关键字 `const` 本身并没有造成任何字面上的不同。

|

||||

|

||||

好了,这是 GCC 的结果。或许我们需要一个更聪明的编译器。Clang 会有更好的表现吗?

|

||||

|

||||

```

|

||||

$ clang -S -Wall -O3 -emit-llvm test.c

|

||||

$ view test.ll

|

||||

```

|

||||

|

||||

这是 `IR` 代码(LCTT 译注:LLVM 的中间语言)。它比汇编代码更加紧凑,所以我可以把两个函数都导出来,让你可以看清楚我所说的“除了调用外,没有任何字面上的不同”是什么意思:

|

||||

|

||||

```

|

||||

; Function Attrs: nounwind uwtable

|

||||

define dso_local void @byArg(i32*) local_unnamed_addr #0 {

|

||||

%2 = load i32, i32* %0, align 4, !tbaa !2

|

||||

%3 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 %2)

|

||||

tail call void @func(i32* %0) #4

|

||||

%4 = load i32, i32* %0, align 4, !tbaa !2

|

||||

%5 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 %4)

|

||||

ret void

|

||||

}

|

||||

|

||||

; Function Attrs: nounwind uwtable

|

||||

define dso_local void @constByArg(i32*) local_unnamed_addr #0 {

|

||||

%2 = load i32, i32* %0, align 4, !tbaa !2

|

||||

%3 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 %2)

|

||||

tail call void @constFunc(i32* %0) #4

|

||||

%4 = load i32, i32* %0, align 4, !tbaa !2

|

||||

%5 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 %4)

|

||||

ret void

|

||||

}

|

||||

```

|

||||

|

||||

### 某些有作用的东西

|

||||

|

||||

接下来是一组 `const` 能够真正产生作用的代码:

|

||||

|

||||

```

|

||||

void localVar()

|

||||

{

|

||||

int x = 42;

|

||||

printf("%d\n", x);

|

||||

constFunc(&x);

|

||||

printf("%d\n", x);

|

||||

}

|

||||

|

||||

void constLocalVar()

|

||||

{

|

||||

const int x = 42; // 对本地变量使用 const

|

||||

printf("%d\n", x);

|

||||

constFunc(&x);

|

||||

printf("%d\n", x);

|

||||

}

|

||||

```

|

||||

|

||||

下面是 `localVar()` 的汇编代码,其中有两条指令在 `constLocalVar()` 中会被优化掉:

|

||||

|

||||

```

|

||||

localVar:

|

||||

.LFB25:

|

||||

.cfi_startproc

|

||||

subq $24, %rsp

|

||||

.cfi_def_cfa_offset 32

|

||||

movl $42, %edx

|

||||

movl $1, %edi

|

||||

movq %fs:40, %rax

|

||||

movq %rax, 8(%rsp)

|

||||

xorl %eax, %eax

|

||||

leaq .LC0(%rip), %rsi

|

||||

movl $42, 4(%rsp)

|

||||

call __printf_chk@PLT

|

||||

leaq 4(%rsp), %rdi

|

||||

call constFunc@PLT

|

||||

movl 4(%rsp), %edx # 在 constLocalVar() 中没有

|

||||

xorl %eax, %eax

|

||||

movl $1, %edi

|

||||

leaq .LC0(%rip), %rsi # 在 constLocalVar() 中没有

|

||||

call __printf_chk@PLT

|

||||

movq 8(%rsp), %rax

|

||||

xorq %fs:40, %rax

|

||||

jne .L9

|

||||

addq $24, %rsp

|

||||

.cfi_remember_state

|

||||

.cfi_def_cfa_offset 8

|

||||

ret

|

||||

.L9:

|

||||

.cfi_restore_state

|

||||

call __stack_chk_fail@PLT

|

||||

.cfi_endproc

|

||||

```

|

||||

|

||||

在 LLVM 生成的 `IR` 代码中更明显一点。在 `constLocalVar()` 中,第二次调用 `printf()` 之前的 `load` 会被优化掉:

|

||||

|

||||

```

|

||||

; Function Attrs: nounwind uwtable

|

||||

define dso_local void @localVar() local_unnamed_addr #0 {

|

||||

%1 = alloca i32, align 4

|

||||

%2 = bitcast i32* %1 to i8*

|

||||

call void @llvm.lifetime.start.p0i8(i64 4, i8* nonnull %2) #4

|

||||

store i32 42, i32* %1, align 4, !tbaa !2

|

||||

%3 = tail call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 42)

|

||||

call void @constFunc(i32* nonnull %1) #4

|

||||

%4 = load i32, i32* %1, align 4, !tbaa !2

|

||||

%5 = call i32 (i8*, ...) @printf(i8* getelementptr inbounds ([4 x i8], [4 x i8]* @.str, i64 0, i64 0), i32 %4)

|

||||

call void @llvm.lifetime.end.p0i8(i64 4, i8* nonnull %2) #4

|

||||

ret void

|

||||

}

|

||||

```

|

||||

|

||||

好吧,现在,`constLocalVar()` 成功的省略了对 `*x` 的重新读取,但是可能你已经注意到一些问题:`localVar()` 和 `constLocalVar()` 在函数体中做了同样的 `constFunc()` 调用。如果编译器能够推断出 `constFunc()` 没有修改 `constLocalVar()` 中的 `*x`,那为什么不能推断出完全一样的函数调用也没有修改 `localVar()` 中的 `*x`?

|

||||

|

||||

这个解释更贴近于为什么 C 语言的 `const` 不能作为优化手段的核心原因。C 语言的 `const` 有两个有效的含义:它可以表示这个变量是某个可能是常数也可能不是常数的数据的一个只读别名,或者它可以表示该变量是真正的常量。如果你移除了一个指向常量的指针的 `const` 属性并写入数据,那结果将是一个未定义行为。另一方面,如果是一个指向非常量值的 `const` 指针,将就没问题。

|

||||

|

||||

这份 `constFunc()` 的可能实现揭示了这意味着什么:

|

||||

|

||||

```

|

||||

// x 是一个指向某个可能是常数也可能不是常数的数据的只读指针

|

||||

void constFunc(const int *x)

|

||||

{

|

||||

// local_var 是一个真正的常数

|

||||

const int local_var = 42;

|

||||

|

||||

// C 语言规定的未定义行为

|

||||

doubleIt((int*)&local_var);

|

||||

// 谁知道这是不是一个未定义行为呢?

|

||||

doubleIt((int*)x);

|

||||

}

|

||||

|

||||

void doubleIt(int *x)

|

||||

{

|

||||

*x *= 2;

|

||||

}

|

||||

```

|

||||

|

||||

`localVar()` 传递给 `constFunc()` 一个指向非 `const` 变量的 `const` 指针。因为这个变量并非常量,`constFunc()` 可以撒个谎并强行修改它而不触发未定义行为。所以,编译器不能断定变量在调用 `constFunc()` 后仍是同样的值。在 `constLocalVar()` 中的变量是真正的常量,因此,编译器可以断定它不会改变 —— 因为在 `constFunc()` 去除变量的 `const` 属性并写入它*将*会是一个未定义行为。

|

||||

|

||||

第一个例子中的函数 `byArg()` 和 `constByArg()` 是没有可能优化的,因为编译器没有任何方法能知道 `*x` 是否真的是 `const` 常量。

|

||||

|

||||

> 补充(和题外话):相当多的读者已经正确地指出,使用 `const int *x`,该指针本身不是限定的常量,只是该数据被加个了别名,而 `const int * const extra_const` 是一个“双向”限定为常量的指针。但是因为指针本身的常量与别名数据的常量无关,所以结果是相同的。仅在 `extra_const` 指向使用 `const` 定义的对象时,`*(int*const)extra_const = 0` 才是未定义行为。(实际上,`*(int*)extra_const = 0` 也不会更糟。)因为它们之间的区别可以一句话说明白,一个是完全的 `const` 指针,另外一个可能是也可能不是常量本身的指针,而是一个可能是也可能不是常量的对象的只读别名,我将继续不严谨地引用“常量指针”。(题外话结束)

|

||||

|

||||

但是为什么不一致呢?如果编译器能够推断出 `constLocalVar()` 中调用的 `constFunc()` 不会修改它的参数,那么肯定也能继续在其他 `constFunc()` 的调用上实施相同的优化,是吗?并不。编译器不能假设 `constLocalVar()` 根本没有运行。如果不是这样(例如,它只是代码生成器或者宏的一些未使用的额外输出),`constFunc()` 就能偷偷地修改数据而不触发未定义行为。

|

||||

|

||||

你可能需要重复阅读几次上述说明和示例,但不要担心,它听起来很荒谬,它确实是正确的。不幸的是,对 `const` 变量进行写入是最糟糕的未定义行为:大多数情况下,编译器无法知道它是否将会是未定义行为。所以,大多数情况下,编译器看见 `const` 时必须假设它未来可能会被移除掉,这意味着编译器不能使用它进行优化。这在实践中是正确的,因为真实的 C 代码会在“深思熟虑”后移除 `const`。

|

||||

|

||||

简而言之,很多事情都可以阻止编译器使用 `const` 进行优化,包括使用指针从另一内存空间接受数据,或者在堆空间上分配数据。更糟糕的是,在大部分编译器能够使用 `const` 进行优化的情况,它都不是必须的。例如,任何像样的编译器都能推断出下面代码中的 `x` 是一个常量,甚至都不需要 `const`:

|

||||

|

||||

```

|

||||

int x = 42, y = 0;

|

||||

printf("%d %d\n", x, y);

|

||||

y += x;

|

||||

printf("%d %d\n", x, y);

|

||||

```

|

||||

|

||||

总结,`const` 对优化而言几乎无用,因为:

|

||||

|

||||

1. 除了特殊情况,编译器需要忽略它,因为其他代码可能合法地移除它

|

||||

2. 在 #1 以外的大多数例外中,编译器无论如何都能推断出该变量是常量

|

||||

|

||||

### C++

|

||||

|

||||

如果你在使用 C++ 那么有另外一个方法让 `const` 能够影响到代码的生成:函数重载。你可以用 `const` 和非 `const` 的参数重载同一个函数,而非 `const` 版本的代码可能可以被优化(由程序员优化而不是编译器),减少某些拷贝或者其他事情。

|

||||

|

||||

```

|

||||

void foo(int *p)

|

||||

{

|

||||

// 需要做更多的数据拷贝

|

||||

}

|

||||

|

||||

void foo(const int *p)

|

||||

{

|

||||

// 不需要保护性的拷贝副本

|

||||

}

|

||||

|

||||

int main()

|

||||

{

|

||||

const int x = 42;

|

||||

// const 影响被调用的是哪一个版本的重载函数

|

||||

foo(&x);

|

||||

return 0;

|

||||

}

|

||||

```

|

||||

|

||||

一方面,我不认为这会在实际的 C++ 代码中大量使用。另一方面,为了导致差异,程序员需要假设编译器无法做出,因为它们不受语言保护。

|

||||

|

||||

### 用 Sqlite3 进行实验

|

||||

|

||||

有了足够的理论和例子。那么 `const` 在一个真正的代码库中有多大的影响呢?我将会在代码库 `Sqlite`(版本:3.30.0)上做一个测试,因为:

|

||||

|

||||

* 它真正地使用了 `const`

|

||||

* 它不是一个简单的代码库(超过 20 万行代码)

|

||||

* 作为一个数据库,它包括了字符串处理、数学计算、日期处理等一系列内容

|

||||

* 它能够在绑定 CPU 的情况下进行负载测试

|

||||

|

||||

此外,作者和贡献者们已经进行了多年的性能优化工作,因此我能确定他们没有错过任何有显著效果的优化。

|

||||

|

||||

#### 配置

|

||||

|

||||

我做了两份[源码][2]拷贝,并且正常编译其中一份。而对于另一份拷贝,我插入了这个特殊的预处理代码段,将 `const` 变成一个空操作:

|

||||

|

||||

```

|

||||

#define const

|

||||

```

|

||||

|

||||

(GNU) `sed` 可以将一些东西添加到每个文件的顶端,比如 `sed -i '1i#define const' *.c *.h`。

|

||||

|

||||

在编译期间使用脚本生成 `Sqlite` 代码稍微有点复杂。幸运的是当 `const` 代码和非 `const` 代码混合时,编译器会产生了大量的提醒,因此很容易发现它并调整脚本来包含我的反 `const` 代码段。

|

||||

|

||||

直接比较编译结果毫无意义,因为任意微小的改变就会影响整个内存布局,这可能会改变整个代码中的指针和函数调用。因此,我用每个指令的二进制大小和汇编代码作为识别码(`objdump -d libsqlite3.so.0.8.6`)。举个例子,这个函数:

|

||||

|

||||

```

|

||||

000000000005d570 <sqlite3_blob_read>:

|

||||

5d570: 4c 8d 05 59 a2 ff ff lea -0x5da7(%rip),%r8 # 577d0 <sqlite3BtreePayloadChecked>

|

||||

5d577: e9 04 fe ff ff jmpq 5d380 <blobReadWrite>

|

||||

5d57c: 0f 1f 40 00 nopl 0x0(%rax)

|

||||

```

|

||||

|

||||

将会变成这样:

|

||||

|

||||

```

|

||||

sqlite3_blob_read 7lea 5jmpq 4nopl

|

||||

```

|

||||

|

||||

在编译时,我保留了所有 `Sqlite` 的编译设置。

|

||||

|

||||

#### 分析编译结果

|

||||

|

||||

`const` 版本的 `libsqlite3.so` 的大小是 4,740,704 字节,大约比 4,736,712 字节的非 `const` 版本大了 0.1% 。在全部 1374 个导出函数(不包括类似 PLT 里的底层辅助函数)中,一共有 13 个函数的识别码不一致。

|

||||

|

||||

其中的一些改变是由于插入的预处理代码。举个例子,这里有一个发生了更改的函数(已经删去一些 `Sqlite` 特有的定义):

|

||||

|

||||

```

|

||||

#define LARGEST_INT64 (0xffffffff|(((int64_t)0x7fffffff)<<32))

|

||||

#define SMALLEST_INT64 (((int64_t)-1) - LARGEST_INT64)

|

||||

|

||||

static int64_t doubleToInt64(double r){

|

||||

/*

|

||||

** Many compilers we encounter do not define constants for the

|

||||

** minimum and maximum 64-bit integers, or they define them

|

||||

** inconsistently. And many do not understand the "LL" notation.

|

||||

** So we define our own static constants here using nothing

|

||||

** larger than a 32-bit integer constant.

|

||||

*/

|

||||

static const int64_t maxInt = LARGEST_INT64;

|

||||

static const int64_t minInt = SMALLEST_INT64;

|

||||

|

||||

if( r<=(double)minInt ){

|

||||

return minInt;

|

||||

}else if( r>=(double)maxInt ){

|

||||

return maxInt;

|

||||

}else{

|

||||

return (int64_t)r;

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

删去 `const` 使得这些常量变成了 `static` 变量。我不明白为什么会有不了解 `const` 的人让这些变量加上 `static`。同时删去 `static` 和 `const` 会让 GCC 再次认为它们是常量,而我们将得到同样的编译输出。由于类似这样的局部的 `static const` 变量,使得 13 个函数中有 3 个函数产生假的变化,但我一个都不打算修复它们。

|

||||

|

||||

`Sqlite` 使用了很多全局变量,而这正是大多数真正的 `const` 优化产生的地方。通常情况下,它们类似于将一个变量比较代替成一个常量比较,或者一个循环在部分展开的一步。([Radare toolkit][3] 可以很方便的找出这些优化措施。)一些变化则令人失望。`sqlite3ParseUri()` 有 487 个指令,但 `const` 产生的唯一区别是进行了这个比较:

|

||||

|

||||

```

|

||||

test %al, %al

|

||||

je <sqlite3ParseUri+0x717>

|

||||

cmp $0x23, %al

|

||||

je <sqlite3ParseUri+0x717>

|

||||

```

|

||||

|

||||

并交换了它们的顺序:

|

||||

|

||||

```

|

||||

cmp $0x23, %al

|

||||

je <sqlite3ParseUri+0x717>

|

||||

test %al, %al

|

||||

je <sqlite3ParseUri+0x717>

|

||||

```

|

||||

|

||||

#### 基准测试

|

||||

|

||||

`Sqlite` 自带了一个性能回归测试,因此我尝试每个版本的代码执行一百次,仍然使用默认的 `Sqlite` 编译设置。以秒为单位的测试结果如下:

|

||||

|

||||

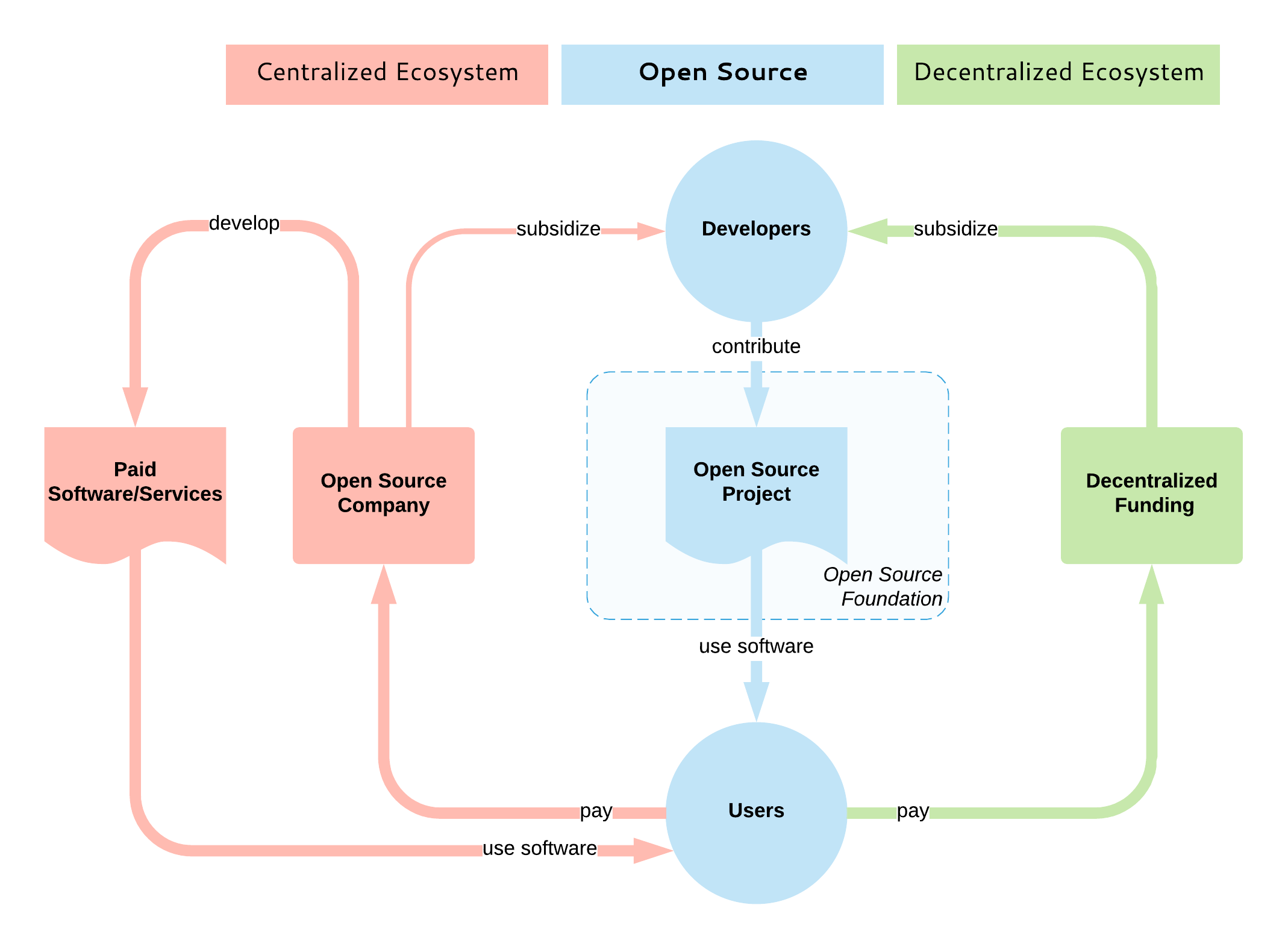

| | const | 非 const

|

||||

---|---|---

|

||||

最小值 | 10.658s | 10.803s

|

||||

中间值 | 11.571s | 11.519s

|

||||

最大值 | 11.832s | 11.658s

|

||||

平均值 | 11.531s | 11.492s

|

||||

|

||||

就我个人看来,我没有发现足够的证据来说明这个差异值得关注。我是说,我从整个程序中删去 `const`,所以如果它有明显的差别,那么我希望它是显而易见的。但也许你关心任何微小的差异,因为你正在做一些绝对性能非常重要的事。那让我们试一下统计分析。

|

||||

|

||||

我喜欢使用类似 Mann-Whitney U 检验这样的东西。它类似于更著名的 T 检验,但对你在机器上计时时产生的复杂随机变量(由于不可预测的上下文切换、页错误等)更加健壮。以下是结果:

|

||||

|

||||

|| const | 非 const|

|

||||

---|---|---

|

||||

N | 100 | 100

|

||||

Mean rank | 121.38 | 79.62

|

||||

|

||||

|||

|

||||

---|---

|

||||

Mann-Whitney U | 2912

|

||||

Z | -5.10

|

||||

2-sided p value | <10-6

|

||||

HL median difference | -0.056s

|

||||

95% confidence interval | -0.077s – -0.038s

|

||||

|

||||

U 检验已经发现统计意义上具有显著的性能差异。但是,令人惊讶的是,实际上是非 `const` 版本更快——大约 60ms,0.5%。似乎 `const` 启用的少量“优化”不值得额外代码的开销。这不像是 `const` 启用了任何类似于自动矢量化的重要的优化。当然,你的结果可能因为编译器配置、编译器版本或者代码库等等而有所不同,但是我觉得这已经说明了 `const` 是否能够有效地提高 `C` 的性能,我们现在已经看到答案了。

|

||||

|

||||

### 那么,const 有什么用呢?

|

||||

|

||||

尽管存在缺陷,C/C++ 的 `const` 仍有助于类型安全。特别是,结合 C++ 的移动语义和 `std::unique_pointer`,`const` 可以使指针所有权显式化。在超过十万行代码的 C++ 旧代码库里,指针所有权模糊是一个大难题,我对此深有感触。

|

||||

|

||||

但是,我以前常常使用 `const` 来实现有意义的类型安全。我曾听说过基于性能上的原因,最好是尽可能多地使用 `const`。我曾听说过当性能很重要时,重构代码并添加更多的 `const` 非常重要,即使以降低代码可读性的方式。**当时觉得这没问题,但后来我才知道这并不对。**

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://theartofmachinery.com/2019/08/12/c_const_isnt_for_performance.html

|

||||

|

||||

作者:[Simon Arneaud][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[LazyWolfLin](https://github.com/LazyWolfLin)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://theartofmachinery.com

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://theartofmachinery.com/2019/04/05/d_as_c_replacement.html#const-and-immutable

|

||||

[2]: https://sqlite.org/src/doc/trunk/README.md

|

||||

[3]: https://rada.re/r/

|

||||

@ -0,0 +1,168 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (heguangzhi)

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11356-1.html)

|

||||

[#]: subject: (Managing Ansible environments on MacOS with Conda)

|

||||

[#]: via: (https://opensource.com/article/19/8/using-conda-ansible-administration-macos)

|

||||

[#]: author: (James Farrell https://opensource.com/users/jamesf)

|

||||

|

||||

|

||||

使用 Conda 管理 MacOS 上的 Ansible 环境

|

||||

=====

|

||||

|

||||

> Conda 将 Ansible 所需的一切都收集到虚拟环境中并将其与其他项目分开。

|

||||

|

||||

|

||||

|

||||

如果你是一名使用 MacOS 并涉及到 Ansible 管理的 Python 开发人员,你可能希望使用 Conda 包管理器将 Ansible 的工作内容与核心操作系统和其他本地项目分开。

|

||||

|

||||

Ansible 基于 Python。要让 Ansible 在 MacOS 上工作,Conda 并不是必须要的,但是它确实让你管理 Python 版本和包依赖变得更加容易。这允许你在 MacOS 上使用升级的 Python 版本,并在你的系统中、Ansible 和其他编程项目之间保持 Python 包的依赖性相互独立。

|

||||

|

||||

在 MacOS 上安装 Ansible 还有其他方法。你可以使用 [Homebrew][2],但是如果你对 Python 开发(或 Ansible 开发)感兴趣,你可能会发现在一个独立 Python 虚拟环境中管理 Ansible 可以减少一些混乱。我觉得这更简单;与其试图将 Python 版本和依赖项加载到系统或 `/usr/local` 目录中 ,还不如使用 Conda 帮助我将 Ansible 所需的一切都收集到一个虚拟环境中,并将其与其他项目完全分开。

|

||||

|

||||

本文着重于使用 Conda 作为 Python 项目来管理 Ansible,以保持它的干净并与其他项目分开。请继续阅读,并了解如何安装 Conda、创建新的虚拟环境、安装 Ansible 并对其进行测试。

|

||||

|

||||

### 序幕

|

||||

|

||||

最近,我想学习 [Ansible][3],所以我需要找到安装它的最佳方法。

|

||||

|

||||

我通常对在我的日常工作站上安装东西很谨慎。我尤其不喜欢对供应商的默认操作系统安装应用手动更新(这是我多年作为 Unix 系统管理的习惯)。我真的很想使用 Python 3.7,但是 MacOS 的 Python 包是旧的 2.7,我不会安装任何可能干扰核心 MacOS 系统的全局 Python 包。

|

||||

|

||||

所以,我使用本地 Ubuntu 18.04 虚拟机上开始了我的 Ansible 工作。这提供了真正意义上的的安全隔离,但我很快发现管理它是非常乏味的。所以我着手研究如何在本机 MacOS 上获得一个灵活但独立的 Ansible 系统。

|

||||

|

||||

由于 Ansible 基于 Python,Conda 似乎是理想的解决方案。

|

||||

|

||||

### 安装 Conda

|

||||

|

||||

Conda 是一个开源软件,它提供方便的包和环境管理功能。它可以帮助你管理多个版本的 Python、安装软件包依赖关系、执行升级和维护项目隔离。如果你手动管理 Python 虚拟环境,Conda 将有助于简化和管理你的工作。浏览 [Conda 文档][4]可以了解更多细节。

|

||||

|

||||

我选择了 [Miniconda][5] Python 3.7 安装在我的工作站中,因为我想要最新的 Python 版本。无论选择哪个版本,你都可以使用其他版本的 Python 安装新的虚拟环境。

|

||||

|

||||

要安装 Conda,请下载 PKG 格式的文件,进行通常的双击,并选择 “Install for me only” 选项。安装在我的系统上占用了大约 158 兆的空间。

|

||||

|

||||

安装完成后,调出一个终端来查看你有什么了。你应该看到:

|

||||

|

||||

* 在你的家目录中的 `miniconda3` 目录

|

||||

* shell 提示符被修改为 `(base)`

|

||||

* `.bash_profile` 文件更新了一些 Conda 特有的设置内容

|

||||

|

||||

现在基础已经安装好了,你有了第一个 Python 虚拟环境。运行 Python 版本检查可以证明这一点,你的 `PATH` 将指向新的位置:

|

||||

|

||||

```

|

||||

(base) $ which python

|

||||

/Users/jfarrell/miniconda3/bin/python

|

||||

(base) $ python --version

|

||||

Python 3.7.1

|

||||

```

|

||||

|

||||

现在安装了 Conda,下一步是建立一个虚拟环境,然后安装 Ansible 并运行。

|

||||

|

||||

### 为 Ansible 创建虚拟环境

|

||||

|

||||

我想将 Ansible 与我的其他 Python 项目分开,所以我创建了一个新的虚拟环境并切换到它:

|

||||

|

||||

```

|

||||

(base) $ conda create --name ansible-env --clone base

|

||||

(base) $ conda activate ansible-env

|

||||

(ansible-env) $ conda env list

|

||||

```

|

||||

|

||||

第一个命令将 Conda 库克隆到一个名为 `ansible-env` 的新虚拟环境中。克隆引入了 Python 3.7 版本和一系列默认的 Python 模块,你可以根据需要添加、删除或升级这些模块。

|

||||

|

||||

第二个命令将 shell 上下文更改为这个新的环境。它为 Python 及其包含的模块设置了正确的路径。请注意,在 `conda activate ansible-env` 命令后,你的 shell 提示符会发生变化。

|

||||

|

||||

第三个命令不是必须的;它列出了安装了哪些 Python 模块及其版本和其他数据。

|

||||

|

||||

你可以随时使用 Conda 的 `activate` 命令切换到另一个虚拟环境。这将带你回到基本环境:`conda base`。

|

||||

|

||||

### 安装 Ansible

|

||||

|

||||

安装 Ansible 有多种方法,但是使用 Conda 可以将 Ansible 版本和所有需要的依赖项打包在一个地方。Conda 提供了灵活性,既可以将所有内容分开,又可以根据需要添加其他新环境(我将在后面演示)。

|

||||

|

||||

要安装 Ansible 的相对较新版本,请使用:

|

||||

|

||||

```

|

||||

(base) $ conda activate ansible-env

|

||||

(ansible-env) $ conda install -c conda-forge ansible

|

||||

```

|

||||

|

||||

由于 Ansible 不是 Conda 默认通道的一部分,因此 `-c` 用于从备用通道搜索和安装。Ansible 现已安装到 `ansible-env` 虚拟环境中,可以使用了。

|

||||

|

||||

### 使用 Ansible

|

||||

|

||||

既然你已经安装了 Conda 虚拟环境,就可以使用它了。首先,确保要控制的节点已将工作站的 SSH 密钥安装到正确的用户帐户。

|

||||

|

||||

调出一个新的 shell 并运行一些基本的 Ansible 命令:

|

||||

|

||||

```

|

||||

(base) $ conda activate ansible-env

|

||||

(ansible-env) $ ansible --version

|

||||

ansible 2.8.1

|

||||

config file = None

|

||||

configured module search path = ['/Users/jfarrell/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

|

||||

ansible python module location = /Users/jfarrell/miniconda3/envs/ansibleTest/lib/python3.7/site-packages/ansible

|

||||

executable location = /Users/jfarrell/miniconda3/envs/ansibleTest/bin/ansible

|

||||

python version = 3.7.1 (default, Dec 14 2018, 13:28:58) [Clang 4.0.1 (tags/RELEASE_401/final)]

|

||||

(ansible-env) $ ansible all -m ping -u ansible

|

||||

192.168.99.200 | SUCCESS => {

|

||||

"ansible_facts": {

|

||||

"discovered_interpreter_python": "/usr/bin/python"

|

||||

},

|

||||

"changed": false,

|

||||

"ping": "pong"

|

||||

}

|

||||

```

|

||||

|

||||

现在 Ansible 工作了,你可以在控制台中抽身,并从你的 MacOS 工作站中使用它们。

|

||||

|

||||

### 克隆新的 Ansible 进行 Ansible 开发

|

||||

|

||||

这部分完全是可选的;只有当你想要额外的虚拟环境来修改 Ansible 或者安全地使用有问题的 Python 模块时,才需要它。你可以通过以下方式将主 Ansible 环境克隆到开发副本中:

|

||||

|

||||

```

|

||||

(ansible-env) $ conda create --name ansible-dev --clone ansible-env

|

||||

(ansible-env) $ conda activte ansible-dev

|

||||

(ansible-dev) $

|

||||

```

|

||||

|

||||

### 需要注意的问题

|

||||

|

||||

偶尔你可能遇到使用 Conda 的麻烦。你通常可以通过以下方式删除不良环境:

|

||||

|

||||

```

|

||||

$ conda activate base

|

||||

$ conda remove --name ansible-dev --all

|

||||

```

|

||||

|

||||

如果出现无法解决的错误,通常可以通过在 `~/miniconda3/envs` 中找到该环境并删除整个目录来直接删除环境。如果基础环境损坏了,你可以删除整个 `~/miniconda3`,然后从 PKG 文件中重新安装。只要确保保留 `~/miniconda3/envs` ,或使用 Conda 工具导出环境配置并在以后重新创建即可。

|

||||

|

||||

MacOS 上不包括 `sshpass` 程序。只有当你的 Ansible 工作要求你向 Ansible 提供 SSH 登录密码时,才需要它。你可以在 SourceForge 上找到当前的 [sshpass 源代码][6]。

|

||||

|

||||

最后,基础的 Conda Python 模块列表可能缺少你工作所需的一些 Python 模块。如果你需要安装一个模块,首选命令是 `conda install package`,但是需要的话也可以使用 `pip`,Conda 会识别安装的模块。

|

||||

|

||||

### 结论

|

||||

|

||||

Ansible 是一个强大的自动化工具,值得我们去学习。Conda 是一个简单有效的 Python 虚拟环境管理工具。

|

||||

|

||||

在你的 MacOS 环境中保持软件安装分离是保持日常工作环境的稳定性和健全性的谨慎方法。Conda 尤其有助于升级你的 Python 版本,将 Ansible 从其他项目中分离出来,并安全地使用 Ansible。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/19/8/using-conda-ansible-administration-macos

|

||||

|

||||

作者:[James Farrell][a]

|

||||

选题:[lujun9972][b]

|

||||

译者:[heguangzhi](https://github.com/heguangzhi)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]: https://opensource.com/users/jamesf

|

||||

[b]: https://github.com/lujun9972

|

||||

[1]: https://opensource.com/sites/default/files/styles/image-full-size/public/lead-images/cicd_continuous_delivery_deployment_gears.png?itok=kVlhiEkc (CICD with gears)

|

||||

[2]: https://brew.sh/

|

||||

[3]: https://docs.ansible.com/?extIdCarryOver=true&sc_cid=701f2000001OH6uAAG

|

||||

[4]: https://conda.io/projects/conda/en/latest/index.html

|

||||

[5]: https://docs.conda.io/en/latest/miniconda.html

|

||||

[6]: https://sourceforge.net/projects/sshpass/

|

||||

@ -1,30 +1,32 @@

|

||||

[#]: collector: (lujun9972)

|

||||

[#]: translator: (geekpi)

|

||||

[#]: reviewer: ( )

|

||||

[#]: publisher: ( )

|

||||

[#]: url: ( )

|

||||

[#]: reviewer: (wxy)

|

||||

[#]: publisher: (wxy)

|

||||

[#]: url: (https://linux.cn/article-11333-1.html)

|

||||

[#]: subject: (Getting started with HTTPie for API testing)

|

||||

[#]: via: (https://opensource.com/article/19/8/getting-started-httpie)

|

||||

[#]: author: (Moshe Zadka https://opensource.com/users/moshezhttps://opensource.com/users/mkalindepauleduhttps://opensource.com/users/jamesf)

|

||||

|

||||

使用 HTTPie 进行 API 测试

|

||||

======

|

||||

使用 HTTPie 调试 API,一个用 Python 写的简易命令行工具。

|

||||

![Raspberry pie with slice missing][1]

|

||||

|

||||

[HTTPie][2] 是一个非常易于使用且易于升级的 HTTP 客户端。它的发音为 “aitch-tee-tee-pie” 并以 **http** 运行,它是一个用 Python 编写的命令行工具来用于访问 Web。

|

||||

> 使用 HTTPie 调试 API,这是一个用 Python 写的易用的命令行工具。

|

||||

|

||||

由于这篇是关于 HTTP 客户端的,因此你需要一个 HTTP 服务器来试用它。在这里,访问 [httpbin.org] [3],它是一个简单的开源 HTTP 请求和响应服务。httpbin.org 网站是一种测试 Web API 的强大方式,并能仔细管理并显示请求和相应内容,但现在我们将专注于 HTTPie 的强大功能。

|

||||

|

||||

|

||||

[HTTPie][2] 是一个非常易用、易于升级的 HTTP 客户端。它的发音为 “aitch-tee-tee-pie” 并以 `http` 命令运行,它是一个用 Python 编写的来用于访问 Web 的命令行工具。

|

||||

|

||||

由于这是一篇关于 HTTP 客户端的指导文章,因此你需要一个 HTTP 服务器来试用它。在这里,访问 [httpbin.org][3],它是一个简单的开源 HTTP 请求和响应服务。httpbin.org 网站是一种测试 Web API 的强大方式,并能仔细管理并显示请求和响应内容,不过现在让我们专注于 HTTPie 的强大功能。

|

||||

|

||||

### Wget 和 cURL 的替代品

|

||||

|

||||

你可能听说过古老的 [Wget][4] 或稍微更新的 [cURL][5] 工具,它们允许你从命令行访问 Web。它们是为访问网站而编写的,而 HTTPie 则用于访问 _Web API_。

|

||||

你可能听说过古老的 [Wget][4] 或稍微新一些的 [cURL][5] 工具,它们允许你从命令行访问 Web。它们是为访问网站而编写的,而 HTTPie 则用于访问 Web API。

|

||||

|

||||

网站请求设计介于计算机和正在阅读并响应他们所看到的内容的最终用户之间。这并不太依赖于结构化的响应。但是,API 请求会在两台计算机之间进行_结构化_调用。人类不是图片的一部分,像 HTTPie 这样的命令行工具的参数可以有效地处理这个问题。

|

||||

网站请求发生在计算机和正在阅读并响应它所看到的内容的最终用户之间,这并不太依赖于结构化的响应。但是,API 请求会在两台计算机之间进行*结构化*调用,人并不是该流程内的一部分,像 HTTPie 这样的命令行工具的参数可以有效地处理这个问题。

|

||||

|

||||

### 安装 HTTPie

|

||||

|

||||

有几种方法可以安装 HTTPie。你可以通过包管理器安装,无论你使用的是 **brew**、**apt**、**yum** 还是 **dnf**。但是,如果你已配置 [virtualenvwrapper] [6],那么你可以用自己的方式安装:

|

||||

有几种方法可以安装 HTTPie。你可以通过包管理器安装,无论你使用的是 `brew`、`apt`、`yum` 还是 `dnf`。但是,如果你已配置 [virtualenvwrapper][6],那么你可以用自己的方式安装:

|

||||

|

||||

|

||||

```

|

||||

@ -34,34 +36,33 @@ $ mkvirtualenv httpie

|

||||

...

|

||||

(httpie) $ deactivate

|

||||

$ alias http=~/.virtualenvs/httpie/bin/http

|

||||

$ http -b GET <https://httpbin.org/get>

|

||||

$ http -b GET https://httpbin.org/get

|

||||

{

|

||||

"args": {},

|

||||

"headers": {

|

||||

"Accept": "*/*",

|

||||

"Accept-Encoding": "gzip, deflate",

|

||||

"Host": "httpbin.org",

|

||||

"User-Agent": "HTTPie/1.0.2"

|

||||

},

|

||||

"origin": "104.220.242.210, 104.220.242.210",

|

||||

"url": "<https://httpbin.org/get>"

|

||||

"args": {},

|

||||

"headers": {

|

||||

"Accept": "*/*",

|

||||

"Accept-Encoding": "gzip, deflate",

|

||||

"Host": "httpbin.org",

|

||||

"User-Agent": "HTTPie/1.0.2"

|

||||

},

|

||||

"origin": "104.220.242.210, 104.220.242.210",

|

||||

"url": "https://httpbin.org/get"

|

||||

}

|

||||

```

|

||||

|

||||

通过直接将 **http** 设置为虚拟环境中的命令别名,即使虚拟环境在非活动状态,你也可以运行它。 你可以将 **alias** 命令放在 **.bash_profile** 或 **.bashrc** 中,这样你就可以使用以下命令升级 HTTPie:

|

||||

通过将 `http` 别名指向为虚拟环境中的命令,即使虚拟环境在非活动状态,你也可以运行它。你可以将 `alias` 命令放在 `.bash_profile` 或 `.bashrc` 中,这样你就可以使用以下命令升级 HTTPie:

|

||||

|

||||

|

||||

```

|

||||

`$ ~/.virtualenvs/httpie/bin/pip install -U pip`

|

||||

$ ~/.virtualenvs/httpie/bin/pip install -U pip

|

||||

```

|

||||

|

||||

### 使用 HTTPie 查询网站

|

||||

|

||||

HTTPie 可以简化查询和测试 API。 这里使用了一个选项, **-b**(也可以是 **\--body**)。 没有它,HTTPie 将默认打印整个响应,包括头:

|

||||

|

||||

HTTPie 可以简化查询和测试 API。上面使用了一个选项,`-b`(即 `--body`)。没有它,HTTPie 将默认打印整个响应,包括响应头:

|

||||

|

||||

```

|

||||

$ http GET <https://httpbin.org/get>

|

||||

$ http GET https://httpbin.org/get

|

||||

HTTP/1.1 200 OK

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -77,23 +78,22 @@ X-Frame-Options: DENY

|

||||

X-XSS-Protection: 1; mode=block

|

||||

|

||||

{

|

||||

"args": {},

|

||||

"headers": {

|

||||

"Accept": "*/*",

|

||||

"Accept-Encoding": "gzip, deflate",

|

||||

"Host": "httpbin.org",

|

||||

"User-Agent": "HTTPie/1.0.2"

|

||||

},

|

||||

"origin": "104.220.242.210, 104.220.242.210",

|

||||

"url": "<https://httpbin.org/get>"

|

||||

"args": {},

|

||||

"headers": {

|

||||

"Accept": "*/*",

|

||||

"Accept-Encoding": "gzip, deflate",

|

||||

"Host": "httpbin.org",

|

||||

"User-Agent": "HTTPie/1.0.2"

|

||||

},

|

||||

"origin": "104.220.242.210, 104.220.242.210",

|

||||

"url": "https://httpbin.org/get"

|

||||

}

|

||||

```

|

||||

|

||||

这在调试 API 服务时非常重要,因为大量信息在 HTTP 头中发送。 例如,查看发送的 cookie 通常很重要。Httpbin.org 提供了通过 URL 路径设置 cookie(用于测试目的)的选项。 以下设置一个标题为 **opensource**, 值为 **awesome** 的 cookie:

|

||||

|

||||

这在调试 API 服务时非常重要,因为大量信息在响应头中发送。例如,查看发送的 cookie 通常很重要。httpbin.org 提供了通过 URL 路径设置 cookie(用于测试目的)的方式。以下设置一个标题为 `opensource`, 值为 `awesome` 的 cookie:

|

||||

|

||||

```

|

||||

$ http GET <https://httpbin.org/cookies/set/opensource/awesome>

|

||||

$ http GET https://httpbin.org/cookies/set/opensource/awesome

|

||||

HTTP/1.1 302 FOUND

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -116,11 +116,10 @@ X-XSS-Protection: 1; mode=block

|

||||

<a href="/cookies">/cookies</a>. If not click the link.

|

||||

```

|

||||

|

||||

注意 **Set-Cookie: opensource=awesome; Path=/** 的 HTTP 头。 这表明你预期设置的 cookie 已正确设置,路径为 **/**。 另请注意,即使你有 **302**重定向,**http** 也不会遵循它。 如果你想要遵循重定向,则需要使用 **\--follow** 标志请求:

|

||||

|

||||

注意 `Set-Cookie: opensource=awesome; Path=/` 的响应头。这表明你预期设置的 cookie 已正确设置,路径为 `/`。另请注意,即使你得到了 `302` 重定向,`http` 也不会遵循它。如果你想要遵循重定向,则需要明确使用 `--follow` 标志请求:

|

||||

|

||||

```

|

||||

$ http --follow GET <https://httpbin.org/cookies/set/opensource/awesome>

|

||||

$ http --follow GET https://httpbin.org/cookies/set/opensource/awesome

|

||||

HTTP/1.1 200 OK

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -136,18 +135,17 @@ X-Frame-Options: DENY

|

||||

X-XSS-Protection: 1; mode=block

|

||||

|

||||

{

|

||||

"cookies": {

|

||||

"opensource": "awesome"

|

||||

}

|

||||

"cookies": {

|

||||

"opensource": "awesome"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

但此时你无法看到原来的 **Set-Cookie** 头。为了看到中间响应,你需要使用 **\--all**:

|

||||

但此时你无法看到原来的 `Set-Cookie` 头。为了看到中间响应,你需要使用 `--all`:

|

||||

|

||||

|

||||

```

|

||||

$ http --headers --all --follow \

|

||||

GET <https://httpbin.org/cookies/set/opensource/awesome>

|

||||

$ http --headers --all --follow GET https://httpbin.org/cookies/set/opensource/awesome

|

||||

HTTP/1.1 302 FOUND

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -178,12 +176,10 @@ Content-Length: 66

|

||||

Connection: keep-alive

|

||||

```

|

||||

|

||||

打印 body 并不有趣,因为你大多数关心 cookie。如果你像看到中间请求的头,而不是最终请求中的 body,你可以使用:

|

||||

|

||||

打印响应体并不有趣,因为你大多数时候只关心 cookie。如果你想看到中间请求的响应头,而不是最终请求中的响应体,你可以使用:

|

||||

|

||||

```

|

||||

$ http --print hb --history-print h --all --follow \

|

||||

GET <https://httpbin.org/cookies/set/opensource/awesome>

|

||||

$ http --print hb --history-print h --all --follow GET https://httpbin.org/cookies/set/opensource/awesome

|

||||

HTTP/1.1 302 FOUND

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -214,21 +210,20 @@ Content-Length: 66

|

||||

Connection: keep-alive

|

||||

|

||||

{

|

||||

"cookies": {

|

||||

"opensource": "awesome"

|

||||

}

|

||||

"cookies": {

|

||||

"opensource": "awesome"

|

||||

}

|

||||

}

|

||||

```

|

||||

|

||||

你可以使用 **\--print** 精确控制打印,并使用 **\--history-print** 覆盖中间请求的打印。

|

||||

你可以使用 `--print` 精确控制打印的内容(`h`:响应头;`b`:响应体),并使用 `--history-print` 覆盖中间请求的打印内容设置。

|

||||

|

||||

### 使用 HTTPie 下载二进制文件

|

||||

|

||||

有时 body 并不是文本形式,它需要发送到可被不同应用打开的文件:

|

||||

|

||||

有时响应体并不是文本形式,它需要发送到可被不同应用打开的文件:

|

||||

|

||||

```

|

||||

$ http GET <https://httpbin.org/image/jpeg>

|

||||

$ http GET https://httpbin.org/image/jpeg

|

||||

HTTP/1.1 200 OK

|

||||

Access-Control-Allow-Credentials: true

|

||||

Access-Control-Allow-Origin: *

|

||||

@ -242,6 +237,7 @@ X-Content-Type-Options: nosniff

|

||||

X-Frame-Options: DENY

|

||||

X-XSS-Protection: 1; mode=block

|

||||

|

||||

|

||||

+-----------------------------------------+

|

||||

| NOTE: binary data not shown in terminal |

|

||||

+-----------------------------------------+

|

||||

@ -249,9 +245,8 @@ X-XSS-Protection: 1; mode=block

|

||||

|

||||

要得到正确的图片,你需要保存到文件:

|

||||

|