mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-09 01:30:10 +08:00

Merge remote-tracking branch 'LCTT/master'

This commit is contained in:

commit

15e609b9fe

@ -1,127 +0,0 @@

|

||||

translated by hopefully2333

|

||||

|

||||

Using machine learning to color cartoons

|

||||

======

|

||||

|

||||

|

||||

A big problem with supervised machine learning is the need for huge amounts of labeled data. It's a big problem especially if you don't have the labeled data—and even in a world awash with big data, most of us don't.

|

||||

|

||||

Although a few companies have access to enormous quantities of certain kinds of labeled data, for most organizations and many applications, creating sufficient quantities of the right kind of labeled data is cost prohibitive or impossible. Sometimes the domain is one in which there just isn't much data (for example, when diagnosing a rare disease or determining whether a signature matches a few known exemplars). Other times the volume of data needed multiplied by the cost of human labeling by [Amazon Turkers][1] or summer interns is just too high. Paying to label every frame of a movie-length video adds up fast, even at a penny a frame.

|

||||

|

||||

### The big problem of big data requirements

|

||||

|

||||

The specific problem our group set out to solve was: Can we train a model to automate applying a simple color scheme to a black and white character without hand-drawing hundreds or thousands of examples as training data?

|

||||

|

||||

* A rule-based strategy for extreme augmentation of small datasets

|

||||

* A borrowed TensorFlow image-to-image translation model, Pix2Pix, to automate cartoon coloring with very limited training data

|

||||

|

||||

|

||||

|

||||

In this experiment (which we called DragonPaint), we confronted the problem of deep learning's enormous labeled-data requirements using:

|

||||

|

||||

I had seen [Pix2Pix][2], a machine learning image-to-image translation model described in a paper ("Image-to-Image Translation with Conditional Adversarial Networks," by Isola, et al.), that colorizes landscapes after training on AB pairs where A is the grayscale version of landscape B. My problem seemed similar. The only problem was training data.

|

||||

|

||||

I needed the training data to be very limited because I didn't want to draw and color a lifetime supply of cartoon characters just to train the model. The tens of thousands (or hundreds of thousands) of examples often required by deep-learning models were out of the question.

|

||||

|

||||

Based on Pix2Pix's examples, we would need at least 400 to 1,000 sketch/colored pairs. How many was I willing to draw? Maybe 30. I drew a few dozen cartoon flowers and dragons and asked whether I could somehow turn this into a training set.

|

||||

|

||||

### The 80% solution: color by component

|

||||

|

||||

|

||||

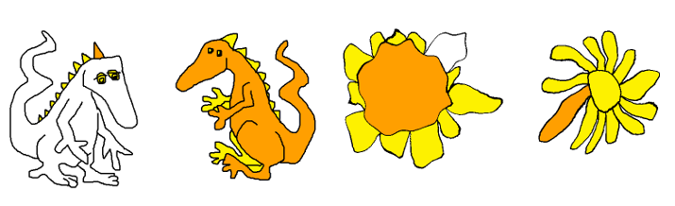

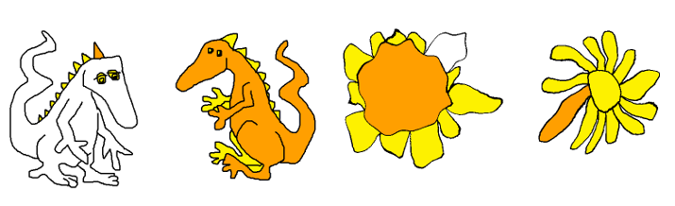

![Characters colored by component rules][4]

|

||||

|

||||

Characters colored by component rules

|

||||

|

||||

When faced with a shortage of training data, the first question to ask is whether there is a good non-machine-learning based approach to our problem. If there's not a complete solution, is there a partial solution, and would a partial solution do us any good? Do we even need machine learning to color flowers and dragons? Or can we specify geometric rules for coloring?

|

||||

|

||||

|

||||

![How to color by components][6]

|

||||

|

||||

How to color by components

|

||||

|

||||

There is a non-machine-learning approach to solving my problem. I could tell a kid how I want my drawings colored: Make the flower's center orange and the petals yellow. Make the dragon's body orange and the spikes yellow.

|

||||

|

||||

At first, that doesn't seem helpful because our computer doesn't know what a center or a petal or a body or a spike is. But it turns out we can define the flower or dragon parts in terms of connected components and get a geometric solution for coloring about 80% of our drawings. Although 80% isn't enough, we can bootstrap from that partial-rule-based solution to 100% using strategic rule-breaking transformations, augmentations, and machine learning.

|

||||

|

||||

Connected components are what is colored when you use Windows Paint (or a similar application). For example, when coloring a binary black and white image, if you click on a white pixel, the white pixels that are be reached without crossing over black are colored the new color. In a "rule-conforming" cartoon dragon or flower sketch, the biggest white component is the background. The next biggest is the body (plus the arms and legs) or the flower's center. The rest are spikes or petals, except for the dragon's eye, which can be distinguished by its distance from the background.

|

||||

|

||||

### Using strategic rule breaking and Pix2Pix to get to 100%

|

||||

|

||||

Some of my sketches aren't rule-conforming. A sloppily drawn line might leave a gap. A back limb will get colored like a spike. A small, centered daisy will switch a petal and the center's coloring rules.

|

||||

|

||||

|

||||

|

||||

For the 20% we couldn't color with the geometric rules, we needed something else. We turned to Pix2Pix, which requires a minimum training set of 400 to 1,000 sketch/colored pairs (i.e., the smallest training sets in the [Pix2Pix paper][7]) including rule-breaking pairs.

|

||||

|

||||

So, for each rule-breaking example, we finished the coloring by hand (e.g., back limbs) or took a few rule-abiding sketch/colored pairs and broke the rule. We erased a bit of a line in A or we transformed a fat, centered flower pair A and B with the same function (f) to create a new pair f(A) and f(B)—a small, centered flower. That got us to a training set.

|

||||

|

||||

### Extreme augmentations with gaussian filters and homeomorphisms

|

||||

|

||||

It's common in computer vision to augment an image training set with geometric transformations, such as rotation, translation, and zoom.

|

||||

|

||||

But what if we need to turn sunflowers into daisies or make a dragon's nose bulbous or pointy?

|

||||

|

||||

Or what if we just need an enormous increase in data volume without overfitting? Here we need a dataset 10 to 30 times larger than what we started with.

|

||||

|

||||

![Sunflower turned into a daisy with r -> r cubed][9]

|

||||

|

||||

Sunflower turned into a daisy with r -> r cubed

|

||||

|

||||

![Gaussian filter augmentations][11]

|

||||

|

||||

Gaussian filter augmentations

|

||||

|

||||

Certain homeomorphisms of the unit disk make good daisies (e.g., r -> r cubed) and Gaussian filters change a dragon's nose. Both were extremely useful for creating augmentations for our dataset and produced the augmentation volume we needed, but they also started to change the style of the drawings in ways that an [affine transformation][12] could not.

|

||||

|

||||

This inspired questions beyond how to automate a simple coloring scheme: What defines an artist's style, either to an outside viewer or the artist? When does an artist adopt as their own a drawing they could not have made without the algorithm? When does the subject matter become unrecognizable? What's the difference between a tool, an assistant, and a collaborator?

|

||||

|

||||

### How far can we go?

|

||||

|

||||

How little can we draw for input and how much variation and complexity can we create while staying within a subject and style recognizable as the artist's? What would we need to do to make an infinite parade of giraffes or dragons or flowers? And if we had one, what could we do with it?

|

||||

|

||||

Those are questions we'll continue to explore in future work.

|

||||

|

||||

But for now, the rules, augmentations, and Pix2Pix model worked. We can color flowers really well, and the dragons aren't bad.

|

||||

|

||||

|

||||

![Results: flowers colored by model trained on flowers][14]

|

||||

|

||||

Results: Flowers colored by model trained on flowers

|

||||

|

||||

|

||||

![Results: dragons trained on model trained on dragons][16]

|

||||

|

||||

Results: Dragons trained on model trained on dragons

|

||||

|

||||

To learn more, attend Gretchen Greene's talk, [DragonPaint – bootstrapping small data to color cartoons][17], at [PyCon Cleveland 2018][18].

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/dragonpaint-bootstrapping

|

||||

|

||||

作者:[K. Gretchen Greene][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/kggreene

|

||||

[1]:https://www.mturk.com/

|

||||

[2]:https://phillipi.github.io/pix2pix/

|

||||

[3]:/file/393246

|

||||

[4]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint2.png?itok=qw_q72A5 (Characters colored by component rules)

|

||||

[5]:/file/393251

|

||||

[6]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint3.png?itok=JK3TPcvp (How to color by components)

|

||||

[7]:https://arxiv.org/abs/1611.07004

|

||||

[8]:/file/393261

|

||||

[9]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint5.png?itok=GvipU8l8 (Sunflower turned into a daisy with r -> r cubed)

|

||||

[10]:/file/393266

|

||||

[11]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint6.png?itok=r14I2Fyz (Gaussian filter augmentations)

|

||||

[12]:https://en.wikipedia.org/wiki/Affine_transformation

|

||||

[13]:/file/393271

|

||||

[14]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint7.png?itok=xKWvyi_T (Results: flowers colored by model trained on flowers)

|

||||

[15]:/file/393276

|

||||

[16]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint8.png?itok=fSM5ovBT (Results: dragons trained on model trained on dragons)

|

||||

[17]:https://us.pycon.org/2018/schedule/presentation/113/

|

||||

[18]:https://us.pycon.org/2018/

|

||||

@ -1,124 +0,0 @@

|

||||

translating---geekpi

|

||||

|

||||

The Source Code Line Counter And Analyzer

|

||||

======

|

||||

|

||||

|

||||

|

||||

**Ohcount** is simple command line utility that analyzes the source code and prints the total number lines of a source code file. It is not just source code line counter, but also detects the popular open source licenses, such as GPL, within a large directory of source code. Additionally, Ohcount can also detect code that targets a particular programming API such as KDE or Win32. As of writing this guide, Ohcount currently supports over 70 popular programming languages. It is written in **C** programming language and is originally developed by **Ohloh** for generating the reports at [www.openhub.net][1].

|

||||

|

||||

In this brief tutorial, we are going to how to install and use Ohcount to analyze source code files in Debian, Ubuntu and its variants like Linux Mint.

|

||||

|

||||

### Ohcount – The source code line counter

|

||||

|

||||

**Installation**

|

||||

|

||||

Ohcount is available in the default repositories in Debian and Ubuntu and its derivatives, so you can install it using APT package manager as shown below.

|

||||

```

|

||||

$ sudo apt-get install ohcount

|

||||

|

||||

```

|

||||

|

||||

**Usage**

|

||||

|

||||

Ohcount usage is extremely simple.

|

||||

|

||||

All you have to do is go to the directory where you have the source code that you want to analyze and ohcount program.

|

||||

|

||||

Say for example, I am going to analyze the source of code of [**coursera-dl**][2] program.

|

||||

```

|

||||

$ cd coursera-dl-master/

|

||||

|

||||

$ ohcount

|

||||

|

||||

```

|

||||

|

||||

Here is the line count summary of Coursera-dl program:

|

||||

|

||||

![][4]

|

||||

|

||||

As you can see, the source code of Coursera-dl program contains 141 files in total. The first column specifies the name of programming languages that the source code consists of. The second column displays the number of files in each programming languages. The third column displays the total number of lines in each programming language. The fourth and fifth lines displays how many lines of comments and its percentage in the code. The sixth column displays the number of blank lines. And the final and seventh column displays total line of codes in each language and the gross total of coursera-dl program.

|

||||

|

||||

Alternatively, mention the complete path directly like below.

|

||||

```

|

||||

$ ohcount coursera-dl-master/

|

||||

|

||||

```

|

||||

|

||||

The path can be any number of individual files or directories. Directories will be probed recursively. If no path is given, the current directory will be used.

|

||||

|

||||

If you don’t want to mention the whole directory path each time, just CD into it and use ohcount utility to analyze the codes in that directory.

|

||||

|

||||

To count lines of code per file, use **-i** flag.

|

||||

```

|

||||

$ ohcount -i

|

||||

|

||||

```

|

||||

|

||||

**Sample output:**

|

||||

|

||||

![][5]

|

||||

|

||||

Ohcount utility can also show the annotated source code when you use **-a** flag.

|

||||

```

|

||||

$ ohcount -a

|

||||

|

||||

```

|

||||

|

||||

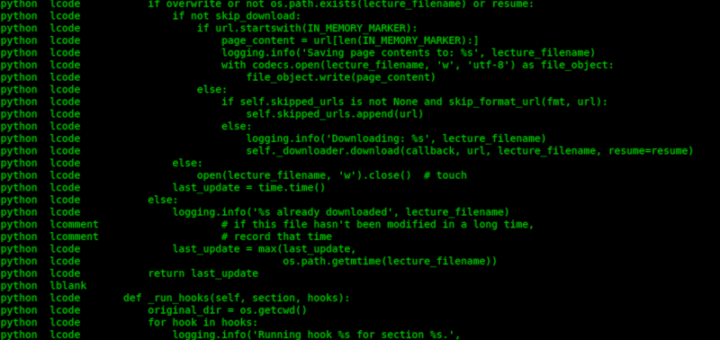

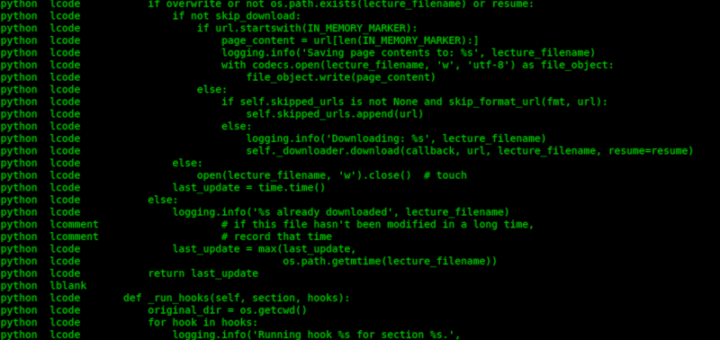

![][6]

|

||||

|

||||

As you can see, the contents of all source code files found in this directory is displayed. Each line is prefixed with a tab-delimited language name and semantic categorization (code, comment, or blank).

|

||||

|

||||

Some times, you just want to know the license used in the source code. To do so, use **-l** flag.

|

||||

```

|

||||

$ ohcount -l

|

||||

lgpl3, coursera_dl.py

|

||||

gpl coursera_dl.py

|

||||

|

||||

```

|

||||

|

||||

Another available option is **-re** , which is used to print raw entity information to the screen (mainly for debugging).

|

||||

```

|

||||

$ ohcount -re

|

||||

|

||||

```

|

||||

|

||||

To find all source code files within the given paths recursively, use **-d** flag.

|

||||

```

|

||||

$ ohcount -d

|

||||

|

||||

```

|

||||

|

||||

The above command will display all source code files in the current working directory and the each file name will be prefixed with a tab-delimited language name.

|

||||

|

||||

To know more details and supported options, run:

|

||||

```

|

||||

$ ohcount --help

|

||||

|

||||

```

|

||||

|

||||

Ohcount is quite useful for developers who wants to analysis the code written by themselves or other developers, and check how many lines that code contains, which languages have been used to write those codes, and the license details of the code etc.

|

||||

|

||||

And, that’s all for now. Hope this was useful. More good stuffs to come. Stay tuned!

|

||||

|

||||

Cheers!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/ohcount-the-source-code-line-counter-and-analyzer/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:http://www.openhub.net

|

||||

[2]:https://www.ostechnix.com/coursera-dl-a-script-to-download-coursera-videos/

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-2.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-1-5.png

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-2-2.png

|

||||

@ -1,3 +1,5 @@

|

||||

translating---geekpi

|

||||

|

||||

How To Test A Package Without Installing It In Linux

|

||||

======

|

||||

|

||||

|

||||

@ -0,0 +1,127 @@

|

||||

|

||||

使用机器学习来进行卡通上色

|

||||

======

|

||||

|

||||

|

||||

监督式机器学习的一个大问题是需要大量的标签数据,特别是如果你没有这些数据时——即使这是一个充斥着大数据的世界,我们大多数人依然没有大数据——这就真的是一个大问题了。

|

||||

|

||||

尽管少数公司可以访问某些类型的大量标签数据,但对于大多数的组织和应用来说,创造足够的正确类型的标签数据,花费还是太高了,以至于近乎不可能。在某些时候,这个领域还是一个没有太多数据的领域(比如说,当我们诊断一种稀有的疾病,或者判断一个标签是否匹配我们已知的那一点点样本时)。其他时候,通过 Amazon Turkers 或者暑假工这些人工方式来给我们需要的数据打标签,这样做的花费太高了。对于一部电影长度的视频,因为要对每一帧做标签,所以成本上涨得很快,即使是一帧一美分。

|

||||

|

||||

### 大数据需求的一个大问题

|

||||

|

||||

我们团队目前打算解决一个问题是:我们能不能在没有手绘的数百或者数千训练数据的情况下,训练出一个模型,来自动化地为黑白像素图片提供简单的配色方案。

|

||||

|

||||

* 对小数据集的快速增长使用基于规则的的策略。

|

||||

* 模仿 tensorflow 图像转换的模型,Pix2Pix 框架,从而在训练数据有限的情况下实现自动化卡通渲染。

|

||||

|

||||

|

||||

|

||||

在这个实验中(我们称这个实验为龙画),面对深度学习庞大的对标签数据的需求,我们使用以下这种方法:

|

||||

|

||||

我曾见过 Pix2Pix 框架,在一篇论文(由 Isola 等人撰写的“Image-to-Image Translation with Conditional Adversarial Networks”)中描述的机器学习图像转换模型,现在设 A 图片是 B 的灰色版,在对 AB 对进行训练后,再给风景图片进行上色。我的问题和这是类似的,唯一的问题就是训练数据。

|

||||

|

||||

我需要的训练数据非常有限,因为我不想为了训练这个模型,一辈子画画和上色来为它提供彩色图片,深度学习模型需要成千上万(或者成百上千)的训练数据。

|

||||

|

||||

基于 Pix2Pix 的案例,我们需要至少 400 到 1000 的黑白、彩色成对的数据。你问我愿意画多少?可能就只有 30。我画了一小部分卡通花和卡通龙,然后去确认我是否可以把他们放进数据集中。

|

||||

|

||||

### 80% 的解决方案:按组件上色

|

||||

|

||||

|

||||

![Characters colored by component rules][4]

|

||||

|

||||

按组件规则对黑白像素进行上色

|

||||

|

||||

当面对训练数据的短缺时,要问的第一个问题就是,是否有一个好的非机器学习的方法来解决我们的问题,如果没有一个完整的解决方案,那是否有一个部分的解决方案,这个部分解决方案对我们是否有好处?我们真的需要机器学习的方法来为花和龙上色吗?或者我们能为上色指定几何规则吗?

|

||||

|

||||

|

||||

![How to color by components][6]

|

||||

|

||||

如何按组件进行上色

|

||||

|

||||

现在有一种非机器学习的方法来解决我的问题。我可以告诉一个孩子,我想怎么给我的画上色:把花的中心画成橙色,把花瓣画成黄色,把龙的身体画成橙色,把龙的尖刺画成黄色。

|

||||

|

||||

开始的时候,这似乎没有什么帮助,因为我们的电脑不知道什么是中心,什么是花瓣,什么是身体,什么是尖刺。但事实证明,我们可以依据连接组件来定义花和龙的部分,然后得到一个几何解决方案为我们 80% 的画来上色,虽然 80% 还不够,我们可以使用战略性违规转换、参数和机器学习来引导基于部分规则的解决方案达到 100%。

|

||||

|

||||

连接的组件使用的是 Windows 画图(或者类似的应用)上的色,例如,当我们对一个二进制黑白图像上色时,如果你单击一个白色像素,这个白色像素会在不穿过黑色的情况下变成一种新的颜色。在一个规则相同的卡通龙或者花的素描中,最大的白色组件就是背景,下一个最大的组件就是身体(加上手臂和腿)或者花的中心,其余的部分就是尖刺和花瓣,除了龙眼睛,它可以通过和背景的距离来做区分。

|

||||

|

||||

### 使用战略规则和 Pix2Pix 来达到 100%

|

||||

|

||||

|

||||

我的一部分素描不符合规则,一条粗心画下的线可能会留下一个缺口,一条后肢可能会上成尖刺的颜色,一个小的,居中的雏菊会交换花瓣和中心的上色规则。

|

||||

|

||||

|

||||

|

||||

对于那 20% 我们不能用几何规则进行上色的部分,我们需要其他的方法来对它进行处理,我们转向 Pix2Pix 模型,它至少需要 400 到 1000 的素描/彩色对作为数据集(在 Pix2Pix 论文里的最小的数据集),里面包括违反规则的例子。

|

||||

|

||||

所以,对于每个违反规则的例子,我们最后都会通过手工的方式进行上色(比如后肢)或者选取一些符合规则的素描 / 彩色对来打破规则。我们在 A 中删除一些线,或者我们多转换一些,居中的花朵 A 和 B 使用相同的函数 (f) 来创造新的一对,f(A) 和 f(B),一个小而居中的花朵,这可以加入到数据集。

|

||||

|

||||

### 使用高斯滤波器和同胚增大到最大

|

||||

|

||||

在计算机视觉中使用几何转换增强数据集是很常见的做法。例如循环,平移,和缩放。

|

||||

|

||||

但是如果我们需要把向日葵转换为雏菊或者把龙的鼻子变成球型和尖刺型呢?

|

||||

|

||||

或者如果说我们只需要大量增加数据量而不管过拟合?那我们需要比我们一开始使用的数据集大 10 到 30 倍的数据集。

|

||||

|

||||

![Sunflower turned into a daisy with r -> r cubed][9]

|

||||

|

||||

向日葵通过 r -> r 立方体方式变成一个雏菊

|

||||

|

||||

![Gaussian filter augmentations][11]

|

||||

|

||||

高斯滤波器增强

|

||||

|

||||

单位盘的某些同胚可以形成很好的雏菊(比如 r -> r 立方体,高斯滤波器可以改变龙的鼻子。这两者对于数据集的快速增长是非常有用的,并且产生的大量数据都是我们需要的。但是他们也会开始用一种不能仿射转换的方式来改变画的风格。

|

||||

|

||||

之前我们考虑的是如何自动化地设计一个简单的上色方案,上述内容激发了一个在这之外的问题:什么东西定义了艺术家的风格,不管是外部的观察者还是艺术家自己?他们什么时候确定了自己的的绘画风格呢?他们不可能没有自己画画的算法?工具、助手和合作者之间的区别是什么?

|

||||

|

||||

### 我们可以走多远?

|

||||

|

||||

我们画画的投入可以有多低?保持在一个主题之内并且风格可以辨认出为某个艺术家的作品,在这个范围内我们可以创造出多少的变化和复杂性?我们需要做什么才能完成一个有无限的长颈鹿、龙、花的游行画卷?如果我们有了这样一幅,我们可以用它来做什么?

|

||||

|

||||

这些都是我们会继续在后面的工作中进行探索的问题。

|

||||

|

||||

但是现在,规则、增强和 Pix2Pix 模型起作用了。我们可以很好地为花上色了,给龙上色也不错。

|

||||

|

||||

|

||||

![Results: flowers colored by model trained on flowers][14]

|

||||

|

||||

结果:通过花这方面的模型训练来给花上色。

|

||||

|

||||

|

||||

![Results: dragons trained on model trained on dragons][16]

|

||||

|

||||

结果:龙的模型训练的训练结果。

|

||||

|

||||

想了解更多,参与 Gretchen Greene's talk, DragonPaint – bootstrapping small data to color cartoons, 在 PyCon Cleveland 2018.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://opensource.com/article/18/4/dragonpaint-bootstrapping

|

||||

|

||||

作者:[K. Gretchen Greene][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[hopefully2333](https://github.com/hopefully2333)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://opensource.com/users/kggreene

|

||||

[1]:https://www.mturk.com/

|

||||

[2]:https://phillipi.github.io/pix2pix/

|

||||

[3]:/file/393246

|

||||

[4]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint2.png?itok=qw_q72A5 (Characters colored by component rules)

|

||||

[5]:/file/393251

|

||||

[6]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint3.png?itok=JK3TPcvp (How to color by components)

|

||||

[7]:https://arxiv.org/abs/1611.07004

|

||||

[8]:/file/393261

|

||||

[9]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint5.png?itok=GvipU8l8 (Sunflower turned into a daisy with r -> r cubed)

|

||||

[10]:/file/393266

|

||||

[11]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint6.png?itok=r14I2Fyz (Gaussian filter augmentations)

|

||||

[12]:https://en.wikipedia.org/wiki/Affine_transformation

|

||||

[13]:/file/393271

|

||||

[14]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint7.png?itok=xKWvyi_T (Results: flowers colored by model trained on flowers)

|

||||

[15]:/file/393276

|

||||

[16]:https://opensource.com/sites/default/files/styles/panopoly_image_original/public/u128651/dragonpaint8.png?itok=fSM5ovBT (Results: dragons trained on model trained on dragons)

|

||||

[17]:https://us.pycon.org/2018/schedule/presentation/113/

|

||||

[18]:https://us.pycon.org/2018/

|

||||

@ -0,0 +1,122 @@

|

||||

源代码行计数器和分析器

|

||||

======

|

||||

|

||||

|

||||

|

||||

**Ohcount** 是一个简单的命令行工具,可用于分析源代码并打印代码的总行数。它不仅仅是代码行计数器,还可以在含有大量代码的目录中检测流行的开源许可证,例如 GPL。此外,Ohcount 还可以检测针对特定编程 API(例如 KDE 或 Win32)的代码。在编写本指南时,Ohcount 目前支持 70 多种流行的编程语言。它用 **C** 语言编写,最初由 **Ohloh** 开发,用于在 [www.openhub.net][1] 中生成报告。

|

||||

|

||||

在这篇简短的教程中,我们将介绍如何安装和使用 Ohcount 来分析 Debian、Ubuntu 及其变体(如 Linux Mint)中的源代码文件。

|

||||

|

||||

### Ohcount – 代码行计数器

|

||||

|

||||

**安装**

|

||||

|

||||

Ohcount 存在于 Debian 和 Ubuntu 及其派生版的默认仓库中,因此你可以使用 APT 软件包管理器来安装它,如下所示。

|

||||

```

|

||||

$ sudo apt-get install ohcount

|

||||

|

||||

```

|

||||

|

||||

**用法**

|

||||

|

||||

Ohcount 的使用非常简单。

|

||||

|

||||

你所要做的就是进入你想要分析代码的目录并执行程序。

|

||||

|

||||

举例来说,我将分析 [**coursera-dl**][2] 程序的源代码。

|

||||

```

|

||||

$ cd coursera-dl-master/

|

||||

|

||||

$ ohcount

|

||||

|

||||

```

|

||||

|

||||

以下是 Coursera-dl 的行数摘要:

|

||||

|

||||

![][4]

|

||||

|

||||

如你所见,Coursera-dl 的源代码总共包含 141 个文件。第一列说明源码含有的编程语言的名称。第二列显示每种编程语言的文件数量。第三列显示每种编程语言的总行数。第四行和第五行显示代码中由多少行注释及其百分比。第六列显示空行的数量。最后一列和第七列显示每种语言的全部代码行数以及 coursera-dl 的总行数。

|

||||

|

||||

或者,直接使用下面的完整路径。

|

||||

```

|

||||

$ ohcount coursera-dl-master/

|

||||

|

||||

```

|

||||

|

||||

路径可以是任何数量的单个文件或目录。目录将被递归探测。如果没有给出路径,则使用当前目录。

|

||||

|

||||

如果你不想每次都输入完整目录路径,只需 cd 进入它,然后使用 ohcount 来分析该目录中的代码。

|

||||

|

||||

要计算每个文件的代码行数,请使用 **-i** 标志。

|

||||

```

|

||||

$ ohcount -i

|

||||

|

||||

```

|

||||

|

||||

**示例输出:**

|

||||

|

||||

![][5]

|

||||

|

||||

当您使用 **-a** 标志时,ohcount 还可以显示带标注的源码。

|

||||

```

|

||||

$ ohcount -a

|

||||

|

||||

```

|

||||

|

||||

![][6]

|

||||

|

||||

如你所见,显示了目录中所有源代码的内容。每行都以制表符分隔的语言名称和语义分类(代码、注释或空白)为前缀。

|

||||

|

||||

有时候,你只是想知道源码中使用的许可证。为此,请使用 **-l** 标志。

|

||||

```

|

||||

$ ohcount -l

|

||||

lgpl3, coursera_dl.py

|

||||

gpl coursera_dl.py

|

||||

|

||||

```

|

||||

|

||||

另一个可用选项是 **-re**,用于将原始实体信息打印到屏幕(主要用于调试)。

|

||||

```

|

||||

$ ohcount -re

|

||||

|

||||

```

|

||||

|

||||

要递归地查找给定路径内的所有源码文件,请使用 **-d** 标志。

|

||||

```

|

||||

$ ohcount -d

|

||||

|

||||

```

|

||||

|

||||

上述命令将显示当前工作目录中的所有源码文件,每个文件名将以制表符分隔的语言名称为前缀。

|

||||

|

||||

要了解更多详细信息和支持的选项,请运行:

|

||||

```

|

||||

$ ohcount --help

|

||||

|

||||

```

|

||||

|

||||

对于想要分析自己或其他开发人员开发的代码,并检查代码的行数,用于编写这些代码的语言以及代码的许可证详细信息等,ohcount 非常有用。

|

||||

|

||||

就是这些了。希望对你有用。会有更好的东西。敬请关注!

|

||||

|

||||

干杯!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.ostechnix.com/ohcount-the-source-code-line-counter-and-analyzer/

|

||||

|

||||

作者:[SK][a]

|

||||

选题:[lujun9972](https://github.com/lujun9972)

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创编译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:https://www.ostechnix.com/author/sk/

|

||||

[1]:http://www.openhub.net

|

||||

[2]:https://www.ostechnix.com/coursera-dl-a-script-to-download-coursera-videos/

|

||||

[4]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-2.png

|

||||

[5]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-1-5.png

|

||||

[6]:http://www.ostechnix.com/wp-content/uploads/2018/05/ohcount-2-2.png

|

||||

Loading…

Reference in New Issue

Block a user