mirror of

https://github.com/LCTT/TranslateProject.git

synced 2025-03-21 02:10:11 +08:00

commit

15dec4d7be

@ -1,12 +1,12 @@

|

||||

7 个驱动开源发展的社区

|

||||

================================================================================

|

||||

不久前,开源模式还被成熟的工业厂商以怀疑的态度认作是叛逆小孩的玩物。如今,开放的倡议和基金会在一长列的供应商提供者的支持下正蓬勃发展,而他们将开源模式视作创新的关键。

|

||||

不久前,开源模式还被成熟的工业级厂商以怀疑的态度认作是叛逆小孩的玩物。如今,开源的促进会和基金会在一长列的供应商提供者的支持下正蓬勃发展,而他们将开源模式视作创新的关键。

|

||||

|

||||

|

||||

|

||||

### 技术的开放发展驱动着创新 ###

|

||||

|

||||

在过去的 20 几年间,技术的开放发展已被视作驱动创新的关键因素。即使那些以前将开源视作威胁的公司也开始接受这个观点 — 例如微软,如今它在一系列的开源倡议中表现活跃。到目前为止,大多数的开放发展都集中在软件方面,但甚至这个也正在改变,因为社区已经开始向开源硬件倡议方面聚拢。这里有 7 个成功地在硬件和软件方面同时促进和发展开源技术的组织。

|

||||

在过去的 20 几年间,技术的开源推进已被视作驱动创新的关键因素。即使那些以前将开源视作威胁的公司也开始接受这个观点 — 例如微软,如今它在一系列的开源的促进会中表现活跃。到目前为止,大多数的开源推进都集中在软件方面,但甚至这个也正在改变,因为社区已经开始向开源硬件倡议方面聚拢。这里介绍 7 个成功地在硬件和软件方面同时促进和发展开源技术的组织。

|

||||

|

||||

### OpenPOWER 基金会 ###

|

||||

|

||||

@ -16,21 +16,21 @@

|

||||

|

||||

IBM 通过开放其基于 Power 架构的硬件和软件技术,向使用 Power IP 的独立硬件产品提供许可证等方式为基金会的建立播下种子。如今超过 70 个成员共同协作来为基于 Linux 的数据中心提供自定义的开放服务器,组件和硬件。

|

||||

|

||||

今年四月,在比最新基于 x86 系统快 50 倍的数据分析能力的新的 POWER8 处理器的服务器的基础上, OpenPOWER 推出了一个技术路线图。七月, IBM 和 Google 发布了一个固件堆栈。十月见证了 NVIDIA GPU 带来加速 POWER8 系统的能力和来自 Tyan 的第一个 OpenPOWER 参考服务器。

|

||||

去年四月,在比最新基于 x86 系统快 50 倍的数据分析能力的新的 POWER8 处理器的服务器的基础上, OpenPOWER 推出了一个技术路线图。七月, IBM 和 Google 发布了一个固件堆栈。去年十月见证了 NVIDIA GPU 带来加速 POWER8 系统的能力和来自 Tyan 的第一个 OpenPOWER 参考服务器。

|

||||

|

||||

### Linux 基金会 ###

|

||||

|

||||

|

||||

|

||||

于 2000 年建立的 [Linux 基金会][2] 如今成为掌控着历史上最大的开源协同发展成果,它有着超过 180 个合作成员和许多独立成员及学生成员。它赞助核心 Linux 开发者的工作并促进、保护和推进 Linux 操作系统和协作软件的开发。

|

||||

于 2000 年建立的 [Linux 基金会][2] 如今成为掌控着历史上最大的开源协同开发成果,它有着超过 180 个合作成员和许多独立成员及学生成员。它赞助 Linux 核心开发者的工作并促进、保护和推进 Linux 操作系统,并协调软件的协作开发。

|

||||

|

||||

它最为成功的协作项目包括 Code Aurora Forum (一个拥有为移动无线产业服务的企业财团),MeeGo (一个为移动设备和 IVI (注:指的是车载消息娱乐设备,为 In-Vehicle Infotainment 的简称) 构建一个基于 Linux 内核的操作系统的项目) 和 Open Virtualization Alliance (开放虚拟化联盟,它促进自由和开源软件虚拟化解决方案的采用)。

|

||||

它最为成功的协作项目包括 Code Aurora Forum (一个拥有为移动无线产业服务的企业财团),MeeGo (一个为移动设备和 IVI [注:指的是车载消息娱乐设备,为 In-Vehicle Infotainment 的简称] 构建一个基于 Linux 内核的操作系统的项目) 和 Open Virtualization Alliance (开放虚拟化联盟,它促进自由和开源软件虚拟化解决方案的采用)。

|

||||

|

||||

### 开放虚拟化联盟 ###

|

||||

|

||||

|

||||

|

||||

[开放虚拟化联盟(OVA)][3] 的存在目的为:通过提供使用案例和对具有互操作性的通用接口和 API 的发展提供支持,来促进自由、开源软件的虚拟化解决方案例如 KVM 的采用。KVM 将 Linux 内核转变为一个虚拟机管理程序。

|

||||

[开放虚拟化联盟(OVA)][3] 的存在目的为:通过提供使用案例和对具有互操作性的通用接口和 API 的发展提供支持,来促进自由、开源软件的虚拟化解决方案,例如 KVM 的采用。KVM 将 Linux 内核转变为一个虚拟机管理程序。

|

||||

|

||||

如今, KVM 已成为和 OpenStack 共同使用的最为常见的虚拟机管理程序。

|

||||

|

||||

@ -40,31 +40,31 @@ IBM 通过开放其基于 Power 架构的硬件和软件技术,向使用 Power

|

||||

|

||||

原本作为一个 IaaS(基础设施即服务) 产品由 NASA 和 Rackspace 于 2010 年启动,[OpenStack 基金会][4] 已成为最大的开源项目聚居地之一。它拥有超过 200 家公司成员,其中包括 AT&T, AMD, Avaya, Canonical, Cisco, Dell 和 HP。

|

||||

|

||||

大约以 6 个月为一个发行周期,基金会的 OpenStack 项目被发展用来通过一个基于 Web 的仪表盘,命令行工具或一个 RESTful 风格的 API 来控制或调配流经一个数据中心的处理存储池和网络资源。至今为止,基金会支持的协作发展已经孕育出了一系列 OpenStack 组件,其中包括 OpenStack Compute(一个云计算网络控制器,它是一个 IaaS 系统的主要部分),OpenStack Networking(一个用以管理网络和 IP 地址的系统) 和 OpenStack Object Storage(一个可扩展的冗余存储系统)。

|

||||

大约以 6 个月为一个发行周期,基金会的 OpenStack 项目开发用于通过一个基于 Web 的仪表盘,命令行工具或一个 RESTful 风格的 API 来控制或调配流经一个数据中心的处理存储池和网络资源。至今为止,基金会支持的协同开发已经孕育出了一系列 OpenStack 组件,其中包括 OpenStack Compute(一个云计算网络控制器,它是一个 IaaS 系统的主要部分),OpenStack Networking(一个用以管理网络和 IP 地址的系统) 和 OpenStack Object Storage(一个可扩展的冗余存储系统)。

|

||||

|

||||

### OpenDaylight ###

|

||||

|

||||

|

||||

|

||||

作为来自 Linux 基金会的另一个协作项目, [OpenDaylight][5] 是一个由诸如 Dell, HP, Oracle 和 Avaya 等行业厂商于 2013 年 4 月建立的联合倡议。它的任务是建立一个由社区主导,开放,有工业支持的针对 Software-Defined Networking (SDN) 的包含代码和蓝图的框架。其思路是提供一个可直接部署的全功能 SDN 平台,而不需要其他组件,供应商可提供附件组件和增强组件。

|

||||

作为来自 Linux 基金会的另一个协作项目, [OpenDaylight][5] 是一个由诸如 Dell, HP, Oracle 和 Avaya 等行业厂商于 2013 年 4 月建立的联合倡议。它的任务是建立一个由社区主导、开源、有工业支持的针对软件定义网络( SDN: Software-Defined Networking)的包含代码和蓝图的框架。其思路是提供一个可直接部署的全功能 SDN 平台,而不需要其他组件,供应商可提供附件组件和增强组件。

|

||||

|

||||

### Apache 软件基金会 ###

|

||||

|

||||

|

||||

|

||||

[Apache 软件基金会 (ASF)][7] 是将近 150 个顶级项目的聚居地,这些项目涵盖从开源企业级自动化软件到与 Apache Hadoop 相关的分布式计算的整个生态系统。这些项目分发企业级、可免费获取的软件产品,而 Apache 协议则是为了让无论是商业用户还是个人用户更方便地部署 Apache 的产品。

|

||||

[Apache 软件基金会 (ASF)][7] 是将近 150 个顶级项目的聚居地,这些项目涵盖从开源的企业级自动化软件到与 Apache Hadoop 相关的分布式计算的整个生态系统。这些项目分发企业级、可免费获取的软件产品,而 Apache 协议则是为了让无论是商业用户还是个人用户更方便地部署 Apache 的产品。

|

||||

|

||||

ASF 于 1999 年作为一个会员制,非盈利公司注册,其核心为精英 — 要成为它的成员,你必须首先在基金会的一个或多个协作项目中做出积极贡献。

|

||||

ASF 是 1999 年成立的一个会员制,非盈利公司,以精英为其核心 — 要成为它的成员,你必须首先在基金会的一个或多个协作项目中做出积极贡献。

|

||||

|

||||

### 开放计算项目 ###

|

||||

|

||||

|

||||

|

||||

作为 Facebook 重新设计其 Oregon 数据中心的副产物, [开放计算项目][7] 旨在发展针对数据中心的开放硬件解决方案。 OCP 是一个由廉价、无浪费的服务器,针对 Open Rack(为数据中心设计的机架标准,来让机架集成到数据中心的基础设施中) 的模块化 I/O 存储和一个相对 "绿色" 的数据中心设计方案等构成。

|

||||

作为 Facebook 重新设计其 Oregon 数据中心的副产物, [开放计算项目][7] 旨在发展针对数据中心的开源硬件解决方案。 OCP 是一个由廉价无浪费的服务器、针对 Open Rack(为数据中心设计的机架标准,来让机架集成到数据中心的基础设施中) 的模块化 I/O 存储和一个相对 "绿色" 的数据中心设计方案等构成。

|

||||

|

||||

OCP 董事会成员包括来自 Facebook,Intel,Goldman Sachs,Rackspace 和 Microsoft 的代表。

|

||||

|

||||

OCP 最近宣布了许可证的两个选择: 一个类似 Apache 2.0 的允许衍生工作的许可证和一个更规范的鼓励回滚到原有软件的更改的许可证。

|

||||

OCP 最近宣布了有两种可选的许可证: 一个类似 Apache 2.0 的允许衍生工作的许可证,和一个更规范的鼓励将更改回馈到原有软件的许可证。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -72,7 +72,7 @@ via: http://www.networkworld.com/article/2866074/opensource-subnet/7-communities

|

||||

|

||||

作者:[Thor Olavsrud][a]

|

||||

译者:[FSSlc](https://github.com/FSSlc)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,20 +1,20 @@

|

||||

如何在 Ubuntu 中管理和使用 LVM(Logical Volume Management,逻辑卷管理)

|

||||

如何在 Ubuntu 中管理和使用 逻辑卷管理 LVM

|

||||

================================================================================

|

||||

|

||||

|

||||

在我们之前的文章中,我们介绍了[什么是 LVM 以及能用 LVM 做什么][1],今天我们会给你介绍一些 LVM 的主要管理工具,使得你在设置和扩展安装时更游刃有余。

|

||||

|

||||

正如之前所述,LVM 是介于你的操作系统和物理硬盘驱动器之间的抽象层。这意味着你的物理硬盘驱动器和分区不再依赖于他们所在的硬盘驱动和分区。而是,你的操作系统所见的硬盘驱动和分区可以是由任意数目的独立硬盘驱动汇集而成或是一个软件磁盘阵列。

|

||||

正如之前所述,LVM 是介于你的操作系统和物理硬盘驱动器之间的抽象层。这意味着你的物理硬盘驱动器和分区不再依赖于他们所在的硬盘驱动和分区。而是你的操作系统所见的硬盘驱动和分区可以是由任意数目的独立硬盘汇集而成的或是一个软件磁盘阵列。

|

||||

|

||||

要管理 LVM,这里有很多可用的 GUI 工具,但要真正理解 LVM 配置发生的事情,最好要知道一些命令行工具。这当你在一个服务器或不提供 GUI 工具的发行版上管理 LVM 时尤为有用。

|

||||

|

||||

LVM 的大部分命令和彼此都非常相似。每个可用的命令都由以下其中之一开头:

|

||||

|

||||

- Physical Volume = pv

|

||||

- Volume Group = vg

|

||||

- Logical Volume = lv

|

||||

- Physical Volume (物理卷) = pv

|

||||

- Volume Group (卷组)= vg

|

||||

- Logical Volume (逻辑卷)= lv

|

||||

|

||||

物理卷命令用于在卷组中添加或删除硬盘驱动。卷组命令用于为你的逻辑卷操作更改显示的物理分区抽象集。逻辑卷命令会以分区形式显示卷组使得你的操作系统能使用指定的空间。

|

||||

物理卷命令用于在卷组中添加或删除硬盘驱动。卷组命令用于为你的逻辑卷操作更改显示的物理分区抽象集。逻辑卷命令会以分区形式显示卷组,使得你的操作系统能使用指定的空间。

|

||||

|

||||

### 可下载的 LVM 备忘单 ###

|

||||

|

||||

@ -26,7 +26,7 @@ LVM 的大部分命令和彼此都非常相似。每个可用的命令都由以

|

||||

|

||||

### 如何查看当前 LVM 信息 ###

|

||||

|

||||

你首先需要做的事情是检查你的 LVM 设置。s 和 display 命令和物理卷(pv)、卷组(vg)以及逻辑卷(lv)一起使用,是一个找出当前设置好的开始点。

|

||||

你首先需要做的事情是检查你的 LVM 设置。s 和 display 命令可以和物理卷(pv)、卷组(vg)以及逻辑卷(lv)一起使用,是一个找出当前设置的好起点。

|

||||

|

||||

display 命令会格式化输出信息,因此比 s 命令更易于理解。对每个命令你会看到名称和 pv/vg 的路径,它还会给出空闲和已使用空间的信息。

|

||||

|

||||

@ -40,17 +40,17 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

#### 创建物理卷 ####

|

||||

|

||||

我们会从一个完全新的没有任何分区和信息的硬盘驱动开始。首先找出你将要使用的磁盘。(/dev/sda, sdb, 等)

|

||||

我们会从一个全新的没有任何分区和信息的硬盘开始。首先找出你将要使用的磁盘。(/dev/sda, sdb, 等)

|

||||

|

||||

> 注意:记住所有的命令都要以 root 身份运行或者在命令前面添加 'sudo' 。

|

||||

|

||||

fdisk -l

|

||||

|

||||

如果之前你的硬盘驱动从没有格式化或分区,在 fdisk 的输出中你很可能看到类似下面的信息。这完全正常,因为我们会在下面的步骤中创建需要的分区。

|

||||

如果之前你的硬盘从未格式化或分区过,在 fdisk 的输出中你很可能看到类似下面的信息。这完全正常,因为我们会在下面的步骤中创建需要的分区。

|

||||

|

||||

|

||||

|

||||

我们的新磁盘位置是 /dev/sdb,让我们用 fdisk 命令在驱动上创建一个新的分区。

|

||||

我们的新磁盘位置是 /dev/sdb,让我们用 fdisk 命令在磁盘上创建一个新的分区。

|

||||

|

||||

这里有大量能创建新分区的 GUI 工具,包括 [Gparted][2],但由于我们已经打开了终端,我们将使用 fdisk 命令创建需要的分区。

|

||||

|

||||

@ -62,9 +62,9 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

|

||||

|

||||

以指定的顺序输入命令创建一个使用新硬盘驱动 100% 空间的主分区并为 LVM 做好了准备。如果你需要更改分区的大小或相应多个分区,我建议使用 GParted 或自己了解关于 fdisk 命令的使用。

|

||||

以指定的顺序输入命令创建一个使用新硬盘 100% 空间的主分区并为 LVM 做好了准备。如果你需要更改分区的大小或想要多个分区,我建议使用 GParted 或自己了解一下关于 fdisk 命令的使用。

|

||||

|

||||

**警告:下面的步骤会格式化你的硬盘驱动。确保在进行下面步骤之前你的硬盘驱动中没有任何信息。**

|

||||

**警告:下面的步骤会格式化你的硬盘驱动。确保在进行下面步骤之前你的硬盘驱动中没有任何有用的信息。**

|

||||

|

||||

- n = 创建新分区

|

||||

- p = 创建主分区

|

||||

@ -79,9 +79,9 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

- t = 更改分区类型

|

||||

- 8e = 更改为 LVM 分区类型

|

||||

|

||||

核实并将信息写入硬盘驱动器。

|

||||

核实并将信息写入硬盘。

|

||||

|

||||

- p = 查看分区设置使得写入更改到磁盘之前可以回看

|

||||

- p = 查看分区设置使得在写入更改到磁盘之前可以回看

|

||||

- w = 写入更改到磁盘

|

||||

|

||||

|

||||

@ -102,7 +102,7 @@ display 命令会格式化输出信息,因此比 s 命令更易于理解。对

|

||||

|

||||

|

||||

|

||||

Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称,但建议标签以 vg 开头,以便后面你使用它时能意识到这是一个卷组。

|

||||

vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称,但建议标签以 vg 开头,以便后面你使用它时能意识到这是一个卷组。

|

||||

|

||||

#### 创建逻辑卷 ####

|

||||

|

||||

@ -112,7 +112,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

|

||||

|

||||

-L 命令指定逻辑卷的大小,在该情况中是 3 GB,-n 命令指定卷的名称。 指定 vgpool 所以 lvcreate 命令知道从什么卷获取空间。

|

||||

-L 命令指定逻辑卷的大小,在该情况中是 3 GB,-n 命令指定卷的名称。 指定 vgpool 以便 lvcreate 命令知道从什么卷获取空间。

|

||||

|

||||

#### 格式化并挂载逻辑卷 ####

|

||||

|

||||

@ -131,7 +131,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

#### 重新设置逻辑卷大小 ####

|

||||

|

||||

逻辑卷的一个好处是你能使你的共享物理变大或变小而不需要移动所有东西到一个更大的硬盘驱动。另外,你可以添加新的硬盘驱动并同时扩展你的卷组。或者如果你有一个不使用的硬盘驱动,你可以从卷组中移除它使得逻辑卷变小。

|

||||

逻辑卷的一个好处是你能使你的存储物理地变大或变小,而不需要移动所有东西到一个更大的硬盘。另外,你可以添加新的硬盘并同时扩展你的卷组。或者如果你有一个不使用的硬盘,你可以从卷组中移除它使得逻辑卷变小。

|

||||

|

||||

这里有三个用于使物理卷、卷组和逻辑卷变大或变小的基础工具。

|

||||

|

||||

@ -147,9 +147,9 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

按照上面创建新分区并更改分区类型为 LVM(8e) 的步骤安装一个新硬盘驱动。然后用 pvcreate 命令创建一个 LVM 能识别的物理卷。

|

||||

|

||||

#### 添加新硬盘驱动到卷组 ####

|

||||

#### 添加新硬盘到卷组 ####

|

||||

|

||||

要添加新的硬盘驱动到一个卷组,你只需要知道你的新分区,在我们的例子中是 /dev/sdc1,以及想要添加到的卷组的名称。

|

||||

要添加新的硬盘到一个卷组,你只需要知道你的新分区,在我们的例子中是 /dev/sdc1,以及想要添加到的卷组的名称。

|

||||

|

||||

这会添加新物理卷到已存在的卷组中。

|

||||

|

||||

@ -189,7 +189,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

1. 调整文件系统大小 (调整之前确保已经移动文件到硬盘驱动安全的地方)

|

||||

1. 减小逻辑卷 (除了 + 可以扩展大小,你也可以用 - 压缩大小)

|

||||

1. 用 vgreduce 从卷组中移除硬盘驱动

|

||||

1. 用 vgreduce 从卷组中移除硬盘

|

||||

|

||||

#### 备份逻辑卷 ####

|

||||

|

||||

@ -197,7 +197,7 @@ Vgpool 是新创建的卷组的名称。你可以使用任何你喜欢的名称

|

||||

|

||||

|

||||

|

||||

LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该照片可以用于在不同的硬盘驱动上进行备份。生成一个备份的时候,任何需要添加到逻辑卷的新信息会如往常一样写入磁盘,但会跟踪更改使得原始快照永远不会损毁。

|

||||

LVM 获取快照的时候,会有一张和逻辑卷完全相同的“照片”,该“照片”可以用于在不同的硬盘上进行备份。生成一个备份的时候,任何需要添加到逻辑卷的新信息会如往常一样写入磁盘,但会跟踪更改使得原始快照永远不会损毁。

|

||||

|

||||

要创建一个快照,我们需要创建拥有足够空闲空间的逻辑卷,用于保存我们备份的时候会写入该逻辑卷的任何新信息。如果驱动并不是经常写入,你可以使用很小的一个存储空间。备份完成的时候我们只需要移除临时逻辑卷,原始逻辑卷会和往常一样。

|

||||

|

||||

@ -209,7 +209,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

|

||||

|

||||

这里我们创建了一个只有 512MB 的逻辑卷,因为驱动实际上并不会使用。512MB 的空间会保存备份时产生的任何新数据。

|

||||

这里我们创建了一个只有 512MB 的逻辑卷,因为该硬盘实际上并不会使用。512MB 的空间会保存备份时产生的任何新数据。

|

||||

|

||||

#### 挂载新快照 ####

|

||||

|

||||

@ -222,7 +222,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

#### 复制快照和删除逻辑卷 ####

|

||||

|

||||

你剩下需要做的是从 /mnt/lvstuffbackup/ 中复制所有文件到一个外部的硬盘驱动或者打包所有文件到一个文件。

|

||||

你剩下需要做的是从 /mnt/lvstuffbackup/ 中复制所有文件到一个外部的硬盘或者打包所有文件到一个文件。

|

||||

|

||||

**注意:tar -c 会创建一个归档文件,-f 要指出归档文件的名称和路径。要获取 tar 命令的帮助信息,可以在终端中输入 man tar。**

|

||||

|

||||

@ -230,7 +230,7 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

|

||||

|

||||

|

||||

记住备份发生的时候写到 lvstuff 的所有文件都会在我们之前创建的临时逻辑卷中被跟踪。确保备份的时候你有足够的空闲空间。

|

||||

记住备份时候写到 lvstuff 的所有文件都会在我们之前创建的临时逻辑卷中被跟踪。确保备份的时候你有足够的空闲空间。

|

||||

|

||||

备份完成后,卸载卷并移除临时快照。

|

||||

|

||||

@ -259,10 +259,10 @@ LVM 获取快照的时候,会有一张和逻辑卷完全相同的照片,该

|

||||

via: http://www.howtogeek.com/howto/40702/how-to-manage-and-use-lvm-logical-volume-management-in-ubuntu/

|

||||

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://www.howtogeek.com/howto/36568/what-is-logical-volume-management-and-how-do-you-enable-it-in-ubuntu/

|

||||

[1]:https://linux.cn/article-5953-1.html

|

||||

[2]:http://www.howtogeek.com/howto/17001/how-to-format-a-usb-drive-in-ubuntu-using-gparted/

|

||||

[3]:http://www.howtogeek.com/howto/33552/htg-explains-which-linux-file-system-should-you-choose/

|

||||

@ -1,18 +1,18 @@

|

||||

如何通过反向 SSH 隧道访问 NAT 后面的 Linux 服务器

|

||||

================================================================================

|

||||

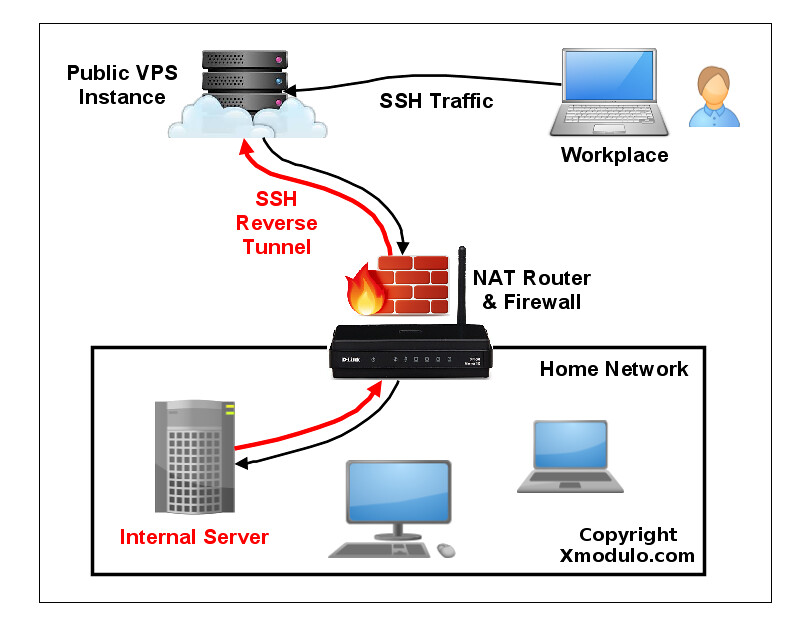

你在家里运行着一台 Linux 服务器,访问它需要先经过 NAT 路由器或者限制性防火墙。现在你想不在家的时候用 SSH 登录到这台服务器。你如何才能做到呢?SSH 端口转发当然是一种选择。但是,如果你需要处理多个嵌套的 NAT 环境,端口转发可能会变得非常棘手。另外,在多种 ISP 特定条件下可能会受到干扰,例如阻塞转发端口的限制性 ISP 防火墙、或者在用户间共享 IPv4 地址的运营商级 NAT。

|

||||

你在家里运行着一台 Linux 服务器,它放在一个 NAT 路由器或者限制性防火墙后面。现在你想在外出时用 SSH 登录到这台服务器。你如何才能做到呢?SSH 端口转发当然是一种选择。但是,如果你需要处理多级嵌套的 NAT 环境,端口转发可能会变得非常棘手。另外,在多种 ISP 特定条件下可能会受到干扰,例如阻塞转发端口的限制性 ISP 防火墙、或者在用户间共享 IPv4 地址的运营商级 NAT。

|

||||

|

||||

### 什么是反向 SSH 隧道? ###

|

||||

|

||||

SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧道的概念非常简单。对于此,在限制性家庭网络之外你需要另一台主机(所谓的“中继主机”),你能从当前所在地通过 SSH 登录。你可以用有公共 IP 地址的 [VPS 实例][1] 配置一个中继主机。然后要做的就是从你家庭网络服务器中建立一个到公共中继主机的永久 SSH 隧道。有了这个隧道,你就可以从中继主机中连接“回”家庭服务器(这就是为什么称之为 “反向” 隧道)。不管你在哪里、你家庭网络中的 NAT 或 防火墙限制多么严重,只要你可以访问中继主机,你就可以连接到家庭服务器。

|

||||

SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧道的概念非常简单。使用这种方案,在你的受限的家庭网络之外你需要另一台主机(所谓的“中继主机”),你能从当前所在地通过 SSH 登录到它。你可以用有公网 IP 地址的 [VPS 实例][1] 配置一个中继主机。然后要做的就是从你的家庭网络服务器中建立一个到公网中继主机的永久 SSH 隧道。有了这个隧道,你就可以从中继主机中连接“回”家庭服务器(这就是为什么称之为 “反向” 隧道)。不管你在哪里、你的家庭网络中的 NAT 或 防火墙限制多么严格,只要你可以访问中继主机,你就可以连接到家庭服务器。

|

||||

|

||||

|

||||

|

||||

### 在 Linux 上设置反向 SSH 隧道 ###

|

||||

|

||||

让我们来看看怎样创建和使用反向 SSH 隧道。我们有如下假设。我们会设置一个从家庭服务器到中继服务器的反向 SSH 隧道,然后我们可以通过中继服务器从客户端计算机 SSH 登录到家庭服务器。**中继服务器** 的公共 IP 地址是 1.1.1.1。

|

||||

让我们来看看怎样创建和使用反向 SSH 隧道。我们做如下假设:我们会设置一个从家庭服务器(homeserver)到中继服务器(relayserver)的反向 SSH 隧道,然后我们可以通过中继服务器从客户端计算机(clientcomputer) SSH 登录到家庭服务器。本例中的**中继服务器** 的公网 IP 地址是 1.1.1.1。

|

||||

|

||||

在家庭主机上,按照以下方式打开一个到中继服务器的 SSH 连接。

|

||||

在家庭服务器上,按照以下方式打开一个到中继服务器的 SSH 连接。

|

||||

|

||||

homeserver~$ ssh -fN -R 10022:localhost:22 relayserver_user@1.1.1.1

|

||||

|

||||

@ -20,11 +20,11 @@ SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧

|

||||

|

||||

“-R 10022:localhost:22” 选项定义了一个反向隧道。它转发中继服务器 10022 端口的流量到家庭服务器的 22 号端口。

|

||||

|

||||

用 “-fN” 选项,当你用一个 SSH 服务器成功通过验证时 SSH 会进入后台运行。当你不想在远程 SSH 服务器执行任何命令、就像我们的例子中只想转发端口的时候非常有用。

|

||||

用 “-fN” 选项,当你成功通过 SSH 服务器验证时 SSH 会进入后台运行。当你不想在远程 SSH 服务器执行任何命令,就像我们的例子中只想转发端口的时候非常有用。

|

||||

|

||||

运行上面的命令之后,你就会回到家庭主机的命令行提示框中。

|

||||

|

||||

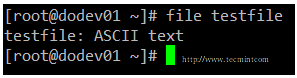

登录到中继服务器,确认 127.0.0.1:10022 绑定到了 sshd。如果是的话就表示已经正确设置了反向隧道。

|

||||

登录到中继服务器,确认其 127.0.0.1:10022 绑定到了 sshd。如果是的话就表示已经正确设置了反向隧道。

|

||||

|

||||

relayserver~$ sudo netstat -nap | grep 10022

|

||||

|

||||

@ -36,13 +36,13 @@ SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧

|

||||

|

||||

relayserver~$ ssh -p 10022 homeserver_user@localhost

|

||||

|

||||

需要注意的一点是你在本地输入的 SSH 登录/密码应该是家庭服务器的,而不是中继服务器的,因为你是通过隧道的本地端点登录到家庭服务器。因此不要输入中继服务器的登录/密码。成功登陆后,你就在家庭服务器上了。

|

||||

需要注意的一点是你在上面为localhost输入的 SSH 登录/密码应该是家庭服务器的,而不是中继服务器的,因为你是通过隧道的本地端点登录到家庭服务器,因此不要错误输入中继服务器的登录/密码。成功登录后,你就在家庭服务器上了。

|

||||

|

||||

### 通过反向 SSH 隧道直接连接到网络地址变换后的服务器 ###

|

||||

|

||||

上面的方法允许你访问 NAT 后面的 **家庭服务器**,但你需要登录两次:首先登录到 **中继服务器**,然后再登录到**家庭服务器**。这是因为中继服务器上 SSH 隧道的端点绑定到了回环地址(127.0.0.1)。

|

||||

|

||||

事实上,有一种方法可以只需要登录到中继服务器就能直接访问网络地址变换之后的家庭服务器。要做到这点,你需要让中继服务器上的 sshd 不仅转发回环地址上的端口,还要转发外部主机的端口。这通过指定中继服务器上运行的 sshd 的 **网关端口** 实现。

|

||||

事实上,有一种方法可以只需要登录到中继服务器就能直接访问NAT之后的家庭服务器。要做到这点,你需要让中继服务器上的 sshd 不仅转发回环地址上的端口,还要转发外部主机的端口。这通过指定中继服务器上运行的 sshd 的 **GatewayPorts** 实现。

|

||||

|

||||

打开**中继服务器**的 /etc/ssh/sshd_conf 并添加下面的行。

|

||||

|

||||

@ -74,23 +74,23 @@ SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧

|

||||

|

||||

tcp 0 0 1.1.1.1:10022 0.0.0.0:* LISTEN 1538/sshd: dev

|

||||

|

||||

不像之前的情况,现在隧道的端点是 1.1.1.1:10022(中继服务器的公共 IP 地址),而不是 127.0.0.1:10022。这就意味着从外部主机可以访问隧道的端点。

|

||||

不像之前的情况,现在隧道的端点是 1.1.1.1:10022(中继服务器的公网 IP 地址),而不是 127.0.0.1:10022。这就意味着从外部主机可以访问隧道的另一端。

|

||||

|

||||

现在在任何其它计算机(客户端计算机),输入以下命令访问网络地址变换之后的家庭服务器。

|

||||

|

||||

clientcomputer~$ ssh -p 10022 homeserver_user@1.1.1.1

|

||||

|

||||

在上面的命令中,1.1.1.1 是中继服务器的公共 IP 地址,家庭服务器用户必须是和家庭服务器相关联的用户账户。这是因为你真正登录到的主机是家庭服务器,而不是中继服务器。后者只是中继你的 SSH 流量到家庭服务器。

|

||||

在上面的命令中,1.1.1.1 是中继服务器的公共 IP 地址,homeserver_user必须是家庭服务器上的用户账户。这是因为你真正登录到的主机是家庭服务器,而不是中继服务器。后者只是中继你的 SSH 流量到家庭服务器。

|

||||

|

||||

### 在 Linux 上设置一个永久反向 SSH 隧道 ###

|

||||

|

||||

现在你已经明白了怎样创建一个反向 SSH 隧道,然后把隧道设置为 “永久”,这样隧道启动后就会一直运行(不管临时的网络拥塞、SSH 超时、中继主机重启,等等)。毕竟,如果隧道不是一直有效,你不可能可靠的登录到你的家庭服务器。

|

||||

现在你已经明白了怎样创建一个反向 SSH 隧道,然后把隧道设置为 “永久”,这样隧道启动后就会一直运行(不管临时的网络拥塞、SSH 超时、中继主机重启,等等)。毕竟,如果隧道不是一直有效,你就不能可靠的登录到你的家庭服务器。

|

||||

|

||||

对于永久隧道,我打算使用一个叫 autossh 的工具。正如名字暗示的,这个程序允许你不管任何理由自动重启 SSH 会话。因此对于保存一个反向 SSH 隧道有效非常有用。

|

||||

对于永久隧道,我打算使用一个叫 autossh 的工具。正如名字暗示的,这个程序可以让你的 SSH 会话无论因为什么原因中断都会自动重连。因此对于保持一个反向 SSH 隧道非常有用。

|

||||

|

||||

第一步,我们要设置从家庭服务器到中继服务器的[无密码 SSH 登录][2]。这样的话,autossh 可以不需要用户干预就能重启一个损坏的反向 SSH 隧道。

|

||||

|

||||

下一步,在初始化隧道的家庭服务器上[安装 autossh][3]。

|

||||

下一步,在建立隧道的家庭服务器上[安装 autossh][3]。

|

||||

|

||||

在家庭服务器上,用下面的参数运行 autossh 来创建一个连接到中继服务器的永久 SSH 隧道。

|

||||

|

||||

@ -113,7 +113,7 @@ SSH 端口转发的一种替代方案是 **反向 SSH 隧道**。反向 SSH 隧

|

||||

|

||||

### 总结 ###

|

||||

|

||||

在这篇博文中,我介绍了你如何能从外部中通过反向 SSH 隧道访问限制性防火墙或 NAT 网关之后的 Linux 服务器。尽管我介绍了家庭网络中的一个使用事例,在企业网络中使用时你尤其要小心。这样的一个隧道可能被视为违反公司政策,因为它绕过了企业的防火墙并把企业网络暴露给外部攻击。这很可能被误用或者滥用。因此在使用之前一定要记住它的作用。

|

||||

在这篇博文中,我介绍了你如何能从外部通过反向 SSH 隧道访问限制性防火墙或 NAT 网关之后的 Linux 服务器。这里我介绍了家庭网络中的一个使用事例,但在企业网络中使用时你尤其要小心。这样的一个隧道可能被视为违反公司政策,因为它绕过了企业的防火墙并把企业网络暴露给外部攻击。这很可能被误用或者滥用。因此在使用之前一定要记住它的作用。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -121,11 +121,11 @@ via: http://xmodulo.com/access-linux-server-behind-nat-reverse-ssh-tunnel.html

|

||||

|

||||

作者:[Dan Nanni][a]

|

||||

译者:[ictlyh](https://github.com/ictlyh)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](http://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://xmodulo.com/author/nanni

|

||||

[1]:http://xmodulo.com/go/digitalocean

|

||||

[2]:http://xmodulo.com/how-to-enable-ssh-login-without.html

|

||||

[3]:http://ask.xmodulo.com/install-autossh-linux.html

|

||||

[2]:https://linux.cn/article-5444-1.html

|

||||

[3]:https://linux.cn/article-5459-1.html

|

||||

231

published/20150717 How to monitor NGINX- Part 1.md

Normal file

231

published/20150717 How to monitor NGINX- Part 1.md

Normal file

@ -0,0 +1,231 @@

|

||||

如何监控 NGINX(第一篇)

|

||||

================================================================================

|

||||

|

||||

|

||||

### NGINX 是什么? ###

|

||||

|

||||

[NGINX][1] (发音为 “engine X”) 是一种流行的 HTTP 和反向代理服务器。作为一个 HTTP 服务器,NGINX 可以使用较少的内存非常高效可靠地提供静态内容。作为[反向代理][2],它可以用作多个后端服务器或类似缓存和负载平衡这样的其它应用的单一访问控制点。NGINX 是一个自由开源的产品,并有一个具备更全的功能的叫做 NGINX Plus 的商业版。

|

||||

|

||||

NGINX 也可以用作邮件代理和通用的 TCP 代理,但本文并不直接讨论 NGINX 的那些用例的监控。

|

||||

|

||||

### NGINX 主要指标 ###

|

||||

|

||||

通过监控 NGINX 可以 捕获到两类问题:NGINX 本身的资源问题,和出现在你的基础网络设施的其它问题。大多数 NGINX 用户会用到以下指标的监控,包括**每秒请求数**,它提供了一个由所有最终用户活动组成的上层视图;**服务器错误率** ,这表明你的服务器已经多长没有处理看似有效的请求;还有**请求处理时间**,这说明你的服务器处理客户端请求的总共时长(并且可以看出性能降低或当前环境的其他问题)。

|

||||

|

||||

更一般地,至少有三个主要的指标类别来监视:

|

||||

|

||||

- 基本活动指标

|

||||

- 错误指标

|

||||

- 性能指标

|

||||

|

||||

下面我们将分析在每个类别中最重要的 NGINX 指标,以及用一个相当普遍但是值得特别提到的案例来说明:使用 NGINX Plus 作反向代理。我们还将介绍如何使用图形工具或可选择的监控工具来监控所有的指标。

|

||||

|

||||

本文引用指标术语[来自我们的“监控 101 系列”][3],,它提供了一个指标收集和警告框架。

|

||||

|

||||

#### 基本活跃指标 ####

|

||||

|

||||

无论你在怎样的情况下使用 NGINX,毫无疑问你要监视服务器接收多少客户端请求和如何处理这些请求。

|

||||

|

||||

NGINX Plus 上像开源 NGINX 一样可以报告基本活跃指标,但它也提供了略有不同的辅助模块。我们首先讨论开源的 NGINX,再来说明 NGINX Plus 提供的其他指标的功能。

|

||||

|

||||

**NGINX**

|

||||

|

||||

下图显示了一个客户端连接的过程,以及开源版本的 NGINX 如何在连接过程中收集指标。

|

||||

|

||||

|

||||

|

||||

Accepts(接受)、Handled(已处理)、Requests(请求)是一直在增加的计数器。Active(活跃)、Waiting(等待)、Reading(读)、Writing(写)随着请求量而增减。

|

||||

|

||||

| 名称 | 描述| [指标类型](https://www.datadoghq.com/blog/monitoring-101-collecting-data/)|

|

||||

|-----------|-----------------|-------------------------------------------------------------------------------------------------------------------------|

|

||||

| Accepts | NGINX 所接受的客户端连接数 | 资源: 功能 |

|

||||

| Handled | 成功的客户端连接数 | 资源: 功能 |

|

||||

| Active | 当前活跃的客户端连接数| 资源: 功能 |

|

||||

| Dropped(已丢弃,计算得出)| 丢弃的连接数(接受 - 已处理)| 工作:错误*|

|

||||

| Requests | 客户端请求数 | 工作:吞吐量 |

|

||||

|

||||

|

||||

_*严格的来说,丢弃的连接是 [一个资源饱和指标](https://www.datadoghq.com/blog/monitoring-101-collecting-data/#resource-metrics),但是因为饱和会导致 NGINX 停止服务(而不是延后该请求),所以,“已丢弃”视作 [一个工作指标](https://www.datadoghq.com/blog/monitoring-101-collecting-data/#work-metrics) 比较合适。_

|

||||

|

||||

NGINX worker 进程接受 OS 的连接请求时 **Accepts** 计数器增加,而**Handled** 是当实际的请求得到连接时(通过建立一个新的连接或重新使用一个空闲的)。这两个计数器的值通常都是相同的,如果它们有差别则表明连接被**Dropped**,往往这是由于资源限制,比如已经达到 NGINX 的[worker_connections][4]的限制。

|

||||

|

||||

一旦 NGINX 成功处理一个连接时,连接会移动到**Active**状态,在这里对客户端请求进行处理:

|

||||

|

||||

Active状态

|

||||

|

||||

- **Waiting**: 活跃的连接也可以处于 Waiting 子状态,如果有在此刻没有活跃请求的话。新连接可以绕过这个状态并直接变为到 Reading 状态,最常见的是在使用“accept filter(接受过滤器)” 和 “deferred accept(延迟接受)”时,在这种情况下,NGINX 不会接收 worker 进程的通知,直到它具有足够的数据才开始响应。如果连接设置为 keep-alive ,那么它在发送响应后将处于等待状态。

|

||||

|

||||

- **Reading**: 当接收到请求时,连接离开 Waiting 状态,并且该请求本身使 Reading 状态计数增加。在这种状态下 NGINX 会读取客户端请求首部。请求首部是比较小的,因此这通常是一个快速的操作。

|

||||

|

||||

- **Writing**: 请求被读取之后,其使 Writing 状态计数增加,并保持在该状态,直到响应返回给客户端。这意味着,该请求在 Writing 状态时, 一方面 NGINX 等待来自上游系统的结果(系统放在 NGINX “后面”),另外一方面,NGINX 也在同时响应。请求往往会在 Writing 状态花费大量的时间。

|

||||

|

||||

通常,一个连接在同一时间只接受一个请求。在这种情况下,Active 连接的数目 == Waiting 的连接 + Reading 请求 + Writing 。然而,较新的 SPDY 和 HTTP/2 协议允许多个并发请求/响应复用一个连接,所以 Active 可小于 Waiting 的连接、 Reading 请求、Writing 请求的总和。 (在撰写本文时,NGINX 不支持 HTTP/2,但预计到2015年期间将会支持。)

|

||||

|

||||

**NGINX Plus**

|

||||

|

||||

正如上面提到的,所有开源 NGINX 的指标在 NGINX Plus 中是可用的,但另外也提供其他的指标。本节仅说明了 NGINX Plus 可用的指标。

|

||||

|

||||

|

||||

|

||||

|

||||

Accepted (已接受)、Dropped,总数是不断增加的计数器。Active、 Idle(空闲)和处于 Current(当前)处理阶段的各种状态下的连接或请求的当前数量随着请求量而增减。

|

||||

|

||||

| 名称 | 描述| [指标类型](https://www.datadoghq.com/blog/monitoring-101-collecting-data/)|

|

||||

|-----------|-----------------|-------------------------------------------------------------------------------------------------------------------------|

|

||||

| Accepted | NGINX 所接受的客户端连接数 | 资源: 功能 |

|

||||

| Dropped |丢弃的连接数(接受 - 已处理)| 工作:错误*|

|

||||

| Active | 当前活跃的客户端连接数| 资源: 功能 |

|

||||

| Idle | 没有当前请求的客户端连接| 资源: 功能 |

|

||||

| Total(全部) | 客户端请求数 | 工作:吞吐量 |

|

||||

|

||||

_*严格的来说,丢弃的连接是 [一个资源饱和指标](https://www.datadoghq.com/blog/monitoring-101-collecting-data/#resource-metrics),但是因为饱和会导致 NGINX 停止服务(而不是延后该请求),所以,“已丢弃”视作 [一个工作指标](https://www.datadoghq.com/blog/monitoring-101-collecting-data/#work-metrics) 比较合适。_

|

||||

|

||||

当 NGINX Plus worker 进程接受 OS 的连接请求时 **Accepted** 计数器递增。如果 worker 进程为请求建立连接失败(通过建立一个新的连接或重新使用一个空闲),则该连接被丢弃, **Dropped** 计数增加。通常连接被丢弃是因为资源限制,如 NGINX Plus 的[worker_connections][4]的限制已经达到。

|

||||

|

||||

**Active** 和 **Idle** 和[如上所述][5]的开源 NGINX 的“active” 和 “waiting”状态是相同的,但是有一点关键的不同:在开源 NGINX 上,“waiting”状态包括在“active”中,而在 NGINX Plus 上“idle”的连接被排除在“active” 计数外。**Current** 和开源 NGINX 是一样的也是由“reading + writing” 状态组成。

|

||||

|

||||

**Total** 为客户端请求的累积计数。请注意,单个客户端连接可涉及多个请求,所以这个数字可能会比连接的累计次数明显大。事实上,(total / accepted)是每个连接的平均请求数量。

|

||||

|

||||

**开源 和 Plus 之间指标的不同**

|

||||

|

||||

|NGINX (开源) |NGINX Plus|

|

||||

|-----------------------|----------------|

|

||||

| accepts | accepted |

|

||||

| dropped 通过计算得来| dropped 直接得到 |

|

||||

| reading + writing| current|

|

||||

| waiting| idle|

|

||||

| active (包括 “waiting”状态) | active (排除 “idle” 状态)|

|

||||

| requests| total|

|

||||

|

||||

**提醒指标: 丢弃连接**

|

||||

|

||||

被丢弃的连接数目等于 Accepts 和 Handled 之差(NGINX 中),或是可直接得到标准指标(NGINX Plus 中)。在正常情况下,丢弃连接数应该是零。如果在每个单位时间内丢弃连接的速度开始上升,那么应该看看是否资源饱和了。

|

||||

|

||||

|

||||

|

||||

**提醒指标: 每秒请求数**

|

||||

|

||||

按固定时间间隔采样你的请求数据(开源 NGINX 的**requests**或者 NGINX Plus 中**total**) 会提供给你单位时间内(通常是分钟或秒)所接受的请求数量。监测这个指标可以查看进入的 Web 流量尖峰,无论是合法的还是恶意的,或者突然的下降,这通常都代表着出现了问题。每秒请求数若发生急剧变化可以提醒你的环境出现问题了,即使它不能告诉你确切问题的位置所在。请注意,所有的请求都同样计数,无论 URL 是什么。

|

||||

|

||||

|

||||

|

||||

**收集活跃指标**

|

||||

|

||||

开源的 NGINX 提供了一个简单状态页面来显示基本的服务器指标。该状态信息以标准格式显示,实际上任何图形或监控工具可以被配置去解析这些相关数据,以用于分析、可视化、或提醒。NGINX Plus 提供一个 JSON 接口来供给更多的数据。阅读相关文章“[NGINX 指标收集][6]”来启用指标收集的功能。

|

||||

|

||||

#### 错误指标 ####

|

||||

|

||||

| 名称 | 描述| [指标类型](https://www.datadoghq.com/blog/monitoring-101-collecting-data/)| 可用于 |

|

||||

|-----------|-----------------|--------------------------------------------------------------------------------------------------------|----------------|

|

||||

| 4xx 代码 | 客户端错误计数 | 工作:错误 | NGINX 日志, NGINX Plus|

|

||||

| 5xx 代码| 服务器端错误计数 | 工作:错误 | NGINX 日志, NGINX Plus|

|

||||

|

||||

NGINX 错误指标告诉你服务器是否经常返回错误而不是正常工作。客户端错误返回4XX状态码,服务器端错误返回5XX状态码。

|

||||

|

||||

**提醒指标: 服务器错误率**

|

||||

|

||||

服务器错误率等于在单位时间(通常为一到五分钟)内5xx错误状态代码的总数除以[状态码][7](1XX,2XX,3XX,4XX,5XX)的总数。如果你的错误率随着时间的推移开始攀升,调查可能的原因。如果突然增加,可能需要采取紧急行动,因为客户端可能收到错误信息。

|

||||

|

||||

|

||||

|

||||

关于客户端错误的注意事项:虽然监控4XX是很有用的,但从该指标中你仅可以捕捉有限的信息,因为它只是衡量客户的行为而不捕捉任何特殊的 URL。换句话说,4xx出现的变化可能是一个信号,例如网络扫描器正在寻找你的网站漏洞时。

|

||||

|

||||

**收集错误度量**

|

||||

|

||||

虽然开源 NGINX 不能马上得到用于监测的错误率,但至少有两种方法可以得到:

|

||||

|

||||

- 使用商业支持的 NGINX Plus 提供的扩展状态模块

|

||||

- 配置 NGINX 的日志模块将响应码写入访问日志

|

||||

|

||||

关于这两种方法,请阅读相关文章“[NGINX 指标收集][6]”。

|

||||

|

||||

#### 性能指标 ####

|

||||

|

||||

| 名称 | 描述| [指标类型](https://www.datadoghq.com/blog/monitoring-101-collecting-data/)| 可用于 |

|

||||

|-----------|-----------------|--------------------------------------------------------------------------------------------------------|----------------|

|

||||

| request time (请求处理时间)| 处理每个请求的时间,单位为秒 | 工作:性能 | NGINX 日志|

|

||||

|

||||

**提醒指标: 请求处理时间**

|

||||

|

||||

请求处理时间指标记录了 NGINX 处理每个请求的时间,从读到客户端的第一个请求字节到完成请求。较长的响应时间说明问题在上游。

|

||||

|

||||

**收集处理时间指标**

|

||||

|

||||

NGINX 和 NGINX Plus 用户可以通过添加 $request_time 变量到访问日志格式中来捕捉处理时间数据。关于配置日志监控的更多细节在[NGINX指标收集][6]。

|

||||

|

||||

#### 反向代理指标 ####

|

||||

|

||||

| 名称 | 描述| [指标类型](https://www.datadoghq.com/blog/monitoring-101-collecting-data/)| 可用于 |

|

||||

|-----------|-----------------|--------------------------------------------------------------------------------------------------------|----------------|

|

||||

| 上游服务器的活跃链接 | 当前活跃的客户端连接 | 资源:功能 | NGINX Plus |

|

||||

| 上游服务器的 5xx 错误代码| 服务器错误 | 工作:错误 | NGINX Plus |

|

||||

| 每个上游组的可用服务器 | 服务器传递健康检查 | 资源:可用性| NGINX Plus

|

||||

|

||||

[反向代理][9]是 NGINX 最常见的使用方法之一。商业支持的 NGINX Plus 显示了大量有关后端(或“上游 upstream”)的服务器指标,这些与反向代理设置相关的。本节重点介绍了几个 NGINX Plus 用户可用的关键上游指标。

|

||||

|

||||

NGINX Plus 首先将它的上游指标按组分开,然后是针对单个服务器的。因此,例如,你的反向代理将请求分配到五个上游的 Web 服务器上,你可以一眼看出是否有单个服务器压力过大,也可以看出上游组中服务器的健康状况,以确保良好的响应时间。

|

||||

|

||||

**活跃指标**

|

||||

|

||||

**每上游服务器的活跃连接**的数量可以帮助你确认反向代理是否正确的分配工作到你的整个服务器组上。如果你正在使用 NGINX 作为负载均衡器,任何一台服务器处理的连接数的明显偏差都可能表明服务器正在努力消化请求,或者是你配置使用的负载均衡的方法(例如[round-robin 或 IP hashing][10])不是最适合你流量模式的。

|

||||

|

||||

**错误指标**

|

||||

|

||||

错误指标,上面所说的高于5XX(服务器错误)状态码,是监控指标中有价值的一个,尤其是响应码部分。 NGINX Plus 允许你轻松地提取**每个上游服务器的 5xx 错误代码**的数量,以及响应的总数量,以此来确定某个特定服务器的错误率。

|

||||

|

||||

**可用性指标**

|

||||

|

||||

对于 web 服务器的运行状况,还有另一种角度,NGINX 可以通过**每个组中当前可用服务器的总量**很方便监控你的上游组的健康。在一个大的反向代理上,你可能不会非常关心其中一个服务器的当前状态,就像你只要有可用的服务器组能够处理当前的负载就行了。但监视上游组内的所有工作的服务器总量可为判断 Web 服务器的健康状况提供一个更高层面的视角。

|

||||

|

||||

**收集上游指标**

|

||||

|

||||

NGINX Plus 上游指标显示在内部 NGINX Plus 的监控仪表盘上,并且也可通过一个JSON 接口来服务于各种外部监控平台。在我们的相关文章“[NGINX指标收集][6]”中有个例子。

|

||||

|

||||

### 结论 ###

|

||||

|

||||

在这篇文章中,我们已经谈到了一些有用的指标,你可以使用表格来监控 NGINX 服务器。如果你是刚开始使用 NGINX,监控下面提供的大部分或全部指标,可以让你很好的了解你的网络基础设施的健康和活跃程度:

|

||||

|

||||

- [已丢弃的连接][12]

|

||||

- [每秒请求数][13]

|

||||

- [服务器错误率][14]

|

||||

- [请求处理数据][15]

|

||||

|

||||

最终,你会学到更多,更专业的衡量指标,尤其是关于你自己基础设施和使用情况的。当然,监控哪一项指标将取决于你可用的工具。参见相关的文章来[逐步指导你的指标收集][6],不管你使用 NGINX 还是 NGINX Plus。

|

||||

|

||||

在 Datadog 中,我们已经集成了 NGINX 和 NGINX Plus,这样你就可以以最少的设置来收集和监控所有 Web 服务器的指标。 [在本文中][17]了解如何用 NGINX Datadog来监控,并开始[免费试用 Datadog][18]吧。

|

||||

|

||||

### 诚谢 ###

|

||||

|

||||

在文章发表之前非常感谢 NGINX 团队审阅这篇,并提供重要的反馈和说明。

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: https://www.datadoghq.com/blog/how-to-monitor-nginx/

|

||||

|

||||

作者:K Young

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[1]:http://nginx.org/en/

|

||||

[2]:http://nginx.com/resources/glossary/reverse-proxy-server/

|

||||

[3]:https://www.datadoghq.com/blog/monitoring-101-collecting-data/

|

||||

[4]:http://nginx.org/en/docs/ngx_core_module.html#worker_connections

|

||||

[5]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#active-state

|

||||

[6]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/

|

||||

[7]:http://www.w3.org/Protocols/rfc2616/rfc2616-sec10.html

|

||||

[8]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/

|

||||

[9]:https://en.wikipedia.org/wiki/Reverse_proxy

|

||||

[10]:http://nginx.com/blog/load-balancing-with-nginx-plus/

|

||||

[11]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/

|

||||

[12]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#dropped-connections

|

||||

[13]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#requests-per-second

|

||||

[14]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#server-error-rate

|

||||

[15]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#request-processing-time

|

||||

[16]:https://www.datadoghq.com/blog/how-to-collect-nginx-metrics/

|

||||

[17]:https://www.datadoghq.com/blog/how-to-monitor-nginx-with-datadog/

|

||||

[18]:https://www.datadoghq.com/blog/how-to-monitor-nginx/#sign-up

|

||||

[19]:https://github.com/DataDog/the-monitor/blob/master/nginx/how_to_monitor_nginx.md

|

||||

[20]:https://github.com/DataDog/the-monitor/issues

|

||||

@ -1,32 +1,32 @@

|

||||

无忧之道:Docker中容器的备份、恢复和迁移

|

||||

================================================================================

|

||||

今天,我们将学习如何快速地对docker容器进行快捷备份、恢复和迁移。[Docker][1]是一个开源平台,用于自动化部署应用,以通过快捷的途径在称之为容器的轻量级软件层下打包、发布和运行这些应用。它使得应用平台独立,因为它扮演了Linux上一个额外的操作系统级虚拟化的自动化抽象层。它通过其组件cgroups和命名空间利用Linux内核的资源分离特性,达到避免虚拟机开销的目的。它使得用于部署和扩展web应用、数据库和后端服务的大规模构建块无需依赖于特定的堆栈或供应者。

|

||||

今天,我们将学习如何快速地对docker容器进行快捷备份、恢复和迁移。[Docker][1]是一个开源平台,用于自动化部署应用,以通过快捷的途径在称之为容器的轻量级软件层下打包、发布和运行这些应用。它使得应用平台独立,因为它扮演了Linux上一个额外的操作系统级虚拟化的自动化抽象层。它通过其组件cgroups和命名空间利用Linux内核的资源分离特性,达到避免虚拟机开销的目的。它使得用于部署和扩展web应用、数据库和后端服务的大规模构建组件无需依赖于特定的堆栈或供应者。

|

||||

|

||||

所谓的容器,就是那些创建自Docker镜像的软件层,它包含了独立的Linux文件系统和开箱即用的应用程序。如果我们有一个在盒子中运行着的Docker容器,并且想要备份这些容器以便今后使用,或者想要迁移这些容器,那么,本教程将帮助你掌握在Linux操作系统中备份、恢复和迁移Docker容器。

|

||||

所谓的容器,就是那些创建自Docker镜像的软件层,它包含了独立的Linux文件系统和开箱即用的应用程序。如果我们有一个在机器中运行着的Docker容器,并且想要备份这些容器以便今后使用,或者想要迁移这些容器,那么,本教程将帮助你掌握在Linux操作系统中备份、恢复和迁移Docker容器的方法。

|

||||

|

||||

我们怎样才能在Linux中备份、恢复和迁移Docker容器呢?这里为您提供了一些便捷的步骤。

|

||||

|

||||

### 1. 备份容器 ###

|

||||

|

||||

首先,为了备份Docker中的容器,我们会想看看我们想要备份的容器列表。要达成该目的,我们需要在我们运行这Docker引擎,并已创建了容器的Linux机器中运行 docker ps 命令。

|

||||

首先,为了备份Docker中的容器,我们会想看看我们想要备份的容器列表。要达成该目的,我们需要在我们运行着Docker引擎,并已创建了容器的Linux机器中运行 docker ps 命令。

|

||||

|

||||

# docker ps

|

||||

|

||||

|

||||

|

||||

在此之后,我们要选择我们想要备份的容器,然后我们会去创建该容器的快照。我们可以使用 docker commit 命令来创建快照。

|

||||

在此之后,我们要选择我们想要备份的容器,然后去创建该容器的快照。我们可以使用 docker commit 命令来创建快照。

|

||||

|

||||

# docker commit -p 30b8f18f20b4 container-backup

|

||||

|

||||

|

||||

|

||||

该命令会生成一个作为Docker镜像的容器快照,我们可以通过运行 docker images 命令来查看Docker镜像,如下。

|

||||

该命令会生成一个作为Docker镜像的容器快照,我们可以通过运行 `docker images` 命令来查看Docker镜像,如下。

|

||||

|

||||

# docker images

|

||||

|

||||

|

||||

|

||||

正如我们所看见的,上面做的快照已经作为Docker镜像保存了。现在,为了备份该快照,我们有两个选择,一个是我们可以登陆进Docker注册中心,并推送该镜像;另一个是我们可以将Docker镜像打包成tarball备份,以供今后使用。

|

||||

正如我们所看见的,上面做的快照已经作为Docker镜像保存了。现在,为了备份该快照,我们有两个选择,一个是我们可以登录进Docker注册中心,并推送该镜像;另一个是我们可以将Docker镜像打包成tar包备份,以供今后使用。

|

||||

|

||||

如果我们想要在[Docker注册中心][2]上传或备份镜像,我们只需要运行 docker login 命令来登录进Docker注册中心,然后推送所需的镜像即可。

|

||||

|

||||

@ -39,23 +39,23 @@

|

||||

|

||||

|

||||

|

||||

如果我们不想备份到docker注册中心,而是想要将此镜像保存在本地机器中,以供日后使用,那么我们可以将其作为tarball备份。要完成该操作,我们需要运行以下 docker save 命令。

|

||||

如果我们不想备份到docker注册中心,而是想要将此镜像保存在本地机器中,以供日后使用,那么我们可以将其作为tar包备份。要完成该操作,我们需要运行以下 `docker save` 命令。

|

||||

|

||||

# docker save -o ~/container-backup.tar container-backup

|

||||

|

||||

|

||||

|

||||

要验证tarball时候已经生成,我们只需要在保存tarball的目录中运行 ls 命令。

|

||||

要验证tar包是否已经生成,我们只需要在保存tar包的目录中运行 ls 命令即可。

|

||||

|

||||

### 2. 恢复容器 ###

|

||||

|

||||

接下来,在我们成功备份了我们的Docker容器后,我们现在来恢复这些被快照成Docker镜像的容器。如果我们已经在注册中心推送了这些Docker镜像,那么我们仅仅需要把那个Docker镜像拖回并直接运行即可。

|

||||

接下来,在我们成功备份了我们的Docker容器后,我们现在来恢复这些制作了Docker镜像快照的容器。如果我们已经在注册中心推送了这些Docker镜像,那么我们仅仅需要把那个Docker镜像拖回并直接运行即可。

|

||||

|

||||

# docker pull arunpyasi/container-backup:test

|

||||

|

||||

|

||||

|

||||

但是,如果我们将这些Docker镜像作为tarball文件备份到了本地,那么我们只要使用 docker load 命令,后面加上tarball的备份路径,就可以加载该Docker镜像了。

|

||||

但是,如果我们将这些Docker镜像作为tar包文件备份到了本地,那么我们只要使用 docker load 命令,后面加上tar包的备份路径,就可以加载该Docker镜像了。

|

||||

|

||||

# docker load -i ~/container-backup.tar

|

||||

|

||||

@ -63,7 +63,7 @@

|

||||

|

||||

# docker images

|

||||

|

||||

在镜像被加载后,我们将从加载的镜像去运行Docker容器。

|

||||

在镜像被加载后,我们将用加载的镜像去运行Docker容器。

|

||||

|

||||

# docker run -d -p 80:80 container-backup

|

||||

|

||||

@ -71,11 +71,11 @@

|

||||

|

||||

### 3. 迁移Docker容器 ###

|

||||

|

||||

迁移容器同时涉及到了上面两个操作,备份和恢复。我们可以将任何一个Docker容器从一台机器迁移到另一台机器。在迁移过程中,首先我们将容器的备份作为快照Docker镜像。然后,该Docker镜像或者是被推送到了Docker注册中心,或者被作为tarball文件保存到了本地。如果我们将镜像推送到了Docker注册中心,我们简单地从任何我们想要的机器上使用 docker run 命令来恢复并运行该容器。但是,如果我们将镜像打包成tarball备份到了本地,我们只需要拷贝或移动该镜像到我们想要的机器上,加载该镜像并运行需要的容器即可。

|

||||

迁移容器同时涉及到了上面两个操作,备份和恢复。我们可以将任何一个Docker容器从一台机器迁移到另一台机器。在迁移过程中,首先我们将把容器备份为Docker镜像快照。然后,该Docker镜像或者是被推送到了Docker注册中心,或者被作为tar包文件保存到了本地。如果我们将镜像推送到了Docker注册中心,我们简单地从任何我们想要的机器上使用 docker run 命令来恢复并运行该容器。但是,如果我们将镜像打包成tar包备份到了本地,我们只需要拷贝或移动该镜像到我们想要的机器上,加载该镜像并运行需要的容器即可。

|

||||

|

||||

### 尾声 ###

|

||||

|

||||

最后,我们已经学习了如何快速地备份、恢复和迁移Docker容器,本教程适用于各个成功运行Docker的操作系统平台。真的,Docker是一个相当简单易用,然而功能却十分强大的工具。它的命令相当易记,这些命令都非常短,带有许多简单而强大的标记和参数。上面的方法让我们备份容器时很是安逸,使得我们可以在日后很轻松地恢复它们。这会帮助我们恢复我们的容器和镜像,即便主机系统崩溃,甚至意外地被清除。如果你还有很多问题、建议、反馈,请在下面的评论框中写出来吧,可以帮助我们改进或更新我们的内容。谢谢大家!享受吧 :-)

|

||||

最后,我们已经学习了如何快速地备份、恢复和迁移Docker容器,本教程适用于各个可以成功运行Docker的操作系统平台。真的,Docker是一个相当简单易用,然而功能却十分强大的工具。它的命令相当易记,这些命令都非常短,带有许多简单而强大的标记和参数。上面的方法让我们备份容器时很是安逸,使得我们可以在日后很轻松地恢复它们。这会帮助我们恢复我们的容器和镜像,即便主机系统崩溃,甚至意外地被清除。如果你还有很多问题、建议、反馈,请在下面的评论框中写出来吧,可以帮助我们改进或更新我们的内容。谢谢大家!享受吧 :-)

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

@ -83,7 +83,7 @@ via: http://linoxide.com/linux-how-to/backup-restore-migrate-containers-docker/

|

||||

|

||||

作者:[Arun Pyasi][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -5,10 +5,12 @@

|

||||

我试着在Ubuntu中安装Emerald图标主题,而这个主题被打包成了.7z归档包。和以往一样,我试着通过在GUI中右击并选择“提取到这里”来将它解压缩。但是Ubuntu 15.04却并没有解压文件,取而代之的,却是丢给了我一个下面这样的错误信息:

|

||||

|

||||

> Could not open this file

|

||||

>

|

||||

> 无法打开该文件

|

||||

>

|

||||

> There is no command installed for 7-zip archive files. Do you want to search for a command to open this file?

|

||||

> 没有安装用于7-zip归档文件的命令。你是否想要搜索命令来打开该文件?

|

||||

>

|

||||

> 没有安装用于7-zip归档文件的命令。你是否想要搜索用于来打开该文件的命令?

|

||||

|

||||

错误信息看上去是这样的:

|

||||

|

||||

@ -42,7 +44,7 @@ via: http://itsfoss.com/fix-there-is-no-command-installed-for-7-zip-archive-file

|

||||

|

||||

作者:[Abhishek][a]

|

||||

译者:[GOLinux](https://github.com/GOLinux)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -1,26 +1,25 @@

|

||||

什么是逻辑分区管理工具,它怎么在Ubuntu启用?

|

||||

什么是逻辑分区管理 LVM ,如何在Ubuntu中使用?

|

||||

================================================================================

|

||||

> 逻辑分区管理(LVM)是每一个主流Linux发行版都含有的磁盘管理选项。无论你是否需要设置存储池或者只需要动态创建分区,LVM就是你正在寻找的。

|

||||

|

||||

> 逻辑分区管理(LVM)是每一个主流Linux发行版都含有的磁盘管理选项。无论是你需要设置存储池,还是只想动态创建分区,那么LVM就是你正在寻找的。

|

||||

|

||||

### 什么是 LVM? ###

|

||||

|

||||

逻辑分区管理是一个存在于磁盘/分区和操作系统之间的一个抽象层。在传统的磁盘管理中,你的操作系统寻找有哪些磁盘可用(/dev/sda、/dev/sdb等等)接着这些磁盘有哪些可用的分区(如/dev/sda1、/dev/sda2等等)。

|

||||

逻辑分区管理是一个存在于磁盘/分区和操作系统之间的一个抽象层。在传统的磁盘管理中,你的操作系统寻找有哪些磁盘可用(/dev/sda、/dev/sdb等等),并且这些磁盘有哪些可用的分区(如/dev/sda1、/dev/sda2等等)。

|

||||

|

||||

在LVM下,磁盘和分区可以抽象成一个设备中含有多个磁盘和分区。你的操作系统将不会知道这些区别,因为LVM只会给操作系统展示你设置的卷组(磁盘)和逻辑卷(分区)

|

||||

在LVM下,磁盘和分区可以抽象成一个含有多个磁盘和分区的设备。你的操作系统将不会知道这些区别,因为LVM只会给操作系统展示你设置的卷组(磁盘)和逻辑卷(分区)

|

||||

|

||||

,因此可以很容易地动态调整和创建新的磁盘和分区。除此之外,LVM带来你的文件系统不具备的功能。比如,ext3不支持实时快照,但是如果你正在使用LVM你可以不卸载磁盘的情况下做一个逻辑卷的快照。

|

||||

因为卷组和逻辑卷并不物理地对应到影片,因此可以很容易地动态调整和创建新的磁盘和分区。除此之外,LVM带来了你的文件系统所不具备的功能。比如,ext3不支持实时快照,但是如果你正在使用LVM你可以不卸载磁盘的情况下做一个逻辑卷的快照。

|

||||

|

||||

### 你什么时候该使用LVM? ###

|

||||

|

||||

在使用LVM之前首先得考虑的一件事是你要用你的磁盘和分区完成什么。一些发行版如Fedora已经默认安装了LVM。

|

||||

在使用LVM之前首先得考虑的一件事是你要用你的磁盘和分区来做什么。注意,一些发行版如Fedora已经默认安装了LVM。

|

||||

|

||||

如果你使用的是一台只有一块磁盘的Ubuntu笔记本电脑,并且你不需要像实时快照这样的扩展功能,那么你或许不需要LVM。如果I想要轻松地扩展或者想要将多块磁盘组成一个存储池,那么LVM或许正式你郑寻找的。

|

||||

如果你使用的是一台只有一块磁盘的Ubuntu笔记本电脑,并且你不需要像实时快照这样的扩展功能,那么你或许不需要LVM。如果你想要轻松地扩展或者想要将多块磁盘组成一个存储池,那么LVM或许正是你所寻找的。

|

||||

|

||||

### 在Ubuntu中设置LVM ###

|

||||

|

||||

使用LVM首先要了解的一件事是没有简单的方法将已经存在传统的分区转换成逻辑分区。可以将它移到一个使用LVM的新分区下,但是这并不会在本篇中提到;反之我们将全新安装一台Ubuntu 10.10来设置LVM

|

||||

|

||||

|

||||

使用LVM首先要了解的一件事是,没有一个简单的方法可以将已有的传统分区转换成逻辑卷。可以将数据移到一个使用LVM的新分区下,但是这并不会在本篇中提到;在这里,我们将全新安装一台Ubuntu 10.10来设置LVM。(LCTT 译注:本文针对的是较老的版本,新的版本已经不需如此麻烦了)

|

||||

|

||||

要使用LVM安装Ubuntu你需要使用另外的安装CD。从下面的链接中下载并烧录到CD中或者[使用unetbootin创建一个USB盘][1]。

|

||||

|

||||

@ -64,7 +63,7 @@ via: http://www.howtogeek.com/howto/36568/what-is-logical-volume-management-and-

|

||||

|

||||

作者:[How-To Geek][a]

|

||||

译者:[geekpi](https://github.com/geekpi)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

@ -0,0 +1,65 @@

|

||||

使用 Find 命令来帮你找到那些需要清理的文件

|

||||

================================================================================

|

||||

|

||||

|

||||

*Credit: Sandra H-S*

|

||||

|

||||

有一个问题几乎困扰着所有的文件系统 -- 包括 Unix 和其他的 -- 那就是文件的不断积累。几乎没有人愿意花时间清理掉他们不再使用的文件和整理文件系统,结果,文件变得很混乱,很难找到有用的东西,要使它们运行良好、维护备份、易于管理,这将是一种持久的挑战。

|

||||

|

||||

我见过的一种解决问题的方法是建议使用者将所有的数据碎屑创建一个文件集合的总结报告或"概况",来报告诸如所有的文件数量;最老的,最新的,最大的文件;并统计谁拥有这些文件等数据。如果有人看到五年前的一个包含五十万个文件的文件夹,他们可能会去删除哪些文件 -- 或者,至少会归档和压缩。主要问题是太大的文件夹会使人担心误删一些重要的东西。如果有一个描述文件夹的方法能帮助显示文件的性质,那么你就可以去清理它了。

|

||||

|

||||

当我准备做 Unix 文件系统的总结报告时,几个有用的 Unix 命令能提供一些非常有用的统计信息。要计算目录中的文件数,你可以使用这样一个 find 命令。

|

||||

|

||||

$ find . -type f | wc -l

|

||||

187534

|

||||

|

||||

虽然查找最老的和最新的文件是比较复杂,但还是相当方便的。在下面的命令,我们使用 find 命令再次查找文件,以文件时间排序并按年-月-日的格式显示,在列表顶部的显然是最老的。

|

||||

|

||||

在第二个命令,我们做同样的,但打印的是最后一行,这是最新的。

|

||||

|

||||

$ find -type f -printf '%T+ %p\n' | sort | head -n 1

|

||||

2006-02-03+02:40:33 ./skel/.xemacs/init.el

|

||||

$ find -type f -printf '%T+ %p\n' | sort | tail -n 1

|

||||

2015-07-19+14:20:16 ./.bash_history

|

||||

|

||||

printf 命令输出 %T(文件日期和时间)和 %P(带路径的文件名)参数。

|

||||

|

||||

如果我们在查找家目录时,无疑会发现,history 文件(如 .bash_history)是最新的,这并没有什么用。你可以通过 "un-grepping" 来忽略这些文件,也可以忽略以.开头的文件,如下图所示的。

|

||||

|

||||

$ find -type f -printf '%T+ %p\n' | grep -v "\./\." | sort | tail -n 1

|

||||

2015-07-19+13:02:12 ./isPrime

|

||||

|

||||

寻找最大的文件使用 %s(大小)参数,包括文件名(%f),因为这就是我们想要在报告中显示的。

|

||||

|

||||

$ find -type f -printf '%s %f \n' | sort -n | uniq | tail -1

|

||||

20183040 project.org.tar

|

||||

|

||||

统计文件的所有者,使用%u(所有者)

|

||||

|

||||

$ find -type f -printf '%u \n' | grep -v "\./\." | sort | uniq -c

|

||||

180034 shs

|

||||

7500 jdoe

|

||||

|

||||

如果文件系统能记录上次的访问日期,也将是非常有用的,可以用来看该文件有没有被访问过,比方说,两年之内没访问过。这将使你能明确分辨这些文件的价值。这个最后访问(%a)参数这样使用:

|

||||

|

||||

$ find -type f -printf '%a+ %p\n' | sort | head -n 1

|

||||

Fri Dec 15 03:00:30 2006+ ./statreport

|

||||

|

||||

当然,如果大多数最近访问的文件也是在很久之前的,这看起来你需要处理更多文件了。

|

||||

|

||||

$ find -type f -printf '%a+ %p\n' | sort | tail -n 1

|

||||

Wed Nov 26 03:00:27 2007+ ./my-notes

|

||||

|

||||

要想层次分明,可以为一个文件系统或大目录创建一个总结报告,显示这些文件的日期范围、最大的文件、文件所有者们、最老的文件和最新访问时间,可以帮助文件拥有者判断当前有哪些文件夹是重要的哪些该清理了。

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itworld.com/article/2949898/linux/profiling-your-file-systems.html

|

||||

|

||||

作者:[Sandra Henry-Stocker][a]

|

||||

译者:[strugglingyouth](https://github.com/strugglingyouth)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itworld.com/author/Sandra-Henry_Stocker/

|

||||

@ -0,0 +1,75 @@

|

||||

选择成为软件开发工程师的5个原因

|

||||

================================================================================

|

||||

|

||||

|

||||

|

||||

这个星期我将给本地一所高中做一次有关于程序猿是怎样工作的演讲。我是志愿(由 [Transfer][1] 组织的)来到这所学校谈论我的工作的。这个学校本周将有一个技术主题日,并且他们很想听听科技行业是怎样工作的。因为我是从事软件开发的,这也是我将和学生们讲的内容。演讲的其中一部分是我为什么觉得软件开发是一个很酷的职业。主要原因如下:

|

||||

|

||||

### 5个原因 ###

|

||||

|

||||

**1、创造性**

|

||||

|

||||

如果你问别人创造性的工作有哪些,别人通常会说像作家,音乐家或者画家那样的(工作)。但是极少有人知道软件开发也是一项非常具有创造性的工作。它是最符合创造性定义的了,因为你创造了一个以前没有的新功能。这种解决方案可以在整体和细节上以很多形式来展现。我们经常会遇到一些需要做权衡的场景(比如说运行速度与内存消耗的权衡)。当然前提是这种解决方案必须是正确的。这些所有的行为都是需要强大的创造性的。

|

||||

|

||||

**2、协作性**

|

||||

|

||||

另外一个表象是程序猿们独自坐在他们的电脑前,然后撸一天的代码。但是软件开发事实上通常总是一个团队努力的结果。你会经常和你的同事讨论编程问题以及解决方案,并且和产品经理、测试人员、客户讨论需求以及其他问题。

|

||||

经常有人说,结对编程(2个开发人员一起在一个电脑上编程)是一种流行的最佳实践。

|

||||

|

||||

**3、高需性**

|

||||

|

||||

世界上越来越多的人在用软件,正如 [Marc Andreessen](https://en.wikipedia.org/wiki/Marc_Andreessen) 所说 " [软件正在吞噬世界][2] "。虽然程序猿现在的数量非常巨大(在斯德哥尔摩,程序猿现在是 [最普遍的职业][3] ),但是,需求量一直处于供不应求的局面。据软件公司说,他们最大的挑战之一就是 [找到优秀的程序猿][4] 。我也经常接到那些想让我跳槽的招聘人员打来的电话。我知道至少除软件行业之外的其他行业的雇主不会那么拼(的去招聘)。

|

||||

|

||||

**4、高酬性**

|

||||

|

||||

软件开发可以带来不菲的收入。卖一份你已经开发好的软件的额外副本是没有 [边际成本][5] 的。这个事实与对程序猿的高需求意味着收入相当可观。当然还有许多更捞金的职业,但是相比一般人群,我认为软件开发者确实“日进斗金”(知足吧!骚年~~)。

|

||||

|

||||

**5、前瞻性**

|

||||

|

||||

有许多工作岗位消失,往往是由于它们可以被计算机和软件代替。但是所有这些新的程序依然需要开发和维护,因此,程序猿的前景还是相当好的。

|

||||

|

||||

### 但是...###

|

||||

|

||||

**外包又是怎么一回事呢?**

|

||||

|

||||

难道所有外包到其他国家的软件开发的薪水都很低吗?这是一个理想丰满,现实骨感的例子(有点像 [瀑布开发模型][6] )。软件开发基本上跟设计的工作一样,是一个探索发现的工作。它受益于强有力的合作。更进一步说,特别当你的主打产品是软件的时候,你所掌握的开发知识是绝对的优势。知识在整个公司中分享的越容易,那么公司的发展也将越来越好。

|

||||

|

||||

换一种方式去看待这个问题。软件外包已经存在了相当一段时间了。但是对本土程序猿的需求量依旧非常高。因为许多软件公司看到了雇佣本土程序猿的带来的收益要远远超过了相对较高的成本(其实还是赚了)。

|

||||

|

||||

### 如何成为人生大赢家 ###

|

||||

|

||||

虽然我有许多我认为软件开发是一件非常有趣的事情的理由 (详情见: [为什么我热爱编程][7] )。但是这些理由,并不适用于所有人。幸运的是,尝试编程是一件非常容易的事情。在互联网上有数不尽的学习编程的资源。例如,[Coursera][8] 和 [Udacity][9] 都拥有很好的入门课程。如果你从来没有撸过码,可以尝试其中一个免费的课程,找找感觉。

|

||||

|

||||

寻找一个既热爱又能谋生的事情至少有2个好处。首先,由于你天天去做,工作将比你简单的只为谋生要有趣的多。其次,如果你真的非常喜欢,你将更好的擅长它。我非常喜欢下面一副关于伟大工作组成的韦恩图(作者 [@eskimon)][10]) 。因为编码的薪水确实相当不错,我认为如果你真的喜欢它,你将有一个很好的机会,成为人生的大赢家!

|

||||

|

||||

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://henrikwarne.com/2014/12/08/5-reasons-why-software-developer-is-a-great-career-choice/

|

||||

|

||||

作者:[Henrik Warne][a]

|

||||

译者:[mousycoder](https://github.com/mousycoder)

|

||||

校对:[wxy](https://github.com/wxy)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

|

||||

[a]:http://henrikwarne.com/

|

||||

[1]:http://www.transfer.nu/omoss/transferinenglish.jspx?pageId=23

|

||||

[2]:http://www.wsj.com/articles/SB10001424053111903480904576512250915629460

|

||||

[3]:http://www.di.se/artiklar/2014/6/12/jobbet-som-tar-over-landet/

|

||||

[4]:http://computersweden.idg.se/2.2683/1.600324/examinationstakten-racker-inte-for-branschens-behov

|

||||

[5]:https://en.wikipedia.org/wiki/Marginal_cost

|

||||

[6]:https://en.wikipedia.org/wiki/Waterfall_model

|

||||

[7]:http://henrikwarne.com/2012/06/02/why-i-love-coding/

|

||||

[8]:https://www.coursera.org/

|

||||

[9]:https://www.udacity.com/

|

||||

[10]:https://eskimon.wordpress.com/about/

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

|

||||

@ -1,3 +1,5 @@

|

||||

martin

|

||||

|

||||

Interview: Larry Wall

|

||||

================================================================================

|

||||

> Perl 6 has been 15 years in the making, and is now due to be released at the end of this year. We speak to its creator to find out what’s going on.

|

||||

@ -122,4 +124,4 @@ via: http://www.linuxvoice.com/interview-larry-wall/

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.linuxvoice.com/author/mike/

|

||||

[a]:http://www.linuxvoice.com/author/mike/

|

||||

|

||||

100

sources/talk/20150806 5 heroes of the Linux world.md

Normal file

100

sources/talk/20150806 5 heroes of the Linux world.md

Normal file

@ -0,0 +1,100 @@

|

||||

Translating by ZTinoZ

|

||||

5 heroes of the Linux world

|

||||

================================================================================

|

||||

Who are these people, seen and unseen, whose work affects all of us every day?

|

||||

|

||||

|

||||

Image courtesy [Christopher Michel/Flickr][1]

|

||||

|

||||

### High-flying penguins ###

|

||||

|

||||

Linux and open source is driven by passionate people who write best-of-breed software and then release the code to the public so anyone can use it, without any strings attached. (Well, there is one string attached and that’s licence.)

|

||||

|

||||

Who are these people? These heroes of the Linux world, whose work affects all of us every day. Allow me to introduce you.

|

||||

|

||||

|

||||

Image courtesy Swapnil Bhartiya

|

||||

|

||||

### Klaus Knopper ###

|

||||

|

||||

Klaus Knopper, an Austrian developer who lives in Germany, is the founder of Knoppix and Adriana Linux, which he developed for his blind wife.

|

||||

|

||||

Knoppix holds a very special place in heart of those Linux users who started using Linux before Ubuntu came along. What makes Knoppix so special is that it popularized the concept of Live CD. Unlike Windows or Mac OS X, you could run the entire operating system from the CD without installing anything on the system. It allowed new users to test Linux on their systems without formatting the hard drive. The live feature of Linux alone contributed heavily to its popularity.

|

||||

|

||||

|

||||

Image courtesy [Fórum Internacional Software Live/Flickr][2]

|

||||

|

||||

### Lennart Pottering ###

|

||||

|

||||

Lennart Pottering is yet another genius from Germany. He has written so many core components of a Linux (as well as BSD) system that it’s hard to keep track. Most of his work is towards the successors of aging or broken components of the Linux systems.

|

||||

|

||||

Pottering wrote the modern init system systemd, which shook the Linux world and created a [rift in the Debian community][3].

|

||||

|

||||

While Linus Torvalds has no problems with systemd, and praises it, he is not a huge fan of the way systemd developers (including the co-author Kay Sievers,) respond to bug reports and criticism. At one point Linus said on the LKML (Linux Kernel Mailing List) that he would [never work with Sievers][4].

|

||||

|

||||

Lennart is also the author of Pulseaudio, sound server on Linux and Avahi, zero-configuration networking (zeroconf) implementation.

|

||||

|

||||

|

||||

Image courtesy [Meego Com/Flickr][5]

|

||||

|

||||

### Jim Zemlin ###

|

||||

|

||||

Jim Zemlin isn't a developer, but as founder of The Linux Foundation he is certainly one of the most important figures of the Linux world.

|

||||

|

||||

In 2007, The Linux Foundation was formed as a result of merger between two open source bodies: the Free Standards Group and the Open Source Development Labs. Zemlin was the executive director of the Free Standards Group. Post-merger Zemlin became the executive director of The Linux Foundation and has held that position since.

|

||||

|

||||

Under his leadership, The Linux Foundation has become the central figure in the modern IT world and plays a very critical role for the Linux ecosystem. In order to ensure that key developers like Torvalds and Kroah-Hartman can focus on Linux, the foundation sponsors them as fellows.

|

||||

|

||||

Zemlin also made the foundation a bridge between companies so they can collaborate on Linux while at the same time competing in the market. The foundation also organizes many conferences around the world and [offers many courses for Linux developers][6].

|

||||

|

||||

People may think of Zemlin as Linus Torvalds' boss, but he refers to himself as "Linus Torvalds' janitor."

|

||||

|

||||

|

||||

Image courtesy [Coscup/Flickr][7]

|

||||

|

||||

### Greg Kroah-Hartman ###

|

||||

|

||||

Greg Kroah-Hartman is known as second-in-command of the Linux kernel. The ‘gentle giant’ is the maintainer of the stable branch of the kernel and of staging subsystem, USB, driver core, debugfs, kref, kobject, and the [sysfs][8] kernel subsystems along with many other components of a Linux system.

|

||||

|

||||

He is also credited for device drivers for Linux. One of his jobs is to travel around the globe, meet hardware makers and persuade them to make their drivers available for Linux. The next time you plug some random USB device to your system and it works out of the box, thank Kroah-Hartman. (Don't thank the distro. Some distros try to take credit for the work Kroah-Hartman or the Linux kernel did.)

|

||||

|

||||

Kroah-Hartman previously worked for Novell and then joined the Linux Foundation as a fellow, alongside Linus Torvalds.

|

||||

|

||||

Kroah-Hartman is the total opposite of Linus and never rants (at least publicly). One time there was some ripple was when he stated that [Canonical doesn’t contribute much to the Linux kernel][9].

|

||||

|

||||

On a personal level, Kroah-Hartman is extremely helpful to new developers and users and is easily accessible.

|

||||

|

||||

|

||||

Image courtesy Swapnil Bhartiya

|

||||

|

||||

### Linus Torvalds ###

|

||||

|

||||

No collection of Linux heroes would be complete without Linus Torvalds. He is the author of the Linux kernel, the most used open source technology on the planet and beyond. His software powers everything from space stations to supercomputers, military drones to mobile devices and tiny smartwatches. Linus remains the authority on the Linux kernel and makes the final decision on which patches to merge to the kernel.

|

||||

|

||||

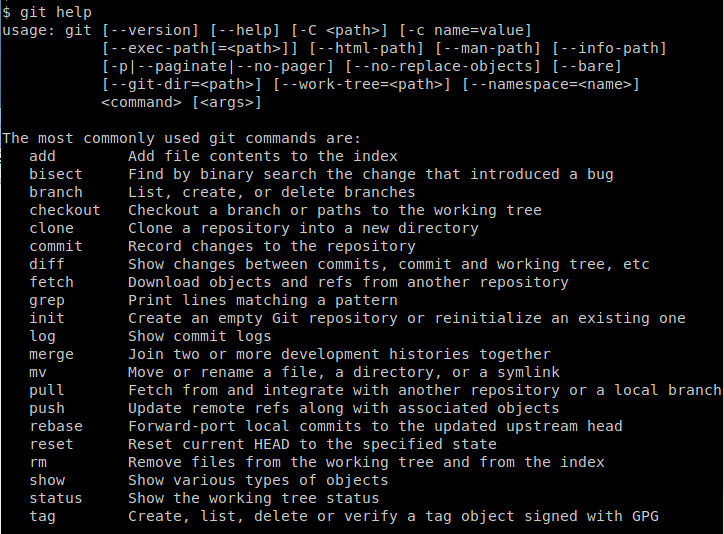

Linux isn't Torvalds' only contribution open source. When he got fed-up with the existing software revision control systems, which his kernel heavily relied on, he wrote his own, called Git. Git enjoys the same reputation as Linux; it is the most used version control system in the world.

|

||||

|

||||

Torvalds is also a passionate scuba diver and when he found no decent dive logs for Linux, he wrote his own and called it SubSurface.

|

||||

|

||||

Torvalds is [well known for his rants][10] and once admitted that his ego is as big as a small planet. But he is also known for admitting his mistakes if he realizes he was wrong.

|

||||

|

||||

--------------------------------------------------------------------------------

|

||||

|

||||

via: http://www.itworld.com/article/2955001/linux/5-heros-of-the-linux-world.html

|

||||

|

||||

作者:[Swapnil Bhartiya][a]

|

||||

译者:[译者ID](https://github.com/译者ID)

|

||||

校对:[校对者ID](https://github.com/校对者ID)

|

||||

|

||||

本文由 [LCTT](https://github.com/LCTT/TranslateProject) 原创翻译,[Linux中国](https://linux.cn/) 荣誉推出

|

||||

|

||||

[a]:http://www.itworld.com/author/Swapnil-Bhartiya/

|

||||

[1]:https://flic.kr/p/siJ25M

|

||||

[2]:https://flic.kr/p/uTzj54

|

||||

[3]:http://www.itwire.com/business-it-news/open-source/66153-systemd-fallout-two-debian-technical-panel-members-resign

|

||||

[4]:http://www.linuxveda.com/2014/04/04/linus-torvalds-systemd-kay-sievers/

|

||||

[5]:https://flic.kr/p/9Lnhpu

|

||||

[6]:http://www.itworld.com/article/2951968/linux/linux-foundation-offers-cheaper-courses-and-certifications-for-india.html

|

||||

[7]:https://flic.kr/p/hBv8Pp

|

||||

[8]:https://en.wikipedia.org/wiki/Sysfs

|

||||

[9]:https://www.youtube.com/watch?v=CyHAeGBFS8k

|

||||

[10]:http://www.itworld.com/article/2873200/operating-systems/11-technologies-that-tick-off-linus-torvalds.html

|

||||

@ -0,0 +1,81 @@

|

||||

Torvalds 2.0: Patricia Torvalds on computing, college, feminism, and increasing diversity in tech

|

||||

================================================================================

|

||||

|

||||

Image by : Photo by Becky Svartström. Modified by Opensource.com. [CC BY-SA 4.0][1]

|

||||

|

||||

Patricia Torvalds isn't the Torvalds name that pops up in Linux and open source circles. Yet.

|

||||

|

||||

|

||||

|

||||

At 18, Patricia is a feminist with a growing list of tech achievements, open source industry experience, and her sights set on diving into her freshman year of college at Duke University's Pratt School of Engineering. She works for [Puppet Labs][2] in Portland, Oregon, as an intern, but soon she'll head to Durham, North Carolina, to start the fall semester of college.

|

||||

|

||||

In this exclusive interview, Patricia explains what got her interested in computer science and engineering (spoiler alert: it wasn't her father), what her high school did "right" with teaching tech, the important role feminism plays in her life, and her thoughts on the lack of diversity in technology.

|

||||

|

||||

|

||||

|

||||

### What made you interested in studying computer science and engineering? ###

|

||||

|

||||

My interest in tech really grew throughout high school. I wanted to go into biology for a while, until around my sophomore year. I had a web design internship at the Portland VA after my sophomore year. And I took an engineering class called Exploratory Ventures, which sent an ROV into the Pacific ocean late in my sophomore year, but the turning point was probably when I was named a regional winner and national runner up for the [NCWIT Aspirations in Computing][3] award halfway through my junior year.

|

||||

|

||||

The award made me feel validated in my interest, of course, but I think the most important part of it was getting to join a Facebook group for all the award winners. The girls who have won the award are absolutely incredible and so supportive of each other. I was definitely interested in computer science before I won the award, because of my work in XV and at the VA, but having these girls to talk to solidified my interest and has kept it really strong. Teaching XV—more on that later—my junior and senior year, also, made engineering and computer science really fun for me.

|

||||

|

||||

### What do you plan to study? And do you already know what you want to do after college? ###

|

||||

|

||||

I hope to major in either Mechanical or Electrical and Computer Engineering as well as Computer Science, and minor in Women's Studies. After college, I hope to work for a company that supports or creates technology for social good, or start my own company.

|

||||

|

||||

### My daughter had one high school programming class—Visual Basic. She was the only girl in her class, and she ended up getting harassed and having a miserable experience. What was your experience like? ###

|

||||

|

||||

My high school began offering computer science classes my senior year, and I took Visual Basic as well! The class wasn't bad, but I was definitely one of three or four girls in the class of 20 or so students. Other computing classes seemed to have similar gender breakdowns. However, my high school was extremely small and the teacher was supportive of inclusivity in tech, so there was no harassment that I noticed. Hopefully the classes become more diverse in future years.

|

||||

|

||||

### What did your schools do right technology-wise? And how could they have been better? ###

|

||||

|

||||

My high school gave us consistent access to computers, and teachers occasionally assigned technology-based assignments in unrelated classes—we had to create a website for a social studies class a few times—which I think is great because it exposes everyone to tech. The robotics club was also pretty active and well-funded, but fairly small; I was not a member. One very strong component of the school's technology/engineering program is actually a student-taught engineering class called Exploratory Ventures, which is a hands-on class that tackles a new engineering or computer science problem every year. I taught it for two years with a classmate of mine, and have had students come up to me and tell me they're interested in pursuing engineering or computer science as a result of the class.

|

||||

|

||||

However, my high school was not particularly focused on deliberately including young women in these programs, and it isn't very racially diverse. The computing-based classes and clubs were, by a vast majority, filled with white male students. This could definitely be improved on.

|

||||

|

||||

### Growing up, how did you use technology at home? ###

|

||||

|

||||

Honestly, when I was younger I used my computer time (my dad created a tracker, which logged us off after an hour of Internet use) to play Neopets or similar games. I guess I could have tried to mess with the tracker or played on the computer without Internet use, but I just didn't. I sometimes did little science projects with my dad, and I remember once printing "Hello world" in the terminal with him a thousand times, but mostly I just played online games with my sisters and didn't get my start in computing until high school.

|

||||

|

||||

### You were active in the Feminism Club at your high school. What did you learn from that experience? What feminist issues are most important to you now? ###

|

||||

|

||||

My friend and I co-founded Feminism Club at our high school late in our sophomore year. We did receive lots of resistance to the club at first, and while that never entirely went away, by the time we graduated feminist ideals were absolutely a part of the school's culture. The feminist work we did at my high school was generally on a more immediate scale and focused on issues like the dress code.

|

||||

|

||||

Personally, I'm very focused on intersectional feminism, which is feminism as it applies to other aspects of oppression like racism and classism. The Facebook page [Guerrilla Feminism][4] is a great example of an intersectional feminism and has done so much to educate me. I currently run the Portland branch.

|

||||

|

||||

Feminism is also important to me in terms of diversity in tech, although as an upper-class white woman with strong connections in the tech world, the problems here affect me much less than they do other people. The same goes for my involvement in intersectional feminism. Publications like [Model View Culture][5] are very inspiring to me, and I admire Shanley Kane so much for what she does.

|

||||

|

||||

### What advice would you give parents who want to teach their children how to program? ###

|

||||

|

||||

Honestly, nobody ever pushed me into computer science or engineering. Like I said, for a long time I wanted to be a geneticist. I got a summer internship doing web design for the VA the summer after my sophomore year and totally changed my mind. So I don't know if I can fully answer that question.

|

||||

|

||||

I do think genuine interest is important, though. If my dad had sat me down in front of the computer and told me to configure a webserver when I was 12, I don't think I'd be interested in computer science. Instead, my parents gave me a lot of free reign to do what I wanted, which was mostly coding terrible little HTML sites for my Neopets. Neither of my younger sisters are interested in engineering or computer science, and my parents don't care. I'm really lucky my parents have given me and my sisters the encouragement and resources to explore our interests.

|

||||

|

||||

Still, I grew up saying my future career would be "like my dad's"—even when I didn't know what he did. He has a pretty cool job. Also, one time when I was in middle school, I told him that and he got a little choked up and said I wouldn't think that in high school. So I guess that motivated me a bit.

|

||||

|

||||

### What suggestions do you have for leaders in open source communities to help them attract and maintain a more diverse mix of contributors? ###

|

||||

|

||||

I'm actually not active in particular open source communities. I feel much more comfortable discussing computing with other women; I'm a member of the [NCWIT Aspirations in Computing][6] network and it's been one of the most important aspects of my continued interest in technology, as well as the Facebook group [Ladies Storm Hackathons][7].

|

||||

|

||||

I think this applies well to attracting and maintaining a talented and diverse mix of contributors: Safe spaces are important. I have seen the misogynistic and racist comments made in some open source communities, and subsequent dismissals when people point out the issues. I think that in maintaining a professional community there have to be strong standards on what constitutes harassment or inappropriate conduct. Of course, people can—and will—have a variety of opinions on what they should be able to express in open source communities, or any community. However, if community leaders actually want to attract and maintain diverse talent, they need to create a safe space and hold community members to high standards.

|

||||

|

||||

I also think that some community leaders just don't value diversity. It's really easy to argue that tech is a meritocracy, and the reason there are so few marginalized people in tech is just that they aren't interested, and that the problem comes from earlier on in the pipeline. They argue that if someone is good enough at their job, their gender or race or sexual orientation doesn't matter. That's the easy argument. But I was raised not to make excuses for mistakes. And I think the lack of diversity is a mistake, and that we should be taking responsibility for it and actively trying to make it better.

|

||||

|

||||